Published: Aug 8, 2024 by Isaac Johnson

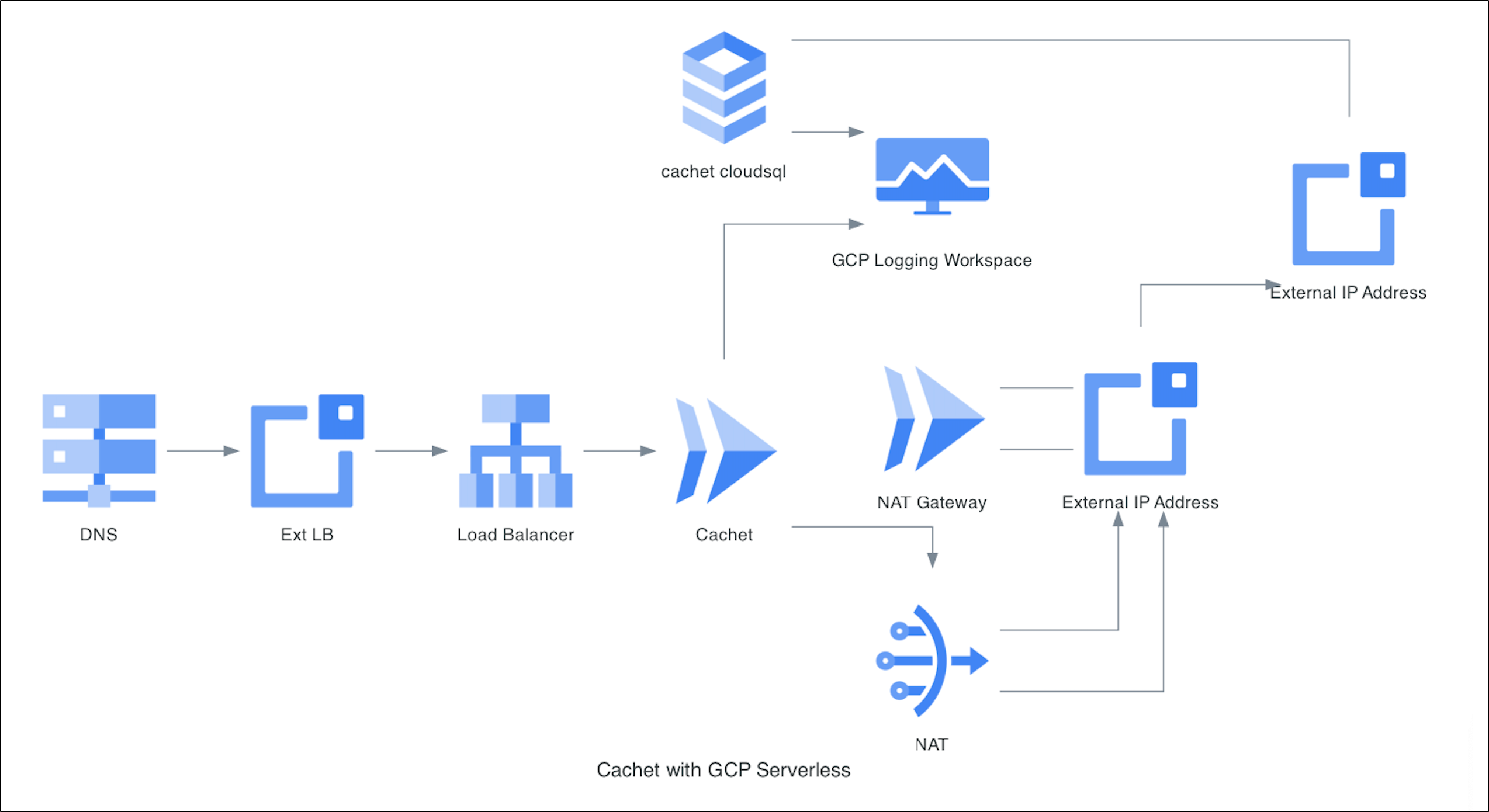

Last time we looked at Cachet running in a local Kubernetes and on Docker. Today we’ll build out a proper cloud-native solution in GCP using CloudRun, CloudSQL, and ALBs with CloudDNS.

We’ll look at costs and some other options as well including Datadog, Github and Aiven.io free-tier databases.

Getting Started

I’m quite interested in setting up Cachet in GCP as a serverless CloudRun backed by CloudSQL.

Let’s start with a CloudSQL instance that can be our backend.

First, I need to login and set my project if I haven’t done that lately

$ gcloud auth login

$ gcloud config set project myanthosproject2

I’ll then launch using the cheapest micro size.

$ gcloud sql instances create cachet-psql --database-version POSTGRES_15 --region us-central1 --tier db-f1-micro --activation-policy always

API [sqladmin.googleapis.com] not enabled on project [myanthosproject2]. Would you like to enable and retry

(this will take a few minutes)? (y/N)? y

Enabling service [sqladmin.googleapis.com] on project [myanthosproject2]...

Operation "operations/acat.p2-511842454269-957451b0-411c-4d9d-a178-5eead3ca8537" finished successfully.

Creating Cloud SQL instance for POSTGRES_15...⠹

Creating Cloud SQL instance for POSTGRES_15...done.

Created [https://sqladmin.googleapis.com/sql/v1beta4/projects/myanthosproject2/instances/cachet-psql].

NAME DATABASE_VERSION LOCATION TIER PRIMARY_ADDRESS PRIVATE_ADDRESS STATUS

cachet-psql POSTGRES_15 us-central1-a db-f1-micro 34.172.121.6 - RUNNABLE

You’ll note that it prompts to enable the API if I haven’t enabled it already.

I’ll need a postgres user password to do the rest of the work, so let’s get that

$ gcloud sql users set-password postgres --instance cachet-psql --password DKe8d9d03DKd

Updating Cloud SQL user...done.

Let’s now connect with that username …

$ gcloud sql connect cachet-psql --user postgres

Allowlisting your IP for incoming connection for 5 minutes...done.

ERROR: (gcloud.sql.connect) Psql client not found. Please install a psql client and make sure it is in PATH to be able to connect to the database instance.

Ah, I need a local psql client

builder@LuiGi:~/Workspaces/cachethq-Docker$ sudo apt update && sudo apt install postgresql-client

[sudo] password for builder:

Hit:1 https://dl.yarnpkg.com/debian stable InRelease

Hit:2 http://archive.ubuntu.com/ubuntu jammy InRelease

Hit:3 https://packages.microsoft.com/repos/azure-cli jammy InRelease

Get:4 http://archive.ubuntu.com/ubuntu jammy-updates InRelease [128 kB]

Get:5 http://security.ubuntu.com/ubuntu jammy-security InRelease [129 kB]

Get:6 https://packages.cloud.google.com/apt cloud-sdk InRelease [1616 B]

Get:7 http://archive.ubuntu.com/ubuntu jammy-backports InRelease [127 kB]

Get:8 http://archive.ubuntu.com/ubuntu jammy-updates/main amd64 Packages [1900 kB]

Get:9 https://packages.cloud.google.com/apt cloud-sdk/main amd64 Packages [3142 kB]

Get:10 http://security.ubuntu.com/ubuntu jammy-security/main amd64 Packages [1679 kB]

Get:11 http://archive.ubuntu.com/ubuntu jammy-updates/main Translation-en [338 kB]

Get:12 http://archive.ubuntu.com/ubuntu jammy-updates/restricted amd64 Packages [2257 kB]

Get:13 http://archive.ubuntu.com/ubuntu jammy-updates/restricted Translation-en [388 kB]

Get:14 http://archive.ubuntu.com/ubuntu jammy-updates/universe amd64 Packages [1109 kB]

Get:15 http://archive.ubuntu.com/ubuntu jammy-updates/universe Translation-en [259 kB]

Get:16 https://packages.cloud.google.com/apt cloud-sdk/main all Packages [1490 kB]

Get:17 http://security.ubuntu.com/ubuntu jammy-security/main Translation-en [280 kB]

Get:18 http://security.ubuntu.com/ubuntu jammy-security/restricted amd64 Packages [2178 kB]

Get:19 http://security.ubuntu.com/ubuntu jammy-security/restricted Translation-en [374 kB]

Get:20 http://security.ubuntu.com/ubuntu jammy-security/universe amd64 Packages [887 kB]

Get:21 http://security.ubuntu.com/ubuntu jammy-security/universe Translation-en [173 kB]

Fetched 16.8 MB in 3s (5682 kB/s)

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

36 packages can be upgraded. Run 'apt list --upgradable' to see them.

W: https://dl.yarnpkg.com/debian/dists/stable/InRelease: Key is stored in legacy trusted.gpg keyring (/etc/apt/trusted.gpg), see the DEPRECATION section in apt-key(8) for details.

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following additional packages will be installed:

libpq5 postgresql-client-14 postgresql-client-common

Suggested packages:

postgresql-14 postgresql-doc-14

The following NEW packages will be installed:

libpq5 postgresql-client postgresql-client-14 postgresql-client-common

0 upgraded, 4 newly installed, 0 to remove and 36 not upgraded.

Need to get 1404 kB of archives.

After this operation, 4615 kB of additional disk space will be used.

Do you want to continue? [Y/n] Y

Get:1 http://archive.ubuntu.com/ubuntu jammy-updates/main amd64 libpq5 amd64 14.12-0ubuntu0.22.04.1 [149 kB]

Get:2 http://archive.ubuntu.com/ubuntu jammy/main amd64 postgresql-client-common all 238 [29.6 kB]

Get:3 http://archive.ubuntu.com/ubuntu jammy-updates/main amd64 postgresql-client-14 amd64 14.12-0ubuntu0.22.04.1 [1223 kB]

Get:4 http://archive.ubuntu.com/ubuntu jammy/main amd64 postgresql-client all 14+238 [3292 B]

Fetched 1404 kB in 1s (2680 kB/s)

Selecting previously unselected package libpq5:amd64.

(Reading database ... 150601 files and directories currently installed.)

Preparing to unpack .../libpq5_14.12-0ubuntu0.22.04.1_amd64.deb ...

Unpacking libpq5:amd64 (14.12-0ubuntu0.22.04.1) ...

Selecting previously unselected package postgresql-client-common.

Preparing to unpack .../postgresql-client-common_238_all.deb ...

Unpacking postgresql-client-common (238) ...

Selecting previously unselected package postgresql-client-14.

Preparing to unpack .../postgresql-client-14_14.12-0ubuntu0.22.04.1_amd64.deb ...

Unpacking postgresql-client-14 (14.12-0ubuntu0.22.04.1) ...

Selecting previously unselected package postgresql-client.

Preparing to unpack .../postgresql-client_14+238_all.deb ...

Unpacking postgresql-client (14+238) ...

Setting up postgresql-client-common (238) ...

Setting up libpq5:amd64 (14.12-0ubuntu0.22.04.1) ...

Setting up postgresql-client-14 (14.12-0ubuntu0.22.04.1) ...

update-alternatives: using /usr/share/postgresql/14/man/man1/psql.1.gz to provide /usr/share/man/man1/psql.1.gz (psql.1.gz) in auto mode

Setting up postgresql-client (14+238) ...

Processing triggers for man-db (2.10.2-1) ...

Processing triggers for libc-bin (2.35-0ubuntu3.8) ...

builder@LuiGi:~/Workspaces/cachethq-Docker$ which psql

/usr/bin/psql

Let’s try that gcloud command again and create our database and user. We’ll also grant permissions for that user to the new database

$ gcloud sql connect cachet-psql --user postgres

Allowlisting your IP for incoming connection for 5 minutes...done.

Connecting to database with SQL user [postgres].Password:

psql (14.12 (Ubuntu 14.12-0ubuntu0.22.04.1), server 15.7)

WARNING: psql major version 14, server major version 15.

Some psql features might not work.

SSL connection (protocol: TLSv1.3, cipher: TLS_AES_256_GCM_SHA384, bits: 256, compression: off)

Type "help" for help.

postgres=> CREATE DATABASE cachet;

CREATE DATABASE

postgres=> CREATE USER cachet_user WITH PASSWORD 'DKdoeDkdieoD0083D';

CREATE ROLE

postgres=> GRANT ALL PRIVILEGES ON DATABASE cachet TO cachet_user;

GRANT

postgres=> exit

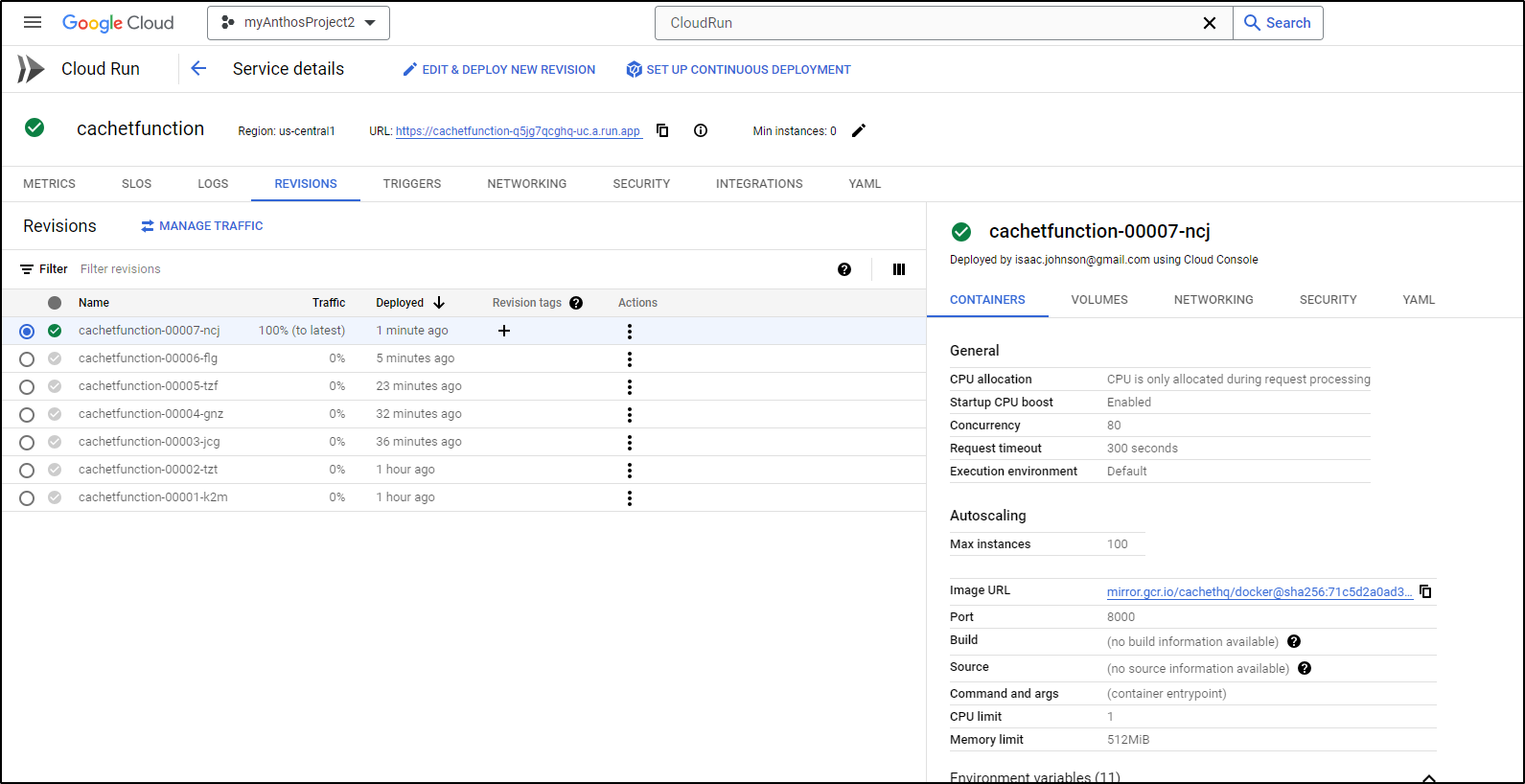

I need to create the CloudRun with settings

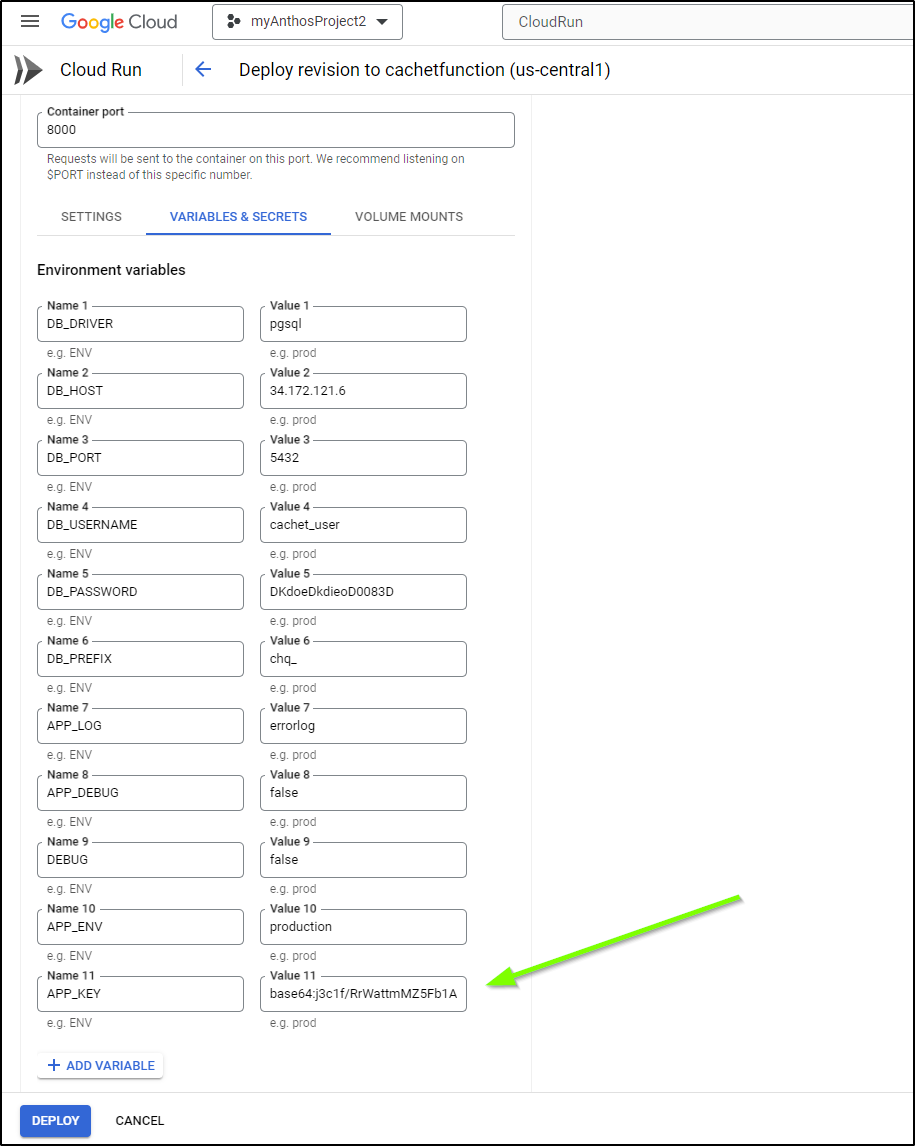

$ gcloud run deploy cachetfunction --image cachethq/docker:latest --platform managed --region us-central1 --allow-unauthenticated --set-env-vars=DB_DRIVER=pgsql,DB_HOST=34.172.121.6,DB_PORT=5432,DB_USERNAME=cachet_user,DB_PASSWORD=DKdoeDkdieoD0083D,DB_PREFIX=chq_,APP_LOG=errorlog,APP_DEBUG=false,DEBUG=false,APP_ENV=production --port 8000

Deploying container to Cloud Run service [cachetfunction] in project [myanthosproject2] region [us-central1]

X Deploying new service...

- Creating Revision...

. Routing traffic...

✓ Setting IAM Policy...

Deployment failed

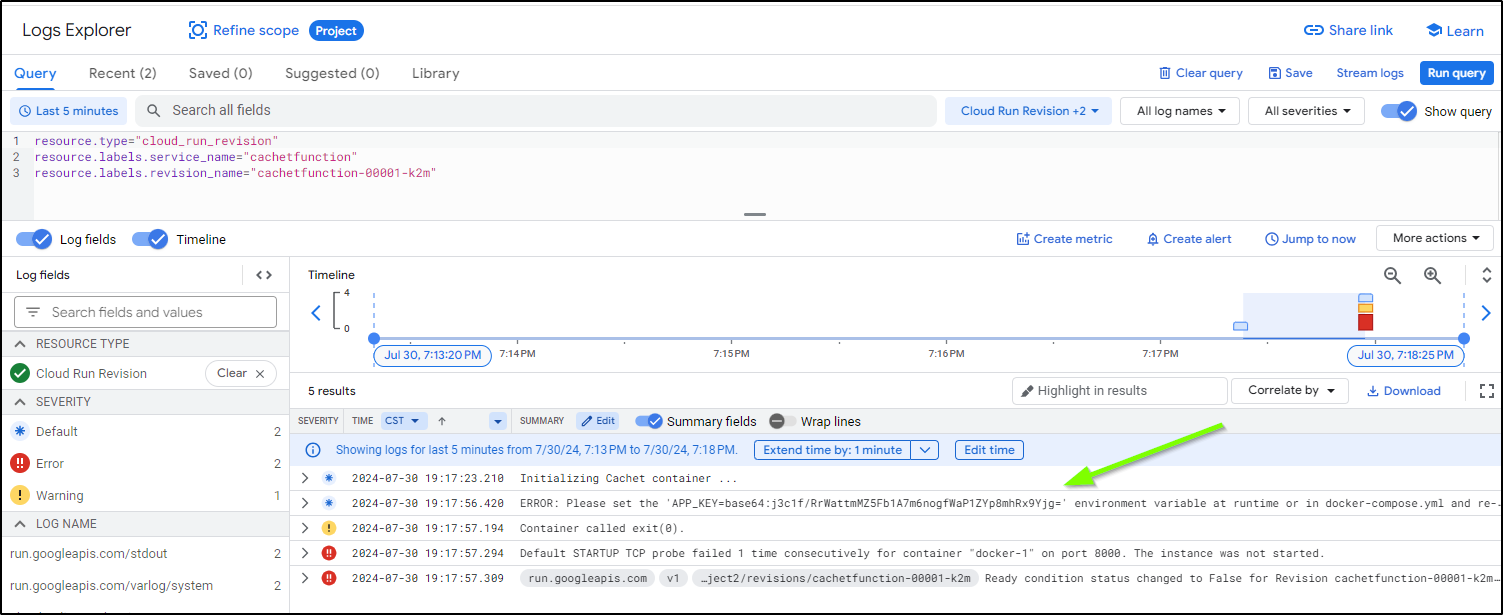

ERROR: (gcloud.run.deploy) Revision 'cachetfunction-00001-k2m' is not ready and cannot serve traffic. The user-provided container failed to start and listen on the port defined provided by the PORT=8000 environment variable. Logs for this revision might contain more information.

Logs URL: https://console.cloud.google.com/logs/viewer?project=myanthosproject2&resource=cloud_run_revision/service_name/cachetfunction/revision_name/cachetfunction-00001-k2m&advancedFilter=resource.type%3D%22cloud_run_revision%22%0Aresource.labels.service_name%3D%22cachetfunction%22%0Aresource.labels.revision_name%3D%22cachetfunction-00001-k2m%22

For more troubleshooting guidance, see https://cloud.google.com/run/docs/troubleshooting#container-failed-to-start

I can now edit it to set the APP_KEY in the “Variables & Secrets”

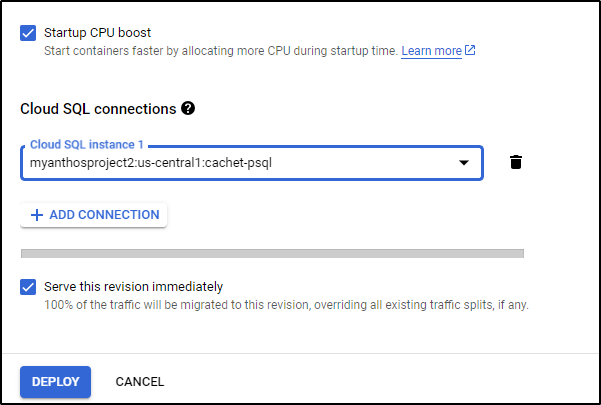

I’m also going to add a CloudSQL Connection when I deploy

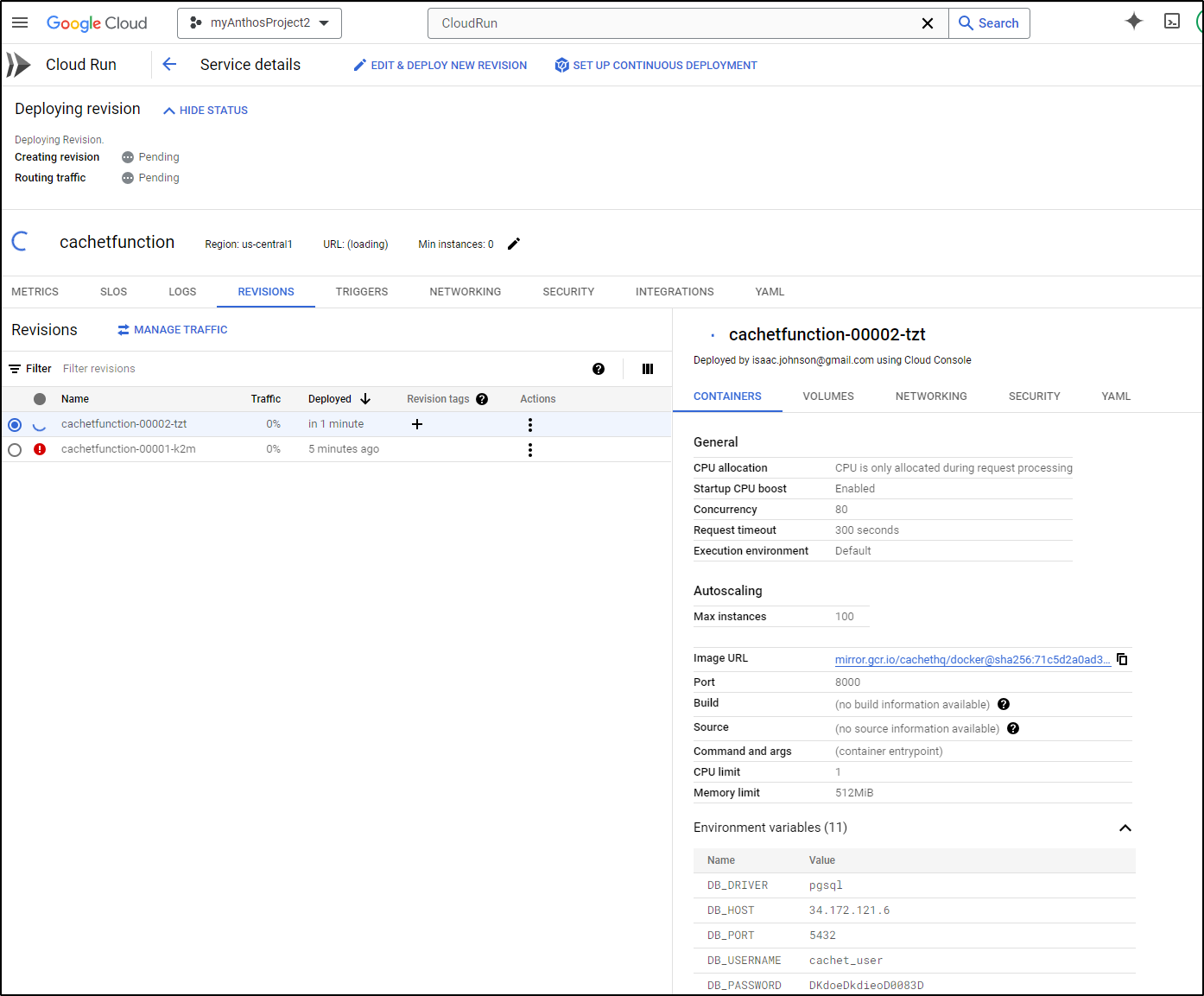

I’ll now deploy it

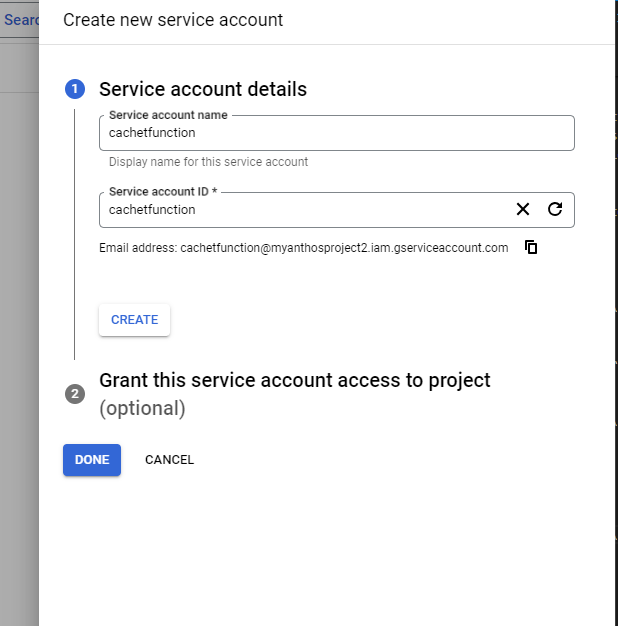

It took a bit of futzing including setting a unique service account

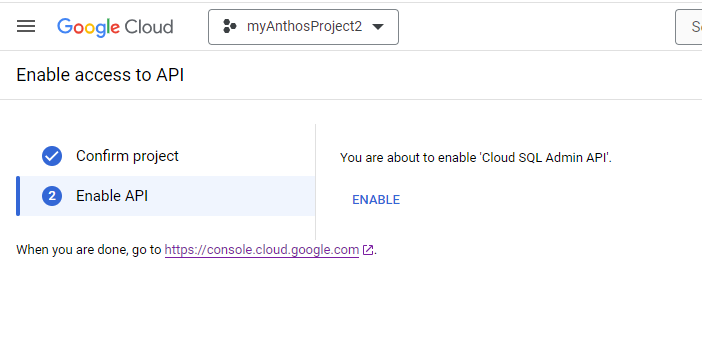

Enabling the Cloud SQL Admin API

I also just opened up the PostgresQL CloudSQL instance to 0.0.0.0\0 to get past connectivity issues and lastly, to perform migrations, indeed I needed to use the postgres user and password

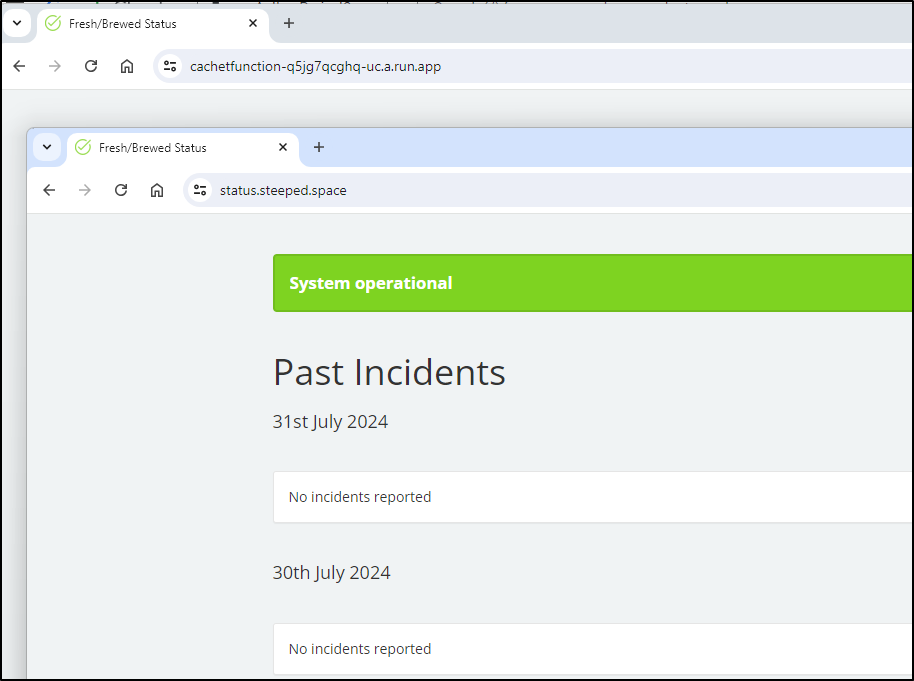

But I have it running now

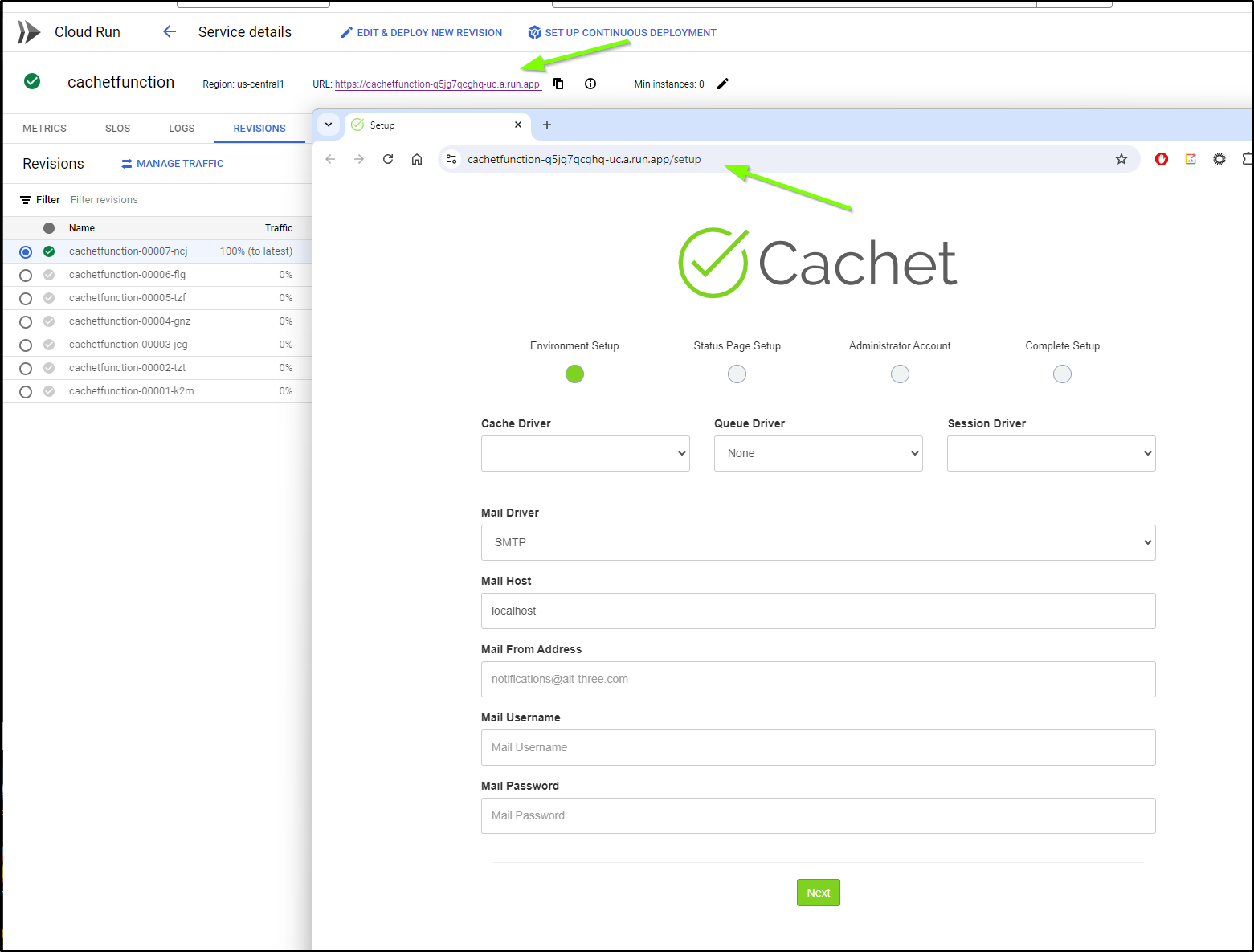

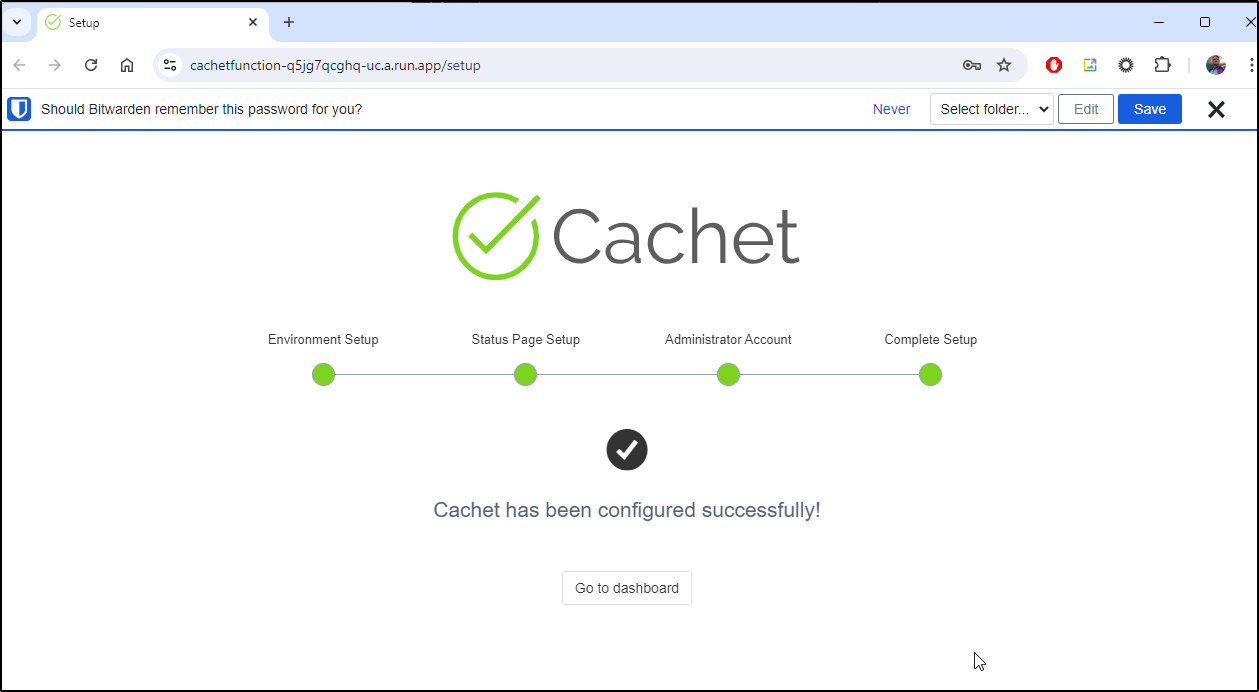

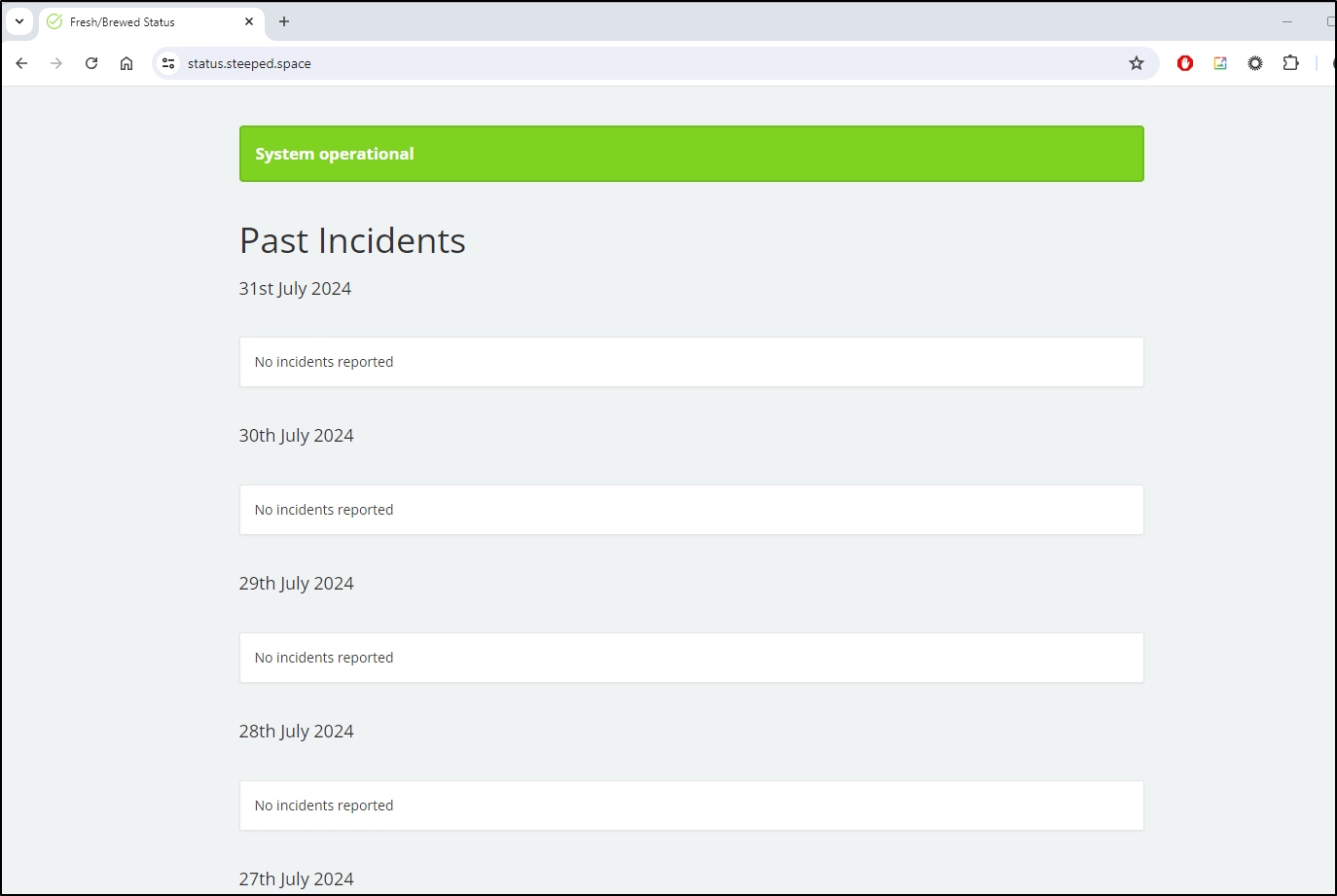

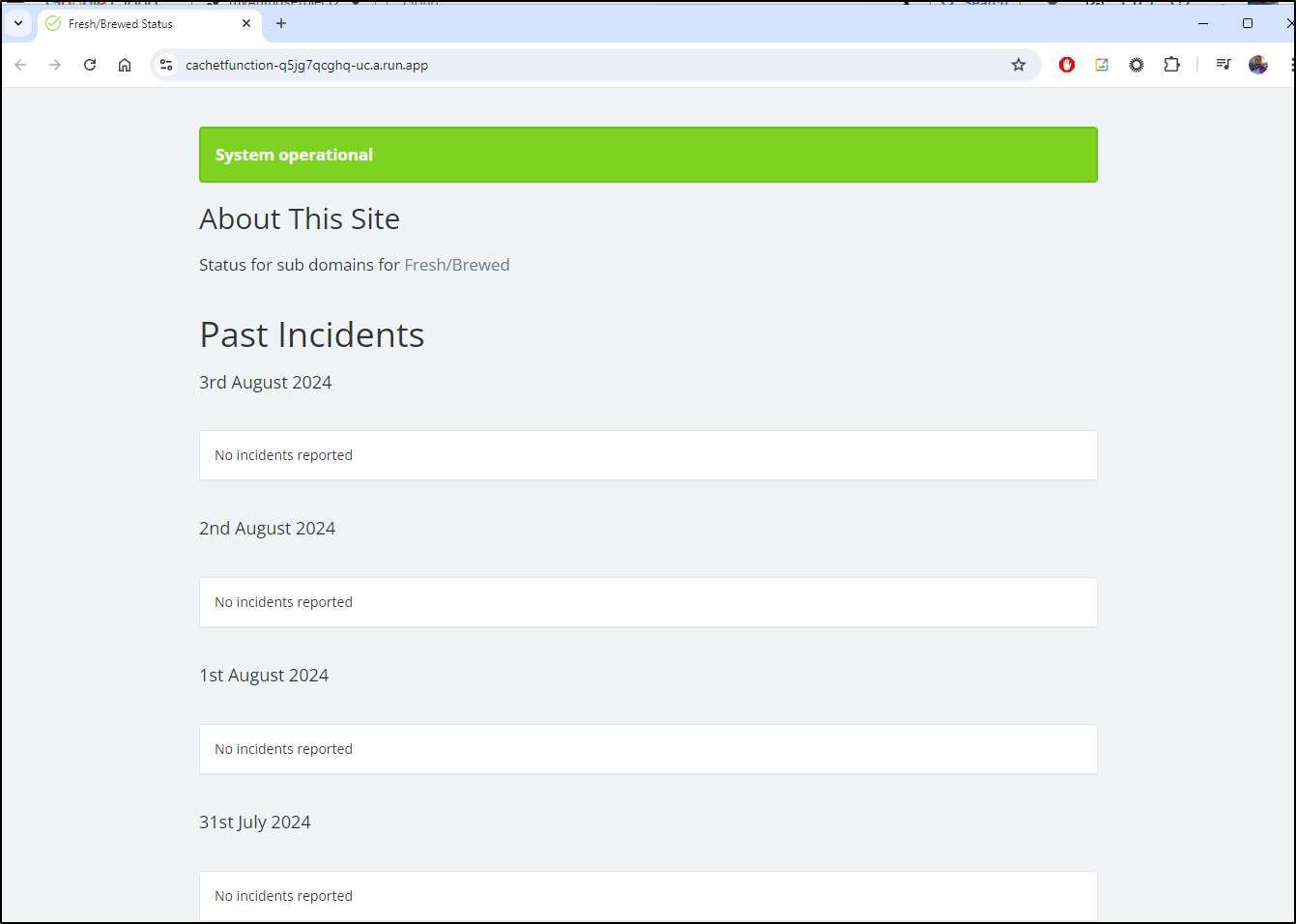

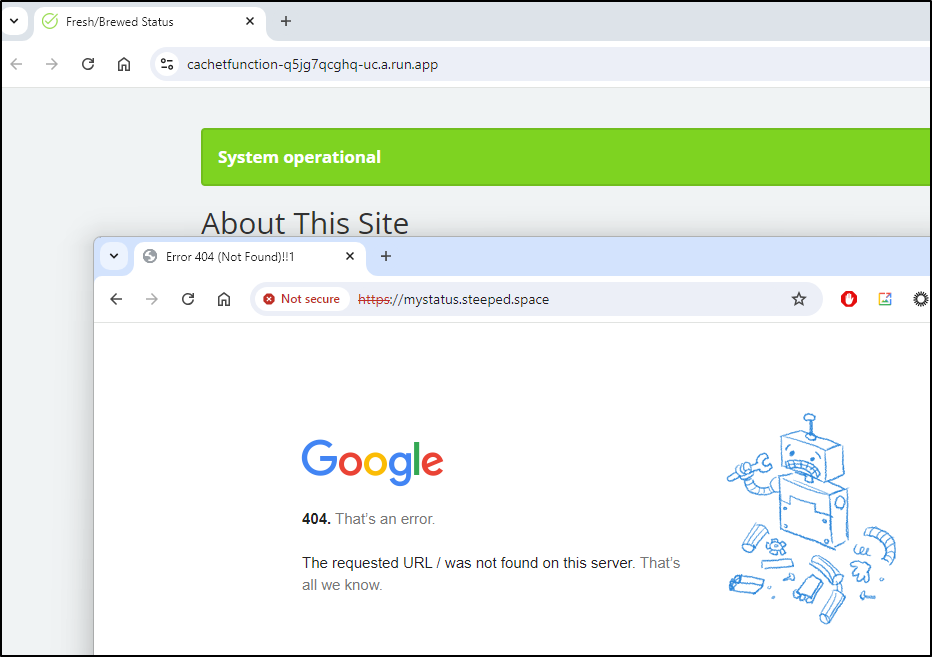

I have yet to setup a Loadbalancer and forwarder, but I can now access using the Direct URL

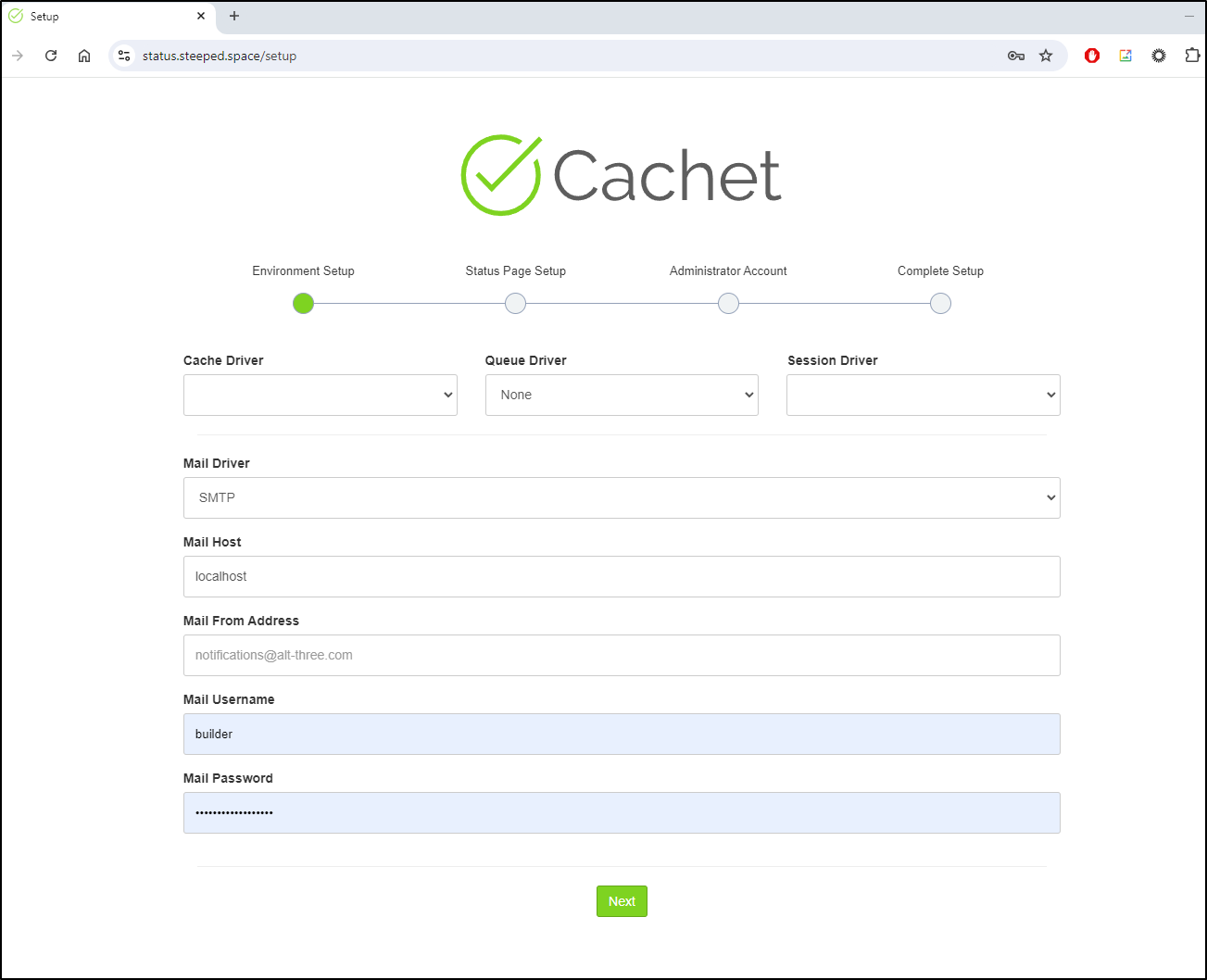

I can walk through setup

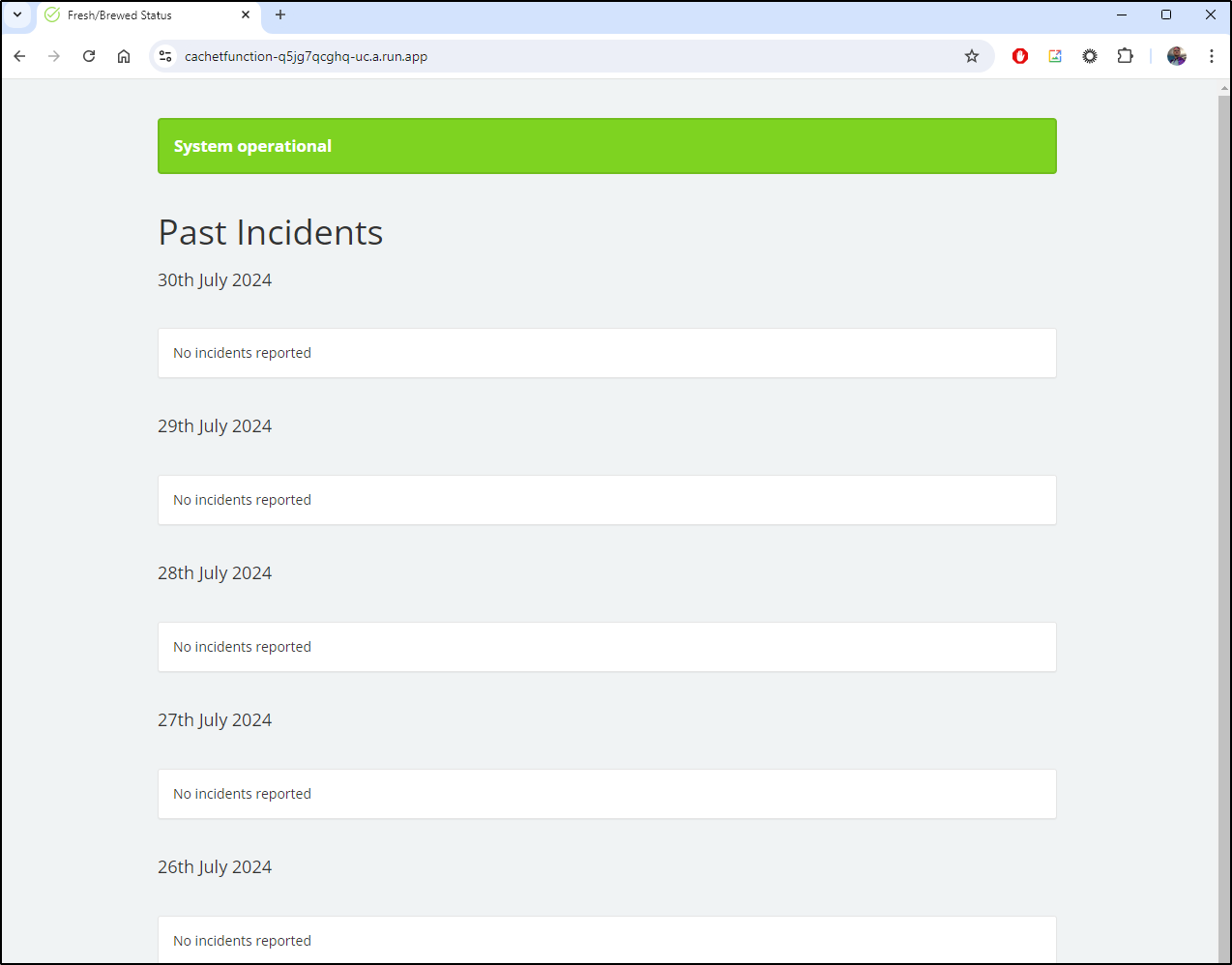

I can now see it live

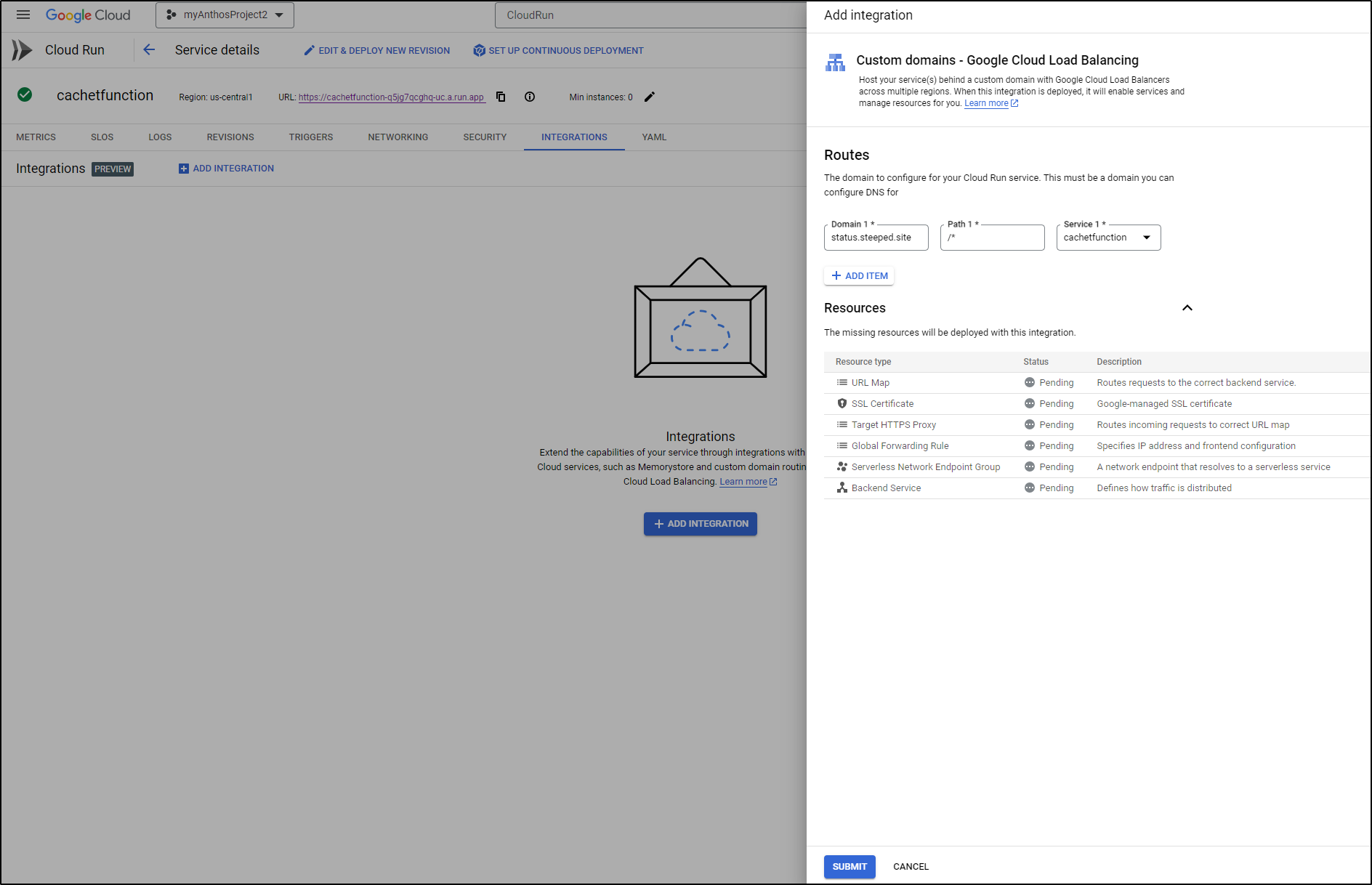

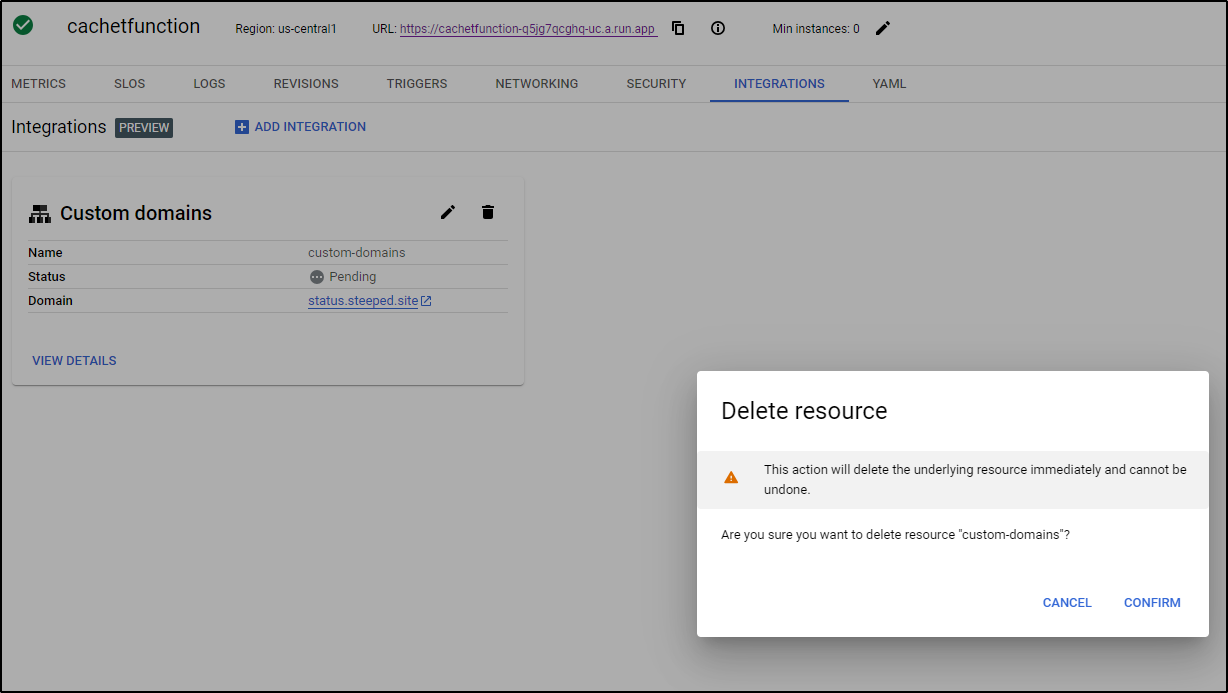

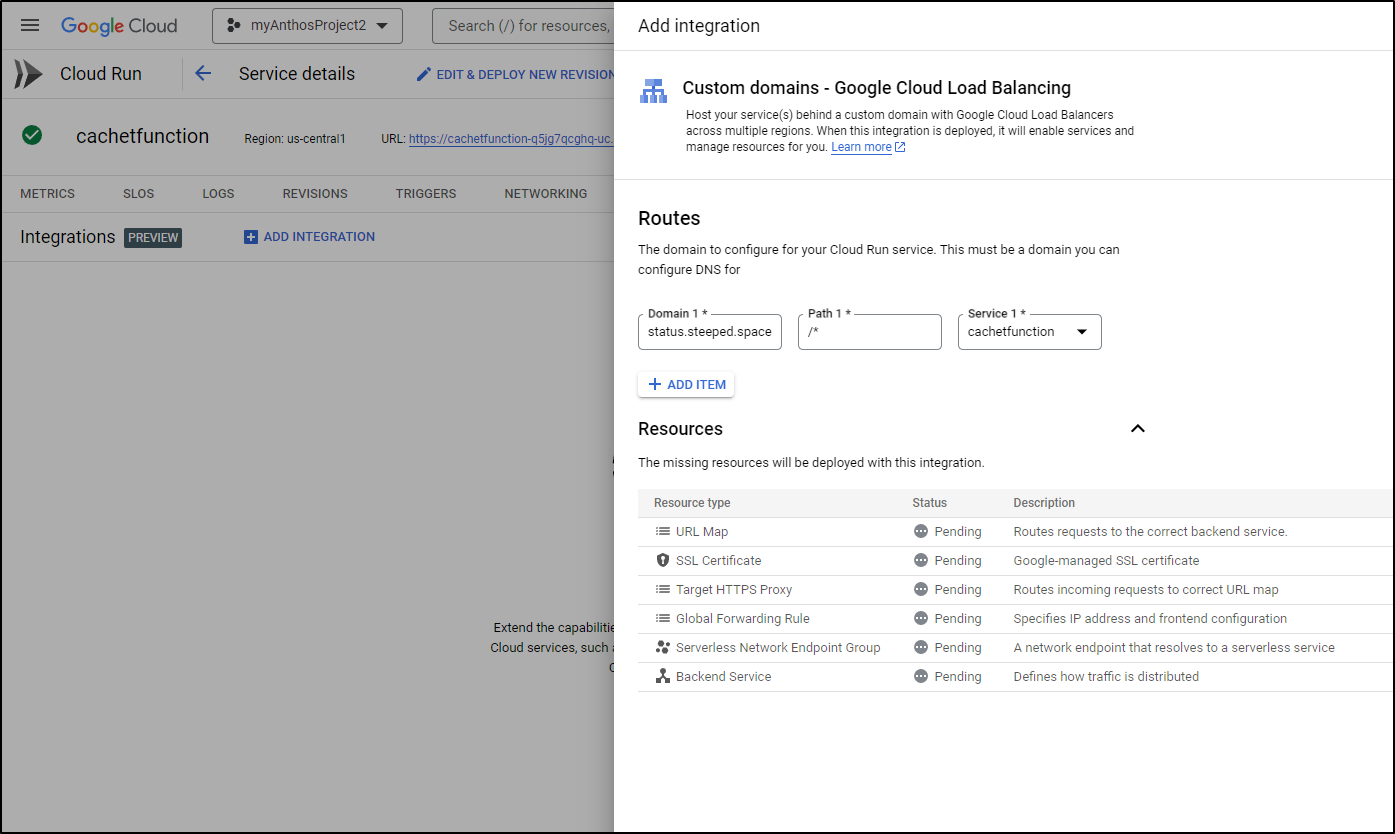

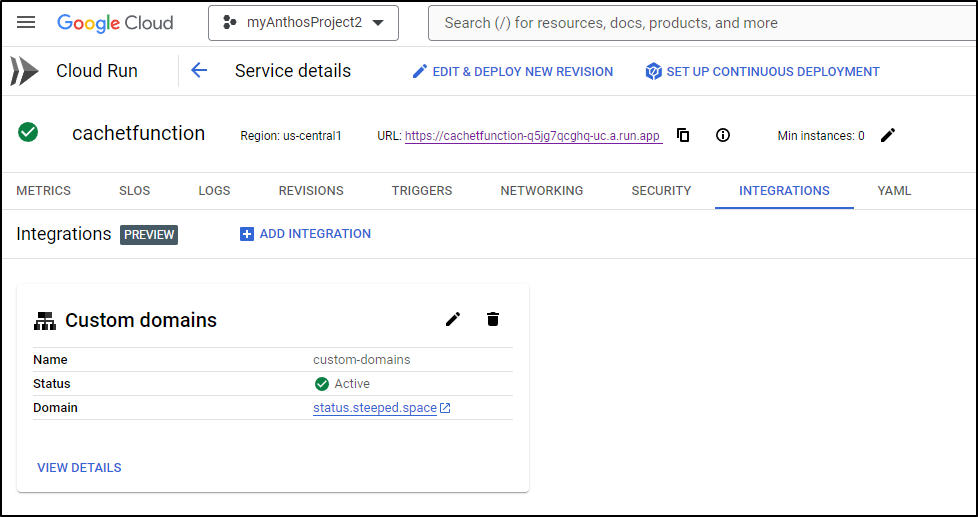

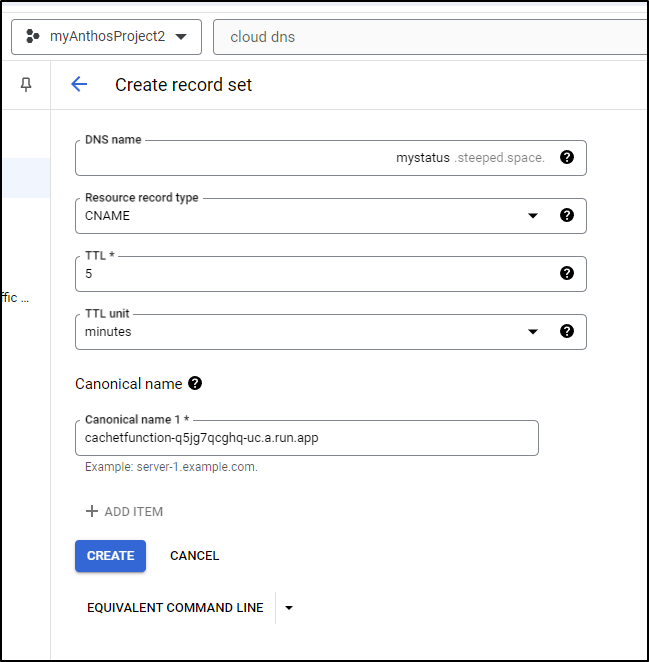

Next, I will use the Integrations tab to add a GCP Load Balancer directly to my CloudRun

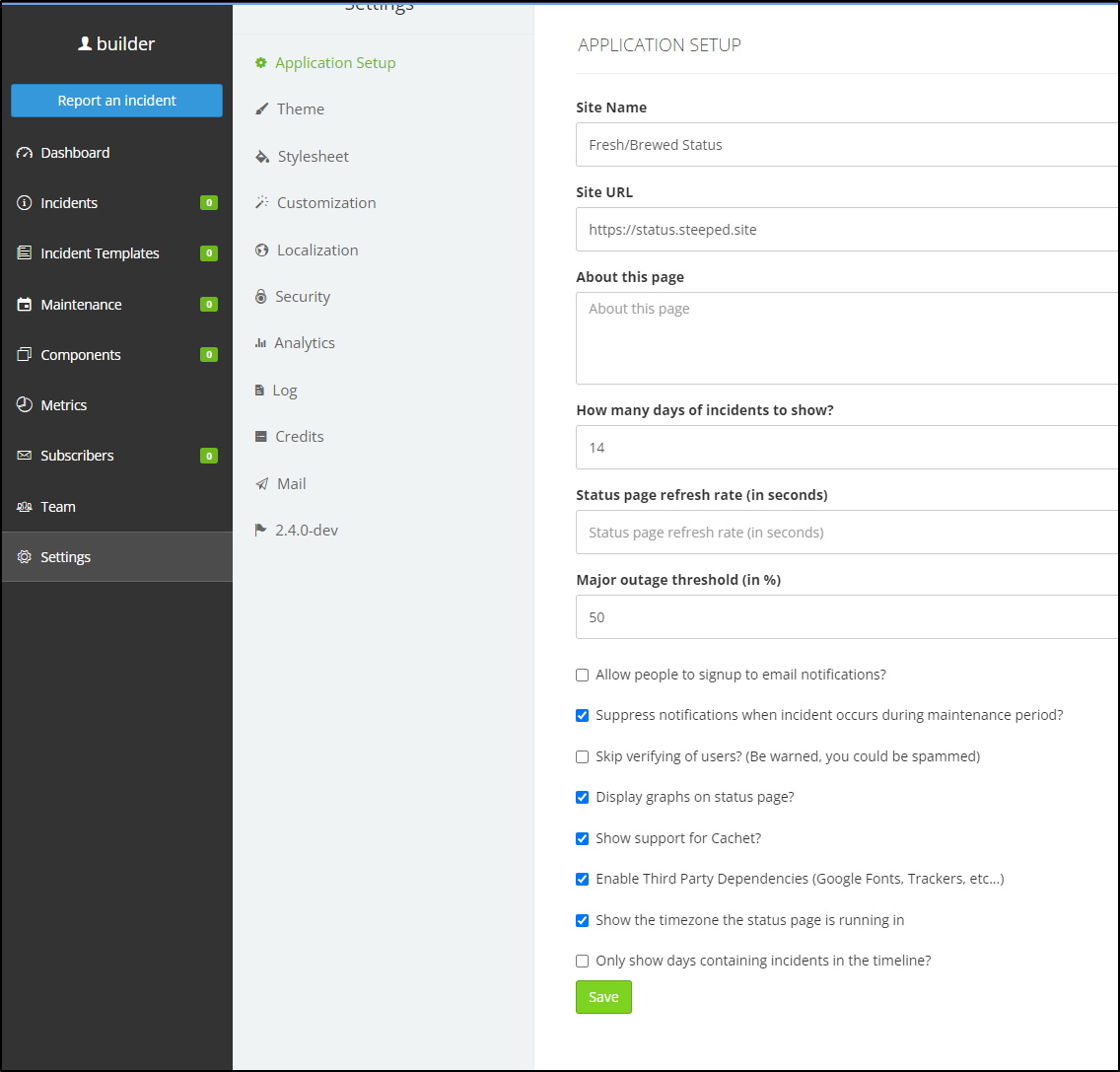

The first time I did this, as you see above, I typoed and wrote ‘status.steeped.site’ instead of ‘status.steeped.space’

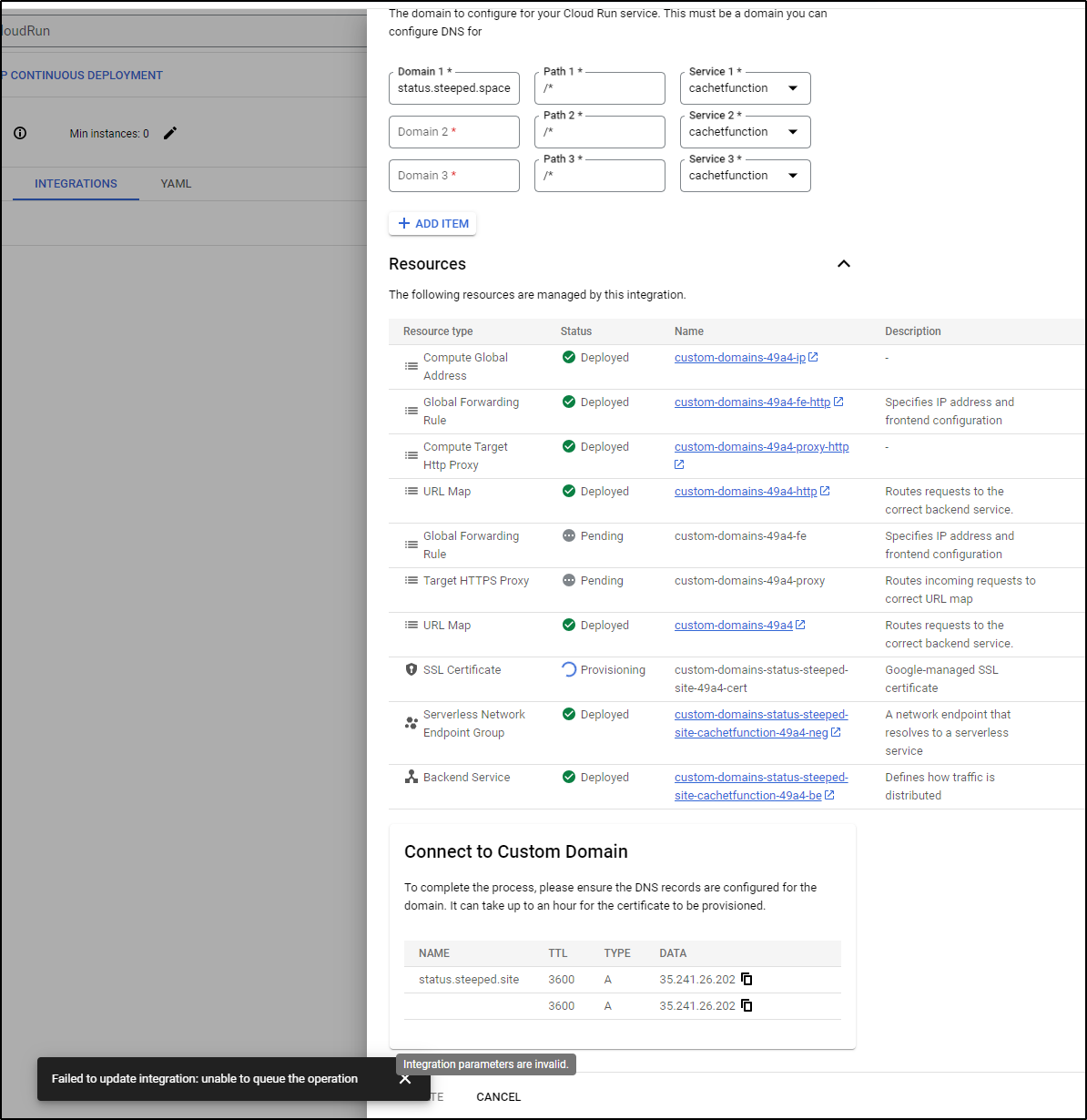

Edit didn’t work and it rather was hung in provisioning

So I removed the integration altogether

And will try again, this time with the right DNS name

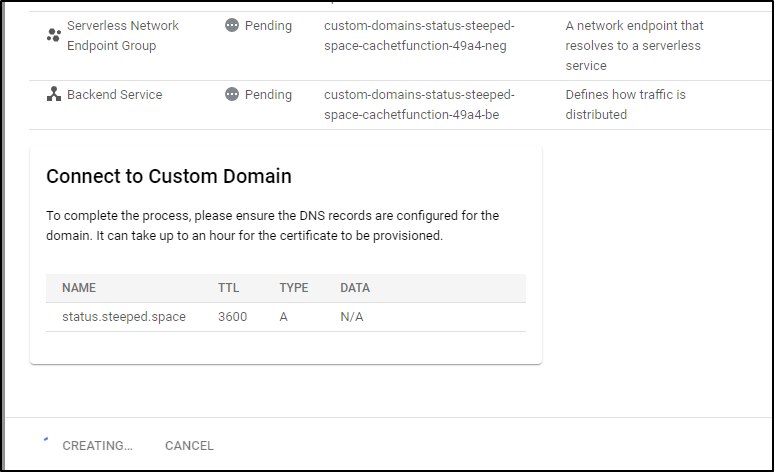

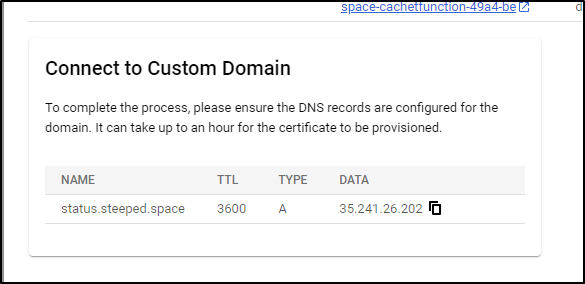

One thing to watch in the lower right is the “Connect to Custom Domain” window.

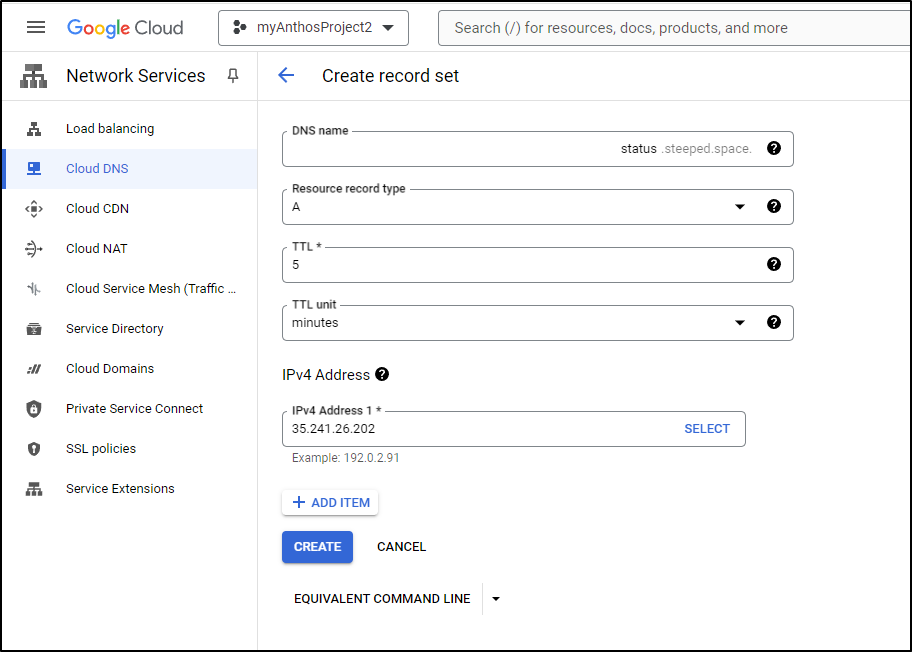

When it populates the “Data” with the destination for the A Record, then we need to create that.

Once set, I see it active

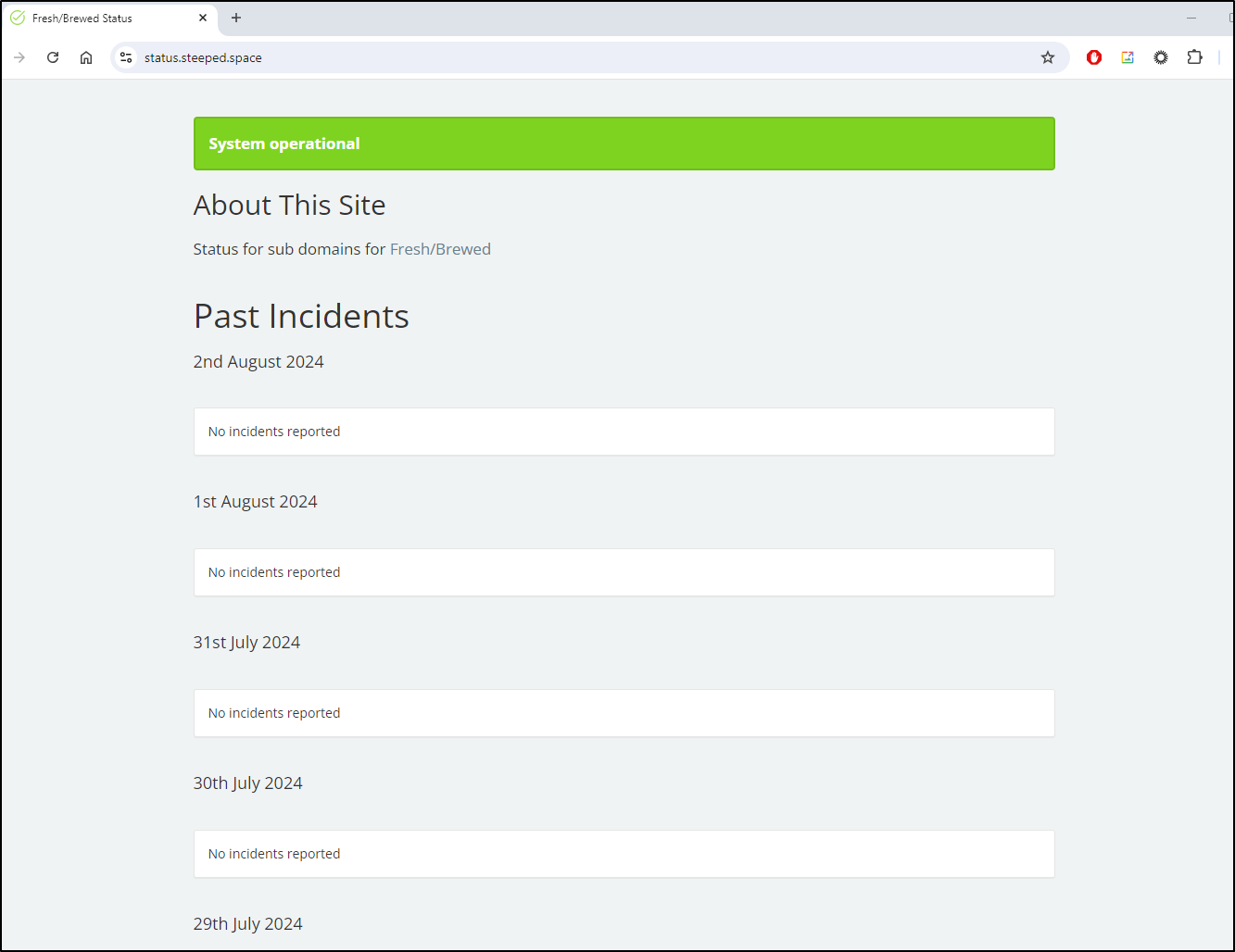

Now that it’s complete, we can access the site on status.steeped.space

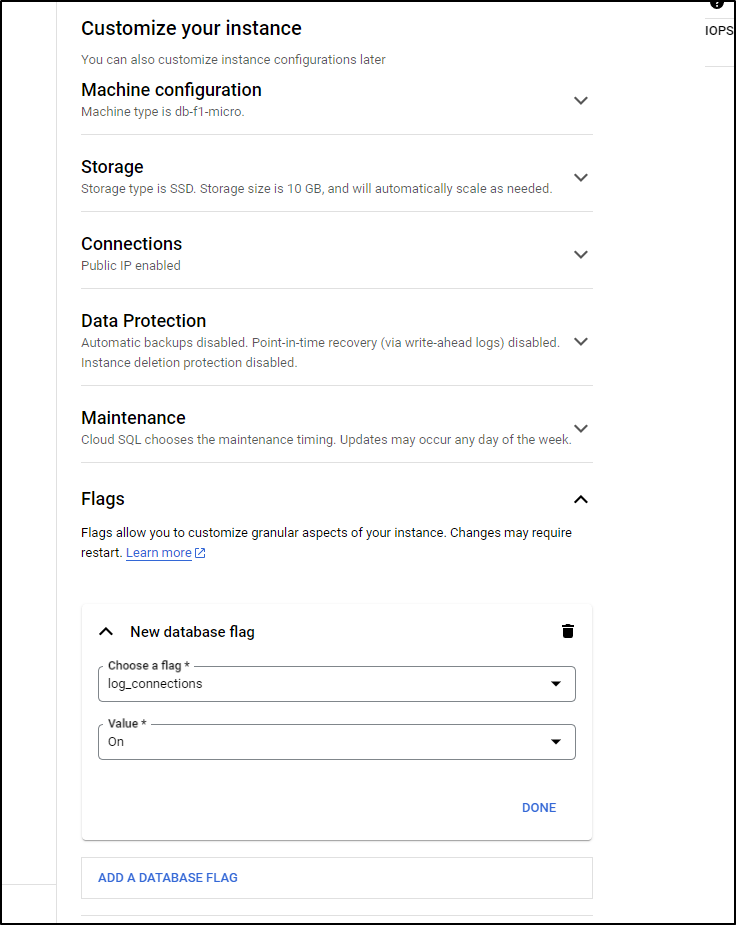

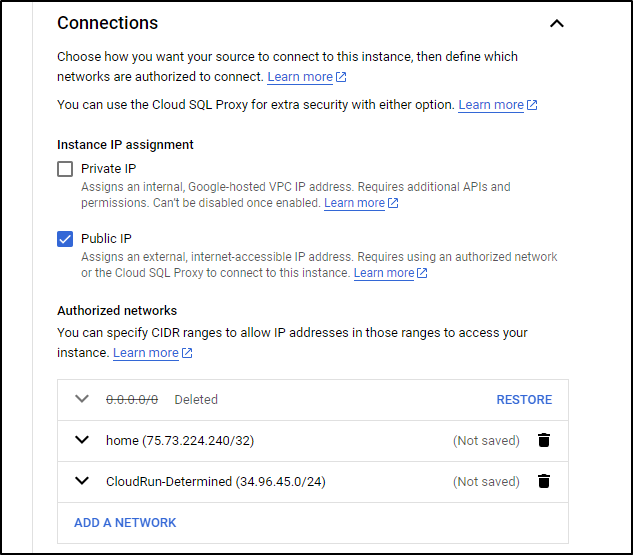

Database corrections

While I wait on that, let’s log connections so we can reduce that expansive CIDR we allowed to access CloudSQL.

I’ll go to Overview and Edit Configuration on the CloudSQL Database then go to Database Flags to set a new flag. The flag we want is ‘log_connections” and to set it to “on”

Which I can add in the CloudDNS “Add Record Set” window

Or, of course, I could the gcloud cli

gcloud dns --project=myanthosproject2 record-sets create status.steeped.space. --zone="steepedspace" --type="A" --ttl="300" --rrdatas="35.241.26.202"

While GCP won’t show me the IP address that is used by CloudRun behind the scenes, I can always go to the database itself to ask:

postgres=> SELECT

pid,

usename AS user,

client_addr AS ip_address,

application_name,

state

FROM pg_stat_activity;

pid | user | ip_address | application_name | state

-------+---------------+---------------+------------------+--------

30 | cloudsqladmin | | |

16738 | postgres | 34.96.45.245 | | idle

29 | | | |

17122 | postgres | 75.73.224.240 | psql | active

37 | cloudsqladmin | 127.0.0.1 | cloudsqlagent | idle

17129 | cloudsqladmin | 127.0.0.1 | cloudsqlagent | idle

19 | | | |

18 | | | |

28 | | | |

(9 rows)

I know that 75.73.224.240 is my local host so the CloudRun must be in the 34.96.45.245 range

$ nslookup 34.96.45.245

245.45.96.34.in-addr.arpa name = 245.45.96.34.bc.googleusercontent.com.

Authoritative answers can be found from:

I’ll now tweak my connection rules

And both URLs come up fine

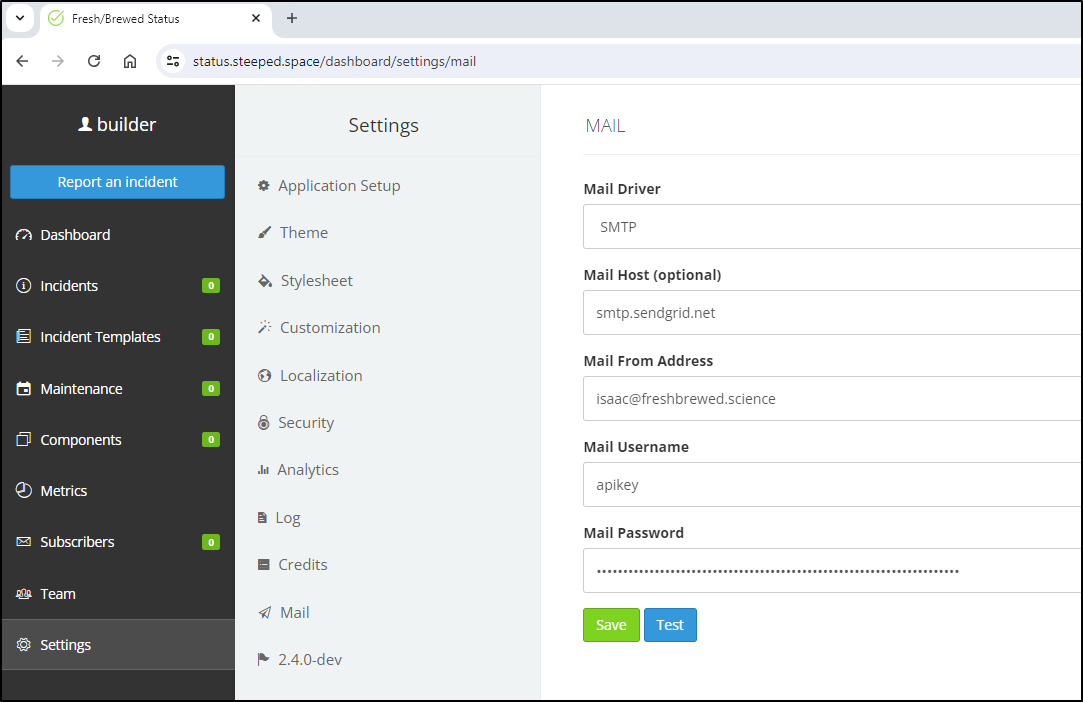

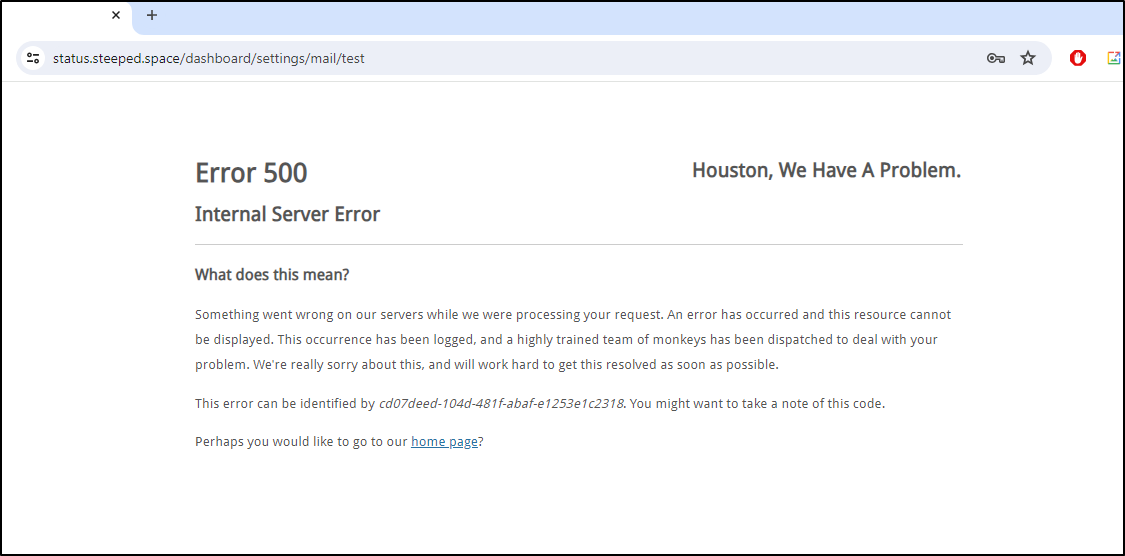

Last test for email

I’ll try one last time with this image, though I don’t expect it to work, to use Sendgrid

Nope

I also tried google smtp.gmail.com and my mail.gandi.net but all of then dumped.

For now, I’ll disable email signup and fix the Site URL while I’m there

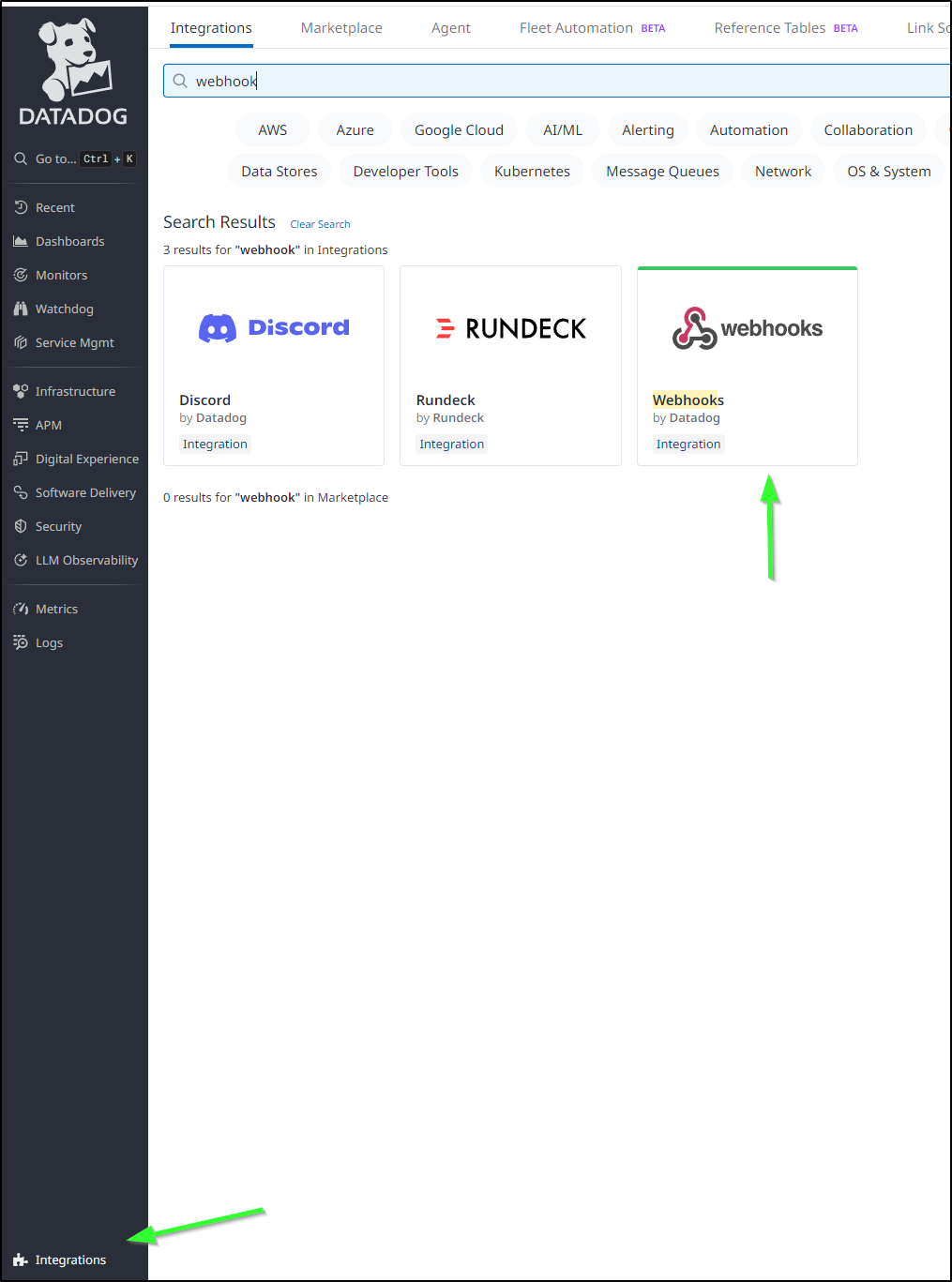

Updating Datadog

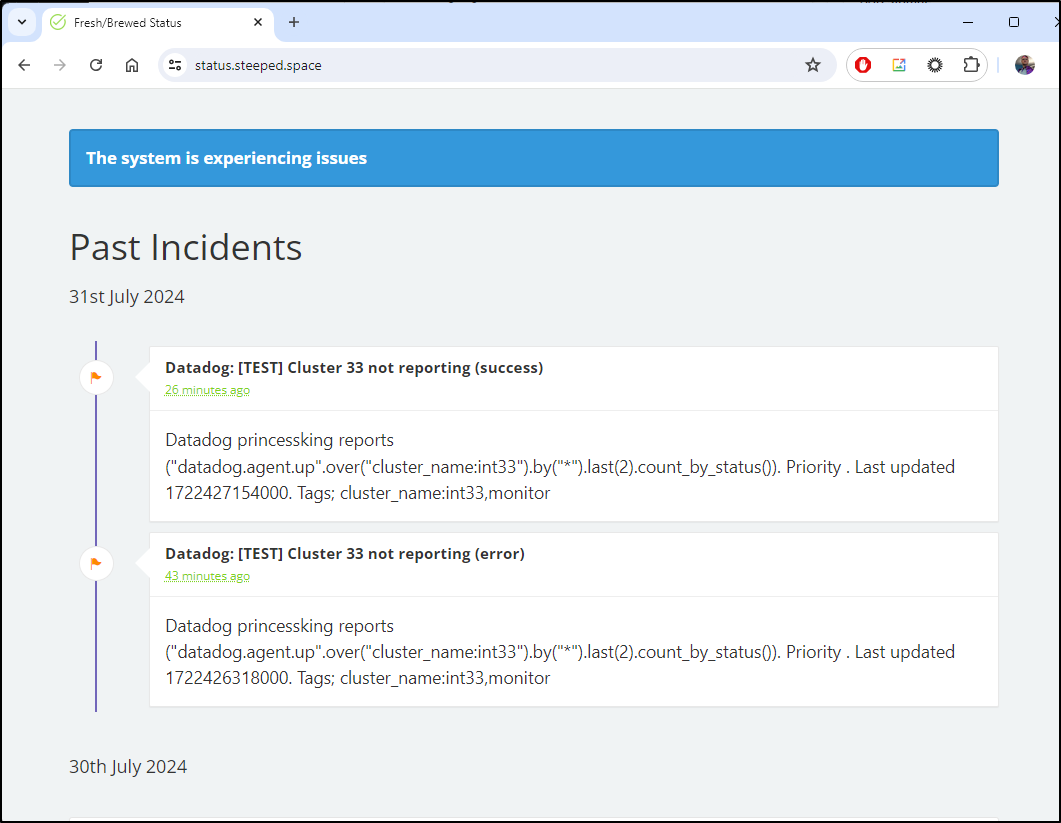

Now that I have a new status, let’s make sure to update our Datadog monitors as well

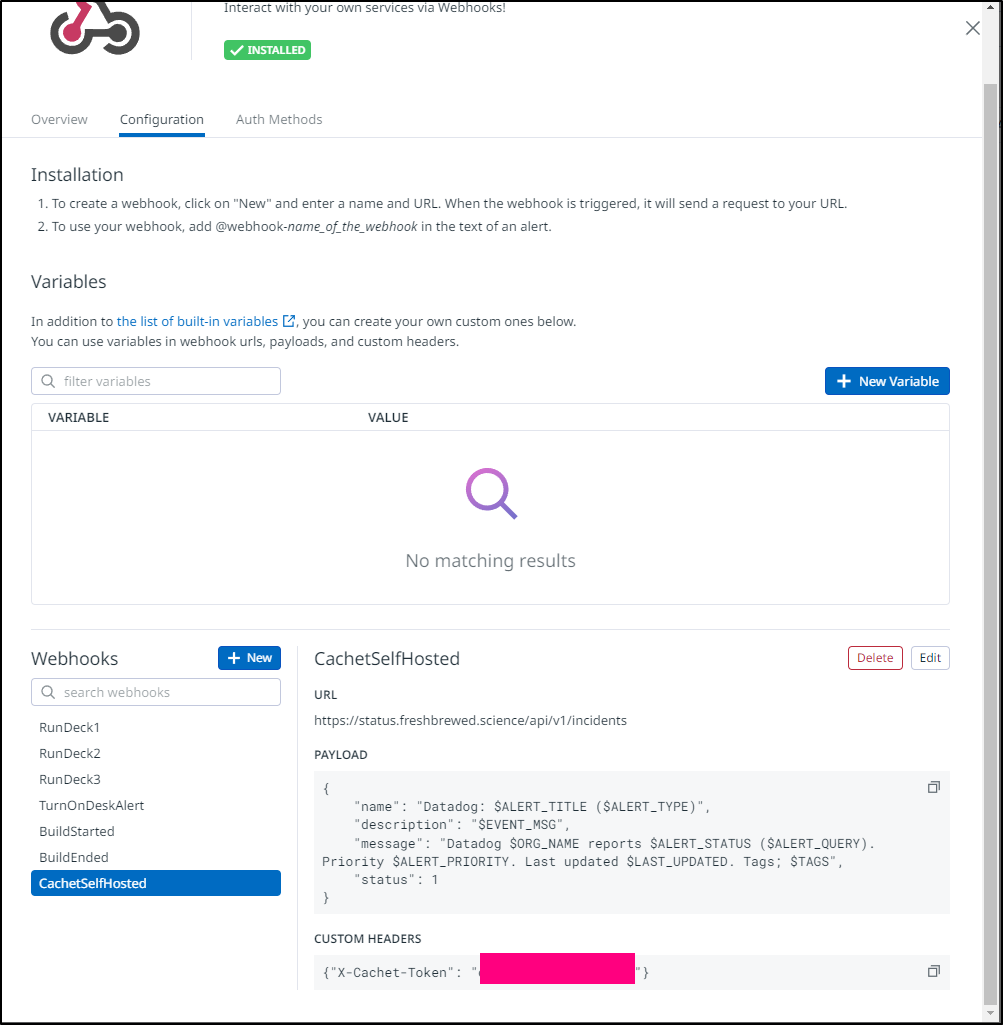

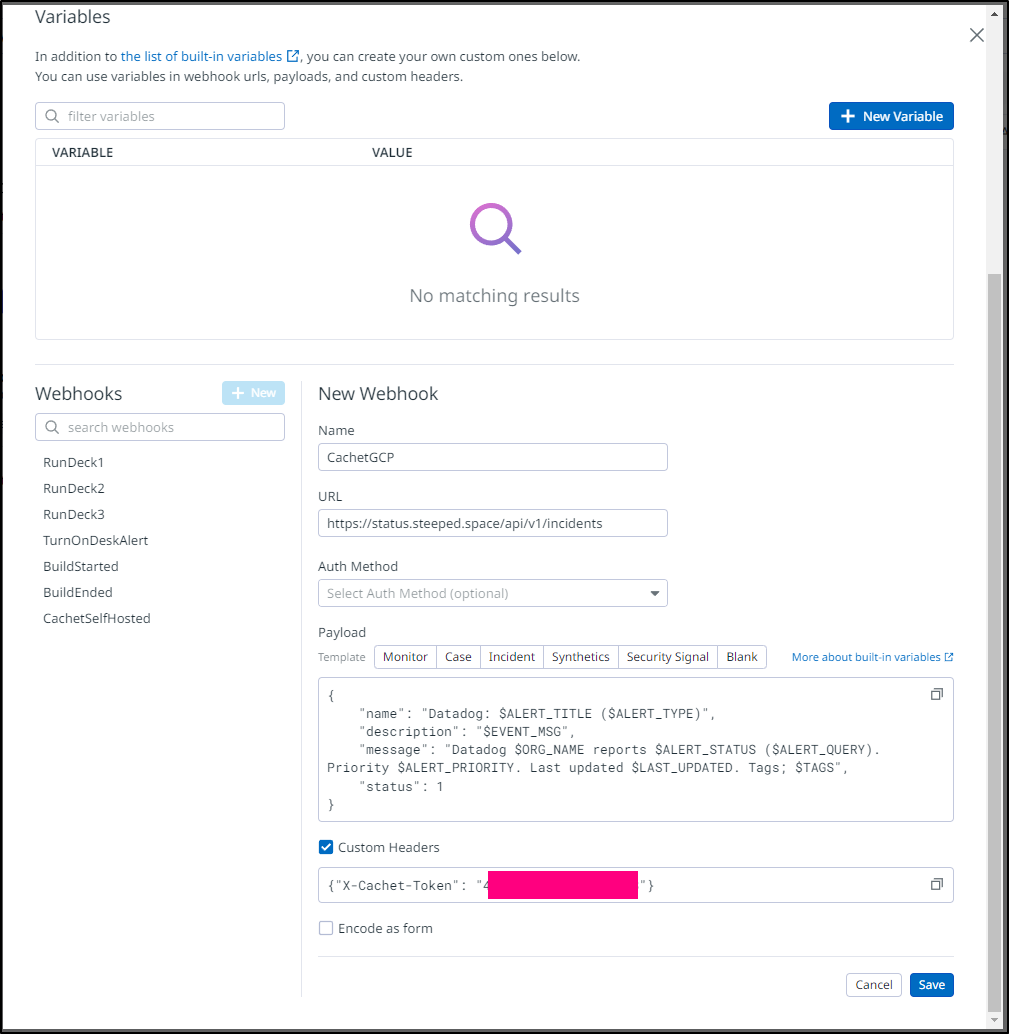

We do this in Integrations/Webhooks

I can see my current self-hosted instance there

With the payload

{

"name": "Datadog: $ALERT_TITLE ($ALERT_TYPE)",

"description": "$EVENT_MSG",

"message": "Datadog $ORG_NAME reports $ALERT_STATUS ($ALERT_QUERY). Priority $ALERT_PRIORITY. Last updated $LAST_UPDATED. Tags; $TAGS",

"status": 1

}

I’ll then add a Webhook to the new url https://status.freshbrewed.science/api/v1/incidents

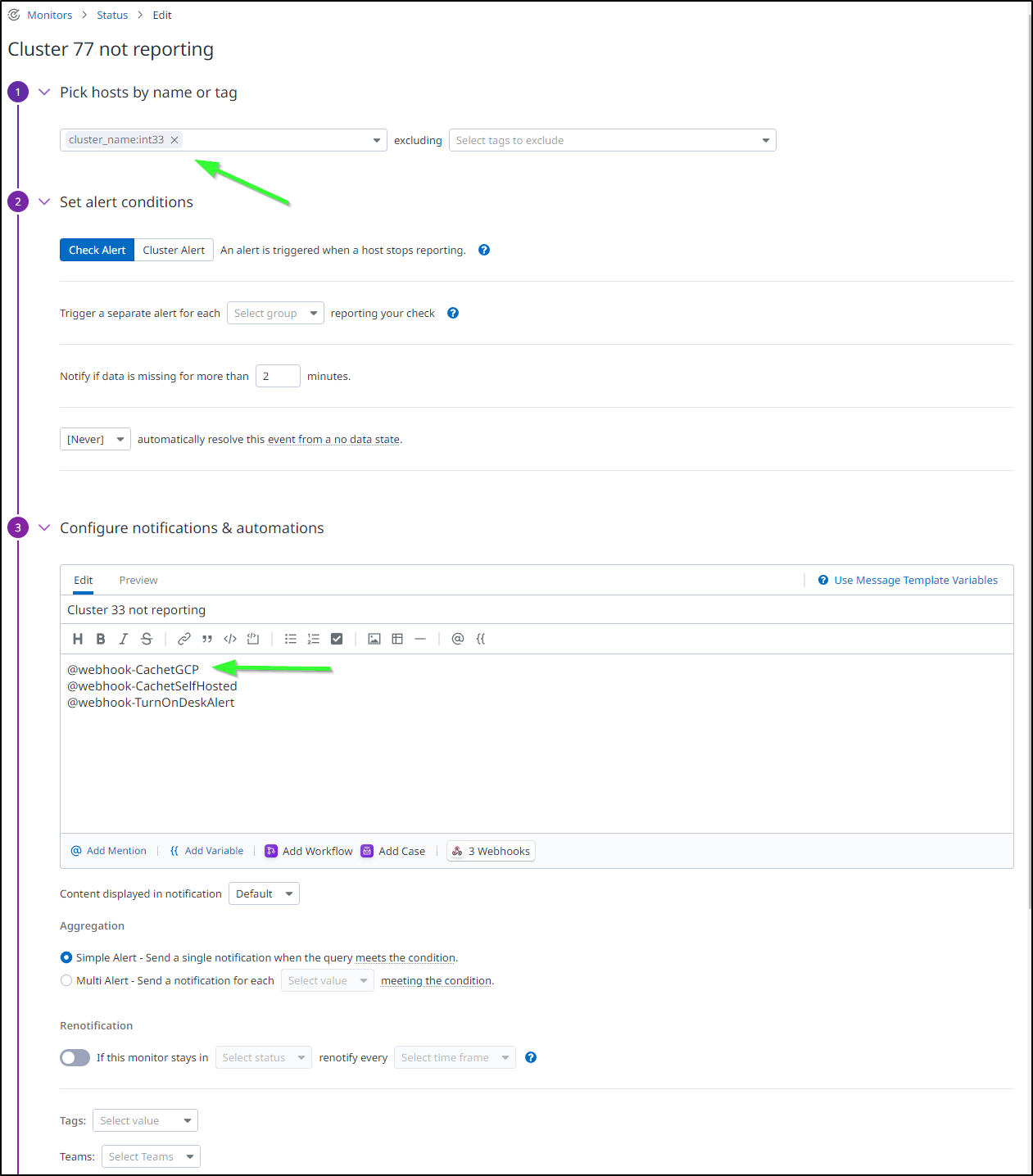

Since I want to display when the primary cluster is down, let’s edit that monitor.

For some reason, I cannot seem to change the Monitor name, but i can fix the cluster and notification endpoints

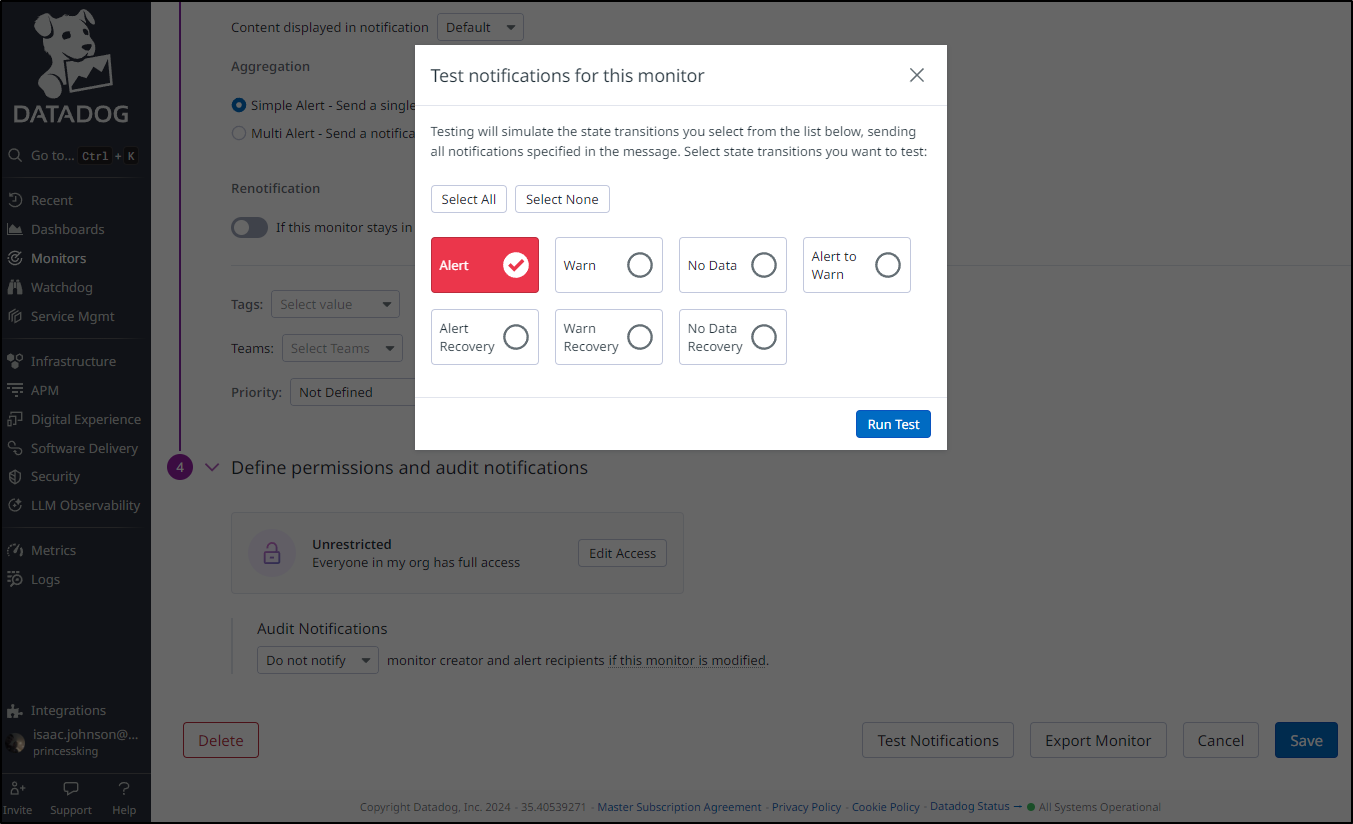

I’ll run a quick test

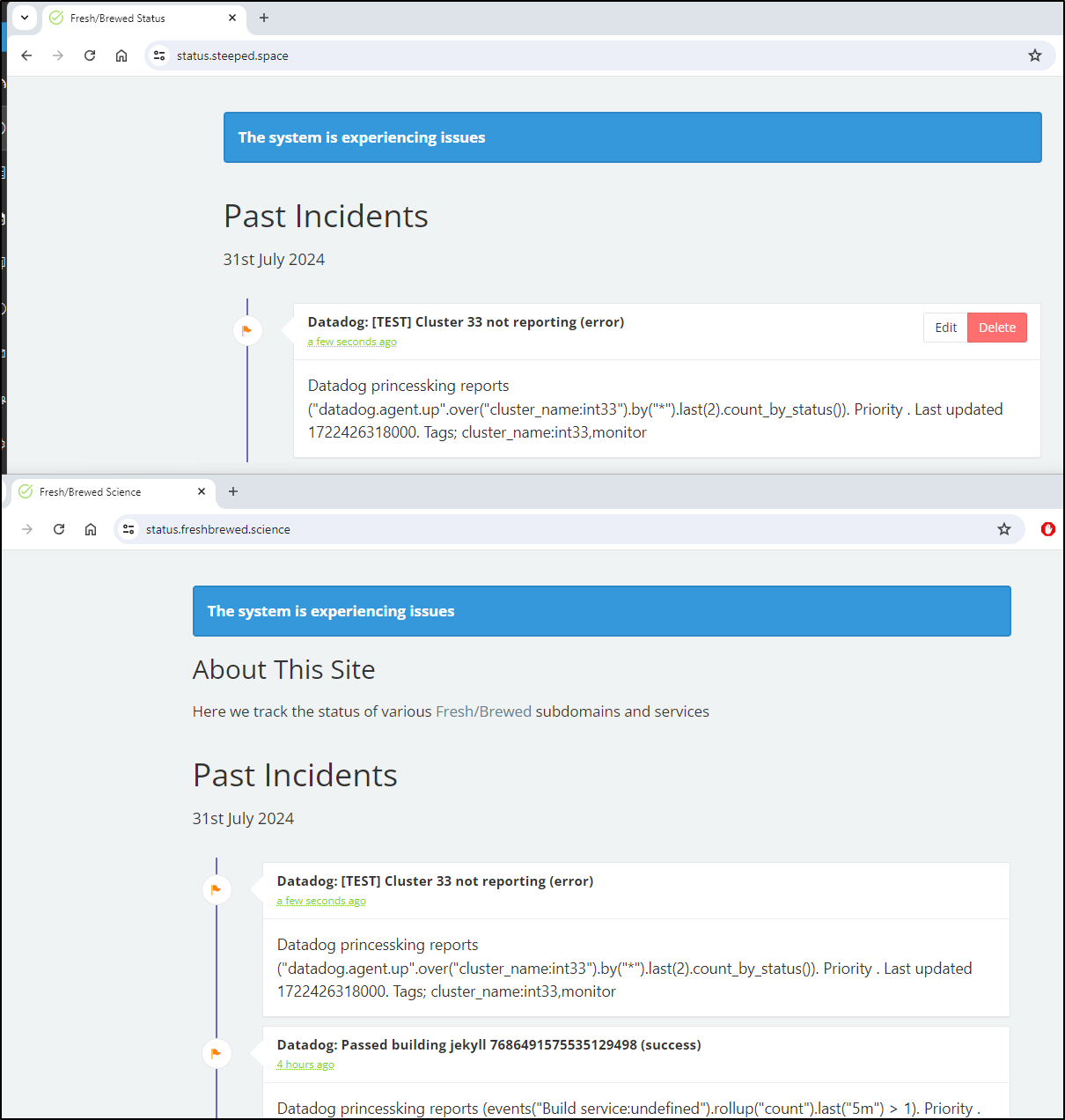

I can see both were triggered

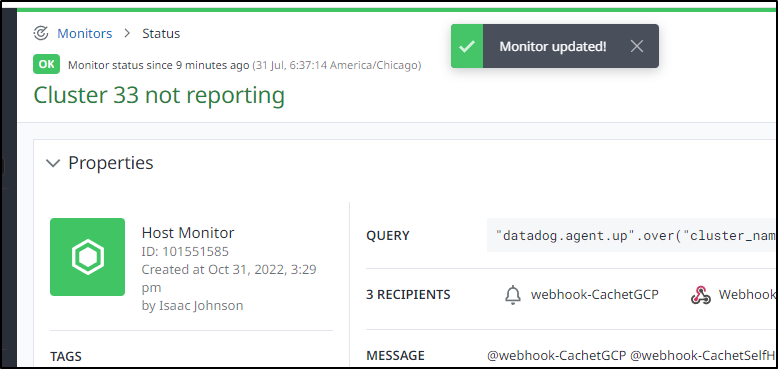

And when I saved it, I did see the Monitor name change

CloudRun Static egress

Let’s add static Egress so we can lock down CloudSQL

I see I have a default network already

$ gcloud compute networks list

NAME SUBNET_MODE BGP_ROUTING_MODE IPV4_RANGE GATEWAY_IPV4

default AUTO REGIONAL

Next, I’ll create a Subnet I can use there

$ gcloud compute networks subnets create myinternal2 --range=10.2.0.0/28 --network=default --region=us-central1

Created [https://www.googleapis.com/compute/v1/projects/myanthosproject2/regions/us-central1/subnetworks/myinternal].

NAME REGION NETWORK RANGE STACK_TYPE IPV6_ACCESS_TYPE INTERNAL_IPV6_PREFIX EXTERNAL_IPV6_PREFIX

myinternal us-central1 default 10.1.0.0/24 IPV4_ONLY

$ gcloud compute networks subnets create myinternal2 --range=10.2.0.0/28 --network=default --region=us-central1

Created [https://www.googleapis.com/compute/v1/projects/myanthosproject2/regions/us-central1/subnetworks/myinternal2].

NAME REGION NETWORK RANGE STACK_TYPE IPV6_ACCESS_TYPE INTERNAL_IPV6_PREFIX EXTERNAL_IPV6_PREFIX

myinternal2 us-central1 default 10.2.0.0/28 IPV4_ONLY

Next, I need a serverless VPC Access Connector created:

$ gcloud compute networks vpc-access connectors create cloudrunacces

scon2 --region=us-central1 --subnet-project=myanthosproject2 --subnet=myinternal2

Create request issued for: [cloudrunaccesscon2]

Waiting for operation [projects/myanthosproject2/locations/us-central1/operations/60f11959-94de-4075-bdd3-51f97514b

f7b] to complete...done.

Created connector [cloudrunaccesscon2].

And a NAT gateway router (this worries me on cost)

$ gcloud compute routers create myvpcrouter \

--network=default \

--region=us-central1

Creating router [myvpcrouter]...done.

NAME REGION NETWORK

myvpcrouter us-central1 default

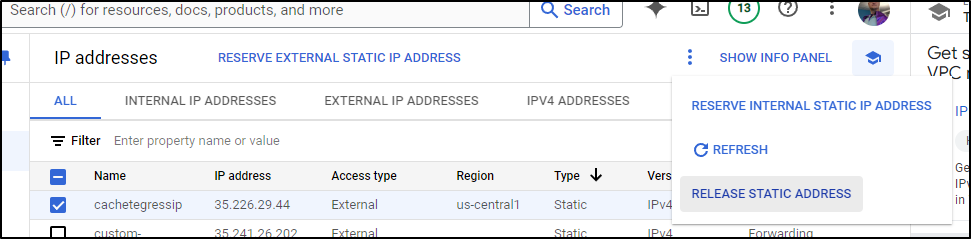

I need to create an egress IP now

$ gcloud compute addresses create cachetegressip --region=us-central1

Created [https://www.googleapis.com/compute/v1/projects/myanthosproject2/regions/us-central1/addresses/cachetegressip].

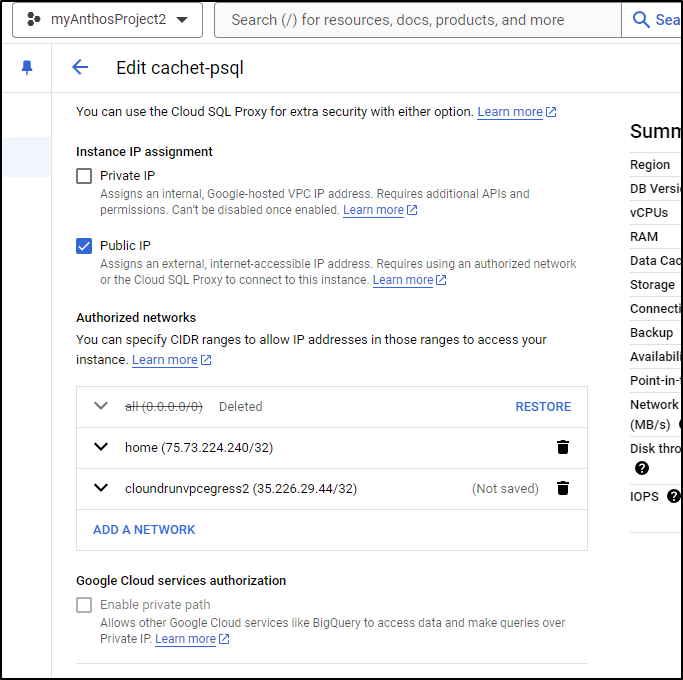

I can get that IP right now so we can whitelist it for CloudSQL

$ gcloud compute addresses describe cachetegressip --region=us-centr

al1

address: 35.226.29.44

addressType: EXTERNAL

creationTimestamp: '2024-07-31T05:19:30.657-07:00'

description: ''

id: '2121319053917656381'

kind: compute#address

labelFingerprint: 42WmSpB8rSM=

name: cachetegressip

networkTier: PREMIUM

region: https://www.googleapis.com/compute/v1/projects/myanthosproject2/regions/us-central1

selfLink: https://www.googleapis.com/compute/v1/projects/myanthosproject2/regions/us-central1/addresses/cachetegressip

status: RESERVED

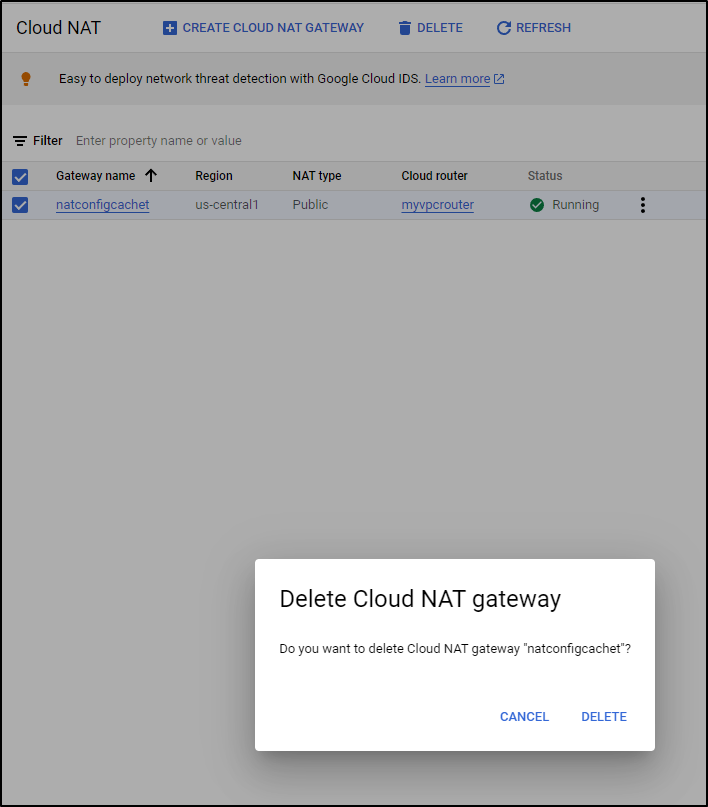

Next, we need to create a a NAT Gateway configuraiton so we can use the static IP just created

$ gcloud compute routers nats create natconfigcachet \

--router=myvpcrouter \

--region=us-central1 \

--nat-custom-subnet-ip-ranges=myinternal2 \

--nat-external-ip-pool=cachetegressip

Creating NAT [natconfigcachet] in router [myvpcrouter]...done.

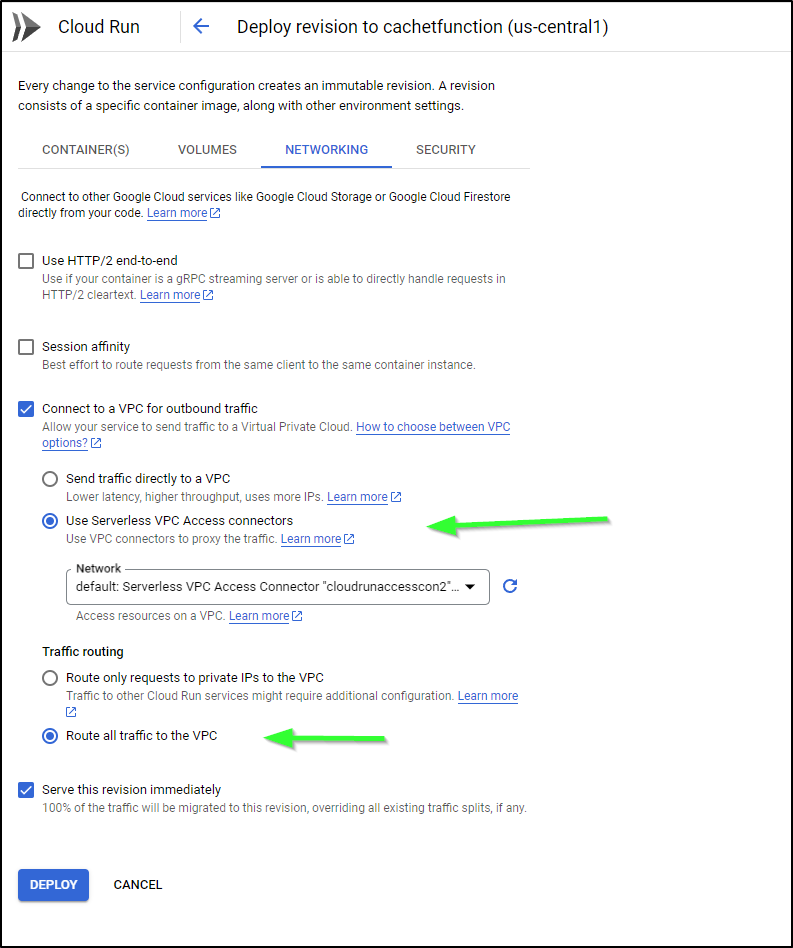

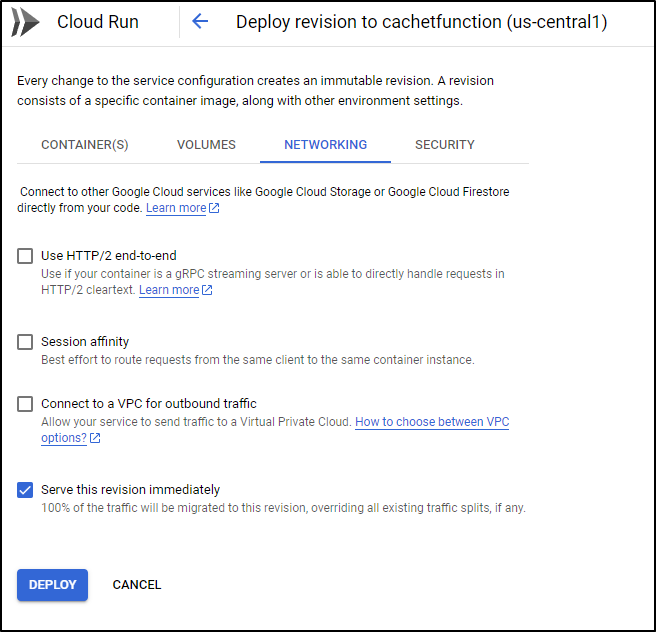

You can review the guide here on gcloud commands to launch a new deploy, however, as we have a working configured instance, I just want to do it in the Cloud Console.

We’ll find the settings we desire in “Networking”

I refreshed the page and then checked the CloudSQL stats table

postgres=> SELECT

pid,

usename AS user,

client_addr AS ip_address,

application_name,

state

FROM pg_stat_activity;

pid | user | ip_address | application_name | state

-------+---------------+---------------+------------------+---------------------

30 | cloudsqladmin | | |

18961 | postgres | 35.226.29.44 | | idle in transaction

29 | | | |

37 | cloudsqladmin | 127.0.0.1 | cloudsqlagent | idle

18337 | postgres | 75.73.224.240 | psql | active

18967 | cloudsqladmin | 127.0.0.1 | cloudsqlagent | idle

19 | | | |

18 | | | |

28 | | | |

(9 rows)

postgres=>

This line clearly shows it is indeed using the egress IP

18961 | postgres | 35.226.29.44 | | idle in transaction

I can now drop the 0.0.0.0/0 CIDR and use the VPC Egress IP

Lastly, a quick refresh shows it’s working

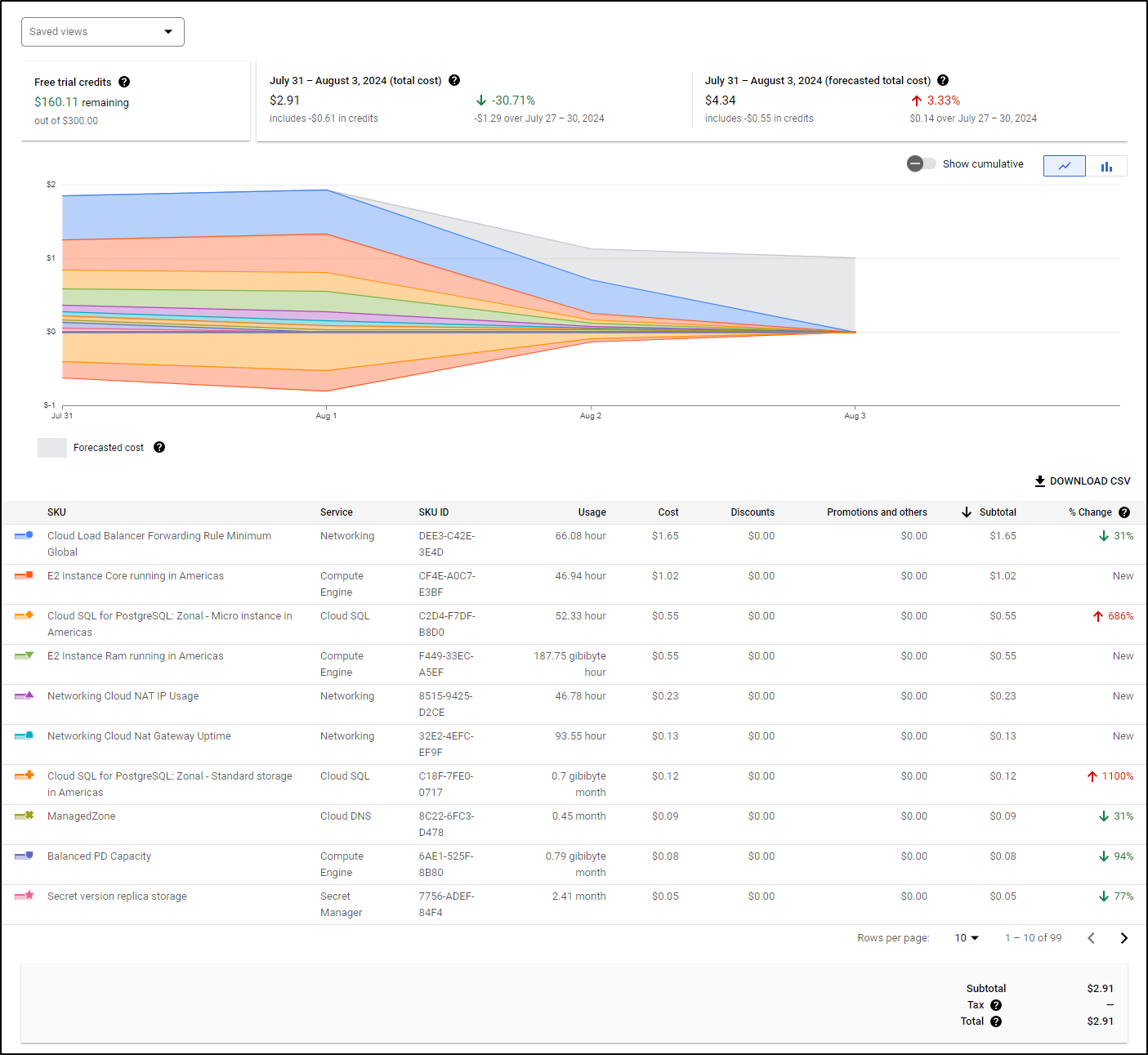

Costs

Frankly, that’s a lot of “stuff” for a serverless instance with a micro sized CloudSQL

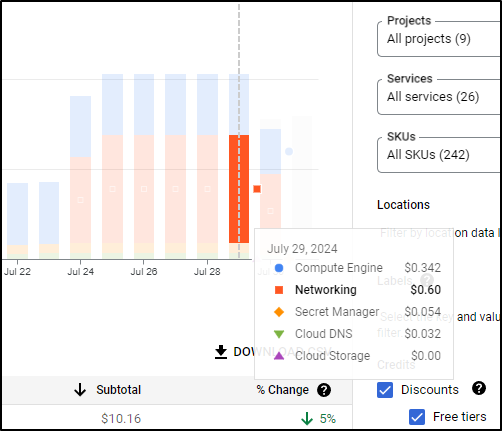

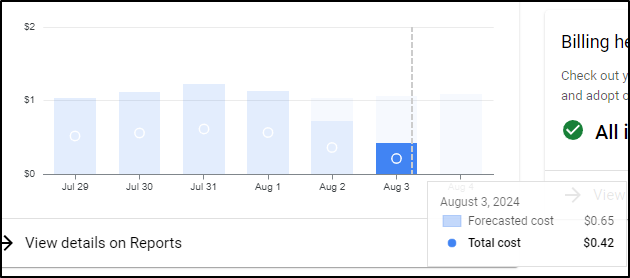

Just doing a spot check on yesterday (before I added the NAT gateway), I can see I’m running at $1.028 a day

That might mean for this instance I’m nearly at US$32/mo.

I checked one more time a few days later

Even with one day spiking to 1.25 and the next dropping to 0.75, we are still hovering around US$1/day

Alternatives

Let’s be fair - nothing is really ever free free. There are options, though.

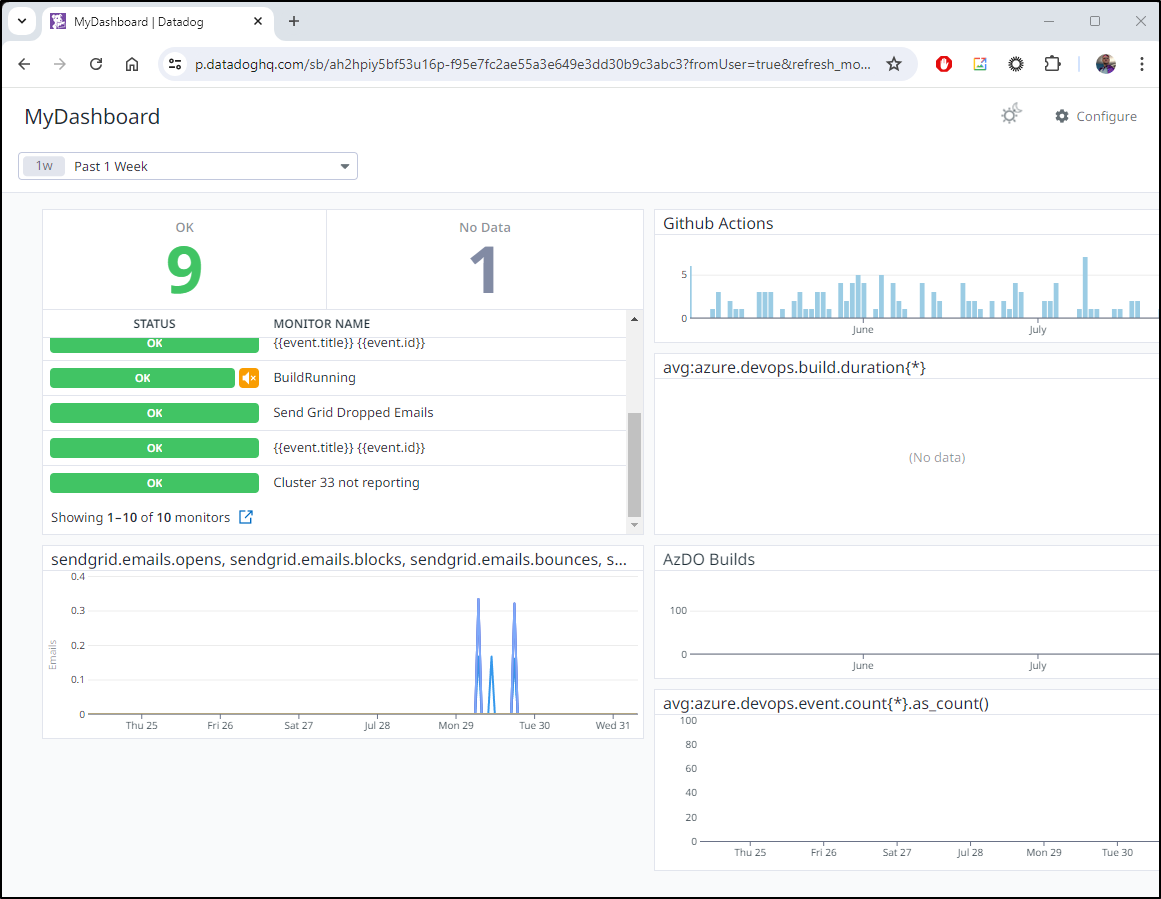

Datadog

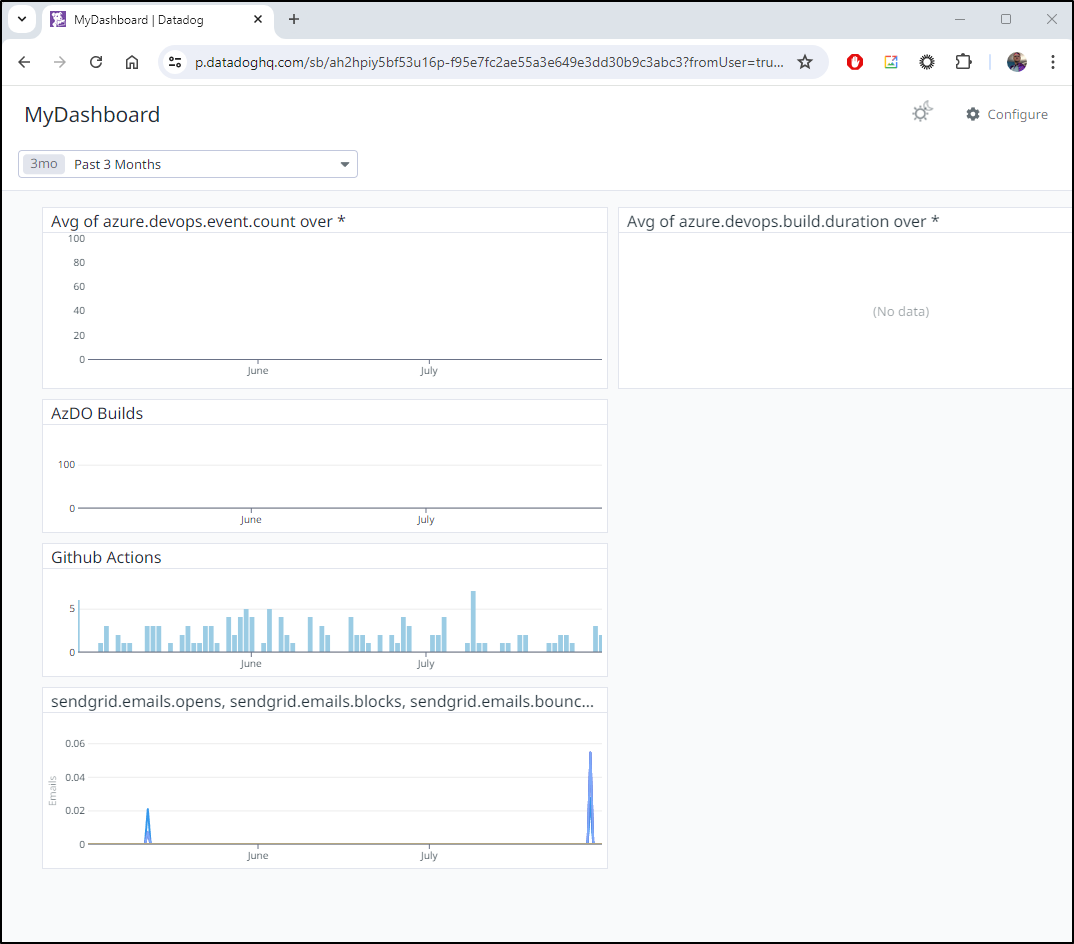

Consider a simple Datadog Public Dashboard

Right now it just shows AzDO and Github builds co-mingled with a status on Sendgrid issues

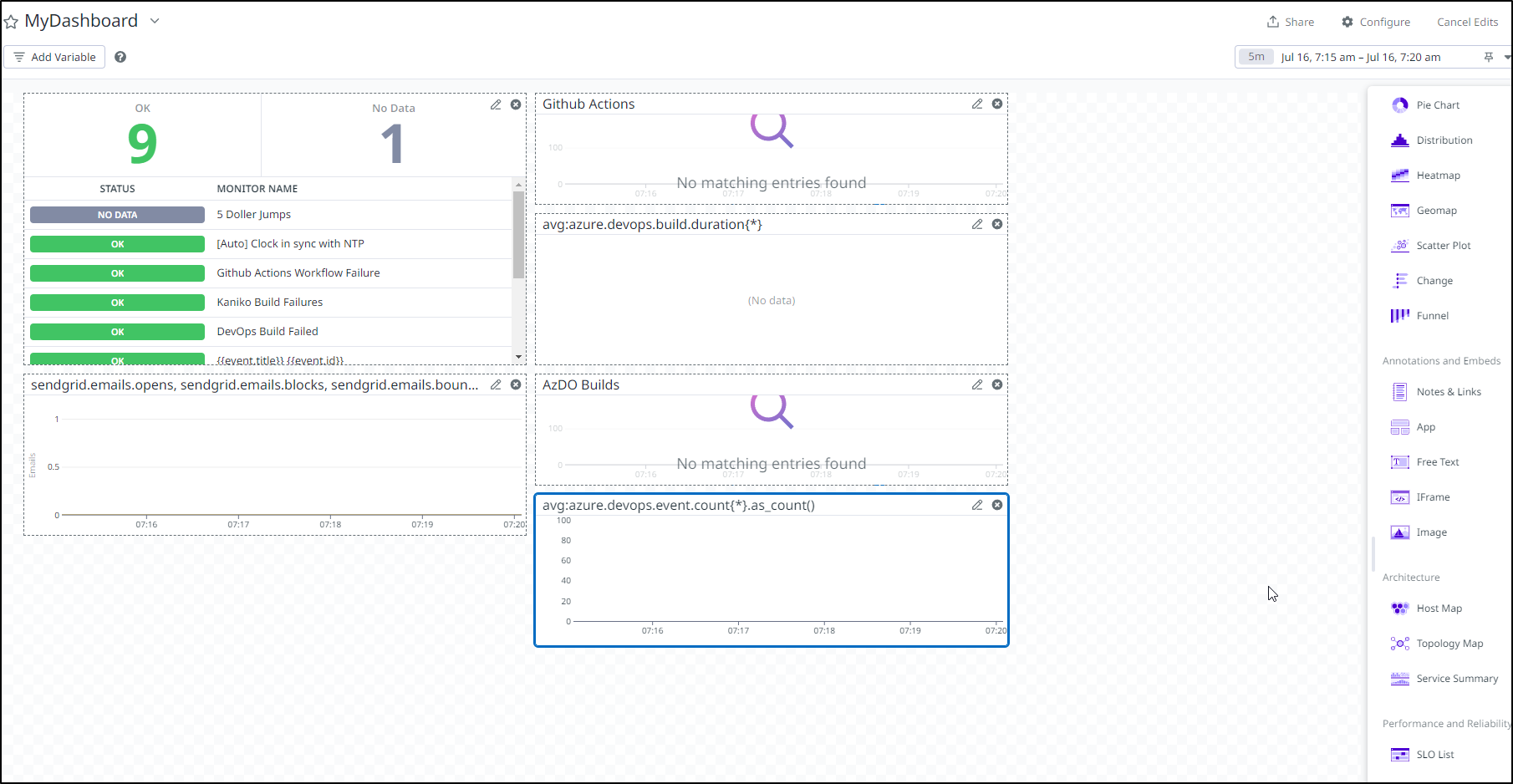

But I can easily change that up to show Monitor statuses

Now I have a nice Public URL with my monitors front and center

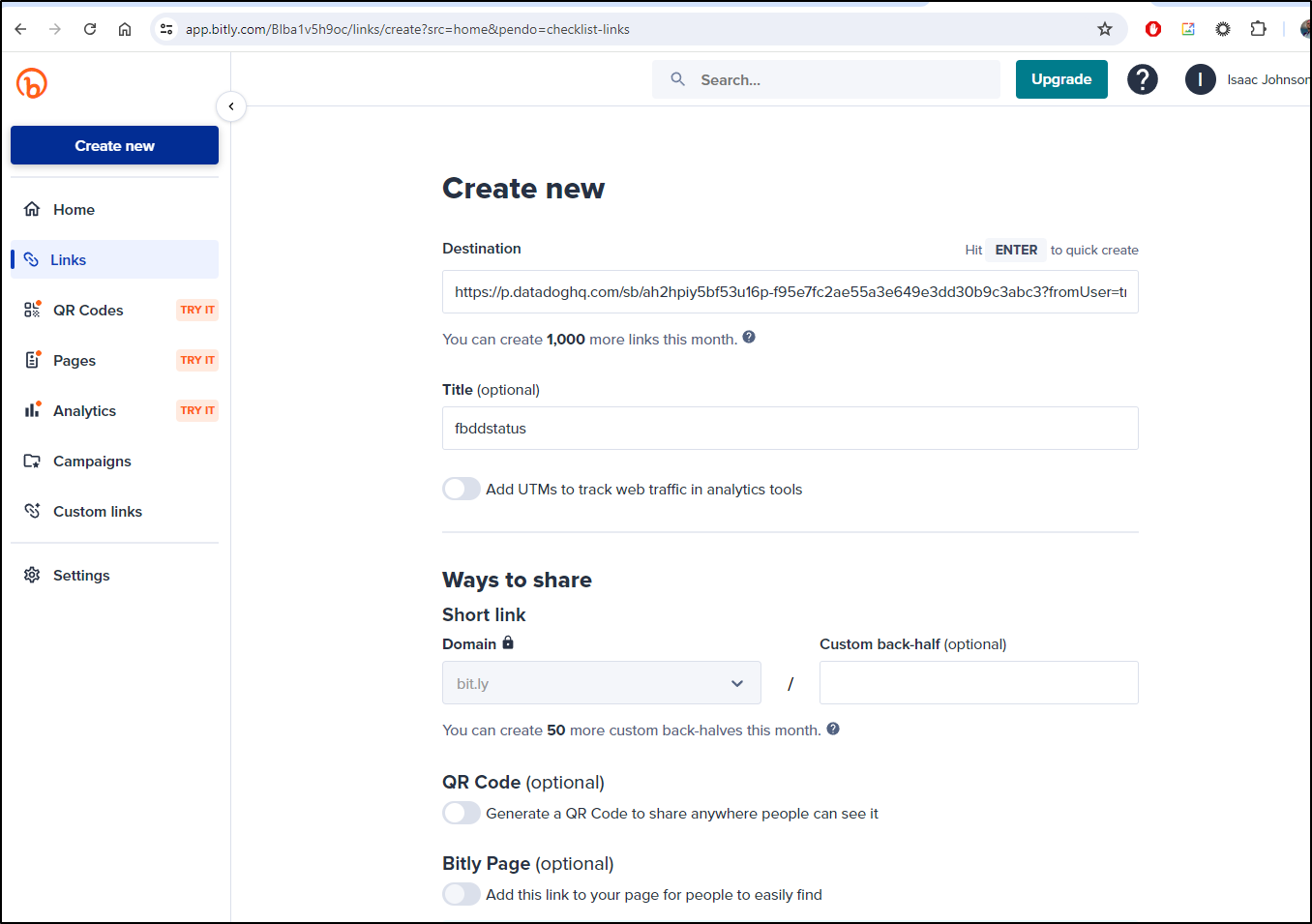

That’s a pretty long URL, so I can use something like bit.ly to shorten it

This means we have a basic forwarding link now at https://bit.ly/4drr1p1.

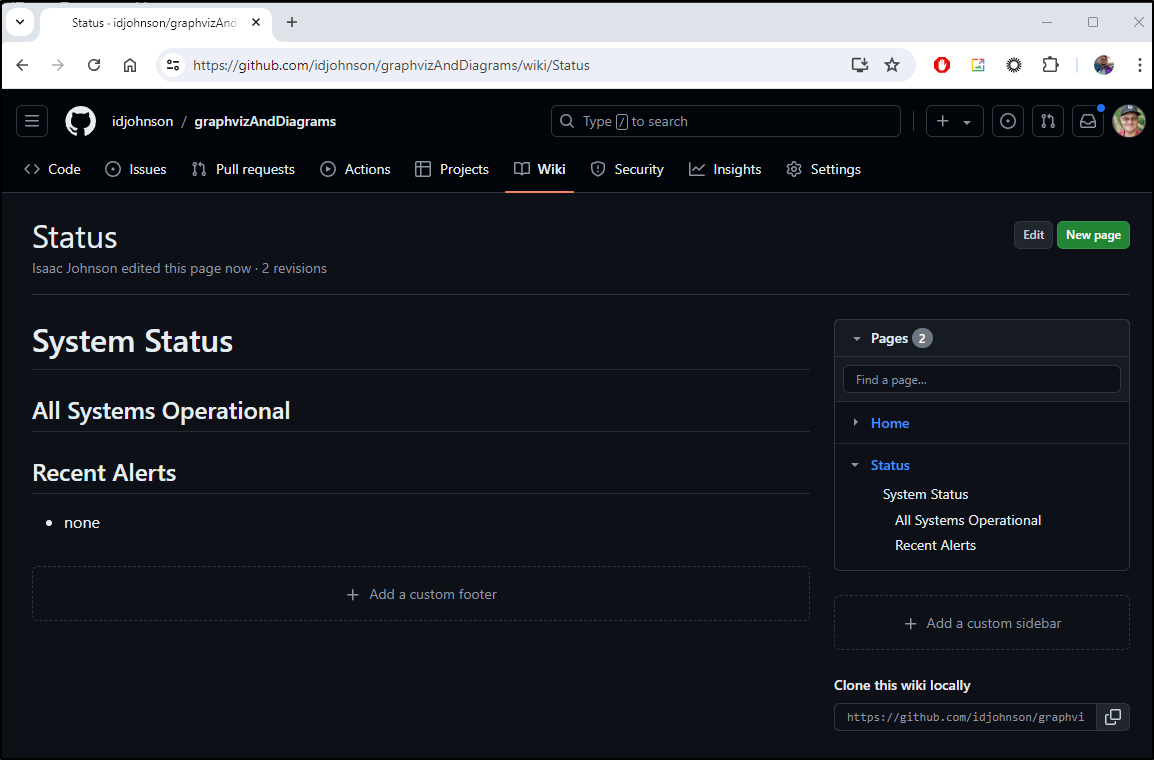

Github

One could just use a basic Github page to keep announcements in an external place. I borrowed the Wiki on one of my public repos here

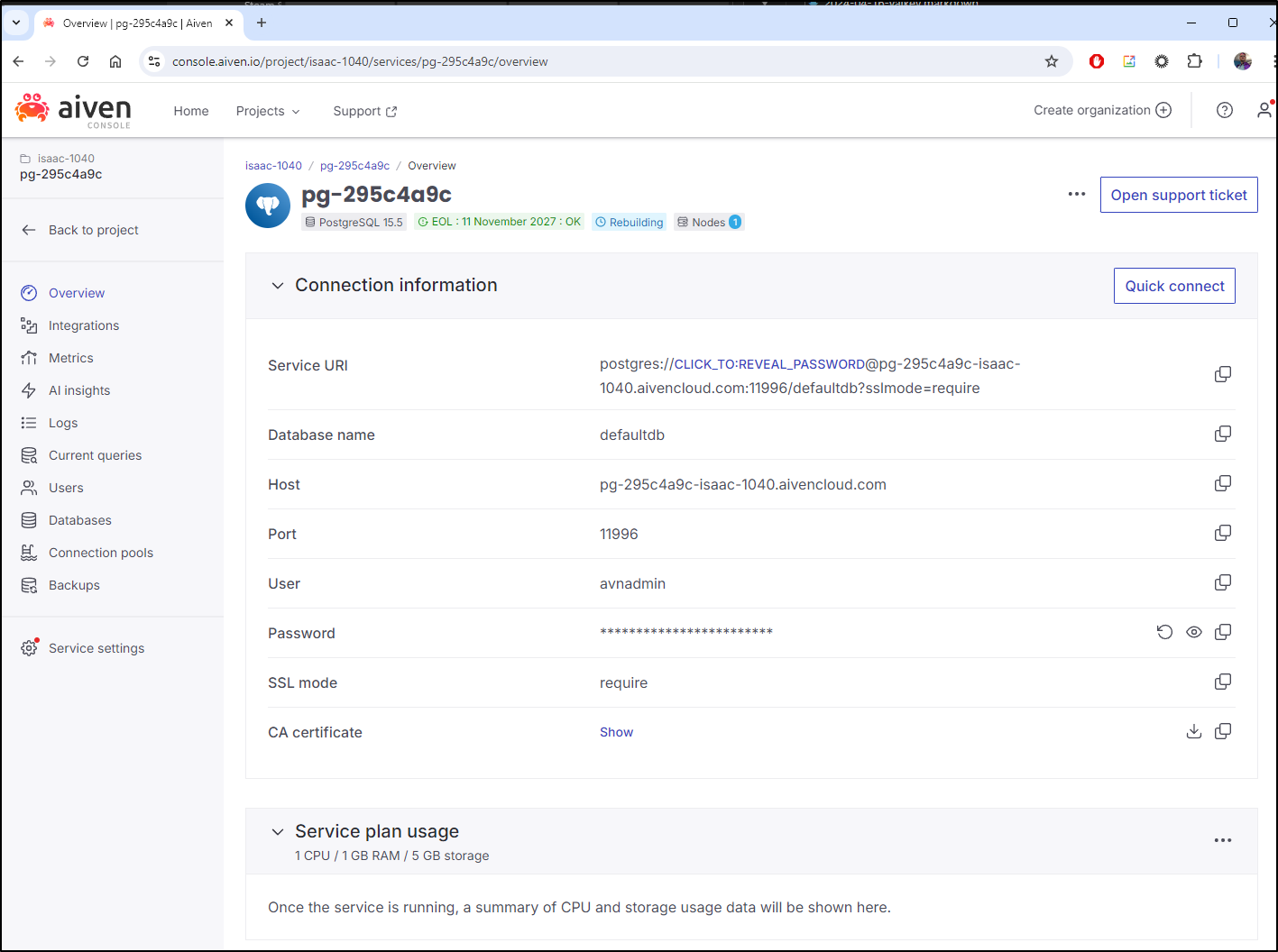

Using Aiven Free-tier PostgreSQL

A whole lot of my daily costs come from setting up a full networking layer to connect to the CloudSQL. I fully own I might be doing that wrong - there has to be a private connector that can just use IAM.

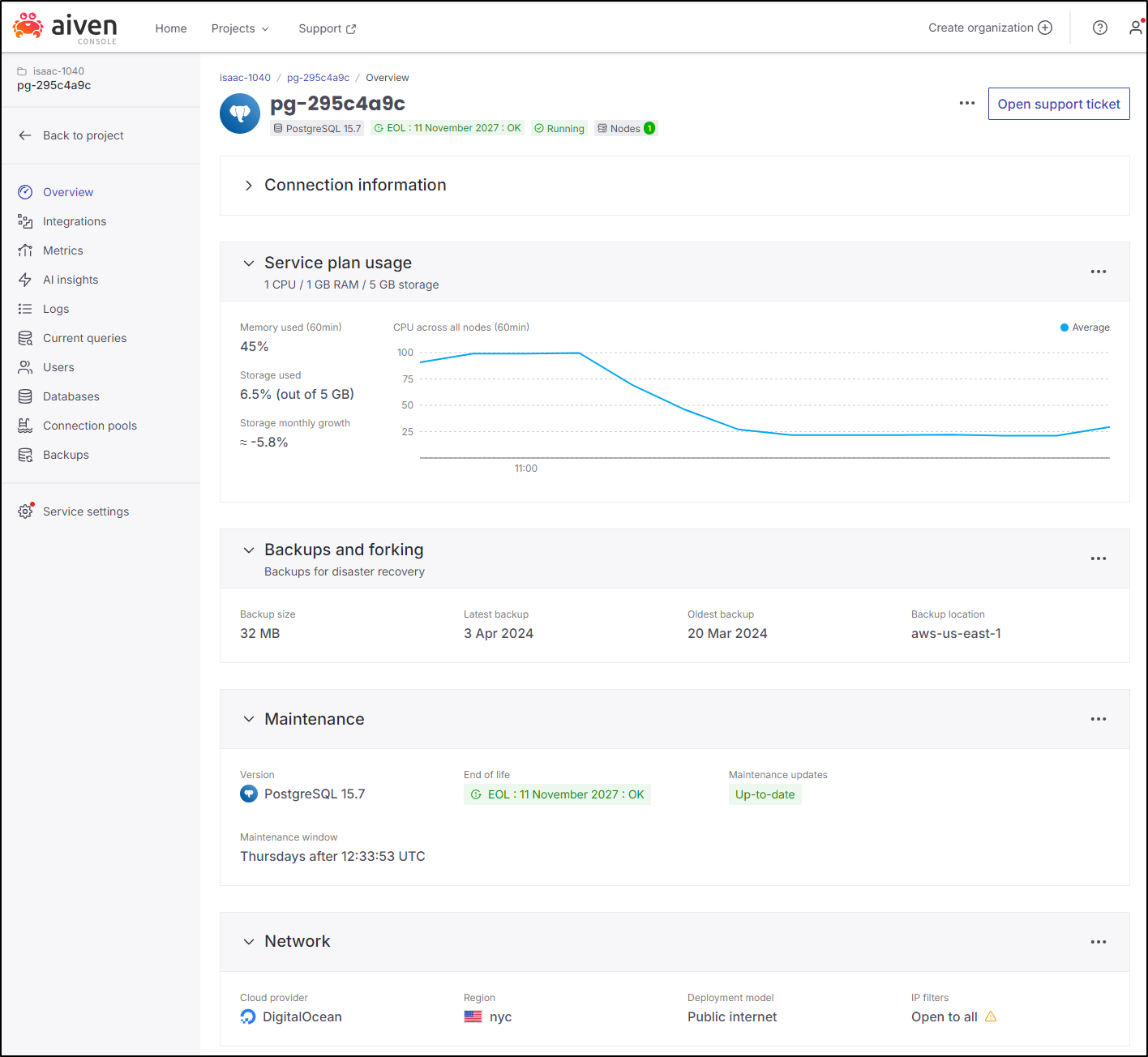

Regardless, I’m going to try and save money by getting rid of the CloudSQL instance and switching to a Free-tier Aiven PostgreSQL database

In my CloudRun, I’ll first disable the VPC Egress which is really what is costing me (I knew that NAT appliance would add up)

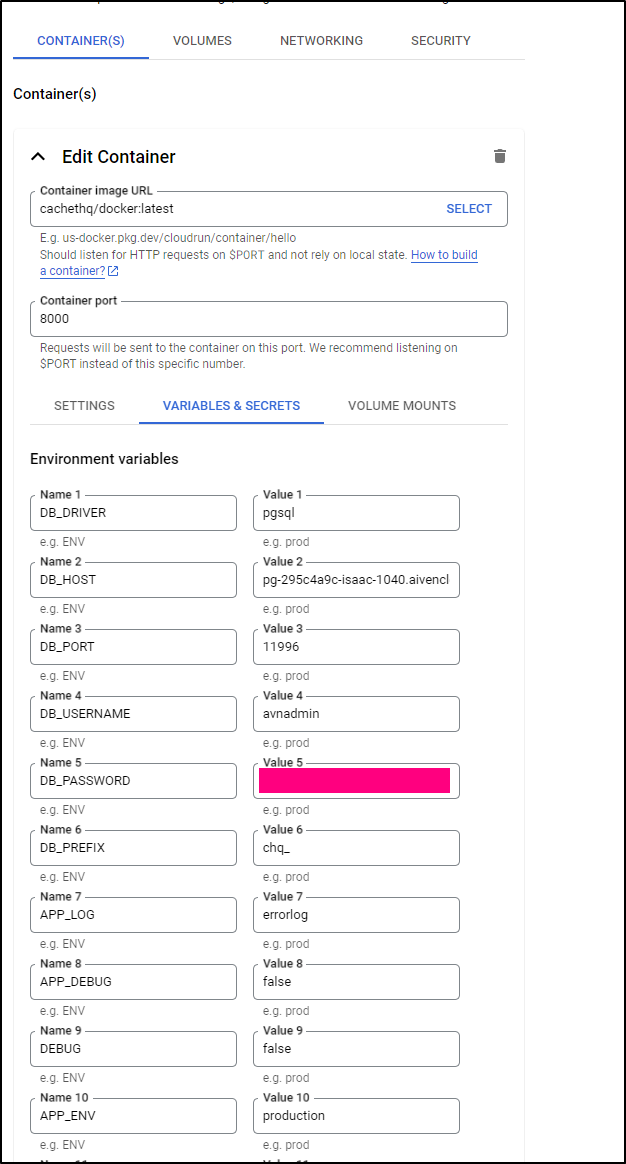

I’ll change my Cachet Cloud Run instance to use it by way of container variables

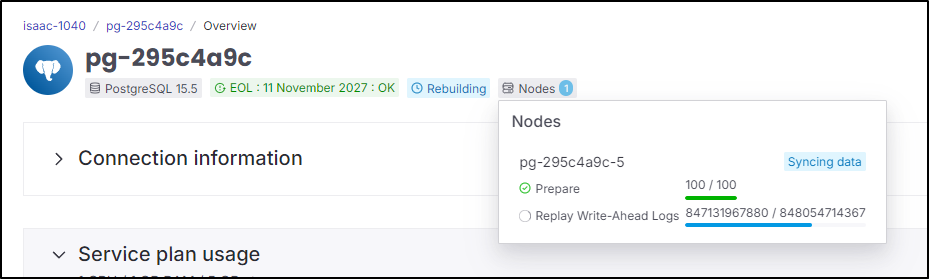

I’ve had it powered off so long, it needs a moment to replay the WAL files

When I deploy, GCP prompted me if I wanted to remove the VPC and redeploy, which I confirmed.

Now when I refresh, I get to set it up again

And moments later I have an operational site

And like a bit happy family, we see Aiven (Finland) uses Digital Ocean Droplets (NYC) with backups to AWS us-east-1 (Virginia I believe).

I’m going to remove the Cloud NAT now

And while it is just pennies for the reserved IP, I don’t need it now so I’ll release that

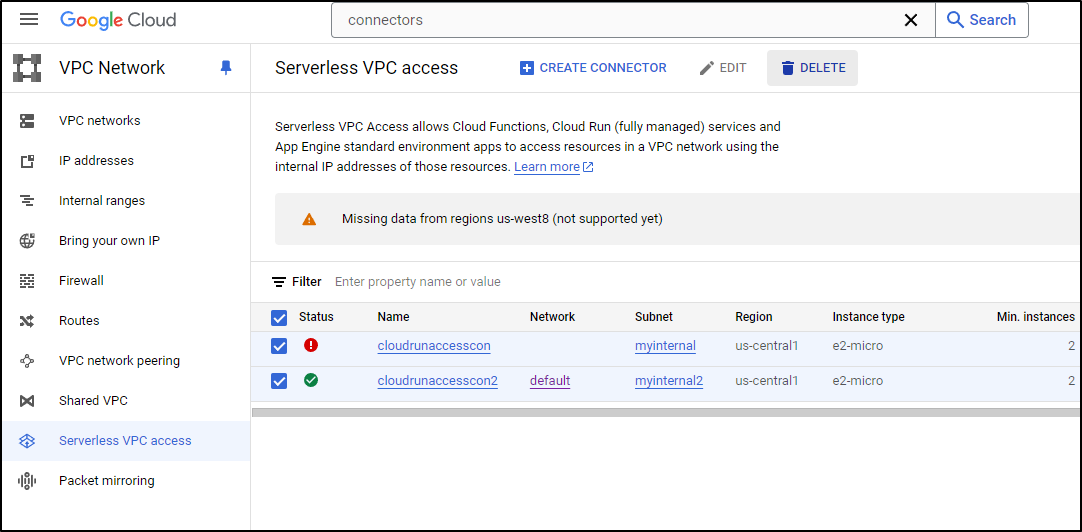

I have some VPC Access connectors I can ditch now

$ gcloud compute networks vpc-access connectors list --region=us-central1

CONNECTOR_ID REGION NETWORK IP_CIDR_RANGE SUBNET SUBNET_PROJECT MACHINE_TYPE MIN_INSTANCES MAX_INSTANCES MIN_THROUGHPUT MAX_THROUGHPUT STATE

cloudrunaccesscon us-central1 myinternal myanthosproject2 e2-micro 2 10 200 1000 ERROR

cloudrunaccesscon2 us-central1 default myinternal2 myanthosproject2 e2-micro 2 10 200 1000 READY

Which I can see and remove from the UI as well

They seemed to hang, but then I checked via the CLI and see they are being removed

$ gcloud compute networks vpc-access connectors list --region=us-central1

CONNECTOR_ID REGION NETWORK IP_CIDR_RANGE SUBNET SUBNET_PROJECT MACHINE_TYPE MIN_INSTANCES MAX_INSTANCES MIN_THROUGHPUT MAX_THROUGHPUT STATE

cloudrunaccesscon us-central1 myinternal myanthosproject2 e2-micro 2 10 200 1000 DELETING

cloudrunaccesscon2 us-central1 default myinternal2 myanthosproject2 e2-micro 2 10 200 1000 DELETING

Let’s not forget the Router we created for VPC access - that can be removed

$ gcloud compute routers list --regions=us-central1

NAME REGION NETWORK

myvpcrouter us-central1 default

$ gcloud compute routers delete myvpcrouter --region=us-central1

The following routers will be deleted:

- [myvpcrouter] in [us-central1]

Do you want to continue (Y/n)? Y

Deleted [https://www.googleapis.com/compute/v1/projects/myanthosproject2/regions/us-central1/routers/myvpcrouter].

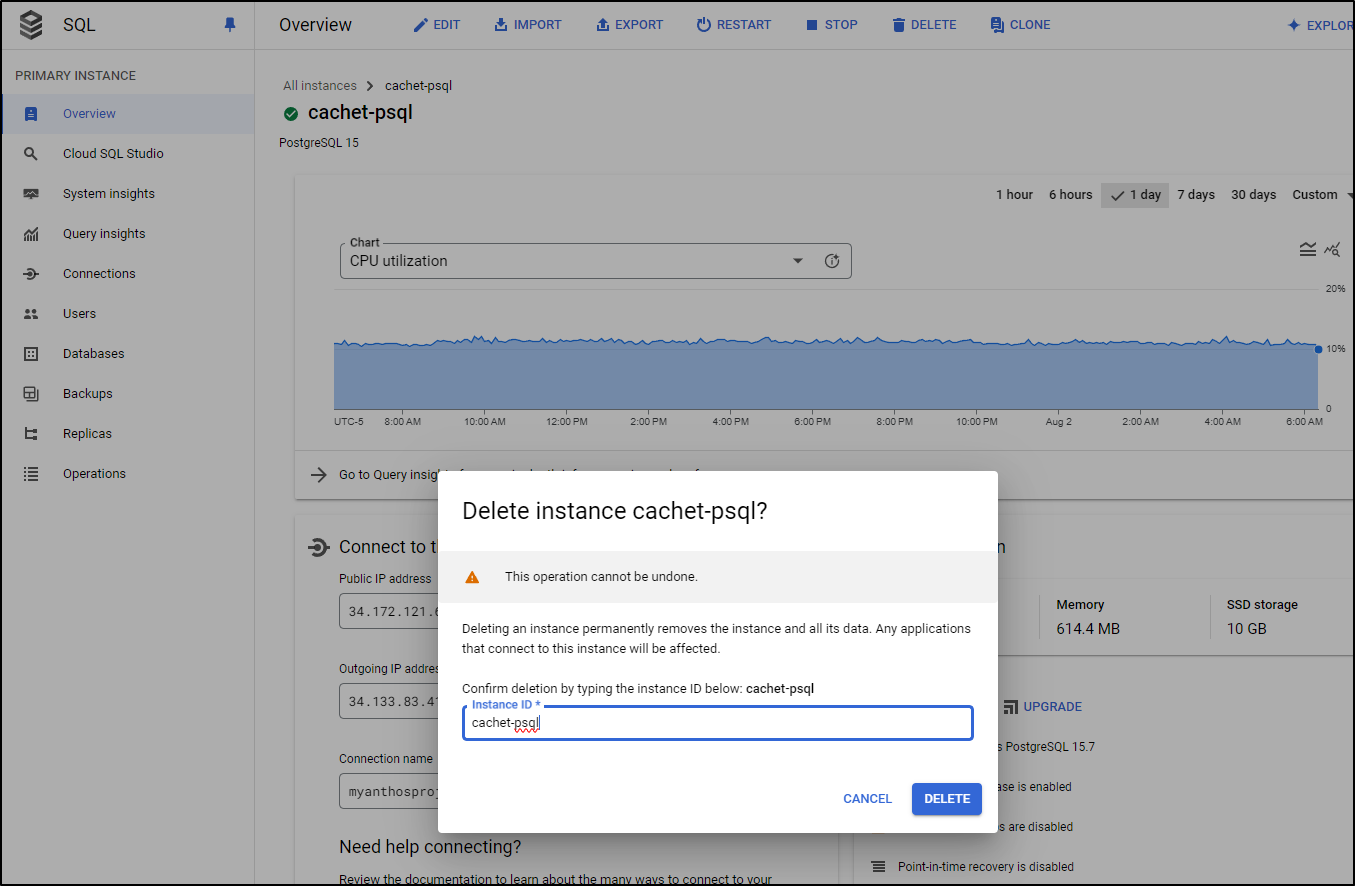

And now that we are live using Aiven, let me do away with the CloudSQL instance as well

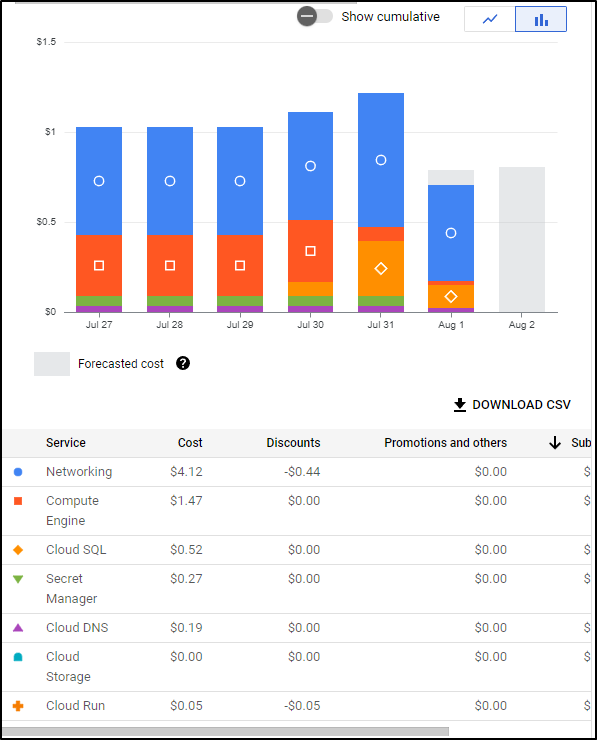

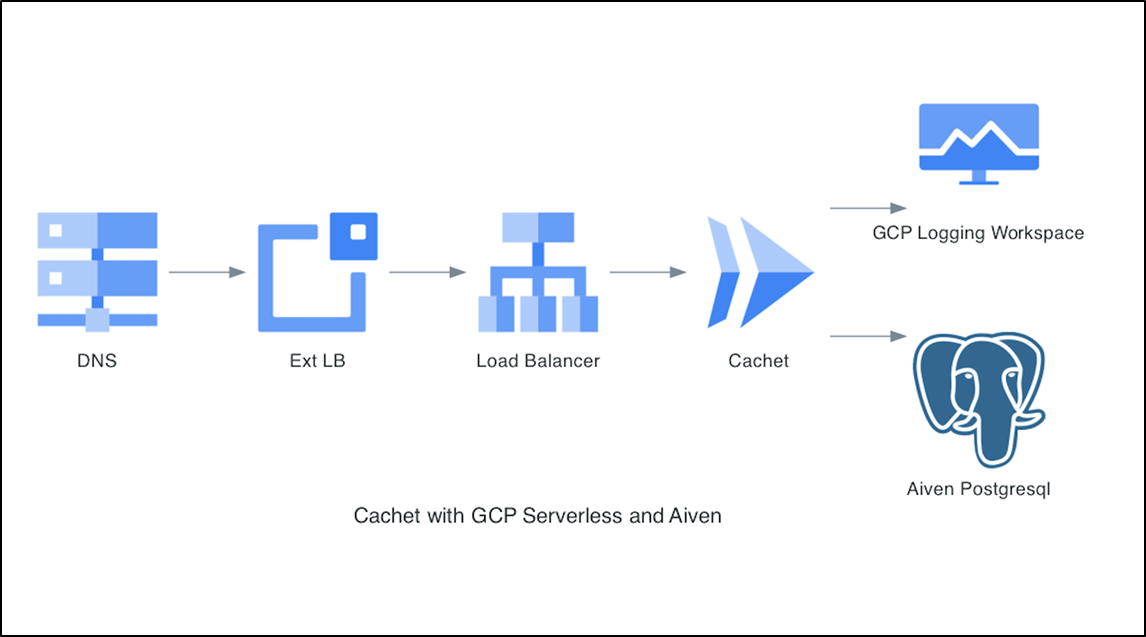

From an Infrastructure perspective, this greatly reduces costs and complexity:

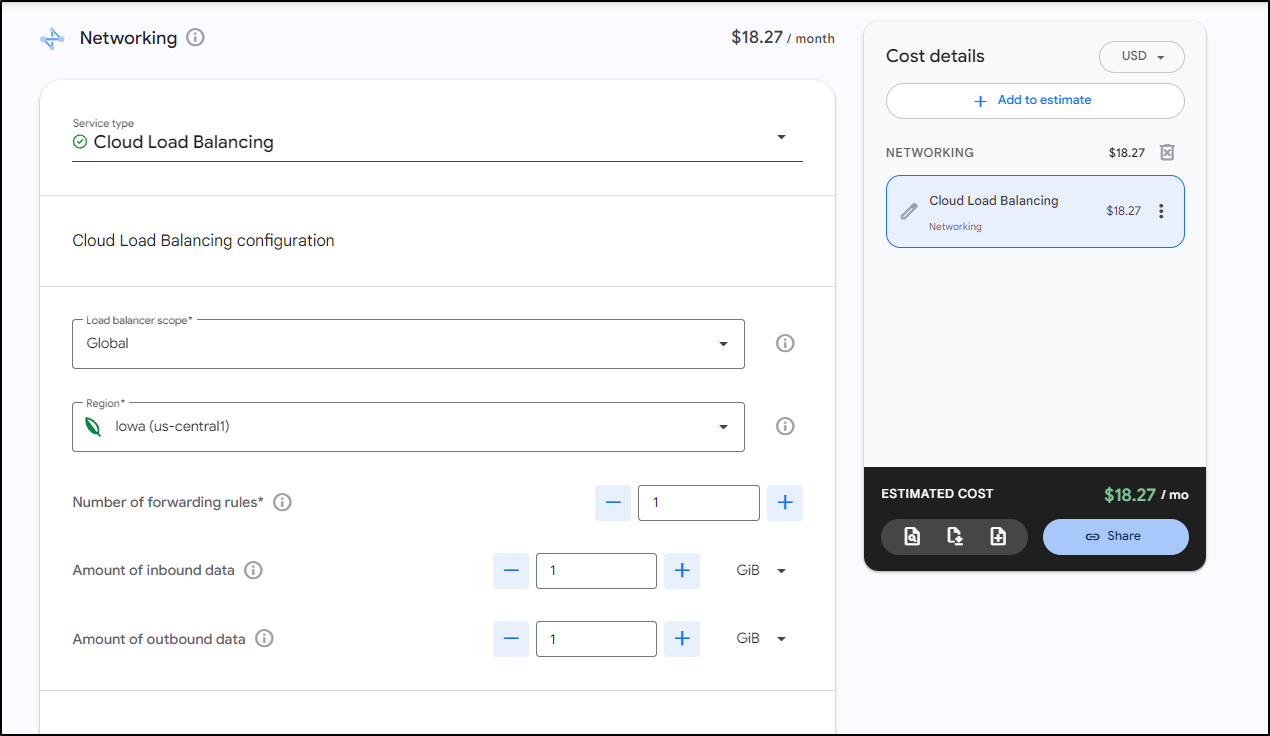

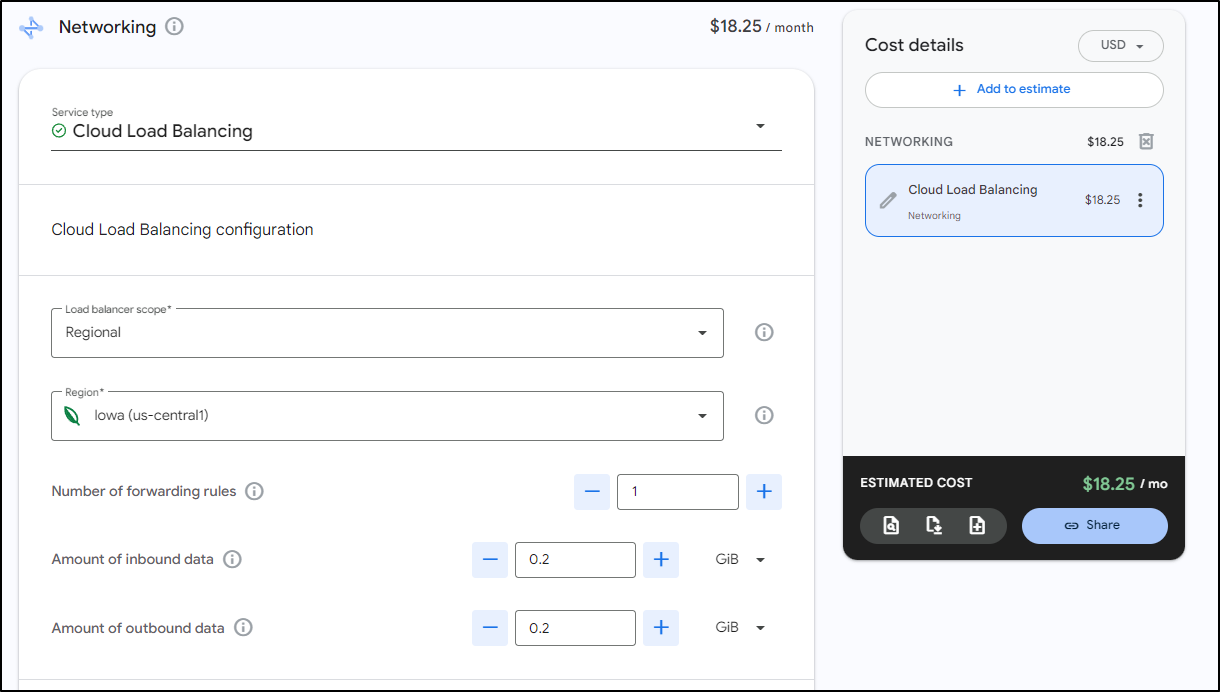

After a day I went back to check costs and saw the real cost is that Load Balancer

All other costs aside, whether it’s global

or regional with little data

I’m on deck for at least US$18 a month for a GCP Load Balancer. The cost doesn’t really change much up to 4 forwarding rules. Even pushing 100Gb only brings it to $19.

Now If I can handle just a generic name, I could do away with the LB

I was a bit curious if I could just use a CNAME to keep it simple

Sadly, that didn’t work

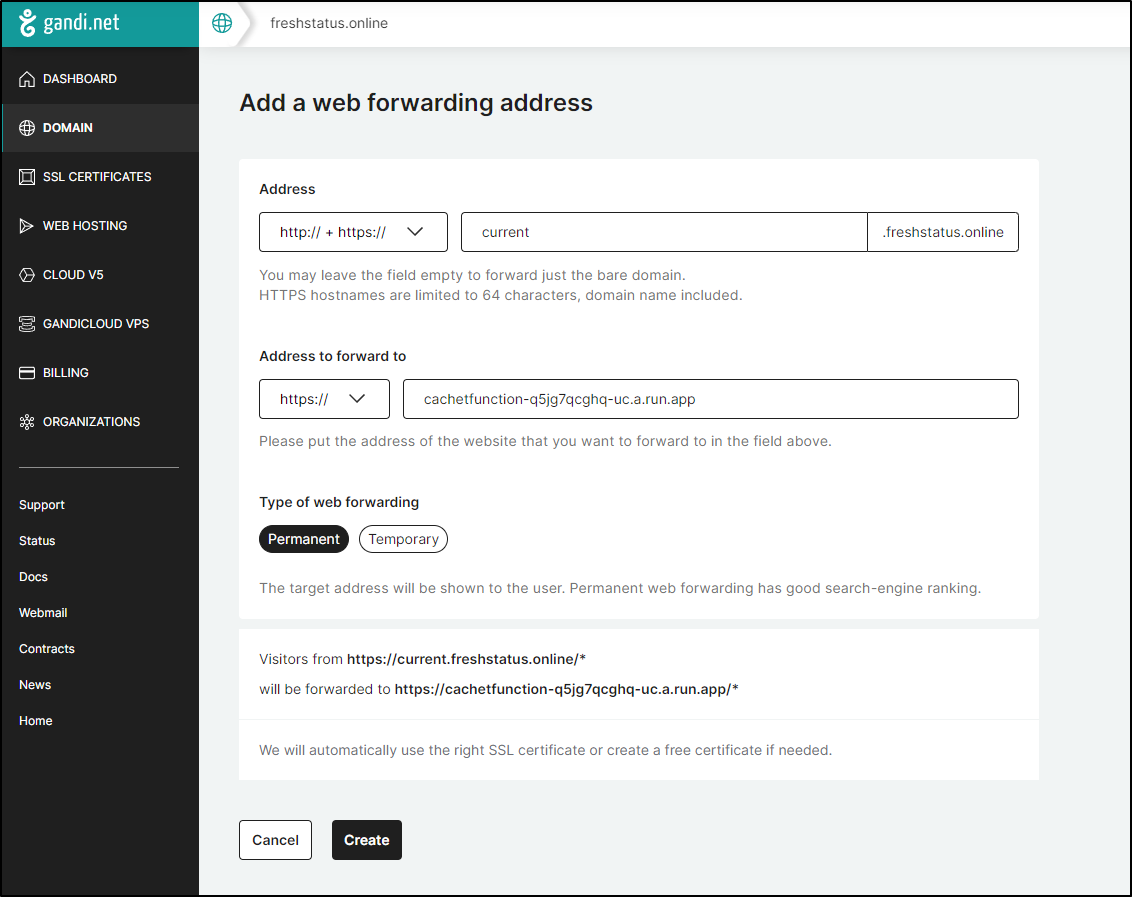

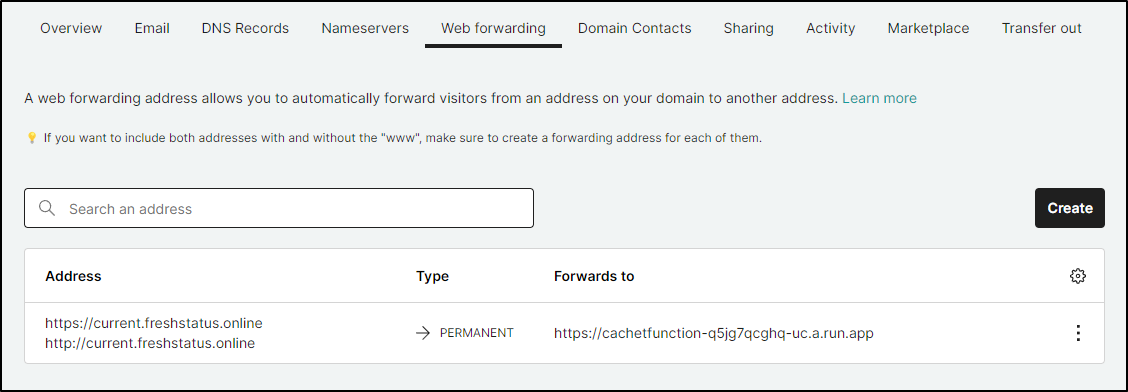

My last idea was to use a Web Forwarding address from my DNS provider. It only works if I leave it as Gandi DNS so I registered a domain to test

which created

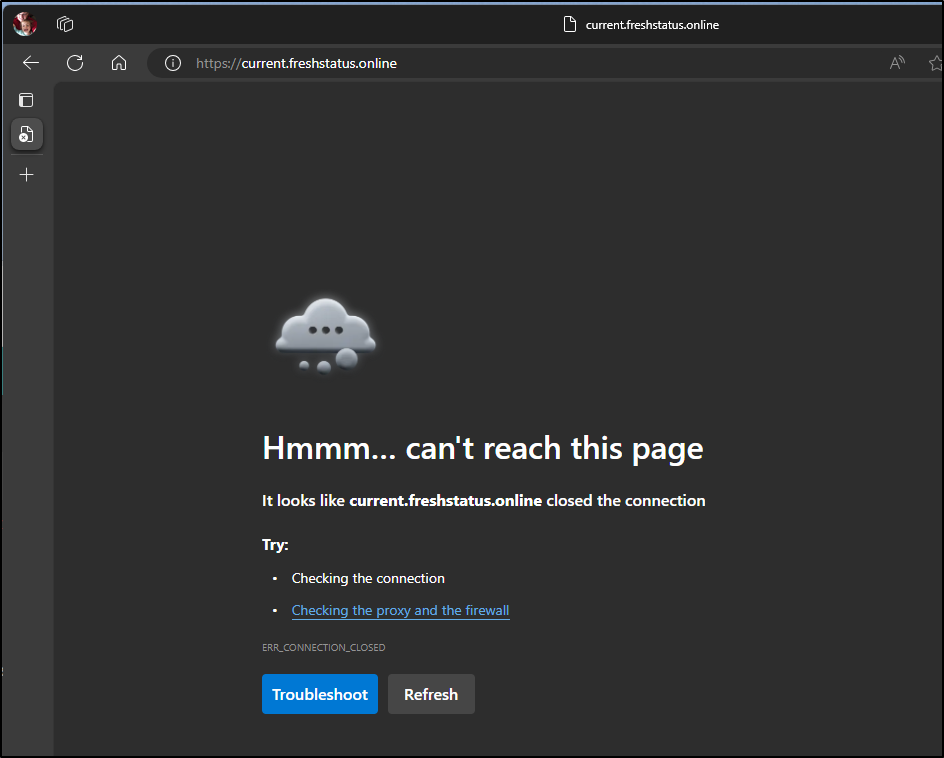

But that didn’t seem to work

Summary

Today we built out a few cloud-native options for Cachet to be hosted in GCP. Our first path included everything we would need - a PostgreSQL CloudSQL Database, CloudRun serverless app, and a Loadbalancer with DNS name served by CloudDNS. We then secured it with a CloudRun static egress which created a NAT Gateway router and VPC Access Connector. While this worked to limit our egress from CloudRun (and thus limit our access to the CloudSQL Public IP), our costs were a bit higher than I would like for a single app; around $32 a month.

One alternative I then tried was moving the database to a free-tier at Aiven.io and tearing down the extra networking. But the Ingress Application Load Balancer that serves the Domain would still run us $18/mo. So it’s hard to see how I do this for under $15/mo using CloudRun - IF I need a nice DNS name

That said, if one can suffice with a URL like https://cachetfunction-q5jg7qcghq-uc.a.run.app/ or an in-memory database (not sure if it persists in the container, but I do see sqlite as an option) we might squeeze it cheaper.

The other alternatives we looked at were a Datadog public dashboard and a Github Wiki status page in Markdown - both of which could suffice. I’m pretty sure we could even use a Github action to build a status page using Github pages on a schedule. But we’ll save all that for next time.