Published: Jul 30, 2024 by Isaac Johnson

I really planned to try and find the love for Backstage - I did. Others clearly like it. I just cannot find a redeeming quality. Sure, when setup in IIS or Nginx manually by engineers, it does work. But it’s just so manual and painful to manage. For something so hip, I just don’t get it.

Today, we will try and get this working. The first title of this post nearly a month ago was “Backstage in Kubernetes”. But we’ll see to what end I am successful there.

If you want the TLDR, save yourself the headache and buy a SaaS offering of Backstage or using something a bit better like Configure8.

Regardless, let’s put on our big girl pants and do this…

Installation

We first add the helm repo and update

$ helm repo add backstage https://backstage.github.io/charts

"backstage" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "backstage" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "nicholaswilde" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

I can now install and pass a few settings to my helm deploy. I pulled the GH token from AKV inline

$ helm install backstage -n backstage --create-namespace --set backstage.extraEnvVars[0].name=NODE_ENV --set backstage.extraEnvVars[0].value=development --set backstage.extraEnvVars[1].name=GITHUB_TOKEN --set backstage.extraEnvVars[1].value=`az keyvault secret show --name github-token-acrtasks --vault-name idjakv --subscription Pay-As-You-Go | jq -r .value | tr -d '\n'` backstage/backstage

NAME: backstage

LAST DEPLOYED: Tue Jul 9 16:16:14 2024

NAMESPACE: backstage

STATUS: deployed

REVISION: 1

TEST SUITE: None

It would seem Backstage is running

$ kubectl get pods -n backstage

NAME READY STATUS RESTARTS AGE

backstage-5cbc9ccf79-c6fqv 1/1 Running 0 75s

We can port-forward to the service to access the UI

$ kubectl port-forward svc/backstage -n backstage 7007:7007

Forwarding from 127.0.0.1:7007 -> 7007

Forwarding from [::1]:7007 -> 7007

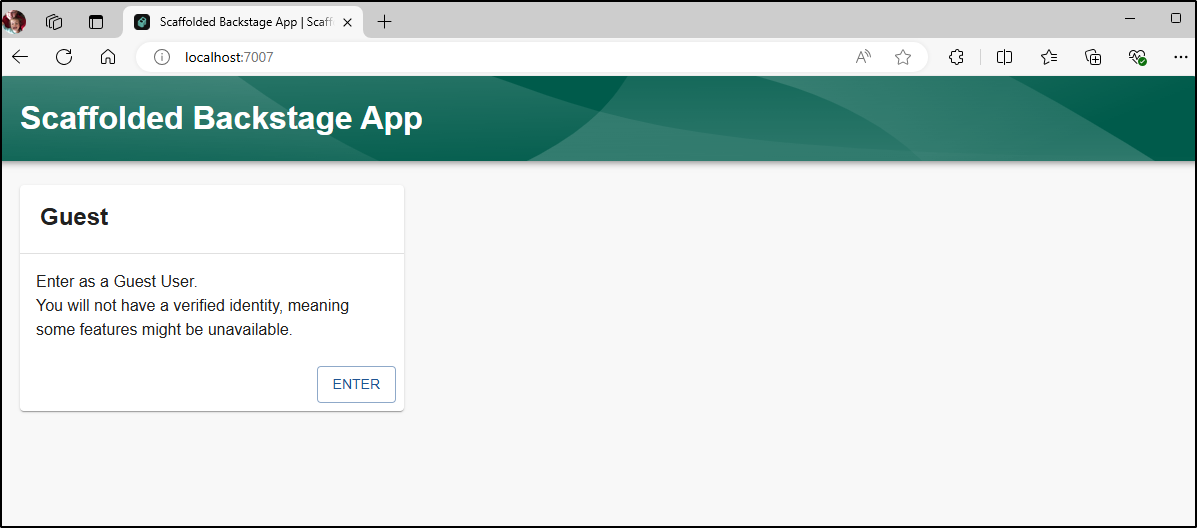

Handling connection for 7007

Handling connection for 7007

Handling connection for 7007

Handling connection for 7007

Handling connection for 7007

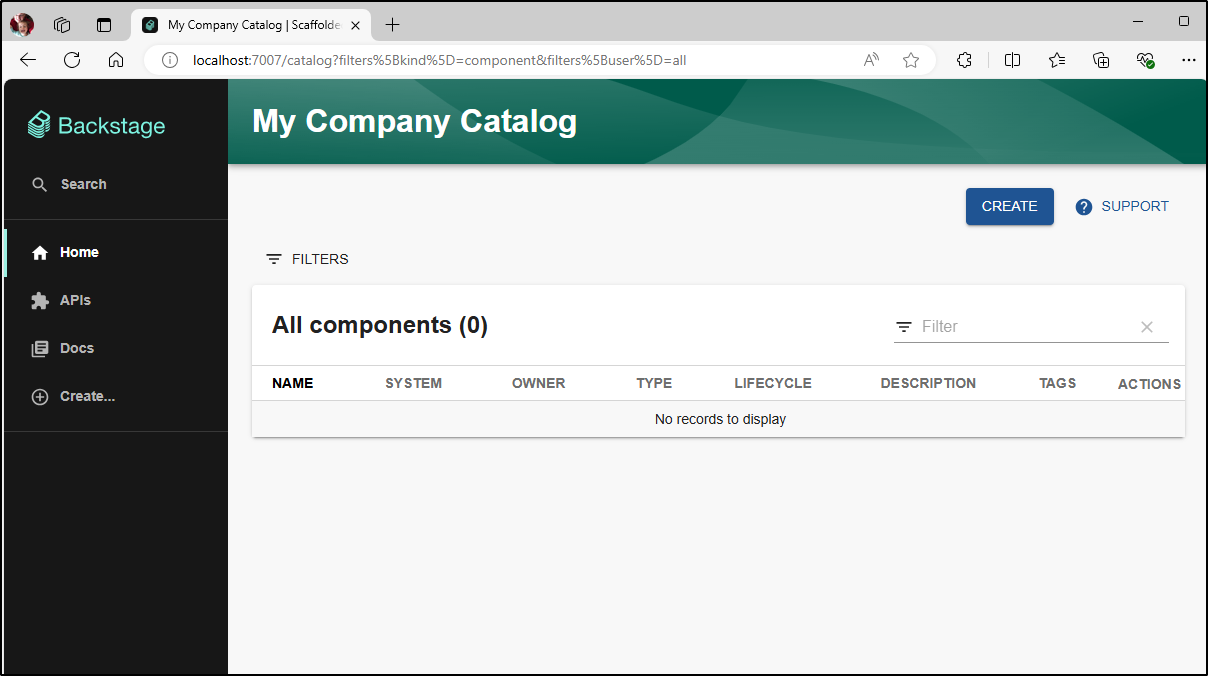

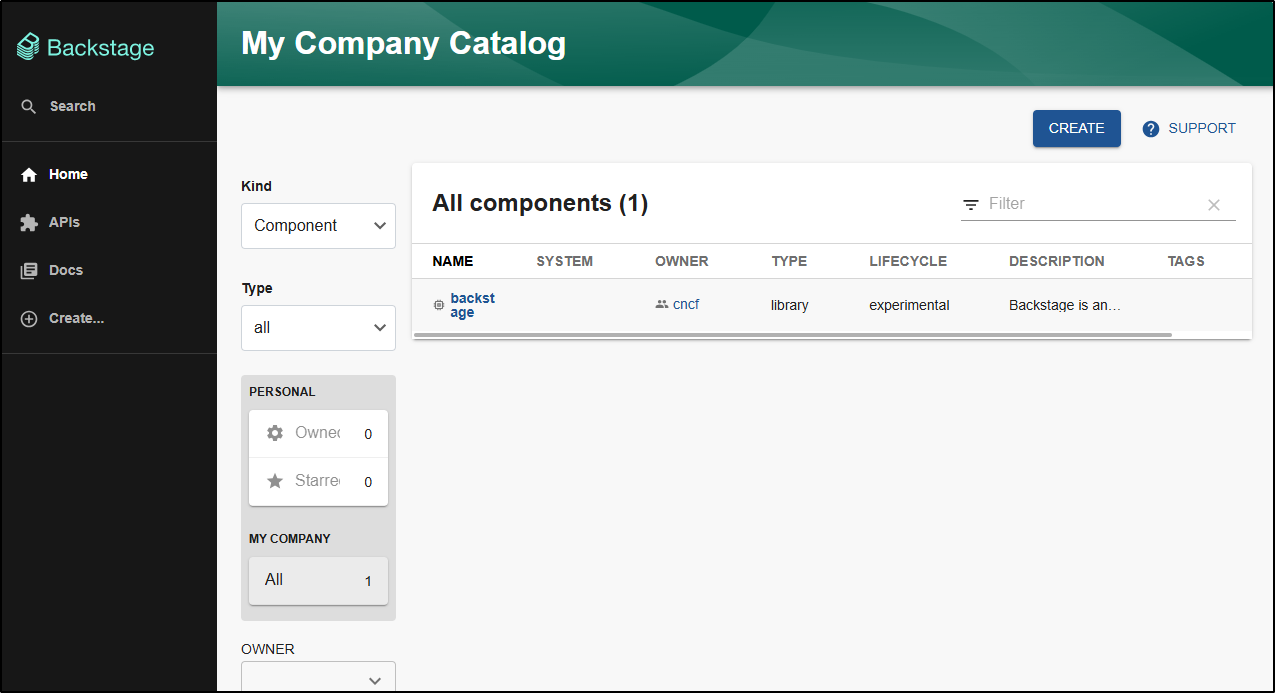

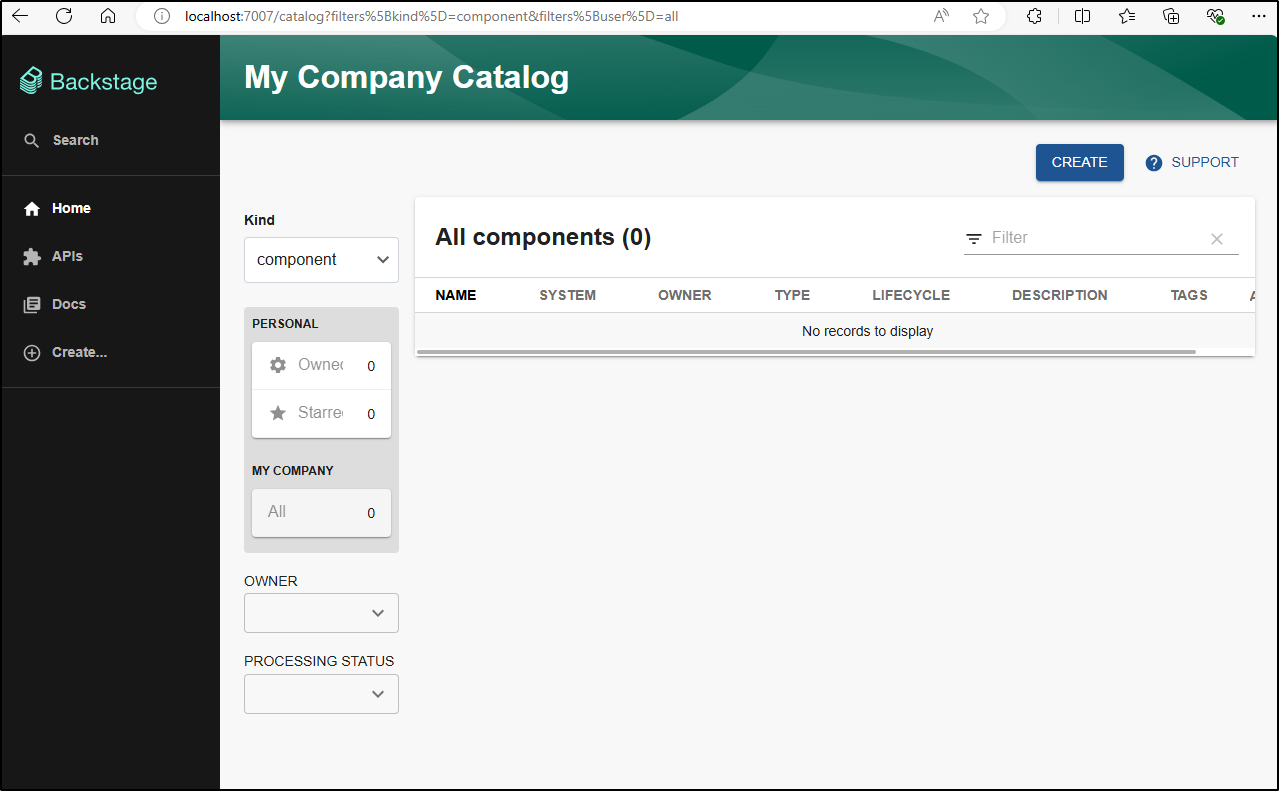

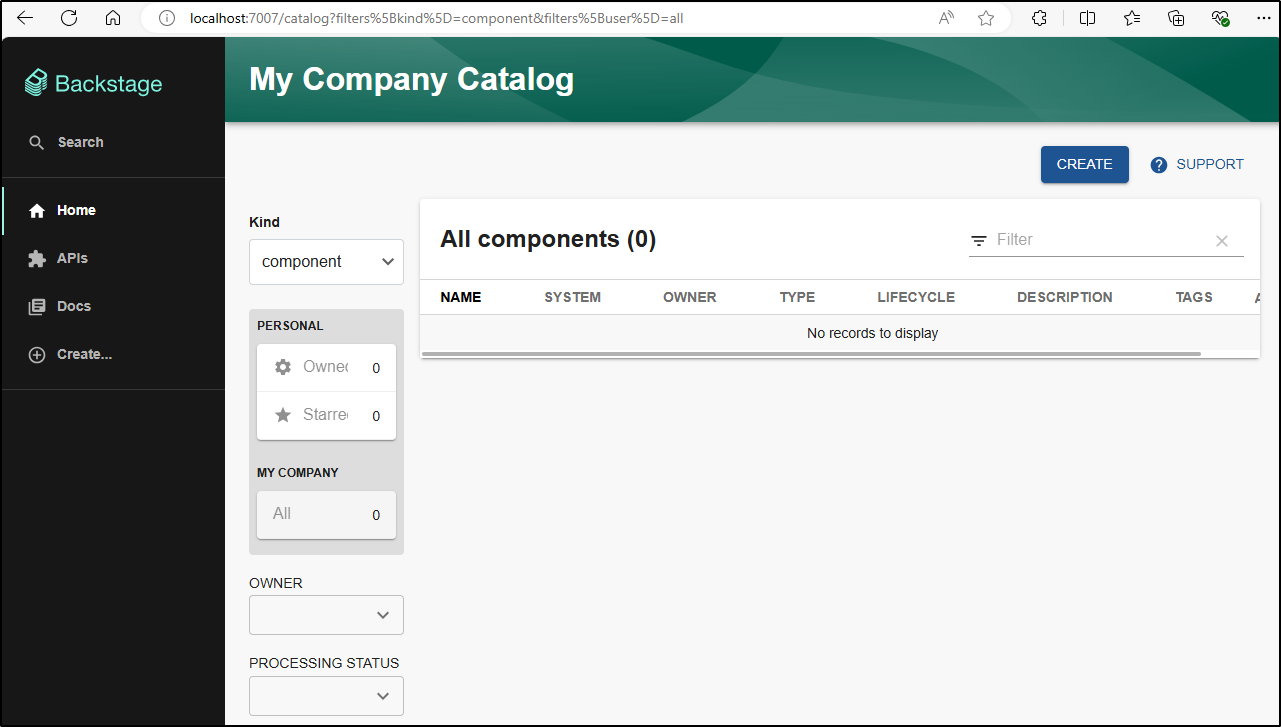

Here we have an empty catalogue

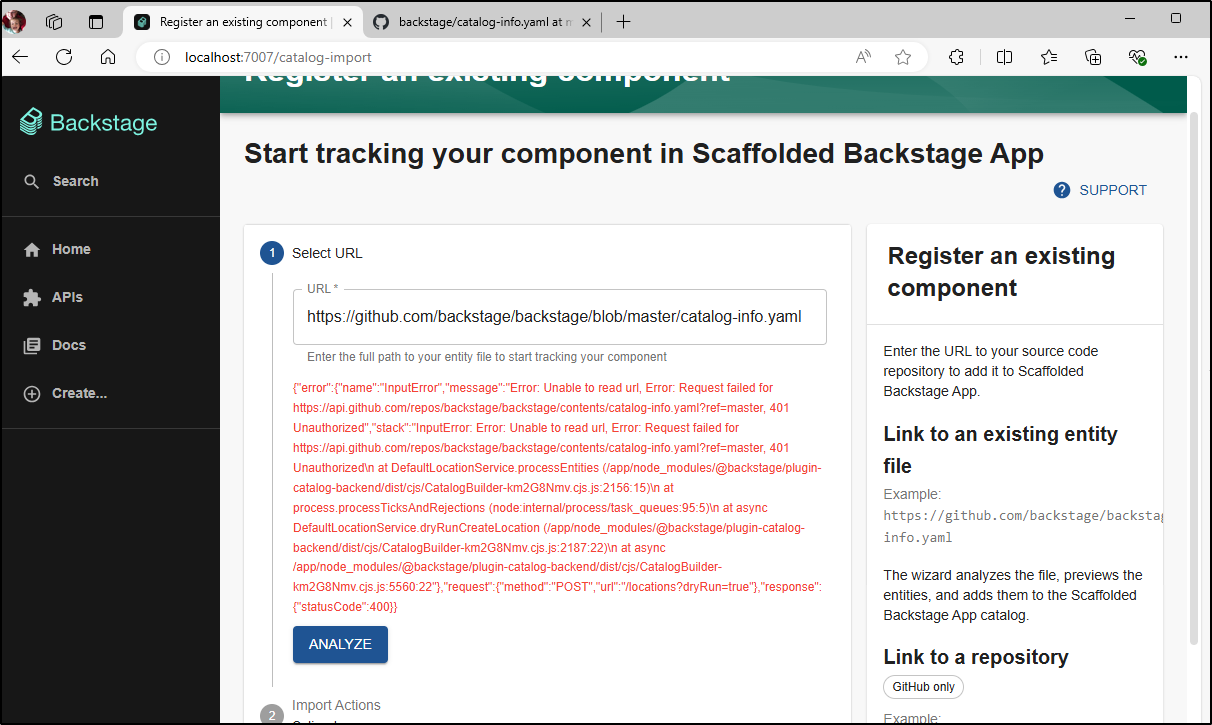

I was rejected from even the standard sample catalogue which makes me question my GH token

I swapped tokens

$ helm upgrade backstage -n backstage --create-namespace --set backstage.extraEnvVars[0].name=NODE_ENV --set backstage.extraEnvVars[0].value=development --set backstage.extraEnvVars[1].name=GITHUB_TOKEN --set backstage.extraEnvVars[1].value=`az keyvault secret show --name GithubToken-MyFull90d --vault-name idjakv --subscript

ion Pay-As-You-Go | jq -r .value | tr -d '\n'` backstage/backstage

Release "backstage" has been upgraded. Happy Helming!

NAME: backstage

LAST DEPLOYED: Tue Jul 9 16:27:35 2024

NAMESPACE: backstage

STATUS: deployed

REVISION: 3

TEST SUITE: None

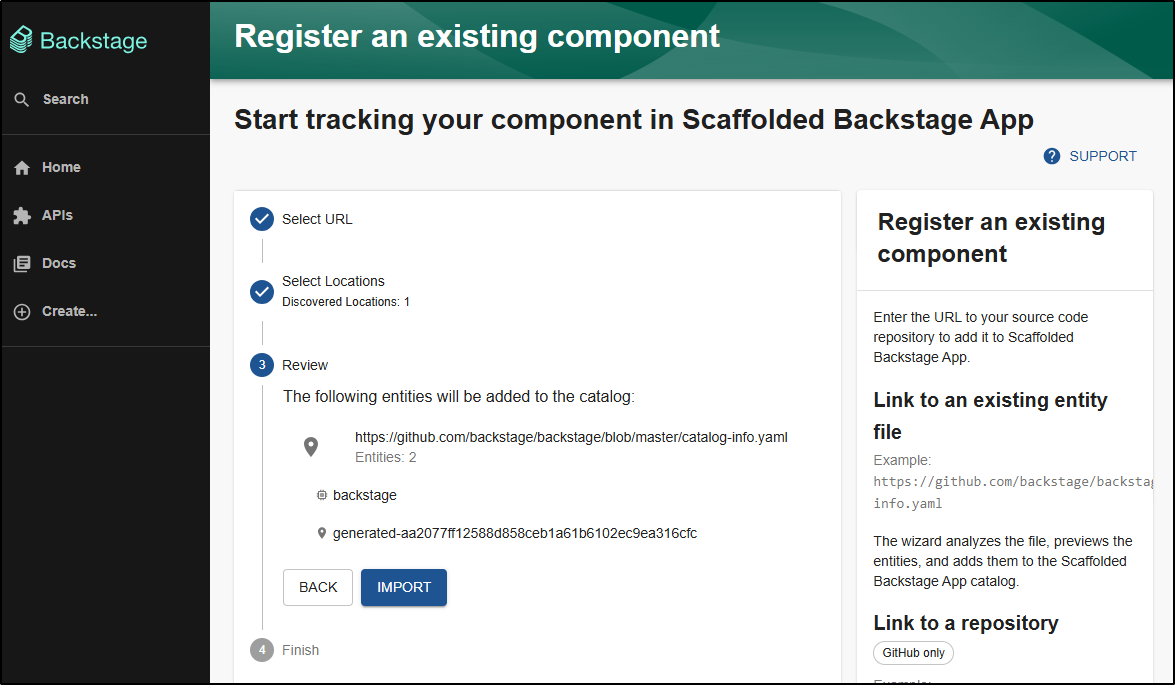

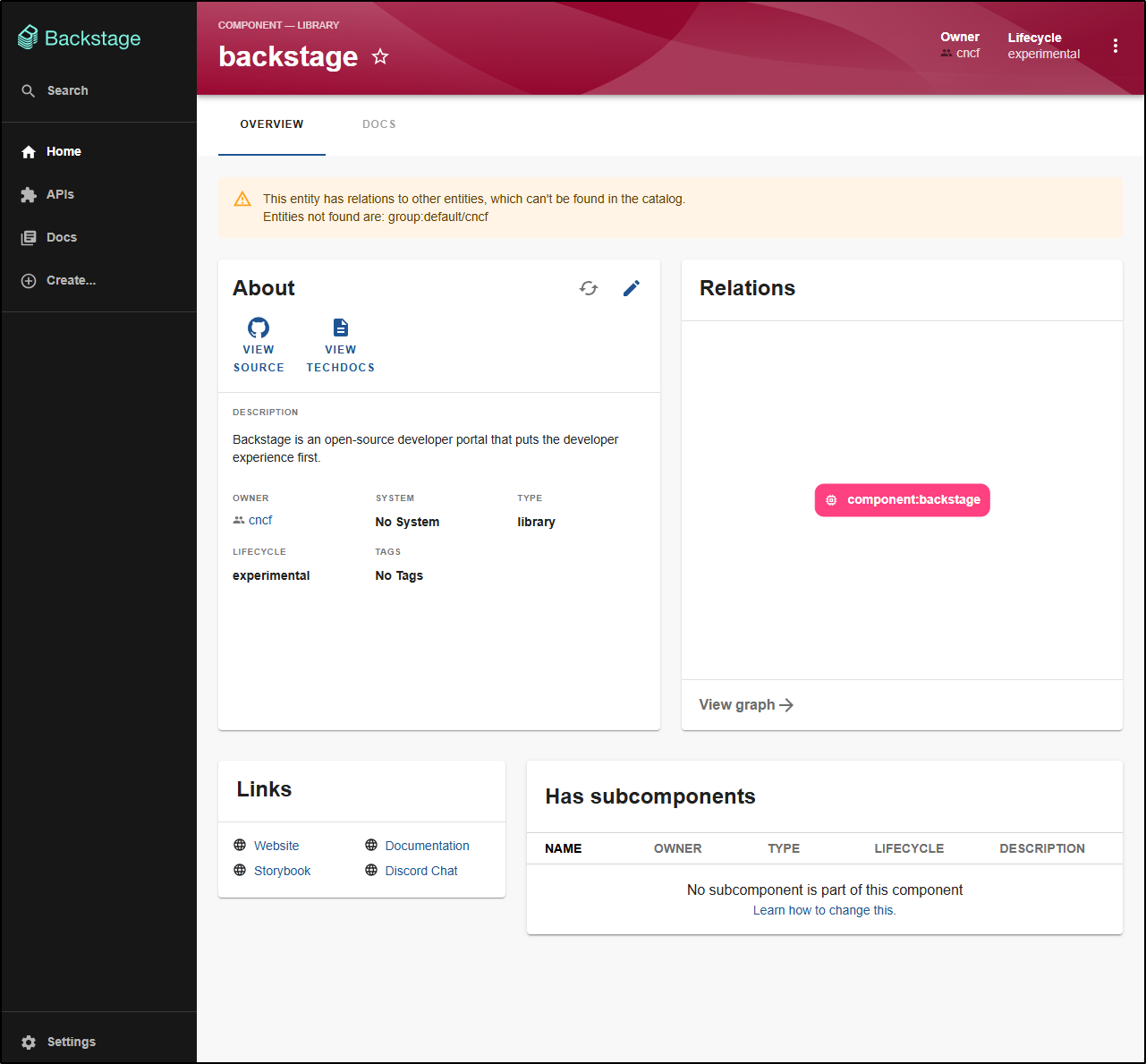

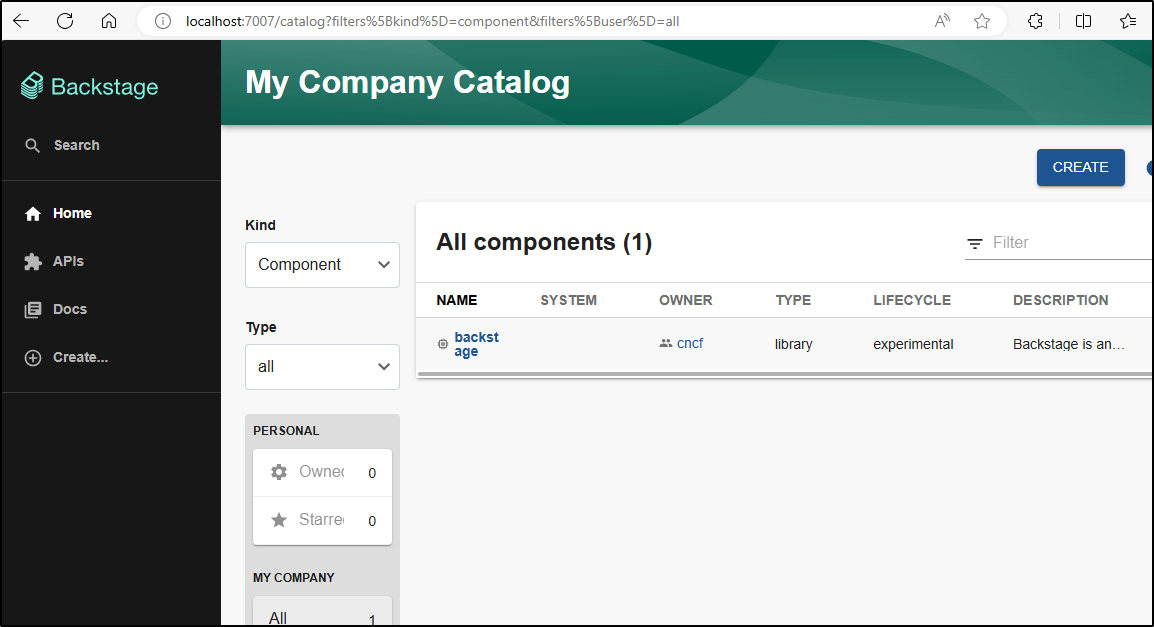

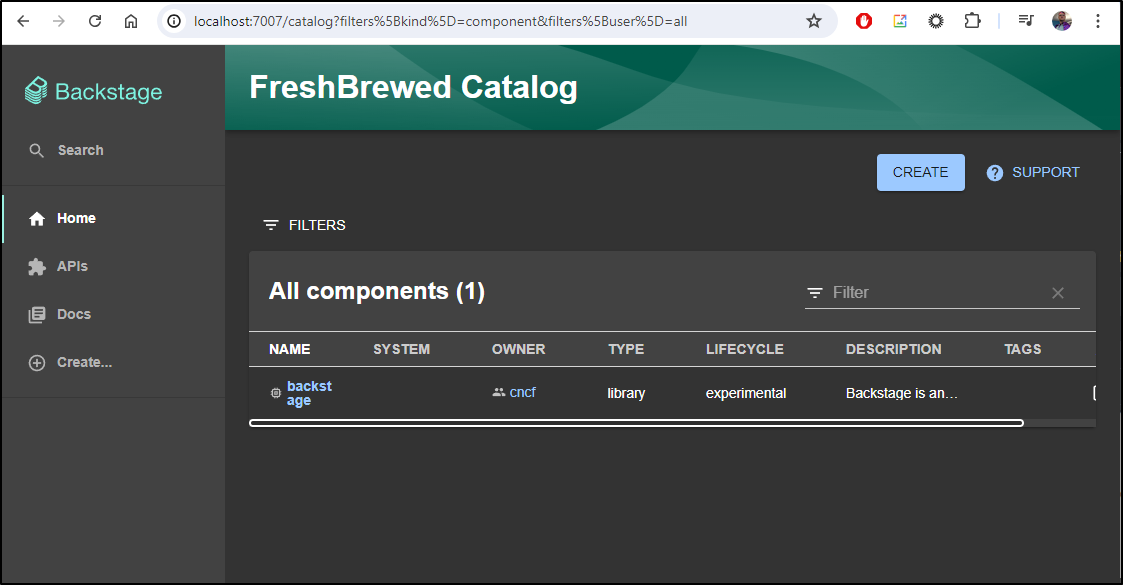

That worked a bit better

We can now see that component in the list

It also appears in the main page

I see no PVCs so it makes me question persistance

builder@LuiGi:~/Workspaces/samples$ kubectl get pvc -n backstage

No resources found in backstage namespace.

builder@LuiGi:~/Workspaces/samples$ kubectl get pods -n backstage

NAME READY STATUS RESTARTS AGE

backstage-76fddf564b-2gqkb 1/1 Running 0 3m38s

builder@LuiGi:~/Workspaces/samples$ kubectl delete pod backstage-76fddf564b-2gqkb -n backstage

pod "backstage-76fddf564b-2gqkb" deleted

builder@LuiGi:~/Workspaces/samples$ kubectl get pods -n backstage

NAME READY STATUS RESTARTS AGE

backstage-76fddf564b-7bhwt 1/1 Running 0 5s

Indeed, that rotation wiped my import

I figured I would pivot to PostgreSQL enabled

$ helm delete backstage -n backstage

$ helm install backstage -n backstage --create-namespace --set backstage.extraEnvVars[0].name=NODE_ENV --set backstage.extraEnvVars[0].value=development --set backstage.extraEnvVars[1].name=GITHUB_TOKEN --set backstage.extraEnvVars[1].value=`az keyvault secret show --name GithubToken-MyFull90d --vault-name idjakv --subscription Pay-As-You-Go | jq -r .value | tr -d '\n'` --set postgresql.enabled=true backstage/backstage

I’ll again add the component

Let’s rotate the pod

$ kubectl get pods -n backstage

NAME READY STATUS RESTARTS AGE

backstage-6db5b7784c-scr7t 1/1 Running 0 7m5s

backstage-postgresql-0 1/1 Running 1 (3m39s ago) 7m5s

$ kubectl delete pod backstage-6db5b7784c-scr7t -n backstage

pod "backstage-6db5b7784c-scr7t" deleted

$ kubectl get pods -n backstage

NAME READY STATUS RESTARTS AGE

backstage-postgresql-0 1/1 Running 1 (4m21s ago) 7m47s

backstage-6db5b7784c-x4j28 1/1 Running 0 27s

But it didn’t stay

$ kubectl create configmap my-app-config -n backstage –from-file=app-config.extra.yam l=/home/builder/Workspaces/samples/app-config.yaml configmap/my-app-config created

$ cat app-config.yaml

app:

title: Fresh/Brewed Backstage App

baseUrl: http://localhost:3000

organization:

name: FreshBrewed

backend:

baseUrl: http://localhost:7007

listen:

port: 7007

csp:

connect-src: ["'self'", 'http:', 'https:']

cors:

origin: http://localhost:3000

methods: [GET, HEAD, PATCH, POST, PUT, DELETE]

credentials: true

database:

client: better-sqlite3

connection: ':memory:'

integrations:

github:

- host: github.com

token: ${GITHUB_TOKEN}

auth:

providers:

guest: {}

catalog:

import:

entityFilename: catalog-info.yaml

pullRequestBranchName: backstage-integration

rules:

- allow: [Component, System, API, Resource, Location]

locations:

- type: url

target: https://github.com/backstage/backstage/blob/master/catalog-info.yaml

rules:

- allow: [ Template ]

I can then install (only after removing the prior)

$ helm delete backstage -n backstage

release "backstage" uninstalled

$ helm install backstage -n backstage --create-namespace --set backstage.extraEnvVars[0].name=NODE_ENV --set backstage.extraEnvVars[0].value=development --set backstage.extraEnvVars[1].name=GITHUB_TOKEN --set backstage.extraEnvVars[1].value=`az keyvault secret show --name GithubToken-MyFull90d --vault-name idjakv --subscription Pay-As-You-Go | jq -r .value | tr -d '\n'` --set postgresql.enabled=true --set backstage.extraAppConfig[0].filename=app-config.extra.yaml --set backstage.extraAppConfig[0].configMapRef=my-app-config backstage/backstage

NAME: backstage

LAST DEPLOYED: Tue Jul 9 16:58:25 2024

NAMESPACE: backstage

STATUS: deployed

REVISION: 1

TEST SUITE: None

However that didn’t work.

The problem was that my CM was errant

$ kubectl port-forward svc/backstage -n backstage 7007:7007

Forwarding from 127.0.0.1:7007 -> 7007

Forwarding from [::1]:7007 -> 7007

Handling connection for 7007

Handling connection for 7007

E0709 18:21:26.870026 69611 portforward.go:409] an error occurred forwarding 7007 -> 7007: error forwarding port 7007 to pod bde32cfcfed1df71f82d86a2b92bdaeb6c0df610db2dff0ebe4ba1f3b62b099f, uid : failed to execute portforward in network namespace "/var/run/netns/cni-ee327fa4-6dff-58a3-99b6-aa4b27927259": failed to connect to localhost:7007 inside namespace "bde32cfcfed1df71f82d86a2b92bdaeb6c0df610db2dff0ebe4ba1f3b62b099f", IPv4: dial tcp4 127.0.0.1:7007: connect: connection refused IPv6 dial tcp6: address localhost: no suitable address found

error: lost connection to pod

I updated my CM to put in the token, added baseUrl and the techdoc

$ kubectl get cm my-app-config -n backstage -o yaml

apiVersion: v1

data:

app-config.extra.yaml: |+

app:

baseUrl: http://localhost:7007

organization:

name: FreshBrewed

techdocs:

builder: 'local' # Alternatives - 'external'

generator:

runIn: 'docker'

# dockerImage: my-org/techdocs # use a custom docker image

# pullImage: true # or false to disable automatic pulling of image (e.g. if custom docker login is required)

publisher:

type: 'local' # Alternatives - 'googleGcs' or 'awsS3' or 'azureBlobStorage' or 'openStackSwift'. Read documentation for using alternatives.

backend:

baseUrl: http://localhost:7007

listen:

port: 7007

csp:

connect-src: ["'self'", 'http:', 'https:']

cors:

origin: http://localhost:3000

methods: [GET, HEAD, PATCH, POST, PUT, DELETE]

credentials: true

database:

client: better-sqlite3

connection: ':memory:'

integrations:

github:

- host: github.com

token: ghp_Rxxxxxxxxxxxxxxxxxxxxxxxxxxxxxo

auth:

providers:

guest: {}

catalog:

import:

entityFilename: catalog-info.yaml

pullRequestBranchName: backstage-integration

rules:

- allow: [Component, System, API, Resource, Location]

locations:

- type: url

target: https://github.com/backstage/backstage/blob/master/catalog-info.yaml

rules:

- allow: [ Template ]

kind: ConfigMap

metadata:

creationTimestamp: "2024-07-09T21:57:12Z"

name: my-app-config

namespace: backstage

resourceVersion: "5149239"

uid: a43a678a-82e7-40c9-82c9-e8403dd18174

Now it works

$ kubectl port-forward svc/backstage -n backstage 7007:7007

Forwarding from 127.0.0.1:7007 -> 7007

Forwarding from [::1]:7007 -> 7007

Handling connection for 7007

Handling connection for 7007

Handling connection for 7007

Handling connection for 7007

Handling connection for 7007

Handling connection for 7007

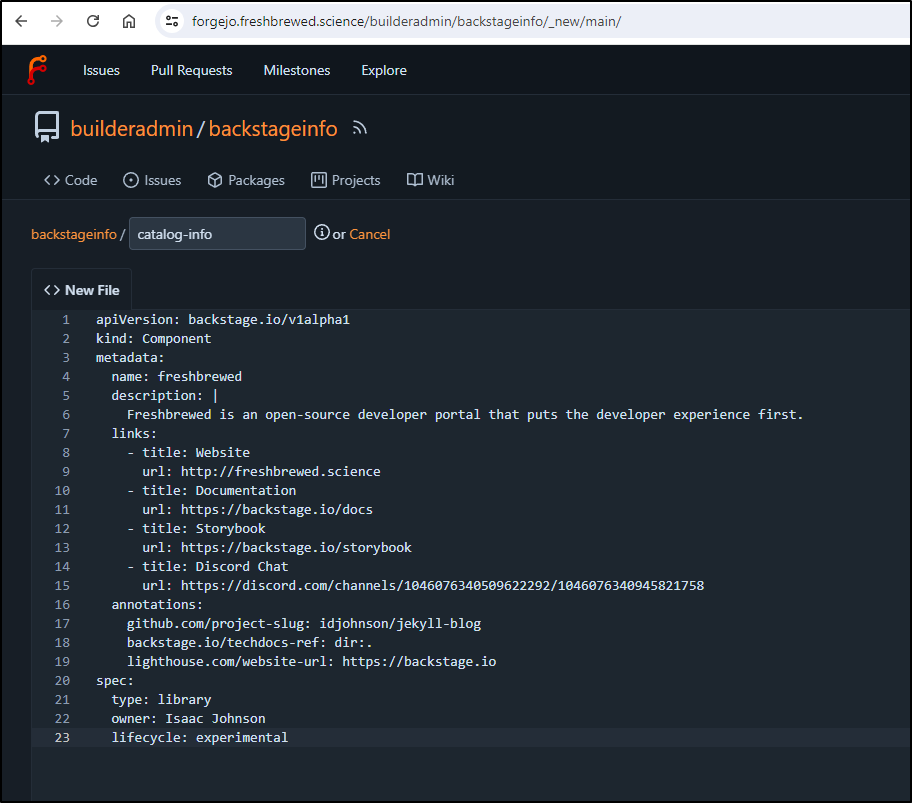

I really tried to use Forgejo, but it wouldn’t take

So I just moved to Github here.

apiVersion: backstage.io/v1alpha1

kind: Component

metadata:

name: freshbrewed

description: |

Freshbrewed is an open-source developer portal that puts the developer experience first.

links:

- title: Website

url: http://freshbrewed.science

- title: Documentation

url: https://backstage.io/docs

- title: Storybook

url: https://backstage.io/storybook

- title: Discord Chat

url: https://discord.com/channels/1046076340509622292/1046076340945821758

annotations:

github.com/project-slug: idjohnson/jekyll-blog

backstage.io/techdocs-ref: dir:.

lighthouse.com/website-url: https://backstage.io

spec:

type: library

owner: Isaac Johnson

lifecycle: experimental

And added to the Configmap

catalog:

import:

entityFilename: catalog-info.yaml

pullRequestBranchName: backstage-integration

rules:

- allow: [Component, System, API, Resource, Location]

locations:

- type: url

target: https://github.com/idjohnson/backstageInfo/blob/main/backstage-info.yaml

rules:

- allow: [ Template ]

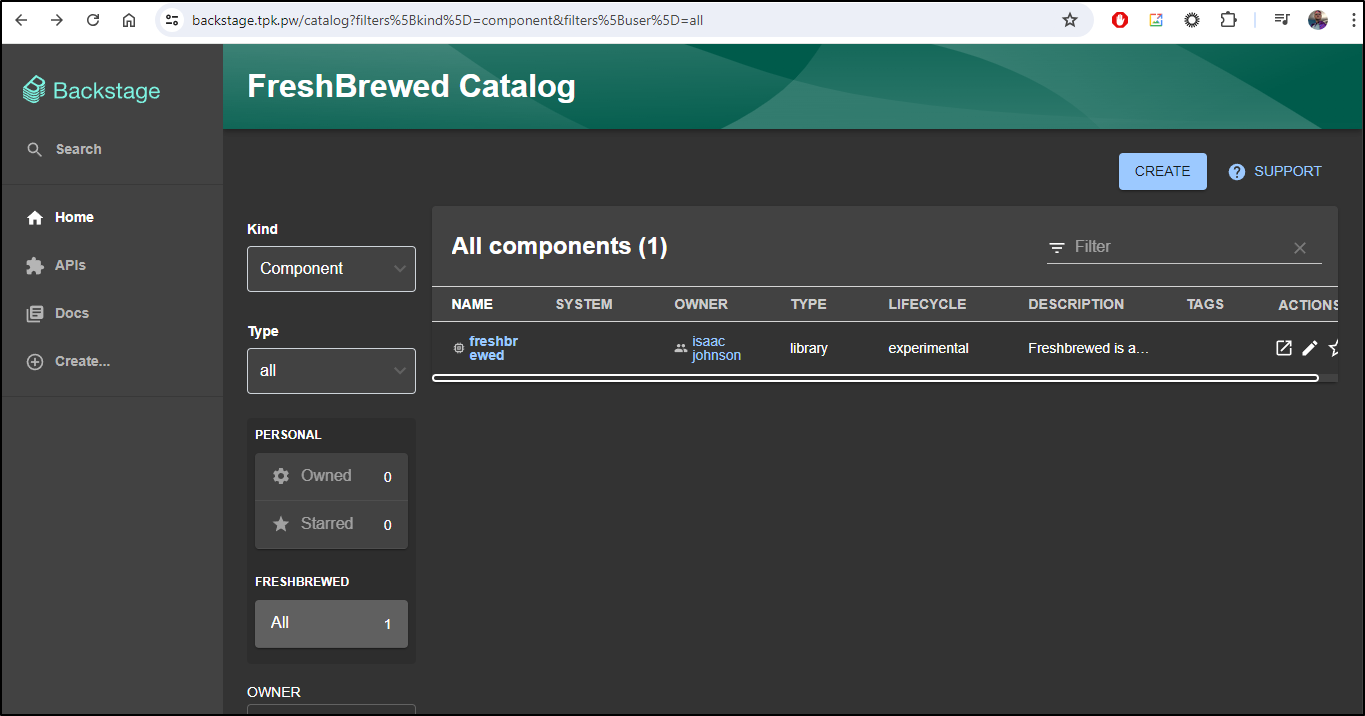

Then it loaded just fine

$ kubectl delete pods -l "app.kubernetes.io/component=backstage" -n backstage

pod "backstage-8486fb8f64-xh8mb" deleted

$ kubectl port-forward svc/backstage -n backstage 7007:7007

Forwarding from 127.0.0.1:7007 -> 7007

Forwarding from [::1]:7007 -> 7007

Handling connection for 7007

Handling connection for 7007

Handling connection for 7007

Handling connection for 7007

I can get the svc for backstage

$ kubectl get svc -n backstage backstage -o yaml

apiVersion: v1

kind: Service

metadata:

annotations:

meta.helm.sh/release-name: backstage

meta.helm.sh/release-namespace: backstage

creationTimestamp: "2024-07-09T21:58:46Z"

labels:

app.kubernetes.io/component: backstage

app.kubernetes.io/instance: backstage

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: backstage

helm.sh/chart: backstage-1.9.5

name: backstage

namespace: backstage

resourceVersion: "5141287"

uid: 31efdb5a-9112-427a-9bdb-5cb74279fd31

spec:

clusterIP: 10.43.104.142

clusterIPs:

- 10.43.104.142

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: http-backend

port: 7007

protocol: TCP

targetPort: backend

selector:

app.kubernetes.io/component: backstage

app.kubernetes.io/instance: backstage

app.kubernetes.io/name: backstage

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}

Production

$ kubectl create ns backstage

namespace/backstage created

$ kubectl apply -f ./mycm.yaml -n backstage

configmap/my-app-config created

$ helm install backstage -f ./values.yaml -n backstage backstage/backstage

NAME: backstage

LAST DEPLOYED: Tue Jul 9 19:52:20 2024

NAMESPACE: backstage

STATUS: deployed

REVISION: 1

TEST SUITE: None

I’ll add an Azure DNS entry

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n backstage

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "0956cdc4-1592-4c7d-a8a4-f8bbc6ace334",

"fqdn": "backstage.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/backstage",

"name": "backstage",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"type": "Microsoft.Network/dnszones/A"

}

I can create an Ingress

$ cat backstage.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/websocket-services: backstage

name: backstage-ingress

spec:

rules:

- host: backstage.tpk.pw

http:

paths:

- backend:

service:

name: backstage

port:

number: 7007

path: /

pathType: Prefix

tls:

- hosts:

- backstage.tpk.pw

secretName: backstage-tls

$ kubectl apply -f ./backstage.ingress.yaml -n backstage

ingress.networking.k8s.io/backstage-ingress created

When the cert is satisified we can test

$ kubectl get cert -n backstage

NAME READY SECRET AGE

backstage-tls True backstage-tls 85s

I can then test

Auth

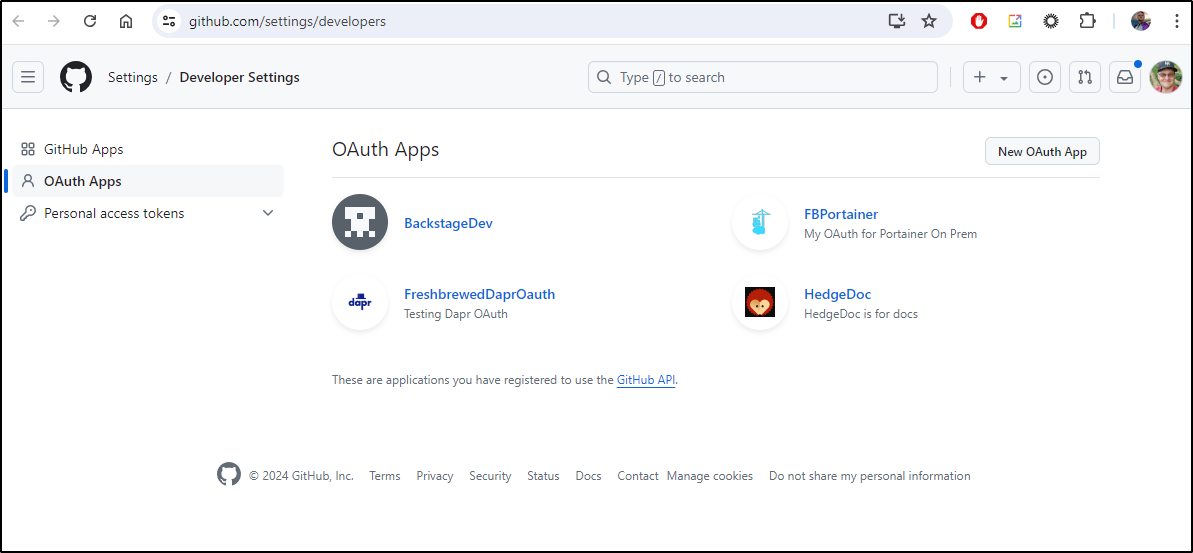

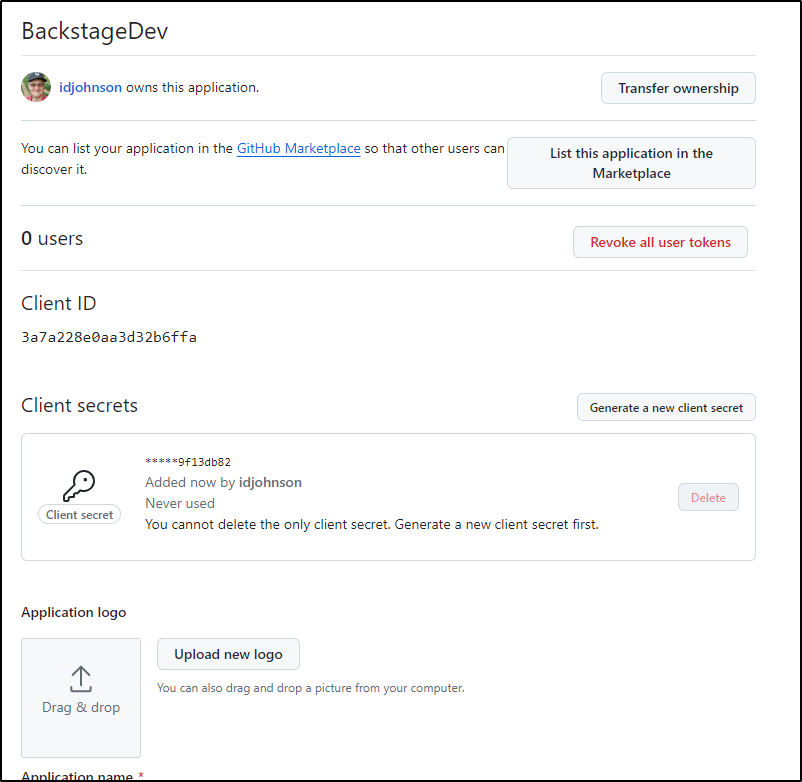

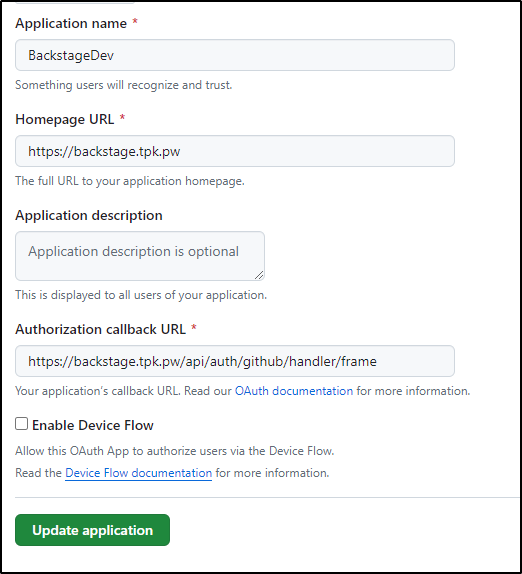

Let’s set up Github Auth in Github Developer Settings

I’ll need the Client ID and Secret (which is shown once)

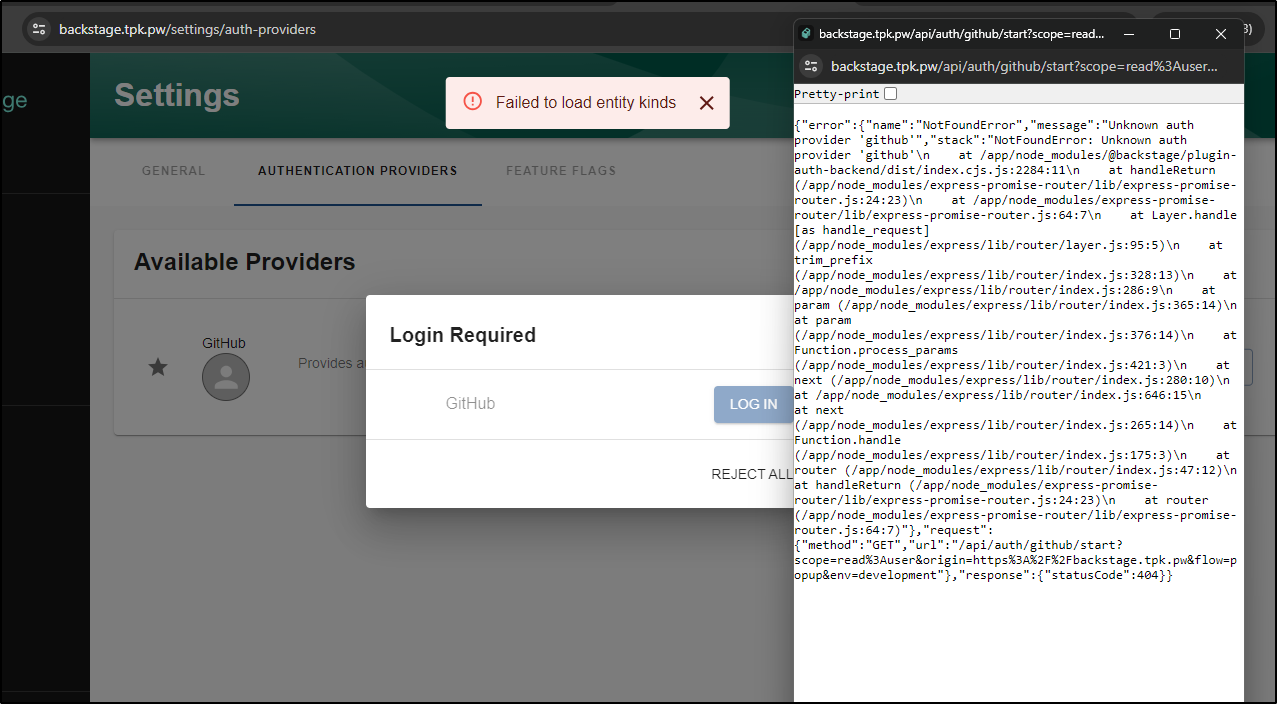

I believe I have right Auth values

Testing a few times, I couldnt get Auth to work

Docker

I tried a lot to get it to build and run in Docker

$ yarn install --frozen-lockfile

$ NODE_OPTIONS=--max-old-space-size=8192 yarn tsc

$ NODE_OPTIONS=--max-old-space-size=8192 yarn build:backend --config ../../app-config.yaml

But i also tried

$ NODE_OPTIONS=--max-old-space-size=8192 yarn build:backend --config ./app-config.yaml

as that is where i saw the app-config.

I would build

$ docker image build . -f packages/backend/Dockerfile --tag backstage

But all the runs crashed

Loading config from MergedConfigSource{FileConfigSource{path="/app/app-config.yaml"}, EnvConfigSource{count=0}}

{"level":"info","message":"Found 2 new secrets in config that will be redacted","service":"backstage"}

{"level":"info","message":"Listening on :7007","service":"rootHttpRouter"}

{"level":"info","message":"Plugin initialization started: 'auth', 'app', 'catalog', 'devtools', 'kubernetes', 'permission', 'proxy', 'scaffolder', 'search', 'techdocs', 'signals', 'notifications'","service":"backstage","type":"initialization"}

{"level":"info","message":"[HPM] Proxy created: /circleci/api -> https://circleci.com/api/v1.1","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy rewrite rule created: \"^/api/proxy/circleci/api/?\" ~> \"/\"","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy created: /jenkins/api -> http://localhost:8080","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy rewrite rule created: \"^/api/proxy/jenkins/api/?\" ~> \"/\"","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy created: /travisci/api -> https://api.travis-ci.com","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy rewrite rule created: \"^/api/proxy/travisci/api/?\" ~> \"/\"","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy created: /newrelic/apm/api -> https://api.newrelic.com/v2","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy rewrite rule created: \"^/api/proxy/newrelic/apm/api/?\" ~> \"/\"","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy created: /newrelic/api -> https://api.newrelic.com","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy rewrite rule created: \"^/api/proxy/newrelic/api/?\" ~> \"/\"","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy created: /pagerduty -> https://api.pagerduty.com","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy rewrite rule created: \"^/api/proxy/pagerduty/?\" ~> \"/\"","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy created: /buildkite/api -> https://api.buildkite.com/v2/","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy rewrite rule created: \"^/api/proxy/buildkite/api/?\" ~> \"/\"","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy created: /sentry/api -> https://sentry.io/api/","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy rewrite rule created: \"^/api/proxy/sentry/api/?\" ~> \"/\"","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy created: /ilert -> https://api.ilert.com","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy rewrite rule created: \"^/api/proxy/ilert/?\" ~> \"/\"","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy created: /airflow -> https://your.airflow.instance.com/api/v1","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy rewrite rule created: \"^/api/proxy/airflow/?\" ~> \"/\"","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy created: /gocd -> https://your.gocd.instance.com/go/api","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy rewrite rule created: \"^/api/proxy/gocd/?\" ~> \"/\"","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy created: /dynatrace -> https://your.dynatrace.instance.com/api/v2","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy rewrite rule created: \"^/api/proxy/dynatrace/?\" ~> \"/\"","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy created: /stackstorm -> https://your.stackstorm.instance.com/api","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy rewrite rule created: \"^/api/proxy/stackstorm/?\" ~> \"/\"","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy created: /puppetdb -> https://your.puppetdb.instance.com","plugin":"proxy","service":"backstage"}

{"level":"info","message":"[HPM] Proxy rewrite rule created: \"^/api/proxy/puppetdb/?\" ~> \"/\"","plugin":"proxy","service":"backstage"}

{"level":"info","message":"registered additional routes for catalogModuleUnprocessedEntities","plugin":"catalog","service":"backstage"}

{"level":"info","message":"Initializing Kubernetes backend","plugin":"kubernetes","service":"backstage"}

{"level":"info","message":"action=LoadingCustomResources numOfCustomResources=0","plugin":"kubernetes","service":"backstage"}

{"level":"info","message":"Configuring \"database\" as KeyStore provider","plugin":"auth","service":"backstage"}

{"level":"info","message":"Creating Local publisher for TechDocs","plugin":"techdocs","service":"backstage"}

{"level":"info","message":"Starting scaffolder with the following actions enabled github:actions:dispatch, github:autolinks:create, github:deployKey:create, github:environment:create, github:issues:label, github:repo:create, github:repo:push, github:webhook, publish:github, publish:github:pull-request, github:pages:enable, fetch:plain, fetch:plain:file, fetch:template, debug:log, debug:wait, catalog:register, catalog:fetch, catalog:write, fs:delete, fs:rename","plugin":"scaffolder","service":"backstage"}

{"level":"info","message":"Added ToolDocumentCollatorFactory collator factory for type tools","plugin":"search","service":"backstage"}

{"level":"info","message":"Added DefaultCatalogCollatorFactory collator factory for type software-catalog","plugin":"search","service":"backstage"}

{"level":"info","message":"Added DefaultTechDocsCollatorFactory collator factory for type techdocs","plugin":"search","service":"backstage"}

{"level":"info","message":"Performing database migration","plugin":"catalog","service":"backstage"}

/app/packages/backend-app-api/dist/index.cjs.js:1680

throw new errors.ForwardedError(

^

ForwardedError: Plugin 'app' startup failed; caused by Error: Cannot find module 'example-app/package.json'

Require stack:

- /app/packages/backend-plugin-api/dist/cjs/paths-D7KGMZeP.cjs.js

- /app/packages/backend-plugin-api/dist/index.cjs.js

- /app/packages/backend-app-api/dist/index.cjs.js

- /app/packages/backend-defaults/dist/index.cjs.js

- /app/packages/backend/dist/index.cjs.js

at /app/packages/backend-app-api/dist/index.cjs.js:1680:19

at async /app/packages/backend-app-api/dist/index.cjs.js:1679:11

at async Promise.all (index 1)

... 2 lines matching cause stack trace ...

at async BackstageBackend.start (/app/packages/backend-app-api/dist/index.cjs.js:1762:5) {

cause: Error: Cannot find module 'example-app/package.json'

Require stack:

- /app/packages/backend-plugin-api/dist/cjs/paths-D7KGMZeP.cjs.js

- /app/packages/backend-plugin-api/dist/index.cjs.js

- /app/packages/backend-app-api/dist/index.cjs.js

- /app/packages/backend-defaults/dist/index.cjs.js

- /app/packages/backend/dist/index.cjs.js

at Module._resolveFilename (node:internal/modules/cjs/loader:1140:15)

at Function.resolve (node:internal/modules/helpers:188:19)

at Object.resolvePackagePath (/app/packages/backend-plugin-api/dist/cjs/paths-D7KGMZeP.cjs.js:18:27)

at Object.createRouter (/app/plugins/app-backend/dist/cjs/router-9HkxMeSl.cjs.js:238:39)

at Object.init [as func] (/app/plugins/app-backend/dist/alpha.cjs.js:56:39)

at /app/packages/backend-app-api/dist/index.cjs.js:1679:33

at async Promise.all (index 1)

at async #doStart (/app/packages/backend-app-api/dist/index.cjs.js:1633:5)

at async BackendInitializer.start (/app/packages/backend-app-api/dist/index.cjs.js:1562:5)

at async BackstageBackend.start (/app/packages/backend-app-api/dist/index.cjs.js:1762:5) {

code: 'MODULE_NOT_FOUND',

requireStack: [

'/app/packages/backend-plugin-api/dist/cjs/paths-D7KGMZeP.cjs.js',

'/app/packages/backend-plugin-api/dist/index.cjs.js',

'/app/packages/backend-app-api/dist/index.cjs.js',

'/app/packages/backend-defaults/dist/index.cjs.js',

'/app/packages/backend/dist/index.cjs.js'

]

}

}

Node.js v18.20.4

Summary

I litterally sat on this post for a month. I kept trying to get myself to get fired up to try again and each time I just sat there and stared at the prompt.

I mean, you can put backstage on a VM and I could do that, but I really don’t want to - I don’t like it. I cannot explain it. I mean, IF I wanted to build out an API Dashboard I would far prefer to build out something like Configure8.

I know multiple developers in companies I respect who think it’s the bee’s knees and swear by it. They have multiple developers building out backends and just get so excited. I also know people who get passionate about Jenkins. So yeah…

I tried - i did. But I’m just not willing to deal with apps that cannot modernize to containers. I just lack the patience for VM based apps. When Backstage has a working Helm Chart, then I might try again. Or a decent working snap package. Otherwise, lest I poop in your cereal bowl - if you like it - great, you must see some value I am blind to, I just don’t like it.