Published: Jul 23, 2024 by Isaac Johnson

July of 2024 and I’m dealing with a Paper Flight ticket checked against paper manifests; And I was one of the lucky few. Many people dealt with much more – including being stuck in Hub cities with no hotels and no car rentals.

It was on the plane I started to stew about my own systems and alerts. While I focused on my employer needs Friday, later in the weekend I revisited my Uptime Kuma, PagerDuty, and Infrastructure Markdown pages.

Reviewing

I realized that coming home, my office was a mess. Most often when I let things get away with me, whether too busy with kiddos, or work, or whatever (insert lousy excuse here), it also means my infrastructure has suffered.

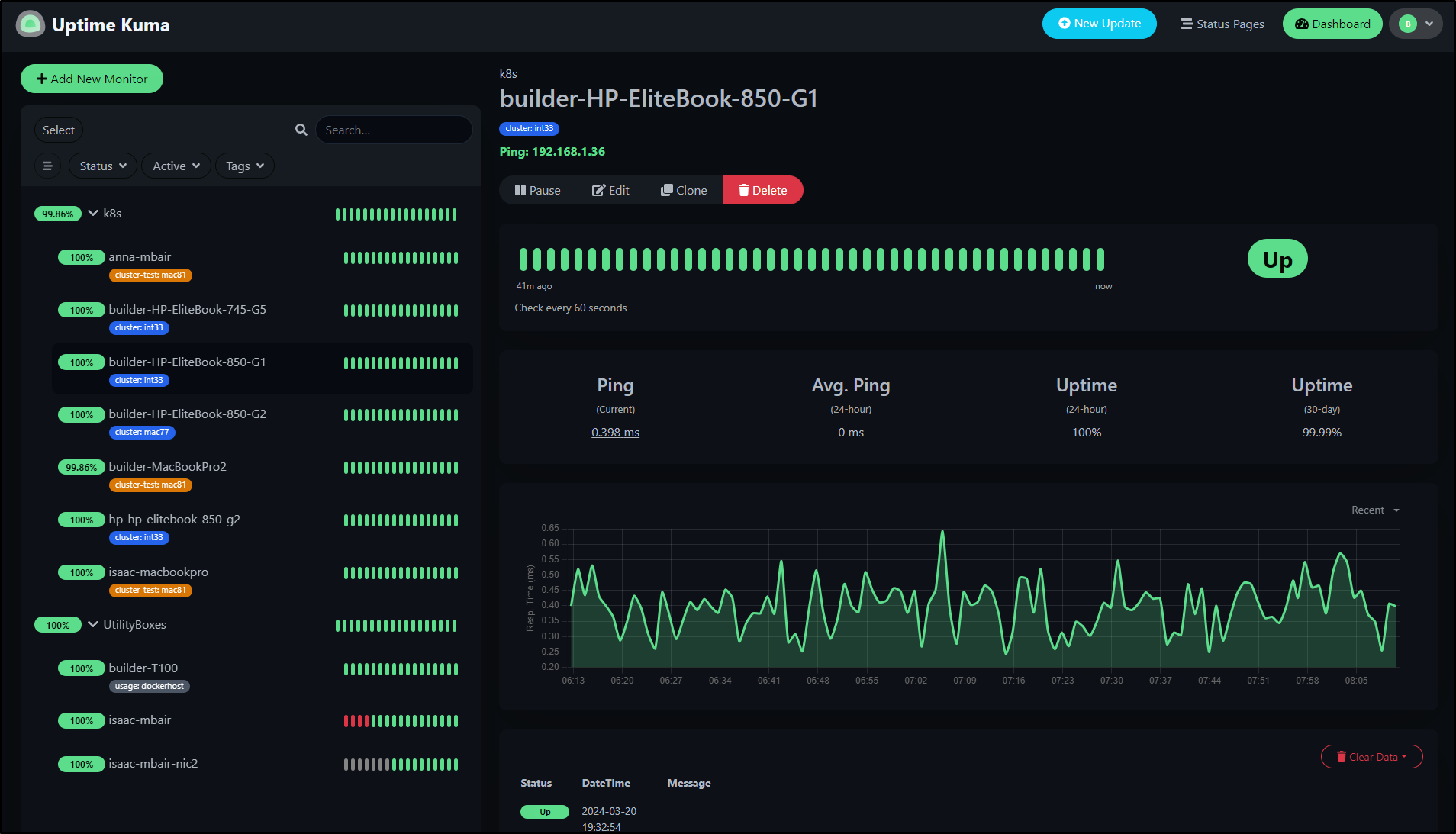

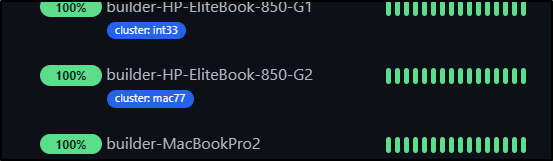

In looking at Uptime, I noticed one host was disabled, the nodes on the former Mac77 cluster where misaligned and overall, it was not accurate.

My first stab was to get Uptime lined up

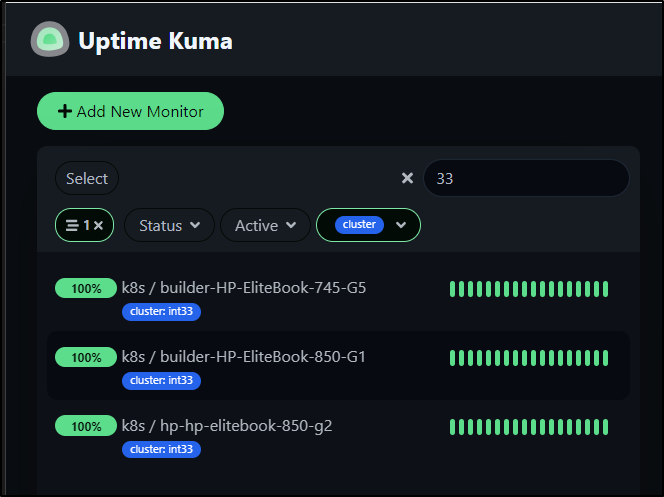

This is not perfect, but at least I can view the current Int33 (production) nodes

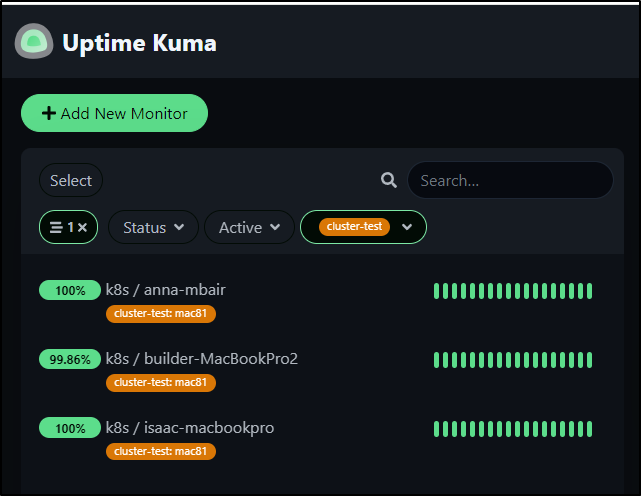

and the test cluster nodes

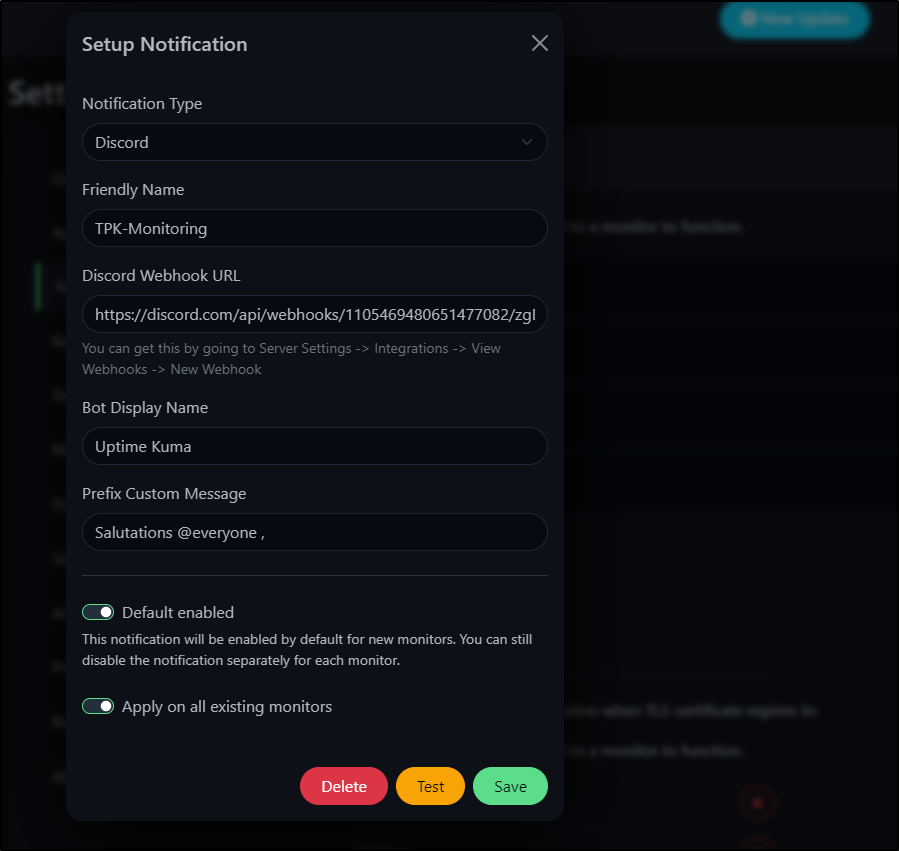

I need to double check the monitors are in place and functional

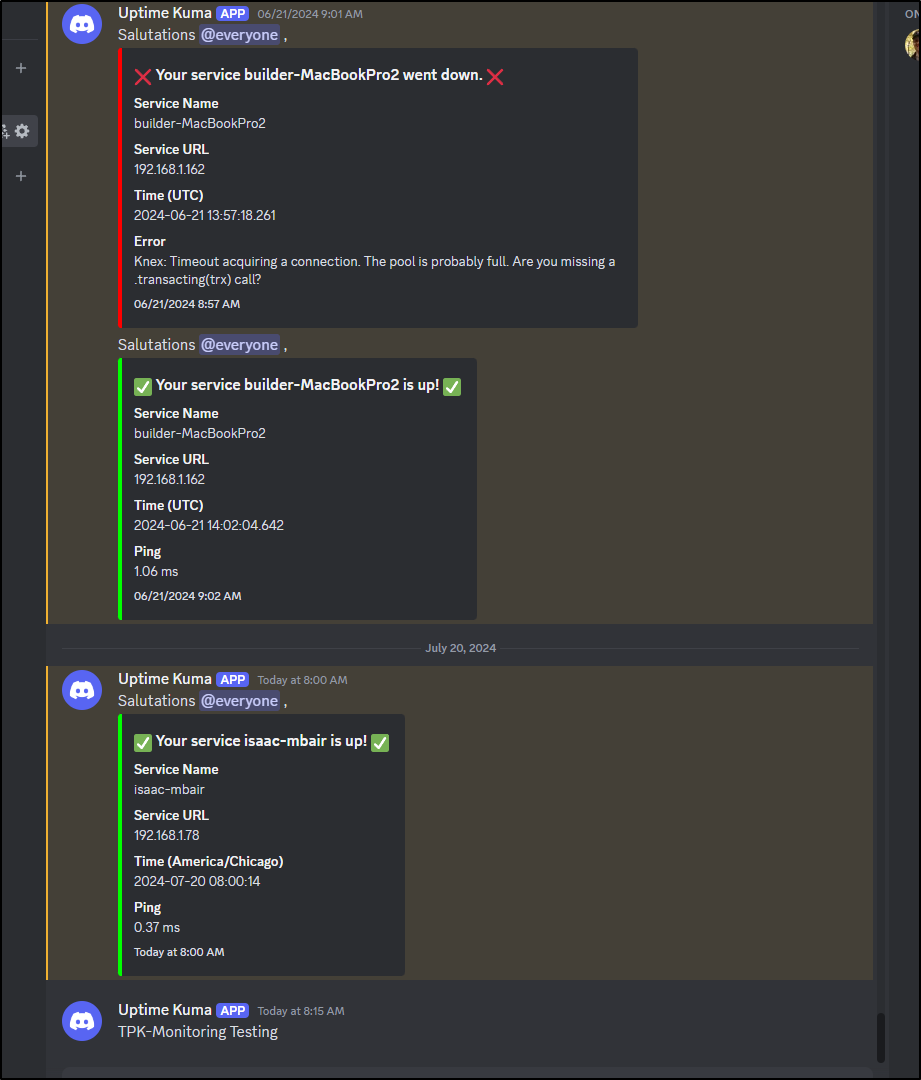

I’ll quick test Discord

I can see Discord is just quiet, but the monitors are working

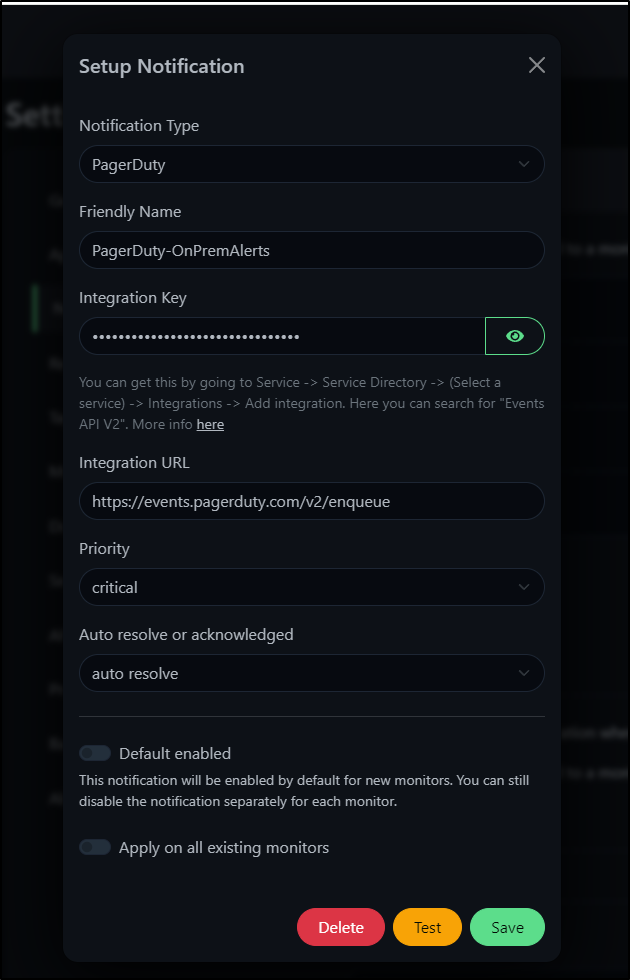

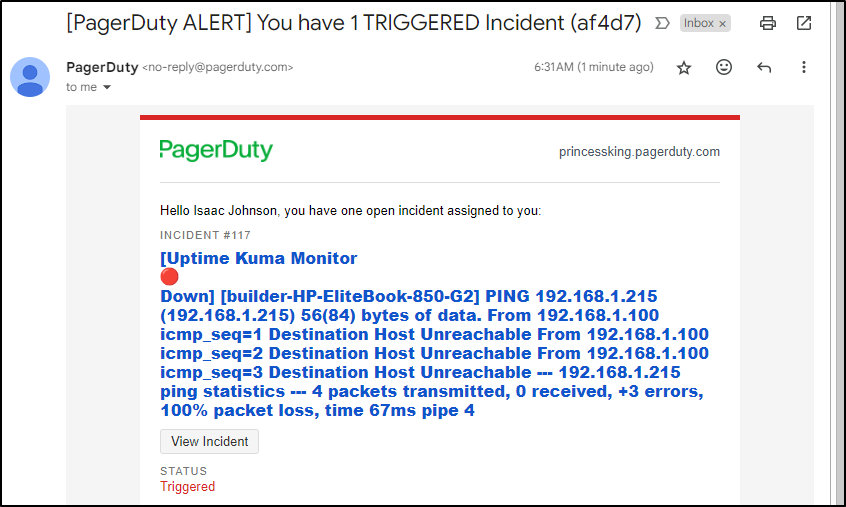

I tested PagerDuty next

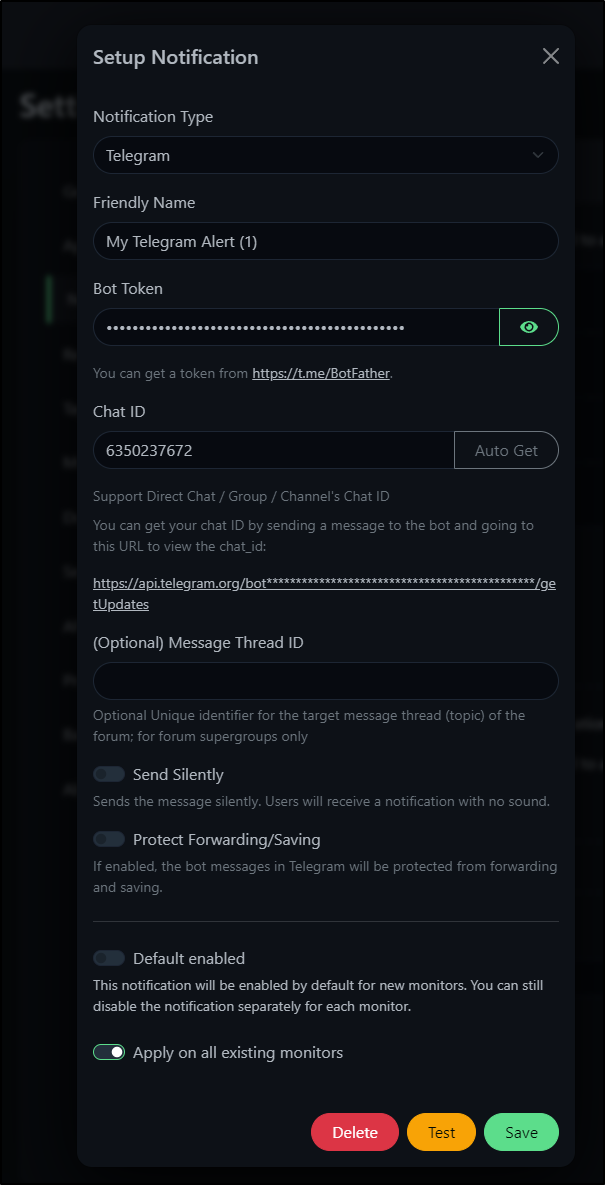

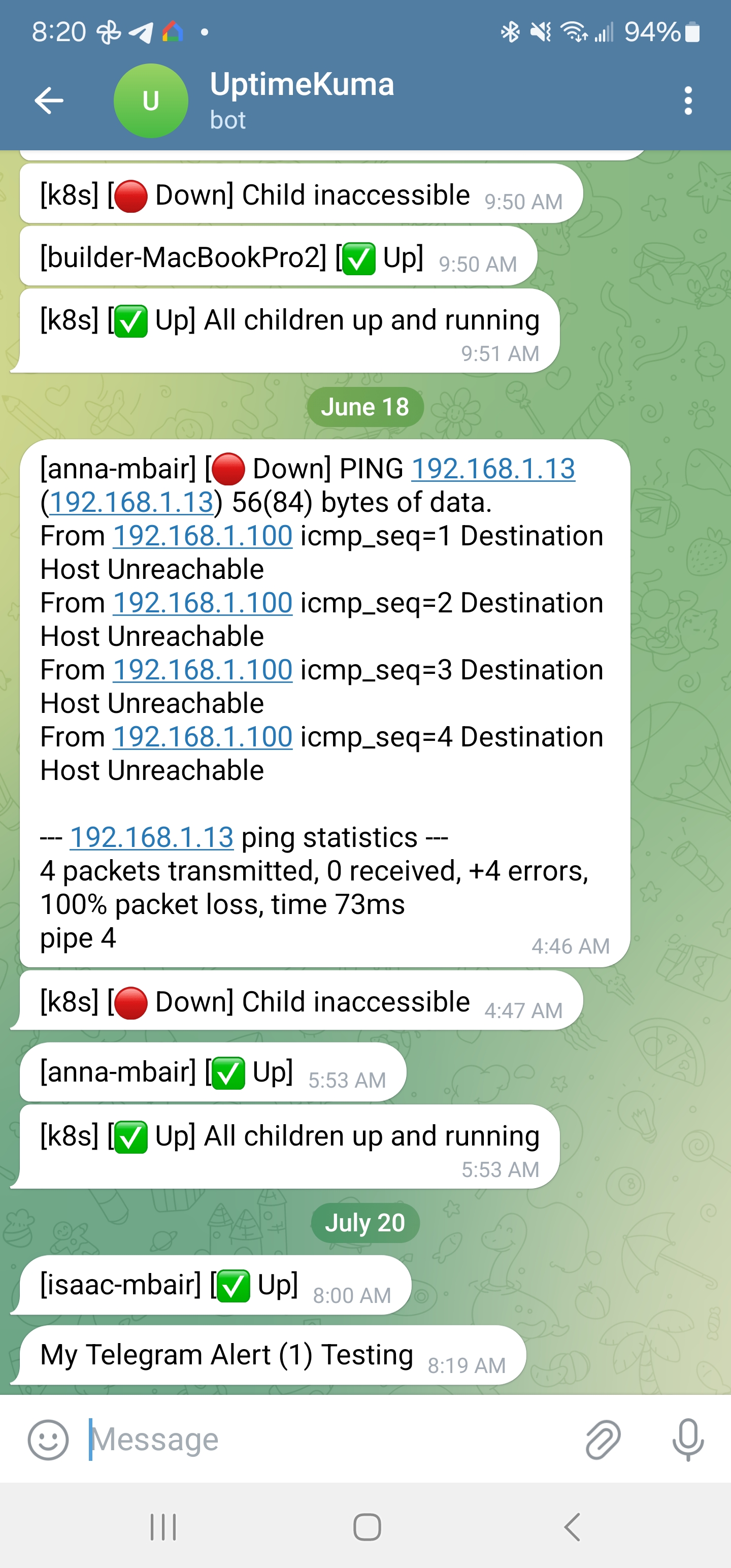

And Telegram

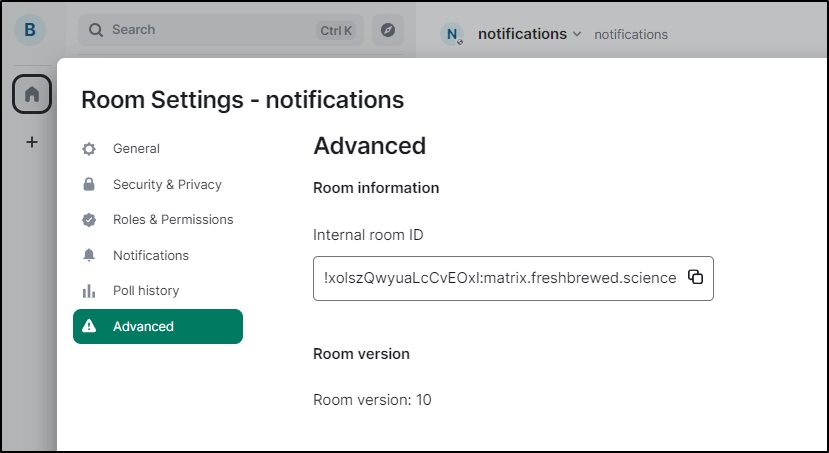

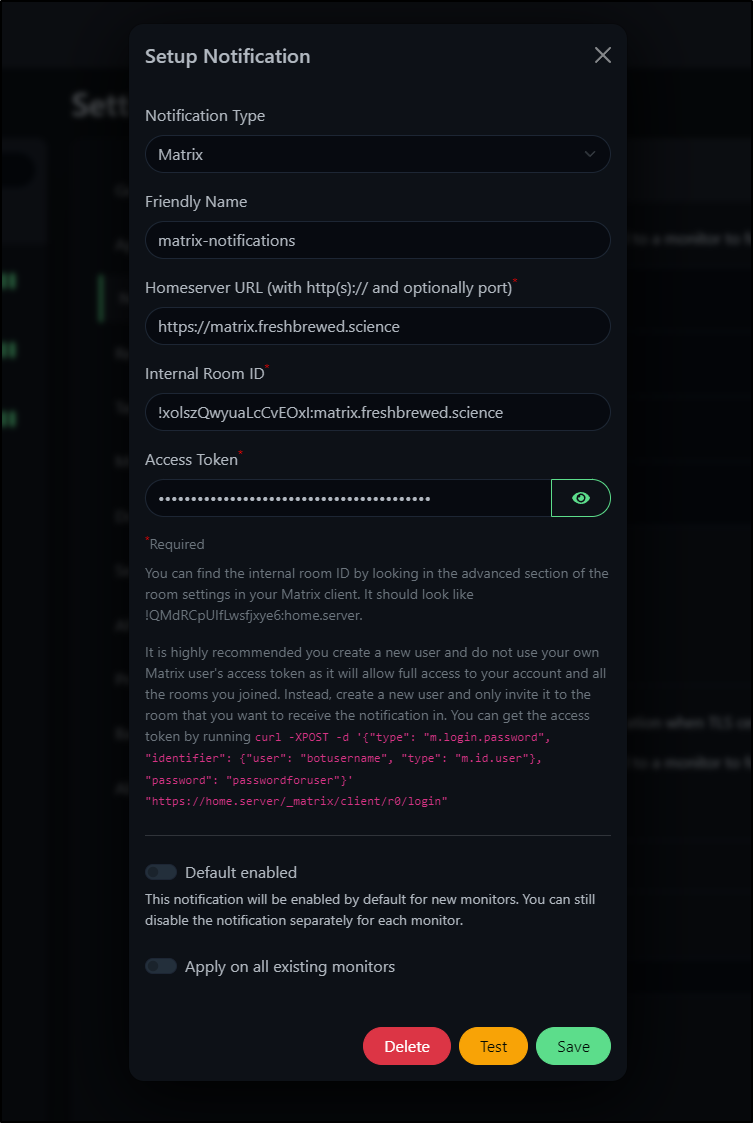

However, what didn’t work was Matrix. The Room was still the old instance.

It’s a public room, so I just need the Room ID we can get from Advanced (other clients will show in settings or details)

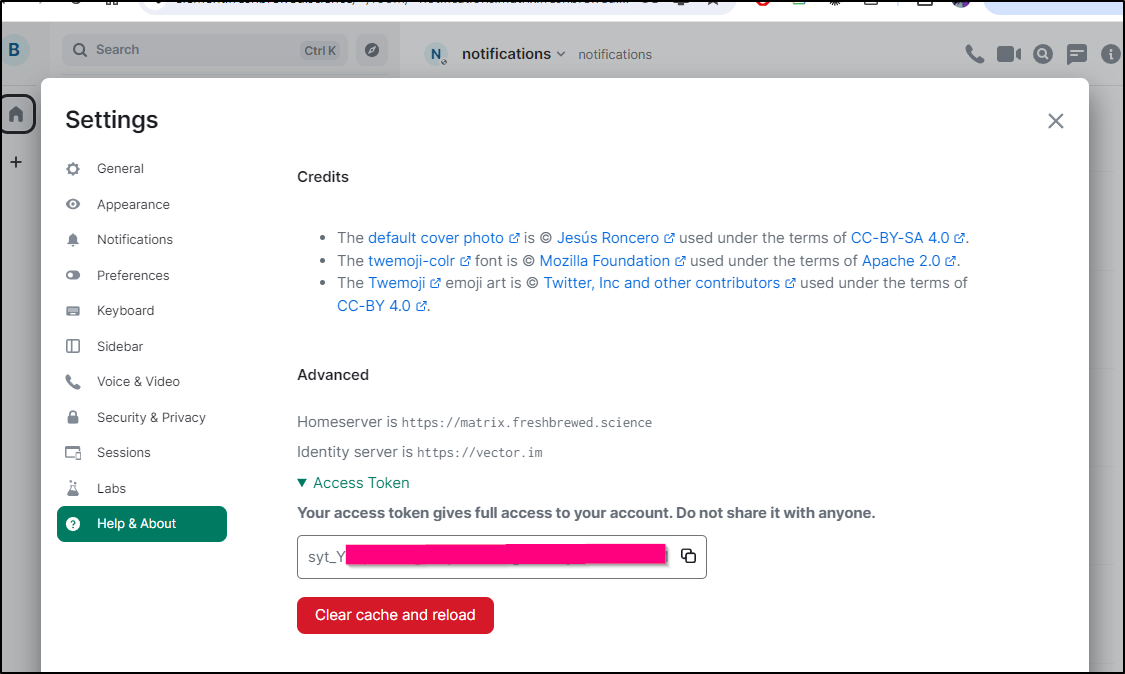

I need the access token, which I can pull from help and about

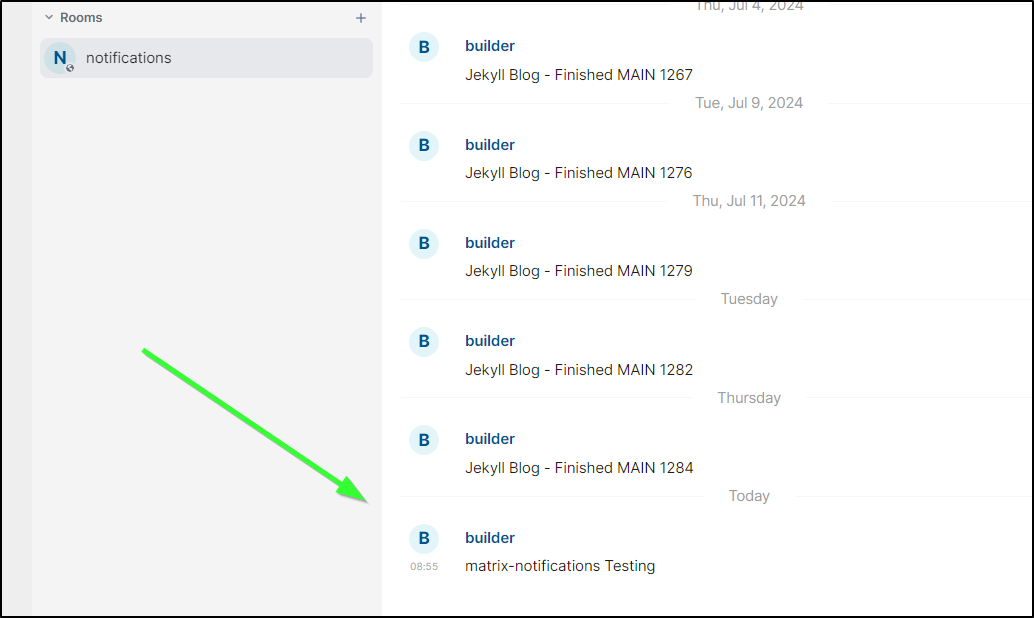

I can now test

and see it posted.

This time I decided not to have a separate room for Server monitors but just co-mingle with blog updates

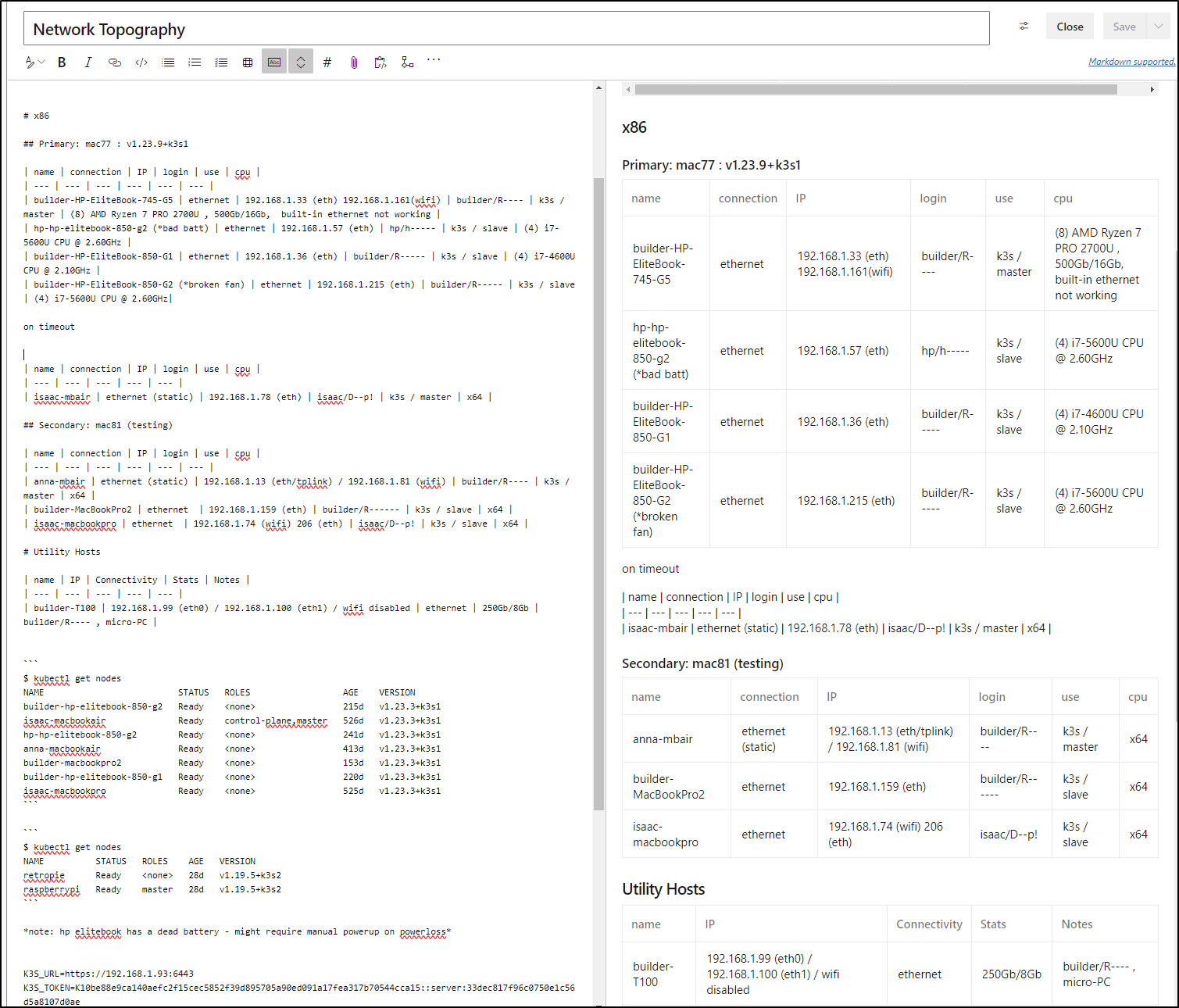

Topography

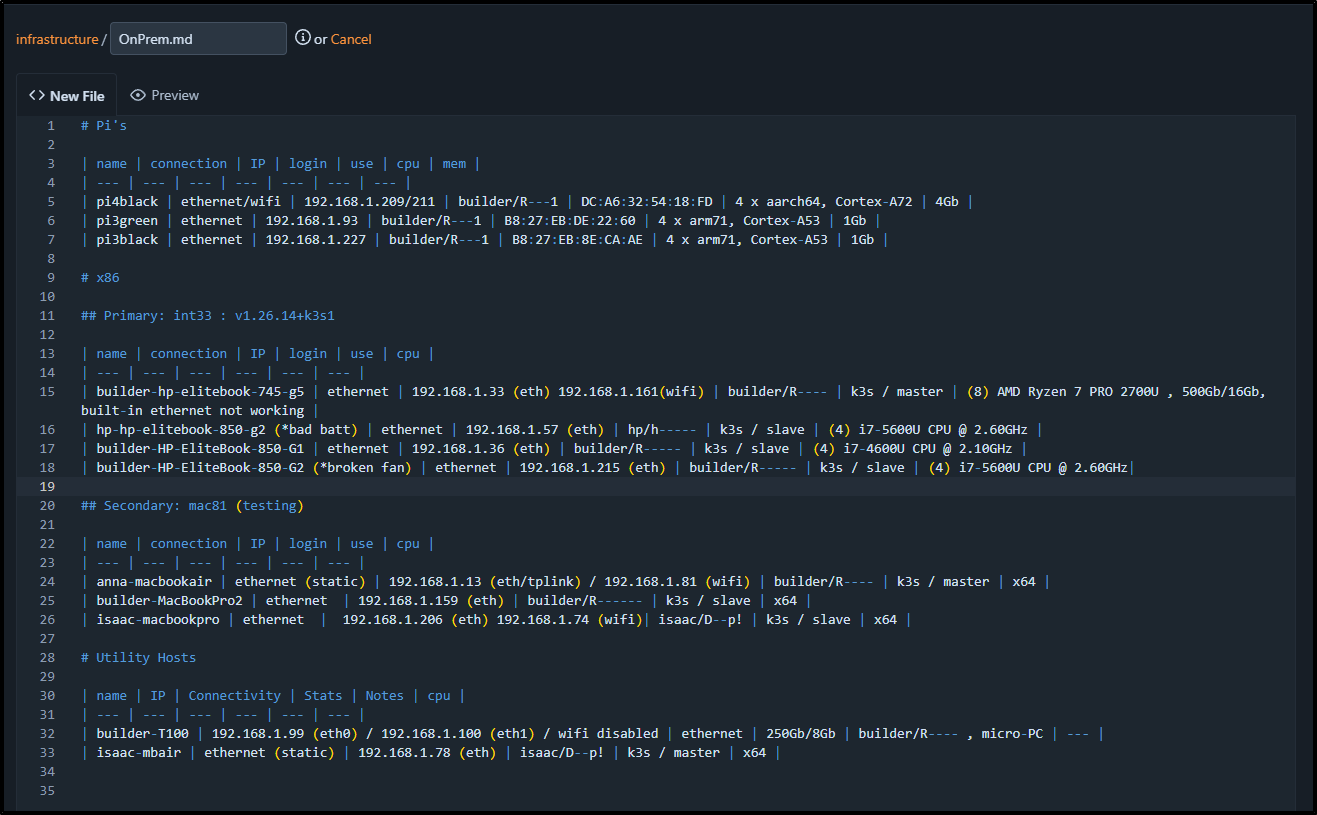

I know I’ve demo’ed many documentation suites. The source of truth, to this day, remains my Azure DevOps Wiki.

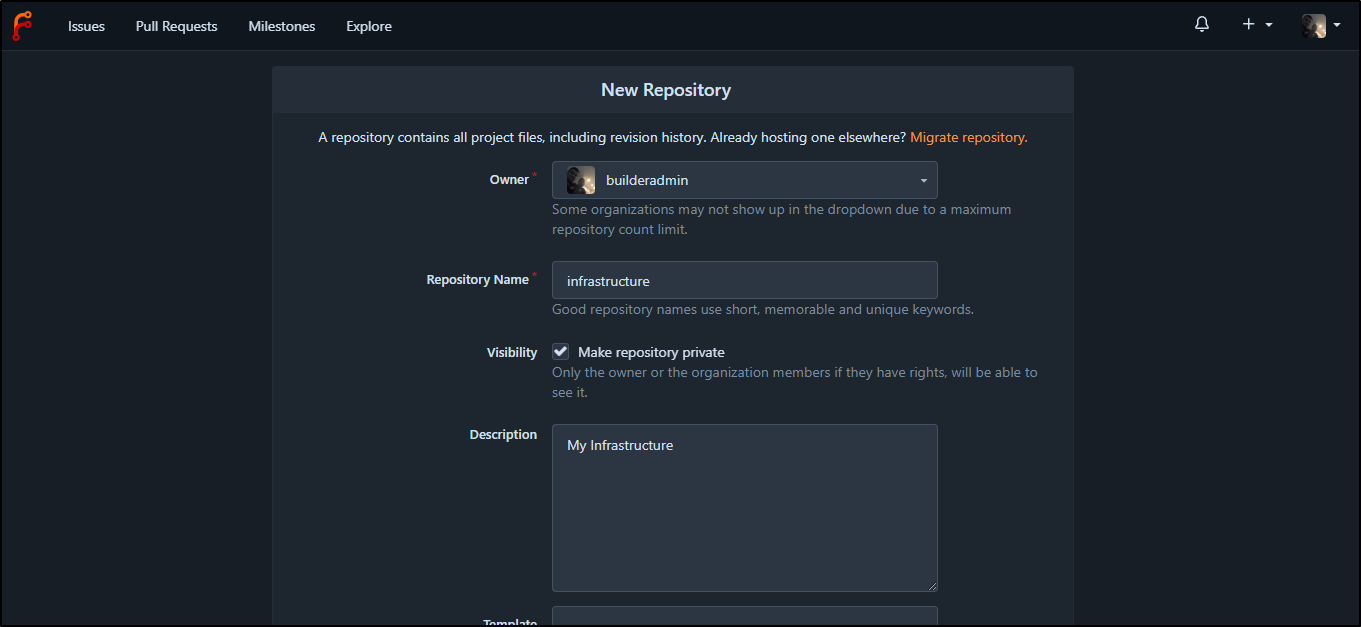

I think it’s about time to move it to a Git repo instead.

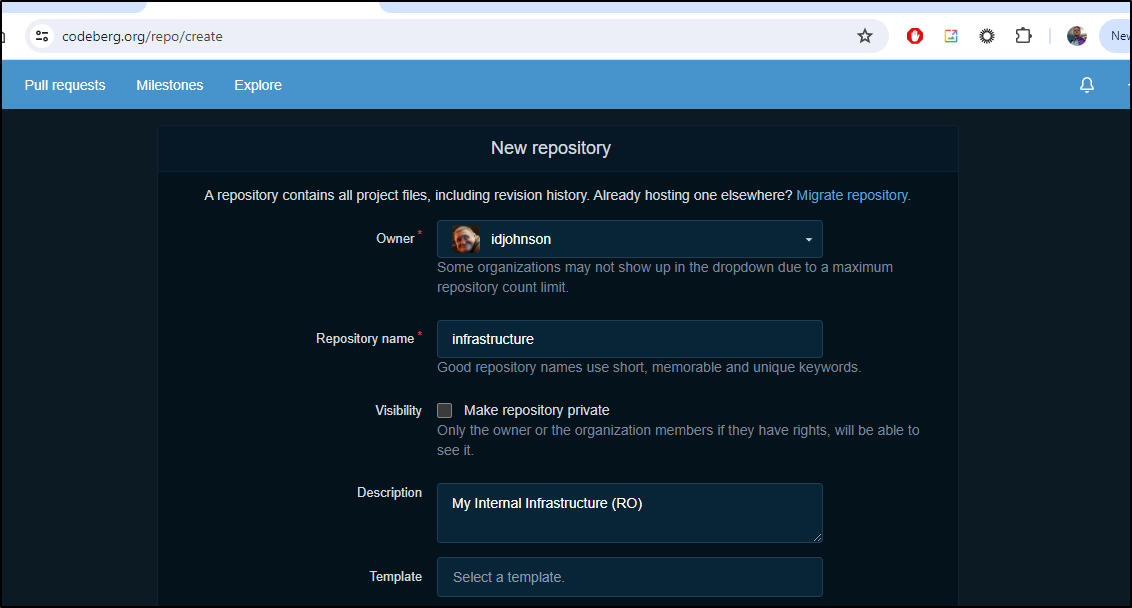

I’ll create a new one in Forgejo

I’ll cleanup the markdown, something like:

# Pi's

| name | connection | IP | login | use | cpu | mem |

| --- | --- | --- | --- | --- | --- | --- |

| pi4black | ethernet/wifi | 192.168.1.209/211 | builder/R---1 | DC:A6:32:54:18:FD | 4 x aarch64, Cortex-A72 | 4Gb |

| pi3green | ethernet | 192.168.1.93 | builder/R---1 | B8:27:EB:DE:22:60 | 4 x arm71, Cortex-A53 | 1Gb |

| pi3black | ethernet | 192.168.1.227 | builder/R---1 | B8:27:EB:8E:CA:AE | 4 x arm71, Cortex-A53 | 1Gb |

What this reminds me is that builder-HP-EliteBook-850-G2 (.215) is offline and not on purpose. Uptime still showed it as part of mac77…

I’ll look up the bash history of it’s sister builder-HP-EliteBook-850-G1 to get the node token and exact Install version

builder@builder-HP-EliteBook-850-G1:~$ history | tail -n 10

155 sudo /usr/local/bin/k3s-uninstall.sh

156 sudo /usr/local/bin/k3s-agent-uninstall.sh

157 sudo reboot

158 nslookup harbor.freshbrewed.science

159 curl -sfL https://get.k3s.io | INSTALL_K3S_VERSION="v1.26.14%2Bk3s1" K3S_URL=https://192.168.1.33:6443 K3S_TOKEN=K10ef48ebf2c2adb1da135c5fd0ad3fa4966ac35da5d6d941de7ecaa52d48993605::server:ed854070980c4560bbba49f8217363d4 sh

160 curl -sfL https://get.k3s.io | INSTALL_K3S_VERSION="v1.26.14%2Bk3s1" K3S_URL=https://192.168.1.33:6443 K3S_TOKEN=K10ef48ebf2c2adb1da135c5fd0ad3fa4966ac35da5d6d941de7ecaa52d48993605::server:ed854070980c4560bbba49f8217363d4 sh -

161 history

162 exit

163 history

164 history | tail -n 10

I can actually see that I haven’t used this host in some time, it still has the join from the old 78 box

150 sudo mount -t nfs 192.168.1.129:/volume1/k3snfs /mnt/nfs/k3snfs

151 curl -sfL https://get.k3s.io | INSTALL_K3S_VERSION=v1.25.16+k3s4 K3S_URL=https://192.168.1.78:6443 K3S_TOKEN=K107d8e80976d8e1258a502cc802d2ad6c4c35cc2f16a36161e32417e87738014a8::server:581be6c9da1c56ea3d8d5d776979585a sh -

152 lsb_release -a

153 cat /proc/cpuinfo

154 uptime

155 sudo apt-get update && sudo apt-get upgrade -y

156 date

157 ps -ef | grep k3s

158 lsb_release -a

159 hsitory

160 history

161 nslookup demo2.staging.consoloservices.com

162 sudo su -

163 exit

164 history

I’ll uninstall:

builder@builder-HP-EliteBook-850-G2:~$ sudo /usr/local/bin/k3s-uninstall.sh

[sudo] password for builder:

sudo: /usr/local/bin/k3s-uninstall.sh: command not found

builder@builder-HP-EliteBook-850-G2:~$ sudo /usr/local/bin/k3s-agent-uninstall.sh

[sudo] password for builder:

+ id -u

+ [ 0 -eq 0 ]

+ /usr/local/bin/k3s-killall.sh

+ [ -s /etc/systemd/system/k3s-agent.service ]

+ basename /etc/systemd/system/k3s-agent.service

+ systemctl stop k3s-agent.service

Failed to allocate directory watch: Too many open files

+ [ -x /etc/init.d/k3s* ]

+ killtree 5973 6205 6250 6805 7520 7609 7650 7768 8086 8218 8483 8503 8791 9015 9485 9583 9774 9819 18963 20456 31745 183775 184169 184312 184369 184775 185509 185729 185936 186204 186854 186885 187143 187705 188451 188633 189780 190328 191248 191333 196024 254661 794318 1949763 2423120 2423235 2908165 2908665

+ kill -9 5973 5994 6512 6205 6234 6883 6250 6271 11292 11540 11798 11987 6805 6830 7110 7173 7520 7568 7609 7659 7890 7650 7691 7863 7979 7768 7800 10637 10727 8086 8110 11909 13143 13151 8218 8241 11080 8483 8526 9894 12984 12985 12986 11884 12565 12609 8503 8552 8791 8813 9015 9060 9485 9552 13422 13696 13697 13698 13699 13700 13701 42050 97186 9583 9605 9808 12972 12973 12974 11830 12426 12577 9774 9859 13828 9819 9870 18963 18984 19015 20456 20477 21102 31745 31767 32401 32470 32471 32473 32474 32475 183775 183795 184169 184187 184842 184895 184312 184361 184679 184369 184397 185030 185100 3893932 3893933 3893934 3893935 184775 184798 185565 185509 185530 185729 185750 185936 185957 186204 186236 186854 186904 187881 187941 187994 188000 186885 186928 187143 187162 188804 188824 188826 189223 187705 187726 188451 188474 188543 188633 188656 189243 189254 189255 189256 189257 189780 189803 189954 190328 190348 191881 191934 191248 191267 191333 191353 191910 196024 196063 196193 254661 254684 794318 794340 795426 795449 1949763 1949786 2423120 2423142 2423521 2423536 2423235 2423257 2423557 2908165 2908188 2908665 2908688 2908860

+ do_unmount_and_remove /run/k3s

+ set +x

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/fe4dedab8877cf3dfc9841b560feb18c5c55ef2cf56cb0248b8fb22bbe109a68/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/fb3a9cc86db5024c646338a188ce018c823a4498915deb880adfc20c7dc5a4d7/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/f767fd9e19648920dce7a13b5fcdd7aa39ca5fbbdfad46a97b42fcb370bc8ba1/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/f3f6552e8d28f734083963f0deadabe443e1c312caa851ce3f91db21e4933bbf/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/f3a325cad1ad42ce03fb1105185a9c7f233c90ec24621919c336e5dc6b7f3c23/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/f2dac9d92c034d7aafe1d10d389f6c8aa3820cd31281f804571fe1384515a29b/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/f043ec675d04e5eb1ea90a10cce8a65d474c5c0d6a0a5b3eeb6f5a6cc0345405/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/ed33c9f70a3959a89ac95993e6a1fe3c23d964dd237707b8e0d3a7ae37dbceaf/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/eb69b88f17393cd316dd9a3e19c697cfa8b47d8162dd7d0fc23d2b06b50a28a6/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/e907b8e58f6003558cb85b4d63168f7167a88df4ca2ffe0f89b7b35020a6ff06/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/e8997dd728e3caad8d5e7066ce49e3acbddd72d3f1ec8d12c019a760c960faef/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/e82a429bff348c03e7a622ff55f89fa5642338b8c4824e5059b31cad313377eb/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/e56d5c2b0d23215fbf68fea14813fcaeb609ad7c09e1e15101e4baf759b78334/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/e4c153869ce82f19bc69d255f8d40ad135568a70c95a152f49f05546c2e0e838/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/e311992ed9c6916f4b447db4bae6168d8c17d213a0b176ad8c90bf8c848d2f08/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/e2cbd0a7ed2753af81b1b56d9cf683cda3ba8a4e000cd1cedde117504f234489/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/e1fb73d486cecbb5b55b12b0d5903415910f2badd8d4d1a2f09340ff370e18ea/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/e12a64ed977549327067aede6349cfa0aff8e1df180a16e28d0b67455bde06ae/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/dd8d1b583bf15ca0a6357f9a5c2b9a7d37dfb78dbbdfd2c2a8c2239152fc959e/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/dce6bee8ee97f1dd6dc1c7d43ce3b29f4a120389d634ab98d2fc2cb36d43b47d/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/d880083f8be164c7359ca4249a0e6f716cd768fe8e3515d86a8f4c9278d58fb7/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/d3945ad7219930d6dc1a50311bb4f9709471df5829c73c0ce8087a123608b60d/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/cd598ad86ded522522e66d18230f638a3264cb08d225d6ee00b2cc5766f7a836/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/cbd9d354a0989b95f92027b1396c718c952c962dadf7bcacba31994de2cdc12f/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/cb1a169f97b325e6973881566f5517be54b0b081fc1c3946c7953d6778240b26/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/c9c4bc708817990d580865612e7d589941b5fc4a671ee5725f6fefea3f55fd3c/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/c7555dbb954c76ddf7827016f213a9b0ad8c7a384eaffb2433d731948cd104df/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/c6d8d0d4448b3ec8e5806a4b0a77e5f86084088fae4c4e28aead97cbb37d98e6/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/c0916e663a19eb9fec1c0c4f7cd6f4a3bff95e47470cdf5ec93c43b12ade9fd1/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/b59531db70ff848d41f2c9997a21f47d7b2cf2c25d530c7d387be510dd4c5667/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/aa8a66b65cd88e2ab778c64242fdde767423e6a358d1ab69cad0eb585ff291c9/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/a52d99e89030477f8857310b17692cf271275536215248ab3e1e80eaa7b9d7a0/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/a51c2f8d90c107fc97328276f03e2c718a53f353c420de951d27adbba4ccf10c/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/a3bd5e29b3f879078ea8c3bee432bcd774417b3315a128e72c7ca09626c4268a/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/9d6731f76f5f623188bb7cc9a5ebf8d8ce0f522f4f6aebf40cb989e6d20b99cd/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/95c5c2b9263eb647fda36e432a601068f02cba133661cca30edf7d05da7aab32/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/8bb9e278f360fb9b0074ce484d028d8610cc7b078762aabcafaccdd8d7e64cd6/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/8831826881fbb5d190ed13098fe396793179bb7cf470279217a6b4b4fc9165a7/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/868ff3f5e90dd3b94c871346bdaf09ae9f7190a87bae1507d2fe3dd5ab12d255/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/816edbf3bca99cf09b4f0622c2ae6832fa9fe263ccfb63e558d48bff1d7a6782/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/79b74931721d7bd45c7cf388213eb050c45dfc0a796388985e7e3a0fe53096c1/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/77e49745a8a290e9865ce088f5650b1a5d2bf3568d70c00835038d1944c94494/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/75bed7eda95b700fcb362b8a46f8d18c326655a0f8109615639b36b1e347b7d2/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/73f8e8b008eecc4b1f954d9f80a60af6182721e8baffdc93eacc3cc902d166cc/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/712da56fb7b048ebe1f11eeb08e3cea8397e23f39cd88487db45f28983bb6606/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/701a234d13efb5b331a6f34c0a53e02e1fc15bb225ee6e432c9ce4c0855cc47d/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/6e9199576509fda15bc3f14a1d054185f094cf66c71fa3a95838a72972932b93/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/6d05ec5b5b33a3366ccc798783eb1cacdde62661c9f8dca9a4d4dce84881f7a0/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/6c79984c15a2c083328e9f49bb1d78be76e6423c5f08db9fe233665cdd26e3e1/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/6ab9e34b325ba083b6f52b2f1e34953ecd21a8ac94e432e1ce581834da5a60d9/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/69ddf10681eee7cef798515c0c3df69ab17faef966580cbfc8e5ab9ada85574b/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/660e9a1c41950a63d95ca9adc09659a31918151ee032f494b5490f42a7b0533a/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/65547e97c30b9aff1eff9cc8256ad351ee08686c57115a3f4e919973dfad9ea0/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/65367e461a2009f4f33e1fa896b4f97d01f4d0910f7f5d840db05222cdda36d4/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/5c5e31a8602d147c355e3a87e3f062303ed697f01a5807ff8727ae0e0b63cfb0/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/5bedcea94d074338f642ab75098929317d3884d945db5696454562a71a2cc778/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/5bda1e2dbede096ac191696d03d479514abf6afe4bc512f5518bbb63f7fb2d56/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/5b5c729734b39b15c76427ce13472a7007764c35183a385249d5c0ce3c40da7d/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/584f3cb9033e1a3633e3ebf3454e9e334445c3360039f8a63ae113c3918d3849/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/553a6ecc9d837409e3782a737fa263567fb4dc065f4f1c4762bcd4b4c6661396/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/521d8d5a7526079490496f1df668742e61f5ffcdaba88c7bb114d0464efb8e39/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/5172b07488e47c94081706d4585eb95273f03643d6f2e0f64609e40ab36e266c/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/4f4ae3efd259c512f49c10f940f0976d5fc448489f42feb281dbe98c6fd53092/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/4e98b10fcbf118d5973c8821db223a036fa841defab1cc5a431a54e376290e0a/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/4e0cc60f83f8a4140eff185f6ee8cac65dcce6c69a4282a451ffe0339b3855c2/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/46d74a644b3fd3854ce4afc4b1f4f67a79df78bada84b0c64a7eb0c2c33fb779/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/4643ab66b520a86589d1abd2da8c90130e3ba0ea9d413c8abfa897b2f53933a8/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/45f553a4e1e02f7a5dcc8885438cbeff9f52ed4ee9998afb72b97c8ce2ac86b9/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/447f13ff8f0bf460c91c4a9e464da17955c9adb372271fb08fbff2a594042125/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/435e27b3ca3c96df0b202bca9fb100839beda482d89edcc0cdd223adf59434e4/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/3fb71c77749bdf28b1919262c880ef8bfadaad118ba728a61fb4c28a6d6bbdeb/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/3d8f2ddf4f340e160adfbff162776a560840c4c93f07939fe4a8205909000d1e/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/3d42edee63d7728f6ec6171730d2713ef89b31c87eca55eed118c3cdec30992c/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/3c4b2dd46060c8d071a23e6df744ce2f098405fbb423f990bbeca1420ea8e6bd/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/392e652301aceb3690d05a80e9631be4b4fe7049a9c2b17a0a5d06df52e25fb4/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/3220e065a21a4d528309817f87dd5677160cb0bf870457283a99d27a77e76abe/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/2d5b3fdb2413250f6aeeef2563738ea6fd5b4b191a1853de0668d0ef5ce895ad/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/273fe7d5f7aa0b91103a763137c5cbb1692268c1b403c429c9d281041de3cd34/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/24d19ebd534ed3041bf7eedad329cee995a7162d91cbaf159f7dbc422a225a0b/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/1ffac8fc0caaead096812eaf1ed8840b46801120c9e8a4aeecf606fcef6f4b88/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/1e7a1f15428b882a13119e4cd6356def19ba77d216c1381ad5931f9b297b0c69/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/17baeb5568072e0e8a3418834569f86d7aa97a93e4632575dafb821db4614977/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/16c300fd9e8c05495a7eadf46085f4e83255f40c6725614707b449da9305aecb/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/16661c4b4b52bc87c70000fa8c946d6ccfc2a4017df3d15d5ddeccd35abf9266/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/108d6207306f129d6b01bf5dc431438c894de5cd0d204d32d1bf018a9749d339/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/087b56473f3ba20d206cb4ddda3638865e11e0f6a8da65bee605ec7ff135d5fa/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/07b65a1522863381591feaad701fbd9a355894c5b6ce4374eca85b074241526c/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.runtime.v2.task/k8s.io/04a87f40e144e697d395c346578fcfc66debfcdba9ada333bffab20fb10d4d6c/rootfs

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/fb3a9cc86db5024c646338a188ce018c823a4498915deb880adfc20c7dc5a4d7/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/f3f6552e8d28f734083963f0deadabe443e1c312caa851ce3f91db21e4933bbf/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/f043ec675d04e5eb1ea90a10cce8a65d474c5c0d6a0a5b3eeb6f5a6cc0345405/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/eb69b88f17393cd316dd9a3e19c697cfa8b47d8162dd7d0fc23d2b06b50a28a6/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/e82a429bff348c03e7a622ff55f89fa5642338b8c4824e5059b31cad313377eb/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/e56d5c2b0d23215fbf68fea14813fcaeb609ad7c09e1e15101e4baf759b78334/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/e4c153869ce82f19bc69d255f8d40ad135568a70c95a152f49f05546c2e0e838/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/e311992ed9c6916f4b447db4bae6168d8c17d213a0b176ad8c90bf8c848d2f08/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/e1fb73d486cecbb5b55b12b0d5903415910f2badd8d4d1a2f09340ff370e18ea/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/e12a64ed977549327067aede6349cfa0aff8e1df180a16e28d0b67455bde06ae/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/dd8d1b583bf15ca0a6357f9a5c2b9a7d37dfb78dbbdfd2c2a8c2239152fc959e/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/d880083f8be164c7359ca4249a0e6f716cd768fe8e3515d86a8f4c9278d58fb7/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/d3945ad7219930d6dc1a50311bb4f9709471df5829c73c0ce8087a123608b60d/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/cb1a169f97b325e6973881566f5517be54b0b081fc1c3946c7953d6778240b26/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/c9c4bc708817990d580865612e7d589941b5fc4a671ee5725f6fefea3f55fd3c/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/c6d8d0d4448b3ec8e5806a4b0a77e5f86084088fae4c4e28aead97cbb37d98e6/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/c0916e663a19eb9fec1c0c4f7cd6f4a3bff95e47470cdf5ec93c43b12ade9fd1/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/b59531db70ff848d41f2c9997a21f47d7b2cf2c25d530c7d387be510dd4c5667/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/aa8a66b65cd88e2ab778c64242fdde767423e6a358d1ab69cad0eb585ff291c9/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/a52d99e89030477f8857310b17692cf271275536215248ab3e1e80eaa7b9d7a0/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/a3bd5e29b3f879078ea8c3bee432bcd774417b3315a128e72c7ca09626c4268a/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/8831826881fbb5d190ed13098fe396793179bb7cf470279217a6b4b4fc9165a7/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/868ff3f5e90dd3b94c871346bdaf09ae9f7190a87bae1507d2fe3dd5ab12d255/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/75bed7eda95b700fcb362b8a46f8d18c326655a0f8109615639b36b1e347b7d2/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/73f8e8b008eecc4b1f954d9f80a60af6182721e8baffdc93eacc3cc902d166cc/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/701a234d13efb5b331a6f34c0a53e02e1fc15bb225ee6e432c9ce4c0855cc47d/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/6e9199576509fda15bc3f14a1d054185f094cf66c71fa3a95838a72972932b93/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/6d05ec5b5b33a3366ccc798783eb1cacdde62661c9f8dca9a4d4dce84881f7a0/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/6c79984c15a2c083328e9f49bb1d78be76e6423c5f08db9fe233665cdd26e3e1/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/660e9a1c41950a63d95ca9adc09659a31918151ee032f494b5490f42a7b0533a/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/65547e97c30b9aff1eff9cc8256ad351ee08686c57115a3f4e919973dfad9ea0/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/65367e461a2009f4f33e1fa896b4f97d01f4d0910f7f5d840db05222cdda36d4/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/5c5e31a8602d147c355e3a87e3f062303ed697f01a5807ff8727ae0e0b63cfb0/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/5bedcea94d074338f642ab75098929317d3884d945db5696454562a71a2cc778/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/5b5c729734b39b15c76427ce13472a7007764c35183a385249d5c0ce3c40da7d/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/521d8d5a7526079490496f1df668742e61f5ffcdaba88c7bb114d0464efb8e39/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/5172b07488e47c94081706d4585eb95273f03643d6f2e0f64609e40ab36e266c/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/4e0cc60f83f8a4140eff185f6ee8cac65dcce6c69a4282a451ffe0339b3855c2/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/46d74a644b3fd3854ce4afc4b1f4f67a79df78bada84b0c64a7eb0c2c33fb779/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/447f13ff8f0bf460c91c4a9e464da17955c9adb372271fb08fbff2a594042125/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/3c4b2dd46060c8d071a23e6df744ce2f098405fbb423f990bbeca1420ea8e6bd/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/392e652301aceb3690d05a80e9631be4b4fe7049a9c2b17a0a5d06df52e25fb4/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/3220e065a21a4d528309817f87dd5677160cb0bf870457283a99d27a77e76abe/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/2d5b3fdb2413250f6aeeef2563738ea6fd5b4b191a1853de0668d0ef5ce895ad/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/273fe7d5f7aa0b91103a763137c5cbb1692268c1b403c429c9d281041de3cd34/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/16c300fd9e8c05495a7eadf46085f4e83255f40c6725614707b449da9305aecb/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/16661c4b4b52bc87c70000fa8c946d6ccfc2a4017df3d15d5ddeccd35abf9266/shm

sh -c 'umount -f "$0" && rm -rf "$0"' /run/k3s/containerd/io.containerd.grpc.v1.cri/sandboxes/04a87f40e144e697d395c346578fcfc66debfcdba9ada333bffab20fb10d4d6c/shm

+ do_unmount_and_remove /var/lib/rancher/k3s

+ set +x

+ do_unmount_and_remove /var/lib/kubelet/pods

+ set +x

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/fa775510-be07-4636-b953-f634e3ae075a/volumes/kubernetes.io~projected/kube-api-access-7xg7j

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/f6b018d8-cbf6-4535-983e-1cec410624de/volume-subpaths/token-service-private-key/core/2

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/f6b018d8-cbf6-4535-983e-1cec410624de/volume-subpaths/secret-key/core/1

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/f6b018d8-cbf6-4535-983e-1cec410624de/volume-subpaths/config/core/0

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/f6b018d8-cbf6-4535-983e-1cec410624de/volumes/kubernetes.io~secret/token-service-private-key

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/f6b018d8-cbf6-4535-983e-1cec410624de/volumes/kubernetes.io~secret/secret-key

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/e27f43d5-abbe-4f5e-ad28-2ffd8eca124a/volumes/kubernetes.io~secret/inline-config-sources

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/e27f43d5-abbe-4f5e-ad28-2ffd8eca124a/volumes/kubernetes.io~secret/init

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/e27f43d5-abbe-4f5e-ad28-2ffd8eca124a/volumes/kubernetes.io~secret/config

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/e27f43d5-abbe-4f5e-ad28-2ffd8eca124a/volumes/kubernetes.io~projected/kube-api-access-zv7nr

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/e0cd82c8-245e-4a4d-90e7-090f0a12d6a5/volumes/kubernetes.io~projected/kube-api-access-4dkpg

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/cac6de75-786e-4e70-931f-081ab2cf6399/volumes/kubernetes.io~projected/kube-api-access-6b5dp

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/c6af5ae5-c84e-445e-8f89-c15dd4c86952/volume-subpaths/default-config/my-redis-release-redis-cluster/2

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/c6af5ae5-c84e-445e-8f89-c15dd4c86952/volumes/kubernetes.io~projected/kube-api-access-whbk7

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/c6af5ae5-c84e-445e-8f89-c15dd4c86952/volumes/kubernetes.io~nfs/pvc-a501783c-2469-47ce-904d-76320807016b

umount.nfs: /var/lib/kubelet/pods/c6af5ae5-c84e-445e-8f89-c15dd4c86952/volumes/kubernetes.io~nfs/pvc-a501783c-2469-47ce-904d-76320807016b: device is busy

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/becb37ad-89fa-4fa8-9216-9fdb57bc0733/volumes/kubernetes.io~projected/kube-api-access-h7fh4

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/b99889eb-9bca-4bf9-8197-64ea752981bb/volumes/kubernetes.io~projected/kube-api-access-n48tm

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/a88099a1-a61e-4bd0-9ab9-b62cd3f47e0e/volumes/kubernetes.io~secret/kubernetes-dashboard-certs

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/a88099a1-a61e-4bd0-9ab9-b62cd3f47e0e/volumes/kubernetes.io~projected/kube-api-access-sstn9

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/a70353ac-cfe9-4501-b5b1-c44b02368529/volume-subpaths/installinfo/cluster-agent/3

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/a70353ac-cfe9-4501-b5b1-c44b02368529/volumes/kubernetes.io~projected/kube-api-access-4rz8p

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/9cc9258c-2804-44df-b20d-63f55ca179b5/volumes/kubernetes.io~projected/kube-api-access-ghg2d

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/93c5d920-f02a-4a6d-ae9d-6e908d9f48ab/volumes/kubernetes.io~projected/kube-api-access-cjss7

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/93c5d920-f02a-4a6d-ae9d-6e908d9f48ab/volumes/kubernetes.io~nfs/pvc-0b1e898a-3dc3-4ee0-8fee-80dc6d264ecf

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/9317b1cd-c6ff-4e18-a5c8-d49467f7737f/volumes/kubernetes.io~projected/kube-api-access-9prqb

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/9035c941-a582-4b66-b3f1-227569ee8b60/volumes/kubernetes.io~secret/inline-config-sources

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/9035c941-a582-4b66-b3f1-227569ee8b60/volumes/kubernetes.io~secret/init

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/9035c941-a582-4b66-b3f1-227569ee8b60/volumes/kubernetes.io~secret/config

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/9035c941-a582-4b66-b3f1-227569ee8b60/volumes/kubernetes.io~projected/kube-api-access-9t7lm

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8d0f525f-0bd3-4522-9772-2a2263ea63ec/volume-subpaths/config/noisedash/2

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8d0f525f-0bd3-4522-9772-2a2263ea63ec/volumes/kubernetes.io~projected/kube-api-access-l2jr6

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8b12b76b-e2c2-4124-a916-f209830f0614/volume-subpaths/sysprobe-config/system-probe/5

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8b12b76b-e2c2-4124-a916-f209830f0614/volume-subpaths/sysprobe-config/process-agent/11

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8b12b76b-e2c2-4124-a916-f209830f0614/volume-subpaths/sysprobe-config/init-config/4

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8b12b76b-e2c2-4124-a916-f209830f0614/volume-subpaths/sysprobe-config/agent/9

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8b12b76b-e2c2-4124-a916-f209830f0614/volume-subpaths/installinfo/agent/1

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8b12b76b-e2c2-4124-a916-f209830f0614/volumes/kubernetes.io~projected/kube-api-access-pts4k

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/8352322a-6bad-4740-8782-ffa027848daa/volumes/kubernetes.io~projected/kube-api-access-m2cq6

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/80d97708-50cc-4a97-9667-6f34e28a8fdb/volumes/kubernetes.io~projected/kube-api-access-rv8lx

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/80d97708-50cc-4a97-9667-6f34e28a8fdb/volumes/kubernetes.io~nfs/nfs-client-root

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/75a67830-19fa-4ebb-b909-8b2269aef65a/volumes/kubernetes.io~secret/inline-config-sources

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/75a67830-19fa-4ebb-b909-8b2269aef65a/volumes/kubernetes.io~secret/init

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/75a67830-19fa-4ebb-b909-8b2269aef65a/volumes/kubernetes.io~secret/config

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/75a67830-19fa-4ebb-b909-8b2269aef65a/volumes/kubernetes.io~projected/kube-api-access-cwg52

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/75320730-ee46-4890-b0ae-fff3df3e5c0d/volumes/kubernetes.io~projected/kube-api-access-5lvbx

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/64f1b8c9-7d81-4576-baee-b9a4f532da38/volumes/kubernetes.io~projected/kube-api-access-t24fw

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/60ee77f7-72e2-40fe-9d34-5cd3147f7efc/volumes/kubernetes.io~projected/kube-api-access-6cjcl

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/5e9dc501-b8ce-41d6-a88b-ab0894fe6872/volumes/kubernetes.io~projected/kube-api-access-gw5ll

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/5d7dfff9-f932-4b5f-b9d4-a2a2989cff8e/volumes/kubernetes.io~projected/kube-api-access-ncxpw

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/5abdabd1-56ac-4de9-97d8-5b532040c095/volume-subpaths/installinfo/agent/0

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/5abdabd1-56ac-4de9-97d8-5b532040c095/volumes/kubernetes.io~projected/kube-api-access-nf7pj

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/4d4a6118-d36f-47b1-8047-bd87310c2f11/volume-subpaths/portal-config/portal/0

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/474664dd-fac0-4e3d-8b23-ed2521c32ccf/volume-subpaths/jobservice-config/jobservice/0

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/474664dd-fac0-4e3d-8b23-ed2521c32ccf/volumes/kubernetes.io~nfs/pvc-25e2c0fe-5c7b-4bd2-8443-49659d6fff98

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/3d4c15e0-e7ab-4aba-be39-bf412e927611/volumes/kubernetes.io~projected/kube-api-access-nqknx

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/3a85fcad-0656-4ca6-a668-b9261a2dcc60/volume-subpaths/config-volume/codex/2

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/3a85fcad-0656-4ca6-a668-b9261a2dcc60/volumes/kubernetes.io~projected/kube-api-access-wxmqx

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/3a85fcad-0656-4ca6-a668-b9261a2dcc60/volumes/kubernetes.io~nfs/pvc-324c5400-9e0a-45e2-86f5-3f4f468e9c83

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/3a85fcad-0656-4ca6-a668-b9261a2dcc60/volumes/kubernetes.io~nfs/pvc-231f0c71-8e0a-4700-b6b6-2e191d5fde67

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/39609496-f950-4f85-b749-a6e6f8ba87cd/volume-subpaths/registry-htpasswd/registry/1

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/39609496-f950-4f85-b749-a6e6f8ba87cd/volume-subpaths/registry-config/registryctl/2

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/39609496-f950-4f85-b749-a6e6f8ba87cd/volume-subpaths/registry-config/registryctl/1

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/39609496-f950-4f85-b749-a6e6f8ba87cd/volume-subpaths/registry-config/registry/2

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/39609496-f950-4f85-b749-a6e6f8ba87cd/volumes/kubernetes.io~secret/registry-htpasswd

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/39609496-f950-4f85-b749-a6e6f8ba87cd/volumes/kubernetes.io~nfs/pvc-5bdd84b3-2fe1-4916-aad6-9c66782e9c09

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/321a06f2-676f-4315-abd0-1bb38ef46e4e/volumes/kubernetes.io~projected/kube-api-access-kv4zc

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/2be19e54-a0d0-4089-99fd-e7d3603f51b2/volumes/kubernetes.io~projected/kube-api-access-r9ljh

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/2acda116-5667-4d30-bc1a-edab9c3ee9cc/volumes/kubernetes.io~projected/kube-api-access-2wftr

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/29bcbdc4-109c-403d-aa6e-1d1ff1881d1f/volumes/kubernetes.io~projected/kube-api-access-65v5n

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/285d43f3-d71e-44d8-a0a8-ed3e683a3d84/volumes/kubernetes.io~projected/kube-api-access-8nmf2

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/26feb8e2-184c-4236-ae37-b894d0832a64/volumes/kubernetes.io~secret/signingkey

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/26feb8e2-184c-4236-ae37-b894d0832a64/volumes/kubernetes.io~secret/secrets

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/26feb8e2-184c-4236-ae37-b894d0832a64/volumes/kubernetes.io~projected/kube-api-access-k6nzw

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/2265657c-c939-4d87-8370-518ccb003f8e/volumes/kubernetes.io~projected/kube-api-access-bvh22

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/2265657c-c939-4d87-8370-518ccb003f8e/volumes/kubernetes.io~nfs/pvc-a2d796d9-a5e3-4429-95f6-f4f1e8c222e8

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/21c9cef9-6b32-49e4-9fab-4574e8c945bd/volumes/kubernetes.io~secret/settings

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/21c9cef9-6b32-49e4-9fab-4574e8c945bd/volumes/kubernetes.io~projected/kube-api-access-7q4vr

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/1d188b01-f2fe-4a1e-8b0a-11e1a052e204/volumes/kubernetes.io~projected/kube-api-access-vptjq

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/1c4d772f-7967-4706-8ca9-3d888b159730/volumes/kubernetes.io~projected/kube-api-access-6h267

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/17a09cc5-8ff2-4557-a3dd-7de449d51c50/volumes/kubernetes.io~projected/kube-api-access-5l996

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/17a09cc5-8ff2-4557-a3dd-7de449d51c50/volumes/kubernetes.io~downward-api/namespace-volume

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/091d5ce0-d974-4dcf-a45e-d8183118c2ad/volumes/kubernetes.io~secret/cert

sh -c 'umount -f "$0" && rm -rf "$0"' /var/lib/kubelet/pods/091d5ce0-d974-4dcf-a45e-d8183118c2ad/volumes/kubernetes.io~projected/kube-api-access-kwrhl

+ do_unmount_and_remove /var/lib/kubelet/plugins

+ set +x

+ do_unmount_and_remove /run/netns/cni-

+ set +x

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-fc656d2d-39b5-9c4b-4a29-c3009151a317

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-f69c3155-7087-1275-dd26-e4b33072a588

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-f55fdfc5-57ea-710e-7050-ae6b1c049dae

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-e92a2e26-c60d-5c42-18a5-6826608c283d

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-e4473e7a-54c0-db4f-2521-b606276aca91

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-e3532799-ccff-29d6-2a42-1628825e5847

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-d90005f9-87f9-4ece-543a-25a8e67dc728

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-d89d4e15-6a8e-af59-e523-042c16e2df70

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-d248831d-5e0a-767d-09e6-c342d2f7896c

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-cfd70d99-48a1-2d33-e015-ad9e5a01c041

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-c888b5cb-7b10-a7d3-5002-42f54ac6e4f7

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-c6c27118-f694-74f4-690b-49e0dd433afc

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-c28dc64d-3ca1-4892-64c4-d14da5e38c1f

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-bed02af4-8d6f-5581-2c65-f6143e088046

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-bcd4a3ea-cb36-6c4f-461e-b693c8c65020

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-b8b8c30c-4351-c0de-1037-336893919151

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-b688e075-551d-f71e-2923-cd7290a80c73

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-b611c4dd-4ab0-da01-da92-c58a10695315

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-a9d73069-5fb5-f6f7-699c-f9d3bf9f2b54

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-8c25d735-2403-1995-a515-254608746edc

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-8a69beb4-5d35-f6ce-7003-a5a7fcba8fd9

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-881beb52-4ad9-e03e-4755-2c80c01ea16e

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-7578e970-57bc-2445-a704-9e289ede05b6

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-6fe04390-4350-b9f8-9162-785534629e1d

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-6e232049-d938-d987-9594-bb804a990899

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-69d0f804-2bdf-d30f-67c0-b76ff8e03573

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-6861f229-9f6b-b771-69fc-1179e4fe32fb

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-6624f920-f277-672e-a238-e6c5fabbf1d4

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-5e6184ff-fe5a-92d5-94b3-8aaca064755e

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-53b73460-a0e7-6812-f34c-e01937c89a5b

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-4f6e0de8-01fe-b2c0-1a79-d59634468f18

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-4ab87c3f-4f3a-cda8-55bc-9df5b211946a

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-4aab75ce-33d5-0527-5c69-2d4344c70dd6

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-3b2a237e-6508-17da-e8e9-d22e79a81ebd

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-3b0dc353-b815-8742-5517-c24ae8e1088f

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-367d8616-361d-82a7-6ff5-e9cced7859a8

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-2e537cf6-37ba-9c54-cd08-2cb5ade99478

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-2bdb1259-6ce7-98d8-2165-a6bb2fba1ad7

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-2b5310fe-9d6d-f808-6850-255dbcf7b785

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-22bfe770-6240-bc0e-f267-baf94edb923e

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-21c1449d-e003-8a4b-f627-0c50840aba7b

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-1df33f5d-2f45-5f25-561d-40c3343fca6d

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-187135d4-4b0b-ece1-ac3a-dd0085316222

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-173ce3b0-d980-945d-4ff7-337ae167a948

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-10c62a3b-eec2-78a0-932f-bb295d3bacfb

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-0e12f254-fb3e-2574-53b2-2af49247a919

sh -c 'umount -f "$0" && rm -rf "$0"' /run/netns/cni-0312f755-4cf4-079a-d122-8a5febfd8f11

+ ip netns show

+ grep cni-

+ xargs -r -t -n 1 ip netns delete

+ remove_interfaces

+ ip link show

+ grep master cni0

+ read ignore iface ignore

+ iface=veth1b7d36e3

+ [ -z veth1b7d36e3 ]

+ ip link delete veth1b7d36e3

+ read ignore iface ignore

+ iface=veth00dfd6a3

+ [ -z veth00dfd6a3 ]

+ ip link delete veth00dfd6a3

+ read ignore iface ignore

+ iface=vethd1f5348a

+ [ -z vethd1f5348a ]

+ ip link delete vethd1f5348a

+ read ignore iface ignore

+ iface=vetha02d9da0

+ [ -z vetha02d9da0 ]

+ ip link delete vetha02d9da0

Cannot find device "vetha02d9da0"

+ read ignore iface ignore

+ iface=veth9f5e2b4d

+ [ -z veth9f5e2b4d ]

+ ip link delete veth9f5e2b4d

Cannot find device "veth9f5e2b4d"

+ read ignore iface ignore

+ iface=veth895f557e

+ [ -z veth895f557e ]

+ ip link delete veth895f557e

Cannot find device "veth895f557e"

+ read ignore iface ignore

+ iface=veth7bced95c

+ [ -z veth7bced95c ]

+ ip link delete veth7bced95c

Cannot find device "veth7bced95c"

+ read ignore iface ignore

+ iface=veth1b235c3b

+ [ -z veth1b235c3b ]

+ ip link delete veth1b235c3b

Cannot find device "veth1b235c3b"

+ read ignore iface ignore

+ iface=veth492b13cb

+ [ -z veth492b13cb ]

+ ip link delete veth492b13cb

Cannot find device "veth492b13cb"

+ read ignore iface ignore

+ iface=veth19161500

+ [ -z veth19161500 ]

+ ip link delete veth19161500

Cannot find device "veth19161500"

+ read ignore iface ignore

+ iface=veth5cb41667

+ [ -z veth5cb41667 ]

+ ip link delete veth5cb41667

Cannot find device "veth5cb41667"

+ read ignore iface ignore

+ iface=veth4e8c198b

+ [ -z veth4e8c198b ]

+ ip link delete veth4e8c198b

Cannot find device "veth4e8c198b"

+ read ignore iface ignore

+ iface=vethc1c98865

+ [ -z vethc1c98865 ]

+ ip link delete vethc1c98865

Cannot find device "vethc1c98865"

+ read ignore iface ignore

+ iface=veth71d49b4f

+ [ -z veth71d49b4f ]

+ ip link delete veth71d49b4f

Cannot find device "veth71d49b4f"

+ read ignore iface ignore

+ iface=vethb015c62d

+ [ -z vethb015c62d ]

+ ip link delete vethb015c62d

Cannot find device "vethb015c62d"

+ read ignore iface ignore

+ iface=veth4cd08fc8

+ [ -z veth4cd08fc8 ]

+ ip link delete veth4cd08fc8

Cannot find device "veth4cd08fc8"

+ read ignore iface ignore

+ iface=veth3352f9c0

+ [ -z veth3352f9c0 ]

+ ip link delete veth3352f9c0

Cannot find device "veth3352f9c0"

+ read ignore iface ignore

+ iface=vethc34b856a

+ [ -z vethc34b856a ]

+ ip link delete vethc34b856a

Cannot find device "vethc34b856a"

+ read ignore iface ignore

+ iface=veth607231b0

+ [ -z veth607231b0 ]

+ ip link delete veth607231b0

Cannot find device "veth607231b0"

+ read ignore iface ignore

+ iface=vethd1d0e9a1

+ [ -z vethd1d0e9a1 ]

+ ip link delete vethd1d0e9a1

Cannot find device "vethd1d0e9a1"

+ read ignore iface ignore

+ iface=veth2d24920d

+ [ -z veth2d24920d ]

+ ip link delete veth2d24920d

Cannot find device "veth2d24920d"

+ read ignore iface ignore

+ iface=vethd87a9238

+ [ -z vethd87a9238 ]

+ ip link delete vethd87a9238

Cannot find device "vethd87a9238"

+ read ignore iface ignore

+ iface=vethf5a08b5e

+ [ -z vethf5a08b5e ]

+ ip link delete vethf5a08b5e

Cannot find device "vethf5a08b5e"

+ read ignore iface ignore

+ iface=vethf4e57c9b

+ [ -z vethf4e57c9b ]

+ ip link delete vethf4e57c9b

Cannot find device "vethf4e57c9b"

+ read ignore iface ignore

+ iface=vethce27cb78

+ [ -z vethce27cb78 ]

+ ip link delete vethce27cb78

Cannot find device "vethce27cb78"

+ read ignore iface ignore

+ ip link delete cni0

+ ip link delete flannel.1

+ ip link delete flannel-v6.1

Cannot find device "flannel-v6.1"

+ ip link delete kube-ipvs0

Cannot find device "kube-ipvs0"

+ ip link delete flannel-wg

Cannot find device "flannel-wg"

+ ip link delete flannel-wg-v6

Cannot find device "flannel-wg-v6"

+ command -v tailscale

+ [ -n ]

+ rm -rf /var/lib/cni/

+ iptables-save

+ grep -v KUBE-

+ + iptables-restore

grep -iv flannel

+ grep -v CNI-

+ ip6tables-save

+ grep -v KUBE-

+ + grep -iv flannel

ip6tables-restore

+ grep -v CNI-

+ command -v systemctl

/usr/bin/systemctl

+ systemctl disable k3s-agent

Removed /etc/systemd/system/multi-user.target.wants/k3s-agent.service.

+ systemctl reset-failed k3s-agent

Failed to reset failed state of unit k3s-agent.service: Unit k3s-agent.service not loaded.

+ systemctl daemon-reload

+ command -v rc-update

+ rm -f /etc/systemd/system/k3s-agent.service

+ rm -f /etc/systemd/system/k3s-agent.service.env

+ trap remove_uninstall EXIT

+ [ -L /usr/local/bin/kubectl ]

+ rm -f /usr/local/bin/kubectl

+ [ -L /usr/local/bin/crictl ]

+ rm -f /usr/local/bin/crictl

+ [ -L /usr/local/bin/ctr ]

+ rm -rf /etc/rancher/k3s

+ rm -rf /run/k3s

+ rm -rf /run/flannel

+ rm -rf /var/lib/rancher/k3s

+ rm -rf /var/lib/kubelet

...

It kept getting stuck with an old pod that had an NFS mount

root@builder-HP-EliteBook-850-G2:/var/lib/kubelet/pods/c6af5ae5-c84e-445e-8f89-c15dd4c86952/volumes/kubernetes.io~nfs#

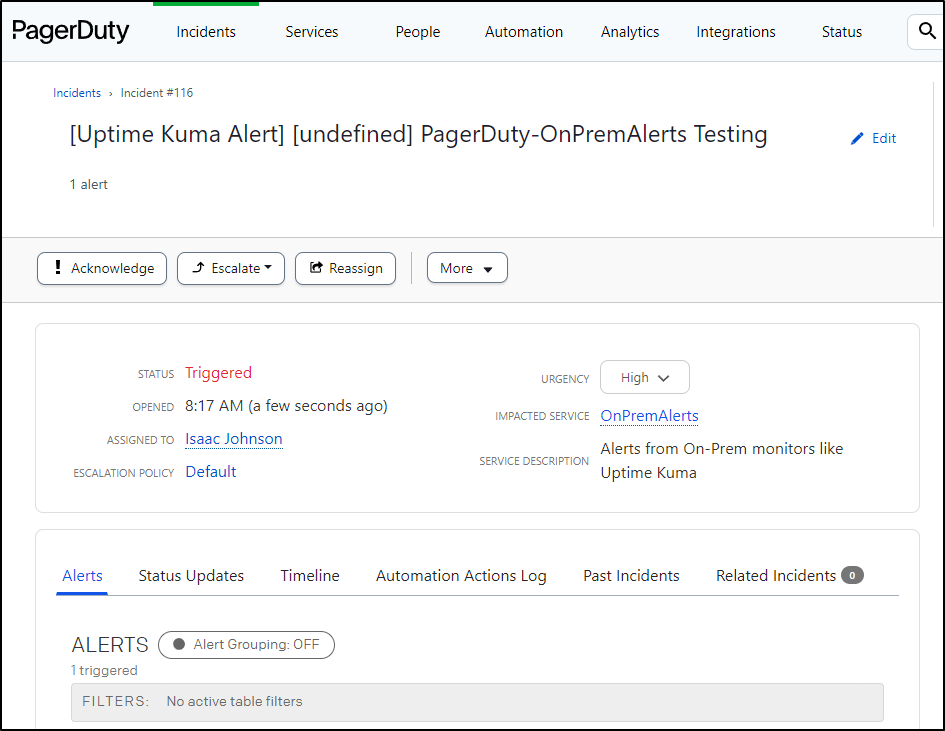

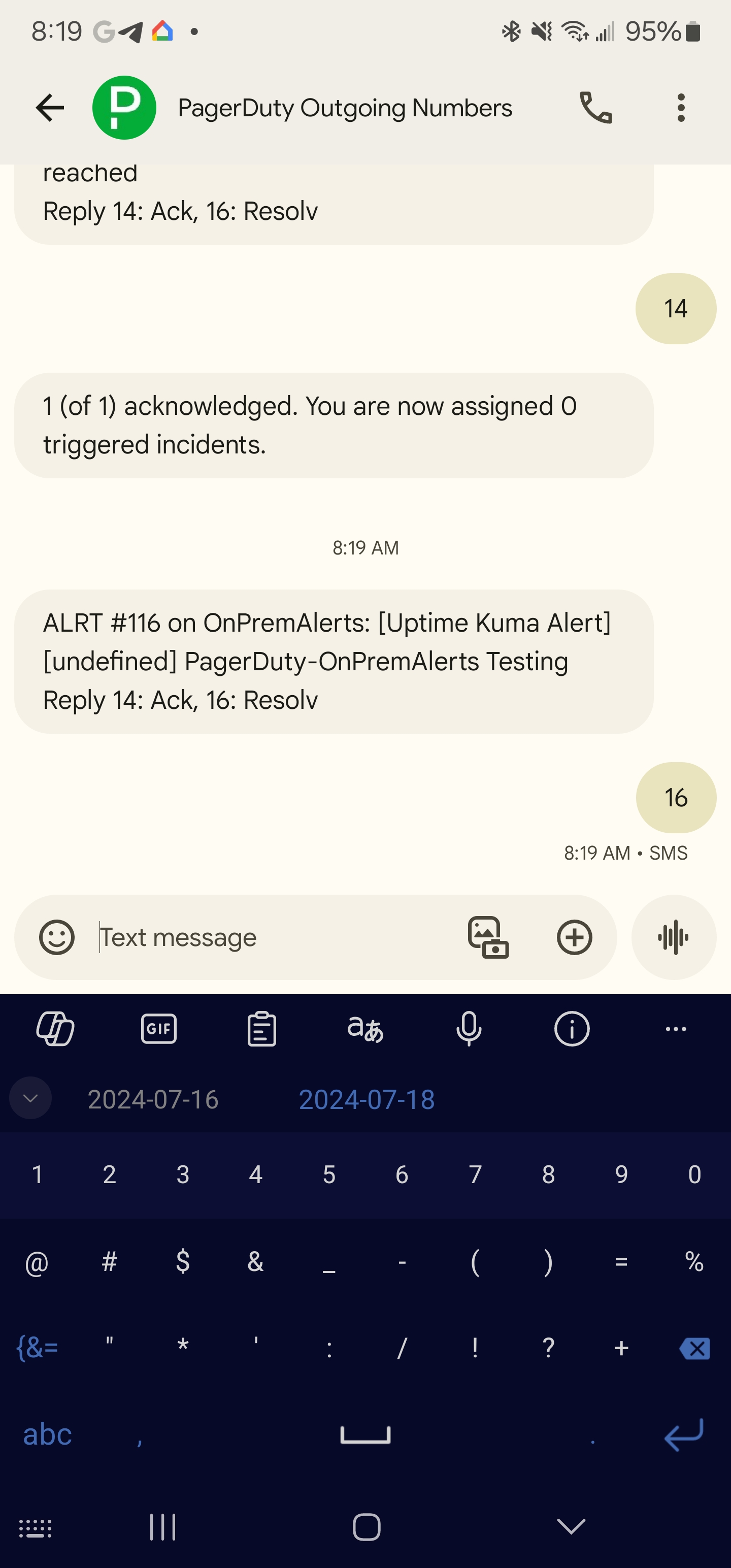

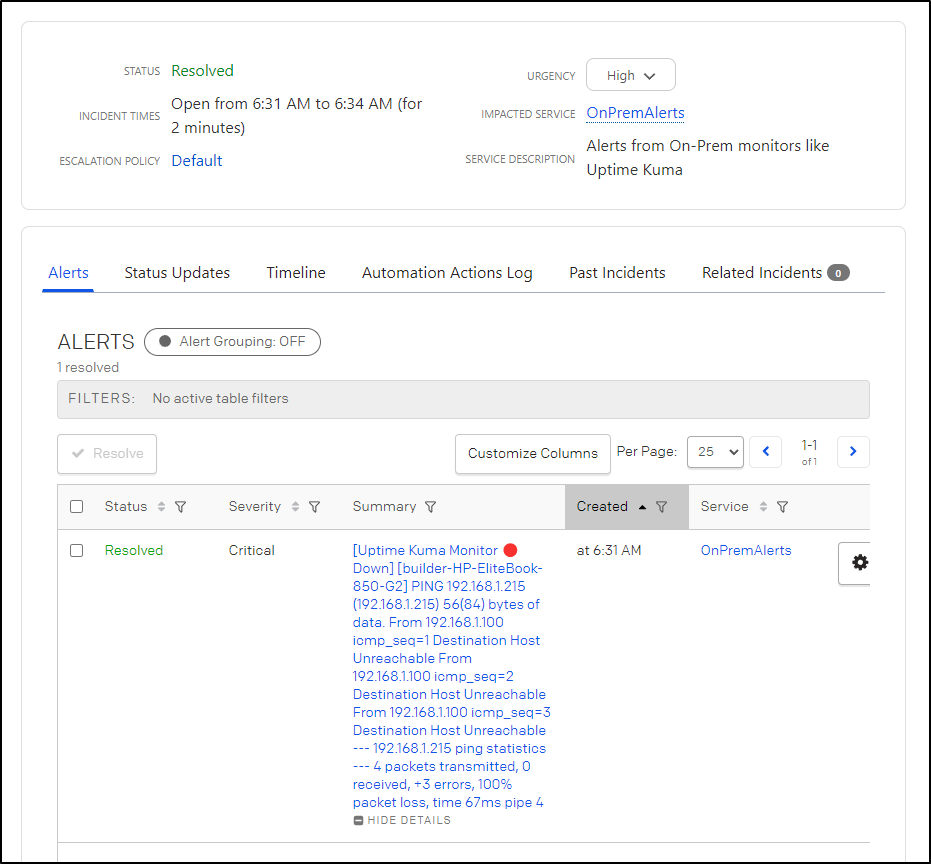

When I bounced it, it was good to see I got a proper PD alert

But when I went to check it, it had already been resolved

After reboot, the uninstall completed without issue

builder@builder-HP-EliteBook-850-G2:~$ sudo /usr/local/bin/k3s-agent-uninstall.sh

[sudo] password for builder:

+ id -u

+ [ 0 -eq 0 ]

+ /usr/local/bin/k3s-killall.sh

+ [ -s /etc/systemd/system/k3s*.service ]

+ [ -x /etc/init.d/k3s* ]

+ killtree

+ kill -9

+ do_unmount_and_remove /run/k3s

+ set +x

+ do_unmount_and_remove /var/lib/rancher/k3s

+ set +x

+ do_unmount_and_remove /var/lib/kubelet/pods

+ set +x

+ do_unmount_and_remove /var/lib/kubelet/plugins

+ set +x

+ do_unmount_and_remove /run/netns/cni-

+ set +x

+ ip netns show

+ grep cni-

+ xargs -r -t -n 1 ip netns delete

+ remove_interfaces

+ ip link show

+ grep master cni0

+ read ignore iface ignore

+ ip link delete cni0

Cannot find device "cni0"

+ ip link delete flannel.1

Cannot find device "flannel.1"

+ ip link delete flannel-v6.1

Cannot find device "flannel-v6.1"

+ ip link delete kube-ipvs0

Cannot find device "kube-ipvs0"

+ ip link delete flannel-wg

Cannot find device "flannel-wg"

+ ip link delete flannel-wg-v6

Cannot find device "flannel-wg-v6"

+ command -v tailscale

+ [ -n ]

+ rm -rf /var/lib/cni/

+ iptables-save

+ grep -v KUBE-

+ grep -v CNI-

+ + iptables-restore

grep -iv flannel

+ ip6tables-save

+ grep -v KUBE-

+ grep+ ip6tables-restore

-v CNI-

+ grep -iv flannel

+ command -v systemctl

/usr/bin/systemctl

+ systemctl disable k3s-agent

Failed to disable unit: Unit file k3s-agent.service does not exist.

+ systemctl reset-failed k3s-agent

Failed to reset failed state of unit k3s-agent.service: Unit k3s-agent.service not loaded.

+ systemctl daemon-reload

+ command -v rc-update

+ rm -f /etc/systemd/system/k3s-agent.service

+ rm -f /etc/systemd/system/k3s-agent.service.env

+ trap remove_uninstall EXIT

+ [ -L /usr/local/bin/kubectl ]

+ [ -L /usr/local/bin/crictl ]

+ [ -L /usr/local/bin/ctr ]

+ rm -rf /etc/rancher/k3s

+ rm -rf /run/k3s

+ rm -rf /run/flannel

+ rm -rf /var/lib/rancher/k3s

+ rm -rf /var/lib/kubelet

+ rm -f /usr/local/bin/k3s

+ rm -f /usr/local/bin/k3s-killall.sh

+ type yum

+ type rpm-ostree

+ type zypper

+ remove_uninstall

+ rm -f /usr/local/bin/k3s-agent-uninstall.sh

I can now install and add this host to the main int33 pool

builder@builder-HP-EliteBook-850-G2:~$ curl -sfL https://get.k3s.io | INSTALL_K3S_VERSION="v1.26.14%2Bk3s1" K3S_URL=https://192.168.1.33:6443 K3S_TOKEN=K10ef48ebf2c2adb1da135c5fd0ad3fa4966ac35da5d6d941de7ecaa52d48993605::server:ed854070980c4560bbba49f8217363d4 sh -

[INFO] Using v1.26.14%2Bk3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.26.14%2Bk3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.26.14%2Bk3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Skipping /usr/local/bin/ctr symlink to k3s, command exists in PATH at /usr/bin/ctr

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] systemd: Starting k3s-agent

And see that it is up

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-850-g1 Ready <none> 142d v1.26.14+k3s1

builder-hp-elitebook-745-g5 Ready control-plane,master 142d v1.26.14+k3s1

hp-hp-elitebook-850-g2 Ready <none> 139d v1.26.14+k3s1

builder-hp-elitebook-850-g2 Ready <none> 36s v1.26.14+k3s1

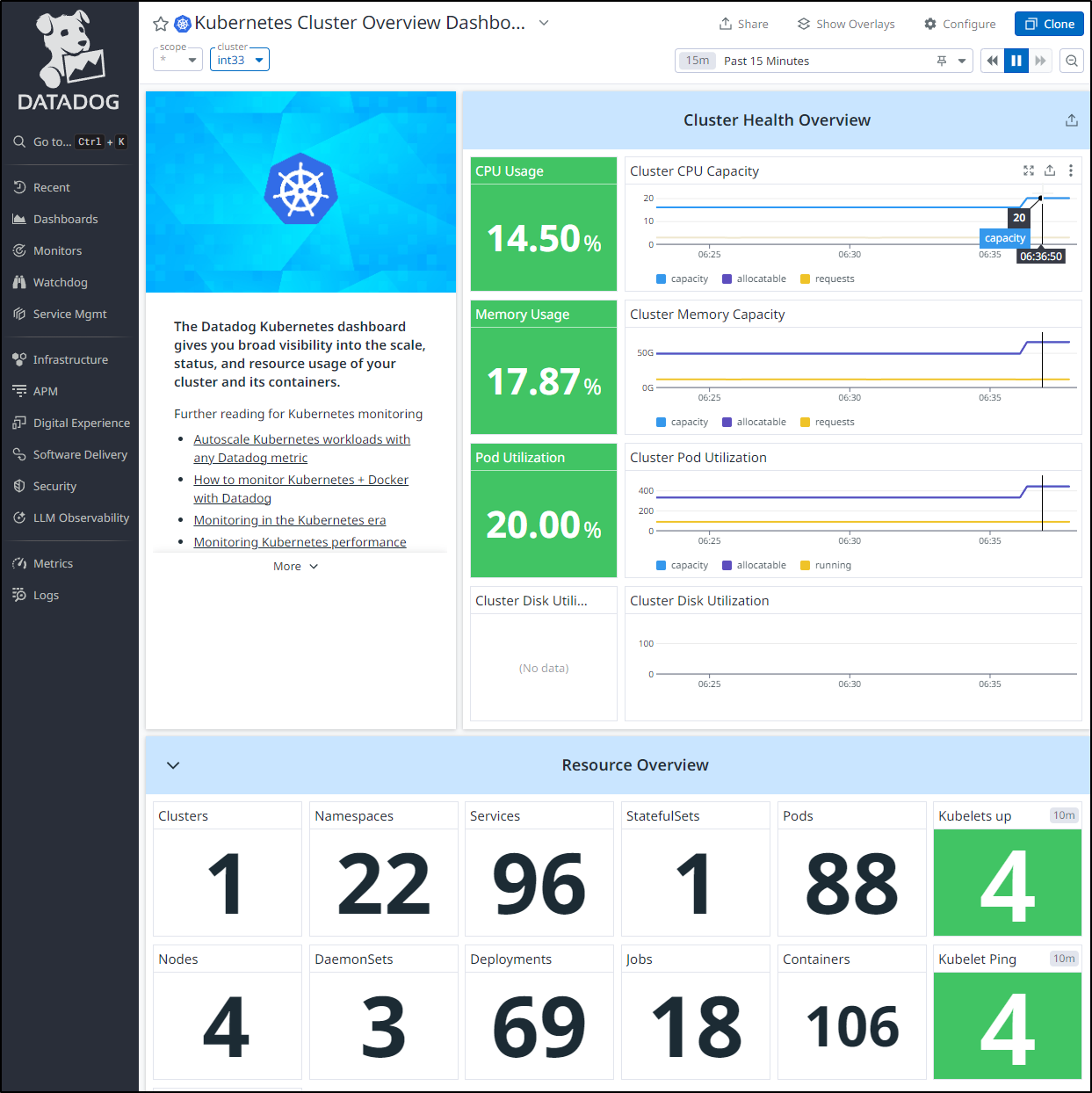

Another indicator is that I can see the node count has gone to 4 and the capacity has increased using the Datadog dashboard

DR Wiki

I’ll also want to take a moment to set a destination sync in Codeberg for DR.

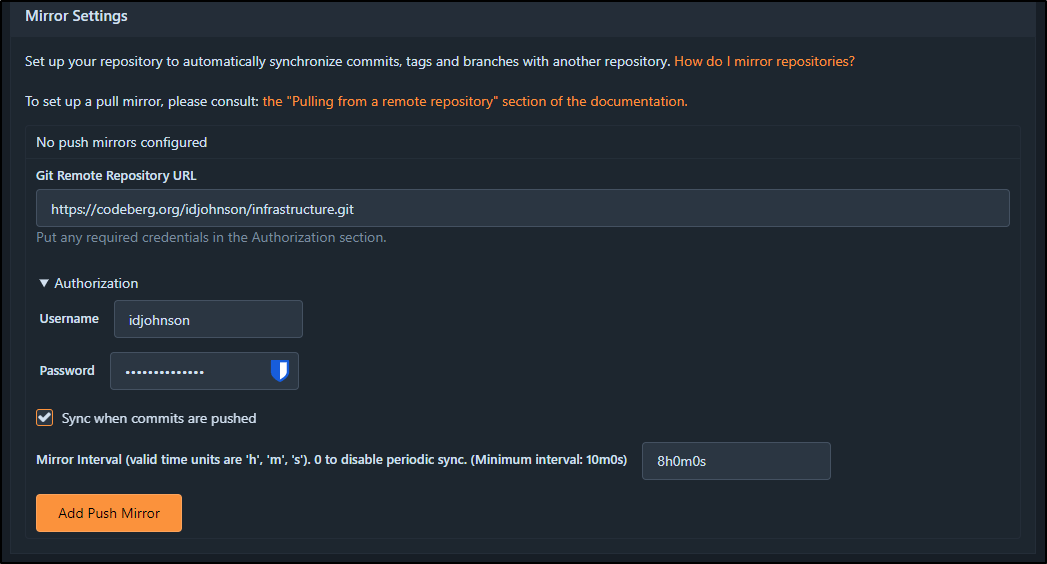

Now back in Forgejo, I’ll set it as a destination.

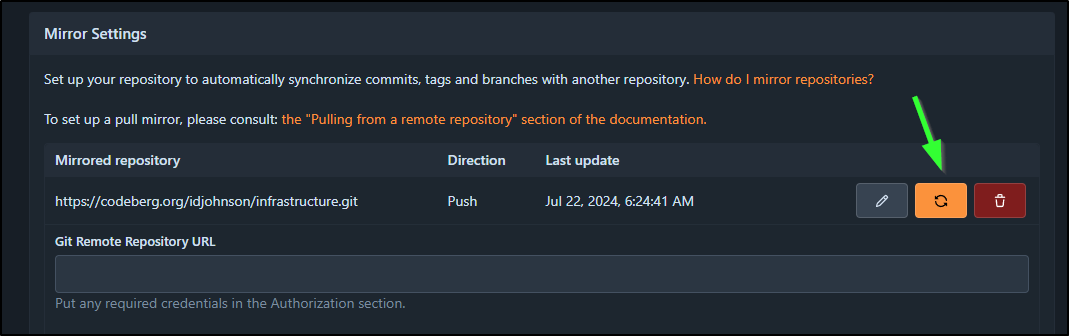

I’ll force a push

And now you can see the live update.

To be honest, the only the I stewed on is my own password hints. It doesn’t give away the length or passwords, just the letter to give me a mental reminder. I do worry if that is bad Infosec and I should link to an AKV or something.

Form

I feel embarrassed to even admit this, but I also had the suspicion something was amiss with my feedback form. I hadn’t gotten any feedback in some time and it seemed fishy.

I have noted my keybase ID and email so if someone were to really want to reach me, it’s there.

I tested the Feedback form and nothing happened.

Once I dug in, I found two PATs had expired behind the scenes. my ghtoken-narrowReadOnlWFTest which is a narrowly scoped PAT just for triggering the Github workflow. It has to be because it’s plain text in the the app.c3f9f951.js linked file in the HTML page.

The second is My90DayGHIssueWriter which is used in the actual Github Actions flow to write Github Issues into my repo. That said, if that expires, while I would not get issues, I would get all the email notifications and Discord updates, so it would be annoying, but not horrid.

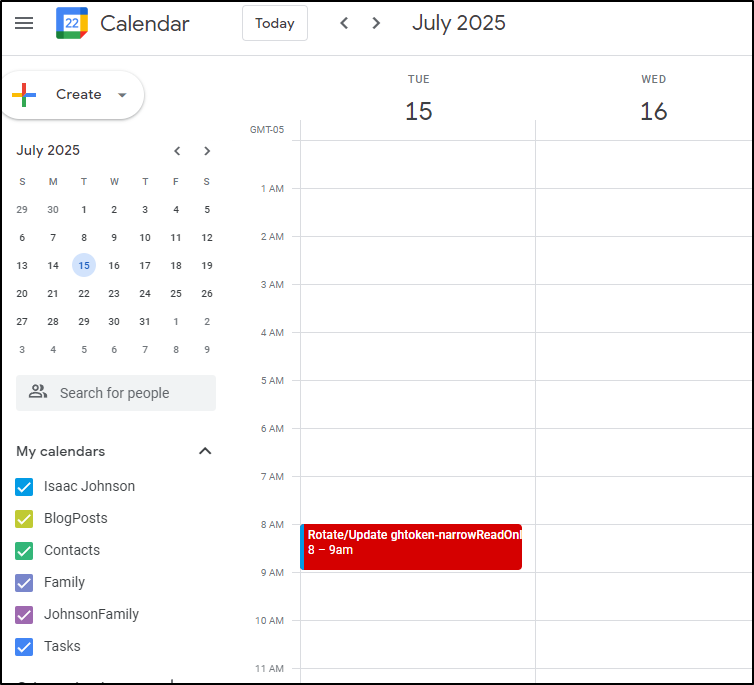

Once I updated the PATs and tested the form, I add a Calendar reminder to not forget to deal with next time

I did the same for the 90Day issue writer PAT.

Summary

This was not too long of a blog post, I admit. But I wanted to talk out some of the care/love/feeding one has to do as an SRE. Frankly, these kind of activities really translate to the kind of role I play in my professional life - ensuring we keep the lights on.

Regular checks of alerting systems should be key - you don’t want to test that dusty fire extinguisher the first time your kitchen catches fire - in much the same way you don’t want to hope your monitors work the first time you have an outage.

In bigger systems with proper teams, we also see the need to check the monitors for:

- Active users (remove departed employees, add new ones)

- Active systems (remove deprecated systems, add new ones).

- Notification channels (ensure the right Teams/Slack channels, and clear old ones)

One complication I see in the real world happens when using Terraform for setting up APMs and Alerts. I leaned into it because Code always beats Click-Ops, but I’ve also seen it get WAY too complicated.

My guidance would be that if you use IaC like Spacelift, OpenTofu or IBM (Hashi) Terraform for provisioning, you keep your code real dumb. I mean, make it long and obvious.

The worst thing you can do is make it over-engineered to the point your SREs, Ops and Front-line support people cannot make heads or tails and just use the UI - because they will and dammit they should if you block them from setting alerts.

One strategy you can use is to move the Monitoring and Alerting Terraform to new projects (workspaces). This way you still have proper slow and detailed PR reviews on real infrastructure (especially in controlled environments), but you give more freedom (read: speed) to the monitoring projects.

It does mean using more ‘data’ objects to pull things in instead of the simpler ‘module’ or ‘resource’ references, but the benefit is you KISS on the monitoring code and get the benefits of GIT traceability on change.

Let’s cover the 3 requirements on alerts as code:

- Can we see who changed alerts and when - do we understand what those alerts mean?

- Can we review those changes, or ensure others reviewed those changes on controlled (production) environments?

- Can we tie changes to justifications for change (tickets) - that is, can we explain not only who and when, but why a change occurred?

Anything more than that such as heavy use of modules, or usage of nested code or complicated loops should only be used if the full team is on board and finds it more readable. I have realized that the older I get the more I classify a lot of that stuff as ‘resume driven development’ or ‘hipster code’. But, when I am in leadership roles, I must trust the team and they set the tone.

For instance, years back I was on a team at TR - some of the smartest and best folks I ever managed (tip of the hat to Robert, Meena, and Anthony, to name a few) and they loved Python – I did not. I would write tickets to create our Lambdas in NodeJS and they would change them back. While we joked about it - I changed - I had to admit that my team’s desires were more important than the languages I liked. Even today I ask my colleagues their preferred language and write to their spec.

Lastly, and I cannot get into specifics because of the nature of the work - but this past Friday - the folks that fought the blaze to the early hours and got our systems live again were released from those duties mid morning. New, non-on-call’s came online and it was only fitting to tell the tired and battle-worn to get some sleep and take the day.

Your SREs and Ops cannot run on red all the time. It’s also very hard to let go of something you managed through - they may desire to stay through to the end. But sometimes we have to call it and let someone else take over. Considering this crazy weekend, I might now be mixing Crowdstrike with Presential announcements - but regardless, it’s important to hand off and let others take over.

I also am proud of my leadership at work. Many, sounding like absolute wrecks from having been up all night chose to lavish praise on the workers and ensure every one got the accolades they so rightly deserved. I’ll just wrap by saying I came away from this massive Crowdstrike outage with pride and heart filled with love.