Published: Jul 11, 2024 by Isaac Johnson

Today we’ll pick up where we left off last time on triggering AWX (Ansible) jobs with a variety of tools. In last weeks post, we covered Rundeck, AWS SQS and Lambdas and Azure DevOps webhooks.

Today we’ll look at a few solutions in GCP including Pubsub to CloudRun which then triggers AWX. We’ll build the function in Python and look at it both from the Function and CloudRun perspective. We will take this even further by using GCP EventArc to tie it to a Datadog channel for automation via Datadog. We’ll show a full run with my “5 Dollar job” alert job based on cost metrics.

We’ll also look at Azure Event Hubs to an Azure Function that can trigger AWX. We’ll switch to NodeJS for that function and tie it to a Github CICD workflow.

Next, we zip over to Google again and look at Pubsub to Dapr to AWX. I’ll walk through how to set up GCP SAs and tie that into a Dapr Component. Lastly, we’ll first test using a modified Node Subscriber based on a tutorial example and lastly we’ll start fresh and build a CSharp subscriber.

Pubsub to CloudRun to AWX

In the Cloud Native realm, we can look to Google’s Pubsub which can easily tie to a serverless CloudRun, which in turn could call AWX.

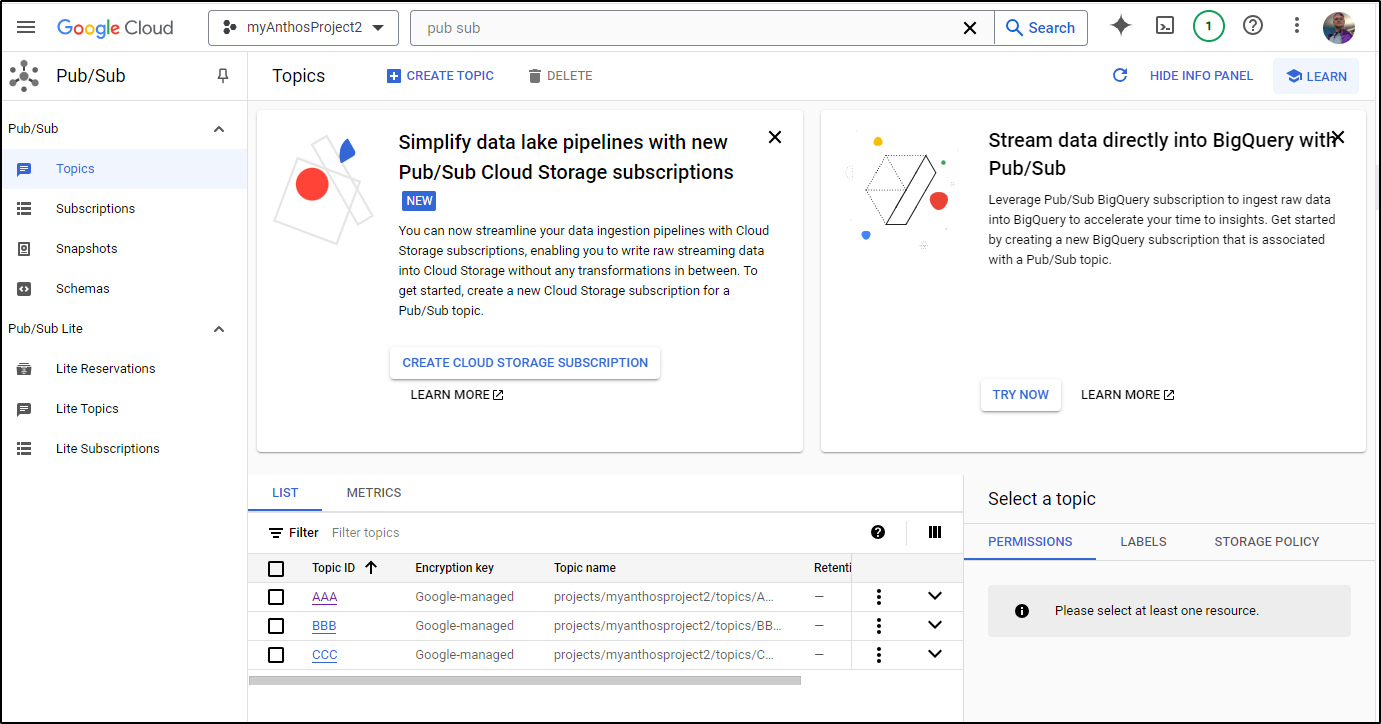

I have some Pubsub topics already in my GCP Project:

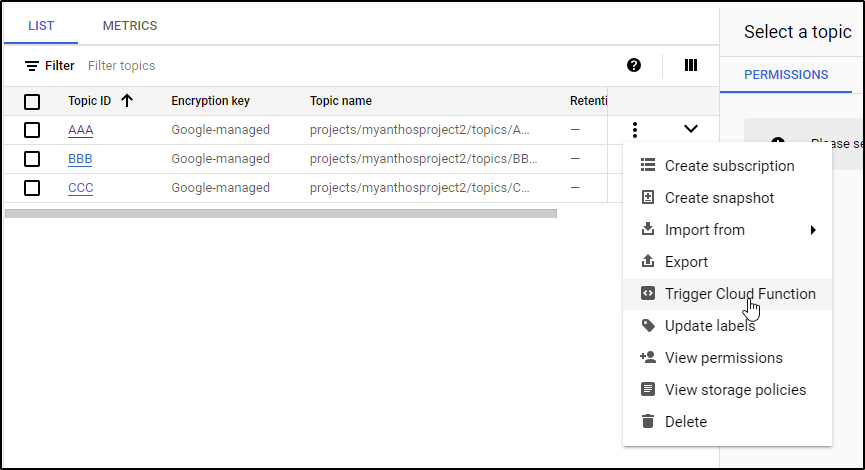

I can use the “…” menu to create a new function.

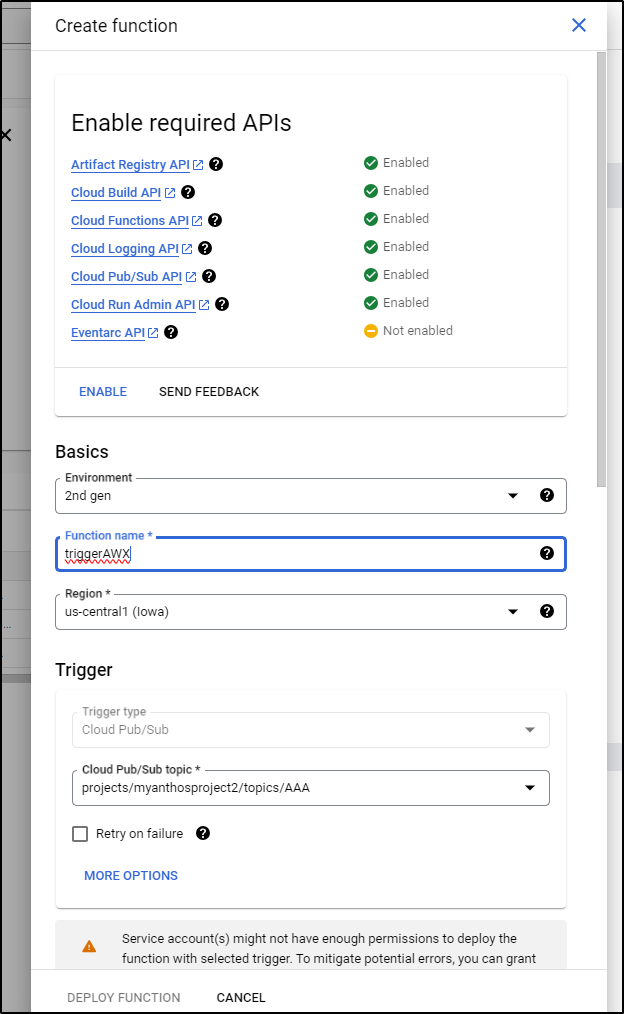

In the top half, we can enable APIs (if they need to be enabled) as well as name the function

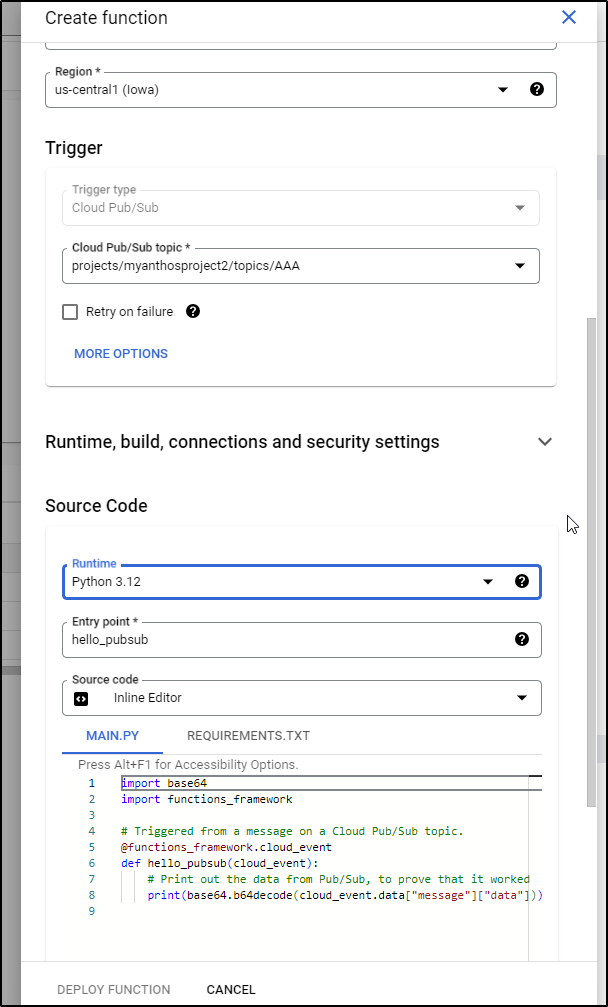

I’ll grant permissions and switch from NodeJS to Python in the bottom code pane

I’ll update the code to below:

import base64

import functions_framework

import requests

# Triggered from a message on a Cloud Pub/Sub topic.

@functions_framework.cloud_event

def hello_pubsub(cloud_event):

# Print out the data from Pub/Sub, to prove that it worked

print(base64.b64decode(cloud_event.data["message"]["data"]))

url = 'https://rundeck.tpk.pw/api/47/webhook/xsAWiKkuypPlfWDvjmKztDM3Cnn4w4i4#New_Hookt'

data = {'nothing': 'nothing'}

response = requests.post(url, json=data)

print(response.status_code)

print('Loading function')

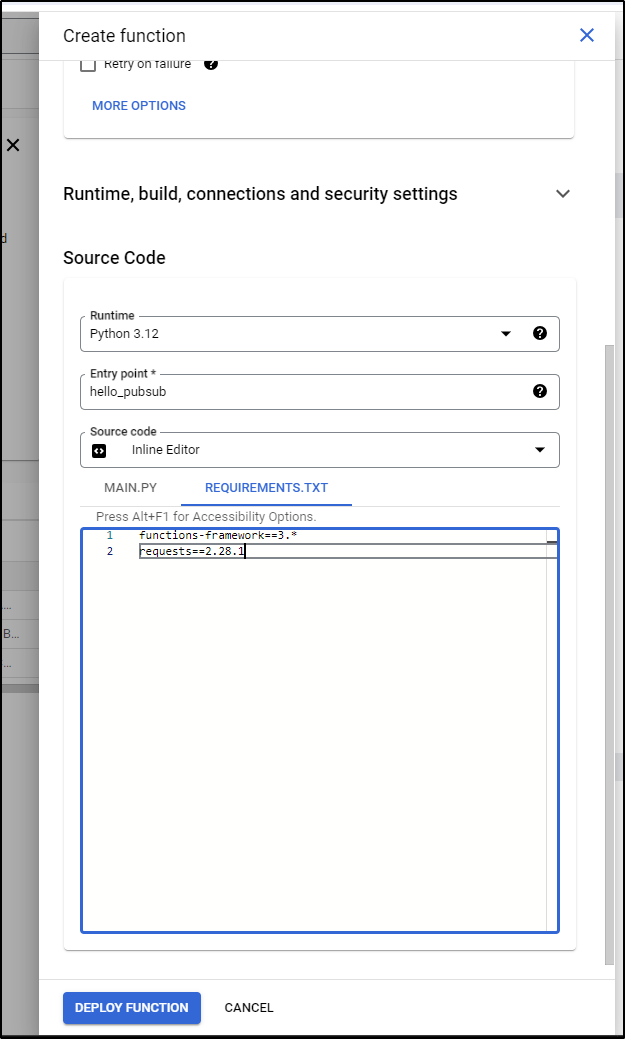

I’ll then update the requirements.txt

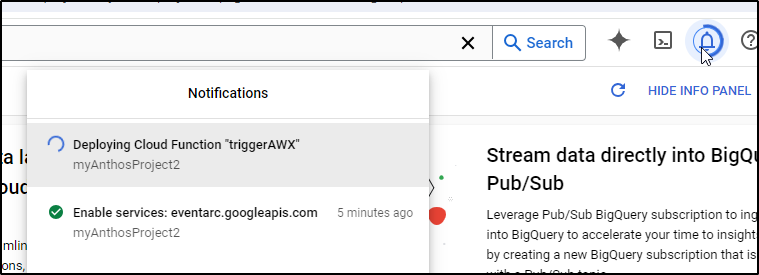

I can now see it deploying:

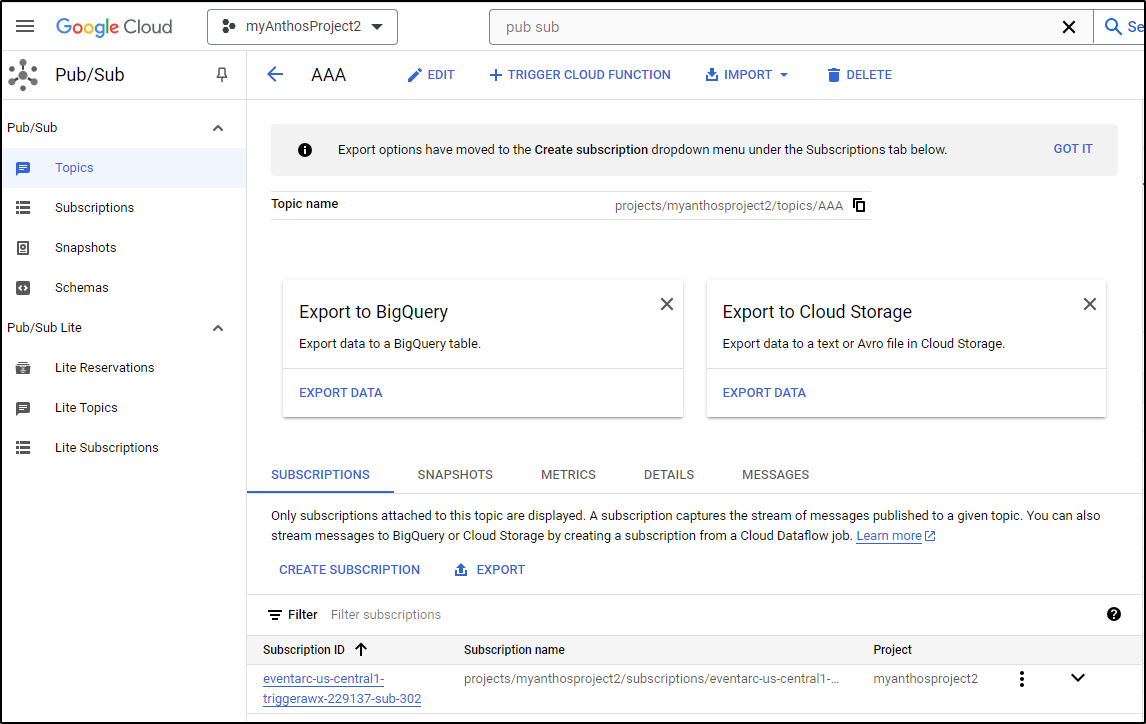

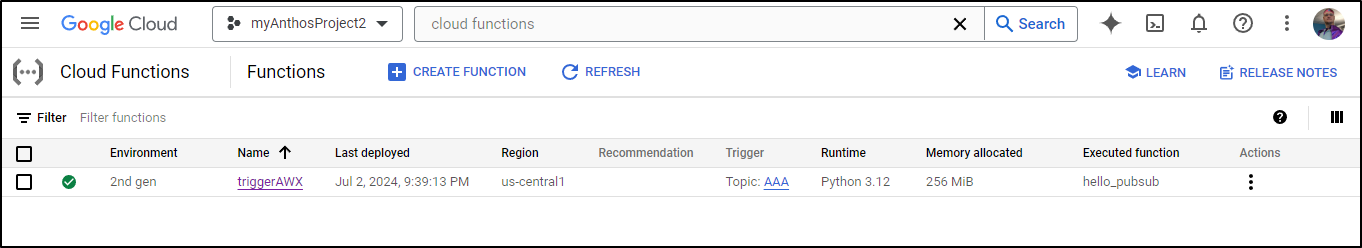

And see the TriggerAWX function tied to the Pub/Sub topic AAA

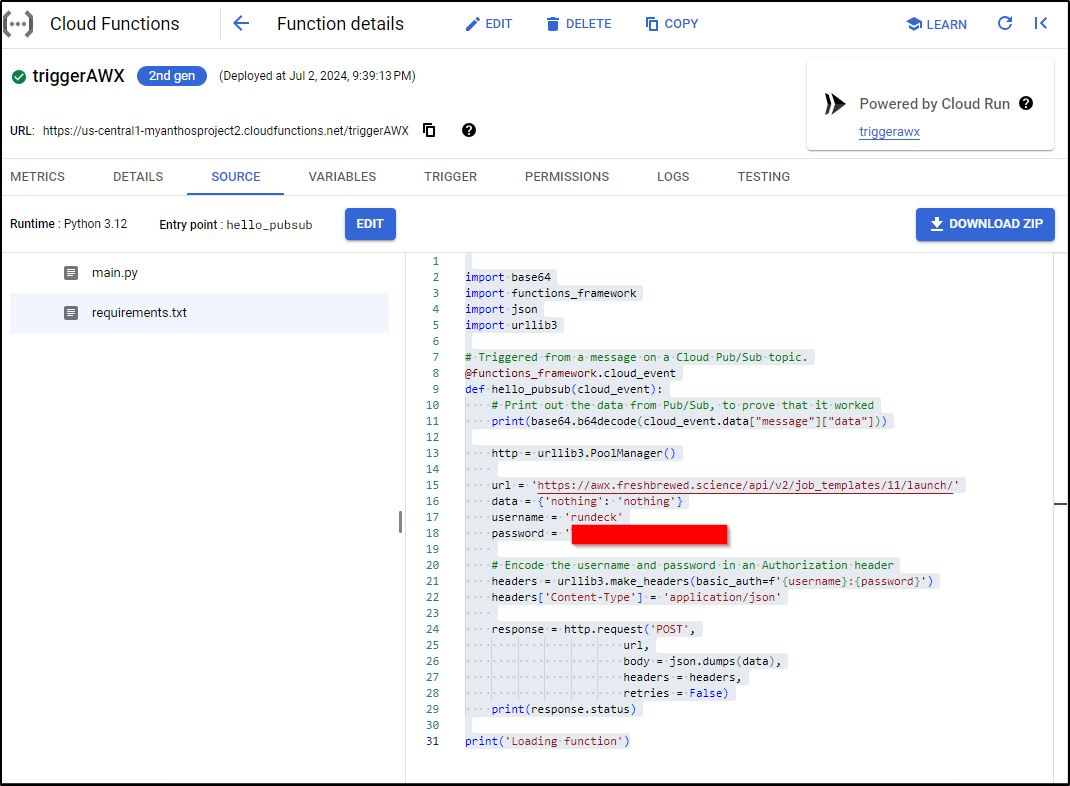

I updated my function and used the following Python:

import base64

import functions_framework

import json

import urllib3

# Triggered from a message on a Cloud Pub/Sub topic.

@functions_framework.cloud_event

def hello_pubsub(cloud_event):

# Print out the data from Pub/Sub, to prove that it worked

print(base64.b64decode(cloud_event.data["message"]["data"]))

http = urllib3.PoolManager()

url = 'https://awx.freshbrewed.science/api/v2/job_templates/11/launch/'

data = {'nothing': 'nothing'}

username = 'rundeck'

password = 'ThisIsNotMyPassword'

# Encode the username and password in an Authorization header

headers = urllib3.make_headers(basic_auth=f'{username}:{password}')

headers['Content-Type'] = 'application/json'

response = http.request('POST',

url,

body = json.dumps(data),

headers = headers,

retries = False)

print(response.status)

print('Loading function')

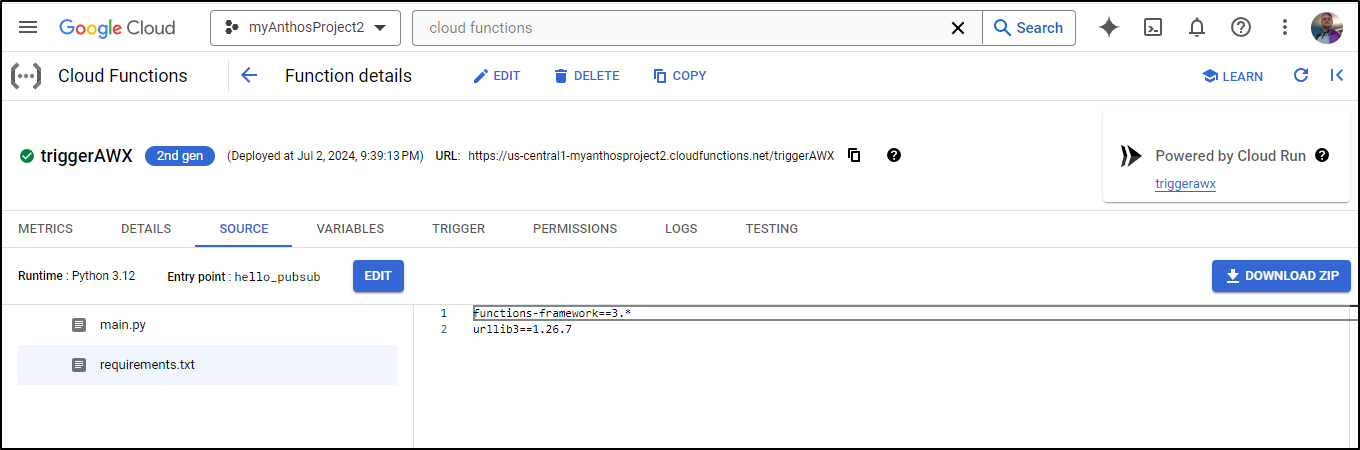

I should point out that at any point I can edit and save this CloudRun function using the Cloud Console:

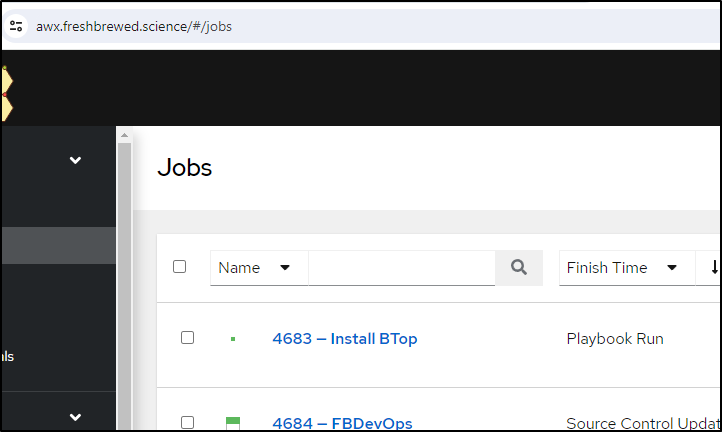

Here we can see a test of using Pubsub to CloudRun to AWX Directly. As I show, we can see it invokes via the logs and AWX, but the metrics did not immediately update:

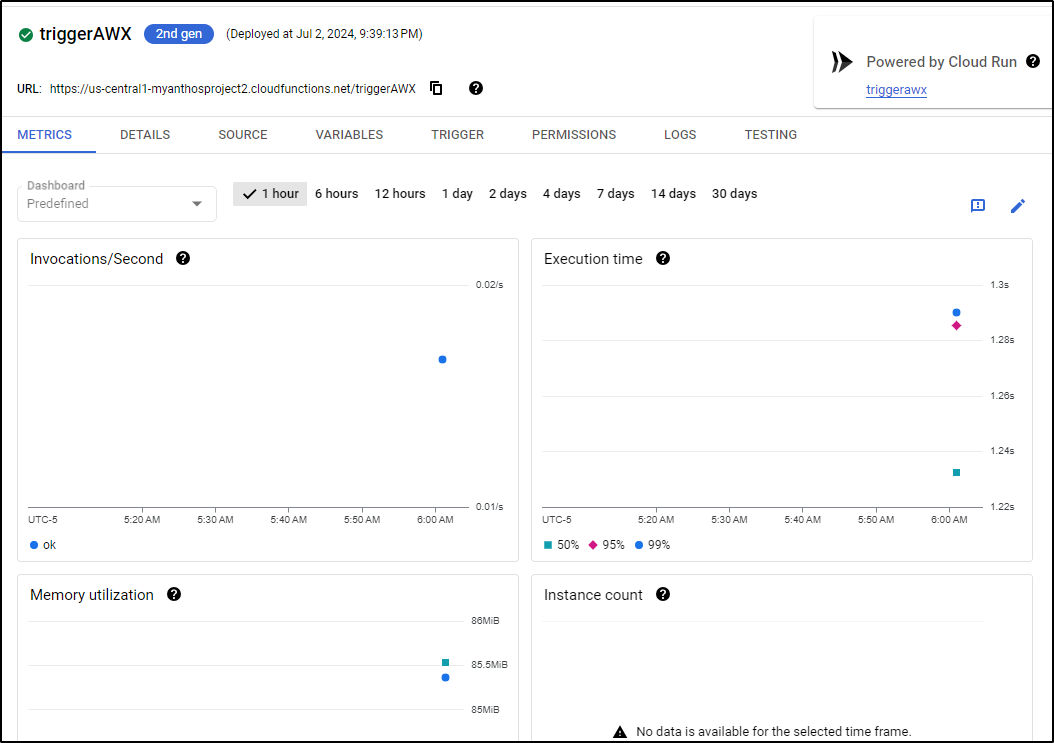

But a few minutes later, I did see results in the Metrics for that run:

Note: I’m not entirely sure why my logs are in CST (not CDT) and my metrics are two hours ahead of CDT.

We can now test the full flow of sending a pubsub message and properly triggering a CloudFunction to invoke an AWX Job:

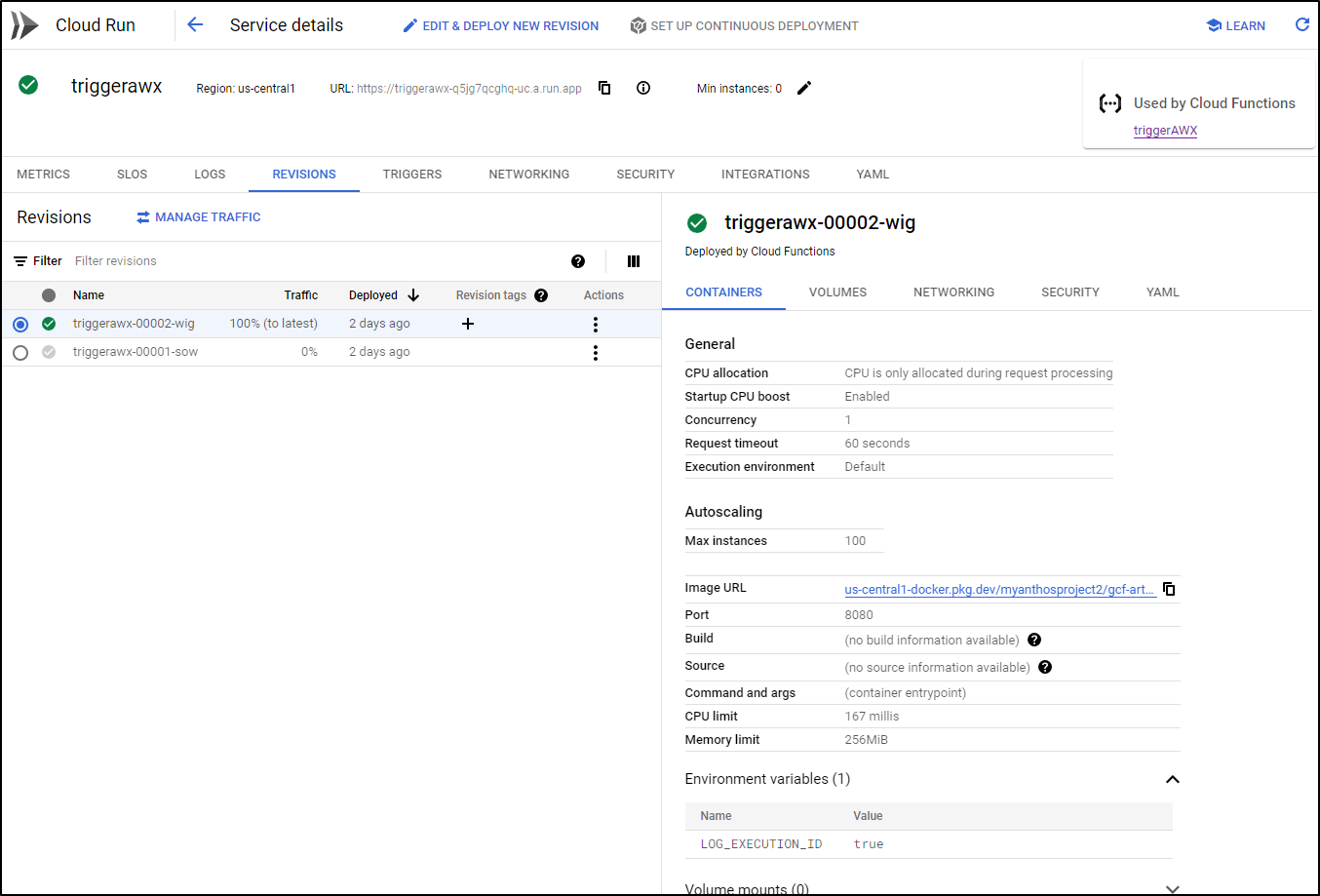

Functions and Cloud Run

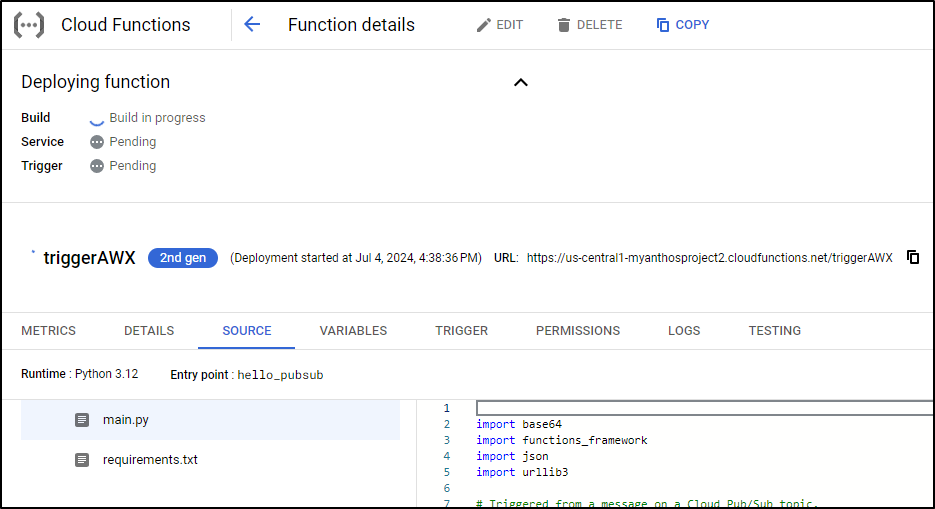

What is clear when we look at Cloud Functions is that I have a 2nd Gen function based on Python 3.12 and it’s tied to the Pubsub topic AAA:

It’s as a function that I can edit source or requirements and redeploy

But as you seen in the upper right there, we have a hint this is also a CloudRun function as well.

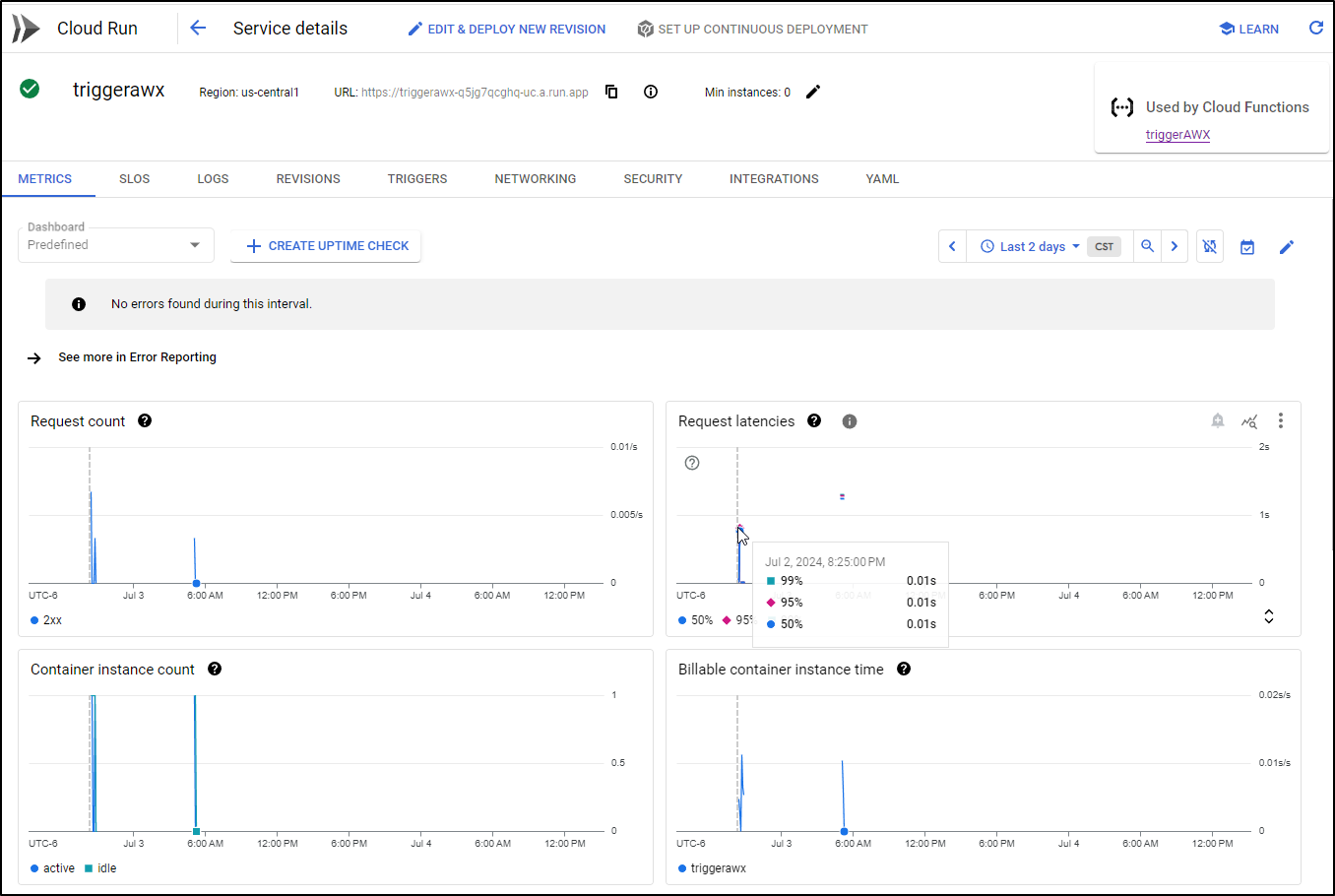

There we can see Metrics like billable time, instances and latencies

It is from the “Revisions” area in Cloud Run we can see actual runnable versions (the broken non-compilable intermediate versions I was banging out earlier do not show - just those that compiled into a runnable image)

Datadog to GCP CloudRun via Eventarc Channels

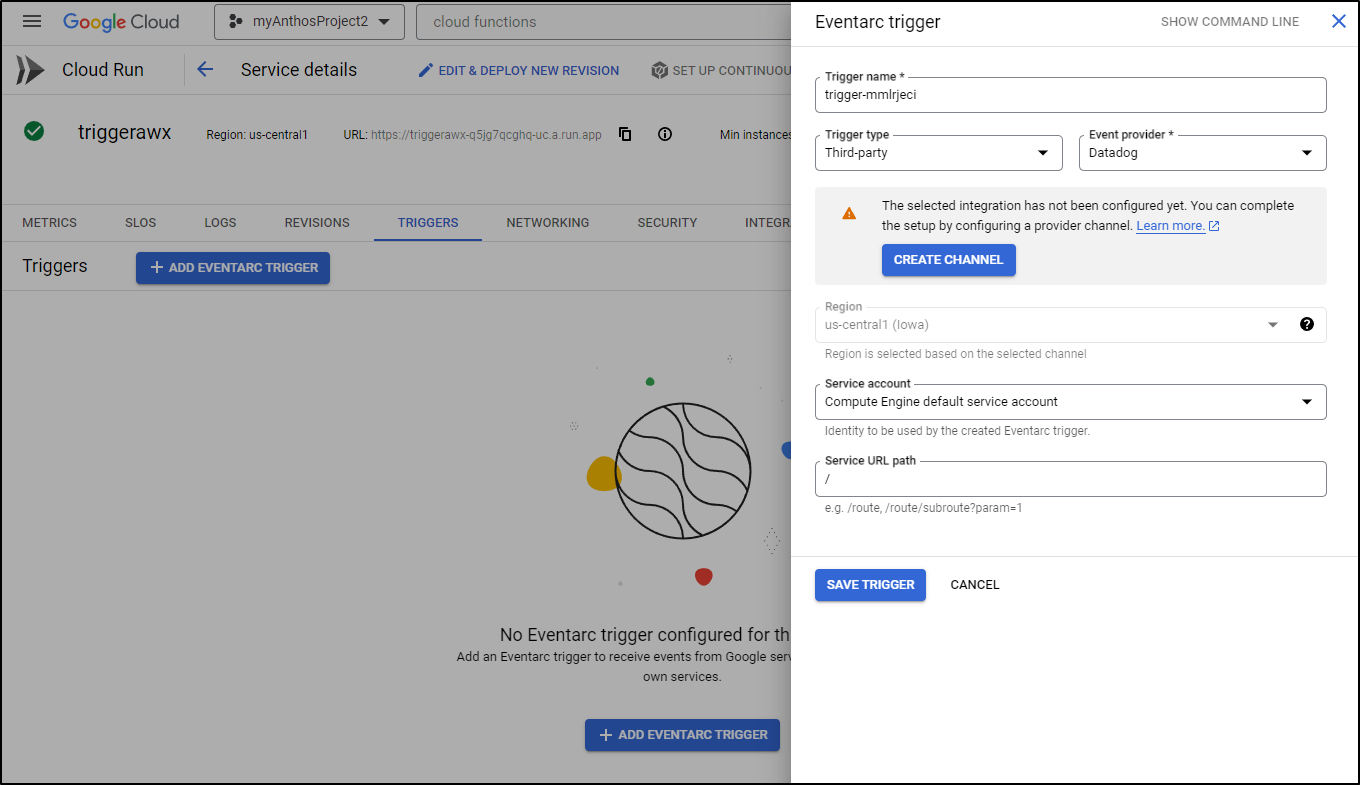

What dawned on me is that I could actually kick this Cloud Run function off via an Eventarc connection to Datadog

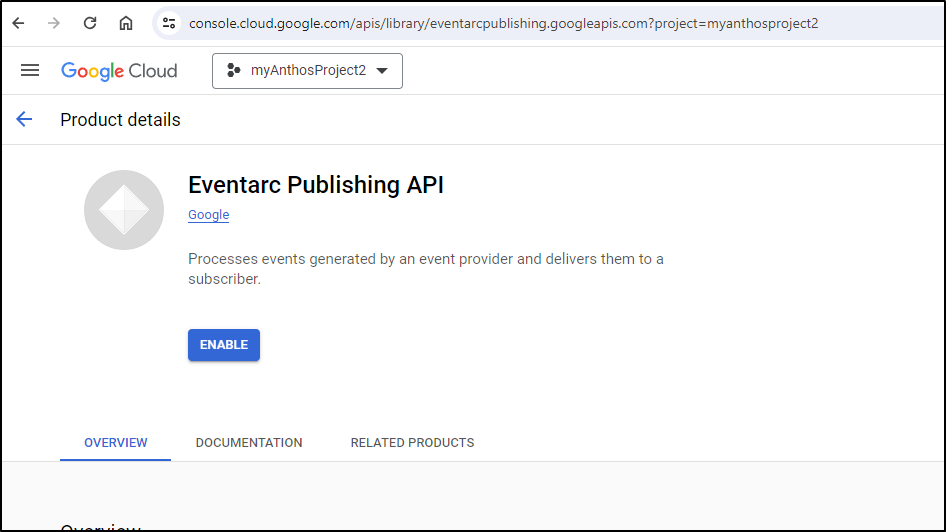

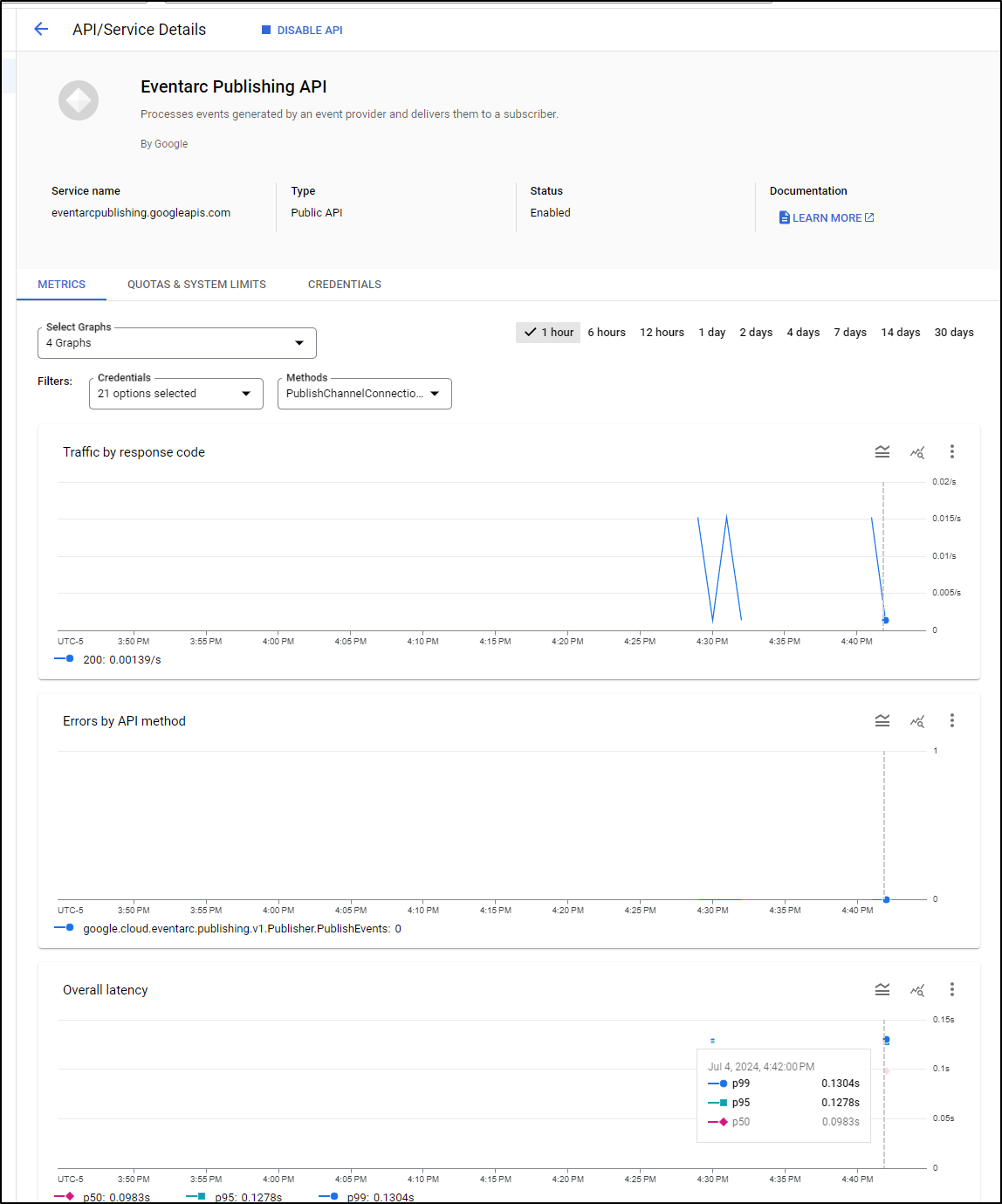

I first need to enable the Eventarc Publishing API

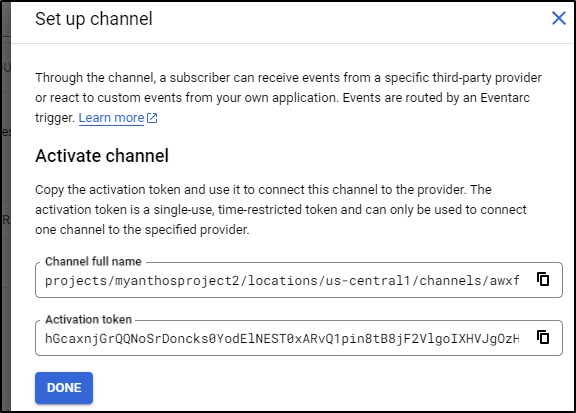

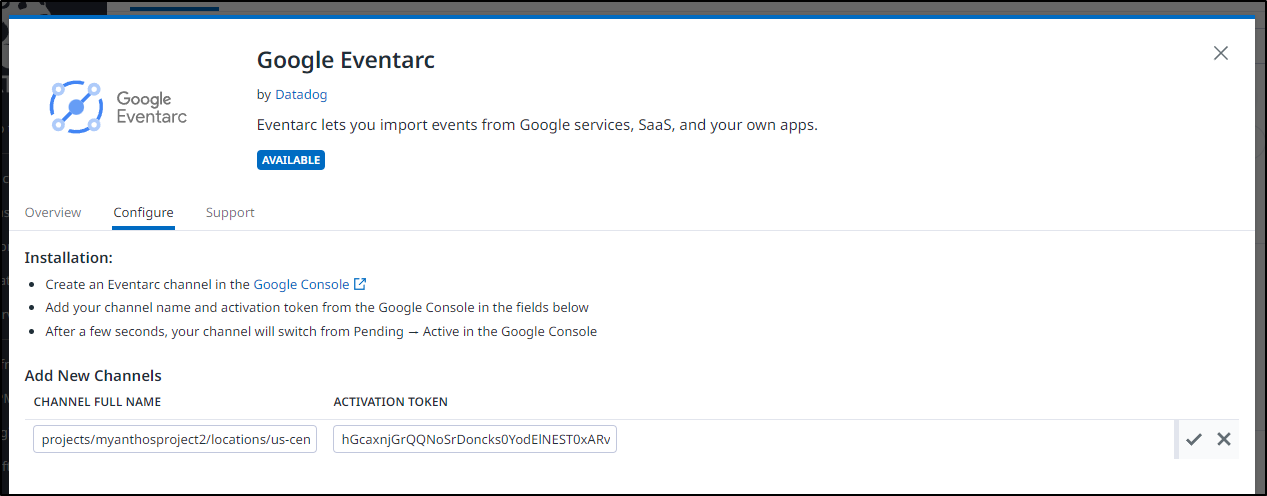

I can then create the channel which provides me a short lived activation token

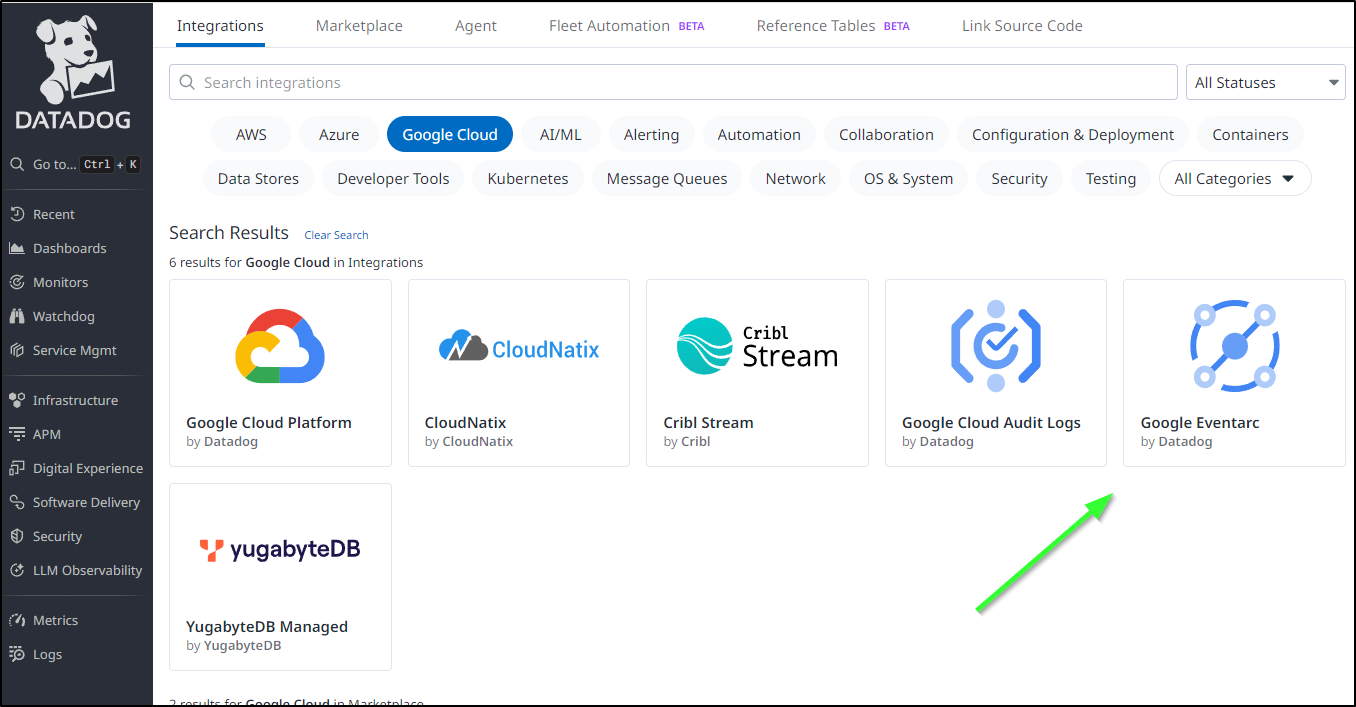

Back in Datadog, I can go to Integrations and find Eventarc

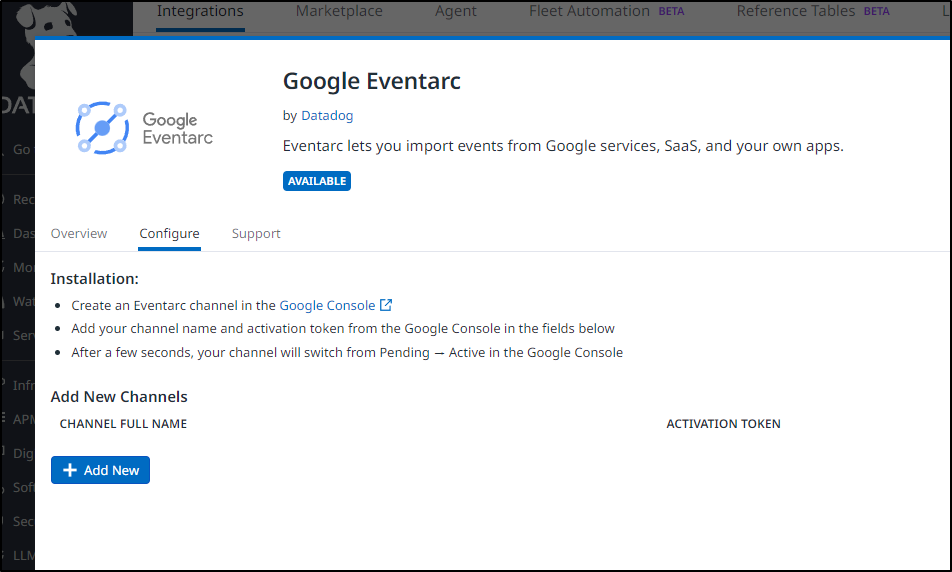

In configure, I can add a new channel

I’ll paste in the details and click the check mark

I now have an Eventarc destination I can use in Datadog

So let’s think of an example - what if a detected jump in AWS costs would be a good case for running an AWX ansible playbook to go and empty some temp storage or power down VMs.

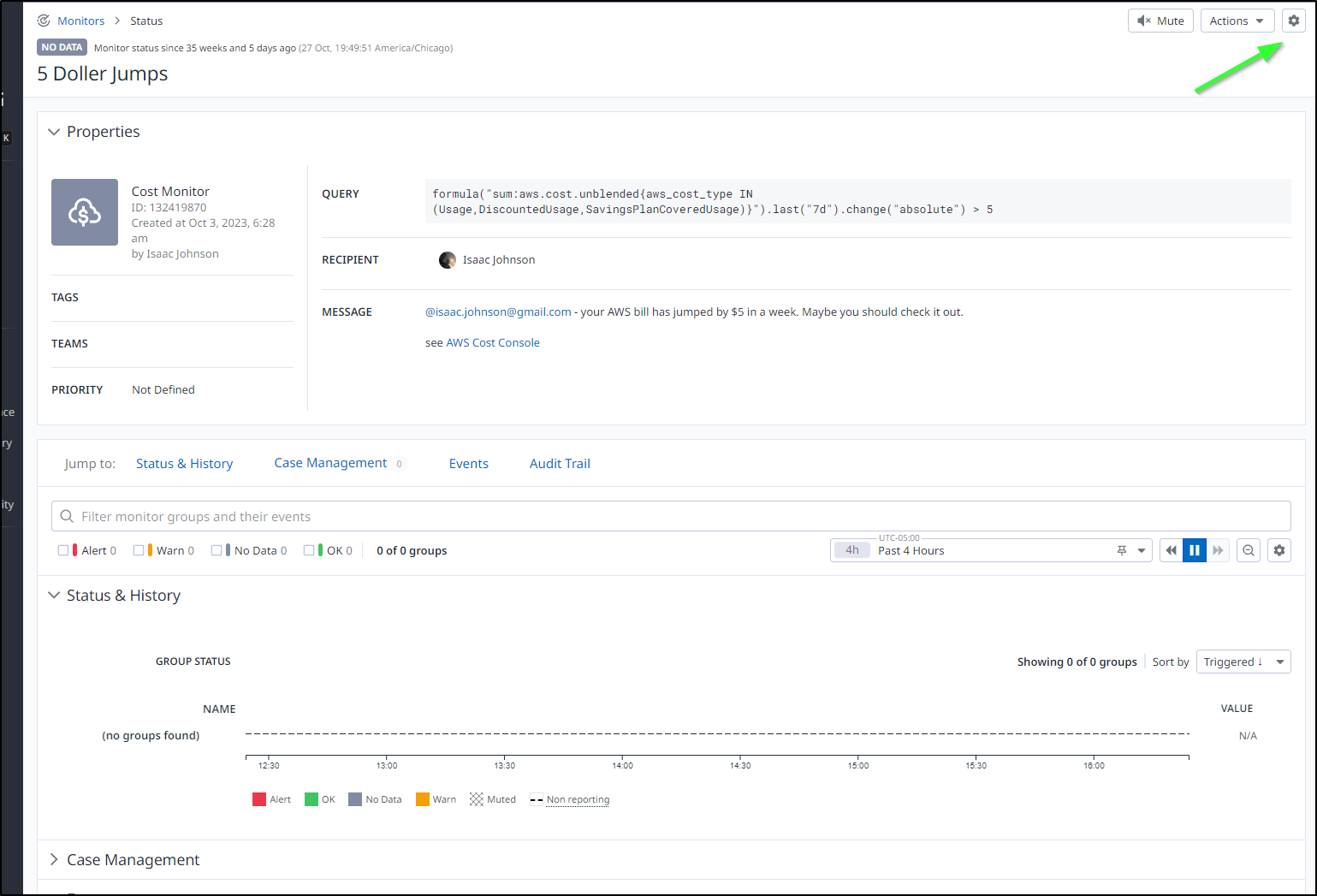

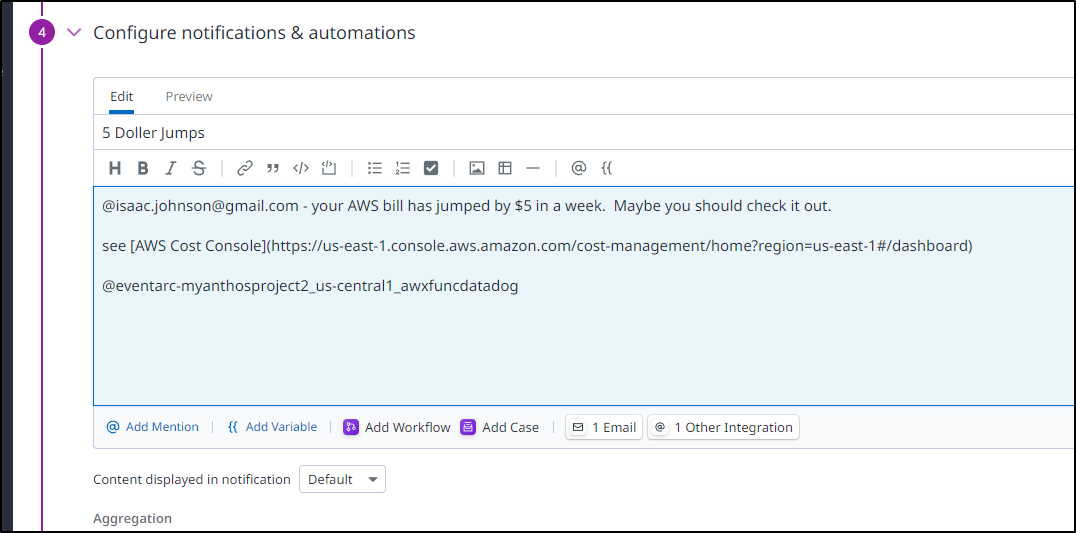

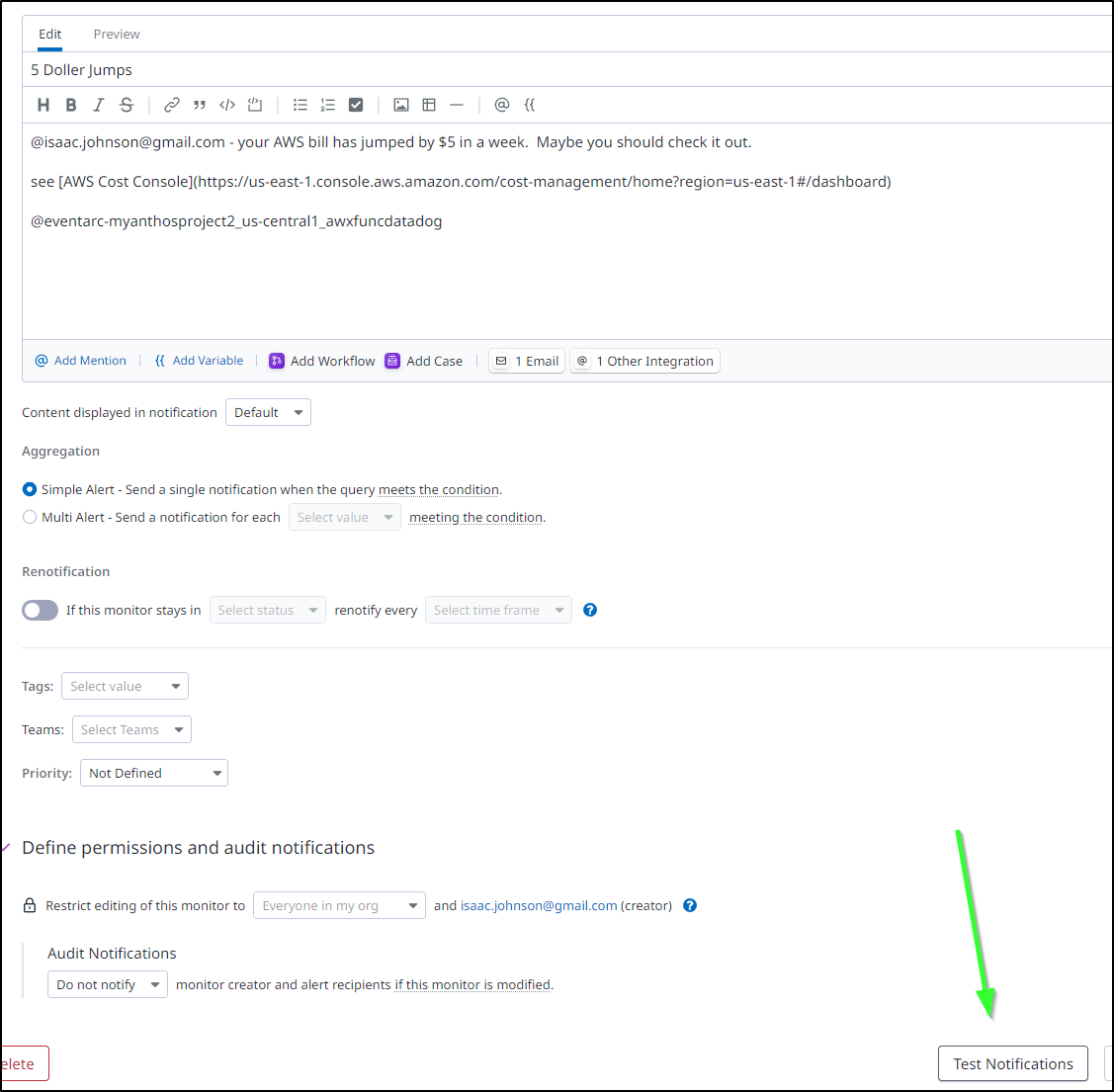

I could edit my ‘5 Dollar Jumps’ monitor

In the message block, I can then use @ to bring up the destinations which shows my GCP Eventarc channel. I’ll select it

Then use “Test Notifications” at the bottom to give it a try

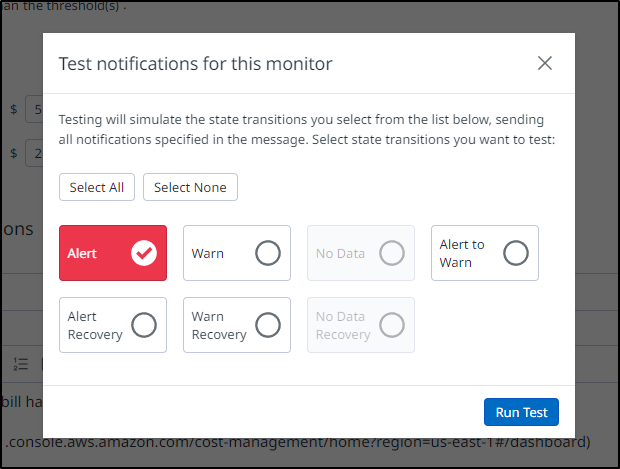

I’ll pick “Alert” and click “Run Test”

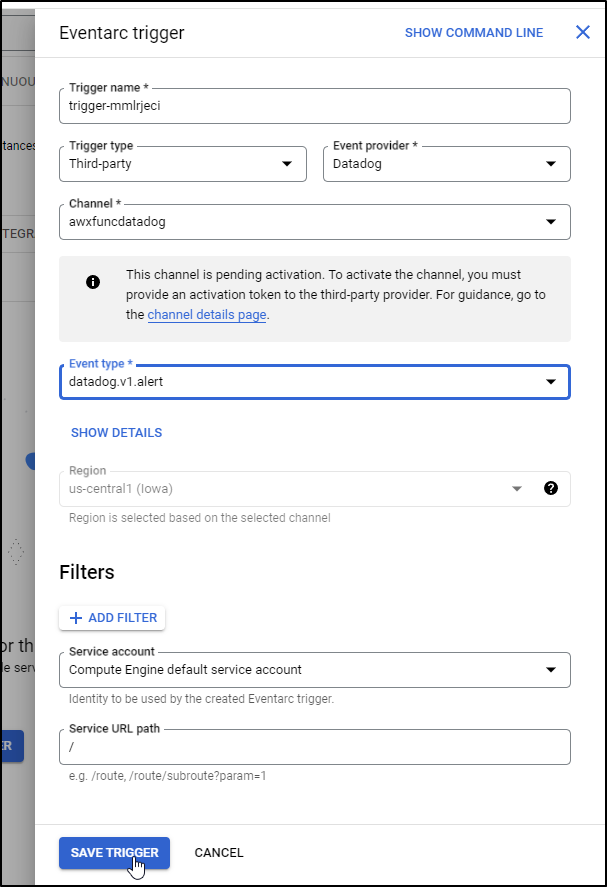

Oops - I forgot to save my Eventarc trigger, so lets do that now. Note, we can see the ‘datadog.v1.alert’ listed as an option since I had clicked the Run Test already.

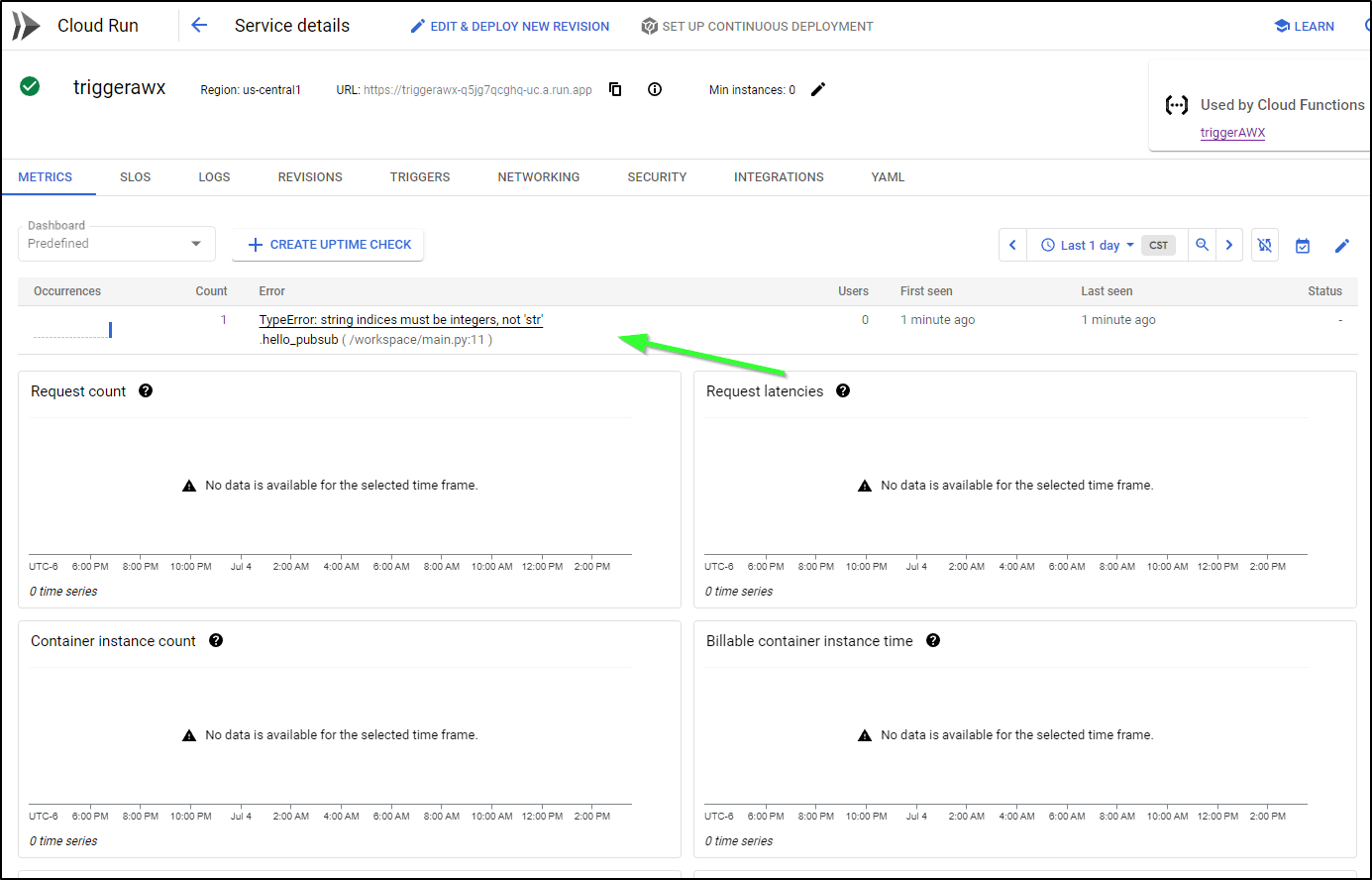

While this did invoke the function, the fact that my function expects a pubsub message meant it did not complete successfully

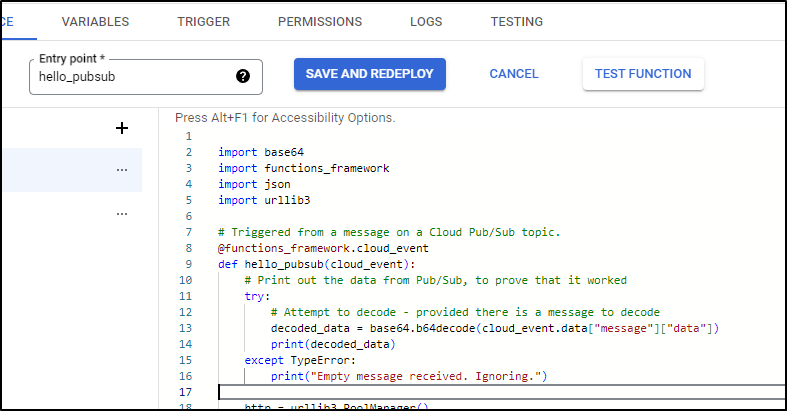

So that we can accept payloads without Pubsub, I’ll wrap in a try/except block

import base64

import functions_framework

import json

import urllib3

# Triggered from a message on a Cloud Pub/Sub topic.

@functions_framework.cloud_event

def hello_pubsub(cloud_event):

# Print out the data from Pub/Sub, to prove that it worked

try:

# Attempt to decode - provided there is a message to decode

decoded_data = base64.b64decode(cloud_event.data["message"]["data"])

print(decoded_data)

except TypeError:

print("Empty message received. Ignoring.")

Updating will build and publish

I can do a live test to see that indeed it calls the function and it handles empty messages:

After I stopped recording, i did check and it invoked the AWX job

I can also see invokations in the metrics for Eventarc

Azure EventHub to Function to AWX

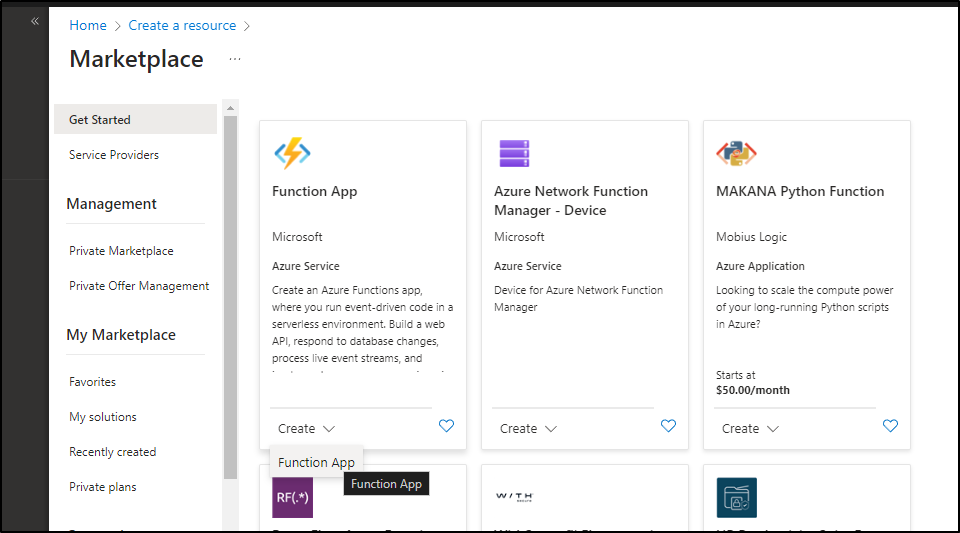

Similar to GCP, we could use Azure to accomplish the same thing using Azure Event Hubs to trigger an Azure Function that could call AWX

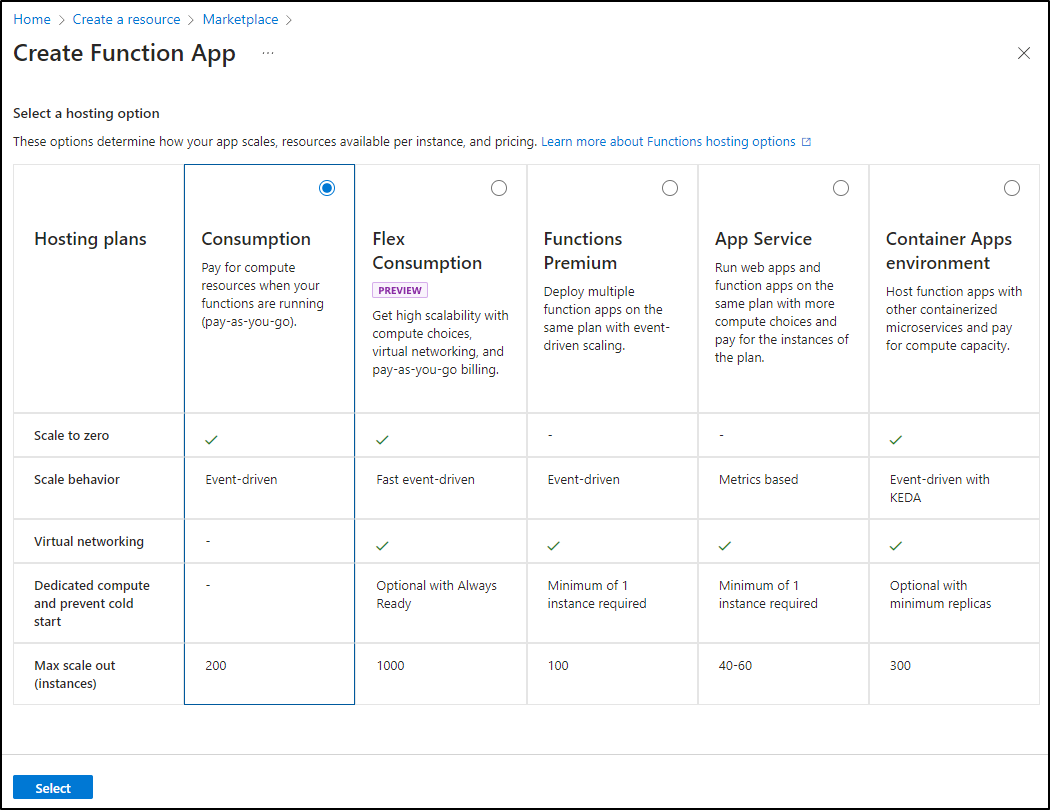

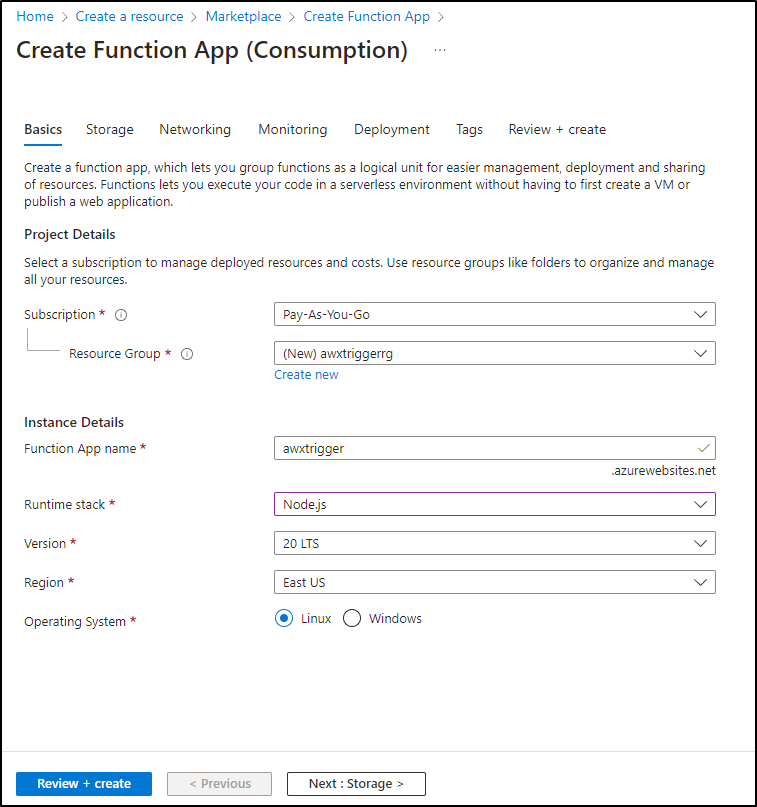

I could easily see going two routes on this - either Consumption based or using a KEDA based Container Apps environment. I’ll start simple and use the Consumption plan

I already showed examples in Python so let’s use NodeJS this time. I’ll also create a Resource Group to make costing easier to manage (and cleanup if I desire)

The next real choice is about Public access. If I want this function reachable from anywhere or just inside Azure. For the moment I’ll enable public (which is default)

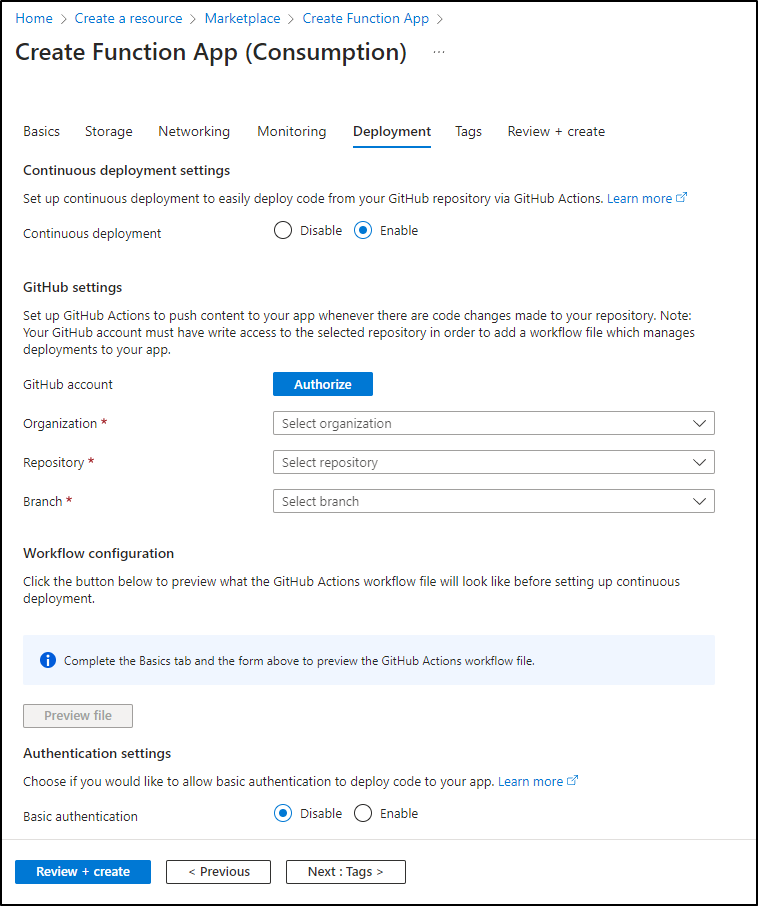

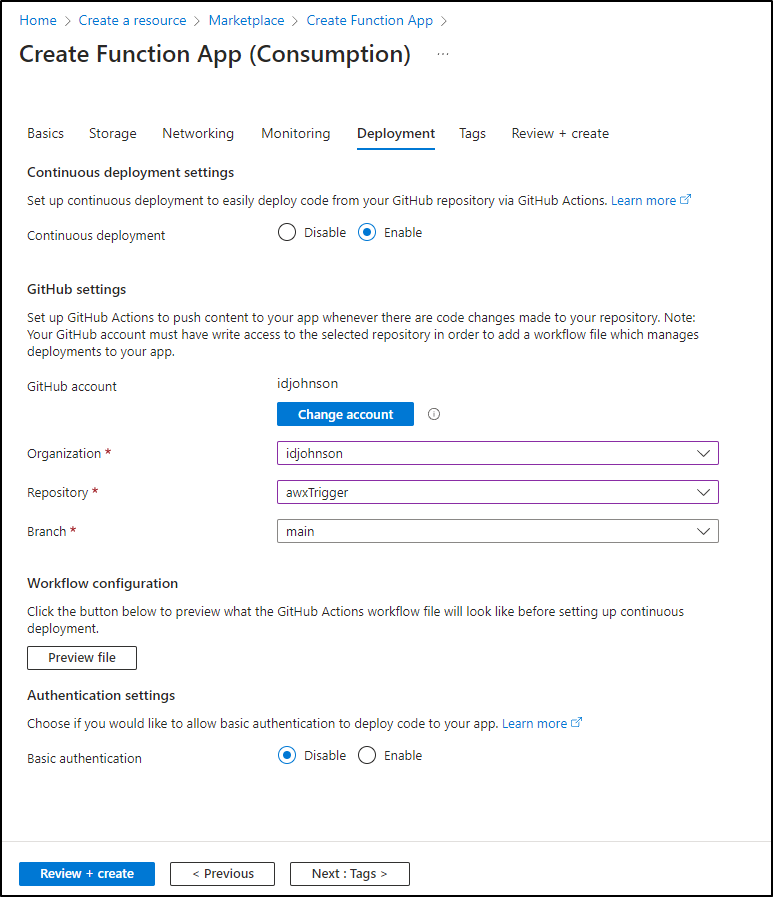

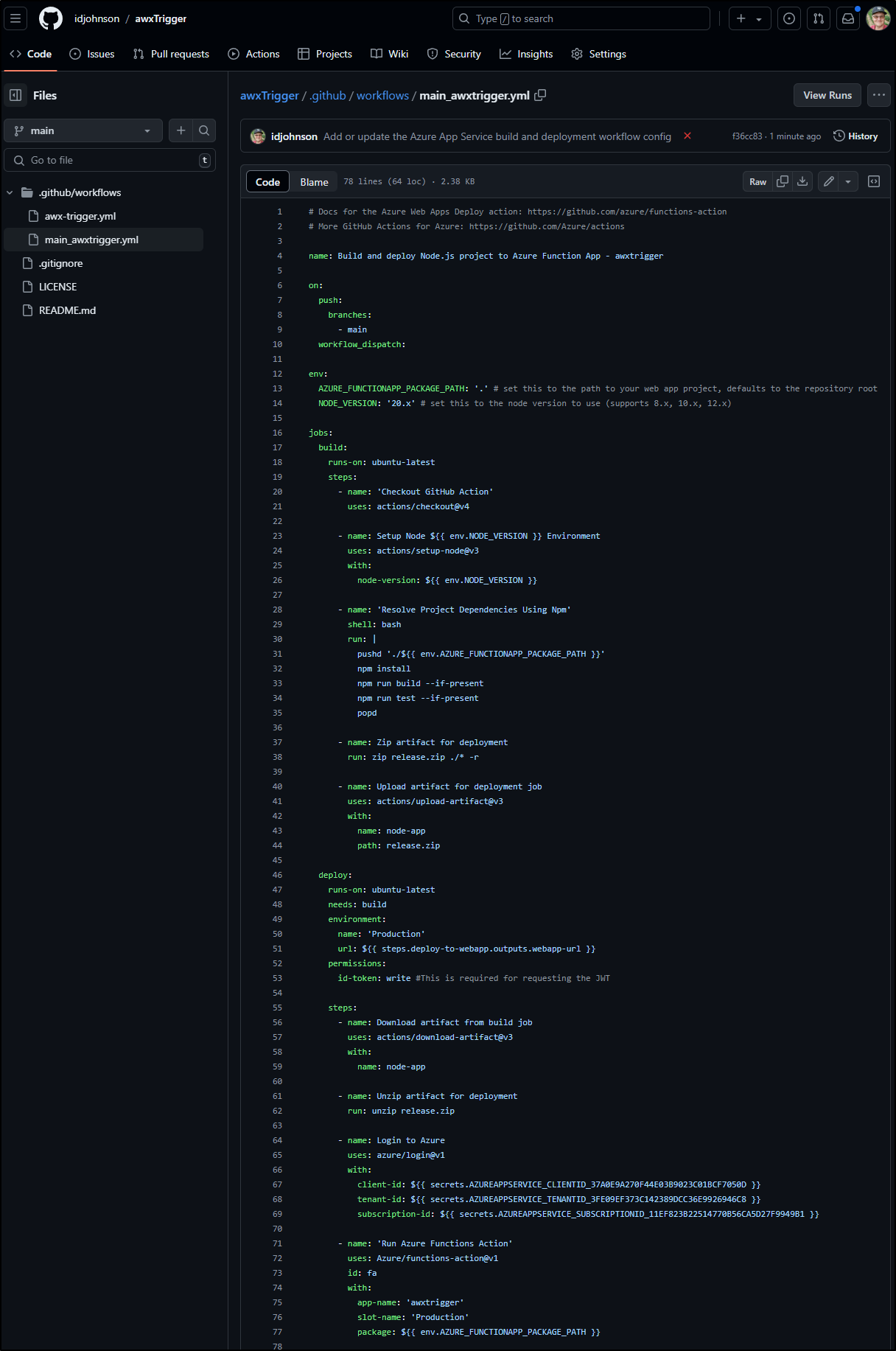

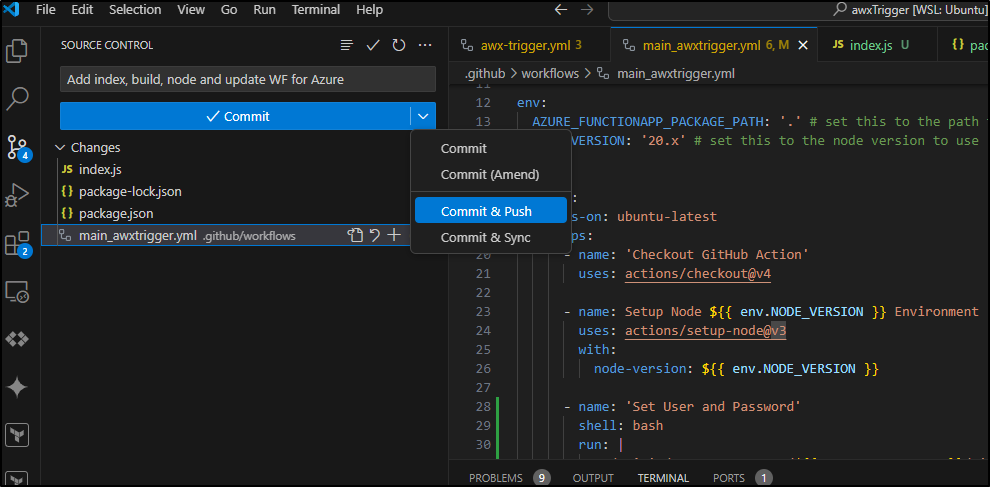

Next, we have the choice of CICD via Github. I generally like to build and push so I’m going to head this route and Authorize to Github

I’ll opt to put it in the same public AWXTrigger repo we used earlier for GH Workflow driven updates

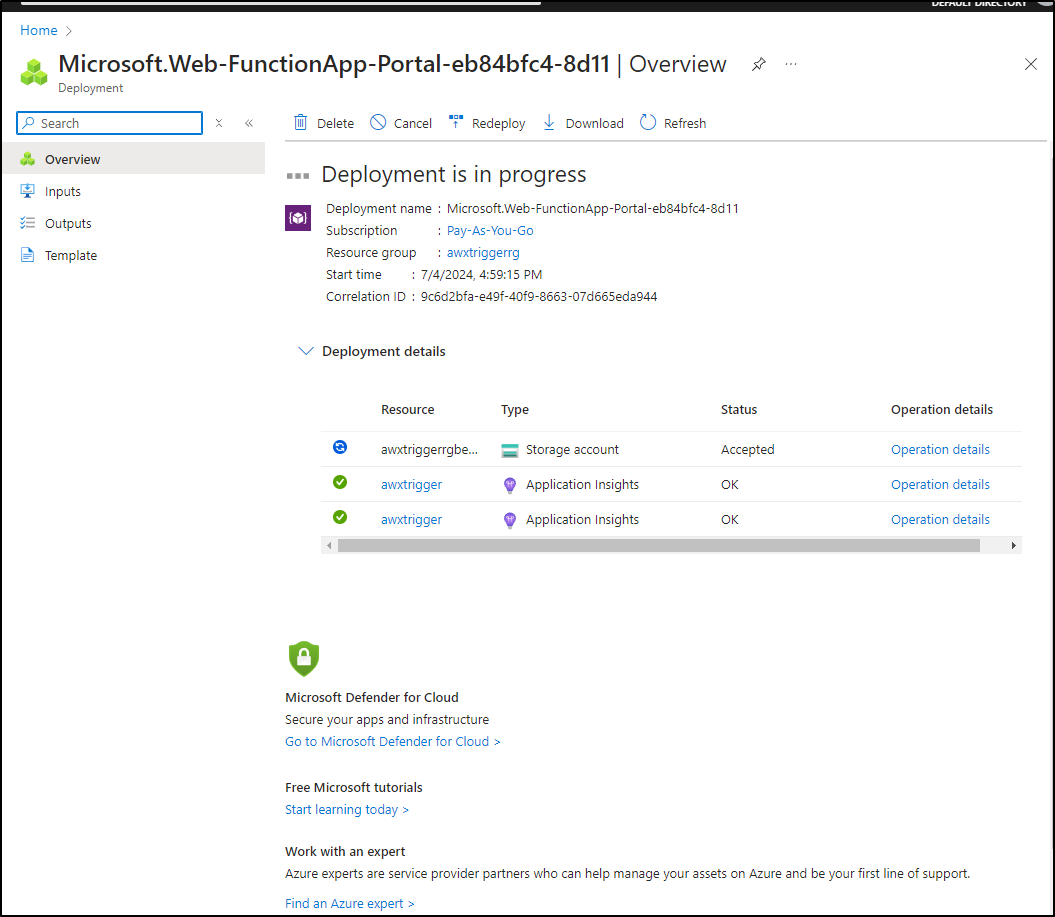

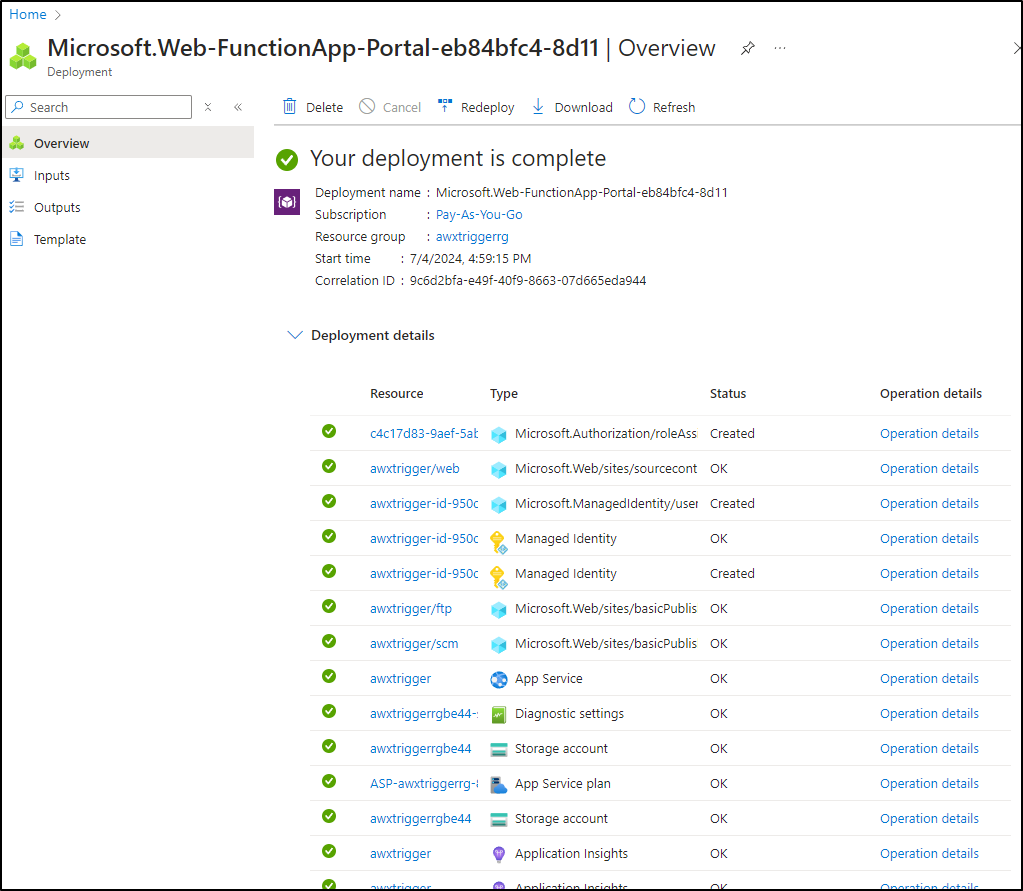

Azure will now kick in and create the storage plan, App Service plan, App Insights (monitoring)

I should note that as it goes along, we’ll see the Resource list populate with each item

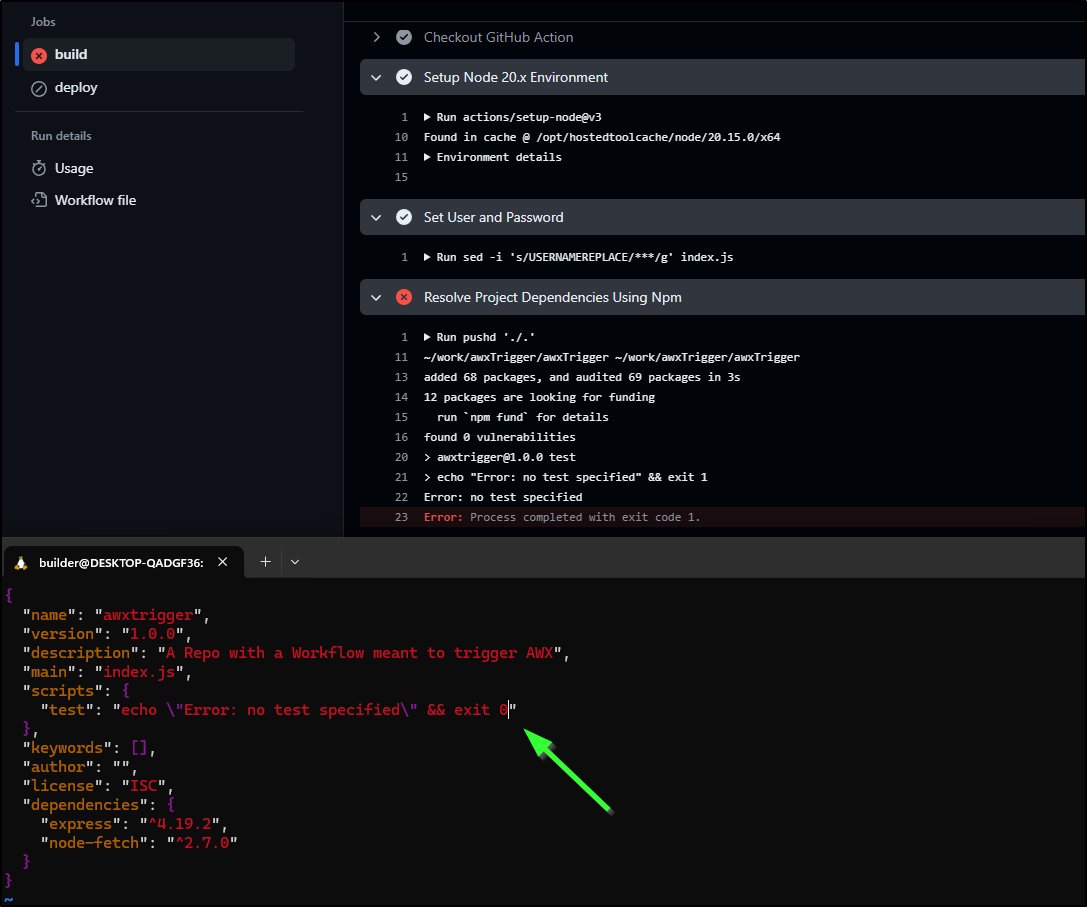

What I can see added to my repo was a basic NPM build file, but nothing more

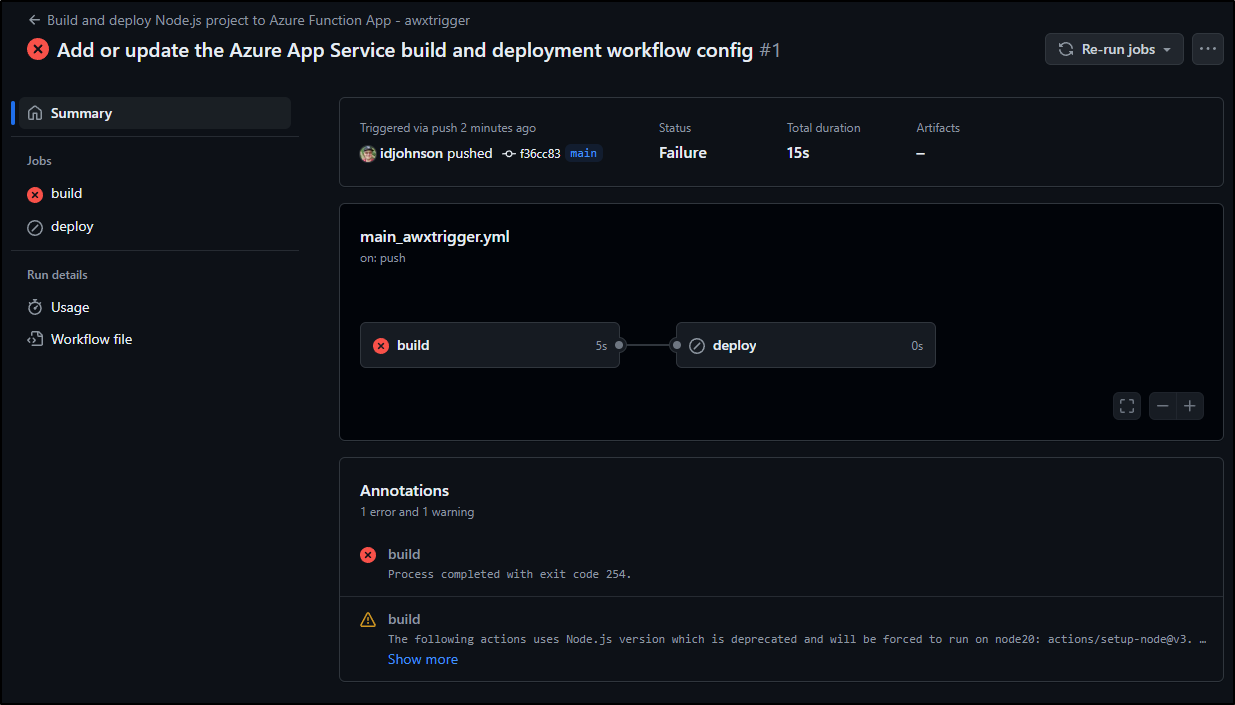

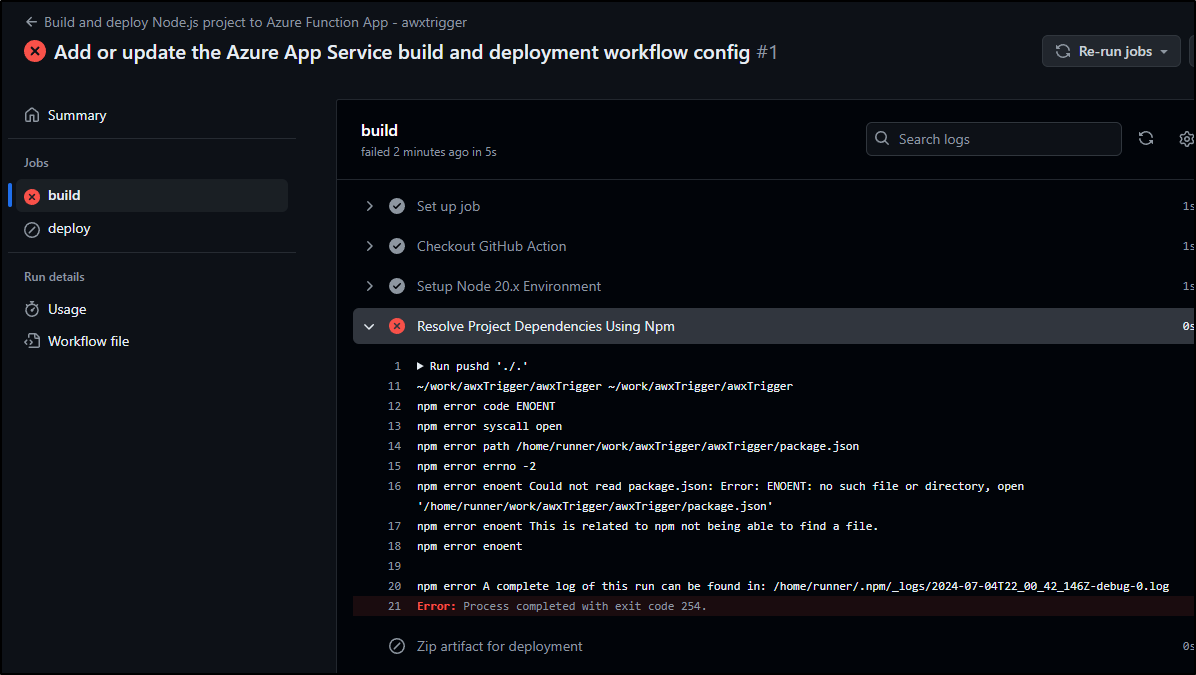

This, of course, fails as there exists no NodeJS code as of yet

I’ll now clone local and update my local env to Node 20.

builder@DESKTOP-QADGF36:~/Workspaces/awxTrigger$ git pull

remote: Enumerating objects: 8, done.

remote: Counting objects: 100% (8/8), done.

remote: Compressing objects: 100% (4/4), done.

remote: Total 5 (delta 0), reused 0 (delta 0), pack-reused 0

Unpacking objects: 100% (5/5), 1.46 KiB | 497.00 KiB/s, done.

From https://github.com/idjohnson/awxTrigger

f74f014..f36cc83 main -> origin/main

Updating f74f014..f36cc83

Fast-forward

.github/workflows/main_awxtrigger.yml | 78 ++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

1 file changed, 78 insertions(+)

create mode 100644 .github/workflows/main_awxtrigger.yml

builder@DESKTOP-QADGF36:~/Workspaces/awxTrigger$ nvm list

-> v10.22.1

v12.22.11

v14.18.1

v16.14.2

v17.6.0

v18.17.1

v18.18.2

default -> 10.22.1 (-> v10.22.1)

iojs -> N/A (default)

unstable -> N/A (default)

node -> stable (-> v18.18.2) (default)

stable -> 18.18 (-> v18.18.2) (default)

lts/* -> lts/iron (-> N/A)

lts/argon -> v4.9.1 (-> N/A)

lts/boron -> v6.17.1 (-> N/A)

lts/carbon -> v8.17.0 (-> N/A)

lts/dubnium -> v10.24.1 (-> N/A)

lts/erbium -> v12.22.12 (-> N/A)

lts/fermium -> v14.21.3 (-> N/A)

lts/gallium -> v16.20.2 (-> N/A)

lts/hydrogen -> v18.18.2

lts/iron -> v20.9.0 (-> N/A)

builder@DESKTOP-QADGF36:~/Workspaces/awxTrigger$ nvm install lst/iron

Version 'lst/iron' not found - try `nvm ls-remote` to browse available versions.

builder@DESKTOP-QADGF36:~/Workspaces/awxTrigger$ nvm install 20.9.0

Downloading and installing node v20.9.0...

Downloading https://nodejs.org/dist/v20.9.0/node-v20.9.0-linux-x64.tar.xz...

###################################################################################################################################### 100.0%

Computing checksum with sha256sum

Checksums matched!

Now using node v20.9.0 (npm v10.1.0)

builder@DESKTOP-QADGF36:~/Workspaces/awxTrigger$ nvm use 20.9.0

Now using node v20.9.0 (npm v10.1.0)

I just need to now build out the NodeJS app.

First I’ll create the npm structure

builder@DESKTOP-QADGF36:~/Workspaces/awxTrigger$ npm init -y

Wrote to /home/builder/Workspaces/awxTrigger/package.json:

{

"name": "awxtrigger",

"version": "1.0.0",

"description": "A Repo with a Workflow meant to trigger AWX",

"main": "index.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"keywords": [],

"author": "",

"license": "ISC"

}

and add express and fetch

builder@DESKTOP-QADGF36:~/Workspaces/awxTrigger$ npm install --save express node-fetch

added 70 packages, and audited 71 packages in 2s

15 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

npm notice

npm notice New minor version of npm available! 10.1.0 -> 10.8.1

npm notice Changelog: https://github.com/npm/cli/releases/tag/v10.8.1

npm notice Run npm install -g npm@10.8.1 to update!

npm notice

I could just use the plain text user and password:

$ cat index.js

$ cat index.js

const express = require('express');

const fetch = require('node-fetch');

const app = express();

const PORT = 8080;

// Middleware to parse JSON body

app.use(express.json());

// Route handler for POST requests

app.post('/', async (req, res) => {

try {

// Replace with your actual username and password

const username = 'someuser';

const password = 'somepassword';

// Make a POST request to mytest.com

const response = await fetch('https://awx.freshbrewed.science/api/v2/job_templates/11/launch/', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({ username, password }),

});

// Get the response status code

const statusCode = response.status;

// Send the status code as the response

res.status(statusCode).send(`AWX Response code: ${statusCode}`);

} catch (error) {

console.error('Error:', error.message);

res.status(500).send('Internal server error');

}

});

// Start the server

app.listen(PORT, () => {

console.log(`AWX Trigger server listening on port ${PORT}`);

});

But then the plain password would be saved in a public Github repo which would be very boneheaded of me.

Instead, I’ll put in a placeholder for USERNAME and PASSWORD

$ cat index.js

const express = require('express');

const fetch = require('node-fetch');

const app = express();

const PORT = 8080;

// Middleware to parse JSON body

app.use(express.json());

// Route handler for POST requests

app.get('/', async (req, res) => {

try {

// Replace with your actual username and password

const username = 'USERNAMEREPLACE';

const password = 'PASSWORDREPLACE';

// Make a POST request to mytest.com

const response = await fetch('https://awx.freshbrewed.science/api/v2/job_templates/11/launch/', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({ username, password }),

});

// Get the response status code

const statusCode = response.status;

// Send the status code as the response

res.status(statusCode).send(`AWX Response code: ${statusCode}`);

} catch (error) {

console.error('Error:', error.message);

res.status(500).send('Internal server error');

}

});

// Start the server

app.listen(PORT, () => {

console.log(`AWX Trigger server listening on port ${PORT}`);

});

Then I added a step to replace the user and password inline at build time

jobs:

build:

runs-on: ubuntu-latest

steps:

- name: 'Checkout GitHub Action'

uses: actions/checkout@v4

- name: Setup Node $ Environment

uses: actions/setup-node@v3

with:

node-version: $

- name: 'Set User and Password'

shell: bash

run: |

sed -i 's/USERNAMEREPLACE/$/g' index.js

sed -i 's/PASSWORDREPLACE/$/g' index.js

In testing, I ran into some issues with Node-fetch that required me to downgrade to the prior version

builder@DESKTOP-QADGF36:~/Workspaces/awxTrigger$ npm install --save node-fetch@2

added 3 packages, removed 5 packages, changed 1 package, and audited 69 packages in 812ms

12 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

But now I can run the express app

builder@DESKTOP-QADGF36:~/Workspaces/awxTrigger$ node index.js

AWX Trigger server listening on port 8080

I get a 401 (unauth) as i have the boilerplate creds in there

I’ll push the whole lot up to Github

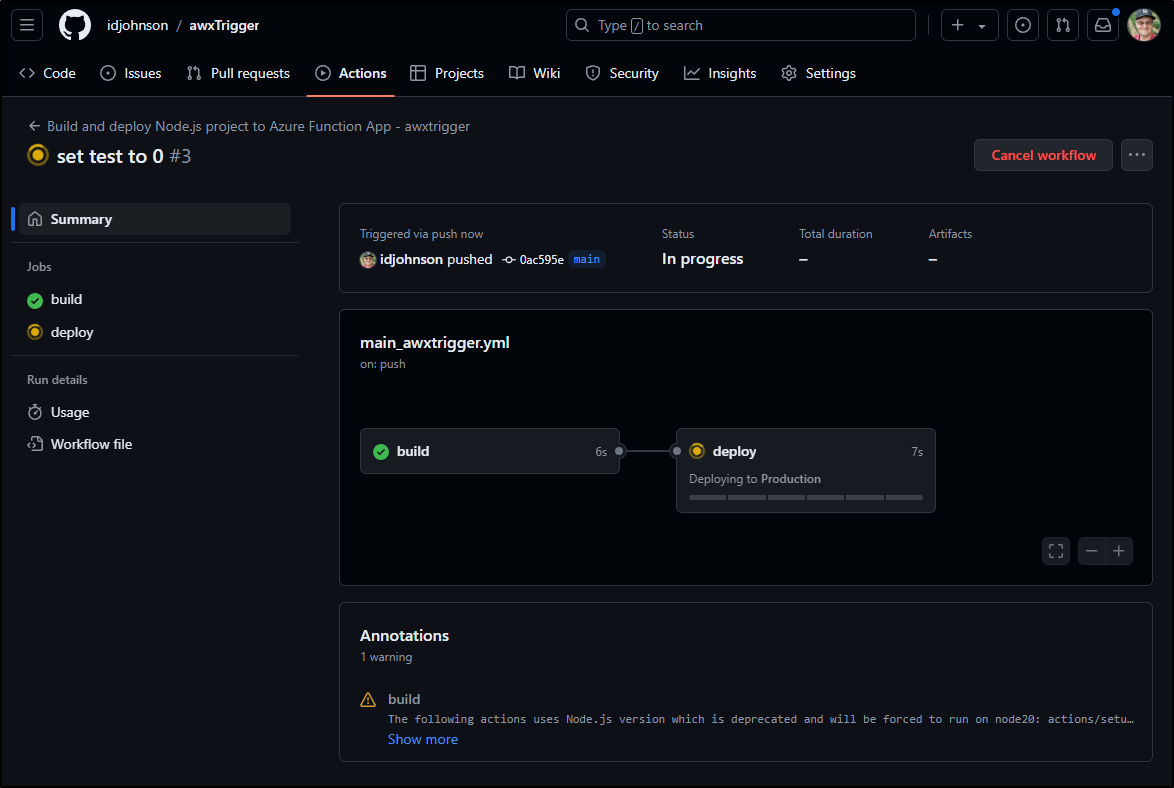

It only failed because the default test script exits “1” by default. I’ll quick change that to 0

We now pass the build stage and are moving on to Production deploys

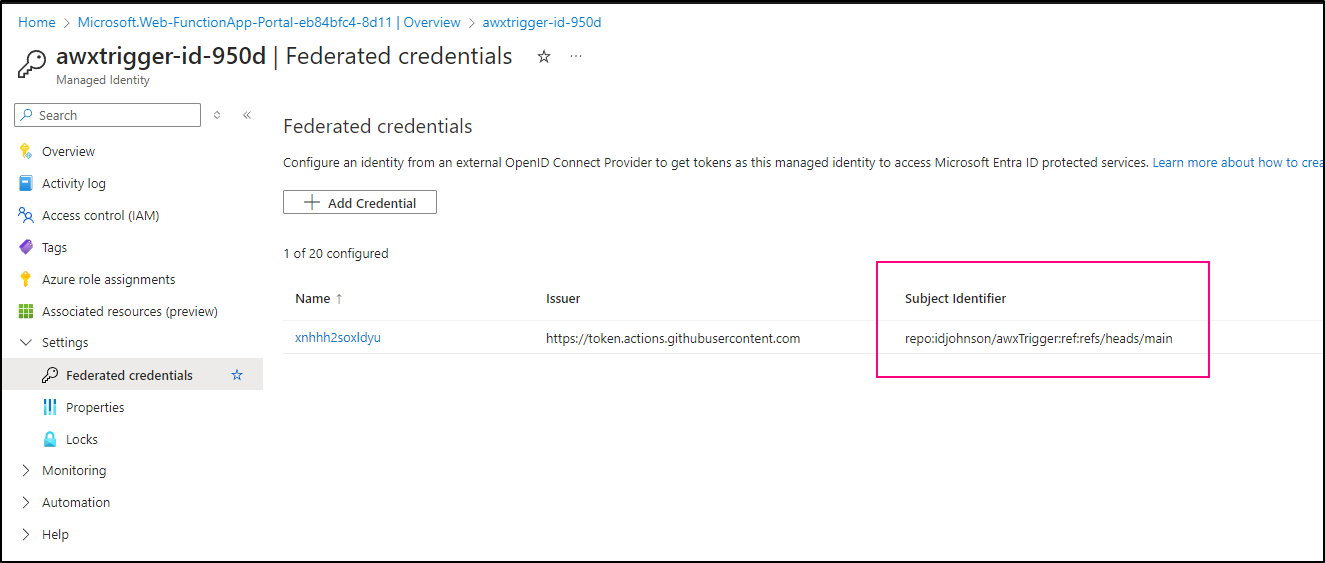

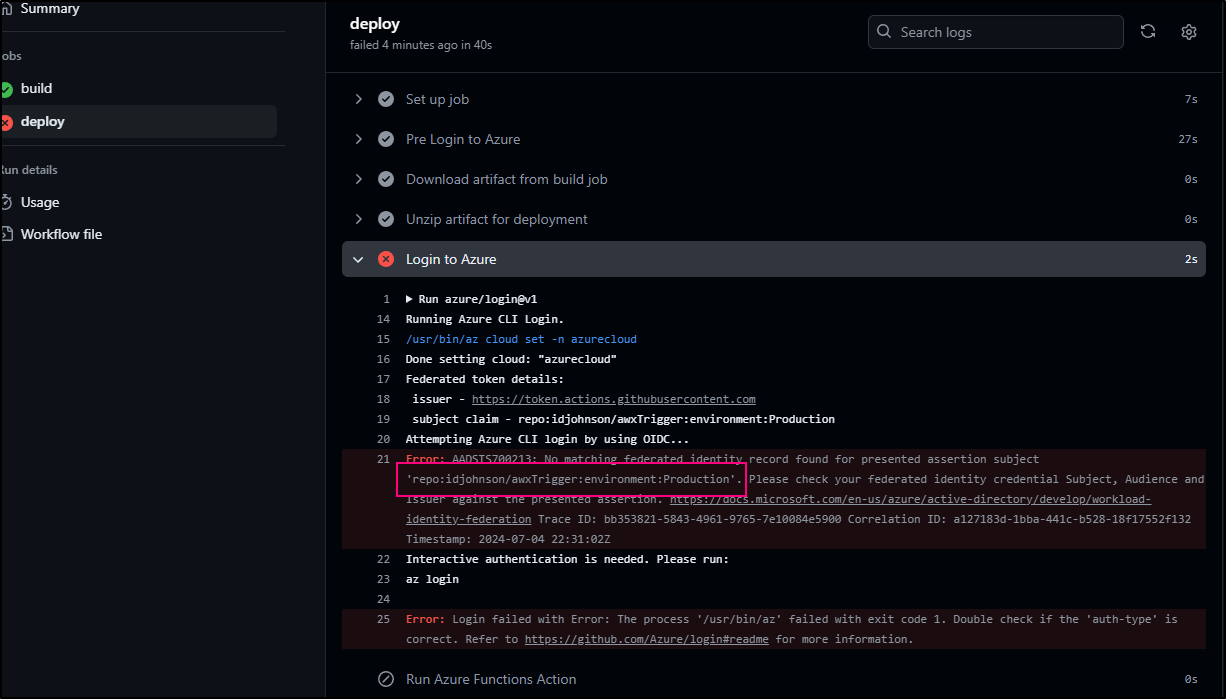

As onboarded, they have a bit of a mistake (or limitation) in their OIDC setup.

The Subject Identifier in the OIDC Federated Credential profile was scoped to just ‘refs/heads/main’

Whereas our build is attempting to use ‘environment:Production’

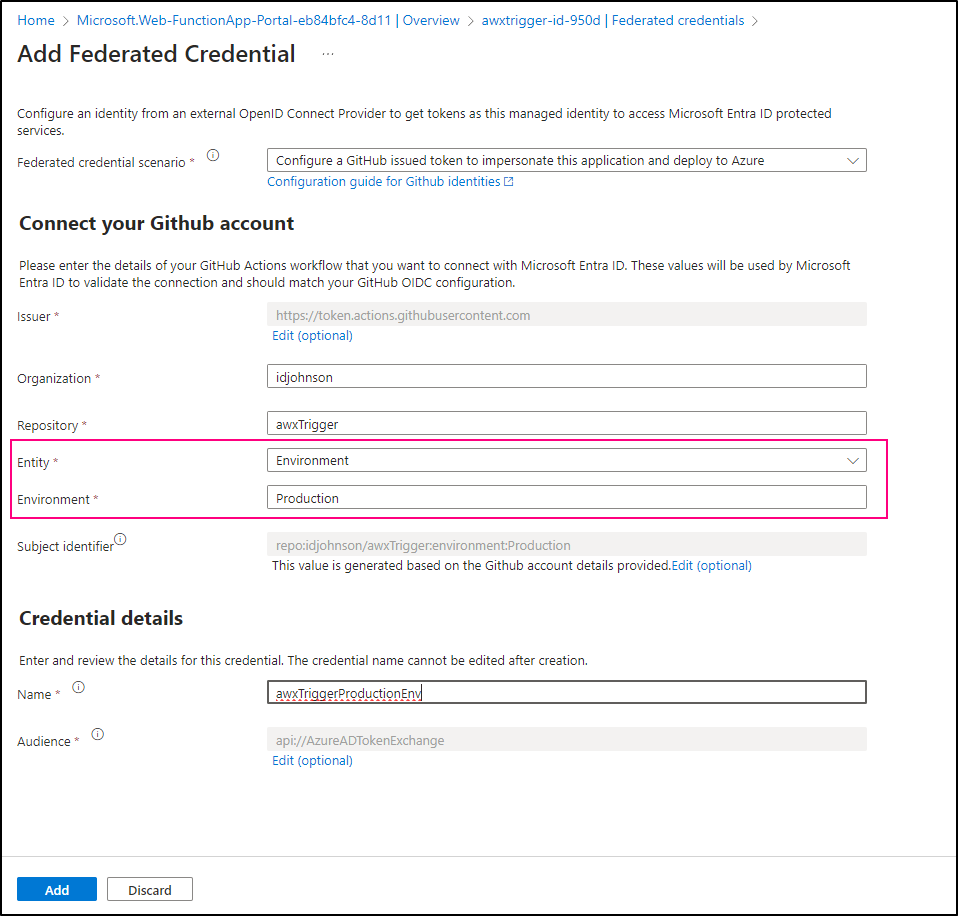

I should be able to add that Entity to the federated credential

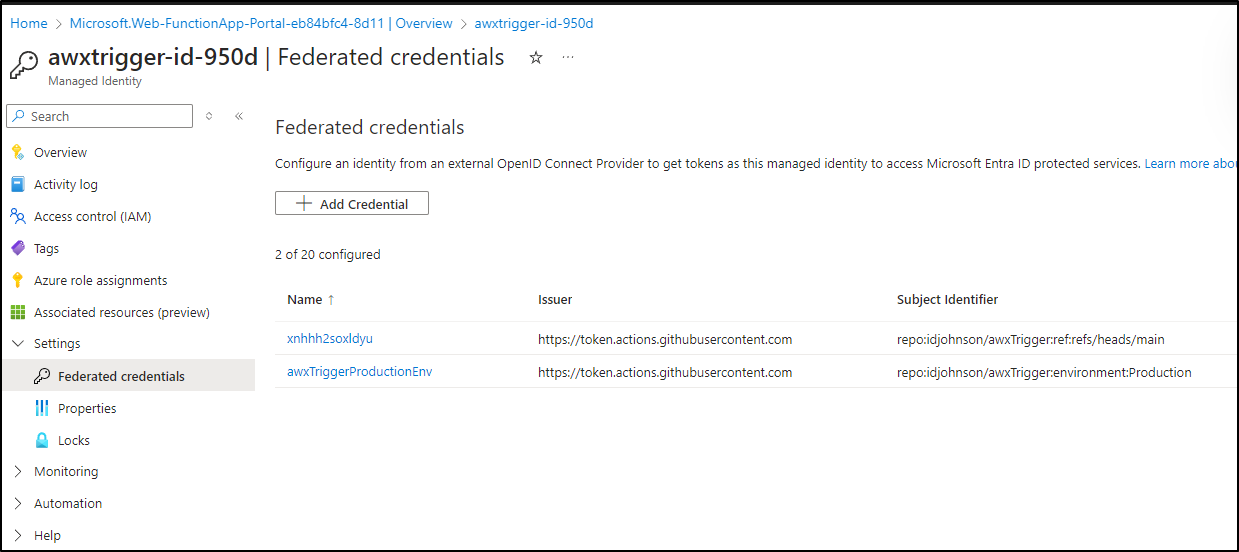

Now we see both

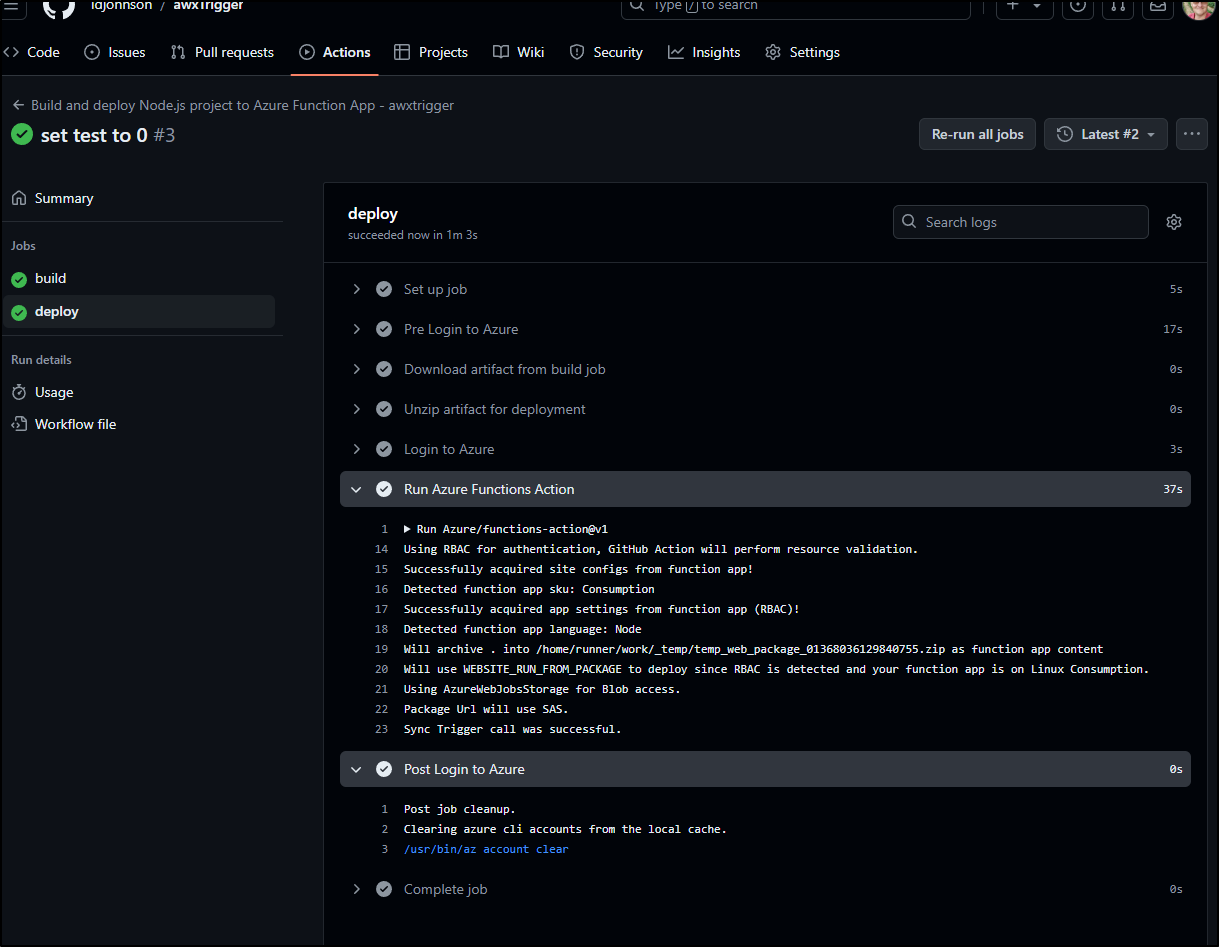

This time a re-deploy of the “deploy” stage seems to get past our authentication issue

GCP Pubsub to Dapr to AWX

We can tie Dapr to SQS, Pubsub and the like. We can then “receive” events via the Dapr sidecar to our containers that could in turn trigger AWX

Let’s start by creating a new .NET web app in a fresh directory

builder@DESKTOP-QADGF36:~/Workspaces$ cd csharpPubSub/

builder@DESKTOP-QADGF36:~/Workspaces/csharpPubSub$ ls

builder@DESKTOP-QADGF36:~/Workspaces/csharpPubSub$ dotnet new web

The template "ASP.NET Core Empty" was created successfully.

Processing post-creation actions...

Running 'dotnet restore' on /home/builder/Workspaces/csharpPubSub/csharpPubSub.csproj...

Determining projects to restore...

Restored /home/builder/Workspaces/csharpPubSub/csharpPubSub.csproj (in 75 ms).

Restore succeeded.

I’ll then build out the files. It essentially is creating the first pass from the csharp-subscriber from the dapr quick starts

builder@DESKTOP-QADGF36:~/Workspaces/csharpPubSub$ git add -A

builder@DESKTOP-QADGF36:~/Workspaces/csharpPubSub$ git commit -m "Initial"

[main (root-commit) dbeadb6] Initial

9 files changed, 555 insertions(+)

create mode 100644 .dockerignore

create mode 100644 .gitignore

create mode 100644 Dockerfile

create mode 100644 Program.cs

create mode 100644 Properties/launchSettings.json

create mode 100644 appsettings.Development.json

create mode 100644 appsettings.json

create mode 100644 csharpPubSub.csproj

create mode 100644 launchSettings.json

I want to tye this ultimately up to GCP Pubsub, so I’ll hardocde in the topcs of AAA, BBB and CCC

using Dapr;

var builder = WebApplication.CreateBuilder(args);

var app = builder.Build();

// Dapr configurations

app.UseCloudEvents();

app.MapSubscribeHandler();

//app.MapGet("/", () => "Hello World!");

app.MapPost("/AAA", [Topic("pubsub", "AAA")] (ILogger<Program> logger, MessageEvent item) => {

Console.WriteLine($"{item.MessageType}: {item.Message}");

return Results.Ok();

});

app.MapPost("/BBB", [Topic("pubsub", "BBB")] (ILogger<Program> logger, MessageEvent item) => {

Console.WriteLine($"{item.MessageType}: {item.Message}");

return Results.Ok();

});

app.MapPost("/CCC", [Topic("pubsub", "CCC")] (ILogger<Program> logger, Dictionary<string, string> item) => {

Console.WriteLine($"{item["messageType"]}: {item["message"]}");

return Results.Ok();

});

app.Run();

internal record MessageEvent(string MessageType, string Message);

I can do dotnet restore

builder@DESKTOP-QADGF36:~/Workspaces/csharpPubSub$ dotnet restore

Determining projects to restore...

Restored /home/builder/Workspaces/csharpPubSub/csharpPubSub.csproj (in 6.97 sec).

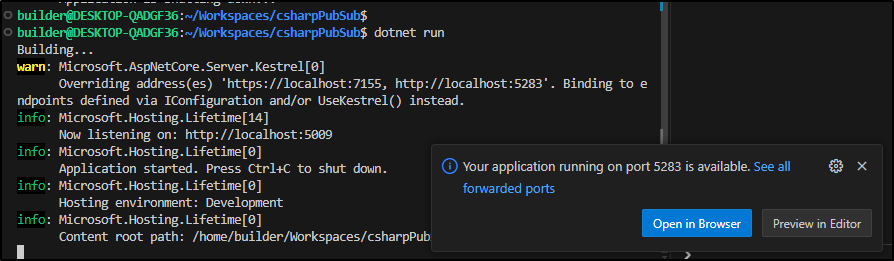

Then dotnet run to see if it launches

Because our Dockerfile expects “/out”

# Build runtime image

FROM mcr.microsoft.com/dotnet/aspnet:6.0

WORKDIR /app

COPY /out .

ENTRYPOINT ["dotnet", "csharp-subscriber.dll"]

We should build into that folder

$ dotnet build -o ./out

Microsoft (R) Build Engine version 17.0.0+c9eb9dd64 for .NET

Copyright (C) Microsoft Corporation. All rights reserved.

Determining projects to restore...

All projects are up-to-date for restore.

csharpPubSub -> /home/builder/Workspaces/csharpPubSub/out/csharpPubSub.dll

Build succeeded.

0 Warning(s)

0 Error(s)

Time Elapsed 00:00:00.91

I can now build a new docker image

builder@DESKTOP-QADGF36:~/Workspaces/csharpPubSub$ docker build -t idjohnson/fbdaprpubsubtest:0.1 .

[+] Building 4.6s (8/8) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 38B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 35B 0.0s

=> [internal] load metadata for mcr.microsoft.com/dotnet/aspnet:6.0 0.3s

=> [1/3] FROM mcr.microsoft.com/dotnet/aspnet:6.0@sha256:6111a90354ba2a4db3160b12783ae8d7a3fcef17236 3.6s

=> => resolve mcr.microsoft.com/dotnet/aspnet:6.0@sha256:6111a90354ba2a4db3160b12783ae8d7a3fcef17236 0.0s

=> => sha256:6111a90354ba2a4db3160b12783ae8d7a3fcef172367490857030aa6ac4b0394 1.79kB / 1.79kB 0.0s

=> => sha256:6dddcfc5a9f94298b7e2d8b506ab93e6538e81eaba40089202b3acc757833167 1.37kB / 1.37kB 0.0s

=> => sha256:685f1699d128626b55749821199854a51bd5573a1583c2d4f53c2535d964514e 2.34kB / 2.34kB 0.0s

=> => sha256:76956b537f14770ffd78afbe4f17016b2794c4b9b568325e8079089ea5c4e8cd 31.42MB / 31.42MB 1.6s

=> => sha256:fc8838649f7854a26cad9c6f1a737a4fce824a59e31307266883fcdaed03f14d 15.17MB / 15.17MB 1.3s

=> => sha256:6dfac5b7885b4a345722d97eca748c3224cdca57b987eed3d57e13657ef68fb6 31.65MB / 31.65MB 1.8s

=> => sha256:96cf3ab9787310d28894995444abef3943543dd25e7e8fb9be98c37e1a0ebc14 154B / 154B 1.5s

=> => sha256:eeb1d06798fd0c32b8f9d08c26dd0cfbb71bd4d2e69a4ace026e6a662f7a81df 9.47MB / 9.47MB 2.0s

=> => extracting sha256:76956b537f14770ffd78afbe4f17016b2794c4b9b568325e8079089ea5c4e8cd 0.9s

=> => extracting sha256:fc8838649f7854a26cad9c6f1a737a4fce824a59e31307266883fcdaed03f14d 0.3s

=> => extracting sha256:6dfac5b7885b4a345722d97eca748c3224cdca57b987eed3d57e13657ef68fb6 0.4s

=> => extracting sha256:96cf3ab9787310d28894995444abef3943543dd25e7e8fb9be98c37e1a0ebc14 0.0s

=> => extracting sha256:eeb1d06798fd0c32b8f9d08c26dd0cfbb71bd4d2e69a4ace026e6a662f7a81df 0.2s

=> [internal] load build context 0.0s

=> => transferring context: 1.38MB 0.0s

=> [2/3] WORKDIR /app 0.5s

=> [3/3] COPY /out . 0.0s

=> exporting to image 0.0s

=> => exporting layers 0.0s

=> => writing image sha256:8338266180a3f8194d09a07ba8fccb0b2c7a86c84b00b59e55e3b350699e413d 0.0s

=> => naming to docker.io/idjohnson/fbdaprpubsubtest:0.1 0.0s

builder@DESKTOP-QADGF36:~/Workspaces/csharpPubSub$

Then push to docker hub

builder@DESKTOP-QADGF36:~/Workspaces/csharpPubSub$ docker push idjohnson/fbdaprpubsubtest:0.1

The push refers to repository [docker.io/idjohnson/fbdaprpubsubtest]

39433df7f844: Pushed

67937990f858: Pushed

93675e9744b0: Pushed

b75ddd1cb6cc: Pushed

2097f23a4e18: Pushed

644f095d4c24: Pushed

a2375faae132: Pushed

0.1: digest: sha256:b6ff2b440926a523136874631957f98a0b1a31d5b3dc92c09789ecd81ec74dbd size: 1787

Kubernetes

At present, my cluster with Dapr just has a statestore (set to redis)

$ kubectl get component

NAME AGE

statestore 19d

The setup for a GCP Pubsub component has quite a few fields

While we covered this a couple years ago, let’s walk through the IAM process again

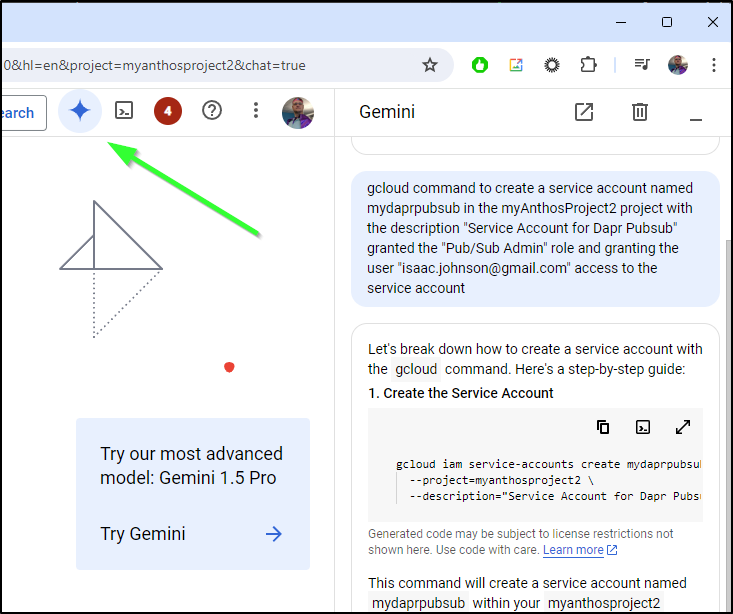

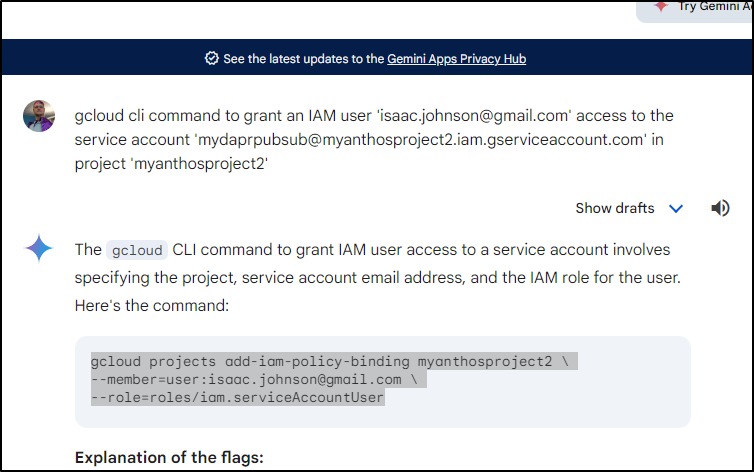

One difference to two years ago is that we have some AI tools like Gemini to help automate some of the work

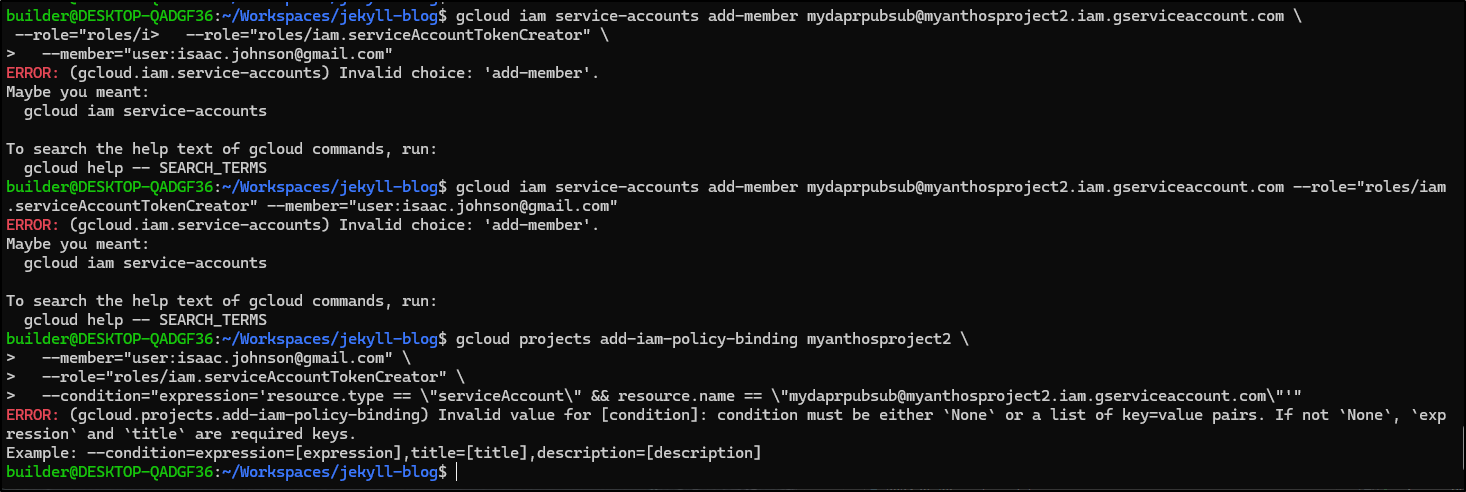

On the command line, we can do these steps we used the UI for in the past

First, we create the SA

$ gcloud auth login

Your browser has been opened to visit:

... snip ...

# Create a Service account

$ gcloud iam service-accounts create mydaprpubsub \

> --project=myanthosproject2 \

> --description="Service Account for Dapr Pubsub"

Created service account [mydaprpubsub].

Let’s then add to the Pub/Sub admin role

$ gcloud projects add-iam-policy-binding myanthosproject2 \

mber="serviceAcc> --member="serviceAccount:mydaprpubsub@myanthosproject2.iam.gserviceaccount.com" \

> --role="roles/pubsub.admin"

Updated IAM policy for project [myanthosproject2].

bindings:

- members:

- serviceAccount:service-511842454269@gcp-sa-aiplatform.iam.gserviceaccount.com

role: roles/aiplatform.serviceAgent

... snip ...

And grant ourselves access… however Gemini is wrong

Oddly, Gemini on the web did get it right

$ gcloud projects add-iam-policy-binding myanthosproject2 --member=user:isaac.johnson@gmail.com --role=roles/iam.serviceAccountUser

Updated IAM policy for project [myanthosproject2].

bindings:

- members:

- serviceAccount:service-511842454269@gcp-sa-aiplatform.iam.gserviceaccount.com

role: roles/aiplatform.serviceAgent

... snip ...

We now need to create the JSON key and download it

$ gcloud iam service-accounts keys create mydaprpubsub-key.json --iam-account=mydaprpubsub@myanthosproject2.iam.

gserviceaccount.com --project=myanthosproject2

created key [e2aa6a01e499c57f7c20a7631332ae21dcfe63b5] of type [json] as [mydaprpubsub-key.json] for [mydaprpubsub@myanthosproject2.iam.gserviceaccount.com]

Again, I’m not going to belabour the usage of AI tooling, but none of the Gemini outputs worked so I found the refered to the IAM official documentation for the proper commands.

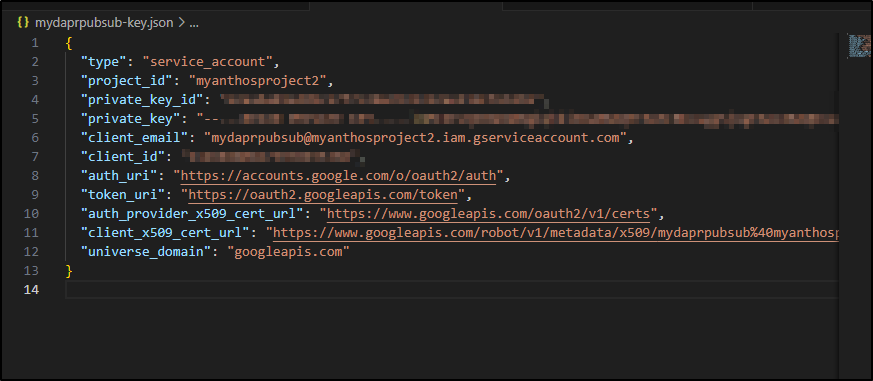

The key ends up looking like this;

I didn’t really want to take the time to copy and paste as I had before to complete all the field the component requires so I wrote a bash script to do it for me.

GCP SA JSON to Pubsub Kubernetes Dapr Component YAML:

$ cat ./daprPubSub.sh

#!/bin/bash

set +x

# Check if there are exactly two arguments provided

if [ $# -ne 2 ]; then

echo "Error: Please provide exactly two arguments."

echo "daprPubSub.sh [ source sa json ] [ output Dapr component YAML ]"

exit 1

fi

export SRCFILE="$1"

export OUTFILE="$2"

export SATYPE=`cat $SRCFILE | jq -r '.type'`

export SAPROJ=`cat $SRCFILE | jq -r '.project_id'`

export SAPRIVID=`cat $SRCFILE | jq -r '.private_key_id'`

export SAPRIVKEY=`cat $SRCFILE | jq -r '.private_key'`

export SACLIENTE=`cat $SRCFILE | jq -r '.client_email'`

export SACLIENTID=`cat $SRCFILE | jq -r '.client_id'`

export SAAUTHURI=`cat $SRCFILE | jq -r '.auth_uri'`

export SATOKENURI=`cat $SRCFILE | jq -r '.token_uri'`

export SAAUTHCERTU=`cat $SRCFILE | jq -r '.auth_provider_x509_cert_url'`

export SACLIENTCERT=`cat $SRCFILE | jq -r '.client_x509_cert_url'`

export SAUNIVDOM=`cat $SRCFILE | jq -r '.universe_domain'`

# Create Dapr formatted file

cat <<EOF > $OUTFILE

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: pubsub

namespace: default

spec:

type: pubsub.gcp.pubsub

version: v1

metadata:

- name: type

value: $SATYPE

- name: projectId

value: $SAPROJ

- name: privateKeyId

value: $SAPRIVID

- name: clientEmail

value: $SACLIENTE

- name: clientId

value: "$SACLIENTID"

- name: authUri

value: $SAAUTHURI

- name: tokenUri

value: $SATOKENURI

- name: authProviderX509CertUrl

value: $SAAUTHCERTU

- name: clientX509CertUrl

value: $SACLIENTCERT

- name: privateKey

value: |

EOF

echo "$SAPRIVKEY" | sed 's/^/ &/' >> $OUTFILE

cat <<EOT >> $OUTFILE

- name: disableEntityManagement

value: "false"

- name: enableMessageOrdering

value: "false"

EOT

I can now run that and see the output

$ ./daprPubSub.sh mydaprpubsub-key.json daprcomponent.yaml

$ cat daprcomponent.yaml

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: pubsub

namespace: default

spec:

type: pubsub.gcp.pubsub

version: v1

metadata:

- name: type

value: service_account

... snip ...

I can then load the PubSub component

$ kubectl apply -f ./daprcomponent.yaml

component.dapr.io/pubsub created

$ kubectl get components

NAME AGE

statestore 19d

pubsub 5s

Testing with the node subscriber

Looking back at our prior Dapr write-up from 2022 we can see we tested with NodeJS Subscribers and the react form.

Since that time, my Harbor had to be reset so I’ll need to rebuild and push the node-subscriber

builder@DESKTOP-QADGF36:~/Workspaces/quickstarts/tutorials/pub-sub/node-subscriber$ docker build -t harbor.freshbrewed.science/freshbrewedprivate/pubsub-node-subscriber:gcp1 .

[+] Building 24.6s (10/10) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 145B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/node:17-alpine 21.1s

=> [auth] library/node:pull token for registry-1.docker.io 0.0s

=> [1/4] FROM docker.io/library/node:17-alpine@sha256:76e638eb0d73ac5f0b76d70df3ce1ddad941ac63595d44092b625e2cd557ddbf 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 2.04kB 0.0s

=> CACHED [2/4] WORKDIR /usr/src/app 0.0s

=> [3/4] COPY . . 0.0s

=> [4/4] RUN npm install 3.1s

=> exporting to image 0.1s

=> => exporting layers 0.1s

=> => writing image sha256:f256abd36888039e23ea76e3c177d43921e86217673fe12ec907497d68db911a 0.0s

=> => naming to harbor.freshbrewed.science/freshbrewedprivate/pubsub-node-subscriber:gcp1 0.0s

builder@DESKTOP-QADGF36:~/Workspaces/quickstarts/tutorials/pub-sub/node-subscriber$ docker push harbor.freshbrewed.science/freshbrewedprivate/pubsub-node-subscriber:gcp1

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/pubsub-node-subscriber]

61ff6d029830: Pushed

8f29e7db898a: Pushed

be03214d51e8: Pushed

e6a74996eabe: Pushed

db2e1fd51a80: Pushed

19ebba8d6369: Pushed

4fc242d58285: Pushed

gcp1: digest: sha256:69f158e4cecd80bfc4edb857bc040a1cf73147b9bcd9540aa80b2e9f487a9743 size: 1784

As well as the React Form

builder@DESKTOP-QADGF36:~/Workspaces/quickstarts/tutorials/pub-sub/react-form$ docker build -t harbor.freshbrewed.science/freshbrewedprivate/pubsub-react-form:gcp2 .

[+] Building 53.7s (9/9) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 153B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 52B 0.0s

=> [internal] load metadata for docker.io/library/node:17-alpine 4.7s

=> [internal] load build context 0.0s

=> => transferring context: 1.49MB 0.0s

=> [1/4] FROM docker.io/library/node:17-alpine@sha256:76e638eb0d73ac5f0b76d70df3ce1ddad941ac63595d44092b625e2cd557ddbf 0.0s

=> CACHED [2/4] WORKDIR /usr/src/app 0.0s

=> [3/4] COPY . . 0.0s

=> [4/4] RUN npm run build 44.3s

=> exporting to image 4.5s

=> => exporting layers 4.5s

=> => writing image sha256:b79f0318f0e68503831c3b81ad9050695826b9d7de700758a832fc9ba3ea7188 0.0s

=> => naming to harbor.freshbrewed.science/freshbrewedprivate/pubsub-react-form:gcp2 0.0s

builder@DESKTOP-QADGF36:~/Workspaces/quickstarts/tutorials/pub-sub/react-form$ docker push harbor.freshbrewed.science/freshbrewedprivate/pubsub-react-form:gcp2

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/pubsub-react-form]

3a32c88366aa: Pushed

46f767b124fe: Pushed

be03214d51e8: Mounted from freshbrewedprivate/pubsub-node-subscriber

e6a74996eabe: Mounted from freshbrewedprivate/pubsub-node-subscriber

db2e1fd51a80: Mounted from freshbrewedprivate/pubsub-node-subscriber

19ebba8d6369: Mounted from freshbrewedprivate/pubsub-node-subscriber

4fc242d58285: Mounted from freshbrewedprivate/pubsub-node-subscriber

gcp2: digest: sha256:4b39950245cc25427f58f5ff587b19390761819307a34109779f49ab7f3e683b size: 1788

Since I’m using my Harbor CR, the test cluster needs the Docker Pull Secret. If you use Dockerhub, you won’t need that

builder@DESKTOP-QADGF36:~/Workspaces/quickstarts/tutorials/pub-sub/deploy$ kubectl apply -f ./myharborreg.yaml

secret/myharborreg created

I can now deploy the Node Subscriber and react form

builder@DESKTOP-QADGF36:~/Workspaces/quickstarts/tutorials/pub-sub/deploy$ kubectl apply -f ./node-subscriber.yaml

deployment.apps/node-subscriber created

builder@DESKTOP-QADGF36:~/Workspaces/quickstarts/tutorials/pub-sub/deploy$ kubectl apply -f ./react-form.yaml

service/react-form created

deployment.apps/react-form created

While I wait a beat for the pods to come up, let’s review the code

The Node Subscriber really just changed app.js to use the hardcoded “AAA” and “BBB” topics

//

// Copyright 2021 The Dapr Authors

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

// http://www.apache.org/licenses/LICENSE-2.0

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

//

const express = require('express');

const bodyParser = require('body-parser');

const app = express();

// Dapr publishes messages with the application/cloudevents+json content-type

app.use(bodyParser.json({ type: 'application/*+json' }));

const port = 3000;

app.get('/dapr/subscribe', (_req, res) => {

res.json([

{

pubsubname: "pubsub",

topic: "AAA",

route: "AAA"

},

{

pubsubname: "pubsub",

topic: "BBB",

route: "BBB"

}

]);

});

app.post('/AAA', (req, res) => {

console.log("AAA: ", req.body.data.message);

res.sendStatus(200);

});

app.post('/BBB', (req, res) => {

console.log("BBB: ", req.body.data.message);

res.sendStatus(200);

});

app.listen(port, () => console.log(`Node App listening on port ${port}!`));

And the React Form changed MessageForm.js to pack in the pick list the topics of AAA, BBB, and CCC

//

// Copyright 2021 The Dapr Authors

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

// http://www.apache.org/licenses/LICENSE-2.0

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

//

import React from 'react';

export class MessageForm extends React.Component {

constructor(props) {

super(props);

this.state = this.getInitialState();

}

handleInputChange = (event) => {

const target = event.target;

const value = target.value;

const name = target.name;

console.log(`Setting ${name} to ${value}`)

this.setState({

[name]: value

});

}

handleSubmit = (event) => {

fetch('/publish', {

headers: {

'Accept': 'application/json',

'Content-Type': 'application/json'

},

method:"POST",

body: JSON.stringify(this.state),

});

event.preventDefault();

this.setState(this.getInitialState());

}

getInitialState = () => {

return {

messageType: "A",

message: ""

};

}

render() {

return (

<div class="col-12 col-md-9 col-xl-8 py-md-3 pl-md-5 bd-content">

<form onSubmit={this.handleSubmit}>

<div className="form-group">

<label>Select Message Type</label>

<select className="custom-select custom-select-lg mb-3" name="messageType" onChange={this.handleInputChange} value={this.state.messageType}>

<option value="AAA">AAA</option>

<option value="BBB">BBB</option>

<option value="CCC">CCC</option>

<option value="AAA">AAA</option>

</select>

</div>

<div className="form-group">

<label>Enter message</label>

<textarea className="form-control" id="exampleFormControlTextarea1" rows="3" name="message" onChange={this.handleInputChange} value={this.state.message} placeholder="Enter message here"></textarea>

</div>

<button type="submit" className="btn btn-primary">Submit</button>

</form>

</div>

);

}

}

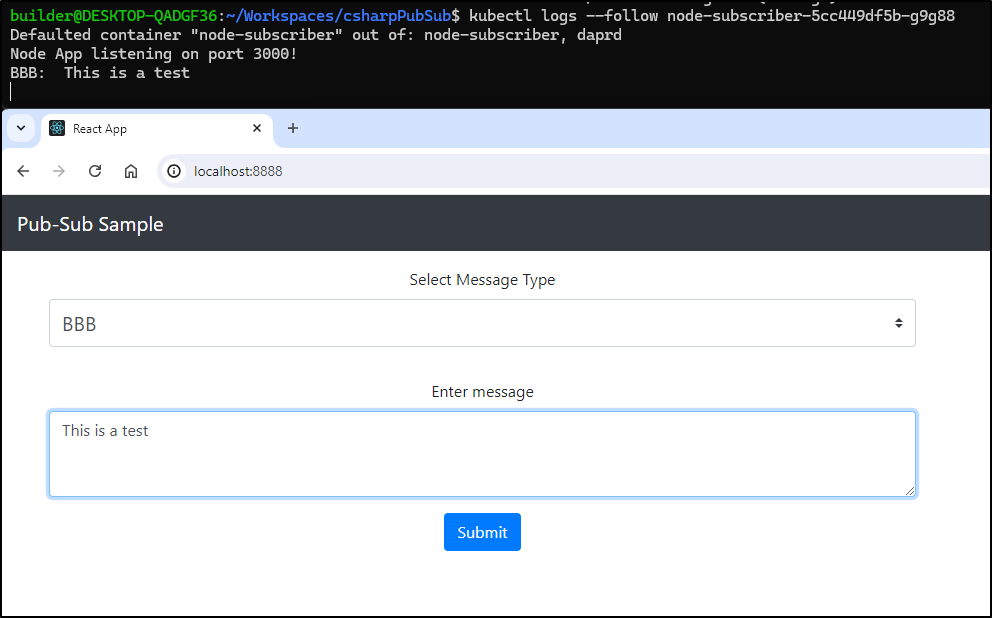

I can now port-forward and test

$ kubectl port-forward react-form-55fbd77df9-9tbsz 8888:8080

Forwarding from 127.0.0.1:8888 -> 8080

Forwarding from [::1]:8888 -> 8080

Handling connection for 8888

Handling connection for 8888

We can watch it immediately fetch messages - so fast that I have to kill the deployment just to see some results in the cloud console

And as you would expect, provided we format the message as Dapr compliant JSON, we can post messages right from GCP Cloud Console

Tying in AWX

We’ve shown we can trigger the Node-Subscriber using the web form as well as Pub/Sub directly, but how might we add a call to AWX?

First, I’ll add the https library

builder@DESKTOP-QADGF36:~/Workspaces/quickstarts/tutorials/pub-sub/node-subscriber$ npm install --save https

added 1 package, and audited 67 packages in 509ms

12 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

Then I can update the app.js to check for “AWX” in the message and parse out the job. I’ll add a call using the https library to the AWX rest endpoint to invoke the template.

For the time being, I’ll leave the auth hardcoded in (but I could turn those into variables passed by kubernetes).

//

// Copyright 2021 The Dapr Authors

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

// http://www.apache.org/licenses/LICENSE-2.0

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

//

const https = require('https');

const express = require('express');

const bodyParser = require('body-parser');

const app = express();

// Dapr publishes messages with the application/cloudevents+json content-type

app.use(bodyParser.json({ type: 'application/*+json' }));

const port = 3000;

// AWX user and pass

const username = 'rundeck';

const password = 'ReallyNotMyPassword';

const postData = JSON.stringify({

nothing: 'nothing'

});

app.get('/dapr/subscribe', (_req, res) => {

res.json([

{

pubsubname: "pubsub",

topic: "AAA",

route: "AAA"

},

{

pubsubname: "pubsub",

topic: "BBB",

route: "BBB"

}

]);

});

app.post('/AAA', (req, res) => {

console.log("AAA: ", req.body.data.message);

res.sendStatus(200);

});

app.post('/BBB', (req, res) => {

console.log("BBB: ", req.body.data.message);

const inputString = req.body.data.message;

if (inputString.startsWith("AWX:")) {

// Extract everything after the ":"

const AWXJOB = inputString.slice(4); // 4 is the length of "AWX:"

// Print to console

console.log("AWX JOB", AWXJOB);

console.log("AWX PATH", `/api/v2/job_templates/${AWXJOB}/launch/`);

// Set up the request options

const options = {

hostname: 'awx.freshbrewed.science',

port: 443, // HTTPS port

path: `/api/v2/job_templates/${AWXJOB}/launch/`,

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': `Basic ${Buffer.from(`${username}:${password}`).toString('base64')}`,

'Content-Length': Buffer.byteLength(postData)

}

};

// Make the request

const req = https.request(options, (res) => {

console.log(`Response code: ${res.statusCode}`);

console.log(`Response headers: ${res.headers}`);

// Handle the response data if needed

res.on('data', (chunk) => {

// Process the response data here

console.log("got some data back");

});

});

req.on('error', (error) => {

console.error('Error:', error.message);

});

// Send the POST data

req.write(postData);

req.end();

} else {

console.log("String does not start with 'AWX:'");

}

res.sendStatus(200);

});

app.listen(port, () => console.log(`Node App listening on port ${port}!`));

Since the auth is baked in, I’ll leave this one in my private CR as harbor.freshbrewed.science/freshbrewedprivate/pubsub-node-subscriber:gcp6. I was testing a lot so I just built out a quick command to up the lable with each round:

$ export NSLBL=gcp6 && docker build -t harbor.freshbrewed.science/freshbrewedprivate/pubsub-node-subscriber:$NSLBL . && docker push harbor.freshbrewed.science/freshbrewedprivate/pubsub-node-subscriber:$NSLBL && sed -i "s/pubsub-node-subscriber:.*/pubsub-node-subscriber:$NSLBL/" ../deploy/node-subscriber.yaml && kubectl apply -f ../deploy/node-subscriber.yaml

[+] Building 22.4s (10/10) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 37B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/node:17-alpine 20.9s

=> [auth] library/node:pull token for registry-1.docker.io 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 37.36kB 0.0s

=> [1/4] FROM docker.io/library/node:17-alpine@sha256:76e638eb0d73ac5f0b76d70df3ce1ddad941ac63595d44092b625e2cd557ddbf 0.0s

=> CACHED [2/4] WORKDIR /usr/src/app 0.0s

=> [3/4] COPY . . 0.1s

=> [4/4] RUN npm install 1.1s

=> exporting to image 0.1s

=> => exporting layers 0.1s

=> => writing image sha256:5a0e07b5faa9c20dc70e22fe9e36089adf437afbd0f2161c3a0415890a0dd225 0.0s

=> => naming to harbor.freshbrewed.science/freshbrewedprivate/pubsub-node-subscriber:gcp6 0.0s

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/pubsub-node-subscriber]

9c24c6423743: Pushed

70ad9960702a: Pushed

be03214d51e8: Layer already exists

e6a74996eabe: Layer already exists

db2e1fd51a80: Layer already exists

19ebba8d6369: Layer already exists

4fc242d58285: Layer already exists

gcp6: digest: sha256:8e992ed493fbdfd2427f50c16d5ebf43fdc5086ea6787b0eead41cbdcf8c91ba size: 1785

deployment.apps/node-subscriber configured

Let’s test the flow by sending a normal message, then one with our AWX keyword and see that it triggers an AWX Job:

I’ll use the following to watch the Node Subscriber logs:

$ kubectl logs --follow `kubectl get pods -l "dapr.io/app-id=node-subscriber" -o jsonpath="{.items[0].metadata.name}"`

CSharp

Let’s now take that container we built and use it. You are welcome to use mine from Dockerhub

I need to double check the port. I’ll add back my GET so i can view a Hello World.

Note: The rest of this is in a public repository you can reference here

using Dapr;

var builder = WebApplication.CreateBuilder(args);

var app = builder.Build();

// Dapr configurations

app.UseCloudEvents();

app.MapSubscribeHandler();

app.MapGet("/", () => "Hello World!");

app.MapPost("/AAA", [Topic("pubsub", "AAA")] (ILogger<Program> logger, MessageEvent item) => {

Console.WriteLine($"{item.MessageType}: {item.Message}");

return Results.Ok();

});

app.MapPost("/BBB", [Topic("pubsub", "BBB")] (ILogger<Program> logger, MessageEvent item) => {

Console.WriteLine($"{item.MessageType}: {item.Message}");

return Results.Ok();

});

app.MapPost("/CCC", [Topic("pubsub", "CCC")] (ILogger<Program> logger, Dictionary<string, string> item) => {

Console.WriteLine($"{item["messageType"]}: {item["message"]}");

return Results.Ok();

});

app.Run();

internal record MessageEvent(string MessageType, string Message);

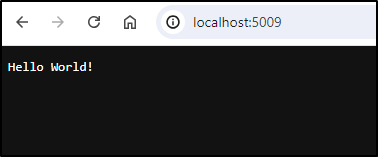

I then ran with dotnet run

$ dotnet run

Building...

warn: Microsoft.AspNetCore.Server.Kestrel[0]

Overriding address(es) 'https://localhost:7155, http://localhost:5283'. Binding to endpoints defined via IConfiguration and/or UseKestrel() instead.

info: Microsoft.Hosting.Lifetime[14]

Now listening on: http://localhost:5009

info: Microsoft.Hosting.Lifetime[0]

Application started. Press Ctrl+C to shut down.

info: Microsoft.Hosting.Lifetime[0]

Hosting environment: Development

info: Microsoft.Hosting.Lifetime[0]

Content root path: /home/builder/Workspaces/csharpPubSub/

I’m pretty sure that is 5009 which matches the app settings, but I can check to be certain

At this point, my Dockerfile is still a basic

# Build runtime image

FROM mcr.microsoft.com/dotnet/aspnet:6.0

WORKDIR /app

COPY /out .

ENTRYPOINT ["dotnet", "csharp-subscriber.dll"]

I rather prefer to build and run in a container

# Use the official .NET 6.0 SDK image as the base

FROM mcr.microsoft.com/dotnet/sdk:6.0 AS build

# Set the working directory inside the container

WORKDIR /app

# Copy the project files from your local machine to the container

COPY . .

# Restore dependencies

RUN dotnet restore

# Build the application

RUN dotnet publish -c Release -o /app/publish

# Create a new stage for the runtime image

FROM mcr.microsoft.com/dotnet/aspnet:6.0 AS runtime

# Copy the published application from the build stage

COPY --from=build /app/publish .

# Expose the port your application listens on (adjust if needed)

EXPOSE 5009

# Set the entry point to run your application

ENTRYPOINT ["dotnet", "csharpPubSub.dll"]

I built and pushed as idjohnson/fbdaprpubsubtest:0.5

I then used in a kubernetes deployment

$ cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: daprpubsub

labels:

app: daprpubsub

spec:

replicas: 1

selector:

matchLabels:

app: daprpubsub

template:

metadata:

labels:

app: daprpubsub

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "daprpubsub"

dapr.io/app-port: "5009"

spec:

containers:

- name: daprpubsub

image: idjohnson/fbdaprpubsubtest:0.5

ports:

- containerPort: 5009

imagePullPolicy: Always

$ kubectl apply -f ./deployment.yaml

deployment.apps/daprpubsub created

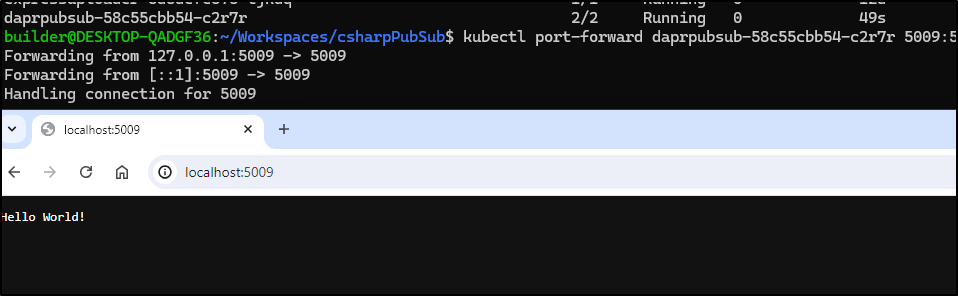

I can do a quick test

$ kubectl port-forward daprpubsub-58c55cbb54-c2r7r 5009:5009

Forwarding from 127.0.0.1:5009 -> 5009

Forwarding from [::1]:5009 -> 5009

Handling connection for 5009

I can now test pushing a message to one of my PubSub topics

I created a subscription that could push the BBB topic over to my container

apiVersion: dapr.io/v2alpha1

kind: Subscription

metadata:

name: bbb-to-daprpubsub

spec:

topic: BBB

routes:

default: /BBB

pubsubname: pubsub

scopes:

- daprpubsub

I tried one run using an “Order”

$ cat Program.cs

using System.Text.Json.Serialization;

using Dapr;

var builder = WebApplication.CreateBuilder(args);

var app = builder.Build();

// Dapr will send serialized event object vs. being raw CloudEvent

app.UseCloudEvents();

// needed for Dapr pub/sub routing

app.MapSubscribeHandler();

if (app.Environment.IsDevelopment()) {app.UseDeveloperExceptionPage();}

app.MapGet("/", () => "Hello World!");

// Dapr subscription in [Topic] routes orders topic to this route

app.MapPost("/BBB", [Topic("pubsub", "BBB")] (Order order) => {

Console.WriteLine("Subscriber received : " + order);

return Results.Ok(order);

});

await app.RunAsync();

public record Order([property: JsonPropertyName("orderId")] int OrderId);

It could parse the Dapr message from the React Form (idjohnson/fbdaprpubsubtest:0.7)

$ kubectl logs daprpubsub-7dfd659496-8ggms --follow

Defaulted container "daprpubsub" out of: daprpubsub, daprd

warn: Microsoft.AspNetCore.Server.Kestrel[0]

Overriding address(es) 'http://+:80'. Binding to endpoints defined via IConfiguration and/or UseKestrel() instead.

info: Microsoft.Hosting.Lifetime[14]

Now listening on: http://localhost:5009

info: Microsoft.Hosting.Lifetime[0]

Application started. Press Ctrl+C to shut down.

info: Microsoft.Hosting.Lifetime[0]

Hosting environment: Production

info: Microsoft.Hosting.Lifetime[0]

Content root path: /

Subscriber received : Order { OrderId = 0 }

Subscriber received : Order { OrderId = 0 }

Subscriber received : Order { OrderId = 0 }

Subscriber received : Order { OrderId = 0 }

Subscriber received : Order { OrderId = 0 }

Subscriber received : Order { OrderId = 0 }

Subscriber received : Order { OrderId = 0 }

Subscriber received : Order { OrderId = 0 }

Subscriber received : Order { OrderId = 0 }

Subscriber received : Order { OrderId = 0 }

Subscriber received : Order { OrderId = 0 }

Subscriber received : Order { OrderId = 0 }

But didnt really know what the “order” was

I pivotted back to the message block

using System.Text.Json.Serialization;

using Dapr;

var builder = WebApplication.CreateBuilder(args);

var app = builder.Build();

// Dapr will send serialized event object vs. being raw CloudEvent

app.UseCloudEvents();

// needed for Dapr pub/sub routing

app.MapSubscribeHandler();

if (app.Environment.IsDevelopment()) {app.UseDeveloperExceptionPage();}

app.MapGet("/", () => "Hello World!");

// Dapr subscription in [Topic] routes orders topic to this route

app.MapPost("/BBB", [Topic("pubsub", "BBB")] (ILogger<Program> logger, MessageEvent item) => {

Console.WriteLine($"{item.MessageType}: {item.Message}");

return Results.Ok();

});

await app.RunAsync();

internal record MessageEvent(string MessageType, string Message);

which worked dandy:

Next, I want to update it to actually trigger AWX. I’ll be using some environment variables now in the code

using System;

using System.Net.Http;

using System.Text;

using System.Text.Json.Serialization;

using System.Threading.Tasks;

using Microsoft.AspNetCore.Builder;

using Microsoft.AspNetCore.Http;

using Microsoft.Extensions.Logging;

using Dapr;

var builder = WebApplication.CreateBuilder(args);

var app = builder.Build();

// Dapr will send serialized event object vs. being raw CloudEvent

app.UseCloudEvents();

// needed for Dapr pub/sub routing

app.MapSubscribeHandler();

if (app.Environment.IsDevelopment()) {app.UseDeveloperExceptionPage();}

app.MapGet("/", () => "Hello World!");

// Dapr subscription in [Topic] routes orders topic to this route

app.MapPost("/BBB", [Topic("pubsub", "BBB")] async (ILogger<Program> logger, MessageEvent item) =>

{

Console.WriteLine($"{item.MessageType}: {item.Message}");

if (item.Message.StartsWith("AWX:"))

{

string AWXJOB = item.Message.Substring(4); // Extract the text after "AWX:"

Console.WriteLine($"JOB FOUND: {AWXJOB}");

// Construct the URL

string baseUrl = Environment.GetEnvironmentVariable("AWX_BASEURI");;

string launchUrl = $"{baseUrl}{AWXJOB}/launch/";

// Create an HttpClient with basic authentication

using var client = new HttpClient();

// Retrieve username and password from environment variables

string username = Environment.GetEnvironmentVariable("AWX_USERNAME");

string password = Environment.GetEnvironmentVariable("AWX_PASSWORD");

var credentials = Convert.ToBase64String(Encoding.ASCII.GetBytes($"{username}:{password}"));

client.DefaultRequestHeaders.Add("Authorization", $"Basic {credentials}");

// Make the POST request

HttpResponseMessage response = await client.PostAsync(launchUrl, null);

// Print the response code

Console.WriteLine($"Response Code: {response.StatusCode}");

}

else

{

Console.WriteLine("No AWX job found.");

}

return Results.Ok();

});

await app.RunAsync();

internal record MessageEvent(string MessageType, string Message);

These can then come via the deployment YAML.

First, this expects the password (which I wasn’t about to check in to Github).

We can create that with a kubectl command:

$ kubectl create secret generic awxpassword --from-literal=AWX_PASSWORD='SomeAWXPassword'

secret/awxpassword created

Then deploy the deployment yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: daprpubsub

labels:

app: daprpubsub

spec:

replicas: 1

selector:

matchLabels:

app: daprpubsub

template:

metadata:

labels:

app: daprpubsub

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "daprpubsub"

dapr.io/app-port: "5009"

spec:

containers:

- name: daprpubsub

image: idjohnson/fbdaprpubsubtest:1.0

ports:

- containerPort: 5009

imagePullPolicy: IfNotPresent

env:

- name: AWX_BASEURI

value: "https://awx.freshbrewed.science/api/v2/job_templates/"

- name: AWX_USERNAME

value: "rundeck"

- name: AWX_PASSWORD

valueFrom:

secretKeyRef:

name: awxpassword

key: AWX_PASSWORD

---

apiVersion: dapr.io/v2alpha1

kind: Subscription

metadata:

name: bbb-to-daprpubsub

spec:

topic: BBB

routes:

default: /BBB

pubsubname: pubsub

scopes:

- daprpubsub

Just a quick note. If you have been following along and built and deployed the node subscriber, you’ll want to remove it or you’ll double-up on AWX deploys

$ kubectl delete deployment node-subscriber

deployment.apps "node-subscriber" deleted

I’ll apply the new deployment and watch the pod

builder@DESKTOP-QADGF36:~/Workspaces/csharpPubSub$ kubectl apply -f ./deployment.yaml

deployment.apps/daprpubsub created

subscription.dapr.io/bbb-to-daprpubsub created

builder@DESKTOP-QADGF36:~/Workspaces/csharpPubSub$ kubectl logs --follow `kubectl get pods -l "app=daprpubsub" -o jsonpath="{.items[0].metadata.name}"`

Defaulted container "daprpubsub" out of: daprpubsub, daprd

warn: Microsoft.AspNetCore.Server.Kestrel[0]

Overriding address(es) 'http://+:80'. Binding to endpoints defined via IConfiguration and/or UseKestrel() instead.

info: Microsoft.Hosting.Lifetime[14]

Now listening on: http://localhost:5009

info: Microsoft.Hosting.Lifetime[0]

Application started. Press Ctrl+C to shut down.

info: Microsoft.Hosting.Lifetime[0]

Hosting environment: Production

info: Microsoft.Hosting.Lifetime[0]

Content root path: /

We can now watch the full flow in action:

You saw me use the React form, but one could push a message to pub/sub directly as well

{"data":{"message":"AWX:7","messageType":"BBB"},"datacontenttype":"application/json","id":"78a072f7-0f35-439b-9cca-e45ccc7ca8a1","pubsubname":"pubsub","source":"react-form","specversion":"1.0","time":"2024-07-08T23:35:13Z","topic":"BBB","traceid":"00-00000000000000000000000000000000-0000000000000000-00","traceparent":"00-00000000000000000000000000000000-0000000000000000-00","tracestate":"","type":"com.dapr.event.sent"}

I pushed the repo up so you can copy it as you desire: https://github.com/idjohnson/csharpPubSub.

Summary

We banged out a lot of examples today using Python, NodeJS and Dotnet. We looked at GCP Pubsub driving a CloudRun Function which we then used Eventarc to tie to a Datadog alert.

We looked at Azure Event Hubs and a NodeJS function as pushed via a Github workflow and OIDC. We then wrapped by looking back at GCP PubSub but this time leveraging the Dapr framework to trigger a NodeJS and CSharp subscriber using a React Form.

Hopefully these two posts cover just some of the ways we can trigger AWX Jobs remotely.