Published: Jul 4, 2024 by Isaac Johnson

Today we are going to talk about a couple of Open Source apps I recently came across, Dufs and Pastefy. Pastefy falls into the same camp as OpenGist or pastebin. It’s just a quick simple way we can share code snippets and other textual content with a quick URL. As it allows us to password protect an unlisted link with an expiry, it can serve as a quick way to share secrets. We’ll load it into Kubernetes, expose with TLS and give it a try.

Dufs, while sounding like a brew from the Simpsons, actually stands for “distinctive utility file server” and is meant to be a very lightweight file server written in Rust. It’s easy to run from Docker and thus easy for us to convert to Kubernetes and serve up. We’ll set it up and then look at how we might use it as a backend for Kubernetes job outputs.

Pastefy

As mentioned Pastefy is an open-source alternative to Gists or Pastebin. It’s a Java app with a Javascript (Vue) front end. It’s under the umbrella of InteraApps and seems to be a partnership of Jeff and Julian G.

Pastefy Setup

To get started, we can clone down the Github repo and build locally

builder@LuiGi:~/Workspaces$ git clone https://github.com/interaapps/pastefy.git

Cloning into 'pastefy'...

remote: Enumerating objects: 3299, done.

remote: Counting objects: 100% (1001/1001), done.

remote: Compressing objects: 100% (320/320), done.

remote: Total 3299 (delta 570), reused 920 (delta 496), pack-reused 2298

Receiving objects: 100% (3299/3299), 4.08 MiB | 4.67 MiB/s, done.

Resolving deltas: 100% (1710/1710), done.

builder@LuiGi:~/Workspaces$ cd pastefy/

builder@LuiGi:~/Workspaces/pastefy$ ls

CODE_OF_CONDUCT.md Dockerfile README.md deployment docker-compose.yml

CONTRIBUTING.md LICENSE backend docker-compose.override.yml frontend

Now build it locally

builder@LuiGi:~/Workspaces/pastefy$ docker build -t pastefy:test .

[+] Building 99.0s (19/19) FINISHED docker:default

=> [internal] load build definition from Dockerfile 0.1s

=> => transferring dockerfile: 672B 0.0s

=> [internal] load metadata for docker.io/library/openjdk:8-jre-slim 5.1s

=> [internal] load metadata for docker.io/library/node:19.9.0-alpine3.17 5.1s

=> [internal] load metadata for docker.io/library/maven:3.6.0-jdk-8-slim 5.1s

=> [internal] load .dockerignore 0.1s

=> => transferring context: 2B 0.0s

=> [frontend 1/6] FROM docker.io/library/node:19.9.0-alpine3.17@sha256:3152fa8e36c952a792809026b21bfa5312e8f65f60202d28cae3256228da1519 8.4s

=> => resolve docker.io/library/node:19.9.0-alpine3.17@sha256:3152fa8e36c952a792809026b21bfa5312e8f65f60202d28cae3256228da1519 0.1s

=> => sha256:3152fa8e36c952a792809026b21bfa5312e8f65f60202d28cae3256228da1519 1.43kB / 1.43kB 0.0s

=> => sha256:f235f83c2e851c9d4165263842ece4d8ee80bd029634874b25d1ba3f3dba7564 1.16kB / 1.16kB 0.0s

=> => sha256:c34f4a4112ef86ffd844aa57f764d0fe0cab970193ee40e841ff1d0def56eb94 6.73kB / 6.73kB 0.0s

=> => sha256:3f97a19e6e8eedc596d8cbac43598f02b7c9656749a7f8c662f77208ed93c26c 2.34MB / 2.34MB 0.6s

=> => sha256:68c8e37007377cf0219777bcf496a8d4a5d11fc4a3748e32f87a87ec1e8fcfa3 48.15MB / 48.15MB 5.5s

=> => sha256:f56be85fc22e46face30e2c3de3f7fe7c15f8fd7c4e5add29d7f64b87abdaa09 3.37MB / 3.37MB 0.8s

=> => sha256:9a695742ba42c6b31e87aa8ed51e9dc70cfc9f5df5fb0f57db51967da2b55bf0 451B / 451B 0.8s

=> => extracting sha256:f56be85fc22e46face30e2c3de3f7fe7c15f8fd7c4e5add29d7f64b87abdaa09 0.1s

=> => extracting sha256:68c8e37007377cf0219777bcf496a8d4a5d11fc4a3748e32f87a87ec1e8fcfa3 2.2s

=> => extracting sha256:3f97a19e6e8eedc596d8cbac43598f02b7c9656749a7f8c662f77208ed93c26c 0.1s

=> => extracting sha256:9a695742ba42c6b31e87aa8ed51e9dc70cfc9f5df5fb0f57db51967da2b55bf0 0.0s

=> [internal] load build context 0.2s

=> => transferring context: 1.24MB 0.1s

=> [build 1/6] FROM docker.io/library/maven:3.6.0-jdk-8-slim@sha256:8aba145d88d61eaef0492b3a9c8026f8c3102f698de6d8242143ae147205095a 12.8s

=> => resolve docker.io/library/maven:3.6.0-jdk-8-slim@sha256:8aba145d88d61eaef0492b3a9c8026f8c3102f698de6d8242143ae147205095a 0.1s

=> => sha256:8aba145d88d61eaef0492b3a9c8026f8c3102f698de6d8242143ae147205095a 2.37kB / 2.37kB 0.0s

=> => sha256:a8e5c230aa78e4ad1a094c449c68627288005520d7787d8e2bcc00cf77631df7 2.20kB / 2.20kB 0.0s

=> => sha256:c203892a812465093fc3599b59bd234af6a02167899d1a8ec38ebb812738c254 6.99kB / 6.99kB 0.0s

=> => sha256:27833a3ba0a545deda33bb01eaf95a14d05d43bf30bce9267d92d17f069fe897 22.50MB / 22.50MB 3.3s

=> => sha256:16d944e3d00df7bedd2f9a6aa678132a1fb785f7d56b16fdf24c22d5c7c3b7a1 454.89kB / 454.89kB 1.1s

=> => sha256:9019de9fce5f74bfb1abcbec71bbcb5c81916617cc4697889772607263281abc 247B / 247B 1.3s

=> => sha256:9b053055f644af725a07611b2e29b1d9aa52bf8bd04db344ca086794cbe523cb 131B / 131B 1.5s

=> => sha256:1c80aca6b8ec87a87c2200276a5e098ed72ca708e8befa0b8951e019be98a90c 67.55MB / 67.55MB 9.9s

=> => extracting sha256:27833a3ba0a545deda33bb01eaf95a14d05d43bf30bce9267d92d17f069fe897 1.2s

=> => sha256:a63811f09e7c893cfd4764ed370e3ac47831f7351460814b51fedf6ca88b837e 3.96MB / 3.96MB 4.0s

=> => sha256:f88ce8d36c867a6a2f429d29502963d458fb3b0361e1110e649d68366143ac83 9.09MB / 9.09MB 5.2s

=> => extracting sha256:16d944e3d00df7bedd2f9a6aa678132a1fb785f7d56b16fdf24c22d5c7c3b7a1 0.0s

=> => extracting sha256:9019de9fce5f74bfb1abcbec71bbcb5c81916617cc4697889772607263281abc 0.0s

=> => extracting sha256:9b053055f644af725a07611b2e29b1d9aa52bf8bd04db344ca086794cbe523cb 0.0s

=> => sha256:a603a47619812e4395a114c02333ee989a12f8a7485d632a951f97dcd9879ff7 751B / 751B 5.4s

=> => sha256:f315d92acca3b65ac5fa25a04e72473d1b5bfd8052c5ff7582f14464ab454e38 361B / 361B 5.6s

=> => extracting sha256:1c80aca6b8ec87a87c2200276a5e098ed72ca708e8befa0b8951e019be98a90c 1.6s

=> => extracting sha256:a63811f09e7c893cfd4764ed370e3ac47831f7351460814b51fedf6ca88b837e 0.1s

=> => extracting sha256:f88ce8d36c867a6a2f429d29502963d458fb3b0361e1110e649d68366143ac83 0.1s

=> => extracting sha256:a603a47619812e4395a114c02333ee989a12f8a7485d632a951f97dcd9879ff7 0.0s

=> => extracting sha256:f315d92acca3b65ac5fa25a04e72473d1b5bfd8052c5ff7582f14464ab454e38 0.0s

=> [stage-2 1/2] FROM docker.io/library/openjdk:8-jre-slim@sha256:53186129237fbb8bc0a12dd36da6761f4c7a2a20233c20d4eb0d497e4045a4f5 13.9s

=> => resolve docker.io/library/openjdk:8-jre-slim@sha256:53186129237fbb8bc0a12dd36da6761f4c7a2a20233c20d4eb0d497e4045a4f5 0.1s

=> => sha256:53186129237fbb8bc0a12dd36da6761f4c7a2a20233c20d4eb0d497e4045a4f5 549B / 549B 0.0s

=> => sha256:285c61a1e5e6b7b3709729b69558670148c5fdc6eb7104fae7dd370042c51430 1.16kB / 1.16kB 0.0s

=> => sha256:85b121affeddcffc7bc6618140bb0285ad1257bd318676ddc67816863c0686c0 7.47kB / 7.47kB 0.0s

=> => sha256:1efc276f4ff952c055dea726cfc96ec6a4fdb8b62d9eed816bd2b788f2860ad7 31.37MB / 31.37MB 10.1s

=> => sha256:a2f2f93da48276873890ac821b3c991d53a7e864791aaf82c39b7863c908b93b 1.58MB / 1.58MB 6.1s

=> => sha256:1a2de4cc94315f2ba5015e6781672aa8e0b1456a4d488694bb5f016d8f59fa70 210B / 210B 6.2s

=> => sha256:d2421c7a4bbfc037d7fb487893cc5fe145e329dfb39b5ee6557016bf6c34072d 41.70MB / 41.70MB 11.5s

=> => extracting sha256:1efc276f4ff952c055dea726cfc96ec6a4fdb8b62d9eed816bd2b788f2860ad7 2.0s

=> => extracting sha256:a2f2f93da48276873890ac821b3c991d53a7e864791aaf82c39b7863c908b93b 0.1s

=> => extracting sha256:1a2de4cc94315f2ba5015e6781672aa8e0b1456a4d488694bb5f016d8f59fa70 0.0s

=> => extracting sha256:d2421c7a4bbfc037d7fb487893cc5fe145e329dfb39b5ee6557016bf6c34072d 0.8s

=> [frontend 2/6] COPY frontend/package*.json ./app/ 0.2s

=> [frontend 3/6] RUN npm --legacy-peer-deps --prefix app install 12.6s

=> [build 2/6] COPY backend/src /home/app/src 0.4s

=> [build 3/6] COPY backend/pom.xml /home/app 0.1s

=> [frontend 4/6] COPY frontend app 0.1s

=> [frontend 5/6] RUN npm run --prefix app build --prod 15.4s

=> [build 4/6] COPY --from=frontend backend/src/main/resources/static /home/app/src/main/resources/static 0.1s

=> [build 5/6] RUN mvn -f /home/app/pom.xml clean package 56.4s

=> [stage-2 2/2] COPY --from=build /home/app/target/backend.jar /usr/local/lib/backend.jar 0.1s

=> exporting to image 0.1s

=> => exporting layers 0.1s

=> => writing image sha256:73d7fcd5987fecd512abf2be524b7d11eca0ac042c5613113c600e6c26edc284 0.0s

=> => naming to docker.io/library/pastefy:test 0.0s

What's Next?

1. Sign in to your Docker account → docker login

2. View a summary of image vulnerabilities and recommendations → docker scout quickview

I’ll switch the docker-compose to my local image

builder@LuiGi:~/Workspaces/pastefy$ vi docker-compose.yml

builder@LuiGi:~/Workspaces/pastefy$ git diff docker-compose.yml

diff --git a/docker-compose.yml b/docker-compose.yml

index 2d79f6c..dba0fbf 100644

--- a/docker-compose.yml

+++ b/docker-compose.yml

@@ -15,7 +15,7 @@ services:

pastefy:

depends_on:

- db

- image: interaapps/pastefy:latest

+ image: pastefy:test

ports:

- "9999:80"

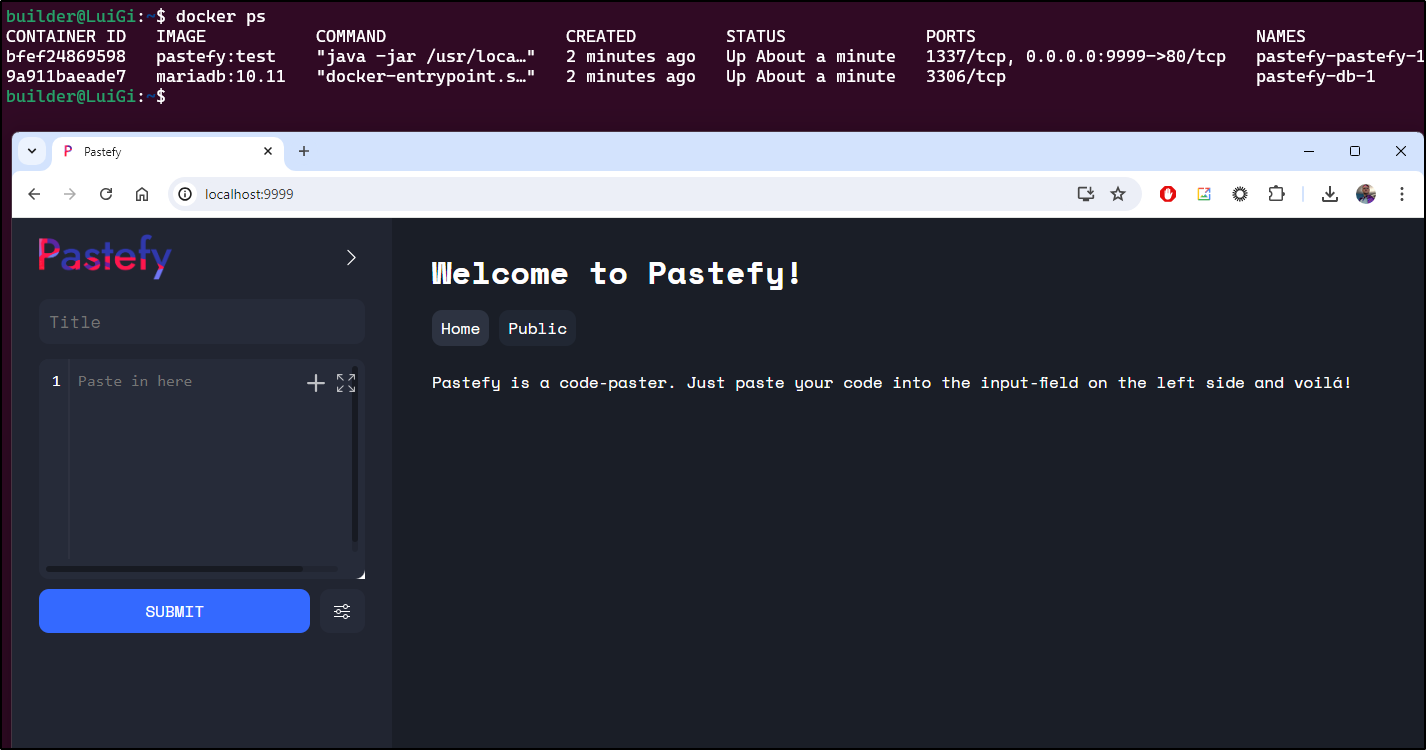

The first time I launched, Pastefy crashed failing to connect to the database. I think this was just because I had no local MariaDB 10 image so it took too long to come up.

The second time I launched docker compose up it worked

builder@LuiGi:~/Workspaces/pastefy$ docker-compose up

[+] Running 2/0

✔ Container pastefy-db-1 Created 0.0s

✔ Container pastefy-pastefy-1 Created 0.0s

Attaching to db-1, pastefy-1

db-1 | 2024-06-23 14:12:25+00:00 [Note] [Entrypoint]: Entrypoint script for MariaDB Server 1:10.11.8+maria~ubu2204 started.

db-1 | 2024-06-23 14:12:26+00:00 [Warn] [Entrypoint]: /sys/fs/cgroup/name=systemd:/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed

db-1 | 14:misc:/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed

db-1 | 13:rdma:/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed

db-1 | 12:pids:/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed

db-1 | 11:hugetlb:/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed

db-1 | 10:net_prio:/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed

db-1 | 9:perf_event:/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed

db-1 | 8:net_cls:/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed

db-1 | 7:freezer:/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed

db-1 | 6:devices:/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed

db-1 | 5:memory:/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed

db-1 | 4:blkio:/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed

db-1 | 3:cpuacct:/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed

db-1 | 2:cpu:/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed

db-1 | 1:cpuset:/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed

db-1 | 0::/docker/9a911baeade7c4074413b7eed5685beeef6e8d564fe49c2e883ac356e6c142ed/memory.pressure not writable, functionality unavailable to MariaDB

db-1 | 2024-06-23 14:12:26+00:00 [Note] [Entrypoint]: Switching to dedicated user 'mysql'

db-1 | 2024-06-23 14:12:26+00:00 [Note] [Entrypoint]: Entrypoint script for MariaDB Server 1:10.11.8+maria~ubu2204 started.

pastefy-1 | SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

pastefy-1 | SLF4J: Defaulting to no-operation (NOP) logger implementation

pastefy-1 | SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

db-1 | 2024-06-23 14:12:26+00:00 [Note] [Entrypoint]: MariaDB upgrade not required

db-1 | 2024-06-23 14:12:26 0 [Note] Starting MariaDB 10.11.8-MariaDB-ubu2204 source revision 3a069644682e336e445039e48baae9693f9a08ee as process 1

db-1 | 2024-06-23 14:12:26 0 [Note] InnoDB: Compressed tables use zlib 1.2.11

db-1 | 2024-06-23 14:12:26 0 [Note] InnoDB: Number of transaction pools: 1

db-1 | 2024-06-23 14:12:26 0 [Note] InnoDB: Using crc32 + pclmulqdq instructions

db-1 | 2024-06-23 14:12:26 0 [Note] mariadbd: O_TMPFILE is not supported on /tmp (disabling future attempts)

db-1 | 2024-06-23 14:12:26 0 [Note] InnoDB: Using liburing

db-1 | 2024-06-23 14:12:26 0 [Note] InnoDB: Initializing buffer pool, total size = 128.000MiB, chunk size = 2.000MiB

db-1 | 2024-06-23 14:12:26 0 [Note] InnoDB: Completed initialization of buffer pool

db-1 | 2024-06-23 14:12:26 0 [Note] InnoDB: File system buffers for log disabled (block size=4096 bytes)

db-1 | 2024-06-23 14:12:26 0 [Note] InnoDB: End of log at LSN=46950

db-1 | 2024-06-23 14:12:26 0 [Note] InnoDB: 128 rollback segments are active.

db-1 | 2024-06-23 14:12:26 0 [Note] InnoDB: Setting file './ibtmp1' size to 12.000MiB. Physically writing the file full; Please wait ...

db-1 | 2024-06-23 14:12:26 0 [Note] InnoDB: File './ibtmp1' size is now 12.000MiB.

db-1 | 2024-06-23 14:12:26 0 [Note] InnoDB: log sequence number 46950; transaction id 14

db-1 | 2024-06-23 14:12:26 0 [Note] InnoDB: Loading buffer pool(s) from /var/lib/mysql/ib_buffer_pool

db-1 | 2024-06-23 14:12:26 0 [Note] Plugin 'FEEDBACK' is disabled.

db-1 | 2024-06-23 14:12:26 0 [Note] InnoDB: Buffer pool(s) load completed at 240623 14:12:26

db-1 | 2024-06-23 14:12:26 0 [Warning] You need to use --log-bin to make --expire-logs-days or --binlog-expire-logs-seconds work.

db-1 | 2024-06-23 14:12:26 0 [Note] Server socket created on IP: '0.0.0.0'.

db-1 | 2024-06-23 14:12:26 0 [Note] Server socket created on IP: '::'.

db-1 | 2024-06-23 14:12:26 0 [Note] mariadbd: ready for connections.

db-1 | Version: '10.11.8-MariaDB-ubu2204' socket: '/run/mysqld/mysqld.sock' port: 3306 mariadb.org binary distribution

pastefy-1 | Jun 23, 2024 2:12:28 PM org.javawebstack.httpserver.HTTPServer start

pastefy-1 | INFO: HTTP-Server started on port 80

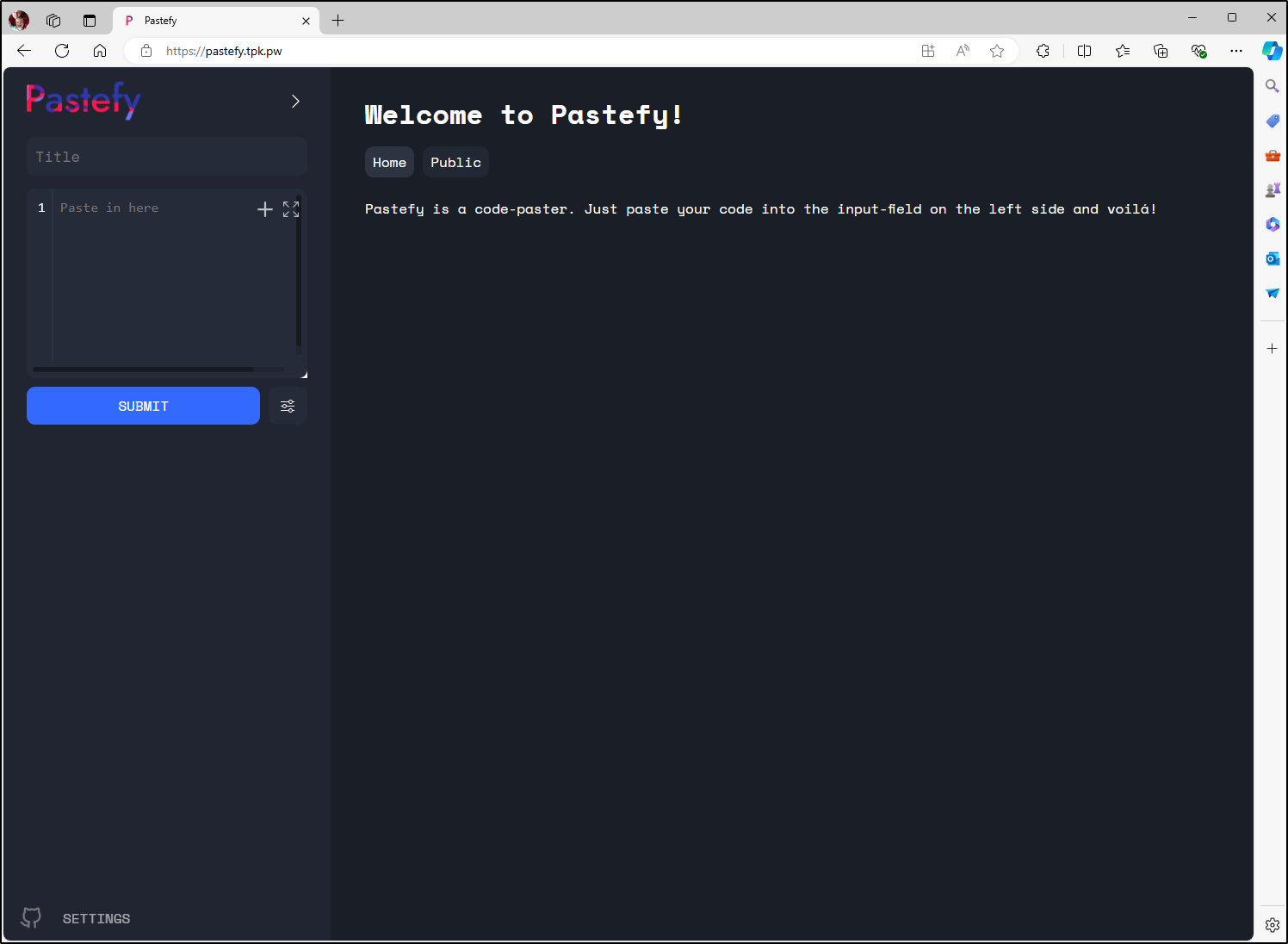

I can now see it running on port 9999

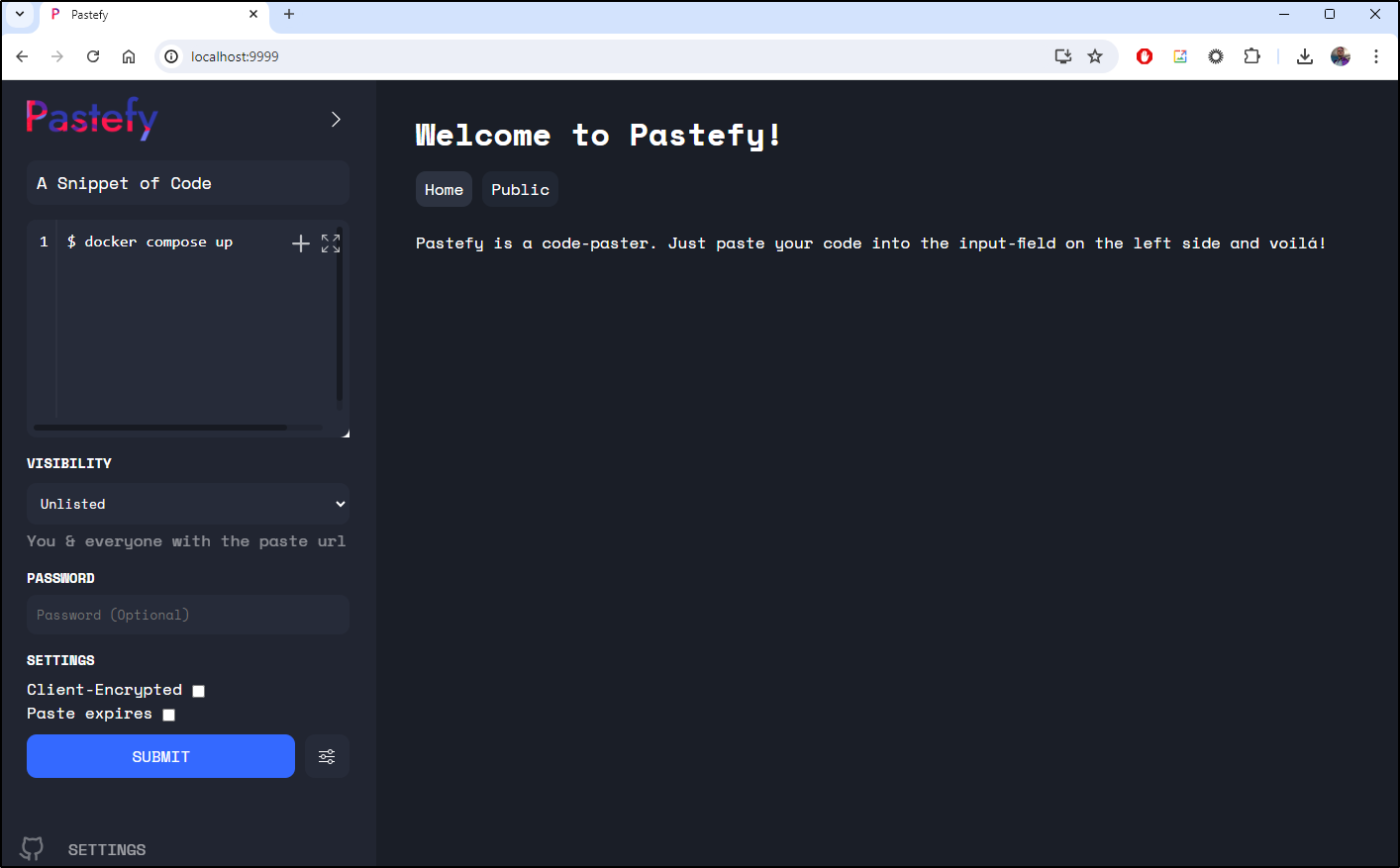

I can then create an unlisted paste

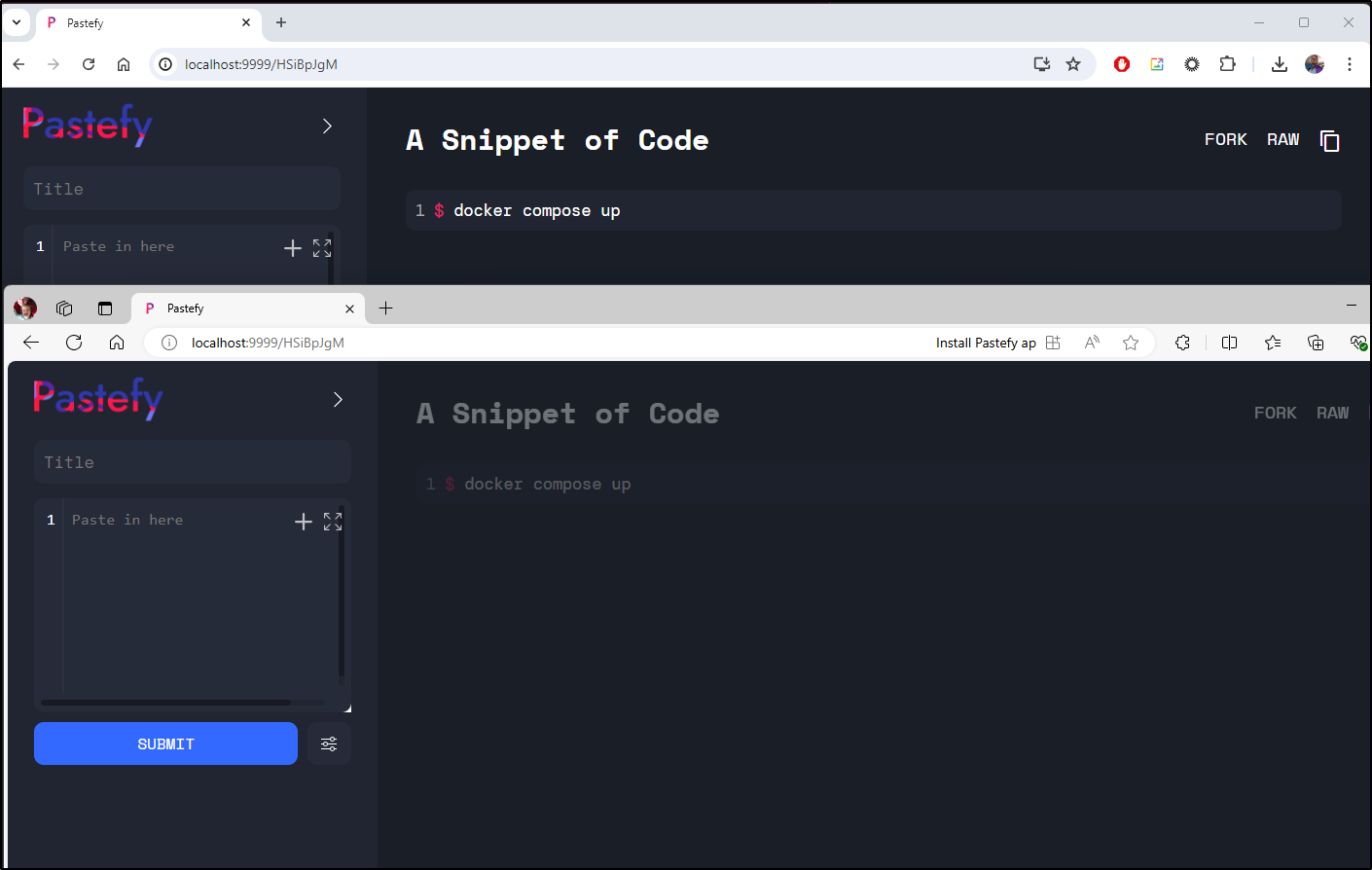

When I submit, I can see the URL at the top. It is also set to my clipboard at the same time. I tested in another browser

Because I didn’t launch with -d, I can just use ctrl-c to shutdown the Docker Compose session

pastefy-1 | Jun 23, 2024 2:12:28 PM org.javawebstack.httpserver.HTTPServer start

pastefy-1 | INFO: HTTP-Server started on port 80

^CGracefully stopping... (press Ctrl+C again to force)

[+] Stopping 2/2

✔ Container pastefy-pastefy-1 Stopped 0.7s

✔ Container pastefy-db-1 Stopped 0.8s

canceled

I want to try this in Kubernetes next, but I’m really distrustful of OPPs, so I’ll just push my own container build out.

I’ll push out my local build

$ docker push idjohnson/pastefy:test

The push refers to repository [docker.io/idjohnson/pastefy]

425b494f9d24: Pushed

b66078cf4b41: Mounted from library/openjdk

cd5a0a9f1e01: Mounted from library/openjdk

eafe6e032dbd: Mounted from library/openjdk

92a4e8a3140f: Mounted from library/openjdk

test: digest: sha256:4c2088a66e8c197de59fc4d6db9864a6478fd66be9484eb1b67a0ef321c59e0d size: 1371

I created an A Record for pastefy.tpk.pw

We can see the author provided a YAML to be a basis for us

builder@LuiGi:~/Workspaces/pastefy/deployment$ cat prod.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: pastefy-prod

labels:

app: pastefy-prod

spec:

selector:

matchLabels:

app: pastefy-prod

template:

metadata:

labels:

app: pastefy-prod

spec:

containers:

- name: pastefy-prod

image: 'idjohnson/pastefy:test'

imagePullPolicy: Always

ports:

- containerPort: 80

envFrom:

- configMapRef:

name: pastefy-env

revisionHistoryLimit: 1

---

kind: Service

apiVersion: v1

metadata:

name: pastefy-prod

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: pastefy-prod

I don’t see any MariaDB in the image nor configuration for it’s database.

Let’s solve it by launching a YAML manifest to create a DB in the namespace

$ cat db2.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mariadb-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mariadb-deployment

spec:

replicas: 1

selector:

matchLabels:

app: mariadb

template:

metadata:

labels:

app: mariadb

spec:

containers:

- name: mariadb

image: mariadb:10

env:

- name: MYSQL_ROOT_PASSWORD

value: "MyG@@Password"

- name: MYSQL_USER

value: "MyGoodUser"

- name: MYSQL_PASSWORD

value: "MyG@@dPassword"

- name: MYSQL_DATABASE

value: "pastefy"

ports:

- containerPort: 3306

volumeMounts:

- name: mariadb-storage

mountPath: /var/lib/mysql

volumes:

- name: mariadb-storage

persistentVolumeClaim:

claimName: mariadb-pvc

---

apiVersion: v1

kind: Service

metadata:

name: mariadb-service

spec:

type: ClusterIP

ports:

- port: 3306

selector:

app: mariadb

Here we can create a namespace and launch our MariaDB database

$ kubectl create ns pastefy

namespace/pastefy created

$ kubectl apply -f ./db2.yaml -n pastefy

persistentvolumeclaim/mariadb-pvc created

deployment.apps/mariadb-deployment created

service/mariadb-service created

Let’s look at the settings

$ cat settings.yaml

http.server.port=1337

HTTP_SERVER_CORS=*

DATABASE_DRIVER=mysql

DATABASE_NAME=pastefy

DATABASE_USER=MyGoodUser

DATABASE_PASSWORD=MyG@@dPassword

DATABASE_HOST=mariadb-service

DATABASE_PORT=3306

SERVER_NAME=https://pastefy.tpk.pw

# Optional

# PASTEFY_INFO_CUSTOM_LOGO=https://urltoimage

PASTEFY_INFO_CUSTOM_NAME=Pastefy

PASTEFY_INFO_CUSTOM_FOOTER=WEBSITE=https://example.org,SEPERATED BY COMMA=https://example.org

# Requires login for read and creation of pastes

PASTEFY_LOGIN_REQUIRED=false

# Login-requirements for specific access types

PASTEFY_LOGIN_REQUIRED_CREATE=false

# This will disable the raw mode as well for browser users

PASTEFY_LOGIN_REQUIRED_READ=false

# Check the encryption checkbox by default

PASTEFY_ENCRYPTION_DEFAULT=false

# Requires every new account being accepted by an administrator

PASTEFY_GRANT_ACCESS_REQUIRED=false

# Allows /paste route listing all pastes

PASTEFY_LIST_PASTES=false

# Makes /app/stats public

PASTEFY_PUBLIC_STATS=false

# Disables public pastes section

PASTEFY_PUBLIC_PASTES=false

$ kubectl create configmap -n pastefy pastefy-env --from-file=settings.yaml

configmap/pastefy-env created

Now I’ll test pastefy with those settings

$ kubectl apply -f ./prod-dep.yaml -n pastefy

deployment.apps/pastefy-prod created

service/pastefy-prod created

I can see pods running

$ kubectl get pods -n pastefy

NAME READY STATUS RESTARTS AGE

mariadb-deployment-7cc794cfcd-4kzs8 1/1 Running 0 18m

pastefy-prod-65cc745864-8b4wn 1/1 Running 0 36s

However, the logs suggest the SQL driver is absent

$ kubectl logs pastefy-prod-65cc745864-8b4wn -n pastefy

org.javawebstack.orm.wrapper.SQLDriverNotFoundException: SQL Driver none not found!

at org.javawebstack.orm.wrapper.SQLDriverFactory.getDriver(SQLDriverFactory.java:34)

at de.interaapps.pastefy.Pastefy.setupModels(Pastefy.java:170)

at de.interaapps.pastefy.Pastefy.<init>(Pastefy.java:85)

at de.interaapps.pastefy.Pastefy.main(Pastefy.java:312)

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

Exception in thread "Timer-0" java.lang.NullPointerException

at de.interaapps.pastefy.Pastefy$2.run(Pastefy.java:300)

at java.util.TimerThread.mainLoop(Timer.java:555)

at java.util.TimerThread.run(Timer.java:505)

Jun 24, 2024 12:45:39 AM org.javawebstack.httpserver.HTTPServer start

INFO: HTTP-Server started on port 80

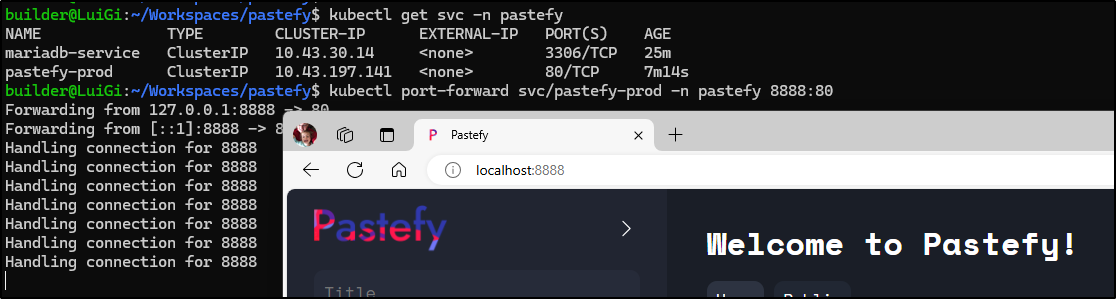

Checking that, we can see it is functioning

with the services

$ kubectl get svc -n pastefy

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mariadb-service ClusterIP 10.43.30.14 <none> 3306/TCP 25m

pastefy-prod ClusterIP 10.43.197.141 <none> 80/TCP 7m14s

I can then create the ingress

$ cat ./ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

name: pastefy-ingress

spec:

rules:

- host: pastefy.tpk.pw

http:

paths:

- backend:

service:

name: pastefy-prod

port:

number: 80

path: /

pathType: Prefix

tls:

- hosts:

- pastefy.tpk.pw

secretName: pastefy-tls

$ kubectl apply -f ./ingress.yaml -n pastefy

ingress.networking.k8s.io/pastefy-ingress created

When I see the cert is satisified

$ kubectl get cert -n pastefy

NAME READY SECRET AGE

pastefy-tls True pastefy-tls 90s

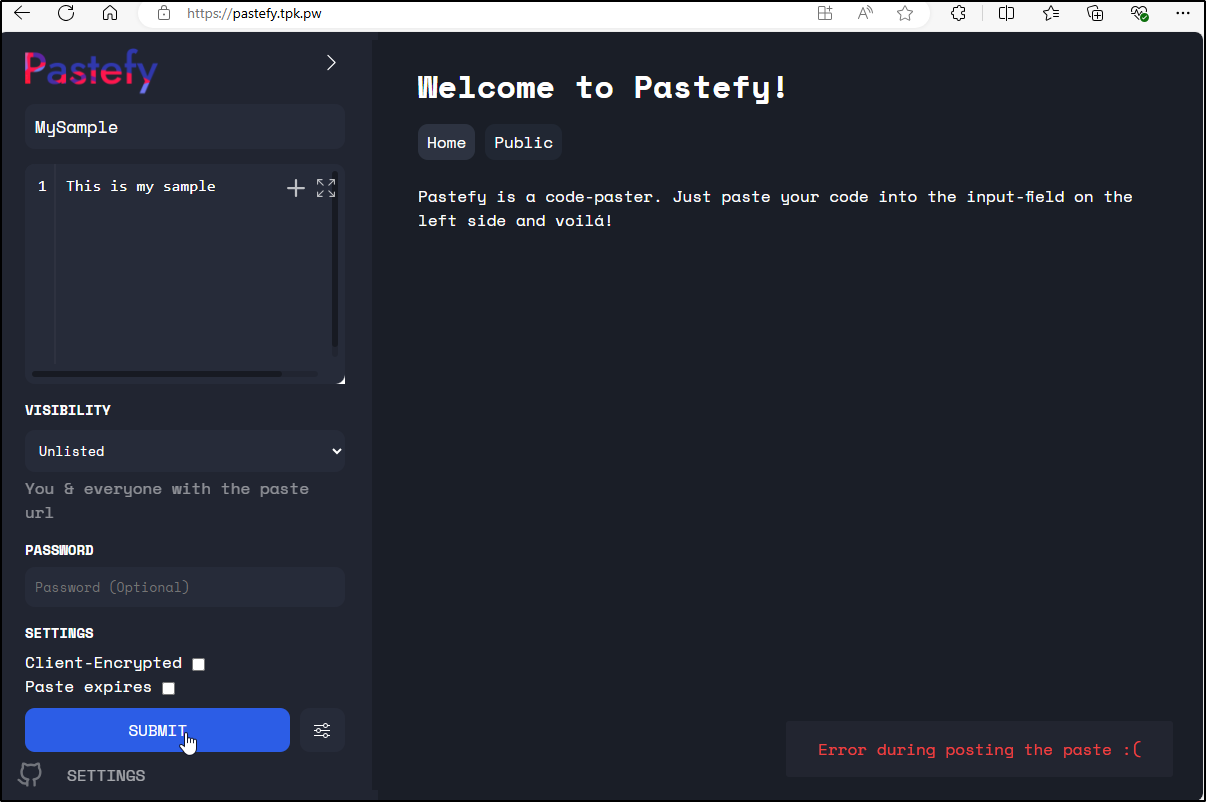

It’s now live at pastefy.tpk.pw

However, I still see errors

I keep seeing Java errors in the logs

$ kubectl logs pastefy-prod-65cc745864-8b4wn -n pastefy

org.javawebstack.orm.wrapper.SQLDriverNotFoundException: SQL Driver none not found!

at org.javawebstack.orm.wrapper.SQLDriverFactory.getDriver(SQLDriverFactory.java:34)

at de.interaapps.pastefy.Pastefy.setupModels(Pastefy.java:170)

at de.interaapps.pastefy.Pastefy.<init>(Pastefy.java:85)

at de.interaapps.pastefy.Pastefy.main(Pastefy.java:312)

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

Exception in thread "Timer-0" java.lang.NullPointerException

at de.interaapps.pastefy.Pastefy$2.run(Pastefy.java:300)

at java.util.TimerThread.mainLoop(Timer.java:555)

at java.util.TimerThread.run(Timer.java:505)

Jun 24, 2024 12:45:39 AM org.javawebstack.httpserver.HTTPServer start

INFO: HTTP-Server started on port 80

java.lang.NullPointerException

at de.interaapps.pastefy.controller.PasteController.getPaste(PasteController.java:167)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.javawebstack.httpserver.router.RouteBinder$BindMapper.invoke(RouteBinder.java:254)

at org.javawebstack.httpserver.router.RouteBinder$BindHandler.handle(RouteBinder.java:300)

at org.javawebstack.httpserver.HTTPServer.execute(HTTPServer.java:339)

at org.javawebstack.httpserver.HTTPServer$HttpHandler.handle(HTTPServer.java:415)

at org.eclipse.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:127)

at org.eclipse.jetty.server.Server.handle(Server.java:516)

at org.eclipse.jetty.server.HttpChannel.lambda$handle$1(HttpChannel.java:400)

at org.eclipse.jetty.server.HttpChannel.dispatch(HttpChannel.java:645)

at org.eclipse.jetty.server.HttpChannel.handle(HttpChannel.java:392)

at org.eclipse.jetty.server.HttpConnection.onFillable(HttpConnection.java:277)

at org.eclipse.jetty.io.AbstractConnection$ReadCallback.succeeded(AbstractConnection.java:311)

at org.eclipse.jetty.io.FillInterest.fillable(FillInterest.java:105)

at org.eclipse.jetty.io.ChannelEndPoint$1.run(ChannelEndPoint.java:104)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.runTask(EatWhatYouKill.java:338)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.doProduce(EatWhatYouKill.java:315)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.tryProduce(EatWhatYouKill.java:173)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.run(EatWhatYouKill.java:131)

at org.eclipse.jetty.util.thread.ReservedThreadExecutor$ReservedThread.run(ReservedThreadExecutor.java:409)

at org.eclipse.jetty.util.thread.QueuedThreadPool.runJob(QueuedThreadPool.java:883)

at org.eclipse.jetty.util.thread.QueuedThreadPool$Runner.run(QueuedThreadPool.java:1034)

at java.lang.Thread.run(Thread.java:750)

java.lang.NullPointerException

at de.interaapps.pastefy.controller.PasteController.getPaste(PasteController.java:167)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.javawebstack.httpserver.router.RouteBinder$BindMapper.invoke(RouteBinder.java:254)

at org.javawebstack.httpserver.router.RouteBinder$BindHandler.handle(RouteBinder.java:300)

at org.javawebstack.httpserver.HTTPServer.execute(HTTPServer.java:339)

at org.javawebstack.httpserver.HTTPServer$HttpHandler.handle(HTTPServer.java:415)

at org.eclipse.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:127)

at org.eclipse.jetty.server.Server.handle(Server.java:516)

at org.eclipse.jetty.server.HttpChannel.lambda$handle$1(HttpChannel.java:400)

at org.eclipse.jetty.server.HttpChannel.dispatch(HttpChannel.java:645)

at org.eclipse.jetty.server.HttpChannel.handle(HttpChannel.java:392)

at org.eclipse.jetty.server.HttpConnection.onFillable(HttpConnection.java:277)

at org.eclipse.jetty.io.AbstractConnection$ReadCallback.succeeded(AbstractConnection.java:311)

at org.eclipse.jetty.io.FillInterest.fillable(FillInterest.java:105)

at org.eclipse.jetty.io.ChannelEndPoint$1.run(ChannelEndPoint.java:104)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.runTask(EatWhatYouKill.java:338)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.doProduce(EatWhatYouKill.java:315)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.tryProduce(EatWhatYouKill.java:173)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.run(EatWhatYouKill.java:131)

at org.eclipse.jetty.util.thread.ReservedThreadExecutor$ReservedThread.run(ReservedThreadExecutor.java:409)

at org.eclipse.jetty.util.thread.QueuedThreadPool.runJob(QueuedThreadPool.java:883)

at org.eclipse.jetty.util.thread.QueuedThreadPool$Runner.run(QueuedThreadPool.java:1034)

at java.lang.Thread.run(Thread.java:750)

Let me pivot to setting the ConfigMap from key/value pairs

$ kubectl delete cm pastefy-env -n pastefy

configmap "pastefy-env" deleted

$ kubectl create configmap -n pastefy pastefy-env \

--from-literal=http.server.port=1337 \

--from-literal=HTTP_SERVER_CORS='*' \

--from-literal=DATABASE_DRIVER=mysql \

--from-literal=DATABASE_NAME=pastefy \

--from-literal=DATABASE_USER=MyGoodUser \

--from-literal=DATABASE_PASSWORD='MyG@@dPassword' \

--from-literal=DATABASE_HOST=mariadb-service \

--from-literal=DATABASE_PORT=3306 \

--from-literal=SERVER_NAME=https://pastefy.tpk.pw \

--from-literal=PASTEFY_INFO_CUSTOM_NAME=Pastefy \

--from-literal=PASTEFY_INFO_CUSTOM_FOOTER='WEBSITE=https://example.org,SEPERATED BY COMMA=https://example.org' \

--from-literal=PASTEFY_LOGIN_REQUIRED=false \

--from-literal=PASTEFY_LOGIN_REQUIRED_CREATE=false \

--from-literal=PASTEFY_LOGIN_REQUIRED_READ=false \

--from-literal=PASTEFY_ENCRYPTION_DEFAULT=false \

--from-literal=PASTEFY_GRANT_ACCESS_REQUIRED=false

configmap/pastefy-env created

I debugged for a while before scrapping my local built container and moving on to IneraApps.

I’m not sure what my container had wrong, but using the authors worked, once I swapped to port 1337

$ cat prod-dep.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: pastefy-prod

labels:

app: pastefy-prod

spec:

selector:

matchLabels:

app: pastefy-prod

template:

metadata:

labels:

app: pastefy-prod

spec:

containers:

- name: pastefy-prod

image: 'interaapps/pastefy:latest'

imagePullPolicy: Always

ports:

- containerPort: 1337

envFrom:

- configMapRef:

name: pastefy-env

revisionHistoryLimit: 1

---

kind: Service

apiVersion: v1

metadata:

name: pastefy-prod

spec:

ports:

- protocol: TCP

port: 80

targetPort: 1337

selector:

app: pastefy-prod

We can see a test:

Duffs

Duffs is a simple File server we can use to serve up some arbitrary content. It seems to have been created by Sigoden, but the Github profile is absent so it’s hard to say much other than whomever Sigoden is, they sure commit an awful lot of OS code.

The Dufs project has been active for at least two years and seems to release fairly regularly.

Setup

They mention we could use the docker command to whip up a Dufs server

$ docker run -v `pwd`:/data -p 5000:5000 --rm sigoden/dufs /data -A

Let’s turn that into a standard Kubernetes YAML manifest with Service, Deployment and PVC sections

$ cat ./dufs.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: dufs-app

spec:

replicas: 1

selector:

matchLabels:

app: dufs

template:

metadata:

labels:

app: dufs

spec:

containers:

- name: dufs-container

image: sigoden/dufs

ports:

- containerPort: 5000

volumeMounts:

- name: dufs

mountPath: /data

volumes:

- name: dufs

persistentVolumeClaim:

claimName: dufs-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: dufs-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Service

metadata:

name: dufs-service

spec:

selector:

app: dufs

ports:

- protocol: TCP

port: 80

targetPort: 5000

I can then apply to create

$ kubectl apply -f ./dufs.yaml

deployment.apps/dufs-app created

persistentvolumeclaim/dufs-pvc created

service/dufs-service created

Since I know I’ll want to test externally, I’ll take a moment to drop an A record in Route53

$ cat r53-dufs.json

{

"Comment": "CREATE dufs fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "dufs.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "75.73.224.240"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-dufs.json

{

"ChangeInfo": {

"Id": "/change/C0711640R4M7338VAMA5",

"Status": "PENDING",

"SubmittedAt": "2024-06-26T21:20:42.340Z",

"Comment": "CREATE dufs fb.s A record "

}

}

Which I can then use in an ingress for the service

$ cat dufs.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/websocket-services: dufs-service

name: dufs

spec:

rules:

- host: dufs.freshbrewed.science

http:

paths:

- backend:

service:

name: dufs-service

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- dufs.freshbrewed.science

secretName: dufs-tls

$ kubectl apply -f ./dufs.ingress.yaml

ingress.networking.k8s.io/dufs created

When the cert comes back

$ kubectl get cert dufs-tls

NAME READY SECRET AGE

dufs-tls True dufs-tls 1m

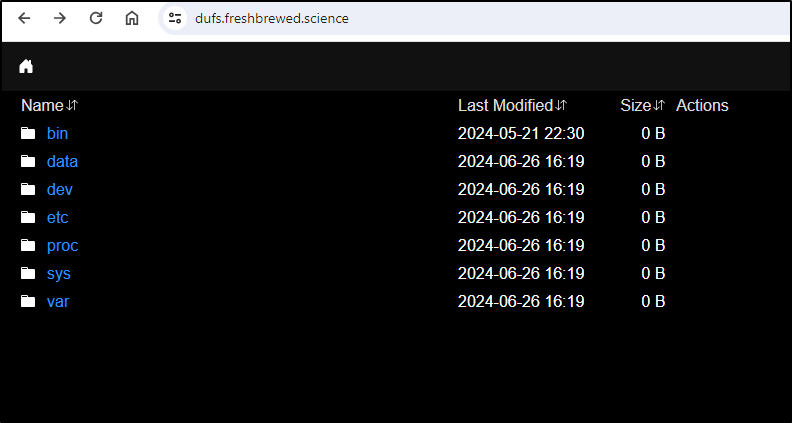

We can browse the files now

Seems it shows the whole OS.

We can fix that by reducing to show just the ‘/data’ folder as well as passing ‘-A’ for allowing all operations

apiVersion: apps/v1

kind: Deployment

metadata:

name: dufs-app

spec:

replicas: 1

selector:

matchLabels:

app: dufs

template:

metadata:

labels:

app: dufs

spec:

containers:

- name: dufs-container

image: sigoden/dufs

command: ["dufs", "/data","-A"]

ports:

- containerPort: 5000

volumeMounts:

- name: dufs

mountPath: /data

volumes:

- name: dufs

persistentVolumeClaim:

claimName: dufs-pvc

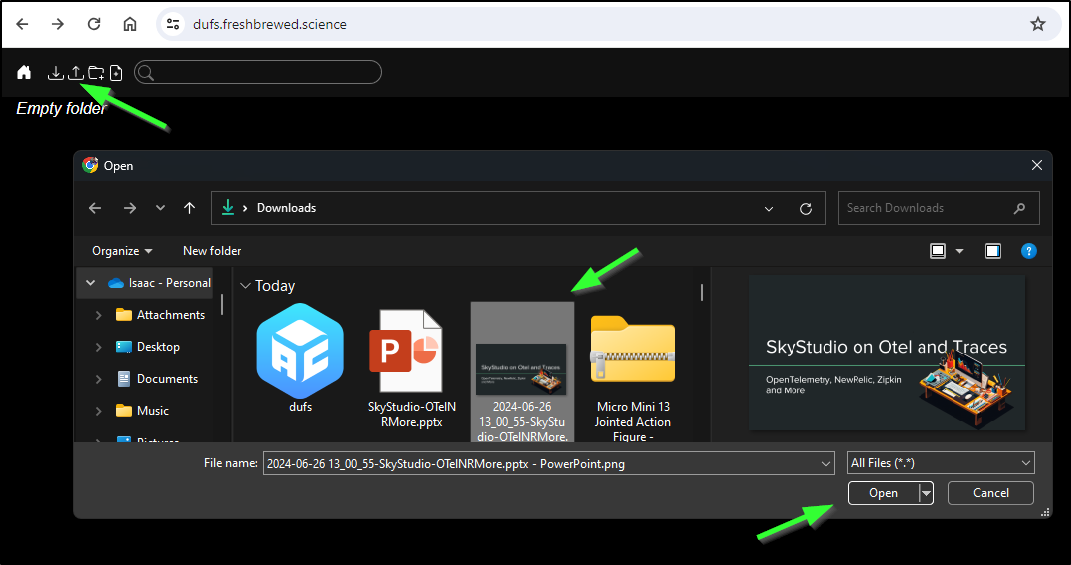

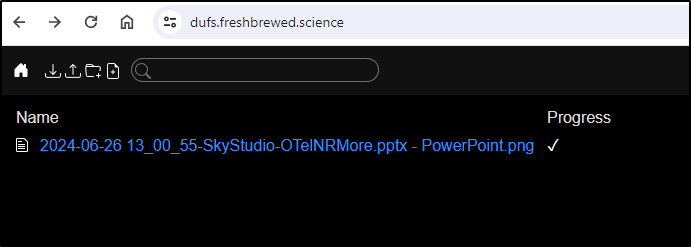

I can now upload an image

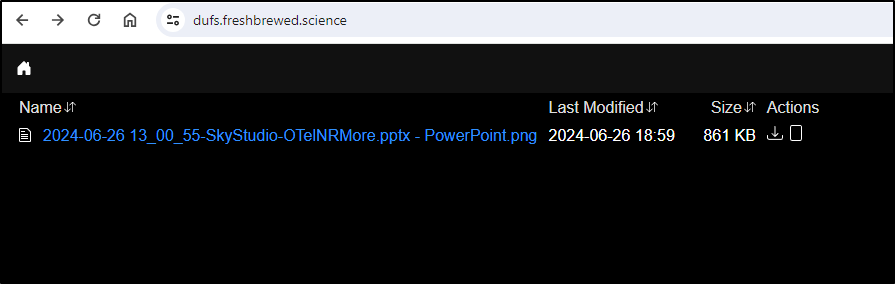

And we can see it’s now there

I can edit the deployment and remove “-A”

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: dufs

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: dufs

spec:

containers:

- command:

- dufs

- /data

image: sigoden/dufs

imagePullPolicy: Always

name: dufs-container

ports:

- containerPort: 5000

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /data

name: dufs

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- name: dufs

persistentVolumeClaim:

claimName: dufs-pvc

I can now see only download is available

We can see the image now hosted at https://dufs.freshbrewed.science/2024-06-26%2013_00_55-SkyStudio-OTelNRMore.pptx%20-%20PowerPoint.png.

I wanted to try and copy a file directly but the dufs container lacks bash, sh and tar

$ kubectl cp ./test.txt dufs-app-7f48d7d7bc-26pws:/data/test.txt

error: Internal error occurred: error executing command in container: failed to exec in container: failed to start exec "2ac3f7848dc0961ba73399572b83cf550ade2da012315dcb5c504fb206cf4d77": OCI runtime exec failed: exec failed: unable to start container process: exec: "tar": executable file not found in $PATH: unknown

My second idea was to use a manifest to create a temporary pod that mounts the same PVC, upload a file, then delete the pod:

$ cat dufs2.yaml

apiVersion: v1

kind: Pod

metadata:

name: ubuntu-pod

spec:

containers:

- name: ubuntu

image: ubuntu

command: ["sleep", "36400"]

volumeMounts:

- mountPath: /data

name: data-volume

volumes:

- name: data-volume

persistentVolumeClaim:

claimName: dufs-pvc

restartPolicy: Never

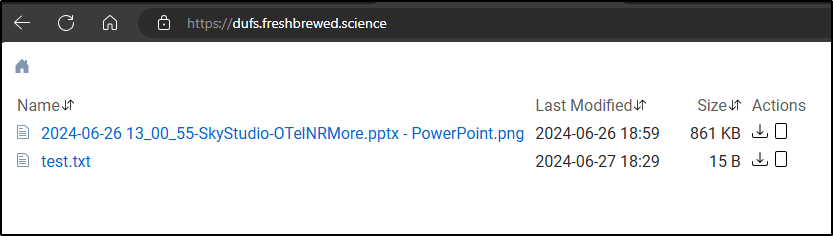

I launched it, copied a file then removed the pod

$ kubectl apply -f ./dufs2.yaml

pod/ubuntu-pod created

$ kubectl cp ./test.txt ubuntu-pod:/data/test.txt

$ kubectl delete -f ./dufs2.yaml

pod "ubuntu-pod" deleted

This seemed to work

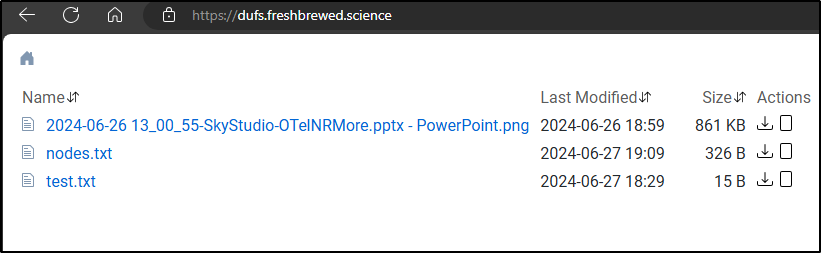

I can create a manifest YAML for a job that lists nodes and then saves it to the same PVC

$ cat test.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: kubectl-job-sa

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: node-list-role

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-list-role-binding

subjects:

- kind: ServiceAccount

name: kubectl-job-sa

namespace: default

roleRef:

kind: ClusterRole

name: node-list-role

apiGroup: rbac.authorization.k8s.io

---

apiVersion: batch/v1

kind: Job

metadata:

name: kubectl-job

spec:

template:

spec:

serviceAccountName: kubectl-job-sa

containers:

- name: kubectl-container

image: bitnami/kubectl:latest

command: ["/bin/sh", "-c"]

args:

- |

kubectl get nodes > /data/nodes.txt

volumeMounts:

- name: data-volume

mountPath: /data

volumes:

- name: kubectl-config

configMap:

name: kubectl-config

- name: data-volume

persistentVolumeClaim:

claimName: dufs-pvc

restartPolicy: Never

backoffLimit: 4

$ kubectl apply -f ./test.yaml

serviceaccount/kubectl-job-sa created

Warning: resource clusterroles/node-list-role is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

clusterrole.rbac.authorization.k8s.io/node-list-role configured

clusterrolebinding.rbac.authorization.k8s.io/node-list-role-binding created

job.batch/kubectl-job created

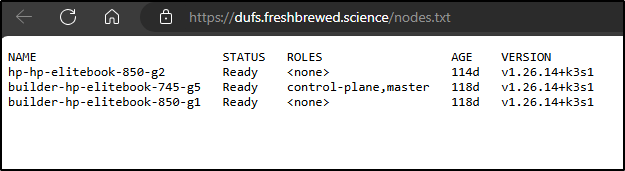

Which worked

$ kubectl get jobs

NAME COMPLETIONS DURATION AGE

kubectl-job 1/1 5s 35s

I can view here

Summary

We took a look at two useful open-source apps today; Pastefy and Dufs. The first is just a simple solution for sharing pastes. While it can run in Docker, we launched it in Kubernetes and verified we could share with passwords. I might keep it as a basic way to share a secret.

Dufs, as a light file-server is really quite handy. I like that I could quickly drop files into the path and expose them and it was easy to turn on and off uploads. There is an advanced section on the Github readme that covers limiting paths and user accounts for those that want to see what more they could do with Dufs.

You can reach my pastefy at https://pastefy.tpk.pw/ and Dufs at https://dufs.freshbrewed.science/ as long as I still have them hosted.