Published: Apr 4, 2024 by Isaac Johnson

Today we’ll check out a couple interesting Open-Source containerized apps. Siyuan is a small interactive Notebooking app. I see it as a rich collaboration space akin to Google Wave or Jupyter. As you’ll see below, it works great in Docker, but not so much in Kubernetes.

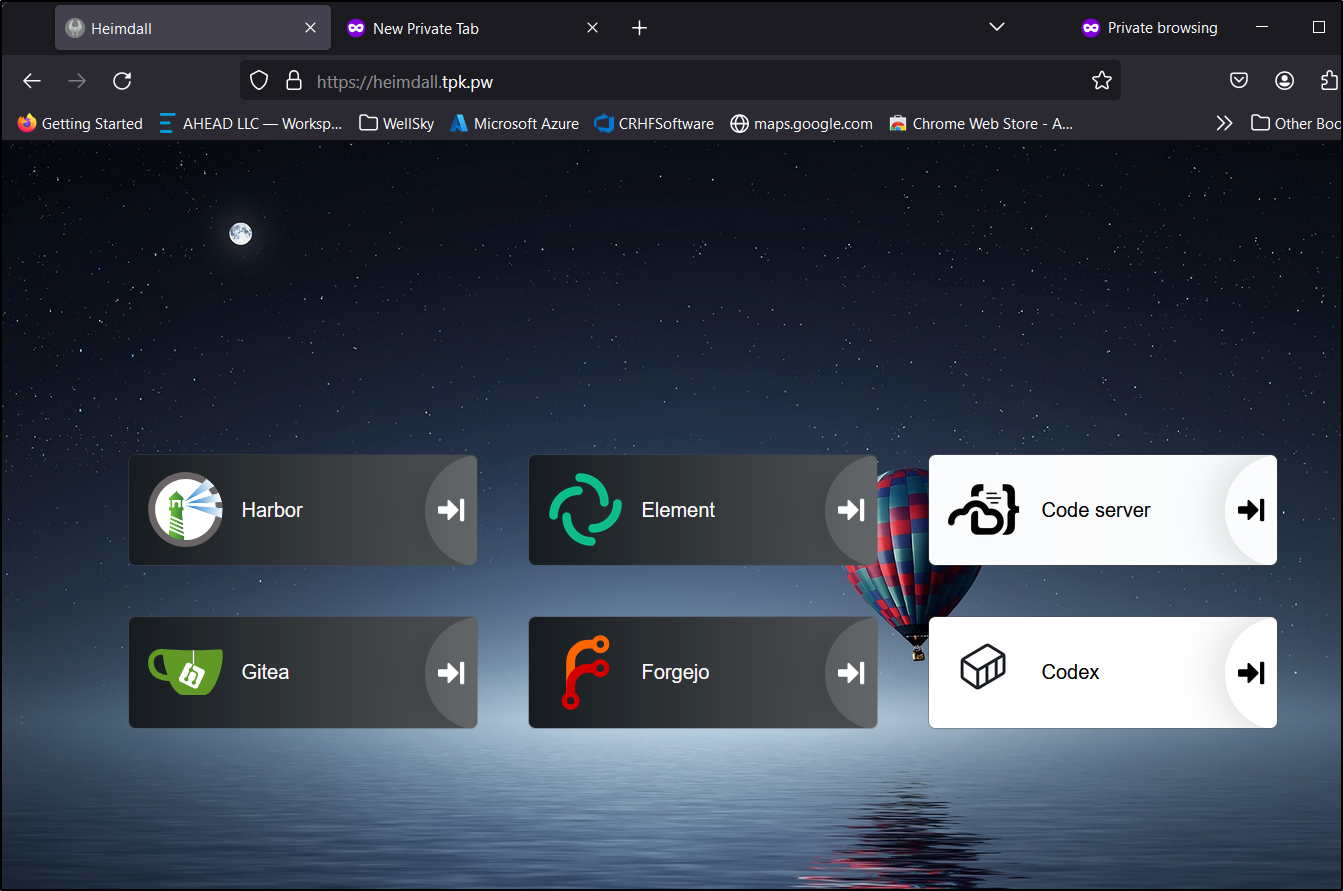

Heimdall is a slick single-purpose app for hosting bookmarks. As we’ll see, it’s quite easy to setup and configure.

Let’s dig in.

Siyuan

I’m not sure if to best call it a Google Docs to share, or a Google Wave kind of app. But Siyuan bills itself as “a privacy-first personal knowledge management system that supports complete offline usage, as well as end-to-end encrypted data sync.”

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n siyuan

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "7da12fc5-25e6-42c7-a1f9-4844fc18c3b0",

"fqdn": "siyuan.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/siyuan",

"name": "siyuan",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"type": "Microsoft.Network/dnszones/A"

}

builder@LuiGi17:~/Workspaces/siyuan$

I tried building an launching natively in Kubernetes

apiVersion: v1

kind: Service

metadata:

name: siyuan-service

spec:

selector:

app: siyuan

ports:

- protocol: TCP

port: 6806

targetPort: 6806

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: siyuan-deployment

spec:

replicas: 1

selector:

matchLabels:

app: siyuan

template:

metadata:

labels:

app: siyuan

spec:

containers:

- name: siyuan

image: b3log/siyuan

command: ["--workspace=/siyuan/workspace/", "--accessAuthCode=freshbrewed"]

ports:

- containerPort: 6806

volumeMounts:

- name: siyuan-volume

mountPath: /siyuan/workspace

volumes:

- name: siyuan-volume

persistentVolumeClaim:

claimName: siyuan-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: siyuan-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: managed-nfs-storage

resources:

requests:

storage: 1Gi

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: siyuan-ingress

annotations:

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/websocket-services: siyuan-service

cert-manager.io/cluster-issuer: azuredns-tpkpw

spec:

rules:

- host: siyuan.tpk.pw

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: siyuan-service

port:

number: 6806

tls:

- hosts:

- siyuan.tpk.pw

secretName: siyuan-tls

A quick apply

$ kubectl apply -f kubernetes.yaml

service/siyuan-service created

deployment.apps/siyuan-deployment created

persistentvolumeclaim/siyuan-pvc created

ingress.networking.k8s.io/siyuan-ingress created

But regardless of PVC type, it just would not stay up.

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 14s default-scheduler Successfully assigned default/siyuan-deployment-55b8497997-svpjd to hp-hp-elitebook-850-g2

Normal Pulled 14s kubelet Successfully pulled image "b3log/siyuan" in 531.280821ms (531.288602ms including waiting)

Normal Pulled 13s kubelet Successfully pulled image "b3log/siyuan" in 515.675314ms (515.686786ms including waiting)

Normal Pulling 0s (x3 over 14s) kubelet Pulling image "b3log/siyuan"

Normal Pulled <invalid> kubelet Successfully pulled image "b3log/siyuan" in 536.552001ms (536.559363ms including waiting)

Normal Created <invalid> (x3 over 14s) kubelet Created container siyuan

Warning Failed <invalid> (x3 over 14s) kubelet Error: failed to create containerd task: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: exec: "--accessAuthCode=freshbrewed": executable file not found in $PATH: unknown

Warning BackOff <invalid> (x3 over 12s) kubelet Back-off restarting failed container siyuan in pod siyuan-deployment-55b8497997-svpjd_default(6de23568-7959-400b-940f-f16dd501e2a5)

Even an empty dir failed:

apiVersion: apps/v1

kind: Deployment

metadata:

name: siyuan-deployment

spec:

replicas: 1

selector:

matchLabels:

app: siyuan

template:

metadata:

labels:

app: siyuan

spec:

containers:

- name: siyuan

image: b3log/siyuan

command: ["--workspace=/mnt/workspace/", "--accessAuthCode=freshbrewed"]

ports:

- containerPort: 6806

volumeMounts:

- name: datadir

mountPath: /mnt/workspace

volumes:

- name: datadir

emptyDir: {}

- name: siyuan-volume

persistentVolumeClaim:

claimName: siyuan-pvc

with events

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 22s default-scheduler Successfully assigned default/siyuan-deployment-664bbf575d-ld56v to hp-hp-elitebook-850-g2

Normal Pulled 22s kubelet Successfully pulled image "b3log/siyuan" in 538.498717ms (538.507397ms including waiting)

Normal Pulled 20s kubelet Successfully pulled image "b3log/siyuan" in 510.623522ms (510.636937ms including waiting)

Normal Pulling 8s (x3 over 22s) kubelet Pulling image "b3log/siyuan"

Normal Pulled 7s kubelet Successfully pulled image "b3log/siyuan" in 519.929014ms (519.940438ms including waiting)

Normal Created 7s (x3 over 22s) kubelet Created container siyuan

Warning Failed 7s (x3 over 22s) kubelet Error: failed to create containerd task: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: exec: "--workspace=/mnt/workspace/": stat --workspace=/mnt/workspace/: no such file or directory: unknown

Warning BackOff 7s (x3 over 20s) kubelet Back-off restarting failed container siyuan in pod siyuan-deployment-664bbf575d-ld56v_default(bda5fa8b-ee3e-4cb5-95bf-4ed40437de53)

Via Dockerhost

Let’s pivot to running via Docker.

I’ll create a fresh directory

builder@builder-T100:~$ mkdir siyuan

builder@builder-T100:~$ cd siyuan/

builder@builder-T100:~/siyuan$ pwd

/home/builder/siyuan

builder@builder-T100:~/siyuan$ mkdir workspace

builder@builder-T100:~/siyuan$ ls

workspace

builder@builder-T100:~/siyuan$ pwd

/home/builder/siyuan

I can then launch with Docker

builder@builder-T100:~/siyuan$ docker run -d -v /home/builder/siyuan/workspace:/mnt/workspace -p 6806:6806 b3log/siyuan --workspace=/mnt/workspace --accessAuthCode=nottherealauthcode

Unable to find image 'b3log/siyuan:latest' locally

latest: Pulling from b3log/siyuan

4abcf2066143: Already exists

497ade521f16: Pull complete

de3448679987: Pull complete

ff5a5b61bdbb: Pull complete

Digest: sha256:1e609b730937dc77210fbcc97bd27bcb9f885d3ddefd41a1d0e76a5349db9281

Status: Downloaded newer image for b3log/siyuan:latest

4f683a96127697b0e5ae6c44ab69d2835be80bdcf0ed67a68d25625d7c066044

I now need to expose it via Kubernetes to make it externally reachable

---

apiVersion: v1

kind: Endpoints

metadata:

name: siyuan-service

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: siyuan

port: 6806

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: siyuan-service

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: siyuan

port: 6806

protocol: TCP

targetPort: 6806

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: siyuan-ingress

annotations:

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/websocket-services: siyuan-service

cert-manager.io/cluster-issuer: azuredns-tpkpw

spec:

rules:

- host: siyuan.tpk.pw

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: siyuan-service

port:

number: 6806

tls:

- hosts:

- siyuan.tpk.pw

secretName: siyuan-tls

Which I’ll swap in

builder@LuiGi17:~/Workspaces/siyuan$ kubectl delete -f kubernetes.yaml

service "siyuan-service" deleted

deployment.apps "siyuan-deployment" deleted

persistentvolumeclaim "siyuan-pvc" deleted

ingress.networking.k8s.io "siyuan-ingress" deleted

builder@LuiGi17:~/Workspaces/siyuan$ kubectl apply -f ./kubernetes-w-docker.yaml

endpoints/siyuan-service created

service/siyuan-service created

ingress.networking.k8s.io/siyuan-ingress created

builder@LuiGi17:~/Workspaces/siyuan$

This worked!

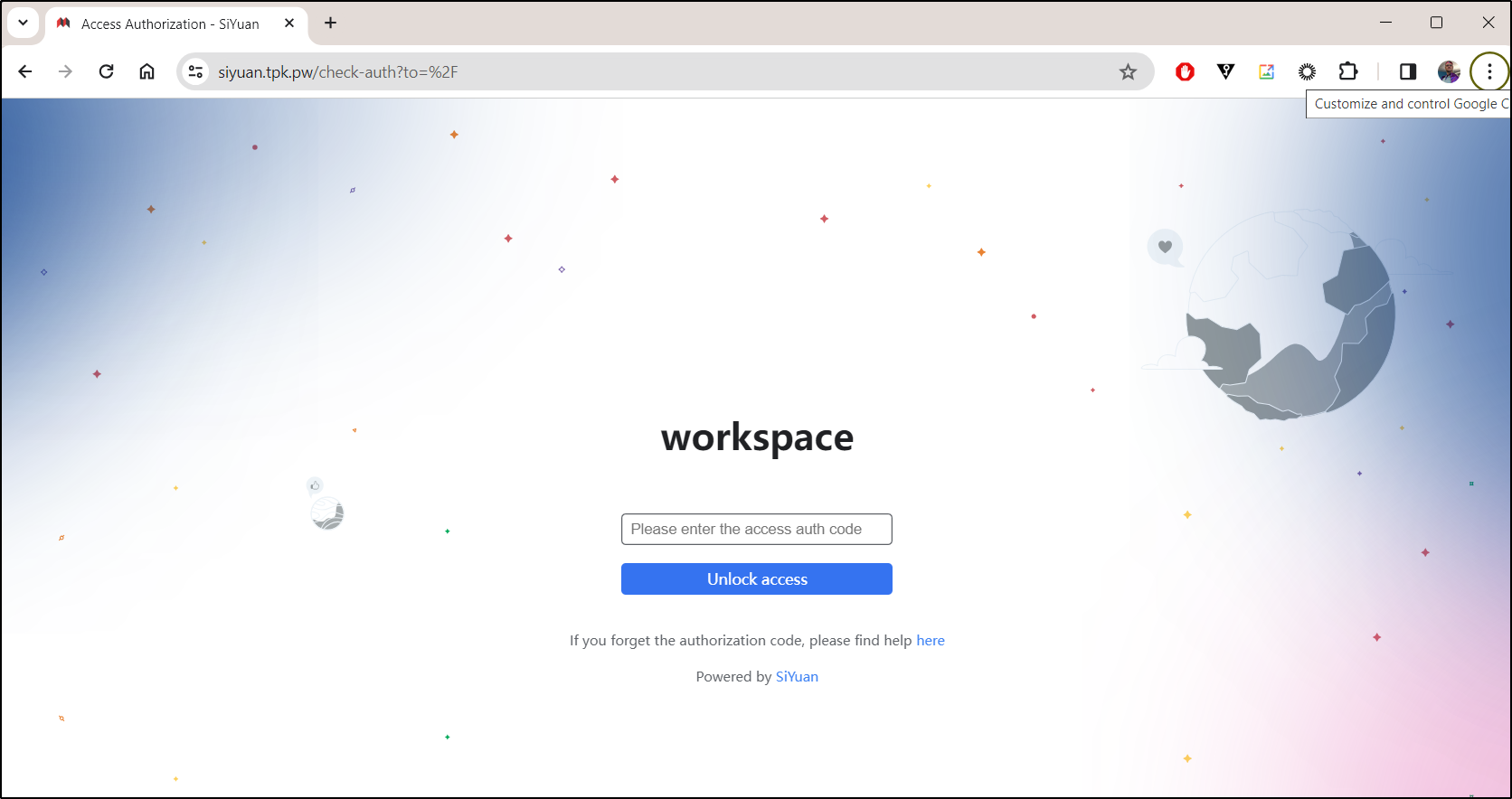

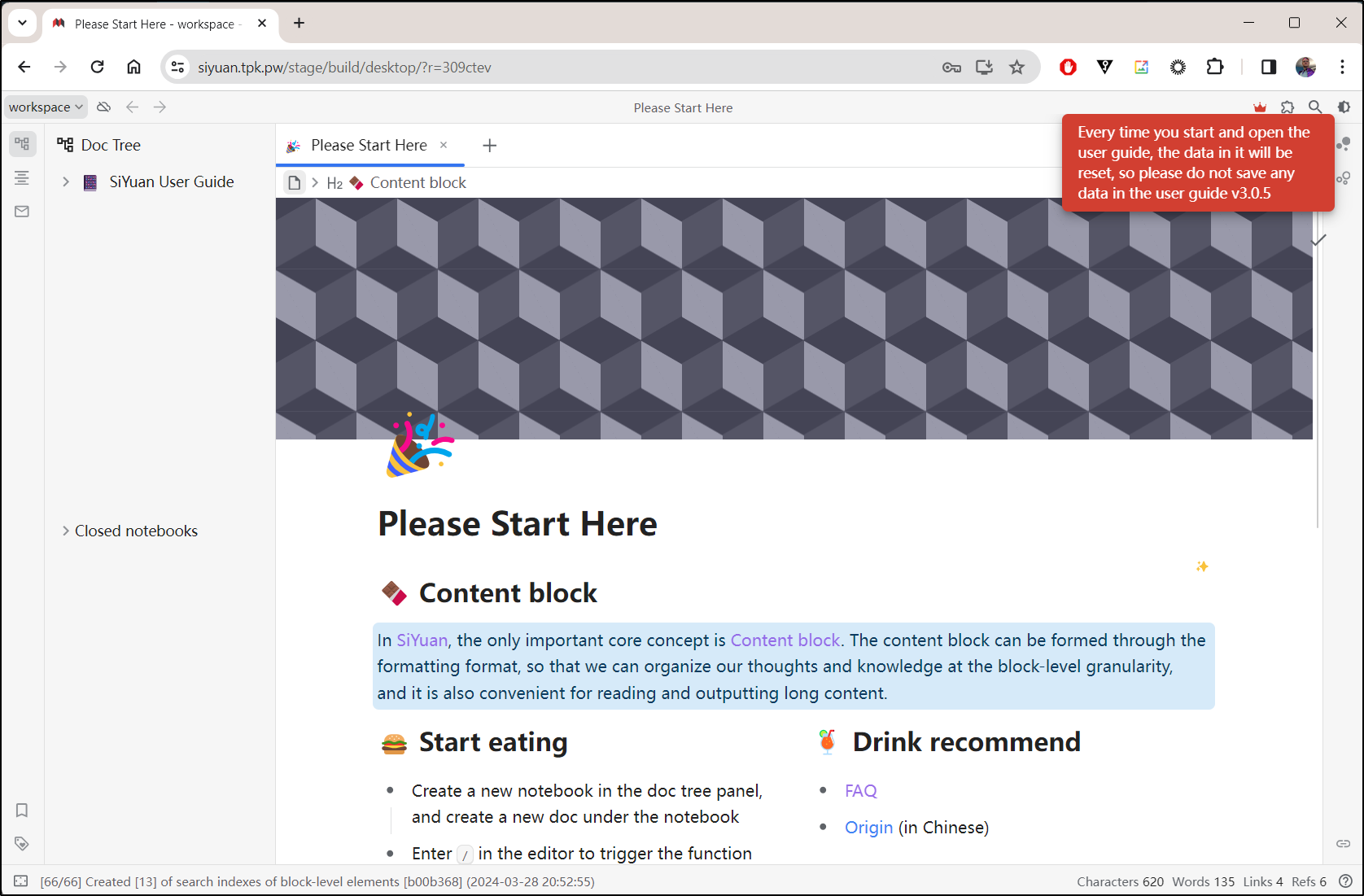

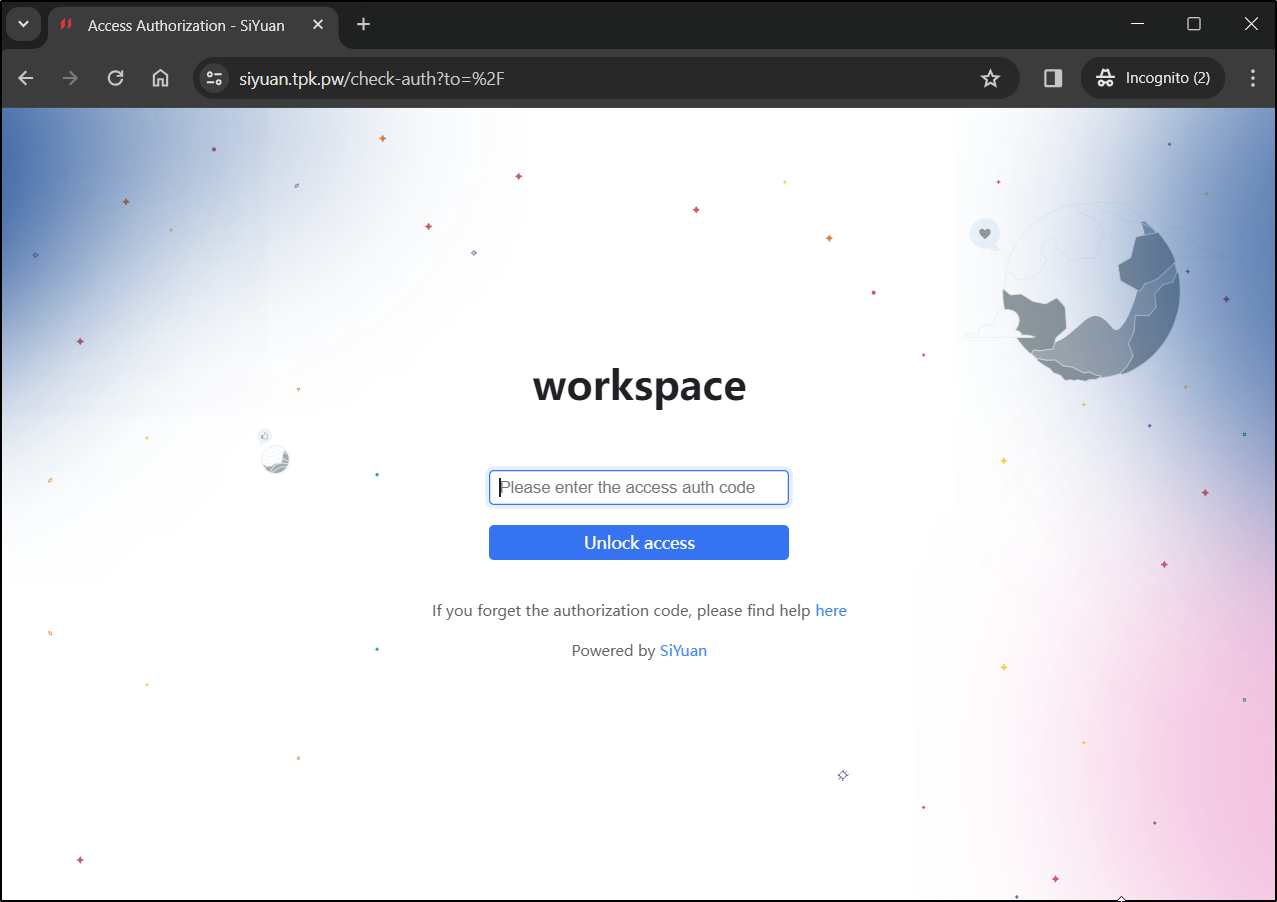

Once I typed my unlock code and hit enter, I had Siyuan unlocked and running

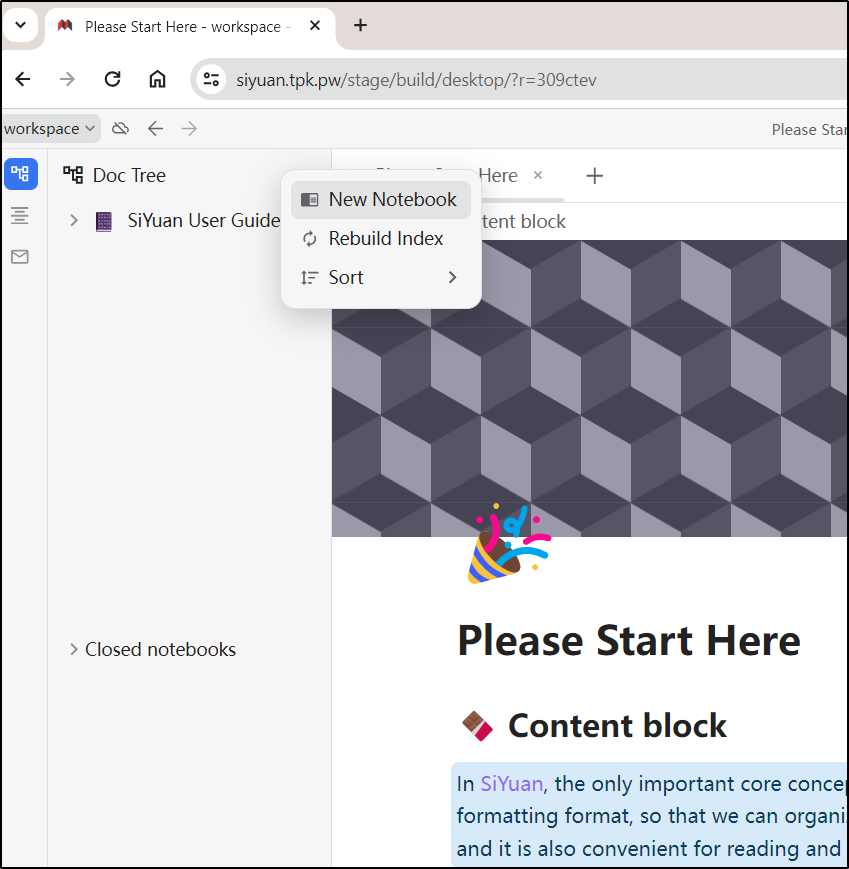

I’ll start by creating a new Notebook

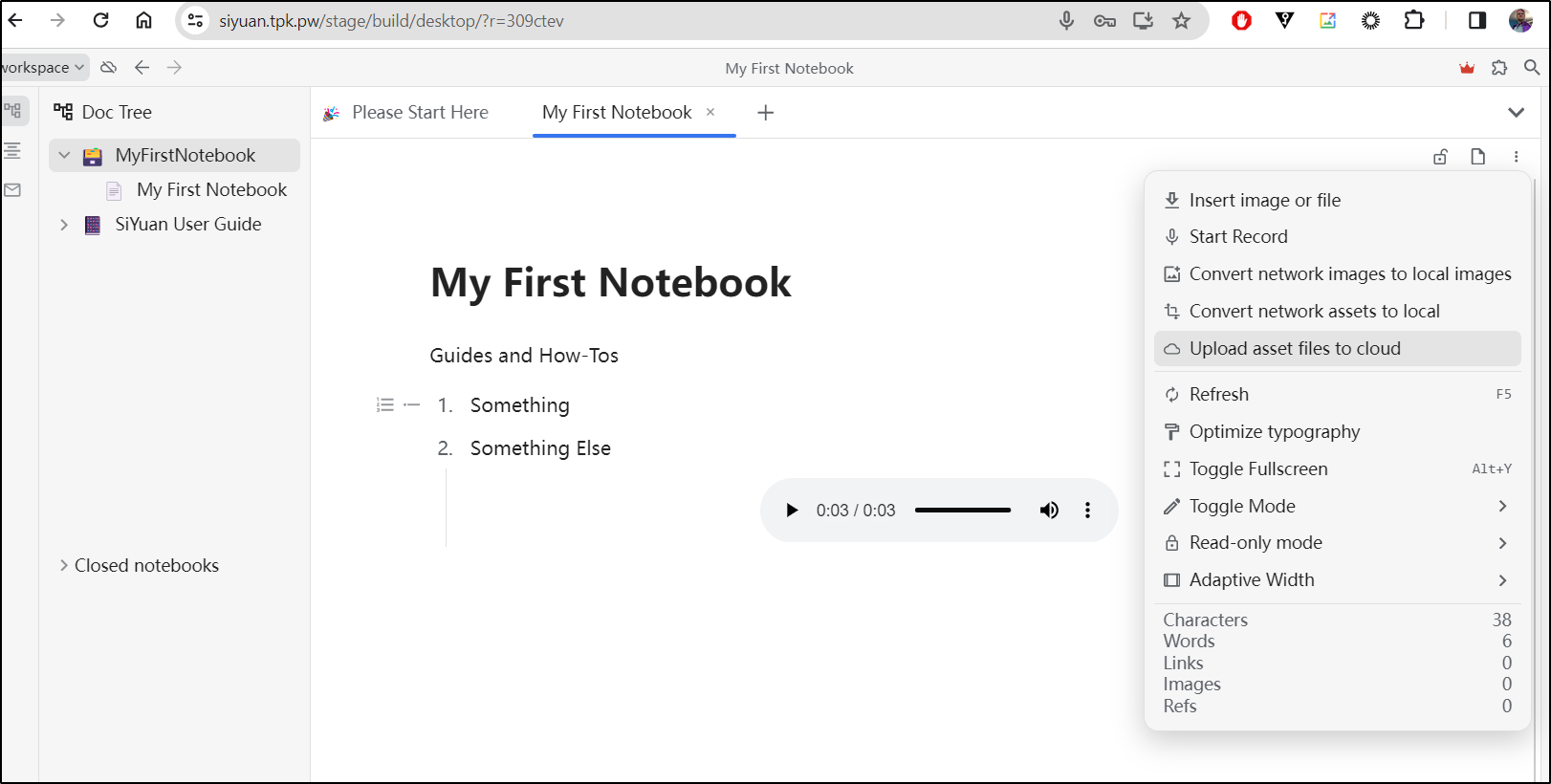

I can make a list and even record some audio inline

The Unlock code is required anytime we want to access the notebooks. This makes it more like a private google docs

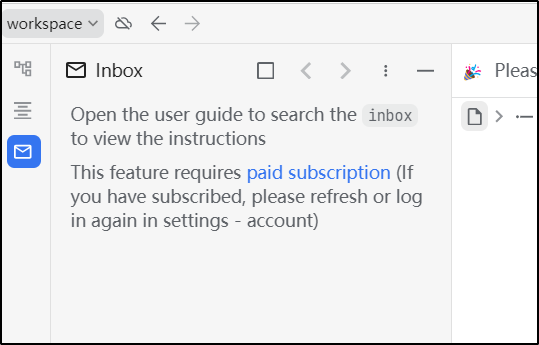

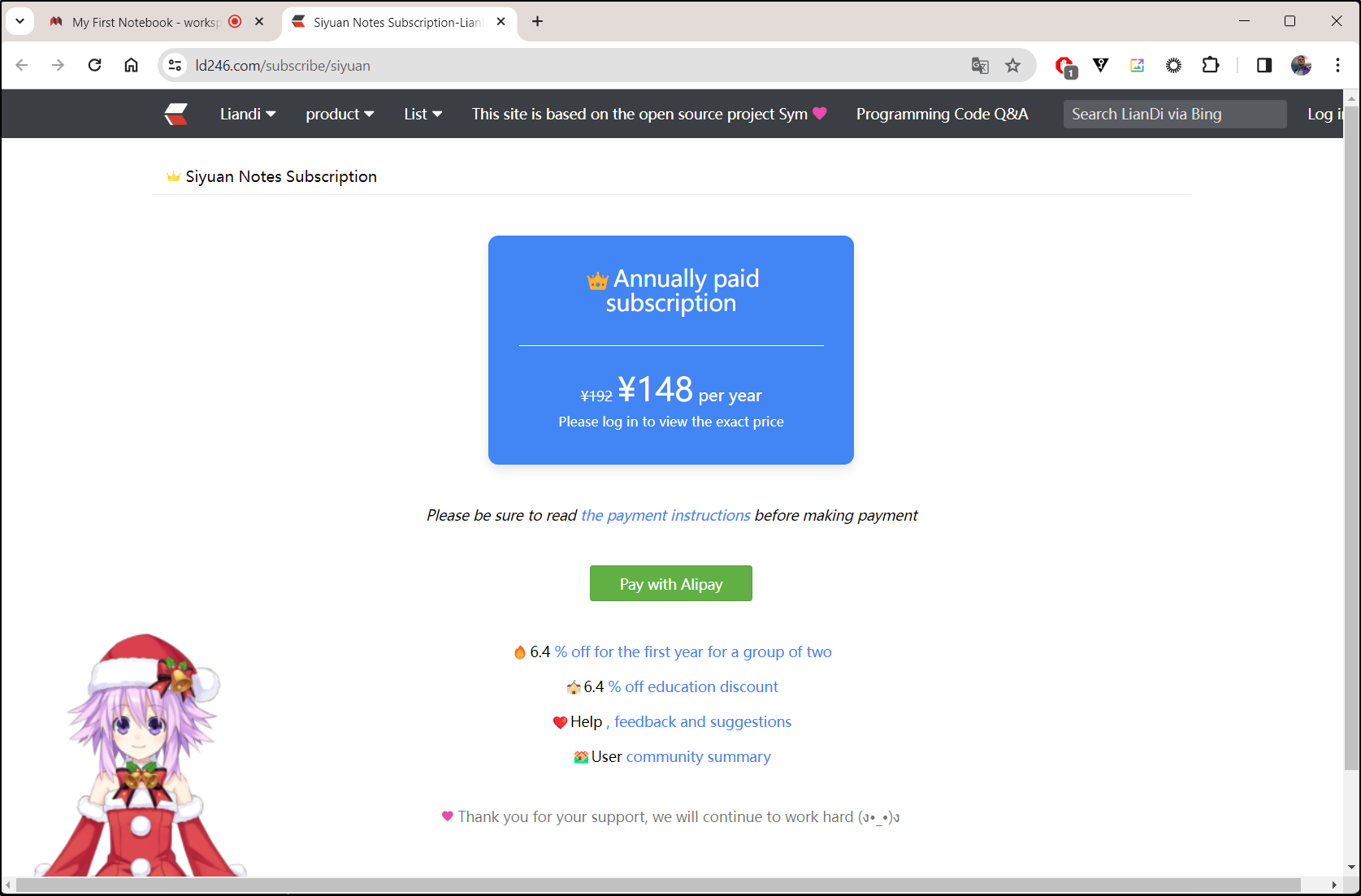

There are some features behind a paywall like email notifications

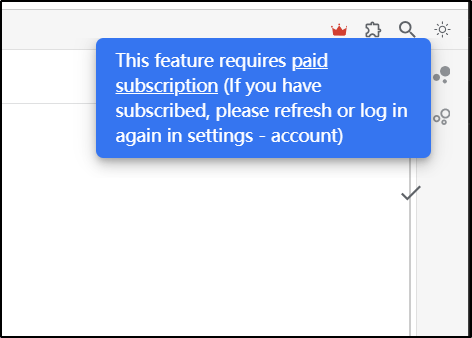

or uploading assets to the cloud

Those subscriptions are serviced with AliPay

(used Google Translate on the page):

It’s a pretty fair deal at 148 Yuan a year (roughly US $20/year). I would see this as modern shareware.

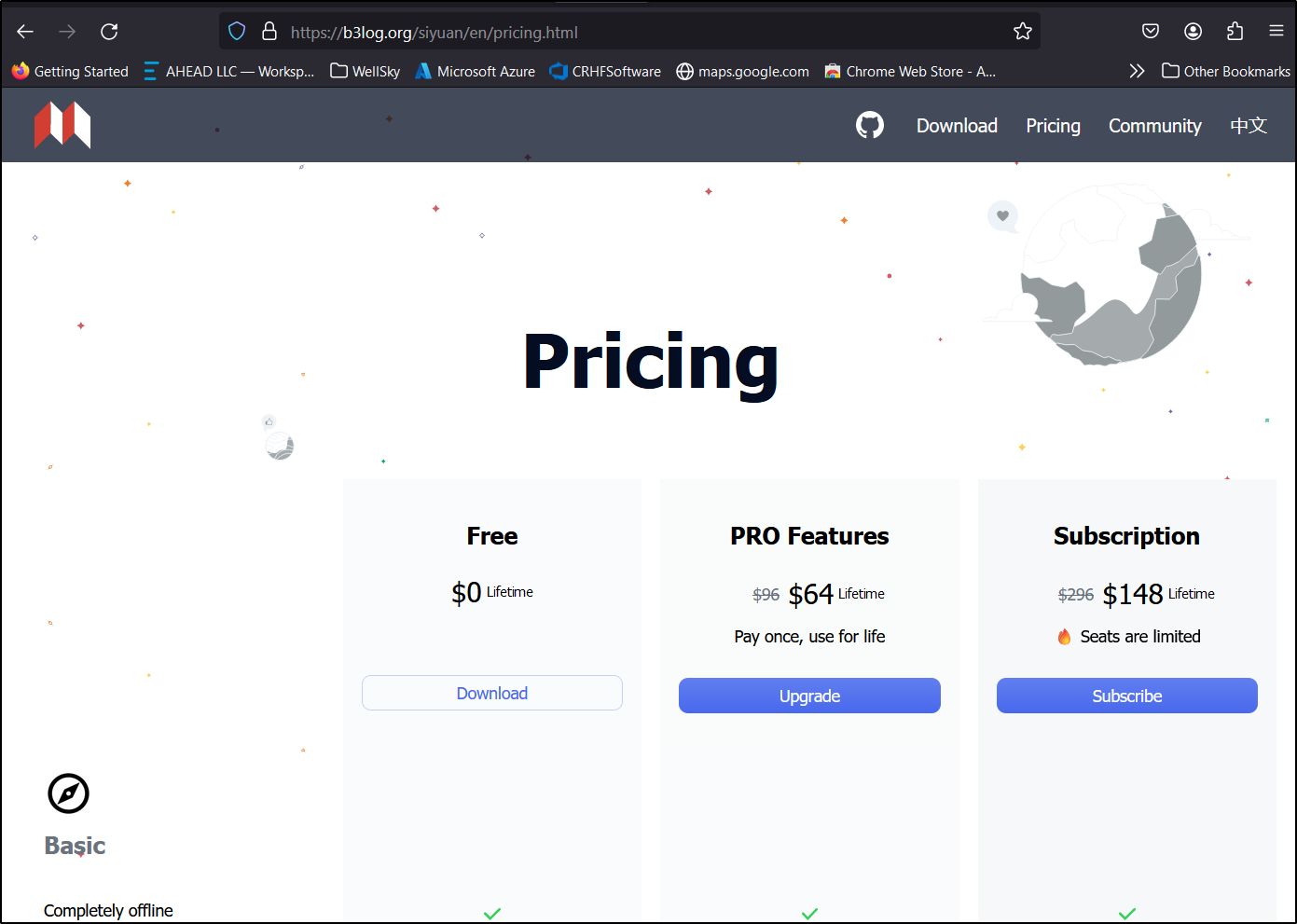

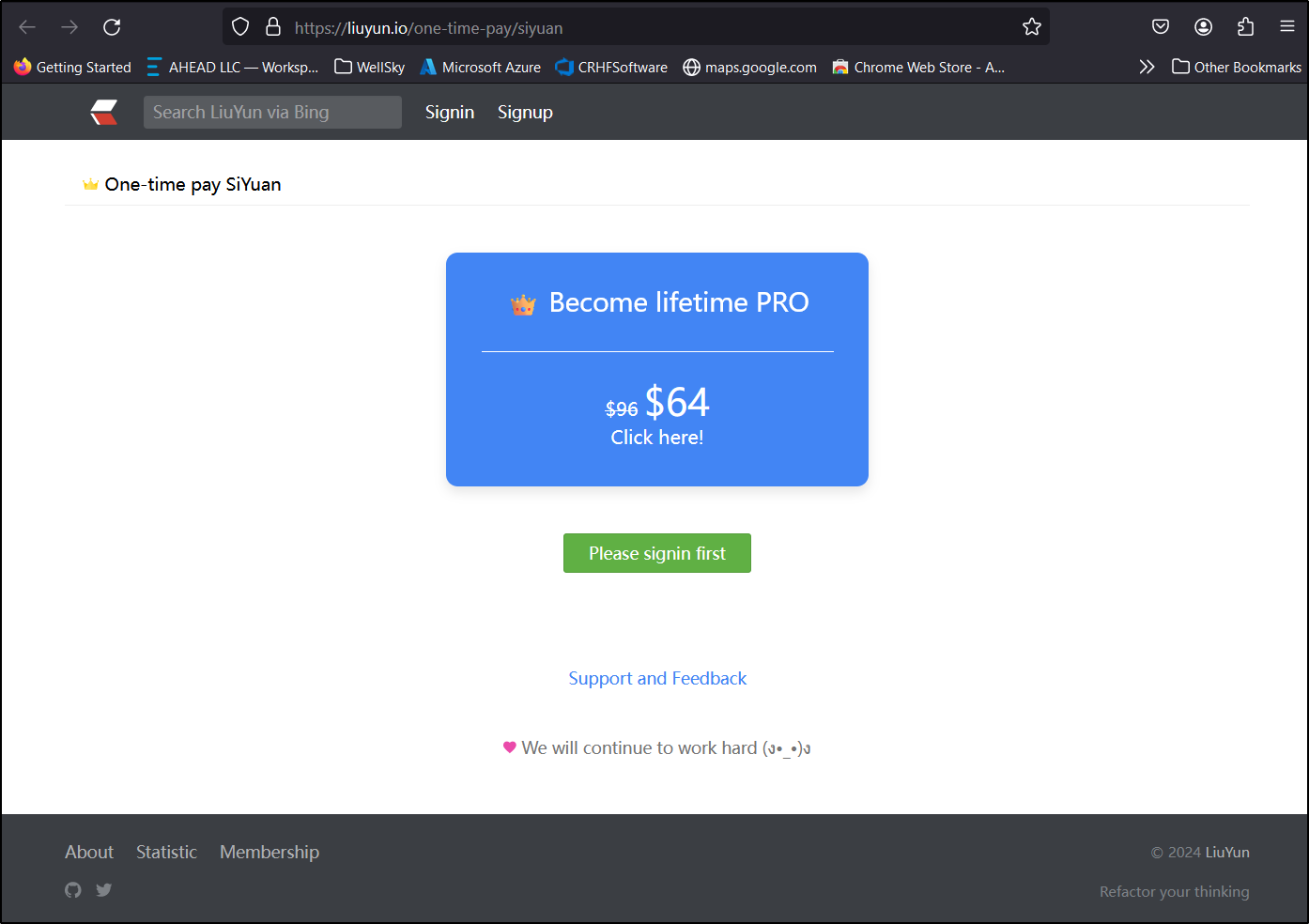

The website, however, has slightly different pricing.

which also kicks us over to that Liuyun.io site

That too seems reasonable; $20/year or US$64/life.

More Kubernetes tests

It was bothering me that the native K8s didn’t work

I created a quick test deployment to just see that the folder exists

apiVersion: apps/v1

kind: Deployment

metadata:

name: siyuan-deployment-test

spec:

replicas: 1

selector:

matchLabels:

app: siyuantest

template:

metadata:

labels:

app: siyuantest

spec:

initContainers:

- name: init-siyuan

image: busybox

command: ["sh", "-c", "mkdir -p /siyuan/workspace && pwd && ls -ltra /siyuan/"]

volumeMounts:

- name: siyuan-volume

mountPath: /siyuan/workspace

containers:

- name: siyuan

image: b3log/siyuan

command: ["--workspace=/siyuan/workspace", "--accessAuthCode=xxxxx12345"]

ports:

- containerPort: 6806

volumeMounts:

- name: siyuan-volume

mountPath: /siyuan/workspace

volumes:

- name: siyuan-volume

emptyDir: {}

And it worked, but still crashed.

$ kubectl describe pod siyuan-deployment-test-57d48bb46c-m7z2q | tail -n16

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 7m53s default-scheduler Successfully assigned default/siyuan-deployment-test-57d48bb46c-m7z2q to hp-hp-elitebook-850-g2

Normal Pulling 7m53s kubelet Pulling image "busybox"

Normal Pulled 7m52s kubelet Successfully pulled image "busybox" in 508.991117ms (508.999921ms including waiting)

Normal Created 7m52s kubelet Created container init-siyuan

Normal Started 7m52s kubelet Started container init-siyuan

Normal Pulled 7m50s kubelet Successfully pulled image "b3log/siyuan" in 518.753632ms (518.767669ms including waiting)

Normal Pulled 7m49s kubelet Successfully pulled image "b3log/siyuan" in 509.945551ms (509.954747ms including waiting)

Normal Pulled 7m32s kubelet Successfully pulled image "b3log/siyuan" in 588.482233ms (588.489833ms including waiting)

Normal Pulling 7m2s (x4 over 7m51s) kubelet Pulling image "b3log/siyuan"

Normal Pulled 7m1s kubelet Successfully pulled image "b3log/siyuan" in 572.98755ms (573.003191ms including waiting)

Normal Created 7m1s (x4 over 7m50s) kubelet Created container siyuan

Warning Failed 7m1s (x4 over 7m50s) kubelet Error: failed to create containerd task: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: exec: "--workspace=/siyuan/workspace": stat --workspace=/siyuan/workspace: no such file or directory: unknown

Warning BackOff 2m49s (x24 over 7m49s) kubelet Back-off restarting failed container siyuan in pod siyuan-deployment-test-57d48bb46c-m7z2q_default(096b4f89-edb7-42f8-aa01-ac2e8c37ac8d)

However, the init container tells me the folder exists

builder@LuiGi17:~/Workspaces/siyuan$ kubectl logs siyuan-deployment-test-57d48bb46c-m7z2q

Defaulted container "siyuan" out of: siyuan, init-siyuan (init)

builder@LuiGi17:~/Workspaces/siyuan$ kubectl logs siyuan-deployment-test-57d48bb46c-m7z2q init-siyuan

/

total 12

drwxrwxrwx 2 root root 4096 Mar 28 13:19 workspace

drwxr-xr-x 1 root root 4096 Mar 28 13:19 ..

drwxr-xr-x 3 root root 4096 Mar 28 13:19 .

On my working docker instance, it seems fine

builder@builder-T100:~/siyuan$ ls -ltra workspace/

total 24

drwxrwxr-x 3 builder builder 4096 Mar 28 07:42 ..

-rw------- 1 builder builder 0 Mar 28 07:45 .lock

drwxr-xr-x 4 builder builder 4096 Mar 28 07:45 temp

drwxr-xr-x 12 builder builder 4096 Mar 28 07:57 data

drwxr-xr-x 3 builder builder 4096 Mar 28 08:05 history

drwxrwxr-x 6 builder builder 4096 Mar 28 08:05 .

drwxr-xr-x 3 builder builder 4096 Mar 28 08:06 conf

Heimdall

Let’s move on to a completely different tool, Heimdall. While Linuxserver, the group, has created a nice bundle, it’s an app created by Chris Hunt out of Coventry, Warkshire, England.

The group has a guide for launching on Docker, however, I would rather run natively in Kubernetes if I have a choice.

To do so, we first need to create an A Record in our DNS hosted zone

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n heimdall

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "4927a598-8b98-48a5-b381-e918fc4fcc66",

"fqdn": "heimdall.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/heimdall",

"name": "heimdall",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"type": "Microsoft.Network/dnszones/A"

}

Then we can apply a Kubernetes YAML manifest

$ cat heimdall.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: heimdall-deployment

spec:

replicas: 1

selector:

matchLabels:

app: heimdall

template:

metadata:

labels:

app: heimdall

spec:

containers:

- name: heimdall

image: lscr.io/linuxserver/heimdall:latest

env:

- name: PUID

value: "1000"

- name: PGID

value: "1000"

- name: TZ

value: "Etc/UTC"

ports:

- containerPort: 443

volumeMounts:

- name: config-volume

mountPath: /config

volumes:

- name: config-volume

persistentVolumeClaim:

claimName: heimdall-pvc

---

apiVersion: v1

kind: Service

metadata:

name: heimdall-service

spec:

selector:

app: heimdall

ports:

- protocol: TCP

port: 443

targetPort: 443

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: heimdall-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: local-path

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: heimdall-ingress

annotations:

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/websocket-services: heimdall-service

cert-manager.io/cluster-issuer: azuredns-tpkpw

spec:

rules:

- host: heimdall.tpk.pw

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: heimdall-service

port:

number: 6806

tls:

- hosts:

- heimdall.tpk.pw

secretName: heimdall-tls

$ kubectl apply -f heimdall.yaml

deployment.apps/heimdall-deployment created

service/heimdall-service created

persistentvolumeclaim/heimdall-pvc created

ingress.networking.k8s.io/heimdall-ingress created

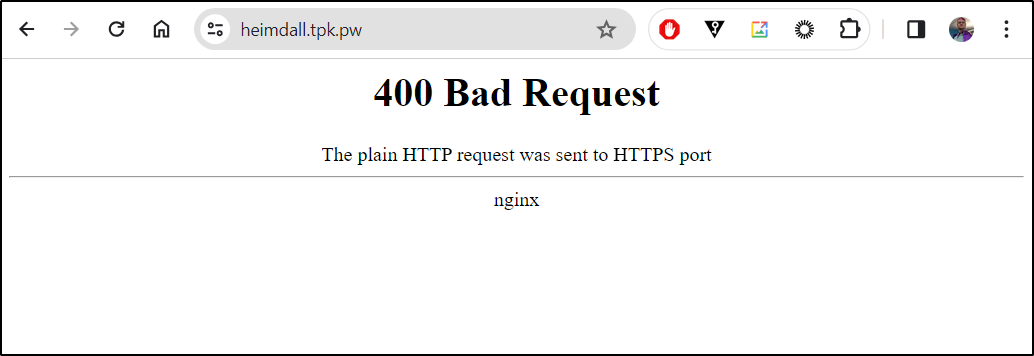

However, when I swapped to the HTTP port (80)

$ cat heimdall.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: heimdall-deployment

spec:

replicas: 1

selector:

matchLabels:

app: heimdall

template:

metadata:

labels:

app: heimdall

spec:

containers:

- name: heimdall

image: lscr.io/linuxserver/heimdall:latest

env:

- name: PUID

value: "1000"

- name: PGID

value: "1000"

- name: TZ

value: "Etc/UTC"

ports:

- containerPort: 80

volumeMounts:

- name: config-volume

mountPath: /config

volumes:

- name: config-volume

persistentVolumeClaim:

claimName: heimdall-pvc

---

apiVersion: v1

kind: Service

metadata:

name: heimdall-service

spec:

selector:

app: heimdall

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: heimdall-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: local-path

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: heimdall-ingress

annotations:

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/websocket-services: heimdall-service

cert-manager.io/cluster-issuer: azuredns-tpkpw

spec:

rules:

- host: heimdall.tpk.pw

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: heimdall-service

port:

number: 80

tls:

- hosts:

- heimdall.tpk.pw

secretName: heimdall-tls

builder@LuiGi17:~/Workspaces/siyuan$ kubectl delete -f heimdall.yaml

deployment.apps "heimdall-deployment" deleted

service "heimdall-service" deleted

persistentvolumeclaim "heimdall-pvc" deleted

ingress.networking.k8s.io "heimdall-ingress" deleted

builder@LuiGi17:~/Workspaces/siyuan$ kubectl apply -f heimdall.yaml

deployment.apps/heimdall-deployment created

service/heimdall-service created

persistentvolumeclaim/heimdall-pvc created

ingress.networking.k8s.io/heimdall-ingress created

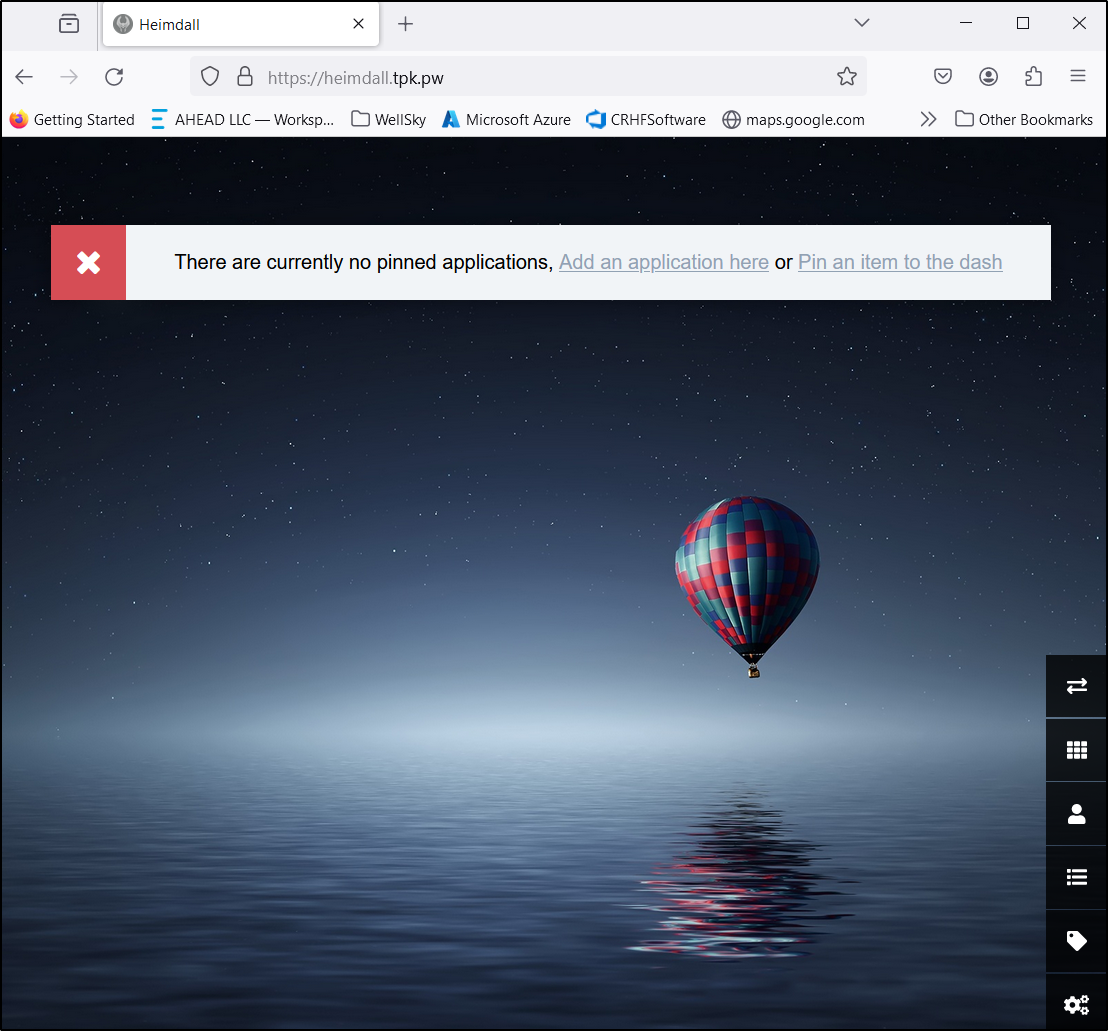

which worked just fine

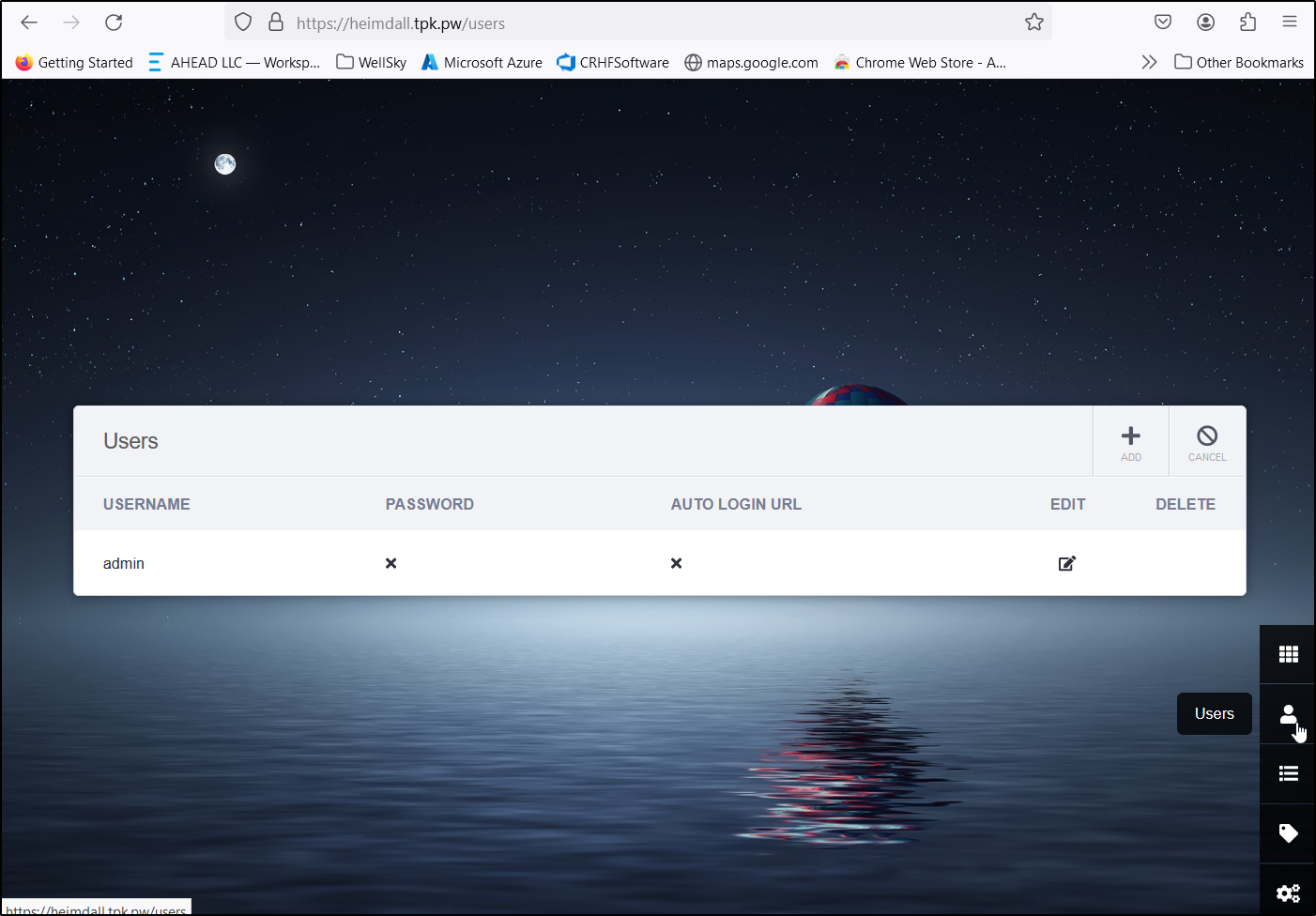

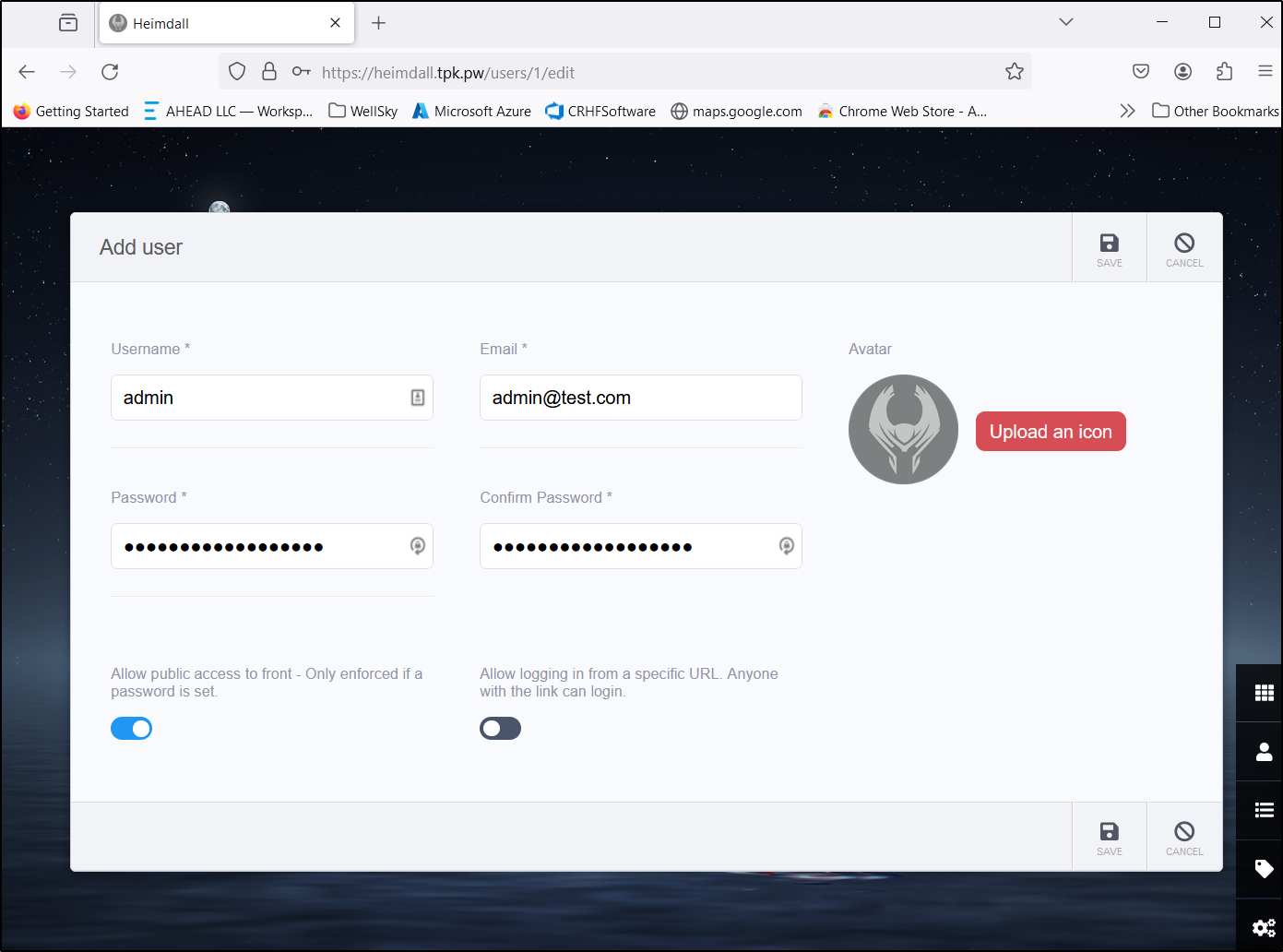

By default, one is logged in with Admin and that user has no password. I’ll correct that right away by going to users and editing the admin user

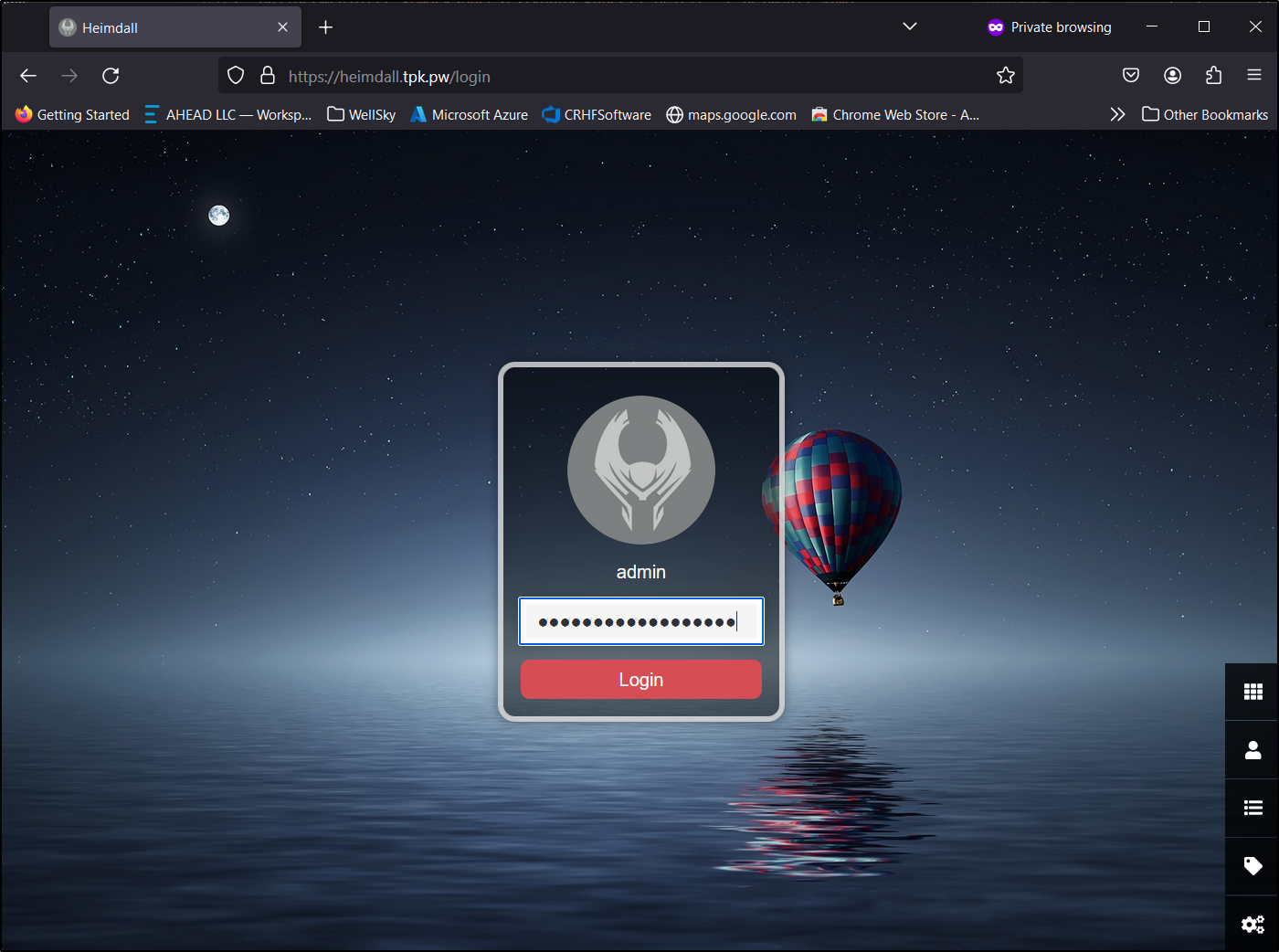

I’ll allow public access, but still set a password

While I can view the front page, I now have to login to edit

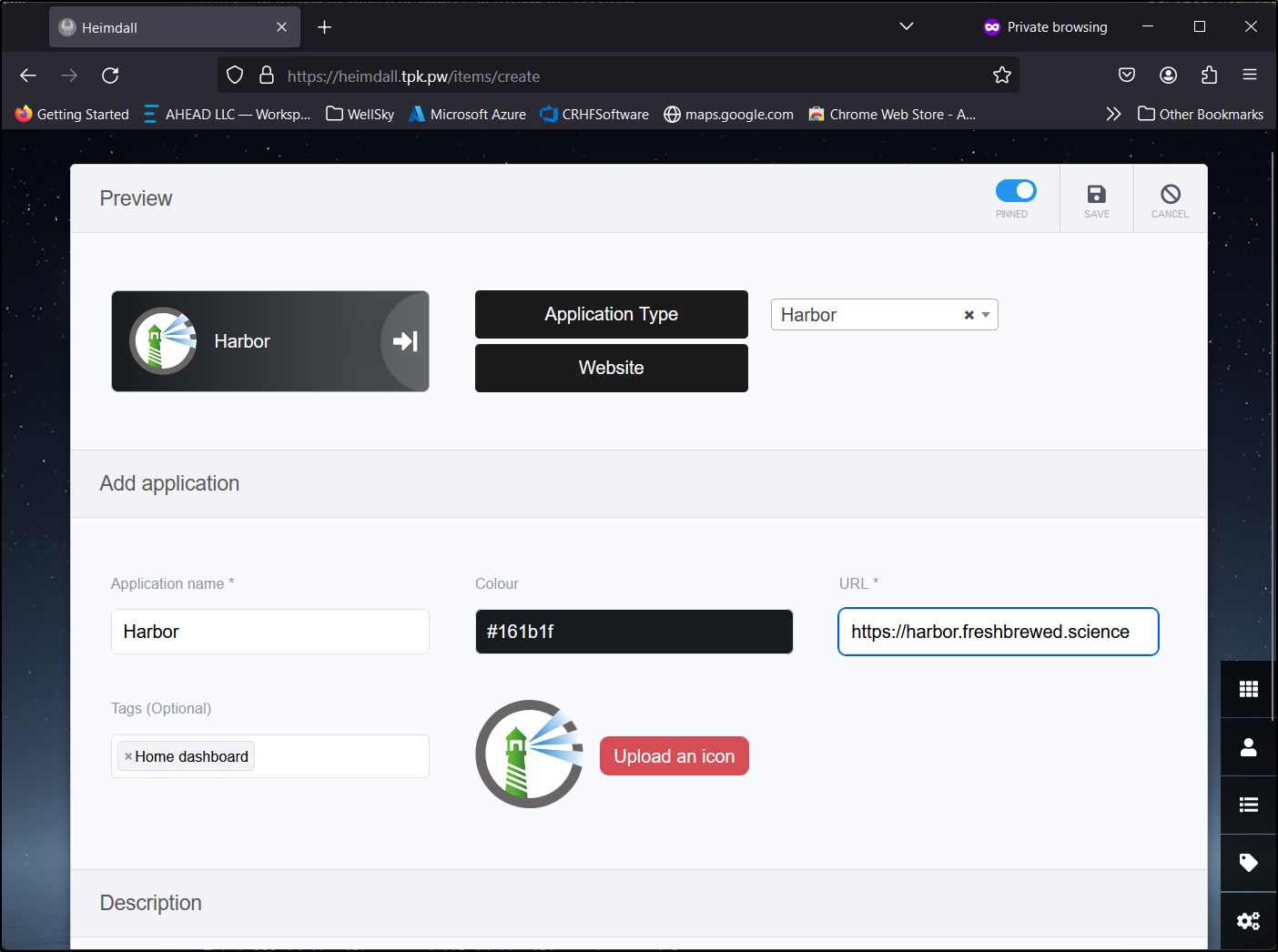

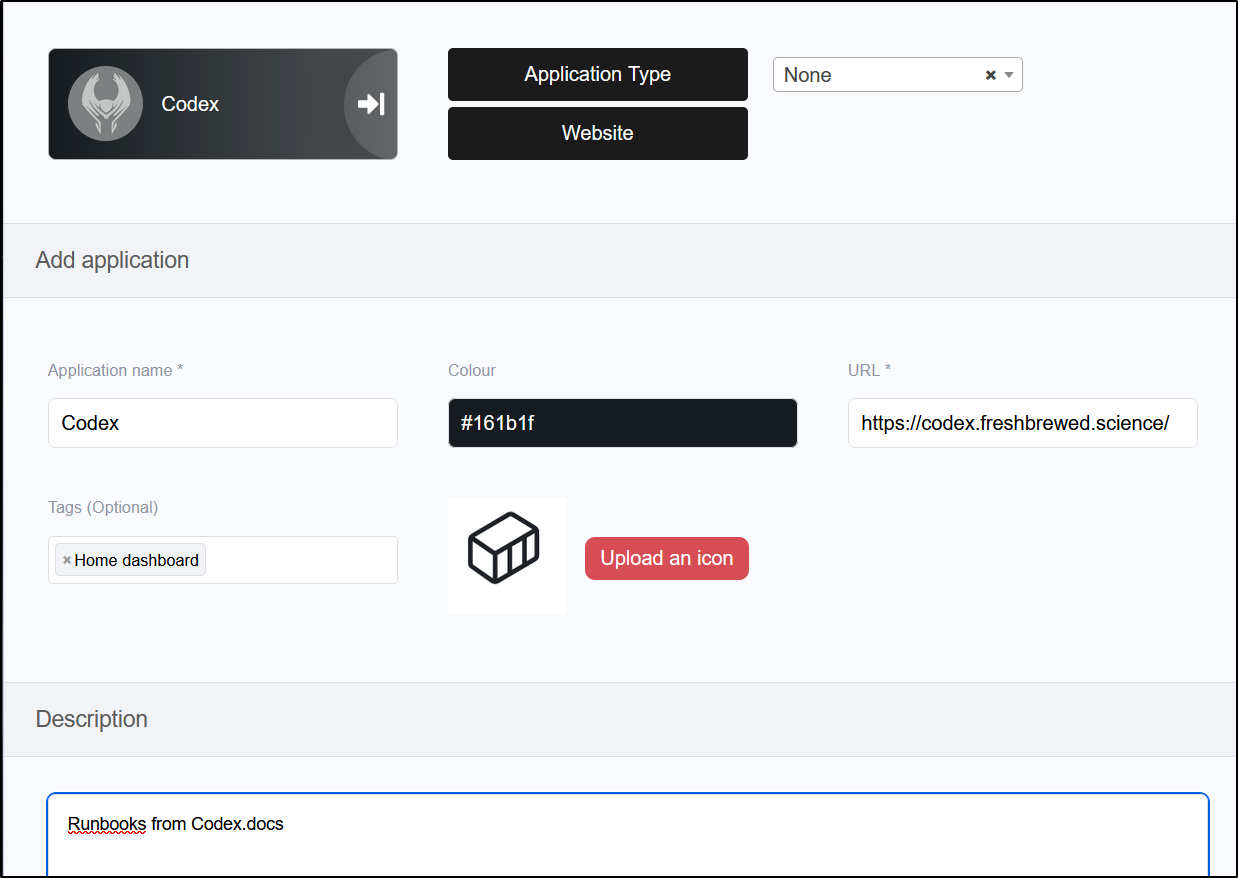

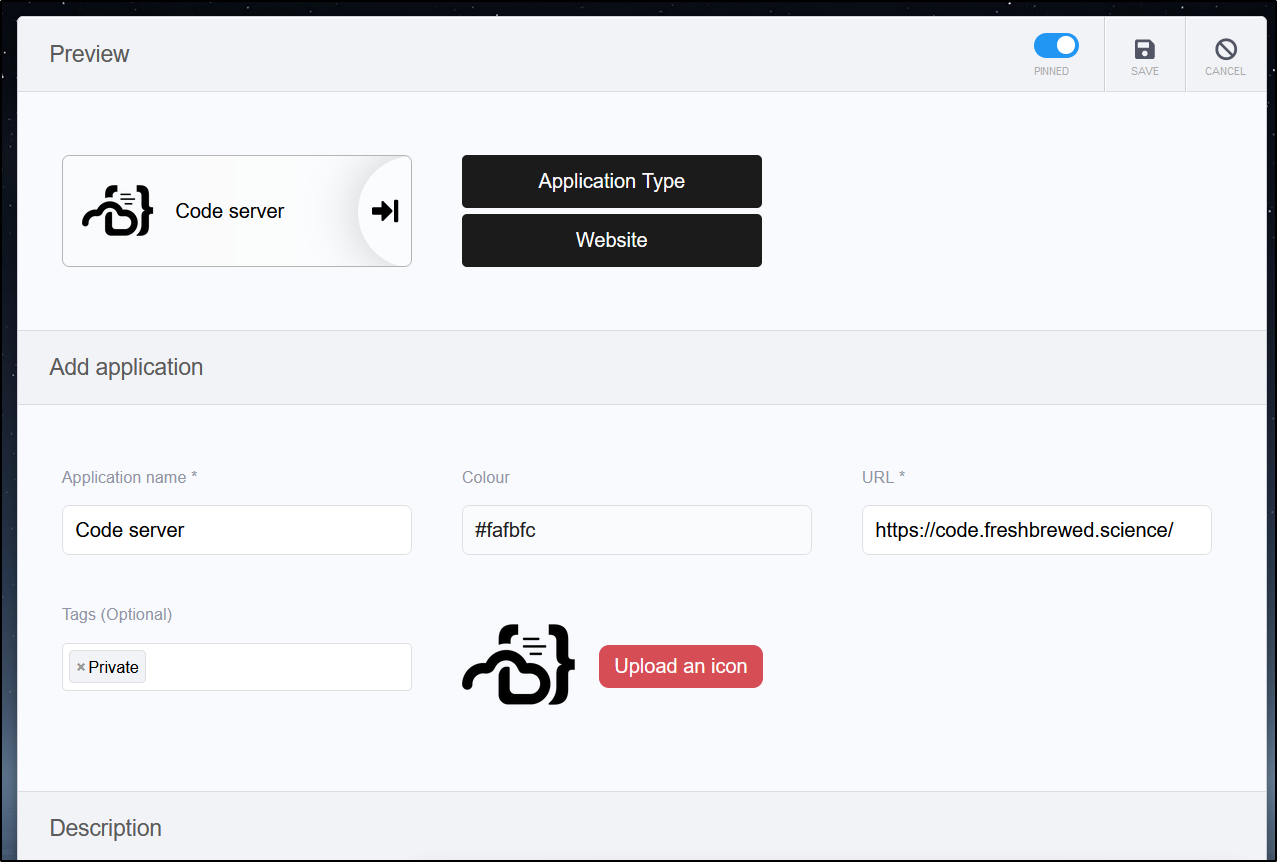

It has a suprisingly large pre-defined list of Apps. Here I’ll add a link to our Harbor instance

Only one required me to upload an icon

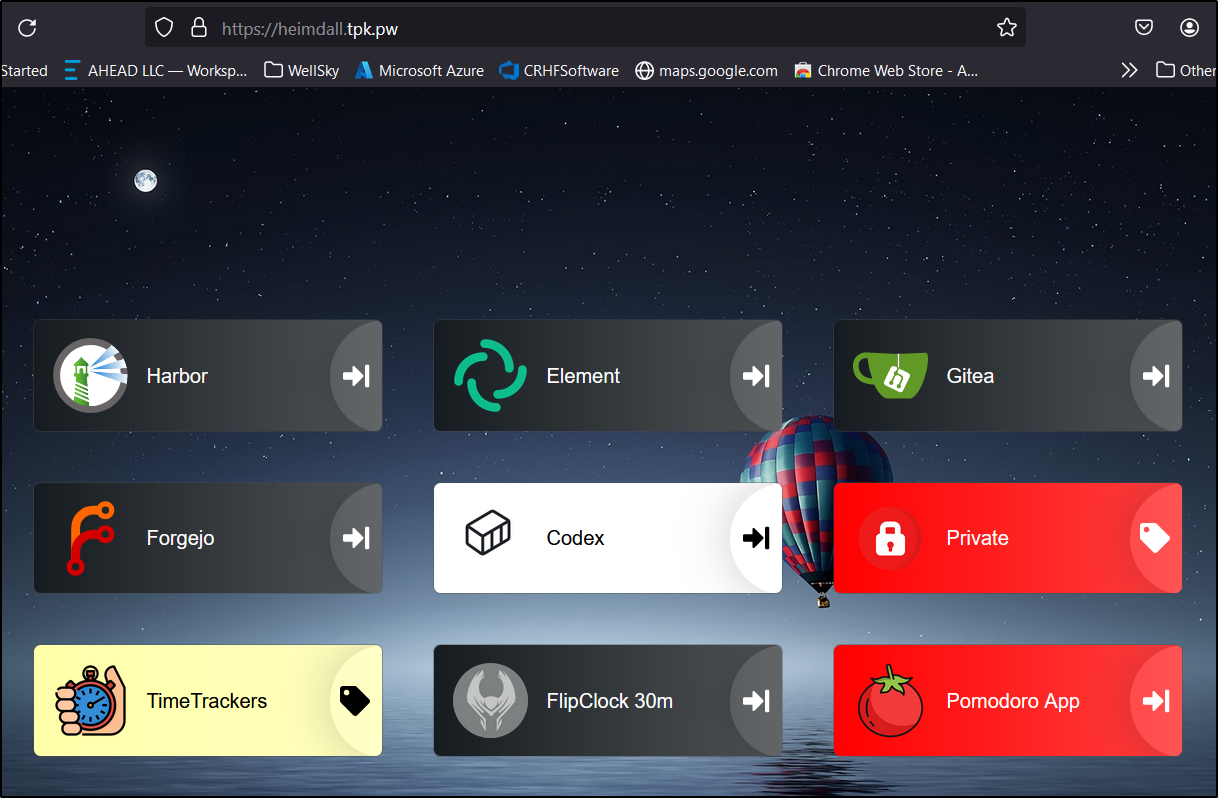

I now have a nice little landing page at https://heimdall.tpk.pw/ of some of the apps I serve out of Kubernetes

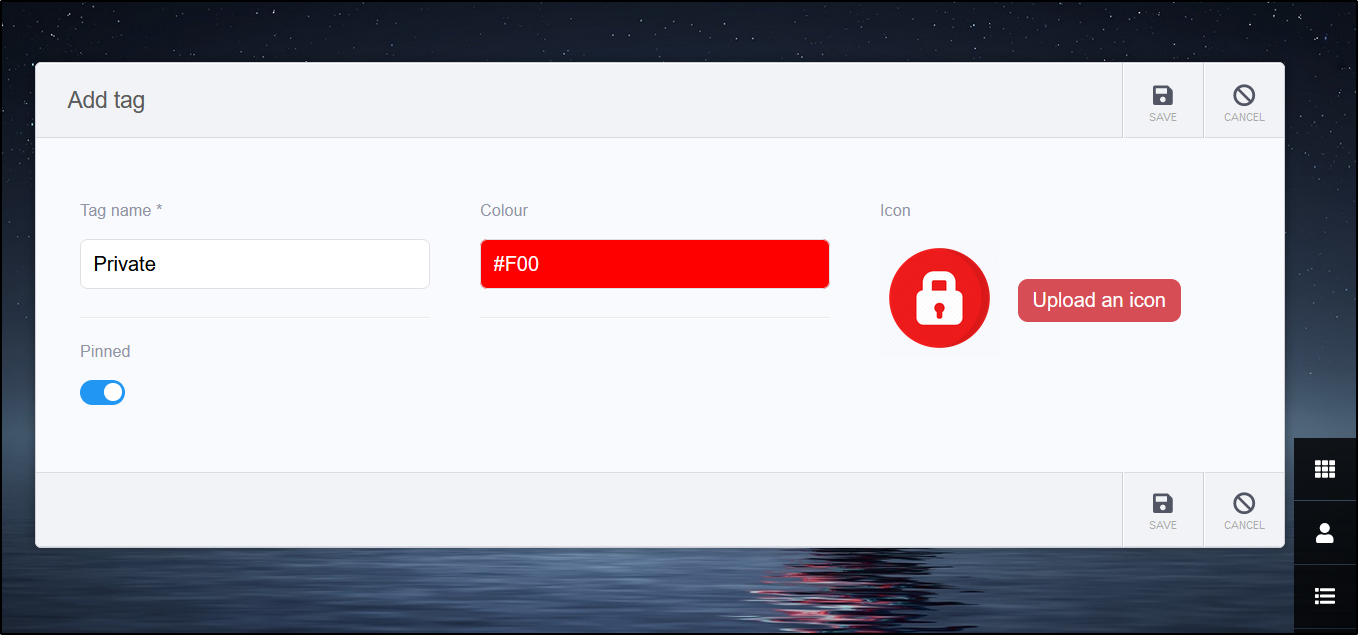

Tags

We can add new tags.

For instance, perhaps I want a tag to denote private/non-public endpoints

I can then swap tags on an application to move it into private

By the time I was done, I had a few apps. Though, I am not sure I’ll keep the layout as it seems a bit busy

Summary

Today we explored a pretty good documentation app in Siyuan. It has some promising features and we’ll have to see if I keep it longer than MkDocs or Codex. It was easy to fire up in Docker but not so much in Kubernetes.

Heimdall is an interesting bookmarking site. I don’t have to keep the name heimdall.tpk.pw. It might make more sense as “applications.freshbrewed.science” or similar. It was very easy to fire up in Kubernetes and has a surprisingly large list of predefined icons/apps.