Published: Mar 26, 2024 by Isaac Johnson

Darktable is like a cozy digital workshop where photographers can fine-tune their images with care. Think of it as an open-source Photoshop for those who appreciate the artistry of pixels.

Recently, I embarked on a journey to explore Darktable’s fascinating world. I decided to take a peek behind the curtain, both in the Docker realm and aboard the Azure Kubernetes Service (AKS) ship.

But that’s not all! My adventure didn’t stop there. I wandered into the land of productivity and discovered four delightful Pomodoro apps, each neatly packaged in its own container. These little timekeepers are perfect for those moments when you need a gentle nudge to stay focused.

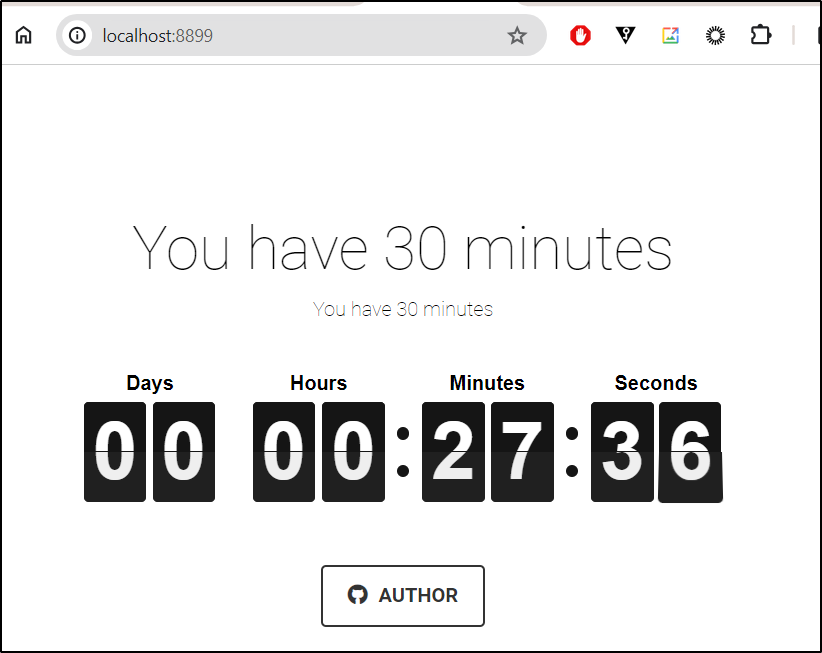

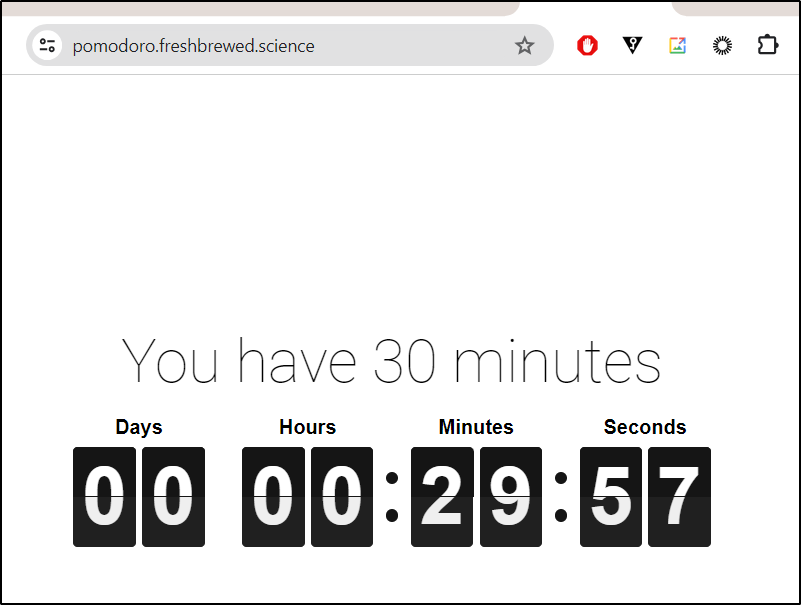

And as a cherry on top, I whipped up my very own 30-minute flip clock—a humble creation that ticks away, reminding me to get my butt in gear and complete my tasks.

Let’s dig in with Dark Table first.

to be upfront, i asked copilot to warm up my rather dry intro.. i even toned it down a bit.. but just being upfront on that…

Dark Table

While DarkTable is designed to be a Linux app run on a desktop, there is a containerized version available from the LinuxServer group

We can launch it using:

docker run -d \

--name=darktable \

--security-opt seccomp=unconfined `#optional` \

-e PUID=1000 \

-e PGID=1000 \

-e TZ=Etc/UTC \

-p 4100:3000 \

-p 4101:3001 \

-v /home/builder/darktable:/config \

--restart unless-stopped \

lscr.io/linuxserver/darktable:latest

For instance:

$ docker run -d \

--name=darktable \

--security-opt seccomp=unconfined `#optional` \

-e PUID=1000 \

-e PGID=1000 \

-e TZ=Etc/UTC \

-p 4100:3000 \

-p 4101:3001 \

-v /home/builder/darktable:/config \

--restart unless-stopped \

lscr.io/linuxserver/darktable:latest

Unable to find image 'lscr.io/linuxserver/darktable:latest' locally

latest: Pulling from linuxserver/darktable

0e656ed1867e: Pull complete

972670059f53: Pull complete

3e59f3bd8101: Pull complete

0f3f97e9f995: Pull complete

a5b7109ec0b6: Pull complete

6df50db3ea97: Pull complete

74398f9e3860: Pull complete

251d02121add: Pull complete

a4284ce93216: Pull complete

bed244832bd7: Pull complete

86992bb9f161: Pull complete

Digest: sha256:bbe472d2a12a677d0ba596a1ee098b26b29cdef3f95e4cf9f06d3007f4c2ef75

Status: Downloaded newer image for lscr.io/linuxserver/darktable:latest

1389df4117a84f4350967306473c5f32f2c1418711969953516fa2049a572408

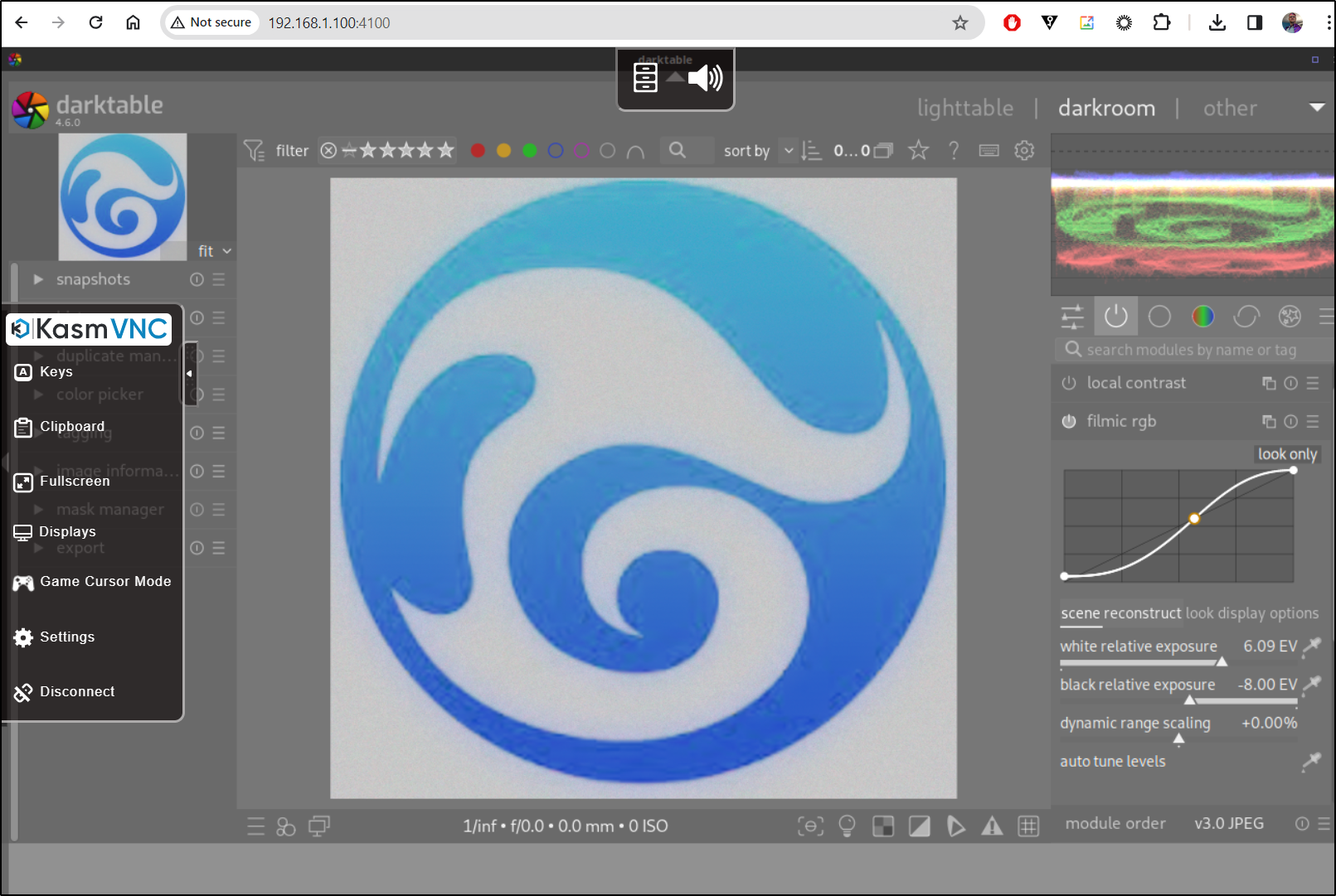

We can now see the app on port 4100. One thing I didn’t realize is this containerized version is really just streaming VNC with the desktop app. Moving to the left will show the VNC controls

Let’s play around a bit with images. I’ll admit I’m not a photographer so it’s mostly just me messing around:

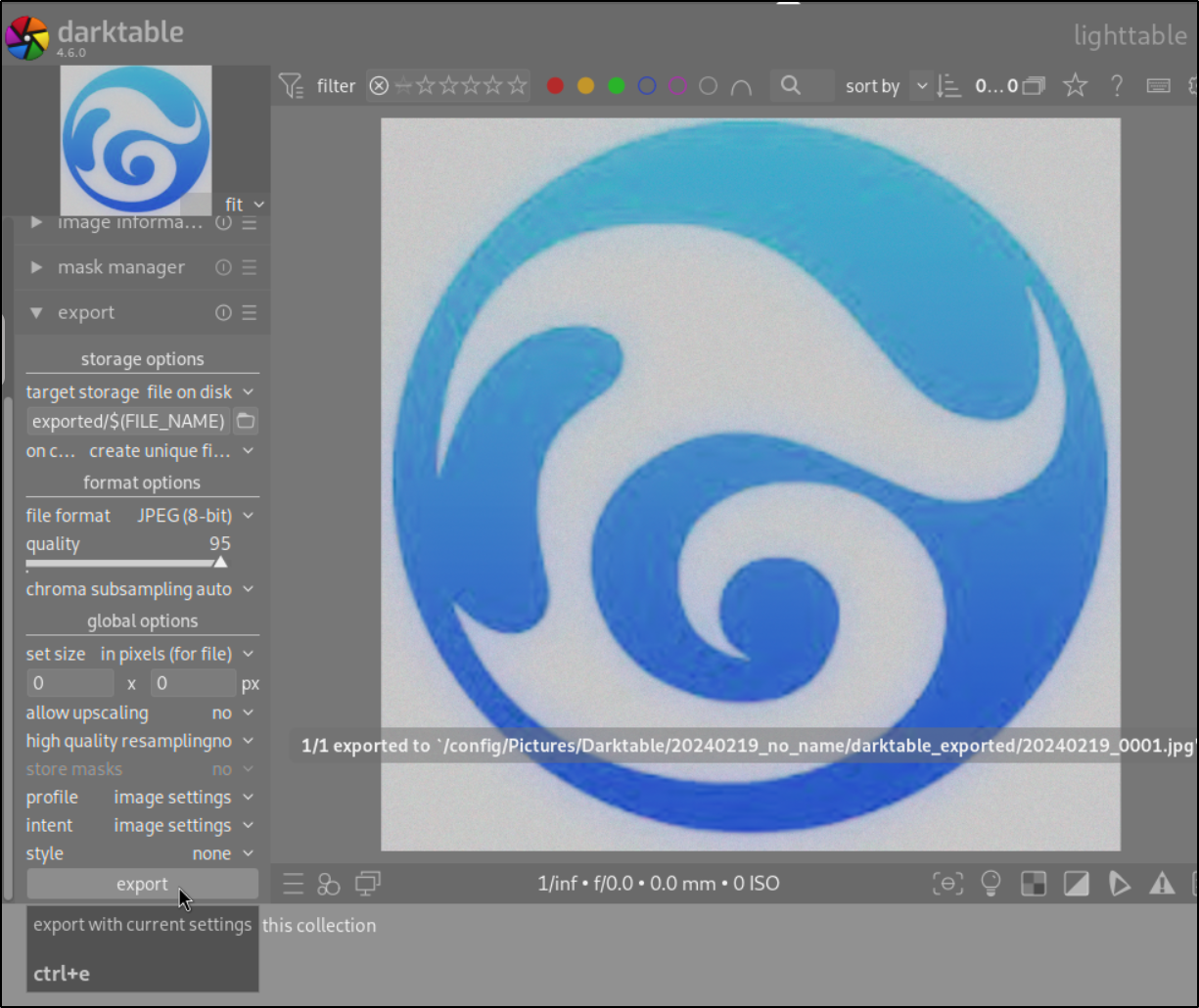

I can use export to dump a modified file to a local folder

I can exec into the container to see the images

builder@builder-T100:~$ docker exec -it darktable /bin/sh

root@1389df4117a8:/# ls

app bin boot command config defaults dev docker-mods etc home init kasmbins kasminit kclient lib lib64 lsiopy mnt opt package proc root run sbin srv sys tmp usr var

root@1389df4117a8:/# cd config/

root@1389df4117a8:/config# cd Pictures/

root@1389df4117a8:/config/Pictures# cd Darktable/

root@1389df4117a8:/config/Pictures/Darktable# ls

20240219_no_name

root@1389df4117a8:/config/Pictures/Darktable# cd 20240219_no_name/

root@1389df4117a8:/config/Pictures/Darktable/20240219_no_name# ls

20240219_0001.png 20240219_0001.png.xmp darktable_exported

root@1389df4117a8:/config/Pictures/Darktable/20240219_no_name#

However, because I mounted the “config” director when I launched the container, I can always see and copy the from the local mount

builder@builder-T100:~/darktable$ ls Pictures/Darktable/20240219_no_name/

20240219_0001.png 20240219_0001.png.xmp darktable_exported

Kubernetes

Let’s fire up an AKS cluster to try in Azure

I’ll get fresh creds for my SP and create a Resource Group

$ az group create -n idjakstest219 --location centralus

{

"id": "/subscriptions/8defc61d-657a-453d-a6ff-cb9f91289a61/resourceGroups/idjakstest219",

"location": "centralus",

"managedBy": null,

"name": "idjakstest219",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

$ az ad sp create-for-rbac -n idjaksupg01sp --skip-assignment --output json > my_sp.json

WARNING: Option '--skip-assignment' has been deprecated and will be removed in a future release.

WARNING: Found an existing application instance: (id) 34fede26-2ae7-4ccd-9c29-3bc220b9784a. We will patch it.

I’ll then set the env vars for those creds and fire up an AKS instance

$ export SP_PASS=`cat my_sp.json | jq -r .password`

$ export SP_ID=`cat my_sp.json | jq -r .appId`

$ az aks create --resource-group idjakstest219 --name idjtestaks219 --location centralus --node-count 3 --enable-cluster-autoscaler --min-count 2 --max-count 4 --generate-ssh-keys --network-plugin azure --network-policy azure --service-principal $SP_ID --client-secret $SP_PASS

docker_bridge_cidr is not a known attribute of class <class 'azure.mgmt.containerservice.v2023_10_01.models._models_py3.ContainerServiceNetworkProfile'> and will be ignored

{

"aadProfile": null,

"addonProfiles": null,

"agentPoolProfiles": [

...snip...

Now I just need my Kube creds and we can check to see if our nodes are up

$ az aks get-credentials -n idjtestaks219 -g idjakstest219 --admin

Merged "idjtestaks219-admin" as current context in /home/builder/.kube/config

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-31068033-vmss000000 Ready agent 4m39s v1.27.7

aks-nodepool1-31068033-vmss000001 Ready agent 4m46s v1.27.7

aks-nodepool1-31068033-vmss000002 Ready agent 4m33s v1.27.7

I converted the docker invokation over to a kubernetes manifest

$ cat darktable.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: darktable

spec:

replicas: 1

selector:

matchLabels:

app: darktable

template:

metadata:

labels:

app: darktable

spec:

containers:

- name: darktable

image: lscr.io/linuxserver/darktable:latest

env:

- name: TZ

value: "Etc/UTC"

ports:

- containerPort: 3000

- containerPort: 3001

volumeMounts:

- mountPath: /config

name: config-volume

volumes:

- name: config-volume

persistentVolumeClaim:

claimName: darktable-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: darktable-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Service

metadata:

name: darktableservice

spec:

selector:

app: darktable

ports:

- name: appone

protocol: TCP

port: 3000

targetPort: 3000

- name: apptwo

protocol: TCP

port: 3001

targetPort: 3001

type: LoadBalancer

Then apply

$ kubectl apply -f ./darktable.yaml

deployment.apps/darktable created

persistentvolumeclaim/darktable-pvc created

service/darktableservice created

We can quickly see our service is up

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

darktableservice LoadBalancer 10.0.66.23 52.185.88.50 3000:31097/TCP,3001:32175/TCP 36s

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 8m45s

We now have a working Darktable in Azure, albeit wide open for all to use

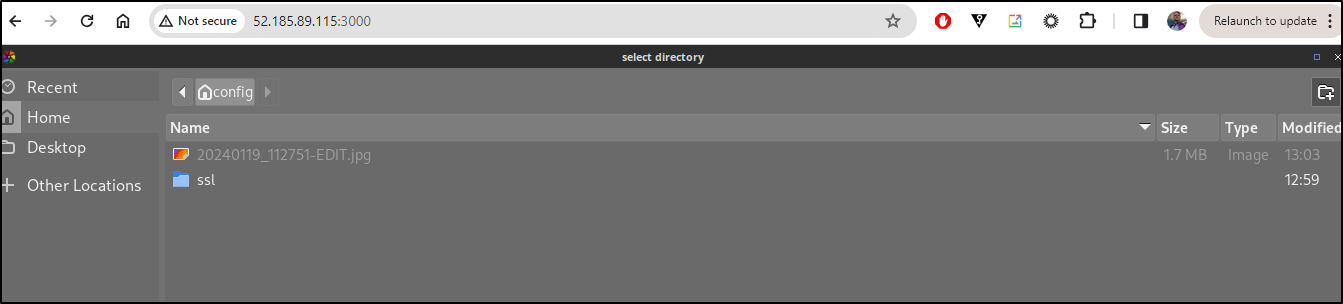

We can use kubectl commands to copy files

$ ls -ltra /mnt/c/Users/isaac/Downloads/20240119_112751-EDIT.jpg

-rwxrwxrwx 1 builder builder 1671985 Jan 23 06:52 /mnt/c/Users/isaac/Downloads/20240119_112751-EDIT.jpg

$ kubectl cp /mnt/c/Users/isaac/Downloads/20240119_112751-EDIT.jpg darktable-f9679c48d-vg8zd:/config/20240119_112751-EDIT.jpg

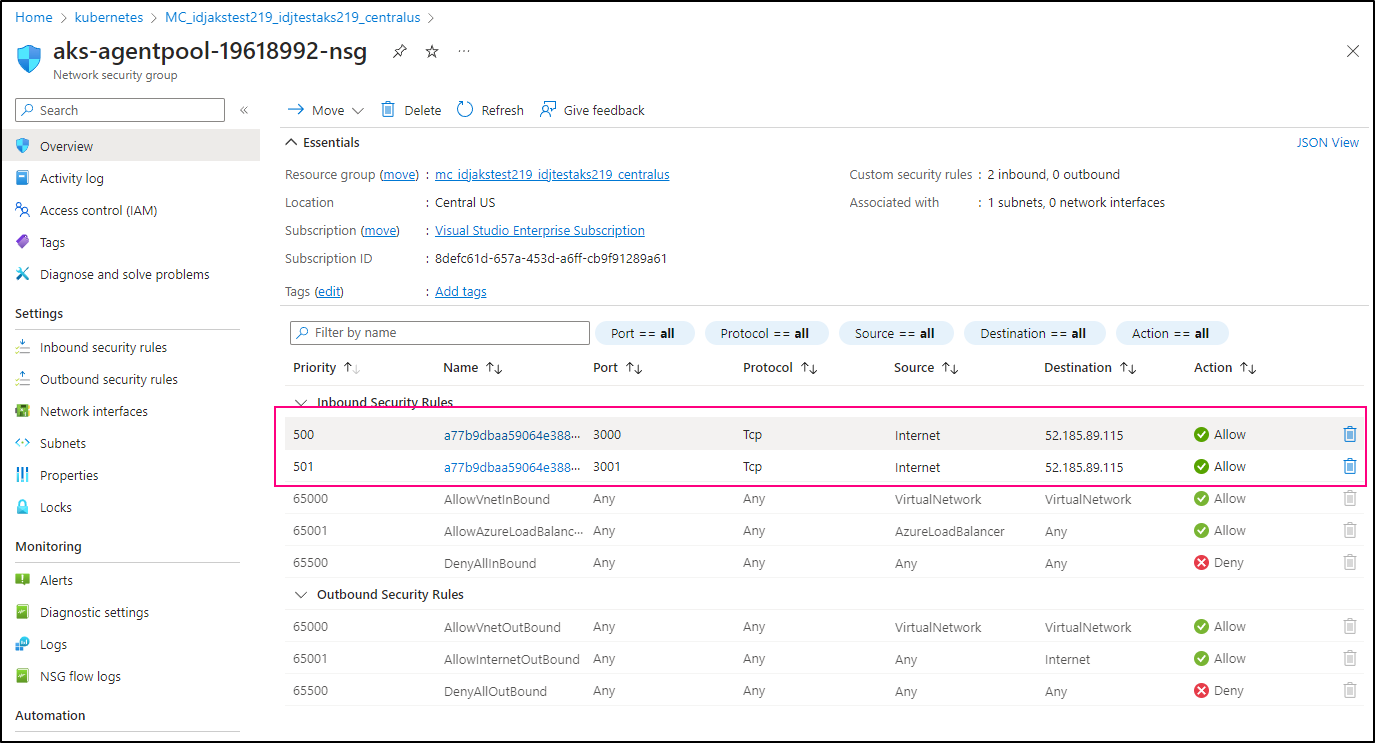

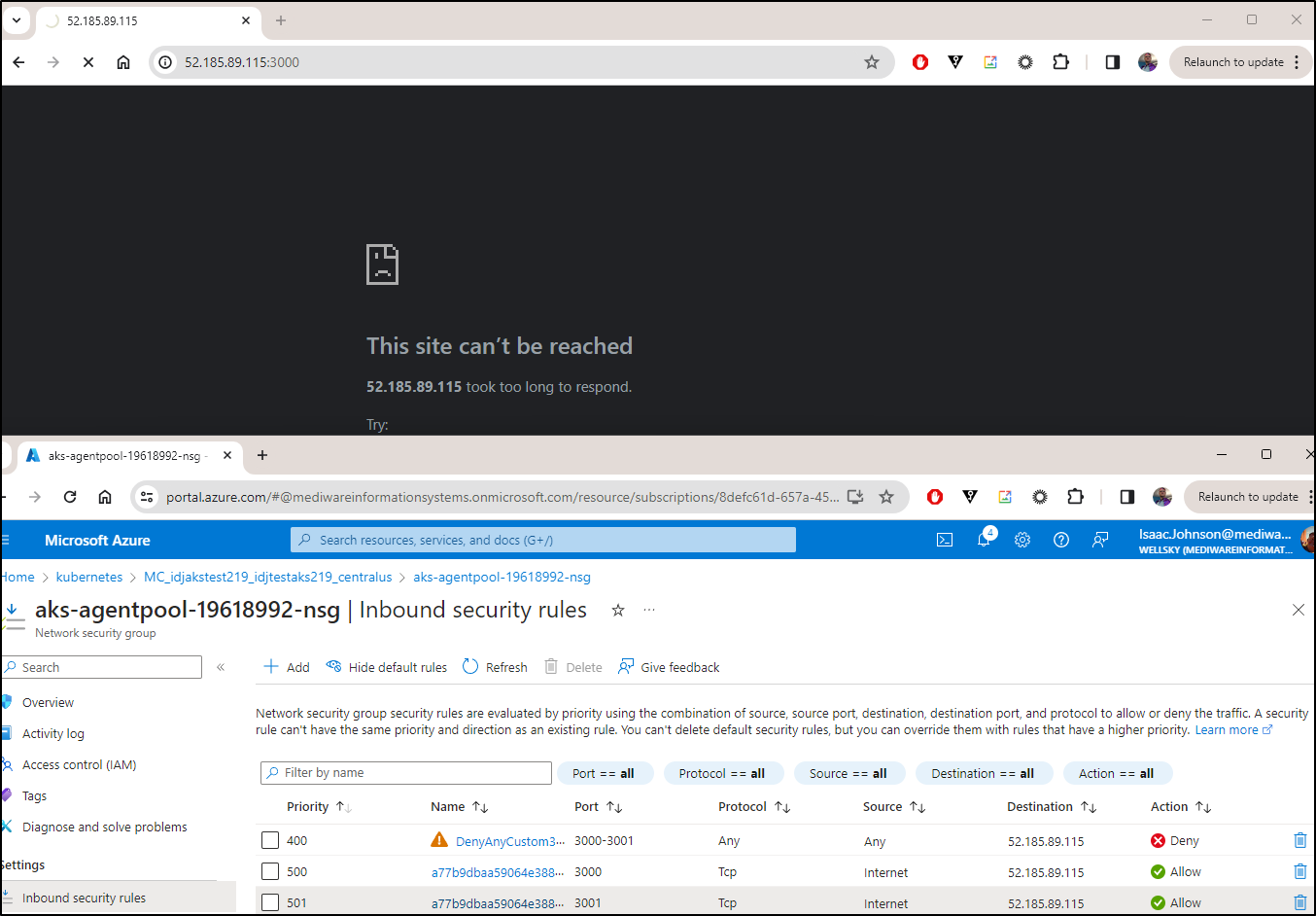

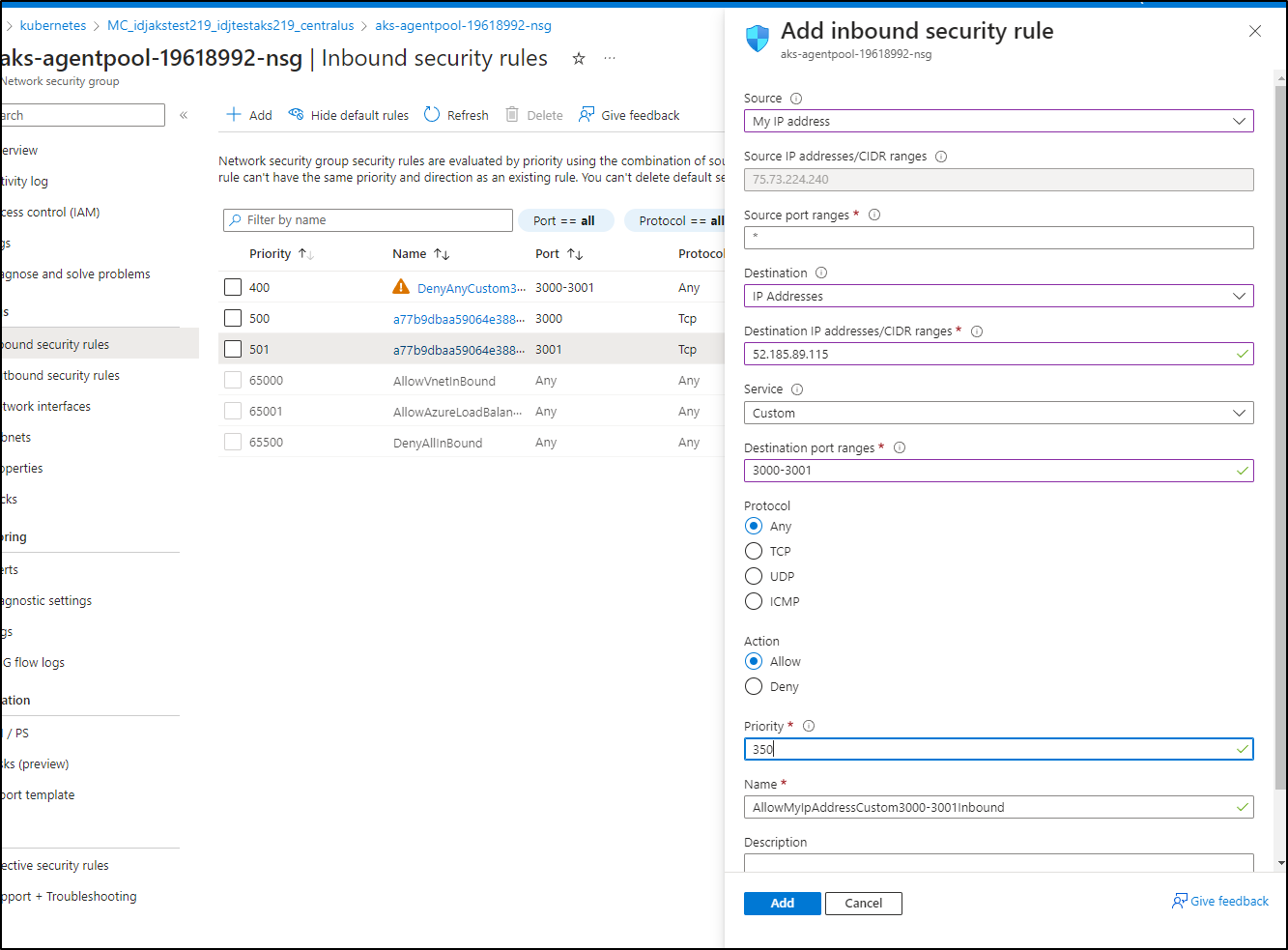

Because this is within the VNet created for AKS, we can always limit at the NSG level

Change from allowing “Internet” to those ports

I’ll add a Deny to all and see that it does indeed block traffic

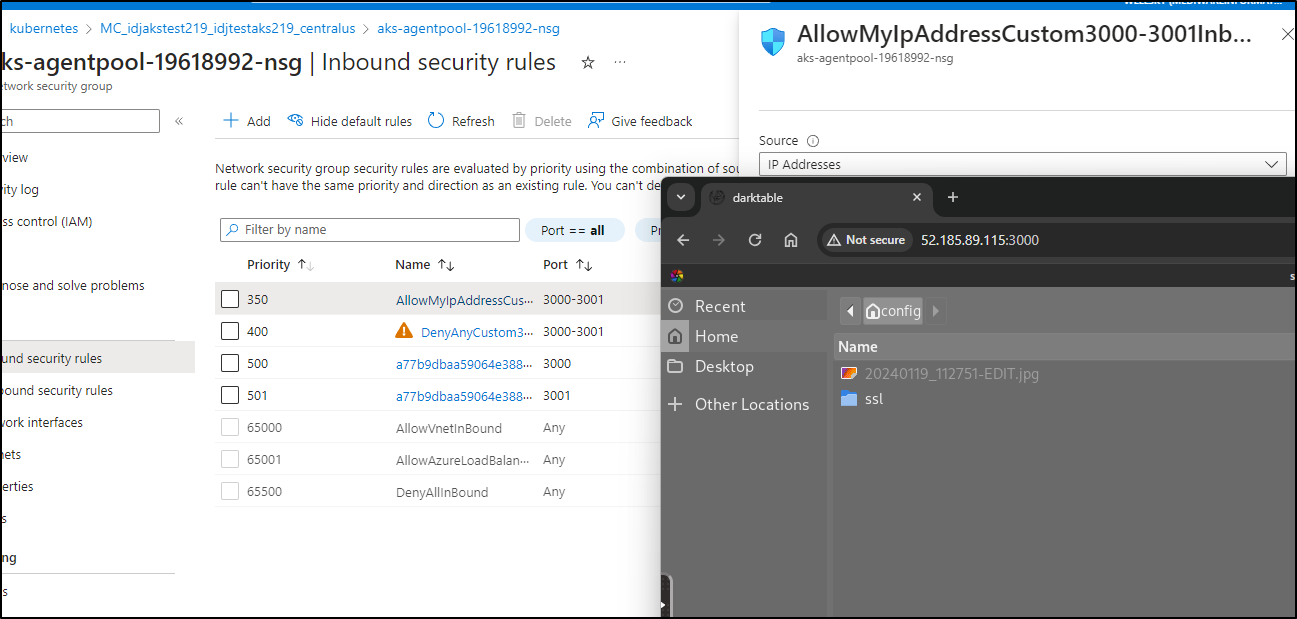

Then I’ll add a rule ahead of it for my IP address

And verify it works

I opted to create rules ahead of those automatically created by AKS to as not modify auto-created objects

Pom app

I found this nifty Pomodoro app that really hasn’t been touched in 6 years at pomodoreApp

My first step was to clone the repo and build an image

builder@DESKTOP-QADGF36:~/Workspaces$ git clone https://github.com/temaEmelyan/pomodoreApp.git

Cloning into 'pomodoreApp'...

remote: Enumerating objects: 2452, done.

remote: Total 2452 (delta 0), reused 0 (delta 0), pack-reused 2452

Receiving objects: 100% (2452/2452), 1.75 MiB | 6.53 MiB/s, done.

Resolving deltas: 100% (1147/1147), done.

builder@DESKTOP-QADGF36:~/Workspaces$ cd pomodoreApp/

builder@DESKTOP-QADGF36:~/Workspaces/pomodoreApp$ docker build -t idjohnson/pomodoreapp:latest .

[+] Building 5.7s (7/7) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 254B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/openjdk:8-jdk-alpine 2.3s

=> [auth] library/openjdk:pull token for registry-1.docker.io 0.0s

=> [internal] load build context 0.4s

=> => transferring context: 2B 0.2s

=> [1/2] FROM docker.io/library/openjdk:8-jdk-alpine@sha256:94792824df2df33402f201713f932b58cb9de94a0cd524164a0f2283343547b3 2.4s

=> => resolve docker.io/library/openjdk:8-jdk-alpine@sha256:94792824df2df33402f201713f932b58cb9de94a0cd524164a0f2283343547b3 0.0s

=> => sha256:94792824df2df33402f201713f932b58cb9de94a0cd524164a0f2283343547b3 1.64kB / 1.64kB 0.0s

=> => sha256:44b3cea369c947527e266275cee85c71a81f20fc5076f6ebb5a13f19015dce71 947B / 947B 0.0s

=> => sha256:a3562aa0b991a80cfe8172847c8be6dbf6e46340b759c2b782f8b8be45342717 3.40kB / 3.40kB 0.0s

=> => sha256:e7c96db7181be991f19a9fb6975cdbbd73c65f4a2681348e63a141a2192a5f10 2.76MB / 2.76MB 0.3s

=> => sha256:f910a506b6cb1dbec766725d70356f695ae2bf2bea6224dbe8c7c6ad4f3664a2 238B / 238B 0.1s

=> => sha256:c2274a1a0e2786ee9101b08f76111f9ab8019e368dce1e325d3c284a0ca33397 70.73MB / 70.73MB 1.6s

=> => extracting sha256:e7c96db7181be991f19a9fb6975cdbbd73c65f4a2681348e63a141a2192a5f10 0.4s

=> => extracting sha256:f910a506b6cb1dbec766725d70356f695ae2bf2bea6224dbe8c7c6ad4f3664a2 0.0s

=> => extracting sha256:c2274a1a0e2786ee9101b08f76111f9ab8019e368dce1e325d3c284a0ca33397 0.5s

=> ERROR [2/2] ADD target/pomodoreApp-*.jar app.jar 0.1s

------

> [2/2] ADD target/pomodoreApp-*.jar app.jar:

------

lstat /var/lib/docker/tmp/buildkit-mount2742879026/target: no such file or directory

Seems the author wanted to do local builds that then were added to the Dockerfile as a second step.

Once I fixed the Dockerfile:

# Use maven:3.6.0-jdk-8-alpine as a parent image

FROM maven:3.6.0-jdk-8-alpine AS build

# Set the working directory in the container to /app

WORKDIR /app

# Copy the pom.xml file to download dependencies

COPY pom.xml .

# Download the dependencies

RUN mvn dependency:go-offline -B

# Copy the rest of the application

COPY src /app/src

# Package the application

RUN mvn package

# Use openjdk:8-jdk-alpine for the runtime

FROM openjdk:8-jdk-alpine

# Copy the jar file from the build stage

COPY --from=build /app/target/*.jar /app.jar

# Set the JAVA_OPTS environment variable

ENV JAVA_OPTS=""

# Set the POMO_PROFILE environment variable

ENV POMO_PROFILE="default"

# Run the jar file when the container launches

ENTRYPOINT exec java $JAVA_OPTS -jar /app.jar --spring.profiles.active=$POMO_PROFILE

I launched it locally

builder@LuiGi17:~/Workspaces/pomodoreApp$ docker run -p 8899:8080 pomodoreapp

. ____ _ __ _ _

/\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \

( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \

\\/ ___)| |_)| | | | | || (_| | ) ) ) )

' |____| .__|_| |_|_| |_\__, | / / / /

=========|_|==============|___/=/_/_/_/

:: Spring Boot :: (v2.1.0.RELEASE)

2024-02-21 00:29:31.538 INFO 1 --- [ main] com.temelyan.pomoapp.WebApplicationKt : Starting WebApplicationKt v0.0.1 on 137be41a6d81 with PID 1 (/app.jar started by root in /)

2024-02-21 00:29:31.540 INFO 1 --- [ main] com.temelyan.pomoapp.WebApplicationKt : The following profiles are active: default

2024-02-21 00:29:32.399 INFO 1 --- [ main] .s.d.r.c.RepositoryConfigurationDelegate : Bootstrapping Spring Data repositories in DEFAULT mode.

2024-02-21 00:29:32.483 INFO 1 --- [ main] .s.d.r.c.RepositoryConfigurationDelegate : Finished Spring Data repository scanning in 75ms. Found 4 repository interfaces.

... snip ...

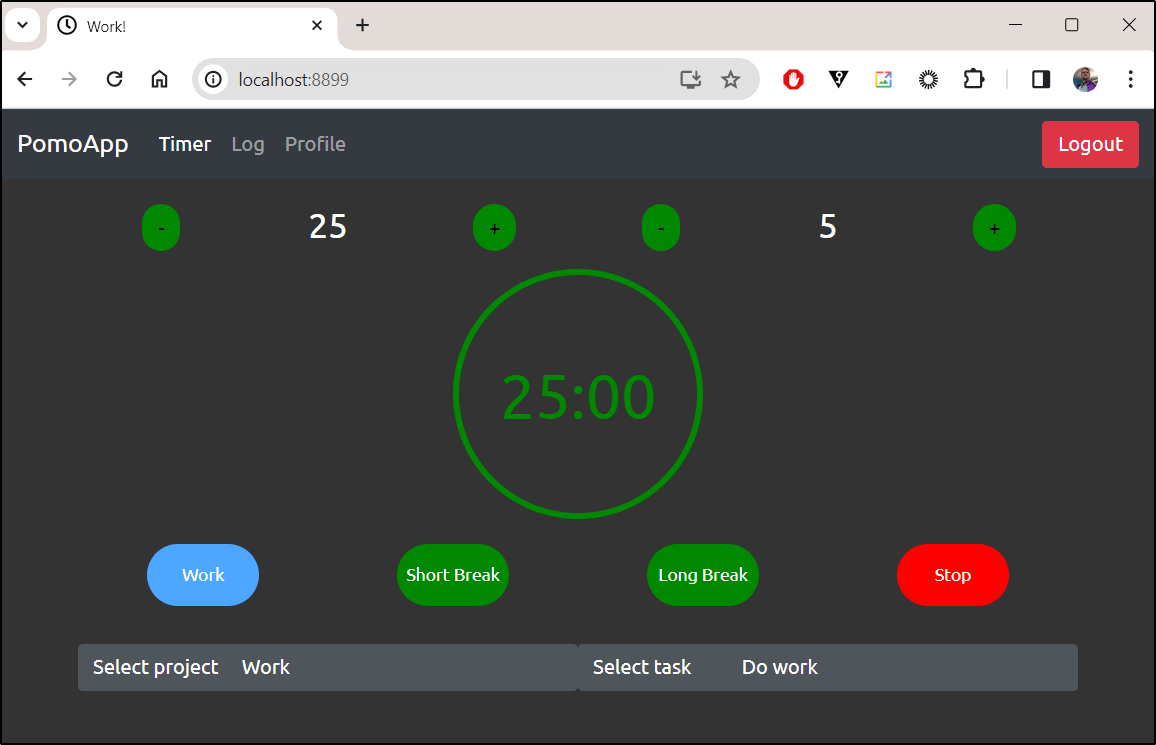

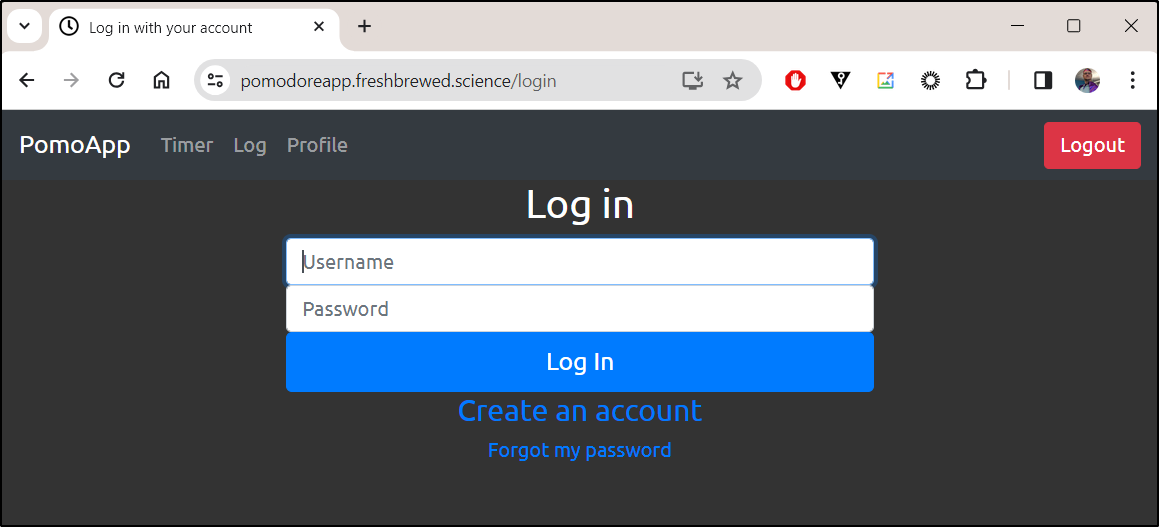

Then I could create an account and then login

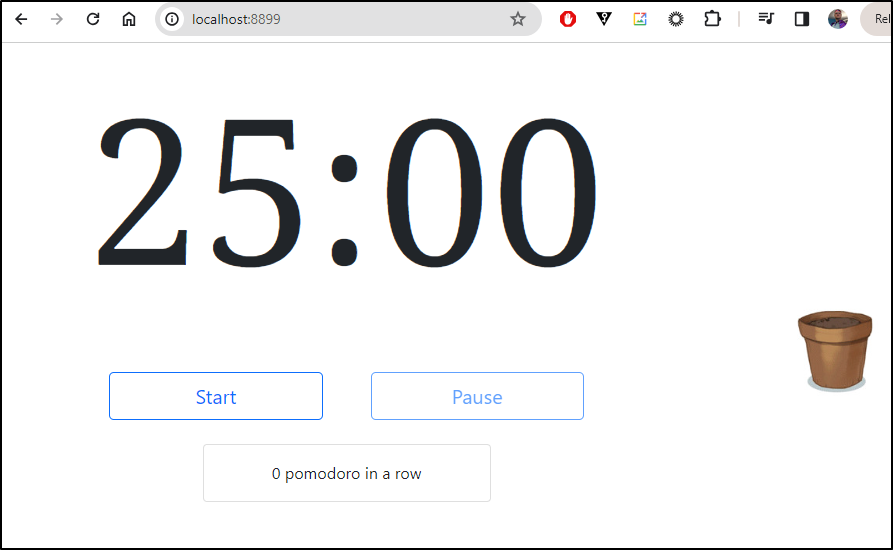

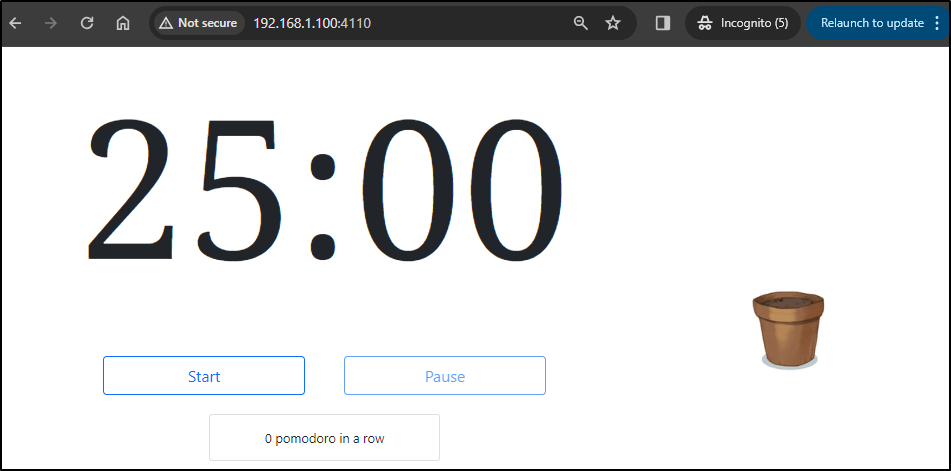

Here we can see it in action:

So we can share with others and also have an image we’ll use later in Kubernetes by pushing to Dockerhub

builder@LuiGi17:~/Workspaces/pomodoreApp$ docker tag pomodoreapp idjohnson/pomodoreapp:latest

builder@LuiGi17:~/Workspaces/pomodoreApp$ docker push idjohnson/pomodoreapp:latest

The push refers to repository [docker.io/idjohnson/pomodoreapp]

9e0750b29bf3: Pushed

ceaf9e1ebef5: Mounted from library/openjdk

9b9b7f3d56a0: Mounted from library/openjdk

f1b5933fe4b5: Mounted from library/openjdk

latest: digest: sha256:c664875c4306d9e1ccf23eacbbad35af364b5e7b4c196412587ea6d3dbe89ddc size: 1159

$ cat r53-pomodoreapp.json { “Comment”: “CREATE pomodoreapp fb.s A record “, “Changes”: [ { “Action”: “CREATE”, “ResourceRecordSet”: { “Name”: “pomodoreapp.freshbrewed.science”, “Type”: “A”, “TTL”: 300, “ResourceRecords”: [ { “Value”: “75.73.224.240” } ] } } ] }

$ aws route53 change-resource-record-sets –hosted-zone-id Z39E8QFU0F9PZP –change-batch file://r53-pomodoreapp.json { “ChangeInfo”: { “Id”: “/change/C022151920S3D9S930G2”, “Status”: “PENDING”, “SubmittedAt”: “2024-02-21T00:49:12.169Z”, “Comment”: “CREATE pomodoreapp fb.s A record “ } }

I can now deploy into Kubernetes using the following manifest YAML

$ cat k8s.yaml

apiVersion: v1

kind: Service

metadata:

name: pomodoreapp

spec:

selector:

app: pomodoreapp

ports:

- protocol: TCP

port: 80

targetPort: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: pomodoreapp

spec:

replicas: 1

selector:

matchLabels:

app: pomodoreapp

template:

metadata:

labels:

app: pomodoreapp

spec:

containers:

- name: pomodoreapp

image: idjohnson/pomodoreapp:latest

ports:

- containerPort: 8080

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: pomodoreapp

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

spec:

rules:

- host: pomodoreapp.freshbrewed.science

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: pomodoreapp

port:

number: 80

tls:

- hosts:

- pomodoreapp.freshbrewed.science

secretName: pomodoreapp-tls

Which I can deploy

$ kubectl apply -f ./k8s.yaml

service/pomodoreapp created

deployment.apps/pomodoreapp created

ingress.networking.k8s.io/pomodoreapp created

And soon test

However, without a PVC, resetting the pod

builder@LuiGi17:~/Workspaces/pomodoreApp$ kubectl get pods | grep pomod

pomodoreapp-848774b4b4-spmjf 1/1 Running 0 4m27s

builder@LuiGi17:~/Workspaces/pomodoreApp$ kubectl delete pod -l app=pomodoreapp

pod "pomodoreapp-848774b4b4-spmjf" deleted

builder@LuiGi17:~/Workspaces/pomodoreApp$ kubectl get pods | grep pomod

pomodoreapp-848774b4b4-mpk54 1/1 Running 0 4s

would lose the data

I modified the Dockerfile to switch from ‘default’ to the ‘develop’ profile which uses hibernate

# Set the POMO_PROFILE environment variable

ENV POMO_PROFILE="develop"

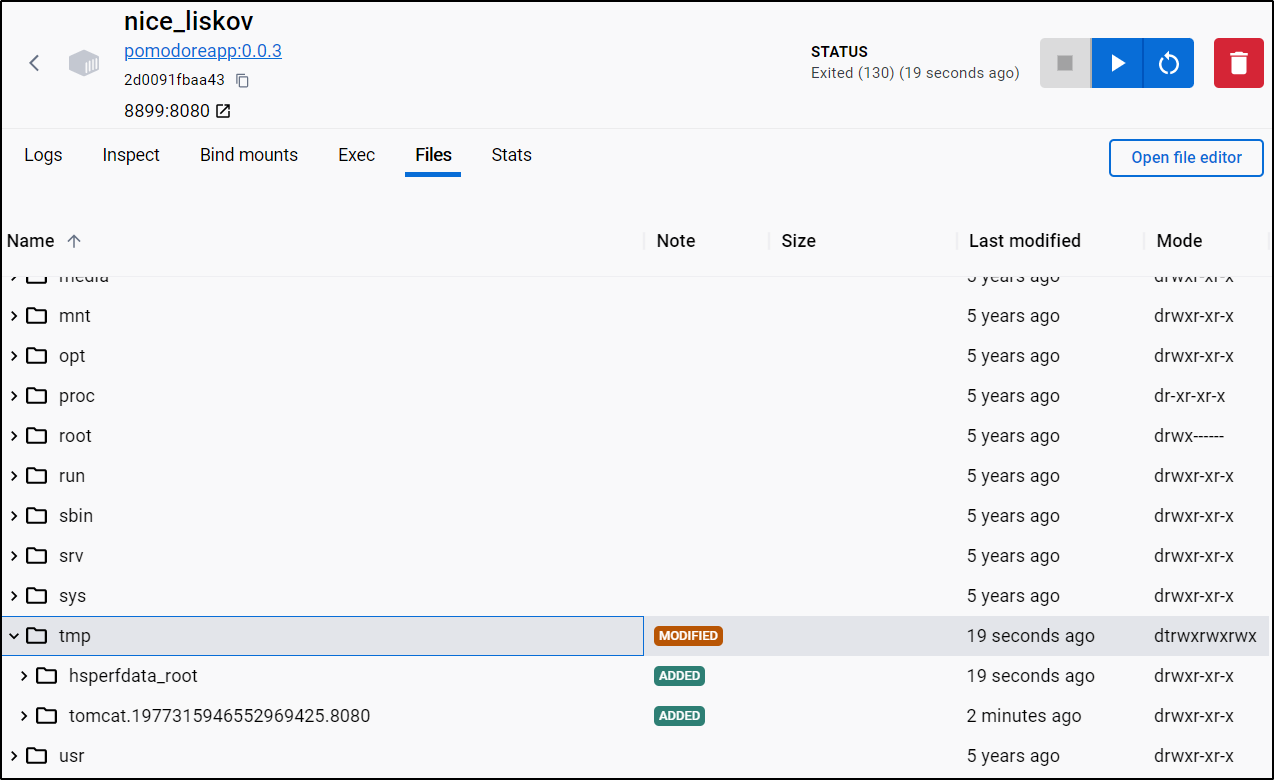

So then I tested locally to verify where files lived

then updated the manifest to use the new container and leverage a PVC

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pomodoreapppvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: managed-nfs-storage

resources:

requests:

storage: 2Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: pomodoreapp

spec:

replicas: 1

selector:

matchLabels:

app: pomodoreapp

template:

metadata:

labels:

app: pomodoreapp

spec:

containers:

- name: pomodoreapp

image: idjohnson/pomodoreapp:0.0.3

ports:

- containerPort: 8080

volumeMounts:

- name: pomodoreappstorage

mountPath: /tmp

volumes:

- name: pomodoreappstorage

persistentVolumeClaim:

claimName: pomodoreapppvc

Then we can apply it

$ kubectl apply -f ./k8s.yaml

service/pomodoreapp unchanged

persistentvolumeclaim/pomodoreapppvc created

deployment.apps/pomodoreapp configured

ingress.networking.k8s.io/pomodoreapp unchanged

We see a PVC now and the pod cycle

$ kubectl get pods -l app=pomodoreapp

NAME READY STATUS RESTARTS AGE

pomodoreapp-6f66d8bf7c-59rk2 1/1 Running 0 43s

$ kubectl get pvc | grep pom

pomodoreapppvc Bound pvc-a9caba52-d222-4886-ac97-78435071afed 2Gi RWO

managed-nfs-storage 59s

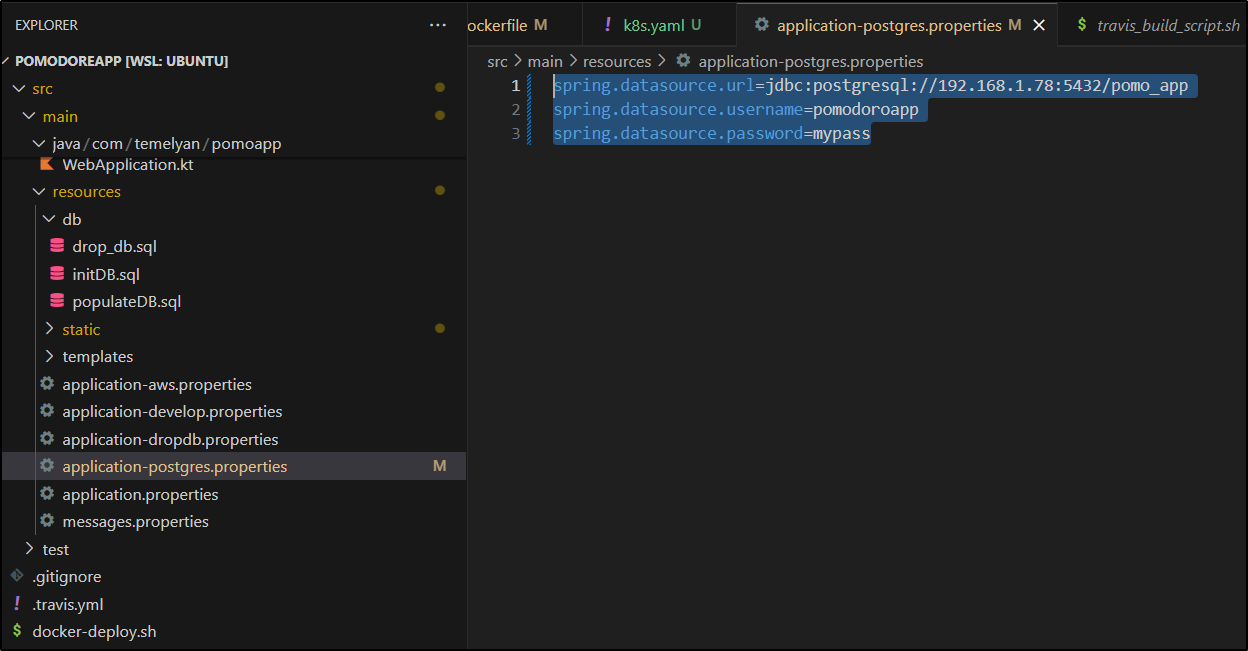

I tried PostgreSQL (0.0.5)

spring.datasource.url=jdbc:postgresql://192.168.1.78:5432/pomo_app

spring.datasource.username=pomodoroapp

spring.datasource.password=mypass

Then created a DB

postgres@isaac-MacBookAir:~$ createuser --pwprompt pomodoroapp

Enter password for new role:

Enter it again:

postgres@isaac-MacBookAir:~$ createdb pomo_app

postgres@isaac-MacBookAir:~$ exit

I’ll grant permissions

isaac@isaac-MacBookAir:~$ sudo su - postgres

[sudo] password for isaac:

postgres@isaac-MacBookAir:~$ psql

psql (12.17 (Ubuntu 12.17-0ubuntu0.20.04.1))

Type "help" for help.

postgres=# GRANT ALL ON ALL TABLES IN SCHEMA public TO pomodoroapp;

GRANT

postgres=# grant all privileges on database pomo_app to pomodoroapp;

GRANT

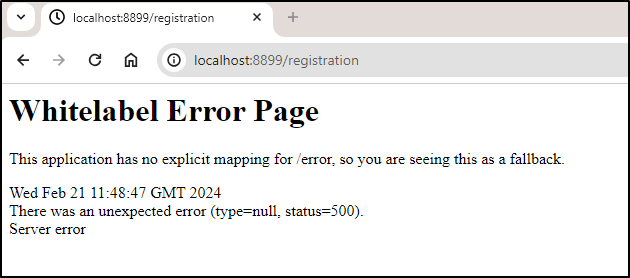

But saw errors in the logs and it crashed when i tried to register a user

2024-02-21 02:56:16.470 ERROR 1 --- [nio-8080-exec-4] o.h.engine.jdbc.spi.SqlExceptionHelper : ERROR: relation "users" does not exist

Position: 129

2024-02-21 02:56:16.478 ERROR 1 --- [nio-8080-exec-4] o.a.c.c.C.[.[.[/].[dispatcherServlet] : Servlet.service() for servlet [dispatcherServlet] in context with path [] threw exception [Request processing failed; nested exception is org.springframework.dao.InvalidDataAccessResourceUsageException: could not extract ResultSet; SQL [n/a]; nested exception is org.hibernate.exception.SQLGrammarException: could not extract ResultSet] with root cause

org.postgresql.util.PSQLException: ERROR: relation "users" does not exist

Position: 129

at org.postgresql.core.v3.QueryExecutorImpl.receiveErrorResponse(QueryExecutorImpl.java:2433) ~[postgresql-42.2.2.jre7.jar!/:42.2.2.jre7]

at org.postgresql.core.v3.QueryExecutorImpl.processResults(QueryExecutorImpl.java:2178) ~[postgresql-42.2.2.jre7.jar!/:42.2.2.jre7]

On login

at org.springframework.dao.support.PersistenceExceptionTranslationInterceptor.invoke(PersistenceExceptionTranslationInterceptor.java:139) ~[spring-tx-5.1.2.RELEASE.jar!/:5.1.2.RELEASE]

... 84 common frames omitted

Caused by: org.postgresql.util.PSQLException: ERROR: relation "users" does not exist

Position: 129

at org.postgresql.core.v3.QueryExecutorImpl.receiveErrorResponse(QueryExecutorImpl.java:2433) ~[postgresql-42.2.2.jre7.jar!/:42.2.2.jre7]

at org.postgresql.core.v3.QueryExecutorImpl.processResults(QueryExecutorImpl.java:2178) ~[postgresql-42.2.2.jre7.jar!/:42.2.2.jre7]

And a crash on registration

I’ve used this same PostgreSQL DB for firefly and others so I suspect the code.

For now, I’ll create a PR for the author and just take the persistence as a current gap to fill in later.

You are welcome to fork my fork as well or just get my latest container from Dockerhub.

Simple Pom App

I started looking for a more basic one that didn’t require authentication or a database.

I found a few on Dockerhub. About a year back, Eugene Parkhomenko published a very lightweight one that looks to run Puma (ie. Ruby).

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ docker run -p 8899:80 eugeneparkhom/pomodoro:1.0.48

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: /etc/nginx/conf.d/default.conf differs from the packaged version

/docker-entrypoint.sh: Sourcing /docker-entrypoint.d/15-local-resolvers.envsh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2024/02/21 12:33:59 [notice] 1#1: using the "epoll" event method

2024/02/21 12:33:59 [notice] 1#1: nginx/1.25.2

2024/02/21 12:33:59 [notice] 1#1: built by gcc 12.2.0 (Debian 12.2.0-14)

2024/02/21 12:33:59 [notice] 1#1: OS: Linux 5.15.133.1-microsoft-standard-WSL2

2024/02/21 12:33:59 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2024/02/21 12:33:59 [notice] 1#1: start worker processes

2024/02/21 12:33:59 [notice] 1#1: start worker process 28

2024/02/21 12:33:59 [notice] 1#1: start worker process 29

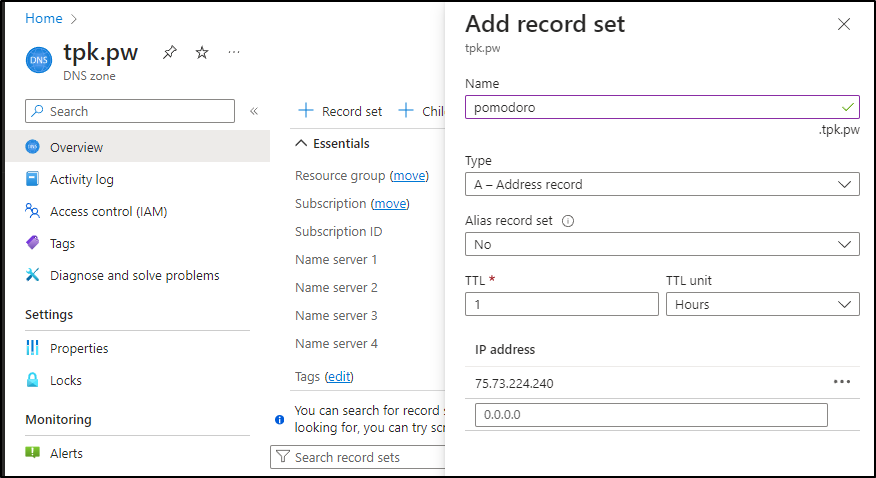

Before I move on to Kubernetes, you’ve seen me use AWS Route53 so often it’s a bit rote.

Let’s use Azure DNS this time. I’ll add an A Record there

I can then create a quick k8s manifest

$ cat pomo-new.yaml

apiVersion: v1

kind: Service

metadata:

name: pomosimple

spec:

selector:

app: pomosimple

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: pomosimple

spec:

replicas: 1

selector:

matchLabels:

app: pomosimple

template:

metadata:

labels:

app: pomosimple

spec:

containers:

- name: pomosimple

image: eugeneparkhom/pomodoro:1.0.48

ports:

- containerPort: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: pomosimple

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

spec:

rules:

- host: pomodoro.tpk.pw

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: pomosimple

port:

number: 80

tls:

- hosts:

- pomodoro.tpk.pw

secretName: pomosimple-tls

Apply it

$ kubectl apply -f ./pomo-new.yaml

service/pomosimple created

deployment.apps/pomosimple created

ingress.networking.k8s.io/pomosimple created

Sadly, while valid, it seems LE didn’t like the cert request. I gave it 15 minutes then pivoted

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ kubectl get CertificateRequest | tail -n1

pomosimple-tls-v8nnp True False letsencrypt-prod system:serviceaccount:cert-manager:cert-manager 9m

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal cert-manager.io 8m12s cert-manager-certificaterequests-approver Certificate request has been approved by cert-manager.io

Normal OrderCreated 8m12s cert-manager-certificaterequests-issuer-acme Created Order resource default/pomosimple-tls-v8nnp-1715295675

Normal OrderPending 8m12s cert-manager-certificaterequests-issuer-acme Waiting on certificate issuance from order default/pomosimple-tls-v8nnp-1715295675: ""

Then I realized that my ClusterIssuer (prod) is set to use R53 resolving, not NGinx

$ kubectl get clusterissuer letsencrypt-prod -o yaml

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-prod

spec:

acme:

email: isaac.johnson@gmail.com

preferredChain: ""

privateKeySecretRef:

name: letsencrypt-prod

server: https://acme-v02.api.letsencrypt.org/directory

solvers:

- dns01:

route53:

... snip ...

I left my old Ngixn one as letsencrypt-prod-old:

p$ kubectl get clusterissuer letsencrypt-prod-old -o yaml

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"cert-manager.io/v1","kind":"ClusterIssuer","metadata":{"annotations":{},"name":"letsencrypt-prod-old"},"spec":{"acme":{"email":"isaac.johnson@gmail.com","privateKeySecretRef":{"name":"letsencrypt-prod"},"server":"https://acme-v02.api.letsencrypt.org/directory","solvers":[{"http01":{"ingress":{"class":"nginx"}}}]}}}

creationTimestamp: "2022-07-26T02:19:47Z"

generation: 1

name: letsencrypt-prod-old

resourceVersion: "1074"

uid: 014e8f01-a737-403c-bcef-e96d4c009cbd

spec:

acme:

email: isaac.johnson@gmail.com

preferredChain: ""

privateKeySecretRef:

name: letsencrypt-prod

server: https://acme-v02.api.letsencrypt.org/directory

solvers:

- http01:

ingress:

class: nginx

status:

acme:

lastRegisteredEmail: isaac.johnson@gmail.com

uri: https://acme-v02.api.letsencrypt.org/acme/acct/646879196

conditions:

- lastTransitionTime: "2022-07-26T02:19:48Z"

message: The ACME account was registered with the ACME server

observedGeneration: 1

reason: ACMEAccountRegistered

status: "True"

type: Ready

Let’s fix that

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ kubectl delete -f ./pomo-new.yaml

service "pomosimple" deleted

deployment.apps "pomosimple" deleted

ingress.networking.k8s.io "pomosimple" deleted

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ sed -i /letsencrypt-prod/letsencrypt-prod-old/g ./pomo-new.yaml

sed: -e expression #1, char 20: extra characters after command

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ sed -i 's/letsencrypt-prod/letsencrypt-prod-old/g' ./pomo-new.yaml

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ cat pomo-new.yaml

apiVersion: v1

kind: Service

metadata:

name: pomosimple

spec:

selector:

app: pomosimple

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: pomosimple

spec:

replicas: 1

selector:

matchLabels:

app: pomosimple

template:

metadata:

labels:

app: pomosimple

spec:

containers:

- name: pomosimple

image: eugeneparkhom/pomodoro:1.0.48

ports:

- containerPort: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: pomosimple

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod-old

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

spec:

rules:

- host: pomodoro.tpk.pw

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: pomosimple

port:

number: 80

tls:

- hosts:

- pomodoro.tpk.pw

secretName: pomosimple-tls

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ kubectl apply -f ./pomo-new.yaml

service/pomosimple created

deployment.apps/pomosimple created

ingress.networking.k8s.io/pomosimple created

That doesnt seem to work for me either

$ kubectl get cert | tail -n1

pomosimple-tls False pomosimple-tls 91m

Azure Cluster Issuer

Let’s just take a moment and do this the right way.

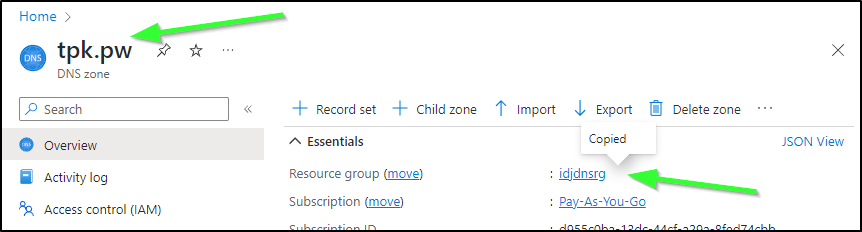

I’ll need my Zone and Resource Group information

# just come up with a name that is valid and unique

$ AZURE_CERT_MANAGER_NEW_SP_NAME=idjcertmanagerk3s

$ AZURE_DNS_ZONE_RESOURCE_GROUP=idjdnsrg

$ AZURE_DNS_ZONE=tpk.pw

We’ll then create the SP and capture the relevant details; id and password, tenant and sub

$ DNS_SP=$(az ad sp create-for-rbac --name $AZURE_CERT_MANAGER_NEW_SP_NAME --output json)

WARNING: The output includes credentials that you must protect. Be sure that you do not include these credentials in your code or check the credentials into your source control. For more information, see https://aka.ms/azadsp-cli

$ AZURE_CERT_MANAGER_SP_APP_ID=$(echo $DNS_SP | jq -r '.appId')

$ AZURE_CERT_MANAGER_SP_PASSWORD=$(echo $DNS_SP | jq -r '.password')

$ AZURE_TENANT_ID=$(echo $DNS_SP | jq -r '.tenant')

$ AZURE_SUBSCRIPTION_ID=$(az account show --output json | jq -r '.id')

When I saw the following error

$ DNS_ID=$(az network dns zone show --name $AZURE_DNS_ZONE --resource-group $AZURE_DNS_ZONE_RESOURCE_GROUP --query "id" --output tsv)

ERROR: (ResourceGroupNotFound) Resource group 'idjdnsrg' could not be found.

Code: ResourceGroupNotFound

Message: Resource group 'idjdnsrg' could not be found.

It clued me into the fact i had the wrong subscription set

$ az account set --subscription Pay-As-You-Go

I then resumed the steps to add the right permission

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ AZURE_CERT_MANAGER_SP_APP_ID=$(echo $DNS_SP | jq -r '.appId')

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ AZURE_CERT_MANAGER_SP_PASSWORD=$(echo $DNS_SP | jq -r '.password')

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ AZURE_TENANT_ID=$(echo $DNS_SP | jq -r '.tenant')

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ AZURE_SUBSCRIPTION_ID=$(az account show --output json | jq -r '.id')

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ DNS_ID=$(az network dns zone show --name $AZURE_DNS_ZONE --resource-group $AZURE_DNS_ZONE_RESOURCE_GROUP --query "id" --output tsv)

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ az role assignment create --assignee $AZURE_CERT_MANAGER_SP_APP_ID --role "DNS Zone Contributor" --scope $DNS_ID

{

"condition": null,

"conditionVersion": null,

"createdBy": null,

I’ll need to set the secret

$ kubectl create secret generic azuredns-config --from-literal=client-secret=$AZURE_CERT_MANAGER_SP_PASSWORD

secret/azuredns-config created

I’ll get my non secret outputs

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ echo "AZURE_CERT_MANAGER_SP_APP_ID: $AZURE_CERT_MANAGER_SP_APP_ID"

AZURE_CERT_MANAGER_SP_APP_ID: 7d420c37-5aa9-434b-9c65-cd3e3066ec00

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ echo "AZURE_SUBSCRIPTION_ID: $AZURE_SUBSCRIPTION_ID"

AZURE_SUBSCRIPTION_ID: d955c0ba-13dc-44cf-a29a-8fed74cbb22d

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ echo "AZURE_TENANT_ID: $AZURE_TENANT_ID"

AZURE_TENANT_ID: 28c575f6-ade1-4838-8e7c-7e6d1ba0eb4a

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ echo "AZURE_DNS_ZONE: $AZURE_DNS_ZONE"

AZURE_DNS_ZONE: tpk.pw

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ echo "AZURE_DNS_ZONE_RESOURCE_GROUP: $AZURE_DNS_ZONE_RESOURCE_GROUP"

AZURE_DNS_ZONE_RESOURCE_GROUP: idjdnsrg

Which I can now use to build out the clusterissuer

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ cat clusterIssuer.yaml

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: azuredns-tpkpw

spec:

acme:

email: isaac.johnson@gmail.com

preferredChain: ""

privateKeySecretRef:

name: azuredns-tpkpw

server: https://acme-v02.api.letsencrypt.org/directory

solvers:

- dns01:

azureDNS:

clientID: 7d420c37-5aa9-434b-9c65-cd3e3066ec00

clientSecretSecretRef:

# The following is the secret we created in Kubernetes. Issuer will use this to present challenge to Azure DNS.

name: azuredns-config

key: client-secret

subscriptionID: d955c0ba-13dc-44cf-a29a-8fed74cbb22d

tenantID: 28c575f6-ade1-4838-8e7c-7e6d1ba0eb4a

resourceGroupName: idjdnsrg

hostedZoneName: tpk.pw

# Azure Cloud Environment, default to AzurePublicCloud

environment: AzurePublicCloud

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ kubectl apply -f ./clusterIssuer.yaml

clusterissuer.cert-manager.io/azuredns-tpkpw created

Now apply

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ kubectl delete -f ./pomo-new.yaml

service "pomosimple" deleted

deployment.apps "pomosimple" deleted

ingress.networking.k8s.io "pomosimple" deleted

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ vi pomo-new.yaml

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ cat pomo-new.yaml | grep azure

cert-manager.io/cluster-issuer: azuredns-tpkpw

builder@DESKTOP-QADGF36:~/Workspaces/idjohnson-pomodoreapp$ kubectl apply -f ./pomo-new.yaml

service/pomosimple created

deployment.apps/pomosimple created

ingress.networking.k8s.io/pomosimple created

But sadly that didn’t work

pomosimple-tls False pomosimple-tls 81m

I realized that for a clusterIssuer the secret we created actually needs to live in the cluster-issuer namespace.

From the logs

$ kubectl logs cert-manager-64d9bc8b74-kpkjk -n cert-manager | grep pomo | tail -n5

E0221 14:56:34.830884 1 controller.go:166] cert-manager/challenges "msg"="re-queuing item due to error processing" "error"="error getting azuredns client secret: secret \"azuredns-config\" not found" "key"="default/pomosimple-tls-qfg77-3398271211-3929770327"

E0221 15:01:54.831738 1 controller.go:166] cert-manager/challenges "msg"="re-queuing item due to error processing" "error"="error getting azuredns client secret: secret \"azuredns-config\" not found" "key"="default/pomosimple-tls-qfg77-3398271211-3929770327"

E0221 15:12:34.833248 1 controller.go:166] cert-manager/challenges "msg"="re-queuing item due to error processing" "error"="error getting azuredns client secret: secret \"azuredns-config\" not found" "key"="default/pomosimple-tls-qfg77-3398271211-3929770327"

E0221 15:33:54.835468 1 controller.go:166] cert-manager/challenges "msg"="re-queuing item due to error processing" "error"="error getting azuredns client secret: secret \"azuredns-config\" not found" "key"="default/pomosimple-tls-qfg77-3398271211-3929770327"

E0221 16:03:54.836060 1 controller.go:166] cert-manager/challenges "msg"="re-queuing item due to error processing" "error"="error getting azuredns client secret: secret \"azuredns-config\" not found" "key"="default/pomosimple-tls-qfg77-3398271211-3929770327"

I think created

$ kubectl create secret generic azuredns-config -n cert-manager --from-literal=client-secret=$AZURE_CERT_MANAGER_SP_PASSWORD

secret/azuredns-config created

And this worked

$ kubectl get cert | tail -n1

planereport-tls True planereport-tls 60d

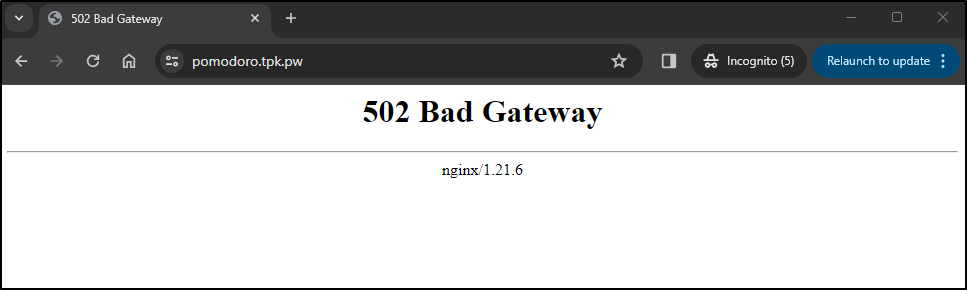

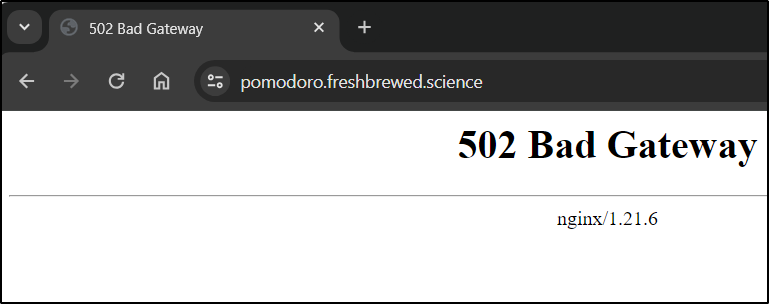

Nginx would serve the Domain now, but not the app

I saw errors

10.42.0.36 - - [21/Feb/2024:23:03:55 +0000] "GET / HTTP/1.1" 502 559 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36" "-"

10.42.0.36 - - [21/Feb/2024:23:03:55 +0000] "POST /api/actions/runner.v1.RunnerService/FetchTask HTTP/1.1" 200 2 "-" "connect-go/1.2.0-dev (go1.20.6)" "-"

2024/02/21 23:03:55 [error] 24#24: *4 upstream sent invalid header: "\x20..." while reading response header from upstream, client: 10.42.0.36, server: pomodoro.tpk.pw, request: "GET / HTTP/1.1", upstream: "http://10.42.2.229:80/", host: "pomodoro.tpk.pw"

10.42.0.36 - - [21/Feb/2024:23:03:55 +0000] "GET / HTTP/1.1" 502 559 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36" "-"

2024/02/21 23:03:56 [error] 24#24: *4 upstream sent invalid header: "\x20..." while reading response header from upstream, client: 10.42.0.36, server: pomodoro.tpk.pw, request: "GET / HTTP/1.1", upstream: "http://10.42.2.229:80/", host: "pomodoro.tpk.pw"

I even tried adding a data block to my Nginx controllers to see if that would get past the errors

$ kubectl get cm nginx-ingress-release-nginx-ingress -o yaml

apiVersion: v1

data:

enable-underscores-in-headers: "true"

ignore-invalid-headers: "false"

kind: ConfigMap

as you can see below:

$ cat pomo-new.yaml

apiVersion: v1

kind: Service

metadata:

name: pomosimple

spec:

selector:

app: pomosimple

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: pomosimple

spec:

replicas: 1

selector:

matchLabels:

app: pomosimple

template:

metadata:

labels:

app: pomosimple

spec:

containers:

- name: pomosimple

image: eugeneparkhom/pomodoro:1.0.48

ports:

- containerPort: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: pomosimple

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/websocket-services: pomosimple

spec:

rules:

- host: pomodoro.tpk.pw

http:

paths:

- pathType: ImplementationSpecific

path: "/"

backend:

service:

name: pomosimple

port:

number: 80

tls:

- hosts:

- pomodoro.tpk.pw

secretName: pomosimple-tls

I can launch on my dockerhost

builder@builder-T100:~$ sudo docker run -p 4110:80 --name pomodorosimp -d eugeneparkhom/pomodoro:1.0.48

Unable to find image 'eugeneparkhom/pomodoro:1.0.48' locally

1.0.48: Pulling from eugeneparkhom/pomodoro

52d2b7f179e3: Pull complete

fd9f026c6310: Pull complete

055fa98b4363: Pull complete

96576293dd29: Pull complete

a7c4092be904: Pull complete

e3b6889c8954: Pull complete

da761d9a302b: Pull complete

574ca9675103: Pull complete

a893268991ce: Pull complete

f208e5a1feec: Pull complete

Digest: sha256:dd07a4e830caf50e8a29f8479aa8cfe79a1ec6c08d4426c7fc54b20d895b4659

Status: Downloaded newer image for eugeneparkhom/pomodoro:1.0.48

0b2f15589778b595b741d6d9d23e75c671424da9c28ea5536346e9bdeaf81664

and test

But when I try and forward traffic there

$ cat pom-new2.yaml

---

apiVersion: v1

kind: Endpoints

metadata:

name: pomo-external-ip

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: pomosimp

port: 4110

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: pomo-external-ip

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: pomo

port: 80

protocol: TCP

targetPort: 8095

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: pomosimple

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

spec:

rules:

- host: pomodoro.tpk.pw

http:

paths:

- pathType: ImplementationSpecific

path: "/"

backend:

service:

name: pomo-external-ip

port:

number: 80

tls:

- hosts:

- pomodoro.tpk.pw

secretName: pomosimple-tls

$ kubectl apply -f ./pom-new2.yaml

endpoints/pomo-external-ip created

service/pomo-external-ip created

ingress.networking.k8s.io/pomosimple configured

It doesn’t work.

I then decided to pivot to a Freshbrewed URL just to rule out Azure DNS issues

$ cat pomo-new.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: pomodoro-external-ip

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: pomodoro

port: 4110

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: pomodoro-external-ip

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: pomodoro

port: 80

protocol: TCP

targetPort: 4110

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: pomodoro-external-ip

generation: 1

labels:

app.kubernetes.io/instance: pomodoroingress

name: pomodoroingress

spec:

rules:

- host: pomodoro.freshbrewed.science

http:

paths:

- backend:

service:

name: pomodoro-external-ip

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- pomodoro.freshbrewed.science

secretName: pomodoro-tls

$ kubectl apply -f pomo-new.yaml

endpoints/pomodoro-external-ip created

service/pomodoro-external-ip created

ingress.networking.k8s.io/pomodoroingress created

STILL no go

I fired a VNC pod up and verified the app is on 192.168.1.100 locally on 4110.

I guess this is just one of those apps that isn’t going to work with Nginx Ingress.

Another Pomo app

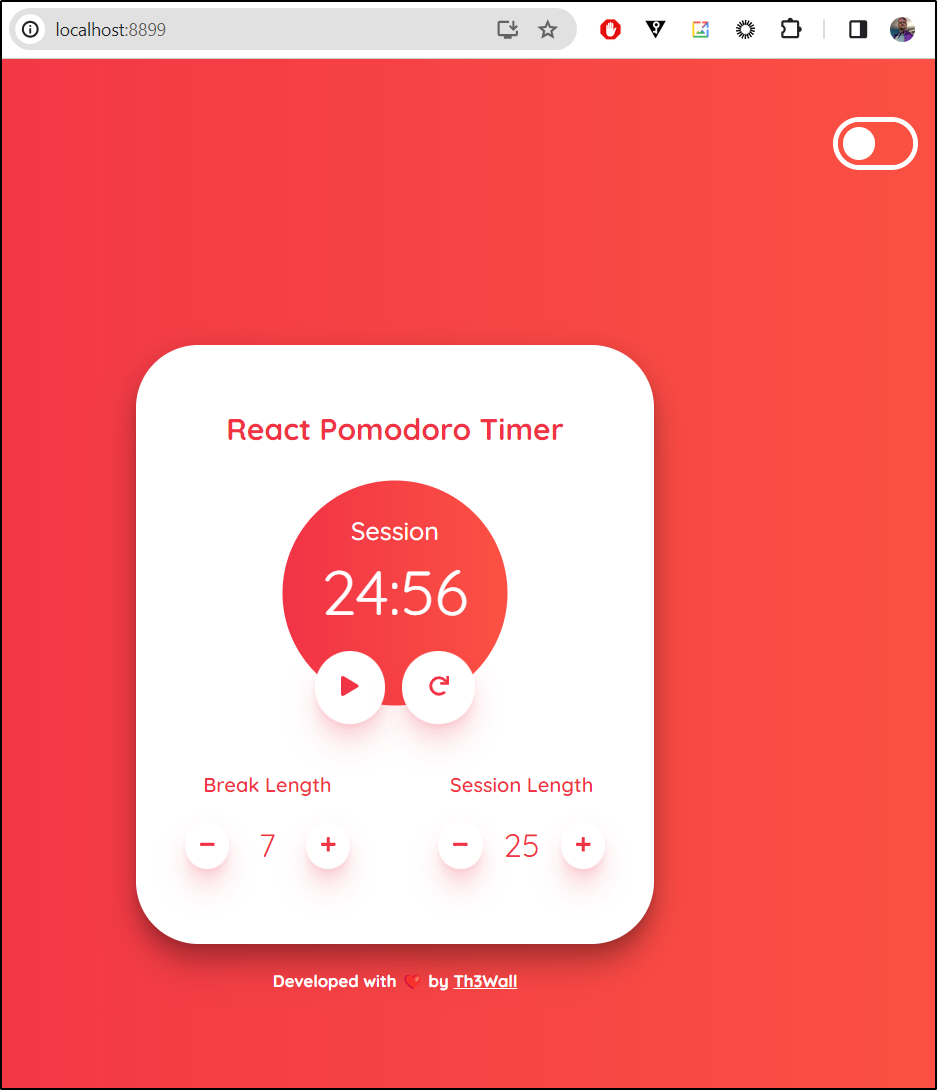

I found another promising app. This time just a straightforward ReactJS app developed by Davide Mandelli at https://github.com/Th3Wall/react-pomodoro-clock.git

I cloned the repo then created a working Dockerfile that would build and expose it with Nginx on port 80

$ cat Dockerfile

# Use an official Node runtime as a parent image

FROM node:14-alpine

# Set the working directory in the container to /app

WORKDIR /app

# Copy package.json and package-lock.json to the working directory

COPY package*.json ./

# Install the application dependencies

RUN npm install

# Copy the rest of the application to the working directory

COPY . .

# Build the application

RUN npm run build

# Use an official Nginx runtime as a parent image for the runtime stage

FROM nginx:stable-alpine

# Copy the build output to replace the default Nginx contents.

COPY --from=0 /app/build /usr/share/nginx/html

# Expose port 80 on the container

EXPOSE 80

# Start Nginx

CMD ["nginx", "-g", "daemon off;"]

To test, I built and ran:

$ docker run -p 8899:80 pomonginxtest

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2024/02/22 00:23:45 [notice] 1#1: using the "epoll" event method

2024/02/22 00:23:45 [notice] 1#1: nginx/1.24.0

2024/02/22 00:23:45 [notice] 1#1: built by gcc 12.2.1 20220924 (Alpine 12.2.1_git20220924-r4)

2024/02/22 00:23:45 [notice] 1#1: OS: Linux 5.15.133.1-microsoft-standard-WSL2

2024/02/22 00:23:45 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2024/02/22 00:23:45 [notice] 1#1: start worker processes

2024/02/22 00:23:45 [notice] 1#1: start worker process 30

2024/02/22 00:23:45 [notice] 1#1: start worker process 31

2024/02/22 00:23:45 [notice] 1#1: start worker process 32

2024/02/22 00:23:45 [notice] 1#1: start worker process 33

2024/02/22 00:23:45 [notice] 1#1: start worker process 34

2024/02/22 00:23:45 [notice] 1#1: start worker process 35

2024/02/22 00:23:45 [notice] 1#1: start worker process 36

which worked

I can tag that image or just build with my dockerhub tag and push

builder@LuiGi17:~/Workspaces/react-pomodoro-clock$ docker build -t idjohnson/pomonginx:latest .

[+] Building 1.3s (16/16) FINISHED docker:default

=> [internal] load build definition from Dockerfile 0.1s

=> => transferring dockerfile: 739B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/nginx:stable-alpine 1.0s

=> [internal] load metadata for docker.io/library/node:14-alpine 1.1s

=> [auth] library/nginx:pull token for registry-1.docker.io 0.0s

=> [auth] library/node:pull token for registry-1.docker.io 0.0s

=> [stage-0 1/6] FROM docker.io/library/node:14-alpine@sha256:434215b487a329c9e867202ff89e704d3a75e554822e07f3e0c 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 3.60kB 0.0s

=> [stage-1 1/2] FROM docker.io/library/nginx:stable-alpine@sha256:6845649eadc1f0a5dacaf5bb3f01b480ce200ae1249114 0.0s

=> CACHED [stage-0 2/6] WORKDIR /app 0.0s

=> CACHED [stage-0 3/6] COPY package*.json ./ 0.0s

=> CACHED [stage-0 4/6] RUN npm install 0.0s

=> CACHED [stage-0 5/6] COPY . . 0.0s

=> CACHED [stage-0 6/6] RUN npm run build 0.0s

=> CACHED [stage-1 2/2] COPY --from=0 /app/build /usr/share/nginx/html 0.0s

=> exporting to image 0.0s

=> => exporting layers 0.0s

=> => writing image sha256:e1adcf22b678dd3e1b78304a5da26b7c378444498f5911cc2261a0520d882f52 0.0s

=> => naming to docker.io/idjohnson/pomonginx:latest 0.0s

What's Next?

View a summary of image vulnerabilities and recommendations → docker scout quickview

builder@LuiGi17:~/Workspaces/react-pomodoro-clock$ docker push idjohnson/pomonginx:latest

The push refers to repository [docker.io/idjohnson/pomonginx]

a2cc9cece689: Pushed

41f8f6af7bd8: Mounted from library/nginx

729d247f77b3: Mounted from library/nginx

eb96d7c5ddb8: Mounted from library/nginx

571e16e56ac6: Mounted from library/nginx

69f5264c89b0: Mounted from library/nginx

cbe466e5e6d0: Mounted from library/nginx

f4111324080c: Mounted from library/nginx

latest: digest: sha256:c89b447224f9784dca14e2122c23e0025d398741e38e82907c14d42f4c4deb27 size: 1992

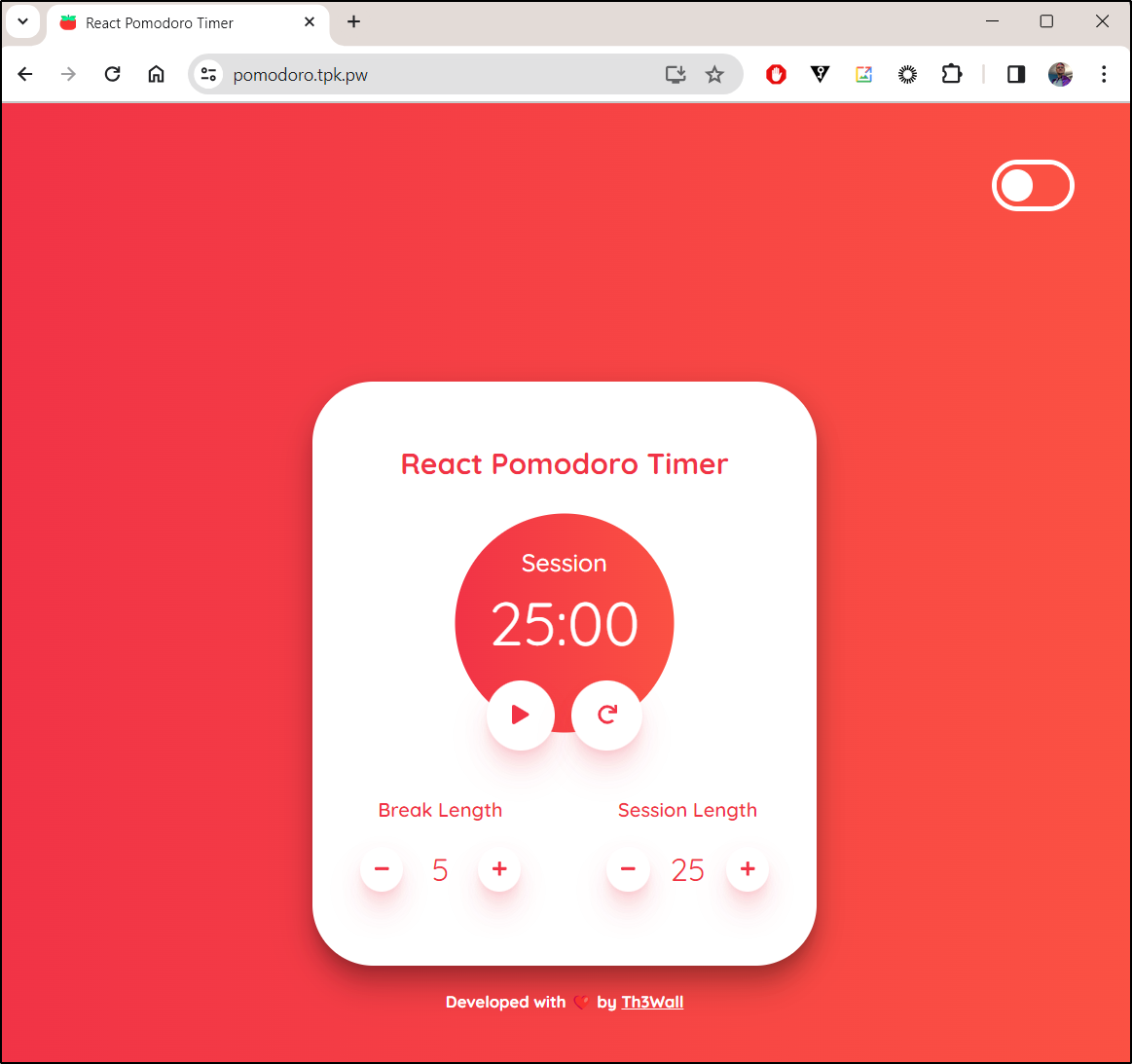

I’ll now delete the prior Pomo and launch this one on the same Azure DNS entry

builder@LuiGi17:~/Workspaces/react-pomodoro-clock$ kubectl delete -f ./k8s.yaml

service "pomosimple" deleted

deployment.apps "pomosimple" deleted

ingress.networking.k8s.io "pomosimple" deleted

builder@LuiGi17:~/Workspaces/react-pomodoro-clock$ cat k8s.yaml

apiVersion: v1

kind: Service

metadata:

name: pomosimple

spec:

selector:

app: pomosimple

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: pomosimple

spec:

replicas: 1

selector:

matchLabels:

app: pomosimple

template:

metadata:

labels:

app: pomosimple

spec:

containers:

- name: pomosimple

image: idjohnson/pomonginx:latest

ports:

- containerPort: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: pomosimple

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/websocket-services: pomosimple

spec:

rules:

- host: pomodoro.tpk.pw

http:

paths:

- pathType: ImplementationSpecific

path: "/"

backend:

service:

name: pomosimple

port:

number: 80

tls:

- hosts:

- pomodoro.tpk.pw

secretName: pomosimple-tls

builder@LuiGi17:~/Workspaces/react-pomodoro-clock$ kubectl apply -f k8s.yaml

service/pomosimple created

deployment.apps/pomosimple created

ingress.networking.k8s.io/pomosimple created

Success!

As it’s now running just fine at https://pomodoro.tpk.pw/, like before, I’ll drop the guy a PR

Flipdown

I liked this Flipdown clock from https://github.com/PButcher/flipdown.git

I created a Dockerfile

$ cat Dockerfile

# Use an official Node runtime as a parent image

FROM node:14-alpine

# Set the working directory in the container to /app

WORKDIR /app

# Copy package.json and package-lock.json to the working directory

COPY package*.json ./

# Install the application dependencies

RUN npm install

# Copy the rest of the application to the working directory

COPY . .

# Build the application

RUN npm run build

# Use an official Nginx runtime as a parent image for the runtime stage

FROM nginx:stable-alpine

# Copy the build output to replace the default Nginx contents.

COPY --from=0 /app/example /usr/share/nginx/html

# Expose port 80 on the container

EXPOSE 80

# Start Nginx

CMD ["nginx", "-g", "daemon off;"]

and tweaked the main.js to do just 30 minutes

$ cat example/js/main.js

document.addEventListener('DOMContentLoaded', () => {

// Unix timestamp (in seconds) to count down to

var twoDaysFromNow = (new Date().getTime() / 1000) + (1800) + 1;

// Set up FlipDown

var flipdown = new FlipDown(twoDaysFromNow)

// Start the countdown

.start()

// Do something when the countdown ends

.ifEnded(() => {

console.log('The countdown has ended!');

});

// Toggle theme

var interval = setInterval(() => {

let body = document.body;

body.classList.toggle('light-theme');

body.querySelector('#flipdown').classList.toggle('flipdown__theme-dark');

body.querySelector('#flipdown').classList.toggle('flipdown__theme-light');

}, 900000);

// Show version number

var ver = document.getElementById('ver');

ver.innerHTML = flipdown.version;

});

And quick build and test showed a pretty nice page i could keep in the corner of my monitors

$ docker build -t flipclock:pomo . && docker run -p 8899:80 flipclock:pomo

I pushed a tagged build up to dockerhub

$ docker push idjohnson/flippomo:latest

The push refers to repository [docker.io/idjohnson/flippomo]

a6b7fee171d2: Pushed

41f8f6af7bd8: Mounted from idjohnson/pomonginx

729d247f77b3: Mounted from idjohnson/pomonginx

eb96d7c5ddb8: Mounted from idjohnson/pomonginx

571e16e56ac6: Mounted from idjohnson/pomonginx

69f5264c89b0: Mounted from idjohnson/pomonginx

cbe466e5e6d0: Mounted from idjohnson/pomonginx

f4111324080c: Mounted from idjohnson/pomonginx

latest: digest: sha256:ff979d20e87718589ebc41ad91b5bae592cfc28454ba21fa324e165e302f72f2 size: 1989

Now to tag and push

builder@LuiGi17:~/Workspaces/flipdown$ docker tag flipclock:pomo idjohnson/flipclock:pomo

builder@LuiGi17:~/Workspaces/flipdown$ docker push idjohnson/flipclock:pomo

The push refers to repository [docker.io/idjohnson/flipclock]

fa8002c0a165: Pushed

41f8f6af7bd8: Mounted from idjohnson/flippomo

729d247f77b3: Mounted from idjohnson/flippomo

eb96d7c5ddb8: Mounted from idjohnson/flippomo

571e16e56ac6: Mounted from idjohnson/flippomo

69f5264c89b0: Mounted from idjohnson/flippomo

cbe466e5e6d0: Mounted from idjohnson/flippomo

f4111324080c: Mounted from idjohnson/flippomo

Now I’ll relaunch it and test

$ cat k8s.yaml

apiVersion: v1

kind: Service

metadata:

name: flippomo

spec:

selector:

app: flippomo

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: flippomo

spec:

replicas: 1

selector:

matchLabels:

app: flippomo

template:

metadata:

labels:

app: flippomo

spec:

containers:

- name: flippomo

image: idjohnson/flipclock:pomo

ports:

- containerPort: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: flippomo

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/websocket-services: flippomo

spec:

rules:

- host: pomodoro.freshbrewed.science

http:

paths:

- pathType: ImplementationSpecific

path: "/"

backend:

service:

name: flippomo

port:

number: 80

tls:

- hosts:

- pomodoro.freshbrewed.science

secretName: pomodoro-tls

$ kubectl apply -f ./k8s.yaml

service/flippomo created

deployment.apps/flippomo created

ingress.networking.k8s.io/flippomo created

Summary

We covered a lot today. We looked at DarkTable in Docker and on AKS. We explored it’s features as an interesting VNC based application - kind of like the OS version of Citrix.

I had found myself then in need of some decent Pomodoro timers for productivity. I tried the 6-year-old pomodoreApp which wasn’t elegant, but was perfectly functional. I tried a newer lightweight one by Eugene Parkhomenko. I could get that to work locally, but not using my Nginx ingress controller.

We then tried a ReactJS app from https://github.com/Th3Wall/react-pomodoro-clock.git created by Davide Mandelli. Lightweight and simple, this really fit my bill (and I’ve been using since). Lastly, I took the example flipdown clock from here and tweaked it up to be a container deployable into Kubernetes. This is just a 30m countdown that resets on refresh. Useable for pomodoro, meetings and even half our conference breaks.