Published: Feb 8, 2024 by Isaac Johnson

Today, let’s checkout some Open-Source apps, namely CodeX Docs and OpenDocMan. CodeX is the name of a group of OpenSource engineers and an overall grouping of their packages. OpenDocMan is a pretty old PHP driven Document management system created by Stephen Lawrence Jr.. While, there hasn’t been a release in the Github repo since Mar of 2021, I do see active merges so perhaps a new release is coming. In our writeup, we’ll being using latest from the main (master`) branch.

CodeX Docs

I know of CodeX from their Editor.js. But they also make a server-side implementation as well.

I found out about CodeX from a MariusHosting post a while back.

Docker Installation

Let’s start with the Docker implementation

I need to create some folders first

builder@builder-T100:~$ mkdir codex

builder@builder-T100:~$ cd codex/

builder@builder-T100:~/codex$ ls

builder@builder-T100:~/codex$ mkdir uploads

builder@builder-T100:~/codex$ mkdir db

I’ll then need to make a docs-config.yaml. I based it off the one in their Github repo minus the S3 block.

builder@builder-T100:~/codex$ cat docs-config.yaml

port: 3000

host: "localhost"

uploads:

driver: "local"

local:

path: "./uploads"

frontend:

title: "CodeX Docs"

description: "Free Docs app powered by Editor.js ecosystemt"

startPage: ""

misprintsChatId: "12344564"

yandexMetrikaId: ""

carbon:

serve: ""

placement: ""

menu:

- "Guides"

- title: "CodeX"

uri: "https://codex.so"

auth:

password: mypassword

secret: shhdonttell

hawk:

# frontendToken: "123"

# backendToken: "123"

database:

driver: local # you can change database driver here. 'mongodb' or 'local'

local:

path: ./db

Now I can launch it in my Dockerhost

$ sudo docker run -p 8095:3000 -v /home/builder/codex/uploads:/usr/src/app/uploads -v /home/builder/codex/db:/usr/src/app/db -v /home/builder/codex/docs-config.yaml:/usr/src/app/docs-config.yaml --name codex -d ghcr.io/codex-team/codex.docs:latest

Unable to find image 'ghcr.io/codex-team/codex.docs:latest' locally

latest: Pulling from codex-team/codex.docs

3d2430473443: Pull complete

b60fa0ff74d7: Pull complete

dc7a390288bd: Pull complete

33306f9c18eb: Pull complete

754a8e26ef57: Pull complete

be09c7a20f05: Pull complete

7894e5316a09: Pull complete

af17abb97ba1: Pull complete

33513ffd9fe3: Pull complete

Digest: sha256:fc932818383a59193669ae46953bcc6f1baafee1a659a1200508c69aed0dbad5

Status: Downloaded newer image for ghcr.io/codex-team/codex.docs:latest

c194e587da2e59a17e4e2b0f8bad263217a9bb87808be3852e2bed1769f74062

Since I want to immediately try this remotely, I’ll create the A Record

$ cat r53-codex.json

{

"Comment": "CREATE codex fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "codex.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "75.73.224.240"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-codex.json

{

"ChangeInfo": {

"Id": "/change/C0369074QL16RY8M5PF3",

"Status": "PENDING",

"SubmittedAt": "2024-01-10T00:50:58.985Z",

"Comment": "CREATE codex fb.s A record "

}

}

This first path will just front the traffic from port 8095 on the dockerhost

$ cat ingress.docker.codex.yaml

---

apiVersion: v1

kind: Endpoints

metadata:

name: codex-external-ip

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: codex

port: 8095

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: codex-external-ip

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: codex

port: 80

protocol: TCP

targetPort: 8095

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: codex-external-ip

generation: 1

labels:

app.kubernetes.io/instance: codexingress

name: codexingress

spec:

rules:

- host: codex.freshbrewed.science

http:

paths:

- backend:

service:

name: codex-external-ip

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- codex.freshbrewed.science

secretName: codex-tls

Which I applied

$ kubectl apply -f ingress.docker.codex.yaml

endpoints/codex-external-ip created

service/codex-external-ip created

ingress.networking.k8s.io/codexingress created

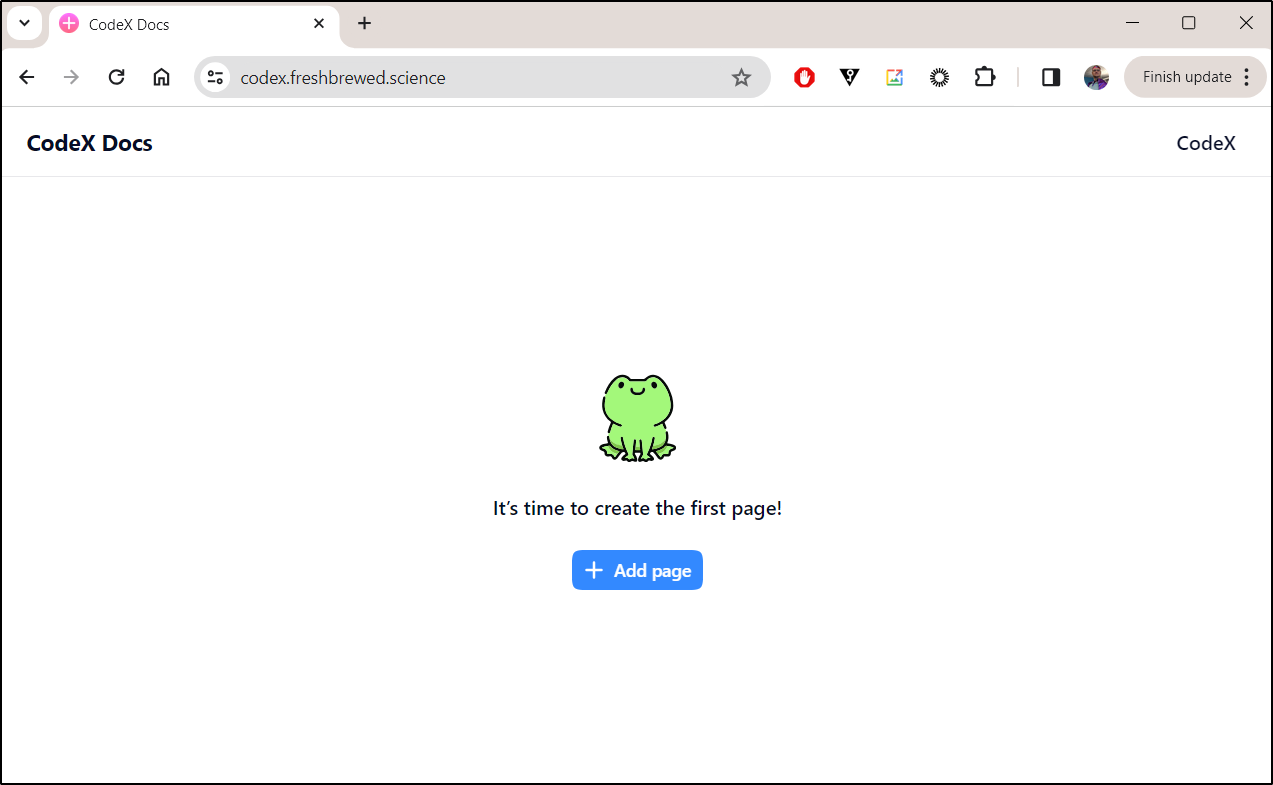

I can now see the live site

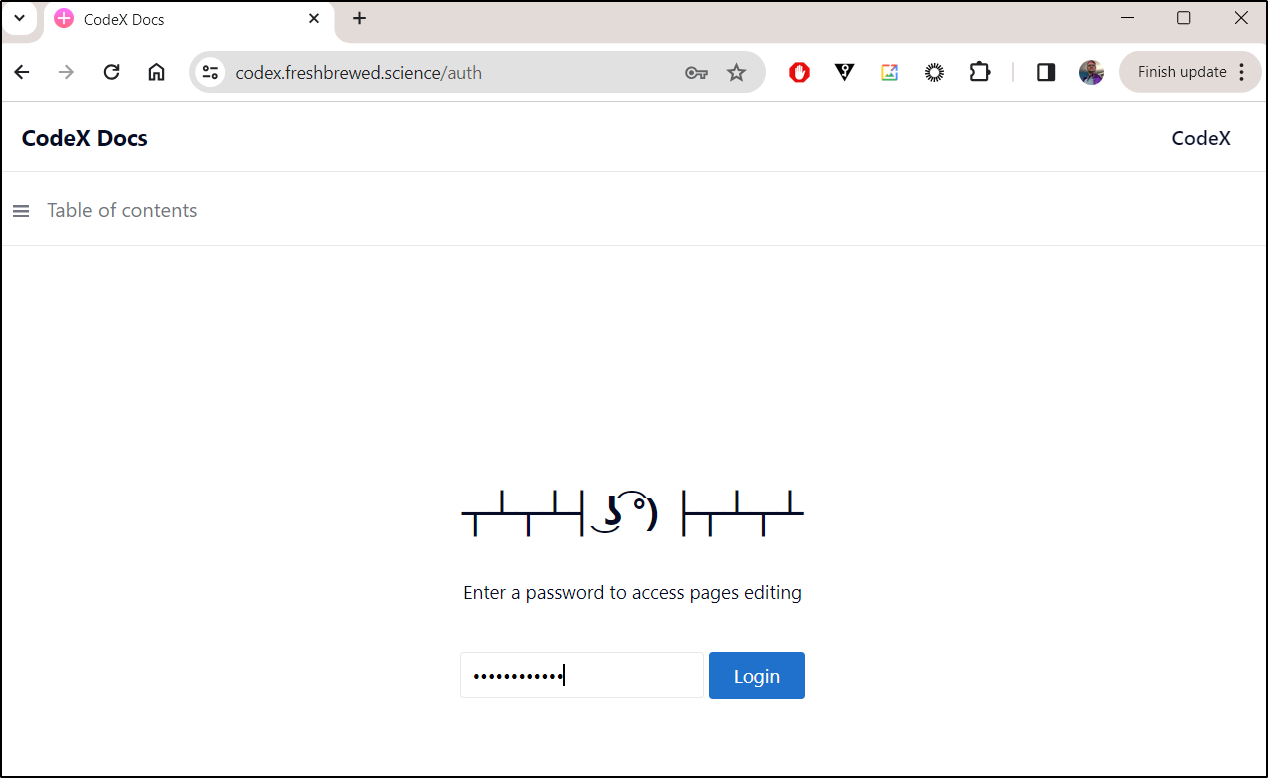

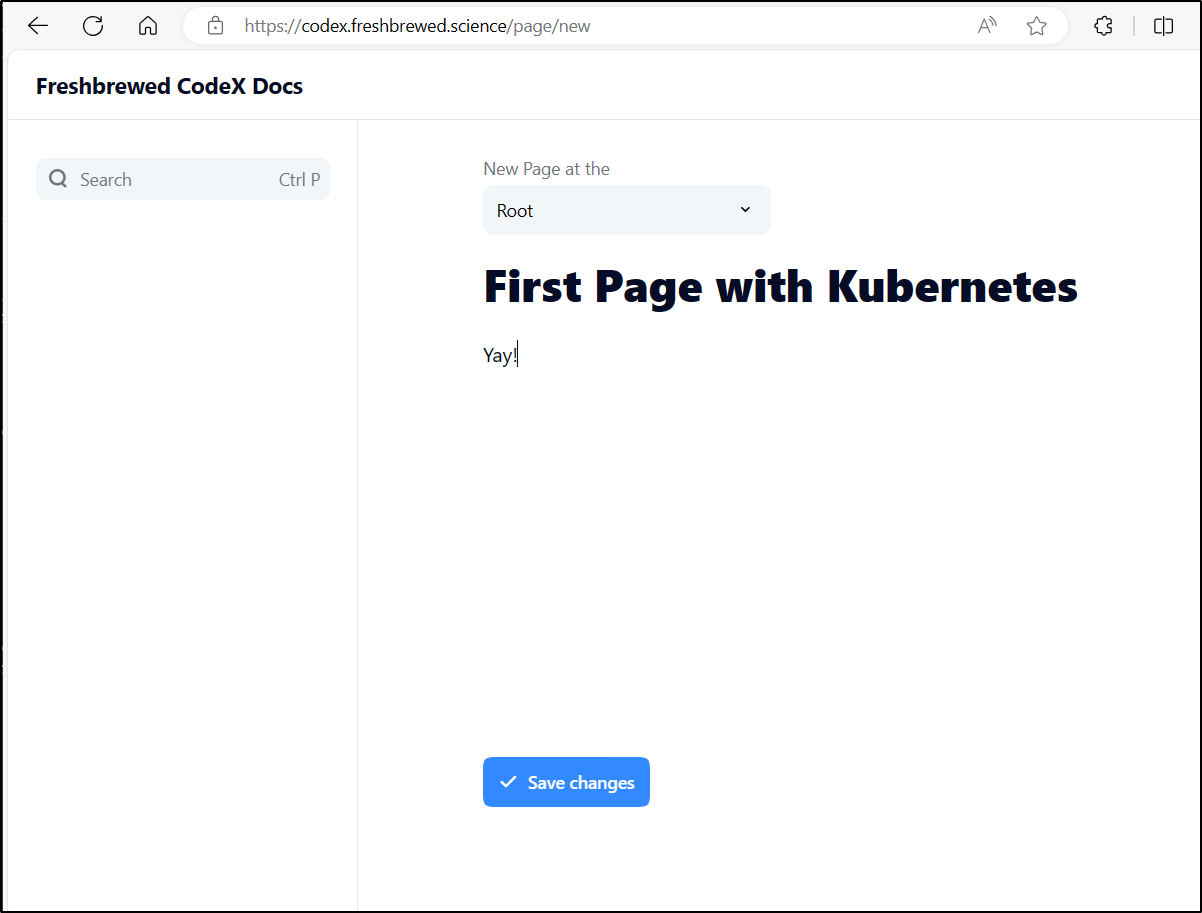

To add a page, one has to enter a password

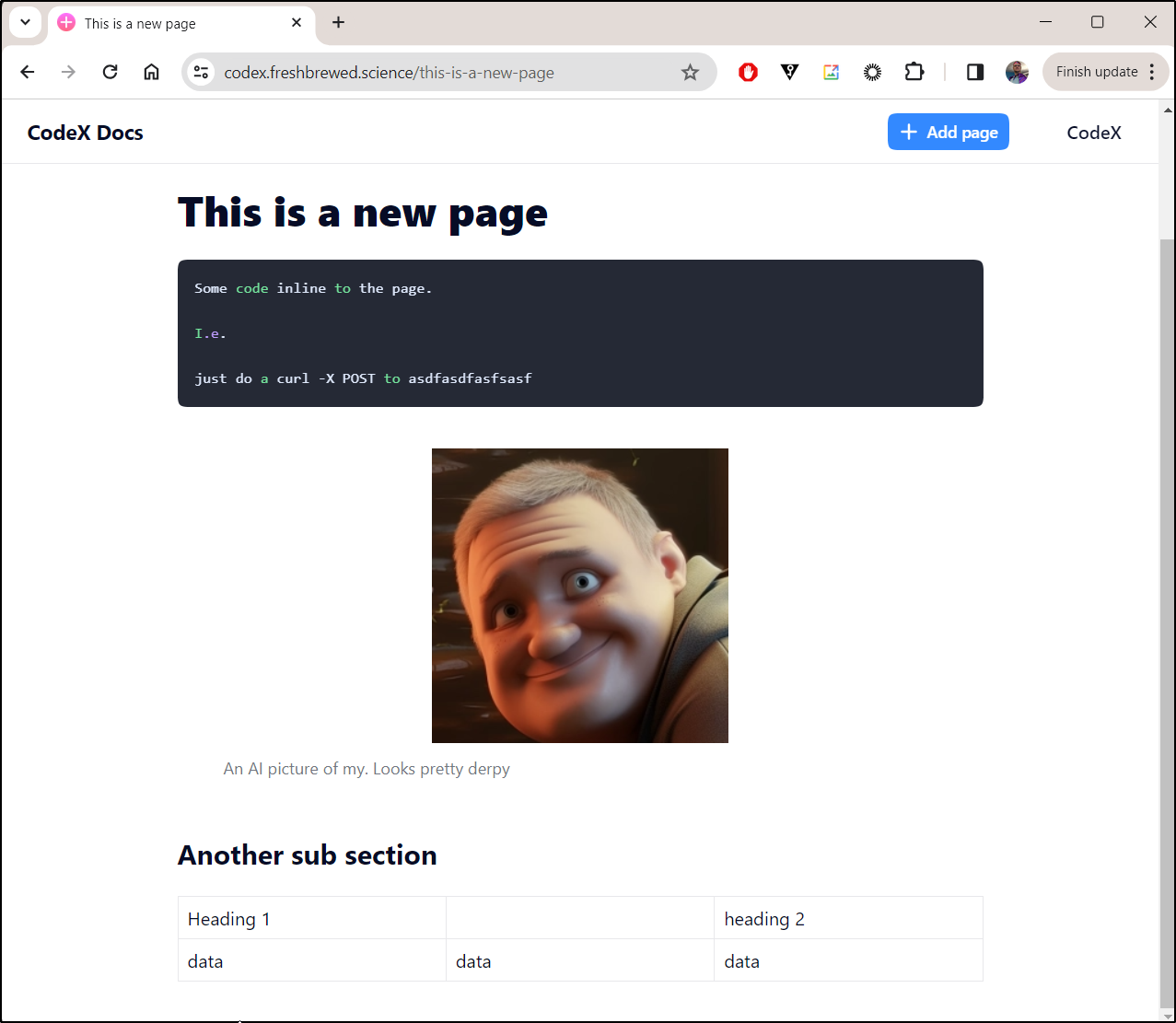

I can now start to add content with the password (mypassword) entered

let’s work out the first page together

I now have this page setup

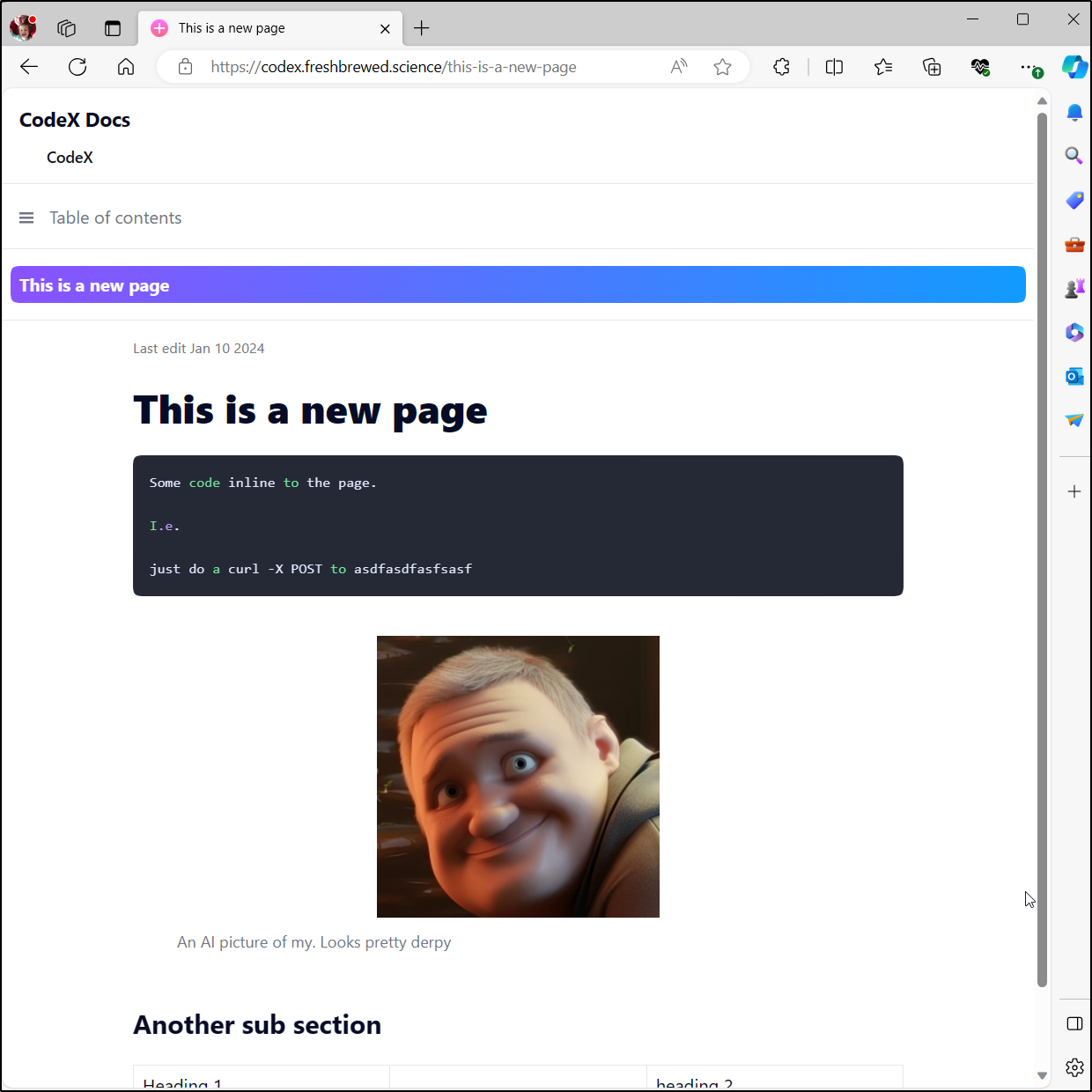

If we hop to a different browser to check non-logged in users we can see it here:

Native Kubernetes

I’ll start by taking my docs-config.yaml and create a ConfigMap out of it

builder@LuiGi17:~/Workspaces/codex$ vi docs-config.yaml

builder@LuiGi17:~/Workspaces/codex$ kubectl create configmap codex-config --from-file=./docs-config.yaml --dry-run=client -o yaml >> k8s.deployment.yaml

builder@LuiGi17:~/Workspaces/codex$ vi k8s.deployment.yaml

Next, I need to add some PVCs for app uploads and the db volume:

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: codex-db

spec:

accessModes:

- ReadWriteOnce

storageClassName: managed-nfs-storage

resources:

requests:

storage: 5Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: codex-api

spec:

accessModes:

- ReadWriteOnce

storageClassName: managed-nfs-storage

resources:

requests:

storage: 5Gi

I’ll need a deployment, service and lastly Ingress added.

All in, the file now looks like this:

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: codex-db

spec:

accessModes:

- ReadWriteOnce

storageClassName: managed-nfs-storage

resources:

requests:

storage: 5Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: codex-uploads

spec:

accessModes:

- ReadWriteOnce

storageClassName: managed-nfs-storage

resources:

requests:

storage: 10Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: codex

spec:

replicas: 2

selector:

matchLabels:

app: codex

template:

metadata:

labels:

app: codex

spec:

containers:

- name: codex

image: ghcr.io/codex-team/codex.docs:latest

ports:

- containerPort: 3000

volumeMounts:

- name: db

mountPath: /usr/src/app/db

- name: uploads

mountPath: /usr/src/app/uploads

- name: config-volume

mountPath: /usr/src/app/docs-config.yaml

subPath: docs-config.yaml

volumes:

- name: db

persistentVolumeClaim:

claimName: codex-db

- name: uploads

persistentVolumeClaim:

claimName: codex-uploads

- name: config-volume

configMap:

name: codex-config

---

apiVersion: v1

kind: Service

metadata:

name: codex

spec:

selector:

app: codex

ports:

- protocol: TCP

port: 80

targetPort: 3000

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

labels:

app.kubernetes.io/instance: codex

name: codexingress

spec:

rules:

- host: codex.freshbrewed.science

http:

paths:

- backend:

service:

name: codex

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- codex.freshbrewed.science

secretName: codex-tls

---

apiVersion: v1

data:

docs-config.yaml: |

port: 3000

host: "localhost"

uploads:

driver: "local"

local:

path: "./uploads"

frontend:

title: "Freshbrewed CodeX Docs"

description: "Free Docs app powered by Editor.js ecosystemt"

startPage: ""

misprintsChatId: "12344564"

yandexMetrikaId: ""

carbon:

serve: ""

placement: ""

menu:

- "Guides"

- title: "CodeX"

uri: "https://codex.so"

auth:

password: Darfdarf01

secret: chucklestheclown1234

hawk:

# frontendToken: "123"

# backendToken: "123"

database:

driver: local # you can change database driver here. 'mongodb' or 'local'

local:

path: ./db

kind: ConfigMap

metadata:

creationTimestamp: null

name: codex-config

I’ll start by removing the Docker version

$ kubectl delete -f ingress.docker.codex.yaml

endpoints "codex-external-ip" deleted

service "codex-external-ip" deleted

ingress.networking.k8s.io "codexingress" deleted

Then applying this new one

$ kubectl apply -f ingress.all.codex.yaml

persistentvolumeclaim/codex-db created

persistentvolumeclaim/codex-uploads created

deployment.apps/codex created

service/codex created

ingress.networking.k8s.io/codexingress created

configmap/codex-config created

I can see it is running already

As I have two pods running

$ kubectl get pods | grep codex

codex-5bdb5ccf57-zctqd 1/1 Running 0 3m9s

codex-5bdb5ccf57-t4qqt 1/1 Running 0 3m9s

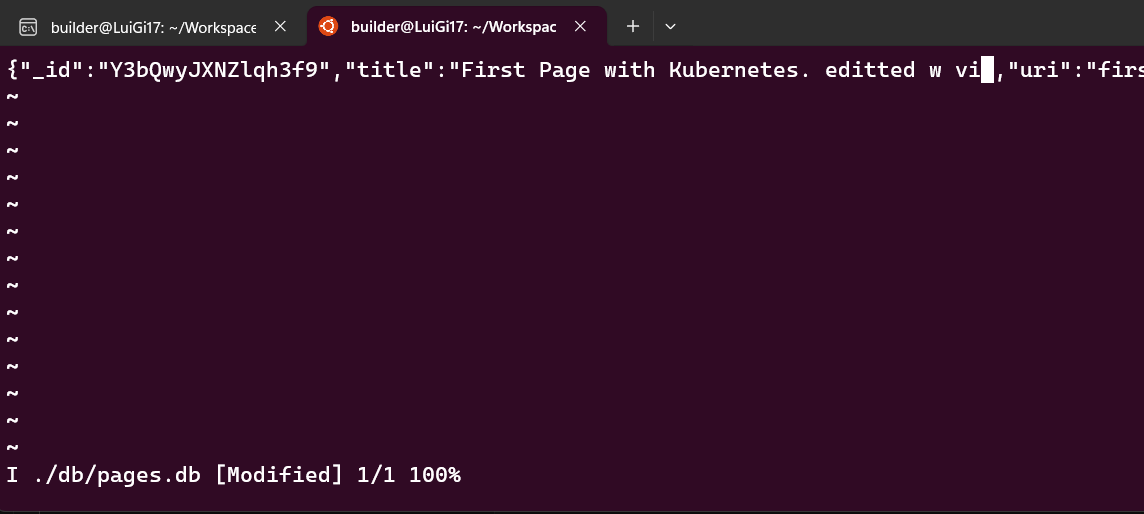

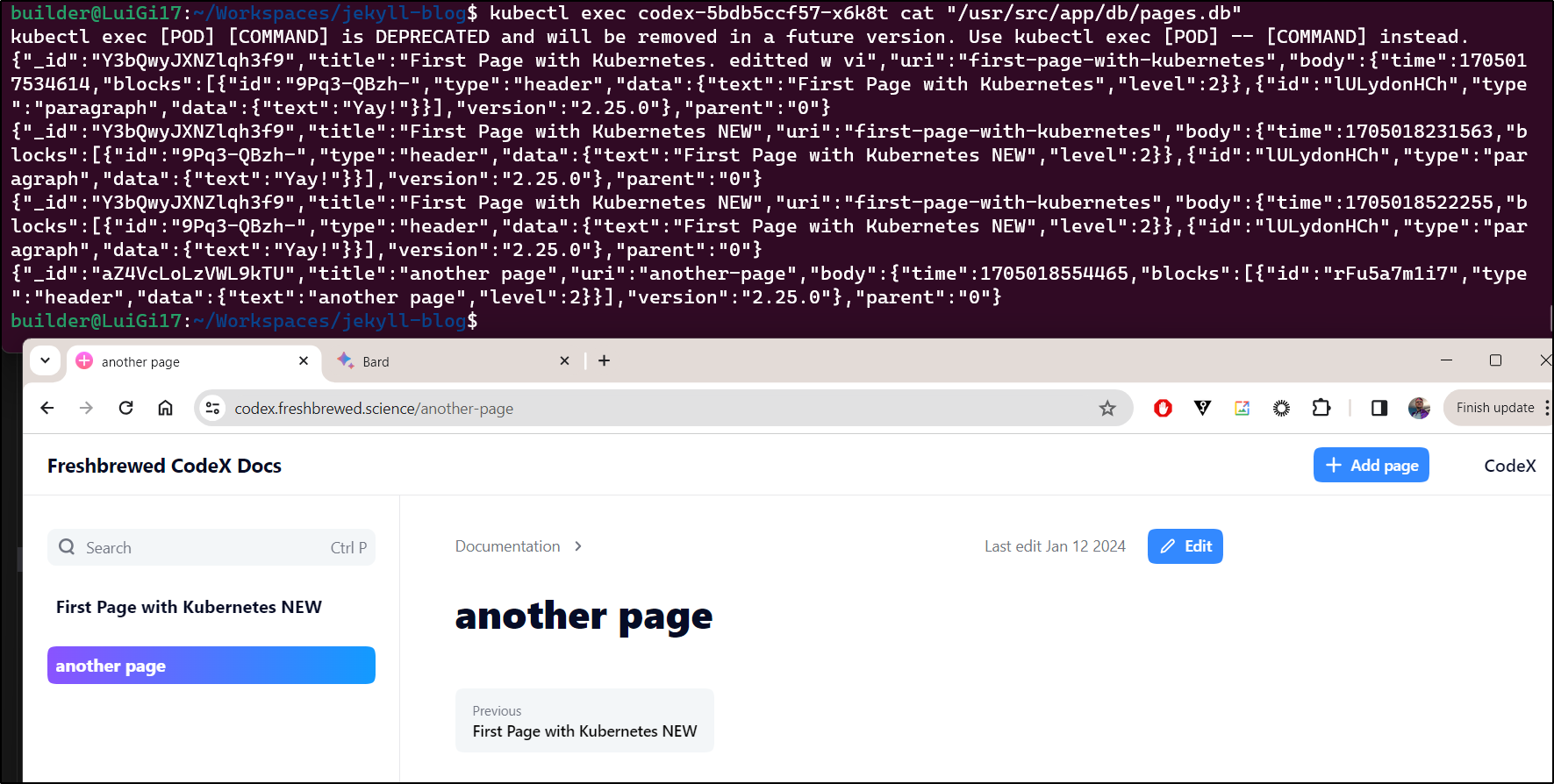

I can jump into one and see the format of this file

$ kubectl exec -it codex-5bdb5ccf57-zctqd -- /bin/sh

/usr/src/app # ls

db docs-config.yaml package.json uploads

dist node_modules public yarn.lock

/usr/src/app # cat ./db/pages.db

{"_id":"Y3bQwyJXNZlqh3f9","title":"First Page with Kubernetes","uri":"first-page-with-kubernetes","body":{"time":1705017534614,"blocks":[{"id":"9Pq3-QBzh-","type":"header","data":{"text":"First Page with Kubernetes","level":2}},{"id":"lULydonHCh","type":"paragraph","data":{"text":"Yay!"}}],"version":"2.25.0"},"parent":"0"}

I tried editting with vi

and I can go to the other pod and see it’s there

builder@LuiGi17:~/Workspaces/jekyll-blog$ kubectl exec -it codex-5bdb5ccf57-t4qqt -- /bin/sh

/usr/src/app # ls

db docs-config.yaml package.json uploads

dist node_modules public yarn.lock

/usr/src/app # ls uploads

/usr/src/app # ls db

aliases.db files.db pages.db pagesOrder.db

/usr/src/app # ls -l db

total 12

-rw-r--r-- 1 root root 126 Jan 11 23:58 aliases.db

-rw-r--r-- 1 root root 0 Jan 11 23:56 files.db

-rw-r--r-- 1 root root 339 Jan 12 00:02 pages.db

-rw-r--r-- 1 root root 67 Jan 11 23:58 pagesOrder.db

/usr/src/app # cat ./db/pages

cat: can't open './db/pages': No such file or directory

/usr/src/app # cat ./db/pages.db

{"_id":"Y3bQwyJXNZlqh3f9","title":"First Page with Kubernetes. editted w vi","uri":"first-page-with-kubernetes","body":{"time":1705017534614,"blocks":[{"id":"9Pq3-QBzh-","type":"header","data":{"text":"First Page with Kubernetes","level":2}},{"id":"lULydonHCh","type":"paragraph","data":{"text":"Yay!"}}],"version":"2.25.0"},"parent":"0"}

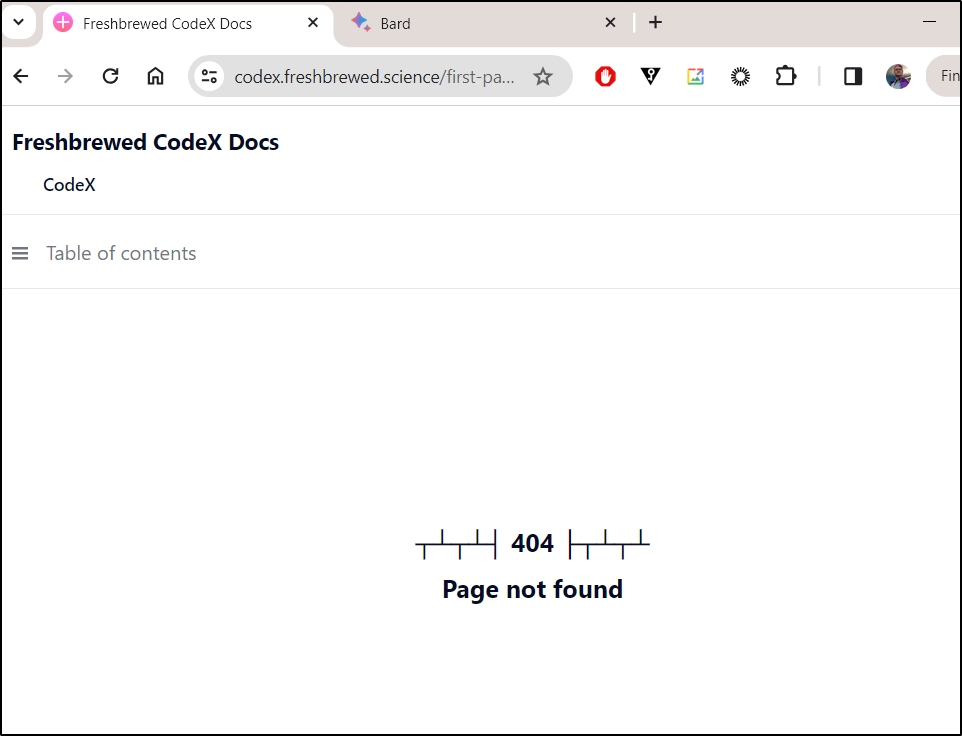

However, on refresh, I would get either a 404 or a cached copy of the old page

I rotated the pods

$ kubectl delete pods -l app=codex

pod "codex-5bdb5ccf57-zctqd" deleted

pod "codex-5bdb5ccf57-t4qqt" deleted

but still showing me the last page

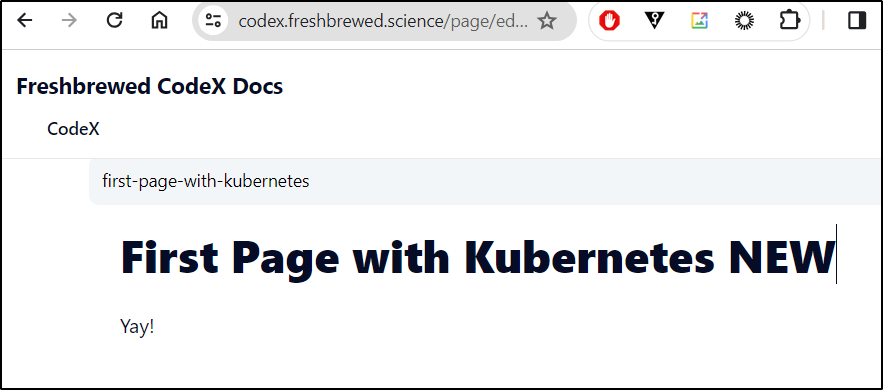

I editted in the UI

However, every other reload or so showed the old page. Perhaps this isn’t an app that can do multiple replicas well.

I decided to scale down to avoid that issue

$ kubectl scale deployment/codex --replicas=1

deployment.apps/codex scaled

$ kubectl get pods -l app=codex

NAME READY STATUS RESTARTS AGE

codex-5bdb5ccf57-x6k8t 1/1 Running 0 4m38s

codex-5bdb5ccf57-xsj44 1/1 Terminating 0 4m38s

Checking the pod, I do see the edit

$ kubectl exec codex-5bdb5ccf57-x6k8t cat "/usr/src/app/db/pages.db"

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

{"_id":"Y3bQwyJXNZlqh3f9","title":"First Page with Kubernetes. editted w vi","uri":"first-page-with-kubernetes","body":{"time":1705017534614,"blocks":[{"id":"9Pq3-QBzh-","type":"header","data":{"text":"First Page with Kubernetes","level":2}},{"id":"lULydonHCh","type":"paragraph","data":{"text":"Yay!"}}],"version":"2.25.0"},"parent":"0"}

{"_id":"Y3bQwyJXNZlqh3f9","title":"First Page with Kubernetes NEW","uri":"first-page-with-kubernetes","body":{"time":1705018231563,"blocks":[{"id":"9Pq3-QBzh-","type":"header","data":{"text":"First Page with Kubernetes NEW","level":2}},{"id":"lULydonHCh","type":"paragraph","data":{"text":"Yay!"}}],"version":"2.25.0"},"parent":"0"}

Small Images would upload but I got errors when tryin a 3Mb image

I’m going to tweak the ingress with some new annotations

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

Then re-apply (I find with Nginx, a delete then add works better)

$ kubectl delete ingress codexingress

ingress.networking.k8s.io "codexingress" deleted

$ kubectl apply -f ./ingress.all.codex.yaml

persistentvolumeclaim/codex-db unchanged

persistentvolumeclaim/codex-uploads unchanged

deployment.apps/codex configured

service/codex unchanged

ingress.networking.k8s.io/codexingress created

configmap/codex-config configured

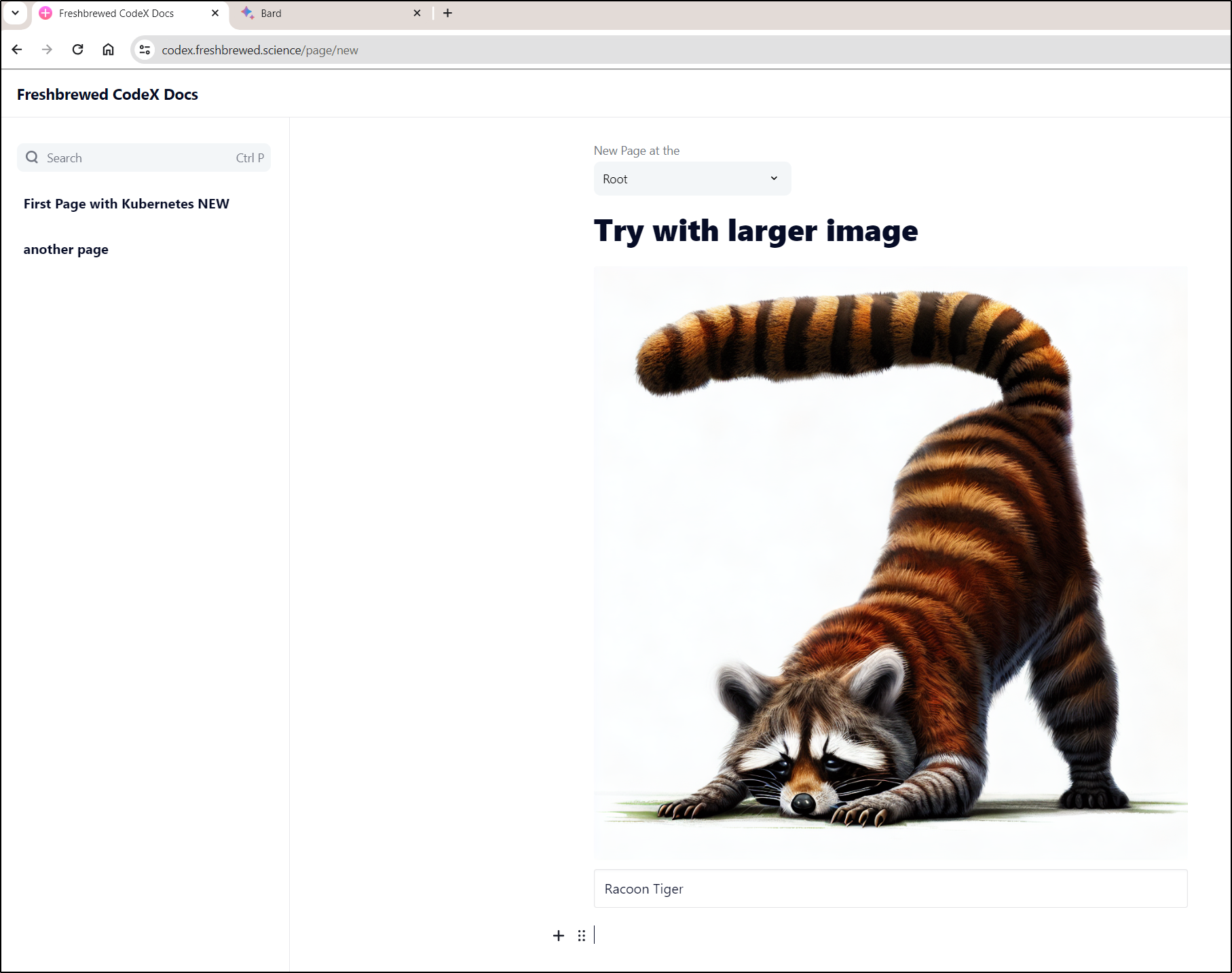

This time it worked great

And we can see the larger image

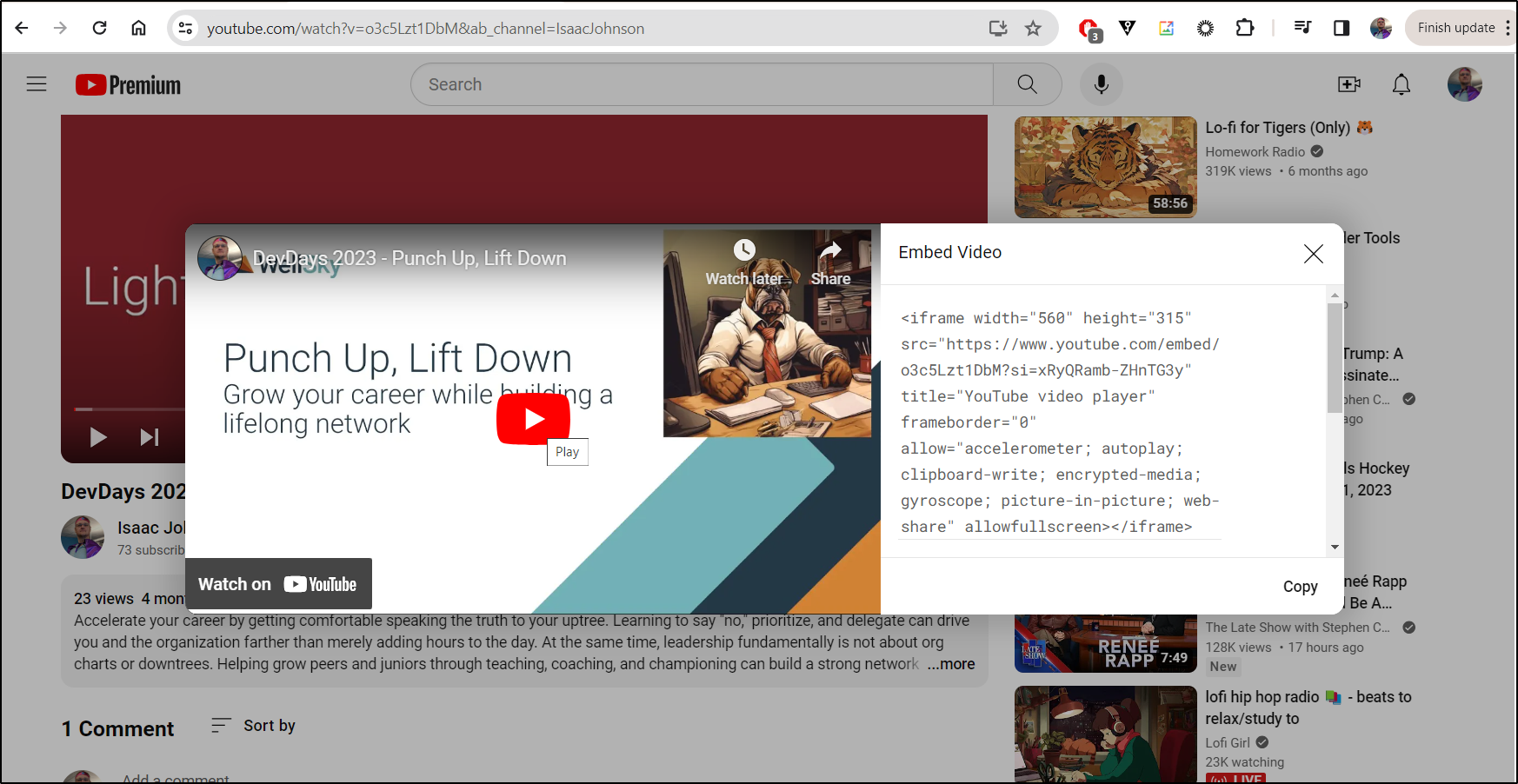

If we wanted to embed a video, such as from YT, we can get the embed video

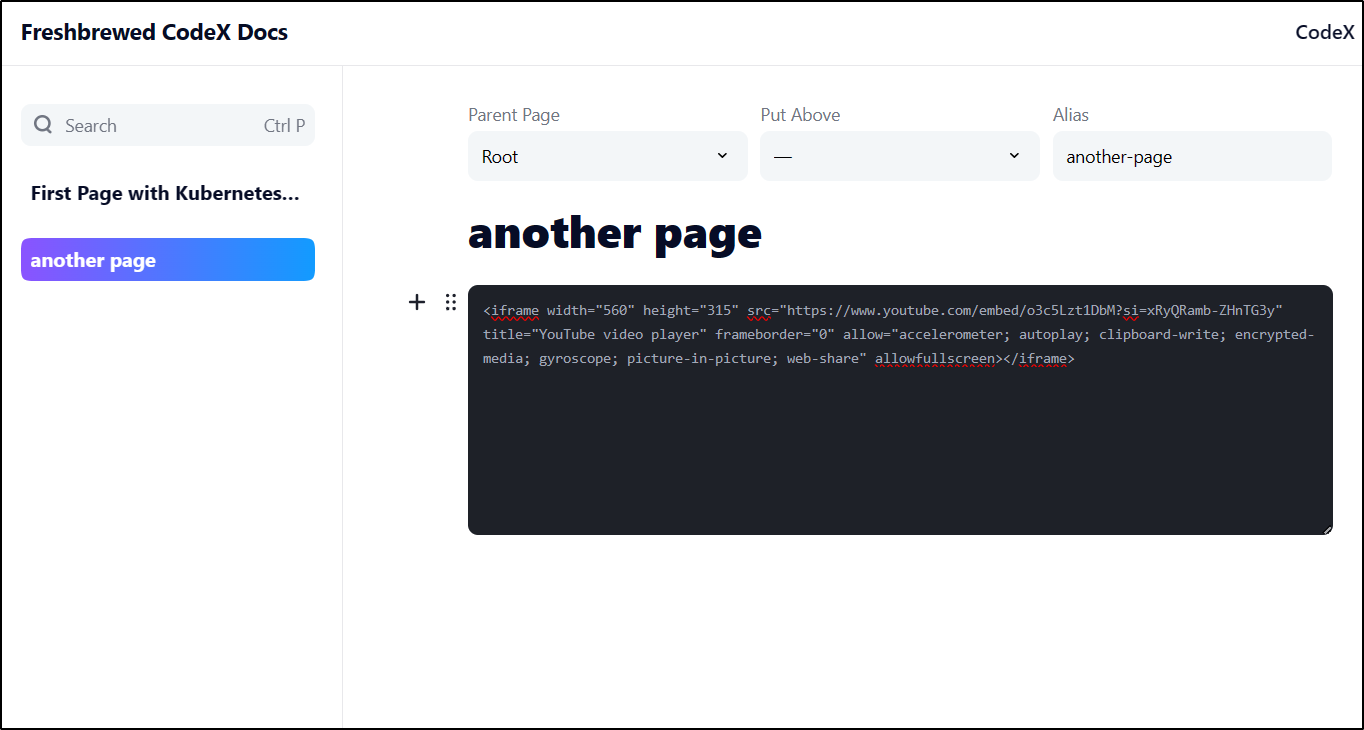

Now I can add it to a CodeX Page

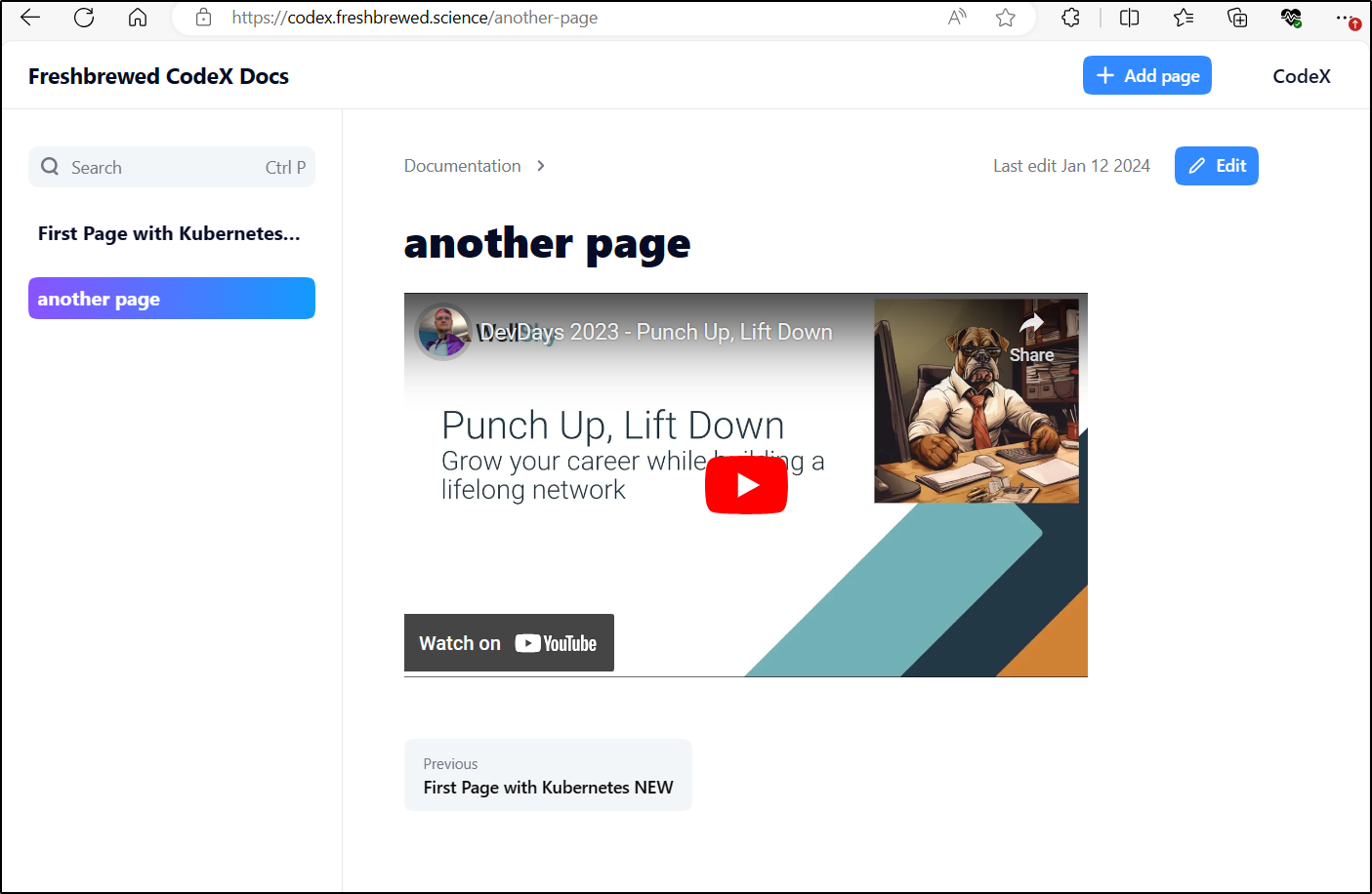

and we can see the video and play it

OpenDocMan

First, I just have to call it out. The name of this tool really just makes me think of people yelling

Setup

Let’s first bring down the latest Git code from their repo

builder@DESKTOP-QADGF36:~/Workspaces$ git clone https://github.com/opendocman/opendocman.git

Cloning into 'opendocman'...

remote: Enumerating objects: 12738, done.

remote: Counting objects: 100% (1709/1709), done.

remote: Compressing objects: 100% (913/913), done.

remote: Total 12738 (delta 727), reused 1684 (delta 725), pack-reused 11029

Receiving objects: 100% (12738/12738), 9.55 MiB | 18.53 MiB/s, done.

Resolving deltas: 100% (5669/5669), done.

At first, I tried just a docker compose up

builder@DESKTOP-QADGF36:~/Workspaces/opendocman$ docker compose up

[+] Running 10/10

⠿ db Pulled 6.1s

⠿ 527f5363b98e Pull complete 1.6s

⠿ bda22cfb6e45 Pull complete 1.7s

⠿ 17bea36fca0c Pull complete 2.0s

⠿ e30c36a0d4a4 Pull complete 2.1s

⠿ 8f06f892ff54 Pull complete 2.2s

⠿ 11e00e2f44db Pull complete 4.2s

⠿ 041d97613a4d Pull complete 4.3s

⠿ bd5b12e516b7 Pull complete 4.3s

⠿ aeb489c6fb29 Pull complete 4.4s

[+] Building 98.4s (15/15) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 952B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/php:7.4-apache 2.6s

=> [ 1/10] FROM docker.io/library/php:7.4-apache@sha256:c9d7e608f73832673479770d66aacc8100011ec751d1905ff63fae3fe2e0ca6d 6.6s

=> => resolve docker.io/library/php:7.4-apache@sha256:c9d7e608f73832673479770d66aacc8100011ec751d1905ff63fae3fe2e0ca6d 0.0s

=> => sha256:18b3497ee7f2099a90b66c23a0bc3d5261b12bab367263e1b40e9b004c39e882 3.04kB / 3.04kB 0.0s

=> => sha256:20a3732f422b7b28dcf99e8597f093a8c135efca62ff0dc02a2d92d916369413 12.51kB / 12.51kB 0.0s

=> => sha256:c428f1a494230852524a2a5957cc5199c36c8b403305e0e877d580bd0ec9e763 226B / 226B 0.1s

=> => sha256:156740b07ef8a632f9f7bea4e57e4ee5541ade376adf9169351a1265382e39de 91.63MB / 91.63MB 2.8s

=> => sha256:c9d7e608f73832673479770d66aacc8100011ec751d1905ff63fae3fe2e0ca6d 1.86kB / 1.86kB 0.0s

=> => sha256:a603fa5e3b4127f210503aaa6189abf6286ee5a73deeaab460f8f33ebc6b64e2 31.41MB / 31.41MB 1.2s

=> => sha256:fb5a4c8af82f00730b7427e47bda7f76cea2e2b9aea421750bc9025aface98d8 270B / 270B 0.3s

=> => sha256:25f85b498fd5bfc6cce951513219fe480850daba71e6e997741e984d18483971 19.25MB / 19.25MB 1.8s

=> => sha256:9b233e420ac7bbca645bb82c213029762acf1742400c076360dc303213c309d5 475B / 475B 1.4s

=> => extracting sha256:a603fa5e3b4127f210503aaa6189abf6286ee5a73deeaab460f8f33ebc6b64e2 1.0s

=> => sha256:fe42347c4ecfc90333acd9cad13912387eea39d13827a25cfa78727fa5d200e9 514B / 514B 1.5s

=> => sha256:d14eb2ed1e17ae00f5fcb44b0d562e2867c401c20372829e2cf443fc409342fa 10.76MB / 10.76MB 2.1s

=> => sha256:66d98f73acb62e86c0c226f9eedcbc7eda305df0c1e171ca5caf81cb8b1c40cb 491B / 491B 1.9s

=> => sha256:d2c43c5efbc861f83ee6565c7102ca660d6f35e158324fbb042de5017e43afe8 10.20MB / 10.20MB 2.6s

=> => sha256:ab590b48ea476386dd7b07c34de9eff7cf2103c4668ade985fe31e59f15deee8 2.46kB / 2.46kB 2.2s

=> => sha256:80692ae2d067c8358112c56490a2a97f69ef395fd8f7662a31498644c9a813ef 246B / 246B 2.6s

=> => extracting sha256:c428f1a494230852524a2a5957cc5199c36c8b403305e0e877d580bd0ec9e763 0.0s

=> => sha256:05e465aaa99a358add4acecdade8f39843089069f31fea0201533d3a09a98c9a 892B / 892B 2.7s

=> => extracting sha256:156740b07ef8a632f9f7bea4e57e4ee5541ade376adf9169351a1265382e39de 2.0s

=> => extracting sha256:fb5a4c8af82f00730b7427e47bda7f76cea2e2b9aea421750bc9025aface98d8 0.0s

=> => extracting sha256:25f85b498fd5bfc6cce951513219fe480850daba71e6e997741e984d18483971 0.4s

=> => extracting sha256:9b233e420ac7bbca645bb82c213029762acf1742400c076360dc303213c309d5 0.0s

=> => extracting sha256:fe42347c4ecfc90333acd9cad13912387eea39d13827a25cfa78727fa5d200e9 0.0s

=> => extracting sha256:d14eb2ed1e17ae00f5fcb44b0d562e2867c401c20372829e2cf443fc409342fa 0.1s

=> => extracting sha256:66d98f73acb62e86c0c226f9eedcbc7eda305df0c1e171ca5caf81cb8b1c40cb 0.0s

=> => extracting sha256:d2c43c5efbc861f83ee6565c7102ca660d6f35e158324fbb042de5017e43afe8 0.3s

=> => extracting sha256:ab590b48ea476386dd7b07c34de9eff7cf2103c4668ade985fe31e59f15deee8 0.0s

=> => extracting sha256:80692ae2d067c8358112c56490a2a97f69ef395fd8f7662a31498644c9a813ef 0.0s

=> => extracting sha256:05e465aaa99a358add4acecdade8f39843089069f31fea0201533d3a09a98c9a 0.0s

=> [internal] load build context 0.3s

=> => transferring context: 21.83MB 0.3s

=> [ 2/10] RUN apt-get update && apt-get install --no-install-recommends -y apt-utils vim git openssl ssl-cert sendmail 85.2s

=> [ 3/10] COPY src/main/resources/php.ini /usr/local/etc/php/conf.d 0.0s

=> [ 4/10] RUN a2enmod rewrite 0.5s

=> [ 5/10] RUN a2ensite default-ssl 0.6s

=> [ 6/10] RUN a2enmod ssl 0.7s

=> [ 7/10] COPY . /var/www/html 0.1s

=> [ 8/10] RUN usermod -u 1000 www-data 0.5s

=> [ 9/10] COPY src/main/resources/*.sh / 0.0s

=> [10/10] RUN chmod 755 /*.sh 0.6s

=> exporting to image 0.7s

=> => exporting layers 0.6s

=> => writing image sha256:efcf28f6ed40b01a78bc8e20f2b3e630769acb93be72af6a5b628ca2675a0ef7 0.0s

=> => naming to docker.io/library/opendocman-app 0.0s

[+] Running 6/5

⠿ Network opendocman_default Created 0.2s

⠿ Volume "opendocman_odm-db-data" Created 0.0s

⠿ Volume "opendocman_odm-files-data" Created 0.0s

⠿ Volume "opendocman_odm-docker-configs" Created 0.0s

⠿ Container opendocman-db-1 Created 0.0s

⠿ Container opendocman-app-1 Created 0.1s

Attaching to opendocman-app-1, opendocman-db-1

opendocman-db-1 | 2024-01-12 12:55:08+00:00 [Note] [Entrypoint]: Entrypoint script for MariaDB Server 1:10.4.32+maria~ubu2004 started.

opendocman-db-1 | 2024-01-12 12:55:08+00:00 [Note] [Entrypoint]: Switching to dedicated user 'mysql'

opendocman-db-1 | 2024-01-12 12:55:08+00:00 [Note] [Entrypoint]: Entrypoint script for MariaDB Server 1:10.4.32+maria~ubu2004 started.

opendocman-db-1 | 2024-01-12 12:55:09+00:00 [Note] [Entrypoint]: Initializing database files

Error response from daemon: driver failed programming external connectivity on endpoint opendocman-app-1 (09f1b366d19dbd6d248fd954e61b34a689f9d690bd844dbea55757e2571480bb): Bind for 0.0.0.0:80 failed: port is already allocated

But the default port of 80 was clearly in use

I changed the docker-compose file to use different ports

$ git diff docker-compose.yml

diff --git a/docker-compose.yml b/docker-compose.yml

index ebeffce..01aad0b 100644

--- a/docker-compose.yml

+++ b/docker-compose.yml

@@ -18,8 +18,8 @@ services:

depends_on:

- db

ports:

- - 80:80

- - 443:443

+ - 8080:80

+ - 8443:443

- 9000:9000

hostname: odm.local

environment:

Then launched it

$ docker compose up

[+] Running 2/2

⠿ Container opendocman-db-1 Recreated 3.2s

⠿ Container opendocman-app-1 Recreated 0.1s

Attaching to opendocman-app-1, opendocman-db-1

opendocman-db-1 | 2024-01-12 13:26:32+00:00 [Note] [Entrypoint]: Entrypoint script for MariaDB Server 1:10.4.32+maria~ubu2004 started.

opendocman-db-1 | 2024-01-12 13:26:32+00:00 [Note] [Entrypoint]: Switching to dedicated user 'mysql'

opendocman-db-1 | 2024-01-12 13:26:32+00:00 [Note] [Entrypoint]: Entrypoint script for MariaDB Server 1:10.4.32+maria~ubu2004 started.

opendocman-db-1 | 2024-01-12 13:26:32+00:00 [Note] [Entrypoint]: MariaDB upgrade not required

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] Starting MariaDB 10.4.32-MariaDB-1:10.4.32+maria~ubu2004 source revision c4143f909528e3fab0677a28631d10389354c491 as process 1

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: Using Linux native AIO

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: Mutexes and rw_locks use GCC atomic builtins

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: Uses event mutexes

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: Compressed tables use zlib 1.2.11

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: Number of pools: 1

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: Using SSE2 crc32 instructions

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] mysqld: O_TMPFILE is not supported on /tmp (disabling future attempts)

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: Initializing buffer pool, total size = 256M, instances = 1, chunk size = 128M

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: Completed initialization of buffer pool

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: If the mysqld execution user is authorized, page cleaner thread priority can be changed. See the man page of setpriority().

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: 128 out of 128 rollback segments are active.

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: Creating shared tablespace for temporary tables

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: Setting file './ibtmp1' size to 12 MB. Physically writing the file full; Please wait ...

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: File './ibtmp1' size is now 12 MB.

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: 10.4.32 started; log sequence number 60961; transaction id 20

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: Loading buffer pool(s) from /var/lib/mysql/ib_buffer_pool

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] Plugin 'FEEDBACK' is disabled.

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] InnoDB: Buffer pool(s) load completed at 240112 13:26:32

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] Server socket created on IP: '::'.

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] Reading of all Master_info entries succeeded

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] Added new Master_info '' to hash table

opendocman-db-1 | 2024-01-12 13:26:32 0 [Note] mysqld: ready for connections.

opendocman-db-1 | Version: '10.4.32-MariaDB-1:10.4.32+maria~ubu2004' socket: '/var/run/mysqld/mysqld.sock' port: 3306 mariadb.org binary distribution

opendocman-app-1 | [Fri Jan 12 13:26:35.086561 2024] [ssl:warn] [pid 16] AH01909: odm.local:443:0 server certificate does NOT include an ID which matches the server name

opendocman-app-1 | [Fri Jan 12 13:26:35.100267 2024] [ssl:warn] [pid 16] AH01909: odm.local:443:0 server certificate does NOT include an ID which matches the server name

opendocman-app-1 | [Fri Jan 12 13:26:35.102010 2024] [mpm_prefork:notice] [pid 16] AH00163: Apache/2.4.54 (Debian) PHP/7.4.33 OpenSSL/1.1.1n configured -- resuming normal operations

opendocman-app-1 | [Fri Jan 12 13:26:35.102037 2024] [core:notice] [pid 16] AH00094: Command line: '/usr/sbin/apache2 -D FOREGROUND'

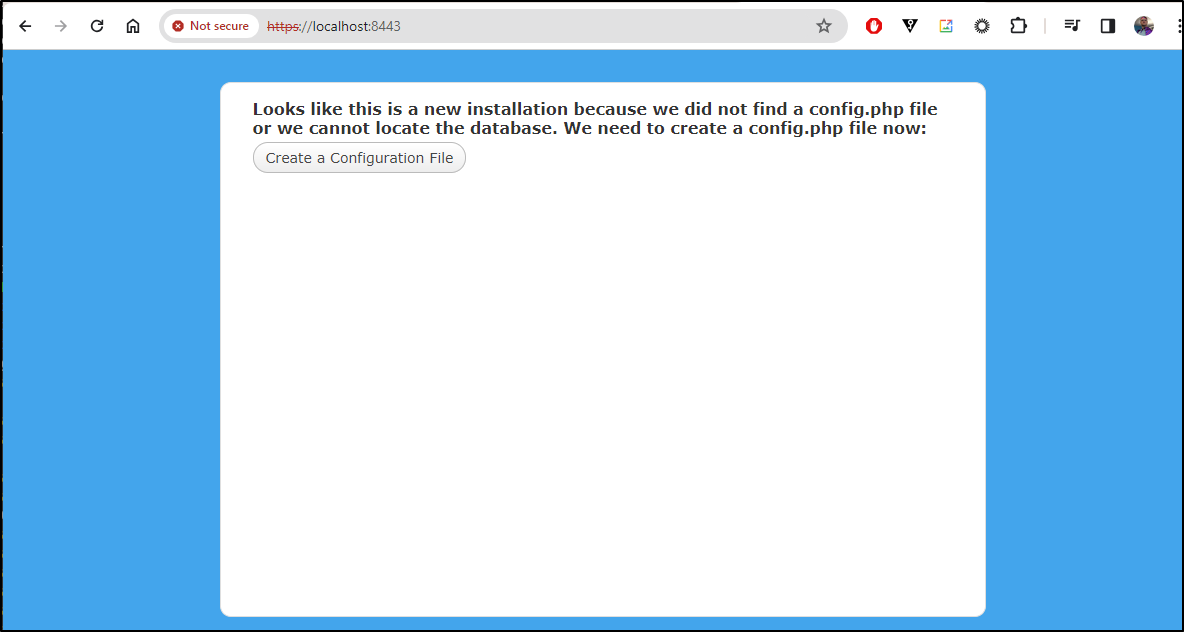

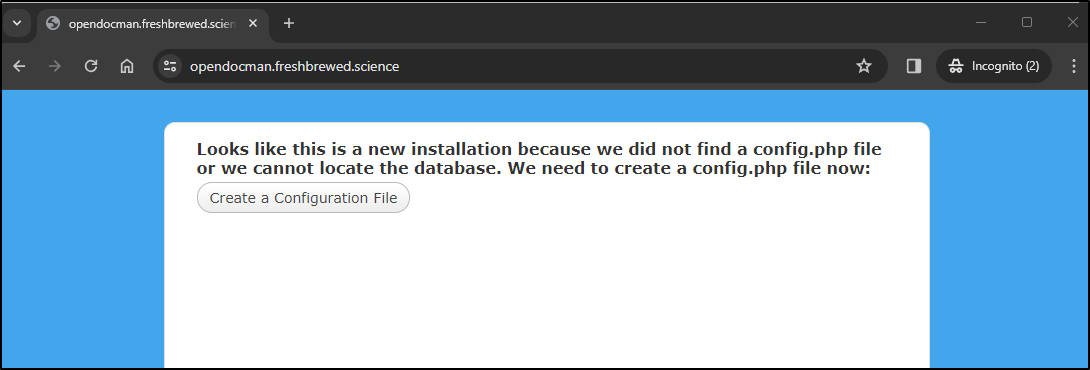

This worked

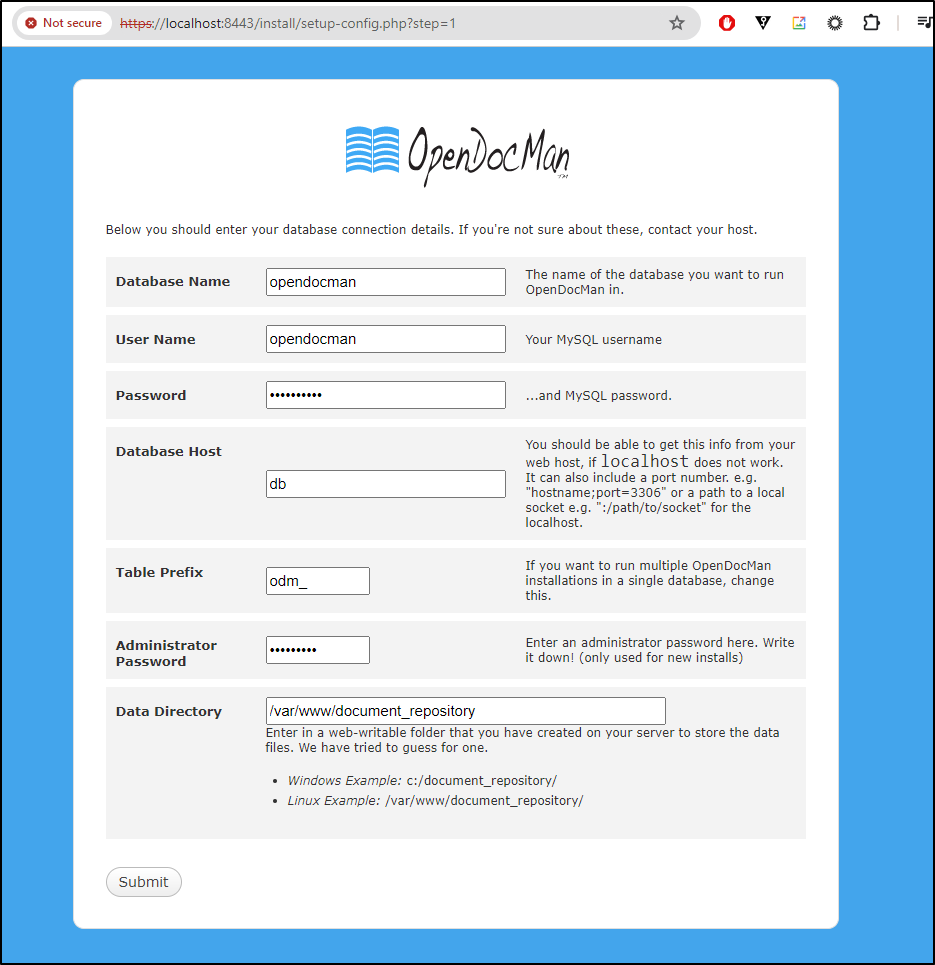

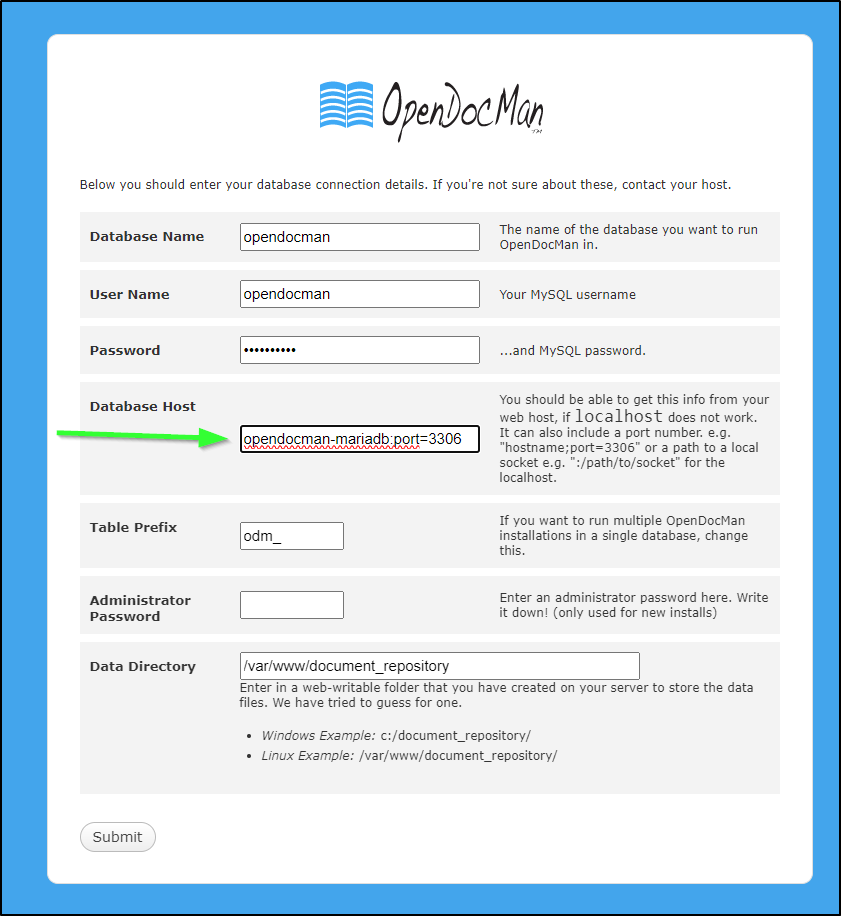

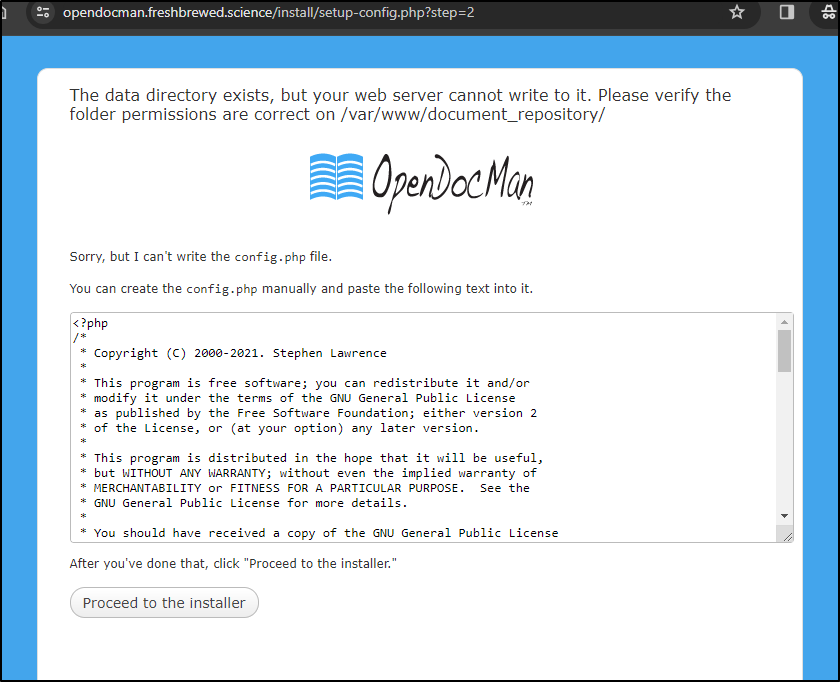

I clicked “Create Configuration” which brought me to a setup config page

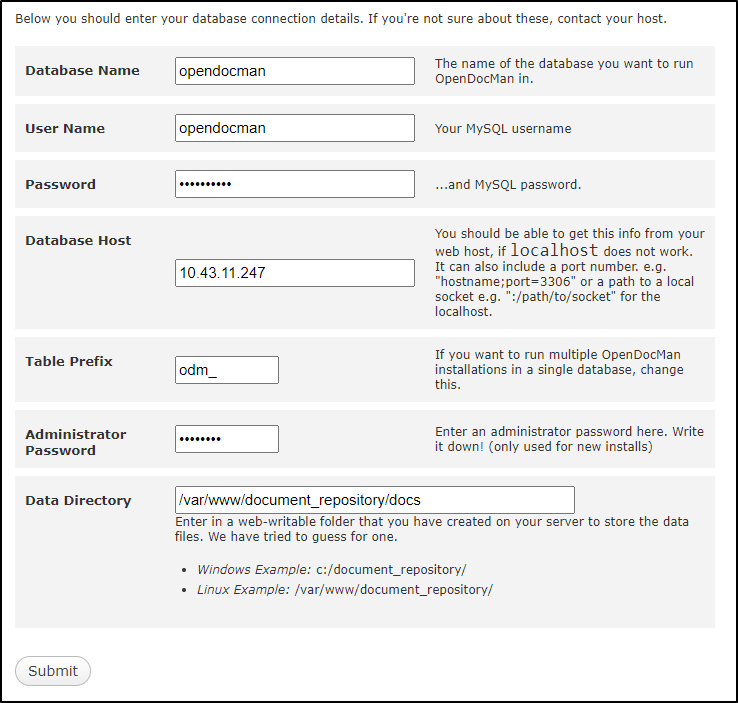

In the next page, I left defaults except for setting an “Administrator Password”

The last step was “Run the Install”

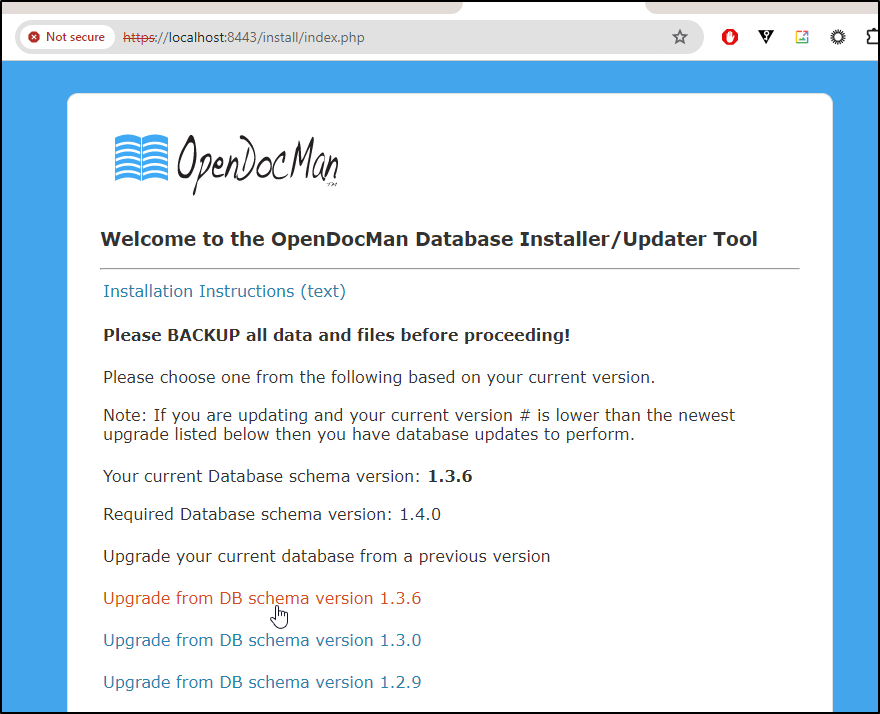

The next page linked to a Readme and what I assume are upgrade schema steps - I clicked on the one that matched my detected database

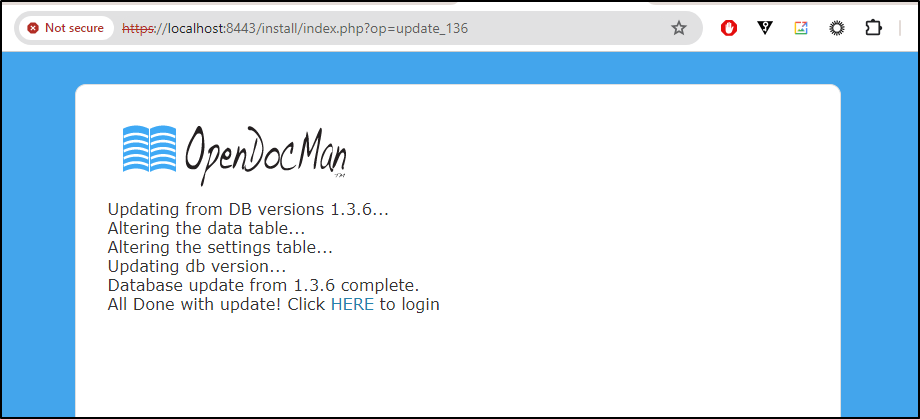

It seems to have done something

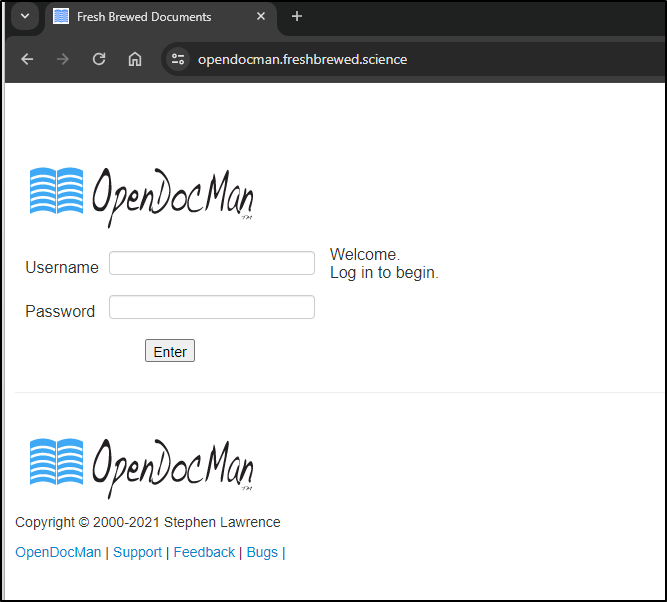

Clicking the “Click Here” brings me to the login page

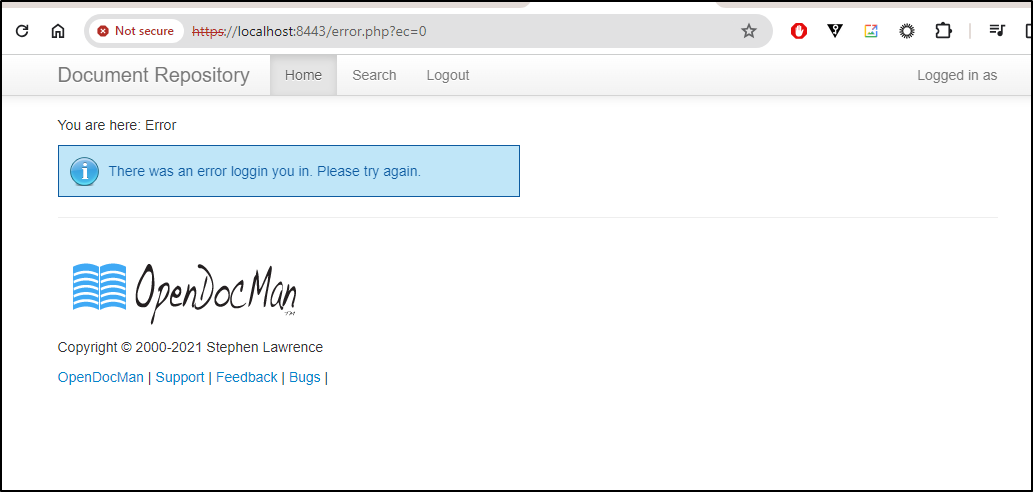

I tried using “Administrator” with the password I had set, but got an error page. Following the docs, I found it is supposed to be ‘admin’. But even that gives me an error

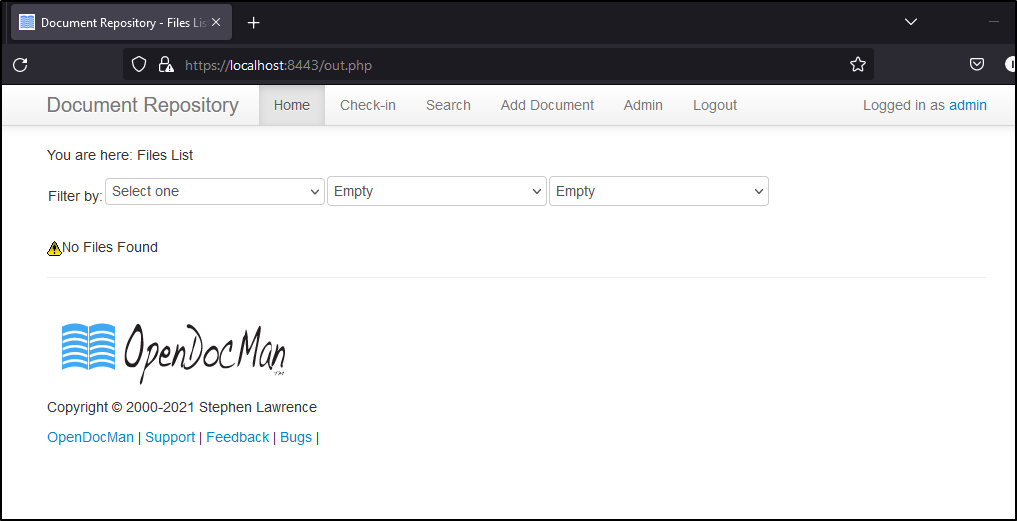

Oddly, entering ‘admin’ with no password seemed to work

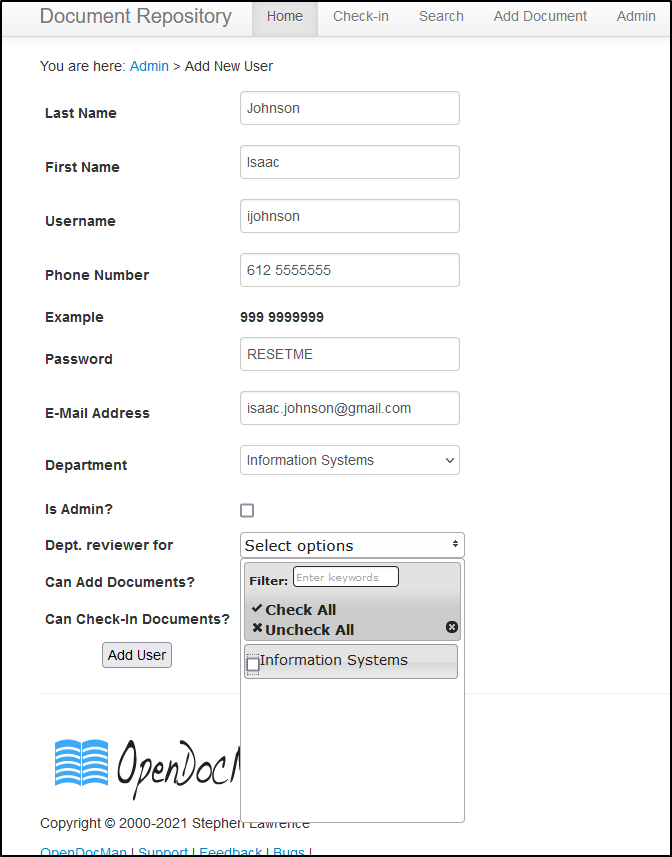

We can create a user who is non-admin and determine the Deptarments for which they are a reviewer

Or just make them an admin

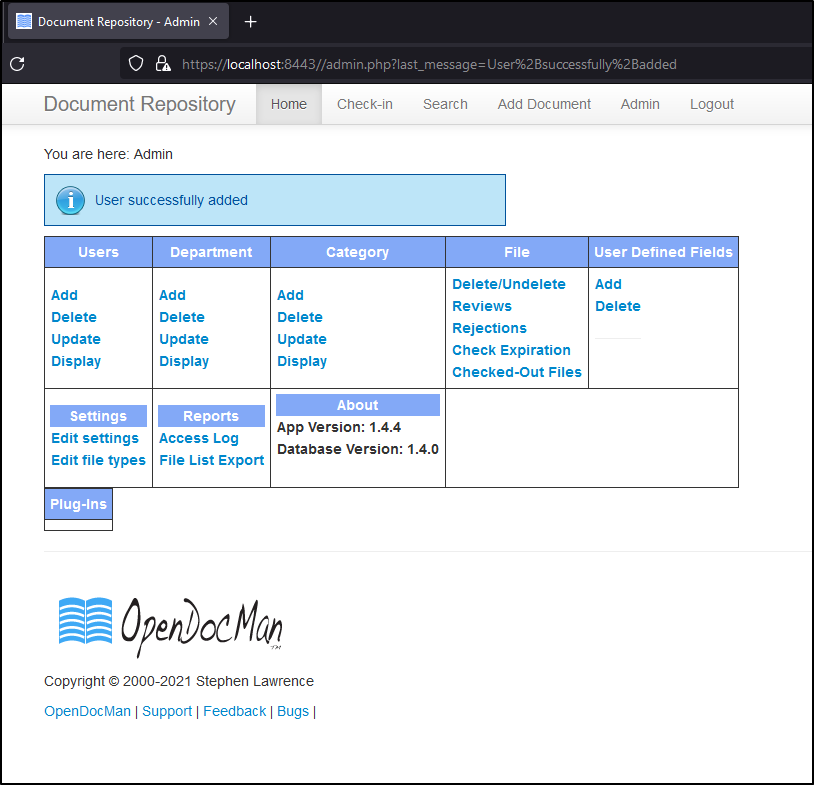

I see a blinky blue box indicating the user add was successful

I can now edit the admin user and set a password as well

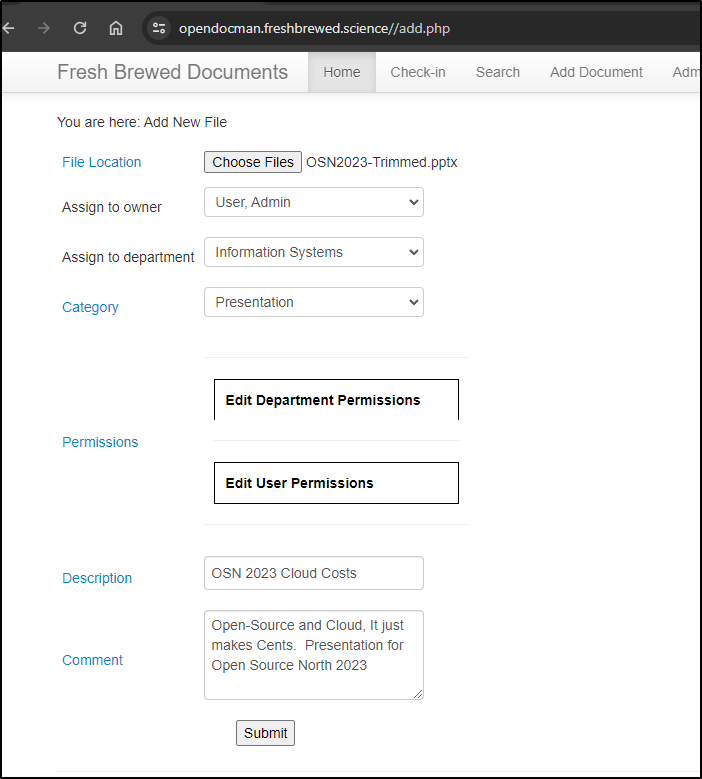

Adding Documents

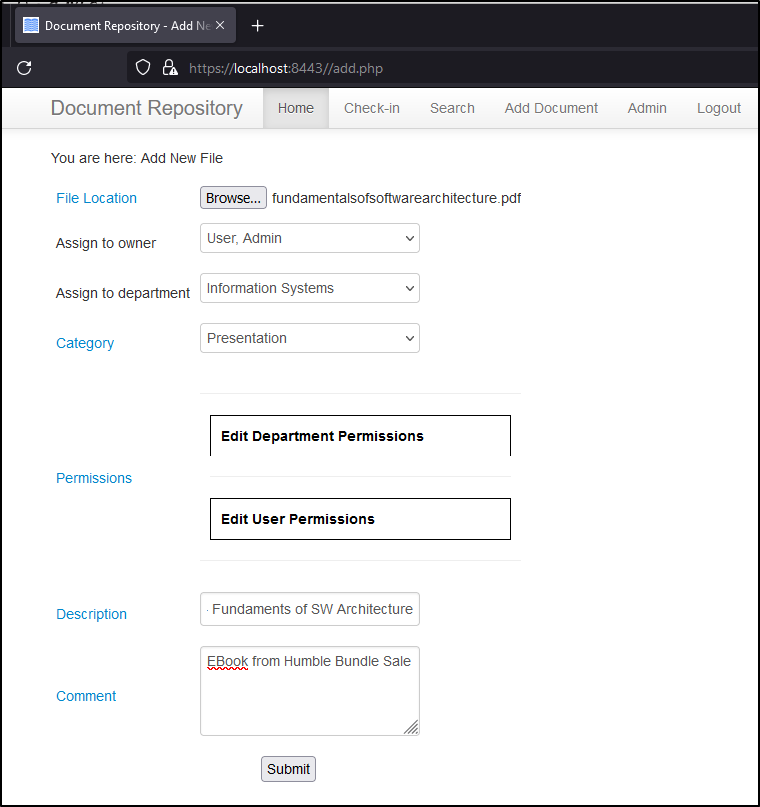

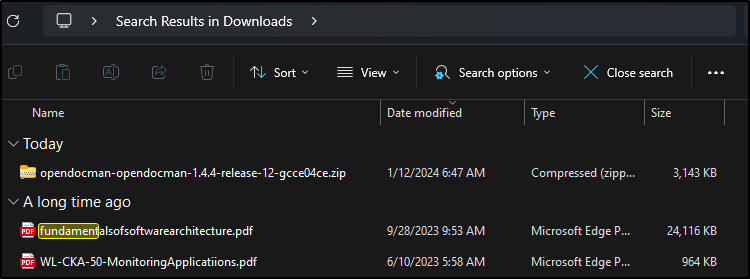

Let’s add a Document. I’ll start with a PDF

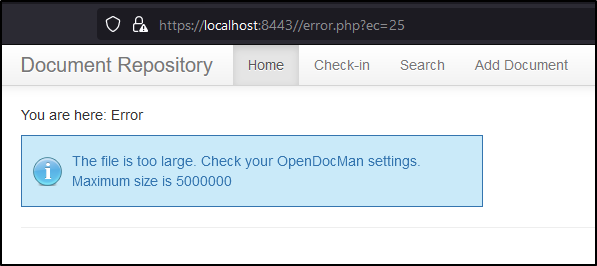

I got a size alert

Seems 24Mb is too big

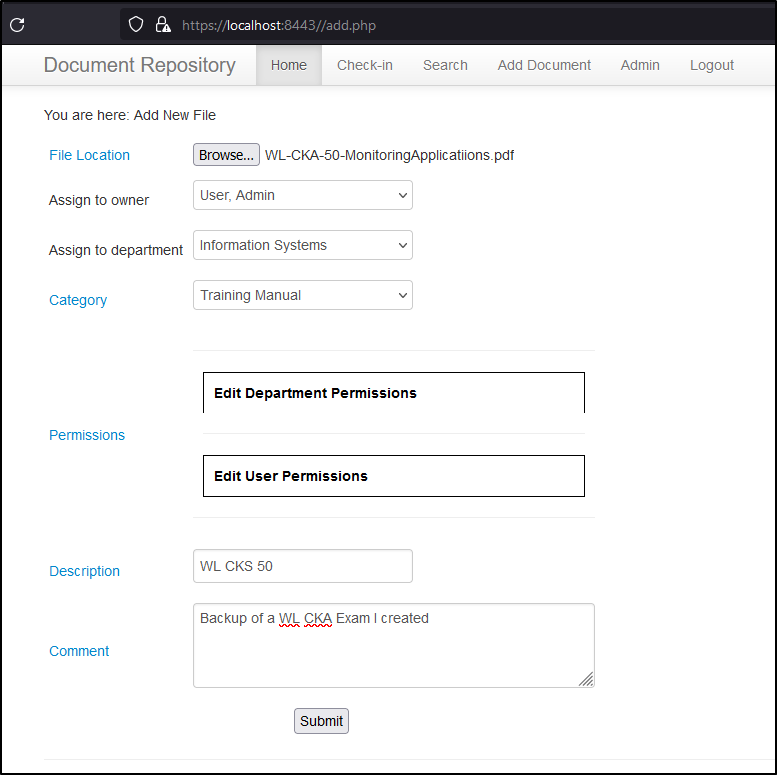

I’ll try a smaller doc

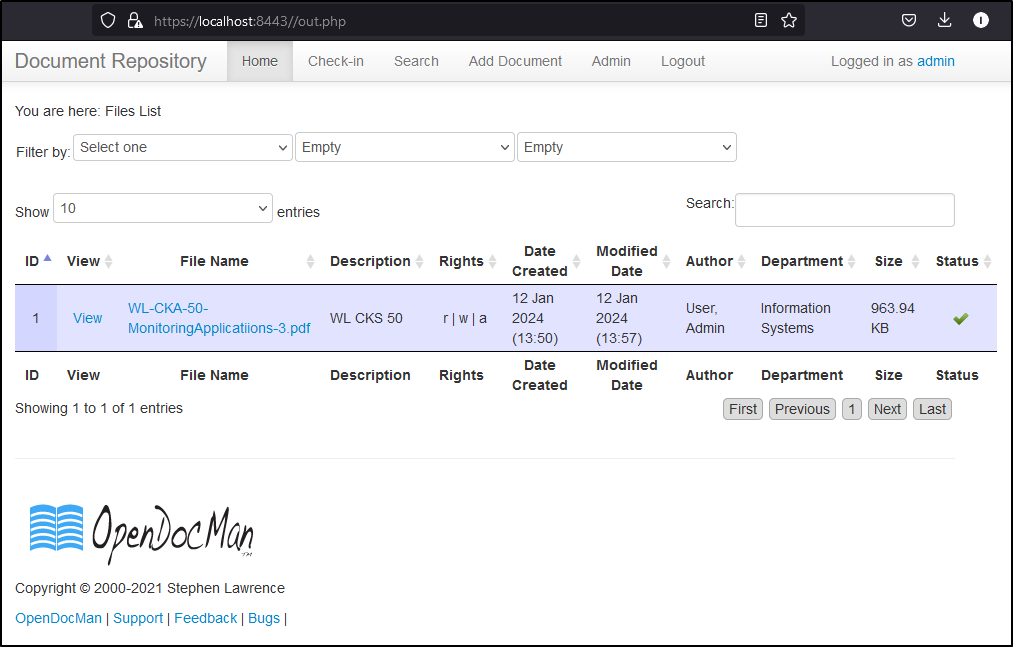

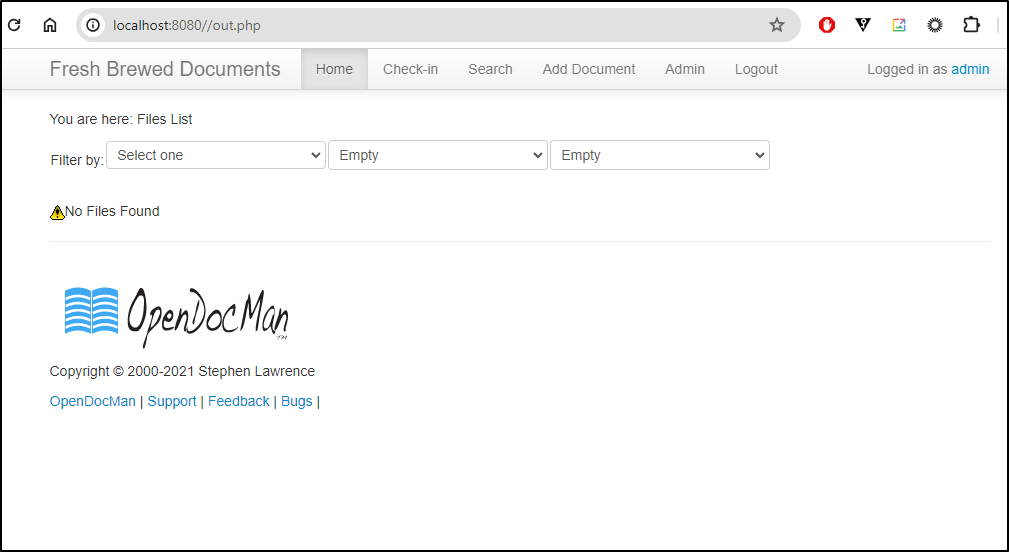

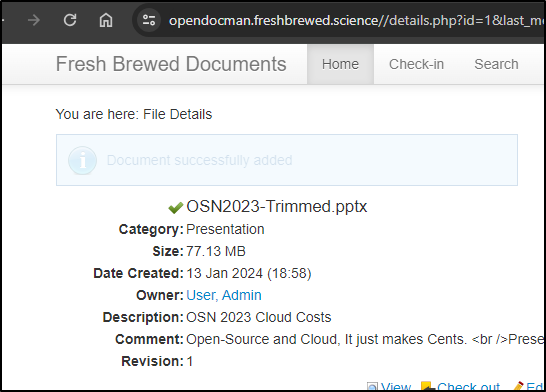

That worked

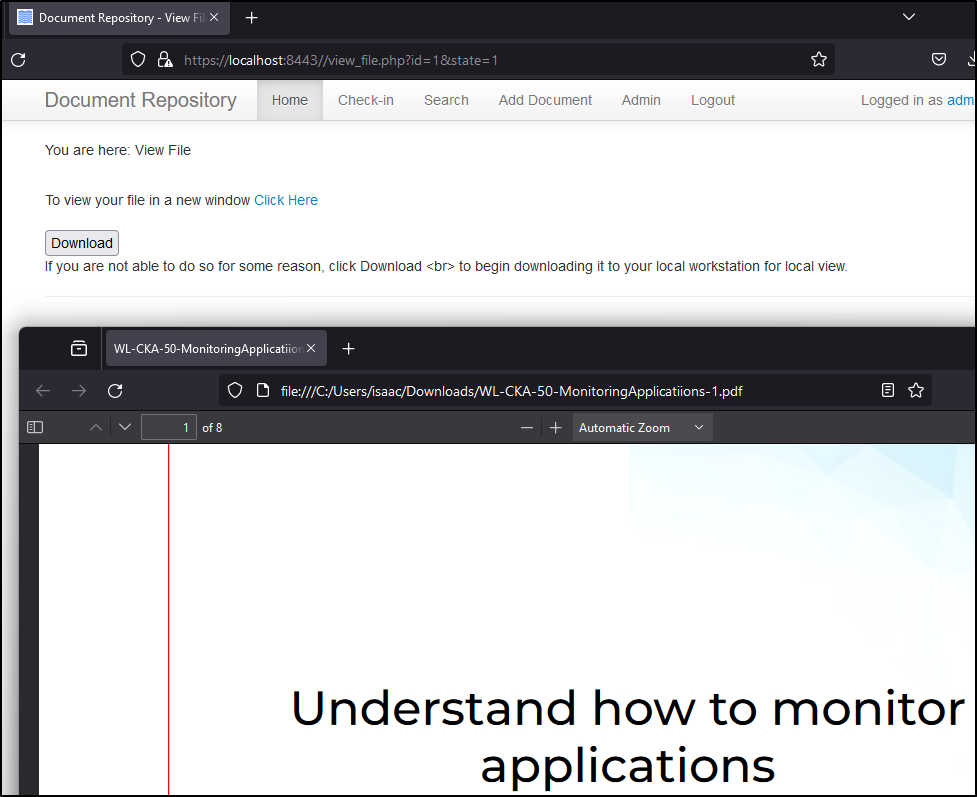

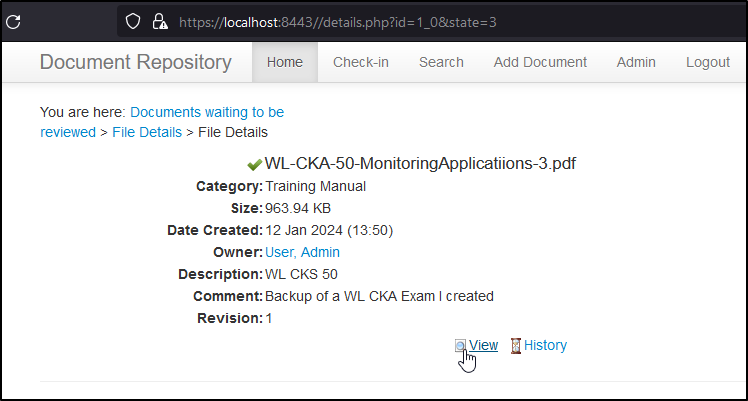

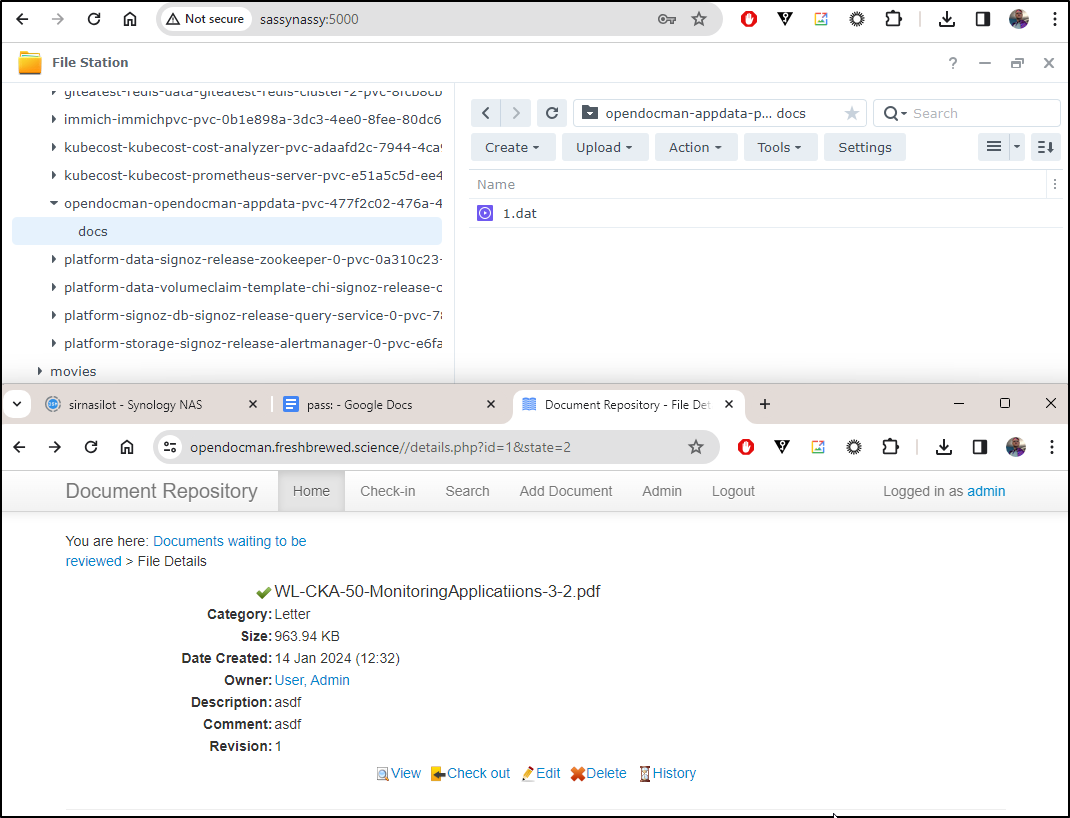

I can chose to “view” and open it in a browser or Download (with the button)

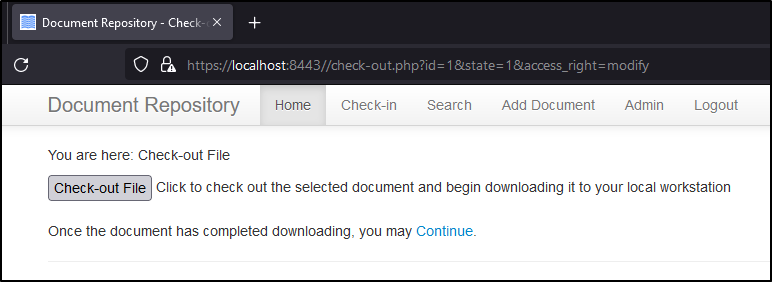

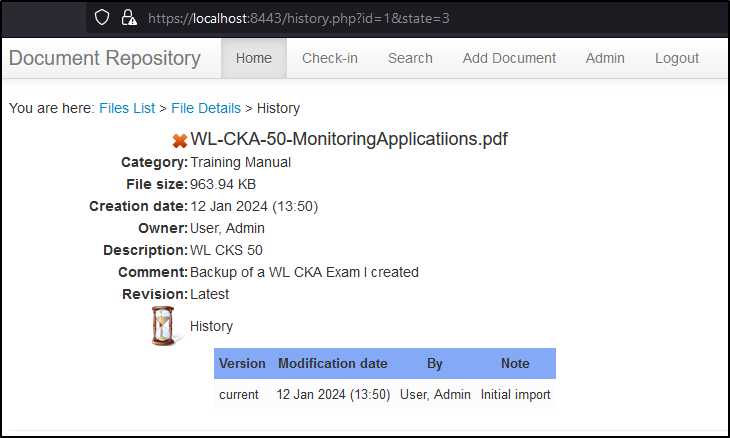

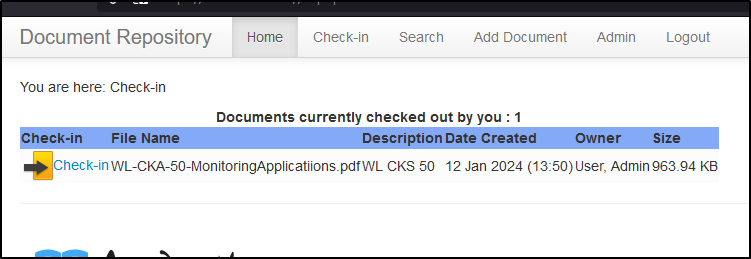

I can also “Check Out” the file

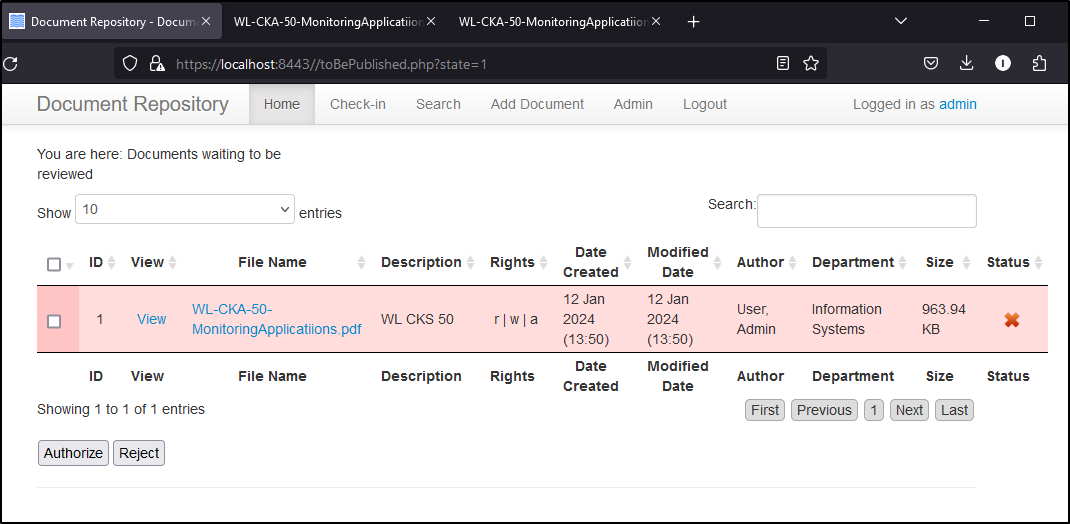

When I “Check Out” it now shows as a “Document waiting to be reviewed”

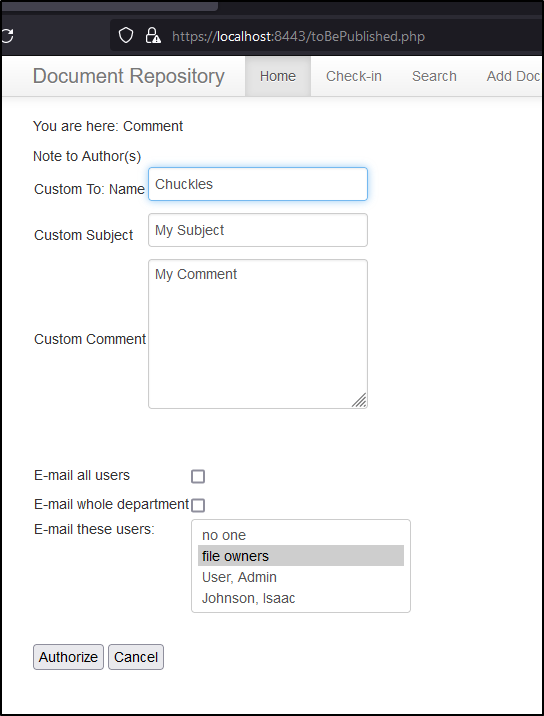

I’ll see what “Authorize does”

I don’t really see anything different in the history

Let me “Check in” the file

I’m prompted to select, what I assume to be, the revised version

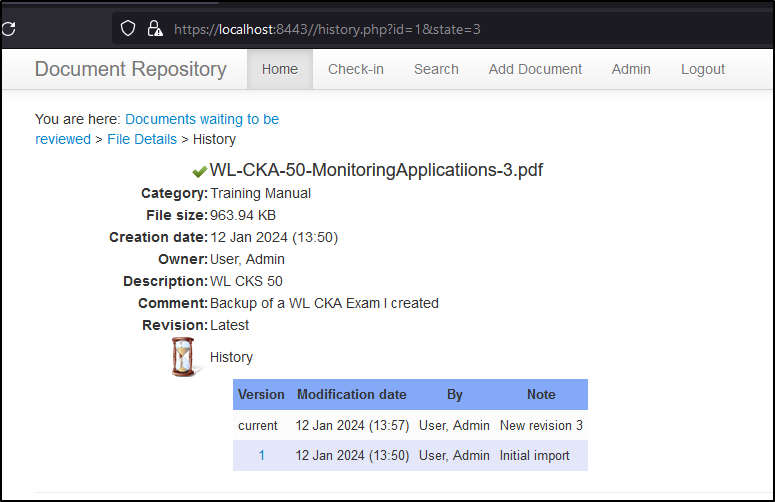

We can now see that in the history

I can always go back to the older version and see the “before” as well

Document sizes

I can change the value in Settings if I really want 24Mb file

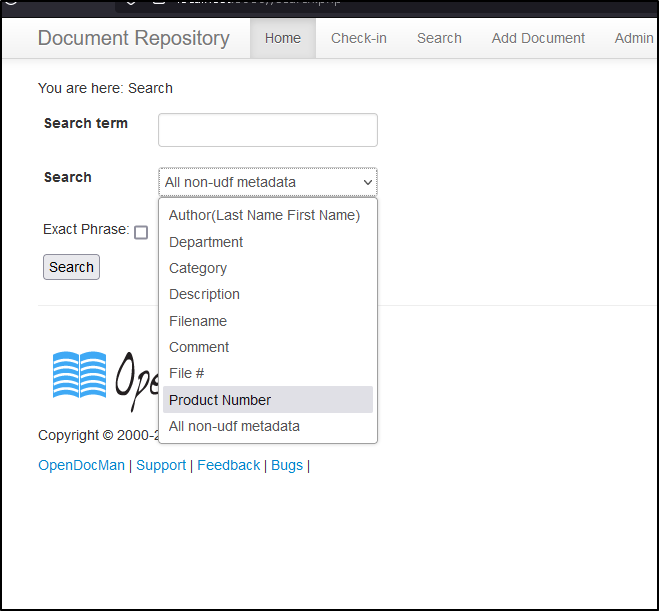

Search

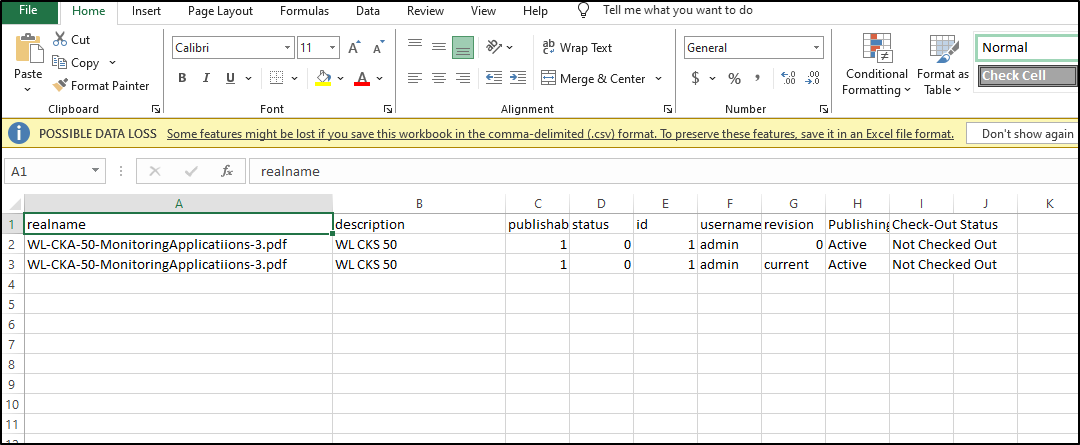

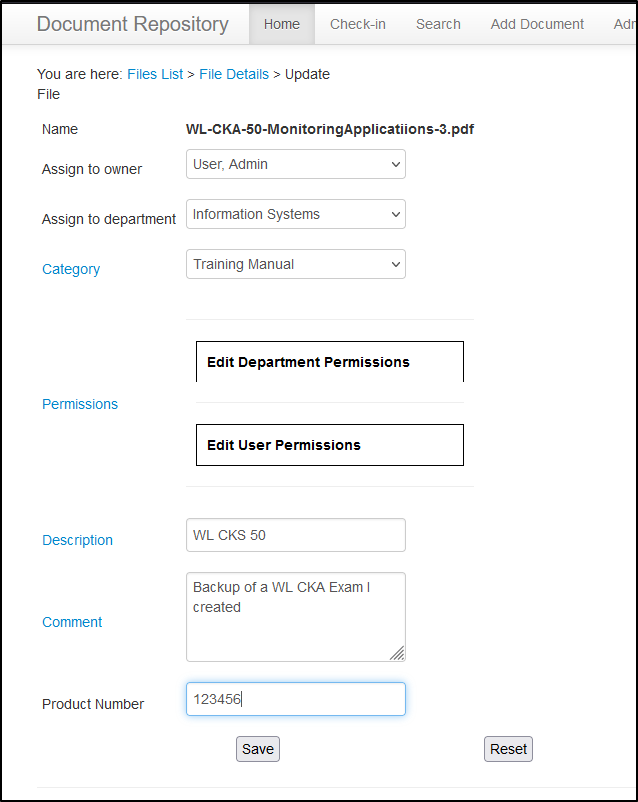

Let’s review the one Document I have there:

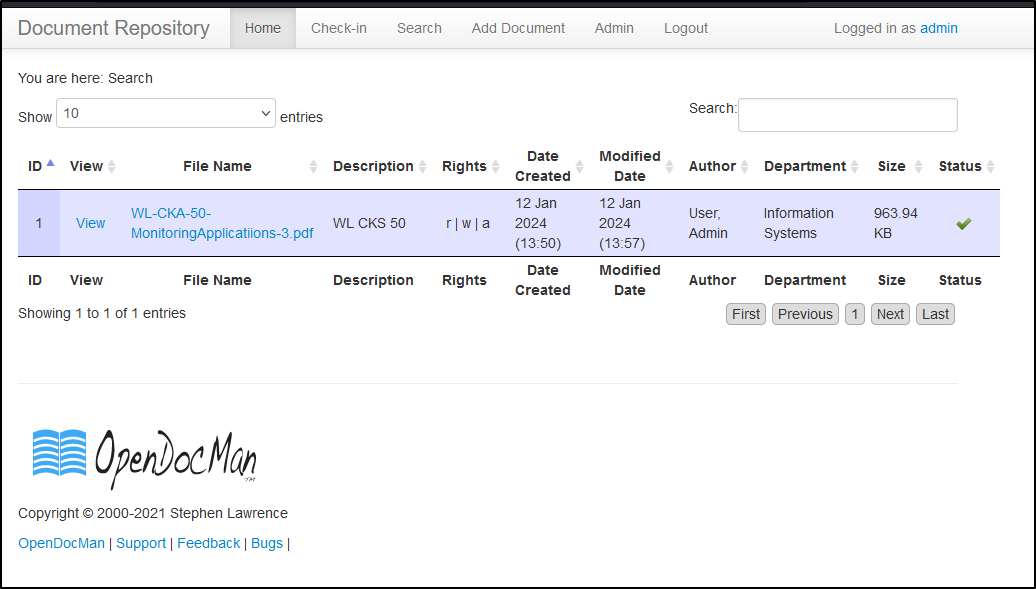

Its filename is “WL-CKA-50-MonitoringApplicatiions-3.pdf”

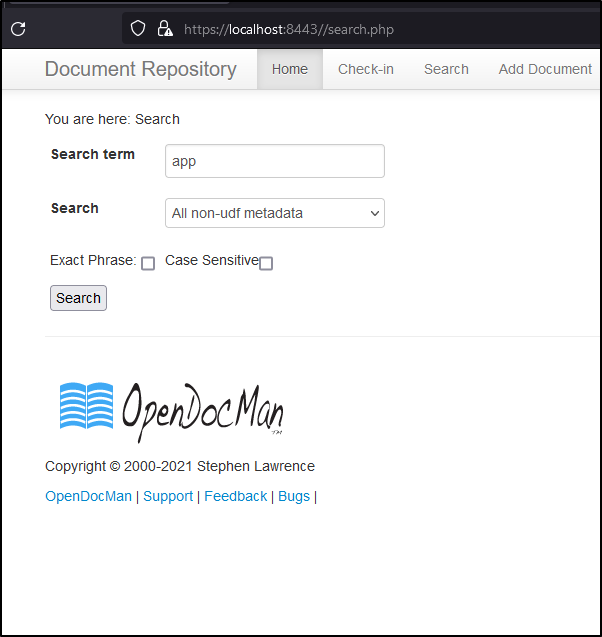

If I search for “app”

It will come back with the results

It also worked to serach for “File #” of “1” and “Author” of “Admin”

Reprots

We can view things like the access log

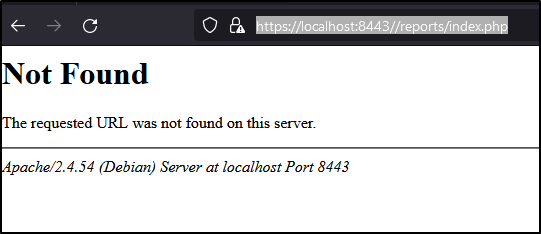

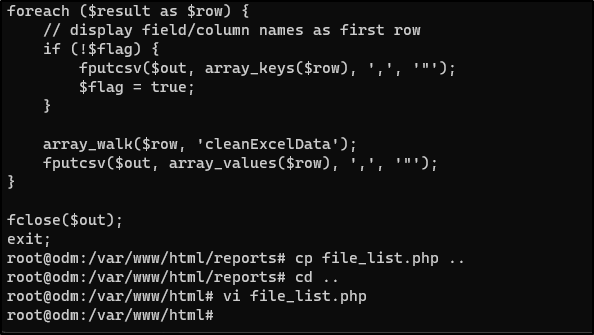

There is a “File List Export” that tries to load /reports/file_list.php. That exists on the container, and the permissions are fine, but something is not letting the webserver load it and just redirects to https://localhost:8443//reports/index.php

Just because I was nosy, I hopped on the container, verified the file, perms and then moved it out of “/reports” and updated the PHP to remove all the ../ in the code

Now, if I manually go to https://localhost:8443/file_list.php I get a nice CSV export

User Defined Fields

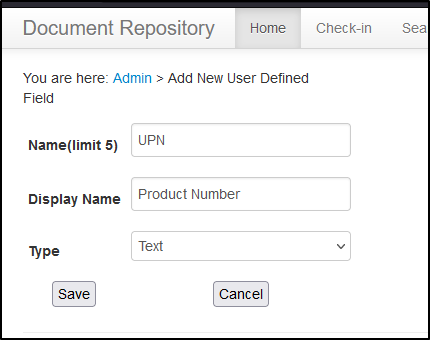

Maybe we wish to collect just a bit more information, such as an OID or Product Number.

Let’s add a “UPN” field of type text

Now when I edit the file, I can set the Product Number

Moreover, that is a field I can use in search

For my use case, where I deliver content organized over “sequences”, this is particularly useful.

Kubernetes

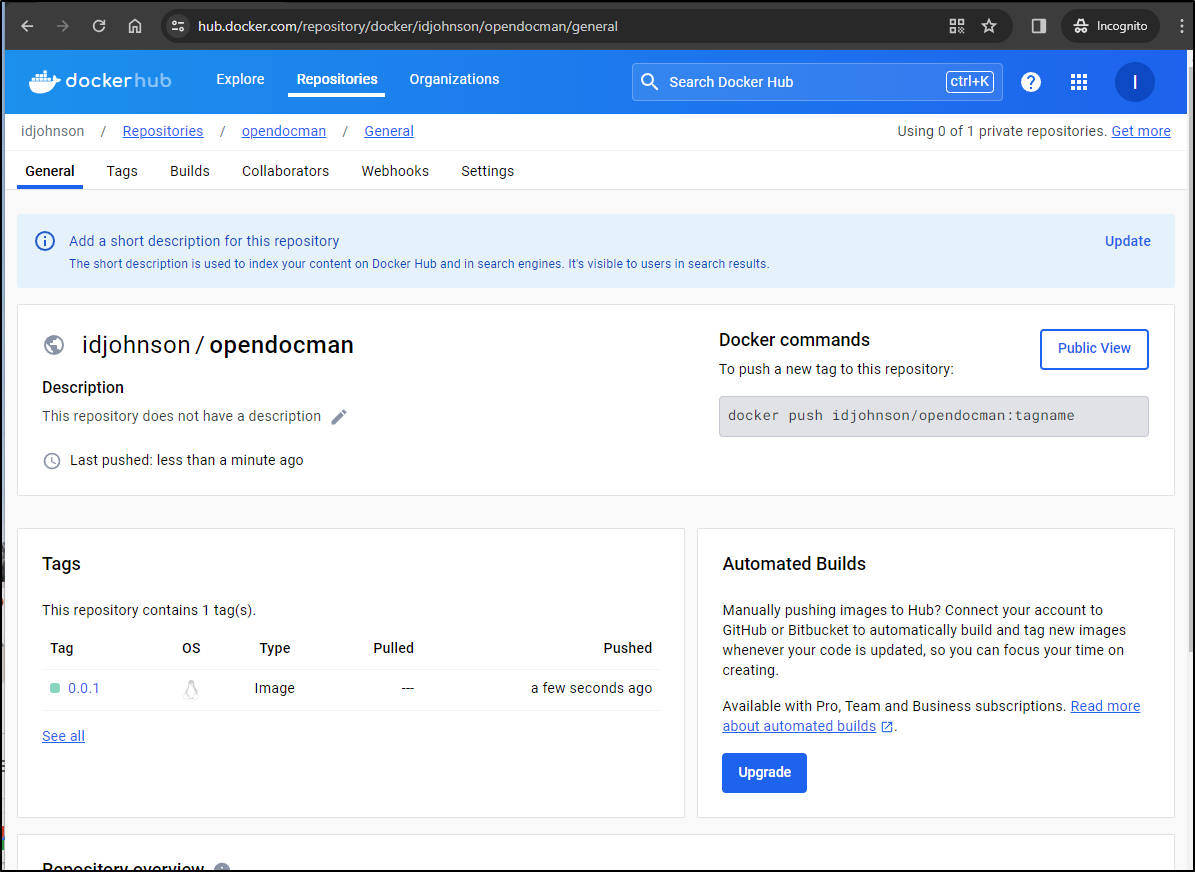

Let’s help build out a chart others can use. I didn’t see a Kubernetes setup in the OS repo so I made one.

I’ll first need to build the container

builder@DESKTOP-QADGF36:~/Workspaces/opendocman$ docker build -t opendocman:0.0.1 .

[+] Building 3.7s (15/15) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 958B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/php:7.4-apache 1.6s

=> [ 1/10] FROM docker.io/library/php:7.4-apache@sha256:c9d7e608f73832673479770d66aacc8100011ec751d1905ff63fae3fe2e0ca6d 0.0s

=> [internal] load build context 0.4s

=> => transferring context: 21.85MB 0.2s

=> CACHED [ 2/10] RUN apt-get update && apt-get install --no-install-recommends -y apt-utils vim git openssl ssl-cert sen 0.0s

=> CACHED [ 3/10] COPY src/main/resources/php.ini /usr/local/etc/php/conf.d 0.0s

=> CACHED [ 4/10] RUN a2enmod rewrite 0.0s

=> CACHED [ 5/10] RUN a2ensite default-ssl 0.0s

=> CACHED [ 6/10] RUN a2enmod ssl 0.0s

=> [ 7/10] COPY . /var/www/html 0.2s

=> [ 8/10] RUN usermod -u 1000 www-data 0.3s

=> [ 9/10] COPY src/main/resources/*.sh / 0.0s

=> [10/10] RUN chmod 755 /*.sh 0.5s

=> exporting to image 0.3s

=> => exporting layers 0.2s

=> => writing image sha256:67262f5c3c4f6b9c3985d12c2823953e59d9c59c252e3a14ce5d514d0b3caa05 0.0s

=> => naming to docker.io/library/opendocman:0.0.1

and then push it somewhere we can use:

builder@DESKTOP-QADGF36:~/Workspaces/opendocman$ docker tag opendocman:0.0.1 idjohnson/opendocman:0.0.1

builder@DESKTOP-QADGF36:~/Workspaces/opendocman$ docker push idjohnson/opendocman:0.0.1

builder@DESKTOP-QADGF36:~/Workspaces/opendocman$ docker push idjohnson/opendocman:0.0.1

The push refers to repository [docker.io/idjohnson/opendocman]

776731236c1f: Pushed

c206b9961e6d: Pushed

1cc6d3498ff1: Pushed

076bc433eeaa: Pushed

decc2a821dea: Pushed

d4a15729e799: Pushed

94e69dd6db42: Pushed

578889f1a856: Pushed

847f3212b72e: Pushed

3d33242bf117: Mounted from library/php

529016396883: Mounted from library/php

5464bcc3f1c2: Mounted from library/php

28192e867e79: Mounted from library/php

d173e78df32e: Mounted from library/php

0be1ec4fbfdc: Mounted from library/php

30fa0c430434: Mounted from library/php

a538c5a6e4e0: Mounted from library/php

e5d40f64dcb4: Mounted from library/php

44148371c697: Mounted from library/php

797a7c0590e0: Mounted from library/php

f60117696410: Mounted from library/php

ec4a38999118: Mounted from library/php

0.0.1: digest: sha256:3b86de62f84e104191c3d44ab4abc00303086a6acc15dedcde47d9e1a7ae89e0 size: 4911

I now have a public container for consumption https://hub.docker.com/repository/docker/idjohnson/opendocman/general.

I built out a chart. I had planned to use MariaDB 10.3 but it was too old and no longer hosted in Bitnami:

builder@DESKTOP-QADGF36:~/Workspaces/opendocman/helm$ helm dependency update ./opendocman

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "zabbix-community" chart repository

...Successfully got an update from the "jfelten" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "opencost" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Unable to get an update from the "freshbrewed" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Unable to get an update from the "myharbor" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Successfully got an update from the "nfs" chart repository

...Successfully got an update from the "kiwigrid" chart repository

...Successfully got an update from the "openfunction" chart repository

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "portainer" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "gitea-charts" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "signoz" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "openzipkin" chart repository

...Successfully got an update from the "ananace-charts" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "gitlab" chart repository

...Unable to get an update from the "epsagon" chart repository (https://helm.epsagon.com):

Get "https://helm.epsagon.com/index.yaml": dial tcp: lookup helm.epsagon.com on 172.22.64.1:53: server misbehaving

...Successfully got an update from the "ngrok" chart repository

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "kube-state-metrics" chart repository

...Successfully got an update from the "btungut" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "akomljen-charts" chart repository

...Successfully got an update from the "makeplane" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "ingress-nginx" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "open-telemetry" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "castai-helm" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "prometheus-community" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

Error: can't get a valid version for repositories mariadb. Try changing the version constraint in Chart.yaml

Checking versions

builder@DESKTOP-QADGF36:~/Workspaces/opendocman/helm$ helm search repo bitnami/mariadb --versions

NAME CHART VERSION APP VERSION DESCRIPTION

bitnami/mariadb 15.0.1 11.2.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 15.0.0 11.2.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 14.1.4 11.1.3 MariaDB is an open source, community-developed ...

bitnami/mariadb 14.1.3 11.1.3 MariaDB is an open source, community-developed ...

bitnami/mariadb 14.1.2 11.1.3 MariaDB is an open source, community-developed ...

bitnami/mariadb 14.1.1 11.1.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 14.1.0 11.1.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 14.0.3 11.1.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 14.0.2 11.1.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 14.0.1 11.1.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 14.0.0 11.1.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 13.1.3 11.0.3 MariaDB is an open source, community-developed ...

bitnami/mariadb 13.1.2 11.0.3 MariaDB is an open source, community-developed ...

bitnami/mariadb 13.1.1 11.0.3 MariaDB is an open source, community-developed ...

bitnami/mariadb 13.1.0 11.0.3 MariaDB is an open source, community-developed ...

bitnami/mariadb 13.0.5 11.0.3 MariaDB is an open source, community-developed ...

bitnami/mariadb 13.0.4 11.0.3 MariaDB is an open source, community-developed ...

bitnami/mariadb 13.0.3 11.0.3 MariaDB is an open source, community-developed ...

bitnami/mariadb 13.0.2 11.0.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 13.0.1 11.0.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 13.0.0 11.0.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 12.2.9 10.11.4 MariaDB is an open source, community-developed ...

bitnami/mariadb 12.2.8 10.11.4 MariaDB is an open source, community-developed ...

bitnami/mariadb 12.2.7 10.11.4 MariaDB is an open source, community-developed ...

bitnami/mariadb 12.2.5 10.11.4 MariaDB is an open source, community-developed ...

bitnami/mariadb 12.2.4 10.11.3 MariaDB is an open source, community-developed ...

bitnami/mariadb 12.2.3 10.11.3 MariaDB is an open source, community-developed ...

bitnami/mariadb 12.2.2 10.11.3 MariaDB is an open source, community-developed ...

bitnami/mariadb 12.2.1 10.11.3 MariaDB is an open source, community-developed ...

bitnami/mariadb 12.1.6 10.11.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 12.1.5 10.11.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 12.1.4 10.11.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 12.1.3 10.11.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 12.1.2 10.11.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 12.1.1 10.11.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 12.0.0 10.11.2 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.5.7 10.6.12 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.5.6 10.6.12 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.5.5 10.6.12 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.5.4 10.6.12 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.5.3 10.6.12 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.5.2 10.6.12 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.5.1 10.6.12 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.5.0 10.6.12 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.4.7 10.6.12 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.4.6 10.6.12 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.4.5 10.6.11 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.4.4 10.6.11 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.4.3 10.6.11 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.4.2 10.6.11 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.4.1 10.6.11 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.4.0 10.6.11 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.3.5 10.6.11 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.3.4 10.6.10 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.3.3 10.6.10 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.3.2 10.6.10 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.3.1 10.6.10 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.3.0 10.6.9 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.2.2 10.6.9 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.2.1 10.6.9 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.2.0 10.6.9 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.1.8 10.6.9 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.1.7 10.6.8 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.1.6 10.6.8 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.1.5 10.6.8 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.1.4 10.6.8 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.1.3 10.6.8 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.1.2 10.6.8 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.1.1 10.6.8 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.1.0 10.6.8 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.0.14 10.6.8 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.0.13 10.6.8 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.0.12 10.6.8 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.0.11 10.6.8 MariaDB is an open source, community-developed ...

bitnami/mariadb 11.0.10 10.6.8 MariaDB is an open source, community-developed ...

bitnami/mariadb-galera 11.0.2 11.2.2 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 11.0.1 11.2.2 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 11.0.0 11.2.2 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 10.1.3 11.1.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 10.1.2 11.1.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 10.1.1 11.1.2 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 10.1.0 11.1.2 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 10.0.3 11.1.2 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 10.0.2 11.1.2 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 10.0.1 11.1.2 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 10.0.0 11.1.2 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 9.2.1 11.0.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 9.2.0 11.0.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 9.1.3 11.0.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 9.1.2 11.0.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 9.1.1 11.0.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 9.1.0 11.0.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 9.0.5 11.0.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 9.0.4 11.0.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 9.0.3 11.0.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 9.0.2 11.0.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 9.0.1 11.0.2 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 9.0.0 11.0.2 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 8.2.9 10.11.4 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 8.2.8 10.11.4 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 8.2.7 10.11.4 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 8.2.6 10.11.4 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 8.2.5 10.11.4 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 8.2.4 10.11.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 8.2.3 10.11.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 8.2.2 10.11.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 8.2.1 10.11.3 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 8.1.2 10.11.2 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 8.1.1 10.11.2 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 8.0.1 10.11.2 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 8.0.0 10.11.2 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.5.5 10.6.12 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.5.4 10.6.12 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.5.3 10.6.12 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.5.2 10.6.12 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.5.1 10.6.12 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.5.0 10.6.12 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.15 10.6.12 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.14 10.6.12 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.13 10.6.11 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.12 10.6.11 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.11 10.6.11 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.10 10.6.11 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.9 10.6.11 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.8 10.6.11 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.7 10.6.10 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.6 10.6.10 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.5 10.6.10 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.4 10.6.10 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.3 10.6.10 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.2 10.6.9 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.1 10.6.9 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.4.0 10.6.9 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.3.13 10.6.9 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.3.12 10.6.9 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.3.11 10.6.8 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.3.10 10.6.8 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.3.9 10.6.8 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.3.8 10.6.8 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.3.7 10.6.8 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.3.6 10.6.8 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.3.5 10.6.8 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.3.4 10.6.8 MariaDB Galera is a multi-primary database clus...

bitnami/mariadb-galera 7.3.2 10.6.8 MariaDB Galera is a multi-primary database clus...

Once I updated to 11.0.10

$h elm dependency...

...snip..

Update Complete. ⎈Happy Helming!⎈

Saving 1 charts

Downloading mariadb from repo https://charts.bitnami.com/bitnami

Deleting outdated charts

I then installed:

builder@DESKTOP-QADGF36:~/Workspaces/opendocman/helm$ helm install opendocman -n opendocman --create-namespace ./opendocman

NAME: opendocman

LAST DEPLOYED: Sat Jan 13 10:55:56 2024

NAMESPACE: opendocman

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace opendocman -l "app.kubernetes.io/name=opendocman,app.kubernetes.io/instance=opendocman" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace opendocman $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace opendocman port-forward $POD_NAME 8080:$CONTAINER_PORT

And could see the pods came up, as well as the PVCs

builder@DESKTOP-QADGF36:~/Workspaces/opendocman/helm$ kubectl get pods -n opendocman

NAME READY STATUS RESTARTS AGE

opendocman-7fb7dd8874-rhrgh 1/1 Running 0 88s

opendocman-mariadb-0 1/1 Running 0 88s

builder@DESKTOP-QADGF36:~/Workspaces/opendocman/helm$ kubectl get pvc -n opendocman

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

opendocman-appconfig Bound pvc-befd6f61-8fdc-4678-93c1-0fd7b7408454 1Gi RWO local-path 93s

opendocman-appdata Bound pvc-d9e99e02-65a3-47ec-8252-2e5734da6da8 5Gi RWO local-path 93s

data-opendocman-mariadb-0 Bound pvc-308c6263-a291-4052-a45c-7da3729e5c7c 8Gi RWO local-path 93s

I can now port-forward and do the same setup again

builder@DESKTOP-QADGF36:~/Workspaces/opendocman/helm$ kubectl get svc -n opendocman

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

opendocman-mariadb ClusterIP 10.43.87.85 <none> 3306/TCP 114s

opendocman ClusterIP 10.43.171.23 <none> 80/TCP 114s

builder@DESKTOP-QADGF36:~/Workspaces/opendocman/helm$ kubectl port-forward -n opendocman svc/opendocman 8080:80

Forwarding from 127.0.0.1:8080 -> 80

Forwarding from [::1]:8080 -> 80

Handling connection for 8080

Handling connection for 8080

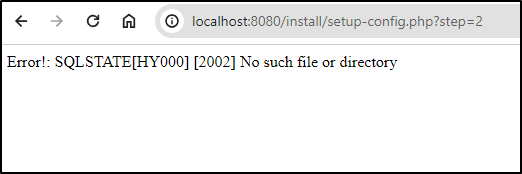

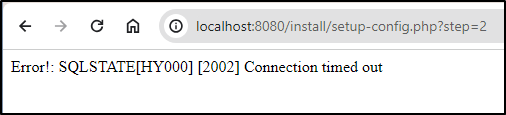

Though, this time I saw an error

I tried the service name

but timed out:

Hopping on the container, I could see MariaDB was not allowing us in (trying the service name, service IP and pod IP)

builder@DESKTOP-QADGF36:~$ kubectl exec -it opendocman-7fb7dd8874-rhrgh -n opendocman -- /bin/bash

root@opendocman-7fb7dd8874-rhrgh:/var/www/html# which mysql

/usr/bin/mysql

root@opendocman-7fb7dd8874-rhrgh:/var/www/html# mysql --host=opendocman-mariadb --user=opendocman --password=opendocman opendocman

ERROR 1045 (28000): Access denied for user 'opendocman'@'10.42.3.138' (using password: YES)

root@opendocman-7fb7dd8874-rhrgh:/var/www/html# mysql --host=10.43.87.85 --user=opendocman --password=opendocman opendocman

ERROR 1045 (28000): Access denied for user 'opendocman'@'10.42.3.138' (using password: YES)

root@opendocman-7fb7dd8874-rhrgh:/var/www/html# mysql --host=10.42.3.140 --user=opendocman --password=opendocman opendocman

ERROR 1045 (28000): Access denied for user 'opendocman'@'10.42.3.138' (using password: YES)

root@opendocman-7fb7dd8874-rhrgh:/var/www/html# mysql --host=10.42.3.140 --user=opendocman --password=opendocman opendocman --port=3306

ERROR 1045 (28000): Access denied for user 'opendocman'@'10.42.3.138' (using password: YES)

root@opendocman-7fb7dd8874-rhrgh:/var/www/html# mysql --host=10.43.87.85 --user=opendocman --password=opendocman opendocman --port=3306

ERROR 1045 (28000): Access denied for user 'opendocman'@'10.42.3.138' (using password: YES)

I’ve fought for a while to get the Dependency chart to work:

apiVersion: v2

name: opendocman

description: A Helm chart for Kubernetes

type: application

version: 0.1.0

appVersion: "1.16.0"

dependencies:

- name: mariadb

version: 11.0.14

repository: https://charts.bitnami.com/bitnami

tags:

- mysql

- database

- mariadb

- bitnami

values:

mariadb:

allowRootFromRemote: true

auth:

rootPassword: opendocman

user: opendocman

password: opendocman

database: opendocman

I had to do two things; move the values out of Chart.yaml and into my main values.yaml

mariadb:

mariadb:

allowRootFromRemote: true

auth:

rootPassword: opendocman

username: opendocman

password: opendocman

database: opendocman

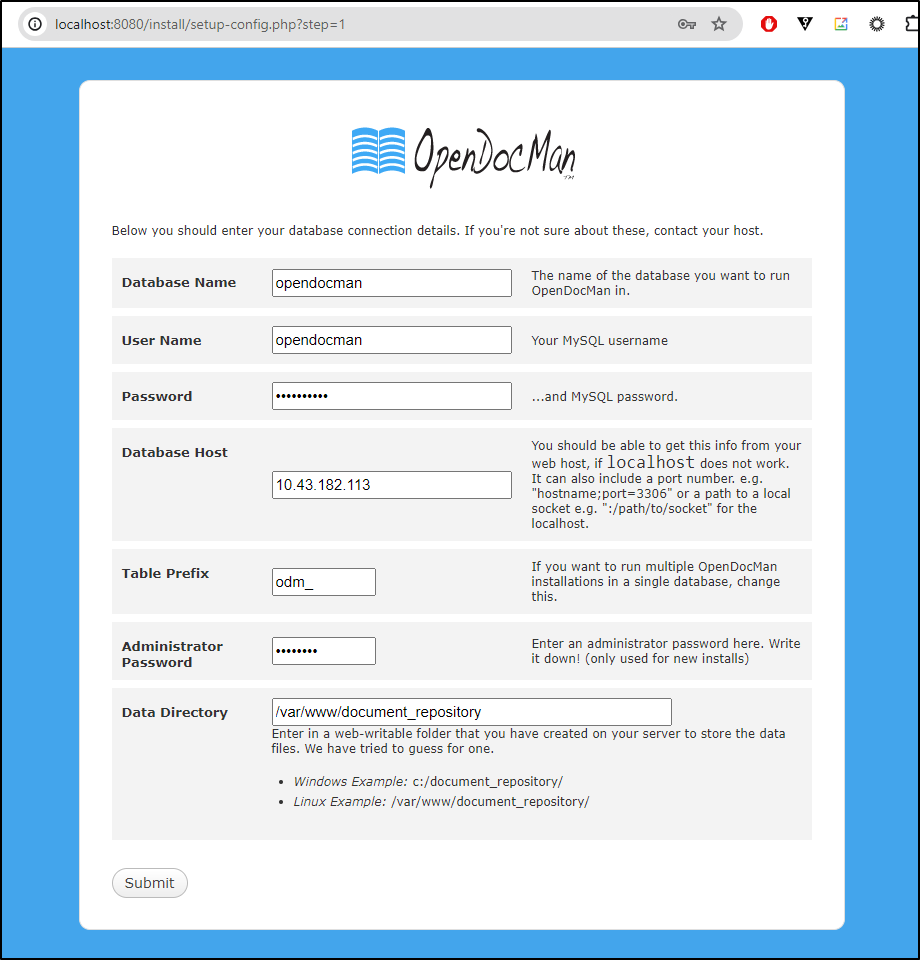

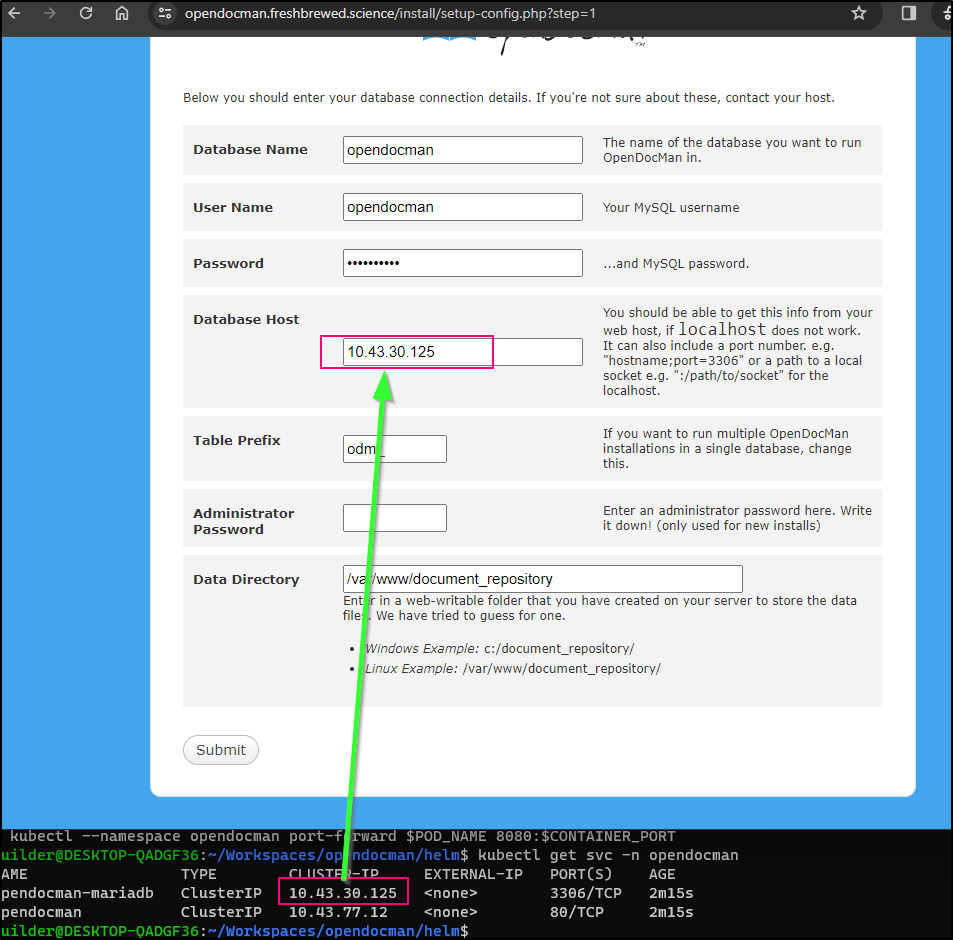

Also, the host name that ultimately did work was the ClusterIP of the mariadb service. While it suggested hostname;port=3306, that doesn’t seem to work for me.

$ helm install opendocman -n opendocman --create-namespace ./opendocman

NAME: opendocman

LAST DEPLOYED: Sat Jan 13 12:07:44 2024

NAMESPACE: opendocman

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace opendocman -l "app.kubernetes.io/name=opendocman,app.kubernetes.io/instance=opendocman" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace opendocman $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace opendocman port-forward $POD_NAME 8080:$CONTAINER_PORT

$ kubectl get svc -n opendocman

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

opendocman-mariadb ClusterIP 10.43.182.113 <none> 3306/TCP 70s

opendocman ClusterIP 10.43.1.82 <none> 80/TCP 70s

$ kubectl port-forward -n opendocman svc/opendocman 8080:80

Forwarding from 127.0.0.1:8080 -> 80

Forwarding from [::1]:8080 -> 80

Handling connection for 8080

Handling connection for 8080

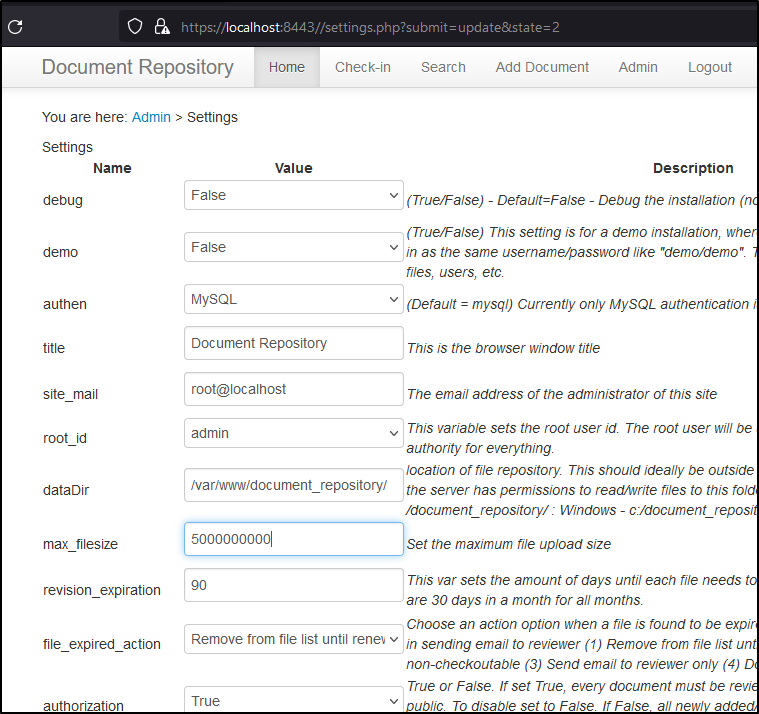

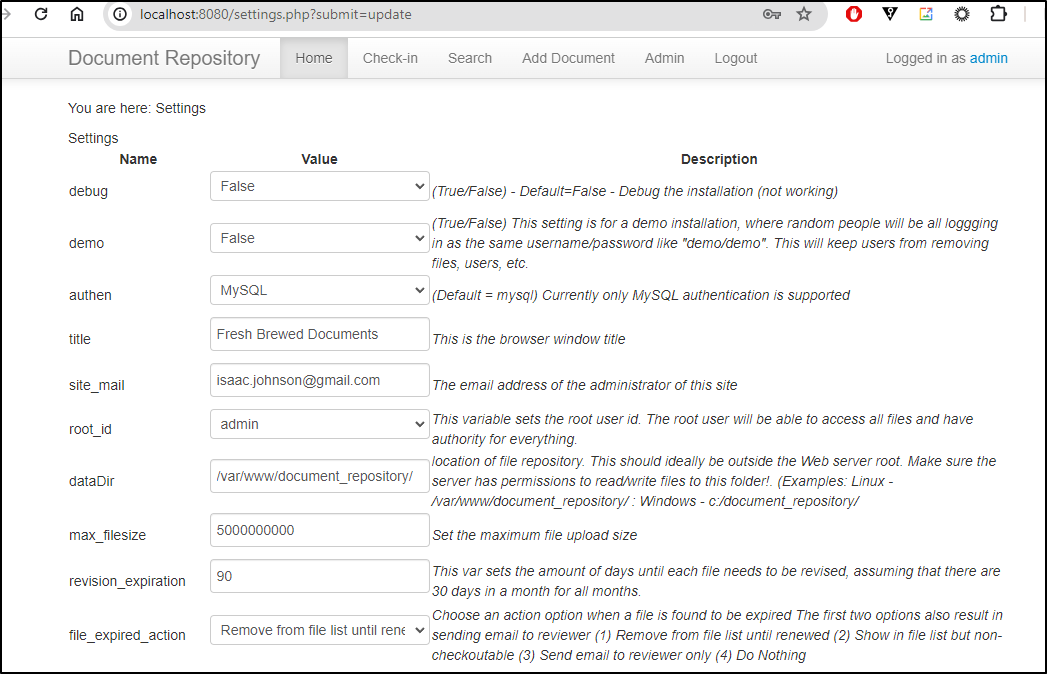

Once config was done, I was prompted to edit any further settings. I tweaked the name and uppped the max_filesize

We can now see our title

Next, I’ll add an ingress

$ cat r53-opendocman.json

{

"Comment": "CREATE opendocman fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "opendocman.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "75.73.224.240"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-opendocman.json

{

"ChangeInfo": {

"Id": "/change/C06215622Y0749TQNY0UG",

"Status": "PENDING",

"SubmittedAt": "2024-01-13T18:16:16.977Z",

"Comment": "CREATE opendocman fb.s A record "

}

}

My chart does have an ingress block we can use. However, I’ll just do it outside the chart this time.

$ cat opendocman.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

labels:

app.kubernetes.io/instance: opendocman

name: opendocmaningress

namespace: opendocman

spec:

rules:

- host: opendocman.freshbrewed.science

http:

paths:

- backend:

service:

name: opendocman

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- opendocman.freshbrewed.science

secretName: opendocman-tls

$ kubectl apply -f opendocman.yaml -n opendocman

ingress.networking.k8s.io/opendocmaningress created

We can now reach our OpenDocMan with proper TLS

Because I used Ingress outside, I can redo this - such as switching storage classes, but leave the ingress in place

$ helm delete opendocman -n opendocman && kubectl delete pvc -n opendocman data-mariadb-0 & kubectl delete pvc -n opendocman data-opendocman-mariadb-0 &

[1] 31984

[2] 31985

$ kubectl get pvc -n opendocman

No resources found in opendocman namespace.

I’ll now re-install with a difference StorageClass. PVC modifications are one of the few things that do not work so well with helm update. My experience has been they require a manual removal and replacment.

I’ll now install with my managed NFS storage class

$ helm install opendocman -n opendocman --create-namespace --set appconfig.storageClassName=managed-nfs-storage --set appdata.storageClassName=managed-nfs-storage --set appdata.size=10Gi ./opendocman

NAME: opendocman

LAST DEPLOYED: Sat Jan 13 12:42:37 2024

NAMESPACE: opendocman

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace opendocman -l "app.kubernetes.io/name=opendocman,app.kubernetes.io/instance=opendocman" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace opendocman $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace opendocman port-forward $POD_NAME 8080:$CONTAINER_PORT

One needs to move fast since anyone, at this moment, could configure and set a password

As before, we’ll use the Cluster IP for the MariaDB service

I’ll try a large file

It took a long time, but did get uploaded

I made one last change the helm chart. I found some of my storageClasses just refused to jive with the PHP backend.

I added an initContainer

initContainers:

- name: docpermfix

image: busybox:latest

command: ["sh","-c","chown -R 1000:1000 /var/www/html/docker-configs && chown -R 1000:1000 /var/www/document_repository"]

resources:

limits:

cpu: "1"

memory: 1Gi

volumeMounts:

- name: appdata

mountPath: /var/www/document_repository

- name: appconfig

mountPath: /var/www/html/docker-configs

But that didn’t work, at least for me. I ended up creating a sub-folder for uploads that would let me chown that

initContainers:

- name: docpermfix

image: busybox:latest

command: ["sh","-c","mkdir -p /var/www/document_repository/docs && chown -R 1000:1000 /var/www/document_repository/docs"]

resources:

limits:

cpu: "1"

memory: 1Gi

volumeMounts:

- name: appdata

mountPath: /var/www/document_repository

Thus ensuring we could use durable storage, at least for docs

$ helm install opendocman -n opendocman --create-namespace --set appconfig.storageClassName=local-path --set appdata.storageClassName=managed-nfs-storage --set appdata.size=10Gi ./opendocman

NAME: opendocman

LAST DEPLOYED: Sun Jan 14 06:54:12 2024

NAMESPACE: opendocman

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace opendocman -l "app.kubernetes.io/name=opendocman,app.kubernetes.io/instance=opendocman" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace opendocman $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace opendocman port-forward $POD_NAME 8080:$CONTAINER_PORT

And I use the “docs” subfolder in the config

Which we can see the durable NAS back-end here

I put the Helm chart into a forked repo for you to use https://github.com/idjohnson/opendocman/tree/create-helm-charts/helm. I did create a PR for the original owner in case the owner wants it.

Summary

I was initially wary of CodeX as it was founded by Peter Savchenko (or his twitter page if you use that tool). I’m trying to stay a-political in this blog, however, personally, I do avoid all things from Russia due to the Ukraine invasion. That said, from the Instagram it seems it’s a global group and non-political. From the vk site it suggests that they still have regular CodeX meetups in St. Petersburg.

With regards to OpenDocMan, It would appear the underlying author has pivoted to “Logical Arts LLC” and actively develops a commercial version now called “SecureDocMan”. Their “About Us” says: “SecureDocMan is part of Logical Arts LLC, an Internet solutions provider based out of California. Logical Arts LLC began back in 2002 as a provider of real estate solutions, specializing in listing data management. At the same time, we began developing our first document management software project, OpenDocMan, which we still actively develop and support”.

I found both tools to fill a niche. I actually like the OpenDocMan for having files that are deliverables with versions. If I had to deliver sequences for a Lab again, this would be a nice hand-off. I could also see it used by companies getting work from subcontractors where they could upload a completed “thing” for review. I do wish it had a better backend or way to easily dump all the files for backup. Currently the “files” end up as “.DAT” files on the backend.