Published: Jan 18, 2024 by Isaac Johnson

I actually came across Mattermost while exploring an Open-Source deployment toolset. While the toolset didn’t work out, I found Mattermost a compelling offering. It’s a self-hosted toolset that can run in Docker, Kubernetes, Ubuntu or the Cloud (via canned Bitnami marketplace items in AWS, Azure and GCP).

We’ll explore Docker and Kubernetes then touch on “cloud” via the Bitnami stack in Azure.

Mattermost in Docker

Let’s start with the simple Dockerfile version. I can pull and run the “preview” image in my Docker host.

builder@builder-T100:~$ docker run --name mattermost-preview -d --publish 8065:8065 mattermost/mattermost-preview

Unable to find image 'mattermost/mattermost-preview:latest' locally

latest: Pulling from mattermost/mattermost-preview

af107e978371: Already exists

161c4a1c9e47: Pull complete

068ec7aef1c0: Pull complete

84cb495413f5: Pull complete

4b9c266e38bb: Pull complete

abf3132b55e3: Pull complete

61755c2bbafa: Pull complete

dcc6b87767cd: Pull complete

844bb6ff3ef2: Pull complete

ee304895c401: Pull complete

1a107841a059: Pull complete

69b04a3aa964: Pull complete

0ea1f902e042: Pull complete

d36a0d8fbcc1: Pull complete

e33efdf41c62: Pull complete

18db8bc0c846: Pull complete

7d95738fb467: Pull complete

e4db57eba801: Pull complete

5961482dbd04: Pull complete

a1263977511e: Pull complete

98c090492600: Pull complete

57b9b92b3921: Pull complete

62fdbdd4379c: Pull complete

Digest: sha256:cc133a74724fc1e106d035ac1e6345ce1ba295faaca47612b634fba84f106ccb

Status: Downloaded newer image for mattermost/mattermost-preview:latest

c4f698e8b3778a3998d641df224f1d09a2f0c58fae8924cac148feda62d4cbe4

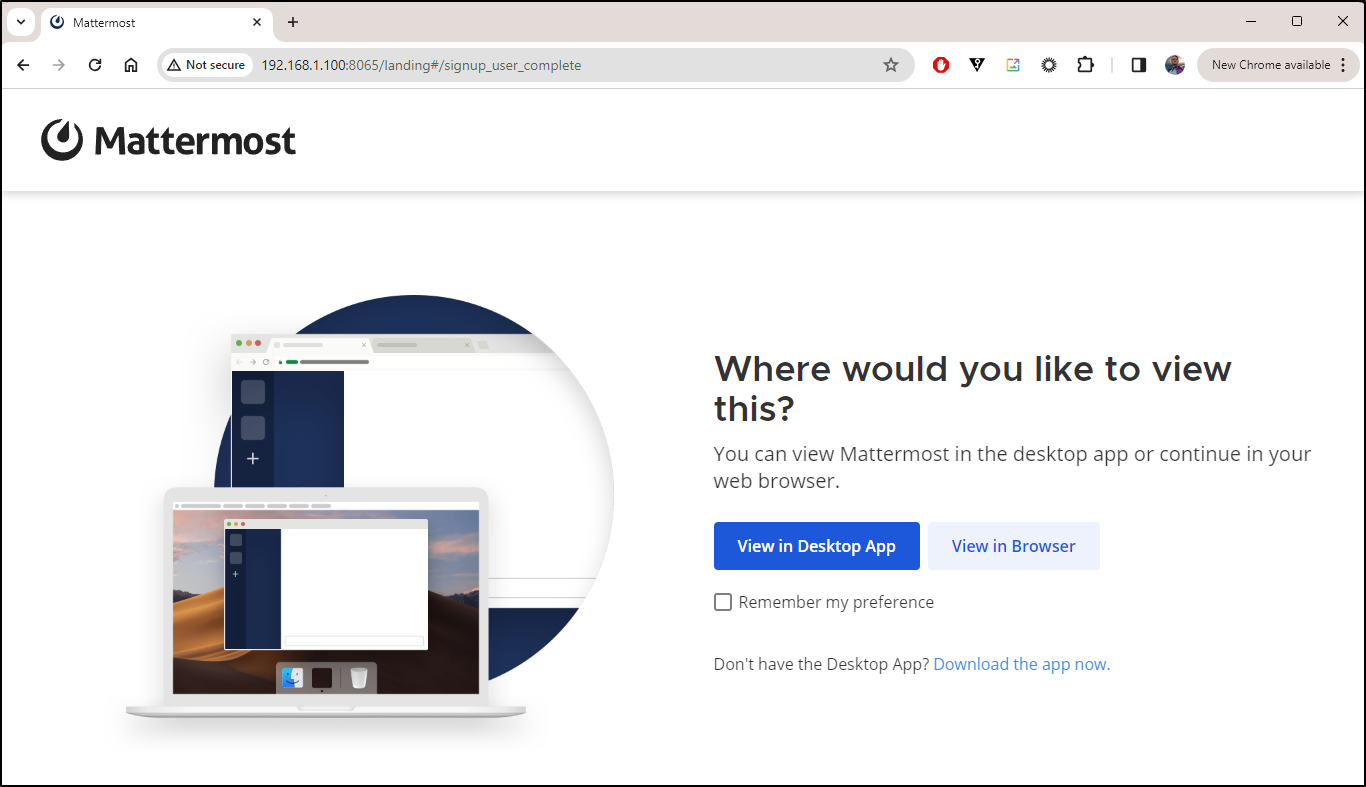

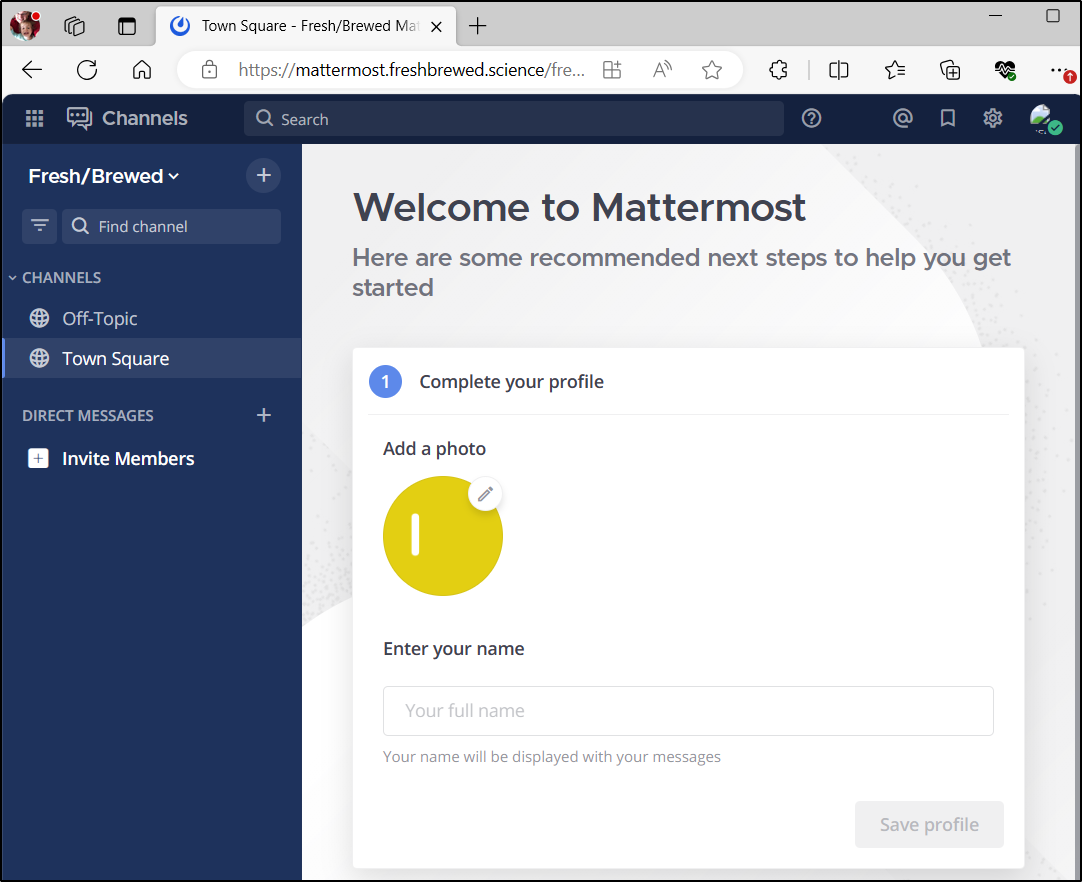

On port 8065, I can now see it show up in the browser

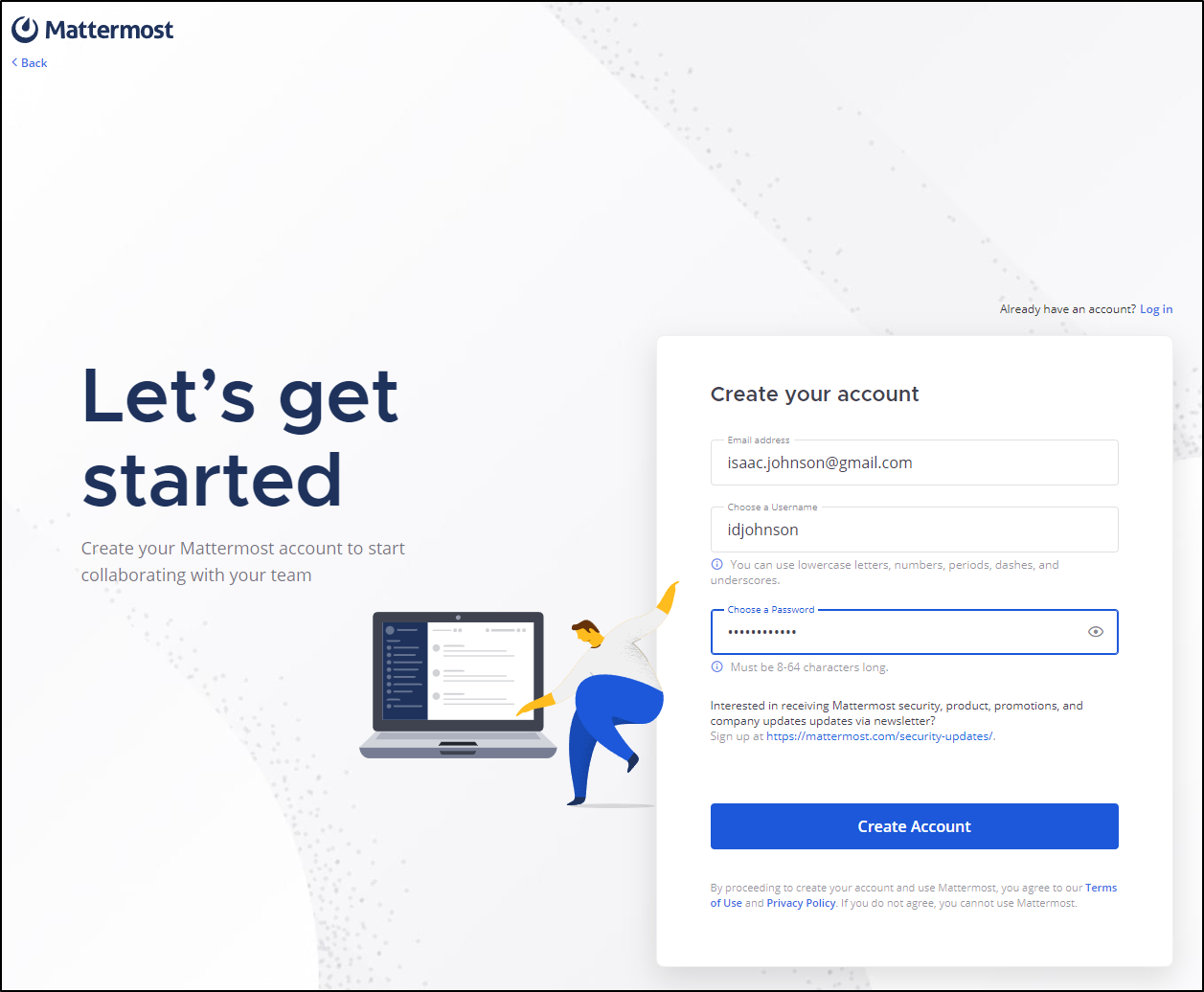

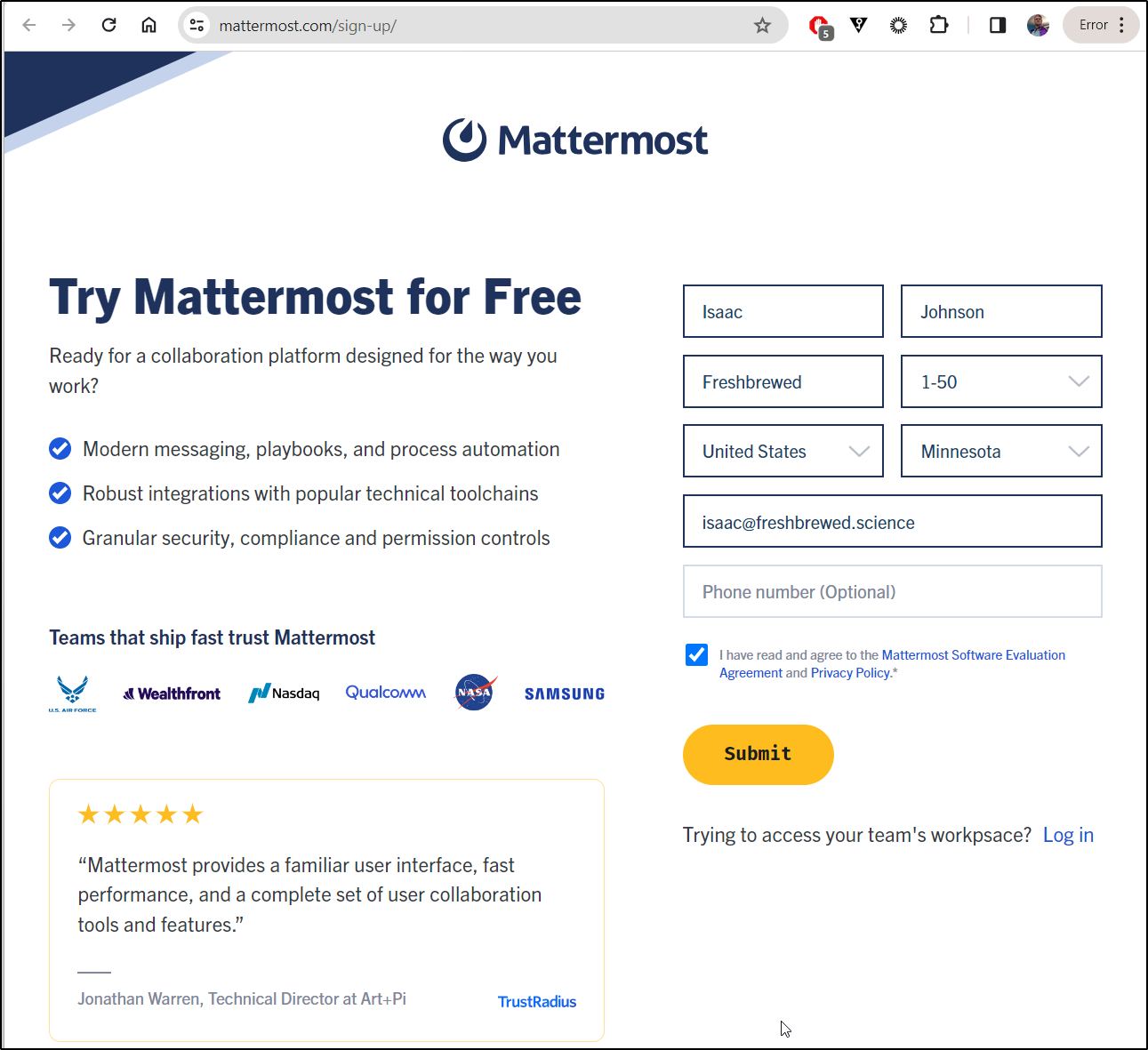

I chose to use the webapp and it wanted a user signup

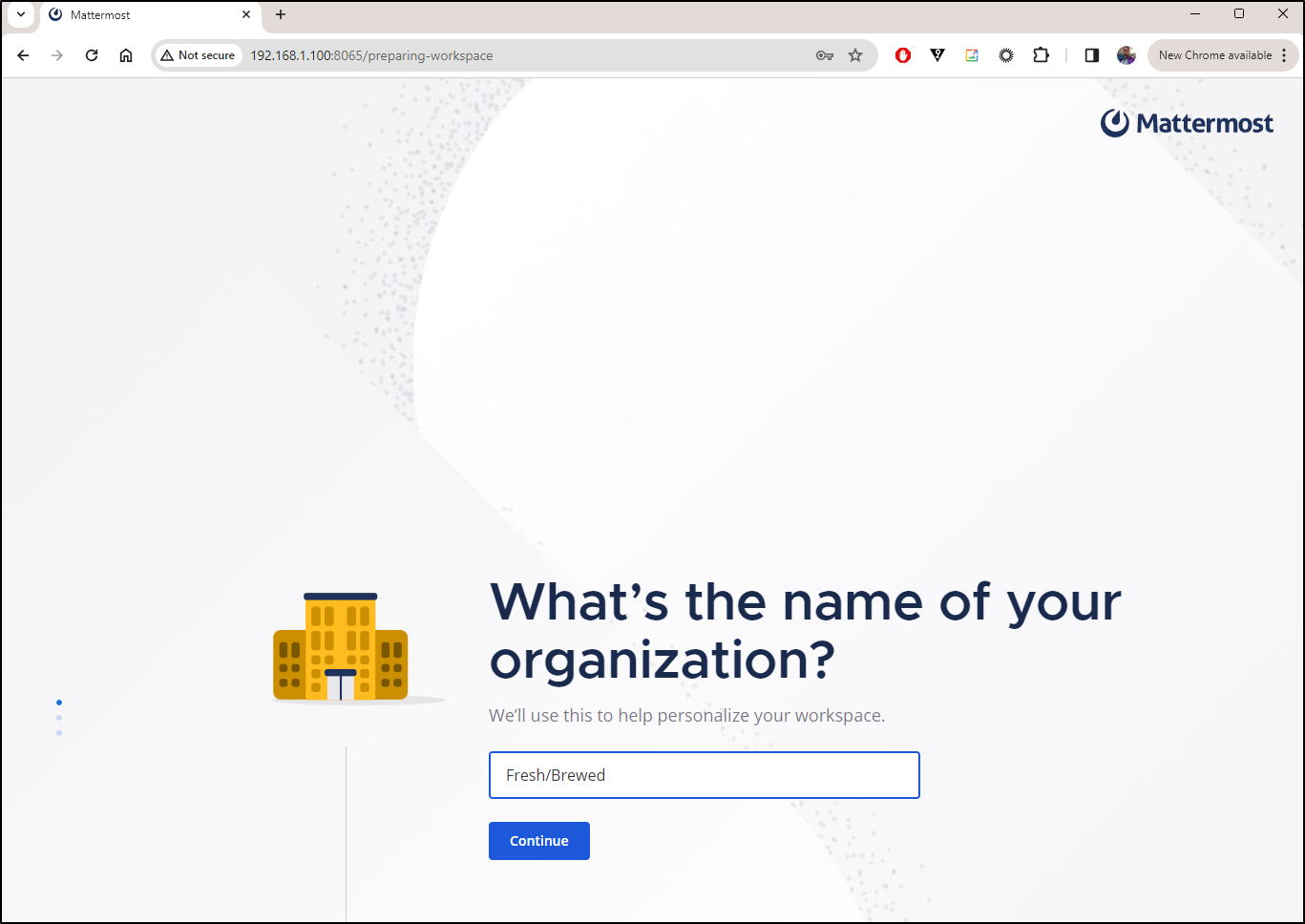

Next, I created an Organization

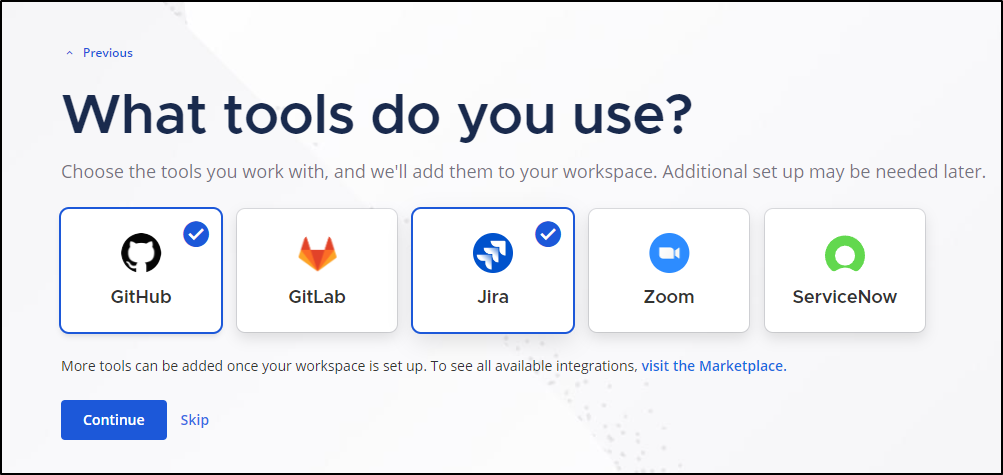

I was prompted on tools we use

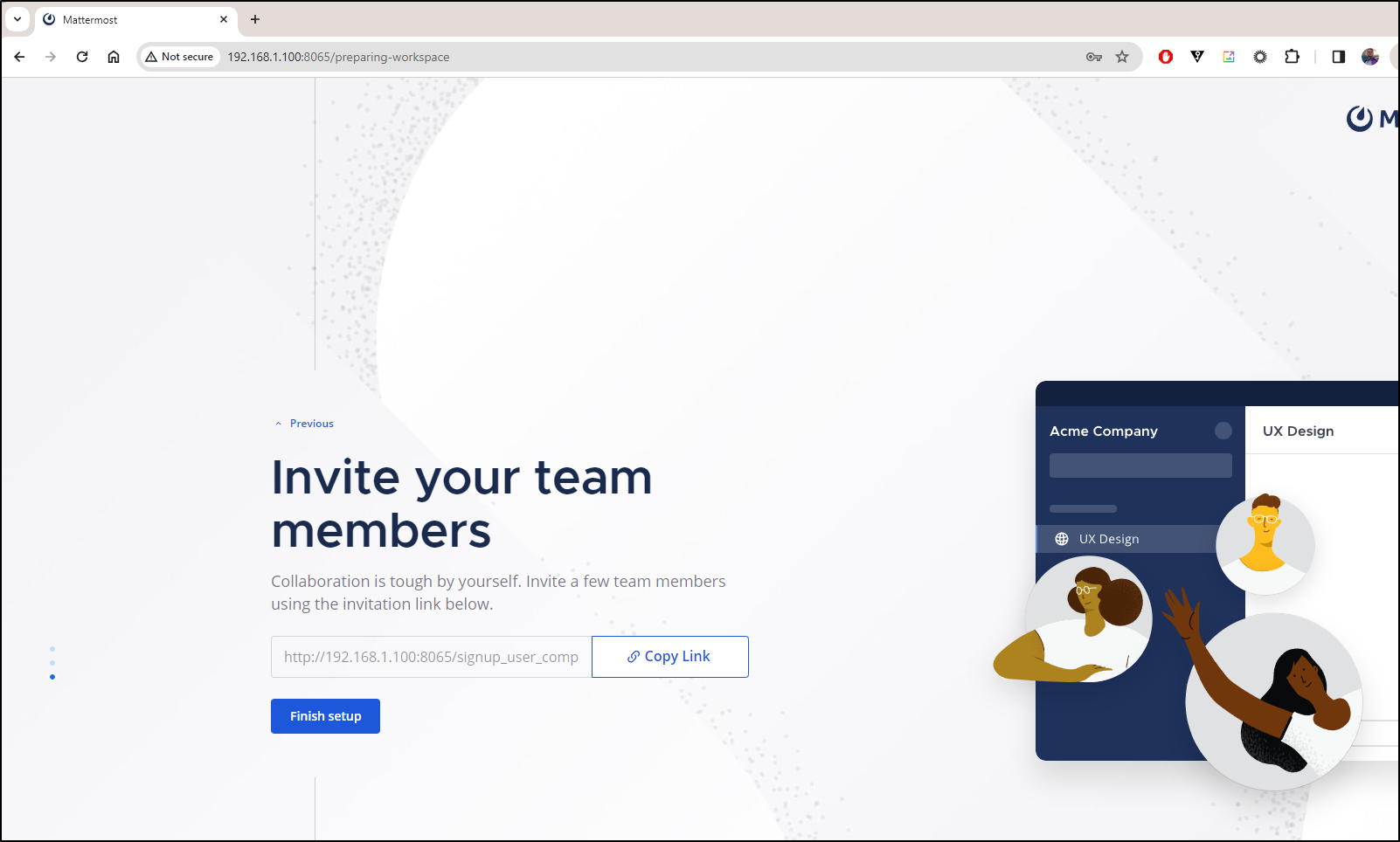

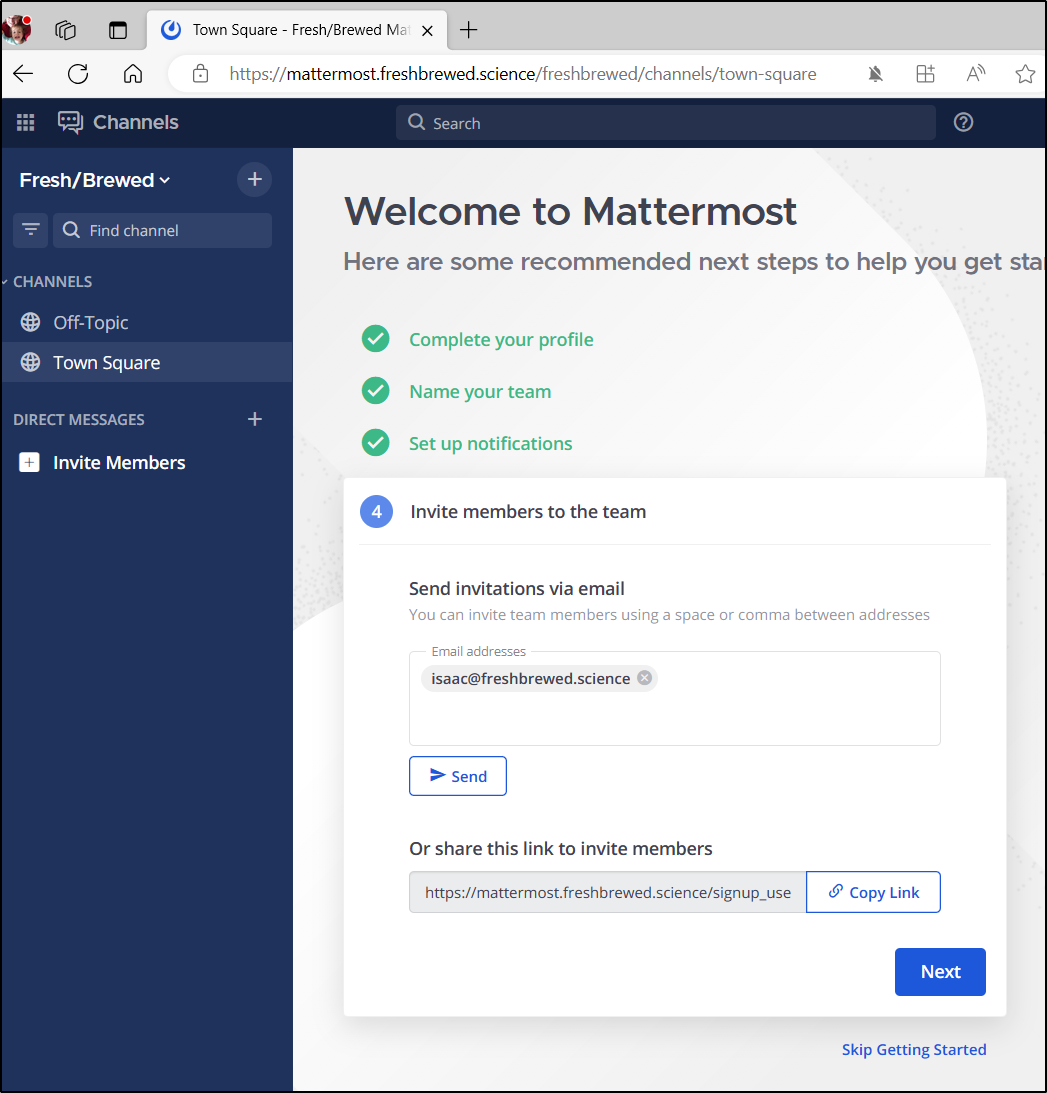

It then gave me a link others could use (I guess provided they are in my network; http://192.168.1.100:8065/signup_user_complete/?id=ccd8n8hd3t8htg7i3pagf7jr4a)

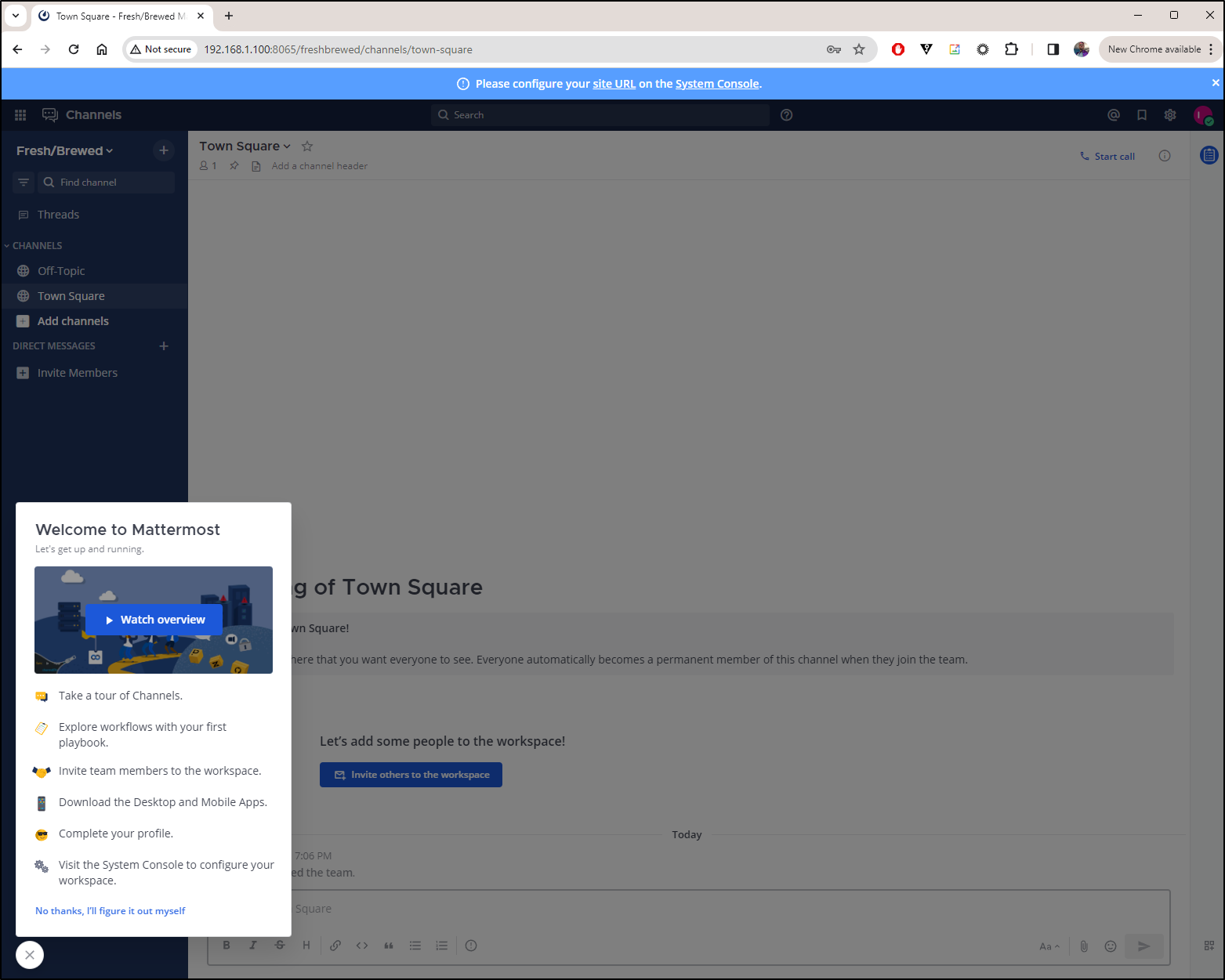

I then landed in a something very Slack-like

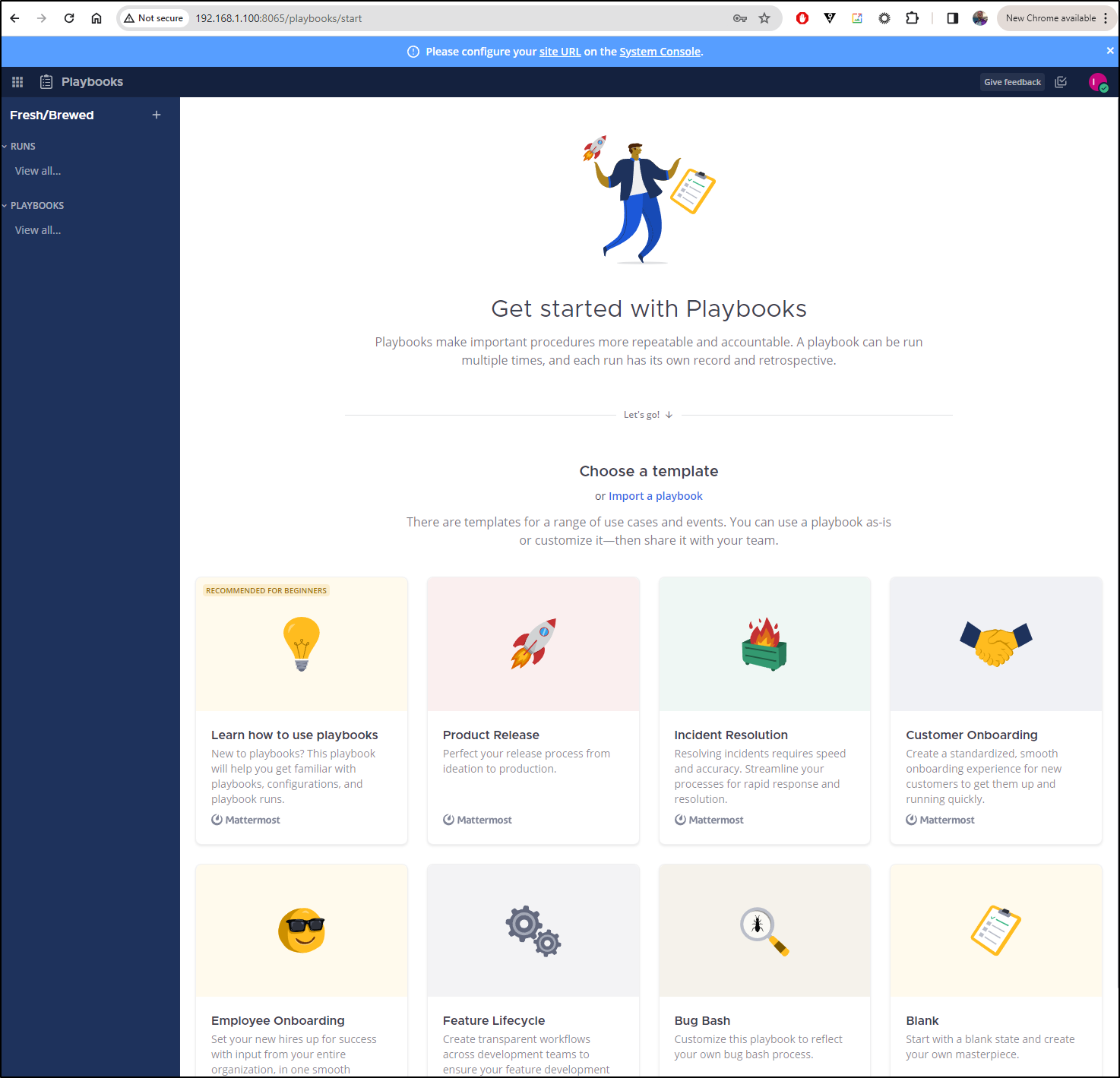

I figured I would start with Playbooks. I picked “Incident Resolution” from the list mostly because I dig the dumpster fire icon

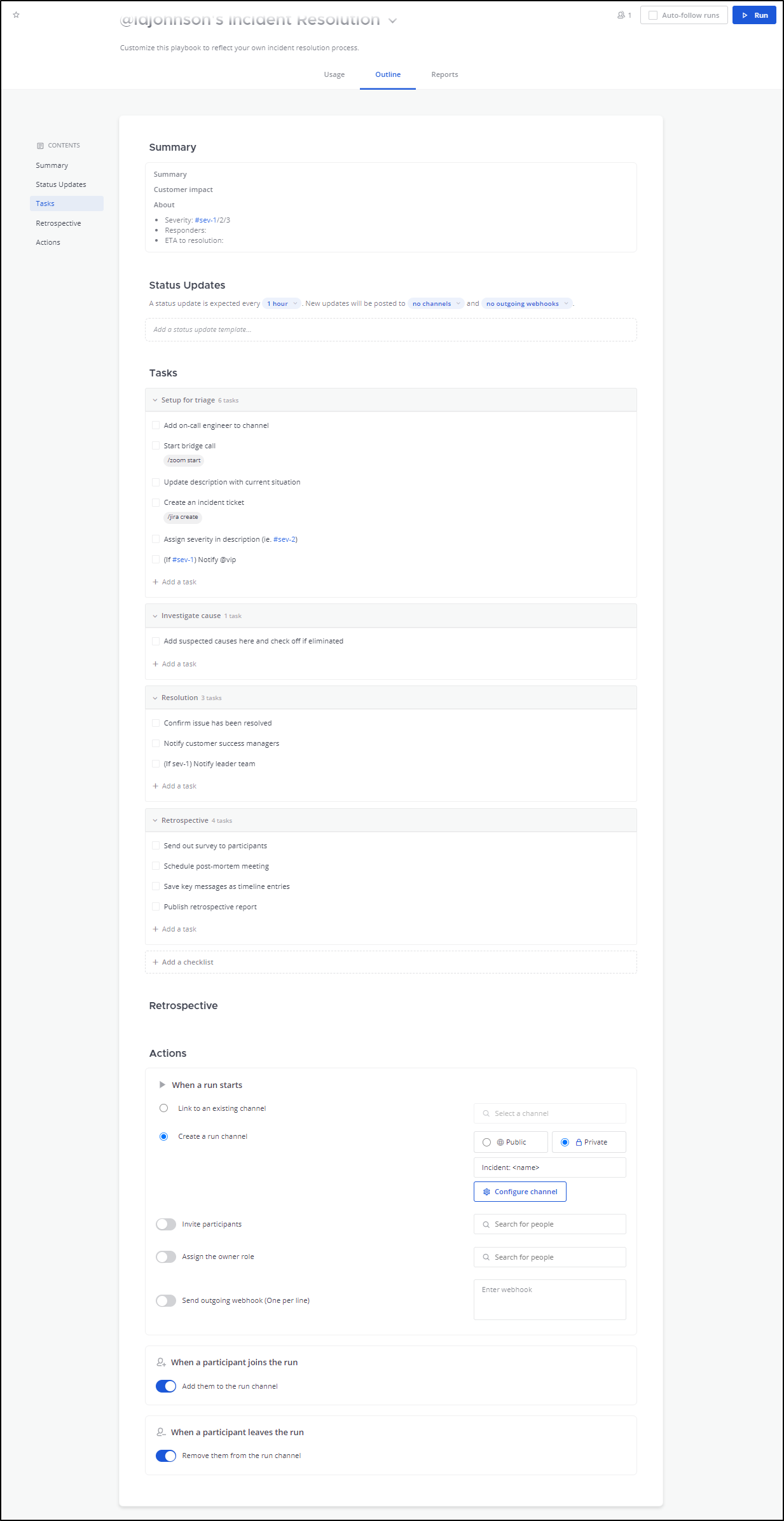

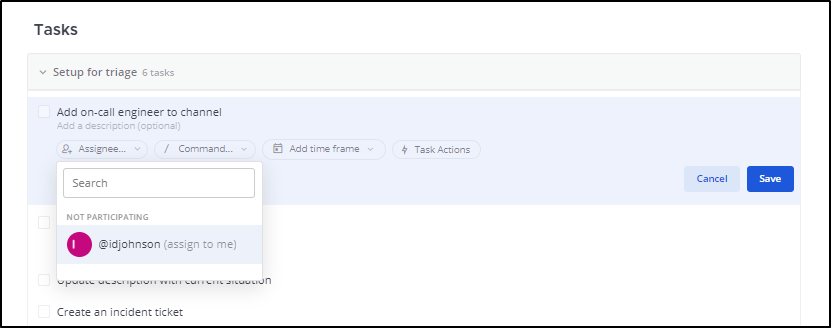

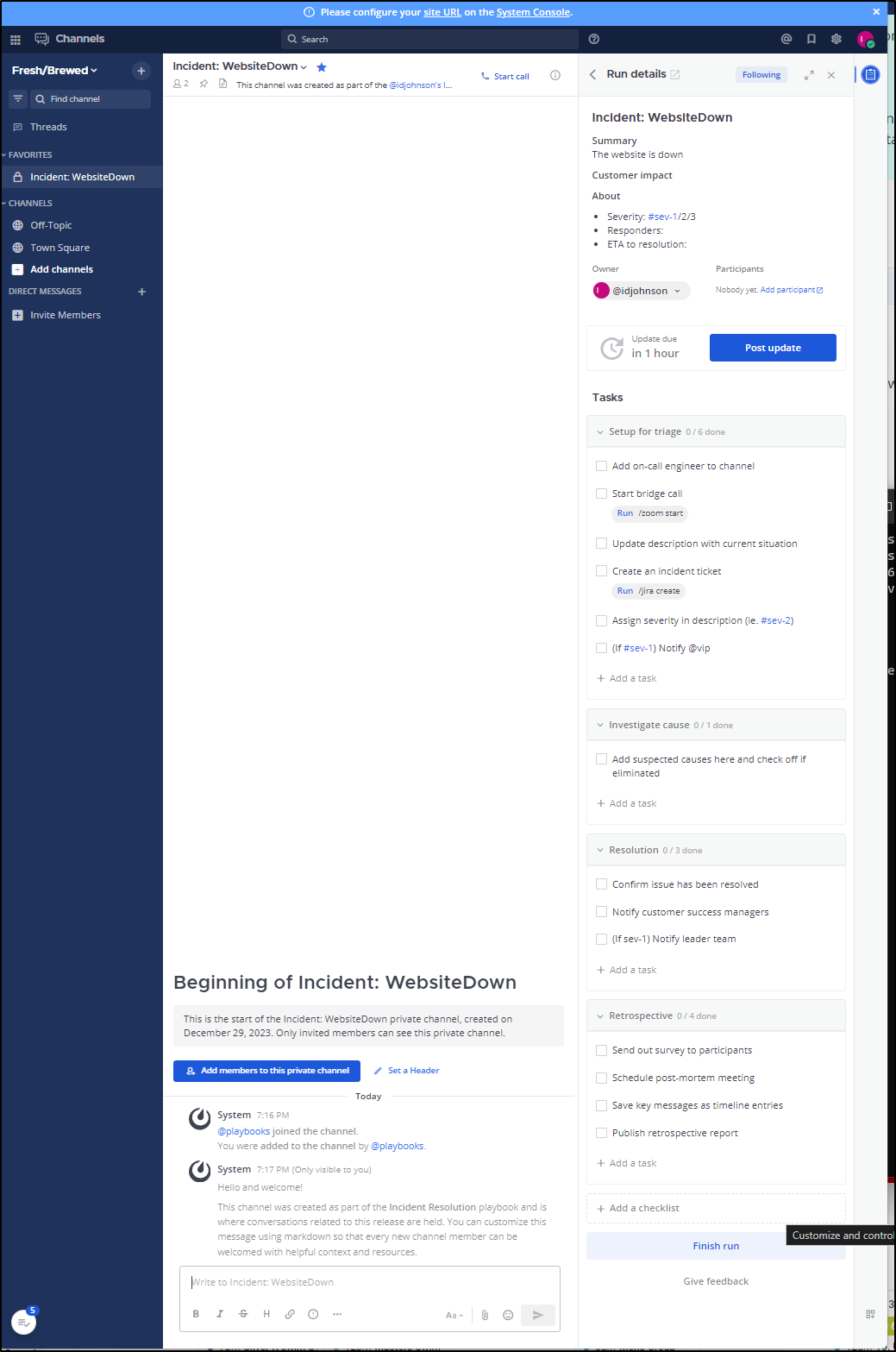

There is a lot to unpack here:

Some features are clearly free, like adding on-call from a pick list of users

Others, like “Add a Timeframe” pop up a “pay me” dialogue

There is some legitimately cool stuff here such as auto-creating a channel on Incident and providing a Welcome message to those jumping in to help

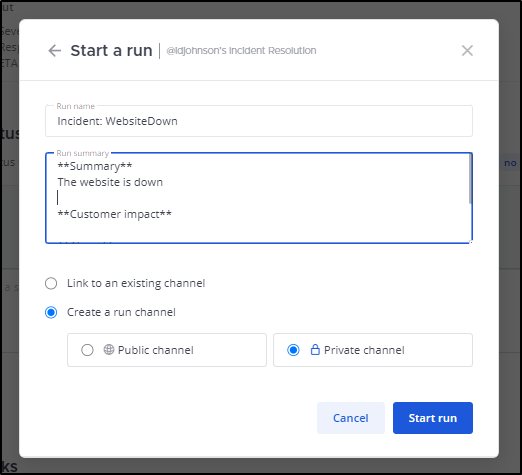

Let’s try a test

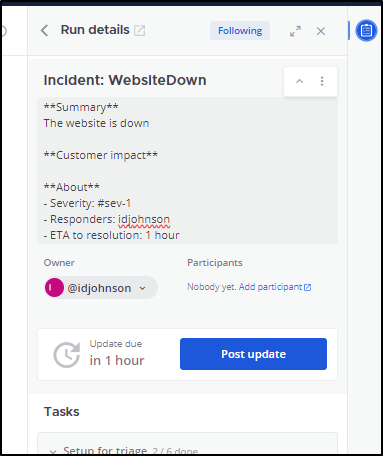

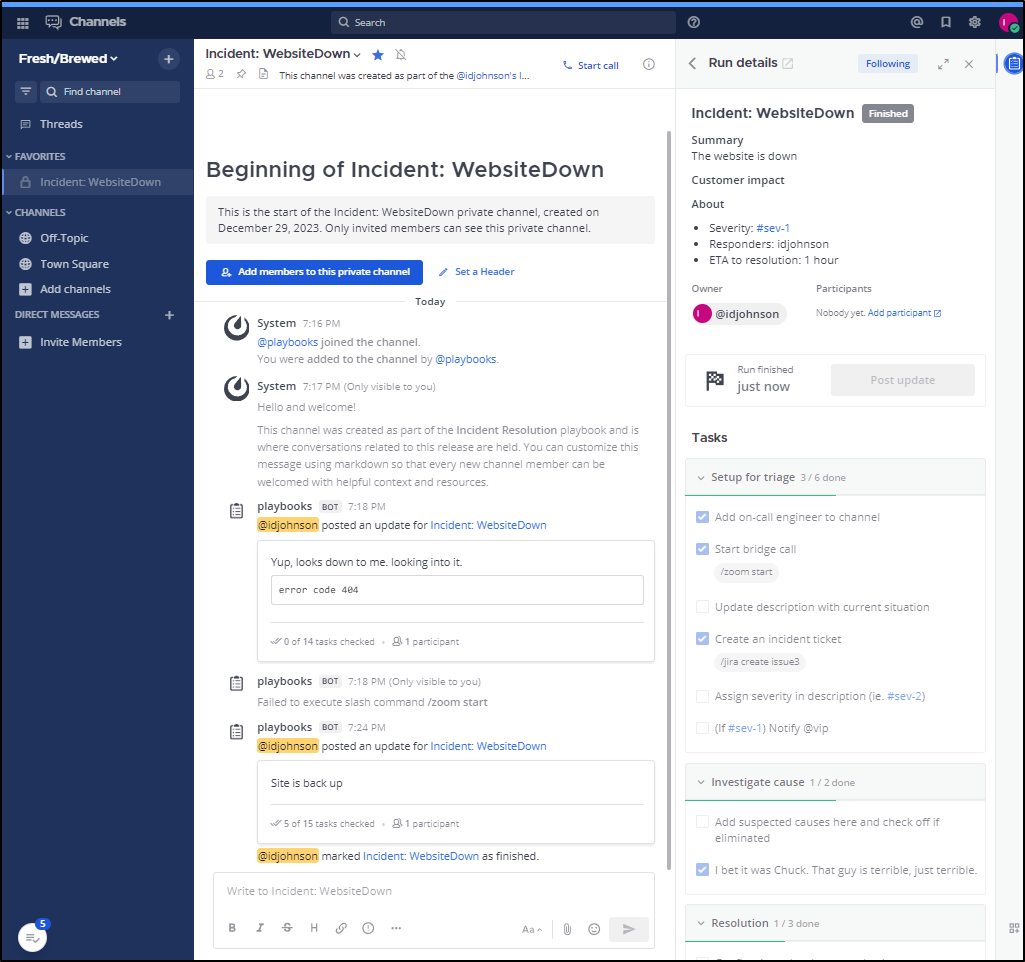

I immediately see I was added to the channel and updates are due within the hour

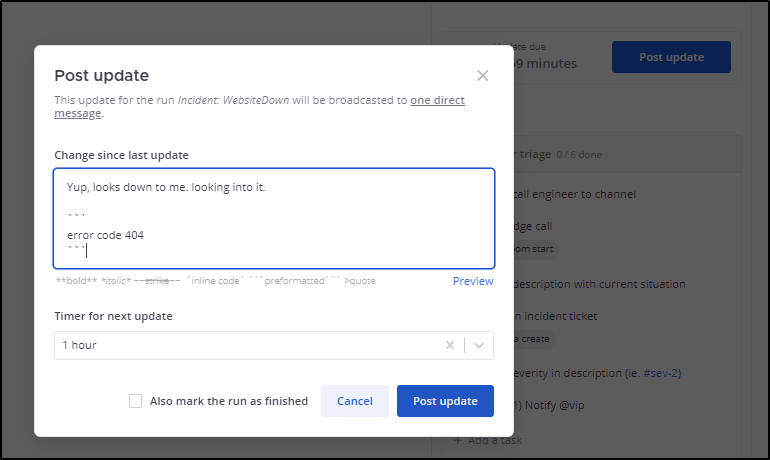

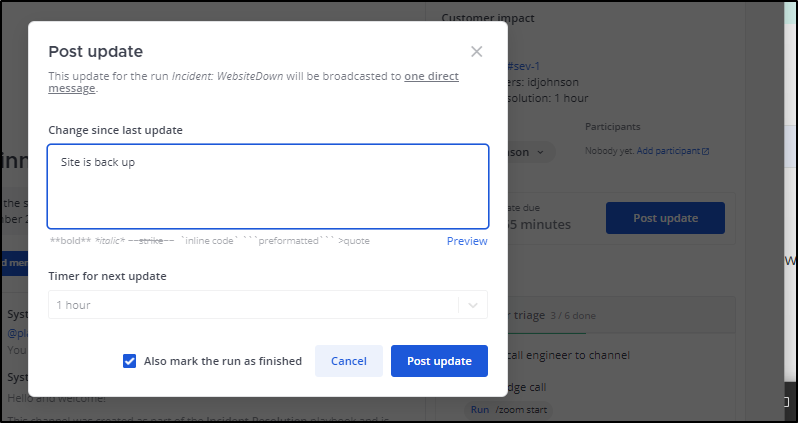

I can now post an update

I can click in the upper right to change settings, like severity or incident commander

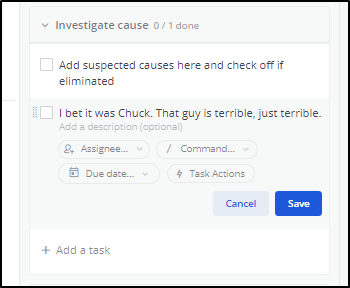

We can add in ideas that we can discuss and check off

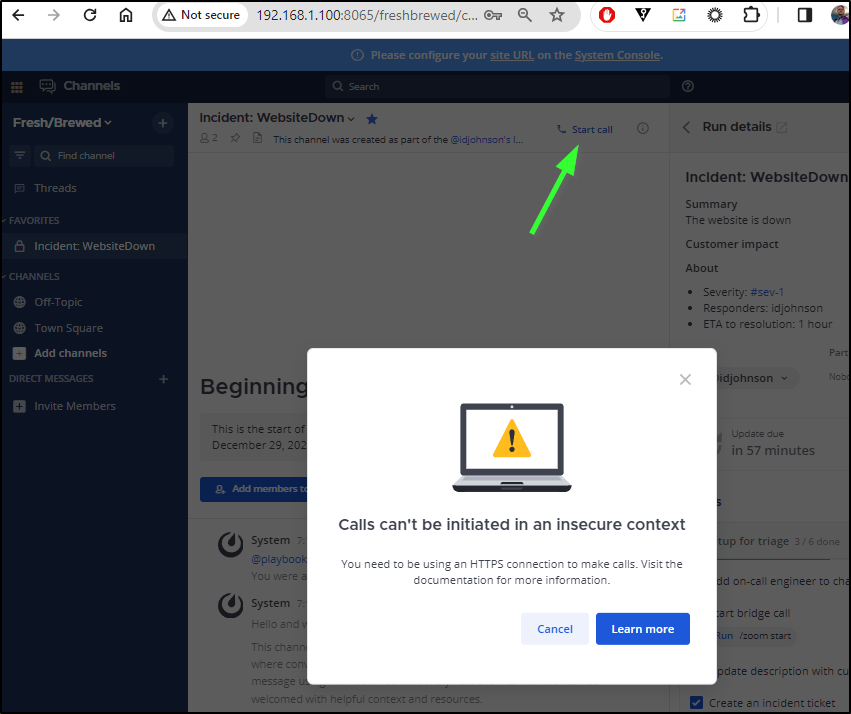

It wasn’t keen on me starting a call since we were doing http

I can post an update that the problem is wrapped up

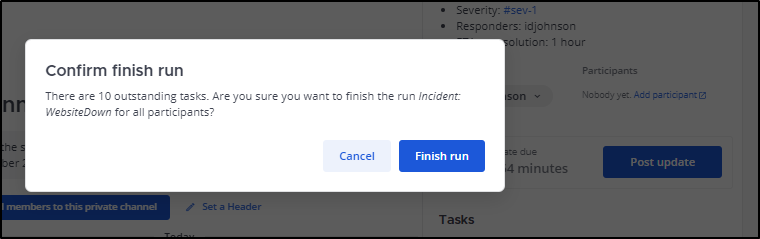

It will want confirmation about the unfinished tasks first

And now we see it is completed

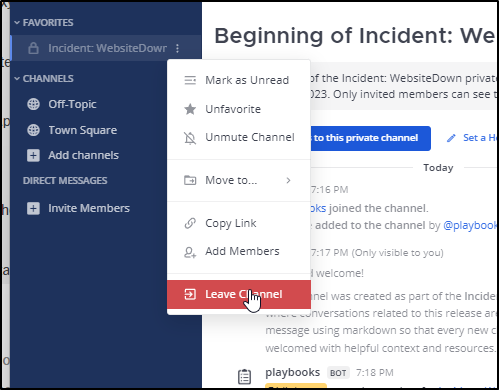

I can now leave the channel

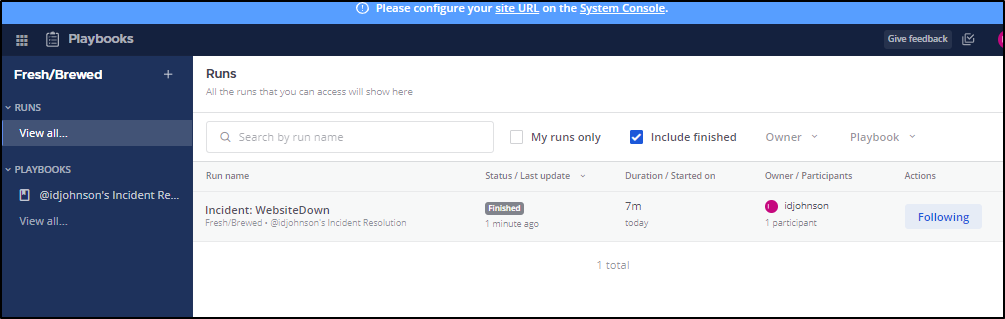

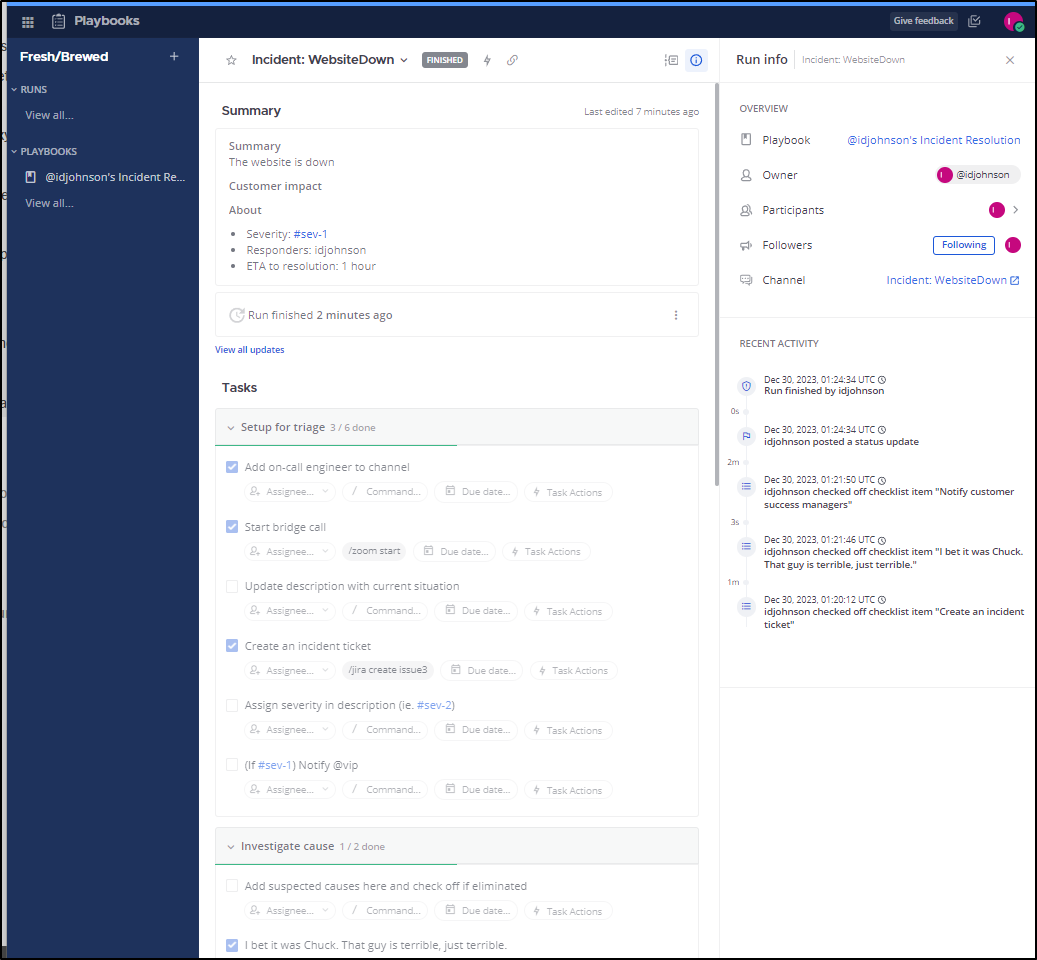

Note, even though I left the channel I can still go back to review what happened. If we go to Playbooks, we can view finished playbooks

And from there get a breakdown of what happened and when (and by whom)

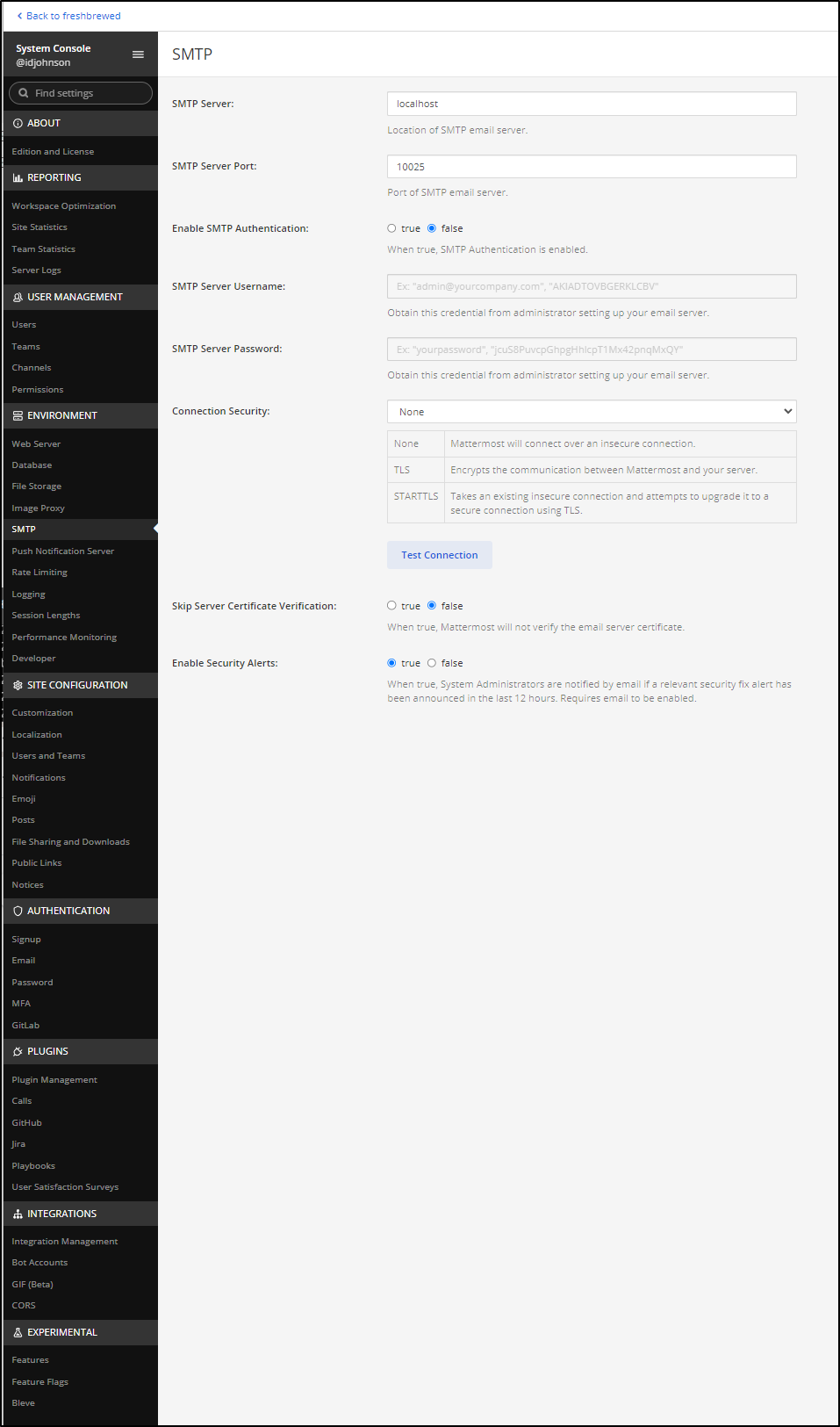

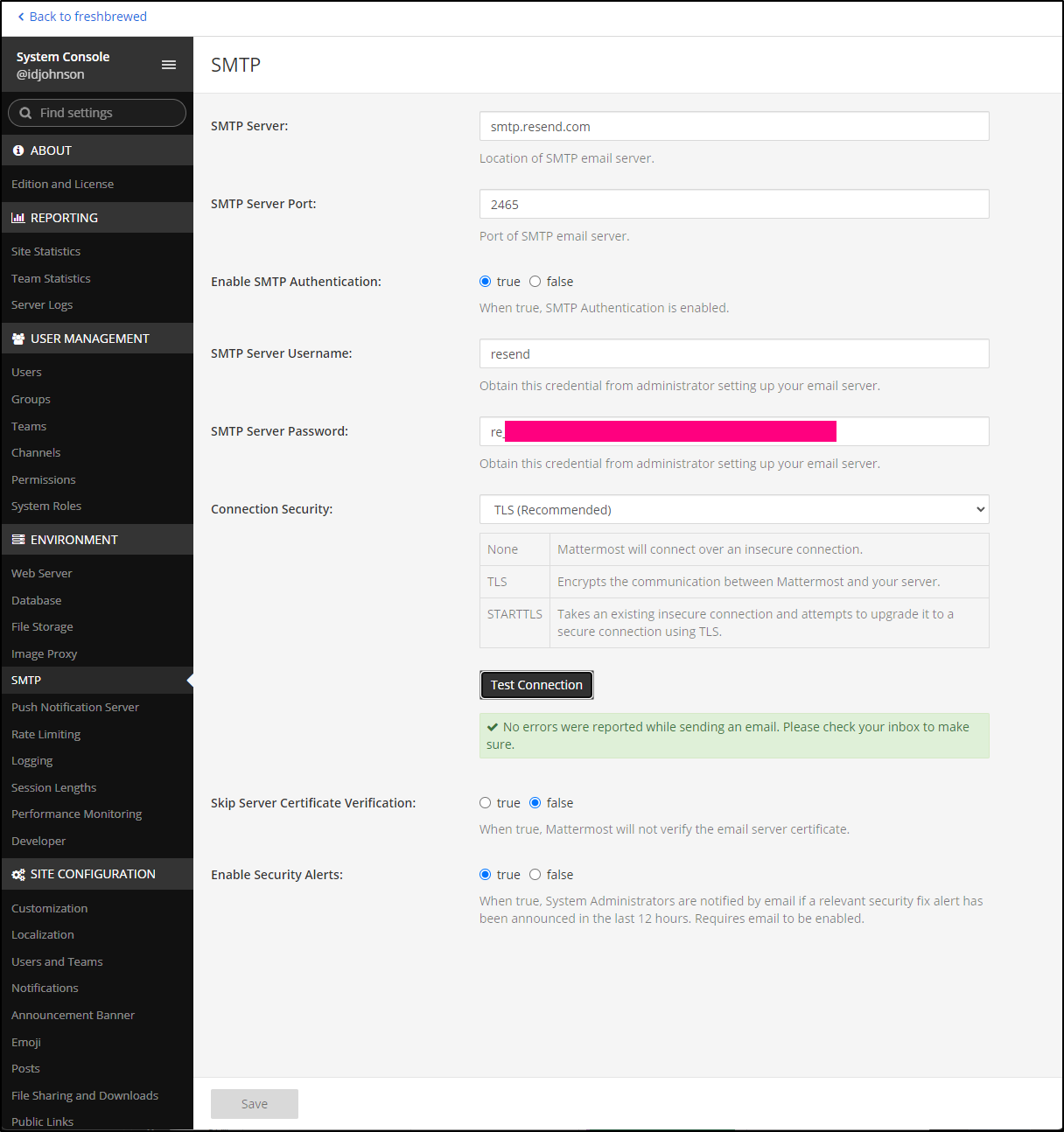

System settings

If we pop over to the system console, it’s a very long left-hand Navigation

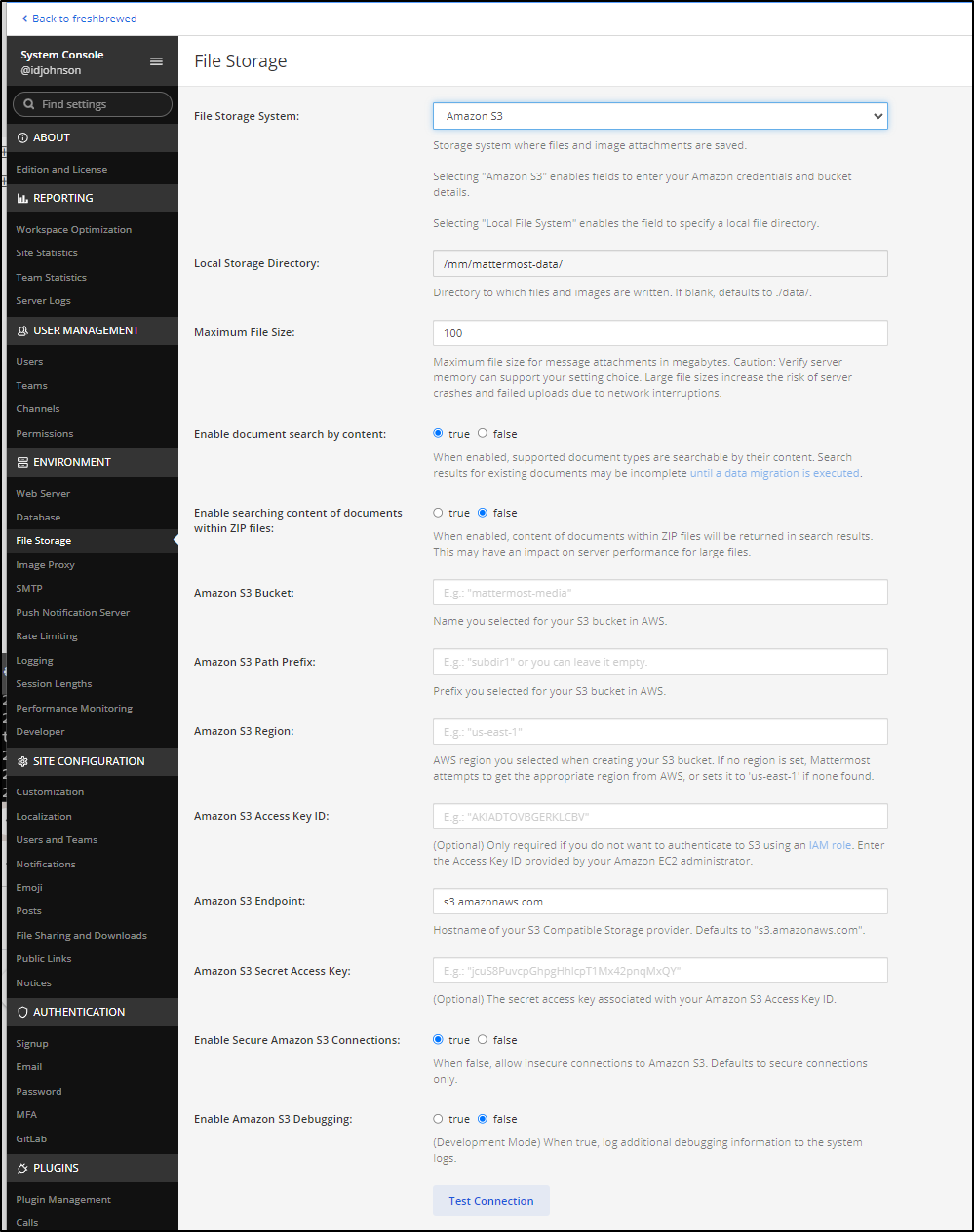

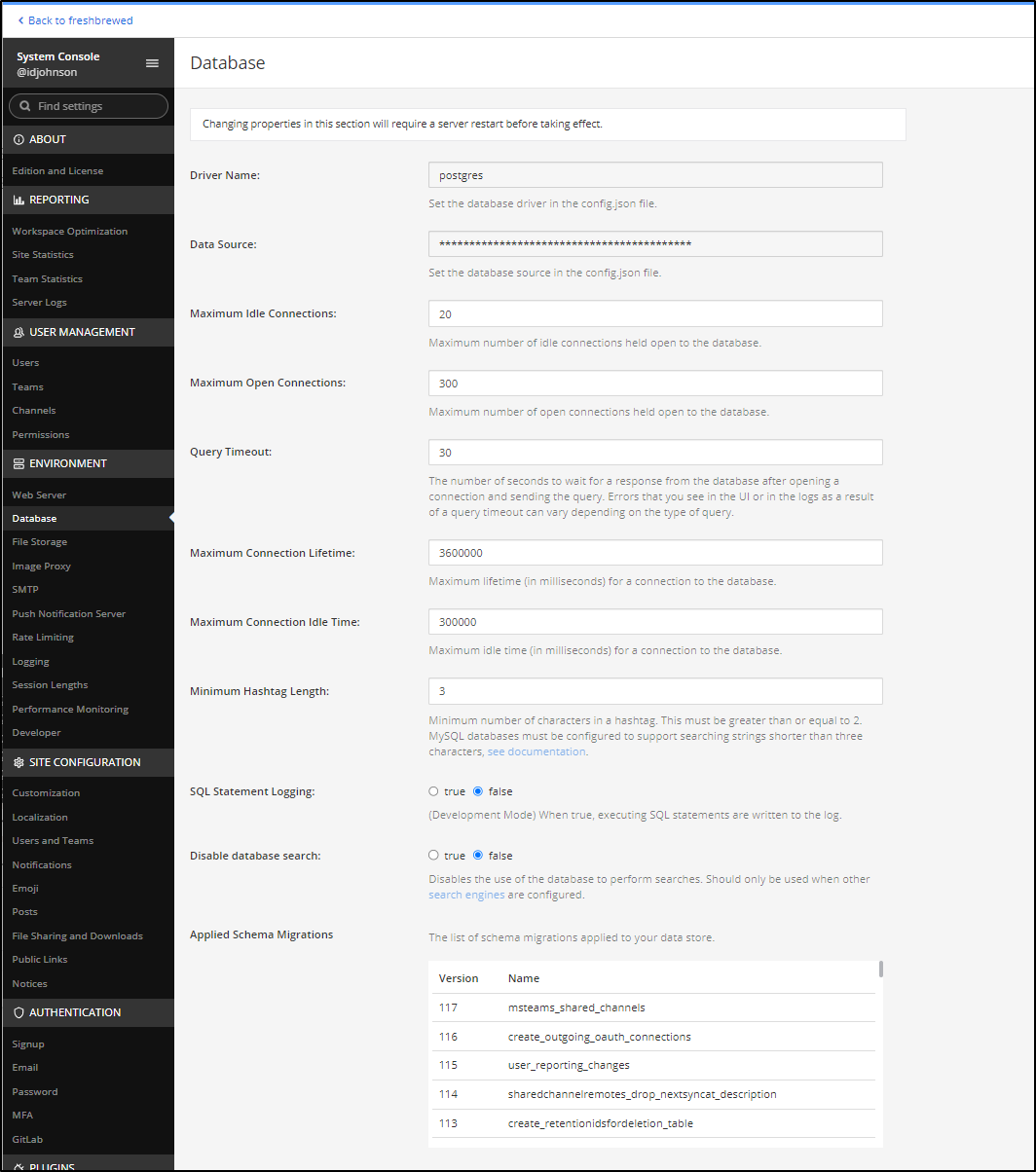

Some things, like file storage are just local file system or S3

However, I can always use a GCS HMAC endpoint (see example) or MinIO with GCS bucket backing if I wanted to expose Google Cloud storage or expose with Minio on my NAS.

The docs suggest we have other choices but I have yet to see them

There are some settings, like the database, that are only changeable via files on the host.

I’ve had my fun but I think I’ll stop this instance in Docker to try Kubernetes instead

builder@builder-T100:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c4f698e8b377 mattermost/mattermost-preview "/bin/sh -c ./docker…" 8 hours ago Up 8 hours 5432/tcp, 0.0.0.0:8065->8065/tcp, :::8065->8065/tcp mattermost-preview

builder@builder-T100:~$ docker stop mattermost-preview

mattermost-preview

builder@builder-T100:~$ docker rm mattermost-preview

mattermost-preview

Kubernetes

We can follow their Kubernetes Install Guide which involves an operator, database setup, configuration files then instantiation of the operator.

While I know the ingress wont sort out in my test K8s, I want to see what this does first.

We’ll first create a namespace and install the operator

$ kubectl create ns mattermost-operator

namespace/mattermost-operator created

$ kubectl apply -n mattermost-operator -f https://raw.githubusercontent.com/mattermost/mattermost-operator/master/docs/mattermost-operator/mattermost-operator.yaml

customresourcedefinition.apiextensions.k8s.io/clusterinstallations.mattermost.com created

customresourcedefinition.apiextensions.k8s.io/mattermostrestoredbs.mattermost.com created

customresourcedefinition.apiextensions.k8s.io/mattermosts.installation.mattermost.com created

serviceaccount/mattermost-operator created

clusterrole.rbac.authorization.k8s.io/mattermost-operator created

clusterrolebinding.rbac.authorization.k8s.io/mattermost-operator created

service/mattermost-operator created

deployment.apps/mattermost-operator created

I’m going to use a utility box for the database for now

builder@builder-HP-EliteBook-745-G5:~$ sudo -u postgres psql

psql (12.17 (Ubuntu 12.17-0ubuntu0.20.04.1))

Type "help" for help.

postgres=# create database mattermost;

CREATE DATABASE

postgres=# create user mattermost with encrypted password 'matterpass';

CREATE ROLE

postgres=# grant all privileges on database mattermost to mattermost;

GRANT

postgres=# \q

By default, Postgres is on port 5432, but we can validate that

$ pg_lsclusters

Ver Cluster Port Status Owner Data directory Log file

12 main 5432 online postgres /var/lib/postgresql/12/main /var/log/postgresql/postgresql-12-main.log

I can now create a secret using that host, user, pass and db

$ echo postgres://mattermost:matterpass@192.168.1.33:5432/mattermost | base64 -w 0

cG9zdGdyZXM6Ly9tYXR0ZXJtb3N0Om1hdHRlcnBhc3NAMTkyLjE2OC4xLjMzOjU0MzIvbWF0dGVybW9zdAo=

I’ll now use that in the database settings we will need

$ cat ./my-postgres-connection.yaml

apiVersion: v1

data:

DB_CONNECTION_CHECK_URL: cG9zdGdyZXM6Ly9tYXR0ZXJtb3N0Om1hdHRlcnBhc3NAMTkyLjE2OC4xLjMzOjU0MzIvbWF0dGVybW9zdAo=

DB_CONNECTION_STRING: cG9zdGdyZXM6Ly9tYXR0ZXJtb3N0Om1hdHRlcnBhc3NAMTkyLjE2OC4xLjMzOjU0MzIvbWF0dGVybW9zdAo=

MM_SQLSETTINGS_DATASOURCEREPLICAS: cG9zdGdyZXM6Ly9tYXR0ZXJtb3N0Om1hdHRlcnBhc3NAMTkyLjE2OC4xLjMzOjU0MzIvbWF0dGVybW9zdAo=

kind: Secret

metadata:

name: my-postgres-connection

type: Opaque

For S3 items, we need an access and secret.

$ cat my-s3-iam-access-key.yaml

apiVersion: v1

data:

accesskey: QUtJQUFBQkJDQ0RERUVGRkdHSEgK

secretkey: QUJDRGFiY2RFRkdIZWZnaEhJSmhpaktMTWtsbU5PUG5vcFFSU3Fycwo=

kind: Secret

metadata:

name: my-s3-iam-access-key

type: Opaque

I need to create a namespace and add both the database and AWS secrets there

builder@DESKTOP-QADGF36:~/Workspaces/mattermost$ kubectl create ns mattermost

namespace/mattermost created

builder@DESKTOP-QADGF36:~/Workspaces/mattermost$ kubectl apply -f my-s3-iam-access-key.yaml -n mattermost

secret/my-s3-iam-access-key created

builder@DESKTOP-QADGF36:~/Workspaces/mattermost$ kubectl apply -f my-postgres-connection.yaml -n mattermost

secret/my-postgres-connection created

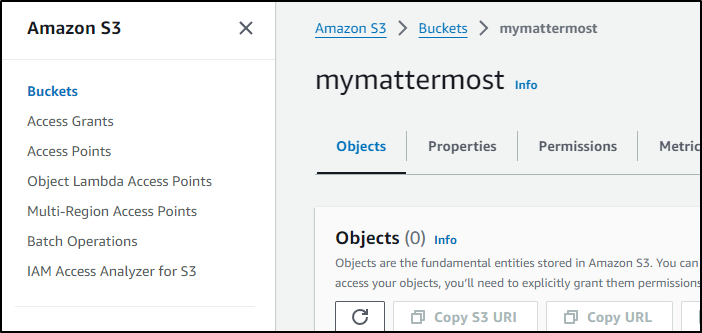

Then, I’ll create an S3 bucket to use for storage

$ aws s3 mb s3://mymattermost

make_bucket: mymattermost

Only because I assumed which AWS account I was using, I also double checked it was created via the AWS console

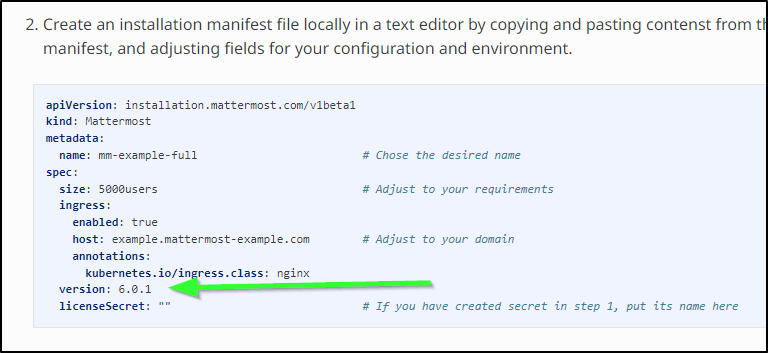

We can now update the mattermost-installation yaml to reference the PG connection, AWS Secrets and our bucket name

$ cat mattermost-installation.yaml

apiVersion: installation.mattermost.com/v1beta1

kind: Mattermost

metadata:

name: mm-freshbrewed

spec:

size: 1000users # Adjust to your requirements

ingress:

enabled: true

host: mattermost.freshbrewed.science

annotations:

kubernetes.io/ingress.class: nginx

version: 6.0.1

licenseSecret: ""

database:

external:

secret: my-postgres-connection

fileStore:

external:

url: s3.amazonaws.com

bucket: mymattermost

secret: my-s3-iam-access-key

mattermostEnv:

- name: MM_FILESETTINGS_AMAZONS3SSE

value: "true"

- name: MM_FILESETTINGS_AMAZONS3SSL

value: "true"

Now just apply it and hope the operator takes care of the rest

$ kubectl apply -n mattermost -f mattermost-installation.yaml

mattermost.installation.mattermost.com/mm-freshbrewed created

We can check the status

$ kubectl -n mattermost get mm -w

NAME STATE IMAGE VERSION ENDPOINT

mm-freshbrewed reconciling

I have it a good 30m to see if something would come up

All I got was

$ kubectl describe mm -n mattermost

Name: mm-freshbrewed

Namespace: mattermost

Labels: <none>

Annotations: <none>

API Version: installation.mattermost.com/v1beta1

Kind: Mattermost

Metadata:

Creation Timestamp: 2023-12-30T19:26:26Z

Generation: 2

Resource Version: 10235440

UID: 8cd3b1d2-55cb-42a8-9ba9-2a506b245fe4

Spec:

Database:

External:

Secret: my-postgres-connection

Elastic Search:

File Store:

External:

Bucket: mymattermost

Secret: my-s3-iam-access-key

URL: s3.amazonaws.com

Image: mattermost/mattermost-enterprise-edition

Image Pull Policy: IfNotPresent

Ingress:

Annotations:

kubernetes.io/ingress.class: nginx

Enabled: true

Host: mattermost.freshbrewed.science

Ingress Name:

Mattermost Env:

Name: MM_FILESETTINGS_AMAZONS3SSE

Value: true

Name: MM_FILESETTINGS_AMAZONS3SSL

Value: true

Pod Extensions:

Probes:

Liveness Probe:

Readiness Probe:

Replicas: 2

Scheduling:

Resources:

Limits:

Cpu: 2

Memory: 4Gi

Requests:

Cpu: 150m

Memory: 256Mi

Version: 6.0.1

Status:

Observed Generation: 2

Replicas: 2

State: reconciling

Events: <none>

Looking into the crashed pods

$ kubectl get pods -n mattermost

NAME READY STATUS RESTARTS AGE

mm-freshbrewed-669697fd97-lzhxl 0/1 CrashLoopBackOff 12 (2m26s ago) 39m

mm-freshbrewed-669697fd97-trhqp 0/1 CrashLoopBackOff 12 (110s ago) 39m

I noticed the error:

Message: Error: failed to load configuration: failed to initialize: failed to create Configurations table: parse "postgres://mattermost:matterpass@192.168.1.33:5432/mattermost\n": net/url: invalid control character in URL

Their examples had newlines which is why I went with them. Let’s strip out the newline with tr -d \n

builder@DESKTOP-QADGF36:~/Workspaces/mattermost$ echo postgres://mattermost:matterpass@192.168.1.33:5432/mattermost | tr -d '\n' | base64 -w 0

cG9zdGdyZXM6Ly9tYXR0ZXJtb3N0Om1hdHRlcnBhc3NAMTkyLjE2OC4xLjMzOjU0MzIvbWF0dGVybW9zdA==builder@DESKTOP-QADGF36:~/Workspaces/mattermost$

builder@DESKTOP-QADGF36:~/Workspaces/mattermost$

builder@DESKTOP-QADGF36:~/Workspaces/mattermost$ echo cG9zdGdyZXM6Ly9tYXR0ZXJtb3N0Om1hdHRlcnBhc3NAMTkyLjE2OC4xLjMzOjU0MzIvbWF0dGVybW9zdA== | base64 --decode

postgres://mattermost:matterpass@192.168.1.33:5432/mattermostbuilder@DESKTOP-QADGF36:~/Workspaces/mattermost$

I’ll apply and rotate the pods

builder@DESKTOP-QADGF36:~/Workspaces/mattermost$ kubectl apply -f my-postgres-connection.yaml -n mattermost

secret/my-postgres-connection configured

builder@DESKTOP-QADGF36:~/Workspaces/mattermost$ kubectl delete pods -l app=mattermost -n mattermost

pod "mm-freshbrewed-669697fd97-cbtkm" deleted

pod "mm-freshbrewed-669697fd97-rpvtx" deleted

builder@DESKTOP-QADGF36:~/Workspaces/mattermost$ kubectl get pods -n mattermost

NAME READY STATUS RESTARTS AGE

mm-freshbrewed-669697fd97-qflsj 0/1 Running 0 9s

mm-freshbrewed-669697fd97-7hlth 0/1 CrashLoopBackOff 1 (3s ago) 9s

Still seems unhappy

$ kubectl get pods -n mattermost

NAME READY STATUS RESTARTS AGE

mm-freshbrewed-669697fd97-7hlth 0/1 CrashLoopBackOff 1 (13s ago) 19s

mm-freshbrewed-669697fd97-qflsj 0/1 Error 1 (11s ago) 19s

I see a new error…

$ kubectl logs mm-freshbrewed-669697fd97-7hlth -n mattermost

Defaulted container "mattermost" out of: mattermost, init-check-database (init)

Error: failed to load configuration: failed to initialize: failed to create Configurations table: pq: no pg_hba.conf entry for host "192.168.1.159", user "mattermost", database "mattermost", SSL on

That one is actually my fault. I neglected to add all CIDRs for IPv4. My pg_hba.conf showed

# "local" is for Unix domain socket connections only

local all all peer

# IPv4 local connections:

host all all 127.0.0.1/32 md5

Which I changed to

# IPv4 local connections:

host all all 0.0.0.0/0 md5

Then a quick bounce of the database

$ sudo service postgresql restart

And then the pods

builder@DESKTOP-QADGF36:~/Workspaces/mattermost$ kubectl get pods -n mattermost

NAME READY STATUS RESTARTS AGE

mm-freshbrewed-669697fd97-7hlth 0/1 CrashLoopBackOff 5 (117s ago) 5m1s

mm-freshbrewed-669697fd97-qflsj 0/1 CrashLoopBackOff 5 (93s ago) 5m1s

builder@DESKTOP-QADGF36:~/Workspaces/mattermost$ kubectl delete pods -l app=mattermost -n mattermost

pod "mm-freshbrewed-669697fd97-7hlth" deleted

pod "mm-freshbrewed-669697fd97-qflsj" deleted

$ kubectl get pods -n mattermost

NAME READY STATUS RESTARTS AGE

mm-freshbrewed-669697fd97-khrjq 1/1 Running 0 31s

mm-freshbrewed-669697fd97-fdf84 1/1 Running 0 31s

I now see

$ kubectl -n mattermost get mm

NAME STATE IMAGE VERSION ENDPOINT

mm-freshbrewed stable mattermost/mattermost-enterprise-edition 6.0.1 mattermost.freshbrewed.science

I can see it created an ingress (albeit not working since this is a Traefik cluster without proper ingress setup)

$ kubectl get ingress --all-namespaces

NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE

plane-ns my-plane-ingress nginx plane.example.com,plane-minio.example.com 80 13d

disabledtest mytest-pyk8sservice traefik pytestapp.local 192.168.1.13,192.168.1.159,192.168.1.206 80, 443 29h

mattermost mm-freshbrewed <none> mattermost.freshbrewed.science 80 8m42s

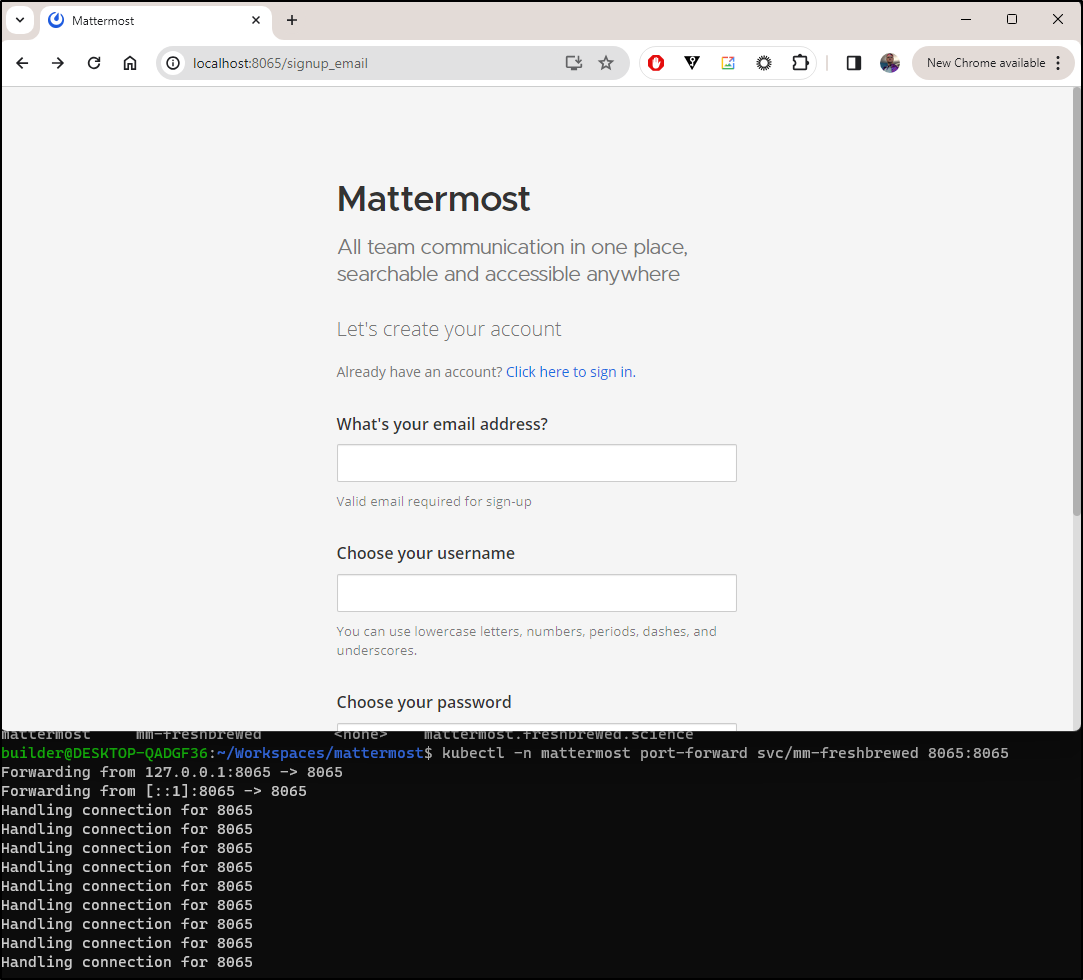

That said, I can port-forward to the service to get it setup

$ kubectl -n mattermost port-forward svc/mm-freshbrewed 8065:8065

Forwarding from 127.0.0.1:8065 -> 8065

Forwarding from [::1]:8065 -> 8065

Handling connection for 8065

Handling connection for 8065

Handling connection for 8065

Production Cluster

I felt mostly safe launching this into my production cluster. With my main cluster, we can get a real ingress and test with SSL.

My first thought was to use a solid HA NAS for the database. The docs suggest I can use MySQL (MariaDB) so I tried that first

I created a user on sirnasilot

MariaDB [(none)]> create user 'mmuser'@'%' identified by 'M4tt3rM0st@@@@';

Query OK, 0 rows affected (0.081 sec)

I then made the database and granted the user access

MariaDB [(none)]> create database mattermost;

Query OK, 1 row affected (0.010 sec)

MariaDB [(none)]> grant all privileges on mattermost.* to 'mmuser'@'%';

Query OK, 0 rows affected (0.502 sec)

MariaDB [(none)]> GRANT ALTER, CREATE, DELETE, DROP, INDEX, INSERT, SELECT, UPDATE, REFERENCES ON mattermost.* TO 'mmuser'@'%';

Query OK, 0 rows affected (0.198 sec)

The examples I saw out there suggested a DB String like:

mysql://mmuser:really_secure_password@tcp(127.0.0.1:3306)/mattermost?charset=utf8mb4,utf8&writeTimeout=30s

I switched users mostly because I didn’t know how to escape the @ in the password. So the new string might look like

mysql://matteruser:M4tttt3r-m0st@tcp(192.168.1.117:3306)/mattermost?charset=utf8mb4,utf8&writeTimeout=30s

I base64’ed it and set it as a password in the YAML my-database.yaml

Ingress

Like normal, we need to quick create an A Record for this host

$ cat r53-mattermost.yaml

{

"Comment": "CREATE mattermost fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "mattermost.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "75.73.224.240"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-mattermost.yaml

{

"ChangeInfo": {

"Id": "/change/C03923801VZBK306NNJIW",

"Status": "PENDING",

"SubmittedAt": "2023-12-31T02:53:24.419Z",

"Comment": "CREATE mattermost fb.s A record "

}

}

Operator install

I’ll setup the operator

builder@LuiGi17:~/Workspaces/mattermost$ kubectl create ns mattermost-operator

namespace/mattermost-operator created

builder@LuiGi17:~/Workspaces/mattermost$ kubectl apply -n mattermost-operator -f https://raw.githubusercontent.com/mattermost/mattermost-operator/master/docs/mattermost-operator/mattermost-operator.yaml

customresourcedefinition.apiextensions.k8s.io/clusterinstallations.mattermost.com created

customresourcedefinition.apiextensions.k8s.io/mattermostrestoredbs.mattermost.com created

customresourcedefinition.apiextensions.k8s.io/mattermosts.installation.mattermost.com created

serviceaccount/mattermost-operator created

clusterrole.rbac.authorization.k8s.io/mattermost-operator created

clusterrolebinding.rbac.authorization.k8s.io/mattermost-operator created

service/mattermost-operator created

deployment.apps/mattermost-operator created

Installing

I created the AWS secret

$ kubectl apply -f /home/builder/Workspaces/mattermost/my-s3-iam-access-key.yaml -n mattermo

st

secret/my-s3-iam-access-key created

$ kubectl apply -f /home/builder/Workspaces/mattermost/my-database.yaml -n mattermost

secret/mattermost-db-secret created

Lastly, we bring it all-together to install

$ kubectl apply -f /home/builder/Workspaces/mattermost/mattermost-installation.yaml -n mattermost

mattermost.installation.mattermost.com/mm-freshbrewed created

I will spare you the many multiple attempts to get MariaDB working. I ended up going back to Postgres as I could not get mariadb auth to work.

$ kubectl apply -f mattermost-installation.yaml -n mattermost

mattermost.installation.mattermost.com/mm-freshbrewed configured

$ kubectl get pods -n mattermost

NAME READY STATUS RESTARTS AGE

mm-freshbrewed-f495b6754-n7rld 1/1 Running 0 64s

mm-freshbrewed-f495b6754-fnwmh 1/1 Running 0 38s

Operator Issues with NGinx Ingress

I fought TLS for a while. It seems the Operator just will not let me set the TLS block. Moreover, if I create manually, it’s overwritten. If I delete the errant one, it’s immediately restored.

In the end, I created the MM Installation without Ingress enabled:

$ cat mattermost-installation.yaml

apiVersion: installation.mattermost.com/v1beta1

kind: Mattermost

metadata:

name: mm-freshbrewed

spec:

size: 1000users # Adjust to your requirements

ingress:

enabled: false

host: mattermost.freshbrewed.science

annotations:

cert-manager.io/issuer: "letsencrypt-prod"

cert-manager.io/cluster-issuer: "letsencrypt-prod"

kubernetes.io/ingress.class: "nginx"

ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

version: 6.0.1

licenseSecret: ""

database:

external:

secret: my-postgres-connection

fileStore:

external:

url: s3.amazonaws.com

bucket: mymattermost

secret: my-s3-iam-access-key

mattermostEnv:

- name: MM_FILESETTINGS_AMAZONS3SSE

value: "true"

- name: MM_FILESETTINGS_AMAZONS3SSL

value: "true"

Then applied the Ingress with TLS manually afterward

$ cat t.o

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/proxy-body-size: 1000M

nginx.ingress.kubernetes.io/ssl-redirect: "true"

labels:

app: mattermost

name: mm-freshbrewed

namespace: mattermost

spec:

rules:

- host: mattermost.freshbrewed.science

http:

paths:

- backend:

service:

name: mm-freshbrewed

port:

number: 8065

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- mattermost.freshbrewed.science

secretName: mattermost-tls

$ kubectl apply -f t.o -n mattermost

ingress.networking.k8s.io/mm-freshbrewed created

We can now see it come up (spoiler - for a while… seems after some amount of time, it comes back and removes our ingress)

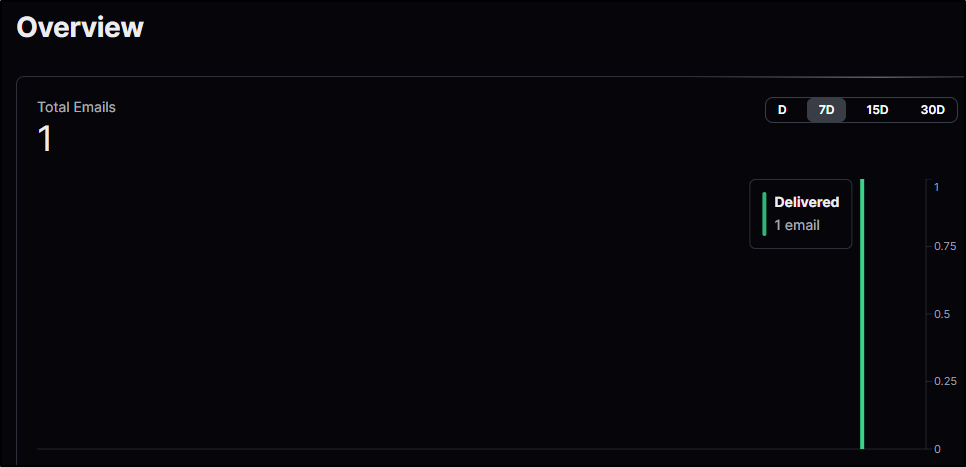

I’m curious if emails went out as I did not setup SMTP just yet

Checking a day later, still no email so I would assume SMTP needs to be set first.

Note: When you do setup SMTP, you have to “save” before you “test”, otherwise the “test” button tests the last saved settings.

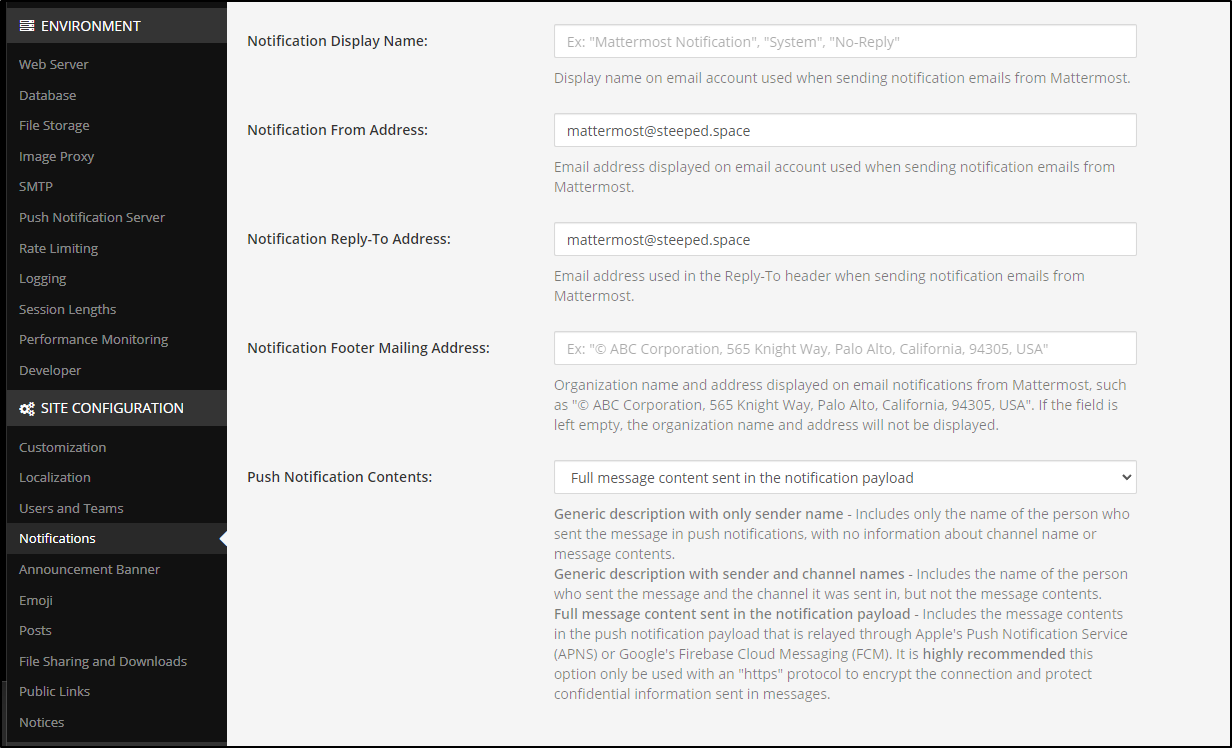

I fixed the “From” address in Notification settings

Then fired a fresh invite. I could see in Resend a note that it was sent

And this time it was delivered

I kept searching for “calls” and couldn’t find it. I knew I saw a “call” feature in the Docker version. Upon looking at the Docs, it seems that is 7.0 feature.

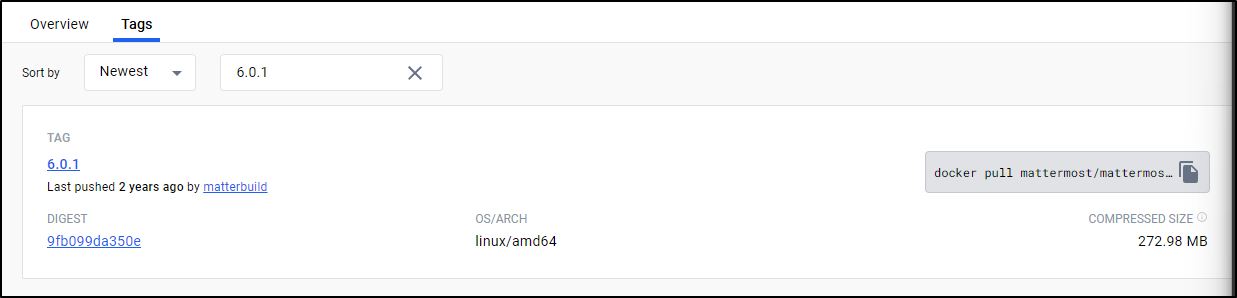

Looking at the tags in Dockerhub, I think we are quite a bit behind as they are building version 9.4.0 Release Candidates and we went with 6.0.1.

It’s surprising to me that their public Install docs show such a dated version.

And on Dockerhub:

I plan to give ‘release-8.1’ a try

$ kubectl get mattermost -n mattermost

NAME STATE IMAGE VERSION ENDPOINT

mm-freshbrewed stable mattermost/mattermost-enterprise-edition 6.0.1 not available

$ kubectl edit mattermost -n mattermost

mattermost.installation.mattermost.com/mm-freshbrewed edited

$ kubectl get mattermost -n mattermost

NAME STATE IMAGE VERSION ENDPOINT

mm-freshbrewed reconciling mattermost/mattermost-enterprise-edition 6.0.1 not available

$ kubectl get mattermost -n mattermost

NAME STATE IMAGE VERSION ENDPOINT

mm-freshbrewed stable mattermost/mattermost-enterprise-edition release-8.1 not available

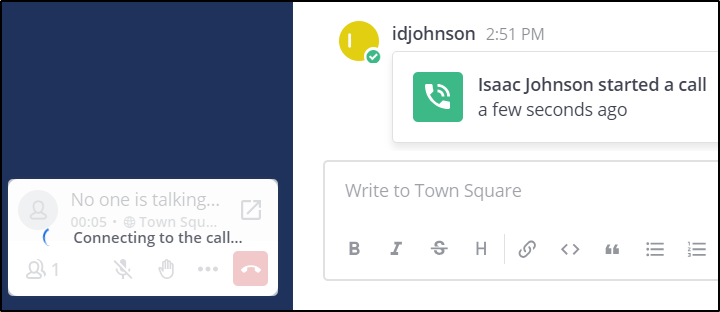

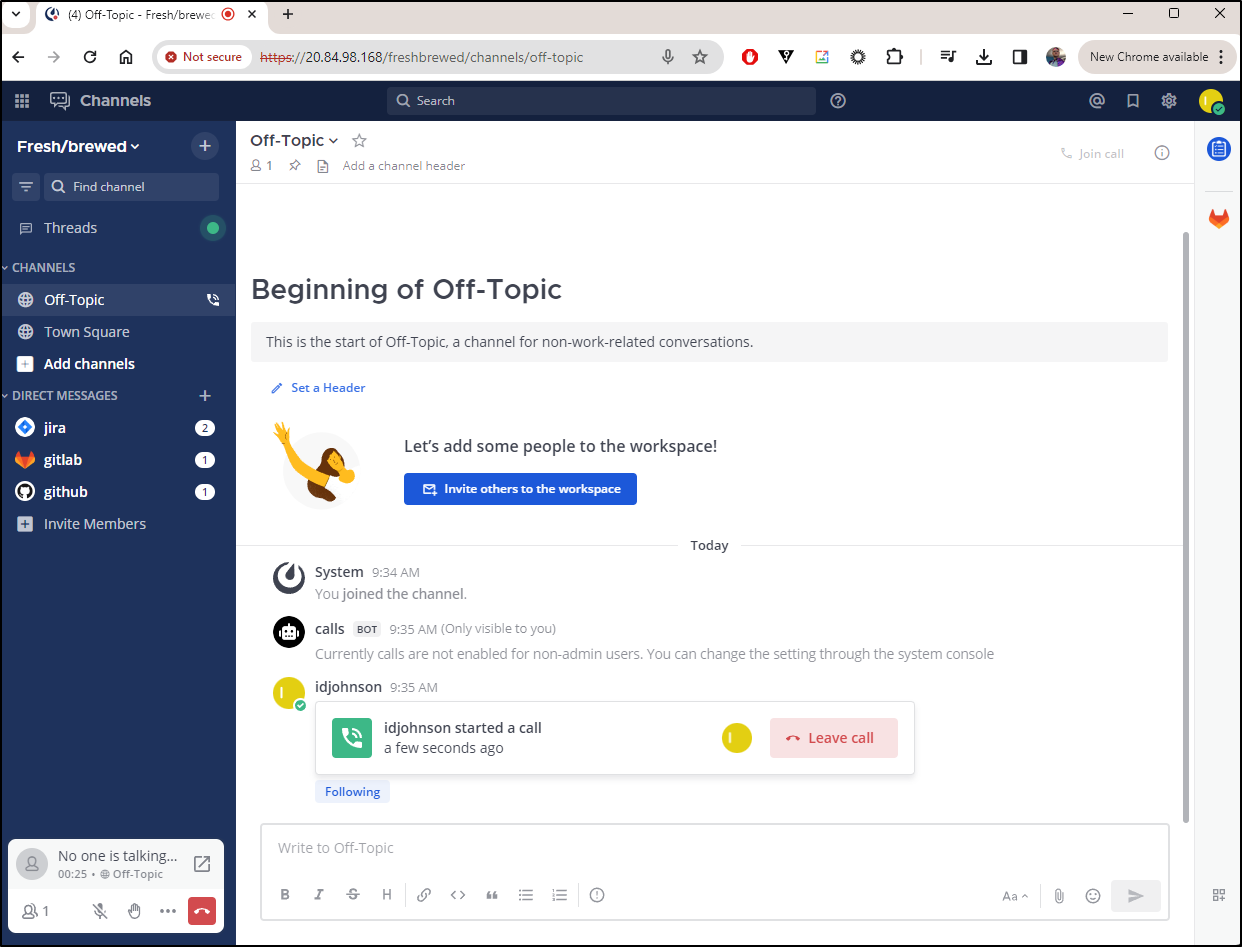

I tried to start a call

But it kept erroring

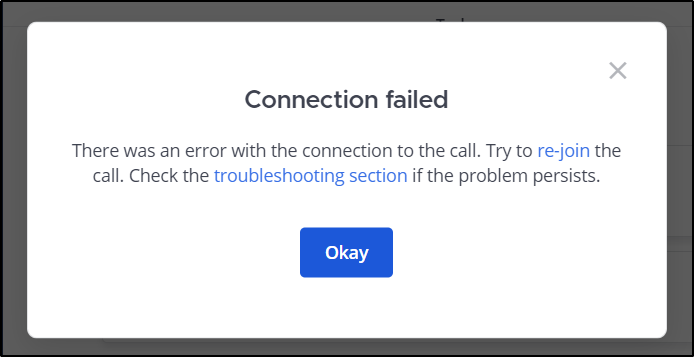

There seems to be some nuances about forwarding TCP and UDP traffic. I even tried to forward both

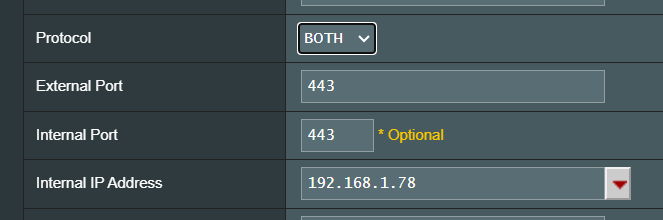

Another issue is that after a while, something erases my Ingress in the namespace. It’s almost like the operator catches up and deletes it

$ kubectl get ingress -n mattermost

No resources found in mattermost namespace.

This is a bit of a deal-breaker for me - I can’t just keep re-adding the Ingress and the current chart doesn’t properly setup annotations for TLS. I’m not going to host a service with logins on HTTP.

Removal

Removing Mattermost is easy.

We check the ‘mattermost’ install in our namespace (or all namespaces)

$ kubectl get mattermost -n mattermost

NAME STATE IMAGE VERSION ENDPOINT

mm-freshbrewed stable mattermost/mattermost-enterprise-edition release-8.1 not available

Then just delete it

$ kubectl delete mattermost mm-freshbrewed -n mattermost

mattermost.installation.mattermost.com "mm-freshbrewed" deleted

We can easily verify the workloads are gone

$ kubectl delete mattermost -n mattermost

error: resource(s) were provided, but no name was specified

$ kubectl get pods -n mattermost

No resources found in mattermost namespace.

$ kubectl get svc -n mattermost

No resources found in mattermost namespace.

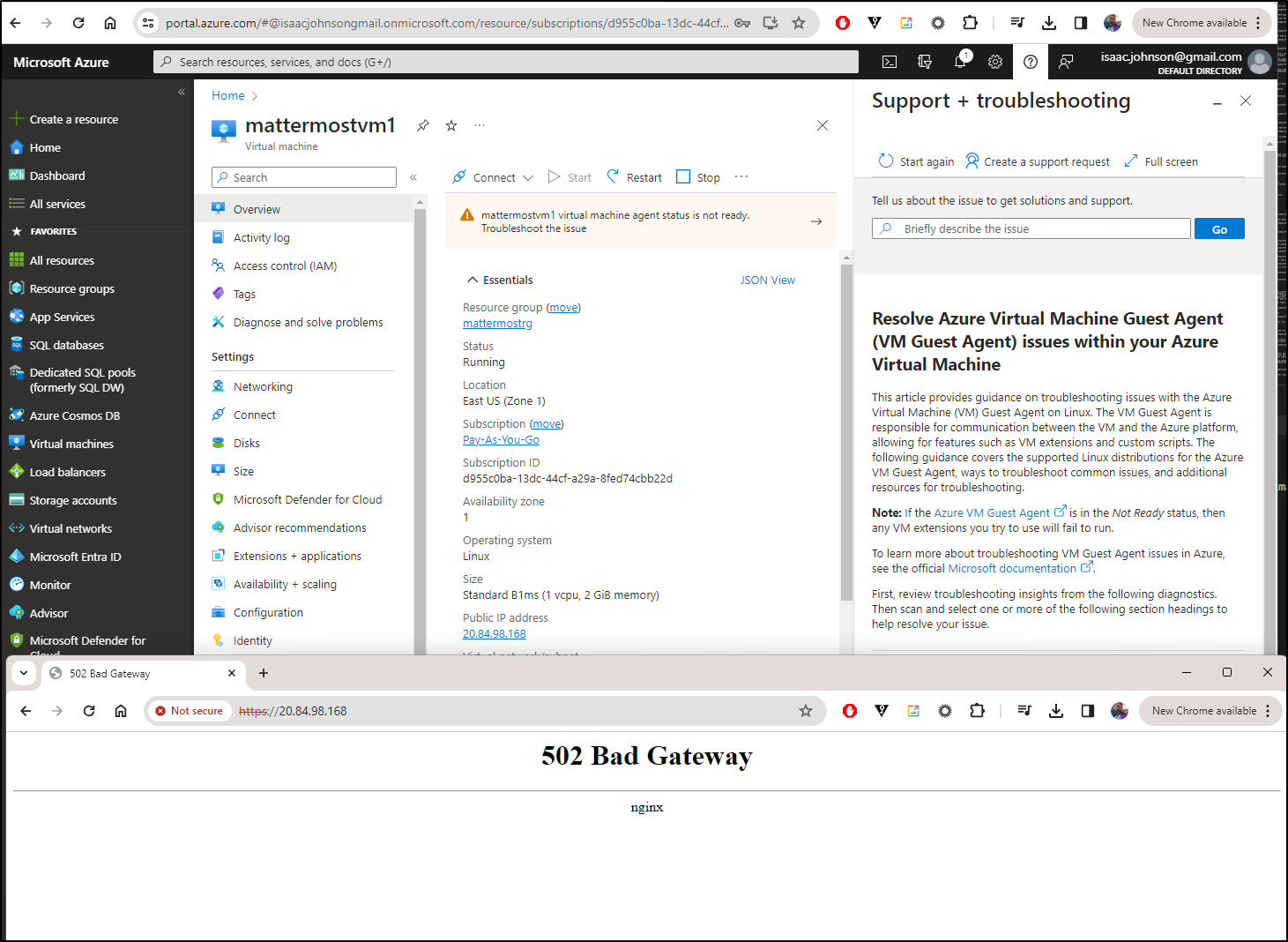

Cloud

Let’s try the Cloud offering

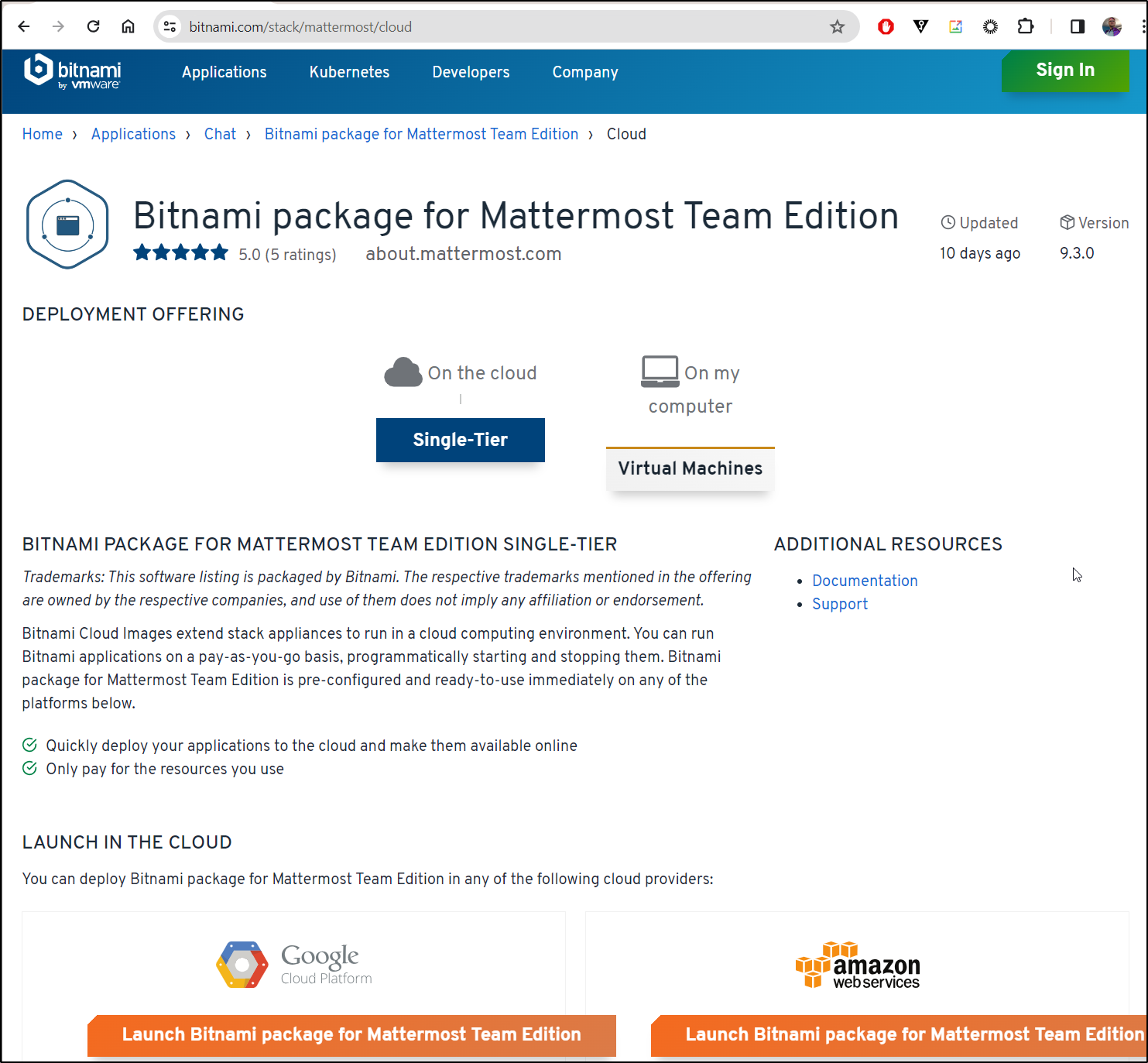

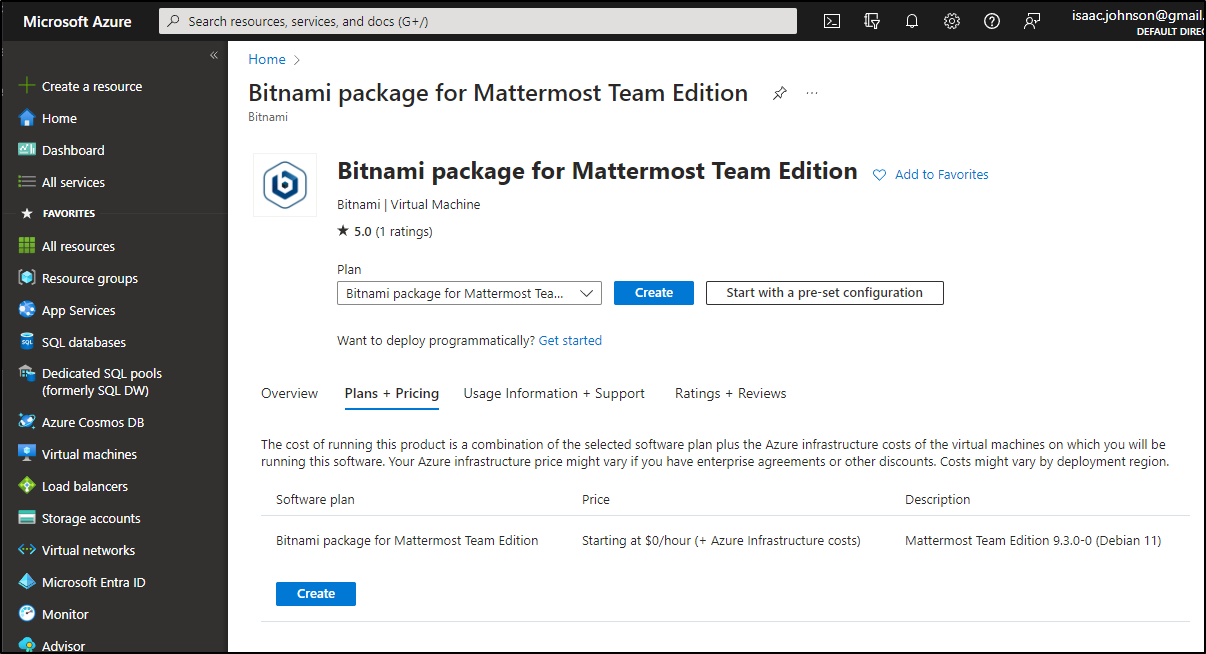

Which surprised me to find out just directs me to a Bitnami deploy

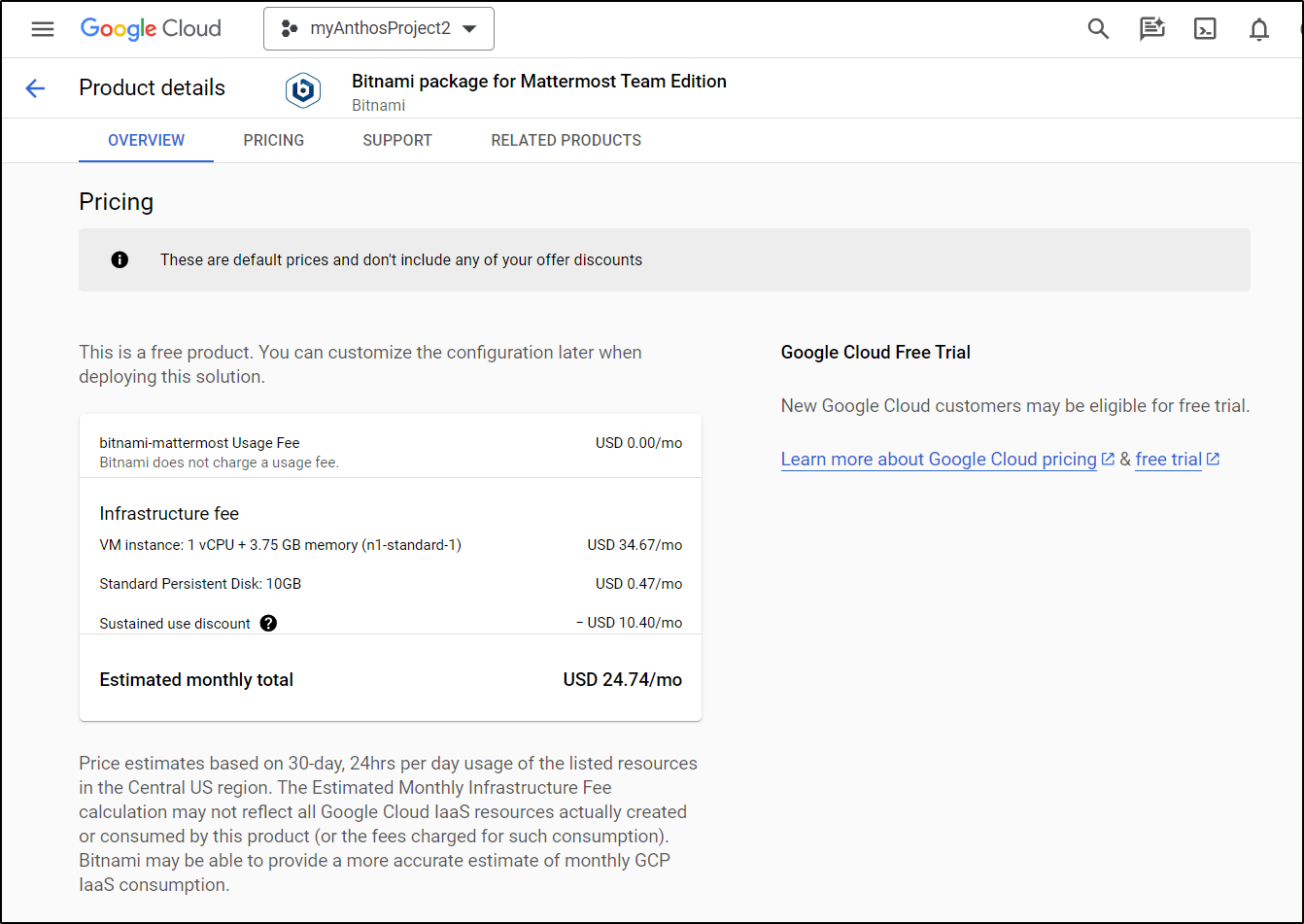

Which I can fire up in GCP

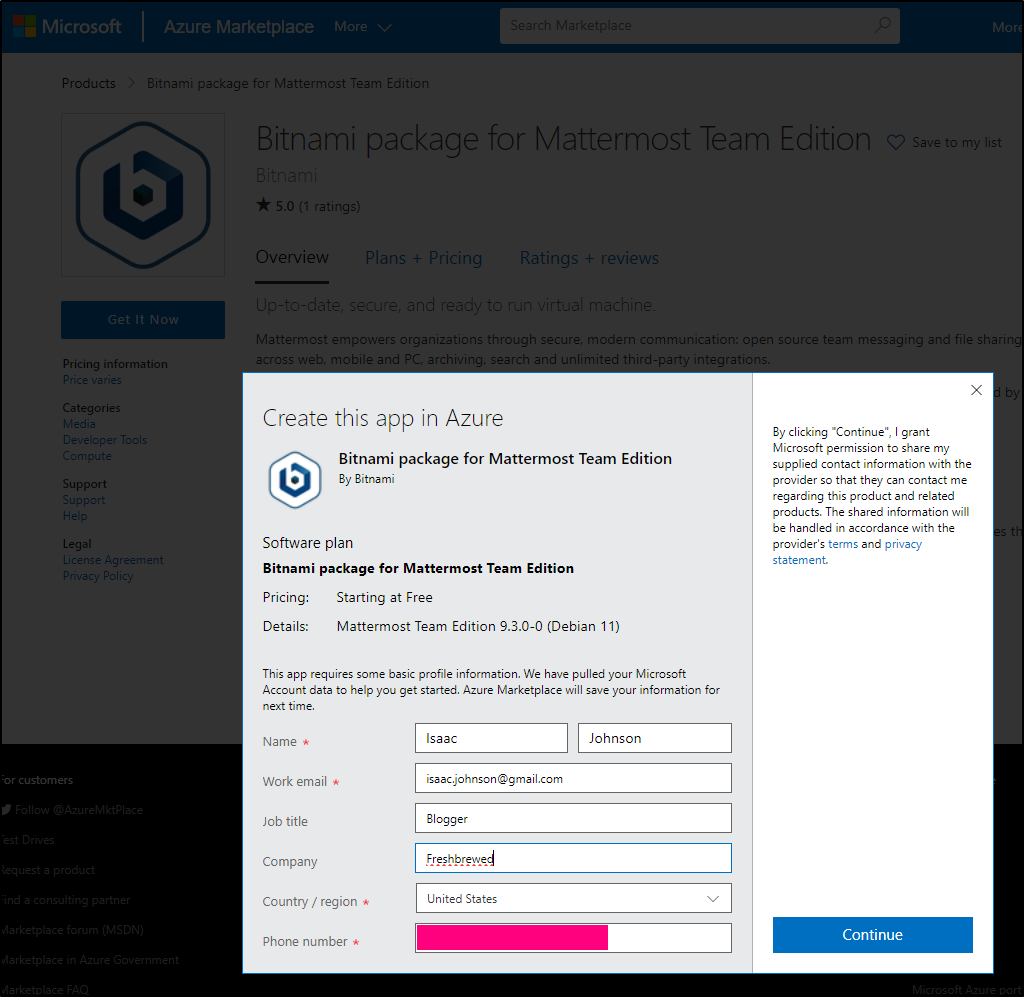

or Azure

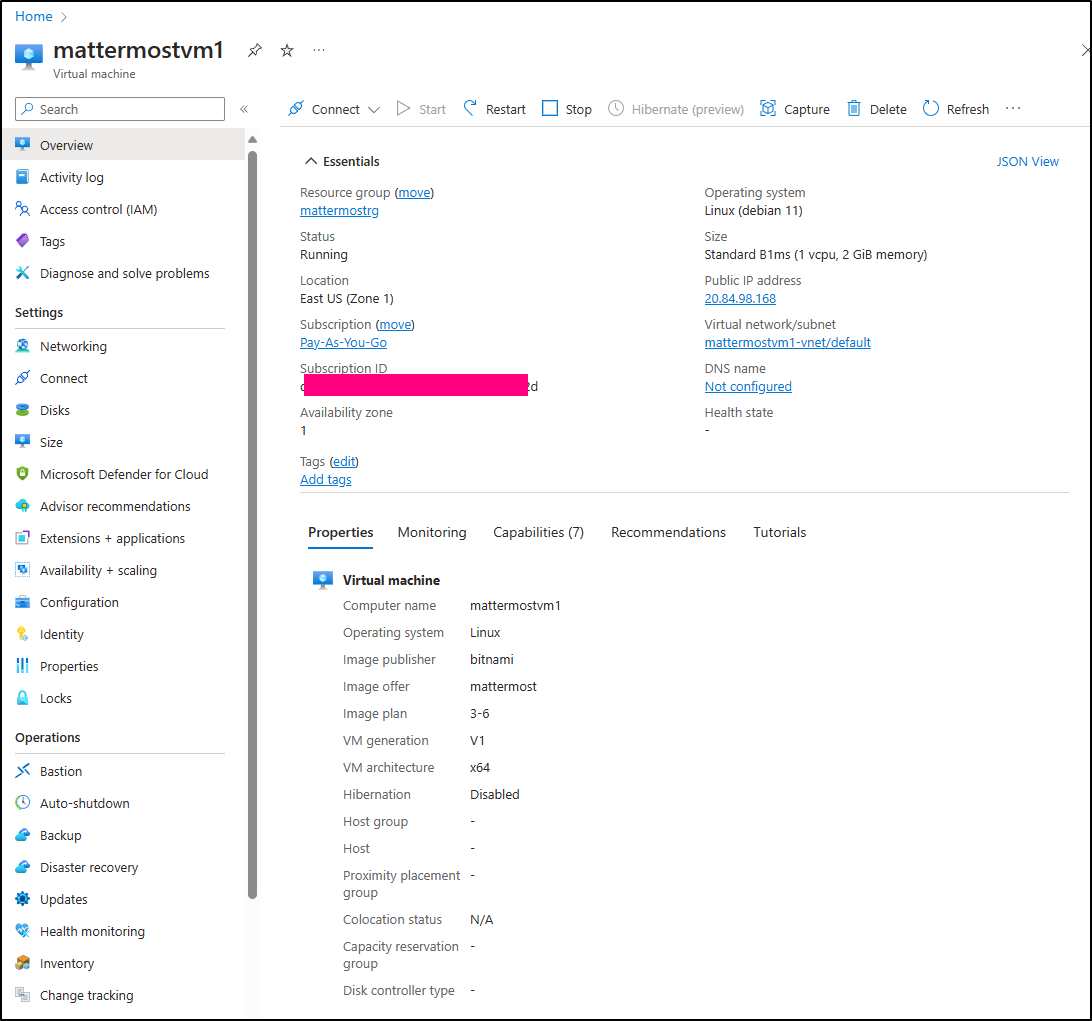

In Azure, I can confirm the cost is just the VM as the package from Bitnami is $0/hour

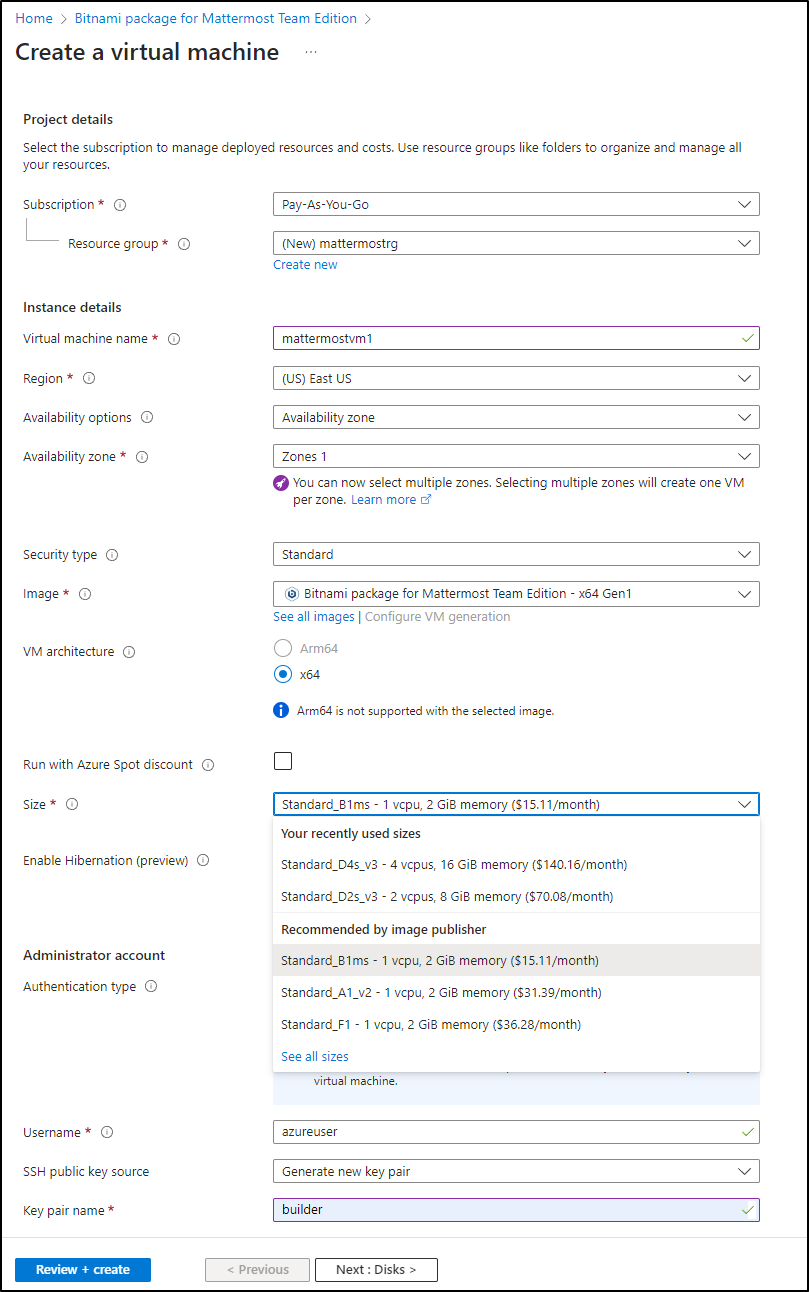

When creating, I’ll store this in a new Resource Group (mattermostrg). I can also pick from a few VM sizes. I’ll go with Standard_B1ms at $15.11 month

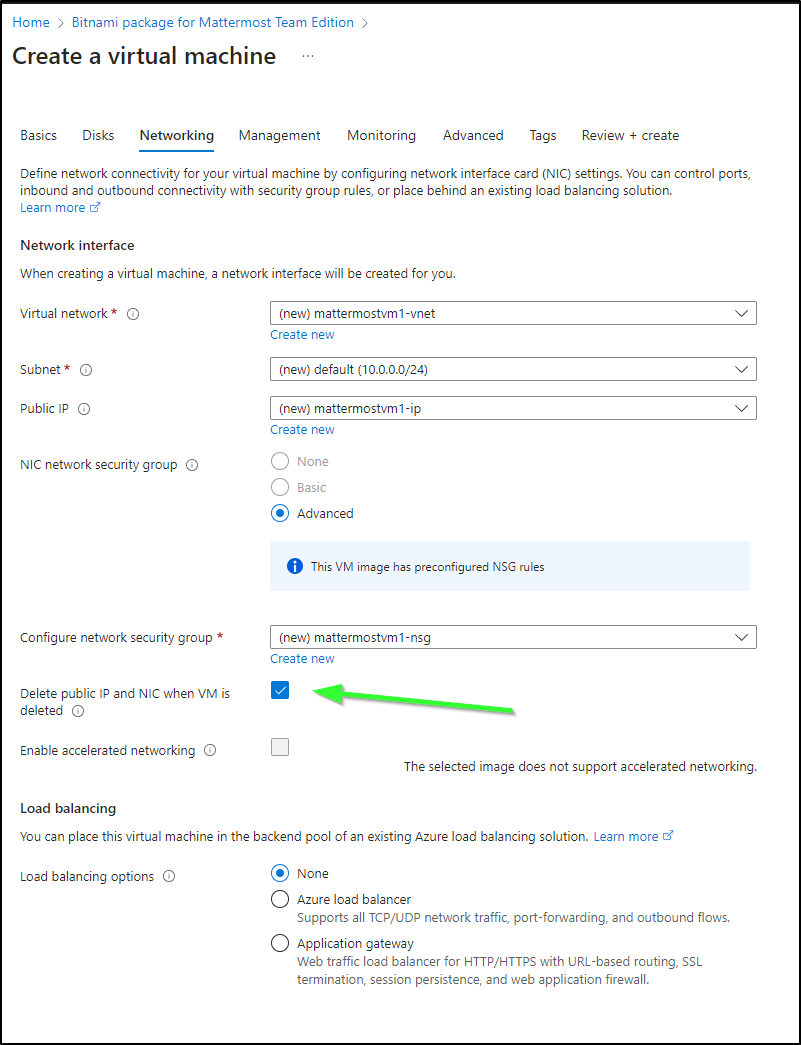

I did say to delete the Public IP if the VM goes away. A real HA setup would leave the default, unchecked

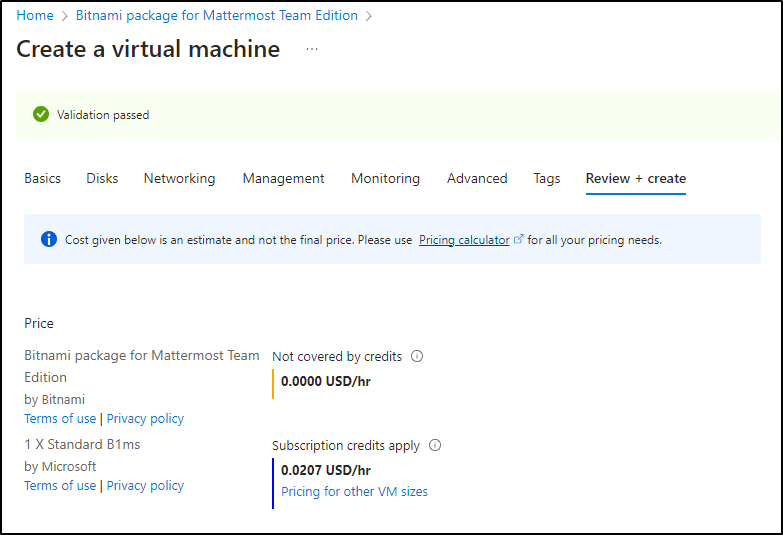

In my review and create we can see it will be just over 2c an hour for the VM and probably a little bit more for network and disk, but that seems reasonable.

On create, it will prompt me to save the PEM

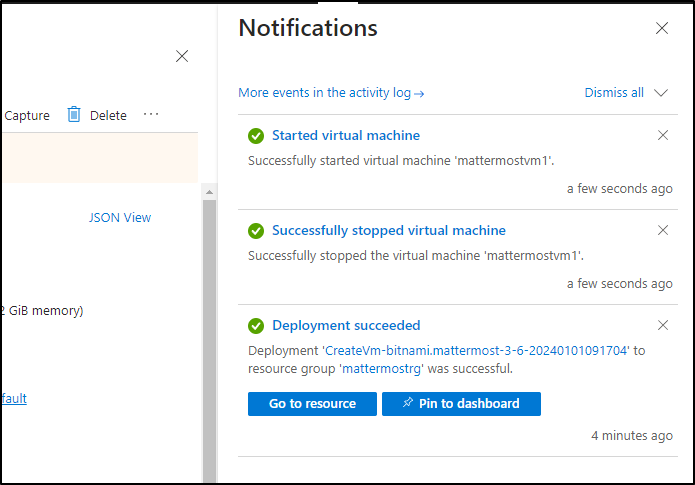

I got an error on first launch

I figured I would try the global debug of offnonagain

when completed

I was trying to login

$ ssh -i /home/builder/.ssh/builder.pem azureuser@20.84.98.168

Linux mattermostvm1 5.10.0-26-cloud-amd64 #1 SMP Debian 5.10.197-1 (2023-09-29) x86_64

The programs included with the Debian GNU/Linux system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent

permitted by applicable law.

___ _ _ _

| _ |_) |_ _ _ __ _ _ __ (_)

| _ \ | _| ' \/ _` | ' \| |

|___/_|\__|_|_|\__,_|_|_|_|_|

*** Welcome to the Bitnami package for Mattermost Team Edition 9.3.0-0 ***

*** Documentation: https://docs.bitnami.com/azure/apps/mattermost/ ***

*** https://docs.bitnami.com/azure/ ***

*** Bitnami Forums: https://github.com/bitnami/vms/ ***

bitnami@mattermostvm1:~$

when the site came up.. So we will just assume due to the small class size of the host, it just takes some time

This time the call worked

Sharing is kind of interesting in that I see a browser notification that I’m sharing that browser but in Mattermost, it’s a small live icon. Both let me stop sharing.

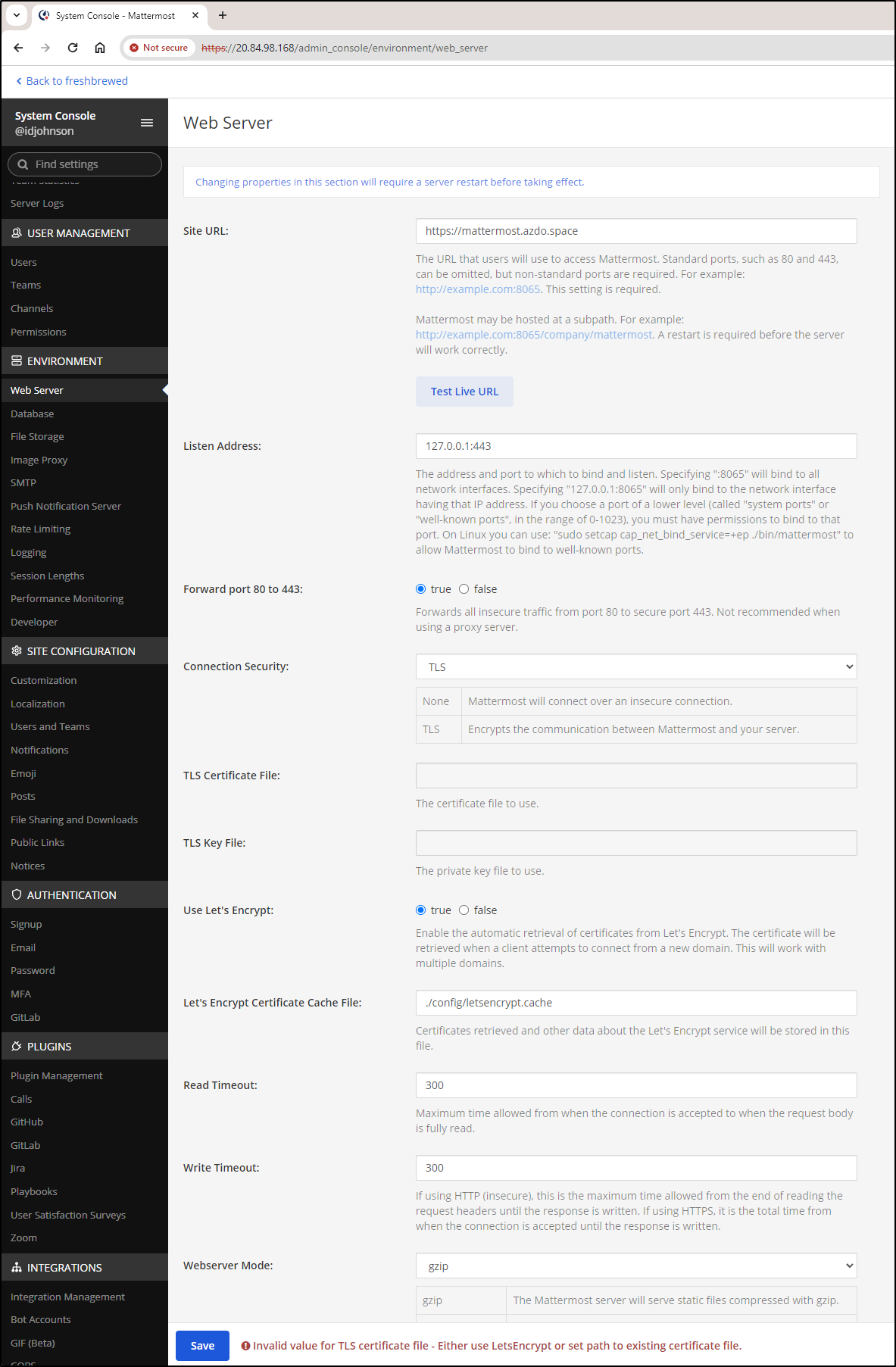

Let’s try and setup LE and a domain name. I created a quick A record for azdo.space

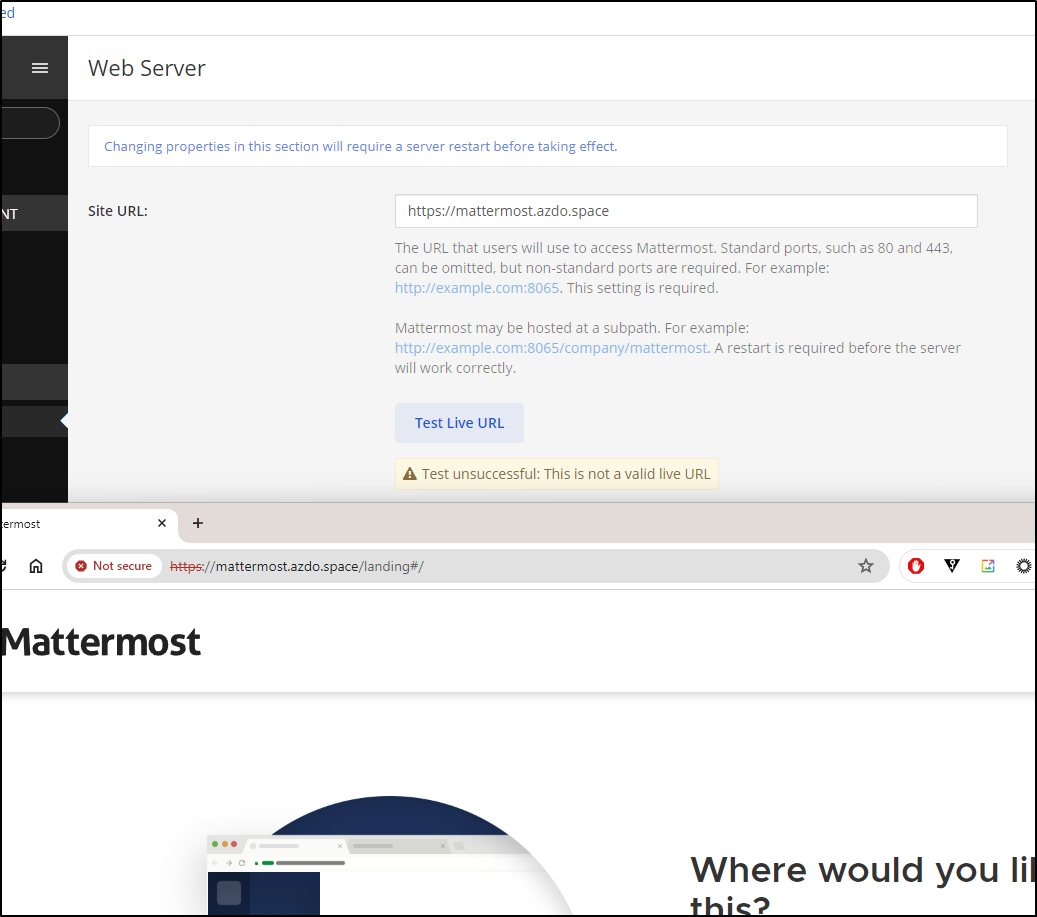

I saved it, but I’m not certain it took effect.

I can see it resolve, but the “Test Live URL” says it’s not valid

Bitnami steps to check services do not seem to work

bitnami@mattermostvm1:~$ sudo /opt/bitnami/ctlscript.sh status

Control file '/etc/gonit/gonitrc' does not exists

Even if I create the missing rc file

bitnami@mattermostvm1:~$ sudo /opt/bitnami/ctlscript.sh status mattermost

Cannot find any running daemon to contact. If it is running, make sure you are pointing to the right pid file (/var/run/gonit.pid)

There is no service either for systemctl

bitnami@mattermostvm1:~$ sudo systemctl restart mattermost

Failed to restart mattermost.service: Unit mattermost.service not found.

However, I can always do the old offnonagain

bitnami@mattermostvm1:~$ sudo reboot now

bitnami@mattermostvm1:~$ Connection to 20.84.98.168 closed by remote host.

Connection to 20.84.98.168 closed.

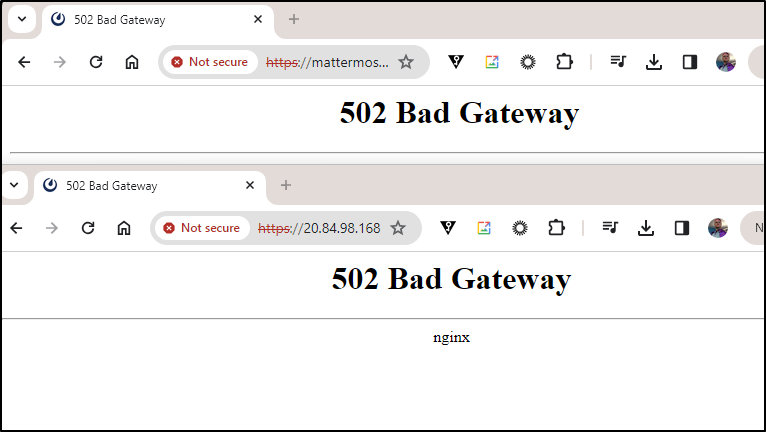

I gave it a solid 30m and still nothing

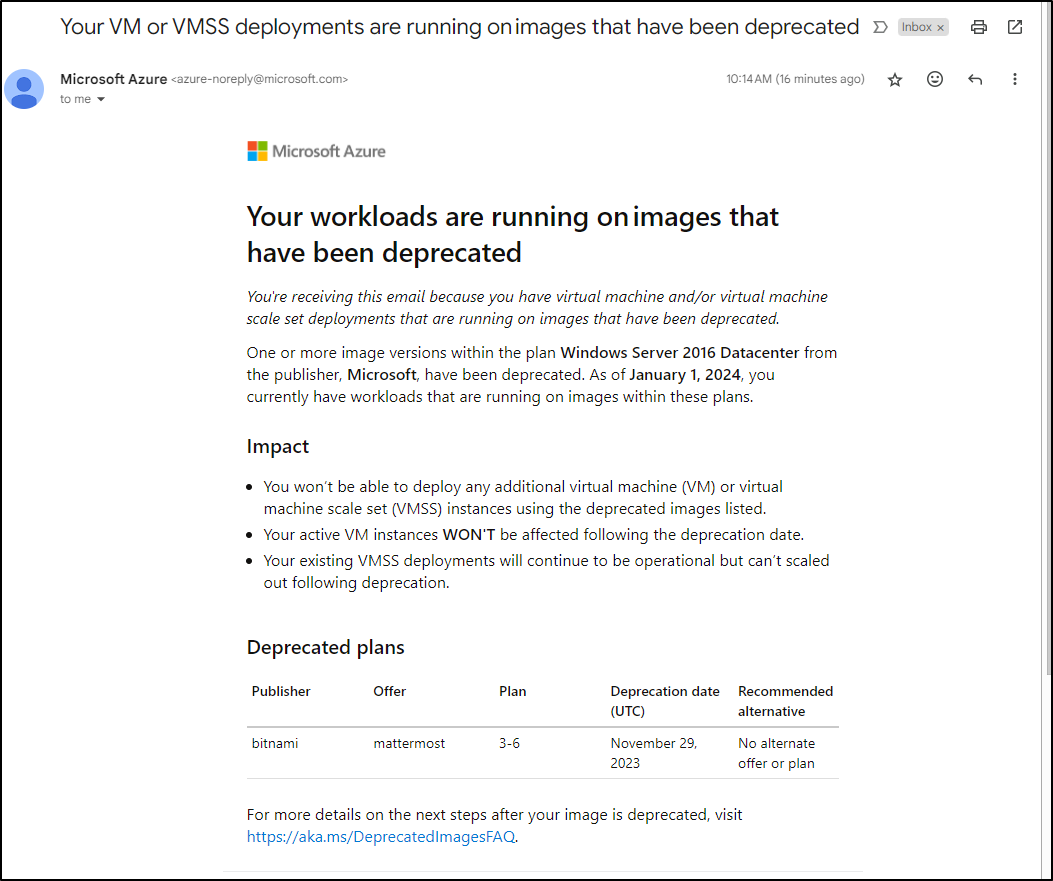

Meanwhile, while waiting for it to come up, I was surprised to see an email from Azure suggesting this chart was built on Windows 2016 DC

I wonder if it’s just a mistake as I can see the OS is Debian and SSH worked without issue

I went back onto the host and checked to see what was listening on 8065. I dove into the NGinx conf till I figured out Nginx just fronts everything over to mattermost locally

bitnami@mattermostvm1:/var/log$ cat /opt/bitnami/nginx/conf/server_blocks/mattermost-https-server-block.conf

upstream backend {

server 127.0.0.1:8065;

}

proxy_cache_path /opt/bitnami/nginx/tmp/cache levels=1:2 keys_zone=mattermost_cache:10m max_size=3g inactive=120m use_temp_path=off;

server {

# Port to listen on, can also be set in IP:PORT format

listen 443 ssl default_server;

root /opt/bitnami/mattermost;

# Catch-all server block

# See: https://nginx.org/en/docs/http/server_names.html#miscellaneous_names

server_name _;

ssl_certificate bitnami/certs/server.crt;

ssl_certificate_key bitnami/certs/server.key;

ssl_session_timeout 1d;

# Enable TLS versions (TLSv1.3 is required upcoming HTTP/3 QUIC).

ssl_protocols TLSv1.2 TLSv1.3;

# Enable TLSv1.3's 0-RTT. Use $ssl_early_data when reverse proxying to

# prevent replay attacks.

#

# @see: https://nginx.org/en/docs/http/ngx_http_ssl_module.html#ssl_early_data

ssl_early_data on;

ssl_ciphers 'ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256';

ssl_prefer_server_ciphers on;

ssl_session_cache shared:SSL:50m;

# HSTS (ngx_http_headers_module is required) (15768000 seconds = 6 months)

add_header Strict-Transport-Security max-age=15768000;

# OCSP Stapling ---

# fetch OCSP records from URL in ssl_certificate and cache them

#ssl_stapling on;

#ssl_stapling_verify on;

location ~ /api/v[0-9]+/(users/)?websocket$ {

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

client_max_body_size 50M;

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Frame-Options SAMEORIGIN;

proxy_buffers 256 16k;

proxy_buffer_size 16k;

client_body_timeout 60;

send_timeout 300;

lingering_timeout 5;

proxy_connect_timeout 90;

proxy_send_timeout 300;

proxy_read_timeout 90s;

proxy_http_version 1.1;

proxy_pass http://backend;

}

location / {

client_max_body_size 50M;

proxy_set_header Connection "";

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Frame-Options SAMEORIGIN;

proxy_buffers 256 16k;

proxy_buffer_size 16k;

proxy_read_timeout 600s;

proxy_cache mattermost_cache;

proxy_cache_revalidate on;

proxy_cache_min_uses 2;

proxy_cache_use_stale timeout;

proxy_cache_lock on;

proxy_http_version 1.1;

proxy_pass http://backend;

}

include "/opt/bitnami/nginx/conf/bitnami/*.conf";

}

And nothing is listening on 8065 now

bitnami@mattermostvm1:/var/log$ sudo netstat -tulpn | grep LISTEN

tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 686/nginx: master p

tcp 0 0 127.0.0.1:3306 0.0.0.0:* LISTEN 704/mysqld

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 686/nginx: master p

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1589/sshd: /usr/sbi

tcp6 0 0 :::33060 :::* LISTEN 704/mysqld

tcp6 0 0 :::22 :::* LISTEN 1589/sshd: /usr/sbi

Doing a start hung at the Mattermost step

bitnami@mattermostvm1:/etc/init.d$ sudo /etc/init.d/bitnami start

## 2024-01-01 16:38:32+00:00 ## INFO ## Running /opt/bitnami/var/init/pre-start/010_wait_waagent...

## 2024-01-01 16:38:32+00:00 ## INFO ## Running /opt/bitnami/var/init/pre-start/020_wait_until_mnt_mounted...

## 2024-01-01 16:38:32+00:00 ## INFO ## Running /opt/bitnami/var/init/pre-start/030_resize_fs...

resize2fs 1.46.2 (28-Feb-2021)

The filesystem is already 7831547 (4k) blocks long. Nothing to do!

## 2024-01-01 16:38:32+00:00 ## INFO ## Running /opt/bitnami/var/init/pre-start/040_hostname...

## 2024-01-01 16:38:32+00:00 ## INFO ## Running /opt/bitnami/var/init/pre-start/050_swap_file...

## 2024-01-01 16:38:32+00:00 ## INFO ## Running /opt/bitnami/var/init/pre-start/060_get_default_passwords...

## 2024-01-01 16:38:32+00:00 ## INFO ## Starting services...

2024-01-01T16:38:32.748Z - info: Saving configuration info to disk

2024-01-01T16:38:32.975Z - info: Performing service start operation for nginx

nginx 16:38:33.14 INFO ==> nginx is already running

2024-01-01T16:38:33.146Z - info: Performing service start operation for mysql

mysql 16:38:33.28 INFO ==> mysql is already running

2024-01-01T16:38:33.282Z - info: Performing service start operation for mattermost

I tried again

bitnami@mattermostvm1:/opt/bitnami$ sudo /opt/bitnami/ctlscript.sh start

Starting services..

Job for bitnami.service failed because the control process exited with error code.

See "systemctl status bitnami.service" and "journalctl -xe" for details.

bitnami@mattermostvm1:/opt/bitnami$ journalctl -xe

Jan 01 16:47:04 mattermostvm1 systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Jan 01 16:47:04 mattermostvm1 systemd[1]: bitnami.service: Found left-over process 689 (nginx) in control group while starting unit. Ignoring.

Jan 01 16:47:04 mattermostvm1 systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Jan 01 16:47:04 mattermostvm1 systemd[1]: bitnami.service: Found left-over process 690 (nginx) in control group while starting unit. Ignoring.

Jan 01 16:47:04 mattermostvm1 systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Jan 01 16:47:04 mattermostvm1 systemd[1]: bitnami.service: Found left-over process 704 (mysqld) in control group while starting unit. Ignoring.

Jan 01 16:47:04 mattermostvm1 systemd[1]: This usually indicates unclean termination of a previous run, or service implementation deficiencies.

Jan 01 16:47:04 mattermostvm1 bitnami[2408]: ## 2024-01-01 16:47:04+00:00 ## INFO ## Running /opt/bitnami/var/init/pre-start/010_wait_waagent...

Jan 01 16:47:04 mattermostvm1 systemd[1]: Starting LSB: bitnami init script...

░░ Subject: A start job for unit bitnami.service has begun execution

░░ Defined-By: systemd

░░ Support: https://www.debian.org/support

░░

░░ A start job for unit bitnami.service has begun execution.

░░

░░ The job identifier is 485.

Jan 01 16:47:04 mattermostvm1 bitnami[2408]: ## 2024-01-01 16:47:04+00:00 ## INFO ## Running /opt/bitnami/var/init/pre-start/020_wait_until_mnt_mounted...

Jan 01 16:47:04 mattermostvm1 bitnami[2408]: ## 2024-01-01 16:47:04+00:00 ## INFO ## Running /opt/bitnami/var/init/pre-start/030_resize_fs...

Jan 01 16:47:04 mattermostvm1 bitnami[2408]: resize2fs 1.46.2 (28-Feb-2021)

Jan 01 16:47:04 mattermostvm1 bitnami[2408]: The filesystem is already 7831547 (4k) blocks long. Nothing to do!

Jan 01 16:47:04 mattermostvm1 bitnami[2408]: ## 2024-01-01 16:47:04+00:00 ## INFO ## Running /opt/bitnami/var/init/pre-start/040_hostname...

Jan 01 16:47:04 mattermostvm1 bitnami[2408]: ## 2024-01-01 16:47:04+00:00 ## INFO ## Running /opt/bitnami/var/init/pre-start/050_swap_file...

Jan 01 16:47:04 mattermostvm1 bitnami[2408]: ## 2024-01-01 16:47:04+00:00 ## INFO ## Running /opt/bitnami/var/init/pre-start/060_get_default_passwords...

Jan 01 16:47:04 mattermostvm1 bitnami[2404]: ## 2024-01-01 16:47:04+00:00 ## INFO ## Starting services...

Jan 01 16:47:05 mattermostvm1 bitnami[2478]: 2024-01-01T16:47:05.236Z - info: Saving configuration info to disk

Jan 01 16:47:05 mattermostvm1 bitnami[2478]: 2024-01-01T16:47:05.472Z - info: Performing service start operation for nginx

Jan 01 16:47:05 mattermostvm1 bitnami[2478]: nginx 16:47:05.65 INFO ==> nginx is already running

Jan 01 16:47:05 mattermostvm1 bitnami[2478]: 2024-01-01T16:47:05.656Z - info: Performing service start operation for mysql

Jan 01 16:47:05 mattermostvm1 bitnami[2478]: mysql 16:47:05.79 INFO ==> mysql is already running

Jan 01 16:47:05 mattermostvm1 bitnami[2478]: 2024-01-01T16:47:05.798Z - info: Performing service start operation for mattermost

Jan 01 16:47:15 mattermostvm1 dhclient[440]: XMT: Solicit on eth0, interval 120540ms.

Jan 01 16:49:15 mattermostvm1 dhclient[440]: XMT: Solicit on eth0, interval 112850ms.

Jan 01 16:49:45 mattermostvm1 bitnami[2478]: 2024-01-01T16:49:45.042Z - error: Unable to perform start operation Export start for mattermost failed with exit code 1

Jan 01 16:49:45 mattermostvm1 bitnami[2707]: ## 2024-01-01 16:49:45+00:00 ## INFO ## Running /opt/bitnami/var/init/post-start/010_detect_new_account...

Jan 01 16:49:45 mattermostvm1 bitnami[2707]: ## 2024-01-01 16:49:45+00:00 ## INFO ## Running /opt/bitnami/var/init/post-start/020_bitnami_agent_extra...

Jan 01 16:49:45 mattermostvm1 bitnami[2707]: ## 2024-01-01 16:49:45+00:00 ## INFO ## Running /opt/bitnami/var/init/post-start/030_bitnami_agent...

Jan 01 16:49:45 mattermostvm1 bitnami[2707]: ## 2024-01-01 16:49:45+00:00 ## INFO ## Running /opt/bitnami/var/init/post-start/040_update_welcome_file...

Jan 01 16:49:45 mattermostvm1 bitnami[2707]: ## 2024-01-01 16:49:45+00:00 ## INFO ## Running /opt/bitnami/var/init/post-start/050_bitnami_credentials_file...

Jan 01 16:49:45 mattermostvm1 bitnami[2707]: ## 2024-01-01 16:49:45+00:00 ## INFO ## Running /opt/bitnami/var/init/post-start/060_clean_metadata...

Jan 01 16:49:45 mattermostvm1 systemd[1]: bitnami.service: Control process exited, code=exited, status=1/FAILURE

░░ Subject: Unit process exited

░░ Defined-By: systemd

░░ Support: https://www.debian.org/support

░░

░░ An ExecStart= process belonging to unit bitnami.service has exited.

░░

░░ The process' exit code is 'exited' and its exit status is 1.

Jan 01 16:49:45 mattermostvm1 systemd[1]: bitnami.service: Failed with result 'exit-code'.

░░ Subject: Unit failed

░░ Defined-By: systemd

░░ Support: https://www.debian.org/support

░░

░░ The unit bitnami.service has entered the 'failed' state with result 'exit-code'.

Jan 01 16:49:45 mattermostvm1 sudo[2395]: pam_unix(sudo:session): session closed for user root

Jan 01 16:49:45 mattermostvm1 systemd[1]: bitnami.service: Unit process 686 (nginx) remains running after unit stopped.

Jan 01 16:49:45 mattermostvm1 systemd[1]: bitnami.service: Unit process 689 (nginx) remains running after unit stopped.

Jan 01 16:49:45 mattermostvm1 systemd[1]: bitnami.service: Unit process 690 (nginx) remains running after unit stopped.

Jan 01 16:49:45 mattermostvm1 systemd[1]: bitnami.service: Unit process 704 (mysqld) remains running after unit stopped.

Jan 01 16:49:45 mattermostvm1 systemd[1]: Failed to start LSB: bitnami init script.

░░ Subject: A start job for unit bitnami.service has failed

░░ Defined-By: systemd

░░ Support: https://www.debian.org/support

░░

░░ A start job for unit bitnami.service has finished with a failure.

░░

░░ The job identifier is 485 and the job result is failed.

Jan 01 16:49:45 mattermostvm1 systemd[1]: bitnami.service: Consumed 32.682s CPU time.

░░ Subject: Resources consumed by unit runtime

░░ Defined-By: systemd

░░ Support: https://www.debian.org/support

░░

░░ The unit bitnami.service completed and consumed the indicated resources.

bitnami@mattermostvm1:/opt/bitnami$ cat /opt/bitnami/var/init/post-start/060_clean_metadata

#!/bin/sh

# Copyright VMware, Inc.

# SPDX-License-Identifier: APACHE-2.0

#

# Bitnami Clean metadata files after initialization completes

#

. /opt/bitnami/scripts/init/functions

run_once_globally_check "clean_metadata"

if [ $? -ne 0 ]; then

exit 0

fi

# remove metadata password

set_stored_data metadata_applications_password ""

set_stored_data metadata_system_passsudo systemctl disable apache2.serviceami

$ sudo systemctl disable apache2.service

Failed to disable unit: Unit file apache2.service does not exist.

Cleanup

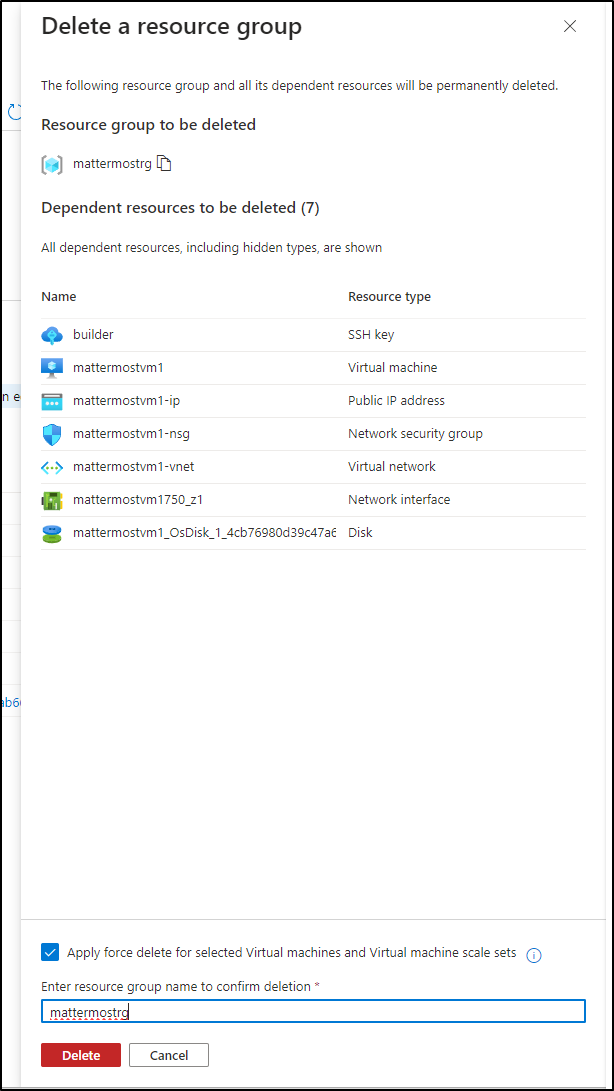

I’m done messing with this Azure host. I deleted but just removing the Resource Group

Comparison with Matrix Synapse

I can see video calls work with Element (Matrix). I’ve written about Matrix Synapse and Element, which provides a client, Web and Mobile, to Matrix. It’s part of the Fediverse. While it is slightly more complicated to think through Fediverse and Fediramp - basically we are creating a sort of global decentralized platform. The fact is, it is really easy to provide the chat, meeting room, and video call features in something that is open and free.

Here is a terrible visage of my unkempt mug over holiday break calling myself using Element on Android and Element on the web using different accounts:

While in the call, I can see screenshare works there too

So, if the video calling feature really is a deal-breaker, one can use something like Zoom, Element, Teams, Meet (or whatever Google calls their chat service this month).

Pricing

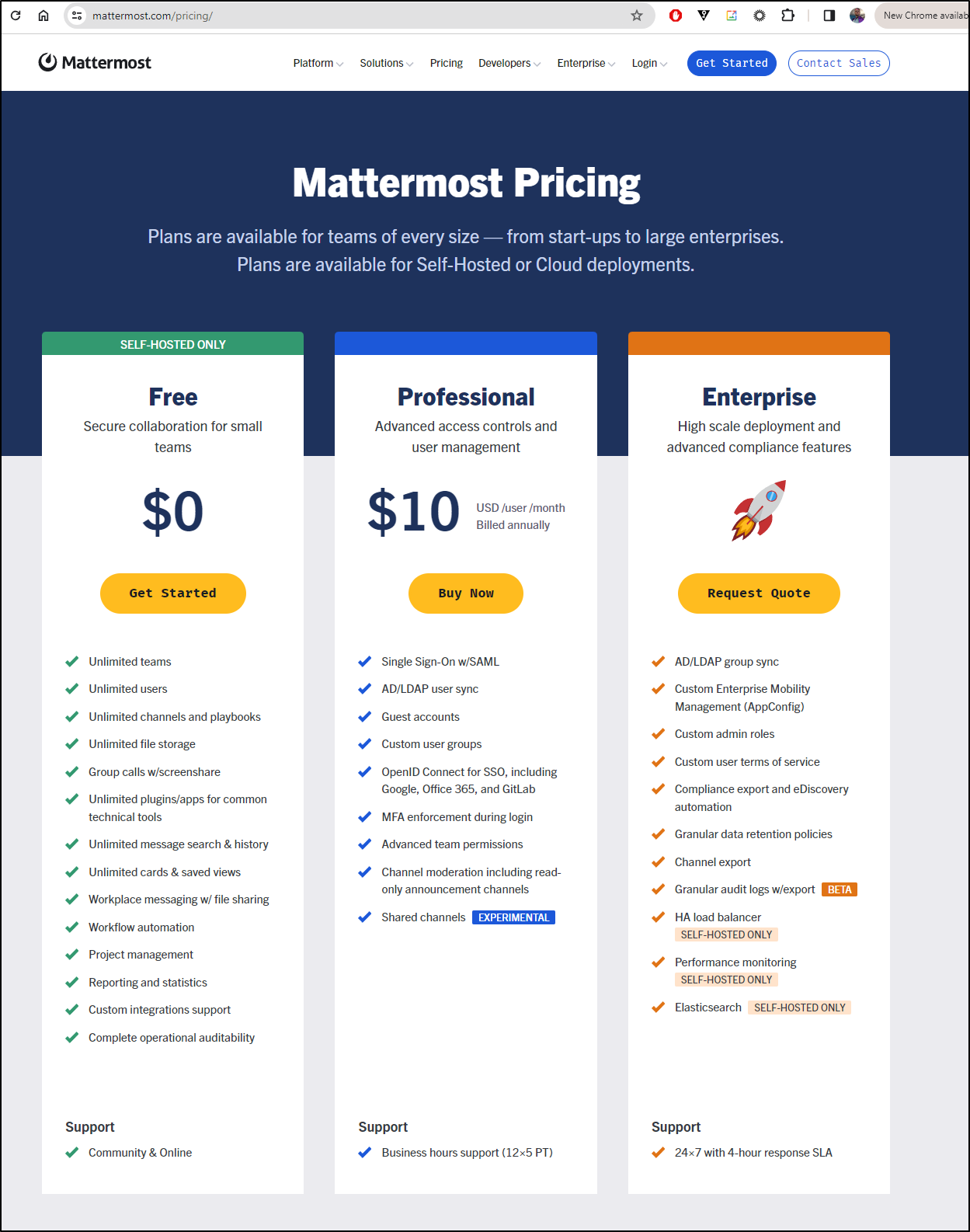

The current pricing is $120/user/year for Professional and “ask me” for Enterprise

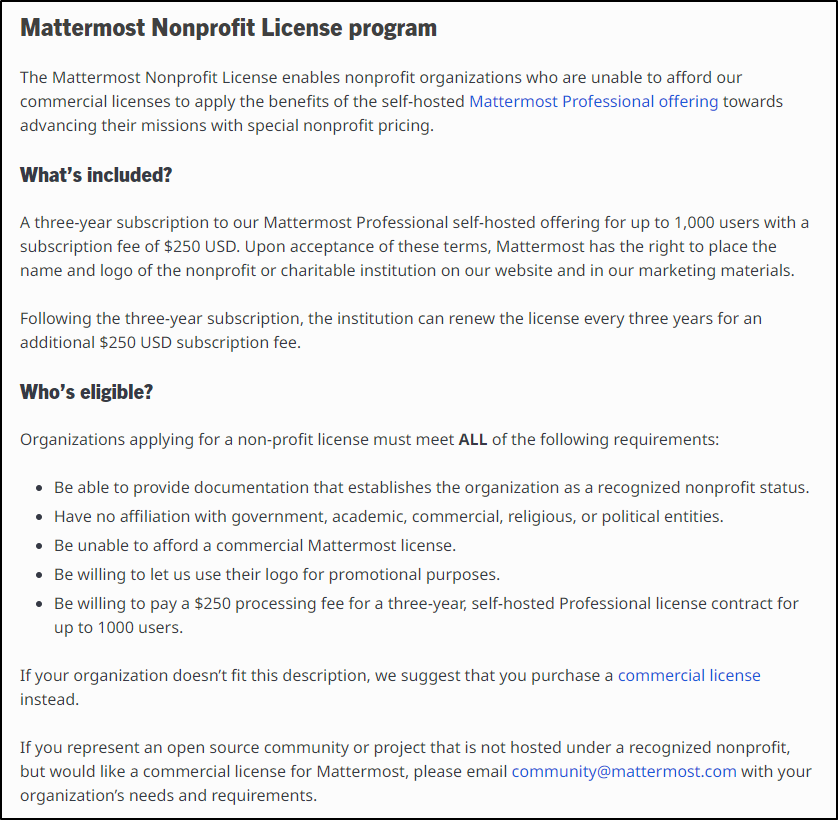

I should note they do have a non-profit program that will grant a 3y license for $250:

Company

Mattermost was founded in 2016 by Corey Hulen and Ian Tien, Mattermost offers a suite of workflow-centric tools that integrate team messaging, audio and screen share, workflow automation and project management. Corey and Ian both worked at Microsoft between 2004 and 2008 but both have lead successful prior startups.

Mattermost is headquartered in Palo Alto, California, and operates as a remote-first company with a global team of 100 to 250 (Pitchbook says 159 which lines up with LinkedIn)

Summary

It’s an interesting product. I expected some form of SaaS/Cloud but really it is just a host-it-yourself software production that links to some canned playbooks in cloud providers.

It has a lot going for it with playbooks. I can see a full implementation being great for an Ops or SRE team. The ability to direct the flow seems perfect for a NOC.

For me, however, I’m not wild on the limited Kubernetes deployment. I might come back for a helm chart or an updated Operator with better Ingress support.