Published: Jan 8, 2024 by Isaac Johnson

I was asked about why I thought Gitea ate my repositories by some folks at CommitGo (the company that provides Gitea Cloud).

I made the statement that it “dropped support for MySQL” in my Maintenance post last month and yet clearly I am using it with MySQL today.

I wanted to run some tests that either confirm I was errant or indeed there was a helm chart issue. Let’s see (honestly, I have no idea what I’ll find as I write this).

Setup the first

I know I wrote my first and immediately followup early August 2023.

Based on that, it’s quite likely I used Helm chart v9.1.0.

So I can test, I will create a new DB and new Route53/A Record.

$ cat r53-giteatest.json

{

"Comment": "CREATE giteatest fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "giteatest.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "75.73.224.240"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-giteatest.json

{

"ChangeInfo": {

"Id": "/change/C08354533QL8OS83DJ4LY",

"Status": "PENDING",

"SubmittedAt": "2024-01-06T21:52:22.963Z",

"Comment": "CREATE giteatest fb.s A record "

}

}

I’ll then create a Database in MySQL for this instance. I don’t need to create a new user as we can use the existing gitea user

ijohnson@sirnasilot:~$ mysql -u root -p

Enter password:

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 1415693

Server version: 10.11.2-MariaDB Source distribution

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> CREATE DATABASE giteatestdb CHARACTER SET 'utf8mb4' COLLATE 'utf8mb4_unicode_ci';

Query OK, 1 row affected (0.001 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON giteatestdb.* TO 'gitea'@'%';

Query OK, 0 rows affected (0.066 sec)

MariaDB [(none)]> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.115 sec)

MariaDB [(none)]> \q

Bye

I want to replicate behaviors, So I’ll install as I did Aug 3 first without specifying the db block.

$ cat first.values.yaml

gitea:

admin:

username: "builder"

password: "ThisIsNotMyPassowrd!"

email: "isaac.johnson@gmail.com"

config:

actions:

ENABLED: true

server:

DOMAIN: giteatest.freshbrewed.science

ROOT_URL: https://giteatest.freshbrewed.science/

metrics:

enabled: true

global:

storageClass: "managed-nfs-storage"

ingress:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "600"

nginx.org/proxy-read-timeout: "600"

enabled: true

hosts:

- host: giteatest.freshbrewed.science

paths:

- path: /

pathType: ImplementationSpecific

tls:

- hosts:

- giteatest.freshbrewed.science

secretName: giteatest-tls

I’ll check the chart versions available

$ helm search repo gitea-charts -l

NAME CHART VERSION APP VERSION DESCRIPTION

gitea-charts/gitea 10.0.2 1.21.3 Gitea Helm chart for Kubernetes

gitea-charts/gitea 10.0.1 1.21.2 Gitea Helm chart for Kubernetes

gitea-charts/gitea 10.0.0 1.21.2 Gitea Helm chart for Kubernetes

gitea-charts/gitea 9.6.1 1.21.1 Gitea Helm chart for Kubernetes

gitea-charts/gitea 9.6.0 1.21.0 Gitea Helm chart for Kubernetes

gitea-charts/gitea 9.5.1 1.20.5 Gitea Helm chart for Kubernetes

gitea-charts/gitea 9.5.0 1.20.5 Gitea Helm chart for Kubernetes

gitea-charts/gitea 9.4.0 1.20.4 Gitea Helm chart for Kubernetes

gitea-charts/gitea 9.3.0 1.20.3 Gitea Helm chart for Kubernetes

gitea-charts/gitea 9.2.1 1.20.3 Gitea Helm chart for Kubernetes

gitea-charts/gitea 9.2.0 1.20.3 Gitea Helm chart for Kubernetes

gitea-charts/gitea 9.1.0 1.20.2 Gitea Helm chart for Kubernetes

gitea-charts/gitea 9.0.4 1.20.1 Gitea Helm chart for Kubernetes

gitea-charts/gitea 9.0.3 1.20-nightly Gitea Helm chart for Kubernetes

gitea-charts/gitea 9.0.2 1.20.0 Gitea Helm chart for Kubernetes

gitea-charts/gitea 9.0.1 1.20.0 Gitea Helm chart for Kubernetes

gitea-charts/gitea 9.0.0 1.20.0 Gitea Helm chart for Kubernetes

My first attempt to install failed as the helm chart clearly has a hard-coded PVC name set thus two installs can’t exist in the same namespace using this chart

$ helm install giteatest -f first.values.yaml gitea-charts/gitea --version 9.1.0

Error: INSTALLATION FAILED: rendered manifests contain a resource that already exists. Unable to continue with install: PersistentVolumeClaim "gitea-shared-storage" in namespace "default" exists and cannot be imported into the current release: invalid ownership metadata; annotation validation error: key "meta.helm.sh/release-name" must equal "giteatest": current value is "gitea"

I could, however, do it in a new namespace

$ kubectl create ns giteatest

namespace/giteatest created

$ helm install -n giteatest giteatest -f first.values.yaml gitea-charts/gitea --v

ersion 9.1.0

NAME: giteatest

LAST DEPLOYED: Sat Jan 6 16:12:57 2024

NAMESPACE: giteatest

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

https://giteatest.freshbrewed.science/

It took a while, but up it came eventually

Next, I’ll create the second values I used that had MySQL backing

$ cat second.values.yaml

gitea:

admin:

username: "builder"

password: "ThisIsNotMyPassowrd!"

email: "isaac.johnson@gmail.com"

config:

actions:

ENABLED: true

database:

DB_TYPE: mysql

HOST: 192.168.1.117

NAME: giteatestdb

USER: gitea

PASSWD: MyDatabasePassword

SCHEMA: gitea

mailer:

ENABLED: true

FROM: isaac@freshbrewed.science

MAILER_TYPE: smtp

SMTP_ADDR: smtp.sendgrid.net

SMTP_PORT: 465

USER: apikey

PASSWD: SG.sadfsadfasdfasdfsadfasdfasdfasdfasdfsadfsafsadfsadf

openid:

ENABLE_OPENID_SIGNIN: false

ENABLE_OPENID_SIGNUP: false

server:

DOMAIN: giteatest.freshbrewed.science

ROOT_URL: https://giteatest.freshbrewed.science/

service:

DISABLE_REGISTRATION: true

metrics:

enabled: true

postgresql:

enabled: false

global:

storageClass: "managed-nfs-storage"

ingress:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "600"

nginx.org/proxy-read-timeout: "600"

enabled: true

hosts:

- host: giteatest.freshbrewed.science

paths:

- path: /

pathType: ImplementationSpecific

tls:

- hosts:

- giteatest.freshbrewed.science

secretName: giteatest-tls

I’ll then upgrade my existing installation

$ helm upgrade -n giteatest giteatest -f second.values.yaml gitea-charts/gitea --version 9.1.0

Release "giteatest" has been upgraded. Happy Helming!

NAME: giteatest

LAST DEPLOYED: Sat Jan 6 16:24:21 2024

NAMESPACE: giteatest

STATUS: deployed

REVISION: 2

NOTES:

1. Get the application URL by running these commands:

https://giteatest.freshbrewed.science/

$ helm list -n giteatest

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

giteatest giteatest 2 2024-01-06 16:24:21.165969144 -0600 CST deployed gitea-9.1.0 1.20.2

I can clearly see the chart is stuck.. port 5432 is for PostgreSQL, not MySQL (3306)

Every 2.0s: kubectl logs giteatest-696f9967c7-2944x -n giteatest --container configure-gitea LuiGi17: Sat Jan 6 17:00:04 2024

==== BEGIN GITEA CONFIGURATION ====

2024/01/06 22:55:57 .../cli@v1.22.13/app.go:410:RunAsSubcommand() [I] PING DATABASE postgresschema

2024/01/06 22:55:57 cmd/migrate.go:33:runMigrate() [I] AppPath: /usr/local/bin/gitea

2024/01/06 22:55:57 cmd/migrate.go:34:runMigrate() [I] AppWorkPath: /data

2024/01/06 22:55:57 cmd/migrate.go:35:runMigrate() [I] Custom path: /data/gitea

2024/01/06 22:55:57 cmd/migrate.go:36:runMigrate() [I] Log path: /data/log

2024/01/06 22:55:57 cmd/migrate.go:37:runMigrate() [I] Configuration file: /data/gitea/conf/app.ini

2024/01/06 22:55:57 cmd/migrate.go:40:runMigrate() [F] Failed to initialize ORM engine: dial tcp 192.168.1.117:5432: connect: connection refused

Gitea migrate might fail due to database connection...This init-container will try again in a few seconds

the pods:

$ kubectl get pods -n giteatest

NAME READY STATUS RESTARTS AGE

giteatest-redis-cluster-3 1/1 Running 0 47m

giteatest-postgresql-ha-postgresql-0 1/1 Running 0 47m

giteatest-redis-cluster-1 1/1 Running 0 47m

giteatest-redis-cluster-0 1/1 Running 0 47m

giteatest-redis-cluster-2 1/1 Running 0 47m

giteatest-postgresql-ha-pgpool-c5fcbc984-spz2d 1/1 Running 0 47m

giteatest-postgresql-ha-postgresql-1 1/1 Running 0 47m

giteatest-postgresql-ha-postgresql-2 1/1 Running 0 47m

giteatest-5cd8756994-ppw5n 1/1 Running 0 47m

giteatest-redis-cluster-5 0/1 CrashLoopBackOff 14 (4m51s ago) 47m

giteatest-696f9967c7-2944x 0/1 Init:CrashLoopBackOff 11 (4m41s ago) 36m

giteatest-redis-cluster-4 0/1 CrashLoopBackOff 14 (4m29s ago) 47m

I waited for a while but no go

$ kubectl get pods -n giteatest

NAME READY STATUS RESTARTS AGE

giteatest-redis-cluster-3 1/1 Running 0 88m

giteatest-redis-cluster-1 1/1 Running 0 88m

giteatest-redis-cluster-0 1/1 Running 0 88m

giteatest-redis-cluster-2 1/1 Running 0 88m

giteatest-5cd8756994-ppw5n 1/1 Running 0 88m

giteatest-postgresql-ha-postgresql-2 1/1 Running 0 39m

giteatest-postgresql-ha-postgresql-1 1/1 Running 0 37m

giteatest-postgresql-ha-postgresql-0 1/1 Running 0 36m

giteatest-postgresql-ha-pgpool-7b56775876-zn72s 1/1 Running 2 (34m ago) 39m

giteatest-redis-cluster-4 0/1 CrashLoopBackOff 22 (4m21s ago) 88m

giteatest-redis-cluster-5 0/1 CrashLoopBackOff 12 (2m45s ago) 39m

giteatest-67c6bc69bf-bn8t2 0/1 Init:CrashLoopBackOff 12 (2m54s ago) 39m

I rotated the pod and still no go

$ kubectl logs giteatest-5cd8756994-g9tmk -n giteatest --container configure-gitea

==== BEGIN GITEA CONFIGURATION ====

2024/01/06 23:45:29 cmd/migrate.go:33:runMigrate() [I] AppPath: /usr/local/bin/gitea

2024/01/06 23:45:29 cmd/migrate.go:34:runMigrate() [I] AppWorkPath: /data

2024/01/06 23:45:29 cmd/migrate.go:35:runMigrate() [I] Custom path: /data/gitea

2024/01/06 23:45:29 cmd/migrate.go:36:runMigrate() [I] Log path: /data/log

2024/01/06 23:45:29 cmd/migrate.go:37:runMigrate() [I] Configuration file: /data/gitea/conf/app.ini

2024/01/06 23:45:29 .../cli@v1.22.13/app.go:410:RunAsSubcommand() [I] PING DATABASE postgresschema

2024/01/06 23:45:29 cmd/migrate.go:40:runMigrate() [F] Failed to initialize ORM engine: dial tcp 192.168.1.117:5432: connect: connection refused

Gitea migrate might fail due to database connection...This init-container will try again in a few seconds

I removed it and reinstalled, but same error

builder@LuiGi17:~/Workspaces/giteaTest$ kubectl logs giteatest-696f9967c7-f77kd -n giteatest

Defaulted container "gitea" out of: gitea, init-directories (init), init-app-ini (init), configure-gitea (init)

Error from server (BadRequest): container "gitea" in pod "giteatest-696f9967c7-f77kd" is waiting to start: PodInitializing

builder@LuiGi17:~/Workspaces/giteaTest$ kubectl logs giteatest-696f9967c7-f77kd -n giteatest --container configure-gitea

==== BEGIN GITEA CONFIGURATION ====

2024/01/06 23:48:40 cmd/migrate.go:33:runMigrate() [I] AppPath: /usr/local/bin/gitea

2024/01/06 23:48:40 .../cli@v1.22.13/app.go:410:RunAsSubcommand() [I] PING DATABASE postgresschema

2024/01/06 23:48:40 cmd/migrate.go:34:runMigrate() [I] AppWorkPath: /data

2024/01/06 23:48:40 cmd/migrate.go:35:runMigrate() [I] Custom path: /data/gitea

2024/01/06 23:48:40 cmd/migrate.go:36:runMigrate() [I] Log path: /data/log

2024/01/06 23:48:40 cmd/migrate.go:37:runMigrate() [I] Configuration file: /data/gitea/conf/app.ini

2024/01/06 23:48:40 cmd/migrate.go:40:runMigrate() [F] Failed to initialize ORM engine: dial tcp 192.168.1.117:5432: connect: connection refused

Gitea migrate might fail due to database connection...This init-container will try again in a few seconds

It took a bit to work out, but the lack of a statefulset clued me in. I often write my articles days to weeks in advance of the publish date. That meant it was feasabile I did not, indeed, use chart 9.1.0 rather chart 8.3.0 before the August date.

I reinstalled, this time, with version 8.3.0.

We can see it working to build out the database

$ kubectl logs giteatest-0 -n giteatest --container configure-gitea | tail -n 10

2024/01/07 00:09:50 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE TABLE IF NOT EXISTS `repo_archiver` (`id` BIGINT(20) PRIMARY KEY AUTO_INCREMENT NOT NULL, `repo_id` BIGINT(20) NULL, `type` INT NULL, `status` INT NULL, `commit_id` VARCHAR(40) NULL, `created_unix` BIGINT(20) NOT NULL) ENGINE=InnoDB DEFAULT CHARSET utf8mb4 ROW_FORMAT=DYNAMIC [] - 2.107675941s

2024/01/07 00:09:53 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE UNIQUE INDEX `UQE_repo_archiver_s` ON `repo_archiver` (`repo_id`,`type`,`commit_id`) [] - 3.462872998s

2024/01/07 00:09:58 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE INDEX `IDX_repo_archiver_repo_id` ON `repo_archiver` (`repo_id`) [] - 4.842962589s

2024/01/07 00:10:02 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE INDEX `IDX_repo_archiver_created_unix` ON `repo_archiver` (`created_unix`) [] - 3.384260182s

2024/01/07 00:10:03 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE TABLE IF NOT EXISTS `attachment` (`id` BIGINT(20) PRIMARY KEY AUTO_INCREMENT NOT NULL, `uuid` VARCHAR(40) NULL, `repo_id` BIGINT(20) NULL, `issue_id` BIGINT(20) NULL, `release_id` BIGINT(20) NULL, `uploader_id` BIGINT(20) DEFAULT 0 NULL, `comment_id` BIGINT(20) NULL, `name` VARCHAR(255) NULL, `download_count` BIGINT(20) DEFAULT 0 NULL, `size` BIGINT(20) DEFAULT 0 NULL, `created_unix` BIGINT(20) NULL) ENGINE=InnoDB DEFAULT CHARSET utf8mb4 ROW_FORMAT=DYNAMIC [] - 1.492965432s

2024/01/07 00:10:08 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE UNIQUE INDEX `UQE_attachment_uuid` ON `attachment` (`uuid`) [] - 4.665334558s

2024/01/07 00:10:13 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE INDEX `IDX_attachment_repo_id` ON `attachment` (`repo_id`) [] - 5.058815625s

2024/01/07 00:10:17 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE INDEX `IDX_attachment_issue_id` ON `attachment` (`issue_id`) [] - 4.003172323s

2024/01/07 00:10:22 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE INDEX `IDX_attachment_release_id` ON `attachment` (`release_id`) [] - 4.707358064s

2024/01/07 00:10:27 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE INDEX `IDX_attachment_uploader_id` ON `attachment` (`uploader_id`) [] - 5.437581687s

Then see pods come up

$ kubectl get pods -n giteatest

NAME READY STATUS RESTARTS AGE

giteatest-memcached-b4dd9ccfd-6fbbj 1/1 Running 0 133m

giteatest-0 1/1 Running 0 133m

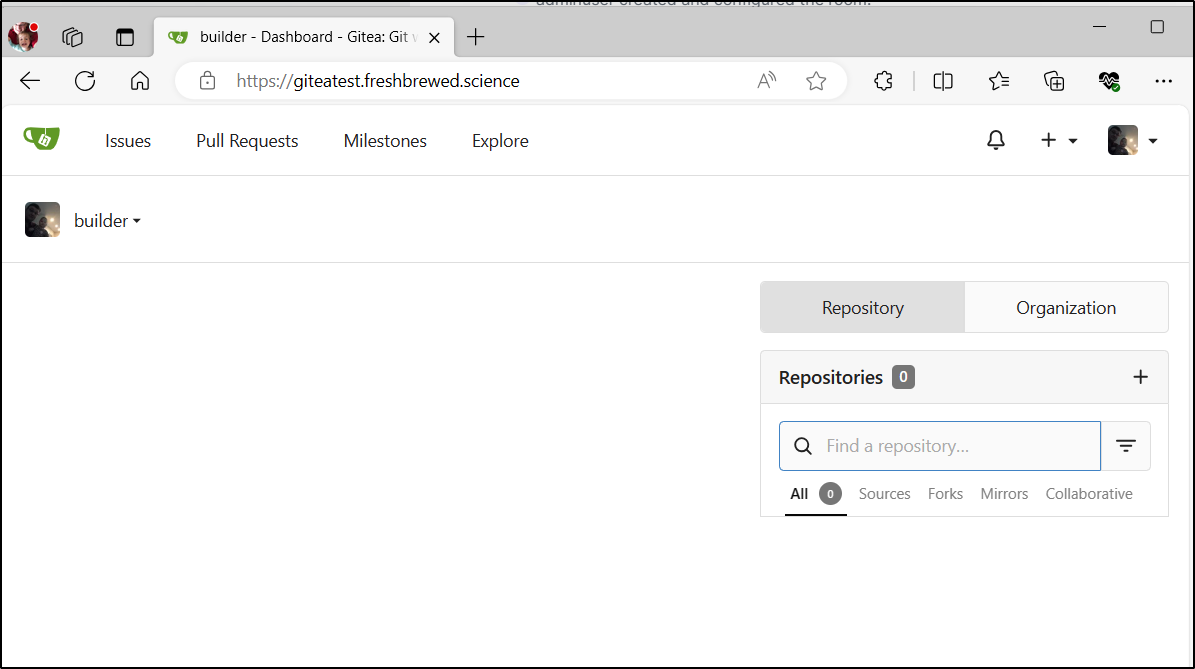

At this point we have a working Gitea installation using the 8.x charts.

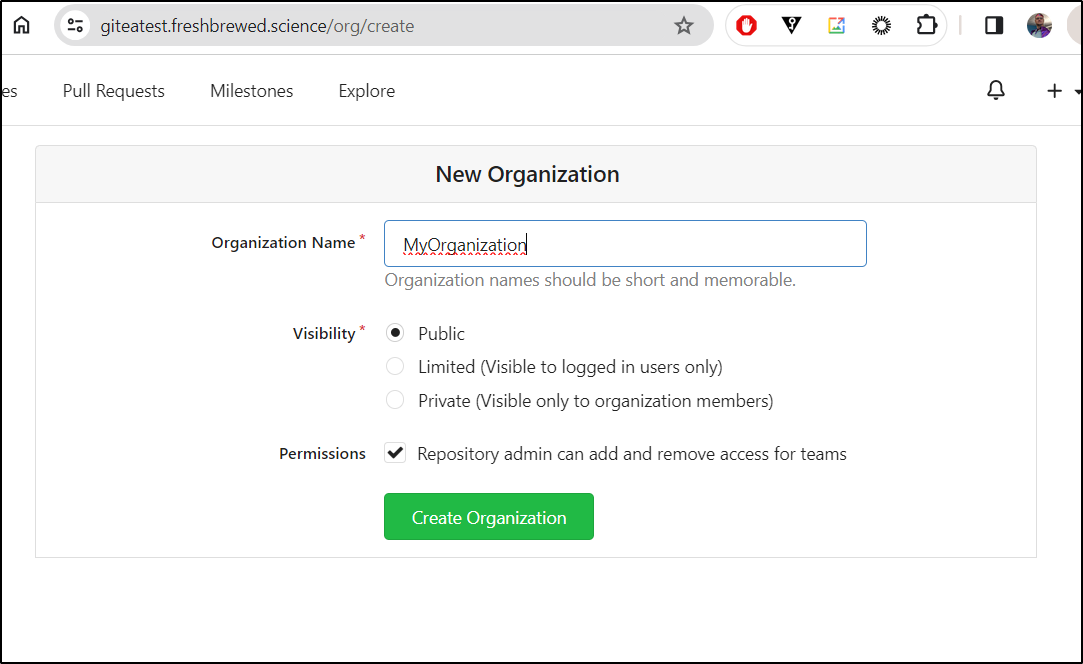

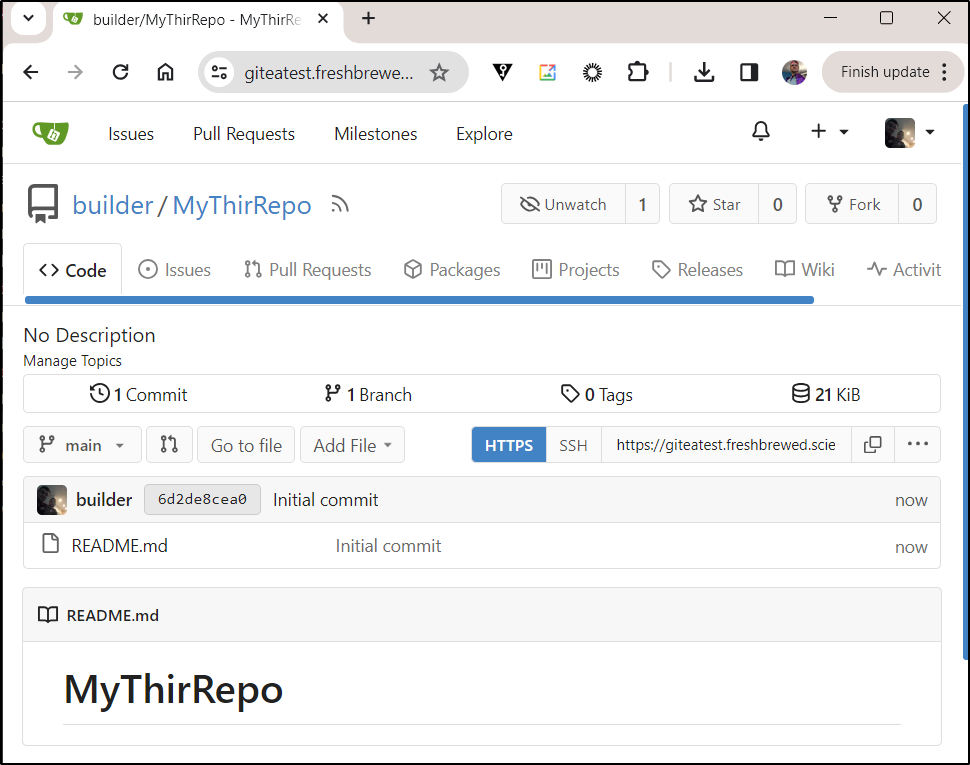

I’ll now create an Organization

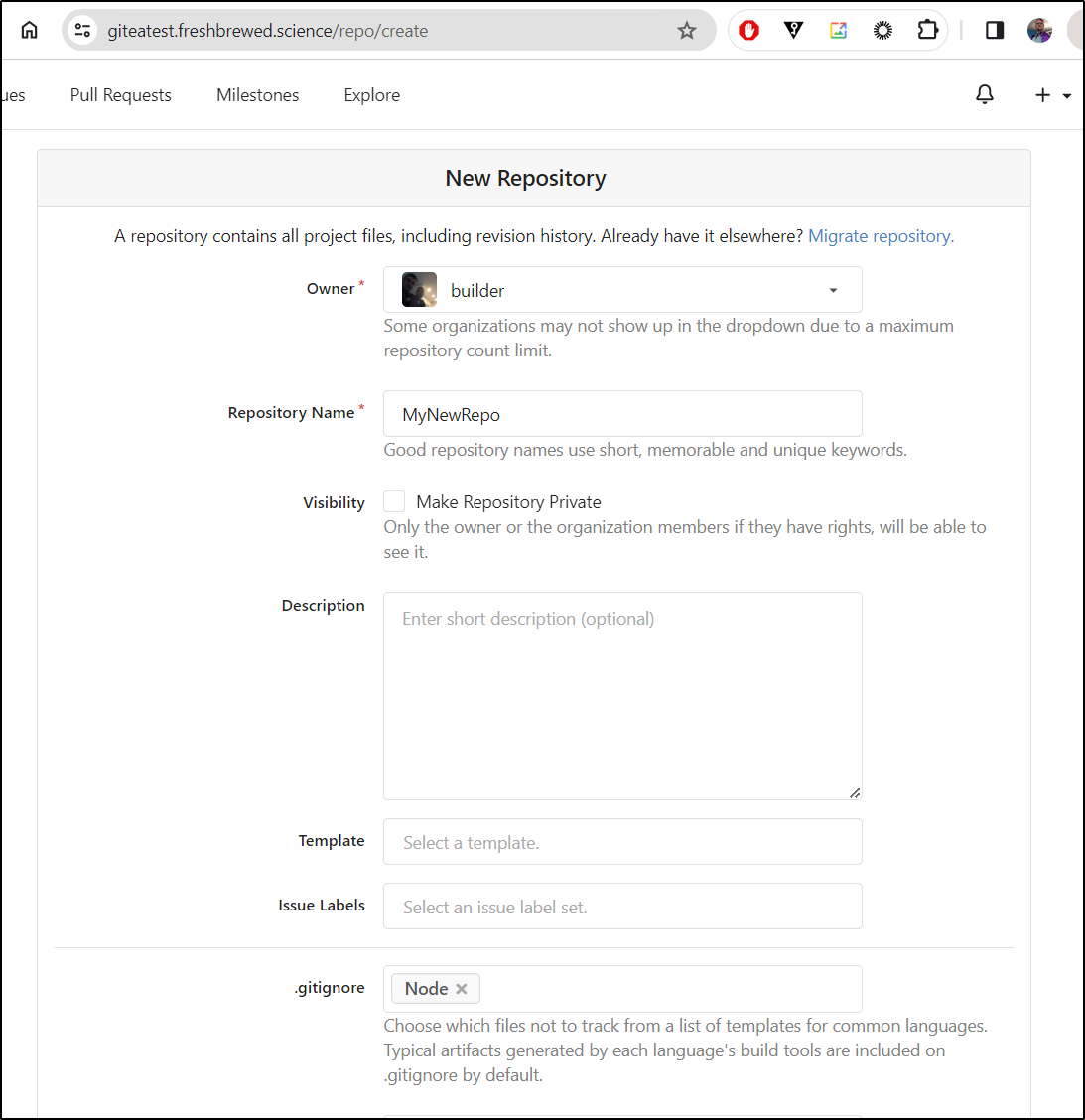

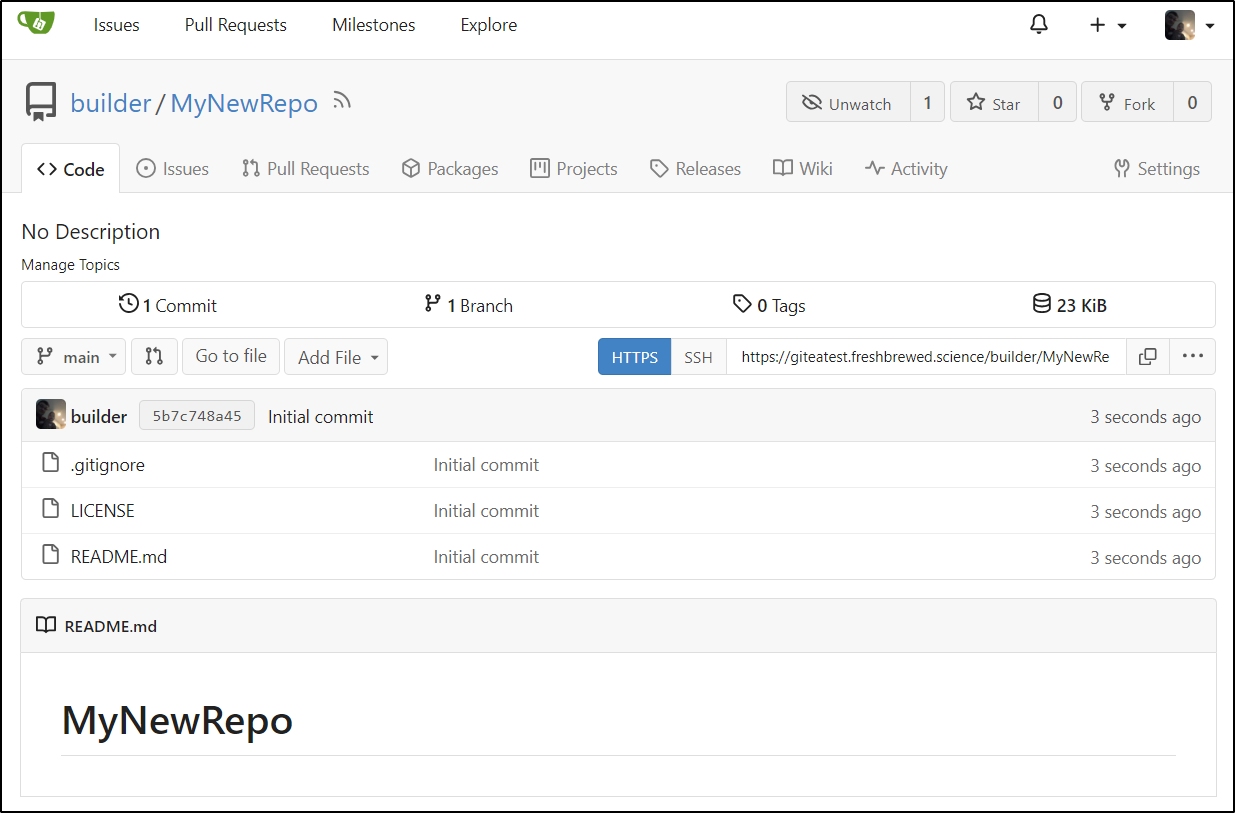

Creating a new repository

We can see some files in the repo

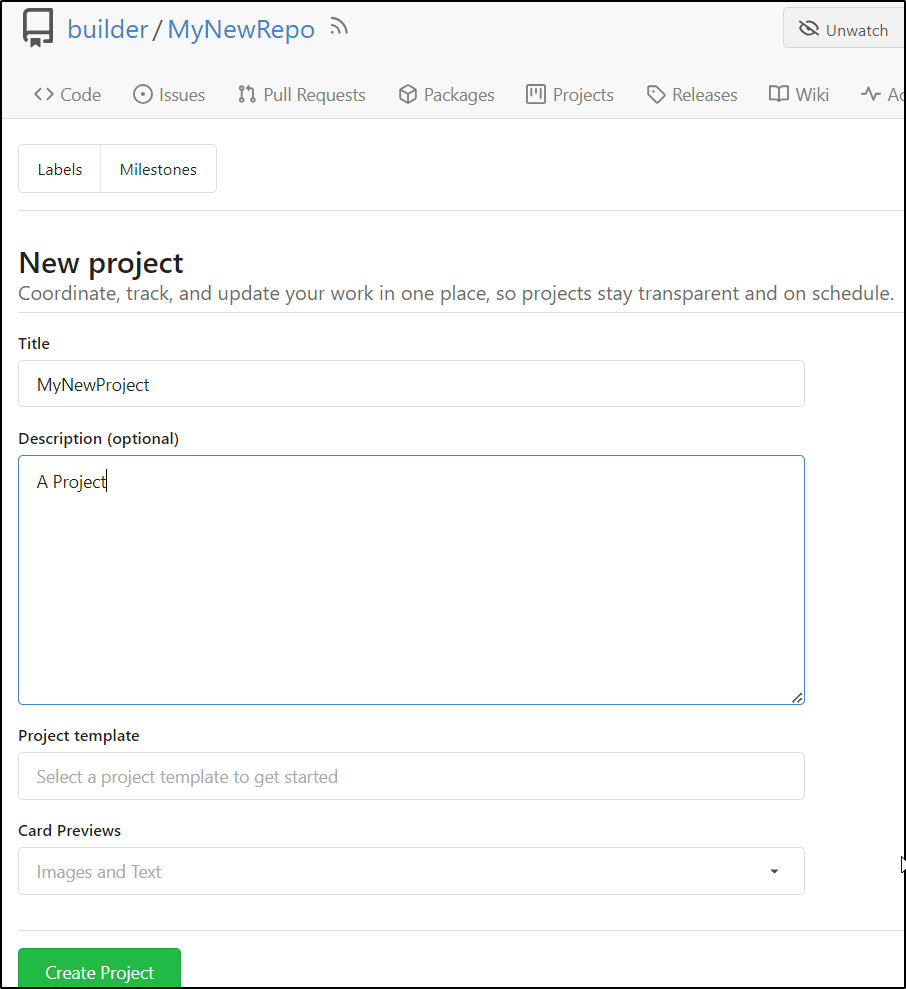

I’ll now create a new project

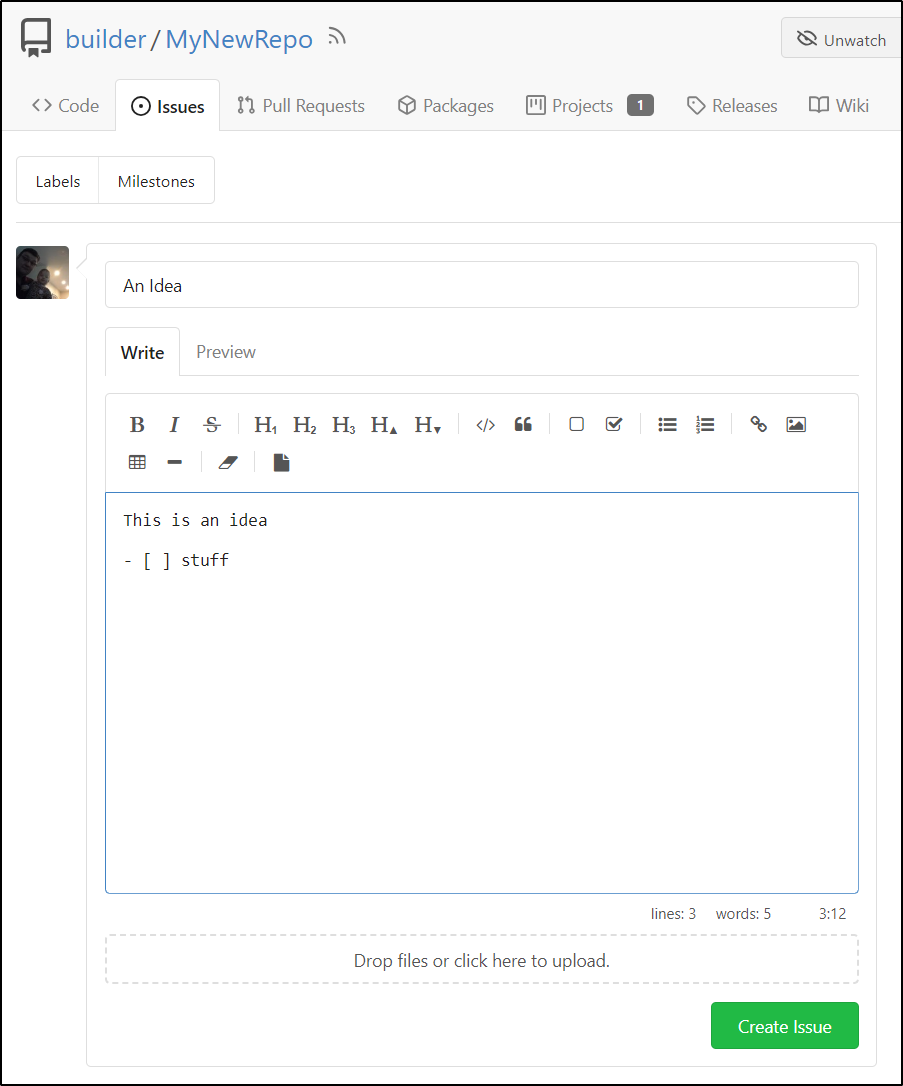

Now we can create an issue in there

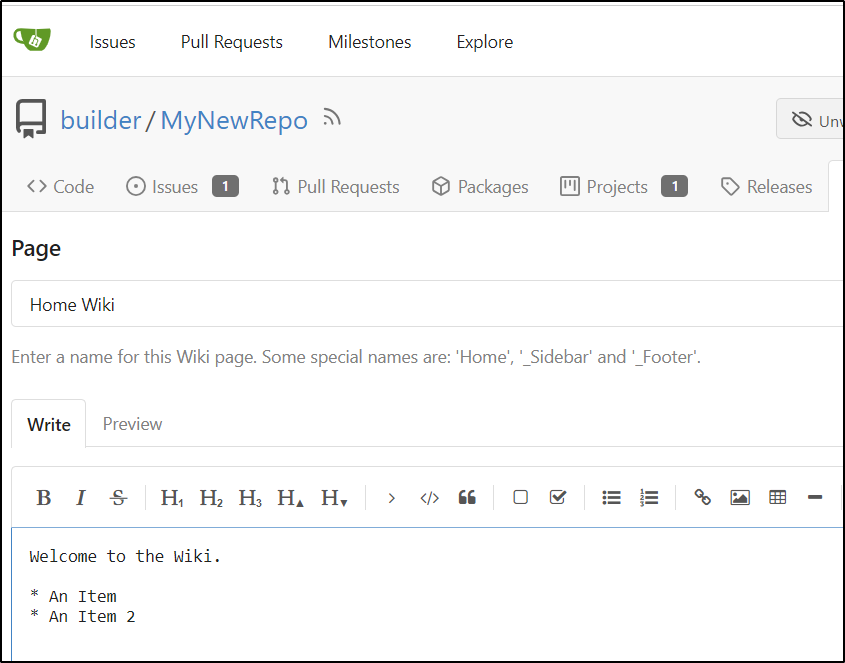

Next, I’ll create a wiki

We can now see it running in the namespace

$ helm list -n giteatest

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

giteatest giteatest 1 2024-01-06 18:05:08.744255236 -0600 CST deployed gitea-8.3.0 1.19.3

Upgrading to 9.x

We can see the upgrade to 9.1.0 and how it crashes the existing install

We can see the configure-gitea container is trying to initialize postgres

$ kubectl logs giteatest-78d4768db4-m8nrf -n giteatest --container configure-gitea

==== BEGIN GITEA CONFIGURATION ====

2024/01/07 02:47:10 cmd/migrate.go:33:runMigrate() [I] AppPath: /usr/local/bin/gitea

2024/01/07 02:47:10 cmd/migrate.go:34:runMigrate() [I] AppWorkPath: /data

2024/01/07 02:47:10 cmd/migrate.go:35:runMigrate() [I] Custom path: /data/gitea

2024/01/07 02:47:10 cmd/migrate.go:36:runMigrate() [I] Log path: /data/log

2024/01/07 02:47:10 cmd/migrate.go:37:runMigrate() [I] Configuration file: /data/gitea/conf/app.ini

2024/01/07 02:47:10 .../cli@v1.22.13/app.go:410:RunAsSubcommand() [I] PING DATABASE postgresschema

2024/01/07 02:47:10 cmd/migrate.go:40:runMigrate() [F] Failed to initialize ORM engine: pq: unknown response for startup: 'Y'

Gitea migrate might fail due to database connection...This init-container will try again in a few seconds

So the upgrade

$ helm upgrade --install -n giteatest giteatest -f ./second.values.yaml gitea-charts/gitea --version 9.1.0

Error: UPGRADE FAILED: create: failed to create: Internal error occurred: failed calling webhook "rancher.cattle.io": failed to call webhook: Post "https://rancher-webhook.cattle-system.svc:443/v1/webhook/mutation?timeout=10s": EOF

$ helm upgrade --install -n giteatest giteatest -f ./second.values.yaml gitea-charts/gitea --version 9.1.0

Release "giteatest" has been upgraded. Happy Helming!

NAME: giteatest

LAST DEPLOYED: Sat Jan 6 21:00:50 2024

NAMESPACE: giteatest

STATUS: deployed

REVISION: 3

NOTES:

1. Get the application URL by running these commands:

https://giteatest.freshbrewed.science/

I realized I might solve it by adding a new setting that was added and set to enabled by default

$ cat second.values.yaml | grep -C 5 ^postgres

DISABLE_REGISTRATION: true

metrics:

enabled: true

postgresql:

enabled: false

postgresql-ha:

enabled: false

global:

storageClass: "managed-nfs-storage"

Now when I upgrade, I got troubles

$ helm upgrade --install -n giteatest giteatest -f ./second.values.yaml gitea-charts/gitea --version 9.1.0

Error: UPGRADE FAILED: create: failed to create: Internal error occurred: failed calling webhook "rancher.cattle.io": failed to call webhook: Post "https://rancher-webhook.cattle-system.svc:443/v1/webhook/mutation?timeout=10s": EOF

builder@LuiGi17:~/Workspaces/giteaTest$ helm upgrade --install -n giteatest giteatest -f ./second.values.yaml gitea-charts/gitea --version 9.1.0

Release "giteatest" has been upgraded. Happy Helming!

NAME: giteatest

LAST DEPLOYED: Sat Jan 6 21:00:50 2024

NAMESPACE: giteatest

STATUS: deployed

REVISION: 3

NOTES:

1. Get the application URL by running these commands:

https://giteatest.freshbrewed.science/

This too landed in trash

$ kubectl get pods -n giteatest

NAME READY STATUS RESTARTS AGE

giteatest-redis-cluster-3 0/1 Running 0 25m

giteatest-redis-cluster-4 0/1 Running 0 25m

giteatest-redis-cluster-5 0/1 Running 0 25m

giteatest-redis-cluster-0 0/1 CrashLoopBackOff 9 (4m35s ago) 25m

giteatest-redis-cluster-1 0/1 CrashLoopBackOff 9 (4m23s ago) 25m

giteatest-78d4768db4-m8nrf 0/1 Init:CrashLoopBackOff 9 (4m29s ago) 25m

giteatest-redis-cluster-2 0/1 CrashLoopBackOff 9 (4m16s ago) 25m

giteatest-b459b8d4-8dnrz 0/1 CrashLoopBackOff 3 (18s ago) 9m4s

Let me reduce the number of Redis replicas

redis-cluster:

cluster:

nodes: 2

And upgrade (spoiler alert: you need at least 3 which I will discover in a bit)

$ helm upgrade --install -n giteatest giteatest -f ./second.values.yaml gitea-charts/gitea --version 9.1.0

Release "giteatest" has been upgraded. Happy Helming!

NAME: giteatest

LAST DEPLOYED: Sat Jan 6 21:15:04 2024

NAMESPACE: giteatest

STATUS: deployed

REVISION: 4

NOTES:

1. Get the application URL by running these commands:

https://giteatest.freshbrewed.science/

The Redis instances keep crashing

$ kubectl logs giteatest-redis-cluster-0 -n giteatest

redis-cluster 03:17:29.43

redis-cluster 03:17:29.43 Welcome to the Bitnami redis-cluster container

redis-cluster 03:17:29.43 Subscribe to project updates by watching https://github.com/bitnami/containers

redis-cluster 03:17:29.43 Submit issues and feature requests at https://github.com/bitnami/containers/issues

redis-cluster 03:17:29.43

redis-cluster 03:17:29.44 INFO ==> ** Starting Redis setup **

redis-cluster 03:17:29.46 WARN ==> You set the environment variable ALLOW_EMPTY_PASSWORD=yes. For safety reasons, do not use this flag in a production environment.

redis-cluster 03:17:29.46 INFO ==> Initializing Redis

redis-cluster 03:17:29.47 INFO ==> Setting Redis config file

Changing old IP 10.42.1.73 by the new one 10.42.1.73

Changing old IP 10.42.3.24 by the new one 10.42.3.25

redis-cluster 03:17:29.55 INFO ==> ** Redis setup finished! **

1:C 07 Jan 2024 03:17:29.582 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

1:C 07 Jan 2024 03:17:29.582 # Redis version=7.0.12, bits=64, commit=00000000, modified=0, pid=1, just started

1:C 07 Jan 2024 03:17:29.582 # Configuration loaded

1:M 07 Jan 2024 03:17:29.582 * monotonic clock: POSIX clock_gettime

1:M 07 Jan 2024 03:17:29.588 * Node configuration loaded, I'm 5b0dc9aa45a1ab5c11104bfee62ef4633a994431

_._

_.-``__ ''-._

_.-`` `. `_. ''-._ Redis 7.0.12 (00000000/0) 64 bit

.-`` .-```. ```\/ _.,_ ''-._

( ' , .-` | `, ) Running in cluster mode

|`-._`-...-` __...-.``-._|'` _.-'| Port: 6379

| `-._ `._ / _.-' | PID: 1

`-._ `-._ `-./ _.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' | https://redis.io

`-._ `-._`-.__.-'_.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' |

`-._ `-._`-.__.-'_.-' _.-'

`-._ `-.__.-' _.-'

`-._ _.-'

`-.__.-'

1:M 07 Jan 2024 03:17:29.589 # Server initialized

1:M 07 Jan 2024 03:17:29.591 # Unable to obtain the AOF file appendonly.aof.1.base.rdb length. stat: Permission denied

I added an enabled: false to the values to disable redis fro a moment

redis-cluster:

enabled: false

cluster:

nodes: 2

Then removed the old volumes

$ ./yyy

persistentvolumeclaim "redis-data-giteatest-redis-cluster-1" deleted

persistentvolumeclaim "redis-data-giteatest-redis-cluster-0" deleted

persistentvolumeclaim "redis-data-giteatest-redis-cluster-5" deleted

persistentvolumeclaim "redis-data-giteatest-redis-cluster-2" deleted

persistentvolumeclaim "redis-data-giteatest-redis-cluster-3" deleted

persistentvolumeclaim "redis-data-giteatest-redis-cluster-4" deleted

then set it back

redis-cluster:

enabled: true

cluster:

nodes: 2

And upgraded with helm again.

As it’s in a non-stop crashloop, let’s force a bounce

redis: 2024/01/07 03:30:07 cluster.go:1760: getting command info: dial tcp: lookup giteatest-redis-cluster-headless.giteatest.svc.cluster.local: no such host

redis: 2024/01/07 03:30:07 cluster.go:1760: getting command info: dial tcp: lookup giteatest-redis-cluster-headless.giteatest.svc.cluster.local: no such host

2024/01/07 03:30:07 .../queue/base_redis.go:37:newBaseRedisGeneric() [W] Redis is not ready, waiting for 1 second to retry: dial tcp: lookup giteatest-redis-cluster-headless.giteatest.svc.cluster.local: no such host

redis: 2024/01/07 03:30:08 cluster.go:1760: getting command info: dial tcp: lookup giteatest-redis-cluster-headless.giteatest.svc.cluster.local: no such host

redis: 2024/01/07 03:30:08 cluster.go:1760: getting command info: dial tcp: lookup giteatest-redis-cluster-headless.giteatest.svc.cluster.local: no such host

2024/01/07 03:30:08 .../queue/base_redis.go:37:newBaseRedisGeneric() [W] Redis is not ready, waiting for 1 second to retry: dial tcp: lookup giteatest-redis-cluster-headless.giteatest.svc.cluster.local: no such host

redis: 2024/01/07 03:30:09 cluster.go:1760: getting command info: dial tcp: lookup giteatest-redis-cluster-headless.giteatest.svc.cluster.local: no such host

redis: 2024/01/07 03:30:09 cluster.go:1760: getting command info: dial tcp: lookup giteatest-redis-cluster-headless.giteatest.svc.cluster.local: no such host

2024/01/07 03:30:09 .../queue/base_redis.go:37:newBaseRedisGeneric() [W] Redis is not ready, waiting for 1 second to retry: dial tcp: lookup giteatest-redis-cluster-headless.giteatest.svc.cluster.local: no such host

2024/01/07 03:30:10 ...les/queue/manager.go:108:]() [E] Failed to create queue "mail": dial tcp: lookup giteatest-redis-cluster-headless.giteatest.svc.cluster.local: no such host

2024/01/07 03:30:10 ...ces/mailer/mailer.go:419:NewContext() [F] Unable to create mail queue

builder@LuiGi17:~/Workspaces/giteaTest$ kubectl get pods -n giteatest

NAME READY STATUS RESTARTS AGE

giteatest-77dd975468-t985b 0/1 CrashLoopBackOff 6 (4m30s ago) 11m

giteatest-redis-cluster-0 0/1 Running 0 2m32s

giteatest-redis-cluster-1 0/1 Running 0 2m32s

giteatest-b459b8d4-8dnrz 0/1 CrashLoopBackOff 9 (2m23s ago) 33m

builder@LuiGi17:~/Workspaces/giteaTest$ kubectl delete pod giteatest-77dd975468-t985b -n giteatest

pod "giteatest-77dd975468-t985b" deleted

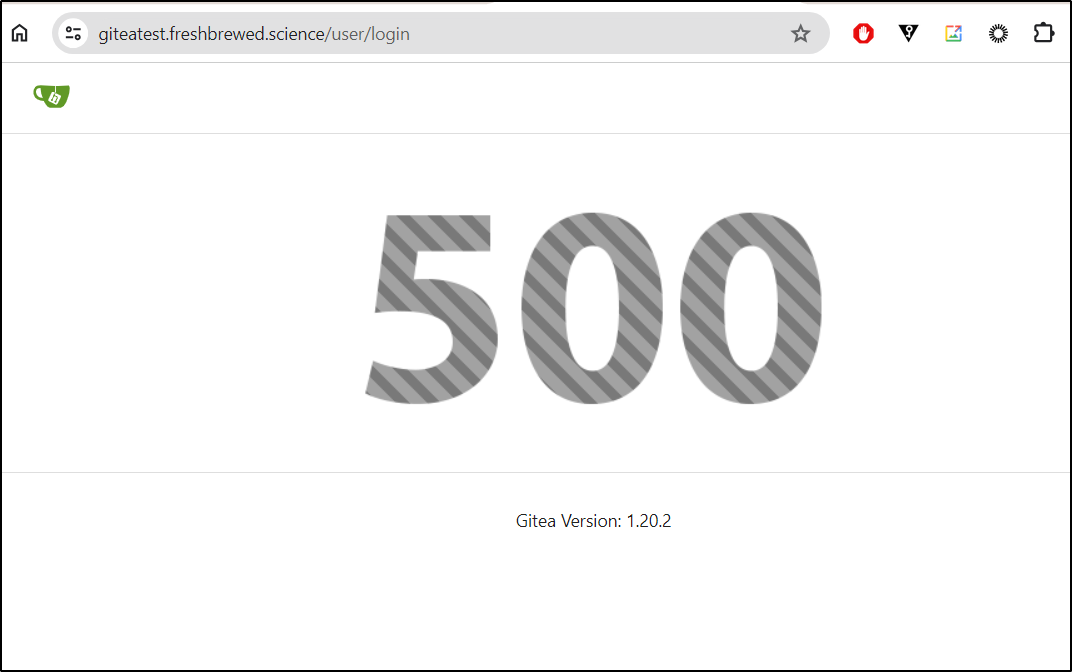

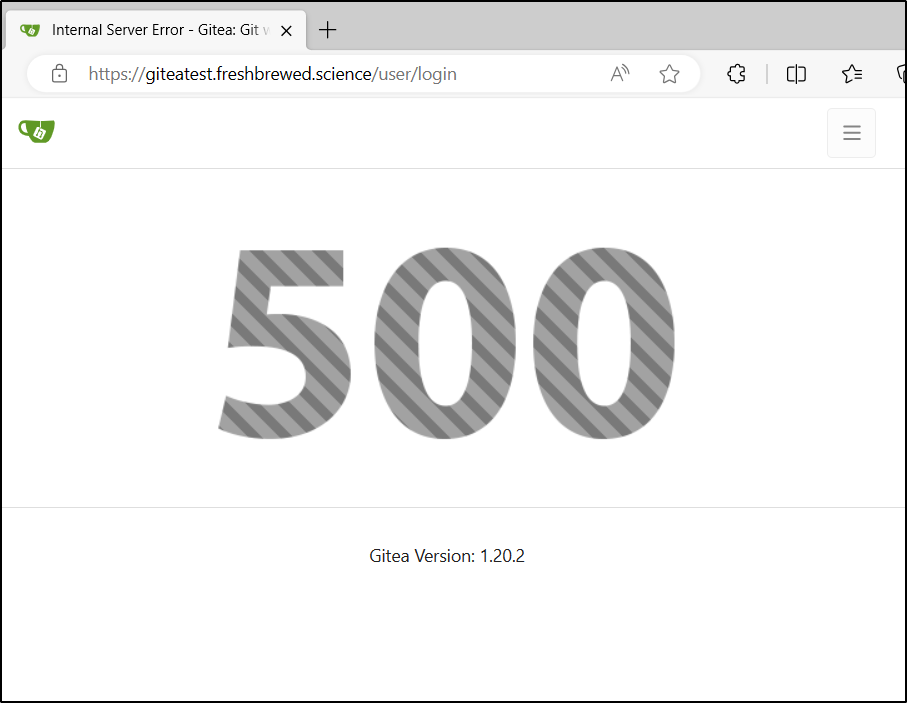

However, I get a 500 error:

I can see the pod is running

$ kubectl get pods -n giteatest

NAME READY STATUS RESTARTS AGE

giteatest-redis-cluster-0 0/1 Running 0 9m9s

giteatest-redis-cluster-1 0/1 Running 0 9m9s

giteatest-b459b8d4-8dnrz 1/1 Running 10 (9m ago) 40m

Then rotate the pod

$ kubectl delete pod giteatest-b459b8d4-8dnrz -n giteatest

pod "giteatest-b459b8d4-8dnrz" deleted

Even with the rotated pod, I got 500s

$ kubectl get pods -n giteatest

NAME READY STATUS RESTARTS AGE

giteatest-redis-cluster-0 0/1 Running 0 10m

giteatest-redis-cluster-1 0/1 Running 0 10m

giteatest-b459b8d4-gjmtz 1/1 Running 0 86s

I’ll then try an upgrade

$ helm upgrade --install -n giteatest giteatest -f ./second.values.yaml gitea-charts/gitea --version 9.5.1

Release "giteatest" has been upgraded. Happy Helming!

NAME: giteatest

LAST DEPLOYED: Sat Jan 6 21:44:08 2024

NAMESPACE: giteatest

STATUS: deployed

REVISION: 9

NOTES:

1. Get the application URL by running these commands:

https://giteatest.freshbrewed.science/

After realizing Redis needs 3 for a quorum:

$ kubectl get pods -n giteatest

NAME READY STATUS RESTARTS AGE

giteatest-redis-cluster-0 0/1 Running 0 19m

giteatest-76c588f857-r87jd 1/1 Running 0 7m6s

giteatest-redis-cluster-1 0/1 CrashLoopBackOff 6 (64s ago) 7m5s

I fixed, cleaned the PVCs and upgrades back to enable`

$ helm upgrade --install -n giteatest giteatest -f ./second.values.yaml gitea-charts/gitea

Release "giteatest" has been upgraded. Happy Helming!

NAME: giteatest

LAST DEPLOYED: Sat Jan 6 21:51:28 2024

NAMESPACE: giteatest

STATUS: deployed

REVISION: 10

NOTES:

1. Get the application URL by running these commands:

https://giteatest.freshbrewed.science/

I gave it a full 12hr to see if the system would correct itself. However, the next day I could see Redis was still in endless crashes

$ kubectl get pods -n giteatest

NAME READY STATUS RESTARTS AGE

giteatest-redis-cluster-0 0/1 Running 0 12h

giteatest-6c6d8d8b48-6c4rl 1/1 Running 0 12h

giteatest-redis-cluster-1 0/1 CrashLoopBackOff 151 (3m40s ago) 12h

In this case, it was due to my reduction to a 2-node cluster when Redis clearly wants 3

Node giteatest-redis-cluster-1.giteatest-redis-cluster-headless not ready, waiting for all the nodes to be ready...

Waiting 0s before querying node ip addresses

*** ERROR: Invalid configuration for cluster creation.

*** Redis Cluster requires at least 3 master nodes.

*** This is not possible with 2 nodes and 1 replicas per node.

*** At least 6 nodes are required.

The cluster was already created, the nodes should have recovered it

redis-server "${ARGS[@]}"

I’ll consider this a bit of user error and up the value:

builder@DESKTOP-QADGF36:/tmp/gitea$ helm get values giteatest -n giteatest -o yaml > values.yaml

builder@DESKTOP-QADGF36:/tmp/gitea$ vi values.yaml

builder@DESKTOP-QADGF36:/tmp/gitea$ helm get values giteatest -n giteatest -o yaml > values.yaml.bak

difbuilder@DESKTOP-QADGF36:/tmp/gitea$ diff -C 3 values.yaml values.yaml.bak

*** values.yaml 2024-01-07 10:27:12.621786060 -0600

--- values.yaml.bak 2024-01-07 10:27:19.541776882 -0600

***************

*** 61,65 ****

enabled: false

redis-cluster:

cluster:

! nodes: 3

enabled: true

--- 61,65 ----

enabled: false

redis-cluster:

cluster:

! nodes: 2

enabled: true

Then upgrade to make it live

$ helm upgrade --install -n giteatest giteatest -f ./values.yaml gitea-charts/gitea

Release "giteatest" has been upgraded. Happy Helming!

NAME: giteatest

LAST DEPLOYED: Sun Jan 7 10:27:53 2024

NAMESPACE: giteatest

STATUS: deployed

REVISION: 11

NOTES:

1. Get the application URL by running these commands:

https://giteatest.freshbrewed.science/

While I scrubbed the PVCs and did manage to get them back up

$ kubectl get pods -n giteatest

NAME READY STATUS RESTARTS AGE

giteatest-redis-cluster-0 1/1 Running 0 38s

giteatest-redis-cluster-1 1/1 Running 0 38s

giteatest-redis-cluster-2 1/1 Running 0 38s

giteatest-6c6d8d8b48-wbd4z 1/1 Running 0 38s

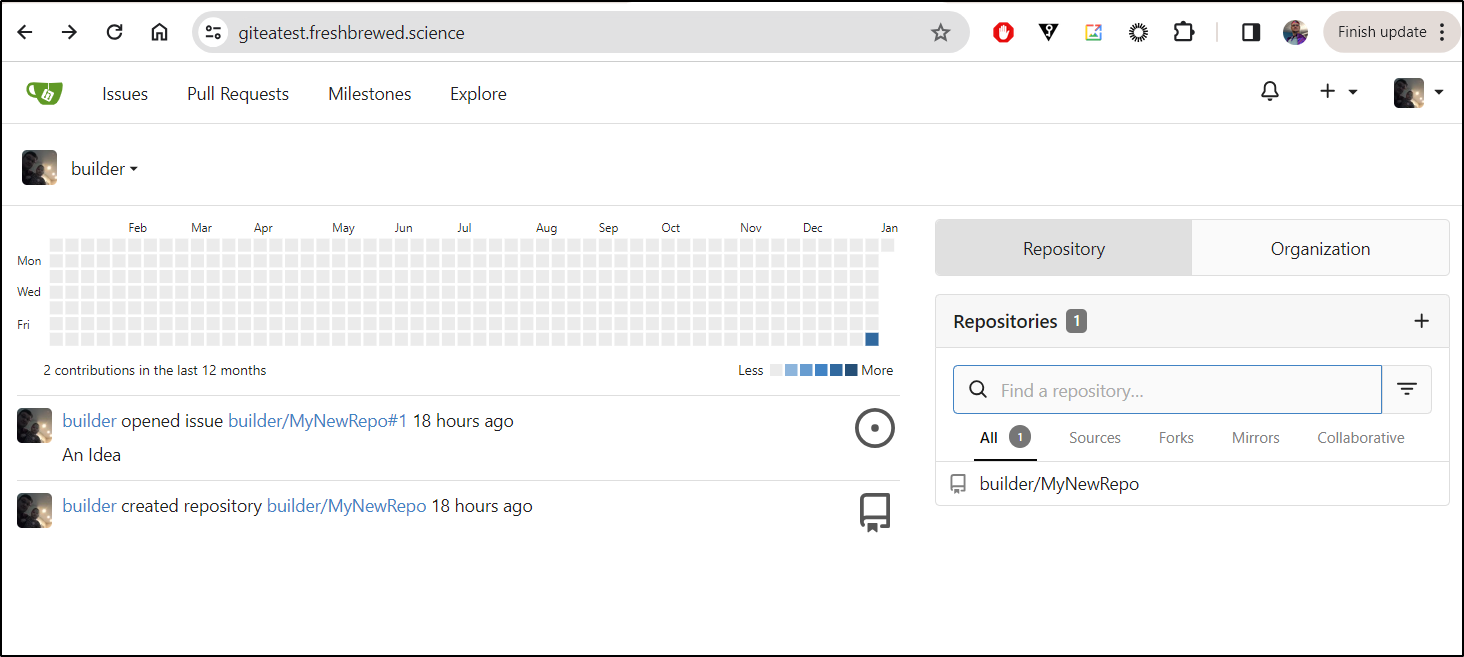

While I can login

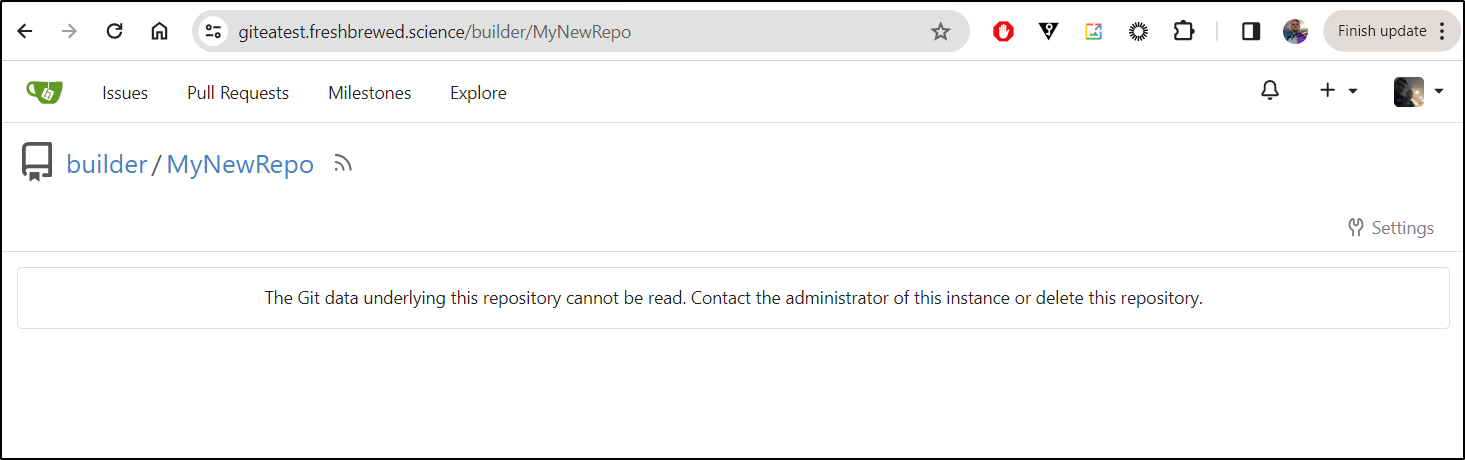

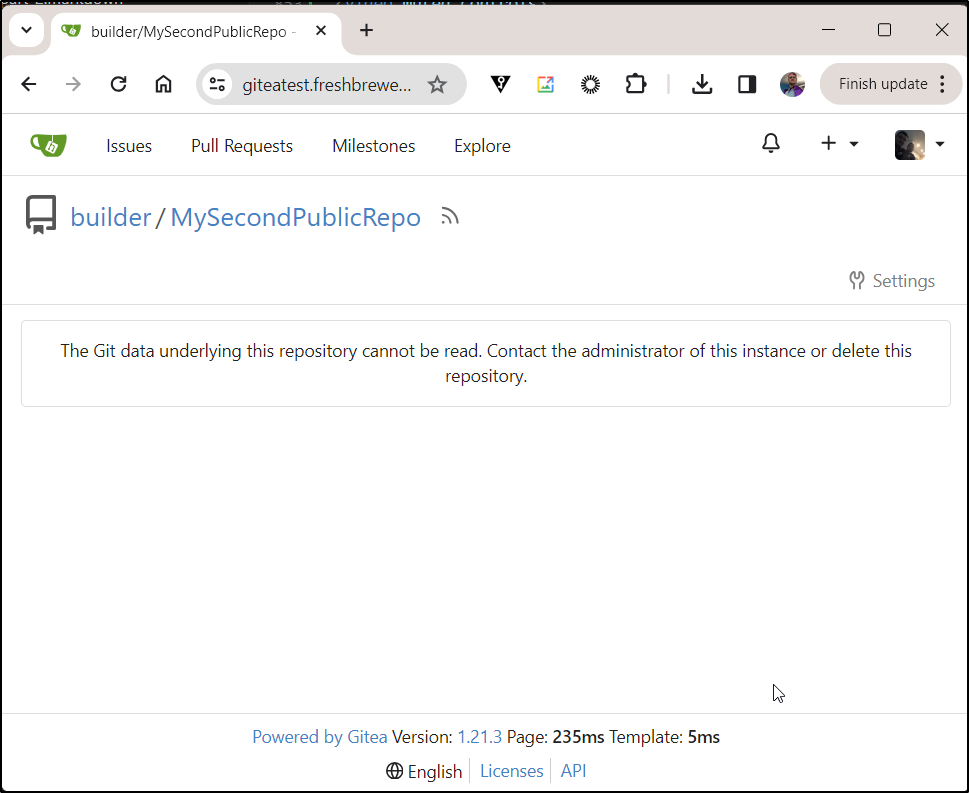

But the data cannot be read

You can see how our data is now corrupted

Do it again

Let’s do it all over and see if it is possible, knowing all these things, to go from an 8.x chart to a 9.x without losing our data

$ helm delete -n giteatest giteatest

These resources were kept due to the resource policy:

[PersistentVolumeClaim] gitea-shared-storage

release "giteatest" uninstalled

I’ll remove the namespace to ensure all secrets, CMs and PVCs are removed

$ kubectl delete ns giteatest

namespace "giteatest" deleted

I’ll make a brand new MariaDB DB

ijohnson@sirnasilot:~$ mysql -u root -p

Enter password:

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 1459177

Server version: 10.11.2-MariaDB Source distribution

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> CREATE DATABASE giteatestdb2 CHARACTER SET 'utf8mb4' COLLATE 'utf8mb4_unicode

_ci';

Query OK, 1 row affected (0.001 sec)

MariaDB [(none)]> grant all privileges on giteatestdb2.* to 'gitea'@'%';

Query OK, 0 rows affected (0.185 sec)

MariaDB [(none)]> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.002 sec)

MariaDB [(none)]> \q

Bye

I’ll form a new values.yaml to use:

$ cat chart8.values.yaml

gitea:

admin:

username: "builder"

password: "ThisIsNotMyPassowrd!"

email: "isaac.johnson@gmail.com"

config:

actions:

ENABLED: true

database:

DB_TYPE: mysql

HOST: 192.168.1.117:3306

NAME: giteatestdb2

USER: gitea

PASSWD: "MyDatabasePassword"

mailer:

ENABLED: true

FROM: isaac@freshbrewed.science

MAILER_TYPE: smtp

SMTP_ADDR: smtp.sendgrid.net

SMTP_PORT: 465

USER: apikey

PASSWD: SG.sadfsadfasdfasdfsadfasdfasdfasdfasdfsadfsafsadfsadf

openid:

ENABLE_OPENID_SIGNIN: false

ENABLE_OPENID_SIGNUP: false

server:

DOMAIN: giteatest.freshbrewed.science

ROOT_URL: https://giteatest.freshbrewed.science/

service:

DISABLE_REGISTRATION: true

metrics:

enabled: true

postgresql:

enabled: false

global:

storageClass: "managed-nfs-storage"

ingress:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "600"

nginx.org/proxy-read-timeout: "600"

enabled: true

hosts:

- host: giteatest.freshbrewed.science

paths:

- path: /

pathType: ImplementationSpecific

tls:

- hosts:

- giteatest.freshbrewed.science

secretName: giteatest-tls

Then install with --create-namespace to create the replacement giteatest namespace on the fly

$ helm upgrade --install -n giteatest --create-namespace

giteatest -f ./chart8.values.yaml gitea-charts/gitea --version 8.3.0

Release "giteatest" does not exist. Installing it now.

coalesce.go:175: warning: skipped value for memcached.initContainers: Not a table.

NAME: giteatest

LAST DEPLOYED: Sun Jan 7 14:59:53 2024

NAMESPACE: giteatest

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

https://giteatest.freshbrewed.science/

I can see it slowly coming up. The first time always takes a bit as it sets up the MySQL database

$ kubectl get pods -n giteatest

NAME READY STATUS RESTARTS AGE

giteatest-memcached-b4dd9ccfd-qwbm4 1/1 Running 0 69s

giteatest-0 0/1 Init:2/3 0 69s

I can watch it by checking the gitea-configure container

$ kubectl logs giteatest-0 -n giteatest --container configure-gitea | tail -n10

2024/01/07 21:05:08 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE INDEX `IDX_user_updated_unix` ON `user` (`updated_unix`) [] - 5.751395031s

2024/01/07 21:05:14 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE INDEX `IDX_user_last_login_unix` ON `user` (`last_login_unix`) [] - 5.701633752s

2024/01/07 21:05:17 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE TABLE IF NOT EXISTS `repo_archiver` (`id` BIGINT(20) PRIMARY KEY AUTO_INCREMENT NOT NULL, `repo_id` BIGINT(20) NULL, `type` INT NULL, `status` INT NULL, `commit_id` VARCHAR(40) NULL, `created_unix` BIGINT(20) NOT NULL) ENGINE=InnoDB DEFAULT CHARSET utf8mb4 ROW_FORMAT=DYNAMIC [] - 3.242440889s

2024/01/07 21:05:23 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE UNIQUE INDEX `UQE_repo_archiver_s` ON `repo_archiver` (`repo_id`,`type`,`commit_id`) [] - 5.293209084s

2024/01/07 21:05:28 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE INDEX `IDX_repo_archiver_repo_id` ON `repo_archiver` (`repo_id`) [] - 5.201385662s

2024/01/07 21:05:34 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE INDEX `IDX_repo_archiver_created_unix` ON `repo_archiver` (`created_unix`) [] - 5.601427875s

2024/01/07 21:05:37 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE TABLE IF NOT EXISTS `attachment` (`id` BIGINT(20) PRIMARY KEY AUTO_INCREMENT NOT NULL, `uuid` VARCHAR(40) NULL, `repo_id` BIGINT(20) NULL, `issue_id` BIGINT(20) NULL, `release_id` BIGINT(20) NULL, `uploader_id` BIGINT(20) DEFAULT 0 NULL, `comment_id` BIGINT(20) NULL, `name` VARCHAR(255) NULL, `download_count` BIGINT(20) DEFAULT 0 NULL, `size` BIGINT(20) DEFAULT 0 NULL, `created_unix` BIGINT(20) NULL) ENGINE=InnoDB DEFAULT CHARSET utf8mb4 ROW_FORMAT=DYNAMIC [] - 3.359156594s

2024/01/07 21:05:41 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE UNIQUE INDEX `UQE_attachment_uuid` ON `attachment` (`uuid`) [] - 4.593176072s

2024/01/07 21:05:47 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE INDEX `IDX_attachment_repo_id` ON `attachment` (`repo_id`) [] - 5.726498245s

2024/01/07 21:05:52 models/db/engine.go:125:SyncAllTables() [I] [SQL] CREATE INDEX `IDX_attachment_issue_id` ON `attachment` (`issue_id`) [] - 4.901474326s

This time it took way longer wrapping the database setup after 31m

$ kubectl get pods -n giteatest

NAME READY STATUS RESTARTS AGE

giteatest-memcached-b4dd9ccfd-qwbm4 1/1 Running 0 31m

giteatest-0 1/1 Running 0 31m

Let’s login and set things up including create and putting content into a new repo

Now we can upgrade while adding the additions.txt to the end:

$ cat additions.txt

postgresql-ha:

enabled: false

redis-cluster:

cluster:

nodes: 3

enabled: true

So this shows upgrade to 8.3.0 to 9.1.0 using an external MySQL DB, it falls down. It could be due to migrations or the swapping in of a new HA cluster.

This leaves the existing Repos broken

and outstanding clones:

$ git pull

fatal: Could not read from remote repository.

Please make sure you have the correct access rights

and the repository exists.

However, I can use this instance to create new repos

Summary

What we have worked through, twice actually, is that the Gitea charts, using external MariaDB/MySQL repos had some form of a breaking change between 8.3.0 and 9.x.

Admins should always backup up data, especially on major chart updates. There is a backup and restore process one can use that would have sorted this out (simply unzip the backup in the container).

That said, I have confidence in the backup and restore so I won’t test that. As always, I love the feedback and welcome challenges. I went back and made a note