Published: Nov 28, 2023 by Isaac Johnson

I recently saw a release announcement for Docusaurus 3.0. It’s a static documentation platform open sourced by Meta. It seemed something worth checking out.

Installation

Let’s start with the most simple usage. We’ll use npx to install it

I’ll make a dir and ensure I’m using a recent NodeJS

builder@LuiGi17:~/Workspaces$ mkdir docuSite

builder@LuiGi17:~/Workspaces$ cd docuSite/

builder@LuiGi17:~/Workspaces/docuSite$ nvm list

v14.21.3

v16.20.2

v18.18.1

-> v18.18.2

v20.8.0

default -> node (-> v20.8.0)

iojs -> N/A (default)

unstable -> N/A (default)

node -> stable (-> v20.8.0) (default)

stable -> 20.8 (-> v20.8.0) (default)

lts/* -> lts/iron (-> N/A)

lts/argon -> v4.9.1 (-> N/A)

lts/boron -> v6.17.1 (-> N/A)

lts/carbon -> v8.17.0 (-> N/A)

lts/dubnium -> v10.24.1 (-> N/A)

lts/erbium -> v12.22.12 (-> N/A)

lts/fermium -> v14.21.3

lts/gallium -> v16.20.2

lts/hydrogen -> v18.18.2

lts/iron -> v20.9.0 (-> N/A)

I’ll fire up an npx command to start the install

builder@LuiGi17:~/Workspaces/docuSite$ npx create-docusaurus@latest my-fb-website classic

Need to install the following packages:

create-docusaurus@3.0.0

Ok to proceed? (y) Y

(#########⠂⠂⠂⠂⠂⠂⠂⠂⠂) ⠹ idealTree:semver: sill placeDep ROOT lru-cache@5.1.1 OK for: @babel/helper-compilation-targets@7.22.15 want:

When the installer is done, we can see some commands

294 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

[SUCCESS] Created my-fb-website.

[INFO] Inside that directory, you can run several commands:

`npm start`

Starts the development server.

`npm run build`

Bundles your website into static files for production.

`npm run serve`

Serves the built website locally.

`npm run deploy`

Publishes the website to GitHub pages.

We recommend that you begin by typing:

`cd my-fb-website`

`npm start`

Happy building awesome websites!

I hopped into the dir and tried it

builder@LuiGi17:~/Workspaces/docuSite$ cd my-fb-website/

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website$ ls

README.md babel.config.js blog docs docusaurus.config.js node_modules package-lock.json package.json sidebars.js src static

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website$ npm start

> my-fb-website@0.0.0 start

> docusaurus start

[INFO] Starting the development server...

[SUCCESS] Docusaurus website is running at: http://localhost:3000/

✔ Client

Compiled successfully in 7.40s

client (webpack 5.89.0) compiled successfully

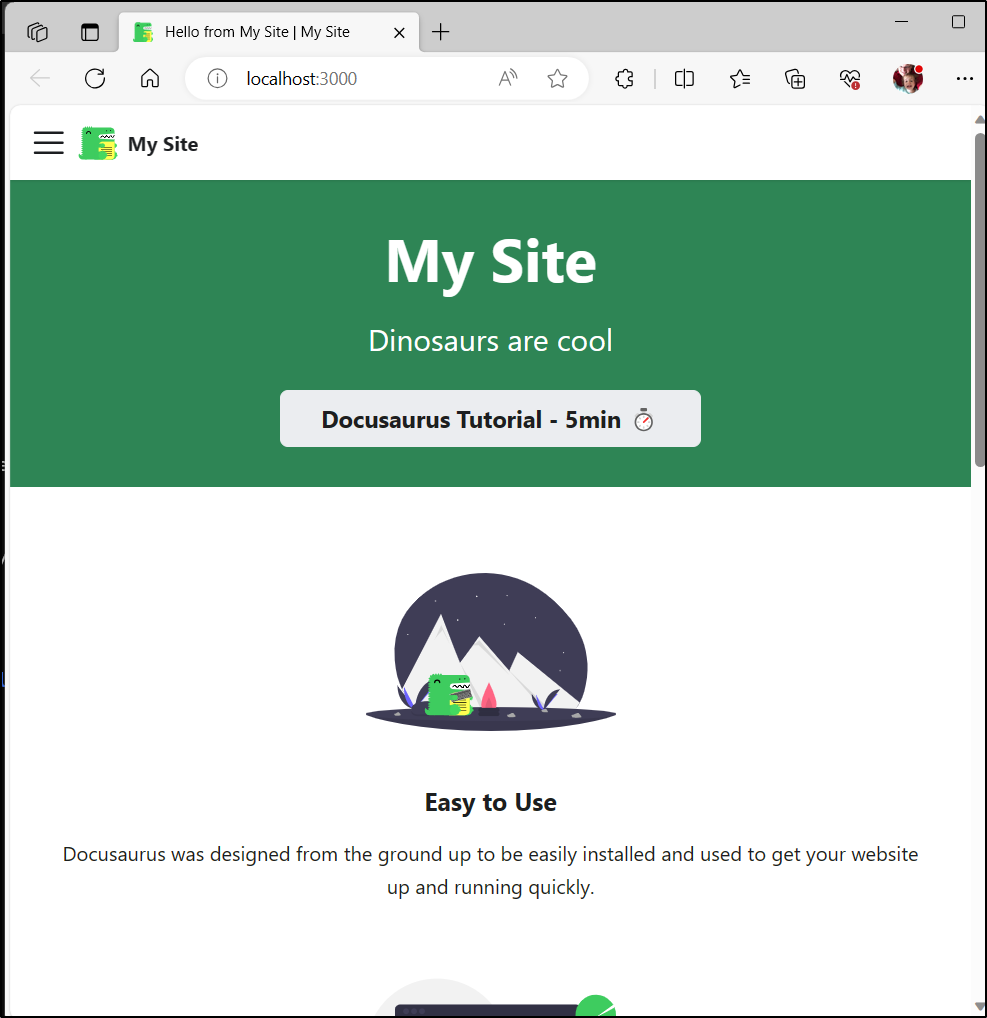

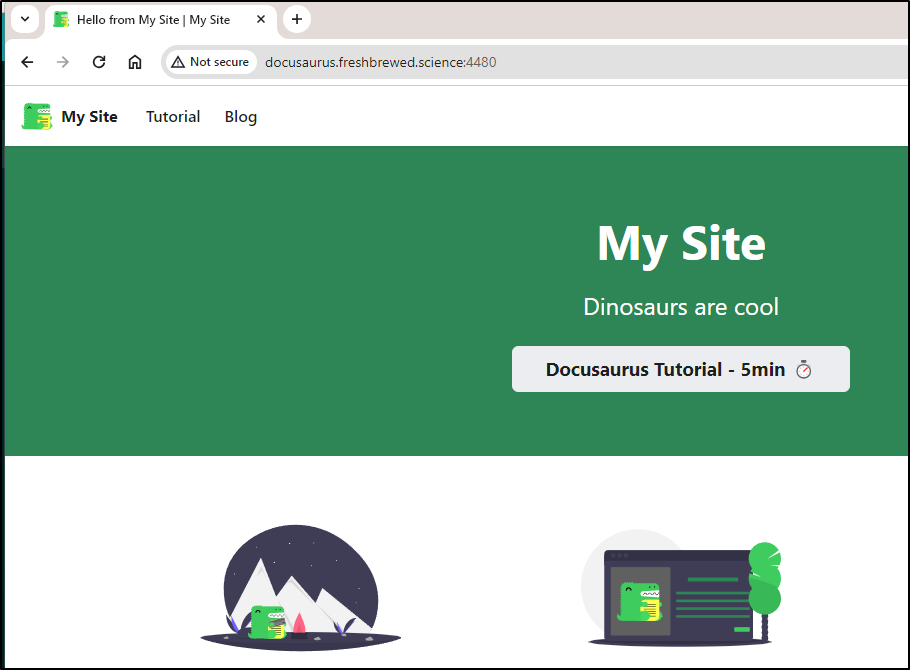

That fired up a sample site

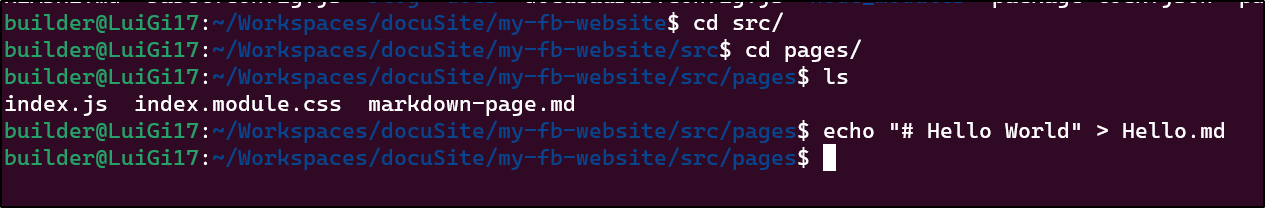

I can now create a page

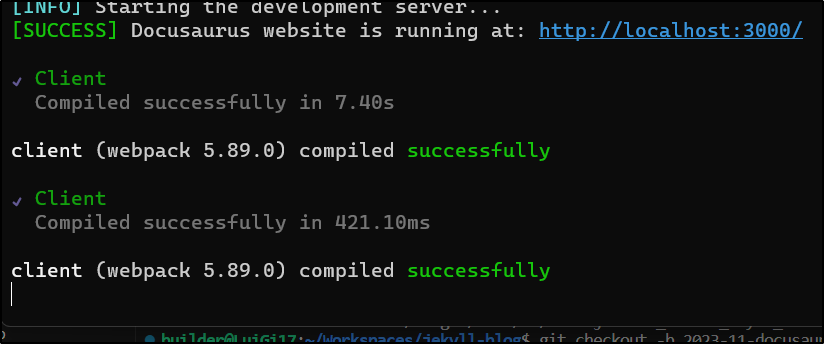

I see the app auto-reloads when it detected a change:

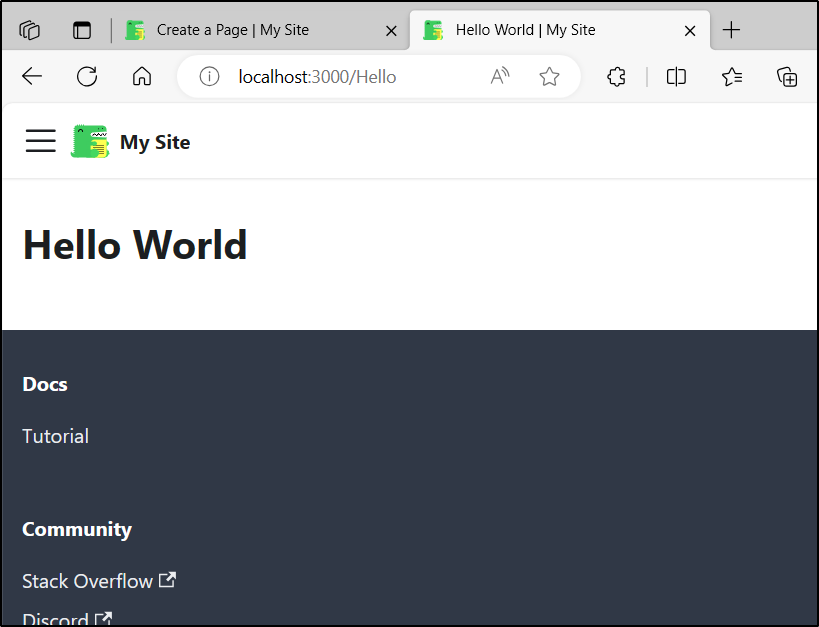

I can see that echo '# Hello World' > Hello.md indeed created a “Hello” page:

We can also create a React page, perhaps in a subfolder

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website/src/pages$ mkdir pubs

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website/src/pages$ cd pubs

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website/src/pages/pubs$ vi pub01.js

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website/src/pages/pubs$ cat pub01.js

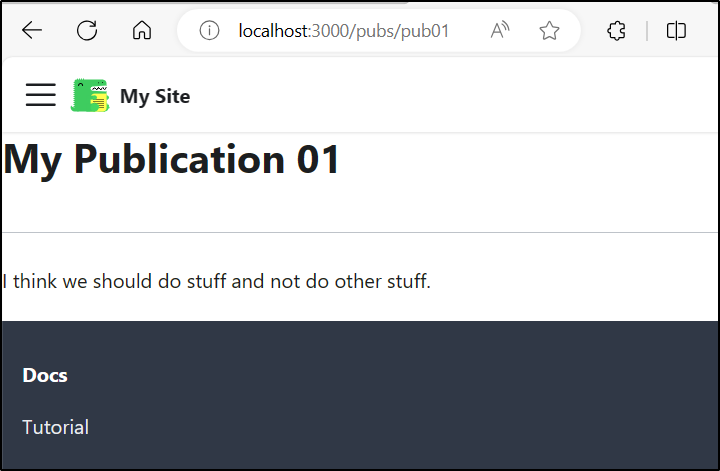

import React from 'react';

import Layout from '@theme/Layout';

export default function MyReactPage() {

return (

<Layout>

<h1>My Publication 01</h1>

<hr/>

<p>I think we should do stuff and not do other stuff.</p>

</Layout>

);

}

Which as one would expect, triggered the app process and we were able to go right to the page

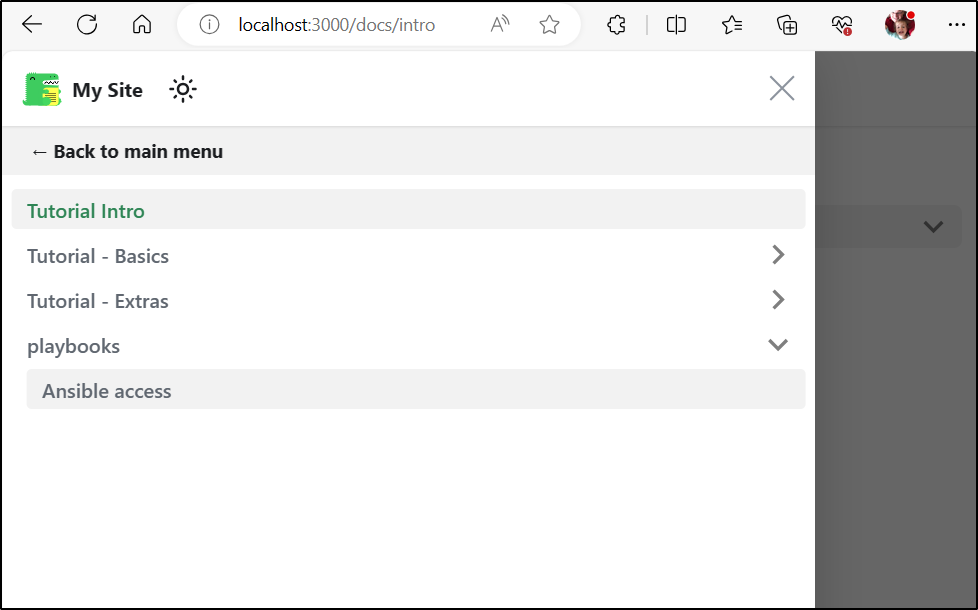

Docs are similar to pages, but they will show up on the left-hand navigation.

Say, for instance, we wanted to build a library of Runbooks for our organization

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website/docs$ mkdir playbooks

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website/docs$ cd playbooks/

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website/docs/playbooks$ vi ansible.md

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website/docs/playbooks$ cat ansible.md

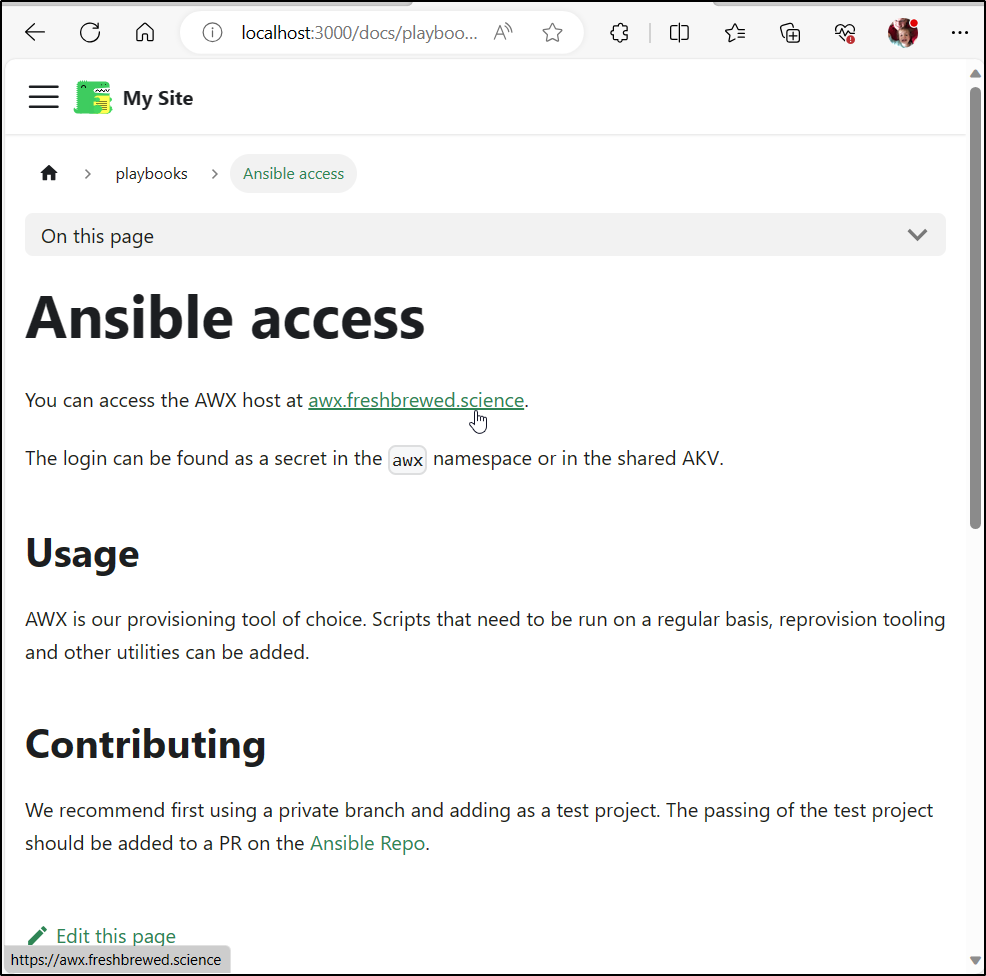

# Ansible access

You can access the AWX host at [awx.freshbrewed.science](https://awx.freshbrewed.science).

The login can be found as a secret in the `awx` namespace or in the shared AKV.

## Usage

AWX is our provisioning tool of choice. Scripts that need to be run on a regular basis, reprovision tooling and other utilities can be added.

## Contributing

We recommend first using a private branch and adding as a test project. The passing of the test project should be added to a PR on the [Ansible Repo](https://github.com/idjohnson/ansible).

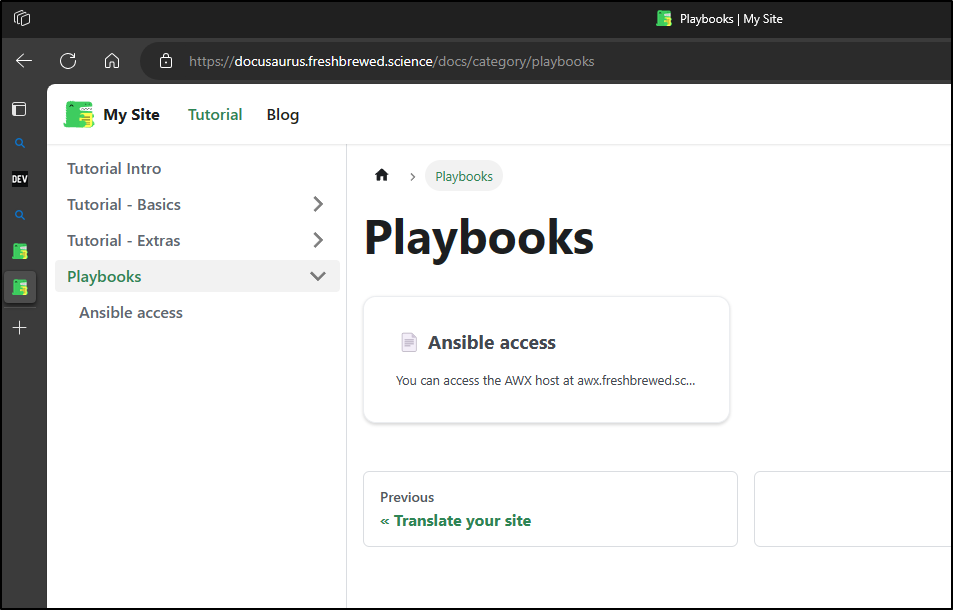

I can now see it refreshed in my left hand nav

Which I think would be a pretty functional start

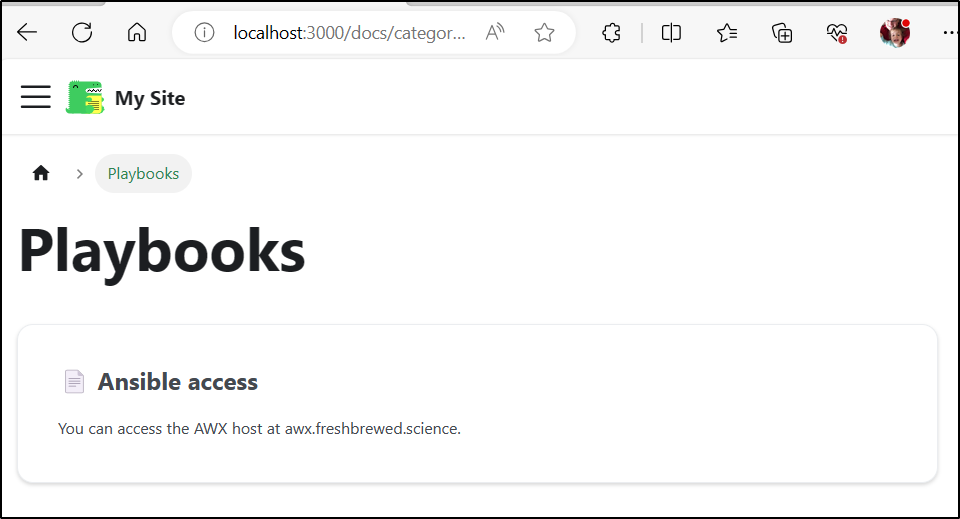

I made the Playbooks area a bit nicer by adding a _category_.json file to generate an index page

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website/docs/playbooks$ cat _category_.json

{

"label": "Playbooks",

"position": 4,

"link": {

"type": "generated-index"

}

}

We now see a landing page:

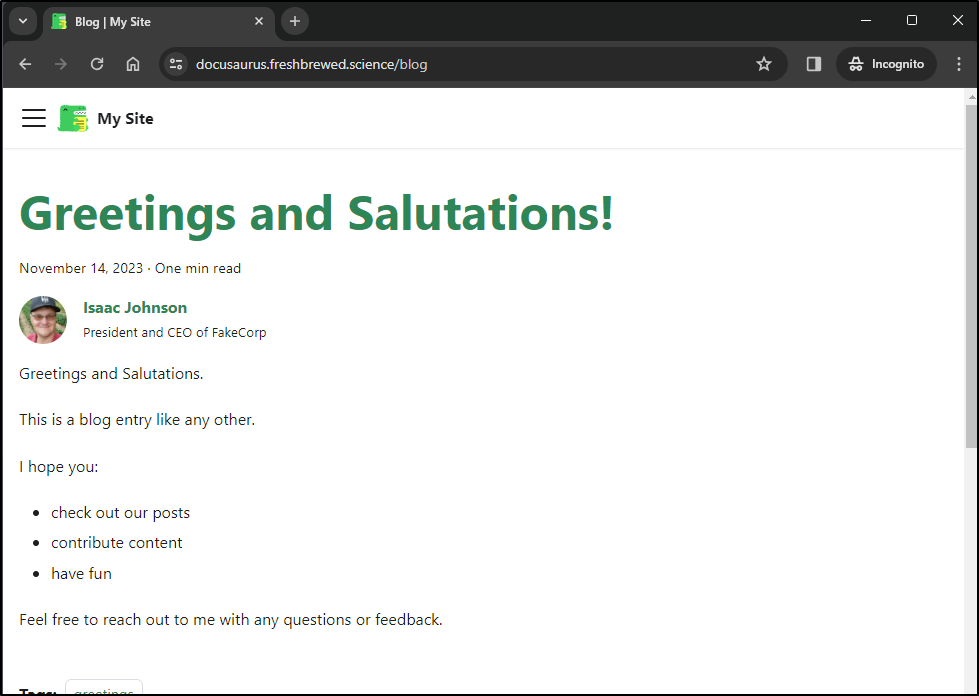

Blog

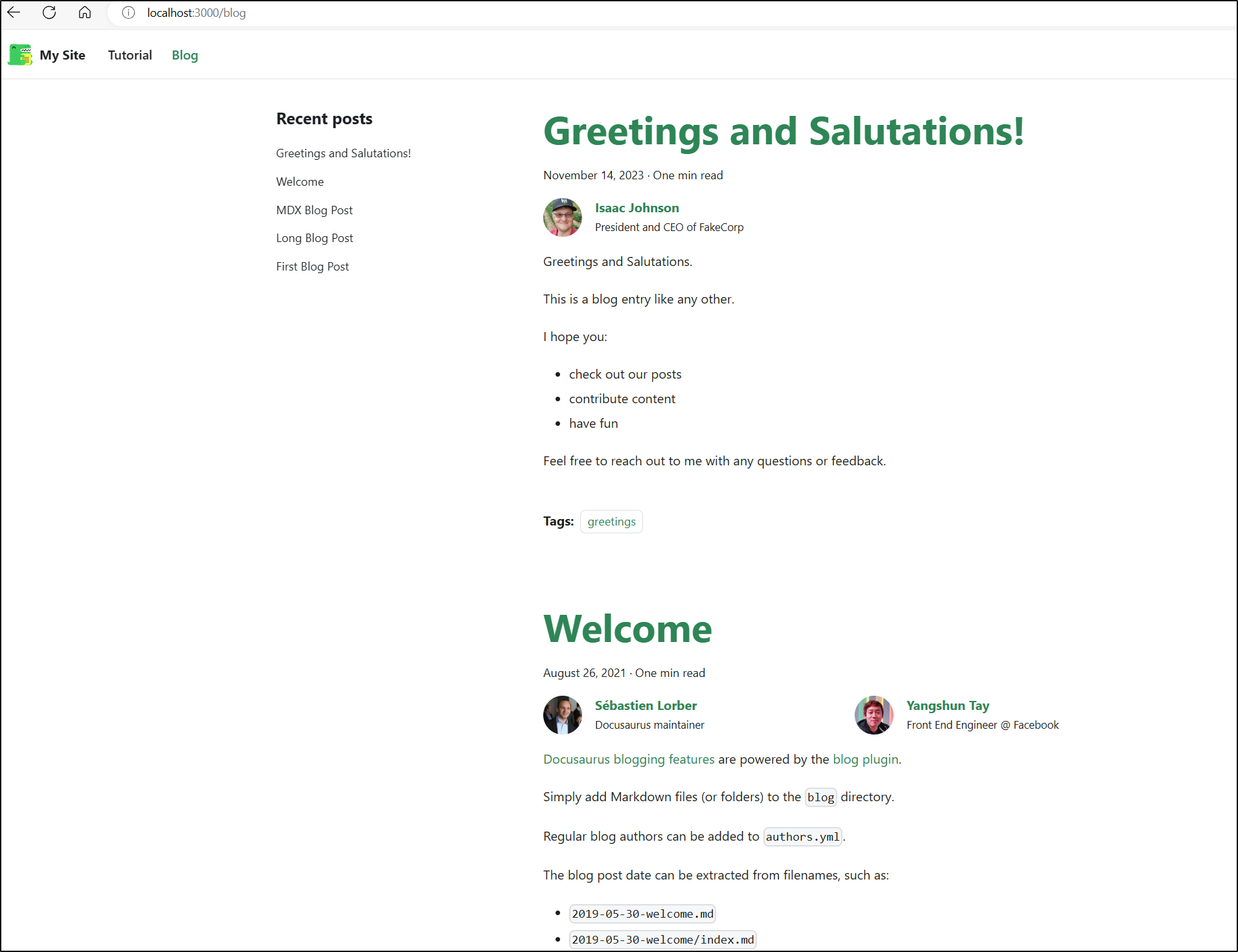

We can also use this for a Blog. Let’s add a quick entry

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website/blog$ ls

2019-05-28-first-blog-post.md 2019-05-29-long-blog-post.md 2021-08-01-mdx-blog-post.mdx 2021-08-26-welcome authors.yml

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website/blog$ vi 2023-11-14-salutations.md

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website/blog$ cat 2023-11-14-salutations.md

---

slug: greetings

title: Greetings and Salutations!

authors:

- name: Isaac Johnson

title: President and CEO of FakeCorp

url: https://github.com/idjohnson

image_url: https://avatars.githubusercontent.com/u/6699477?v=4

tags: [greetings]

---

Greetings and Salutations.

This is a blog entry like any other.

I hope you:

- check out our posts

- contribute content

- have fun

Feel free to reach out to me with any questions or feedback.

Unlike the docs or pages, the Blog will add to the top

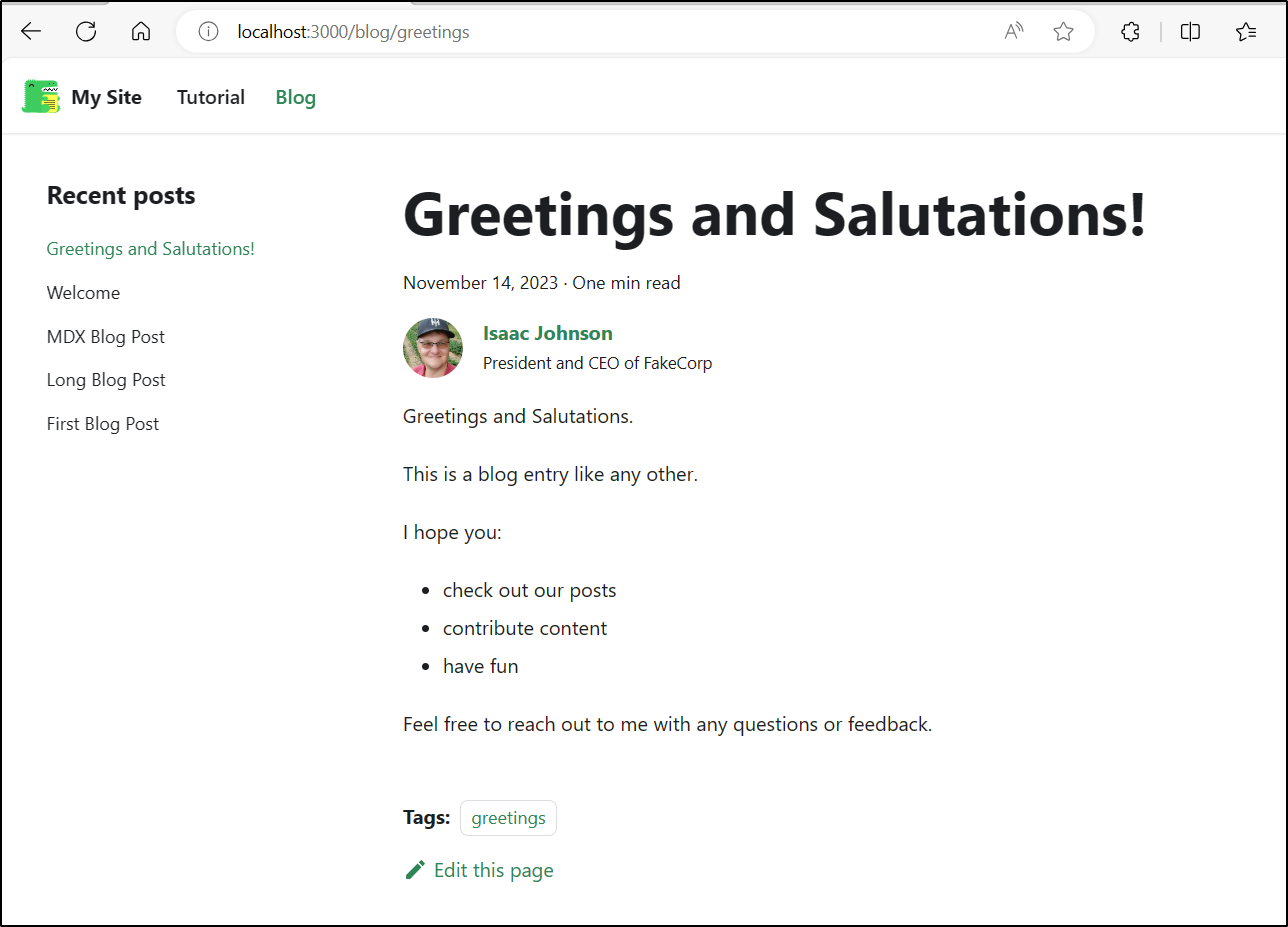

Though I have the ability to directly to an entry if I want which would be useful for linking

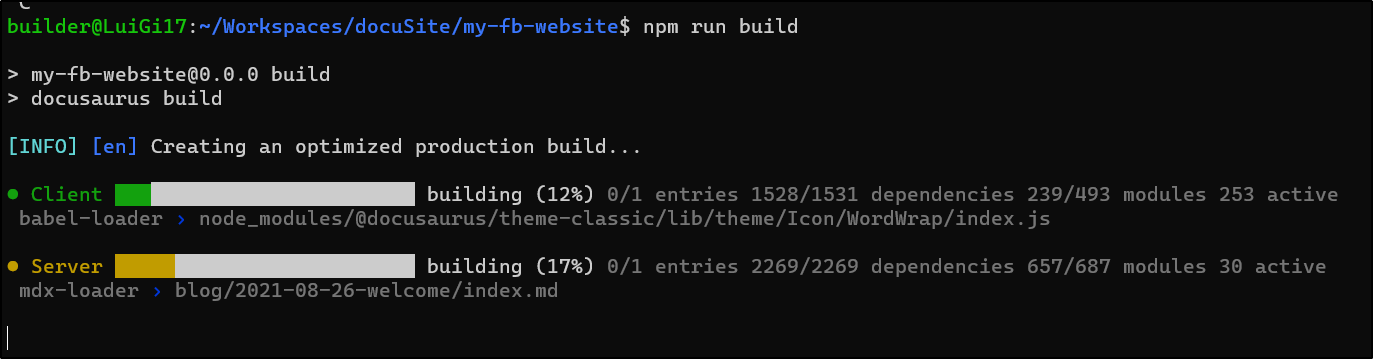

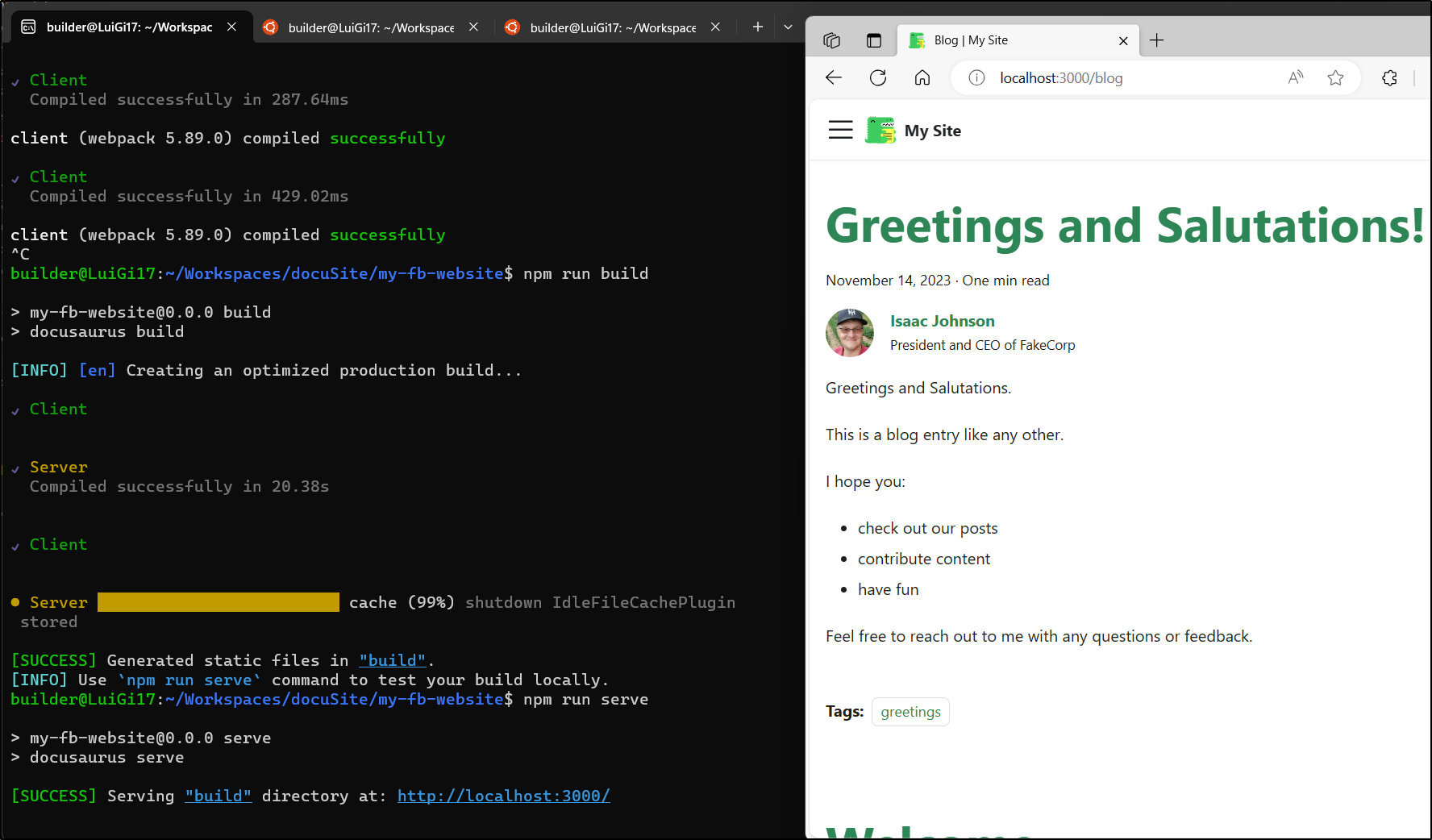

Building the static site

This is really meant to be “built” into a static site.

When I run npm run build I can see it pull everything together

$ npm run build

> my-fb-website@0.0.0 build

> docusaurus build

[INFO] [en] Creating an optimized production build...

✔ Client

✔ Server

Compiled successfully in 20.38s

✔ Client

● Server █████████████████████████ cache (99%) shutdown IdleFileCachePlugin

stored

[SUCCESS] Generated static files in "build".

[INFO] Use `npm run serve` command to test your build locally.

I can easily run npm run serve to now serve the static site. Unlike the app run earlier, this is static, it will not rebuild if we change files. This is mostly to validate the created static site locally

Now we can deploy to Github pages when the time comes. As it stands, it wont

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website$ npm run deploy

> my-fb-website@0.0.0 deploy

> docusaurus deploy

[WARNING] When deploying to GitHub Pages, it is better to use an explicit "trailingSlash" site config.

Otherwise, GitHub Pages will add an extra trailing slash to your site urls only on direct-access (not when navigation) with a server redirect.

This behavior can have SEO impacts and create relative link issues.

[INFO] Deploy command invoked...

Error: Please set the GIT_USER environment variable, or explicitly specify USE_SSH instead!

at Command.deploy (/home/builder/Workspaces/docuSite/my-fb-website/node_modules/@docusaurus/core/lib/commands/deploy.js:70:19)

[INFO] Docusaurus version: 3.0.0

Node version: v18.18.2

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website$

Dockerfile

If we want to expose this using Docker, we need to tweak the package.json to start the app with all hosts allowed (0.0.0.0):

You need to set the host allow to 0.0.0.0

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website$ cat package.json | grep 0.0.0.0

"start": "docusaurus start --host 0.0.0.0",

"serve": "docusaurus serve --host 0.0.0.0",

We can then create a Dockerfile that will serve Dev and Production. I found this on a blog article on dev.to, just to give proper credit.

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website$ cat Dockerfile

## Base ########################################################################

# Use a larger node image to do the build for native deps (e.g., gcc, python)

FROM node:lts as base

# Reduce npm log spam and colour during install within Docker

ENV NPM_CONFIG_LOGLEVEL=warn

ENV NPM_CONFIG_COLOR=false

# We'll run the app as the `node` user, so put it in their home directory

WORKDIR /home/node/app

# Copy the source code over

COPY --chown=node:node . /home/node/app/

## Development #################################################################

# Define a development target that installs devDeps and runs in dev mode

FROM base as development

WORKDIR /home/node/app

# Install (not ci) with dependencies, and for Linux vs. Linux Musl (which we use for -alpine)

RUN npm install

# Switch to the node user vs. root

USER node

# Expose port 3000

EXPOSE 3000

# Start the app in debug mode so we can attach the debugger

CMD ["npm", "start"]

## Production ##################################################################

# Also define a production target which doesn't use devDeps

FROM base as production

WORKDIR /home/node/app

COPY --chown=node:node --from=development /home/node/app/node_modules /home/node/app/node_modules

# Build the Docusaurus app

RUN npm run build

## Deploy ######################################################################

# Use a stable nginx image

FROM nginx:stable-alpine as deploy

WORKDIR /home/node/app

# Copy what we've installed/built from production

COPY --chown=node:node --from=production /home/node/app/build /usr/share/nginx/html/

I can build it:

builder@LuiGi17:~/Workspaces/docuSite/my-fb-website$ docker build --target development -t docs:dev .

[+] Building 74.5s (11/11) FINISHED docker:default

=> [internal] load build definition from Dockerfile 0.1s

=> => transferring dockerfile: 1.61kB 0.0s

=> [internal] load .dockerignore 0.1s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/node:lts 2.6s

=> [auth] library/node:pull token for registry-1.docker.io 0.0s

=> [base 1/3] FROM docker.io/library/node:lts@sha256:5f21943fe97b24ae1740da6d7b9c56ac43fe3495acb47c1b232b0a352b02a25c 62.7s

=> => resolve docker.io/library/node:lts@sha256:5f21943fe97b24ae1740da6d7b9c56ac43fe3495acb47c1b232b0a352b02a25c 0.0s

=> => sha256:5f21943fe97b24ae1740da6d7b9c56ac43fe3495acb47c1b232b0a352b02a25c 1.21kB / 1.21kB 0.0s

=> => sha256:cb7cd40ba6483f37f791e1aace576df449fc5f75332c19ff59e2c6064797160e 2.00kB / 2.00kB 0.0s

=> => sha256:b5288ff94366c7c413f66f967629c66876f22de02bae58f0c0cba2fa42eb292b 7.52kB / 7.52kB 0.0s

=> => sha256:325c5bf4c2f26c11380501bec4b6eef8a3ea35b554aa1b222cbcd1e1fe11ae1d 64.13MB / 64.13MB 21.2s

=> => sha256:8457fd5474e70835e4482983a5662355d892d5f6f0f90a27a8e9f009997e8196 49.58MB / 49.58MB 21.7s

=> => sha256:13baa2029dde87a21b87127168a0fb50a007c07da6b5adc8864e1fe1376c86ff 24.05MB / 24.05MB 11.4s

=> => sha256:7e18a660069fd7f87a7a6c49ddb701449bfb929c066811777601d36916c7f674 211.06MB / 211.06MB 51.5s

=> => sha256:c30e0acec6d561f6ec4e8d3ccebdeedbe505923a613afe3df4a6ea0a40f2240a 3.37kB / 3.37kB 21.3s

=> => sha256:6b06d99eb2a57636421f94780405fdc53fc8159cf7991fca61edb9a4565b234f 47.84MB / 47.84MB 37.8s

=> => extracting sha256:8457fd5474e70835e4482983a5662355d892d5f6f0f90a27a8e9f009997e8196 3.1s

=> => sha256:a3e4c4d8a88e065a9b9ed4634fafd6b3064ea8a673efbf8ecc046038c73363ef 2.21MB / 2.21MB 22.9s

=> => sha256:9dbab0291c0e40e9c4172533b5c8c74c1f7b24b50c6973b45c687d1d5f086679 452B / 452B 23.1s

=> => extracting sha256:13baa2029dde87a21b87127168a0fb50a007c07da6b5adc8864e1fe1376c86ff 0.8s

=> => extracting sha256:325c5bf4c2f26c11380501bec4b6eef8a3ea35b554aa1b222cbcd1e1fe11ae1d 3.5s

=> => extracting sha256:7e18a660069fd7f87a7a6c49ddb701449bfb929c066811777601d36916c7f674 7.5s

=> => extracting sha256:c30e0acec6d561f6ec4e8d3ccebdeedbe505923a613afe3df4a6ea0a40f2240a 0.0s

=> => extracting sha256:6b06d99eb2a57636421f94780405fdc53fc8159cf7991fca61edb9a4565b234f 2.7s

=> => extracting sha256:a3e4c4d8a88e065a9b9ed4634fafd6b3064ea8a673efbf8ecc046038c73363ef 0.1s

=> => extracting sha256:9dbab0291c0e40e9c4172533b5c8c74c1f7b24b50c6973b45c687d1d5f086679 0.0s

=> [internal] load build context 6.6s

=> => transferring context: 271.88MB 6.4s

=> [base 2/3] WORKDIR /home/node/app 0.3s

=> [base 3/3] COPY --chown=node:node . /home/node/app/ 2.7s

=> [development 1/2] WORKDIR /home/node/app 0.1s

=> [development 2/2] RUN npm install 2.5s

=> exporting to image 3.3s

=> => exporting layers 3.2s

=> => writing image sha256:be3e9fdcf98f6ebb6e862c80256d51bef42b469f087470a4a1a49a0baecbd3a4 0.0s

=> => naming to docker.io/library/docs:dev 0.0s

What's Next?

View a summary of image vulnerabilities and recommendations → docker scout quickview

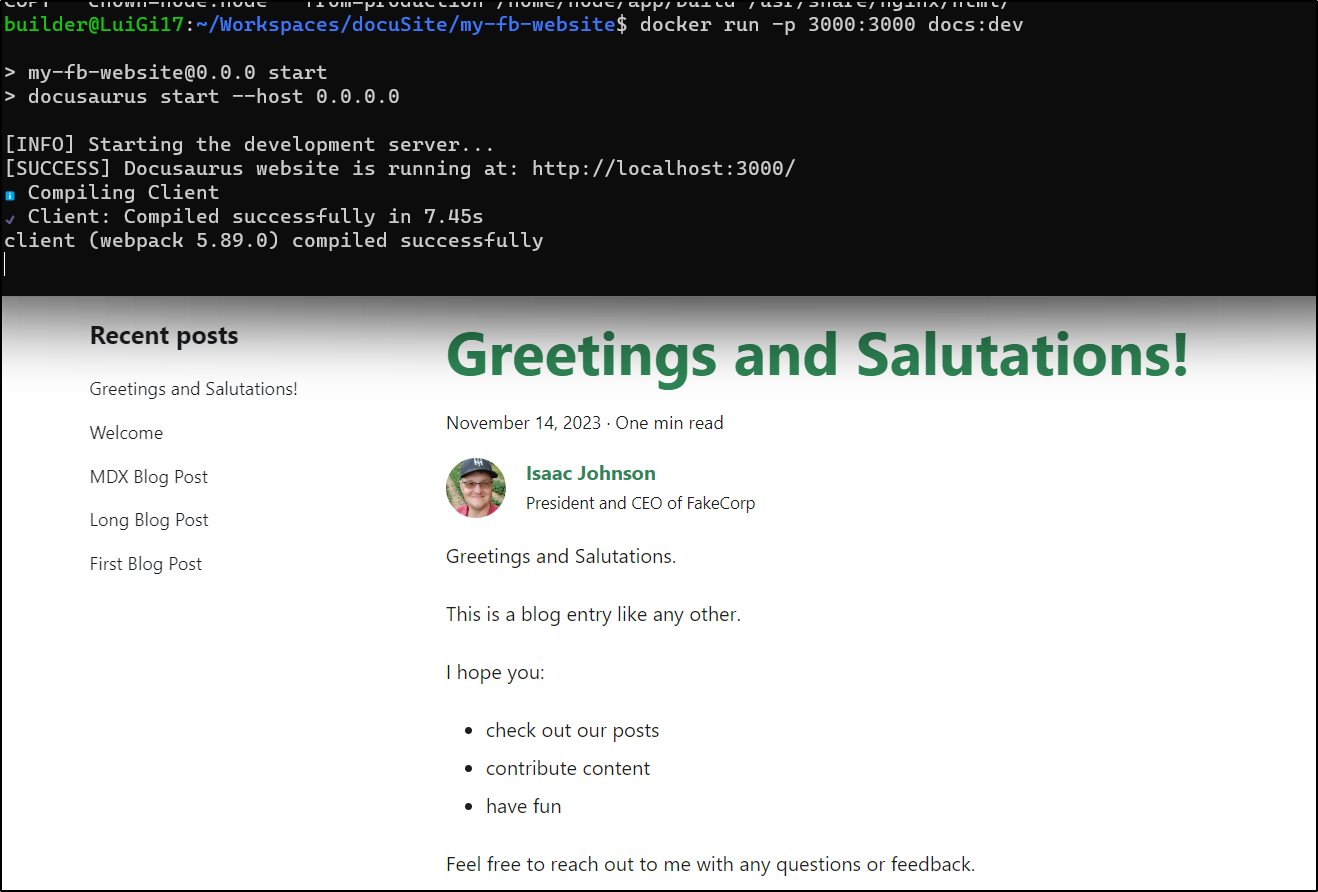

Then run it

$ docker run -p 3000:3000 docs:dev

> my-fb-website@0.0.0 start

> docusaurus start --host 0.0.0.0

[INFO] Starting the development server...

[SUCCESS] Docusaurus website is running at: http://localhost:3000/

ℹ Compiling Client

✔ Client: Compiled successfully in 7.45s

client (webpack 5.89.0) compiled successfully

As you can see in the Dockerfile, the real difference between “Development” and “Production” is really about building static files or running the app

## Development #################################################################

# Define a development target that installs devDeps and runs in dev mode

FROM base as development

WORKDIR /home/node/app

# Install (not ci) with dependencies, and for Linux vs. Linux Musl (which we use for -alpine)

RUN npm install

# Switch to the node user vs. root

USER node

# Expose port 3000

EXPOSE 3000

# Start the app in debug mode so we can attach the debugger

CMD ["npm", "start"]

## Production ##################################################################

# Also define a production target which doesn't use devDeps

FROM base as production

WORKDIR /home/node/app

COPY --chown=node:node --from=development /home/node/app/node_modules /home/node/app/node_modules

# Build the Docusaurus app

RUN npm run build

## Deploy ######################################################################

# Use a stable nginx image

FROM nginx:stable-alpine as deploy

WORKDIR /home/node/app

# Copy what we've installed/built from production

COPY --chown=node:node --from=production /home/node/app/build /usr/share/nginx/html/

Kubernetes

Of course, I’ll want to host this outside of a local docker. I could put the docker container on a Docker host and serve it up there. I could also create a deployment that I update. An advantage of Kubernetes, is that I can later take the docs folder and turn it into a configmap mount that I update on the fly.

Let’s start with Docker host path first, then work our way to K8s.

I’ll build with a more proper tag (I could just point a tag to a tag, but I moved hosts so I need to rebuild anyhow)

$ docker build --target development -t docusaurus:dev01 .

[+] Building 29.9s (10/10) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 1.62kB 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/node:lts 1.8s

=> [base 1/3] FROM docker.io/library/node:lts@sha256:5f21943fe97b24ae1740da6d7b9c56ac43fe3495acb47c 11.5s

=> => resolve docker.io/library/node:lts@sha256:5f21943fe97b24ae1740da6d7b9c56ac43fe3495acb47c1b232b 0.0s

=> => sha256:cb7cd40ba6483f37f791e1aace576df449fc5f75332c19ff59e2c6064797160e 2.00kB / 2.00kB 0.0s

=> => sha256:5f21943fe97b24ae1740da6d7b9c56ac43fe3495acb47c1b232b0a352b02a25c 1.21kB / 1.21kB 0.0s

=> => sha256:b5288ff94366c7c413f66f967629c66876f22de02bae58f0c0cba2fa42eb292b 7.52kB / 7.52kB 0.0s

=> => sha256:8457fd5474e70835e4482983a5662355d892d5f6f0f90a27a8e9f009997e8196 49.58MB / 49.58MB 1.0s

=> => sha256:13baa2029dde87a21b87127168a0fb50a007c07da6b5adc8864e1fe1376c86ff 24.05MB / 24.05MB 3.0s

=> => sha256:325c5bf4c2f26c11380501bec4b6eef8a3ea35b554aa1b222cbcd1e1fe11ae1d 64.13MB / 64.13MB 3.8s

=> => sha256:7e18a660069fd7f87a7a6c49ddb701449bfb929c066811777601d36916c7f674 211.06MB / 211.06MB 4.8s

=> => extracting sha256:8457fd5474e70835e4482983a5662355d892d5f6f0f90a27a8e9f009997e8196 1.2s

=> => extracting sha256:13baa2029dde87a21b87127168a0fb50a007c07da6b5adc8864e1fe1376c86ff 0.4s

=> => sha256:c30e0acec6d561f6ec4e8d3ccebdeedbe505923a613afe3df4a6ea0a40f2240a 3.37kB / 3.37kB 3.5s

=> => sha256:6b06d99eb2a57636421f94780405fdc53fc8159cf7991fca61edb9a4565b234f 47.84MB / 47.84MB 5.6s

=> => extracting sha256:325c5bf4c2f26c11380501bec4b6eef8a3ea35b554aa1b222cbcd1e1fe11ae1d 1.5s

=> => sha256:a3e4c4d8a88e065a9b9ed4634fafd6b3064ea8a673efbf8ecc046038c73363ef 2.21MB / 2.21MB 4.1s

=> => sha256:9dbab0291c0e40e9c4172533b5c8c74c1f7b24b50c6973b45c687d1d5f086679 452B / 452B 4.2s

=> => extracting sha256:7e18a660069fd7f87a7a6c49ddb701449bfb929c066811777601d36916c7f674 4.0s

=> => extracting sha256:c30e0acec6d561f6ec4e8d3ccebdeedbe505923a613afe3df4a6ea0a40f2240a 0.0s

=> => extracting sha256:6b06d99eb2a57636421f94780405fdc53fc8159cf7991fca61edb9a4565b234f 1.2s

=> => extracting sha256:a3e4c4d8a88e065a9b9ed4634fafd6b3064ea8a673efbf8ecc046038c73363ef 0.1s

=> => extracting sha256:9dbab0291c0e40e9c4172533b5c8c74c1f7b24b50c6973b45c687d1d5f086679 0.0s

=> [internal] load build context 1.4s

=> => transferring context: 1.36MB 1.2s

=> [base 2/3] WORKDIR /home/node/app 1.7s

=> [base 3/3] COPY --chown=node:node . /home/node/app/ 0.1s

=> [development 1/2] WORKDIR /home/node/app 0.0s

=> [development 2/2] RUN npm install 11.3s

=> exporting to image 3.4s

=> => exporting layers 3.4s

=> => writing image sha256:06a14264f55ed413fce19ccd6e60d0244af8222aefbae06925e0c727e9a706e3 0.0s

=> => naming to docker.io/library/docusaurus:dev01 0.0s

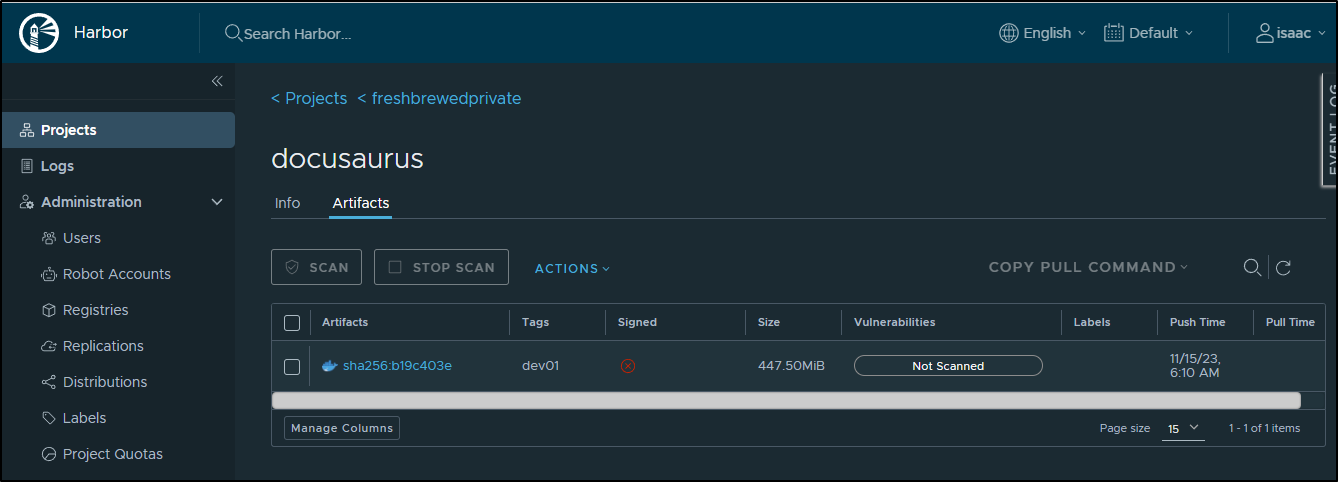

I’ll tag to my Harbor CR

$ docker tag docusaurus:dev01 harbor.freshbrewed.science/freshbrewedprivate/docusaurus:dev01

Then push it

$ docker push harbor.freshbrewed.science/freshbrewedprivate/docusaurus:dev01

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/docusaurus]

fdf4034f00a3: Pushed

5f70bf18a086: Mounted from freshbrewedprivate/pubsub-python-subscriber

aca7e72fa4e4: Pushed

0966a7a329d3: Pushed

722019bfb882: Pushed

de9aa01d609b: Pushed

fb9679ec7cc7: Pushed

ccd778ffcca1: Pushed

12b956927ba2: Pushed

266def75d28e: Pushed

29e49b59edda: Pushed

1777ac7d307b: Pushed

dev01: digest: sha256:b19c403e337e056d7dc2a5f6093330d7c6ae5a57e7be1d8e51c6c6c28ca05159 size: 2839

If you don’t have a Harbor CR to use, you could use Dockerhub or any other Container Registry. As it stands, the image is about 450Mb

Next, I’ll hop over to my container runner box (which, if curious, is a little SkyBarium Mini PC which runs a few containerized services already).

builder@builder-T100:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1f3ada372a43 darthsim/imgproxy:v3.8.0 "imgproxy" 9 days ago Up 9 days (healthy) 8080/tcp supabase-imgproxy

26e4f801ed15 timberio/vector:0.28.1-alpine "/usr/local/bin/vect…" 9 days ago Up 9 days (healthy) supabase-vector

8ed93813eeca rundeck/rundeck:4.17.1 "/tini -- docker-lib…" 3 weeks ago Up 3 weeks 0.0.0.0:5440->4440/tcp, :::5440->4440/tcp rundeck4

1700cf832175 louislam/uptime-kuma:1.23.3 "/usr/bin/dumb-init …" 3 weeks ago Up 3 weeks (healthy) 0.0.0.0:3101->3001/tcp, :::3101->3001/tcp uptime-kuma-1233b

767c9377f238 rundeck/rundeck:3.4.6 "/tini -- docker-lib…" 6 months ago Up 3 months 0.0.0.0:4440->4440/tcp, :::4440->4440/tcp rundeck2

Oddly, I found when I build this container fresh, it really wanted to write to a dir to which it didnt have permissions

builder@builder-T100:~$ docker run -p 4480:3000 --name docusaurustest harbor.freshbrewed.science/freshbrewedprivate/docusaurus:dev01

> my-fb-website@0.0.0 start

> docusaurus start --host 0.0.0.0

[INFO] Starting the development server...

[Error: EACCES: permission denied, mkdir '/home/node/app/.docusaurus'] {

errno: -13,

code: 'EACCES',

syscall: 'mkdir',

path: '/home/node/app/.docusaurus'

}

[INFO] Docusaurus version: 3.0.0

Node version: v20.9.0

npm notice

npm notice New minor version of npm available! 10.1.0 -> 10.2.3

npm notice Changelog: <https://github.com/npm/cli/releases/tag/v10.2.3>

npm notice Run `npm install -g npm@10.2.3` to update!

npm notice

I tried a volume, but that didn’t solve it either

builder@builder-T100:~$ docker volume create docutest01

docutest01

builder@builder-T100:~$ docker run -p 4480:3000 -v docutest01:/home/node/app --name docusaurustest harbor.freshbrewed.science/freshbrewedprivate/docusaurus:dev01

> my-fb-website@0.0.0 start

> docusaurus start --host 0.0.0.0

[INFO] Starting the development server...

[Error: EACCES: permission denied, mkdir '/home/node/app/.docusaurus'] {

errno: -13,

code: 'EACCES',

syscall: 'mkdir',

path: '/home/node/app/.docusaurus'

}

[INFO] Docusaurus version: 3.0.0

Node version: v20.9.0

npm notice

npm notice New minor version of npm available! 10.1.0 -> 10.2.3

npm notice Changelog: <https://github.com/npm/cli/releases/tag/v10.2.3>

npm notice Run `npm install -g npm@10.2.3` to update!

npm notice

I cannot explain why new builds turfed dir permissions.

After some local debugging, I fixed it by adding a RUN chown -R node:node /home/node/ line to the Dockerfile

$ cat Dockerfile

## Base ########################################################################

# Use a larger node image to do the build for native deps (e.g., gcc, python)

FROM node:lts as base

ENV GROUP_ID=1000 \

USER_ID=1000

# Reduce npm log spam and colour during install within Docker

ENV NPM_CONFIG_LOGLEVEL=warn

ENV NPM_CONFIG_COLOR=false

#USER root

#RUN addgroup --gid $GROUP_ID node

#RUN adduser -D --uid $USER_ID -G node node

# We'll run the app as the `node` user, so put it in their home directory

WORKDIR /home/node/app

# Copy the source code over

COPY --chown=node:node . /home/node/app/

## Development #################################################################

# Define a development target that installs devDeps and runs in dev mode

FROM base as development

WORKDIR /home/node/app

# Install (not ci) with dependencies, and for Linux vs. Linux Musl (which we use for -alpine)

RUN npm install

# Switch to the node user vs. root

RUN chown -R node:node /home/node/

USER node

# Expose port 3000

EXPOSE 3000

# Start the app in debug mode so we can attach the debugger

CMD ["npm", "start"]

## Production ##################################################################

# Also define a production target which doesn't use devDeps

FROM base as production

WORKDIR /home/node/app

COPY --chown=node:node --from=development /home/node/app/node_modules /home/node/app/node_modules

# Build the Docusaurus app

RUN npm run build

## Deploy ######################################################################

# Use a stable nginx image

FROM nginx:stable-alpine as deploy

WORKDIR /home/node/app

# Copy what we've installed/built from production

COPY --chown=node:node --from=production /home/node/app/build /usr/share/nginx/html/

I pushed from the local PC and pulled down to test on the Docker host

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite$ docker tag docs:dev3 harbor.freshbrewed.science/freshbrewedprivate/docusaurus:dev03

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite$ docker push harbor.freshbrewed.science/freshbrewedprivate/docusaurus:dev03

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/docusaurus]

d46521f7d43a: Pushed

368221442350: Pushed

5f70bf18a086: Layer already exists

743e79bd500d: Pushed

0966a7a329d3: Layer already exists

722019bfb882: Layer already exists

de9aa01d609b: Layer already exists

fb9679ec7cc7: Layer already exists

ccd778ffcca1: Layer already exists

12b956927ba2: Layer already exists

266def75d28e: Layer already exists

29e49b59edda: Layer already exists

1777ac7d307b: Layer already exists

dev03: digest: sha256:74a012b652f8678cf2347d931f91d9116403ced43ce912255bbf366475a9280f size: 3051

builder@builder-T100:~$ docker pull harbor.freshbrewed.science/freshbrewedprivate/docusaurus:dev03

dev03: Pulling from freshbrewedprivate/docusaurus

8457fd5474e7: Already exists

13baa2029dde: Already exists

325c5bf4c2f2: Already exists

7e18a660069f: Already exists

c30e0acec6d5: Already exists

6b06d99eb2a5: Already exists

a3e4c4d8a88e: Already exists

9dbab0291c0e: Already exists

a15d89572979: Already exists

4ca29620b88a: Pull complete

4f4fb700ef54: Pull complete

a270b5bb1db2: Pull complete

8b3f35950d09: Pull complete

Digest: sha256:74a012b652f8678cf2347d931f91d9116403ced43ce912255bbf366475a9280f

Status: Downloaded newer image for harbor.freshbrewed.science/freshbrewedprivate/docusaurus:dev03

harbor.freshbrewed.science/freshbrewedprivate/docusaurus:dev03

builder@builder-T100:~$ docker run -p 4480:3000 --name docusaurustest harbor.freshbrewed.science/freshbrewedprivate/docusaurus:dev03

docker: Error response from daemon: Conflict. The container name "/docusaurustest" is already in use by container "1a83d89299de89053f5d2cc185be1eddb43adfe356804fda6a5cbc31ed229109". You have to remove (or rename) that container to be able to reuse that name.

See 'docker run --help'.

builder@builder-T100:~$ docker stop docusaurustest && docker rm docusaurustest

docusaurustest

docusaurustest

builder@builder-T100:~$ docker run -p 4480:3000 --name docusaurustest harbor.freshbrewed.science/freshbrewedprivate/docusaurus:dev03

> my-fb-website@0.0.0 start

> docusaurus start --host 0.0.0.0

[INFO] Starting the development server...

[SUCCESS] Docusaurus website is running at: http://localhost:3000/

ℹ Compiling Client

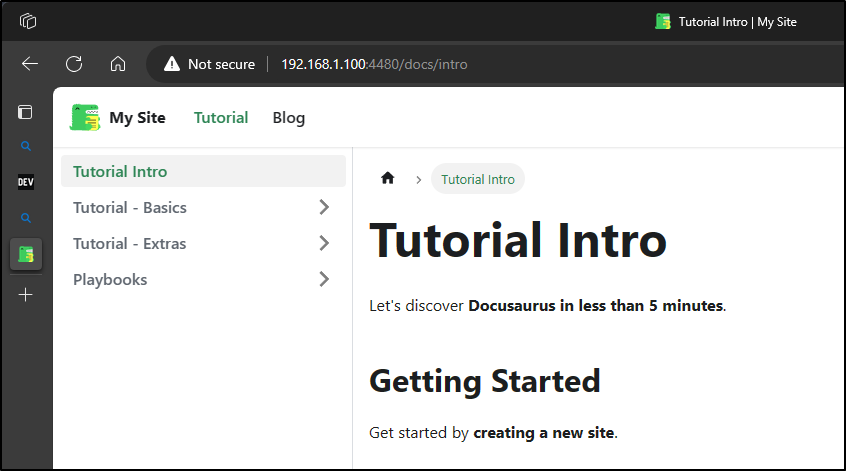

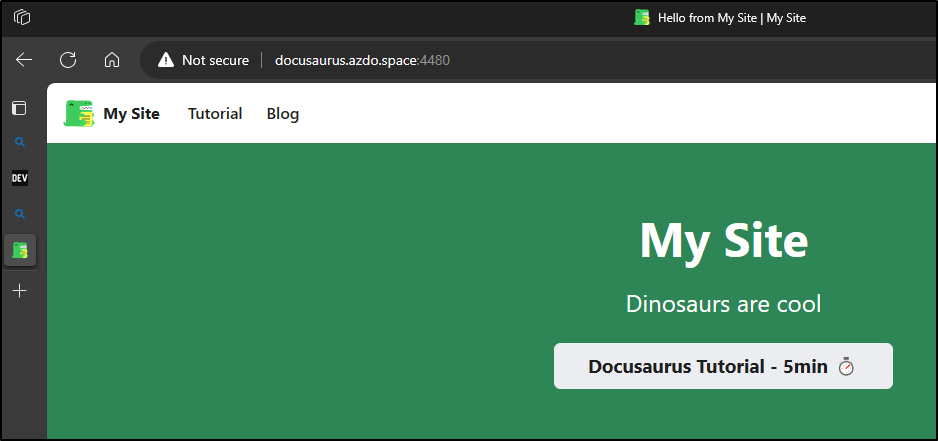

Which I can see now works

Since I will want to run this as a daemon with auto-restart, I’ll add those params and fire it again

builder@builder-T100:~$ docker run -d --restart=always -p 4480:3000 --name docusaurustest harbor.freshbrewed.science/freshbrewedprivate/docusaurus:dev03

897c57fac5b92ed58262b42fa44b7bd146335bead5ce021931ef2dca839f9106

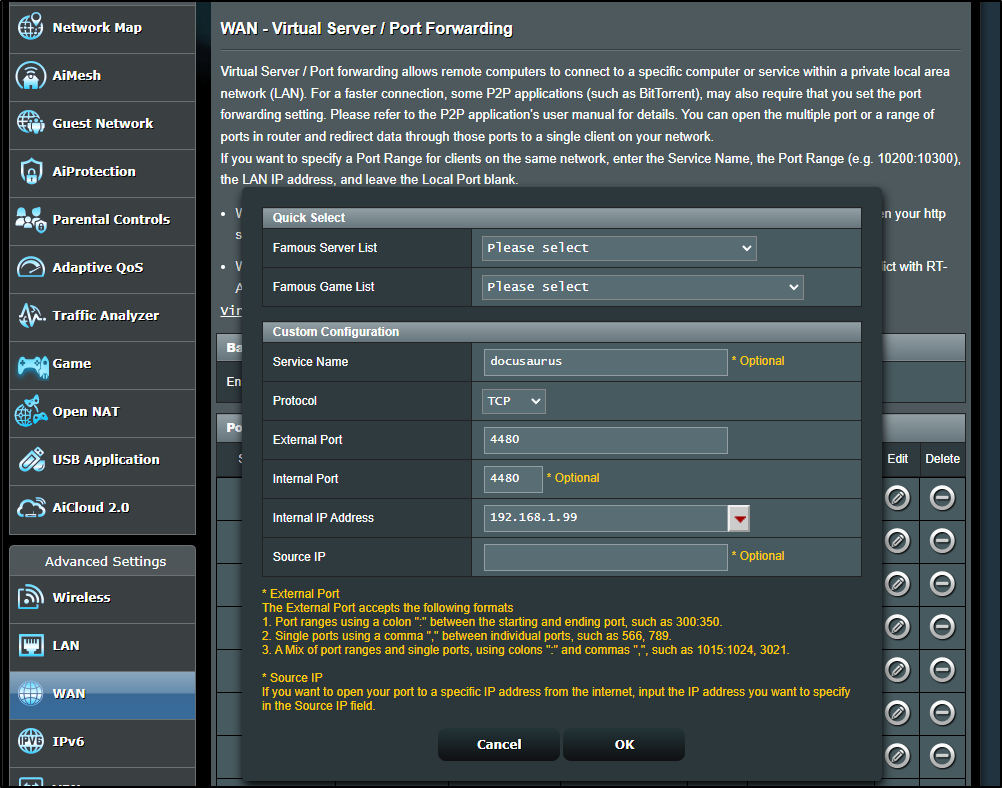

Ingress

So I have a site I can reach on an internal IP (192.168.1.100). I could punch a hole through my firewall so we could resolve externally.

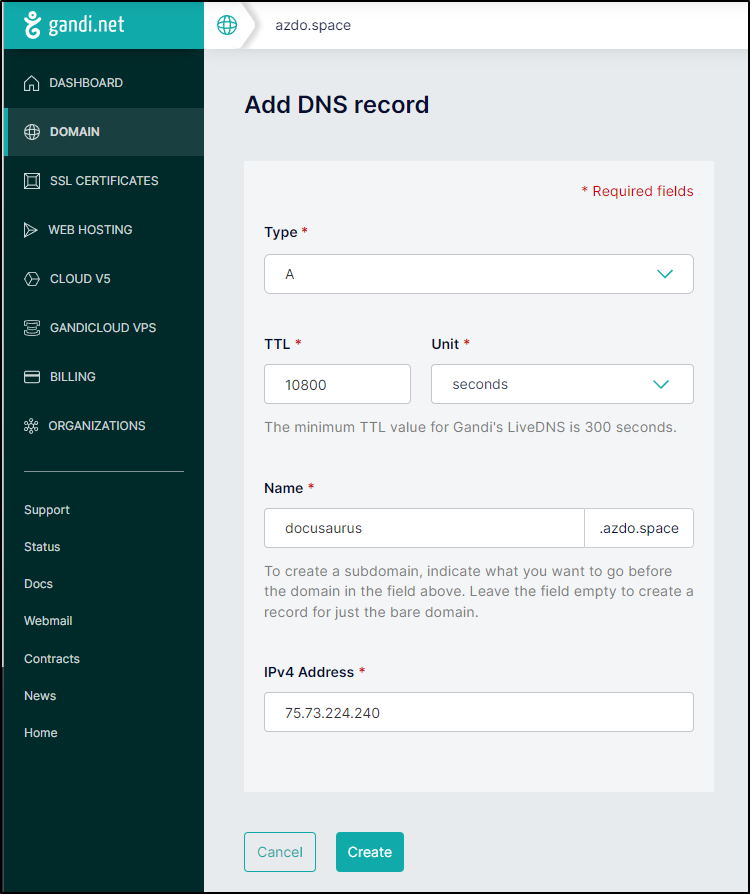

Then add an A Record so we can use a DNS name instead of IP

$ cat r53-docusaurus.json

{

"Comment": "CREATE docusaurus fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "docusaurus.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "75.73.224.240"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-docusaurus.json

{

"ChangeInfo": {

"Id": "/change/C061509636E0M7UMLHQZ8",

"Status": "PENDING",

"SubmittedAt": "2023-11-15T12:49:47.991Z",

"Comment": "CREATE docusaurus fb.s A record "

}

}

Which we can test with http://docusaurus.freshbrewed.science:4480/

We could also just create an A record in any other DNS system. E.g. a quick A record in Gandi

which also works

Now, most people like TLS. It isn’t strictly required, but the world sure loves ‘https’ instead of ‘http’ even for documents.

Kubernetes Ingress to external endpoints for TLS

The way we can sort that out is to create an endpoint that we can map to a service.

We can bundle the Ingress, Service and Endpoint into one YAML block:

$ cat docuIngressSvcEndpoint.yaml

---

apiVersion: v1

kind: Endpoints

metadata:

name: docusaurus-external-ip

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: docusaur

port: 4480

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: docusaurus-external-ip

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: docusaur

port: 80

protocol: TCP

targetPort: 4480

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: docusaurus-external-ip

generation: 1

labels:

app.kubernetes.io/instance: docusaurusingress

name: docusaurusingress

spec:

rules:

- host: docusaurus.freshbrewed.science

http:

paths:

- backend:

service:

name: docusaurus-external-ip

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- docusaurus.freshbrewed.science

secretName: docusaurus-tls

Once applied

$ kubectl apply -f docuIngressSvcEndpoint.yaml

endpoints/docusaurus-external-ip created

service/docusaurus-external-ip created

ingress.networking.k8s.io/docusaurusingress created

And now I have a TLS secured reachable endpoint, and one that doesnt require ports to be specified

Deployment

We routed via an external endpoint to a Dockerized image on a host. But ideally, I would deploy with YAML or helm and not need to have to hop onto a PC to rotate the image on update.

Let’s create a deployment instead.

I’ll remove my prior

$ kubectl delete -f docuIngressSvcEndpoint.yaml

endpoints "docusaurus-external-ip" deleted

service "docusaurus-external-ip" deleted

ingress.networking.k8s.io "docusaurusingress" deleted

Then create a new Deployment and Service to be used with the ingress

$ cat docuIngressSvcEndpoint2.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: docusaurus-deployment

spec:

replicas: 1

selector:

matchLabels:

app: docusaurus

template:

metadata:

labels:

app: docusaurus

spec:

containers:

- name: docusaurus-container

image: harbor.freshbrewed.science/freshbrewedprivate/docusaurus:dev03

ports:

- containerPort: 3000

imagePullSecrets:

- name: myharborreg

---

apiVersion: v1

kind: Service

metadata:

name: docusaurus-service

spec:

selector:

app: docusaurus

ports:

- protocol: TCP

port: 80

targetPort: 3000

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

generation: 1

labels:

app.kubernetes.io/instance: docusaurusingress

name: docusaurusingress

spec:

rules:

- host: docusaurus.freshbrewed.science

http:

paths:

- backend:

service:

name: docusaurus-service

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- docusaurus.freshbrewed.science

secretName: docusaurus-tls

and apply

$ kubectl apply -f docuIngressSvcEndpoint2.yaml

deployment.apps/docusaurus-deployment created

service/docusaurus-service created

ingress.networking.k8s.io/docusaurusingress created

At this point, it might be hard to tell the difference, but I can see pods running in the cluster:

$ kubectl get pods -l app=docusaurus

NAME READY STATUS RESTARTS AGE

docusaurus-deployment-7dfcb7cff5-4jpll 1/1 Running 0 3m33s

$ kubectl get rs -l app=docusaurus

NAME DESIRED CURRENT READY AGE

docusaurus-deployment-7dfcb7cff5 1 1 1 4m19s

And of course, the TLS secured endpoint routing to the replicaset instead of the external endpoint

Dynamic content

Because this is an app and not a static deployment, we could do some fun things.

Say we had some markdown for service status that we updated often:

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite$ cd status

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite/status$ ls

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite/status$ vi servers.md

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite/status$ kubectl get nodes >> servers.md

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite/status$ cat servers.md

# Server status

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-850-g2 Ready <none> 469d v1.23.9+k3s1

builder-hp-elitebook-850-g1 Ready <none> 477d v1.23.9+k3s1

hp-hp-elitebook-850-g2 Ready <none> 477d v1.23.9+k3s1

isaac-macbookair Ready control-plane,master 477d v1.23.9+k3s1

I might want that exposed via Docusaurus.

I can take that folder and turn it into a configmap:

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite/status$ cd ..

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite$ kubectl create configmap mystatus --from-file=status/ -o yaml > createcm.yaml

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite$ cat createcm.yaml

apiVersion: v1

data:

servers.md: |

# Server status

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-850-g2 Ready <none> 469d v1.23.9+k3s1

builder-hp-elitebook-850-g1 Ready <none> 477d v1.23.9+k3s1

hp-hp-elitebook-850-g2 Ready <none> 477d v1.23.9+k3s1

isaac-macbookair Ready control-plane,master 477d v1.23.9+k3s1

kind: ConfigMap

metadata:

creationTimestamp: "2023-11-15T13:24:00Z"

name: mystatus

namespace: default

resourceVersion: "267298787"

uid: 21265254-0415-4a33-8032-5b9743109048

$ kubectl get cm | grep mystatus

mystatus 1 62s

Now I can add that to our deployment:

$ cat docuIngressSvcEndpoint2.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: docusaurus-deployment

spec:

replicas: 1

selector:

matchLabels:

app: docusaurus

template:

metadata:

labels:

app: docusaurus

spec:

containers:

- name: docusaurus-container

image: harbor.freshbrewed.science/freshbrewedprivate/docusaurus:dev03

ports:

- containerPort: 3000

volumeMounts:

- name: mystatus-volume

mountPath: /home/node/app/docs/mystatus

imagePullSecrets:

- name: myharborreg

volumes:

- name: mystatus-volume

configMap:

name: mystatus

---

and apply

$ kubectl apply -f docuIngressSvcEndpoint2.yaml

deployment.apps/docusaurus-deployment configured

service/docusaurus-service unchanged

ingress.networking.k8s.io/docusaurusingress unchanged

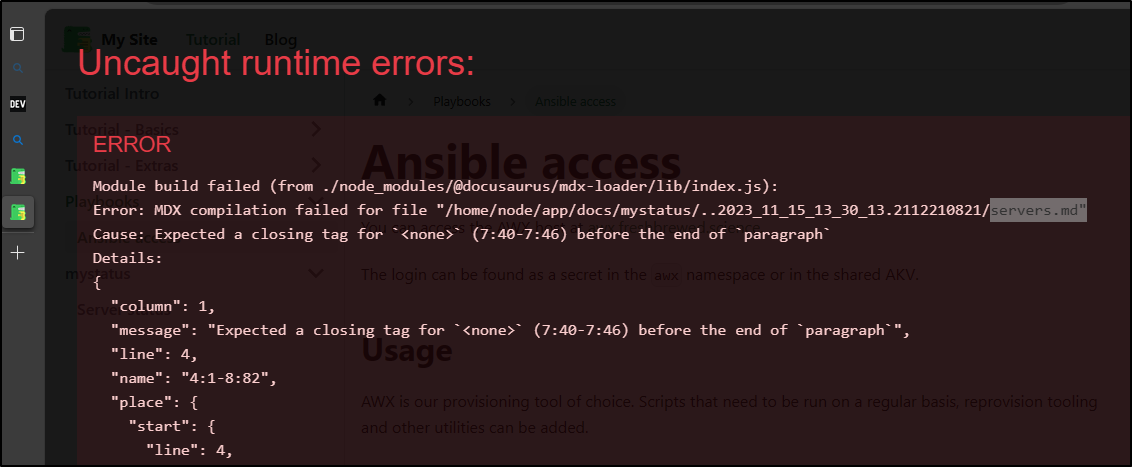

One minor issue is that Docusaurus doesn’t like bad tags

We can’t have the ‘none’ show up like that in the MD

# Server status

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-850-g2 Ready <none> 469d v1.23.9+k3s1

builder-hp-elitebook-850-g1 Ready <none> 477d v1.23.9+k3s1

hp-hp-elitebook-850-g2 Ready <none> 477d v1.23.9+k3s1

isaac-macbookair Ready control-plane,master 477d v1.23.9+k3s1

I’ll swap those with * and recreate the CM

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite/status$ echo -e "# Servers\n\n" > servers.md

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite/status$ kubectl get nodes | sed 's/[<>]/*/g' >> servers.md

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite/status$ cd ..

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite$ kubectl create configmap mystatus --from-file=status/

error: failed to create configmap: configmaps "mystatus" already exists

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite$ kubectl delete cm mystatus && kubectl create configmap mystatus --from-file=status/

configmap "mystatus" deleted

configmap/mystatus created

Then force a pod rotation to get the updated CM

$ kubectl delete pod docusaurus-deployment-54d4865d77-s7npg

pod "docusaurus-deployment-54d4865d77-s7npg" deleted

I actually found this didn’t work, I kept getting crazy crash messages:

$ kubectl logs docusaurus-deployment-54d4865d77-wm277

> my-fb-website@0.0.0 start

> docusaurus start --host 0.0.0.0

[INFO] Starting the development server...

[SUCCESS] Docusaurus website is running at: http://localhost:3000/

ℹ Compiling Client

[ERROR] MDX loader can't read MDX metadata file "/home/node/app/.docusaurus/docusaurus-plugin-content-docs/default/site-docs-mystatus-2023-11-15-13-49-42-1029384323-servers-md-81a.json". Maybe the isMDXPartial option function was not provided?

[Error: ENOENT: no such file or directory, open '/home/node/app/.docusaurus/docusaurus-plugin-content-docs/default/site-docs-mystatus-2023-11-15-13-49-42-1029384323-servers-md-81a.json'] {

errno: -2,

code: 'ENOENT',

syscall: 'open',

path: '/home/node/app/.docusaurus/docusaurus-plugin-content-docs/default/site-docs-mystatus-2023-11-15-13-49-42-1029384323-servers-md-81a.json'

}

[INFO] Docusaurus version: 3.0.0

Node version: v20.9.0

npm notice

npm notice New minor version of npm available! 10.1.0 -> 10.2.3

npm notice Changelog: <https://github.com/npm/cli/releases/tag/v10.2.3>

npm notice Run `npm install -g npm@10.2.3` to update!

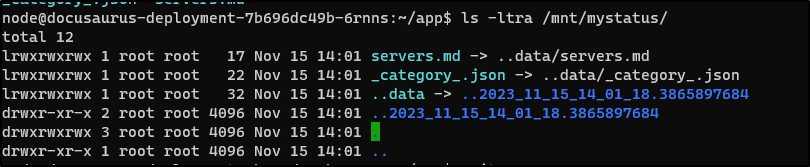

In testing, what I found is Kubernetes will mount it with some sym links that unfortunately docusuarus picks up

I added a _category_.json file and recreated the CM

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite$ cat status/_category_.json

{

"label": "Status",

"position": 5,

"link": {

"type": "generated-index"

}

}

builder@DESKTOP-QADGF36:~/Workspaces/MyFbWebsite$ cat status/servers.md

# Servers

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-850-g1 Ready *none* 477d v1.23.9+k3s1

hp-hp-elitebook-850-g2 Ready *none* 477d v1.23.9+k3s1

isaac-macbookair Ready control-plane,master 477d v1.23.9+k3s1

builder-hp-elitebook-850-g2 Ready *none* 469d v1.23.9+k3s1

Then I changed the deployment spec to mount specific files which fixed the issue

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: docusaurus

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: docusaurus

spec:

containers:

- image: harbor.freshbrewed.science/freshbrewedprivate/docusaurus:dev03

imagePullPolicy: IfNotPresent

name: docusaurus-container

ports:

- containerPort: 3000

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /home/node/app/docs/mystatus/servers.md

name: config-volume

subPath: servers.md

- mountPath: /home/node/app/docs/mystatus/_category_.json

name: config-volume

subPath: _category_.json

dnsPolicy: ClusterFirst

imagePullSecrets:

- name: myharborreg

restartPolicy: Alwayscrontjob

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- configMap:

defaultMode: 420

name: mytestconfig

name: config-volume

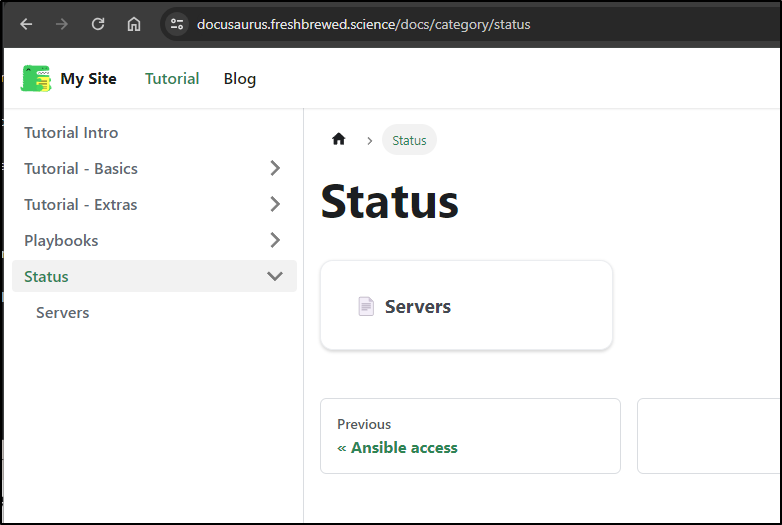

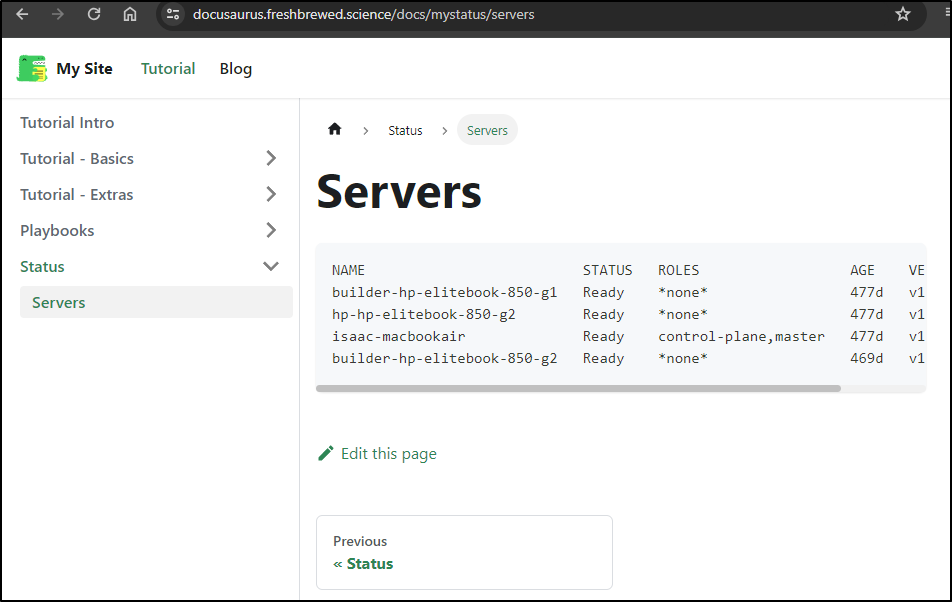

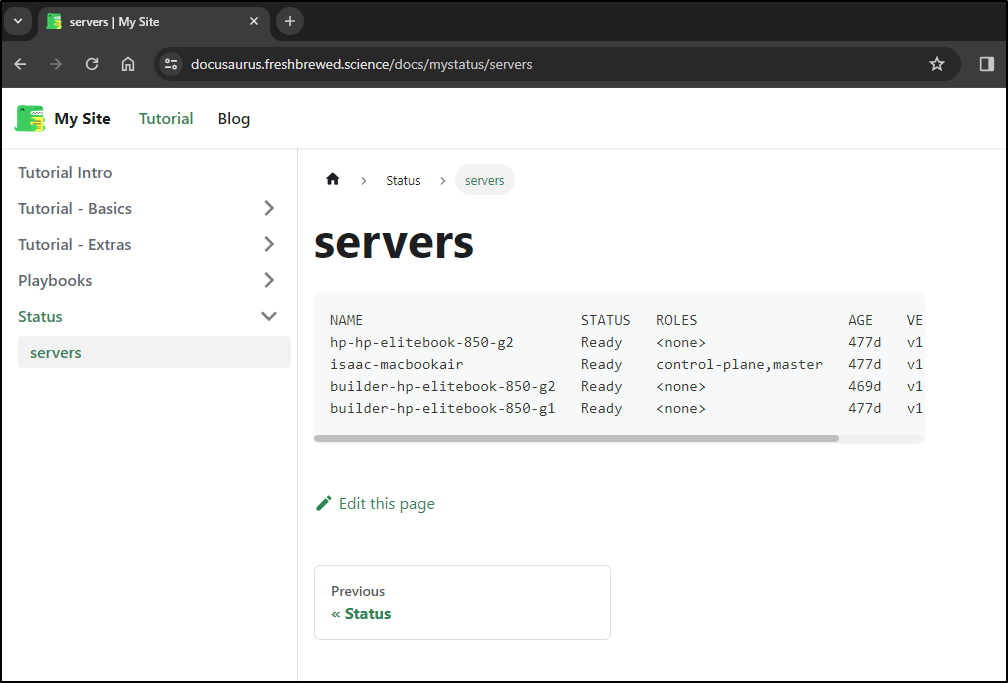

Now we can see status entries

And details

I could likely then create a cronjob that would update the configmap.

Auto-updating with a Kubernetes CronJob

First, I’ll want a role that can view nodes, update configmaps and bounce pods:

$ cat mydocuwatcherrole.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: docuwatcher

namespace: default

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: docuwatcherrole

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["list", "create", "delete"]

- apiGroups: [""]

resources: ["pods"]

verbs: ["list", "delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: docuwatcher-binding

subjects:

- kind: ServiceAccount

name: docuwatcher

namespace: default

roleRef:

kind: ClusterRole

name: docuwatcherrole

apiGroup: rbac.authorization.k8s.io

Then I’ll create a cronjob that will update the configmap dynamically and bounce the docusaurus pod:

$ cat myTestCron.yaml

apiVersion: batch/v1

kind: CronJob

metadata:

name: kubectl-cronjob2

spec:

schedule: "*/1 * * * *"

jobTemplate:

spec:

template:

spec:

serviceAccountName: docuwatcher

containers:

- name: kubectl-container

image: alpine

command:

- "/bin/sh"

- "-c"

- >

apk add --no-cache curl &&

curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl &&

chmod +x kubectl &&

mv kubectl /usr/local/bin/ &&

mkdir /tmp/mystatus && cp -f /mnt/mystatus/*_category_.json /tmp/mystatus &&

printf '%s\n' '# servers' '' '```' > /tmp/mystatus/servers.md && kubectl get nodes >> /tmp/mystatus/servers.md && printf '%s\n' '```' '' >> /tmp/mystatus/servers.md &&

kubectl delete cm mytestconfig && kubectl create configmap mytestconfig --from-file=/tmp/mystatus/ && kubectl delete pods -l app=docusaurus

volumeMounts:

- mountPath: /mnt/mystatus/servers.md

name: config-volume

subPath: servers.md

- mountPath: /mnt/mystatus/_category_.json

name: config-volume

subPath: _category_.json

volumes:

- configMap:

name: mytestconfig

name: config-volume

restartPolicy: OnFailure

I can now see it run

$ kubectl get pods | tail -n 10

gitea-redis-cluster-2 1/1 Running 0 8d

gitea-59cd799bf9-qpprj 1/1 Running 0 8d

my-nexus-repository-manager-56d6fdd884-wfhkl 1/1 Running 0 7d12h

new-jekyllrunner-deployment-6t44l-ljvmr 2/2 Running 0 24h

new-jekyllrunner-deployment-6t44l-z8kf7 2/2 Running 0 4h9m

new-jekyllrunner-deployment-6t44l-6hjtf 2/2 Running 0 4h

gitea-redis-cluster-5 0/1 CrashLoopBackOff 10 (11s ago) 26m

docusaurus-deployment-677b66659b-69tcw 1/1 Terminating 0 71m

docusaurus-deployment-677b66659b-fzjb6 1/1 Running 0 7s

kubectl-cronjob2-28334357-lw6dg 0/1 Completed 0 11s

I can then apply the role, then the cronjob

$ kubectl apply -f mydocuwatcherrole.yaml

serviceaccount/docuwatcher created

clusterrole.rbac.authorization.k8s.io/docuwatcherrole created

clusterrolebinding.rbac.authorization.k8s.io/docuwatcher-binding created

$ kubectl apply -f myTestCron.yaml

cronjob.batch/kubectl-cronjob2 created

We can see the status

$ kubectl logs kubectl-cronjob2-28334360-7dkgt

fetch https://dl-cdn.alpinelinux.org/alpine/v3.18/main/x86_64/APKINDEX.tar.gz

fetch https://dl-cdn.alpinelinux.org/alpine/v3.18/community/x86_64/APKINDEX.tar.gz

(1/7) Installing ca-certificates (20230506-r0)

(2/7) Installing brotli-libs (1.0.9-r14)

(3/7) Installing libunistring (1.1-r1)

(4/7) Installing libidn2 (2.3.4-r1)

(5/7) Installing nghttp2-libs (1.57.0-r0)

(6/7) Installing libcurl (8.4.0-r0)

(7/7) Installing curl (8.4.0-r0)

Executing busybox-1.36.1-r2.trigger

Executing ca-certificates-20230506-r0.trigger

OK: 12 MiB in 22 packages

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 47.5M 100 47.5M 0 0 42.7M 0 0:00:01 0:00:01 --:--:-- 42.8M

configmap "mytestconfig" deleted

configmap/mytestconfig created

pod "docusaurus-deployment-677b66659b-k2qtq" deleted

I can see the auto-generated CM now

$ kubectl get cm mytestconfig -o yaml

apiVersion: v1

data:

_category_.json: |

{

"label": "Status",

"position": 5,

"link": {

"type": "generated-index"

}

}

servers.md: |+

# servers

```

NAME STATUS ROLES AGE VERSION

hp-hp-elitebook-850-g2 Ready <none> 477d v1.23.9+k3s1

isaac-macbookair Ready control-plane,master 477d v1.23.9+k3s1

builder-hp-elitebook-850-g2 Ready <none> 469d v1.23.9+k3s1

builder-hp-elitebook-850-g1 Ready <none> 477d v1.23.9+k3s1

```

kind: ConfigMap

metadata:

creationTimestamp: "2023-11-15T15:32:04Z"

name: mytestconfig

namespace: default

resourceVersion: "267367093"

uid: 32e1aa72-8e6c-4dfe-8d7c-41bd21fe5cb5

Let’s do a quick demo of adding some new data, like configmaps, to the status page

Summary

We started by using npx to create a new Docusaurus instance locally and walked through some examples such as docs and blog entries. We then moved on to building static files before showing how to containerize it with Docker. Once we had a functional Dockerfile, we experimented with exposing directly on a docker host (and forwarding a DNS name to a port) before routing through Kubernetes to handle TLS. Lastly, we showed a working Kubernetes Deployment that would take the secured container from a private CR and expose in a replicaset and then how we might have dynamic content using a configmap updated with a Kubernetes cronjob.

We didn’t dig too much into Docusaurus Plugins, Themes or other styling tweaks. As it stands, it’s a very solid easy to use framework. We could turn it into a Github flow that updates, or GitOps with Argo, or a deployment with Azure DevOps. We may circle back and do this in a follow-up article later.

Overall, I’m impressed with the simplicity and maturity of the framework. It was easy to setup and use and a good one to keep in our toolbelts for markdown driven documentation sites.