Published: Nov 16, 2023 by Isaac Johnson

I saw a blog article a while back showing how to install Code Server on a NAS for remote development. I thought it might be fun to try and do similar and expose through Kubernetes.

Installation

If we look at the Docker image from linuxserver.io’s library, we can see a rather straight-forward Docker compose file:

---

version: "2.1"

services:

code-server:

image: lscr.io/linuxserver/code-server:latest

container_name: code-server

environment:

- PUID=1000

- PGID=1000

- TZ=Etc/UTC

- PASSWORD=password #optional

- HASHED_PASSWORD= #optional

- SUDO_PASSWORD=password #optional

- SUDO_PASSWORD_HASH= #optional

- PROXY_DOMAIN=code-server.my.domain #optional

- DEFAULT_WORKSPACE=/config/workspace #optional

volumes:

- /path/to/appdata/config:/config

ports:

- 8443:8443

restart: unless-stopped

We can turn that into a pretty straightforward Deployment YAML

apiVersion: apps/v1

kind: Deployment

metadata:

name: code-server-deployment

spec:

replicas: 1

selector:

matchLabels:

app: code-server

template:

metadata:

labels:

app: code-server

spec:

containers:

- name: code-server-container

image: lscr.io/linuxserver/code-server:latest

ports:

- containerPort: 8443

env:

- name: PUID

value: "1000"

- name: PGID

value: "1000"

- name: PASSWORD

value: "TestPassword01"

- name: SUDO_PASSWORD

value: "TestPassword02"

- name: TZ

value: "Etc/UTC"

volumeMounts:

- name: code-server-config

mountPath: /config

volumes:

- name: code-server-config

persistentVolumeClaim:

claimName: code-server-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: code-server-pvc

spec:

storageClassName: local-path

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

I’ll launch it in the Test Kubernetes

$ kubectl apply -f test-codeserver.yaml

deployment.apps/code-server-deployment created

persistentvolumeclaim/code-server-pvc created

I soon see it running

$ kubectl get pods -l app=code-server

NAME READY STATUS RESTARTS AGE

code-server-deployment-66d98759fc-t2vdl 1/1 Running 0 47s

I’ll port-forward

$ kubectl port-forward code-server-deployment-66d98759fc-t2vdl 8443:8443

Forwarding from 127.0.0.1:8443 -> 8443

Forwarding from [::1]:8443 -> 8443

Handling connection for 8443

Handling connection for 8443

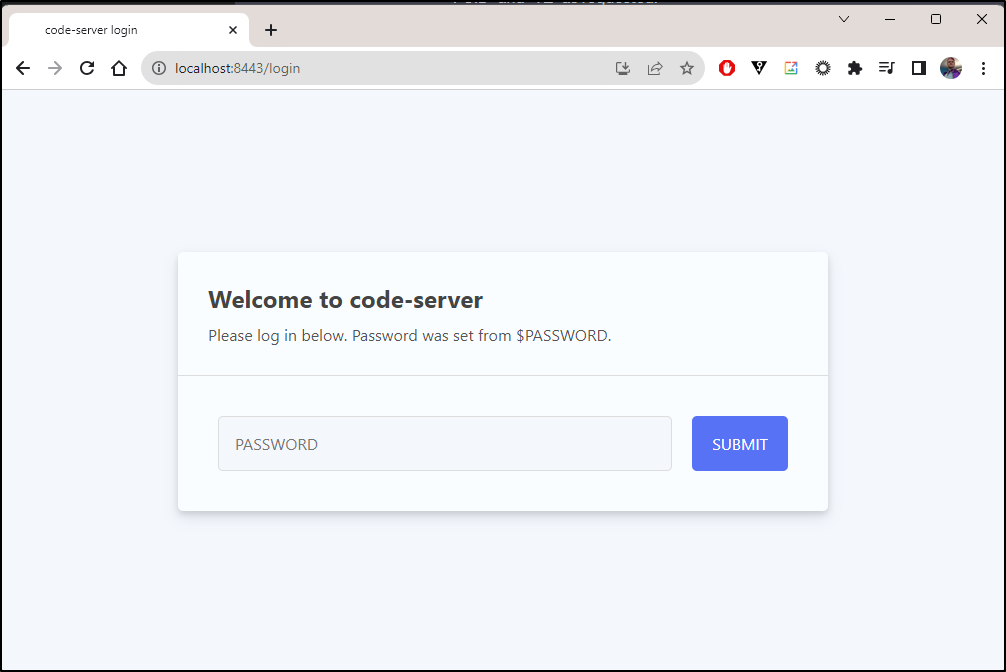

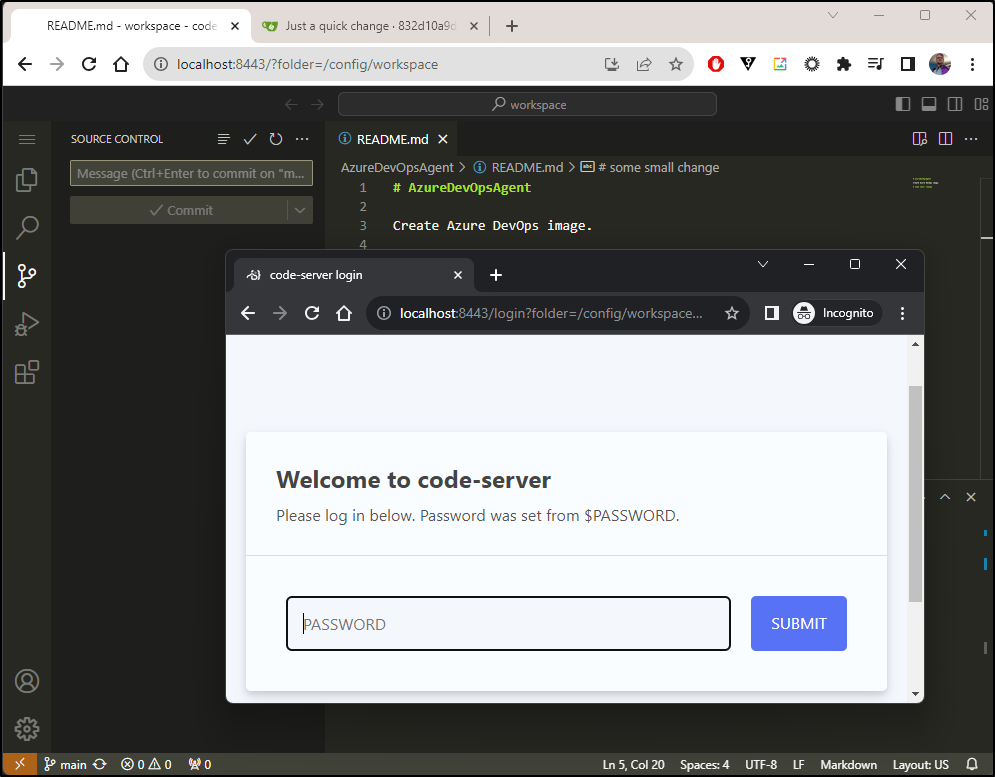

I am prompted for the password

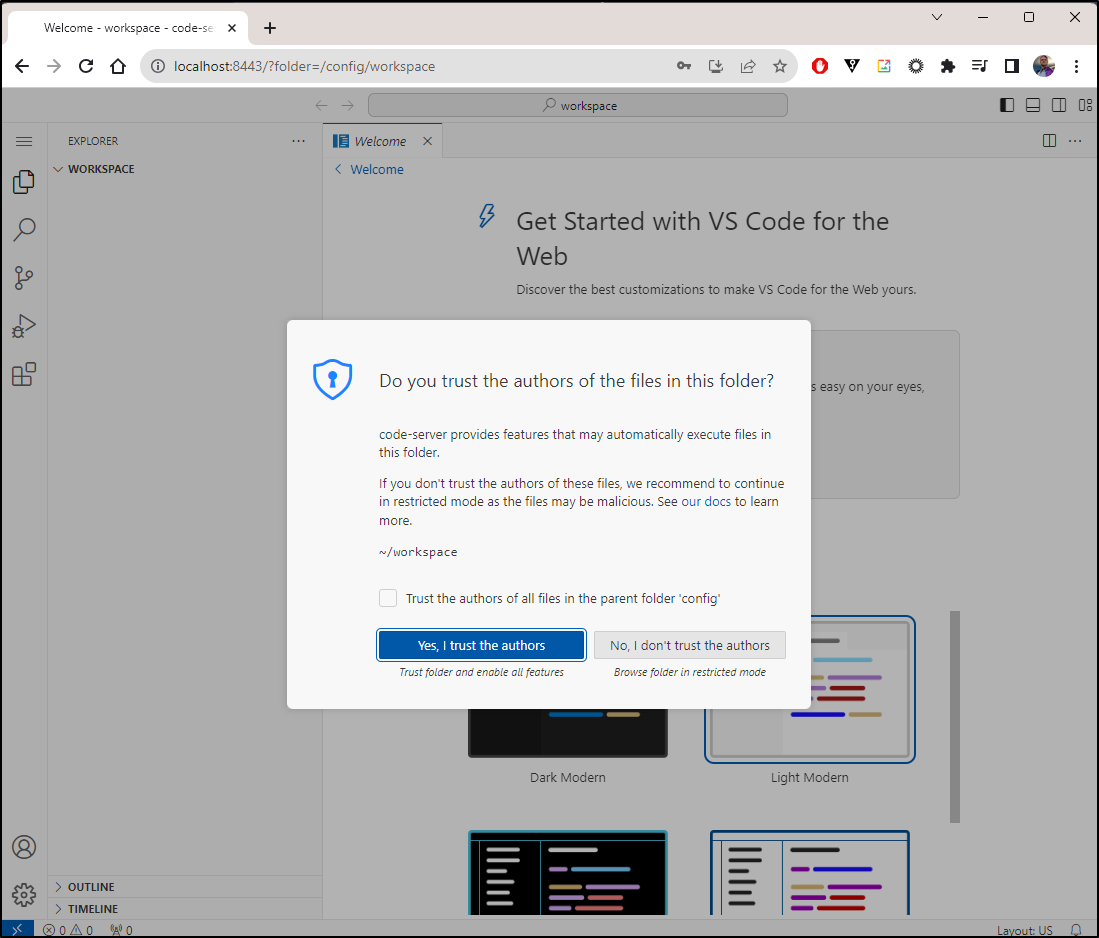

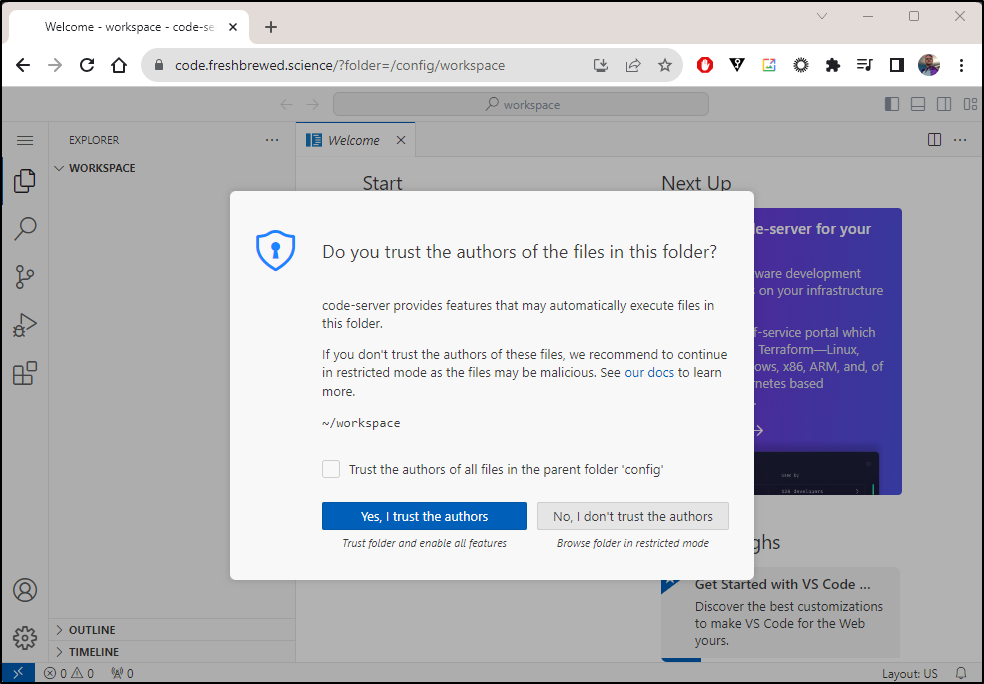

Then whether I trust the authors

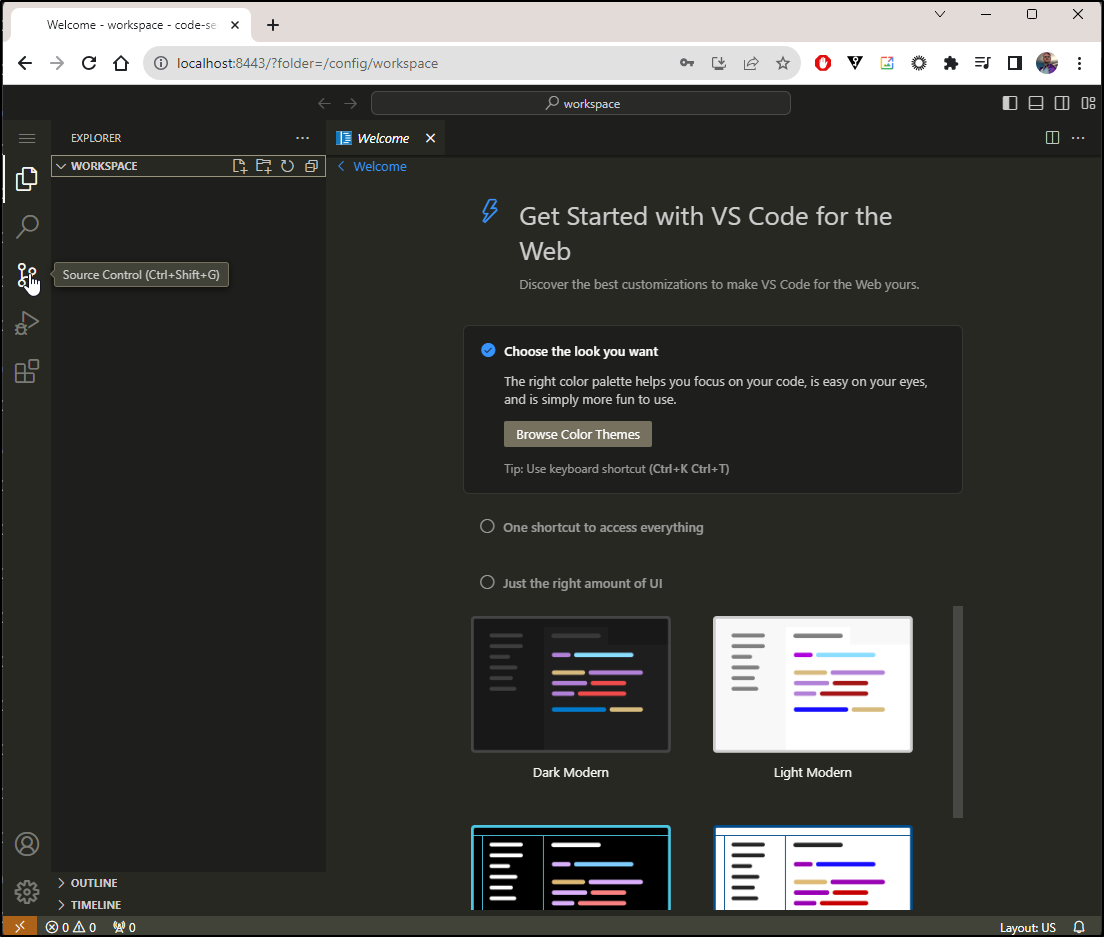

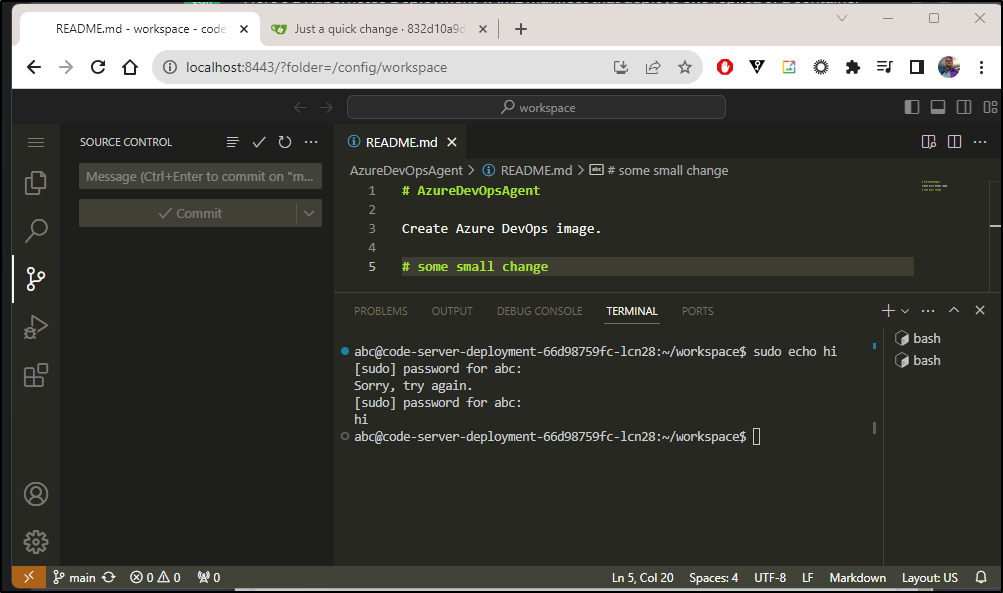

I picked a theme then went to source control

Let’s try a couple ways to engage with Git

Here I will use the terminal to clone a repo in Gitea, but then the UI to create a commit and push:

My next test is object permanence; let’s kill the pod and ensure things were saved in the PVC

My final test was to ensure the sudo password was actually the one I specified and not just the login one:

I was slightly worried it was just remote sharing (like VNC) so I fired an incognito window and indeed it asked for a password, so it is handling session management.

If I use this in Prod, I might need more space. This blog’s GIT repo is nearly 6Gb already.

$ du -chs ./jekyll-blog

5.8G ./jekyll-blog

5.8G total

I’m kind of stumped on what to do about that, to be honest. I don’t really want to purge old articles but as of this writing, I have 50 pages of blog entries going back to 2019 and the rendered static site is nearly 4Gb.

Moving to production

Let’s next create a quick A record

$ cat r53-codeserver.json

{

"Comment": "CREATE code fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "code.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "75.73.224.240"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-codeserver.json

{

"ChangeInfo": {

"Id": "/change/C0135361308ECV801OVU",

"Status": "PENDING",

"SubmittedAt": "2023-11-03T12:46:41.994Z",

"Comment": "CREATE code fb.s A record "

}

}

I’ll now change the storage class, add a service and lastly the Ingress in one single YAML

$ cat prod-codeserver.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: code-server-deployment

spec:

replicas: 1

selector:

matchLabels:

app: code-server

template:

metadata:

labels:

app: code-server

spec:

containers:

- name: code-server-container

image: lscr.io/linuxserver/code-server:latest

ports:

- containerPort: 8443

env:

- name: PUID

value: "1000"

- name: PGID

value: "1000"

- name: PASSWORD

value: "MyPasswordHere!"

- name: SUDO_PASSWORD

value: "MyOtherPasswordHere!"

- name: TZ

value: "America/Chicago"

volumeMounts:

- name: code-server-config

mountPath: /config

volumes:

- name: code-server-config

persistentVolumeClaim:

claimName: code-server-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: code-server-pvc

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

---

apiVersion: v1

kind: Service

metadata:

name: codeserver-service

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8443

selector:

name: code-server

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: code-service

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

labels:

app.kubernetes.io/instance: codeingress

name: codeingress

spec:

rules:

- host: code.freshbrewed.science

http:

paths:

- backend:

service:

name: codeserver-service

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- code.freshbrewed.science

secretName: code-tls

I’ll create a namespace and launch the whole lot into it

$ kubectl create ns codeserver

namespace/codeserver created

$ kubectl apply -f prod-codeserver.yaml -n codeserver

deployment.apps/code-server-deployment created

persistentvolumeclaim/code-server-pvc created

service/codeserver-service created

ingress.networking.k8s.io/codeingress created

I can see the pod, pvc and server came up pretty quick

$ kubectl get pods -n codeserver

NAME READY STATUS RESTARTS AGE

code-server-deployment-888945468-fl7fz 1/1 Running 0 37s

$ kubectl get svc -n codeserver

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

codeserver-service ClusterIP 10.43.2.208 <none> 80/TCP 41s

$ kubectl get pvc -n codeserver

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

code-server-pvc Bound pvc-675caa85-7a57-42bd-9f0f-82f785fd1ef4 20Gi RWO managed-nfs-storage 92s

but something isn’t as good with the ingress

$ kubectl get ingress -n codeserver

NAME CLASS HOSTS ADDRESS PORTS AGE

codeingress <none> code.freshbrewed.science 80, 443 46s

$ kubectl get cert -n codeserver

NAME READY SECRET AGE

code-tls False code-tls 68s

$ kubectl get cert -n codeserver

NAME READY SECRET AGE

code-tls True code-tls 11m

I saw a couple issues; the ingress had a bit too many annotations and the service should have used “app” not “name” on the selector:

---

apiVersion: v1

kind: Service

metadata:

name: codeserver-service

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8443

selector:

app: code-server

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

labels:

app.kubernetes.io/name: code

name: codeingress

spec:

rules:

- host: code.freshbrewed.science

http:

paths:

- backend:

service:

name: codeserver-service

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- code.freshbrewed.science

secretName: code-tls

I’ll reapply

$ kubectl apply -f prod-codeserver.yaml -n codeserver

deployment.apps/code-server-deployment unchanged

persistentvolumeclaim/code-server-pvc unchanged

service/codeserver-service configured

ingress.networking.k8s.io/codeingress configured

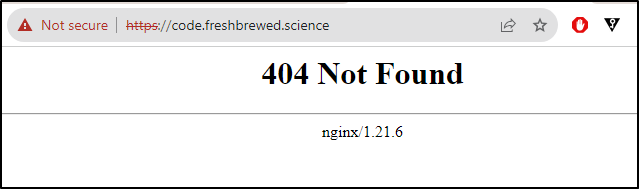

That looks much better

I might have issues with Nginx - seems this needs websockets

I’ll wipe the existing Nginx and add “websocket-services” annotation

$ kubectl delete ingress codeingress -n codeserver

ingress.networking.k8s.io "codeingress" deleted

$ cat prod-codeserver.yaml | tail -n40

targetPort: 8443

selector:

app: code-server

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

nginx.org/websocket-services: codeserver-service

labels:

app.kubernetes.io/name: code

name: codeingress

spec:

rules:

- host: code.freshbrewed.science

http:

paths:

- backend:

service:

name: codeserver-service

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- code.freshbrewed.science

secretName: code-tls

Then re-apply

$ kubectl apply -f prod-codeserver.yaml -n codeserver

deployment.apps/code-server-deployment unchanged

persistentvolumeclaim/code-server-pvc unchanged

service/codeserver-service unchanged

ingress.networking.k8s.io/codeingress created

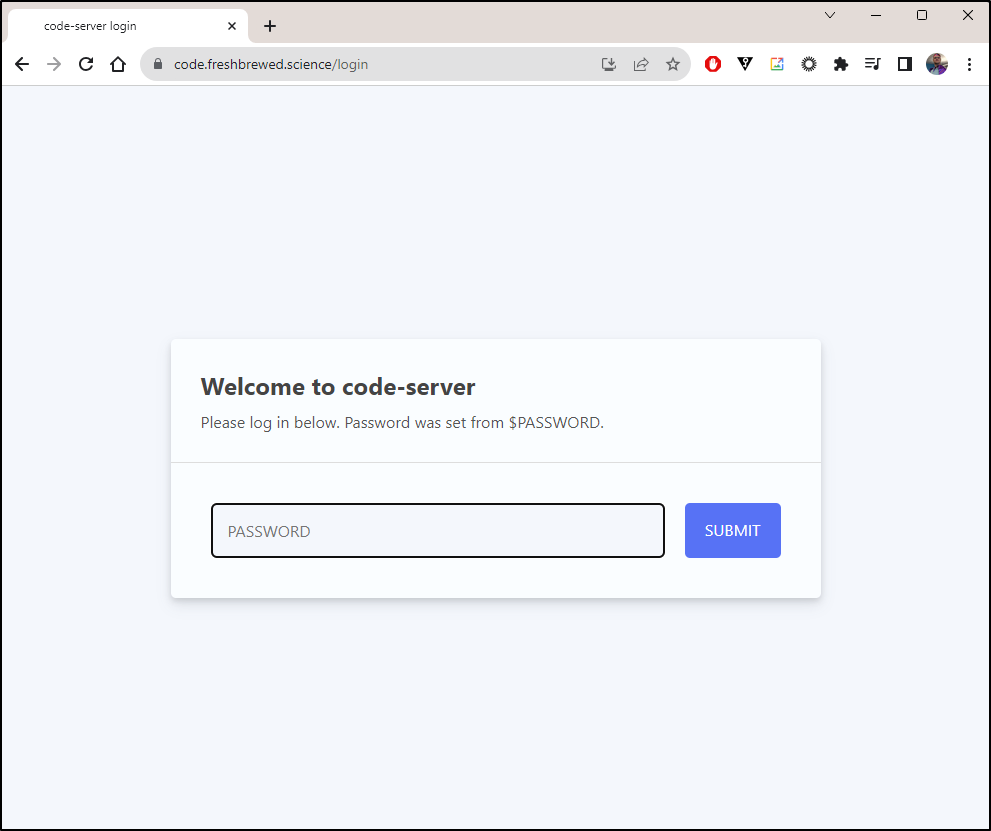

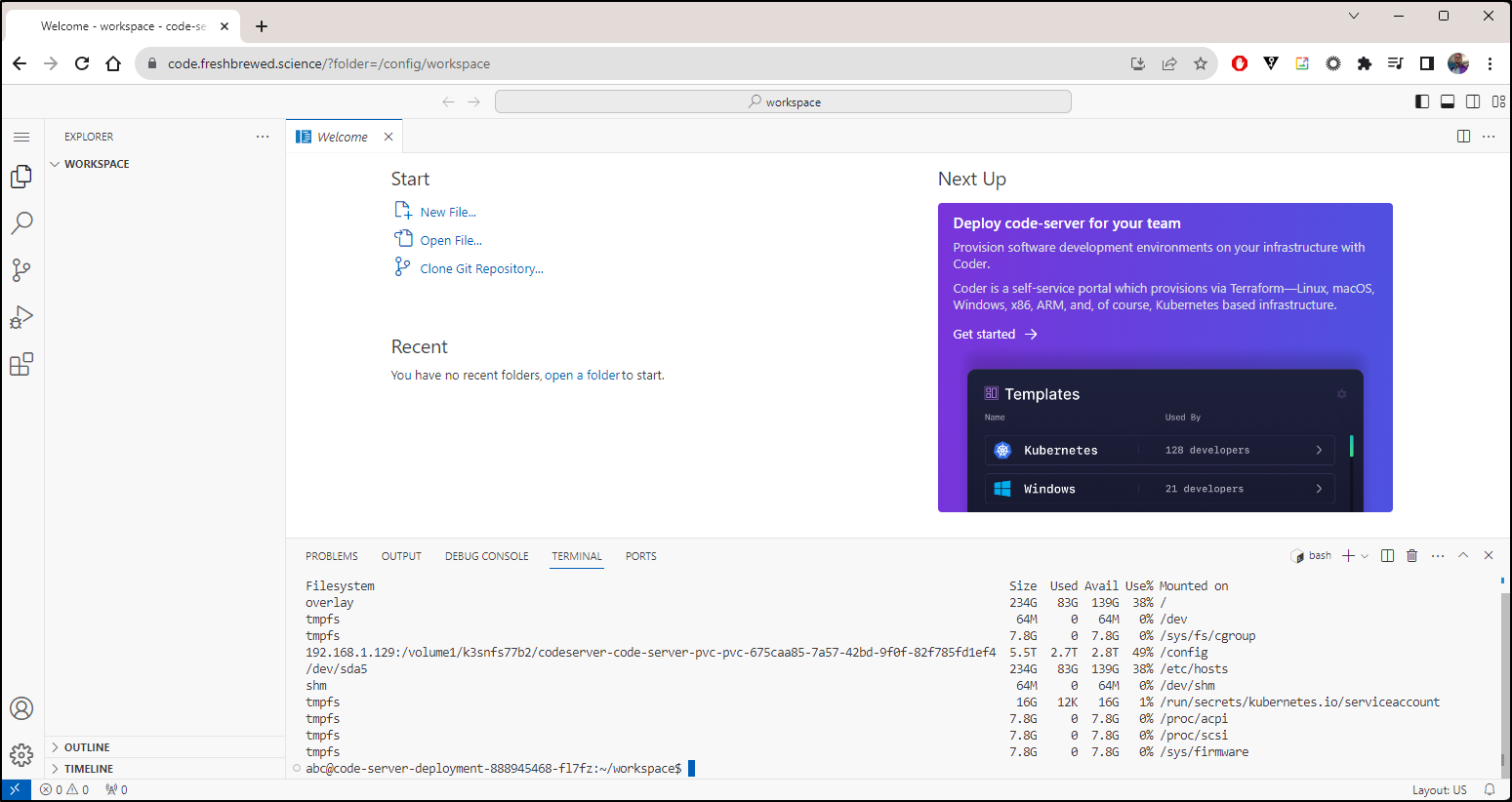

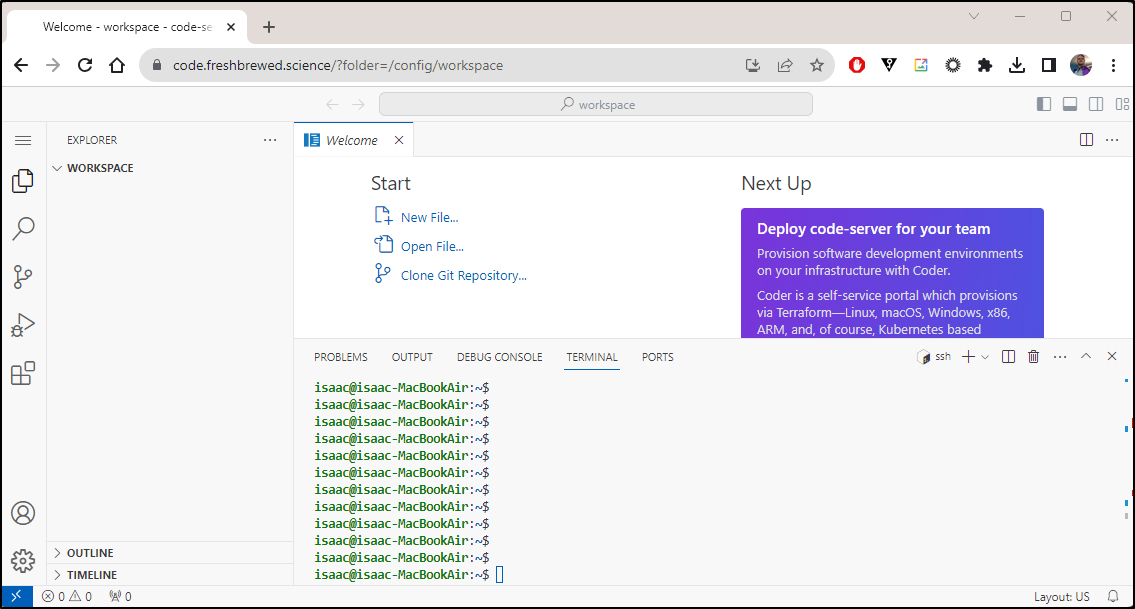

Thankfully that worked:

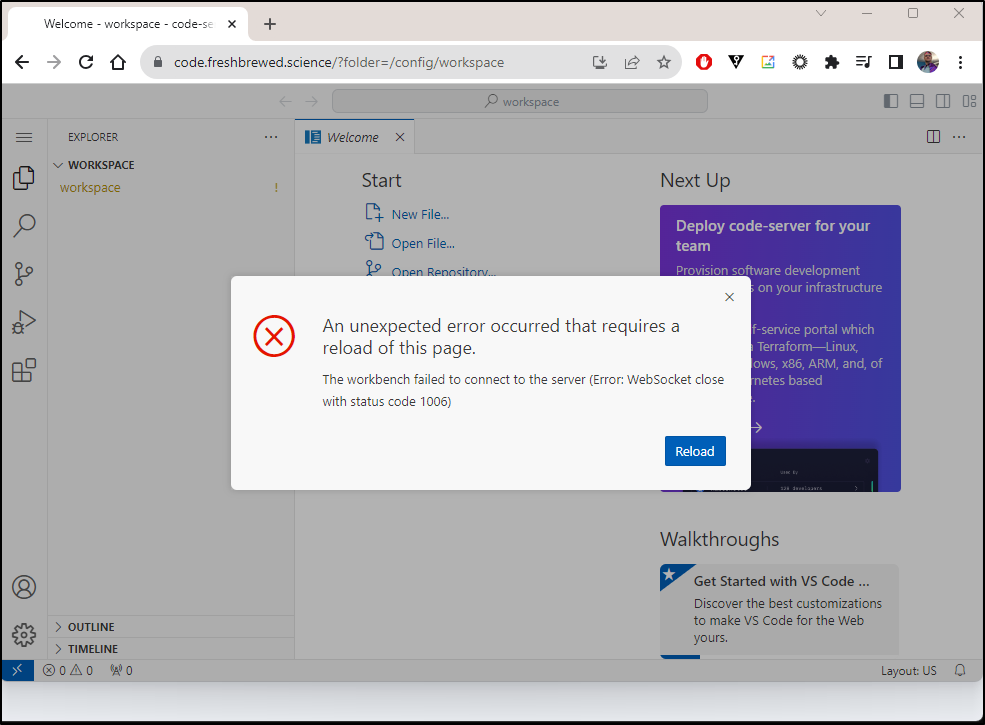

I noted it doesn’t show just the PVC claim size

Rather, it’s just exposing the host nodes stats:

builder@builder-HP-EliteBook-850-G2:~$ df -h

Filesystem Size Used Avail Use% Mounted on

udev 7.8G 0 7.8G 0% /dev

tmpfs 1.6G 9.0M 1.6G 1% /run

/dev/sda5 234G 83G 139G 38% /

tmpfs 7.8G 0 7.8G 0% /dev/shm

tmpfs 5.0M 4.0K 5.0M 1% /run/lock

... snip ...

Summary

We tried the containerized linuxserver.io image for code server in a test Kubernetes cluster. Once verified, we moved on to creating it in a production cluster with ingress. The only real issues encountered were enabling websockets (something those using Traefik won’t encounter).

I think Code Server is a great utility to have in one’s arsenal for working on code. A fun side note is that because this runs in the on-prem cluster, we can use it as a nice little remote terminal for accessing on-prem servers as well