Published: Oct 5, 2023 by Isaac Johnson

This summer Datadog came out with Cloud Cost Management as a new feature to its Observability suite. Today we’ll setup Cost Costs with AWS and Azure and see what we can accomplish. We’ll ingest some real data, look at dashboards, and even setup monitors and alerts.

Setup

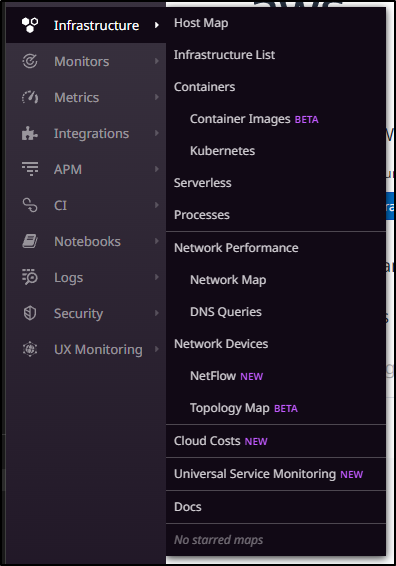

We’ll find the new Cloud Cost Management under Infrastructure

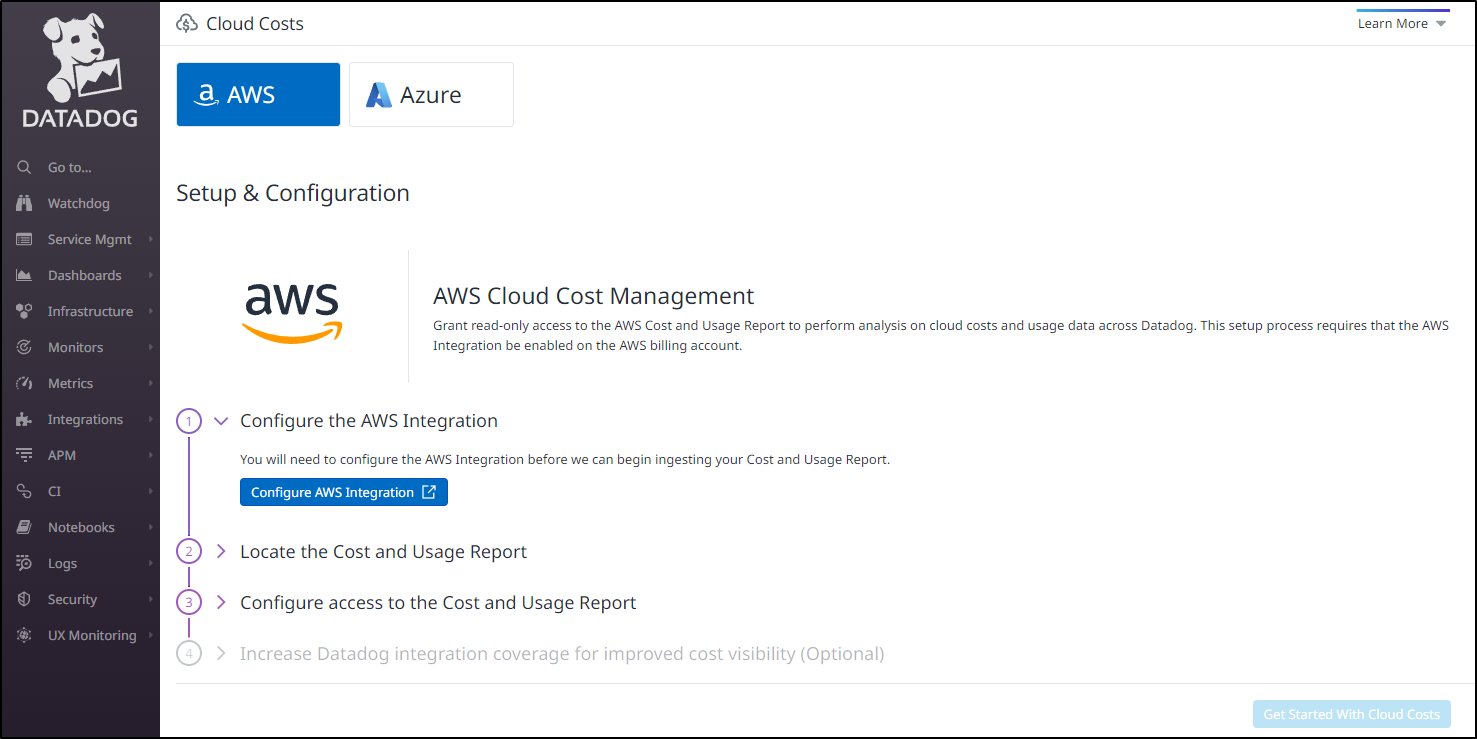

Our next step to setup AWS or Azure

AWS

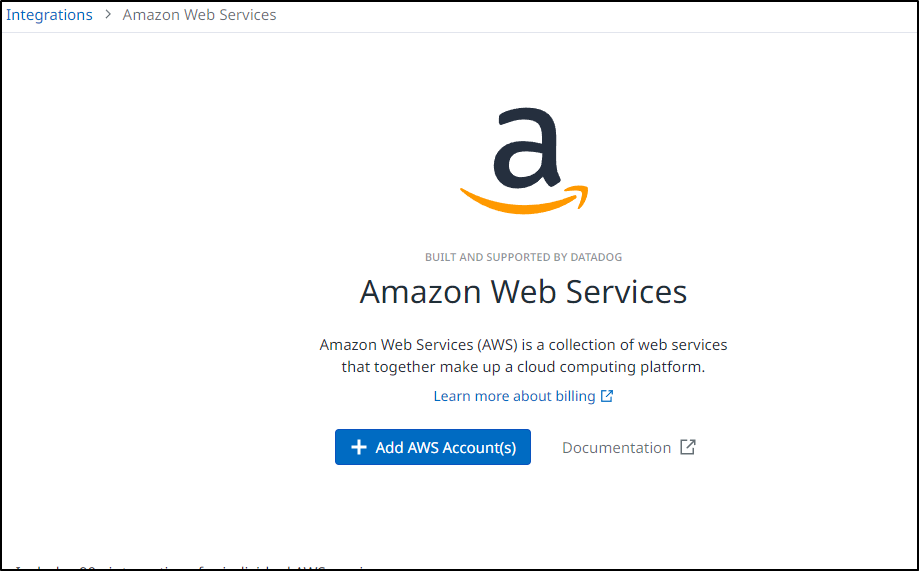

We’ll next add an AWS account

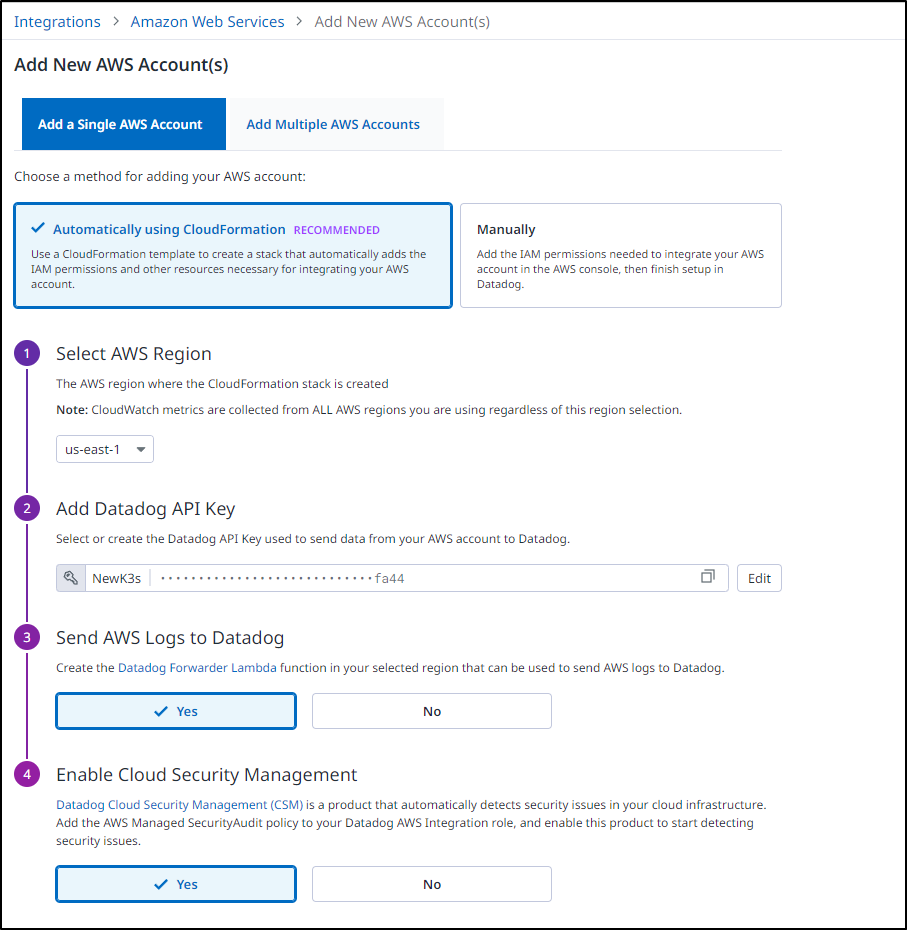

Let’s look at some of the options

We can add one or multiple accounts. In my case, I just want to monitor one.

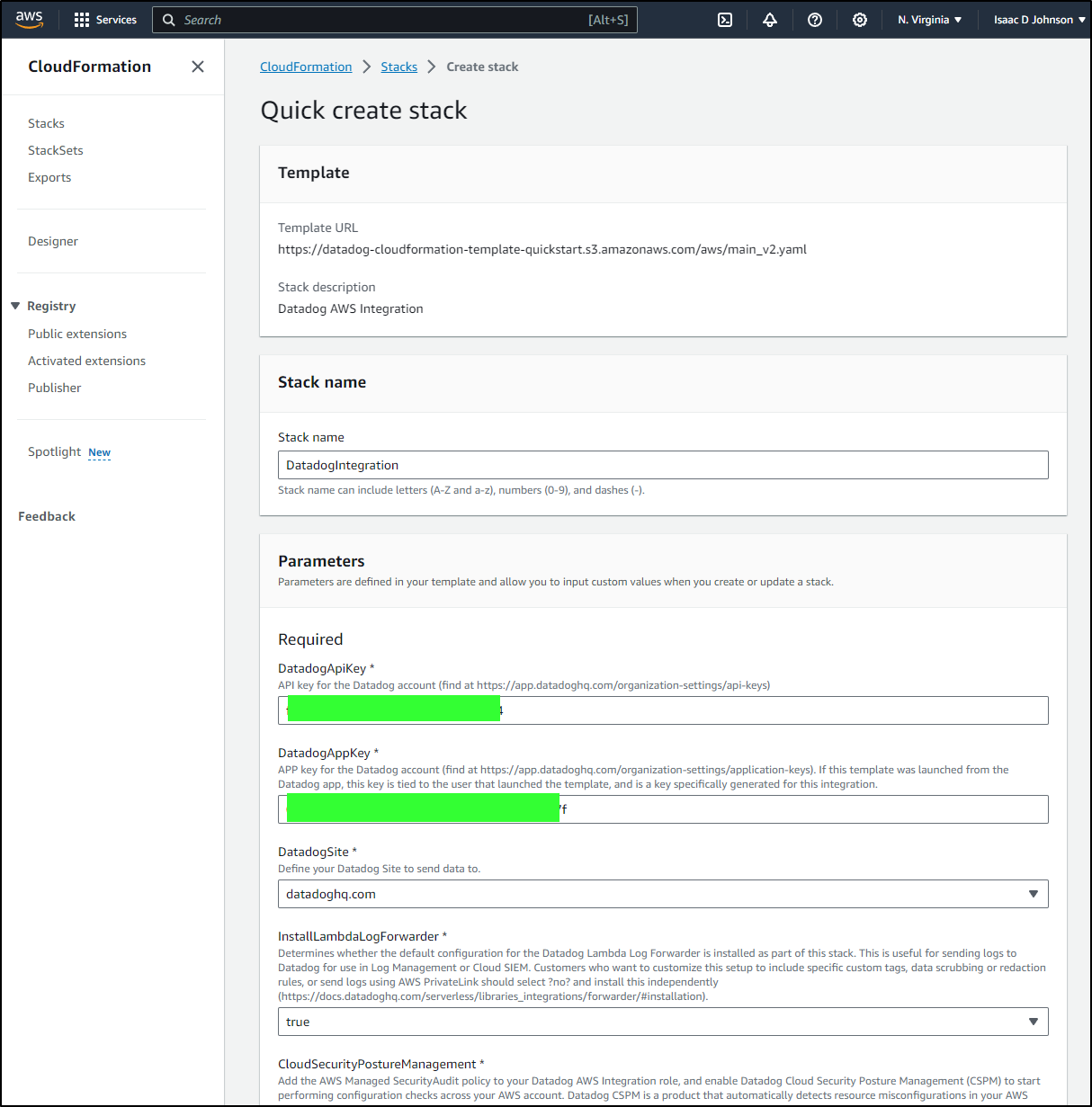

We can select an existing DD API key or create a new one.

Lastly, we can opt-in (or not) on Lambda Log Forwarders and Cloud Security Management

Clicking the “Launch CloudFormation” will kick us over to the AWS console

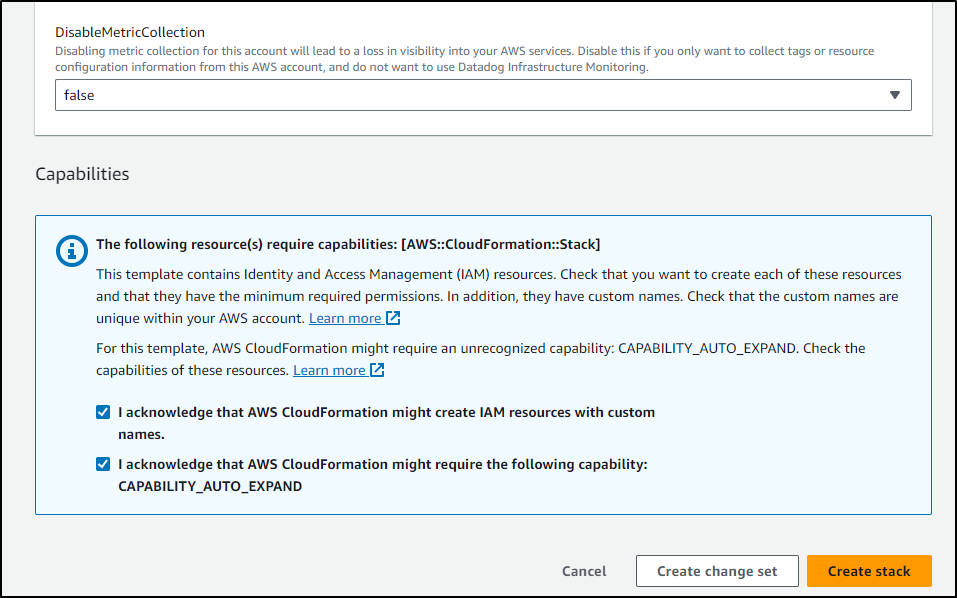

We then click “Create stack” to launch

which creates it

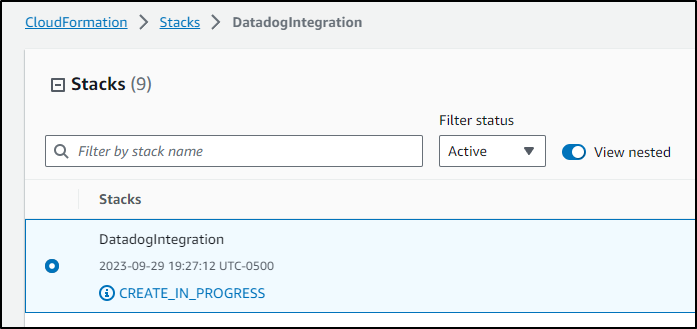

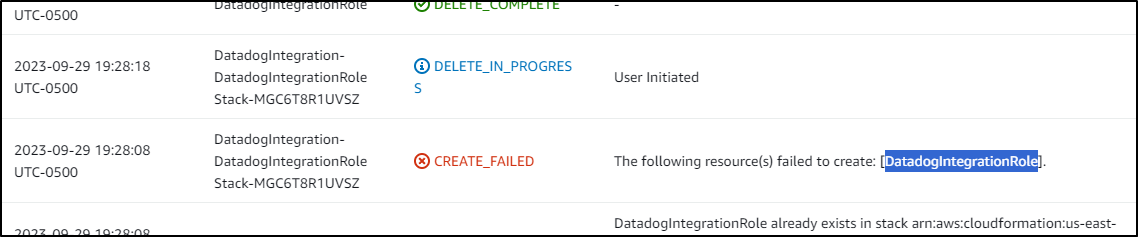

It seems I must already have the role as it failed with the error “already exists”

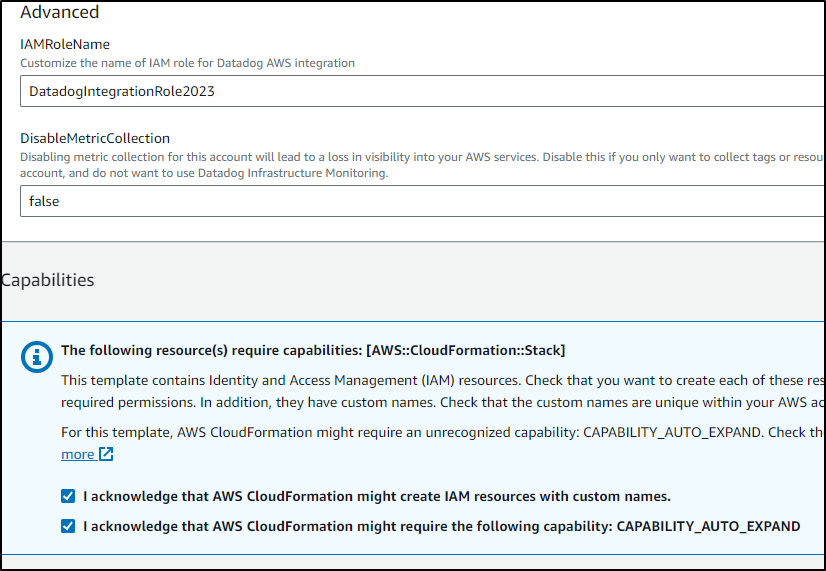

I tried again, this time with definately a new name

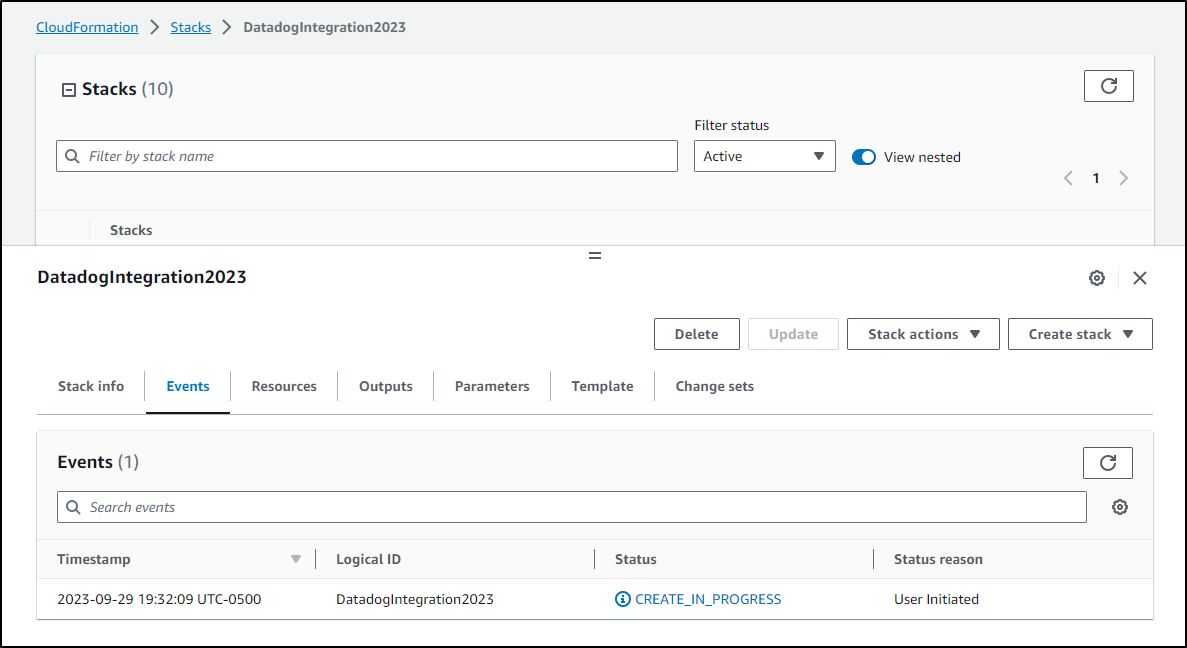

Which launched

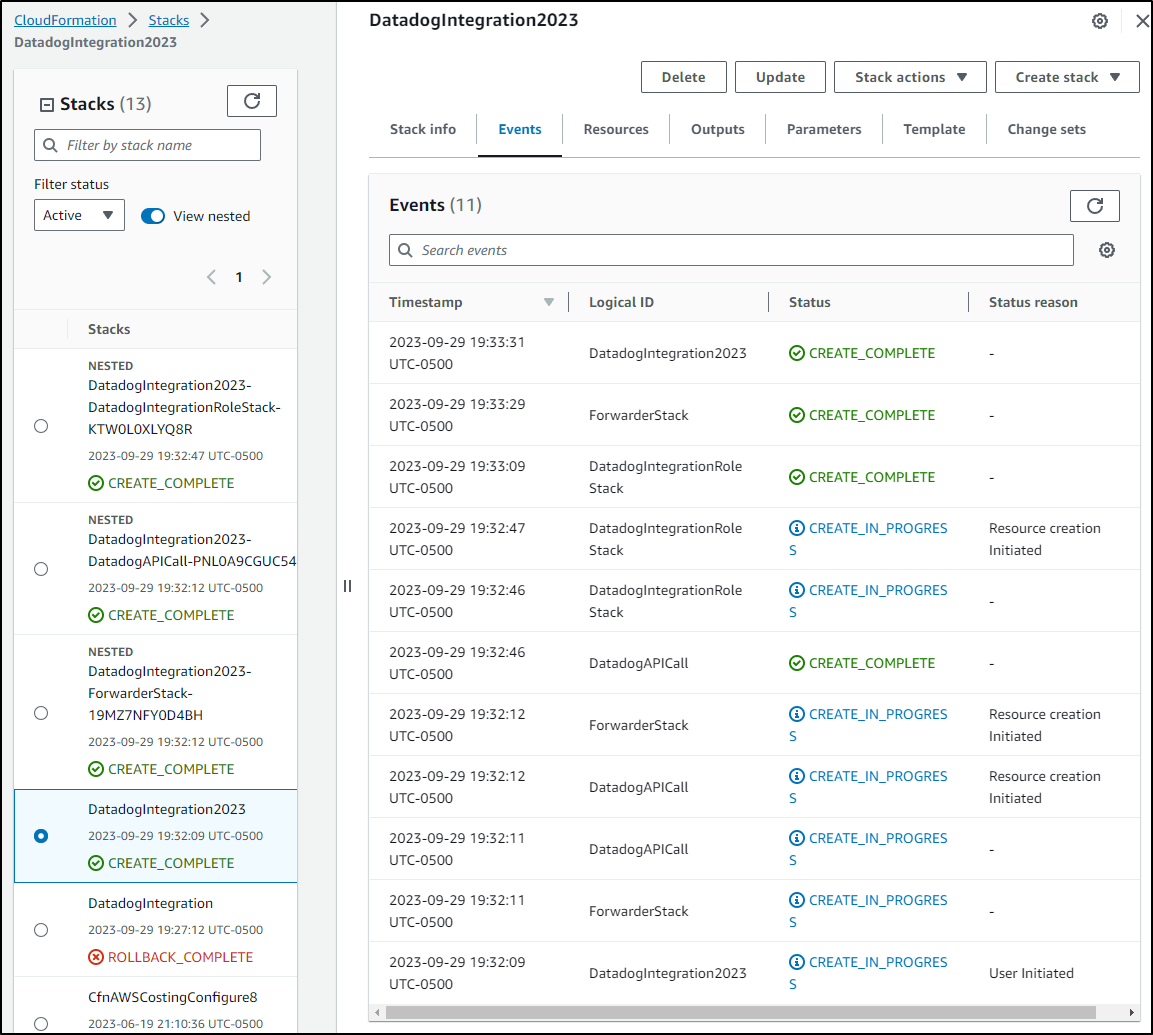

I could watch as it created the resources

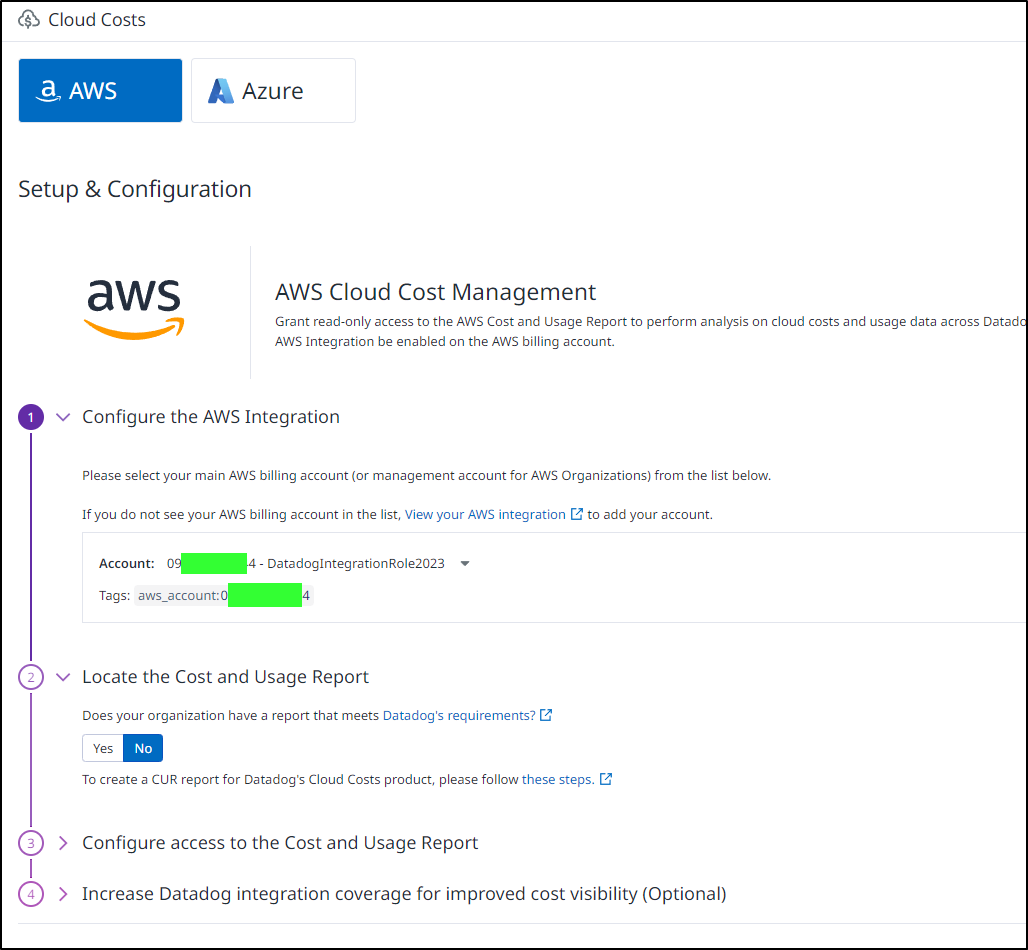

While this meant I now had AWS added, but I need a CUR (Cost Usage Report) in an S3 bucket for this to work.

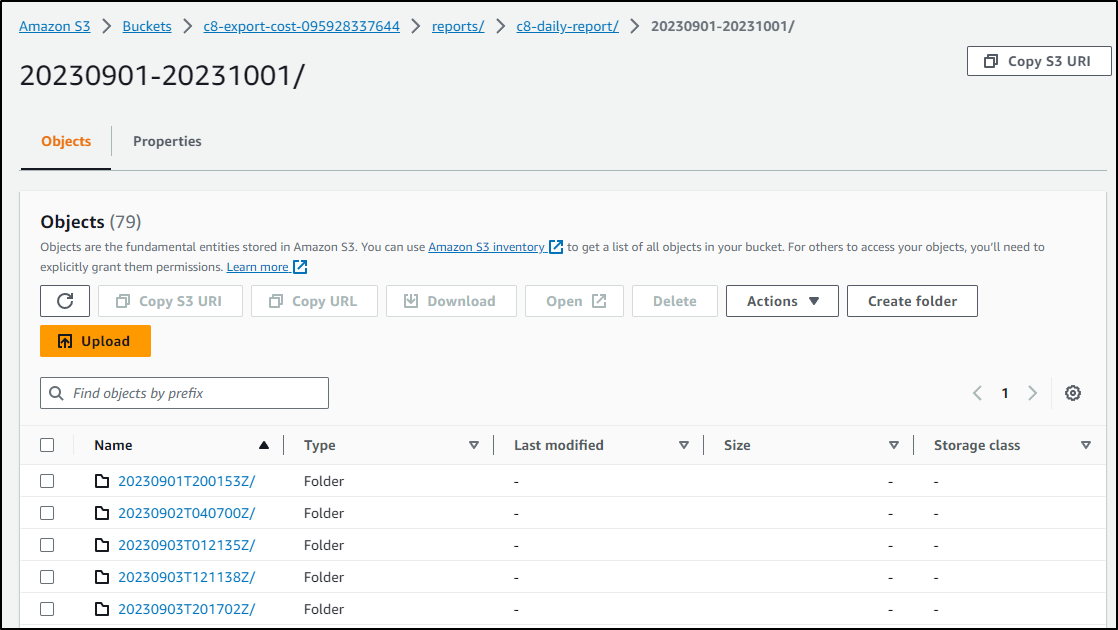

I was about to follow the guide on creating a CUR when I realized we already did this for Configure8.

I’ll try that now

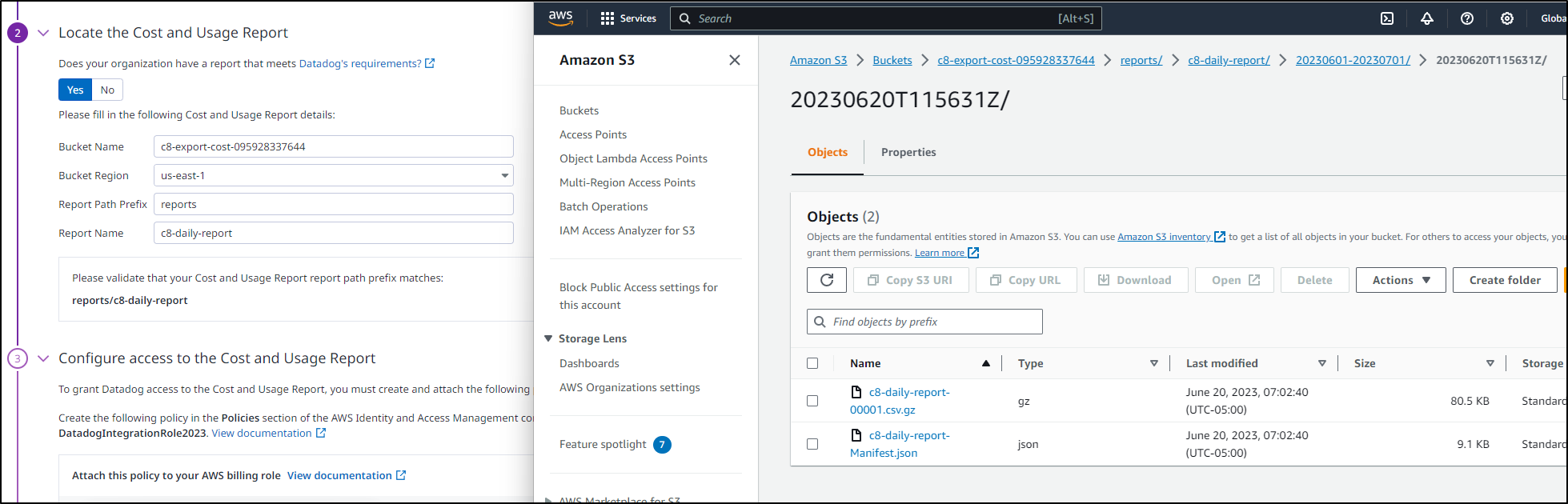

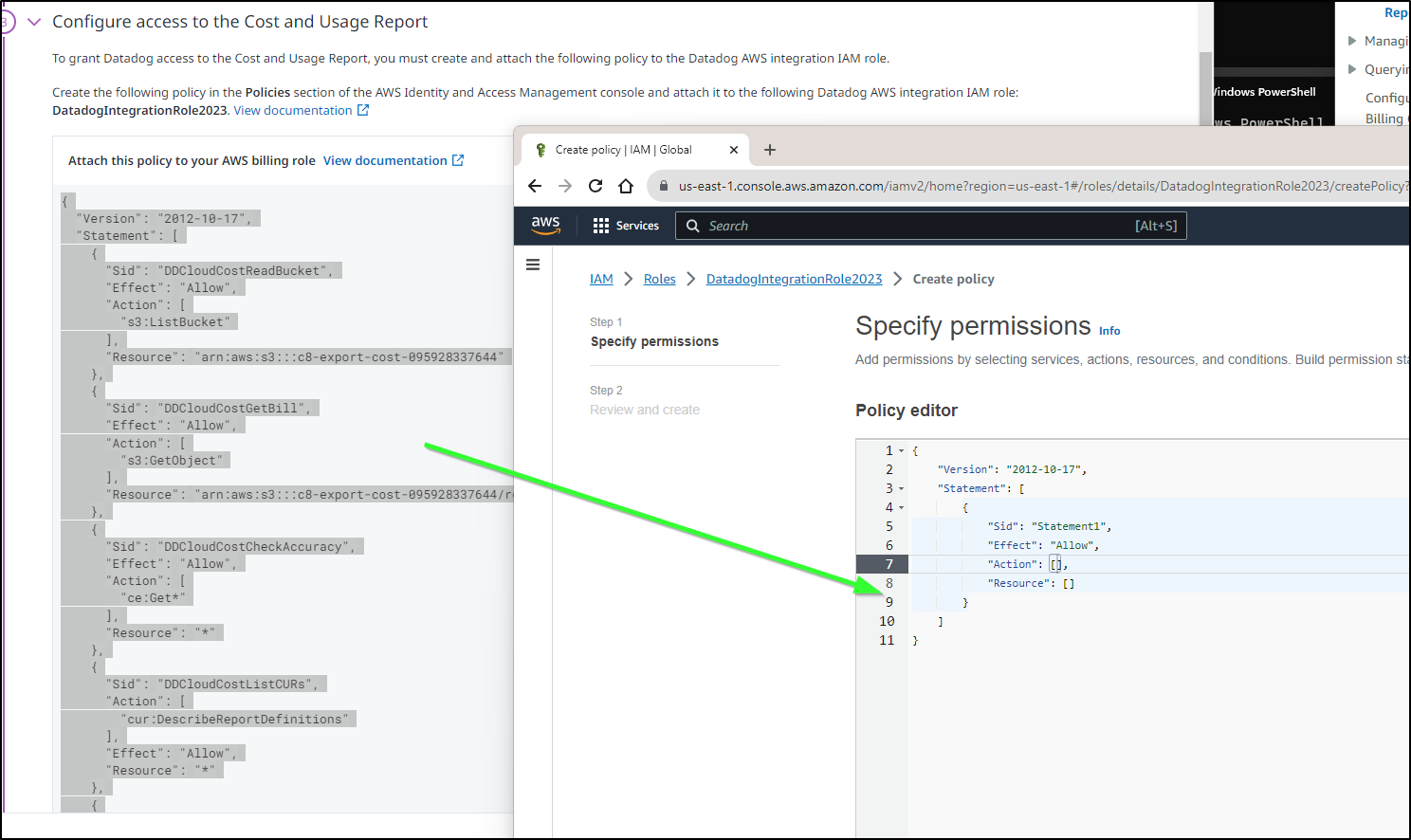

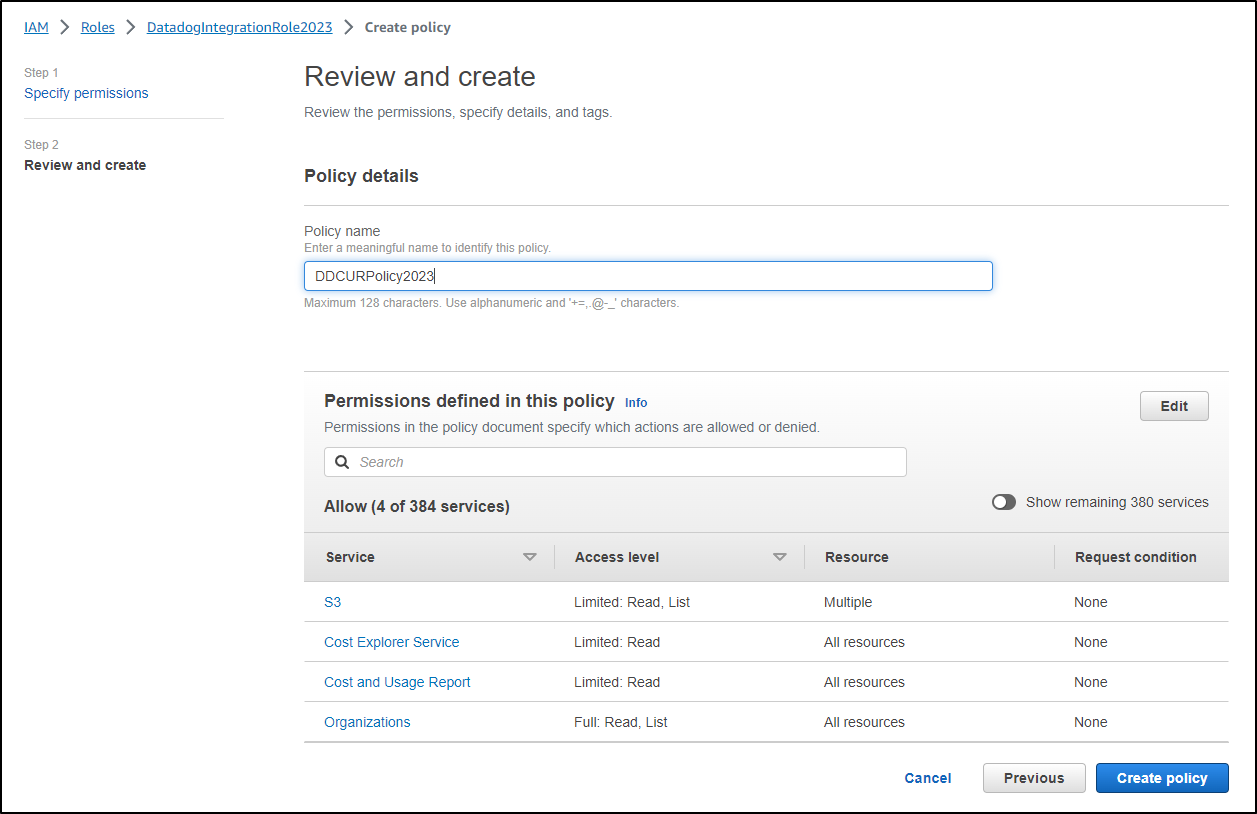

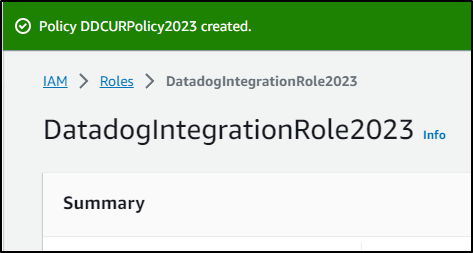

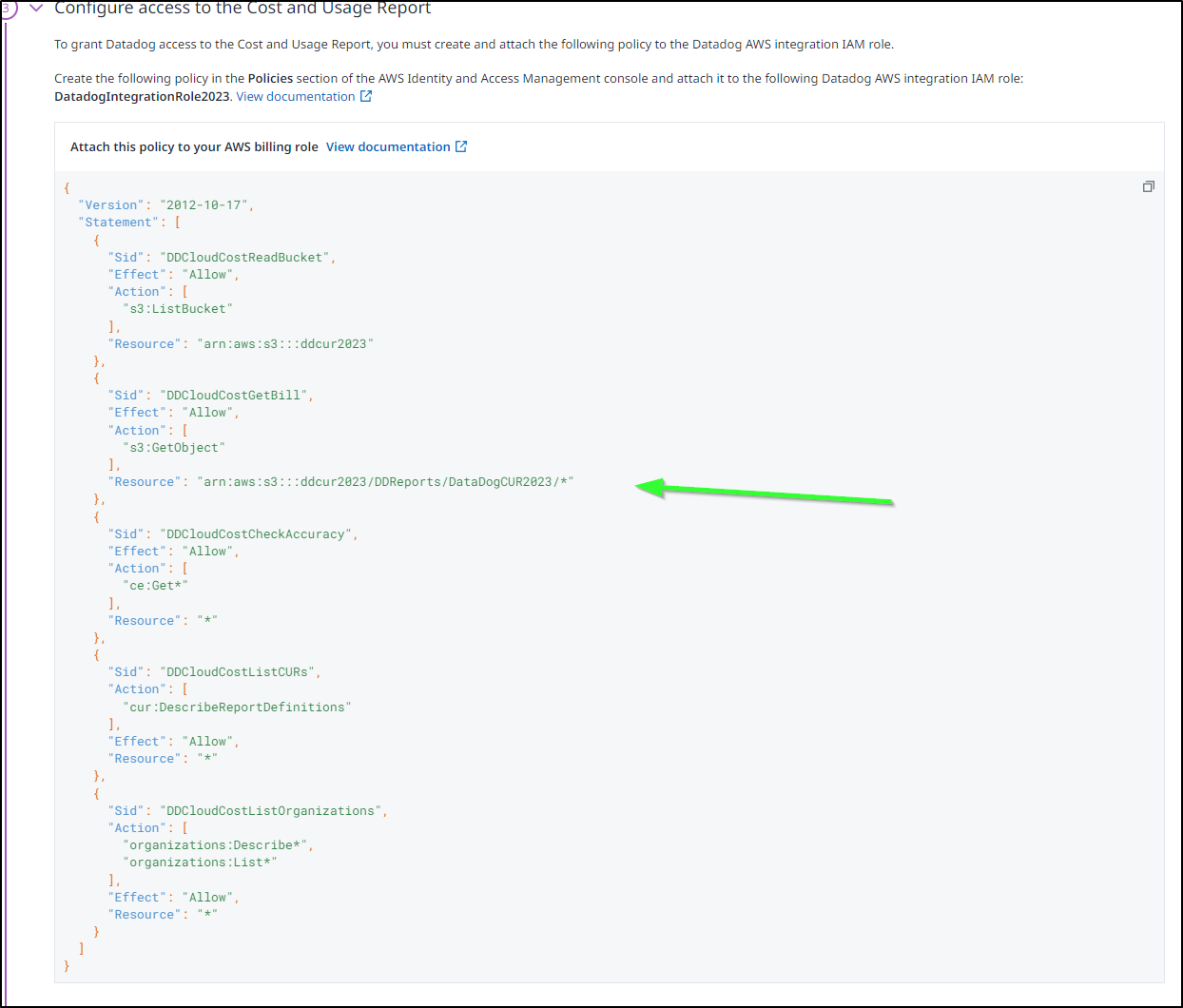

I need to create a Policy on the Role. I could create a Policy, then attach to the DataDogIntegration2023 role. However, I decided instead to do it inline instead

I’ll then click create to the policy

which created

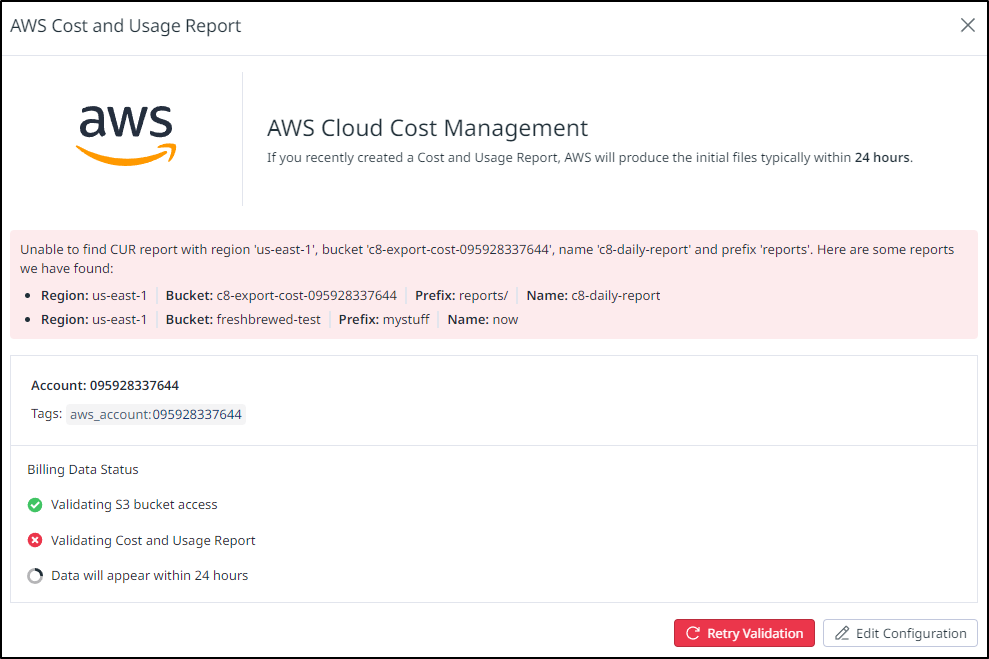

In Datadog I clicked “Get Started with Cloud Costs” to check all the configurations.

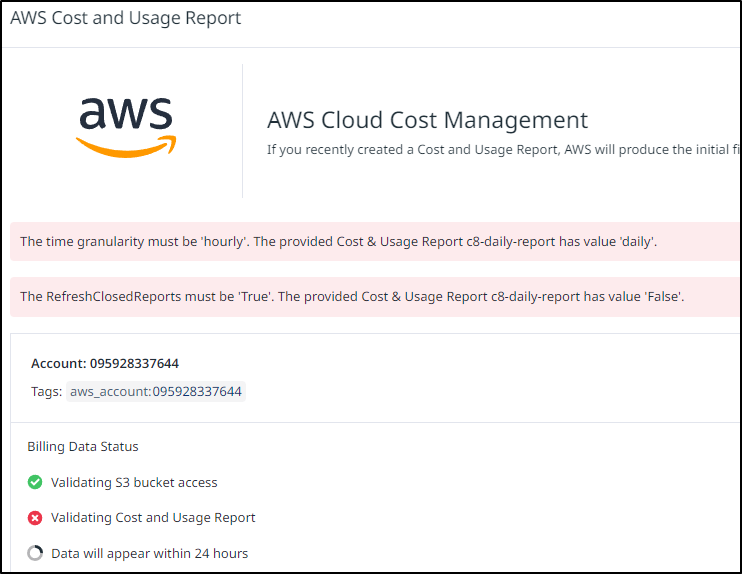

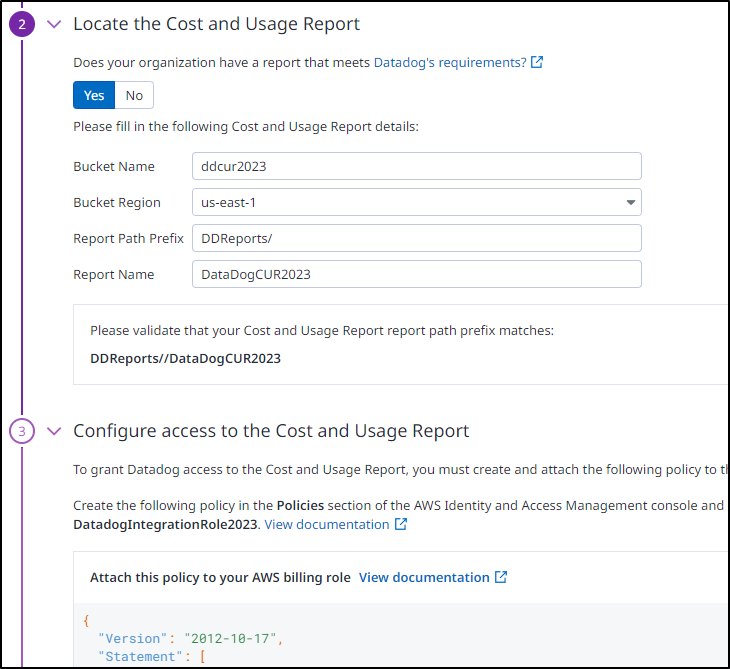

Here it found I needed a slash on my prefix:

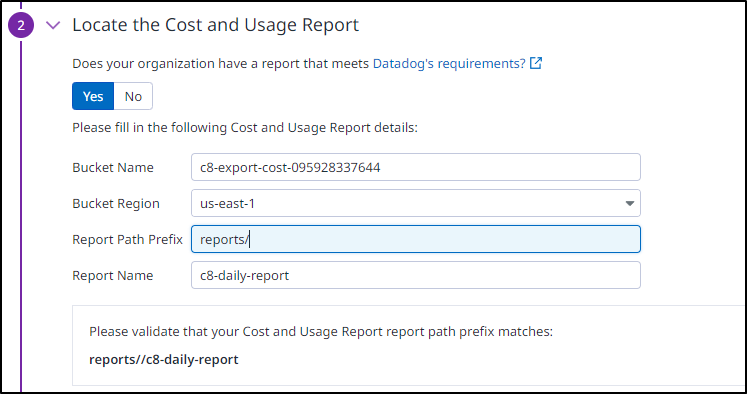

I changed it per the settings (thought he “validate” box looks funny)

and then tried again.. this time it doesn’t like the daily usage nor a Refresh setting that is set

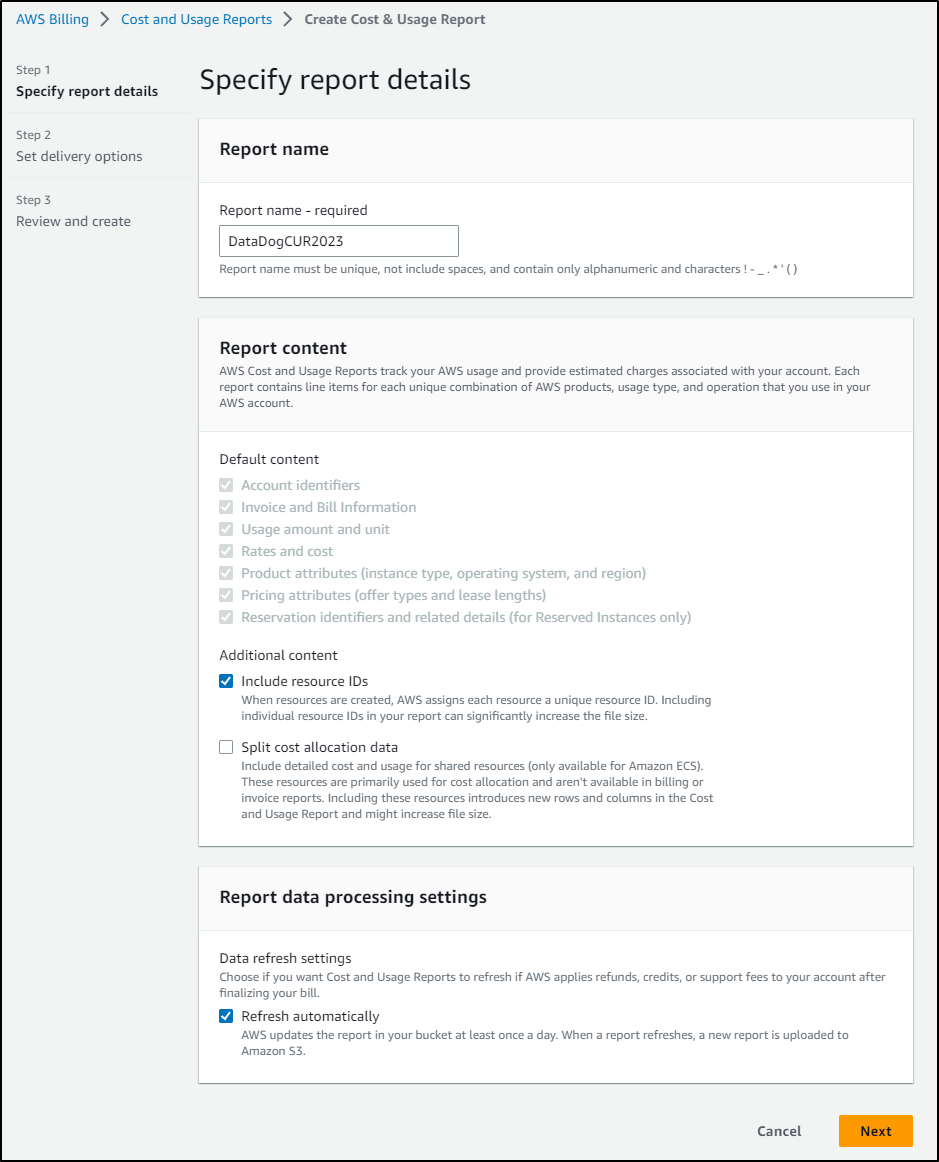

Let’s just create a new one

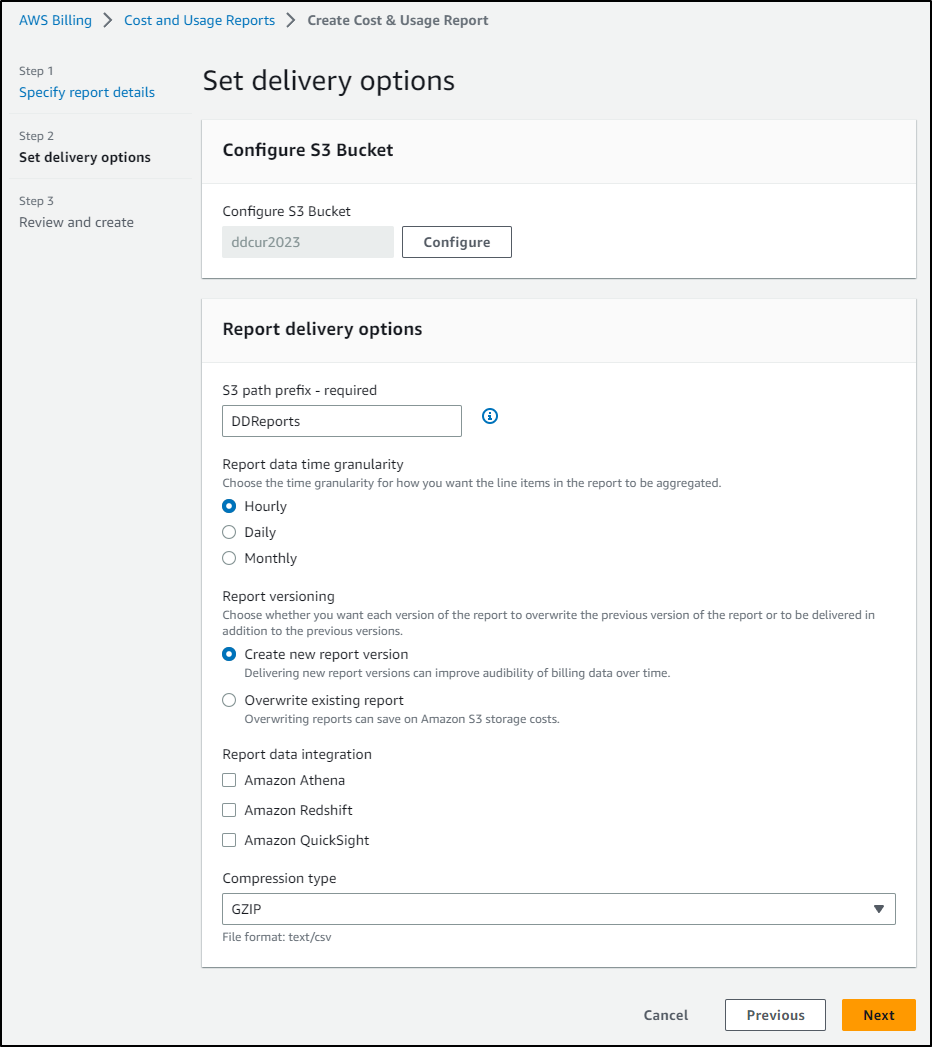

I’ll set it to compress to gzip, hourly granularity and to use a new bucket

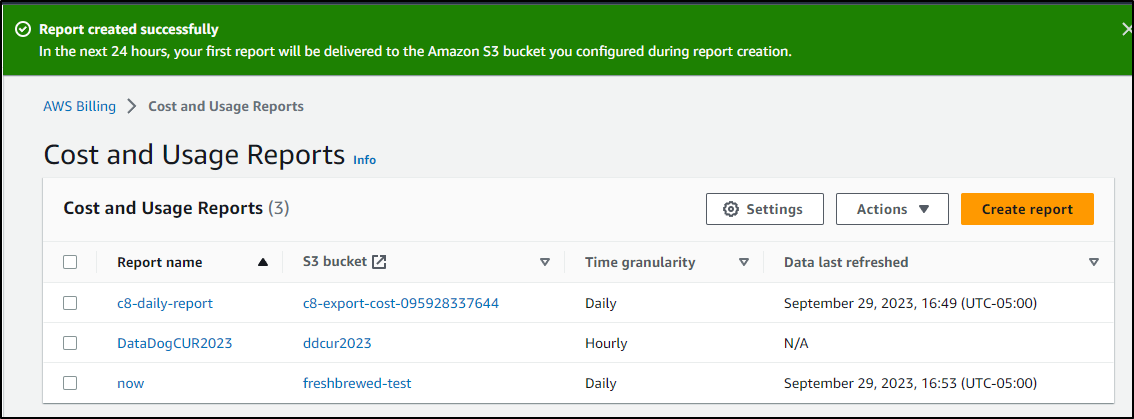

I now have a new one I can use

Once set in the configuration

I also needed to update the IAM because it’s a different bucket

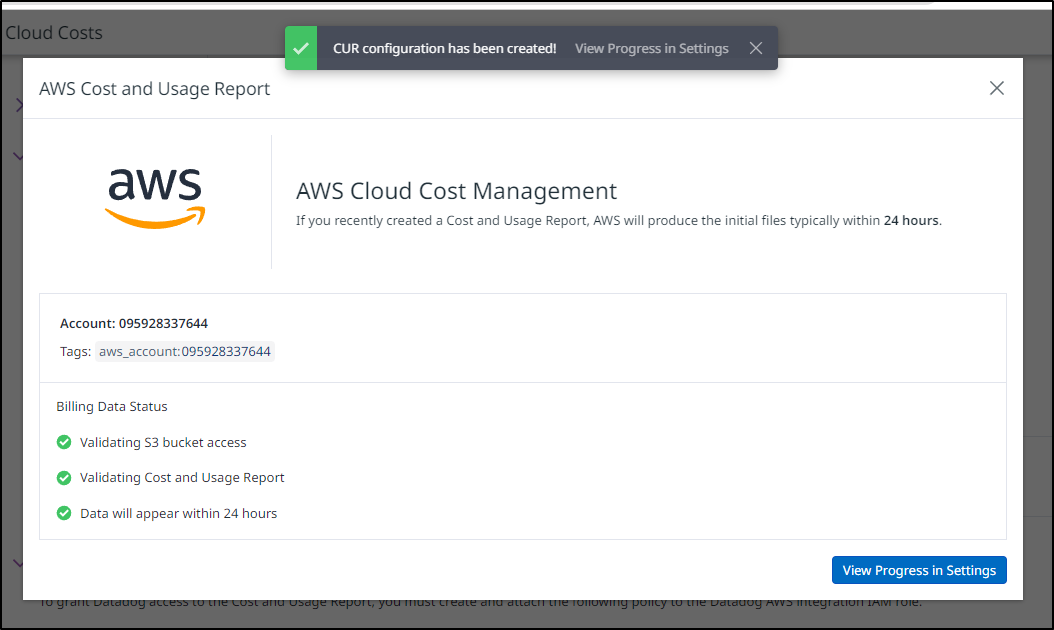

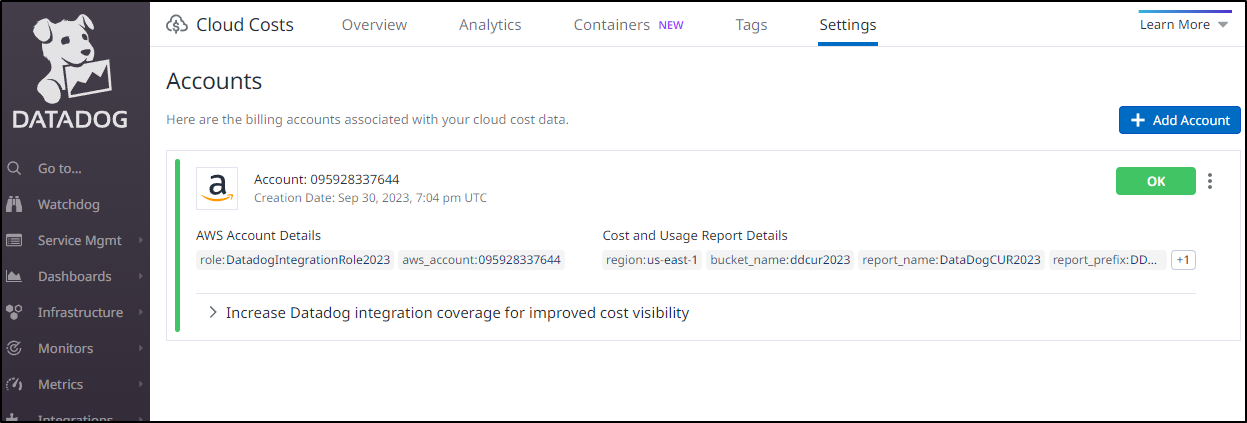

I clicked Getting Started, the removed the slash from the prefix and tried again. This time we finally got it to show all greens

I can now view AWS costs in Cost Explorer

Azure

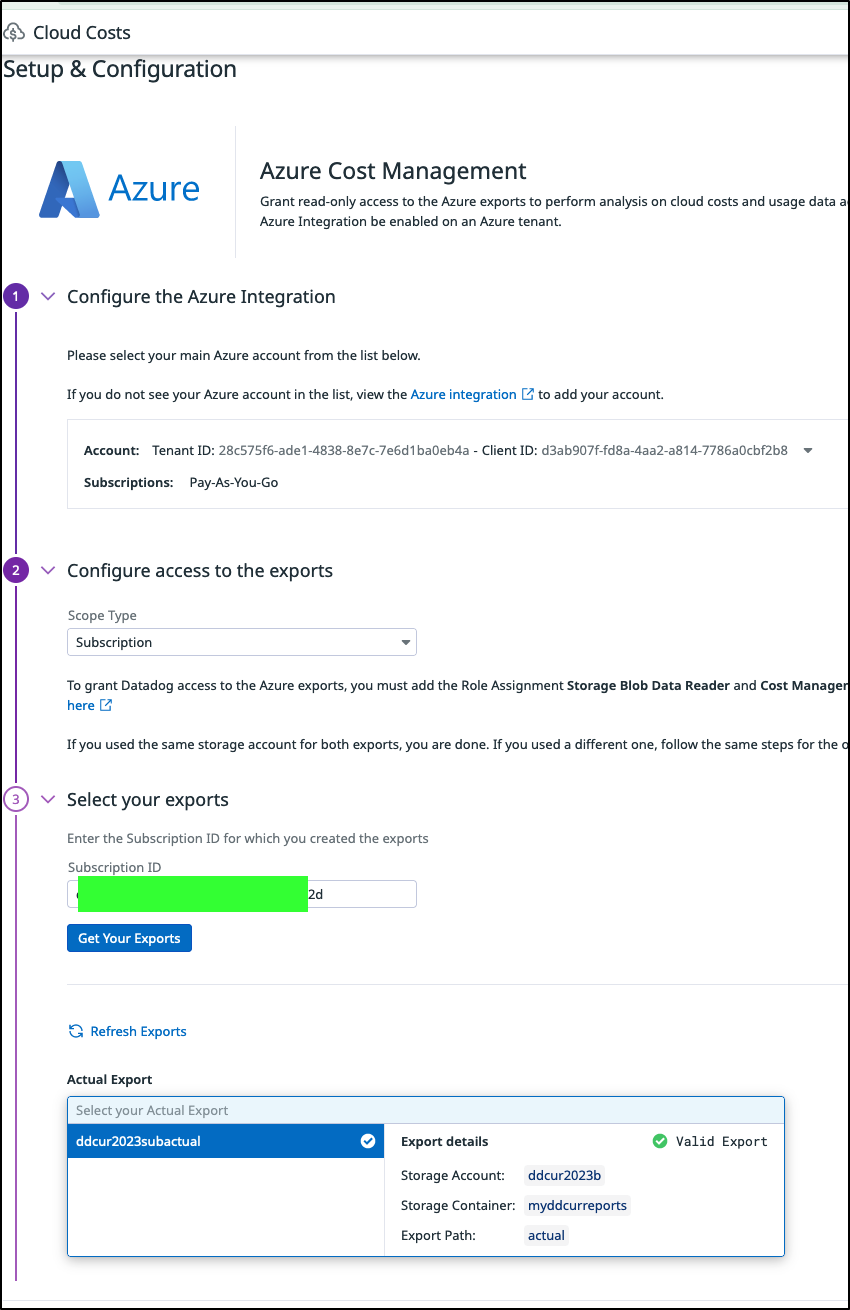

Let’s now add Azure.

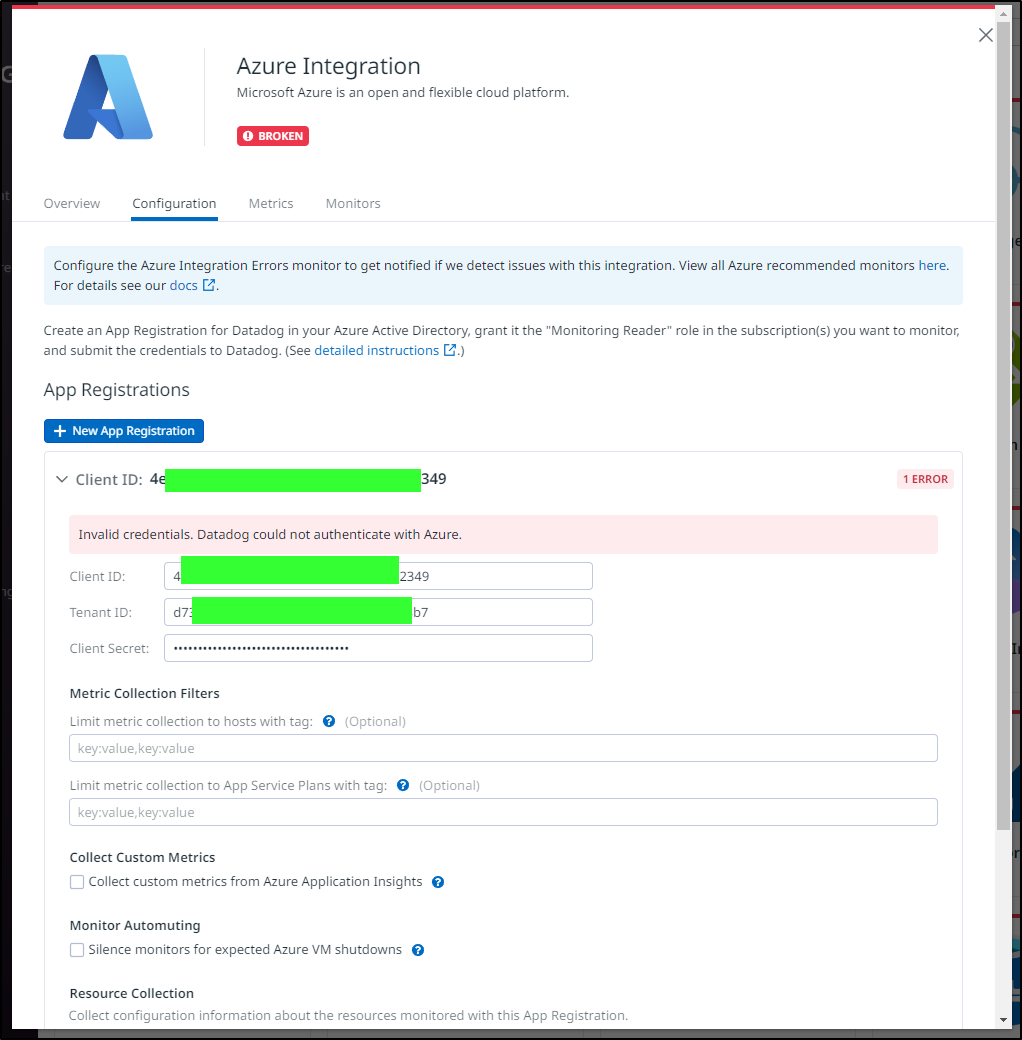

The Azure entry I found was a very old VSE that was no longer valid. This meant I needed to jump over to ‘Azure Integrations’ to add one

It already had prefilled the old sub so I would need to clear all that out

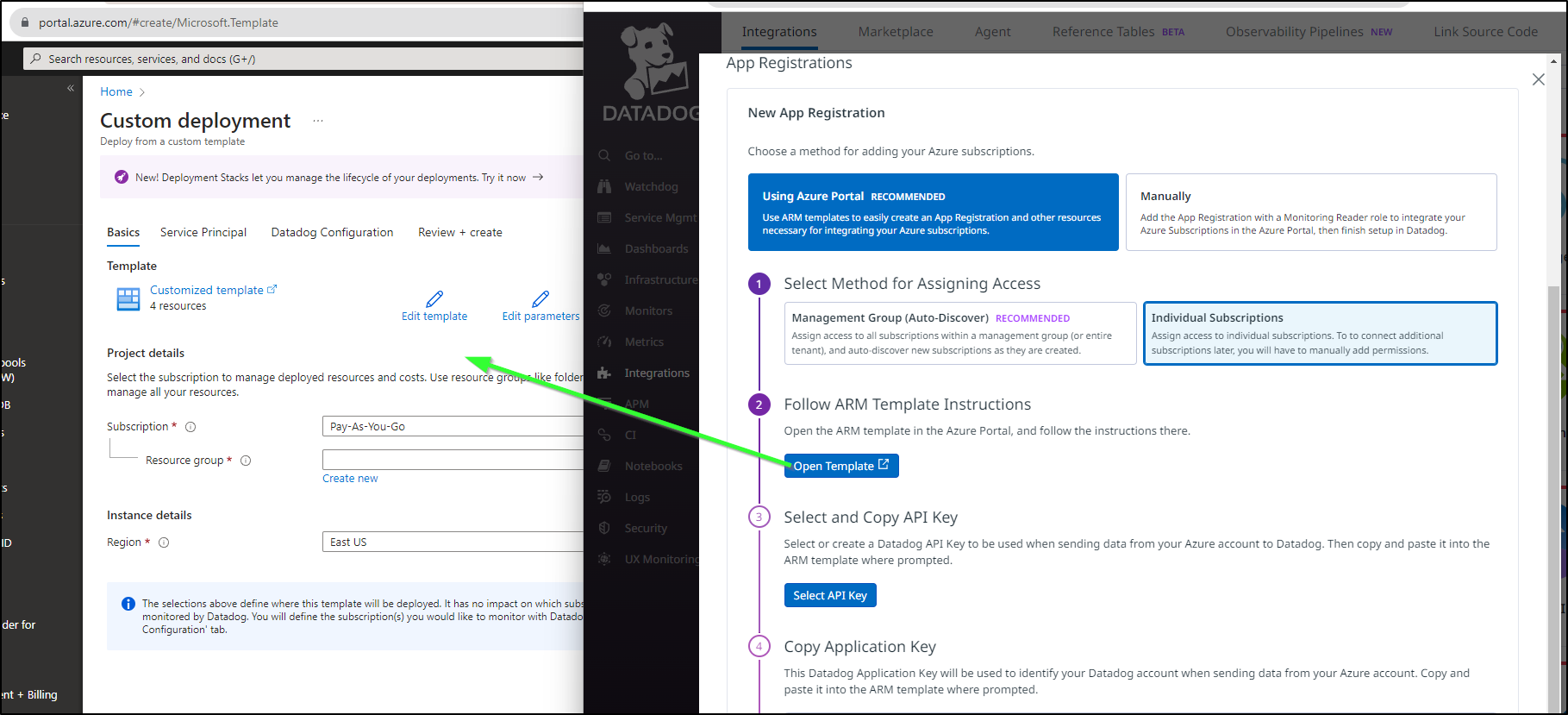

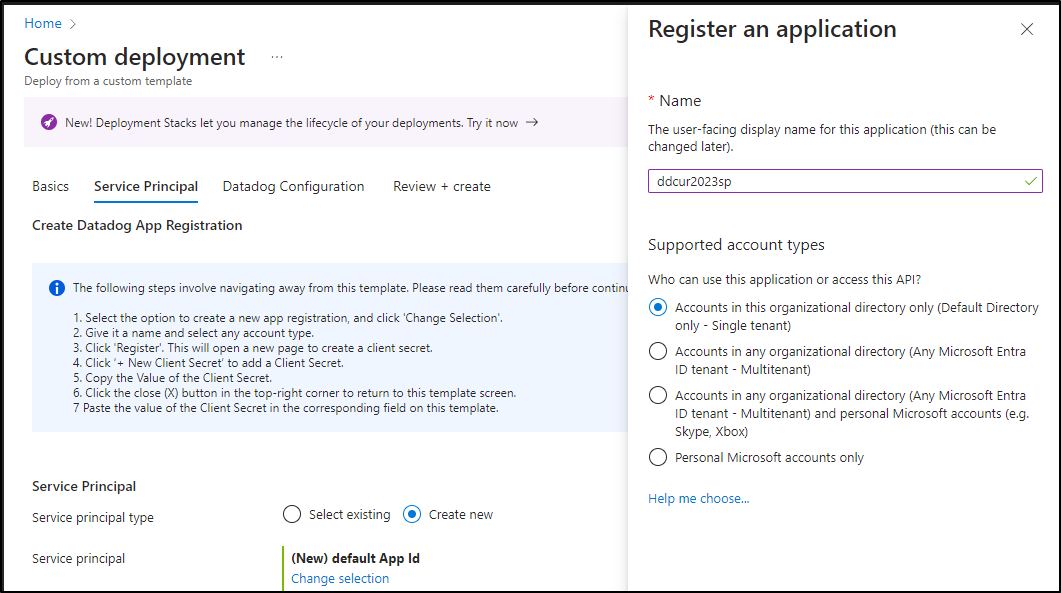

I almost always create SPs manually because, well, I like to do it that way. But this time I’ll try the ARM wizard

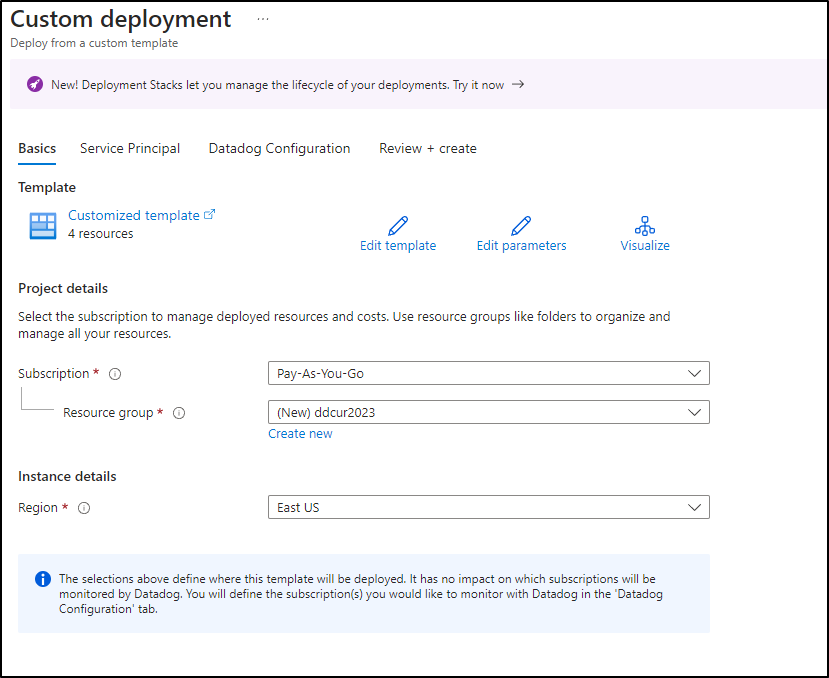

I’ll create a new Resource Group

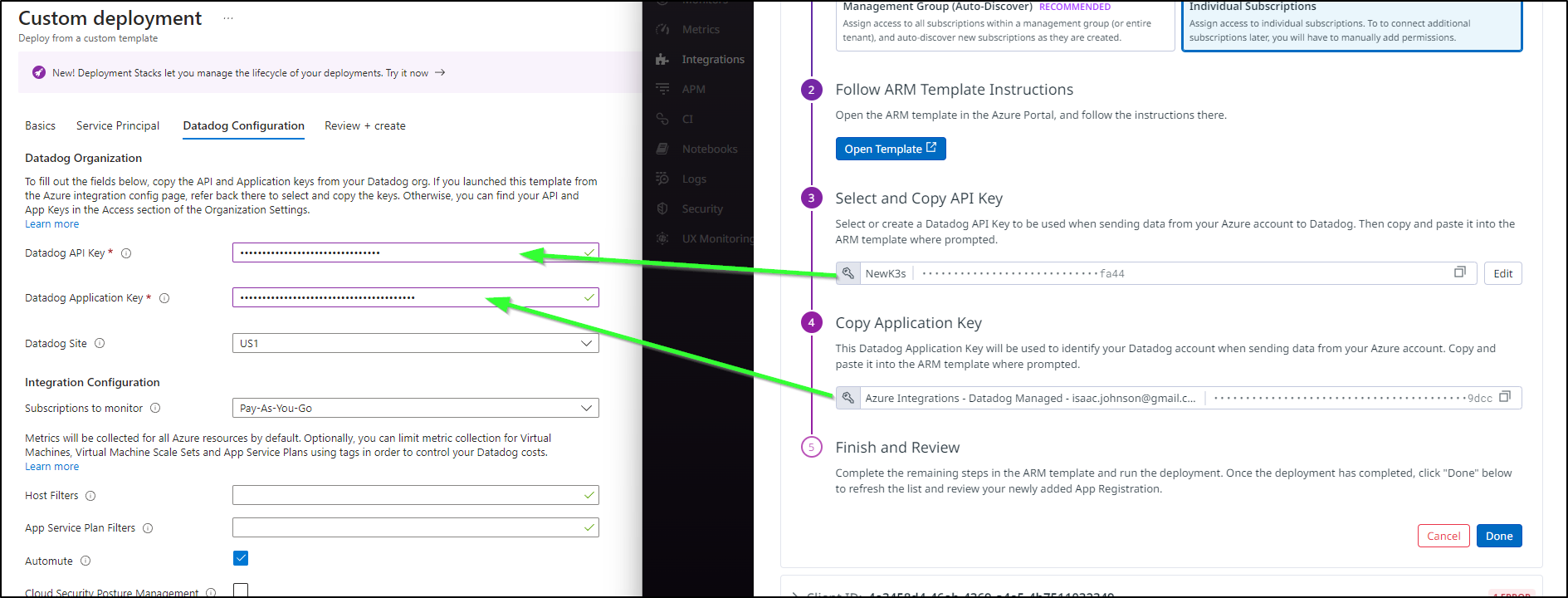

I then need to copy a couple of values over

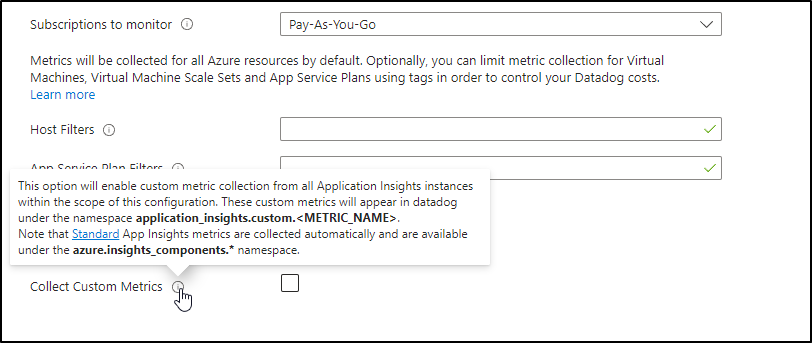

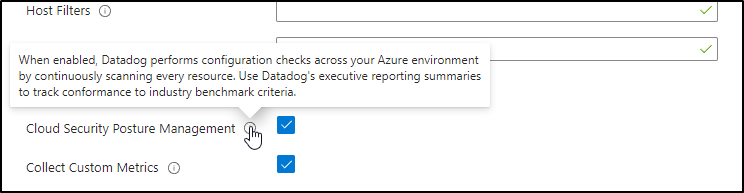

We can optionally enable custom metrics which essentially consumes the App Insights metrics and exposes them in Datadog. I could see some pretty awesome uses for that so I’ll enable. Those that are cost conscious should be aware this will increase your metrics usage

The other thing I plan to enable is the Cloud Security Posture Management which is the DD SIEM system. I heard some good things about it at a recent event and would love to explore that further.

In the Service Principal tab, you’ll want to at least give the SP a name. I narrowed the scope to just my Org Directory.

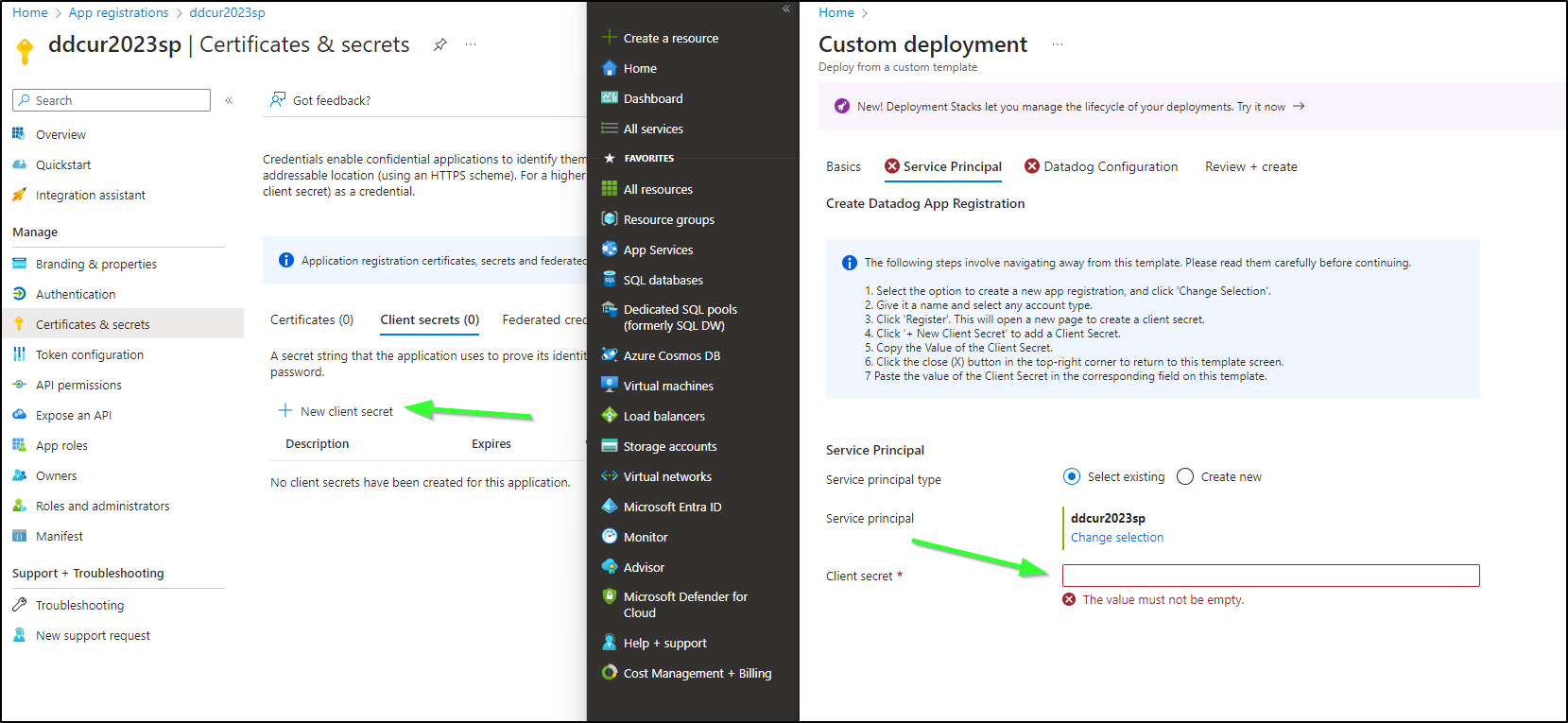

Clicking register popped me out of the template (which was a bit annoying since I lost my progress), but it did get me to the App Registration (SP) so I could create a Client Secret which I would need when going back.

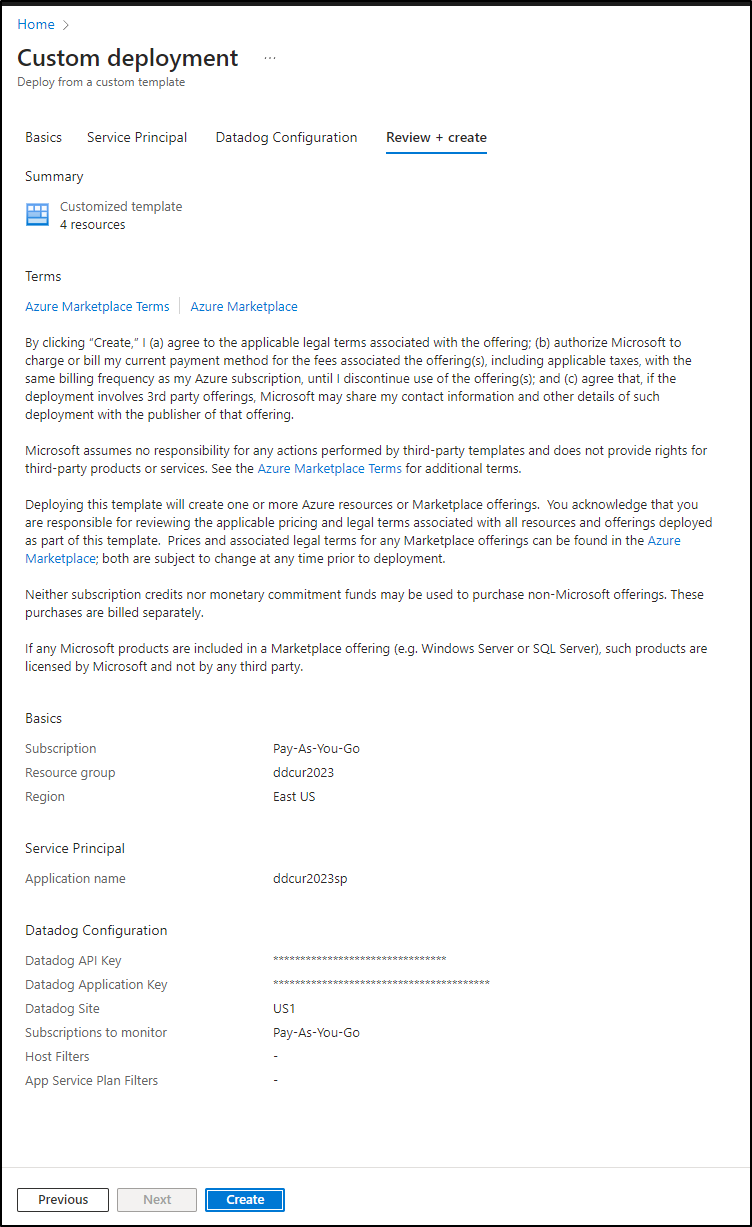

I now had all the pieces and could click create

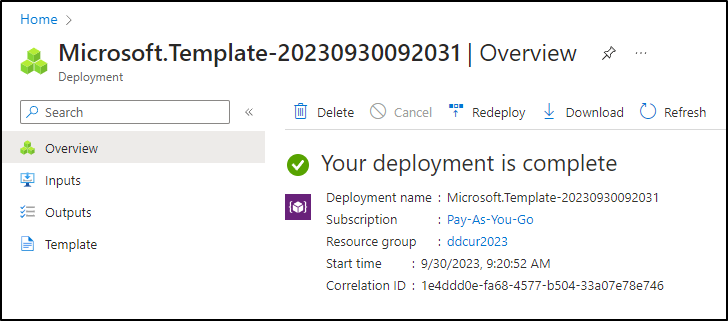

Once the deployment was complete

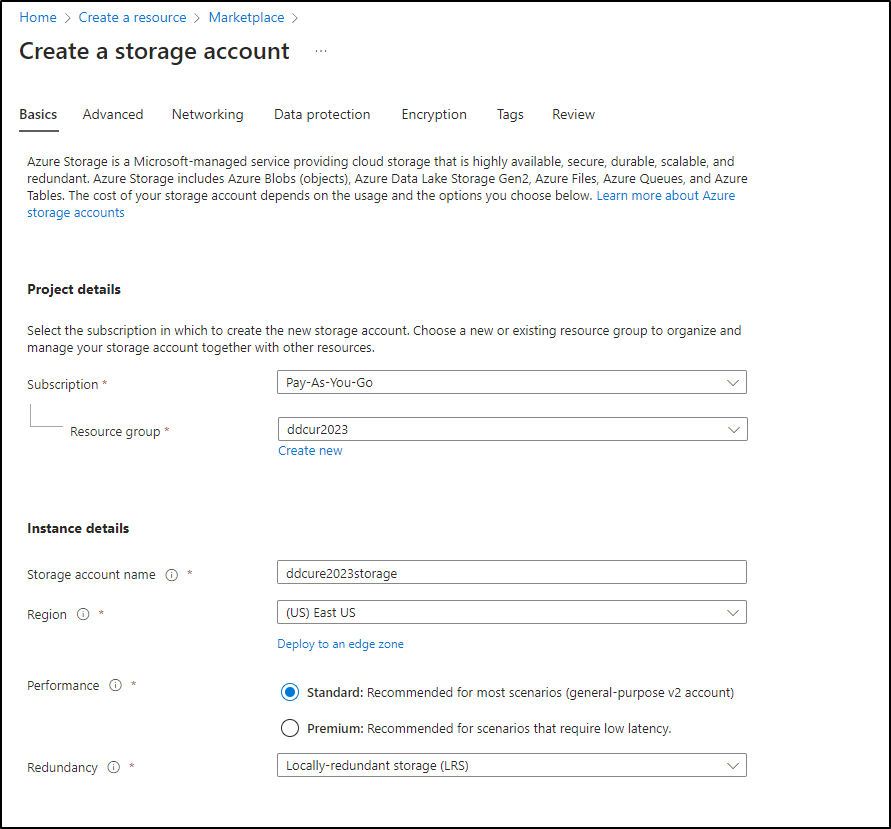

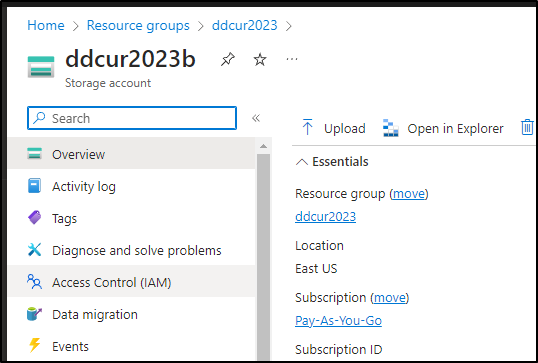

I realized I would need a storage account for the reports we will create in a moment. I created one in the same resource group we just made.

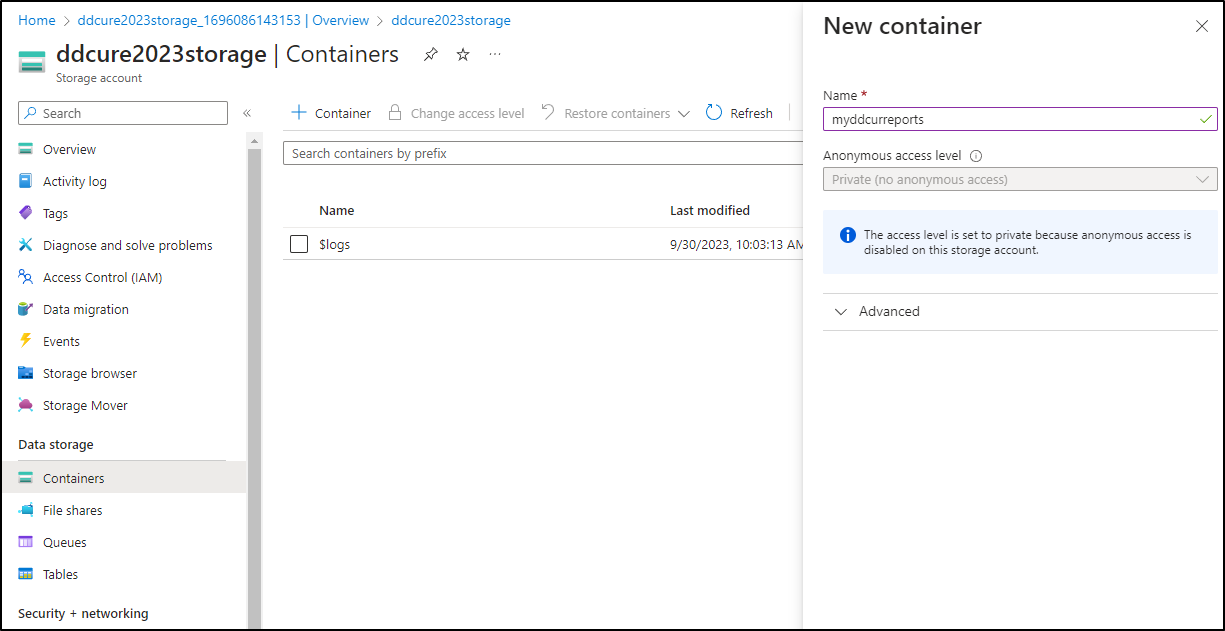

As well as then create a container in the account to hold reports

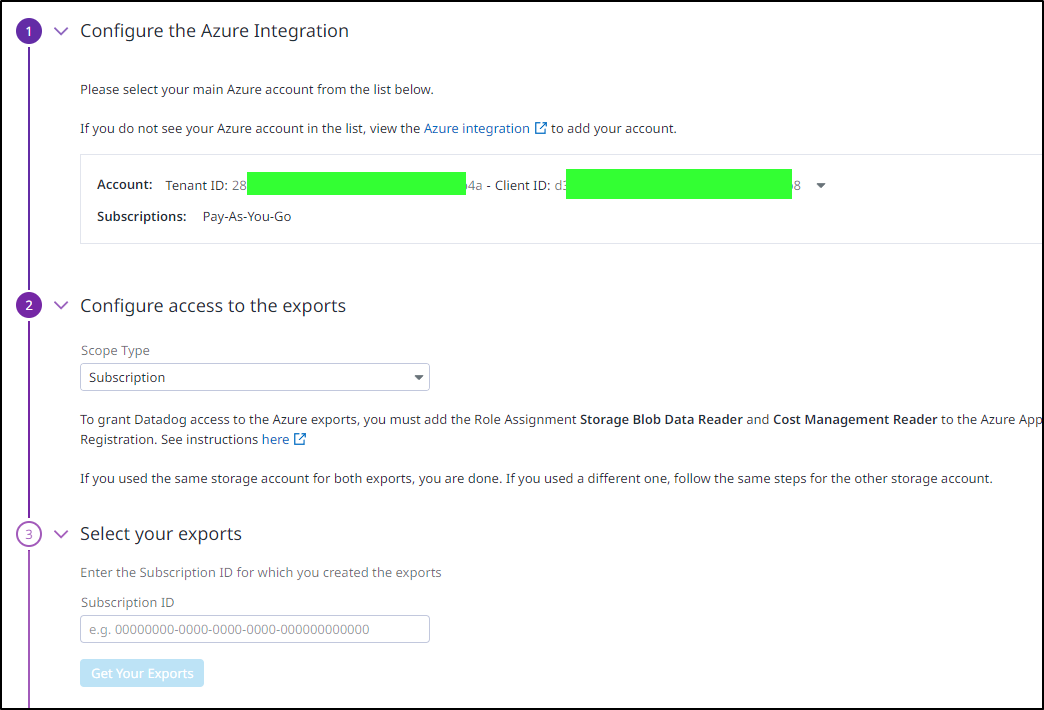

Now that I have a tenant, I want to pull in Subscription level costs. I’ll want to add Storage Blob Reader and Cost Management Reader role assignments to the SP

I left the defaults for all the settings save for moving it to LRS as it’s just reports.

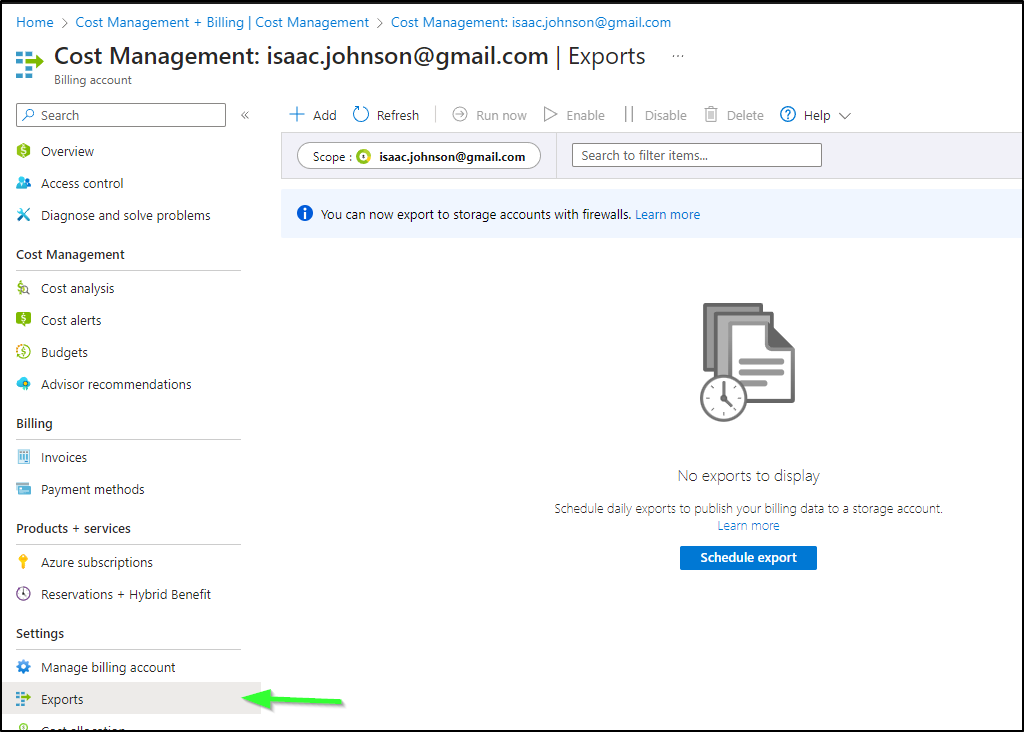

I’ll want to create Cost Management exports under the Billing and Cost Managements area

Here I used the Storage Account I created and set “reports” as the directory into which to save reports.

STOP did you see the mistake? Clearly I did not. In fact, I had a slew of steps detailing out the usage of this report, but those steps were wrong.

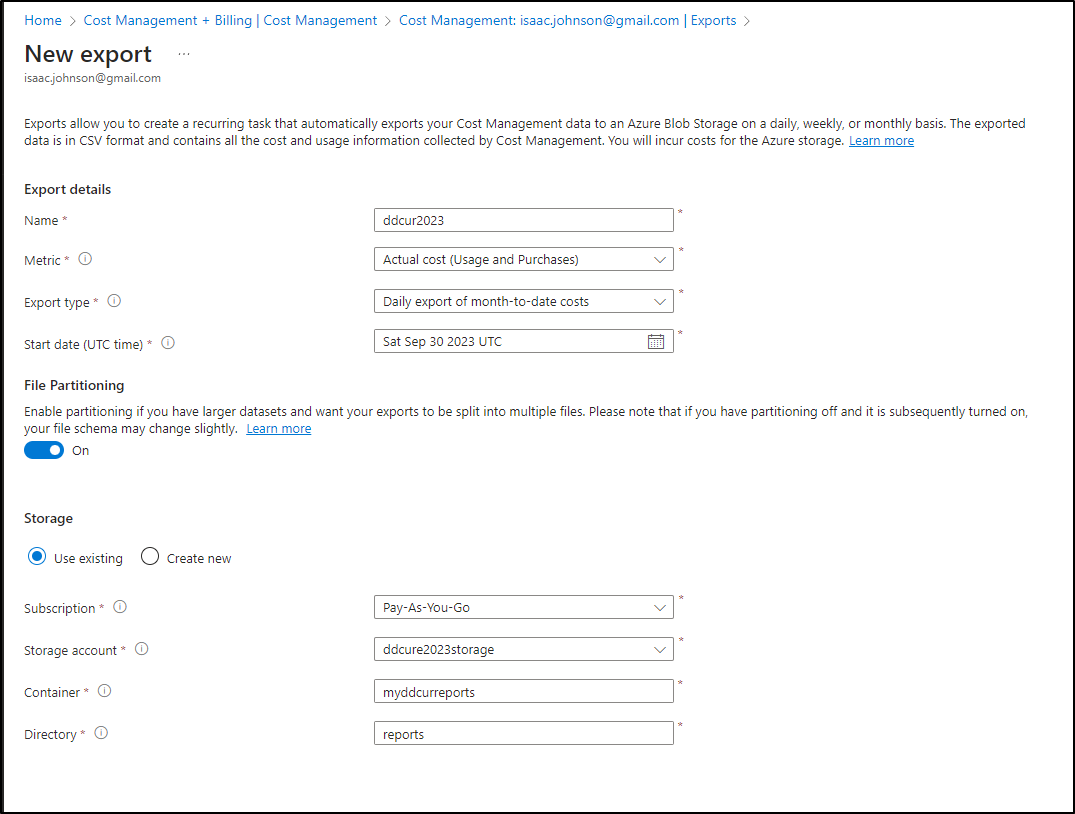

The “Scope”, shown in that prior screenshot, showed my email address meaning we are creating a cost report for the billing entity and not the subscription or resource group. Because my “Billing Account” is a personal, not EA, I got originally blocked later.

I needed to change the “Scope” to my subscription:

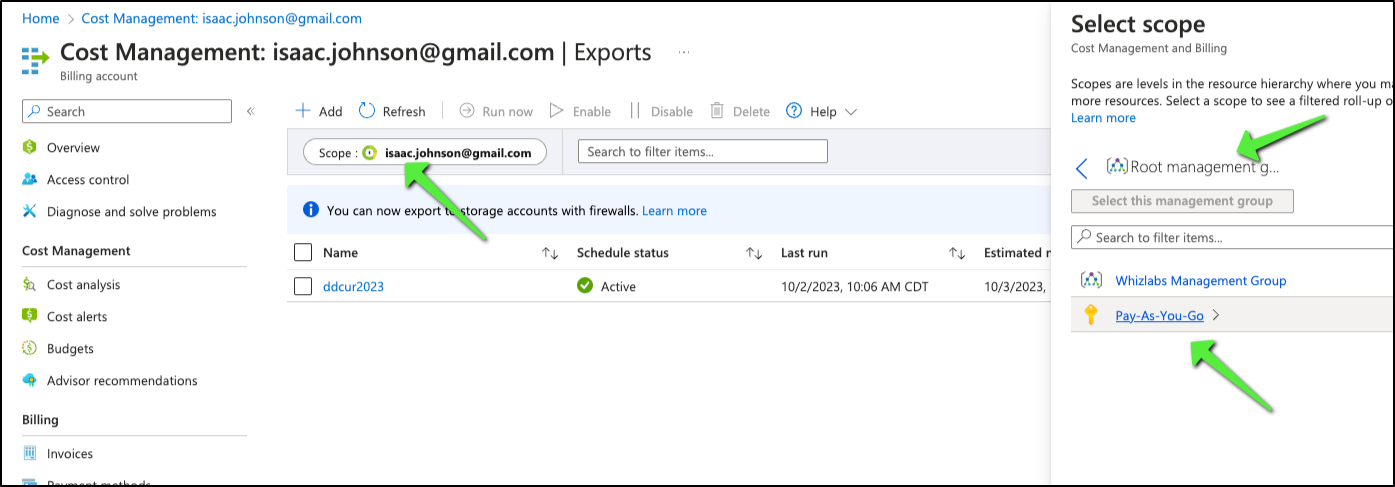

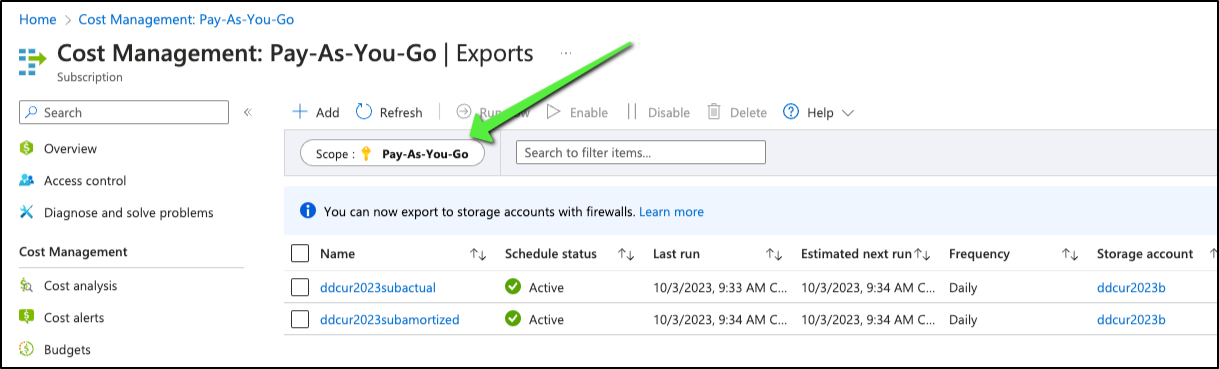

Then create an Amortized and Actual report

Yes! you need both, it will be asked for later.

When created, click “Run Now” to generate an initial report.

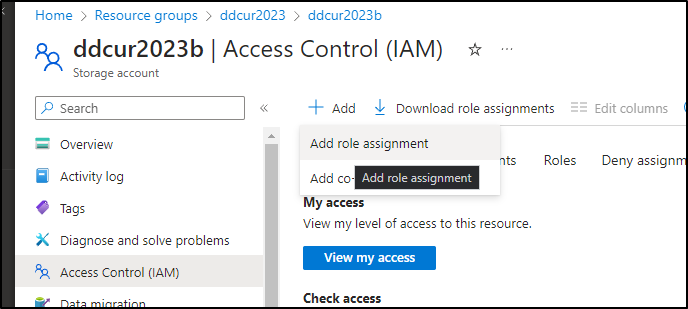

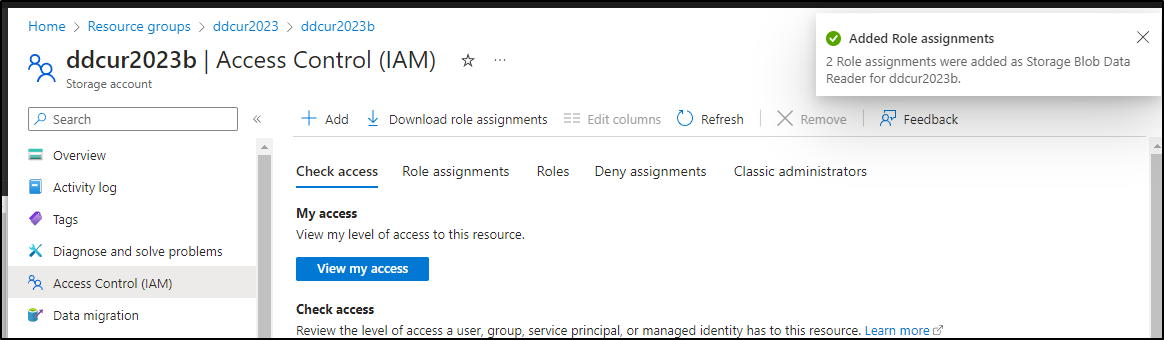

While we wait on that first report, we can go take care of an IAM change by finding that new Storage Account, and going to the IAM section

There, we will add a new Role Assignment

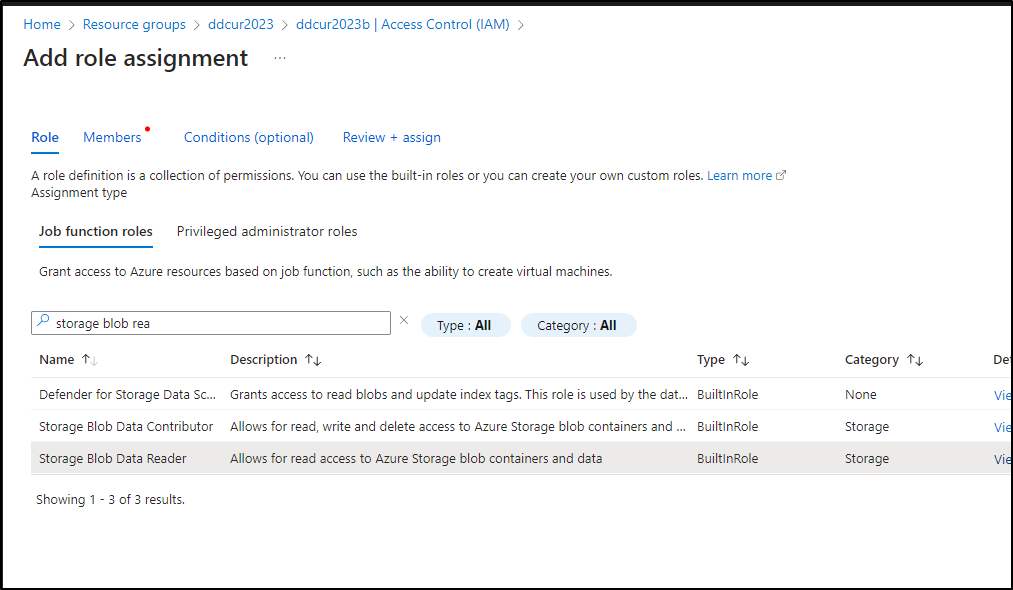

I’ll pick “Storage Blob Data Reader”

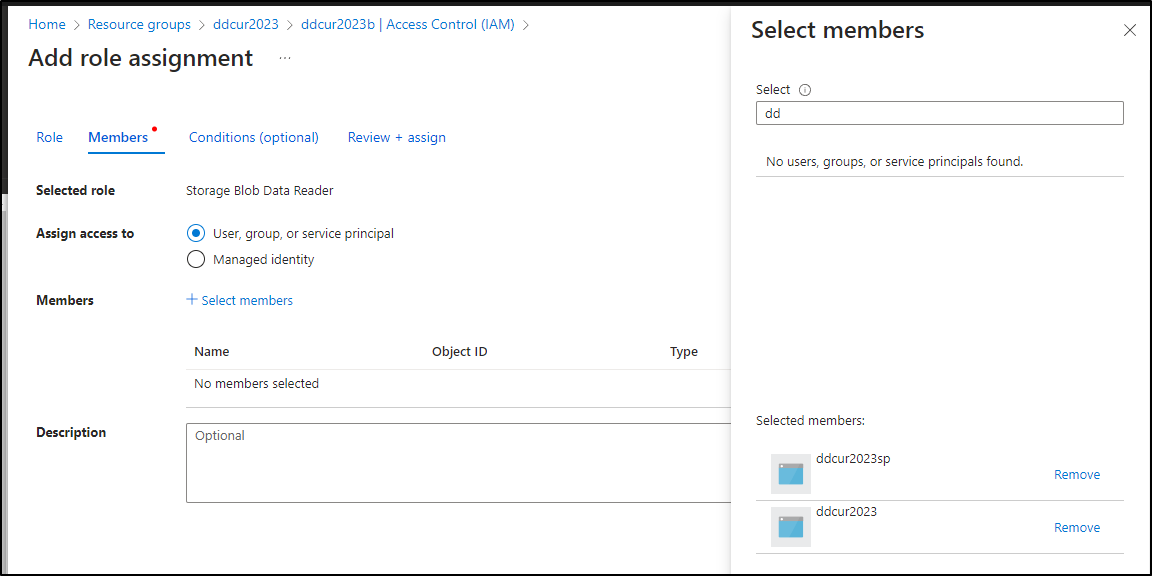

I’ll then add my SP. I might have created two so I’m adding both for good measure

I’ll then complete to done

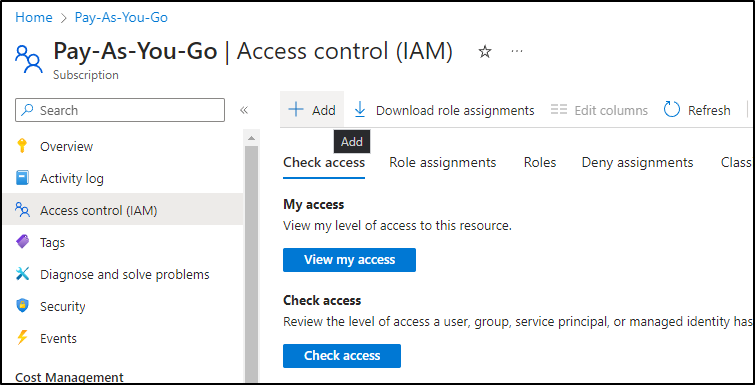

The other thing I need to do is add “Cost Management Reader” for the SP(s) to my sub. I can go to IAM on the subscription and click “Add” and select Role Assignment.

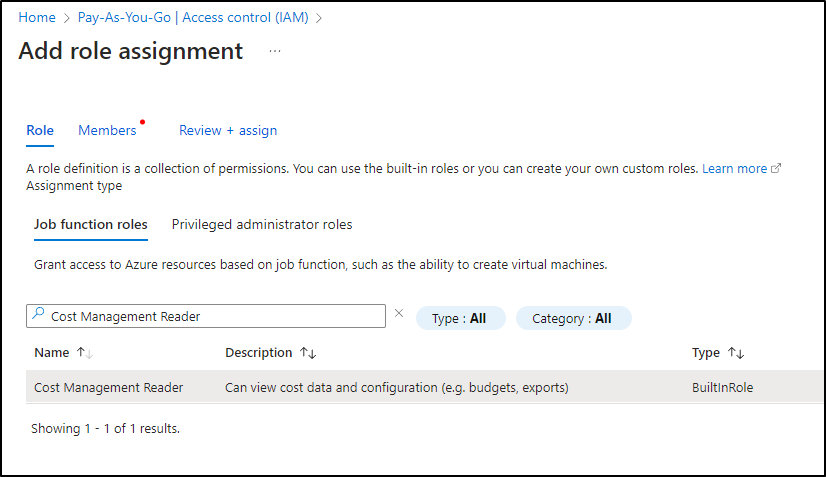

I’ll select Cost Management Reader and click next

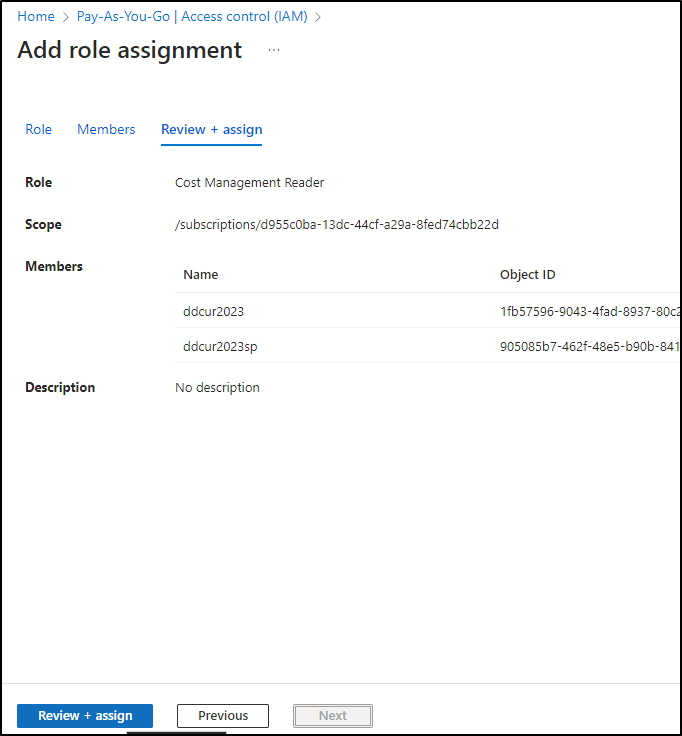

and as before, I’ll add the SP(s) for the reports

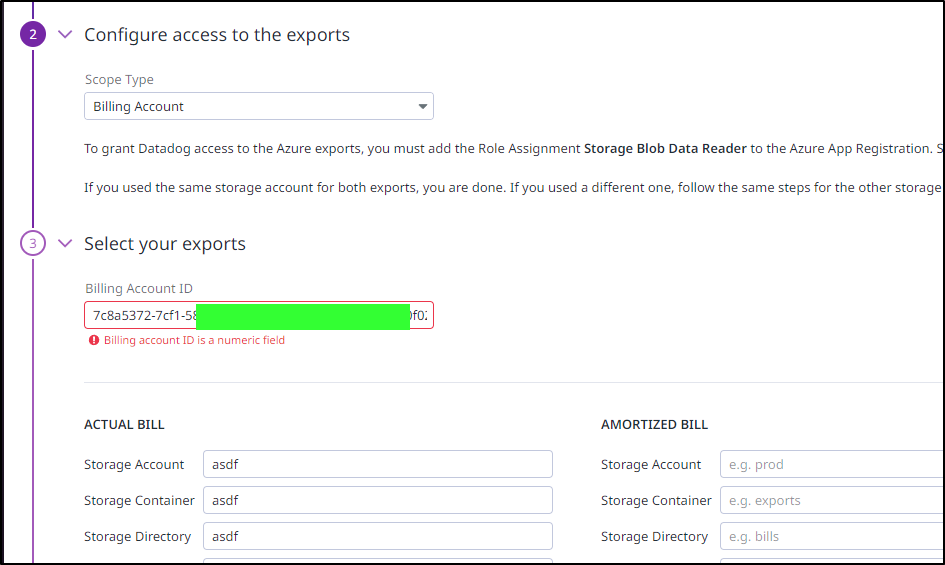

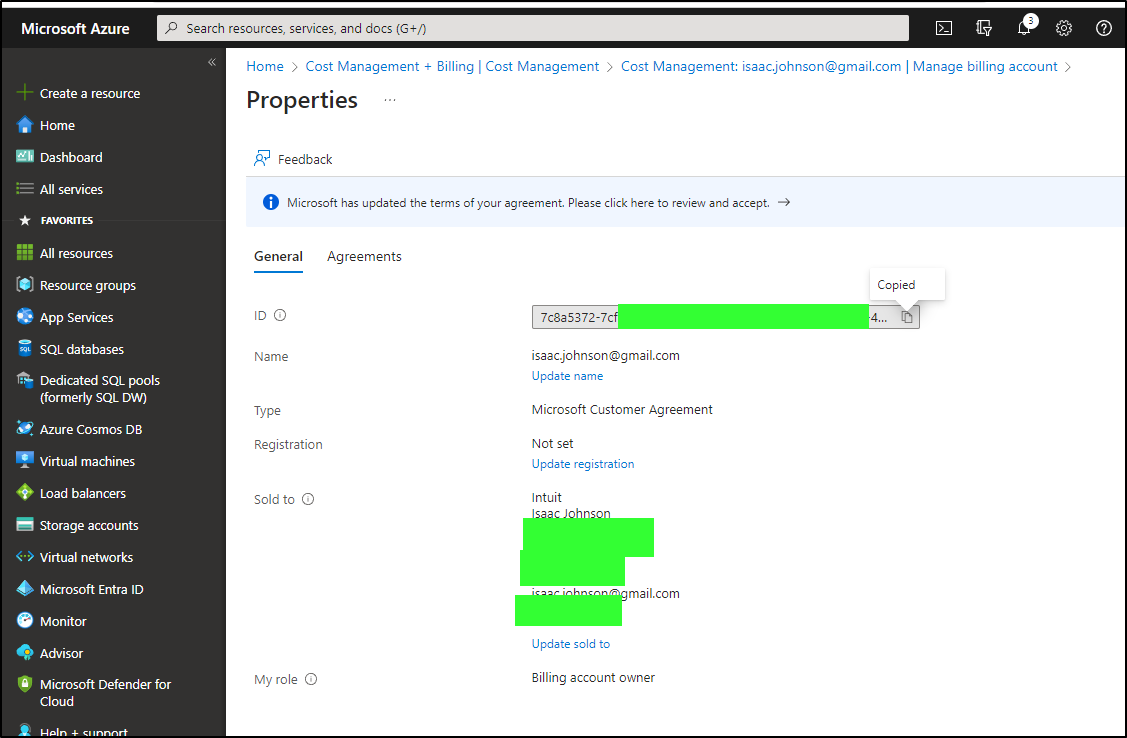

Back in my Datadog window, I’ll now enter the Billing Account ID for the reports

I’m blocked from the Billing Account because it appears Datadog assumes an Enterprise Agreement which gives a numeric value.

Instead, users get a GUID.

Now when I pick Subscription, I can see those reports - these are the two we created earlier

Reviewing Costs

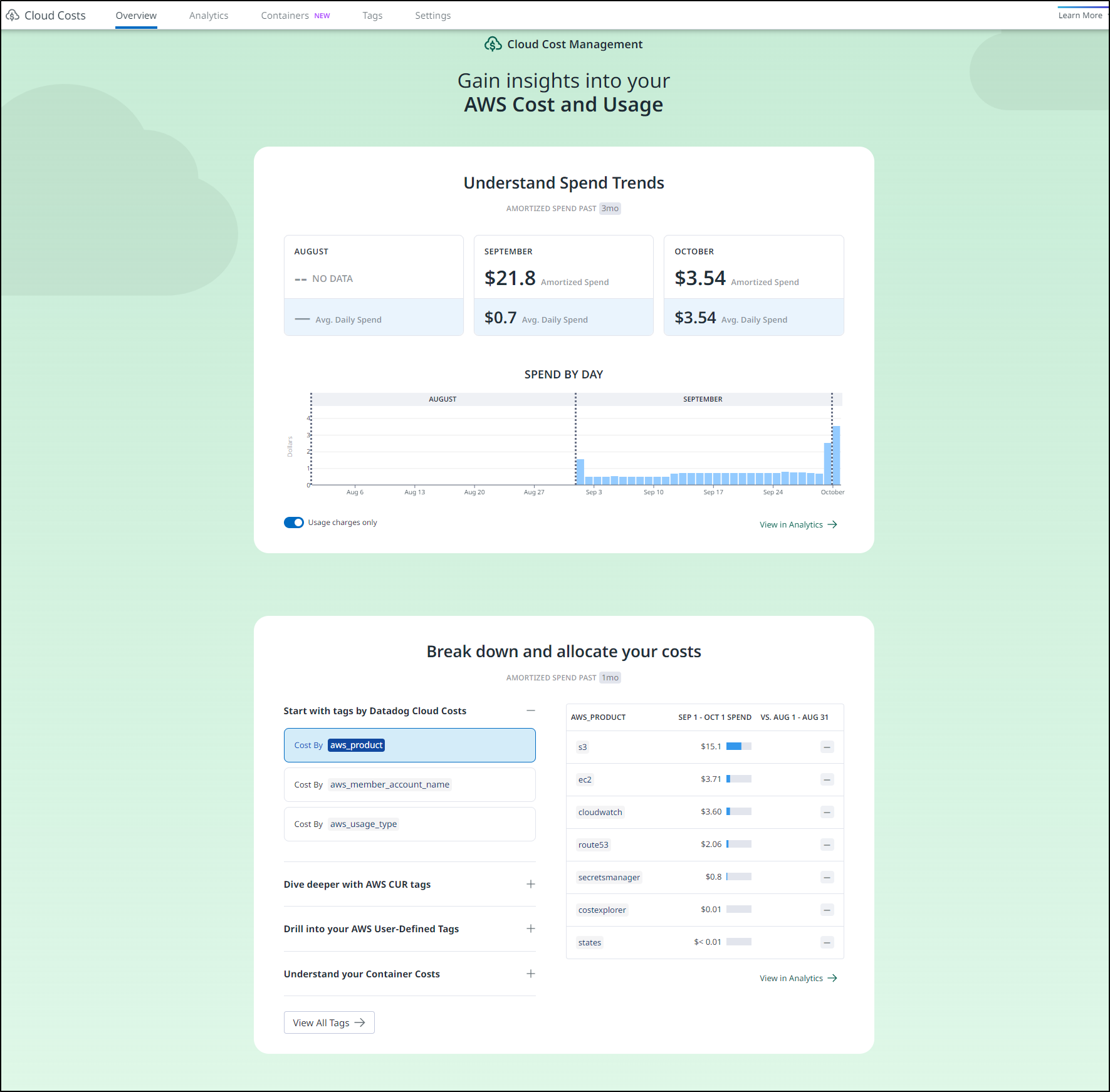

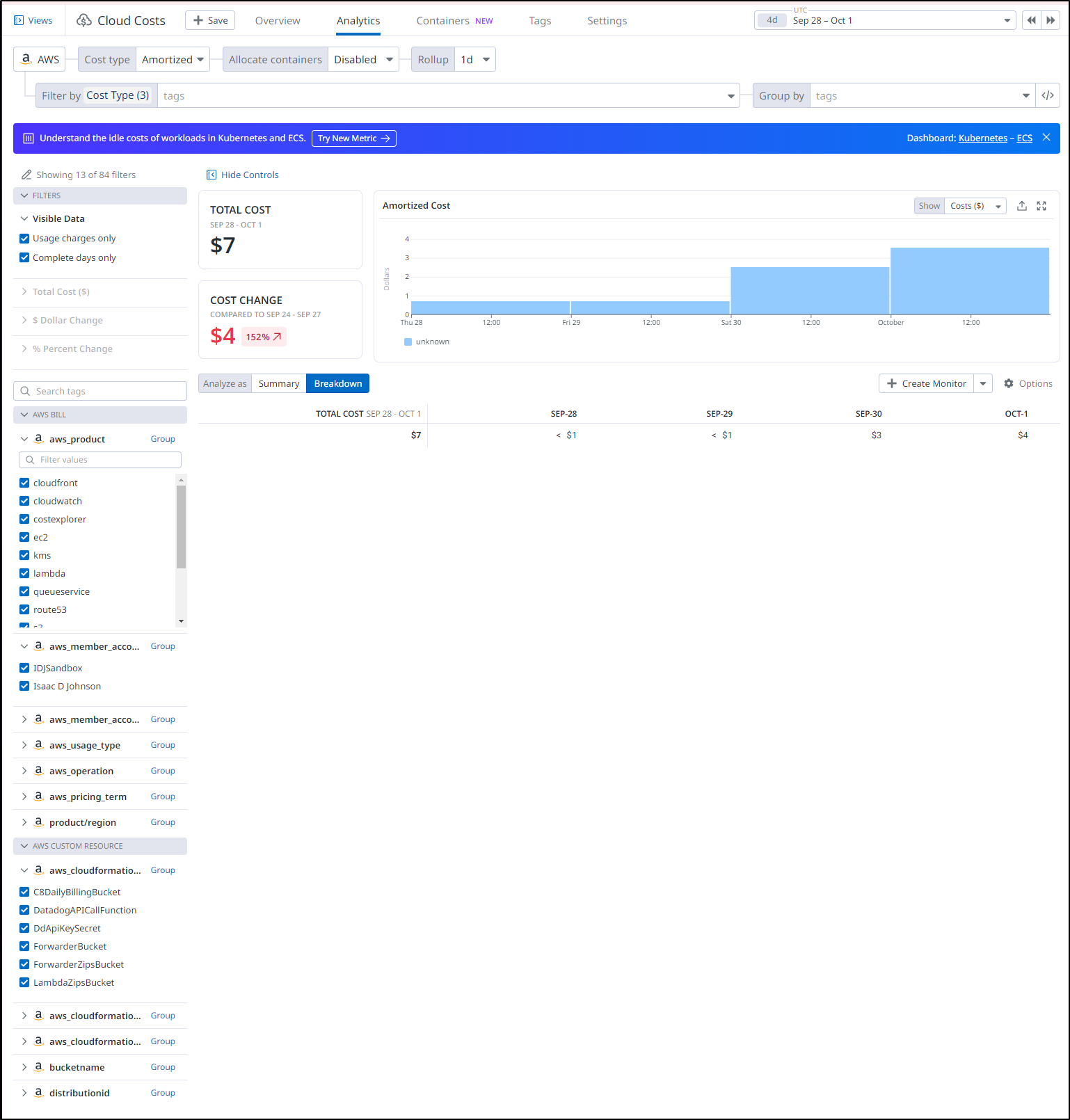

Let’s review what we have with AWS

I can “Analyze” which brings me into a Datadog window that feels very familiar

We can start to explore and figure out where the extra costs are originating

We can see it was from a large Metrics ingestion from CloudWatch - very likely to put together costs. For the first couple days, it spiked.

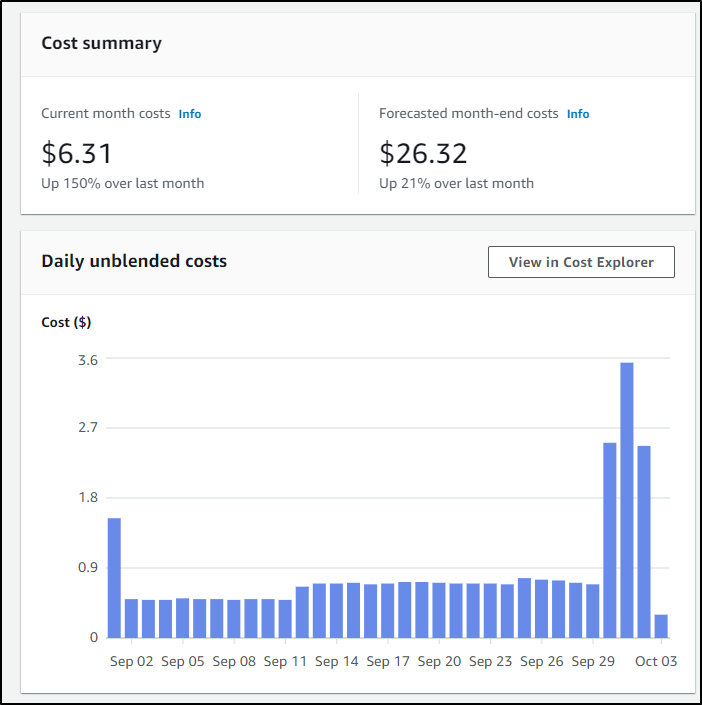

I immediately hopped into AWS Cost Management to see if we were growing. I still feel the burn of that huge Azure bill from last month.

It appears to be settling down

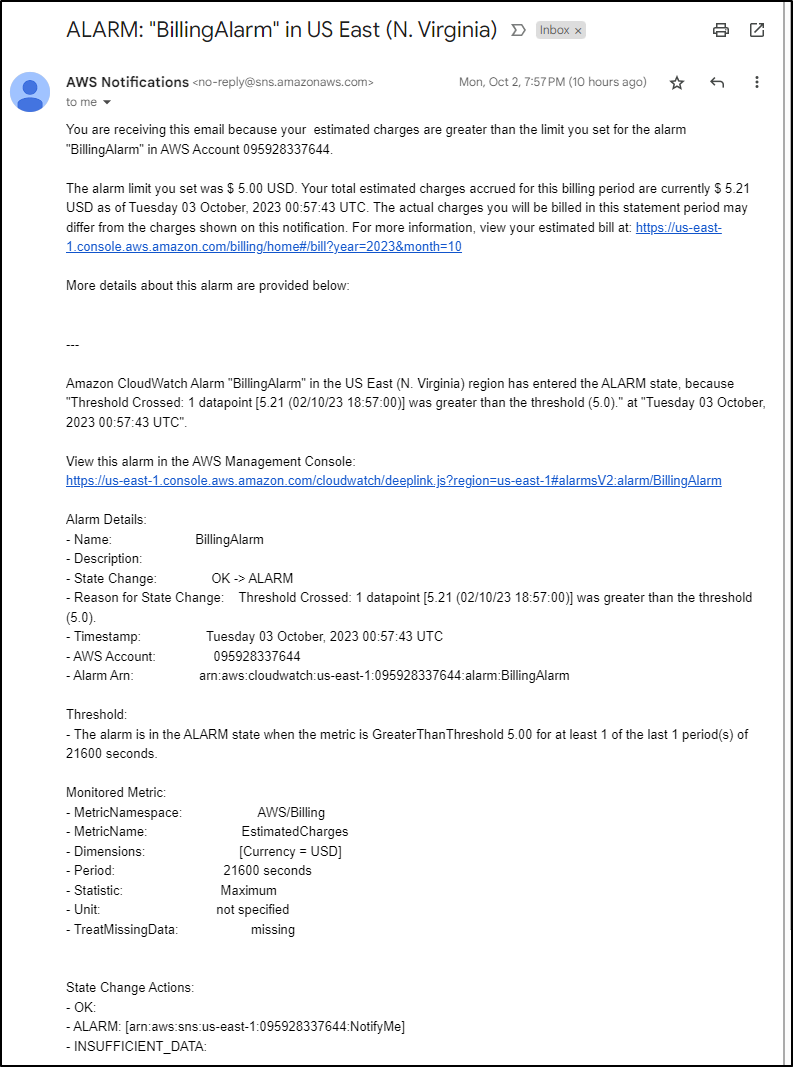

That said, I did get a billing alarm yesterday

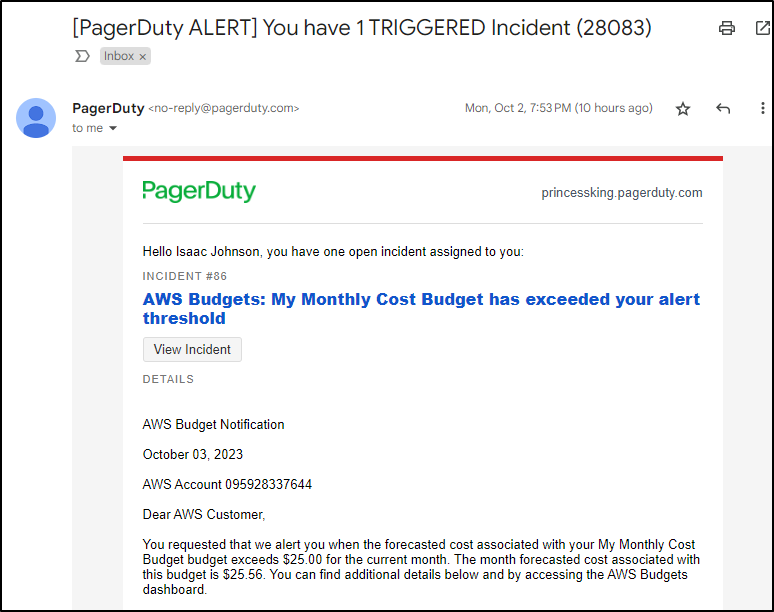

which triggered PagerDuty

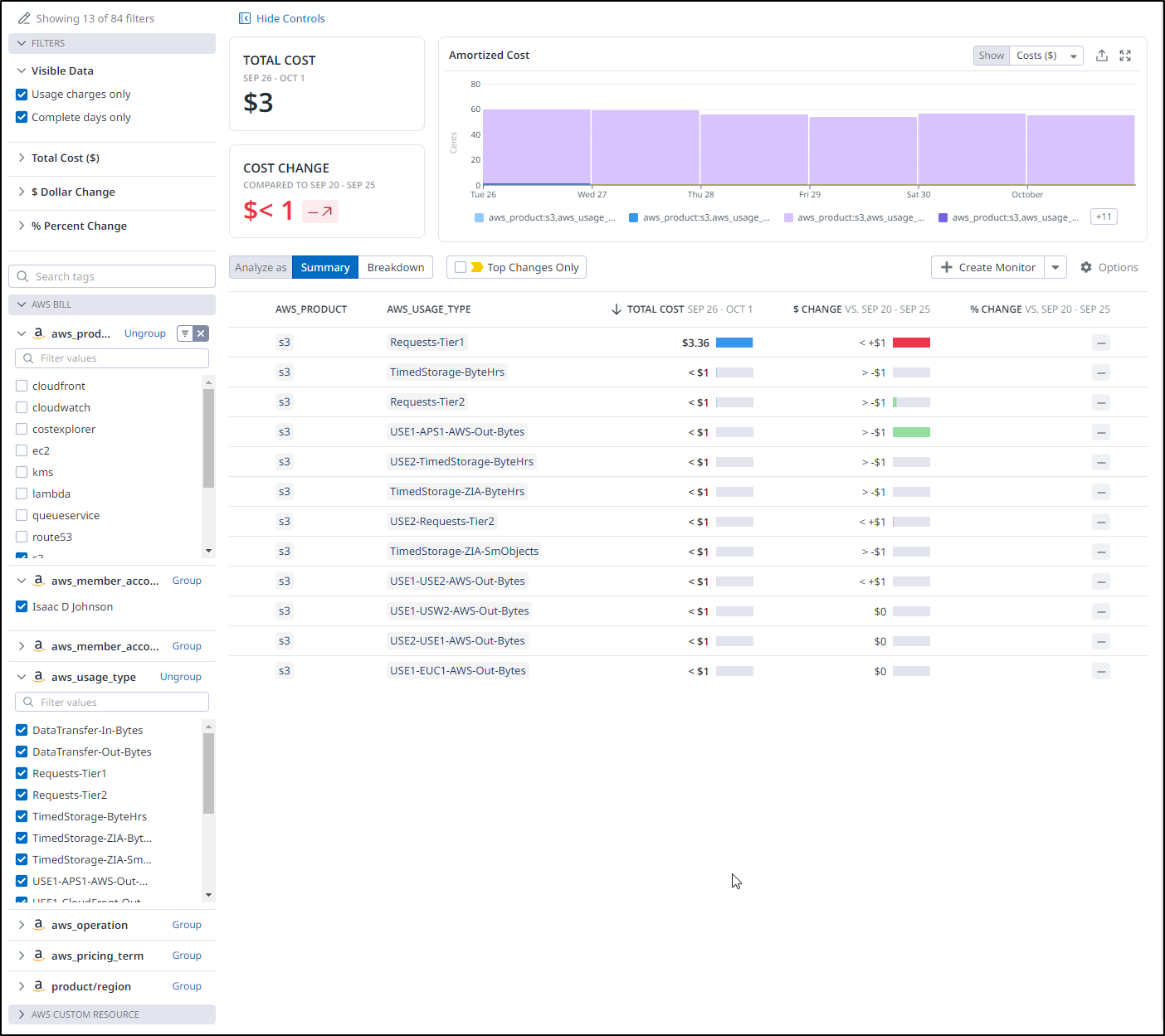

Something I can do in Datadog that I cannot over in AWS is to group by Usage Type, then get a summary with comparison over a period of time.

For instance, I can look at my second biggest cost, S3 usage, and see where those costs come from and how they compare to the prior week.

Monitors and Alerts

Since we are in Datadog, we can also leverage monitors and alerts.

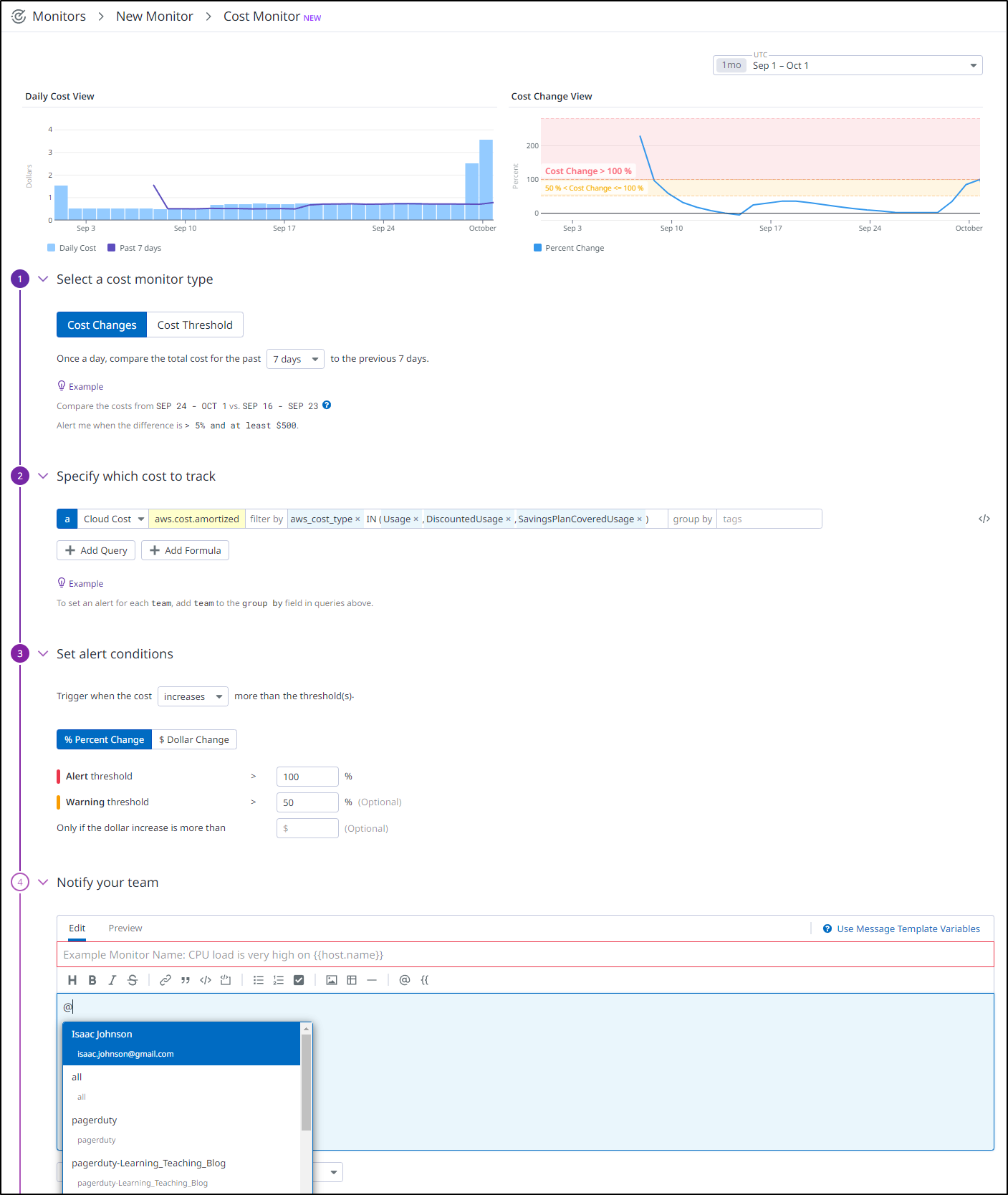

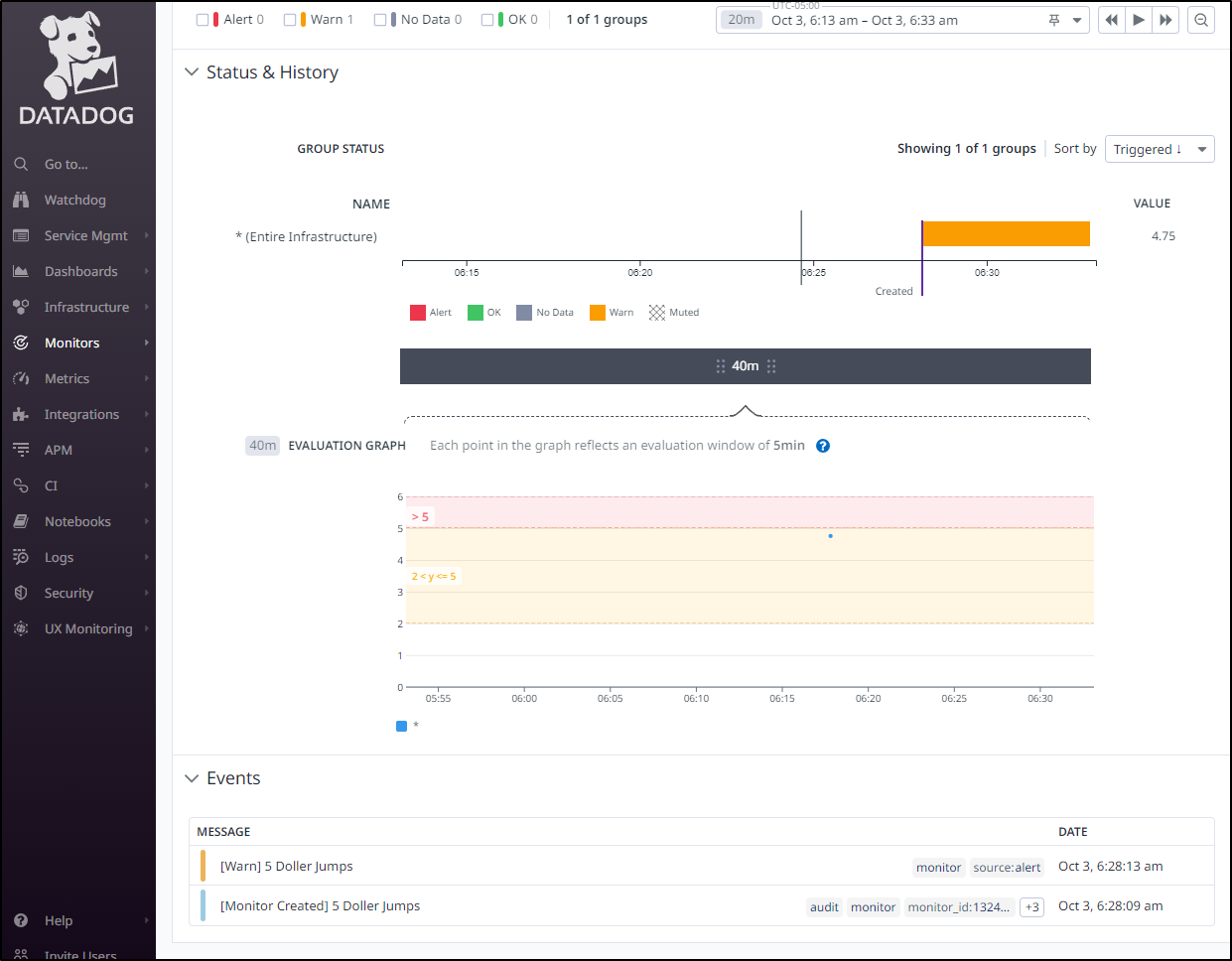

For instance, we can create a Cost Monitor to compare 7d of rolling data to see if the Amortized costs are trending in the wrong direction

At the bottom of the image above you can see I can send to emails, PagerDuty - anything I have tied as a Notification Channel in Datadog.

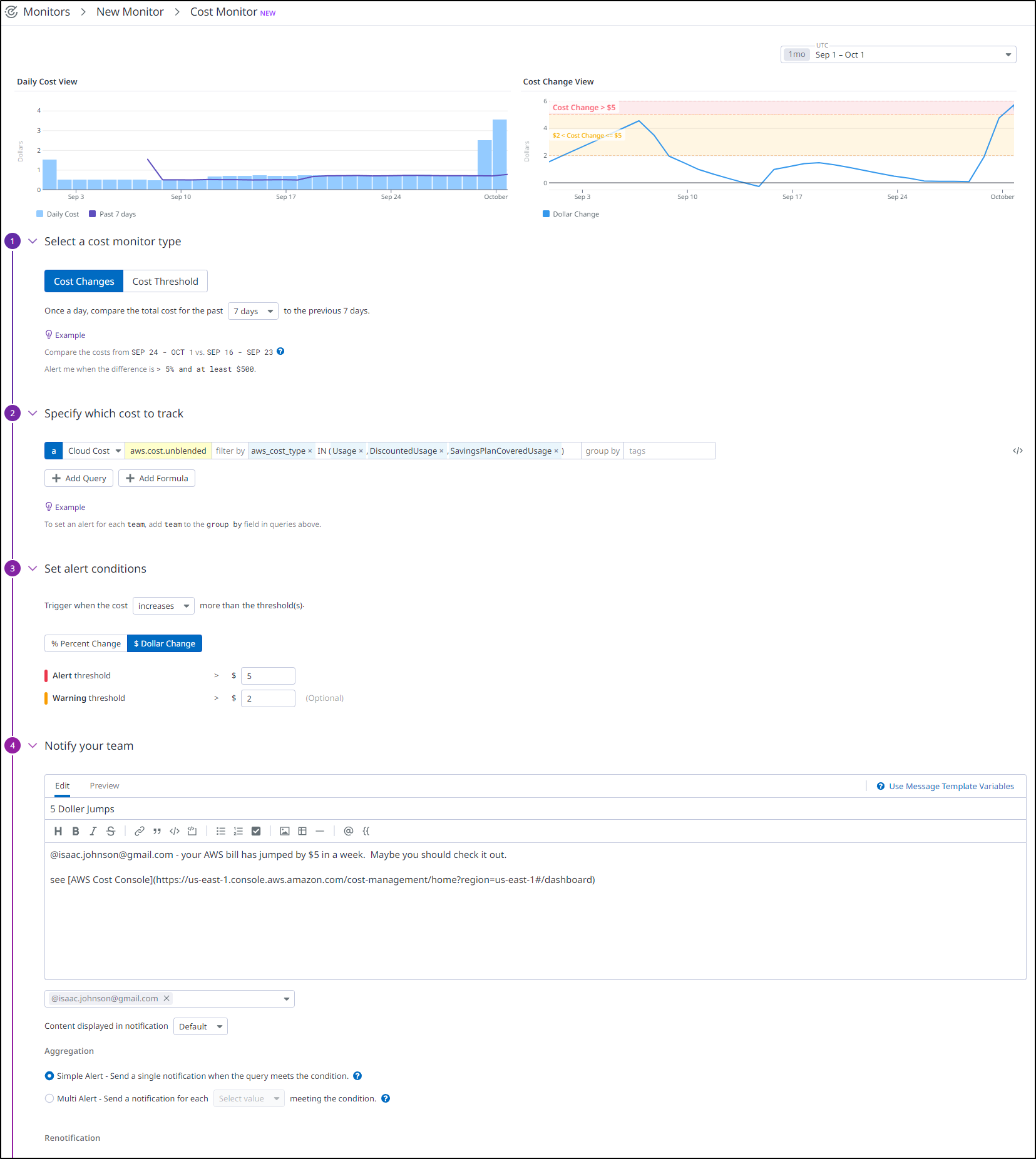

For instance, I’ll create one that emails me when there is a greater than $5 spike in the bill.

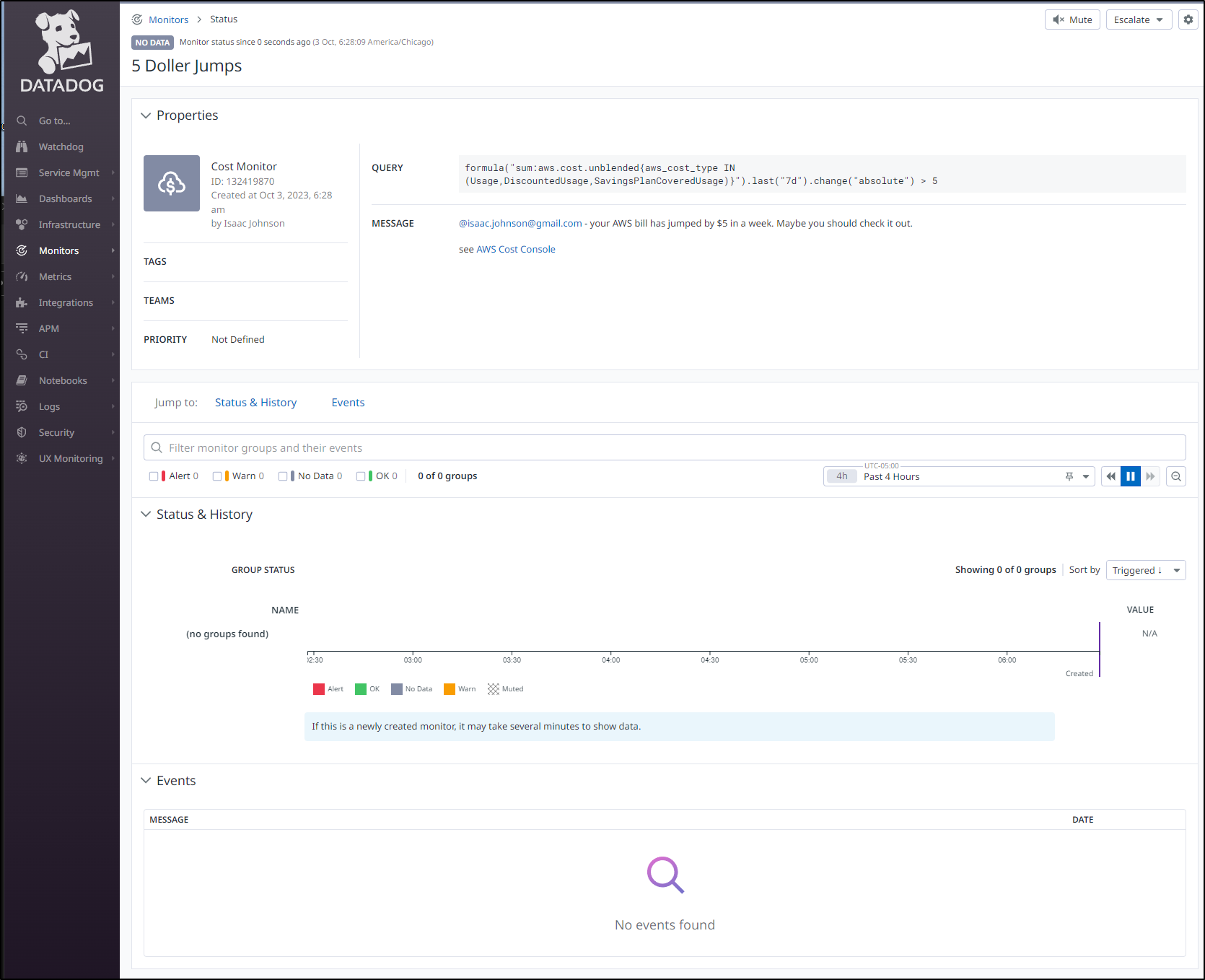

I can now see the new “5 Doller Jumps” Monitor in my monitors

formula("sum:aws.cost.unblended{aws_cost_type IN (Usage,DiscountedUsage,SavingsPlanCoveredUsage)}").last("7d").change("absolute") > 5

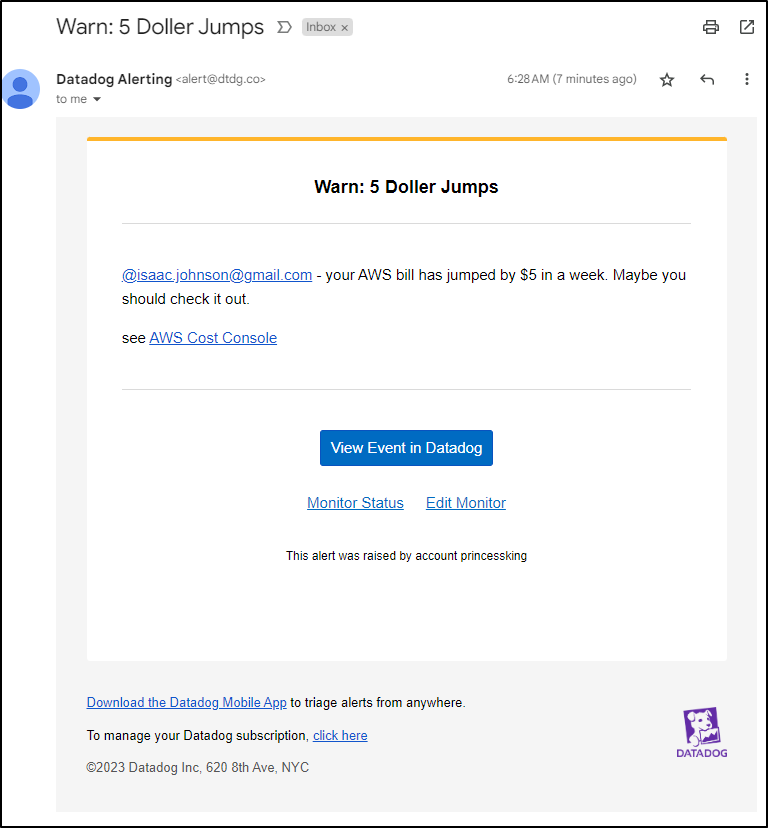

I can see the alert when triggered hit my inbox

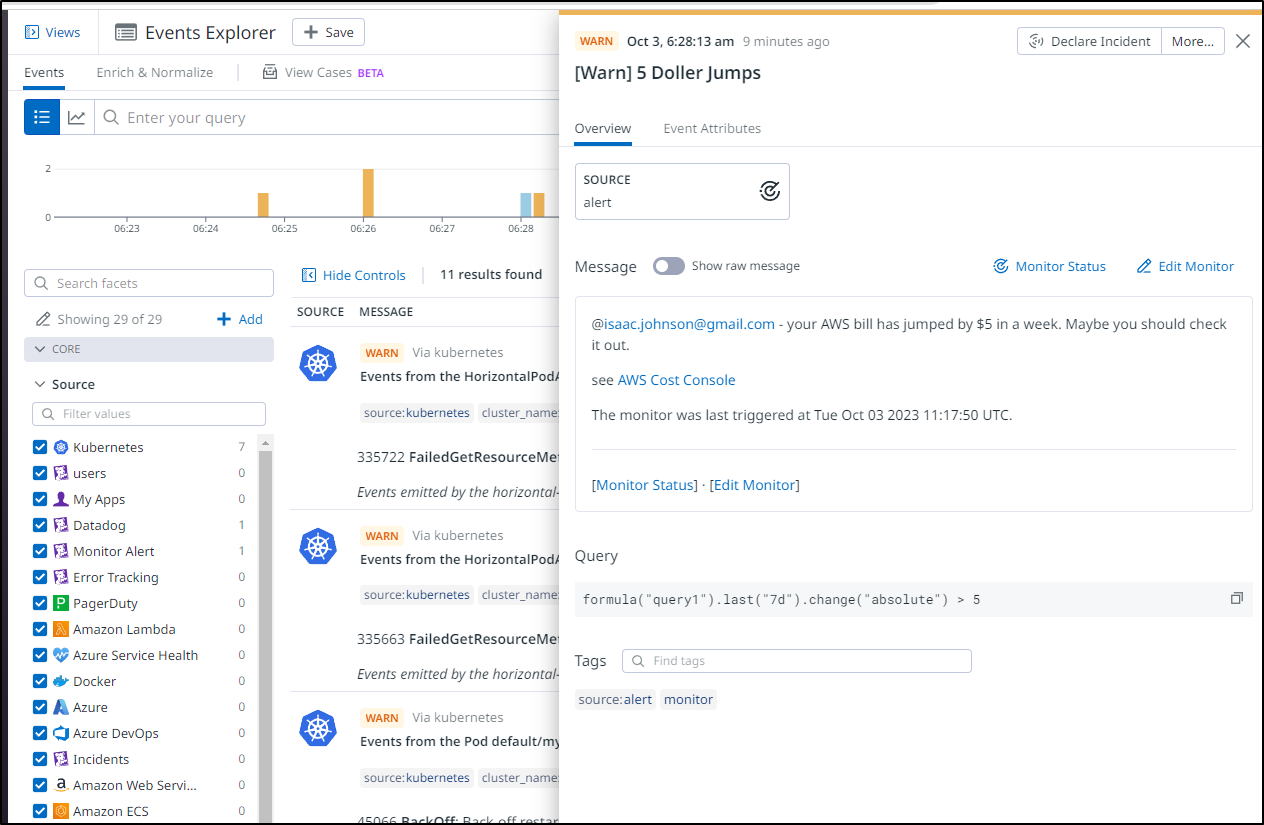

and I can click the link in the email to AWS or to Datadog events

This gives me another advantage in that I can see the history of events for this monitor. That is, if I started to notice a trend over time, or that I spiked on certain months or days, that would all become clear looking at the events.

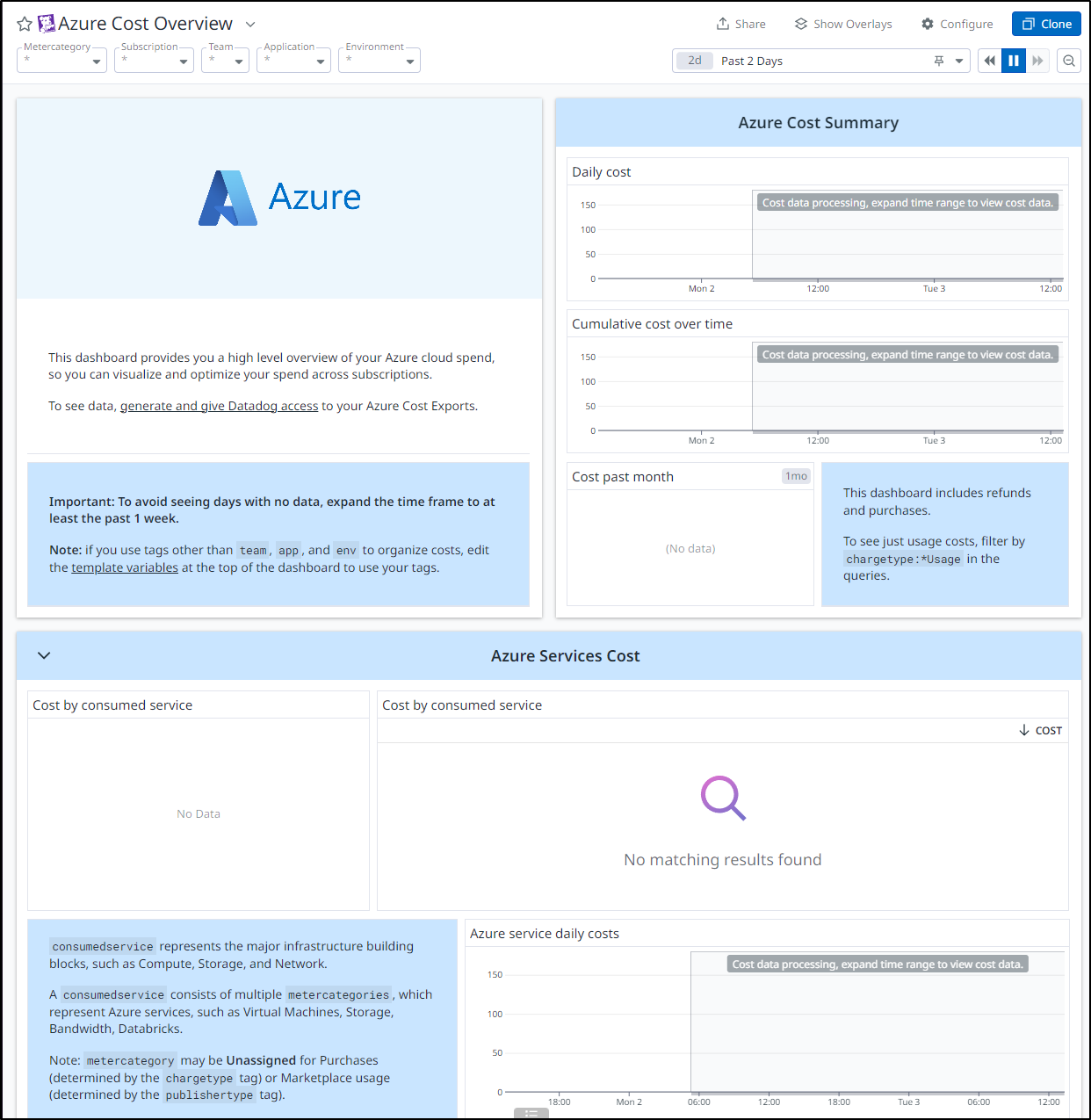

Azure

I want to point out that Datadog helped me fix my Azure ingestion error the day of publication so we’ll have to come back to Azure Cloud Costs.

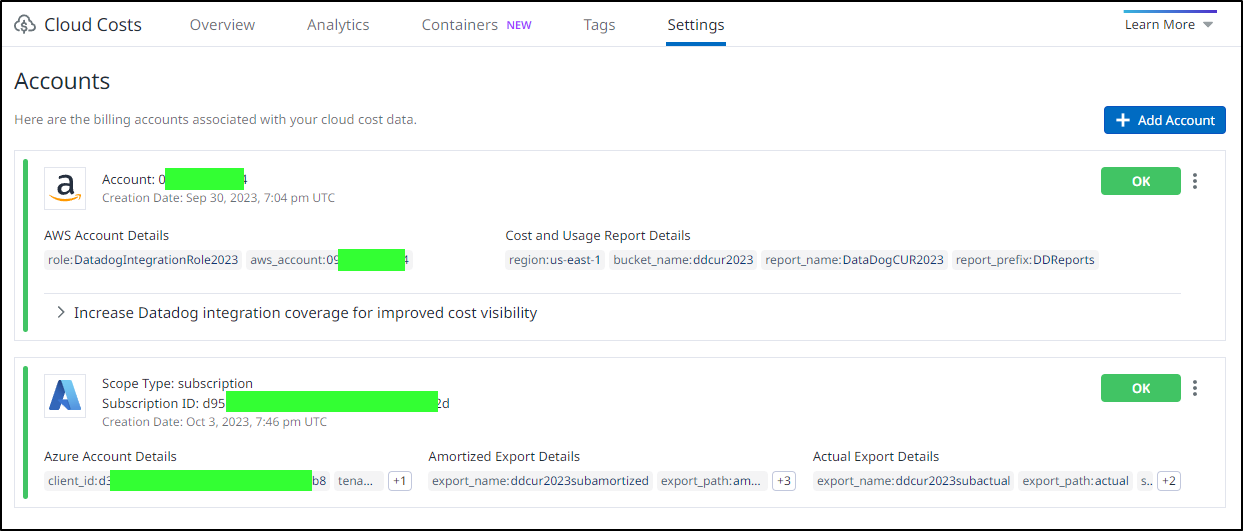

That said, I can see it listed as an account:

And just as AWS, there are Azure Cost Dashboards as well

It’s just blank for me until I get some data

Dashboards

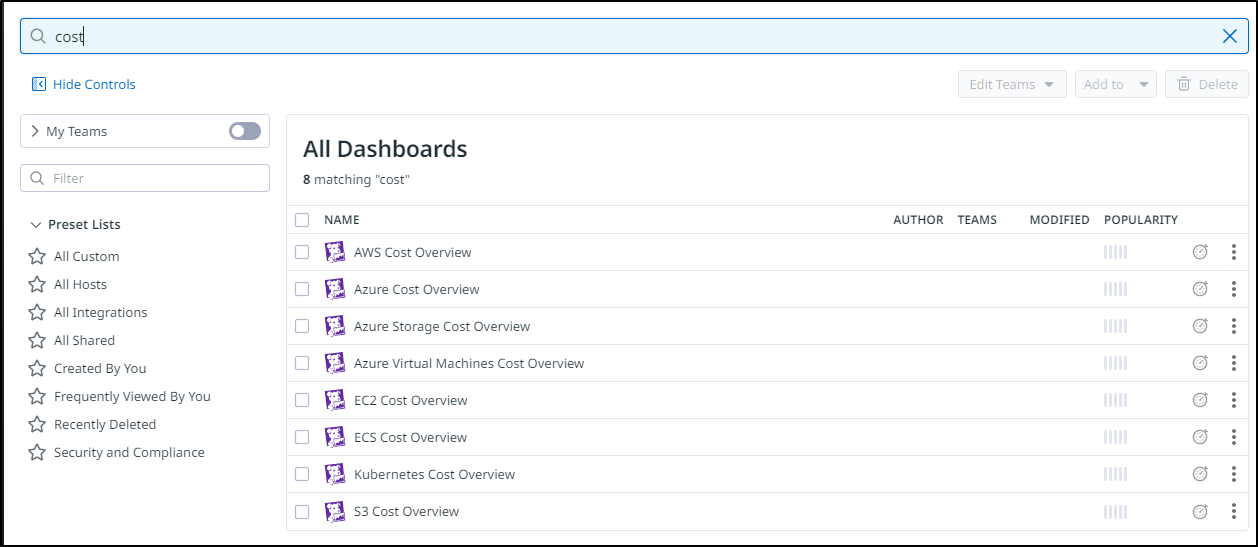

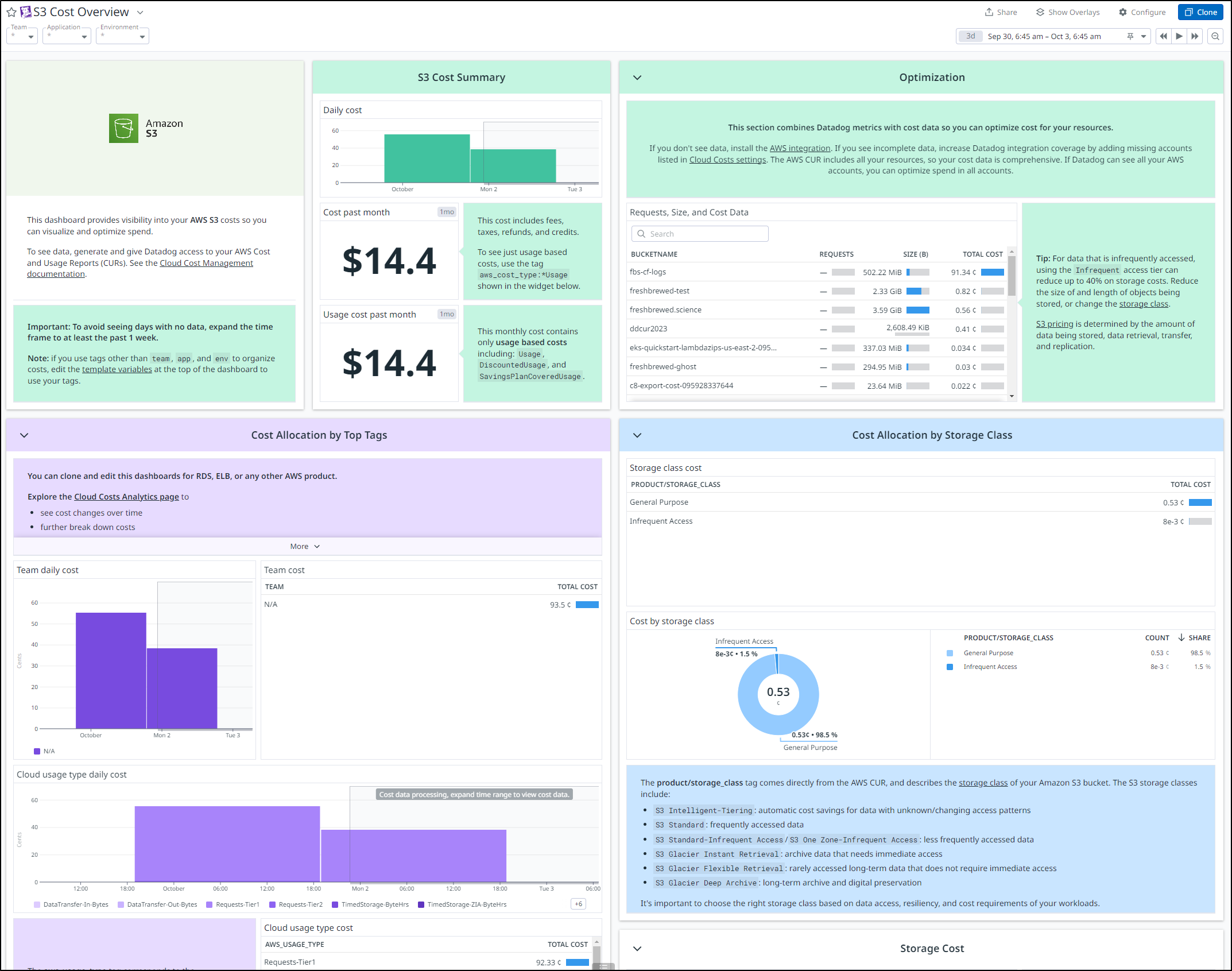

You had to assume Datadog already built out some slick prebaked dashboards and indeed they had.

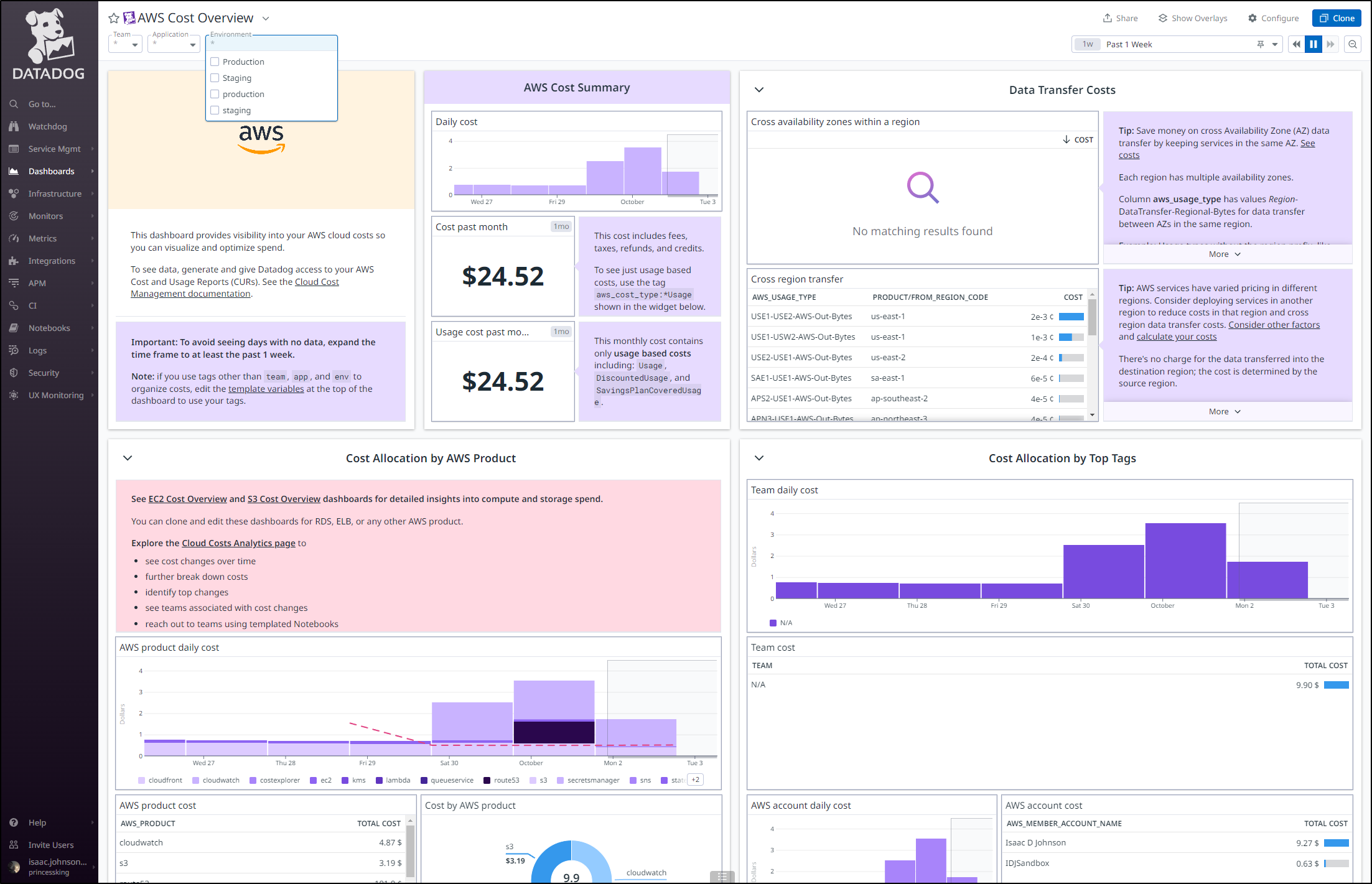

Here is the AWS one:

What you see here is a whole lot of advice along with the numbers. This is reminding me of a lot of ‘cost optimization’ products I’ve used in recent times.

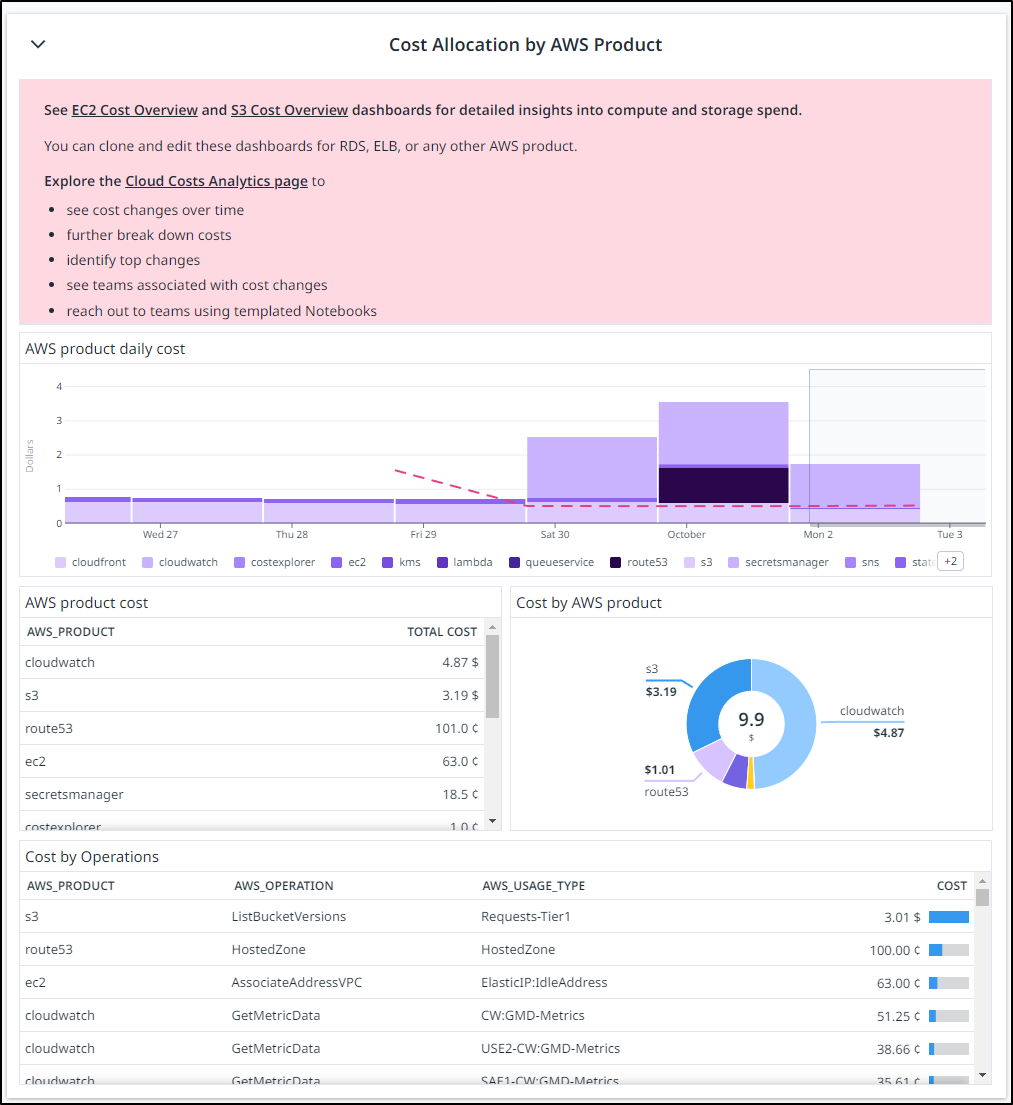

In the bottom left we can see a breakdown (based on my time selector and filters) of costs by product:

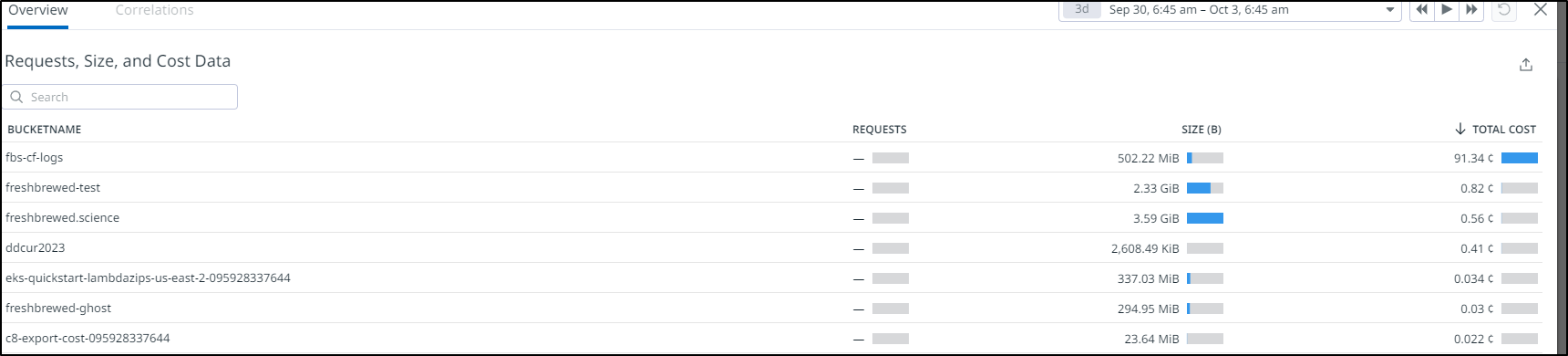

Since S3 is my larger cost, let’s look at the “S3 Cost Overview” dashboard.

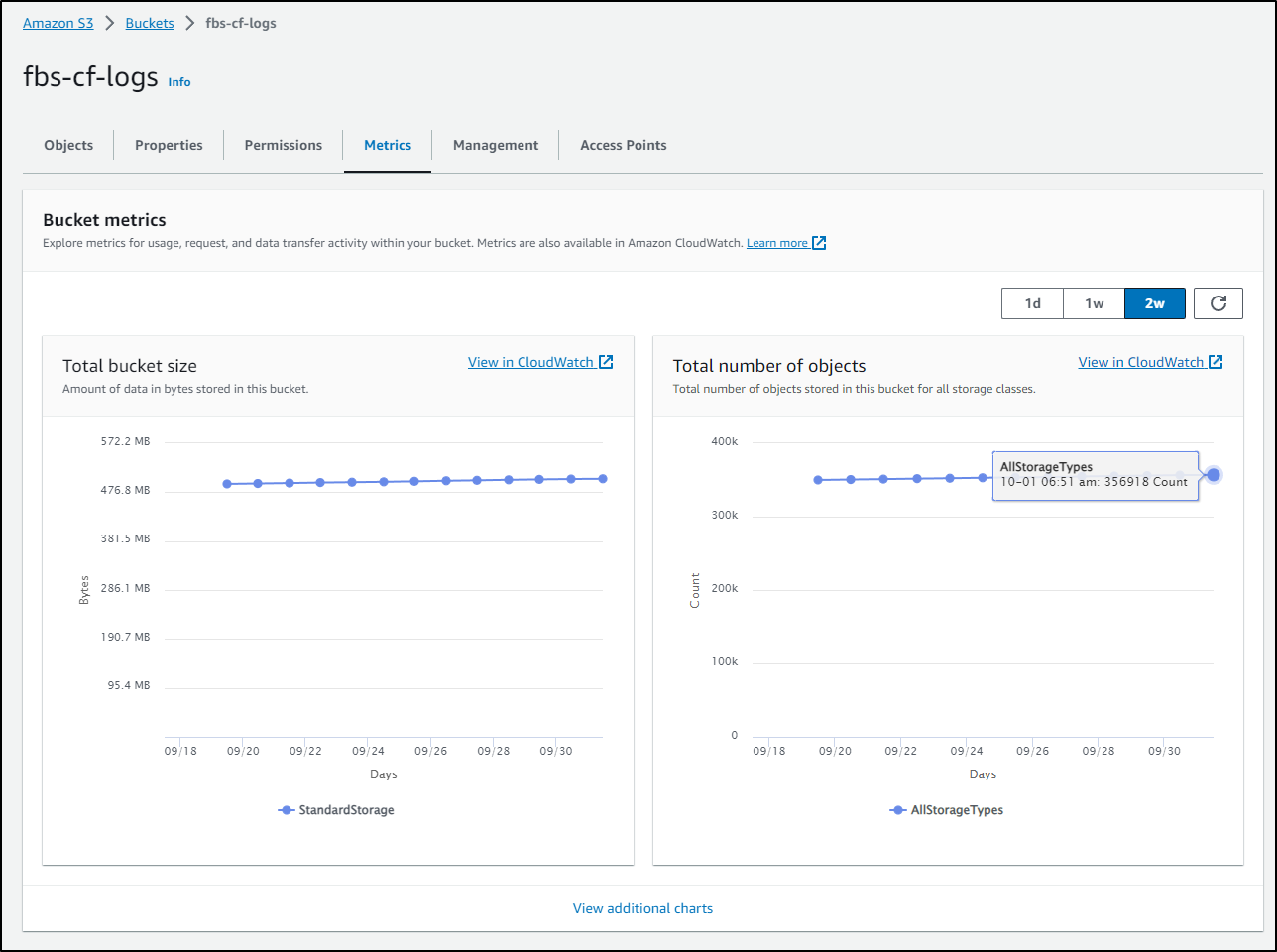

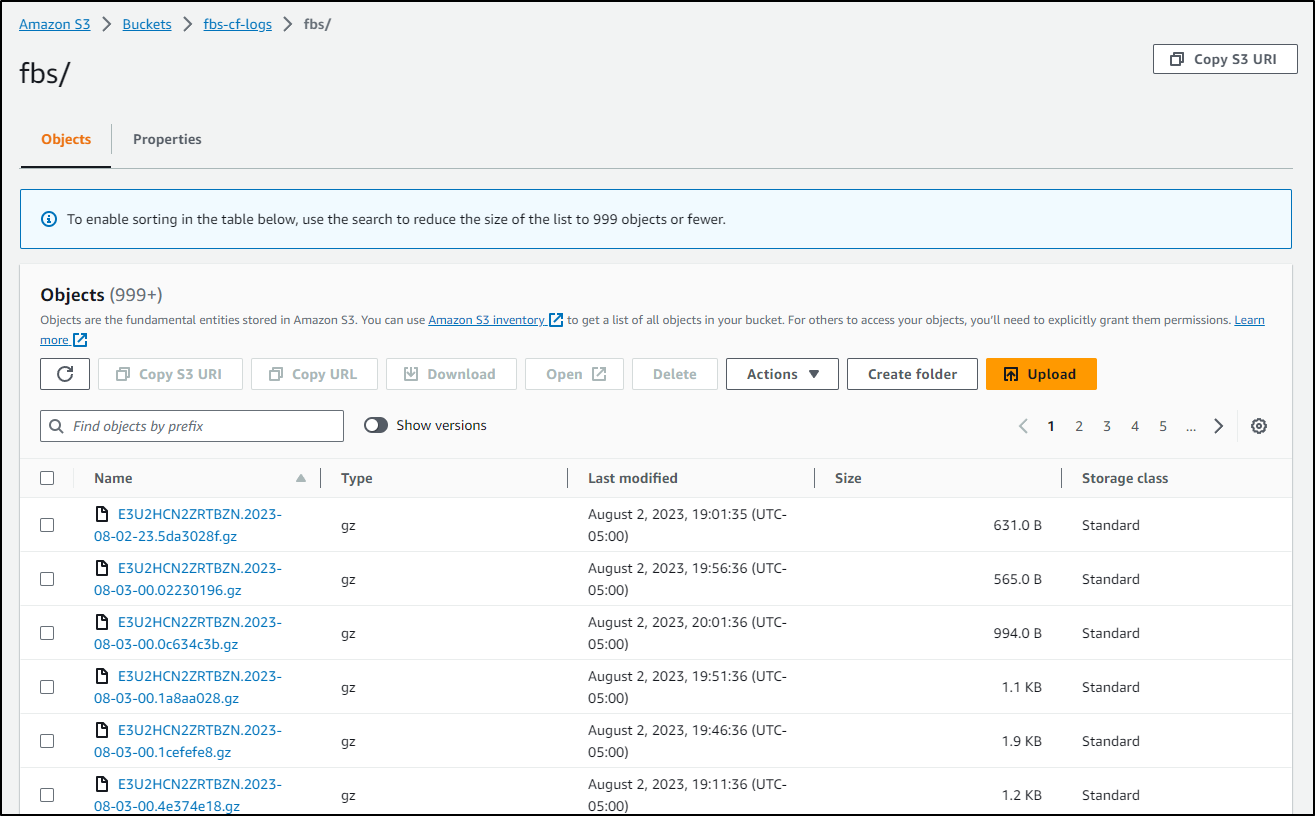

That surprised me a bit; that my ‘cf-logs’ was costing me a lot more than the two backend sites hosted in AWS

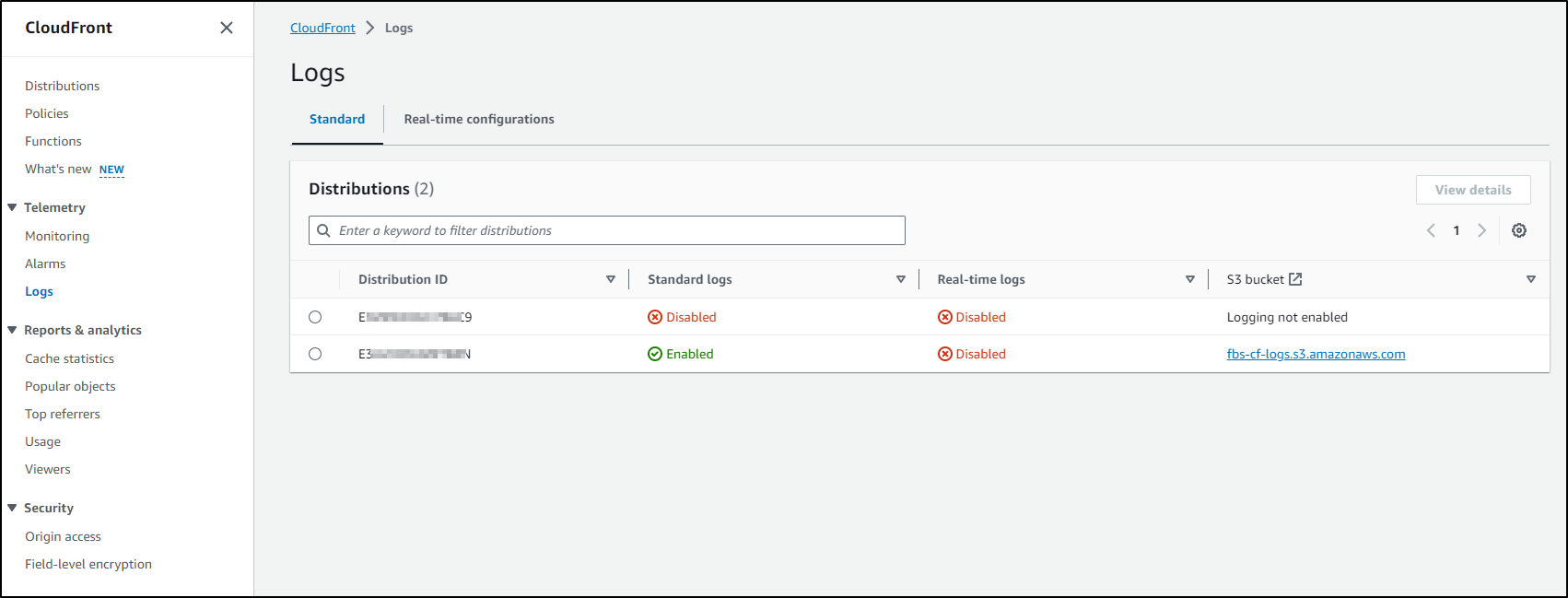

I can double check, but that bucket is used by CloudFront for logs

And it’s been growing ever since Dec 2021 when it was created.

It just stores old logs I’ll never look at

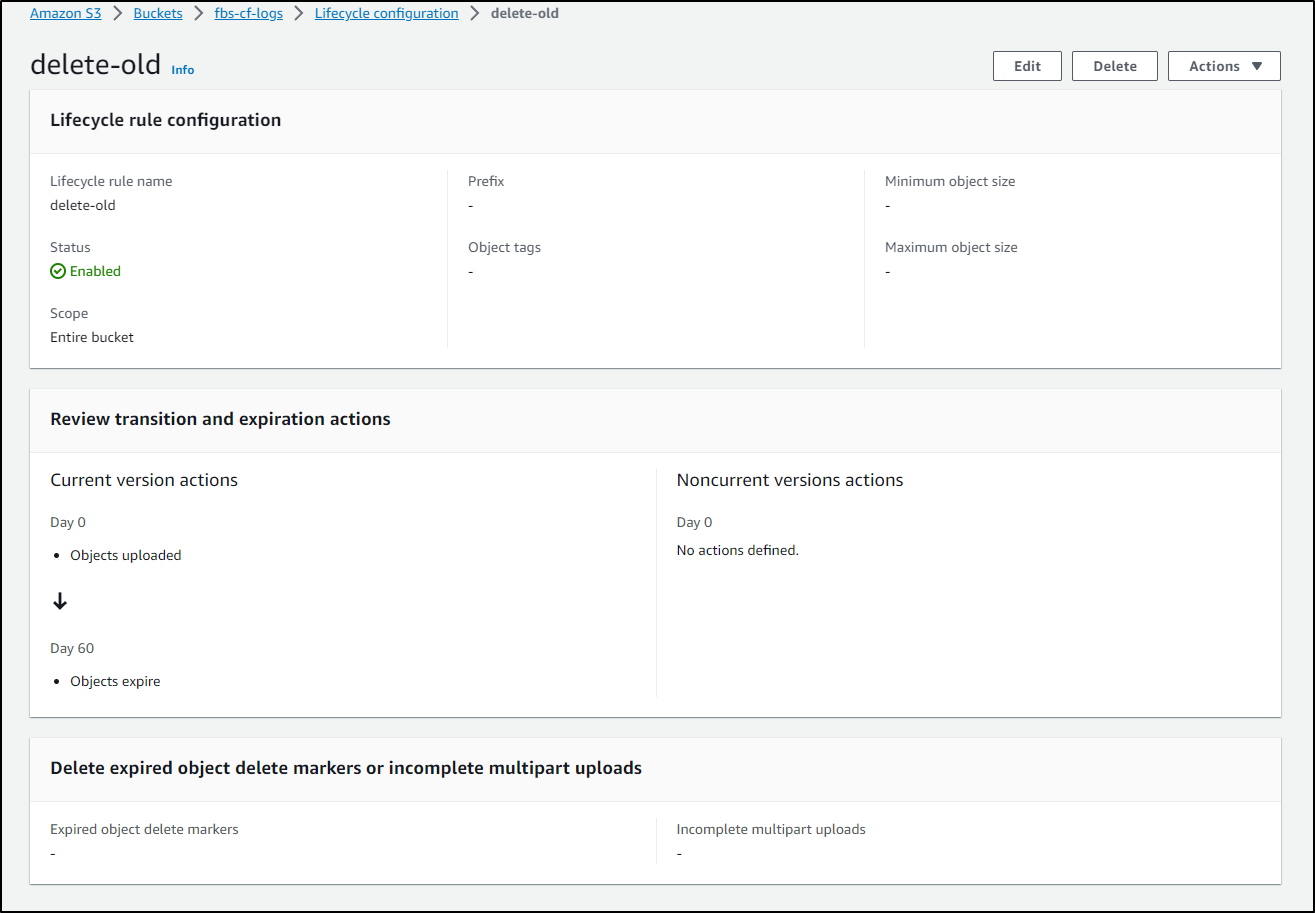

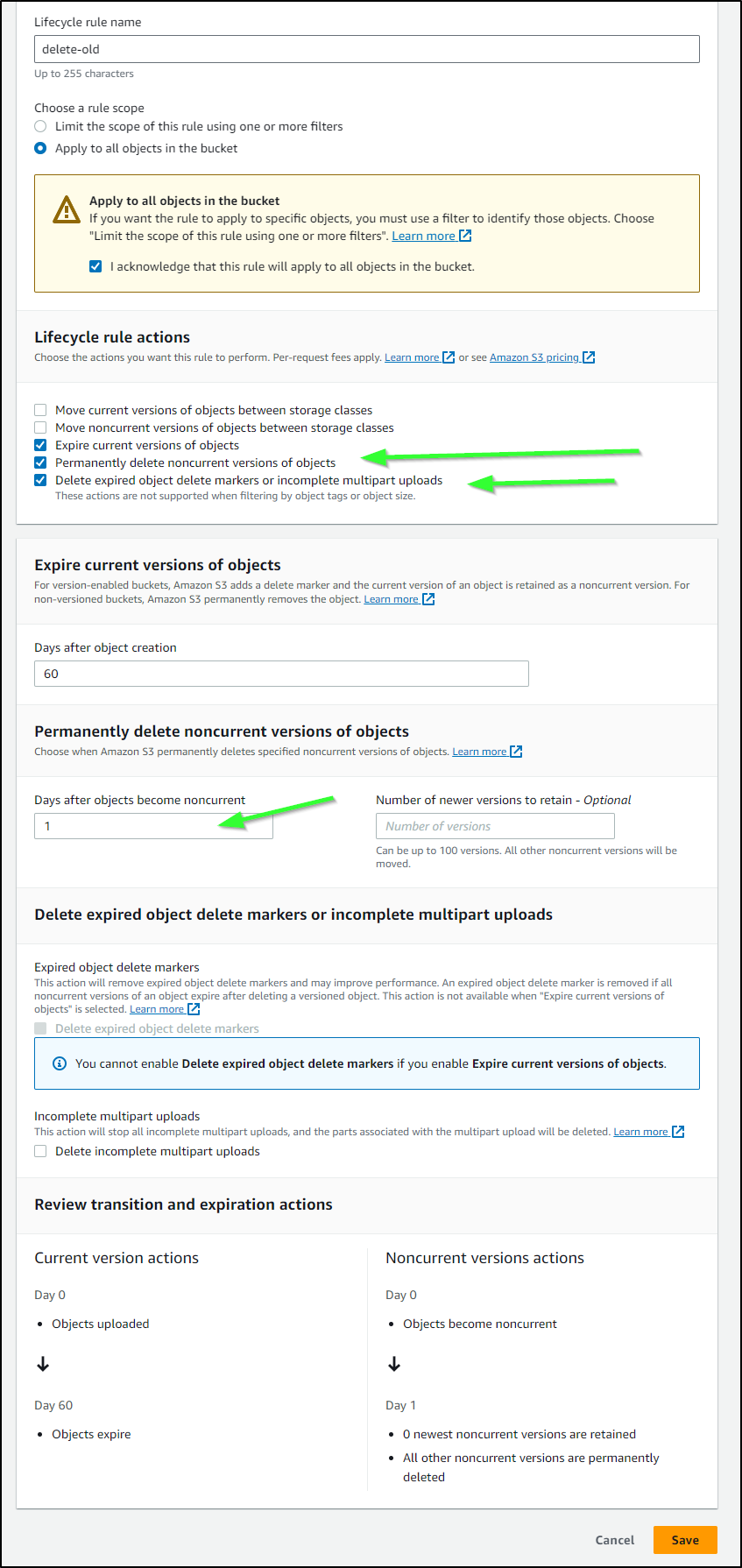

And looking into the Lifecycle policy, I can see the issue - we expire them after 60d but don’t actually delete them!

I’ll update the policy

It can take a few days to have them start to change, but we’ll circle back on that later.

Summary

My outstanding threads with Datadog support on Azure were sorted too soon to demonstrate the Azure Costs in this writeup. Additionally, I’ll be keeping a close eye on the CloudWatch usage as the timing of the spike lines up to the Datadog CUR CloudFormation launch. If my CW usage continues to grow, I may need to pull that back.

As we saw, I was able to find and fix some waste in S3. Anytime a tool immediately saves me money, that makes it a winner for me. I would like to see the Setup and Onboarding improved. When we use Datadog with cloud metrics and log ingestion, its much much simpler. I would hope they improve the onboarding - that is, I would love to see a 1-click cloud formation, or blueprint - something straightforward.

For me to use this as a full replacement for my current setup, I would also need GCP support (which is coming).

Addendum

I am a fan of Datadog. Most of their new features work great.

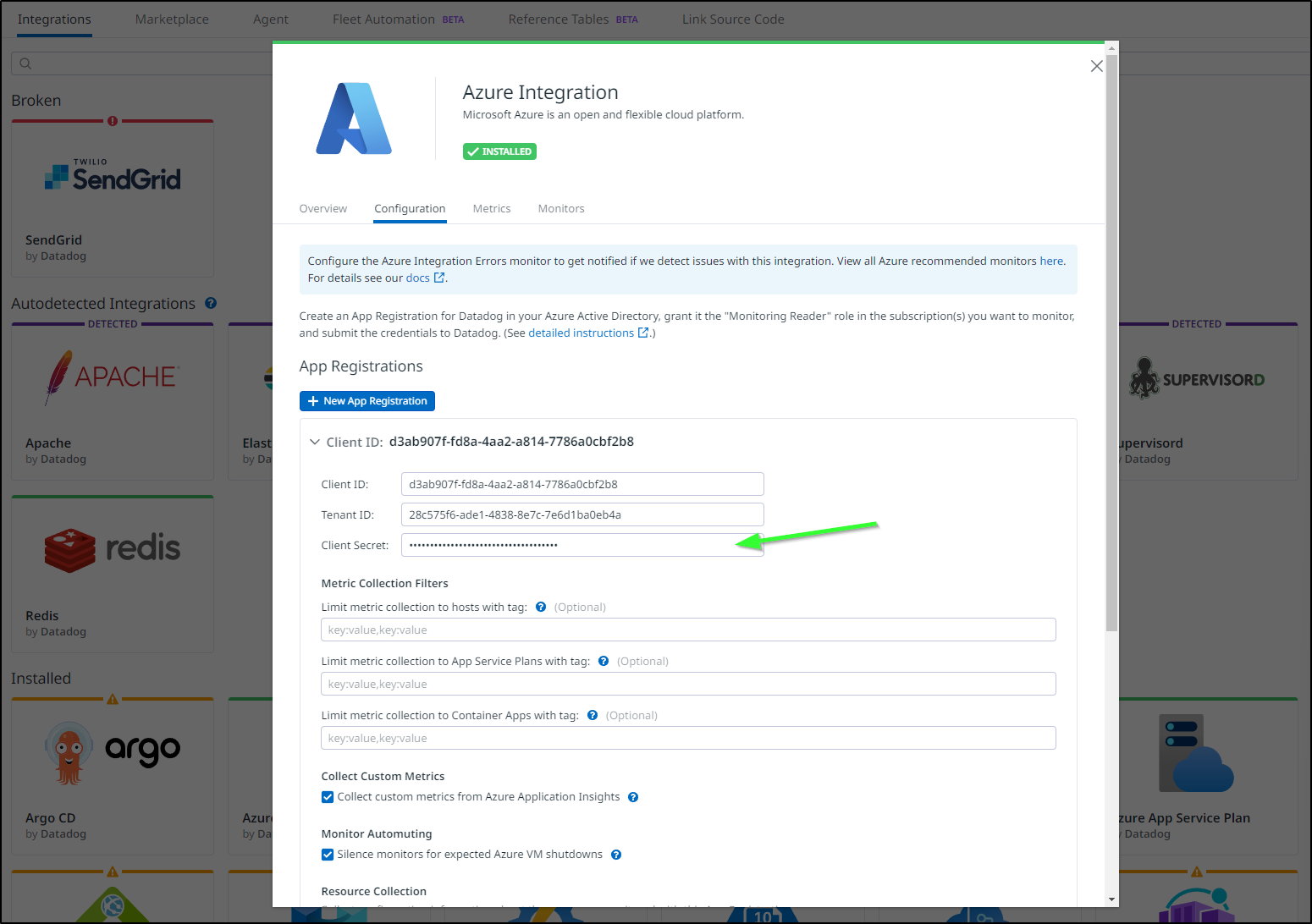

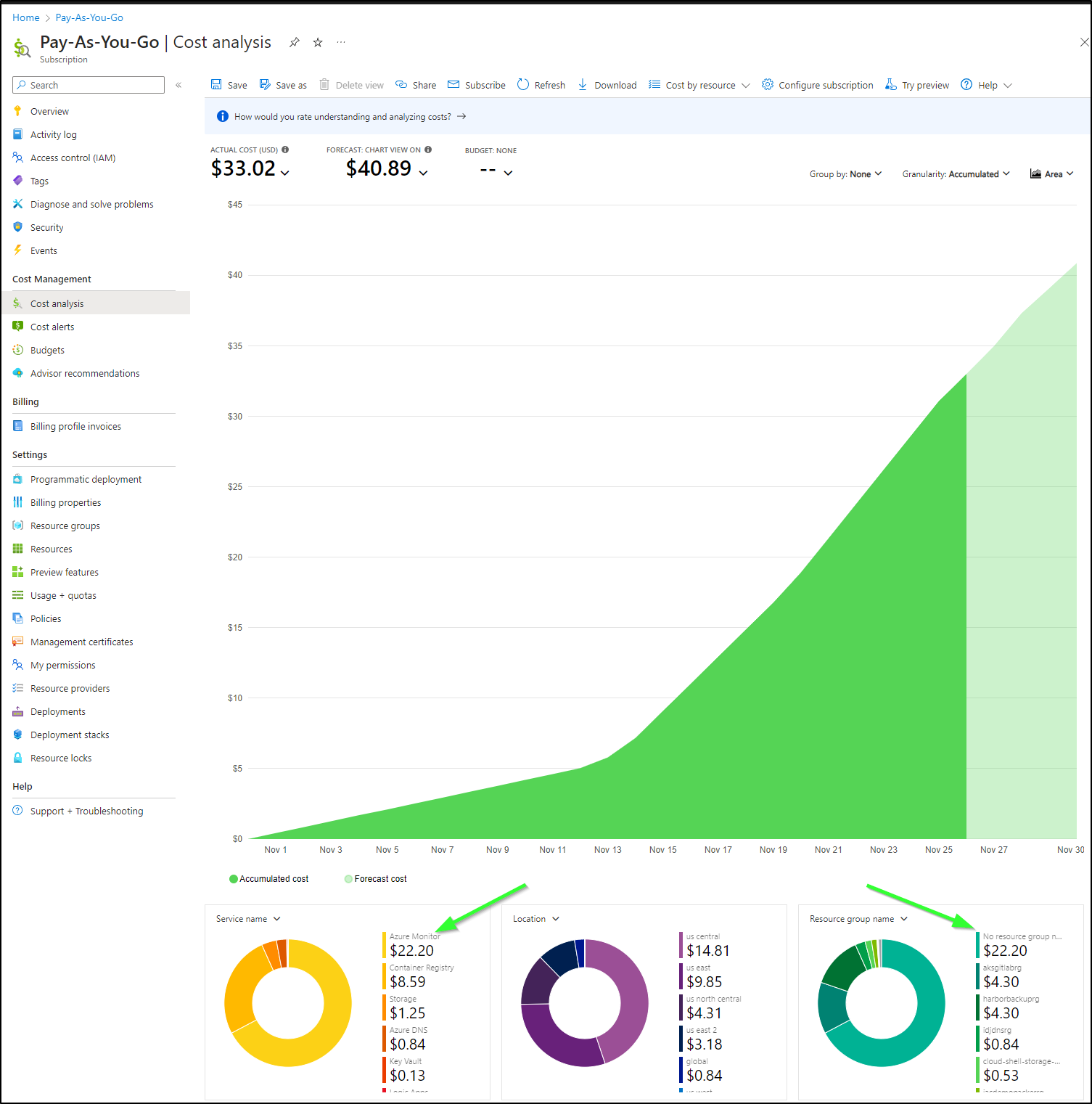

However, this one, cost me a lot. I have fought back the ingestion since. My Azure Bills spiked upwards of $45 from $10.

The one way I was able to stop was to go to the Azure Integration and mess with the client secret - I want it invalid.

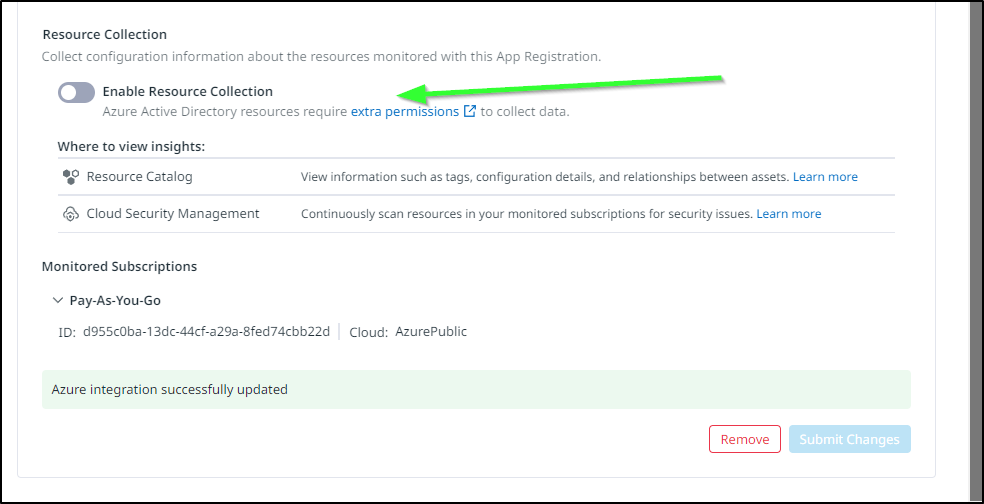

I also disabled the Enable Resource Collection section

I’m fairly certain it is the cause of these massive metrics spikes (“Native Metric Queries”).

If I cannot stop the cost overrun, I’ll end up having to shut down this subscription and I would much much prefer not to do that.

I already shut down the AWS integration when it spiked.