Published: Sep 12, 2023 by Isaac Johnson

Last week we got started with React Next.JS building a basic website by hand. Today we’ll extend that to containerization, Github actions and deployments to both docker and Kubernetes. We’ll explore three artifact stores along the way; dockerhub, private Harbor CR and lastly GHCR (Github Artifacts).

Let’s get started!

Build with pnpm

Before I can move onto a production Docker build, I should run pnpm build to check that my files are in good order

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ pnpm build

> freshbrewed-app@0.1.0 build /home/builder/Workspaces/nodesite/freshbrewed-app

> next build

- info Creating an optimized production build

- info Compiled successfully

Failed to compile.

./src/app/blog/page.tsx

23:72 Error: `'` can be escaped with `'`, `‘`, `'`, `’`. react/no-unescaped-entities

./src/app/layout.tsx

21:15 Warning: Using `<img>` could result in slower LCP and higher bandwidth. Consider using `<Image />` from `next/image` to automatically optimize images. This may incur additional usage or cost from your provider. See: https://nextjs.org/docs/messages/no-img-element @next/next/no-img-element

info - Need to disable some ESLint rules? Learn more here: https://nextjs.org/docs/basic-features/eslint#disabling-rules

- info Linting and checking validity of types . ELIFECYCLE Command failed with exit code 1.

Seems like we need to cleanup ./src/app/blog/page.tsx

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ git diff src/app/blog/

diff --git a/src/app/blog/page.tsx b/src/app/blog/page.tsx

index a9207b7..bca6091 100644

--- a/src/app/blog/page.tsx

+++ b/src/app/blog/page.tsx

@@ -20,7 +20,7 @@ const page = () => {

<main className="flex flex-col gap-2">

<h1 className="mb-0 text-zinc-100 font-bold">Blogs 📚</h1>

<p className="mb-0 text-zinc-400 font-semibold leading-none">

- All the blogs that I have written can be found here, mostly I'll try

+ All the blogs that I have written can be found here, mostly I'll try

to put here all the cool tips and triks in frontend development and my

learnings and experiments or rather anything which seems cool to me

</p>

I won’t cover every linting issue. That said, I needed to install clsx, framer-motion and tailwind-merge:

$ pnpm install --save clsx

╭─────────────────────────────────────────────────────────────────╮

│ │

│ Update available! 8.7.1 → 8.7.4. │

│ Changelog: https://github.com/pnpm/pnpm/releases/tag/v8.7.4 │

│ Run "pnpm add -g pnpm" to update. │

│ │

│ Follow @pnpmjs for updates: https://twitter.com/pnpmjs │

│ │

╰─────────────────────────────────────────────────────────────────╯

Packages: +1

+

Progress: resolved 346, reused 337, downloaded 1, added 1, done

dependencies:

+ clsx 2.0.0

Done in 2.1s

$ pnpm install --save tailwind-merge

Packages: +1

+

Progress: resolved 347, reused 338, downloaded 1, added 1, done

dependencies:

+ tailwind-merge 1.14.0

Done in 2.1s

$ pnpm install --save framer-motion

Packages: +3

+++

Progress: resolved 350, reused 341, downloaded 1, added 3, done

dependencies:

+ framer-motion 10.16.4

Done in 2.2s

Then make a few modifications in order for pnpm build to complete without error:

$ pnpm build

> freshbrewed-app@0.1.0 build /home/builder/Workspaces/nodesite/freshbrewed-app

> next build

- info Creating an optimized production build

- info Compiled successfully

./src/app/layout.tsx

21:15 Warning: Using `<img>` could result in slower LCP and higher bandwidth. Consider using `<Image />` from `next/image` to automatically optimize images. This may incur additional usage or cost from your provider. See: https://nextjs.org/docs/messages/no-img-element @next/next/no-img-element

info - Need to disable some ESLint rules? Learn more here: https://nextjs.org/docs/basic-features/eslint#disabling-rules

- info Linting and checking validity of types

- info Collecting page data

[ ] - info Generating static pages (0/9)(node:12132) ExperimentalWarning: buffer.Blob is an experimental feature. This feature could change at any time

(Use `node --trace-warnings ...` to show where the warning was created)

- info Generating static pages (9/9)

- info Finalizing page optimization

Route (app) Size First Load JS

┌ ○ / 5.17 kB 83.6 kB

├ ○ /blog 304 B 117 kB

├ ● /blog/[slug] 176 B 84.4 kB

├ ├ /blog/hello

├ └ /blog/test

├ ○ /favicon.ico 0 B 0 B

├ ○ /fun 140 B 78.6 kB

└ ○ /guestbook 139 B 78.6 kB

+ First Load JS shared by all 78.5 kB

├ chunks/431-82c16d9f92a293e1.js 26.1 kB

├ chunks/e0f3dcf3-c6e4db7d5451056b.js 50.5 kB

├ chunks/main-app-86ec00818d1758f2.js 221 B

└ chunks/webpack-50a9d2c8e3c6225d.js 1.68 kB

Route (pages) Size First Load JS

─ ○ /404 181 B 76.5 kB

+ First Load JS shared by all 76.3 kB

├ chunks/framework-510ec8ffd65e1d01.js 45.1 kB

├ chunks/main-fb9b6470ef9183d4.js 29.4 kB

├ chunks/pages/_app-991576dc368ea245.js 195 B

└ chunks/webpack-50a9d2c8e3c6225d.js 1.68 kB

○ (Static) automatically rendered as static HTML (uses no initial props)

● (SSG) automatically generated as static HTML + JSON (uses getStaticProps)

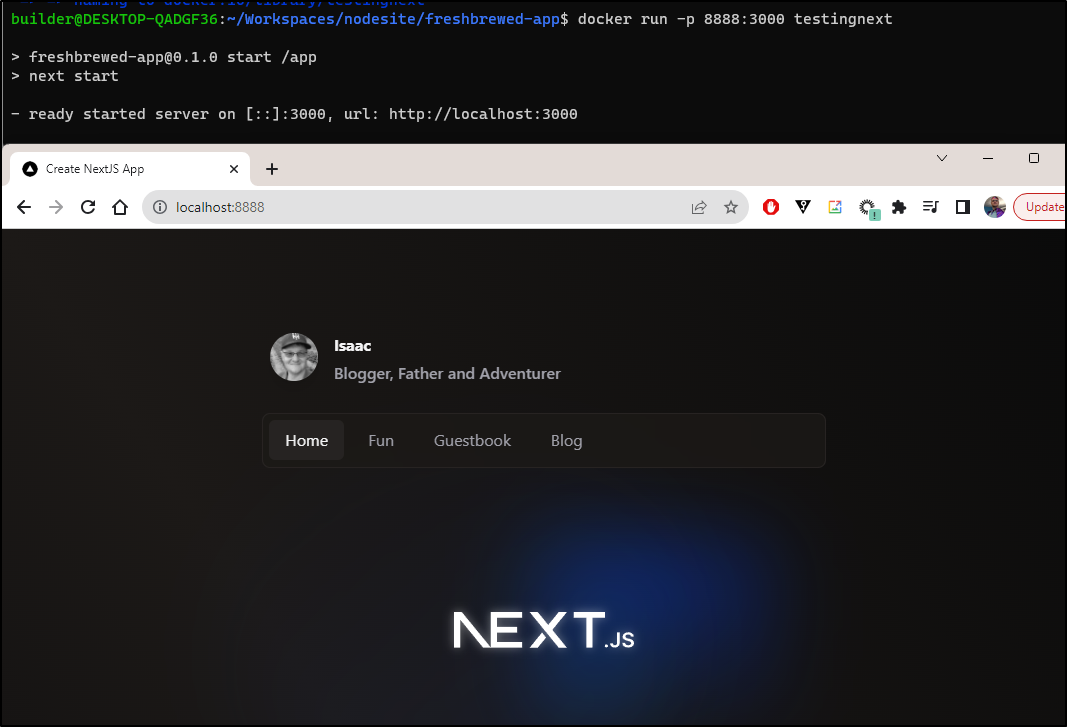

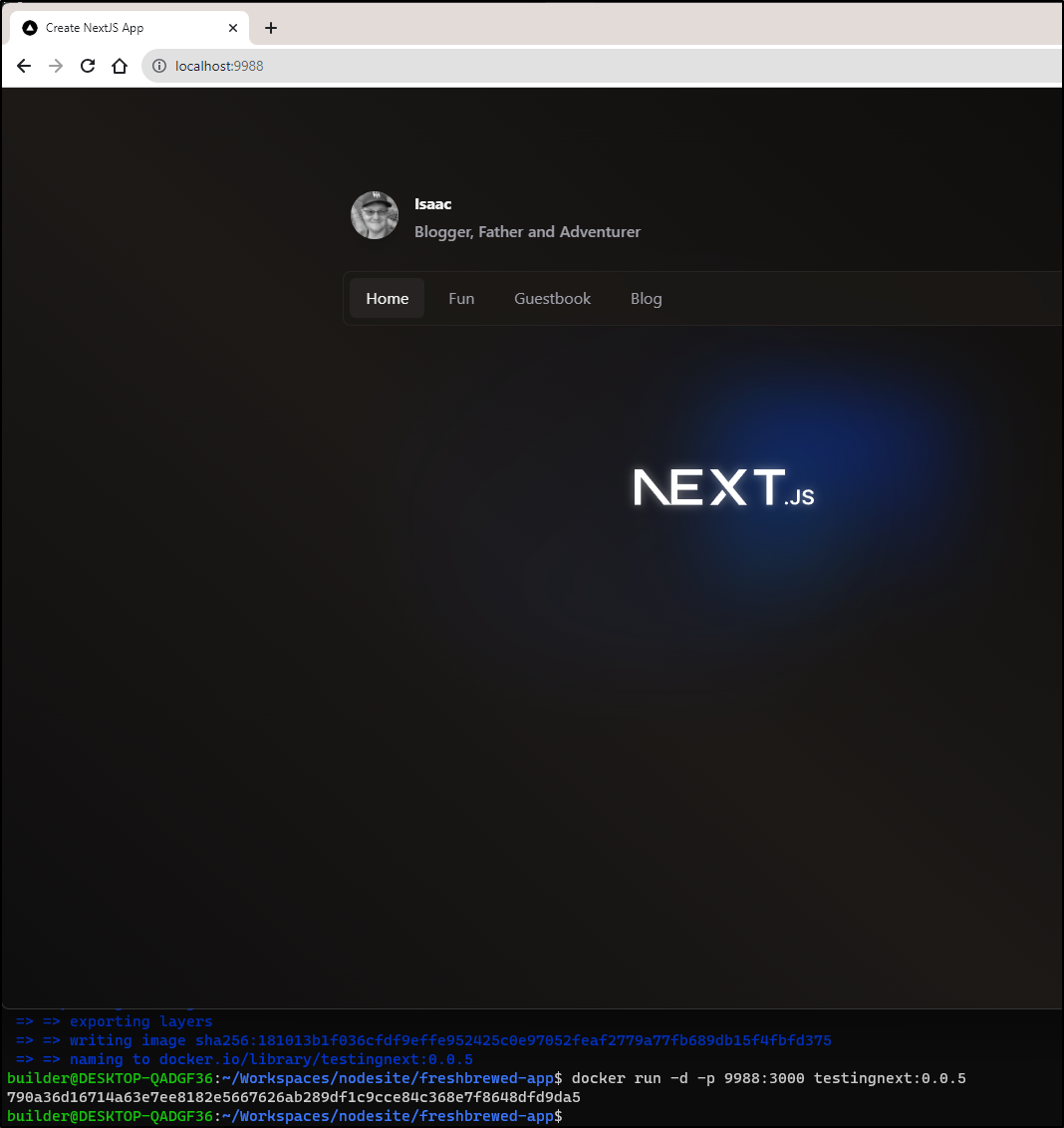

We can test the production build:

$ pnpm start

> freshbrewed-app@0.1.0 start /home/builder/Workspaces/nodesite/freshbrewed-app

> next start

- ready started server on [::]:3000, url: http://localhost:3000

We can see it in action:

Dockerfile

We can now start in on creating the Dockerfile. I’ll create this at the root (above src).

# pull official base image

FROM node:16.14.2-alpine

# set working directory

WORKDIR /app

# add `/app/node_modules/.bin` to $PATH

ENV PATH /app/node_modules/.bin:$PATH

# install app dependencies

COPY package.json ./

COPY pnpm-lock.yaml ./

COPY tsconfig.json ./

RUN npm install -g pnpm

RUN pnpm install

# add app

COPY . ./

RUN pnpm build

# start app

CMD ["pnpm", "start"]

I can now build a tagged image

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ docker build -t testingnext .

[+] Building 73.1s (15/15) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 38B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/node:16.14.2-alpine 1.5s

=> [auth] library/node:pull token for registry-1.docker.io 0.0s

=> [internal] load build context 0.7s

=> => transferring context: 5.02MB 0.6s

=> [1/9] FROM docker.io/library/node:16.14.2-alpine@sha256:28bed508446db2ee028d08e76fb47b935defa26a84986ca050d2596ea67fd506 0.0s

=> CACHED [2/9] WORKDIR /app 0.0s

=> [3/9] COPY package.json ./ 0.0s

=> [4/9] COPY pnpm-lock.yaml ./ 0.0s

=> [5/9] COPY tsconfig.json ./ 0.0s

=> [6/9] RUN npm install -g pnpm 2.2s

=> [7/9] RUN pnpm install 18.2s

=> [8/9] COPY . ./ 5.0s

=> [9/9] RUN pnpm build 37.0s

=> exporting to image 7.5s

=> => exporting layers 7.5s

=> => writing image sha256:039587923b4fd7d874be11f1ce24203c78ad0976cdcb60d3eea670fcad0cc985 0.0s

=> => naming to docker.io/library/testingnext 0.0s

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$

I can test by running and exposing a different port (just to be sure it’s docker hosting this)

Running in Kubernetes

We will do this a few ways to show examples.

Dockerhub

Let’s say you don’t have a self-hosted CR or a secured cloud one - instead you are using a “free” one like Dockerhub.

Dockerhub will insist we namespace our images (in my case, it’s under idjohnson). We need only to tag and push (if it’s been a while, you might need to do docker login as well).

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ docker images | head -n10

REPOSITORY TAG IMAGE ID CREATED SIZE

testingnext latest 039587923b4f 4 minutes ago 1.57GB

simple-static-test latest d068b46cd082 9 days ago 142MB

<none> <none> 5129defec14e 9 days ago 142MB

harbor.freshbrewed.science/freshbrewedprivate/coboladder 0.0.3 0677d41bc8a0 3 weeks ago 930MB

registry.gitlab.com/isaac.johnson/dockerwithtests2 latest 35d7a213e3e2 4 weeks ago 1.02GB

idjohnson/azdoagent 0.1.0 6593aa12f6c1 5 weeks ago 1.23GB

azdoagenttest 0.1.0 6593aa12f6c1 5 weeks ago 1.23GB

idjohnson/azdoagent 0.0.1 75a3ccb4c979 5 weeks ago 911MB

azdoagenttest 0.0.1 75a3ccb4c979 5 weeks ago 911MB

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ docker tag testingnext:latest idjohnson/testingnext:0.0.1

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ docker push idjohnson/testingnext:0.0.1

The push refers to repository [docker.io/idjohnson/testingnext]

ea70412760a3: Pushed

d5e795f29fd5: Pushing [===========================> ] 350MB/626.3MB

0e20664dc028: Pushing [=============================> ] 441.5MB/737.9MB

c986a441510f: Pushed

a23eeb46f872: Pushed

6da8aeca0ea2: Pushed

89a9ebe45556: Pushed

38f00bde5453: Pushed

9c8958a02c6e: Mounted from library/node

b5a53db2b893: Mounted from library/node

cdb4a052fad7: Mounted from library/node

4fc242d58285: Mounted from library/node

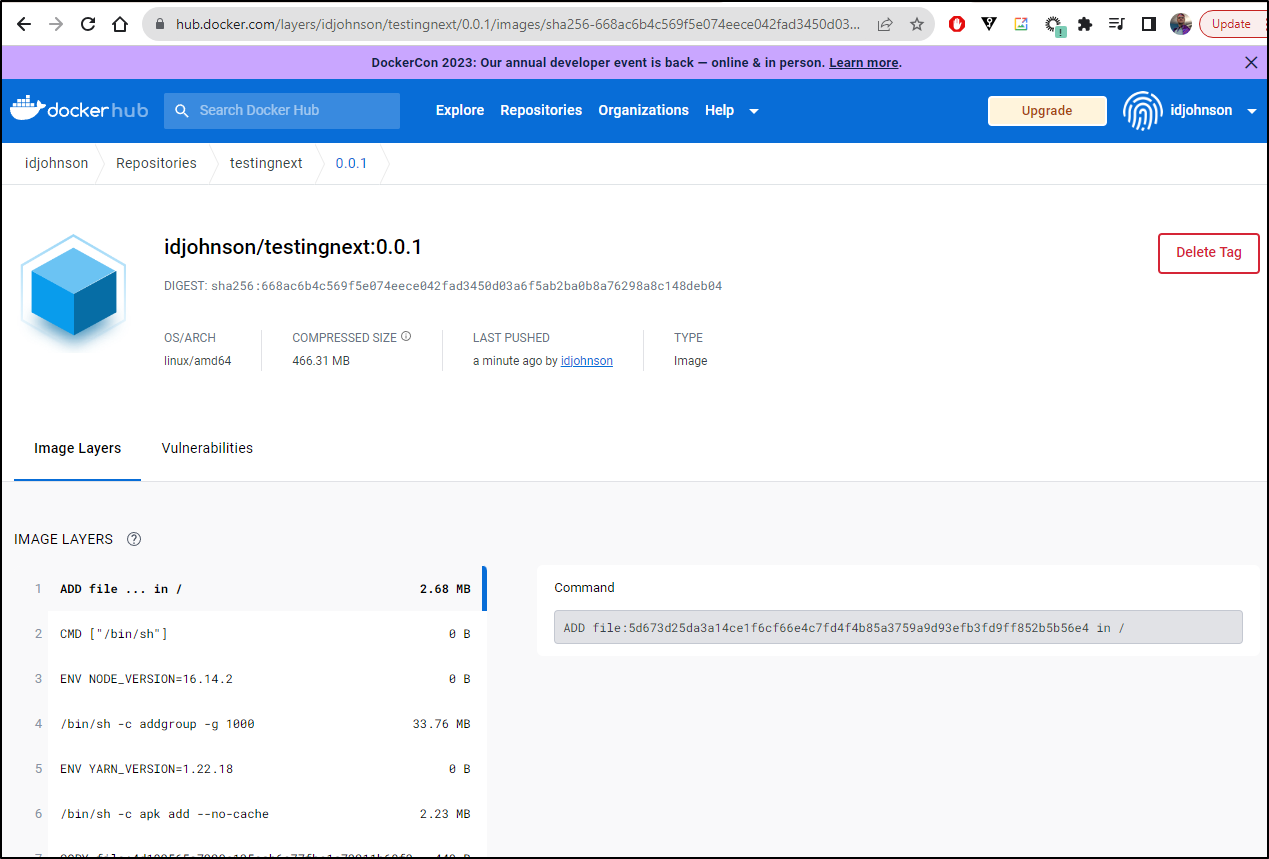

I can see it up there now

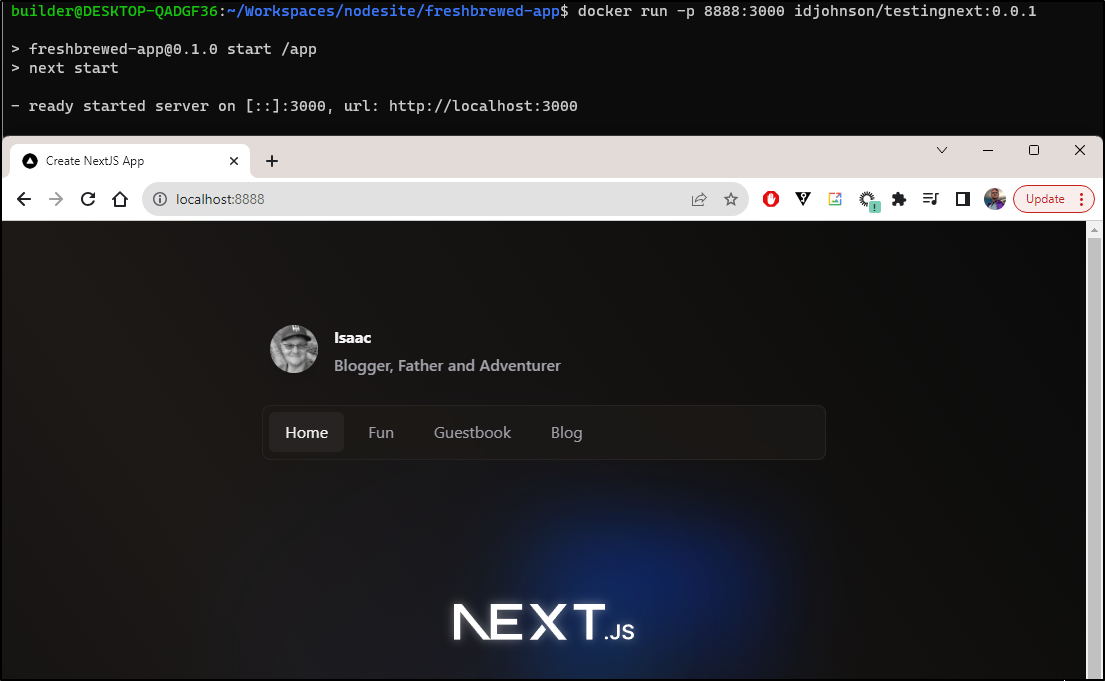

And as basic as it may seem, we can now pull and run that image from anywhere

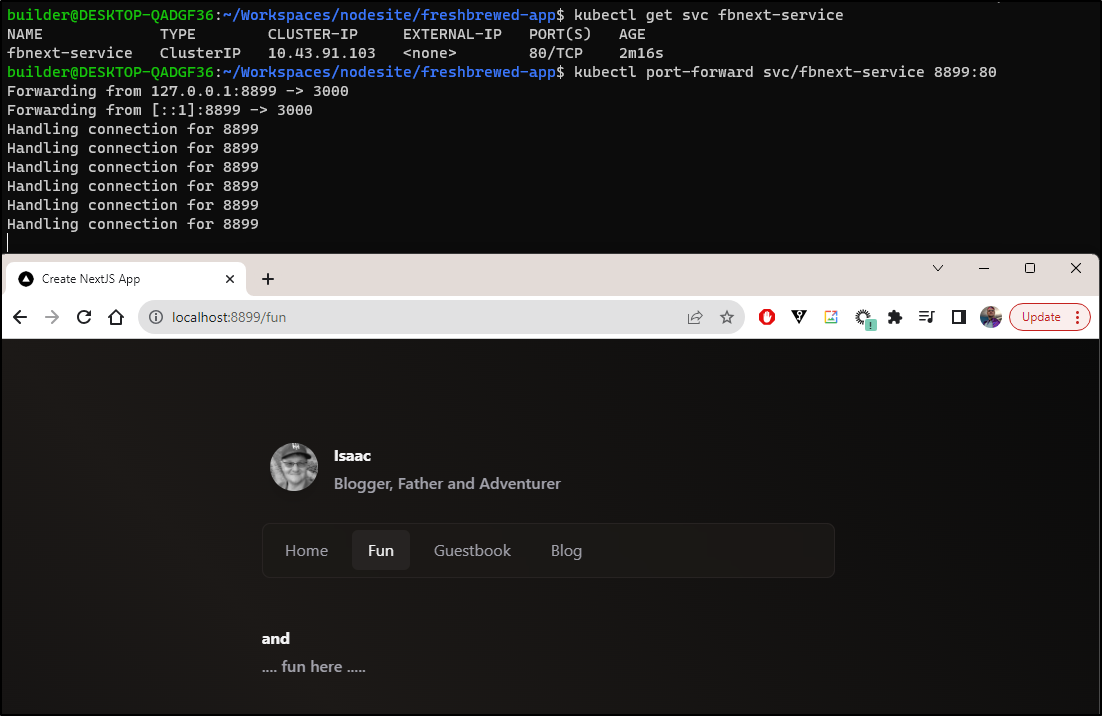

If we wanted to launch into k8s, we could create a Deployment and Service (which would be used by an ingress)

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ cat deploy/k8sRS.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: fbnext-deployment

spec:

replicas: 3 # You can adjust the number of replicas as needed

selector:

matchLabels:

app: fbnext-app

template:

metadata:

labels:

app: fbnext-app

spec:

containers:

- name: fbnext-container

image: idjohnson/testingnext:0.0.1

ports:

- containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: fbnext-service

spec:

selector:

app: fbnext-app

ports:

- protocol: TCP

port: 80

targetPort: 3000

type: ClusterIP

I’ll launch this 3 pod replicaset in a sample cluster

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ kubectl apply -f deploy/k8sRS.yaml

deployment.apps/fbnext-deployment created

service/fbnext-service created

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ kubectl get pods | grep fbnext

fbnext-deployment-c6dcf67db-hxhtf 0/1 ContainerCreating 0 8s

fbnext-deployment-c6dcf67db-xzbqm 0/1 ContainerCreating 0 8s

fbnext-deployment-c6dcf67db-hh96d 0/1 ContainerCreating 0 8s

After a bit, all three are running

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ kubectl get pods | grep fbnext

fbnext-deployment-c6dcf67db-hxhtf 0/1 ContainerCreating 0 38s

fbnext-deployment-c6dcf67db-xzbqm 0/1 ContainerCreating 0 38s

fbnext-deployment-c6dcf67db-hh96d 0/1 ContainerCreating 0 38s

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ kubectl get pods | grep fbnext

fbnext-deployment-c6dcf67db-hxhtf 0/1 ContainerCreating 0 63s

fbnext-deployment-c6dcf67db-hh96d 0/1 ContainerCreating 0 63s

fbnext-deployment-c6dcf67db-xzbqm 1/1 Running 0 63s

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ kubectl get pods | grep fbnext

fbnext-deployment-c6dcf67db-hh96d 0/1 ContainerCreating 0 71s

fbnext-deployment-c6dcf67db-xzbqm 1/1 Running 0 71s

fbnext-deployment-c6dcf67db-hxhtf 1/1 Running 0 71s

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ kubectl get pods | grep fbnext

fbnext-deployment-c6dcf67db-xzbqm 1/1 Running 0 98s

fbnext-deployment-c6dcf67db-hxhtf 1/1 Running 0 98s

fbnext-deployment-c6dcf67db-hh96d 1/1 Running 0 98s

And that is now working just dandy

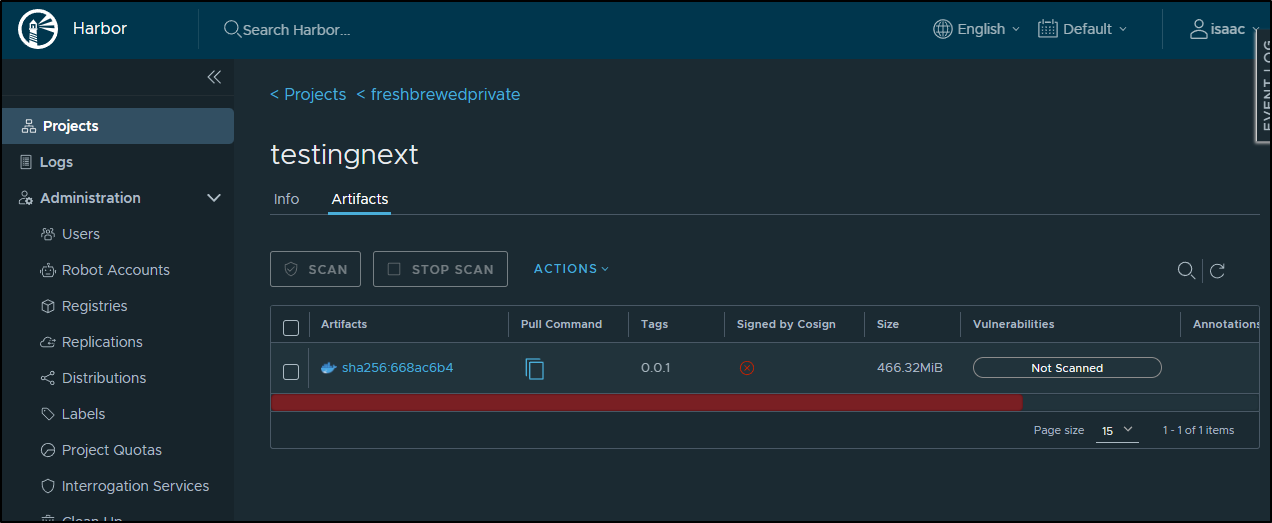

Harbor

If we used a private CR, like Harbor, ACR, ECR or GaaaaRRR!

Seriously, I will never stop finding the Goog’s name of their GCR replacement ridiculous

Okay, but onto a secured CR… The same rules apply; login if needed, then tag and push

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ docker tag testingnext:latest harbor.freshbrewed.science/freshbrewedprivate/testingnext:0.0.1

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ docker push harbor.freshbrewed.science/freshbrewedprivate/testingnext:0.0.1

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/testingnext]

ea70412760a3: Pushed

d5e795f29fd5: Pushed

0e20664dc028: Pushed

c986a441510f: Pushed

a23eeb46f872: Pushed

6da8aeca0ea2: Pushed

89a9ebe45556: Pushed

38f00bde5453: Pushed

9c8958a02c6e: Pushed

b5a53db2b893: Pushed

cdb4a052fad7: Pushed

4fc242d58285: Mounted from freshbrewedprivate/pubsub-node-subscriber

0.0.1: digest: sha256:668ac6b4c569f5e074eece042fad3450d03a6f5ab2ba0b8a76298a8c148deb04 size: 2835

The syntax for a docker secret is such:

kubectl create secret docker-registry secret-tiger-docker \

--docker-email=tiger@acme.example \

--docker-username=tiger \

--docker-password=pass1234 \

--docker-server=my-registry.example:5000

I generally have one handy I can pull into this cluster

$ kubectl get secret myharborreg -o yaml > myharborreg

$ cat myharborreg | sed 's/xx.*==/*****masked****/g'

apiVersion: v1

data:

.dockerconfigjson: *****masked****

kind: Secret

metadata:

annotations:

field.cattle.io/projectId: local:p-rc2tg

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{".dockerconfigjson":"*****masked****"},"kind":"Secret","metadata":{"annotations":{},"creationTimestamp":"2022-06-17T14:24:31Z","name":"myharborreg","namespace":"default","resourceVersion":"731133","uid":"24928f04-cb8a-483e-b44f-aeaa72ef27f2"},"type":"kubernetes.io/dockerconfigjson"}

creationTimestamp: "2022-07-26T12:02:20Z"

name: myharborreg

namespace: default

resourceVersion: "91447233"

uid: 42fd210b-ce02-4cb0-9e10-90add56ce136

type: kubernetes.io/dockerconfigjson

Then I hop to the sample cluster and apply it

$ kubectx mac81

Switched to context "mac81".

$ kubectl apply -f myharborreg

secret/myharborreg created

I can update the image and add an imagePullSecrets block to use the controlled image

$ cat deploy/k8sRS.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: fbnext-deployment

spec:

replicas: 3 # You can adjust the number of replicas as needed

selector:

matchLabels:

app: fbnext-app

template:

metadata:

labels:

app: fbnext-app

spec:

containers:

- name: fbnext-container

image: harbor.freshbrewed.science/freshbrewedprivate/testingnext:0.0.1

ports:

- containerPort: 3000

imagePullSecrets:

- name: myharborreg

---

apiVersion: v1

kind: Service

metadata:

name: fbnext-service

spec:

selector:

app: fbnext-app

ports:

- protocol: TCP

port: 80

targetPort: 3000

type: ClusterIP

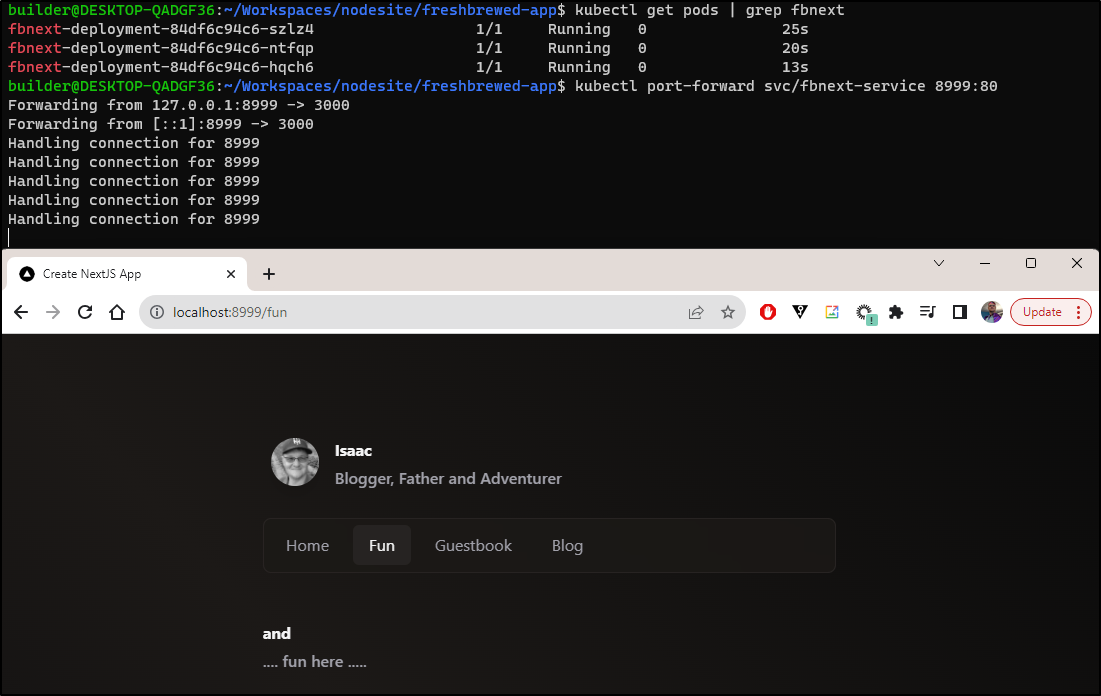

I can now apply to ‘configure’ the deployment to use a different image

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ kubectl apply -f deploy/k8sRS.yaml

deployment.apps/fbnext-deployment configured

service/fbnext-service unchanged

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ kubectl get pods | grep fbnext

fbnext-deployment-c6dcf67db-xzbqm 1/1 Running 0 16m

fbnext-deployment-c6dcf67db-hxhtf 1/1 Running 0 16m

fbnext-deployment-84df6c94c6-szlz4 1/1 Running 0 6s

fbnext-deployment-c6dcf67db-hh96d 1/1 Terminating 0 16m

fbnext-deployment-84df6c94c6-ntfqp 0/1 Pending 0 1s

Since this is my Container Registry without rate limiting and local in my network, it goes much faster.

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ kubectl get pods | grep fbnext

fbnext-deployment-84df6c94c6-szlz4 1/1 Running 0 25s

fbnext-deployment-84df6c94c6-ntfqp 1/1 Running 0 20s

fbnext-deployment-84df6c94c6-hqch6 1/1 Running 0 13s

That works just as well

However, I’m not as thrilled the size of my Docker image. It was quick and dirty. As a result, it’s a hefty 466.32Mb compressed

I’ll pause to push this version we’ve tested up to Github first.

Dockerfile optimization

I want to first share a pro-tip on debugging..

I kept failing to find the proper entry point

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ docker run -p 9988:3000 testingnext:0.0.2

node:internal/modules/cjs/loader:936

throw err;

^

Error: Cannot find module '/app/server.js'

at Function.Module._resolveFilename (node:internal/modules/cjs/loader:933:15)

at Function.Module._load (node:internal/modules/cjs/loader:778:27)

at Function.executeUserEntryPoint [as runMain] (node:internal/modules/run_main:77:12)

at node:internal/main/run_main_module:17:47 {

code: 'MODULE_NOT_FOUND',

requireStack: []

}

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ docker run -p 9988:3000 testingnext:0.0.2

node:internal/modules/cjs/loader:936

throw err;

^

Error: Cannot find module '/app/pnpm'

at Function.Module._resolveFilename (node:internal/modules/cjs/loader:933:15)

at Function.Module._load (node:internal/modules/cjs/loader:778:27)

at Function.executeUserEntryPoint [as runMain] (node:internal/modules/run_main:77:12)

at node:internal/main/run_main_module:17:47 {

code: 'MODULE_NOT_FOUND',

requireStack: []

}

I needed to interactively debug the container, but of course one cannot do that it if it’s crashed.

Just change the dockerfile’s last line to sleep:

ENTRYPOINT ["tail", "-f", "/dev/null"]

#CMD ["pnpm", "start"]

Then you can run it and execute a shell

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ docker run -d -p 9988:3000 testingnext:0.0.2

0f31cc6e25ae8b4baf8f90d3b1fdd254a951da38af0166c12d1a0d8b2106c86c

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ docker exec -it 0f31cc6e25ae8b4baf8f90d3b1fdd254a951da38af0166c12d1a0d8b2106c86c /bin/bash

OCI runtime exec failed: exec failed: unable to start container process: exec: "/bin/bash": stat /bin/bash: no such file or directory: unknown

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ docker exec -it 0f31cc6e25ae8b4baf8f90d3b1fdd254a951da38af0166c12d1a0d8b2106c86c /bin/sh

/app $ ls

app font-manifest.json middleware-manifest.json next-font-manifest.json public webpack-runtime.js

app-paths-manifest.json functions-config-manifest.json middleware-react-loadable-manifest.js pages server-reference-manifest.js

chunks middleware-build-manifest.js next-font-manifest.js pages-manifest.json server-reference-manifest.json

/app $

It took a bit, but the working Dockerfile ultimately looked like this

FROM node:16.14.2-alpine AS BUILD_IMAGE

WORKDIR /app

COPY package.json pnpm-lock.yaml ./

RUN npm install -g pnpm

# install dependencies

RUN pnpm install

COPY . .

# build

RUN pnpm build

# remove dev dependencies

RUN pnpm prune --production

FROM node:16.14.2-alpine

WORKDIR /app

RUN npm install -g pnpm

# copy from build image

COPY --from=BUILD_IMAGE /app/package.json ./package.json

COPY --from=BUILD_IMAGE /app/node_modules ./node_modules

COPY --from=BUILD_IMAGE /app/.next ./.next

COPY --from=BUILD_IMAGE /app/public ./public

EXPOSE 3000

# ENTRYPOINT ["tail", "-f", "/dev/null"]

CMD ["pnpm", "start"]

which I could launch and see it serve on the port

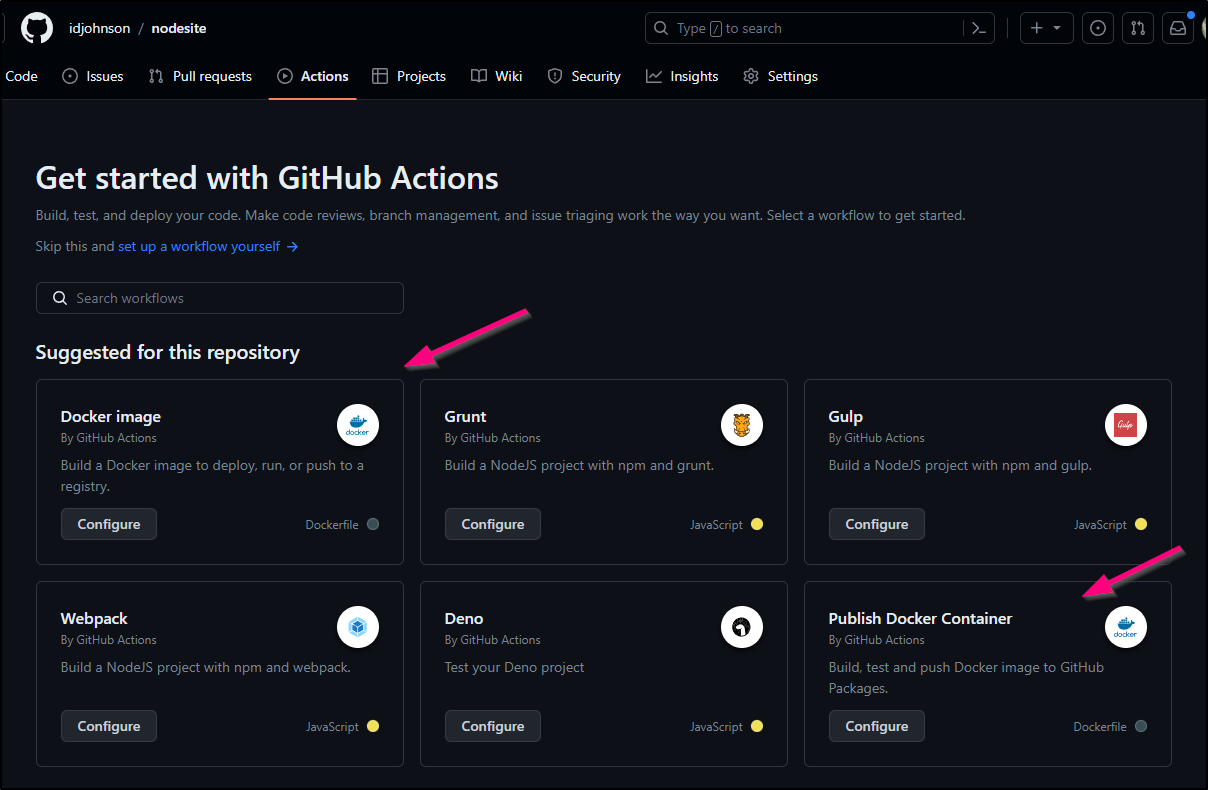

Github Actions

Let’s follow a basic Docker image Github Actions template

I debated which way to go - just build and push to my CR or use GH Packages

For the purpose of trying something new, I’ll start with the GH Packages step.

The YAML created automatically

name: Docker

# This workflow uses actions that are not certified by GitHub.

# They are provided by a third-party and are governed by

# separate terms of service, privacy policy, and support

# documentation.

on:

schedule:

- cron: '33 2 * * *'

push:

branches: [ "main" ]

# Publish semver tags as releases.

tags: [ 'v*.*.*' ]

pull_request:

branches: [ "main" ]

env:

# Use docker.io for Docker Hub if empty

REGISTRY: ghcr.io

# github.repository as <account>/<repo>

IMAGE_NAME: ${{ github.repository }}

jobs:

build:

runs-on: ubuntu-latest

permissions:

contents: read

packages: write

# This is used to complete the identity challenge

# with sigstore/fulcio when running outside of PRs.

id-token: write

steps:

- name: Checkout repository

uses: actions/checkout@v3

# Install the cosign tool except on PR

# https://github.com/sigstore/cosign-installer

- name: Install cosign

if: github.event_name != 'pull_request'

uses: sigstore/cosign-installer@6e04d228eb30da1757ee4e1dd75a0ec73a653e06 #v3.1.1

with:

cosign-release: 'v2.1.1'

# Workaround: https://github.com/docker/build-push-action/issues/461

- name: Setup Docker buildx

uses: docker/setup-buildx-action@79abd3f86f79a9d68a23c75a09a9a85889262adf

# Login against a Docker registry except on PR

# https://github.com/docker/login-action

- name: Log into registry ${{ env.REGISTRY }}

if: github.event_name != 'pull_request'

uses: docker/login-action@28218f9b04b4f3f62068d7b6ce6ca5b26e35336c

with:

registry: ${{ env.REGISTRY }}

username: ${{ github.actor }}

password: ${{ secrets.GITHUB_TOKEN }}

# Extract metadata (tags, labels) for Docker

# https://github.com/docker/metadata-action

- name: Extract Docker metadata

id: meta

uses: docker/metadata-action@98669ae865ea3cffbcbaa878cf57c20bbf1c6c38

with:

images: ${{ env.REGISTRY }}/${{ env.IMAGE_NAME }}

# Build and push Docker image with Buildx (don't push on PR)

# https://github.com/docker/build-push-action

- name: Build and push Docker image

id: build-and-push

uses: docker/build-push-action@ac9327eae2b366085ac7f6a2d02df8aa8ead720a

with:

context: .

push: ${{ github.event_name != 'pull_request' }}

tags: ${{ steps.meta.outputs.tags }}

labels: ${{ steps.meta.outputs.labels }}

cache-from: type=gha

cache-to: type=gha,mode=max

# Sign the resulting Docker image digest except on PRs.

# This will only write to the public Rekor transparency log when the Docker

# repository is public to avoid leaking data. If you would like to publish

# transparency data even for private images, pass --force to cosign below.

# https://github.com/sigstore/cosign

- name: Sign the published Docker image

if: ${{ github.event_name != 'pull_request' }}

env:

# https://docs.github.com/en/actions/security-guides/security-hardening-for-github-actions#using-an-intermediate-environment-variable

TAGS: ${{ steps.meta.outputs.tags }}

DIGEST: ${{ steps.build-and-push.outputs.digest }}

# This step uses the identity token to provision an ephemeral certificate

# against the sigstore community Fulcio instance.

run: echo "${TAGS}" | xargs -I {} cosign sign --yes {}@${DIGEST}

I like most of this - but not the cron - i don’t need daily builds.

I removed the schedule block and saved.

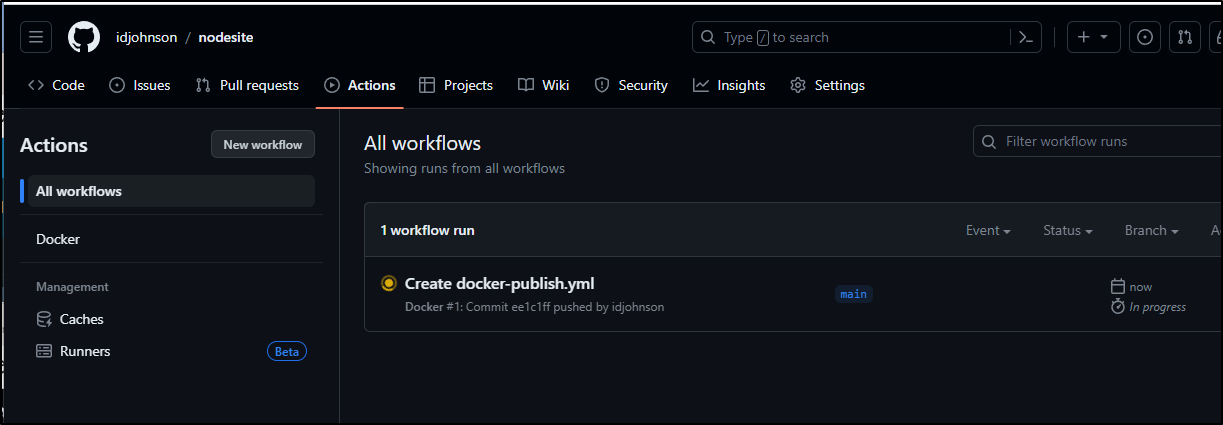

While it initially gave me an error about a missing workflows folder, it refreshed to showing it was building the GH action

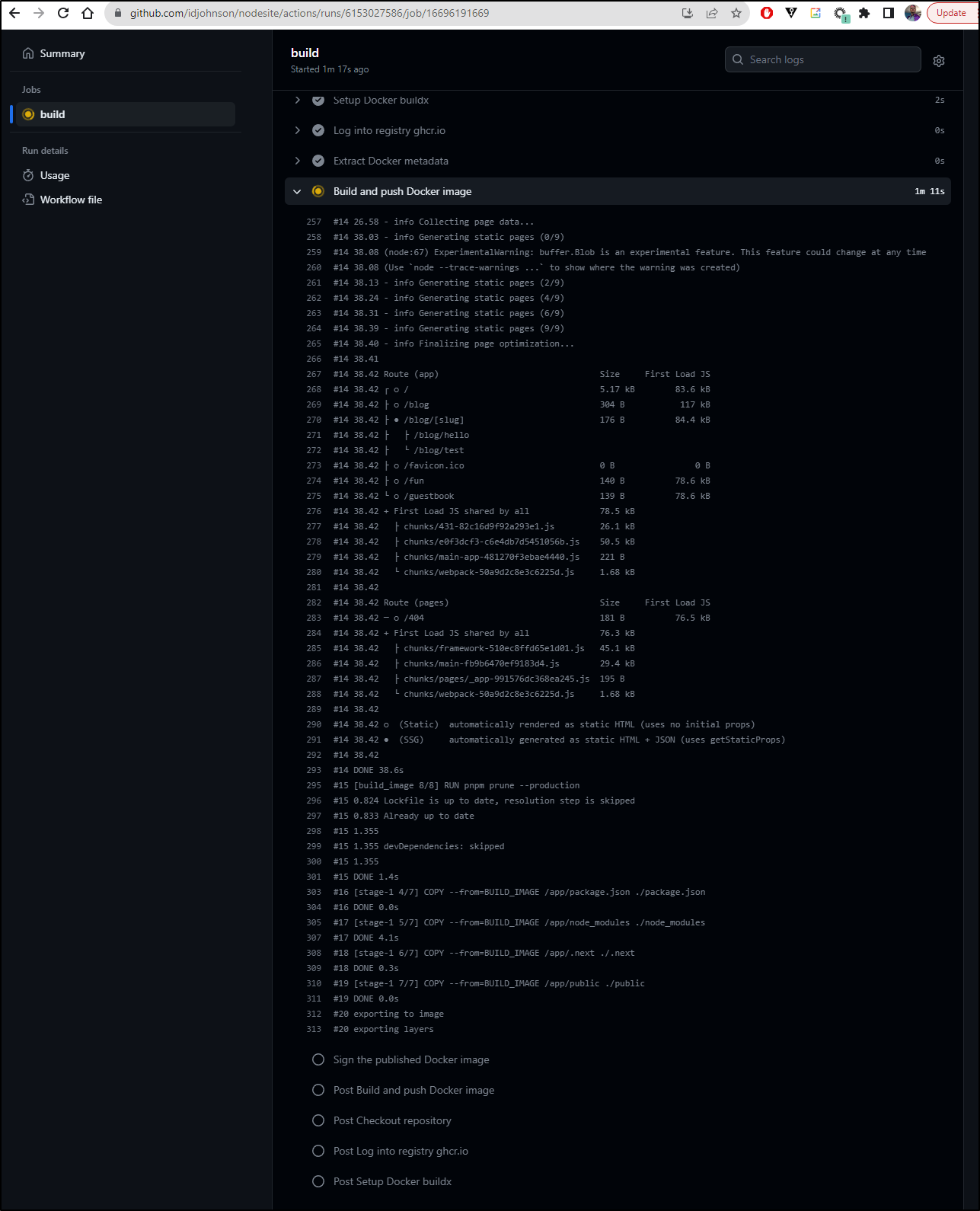

I watched it build, albeit a bit slowly

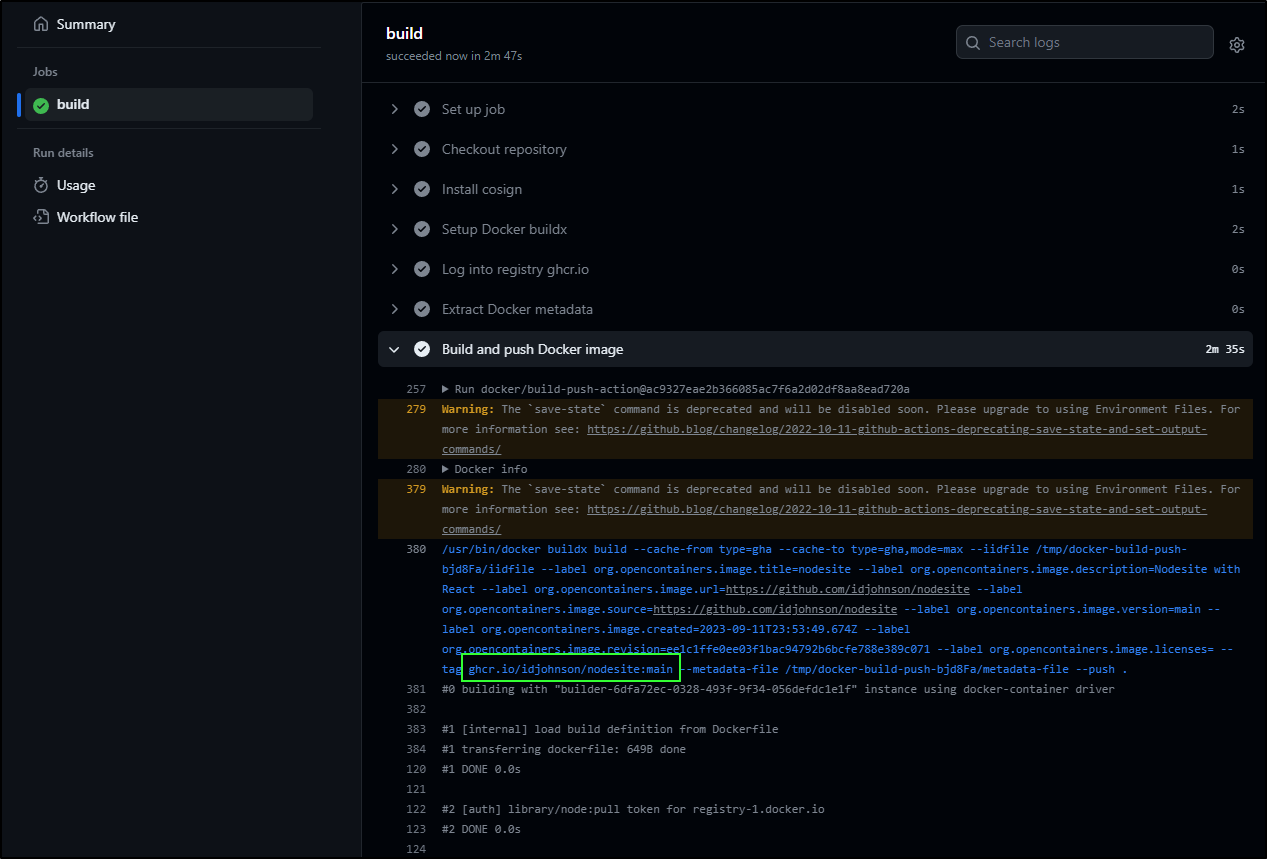

My guess is that it stored the image to ghcr.io/idjohnson/nodesite:main based on the logs:

A quick pull shows it works just dandy

CD to Kubernetes

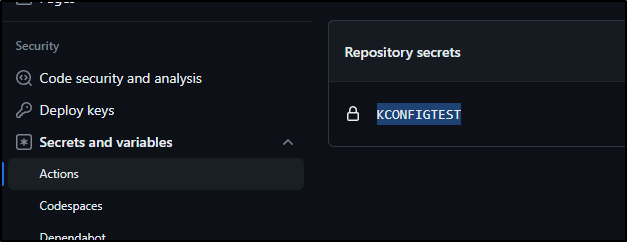

I’ll setup a base64’ed kubeconfig as a secret.

This is for a demo cluster and while functional, it now fails TLS verification due to ever changing external IPs ( thanks Xfinity )

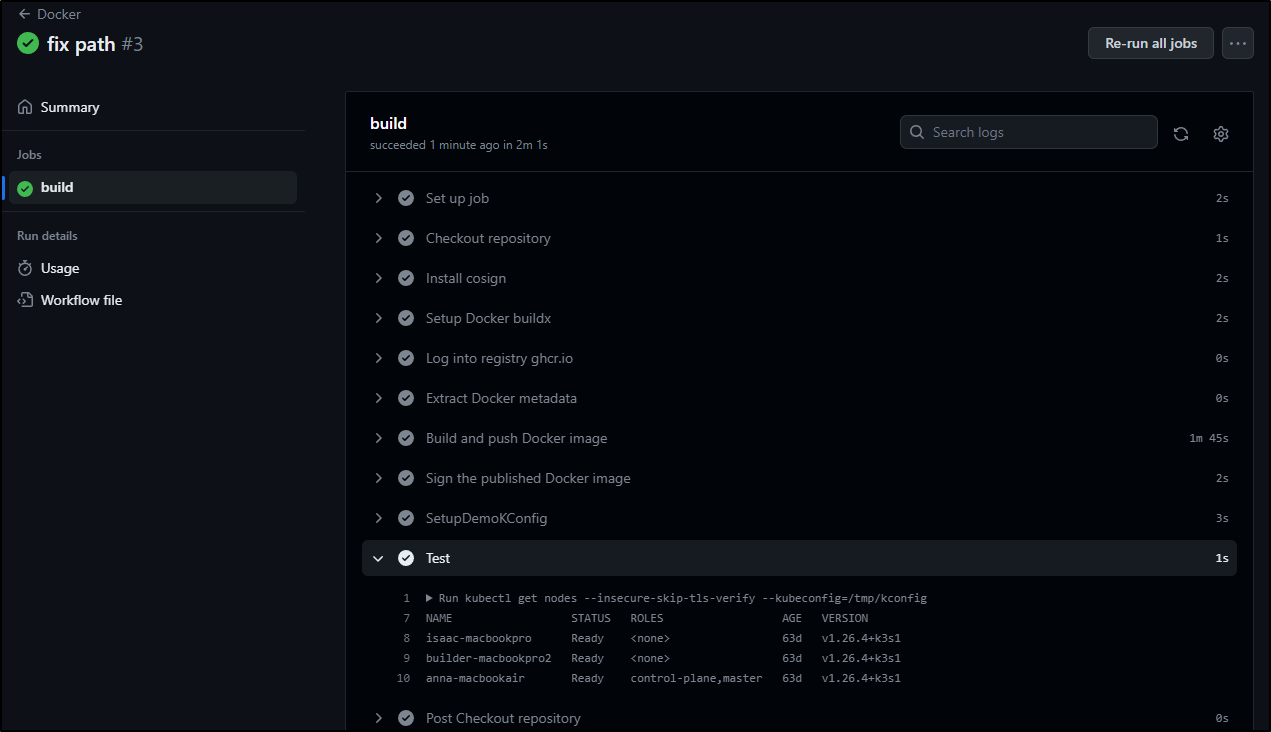

I now just need to add a step at the end of the GH file to decode and use the file

- name: SetupDemoKConfig

run: |

echo $ | base64 --decode > /tmp/kconfig

kubectl get nodes --insecure-skip-tls-verify --kubeconfig=/tmp/kconfig

# Deploy

kubectl apply -f ./deploy/k8sRSGH.yaml --insecure-skip-tls-verify --kubeconfig=/tmp/kconfig

- name: Test

run: |

kubectl get nodes --insecure-skip-tls-verify --kubeconfig=/tmp/kconfig

as well as a basic deployment file in ‘deploy/k8sRSGH.yaml’

The second test block just shows that the kubeconfig persists during the session

I can use kubectl locally to see indeed, the kubeconfig worked

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

ngrok 1/1 1 1 63d

vote-front-azure-vote-1688994153 1/1 1 1 63d

vote-back-azure-vote-1688994153 1/1 1 1 63d

my-opentelemetry-collector 1/1 1 1 51d

homarr-deployment 1/1 1 1 50d

gitea-memcached 1/1 1 1 56d

nginx 1/1 1 1 63d

zipkin 1/1 1 1 51d

act-runner 2/2 2 2 56d

nfs-subdir-external-provisioner 1/1 1 1 37d

fbnext-deployment 3/3 3 3 4d13h

fbnextgh-deployment 3/3 3 3 117s

builder@DESKTOP-QADGF36:~/Workspaces/nodesite/freshbrewed-app$ kubectl get pods | grep fbnextgh

fbnextgh-deployment-5d7564f6c9-bljht 1/1 Running 0 2m8s

fbnextgh-deployment-5d7564f6c9-8h9hz 1/1 Running 0 2m8s

fbnextgh-deployment-5d7564f6c9-jnq7p 1/1 Running 0 2m8s

Summary

We built an initial Dockerfile which, while working, was way to large. Over a few iterations, we optimized the images and used it in a local kubernetes deployment. Lastly, we looked at Github Actions to build and push to the Github Artifact repository (ghcr.io) and use the image both locally with docker and in a workflow to deploy to a Kubernetes cluster.

If we wanted to go the last mile, we would deploy to my production cluster and create a routable helloworld URL with NGinx ingress. I don’t really want this up as a site in it’s current form, so I’ll save that for a later date.