Published: Jun 23, 2023 by Isaac Johnson

This topic of AKS Upgrades has come up a few times for me lately. Questions around how to upgrade, what is affected, how to check and rotate SP IDs. I figured it might be nice to just write it up for all.

We will cover AKS via the CLI, Portal and Terraform (Using TF Cloud). We’ll run some live experiments and cover many different upgrade scenarios.

Creating an AKS cluster

Let’s just create a cluster. To keep it simple, we’ll use the AZ CLI

Resource Group

First, I create a Resource Group

$ az login

$ az account set --subscription 2f0469bd-d00c-4411-85e6-369915da6e54

$ az group create --name showAKSUpgrade --location centralus

Service Principal

Next, I’ll want to create a Service Principal (SP) (aka App Registration) I can use for the cluster

$ az ad sp create-for-rbac -n idjaksupg01sp --skip-assignment --output json

Option '--skip-assignment' has been deprecated and will be removed in a future release.

The output includes credentials that you must protect. Be sure that you do not include these credentials in your code or check the credentials into your source control. For more information, see https://aka.ms/azadsp-cli

{

"appId": "12c57d86-8cc1-4da4-b0ff-b38dc97ab02d",

"displayName": "idjaksupg01sp",

"password": "dGhpcyBpcyBhIHBhc3N3b3JkIGJ1dCBub3QgdGhlIG9uZSB0aGF0IGlzIHJlYWwK",

"tenant": "da4e9527-0d2b-4842-a1f2-f47cb15531de"

}

$ export SP_PASS="dGhpcyBpcyBhIHBhc3N3b3JkIGJ1dCBub3QgdGhlIG9uZSB0aGF0IGlzIHJlYWwK"

$ export SP_ID="12c57d86-8cc1-4da4-b0ff-b38dc97ab02d"

Generally, I save the SP creds into a file and use that for env vars

$ az ad sp create-for-rbac -n idjaksupg01sp --skip-assignment --output json > my_sp.json$ cat my_sp.json | jq -r .appId

12c57d86-8cc1-4da4-b0ff-b38dc97ab02d

I can then set the ID and PASS on the fly

$ export SP_PASS=`cat my_sp.json | jq -r .password`

$ export SP_ID=`cat my_sp.json | jq -r .appId`

Whether you pipe to a JSON or you set manually, the next steps assume you set SP_ID and SP_PASS.

Create Cluster

Next, I’ll create an autoscaling cluster

$ az aks create --resource-group showAKSUpgrade --name idjaksup01 --location centralus --node-count 3 --enable-cluster-autoscaler --min-count 2 --max-count 4 --generate-ssh-keys --network-plugin azure --network-policy azure --service-principal $SP_ID --client-secret $SP_PASS

Once created, I can list it

$ az aks list -o table

Name Location ResourceGroup KubernetesVersion CurrentKubernetesVersion ProvisioningState Fqdn

---------- ---------- --------------- ------------------- -------------------------- ------------------- -----------------------------------------------------------------

idjaksup01 centralus showAKSUpgrade 1.25.6 1.25.6 Succeeded idjaksup01-showaksupgrade-8defc6-2940zsw6.hcp.centralus.azmk8s.io

Finding your SP ID

Assuming you are coming back to a cluster, how might you find the Service Principal ID it uses?

One easy way is to use the AZ CLI

az aks show -n idjaksup01 -g showAKSUpgrade --query servicePrincipalProfile.clientId -o tsv

12c57d86-8cc1-4da4-b0ff-b38dc97ab02d

Rotating a Cred

Assuming we want to expire and rotate the Service Principal client secret, we can just ask for a new ID

$ export SP_ID=`az aks show --resource-group showAKSUpgrade --name idjaksup01 --query servicePrincipalProfile.clientId -o tsv`

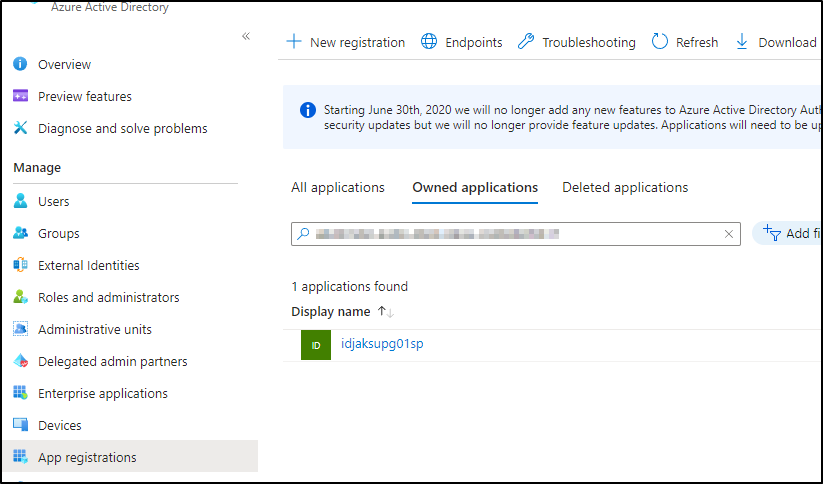

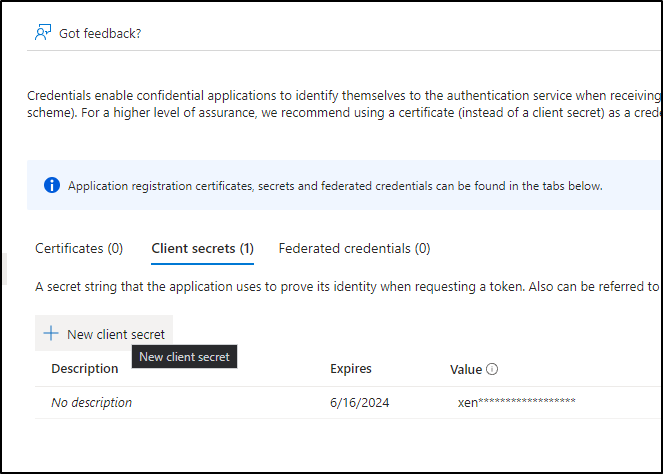

Alternatively, you can go to AAD and find your App Registration

Then create a new secret under secrets to use (you can also see the expiry on former secrets)

Applying to the Cluster

Let’s now apply the new SP Secret to the cluster

I’ll rotate and apply as two steps

$ export SP_NEW_SECRET=`az ad app credential reset --id "$SP_ID" --query password -o tsv`

$ az aks update-credentials -g showAKSUpgrade -n idjaksup01 --reset-service-principal --service-principal "$SP_ID" --client-secret "$SP_NEW_SECRET"

This step will take a while so be patient.

Verification

We can check our secrets by fetching AAD details from a CSI pod.

We first want to login to the cluster

$ az aks get-credentials -g showAKSUpgrade -n idjaksup01 --admin

Merged "idjaksup01-admin" as current context in /home/builder/.kube/config

Then let’s find one of the CSI pods

$ kubectl get pods -l app=csi-azuredisk-node -n kube-system

NAME READY STATUS RESTARTS AGE

csi-azuredisk-node-88sxc 3/3 Running 0 3d13h

csi-azuredisk-node-hw95j 3/3 Running 0 3d13h

We can now fetch and check the SP ID and PASS

$ kubectl exec -it --container azuredisk -n kube-system csi-azuredisk-node-88sxc -- cat /etc/kubernetes/azure.json

{

"cloud": "AzurePublicCloud",

"tenantId": "da4e9527-0d2b-4842-a1f2-f47cb15531de",

"subscriptionId": "2f0469bd-d00c-4411-85e6-369915da6e54",

"aadClientId": "12c57d86-8cc1-4da4-b0ff-b38dc97ab02d",

"aadClientSecret": "bm90IG15IHNlY3JldCwgYnV0IHRoYW5rcyBmb3IgY2hlY2tpbmcK",

"resourceGroup": "MC_showAKSUpgrade_idjaksup01_centralus",

"location": "centralus",

"vmType": "vmss",

"subnetName": "aks-subnet",

"securityGroupName": "aks-agentpool-25858899-nsg",

"vnetName": "aks-vnet-25858899",

"vnetResourceGroup": "",

"routeTableName": "aks-agentpool-25858899-routetable",

"primaryAvailabilitySetName": "",

"primaryScaleSetName": "aks-nodepool1-28899529-vmss",

"cloudProviderBackoffMode": "v2",

"cloudProviderBackoff": true,

"cloudProviderBackoffRetries": 6,

"cloudProviderBackoffDuration": 5,

"cloudProviderRateLimit": true,

"cloudProviderRateLimitQPS": 10,

"cloudProviderRateLimitBucket": 100,

"cloudProviderRateLimitQPSWrite": 10,

"cloudProviderRateLimitBucketWrite": 100,

"useManagedIdentityExtension": false,

"userAssignedIdentityID": "",

"useInstanceMetadata": true,

"loadBalancerSku": "Standard",

"disableOutboundSNAT": false,

"excludeMasterFromStandardLB": true,

"providerVaultName": "",

"maximumLoadBalancerRuleCount": 250,

"providerKeyName": "k8s",

"providerKeyVersion": ""

}

The values above we care about are

"aadClientId": "12c57d86-8cc1-4da4-b0ff-b38dc97ab02d",

"aadClientSecret": "bm90IG15IHNlY3JldCwgYnV0IHRoYW5rcyBmb3IgY2hlY2tpbmcK",

AKS Upgrades

We can upgrade a cluster two ways.

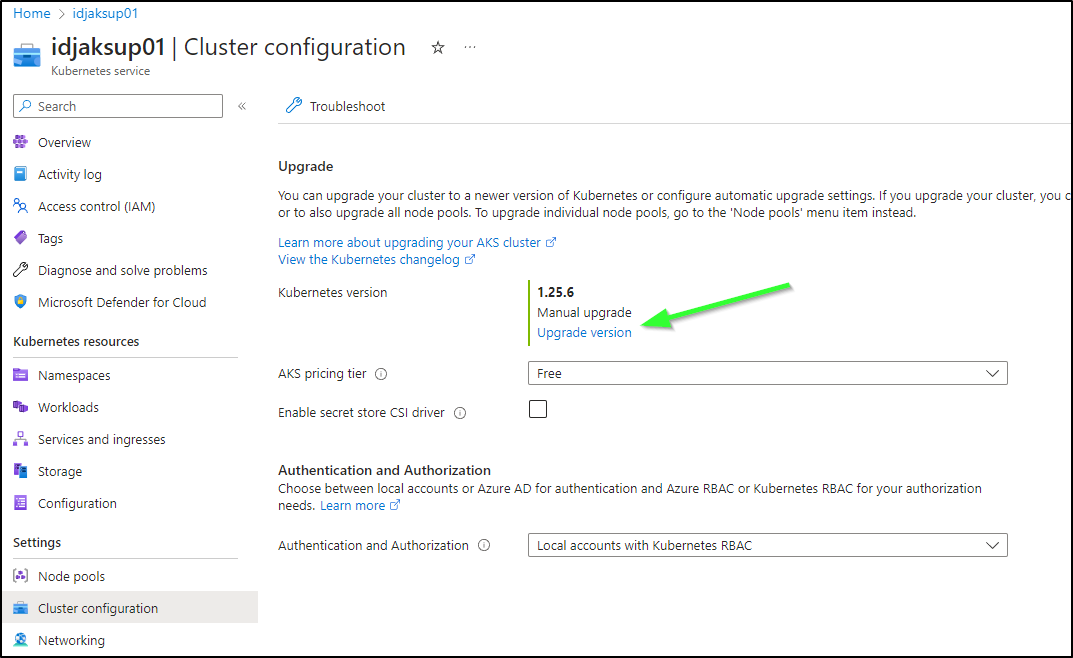

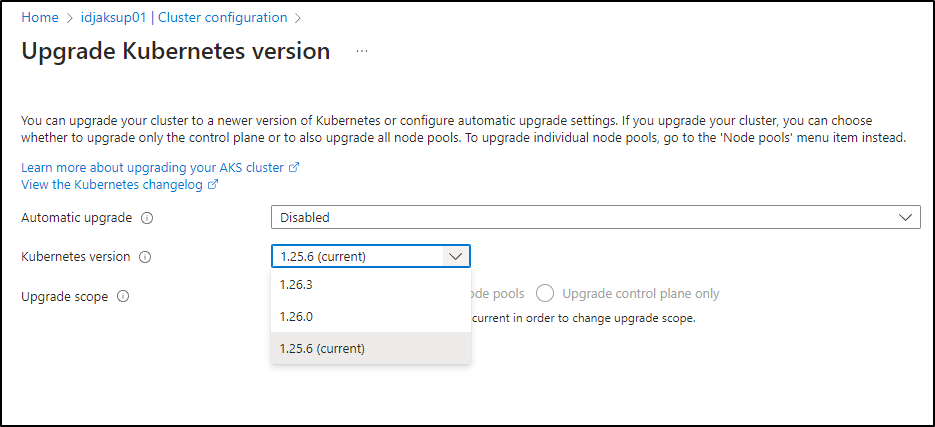

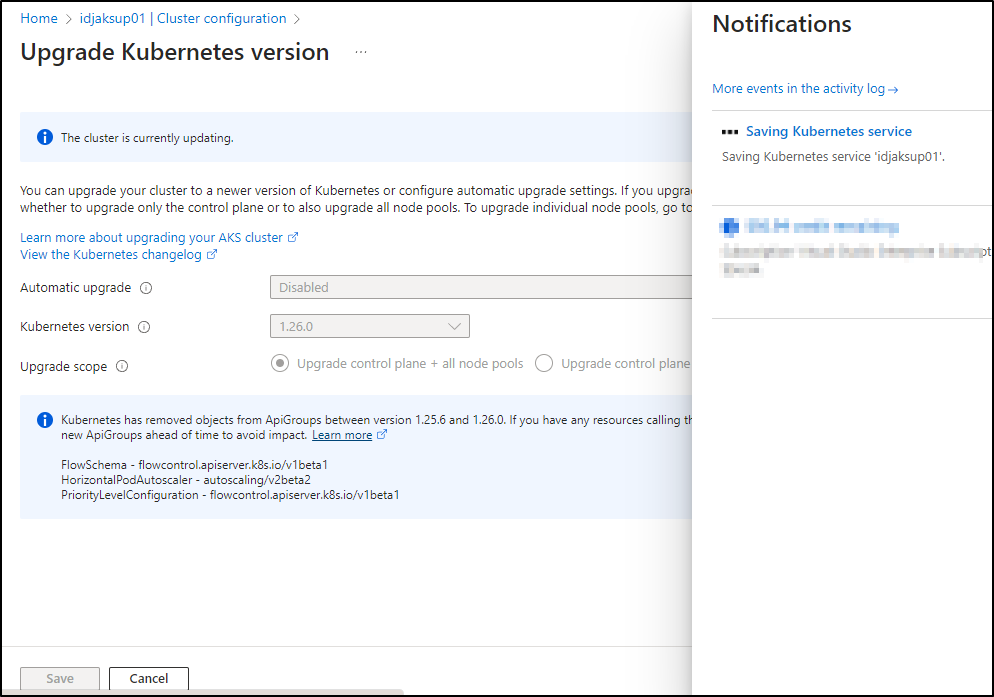

The first is using the Azure Portal. We’ll look it up under “Cluster configuration” in “Settings”

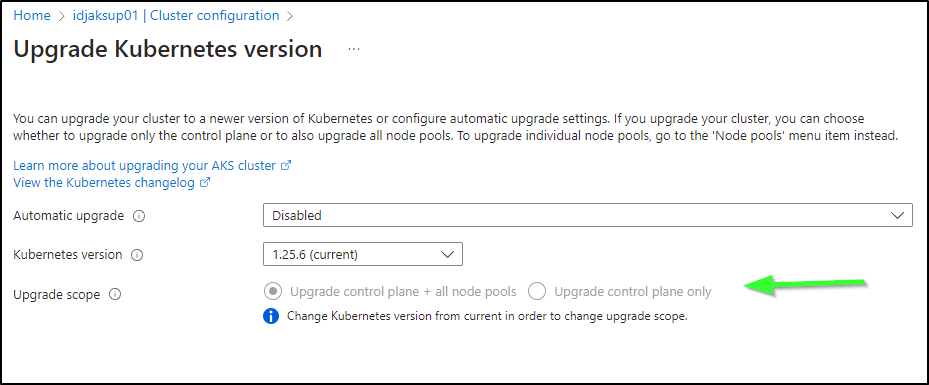

If you have multiple node pools or manual ones, you can chose to do the Control Plane (master nodes) first.

Clicking the drop down shows all the versions we can go to presently (not all that are out there). AKS can upgrade one major release at a time

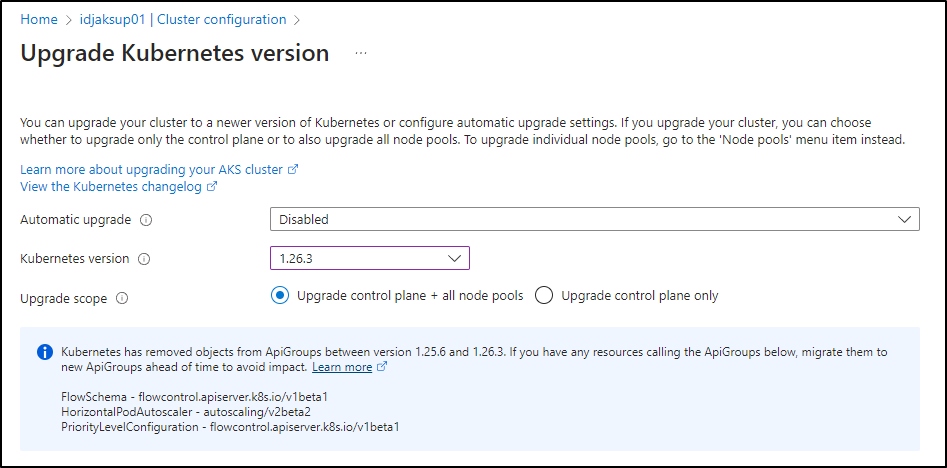

Azure will tell us what to watch out for; namely that a few APIs are being deprecated

Kubernetes has removed objects from ApiGroups between version 1.25.6 and 1.26.3. If you have any resources calling the ApiGroups below, migrate them to new ApiGroups ahead of time to avoid impact.Learn more

FlowSchema - flowcontrol.apiserver.k8s.io/v1beta1

HorizontalPodAutoscaler - autoscaling/v2beta2

PriorityLevelConfiguration - flowcontrol.apiserver.k8s.io/v1beta1

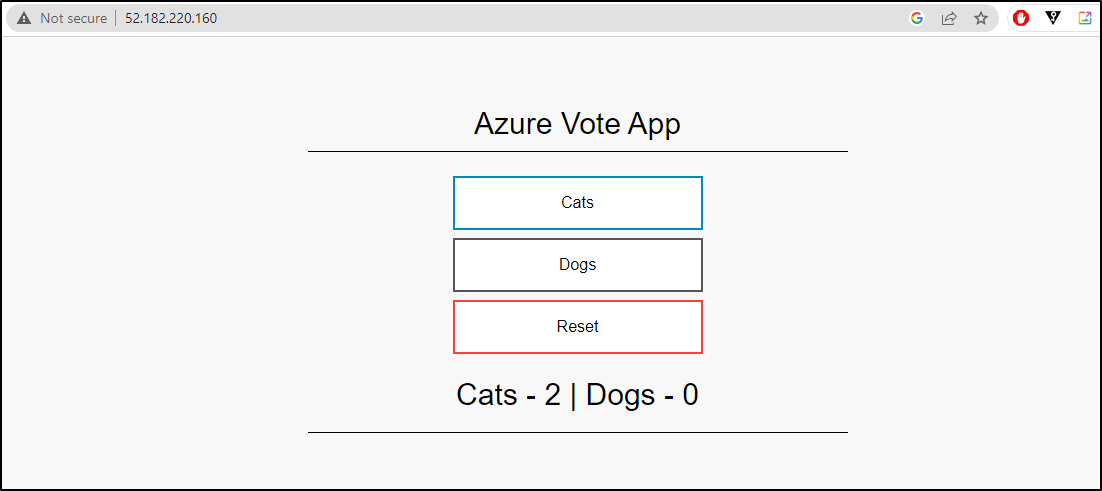

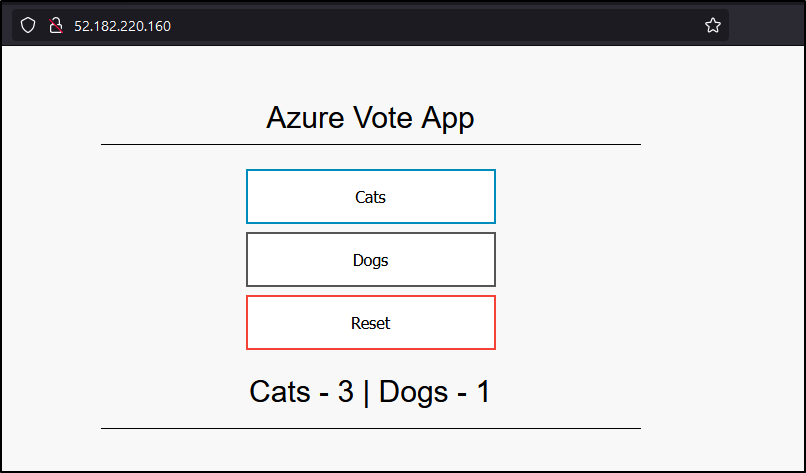

As I want to see some effects, let’s pause for a moment and install a sample app

Azure Vote App

Note: this ultimately did not create an App with deprecated APIs, but is a nice app we can use to watch our upgrades

I’ll add the Sample Repo and update, in case I haven’t yet

$ helm repo add azure-samples https://azure-samples.github.io/helm-charts/

"azure-samples" already exists with the same configuration, skipping

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "kube-state-metrics" chart repository

...Successfully got an update from the "myharbor" chart repository

...Successfully got an update from the "freshbrewed" chart repository

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "akomljen-charts" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "opencost" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "zabbix-community" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "castai-helm" chart repository

...Successfully got an update from the "jfelten" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "kiwigrid" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "epsagon" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "signoz" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "bitnami" chart repository

...Successfully got an update from the "ngrok" chart repository

...Successfully got an update from the "open-telemetry" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "prometheus-community" chart repository

Update Complete. ⎈Happy Helming!⎈

Next I’ll add the vote app

$ helm install azure-samples/azure-vote --generate-name

NAME: azure-vote-1687260329

LAST DEPLOYED: Tue Jun 20 06:25:30 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The Azure Vote application has been started on your Kubernetes cluster.

Title: Azure Vote App

Vote 1 value: Cats

Vote 2 value: Dogs

The externally accessible IP address can take a minute or so to provision. Run the following command to monitor the provisioning status. Once an External IP address has been provisioned, brows to this IP address to access the Azure Vote application.

kubectl get service -l name=azure-vote-front -w

I can now get the LB IP and view the app

$ kubectl get service -l name=azure-vote-front

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

azure-vote-front LoadBalancer 10.0.217.17 52.182.220.160 80:30425/TCP 24s

Let’s now add an HPA on the front end

$ kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

vote-back-azure-vote-1687260329 1/1 1 1 76s

vote-front-azure-vote-1687260329 1/1 1 1 76s

$ kubectl autoscale deployment vote-back-azure-vote-1687260329 --cpu-percent=50 --min=3 --max=10

horizontalpodautoscaler.autoscaling/vote-back-azure-vote-1687260329 autoscaled

I was hoping for a good demo of deprecated APIs, but it seems AKS v1.25 was already set to use the new APIs

builder@DESKTOP-QADGF36:~$ kubectl api-resources | grep autosc

horizontalpodautoscalers hpa autoscaling/v2 true HorizontalPodAutoscaler

builder@DESKTOP-QADGF36:~$ kubectl api-resources | grep flow

flowschemas flowcontrol.apiserver.k8s.io/v1beta2 false FlowSchema

prioritylevelconfigurations flowcontrol.apiserver.k8s.io/v1beta2 false PriorityLevelConfiguration

builder@DESKTOP-QADGF36:~$ kubectl api-resources | grep priority

prioritylevelconfigurations flowcontrol.apiserver.k8s.io/v1beta2 false PriorityLevelConfiguration

priorityclasses pc scheduling.k8s.io/v1 false PriorityClass

To ensure we have a deprecated API involved, I’ll manually create the HPA for the Front end using YAML

$ cat avf.hpa.yaml

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: vote-front-azure-vote-1687260329

namespace: default

spec:

maxReplicas: 10

metrics:

- resource:

name: cpu

target:

averageUtilization: 50

type: Utilization

type: Resource

minReplicas: 3

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: vote-front-azure-vote-1687260329

$ kubectl apply -f avf.hpa.yaml

Warning: autoscaling/v2beta2 HorizontalPodAutoscaler is deprecated in v1.23+, unavailable in v1.26+; use autoscaling/v2 HorizontalPodAutoscaler

horizontalpodautoscaler.autoscaling/vote-front-azure-vote-1687260329 created

Only, AKS caught it and used the newer API!

$ kubectl get hpa vote-front-azure-vote-1687260329

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

vote-front-azure-vote-1687260329 Deployment/vote-front-azure-vote-1687260329 0%/50% 3 10 3 47s

$ kubectl get hpa vote-front-azure-vote-1687260329 -o yaml

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

annotations:

... snip ...

What about the other APIs?

Flow schema is good (at v1beta2 not v1beta1)

$ kubectl get flowschema --all-namespaces

NAME PRIORITYLEVEL MATCHINGPRECEDENCE DISTINGUISHERMETHOD AGE MISSINGPL

exempt exempt 1 <none> 3d14h False

probes exempt 2 <none> 3d14h False

system-leader-election leader-election 100 ByUser 3d14h False

endpoint-controller workload-high 150 ByUser 3d14h False

workload-leader-election leader-election 200 ByUser 3d14h False

system-node-high node-high 400 ByUser 3d14h False

system-nodes system 500 ByUser 3d14h False

kube-controller-manager workload-high 800 ByNamespace 3d14h False

kube-scheduler workload-high 800 ByNamespace 3d14h False

kube-system-service-accounts workload-high 900 ByNamespace 3d14h False

service-accounts workload-low 9000 ByUser 3d14h False

global-default global-default 9900 ByUser 3d14h False

catch-all catch-all 10000 ByUser 3d14h False

$ kubectl get flowschema system-leader-election -o yaml

apiVersion: flowcontrol.apiserver.k8s.io/v1beta2

kind: FlowSchema

metadata:

annotations:

As is priority level

$ kubectl get PriorityLevelConfiguration

NAME TYPE ASSUREDCONCURRENCYSHARES QUEUES HANDSIZE QUEUELENGTHLIMIT AGE

catch-all Limited 5 <none> <none> <none> 3d14h

exempt Exempt <none> <none> <none> <none> 3d14h

global-default Limited 20 128 6 50 3d14h

leader-election Limited 10 16 4 50 3d14h

node-high Limited 40 64 6 50 3d14h

system Limited 30 64 6 50 3d14h

workload-high Limited 40 128 6 50 3d14h

workload-low Limited 100 128 6 50 3d14h

$ kubectl get PriorityLevelConfiguration global-default -o yaml

apiVersion: flowcontrol.apiserver.k8s.io/v1beta2

kind: PriorityLevelConfiguration

metadata:

annotations:

...

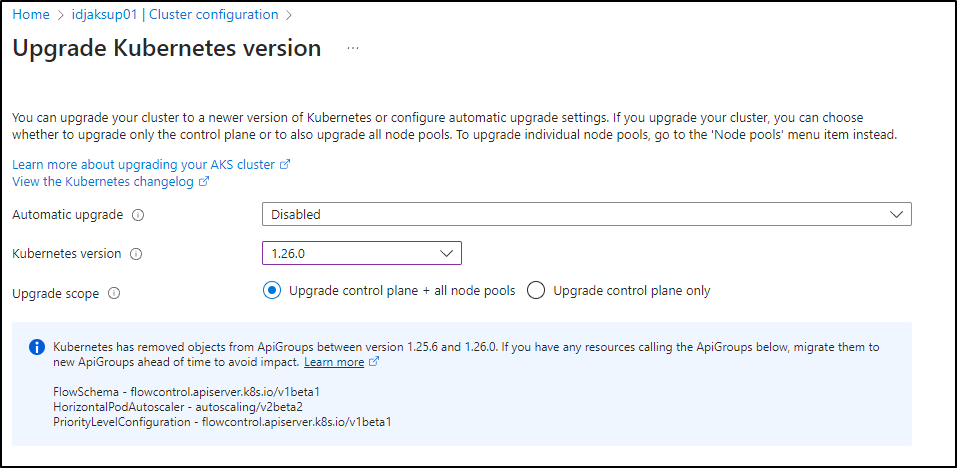

Upgrading

Regardless of not finding a good way to create deprecated APIs, let’s complete the upgrade

I’ll go to 1.26.0 so I can use the CLI next

In saving, we start the upgrade

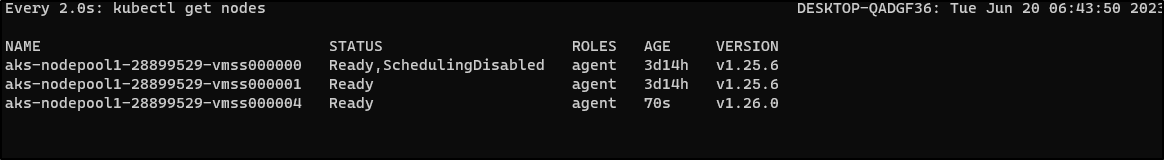

We can then see it start to rotate in new nodes and move workloads.

Never during the process does our app actually go down

I pannicked when Chrome started to get an internal server error, but I couldnt find errors in the Kuberenetes logs and firing up an alternate browser showed things were fine

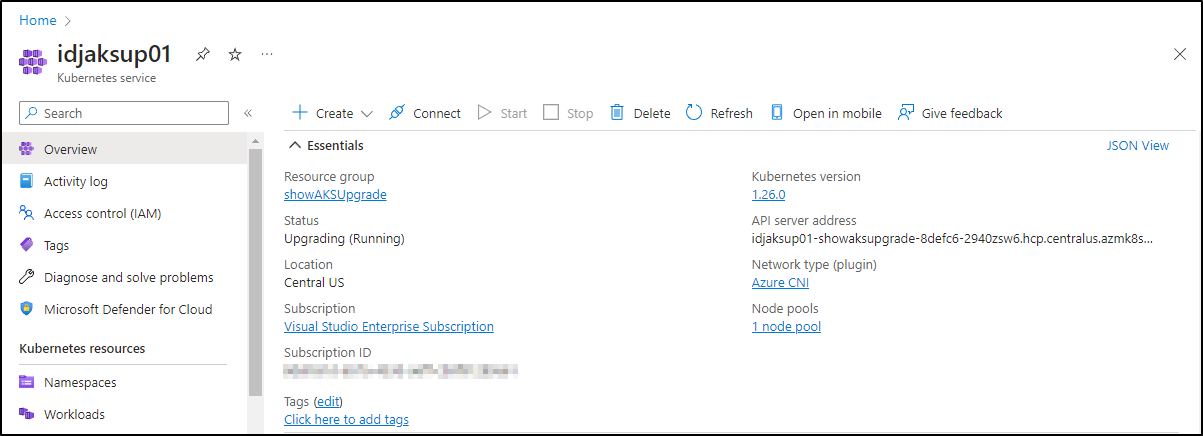

And we can see it is done both in the Azure Portal

Control Plane:

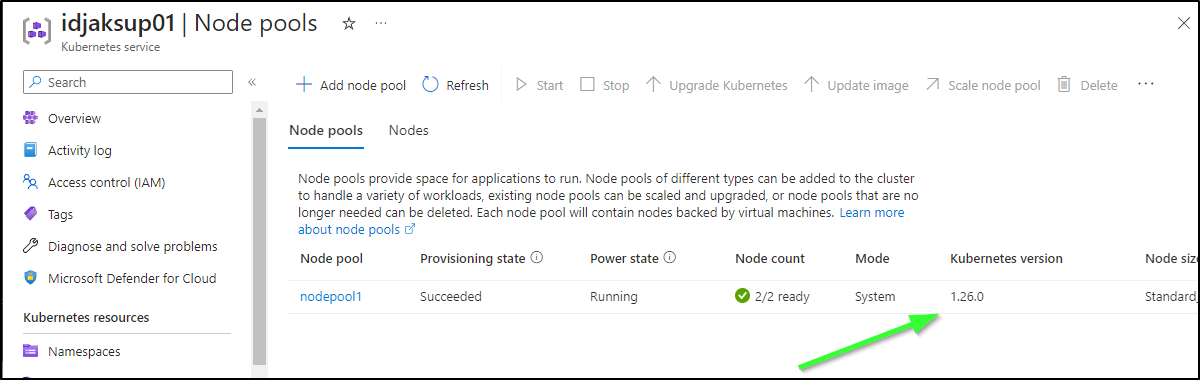

Node Pools:

As well as on the command line

$ az aks list -o table

Name Location ResourceGroup KubernetesVersion CurrentKubernetesVersion ProvisioningState Fqdn

---------- ---------- --------------- ------------------- -------------------------- ------------------- -----------------------------------------------------------------

idjaksup01 centralus showAKSUpgrade 1.26.0 1.26.0 Succeeded idjaksup01-showaksupgrade-8defc6-2940zsw6.hcp.centralus.azmk8s.io

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-28899529-vmss000000 Ready agent 9m14s v1.26.0

aks-nodepool1-28899529-vmss000001 Ready agent 5m35s v1.26.0

Using CLI

Let’s use the CLI this time.

We can ask for what is available

$ az aks get-upgrades -g showAKSUpgrade -n idjaksup01 -o table

Name ResourceGroup MasterVersion Upgrades

------- --------------- --------------- ----------

default showAKSUpgrade 1.26.0 1.26.3

It does warn of blips and confirms I want to do both…

$ az aks upgrade -g showAKSUpgrade -n idjaksup01 --kubernetes-version 1.26.3

Kubernetes may be unavailable during cluster upgrades.

Are you sure you want to perform this operation? (y/N): y

Since control-plane-only argument is not specified, this will upgrade the control plane AND all nodepools to version 1.26.3. Continue? (y/N): y

\ Running ..

While that is running, we can use kubectl get events -w to watch events

15m Warning FailedGetResourceMetric horizontalpodautoscaler/vote-front-azure-vote-1687260329 failed to get cpu utilization: unable to get metrics for resource cpu: unable to fetch metrics from resource metrics API: the server is currently unable to handle the request (get pods.metrics.k8s.io)

15m Warning FailedComputeMetricsReplicas horizontalpodautoscaler/vote-front-azure-vote-1687260329 invalid metrics (1 invalid out of 1), first error is: failed to get cpu resource metric value: failed to get cpu utilization: unable to get metrics for resource cpu: unable to fetch metrics from resource metrics API: the server is currently unable to handle the request (get pods.metrics.k8s.io)

5m11s Warning FailedGetResourceMetric horizontalpodautoscaler/vote-front-azure-vote-1687260329 failed to get cpu utilization: did not receive metrics for any ready pods

5m11s Warning FailedComputeMetricsReplicas horizontalpodautoscaler/vote-front-azure-vote-1687260329 invalid metrics (1 invalid out of 1), first error is: failed to get cpu resource metric value: failed to get cpu utilization: did not receive metrics for any ready pods

0s Normal Starting node/aks-nodepool1-28899529-vmss000001

0s Warning FailedGetResourceMetric horizontalpodautoscaler/vote-front-azure-vote-1687260329 failed to get cpu utilization: unable to get metrics for resource cpu: unable to fetch metrics from resource metrics API: the server is currently unable to handle the request (get pods.metrics.k8s.io)

0s Warning FailedComputeMetricsReplicas horizontalpodautoscaler/vote-front-azure-vote-1687260329 invalid metrics (1 invalid out of 1), first error is: failed to get cpu resource metric value: failed to get cpu utilization: unable to get metrics for resource cpu: unable to fetch metrics from resource metrics API: the server is currently unable to handle the request (get pods.metrics.k8s.io)

0s Normal Starting node/aks-nodepool1-28899529-vmss000000

You can watch it happen here if you want to see the process:

No Upgrades

If you are at the latest, it’s worth noting the “upgrades” field will be null

$ az aks get-upgrades -g showAKSUpgrade -n idjaksup01 -o table

Table output unavailable. Use the --query option to specify an appropriate query. Use --debug for more info.

$ az aks get-upgrades -g showAKSUpgrade -n idjaksup01

{

"agentPoolProfiles": null,

"controlPlaneProfile": {

"kubernetesVersion": "1.26.3",

"name": null,

"osType": "Linux",

"upgrades": null

},

... snip ...

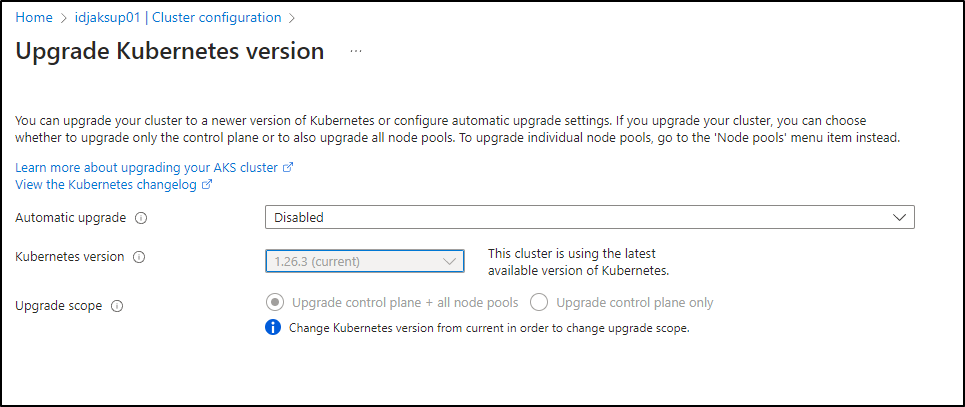

And the portal will say the same

Terraform

First, we’ll need to know what versions we can use in a region. I’ll be using “East US”, so let’s check what is supported there presently

$ az aks get-versions --location eastus --output table

KubernetesVersion Upgrades

------------------- -----------------------

1.27.1(preview) None available

1.26.3 1.27.1(preview)

1.26.0 1.26.3, 1.27.1(preview)

1.25.6 1.26.0, 1.26.3

1.25.5 1.25.6, 1.26.0, 1.26.3

1.24.10 1.25.5, 1.25.6

1.24.9 1.24.10, 1.25.5, 1.25.6

Since I want to test upgrades, I’ll choose the lowest version (1.24.9 ).

I’ll set that in a variable

variable "kubernetes_version" {

type = string

default = "1.24.9 "

description = "The specific version of Kubernetes to use."

}

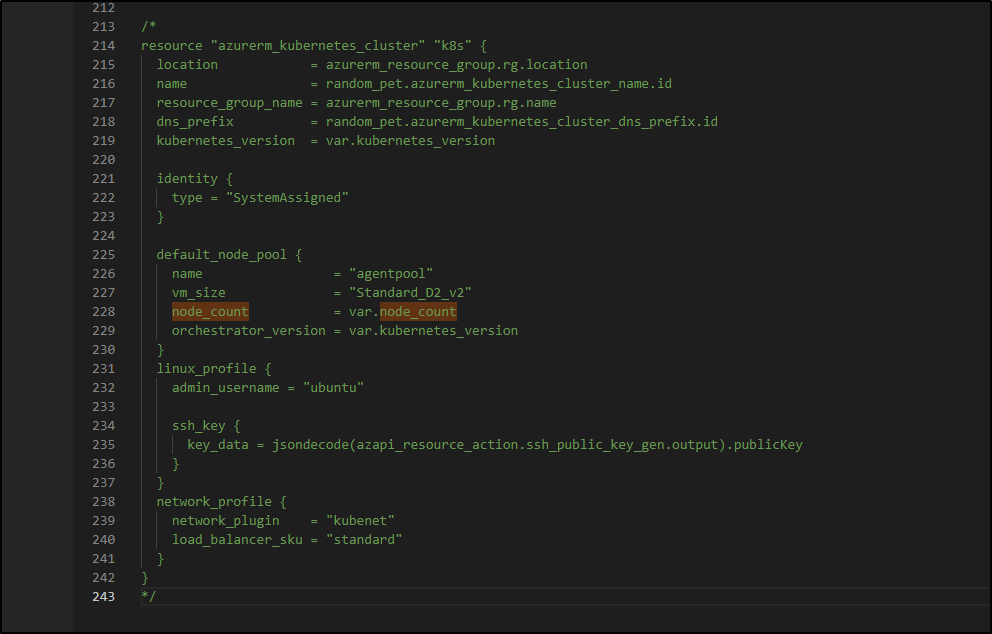

Which I can use in my Kubernetes TF

resource "azurerm_kubernetes_cluster" "k8s" {

location = azurerm_resource_group.rg.location

name = random_pet.azurerm_kubernetes_cluster_name.id

resource_group_name = azurerm_resource_group.rg.name

dns_prefix = random_pet.azurerm_kubernetes_cluster_dns_prefix.id

kubernetes_version = var.kubernetes_version

identity {

type = "SystemAssigned"

}

default_node_pool {

name = "agentpool"

vm_size = "Standard_D2_v2"

node_count = var.node_count

}

linux_profile {

admin_username = "ubuntu"

ssh_key {

key_data = jsondecode(azapi_resource_action.ssh_public_key_gen.output).publicKey

}

}

network_profile {

network_plugin = "kubenet"

load_balancer_sku = "standard"

}

}

I wanted to quick sanity check the sizes in the region in case Standard_D2_v2 is no longer there

$ az vm list-sizes --location eastus -o table | grep Standard_D2_v2

8 7168 Standard_D2_v2 2 1047552 102400

8 7168 Standard_D2_v2_Promo 2 1047552 102400

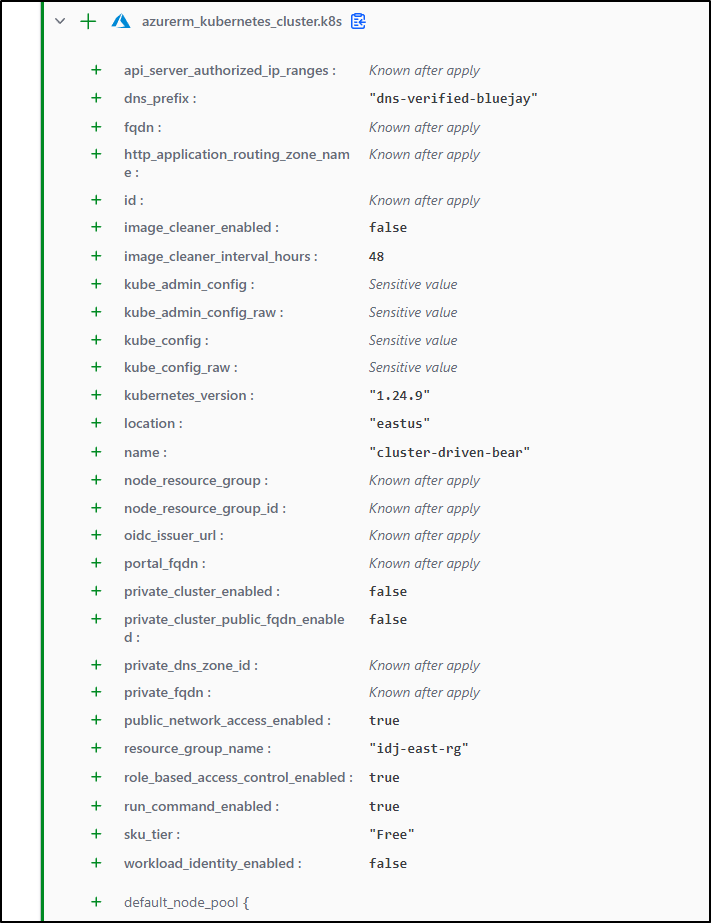

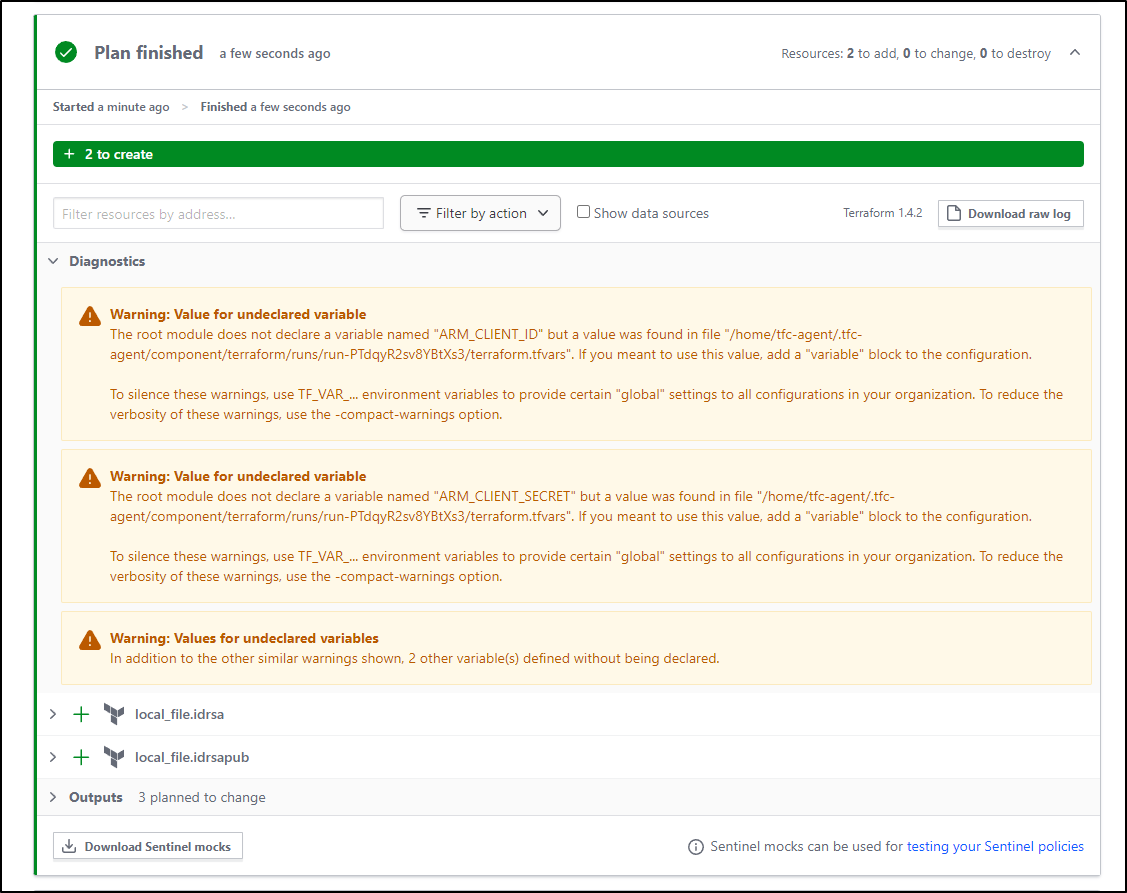

I now have a TF Cloud plan to run

The first time through I made a typo on version (“1.24.9 “ with extra space). If you use an errant version, you’ll get an error such as

Failure sending request: StatusCode=0 -- Original Error: Code="BadRequest" Message="Client Error: error parsing version(1.24.9 ) as semver: Invalid characters in version"

While it is still creating in TF Cloud, I can see it exists in Azure already

$ az aks list -o table

Name Location ResourceGroup KubernetesVersion CurrentKubernetesVersion ProvisioningState Fqdn

------------------- ---------- --------------- ------------------- -------------------------- ------------------- --------------------------------------------------

cluster-driven-bear eastus idj-east-rg 1.24.9 1.24.9 Creating dns-verified-bluejay-q17kaixm.hcp.eastus.azmk8s.io

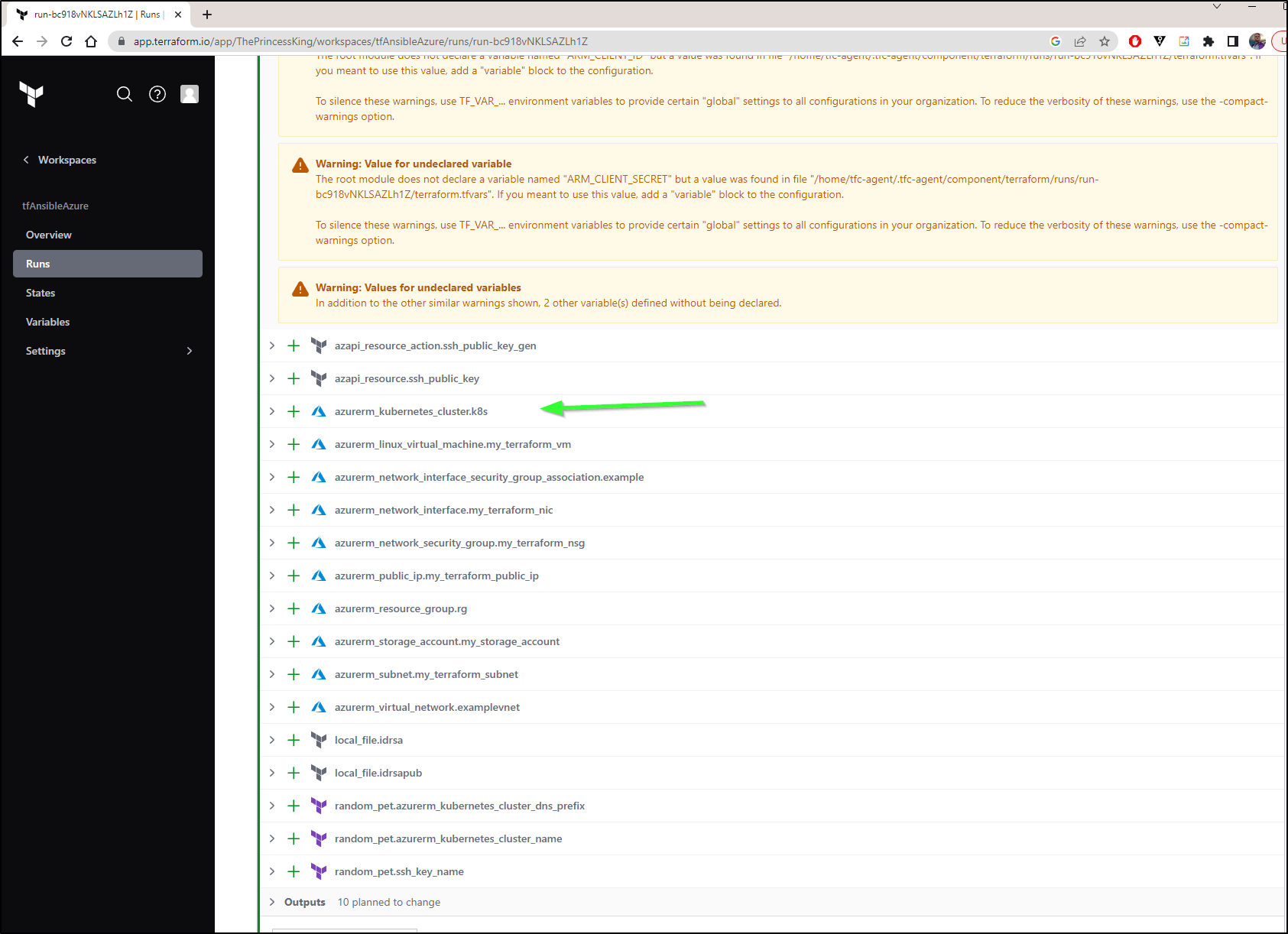

And when the run is done, I see that in TF Cloud as well

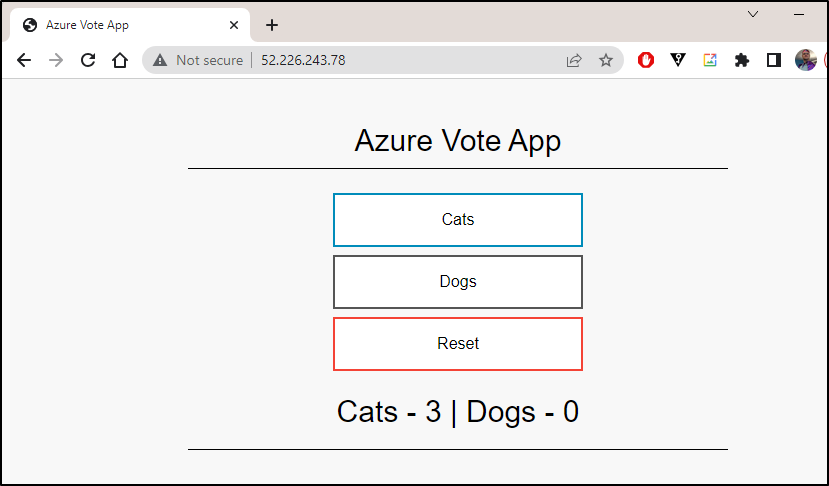

I’ll now add an app so we can see some data

$ az aks get-credentials -n cluster-driven-bear -g idj-east-rg --admin

Merged "cluster-driven-bear-admin" as current context in /home/builder/.kube/config

$ helm install azure-samples/azure-vote --generate-name

NAME: azure-vote-1687520242

LAST DEPLOYED: Fri Jun 23 06:37:23 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The Azure Vote application has been started on your Kubernetes cluster.

Title: Azure Vote App

Vote 1 value: Cats

Vote 2 value: Dogs

The externally accessible IP address can take a minute or so to provision. Run the following command to monitor the provisioning status. Once an External IP address has been provisioned, brows to this IP address to access the Azure Vote application.

kubectl get service -l name=azure-vote-front -w

$ kubectl get service -l name=azure-vote-front

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

azure-vote-front LoadBalancer 10.0.133.63 52.226.243.78 80:30747/TCP 33s

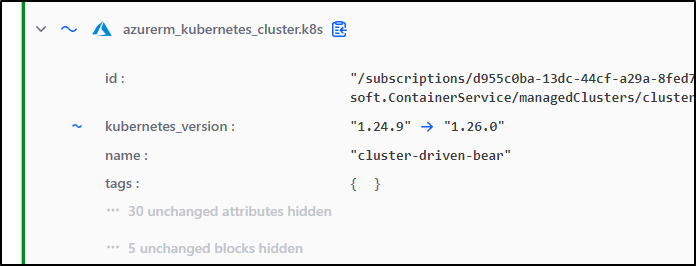

Upgrade Scenario 1: Upgrading with TF

Let’s say we want to upgrade using terraform.

I’ll first try something that should fail - upgrading more than one version up

$ git diff

diff --git a/variables.tf b/variables.tf

index 2e05a73..5524567 100644

--- a/variables.tf

+++ b/variables.tf

@@ -29,7 +29,7 @@ variable "msi_id" {

variable "kubernetes_version" {

type = string

- default = "1.24.9"

+ default = "1.26.0"

description = "The specific version of Kubernetes to use."

}

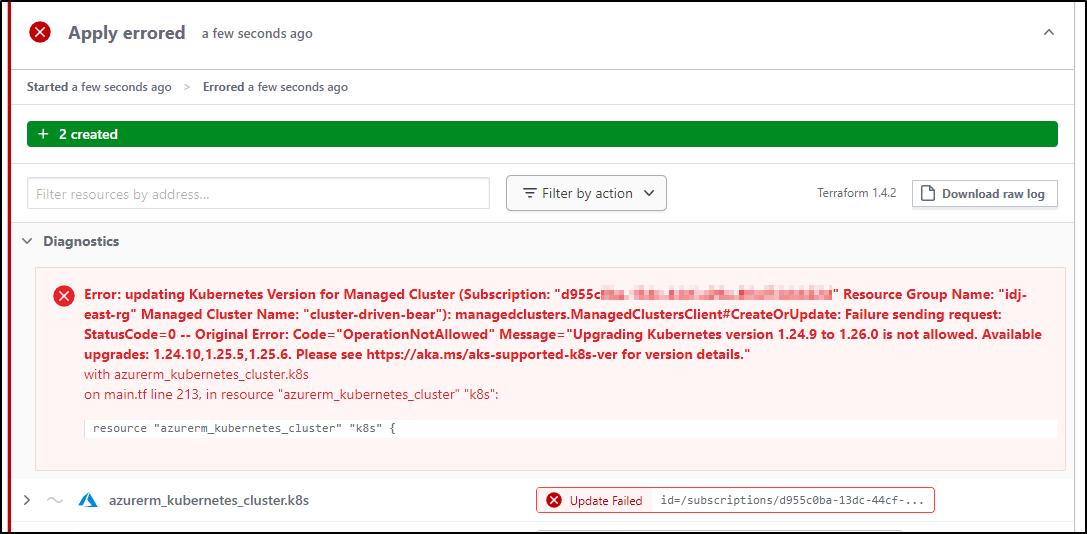

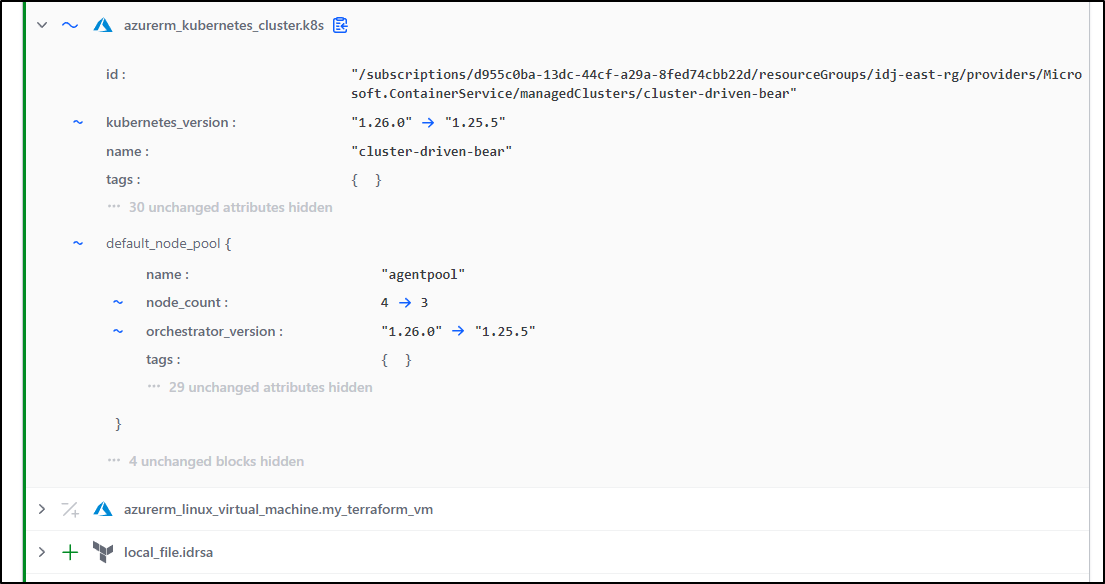

The plan thinks this will work

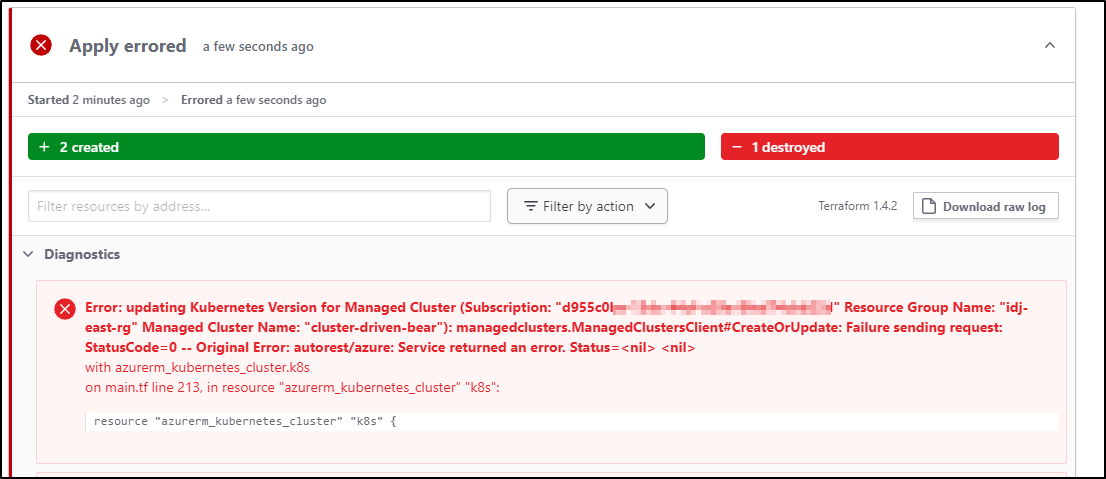

However, the apply catches it and errors, as I would expect

Applying with the proper version works, but unless I call out the node pool versions, I had to upgrade them via the Portal (it only updated the Control Plane).

We can do the node pools at the same time using orchestrator_version

$ git diff

diff --git a/main.tf b/main.tf

index f3a3c5e..4de9093 100644

--- a/main.tf

+++ b/main.tf

@@ -222,9 +222,10 @@ resource "azurerm_kubernetes_cluster" "k8s" {

}

default_node_pool {

- name = "agentpool"

- vm_size = "Standard_D2_v2"

- node_count = var.node_count

+ name = "agentpool"

+ vm_size = "Standard_D2_v2"

+ node_count = var.node_count

+ orchestrator_version = var.kubernetes_version

}

linux_profile {

admin_username = "ubuntu"

In fact, setting them now didn’t even show a thing to change in the plan

Upgrade Scenario 2: Upgrading outside of TF

The other way we can go about this is to do our upgrades in the Portal or external to Terraform and then update terraform to match (and then get our state updated for us). It would be effectively a no-op plan and apply.

The first check is to upgrade via the portal:

and see what happens when we don’t adjust the terraform to match.

Here I’ll update a setting on a VM, not touching anything with the AKS cluster

$ git diff

diff --git a/main.tf b/main.tf

index 4de9093..0f95986 100644

--- a/main.tf

+++ b/main.tf

@@ -165,7 +165,7 @@ resource "azurerm_linux_virtual_machine" "my_terraform_vm" {

computer_name = "myvm"

admin_username = "azureuser"

- disable_password_authentication = true

+ disable_password_authentication = false

admin_ssh_key {

username = "azureuser"

$ git add main.tf

$ git commit -m "non AKS Change"

[clone_with_github d73c343] non AKS Change

1 file changed, 1 insertion(+), 1 deletion(-)

$ git push

Enumerating objects: 5, done.

Counting objects: 100% (5/5), done.

Delta compression using up to 16 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (3/3), 303 bytes | 303.00 KiB/s, done.

Total 3 (delta 2), reused 0 (delta 0)

remote: Resolving deltas: 100% (2/2), completed with 2 local objects.

To https://github.com/idjohnson/tfAnsibleAzure.git

a5eab90..d73c343 clone_with_github -> clone_with_github

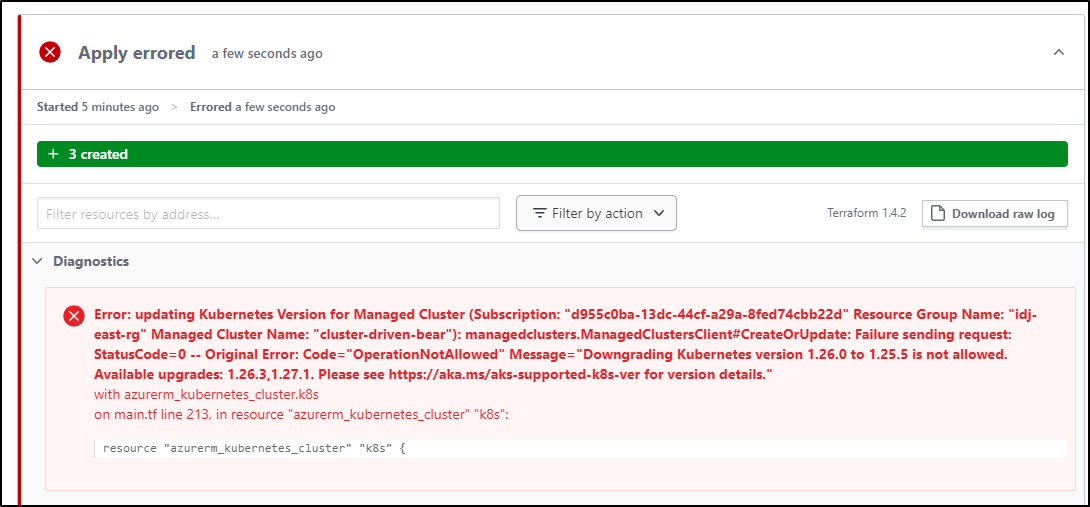

Sadly, it appears it wants to downgrade (but this should be blocked on apply)

Which it did (though sort of an odd error)

I think the strange error was because the plan kicked off while the cluster was not done upgrading. Doing it a second time gave me more reasonable error message: “Downgrading Kubernetes version 1.26.0 to 1.25.5 is not allowed. Available upgrades: 1.26.3,1.27.1. Please see https://aka.ms/aks-supported-k8s-ver for version details.”

That said, I can update Terraform to match the new portal versions and it’s happy again.

Cleanup

Assuming you don’t want to keep the cluster from our CLI steps, you can delete the cluster to save money

$ az aks delete -g showAKSUpgrade -n idjaksup01

Are you sure you want to perform this operation? (y/n): y

- Running ..

As well as the group

$ az group delete --resource-group showAKSUpgrade

Are you sure you want to perform this operation? (y/n): y

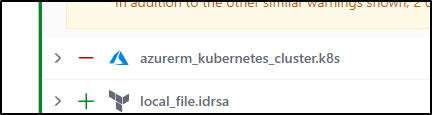

In Terraform, we comment it out to remove

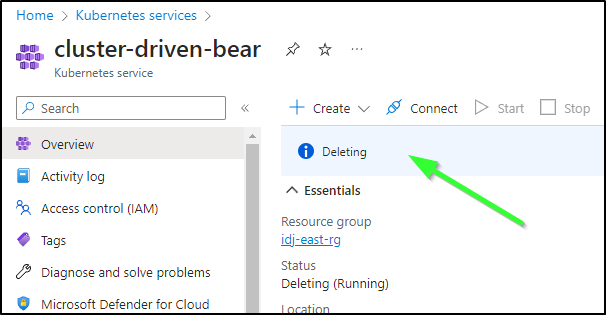

which should remove it via TF Cloud

And we can see it get removed via the Azure Portal as well

Summary

We did two AKS upgrades, we showed how to find and rotate the Service Principal ID and we attempted to load some deprecated APIs. While we weren’t able to really show deprecation handling (AKS will upgrade it, i might add - we would have seen that), we did see the full upgrade flow.

We also tested how this works with Terraform using Terraform Cloud. We tested a few scenarios including trying to upgrade too far, not updating the versions after portal upgrade and more.

Hopefully this guide assuages any fears one might have about Azure Kubernetes Service and it’s upgrade process.