Published: Jun 15, 2023 by Isaac Johnson

I had a note to checkout Frappe cloud and ERPNext. The idea is it is an open-source Enterprise Resource Planning suite that is easy to install and host. We will try and set it up and explore the cloud offering as well.

We will try and set up the Helm chart in Kubernetes, the Docker install with VS Code and lastly the Cloud Offering.

Helm install

Add the Helm repo

$ helm repo add frappe https://helm.erpnext.com

WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /home/builder/.kube/config

WARNING: Kubernetes configuration file is world-readable. This is insecure. Location: /home/builder/.kube/config

"frappe" has been added to your repositories

Helm Repo update

$ helm repo update

WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /home/builder/.kube/config

WARNING: Kubernetes configuration file is world-readable. This is insecure. Location: /home/builder/.kube/config

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "backstage" chart repository

...Successfully got an update from the "frappe" chart repository

...Successfully got an update from the "bitnami" chart repository

...Successfully got an update from the "deliveryhero" chart repository

Update Complete. ⎈Happy Helming!⎈

The chart will want our Storage Class name, so let’s fetch that

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path (default) rancher.io/local-path Delete WaitForFirstConsumer false 10d

We can now install using helm

$ helm upgrade --install frappe-bench --namespace erpnext --create-namespace fra

ppe/erpnext --set persistence.worker.storageClass=local-path

WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /home/builder/.kube/config

WARNING: Kubernetes configuration file is world-readable. This is insecure. Location: /home/builder/.kube/config

Release "frappe-bench" does not exist. Installing it now.

NAME: frappe-bench

LAST DEPLOYED: Sat Jun 10 21:11:02 2023

NAMESPACE: erpnext

STATUS: deployed

REVISION: 1

NOTES:

Frappe/ERPNext Release deployed

Release Name: frappe-bench-erpnext

Wait for the pods to start.

To create sites and other resources, refer:

https://github.com/frappe/helm/blob/main/erpnext/README.md

Frequently Asked Questions:

https://helm.erpnext.com/faq

We can now see services in the namespace

$ kubectl get svc -n erpnext

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

frappe-bench-redis-queue-headless ClusterIP None <none> 6379/TCP 17m

frappe-bench-redis-cache-headless ClusterIP None <none> 6379/TCP 17m

frappe-bench-redis-socketio-headless ClusterIP None <none> 6379/TCP 17m

frappe-bench-erpnext-socketio ClusterIP 10.43.253.10 <none> 9000/TCP 17m

frappe-bench-erpnext-gunicorn ClusterIP 10.43.166.50 <none> 8000/TCP 17m

frappe-bench-mariadb ClusterIP 10.43.81.56 <none> 3306/TCP 17m

frappe-bench-redis-cache-master ClusterIP 10.43.220.193 <none> 6379/TCP 17m

frappe-bench-erpnext ClusterIP 10.43.243.212 <none> 8080/TCP 17m

frappe-bench-redis-queue-master ClusterIP 10.43.95.27 <none> 6379/TCP 17m

frappe-bench-redis-socketio-master ClusterIP 10.43.188.248 <none> 6379/TCP 17m

We can see the pods created

$ kubectl get pod -n erpnext

NAME READY STATUS RESTARTS AGE

frappe-bench-redis-cache-master-0 1/1 Running 0 17m

frappe-bench-redis-socketio-master-0 1/1 Running 0 17m

frappe-bench-redis-queue-master-0 1/1 Running 0 17m

frappe-bench-mariadb-0 1/1 Running 0 17m

frappe-bench-erpnext-worker-s-5598db657c-xw5x5 0/1 Pending 0 17m

frappe-bench-erpnext-nginx-79d8b9db9d-vhlr2 0/1 Pending 0 17m

frappe-bench-erpnext-worker-l-9457499bf-xtj86 0/1 Pending 0 17m

frappe-bench-erpnext-worker-d-c7f58cbb7-vt76x 0/1 Pending 0 17m

frappe-bench-erpnext-socketio-84c7d76fb9-7pdtx 0/1 Pending 0 17m

frappe-bench-erpnext-conf-bench-20230610211102-c686x 0/1 Pending 0 17m

frappe-bench-erpnext-gunicorn-65f5575bc5-ktkns 0/1 Pending 0 17m

frappe-bench-erpnext-scheduler-775ddbc67d-7trm2 0/1 Pending 0 17m

Let’s see what PVCs were requested

$ kubectl get pvc -n erpnext

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

frappe-bench-erpnext Pending local-path 20m

data-frappe-bench-mariadb-0 Bound pvc-0eb3baf3-d49f-4008-b0e4-8af609c4a3b2 8Gi RWO local-path 20m

Looking at the PVC details we can see it’s do to RWO instead of RWM

Events:

Type Reason Age From

Message

---- ------ ---- ----

-------

Normal WaitForFirstConsumer 9m23s persistentvolume-controller

waiting for first consumer to be created before binding

Normal Provisioning <invalid> (x8 over 9m22s) rancher.io/local-path_local-path-provisioner-957fdf8bc-lldmr_57a194f4-4fd7-4223-9093-dcb9fc320a9c External provisioner is provisioning volume for claim "erpnext/frappe-bench-erpnext"

Warning ProvisioningFailed <invalid> (x8 over 9m22s) rancher.io/local-path_local-path-provisioner-957fdf8bc-lldmr_57a194f4-4fd7-4223-9093-dcb9fc320a9c failed to provision volume with StorageClass "local-path": Only support ReadWriteOnce access mode

Normal ExternalProvisioning <invalid> (x83 over 9m22s) persistentvolume-controller

waiting for a volume to be created, either by external provisioner "rancher.io/local-path" or manually created by system administrator

with the PVC spec

$ kubectl get pvc frappe-bench-erpnext -n erpnext -o yaml | tail -n 10

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 8Gi

storageClassName: local-path

volumeMode: Filesystem

status:

phase: Pending

I see plenty of volumes

$ kubectl get pv -n erpnext

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM

STORAGECLASS REASON AGE

pvc-fe8b7fa3-a3a5-4b56-bde0-a2396686c69f 8Gi RWO Delete Bound default/data-backstage-postgresql-0 local-path 7d10h

pvc-d83ee42f-648e-4319-bb6b-dd937c3b6ccf 10Gi RWO Delete Bound kube-logging/data-es-cluster-0 local-path 7d5h

pvc-2caba020-aeb4-4138-9e5f-1a0f9d2ce3a7 10Gi RWO Delete Bound kube-logging/data-es-cluster-1 local-path 7d5h

pvc-0d816603-4186-4b04-a5b3-2077379b1a67 10Gi RWO Delete Bound kube-logging/data-es-cluster-2 local-path 7d5h

pvc-cb82315d-d3fd-4d2d-978b-b3b89d9ec6d8 10Gi RWO Delete Bound default/data-loki-write-0 local-path 7d5h

pvc-d5e51f3e-9282-48a8-9cc3-9e2ec3662bb2 10Gi RWO Delete Bound default/data-loki-write-2 local-path 7d5h

pvc-daf8405a-3303-4390-9b2a-753816e0ca2a 10Gi RWO Delete Bound default/data-loki-write-1 local-path 7d5h

pvc-aad6dc45-2446-468f-9a67-27bbcec439b6 10Gi RWO Delete Bound default/data-loki-backend-1 local-path 7d5h

pvc-becf1805-9674-43a7-80f2-56a45da6b769 10Gi RWO Delete Bound default/data-loki-backend-2 local-path 7d5h

pvc-cc3aba07-ece7-4123-85c4-3a22e5ca6f50 10Gi RWO Delete Bound default/data-loki-backend-0 local-path 7d5h

I changed clusters to one with a different storage class

$ helm upgrade --install frappe-bench --namespace erpnext --create-namespace frappe/erpnext --set persistence.worker.storageClass=managed-nfs-storage

WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /home/builder/.kube/config

WARNING: Kubernetes configuration file is world-readable. This is insecure. Location: /home/builder/.kube/config

Release "frappe-bench" does not exist. Installing it now.

NAME: frappe-bench

LAST DEPLOYED: Sat Jun 10 21:32:41 2023

NAMESPACE: erpnext

STATUS: deployed

REVISION: 1

NOTES:

Frappe/ERPNext Release deployed

Release Name: frappe-bench-erpnext

Wait for the pods to start.

To create sites and other resources, refer:

https://github.com/frappe/helm/blob/main/erpnext/README.md

Frequently Asked Questions:

https://helm.erpnext.com/faq

This time it started to launch pods

$ kubectl get pods -n erpnext

NAME READY STATUS RESTARTS AGE

frappe-bench-erpnext-gunicorn-7755c76b48-m5h7t 0/1 ContainerCreating 0 105s

frappe-bench-erpnext-scheduler-798bf5dc55-b8tq8 0/1 ContainerCreating 0 105s

frappe-bench-erpnext-nginx-7bd486cb49-l2vb8 0/1 ContainerCreating 0 105s

frappe-bench-erpnext-worker-l-c5dbcf5d-vgnm4 0/1 ContainerCreating 0 105s

frappe-bench-erpnext-worker-s-f6d596f7d-fxlxt 0/1 ContainerCreating 0 105s

frappe-bench-erpnext-conf-bench-20230610213241-vk98s 0/1 Init:0/1 0 105s

frappe-bench-redis-queue-master-0 1/1 Running 0 105s

frappe-bench-redis-cache-master-0 1/1 Running 0 105s

frappe-bench-redis-socketio-master-0 1/1 Running 0 105s

frappe-bench-erpnext-socketio-7d6978bb8-fcq4l 0/1 CrashLoopBackOff 3 (19s ago) 104s

frappe-bench-erpnext-worker-d-5bf6fc687b-zb2zr 0/1 CrashLoopBackOff 3 (7s ago) 104s

After a while

$ kubectl get pods -n erpnext

NAME READY STATUS RESTARTS AGE

frappe-bench-redis-queue-master-0 1/1 Running 0 8m40s

frappe-bench-redis-cache-master-0 1/1 Running 0 8m40s

frappe-bench-redis-socketio-master-0 1/1 Running 0 8m40s

frappe-bench-erpnext-nginx-7bd486cb49-l2vb8 1/1 Running 0 8m40s

frappe-bench-erpnext-gunicorn-7755c76b48-m5h7t 1/1 Running 0 8m40s

frappe-bench-erpnext-scheduler-798bf5dc55-b8tq8 1/1 Running 0 8m40s

frappe-bench-erpnext-conf-bench-20230610213241-vk98s 0/1 Completed 0 8m40s

frappe-bench-erpnext-worker-s-f6d596f7d-fxlxt 1/1 Running 2 (2m21s ago) 8m40s

frappe-bench-erpnext-worker-l-c5dbcf5d-vgnm4 1/1 Running 2 (2m23s ago) 8m40s

frappe-bench-erpnext-worker-d-5bf6fc687b-zb2zr 1/1 Running 6 (4m44s ago) 8m39s

frappe-bench-erpnext-socketio-7d6978bb8-fcq4l 0/1 CrashLoopBackOff 6 (67s ago) 8m39s

I restarted the pod and it came up

$ kubectl get pods -l app.kubernetes.io/instance=frappe-bench-socketio -n erpnext

NAME READY STATUS RESTARTS AGE

frappe-bench-erpnext-socketio-7d6978bb8-mtv9n 1/1 Running 0 17s

I can now port-forward

$ kubectport-forward svc/frappe-bench-erpnext -n erpnext 8080:8080

Forwarding from 127.0.0.1:8080 -> 8080

Forwarding from [::1]:8080 -> 8080

Handling connection for 8080

Handling connection for 8080

Setting up a website

We’ll create a password for the user

$ kubectl create secret -n erpnext generic mariadb-root-password --from-literal=password=freshbrewedpassword

secret/mariadb-root-password created

We just need a batch job

$ cat createSite.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: create-erp-example-com

spec:

backoffLimit: 0

template:

spec:

securityContext:

supplementalGroups: [1000]

containers:

- name: create-site

image: frappe/erpnext:v14.27.1

args: ["new"]

imagePullPolicy: IfNotPresent

volumeMounts:

- name: sites-dir

mountPath: /home/frappe/frappe-bench/sites

env:

- name: "SITE_NAME"

value: erpnext-example.svc.cluster.local

- name: "DB_ROOT_USER"

value: root

- name: "MYSQL_ROOT_PASSWORD"

valueFrom:

secretKeyRef:

name: mariadb-root-password

key: password

- name: "ADMIN_PASSWORD"

value: super_secret_password

- name: "INSTALL_APPS"

value: "erpnext"

restartPolicy: Never

volumes:

- name: sites-dir

persistentVolumeClaim:

claimName: frappe-bench-erpnext

readOnly: false

$ kubectl apply -f createSite.yaml -n erpnext

job.batch/create-erp-example-com created

I actually worked the job for a while.

It seems “new” hasn’t been part of the bench command, as far as I can see, for a while.

What was closer was

apiVersion: batch/v1

kind: Job

metadata:

name: create-erp-example-com

spec:

backoffLimit: 0

template:

spec:

securityContext:

supplementalGroups: [1000]

containers:

- name: create-site

image: frappe/erpnext-worker:v13

args: ["bench","new-site","--db-root-username $DB_ROOT_USER","--db-root-password $MYSQL_ROOT_PASSWORD","--db-type mariadb","--admin-password $ADMIN_PASSWORD","--install-app $INSTALL_APPS","$SITE_NAME"]

imagePullPolicy: IfNotPresent

volumeMounts:

- name: sites-dir

mountPath: /home/frappe/frappe-bench/sites

env:

- name: "SITE_NAME"

value: erpnext-example.svc.cluster.local

- name: "DB_ROOT_USER"

value: root

- name: "MYSQL_ROOT_PASSWORD"

valueFrom:

secretKeyRef:

name: mariadb-root-password

key: password

- name: "ADMIN_PASSWORD"

value: super_secret_password

- name: "INSTALL_APPS"

value: "erpnext"

restartPolicy: Never

volumes:

- name: sites-dir

persistentVolumeClaim:

claimName: frappe-bench-erpnext

readOnly: false

The errors to the DB had me trying commands on a worker directly:

frappe@frappe-bench-erpnext-worker-s-f6d596f7d-fxlxt:~/frappe-bench$ mysql --host=10.43.121.34 --port=3306 --user=root --password=freshbrewedpassword

ERROR 2002 (HY000): Can't connect to MySQL server on '10.43.121.34' (115)

I realized that frappe installed a MariaDB service:

$ kubectl get svc frappe-bench-mariadb -o yaml

apiVersion: v1

kind: Service

metadata:

annotations:

meta.helm.sh/release-name: frappe-bench

meta.helm.sh/release-namespace: erpnext

creationTimestamp: "2023-06-11T02:45:04Z"

labels:

app.kubernetes.io/component: primary

app.kubernetes.io/instance: frappe-bench

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: mariadb

helm.sh/chart: mariadb-11.4.2

name: frappe-bench-mariadb

namespace: erpnext

resourceVersion: "156653268"

uid: 665d20c7-014c-4ac3-8c77-1240af1d22a9

spec:

clusterIP: 10.43.121.34

clusterIPs:

- 10.43.121.34

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: mysql

port: 3306

protocol: TCP

targetPort: mysql

selector:

app.kubernetes.io/component: primary

app.kubernetes.io/instance: frappe-bench

app.kubernetes.io/name: mariadb

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}

But no actual mariadb instance

$ kubectl get pods -l app.kubernetes.io/name=mariadb

No resources found in erpnext namespace.

Let’s create a MariaDB to solve this

$ cat frappeMariaDb.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mariadb-deployment

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/component: primary

app.kubernetes.io/instance: frappe-bench

app.kubernetes.io/name: mariadb

template:

metadata:

labels:

app.kubernetes.io/component: primary

app.kubernetes.io/instance: frappe-bench

app.kubernetes.io/name: mariadb

spec:

containers:

- name: mariadb

image: mariadb

ports:

- containerPort: 3306

env:

#- name: MARIADB_RANDOM_ROOT_PASSWORD

- name: MARIADB_ALLOW_EMPTY_ROOT_PASSWORD

value: "0" # if it is 1 and root_password is set, root_password takes precedance

- name: MARIADB_ROOT_PASSWORD

value: freshbrewedpassword

$ kubectl apply -f frappeMariaDb.yaml

deployment.apps/mariadb-deployment created

$ kubectl get pods -l app.kubernetes.io/name=mariadb

NAME READY STATUS RESTARTS AGE

mariadb-deployment-7f64864f49-n6bvd 1/1 Running 0 61s

Interestingly, I can reach the pod directly

frappe@frappe-bench-erpnext-worker-s-f6d596f7d-fxlxt:~/frappe-bench$ mysql --host=10.42.1.221 --port=3306 --user=root -

-password=freshbrewedpassword

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 3

Server version: 11.0.2-MariaDB-1:11.0.2+maria~ubu2204 mariadb.org binary distribution

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> \q

but not via the service

frappe@frappe-bench-erpnext-worker-s-f6d596f7d-fxlxt:~/frappe-bench$ mysql --host=10.43.121.34 --port=3306 --user=root --password=freshbrewedpassword

^C

frappe@frappe-bench-erpnext-worker-s-f6d596f7d-fxlxt:~/frappe-bench$ mysql --host=frappe-bench-mariadb --port=3306 --use

r=root --password=freshbrewedpassword

ERROR 2002 (HY000): Can't connect to MySQL server on 'frappe-bench-mariadb' (115)

frappe@frappe-bench-erpnext-worker-s-f6d596f7d-fxlxt:~/frappe-bench$ mysql --host=frappe-bench-mariadb.erpnext.svc.cluster.local --port=3306 --user=root --password=freshbrewedpassword

ERROR 2002 (HY000): Can't connect to MySQL server on 'frappe-bench-mariadb.erpnext.svc.cluster.local' (115)

Even tho that should work

$ kubectl get pods -l app.kubernetes.io/name=mariadb,app.kubernetes.io/instance=frappe-bench,app.kubernetes.io/name=mariadb

NAME READY STATUS RESTARTS AGE

mariadb-deployment-7f64864f49-n6bvd 1/1 Running 0 4m23s

My next issue was it didn’t like the OOTB MariaDB setup

$ kubectl logs create-erp-example-com-btmzs

For key collation_server. Expected value utf8mb4_unicode_ci, found value utf8mb4_general_ci

================================================================================

Creation of your site - erpnext-example.svc.cluster.local failed because MariaDB is not properly

configured. If using version 10.2.x or earlier, make sure you use the

the Barracuda storage engine.

Please verify the settings above in MariaDB's my.cnf. Restart MariaDB. And

then run `bench new-site erpnext-example.svc.cluster.local` again.

================================================================================

It took a few tries, but I managed to work out the following YAML for a MariaDB configured the way Frappe ERPNext would like it

apiVersion: apps/v1

kind: Deployment

metadata:

name: mariadb-deployment

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/component: primary

app.kubernetes.io/instance: frappe-bench

app.kubernetes.io/name: mariadb

template:

metadata:

labels:

app.kubernetes.io/component: primary

app.kubernetes.io/instance: frappe-bench

app.kubernetes.io/name: mariadb

spec:

containers:

- name: mariadb

image: mariadb

ports:

- containerPort: 3306

env:

#- name: MARIADB_RANDOM_ROOT_PASSWORD

- name: MARIADB_ALLOW_EMPTY_ROOT_PASSWORD

value: "0" # if it is 1 and root_password is set, root_password takes precedance

- name: MARIADB_ROOT_PASSWORD

value: freshbrewedpassword

volumeMounts:

- name: configmap

mountPath: /etc/mysql/mariadb.conf.d

- name: data

mountPath: /var/lib/mariadb

volumes:

- name: configmap

configMap:

name: frappe-mariadb-cnf

- name: data

persistentVolumeClaim:

claimName: frappe-mariadb-data-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: frappe-mariadb-data-pvc

spec:

storageClassName: local-path

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 8Gi

---

apiVersion: v1

kind: ConfigMap

metadata:

name: frappe-mariadb-cnf

labels:

app.kubernetes.io/component: primary

app.kubernetes.io/instance: frappe-bench

app.kubernetes.io/name: mariadb

data:

my.cnf: |-

[mysqld]

character-set-client-handshake = FALSE

character-set-server = utf8mb4

collation-server = utf8mb4_unicode_ci

[mysql]

default-character-set = utf8mb4

The create job had also been updated to pass in the parameters the way the newer bench worker would like

$ cat createSite.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: create-erp-example2-com

spec:

backoffLimit: 0

template:

spec:

securityContext:

supplementalGroups: [1000]

containers:

- name: create-site

image: frappe/erpnext-worker:v13

args: ["bench","new-site","--db-host $(DB_HOST)","--db-root-username $(DB_ROOT_USER)","--db-root-password $(MYSQL_ROOT_PASSWORD)","--db-type mariadb","--admin-password $(ADMIN_PASSWORD)","--install-app $(INSTALL_APPS)","$(SITE_NAME)"]

imagePullPolicy: IfNotPresent

volumeMounts:

- name: sites-dir

mountPath: /home/frappe/frappe-bench/sites

env:

- name: "SITE_NAME"

value: erpnext-example2.svc.cluster.local

- name: "DB_HOST"

value: "10.42.1.225"

- name: "DB_ROOT_USER"

value: root

- name: "MYSQL_ROOT_PASSWORD"

valueFrom:

secretKeyRef:

name: mariadb-root-password

key: password

- name: "ADMIN_PASSWORD"

value: super_secret_password

- name: "INSTALL_APPS"

value: "erpnext"

restartPolicy: Never

volumes:

- name: sites-dir

persistentVolumeClaim:

claimName: frappe-bench-erpnext

readOnly: false

This time, after a sufficent time period passed, it completed

$ kubectl get jobs

NAME COMPLETIONS DURATION AGE

frappe-bench-erpnext-conf-bench-20230610213241 1/1 6m43s 3d17h

create-erp-example2-com 1/1 4m55s 6m14s

$ kubectl logs create-erp-example2-com-5bf9w

Installing frappe...

Updating DocTypes for frappe : [========================================] 100%

Updating country info : [========================================] 100%

Installing erpnext...

Updating DocTypes for erpnext : [========================================] 100%

Updating customizations for Address

Updating customizations for Contact

*** Scheduler is disabled ***

I can see the site was created, but am at a loss on how best to serve via port-forward

builder@LuiGi17:~/Workspaces/jekyll-blog$ kubectl exec -it frappe-bench-erpnext-nginx-7bd486cb49-l2vb8 -- /bin/bash

frappe@frappe-bench-erpnext-nginx-7bd486cb49-l2vb8:~/frappe-bench$ ls -ltra

total 32

drwxr-xr-x 3 frappe frappe 4096 Jun 9 16:57 config

-rw-r--r-- 1 frappe frappe 346 Jun 9 16:57 patches.txt

drwxr-xr-x 7 frappe frappe 4096 Jun 9 16:57 .

drwxr-xr-x 6 frappe frappe 4096 Jun 9 16:58 env

drwxr-xr-x 4 frappe frappe 4096 Jun 9 16:59 apps

drwxr-xr-x 1 frappe frappe 4096 Jun 9 17:00 ..

drwxr-xr-x 2 frappe frappe 4096 Jun 11 02:51 logs

drwxrwxrwx 6 frappe frappe 4096 Jun 14 20:15 sites

frappe@frappe-bench-erpnext-nginx-7bd486cb49-l2vb8:~/frappe-bench$ cd sites/

frappe@frappe-bench-erpnext-nginx-7bd486cb49-l2vb8:~/frappe-bench/sites$ ls

'$SITE_NAME' assets erpnext-example.svc.cluster.local

apps.txt common_site_config.json erpnext-example2.svc.cluster.local

frappe@frappe-bench-erpnext-nginx-7bd486cb49-l2vb8:~/frappe-bench/sites$ cat erpnext-example2.svc.cluster.local/

.test_log locks/ private/ site_config.json

error-snapshots/ logs/ public/

frappe@frappe-bench-erpnext-nginx-7bd486cb49-l2vb8:~/frappe-bench/sites$ cat erpnext-example2.svc.cluster.local/site_config.json

{

"db_host": "10.42.1.225",

"db_name": "_986265b1f1ec718c",

"db_password": "XxZgQ1Mieycd3szX",

"db_type": "mariadb",

"user_type_doctype_limit": {

"employee_self_service": 10

}

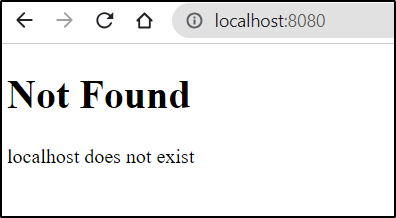

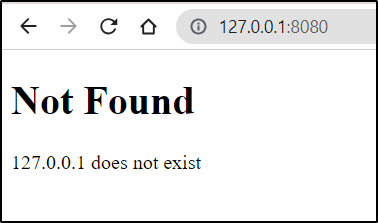

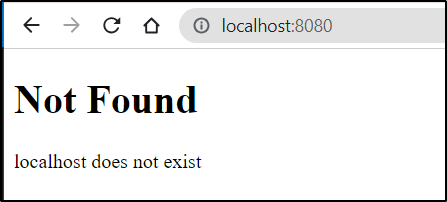

Because when doing port-forward

$ kubectl port-forward svc/frappe-bench-erpnext 8080:8080

Forwarding from 127.0.0.1:8080 -> 8080

Forwarding from [::1]:8080 -> 8080

Ningx seems stuck on looking for localhost or 127.0.0.1… not serving just any traffic.

and here

$ cat createLocalhostSite.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: create-erp-localhost

spec:

backoffLimit: 0

template:

spec:

securityContext:

supplementalGroups: [1000]

containers:

- name: create-site

image: frappe/erpnext-worker:v13

args: ["bench","new-site","--db-host $(DB_HOST)","--db-root-username $(DB_ROOT_USER)","--db-root-password $(MYSQL_ROOT_PASSWORD)","--db-type mariadb","--admin-password $(ADMIN_PASSWORD)","--install-app $(INSTALL_APPS)","$(SITE_NAME)"]

imagePullPolicy: IfNotPresent

volumeMounts:

- name: sites-dir

mountPath: /home/frappe/frappe-bench/sites

env:

- name: "SITE_NAME"

value: localhost

- name: "DB_HOST"

value: "10.42.1.225"

- name: "DB_ROOT_USER"

value: root

- name: "MYSQL_ROOT_PASSWORD"

valueFrom:

secretKeyRef:

name: mariadb-root-password

key: password

- name: "ADMIN_PASSWORD"

value: super_secret_password

- name: "INSTALL_APPS"

value: "erpnext"

restartPolicy: Never

volumes:

- name: sites-dir

persistentVolumeClaim:

claimName: frappe-bench-erpnext

readOnly: false

$ kubectl apply -f createLocalhostSite.yaml

job.batch/create-erp-localhost created

When that finished

$ kubectl logs create-erp-localhost-vr4zd

Installing frappe...

Updating DocTypes for frappe : [========================================] 100%

Updating country info : [========================================] 100%

Installing erpnext...

Updating DocTypes for erpnext : [========================================] 100%

Updating customizations for Address

Updating customizations for Contact

*** Scheduler is disabled ***

Now when I port forward I get an error

$ kubectl port-forward svc/frappe-bench-erpnext 8080:8080

Forwarding from 127.0.0.1:8080 -> 8080

Forwarding from [::1]:8080 -> 8080

Handling connection for 8080

Handling connection for 8080

NGinx didn’t give me any clues

$ kubectl logs frappe-bench-erpnext-nginx-7bd486cb49-l2vb8

PROXY_READ_TIMEOUT defaulting to 120

CLIENT_MAX_BODY_SIZE defaulting to 50m

127.0.0.1 - - [11/Jun/2023:02:58:39 +0000] "GET / HTTP/1.1" 404 111 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [11/Jun/2023:02:58:46 +0000] "GET / HTTP/1.1" 404 111 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [11/Jun/2023:02:58:46 +0000] "GET /favicon.ico HTTP/1.1" 404 111 "http://localhost:8080/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [11/Jun/2023:02:59:10 +0000] "GET / HTTP/1.1" 404 111 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [11/Jun/2023:02:59:16 +0000] "GET / HTTP/1.1" 404 111 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [11/Jun/2023:02:59:16 +0000] "GET /favicon.ico HTTP/1.1" 404 111 "http://127.0.0.1:8080/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [11/Jun/2023:03:04:37 +0000] "GET / HTTP/1.1" 404 111 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [14/Jun/2023:20:24:43 +0000] "GET / HTTP/1.1" 404 111 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [14/Jun/2023:20:24:44 +0000] "GET /favicon.ico HTTP/1.1" 404 111 "http://localhost:8080/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [14/Jun/2023:20:24:54 +0000] "GET / HTTP/1.1" 404 111 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [14/Jun/2023:20:24:54 +0000] "GET /favicon.ico HTTP/1.1" 404 111 "http://127.0.0.1:8080/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [14/Jun/2023:20:30:27 +0000] "GET / HTTP/1.1" 404 111 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [14/Jun/2023:20:31:02 +0000] "GET / HTTP/1.1" 404 111 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [14/Jun/2023:20:31:07 +0000] "GET / HTTP/1.1" 404 111 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [14/Jun/2023:20:43:26 +0000] "GET / HTTP/1.1" 500 141 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [14/Jun/2023:20:43:59 +0000] "GET / HTTP/1.1" 500 141 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [14/Jun/2023:20:45:25 +0000] "GET / HTTP/1.1" 500 141 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [14/Jun/2023:20:45:29 +0000] "GET / HTTP/1.1" 500 141 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

However, no matter what i did, i would get 500s in the nginx logs

127.0.0.1 - - [15/Jun/2023:22:20:35 +0000] "GET /apps.txt HTTP/1.1" 500 141 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [15/Jun/2023:22:27:29 +0000] "GET /apps.txt HTTP/1.1" 500 141 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [15/Jun/2023:22:27:37 +0000] "GET /assets/ HTTP/1.1" 404 185 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [15/Jun/2023:22:27:41 +0000] "GET /erpnext/ HTTP/1.1" 301 169 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [15/Jun/2023:22:27:47 +0000] "GET /index.html HTTP/1.1" 301 169 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [15/Jun/2023:22:28:03 +0000] "GET /index HTTP/1.1" 500 141 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [15/Jun/2023:22:28:04 +0000] "GET / HTTP/1.1" 500 141 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36"

127.0.0.1 - - [15/Jun/2023:22:28:23 +0000] "\x16\x03\x01\x02\x00\x01\x00\x01\xFC\x03\x03\xE0\xF3\xF3,\xC7\x85\x850m\x10jF\xB6\x99\xAF\x1FC-;\x88\xB1\x86\xBD\x16\xE5\xF3w" 400 157 "-" "-"

127.0.0.1 - - [15/Jun/2023:22:28:23 +0000] "\x16\x03\x01\x02\x00\x01\x00\x01\xFC\x03\x03\xEE\xCE\x96\xC0rG\xC0\xB3\x9D\xEF\xC2]{y\x15\xAF\x89nF0\x84=\x1A\xF2\xC4z\xBDr\x0ETX\xDD \x88zf\xDC\xC9\xE6o\x0B:\x95\xDBg\x221e\xB6\x8F\x8D\xB70\xFA\x83\x15\xA0j\x1A\xD1\x94\xDB\xCE\xEA\x19\x00 ::\x13\x01\x13\x02\x13\x03\xC0+\xC0/\xC0,\xC00\xCC\xA9\xCC\xA8\xC0\x13\xC0\x14\x00\x9C\x00\x9D\x00/\x005\x01\x00\x01\x93" 400 157 "-" "-"

127.0.0.1 - - [15/Jun/2023:22:28:23 +0000] "\x16\x03\x01\x02\x00\x01\x00\x01\xFC\x03\x03\xFA\xFA\xCBU\x97\xD3~\xF6\xAAJ\xC1F\xA1{\x9F`\x0B\xFA\xC2\xAB\xE2\xC1]6!(\xA8\x9F\xA6\xB0.O \x82l=M\x18%\xE7K\x87\x1E\x8A\x92?M\xADp\x8E\xFBo1\xD4Lg\xD6p(\xF4\xE6\xA0\x1F\xE3\x97\x00 jj\x13\x01\x13\x02\x13\x03\xC0+\xC0/\xC0,\xC00\xCC\xA9\xCC\xA8\xC0\x13\xC0\x14\x00\x9C\x00\x9D\x00/\x005\x01\x00\x01\x93zz\x00\x00\x00" 400 157 "-" "-"

127.0.0.1 - - [15/Jun/2023:22:28:23 +0000] "\x16\x03\x01\x02\x00\x01\x00\x01\xFC\x03\x03.\xD4\x8F\xB32\x5C%\xABu\x1C\xEF\x7F\x99\xFBC\xEBT\xDE`0^'\xDD\xAE\x1A1\xB6@@\xB4\x8B\xDF \x0Fy\xA0\x04\xFDu'\xBFIsZ\xBD\xB4\xBC\x866V\x87#\xBF" 400 157 "-" "-"

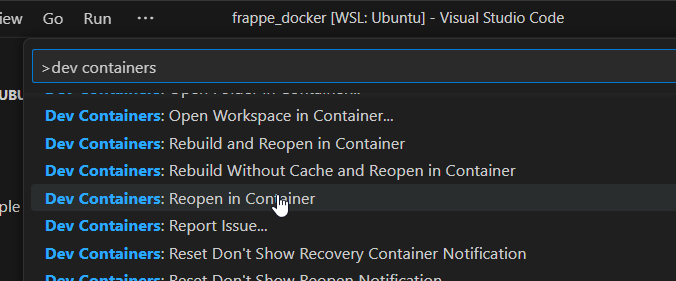

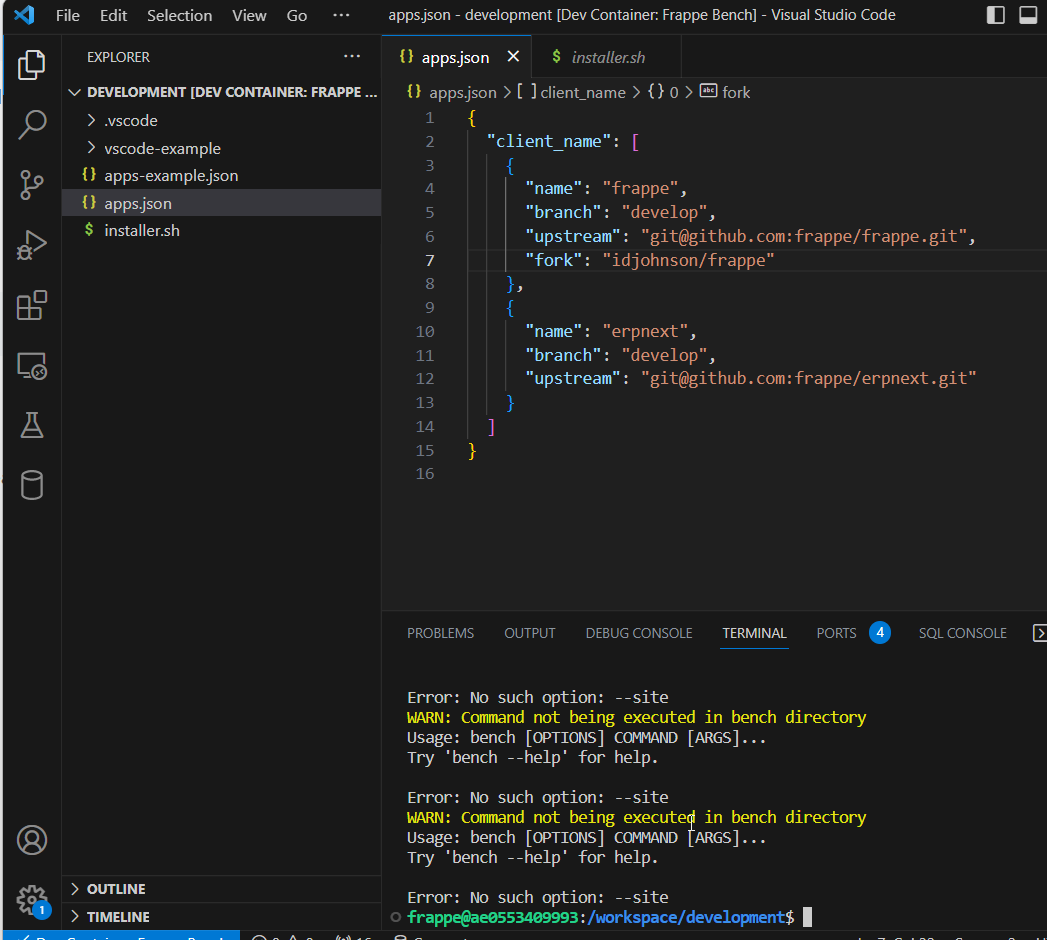

I pivotted and tried to use the Docker install

They speak about “Remote Containers” but the feature is now Dev Containers

But even following the guide crashed and didn’t work

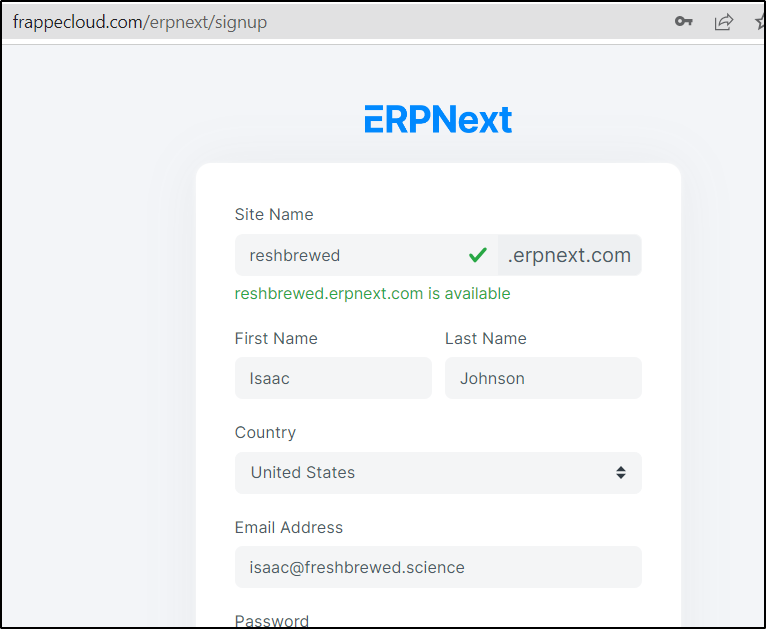

Cloud hosting

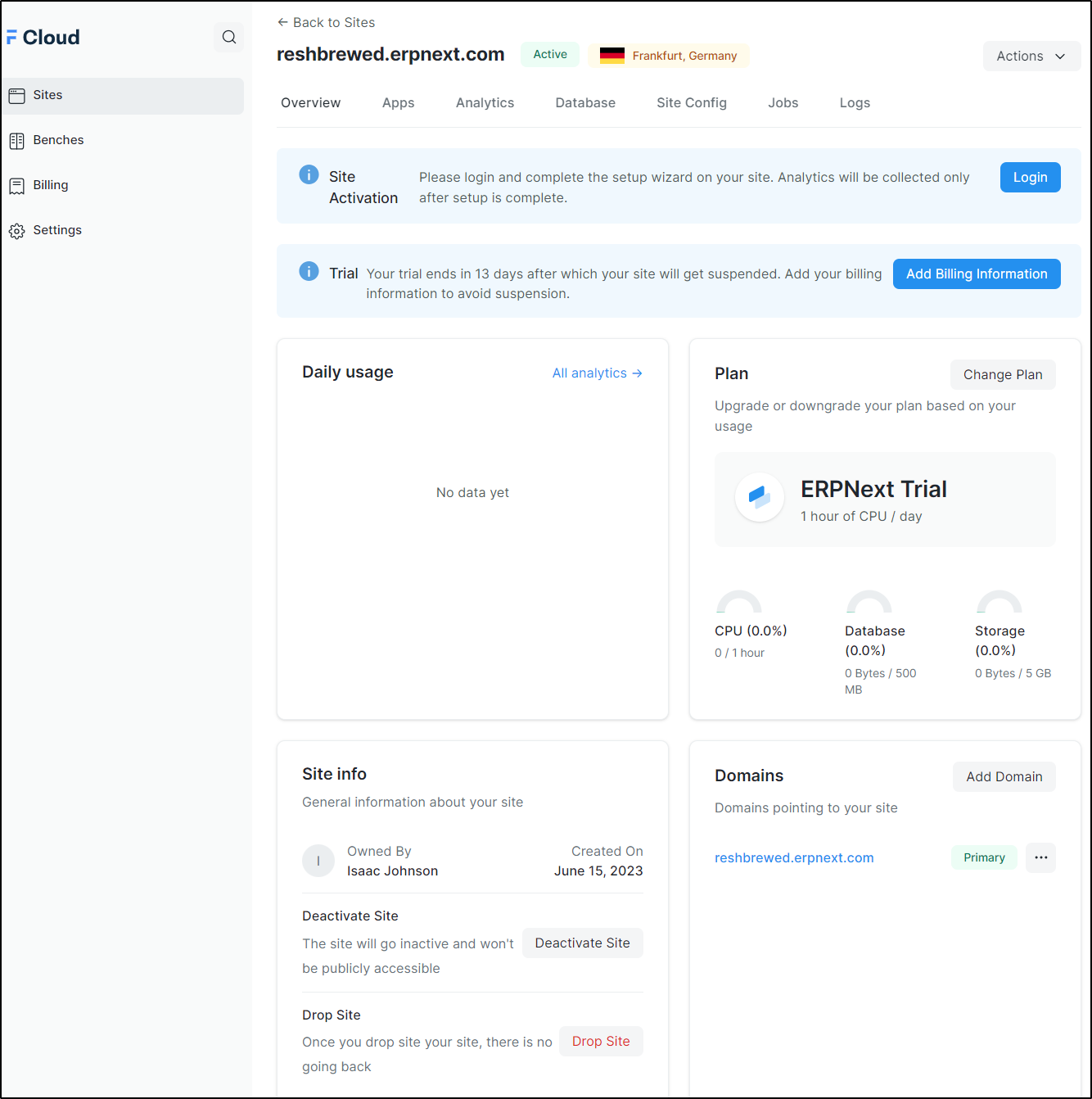

I had spent far too much time trying to get this working. I finally pivoted to use the Cloud hosted

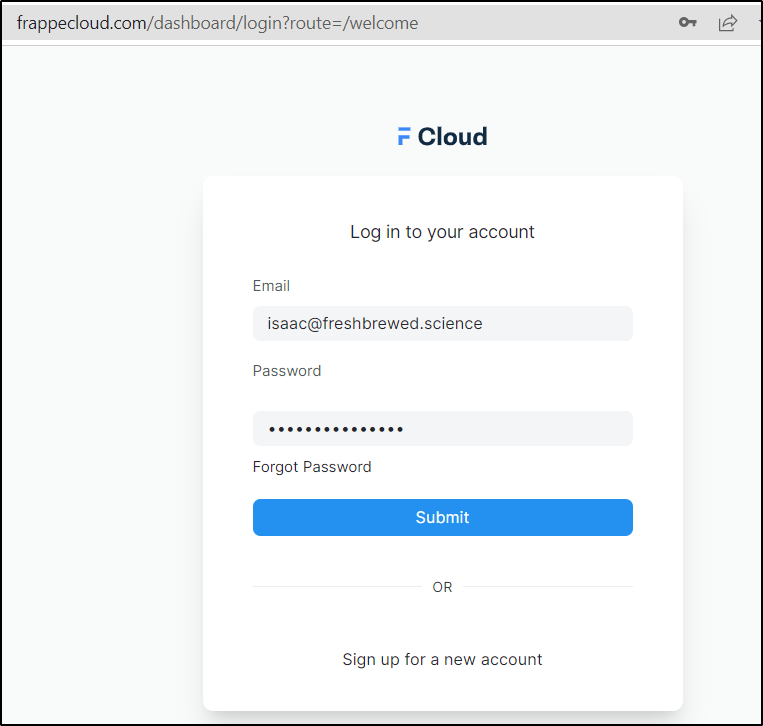

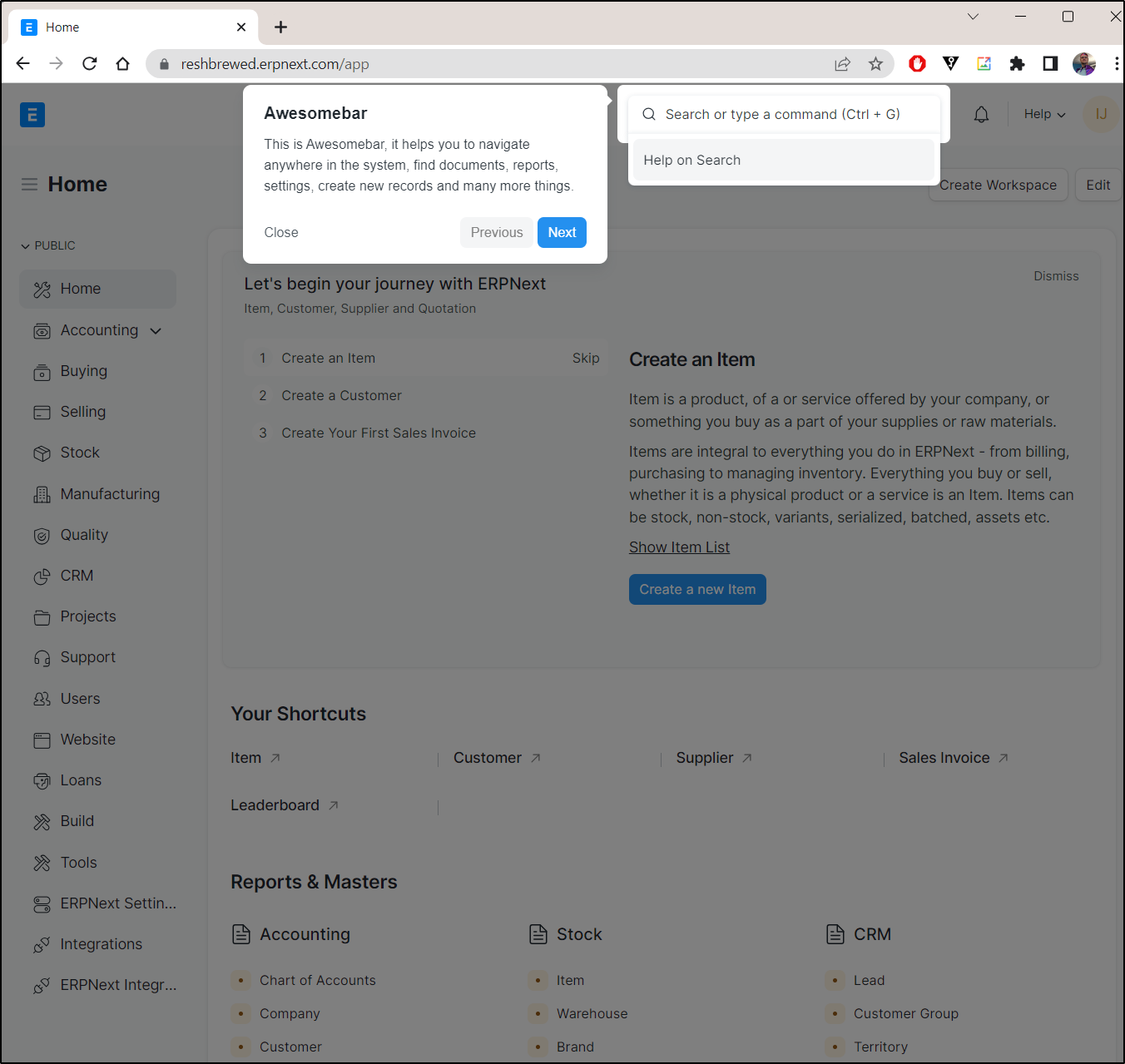

I could then login

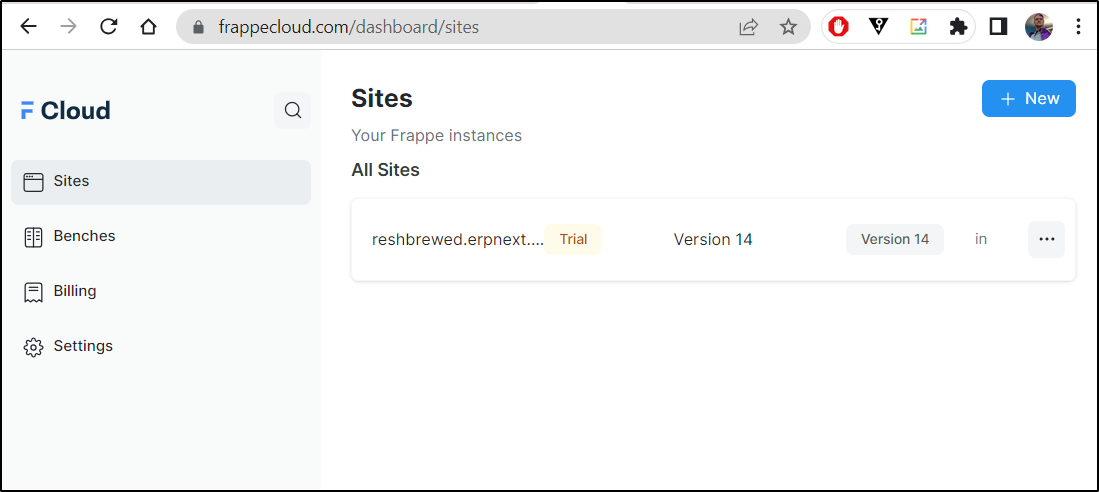

We can now see some sites in the dashboard

We can see some details

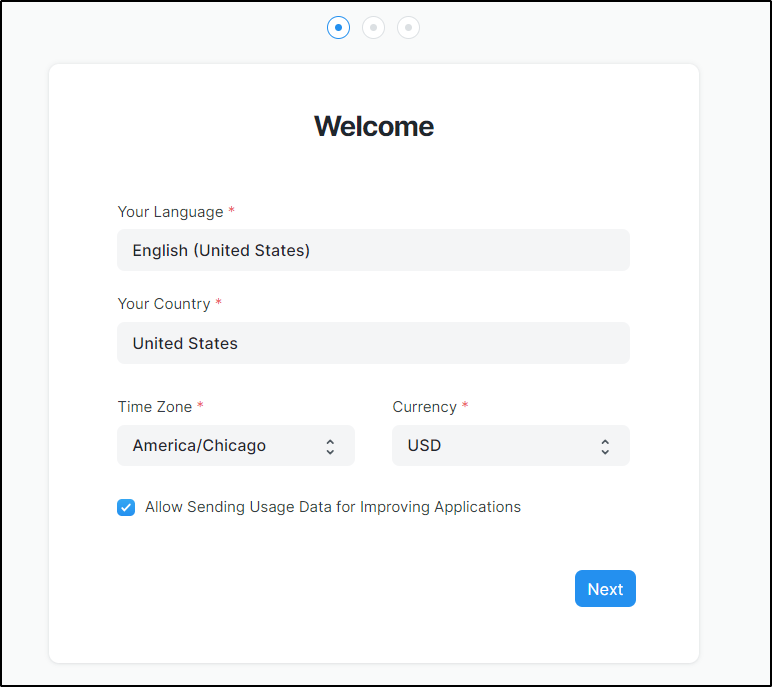

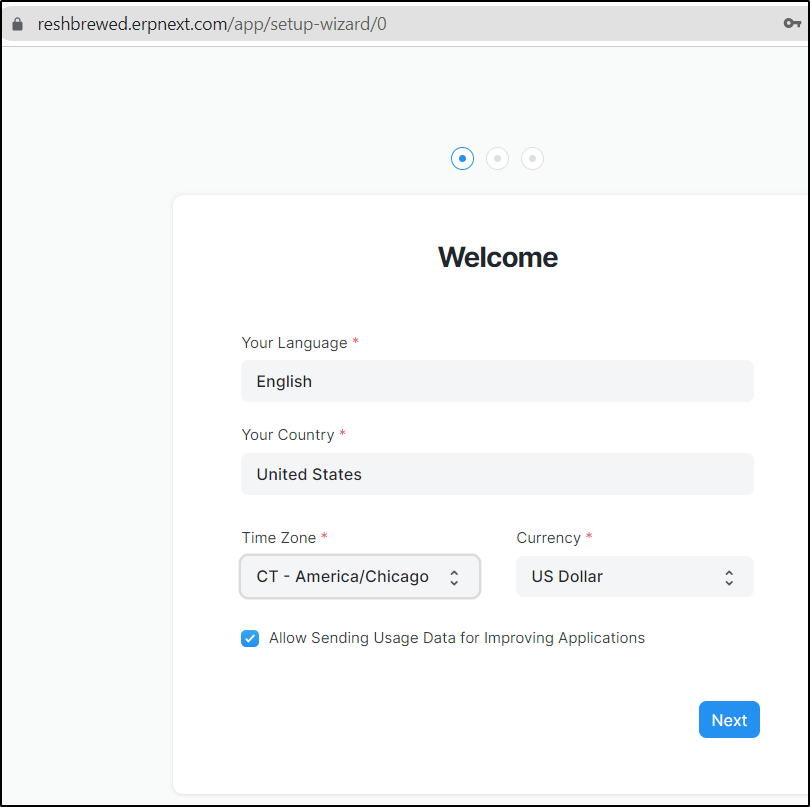

I can login and set details

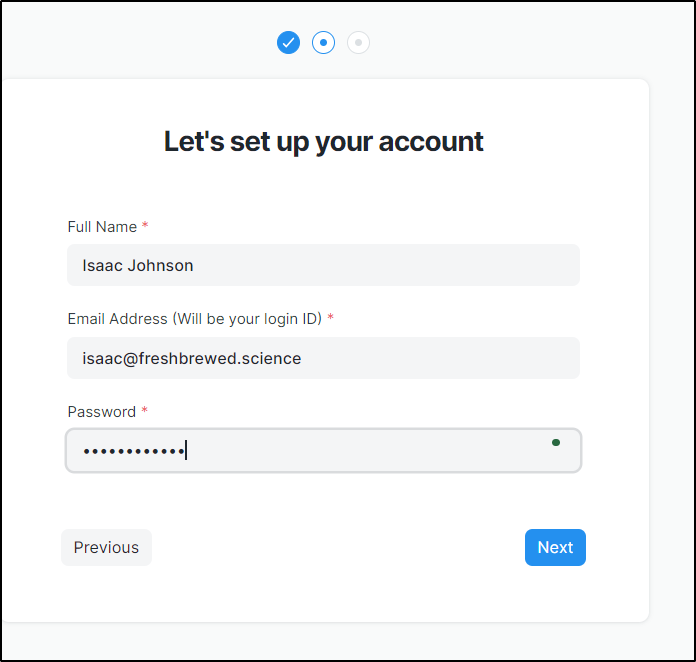

I then create a user account

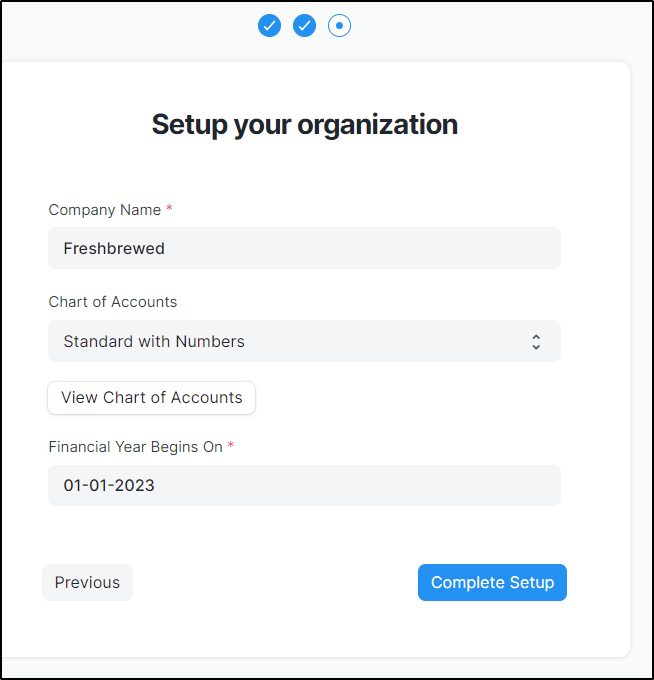

Then we setup our Organization

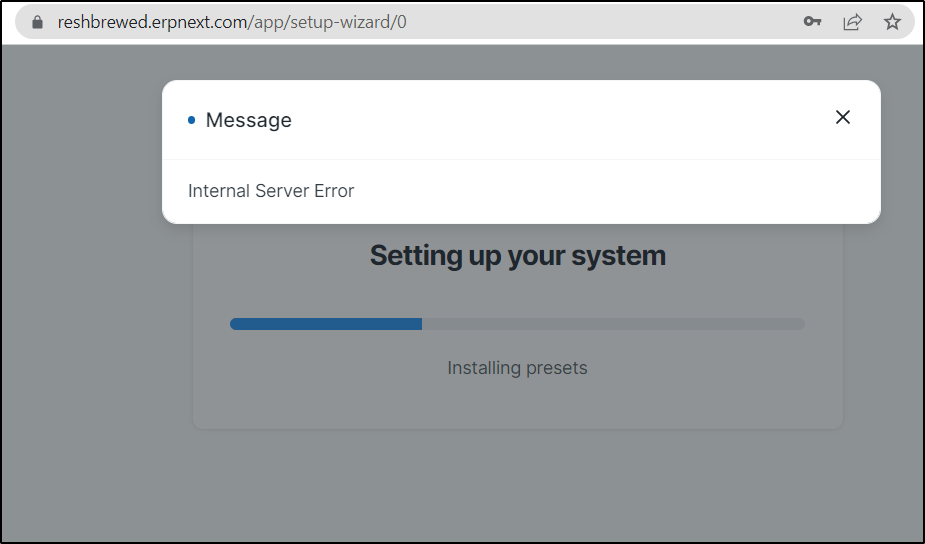

And it then … ?fails

We can now login

it failed the first time, but on a second try

That kicked again the wizard

And upon a second attempt, this works

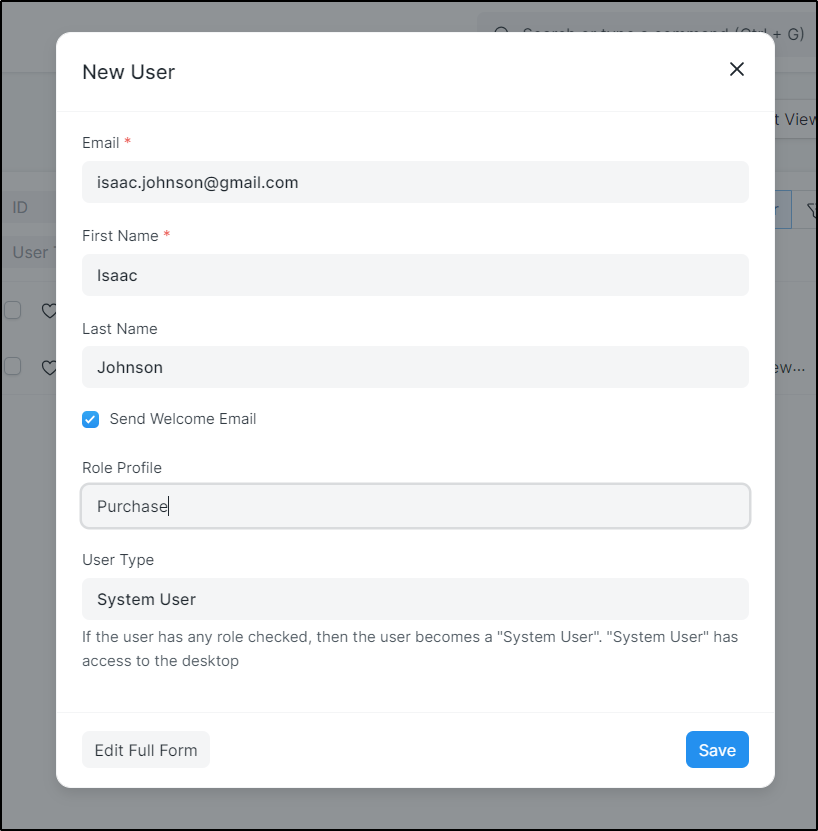

I’ll try adding a user

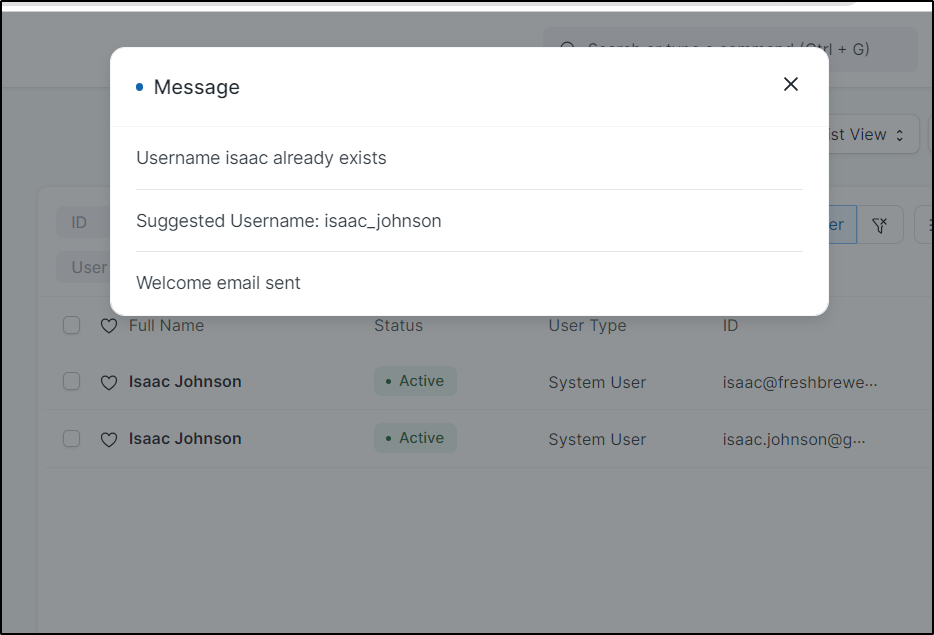

We can see some details and it claims a welcome email was sent

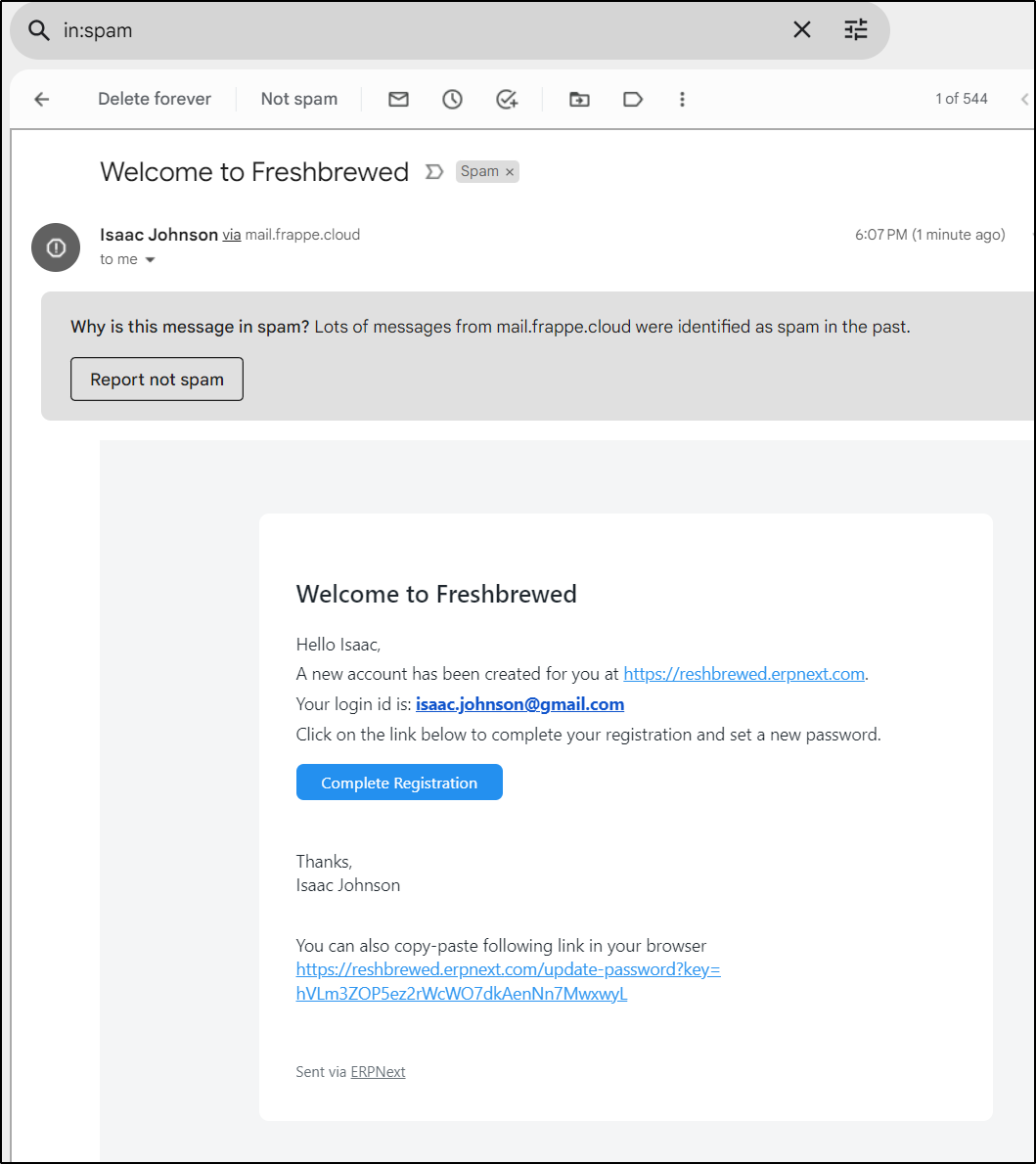

and I did find it in spam in my gmail

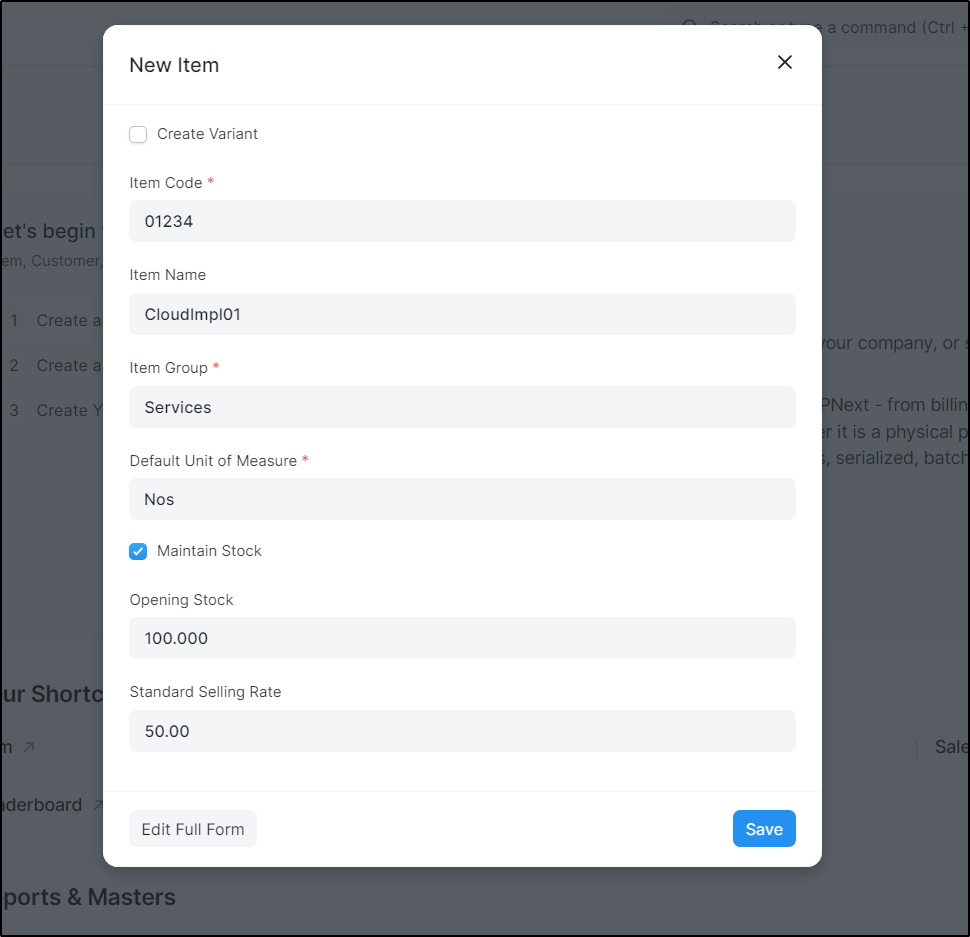

We can create an item to sell

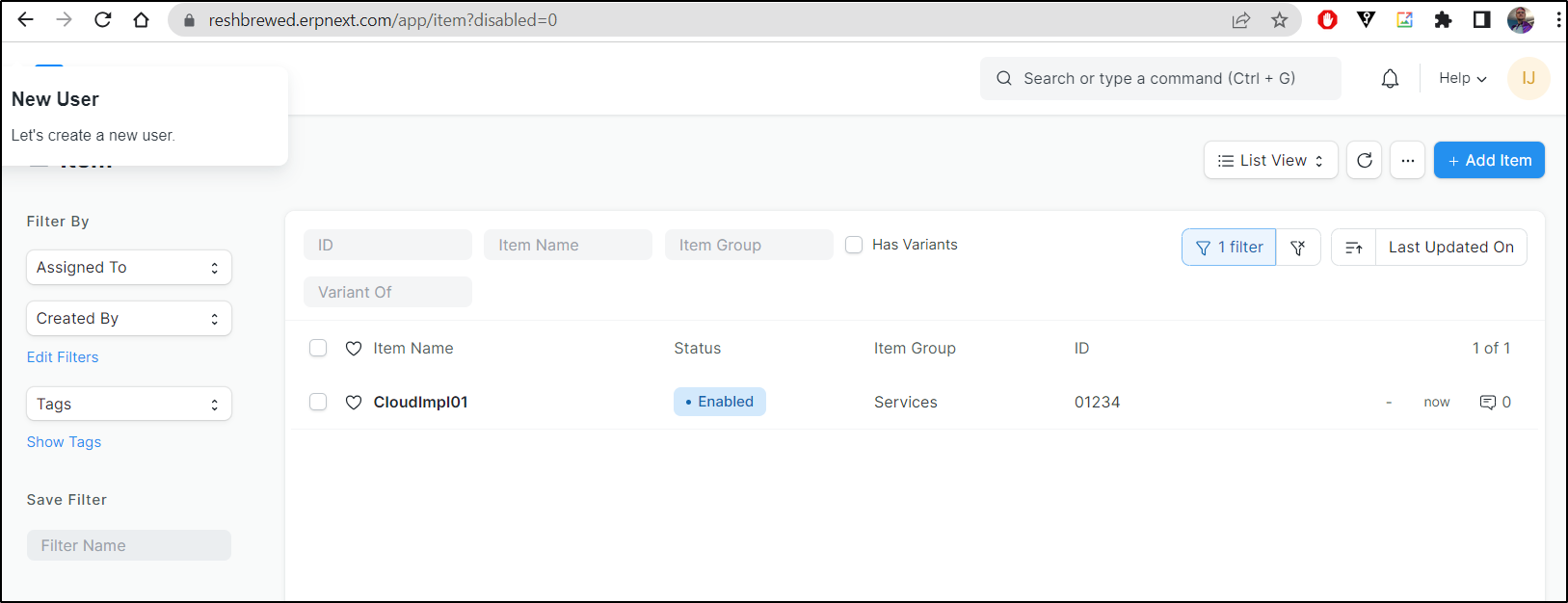

and see it’s available in our items

I’ll stop here. I’m certain we can create customers and sales plans and reports. There are a lot of features throughout this app.

Summary

I fought to set this up in a cloud centric way. Kubernetes seemed to fail, even with a lot of cajoling. Docker didn’t work and even the docker instructions were out of date to VS Code terms which made me suspect.

I got the cloud version going, but it seems a tad buggy and I’m not sure I would be confident storing all my data in such a system.

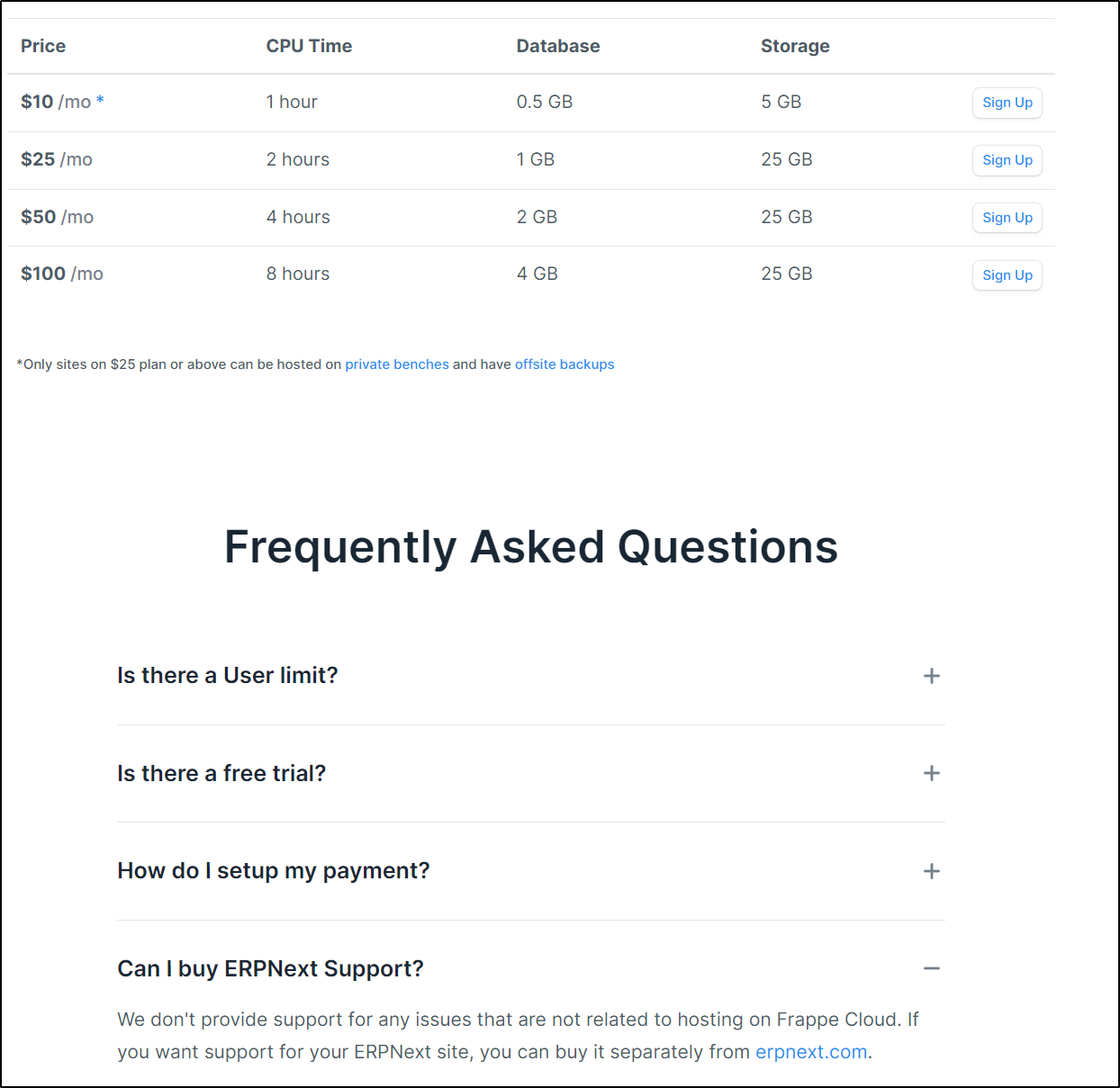

The host FrappeCloud seems more about cloud hosting.

Even the FAQ suggests questions about ERPNext go to Erpnext.com and not them

But going there just links about to creating instances on frappecloud

If you read their full story here you see the founder, Rushabh Mehta basically created it for his families business and had it Open Sourced in 2009/2010.. But then pivoted to turn ERPNext into what he hopes is “like Wordpress like tool for ERPs”.

I might try again doing it in Kubernetes in the future. I think they really want to lean into being a SaaS. It might just be they don’t spend a lot of energy on kubernetes implementations as they are focused on their Cloud offering.