Published: Jun 9, 2023 by Isaac Johnson

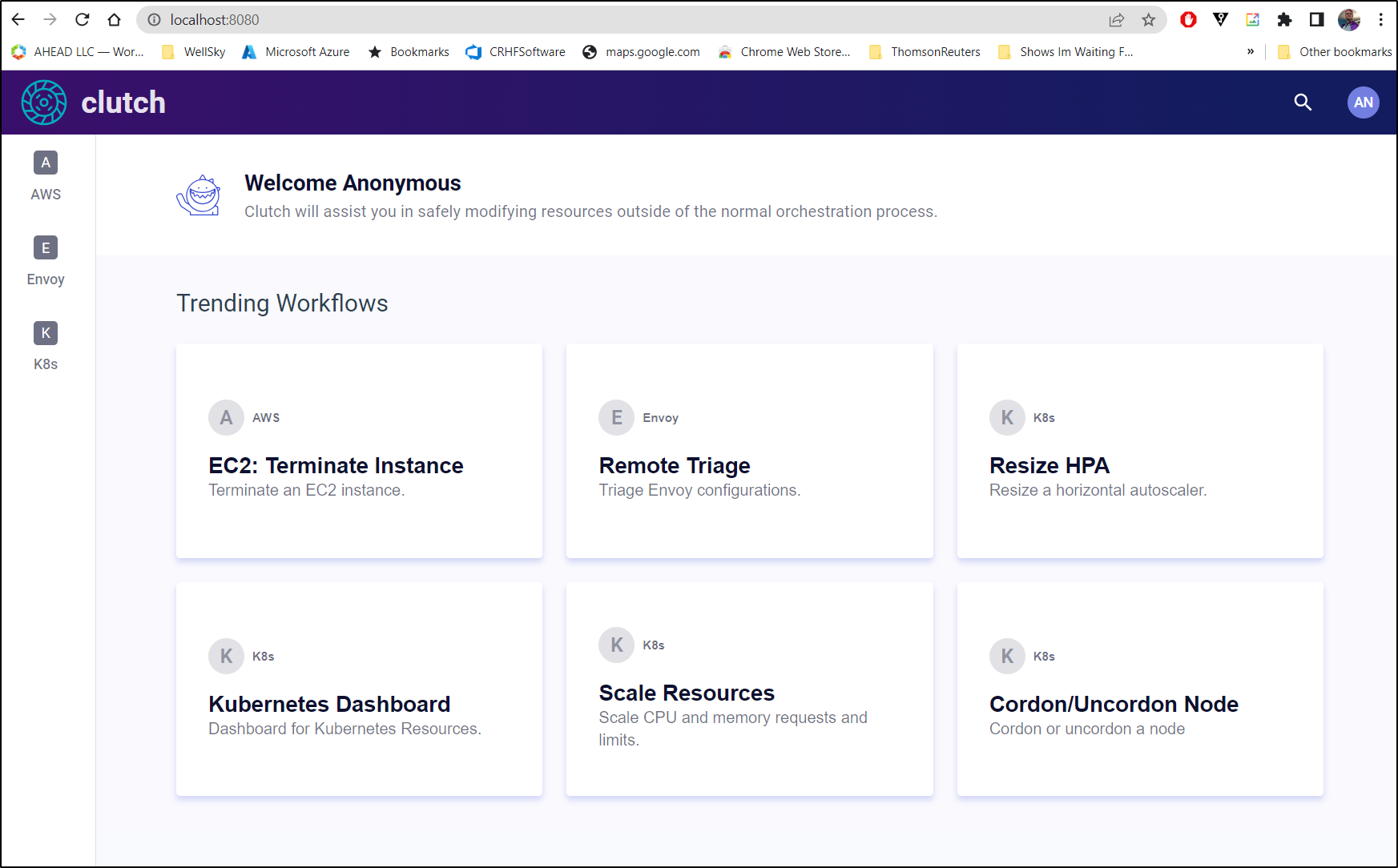

I found out about Lyft Clutch when searching for something completely different.

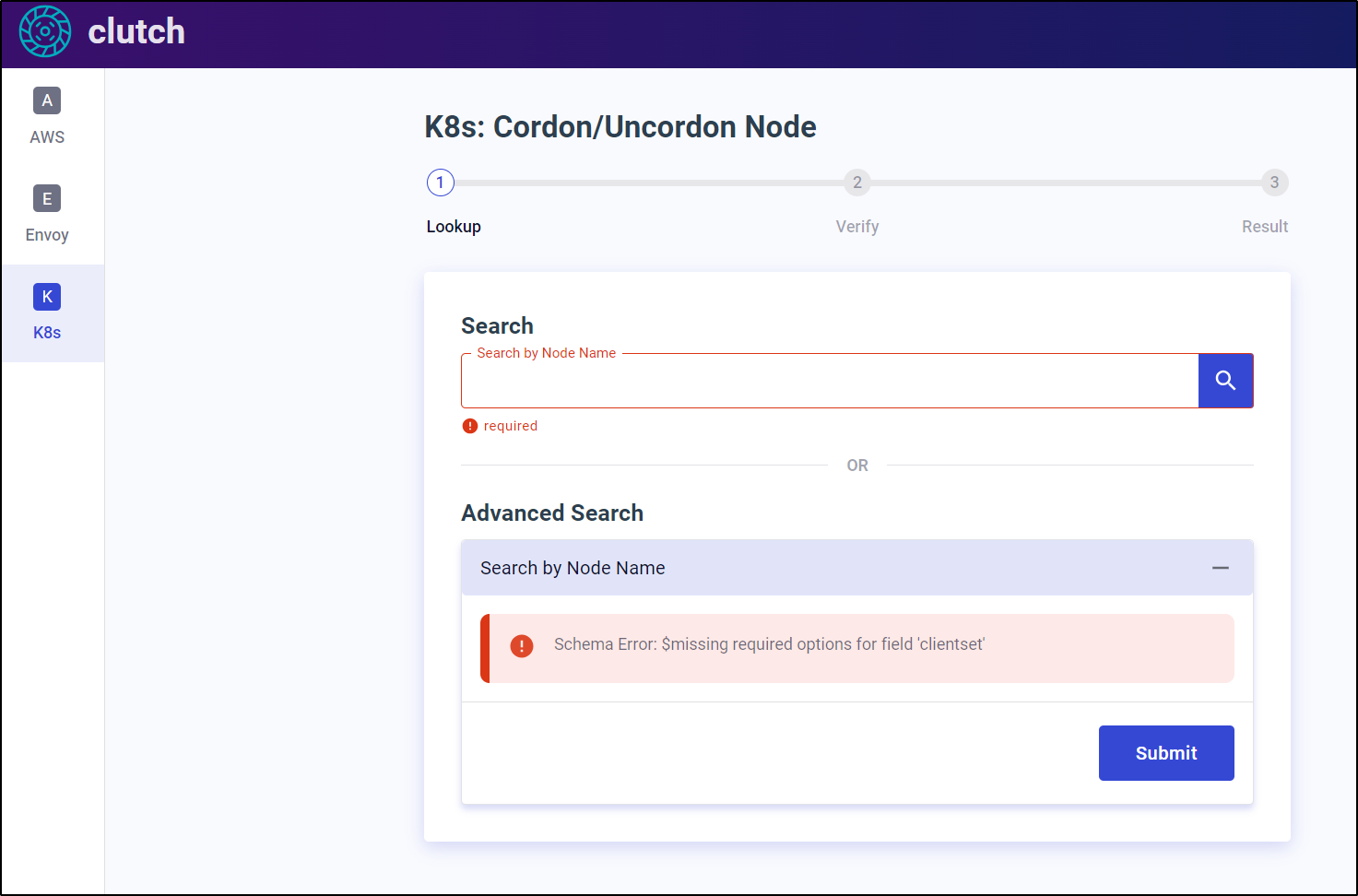

The documentation seemed a bit strange to me. That is, it talked about development extensibility to change the app to do more things, which is neat. But glossed over stuff like “clientset” which seems to be all over.

The only “clientset” i could figure out was “in-cluster” which seemed to refer to the cluster for which the pod ran in.

The configuration doc covers a little bit of the clutch-config.yaml but jumps right in to changing up typescript.

Installation

It couldn’t be easier to fire up Clutch. You just need Docker installed.

$ docker run --rm -p 8080:8080 -it lyft/clutch:latest

Unable to find image 'lyft/clutch:latest' locally

latest: Pulling from lyft/clutch

2445dbf7678f: Pull complete

f291067d32d8: Pull complete

b4d2855a0c0e: Pull complete

08654e6ec81e: Pull complete

Digest: sha256:759fbe6cf0803899bcc8a59fc9f5d1c66f86975d2d037aa6b9c4d969880c3d84

Status: Downloaded newer image for lyft/clutch:latest

2023-06-09T18:07:41.250Z INFO gateway/gateway.go:86 using configuration {"file": "clutch-config.yaml"}

2023-06-09T18:07:41.251Z INFO gateway/gateway.go:125 registering service {"serviceName": "clutch.service.project"}

2023-06-09T18:07:41.251Z INFO gateway/gateway.go:125 registering service {"serviceName": "clutch.service.aws"}

...

there isn’t much we can do without configuration files. But you get the idea

Because I ran with -it I can just ctrl-c to stop the docker server

^C2023-06-09T18:23:01.895Z DEBUG gateway/gateway.go:340 server shutdown gracefully

Kuberntes installation

It’s just as easy to fire it up in Kubernetes. There is a working deployment we can use here

$ kubectl apply -f https://raw.githubusercontent.com/lyft/clutch/main/examples/kubernetes/clutch.yaml

namespace/clutch created

serviceaccount/clutch created

clusterrole.rbac.authorization.k8s.io/lyft:clutch created

clusterrolebinding.rbac.authorization.k8s.io/lyft:clutch created

configmap/clutch-config created

service/clutch created

deployment.apps/clutch created

I can see a service has been created in the clutch namespace

$ kubectl get svc/clutch -n clutch

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

clutch ClusterIP 10.43.107.217 <none> 80/TCP,8080/TCP 91s

I can fire up a port-forward

$ kubectl port-forward svc/clutch -n clutch 8080:80

Forwarding from 127.0.0.1:8080 -> 8080

Forwarding from [::1]:8080 -> 8080

Handling connection for 8080

Handling connection for 8080

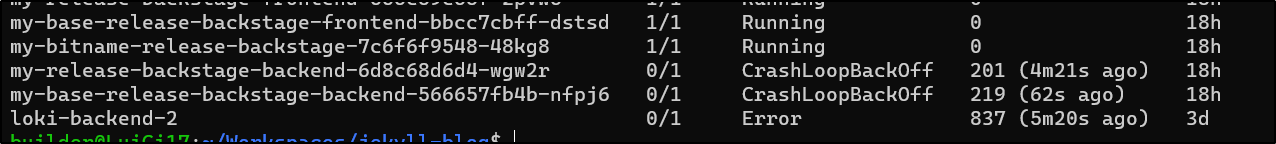

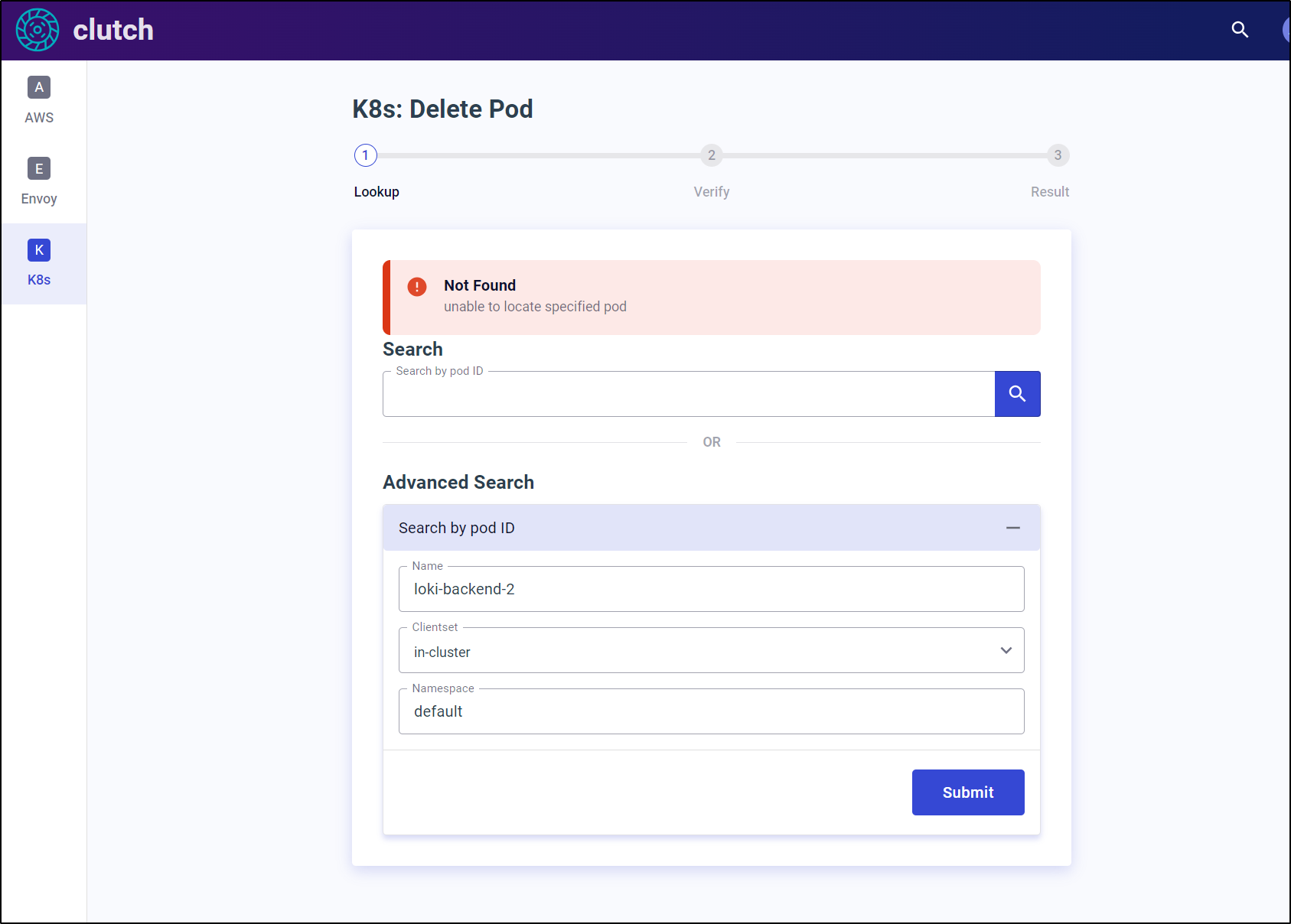

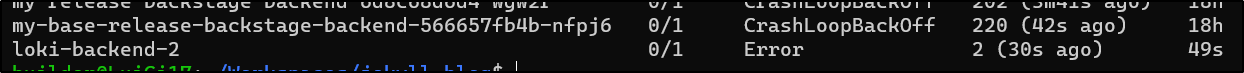

Let’s say we have a pod that needs to be rotated

That loki pod is in a bad way. Let’s terminate it.

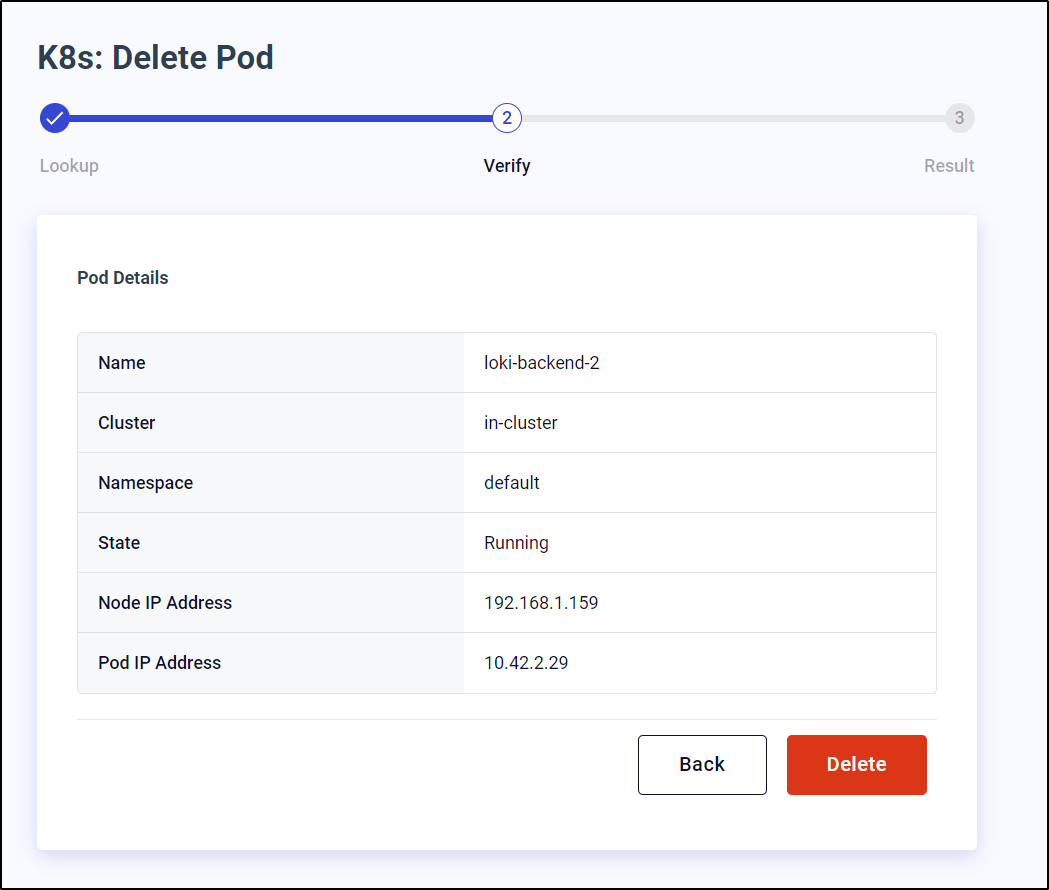

First under “Delete Pod”, we can search for the pod in question

Verify we want to delete it

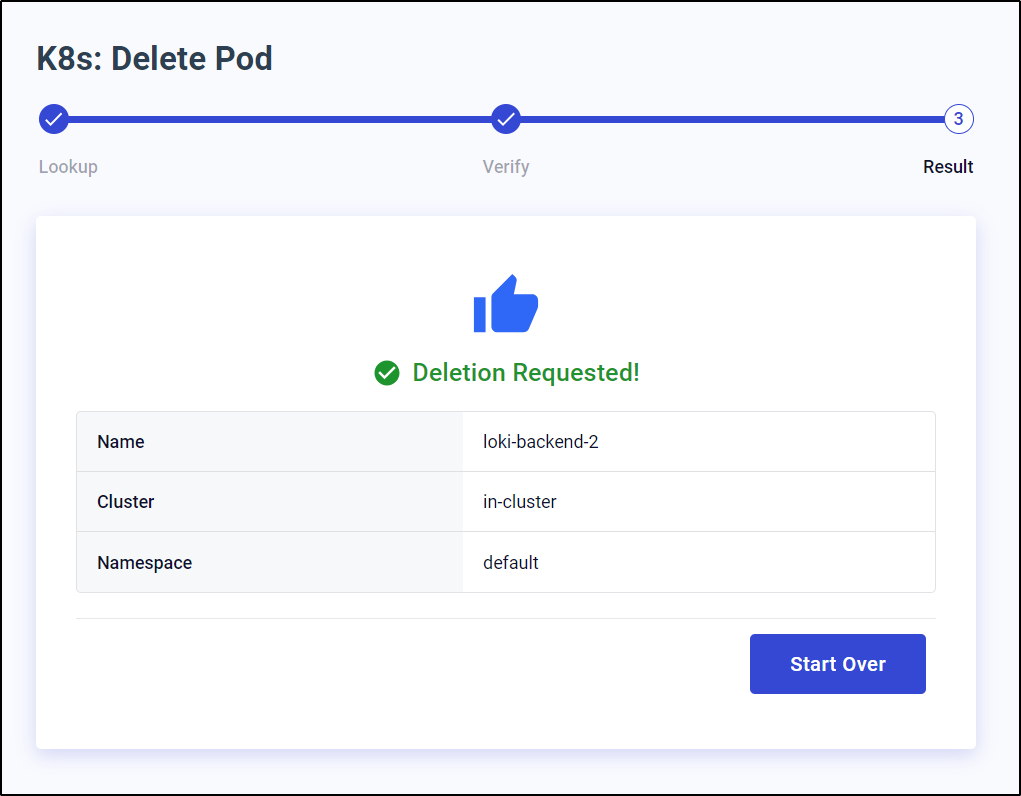

Then delete it

I can see it was removed (and the replicaset replaced it with an equally messed up pod)

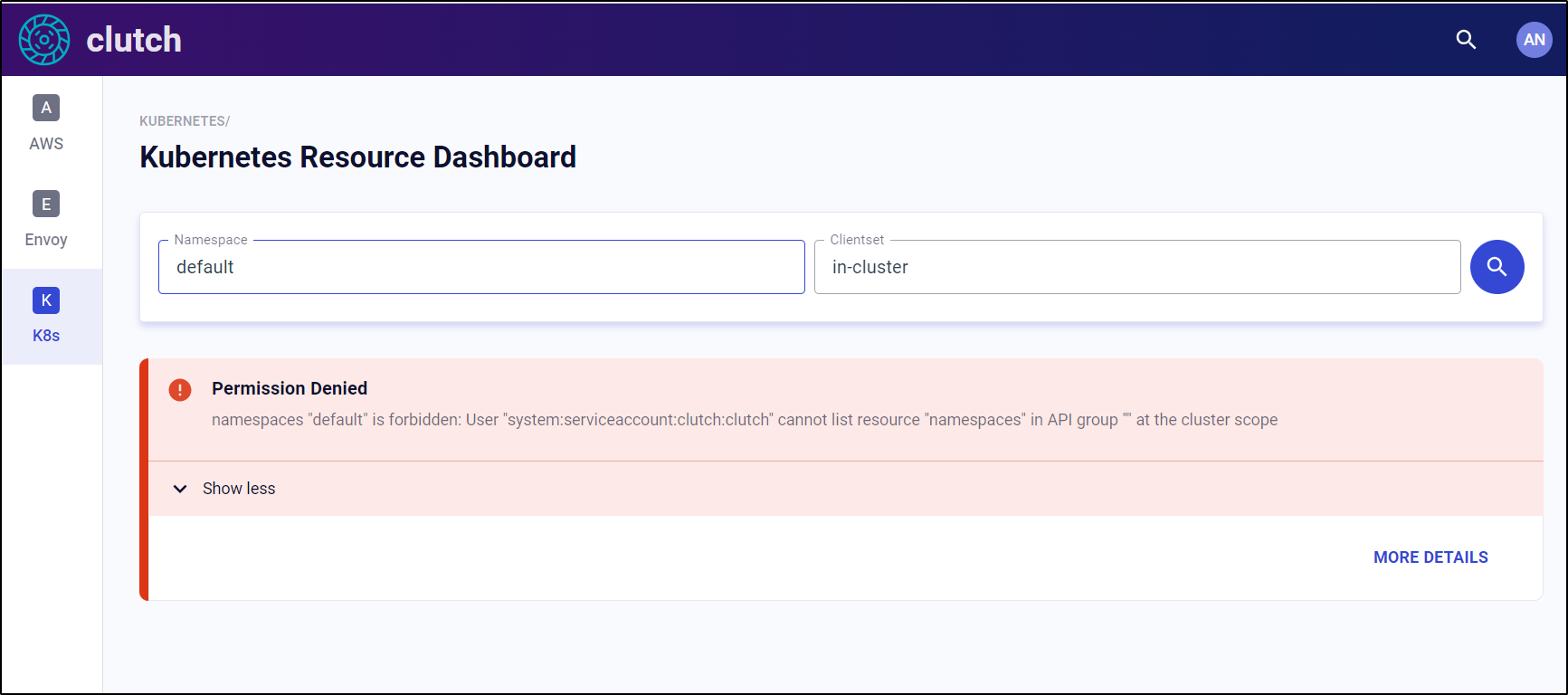

Dashboard

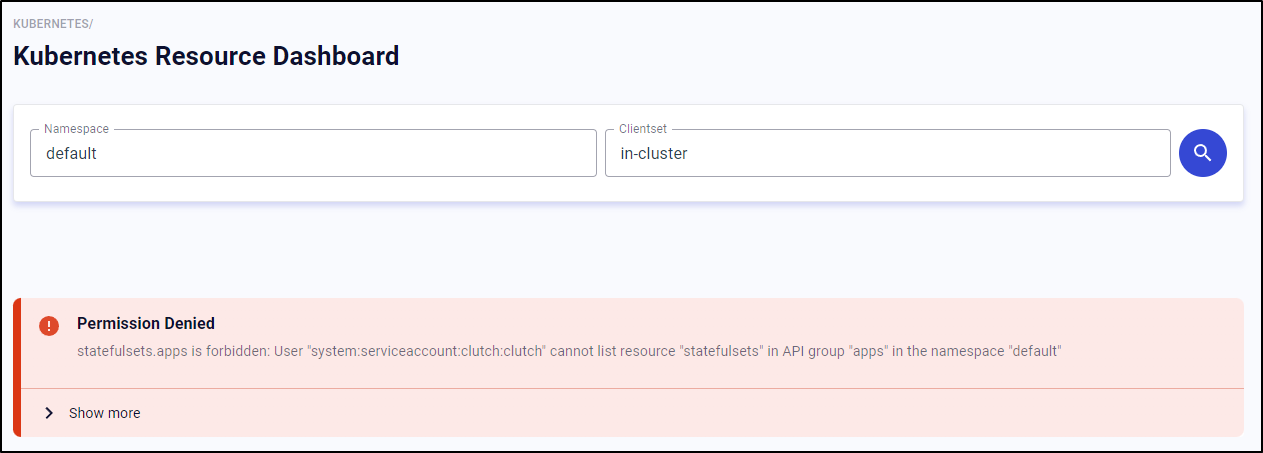

My first issue with using the dashboard was some RBAC permissions needed to be set

As we can see we are missing namespaces in the “lyft:clutch” clusterrole

$ kubectl get clusterrolebinding lyft:clutch

NAME ROLE AGE

lyft:clutch ClusterRole/lyft:clutch 26m

$ kubectl get clusterrolebinding lyft:clutch -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"rbac.authorization.k8s.io/v1","kind":"ClusterRoleBinding","metadata":{"annotations":{},"name":"lyft:clutch"},"roleRef":{"apiGroup":"rbac.authorization.k8s.io","kind":"ClusterRole","name":"lyft:clutch"},"subjects":[{"kind":"ServiceAccount","name":"clutch","namespace":"clutch"}]}

creationTimestamp: "2023-06-09T18:27:25Z"

name: lyft:clutch

resourceVersion: "411272"

uid: 3147b022-ac2c-4685-a9d5-d492417612ce

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: lyft:clutch

subjects:

- kind: ServiceAccount

name: clutch

namespace: clutch

$ kubectl get clusterrole lyft:clutch

NAME CREATED AT

lyft:clutch 2023-06-09T18:27:25Z

$ kubectl get clusterrole lyft:clutch -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"rbac.authorization.k8s.io/v1","kind":"ClusterRole","metadata":{"annotations":{},"name":"lyft:clutch"},"rules":[{"apiGroups":[""],"resources":["pods","pods/status"],"verbs":["get","list","watch","update"]},{"apiGroups":[""],"resources":["pods"],"verbs":["delete"]},{"apiGroups":["autoscaling"],"resources":["horizontalpodautoscalers","horizontalpodautoscalers/status"],"verbs":["get","list","watch"]},{"apiGroups":["autoscaling"],"resources":["horizontalpodautoscalers"],"verbs":["patch","update"]},{"apiGroups":["extensions","apps"],"resources":["deployments","deployments/scale","deployments/status"],"verbs":["get","list","watch"]},{"apiGroups":["extensions","apps"],"resources":["deployments/scale"],"verbs":["patch","update"]},{"apiGroups":["extensions","apps"],"resources":["deployments"],"verbs":["update"]}]}

creationTimestamp: "2023-06-09T18:27:25Z"

name: lyft:clutch

resourceVersion: "411271"

uid: 71dd7b5c-5c95-46f4-9e45-4c02a97de2a2

rules:

- apiGroups:

- ""

resources:

- pods

- pods/status

verbs:

- get

- list

- watch

- update

- apiGroups:

- ""

resources:

- pods

verbs:

- delete

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

- horizontalpodautoscalers/status

verbs:

- get

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- patch

- update

- apiGroups:

- extensions

- apps

resources:

- deployments

- deployments/scale

- deployments/status

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- apps

resources:

- deployments/scale

verbs:

- patch

- update

- apiGroups:

- extensions

- apps

resources:

- deployments

verbs:

- update

Let’s correct that and try again

$ kubectl get clusterrole "lyft:clutch" -o yaml > cr_clutch.yaml

$ kubectl get clusterrole "lyft:clutch" -o yaml > cr_clutch.yaml.bak

$ vi cr_clutch.yaml

$ diff cr_clutch.yaml.bak cr_clutch.yaml

16a17

> - namespaces

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ diff -C 5 cr_clutch.yaml.bak cr_clutch.yaml

*** cr_clutch.yaml.bak 2023-06-09 14:51:48.145799219 -0500

--- cr_clutch.yaml 2023-06-09 14:52:10.316738397 -0500

***************

*** 12,21 ****

--- 12,22 ----

- apiGroups:

- ""

resources:

- pods

- pods/status

+ - namespaces

verbs:

- get

- list

- watch

- update

$ kubectl apply -f ./cr_clutch.yaml

clusterrole.rbac.authorization.k8s.io/lyft:clutch configured

This just found more

I would normally work through every missing permission, but for the purpose of getting things done, I’ll just grant the Clutch SA cluster-admin

$ cat clutch-admin.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: clutch-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: clutch

namespace: clutch

$ kubectl apply -f clutch-admin.yaml

clusterrolebinding.rbac.authorization.k8s.io/clutch-admin created

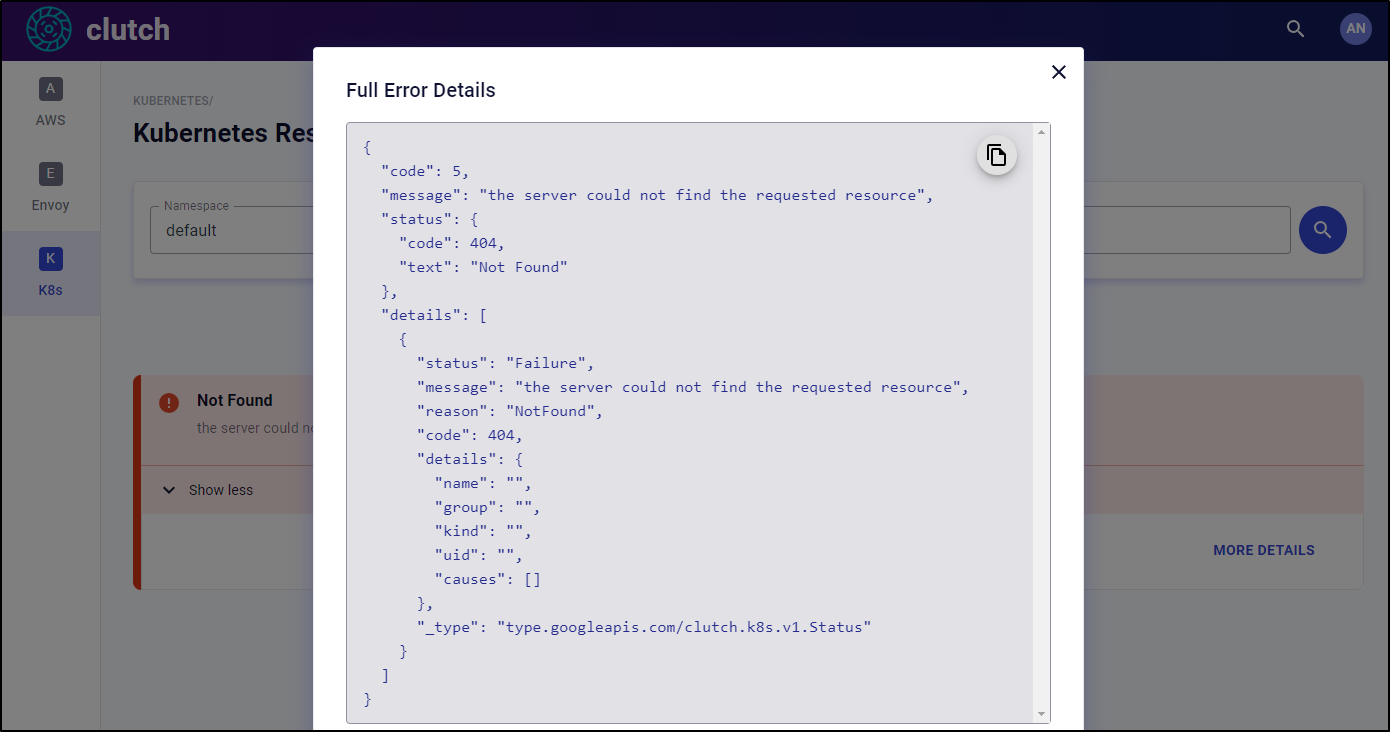

Now that I’m passed RBAC errors, it seems it could not find the requested resource, making me wonder even more what kind of dashboard this might be.

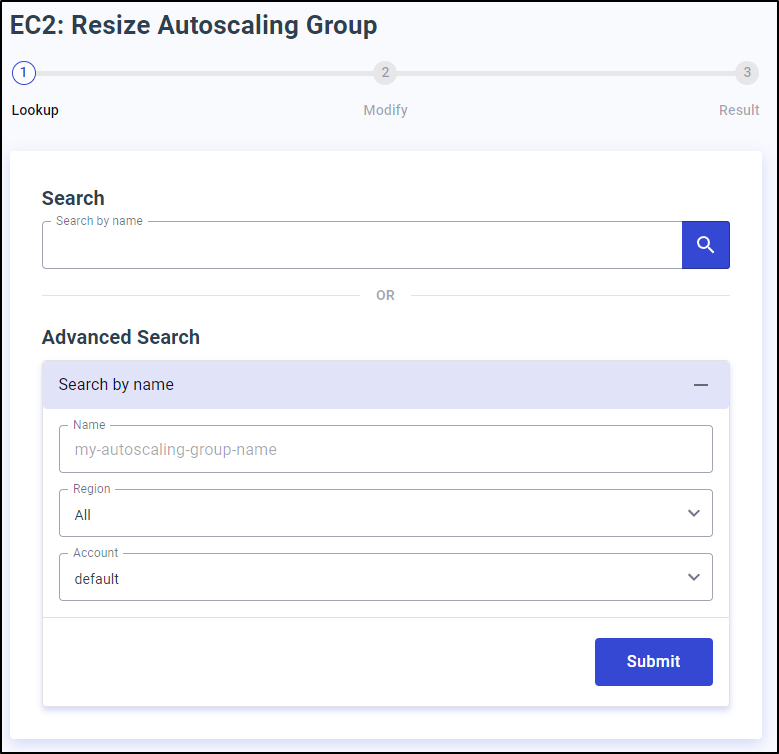

HPA Scaling

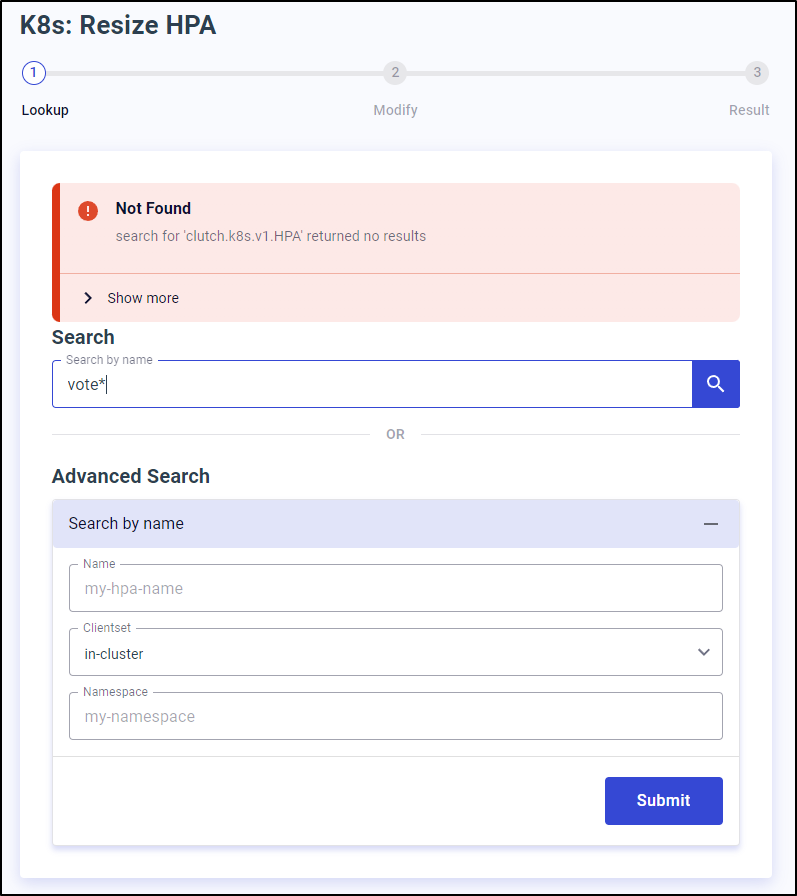

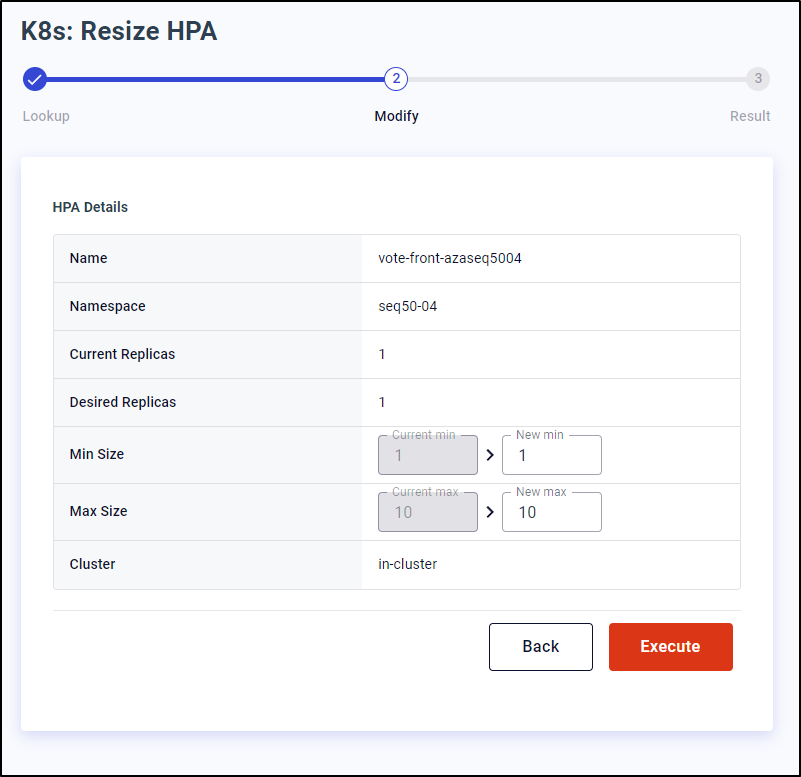

I wanted to try and handle HPA scaling.

I created a quick HorizontalPodAutoscaler for a deployment

$ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

vote-back-azaseq5004 1/1 1 1 5d19h

vote-front-azaseq5004 1/1 1 1 5d19h

$ kubectl autoscale deployment vote-front-azaseq5004 --cpu-percent=50 --min=1 --max=10

horizontalpodautoscaler.autoscaling/vote-front-azaseq5004 autoscaled

$ kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

vote-front-azaseq5004 Deployment/vote-front-azaseq5004 0%/50% 1 10 1 70s

Searching seems pointless as it doesnt work on POSIX or Regex or just a word…

Seems it only works to search for the exact name.

That said, it did find the HPA in a unique namespace

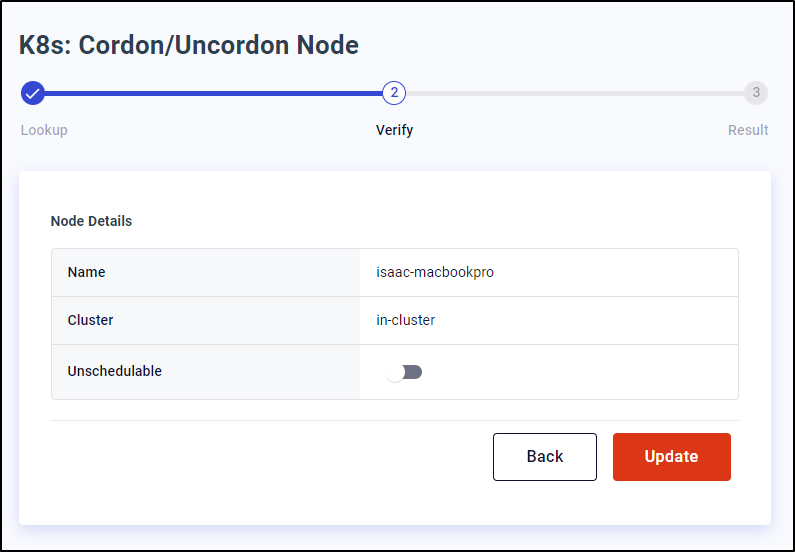

Cordon

Cordon also seemed to work provided I typed the exact Node Name

Envoy

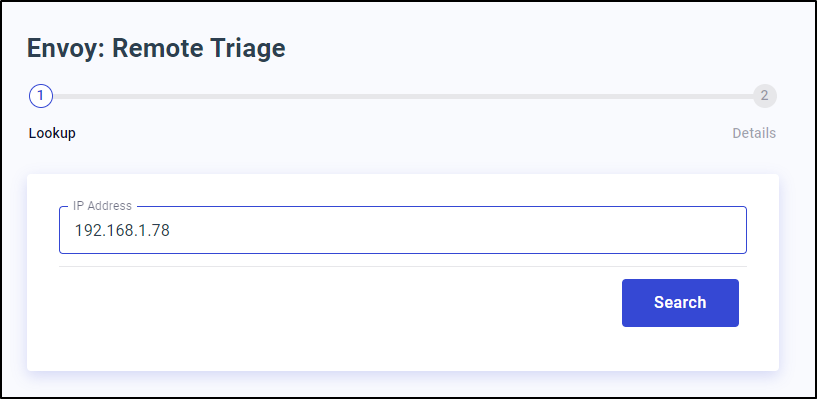

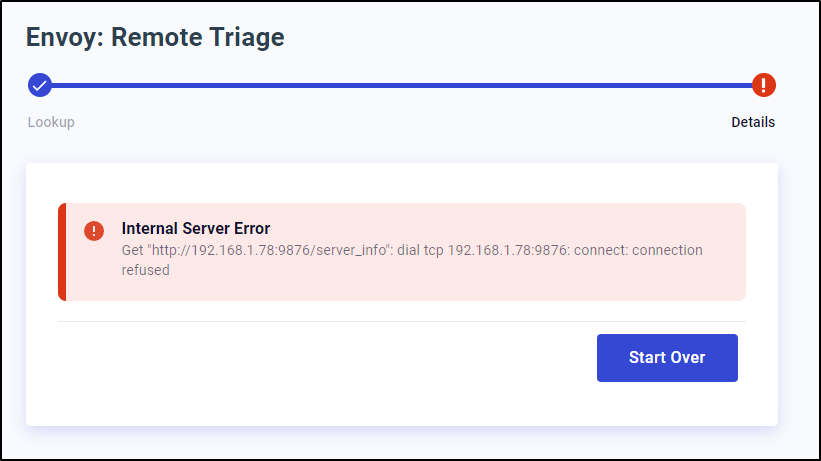

There is an Envoy Remote Triage which seems to try and connect to an IP

Specifically on port 9876 with /server_info.

I think this would have been more handy to just execute generic network connections. I would have found that way more useful.

AWS

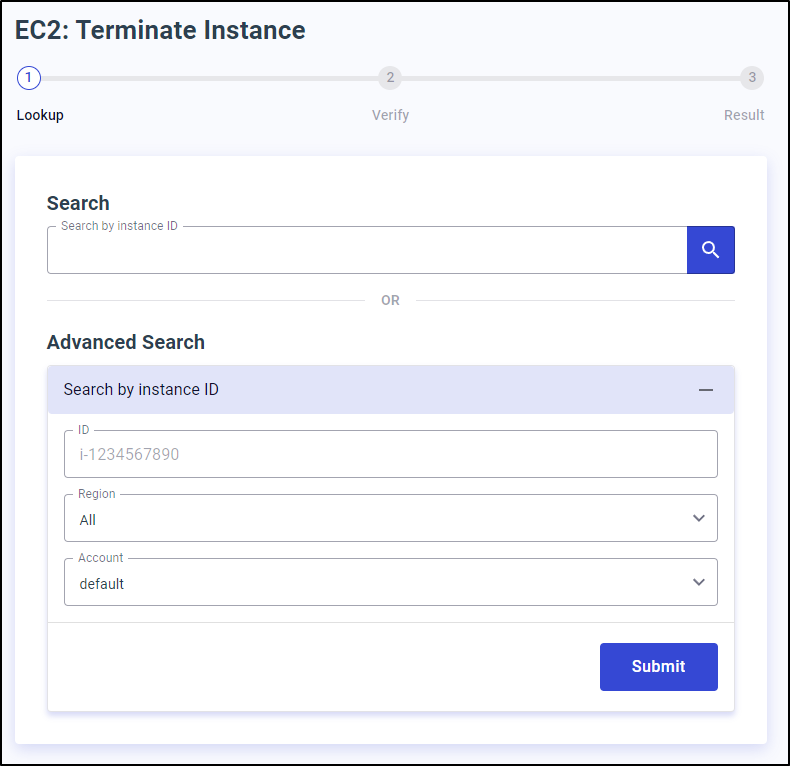

The two features I skipped were AWS specific.

I didn’t feel the need to create an AWS EKS cluster with proper IAM rights just to see if I could terminate EC2 hosts

or change ASGs

I trust this tool would do just fine at that

Summary

First, I asked MidJourney to imagine a “Lyft Clutch” and it went a whole different direction. I wanted to share (especially like the lower left - I’d get that for my daughters).

This is a handy little utility service. I could see putting this in a Utils namespace or keeping it active in Dev and QA namespaces to handle manual scaling. In my past experience, most of the time developers wanted Kube-dash installed, it was specifically to scale replicasets and tweak HPAs.

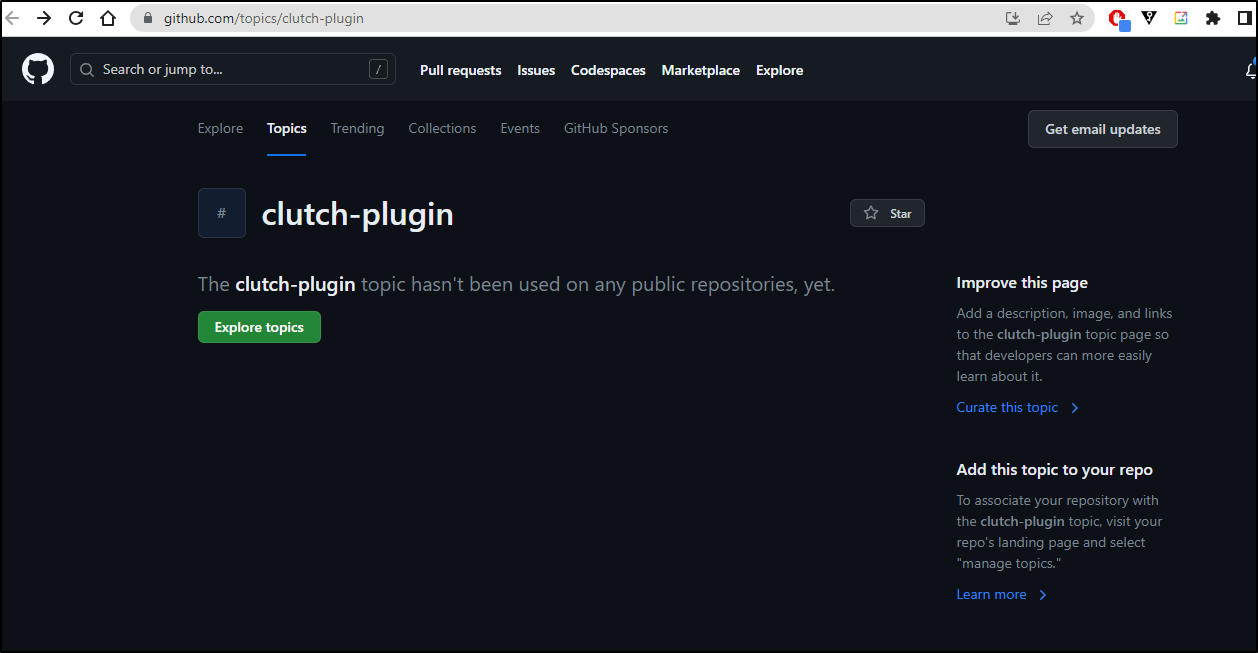

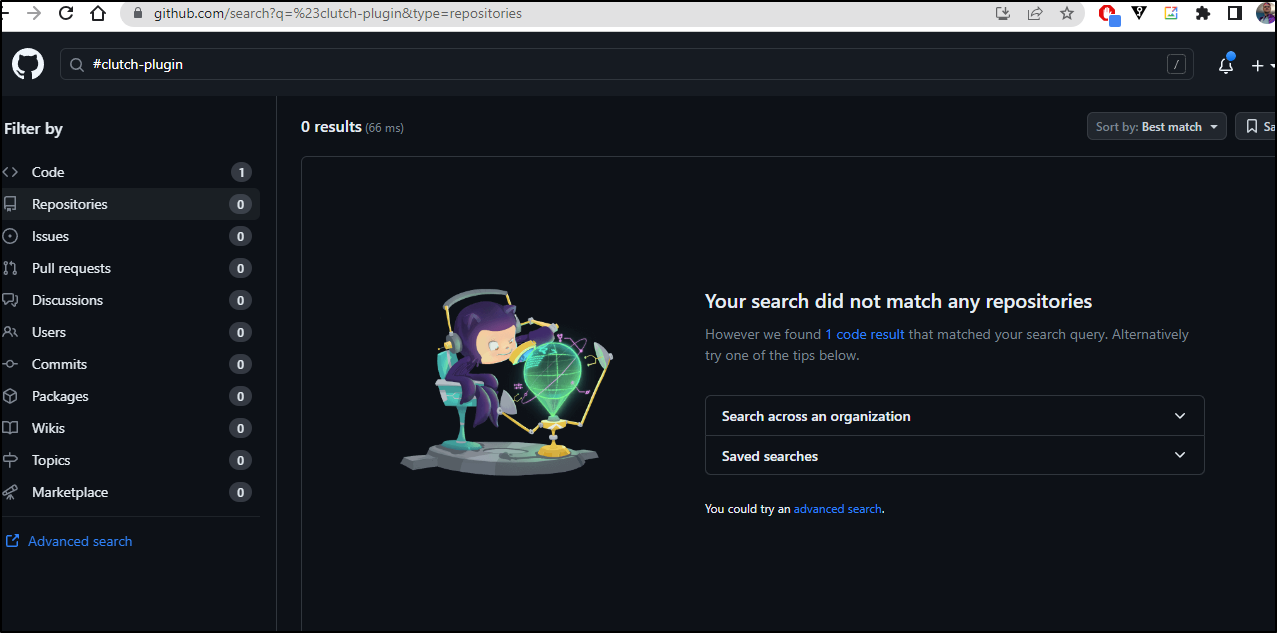

They suggest tagging your repo with “Clutch-plugin” and I found exactly no Github repos that matched that

I found the original announcement from 2020 here which actually originates from a post by Daniel Hochman in a Collabnix repo

I saw that after 9y he left Lyft and is now with Filmhub. Sometimes a cool OpenSource project fizzles when the creator behind it moves on to a new gig, perhaps that is what happened.