Published: May 31, 2023 by Isaac Johnson

A lot of time I need to handle growing logs. More often than not, I’ll use suites like DataDog to ingest logs, but when I need to store right to the cloud, I have to go another route. In this blog we’ll set up an Azure Storage account, configure fluentd, logrotate and lastly Cribl to store metrics and logs there.

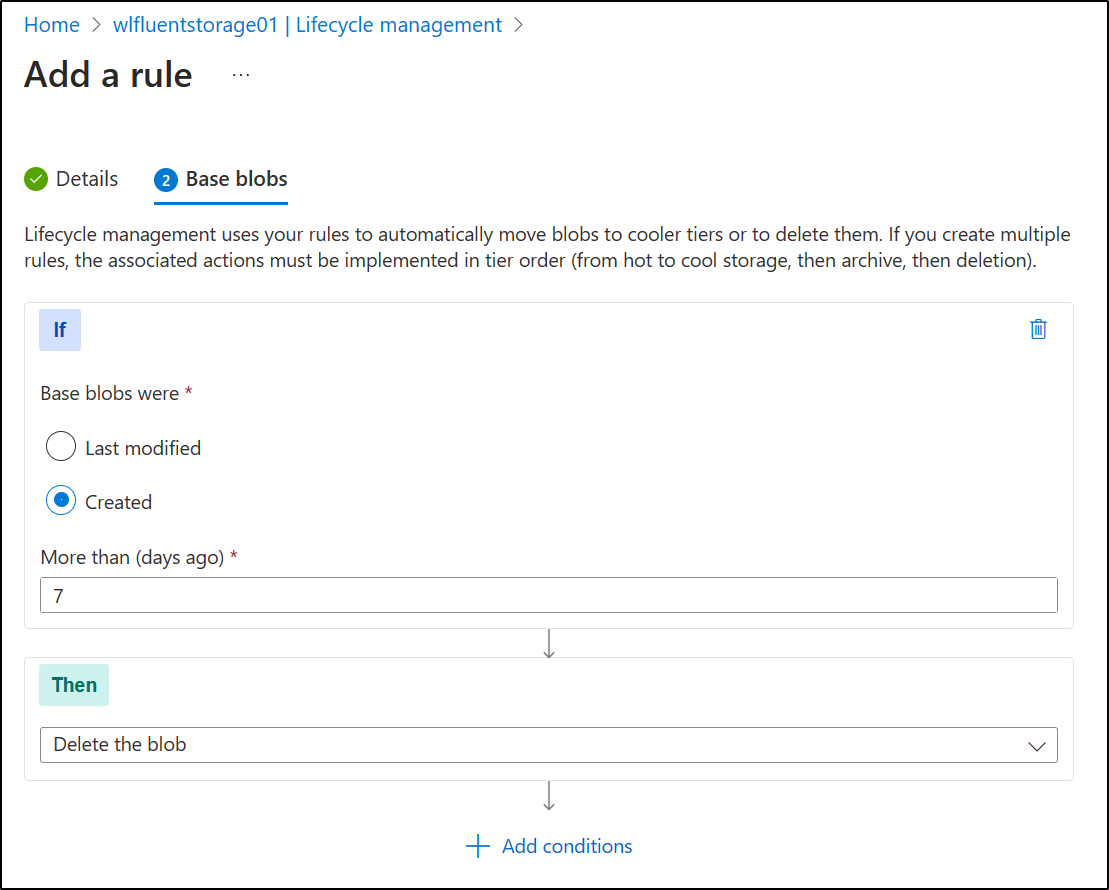

Lastly, we address the storage lifecycle policy to minimize costs.

Create Azure Storage

Let’s first create a storage account resource group

$ az account set --subscription "Pay-As-You-Go"

$ az group create -n wlstorageaccrg --location centralus

{

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/wlstorageaccrg",

"location": "centralus",

"managedBy": null,

"name": "wlstorageaccrg",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

Then we can create a Storage account

$ az storage account create -n wlfluentstorage01 -g wlstorageaccrg --location centralus --sku Standard_LRS

The behavior of this command has been altered by the following extension: storage-preview

{

"accessTier": "Hot",

"allowBlobPublicAccess": true,

"allowCrossTenantReplication": null,

"allowSharedKeyAccess": null,

"allowedCopyScope": null,

"azureFilesIdentityBasedAuthentication": null,

... snip ...

"statusOfPrimary": "available",

"statusOfSecondary": null,

"storageAccountSkuConversionStatus": null,

"tags": {},

"type": "Microsoft.Storage/storageAccounts"

}

Now we can create a container we can use

$ az storage container create --account-name wlfluentstorage01 --name k8sstorage

There are no credentials provided in your command and environment, we will query for account key for your storage account.

It is recommended to provide --connection-string, --account-key or --sas-token in your command as credentials.

You also can add `--auth-mode login` in your command to use Azure Active Directory (Azure AD) for authorization if your login account is assigned required RBAC roles.

For more information about RBAC roles in storage, visit https://docs.microsoft.com/azure/storage/common/storage-auth-aad-rbac-cli.

In addition, setting the corresponding environment variables can avoid inputting credentials in your command. Please use --help to get more information about environment variable usage.

{

"created": true

}

for the next step, we will want the Storage Account Name, Account Key and the Container Name.

We just created them, but if we wanted to see them, we could use

$ az storage account show -n wlfluentstorage01 -g wlstorageaccrg --query "[name,primaryEndpoints.blob]" -o tsv

wlfluentstorage01

https://wlfluentstorage01.blob.core.windows.net/

to show the Primary blob container and storage account name.

We use a different command for the storage account access key

$ az storage account keys list -n wlfluentstorage01 -g wlstorageaccrg --query "[0].value" -o tsv

XvWnqWALiT6gViACfS9RjmgPRdgqJ0cxJi0MKnCwLJJbud/zsErCUK0rB89ZiLlC/MtV2JtH6aRt+AStE/qDYg==

Bitnami Flue Chart

Let’s add the Bitnami repo if not there

$ helm repo add bitnami https://charts.bitnami.com/bitnami

"bitnami" already exists with the same configuration, skipping

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "zabbix-community" chart repository

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "opencost" chart repository

...Successfully got an update from the "myharbor" chart repository

...Successfully got an update from the "freshbrewed" chart repository

...Successfully got an update from the "kube-state-metrics" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "jfelten" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "castai-helm" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "epsagon" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "open-telemetry" chart repository

...Successfully got an update from the "ngrok" chart repository

...Successfully got an update from the "signoz" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "prometheus-community" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "bitnami" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

Update Complete. ⎈Happy Helming!⎈

We can now install Fluentd with the Azure Values

$ helm install my-fluent-az-storage bitnami/fluentd --set plugins.azure-storage-append-blob.enabled=true --set plugins.azure-storage-append-blob.storageAccountName=wlfluentstorage01 --set plugins.azure-storage-append-blob.storageAccountKey=XvWnqWALiT6gViACfS9RjmgPRdgqJ0cxJi0MKnCwLJJbud/zsErCUK0rB89ZiLlC/MtV2JtH6aRt+AStE/qDYg== --set plugins.azure-storage-append-blob.containerName=k8sstorage

NAME: my-fluent-az-storage

LAST DEPLOYED: Mon May 29 14:28:58 2023

NAMESPACE: wldemo2

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: fluentd

CHART VERSION: 5.8.2

APP VERSION: 1.16.1

** Please be patient while the chart is being deployed **

To verify that Fluentd has started, run:

kubectl get all -l "app.kubernetes.io/name=fluentd,app.kubernetes.io/instance=my-fluent-az-storage"

Logs are captured on each node by the forwarder pods and then sent to the aggregator pods. By default, the aggregator pods send the logs to the standard output.

You can see all the logs by running this command:

kubectl logs -l "app.kubernetes.io/component=aggregator"

You can mount your own configuration files to the aggregators and the forwarders. For example, this is useful if you want to forward the aggregated logs to Elasticsearch or another service.

I tried several tricks to get it to create logs, but I found none were made.

YAML

Let’s go another route.

I’ll remove the Helm deploy

$ helm delete my-fluent-az-storage

release "my-fluent-az-storage" uninstalled

I’ll encode the Account Name and Key as base64 so i can use them in a secret

$ echo XvWnqWALiT6gViACfS9RjmgPRdgqJ0cxJi0MKnCwLJJbud/zsErCUK0rB89ZiLlC/MtV2JtH6aRt+AStE/qDYg== | base64 -w

0

WHZXbnFXQUxpVDZnVmlBQ2ZTOVJqbWdQUmRncUowY3hKaTBNS25Dd0xKSmJ1ZC96c0VyQ1VLMHJCODlaaUxsQy9NdFYySnRINmFSdCtBU3RFL3FEWWc9PQo=

$ echo wlfluentstorage01 | base64

d2xmbHVlbnRzdG9yYWdlMDEK

Then create a Secret to apply

$ cat createSecret.yaml

apiVersion: v1

kind: Secret

metadata:

name: azure-secret

type: Opaque

data:

account-name: d2xmbHVlbnRzdG9yYWdlMDEK

account-key: WHZXbnFXQUxpVDZnVmlBQ2ZTOVJqbWdQUmRncUowY3hKaTBNS25Dd0xKSmJ1ZC96c0VyQ1VLMHJCODlaaUxsQy9NdFYySnRINmFSdCtBU3RFL3FEWWc9PQo=

$ kubectl apply -f ./createSecret.yaml

secret/azure-secret created

Next, I create a CM with the same values

$ cat ./fluentCM.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: fluentd-config

data:

fluent.conf: |

<source>

@type tail

path /var/log/containers/*.log

pos_file /var/log/fluentd-containers.log.pos

tag kubernetes.*

read_from_head true

<parse>

@type json

time_format %Y-%m-%dT%H:%M:%S.%NZ

</parse>

</source>

<match kubernetes.**>

@type azurestorageappendblob

azure_storage_account wlfluentstorage01

azure_storage_access_key XvWnqWALiT6gViACfS9RjmgPRdgqJ0cxJi0MKnCwLJJbud/zsErCUK0rB89ZiLlC/MtV2JtH6aRt+AStE/qDYg==

azure_container_name k8sstorage

path logs/%Y/%m/%d/

time_slice_format %Y%m%d-%H%M%S

buffer_chunk_limit 256m

</match>

$ kubectl apply -f ./fluentCM.yaml

configmap/fluentd-config created

Next, let’s create the Fluent DS

$ cat fluentDS.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd

spec:

selector:

matchLabels:

app: fluentd

template:

metadata:

labels:

app: fluentd

spec:

containers:

- name: fluentd

image: fluent/fluentd-kubernetes-daemonset:v1.16.1-debian-azureblob-1.2

env:

- name: AZURE_STORAGE_ACCOUNT_NAME

valueFrom:

secretKeyRef:

name: azure-secret

key: account-name

- name: AZURE_STORAGE_ACCOUNT_KEY

valueFrom:

secretKeyRef:

name: azure-secret

key: account-key

volumeMounts:

- name: varlogcontainers

mountPath: /var/log/containers/

readOnly: true

- name: config-volume

mountPath: /fluentd/etc/

resources:

limits:

memory: 500Mi

securityContext:

privileged: true

livenessProbe:

exec:

command:

- cat /tmp/fluentd-buffers.flb | grep -v '^\s*$' > /dev/null 2>&1 || exit 1; exit 0;

initialDelaySeconds: 30

periodSeconds: 10

volumeMounts:

- name: varlogcontainers

mountPath: /var/log/containers/

readOnly: true

- name: config-volume

mountPath: /fluentd/etc/

volumes:

- name: varlogcontainers

hostPath:

path: /var/log/containers

- name : config-volume

configMap :

name : fluentd-config

$ kubectl apply -f fluentDS.yaml

daemonset.apps/fluentd created

Let’s try Loki

$ cat loki-values2.yaml

loki:

storage:

azure:

account_key: XvWnqWALiT6gViACfS9RjmgPRdgqJ0cxJi0MKnCwLJJbud/zsErCUK0rB89ZiLlC/MtV2JtH6aRt+AStE/qDYg==

account_name: wlfluentstorage01

container_name: k8sstorage

auth_enabled: false

Then we can install

$ helm install --values loki-values2.yaml loki grafana/loki

NAME: loki

LAST DEPLOYED: Tue May 30 06:48:33 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

***********************************************************************

Welcome to Grafana Loki

Chart version: 5.5.11

Loki version: 2.8.2

***********************************************************************

Installed components:

* grafana-agent-operator

* gateway

* read

* write

* backend

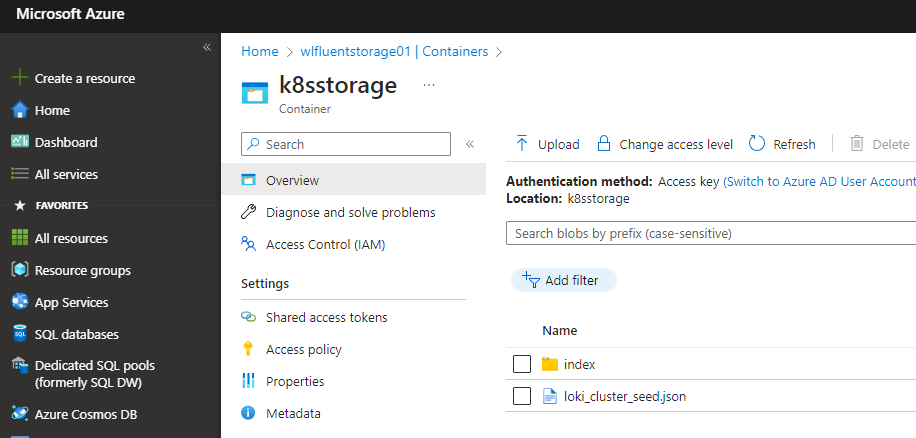

I can almost immediately see Loki is writing things to Azure Storage

To add logs, I would need Promtail with values to push to Loki

$ cat promtail-values.yaml

config:

clients:

- url: http://loki-gateway.default.svc.cluster.local:80/loki/api/v1/push

logLevel: info

serverPort: 3101

lokiAddress: http://loki-gateway.default.svc.cluster.local:80/loki/api/v1/push

$ helm upgrade --install -f promtail-values.yaml promtail grafana/promtail

Store logs to Azure Storage

Maybe my goal is to just get logs to Azure Storage, regardless of how.

For this, I can use BlobFuse v1

I’ll first setup the apt packages

builder@builder-T100:/etc/filebeat$ sudo wget https://packages.microsoft.com/config/ubuntu/20.04/packages-microsoft-prod.deb

microsoft-prod.deb

sudo apt-get update--2023-05-31 17:43:25-- https://packages.microsoft.com/config/ubuntu/20.04/packages-microsoft-prod.deb

Resolving packages.microsoft.com (packages.microsoft.com)... 13.90.21.104

Connecting to packages.microsoft.com (packages.microsoft.com)|13.90.21.104|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 3690 (3.6K) [application/octet-stream]

Saving to: ‘packages-microsoft-prod.deb’

packages-microsoft-prod.deb 100%[============================================================================>] 3.60K --.-KB/s in 0s

2023-05-31 17:43:25 (352 MB/s) - ‘packages-microsoft-prod.deb’ saved [3690/3690]

builder@builder-T100:/etc/filebeat$ sudo dpkg -i packages-microsoft-prod.deb

Selecting previously unselected package packages-microsoft-prod.

(Reading database ... 190512 files and directories currently installed.)

Preparing to unpack packages-microsoft-prod.deb ...

Unpacking packages-microsoft-prod (1.0-ubuntu20.04.1) ...

Setting up packages-microsoft-prod (1.0-ubuntu20.04.1) ...

builder@builder-T100:/etc/filebeat$ sudo apt-get update

Hit:1 http://us.archive.ubuntu.com/ubuntu focal InRelease

Hit:2 https://download.docker.com/linux/ubuntu focal InRelease

Get:3 http://us.archive.ubuntu.com/ubuntu focal-updates InRelease [114 kB]

Get:4 http://security.ubuntu.com/ubuntu focal-security InRelease [114 kB]

Get:5 https://packages.microsoft.com/ubuntu/20.04/prod focal InRelease [3,611 B]

Get:6 http://us.archive.ubuntu.com/ubuntu focal-backports InRelease [108 kB]

Get:7 https://packages.microsoft.com/ubuntu/20.04/prod focal/main arm64 Packages [41.0 kB]

Get:8 https://packages.microsoft.com/ubuntu/20.04/prod focal/main all Packages [2,521 B]

Get:9 https://packages.microsoft.com/ubuntu/20.04/prod focal/main amd64 Packages [201 kB]

Get:10 http://us.archive.ubuntu.com/ubuntu focal-updates/main amd64 Packages [2,611 kB]

Get:11 https://packages.microsoft.com/ubuntu/20.04/prod focal/main armhf Packages [14.4 kB]

Get:12 http://security.ubuntu.com/ubuntu focal-security/main amd64 DEP-11 Metadata [59.9 kB]

Get:13 http://security.ubuntu.com/ubuntu focal-security/universe amd64 DEP-11 Metadata [95.5 kB]

Get:14 http://security.ubuntu.com/ubuntu focal-security/multiverse amd64 DEP-11 Metadata [940 B]

Hit:15 http://apt.postgresql.org/pub/repos/apt focal-pgdg InRelease

Get:16 http://us.archive.ubuntu.com/ubuntu focal-updates/main i386 Packages [836 kB]

Get:17 http://us.archive.ubuntu.com/ubuntu focal-updates/main Translation-en [439 kB]

Get:18 http://us.archive.ubuntu.com/ubuntu focal-updates/main amd64 DEP-11 Metadata [275 kB]

Get:19 http://us.archive.ubuntu.com/ubuntu focal-updates/main amd64 c-n-f Metadata [16.8 kB]

Get:20 http://us.archive.ubuntu.com/ubuntu focal-updates/universe amd64 Packages [1,067 kB]

Get:21 http://us.archive.ubuntu.com/ubuntu focal-updates/universe i386 Packages [730 kB]

Get:22 http://us.archive.ubuntu.com/ubuntu focal-updates/universe amd64 DEP-11 Metadata [409 kB]

Get:23 http://us.archive.ubuntu.com/ubuntu focal-updates/multiverse amd64 DEP-11 Metadata [944 B]

Get:24 http://us.archive.ubuntu.com/ubuntu focal-backports/main amd64 DEP-11 Metadata [7,996 B]

Get:25 http://us.archive.ubuntu.com/ubuntu focal-backports/universe amd64 DEP-11 Metadata [30.5 kB]

Fetched 7,177 kB in 2s (3,938 kB/s)

Reading package lists... Done

N: Skipping acquire of configured file 'main/binary-i386/Packages' as repository 'http://apt.postgresql.org/pub/repos/apt focal-pgdg InRelease' doesn't support architecture 'i386'

Then I can install Blobfuse

builder@builder-T100:/etc/filebeat$ sudo apt-get install blobfuse

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages were automatically installed and are no longer required:

gir1.2-goa-1.0 libfprint-2-tod1 libfwupdplugin1 libxmlb1

Use 'sudo apt autoremove' to remove them.

The following NEW packages will be installed:

blobfuse

0 upgraded, 1 newly installed, 0 to remove and 25 not upgraded.

Need to get 10.1 MB of archives.

After this operation, 35.6 MB of additional disk space will be used.

Get:1 https://packages.microsoft.com/ubuntu/20.04/prod focal/main amd64 blobfuse amd64 1.4.5 [10.1 MB]

Fetched 10.1 MB in 1s (14.0 MB/s)

Selecting previously unselected package blobfuse.

(Reading database ... 190520 files and directories currently installed.)

Preparing to unpack .../blobfuse_1.4.5_amd64.deb ...

Unpacking blobfuse (1.4.5) ...

Setting up blobfuse (1.4.5) ...

I’ll create a directory to be my mount

builder@builder-T100:/etc/filebeat$ sudo mkdir /var/azurelogs

builder@builder-T100:/etc/filebeat$ sudo chown builder /var/azurelogs

And we need a temp dir as well

builder@builder-T100:/etc/filebeat$ sudo mkdir -p /mnt/resource/blobfusetmp

builder@builder-T100:/etc/filebeat$ sudo chown builder /mnt/resource/blobfusetmp

Then create an Azure configuration for my storage account

builder@builder-T100:/etc/filebeat$ vi /home/builder/azure.cfg

builder@builder-T100:/etc/filebeat$ cat /home/builder/azure.cfg

accountName wlfluentstorage01

accountKey XvWnqWALiT6gViACfS9RjmgPRdgqJ0cxJi0MKnCwLJJbud/zsErCUK0rB89ZiLlC/MtV2JtH6aRt+AStE/qDYg==

containerName k8sstorage

Then lastly mount it

builder@builder-T100:/etc/filebeat$ sudo blobfuse /var/azurelogs --tmp-path=/mnt/resource/blobfusetmp --config-file=/home/builder/azure.cfg -o attr_timeout=240 -o entry_timeout=240 -o negative_timeout=120

Interestingly I found that i could copy files as root, but sudo didn’t seem to care for the command

builder@builder-T100:/etc/filebeat$ ls /var/azurelogs

ls: cannot access '/var/azurelogs': Permission denied

builder@builder-T100:/etc/filebeat$ sudo ls /var/azurelogs

index loki_cluster_seed.json

builder@builder-T100:/etc/filebeat$ sudo echo "hi" > /var/azurelogs/test.txt

-bash: /var/azurelogs/test.txt: Permission denied

builder@builder-T100:/etc/filebeat$ sudo mkdir /var/azurelogs/test

builder@builder-T100:/etc/filebeat$ sudo echo "hi" > /var/azurelogs/test/test.txt

-bash: /var/azurelogs/test/test.txt: Permission denied

# as root

builder@builder-T100:/etc/filebeat$ sudo su -

root@builder-T100:~# echo "hi" > /var/azurelogs/test/test.txt

root@builder-T100:~#

Now in Logrotate, let’s rotate files into there. Normally we would use daily, but I’ll set to hourly

builder@builder-T100:/etc/logrotate.d$ sudo vi azurelogs

builder@builder-T100:/etc/logrotate.d$ cat azurelogs

/var/log/auth.log {

su root root

rotate 7

daily

compress

dateext

dateformat .%Y-%m-%d

postrotate

cp /var/log/auth.log.$(date +%Y-%m-%d) /tmp/auth.log.$(date +%Y-%m-%d)

chown root:root /tmp/auth.log.$(date +%Y-%m-%d)

cp /tmp/auth.log.$(date +%Y-%m-%d) /var/azurelogs/auth.log-$(date +%Y%m%d)

endscript

}

Lastly, restart rsyslog

$ sudo service rsyslog restart

I can now test

root@builder-T100:~# echo hi | tee /var/log/auth.log

hi

root@builder-T100:~# logrotate --force /etc/logrotate.d/azurelogs

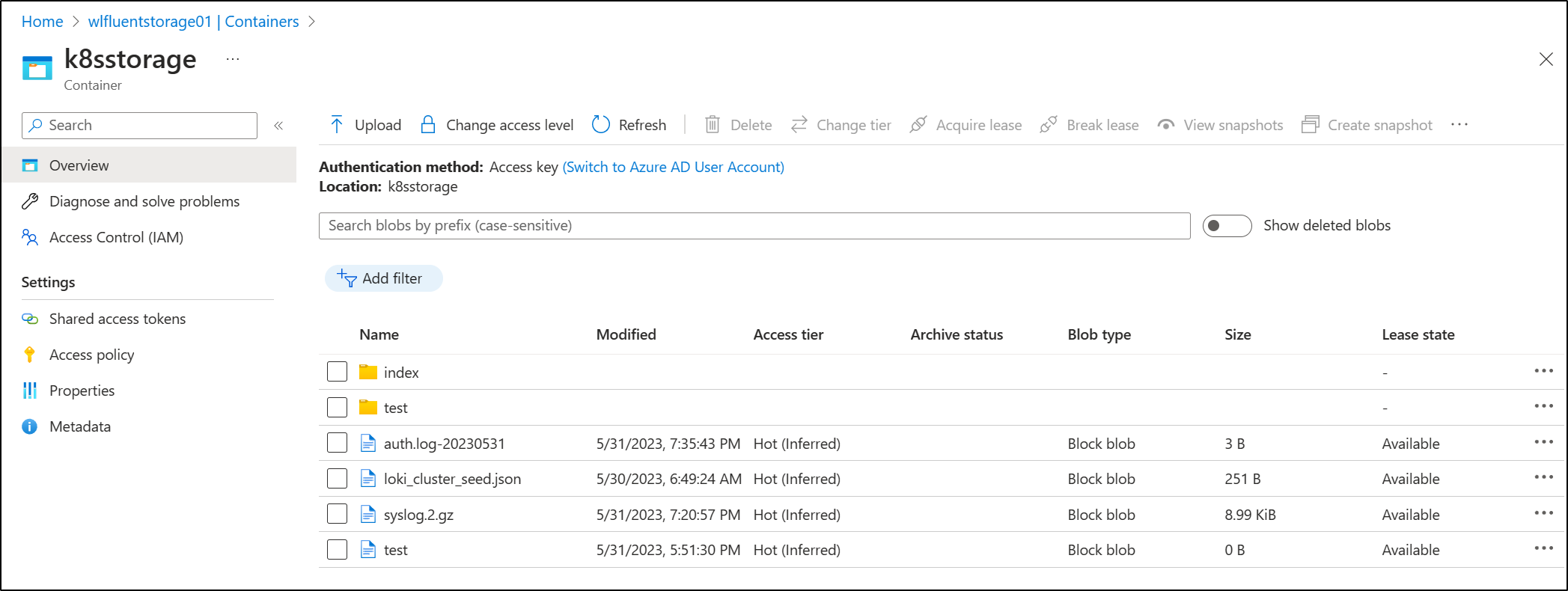

root@builder-T100:~# ls -l /var/azurelogs/

total 8

-rwxrwx--- 1 root root 3 May 31 19:35 auth.log-20230531

drwxrwx--- 2 root root 4096 Dec 31 1969 index

-rwxrwx--- 1 root root 251 May 30 06:49 loki_cluster_seed.json

-rwxrwx--- 1 root root 9204 May 31 19:20 syslog.2.gz

drwxrwx--- 2 root root 4096 May 31 17:54 test

root@builder-T100:~#

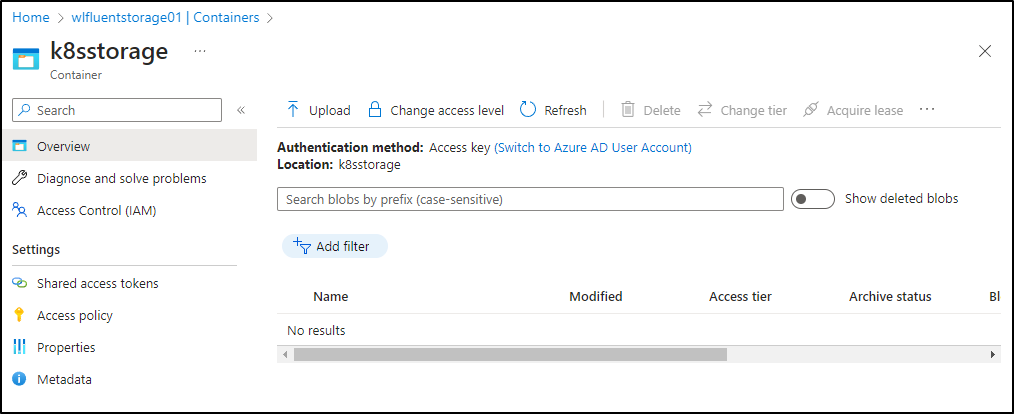

I can see that in Azure as well

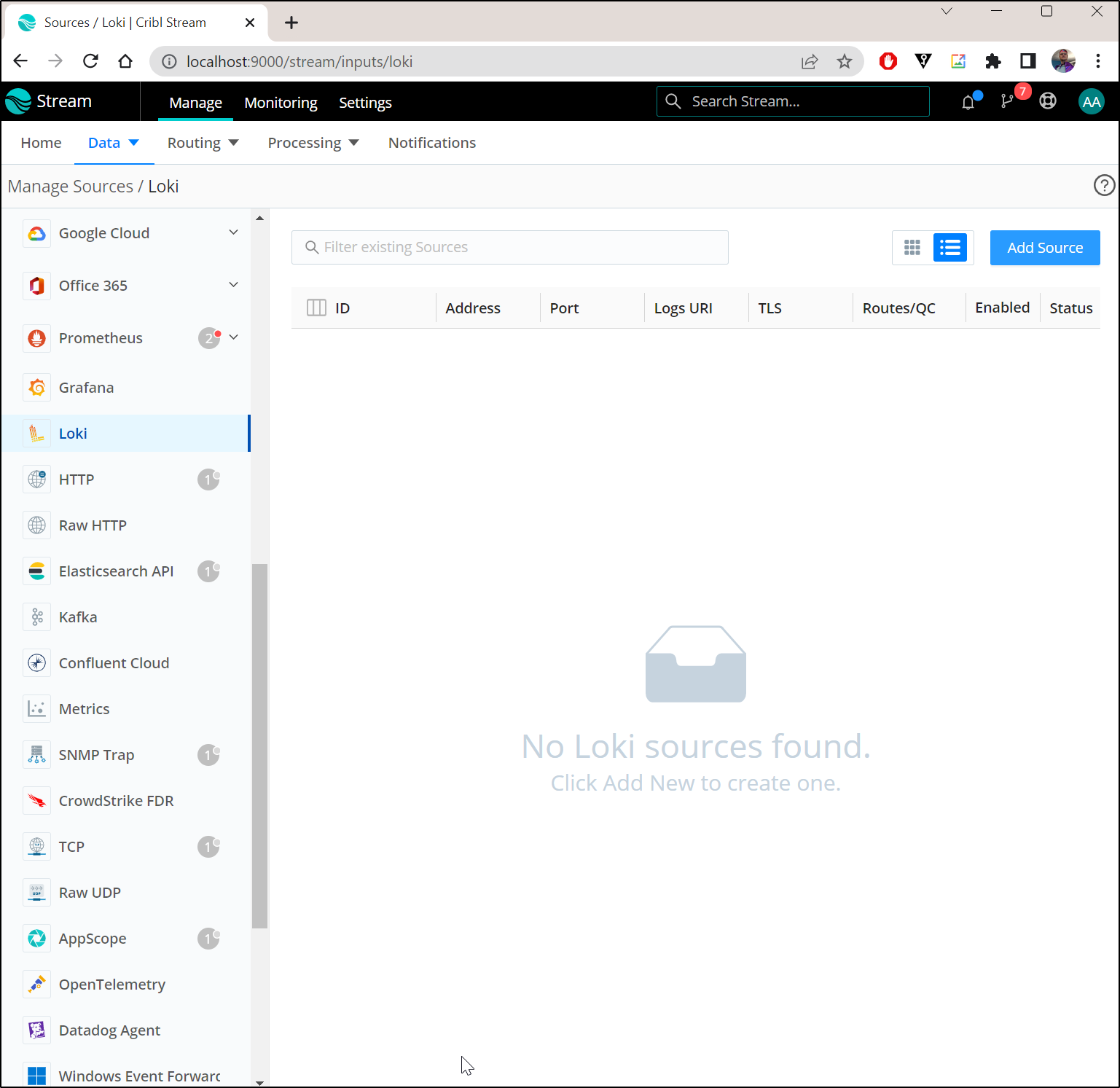

Cribl

I’ll first add the Helm chart

$ helm repo add cribl https://criblio.github.io/helm-charts/

"cribl" already exists with the same configuration, skipping

I can then install using my preferred storage class

$ helm install logstream-leader cribl/logstream-leader --set config.scName='managed-nfs-storage'

NAME: logstream-leader

LAST DEPLOYED: Wed May 31 19:46:02 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

I’ll wait for it to come up

$ kubectl get pods -l app.kubernetes.io/instance=logstream-leader

NAME READY STATUS RESTARTS AGE

logstream-leader-77967db6cd-bj88r 1/1 Running 0 95s

I’ll now port-forward

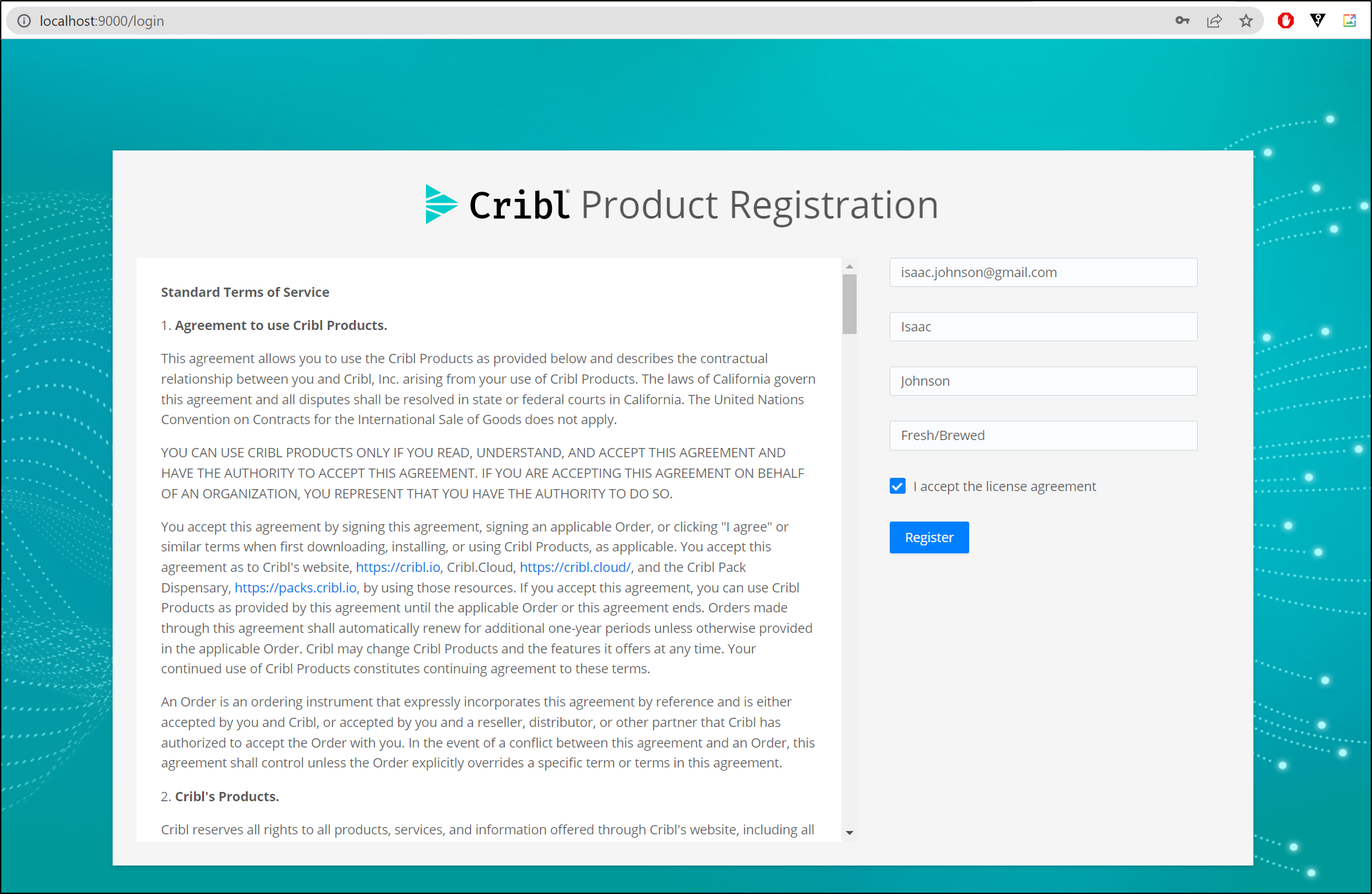

$ kubectl port-forward svc/logstream-leader 9000:9000

Forwarding from 127.0.0.1:9000 -> 9000

Forwarding from [::1]:9000 -> 9000

Handling connection for 9000

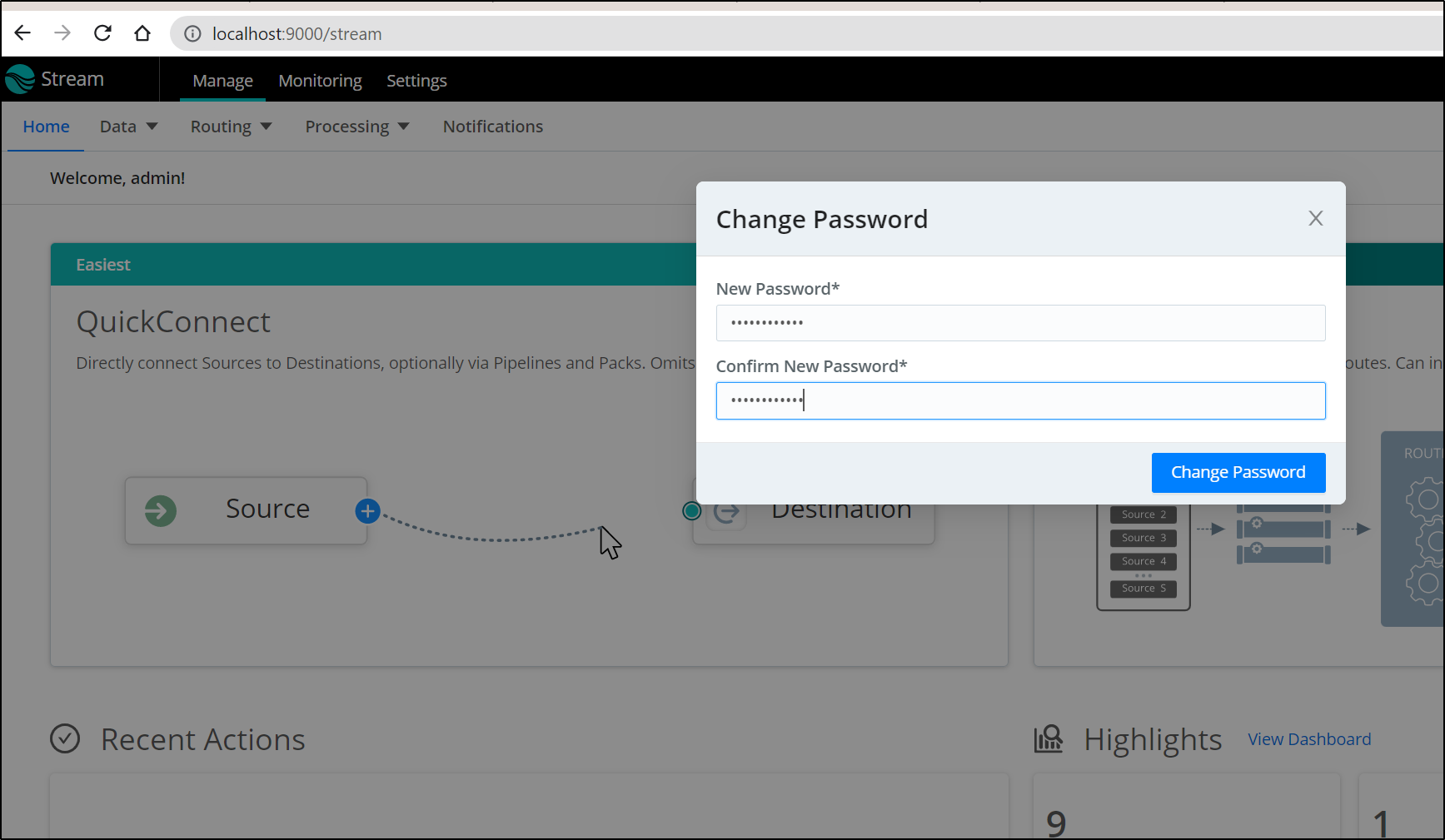

I login with the default “admin/admin” and accept the EULA

We immediately set a password for admin

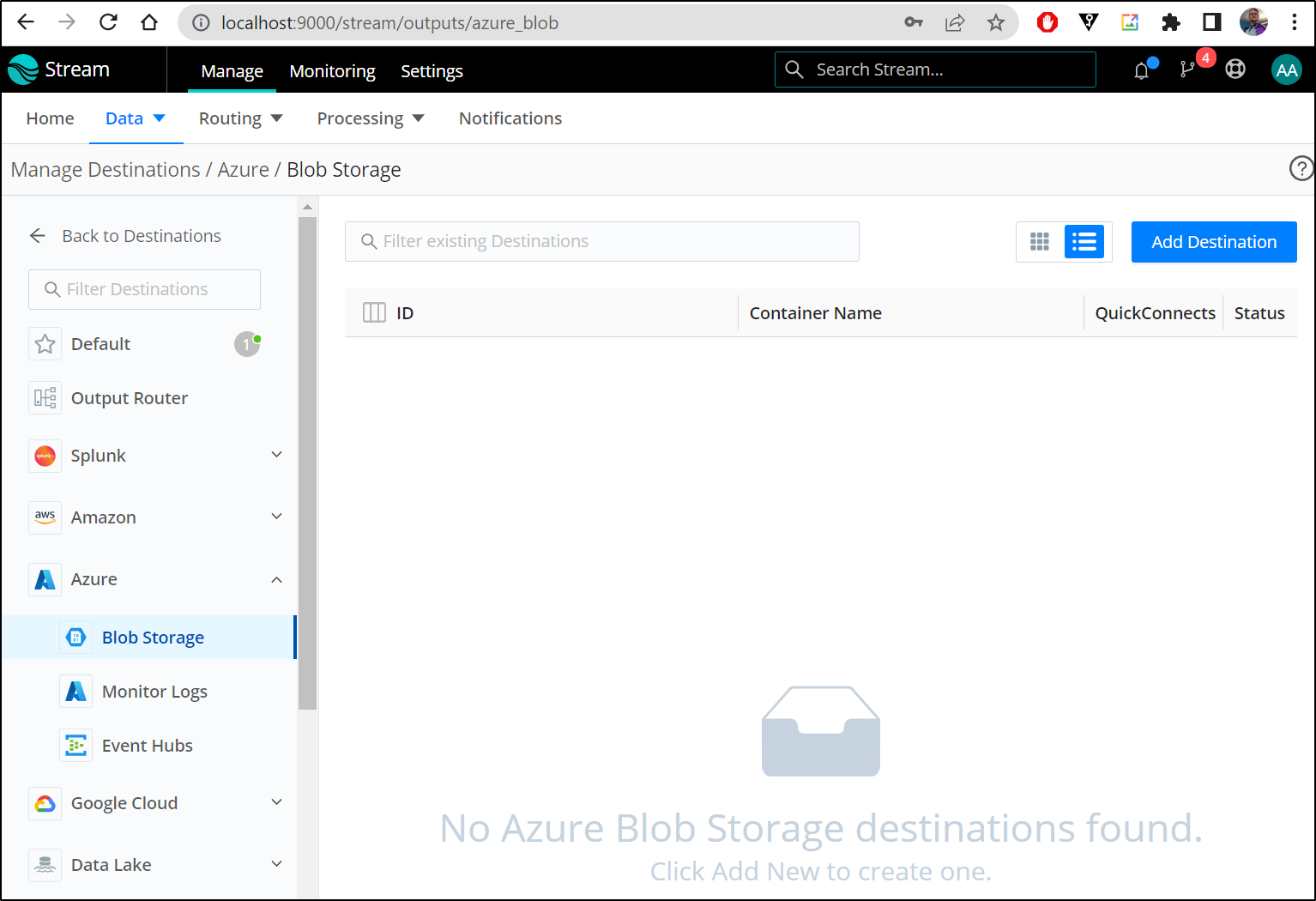

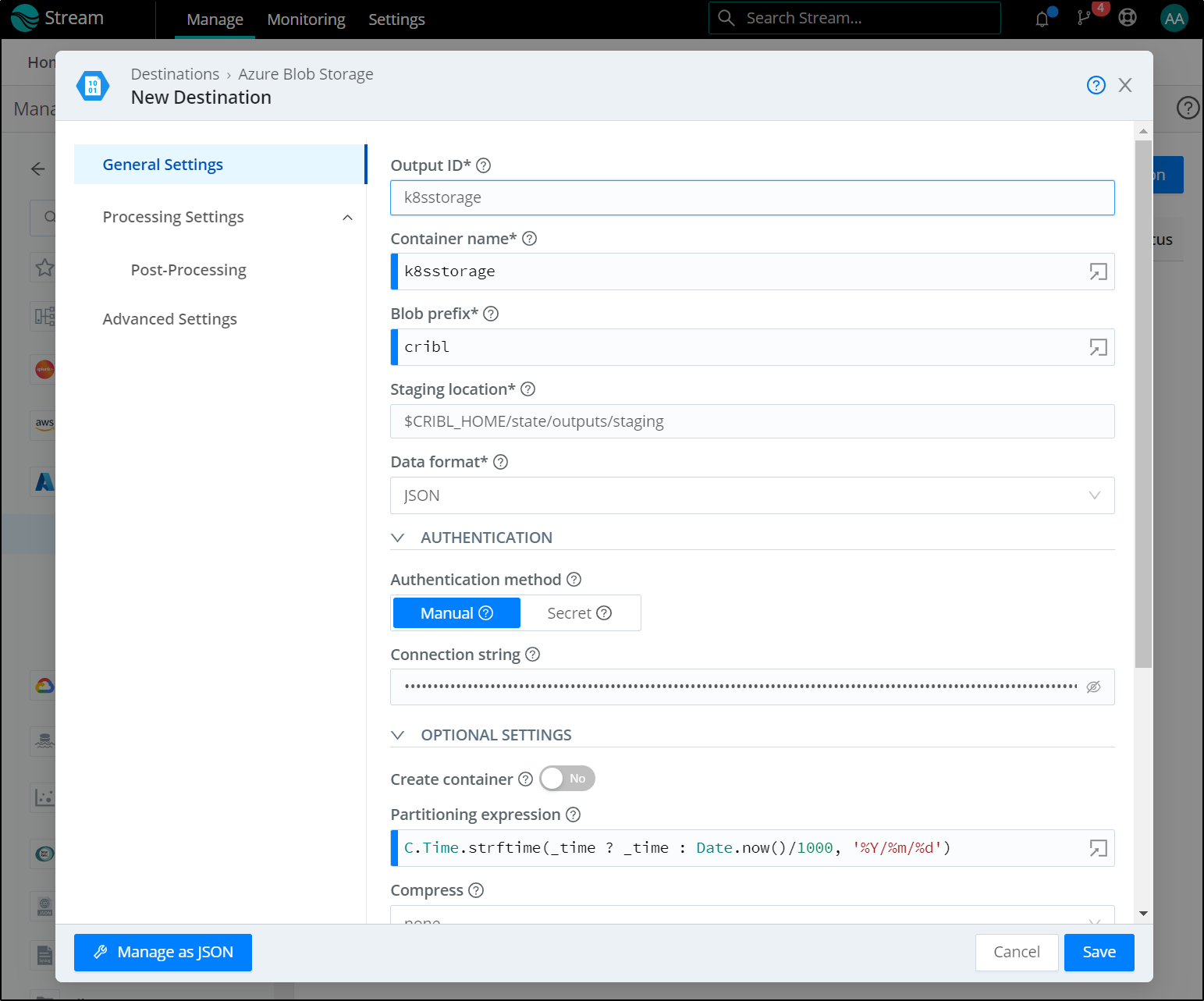

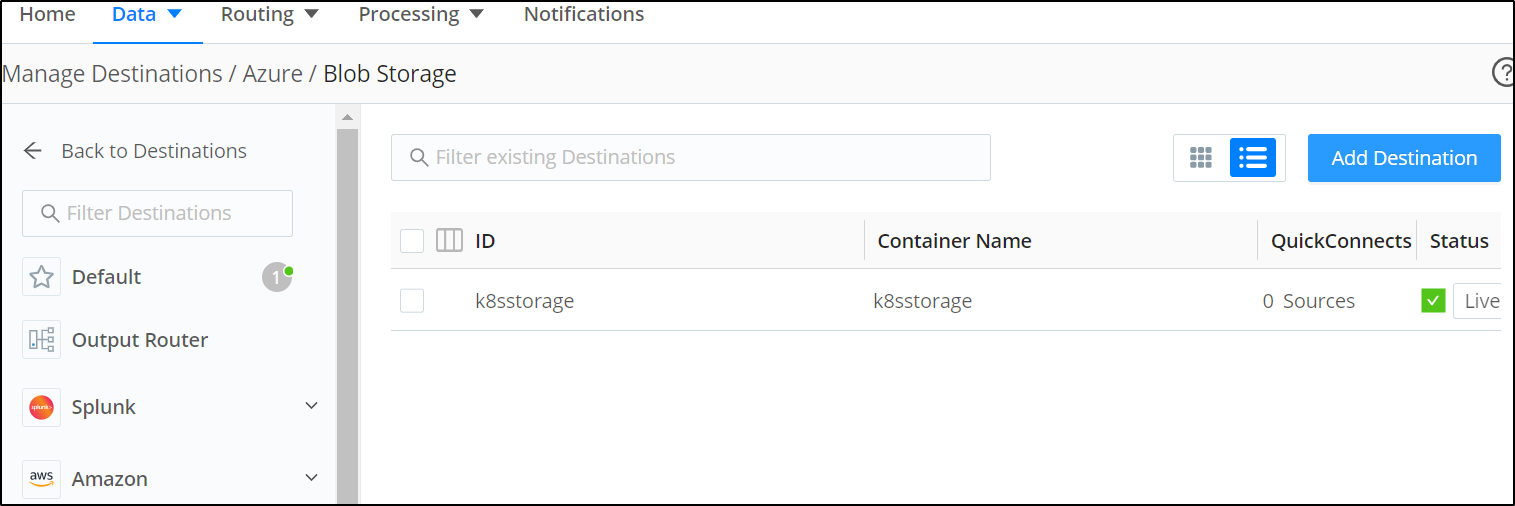

I’ll add a destination for Azure Blob Storage

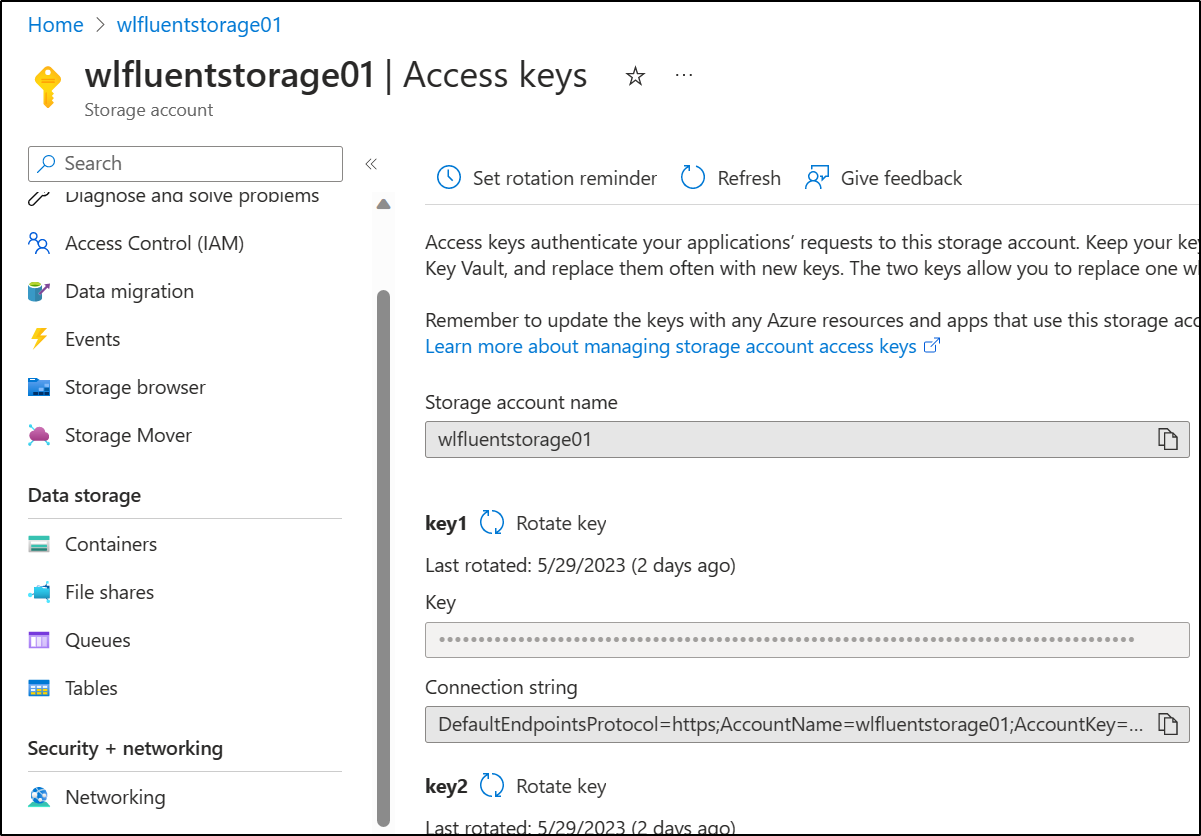

This will need a connection string we can get from the Storage Account in the Keys area

We can enter here

which we can now see saved

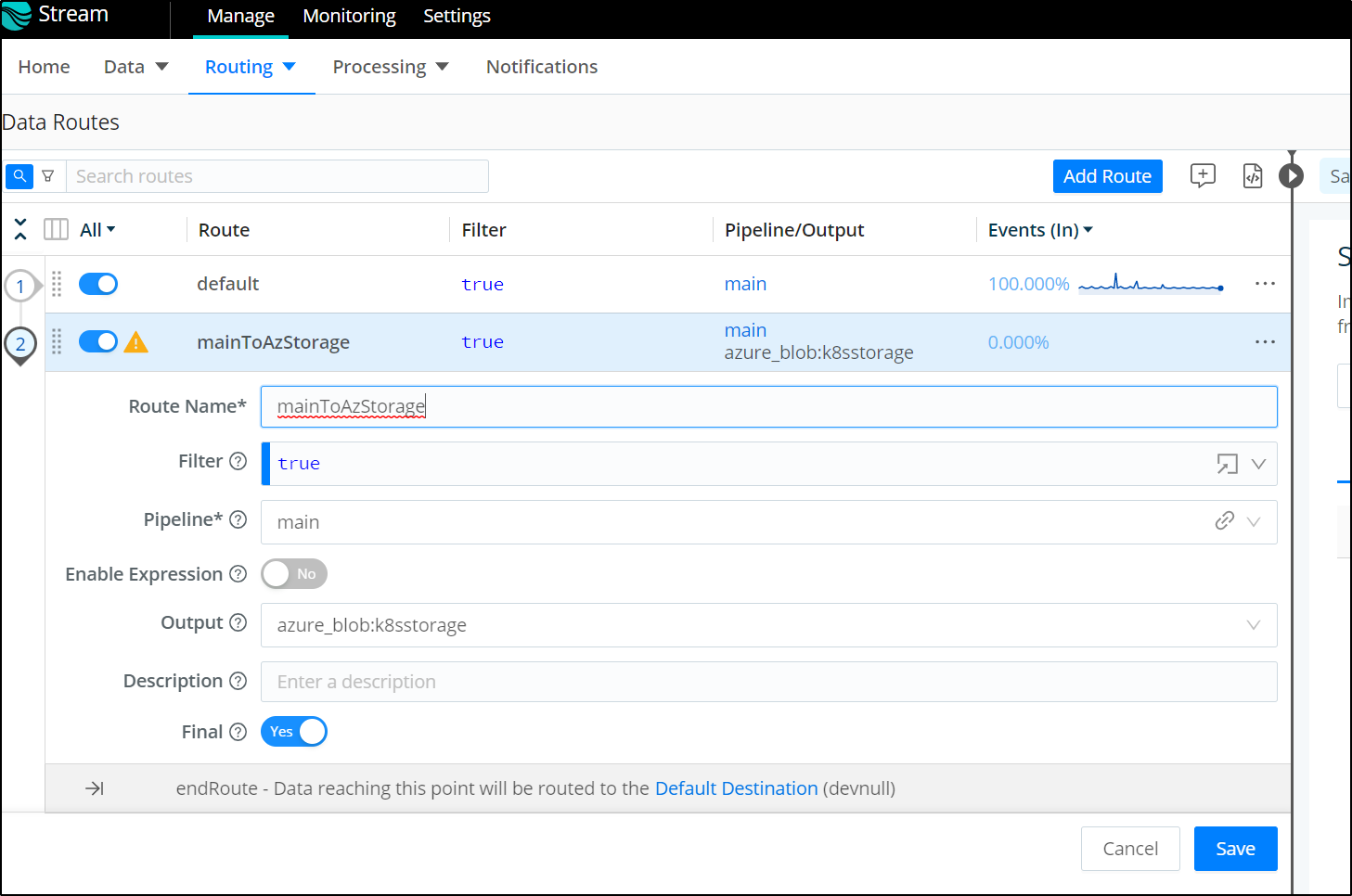

I can then create a Route to use it

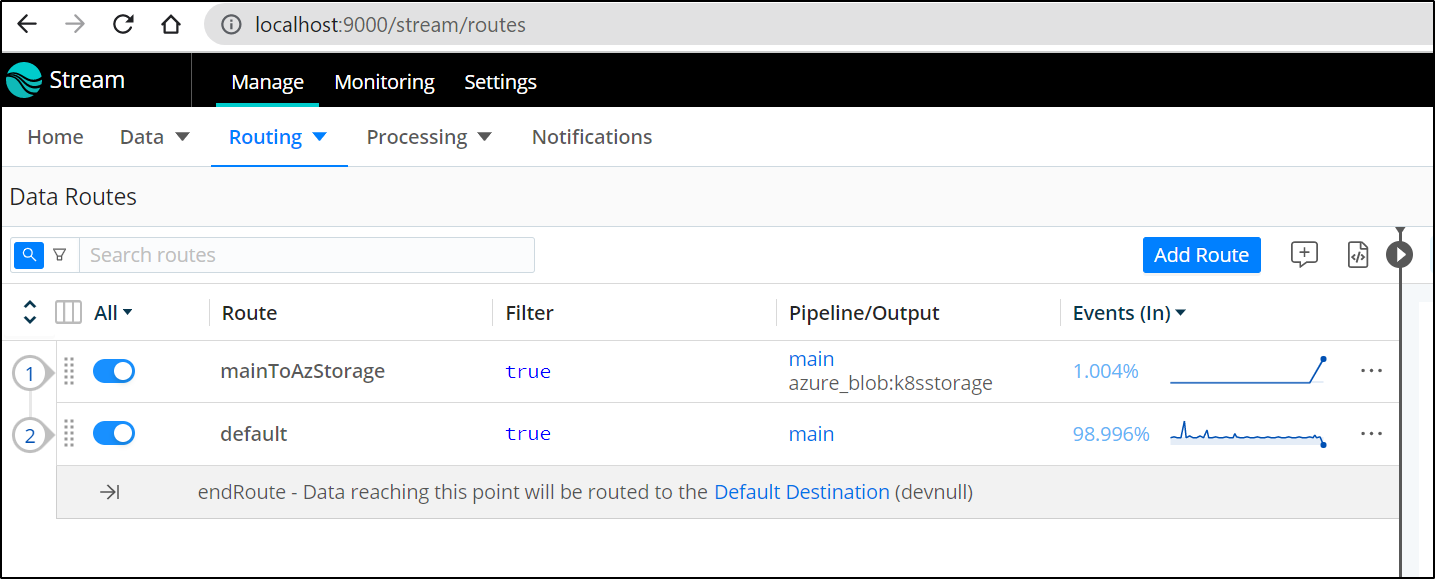

I’ll then move the route to the first slot

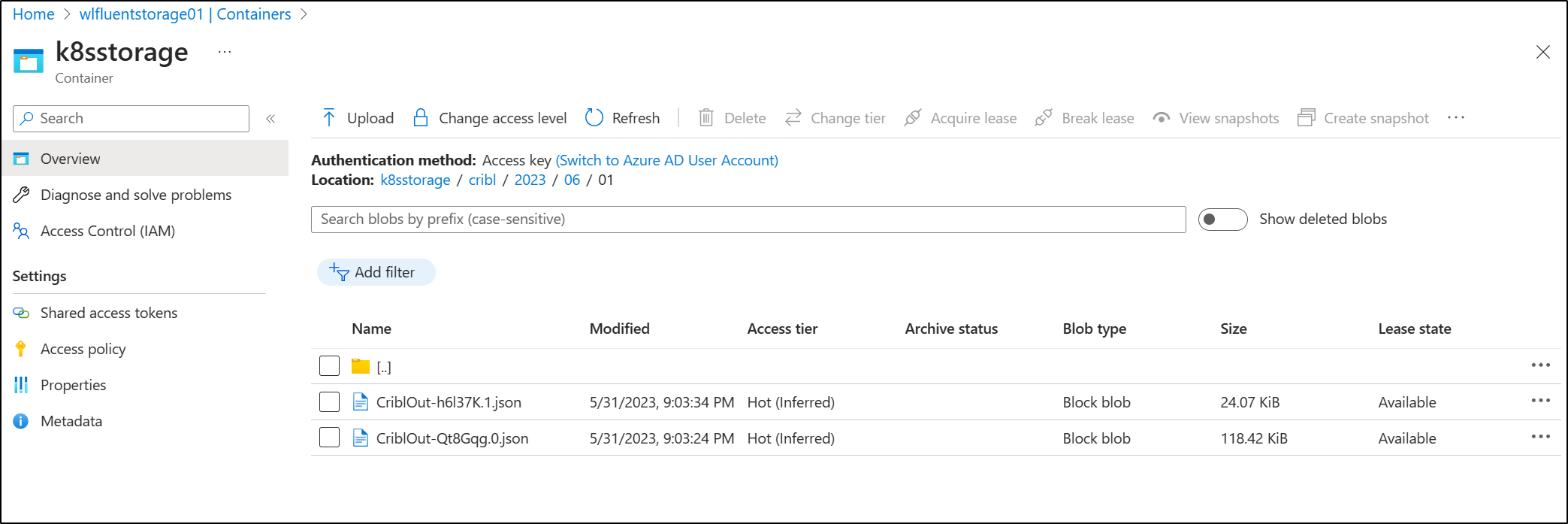

After enabling some basic inputs, I can see Cribl data written to the Azure Storage container

I can add other inputs, but we will save it for later

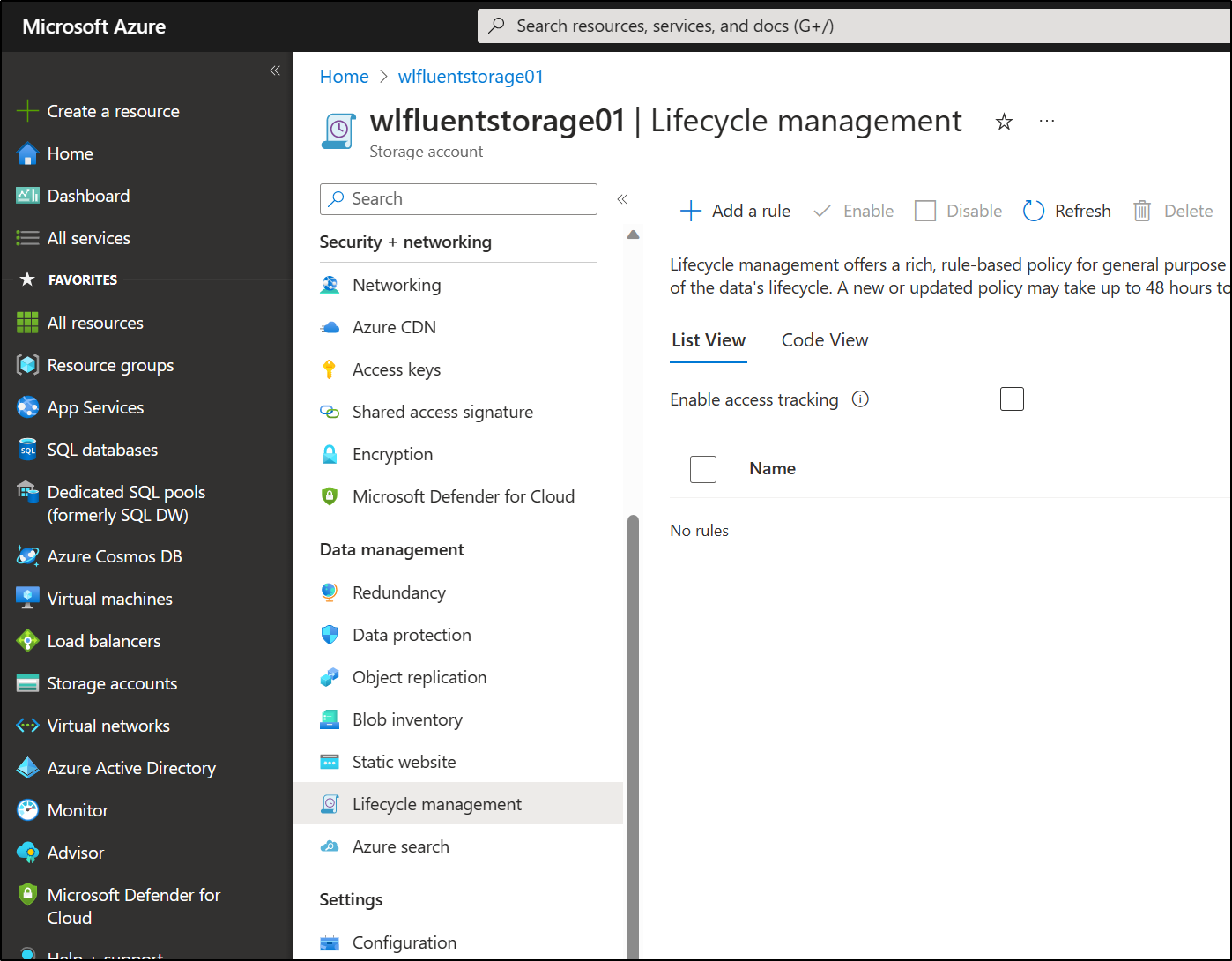

Lifecycle Policies

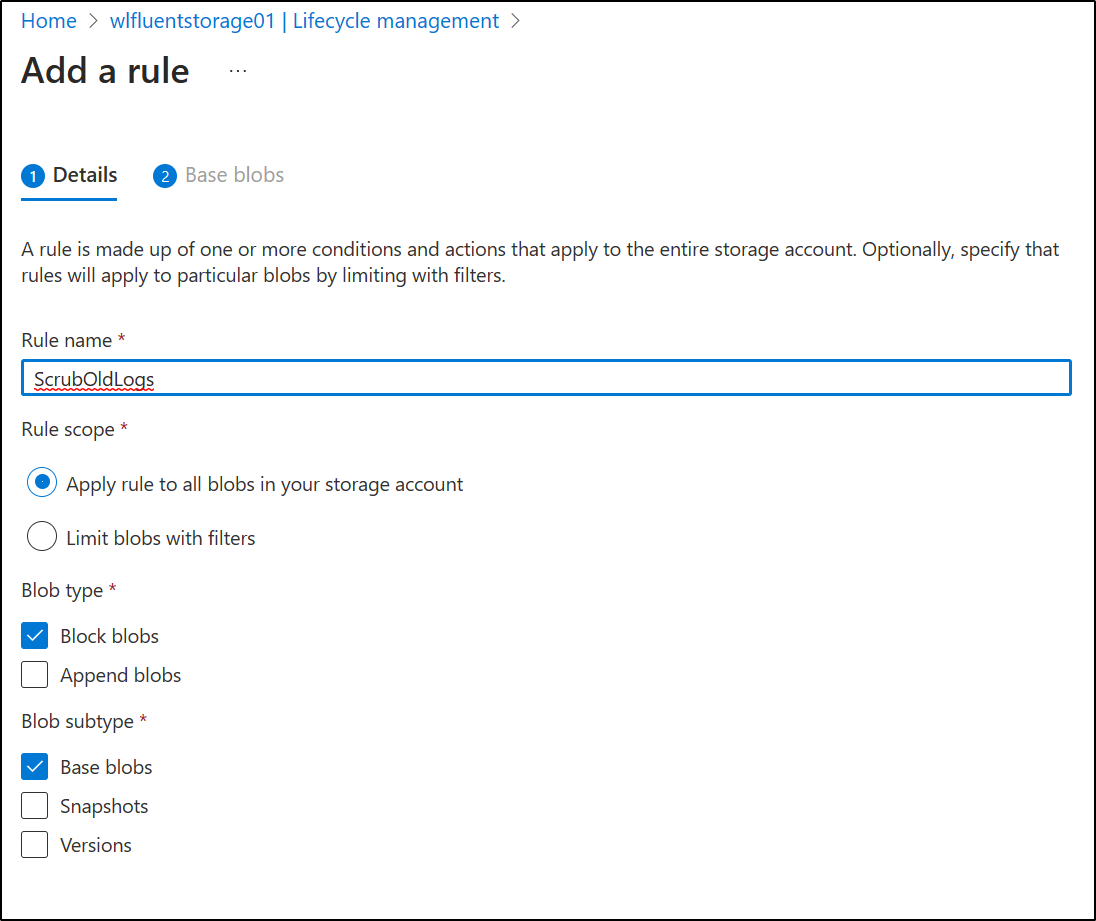

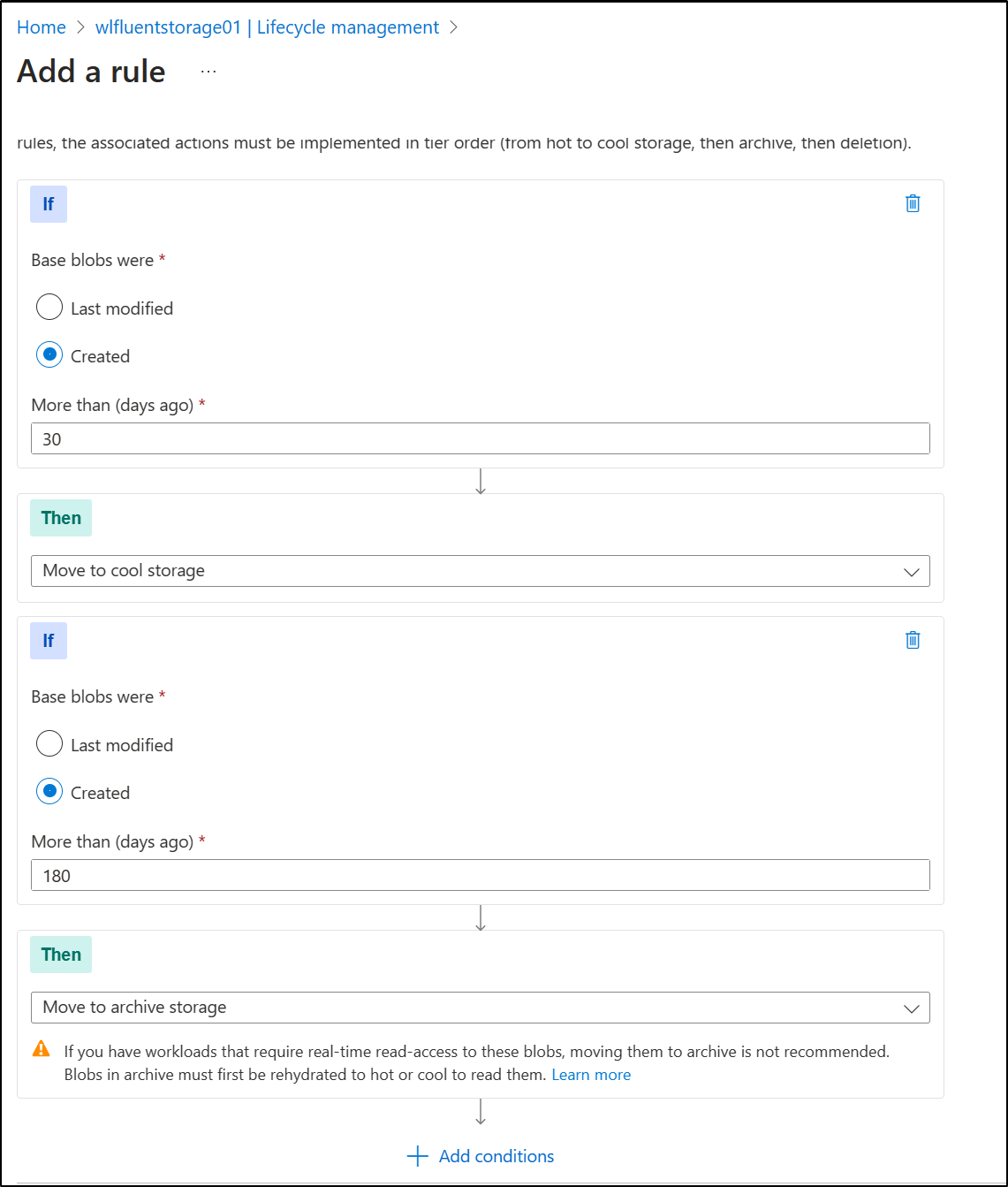

One thing you need to make sure you do for a storage container holding logs is to set a Lifecycle Policy

Give it a name

At the least, we can create a policy that moves to Cool then Archive Storage

Or just delete after a week

Summary

Today we setup a fresh Azure storage account and configured Fluentd. We touched on Loki and setup Logrotate after mounting with BlobFuse. Finally, we setup a local Cribl Stream instance to send data and logs to Azure Storage as a Destination on a Route. We’ll explore more on that later, but hopefully you have some starting points to getting Azure Storage working for log storage.