Published: Mar 21, 2023 by Isaac Johnson

Recently, I’ve needed to address questions around DNS in Kubernetes. I figured it might be worthwhile to review Kube DNS and show how we can change it for local DNS entries.

Today we’ll use a local NAS and the Azure Vote Front service as examples as we setup regexp DNS replacements and forwarding domain entries.

Azure Vote Front

I already had the Azure Vote Front service running. However, if you haven’t set that up, it’s a pretty straightforward helm deploy

$ helm repo add azure-samples https://azure-samples.github.io/helm-charts/

"azure-samples" already exists with the same configuration, skipping

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "newrelic" chart repository

Update Complete. ⎈Happy Helming!⎈

$ helm install azure-samples/azure-vote --generate-name

NAME: azure-vote-1636748326

LAST DEPLOYED: Fri Nov 12 14:18:46 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The Azure Vote application has been started on your Kubernetes cluster.

Title: Azure Vote App

Vote 1 value: Cats

Vote 2 value: Dogs

The externally accessible IP address can take a minute or so to provision. Run the following command to monitor the provisioning status. Once an External IP address has been provisioned, brows to this IP address to access the Azure Vote application.

kubectl get service -l name=azure-vote-front -w

$ kubectl get service -l name=azure-vote-front -w

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

azure-vote-front LoadBalancer 10.0.223.187 52.154.40.139 80:31884/TCP 71s

Stub-Domains in CoreDNS

“Stub-domain” is just a nicer way of saying “fake” or “local private”.

Let’s say we have a local VM we wish to address with a name instead of IP.

In my network, I have a NAS that is resolvable with WINS.

$ nslookup 192.168.1.129

129.1.168.192.in-addr.arpa name = SassyNassy.

Authoritative answers can be found from:

I could then create an entry for CoreDNS to make ‘nas.locaservices’ point to ‘sassynassy’

My current CoreDNS entry lists my primary Kubernetes hosts in the node pool

$ kubectl get cm coredns -n kube-system -o yaml

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

}

hosts /etc/coredns/NodeHosts {

ttl 60

reload 15s

fallthrough

}

prometheus :9153

forward . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

import /etc/coredns/custom/*.server

NodeHosts: |

192.168.1.12 anna-macbookair

192.168.1.206 isaac-macbookpro

192.168.1.159 builder-macbookpro2

kind: ConfigMap

metadata:

annotations:

objectset.rio.cattle.io/applied: H4sIAAAAAAAA/4yQwWrzMBCEX0Xs2fEf20nsX9BDybH02lMva2kdq1Z2g6SkBJN3L8IUCiVtbyNGOzvfzoAn90IhOmHQcKmgAIsJQc+wl0CD8wQaSr1t1PzKSilFIUiIix4JfRoXHQjtdZHTuafAlCgq488xUSi9wK2AybEFDXvhwR2e8QQFHCnh50ZkloTJCcf8lP6NTIqUyuCkNJiSp9LJP5czoLjryztTWB0uE2iYmvjFuVSFenJsHx6tFf41gvGY6Y0Eshz/9D2e0OSZfIJVvMZExwzusSf/I9SIcQQNvaG6a+r/XVdV7abBddPtsN9W66Eedi0N7aberM22zaHf6t0tcPsIAAD//8Ix+PfoAQAA

objectset.rio.cattle.io/id: ""

objectset.rio.cattle.io/owner-gvk: k3s.cattle.io/v1, Kind=Addon

objectset.rio.cattle.io/owner-name: coredns

objectset.rio.cattle.io/owner-namespace: kube-system

creationTimestamp: "2023-03-08T12:23:23Z"

labels:

objectset.rio.cattle.io/hash: bce283298811743a0386ab510f2f67ef74240c57

name: coredns

namespace: kube-system

resourceVersion: "726"

uid: 025e6686-3e7d-4574-8b8b-66e363694268

This allows us to easily resolve the nodes in pods

192.168.1.12 anna-macbookair

192.168.1.206 isaac-macbookpro

192.168.1.159 builder-macbookpro2

I’ll fire a quick interactive pods to check

$ kubectl run my-shell --rm -i --tty --image ubuntu -- bash

root@my-shell:/#

-y dnsutils

root@my-shell:/# nslookup anna-macbookair

Server: 10.43.0.10

Address: 10.43.0.10#53

Name: anna-macbookair

Address: 192.168.1.12

I can see my local DNS via the Router is resolving ‘sassynassy’ but not ‘sassynassy.local’ as I would desire

root@my-shell:/# nslookup sassynassy

Server: 10.43.0.10

Address: 10.43.0.10#53

Name: sassynassy

Address: 192.168.1.129

root@my-shell:/# nslookup nas.locaservices

Server: 10.43.0.10

Address: 10.43.0.10#53

** server can't find nas.locaservices: NXDOMAIN

root@my-shell:/# exit

exit

Session ended, resume using 'kubectl attach my-shell -c my-shell -i -t' command when the pod is running

pod "my-shell" deleted

I usually don’t use kubectl edit, but I will this time.

$ kubectl edit cm coredns -n kube-system

I’ll add rewrite name nas.locaservices sassynassy to the Corefile:

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

}

hosts /etc/coredns/NodeHosts {

ttl 60

reload 15s

fallthrough

}

rewrite name nas.localservices sassynassy

prometheus :9153

forward . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

import /etc/coredns/custom/*.server

NodeHosts: |

192.168.1.12 anna-macbookair

192.168.1.206 isaac-macbookpro

192.168.1.159 builder-macbookpro2

kind: ConfigMap

metadata:

annotations:

objectset.rio.cattle.io/applied: H4sIAAAAAAAA/4yQwWrzMBCEX0Xs2fEf20nsX9BDybH02lMva2kdq1Z2g6SkBJN3L8IUCiVtbyNGOzvfzoAn90IhOmHQcKmgAIsJQc+wl0CD8wQaSr1t1PzKSilFIUiIix4JfRoXHQjtdZHTuafAlCgq488xUSi9wK2AybEFDXvhwR2e8QQFHCnh50ZkloTJCcf8lP6NTIqUyuCkNJiSp9LJP5czoLjryztTWB0uE2iYmvjFuVSFenJsHx6tFf41gvGY6Y0Eshz/9D2e0OSZfIJVvMZExwzusSf/I9SIcQQNvaG6a+r/XVdV7abBddPtsN9W66Eedi0N7aberM22zaHf6t0tcPsIAAD//8Ix+PfoAQAA

objectset.rio.cattle.io/id: ""

objectset.rio.cattle.io/owner-gvk: k3s.cattle.io/v1, Kind=Addon

objectset.rio.cattle.io/owner-name: coredns

objectset.rio.cattle.io/owner-namespace: kube-system

creationTimestamp: "2023-03-08T12:23:23Z"

labels:

objectset.rio.cattle.io/hash: bce283298811743a0386ab510f2f67ef74240c57

name: coredns

namespace: kube-system

resourceVersion: "726"

uid: 025e6686-3e7d-4574-8b8b-66e363694268

Now that we edited it

$ kubectl edit cm coredns -n kube-system

configmap/coredns edited

We can reload the pod nicely with a rollout restart

$ kubectl rollout restart -n kube-system deployment/coredns

Warning: spec.template.spec.nodeSelector[beta.kubernetes.io/os]: deprecated since v1.14; use "kubernetes.io/os" instead

deployment.apps/coredns restarted

We can also use a delete pod to force an update

$ kubectl delete pod `kubectl get pods -l k8s-app=kube-dns -n kube-system --output=jsonpath={.items..metadata.name}` -n kube-system

pod "coredns-7854ff5d74-47w4w" deleted

Now I’ll fire that test pod up again

$ kubectl run my-shell2 --rm -i --tty --image ubuntu -- bash

root@my-shell:/# apt update && apt install -y dnsutils

root@my-shell2:/# nslookup sassynassy

Server: 10.43.0.10

Address: 10.43.0.10#53

Name: sassynassy

Address: 192.168.1.129

root@my-shell2:/# nslookup nas.localservices

Server: 10.43.0.10

Address: 10.43.0.10#53

Name: nas.localservices

Address: 192.168.1.129

Redirecting a DNS Zone

What if we want a whole Zone?

How might we add fakedomain.com?

Let’s say we wish to redirect everything ‘fakedomain.com’ to svc.cluster.local. This \can be handy when namespacing per environment but desiring your pods to stay identical between dev/qa/prod.

We’ll create the optional custom CM coredns can use

$ cat corednscustom.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns-custom

namespace: kube-system

data:

test.override: |

fakedomain.com:53 {

log

errors

rewrite stop {

name regex (.*)\.fakedomain.com {1}.default.svc.cluster.local

answer name (.*)\.default\.svc\.cluster\.local {1}.fakedomain.com

}

forward . /etc/resolv.conf # you can redirect this to a specific DNS server such as 10.0.0.10, but that server must be able to resolve the rewritten domain name

}

I’ll apply and rotate the CoreDNS pod

$ kubectl apply -f corednscustom.yaml && kubectl delete pod -n kube-system `kubectl get pods -l k8s-app=kube-dns -n kube-system --output=jsonpath={.items..metadata.name}`

configmap/coredns-custom configured

pod "coredns-c7fb4c47d-b8xqd" deleted

Let’s assume we want to route to the azure-vote-front service already running

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 10d

vote-back-azure-vote-1678278477 ClusterIP 10.43.145.250 <none> 6379/TCP 10d

azure-vote-front LoadBalancer 10.43.24.222 <pending> 80:32381/TCP 10d

my-dd-release-datadog ClusterIP 10.43.180.187 <none> 8125/UDP,8126/TCP 5d17h

my-dd-release-datadog-cluster-agent-admission-controller ClusterIP 10.43.55.100 <none> 443/TCP 5d17h

my-dd-release-datadog-cluster-agent-metrics-api ClusterIP 10.43.5.105 <none> 8443/TCP 5d17h

my-dd-release-datadog-cluster-agent ClusterIP 10.43.64.21 <none> 5005/TCP 5d17h

I’ll test again

$ kubectl run my-shell3 --rm -i --tty --image ubuntu -- bash

If you don't see a command prompt, try pressing enter.

root@my-shell3:/# apt update >/dev/null 2>&1

root@my-shell3:/# apt install -yq dnsutils iputils-ping >/dev/null 2>&1

root@my-shell3:/# nslookup azure-vote-front.fakedomain.com

Server: 10.43.0.10

Address: 10.43.0.10#53

** server can't find azure-vote-front.fakedomain.com: NXDOMAIN

root@my-shell3:/# ping azure-vote-front.fakedomain.com

ping: azure-vote-front.fakedomain.com: Name or service not known

root@my-shell3:/# ping azure-vote-front.fakedomain.com

PING azure-vote-front.fakedomain.com (52.128.23.153) 56(84) bytes of data.

^C

--- azure-vote-front.fakedomain.com ping statistics ---

3 packets transmitted, 0 received, 100% packet loss, time 2041ms

Clearly, this is not working…

The logs show override files are ignored

$ kubectl logs -n kube-system `kubectl get pods -l k8s-app=kube-dns -n kube-system --output=jsonpath={.items..metadata.name}`

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.server

.:53

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.server

[INFO] plugin/reload: Running configuration SHA512 = 6165dfb940406d783b029d3579a292d4b97d22c854773714679647540fc9147f22223de7313c0cc0a09d1a93657af38d3e025ad05ca7666d9cd4f1ce7f6d91e4

CoreDNS-1.9.1

linux/amd64, go1.17.8, 4b597f8

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.server

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.server

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.server

[WARNING] No files matching import glob pattern: /etc/coredns/custom/*.server

My tests of using the core custom DNS ConfigMaps all failed. However, moving the re-write into the* main* CoreDNS CM did work:

That is, adding

rewrite stop {

name regex (.*)\.fakedomain.org {1}.default.svc.cluster.local

answer name (.*)\.default\.svc\.cluster\.local {1}.fakedomain.org

}

to this CM:

$ kubectl get cm coredns -n kube-system -o yaml

E0320 22:03:20.434154 16216 memcache.go:255] couldn't get resource list for external.metrics.k8s.io/v1beta1: Got empty response for: external.metrics.k8s.io/v1beta1

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

}

hosts /etc/coredns/NodeHosts {

ttl 60

reload 15s

fallthrough

}

rewrite name nas.locaservices sassynassy

rewrite stop {

name regex (.*)\.fakedomain.org {1}.default.svc.cluster.local

answer name (.*)\.default\.svc\.cluster\.local {1}.fakedomain.org

}

prometheus :9153

forward . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

import /etc/coredns/custom/*.server

NodeHosts: |

192.168.1.12 anna-macbookair

192.168.1.206 isaac-macbookpro

192.168.1.159 builder-macbookpro2

fakedomain.db: |

; fakedomain.org test file

fakedomain.org. IN SOA sns.dns.icann.org. noc.dns.icann.org. 2015082541 7200 3600 1209600 3600

fakedomain.org. IN NS b.iana-servers.net.

fakedomain.org. IN NS a.iana-servers.net.

fakedomain.org. IN A 127.0.0.1

a.b.c.w.fakedomain.org. IN TXT "Not a wildcard"

cname.fakedomain.org. IN CNAME www.example.net.

service.fakedomain.org. IN SRV 8080 10 10 fakedomain.org.

kind: ConfigMap

metadata:

annotations:

objectset.rio.cattle.io/applied: H4sIAAAAAAAA/4yQwWrzMBCEX0Xs2fEf20nsX9BDybH02lMva2kdq1Z2g6SkBJN3L8IUCiVtbyNGOzvfzoAn90IhOmHQcKmgAIsJQc+wl0CD8wQaSr1t1PzKSilFIUiIix4JfRoXHQjtdZHTuafAlCgq488xUSi9wK2AybEFDXvhwR2e8QQFHCnh50ZkloTJCcf8lP6NTIqUyuCkNJiSp9LJP5czoLjryztTWB0uE2iYmvjFuVSFenJsHx6tFf41gvGY6Y0Eshz/9D2e0OSZfIJVvMZExwzusSf/I9SIcQQNvaG6a+r/XVdV7abBddPtsN9W66Eedi0N7aberM22zaHf6t0tcPsIAAD//8Ix+PfoAQAA

objectset.rio.cattle.io/id: ""

objectset.rio.cattle.io/owner-gvk: k3s.cattle.io/v1, Kind=Addon

objectset.rio.cattle.io/owner-name: coredns

objectset.rio.cattle.io/owner-namespace: kube-system

creationTimestamp: "2023-03-08T12:23:23Z"

labels:

objectset.rio.cattle.io/hash: bce283298811743a0386ab510f2f67ef74240c57

name: coredns

namespace: kube-system

resourceVersion: "1178072"

uid: 025e6686-3e7d-4574-8b8b-66e363694268

Then bouncing the DNS pod

builder@DESKTOP-72D2D9T:~$ kubectl delete pod -n kube-system `kubectl get pods -l k8s-app=kube-dns -n kube-system --output=jsonpath={.items..metadata.name}`

pod "coredns-64d765b69f-pq9c7" deleted

Now in the test Ubuntu pod:

builder@DESKTOP-72D2D9T:~$ kubectl exec -it my-shell -- bin/bash

E0320 21:59:38.562920 16199 memcache.go:255] couldn't get resource list for external.metrics.k8s.io/v1beta1: Got empty response for: external.metrics.k8s.io/v1beta1

root@my-shell:/#

root@my-shell:/# apt update >/dev/null 2>&1

root@my-shell:/# apt install -yq dnsutils iputils-ping >/dev/null 2>&1

root@my-shell:/# nslookup test.fakedomain.org

Server: 10.43.0.10

Address: 10.43.0.10#53

** server can't find test.fakedomain.org: NXDOMAIN

root@my-shell:/# nslookup azure-vote-front.fakedomain.org

Server: 10.43.0.10

Address: 10.43.0.10#53

Name: azure-vote-front.fakedomain.org

Address: 10.43.24.222

To do some further testing, I fired up a VNC pod

$ cat vncPodDeployment.yaml

#### POD config (run one time)

apiVersion: apps/v1

kind: Deployment

metadata:

name: headless-vnc

labels:

application: headless-vnc

spec:

# 1 Pods should exist at all times.

replicas: 1

template:

metadata:

labels:

application: headless-vnc

spec:

terminationGracePeriodSeconds: 5

containers:

- name: headless-vnc

image: consol/rocky-xfce-vnc

imagePullPolicy: Always

args:

### make normal UI startup to connect via: oc rsh <pod-name> bash

#- '--tail-log'

### checks that vnc server is up and running

livenessProbe:

tcpSocket:

port: 5901

initialDelaySeconds: 1

timeoutSeconds: 1

### checks if http-vnc connection is working

readinessProbe:

httpGet:

path: /

port: 6901

scheme: HTTP

initialDelaySeconds: 1

timeoutSeconds: 1

---

apiVersion: v1

kind: Service

metadata:

labels:

application: headless-vnc

name: headless-vnc

spec:

externalName: headless-vnc

ports:

- name: http-port-tcp

protocol: TCP

port: 6901

targetPort: 6901

nodePort: 32001

- name: vnc-port-tcp

protocol: TCP

port: 5901

targetPort: 5901

nodePort: 32002

selector:

application: headless-vnc

type: NodePort

# Use type loadbalancer if needed

# type: LoadBalancer

$ kubectl apply -f vncPodDeployment.yaml

E0321 08:34:46.975118 16439 memcache.go:255] couldn't get resource list for external.metrics.k8s.io/v1beta1: Got empty response for: external.metrics.k8s.io/v1beta1

deployment.apps/headless-vnc created

service/headless-vnc unchanged

Then port-forwarded to test with VNC

$ kubectl port-forward svc/headless-vnc 5901:5901

E0321 08:35:34.965461 16454 memcache.go:255] couldn't get resource list for external.metrics.k8s.io/v1beta1: Got empty response for: external.metrics.k8s.io/v1beta1

Forwarding from 127.0.0.1:5901 -> 5901

Forwarding from [::1]:5901 -> 5901

Handling connection for 5901

You can now access (password: vncpassword) on localhost:5901

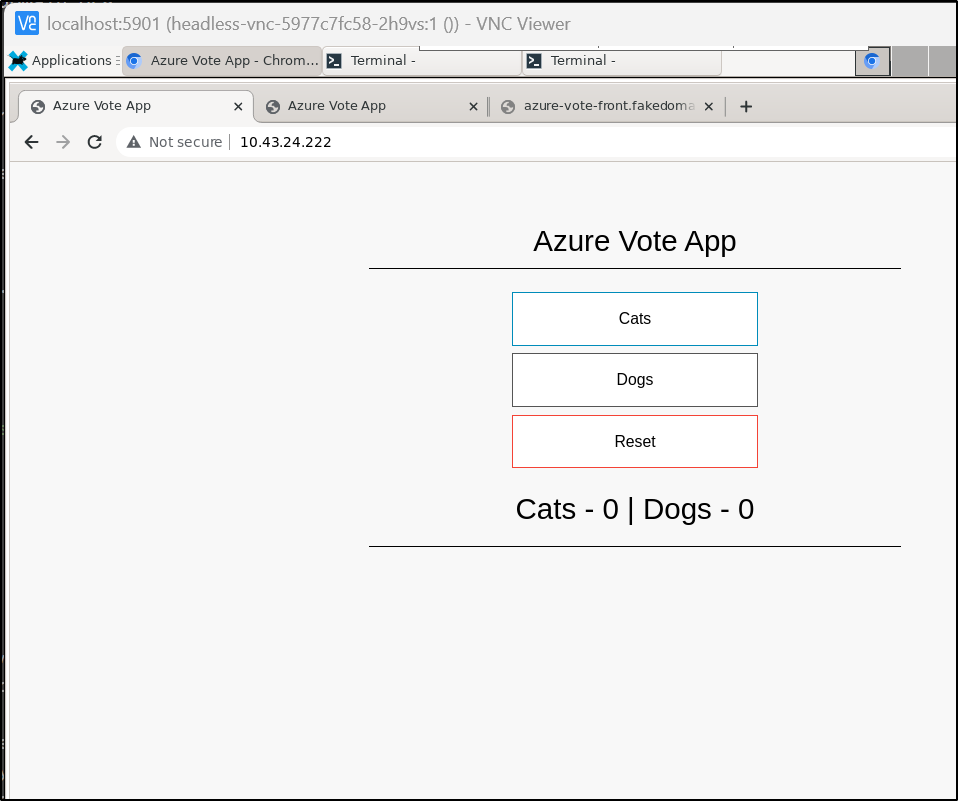

I could access the Azure Vote Front Pod by IP in the VNC Pod:

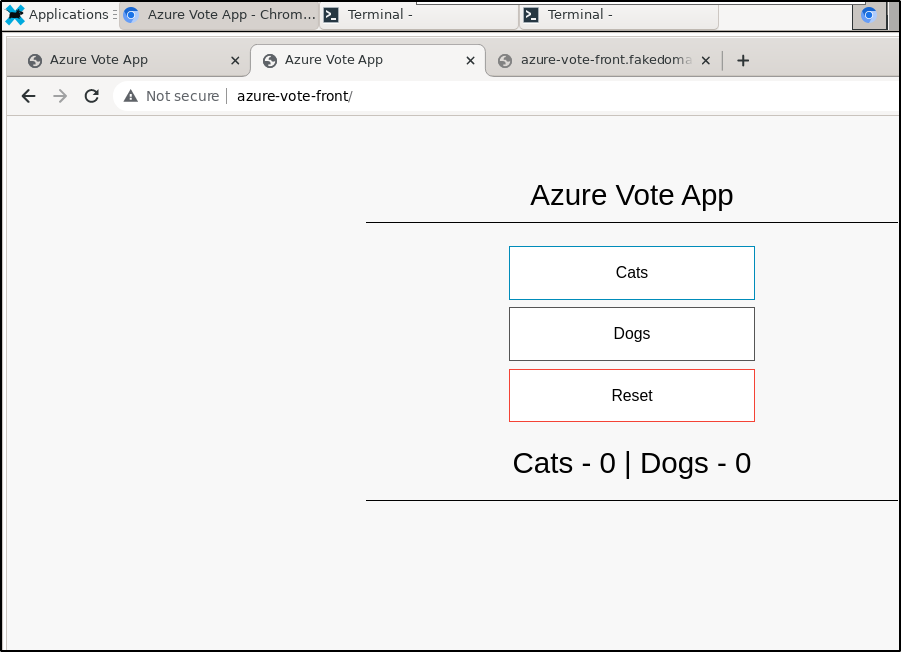

And short name (which according to resolv.conf would be azure-vote-front.svc.cluster.local)

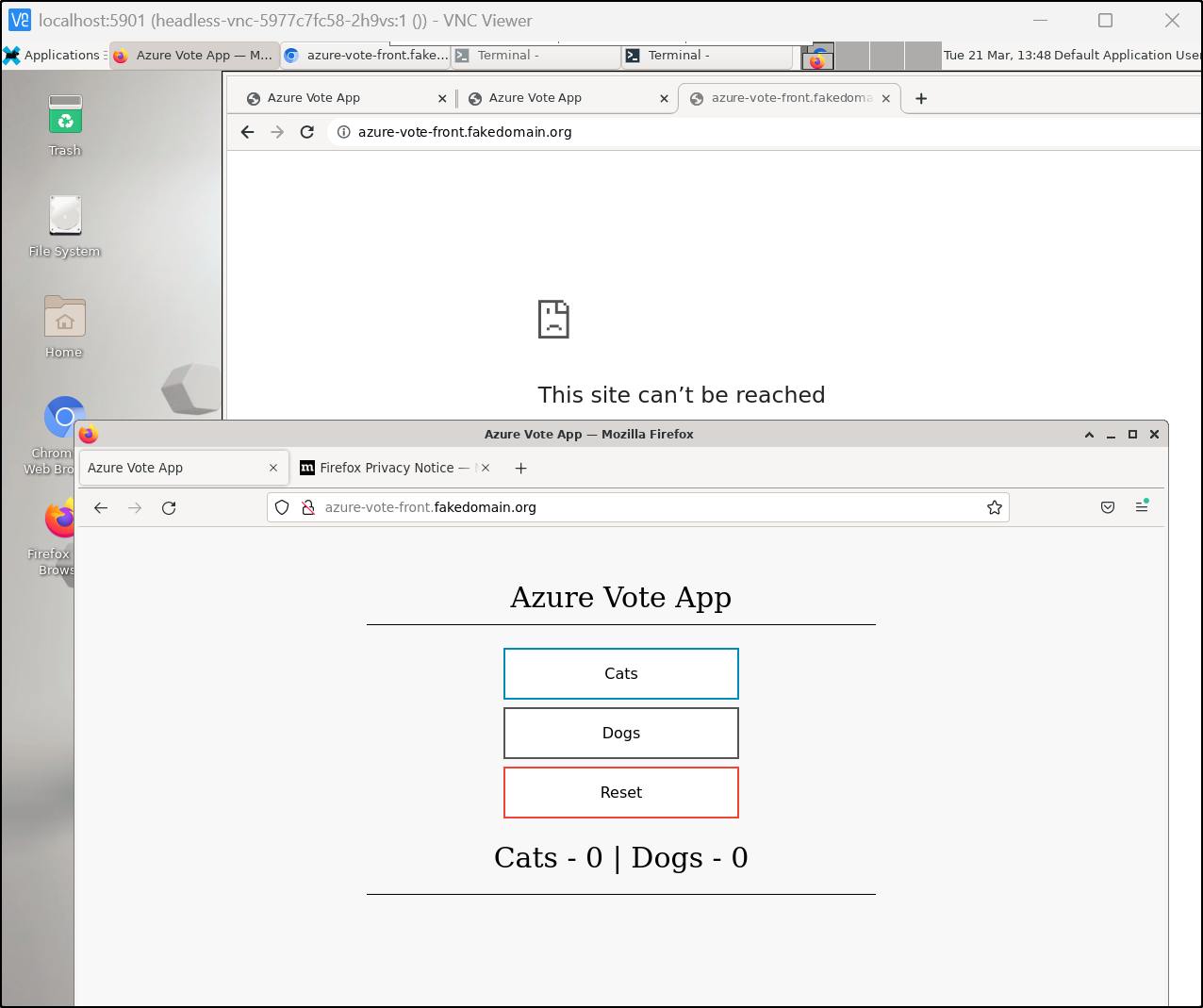

But it didn’t seem to like the remapped domain in Chromium (However, Firefox was fine with it, so that means it’s a browser issue)

Summary

What we have seen thus far is that with a little bit of work we can add a fake local DNS name to services using rewrite. The documentation would suggest that we can add in a Custom DNS CM for CoreDNS, but in my testing, only updating the Main ConfigMap seemed to take effect.

The use, of course, would be to have deployments that reference fixed API URLs, such as “database.servicealpha” or “redis.localservices”. These DNS names could resolve to the right endpoints, depending on environment, all by way of custom DNS names. Of course, this is also where services come into play, resolving by default with $service.$namespace.svc.cluster.local. However, there are times when a piece of middleware or database isn’t hosted in Kubernetes.