Published: Jan 19, 2023 by Isaac Johnson

Back in November, I wrote about Kasa Plugs and PyKasa. In that post I covered how to use Kasa Smart Plugs with lights as webhook triggered indicators. To make the updates, I forked the Python-Kasa repo to here.

The somewhat fun yet also somewhat distracting effect, however, has been that people reading the blog as well as bots have routinely triggered the lights. At first, that was rather fun. But in time, that has become more annoying. Since webhooks are, by their nature, public URLs, we need to create a way to secure them. The common method used by many microservices is to add a KEY or APIKEY.

Today we’ll implement an APIKEY and test. Additionally, we’ll tackle creating a proper helm chart. We’ll create our Github workflow and then set it to upload to both HarborCR and the public Dockerhub. We’ll put a copy of the chart in our HarborCR Public Chart repo (and share the URL). Lastly, we’ll verify everything works by testing both this GH Workflow and others.

Updating my fork

Plenty of updates have happened with Python-Kaska on master. I’ll pull those in to keep the fork up to date.

builder@DESKTOP-QADGF36:~/Workspaces/ijohnson-python-kasa$ git pull https://github.com/python-kasa/python-kasa.git master

remote: Enumerating objects: 55, done.

remote: Counting objects: 100% (55/55), done.

remote: Compressing objects: 100% (26/26), done.

remote: Total 55 (delta 37), reused 37 (delta 28), pack-reused 0

Unpacking objects: 100% (55/55), 38.29 KiB | 956.00 KiB/s, done.

From https://github.com/python-kasa/python-kasa

* branch master -> FETCH_HEAD

Merge made by the 'recursive' strategy.

.github/FUNDING.yml | 1 +

.pre-commit-config.yaml | 14 ++++++------

docs/source/smartbulb.rst | 7 ++++++

kasa/__init__.py | 4 +++-

kasa/cli.py | 47 +++++++++++++++++++++++++++++++++++++++-

kasa/discover.py | 2 +-

kasa/smartbulb.py | 95 ++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++-------------

kasa/smartdevice.py | 6 ++++--

kasa/smartdimmer.py | 10 +++++----

kasa/smartstrip.py | 10 ++++++---

kasa/tests/conftest.py | 4 ----

kasa/tests/test_bulb.py | 1 -

kasa/tests/test_cli.py | 2 +-

kasa/tests/test_dimmer.py | 2 +-

kasa/tests/test_discovery.py | 2 +-

kasa/tests/test_emeter.py | 2 +-

kasa/tests/test_lightstrip.py | 2 +-

kasa/tests/test_plug.py | 2 +-

kasa/tests/test_protocol.py | 1 -

kasa/tests/test_smartdevice.py | 2 +-

kasa/tests/test_strip.py | 2 +-

poetry.lock | 84 ++++++++++++++++++++++++++++++++++++++---------------------------------

pyproject.toml | 1 +

23 files changed, 216 insertions(+), 87 deletions(-)

create mode 100644 .github/FUNDING.yml

In that last post, I glossed over the Dockerfile build.

The Dockerfile looked as such (Note: I’ve updated the FROM to 3.8.15 from 3.8)

FROM python:3.8.15

#FROM python:3.9

#FROM python:slim

# Keeps Python from generating .pyc files in the container

ENV PYTHONDONTWRITEBYTECODE 1

# Turns off buffering for easier container logging

ENV PYTHONUNBUFFERED 1

# Install and setup poetry

RUN pip install -U pip \

&& apt-get update \

&& apt install -y curl netcat \

&& curl -sSL https://install.python-poetry.org | python3 -

ENV PATH="${PATH}:/root/.local/bin"

WORKDIR /usr/src/app

COPY . .

RUN poetry config virtualenvs.create false \

&& poetry install --no-interaction --no-ansi

RUN pip install Flask

RUN pip install pipenv

# Adding new REST App

WORKDIR /usr/src/restapp

COPY ./cashman-flask-project/some.sh ./cashman-flask-project/swap.sh ./cashman-flask-project/Pipfile ./cashman-flask-project/Pipfile.lock ./cashman-flask-project/bootstrap.sh ./

COPY ./cashman-flask-project/restapi ./restapi

# Install API dependencies

RUN pip install pipenv --upgrade

RUN pipenv lock

RUN pipenv install --system --deploy

# Start app

EXPOSE 5000

ENTRYPOINT ["/usr/src/restapp/bootstrap.sh"]

#harbor.freshbrewed.science/freshbrewedprivate/kasarest:1.1.1

Local Dockerbuild

Before I do anything, I’ll build this Dockerfile and push to Dockerhub and Harbor. In the last post I neglected to push to Dockerhub (which would have been helpful for others)

builder@DESKTOP-QADGF36:~/Workspaces/ijohnson-python-kasa$ docker build -t kasarest:1.1.1 .

[+] Building 69.8s (19/19) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 1.16kB 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 34B 0.0s

=> [internal] load metadata for docker.io/library/python:3.8.15 1.9s

=> [auth] library/python:pull token for registry-1.docker.io 0.0s

=> [ 1/13] FROM docker.io/library/python:3.8.15@sha256:7c5b3ec91b7e4131dead176d824ddf317e6c19fe1ae38c54ec0ae60 11.3s

=> => resolve docker.io/library/python:3.8.15@sha256:7c5b3ec91b7e4131dead176d824ddf317e6c19fe1ae38c54ec0ae60389 0.0s

=> => sha256:5c8cfbf51e6e6869f1af2a1e7067b07fd6733036a333c9d29f743b0285e26037 5.16MB / 5.16MB 2.1s

=> => sha256:7c5b3ec91b7e4131dead176d824ddf317e6c19fe1ae38c54ec0ae6038908dd18 1.86kB / 1.86kB 0.0s

=> => sha256:83614f12599b7666292ef828a916d5b9140dc465f4696163022f1cb269f23621 2.22kB / 2.22kB 0.0s

=> => sha256:aa3a609d15798d35c1484072876b7d22a316e98de6a097de33b9dade6a689cd1 10.88MB / 10.88MB 2.5s

=> => sha256:b2477bd071d65d3287eef72698cde4c9025a1817464c5acbaab24fe1d2396330 8.56kB / 8.56kB 0.0s

=> => sha256:f2f58072e9ed1aa1b0143341c5ee83815c00ce47548309fa240155067ab0e698 55.04MB / 55.04MB 1.4s

=> => extracting sha256:f2f58072e9ed1aa1b0143341c5ee83815c00ce47548309fa240155067ab0e698 1.3s

=> => sha256:094e7d9bb04ebf214ea8dc5a488995449684104ae8ad9603bf061cac0d18eb54 54.59MB / 54.59MB 2.4s

=> => sha256:2cbfd734f3824a4390fe4be45f6a11a5543bca1023e4814d72460eaebc2eef89 196.87MB / 196.87MB 5.8s

=> => sha256:22ef46bb37aa091ef97cf92cfac74f1a8d6a244ad3b3d8e30130fb8fef232a30 17.40MB / 17.40MB 6.1s

=> => sha256:aa86ac293d0fa66515f0a670445969ba98dd8d6a114a7f6aea934aaad44084d0 6.29MB / 6.29MB 3.3s

=> => extracting sha256:5c8cfbf51e6e6869f1af2a1e7067b07fd6733036a333c9d29f743b0285e26037 0.2s

=> => extracting sha256:aa3a609d15798d35c1484072876b7d22a316e98de6a097de33b9dade6a689cd1 0.2s

=> => sha256:fe904d2505cb8340337a52df05a7d5e0e0b8eea93d7b05c10f46fc4cb96dfedf 233B / 233B 3.8s

=> => extracting sha256:094e7d9bb04ebf214ea8dc5a488995449684104ae8ad9603bf061cac0d18eb54 1.5s

=> => sha256:a7f0bae21ebb5323b1761346a49e4e8e14381edbd29ea240d48f686cbdd4045d 2.89MB / 2.89MB 4.3s

=> => extracting sha256:2cbfd734f3824a4390fe4be45f6a11a5543bca1023e4814d72460eaebc2eef89 4.0s

=> => extracting sha256:aa86ac293d0fa66515f0a670445969ba98dd8d6a114a7f6aea934aaad44084d0 0.2s

=> => extracting sha256:22ef46bb37aa091ef97cf92cfac74f1a8d6a244ad3b3d8e30130fb8fef232a30 0.5s

=> => extracting sha256:fe904d2505cb8340337a52df05a7d5e0e0b8eea93d7b05c10f46fc4cb96dfedf 0.0s

=> => extracting sha256:a7f0bae21ebb5323b1761346a49e4e8e14381edbd29ea240d48f686cbdd4045d 0.2s

=> [internal] load build context 0.0s

=> => transferring context: 9.02kB 0.0s

=> [ 2/13] RUN pip install -U pip && apt-get update && apt install -y curl netcat && curl -sSL htt 25.3s

=> [ 3/13] WORKDIR /usr/src/app 0.0s

=> [ 4/13] COPY . . 0.0s

=> [ 5/13] RUN poetry config virtualenvs.create false && poetry install --no-interaction --no-ansi 15.0s

=> [ 6/13] RUN pip install Flask 2.5s

=> [ 7/13] RUN pip install pipenv 3.9s

=> [ 8/13] WORKDIR /usr/src/restapp 0.0s

=> [ 9/13] COPY ./cashman-flask-project/some.sh ./cashman-flask-project/swap.sh ./cashman-flask-project/Pipfile 0.0s

=> [10/13] COPY ./cashman-flask-project/restapi ./restapi 0.0s

=> [11/13] RUN pip install pipenv --upgrade 1.5s

=> [12/13] RUN pipenv lock 4.7s

=> [13/13] RUN pipenv install --system --deploy 2.1s

=> exporting to image 0.9s

=> => exporting layers 0.9s

=> => writing image sha256:2b132d635d7c6c86dbbdb7b3f4af82c3a8cb334ef7b4ad408980a44de184f72b 0.0s

=> => naming to docker.io/library/kasarest:1.1.1 0.0s

Now I’ll tag and push to Harbor

$ docker tag kasarest:1.1.1 harbor.freshbrewed.science/freshbrewedprivate/kasarest:1.1.1

$ docker push harbor.freshbrewed.science/freshbrewedprivate/kasarest:1.1.1

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/kasarest]

2b15466fba1b: Pushed

3b53e8dcf622: Pushed

d43b23c7131c: Pushed

1a4317c22999: Pushed

9746097ca417: Pushed

97f9013c1d29: Pushed

77a0e6e0f356: Pushed

6e17c2aea757: Pushed

71084fd7aea2: Pushing [==================================================>] 126.3MB

71084fd7aea2: Pushed

4f98a41a322e: Pushed

bae1758cc222: Pushing [==================================================>] 132.1MB

bae1758cc222: Pushed

d3c4d115aaf3: Pushed

7607c97b6c3f: Pushed

1cad4dc57058: Pushed

4ff8844d474a: Pushing [==================================================>] 538.3MB

b77487480ddb: Pushing [==================================================>] 156.5MB

b77487480ddb: Pushed

4ff8844d474a: Pushed

870a241bfebd: Pushing [==================================================>] 129.3MB

870a241bfebd: Pushed

1.1.1: digest: sha256:0281f244ac24f1fdce614d9fb47f646f9b46eb77964c0475c1a6ab8acbef2274 size: 4734

And to Dockerhub

builder@DESKTOP-QADGF36:~/Workspaces/ijohnson-python-kasa$ docker push idjohnson/kasarest:1.1.1

The push refers to repository [docker.io/idjohnson/kasarest]

2b15466fba1b: Pushed

3b53e8dcf622: Pushed

d43b23c7131c: Pushed

1a4317c22999: Pushed

9746097ca417: Pushed

97f9013c1d29: Pushed

77a0e6e0f356: Pushed

6e17c2aea757: Pushed

71084fd7aea2: Pushed

a70cee43520e: Pushed

4f98a41a322e: Pushed

bae1758cc222: Pushed

938e6c7fe440: Mounted from library/python

d3c4d115aaf3: Mounted from library/python

7607c97b6c3f: Mounted from library/python

1cad4dc57058: Mounted from library/python

4ff8844d474a: Mounted from library/python

b77487480ddb: Mounted from library/python

cd247c0fb37b: Mounted from library/python

cfdd5c3bd77e: Mounted from library/python

870a241bfebd: Mounted from library/python

1.1.1: digest: sha256:0281f244ac24f1fdce614d9fb47f646f9b46eb77964c0475c1a6ab8acbef2274 size: 4734

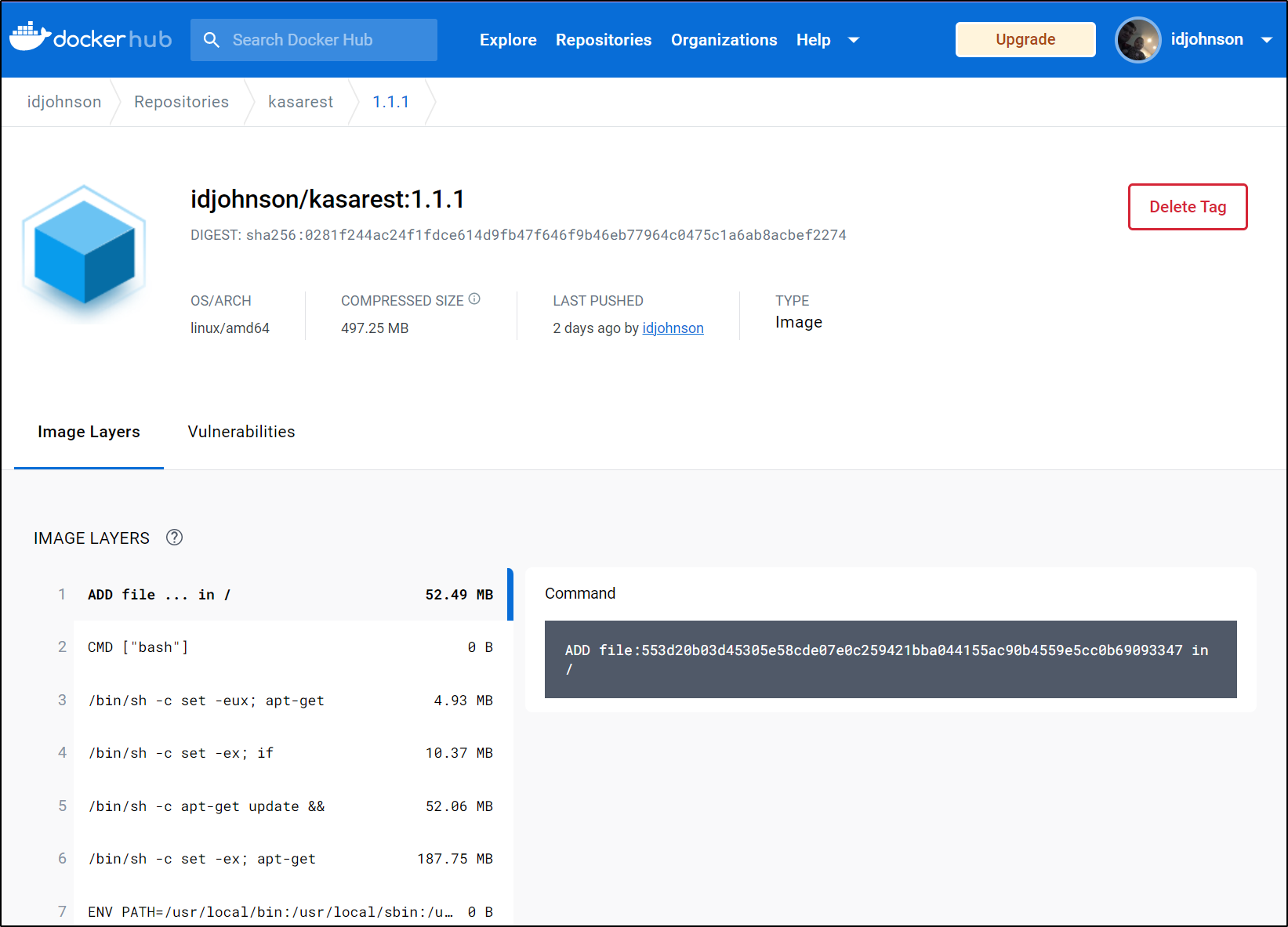

We can now see it in Dockerhub

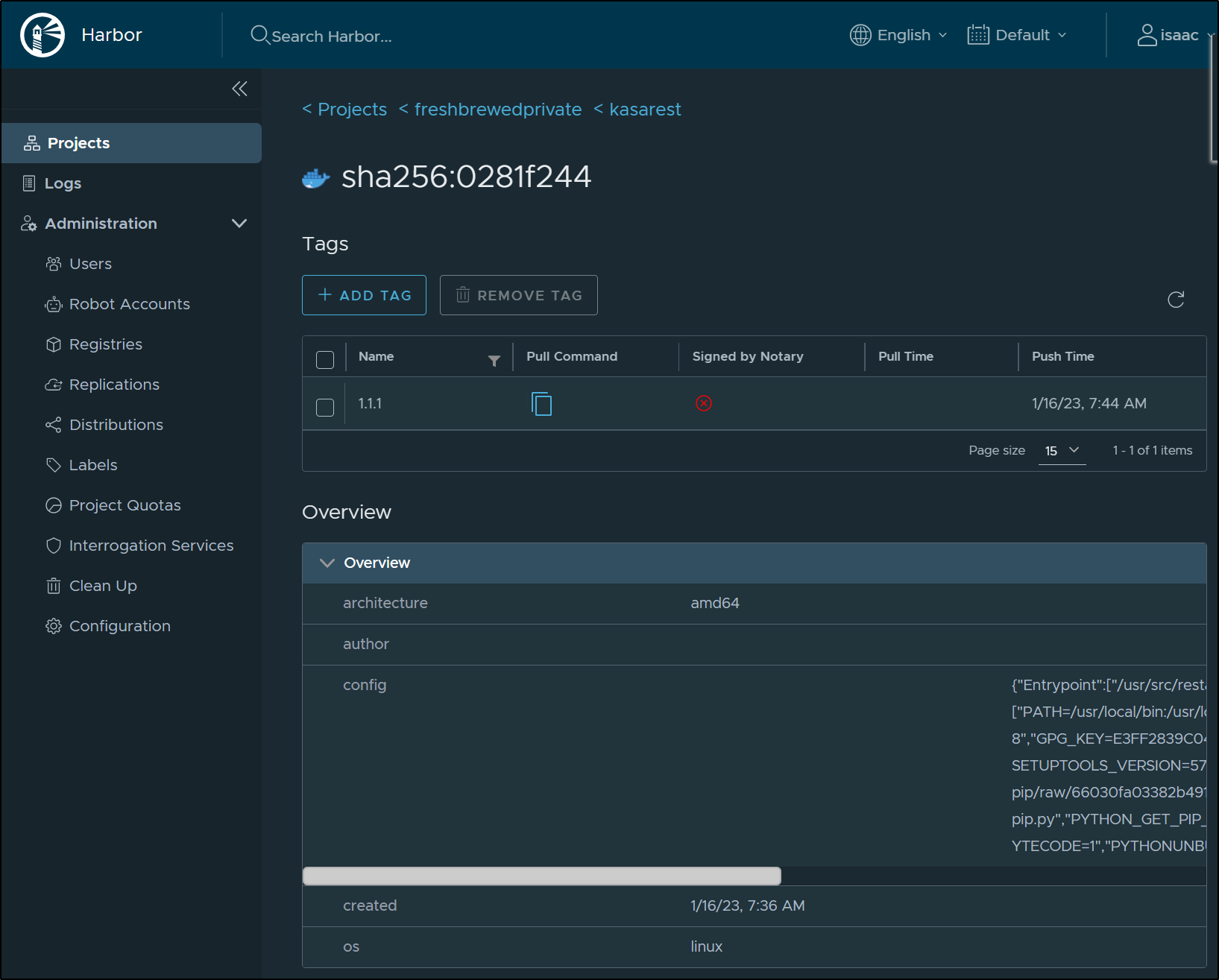

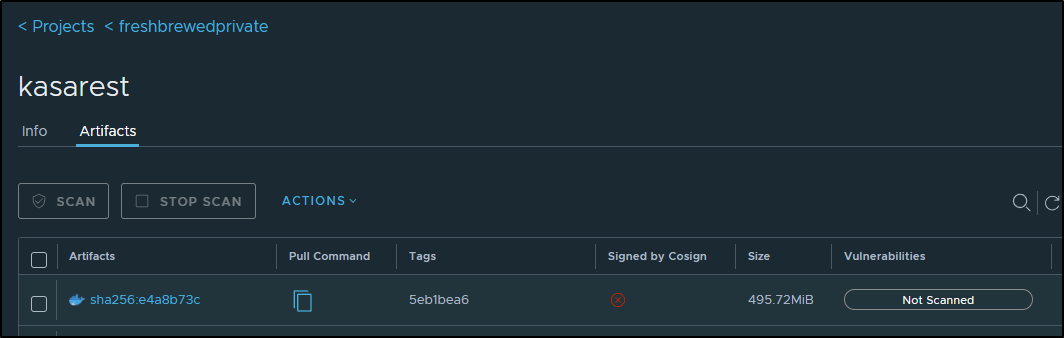

as well in the private Harbor project

Helm Chart

We now have a properly published container. Let’s stop using bland YAML to deploy and instead setup a proper Helm chart.

Create Helm Chart

$ helm create pykasa

Creating pykasa

I’ve updated the Chart a bit, mostly around restartPolicy and ports.

Let’s give it a dry run to see if it is generating right:

builder@DESKTOP-QADGF36:~/Workspaces/ijohnson-python-kasa/pykasa$ helm install --dry-run --debug --generate-name .

install.go:173: [debug] Original chart version: ""

install.go:190: [debug] CHART PATH: /home/builder/Workspaces/ijohnson-python-kasa/pykasa

NAME: chart-1673878451

LAST DEPLOYED: Mon Jan 16 08:14:11 2023

NAMESPACE: default

STATUS: pending-install

REVISION: 1

USER-SUPPLIED VALUES:

{}

COMPUTED VALUES:

affinity: {}

autoscaling:

enabled: false

maxReplicas: 100

minReplicas: 1

targetCPUUtilizationPercentage: 80

fullnameOverride: ""

image:

pullPolicy: IfNotPresent

repository: harbor.freshbrewed.science/freshbrewedprivate/kasarest

tag: 1.1.0

imagePullSecrets:

- name: myharborreg

ingress:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

className: ""

enabled: true

hosts:

- host: kasarest.freshbrewed.science

paths:

- path: /

pathType: ImplementationSpecific

tls:

- hosts:

- kasarest.freshbrewed.science

secretName: kasarest-tls

nameOverride: ""

nodeSelector: {}

podAnnotations: {}

podSecurityContext: {}

replicaCount: 1

resources: {}

restartPolicy: Always

securityContext: {}

service:

port: 5000

type: ClusterIP

serviceAccount:

annotations: {}

create: true

name: ""

tolerations: []

HOOKS:

---

# Source: pykasa/templates/tests/test-connection.yaml

apiVersion: v1

kind: Pod

metadata:

name: "chart-1673878451-pykasa-test-connection"

labels:

helm.sh/chart: pykasa-0.1.0

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: chart-1673878451

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

annotations:

"helm.sh/hook": test

spec:

containers:

- name: wget

image: busybox

command: ['wget']

args: ['chart-1673878451-pykasa:5000']

restartPolicy: Never

MANIFEST:

---

# Source: pykasa/templates/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: chart-1673878451-pykasa

labels:

helm.sh/chart: pykasa-0.1.0

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: chart-1673878451

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

---

# Source: pykasa/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: chart-1673878451-pykasa

labels:

helm.sh/chart: pykasa-0.1.0

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: chart-1673878451

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

spec:

type: ClusterIP

ports:

- port: 5000

targetPort: 5000

protocol: TCP

name: http

selector:

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: chart-1673878451

---

# Source: pykasa/templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: chart-1673878451-pykasa

labels:

helm.sh/chart: pykasa-0.1.0

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: chart-1673878451

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: chart-1673878451

template:

metadata:

labels:

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: chart-1673878451

spec:

imagePullSecrets:

- name: myharborreg

serviceAccountName: chart-1673878451-pykasa

securityContext:

{}

containers:

- name: pykasa

securityContext:

{}

image: "harbor.freshbrewed.science/freshbrewedprivate/kasarest:1.1.0"

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 5000

protocol: TCP

livenessProbe:

httpGet:

path: /

port: http

readinessProbe:

httpGet:

path: /

port: http

env:

- name: PORT

value: "5000"

resources:

{}

restartPolicy: "Always"

---

# Source: pykasa/templates/ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: chart-1673878451-pykasa

labels:

helm.sh/chart: pykasa-0.1.0

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: chart-1673878451

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

spec:

tls:

- hosts:

- "kasarest.freshbrewed.science"

secretName: kasarest-tls

rules:

- host: "kasarest.freshbrewed.science"

http:

paths:

- path: /

pathType: ImplementationSpecific

backend:

service:

name: chart-1673878451-pykasa

port:

number: 5000

NOTES:

1. Get the application URL by running these commands:

https://kasarest.freshbrewed.science/

This looks good. Logically, it is the same as our last yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: kasarest

app.kubernetes.io/name: kasarest

name: kasarest-deployment

spec:

replicas: 1

selector:

matchLabels:

app: kasarest

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

app: kasarest

app.kubernetes.io/name: kasarest

spec:

containers:

- env:

- name: PORT

value: "5000"

image: harbor.freshbrewed.science/freshbrewedprivate/kasarest:1.1.0

name: kasarest

ports:

- containerPort: 5000

protocol: TCP

imagePullSecrets:

- name: myharborreg

restartPolicy: Always

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/name: kasarest

name: kasarest

spec:

internalTrafficPolicy: Cluster

ports:

- name: http

port: 5000

protocol: TCP

targetPort: 5000

selector:

app.kubernetes.io/name: kasarest

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

name: kasarest

spec:

rules:

- host: kasarest.freshbrewed.science

http:

paths:

- backend:

service:

name: kasarest

port:

number: 5000

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- kasarest.freshbrewed.science

secretName: kasarest-tls

---

Just for safety, I’ll backup my existing values, then remove the Deployment, Service and Ingress

$ kubectl get deployment kasarest-deployment -o yaml > bkup-deployment.yaml

$ kubectl get service kasarest -o yaml > bkup-service.yaml

$ kubectl get ingress kasarest -o yaml > bkup-ingress.yaml

$ kubectl delete -f ./kasadep.yaml

deployment.apps "kasarest-deployment" deleted

service "kasarest" deleted

ingress.networking.k8s.io "kasarest" deleted

$ kubectl get pods | grep kasa

kasarest-deployment-85d4cfbc94-ltcg8 1/1 Terminating 0 76d

Now I’ll install with Helm

builder@DESKTOP-QADGF36:~/Workspaces/ijohnson-python-kasa/pykasa$ helm install mykasarelease .

NAME: mykasarelease

LAST DEPLOYED: Mon Jan 16 08:24:30 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

https://kasarest.freshbrewed.science/

I’ll then show it’s running

builder@DESKTOP-QADGF36:~/Workspaces/ijohnson-python-kasa/pykasa$ kubectl get pods | grep kasa

mykasarelease-pykasa-565d98c9f5-4vjgz 1/1 Running 0 75s

builder@DESKTOP-QADGF36:~/Workspaces/ijohnson-python-kasa/pykasa$ kubectl get svc | grep kasa

mykasarelease-pykasa ClusterIP 10.43.166.131 <none> 5000/TCP

81s

builder@DESKTOP-QADGF36:~/Workspaces/ijohnson-python-kasa/pykasa$ kubectl get ingress | grep kasa

mykasarelease-pykasa <none> kasarest.freshbrewed.science 192.168.1.214,192.168.1.38,192.168.1.57,192.168.1.77 80, 443 87s

And I can see it is working (with the last release)

I’ll then try updating the image to the tag we just created

builder@DESKTOP-QADGF36:~/Workspaces/ijohnson-python-kasa/pykasa$ helm upgrade mykasarelease --set image.tag=1.1.1 .

Release "mykasarelease" has been upgraded. Happy Helming!

NAME: mykasarelease

LAST DEPLOYED: Mon Jan 16 08:28:21 2023

NAMESPACE: default

STATUS: deployed

REVISION: 2

NOTES:

1. Get the application URL by running these commands:

https://kasarest.freshbrewed.science/

And I can see the pods cycle

$ kubectl get pods | grep kasa

mykasarelease-pykasa-565d98c9f5-4vjgz 1/1 Running 0 5m2s

mykasarelease-pykasa-59488b4f8-tbsk4 0/1 ContainerCreating 0 71s

$ kubectl get pods | grep kasa

mykasarelease-pykasa-59488b4f8-tbsk4 1/1 Running 0 2m44s

mykasarelease-pykasa-565d98c9f5-4vjgz 1/1 Terminating 0 6m35s

$ kubectl get pods | grep kasa

mykasarelease-pykasa-59488b4f8-tbsk4 1/1 Running 0 4m15s

And I can see it is working

Updating to use CM

First, I want the index.py to show that it can show and CM or Secret controlled value and one passed in via GET and POST.

import subprocess

import os

from subprocess import Popen, PIPE

from subprocess import check_output

from flask import Flask, request, jsonify

app = Flask(__name__)

@app.route("/")

def hello_world():

return "use /on /off and /swap with ?devip=IP&apikey=APIKEY!"

@app.route("/on", methods=["GET","POST"])

def turn_on():

deploykey = os.getenv('API_KEY')

if request.method == 'GET':

devip = request.args.get('devip','192.168.1.245')

typen = request.args.get('type','plug')

apikey = request.args.get('apikey','apikey')

stdout = check_output(['./some.sh',typen,devip,'on']).decode('utf-8')

return '''<h1>Turning On {}: {} -- {}. {}</h1>'''.format(devip, deploykey, apikey, stdout)

if request.method == 'POST':

devip = request.form.get('devip')

typen = request.form.get('type','plug')

apikey = request.form.get('apikey','apikey')

stdout = check_output(['./some.sh',typen,devip,'on']).decode('utf-8')

return '''<h1>Turning On {}: {} -- {}. {}</h1>'''.format(devip, deploykey, apikey, stdout)

@app.route("/off", methods=["GET","POST"])

def turn_off():

deploykey = os.getenv('API_KEY')

if request.method == 'GET':

devip = request.args.get('devip','192.168.1.245')

typen = request.args.get('type','plug')

apikey = request.args.get('apikey','apikey')

stdout = check_output(['./some.sh',typen,devip,'off']).decode('utf-8')

return '''<h1>Turning Off {}: {} -- {}. {}</h1>'''.format(devip, deploykey, apikey, stdout)

if request.method == 'POST':

devip = request.form.get('devip')

typen = request.form.get('type','plug')

apikey = request.form.get('apikey','apikey')

stdout = check_output(['./some.sh',typen,devip,'off']).decode('utf-8')

return '''<h1>Turning Off {}: {} -- {}. {}</h1>'''.format(devip, deploykey, apikey, stdout)

@app.route("/swap", methods=["GET","POST"])

def turn_swap():

deploykey = os.getenv('API_KEY')

if request.method == 'GET':

devip = request.args.get('devip','192.168.1.245')

typen = request.args.get('type','plug')

apikey = request.args.get('apikey','apikey')

#return '''<h1>Swapping On/Off: {}</h1>'''.format(devip)

stdout = check_output(['./swap.sh',typen,devip]).decode('utf-8')

return stdout

if request.method == 'POST':

devip = request.form.get('devip','192.168.1.245')

typen = request.form.get('type','plug')

apikey = request.form.get('apikey','apikey')

stdout = check_output(['./swap.sh',typen,devip]).decode('utf-8')

return stdout

@app.route("/testshell1")

def testshell():

session = Popen(['./some.sh'], stdout=PIPE, stderr=PIPE)

stdout, stderr = session.communicate()

if stderr:

raise Exception("Error "+str(stderr))

return stdout.decode('utf-8')

@app.route("/testshell2")

def testshell2():

stdout = check_output(['./some.sh','plug','192.168.1.245','on']).decode('utf-8')

return stdout

@app.route("/testshell3")

def testshell3():

stdout = check_output(['/usr/local/bin/kasa','--host','192.168.1.245','off']).decode('utf-8')

return stdout

@app.route("/testshell4")

def testshell4():

stdout = check_output(['/usr/local/bin/kasa','--host','192.168.1.245','on']).decode('utf-8')

return stdout

@app.route('/health')

def health():

return

What has been added above is the “apikey” and “deploykey” variables.

I’ll add a variable to the values

apikey: 55555

For now, I’ll add the env var via the Deployment

env:

- name: PORT

value: "{{ .Values.service.port }}"

- name: API_KEY

value: "{{ .Values.apikey }}"

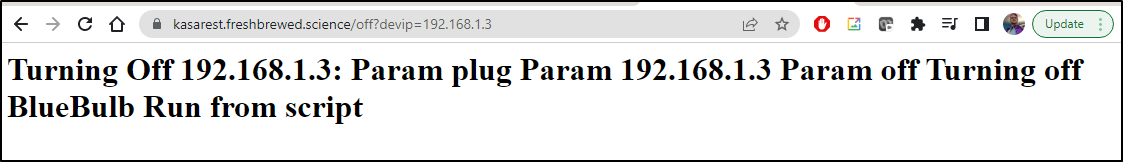

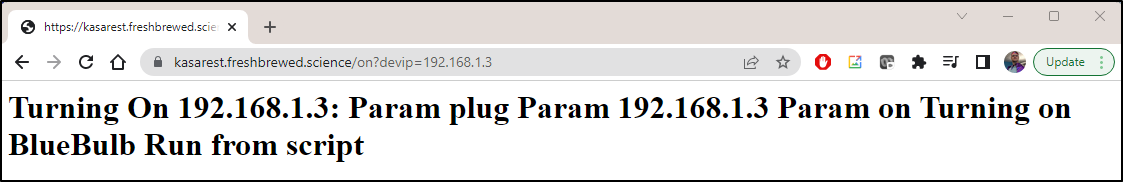

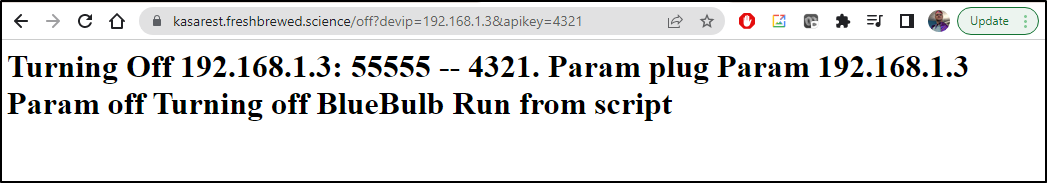

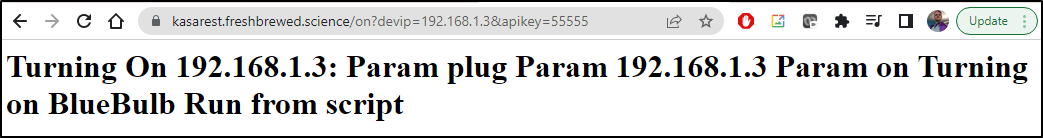

We can see it in action:

Adding an API Key Check

Now that we know we can compare a mutable variable (apikey via helm) with that passed in by the caller, we can add a check on it.

import subprocess

import os

from subprocess import Popen, PIPE

from subprocess import check_output

from flask import Flask, request, jsonify

app = Flask(__name__)

@app.route("/")

def hello_world():

return "use /on /off and /swap with ?devip=IP&apikey=APIKEY!"

@app.route("/on", methods=["GET","POST"])

def turn_on():

deploykey = os.getenv('API_KEY')

if request.method == 'GET':

devip = request.args.get('devip','192.168.1.245')

typen = request.args.get('type','plug')

apikey = request.args.get('apikey','apikey')

stdout = check_output(['./some.sh',typen,devip,'on']).decode('utf-8')

if apikey == deploykey:

return '''<h1>Turning On {}: {} -=- {}. {}</h1>'''.format(devip, deploykey, apikey, stdout)

else:

return '''<h1>Turning On {}: {} -NE- {}. {}</h1>'''.format(devip, deploykey, apikey, stdout)

if request.method == 'POST':

devip = request.form.get('devip')

typen = request.form.get('type','plug')

apikey = request.form.get('apikey','apikey')

stdout = check_output(['./some.sh',typen,devip,'on']).decode('utf-8')

if apikey == deploykey:

return '''<h1>Turning On {}: {} -=- {}. {}</h1>'''.format(devip, deploykey, apikey, stdout)

else:

return '''<h1>Turning On {}: {} -NE- {}. {}</h1>'''.format(devip, deploykey, apikey, stdout)

@app.route("/off", methods=["GET","POST"])

def turn_off():

deploykey = os.getenv('API_KEY')

if request.method == 'GET':

devip = request.args.get('devip','192.168.1.245')

typen = request.args.get('type','plug')

apikey = request.args.get('apikey','apikey')

stdout = check_output(['./some.sh',typen,devip,'off']).decode('utf-8')

if apikey == deploykey:

return '''<h1>Turning Off {}: {} -=- {}. {}</h1>'''.format(devip, deploykey, apikey, stdout)

else:

return '''<h1>Turning Off {}: {} -NE- {}. {}</h1>'''.format(devip, deploykey, apikey, stdout)

if request.method == 'POST':

devip = request.form.get('devip')

typen = request.form.get('type','plug')

apikey = request.form.get('apikey','apikey')

stdout = check_output(['./some.sh',typen,devip,'off']).decode('utf-8')

if apikey == deploykey:

return '''<h1>Turning Off {}: {} -=- {}. {}</h1>'''.format(devip, deploykey, apikey, stdout)

else:

return '''<h1>Turning Off {}: {} -NE- {}. {}</h1>'''.format(devip, deploykey, apikey, stdout)

@app.route("/swap", methods=["GET","POST"])

def turn_swap():

deploykey = os.getenv('API_KEY')

if request.method == 'GET':

devip = request.args.get('devip','192.168.1.245')

typen = request.args.get('type','plug')

apikey = request.args.get('apikey','apikey')

#return '''<h1>Swapping On/Off: {}</h1>'''.format(devip)

stdout = check_output(['./swap.sh',typen,devip]).decode('utf-8')

return stdout

if request.method == 'POST':

devip = request.form.get('devip','192.168.1.245')

typen = request.form.get('type','plug')

apikey = request.form.get('apikey','apikey')

stdout = check_output(['./swap.sh',typen,devip]).decode('utf-8')

return stdout

@app.route("/testshell1")

def testshell():

session = Popen(['./some.sh'], stdout=PIPE, stderr=PIPE)

stdout, stderr = session.communicate()

if stderr:

raise Exception("Error "+str(stderr))

return stdout.decode('utf-8')

@app.route("/testshell2")

def testshell2():

stdout = check_output(['./some.sh','plug','192.168.1.245','on']).decode('utf-8')

return stdout

@app.route("/testshell3")

def testshell3():

stdout = check_output(['/usr/local/bin/kasa','--host','192.168.1.245','off']).decode('utf-8')

return stdout

@app.route("/testshell4")

def testshell4():

stdout = check_output(['/usr/local/bin/kasa','--host','192.168.1.245','on']).decode('utf-8')

return stdout

@app.route('/health')

def health():

return

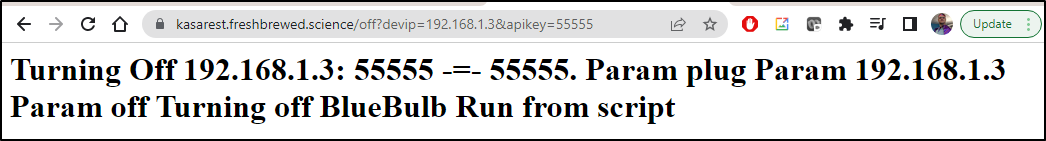

I can see a positive (match)

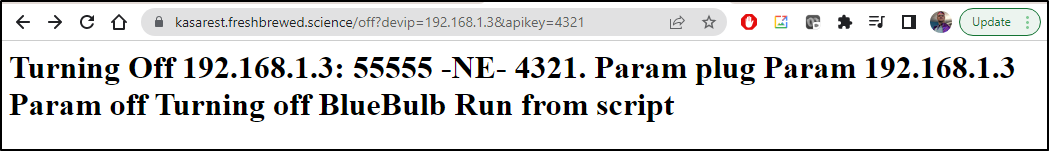

versus not-match

Now, the last change to make is to actually reject non-matching (and clearly not show the API key)

Switching to secret

I’ll add a new secret.yaml to the templates in the chart

apiVersion: v1

kind: Secret

metadata:

name: {{ include "pykasa.fullname" . }}-apikey

labels:

{{- include "pykasa.labels" . | nindent 4 }}

data:

apikey: {{ .Values.apikey | b64enc }}

Then use it in the deployment.yaml

env:

- name: PORT

value: "{{ .Values.service.port }}"

- name: API_KEY

valueFrom:

secretKeyRef:

name: {{ include "pykasa.fullname" . }}-apikey

key: apikey

Note, it doesnt like a bar number (complains about converting from float64). So I changed the value to a string in the values

apikey: "55555"

Lastly, I want to update my index.py to actually stop work if the API is invalid (and clearly not show the secret as that would defeat the purpose)

@app.route("/on", methods=["GET","POST"])

def turn_on():

deploykey = os.getenv('API_KEY')

if request.method == 'GET':

devip = request.args.get('devip','192.168.1.245')

typen = request.args.get('type','plug')

apikey = request.args.get('apikey','apikey')

if apikey == deploykey:

stdout = check_output(['./some.sh',typen,devip,'on']).decode('utf-8')

return '''<h1>Turning On {}: {}</h1>'''.format(devip, stdout)

else:

return '''<h1>NOT Turning On {}: Invalid API Key {}.</h1>'''.format(devip, apikey)

if request.method == 'POST':

devip = request.form.get('devip')

typen = request.form.get('type','plug')

apikey = request.form.get('apikey','apikey')

if apikey == deploykey:

stdout = check_output(['./some.sh',typen,devip,'on']).decode('utf-8')

return '''<h1>Turning On {}: {}</h1>'''.format(devip, stdout)

else:

return '''<h1>NOT Turning On {}: Invalid API Key {}.</h1>'''.format(devip, apikey)

@app.route("/off", methods=["GET","POST"])

def turn_off():

deploykey = os.getenv('API_KEY')

if request.method == 'GET':

devip = request.args.get('devip','192.168.1.245')

typen = request.args.get('type','plug')

apikey = request.args.get('apikey','apikey')

if apikey == deploykey:

stdout = check_output(['./some.sh',typen,devip,'off']).decode('utf-8')

return '''<h1>Turning Off {}: {}</h1>'''.format(devip, stdout)

else:

return '''<h1>NOT Turning Off {}: Invalid API Key {}.</h1>'''.format(devip, apikey)

if request.method == 'POST':

devip = request.form.get('devip')

typen = request.form.get('type','plug')

apikey = request.form.get('apikey','apikey')

if apikey == deploykey:

stdout = check_output(['./some.sh',typen,devip,'off']).decode('utf-8')

return '''<h1>Turning Off {}: {}</h1>'''.format(devip, stdout)

else:

return '''<h1>NOT Turning Off {}: Invalid API KEY {}.</h1>'''.format(devip, apikey)

@app.route("/swap", methods=["GET","POST"])

def turn_swap():

deploykey = os.getenv('API_KEY')

if request.method == 'GET':

devip = request.args.get('devip','192.168.1.245')

typen = request.args.get('type','plug')

apikey = request.args.get('apikey','apikey')

#return '''<h1>Swapping On/Off: {}</h1>'''.format(devip)

if apikey == deploykey:

stdout = check_output(['./swap.sh',typen,devip]).decode('utf-8')

return stdout

else:

return "INVALID API KEY. WILL NOT SWAP"

if request.method == 'POST':

devip = request.form.get('devip','192.168.1.245')

typen = request.form.get('type','plug')

apikey = request.form.get('apikey','apikey')

if apikey == deploykey:

stdout = check_output(['./swap.sh',typen,devip]).decode('utf-8')

return stdout

else:

return "INVALID API KEY. WILL NOT SWAP"

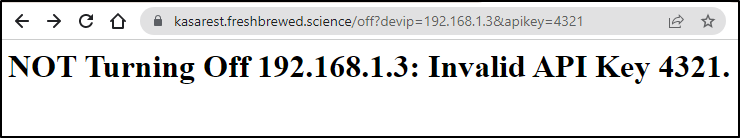

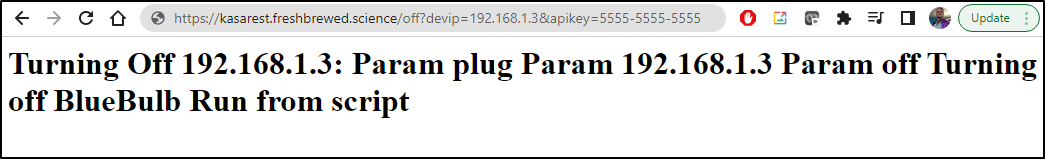

Now using the freshly built container (1.1.5), we can see it fails with the invalid code

But passes with the valid one

Updating GH Webhooks

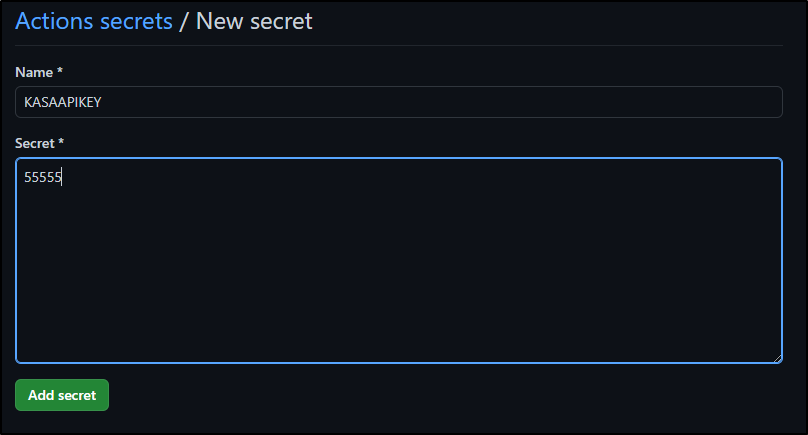

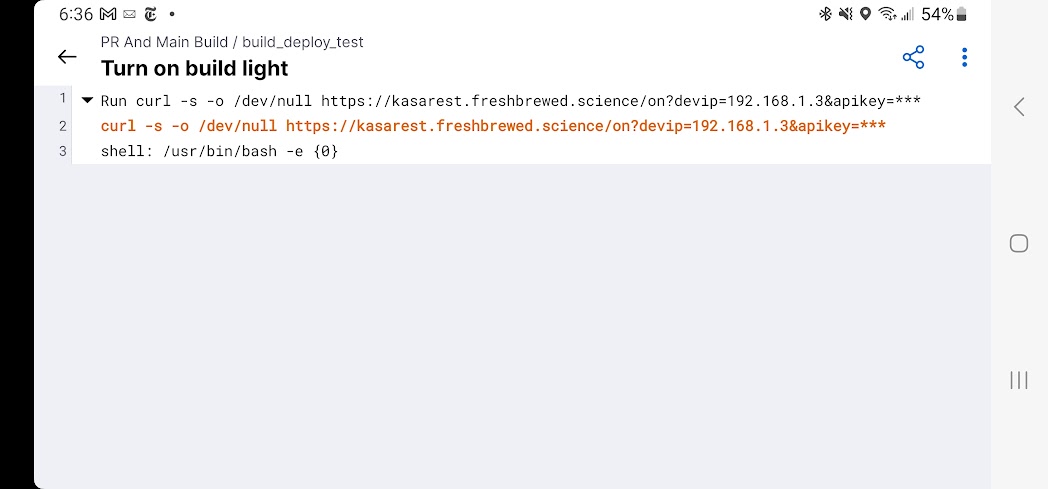

Let’s first set a secret in a Github repo we wish to use the built light (the one shown above 192.168.1.3).

(Clearly I used a different value, but to illustrate the point, I’ll show 55555).

I’ll then update my GH Build to use it

- name: Turn on build light

run: |

curl -s -o /dev/null https://kasarest.freshbrewed.science/on?devip=192.168.1.3&apikey=$

One bit of funny business I found with values when testing the chart:

If you want to pass a numeric in, you have to wrap it. e.g.

$ helm upgrade mykasarelease --set image.tag=$DVER --set apikey=\"123412341234\" ./pykasa/

or put in a local values file:

$ cat values.yaml

image:

tag: 1.1.5

apikey: "123412341234"

Then use it in an upgrade

$ helm upgrade mykasarelease -f values.yaml ./pykasa/

Release "mykasarelease" has been upgraded. Happy Helming!

NAME: mykasarelease

LAST DEPLOYED: Mon Jan 16 18:12:13 2023

NAMESPACE: default

STATUS: deployed

REVISION: 11

NOTES:

1. Get the application URL by running these commands:

https://kasarest.freshbrewed.science/

Either way, that means your URL would need to wrap it: https://kasarest.freshbrewed.science/off?devip=192.168.1.3&apikey="123412341234"

If I enter a string however

$ cat values.yaml

image:

tag: 1.1.5

apikey: 5555-5555-5555

That will work without quotes

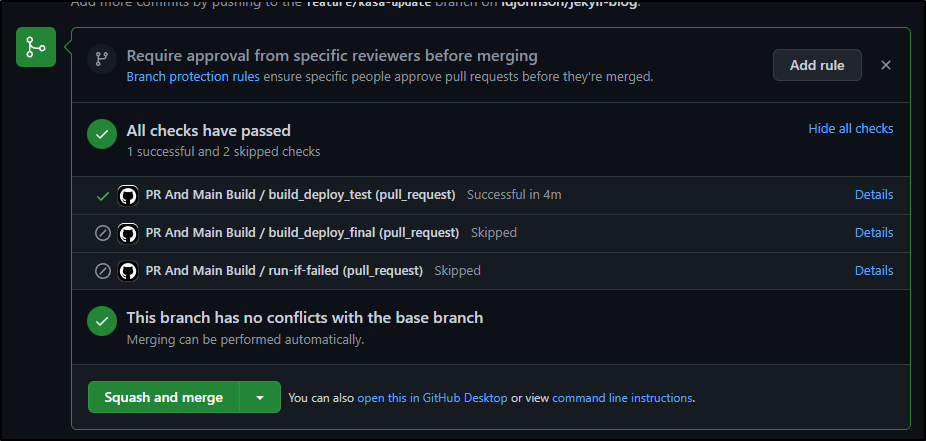

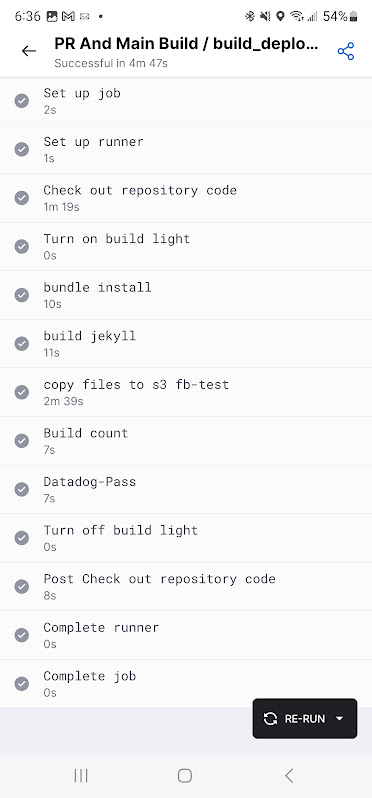

Testing Github

I’ll now test my updated Kasa microservice (using this post I’m writing).

The PR Passes

I can see from the build logs

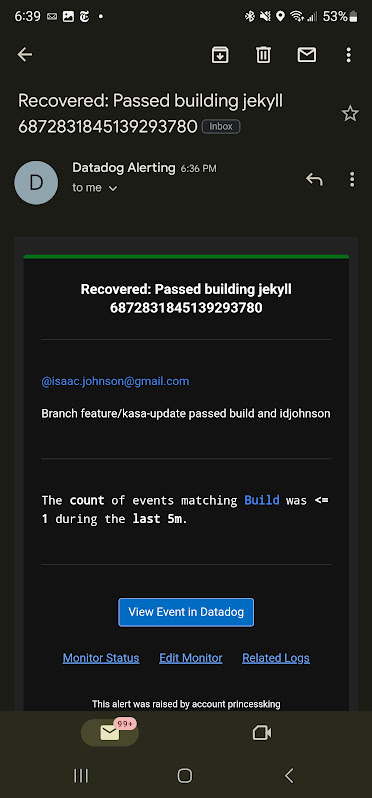

It passed the new key

While the prior build failed, this one passed and thus Datadog gave me a heads up

Adding Github Build for PyKasa Docker builds

It’s fun to build and push locally, but it rather against the grain for good CICD practice.

To automate the build and push, I created a new Github workflow

$ cat .github/workflows/docker.yml

name: Dockerbuild

on:

push:

branches:

- main

- master

pull_request:

jobs:

build_deploy_test:

runs-on: ubuntu-latest

if: ${{ (github.ref != 'refs/heads/main') && (github.ref != 'refs/heads/master') }}

steps:

- name: Check out repository code

uses: actions/checkout@v2

- name: Turn on build light

run: |

curl -s -o /dev/null "https://kasarest.freshbrewed.science/on?devip=192.168.1.3&apikey=${{ secrets.KASAAPIKEY }}"

- name: Add SHORT_SHA env property with commit short sha

run: echo "SHORT_SHA=`echo ${GITHUB_SHA} | cut -c1-8`" >> $GITHUB_ENV

- name: Build Dockerfile

run: |

export BUILDIMGNAME="`cat Dockerfile | tail -n1 | sed 's/^.*\/\(.*\):.*/\1/g'`"

export

docker build -t $BUILDIMGNAME:${SHORT_SHA} .

docker images

- name: "Harbor: Tag and Push"

run: |

export BUILDIMGNAME="`cat Dockerfile | tail -n1 | sed 's/^.*\/\(.*\):.*/\1/g'`"

export HARBORPRIVCRROOT="`cat Dockerfile | tail -n1 | sed 's/^#\(.*\)\/.*/\1/g'`"

docker tag $BUILDIMGNAME:${SHORT_SHA} $HARBORPRIVCRROOT/$BUILDIMGNAME:${SHORT_SHA}

docker images

echo $CR_PAT | docker login harbor.freshbrewed.science -u $CR_USER --password-stdin

docker push $HARBORPRIVCRROOT/$BUILDIMGNAME:${SHORT_SHA}

env: # Or as an environment variable

CR_PAT: ${{ secrets.CR_PAT }}

CR_USER: ${{ secrets.CR_USER }}

- name: Build count

uses: masci/datadog@v1

with:

api-key: ${{ secrets.DATADOG_API_KEY }}

metrics: |

- type: "count"

name: "pykasa.runs.count"

value: 1.0

host: ${{ github.repository_owner }}

tags:

- "project:${{ github.repository }}"

- "branch:${{ github.head_ref }}"

- name: Turn off build light

run: |

curl -s -o /dev/null "https://kasarest.freshbrewed.science/off?devip=192.168.1.3&apikey=${{ secrets.KASAAPIKEY }}"

build_deploy_final:

runs-on: self-hosted

if: ${{ (github.ref == 'refs/heads/main') || (github.ref == 'refs/heads/master') }}

steps:

- name: Check out repository code

uses: actions/checkout@v2

- name: Turn on build light

run: |

curl -s -o /dev/null "https://kasarest.freshbrewed.science/on?devip=192.168.1.3&apikey=${{ secrets.KASAAPIKEY }}"

- name: Build Dockerfile

run: |

export BUILDIMGTAG="`cat Dockerfile | tail -n1 | sed 's/^.*\///g'`"

docker build -t $BUILDIMGTAG .

docker images

- name: "Harbor: Tag and Push"

run: |

export BUILDIMGTAG="`cat Dockerfile | tail -n1 | sed 's/^.*\///g'`"

export FINALBUILDTAG="`cat Dockerfile | tail -n1 | sed 's/^#//g'`"

docker tag $BUILDIMGTAG $FINALBUILDTAG

docker images

echo $CR_PAT | docker login harbor.freshbrewed.science -u $CR_USER --password-stdin

docker push $FINALBUILDTAG

env: # Or as an environment variable

CR_PAT: ${{ secrets.CR_PAT }}

CR_USER: ${{ secrets.CR_USER }}

- name: "Dockerhub: Tag and Push"

run: |

export BUILDIMGTAG="`cat Dockerfile | tail -n1 | sed 's/^.*\///g'`"

export FINALVERSION="`cat Dockerfile | tail -n1 | sed 's/^.*://g'`"

docker tag $BUILDIMGTAG idjohnson/$BUILDIMGTAG

docker images

docker login -u="$DOCKER_USERNAME" -p="$DOCKER_PASSWORD"

docker push idjohnson/$BUILDIMGTAG

env: # Or as an environment variable

DOCKER_PASSWORD: ${{ secrets.DH_PAT }}

DOCKER_USERNAME: ${{ secrets.DH_LOGIN }}

- name: Build count

uses: masci/datadog@v1

with:

api-key: ${{ secrets.DATADOG_API_KEY }}

metrics: |

- type: "count"

name: "pykasa.runs.count"

value: 1.0

host: ${{ github.repository_owner }}

tags:

- "project:${{ github.repository }}"

- "branch:${{ github.head_ref }}"

- name: Turn off build light

run: |

curl -s -o /dev/null "https://kasarest.freshbrewed.science/off?devip=192.168.1.3&apikey=${{ secrets.KASAAPIKEY }}"

run-if-failed:

runs-on: ubuntu-latest

needs: [build_deploy_test, build_deploy_final]

if: always() && (needs.build_deploy_test.result == 'failure' || needs.build_deploy_final.result == 'failure')

steps:

- name: Blink and Leave On build light

run: |

curl -s -o /dev/null "https://kasarest.freshbrewed.science/off?devip=192.168.1.3&apikey=${{ secrets.KASAAPIKEY }}" && sleep 5 \

&& curl -s -o /dev/null "https://kasarest.freshbrewed.science/on?devip=192.168.1.3&apikey=${{ secrets.KASAAPIKEY }}" && sleep 5 \

&& curl -s -o /dev/null "https://kasarest.freshbrewed.science/off?devip=192.168.1.3&apikey=${{ secrets.KASAAPIKEY }}" && sleep 5 \

&& curl -s -o /dev/null "https://kasarest.freshbrewed.science/on?devip=192.168.1.3&apikey=${{ secrets.KASAAPIKEY }}"

- name: Datadog-Fail

uses: masci/datadog@v1

with:

api-key: ${{ secrets.DATADOG_API_KEY }}

events: |

- title: "Failed building pykasa"

text: "Branch ${{ github.head_ref }} failed to build"

alert_type: "error"

host: ${{ github.repository_owner }}

tags:

- "project:${{ github.repository }}"

- name: Fail count

uses: masci/datadog@v1

with:

api-key: ${{ secrets.DATADOG_API_KEY }}

metrics: |

- type: "count"

name: "pykasa.fails.count"

value: 1.0

host: ${{ github.repository_owner }}

tags:

- "project:${{ github.repository }}"

- "branch:${{ github.head_ref }}"

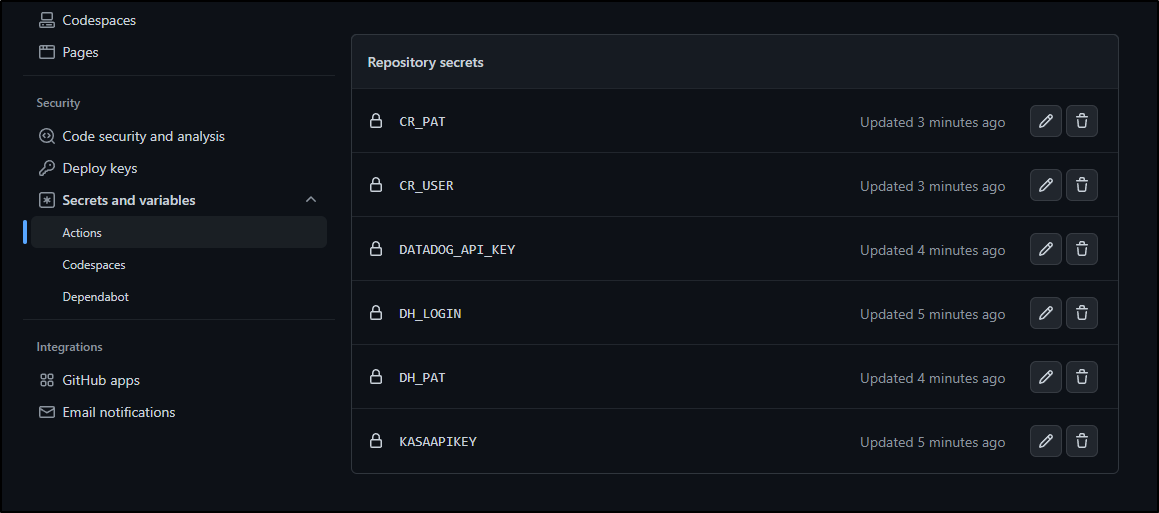

This needed a few secrets defined

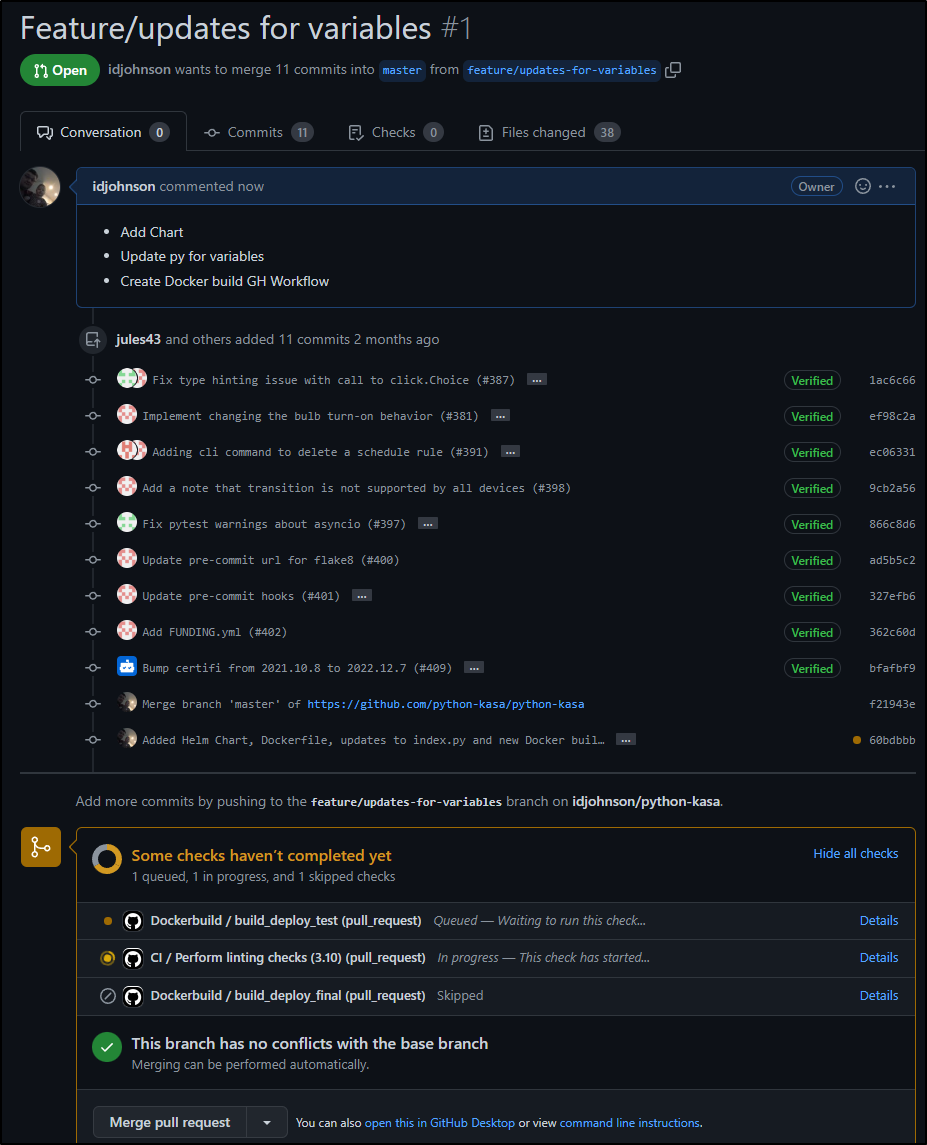

I then created a PR

I can see it pushed to Harbor the short SHA

Uploading a chart for sharing

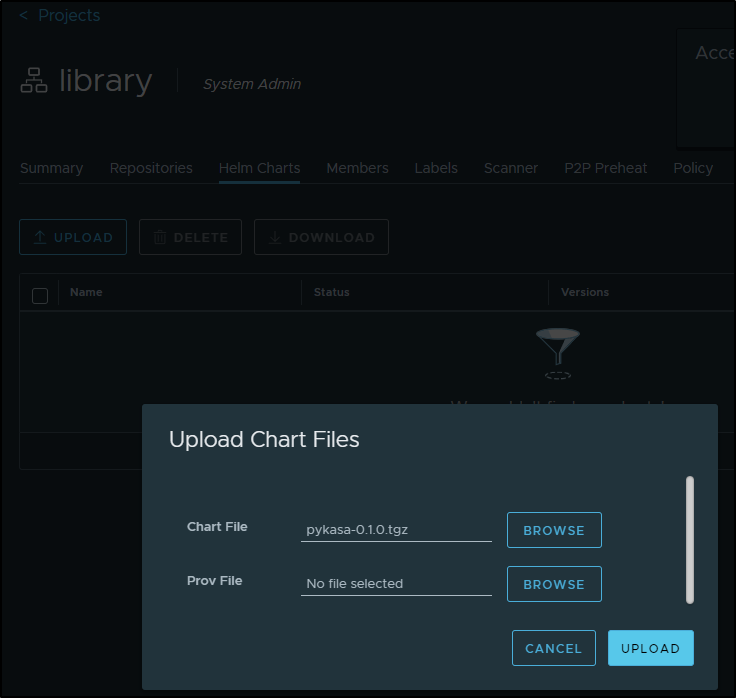

I’ll create the package and stash it in my Downloads folder in Windows. (Long term I’ll likely add another GH Workflow just for chart changes that will version it and push to Harbor)

builder@DESKTOP-QADGF36:~/Workspaces/ijohnson-python-kasa$ helm package pykasa

Successfully packaged chart and saved it to: /home/builder/Workspaces/ijohnson-python-kasa/pykasa-0.1.0.tgz

builder@DESKTOP-QADGF36:~/Workspaces/ijohnson-python-kasa$ cp pykasa-0.1.0.tgz /mnt/c/Users/isaac/Downloads/

I can then upload in the UI

We can now pull and use it (though you’ll likely want to update the values)

$ helm repo add freshbrewed https://harbor.freshbrewed.science/chartrepo/library

"freshbrewed" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "freshbrewed" chart repository

...Successfully got an update from the "myharbor" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "epsagon" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "open-telemetry" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

Then search to show the charts there

$ helm search repo freshbrewed

NAME CHART VERSION APP VERSION DESCRIPTION

freshbrewed/pykasa 0.1.0 1.16.0 A Helm chart for PyKasa

If you use it, make sure to update some of the values

$ helm show values freshbrewed/pykasa | head -n20

# Default values for pykasa.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

replicaCount: 1

image:

repository: harbor.freshbrewed.science/freshbrewedprivate/kasarest

pullPolicy: IfNotPresent

# Overrides the image tag whose default is the chart appVersion.

tag: 1.1.0

apikey: "55555"

imagePullSecrets: [

name: myharborreg

]

nameOverride: ""

fullnameOverride: ""

restartPolicy: Always

For instance, you could use the public build image at dockerhub

You could use:

$ helm install --dry-run --debug --set image.repository=idjohnson/kasarest --set image.tag=1.1.5 --set imag

ePullSecrets=null freshbrewed/pykasa --generate-name

install.go:173: [debug] Original chart version: ""

install.go:190: [debug] CHART PATH: /home/builder/.cache/helm/repository/pykasa-0.1.0.tgz

NAME: pykasa-1673960029

LAST DEPLOYED: Tue Jan 17 06:53:50 2023

NAMESPACE: default

STATUS: pending-install

REVISION: 1

USER-SUPPLIED VALUES:

image:

repository: idjohnson/kasarest

tag: 1.1.5

imagePullSecrets: null

COMPUTED VALUES:

affinity: {}

apikey: "55555"

autoscaling:

enabled: false

maxReplicas: 100

minReplicas: 1

targetCPUUtilizationPercentage: 80

fullnameOverride: ""

image:

pullPolicy: IfNotPresent

repository: idjohnson/kasarest

tag: 1.1.5

ingress:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

className: ""

enabled: true

hosts:

- host: kasarest.freshbrewed.science

paths:

- path: /

pathType: ImplementationSpecific

tls:

- hosts:

- kasarest.freshbrewed.science

secretName: kasarest-tls

nameOverride: ""

nodeSelector: {}

podAnnotations: {}

podSecurityContext: {}

replicaCount: 1

resources: {}

restartPolicy: Always

securityContext: {}

service:

port: 5000

type: ClusterIP

serviceAccount:

annotations: {}

create: true

name: ""

tolerations: []

HOOKS:

---

# Source: pykasa/templates/tests/test-connection.yaml

apiVersion: v1

kind: Pod

metadata:

name: "pykasa-1673960029-test-connection"

labels:

helm.sh/chart: pykasa-0.1.0

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: pykasa-1673960029

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

annotations:

"helm.sh/hook": test

spec:

containers:

- name: wget

image: busybox

command: ['wget']

args: ['pykasa-1673960029:5000']

restartPolicy: Never

MANIFEST:

---

# Source: pykasa/templates/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: pykasa-1673960029

labels:

helm.sh/chart: pykasa-0.1.0

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: pykasa-1673960029

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

---

# Source: pykasa/templates/secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: pykasa-1673960029-apikey

labels:

helm.sh/chart: pykasa-0.1.0

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: pykasa-1673960029

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

data:

apikey: NTU1NTU=

---

# Source: pykasa/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: pykasa-1673960029

labels:

helm.sh/chart: pykasa-0.1.0

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: pykasa-1673960029

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

spec:

type: ClusterIP

ports:

- port: 5000

targetPort: 5000

protocol: TCP

name: http

selector:

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: pykasa-1673960029

---

# Source: pykasa/templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: pykasa-1673960029

labels:

helm.sh/chart: pykasa-0.1.0

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: pykasa-1673960029

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: pykasa-1673960029

template:

metadata:

labels:

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: pykasa-1673960029

spec:

serviceAccountName: pykasa-1673960029

securityContext:

{}

containers:

- name: pykasa

securityContext:

{}

image: "idjohnson/kasarest:1.1.5"

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 5000

protocol: TCP

livenessProbe:

httpGet:

path: /

port: http

readinessProbe:

httpGet:

path: /

port: http

env:

- name: PORT

value: "5000"

- name: API_KEY

valueFrom:

secretKeyRef:

name: pykasa-1673960029-apikey

key: apikey

resources:

{}

restartPolicy: "Always"

---

# Source: pykasa/templates/ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: pykasa-1673960029

labels:

helm.sh/chart: pykasa-0.1.0

app.kubernetes.io/name: pykasa

app.kubernetes.io/instance: pykasa-1673960029

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

spec:

tls:

- hosts:

- "kasarest.freshbrewed.science"

secretName: kasarest-tls

rules:

- host: "kasarest.freshbrewed.science"

http:

paths:

- path: /

pathType: ImplementationSpecific

backend:

service:

name: pykasa-1673960029

port:

number: 5000

NOTES:

1. Get the application URL by running these commands:

https://kasarest.freshbrewed.science/

Summary

In this post we expanded on our first post by adding a proper Github workflow to build and push the Dockerfile. We updated the python code to check for a new API key which we would compare against a new environment variable. We migrated the YAML Deployment to a proper Helm chart that will set the API Key as a Kubernetes secret. Lastly, we tested the full flow in multiple Github workflows.

Later, I’ll want to automatically build the chart and store it and perhaps find a way to contribute all this back to the original project.