Published: Oct 31, 2022 by Isaac Johnson

Harbor has been my preferred on-prem Container Registry but time and time again, it crashes on the database.

builder@DESKTOP-QADGF36:~/Workspaces/harborUpgrde$ kubectl logs harbor-registry-database-0

Defaulted container "database" out of: database, data-migrator (init), data-permissions-ensurer (init)

no need to upgrade postgres, launch it.

2022-10-31 11:06:16.270 UTC [1] FATAL: lock file "postmaster.pid" is empty

2022-10-31 11:06:16.270 UTC [1] HINT: Either another server is starting, or the lock file is the remnant of a previous server startup crash.

And in every case it’s the postgres database (database-0) that seems to be the root cause

builder@DESKTOP-QADGF36:~/Workspaces/harborUpgrde$ kubectl get pods | grep harbor

harbor-registry-exporter-57d748f59b-vn28p 1/1 Running 9 (95d ago) 95d

harbor-registry-notary-signer-65f7ff9b8c-mtkn7 1/1 Running 9 (95d ago) 95d

harbor-registry-chartmuseum-7dc9c77d77-6724s 1/1 Running 0 13m

harbor-registry-portal-85cf959f8b-dz8qn 1/1 Running 0 13m

harbor-registry-redis-0 1/1 Running 0 13m

harbor-registry-registry-6696ddf75b-j2ls2 2/2 Running 0 13m

harbor-registry-trivy-0 1/1 Running 0 13m

harbor-registry-notary-signer-5d645f86cb-rr8vr 0/1 CrashLoopBackOff 6 (4m34s ago) 13m

harbor-registry-notary-server-7c454f96cd-rd2pj 0/1 CrashLoopBackOff 6 (4m26s ago) 13m

harbor-registry-database-0 0/1 CrashLoopBackOff 102 (3m43s ago) 8h

harbor-registry-notary-server-865c5d5764-jvpzs 0/1 CrashLoopBackOff 5078 (2m40s ago) 95d

harbor-registry-jobservice-6c49886d5d-x7gxq 0/1 Running 2710 (6m49s ago) 95d

harbor-registry-exporter-697449c9d9-vt458 0/1 CrashLoopBackOff 6 (68s ago) 13m

harbor-registry-core-7cbb9856dd-j2959 0/1 CrashLoopBackOff 6 (46s ago) 13m

harbor-registry-core-5c6f8bdd47-g2wrr 0/1 Running 4449 (5m33s ago) 95d

harbor-registry-jobservice-7759c9dfc-bpjdp 0/1 Running 7 (5m20s ago) 13m

Whether it fills the PVC which, though auto-expand is enabled, may not expand

builder@DESKTOP-QADGF36:~/Workspaces/harborUpgrde$ kubectl get pvc | grep harbor

harbor-registry-chartmuseum Bound pvc-4666b2a7-6fc1-40c4-80a4-e0887a76ad82 5Gi RWO nfs 97d

harbor-registry-jobservice Bound pvc-6cf34907-9c4b-4c67-aecb-6fda45b6691a 1Gi RWO nfs 97d

harbor-registry-registry Bound pvc-180fe352-8ae1-4866-9d66-5c76a645c53a 5Gi RWO nfs 97d

data-harbor-registry-redis-0 Bound pvc-521479b2-7692-4b44-b38e-43db54b45bdd 1Gi RWO nfs 97d

database-data-harbor-registry-database-0 Bound pvc-61309726-0219-4405-aefe-14aa05434595 1Gi RWO nfs 97d

data-harbor-registry-trivy-0 Bound pvc-127e6d04-b0d5-435f-939a-34d4cb4c54d5 5Gi RWO nfs 97d

harbor-registry-jobservice-scandata Bound pvc-0d393f5b-d3d6-480e-a562-f12a642b1913 1Gi RWO managed-nfs-storage 14m

or it just simply cannot handle crashes (like machine reboots), it has become the single point of failure in my system.

Today we’ll both upgrade to the latest harbor 2.0 and also move to a non-containerized Postgres database

Setting up a Postgres DB

My first thought was to use my NAS, but sadly it’s too old. It only supports MariaDB (MySQL)

My next thought was to use some Pi’s have I have sitting around. They have plenty of disk and frankly they never seem to go down. But they are pretty slow and depend on a microsd. Seems I could easily take them out too.

I decided to use my kubernetes master.

isaac@isaac-MacBookAir:~$ sudo apt update

[sudo] password for isaac:

Hit:1 http://us.archive.ubuntu.com/ubuntu focal InRelease

Hit:2 http://us.archive.ubuntu.com/ubuntu focal-updates InRelease

Hit:3 http://us.archive.ubuntu.com/ubuntu focal-backports InRelease

Hit:4 https://download.docker.com/linux/ubuntu focal InRelease

Get:5 http://security.ubuntu.com/ubuntu focal-security InRelease [114 kB]

Get:6 http://security.ubuntu.com/ubuntu focal-security/main amd64 DEP-11 Metadata [40.7 kB]

Get:7 http://security.ubuntu.com/ubuntu focal-security/universe amd64 DEP-11 Metadata [92.9 kB]

Get:8 http://security.ubuntu.com/ubuntu focal-security/multiverse amd64 DEP-11 Metadata [2,464 B]

Fetched 250 kB in 1s (170 kB/s)

Reading package lists... Done

Building dependency tree

Reading state information... Done

45 packages can be upgraded. Run 'apt list --upgradable' to see them.

Then install postgres

isaac@isaac-MacBookAir:~$ sudo apt install postgresql postgresql-contrib

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages were automatically installed and are no longer required:

libfprint-2-tod1 shim

Use 'sudo apt autoremove' to remove them.

The following additional packages will be installed:

libllvm10 libpq5 postgresql-12 postgresql-client-12 postgresql-client-common postgresql-common sysstat

Suggested packages:

postgresql-doc postgresql-doc-12 libjson-perl isag

The following NEW packages will be installed:

libllvm10 libpq5 postgresql postgresql-12 postgresql-client-12 postgresql-client-common postgresql-common postgresql-contrib sysstat

0 upgraded, 9 newly installed, 0 to remove and 45 not upgraded.

Need to get 30.6 MB of archives.

After this operation, 121 MB of additional disk space will be used.

Do you want to continue? [Y/n] y

Get:1 http://us.archive.ubuntu.com/ubuntu focal/main amd64 libllvm10 amd64 1:10.0.0-4ubuntu1 [15.3 MB]

Get:2 http://us.archive.ubuntu.com/ubuntu focal-updates/main amd64 libpq5 amd64 12.12-0ubuntu0.20.04.1 [117 kB]

Get:3 http://us.archive.ubuntu.com/ubuntu focal-updates/main amd64 postgresql-client-common all 214ubuntu0.1 [28.2 kB]

Get:4 http://us.archive.ubuntu.com/ubuntu focal-updates/main amd64 postgresql-client-12 amd64 12.12-0ubuntu0.20.04.1 [1,051 kB]

Get:5 http://us.archive.ubuntu.com/ubuntu focal-updates/main amd64 postgresql-common all 214ubuntu0.1 [169 kB]

Get:6 http://us.archive.ubuntu.com/ubuntu focal-updates/main amd64 postgresql-12 amd64 12.12-0ubuntu0.20.04.1 [13.5 MB]

...

Processing triggers for systemd (245.4-4ubuntu3.17) ...

Processing triggers for man-db (2.9.1-1) ...

Processing triggers for libc-bin (2.31-0ubuntu9.9) ...

Now I can start the service

isaac@isaac-MacBookAir:~$ sudo systemctl start postgresql.service

And verify it setup basic databases

postgres@isaac-MacBookAir:~$ psql

psql (12.12 (Ubuntu 12.12-0ubuntu0.20.04.1))

Type "help" for help.

postgres=# \l

List of databases

Name | Owner | Encoding | Collate | Ctype | Access privileges

-----------+----------+----------+-------------+-------------+-----------------------

postgres | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 |

template0 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres +

| | | | | postgres=CTc/postgres

template1 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres +

| | | | | postgres=CTc/postgres

(3 rows)

postgres=# \q

I need to make a harbor user

isaac@isaac-MacBookAir:~$ sudo -u postgres createuser --interactive

Enter name of role to add: harbor

Shall the new role be a superuser? (y/n) y

isaac@isaac-MacBookAir:~$ sudo -u postgres psql

psql (12.12 (Ubuntu 12.12-0ubuntu0.20.04.1))

Type "help" for help.

postgres=# \password harbor

Enter new password for user "harbor":

Enter it again:

We need to ensure we are listening on the network (so it works outside of localhost)

isaac@isaac-MacBookAir:~$ sudo vi /etc/postgresql/12/main/postgresql.conf

isaac@isaac-MacBookAir:~$ cat /etc/postgresql/12/main/postgresql.conf | grep ^listen

listen_addresses = '*' # what IP address(es) to listen on;

isaac@isaac-MacBookAir:~$ sudo vi /etc/postgresql/12/main/pg_hba.conf

isaac@isaac-MacBookAir:~$ sudo cat /etc/postgresql/12/main/pg_hba.conf | grep trust

# METHOD can be "trust", "reject", "md5", "password", "scram-sha-256",

host all all 0.0.0.0/0 trust

isaac@isaac-MacBookAir:~$ sudo systemctl restart postgresql.service

Now I can test from a different machine

builder@DESKTOP-QADGF36:~/Workspaces/python-kasa$ sudo apt install postgresql-client-common

builder@DESKTOP-QADGF36:~/Workspaces/python-kasa$ sudo apt install postgresql-client-12

builder@DESKTOP-QADGF36:~/Workspaces/python-kasa$ psql -h 192.168.1.77 -p 5432 -U harbor postgres

psql (12.12 (Ubuntu 12.12-0ubuntu0.20.04.1))

SSL connection (protocol: TLSv1.3, cipher: TLS_AES_256_GCM_SHA384, bits: 256, compression: off)

Type "help" for help.

postgres=#

The first time through, it failed over and over.

I later realized that not only need you to create the user, but also create the initial databases (though the installer will add the appropriate tables)

isaac@isaac-MacBookAir:~$ sudo -u postgres psql

[sudo] password for isaac:

psql (12.12 (Ubuntu 12.12-0ubuntu0.20.04.1))

Type "help" for help.

postgres=# CREATE DATABASE registry;

CREATE DATABASE

postgres=# CREATE DATABASE notary_signer;

CREATE DATABASE

postgres=# CREATE DATABASE notary_server;

CREATE DATABASE

postgres=# grant all privileges on database registry to harbor;

GRANT

postgres=# grant all privileges on database notary_signer to harbor;

GRANT

postgres=# grant all privileges on database notary_server to harbor;

GRANT

postgres=# exit

Now I can install with helm (assume we uninstalled the last)

builder@DESKTOP-QADGF36:~/Workspaces/harborUpgrde$ cat values.yaml

expose:

ingress:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-production

className: nginx

hosts:

core: harbor.freshbrewed.science

notary: notary.freshbrewed.science

tls:

certSource: secret

secret:

notarySecretName: notary.freshbrewed.science-cert

secretName: harbor.freshbrewed.science-cert

type: ingress

database:

type: external

external:

host: 192.168.1.77

port: 5432

username: harbor

password: asdfasdfasdfasdf

externalURL: https://harbor.freshbrewed.science

harborAdminPassword: asdfasdfasdfasdf==

metrics:

enabled: true

notary:

enabled: true

secretKey: asdfasdfasdf

builder@DESKTOP-QADGF36:~/Workspaces/harborUpgrde$ helm install -f values.yaml harbor-registry2 ./harbor

NAME: harbor-registry2

LAST DEPLOYED: Mon Oct 31 06:56:28 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Please wait for several minutes for Harbor deployment to complete.

Then you should be able to visit the Harbor portal at https://harbor.freshbrewed.science

For more details, please visit https://github.com/goharbor/harbor

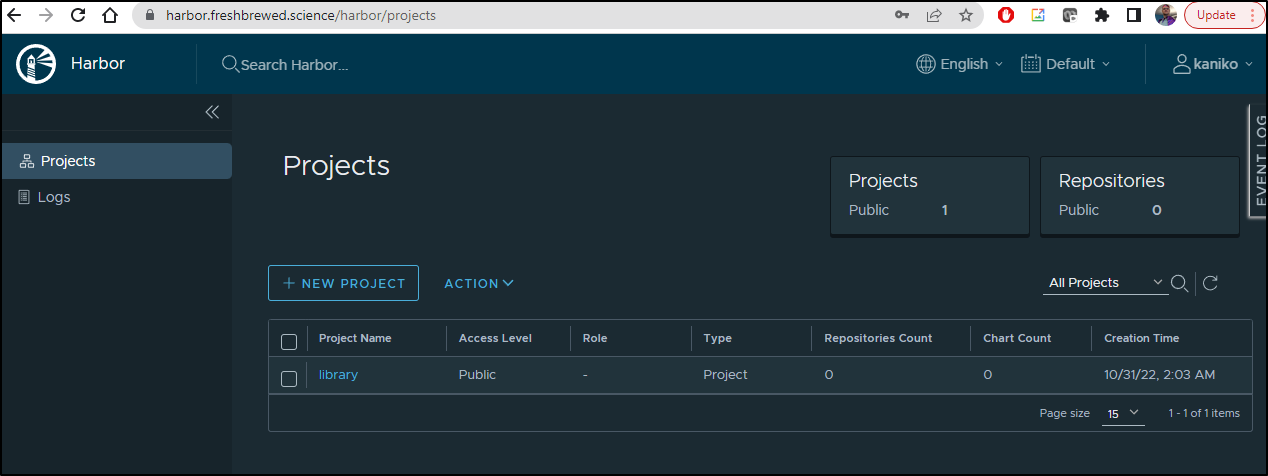

I did have to rotate some pods to get them to kick in. But soon I was back in action

I started to push some existing containers back when I realized that old ingress issue

builder@DESKTOP-QADGF36:~/Workspaces/python-kasa$ docker push harbor.freshbrewed.science/freshbrewedprivate/pythonfunction

Using default tag: latest

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/pythonfunction]

9283b229a025: Pushing [==================================================>] 25.83MB

526119217a7a: Pushing [==================================================>] 92.16kB

4e86cf43e3a8: Preparing

95557c4c07af: Pushing [=======> ] 1.708MB/12.05MB

873602908422: Pushing [==================================================>] 4.608kB

e4abe883350c: Waiting

95a02847aa85: Waiting

b45078e74ec9: Waiting

error parsing HTTP 413 response body: invalid character '<' looking for beginning of value: "<html>\r\n<head><title>413 Request Entity Too Large</title></head>\r\n<body>\r\n<center><h1>413 Request Entity Too Large</h1></center>\r\n<hr><center>nginx/1.21.6</center>\r\n</body>\r\n</html>\r\n"

That is a quick fix

builder@DESKTOP-QADGF36:~/Workspaces/python-kasa$ kubectl get ingress | grep harbor

harbor-registry2-ingress-notary nginx notary.freshbrewed.science 192.168.1.214,192.168.1.38,192.168.1.57,192.168.1.77 80, 443 15m

harbor-registry2-ingress nginx harbor.freshbrewed.science 192.168.1.214,192.168.1.38,192.168.1.57,192.168.1.77 80, 443 15m

builder@DESKTOP-QADGF36:~/Workspaces/python-kasa$ kubectl get ingress harbor-registry2-ingress -o yaml > harbor-registry2-ingress.yaml

builder@DESKTOP-QADGF36:~/Workspaces/python-kasa$ kubectl get ingress harbor-registry2-ingress -o yaml > harbor-registry2-ingress.yaml.bak

builder@DESKTOP-QADGF36:~/Workspaces/python-kasa$ vi harbor-registry2-ingress.yaml

builder@DESKTOP-QADGF36:~/Workspaces/python-kasa$ diff harbor-registry2-ingress.yaml harbor-registry2-ingress.yaml.bak

12,16d11

< nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

< nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

< nginx.org/client-max-body-size: "0"

< nginx.org/proxy-connect-timeout: "600"

< nginx.org/proxy-read-timeout: "600"

builder@DESKTOP-QADGF36:~/Workspaces/python-kasa$ kubectl apply -f harbor-registry2-ingress.yaml

Warning: resource ingresses/harbor-registry2-ingress is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

ingress.networking.k8s.io/harbor-registry2-ingress configured

Now I can push the few containers I know to be missing

builder@DESKTOP-QADGF36:~/Workspaces/python-kasa$ docker push harbor.freshbrewed.science/freshbrewedprivate/pythonfunction

Using default tag: latest

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/pythonfunction]

9283b229a025: Pushed

526119217a7a: Layer already exists

4e86cf43e3a8: Pushed

95557c4c07af: Pushed

873602908422: Layer already exists

e4abe883350c: Pushed

95a02847aa85: Pushed

b45078e74ec9: Pushed

latest: digest: sha256:04ce848927589424cc8f972298745f8e11f94e3299ab2e31f8d9ccd78e4f0f41 size: 1996

builder@DESKTOP-QADGF36:~/Workspaces/python-kasa$ docker push harbor.freshbrewed.science/freshbrewedprivate/azfunc01:v0.0.2

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/azfunc01]

d72f566cf0dd: Pushed

b2000a874981: Pushed

...

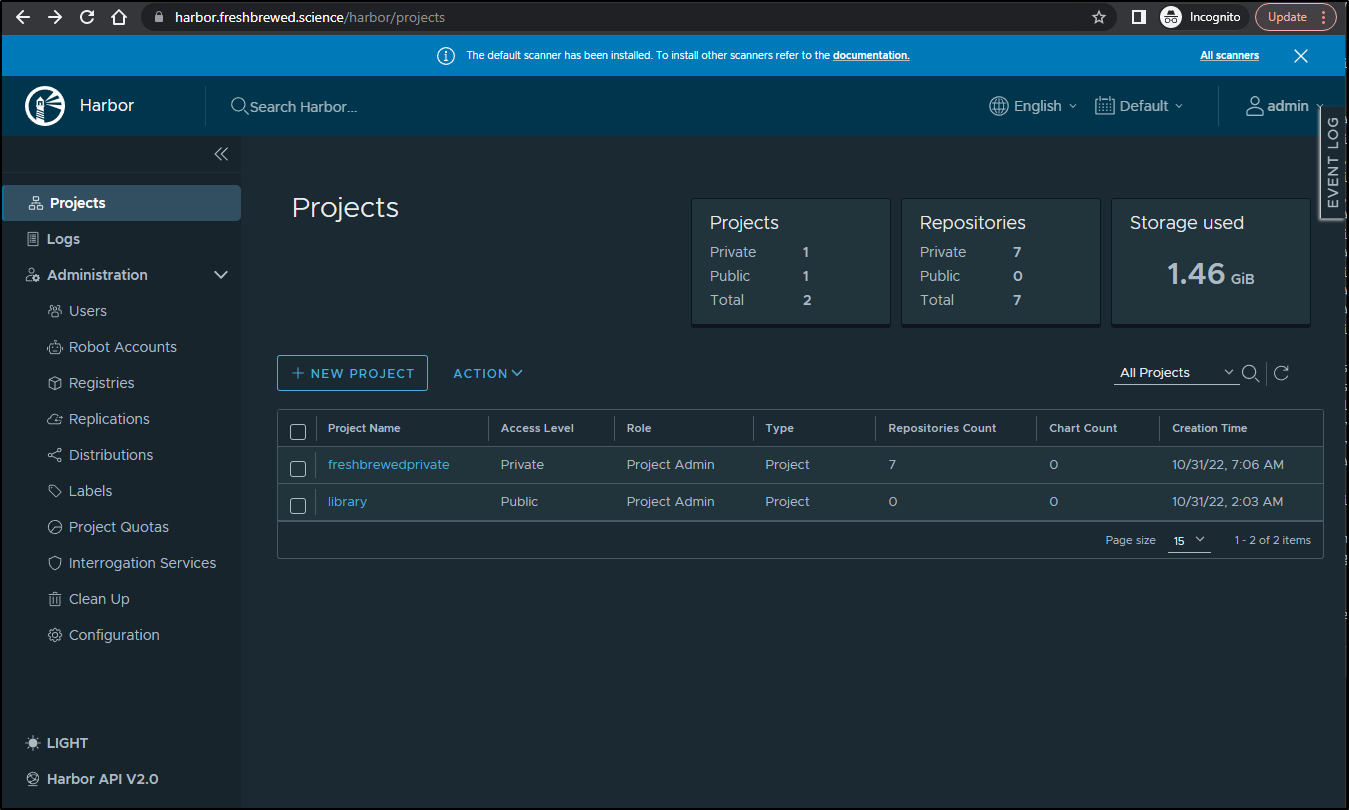

Once I pushed a few images I knew I might need, I logged in to see if harbor was still performant.

Now, not only are my harbor pods all running without issue, I can also see the failing crfunction is back in action

harbor-registry2-chartmuseum-7577686667-pkbqp 1/1 Running 0 35m

harbor-registry2-trivy-0 1/1 Running 0 35m

harbor-registry2-portal-7878768b86-r9qgw 1/1 Running 0 35m

harbor-registry2-redis-0 1/1 Running 0 35m

harbor-registry2-registry-78fd5b8f56-fgnn7 2/2 Running 0 35m

harbor-registry2-notary-signer-845658c5bc-zzjdh 1/1 Running 4 (33m ago) 35m

harbor-registry2-notary-server-6b4b47bb86-wlpj8 1/1 Running 4 (33m ago) 35m

harbor-registry2-core-6bd7984ffb-ffzff 1/1 Running 0 30m

harbor-registry2-exporter-648f957c7c-zs2cw 1/1 Running 0 30m

harbor-registry2-jobservice-57bfcc8bc8-zcsm7 1/1 Running 0 29m

python-crfunction-7d44797b8b-9m5gm 2/2 Running 227 (20m ago) 25d

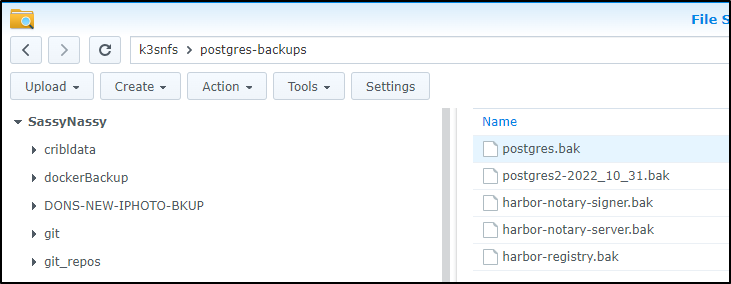

Backups

This time, I want some DR in the mix.

I’ll create a backups folder in a standard NFS mount

isaac@isaac-MacBookAir:~$ mkdir /mnt/nfs/k3snfs/postgres-backups

isaac@isaac-MacBookAir:~$ sudo su - postgres

postgres@isaac-MacBookAir:~$ pg_dump -U postgres postgres > /mnt/nfs/k3snfs/postgres-backups/postgres.bak

postgres@isaac-MacBookAir:~$ pg_dump -U postgres registry > /mnt/nfs/k3snfs/postgres-backups/harbor-registry.bak

postgres@isaac-MacBookAir:~$ pg_dump -U postgres notary_signer > /mnt/nfs/k3snfs/postgres-backups/harbor-notary-signer.bak

postgres@isaac-MacBookAir:~$ pg_dump -U postgres notary_server > /mnt/nfs/k3snfs/postgres-backups/harbor-notary-server.bak

postgres@isaac-MacBookAir:~$ ls -lh /mnt/nfs/k3snfs/postgres-backups

total 160K

-rwxrwxrwx 1 1024 users 5.7K Oct 31 07:42 harbor-notary-server.bak

-rwxrwxrwx 1 1024 users 3.6K Oct 31 07:42 harbor-notary-signer.bak

-rwxrwxrwx 1 1024 users 143K Oct 31 07:41 harbor-registry.bak

-rwxrwxrwx 1 1024 users 555 Oct 31 07:41 postgres.bak

I’ll use crontab -e to add a crontab

CURRENT_DATE=date +%Y_%m_%d

0 0 * * * pg_dump -U postgres postgres > /mnt/nfs/k3snfs/postgres-backups/postgres-$(${CURRENT_DATE}).bak

5 0 * * * pg_dump -U postgres registry > /mnt/nfs/k3snfs/postgres-backups/harbor-registry-$(${CURRENT_DATE}).bak

10 0 * * * pg_dump -U postgres notary_signer > /mnt/nfs/k3snfs/postgres-backups/harbor-notary-signer-$(${CURRENT_DATE}).bak

20 0 * * * pg_dump -U postgres notary_server > /mnt/nfs/k3snfs/postgres-backups/harbor-notary-server-$(${CURRENT_DATE}).bak

This will now create backup files with a date, e.g.

postgres@isaac-MacBookAir:~$ ls -ltra /mnt/nfs/k3snfs/postgres-backups/ | tail -n1

-rwxrwxrwx 1 1024 users 555 Oct 31 07:52 postgres-2022_10_31.bak

And I can check and see them in the NAS

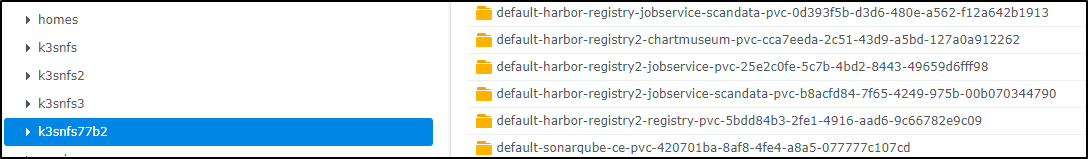

I can also see the PVCs are actually mounted to the NAS via the managed-nfs storage class

builder@DESKTOP-QADGF36:~/Workspaces/python-kasa$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs cluster.local/nfs-server-provisioner-1658802767 Delete Immediate true 97d

managed-nfs-storage (default) fuseim.pri/ifs Delete Immediate true 96d

local-path rancher.io/local-path Delete WaitForFirstConsumer false 97d

We can see we used the less stable ‘nfs’ class prior

builder@DESKTOP-QADGF36:~/Workspaces/python-kasa$ kubectl get pvc | grep harbor

harbor-registry-chartmuseum Bound pvc-4666b2a7-6fc1-40c4-80a4-e0887a76ad82 5Gi RWO nfs 97d

harbor-registry-jobservice Bound pvc-6cf34907-9c4b-4c67-aecb-6fda45b6691a 1Gi RWO nfs 97d

harbor-registry-registry Bound pvc-180fe352-8ae1-4866-9d66-5c76a645c53a 5Gi RWO nfs 97d

data-harbor-registry-redis-0 Bound pvc-521479b2-7692-4b44-b38e-43db54b45bdd 1Gi RWO nfs 97d

database-data-harbor-registry-database-0 Bound pvc-61309726-0219-4405-aefe-14aa05434595 1Gi RWO nfs 97d

data-harbor-registry-trivy-0 Bound pvc-127e6d04-b0d5-435f-939a-34d4cb4c54d5 5Gi RWO nfs 97d

harbor-registry-jobservice-scandata Bound pvc-0d393f5b-d3d6-480e-a562-f12a642b1913 1Gi RWO managed-nfs-storage 123m

harbor-registry2-registry Bound pvc-5bdd84b3-2fe1-4916-aad6-9c66782e9c09 5Gi RWO managed-nfs-storage 62m

harbor-registry2-jobservice-scandata Bound pvc-b8acfd84-7f65-4249-975b-00b070344790 1Gi RWO managed-nfs-storage 62m

harbor-registry2-chartmuseum Bound pvc-cca7eeda-2c51-43d9-a5bd-127a0a912262 5Gi RWO managed-nfs-storage 62m

harbor-registry2-jobservice Bound pvc-25e2c0fe-5c7b-4bd2-8443-49659d6fff98 1Gi RWO managed-nfs-storage 62m

data-harbor-registry2-trivy-0 Bound pvc-2bae3c2a-876d-4aa3-82d1-ee25d2b9df2a 5Gi RWO managed-nfs-storage 62m

data-harbor-registry2-redis-0 Bound pvc-69a7d5f3-02fa-40c2-8bac-d4aee6fe8181 1Gi RWO managed-nfs-storage 62m

Lastly, just as a sanity, I checked the NAS to ensure I saw the mounted PVCs

Summary

It was actually in preparation for a blog post later this week that I noticed Harbor was down, yet again. Invariably, each time it comes from database issues. I decided to move the PVC to a more properly supported NFS mount (the fuse based one).

Lastly, I decided to add a touch of DR by adding postgres daily dumps to NFS.