Published: Aug 16, 2022 by Isaac Johnson

I’ve had a few questions come up recently about how Istio and Vault play together. We know that Consul and Vault, both Hashicorp Products, work quite well together, but can we blend the commercially supported Anthos Service Mesh with Hashi Vault. Will they both add sidecars?

We’ll dig in with Google Kubernetes Engine and try using GKE Autopilot (which abstracts Node pool management), We’ll add Anthos Service Mesh (the GCP supported commercial Istio) and lastly add Hashi Vault to our cluster with and without sidecar injections.

Setup the cluster and services in Google Cloud

First I need to login to gcloud

$ gcloud auth login

Your browser has been opened to visit:

...

ou are now logged in as [isaac.johnson@gmail.com].

Your current project is [gkedemo01]. You can change this setting by running:

$ gcloud config set project PROJECT_ID

To take a quick anonymous survey, run:

$ gcloud survey

Then I should find the project ID for my Anthos project

$ gcloud projects list --filter=anthos --limit 5

PROJECT_ID NAME PROJECT_NUMBER

myanthosproject2 myAnthosProject2 511842454269

I’ll need to enable Service Mesh and Anthos APIs (if I haven’t already)

$ export PROJECTID=myanthosproject2

$ gcloud services enable mesh.googleapis.com --project=$PROJECTID

Operation "operations/acat.p2-511842454269-5f80b86a-307c-4d3c-bff9-bcb5d0893020" finished successfully.

$ gcloud services enable anthos.googleapis.com --project=$PROJECTID

Operation "operations/acat.p2-511842454269-ef2fd078-6eba-42aa-b1a8-af161ea1c13d" finished successfully.

Now we can create

$ export PROJECTNUM=511842454269

$ gcloud container --project $PROJECTID clusters create-auto "freshbrewed-autopilot-cluster-1" --region "us-central1" --release-channel "regular" --network "projects/$PROJECTID/global/networks/default" --subnetwork "projects/$PROJECTID/regions/us-central1/subnetworks/default" --cluster-ipv4-cidr "/17" --services-ipv4-cidr "/22" --labels mesh_id=proj-$PROJECTNUM

The first time I ran, I got an error

ERROR: (gcloud.container.clusters.create-auto) unrecognized arguments:

--labels

mesh_id=proj-511842454269

To search the help text of gcloud commands, run:

gcloud help -- SEARCH_TERMS

This lead me to believe my gcloud was out of date

$ gcloud version

Google Cloud SDK 367.0.0

alpha 2021.12.10

app-engine-go 1.9.72

app-engine-python 1.9.98

app-engine-python-extras 1.9.96

beta 2021.12.10

bq 2.0.72

cloud-build-local 0.5.2

core 2021.12.10

gsutil 5.5

pubsub-emulator 0.6.0

Upgrading should be simple

$ gcloud components update

To help improve the quality of this product, we collect anonymized usage data and anonymized stacktraces when crashes are encountered;

additional information is available at <https://cloud.google.com/sdk/usage-statistics>. This data is handled in accordance with our privacy

policy <https://cloud.google.com/terms/cloud-privacy-notice>. You may choose to opt in this collection now (by choosing 'Y' at the below

prompt), or at any time in the future by running the following command:

gcloud config set disable_usage_reporting false

Do you want to opt-in (y/N)? y

Beginning update. This process may take several minutes.

ERROR: (gcloud.components.update)

You cannot perform this action because the Cloud SDK component manager

is disabled for this installation. You can run the following command

to achieve the same result for this installation:

sudo apt-get update && sudo apt-get --only-upgrade install kubectl google-cloud-sdk-app-engine-java google-cloud-sdk-pubsub-emulator google-cloud-sdk-config-connector google-cloud-sdk-spanner-emulator google-cloud-sdk-kpt google-cloud-sdk google-cloud-sdk-terraform-validator google-cloud-sdk-app-engine-go google-cloud-sdk-skaffold google-cloud-sdk-kubectl-oidc google-cloud-sdk-cbt google-cloud-sdk-firestore-emulator google-cloud-sdk-gke-gcloud-auth-plugin google-cloud-sdk-app-engine-grpc google-cloud-sdk-minikube google-cloud-sdk-anthos-auth google-cloud-sdk-datalab google-cloud-sdk-app-engine-python google-cloud-sdk-bigtable-emulator google-cloud-sdk-cloud-build-local google-cloud-sdk-local-extract google-cloud-sdk-datastore-emulator google-cloud-sdk-app-engine-python-extras

However we have to use apt.

Run apt update and upgrade as suggested

$ sudo apt-get update && sudo apt-get --only-upgrade install kubectl google-cloud-sdk-app-engine-java google-cloud-sdk-pubsub-emulator google-cloud-sdk-config-connector google-cloud-sdk-spanner-emulator google-cloud-sdk-kpt google-cloud-sdk google-cloud-sdk-terraform-validator google-cloud-sdk-app-engine-go google-cloud-sdk-skaffold google-cloud-sdk-kubectl-oidc google-cloud-sdk-cbt google-cloud-sdk-firestore-emulator google-cloud-sdk-gke-gcloud-auth-plugin google-cloud-sdk-app-engine-grpc google-cloud-sdk-minikube google-cloud-sdk-anthos-auth google-cloud-sdk-datalab google-cloud-sdk-app-engine-python google-cloud-sdk-bigtable-emulator google-cloud-sdk-cloud-build-local google-cloud-sdk-local-extract google-cloud-sdk-datastore-emulator google-cloud-sdk-app-engine-python-extras

[sudo] password for builder:

Hit:1 http://archive.ubuntu.com/ubuntu focal InRelease

Get:2 https://apt.releases.hashicorp.com focal InRelease [16.3 kB]

Get:3 http://archive.ubuntu.com/ubuntu focal-updates InRelease [114 kB]

Get:4 https://packages.microsoft.com/repos/azure-cli focal InRelease [10.4 kB]

Get:5 https://packages.microsoft.com/repos/microsoft-ubuntu-focal-prod focal InRelease [10.5 kB]

Get:6 https://apt.releases.hashicorp.com focal/main amd64 Packages [62.7 kB]

Get:7 http://security.ubuntu.com/ubuntu focal-security InRelease [114 kB]

Get:8 https://packages.microsoft.com/debian/11/prod bullseye InRelease [10.5 kB]

Get:9 http://archive.ubuntu.com/ubuntu focal-backports InRelease [108 kB]

Get:10 https://packages.cloud.google.com/apt cloud-sdk InRelease [6751 B]

Get:11 https://packages.microsoft.com/repos/azure-cli focal/main amd64 Packages [9297 B]

Get:12 https://packages.microsoft.com/repos/microsoft-ubuntu-focal-prod focal/main amd64 Packages [185 kB]

Get:13 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 Packages [2036 kB]

Get:14 https://packages.cloud.google.com/apt cloud-sdk/main amd64 Packages [306 kB]

Get:15 https://packages.microsoft.com/debian/11/prod bullseye/main armhf Packages [16.2 kB]

Get:16 https://packages.microsoft.com/debian/11/prod bullseye/main arm64 Packages [14.7 kB]

Get:17 https://packages.microsoft.com/debian/11/prod bullseye/main amd64 Packages [75.9 kB]

Get:18 http://archive.ubuntu.com/ubuntu focal-updates/main Translation-en [364 kB]

Get:19 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 c-n-f Metadata [15.6 kB]

Get:20 http://archive.ubuntu.com/ubuntu focal-updates/restricted amd64 Packages [1222 kB]

Get:21 http://archive.ubuntu.com/ubuntu focal-updates/restricted Translation-en [174 kB]

Get:22 http://archive.ubuntu.com/ubuntu focal-updates/restricted amd64 c-n-f Metadata [584 B]

Get:23 http://archive.ubuntu.com/ubuntu focal-updates/universe amd64 Packages [936 kB]

Get:24 http://archive.ubuntu.com/ubuntu focal-updates/universe Translation-en [210 kB]

Get:25 http://archive.ubuntu.com/ubuntu focal-updates/universe amd64 c-n-f Metadata [21.0 kB]

Get:26 http://archive.ubuntu.com/ubuntu focal-updates/multiverse amd64 Packages [24.4 kB]

Get:27 http://archive.ubuntu.com/ubuntu focal-updates/multiverse amd64 c-n-f Metadata [588 B]

Get:28 http://archive.ubuntu.com/ubuntu focal-backports/main amd64 Packages [45.6 kB]

Get:29 http://archive.ubuntu.com/ubuntu focal-backports/main Translation-en [16.3 kB]

Get:30 http://archive.ubuntu.com/ubuntu focal-backports/main amd64 c-n-f Metadata [1420 B]

Get:31 http://archive.ubuntu.com/ubuntu focal-backports/universe amd64 Packages [24.0 kB]

Get:32 http://archive.ubuntu.com/ubuntu focal-backports/universe Translation-en [16.0 kB]

Get:33 http://security.ubuntu.com/ubuntu focal-security/main amd64 Packages [1672 kB]

Get:34 http://archive.ubuntu.com/ubuntu focal-backports/universe amd64 c-n-f Metadata [860 B]

Get:35 http://security.ubuntu.com/ubuntu focal-security/main Translation-en [282 kB]

Get:36 http://security.ubuntu.com/ubuntu focal-security/main amd64 c-n-f Metadata [10.8 kB]

Get:37 http://security.ubuntu.com/ubuntu focal-security/restricted amd64 Packages [1138 kB]

Get:38 http://security.ubuntu.com/ubuntu focal-security/restricted Translation-en [162 kB]

Get:39 http://security.ubuntu.com/ubuntu focal-security/restricted amd64 c-n-f Metadata [588 B]

Get:40 http://security.ubuntu.com/ubuntu focal-security/universe amd64 Packages [714 kB]

Get:41 http://security.ubuntu.com/ubuntu focal-security/universe Translation-en [130 kB]

Get:42 http://security.ubuntu.com/ubuntu focal-security/universe amd64 c-n-f Metadata [14.7 kB]

Get:43 http://security.ubuntu.com/ubuntu focal-security/multiverse amd64 Packages [22.2 kB]

Get:44 http://security.ubuntu.com/ubuntu focal-security/multiverse amd64 c-n-f Metadata [508 B]

Fetched 10.3 MB in 2s (5707 kB/s)

Reading package lists... Done

Reading package lists... Done

Building dependency tree

Reading state information... Done

Skipping google-cloud-sdk-anthos-auth, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-app-engine-grpc, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-app-engine-java, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-bigtable-emulator, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-cbt, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-config-connector, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-datalab, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-datastore-emulator, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-firestore-emulator, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-gke-gcloud-auth-plugin, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-kpt, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-kubectl-oidc, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-local-extract, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-minikube, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-skaffold, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-spanner-emulator, it is not installed and only upgrades are requested.

Skipping google-cloud-sdk-terraform-validator, it is not installed and only upgrades are requested.

Skipping kubectl, it is not installed and only upgrades are requested.

The following packages were automatically installed and are no longer required:

liblttng-ust-ctl4 liblttng-ust0 python3-crcmod

Use 'sudo apt autoremove' to remove them.

Suggested packages:

google-cloud-sdk-app-engine-java google-cloud-sdk-bigtable-emulator google-cloud-sdk-datastore-emulator kubectl

The following packages will be upgraded:

google-cloud-sdk google-cloud-sdk-app-engine-go google-cloud-sdk-app-engine-python google-cloud-sdk-app-engine-python-extras

google-cloud-sdk-cloud-build-local google-cloud-sdk-pubsub-emulator

6 upgraded, 0 newly installed, 0 to remove and 231 not upgraded.

Need to get 221 MB of archives.

After this operation, 244 MB of additional disk space will be used.

Do you want to continue? [Y/n] Y

Get:1 https://packages.cloud.google.com/apt cloud-sdk/main amd64 google-cloud-sdk all 397.0.0-0 [134 MB]

Get:2 https://packages.cloud.google.com/apt cloud-sdk/main amd64 google-cloud-sdk-app-engine-python all 397.0.0-0 [5522 kB]

Get:3 https://packages.cloud.google.com/apt cloud-sdk/main amd64 google-cloud-sdk-app-engine-go amd64 397.0.0-0 [1883 kB]

Get:4 https://packages.cloud.google.com/apt cloud-sdk/main amd64 google-cloud-sdk-app-engine-python-extras all 397.0.0-0 [15.0 MB]

Get:5 https://packages.cloud.google.com/apt cloud-sdk/main amd64 google-cloud-sdk-cloud-build-local amd64 397.0.0-0 [2720 kB]

Get:6 https://packages.cloud.google.com/apt cloud-sdk/main amd64 google-cloud-sdk-pubsub-emulator all 397.0.0-0 [61.6 MB]

Fetched 221 MB in 5s (42.8 MB/s)

(Reading database ... 220787 files and directories currently installed.)

Preparing to unpack .../0-google-cloud-sdk_397.0.0-0_all.deb ...

Unpacking google-cloud-sdk (397.0.0-0) over (367.0.0-0) ...

Preparing to unpack .../1-google-cloud-sdk-app-engine-python_397.0.0-0_all.deb ...

Unpacking google-cloud-sdk-app-engine-python (397.0.0-0) over (367.0.0-0) ...

Preparing to unpack .../2-google-cloud-sdk-app-engine-go_397.0.0-0_amd64.deb ...

Unpacking google-cloud-sdk-app-engine-go (397.0.0-0) over (367.0.0-0) ...

Preparing to unpack .../3-google-cloud-sdk-app-engine-python-extras_397.0.0-0_all.deb ...

Unpacking google-cloud-sdk-app-engine-python-extras (397.0.0-0) over (367.0.0-0) ...

Preparing to unpack .../4-google-cloud-sdk-cloud-build-local_397.0.0-0_amd64.deb ...

Unpacking google-cloud-sdk-cloud-build-local (397.0.0-0) over (367.0.0-0) ...

Preparing to unpack .../5-google-cloud-sdk-pubsub-emulator_397.0.0-0_all.deb ...

Unpacking google-cloud-sdk-pubsub-emulator (397.0.0-0) over (367.0.0-0) ...

Setting up google-cloud-sdk (397.0.0-0) ...

Setting up google-cloud-sdk-app-engine-python (397.0.0-0) ...

Setting up google-cloud-sdk-cloud-build-local (397.0.0-0) ...

Setting up google-cloud-sdk-pubsub-emulator (397.0.0-0) ...

Processing triggers for man-db (2.9.1-1) ...

Processing triggers for google-cloud-sdk (397.0.0-0) ...

Setting up google-cloud-sdk-app-engine-python-extras (397.0.0-0) ...

Setting up google-cloud-sdk-app-engine-go (397.0.0-0) ...

Processing triggers for google-cloud-sdk (397.0.0-0) ...

Now updated, we can check versions

$ gcloud version

Google Cloud SDK 397.0.0

alpha 2022.08.05

app-engine-go 1.9.72

app-engine-python 1.9.100

app-engine-python-extras 1.9.96

beta 2022.08.05

bq 2.0.75

bundled-python3-unix 3.9.12

core 2022.08.05

gcloud-crc32c 1.0.0

gsutil 5.11

pubsub-emulator 0.7.0

However, even with the latest, the labels parameter just doesn’t exist on the command line for clusters create-auto

$ gcloud container --project $PROJECTID clusters create-auto "freshbrewed-autopilot-cluster-1" --region "us-central1" --release-channel "regular" --network "projects/$PROJECTID/global/networks/default" --subnetwork "projects/$PROJECTID/regions/us-central1/subnetworks/default" --cluster-ipv4-cidr "/17" --services-ipv4-cidr "/22" --labels mesh_id=proj-$PROJECTNUM

ERROR: (gcloud.container.clusters.create-auto) unrecognized arguments:

--labels

mesh_id=proj-511842454269

To search the help text of gcloud commands, run:

gcloud help -- SEARCH_TERMS

$ gcloud container --project "myanthosproject2" clusters create-auto "freshbrewed-autopilot-cluster-1" --region "us-central1" --release-channel "regular" --network "projects/myanthosproject2/global/networks/default" --subnetwork "projects/myanthosproject2/regions/us-central1/subnetworks/default" --cluster-ipv4-cidr "/17" --services-ipv4-cidr "/22" --labels="mesh_id=proj-511842454269"

ERROR: (gcloud.container.clusters.create-auto) unrecognized arguments: --labels=mesh_id=proj-511842454269

To search the help text of gcloud commands, run:

gcloud help -- SEARCH_TERMS

$ gcloud container --project "myanthosproject2" clusters create-auto "freshbrewed-autopilot-cluster-1" --region "us-central1" --release-channel "regular" --network "projects/myanthosproject2/global/networks/default" --subnetwork "projects/myanthosproject2/regions/us-central1/subnetworks/default" --cluster-ipv4-cidr "/17" --services-ipv4-cidr "/22" --labels=mesh_id=proj-511842454269

ERROR: (gcloud.container.clusters.create-auto) unrecognized arguments: --labels=mesh_id=proj-511842454269

To search the help text of gcloud commands, run:

gcloud help -- SEARCH_TERMS

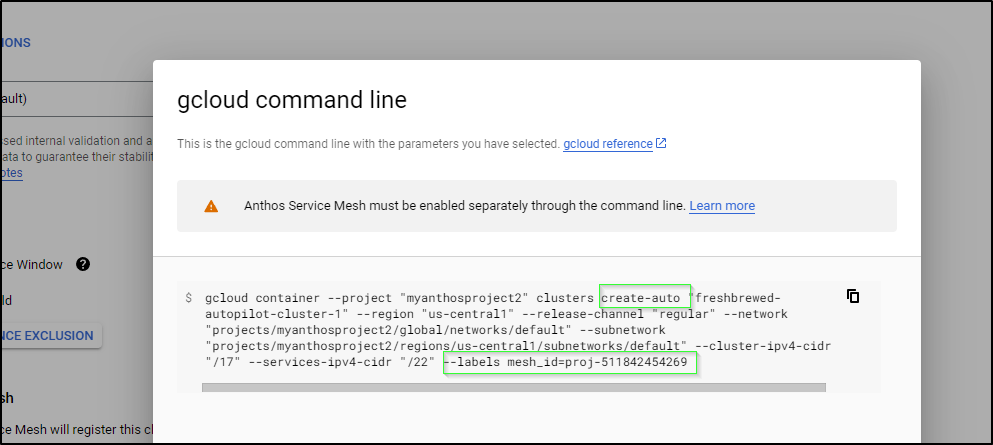

even though gcloud web ui tells me it should

Note: labels work for clusters create however. I guess I’ll have to use the portal until they update the gcloud cli.

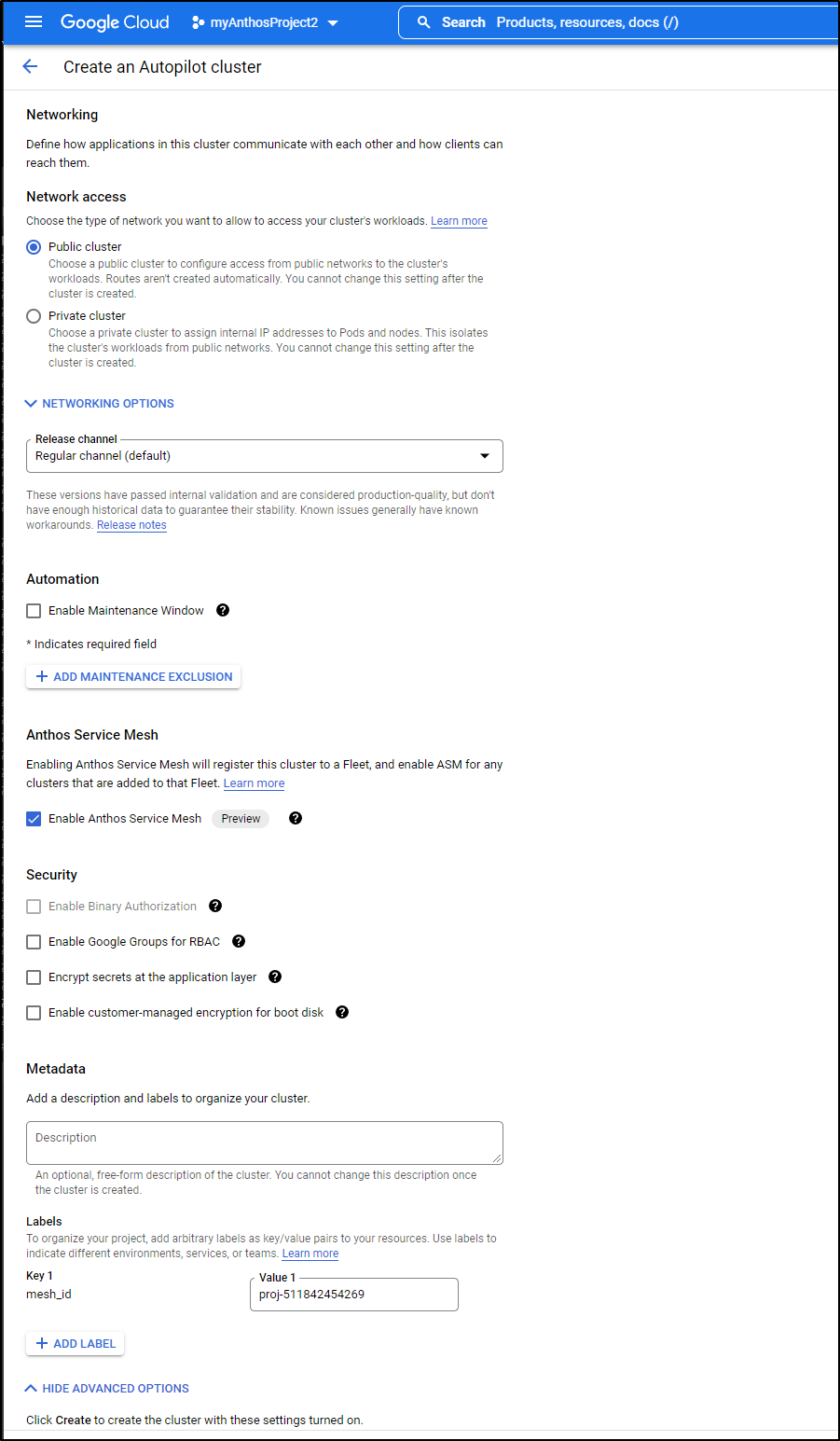

I went to the Gcloud Console and created with the web UI

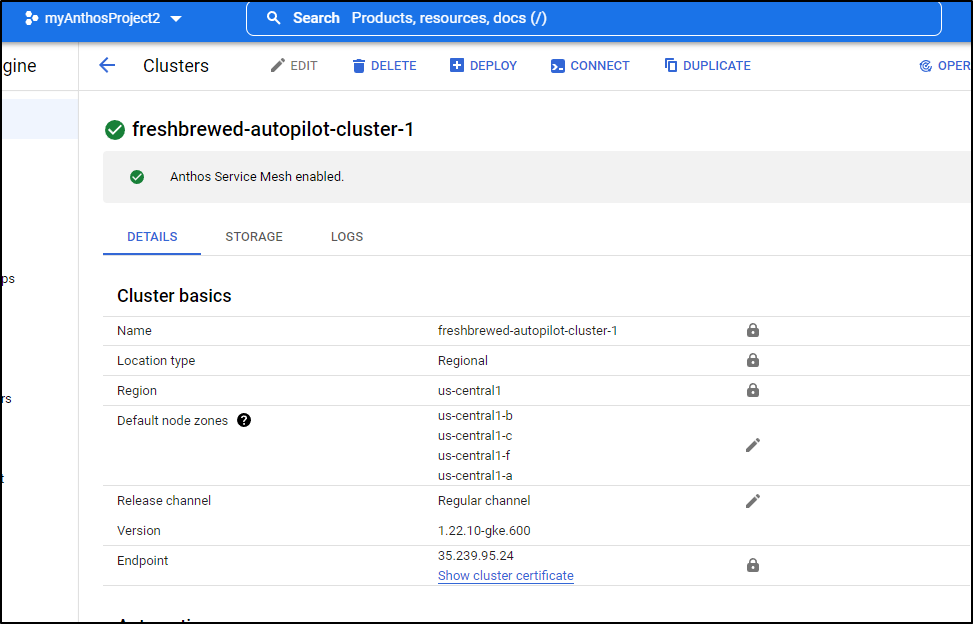

When done, I could see the cluster is running

Once created, I could login

$ gcloud container clusters get-credentials freshbrewed-autopilot-cluster-1 --region us-central1 --project $PROJECTID

Fetching cluster endpoint and auth data.

CRITICAL: ACTION REQUIRED: gke-gcloud-auth-plugin, which is needed for continued use of kubectl, was not found or is not executable. Install gke-gcloud-auth-plugin for use with kubectl by following https://cloud.google.com/blog/products/containers-kubernetes/kubectl-auth-changes-in-gke

kubeconfig entry generated for freshbrewed-autopilot-cluster-1.

And see indeed Istio is running

$ kubectl get pods --all-namespaces | grep istio

kube-system istio-cni-node-4v48d 2/2 Running 0 2m21s

kube-system istio-cni-node-57v6m 2/2 Running 0 3m15s

kube-system istio-cni-node-d4t6n 2/2 Running 0 3m15s

Sample App

Let’s test Istio (Anthos Service Mesh, ASM) by launching a sample app

$ kubectl apply -f https://raw.githubusercontent.com/GoogleCloudPlatform/istio-samples/d8666bb6f72b63fa2f27c7001ce196394494a585/sample

-apps/helloserver/server/server.yaml

deployment.apps/helloserver created

service/hellosvc created

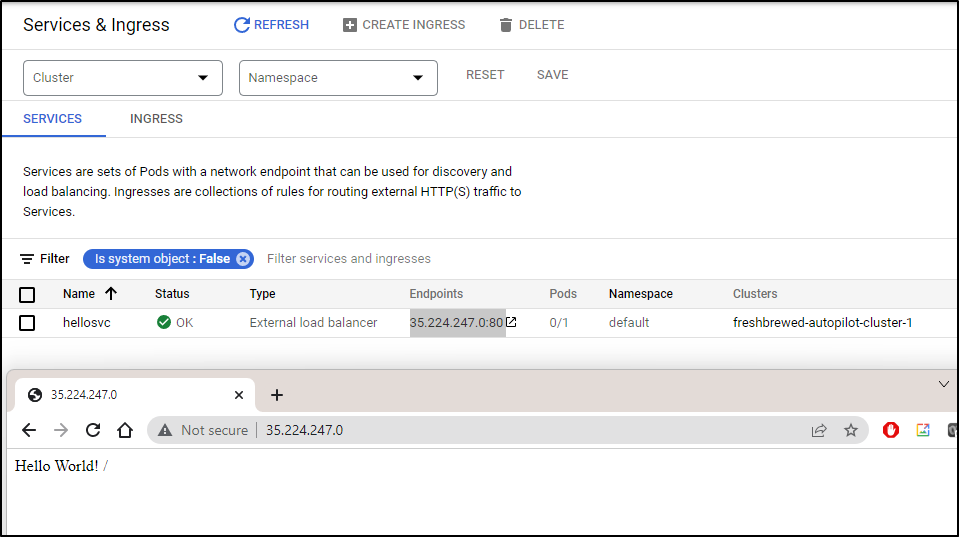

I can see it created a service

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hellosvc LoadBalancer 10.21.3.133 35.224.247.0 80:30972/TCP 2m33s

kubernetes ClusterIP 10.21.0.1 <none> 443/TCP 17m

And can see it is running

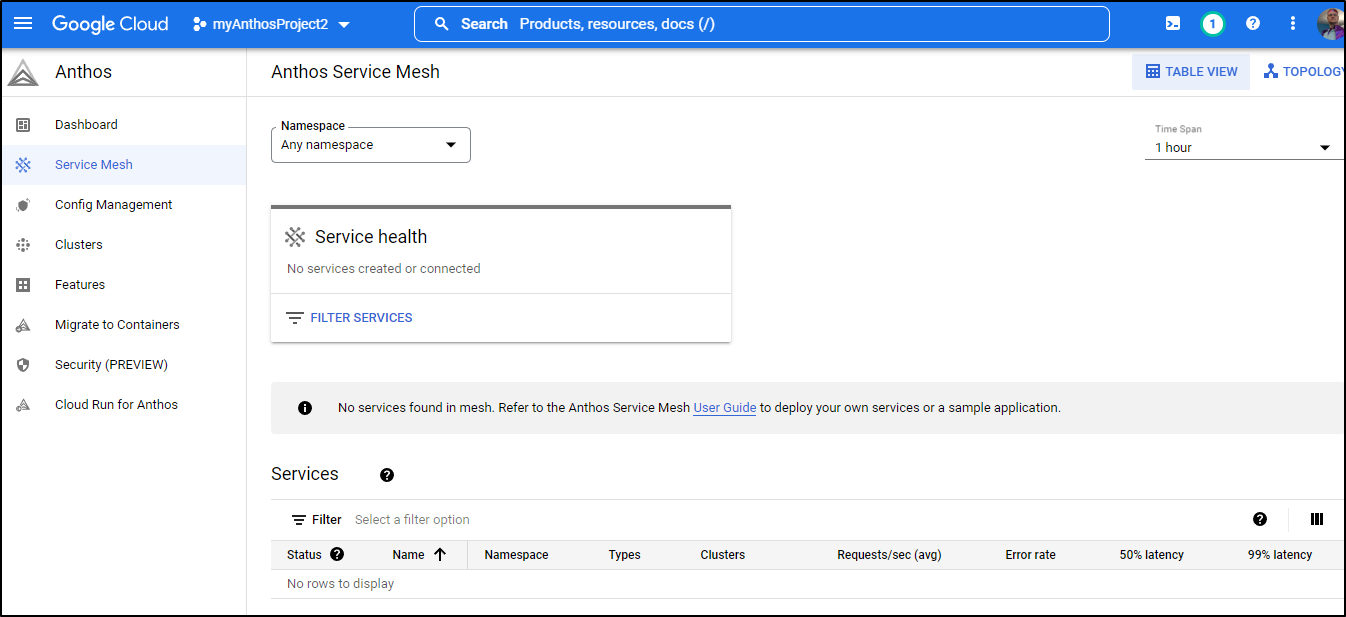

However, nothing is listed in ASM

We need to add the ASM managed label (and remove “istio-injection-enabled” if it exists)

$ kubectl label namespace default istio-injection- istio.io/rev=asm-managed --overwrite

label "istio-injection" not found.

namespace/default labeled

We can rotate the pod to see it add the ASM sidecar

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

helloserver-64f6d5d74b-btgzr 1/1 Running 0 8m30s

$ kubectl delete pod helloserver-64f6d5d74b-btgzr

pod "helloserver-64f6d5d74b-btgzr" deleted

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

helloserver-64f6d5d74b-6ld69 0/2 Init:0/1 0 8s

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

helloserver-64f6d5d74b-6ld69 2/2 Running 0 57s

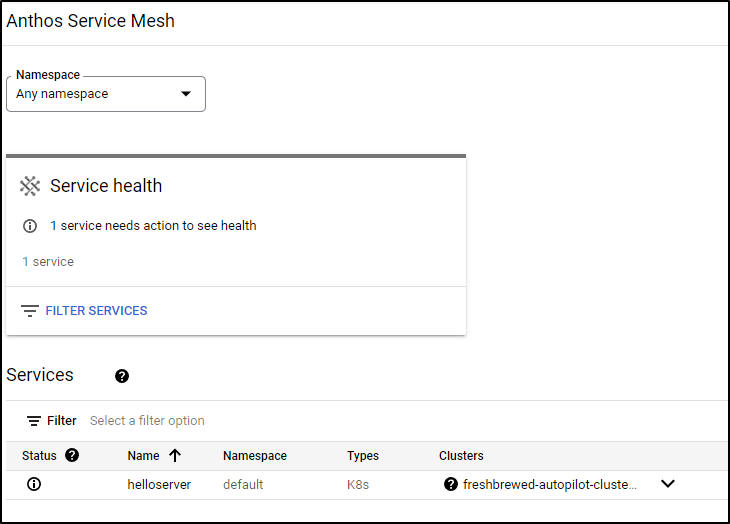

We can now see it listed in Anthos Service Mesh

Installing Vault

Next, let’s add the Helm repo and update

$ helm repo add hashicorp https://helm.releases.hashicorp.com

"hashicorp" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "myharbor" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "epsagon" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

Then install Vault with helm

$ helm install vault hashicorp/vault

W0816 06:47:56.144942 20905 warnings.go:70] Autopilot set default resource requests for Deployment default/vault-agent-injector, as resource requests were not specified. See http://g.co/gke/autopilot-defaults.

W0816 06:47:56.314607 20905 warnings.go:70] Autopilot set default resource requests for StatefulSet default/vault, as resource requests were not specified. See http://g.co/gke/autopilot-defaults.

W0816 06:47:56.562105 20905 warnings.go:70] AdmissionWebhookController: mutated namespaceselector of the webhooks to enforce GKE Autopilot policies.

NAME: vault

LAST DEPLOYED: Tue Aug 16 06:47:50 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

Thank you for installing HashiCorp Vault!

Now that you have deployed Vault, you should look over the docs on using

Vault with Kubernetes available here:

https://www.vaultproject.io/docs/

Your release is named vault. To learn more about the release, try:

$ helm status vault

$ helm get manifest vault

We decided to use Autopilot to allow Google to manage the nodes. However, there is a cost to this; When launched, my pods were showing Pending

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

helloserver-64f6d5d74b-6ld69 2/2 Running 0 16m

vault-0 0/2 Pending 0 89s

vault-agent-injector-674bc999bb-9hjkc 0/2 Pending 0 89s

Describing vault-0 showed it triggered a scale up event

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 74s gke.io/optimize-utilization-scheduler 0/3 nodes are available: 3 Insufficient cpu, 3 Insufficient memory.

Normal TriggeredScaleUp 61s cluster-autoscaler pod triggered scale-up: [{https://www.googleapis.com/compute/v1/projects/myanthosproject2/zones/us-central1-b/instanceGroups/gk3-freshbrewed-autopilo-nap-u5fcr0qm-262a9d55-grp 0->1 (max: 1000)} {https://www.googleapis.com/compute/v1/projects/myanthosproject2/zones/us-central1-f/instanceGroups/gk3-freshbrewed-autopilo-nap-u5fcr0qm-27c1613e-grp 0->1 (max: 1000)}]

Warning FailedScheduling <invalid> gke.io/optimize-utilization-scheduler 0/5 nodes are available: 2 node(s) had taint {node.kubernetes.io/not-ready: }, that the pod didn't tolerate, 3 Insufficient cpu, 3 Insufficient memory.

After a few moments, new nodes were added and we could see the Pod was running

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

helloserver-64f6d5d74b-6ld69 2/2 Running 0 17m

vault-0 1/2 Running 0 28s

vault-agent-injector-674bc999bb-mk9bw 2/2 Running 0 33s

While Vault is indeed running, it is waiting on us to initialize it

$ kubectl exec vault-0 -- vault status

Key Value

--- -----

Seal Type shamir

Initialized false

Sealed true

Total Shares 0

Threshold 0

Unseal Progress 0/0

Unseal Nonce n/a

Version 1.11.2

Build Date 2022-07-29T09:48:47Z

Storage Type file

HA Enabled false

command terminated with exit code 2

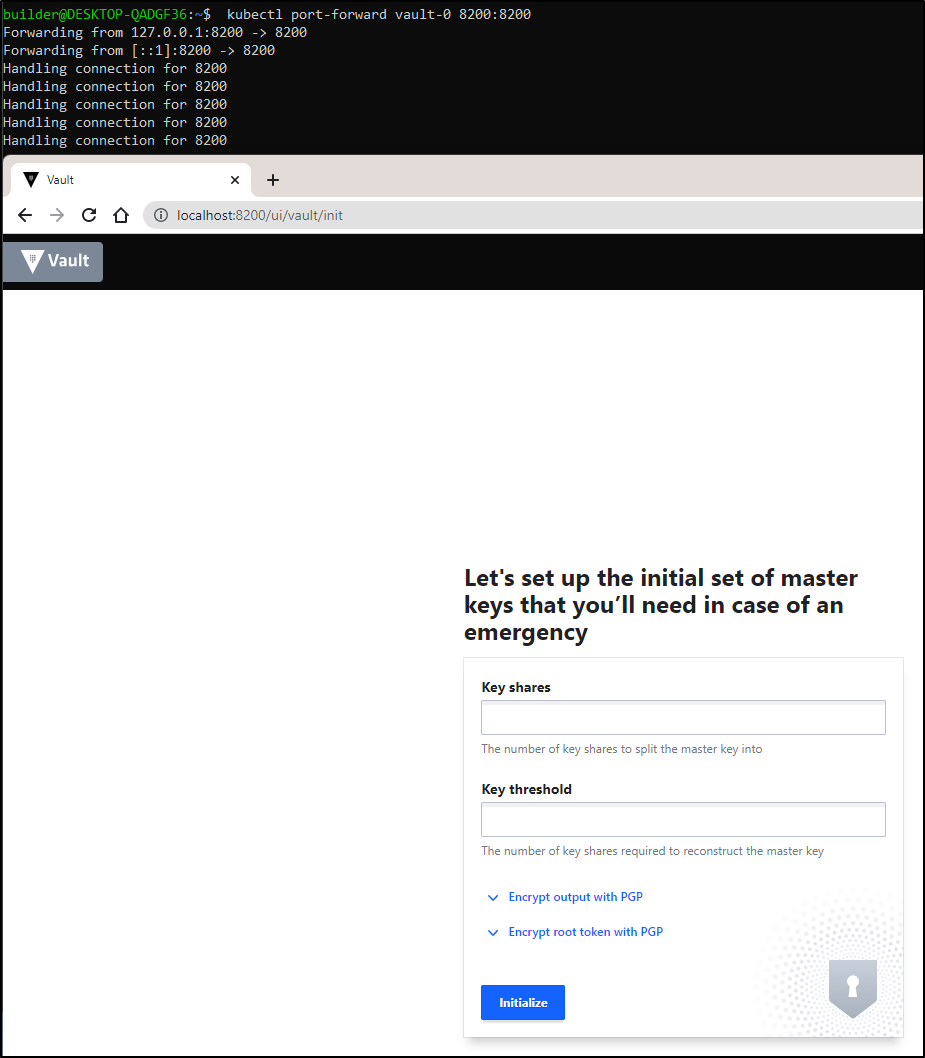

We can port-forward and start the process

$ kubectl port-forward vault-0 8200:8200

I usually do 3 shares and 2 required.

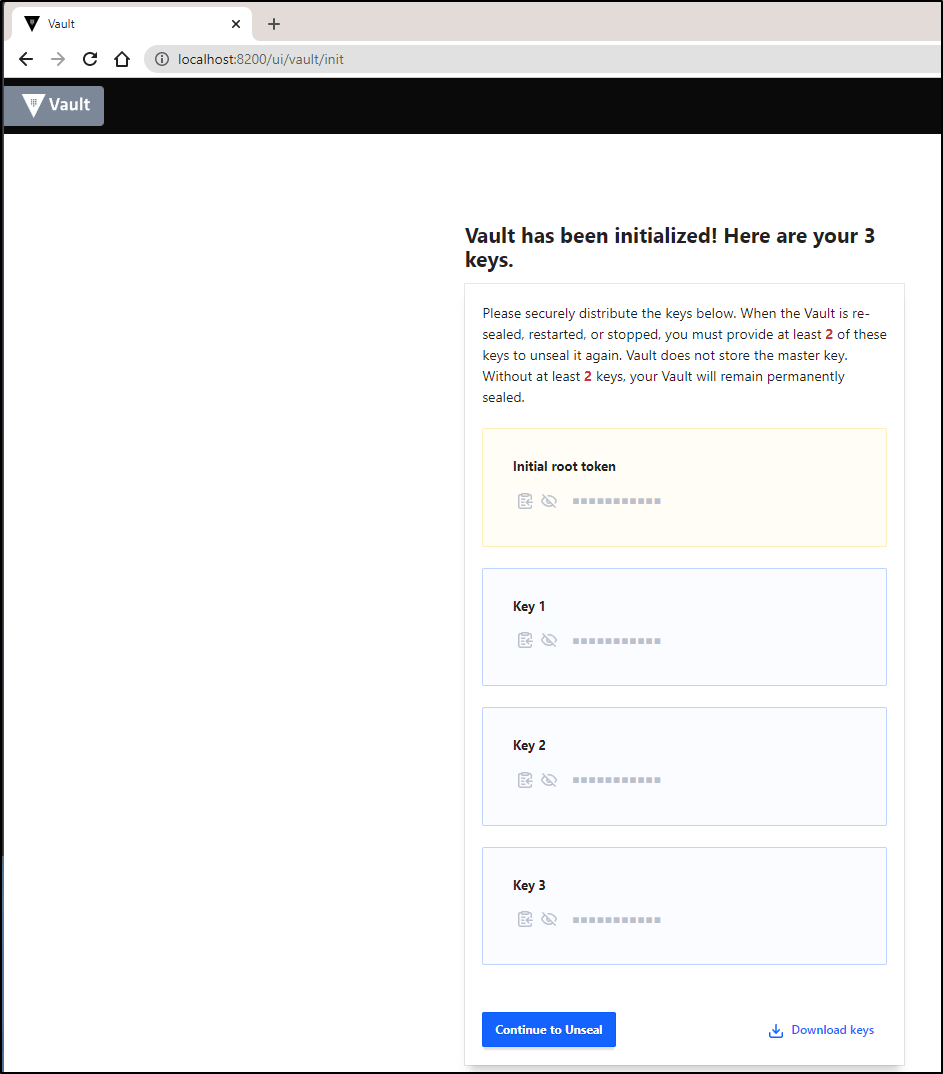

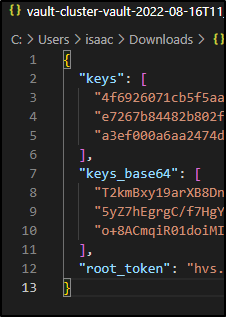

This will then prompt us to save the tokens and keys

You can download the JSON

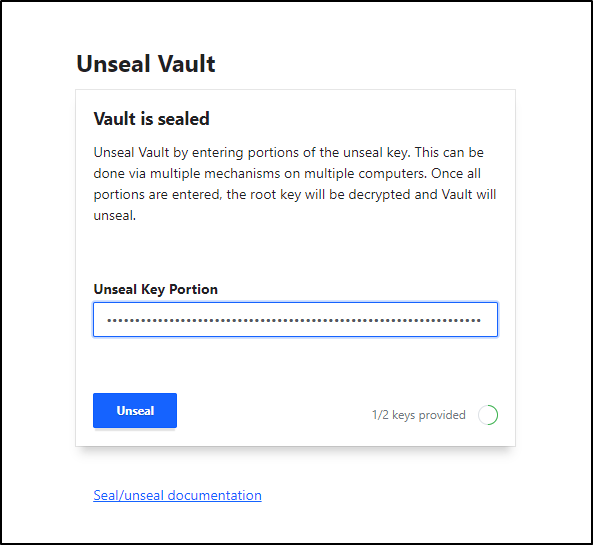

We then continue to the section where we use the keys to unseal the vault

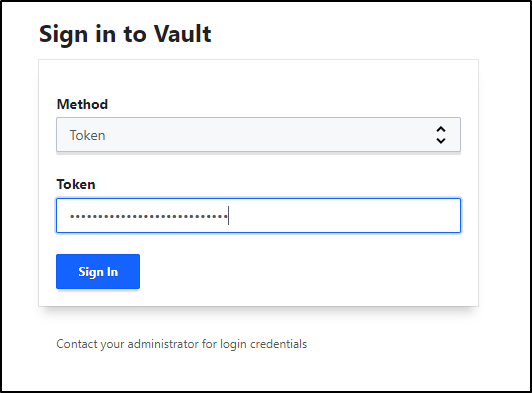

Then we can login with the root token

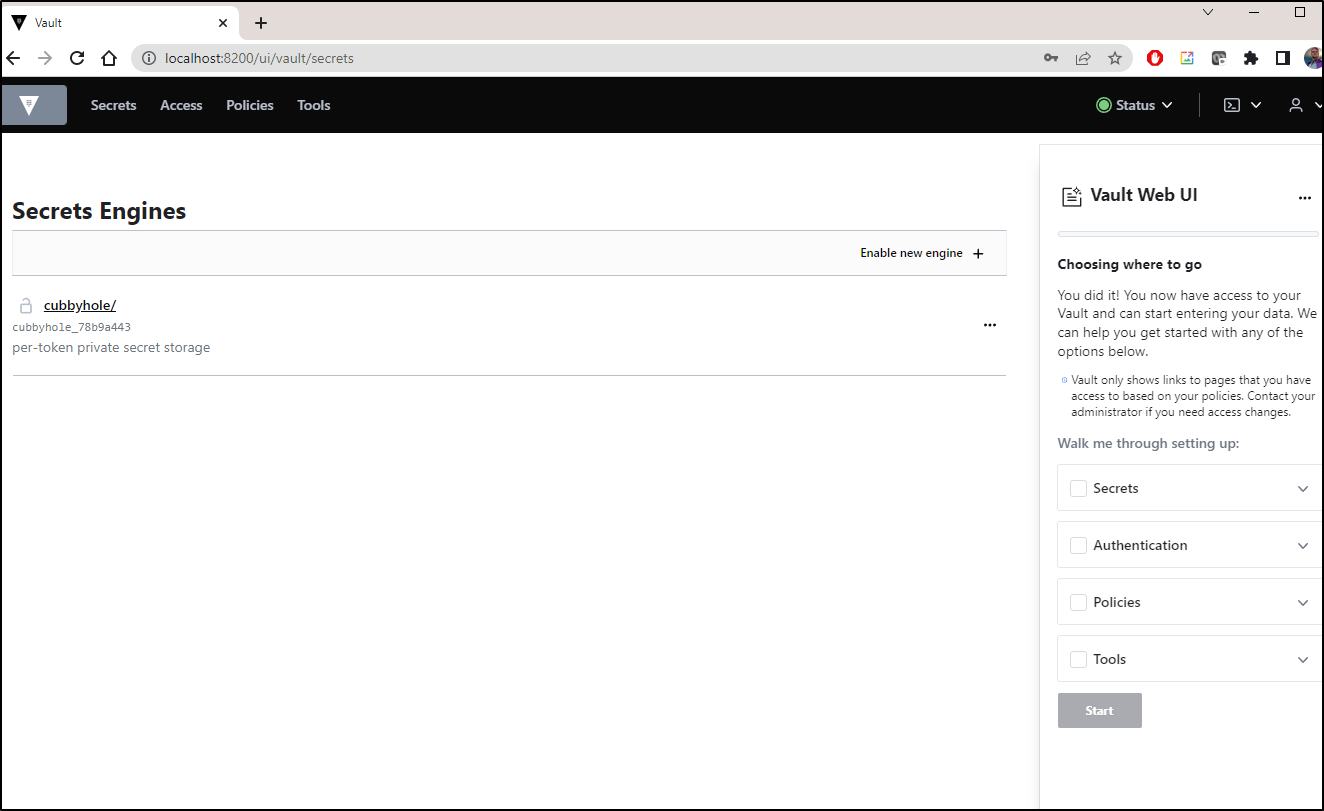

which brings us to the full Vault UI

Enable KV secrets engine

One of the most common secrets engines to use with vault is the “Key/Value” one. We can enable in the UI or command line. Let’s use the command line this time

$ kubectl exec -it vault-0 -- /bin/sh

/ $ vault login

Token (will be hidden):

Success! You are now authenticated. The token information displayed below

is already stored in the token helper. You do NOT need to run "vault login"

again. Future Vault requests will automatically use this token.

Key Value

--- -----

token hvs.**********************

token_accessor GugLWcBLsdwkLT77u3VDGidr

token_duration ∞

token_renewable false

token_policies ["root"]

identity_policies []

policies ["root"]

/ $ vault secrets enable -path=secret kv-v2

Success! Enabled the kv-v2 secrets engine at: secret/

/ $

We can then add a key vault entry

$ vault kv put secret/webapp/config username="myusername" password="justapassword"

====== Secret Path ======

secret/data/webapp/config

======= Metadata =======

Key Value

--- -----

created_time 2022-08-16T11:59:47.377338499Z

custom_metadata <nil>

deletion_time n/a

destroyed false

version 1

Add Kubernetes Auth Method

To allow automated auth for Kubernetes pods, we need to enable that first

/ $ vault auth enable kubernetes

Success! Enabled kubernetes auth method at: kubernetes/

/ $ echo $KUBERNETES_PORT_443_TCP_ADDR

10.21.0.1

/ $ vault write auth/kubernetes/config token_reviewer_jwt="$(cat /var/run/secrets/kubernetes.io/serviceaccount/token)" kubernetes_host="https://$KUBERNETES_PORT_443_TCP_ADDR:443" kubernetes

_ca_cert=@/var/run/secrets/kubernetes.io/serviceaccount/ca.crt

Success! Data written to: auth/kubernetes/config

Then create a read policy

/ $ vault policy write webapp - <<EOF

> path "secret/data/webapp/config" {

> capabilities = ["read"]

> }

> EOF

Success! Uploaded policy: webapp

And apply it to a role

/ $ vault write auth/kubernetes/role/webapp bound_service_account_names=vault bound_service_account_namespaces=default policies=webapp ttl=24h

Success! Data written to: auth/kubernetes/role/webapp

Testing

Let’s create a sample app deployment that reads from the vault the user/pass secret we created. It’s the “webapp” label that will allow this pod to talk to Vault.

$ cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: webapp

labels:

app: webapp

spec:

replicas: 1

selector:

matchLabels:

app: webapp

template:

metadata:

labels:

app: webapp

spec:

serviceAccountName: vault

containers:

- name: app

image: burtlo/exampleapp-ruby:k8s

imagePullPolicy: Always

env:

- name: VAULT_ADDR

value: "http://vault:8200"

- name: JWT_PATH

value: "/var/run/secrets/kubernetes.io/serviceaccount/token"

- name: SERVICE_PORT

value: "8080"

---

apiVersion: v1

kind: Service

metadata:

name: webappsvc

spec:

ports:

- name: http

port: 80

targetPort: 8080

selector:

app: webapp

type: LoadBalancer

$ kubectl apply -f deployment.yaml

Warning: Autopilot set default resource requests for Deployment default/webapp, as resource requests were not specified. See http://g.co/gke/autopilot-defaults.

deployment.apps/webapp created

service/webappsvc created

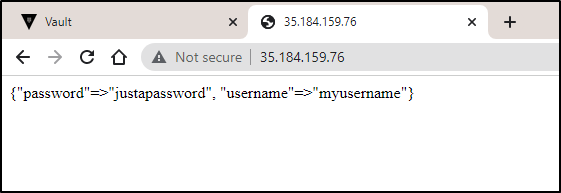

Now let’s test

$ kubectl get svc webappsvc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

webappsvc LoadBalancer 10.21.0.52 35.184.159.76 80:30500/TCP 36s

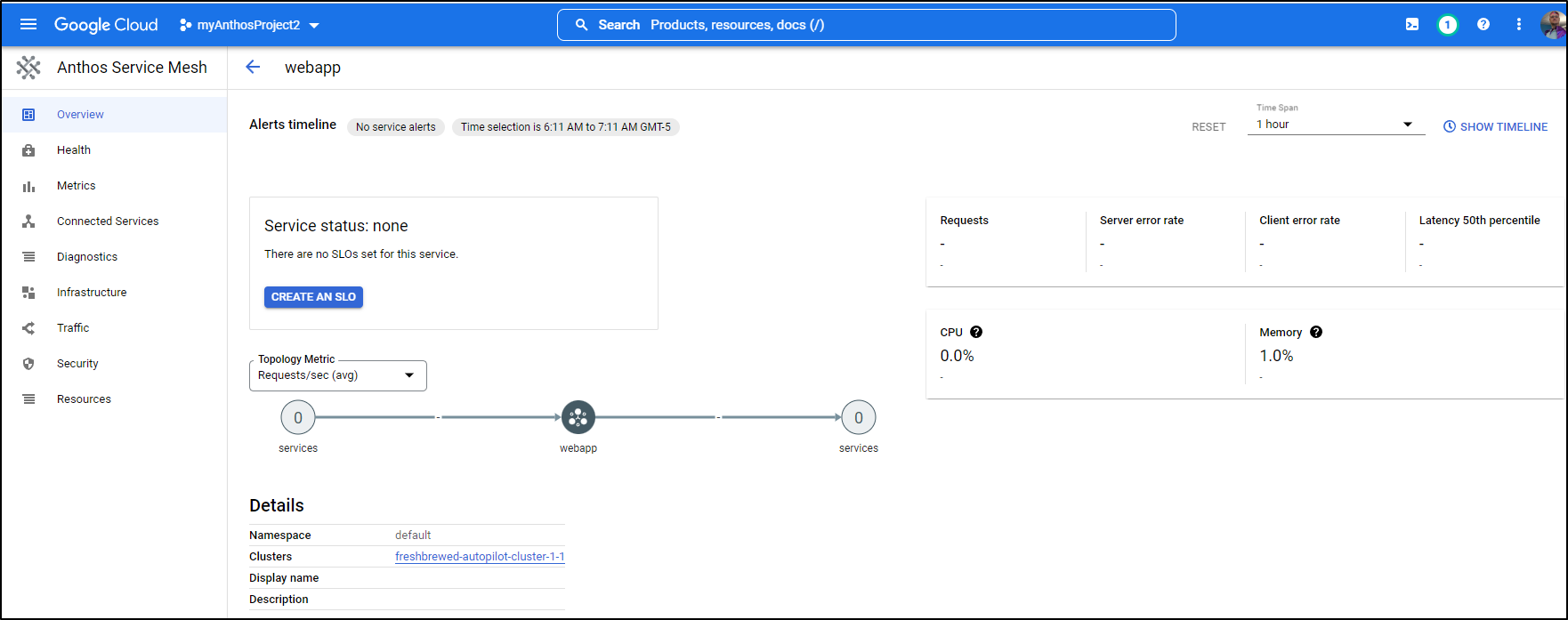

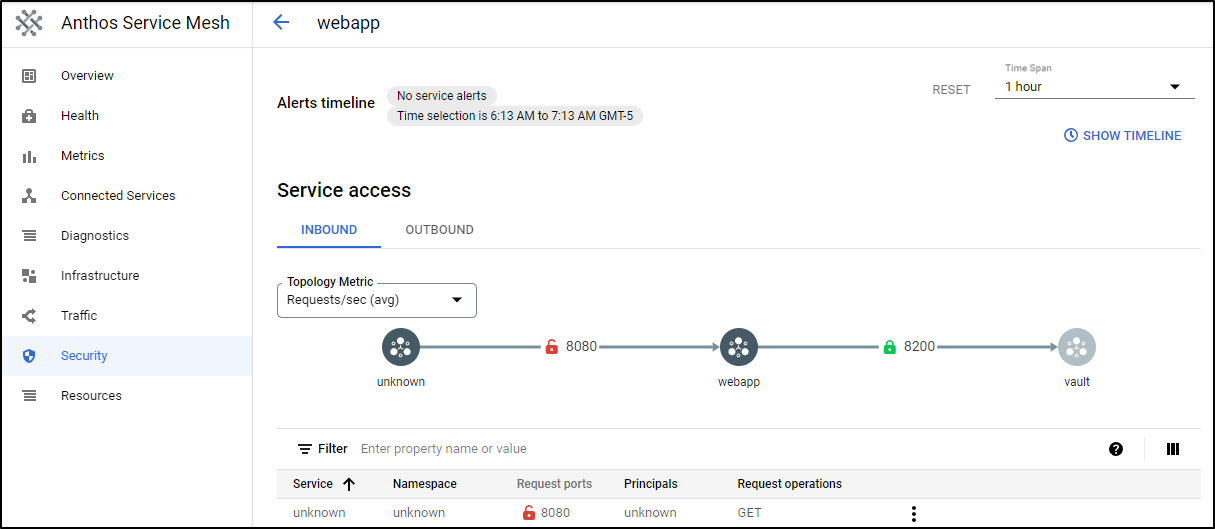

At the same time, we can see that indeed the webapp webapp is being handled by ASM

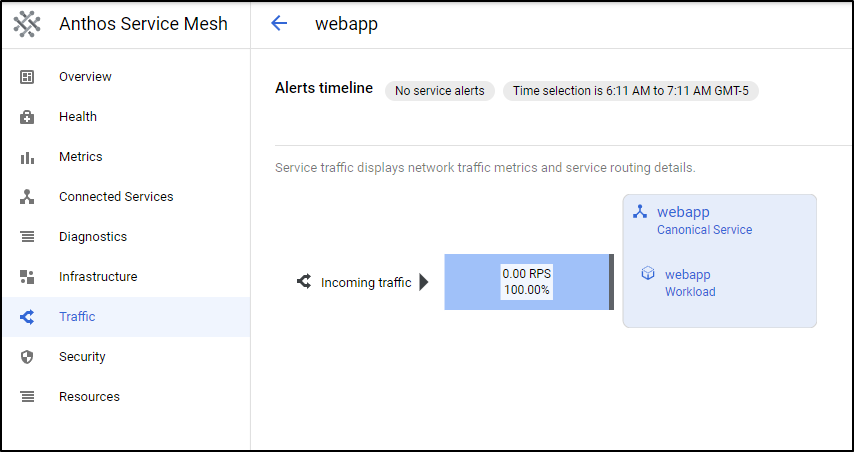

We can see it in the Traffic pane

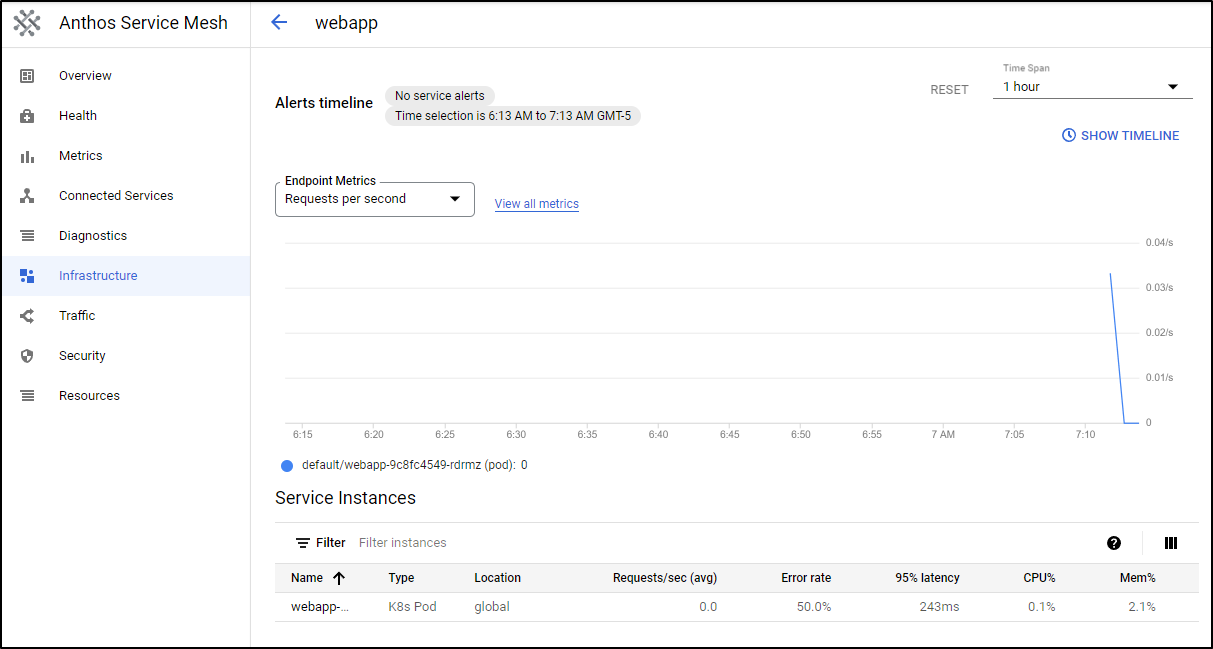

And details in the Infrastructure pane

In fact, we can verify we have mTLS encryption happening by way of the Security pain which indicates the traffic between webapp and vault

Using Istio VirtualService

The APIs keep changing so I find it best to just ask my cluster what APIs we have

$ kubectl api-resources | grep istio

wasmplugins extensions.istio.io/v1alpha1 true WasmPlugin

istiooperators iop,io install.istio.io/v1alpha1 true IstioOperator

destinationrules dr networking.istio.io/v1beta1 true DestinationRule

envoyfilters networking.istio.io/v1alpha3 true EnvoyFilter

gateways gw networking.istio.io/v1beta1 true Gateway

proxyconfigs networking.istio.io/v1beta1 true ProxyConfig

serviceentries se networking.istio.io/v1beta1 true ServiceEntry

sidecars networking.istio.io/v1beta1 true Sidecar

virtualservices vs networking.istio.io/v1beta1 true VirtualService

workloadentries we networking.istio.io/v1beta1 true WorkloadEntry

workloadgroups wg networking.istio.io/v1alpha3 true WorkloadGroup

authorizationpolicies security.istio.io/v1beta1 true AuthorizationPolicy

peerauthentications pa security.istio.io/v1beta1 true PeerAuthentication

requestauthentications ra security.istio.io/v1beta1 true RequestAuthentication

telemetries telemetry telemetry.istio.io/v1alpha1 true Telemetry

We can then create a VirtualService

$ cat virtualservice.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: webap-route

spec:

hosts:

- webapp2.svc.cluster.local

http:

- name: "webapp-v2-routes"

match:

- uri:

prefix: "/"

route:

- destination:

host: webapp.svc.cluster.local

$ kubectl apply -f virtualservice.yaml

virtualservice.networking.istio.io/webap-route created

$ kubectl get virtualservice

NAME GATEWAYS HOSTS AGE

webap-route ["webapp2.svc.cluster.local"] 45s

Until we have a working Gateway, however, it’s not too useful

Vault Auto-inject

To have Vault sidecar injection automatically create a secrets file, we need to update the annotations on the deployment

$ kubectl get deployment webapp -o yaml > deployment.yaml

$ kubectl get deployment webapp -o yaml > deployment.yaml.bak

$ vi deployment.yaml

$ diff -c deployment.yaml.bak deployment.yaml

*** deployment.yaml.bak 2022-08-16 07:31:10.963487005 -0500

--- deployment.yaml 2022-08-16 07:33:44.140439006 -0500

***************

*** 2,7 ****

--- 2,9 ----

kind: Deployment

metadata:

annotations:

+ vault.hashicorp.com/agent-inject-secret-username.txt: secret/data/webapp/config

+ vault.hashicorp.com/role: 'webapp2'

+ vault.hashicorp.com/agent-inject: "true"

autopilot.gke.io/resource-adjustment: '{"input":{"containers":[{"name":"app"}]},"output":{"containers":[{"limits":{"cpu":"500m","ephemeral-storage":"1Gi","memory":"2Gi"},"requests":{"cpu":"500m","ephemeral-storage":"1Gi","memory":"2Gi"},"name":"app"}]},"modified":true}'

deployment.kubernetes.io/revision: "1"

kubectl.kubernetes.io/last-applied-configuration: |

--- 2,7 ----

$ kubectl apply -f deployment.yaml

deployment.apps/webapp configured

Now let’s test

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

helloserver-64f6d5d74b-6ld69 2/2 Running 0 62m

vault-0 2/2 Running 0 44m

vault-agent-injector-674bc999bb-mk9bw 2/2 Running 0 44m

webapp-9c8fc4549-rdrmz 2/2 Running 0 28m

$ kubectl delete pod webapp-9c8fc4549-rdrmz

pod "webapp-9c8fc4549-rdrmz" deleted

Debugging

I tried a few more things… like creating a bespoke service account

$ kubectl create sa webapp

serviceaccount/webapp created

and adding it to a new role

/ $ vault write auth/kubernetes/role/webapp2 bound_service_account_names=webapp bound_service_account_namespaces=default policies=webapp ttl=24h

Success! Data written to: auth/kubernetes/role/webapp2

But nothing seemed to work until i really followed the guide and patched the deployment after launch

$ cat patch-secrets.yaml

spec:

template:

metadata:

annotations:

vault.hashicorp.com/agent-inject: 'true'

vault.hashicorp.com/role: 'webapp2'

vault.hashicorp.com/agent-inject-secret-user-config.txt: 'secret/data/webapp/config'

$ kubectl patch deployment webapp --patch "$(cat patch-secrets.yaml)"

deployment.apps/webapp patched

and while I could see it trying to init a container, it would not get past the Init stage.

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

helloserver-64f6d5d74b-6ld69 2/2 Running 0 88m

vault-0 2/2 Running 0 71m

vault-agent-injector-674bc999bb-2dhq9 2/2 Running 0 8m10s

webapp-5f68f9758d-sbrkl 0/3 Init:1/2 0 99s

webapp-686d589867-6jxcf 2/2 Running 0 2m22s

I tried many paths, recreating the SA, changing roles but I continually got auth issues

$ kubectl logs webapp-695d6fdc84-qtf6p vault-agent-init | tail -n 4

2022-08-16T17:50:58.926Z [INFO] auth.handler: authenticating

2022-08-16T17:50:58.935Z [ERROR] auth.handler: error authenticating: error="Put \"http://vault.default.svc:8200/v1/auth/kubernetes/login\": dial tcp 10.21.2.12:8200: connect: connection refused" backoff=4m41.48s

2022-08-16T17:55:40.417Z [INFO] auth.handler: authenticating

2022-08-16T17:55:40.428Z [ERROR] auth.handler: error authenticating: error="Put \"http://vault.default.svc:8200/v1/auth/kubernetes/login\": dial tcp 10.21.2.12:8200: connect: connection refused" backoff=4m55.91s

That’s when I found this article about Istio (ASM) and Vault not working when used in tandem.

I followed a suggestion to add an exclusion on the 8200 port

$ cat patch-secrets.yaml

spec:

template:

metadata:

annotations:

vault.hashicorp.com/agent-inject: 'true'

vault.hashicorp.com/role: 'internal-app'

vault.hashicorp.com/agent-inject-secret-user-config.txt: 'secret/data/webapp/config'

traffic.sidecar.istio.io/excludeOutboundPorts: "8200"

$ kubectl patch deployment webapp --patch "$(cat patch-secrets.yaml)"

deployment.apps/webapp patched

Then ensured I was using the “internal-app” service user

$ cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

autopilot.gke.io/resource-adjustment: '{"input":{"containers":[{"name":"app"}]},"output":{"containers":[{"limits":{"cpu":"500m","ephemeral-storage":"1Gi","memory":"2Gi"},"requests":{"cpu":"500m","ephemeral-storage":"1Gi","memory":"2Gi"},"name":"app"}]},"modified":true}'

deployment.kubernetes.io/revision: "5"

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{"autopilot.gke.io/resource-adjustment":"{\"input\":{\"containers\":[{\"name\":\"app\"}]},\"output\":{\"containers\":[{\"limits\":{\"cpu\":\"500m\",\"ephemeral-storage\":\"1Gi\",\"memory\":\"2Gi\"},\"requests\":{\"cpu\":\"500m\",\"ephemeral-storage\":\"1Gi\",\"memory\":\"2Gi\"},\"name\":\"app\"}]},\"modified\":true}","deployment.kubernetes.io/revision":"3","vault.hashicorp.com/agent-inject":"true","vault.hashicorp.com/agent-inject-secret-username.txt":"secret/data/webapp/config","vault.hashicorp.com/role":"internal-app"},"creationTimestamp":"2022-08-16T12:04:56Z","generation":8,"labels":{"app":"webapp"},"name":"webapp","namespace":"default","resourceVersion":"175838","uid":"4674ae91-6990-4078-bbed-44c8d9f3ec45"},"spec":{"progressDeadlineSeconds":600,"replicas":1,"revisionHistoryLimit":10,"selector":{"matchLabels":{"app":"webapp"}},"strategy":{"rollingUpdate":{"maxSurge":"25%","maxUnavailable":"25%"},"type":"RollingUpdate"},"template":{"metadata":{"annotations":{"vault.hashicorp.com/agent-inject":"true","vault.hashicorp.com/agent-inject-secret-user-config.txt":"secret/data/webapp/config","vault.hashicorp.com/role":"internal-app"},"creationTimestamp":null,"labels":{"app":"webapp"}},"spec":{"containers":[{"env":[{"name":"VAULT_ADDR","value":"http://vault:8200"},{"name":"JWT_PATH","value":"/var/run/secrets/kubernetes.io/serviceaccount/token"},{"name":"SERVICE_PORT","value":"8080"}],"image":"burtlo/exampleapp-ruby:k8s","imagePullPolicy":"Always","name":"app","resources":{"limits":{"cpu":"500m","ephemeral-storage":"1Gi","memory":"2Gi"},"requests":{"cpu":"500m","ephemeral-storage":"1Gi","memory":"2Gi"}},"securityContext":{"capabilities":{"drop":["NET_RAW"]}},"terminationMessagePath":"/dev/termination-log","terminationMessagePolicy":"File"}],"dnsPolicy":"ClusterFirst","restartPolicy":"Always","schedulerName":"default-scheduler","securityContext":{"seccompProfile":{"type":"RuntimeDefault"}},"serviceAccount":"webapp","serviceAccountName":"webapp","terminationGracePeriodSeconds":30}}},"status":{"availableReplicas":1,"conditions":[{"lastTransitionTime":"2022-08-16T12:51:44Z","lastUpdateTime":"2022-08-16T12:51:44Z","message":"Deployment has minimum availability.","reason":"MinimumReplicasAvailable","status":"True","type":"Available"},{"lastTransitionTime":"2022-08-16T14:29:52Z","lastUpdateTime":"2022-08-16T14:29:52Z","message":"ReplicaSet \"webapp-5f68f9758d\" has timed out progressing.","reason":"ProgressDeadlineExceeded","status":"False","type":"Progressing"}],"observedGeneration":8,"readyReplicas":1,"replicas":2,"unavailableReplicas":1,"updatedReplicas":1}}

vault.hashicorp.com/agent-inject: "true"

vault.hashicorp.com/agent-inject-secret-username.txt: secret/data/webapp/config

vault.hashicorp.com/role: internal-app

creationTimestamp: "2022-08-16T12:04:56Z"

generation: 10

labels:

app: webapp

name: webapp

namespace: default

resourceVersion: "383401"

uid: 4674ae91-6990-4078-bbed-44c8d9f3ec45

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: webapp

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

annotations:

traffic.sidecar.istio.io/excludeOutboundPorts: "8200"

vault.hashicorp.com/agent-inject: "true"

vault.hashicorp.com/agent-inject-secret-user-config.txt: secret/data/webapp/config

vault.hashicorp.com/role: internal-app

creationTimestamp: null

labels:

app: webapp

spec:

containers:

- env:

- name: VAULT_ADDR

value: http://vault:8200

- name: JWT_PATH

value: /var/run/secrets/kubernetes.io/serviceaccount/token

- name: SERVICE_PORT

value: "8080"

image: burtlo/exampleapp-ruby:k8s

imagePullPolicy: Always

name: app

resources:

limits:

cpu: 500m

ephemeral-storage: 1Gi

memory: 2Gi

requests:

cpu: 500m

ephemeral-storage: 1Gi

memory: 2Gi

securityContext:

capabilities:

drop:

- NET_RAW

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext:

seccompProfile:

type: RuntimeDefault

serviceAccount: internal-app

serviceAccountName: internal-app

terminationGracePeriodSeconds: 30

status:

availableReplicas: 1

conditions:

- lastTransitionTime: "2022-08-16T12:51:44Z"

lastUpdateTime: "2022-08-16T12:51:44Z"

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

- lastTransitionTime: "2022-08-16T18:00:18Z"

lastUpdateTime: "2022-08-16T18:00:19Z"

message: ReplicaSet "webapp-f9f557f7f" is progressing.

reason: ReplicaSetUpdated

status: "True"

type: Progressing

observedGeneration: 10

readyReplicas: 1

replicas: 2

unavailableReplicas: 1

updatedReplicas: 1

And it finally was unblocked

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

helloserver-64f6d5d74b-6ld69 2/2 Running 0 9h

vault-0 2/2 Running 0 9h

vault-agent-injector-674bc999bb-kb58z 2/2 Running 0 6h26m

webapp-7db5b4d86c-42ngl 3/3 Running 0 173m

We can verify we can get the secret as a mounted file (as done by the Vault sidecar injector) using kubectl

$ kubectl exec `kubectl get pod -l app=webapp -o jsonpath="{.items[0].metadata.name}"` --container app -- ls /vault/secrets/

user-config.txt

$ kubectl exec `kubectl get pod -l app=webapp -o jsonpath="{.items[0].metadata.name}"` --container app -- cat /vault/secrets/user-config.txt

data: map[password:justapassword username:myusername]

metadata: map[created_time:2022-08-16T11:59:47.377338499Z custom_metadata:<nil> deletion_time: destroyed:false version:1]

Cleanup

We can cleanup the cluster we created if we are done exploring Vault and ASM

$ gcloud container clusters list --project $PROJECTID

NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VERSION NUM_NODES STATUS

freshbrewed-autopilot-cluster-1 us-central1 1.22.10-gke.600 35.239.95.24 e2-medium 1.22.10-gke.600 6 RUNNING

$ gcloud container clusters delete --project $PROJECTID --region us-central1 freshbrewed-autopilot-cluster-1

The following clusters will be deleted.

- [freshbrewed-autopilot-cluster-1] in [us-central1]

Do you want to continue (Y/n)? y

Deleting cluster freshbrewed-autopilot-cluster-1...⠏

Summary

We created a new GKE Autopilot cluster using the command line after enabling the required APIs. Once launched, we installed a sample app and verified Istio (ASM) was working.

Lastly, we added Hashi Vault with Helm. We created a secret and verified with a sample Ruby app we could fetch it then setup, and debugged, Vault sidecar injection working alongside Istio.

I would like to dig deeper into Istio gateways with TLS and if I were to keep the cluster, set up Vault auto-unseal with GCP KMS.