Published: Aug 9, 2022 by Isaac Johnson

In part 2, let’s try and marry Loft.sh and Codefresh. We will try as an endpoint for Argo and as a hosted runtime.

I want to be upfront; This ended up being mostly a fail. I will show something working in this writeup however my ultimate goal of tying Codefresh.io to Loft.sh simply did not pan out.

Using Loft.sh

First thing to point out, Loft can detect new releases and self-update. You can also choose to update via helm. You can check for upgrades in the upgrade area (e.g. https://loft.freshbrewed.science/admin/upgrade)

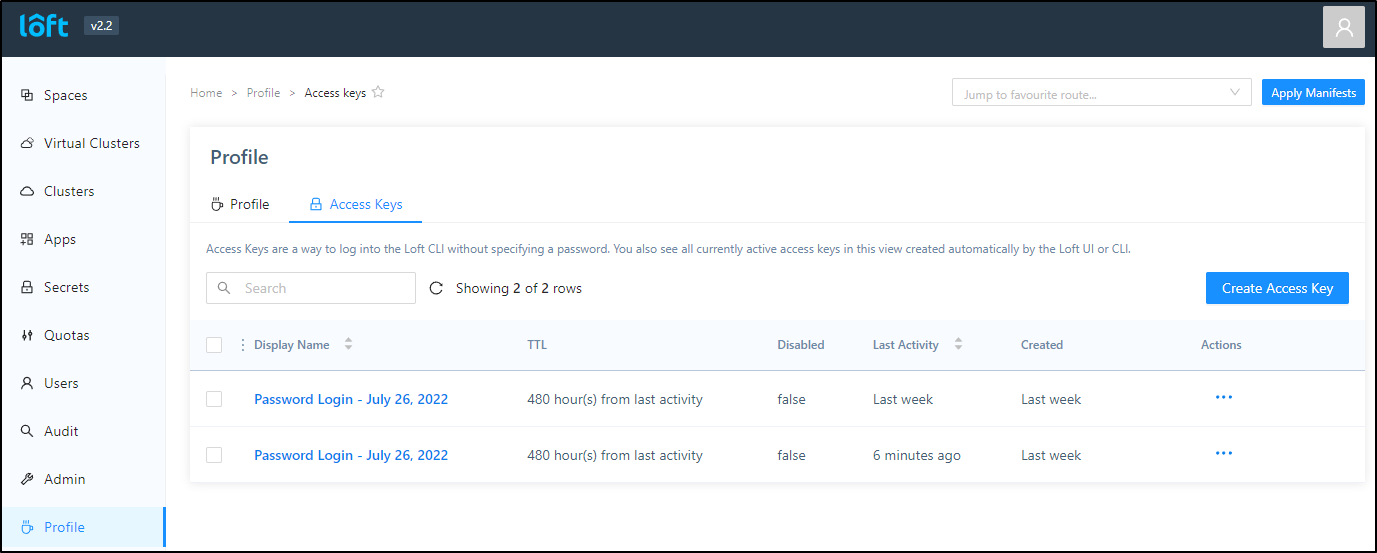

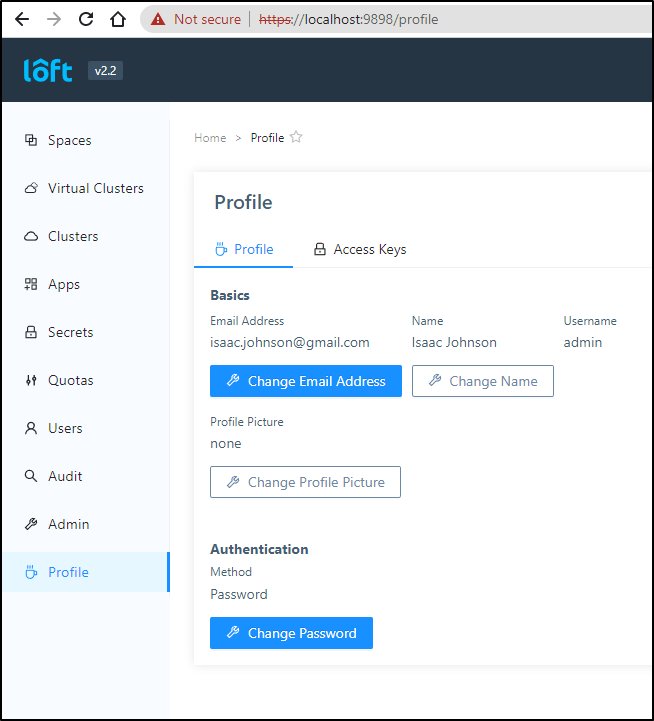

Let’s create a virtual cluster using the loft command line this time. I need to create an access key under the my profile page (e.g. https://loft.freshbrewed.science/profile/access-keys)

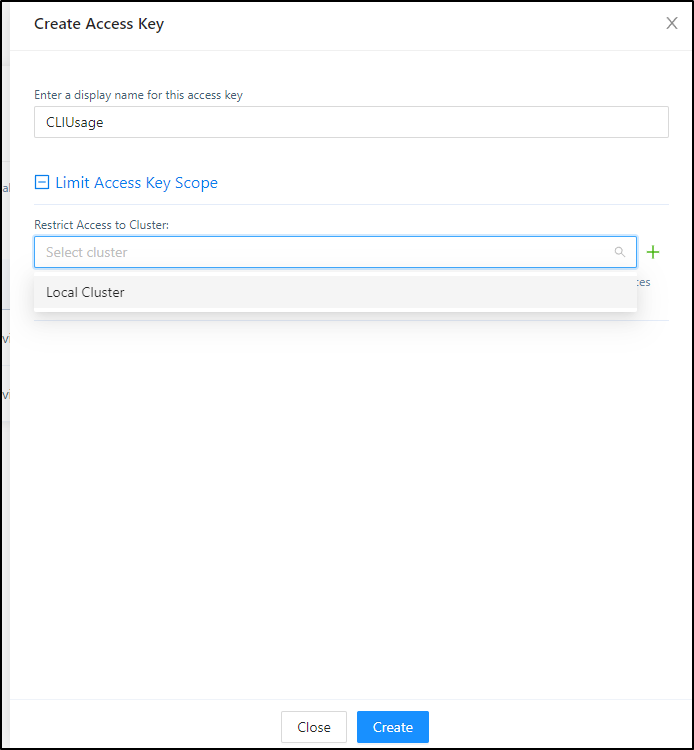

In creating the Access Key we could limit the scope to a given cluster (but we won’t as we will use this to create vClusters)

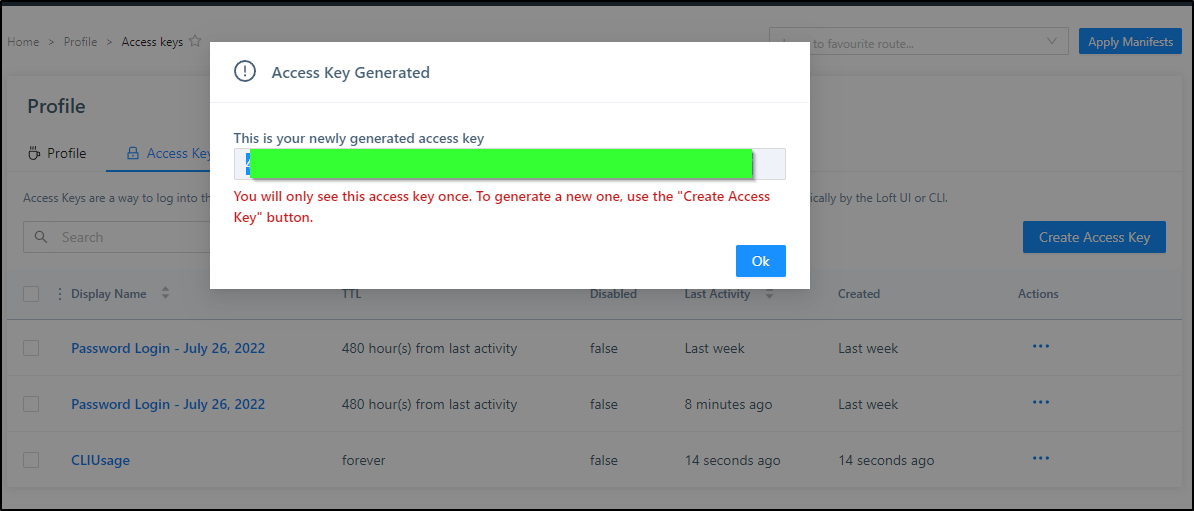

We can then save that key aside

Interestingly, the CLI also noted it was out of date

$ loft login https://loft.freshbrewed.science --access-key=asdfsadfsadfsasadfasdfasdfasdfasdfsadfasdf

[warn] There is a newer version of Loft: v2.2.2. Run `loft upgrade` to upgrade to the newest version.

[done] √ Successfully logged into Loft instance https://loft.freshbrewed.science

Similarily, I could upgrade Loft.sh rather easily

builder@DESKTOP-QADGF36:~$ sudo loft upgrade

[sudo] password for builder:

[done] √ Successfully updated to version 2.2.2

[info] Release note:

### Changes

- **cli**: add `--use-existing` flag to `loft create space`

- **cli**: Fixed an issue where `loft generate admin-kube-config` wasn't working in v1.24 clusters correctly

- **ui**: vcluster advanced options can now be modified in bulk.

- **chart**: add an option for `audit.persistence.accessmodes`

- **api**: Fixed an issue where using OIDC without clientSecret was not possible.

- **api**: Fixed a potential panic in internal port-forwarder

- **api**: Added a new annotation `loft.sh/non-deletable` to prevent space & virtual cluster deletion

- **api**: Use agent endpoints instead of agent service for ingress wakeup

In my case, I would like to create a vCluster for Codefresh.io work

$ loft create vcluster codefresh-work --cluster loft-cluster --description "codefresh-work" --display-name "Codefresh Working Cluster" --print

[info] Waiting for task to start...

[info] Executing Virtual Cluster Creation Task Create Virtual Cluster codefresh-work...

[done] √ Successfully created space vcluster-codefresh-work-homfu

[fatal] create virtual cluster: Internal error occurred: failed calling webhook "storage.agent.loft.sh": failed to call webhook: Post "https://loft-agent-webhook.loft.svc:443/storage?timeout=10s": EOF

[done] √ Successfully created the virtual cluster codefresh-work in cluster loft-cluster and space vcluster-codefresh-work-homfu

apiVersion: v1

clusters:

- cluster:

server: https://loft.freshbrewed.science/kubernetes/virtualcluster/loft-cluster/vcluster-codefresh-work-homfu/codefresh-work

name: loft-vcluster_codefresh-work_vcluster-codefresh-work-homfu_loft-cluster

contexts:

- context:

cluster: loft-vcluster_codefresh-work_vcluster-codefresh-work-homfu_loft-cluster

user: loft-vcluster_codefresh-work_vcluster-codefresh-work-homfu_loft-cluster

name: loft-vcluster_codefresh-work_vcluster-codefresh-work-homfu_loft-cluster

current-context: loft-vcluster_codefresh-work_vcluster-codefresh-work-homfu_loft-cluster

kind: Config

preferences: {}

users:

- name: loft-vcluster_codefresh-work_vcluster-codefresh-work-homfu_loft-cluster

user:

exec:

apiVersion: client.authentication.k8s.io/v1beta1

args:

- token

- --silent

- --config

- /home/builder/.loft/config.json

command: /usr/local/bin/loft

env: null

provideClusterInfo: false

[done] √ Successfully updated kube context to use virtual cluster codefresh-work in space vcluster-codefresh-work-homfu and cluster loft-cluster

Because of an error in my storageclasses, I ended up using the Web interface to accomplish the same task. As such, I needed to then download the updated config

builder@DESKTOP-QADGF36:~$ loft use vcluster codefresh-working-2 --cluster loft-cluster --space vcluster-codefresh-working-2-p5gby

[done] √ Successfully updated kube context to use space vcluster-codefresh-working-2-p5gby in cluster loft-cluster

builder@DESKTOP-QADGF36:~$ kubectx

docker-desktop

ext77

ext81

loft-vcluster_codefresh-working-2_vcluster-codefresh-working-2-p5gby_loft-cluster

mac77

mac81

We can see that we are in the virtual cluster

builder@DESKTOP-QADGF36:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

isaac-macbookair Ready <none> 9m36s v1.23.7

builder@DESKTOP-QADGF36:~$ kubectl get ns

NAME STATUS AGE

default Active 10m

kube-node-lease Active 10m

kube-public Active 10m

kube-system Active 10m

Adding to Codefresh

As I was on a new WSL, I used brew to install the cf cli.

$ brew tap codefresh-io/cli && brew install cf2

Running `brew update --auto-update`...

==> Auto-updated Homebrew!

Updated 2 taps (homebrew/core and derailed/k9s).

==> New Formulae

aws2-wrap docker-buildx jupp nmrpflash prql-compiler treefmt

berkeley-db@5 dooit libbpf ocl-icd prr ttdl

bfgminer dunamai libeatmydata onlykey-agent python-build tuntox

c2rust dura licensor open62541 qsv uthash

cargo-bundle felinks manifest-tool openvi railway vile

cargo-depgraph gaze meek page sgn vulkan-loader

cargo-nextest git-codereview mesheryctl pax sgr x86_64-linux-gnu-binutils

cargo-udeps git-delete-merged-branches metalang99 pint smap xdg-ninja

chain-bench glib-utils mkp224o pipe-rename snowflake

circumflex gum mle pixiewps spr

cpp-httplib helix mprocs pkcs11-tools tea

dart-sdk hyx mypaint-brushes pocl tere

datatype99 interface99 neovide protobuf@3 tlsx

You have 25 outdated formulae installed.

You can upgrade them with brew upgrade

or list them with brew outdated.

==> Tapping codefresh-io/cli

Cloning into '/home/linuxbrew/.linuxbrew/Homebrew/Library/Taps/codefresh-io/homebrew-cli'...

remote: Enumerating objects: 3032, done.

remote: Counting objects: 100% (341/341), done.

remote: Compressing objects: 100% (295/295), done.

remote: Total 3032 (delta 108), reused 182 (delta 45), pack-reused 2691

Receiving objects: 100% (3032/3032), 343.28 KiB | 2.91 MiB/s, done.

Resolving deltas: 100% (752/752), done.

Tapped 2 formulae (15 files, 495.0KB).

==> Downloading https://ghcr.io/v2/homebrew/core/go/manifests/1.18.5

######################################################################## 100.0%

==> Downloading https://ghcr.io/v2/homebrew/core/go/blobs/sha256:dff2b1ca994651bf3a9141a8cbc64e5ab347ac20324f064d27128022a38355b3

==> Downloading from https://pkg-containers.githubusercontent.com/ghcr1/blobs/sha256:dff2b1ca994651bf3a9141a8cbc64e5ab347ac20324f064d27128022a38355b3?se=2022-08-03T12%3A10%3A00Z&sig=1Gc%2FJCmLd

######################################################################## 100.0%

==> Cloning https://github.com/codefresh-io/cli-v2.git

Cloning into '/home/builder/.cache/Homebrew/cf2--git'...

==> Checking out tag v0.0.455

HEAD is now at b21cbf4 bump app-proxy (#510)

==> Installing cf2 from codefresh-io/cli

==> Installing dependencies for codefresh-io/cli/cf2: go

==> Installing codefresh-io/cli/cf2 dependency: go

==> Pouring go--1.18.5.x86_64_linux.bottle.tar.gz

🍺 /home/linuxbrew/.linuxbrew/Cellar/go/1.18.5: 11,988 files, 560.8MB

==> Installing codefresh-io/cli/cf2

==> make cli-package DEV_MODE=false

🍺 /home/linuxbrew/.linuxbrew/Cellar/cf2/0.0.455: 6 files, 119.4MB, built in 45 seconds

==> `brew cleanup` has not been run in the last 30 days, running now...

Disable this behaviour by setting HOMEBREW_NO_INSTALL_CLEANUP.

Hide these hints with HOMEBREW_NO_ENV_HINTS (see `man brew`).

Removing: /home/builder/.cache/Homebrew/kubernetes-cli--1.24.2... (13.4MB)

Removing: /home/builder/.cache/Homebrew/kustomize--4.5.5... (6.9MB)

Removing: /home/builder/.cache/Homebrew/libidn2--2.3.2... (253.7KB)

Removing: /home/builder/.cache/Homebrew/libnghttp2--1.47.0... (227.2KB)

Removing: /home/builder/.cache/Homebrew/tfenv--2.2.3... (24.7KB)

Removing: /home/builder/.cache/Homebrew/kn_bottle_manifest--1.3.1... (6.2KB)

Removing: /home/builder/.cache/Homebrew/go_mod_cache... (43,157 files, 729.7MB)

Removing: /home/builder/.cache/Homebrew/go_cache... (6,400 files, 825.4MB)

Then I needed to login

$ cf config create-context codefresh --api-key asdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdf

INFO New context created: "codefresh"

INFO Switched to context: codefresh

Lastly, I added the cluster:

$ cf cluster add codefresh-hosted

loft-vcluster_codefresh-working-2_vcluster-codefresh-working-2-p5gby_loft-cluster

INFO Building "add-cluster" manifests

serviceaccount/argocd-manager-1659528328473 created

clusterrole.rbac.authorization.k8s.io/argocd-manager-role-1659528328473 created

clusterrolebinding.rbac.authorization.k8s.io/argocd-manager-role-binding-1659528328473 created

configmap/csdp-add-cluster-cm-1659528328473 created

secret/csdp-add-cluster-secret-1659528328473 created

job.batch/csdp-add-cluster-job-1659528328473 created

WARNING Attempt #1/1 failed:

=====

ServiceAccount: argocd-manager-1659528328473

Ingress URL: https://mr-62e3d9f588d8af3a8b8581d3-d6dd0e5.cf-cd.com

Context Name: loft-vcluster-codefresh-working-2-vcluster-codefresh-working

Server: https://loft.freshbrewed.science/kubernetes/virtualcluster/loft-cluster/vcluster-codefresh-working-2-p5gby/codefresh-working-2

Found ServiceAccount secret argocd-manager-1659528328473-token-hmwjt

Cluster "loft.freshbrewed.science/kubernetes/virtualcluster/loft-cluster/vcluster-codefresh-working-2-p5gby/codefresh-working-2" set.

User "argocd-manager-1659528328473" set.

Context "loft-vcluster-codefresh-working-2-vcluster-codefresh-working" created.

STATUS_CODE: 500

{"statusCode":500,"message":"Failed adding cluster \"loft-vcluster-codefresh-working-2-vcluster-codefresh-working\", error: Internal Server Error"}

error creating cluster in runtime

=====

cluster loft-vcluster-codefresh-working-2-vcluster-codefresh-working was added successfully to runtime codefresh-hosted

hmm… that failed..

Let’s ensure we have a proper ingress setup

builder@DESKTOP-QADGF36:~/Workspaces/kubernetes-ingress/deployments/helm-chart$ helm install nginx-ingress-release .

NAME: nginx-ingress-release

LAST DEPLOYED: Wed Aug 3 07:22:33 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The NGINX Ingress Controller has been installed.

builder@DESKTOP-QADGF36:~/Workspaces/kubernetes-ingress/deployments/helm-chart$ kubectl get ingressclass -o yaml > ingress.class.yaml

builder@DESKTOP-QADGF36:~/Workspaces/kubernetes-ingress/deployments/helm-chart$ vi ingress.class.yaml

builder@DESKTOP-QADGF36:~/Workspaces/kubernetes-ingress/deployments/helm-chart$ kubectl get ingressclass -o yaml > ingress.class.yaml.bak

builder@DESKTOP-QADGF36:~/Workspaces/kubernetes-ingress/deployments/helm-chart$ diff ingress.class.yaml ingress.class.yaml.bak

9d8

< ingressclass.kubernetes.io/is-default-class: "true"

builder@DESKTOP-QADGF36:~/Workspaces/kubernetes-ingress/deployments/helm-chart$ kubectl apply -f ingress.class.yaml

Warning: resource ingressclasses/nginx is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

ingressclass.networking.k8s.io/nginx configured

Sadly, in my Loft system, I cannot satisfy new LoadBalancers with external IPs which is a problem

default nginx-ingress-release-nginx-ingress LoadBalancer 10.43.17.245 192.168.1.214,192.168.1.38,192.168.1.57,192.168.1.77 80:32547/TCP,443:31994/TCP 8d

vcluster-codefresh-working-2-p5gby nginx-ingress-release-nginx-ingress-x-default-x-code-dbad7aa412 LoadBalancer 10.43.255.233 <pending> 80:32354/TCP,443:31169/TCP 7m50s

vcluster-codefresh-working-2-p5gby codefresh-working-2-node-builder-hp-elitebook-850-g2 ClusterIP 10.43.0.221 <none> 10250/TCP

Loft and AKS

I’ll pivot from my on-prem cluster and use Azure Kubernetes Service (this will eliminate issues with Loadbalancers).

First, I’ll create a resource group for this work

$ az group create -n aksloftrg --location centralus

{

"id": "/subscriptions/8defc61d-asdf-asdf-sadf-cb9f91289a61/resourceGroups/aksloftrg",

"location": "centralus",

"managedBy": null,

"name": "aksloftrg",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

I need some SP credentials set locally for creating the AKS cluster

$ az ad sp create-for-rbac --name idjaks45sp.DOMAIN.onmicrosoft.com --skip-assignment --output json > mysp.json

WARNING: Found an existing application instance of "b2049cf3-asdf-asdf-asfd-24305989b5a5". We will patch it

WARNING: The output includes credentials that you must protect. Be sure that you do not include these credentials in your code or check the credentials into your source control. For more information, see https://aka.ms/azadsp-cli

WARNING: 'name' property in the output is deprecated and will be removed in the future. Use 'appId' instead.

$ export SP_PASS=`cat mysp.json | jq -r .password`

$ export SP_ID=`cat mysp.json | jq -r .appId`

Then I can create the cluster

$ az aks create -n idjaksloft1 -g aksloftrg --location centralus --node-count 3 --enable-cluster-autoscaler --min-count 2 --max-count 4 --generate-ssh-keys --network-plugin azure --network-policy azure --service-principal $SP_ID --client-secret $SP_PASS

Verification

$ az aks get-credentials -n idjaksloft1 -g aksloftrg --admin

/home/builder/.kube/config has permissions "700".

It should be readable and writable only by its owner.

Merged "idjaksloft1-admin" as current context in /home/builder/.kube/config

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-30313772-vmss000000 Ready agent 72s v1.22.11

aks-nodepool1-30313772-vmss000001 Ready agent 71s v1.22.11

aks-nodepool1-30313772-vmss000002 Ready agent 90s v1.22.11

Next, I’ll add loft.sh

$ loft start

? Seems like you try to use 'loft start' with a different kubernetes context than before. Please choose which kubernetes context you want to use

idjaksloft1-admin

[info] Welcome to Loft!

[info] This installer will help you configure and deploy Loft.

? Enter your email address to create the login for your admin user isaac.johnson@gmail.com

[info] Executing command: helm upgrade loft loft --install --reuse-values --create-namespace --repository-config='' --kube-context idjaksloft1-admin --namespace loft --repo https://charts.loft.sh/ --set admin.email=isaac.johnson@gmail.com --set admin.password=da6e68ae-asdf-asdf-asdf-e2ae2edb5382 --reuse-values

[done] √ Loft has been deployed to your cluster!

[done] √ Loft pod successfully started

[info] Starting port-forwarding to the Loft pod

[info] Waiting until loft is reachable at https://localhost:9898

########################## LOGIN ############################

Username: admin

Password: da6e68ae-asdf-asdf-asdf-e2ae2edb5382 # Change via UI or via: loft reset password

Login via UI: https://localhost:9898

Login via CLI: loft login --insecure https://localhost:9898

!!! You must accept the untrusted certificate in your browser !!!

#################################################################

Loft was successfully installed and port-forwarding has been started.

If you stop this command, run 'loft start' again to restart port-forwarding.

Thanks for using Loft!

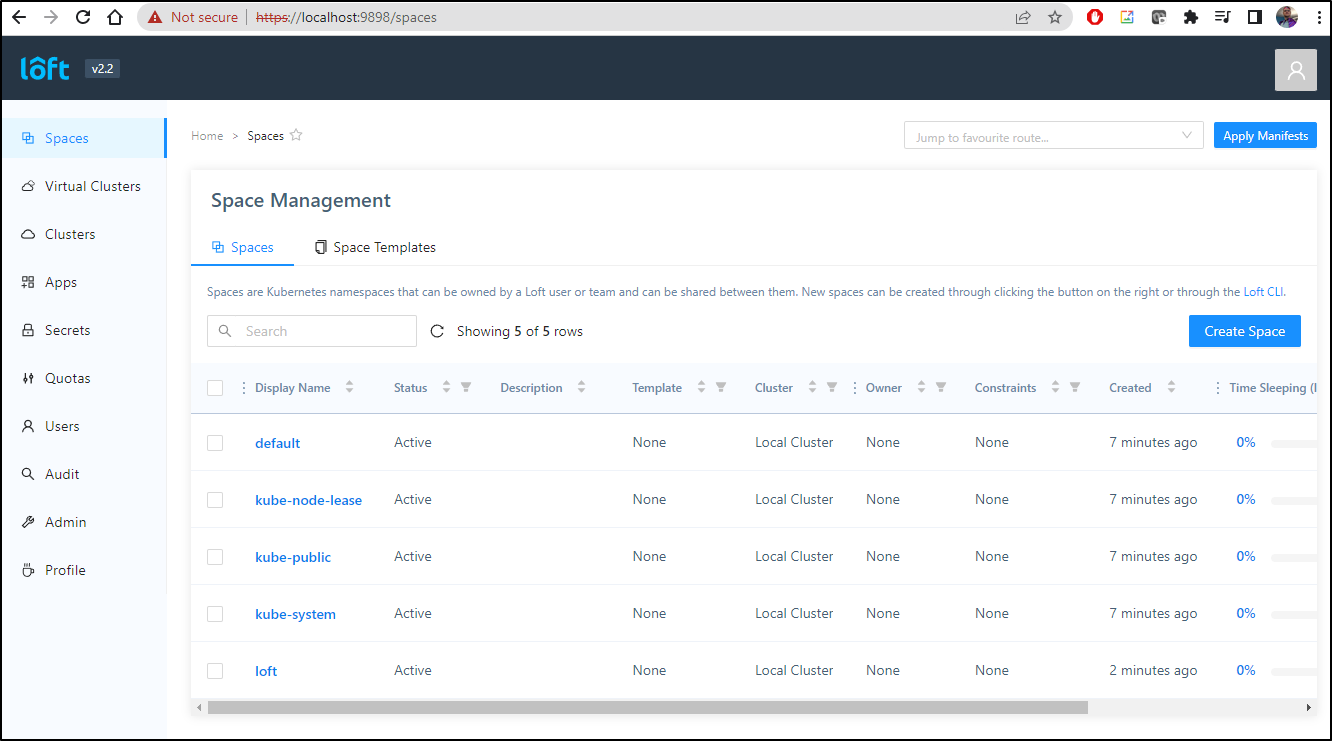

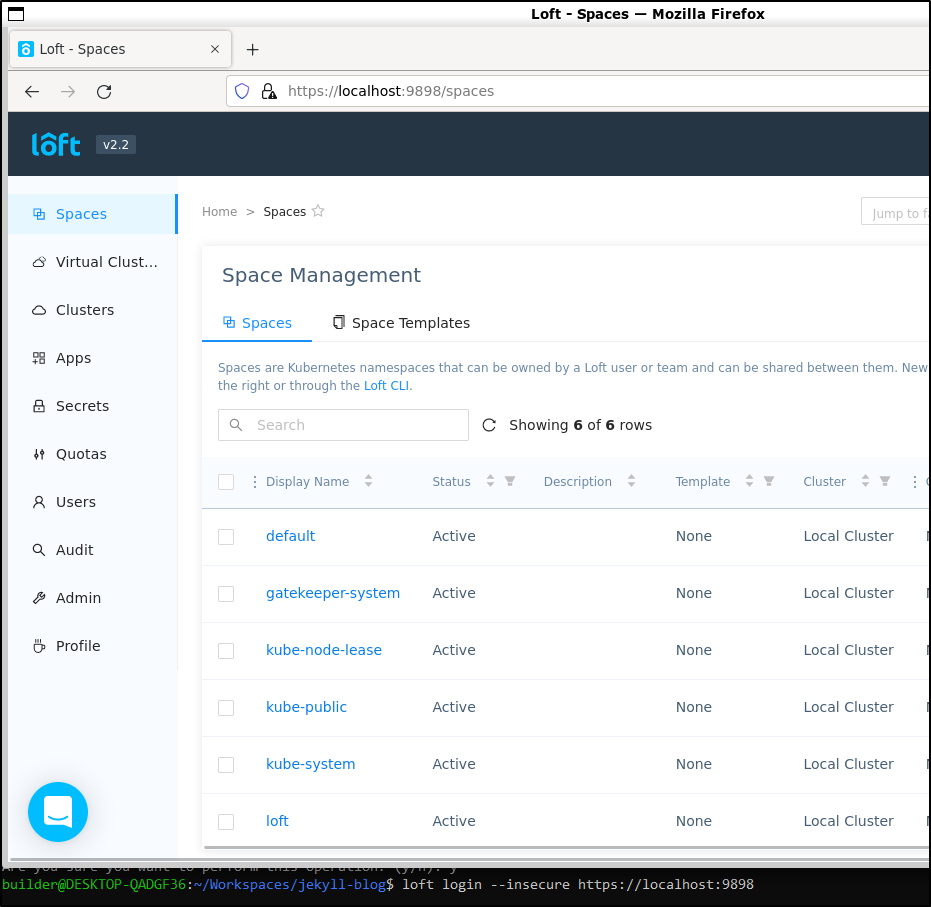

I now have a local loft.sh running

It’s always worth resetting the admin password as your first step

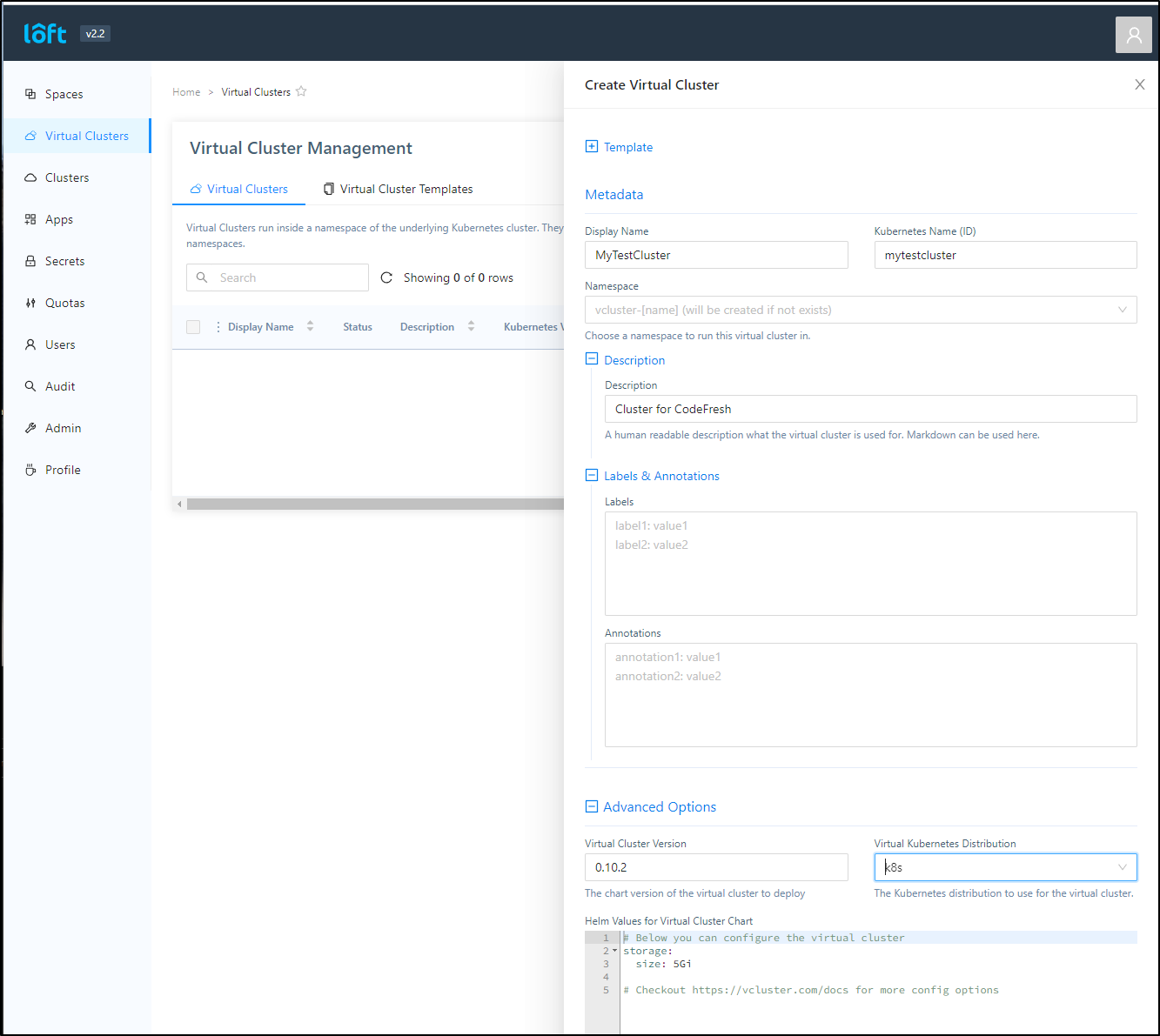

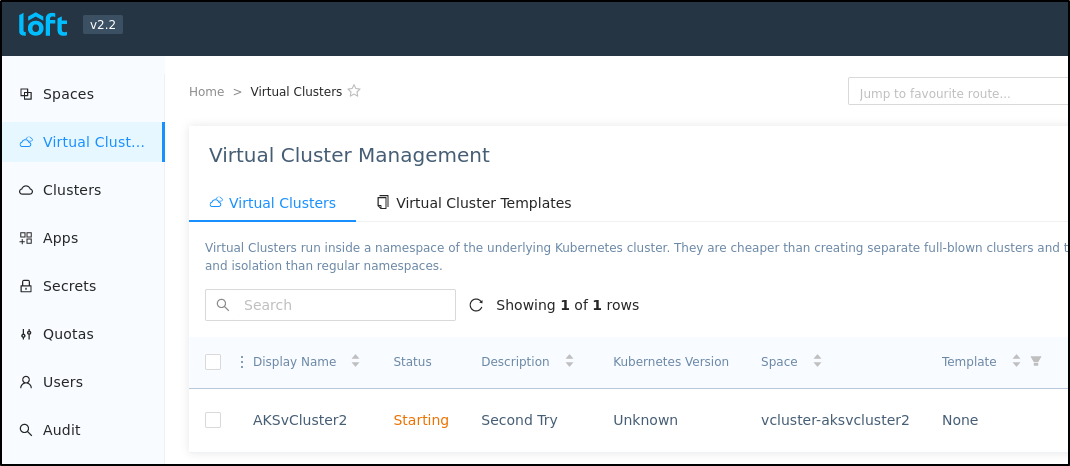

Next, I’ll create a new vCluster (making sure to choose k8s as the template)

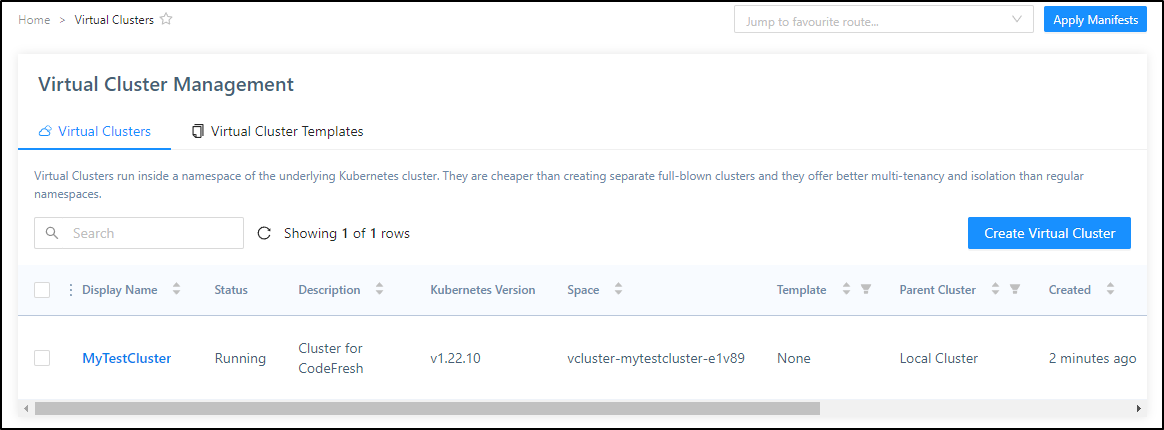

Once we see the cluster is running

We can then use it locally

$ loft connect vcluster -n vcluster-mytestcluster-e1v89 &

[1] 28066

$ [info] Found vcluster pod mytestcluster-9f55f97db-6dj87 in namespace vcluster-mytestcluster-e1v89

[done] √ Virtual cluster kube config written to: ./kubeconfig.yaml. You can access the cluster via `kubectl --kubeconfig ./kubeconfig.yaml get namespaces`

[info] Starting port forwarding on port 8443:8443

Forwarding from 127.0.0.1:8443 -> 8443

Forwarding from [::1]:8443 -> 8443

$ kubectl --kubeconfig ./kubeconfig.yaml get namespaces

Handling connection for 8443

NAME STATUS AGE

default Active 5m28s

kube-node-lease Active 5m29s

kube-public Active 5m29s

kube-system Active 5m29s

Let’s say you desire to change your API key. You’ll need to remove your former codefresh hosted context first before you can add it back

$ cf config delete-context codefresh

WARNING delete context is set as current context, specify a new current context with 'cf config use-context'

INFO Deleted context: codefresh

$ cf config create-context codefresh --api-key adsfasdfsasadfasdf.asdfasfasfasfasfasfsafasdf

INFO New context created: "codefresh"

INFO Switched to context: codefresh

We can now add our AKS cluster as a Codefresh cluster

$ cf cluster add codefresh-hosted

idjaksloft1-admin

INFO Building "add-cluster" manifests

serviceaccount/argocd-manager-1659700744226 created

clusterrole.rbac.authorization.k8s.io/argocd-manager-role-1659700744226 created

clusterrolebinding.rbac.authorization.k8s.io/argocd-manager-role-binding-1659700744226 created

configmap/csdp-add-cluster-cm-1659700744226 created

secret/csdp-add-cluster-secret-1659700744226 created

job.batch/csdp-add-cluster-job-1659700744226 created

INFO Attempt #1/1 succeeded:

=====

ServiceAccount: argocd-manager-1659700744226

Ingress URL: https://mr-62e3d9f588d8af3a8b8581d3-d6dd0e5.cf-cd.com

Context Name: idjaksloft1-admin

Server: https://idjaksloft-aksloftrg-8defc6-aab57dc3.hcp.centralus.azmk8s.io:443

Found ServiceAccount secret argocd-manager-1659700744226-token-66c97

Cluster "idjaksloft-aksloftrg-8defc6-aab57dc3.hcp.centralus.azmk8s.io:443" set.

User "argocd-manager-1659700744226" set.

Context "idjaksloft1-admin" created.

STATUS_CODE: 201

deleting token secret csdp-add-cluster-secret-1659700744226

secret "csdp-add-cluster-secret-1659700744226" deleted

=====

cluster idjaksloft1-admin was added successfully to runtime codefresh-hosted

builder@DESKTOP-QADGF36:~$

We can also add our Loft cluster in much the same way.

I’ll move the kubeconfig into place to avoid confusion

builder@DESKTOP-QADGF36:~$ cp ~/.kube/config ~/.kube/aks-config

builder@DESKTOP-QADGF36:~$ cp /home/builder/Workspaces/jekyll-blog/kubeconfig.yaml ~/.kube/config

builder@DESKTOP-QADGF36:~$ kubectl get nodes

Handling connection for 8443

NAME STATUS ROLES AGE VERSION

aks-nodepool1-30313772-vmss000001 Ready <none> 37m v1.22.10

When I tried to do this for my vCluster, I got the same error as I did on k3s

$ cf cluster add codefresh-hosted

kubernetes-admin@kubernetes

INFO Building "add-cluster" manifests

Handling connection for 8443

serviceaccount/argocd-manager-1659700986941 created

clusterrole.rbac.authorization.k8s.io/argocd-manager-role-1659700986941 created

clusterrolebinding.rbac.authorization.k8s.io/argocd-manager-role-binding-1659700986941 created

configmap/csdp-add-cluster-cm-1659700986941 created

secret/csdp-add-cluster-secret-1659700986941 created

job.batch/csdp-add-cluster-job-1659700986941 created

WARNING Attempt #1/1 failed:

=====

ServiceAccount: argocd-manager-1659700986941

Ingress URL: https://mr-62e3d9f588d8af3a8b8581d3-d6dd0e5.cf-cd.com

Context Name: kubernetes-admin-kubernetes

Server: https://localhost:8443

Found ServiceAccount secret argocd-manager-1659700986941-token-xz46k

Cluster "localhost:8443" set.

User "argocd-manager-1659700986941" set.

Context "kubernetes-admin-kubernetes" created.

STATUS_CODE: 500

{"statusCode":500,"message":"Failed adding cluster \"kubernetes-admin-kubernetes\", error: Internal Server Error"}

error creating cluster in runtime

=====

cluster kubernetes-admin-kubernetes was added successfully to runtime codefresh-hosted

The reason is that this vCluster is not exposed externally. Codefresh.io, for the purpose of having an Argo endpoint essentially onboards your kubeconfig. If your kubeconfig consists of https://localhost/etc it isn’t going to work.

Hybrid Runtime

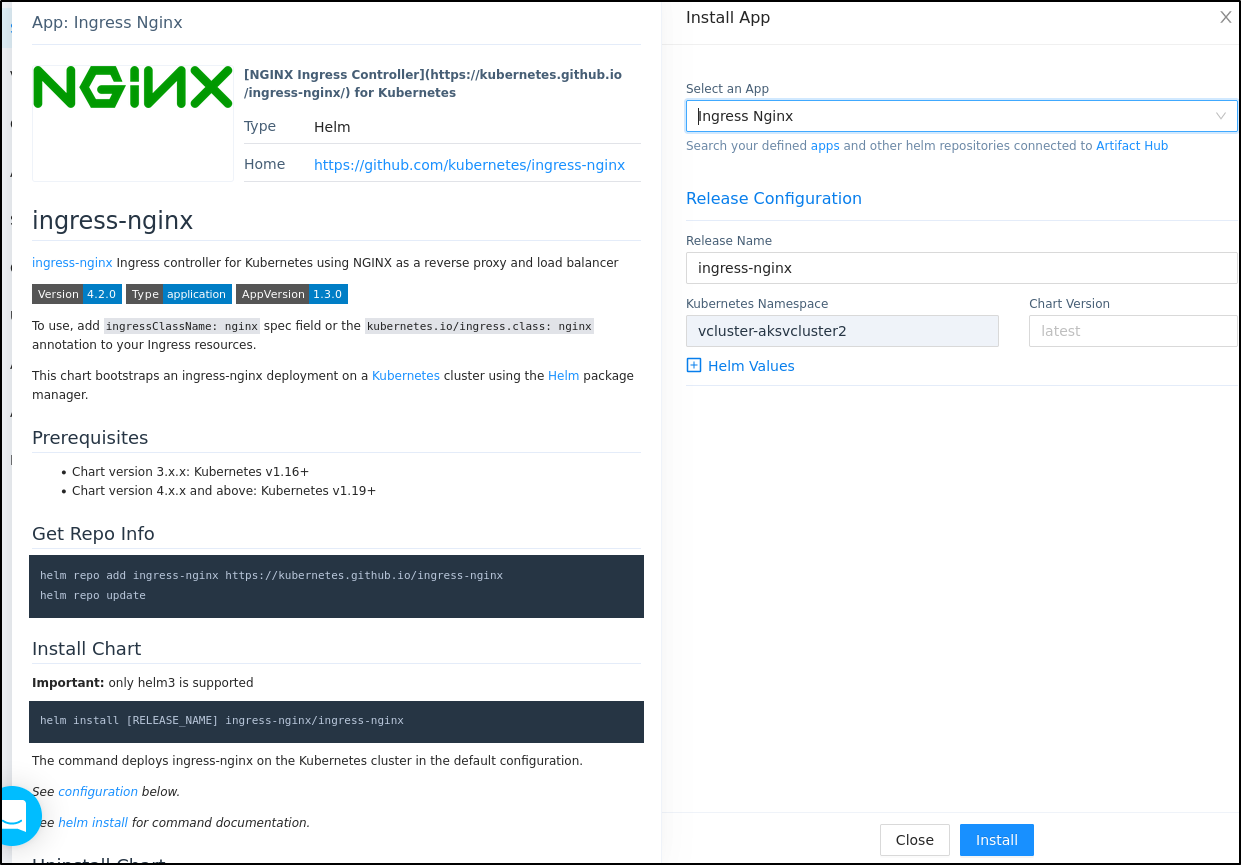

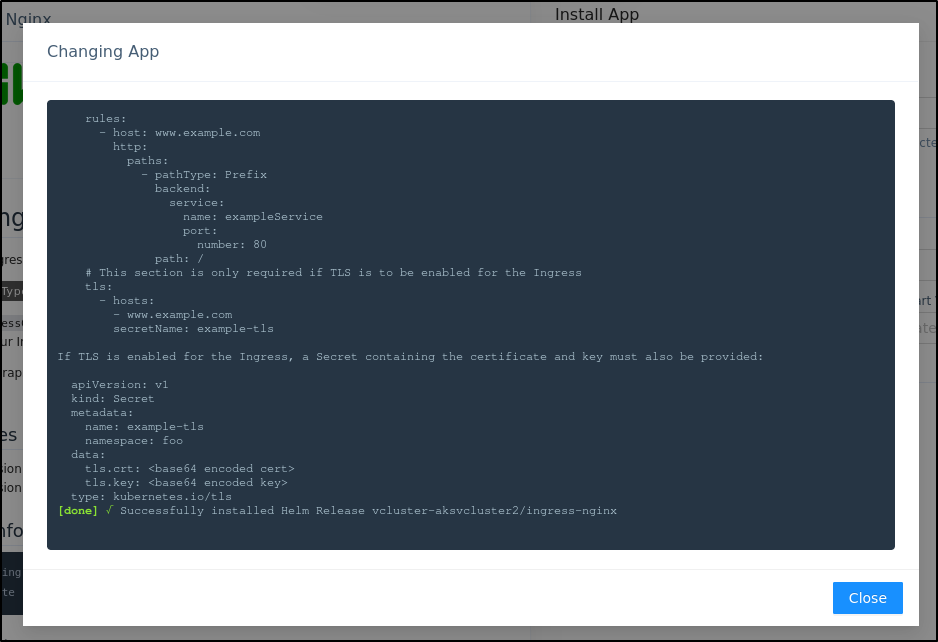

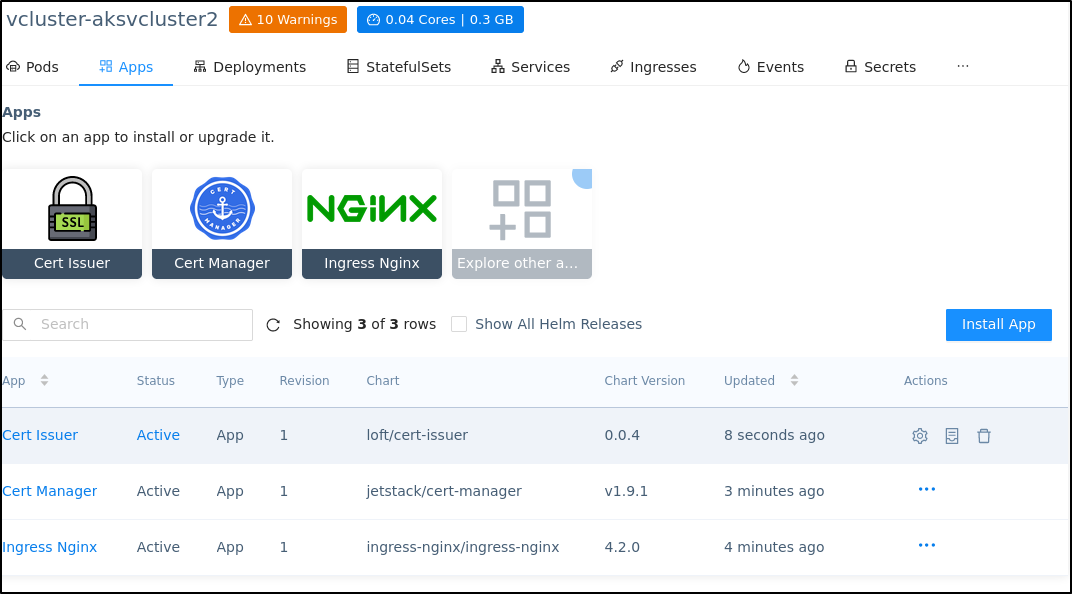

To install the Hybrid runtime, we need to first add an Ingress Controller

$ helm install my-release .

Handling connection for 8443

NAME: my-release

LAST DEPLOYED: Fri Aug 5 07:15:18 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The NGINX Ingress Controller has been installed.

Patch to make default

$ kubectl get ingressclass

Handling connection for 8443

NAME CONTROLLER PARAMETERS AGE

nginx nginx.org/ingress-controller <none> 81s

$ kubectl get ingressclass nginx -o yaml > ingress.class.yaml

$ kubectl get ingressclass nginx -o yaml > ingress.class.yaml.bak

$ vi ingress.class.yaml

$ diff ingress.class.yaml ingress.class.yaml.bak

7d6

< ingressclass.kubernetes.io/is-default-class: "true"

$ kubectl apply -f ingress.class.yaml

Handling connection for 8443

Warning: resource ingressclasses/nginx is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

ingressclass.networking.k8s.io/nginx configured

Next I install a cluster issuer

$ kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.8.0/cert-manager.yaml

Then configure it

$ kubectl apply -f prod-route53-credentials-secret.yaml

Handling connection for 8443

secret/prod-route53-credentials-secret created

$ cat cm-issuer.yml

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-staging

spec:

acme:

email: isaac.johnson@gmail.com

server: https://acme-staging-v02.api.letsencrypt.org/directory

privateKeySecretRef:

name: letsencrypt-staging

solvers:

- http01:

ingress:

class: nginx

---

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-prod-old

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: isaac.johnson@gmail.com

privateKeySecretRef:

name: letsencrypt-prod

solvers:

- http01:

ingress:

class: nginx

---

$ kubectl apply -f cm-issuer.yml

Handling connection for 8443

clusterissuer.cert-manager.io/letsencrypt-staging created

clusterissuer.cert-manager.io/letsencrypt-prod-old created

clusterissuer.cert-manager.io/letsencrypt-prod created

That will need an A Record for https.

We need to check the external IP azure gave us

$ kubectl get svc

Handling connection for 8443

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.51.190 <none> 443/TCP 67m

my-release-nginx-ingress LoadBalancer 10.0.31.235 52.185.102.85 80:32189/TCP,443:30087/TCP 17m

Then use it in the A Record

$ cat r53-aksdemo.json

{

"Comment": "CREATE aksdemo2 fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "aksdemo2.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "52.185.102.85"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-aksdemo.json

{

"ChangeInfo": {

"Id": "/change/C0612256KQT00WF0BWSQ",

"Status": "PENDING",

"SubmittedAt": "2022-08-05T12:33:58.605Z",

"Comment": "CREATE aksdemo2 fb.s A record "

}

}

next we need a cert

$ cat cert-aksdemo.yaml

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: aksdemo2-cert

namespace: default

spec:

dnsNames:

- aksdemo2.freshbrewed.science

issuerRef:

group: cert-manager.io

kind: ClusterIssuer

name: letsencrypt-prod

secretName: aksdemo2-cert-tls

$ kubectl apply -f cert-aksdemo.yaml

Handling connection for 8443

certificate.cert-manager.io/aksdemo2-cert created

After challenges, we can see the cert

$ kubectl get cert

Handling connection for 8443

NAME READY SECRET AGE

aksdemo-cert False aksdemo-cert-tls 10m

aksdemo2-cert True aksdemo2-cert-tls 2m20s

We can pull the values from that secret (aksdemo2-cert-tls) to use as default values for Nginx

$ helm upgrade my-release --set controller.defaultTLS.cert=LS0.....0K --set controller.defaultTLS.key=LS0.......= .

Handling connection for 8443

Release "my-release" has been upgraded. Happy Helming!

NAME: my-release

LAST DEPLOYED: Fri Aug 5 07:49:14 2022

NAMESPACE: default

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

The NGINX Ingress Controller has been installed.

And verify it works

I was pretty sure the helm upgrade would not remove my annotation for default Ingress class, but it was work checking to be sure

$ kubectl get ingressclass nginx -o yaml | grep is-default | head -n1

Handling connection for 8443

ingressclass.kubernetes.io/is-default-class: "true"

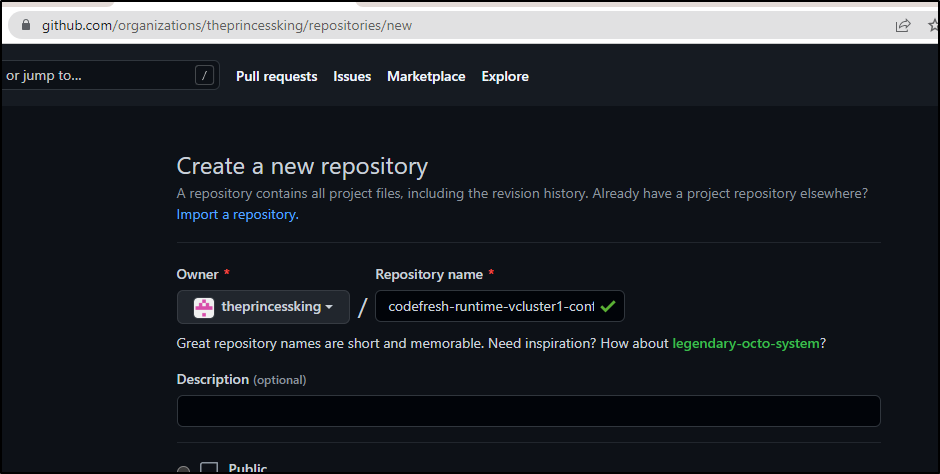

I need to create a Github repo in which to store the config

Having that, and my GH API token handy, I can begin the Codefresh Runtime install

$ cf runtime install

Runtime name: codefresh

kubernetes-admin@kubernetes

INFO Retrieving ingress class info from your cluster...

Handling connection for 8443

INFO Using ingress class: nginx

WARNING You are using the NGINX enterprise edition (nginx.org/ingress-controller) as your ingress controller. To successfully install the runtime, configure all required settings, as described in : https://codefresh.io/csdp-docs/docs/runtime/requirements/

INFO Retrieving ingress controller info from your cluster...

✔ Ingress host: https://aksdemo2.freshbrewed.science|

INFO Using ingress host: https://aksdemo2.freshbrewed.science

✔ Repository URL: https://github.com/theprincessking/codefresh-runtime-vcluster1-config█

✔ Runtime git api token: ****************************************█

INFO Personal git token was not provided. Using runtime git token to register user: "ijohnson". You may replace your personal git token at any time from the UI in the user settings

Yes (default)

Summary

Codefresh context: codefresh

Kube context: kubernetes-admin@kubernetes

Runtime name: codefresh

Repository URL: https://github.com/theprincessking/codefresh-runtime-vcluster1-config

Ingress host: https://aksdemo2.freshbrewed.science

Ingress class: nginx

Installing demo resources: true

Yes

Handling connection for 8443

INFO Running network test...

INFO Network test finished successfully

INFO Installing runtime "codefresh" version=0.0.461

namespace/codefresh created

customresourcedefinition.apiextensions.k8s.io/applications.argoproj.io created

customresourcedefinition.apiextensions.k8s.io/applicationsets.argoproj.io created

customresourcedefinition.apiextensions.k8s.io/appprojects.argoproj.io created

serviceaccount/argocd-application-controller created

serviceaccount/argocd-applicationset-controller created

serviceaccount/argocd-dex-server created

serviceaccount/argocd-redis created

serviceaccount/argocd-server created

role.rbac.authorization.k8s.io/argocd-application-controller created

role.rbac.authorization.k8s.io/argocd-applicationset-controller created

role.rbac.authorization.k8s.io/argocd-dex-server created

role.rbac.authorization.k8s.io/argocd-server created

role.rbac.authorization.k8s.io/codefresh-config-reader created

clusterrole.rbac.authorization.k8s.io/argocd-application-controller created

clusterrole.rbac.authorization.k8s.io/argocd-server created

rolebinding.rbac.authorization.k8s.io/argocd-application-controller created

rolebinding.rbac.authorization.k8s.io/argocd-applicationset-controller created

rolebinding.rbac.authorization.k8s.io/argocd-dex-server created

rolebinding.rbac.authorization.k8s.io/argocd-redis created

rolebinding.rbac.authorization.k8s.io/argocd-server created

rolebinding.rbac.authorization.k8s.io/codefresh-config-reader created

clusterrolebinding.rbac.authorization.k8s.io/argocd-application-controller created

clusterrolebinding.rbac.authorization.k8s.io/argocd-server created

configmap/argocd-cm created

configmap/argocd-cmd-params-cm created

configmap/argocd-gpg-keys-cm created

configmap/argocd-rbac-cm created

configmap/argocd-ssh-known-hosts-cm created

configmap/argocd-tls-certs-cm created

secret/argocd-secret created

service/argocd-applicationset-controller created

service/argocd-dex-server created

service/argocd-metrics created

service/argocd-redis created

service/argocd-repo-server created

service/argocd-server created

service/argocd-server-metrics created

deployment.apps/argocd-applicationset-controller created

deployment.apps/argocd-dex-server created

deployment.apps/argocd-redis created

deployment.apps/argocd-repo-server created

deployment.apps/argocd-server created

statefulset.apps/argocd-application-controller created

networkpolicy.networking.k8s.io/argocd-application-controller-network-policy created

networkpolicy.networking.k8s.io/argocd-dex-server-network-policy created

networkpolicy.networking.k8s.io/argocd-redis-network-policy created

networkpolicy.networking.k8s.io/argocd-repo-server-network-policy created

networkpolicy.networking.k8s.io/argocd-server-network-policy created

secret/autopilot-secret created

waiting for argo-cd to be ready...

After Argo was ready, I saw the installer continue

application.argoproj.io/autopilot-bootstrap created

Handling connection for 8443

'admin:login' logged in successfully

Context 'autopilot' updated

INFO Pushing runtime definition to the installation repo

Handling connection for 8443

secret/codefresh-token created

secret/argocd-token created

INFO Creating component "events"

INFO Creating component "rollouts"

INFO Creating component "workflows"

INFO Creating component "app-proxy"

INFO Creating component "sealed-secrets"

INFO Pushing Master Ingress Manifest

INFO Pushing Argo Workflows ingress manifests

INFO Pushing App-Proxy ingress manifests

It continued onto components…

INFO Pushing Event Reporter manifests

INFO Pushing Codefresh Workflow-Reporter manifests

INFO Pushing Codefresh Rollout-Reporter manifests

INFO Pushing demo pipelines to the new git-source repo

INFO Successfully created git-source: "default-git-source"

WARNING --provider not specified, assuming provider from url: github

Enumerating objects: 3423, done.

Counting objects: 100% (3423/3423), done.

Compressing objects: 100% (1524/1524), done.

Total 3423 (delta 2195), reused 2799 (delta 1701), pack-reused 0

INFO Successfully created git-source: "marketplace-git-source"

INFO Waiting for the runtime installation to complete...

COMPONENT HEALTH STATUS SYNC STATUS VERSION ERRORS

✔ argo-cd HEALTHY SYNCED quay.io/codefresh/argocd:v2.3.4-cap-CR-13082-improve-validation

✔ rollout-reporter HEALTHY SYNCED quay.io/codefresh/argo-events:v1.7.1-cap-CR-13091

✔ workflow-reporter HEALTHY SYNCED quay.io/codefresh/argo-events:v1.7.1-cap-CR-13091

✔ events-reporter HEALTHY SYNCED quay.io/codefresh/argo-events:v1.7.1-cap-CR-13091

↻ sealed-secrets N/A N/A N/A

↻ app-proxy N/A N/A N/A

↻ workflows N/A N/A N/A

↻ rollouts N/A N/A N/A

↻ events N/A N/A N/A

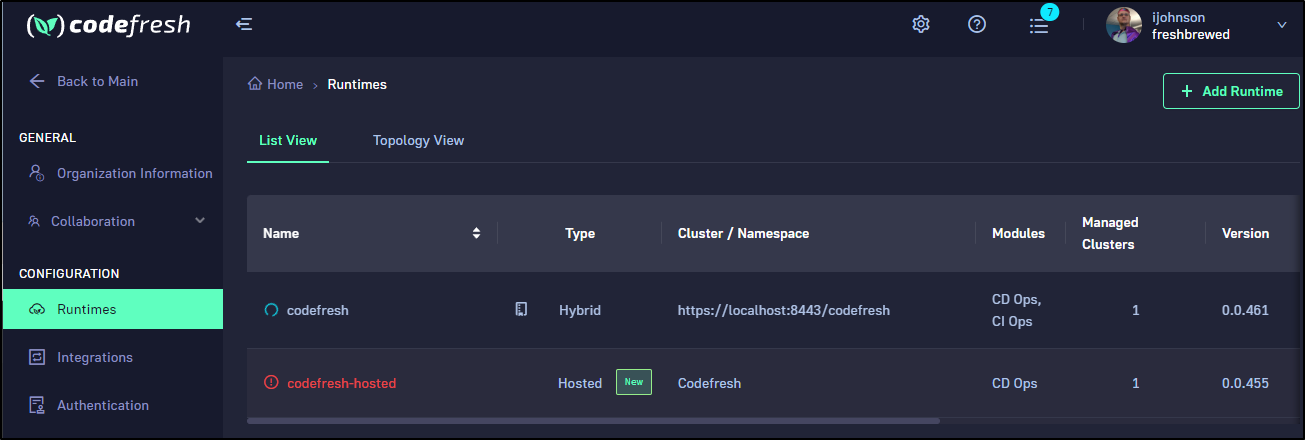

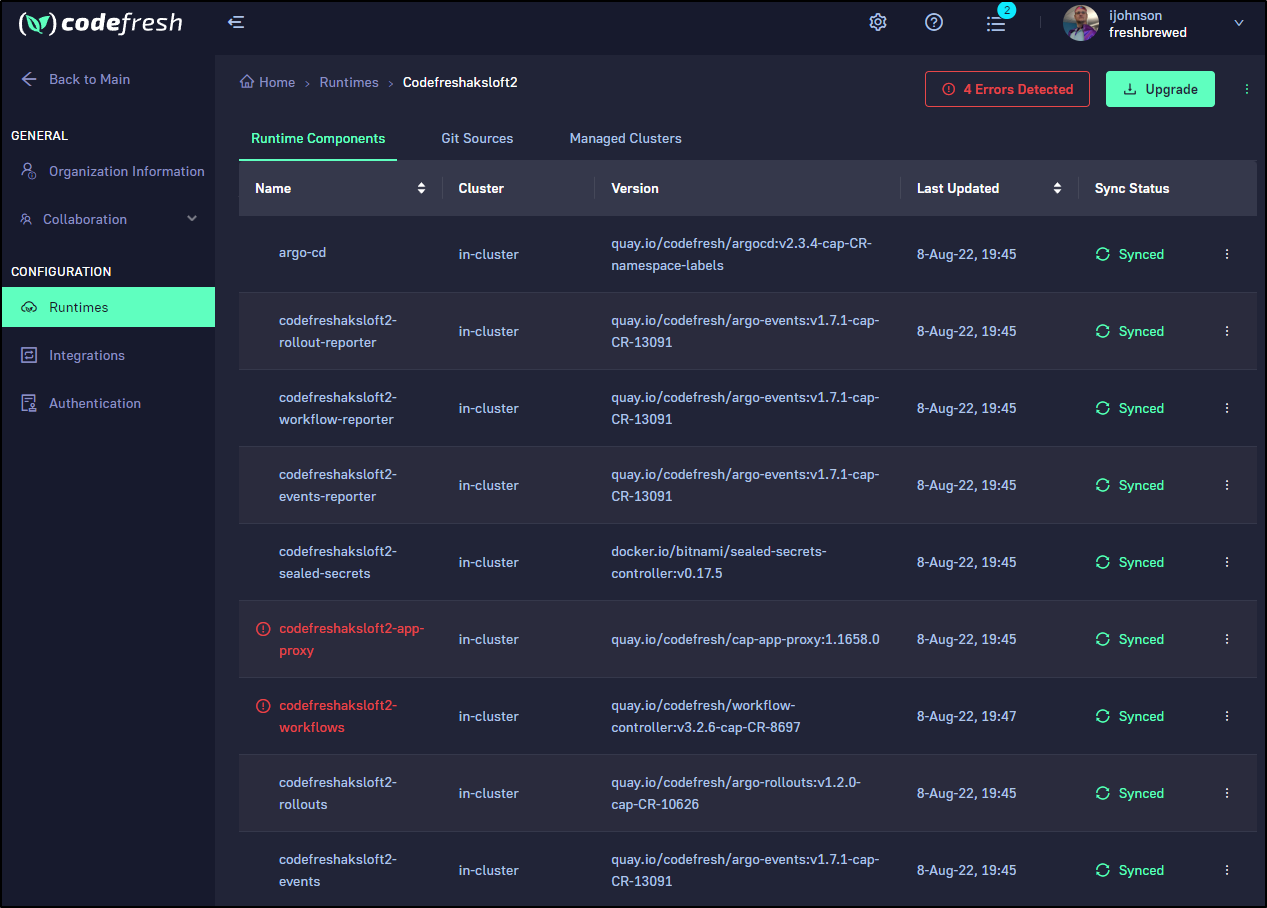

At that time, I could see Codefresh was installing the runtime in the Runtimes section of the portal

In my case, the app-proxy failed to sync

INFO Waiting for the runtime installation to complete...

COMPONENT HEALTH STATUS SYNC STATUS VERSION ERRORS

✔ argo-cd HEALTHY SYNCED quay.io/codefresh/argocd:v2.3.4-cap-CR-13082-improve-validation

✔ rollout-reporter HEALTHY SYNCED quay.io/codefresh/argo-events:v1.7.1-cap-CR-13091

✔ workflow-reporter HEALTHY SYNCED quay.io/codefresh/argo-events:v1.7.1-cap-CR-13091

✔ events-reporter HEALTHY SYNCED quay.io/codefresh/argo-events:v1.7.1-cap-CR-13091

✔ sealed-secrets HEALTHY SYNCED docker.io/bitnami/sealed-secrets-controller:v0.17.5

↻ app-proxy PROGRESSING SYNCED quay.io/codefresh/cap-app-proxy:1.1652.1

↻ workflows PROGRESSING SYNCED quay.io/codefresh/workflow-controller:v3.2.6-cap-CR-8697

✔ rollouts HEALTHY SYNCED quay.io/codefresh/argo-rollouts:v1.2.0-cap-CR-10626

✔ events HEALTHY SYNCED quay.io/codefresh/argo-events:v1.7.1-cap-CR-13091

Checking Account Git Provider -> Success

Downloading runtime definition -> Success

Pre run installation checks -> Success

Runtime installation phase started -> Success

Downloading runtime definition -> Success

Getting kube server address -> Success

Creating runtime on platform -> Success

Bootstrapping repository -> Success

Creating Project -> Success

Creating/Updating codefresh-cm -> Success

Applying secrets to cluster -> Success

Creating components -> Success

Installing components -> Success

Creating git source "default-git-source" -> Success

Creating marketplace-git-source -> Success

Wait for runtime sync -> Failed

Creating a default git integration -> Failed

Creating a default git integration -> Failed

Creating a default git integration -> Failed

Creating a default git integration -> Failed

Creating a default git integration -> Failed

Creating a default git integration -> Failed

FATAL timed out while waiting for git integration to be created

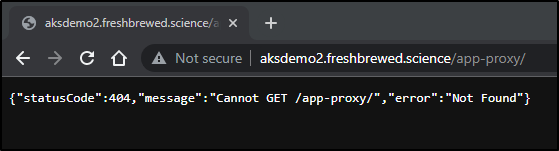

I can see some of the Ingress are working by checking them directly

$ kubectl get ingress -n codefresh

Handling connection for 8443

NAME CLASS HOSTS ADDRESS PORTS AGE

cdp-default-git-source nginx aksdemo2.freshbrewed.science 80 3h12m

codefresh-cap-app-proxy nginx aksdemo2.freshbrewed.science 80 3h12m

codefresh-master nginx aksdemo2.freshbrewed.science 80 3h12m

codefresh-workflows-ingress nginx aksdemo2.freshbrewed.science 80 3h12m

I checked the path for one of them

$ kubectl get ingress -n codefresh codefresh-cap-app-proxy -o yaml

Handling connection for 8443

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"networking.k8s.io/v1","kind":"Ingress","metadata":{"annotations":{"nginx.org/mergeable-ingress-type":"minion"},"creationTimestamp":null,"labels":{"app.kubernetes.io/instance":"codefresh-app-proxy"},"name":"codefresh-cap-app-proxy","namespace":"codefresh"},"spec":{"ingressClassName":"nginx","rules":[{"host":"aksdemo2.freshbrewed.science","http":{"paths":[{"backend":{"service":{"name":"cap-app-proxy","port":{"number":3017}}},"path":"/app-proxy/","pathType":"Prefix"}]}}]},"status":{"loadBalancer":{}}}

nginx.org/mergeable-ingress-type: minion

creationTimestamp: "2022-08-05T12:58:40Z"

generation: 1

labels:

app.kubernetes.io/instance: codefresh-app-proxy

name: codefresh-cap-app-proxy

namespace: codefresh

resourceVersion: "3197"

uid: 60aaba2c-fad8-4a9b-b97b-4cd72935beaf

spec:

ingressClassName: nginx

rules:

- host: aksdemo2.freshbrewed.science

http:

paths:

- backend:

service:

name: cap-app-proxy

port:

number: 3017

path: /app-proxy/

pathType: Prefix

status:

loadBalancer: {}

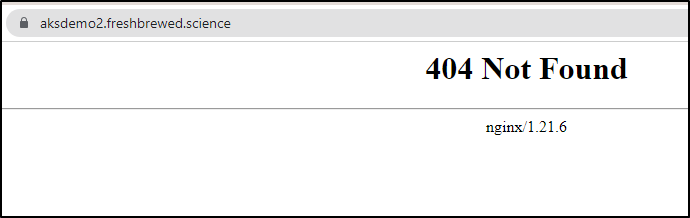

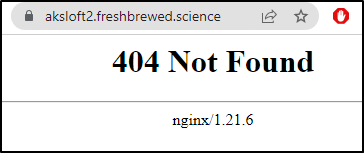

and saw that we got a payload response (may say 404, but the message is JSON so clearly served by a microservice)

Interestingly, I found one service failed to get a ClusterIP.

$ kubectl get svc -n codefresh

Handling connection for 8443

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

argo-rollouts-metrics ClusterIP 10.0.222.126 <none> 8090/TCP 3h14m

argo-server ClusterIP 10.0.137.96 <none> 2746/TCP 3h14m

argocd-applicationset-controller ClusterIP 10.0.19.126 <none> 7000/TCP 3h16m

argocd-dex-server ClusterIP 10.0.162.125 <none> 5556/TCP,5557/TCP,5558/TCP 3h16m

argocd-metrics ClusterIP 10.0.150.209 <none> 8082/TCP 3h16m

argocd-redis ClusterIP 10.0.108.184 <none> 6379/TCP 3h16m

argocd-repo-server ClusterIP 10.0.68.215 <none> 8081/TCP,8084/TCP 3h16m

argocd-server ClusterIP 10.0.155.53 <none> 80/TCP,443/TCP 3h16m

argocd-server-metrics ClusterIP 10.0.108.215 <none> 8083/TCP 3h16m

cap-app-proxy ClusterIP 10.0.150.66 <none> 3017/TCP 3h15m

eventbus-codefresh-eventbus-stan-svc ClusterIP None <none> 4222/TCP,6222/TCP,8222/TCP 3h15m

events-webhook ClusterIP 10.0.166.78 <none> 443/TCP 3h15m

push-github-eventsource-svc ClusterIP 10.0.167.95 <none> 80/TCP 3h14m

sealed-secrets-controller ClusterIP 10.0.245.178 <none> 8080/TCP 3h15m

workflow-controller-metrics ClusterIP 10.0.135.162 <none> 9090/TCP 3h14m

I’ll try re-punching it

$ kubectl get svc eventbus-codefresh-eventbus-stan-svc -n codefresh -o yaml > eventbus-codefresh-eventbus-stan-svc.yaml && kubectl delete svc eventbus-codefresh-eventbus-stan-svc -n codefresh && sleep 5 && kubectl apply -f eventbus-codefresh-eventbus-stan-svc.yaml -n codefresh && echo

Handling connection for 8443

Handling connection for 8443

service "eventbus-codefresh-eventbus-stan-svc" deleted

Handling connection for 8443

Warning: resource services/eventbus-codefresh-eventbus-stan-svc is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

The Service "eventbus-codefresh-eventbus-stan-svc" is invalid: metadata.uid: Invalid value: "7bc861b2-e44a-466f-8cf2-26c30cc1fec1": field is immutable

# remove the UUID and status then try again

$ vi eventbus-codefresh-eventbus-stan-svc.yaml

$ kubectl apply -f eventbus-codefresh-eventbus-stan-svc.yaml -n codefresh

Handling connection for 8443

Warning: resource services/eventbus-codefresh-eventbus-stan-svc is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

service/eventbus-codefresh-eventbus-stan-svc configured

It didn’t help. I could see from the hosting cluster errors updating the endpoint slice

$ kubectl describe svc eventbus-codefresh-eventbus-stan-svc-x-codefresh-x-m-795b21bc95 -n vcluster-mytestcluster-e1v89

Name: eventbus-codefresh-eventbus-stan-svc-x-codefresh-x-m-795b21bc95

Namespace: vcluster-mytestcluster-e1v89

Labels: vcluster.loft.sh/label-mytestcluster-x-174ab45136=codefresh-eventbus

vcluster.loft.sh/label-mytestcluster-x-1a5e497a2b=yes

vcluster.loft.sh/label-mytestcluster-x-9039c53507=codefresh-events

vcluster.loft.sh/label-mytestcluster-x-c1472135b1=eventbus-controller

vcluster.loft.sh/label-mytestcluster-x-ffc8d858a4=codefresh-eventbus

vcluster.loft.sh/managed-by=mytestcluster

vcluster.loft.sh/namespace=codefresh

Annotations: resource-spec-hash: 2904700820

vcluster.loft.sh/managed-annotations:

kubectl.kubernetes.io/last-applied-configuration

resource-spec-hash

vcluster.loft.sh/object-name: eventbus-codefresh-eventbus-stan-svc

vcluster.loft.sh/object-namespace: codefresh

Selector: vcluster.loft.sh/label-mytestcluster-x-174ab45136=codefresh-eventbus,vcluster.loft.sh/label-mytestcluster-x-c1472135b1=eventbus-controller,vcluster.loft.sh/label-mytestcluster-x-ffc8d858a4=codefresh-eventbus,vcluster.loft.sh/managed-by=mytestcluster,vcluster.loft.sh/namespace=codefresh

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: None

IPs: None

Port: tcp-client 4222/TCP

TargetPort: 4222/TCP

Endpoints: 10.224.0.42:4222,10.224.0.61:4222,10.224.0.85:4222

Port: cluster 6222/TCP

TargetPort: 6222/TCP

Endpoints: 10.224.0.42:6222,10.224.0.61:6222,10.224.0.85:6222

Port: monitor 8222/TCP

TargetPort: 8222/TCP

Endpoints: 10.224.0.42:8222,10.224.0.61:8222,10.224.0.85:8222

Session Affinity: None

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedToUpdateEndpointSlices 3m7s endpoint-slice-controller Error updating Endpoint Slices for Service vcluster-mytestcluster-e1v89/eventbus-codefresh-eventbus-stan-svc-x-codefresh-x-m-795b21bc95: failed to delete eventbus-codefresh-eventbus-stan-svc-x-codefresh-x-m-795b2dgp8x EndpointSlice for Service vcluster-mytestcluster-e1v89/eventbus-codefresh-eventbus-stan-svc-x-codefresh-x-m-795b21bc95: endpointslices.discovery.k8s.io "eventbus-codefresh-eventbus-stan-svc-x-codefresh-x-m-795b2dgp8x" not found

I could also see that it did indeed get IPs.. just somehow it isn’t getting back to Loft

$ kubectl get endpointslice --all-namespaces | grep eventbus-codefresh

vcluster-mytestcluster-e1v89 eventbus-codefresh-eventbus-stan-svc-x-codefresh-x-m-795b2ttcnc IPv4 8222,6222,4222 10.224.0.42,10.224.0.61,10.224.0.85 5m15s

I tried one more time, having removed the prior

$ cf runtime install

✔ Runtime name: codefresh4|

kubernetes-admin@kubernetes

INFO Retrieving ingress class info from your cluster...

Handling connection for 8443

INFO Using ingress class: nginx

WARNING You are using the NGINX enterprise edition (nginx.org/ingress-controller) as your ingress controller. To successfully install the runtime, configure all required settings, as described in : https://codefresh.io/csdp-docs/docs/runtime/requirements/

INFO Retrieving ingress controller info from your cluster...

✔ Ingress host: https://aksdemo2.freshbrewed.science|

INFO Using ingress host: https://aksdemo2.freshbrewed.science

Repository URL: https://github.com/theprincessking/codefresh-runtime-vcluster1-config

✔ Runtime git api token: ****************************************█

INFO Personal git token was not provided. Using runtime git token to register user: "ijohnson". You may replace your personal git token at any time from the UI in the user settings

Yes (default)

Summary

Codefresh context: codefresh

Kube context: kubernetes-admin@kubernetes

Runtime name: codefresh4

Repository URL: https://github.com/theprincessking/codefresh-runtime-vcluster1-config

Ingress host: https://aksdemo2.freshbrewed.science

Ingress class: nginx

Installing demo resources: true

Yes

INFO Running network test...

INFO Network test finished successfully

INFO Installing runtime "codefresh4" version=0.0.461

namespace/codefresh4 created

customresourcedefinition.apiextensions.k8s.io/applications.argoproj.io unchanged

customresourcedefinition.apiextensions.k8s.io/applicationsets.argoproj.io configured

customresourcedefinition.apiextensions.k8s.io/appprojects.argoproj.io unchanged

serviceaccount/argocd-application-controller created

serviceaccount/argocd-applicationset-controller created

serviceaccount/argocd-dex-server created

serviceaccount/argocd-redis created

serviceaccount/argocd-server created

role.rbac.authorization.k8s.io/argocd-application-controller created

role.rbac.authorization.k8s.io/argocd-applicationset-controller created

role.rbac.authorization.k8s.io/argocd-dex-server created

role.rbac.authorization.k8s.io/argocd-server created

role.rbac.authorization.k8s.io/codefresh-config-reader created

clusterrole.rbac.authorization.k8s.io/argocd-application-controller configured

clusterrole.rbac.authorization.k8s.io/argocd-server configured

rolebinding.rbac.authorization.k8s.io/argocd-application-controller created

rolebinding.rbac.authorization.k8s.io/argocd-applicationset-controller created

rolebinding.rbac.authorization.k8s.io/argocd-dex-server created

rolebinding.rbac.authorization.k8s.io/argocd-redis created

rolebinding.rbac.authorization.k8s.io/argocd-server created

rolebinding.rbac.authorization.k8s.io/codefresh-config-reader created

clusterrolebinding.rbac.authorization.k8s.io/argocd-application-controller configured

clusterrolebinding.rbac.authorization.k8s.io/argocd-server configured

configmap/argocd-cm created

configmap/argocd-cmd-params-cm created

configmap/argocd-gpg-keys-cm created

configmap/argocd-rbac-cm created

configmap/argocd-ssh-known-hosts-cm created

configmap/argocd-tls-certs-cm created

secret/argocd-secret created

service/argocd-applicationset-controller created

service/argocd-dex-server created

service/argocd-metrics created

service/argocd-redis created

service/argocd-repo-server created

service/argocd-server created

service/argocd-server-metrics created

deployment.apps/argocd-applicationset-controller created

deployment.apps/argocd-dex-server created

deployment.apps/argocd-redis created

deployment.apps/argocd-repo-server created

deployment.apps/argocd-server created

statefulset.apps/argocd-application-controller created

networkpolicy.networking.k8s.io/argocd-application-controller-network-policy created

networkpolicy.networking.k8s.io/argocd-dex-server-network-policy created

networkpolicy.networking.k8s.io/argocd-redis-network-policy created

networkpolicy.networking.k8s.io/argocd-repo-server-network-policy created

networkpolicy.networking.k8s.io/argocd-server-network-policy created

secret/autopilot-secret created

application.argoproj.io/autopilot-bootstrap created

Handling connection for 8443

'admin:login' logged in successfully

Context 'autopilot' updated

INFO Pushing runtime definition to the installation repo

Handling connection for 8443

secret/codefresh-token created

secret/argocd-token created

INFO Creating component "events"

INFO Creating component "rollouts"

INFO Creating component "workflows"

INFO Creating component "app-proxy"

INFO Creating component "sealed-secrets"

INFO Pushing Master Ingress Manifest

INFO Pushing Argo Workflows ingress manifests

INFO Pushing App-Proxy ingress manifests

INFO Pushing Event Reporter manifests

INFO Pushing Codefresh Workflow-Reporter manifests

INFO Pushing Codefresh Rollout-Reporter manifests

INFO Pushing demo pipelines to the new git-source repo

INFO Successfully created git-source: "default-git-source"

WARNING --provider not specified, assuming provider from url: github

Enumerating objects: 3423, done.

Counting objects: 100% (3423/3423), done.

Compressing objects: 100% (1515/1515), done.

Total 3423 (delta 2204), reused 2804 (delta 1710), pack-reused 0

INFO Successfully created git-source: "marketplace-git-source"

INFO Waiting for the runtime installation to complete...

COMPONENT HEALTH STATUS SYNC STATUS VERSION ERRORS

✔ argo-cd HEALTHY SYNCED quay.io/codefresh/argocd:v2.3.4-cap-CR-13082-improve-validation

✔ rollout-reporter HEALTHY SYNCED quay.io/codefresh/argo-events:v1.7.1-cap-CR-13091

✔ workflow-reporter HEALTHY SYNCED quay.io/codefresh/argo-events:v1.7.1-cap-CR-13091

✔ events-reporter HEALTHY SYNCED quay.io/codefresh/argo-events:v1.7.1-cap-CR-13091

✔ sealed-secrets HEALTHY SYNCED docker.io/bitnami/sealed-secrets-controller:v0.17.5

↻ app-proxy PROGRESSING SYNCED quay.io/codefresh/cap-app-proxy:1.1652.1

↻ workflows PROGRESSING SYNCED quay.io/codefresh/workflow-controller:v3.2.6-cap-CR-8697

✔ rollouts HEALTHY SYNCED quay.io/codefresh/argo-rollouts:v1.2.0-cap-CR-10626

✔ events HEALTHY SYNCED quay.io/codefresh/argo-events:v1.7.1-cap-CR-13091

Checking Account Git Provider -> Success

Downloading runtime definition -> Success

Pre run installation checks -> Success

Runtime installation phase started -> Success

Downloading runtime definition -> Success

Getting kube server address -> Success

Creating runtime on platform -> Success

Bootstrapping repository -> Success

Creating Project -> Success

Creating/Updating codefresh-cm -> Success

Applying secrets to cluster -> Success

Creating components -> Success

Installing components -> Success

Creating git source "default-git-source" -> Success

Creating marketplace-git-source -> Success

Wait for runtime sync -> Failed

Creating a default git integration -> Failed

Creating a default git integration -> Failed

Creating a default git integration -> Failed

Creating a default git integration -> Failed

Creating a default git integration -> Failed

Creating a default git integration -> Failed

FATAL timed out while waiting for git integration to be created

And yet again, just as stuck

builder@DESKTOP-QADGF36:~/Workspaces/kubernetes-ingress/deployments/helm-chart$ kubectl get pods --all-namespaces

Handling connection for 8443

NAMESPACE NAME READY STATUS RESTARTS AGE

cert-manager cert-manager-b4d6fd99b-r899t 1/1 Running 0 6h49m

cert-manager cert-manager-cainjector-74bfccdfdf-v5cv5 1/1 Running 0 6h49m

cert-manager cert-manager-webhook-65b766b5f8-pb7kx 1/1 Running 0 6h49m

codefresh4 argo-rollouts-7cf4477c85-n78zq 1/1 Running 0 110m

codefresh4 argo-server-bd49d69b5-29qt9 1/1 Running 0 110m

codefresh4 argocd-application-controller-0 1/1 Running 0 111m

codefresh4 argocd-applicationset-controller-866b88c5cb-nnpds 1/1 Running 0 111m

codefresh4 argocd-dex-server-6bc9f9d895-9kl45 1/1 Running 0 111m

codefresh4 argocd-redis-b9974b4fb-99tsp 1/1 Running 0 111m

codefresh4 argocd-repo-server-5f69789bdc-tf4q4 1/1 Running 0 111m

codefresh4 argocd-server-5cbb746c87-shdmk 1/1 Running 0 111m

codefresh4 calendar-eventsource-fbfs5-6b64f9d86d-4js56 1/1 Running 0 110m

codefresh4 cap-app-proxy-55db568c45-qk6wc 1/1 Running 0 110m

codefresh4 controller-manager-5649d7fb8b-fcxbb 1/1 Running 0 110m

codefresh4 cron-57zg6 0/2 Completed 0 19m

codefresh4 cron-64smp 0/2 Completed 0 79m

codefresh4 cron-mrn9v 0/2 Completed 0 49m

codefresh4 cron-sensor-pb9qz-5589d75849-s789z 1/1 Running 0 110m

codefresh4 eventbus-codefresh-eventbus-stan-0 2/2 Running 0 110m

codefresh4 eventbus-codefresh-eventbus-stan-1 2/2 Running 0 110m

codefresh4 eventbus-codefresh-eventbus-stan-2 2/2 Running 0 110m

codefresh4 events-reporter-eventsource-dsdpm-74d95bffd8-fq6qj 1/1 Running 0 110m

codefresh4 events-reporter-sensor-slpfg-f5c7cb77c-bb5qf 1/1 Running 0 110m

codefresh4 events-webhook-7ffbc8c785-hcr84 1/1 Running 0 110m

codefresh4 push-github-eventsource-h8xw5-9c65f65bc-xm8b5 1/1 Running 0 110m

codefresh4 push-github-sensor-xnt9z-668cd85d6b-57hhn 1/1 Running 0 110m

codefresh4 rollout-reporter-eventsource-jn4n4-55985d645f-gwfg2 1/1 Running 0 110m

codefresh4 rollout-reporter-sensor-mdfg6-5b685fd499-ndbp4 1/1 Running 0 110m

codefresh4 sealed-secrets-controller-74db676b65-zzg6f 1/1 Running 0 110m

codefresh4 workflow-controller-84959dd7f6-8x4q7 1/1 Running 0 110m

codefresh4 workflow-reporter-eventsource-gmzr2-787d4d86c5-5ddps 1/1 Running 0 110m

codefresh4 workflow-reporter-sensor-vmjt5-77974579f9-c9cvr 1/1 Running 0 110m

default my-release-nginx-ingress-79876ddffc-fdvv5 1/1 Running 0 6h55m

kube-system coredns-86d5cb86f5-4xd4v 1/1 Running 0 7h45m

builder@DESKTOP-QADGF36:~/Workspaces/kubernetes-ingress/deployments/helm-chart$ kubectl get svc --all-namespaces

Handling connection for 8443

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cert-manager cert-manager ClusterIP 10.0.211.128 <none> 9402/TCP 6h49m

cert-manager cert-manager-webhook ClusterIP 10.0.123.41 <none> 443/TCP 6h49m

codefresh4 argo-rollouts-metrics ClusterIP 10.0.142.59 <none> 8090/TCP 110m

codefresh4 argo-server ClusterIP 10.0.78.39 <none> 2746/TCP 110m

codefresh4 argocd-applicationset-controller ClusterIP 10.0.58.251 <none> 7000/TCP 111m

codefresh4 argocd-dex-server ClusterIP 10.0.214.42 <none> 5556/TCP,5557/TCP,5558/TCP 111m

codefresh4 argocd-metrics ClusterIP 10.0.35.39 <none> 8082/TCP 111m

codefresh4 argocd-redis ClusterIP 10.0.89.68 <none> 6379/TCP 111m

codefresh4 argocd-repo-server ClusterIP 10.0.186.196 <none> 8081/TCP,8084/TCP 111m

codefresh4 argocd-server ClusterIP 10.0.61.178 <none> 80/TCP,443/TCP 111m

codefresh4 argocd-server-metrics ClusterIP 10.0.136.219 <none> 8083/TCP 111m

codefresh4 cap-app-proxy ClusterIP 10.0.35.123 <none> 3017/TCP 110m

codefresh4 eventbus-codefresh-eventbus-stan-svc ClusterIP None <none> 4222/TCP,6222/TCP,8222/TCP 110m

codefresh4 events-webhook ClusterIP 10.0.88.85 <none> 443/TCP 111m

codefresh4 push-github-eventsource-svc ClusterIP 10.0.220.155 <none> 80/TCP 110m

codefresh4 sealed-secrets-controller ClusterIP 10.0.152.109 <none> 8080/TCP 110m

codefresh4 workflow-controller-metrics ClusterIP 10.0.146.164 <none> 9090/TCP 110m

default kubernetes ClusterIP 10.0.51.190 <none> 443/TCP 7h45m

default my-release-nginx-ingress LoadBalancer 10.0.31.235 52.185.102.85 80:32189/TCP,443:30087/TCP 6h55m

kube-system kube-dns ClusterIP 10.0.207.93 <none> 53/UDP,53/TCP,9153/TCP 7h45m

We can see our cluster has the nodes available

$ kubectl get nodes

Handling connection for 8443

NAME STATUS ROLES AGE VERSION

aks-nodepool1-30313772-vmss000001 Ready <none> 7h45m v1.22.10

aks-nodepool1-30313772-vmss000002 Ready <none> 111m v1.22.10

aks-nodepool1-30313772-vmss000004 Ready <none> 109m v1.22.10

On the master cluster (moving back to the hosting cluster)

$ kubectl get svc --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 7h58m

gatekeeper-system gatekeeper-webhook-service ClusterIP 10.0.67.143 <none> 443/TCP 7h46m

kube-system azure-policy-webhook-service ClusterIP 10.0.93.105 <none> 443/TCP 7h46m

kube-system kube-dns ClusterIP 10.0.0.10 <none> 53/UDP,53/TCP 7h58m

kube-system metrics-server ClusterIP 10.0.12.51 <none> 443/TCP 7h58m

kube-system npm-metrics-cluster-service ClusterIP 10.0.169.5 <none> 9000/TCP 7h58m

loft loft ClusterIP 10.0.222.31 <none> 80/TCP,443/TCP 7h53m

loft loft-agent ClusterIP 10.0.157.72 <none> 80/TCP,443/TCP,9090/TCP 7h53m

loft loft-agent-apiservice ClusterIP 10.0.35.171 <none> 443/TCP 7h53m

loft loft-agent-webhook ClusterIP 10.0.145.209 <none> 443/TCP 7h53m

vcluster-mytestcluster-e1v89 argo-rollouts-metrics-x-codefresh4-x-mytestcluster ClusterIP 10.0.142.59 <none> 8090/TCP 112m

vcluster-mytestcluster-e1v89 argo-server-x-codefresh4-x-mytestcluster ClusterIP 10.0.78.39 <none> 2746/TCP 112m

vcluster-mytestcluster-e1v89 argocd-applicationset-controller-x-codefresh4-x-mytestcluster ClusterIP 10.0.58.251 <none> 7000/TCP 113m

vcluster-mytestcluster-e1v89 argocd-dex-server-x-codefresh4-x-mytestcluster ClusterIP 10.0.214.42 <none> 5556/TCP,5557/TCP,5558/TCP 113m

vcluster-mytestcluster-e1v89 argocd-metrics-x-codefresh4-x-mytestcluster ClusterIP 10.0.35.39 <none> 8082/TCP 113m

vcluster-mytestcluster-e1v89 argocd-redis-x-codefresh4-x-mytestcluster ClusterIP 10.0.89.68 <none> 6379/TCP 113m

vcluster-mytestcluster-e1v89 argocd-repo-server-x-codefresh4-x-mytestcluster ClusterIP 10.0.186.196 <none> 8081/TCP,8084/TCP 113m

vcluster-mytestcluster-e1v89 argocd-server-metrics-x-codefresh4-x-mytestcluster ClusterIP 10.0.136.219 <none> 8083/TCP 113m

vcluster-mytestcluster-e1v89 argocd-server-x-codefresh4-x-mytestcluster ClusterIP 10.0.61.178 <none> 80/TCP,443/TCP 113m

vcluster-mytestcluster-e1v89 cap-app-proxy-x-codefresh4-x-mytestcluster ClusterIP 10.0.35.123 <none> 3017/TCP 112m

vcluster-mytestcluster-e1v89 cert-manager-webhook-x-cert-manager-x-mytestcluster ClusterIP 10.0.123.41 <none> 443/TCP 6h51m

vcluster-mytestcluster-e1v89 cert-manager-x-cert-manager-x-mytestcluster ClusterIP 10.0.211.128 <none> 9402/TCP 6h51m

vcluster-mytestcluster-e1v89 eventbus-codefresh-eventbus-stan-svc-x-codefresh4-x--4952ce3e05 ClusterIP None <none> 4222/TCP,6222/TCP,8222/TCP 112m

vcluster-mytestcluster-e1v89 events-webhook-x-codefresh4-x-mytestcluster ClusterIP 10.0.88.85 <none> 443/TCP 112m

vcluster-mytestcluster-e1v89 kube-dns-x-kube-system-x-mytestcluster ClusterIP 10.0.207.93 <none> 53/UDP,53/TCP,9153/TCP 7h47m

vcluster-mytestcluster-e1v89 my-release-nginx-ingress-x-default-x-mytestcluster LoadBalancer 10.0.31.235 52.185.102.85 80:32189/TCP,443:30087/TCP 6h57m

vcluster-mytestcluster-e1v89 mytestcluster ClusterIP 10.0.51.190 <none> 443/TCP 7h48m

vcluster-mytestcluster-e1v89 mytestcluster-api ClusterIP 10.0.15.167 <none> 443/TCP 7h48m

vcluster-mytestcluster-e1v89 mytestcluster-etcd ClusterIP 10.0.2.198 <none> 2379/TCP,2380/TCP 7h48m

vcluster-mytestcluster-e1v89 mytestcluster-etcd-headless ClusterIP None <none> 2379/TCP,2380/TCP 7h48m

vcluster-mytestcluster-e1v89 mytestcluster-node-aks-nodepool1-30313772-vmss000001 ClusterIP 10.0.205.132 <none> 10250/TCP 7h47m

vcluster-mytestcluster-e1v89 mytestcluster-node-aks-nodepool1-30313772-vmss000002 ClusterIP 10.0.183.227 <none> 10250/TCP 112m

vcluster-mytestcluster-e1v89 mytestcluster-node-aks-nodepool1-30313772-vmss000004 ClusterIP 10.0.96.117 <none> 10250/TCP 110m

vcluster-mytestcluster-e1v89 push-github-eventsource-svc-x-codefresh4-x-mytestcluster ClusterIP 10.0.220.155 <none> 80/TCP 112m

vcluster-mytestcluster-e1v89 sealed-secrets-controller-x-codefresh4-x-mytestcluster ClusterIP 10.0.152.109 <none> 8080/TCP 112m

vcluster-mytestcluster-e1v89 workflow-controller-metrics-x-codefresh4-x-mytestcluster ClusterIP 10.0.146.164 <none> 9090/TCP 112m

AKS Hybrid

I tried installing the Runtime just in AKS (not in Loft). First with HTTPS then with HTTP.

Create a DNS name

First, get the external IP for the Nginx service

$ kubectl get svc -n ingress-basic

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.0.33.97 20.80.65.123 80:30410/TCP,443:30184/TCP 8m23s

ingress-nginx-controller-admission ClusterIP 10.0.118.178 <none> 443/TCP 8m23s

Then create an A Record

$ cat r53-aksdemo4.json

{

"Comment": "CREATE aksdemo4 fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "aksdemo4.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "20.80.65.123"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-aksdemo4.json

{

"ChangeInfo": {

"Id": "/change/C1043297SVAQ561NPF4H",

"Status": "PENDING",

"SubmittedAt": "2022-08-08T01:02:02.994Z",

"Comment": "CREATE aksdemo4 fb.s A record "

}

}

Next I installed a cluster issuer

$ kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.8.0/cert-manager.yaml

Then configurde it

$ kubectl apply -f prod-route53-credentials-secret.yaml

secret/prod-route53-credentials-secret created

we get the secret on the cert and use it in the helm upgrade

$ helm upgrade ingress-nginx -n ingress-basic -f t.yaml ingress-nginx/ingress-nginx

Release "ingress-nginx" has been upgraded. Happy Helming!

NAME: ingress-nginx

LAST DEPLOYED: Sun Aug 7 22:00:17 2022

NAMESPACE: ingress-basic

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status by running 'kubectl --namespace ingress-basic get services -o wide -w ingress-nginx-controller'

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example

namespace: foo

spec:

ingressClassName: nginx

rules:

- host: www.example.com

http:

paths:

- pathType: Prefix

backend:

service:

name: exampleService

port:

number: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

It still did not satisfy the https cert. Trying with HTTP, however, worked just fine

cf runtime install

Runtime name: codefresh5

idjaksloft1-admin

INFO Retrieving ingress class info from your cluster...

INFO Using ingress class: nginx

INFO Retrieving ingress controller info from your cluster...

Ingress host: https://aksdemo4.freshbrewed.science

Could not resolve the URL for ingress host; enter a valid URL

INFO Retrieving ingress controller info from your cluster...

Ingress host: https://aksdemo4.freshbrewed.science/

Could not resolve the URL for ingress host; enter a valid URL

INFO Retrieving ingress controller info from your cluster...

✔ Ingress host: http://aksdemo4.freshbrewed.science|

INFO Using ingress host: http://aksdemo4.freshbrewed.science

Repository URL: https://github.com/theprincessking/codefresh-runtime-aksdemo5-config

Runtime git api token: ****************************************

INFO Personal git token was not provided. Using runtime git token to register user: "ijohnson". You may replace your personal git token at any time from the UI in the user settings

Yes (default)

Summary

Ingress class: nginx

Installing demo resources: true

Codefresh context: codefresh

Kube context: idjaksloft1-admin

Runtime name: codefresh5

Repository URL: https://github.com/theprincessking/codefresh-runtime-aksdemo5-config

Ingress host: http://aksdemo4.freshbrewed.science

Yes

INFO Running network test...

INFO Network test finished successfully

INFO Installing runtime "codefresh5" version=0.0.461

namespace/codefresh5 created

customresourcedefinition.apiextensions.k8s.io/applications.argoproj.io created

customresourcedefinition.apiextensions.k8s.io/applicationsets.argoproj.io created

customresourcedefinition.apiextensions.k8s.io/appprojects.argoproj.io created

serviceaccount/argocd-application-controller created

serviceaccount/argocd-applicationset-controller created

serviceaccount/argocd-dex-server created

serviceaccount/argocd-redis created

serviceaccount/argocd-server created

role.rbac.authorization.k8s.io/argocd-application-controller created

role.rbac.authorization.k8s.io/argocd-applicationset-controller created

role.rbac.authorization.k8s.io/argocd-dex-server created

role.rbac.authorization.k8s.io/argocd-server created

role.rbac.authorization.k8s.io/codefresh-config-reader created

clusterrole.rbac.authorization.k8s.io/argocd-application-controller created

clusterrole.rbac.authorization.k8s.io/argocd-server created

rolebinding.rbac.authorization.k8s.io/argocd-application-controller created

rolebinding.rbac.authorization.k8s.io/argocd-applicationset-controller created

rolebinding.rbac.authorization.k8s.io/argocd-dex-server created

rolebinding.rbac.authorization.k8s.io/argocd-redis created

rolebinding.rbac.authorization.k8s.io/argocd-server created

rolebinding.rbac.authorization.k8s.io/codefresh-config-reader created

clusterrolebinding.rbac.authorization.k8s.io/argocd-application-controller created

clusterrolebinding.rbac.authorization.k8s.io/argocd-server created

configmap/argocd-cm created

configmap/argocd-cmd-params-cm created

configmap/argocd-gpg-keys-cm created

configmap/argocd-rbac-cm created

configmap/argocd-ssh-known-hosts-cm created

configmap/argocd-tls-certs-cm created

secret/argocd-secret created

service/argocd-applicationset-controller created

service/argocd-dex-server created

service/argocd-metrics created

service/argocd-redis created

service/argocd-repo-server created

service/argocd-server created

service/argocd-server-metrics created

deployment.apps/argocd-applicationset-controller created

deployment.apps/argocd-dex-server created

deployment.apps/argocd-redis created

deployment.apps/argocd-repo-server created

deployment.apps/argocd-server created

statefulset.apps/argocd-application-controller created

networkpolicy.networking.k8s.io/argocd-application-controller-network-policy created

networkpolicy.networking.k8s.io/argocd-dex-server-network-policy created

networkpolicy.networking.k8s.io/argocd-redis-network-policy created

networkpolicy.networking.k8s.io/argocd-repo-server-network-policy created

networkpolicy.networking.k8s.io/argocd-server-network-policy created

secret/autopilot-secret created

application.argoproj.io/autopilot-bootstrap created

'admin:login' logged in successfully

Context 'autopilot' updated

INFO Pushing runtime definition to the installation repo

secret/codefresh-token created

secret/argocd-token created

INFO Creating component "events"

INFO Creating component "rollouts"

INFO Creating component "workflows"

INFO Creating component "app-proxy"

INFO Creating component "sealed-secrets"

INFO Pushing Argo Workflows ingress manifests

INFO Pushing App-Proxy ingress manifests

INFO Pushing Event Reporter manifests

INFO Pushing Codefresh Workflow-Reporter manifests

INFO Pushing Codefresh Rollout-Reporter manifests

INFO Pushing demo pipelines to the new git-source repo

INFO Successfully created git-source: "default-git-source"

WARNING --provider not specified, assuming provider from url: github

Enumerating objects: 3423, done.

Counting objects: 100% (3423/3423), done.

Compressing objects: 100% (1517/1517), done.

Total 3423 (delta 2205), reused 2801 (delta 1708), pack-reused 0

INFO Successfully created git-source: "marketplace-git-source"

INFO Waiting for the runtime installation to complete...

COMPONENT HEALTH STATUS SYNC STATUS VERSION ERRORS

✔ argo-cd HEALTHY SYNCED quay.io/codefresh/argocd:v2.3.4-cap-CR-13082-improve-validation

✔ rollout-reporter HEALTHY SYNCED quay.io/codefresh/argo-events:v1.7.1-cap-CR-13091

✔ workflow-reporter HEALTHY SYNCED quay.io/codefresh/argo-events:v1.7.1-cap-CR-13091

✔ events-reporter HEALTHY SYNCED quay.io/codefresh/argo-events:v1.7.1-cap-CR-13091

✔ sealed-secrets HEALTHY SYNCED docker.io/bitnami/sealed-secrets-controller:v0.17.5

✔ app-proxy HEALTHY SYNCED quay.io/codefresh/cap-app-proxy:1.1652.1

✔ workflows HEALTHY SYNCED quay.io/codefresh/workflow-controller:v3.2.6-cap-CR-8697

✔ rollouts HEALTHY SYNCED quay.io/codefresh/argo-rollouts:v1.2.0-cap-CR-10626

✔ events HEALTHY SYNCED quay.io/codefresh/argo-events:v1.7.1-cap-CR-13091

INFO created git integration: default

INFO Added default git integration

INFO registered to git integration: default

Checking Account Git Provider -> Success

Downloading runtime definition -> Success

Pre run installation checks -> Success

Runtime installation phase started -> Success

Downloading runtime definition -> Success

Getting kube server address -> Success

Creating runtime on platform -> Success

Bootstrapping repository -> Success

Creating Project -> Success

Creating/Updating codefresh-cm -> Success

Applying secrets to cluster -> Success

Creating components -> Success

Installing components -> Success

Creating git source "default-git-source" -> Success

Creating marketplace-git-source -> Success

Wait for runtime sync -> Success

Creating a default git integration -> Success

Registering user to the default git integration -> Success

Runtime "codefresh5" installed successfully

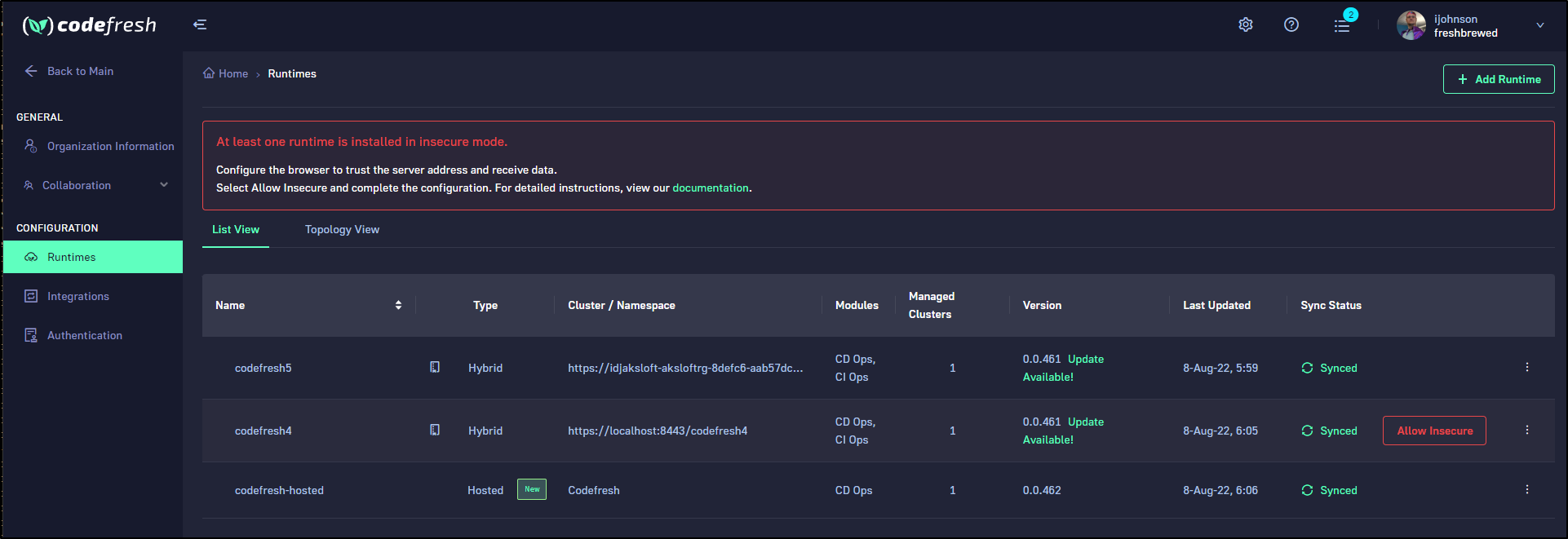

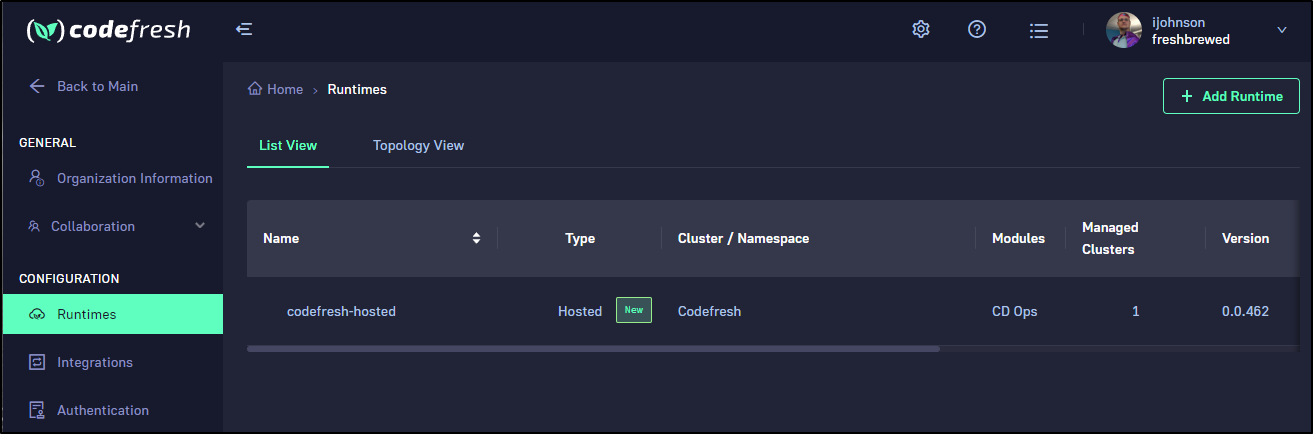

I should add, several days later, the Loft instance, when checked was fine. I could also see the note about “insecure” with regards to my HTTP install

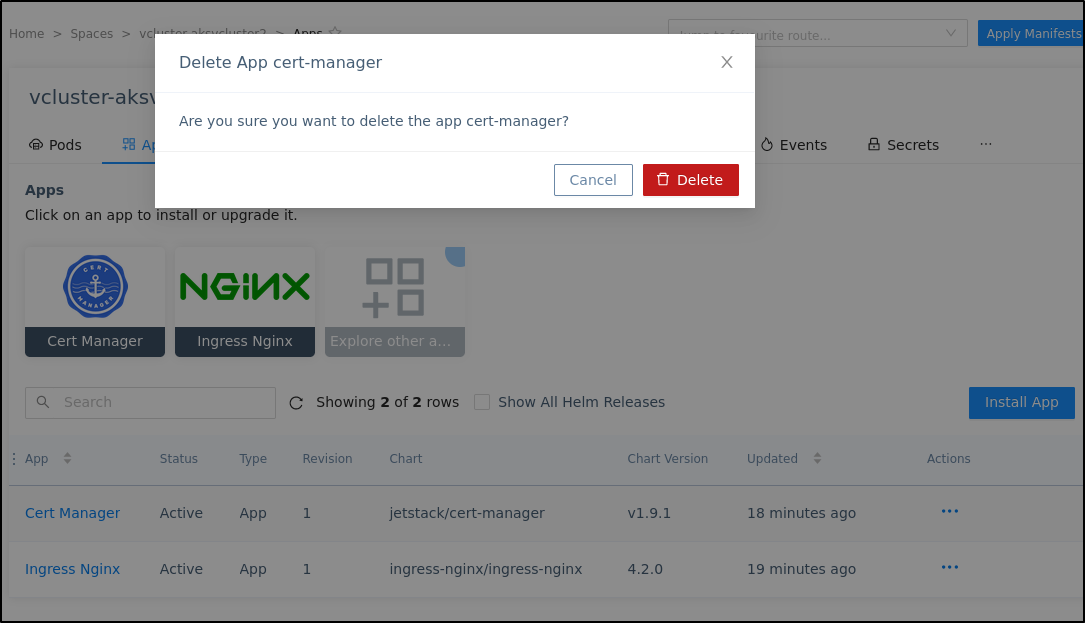

Odd Results with Loft

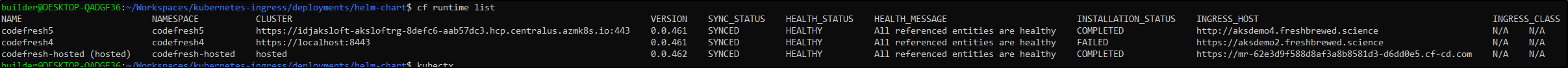

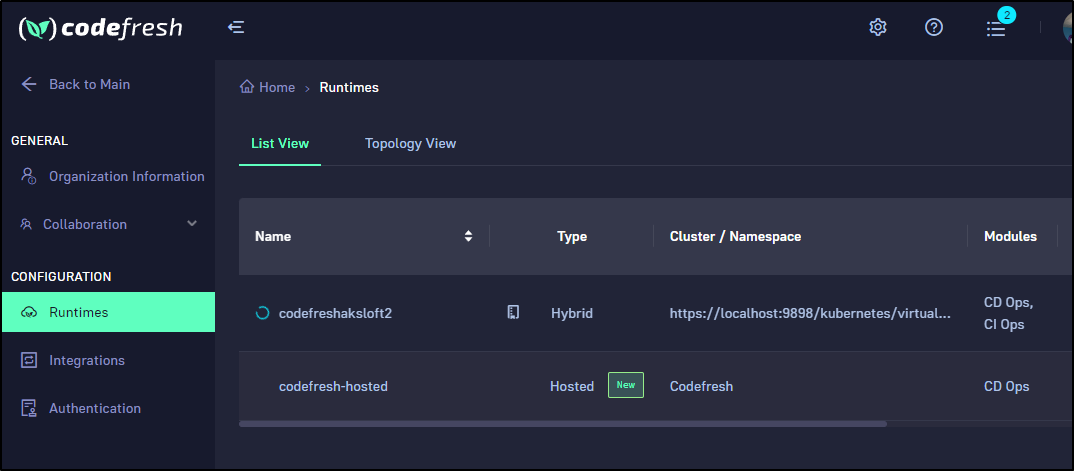

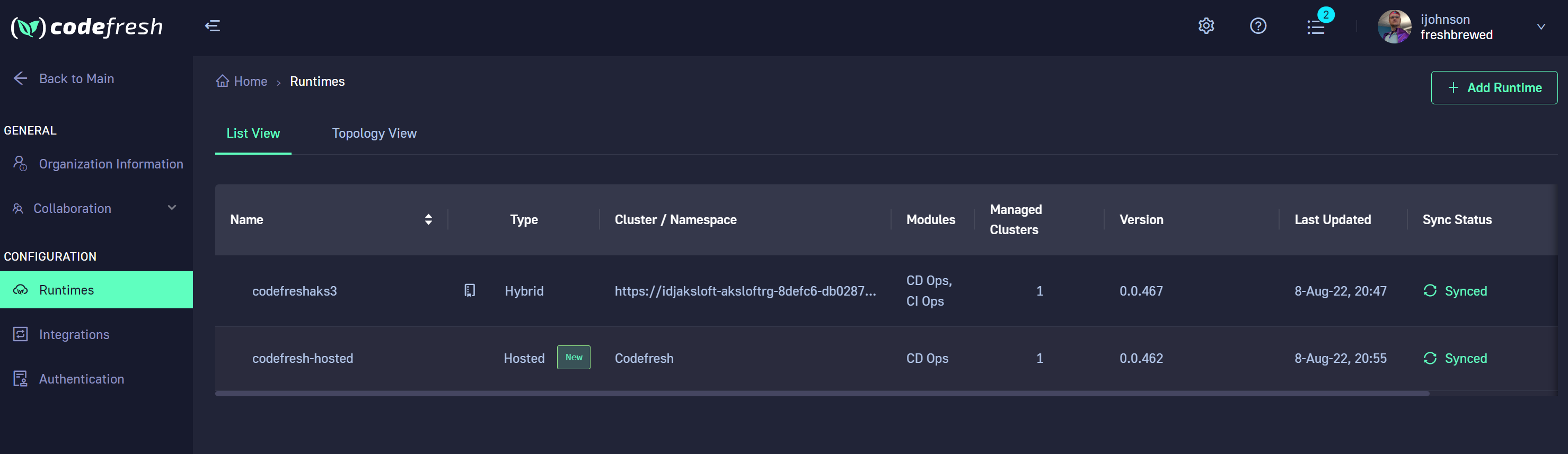

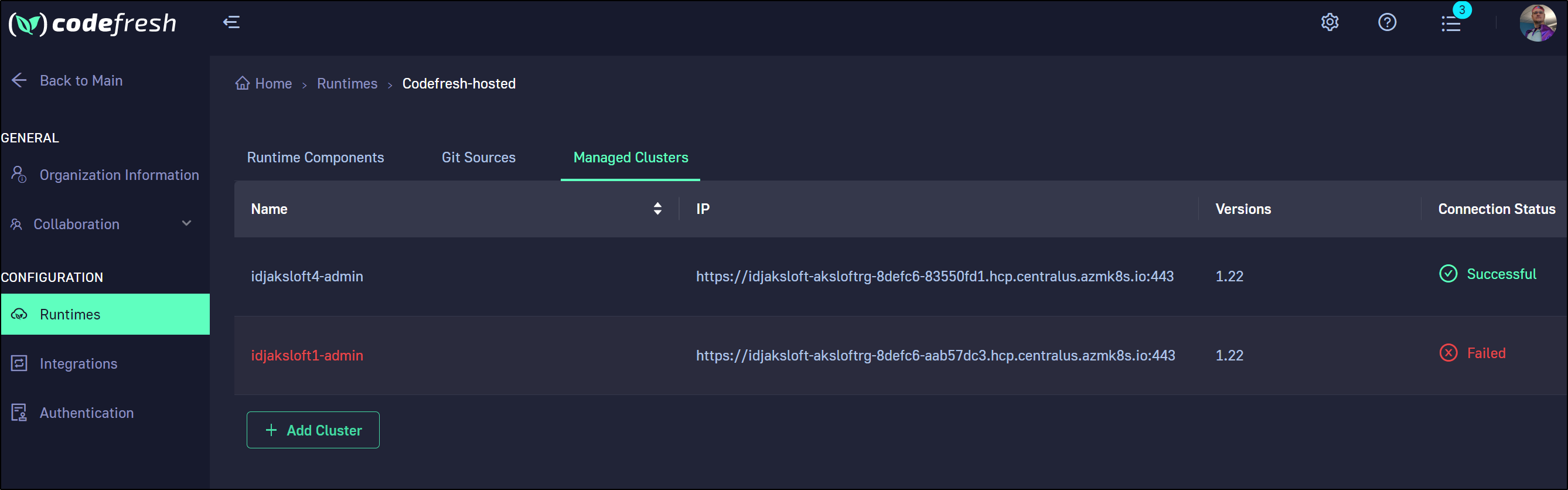

We can now see the runtimes

One thing I found interesting was that when connected to the master AKS cluster which hosts Loft (which hosts the runtime), the Codefresh binary was able to see all the hosted runtimes including the Loft vCluster one

$ cf runtime list

NAME NAMESPACE CLUSTER VERSION SYNC_STATUS HEALTH_STATUS HEALTH_MESSAGE INSTALLATION_STATUS INGRESS_HOST INGRESS_CLASS

codefresh5 codefresh5 https://idjaksloft-aksloftrg-8defc6-aab57dc3.hcp.centralus.azmk8s.io:443 0.0.461 SYNCED HEALTHY All referenced entities are healthy COMPLETED http://aksdemo4.freshbrewed.science N/A N/A

codefresh4 codefresh4 https://localhost:8443 0.0.461 SYNCED HEALTHY All referenced entities are healthy FAILED https://aksdemo2.freshbrewed.science N/A N/A

codefresh-hosted (hosted) codefresh-hosted hosted 0.0.462 SYNCED HEALTHY All referenced entities are healthy COMPLETED https://mr-62e3d9f588d8af3a8b8581d3-d6dd0e5.cf-cd.com N/A N/A

The confusing part is that I know I just installed it on the master cluster aksdemo4. I used http because https failed. Yet the “good” instance is listed on the Loft vCluster using the settings for the failed. And on the “master” we see localhost:8443 which would have been the Loft vCluster using aksdemo2. It’s as if it swapped names or the Ingress on Loft picked up the wrong deployment on master.

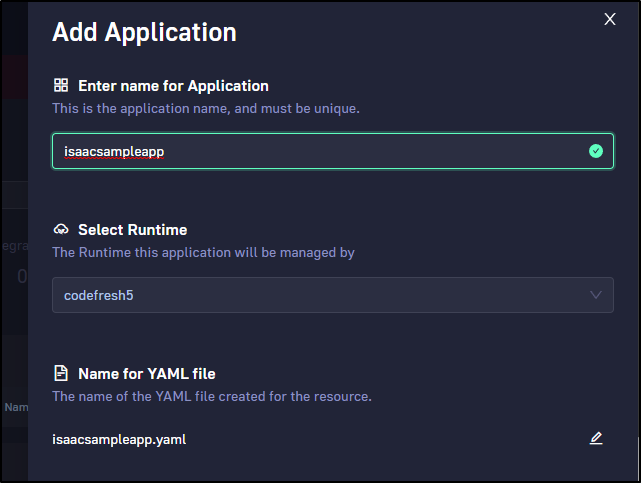

Let’s try using codefresh5 and seeing where it goes

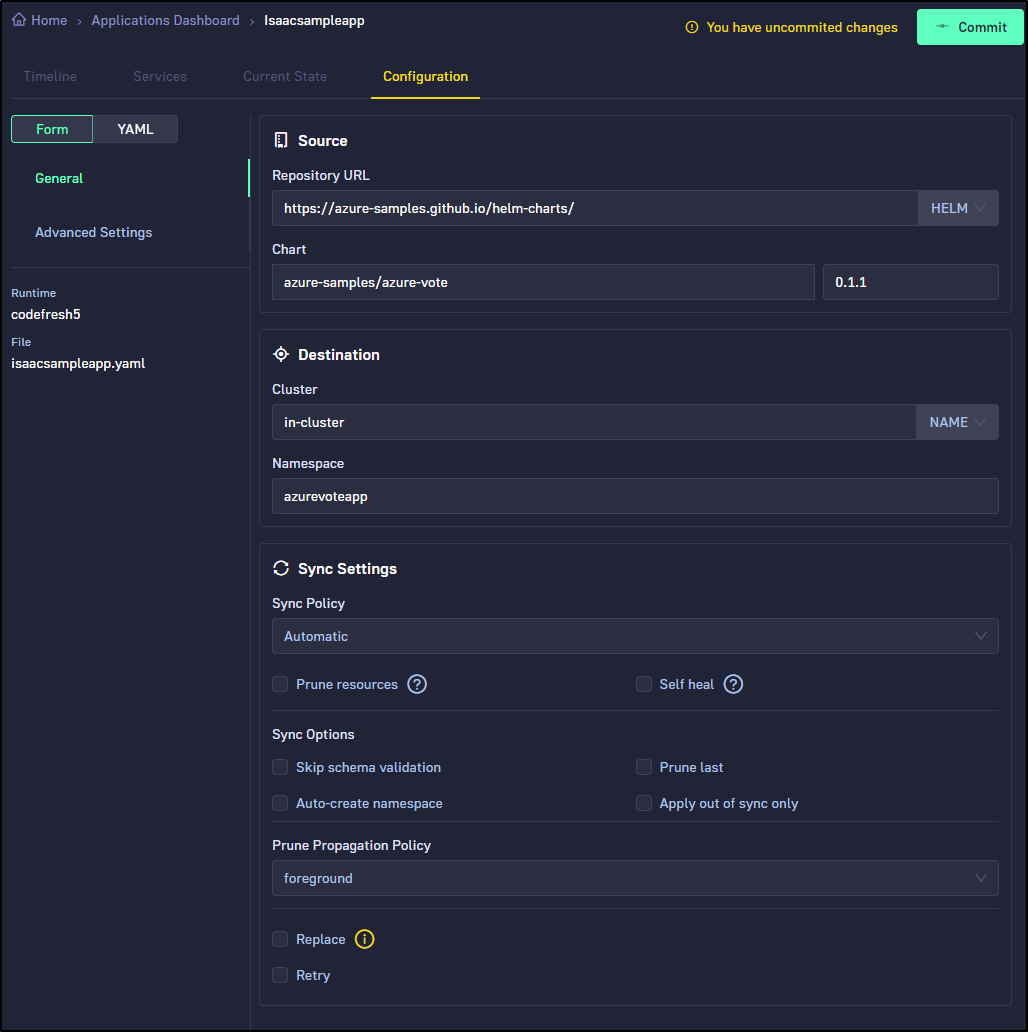

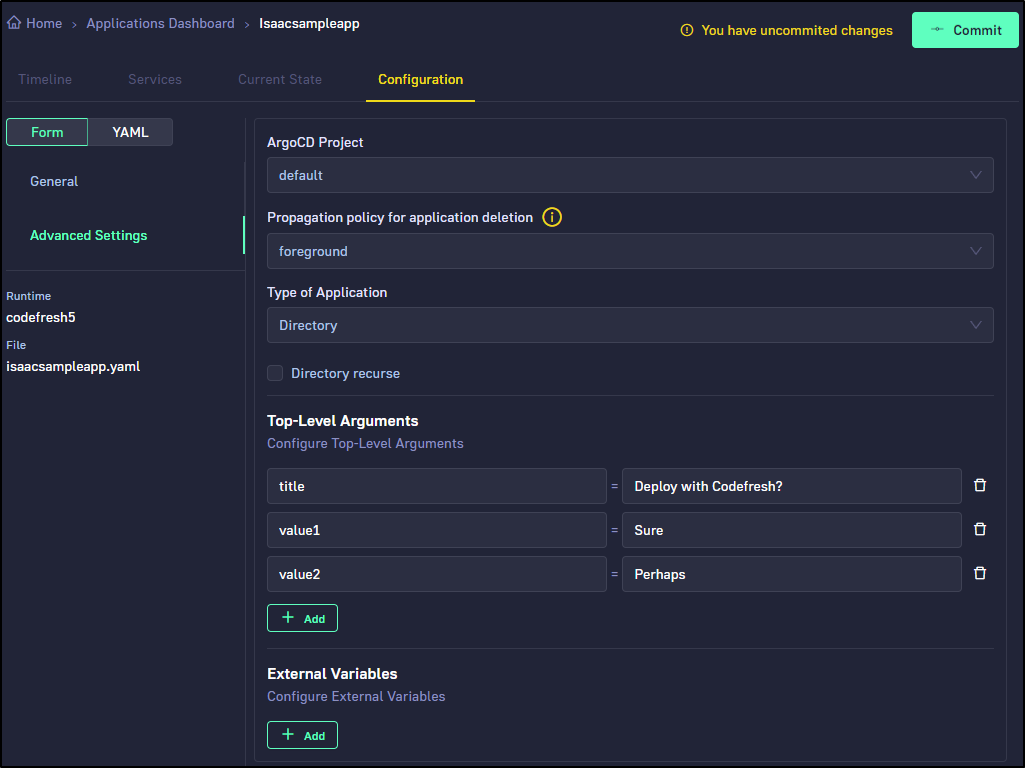

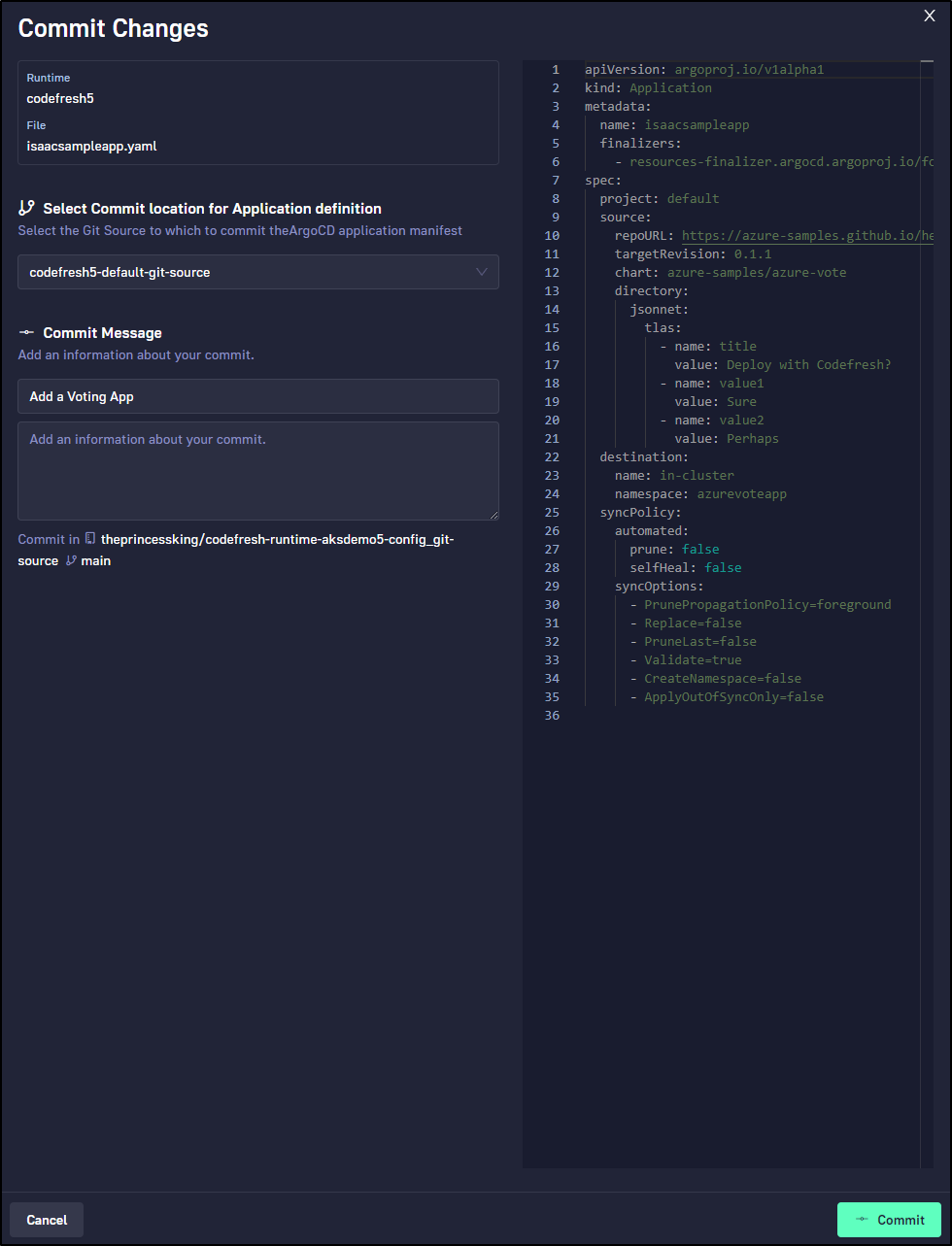

I’ll try the Azure sample voting app

and give it some fun settings in advanced

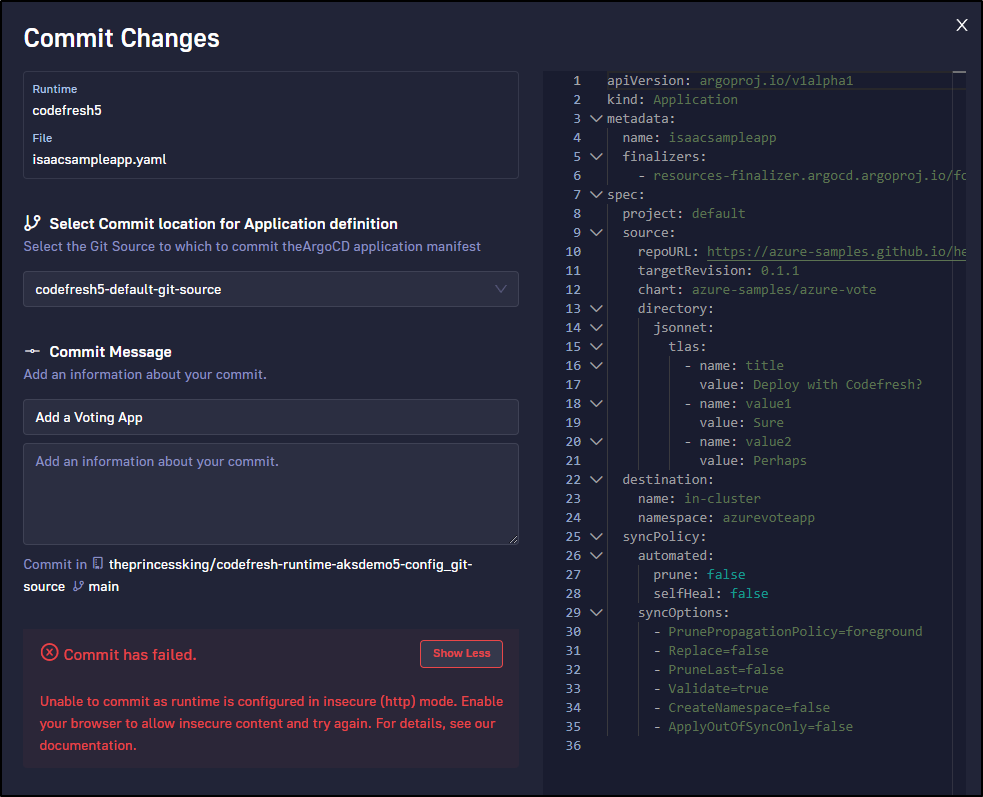

I go to commit

And it fails.

It seems even IF you use HTTP mode, it won’t save the GIT file over HTTP.

Start over

At this point, I have low confidence the various installs on this cluster are cohesive. They seem to be confused all over.

Scrub and Retry

At this point, I’m ready to just start over…

Because it will want to clear out the storage repo for configs, you’ll need to have your Github token handy

$ cf runtime uninstall codefresh5

idjaksloft1-admin

Runtime git api token: ****************************************

Summary

Runtime name: codefresh5

Kube context: idjaksloft1-admin

Repository URL: https://github.com/theprincessking/codefresh-runtime-aksdemo5-config

✔ Runtime git api token: ****************************************█

Yes

INFO Uninstalling runtime "codefresh5" - this process may take a few minutes...

INFO Removed git integration: default

INFO Skipped removing runtime from ISC repo. No git integrations

WARNING --provider not specified, assuming provider from url: github

COMPONENT STATUS

↻ argo-cd Healthy

↻ app-proxy Healthy

↻ events Healthy

↻ events-reporter Healthy

↻ rollout-reporter Healthy

↻ rollouts Healthy

↻ sealed-secrets Healthy

↻ workflow-reporter Healthy

↻ workflows Healthy

waiting for 'autopilot-bootstrap' to be finish syncing..

After a few minutes

COMPONENT STATUS

✔ argo-cd Deleted

✔ app-proxy Deleted

✔ events Deleted

✔ events-reporter Deleted

✔ rollout-reporter Deleted

✔ rollouts Deleted

✔ sealed-secrets Deleted

✔ workflow-reporter Deleted

✔ workflows Deleted

application.argoproj.io "autopilot-bootstrap" deleted

secret "autopilot-secret" deleted

secret "codefresh-token" deleted

No resources found

secret "argocd-secret" deleted

service "argocd-applicationset-controller" deleted

service "argocd-dex-server" deleted

service "argocd-metrics" deleted

service "argocd-redis" deleted

service "argocd-repo-server" deleted

service "argocd-server" deleted

service "argocd-server-metrics" deleted

deployment.apps "argocd-applicationset-controller" deleted

deployment.apps "argocd-dex-server" deleted

deployment.apps "argocd-redis" deleted

deployment.apps "argocd-repo-server" deleted

deployment.apps "argocd-server" deleted

statefulset.apps "argocd-application-controller" deleted

configmap "argocd-cm" deleted

configmap "argocd-cmd-params-cm" deleted

configmap "argocd-gpg-keys-cm" deleted

configmap "argocd-rbac-cm" deleted

configmap "argocd-ssh-known-hosts-cm" deleted

configmap "argocd-tls-certs-cm" deleted

serviceaccount "argocd-application-controller" deleted

serviceaccount "argocd-applicationset-controller" deleted

serviceaccount "argocd-dex-server" deleted

serviceaccount "argocd-redis" deleted

serviceaccount "argocd-server" deleted

networkpolicy.networking.k8s.io "argocd-application-controller-network-policy" deleted

networkpolicy.networking.k8s.io "argocd-dex-server-network-policy" deleted

networkpolicy.networking.k8s.io "argocd-redis-network-policy" deleted

networkpolicy.networking.k8s.io "argocd-repo-server-network-policy" deleted

networkpolicy.networking.k8s.io "argocd-server-network-policy" deleted

rolebinding.rbac.authorization.k8s.io "argocd-application-controller" deleted

rolebinding.rbac.authorization.k8s.io "argocd-applicationset-controller" deleted

rolebinding.rbac.authorization.k8s.io "argocd-dex-server" deleted

rolebinding.rbac.authorization.k8s.io "argocd-redis" deleted

rolebinding.rbac.authorization.k8s.io "argocd-server" deleted

rolebinding.rbac.authorization.k8s.io "codefresh-config-reader" deleted

role.rbac.authorization.k8s.io "argocd-application-controller" deleted

role.rbac.authorization.k8s.io "argocd-applicationset-controller" deleted

role.rbac.authorization.k8s.io "argocd-dex-server" deleted

role.rbac.authorization.k8s.io "argocd-server" deleted

role.rbac.authorization.k8s.io "codefresh-config-reader" deleted

secret "argocd-token" deleted

deployment.apps "argocd-dex-server" deleted

deployment.apps "argocd-redis" deleted

deployment.apps "argocd-repo-server" deleted

deployment.apps "argocd-server" deleted

No resources found

No resources found

INFO Deleting runtime 'codefresh5' from platform

INFO Deleting runtime "codefresh5" from the platform

INFO Successfully deleted runtime "codefresh5" from the platform

Checking if runtime exists -> Success

Removing git integrations -> Success

Removing runtime ISC -> Success

Uninstalling repo -> Success

Deleting runtime from platform -> Success

Done uninstalling runtime "codefresh5" -> Success

However, I got stuck on codefresh4

builder@DESKTOP-QADGF36:~/Workspaces/kubernetes-ingress/deployments/helm-chart$ cf runtime uninstall codefresh4

idjaksloft1-admin

FATAL pre run error: runtime 'codefresh4' does not exist on context 'idjaksloft1-admin'. Make sure you are providing the right kube context or use --force to bypass this check

builder@DESKTOP-QADGF36:~/Workspaces/kubernetes-ingress/deployments/helm-chart$ cf runtime uninstall codefresh4

loft-vcluster_codefresh-working-2_vcluster-codefresh-working-2-p5gby_loft-cluster

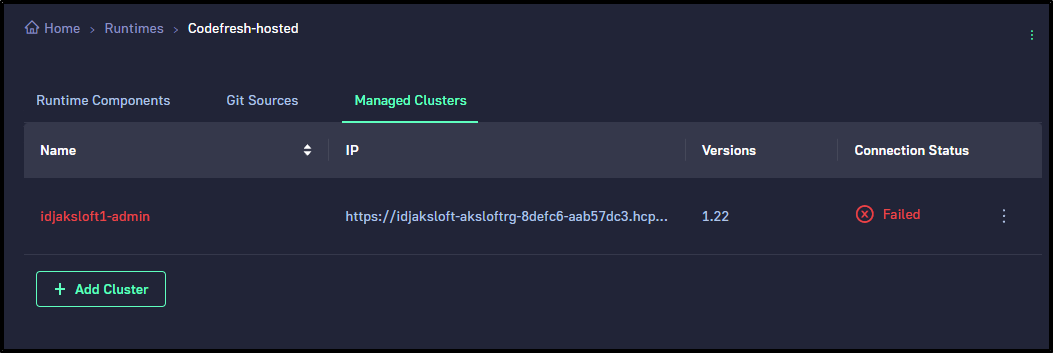

FATAL pre run error: runtime 'codefresh4' does not exist on context 'loft-vcluster_codefresh-working-2_vcluster-codefresh-working-2-p5gby_loft-cluster'. Make sure you are providing the right kube context or use --force to bypass this check