Published: Apr 19, 2022 by Isaac Johnson

We’ve explored Anthos and ArgoCD for GitOps but have yet to dig into FluxCD. FluxCD is a CNCF incubating project that provides GitOps for application deployment (CD) and progressive delivery (PD). We will dig into using FluxCD with Syncier SecurityTower and OPA Gatekeeper.

We’ll look at FluxCD with AKS and on-prem as well as how to update and setup notifications.

Setup fresh AKS

I’d like to start with a fresh cluster this time.

Let’s create our new AKS:

Set your subscription and create a resource group

builder@DESKTOP-72D2D9T:~$ az account set --subscription Pay-As-You-Go

builder@DESKTOP-72D2D9T:~$ az group create -n idjdwt3rg --location centralus

{

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdwt3rg",

"location": "centralus",

"managedBy": null,

"name": "idjdwt3rg",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

We might need an SP. I’ll create one for now (note: I did not end up using)

builder@DESKTOP-72D2D9T:~$ az ad sp create-for-rbac -n idjdwt3 --skip-assignment --output json > my_sp.json

WARNING: The underlying Active Directory Graph API will be replaced by Microsoft Graph API in Azure CLI 2.37.0. Please carefully review all breaking changes introduced during this migration: https://docs.microsoft.com/cli/azure/microsoft-graph-migration

WARNING: Option '--skip-assignment' has been deprecated and will be removed in a future release.

WARNING: The output includes credentials that you must protect. Be sure that you do not include these credentials in your code or check the credentials into your source control. For more information, see https://aka.ms/azadsp-cli

builder@DESKTOP-72D2D9T:~$ export SP_PASS=`cat my_sp.json | jq -r .password`

builder@DESKTOP-72D2D9T:~$ export SP_ID=`cat my_sp.json | jq -r .appId`

Now we can create our AKS

$ az aks create --name idjdwt3aks -g idjdwt3rg --node-count 3 --enable-addons monitoring --generate-ssh-keys

AAD role propagation done[############################################] 100.0000%{

"aadProfile": null,

"addonProfiles": {

"omsagent": {

"config": {

"logAnalyticsWorkspaceResourceID": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourcegroups/defaultresourcegroup-cus/providers/microsoft.operationalinsights/workspaces/defaultworkspace-d955c0ba-13dc-44cf-a29a-8fed74cbb22d-cus",

"useAADAuth": "False"

},

"enabled": true,

"identity": {

"clientId": "275f4675-4bde-4a0a-8251-1f6a05e67a2b",

"objectId": "4700c606-c6a2-4dc7-829c-6f31270bdddb",

"resourceId": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourcegroups/MC_idjdwt3rg_idjdwt3aks_centralus/providers/Microsoft.ManagedIdentity/userAssignedIdentities/omsagent-idjdwt3aks"

}

}

},

"agentPoolProfiles": [

{

"availabilityZones": null,

"count": 3,

"creationData": null,

"enableAutoScaling": false,

"enableEncryptionAtHost": false,

"enableFips": false,

"enableNodePublicIp": false,

"enableUltraSsd": false,

"gpuInstanceProfile": null,

"kubeletConfig": null,

"kubeletDiskType": "OS",

"linuxOsConfig": null,

"maxCount": null,

"maxPods": 110,

"minCount": null,

"mode": "System",

"name": "nodepool1",

"nodeImageVersion": "AKSUbuntu-1804gen2containerd-2022.03.29",

"nodeLabels": null,

"nodePublicIpPrefixId": null,

"nodeTaints": null,

"orchestratorVersion": "1.21.9",

"osDiskSizeGb": 128,

"osDiskType": "Managed",

"osSku": "Ubuntu",

"osType": "Linux",

"podSubnetId": null,

"powerState": {

"code": "Running"

},

"provisioningState": "Succeeded",

"proximityPlacementGroupId": null,

"scaleDownMode": null,

"scaleSetEvictionPolicy": null,

"scaleSetPriority": null,

"spotMaxPrice": null,

"tags": null,

"type": "VirtualMachineScaleSets",

"upgradeSettings": null,

"vmSize": "Standard_DS2_v2",

"vnetSubnetId": null,

"workloadRuntime": null

}

],

"apiServerAccessProfile": null,

"autoScalerProfile": null,

"autoUpgradeProfile": null,

"azurePortalFqdn": "idjdwt3aks-idjdwt3rg-d955c0-93b90aa1.portal.hcp.centralus.azmk8s.io",

"disableLocalAccounts": false,

"diskEncryptionSetId": null,

"dnsPrefix": "idjdwt3aks-idjdwt3rg-d955c0",

"enablePodSecurityPolicy": null,

"enableRbac": true,

"extendedLocation": null,

"fqdn": "idjdwt3aks-idjdwt3rg-d955c0-93b90aa1.hcp.centralus.azmk8s.io",

"fqdnSubdomain": null,

"httpProxyConfig": null,

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourcegroups/idjdwt3rg/providers/Microsoft.ContainerService/managedClusters/idjdwt3aks",

"identity": {

"principalId": "0beda73c-99f6-4eda-b3fd-c01be9b1f1ce",

"tenantId": "28c575f6-ade1-4838-8e7c-7e6d1ba0eb4a",

"type": "SystemAssigned",

"userAssignedIdentities": null

},

"identityProfile": {

"kubeletidentity": {

"clientId": "b1a5a5aa-2ed1-41a6-8f04-6fb9ef73c210",

"objectId": "eb379a3d-7276-4da3-bdae-7eb131cc09c4",

"resourceId": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourcegroups/MC_idjdwt3rg_idjdwt3aks_centralus/providers/Microsoft.ManagedIdentity/userAssignedIdentities/idjdwt3aks-agentpool"

}

},

"kubernetesVersion": "1.21.9",

"linuxProfile": {

"adminUsername": "azureuser",

"ssh": {

"publicKeys": [

{

"keyData": "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC5d8Z+di/B8HkhHGqXWxo3HE20JOB67//K01m7ToeAYhPjYvNwr0f95HLgZAPD0PJwIRd1u1bMx1PqnOxwX0SxIoCPZQVxXsuxiKM4nr08k6RnFTKcztuRb8Di5xLC/Q9Eer33+Q+NV2GWzoBm5oamy+k9Zs6SA+ncShnoBSM6fAxFiQ+iilBLU9e+NfWhaZL5BAeC6bYa+daGkVevKpbKmMYimfeGFhHYNSZikeEfRRGvbnw8L7YiH1n0EQuum6R73OMYmKU70cbQWvaLakkxyH926LMFPk1oz2JxbiZspex3UQYLfe4hcKMY+Lp66g1pQsKqu4dMXhoDN764UJV9"

}

]

}

},

"location": "centralus",

"maxAgentPools": 100,

"name": "idjdwt3aks",

"networkProfile": {

"dnsServiceIp": "10.0.0.10",

"dockerBridgeCidr": "172.17.0.1/16",

"ipFamilies": [

"IPv4"

],

"loadBalancerProfile": {

"allocatedOutboundPorts": null,

"effectiveOutboundIPs": [

{

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/MC_idjdwt3rg_idjdwt3aks_centralus/providers/Microsoft.Network/publicIPAddresses/57d9f012-34b9-4968-8de8-a476973c8c4b",

"resourceGroup": "MC_idjdwt3rg_idjdwt3aks_centralus"

}

],

"enableMultipleStandardLoadBalancers": null,

"idleTimeoutInMinutes": null,

"managedOutboundIPs": {

"count": 1,

"countIpv6": null

},

"outboundIPs": null,

"outboundIpPrefixes": null

},

"loadBalancerSku": "Standard",

"natGatewayProfile": null,

"networkMode": null,

"networkPlugin": "kubenet",

"networkPolicy": null,

"outboundType": "loadBalancer",

"podCidr": "10.244.0.0/16",

"podCidrs": [

"10.244.0.0/16"

],

"serviceCidr": "10.0.0.0/16",

"serviceCidrs": [

"10.0.0.0/16"

]

},

"nodeResourceGroup": "MC_idjdwt3rg_idjdwt3aks_centralus",

"podIdentityProfile": null,

"powerState": {

"code": "Running"

},

"privateFqdn": null,

"privateLinkResources": null,

"provisioningState": "Succeeded",

"publicNetworkAccess": null,

"resourceGroup": "idjdwt3rg",

"securityProfile": {

"azureDefender": null

},

"servicePrincipalProfile": {

"clientId": "msi",

"secret": null

},

"sku": {

"name": "Basic",

"tier": "Free"

},

"systemData": null,

"tags": null,

"type": "Microsoft.ContainerService/ManagedClusters",

"windowsProfile": null

}

The we can validate

builder@DESKTOP-72D2D9T:~$ (rm -f ~/.kube/config || true) && az aks get-credentials -n idjdwt3aks -g idjdwt3rg --admin

Merged "idjdwt3aks-admin" as current context in /home/builder/.kube/config

builder@DESKTOP-72D2D9T:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-30243974-vmss000000 Ready agent 5m4s v1.21.9

aks-nodepool1-30243974-vmss000001 Ready agent 4m54s v1.21.9

aks-nodepool1-30243974-vmss000002 Ready agent 4m45s v1.21.9

Setup Flux

Now let’s setup FLUX

builder@DESKTOP-72D2D9T:~$ curl -s https://fluxcd.io/install.sh | sudo bash

[sudo] password for builder:

[INFO] Downloading metadata https://api.github.com/repos/fluxcd/flux2/releases/latest

[INFO] Using 0.28.5 as release

[INFO] Downloading hash https://github.com/fluxcd/flux2/releases/download/v0.28.5/flux_0.28.5_checksums.txt

[INFO] Downloading binary https://github.com/fluxcd/flux2/releases/download/v0.28.5/flux_0.28.5_linux_amd64.tar.gz

[INFO] Verifying binary download

[INFO] Installing flux to /usr/local/bin/flux

$ . <(flux completion bash)

$ echo ". <(flux completion bash)" >> ~/.bashrc

I’ll set some ENV Vars Flux expects for my Github Userid and Repo

builder@DESKTOP-72D2D9T:~$ export GITHUB_USER=idjohnson

builder@DESKTOP-72D2D9T:~$ export GITHUB_REPO=dockerWithTests2

Now with our GITHUB Token handy (that said, you can use GITHUB_TOKEN to set as an env var), we can setup Flux

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ flux bootstrap github --owner=$GITHUB_USER --repository=$GITHUB_REPO --branch=main --path=./k8s --personal

Please enter your GitHub personal access token (PAT):

► connecting to github.com

► cloning branch "main" from Git repository "https://github.com/idjohnson/dockerWithTests2.git"

✔ cloned repository

► generating component manifests

✔ generated component manifests

✔ committed sync manifests to "main" ("7f9fde283551a7adb3e9718e01b6301df7fb2922")

► pushing component manifests to "https://github.com/idjohnson/dockerWithTests2.git"

✔ installed components

✔ reconciled components

► determining if source secret "flux-system/flux-system" exists

► generating source secret

✔ public key: ecdsa-sha2-nistp384 AAAAE2VjZHNhLXNoYTItbmlzdHAzODQAAAAIbmlzdHAzODQAAABhBAlFeItRIAobjGM4Oxp8NIFPQukBDl3hymLuyv4RBML75X0/Syr5y/RSzPX9dehpqwsuDw6byf+7ZqGNho8VZrdzq4vrVYxQO8PC6wmFyGx1pf9EiB/Ng3+vaxHXbDf6PQ==

✔ configured deploy key "flux-system-main-flux-system-./k8s" for "https://github.com/idjohnson/dockerWithTests2"

► applying source secret "flux-system/flux-system"

✔ reconciled source secret

► generating sync manifests

✔ generated sync manifests

✔ committed sync manifests to "main" ("da3e66c81aea87976c088c83211f37f85eba69cb")

► pushing sync manifests to "https://github.com/idjohnson/dockerWithTests2.git"

► applying sync manifests

✔ reconciled sync configuration

◎ waiting for Kustomization "flux-system/flux-system" to be reconciled

✔ Kustomization reconciled successfully

► confirming components are healthy

✔ helm-controller: deployment ready

✔ kustomize-controller: deployment ready

✔ notification-controller: deployment ready

✔ source-controller: deployment ready

✔ all components are healthy

SecurityTower

Now let’s use securityTower to apply policies on our cluster

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ mkdir -p .securitytower

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2/.securitytower$ vi cluster.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2/.securitytower$ cat cluster.yaml

apiVersion: securitytower.io/v1alpha1

kind: Cluster

metadata:

name: dockerWithTests2

spec:

policies:

path: /policies

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ flux create kustomization policies --source=flux-system --path="./policies" --prune=true --validation=none --interval=1m --export > ./k8s/policies.yaml

We now have the following files to commit:

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ cat .securitytower/cluster.yaml

apiVersion: securitytower.io/v1alpha1

kind: Cluster

metadata:

name: dockerWithTests2

spec:

policies:

path: /policies

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ cat k8s/policies.yaml

Flag --validation has been deprecated, this arg is no longer used, all resources are validated using server-side apply dry-run

---

apiVersion: kustomize.toolkit.fluxcd.io/v1beta2

kind: Kustomization

metadata:

name: policies

namespace: flux-system

spec:

interval: 1m0s

path: ./policies

prune: true

sourceRef:

kind: GitRepository

name: flux-system

Oops - did you catch that? the validation flag was in STDOUT and got into the policies.yaml. let’s fix that first

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ flux create kustomization policies --source=flux-system --path="./policies" --prune=true --interval=1m --export > ./k8s/policies.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ cat k8s/policies.yaml

---

apiVersion: kustomize.toolkit.fluxcd.io/v1beta2

kind: Kustomization

metadata:

name: policies

namespace: flux-system

spec:

interval: 1m0s

path: ./policies

prune: true

sourceRef:

kind: GitRepository

name: flux-system

Add and push

$ git add -A

$ git commit -m "Sync and Policies"

$ git push

Note: at this point, Flux had updated my repo with changes so i had to pull and bring in new Flux created YAMLs in ./k8s

OPA Gatekeeper

We can now setup OPA Gatekeeper. I checked releases for the latest version (3.7) and used that in the URL.

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ kubectl apply -f https://raw.githubusercontent.com/open-policy-agent/gatekeeper/release-3.7/deploy/gatekeeper.yaml

namespace/gatekeeper-system created

resourcequota/gatekeeper-critical-pods created

customresourcedefinition.apiextensions.k8s.io/assign.mutations.gatekeeper.sh created

customresourcedefinition.apiextensions.k8s.io/assignmetadata.mutations.gatekeeper.sh created

customresourcedefinition.apiextensions.k8s.io/configs.config.gatekeeper.sh created

customresourcedefinition.apiextensions.k8s.io/constraintpodstatuses.status.gatekeeper.sh created

customresourcedefinition.apiextensions.k8s.io/constrainttemplatepodstatuses.status.gatekeeper.sh created

customresourcedefinition.apiextensions.k8s.io/constrainttemplates.templates.gatekeeper.sh created

customresourcedefinition.apiextensions.k8s.io/modifyset.mutations.gatekeeper.sh created

customresourcedefinition.apiextensions.k8s.io/mutatorpodstatuses.status.gatekeeper.sh created

customresourcedefinition.apiextensions.k8s.io/providers.externaldata.gatekeeper.sh created

serviceaccount/gatekeeper-admin created

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/gatekeeper-admin created

role.rbac.authorization.k8s.io/gatekeeper-manager-role created

clusterrole.rbac.authorization.k8s.io/gatekeeper-manager-role created

rolebinding.rbac.authorization.k8s.io/gatekeeper-manager-rolebinding created

clusterrolebinding.rbac.authorization.k8s.io/gatekeeper-manager-rolebinding created

secret/gatekeeper-webhook-server-cert created

service/gatekeeper-webhook-service created

deployment.apps/gatekeeper-audit created

deployment.apps/gatekeeper-controller-manager created

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

poddisruptionbudget.policy/gatekeeper-controller-manager created

mutatingwebhookconfiguration.admissionregistration.k8s.io/gatekeeper-mutating-webhook-configuration created

validatingwebhookconfiguration.admissionregistration.k8s.io/gatekeeper-validating-webhook-configuration created

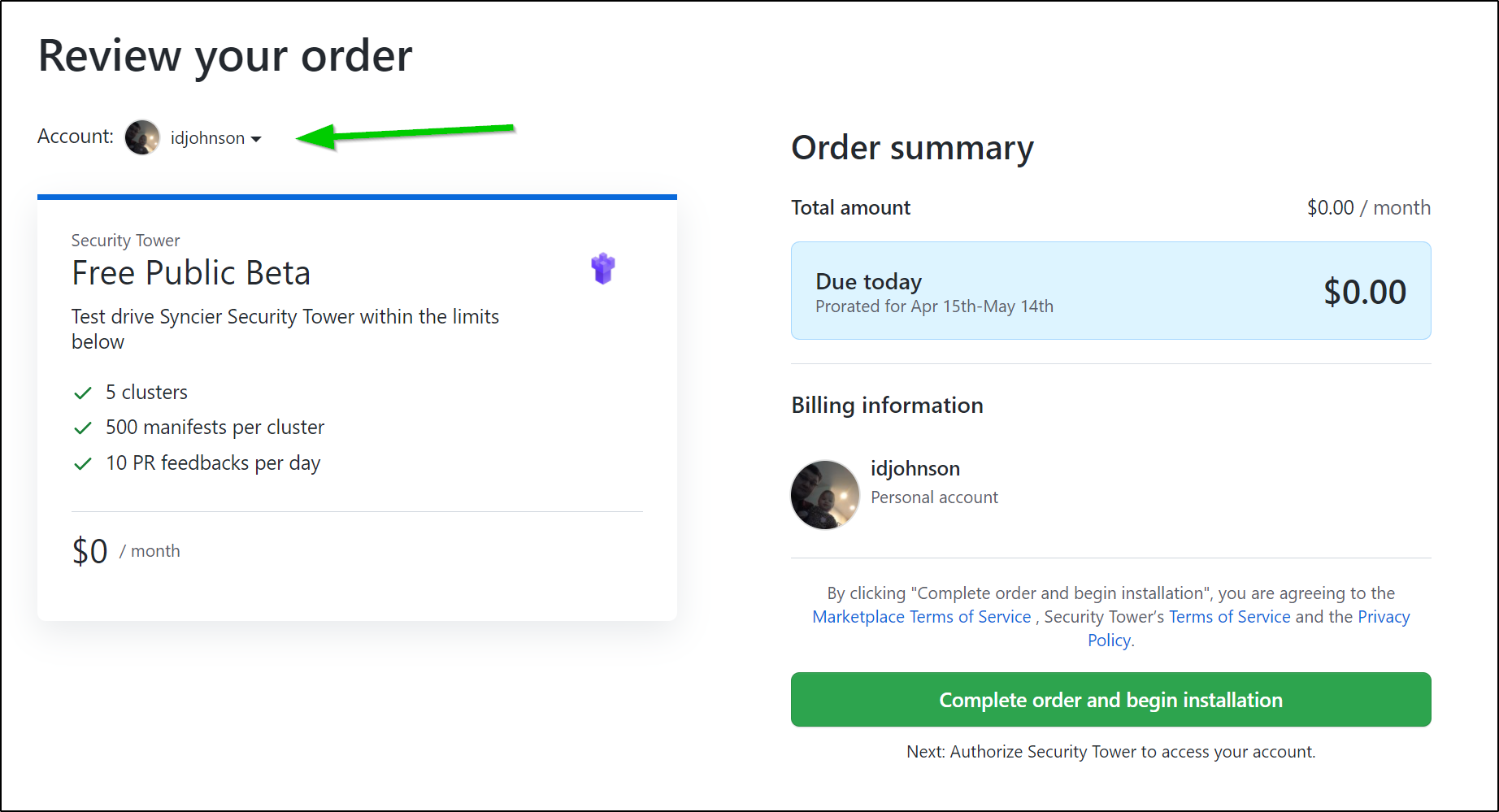

Tower Security is a Github Marketplace app that is (for now) free. Let’s add that: https://github.com/marketplace/security-tower

Ensure you use the right Github account. For work, I have different Github identities and I nearly installed it in a non-personal org.

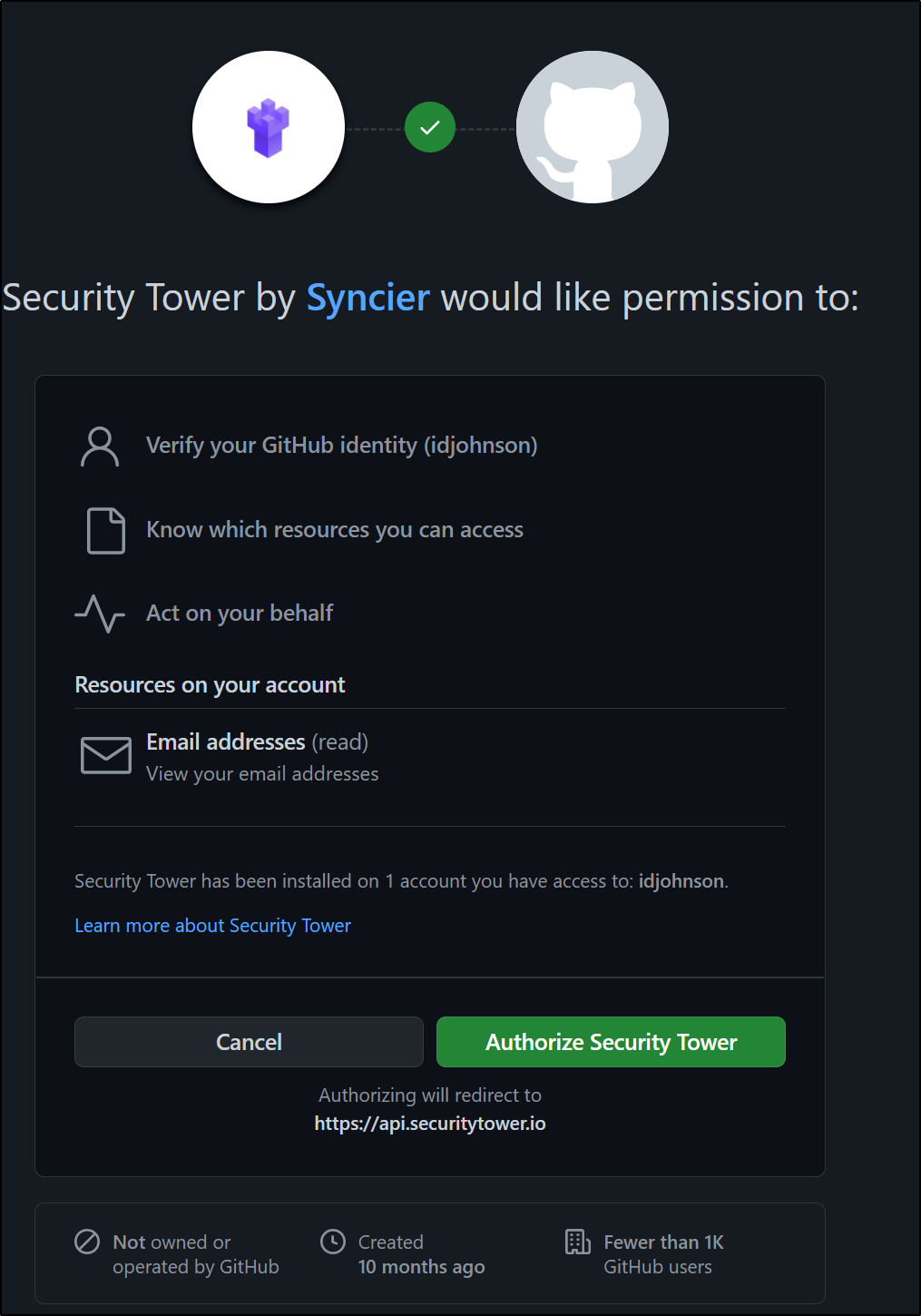

We have to choose which repos and then grant permissions

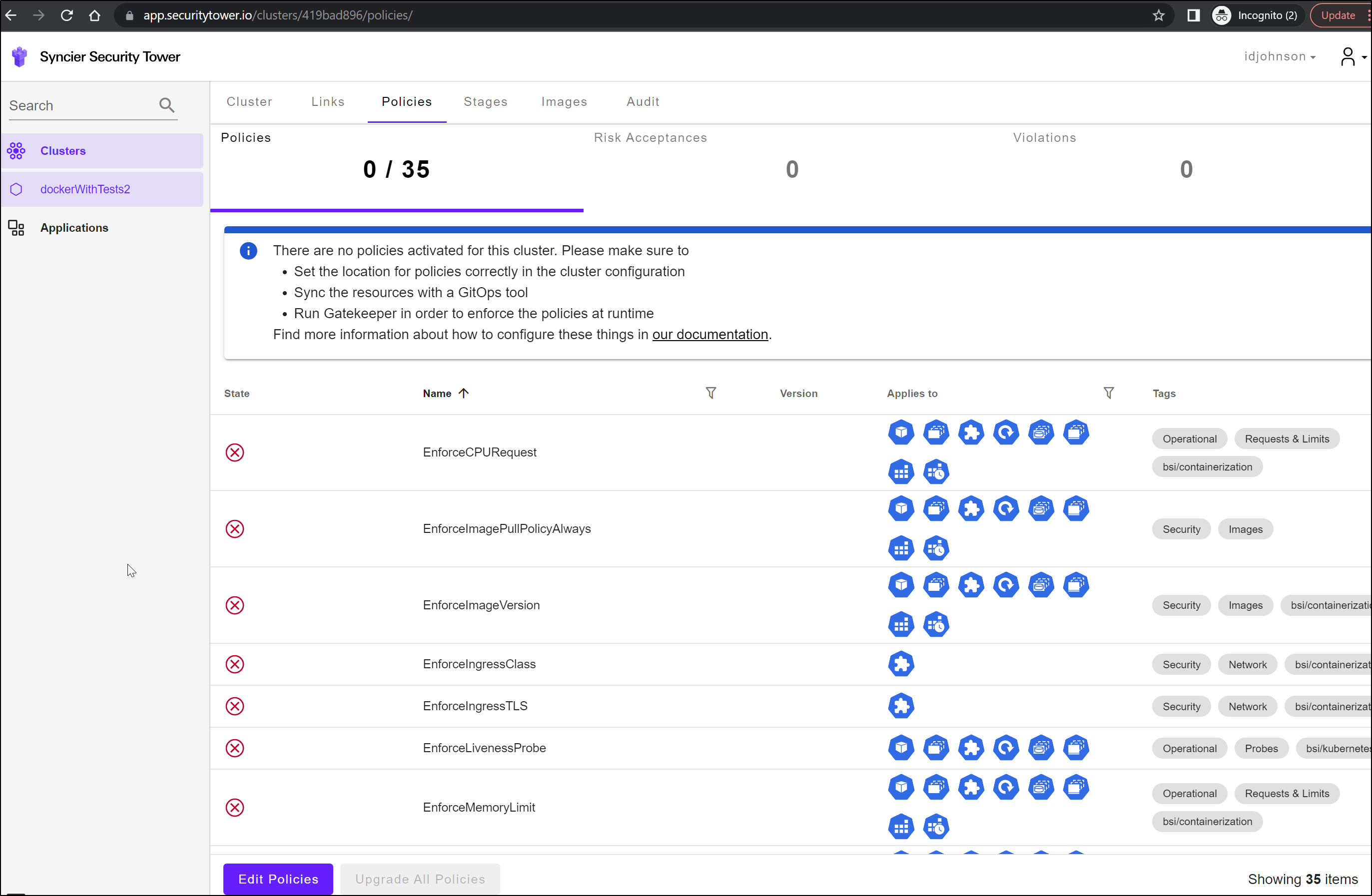

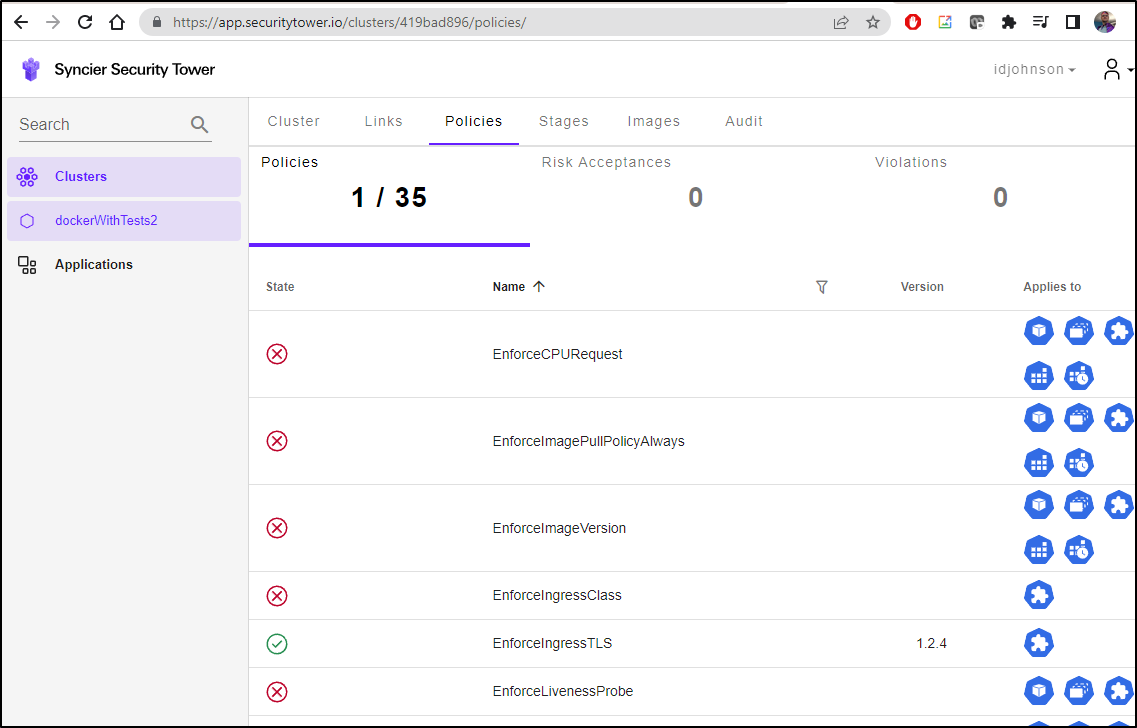

We can now see Syncer’s Dashboard

If we select our Repo, we can see there are no policies being enforced presently, but 35 listed we could

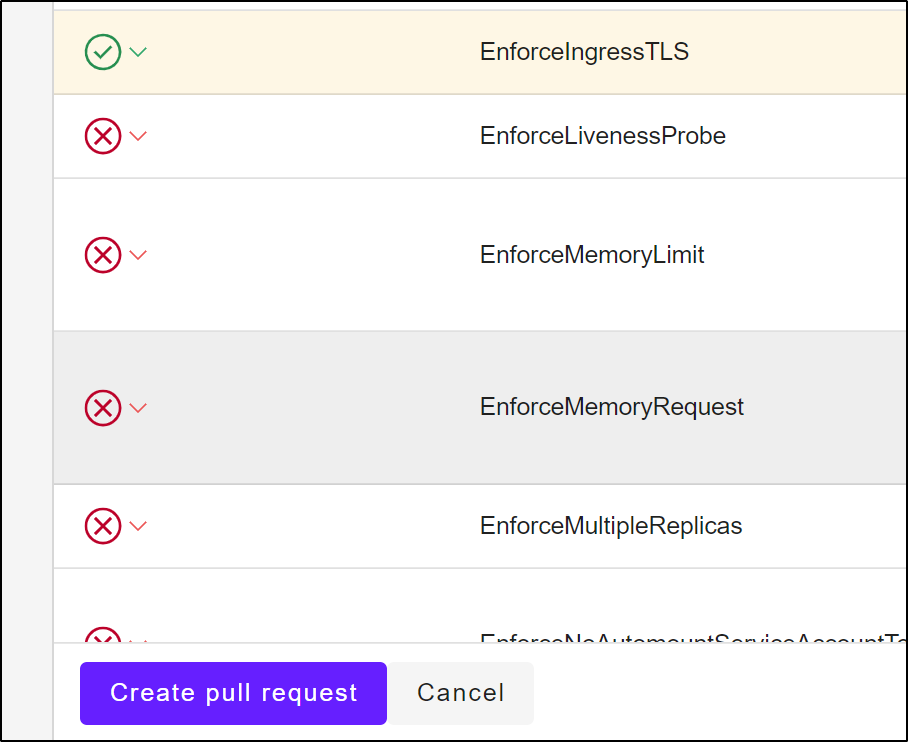

Enabling Syncier Security Tower Policy

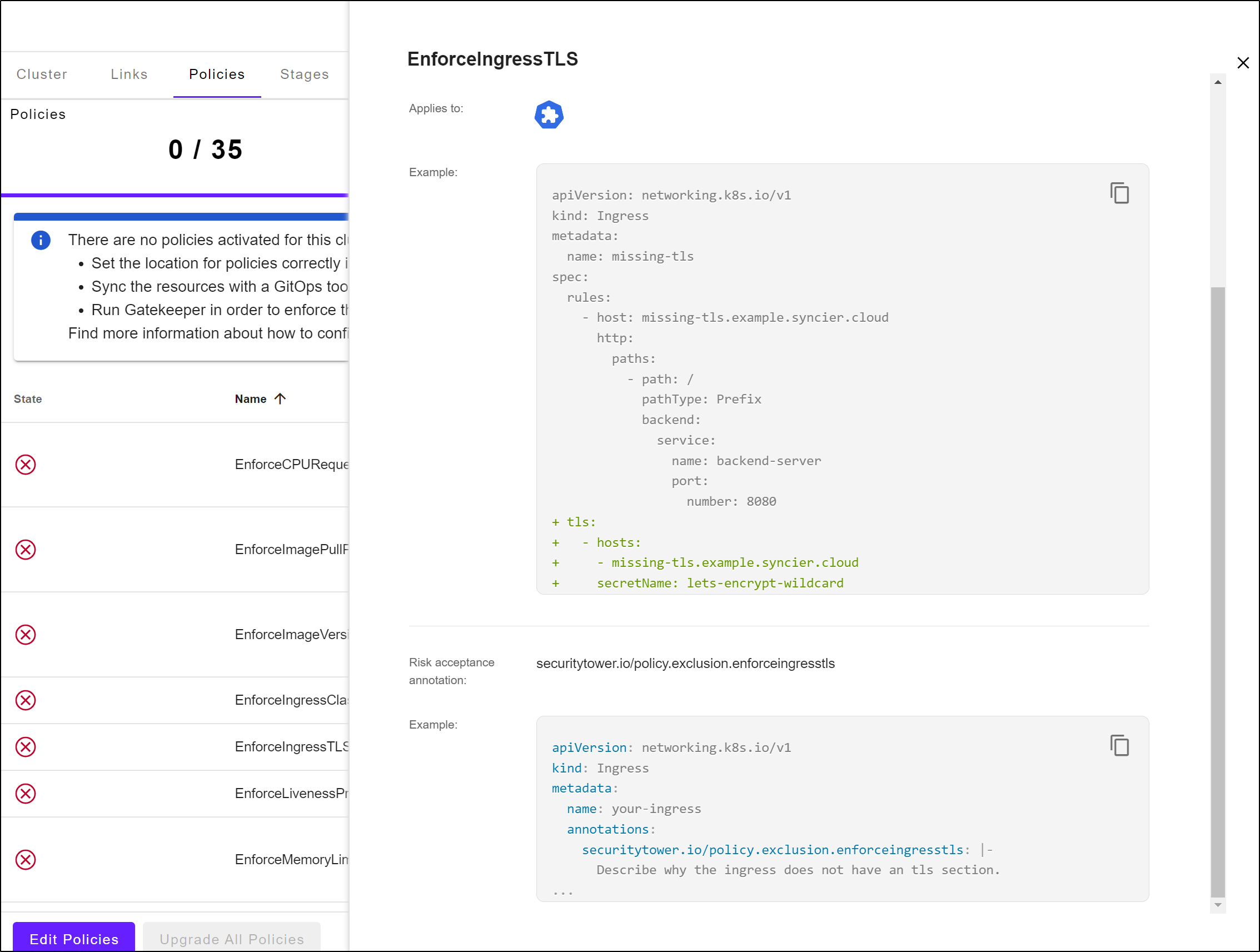

Say, for instance, we wanted to enact one. Like enforcing TLS. We could click the EnforceTLS policies and see an example (of a bad condition) and how one would skip it with an exclusion (explaining why it should be skipped)

To activate a policy, we use the Syncier app:

Once we marked Active at least one policy, we can create a Pull Request

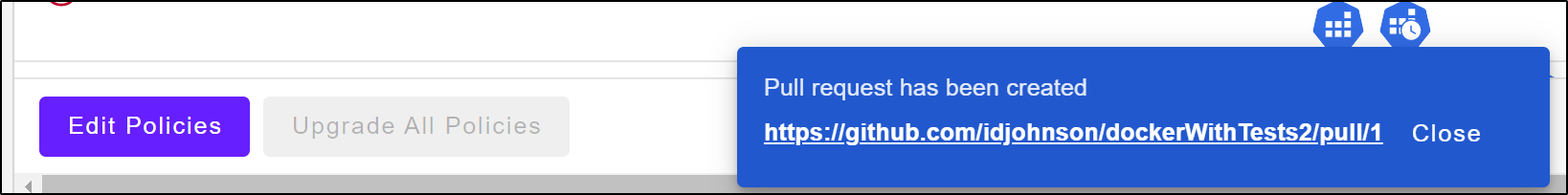

This creates a PR on our behalf

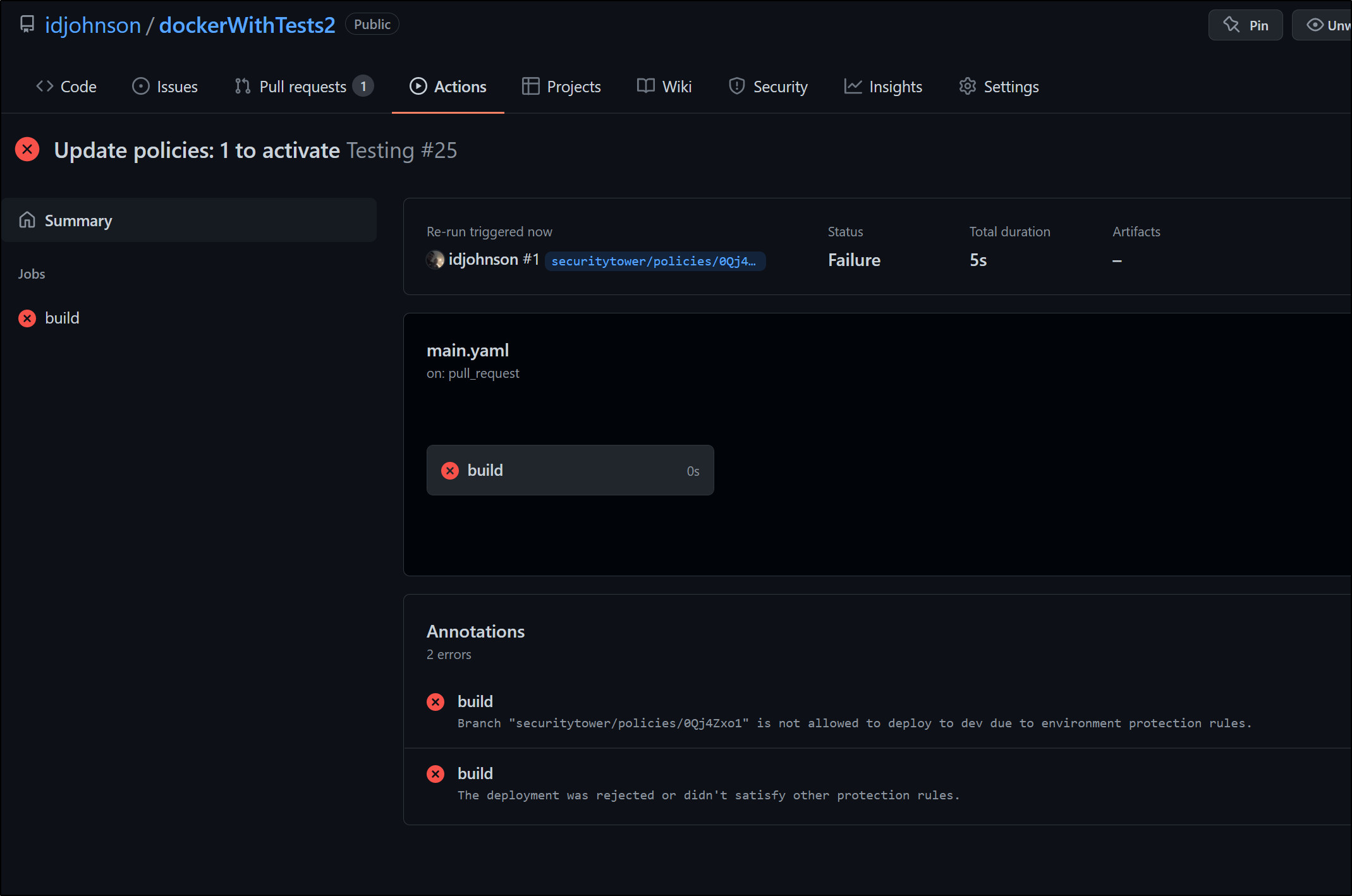

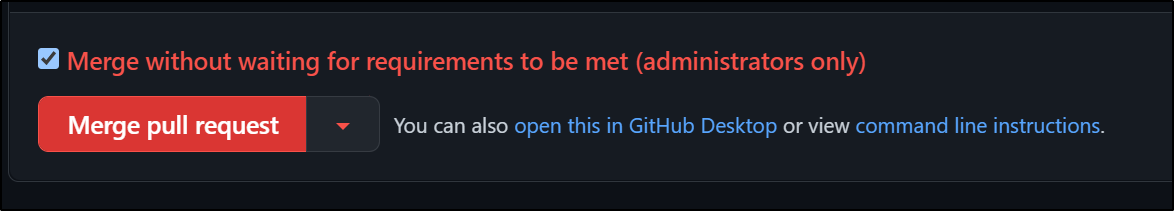

My branch was blocked by security policies so PR build failed

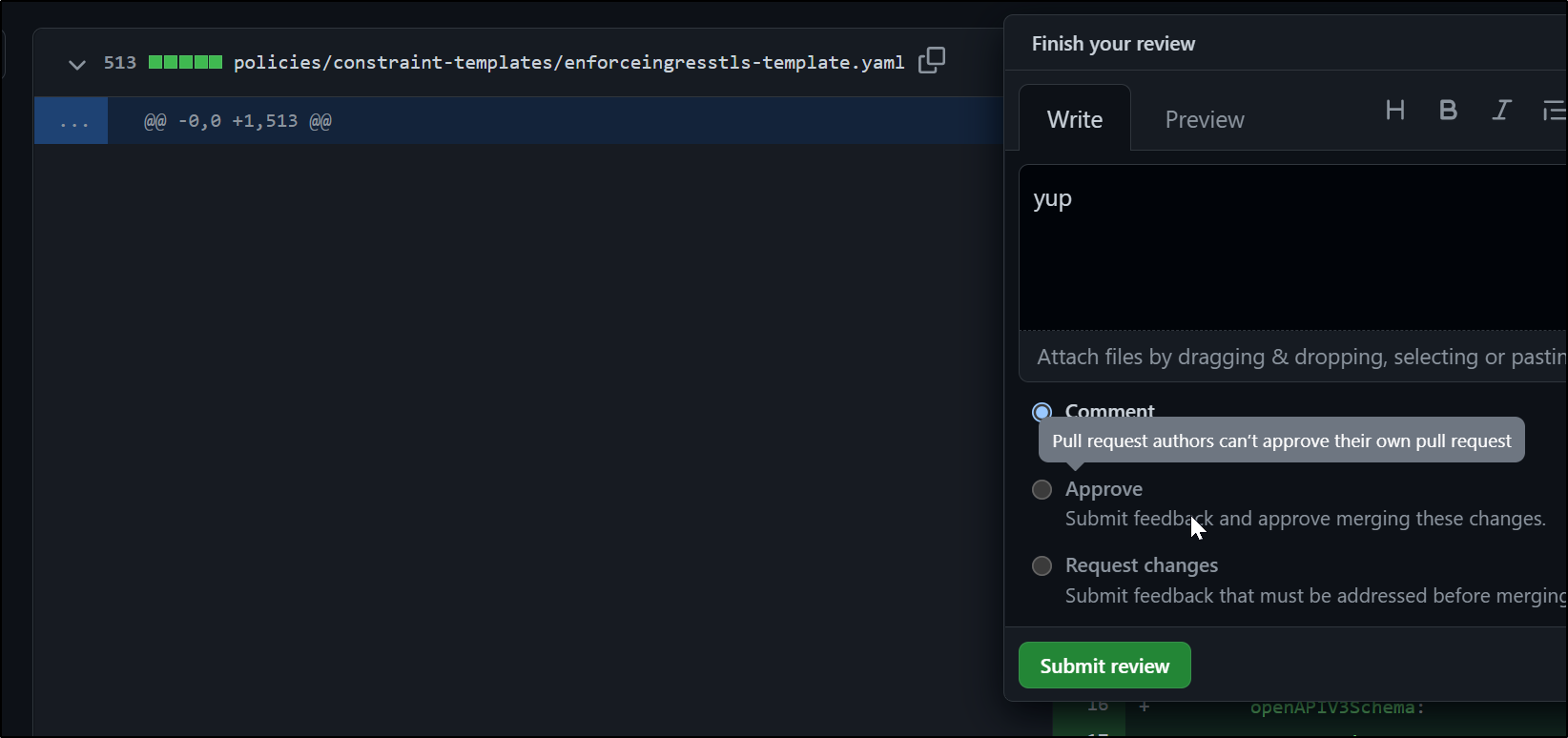

and I’m locked out of self-approve

I’ll skip checks and assume all will be well

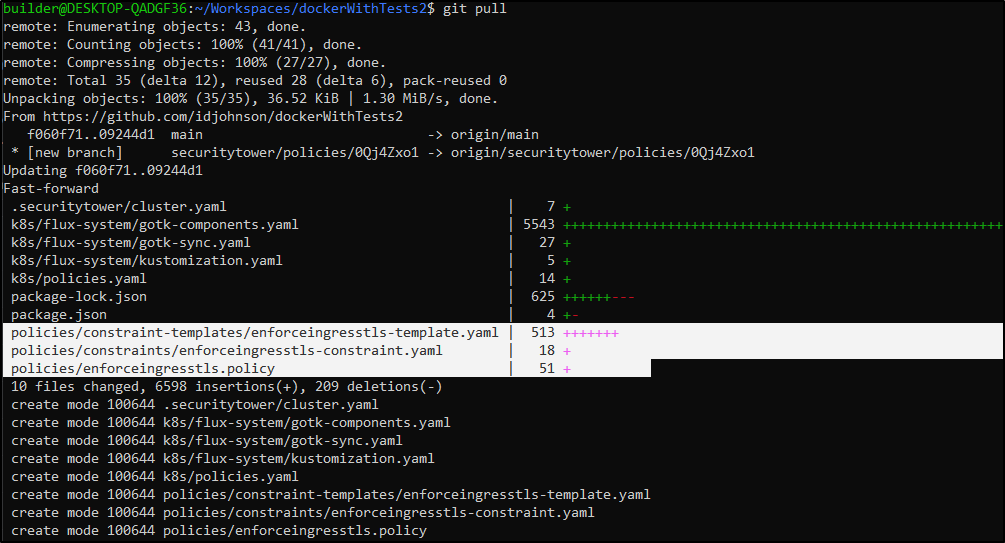

The policy should now be in effect.

We can see the YAML saved in our repo

Here is the SecurityTower Policy saved into our repo and enforced by Flux

$ cat policies/enforceingresstls.policy

apiVersion: securitytower.io/v1alpha2

kind: Policy

metadata:

name: EnforceIngressTLS

spec:

version: "1.2.4"

description: |

Enforces that every ingress uses TLS encryption.

This prevents accidentally creating insecure, unencrypted entry points to your cluster.

---

Note that this policy is part of the following security standards:

- `bsi/containerization`: BSI IT-Grundschutz "Containerisierung": Section: SYS.1.6.A21

kind:

- Security

- Network

- bsi/containerization

example: |

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: missing-tls

spec:

rules:

- host: missing-tls.example.syncier.cloud

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: backend-server

port:

number: 8080

+ tls:

+ - hosts:

+ - missing-tls.example.syncier.cloud

+ secretName: lets-encrypt-wildcard

riskAcceptance:

annotationName: securitytower.io/policy.exclusion.enforceingresstls

example: |

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: your-ingress

annotations:

securitytower.io/policy.exclusion.enforceingresstls: |-

Describe why the ingress does not have an tls section.

...

The content is somewhat long, but there is the gatekeeper admission hook as installed by SecurityTower:

$ cat policies/constraint-templates/enforceingresstls-template.yaml

apiVersion: templates.gatekeeper.sh/v1beta1

kind: ConstraintTemplate

metadata:

creationTimestamp: null

name: enforceingresstls

annotations:

argocd.argoproj.io/sync-wave: "-200"

argocd.argoproj.io/sync-options: Prune=false

argocd.argoproj.io/compare-options: IgnoreExtraneous

spec:

crd:

spec:

names:

kind: EnforceIngressTLS

validation:

openAPIV3Schema:

properties:

excludedNamePatterns:

items:

type: string

type: array

targets:

- libs:

- |-

package lib.common.kubernetes

default is_gatekeeper = false

container_types := {"containers", "initContainers"}

is_gatekeeper {

has_field(input, "review")

has_field(input.review, "object")

}

review = input.review {

input.review

not input.request

}

else = input.request {

input.request

not input.review

}

else = {"object": input, "oldObject": null, "operation": "CREATE"} {

not input.request

not input.review

}

resource = sprintf("%s/%s (%s)", [review.object.kind, review.object.metadata.name, review.object.metadata.namespace]) {

review.object.kind

review.object.metadata.name

review.object.metadata.namespace

}

else = sprintf("%s/%s", [review.object.kind, review.object.metadata.name]) {

review.object.kind

review.object.metadata.name

}

else = review.object.kind {

review.object.kind

}

else = "Unknown" {

true

}

objectName = sprintf("%s", [review.object.metadata.name]) {

review.object.metadata.name

}

else = "Unknown" {

true

}

objectApiVersion = sprintf("%s", [review.object.apiVersion]) {

review.object.apiVersion

}

else = "Unknown" {

true

}

objectKind = sprintf("%s", [review.object.kind]) {

review.object.kind

}

else = "Unknown" {

true

}

has_field(object, field) {

_ = object[field]

}

inputParams = input.parameters {

input.parameters

}

else = data.inventory.parameters {

data.inventory.parameters

}

else = set() {

true

}

inputObject = {

"review": input.review,

"parameters": inputParams,

} {

input.review

not input.request

}

else = {"review": input.request, "parameters": inputParams} {

input.request

not input.review

}

else = {"review": {"object": input, "oldObject": null, "operation": "CREATE"}, "parameters": inputParams} {

not input.request

not input.review

}

objectNamespace = inputObject.review.object.metadata.namespace {

inputObject.review.object.metadata

inputObject.review.object.metadata.namespace

}

else = data.inventory.conftestnamespace {

data.inventory.conftestnamespace

}

else = "default" {

true

}

- |-

package lib.common.messages

import data.lib.common.kubernetes

import data.lib.core.podHelper

printReasonWithObject(policyName, reason, resource, property, containerName, containerType, validValues) = result {

title := sprintf("%v violated: %v\n\n", [policyName, reason])

container := getContainerName(containerName)

fullField := getFullField(resource, property, containerType)

vv := getValidValues(validValues)

object := sprintf("object:\n apiVersion: %v\n kind: %v\n metadata:\n name: %v\n namespace: %v\n", [kubernetes.objectApiVersion, kubernetes.objectKind, kubernetes.objectName, kubernetes.objectNamespace])

jsonPath := getFullXPath(property, containerName, containerType)

result := {"msg": concat("", [title, container, fullField, vv, "\n", object, jsonPath])}

}

getValidValues(validValues) = result {

validValues != ""

result := sprintf("validValues: %v\n", [validValues])

}

else = "" {

true

}

getContainerName(containerName) = result {

containerName != ""

result := sprintf("containerName: %v\n", [containerName])

}

else = "" {

true

}

getFullField(resource, property, containerType) = result {

resource != ""

property != ""

result := sprintf("field: %v.%v\n", [resource, property])

}

else = result {

property != ""

containerType != ""

podHelper.storage.specPath != ""

result := sprintf("field: %v.%v.%v\n", [podHelper.storage.specPath, containerType, property])

}

else = result {

containerType != ""

podHelper.storage.specPath != ""

result := sprintf("field: %v.%v\n", [podHelper.storage.specPath, containerType])

}

else = result {

property != ""

podHelper.storage.specPath != ""

result := sprintf("field: %v.%v\n", [podHelper.storage.specPath, property])

}

else = result {

property != ""

result := sprintf("field: %v\n", [property])

}

else = "" {

true

}

getFullXPath(property, containerName, containerType) = result {

property != ""

containerName != ""

containerType != ""

podHelper.storage.jsonPath != ""

result := sprintf("JSONPath: %v.%v[?(@.name == \"%v\")].%v\n", [podHelper.storage.jsonPath, containerType, containerName, property])

}

else = result {

containerName != ""

containerType != ""

podHelper.storage.specPath != ""

result := sprintf("JSONPath: %v.%v[?(@.name == \"%v\")]\n", [podHelper.storage.jsonPath, containerType, containerName])

}

else = result {

property != ""

property != "spec.replicas"

not startswith(property, "metadata")

podHelper.storage.jsonPath != ""

result := sprintf("JSONPath: %v.%v\n", [podHelper.storage.jsonPath, property])

}

else = result {

property != ""

result := sprintf("JSONPath: .%v\n", [property])

}

else = "" {

true

}

- |-

package lib.core.podHelper

import data.lib.common.kubernetes

storage = result {

validKinds := ["ReplicaSet", "ReplicationController", "Deployment", "StatefulSet", "DaemonSet", "Job"]

any([good | good := kubernetes.review.object.kind == validKinds[_]])

spec := object.get(kubernetes.review.object, "spec", {})

template := object.get(spec, "template", {})

result := {

"objectSpec": object.get(template, "spec", {}),

"objectMetadata": object.get(template, "metadata", {}),

"rootMetadata": object.get(kubernetes.review.object, "metadata", {}),

"specPath": sprintf("%v.spec.template.spec", [lower(kubernetes.review.object.kind)]),

"jsonPath": ".spec.template.spec",

"metadataPath": sprintf("%v.spec.template.metadata", [lower(kubernetes.review.object.kind)]),

}

}

else = result {

kubernetes.review.object.kind == "Pod"

result := {

"objectSpec": object.get(kubernetes.review.object, "spec", {}),

"objectMetadata": object.get(kubernetes.review.object, "metadata", {}),

"rootMetadata": object.get(kubernetes.review.object, "metadata", {}),

"specPath": sprintf("%v.spec", [lower(kubernetes.review.object.kind)]),

"jsonPath": ".spec",

"metadataPath": sprintf("%v.metadata", [lower(kubernetes.review.object.kind)]),

}

}

else = result {

kubernetes.review.object.kind == "CronJob"

spec := object.get(kubernetes.review.object, "spec", {})

jobTemplate := object.get(spec, "jobTemplate", {})

jtSpec := object.get(jobTemplate, "spec", {})

jtsTemplate := object.get(jtSpec, "template", {})

result := {

"objectSpec": object.get(jtsTemplate, "spec", {}),

"objectMetadata": object.get(jtsTemplate, "metadata", {}),

"rootMetadata": object.get(kubernetes.review.object, "metadata", {}),

"specPath": sprintf("%v.spec.jobtemplate.spec.template.spec", [lower(kubernetes.review.object.kind)]),

"jsonPath": ".spec.jobtemplate.spec.template.spec",

"metadataPath": sprintf("%v.spec.jobtemplate.spec.template.metadata", [lower(kubernetes.review.object.kind)]),

}

}

- |-

package lib.common.riskacceptance

import data.lib.common.kubernetes

import data.lib.core.pod

exclusionAnnotationsPrefixes = [

"securitytower.io/policy.exclusion.",

"phylake.io/policy.exclusion.",

"cloud.syncier.com/policy.exclusion.",

]

isValidExclusionAnnotation(annotation) {

count([it |

it := exclusionAnnotationsPrefixes[_]

startswith(annotation, it)

]) > 0

}

else = false {

true

}

isExclusionAnnotationForConstraint(annotation, constraintName) {

count([it |

it := exclusionAnnotationsPrefixes[_]

exclusionAnnotation := concat("", [it, constraintName])

annotation == exclusionAnnotation

]) > 0

}

else = false {

true

}

getPolicyExclusionAnnotations(object) = exclusionAnnotations {

annotations := object.metadata.annotations

not is_null(annotations)

exclusionAnnotations := [i |

annotations[i]

isValidExclusionAnnotation(i)

]

}

else = [] {

true

}

getPolicyExclusionAnnotationsOnOwners(object) = exclusionAnnotations {

parents := [owner |

reference := object.metadata.ownerReferences[_]

owner := pod.getOwnerFor(reference, object.metadata.namespace)

not is_null(owner)

]

grandParents := [owner |

metadata := parents[_].metadata

reference := metadata.ownerReferences[_]

owner := pod.getOwnerFor(reference, metadata.namespace)

not is_null(owner)

]

owners := array.concat(parents, grandParents)

exclusionAnnotations := [annotation |

owners[_].metadata.annotations[annotation]

isValidExclusionAnnotation(annotation)

]

}

getPolicyExclusionAnnotationsAtTemplateLevel(object) = exclusionAnnotations {

kubernetes.has_field(object, "spec")

kubernetes.has_field(object.spec, "template")

kubernetes.has_field(object.spec.template, "metadata")

kubernetes.has_field(object.spec.template.metadata, "annotations")

annotations := object.spec.template.metadata.annotations

not is_null(annotations)

exclusionAnnotations := [i |

annotations[i]

isValidExclusionAnnotation(i)

]

}

else = [] {

true

}

getPolicyExclusionAnnotationsAtNamespaceLevel(object) = exclusionAnnotations {

annotations := data.inventory.cluster.v1.Namespace[kubernetes.objectNamespace].metadata.annotations

not is_null(annotations)

exclusionAnnotations := [i |

annotations[i]

isValidExclusionAnnotation(i)

]

}

else = [] {

true

}

thereIsExclusionAnnotationForConstraint(constraintName) {

not kubernetes.inputParams.ignoreRiskAcceptances

exclusionAnnotationsObjectLevel := getPolicyExclusionAnnotations(kubernetes.review.object)

exclusionAnnotationsTemplateLevel := getPolicyExclusionAnnotationsAtTemplateLevel(kubernetes.review.object)

exclusionAnnotationsNamespaceLevel := getPolicyExclusionAnnotationsAtNamespaceLevel(kubernetes.review.object)

exclusionAnnotationsOwners := getPolicyExclusionAnnotationsOnOwners(kubernetes.review.object)

exclusionAnnotations := array.concat(exclusionAnnotationsObjectLevel, array.concat(exclusionAnnotationsTemplateLevel, array.concat(exclusionAnnotationsNamespaceLevel, exclusionAnnotationsOwners)))

count([it |

it := exclusionAnnotations[_]

isExclusionAnnotationForConstraint(it, constraintName)

]) > 0

}

else = false {

true

}

thereIsNoExclusionAnnotationForConstraint(constraintName) {

not thereIsExclusionAnnotationForConstraint(constraintName)

}

else = false {

true

}

thereIsExclusionAnnotationForConstraintAtTemplateLevel(constraintName) {

exclusionAnnotations := getPolicyExclusionAnnotationsAtTemplateLevel(kubernetes.review.object)

count([it |

it := exclusionAnnotations[_]

isExclusionAnnotationForConstraint(it, constraintName)

]) > 0

}

else = false {

true

}

thereIsNoExclusionAnnotationForConstraintAtTemplateLevel(constraintName) {

not thereIsExclusionAnnotationForConstraintAtTemplateLevel(constraintName)

}

- |-

package lib.core.pod

import data.lib.common.kubernetes

getOwnerFor(reference, namespace) = owner {

is_string(namespace)

count(namespace) > 0

owner := data.inventory.namespace[namespace][reference.apiVersion][reference.kind][reference.name]

not is_null(owner)

}

else = owner {

owner := data.inventory.cluster[reference.apiVersion][reference.kind][reference.name]

not is_null(owner)

}

else = null {

true

}

isRequiresOwnerReferenceCheck(object) {

validKinds := ["Pod", "ReplicaSet", "Job"]

_ = any([good | good := object.kind == validKinds[_]])

}

validOwnerReference {

isRequiresOwnerReferenceCheck(kubernetes.review.object)

metadata := kubernetes.review.object.metadata

owners := [owner | reference := metadata.ownerReferences[_]; owner := getOwnerFor(reference, metadata.namespace); not is_null(owner)]

trace(json.marshal({"owners": owners, "references": kubernetes.review.object.metadata.ownerReferences}))

count(owners) == count(metadata.ownerReferences)

}

rego: |-

package enforceingresstls

import data.lib.common.kubernetes

import data.lib.common.messages

import data.lib.common.riskacceptance

excludedNamePatterns = {name | name := kubernetes.inputParams.excludedNamePatterns[_]} {

kubernetes.inputParams.excludedNamePatterns

}

else = ["cm-acme-http-solver-*"] {

true

}

violation[msg] {

kubernetes.review.object.kind == "Ingress"

riskacceptance.thereIsNoExclusionAnnotationForConstraint("enforceingresstls")

objectNameMatchNotFound(excludedNamePatterns)

not kubernetes.review.object.spec.tls

msg := messages.printReasonWithObject("EnforceIngressTLS", "Should have TLS enabled", "ingress", "spec.tls", "", "", "")

}

violation[msg] {

kubernetes.review.object.kind == "Ingress"

riskacceptance.thereIsNoExclusionAnnotationForConstraint("enforceingresstls")

objectNameMatchNotFound(excludedNamePatterns)

entry := kubernetes.review.object.spec.tls[_]

not entry.secretName

msg := messages.printReasonWithObject("EnforceIngressTLS", "Should have secretName within TLS config", "ingress", "spec.tls.secretName", "", "", "")

}

regexMatchesResultList(stringToCheck, patternsList) = matchVerificationList {

matchVerificationList := [{"pattern": pattern, "match": match} |

pattern := patternsList[_]

match := re_match(pattern, stringToCheck)

]

}

objectNameMatchFound(patternsList) {

objName := kubernetes.review.object.metadata.name

nameToPatternMatchList := regexMatchesResultList(objName, patternsList)

any([patternMatch |

it := nameToPatternMatchList[_]

patternMatch = it.match

])

}

objectNameMatchNotFound(patternsList) {

not objectNameMatchFound(patternsList)

}

target: admission.k8s.gatekeeper.sh

status: {}

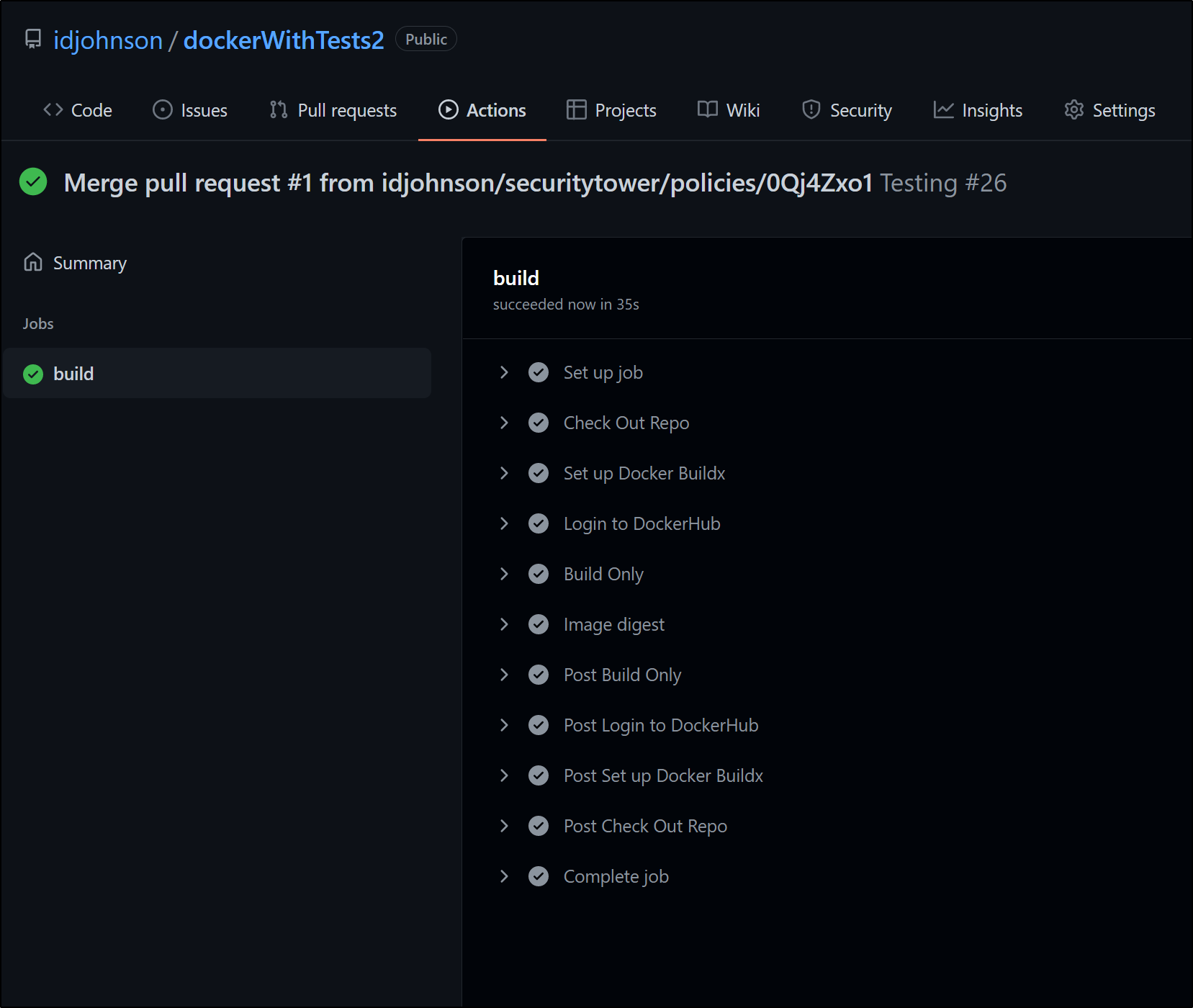

The GH build did build the image to validate and test

however, we’ll need to configure an application to see it show up in audits

We can see our Cluster is trying to launch the image

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ kubectl get pods -n test

NAME READY STATUS RESTARTS AGE

my-nginx-74577b7877-56lzx 0/1 ImagePullBackOff 0 50m

my-nginx-74577b7877-zxhkz 0/1 ImagePullBackOff 0 50m

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ kubectl get deployments -n test

NAME READY UP-TO-DATE AVAILABLE AGE

my-nginx 0/2 2 0 50m

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ kubectl describe pod my-nginx-74577b7877-zxhkz -n test | tail -n10

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 50m default-scheduler Successfully assigned test/my-nginx-74577b7877-zxhkz to aks-nodepool1-30243974-vmss000001

Normal Pulling 49m (x4 over 50m) kubelet Pulling image "idjacrdemo02.azurecr.io/dockerwithtests:devlatest"

Warning Failed 49m (x4 over 50m) kubelet Failed to pull image "idjacrdemo02.azurecr.io/dockerwithtests:devlatest": rpc error: code = Unknown desc = failed to pull and unpack image "idjacrdemo02.azurecr.io/dockerwithtests:devlatest": failed to resolve reference "idjacrdemo02.azurecr.io/dockerwithtests:devlatest": failed to authorize: failed to fetch anonymous token: unexpected status: 401 Unauthorized

Warning Failed 49m (x4 over 50m) kubelet Error: ErrImagePull

Warning Failed 49m (x6 over 50m) kubelet Error: ImagePullBackOff

Normal BackOff 40s (x219 over 50m) kubelet Back-off pulling image "idjacrdemo02.azurecr.io/dockerwithtests:devlatest"

However, this ACR is in an entirely different subscription so i would need to enable ImagePull Secrets to reach to it.

Clean up

I’ll delete the cluster then the resource group

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ az aks delete -n idjdwt3aks -g idjdwt3rg

Are you sure you want to perform this operation? (y/n): y

| Running ..

Adding to new Clusters

Create a fresh AKS. Here I’ll use an entirely different subscription

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ az group create -n idjtest415rg --location eastus

{

"id": "/subscriptions/8defc61d-657a-453d-a6ff-cb9f91289a61/resourceGroups/idjtest415rg",

"location": "eastus",

"managedBy": null,

"name": "idjtest415rg",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ az aks create --name idjtest415aks -g idjtest415rg --node-count 3 --enable-addons monitoring --generate-ssh-keys --subscription 8defc61d-657a-453d-a6ff-cb9f91289a61

| Running ..

Validate

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ (rm -f ~/.kube/config || true) && az aks get-credentials -n idjtest415aks -g idjtest415rg --admin

Merged "idjtest415aks-admin" as current context in /home/builder/.kube/config

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-31566938-vmss000000 Ready agent 3m58s v1.22.6

aks-nodepool1-31566938-vmss000001 Ready agent 4m46s v1.22.6

aks-nodepool1-31566938-vmss000002 Ready agent 3m51s v1.22.6

Since I am on a fresh host, I’ll install Flux

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ curl -s https://fluxcd.io/install.sh | sudo bash

[sudo] password for builder:

[INFO] Downloading metadata https://api.github.com/repos/fluxcd/flux2/releases/latest

[INFO] Using 0.28.5 as release

[INFO] Downloading hash https://github.com/fluxcd/flux2/releases/download/v0.28.5/flux_0.28.5_checksums.txt

[INFO] Downloading binary https://github.com/fluxcd/flux2/releases/download/v0.28.5/flux_0.28.5_linux_amd64.tar.gz

[INFO] Verifying binary download

[INFO] Installing flux to /usr/local/bin/flux

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ . <(flux completion bash)

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ echo ". <(flux completion bash)" >> ~/.bashrc

I’ll use the ENV Var this time instead of typing in my GH Token:

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ export GITHUB_USER=idjohnson

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ export GITHUB_REPO=dockerWithTests2

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ export GITHUB_TOKEN=ghp_asdfasdfasdfasdfasdfasdfasdfasdfasdf

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ cd ../dockerWithTests2/

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ flux bootstrap github --owner=$GITHUB_USER --repository=$GITHUB_REPO --branch=main --path=./k8s --personal

► connecting to github.com

► cloning branch "main" from Git repository "https://github.com/idjohnson/dockerWithTests2.git"

✔ cloned repository

► generating component manifests

✔ generated component manifests

✔ component manifests are up to date

► installing components in "flux-system" namespace

✔ installed components

✔ reconciled components

► determining if source secret "flux-system/flux-system" exists

► generating source secret

✔ public key: ecdsa-sha2-nistp384 AAAAE2VjZHNhLXNoYTItbmlzdHAzODQAAAAIbmlzdHAzODQAAABhBBJsNugQGQUktw2LgMK7eY5nY2L83DvWqV37irwcYkHJoBAGQbbwIw6s+TG/OeLGZd62SunJgsen0uzBxMlyMOgGOOE+U5MxnhlyquSJoBvtPCN9YBvWEB8VtTwUFz2uHA==

✔ configured deploy key "flux-system-main-flux-system-./k8s" for "https://github.com/idjohnson/dockerWithTests2"

► applying source secret "flux-system/flux-system"

✔ reconciled source secret

► generating sync manifests

✔ generated sync manifests

✔ sync manifests are up to date

► applying sync manifests

✔ reconciled sync configuration

◎ waiting for Kustomization "flux-system/flux-system" to be reconciled

✔ Kustomization reconciled successfully

► confirming components are healthy

✔ helm-controller: deployment ready

✔ kustomize-controller: deployment ready

✔ notification-controller: deployment ready

✔ source-controller: deployment ready

✔ all components are healthy

Lastly, let’s add OPA Gatekeeper

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl apply -f https://raw.githubusercontent.com/open-policy-agent/gatekeeper/release-3.7/deploy/gatekeeper.yaml

namespace/gatekeeper-system configured

resourcequota/gatekeeper-critical-pods created

customresourcedefinition.apiextensions.k8s.io/assign.mutations.gatekeeper.sh created

customresourcedefinition.apiextensions.k8s.io/assignmetadata.mutations.gatekeeper.sh created

customresourcedefinition.apiextensions.k8s.io/configs.config.gatekeeper.sh configured

customresourcedefinition.apiextensions.k8s.io/constraintpodstatuses.status.gatekeeper.sh configured

customresourcedefinition.apiextensions.k8s.io/constrainttemplatepodstatuses.status.gatekeeper.sh configured

customresourcedefinition.apiextensions.k8s.io/constrainttemplates.templates.gatekeeper.sh configured

customresourcedefinition.apiextensions.k8s.io/modifyset.mutations.gatekeeper.sh created

customresourcedefinition.apiextensions.k8s.io/mutatorpodstatuses.status.gatekeeper.sh created

customresourcedefinition.apiextensions.k8s.io/providers.externaldata.gatekeeper.sh created

serviceaccount/gatekeeper-admin configured

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/gatekeeper-admin created

role.rbac.authorization.k8s.io/gatekeeper-manager-role configured

clusterrole.rbac.authorization.k8s.io/gatekeeper-manager-role configured

rolebinding.rbac.authorization.k8s.io/gatekeeper-manager-rolebinding configured

clusterrolebinding.rbac.authorization.k8s.io/gatekeeper-manager-rolebinding configured

secret/gatekeeper-webhook-server-cert configured

service/gatekeeper-webhook-service configured

Warning: spec.template.metadata.annotations[container.seccomp.security.alpha.kubernetes.io/manager]: deprecated since v1.19; use the "seccompProfile" field instead

deployment.apps/gatekeeper-audit configured

deployment.apps/gatekeeper-controller-manager created

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

poddisruptionbudget.policy/gatekeeper-controller-manager created

mutatingwebhookconfiguration.admissionregistration.k8s.io/gatekeeper-mutating-webhook-configuration created

validatingwebhookconfiguration.admissionregistration.k8s.io/gatekeeper-validating-webhook-configuration configured

Without me doing anything more, Flux has pulled in the configuration and create the Namespaces, service and LB:

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl get ns

NAME STATUS AGE

default Active 12m

flux-system Active 99s

gatekeeper-system Active 2m20s

kube-node-lease Active 12m

kube-public Active 12m

kube-system Active 12m

test Active 89s

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl get deployments -n test

NAME READY UP-TO-DATE AVAILABLE AGE

my-nginx 0/2 2 0 98s

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl get svc -n test

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-run-svc LoadBalancer 10.0.149.29 20.121.173.169 80:31861/TCP 2m7s

If I look to the error:

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl describe pod my-nginx-74577b7877-h9ghs -n test | tail -n20

Volumes:

kube-api-access-btfvm:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m23s default-scheduler Successfully assigned test/my-nginx-74577b7877-h9ghs to aks-nodepool1-31566938-vmss000002

Normal Pulling 110s (x4 over 3m23s) kubelet Pulling image "idjacrdemo02.azurecr.io/dockerwithtests:devlatest"

Warning Failed 110s (x4 over 3m22s) kubelet Failed to pull image "idjacrdemo02.azurecr.io/dockerwithtests:devlatest": rpc error: code = Unknown desc = failed to pull and unpack image "idjacrdemo02.azurecr.io/dockerwithtests:devlatest": failed to resolve reference "idjacrdemo02.azurecr.io/dockerwithtests:devlatest": failed to authorize: failed to fetch anonymous token: unexpected status: 401 Unauthorized

Warning Failed 110s (x4 over 3m22s) kubelet Error: ErrImagePull

Warning Failed 94s (x6 over 3m21s) kubelet Error: ImagePullBackOff

Normal BackOff 79s (x7 over 3m21s) kubelet Back-off pulling image "idjacrdemo02.azurecr.io/dockerwithtests:devlatest"

we see it’s due to the lack of creds for AKS to talk to ACR.

Since we are in Azure, we can just “attach” the ACR to avoid having to create and set imagePullSecrets

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ az aks update -n idjtest415aks -g idjtest415rg --attach-acr idjacrdemo02

AAD role propagation done[############################################] 100.0000%{

"aadProfile": null,

"addonProfiles": {

"azurepolicy": {

"config": null,

"enabled": true,

"identity": {

"clientId": "56906f9a-324b-46b4-912d-24d2bd5825ff",

"objectId": "08220100-8da3-4e43-959e-0632a994b974",

"resourceId": "/subscriptions/8defc61d-657a-453d-a6ff-cb9f91289a61/resourcegroups/MC_idjtest415rg_idjtest415aks_eastus/providers/Microsoft.ManagedIdentity/userAssignedIdentities/azurepolicy-idjtest415aks"

}

},

"omsagent": {

"config": {

"logAnalyticsWorkspaceResourceID": "/subscriptions/8defc61d-657a-453d-a6ff-cb9f91289a61/resourcegroups/defaultresourcegroup-eus/providers/microsoft.operationalinsights/workspaces/defaultworkspace-8defc61d-657a-453d-a6ff-cb9f91289a61-eus"

},

"enabled": true,

"identity": {

"clientId": "dfeaa143-7b33-4c8f-8dc9-990830945eec",

"objectId": "ec73fed7-a73e-47fd-80a1-18848e780a3b",

"resourceId": "/subscriptions/8defc61d-657a-453d-a6ff-cb9f91289a61/resourcegroups/MC_idjtest415rg_idjtest415aks_eastus/providers/Microsoft.ManagedIdentity/userAssignedIdentities/omsagent-idjtest415aks"

}

}

},

"agentPoolProfiles": [

{

"availabilityZones": null,

"count": 3,

"enableAutoScaling": false,

"enableEncryptionAtHost": false,

"enableFips": false,

"enableNodePublicIp": false,

"enableUltraSsd": false,

"gpuInstanceProfile": null,

"kubeletConfig": null,

"kubeletDiskType": "OS",

"linuxOsConfig": null,

"maxCount": null,

"maxPods": 110,

"minCount": null,

"mode": "System",

"name": "nodepool1",

"nodeImageVersion": "AKSUbuntu-1804gen2containerd-2022.04.05",

"nodeLabels": null,

"nodePublicIpPrefixId": null,

"nodeTaints": null,

"orchestratorVersion": "1.22.6",

"osDiskSizeGb": 128,

"osDiskType": "Managed",

"osSku": "Ubuntu",

"osType": "Linux",

"podSubnetId": null,

"powerState": {

"code": "Running"

},

"provisioningState": "Succeeded",

"proximityPlacementGroupId": null,

"scaleDownMode": null,

"scaleSetEvictionPolicy": null,

"scaleSetPriority": null,

"spotMaxPrice": null,

"tags": null,

"type": "VirtualMachineScaleSets",

"upgradeSettings": null,

"vmSize": "Standard_DS2_v2",

"vnetSubnetId": null

}

],

"apiServerAccessProfile": null,

"autoScalerProfile": null,

"autoUpgradeProfile": null,

"azurePortalFqdn": "idjtest415-idjtest415rg-8defc6-557d8768.portal.hcp.eastus.azmk8s.io",

"disableLocalAccounts": false,

"diskEncryptionSetId": null,

"dnsPrefix": "idjtest415-idjtest415rg-8defc6",

"enablePodSecurityPolicy": null,

"enableRbac": true,

"extendedLocation": null,

"fqdn": "idjtest415-idjtest415rg-8defc6-557d8768.hcp.eastus.azmk8s.io",

"fqdnSubdomain": null,

"httpProxyConfig": null,

"id": "/subscriptions/8defc61d-657a-453d-a6ff-cb9f91289a61/resourcegroups/idjtest415rg/providers/Microsoft.ContainerService/managedClusters/idjtest415aks",

"identity": {

"principalId": "6f4cf82b-74fc-4670-9e40-a4200b06f00d",

"tenantId": "15d19784-ad58-4a57-a66f-ad1c0f826a45",

"type": "SystemAssigned",

"userAssignedIdentities": null

},

"identityProfile": {

"kubeletidentity": {

"clientId": "29844358-1e40-446d-97a1-202a61d3a972",

"objectId": "f19415a1-efd3-450b-bf75-06c2d680f370",

"resourceId": "/subscriptions/8defc61d-657a-453d-a6ff-cb9f91289a61/resourcegroups/MC_idjtest415rg_idjtest415aks_eastus/providers/Microsoft.ManagedIdentity/userAssignedIdentities/idjtest415aks-agentpool"

}

},

"kubernetesVersion": "1.22.6",

"linuxProfile": {

"adminUsername": "azureuser",

"ssh": {

"publicKeys": [

{

"keyData": "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCztCsq2pg/AFf8t6d6LobwssgaXLVNnzn4G0e7M13J9t2o41deZjLQaRTLYyqGhmwp114GpJnac08F4Ln87CbIu2jbQCC2y89bE4k2a9226VJDbZhidPyvEiEyquUpKvvJ9QwUYeQlz3rYLQ3f8gDvO4iFKNla2s8E1gpYv6VxN7e7OX+FJmJ4dY2ydPxQ6RoxOLxWx6IDk9ysDK8MoSIUoD9nvD/PqlWBZLXBqqlO6OadGku3q2naNvafDM5F2p4ixCsJV5fQxPWxqZy0CbyXzs1easL4lQHjK2NwqN8AK6pC1ywItDc1fUpJZEEPOJQLShI+dqqqjUptwJUPq87h"

}

]

}

},

"location": "eastus",

"maxAgentPools": 100,

"name": "idjtest415aks",

"networkProfile": {

"dnsServiceIp": "10.0.0.10",

"dockerBridgeCidr": "172.17.0.1/16",

"loadBalancerProfile": {

"allocatedOutboundPorts": null,

"effectiveOutboundIPs": [

{

"id": "/subscriptions/8defc61d-657a-453d-a6ff-cb9f91289a61/resourceGroups/MC_idjtest415rg_idjtest415aks_eastus/providers/Microsoft.Network/publicIPAddresses/fc493367-6cf8-4a09-a57b-97fbf21fd979",

"resourceGroup": "MC_idjtest415rg_idjtest415aks_eastus"

}

],

"idleTimeoutInMinutes": null,

"managedOutboundIPs": {

"count": 1

},

"outboundIPs": null,

"outboundIpPrefixes": null

},

"loadBalancerSku": "Standard",

"natGatewayProfile": null,

"networkMode": null,

"networkPlugin": "kubenet",

"networkPolicy": null,

"outboundType": "loadBalancer",

"podCidr": "10.244.0.0/16",

"serviceCidr": "10.0.0.0/16"

},

"nodeResourceGroup": "MC_idjtest415rg_idjtest415aks_eastus",

"podIdentityProfile": null,

"powerState": {

"code": "Running"

},

"privateFqdn": null,

"privateLinkResources": null,

"provisioningState": "Succeeded",

"resourceGroup": "idjtest415rg",

"securityProfile": {

"azureDefender": null

},

"servicePrincipalProfile": {

"clientId": "msi",

"secret": null

},

"sku": {

"name": "Basic",

"tier": "Free"

},

"tags": null,

"type": "Microsoft.ContainerService/ManagedClusters",

"windowsProfile": null

}

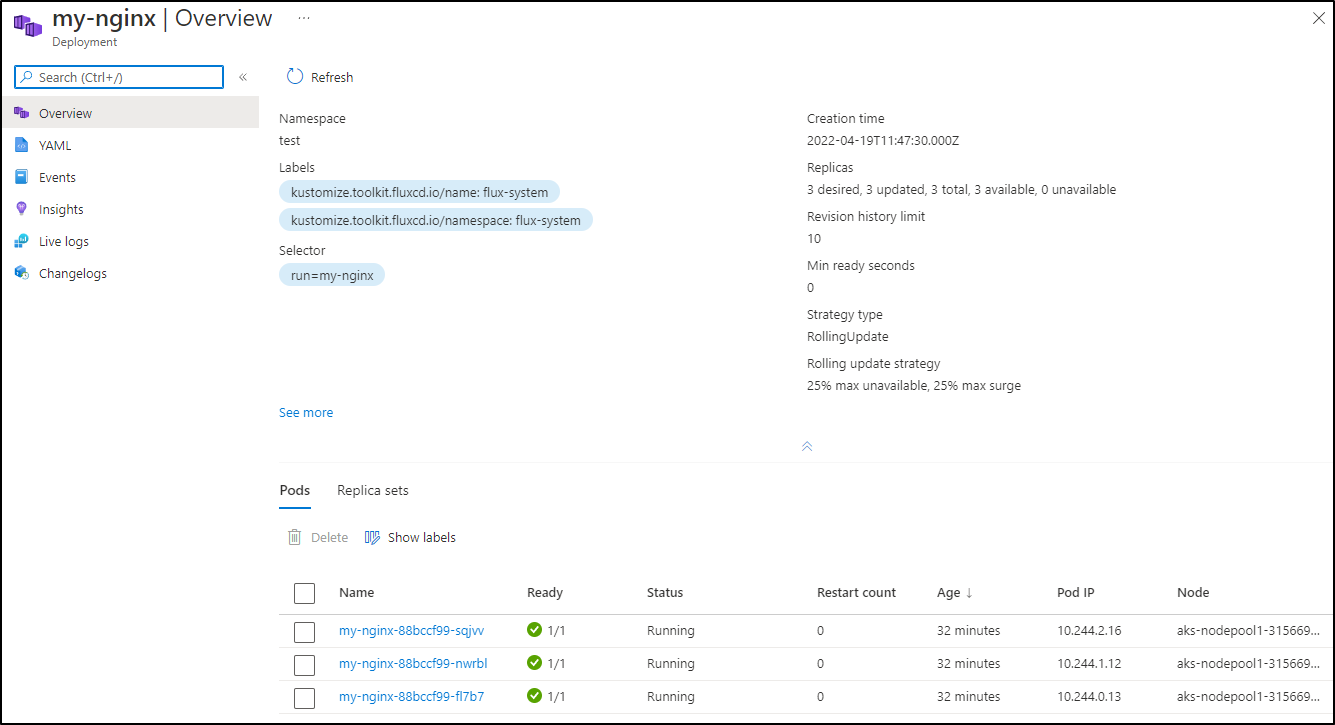

And in a few minutes, it is able to start pulling images

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl get pods -n test

NAME READY STATUS RESTARTS AGE

my-nginx-74577b7877-h9ghs 0/1 ImagePullBackOff 0 11m

my-nginx-74577b7877-s8hcs 0/1 ImagePullBackOff 0 11m

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl get pods -n test

NAME READY STATUS RESTARTS AGE

my-nginx-74577b7877-h9ghs 0/1 ImagePullBackOff 0 13m

my-nginx-74577b7877-s8hcs 1/1 Running 0 13m

We can head back to https://app.securitytower.io/ to see our clusters. I had hoped to find a nice login or link page, but had to use my browser history to find the URL back into Syncier.

Let’s create a Security Tower Application with two stages (and two namespaces). We need to define the YAML in the .securityTower folder and add to source (not apply to k8s directly).

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/.securitytower$ cat stAppDefinition.yaml

apiVersion: securitytower.io/v1alpha1

kind: Application

metadata:

name: nginx-test

spec:

stages:

- name: nginx-app-production

resources:

repository: https://github.com/idjohnson/dockerWithTests2

revision: main

path: k8s

targetNamespace: example-app-1

previousStage: example-app-staging

- name: nginx-app-staging

resources:

repository: https://github.com/idjohnson/dockerWithTests2

revision: main

path: k8s

targetNamespace: example-app-2

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/.securitytower$ git add stAppDefinition.yaml

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/.securitytower$ git commit -m "create ST Application"

[main 0c9b2a3] create ST Application

1 file changed, 19 insertions(+)

create mode 100644 .securitytower/stAppDefinition.yaml

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/.securitytower$ git push

Enumerating objects: 6, done.

Counting objects: 100% (6/6), done.

Delta compression using up to 16 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 580 bytes | 580.00 KiB/s, done.

Total 4 (delta 1), reused 0 (delta 0)

remote: Resolving deltas: 100% (1/1), completed with 1 local object.

To https://github.com/idjohnson/dockerWithTests2.git

09244d1..0c9b2a3 main -> main

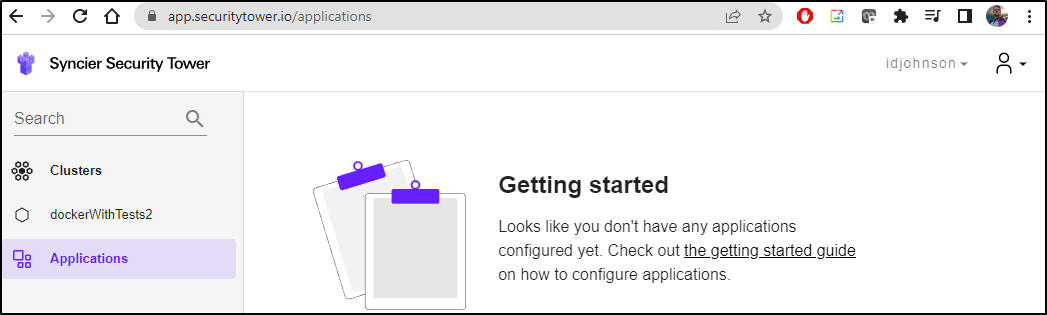

I waited a while, but no Applications showed up on the SecurityTower area

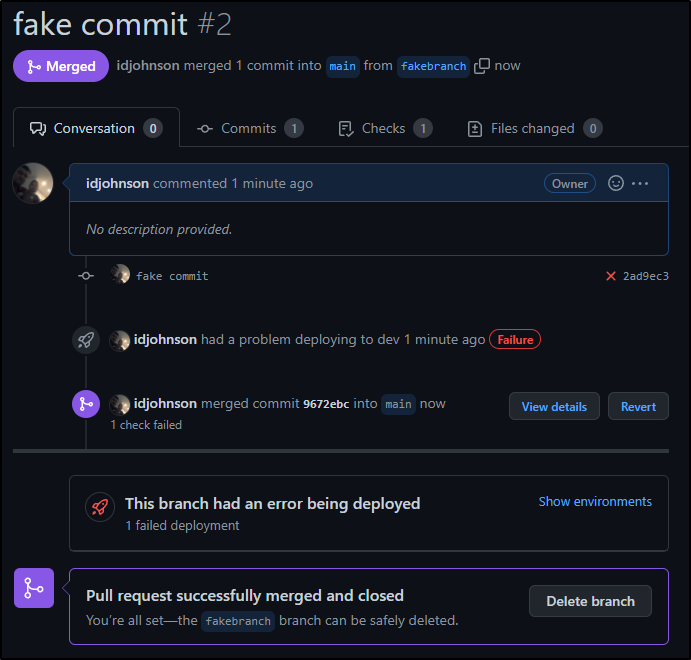

I thought perhaps I could trigger things with a PR

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ git checkout -b fakebranch

Switched to a new branch 'fakebranch'

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ git commit --allow-empty -m "fake commit"

[fakebranch 2ad9ec3] fake commit

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ git push

fatal: The current branch fakebranch has no upstream branch.

To push the current branch and set the remote as upstream, use

git push --set-upstream origin fakebranch

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ darf

git push --set-upstream origin fakebranch [enter/↑/↓/ctrl+c]

Enumerating objects: 1, done.

Counting objects: 100% (1/1), done.

Writing objects: 100% (1/1), 186 bytes | 186.00 KiB/s, done.

Total 1 (delta 0), reused 0 (delta 0)

remote:

remote: Create a pull request for 'fakebranch' on GitHub by visiting:

remote: https://github.com/idjohnson/dockerWithTests2/pull/new/fakebranch

remote:

To https://github.com/idjohnson/dockerWithTests2.git

* [new branch] fakebranch -> fakebranch

Branch 'fakebranch' set up to track remote branch 'fakebranch' from 'origin'.

Perhaps SecurityTower isn’t connected? I next tried renaming the cluster to see if that would show up

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/.securitytower$ git diff

diff --git a/.securitytower/cluster.yaml b/.securitytower/cluster.yaml

index a85fa83..c422964 100644

--- a/.securitytower/cluster.yaml

+++ b/.securitytower/cluster.yaml

@@ -1,7 +1,7 @@

apiVersion: securitytower.io/v1alpha1

kind: Cluster

metadata:

- name: dockerWithTests2

+ name: dockerWithTests3

spec:

policies:

path: /policies

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/.securitytower$ git add cluster.yaml

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/.securitytower$ git commit -m "force new name"

[main 187e808] force new name

1 file changed, 1 insertion(+), 1 deletion(-)

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/.securitytower$ git push

Enumerating objects: 7, done.

Counting objects: 100% (7/7), done.

Delta compression using up to 16 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 383 bytes | 383.00 KiB/s, done.

Total 4 (delta 2), reused 0 (delta 0)

remote: Resolving deltas: 100% (2/2), completed with 2 local objects.

To https://github.com/idjohnson/dockerWithTests2.git

9672ebc..187e808 main -> main

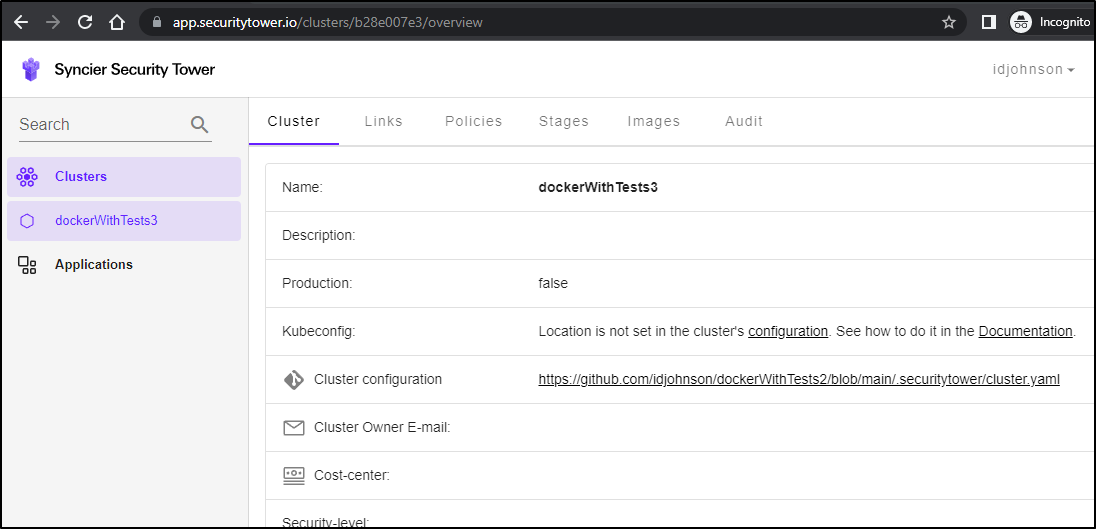

This really should have updated in the webapp however I see nothing reflected (even forcibly relogging in with an incognito window just to make sure nothing was cached)

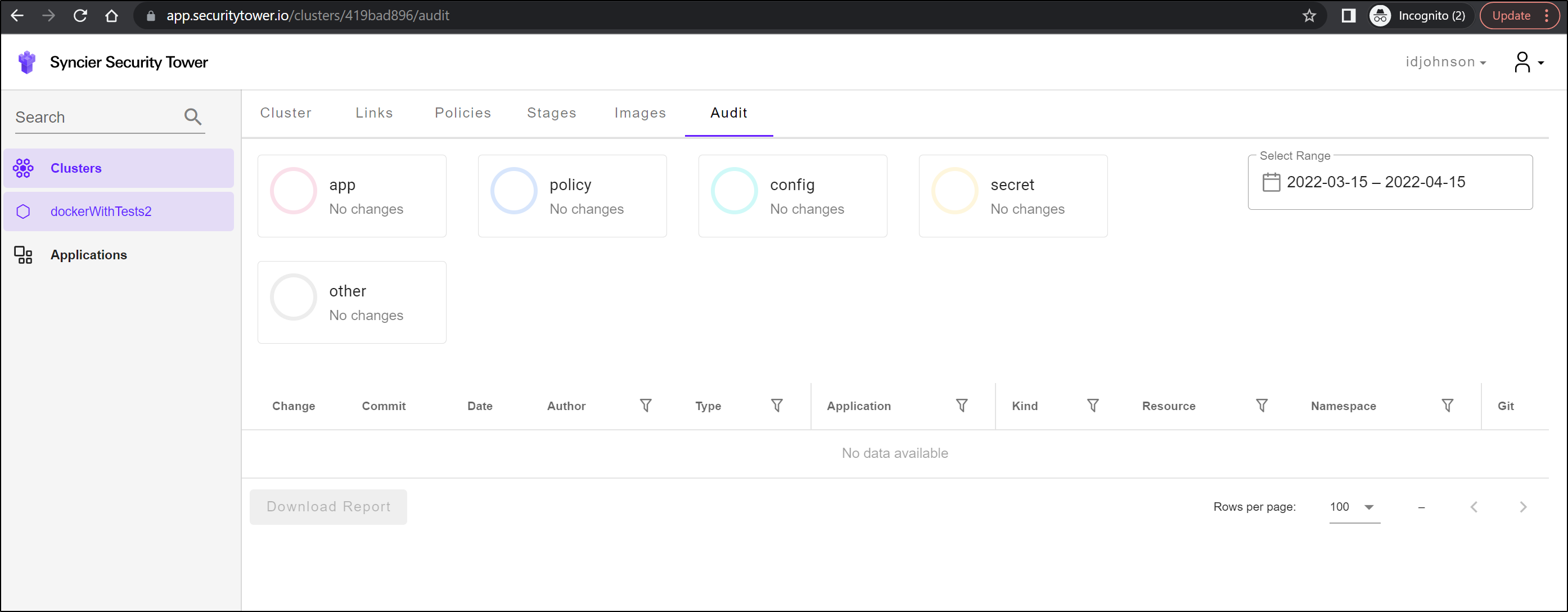

and still no Applications

About an hour later, I did see the cluster name update, but no Applications

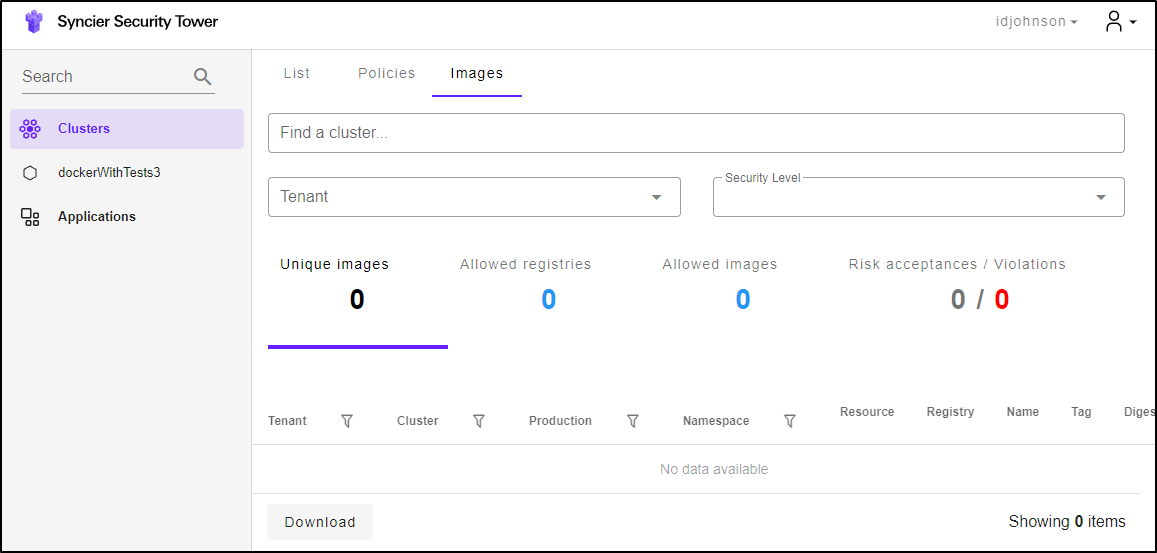

Over all my testing, I never saw Images update either:

On-Prem K3s

I nervously plan to try and add Flux to my cluster. I’ve had some less than stellar results with OPA and Anthos hence my trepidation. That said, Flux does have uninstall instructions.

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ flux bootstrap github --owner=$GITHUB_USER --repository=$GITHUB_REPO --branch=main --path=./k8s --personal

I0419 07:34:04.556226 2986 request.go:665] Waited for 1.005782012s due to client-side throttling, not priority and fairness, request: GET:https://192.168.1.77:6443/apis/compute.azure.crossplane.io/v1alpha3?timeout=32s

► connecting to github.com

► cloning branch "main" from Git repository "https://github.com/idjohnson/dockerWithTests2.git"

✔ cloned repository

► generating component manifests

✔ generated component manifests

✔ component manifests are up to date

► installing components in "flux-system" namespace

✔ installed components

✔ reconciled components

► determining if source secret "flux-system/flux-system" exists

► generating source secret

✔ public key: ecdsa-sha2-nistp384 AAAAE2VjZHNhLXNoYTItbmlzdHAzODQAAAAIbmlzdHAzODQAAABhBK54WSkK6WXNUx9gKddmCVTgn5e85ly3F3GqSMbl2X2Ha5EndWtJy9EgmNtzqENxjY8igvuJeqhT9MKI3bWwrvWRW6g/nyrEOOEYyREB7StdT71KSzVLtGMlRuSCuAs2Pg==

✔ configured deploy key "flux-system-main-flux-system-./k8s" for "https://github.com/idjohnson/dockerWithTests2"

► applying source secret "flux-system/flux-system"

✔ reconciled source secret

I0419 07:34:18.341087 2986 request.go:665] Waited for 1.013815547s due to client-side throttling, not priority and fairness, request: GET:https://192.168.1.77:6443/apis/dapr.io/v2alpha1?timeout=32s

► generating sync manifests

✔ generated sync manifests

✔ sync manifests are up to date

► applying sync manifests

✔ reconciled sync configuration

◎ waiting for Kustomization "flux-system/flux-system" to be reconciled

✔ Kustomization reconciled successfully

I0419 07:34:47.514443 2986 request.go:665] Waited for 1.003914953s due to client-side throttling, not priority and fairness, request: GET:https://192.168.1.77:6443/apis/storage.k8s.io/v1?timeout=32s

► confirming components are healthy

✔ helm-controller: deployment ready

✔ kustomize-controller: deployment ready

✔ notification-controller: deployment ready

✔ source-controller: deployment ready

✔ all components are healthy

We can see it immediately setup things on my cluster. My LB situation is a bit funny so we can see the attempt to spew a slew of Nginx LBs over my nodepool (which is expected). I can also see the same error in pulling the pod

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl get ns | tail -n 3

c-t69nl Terminating 165d

flux-system Active 69s

test Active 36s

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl get deployments -n test

NAME READY UP-TO-DATE AVAILABLE AGE

my-nginx 0/2 2 0 42s

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl get pods -n test

NAME READY STATUS RESTARTS AGE

svclb-nginx-run-svc-xqjp9 0/1 Pending 0 49s

svclb-nginx-run-svc-jgmbs 0/1 Pending 0 49s

svclb-nginx-run-svc-whnlc 0/1 Pending 0 49s

svclb-nginx-run-svc-5hqqc 0/1 Pending 0 49s

svclb-nginx-run-svc-s5sd8 0/1 Pending 0 49s

svclb-nginx-run-svc-njlh7 0/1 Pending 0 49s

svclb-nginx-run-svc-mg8l5 0/1 Pending 0 49s

my-nginx-66bc7bbdf9-f6np9 0/1 ImagePullBackOff 0 49s

my-nginx-66bc7bbdf9-dpwwl 0/1 ErrImagePull 0 49s

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl describe pod my-nginx-66bc7bbdf9-dpwwl -n test | tail -n15

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 75s default-scheduler Successfully assigned test/my-nginx-66bc7bbdf9-dpwwl to isaac-macbookpro

Warning FailedMount 74s kubelet MountVolume.SetUp failed for volume "kube-api-access-gl592" : failed to sync configmap cache: timed out waiting for the condition

Normal Pulling 20s (x3 over 67s) kubelet Pulling image "idjacrdemo02.azurecr.io/dockerwithtests:devlatest"

Warning Failed 19s (x3 over 60s) kubelet Failed to pull image "idjacrdemo02.azurecr.io/dockerwithtests:devlatest": rpc error: code = Unknown desc = failed to pull and unpack image "idjacrdemo02.azurecr.io/dockerwithtests:devlatest": failed to resolve reference "idjacrdemo02.azurecr.io/dockerwithtests:devlatest": failed to authorize: failed to fetch anonymous token: unexpected status: 401 Unauthorized

Warning Failed 19s (x3 over 60s) kubelet Error: ErrImagePull

Normal BackOff 6s (x3 over 60s) kubelet Back-off pulling image "idjacrdemo02.azurecr.io/dockerwithtests:devlatest"

Warning Failed 6s (x3 over 60s) kubelet Error: ImagePullBackOff

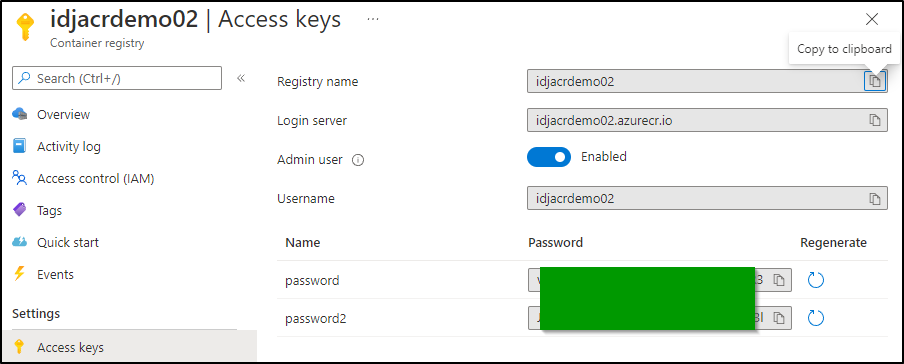

I cannot “attach” to ACR like I am able to with AKS.

However, I can add an imagePullSecret

Get the username, password and login server uri.

I don’t want to actually check this in as a base64 secret to Github.

I’ll add to the namespace created:

$ kubectl create secret docker-registry regcred --docker-server="https://idjacrdemo02.azurecr.io/v1/" --docker-username="idjacrdemo02" --docker-password="vasdfasfdasfdasdfsadfasdfasdf3" --docker-email="isaac.johnson@gmail.com" -n test

secret/regcred created

Now let’s reference and push it

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ vi k8s/deployment.yaml

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ git diff

diff --git a/k8s/deployment.yaml b/k8s/deployment.yaml

index fbf2787..00d5686 100644

--- a/k8s/deployment.yaml

+++ b/k8s/deployment.yaml

@@ -20,6 +20,8 @@ spec:

labels:

run: my-nginx

spec:

+ imagePullSecrets:

+ - name: regcred

containers:

- name: my-nginx

image: nginx

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ git add k8s/deployment.yaml

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ git commit -m "add image pull secret"

[main d9b183c] add image pull secret

1 file changed, 2 insertions(+)

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ git push

Enumerating objects: 7, done.

Counting objects: 100% (7/7), done.

Delta compression using up to 16 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 396 bytes | 396.00 KiB/s, done.

Total 4 (delta 3), reused 0 (delta 0)

remote: Resolving deltas: 100% (3/3), completed with 3 local objects.

To https://github.com/idjohnson/dockerWithTests2.git

187e808..d9b183c main -> main

We can now see it start to update the pods and pull images

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl get deployments -n test

NAME READY UP-TO-DATE AVAILABLE AGE

my-nginx 0/2 2 0 10m

NAME READY UP-TO-DATE AVAILABLE AGE

my-nginx 0/2 1 0 11m

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl get deployments -n test

NAME READY UP-TO-DATE AVAILABLE AGE

my-nginx 1/2 2 1 12m

Cleanup

Now comes the time to see if Flux goes gentle into that good night or will it rage, rage against the dying of the light.

First, I’ll use --dry-run to see what it plans to do

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ flux uninstall --namespace=flux-system --dry-run

I0419 07:52:11.664068 3632 request.go:665] Waited for 1.006776444s due to client-side throttling, not priority and fairness, request: GET:https://192.168.1.77:6443/apis/policy/v1?timeout=32s

► deleting components in flux-system namespace

✔ Deployment/flux-system/kustomize-controller deleted (dry run)

✔ Deployment/flux-system/notification-controller deleted (dry run)

✔ Deployment/flux-system/helm-controller deleted (dry run)

✔ Deployment/flux-system/source-controller deleted (dry run)

✔ Service/flux-system/notification-controller deleted (dry run)

✔ Service/flux-system/source-controller deleted (dry run)

✔ Service/flux-system/webhook-receiver deleted (dry run)

✔ NetworkPolicy/flux-system/allow-egress deleted (dry run)

✔ NetworkPolicy/flux-system/allow-scraping deleted (dry run)

✔ NetworkPolicy/flux-system/allow-webhooks deleted (dry run)

✔ ServiceAccount/flux-system/helm-controller deleted (dry run)

✔ ServiceAccount/flux-system/kustomize-controller deleted (dry run)

✔ ServiceAccount/flux-system/notification-controller deleted (dry run)

✔ ServiceAccount/flux-system/source-controller deleted (dry run)

✔ ClusterRole/crd-controller-flux-system deleted (dry run)

✔ ClusterRoleBinding/cluster-reconciler-flux-system deleted (dry run)

✔ ClusterRoleBinding/crd-controller-flux-system deleted (dry run)

► deleting toolkit.fluxcd.io finalizers in all namespaces

✔ GitRepository/flux-system/flux-system finalizers deleted (dry run)

✔ Kustomization/flux-system/flux-system finalizers deleted (dry run)

► deleting toolkit.fluxcd.io custom resource definitions

✔ CustomResourceDefinition/alerts.notification.toolkit.fluxcd.io deleted (dry run)

✔ CustomResourceDefinition/buckets.source.toolkit.fluxcd.io deleted (dry run)

✔ CustomResourceDefinition/gitrepositories.source.toolkit.fluxcd.io deleted (dry run)

✔ CustomResourceDefinition/helmcharts.source.toolkit.fluxcd.io deleted (dry run)

✔ CustomResourceDefinition/helmreleases.helm.toolkit.fluxcd.io deleted (dry run)

✔ CustomResourceDefinition/helmrepositories.source.toolkit.fluxcd.io deleted (dry run)

✔ CustomResourceDefinition/kustomizations.kustomize.toolkit.fluxcd.io deleted (dry run)

✔ CustomResourceDefinition/providers.notification.toolkit.fluxcd.io deleted (dry run)

✔ CustomResourceDefinition/receivers.notification.toolkit.fluxcd.io deleted (dry run)

✔ Namespace/flux-system deleted (dry run)

✔ uninstall finished

It required a confirm, but it did commit seppuku

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ flux uninstall --namespace=flux-system

? Are you sure you want to delete Flux and its custom resource definitions? [y/N] y█

I0419 07:53:04.784568 3664 request.go:665] Waited for 1.001675627s due to client-side throttling, not priority and fairness, request: GET:https://192.168.1.77:6443/apis/monitoring.coreos.com/v1?timeout=32s

► deleting components in flux-system namespace

✔ Deployment/flux-system/kustomize-controller deleted

✔ Deployment/flux-system/notification-controller deleted

✔ Deployment/flux-system/helm-controller deleted

✔ Deployment/flux-system/source-controller deleted

✔ Service/flux-system/notification-controller deleted

✔ Service/flux-system/source-controller deleted

✔ Service/flux-system/webhook-receiver deleted

✔ NetworkPolicy/flux-system/allow-egress deleted

✔ NetworkPolicy/flux-system/allow-scraping deleted

✔ NetworkPolicy/flux-system/allow-webhooks deleted

✔ ServiceAccount/flux-system/helm-controller deleted

✔ ServiceAccount/flux-system/kustomize-controller deleted

✔ ServiceAccount/flux-system/notification-controller deleted

✔ ServiceAccount/flux-system/source-controller deleted

✔ ClusterRole/crd-controller-flux-system deleted

✔ ClusterRoleBinding/cluster-reconciler-flux-system deleted

✔ ClusterRoleBinding/crd-controller-flux-system deleted

► deleting toolkit.fluxcd.io finalizers in all namespaces

✔ GitRepository/flux-system/flux-system finalizers deleted

✔ Kustomization/flux-system/flux-system finalizers deleted

► deleting toolkit.fluxcd.io custom resource definitions

✔ CustomResourceDefinition/alerts.notification.toolkit.fluxcd.io deleted

✔ CustomResourceDefinition/buckets.source.toolkit.fluxcd.io deleted

✔ CustomResourceDefinition/gitrepositories.source.toolkit.fluxcd.io deleted

✔ CustomResourceDefinition/helmcharts.source.toolkit.fluxcd.io deleted

✔ CustomResourceDefinition/helmreleases.helm.toolkit.fluxcd.io deleted

✔ CustomResourceDefinition/helmrepositories.source.toolkit.fluxcd.io deleted

✔ CustomResourceDefinition/kustomizations.kustomize.toolkit.fluxcd.io deleted

✔ CustomResourceDefinition/providers.notification.toolkit.fluxcd.io deleted

✔ CustomResourceDefinition/receivers.notification.toolkit.fluxcd.io deleted

✔ Namespace/flux-system deleted

✔ uninstall finished

We can see it terminating now

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl get ns | tail -n2

test Active 20m

flux-system Terminating 20m

That said, I need to delete test manually (If I desire to do so)

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl delete ns test

namespace "test" deleted

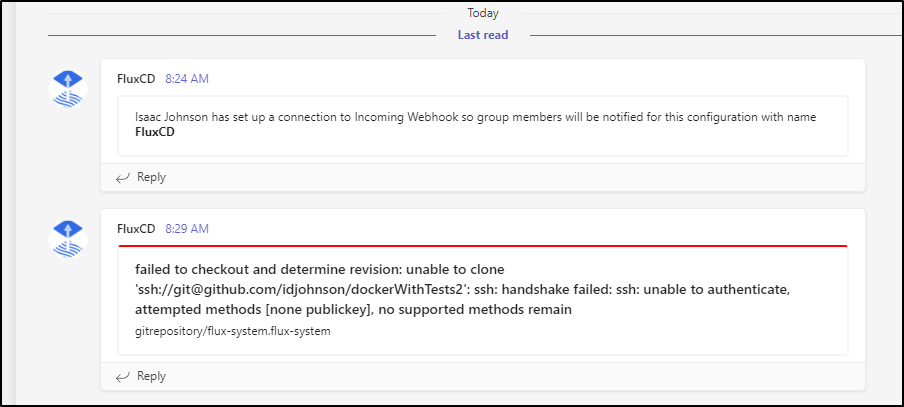

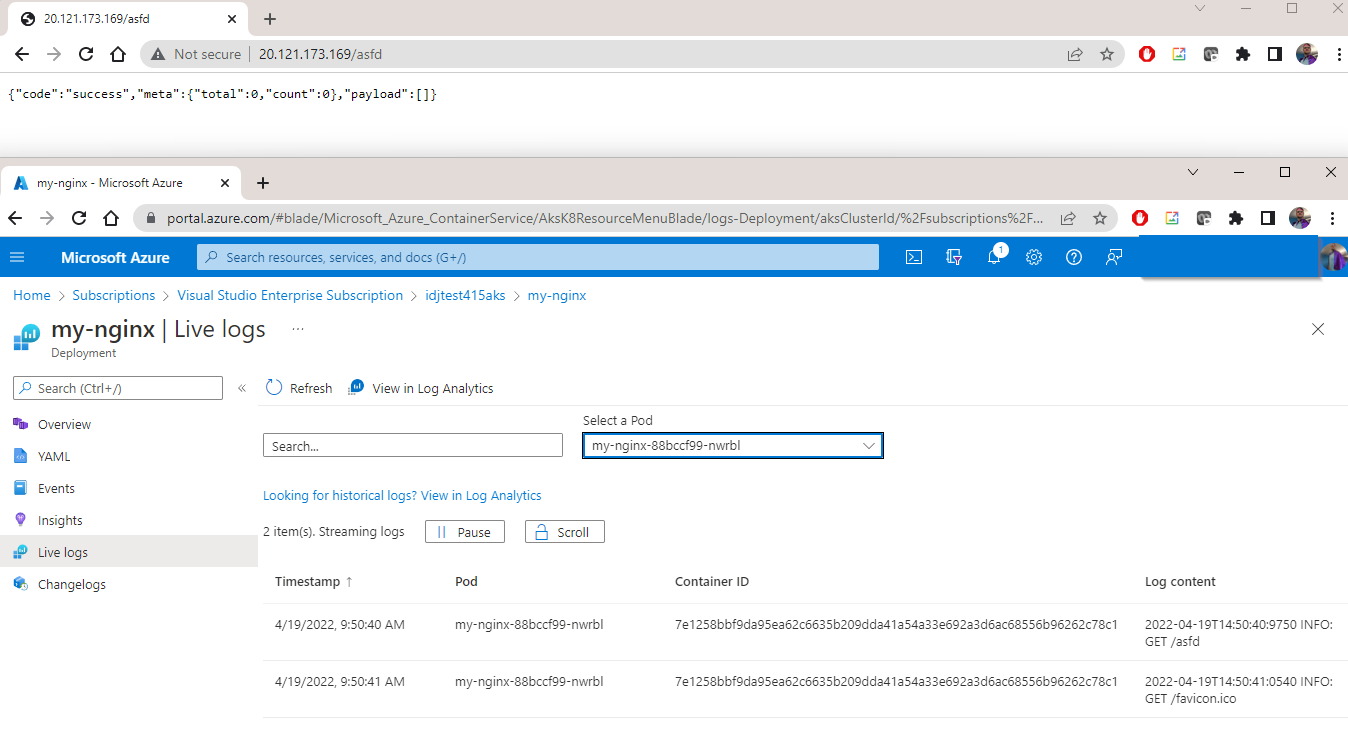

Notifications

Create a new Teams Webhook

which gives a URL like https://princessking.webhook.office.com/webhookb2/ccef6213asdfasdfasdfasdfasdfasdfasdfasdfasdfasdfsadfasdfasdf/IncomingWebhook/dddad878ed1d47edab490bed88fb83ec/sadfsadfsadfsadfsadfsadfasd4

We can now use in a Flux Notifcation paired with an alert

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ cat msteams.yaml

apiVersion: notification.toolkit.fluxcd.io/v1beta1

kind: Provider

metadata:

name: msteams

namespace: flux-system

spec:

type: msteams

address: https://princessking.webhook.office.com/webhookb2/ccef6213asdfasdfasdfasdfasdfasdfasdfasdfasdfasdfsadfasdfasdf/IncomingWebhook/dddad878ed1d47edab490bed88fb83ec/sadfsadfsadfsadfsadfsadfasd4

---

apiVersion: notification.toolkit.fluxcd.io/v1beta1

kind: Alert

metadata:

name: msteams

namespace: flux-system

spec:

providerRef:

name: msteams

eventSeverity: info

eventSources:

- kind: Bucket

name: '*'

- kind: GitRepository

name: '*'

- kind: Kustomization

name: '*'

- kind: HelmRelease

name: '*'

- kind: HelmChart

name: '*'

- kind: HelmRepository

name: '*'

- kind: ImageRepository

name: '*'

- kind: ImagePolicy

name: '*'

- kind: ImageUpdateAutomation

name: '*'

Apply

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl apply -f msteams.yaml

provider.notification.toolkit.fluxcd.io/msteams created

alert.notification.toolkit.fluxcd.io/msteams created

Now if we change the replicaset, that doesn’t trigger an alert

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ vi k8s/deployment.yaml

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ git diff

diff --git a/k8s/deployment.yaml b/k8s/deployment.yaml

index 00d5686..936c2fb 100644

--- a/k8s/deployment.yaml

+++ b/k8s/deployment.yaml

@@ -14,7 +14,7 @@ spec:

selector:

matchLabels:

run: my-nginx

- replicas: 2

+ replicas: 3

template:

metadata:

labels:

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ git add k8s/

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ git commit -m "change deployment to 3"

[main 4b4b277] change deployment to 3

1 file changed, 1 insertion(+), 1 deletion(-)

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ git push

Enumerating objects: 7, done.

Counting objects: 100% (7/7), done.

Delta compression using up to 16 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 362 bytes | 362.00 KiB/s, done.

Total 4 (delta 3), reused 0 (delta 0)

remote: Resolving deltas: 100% (3/3), completed with 3 local objects.

To https://github.com/idjohnson/dockerWithTests2.git

8275901..4b4b277 main -> main

However, if we change the imagerepo, that should alert us

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ git add k8s/kustomization.yaml

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ git commit -m " change kustomization to trigger alert"

[main daecc51] change kustomization to trigger alert

1 file changed, 1 insertion(+), 1 deletion(-)

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ git push

Enumerating objects: 7, done.

Counting objects: 100% (7/7), done.

Delta compression using up to 16 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 376 bytes | 376.00 KiB/s, done.

Total 4 (delta 3), reused 0 (delta 0)

remote: Resolving deltas: 100% (3/3), completed with 3 local objects.

To https://github.com/idjohnson/dockerWithTests2.git

4b4b277..daecc51 main -> main

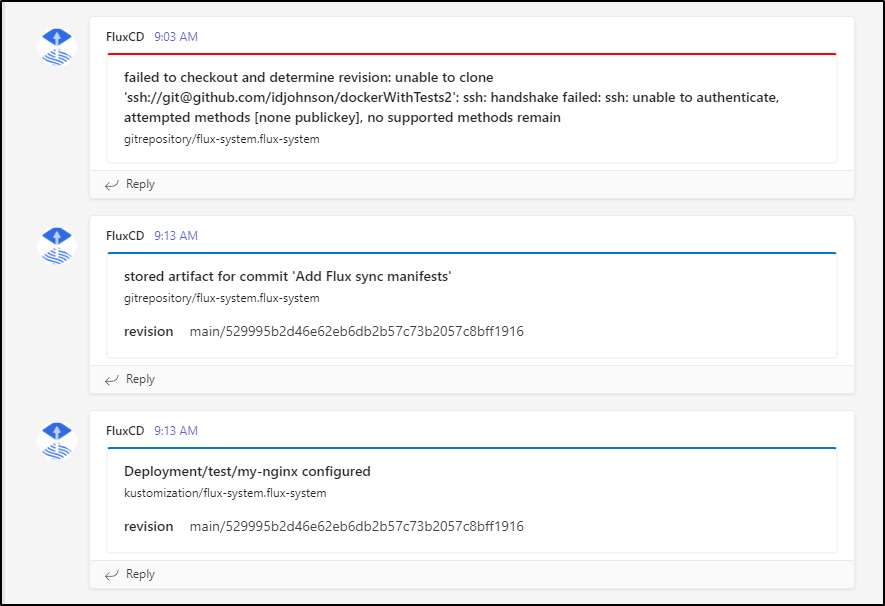

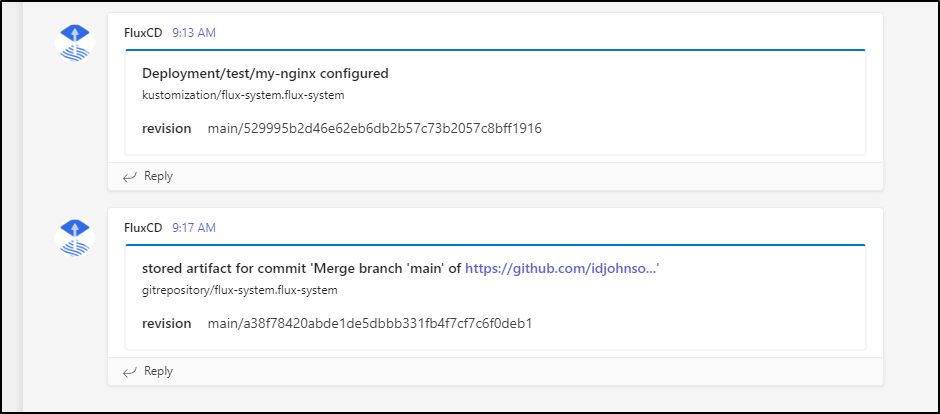

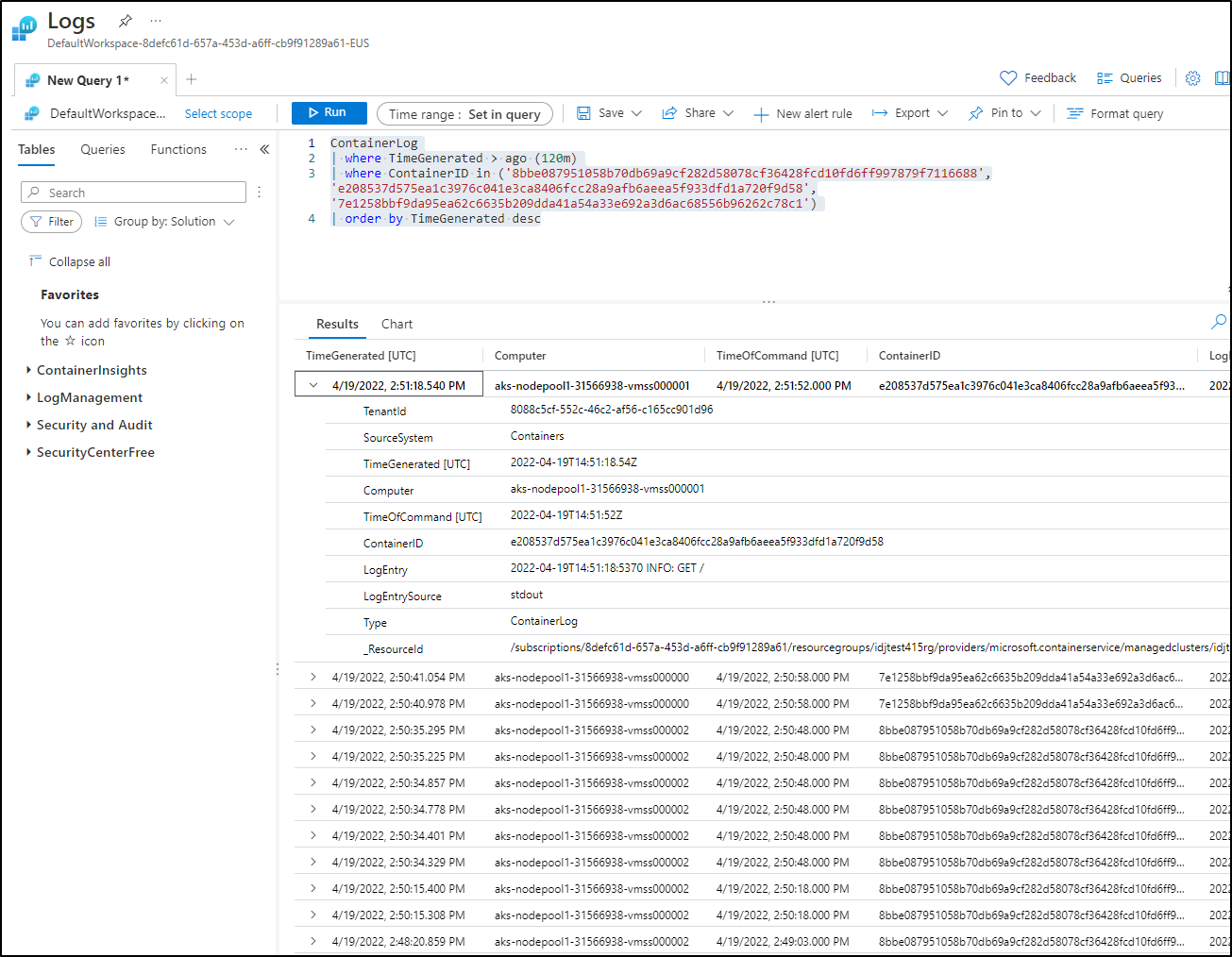

We can also see any errors Flux is having

Github SSH vs HTTPS

This actually showed me that we neglected to add --token-auth to our parameters.

This meant Flux setup local (./k8s) the first time, but not again. Correcting:

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ flux bootstrap github --owner=$GITHUB_USER --repository=$GITHUB_REPO --branch=main --path=k8s --personal --token-auth

► connecting to github.com

► cloning branch "main" from Git repository "https://github.com/idjohnson/dockerWithTests2.git"

✔ cloned repository

► generating component manifests

✔ generated component manifests

✔ component manifests are up to date

✔ reconciled components

► determining if source secret "flux-system/flux-system" exists

► generating source secret

► applying source secret "flux-system/flux-system"

✔ reconciled source secret

► generating sync manifests

✔ generated sync manifests

✔ committed sync manifests to "main" ("529995b2d46e62eb6db2b57c73b2057c8bff1916")

► pushing sync manifests to "https://github.com/idjohnson/dockerWithTests2.git"

► applying sync manifests

✔ reconciled sync configuration

◎ waiting for Kustomization "flux-system/flux-system" to be reconciled

✔ Kustomization reconciled successfully

► confirming components are healthy

✔ helm-controller: deployment ready

✔ kustomize-controller: deployment ready

✔ notification-controller: deployment ready

✔ source-controller: deployment ready

✔ all components are healthy

then showed it was healthy:

However, we can see the pods wont load because we mangled (intentionally) the kustomization and ACR path

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl get pods -n test

NAME READY STATUS RESTARTS AGE

my-nginx-74577b7877-746ns 1/1 Running 0 104s

my-nginx-74577b7877-h9ghs 1/1 Running 0 147m

my-nginx-74577b7877-s8hcs 1/1 Running 0 147m

my-nginx-d9bf587f5-fs92n 0/1 ErrImagePull 0 104s

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ kubectl describe pod my-nginx-d9bf587f5-fs92n -n test | tail -n15

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 116s default-scheduler Successfully assigned test/my-nginx-d9bf587f5-fs92n to aks-nodepool1-31566938-vmss000002

Normal Pulling 32s (x4 over 116s) kubelet Pulling image "idjacrdemo02.azurecr.io/asdfasdfdockerwithtests:devlatest"

Warning Failed 32s (x4 over 115s) kubelet Failed to pull image "idjacrdemo02.azurecr.io/asdfasdfdockerwithtests:devlatest": [rpc error: code = NotFound desc = failed to pull and unpack image "idjacrdemo02.azurecr.io/asdfasdfdockerwithtests:devlatest": failed to resolve reference "idjacrdemo02.azurecr.io/asdfasdfdockerwithtests:devlatest": idjacrdemo02.azurecr.io/asdfasdfdockerwithtests:devlatest: not found, rpc error: code = Unknown desc = failed to pull and unpack image "idjacrdemo02.azurecr.io/asdfasdfdockerwithtests:devlatest": failed to resolve reference "idjacrdemo02.azurecr.io/asdfasdfdockerwithtests:devlatest": failed to authorize: failed to fetch anonymous token: unexpected status: 401 Unauthorized]

Warning Failed 32s (x4 over 115s) kubelet Error: ErrImagePull