Published: Mar 29, 2022 by Isaac Johnson

Google Cloud’s Cloud Run is a fully managed container-based function environment introduced around three years ago. It allows developers to build containerized applications with cloud build then scale them to meet demand.

While I’ve experimented with App Engine Standard and Flex before, I had not pursued Cloud Run. Based on blogs and what I could tell, Cloud Run is effectively a managed KNative minus the GKE. Cloud Functions, their other serverless offering is similar to Cloud Run, but only handle one request at a time.

GCPs plurality of serverless offerings as well as a suspicion that CloudRun and KNative were more than just similar prompted me to want to deep dive into this GCP offering and see how it stacks up.

GCP Serverless Offerings

Let’s start by breaking down the offerings (to date).

App Engine Standard:

- Sandbox just for the language

- No write to disk, CPU/Memory Limits

- No backgrounding or VPN

- Access GCP objects via standard APIs

- Scale 0 to many (custom auto scaling algo)

- No Health Checks

- Single Zone (if failure, moves to new Zone)

- Fast Deployments

App Engine Flex:

- Run in Docker containers on Compute Engine (VM)

- Supports any language Docker can run

- Because it’s hosted in VM, more CPU/Memory available

- Not GCP APIs, use Libraries

- Scale 1 to many - uses Compute Engine autoscaler

- Health Checks

- Uses Regional Managed Instance groups with multiple AZs

- Slower startup (when needing to add instance) - start/deployment in minutes, not seconds

Cloud Run:

- Uses same deployment format as KNative in K8s

- Uses OCI Image (docker iamge)

- KNative Service API contract (similar to K8s Deployment object) serves same env vars, limits, etc to each instance

- Scales 0 to many

- can have “warm instances” now via annotation (via annotation)

- UNLIKE KNative

- no envFrom (cannot use ConfigMaps/Secrets, though secret manager in preview)

- No liveness/readiness probes or imagePullPolicies

- LIKE KNative

- uses Kubernetes (though Google manages the Kubernetes with Cloud Run for you).

Cloud Functions:

- Introduced in 2017.

- Supports specific languages and triggered via HTTP or Cloud Events

- Cloud Function handles packaging of Code

- “Cloud Function only handles one request at a time for each instance, it would be suitable only if the use case is a single-purpose workload.” source

- To expand on that: Each instance of a Cloud Function operates independently of any others. Each event only triggers one cloud function (think lambdas triggered on cloud watch).

- Supports Python, NodeJS and Go

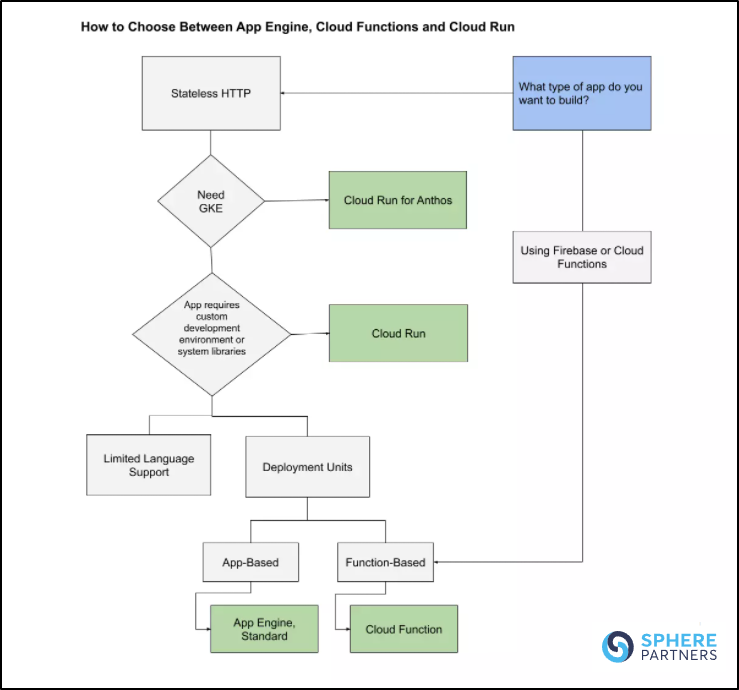

Sphere had this breakdown (not sure I entirely agree with, but it’s a good starting point):

Let’s look at some key features:

Supports specific languages only:

- App Engine Standard

- Cloud Functions

Supports any container language:

- App Engine Flex

- KNative

- Cloud Run

Scales down to Zero

- All but App Engine Flex which scales to one

- this means you will be paying for a persistantly running VM

Timeouts:

- Cloud Run: Up to 15m

- Cloud Functions: Up to 9m

- App Engine: 1m

| App Engine Flex | App Engine Standard | Cloud Functions | Cloud Run | Cloud Run for Anthos | KNative | |

|---|---|---|---|---|---|---|

| Deployment Type | App | App | Function | Container | Container | Container |

| Scaling | 1+ | 0+ | 0 - 1000 | 0 - 1000 | 0+ | 0+ |

| Languages | Any | Java, Go, PHP, Python, NodeJS, Ruby | NodeJS, Python, Go, Java, .NET, Ruby, PHP | Any | Any | Any |

| VPC Networking | Yes | Yes | Yes | Yes | Yes | Yes |

| Quotas/Limits | 32Mb Request, no limit on response, 10k deployments a day | 10Mb Request, 32Mb Response, 10k deployments a day | 32Mb, 10Mb (streaming), 32Mb (non streaming) Responses | 32Mb Request, 32Mb response | 32Mb Request, 32Mb response | None |

| Custom Domains | Yes | Yes | No | Yes (max 50 domain mappings) | Yes | Yes |

| HTTPS/gRPC | No | No | No | No | Yes | Yes |

| Timeout (min) | 1 | 1 | 9 | 15 | 15 … 24h for 0.16.0.gke.1 | 15* |

* reports that using “beta” version can exceed the 900s limit up to 3600 (60m). Will Test

Setup

Cloud Run is about as simple as they come to use, provided you have sufficient permissions.

I’ll create a directory and switch to Node 14

builder@DESKTOP-QADGF36:~/Workspaces$ mkdir idj-cloudrun

builder@DESKTOP-QADGF36:~/Workspaces$ cd idj-cloudrun/

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ nvm list

-> v10.22.1

v14.18.1

default -> 10.22.1 (-> v10.22.1)

iojs -> N/A (default)

unstable -> N/A (default)

node -> stable (-> v14.18.1) (default)

stable -> 14.18 (-> v14.18.1) (default)

lts/* -> lts/gallium (-> N/A)

lts/argon -> v4.9.1 (-> N/A)

lts/boron -> v6.17.1 (-> N/A)

lts/carbon -> v8.17.0 (-> N/A)

lts/dubnium -> v10.24.1 (-> N/A)

lts/erbium -> v12.22.7 (-> N/A)

lts/fermium -> v14.18.1

lts/gallium -> v16.13.0 (-> N/A)

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ nvm use 14.18.1

Now using node v14.18.1 (npm v6.14.15)

Then I’ll npm init to setup the basic package.json

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ npm init

This utility will walk you through creating a package.json file.

It only covers the most common items, and tries to guess sensible defaults.

See `npm help init` for definitive documentation on these fields

and exactly what they do.

Use `npm install <pkg>` afterwards to install a package and

save it as a dependency in the package.json file.

Press ^C at any time to quit.

package name: (idj-cloudrun)

version: (1.0.0)

description: A quick Express App for Hello World Demo

entry point: (index.js)

test command: echo howdy

git repository:

keywords: GCP

author: Isaac Johnson

license: (ISC) MIT

About to write to /home/builder/Workspaces/idj-cloudrun/package.json:

{

"name": "idj-cloudrun",

"version": "1.0.0",

"description": "A quick Express App for Hello World Demo",

"main": "index.js",

"scripts": {

"test": "echo howdy"

},

"keywords": [

"GCP"

],

"author": "Isaac Johnson",

"license": "MIT"

}

Is this OK? (yes) yes

Add Express

$ npm install -save express

npm notice created a lockfile as package-lock.json. You should commit this file.

npm WARN idj-cloudrun@1.0.0 No repository field.

+ express@4.17.3

added 50 packages from 37 contributors and audited 50 packages in 2.18s

2 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

╭───────────────────────────────────────────────────────────────╮

│ │

│ New major version of npm available! 6.14.15 → 8.5.5 │

│ Changelog: https://github.com/npm/cli/releases/tag/v8.5.5 │

│ Run npm install -g npm to update! │

│ │

╰───────────────────────────────────────────────────────────────╯

This makes the package.json:

$ cat package.json

{

"name": "idj-cloudrun",

"version": "1.0.0",

"description": "A quick Express App for Hello World Demo",

"main": "index.js",

"scripts": {

"test": "echo howdy"

},

"keywords": [

"GCP"

],

"author": "Isaac Johnson",

"license": "MIT",

"dependencies": {

"express": "^4.17.3"

}

}

Let’s whip up a quick express helloworld app;

$ cat index.js

const express = require('express')

const app = express()

const port = parseInt(process.env.PORT) || 3000;

const procName = process.env.NAME || 'local';

app.get('/', (req, res) => {

res.send(`Hello World! - from ${procName}`)

})

app.listen(port, () => {

console.log(`Example app listening on port ${port}`)

})

// will need for tests later

module.exports = app;

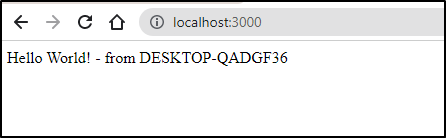

Now we can run and test

$ node index.js

Example app listening on port 3000

I’ll create a quick .gitignore file with gitignore.io.

At this point I’ll want to create a git repo out of my local changes before we go farther.

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ vi .gitignore

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ git init

Initialized empty Git repository in /home/builder/Workspaces/idj-cloudrun/.git/

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ git checkout -b main

Switched to a new branch 'main'

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ git add .gitignore

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ git commit -m "first"

[main (root-commit) 029031f] first

1 file changed, 161 insertions(+)

create mode 100644 .gitignore

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ git add -A

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ git commit -m "initial app"

[main d060e20] initial app

3 files changed, 407 insertions(+)

create mode 100644 index.js

create mode 100644 package-lock.json

create mode 100644 package.json

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ git status

On branch main

nothing to commit, working tree clean

Deploying to Cloud Run

First, we need a project to use and set as default

$ gcloud projects create cloudfunctiondemo01

Create in progress for [https://cloudresourcemanager.googleapis.com/v1/projects/cloudfunctiondemo01].

Waiting for [operations/cp.9088372756946002962] to finish...done.

Enabling service [cloudapis.googleapis.com] on project [cloudfunctiondemo01]...

Operation "operations/acat.p2-156544963444-62789253-7ae6-4679-8a5a-ab8dad95f48f" finished successfully.

$ gcloud config set project cloudfunctiondemo01

Updated property [core/project].

Then you can try and deploy, however, you’ll be met with the “you need a billing account” nastygram

$ gcloud run deploy

Deploying from source. To deploy a container use [--image]. See https://cloud.google.com/run/docs/deploying-source-code for more details.

Source code location (/home/builder/Workspaces/idj-cloudrun):

Next time, use `gcloud run deploy --source .` to deploy the current directory.

Service name (idj-cloudrun):

API [run.googleapis.com] not enabled on project [156544963444]. Would you like to enable and retry (this will take a few minutes)? (y/N)? y

Enabling service [run.googleapis.com] on project [156544963444]...

ERROR: (gcloud.run.deploy) FAILED_PRECONDITION: Billing account for project '156544963444' is not found. Billing must be enabled for activation of service(s) 'run.googleapis.com,containerregistry.googleapis.com' to proceed.

Help Token: Ae-hA1PkEPIe9W5KlWQct1V9qzSyANCiZ4wpK-6diKGmG0A9UXYeBTlnMRXgcfh_tIB8J13qYL-cdN4JhdbPAoO-m-GHWpMMSzuf_qJEsIs5ptBD

- '@type': type.googleapis.com/google.rpc.PreconditionFailure

violations:

- subject: ?error_code=390001&project=156544963444&services=run.googleapis.com&services=containerregistry.googleapis.com

type: googleapis.com/billing-enabled

- '@type': type.googleapis.com/google.rpc.ErrorInfo

domain: serviceusage.googleapis.com/billing-enabled

metadata:

project: '156544963444'

services: run.googleapis.com,containerregistry.googleapis.com

reason: UREQ_PROJECT_BILLING_NOT_FOUND

You can try linking via the alpha command:

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ gcloud alpha billing accounts list

ACCOUNT_ID NAME OPEN MASTER_ACCOUNT_ID

01F9A1-E4183F-271849 My Billing Account True

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ gcloud alpha billing accounts projects link cloudfunctiondemo01 --billing-account=01F9A1-E4183F-271849

WARNING: The `gcloud <alpha|beta> billing accounts projects` groups have been moved to

`gcloud beta billing projects`. Please use the new, shorter commands instead.

ERROR: (gcloud.alpha.billing.accounts.projects.link) FAILED_PRECONDITION: Precondition check failed.

- '@type': type.googleapis.com/google.rpc.QuotaFailure

violations:

- description: 'Cloud billing quota exceeded: https://support.google.com/code/contact/billing_quota_increase'

subject: billingAccounts/01F9A1-E4183F-271849

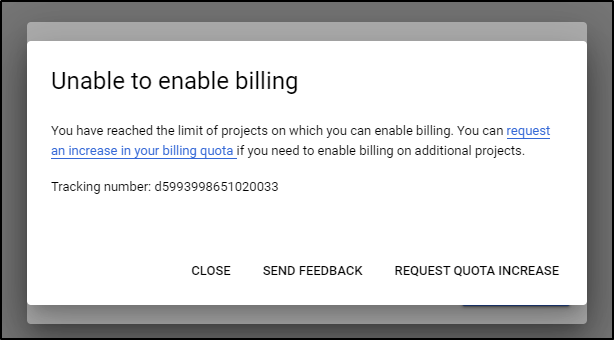

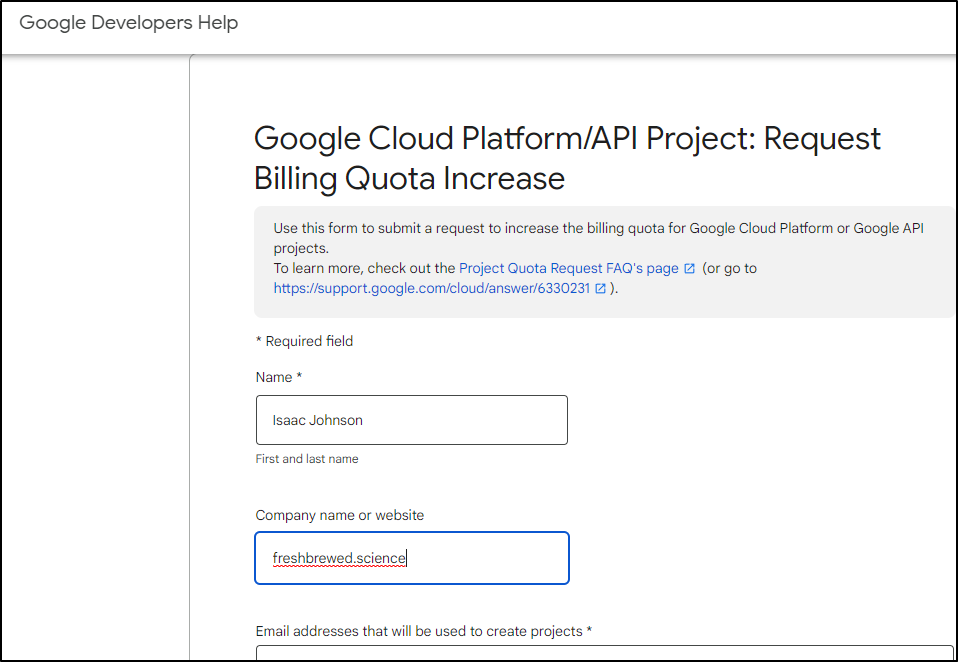

Invariably, I just use the UI (but BOO google, BOO!)

Then I got the error in the UI

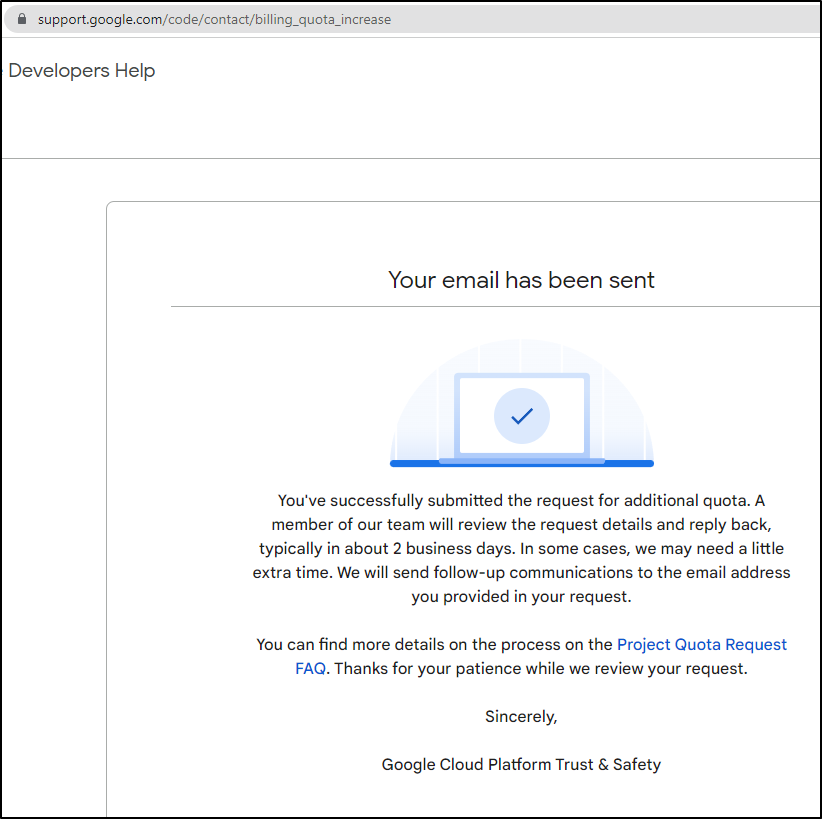

I actually had to fill out a damn form.

And I was told I have to wait up to 2 business days.

Perhaps I use projects wrong. I see them as the closest approximation to Resource Groups as I want to create a top level thing to store work then purge when done to avoid incurring charges.

That said, I can just use a different project for now.. but I’ll have to see what things this makes so I can scrub when done.

$ gcloud run deploy

Deploying from source. To deploy a container use [--image]. See https://cloud.google.com/run/docs/deploying-source-code for more details.

Source code location (/home/builder/Workspaces/idj-cloudrun):

Next time, use `gcloud run deploy --source .` to deploy the current directory.

Service name (idj-cloudrun):

API [run.googleapis.com] not enabled on project [658532986431]. Would you like to enable and retry (this will take a few minutes)? (y/N)? y

Enabling service [run.googleapis.com] on project [658532986431]...

Operation "operations/acf.p2-658532986431-97377e6d-8371-414a-9c3b-281080a5766a" finished successfully.

Please specify a region:

[1] asia-east1

[2] asia-east2

[3] asia-northeast1

[4] asia-northeast2

[5] asia-northeast3

[6] asia-south1

[7] asia-south2

[8] asia-southeast1

[9] asia-southeast2

[10] australia-southeast1

[11] australia-southeast2

[12] europe-central2

[13] europe-north1

[14] europe-west1

[15] europe-west2

[16] europe-west3

[17] europe-west4

[18] europe-west6

[19] northamerica-northeast1

[20] northamerica-northeast2

[21] southamerica-east1

[22] southamerica-west1

[23] us-central1

[24] us-east1

[25] us-east4

[26] us-west1

[27] us-west2

[28] us-west3

[29] us-west4

[30] cancel

Please enter your numeric choice: 23

To make this the default region, run `gcloud config set run/region us-central1`.

API [artifactregistry.googleapis.com] not enabled on project [658532986431]. Would you like to enable and retry (this will take a few minutes)? (y/N)? y

Enabling service [artifactregistry.googleapis.com] on project [658532986431]...

Operation "operations/acat.p2-658532986431-ce7a7956-bd54-4cfb-9310-b6cc7de9804e" finished successfully.

Deploying from source requires an Artifact Registry Docker repository to store built containers. A repository named [cloud-run-source-deploy] in region [us-central1] will be

created.

Do you want to continue (Y/n)? y

This command is equivalent to running `gcloud builds submit --pack image=[IMAGE] /home/builder/Workspaces/idj-cloudrun` and `gcloud run deploy idj-cloudrun --image [IMAGE]`

Allow unauthenticated invocations to [idj-cloudrun] (y/N)? y

Building using Buildpacks and deploying container to Cloud Run service [idj-cloudrun] in project [gkedemo01] region [us-central1]

⠛ Building and deploying new service... Uploading sources.

✓ Creating Container Repository...

✓ Uploading sources...

⠧ Building and deploying new service... Uploading sources.

⠶ Building and deploying new service... Cloud Run error: The user-provided container failed to start and listen on the port defined provided by the PORT=8080 environment variab

le. Logs for this revision might contain more information.

. Setting IAM Policy...

Logs URL: https://console.cloud.google.com/logs/viewer?project=gkedemo01&resource=cloud_run_revision/service_name/idb7a-8794-b6711307a550?project=658532986431].

j-cloudrun/revision_name/idj-cloudrun-00001-dew&advancedFilter=resource.type%3D%22cloud_run_revision%22%0Aresource.labels.service_name%3D%22idj-cloudrun%22%0Aresource.labels.re

vision_name%3D%22idj-cloudrun-00001-dew%22

X Building and deploying new service... Cloud Run error: The user-provided container failed to start and listen on the port defined provided by the PORT=8080 environment variab

le. Logs for this revision might contain more information.

✓ Uploading sources...

Logs URL: https://console.cloud.google.com/logs/viewer?project=gkedemo01&resource=cloud_run_revision/service_name/idb7a-8794-b6711307a550?project=658532986431].

j-cloudrun/revision_name/idj-cloudrun-00001-dew&advancedFilter=resource.type%3D%22cloud_run_revision%22%0Aresource.labels.service_name%3D%22idj-cloudrun%22%0Aresource.labels.re

vision_name%3D%22idj-cloudrun-00001-dew%22

For more troubleshooting guidance, see https://cloud.google.com/run/docs/troubleshooting#container-failed-to-start

Logs URL: https://console.cloud.google.com/logs/viewer?project=gkedemo01&resource=cloud_run_revision/service_name/idj-cloudrun/re

vision_name/idj-cloudrun-00001-dew&advancedFilter=resource.type%3D%22cloud_run_revision%22%0Aresource.labels.service_name%3D%22idj-cloudrun%22%0Aresource.labels.revision_name

%3D%22idj-cloudrun-00001-dew%22

For more troubleshooting guidance, see https://cloud.google.com/run/docs/troubleshooting#container-failed-to-start

. Routing traffic...

✓ Setting IAM Policy...

Deployment failed

ERROR: (gcloud.run.deploy) Cloud Run error: The user-provided container failed to start and listen on the port defined provided by the PORT=8080 environment variable. Logs for this revision might contain more information.

Logs URL: https://console.cloud.google.com/logs/viewer?project=gkedemo01&resource=cloud_run_revision/service_name/idj-cloudrun/revision_name/idj-cloudrun-00001-dew&advancedFilter=resource.type%3D%22cloud_run_revision%22%0Aresource.labels.service_name%3D%22idj-cloudrun%22%0Aresource.labels.revision_name%3D%22idj-cloudrun-00001-dew%22

For more troubleshooting guidance, see https://cloud.google.com/run/docs/troubleshooting#container-failed-to-start

Indeed, I neglected to create a npm start entry, so I added:

$ git diff

diff --git a/package.json b/package.json

index f63be76..85e578a 100644

--- a/package.json

+++ b/package.json

@@ -4,6 +4,7 @@

"description": "A quick Express App for Hello World Demo",

"main": "index.js",

"scripts": {

+ "start": "node index.js",

"test": "echo howdy"

},

"keywords": [

and tried again…

$ gcloud run deploy

Deploying from source. To deploy a container use [--image]. See https://cloud.google.com/run/docs/deploying-source-code for more details.

Source code location (/home/builder/Workspaces/idj-cloudrun):

Next time, use `gcloud run deploy --source .` to deploy the current directory.

Service name (idj-cloudrun):

Please specify a region:

[1] asia-east1

[2] asia-east2

[3] asia-northeast1

[4] asia-northeast2

[5] asia-northeast3

[6] asia-south1

[7] asia-south2

[8] asia-southeast1

[9] asia-southeast2

[10] australia-southeast1

[11] australia-southeast2

[12] europe-central2

[13] europe-north1

[14] europe-west1

[15] europe-west2

[16] europe-west3

[17] europe-west4

[18] europe-west6

[19] northamerica-northeast1

[20] northamerica-northeast2

[21] southamerica-east1

[22] southamerica-west1

[23] us-central1

[24] us-east1

[25] us-east4

[26] us-west1

[27] us-west2

[28] us-west3

[29] us-west4

[30] cancel

Please enter your numeric choice: 23

To make this the default region, run `gcloud config set run/region us-central1`.

This command is equivalent to running `gcloud builds submit --pack image=[IMAGE] /home/builder/Workspaces/idj-cloudrun` and `gcloud run deploy idj-cloudrun --image [IMAGE]`

Building using Buildpacks and deploying container to Cloud Run service [idj-cloudrun] in project [gkedemo01] region [us-central1]

✓ Building and deploying... Done.

✓ Uploading sources...

✓ Building Container... Logs are available at [https://console.cloud.google.com/cloud-build/builds/95910183-8843-437f-983f-a499f3f51f62?project=658532986431].

✓ Creating Revision...

✓ Routing traffic...

Done.

Service [idj-cloudrun] revision [idj-cloudrun-00002-vev] has been deployed and is serving 100 percent of traffic.

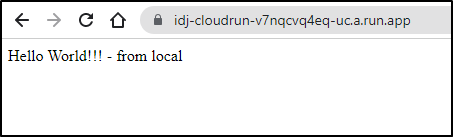

Service URL: https://idj-cloudrun-v7nqcvq4eq-uc.a.run.app

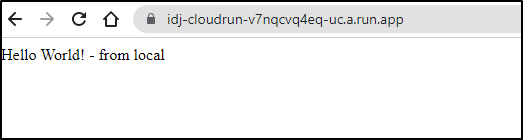

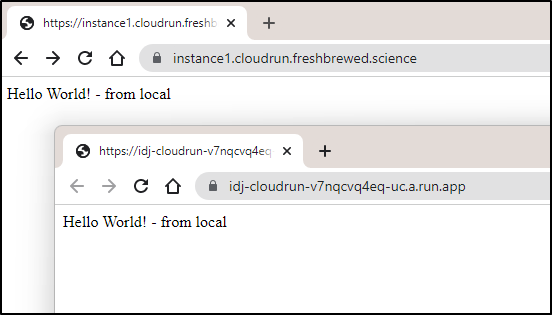

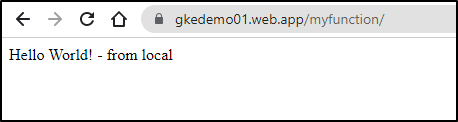

And we see it running just fine

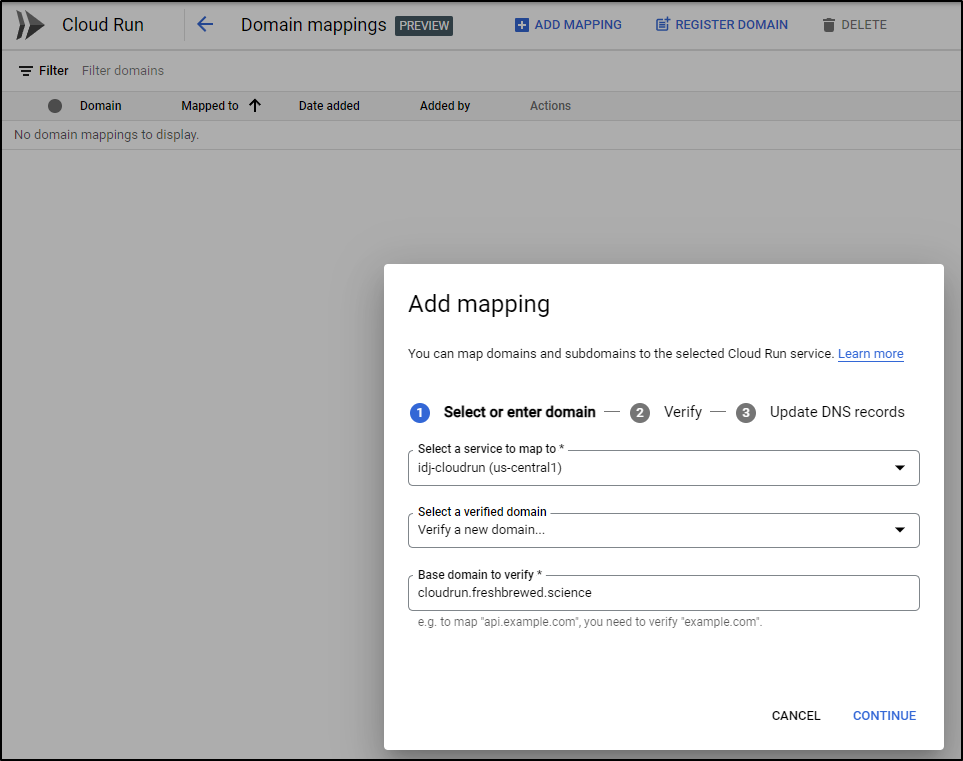

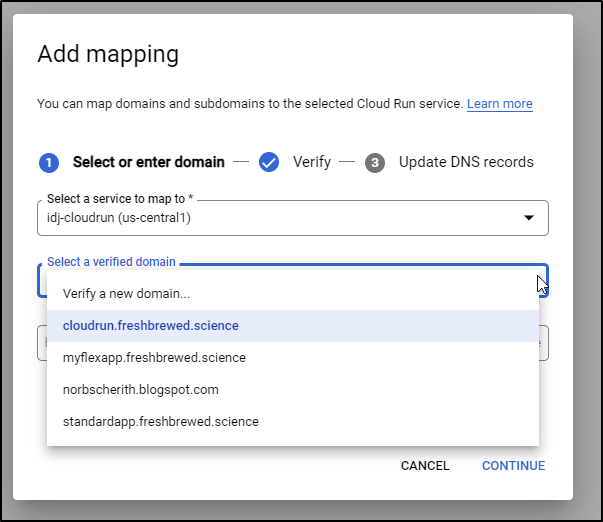

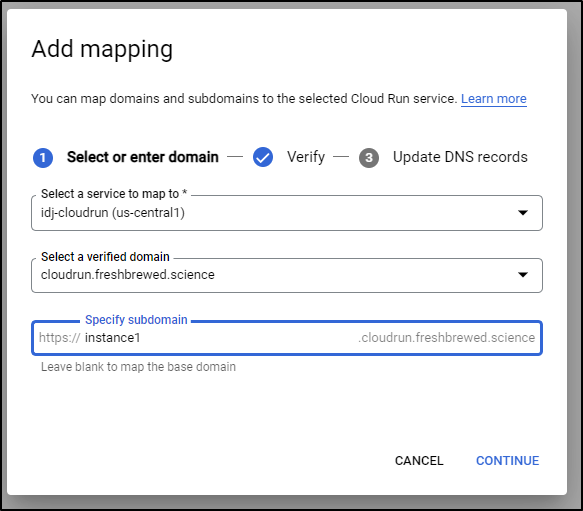

We can add Domain Mappings via the cloud console:

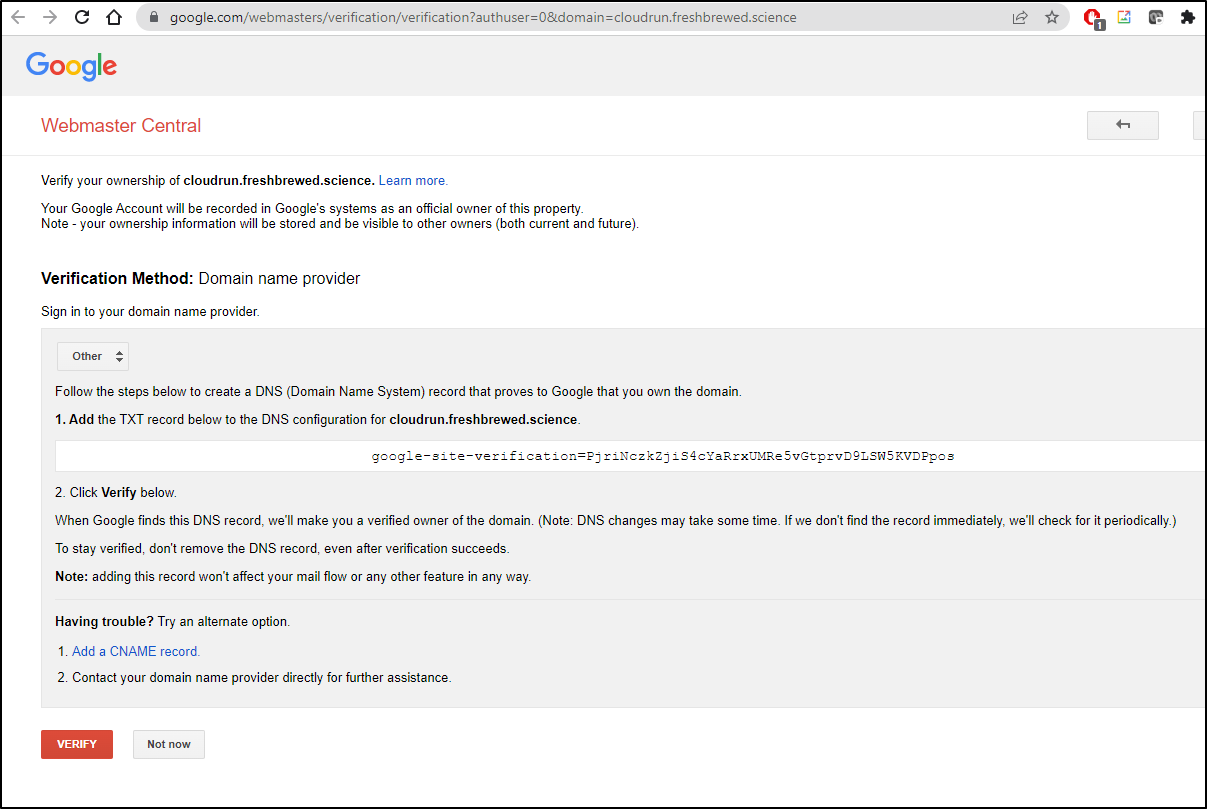

New domains need to be verified.

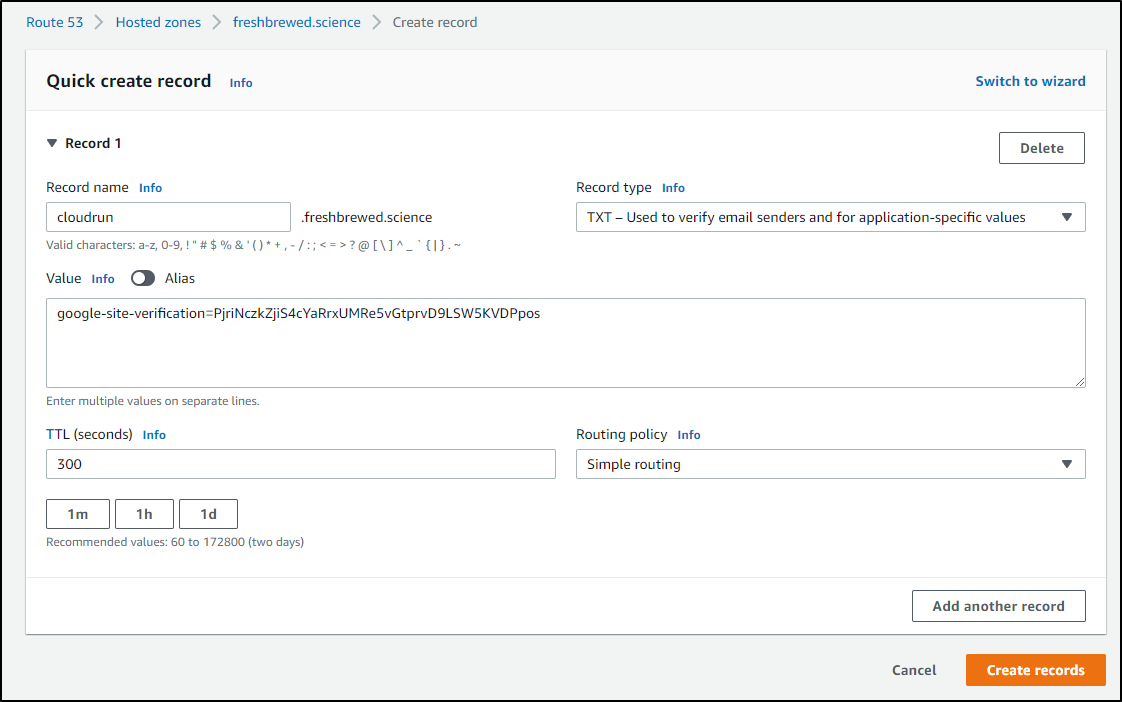

which we can easily do in the AWS console

or command line:

$ cat r53-cname-verify.json

{

"Comment": "CREATE webmaster verify record",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "cloudrun.freshbrewed.science",

"Type": "TXT",

"TTL": 300,

"ResourceRecords": [

{

"Value": "\"google-site-verification=PjriNczkZjiS4cYaRrxUMRe5vGtprvD9LSW5KVDPpos\""

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-cname-verify.json

{

"ChangeInfo": {

"Id": "/change/C06119771DSR6NPU2RQZZ",

"Status": "PENDING",

"SubmittedAt": "2022-03-22T21:05:31.727Z",

"Comment": "CREATE webmaster verify record"

}

}

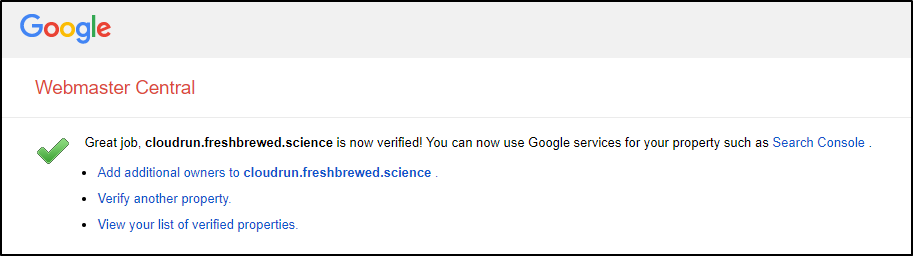

Now when we click verify on the “Webmaster Central” GCP site, it works

It’s now listed as a “verified” domain

And we can then assign a subdomain to this cloud run

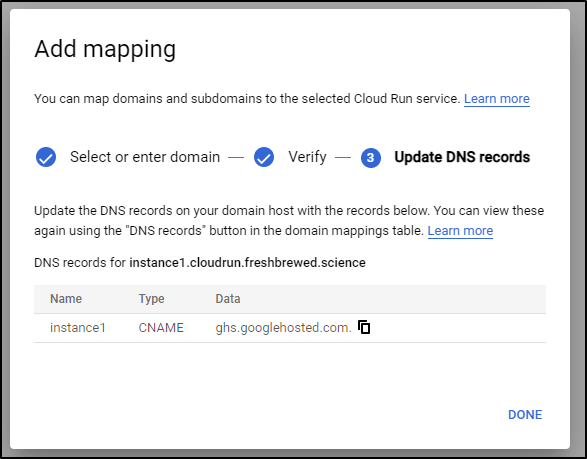

Lastly, I have to point the DNS over to GCP on this

which again, easy to do using the AWS CLI

$ cat r53-gcp-cr-instanc1.json

{

"Comment": "CREATE instance1.cloudrun fb.s CNAME record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "instance1.cloudrun.freshbrewed.science",

"Type": "CNAME",

"TTL": 300,

"ResourceRecords": [

{

"Value": "ghs.googlehosted.com"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-gcp-cr-instanc1.json

{

"ChangeInfo": {

"Id": "/change/C07084463AYLPHTLIUYHD",

"Status": "PENDING",

"SubmittedAt": "2022-03-22T21:11:08.710Z",

"Comment": "CREATE instance1.cloudrun fb.s CNAME record "

}

}

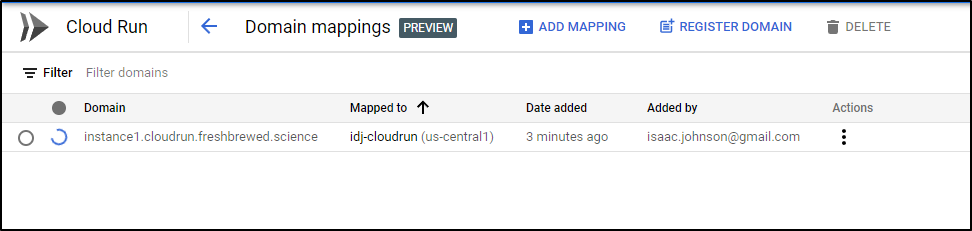

Like with App Engine, this will take a moment to verify and create/apply SSL certs

and I now have both SSL endpoints I can use for the Cloud Run function

Note: it took up to an hour for the new DNS entry to actually work. Until then I got errors

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ wget https://instance1.cloudrun.freshbrewed.science/

--2022-03-22 16:35:24-- https://instance1.cloudrun.freshbrewed.science/

Resolving instance1.cloudrun.freshbrewed.science (instance1.cloudrun.freshbrewed.science)... 142.250.190.147, 2607:f8b0:4009:804::2013

Connecting to instance1.cloudrun.freshbrewed.science (instance1.cloudrun.freshbrewed.science)|142.250.190.147|:443... connected.

Unable to establish SSL connection.

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ curl -k https://instance1.cloudrun.freshbrewed.science/

curl: (35) OpenSSL SSL_connect: SSL_ERROR_SYSCALL in connection to instance1.cloudrun.freshbrewed.science:443

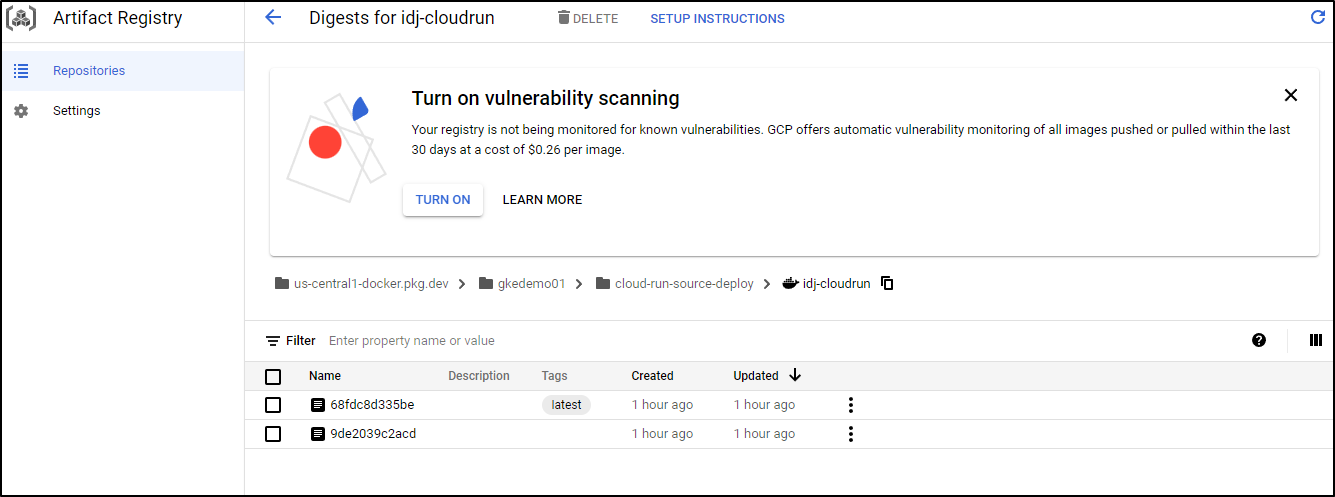

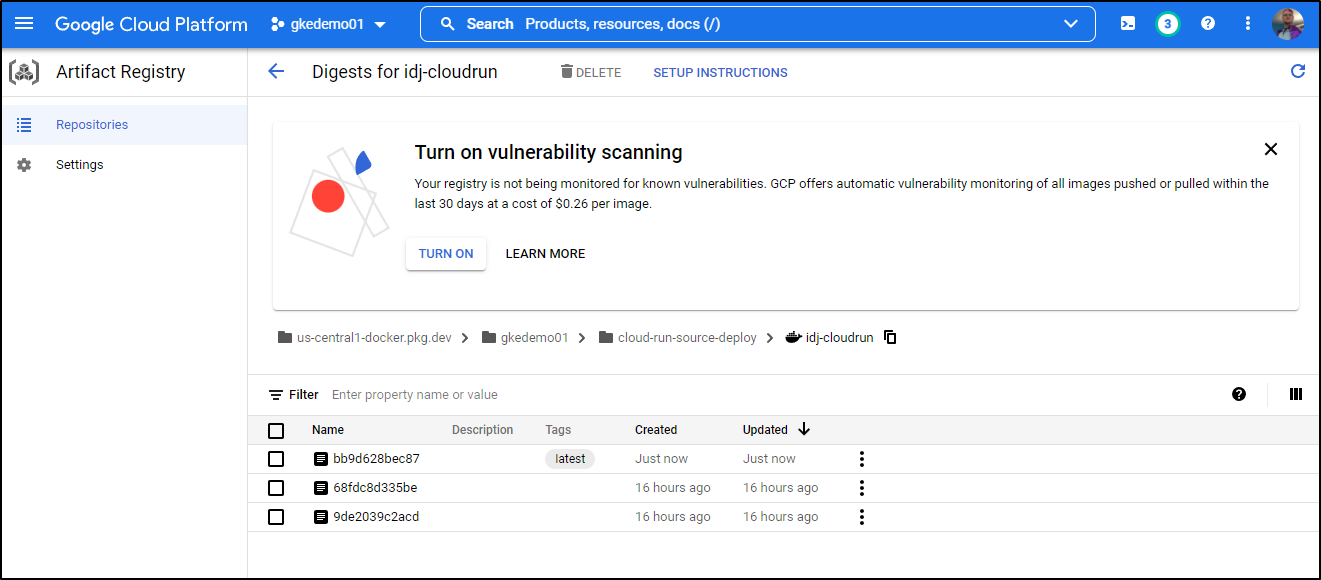

The containers themselves are stored in Artifact Registry (the “new” GCR):

Up to 500Mb/mo is free. Our image is just 86Mb so we won’t exceed the free tier on this.

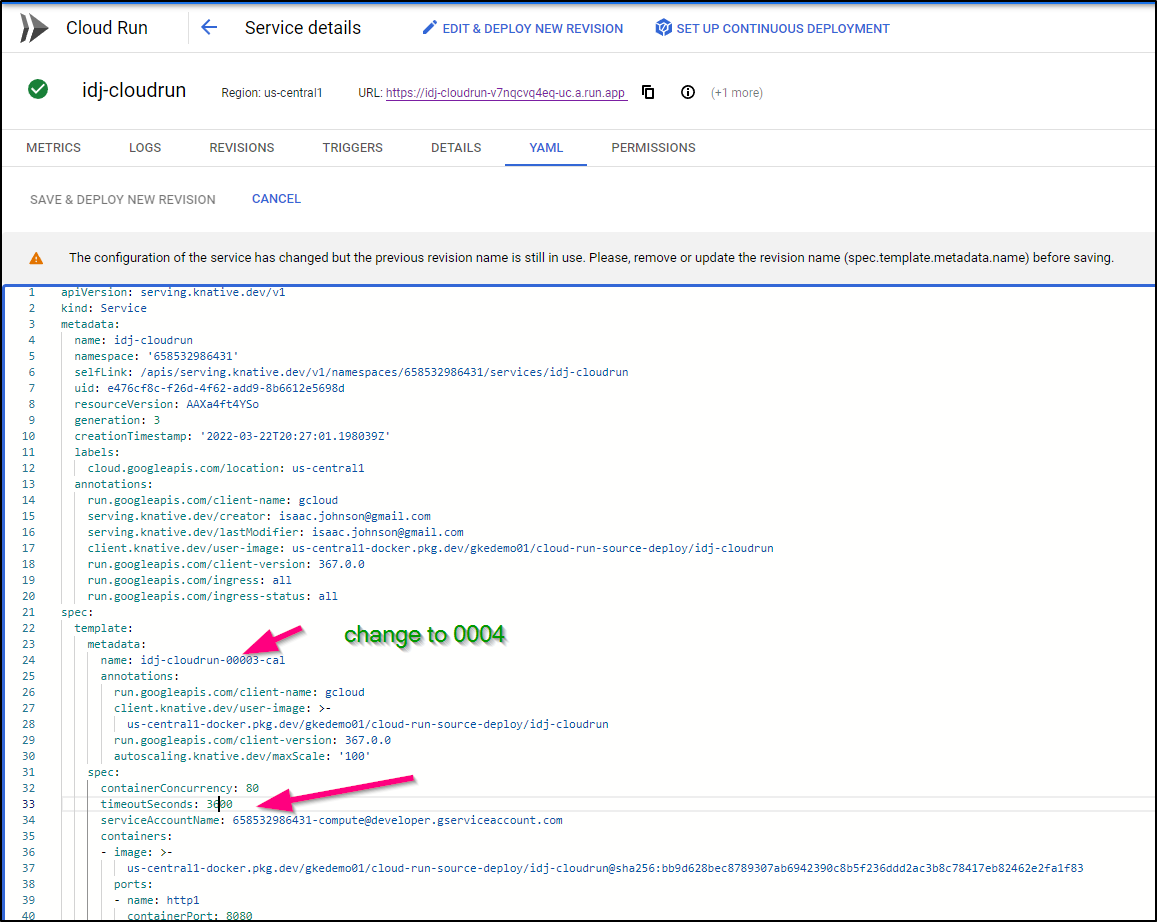

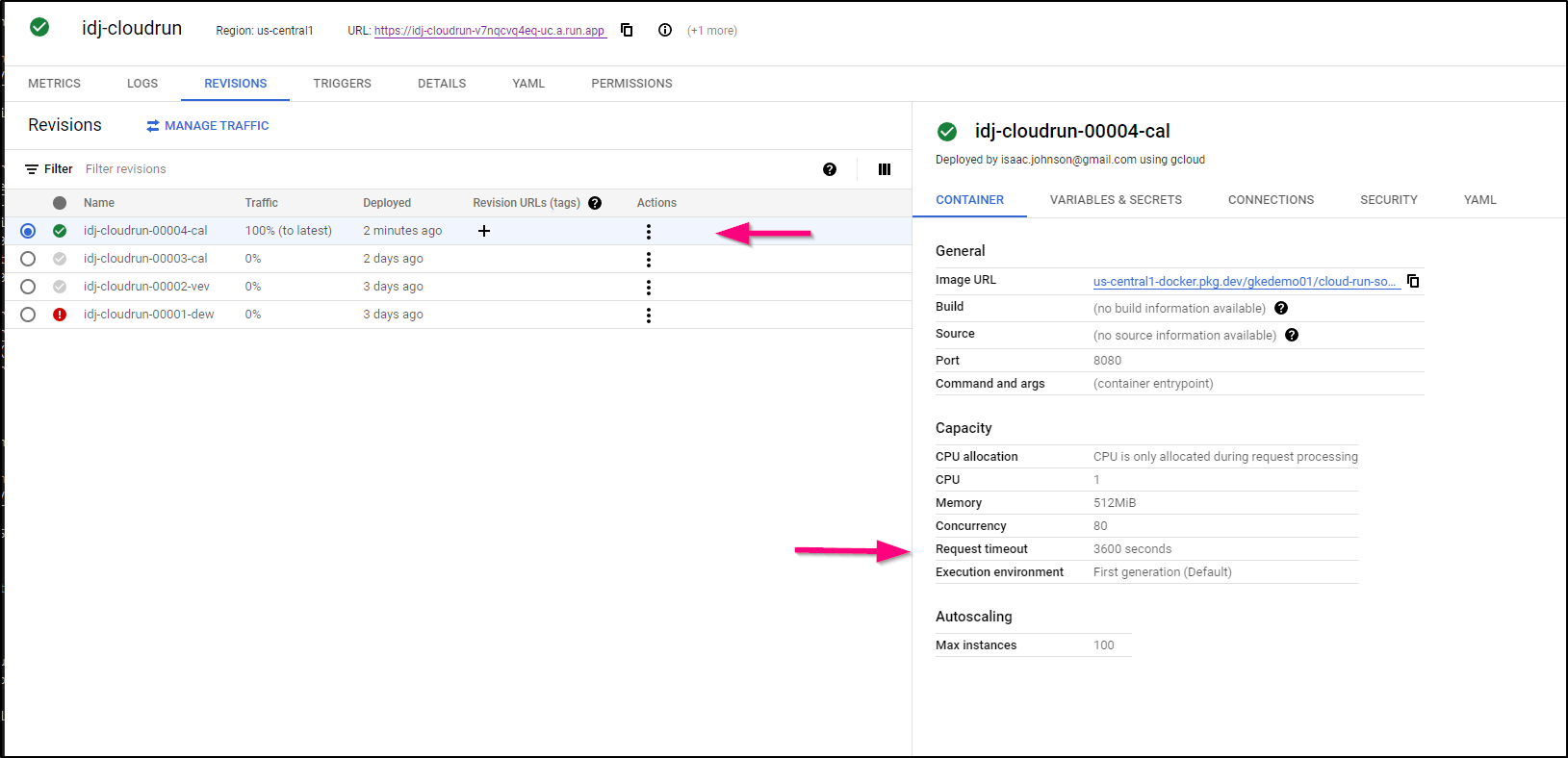

Setting longer timeouts

We can adjust the KN servering YAML directly to up the timeout beyond the default 300s

In Cloud Run, go to the YAML section and edit spec.templates.spec.timeoutSeconds, you’ll also need to change the spec.template.metadata.name to a unique number (i just increased it).

This will increase the value as we see below

Logs and Metrics

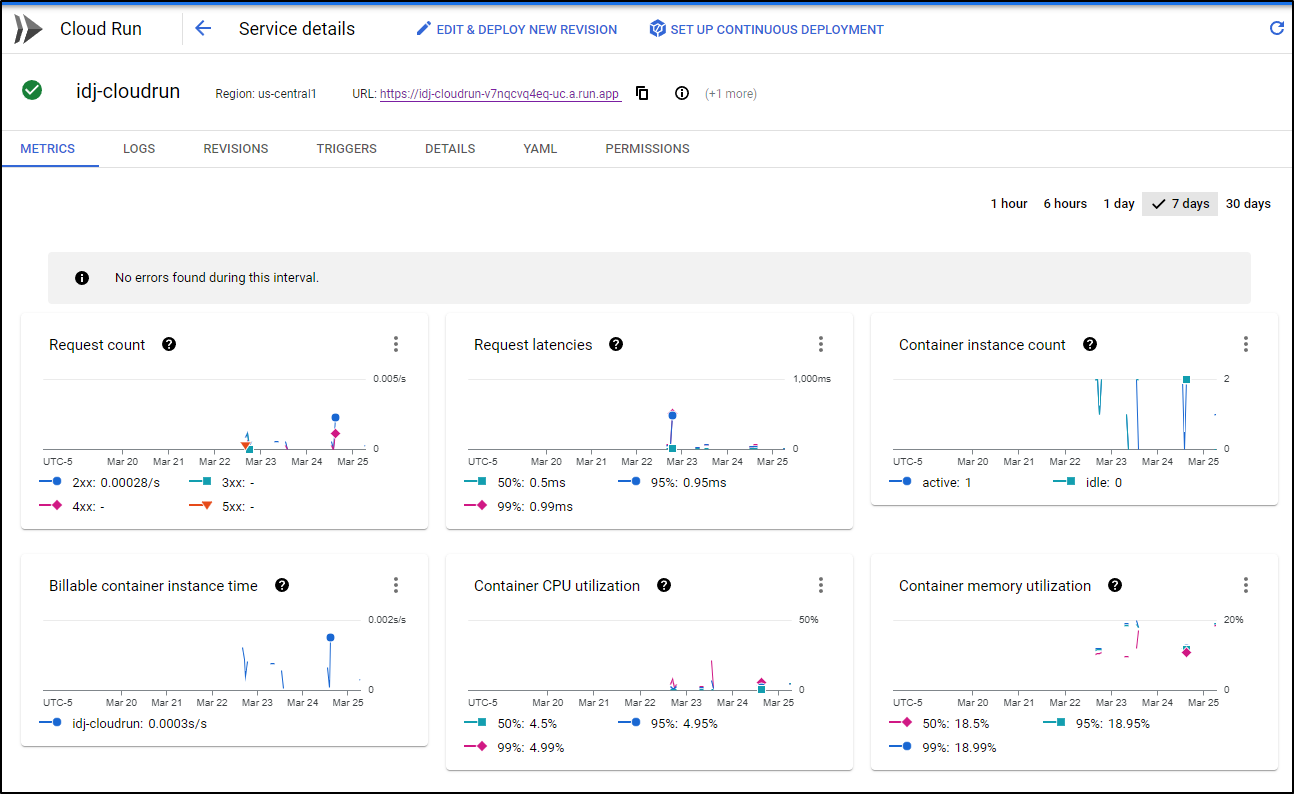

Cloud Run can provide useful metrics and graphs of usage. Click on the Metrics tab for details

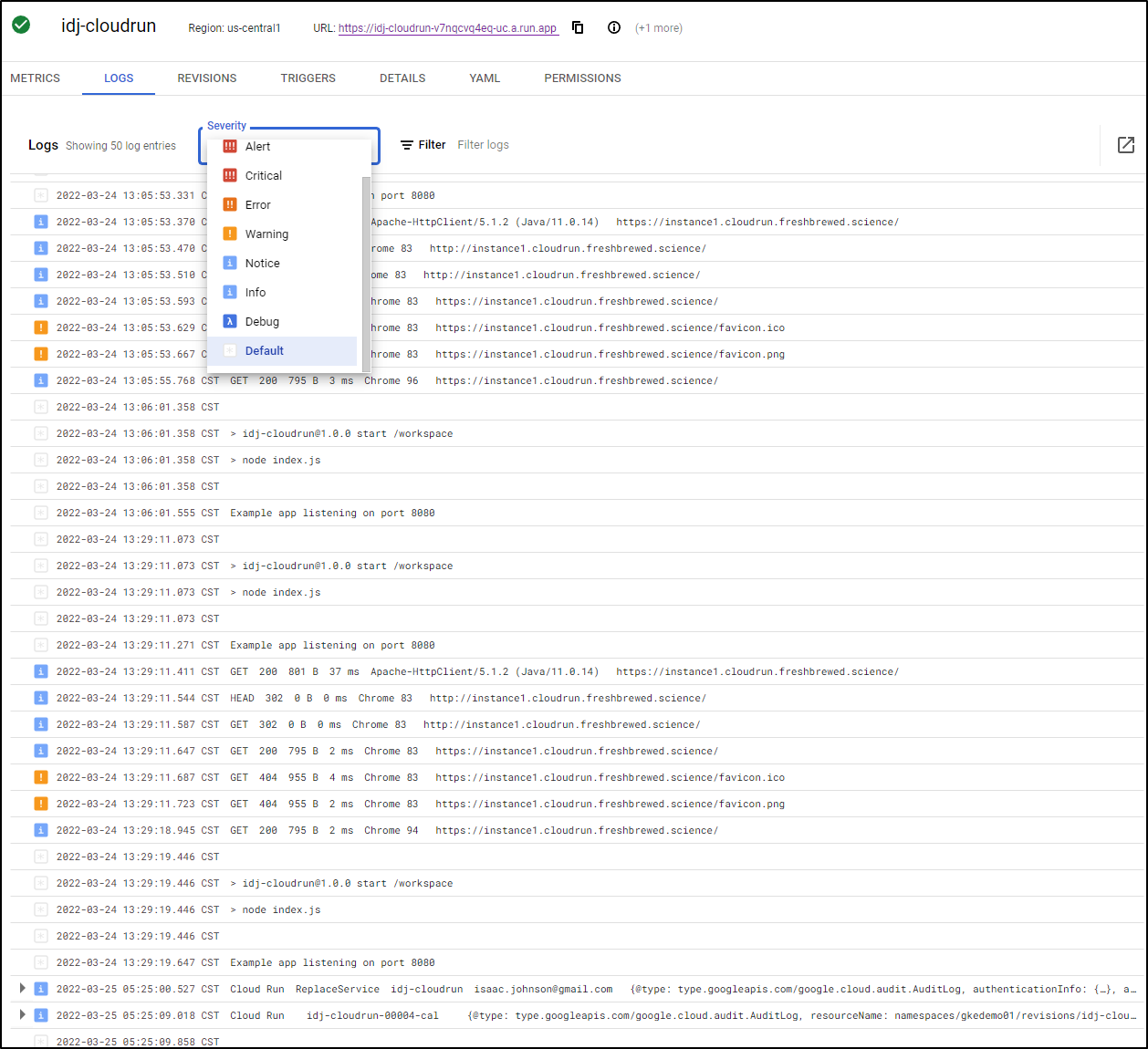

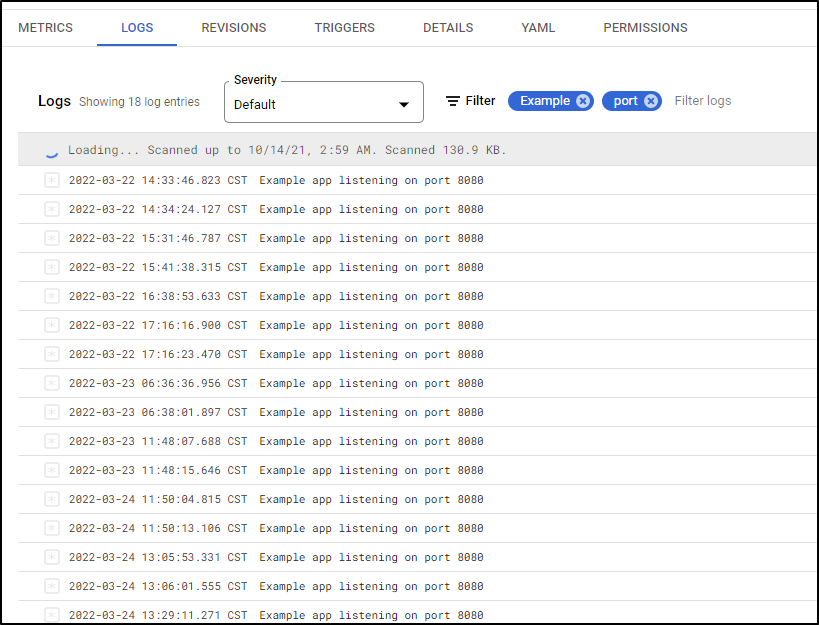

Under Logs we see logs that we can filter by severity

And/or keywords

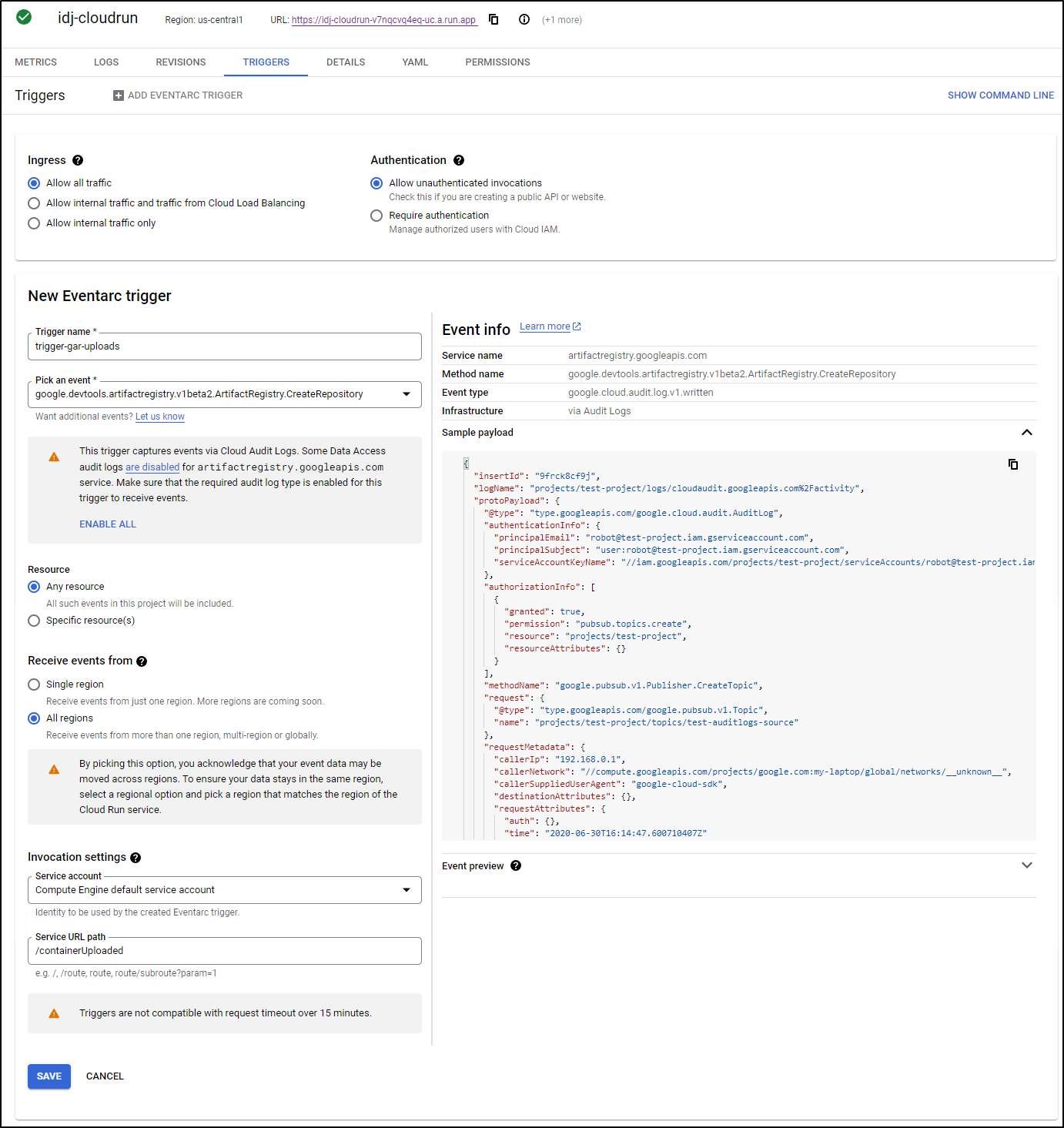

Triggers

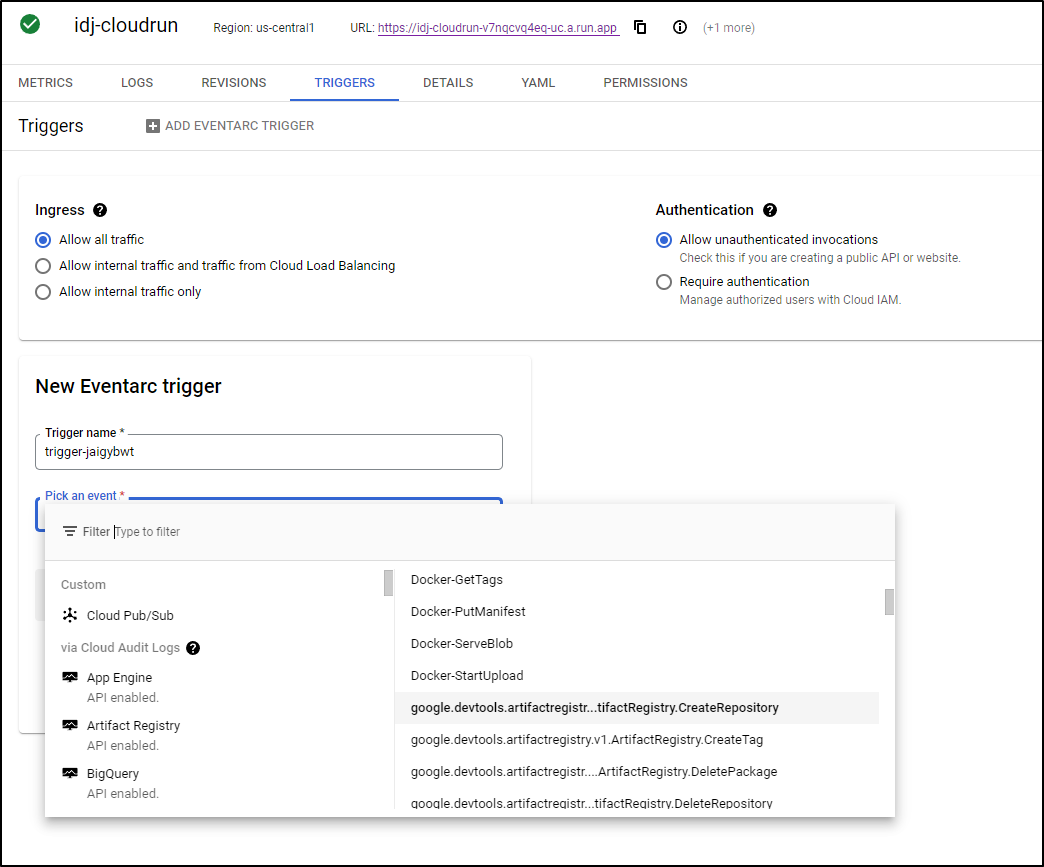

We can trigger on an HTTP webhook, as demonstrated already. But we can trigger on events as well. Under triggers we can limit who can invoke this and if they require authentication. Additionally, if we enable EventArc API, we can trigger on things like a GCR/GAR Repository creation (someone uploaded a new container)

For example, this should trigger a call to an endponit of “containerUploaded” when repositories were created in GAR

Cloud Functions

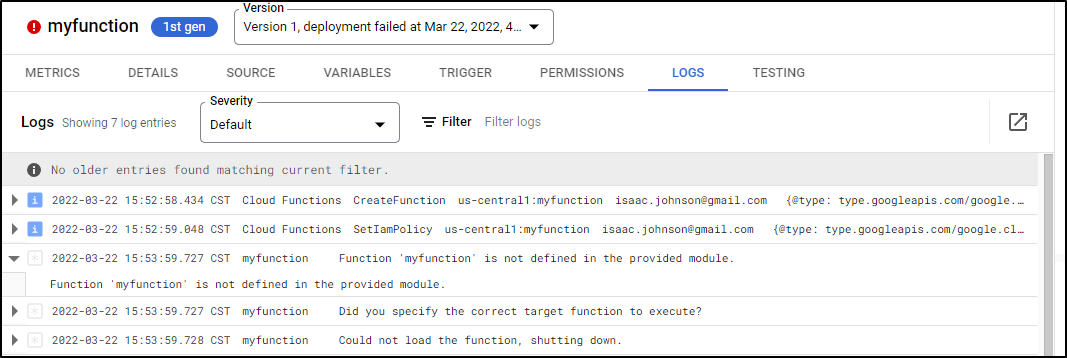

Let’s try and deploy the same cloud run NodeJS function as a cloud function instead

$ gcloud functions deploy myfunction --runtime nodejs14 --trigger-http

API [cloudfunctions.googleapis.com] not enabled on project [658532986431]. Would you like to enable and retry (this will take a few minutes)? (y/N)? y

Enabling service [cloudfunctions.googleapis.com] on project [658532986431]...

Operation "operations/acf.p2-658532986431-bc560d0d-f673-47dd-a404-bbc2d4d72c19" finished successfully.

Created .gcloudignore file. See `gcloud topic gcloudignore` for details.

Allow unauthenticated invocations of new function [myfunction]? (y/N)? y

Deploying function (may take a while - up to 2 minutes)...⠹

For Cloud Build Logs, visit: https://console.cloud.google.com/cloud-build/builds;region=us-central1/9679c7b4-54a2-4bce-abae-eea7641f7f7a?project=658532986431

Deploying function (may take a while - up to 2 minutes)...failed.

ERROR: (gcloud.functions.deploy) OperationError: code=3, message=Function failed on loading user code. This is likely due to a bug in the user code. Error message: Error: please examine your function logs to see the error cause: https://cloud.google.com/functions/docs/monitoring/logging#viewing_logs. Additional troubleshooting documentation can be found at https://cloud.google.com/functions/docs/troubleshooting#logging. Please visit https://cloud.google.com/functions/docs/troubleshooting for in-depth troubleshooting documentation.

Ahh.. with cloud functions, they need the entrypoint (if not the name of the function). Since I clearly don’t export “myfunction” it fails.

One last issue was how I exported the module.

builder@DESKTOP-QADGF36:~/Workspaces/idj-cloudrun$ git diff index.js

diff --git a/index.js b/index.js

index 6de6dfd..a41ebdb 100644

--- a/index.js

+++ b/index.js

@@ -13,4 +13,6 @@ app.listen(port, () => {

})

// will need for tests later

-module.exports = app;

+module.exports = {

+ app

+};

Now it deploys

$ gcloud functions deploy myfunction --runtime nodejs14 --trigger-http --entry-point app

Deploying function (may take a while - up to 2 minutes)...⠹

For Cloud Build Logs, visit: https://console.cloud.google.com/cloud-build/builds;region=us-central1/8f96147a-1037-4147-a20a-98c44098839b?project=658532986431

Deploying function (may take a while - up to 2 minutes)...done.

availableMemoryMb: 256

buildId: 8f96147a-1037-4147-a20a-98c44098839b

buildName: projects/658532986431/locations/us-central1/builds/8f96147a-1037-4147-a20a-98c44098839b

dockerRegistry: CONTAINER_REGISTRY

entryPoint: app

httpsTrigger:

securityLevel: SECURE_OPTIONAL

url: https://us-central1-gkedemo01.cloudfunctions.net/myfunction

ingressSettings: ALLOW_ALL

labels:

deployment-tool: cli-gcloud

name: projects/gkedemo01/locations/us-central1/functions/myfunction

runtime: nodejs14

serviceAccountEmail: gkedemo01@appspot.gserviceaccount.com

sourceUploadUrl: https://storage.googleapis.com/gcf-upload-us-central1-48ea1257-fd05-46ce-94ca-7d216873183d/0fb07d4a-7401-46db-bdc9-10b0512622f5.zip

status: ACTIVE

timeout: 60s

updateTime: '2022-03-22T22:48:02.922Z'

versionId: '3'

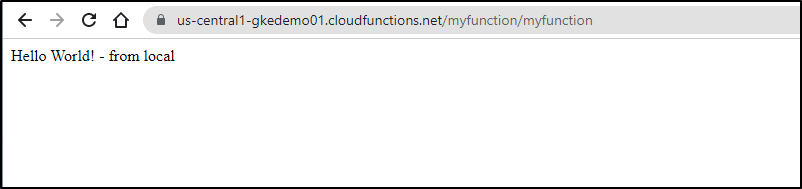

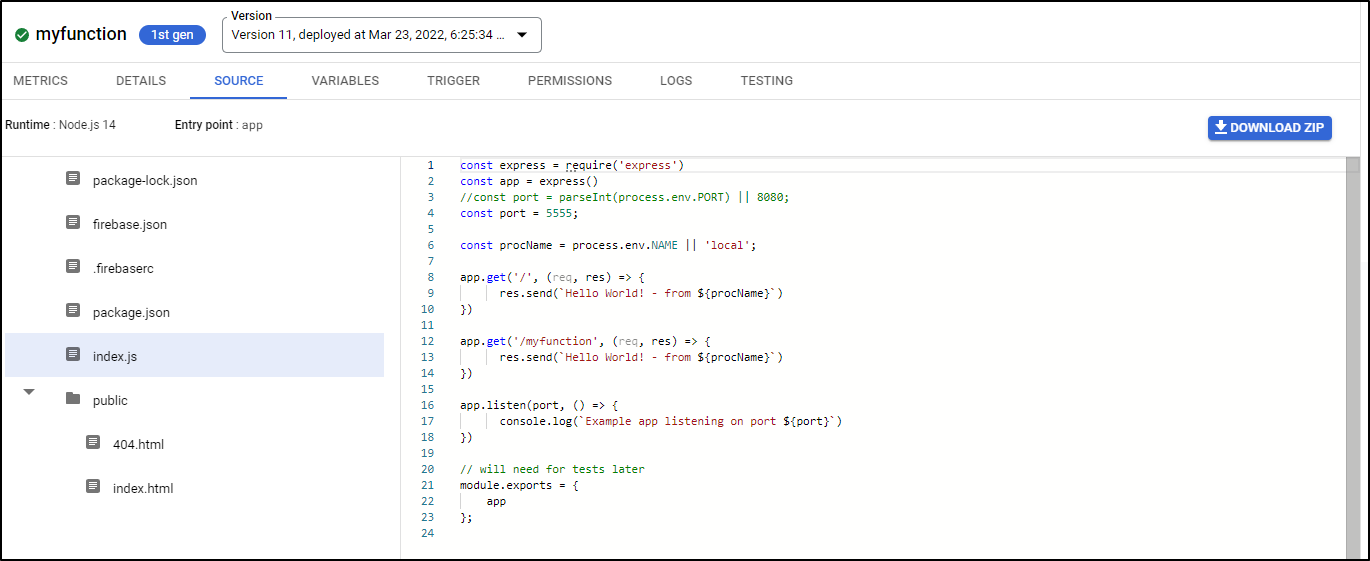

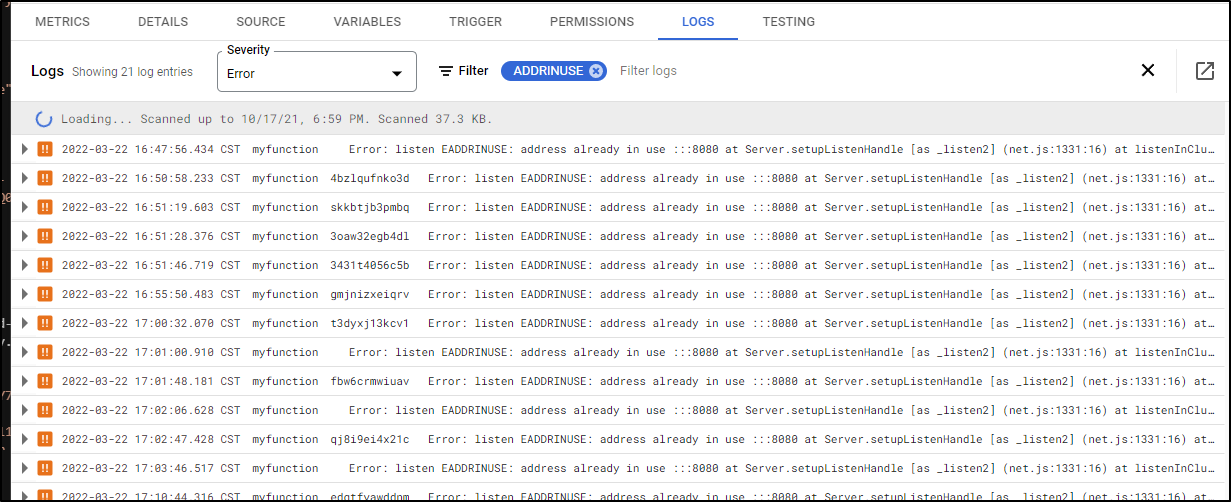

While I cannot rightly explain why it works, changing the port to a hardcoded value seems to address the Port in Use error.

I still cannot figure out how cloud functions is aware I’m on port 5555.

$ cat index.js

const express = require('express')

const app = express()

//const port = parseInt(process.env.PORT) || 8080;

const port = 5555;

const procName = process.env.NAME || 'local';

app.get('/', (req, res) => {

res.send(`Hello World! - from ${procName}`)

})

app.get('/myfunction', (req, res) => {

res.send(`Hello World! - from ${procName}`)

})

app.listen(port, () => {

console.log(`Example app listening on port ${port}`)

})

// will need for tests later

module.exports = {

app

};

deploy

$ gcloud functions deploy myfunction --runtime nodejs14 --trigger-http --entry-point app

Deploying function (may take a while - up to 2 minutes)...⠹

For Cloud Build Logs, visit: https://console.cloud.google.com/cloud-build/builds;region=us-central1/2ea2c5b0-6acb-4e0a-bd1e-e1e6013c7906?project=658532986431

Deploying function (may take a while - up to 2 minutes)...done.

availableMemoryMb: 256

buildId: 2ea2c5b0-6acb-4e0a-bd1e-e1e6013c7906

buildName: projects/658532986431/locations/us-central1/builds/2ea2c5b0-6acb-4e0a-bd1e-e1e6013c7906

dockerRegistry: CONTAINER_REGISTRY

entryPoint: app

httpsTrigger:

securityLevel: SECURE_OPTIONAL

url: https://us-central1-gkedemo01.cloudfunctions.net/myfunction

ingressSettings: ALLOW_ALL

labels:

deployment-tool: cli-gcloud

name: projects/gkedemo01/locations/us-central1/functions/myfunction

runtime: nodejs14

serviceAccountEmail: gkedemo01@appspot.gserviceaccount.com

sourceUploadUrl: https://storage.googleapis.com/gcf-upload-us-central1-48ea1257-fd05-46ce-94ca-7d216873183d/bc7e3256-11a3-4949-9415-077c08802c31.zip

status: ACTIVE

timeout: 60s

updateTime: '2022-03-23T00:01:28.619Z'

versionId: '10'

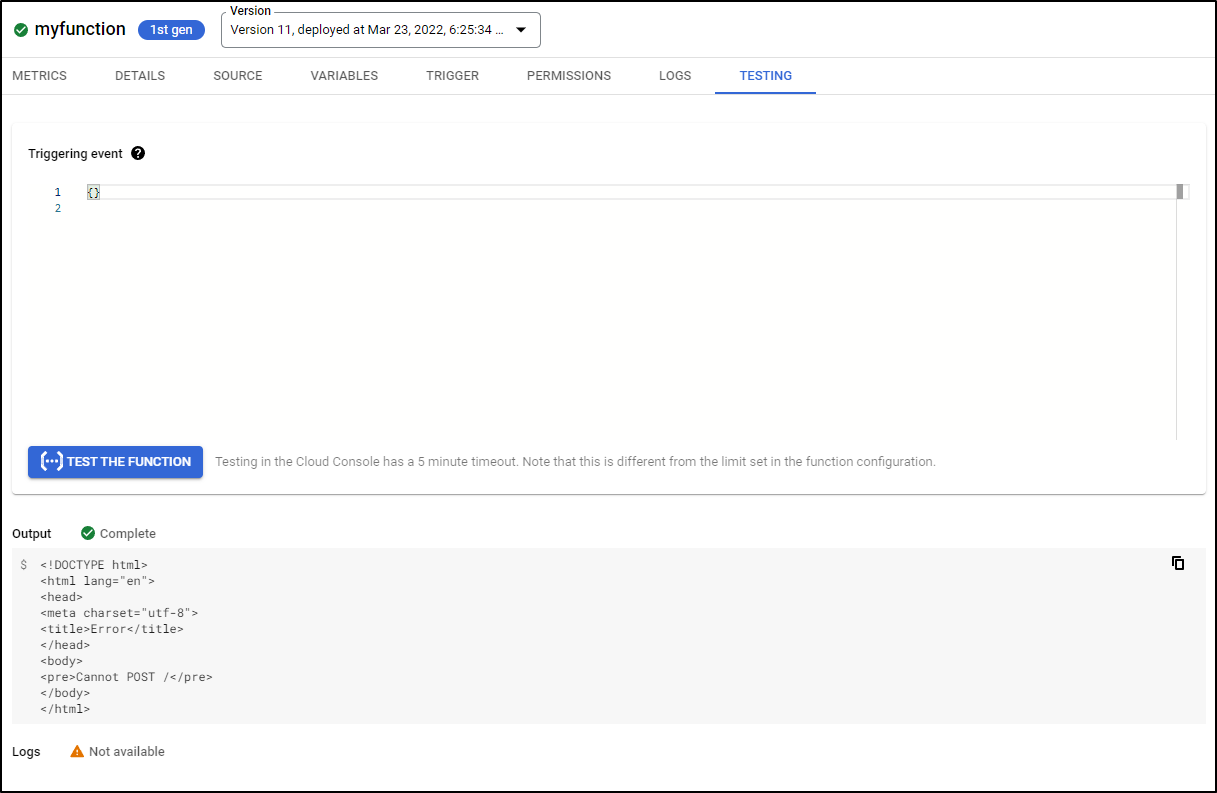

and test

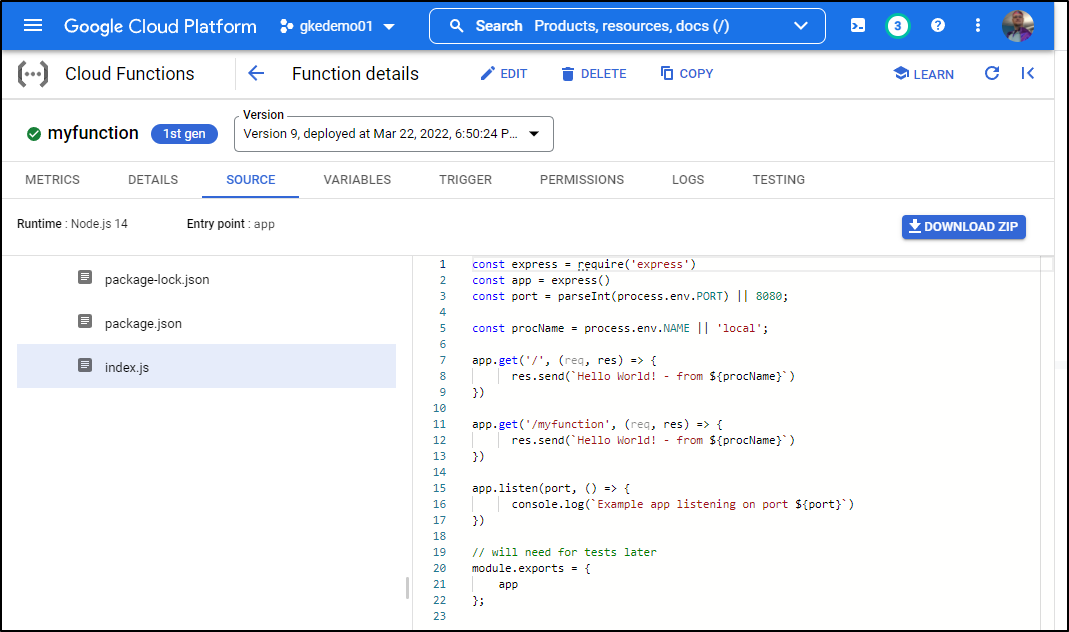

Because we used a Cloud Function, we can see the code behind the function in the Cloud Console

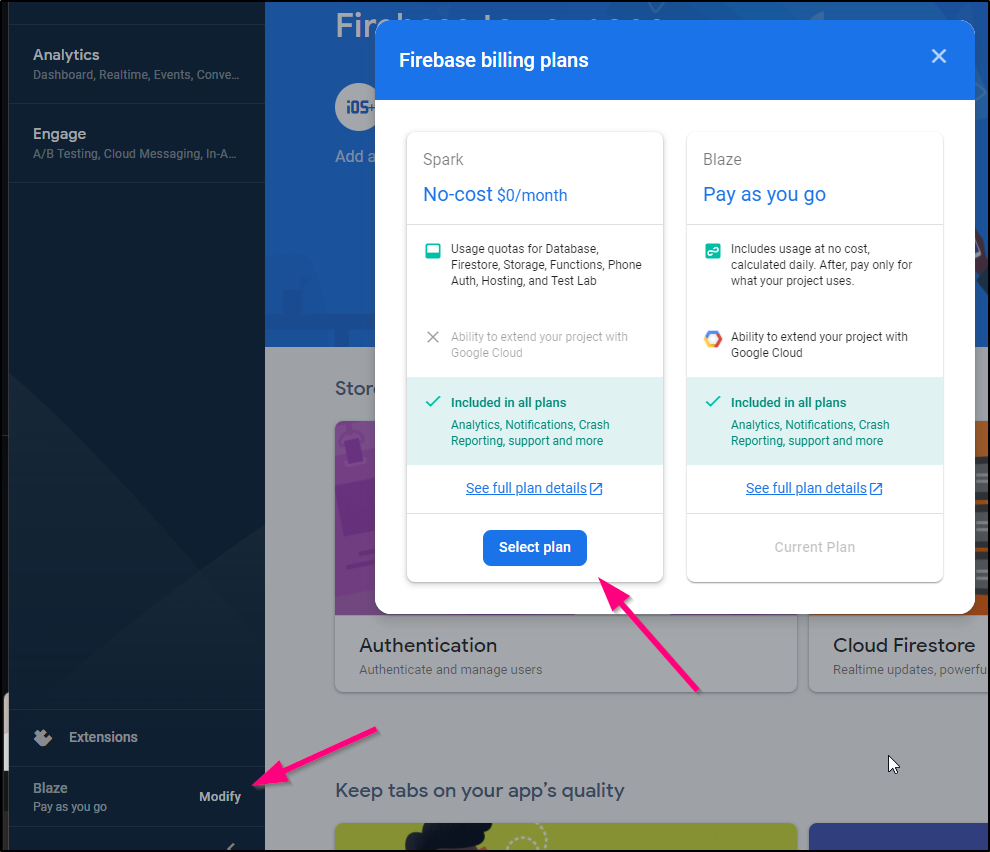

I cannot natively add a custom DNS name to a Cloud Function, but writeups suggest using firebase to accomplish that. Firebase should be free, in the Spark tier for smaller functions.

Let’s set that up;

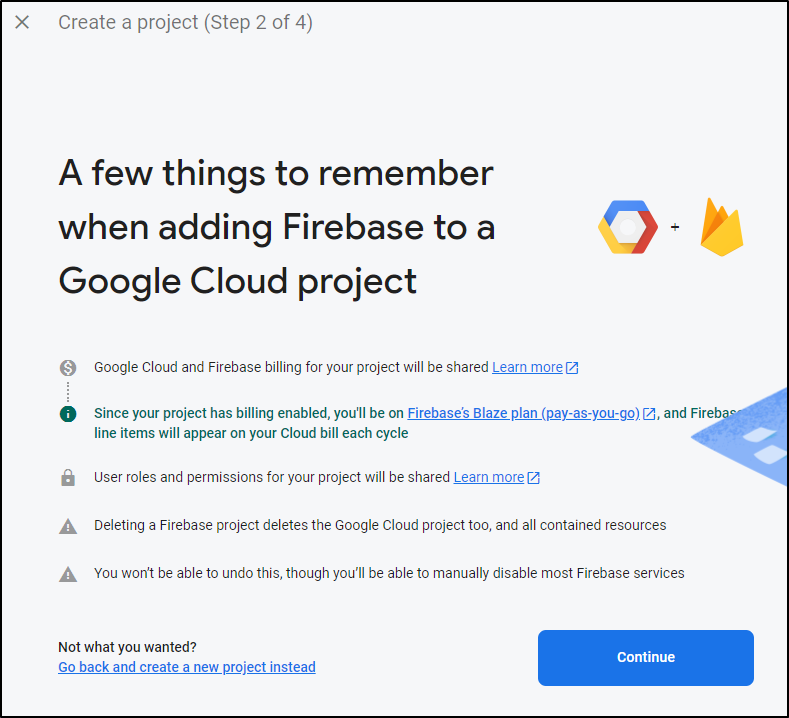

First, we create a firebase project in the firebase console and select our project

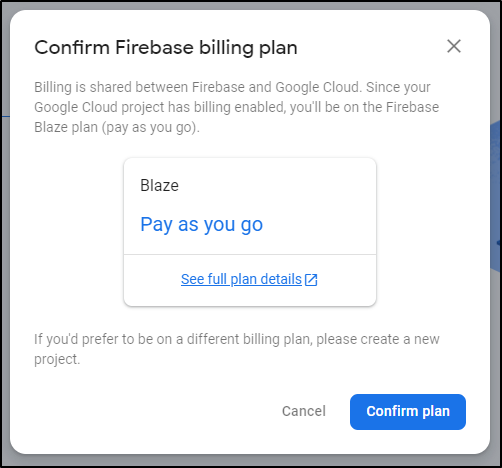

We’ll need to confirm the billing plan

And since we have billing enabled, it determines we clearly want the paid tier and not free tier.

Note: you can move back to the free spark plan after create:

I’ll leave defaults for the rest and then let it finish creating

$ nvm use 14.18.1

Now using node v14.18.1 (npm v6.14.15)dj-function$

$ npm install -g firebase-tools

Login to firebase

$ firebase login

i Firebase optionally collects CLI usage and error reporting information to help improve our products. Data is collected in accordance with Google's privacy policy (https://policies.google.com/privacy) and is not used to identify you.

? Allow Firebase to collect CLI usage and error reporting information? Yes

i To change your data collection preference at any time, run `firebase logout` and log in again.

Visit this URL on this device to log in:

https://accounts.google.com/o/oauth2/auth?client_id=563584335869-fgrhgmd47bqnekij5i8b5pr03ho849e6.apps.googleusercontent.com&scope=email%20openid%20https%3A%2F%2Fwww.googleapis.com%2Fauth%2Fcloudplatformprojects.readonly%20https%3A%2F%2Fwww.googleapis.com%2Fauth%2Ffirebase%20https%3A%2F%2Fwww.googleapis.com%2Fauth%2Fcloud-platform&response_type=code&state=579114489&redirect_uri=http%3A%2F%2Flocalhost%3A9005

Waiting for authentication...

✔ Success! Logged in as isaac.johnson@gmail.com

Next we firebase init and pick hosting (need to hit the spacebar to select, then enter)

$ firebase init

######## #### ######## ######## ######## ### ###### ########

## ## ## ## ## ## ## ## ## ## ##

###### ## ######## ###### ######## ######### ###### ######

## ## ## ## ## ## ## ## ## ## ##

## #### ## ## ######## ######## ## ## ###### ########

You're about to initialize a Firebase project in this directory:

/home/builder/Workspaces/idj-function

? Which Firebase features do you want to set up for this directory? Press Space to select features, then Enter to confirm your choices. Hosting: Configure files for Firebase Hos

ting and (optionally) set up GitHub Action deploys

=== Project Setup

First, let's associate this project directory with a Firebase project.

You can create multiple project aliases by running firebase use --add,

but for now we'll just set up a default project.

? Please select an option: Use an existing project

? Select a default Firebase project for this directory: gkedemo01 (gkedemo01)

i Using project gkedemo01 (gkedemo01)

=== Hosting Setup

Your public directory is the folder (relative to your project directory) that

will contain Hosting assets to be uploaded with firebase deploy. If you

have a build process for your assets, use your build's output directory.

? What do you want to use as your public directory? public

? Configure as a single-page app (rewrite all urls to /index.html)? No

? Set up automatic builds and deploys with GitHub? No

✔ Wrote public/404.html

✔ Wrote public/index.html

i Writing configuration info to firebase.json...

i Writing project information to .firebaserc...

i Writing gitignore file to .gitignore...

✔ Firebase initialization complete!

We will add a rewrite block to the generated firebase.json

builder@DESKTOP-QADGF36:~/Workspaces/idj-function$ cat firebase.json

{

"hosting": {

"public": "public",

"ignore": [

"firebase.json",

"**/.*",

"**/node_modules/**"

]

}

}

builder@DESKTOP-QADGF36:~/Workspaces/idj-function$ vi firebase.json

builder@DESKTOP-QADGF36:~/Workspaces/idj-function$ cat firebase.json

{

"hosting": {

"public": "public",

"ignore": [

"firebase.json",

"**/.*",

"**/node_modules/**"

],

"rewrites": [

{

"source": "myfunction",

"function": "myfunction"

}

]

}

}

Now deploy to firebase

$ firebase deploy

=== Deploying to 'gkedemo01'...

i deploying hosting

i hosting[gkedemo01]: beginning deploy...

i hosting[gkedemo01]: found 2 files in public

✔ hosting[gkedemo01]: file upload complete

i hosting[gkedemo01]: finalizing version...

✔ hosting[gkedemo01]: version finalized

i hosting[gkedemo01]: releasing new version...

✔ hosting[gkedemo01]: release complete

✔ Deploy complete!

Project Console: https://console.firebase.google.com/project/gkedemo01/overview

Hosting URL: https://gkedemo01.web.app

We can now see it running under a firebase URL

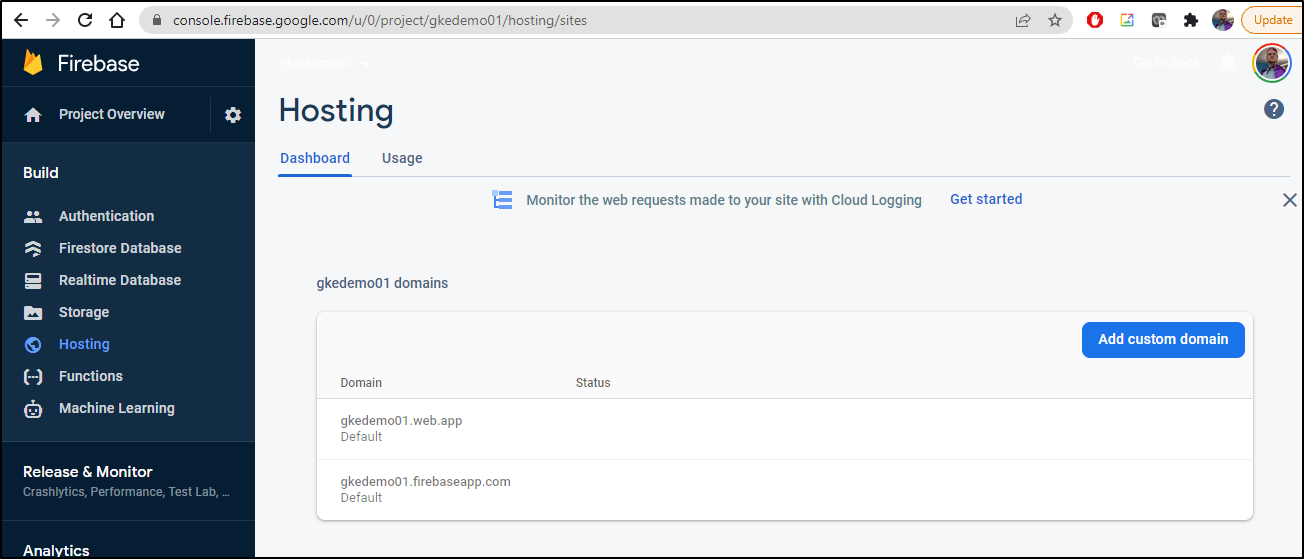

And the flow to add a DNS is similar to Cloud Run

Under Hosting, select “Add custom domain”

Type in a Name

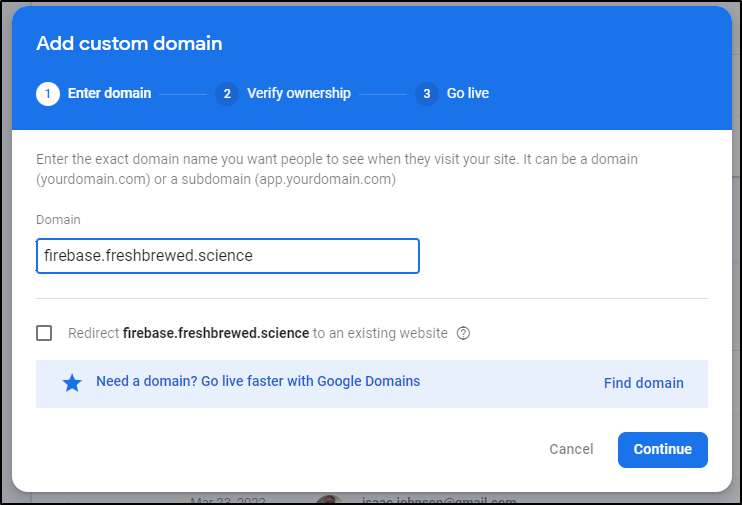

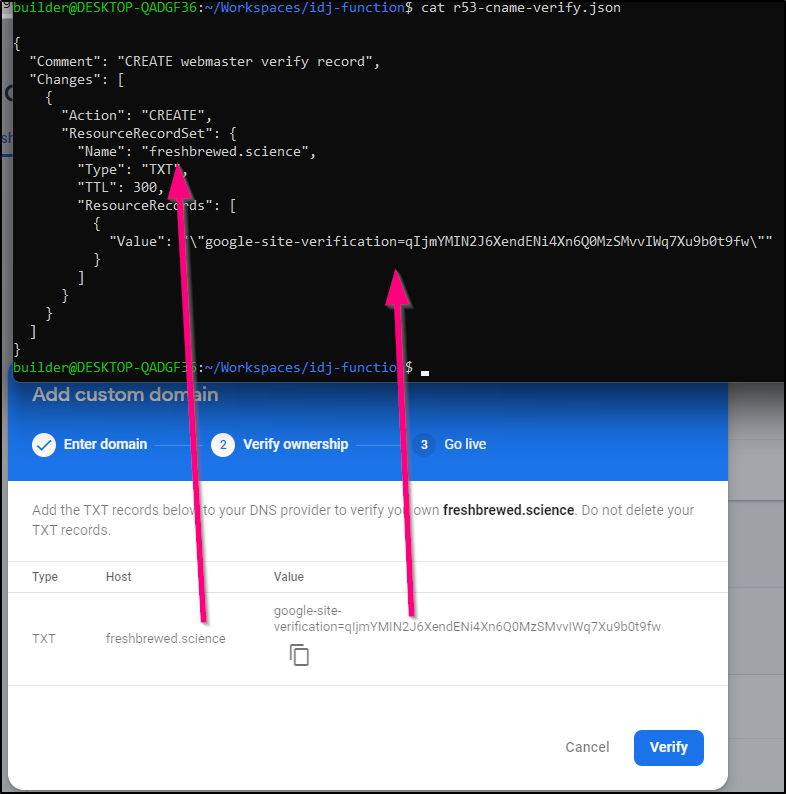

Deal with the TXT record (should be familiar).

Like before, create an r53 JSON

In my case, I already verified before with another provider, so the AWS CLI complained:

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-cname-verify.json

An error occurred (InvalidChangeBatch) when calling the ChangeResourceRecordSets operation: [Tried to create resource record set [name='freshbrewed.science.', type='TXT'] but it already exists]

We can UPSERT to update/insert the record instead

$ cat r53-cname-verify.json

{

"Comment": "UPSERT webmaster verify record",

"Changes": [

{

"Action": "UPSERT",

"ResourceRecordSet": {

"Name": "freshbrewed.science",

"Type": "TXT",

"TTL": 300,

"ResourceRecords": [

{

"Value": "\"google-site-verification=qIjmYMIN2J6XendENi4Xn6Q0MzSMvvIWq7Xu9b0t9fw\""

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-cname-verify.json

{

"ChangeInfo": {

"Id": "/change/C0832003N5X4Y7HT8GO5",

"Status": "PENDING",

"SubmittedAt": "2022-03-23T11:41:40.513Z",

"Comment": "UPSERT webmaster verify record"

}

}

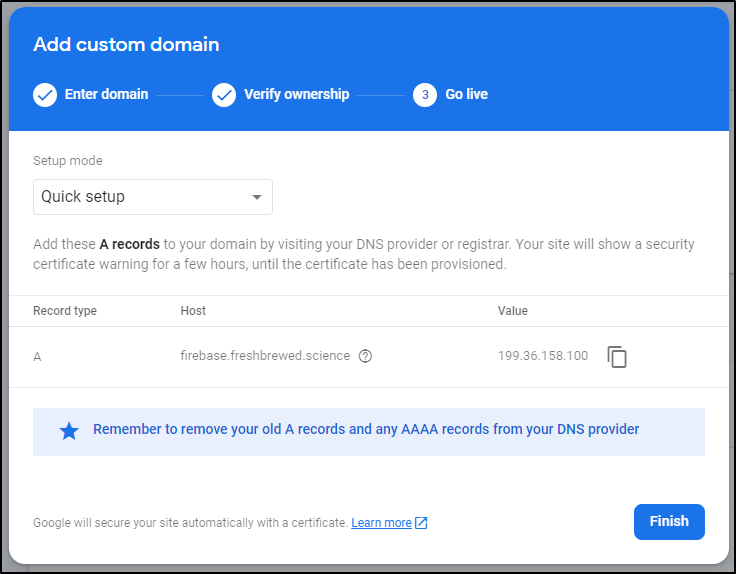

Click Verify in the Firebase console and you should get an A record to point to Firebase

Which is easy to do, again with the AWS CLI

$ cat r53-a-create.json

{

"Comment": "CREATE firebase fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "firebase.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "199.36.158.100"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-a-create.json

{

"ChangeInfo": {

"Id": "/change/C08372991HUJHQM3XPDZ6",

"Status": "PENDING",

"SubmittedAt": "2022-03-23T11:44:35.919Z",

"Comment": "CREATE firebase fb.s A record "

}

}

You’ll note the text in Firebase points out it can take hours for SSL

Add these A records to your domain by visiting your DNS provider or registrar. Your site will show a security certificate warning for a few hours, until the certificate has been provisioned.

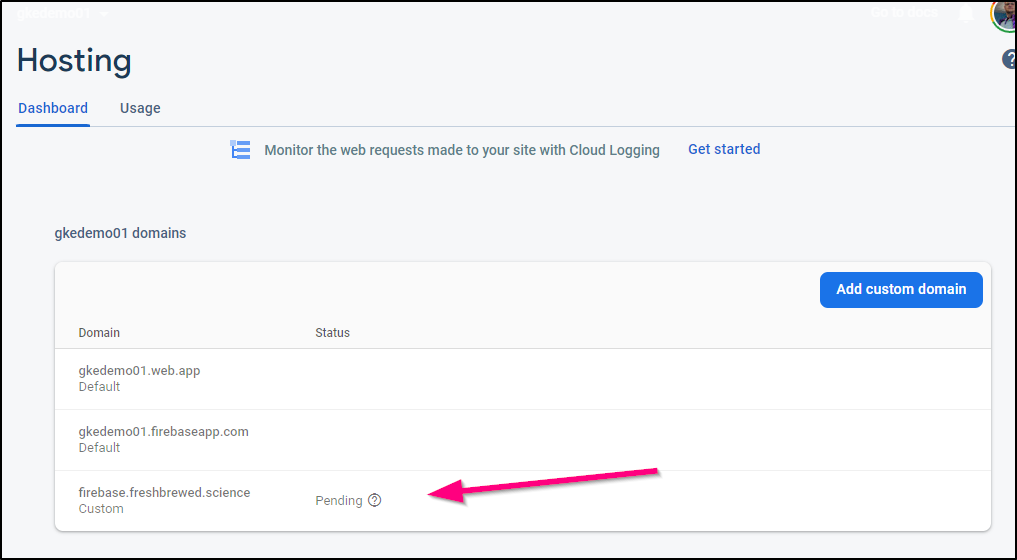

We should see our new URL listed

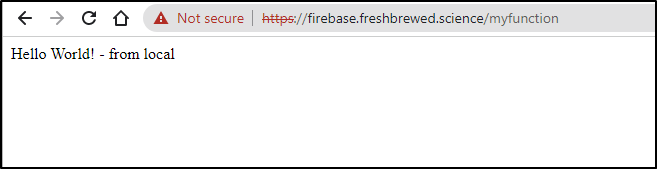

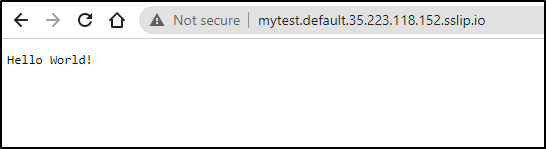

For a demo, I’m okay with the invalid SSL (as that will adjust in the next hour) - but we can see it already works

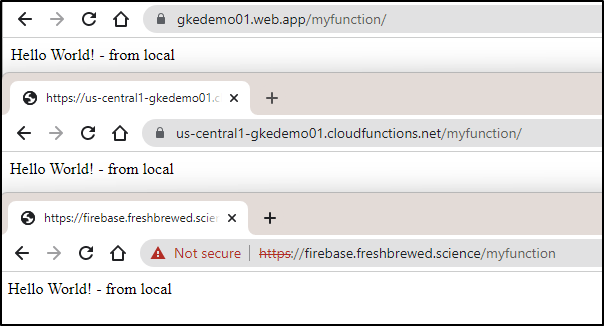

This now means we are serving up our Cloud Function in three ways

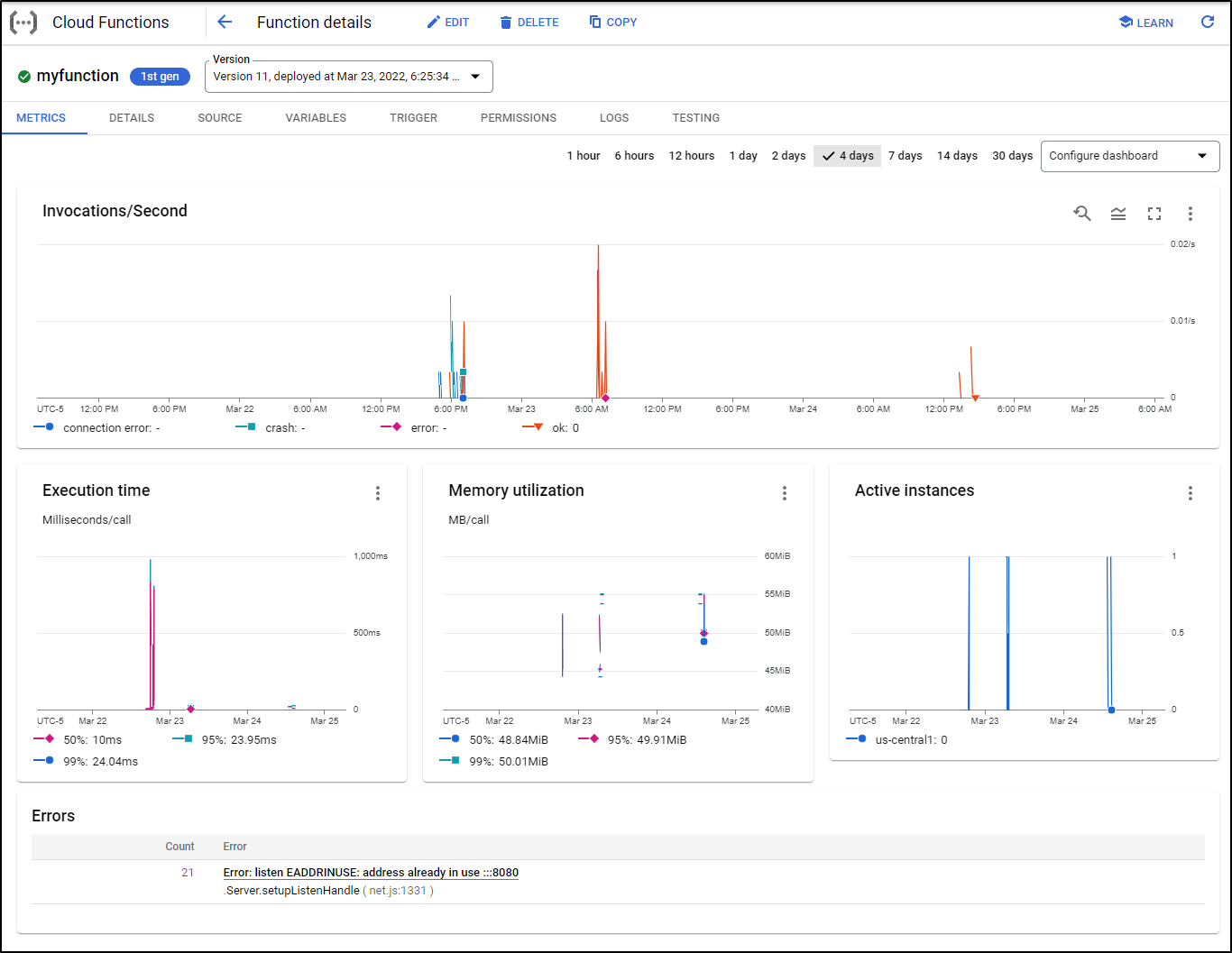

Metrics

Like Cloud Run, we can see basic Usage and Metrics under the Metrics tab

Source

Unique to Cloud Functions is the ability to view the source behind the function, which we can see the Source Tab

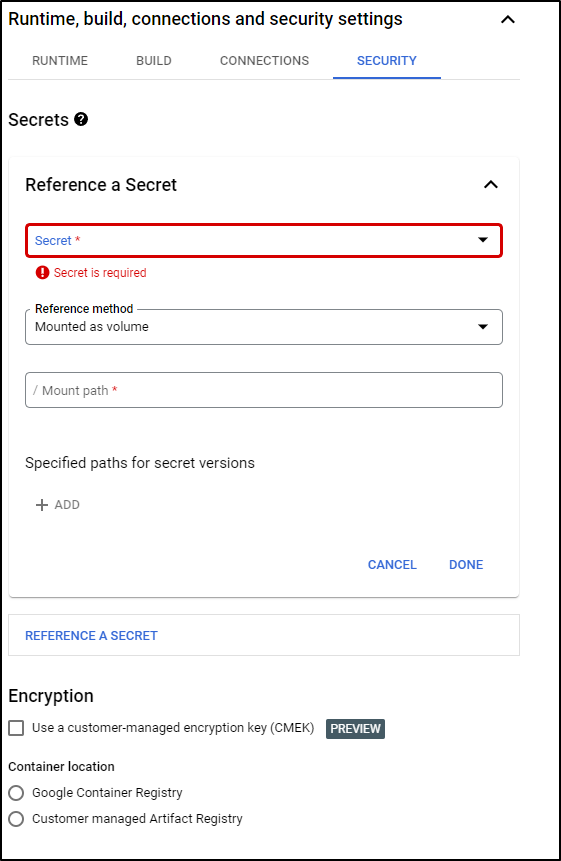

Variables and Secrets

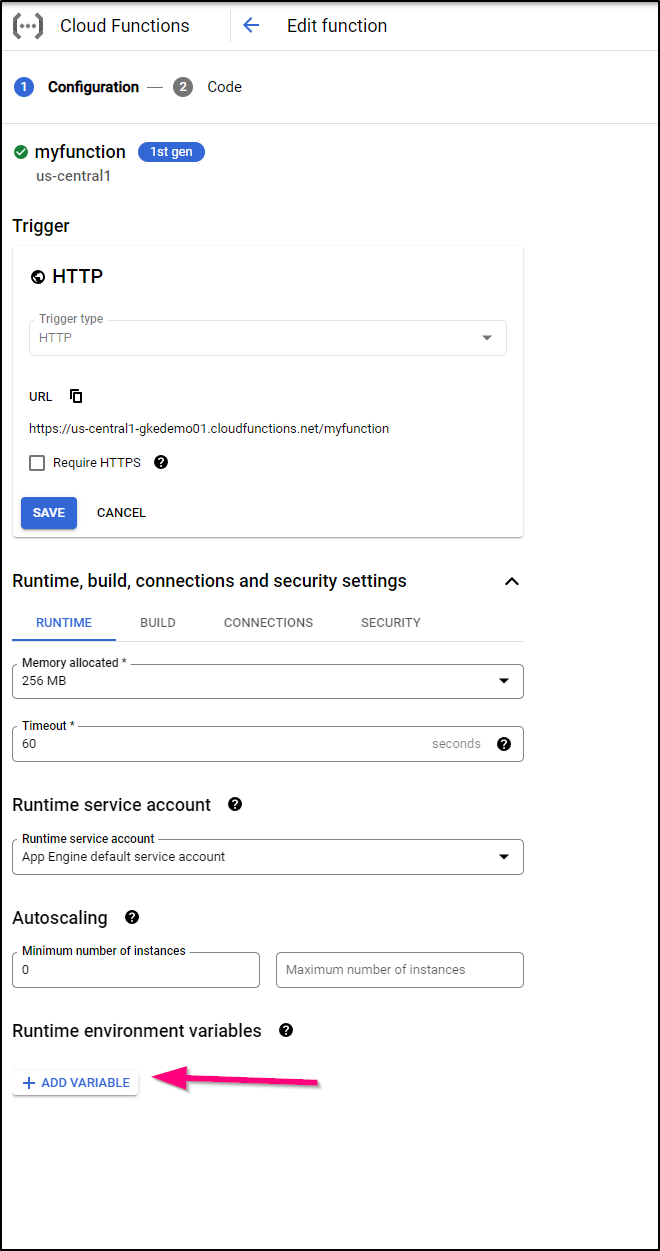

I can view the Variables and Secrets on the Variables tab.

That said, if I edit the function in the console, under build settings, I can add runtime variables

or secrets

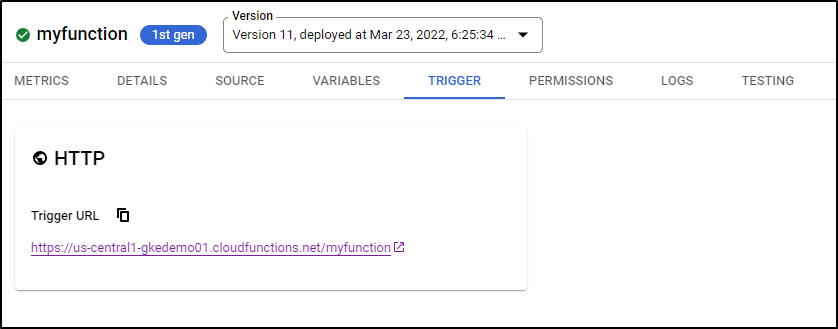

Triggers

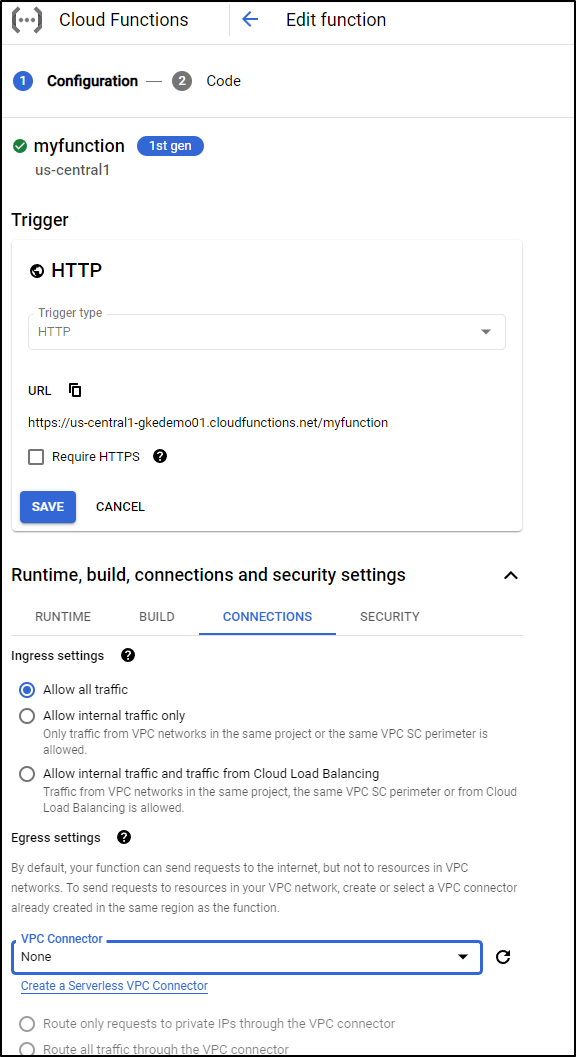

I can view the current trigger

and again, if I edit the function, I can force HTTPS and/or tie to a VPC, private network

Logs

Like before, we can use logs to check current logs and can optionally filter on severity and keywords

Testing

Unique to Cloud Functions, we can directly test the function by sending JSON payloads. Our function is just a basic GET express app. But if we wanted to validate JSON payloads POSTed to our function, we could do so here.

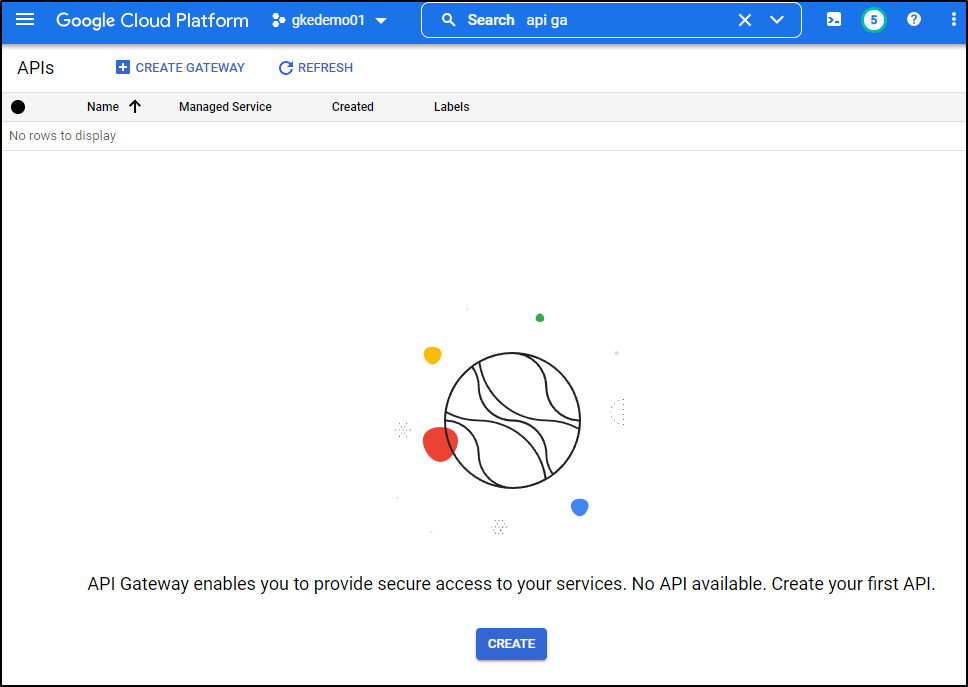

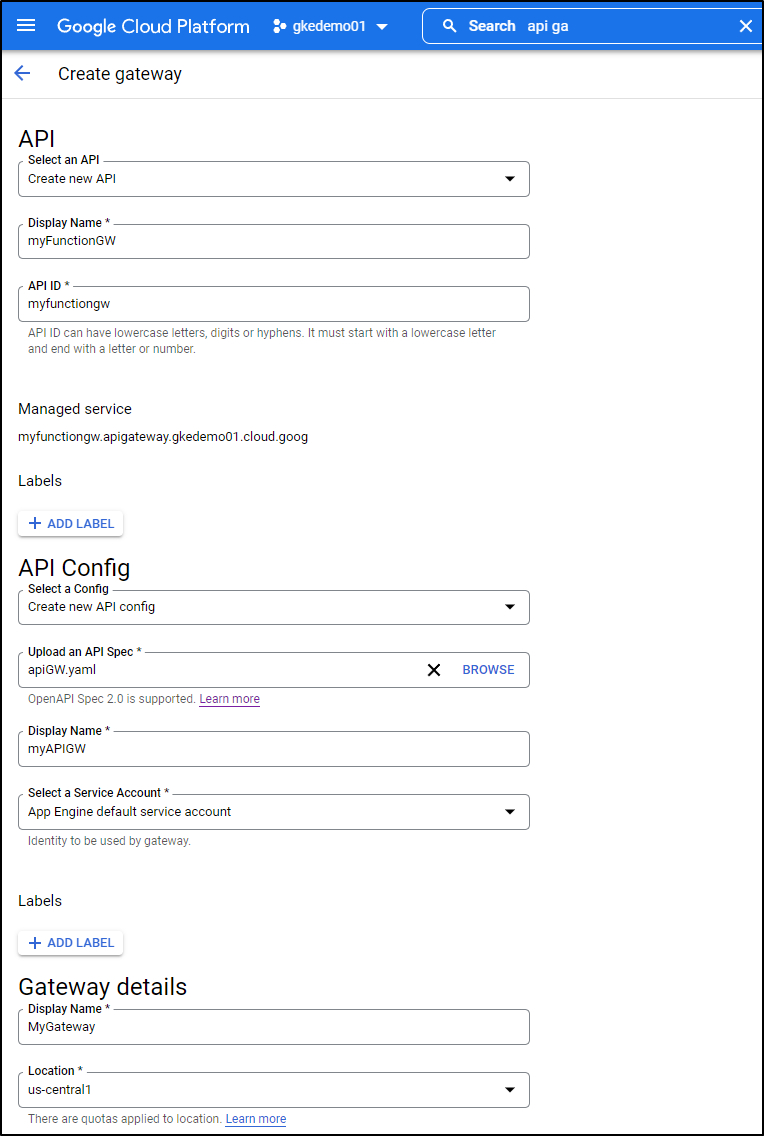

API GW

Another suggestion sent to me was to use API Gateway to route traffic to Functions. Let’s set that up as well.

First, you’ll need to enable the API Gateway API

And the Service Control API

You can then create the API Gateway instance

This uses an OpenAPI Spec. It requires the ‘x-google-backend’ to route traffic.

$ cat /mnt/c/Users/isaac/Downloads/apiGW.yaml

swagger: "2.0"

info:

title: myfunctiongw

description: "Get Hello World"

version: "1.0.0"

schemes:

- "https"

paths:

"/myfunction":

get:

description: "Get Hell World"

operationId: "myfunction"

x-google-backend:

address: https://us-central1-gkedemo01.cloudfunctions.net/myfunction

responses:

200:

description: "Success."

schema:

type: string

400:

description: "Wrong endpoint"

We uploaded that file in the creation step

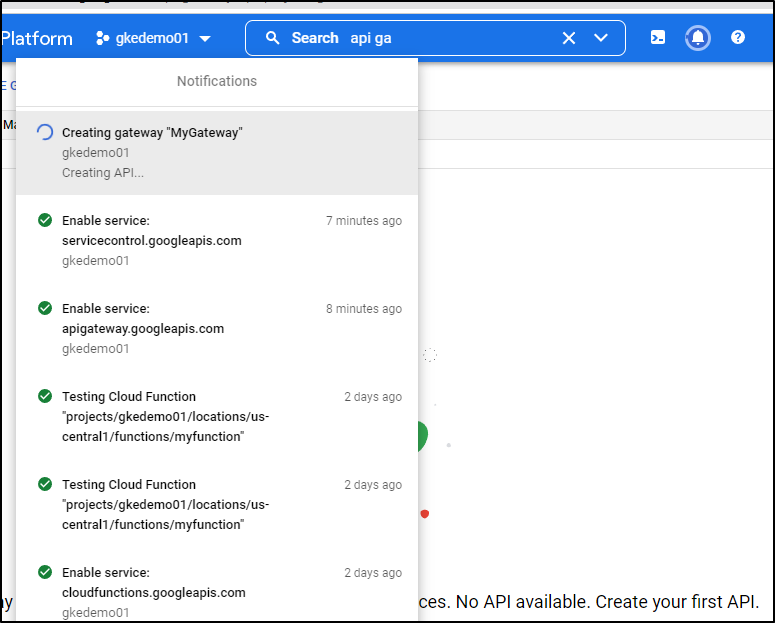

Which starts the create process

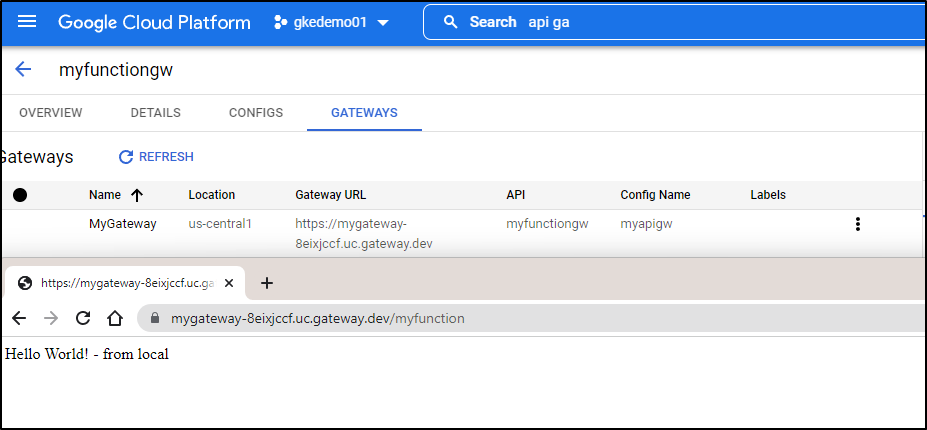

Once created, I can see the Gateway URL in the Gateways section.

I can use that “Gateway URL” to reach the function https://mygateway-8eixjccf.uc.gateway.dev/myfunction

There are ways using an HTTP(S) load balancer to point ultimately to an API GW (see: https://cloud.google.com/api-gateway/docs/gateway-serverless-neg). However, it is beta and creates a far too much unnecessary complexity just to reach a function (FW Rule to URL Map to Backend Service to Serverless NEG to API Gateway to Cloud Run/Function).

KNative

Let’s handle KNative now in GKE

First, we need a K8s cluster to use:

$ gcloud container clusters create mycrtestcluster

WARNING: Starting in January 2021, clusters will use the Regular release channel by default when `--cluster-version`, `--release-channel`, `--no-enable-autoupgrade`, and `--no-enable-autorepair` flags are not specified.

WARNING: Currently VPC-native is the default mode during cluster creation for versions greater than 1.21.0-gke.1500. To create advanced routes based clusters, please pass the `--no-enable-ip-alias` flag

WARNING: Starting with version 1.18, clusters will have shielded GKE nodes by default.

WARNING: Your Pod address range (`--cluster-ipv4-cidr`) can accommodate at most 1008 node(s).

WARNING: Starting with version 1.19, newly created clusters and node-pools will have COS_CONTAINERD as the default node image when no image type is specified.

Creating cluster mycrtestcluster in us-central1-f... Cluster is being health-checked (master is healthy)...done.

Created [https://container.googleapis.com/v1/projects/gkedemo01/zones/us-central1-f/clusters/mycrtestcluster].

To inspect the contents of your cluster, go to: https://console.cloud.google.com/kubernetes/workload_/gcloud/us-central1-f/mycrtestcluster?project=gkedemo01

kubeconfig entry generated for mycrtestcluster.

NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VERSION NUM_NODES STATUS

mycrtestcluster us-central1-f 1.21.9-gke.1002 34.70.179.30 e2-medium 1.21.9-gke.1002 3 RUNNING

By default, Gcloud will setup the Kubeconfig (no need to do a get-credentials)

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

gke-mycrtestcluster-default-pool-f70341f6-9648 Ready <none> 18m v1.21.9-gke.1002

gke-mycrtestcluster-default-pool-f70341f6-d7ds Ready <none> 18m v1.21.9-gke.1002

gke-mycrtestcluster-default-pool-f70341f6-qlpk Ready <none> 18m v1.21.9-gke.1002

First we install the prereqs, namely the CRDs and core components of Knative

$ kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.3.0/serving-crds.yaml

customresourcedefinition.apiextensions.k8s.io/certificates.networking.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/configurations.serving.knative.dev created

customresourcedefinition.apiextensions.k8s.io/clusterdomainclaims.networking.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/domainmappings.serving.knative.dev created

customresourcedefinition.apiextensions.k8s.io/ingresses.networking.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/metrics.autoscaling.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/podautoscalers.autoscaling.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/revisions.serving.knative.dev created

customresourcedefinition.apiextensions.k8s.io/routes.serving.knative.dev created

customresourcedefinition.apiextensions.k8s.io/serverlessservices.networking.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/services.serving.knative.dev created

customresourcedefinition.apiextensions.k8s.io/images.caching.internal.knative.dev created

$ kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.3.0/serving-core.yaml

namespace/knative-serving created

clusterrole.rbac.authorization.k8s.io/knative-serving-aggregated-addressable-resolver created

clusterrole.rbac.authorization.k8s.io/knative-serving-addressable-resolver created

clusterrole.rbac.authorization.k8s.io/knative-serving-namespaced-admin created

clusterrole.rbac.authorization.k8s.io/knative-serving-namespaced-edit created

clusterrole.rbac.authorization.k8s.io/knative-serving-namespaced-view created

clusterrole.rbac.authorization.k8s.io/knative-serving-core created

clusterrole.rbac.authorization.k8s.io/knative-serving-podspecable-binding created

serviceaccount/controller created

clusterrole.rbac.authorization.k8s.io/knative-serving-admin created

clusterrolebinding.rbac.authorization.k8s.io/knative-serving-controller-admin created

clusterrolebinding.rbac.authorization.k8s.io/knative-serving-controller-addressable-resolver created

customresourcedefinition.apiextensions.k8s.io/images.caching.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/certificates.networking.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/configurations.serving.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/clusterdomainclaims.networking.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/domainmappings.serving.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/ingresses.networking.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/metrics.autoscaling.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/podautoscalers.autoscaling.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/revisions.serving.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/routes.serving.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/serverlessservices.networking.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/services.serving.knative.dev unchanged

image.caching.internal.knative.dev/queue-proxy created

configmap/config-autoscaler created

configmap/config-defaults created

configmap/config-deployment created

configmap/config-domain created

configmap/config-features created

configmap/config-gc created

configmap/config-leader-election created

configmap/config-logging created

configmap/config-network created

configmap/config-observability created

configmap/config-tracing created

horizontalpodautoscaler.autoscaling/activator created

poddisruptionbudget.policy/activator-pdb created

deployment.apps/activator created

service/activator-service created

deployment.apps/autoscaler created

service/autoscaler created

deployment.apps/controller created

service/controller created

deployment.apps/domain-mapping created

deployment.apps/domainmapping-webhook created

service/domainmapping-webhook created

horizontalpodautoscaler.autoscaling/webhook created

poddisruptionbudget.policy/webhook-pdb created

deployment.apps/webhook created

service/webhook created

validatingwebhookconfiguration.admissionregistration.k8s.io/config.webhook.serving.knative.dev created

mutatingwebhookconfiguration.admissionregistration.k8s.io/webhook.serving.knative.dev created

mutatingwebhookconfiguration.admissionregistration.k8s.io/webhook.domainmapping.serving.knative.dev created

secret/domainmapping-webhook-certs created

validatingwebhookconfiguration.admissionregistration.k8s.io/validation.webhook.domainmapping.serving.knative.dev created

validatingwebhookconfiguration.admissionregistration.k8s.io/validation.webhook.serving.knative.dev created

secret/webhook-certs created

Next, we need a networking layer. The docs cover Kourier, Istio and Contour.

We’ll use Kourier for this demo

$ kubectl apply -f https://github.com/knative/net-kourier/releases/download/knative-v1.3.0/kourier.yaml

namespace/kourier-system created

configmap/kourier-bootstrap created

configmap/config-kourier created

serviceaccount/net-kourier created

clusterrole.rbac.authorization.k8s.io/net-kourier created

clusterrolebinding.rbac.authorization.k8s.io/net-kourier created

deployment.apps/net-kourier-controller created

service/net-kourier-controller created

deployment.apps/3scale-kourier-gateway created

service/kourier created

service/kourier-internal created

And set Kourier by default

$ kubectl patch configmap/config-network --namespace knative-serving --type merge --patch '{"data":{"ingress.class":"kourier.ingress.networking.knative.dev"}}'

configmap/config-network patched

We can see the external LB now serving up Kourier

$ kubectl --namespace kourier-system get service kourier

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kourier LoadBalancer 10.92.12.121 35.223.118.152 80:31594/TCP,443:32000/TCP 64s

Lastly, let’s setup some DNS. We can use sslip.io for this

$ kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.3.0/serving-default-domain.yaml

job.batch/default-domain created

service/default-domain-service created

and install cert-manager, in case we need it for TLS

$ kubectl apply -f https://github.com/knative/net-certmanager/releases/download/knative-v1.3.0/release.yaml

clusterrole.rbac.authorization.k8s.io/knative-serving-certmanager created

validatingwebhookconfiguration.admissionregistration.k8s.io/config.webhook.net-certmanager.networking.internal.knative.dev created

secret/net-certmanager-webhook-certs created

configmap/config-certmanager created

deployment.apps/net-certmanager-controller created

service/net-certmanager-controller created

deployment.apps/net-certmanager-webhook created

service/net-certmanager-webhook created

Next, we want to install the Knative CLI. You can use brew, containers, go or download a binary (see docs)

We’ll just use homebrew on Linux

$ brew install kn

Running `brew update --preinstall`...

==> Auto-updated Homebrew!

Updated 1 tap (homebrew/core).

==> New Formulae

aarch64-elf-binutils dsq kdoctor observerward stylish-haskell

aarch64-elf-gcc dynaconf koka opendht testkube

aws-auth epinio kubekey postgraphile textidote

bkt fortran-language-server librasterlite2 procyon-decompiler trivy

boost@1.76 fourmolu litani quick-lint-js trzsz

bvm gemgen ltex-ls rospo uutils-findutils

checkmake go@1.17 mapproxy rslint wordle

cloudflared gst-plugins-rs mu-repo sdl12-compat xkcd

dpp inih nickel spidermonkey@78

==> Updated Formulae

Updated 1478 formulae.

==> Renamed Formulae

richmd -> rich-cli

==> Deleted Formulae

advancemenu go@1.10 go@1.12 gstreamermm path-extractor

carina go@1.11 go@1.9 hornetq pdflib-lite

==> Downloading https://ghcr.io/v2/homebrew/core/kn/manifests/1.3.1

######################################################################## 100.0%

==> Downloading https://ghcr.io/v2/homebrew/core/kn/blobs/sha256:09c1dc5c1de3e67551ad5d462411e4d793f9e78c3159b79d746609f1bab103d1

==> Downloading from https://pkg-containers.githubusercontent.com/ghcr1/blobs/sha256:09c1dc5c1de3e67551ad5d462411e4d793f9e78c3159b79d746609f1bab

######################################################################## 100.0%

==> Pouring kn--1.3.1.x86_64_linux.bottle.tar.gz

🍺 /home/linuxbrew/.linuxbrew/Cellar/kn/1.3.1: 6 files, 56MB

==> `brew cleanup` has not been run in the last 30 days, running now...

Disable this behaviour by setting HOMEBREW_NO_INSTALL_CLEANUP.

Hide these hints with HOMEBREW_NO_ENV_HINTS (see `man brew`).

Removing: /home/builder/.cache/Homebrew/Logs/k9s... (4KB)

We can use the kn binary to serve a hello world example

$ kn service create mytest --image gcr.io/knative-samples/helloworld-go --port 8080 --env TARGET=World --revision-name=world

Creating service 'mytest' in namespace 'default':

0.063s The Configuration is still working to reflect the latest desired specification.

0.133s The Route is still working to reflect the latest desired specification.

0.207s Configuration "mytest" is waiting for a Revision to become ready.

22.695s ...

22.866s Ingress has not yet been reconciled.

23.011s Waiting for load balancer to be ready

23.180s Ready to serve.

Service 'mytest' created to latest revision 'mytest-world' is available at URL:

http://mytest.default.35.223.118.152.sslip.io

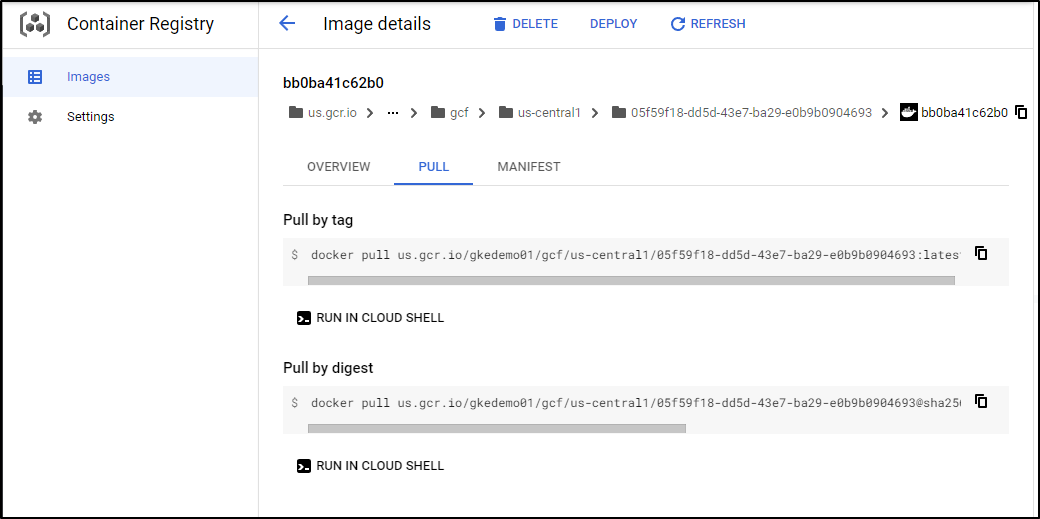

We can lookup our built container from earlier in GCR in the cloud console

And because our GKE can talk natively to our Google Artifact Registry (?GAR) we can use it in a deploy

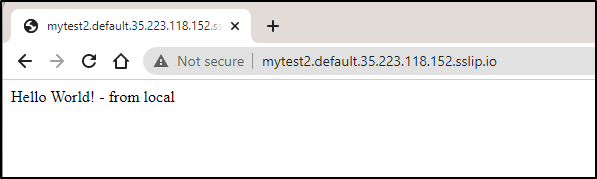

$ kn service create mytest2 --image us.gcr.io/gkedemo01/gcf/us-central1/05f59f18-dd5d-43e7-ba29-e0b9b0904693:latest --port 8080 --revision-name=world

Creating service 'mytest2' in namespace 'default':

0.071s The Configuration is still working to reflect the latest desired specification.

0.149s The Route is still working to reflect the latest desired specification.

0.772s Configuration "mytest2" is waiting for a Revision to become ready.

40.340s ...

40.469s Ingress has not yet been reconciled.

40.602s Waiting for load balancer to be ready

40.810s Ready to serve.

Service 'mytest2' created to latest revision 'mytest2-world' is available at URL:

http://mytest2.default.35.223.118.152.sslip.io

The idea of Knative, of course, is to scale to 0 when not in use. Otherwise, it would be just as easy to use a Kubernetes deployment.

We can double check scaling to zero is enabled:

$ kubectl get cm config-autoscaler -n knative-serving -o yaml | grep enable-scale-to-zero | head -n1

enable-scale-to-zero: "true"

Here we can see a service instantiated, then another, then terminating after a minute of idle:

Costs

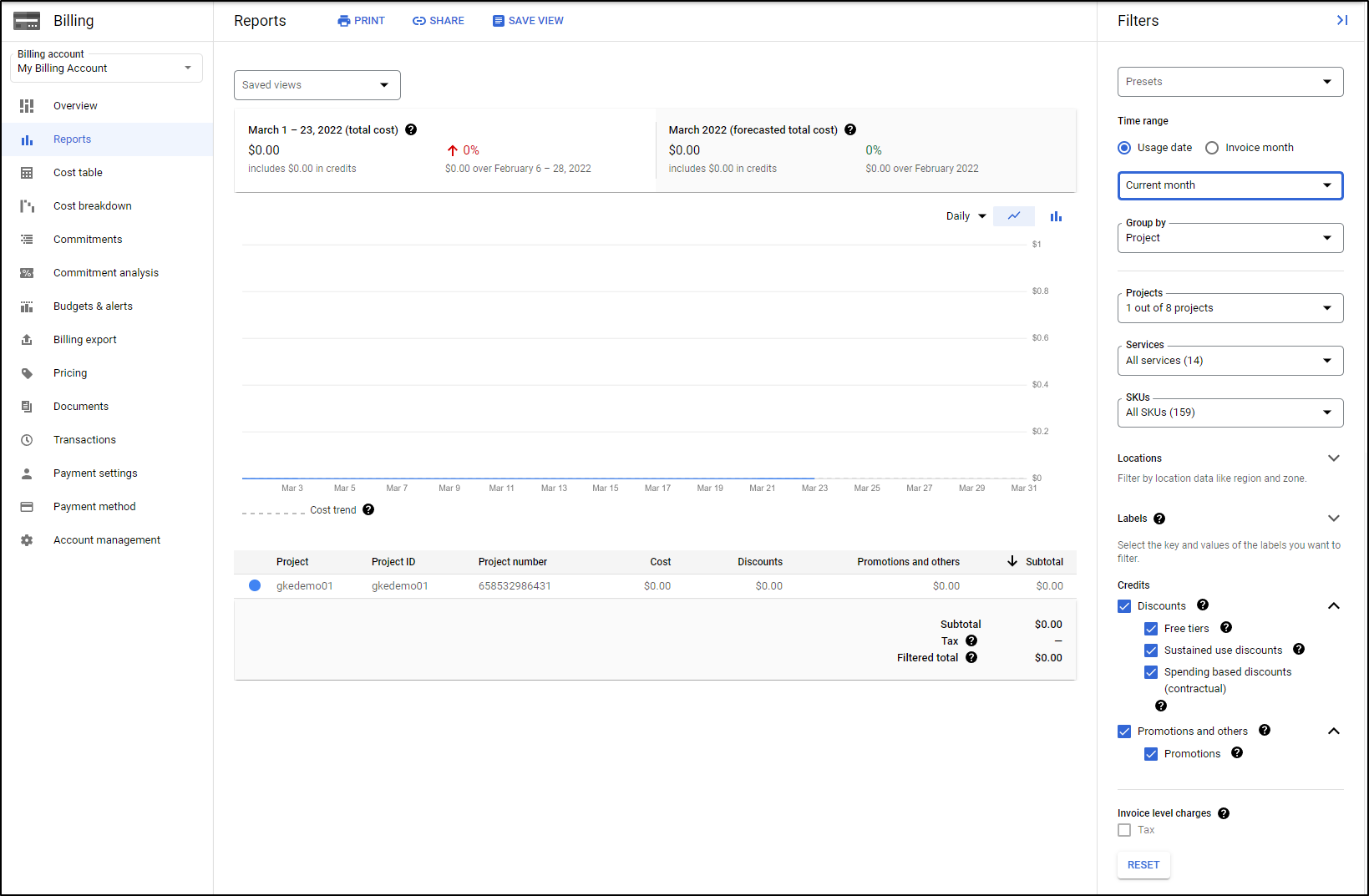

At present, having some services (not GKE) running more than a day, I can say they haven’t accrued any costs

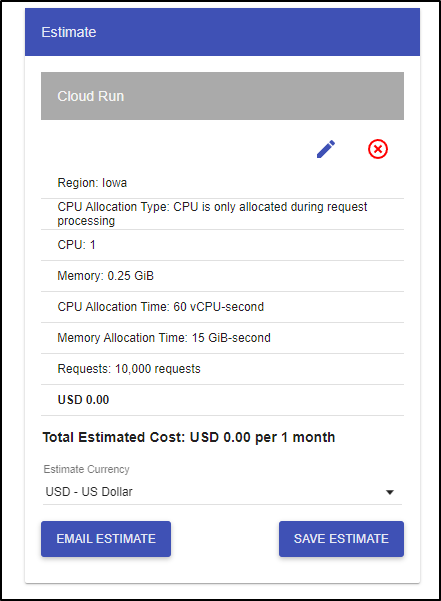

Let’s make some assumptions. A well used function might get 10k hits a month - that seems rather normal for something used by an enterprise app. Assuming this is a function (small), it shouldn’t need much time. I estimated 2s (120ms)

If you don’t require this to have a hot instance running, it stays in the free tier:

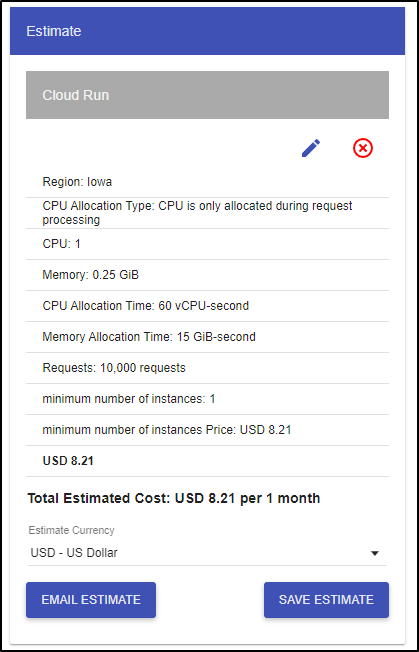

But if you need a “warm” instance always running, it will cost just over US$8/mo (today):

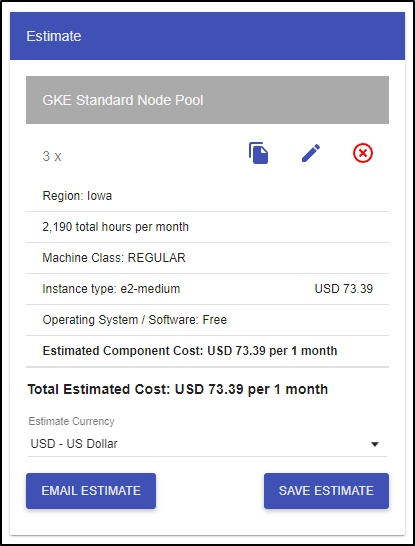

Let’s compare the default GKE as I spun up earlier. By default, it creates 3 e2-medium nodes. So just for compute and hosting, that’s US$73.39/mo (today)

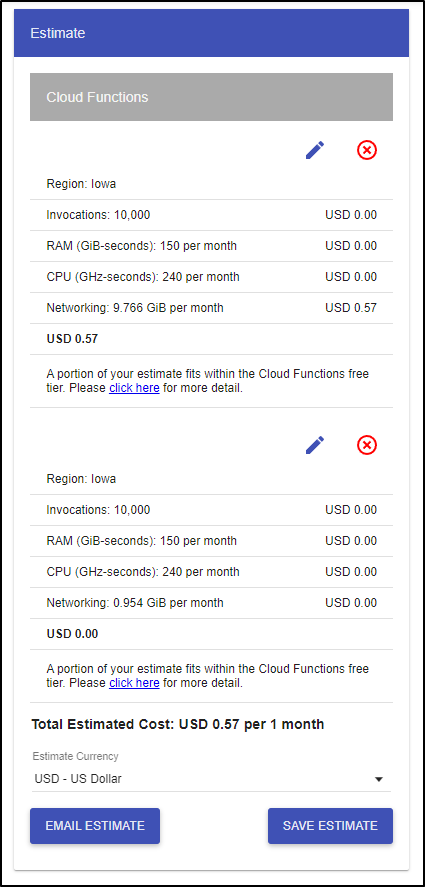

Cloud Functions, even if not holding a “warm” instance, will cost us. We get charged for networking.

Here I assumed 10k invokations at 2s, but guess 1Mb of bandwidth per execution and that totaled US$0.57 a month. If I lowered it to 100Kb of data, then we stayed in the free tier:

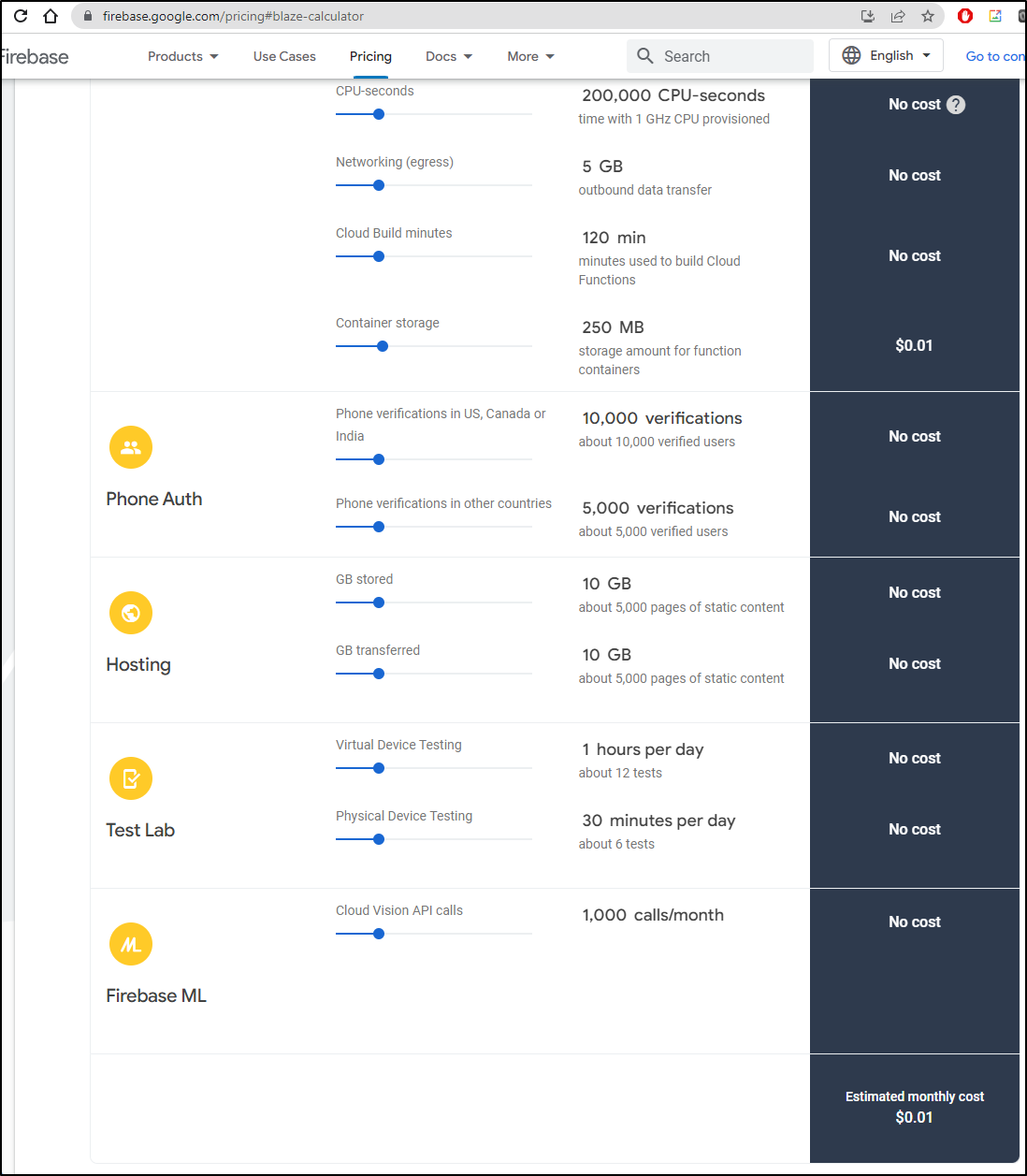

Firebase pricing isn’t in the GCP pricing calculator, but we can use the Firebase pricing calculator to see the only part that might charge is container storage. Otherwise we stay entirely in the free tier

Mixing Cloud Run and KNative

We can rebuild after a change for Cloud Run. So we could see a change, I added some more bangs to the output.

$ gcloud run deploy

Deploying from source. To deploy a container use [--image]. See https://cloud.google.com/run/docs/deploying-source-code for more details.

Source code location (/home/builder/Workspaces/idj-cloudrun):

Next time, use `gcloud run deploy --source .` to deploy the current directory.

Service name (idj-cloudrun):

Please specify a region:

[1] asia-east1

[2] asia-east2

[3] asia-northeast1

[4] asia-northeast2

[5] asia-northeast3

[6] asia-south1

[7] asia-south2

[8] asia-southeast1

[9] asia-southeast2

[10] australia-southeast1

[11] australia-southeast2

[12] europe-central2

[13] europe-north1

[14] europe-west1

[15] europe-west2

[16] europe-west3

[17] europe-west4

[18] europe-west6

[19] northamerica-northeast1

[20] northamerica-northeast2

[21] southamerica-east1

[22] southamerica-west1

[23] us-central1

[24] us-east1

[25] us-east4

[26] us-west1

[27] us-west2

[28] us-west3

[29] us-west4

[30] cancel

Please enter your numeric choice: 23

To make this the default region, run `gcloud config set run/region us-central1`.

This command is equivalent to running `gcloud builds submit --pack image=[IMAGE] /home/builder/Workspaces/idj-cloudrun` and `gcloud run deploy idj-cloudrun --image [IMAGE]`

Building using Buildpacks and deploying container to Cloud Run service [idj-cloudrun] in project [gkedemo01] region [us-central1]

✓ Building and deploying... Done.

✓ Uploading sources...

✓ Building Container... Logs are available at [https://console.cloud.google.com/cloud-build/builds/3386cf1d-7dfb-4fb4-8d95-bace12b4d06a?proje

ct=658532986431].

✓ Creating Revision...

✓ Routing traffic...

Done.

Service [idj-cloudrun] revision [idj-cloudrun-00003-cal] has been deployed and is serving 100 percent of traffic.

Service URL: https://idj-cloudrun-v7nqcvq4eq-uc.a.run.app

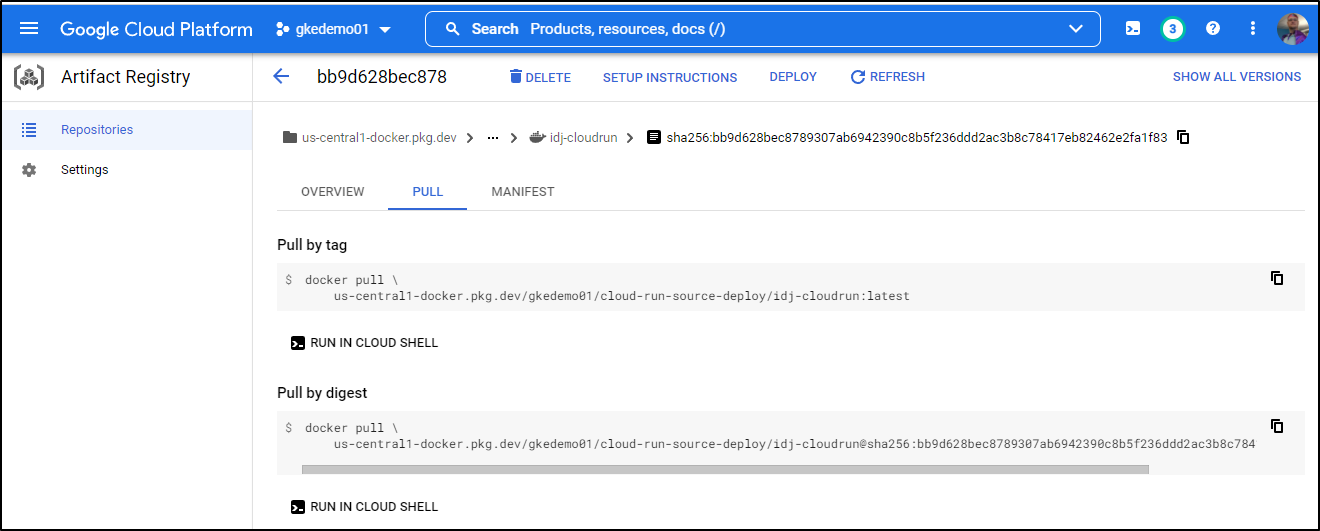

We can see that updated the GAR/GCR image

And we can see it running

I can see the image pull command in GAR

which I can then use in KNative to host the same image

$ kn service create mytest4 --image us-central1-docker.pkg.dev/gkedemo01/cloud-run-source-deplo

y/idj-cloudrun:latest --port 8080 --revision-name=testing

Creating service 'mytest4' in namespace 'default':

0.064s The Configuration is still working to reflect the latest desired specification.

0.117s The Route is still working to reflect the latest desired specification.

0.237s Configuration "mytest4" is waiting for a Revision to become ready.

11.915s ...

12.071s Ingress has not yet been reconciled.

12.167s Waiting for load balancer to be ready

12.336s Ready to serve.

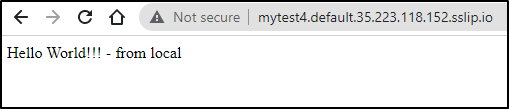

Service 'mytest4' created to latest revision 'mytest4-testing' is available at URL:

http://mytest4.default.35.223.118.152.sslip.io

AKS KNative and Long Timeouts

Let’s experiment with KNative in AKS and see if we can validate using long timeouts.

First we create a resource group

$ az account set --subscription Pay-As-You-Go

$ az group create -n aksKNativeRG --location centralus

{

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/aksKNativeRG",

"location": "centralus",

"managedBy": null,

"name": "aksKNativeRG",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

Create a service principal

$ az ad sp create-for-rbac --name "idjknsp01" --skip-assignment --output json > mysp.json

WARNING: The output includes credentials that you must protect. Be sure that you do not include these credentials in your code or check the credentials into your source control. For more information, see https://aka.ms/azadsp-cli

WARNING: 'name' property in the output is deprecated and will be removed in the future. Use 'appId' instead.

Now we can create the AKS cluster

$ export SP_PASS=`cat mysp.json | jq -r .password`

$ export SP_ID=`cat mysp.json | jq -r .appId`

$ az aks create --resource-group aksKNativeRG -n idjknaks01 --location centralus --node-count 3

--enable-cluster-autoscaler --min-count 2 --max-count 4 --generate-ssh-keys --network-plugin azure --network-policy azure --service-principal $

SP_ID --client-secret $SP_PASS

\ Running ..

Getting kubeconfig and validation

$ az aks get-credentials --resource-group aksKNativeRG -n idjknaks01 --admin

Merged "idjknaks01-admin" as current context in /home/builder/.kube/config

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-38736499-vmss000000 Ready agent 8m15s v1.21.9

aks-nodepool1-38736499-vmss000001 Ready agent 8m12s v1.21.9

aks-nodepool1-38736499-vmss000002 Ready agent 8m19s v1.21.9

Setting up KN in AKS

$ kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.3.0/serving-crds.yaml

customresourcedefinition.apiextensions.k8s.io/certificates.networking.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/configurations.serving.knative.dev created

customresourcedefinition.apiextensions.k8s.io/clusterdomainclaims.networking.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/domainmappings.serving.knative.dev created

customresourcedefinition.apiextensions.k8s.io/ingresses.networking.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/metrics.autoscaling.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/podautoscalers.autoscaling.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/revisions.serving.knative.dev created

customresourcedefinition.apiextensions.k8s.io/routes.serving.knative.dev created

customresourcedefinition.apiextensions.k8s.io/serverlessservices.networking.internal.knative.dev created

customresourcedefinition.apiextensions.k8s.io/services.serving.knative.dev created

customresourcedefinition.apiextensions.k8s.io/images.caching.internal.knative.dev created

$ kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.3.0/serving-core.yaml

namespace/knative-serving created

clusterrole.rbac.authorization.k8s.io/knative-serving-aggregated-addressable-resolver created

clusterrole.rbac.authorization.k8s.io/knative-serving-addressable-resolver created

clusterrole.rbac.authorization.k8s.io/knative-serving-namespaced-admin created

clusterrole.rbac.authorization.k8s.io/knative-serving-namespaced-edit created

clusterrole.rbac.authorization.k8s.io/knative-serving-namespaced-view created

clusterrole.rbac.authorization.k8s.io/knative-serving-core created

clusterrole.rbac.authorization.k8s.io/knative-serving-podspecable-binding created

serviceaccount/controller created

clusterrole.rbac.authorization.k8s.io/knative-serving-admin created

clusterrolebinding.rbac.authorization.k8s.io/knative-serving-controller-admin created

clusterrolebinding.rbac.authorization.k8s.io/knative-serving-controller-addressable-resolver created

customresourcedefinition.apiextensions.k8s.io/images.caching.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/certificates.networking.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/configurations.serving.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/clusterdomainclaims.networking.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/domainmappings.serving.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/ingresses.networking.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/metrics.autoscaling.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/podautoscalers.autoscaling.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/revisions.serving.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/routes.serving.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/serverlessservices.networking.internal.knative.dev unchanged

customresourcedefinition.apiextensions.k8s.io/services.serving.knative.dev unchanged

image.caching.internal.knative.dev/queue-proxy created

configmap/config-autoscaler created

configmap/config-defaults created

configmap/config-deployment created

configmap/config-domain created

configmap/config-features created

configmap/config-gc created

configmap/config-leader-election created

configmap/config-logging created

configmap/config-network created

configmap/config-observability created

configmap/config-tracing created

horizontalpodautoscaler.autoscaling/activator created

poddisruptionbudget.policy/activator-pdb created

deployment.apps/activator created

service/activator-service created

deployment.apps/autoscaler created

service/autoscaler created

deployment.apps/controller created

service/controller created

deployment.apps/domain-mapping created

deployment.apps/domainmapping-webhook created

service/domainmapping-webhook created

horizontalpodautoscaler.autoscaling/webhook created

poddisruptionbudget.policy/webhook-pdb created

deployment.apps/webhook created

service/webhook created

validatingwebhookconfiguration.admissionregistration.k8s.io/config.webhook.serving.knative.dev created

mutatingwebhookconfiguration.admissionregistration.k8s.io/webhook.serving.knative.dev created

mutatingwebhookconfiguration.admissionregistration.k8s.io/webhook.domainmapping.serving.knative.dev created

secret/domainmapping-webhook-certs created

validatingwebhookconfiguration.admissionregistration.k8s.io/validation.webhook.domainmapping.serving.knative.dev created

validatingwebhookconfiguration.admissionregistration.k8s.io/validation.webhook.serving.knative.dev created

secret/webhook-certs created

$ kubectl apply -f https://github.com/knative/net-kourier/releases/download/knative-v1.3.0/kourier.yaml

namespace/kourier-system created

configmap/kourier-bootstrap created

configmap/config-kourier created

serviceaccount/net-kourier created

clusterrole.rbac.authorization.k8s.io/net-kourier created

clusterrolebinding.rbac.authorization.k8s.io/net-kourier created

deployment.apps/net-kourier-controller created

service/net-kourier-controller created

deployment.apps/3scale-kourier-gateway created

service/kourier created

service/kourier-internal created

$ kubectl patch configmap/config-network --namespace knative-serving --type merge --patch '{"data":{"ingress.class":"kourier.ingress.networking.knative.dev"}}'

configmap/config-network patched

$ kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.3.0/serving-default-domain.yaml

job.batch/default-domain created

service/default-domain-service created

$ kubectl --namespace kourier-system get service kourier

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kourier LoadBalancer 10.0.251.211 20.84.211.192 80:32384/TCP,443:32314/TCP 24s

$ kubectl apply -f https://github.com/knative/net-certmanager/releases/download/knative-v1.3.0/release.yaml

clusterrole.rbac.authorization.k8s.io/knative-serving-certmanager created

validatingwebhookconfiguration.admissionregistration.k8s.io/config.webhook.net-certmanager.networking.internal.knative.dev created

secret/net-certmanager-webhook-certs created

configmap/config-certmanager created

deployment.apps/net-certmanager-controller created

service/net-certmanager-controller created

deployment.apps/net-certmanager-webhook created

service/net-certmanager-webhook created

We’ll fire up the hello world KN service

$ kn service create mytest --image gcr.io/knative-samples/helloworld-go --port 8080 --env TARGET=World --revision-name=world

Creating service 'mytest' in namespace 'default':

0.058s The Configuration is still working to reflect the latest desired specification.

0.180s The Route is still working to reflect the latest desired specification.

0.213s Configuration "mytest" is waiting for a Revision to become ready.

22.225s ...

22.290s Ingress has not yet been reconciled.

22.446s Waiting for load balancer to be ready

22.629s Ready to serve.

Service 'mytest' created to latest revision 'mytest-world' is available at URL:

http://mytest.default.20.84.211.192.sslip.io

Setting a very long timeout.

I would think this would work

$ kn service create mytest2 --image gcr.io/knative-samples/helloworld-go --port 8080 --env TARGET=World --revision-name=world --wait-timeout 3600

Creating service 'mytest2' in namespace 'default':

0.170s The Route is still working to reflect the latest desired specification.

0.223s Configuration "mytest2" is waiting for a Revision to become ready.

1.751s ...

1.851s Ingress has not yet been reconciled.

2.014s Waiting for load balancer to be ready

2.236s Ready to serve.

Service 'mytest2' created to latest revision 'mytest2-world' is available at URL:

http://mytest2.default.20.84.211.192.sslip.io

However, it was clear it didnt really change the timeout:

$ kubectl get revision mytest2-world -o yaml | grep timeout

timeoutSeconds: 300

Once I changed the KNative defaults:

$ cat tt.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: config-defaults

namespace: knative-serving

data:

revision-timeout-seconds: "3600" # 5 minutes

max-revision-timeout-seconds: "3600" # 10 minutes

$ kubectl apply -f tt.yml

configmap/config-defaults configured

Then when I create a new service

$ kn service create mytest5 --image gcr.io/knative-samples/helloworld-go --port 8080 --env TARGET=World --revision-name=world --wait-timeout 36000

Creating service 'mytest5' in namespace 'default':

0.069s The Configuration is still working to reflect the latest desired specification.

0.225s The Route is still working to reflect the latest desired specification.

0.373s Configuration "mytest5" is waiting for a Revision to become ready.

2.291s ...

2.388s Ingress has not yet been reconciled.

2.548s Waiting for load balancer to be ready

2.688s Ready to serve.

Service 'mytest5' created to latest revision 'mytest5-world' is available at URL:

http://mytest5.default.20.84.211.192.sslip.io

I can see it has been created

$ kubectl get revision mytest5-world -o yaml | grep -C 4 timeout

tcpSocket:

port: 0

resources: {}

enableServiceLinks: false

timeoutSeconds: 3600

status:

actualReplicas: 0

conditions:

- lastTransitionTime: "2022-03-24T19:07:44Z"

Cleanup

The largest cost if left running will be GKE. let’s remove the cluster first

$ gcloud container clusters delete mycrtestcluster

The following clusters will be deleted.

- [mycrtestcluster] in [us-central1-f]

Do you want to continue (Y/n)? y

Deleting cluster mycrtestcluster...done.

Deleted [https://container.googleapis.com/v1/projects/gkedemo01/zones/us-central1-f/clusters/mycrtestcluster].

If you created a cluster in Azure, you can delete the AKS as well

$ az aks list -o table

Name Location ResourceGroup KubernetesVersion ProvisioningState Fqdn

---------- ---------- --------------- ------------------- ------------------- ---------------------------------------------------------------

idjknaks01 centralus aksKNativeRG 1.21.9 Succeeded idjknaks01-aksknativerg-d955c0-dd1dc9c3.hcp.centralus.azmk8s.io

$ az aks delete -n idjknaks01 -g aksKNativeRG

Are you sure you want to perform this operation? (y/n): y

\ Running ..

Summary

We explored Cloud Run, Functions (with and without Firebase), and KNative. KNative runs on Kubernetes and is amongst a host of popular serverless frameworks including fission.io, kubeless and one of my favourites, OpenFaaS. While I skipped actually setting up SSL for KNative in GKE this time, I did cover it in my prior writeup.

Much of the savings of using serverless is the idea that the containerized service scales to zero when not in use. If we keep one “warm” then we are just paying to host one or more containers. If we held 10 Cloud Run instances “warm” for a month, that would pay for the cost of a small GKE cluster (3 nodes, E2 medium).

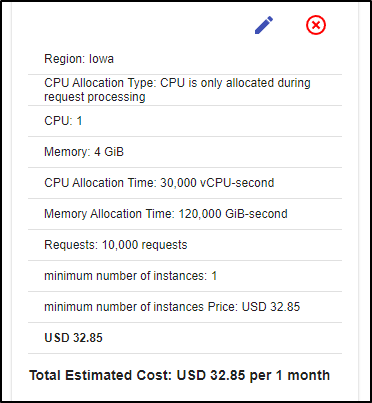

And then language plays a big part if we keep instances warm. If I used a more memory demanding language, such as Java, and I wanted to keep an instance warm with 4Gb of Memory allocated, we are up to US$32 a month per instance!

When deciding between KNative on GKE and Cloud Run, another consideration is concurrency.

If my functions are infrequently used or used at completely different times in the enterprise app, I can easily handle a very large amount in one cluster. But if, in usage of the enterprise app, they all spin up at the same time, I might have scaling issues to contend with. There Cloud Run can handle the scaling quicker than an autoscaling GKE cluster.

Whether using Cloud Run, Functions or KNative, all of them can use a VPC Connector to access resources in a VPC or shared with a VPC (like an on-prem network exposed with Interconnect). There is actually a pretty good medium article detailing that process with GCP.

While we didn’t expore IaC, as you might expect, Cloud Run has a well defined terraform module

resource "google_cloud_run_service" "default" {

name = "cloudrun-srv"

location = "us-central1"

template {

spec {

containers {

image = "us-docker.pkg.dev/cloudrun/container/hello"

}

}

}

traffic {

percent = 100

latest_revision = true

}

}

As do Cloud Functions

resource "google_storage_bucket" "bucket" {

name = "test-bucket"

location = "US"

}

resource "google_storage_bucket_object" "archive" {

name = "index.zip"

bucket = google_storage_bucket.bucket.name

source = "./path/to/zip/file/which/contains/code"

}

resource "google_cloudfunctions_function" "function" {

name = "function-test"

description = "My function"

runtime = "nodejs14"

available_memory_mb = 128

source_archive_bucket = google_storage_bucket.bucket.name

source_archive_object = google_storage_bucket_object.archive.name

trigger_http = true

entry_point = "helloGET"

}

# IAM entry for all users to invoke the function

resource "google_cloudfunctions_function_iam_member" "invoker" {

project = google_cloudfunctions_function.function.project

region = google_cloudfunctions_function.function.region

cloud_function = google_cloudfunctions_function.function.name

role = "roles/cloudfunctions.invoker"

member = "allUsers"

}

KNative can be easily orchestrated with YAML

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: helloworld-go

namespace: default

spec:

template:

spec:

containers:

- image: gcr.io/knative-samples/helloworld-go

env:

- name: TARGET

value: "Go Sample v1"

which means we could just as easily deploy with kn, yaml, helm or GitOps serving YAML from a GIT URL.