Published: Mar 16, 2022 by Isaac Johnson

Uptime Kuma is a very small yet full featured open source tool one can use to monitor and notify just about anything. It covers much of the same ground that Nagios or Site24x7 might cover. I found out about it recently from a Techno Tim YouTube review of the tool.

Today we will setup a simple Docker instance followed by a Kubernetes based instance with Ingress (which is live presently).

We’ll look at how we can monitor a variety of things and trigger notifications as well as invoke Azure DevOps YAML pipelines to fix problems (including a full example on monitoring and fixing a containerized service).

Getting Started

We can just launch Uptime Kuma as a docker container on any host running Docker. Whether running as a local container or via K8s, Uptime Kuma needs a persistent volume in which to store data so we’ll create that first, then launch the container

$ docker volume create ut-kuma

ut-kuma

$ docker run -d --restart=always -p 3001:3001 -v ut-kuma:/app/data --name uptime-kuma louislam/uptime-kuma:1

Unable to find image 'louislam/uptime-kuma:1' locally

1: Pulling from louislam/uptime-kuma

6552179c3509: Pull complete

d4debff14640: Pull complete

fc6770e6bb3f: Pull complete

19b36dce559a: Pull complete

15fd1f9ed413: Pull complete

e755cc628214: Pull complete

5116f08af1b9: Pull complete

e4c85c587fdd: Pull complete

Digest: sha256:1a8f2564fb7514e7e10b28dedfc3fa3bae1e5cb8d5d5def9928fba626f6311aa

Status: Downloaded newer image for louislam/uptime-kuma:1

cead637ea0a2f6b0710d9e718055e495f3974dfa81608529e94cee6c90dc950a

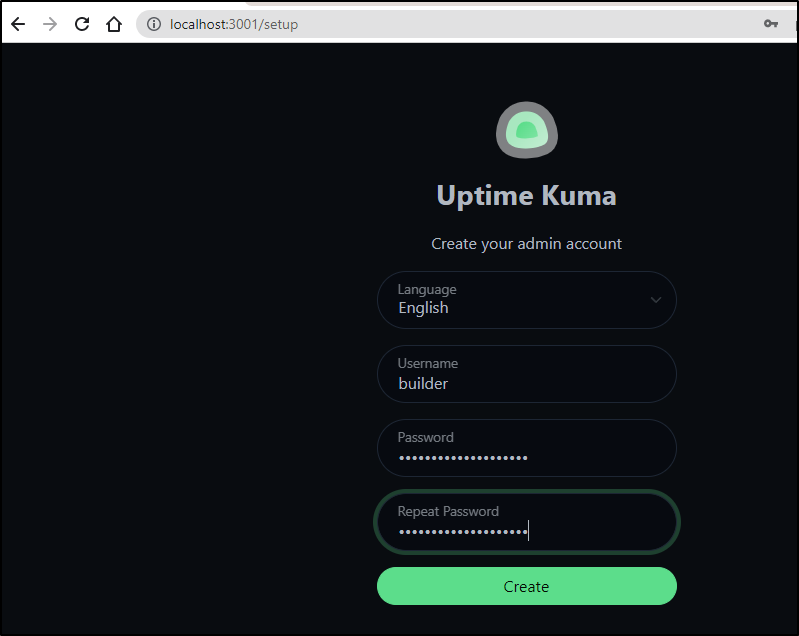

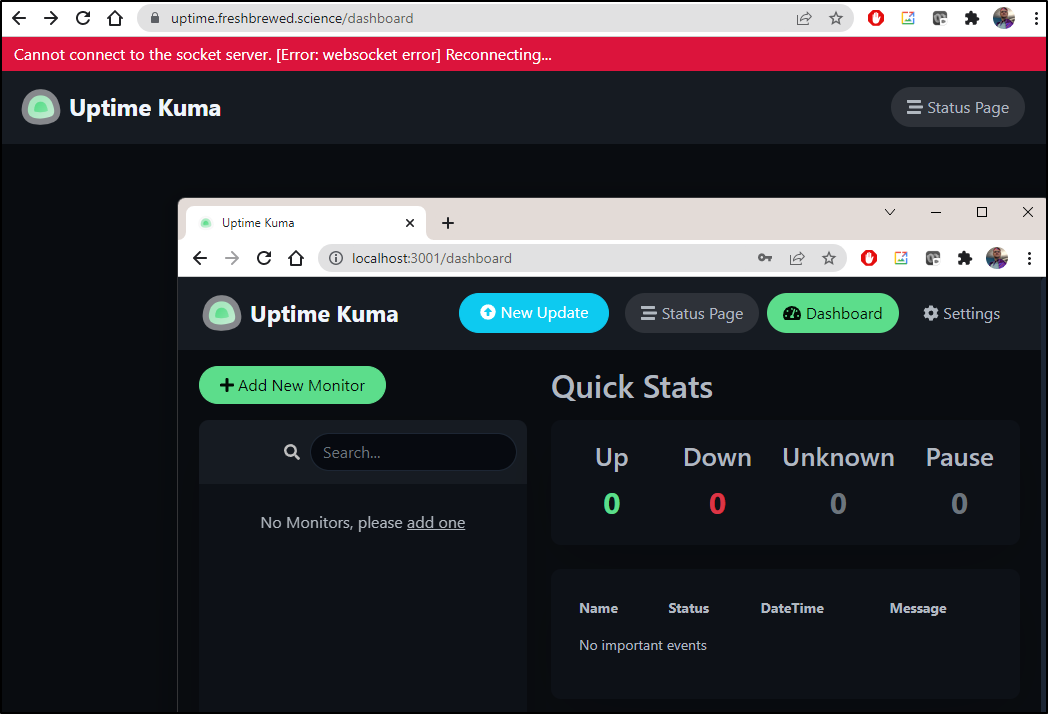

Then just navigate to http://localhost:3001 to get started:

However, I would prefer to run in my cluster so I’ll stop this container running in local docker:

$ docker stop uptime-kuma

uptime-kuma

Via Helm

We can use the Helm chart to install Uptime Kuma to our Kubernetes cluster.

By default it will use the default storage class. In this case, I prefer it use local-path.

$ helm repo add uptime-kuma https://dirsigler.github.io/uptime-kuma-helm

"uptime-kuma" has been added to your repositories

$ helm upgrade uptime-kuma uptime-kuma/uptime-kuma --install --set volume.storageClassName=local-path

Release "uptime-kuma" does not exist. Installing it now.

NAME: uptime-kuma

LAST DEPLOYED: Tue Mar 15 06:09:37 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=uptime-kuma,app.kubernetes.io/instance=uptime-kuma" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace default $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:3001 to use your application"

kubectl --namespace default port-forward $POD_NAME 3001:$CONTAINER_PORT

Next I’ll setup an https ingress

$ cat r53-uptimekuma.json

{

"Comment": "CREATE uptime fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "uptime.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "73.242.50.46"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-uptimekuma.json

{

"ChangeInfo": {

"Id": "/change/C06275451OV1OY90YPZXP",

"Status": "PENDING",

"SubmittedAt": "2022-03-15T11:12:08.929Z",

"Comment": "CREATE uptime fb.s A record "

}

}

Then I just need to make an Ingress.

First, i double check the service and port

$ kubectl get svc | grep uptime

uptime-kuma ClusterIP 10.43.66.55 <none> 3001/TCP 3m25s

then create the ingress

$ cat uptimeIngress.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/tls-acme: "true"

kubernetes.io/ingress.class: nginx

cert-manager.io/cluster-issuer: "letsencrypt-prod"

name: uptime-with-auth

namespace: default

spec:

tls:

- hosts:

- uptime.freshbrewed.science

secretName: uptime-tls

rules:

- host: uptime.freshbrewed.science

http:

paths:

- backend:

service:

name: uptime-kuma

port:

number: 3001

path: /

pathType: ImplementationSpecific

$ kubectl apply -f uptimeIngress.yml

ingress.networking.k8s.io/uptime-with-auth created

I actually had to deal with some tech debt here worth mentioning.

K8s upgrade drama… NGinx, Ingress and Cert Manager

Since upgrading to k3s 1.23, my NGinx controller has been fine. However, upon the first pod movement, it began to crash. It just was too old for the new Kubernetes version.

$ kubectl describe pod my-release-ingress-nginx-controller-7978f85c6f-7wrdh | tail -n15

QoS Class: Burstable

Node-Selectors: kubernetes.io/os=linux

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 16m default-scheduler Successfully assigned default/my-release-ingress-nginx-controller-7978f85c6f-7wrdh to builder-macbookpro2

Normal Killing 15m kubelet Container controller failed liveness probe, will be restarted

Normal Pulled 15m (x2 over 16m) kubelet Container image "k8s.gcr.io/ingress-nginx/controller:v0.46.0@sha256:52f0058bed0a17ab0fb35628ba97e8d52b5d32299fbc03cc0f6c7b9ff036b61a" already present on machine

Normal Created 15m (x2 over 16m) kubelet Created container controller

Normal Started 15m (x2 over 16m) kubelet Started container controller

Warning Unhealthy 14m (x10 over 16m) kubelet Readiness probe failed: HTTP probe failed with statuscode: 500

Warning Unhealthy 11m (x22 over 16m) kubelet Liveness probe failed: HTTP probe failed with statuscode: 500

Warning BackOff 83s (x30 over 9m24s) kubelet Back-off restarting failed container

$ kubectl logs my-release-ingress-nginx-controller-7978f85c6f-7wrdh

-------------------------------------------------------------------------------

NGINX Ingress controller

Release: v0.46.0

Build: 6348dde672588d5495f70ec77257c230dc8da134

Repository: https://github.com/kubernetes/ingress-nginx

nginx version: nginx/1.19.6

-------------------------------------------------------------------------------

I0315 12:00:19.708272 8 flags.go:208] "Watching for Ingress" class="nginx"

W0315 12:00:19.708360 8 flags.go:213] Ingresses with an empty class will also be processed by this Ingress controller

W0315 12:00:19.708975 8 client_config.go:614] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

I0315 12:00:19.709556 8 main.go:241] "Creating API client" host="https://10.43.0.1:443"

I0315 12:00:19.737796 8 main.go:285] "Running in Kubernetes cluster" major="1" minor="23" git="v1.23.3+k3s1" state="clean" commit="5fb370e53e0014dc96183b8ecb2c25a61e891e76" platform="linux/amd64"

I0315 12:00:20.256253 8 main.go:105] "SSL fake certificate created" file="/etc/ingress-controller/ssl/default-fake-certificate.pem"

I0315 12:00:20.259050 8 main.go:115] "Enabling new Ingress features available since Kubernetes v1.18"

W0315 12:00:20.261344 8 main.go:127] No IngressClass resource with name nginx found. Only annotation will be used.

I0315 12:00:20.296563 8 ssl.go:532] "loading tls certificate" path="/usr/local/certificates/cert" key="/usr/local/certificates/key"

I0315 12:00:20.342338 8 nginx.go:254] "Starting NGINX Ingress controller"

I0315 12:00:20.391067 8 event.go:282] Event(v1.ObjectReference{Kind:"ConfigMap", Namespace:"default", Name:"my-release-ingress-nginx-controller", UID:"499ca3ce-1cdc-429a-afa6-ffae625c13e4", APIVersion:"v1", ResourceVersion:"40849360", FieldPath:""}): type: 'Normal' reason: 'CREATE' ConfigMap default/my-release-ingress-nginx-controller

E0315 12:00:21.449475 8 reflector.go:138] k8s.io/client-go@v0.20.2/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

E0315 12:00:22.950695 8 reflector.go:138] k8s.io/client-go@v0.20.2/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

E0315 12:00:25.976583 8 reflector.go:138] k8s.io/client-go@v0.20.2/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

E0315 12:00:30.181191 8 reflector.go:138] k8s.io/client-go@v0.20.2/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

E0315 12:00:39.600209 8 reflector.go:138] k8s.io/client-go@v0.20.2/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

E0315 12:01:00.280096 8 reflector.go:138] k8s.io/client-go@v0.20.2/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

I0315 12:01:15.596574 8 main.go:187] "Received SIGTERM, shutting down"

I0315 12:01:15.596603 8 nginx.go:372] "Shutting down controller queues"

E0315 12:01:15.597208 8 store.go:178] timed out waiting for caches to sync

I0315 12:01:15.597278 8 nginx.go:296] "Starting NGINX process"

I0315 12:01:15.597672 8 leaderelection.go:243] attempting to acquire leader lease default/ingress-controller-leader-nginx...

I0315 12:01:15.598106 8 queue.go:78] "queue has been shutdown, failed to enqueue" key="&ObjectMeta{Name:initial-sync,GenerateName:,Namespace:,SelfLink:,UID:,ResourceVersion:,Generation:0,CreationTimestamp:0001-01-01 00:00:00 +0000 UTC,DeletionTimestamp:<nil>,DeletionGracePeriodSeconds:nil,Labels:map[string]string{},Annotations:map[string]string{},OwnerReferences:[]OwnerReference{},Finalizers:[],ClusterName:,ManagedFields:[]ManagedFieldsEntry{},}"

I0315 12:01:15.598140 8 nginx.go:316] "Starting validation webhook" address=":8443" certPath="/usr/local/certificates/cert" keyPath="/usr/local/certificates/key"

I0315 12:01:15.609689 8 status.go:123] "leaving status update for next leader"

I0315 12:01:15.609722 8 nginx.go:380] "Stopping admission controller"

I0315 12:01:15.609793 8 nginx.go:388] "Stopping NGINX process"

E0315 12:01:15.610273 8 nginx.go:319] "Error listening for TLS connections" err="http: Server closed"

I0315 12:01:15.613205 8 status.go:84] "New leader elected" identity="my-release-ingress-nginx-controller-7978f85c6f-26nf6"

2022/03/15 12:01:15 [notice] 43#43: signal process started

I0315 12:01:16.616622 8 nginx.go:401] "NGINX process has stopped"

I0315 12:01:16.616673 8 main.go:195] "Handled quit, awaiting Pod deletion"

I0315 12:01:26.617108 8 main.go:198] "Exiting" code=0

I needed to bite the bullet and upgrade Nginx.

One key note about upgrading NGinx Ingress is that CRDs do not upgrade.

So let’s add the Nginx stable repo and pull down the latest chart locally:

$ helm repo add nginx-stable https://helm.nginx.com/stable

"nginx-stable" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "bitnami" chart repository

...Unable to get an update from the "myharbor" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

Get "https://harbor.freshbrewed.science/chartrepo/library/index.yaml": dial tcp 73.242.50.46:443: connect: connection timed out

Update Complete. ⎈Happy Helming!⎈

Clearly with NGinx down, my own Harbor chart repo timed out, which is to be expected.

Next, we’ll make a local folder and pull down the chart

builder@DESKTOP-QADGF36:~/Workspaces$ mkdir nginx-fixing

builder@DESKTOP-QADGF36:~/Workspaces$ cd nginx-fixing/

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing$ helm pull nginx-stable/nginx-ingress --untar

Upgrading the CRDs, per the docs, might throw an error which can be ignored.

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing$ ls

nginx-ingress

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing$ cd nginx-ingress/

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing/nginx-ingress$ ls

Chart.yaml README.md crds templates values-icp.yaml values-plus.yaml values.yaml

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing/nginx-ingress$ kubectl apply -f crds/

Warning: resource customresourcedefinitions/aplogconfs.appprotect.f5.com is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/aplogconfs.appprotect.f5.com configured

Warning: resource customresourcedefinitions/appolicies.appprotect.f5.com is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/appolicies.appprotect.f5.com configured

customresourcedefinition.apiextensions.k8s.io/apusersigs.appprotect.f5.com created

customresourcedefinition.apiextensions.k8s.io/apdoslogconfs.appprotectdos.f5.com created

customresourcedefinition.apiextensions.k8s.io/apdospolicies.appprotectdos.f5.com created

customresourcedefinition.apiextensions.k8s.io/dosprotectedresources.appprotectdos.f5.com created

Warning: resource customresourcedefinitions/globalconfigurations.k8s.nginx.org is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/globalconfigurations.k8s.nginx.org configured

Warning: resource customresourcedefinitions/policies.k8s.nginx.org is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/policies.k8s.nginx.org configured

Warning: resource customresourcedefinitions/transportservers.k8s.nginx.org is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/transportservers.k8s.nginx.org configured

Warning: resource customresourcedefinitions/virtualserverroutes.k8s.nginx.org is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/virtualserverroutes.k8s.nginx.org configured

Warning: resource customresourcedefinitions/virtualservers.k8s.nginx.org is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

customresourcedefinition.apiextensions.k8s.io/virtualservers.k8s.nginx.org configured

Perhaps I’m just paranoid, but I do like to set aside my current values just in case the upgrade goes poorly.

$ helm list | grep my-release

my-release default 3 2021-05-29 21:30:58.8689736 -0500 CDT deployed ingress-nginx-3.31.0 0.46.0

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing$ helm get values my-release

USER-SUPPLIED VALUES:

USER-SUPPLIED VALUES: null

controller:

defaultTLS:

secret: default/myk8s.tpk.best-cert

enableCustomResources: true

enableTLSPassthrough: true

logLevel: 3

nginxDebug: true

setAsDefaultIngress: true

useIngressClassOnly: false

watchNamespace: default

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing$ helm get values --all my-release

COMPUTED VALUES:

USER-SUPPLIED VALUES: null

controller:

addHeaders: {}

admissionWebhooks:

annotations: {}

certificate: /usr/local/certificates/cert

enabled: true

existingPsp: ""

failurePolicy: Fail

key: /usr/local/certificates/key

namespaceSelector: {}

objectSelector: {}

patch:

enabled: true

image:

pullPolicy: IfNotPresent

repository: docker.io/jettech/kube-webhook-certgen

tag: v1.5.1

nodeSelector: {}

podAnnotations: {}

priorityClassName: ""

runAsUser: 2000

tolerations: []

port: 8443

service:

annotations: {}

externalIPs: []

loadBalancerSourceRanges: []

servicePort: 443

type: ClusterIP

affinity: {}

annotations: {}

autoscaling:

enabled: false

maxReplicas: 11

minReplicas: 1

targetCPUUtilizationPercentage: 50

targetMemoryUtilizationPercentage: 50

autoscalingTemplate: []

config: {}

configAnnotations: {}

configMapNamespace: ""

containerName: controller

containerPort:

http: 80

https: 443

customTemplate:

configMapKey: ""

configMapName: ""

defaultTLS:

secret: default/myk8s.tpk.best-cert

dnsConfig: {}

dnsPolicy: ClusterFirst

electionID: ingress-controller-leader

enableCustomResources: true

enableMimalloc: true

enableTLSPassthrough: true

existingPsp: ""

extraArgs: {}

extraContainers: []

extraEnvs: []

extraInitContainers: []

extraVolumeMounts: []

extraVolumes: []

healthCheckPath: /healthz

hostNetwork: false

hostPort:

enabled: false

ports:

http: 80

https: 443

image:

allowPrivilegeEscalation: true

digest: sha256:52f0058bed0a17ab0fb35628ba97e8d52b5d32299fbc03cc0f6c7b9ff036b61a

pullPolicy: IfNotPresent

repository: k8s.gcr.io/ingress-nginx/controller

runAsUser: 101

tag: v0.46.0

ingressClass: nginx

keda:

apiVersion: keda.sh/v1alpha1

behavior: {}

cooldownPeriod: 300

enabled: false

maxReplicas: 11

minReplicas: 1

pollingInterval: 30

restoreToOriginalReplicaCount: false

scaledObject:

annotations: {}

triggers: []

kind: Deployment

labels: {}

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

logLevel: 3

maxmindLicenseKey: ""

metrics:

enabled: false

port: 10254

prometheusRule:

additionalLabels: {}

enabled: false

rules: []

service:

annotations: {}

externalIPs: []

loadBalancerSourceRanges: []

servicePort: 10254

type: ClusterIP

serviceMonitor:

additionalLabels: {}

enabled: false

metricRelabelings: []

namespace: ""

namespaceSelector: {}

scrapeInterval: 30s

targetLabels: []

minAvailable: 1

minReadySeconds: 0

name: controller

nginxDebug: true

nodeSelector:

kubernetes.io/os: linux

podAnnotations: {}

podLabels: {}

podSecurityContext: {}

priorityClassName: ""

proxySetHeaders: {}

publishService:

enabled: true

pathOverride: ""

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

replicaCount: 1

reportNodeInternalIp: false

resources:

requests:

cpu: 100m

memory: 90Mi

scope:

enabled: false

namespace: ""

service:

annotations: {}

enableHttp: true

enableHttps: true

enabled: true

externalIPs: []

internal:

annotations: {}

enabled: false

loadBalancerSourceRanges: []

labels: {}

loadBalancerSourceRanges: []

nodePorts:

http: ""

https: ""

tcp: {}

udp: {}

ports:

http: 80

https: 443

targetPorts:

http: http

https: https

type: LoadBalancer

setAsDefaultIngress: true

sysctls: {}

tcp:

annotations: {}

configMapNamespace: ""

terminationGracePeriodSeconds: 300

tolerations: []

topologySpreadConstraints: []

udp:

annotations: {}

configMapNamespace: ""

updateStrategy: {}

useIngressClassOnly: false

watchNamespace: default

defaultBackend:

affinity: {}

autoscaling:

enabled: false

maxReplicas: 2

minReplicas: 1

targetCPUUtilizationPercentage: 50

targetMemoryUtilizationPercentage: 50

enabled: false

existingPsp: ""

extraArgs: {}

extraEnvs: []

extraVolumeMounts: []

extraVolumes: []

image:

allowPrivilegeEscalation: false

pullPolicy: IfNotPresent

readOnlyRootFilesystem: true

repository: k8s.gcr.io/defaultbackend-amd64

runAsNonRoot: true

runAsUser: 65534

tag: "1.5"

livenessProbe:

failureThreshold: 3

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

minAvailable: 1

name: defaultbackend

nodeSelector: {}

podAnnotations: {}

podLabels: {}

podSecurityContext: {}

port: 8080

priorityClassName: ""

readinessProbe:

failureThreshold: 6

initialDelaySeconds: 0

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 5

replicaCount: 1

resources: {}

service:

annotations: {}

externalIPs: []

loadBalancerSourceRanges: []

servicePort: 80

type: ClusterIP

serviceAccount:

automountServiceAccountToken: true

create: true

name: ""

tolerations: []

dhParam: null

imagePullSecrets: []

podSecurityPolicy:

enabled: false

rbac:

create: true

scope: false

revisionHistoryLimit: 10

serviceAccount:

automountServiceAccountToken: true

create: true

name: ""

tcp: {}

udp: {}

Now we upgrade (then verify the values are what I expect)

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing/nginx-ingress$ helm upgrade my-release nginx-stable/nginx-ingress

Release "my-release" has been upgraded. Happy Helming!

NAME: my-release

LAST DEPLOYED: Tue Mar 15 07:12:10 2022

NAMESPACE: default

STATUS: deployed

REVISION: 4

TEST SUITE: None

NOTES:

The NGINX Ingress Controller has been installed.

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing/nginx-ingress$ helm get values my-release

USER-SUPPLIED VALUES:

USER-SUPPLIED VALUES: null

controller:

defaultTLS:

secret: default/myk8s.tpk.best-cert

enableCustomResources: true

enableTLSPassthrough: true

logLevel: 3

nginxDebug: true

setAsDefaultIngress: true

useIngressClassOnly: false

watchNamespace: default

And my sanity check is to see the Harbor page

While this fixed Nginx Ingress - the cert manager was also failing now that v1beta1 APIs were gone

$ kubectl logs cert-manager-cainjector-6d59c8d4f7-djfhk -n cert-manager

I0315 12:22:58.314538 1 start.go:89] "starting" version="v1.0.4" revision="4d870e49b43960fad974487a262395e65da1373e"

I0315 12:22:59.363883 1 request.go:645] Throttling request took 1.012659825s, request: GET:https://10.43.0.1:443/apis/autoscaling/v1?timeout=32s

I0315 12:23:00.365540 1 request.go:645] Throttling request took 2.009080234s, request: GET:https://10.43.0.1:443/apis/networking.k8s.io/v1?timeout=32s

I0315 12:23:01.412298 1 request.go:645] Throttling request took 3.054298259s, request: GET:https://10.43.0.1:443/apis/provisioning.cattle.io/v1?timeout=32s

I0315 12:23:02.862150 1 request.go:645] Throttling request took 1.041138263s, request: GET:https://10.43.0.1:443/apis/storage.k8s.io/v1?timeout=32s

I0315 12:23:03.862222 1 request.go:645] Throttling request took 2.040969542s, request: GET:https://10.43.0.1:443/apis/clusterregistry.k8s.io/v1alpha1?timeout=32s

I0315 12:23:04.865286 1 request.go:645] Throttling request took 3.042038228s, request: GET:https://10.43.0.1:443/apis/configsync.gke.io/v1beta1?timeout=32s

I0315 12:23:05.912213 1 request.go:645] Throttling request took 4.090501202s, request: GET:https://10.43.0.1:443/apis/azure.crossplane.io/v1alpha3?timeout=32s

I0315 12:23:07.761975 1 request.go:645] Throttling request took 1.044102899s, request: GET:https://10.43.0.1:443/apis/apiextensions.crossplane.io/v1alpha1?timeout=32s

I0315 12:23:08.764767 1 request.go:645] Throttling request took 2.043825771s, request: GET:https://10.43.0.1:443/apis/hub.gke.io/v1?timeout=32s

I0315 12:23:09.811271 1 request.go:645] Throttling request took 3.092643007s, request: GET:https://10.43.0.1:443/apis/cluster.x-k8s.io/v1alpha3?timeout=32s

I0315 12:23:10.814472 1 request.go:645] Throttling request took 4.092975359s, request: GET:https://10.43.0.1:443/apis/provisioning.cattle.io/v1?timeout=32s

I0315 12:23:12.660962 1 request.go:645] Throttling request took 1.043867803s, request: GET:https://10.43.0.1:443/apis/cert-manager.io/v1alpha3?timeout=32s

I0315 12:23:13.664095 1 request.go:645] Throttling request took 2.044348776s, request: GET:https://10.43.0.1:443/apis/acme.cert-manager.io/v1alpha3?timeout=32s

I0315 12:23:14.664128 1 request.go:645] Throttling request took 3.044149055s, request: GET:https://10.43.0.1:443/apis/management.cattle.io/v3?timeout=32s

I0315 12:23:15.665109 1 request.go:645] Throttling request took 4.045011453s, request: GET:https://10.43.0.1:443/apis/hub.gke.io/v1?timeout=32s

E0315 12:23:16.419977 1 start.go:158] cert-manager/ca-injector "msg"="error registering core-only controllers" "error"="no matches for kind \"MutatingWebhookConfiguration\" in version \"admissionregistration.k8s.io/v1beta1\""

We can see the API is now v1

$ kubectl api-versions | grep admissionregistration

admissionregistration.k8s.io/v1

We should be able to apply a newer cert manager to solve this

$ kubectl apply -f https://github.com/jetstack/cert-manager/releases/download/v1.4.0/cert-manager.yaml

customresourcedefinition.apiextensions.k8s.io/certificaterequests.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/certificates.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/challenges.acme.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/clusterissuers.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/issuers.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/orders.acme.cert-manager.io configured

namespace/cert-manager unchanged

serviceaccount/cert-manager-cainjector configured

serviceaccount/cert-manager configured

serviceaccount/cert-manager-webhook configured

clusterrole.rbac.authorization.k8s.io/cert-manager-cainjector configured

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-issuers configured

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-clusterissuers configured

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-certificates configured

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-orders configured

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-challenges configured

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-ingress-shim configured

clusterrole.rbac.authorization.k8s.io/cert-manager-view configured

clusterrole.rbac.authorization.k8s.io/cert-manager-edit configured

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-approve:cert-manager-io created

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-certificatesigningrequests created

clusterrole.rbac.authorization.k8s.io/cert-manager-webhook:subjectaccessreviews created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-cainjector configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-issuers configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-clusterissuers configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-certificates configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-orders configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-challenges configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-ingress-shim configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-approve:cert-manager-io created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-certificatesigningrequests created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-webhook:subjectaccessreviews created

role.rbac.authorization.k8s.io/cert-manager-cainjector:leaderelection configured

role.rbac.authorization.k8s.io/cert-manager:leaderelection configured

role.rbac.authorization.k8s.io/cert-manager-webhook:dynamic-serving configured

rolebinding.rbac.authorization.k8s.io/cert-manager-cainjector:leaderelection configured

rolebinding.rbac.authorization.k8s.io/cert-manager:leaderelection configured

rolebinding.rbac.authorization.k8s.io/cert-manager-webhook:dynamic-serving configured

deployment.apps/cert-manager-cainjector configured

deployment.apps/cert-manager configured

deployment.apps/cert-manager-webhook configured

mutatingwebhookconfiguration.admissionregistration.k8s.io/cert-manager-webhook configured

validatingwebhookconfiguration.admissionregistration.k8s.io/cert-manager-webhook configured

Error from server (Invalid): error when applying patch:

{"metadata":{"annotations":{"kubectl.kubernetes.io/last-applied-configuration":"{\"apiVersion\":\"v1\",\"kind\":\"Service\",\"metadata\":{\"annotations\":{},\"labels\":{\"app\":\"cert-manager\",\"app.kubernetes.io/component\":\"controller\",\"app.kubernetes.io/instance\":\"cert-manager\",\"app.kubernetes.io/managed-by\":\"Helm\",\"app.kubernetes.io/name\":\"cert-manager\",\"helm.sh/chart\":\"cert-manager-v1.4.0\"},\"name\":\"cert-manager\",\"namespace\":\"cert-manager\"},\"spec\":{\"ports\":[{\"port\":9402,\"protocol\":\"TCP\",\"targetPort\":9402}],\"selector\":{\"app.kubernetes.io/component\":\"controller\",\"app.kubernetes.io/instance\":\"cert-manager\",\"app.kubernetes.io/name\":\"cert-manager\"},\"type\":\"ClusterIP\"}}\n"},"labels":{"app.kubernetes.io/managed-by":"Helm","helm.sh/chart":"cert-manager-v1.4.0"}}}

to:

Resource: "/v1, Resource=services", GroupVersionKind: "/v1, Kind=Service"

Name: "cert-manager", Namespace: "cert-manager"

for: "https://github.com/jetstack/cert-manager/releases/download/v1.4.0/cert-manager.yaml": Service "cert-manager" is invalid: spec.clusterIPs: Required value

Error from server (Invalid): error when applying patch:

{"metadata":{"annotations":{"kubectl.kubernetes.io/last-applied-configuration":"{\"apiVersion\":\"v1\",\"kind\":\"Service\",\"metadata\":{\"annotations\":{},\"labels\":{\"app\":\"webhook\",\"app.kubernetes.io/component\":\"webhook\",\"app.kubernetes.io/instance\":\"cert-manager\",\"app.kubernetes.io/managed-by\":\"Helm\",\"app.kubernetes.io/name\":\"webhook\",\"helm.sh/chart\":\"cert-manager-v1.4.0\"},\"name\":\"cert-manager-webhook\",\"namespace\":\"cert-manager\"},\"spec\":{\"ports\":[{\"name\":\"https\",\"port\":443,\"targetPort\":10250}],\"selector\":{\"app.kubernetes.io/component\":\"webhook\",\"app.kubernetes.io/instance\":\"cert-manager\",\"app.kubernetes.io/name\":\"webhook\"},\"type\":\"ClusterIP\"}}\n"},"labels":{"app.kubernetes.io/managed-by":"Helm","helm.sh/chart":"cert-manager-v1.4.0"}}}

to:

Resource: "/v1, Resource=services", GroupVersionKind: "/v1, Kind=Service"

Name: "cert-manager-webhook", Namespace: "cert-manager"

for: "https://github.com/jetstack/cert-manager/releases/download/v1.4.0/cert-manager.yaml": Service "cert-manager-webhook" is invalid: spec.clusterIPs: Required value

The errors came from rather stuck services, which I had to manually delete, then try again.

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing$ kubectl delete service cert-manager -n cert-manager

service "cert-manager" deleted

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing$ kubectl delete service cert-manager-webhook -n cert-manager

service "cert-manager-webhook" deleted

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing$ kubectl apply -f https://github.com/jetstack/cert-manager/releases/download/v1.4.0/cert-manager.yaml

customresourcedefinition.apiextensions.k8s.io/certificaterequests.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/certificates.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/challenges.acme.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/clusterissuers.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/issuers.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/orders.acme.cert-manager.io configured

namespace/cert-manager unchanged

serviceaccount/cert-manager-cainjector unchanged

serviceaccount/cert-manager unchanged

serviceaccount/cert-manager-webhook unchanged

clusterrole.rbac.authorization.k8s.io/cert-manager-cainjector unchanged

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-issuers unchanged

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-clusterissuers unchanged

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-certificates unchanged

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-orders unchanged

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-challenges unchanged

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-ingress-shim unchanged

clusterrole.rbac.authorization.k8s.io/cert-manager-view unchanged

clusterrole.rbac.authorization.k8s.io/cert-manager-edit unchanged

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-approve:cert-manager-io unchanged

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-certificatesigningrequests unchanged

clusterrole.rbac.authorization.k8s.io/cert-manager-webhook:subjectaccessreviews unchanged

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-cainjector unchanged

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-issuers unchanged

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-clusterissuers unchanged

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-certificates unchanged

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-orders unchanged

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-challenges unchanged

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-ingress-shim unchanged

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-approve:cert-manager-io unchanged

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-certificatesigningrequests unchanged

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-webhook:subjectaccessreviews configured

role.rbac.authorization.k8s.io/cert-manager-cainjector:leaderelection unchanged

role.rbac.authorization.k8s.io/cert-manager:leaderelection unchanged

role.rbac.authorization.k8s.io/cert-manager-webhook:dynamic-serving unchanged

rolebinding.rbac.authorization.k8s.io/cert-manager-cainjector:leaderelection unchanged

rolebinding.rbac.authorization.k8s.io/cert-manager:leaderelection configured

rolebinding.rbac.authorization.k8s.io/cert-manager-webhook:dynamic-serving configured

service/cert-manager created

service/cert-manager-webhook created

deployment.apps/cert-manager-cainjector unchanged

deployment.apps/cert-manager unchanged

deployment.apps/cert-manager-webhook unchanged

mutatingwebhookconfiguration.admissionregistration.k8s.io/cert-manager-webhook configured

validatingwebhookconfiguration.admissionregistration.k8s.io/cert-manager-webhook configured

Also, if you want to be explicit, download and install the latest CRDs first

$ wget https://github.com/jetstack/cert-manager/releases/download/v1.4.0/cert-manager.crds.yaml

--2022-03-15 07:35:52-- https://github.com/jetstack/cert-manager/releases/download/v1.4.0/cert-manager.crds.yaml

Resolving github.com (github.com)... 140.82.112.4

Connecting to github.com (github.com)|140.82.112.4|:443... connected.

HTTP request sent, awaiting response... 301 Moved Permanently

Location: https://github.com/cert-manager/cert-manager/releases/download/v1.4.0/cert-manager.crds.yaml [following]

--2022-03-15 07:35:52-- https://github.com/cert-manager/cert-manager/releases/download/v1.4.0/cert-manager.crds.yaml

Reusing existing connection to github.com:443.

HTTP request sent, awaiting response... 302 Found

Location: https://objects.githubusercontent.com/github-production-release-asset-2e65be/92313258/ba7c2a80-cde0-11eb-9b2d-5aa10a3df6cd?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20220315%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20220315T123559Z&X-Amz-Expires=300&X-Amz-Signature=a39d25795ccd20fc0003536aa5b978ce91b4231a53c053eda0d7c5cbcb811675&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=92313258&response-content-disposition=attachment%3B%20filename%3Dcert-manager.crds.yaml&response-content-type=application%2Foctet-stream [following]

--2022-03-15 07:35:53-- https://objects.githubusercontent.com/github-production-release-asset-2e65be/92313258/ba7c2a80-cde0-11eb-9b2d-5aa10a3df6cd?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20220315%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20220315T123559Z&X-Amz-Expires=300&X-Amz-Signature=a39d25795ccd20fc0003536aa5b978ce91b4231a53c053eda0d7c5cbcb811675&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=92313258&response-content-disposition=attachment%3B%20filename%3Dcert-manager.crds.yaml&response-content-type=application%2Foctet-stream

Resolving objects.githubusercontent.com (objects.githubusercontent.com)... 185.199.108.133, 185.199.109.133, 185.199.110.133, ...

Connecting to objects.githubusercontent.com (objects.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 1923834 (1.8M) [application/octet-stream]

Saving to: ‘cert-manager.crds.yaml’

cert-manager.crds.yaml 100%[==============================================================================================================================================>] 1.83M 7.55MB/s in 0.2s

2022-03-15 07:35:53 (7.55 MB/s) - ‘cert-manager.crds.yaml’ saved [1923834/1923834]

$ kubectl apply -f cert-manager.crds.yaml

customresourcedefinition.apiextensions.k8s.io/certificaterequests.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/certificates.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/challenges.acme.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/clusterissuers.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/issuers.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/orders.acme.cert-manager.io configured

My webhook for Cert Manager was just not firing. So i tried manually requesting a cert

$ cat test.yml

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: uptime-fb-science

namespace: default

spec:

commonName: uptime.freshbrewed.science

dnsNames:

- uptime.freshbrewed.science

issuerRef:

kind: ClusterIssuer

name: letsencrypt-prod

secretName: uptime.freshbrewed.science-cert

$ kubectl apply -f test.yml

certificate.cert-manager.io/uptime-fb-science created

$ kubectl get cert

NAME READY SECRET AGE

..snip..

uptime-fb-science False uptime.freshbrewed.science-cert 5s

errors showed that I was still using too old of a cert-manager for my 1.23 k8s

$ kubectl logs cert-manager-798f8bb594-56z4s -n cert-manager | tail -n20

E0315 12:40:58.273475 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

E0315 12:41:55.902908 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

E0315 12:42:46.889687 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

E0315 12:43:31.953112 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

E0315 12:44:08.803410 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

E0315 12:45:01.667084 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

E0315 12:45:42.456213 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

I0315 12:45:44.293030 1 trigger_controller.go:189] cert-manager/controller/certificates-trigger "msg"="Certificate must be re-issued" "key"="default/uptime-fb-science" "message"="Issuing certificate as Secret does not exist" "reason"="DoesNotExist"

I0315 12:45:44.293042 1 conditions.go:182] Setting lastTransitionTime for Certificate "uptime-fb-science" condition "Ready" to 2022-03-15 12:45:44.293030983 +0000 UTC m=+1195.800044437

I0315 12:45:44.293083 1 conditions.go:182] Setting lastTransitionTime for Certificate "uptime-fb-science" condition "Issuing" to 2022-03-15 12:45:44.293073828 +0000 UTC m=+1195.800087293

I0315 12:45:44.367138 1 controller.go:162] cert-manager/controller/certificates-readiness "msg"="re-queuing item due to optimistic locking on resource" "key"="default/uptime-fb-science" "error"="Operation cannot be fulfilled on certificates.cert-manager.io \"uptime-fb-science\": the object has been modified; please apply your changes to the latest version and try again"

I0315 12:45:44.372288 1 conditions.go:182] Setting lastTransitionTime for Certificate "uptime-fb-science" condition "Ready" to 2022-03-15 12:45:44.372273962 +0000 UTC m=+1195.879287462

I0315 12:45:45.375323 1 controller.go:162] cert-manager/controller/certificates-key-manager "msg"="re-queuing item due to optimistic locking on resource" "key"="default/uptime-fb-science" "error"="Operation cannot be fulfilled on certificates.cert-manager.io \"uptime-fb-science\": the object has been modified; please apply your changes to the latest version and try again"

I0315 12:45:45.510993 1 conditions.go:242] Setting lastTransitionTime for CertificateRequest "uptime-fb-science-b7dsf" condition "Approved" to 2022-03-15 12:45:45.51096944 +0000 UTC m=+1197.017982953

I0315 12:45:45.860678 1 conditions.go:242] Setting lastTransitionTime for CertificateRequest "uptime-fb-science-b7dsf" condition "Ready" to 2022-03-15 12:45:45.86065169 +0000 UTC m=+1197.367665135

E0315 12:46:36.679773 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

E0315 12:47:17.700104 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

E0315 12:48:15.919178 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

E0315 12:48:59.191742 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

E0315 12:49:37.679836 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource

We’ll move to the latest (v.1.7.1 as of this writing)

$ kubectl apply -f https://github.com/jetstack/cert-manager/releases/download/v1.7.1/cert-manager.yaml

customresourcedefinition.apiextensions.k8s.io/certificaterequests.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/certificates.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/challenges.acme.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/clusterissuers.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/issuers.cert-manager.io configured

customresourcedefinition.apiextensions.k8s.io/orders.acme.cert-manager.io configured

namespace/cert-manager unchanged

serviceaccount/cert-manager-cainjector configured

serviceaccount/cert-manager configured

serviceaccount/cert-manager-webhook configured

configmap/cert-manager-webhook created

clusterrole.rbac.authorization.k8s.io/cert-manager-cainjector configured

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-issuers configured

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-clusterissuers configured

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-certificates configured

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-orders configured

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-challenges configured

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-ingress-shim configured

clusterrole.rbac.authorization.k8s.io/cert-manager-view configured

clusterrole.rbac.authorization.k8s.io/cert-manager-edit configured

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-approve:cert-manager-io configured

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-certificatesigningrequests configured

clusterrole.rbac.authorization.k8s.io/cert-manager-webhook:subjectaccessreviews configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-cainjector configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-issuers configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-clusterissuers configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-certificates configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-orders configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-challenges configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-ingress-shim configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-approve:cert-manager-io configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-certificatesigningrequests configured

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-webhook:subjectaccessreviews configured

role.rbac.authorization.k8s.io/cert-manager-cainjector:leaderelection configured

role.rbac.authorization.k8s.io/cert-manager:leaderelection configured

role.rbac.authorization.k8s.io/cert-manager-webhook:dynamic-serving configured

rolebinding.rbac.authorization.k8s.io/cert-manager-cainjector:leaderelection configured

rolebinding.rbac.authorization.k8s.io/cert-manager:leaderelection configured

rolebinding.rbac.authorization.k8s.io/cert-manager-webhook:dynamic-serving configured

service/cert-manager configured

service/cert-manager-webhook configured

deployment.apps/cert-manager-cainjector configured

deployment.apps/cert-manager configured

deployment.apps/cert-manager-webhook configured

mutatingwebhookconfiguration.admissionregistration.k8s.io/cert-manager-webhook configured

validatingwebhookconfiguration.admissionregistration.k8s.io/cert-manager-webhook configured

$ kubectl get pods -n cert-manager

NAME READY STATUS RESTARTS AGE

cert-manager-5b6d4f8d44-tkkhh 1/1 Running 0 32s

cert-manager-cainjector-747cfdfd87-fxwbf 1/1 Running 0 32s

cert-manager-webhook-67cb765ff6-rkh9p 1/1 Running 0 32s

cert-manager-webhook-69579b9ccd-pf2vb 1/1 Terminating 0 26m

This time it worked:

$ kubectl get cert | tail -n3

core-fb-science True core.freshbrewed.science-cert 314d

myk8s-tpk-best False myk8s.tpk.best-cert 346d

uptime-fb-science False uptime.freshbrewed.science-cert 6m54s

$ kubectl delete -f test.yml

certificate.cert-manager.io "uptime-fb-science" deleted

$ kubectl apply -f test.yml

certificate.cert-manager.io/uptime-fb-science created

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing$ kubectl get cert | grep uptime

uptime-fb-science False uptime.freshbrewed.science-cert 7s

$ kubectl get cert | grep uptime

uptime-fb-science True uptime.freshbrewed.science-cert 26s

# and for safe measure, ask for the Ingress again

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl delete -f uptimeIngress.yml

ingress.networking.k8s.io "uptime" deleted

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl apply -f uptimeIngress.yml

ingress.networking.k8s.io/uptime created

This now shows the uptime Cert is good and Nginx is running, but we still have issues

$ kubectl get pods | grep uptime

uptime-kuma-77cccfc9cc-8hs5z 0/1 CrashLoopBackOff 17 (3m20s ago) 42m

$ kubectl logs uptime-kuma-77cccfc9cc-8hs5z

==> Performing startup jobs and maintenance tasks

==> Starting application with user 0 group 0

Welcome to Uptime Kuma

Your Node.js version: 14

Node Env: production

Importing Node libraries

Importing 3rd-party libraries

Importing this project modules

Prepare Notification Providers

Version: 1.11.4

Creating express and socket.io instance

Server Type: HTTP

Data Dir: ./data/

Connecting to the Database

SQLite config:

[ { journal_mode: 'wal' } ]

[ { cache_size: -12000 } ]

SQLite Version: 3.36.0

Connected

Your database version: 10

Latest database version: 10

Database patch not needed

Database Patch 2.0 Process

Load JWT secret from database.

No user, need setup

Adding route

Adding socket handler

Init the server

Trace: RangeError [ERR_SOCKET_BAD_PORT]: options.port should be >= 0 and < 65536. Received NaN.

at new NodeError (internal/errors.js:322:7)

at validatePort (internal/validators.js:216:11)

at Server.listen (net.js:1457:5)

at /app/server/server.js:1347:12 {

code: 'ERR_SOCKET_BAD_PORT'

}

at process.<anonymous> (/app/server/server.js:1553:13)

at process.emit (events.js:400:28)

at processPromiseRejections (internal/process/promises.js:245:33)

at processTicksAndRejections (internal/process/task_queues.js:96:32)

If you keep encountering errors, please report to https://github.com/louislam/uptime-kuma/issues

Shutdown requested

Called signal: SIGTERM

Stopping all monitors

Trace: Error [ERR_SERVER_NOT_RUNNING]: Server is not running.

at new NodeError (internal/errors.js:322:7)

at Server.close (net.js:1624:12)

at Object.onceWrapper (events.js:519:28)

at Server.emit (events.js:412:35)

at emitCloseNT (net.js:1677:8)

at processTicksAndRejections (internal/process/task_queues.js:81:21) {

code: 'ERR_SERVER_NOT_RUNNING'

}

at process.<anonymous> (/app/server/server.js:1553:13)

at process.emit (events.js:400:28)

at processPromiseRejections (internal/process/promises.js:245:33)

at processTicksAndRejections (internal/process/task_queues.js:96:32)

If you keep encountering errors, please report to https://github.com/louislam/uptime-kuma/issues

Closing the database

SQLite closed

Graceful shutdown successful!

It seems their Helm chart is actually in err and requires an env var.

$ cat values.yml

podEnv:

- name: "UPTIME_KUMA_PORT"

value: "3001"

$ helm upgrade uptime-kuma uptime-kuma/uptime-kuma --install --set volume.storageClassName=local-path -f values.yml

Release "uptime-kuma" has been upgraded. Happy Helming!

NAME: uptime-kuma

LAST DEPLOYED: Tue Mar 15 08:12:09 2022

NAMESPACE: default

STATUS: deployed

REVISION: 3

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=uptime-kuma,app.kubernetes.io/instance=uptime-kuma" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace default $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:3001 to use your application"

kubectl --namespace default port-forward $POD_NAME 3001:$CONTAINER_PORT

$ kubectl logs uptime-kuma-86f75d496b-sgw2s

==> Performing startup jobs and maintenance tasks

==> Starting application with user 0 group 0

Welcome to Uptime Kuma

Your Node.js version: 14

Node Env: production

Importing Node libraries

Importing 3rd-party libraries

Importing this project modules

Prepare Notification Providers

Version: 1.11.4

Creating express and socket.io instance

Server Type: HTTP

Data Dir: ./data/

Copying Database

Connecting to the Database

SQLite config:

[ { journal_mode: 'wal' } ]

[ { cache_size: -12000 } ]

SQLite Version: 3.36.0

Connected

Your database version: 0

Latest database version: 10

Database patch is needed

Backing up the database

Patching ./db/patch1.sql

Patched ./db/patch1.sql

Patching ./db/patch2.sql

Patched ./db/patch2.sql

Patching ./db/patch3.sql

Patched ./db/patch3.sql

Patching ./db/patch4.sql

Patched ./db/patch4.sql

Patching ./db/patch5.sql

Patched ./db/patch5.sql

Patching ./db/patch6.sql

Patched ./db/patch6.sql

Patching ./db/patch7.sql

Patched ./db/patch7.sql

Patching ./db/patch8.sql

Patched ./db/patch8.sql

Patching ./db/patch9.sql

Patched ./db/patch9.sql

Patching ./db/patch10.sql

Patched ./db/patch10.sql

Database Patch 2.0 Process

patch-setting-value-type.sql is not patched

patch-setting-value-type.sql is patching

patch-setting-value-type.sql was patched successfully

patch-improve-performance.sql is not patched

patch-improve-performance.sql is patching

patch-improve-performance.sql was patched successfully

patch-2fa.sql is not patched

patch-2fa.sql is patching

patch-2fa.sql was patched successfully

patch-add-retry-interval-monitor.sql is not patched

patch-add-retry-interval-monitor.sql is patching

patch-add-retry-interval-monitor.sql was patched successfully

patch-incident-table.sql is not patched

patch-incident-table.sql is patching

patch-incident-table.sql was patched successfully

patch-group-table.sql is not patched

patch-group-table.sql is patching

patch-group-table.sql was patched successfully

patch-monitor-push_token.sql is not patched

patch-monitor-push_token.sql is patching

patch-monitor-push_token.sql was patched successfully

patch-http-monitor-method-body-and-headers.sql is not patched

patch-http-monitor-method-body-and-headers.sql is patching

patch-http-monitor-method-body-and-headers.sql was patched successfully

patch-2fa-invalidate-used-token.sql is not patched

patch-2fa-invalidate-used-token.sql is patching

patch-2fa-invalidate-used-token.sql was patched successfully

patch-notification_sent_history.sql is not patched

patch-notification_sent_history.sql is patching

patch-notification_sent_history.sql was patched successfully

patch-monitor-basic-auth.sql is not patched

patch-monitor-basic-auth.sql is patching

patch-monitor-basic-auth.sql was patched successfully

Database Patched Successfully

JWT secret is not found, generate one.

Stored JWT secret into database

No user, need setup

Adding route

Adding socket handler

Init the server

Listening on 3001

Let’s do the initial setup locally:

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=uptime-kuma,app.kubernetes.io/instance=uptime-kuma" -o jsonpath="{.items[0].metadata.name}")

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ export CONTAINER_PORT=$(kubectl get pod --namespace default $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl --namespace default port-forward $POD_NAME 3001:$CONTAINER_PORT

Forwarding from 127.0.0.1:3001 -> 3001

Forwarding from [::1]:3001 -> 3001

Handling connection for 3001

Handling connection for 3001

Handling connection for 3001

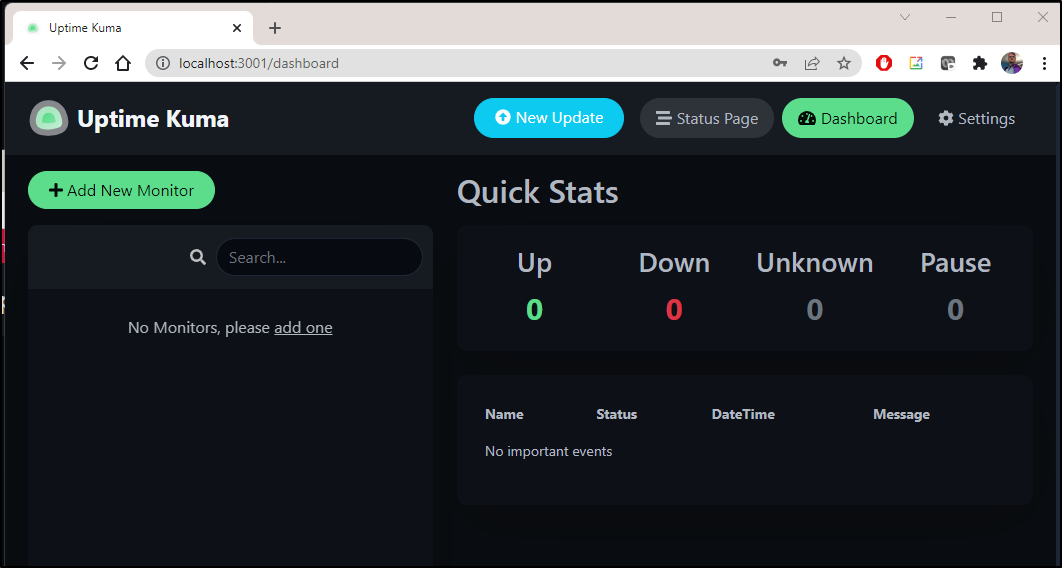

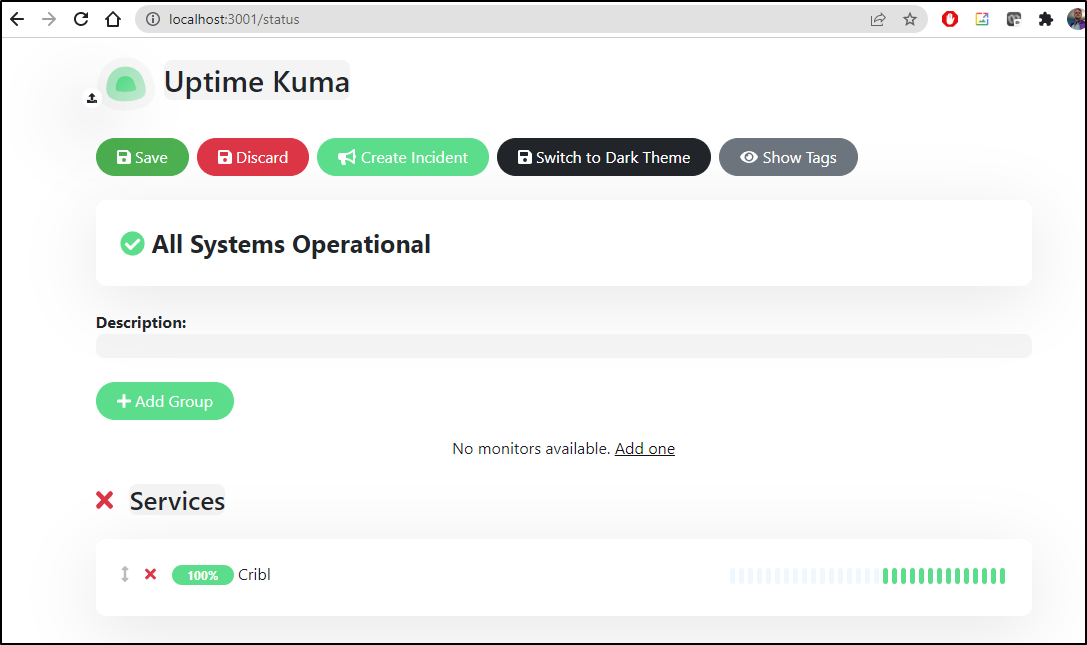

Once the admin user is created, we can see our dashboard

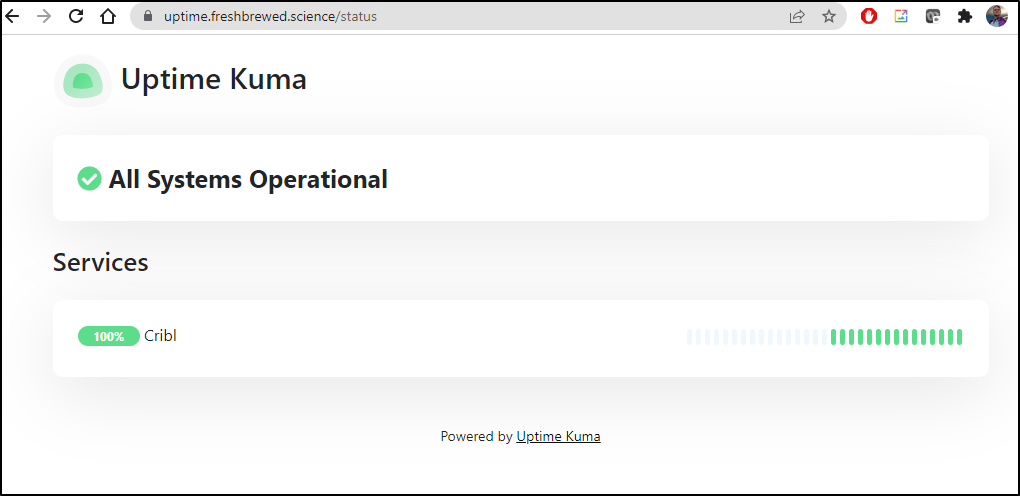

I should point out that at this point, the front end service is live, albeit with no content

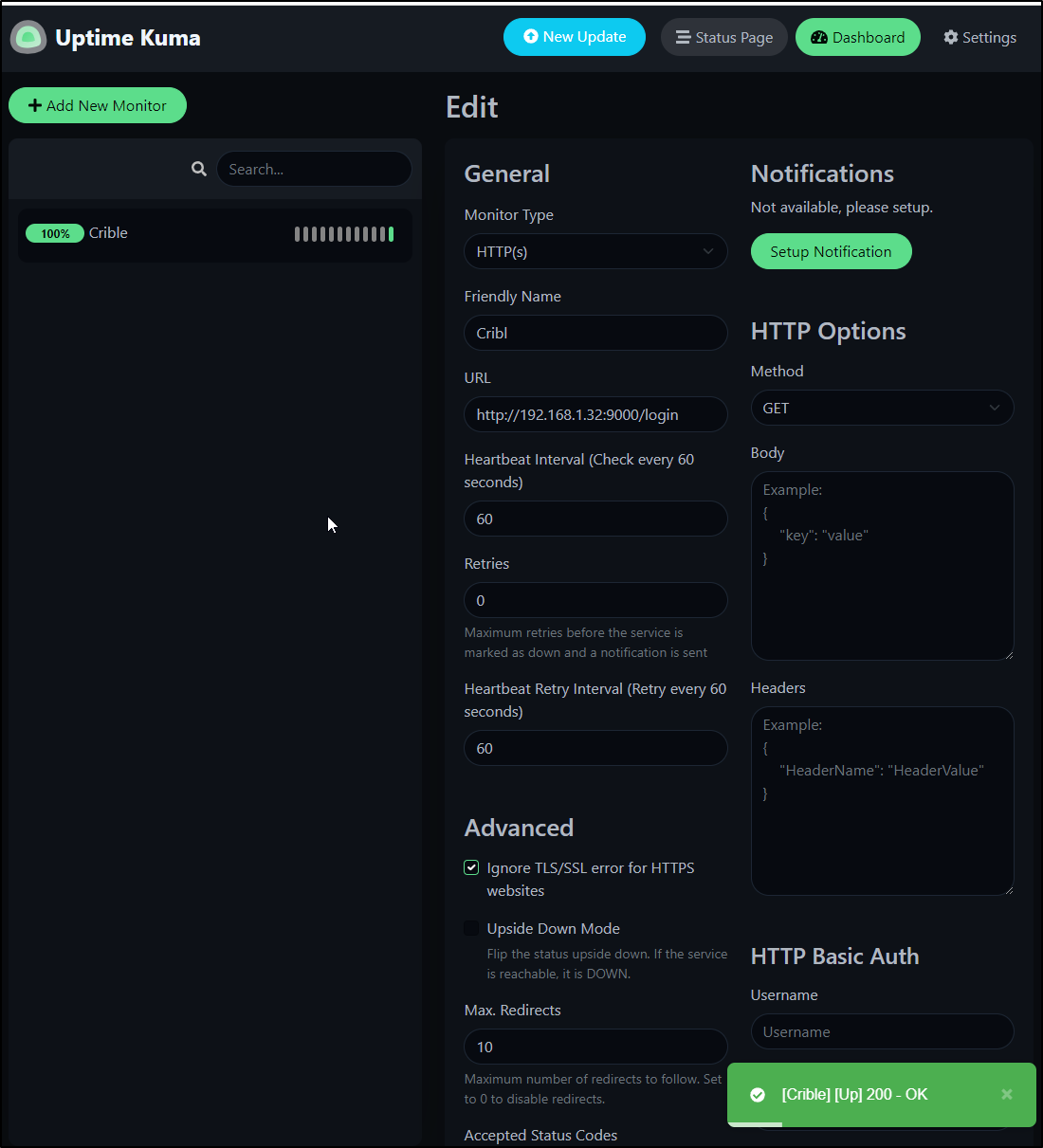

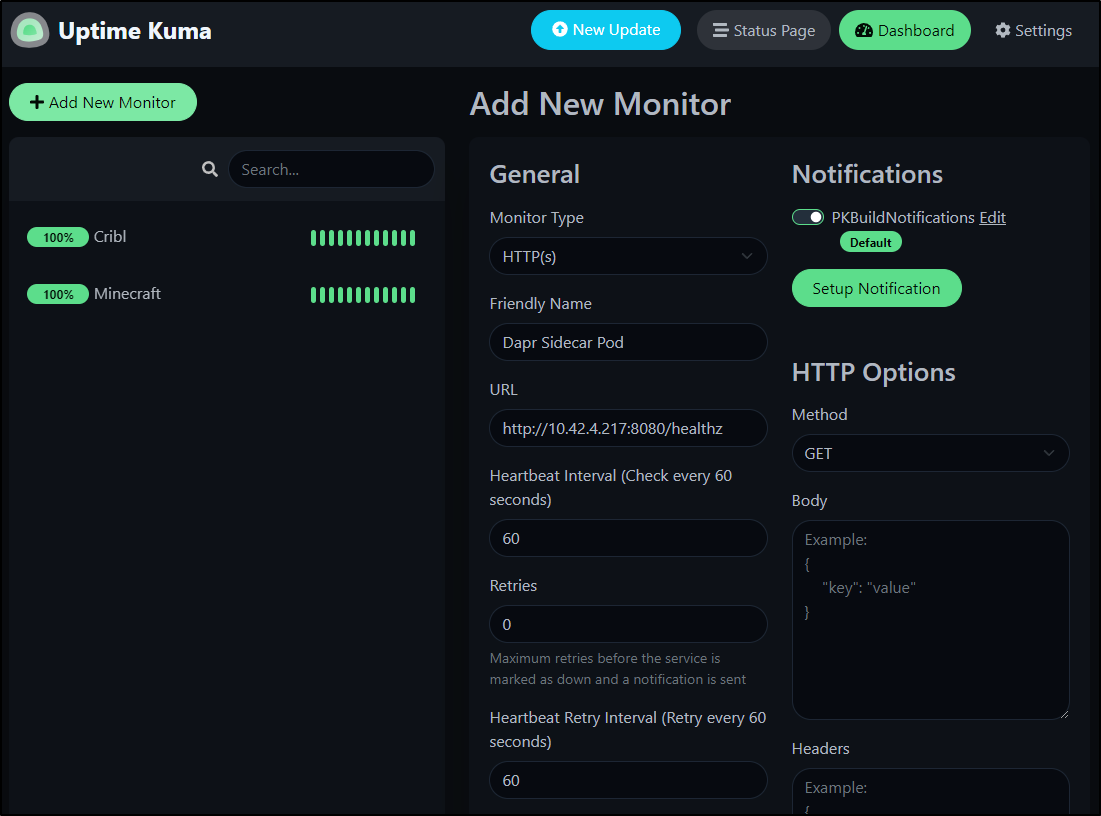

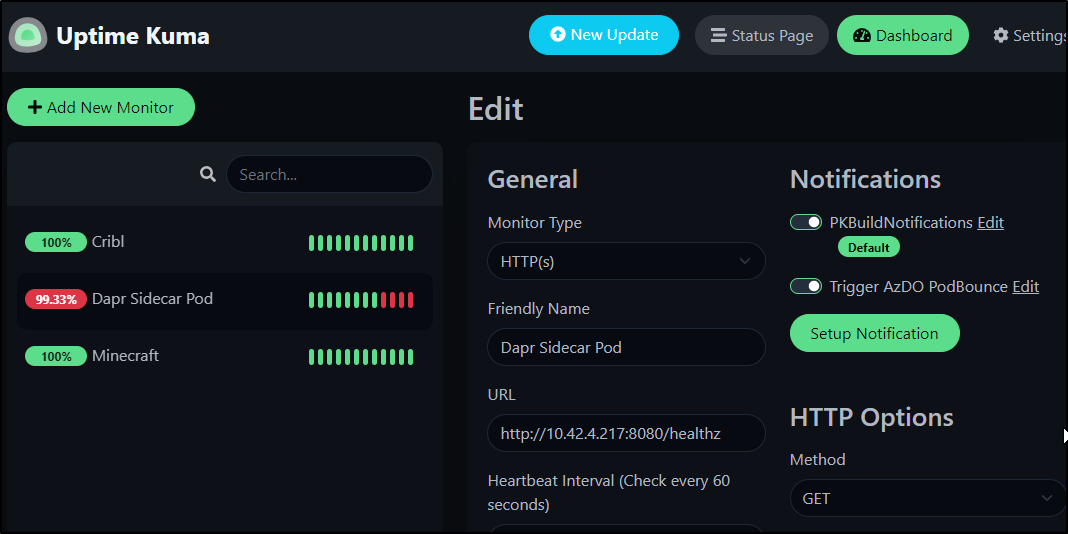

Simple HTTP monitor

Let’s add a simple HTTP monitor. For instance, I may want to Monitor my Cribl Logmonitor instance

We can add an alert

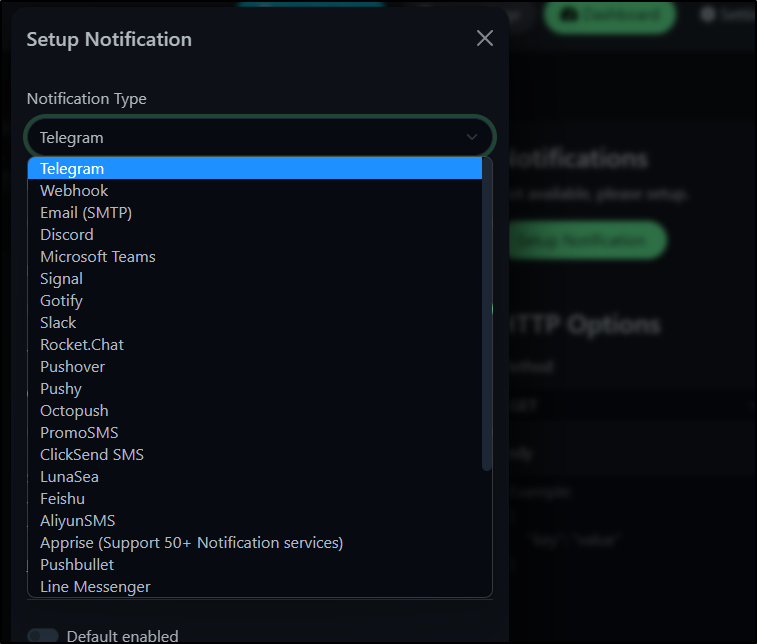

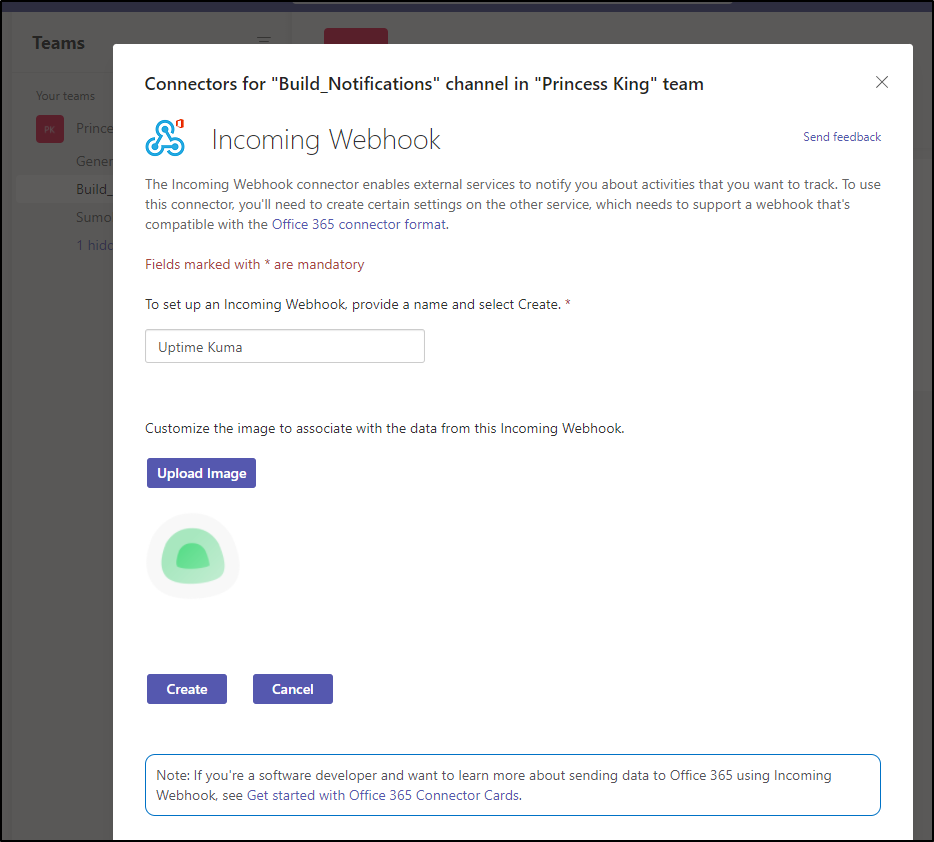

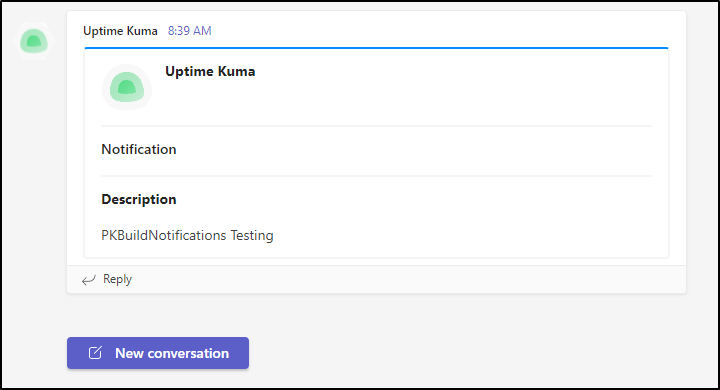

For instance, we can make a Teams Webhook

Which we can now use in a new Notification

Click test to see how it looks

We can share this monitor on the status page as well.

Going to the status page, we can add group, then pick under monitors, Cribl to expose it

Now when I save it, I can see the status

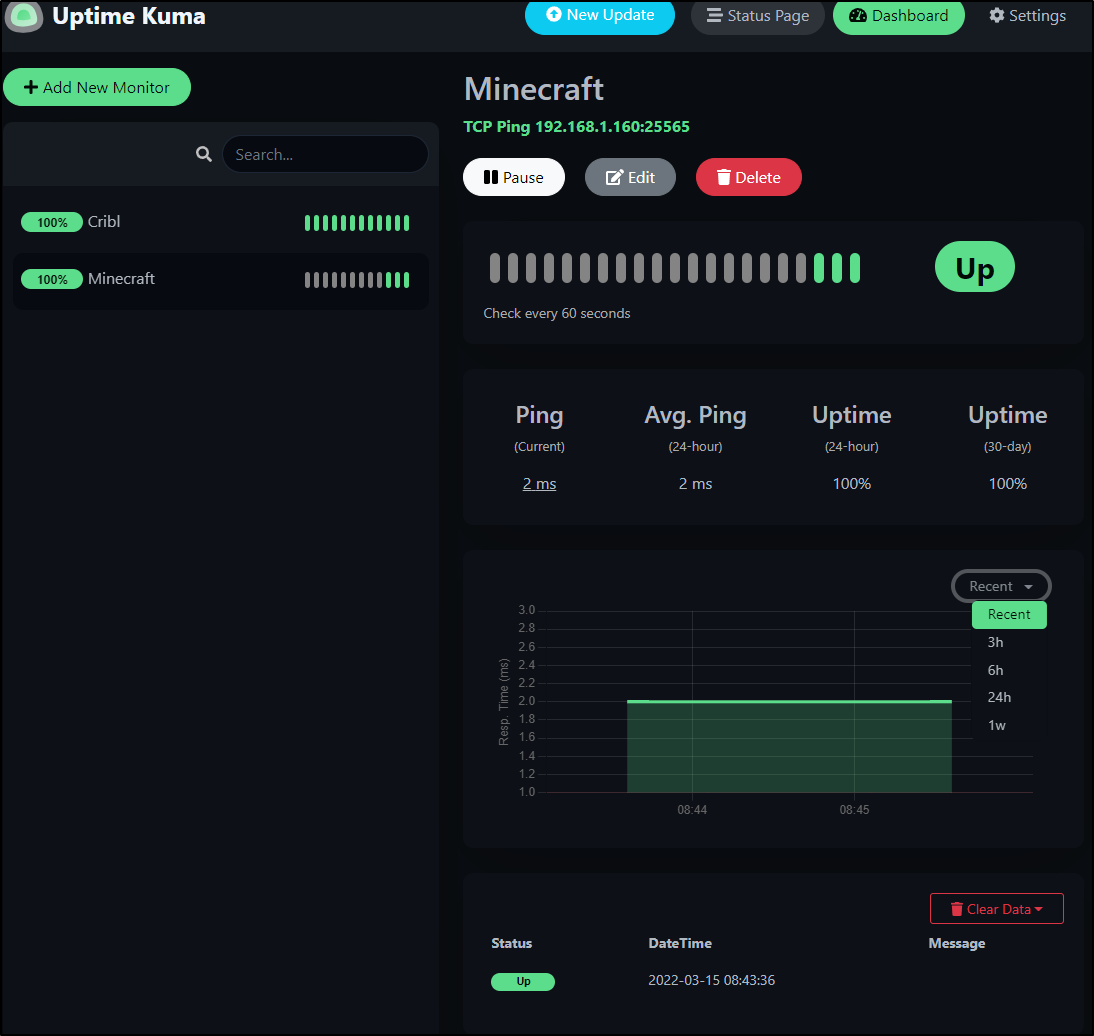

TCP monitors

We can monitor non HTTP hosts as well.

Perhaps I wish to monitor my local FTB (Minecraft) server. The Java server, by default, runs on 25565

We can setup a monitor for this, but disable the PK Teams alert.

I can now see the host is responding and look at data for different time windows

Probe on Liveness

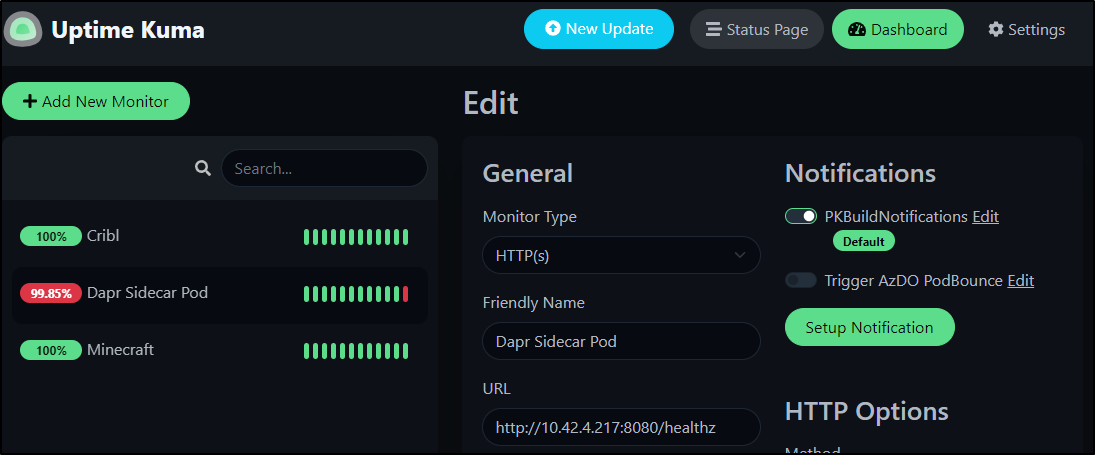

Another check many pods and services offer is a Liveness probe. This is a low cost (to the service) endpoint that just shows if a service is running.

For instance, looking at the Dapr Sidecar Injector:

$ kubectl describe pod dapr-sidecar-injector-5765dc95d9-7lpgm | grep Liveness

Liveness: http-get http://:8080/healthz delay=3s timeout=1s period=3s #success=1 #failure=5

$ kubectl describe pod dapr-sidecar-injector-5765dc95d9-7lpgm | grep IP | head -n1

IP: 10.42.4.217

Which is easy to setup as a quick Health check

and we can start to see some basic health metrics such as ping time when we let it run for a day

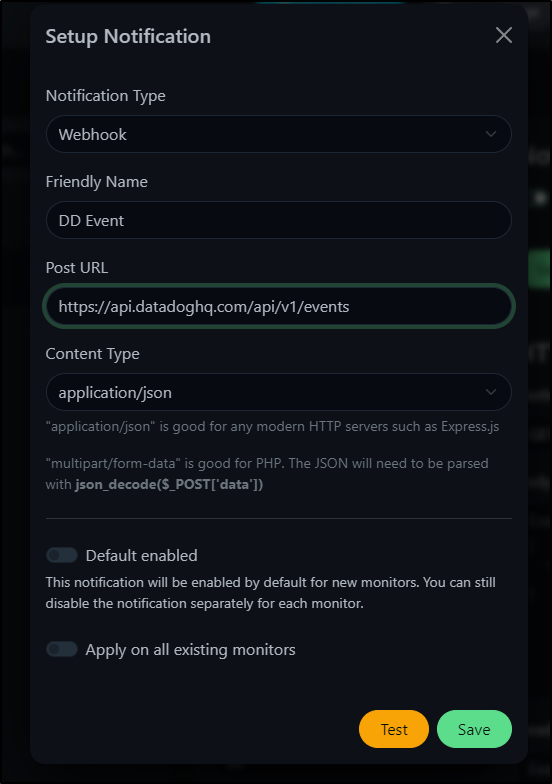

Notifications

I was hoping to use the Webhook Notification to push events to Datadog

However, the REST endpoint really requires the ability to pass the DD API KEY as a Header, e.g.

$ curl -X POST https://api.datadoghq.com/api/v1/events -H 'Content-Type: application/json' -H "DD-API-KEY: 1234123412341234123412341234" -d '{"title": "Uptime Kuma Event", "text": "K8s Pod Down", "tags": [ "host:myk3s" ]}'

{"status":"ok","event":{"id":6428455170932464368,"id_str":"6428455170932464368","title":"Uptime Kuma Event","text":"K8s Pod Down","date_happened":1647428652,"handle":null,"priority":null,"related_event_id":null,"tags":["host:myk3s"],"url":"https://app.datadoghq.com/event/event?id=6428455170932464368"}}

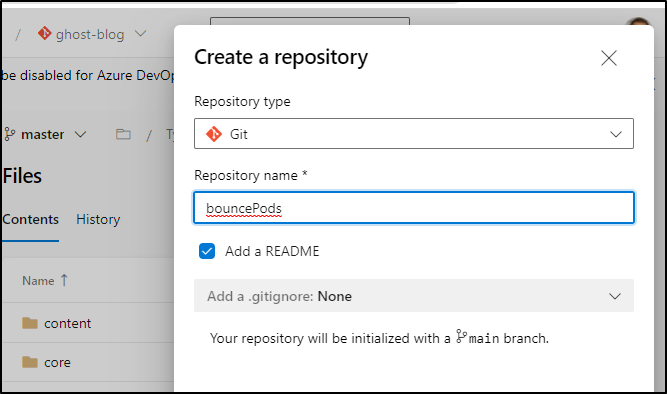

AzDO

One thing we can do is use a pipeline tool, such as Azure DevOps, to respond to issues Uptime Kuma identifies.

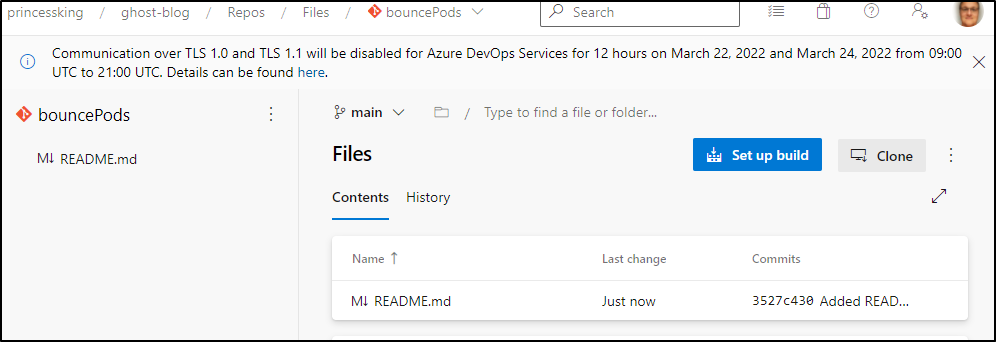

First, let’s create a repo to hold some work. This could be in Azure Repos or Github. For ease, I’ll use a local repo.

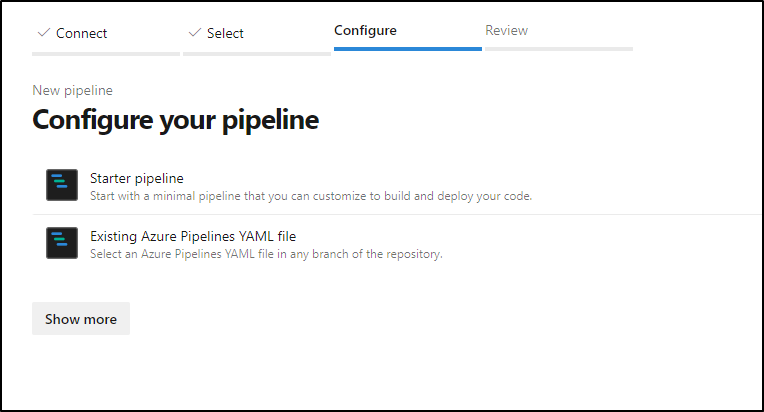

Next, click “Setup a Build” to create the pipeline file

choose starter pipeline

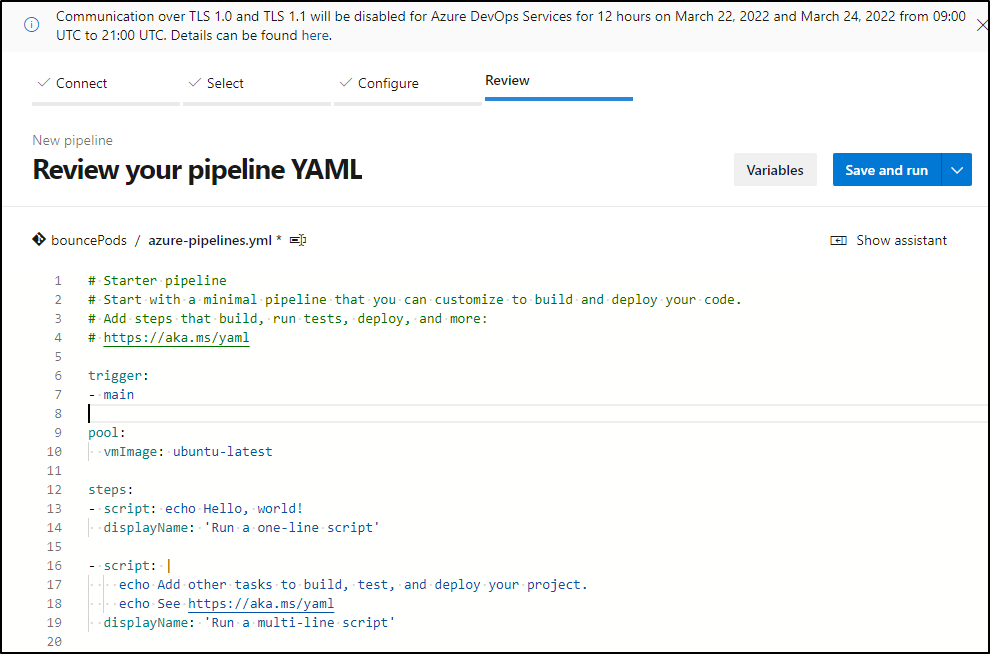

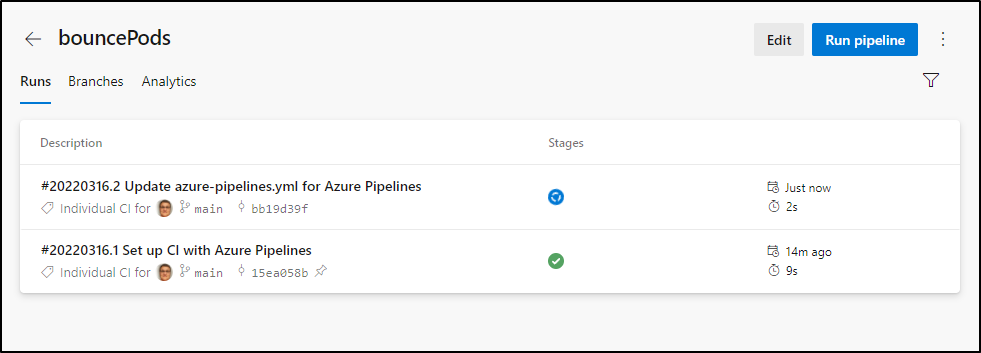

then we can save and run just to see it works

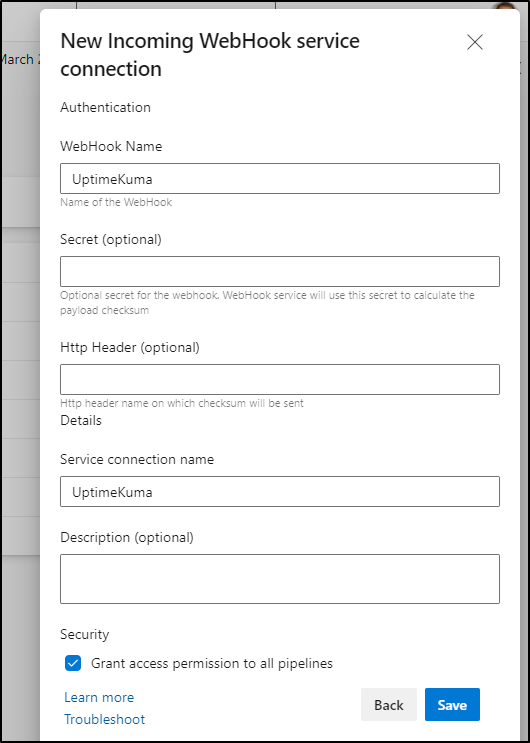

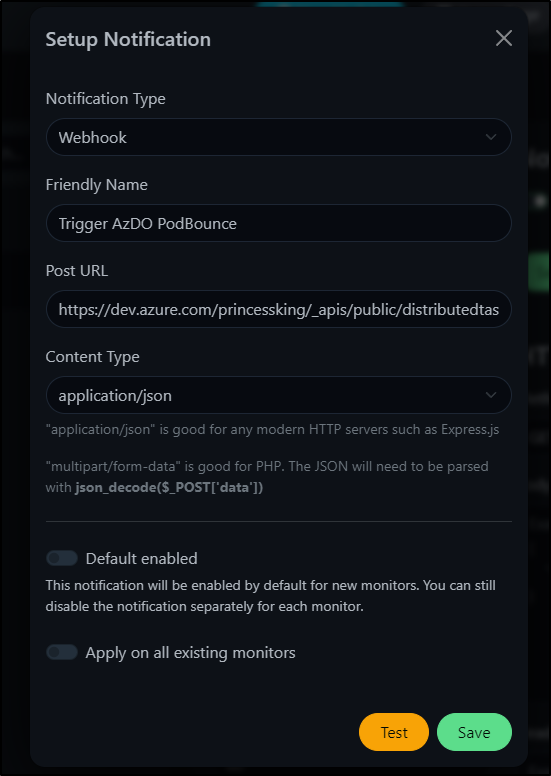

next, let’s make a webhook to invoke this pipeline. I often mix up the service connections and hooks. This is a connection under pipelines. Click create and choose “Incoming Webhook”:

We’ll name it UptimeKuma and grant access. You may want to restrict this later, depending on your AzDO org. Since this is a private tenant, I can safely grant access to all pipelines.

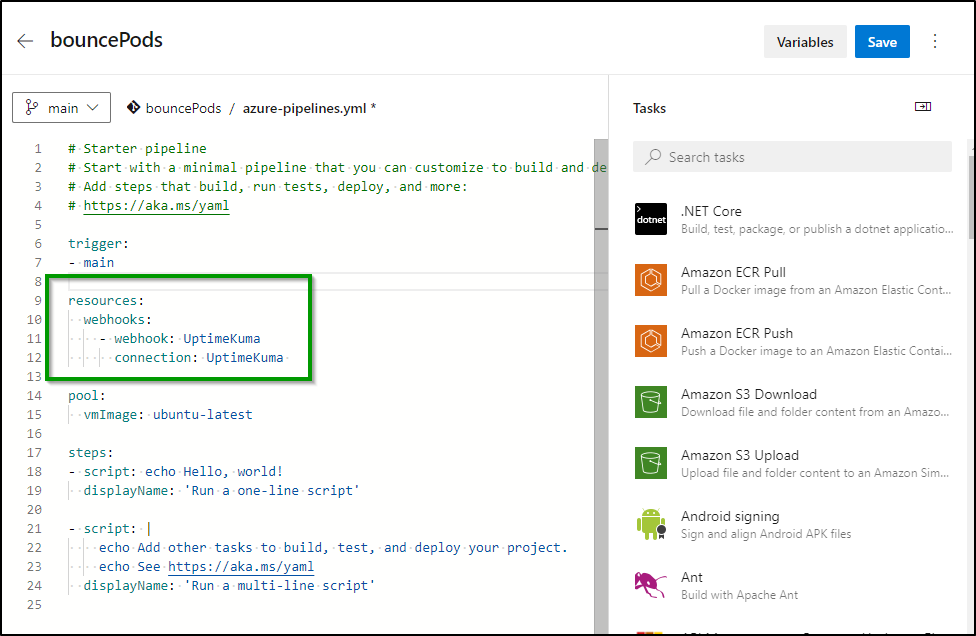

We can now refer to that Webhook and Connection (I make them the same for ease, but you can use different names for the connection and webhook name if you desire).

Because we changed main, that will trigger an invokation

We can now use that Webhook, which works just fine with Uptime Kuma webhook

https://dev.azure.com/princessking/_apis/public/distributedtask/webhooks/UptimeKuma?api-version=6.0-preview

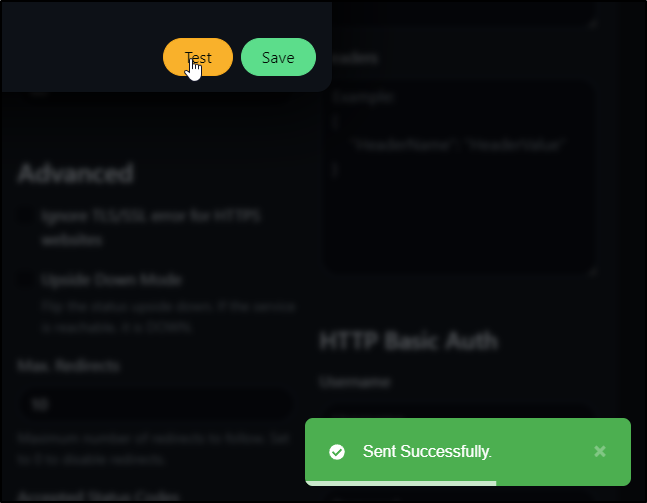

We can verify by clicking test

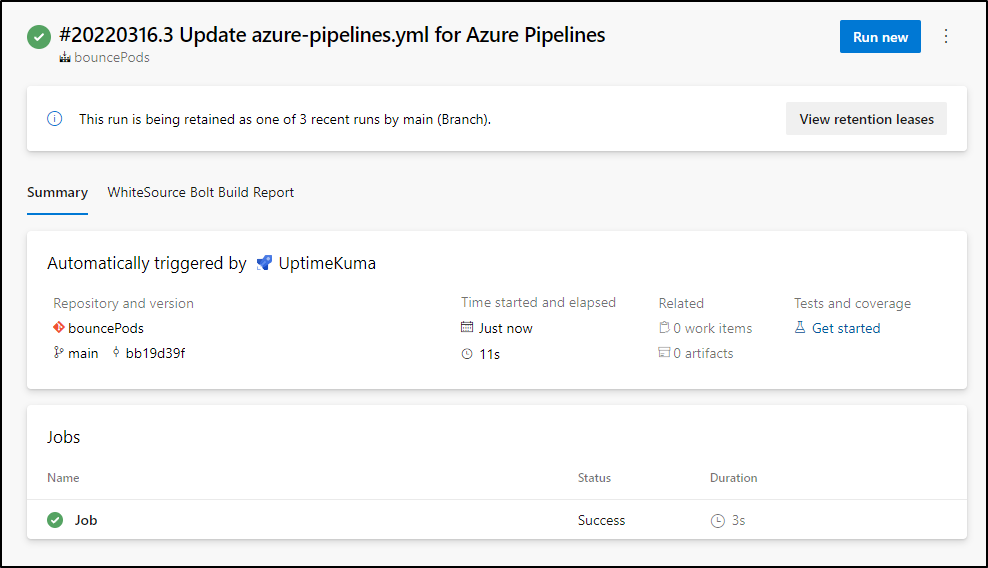

and seeing a build invoke

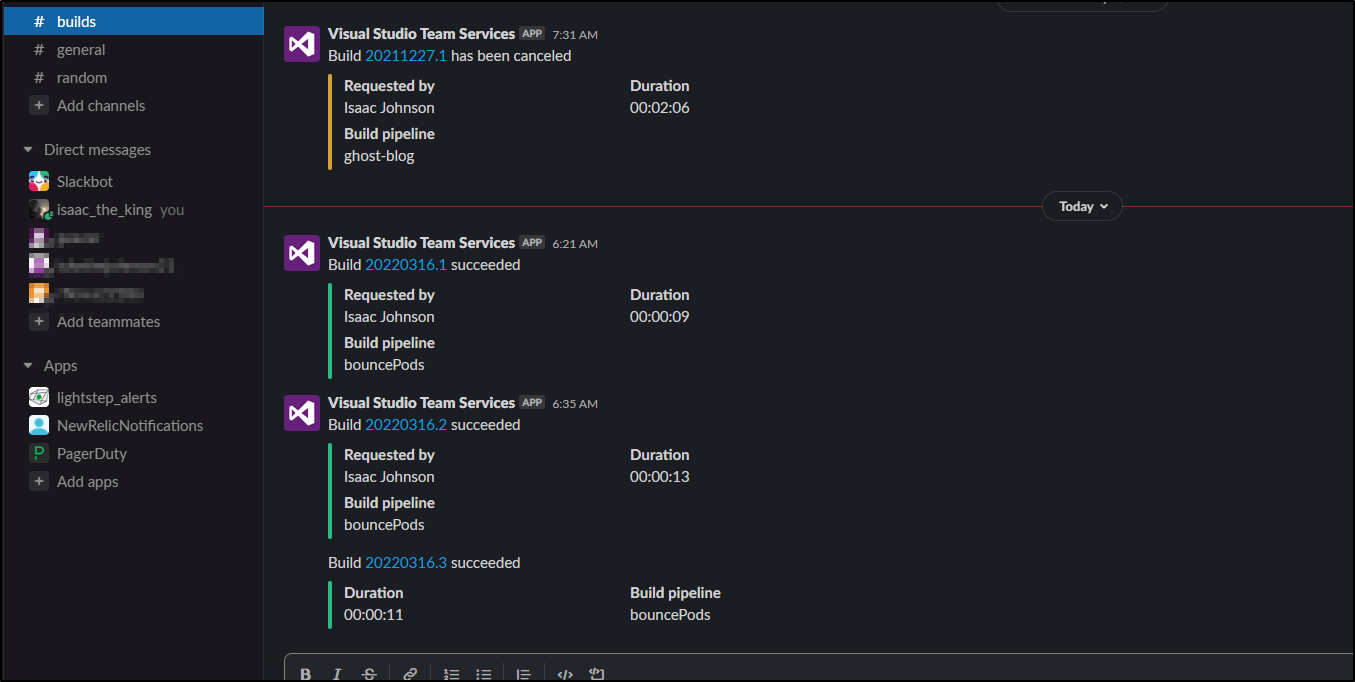

Because I have AzDO tied to Slack, Datadog, etc i can see build outputs in my chat windows

Instead of stopping here with a demo.. Let’s complete the work - we can actually make this function as a mitigation system.

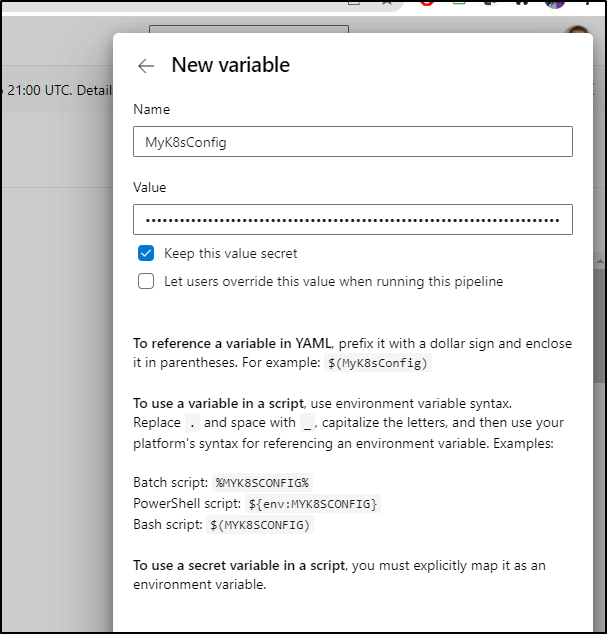

The quickest way is just to set our k8s external config as a base64’ed secret on the pipeline

$ cat ~/.kube/config | base64 -w 0

and save that to a secret

Then change the pipeline to install Kubectl and decode the k8s config and bounce the pod by label

trigger:

- main

resources:

webhooks:

- webhook: UptimeKuma

connection: UptimeKuma

pool:

vmImage: ubuntu-latest

steps:

- task: KubectlInstaller@0

displayName: 'Install Kubectl'

inputs:

kubectlVersion: 'latest'

- script: |

set -x

mkdir -p ~/.kube

echo $(MyK8sConfig) | base64 --decode > ~/.kube/config

displayName: 'Set Kubeconfig'

- script: |

kubectl delete pod `kubectl get pod -l app.kubernetes.io/component=sidecar-injector -o jsonpath="{.items[0].metadata.name}"`

displayName: 'Bounce the Pod'

Note: yes, you could use a Kubernetes service connection. You could also use a containerized Azure DevOps private agent that does not require a kube config. However, for the sake of ease, this method required no private pool nor complicated interactions with the kubernetes CLI Azure DevOps extension which is why I went this direction.

Before we save, we can see the injector pod is 22d old

$ kubectl get pod -l app.kubernetes.io/component=sidecar-injector

NAME READY STATUS RESTARTS AGE

dapr-sidecar-injector-5765dc95d9-7lpgm 1/1 Running 0 22d

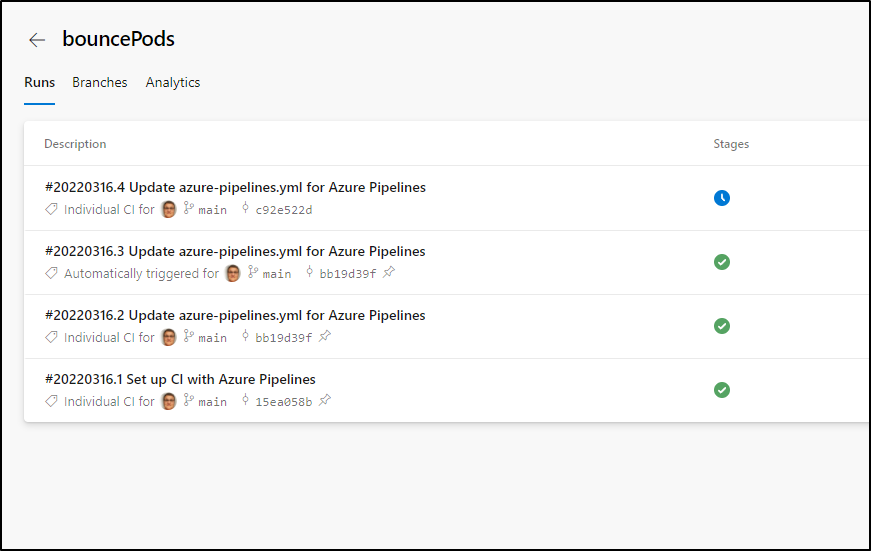

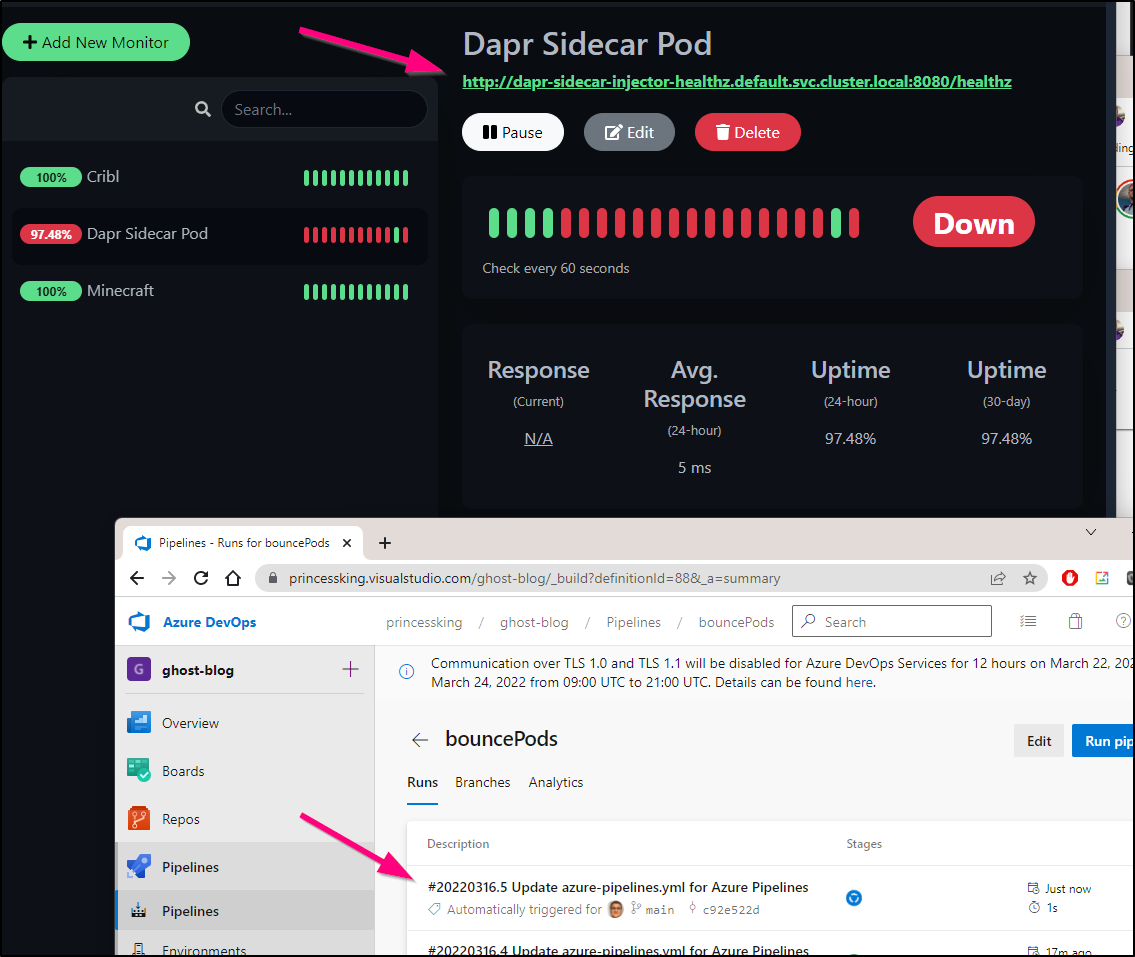

I’ll save which invokes a run

which I can then see bounces the pod

$ kubectl get pod -l app.kubernetes.io/component=sidecar-injector

NAME READY STATUS RESTARTS AGE

dapr-sidecar-injector-5765dc95d9-7lpgm 1/1 Running 0 22d

$ kubectl get pod -l app.kubernetes.io/component=sidecar-injector

NAME READY STATUS RESTARTS AGE

$ kubectl get pod -l app.kubernetes.io/component=sidecar-injector

NAME READY STATUS RESTARTS AGE

dapr-sidecar-injector-5765dc95d9-hblrc 1/1 Running 0 30s

Luckily I did not enable the notification in Uptime Kuma just yet, otherwise we would have had an infinite loop (potententially) as It would bounce, detect its down and trigger a new bounce

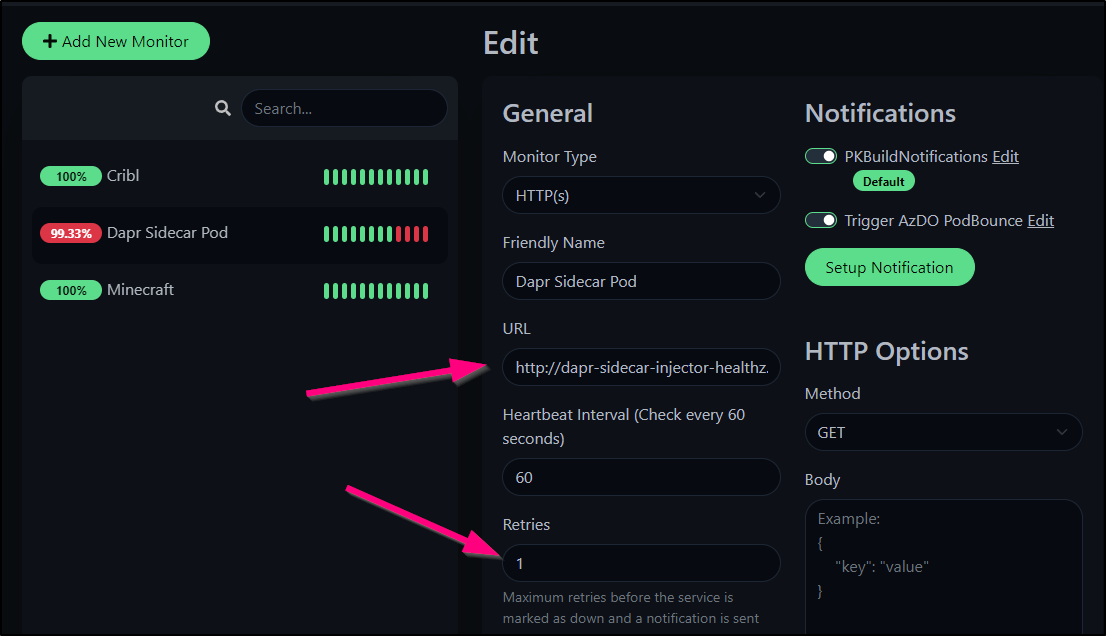

This actually highlights a design issue. Pods change IPs when rotated. So we really cannot fixate on the IP.

While Pods themselves do not expose DNS, services do. There is a Dapr Injector Service presently, but it’s just for HTTPS traffic.

Let’s create a quick HealthZ service so we can easily reach 8080 on http.

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing$ cat myDaprSvc.yml

apiVersion: v1

kind: Service

metadata:

name: dapr-sidecar-injector-healthz

namespace: default

spec:

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: http

port: 8080

protocol: TCP

targetPort: 8080

selector:

app: dapr-sidecar-injector

sessionAffinity: None

type: ClusterIP

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing$ kubectl apply -f myDaprSvc.yml

service/dapr-sidecar-injector-healthz created

We can now use http://dapr-sidecar-injector-healthz.default.svc.cluster.local:8080/healthz in the Uptime Kuma check which will direct to the current pod.

We’ll also set retry to 1 just in case there is a delay in pod rotation

We now see the down detector trigger a pipeline run

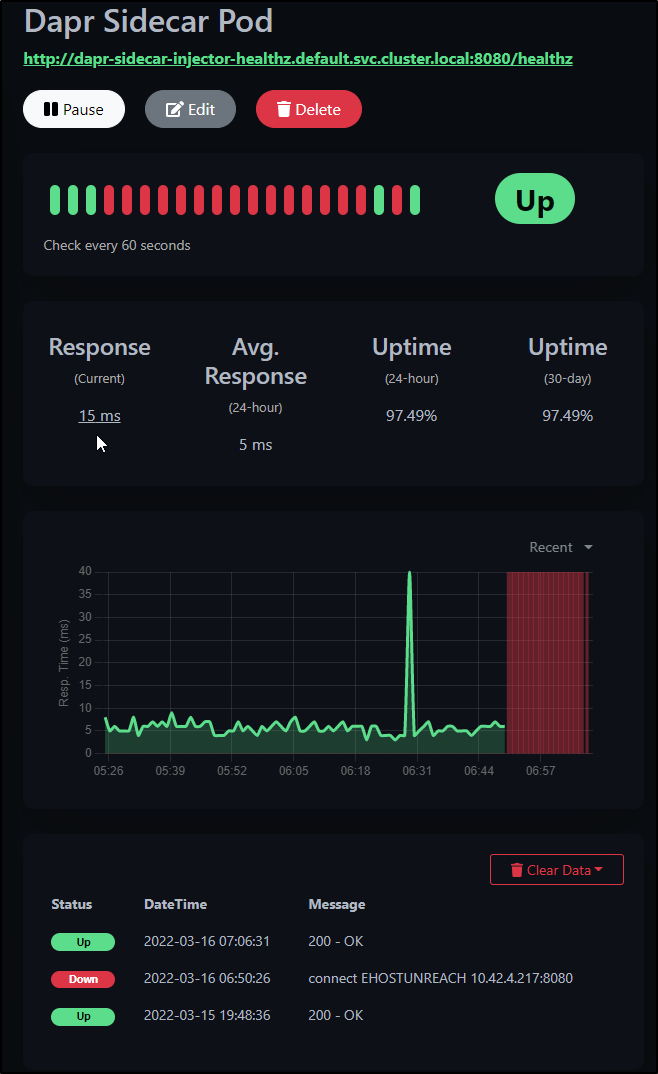

The pod rotates

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing$ kubectl get pod -l app.kubernetes.io/component=sidecar-injector

NAME READY STATUS RESTARTS AGE

dapr-sidecar-injector-5765dc95d9-hblrc 1/1 Running 0 15m

builder@DESKTOP-QADGF36:~/Workspaces/nginx-fixing$ kubectl get pod -l app.kubernetes.io/component=sidecar-injector

NAME READY STATUS RESTARTS AGE

dapr-sidecar-injector-5765dc95d9-tlm4x 1/1 Running 0 34s

and we show as being up once again:

Summary

Today we setup Uptime Kuma in our Kubernetes cluster and configured it to monitor a local app (non containerized), Cribl, a TCP based server (Minecraft), and lastly a containerized service inside the same cluster Uptime Kuma was running in.

We dug into Notifications including Teams and invoking Azure DevOps via Webhooks. Lastly, in the webhook integration with AzDO we built out a full service check with remediation.

Uptime Kuma is a fantastic app I will certainly continue to use to monitor systems. I look forward to seeing what the author does with it (including possibly productizing it in the future).