Published: Dec 21, 2021 by Isaac Johnson

Rundeck is a popular runbook automation suite that was acquired by Pagerduty last year to incorporate into their larger monitoring and automation offerings.

Today we will explore running Rundeck inside of Kubernetes. As we explore the charts and their usage, we will touch on the various configuration options as well as how supportable the ‘free’ version really is. We will explore how robust an offering the open source Rundeck is. Can we use it as a drop-in replacement for Ansible? What exactly is the tie-in with Pagerduty?

We will setup a Rundeck containerized instance in Kubernetes via the Helm Chart (albeit deprecated). We’ll configure with https and basic auth ingress then setup both helloworld jobs and more interesting kuberentes jobs. For Kubernetes, we’ll use GKE with goal of having Rundeck launch a Kubernetes Job object. To wrap up we’ll explore next steps and integration with both Pagerduty and Datadog.

History

Rundeck came about during the boom of desired state tooling around 2010. Rundeck was founded in 2010 (some say 2013) as a company by Damon Edwards, Alex Honor, and Greg Schueler who had the desire to automate server provisioning and enable continuous delivery for what they saw as “islands” of disconnected tools.

The company grew to about 100 commercial deploys, which at least in 2014 was priced at $5k/server/year with $2.5k for each additional. They had a $3M seed round in 2017 before being sold for $95.5M to PagerDuty in 2020. From a sizing perspective RunDeck had around 50 employees at the time. Whatever the pricing is today, they are careful to hide pricing details for the paid tiers.

Architecture

Rundeck has a very similar architecture to Ansible. We have the Rundeck server that hosts Projects. Projects have Jobs and these run on Nodes. Fundamentally it is a Java based App that stores configuration information in a relational database. The commercial product of Rundeck Enterprise adds the concept of a Rundeck Cluster for High Availability.

Rundeck also has a community open-source edition and a containerized version of the platform.

Setup

We can use the Signup URL to get the binaries.

However, we can directly just pull the docker container:

$ docker pull rundeck/rundeck:3.4.6

3.4.6: Pulling from rundeck/rundeck

92dc2a97ff99: Pull complete

be13a9d27eb8: Pull complete

c8299583700a: Pull complete

9e6bddf44d59: Pull complete

e4213de99ee1: Pull complete

70f2ef2fdcfc: Pull complete

9df1c8c1f3a7: Pull complete

ccce9ff83667: Pull complete

696431486e49: Pull complete

c5689cfd19ce: Pull complete

305abb35873c: Pull complete

007492d6e95c: Pull complete

80be92206cfb: Pull complete

Digest: sha256:62a453aaa9759a407042ff3798d775ea4940fe03c1630c93fa10927e30d227bd

Status: Downloaded newer image for rundeck/rundeck:3.4.6

docker.io/rundeck/rundeck:3.4.6

We could just use the docker container and launch it on port 4440:

$ sudo docker run -p 4440:4440 -e EXTERNAL_SERVER_URL=http://MY.HOSTNAME.COM:4440 --name rundeck -t rundeck/rundeck:latest

However there is an incubator (now deprecated) rundeck chart we can use to run natively in Kubernetes:

$ helm repo add incubator https://charts.helm.sh/incubator

"incubator" has been added to your repositories

$ helm install rundeckrelease incubator/rundeck --set image.tag=3.4.6

WARNING: This chart is deprecated

NAME: rundeckrelease

LAST DEPLOYED: Sun Dec 12 11:24:35 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=rundeck,app.kubernetes.io/instance=rundeckrelease" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:4440 to use your application"

kubectl port-forward $POD_NAME --namespace default 4440:4440

We can get the pod name and port-forward to access our pod

$ export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=rundeck,app.kubernetes.io/instance=rundeckrelease" -o jsonpath="{.items[0].metadata.name}")

$ kubectl port-forward $POD_NAME --namespace default 4440:4440

Forwarding from 127.0.0.1:4440 -> 4440

Forwarding from [::1]:4440 -> 4440

Handling connection for 4440

The pod has two containers and serves traffic on port 4440. Access it (when port-forwarding) via http://localhost:4440

Using Rundeck

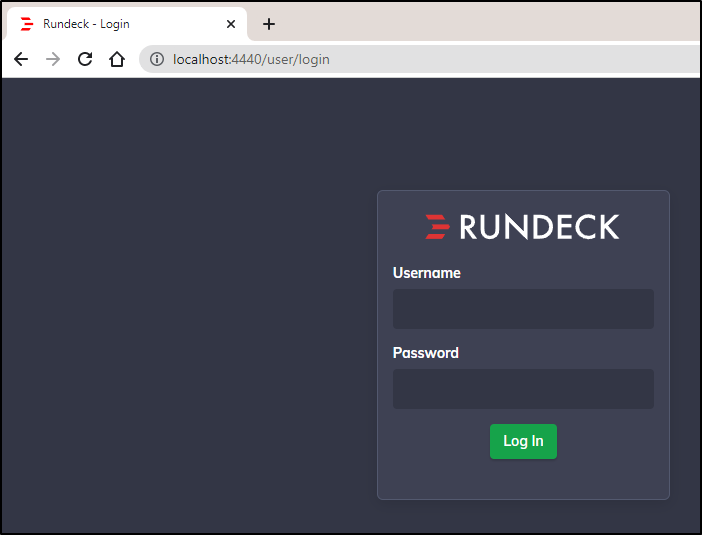

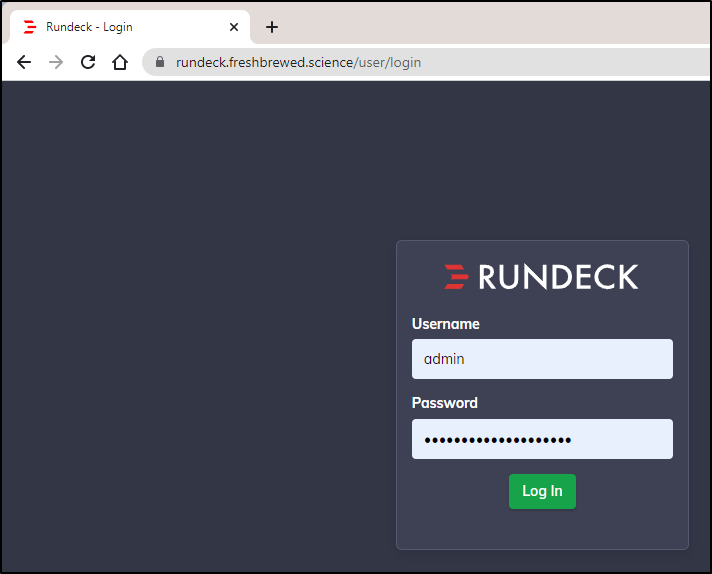

Login with the default admin:admin user:pass (configurable in helm chart)

Note, there is a weird redirect error I kept getting. If you also get that, go directly to the home URL: http://localhost:4440/menu/home

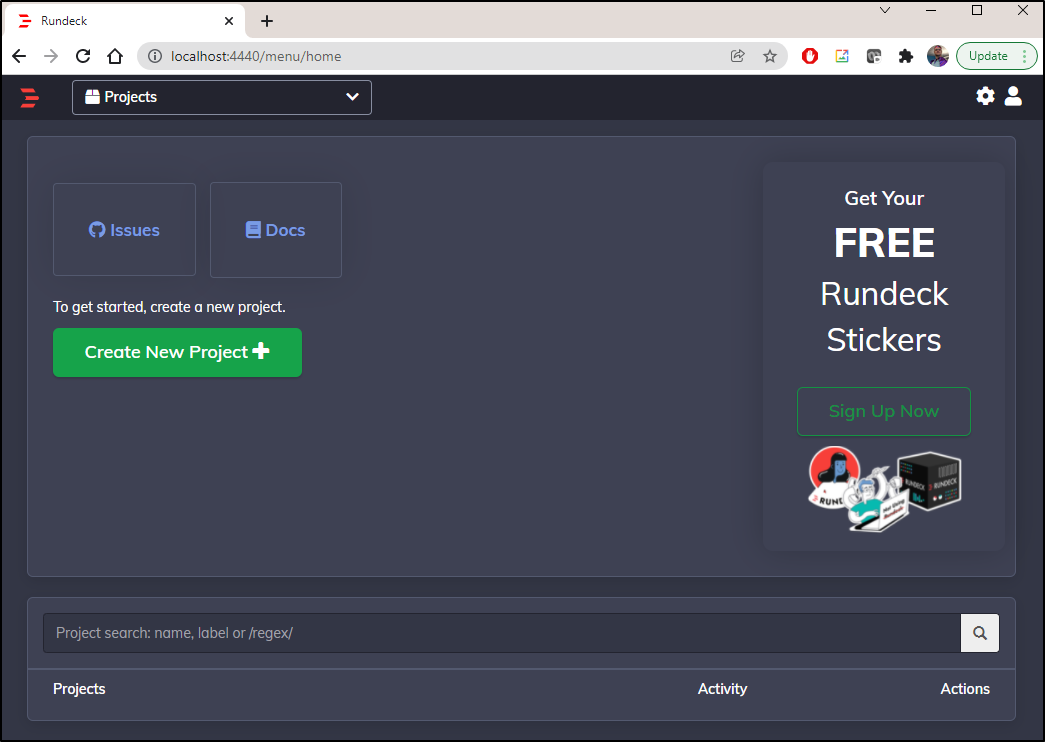

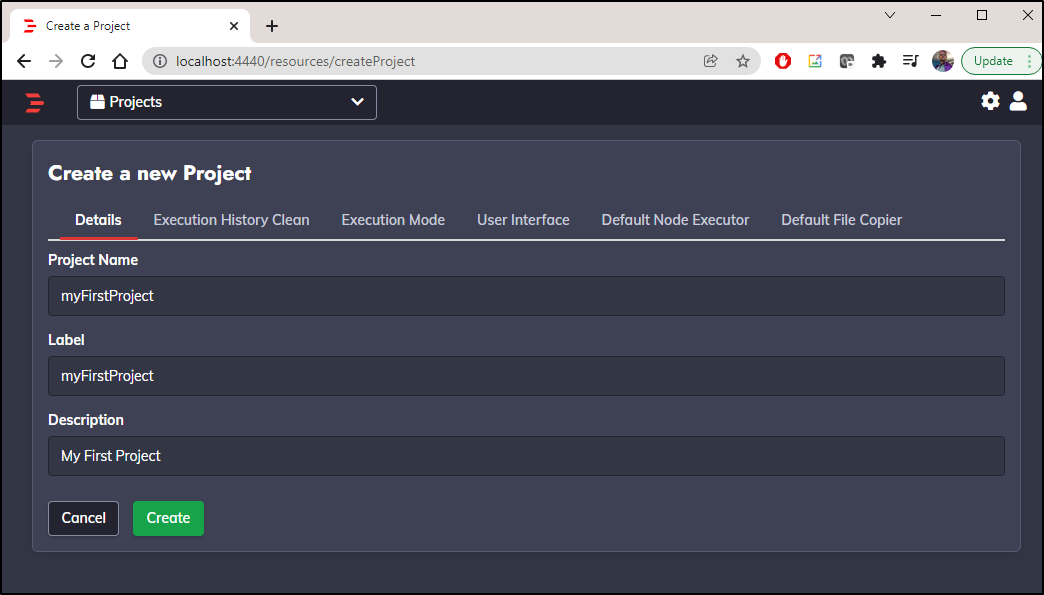

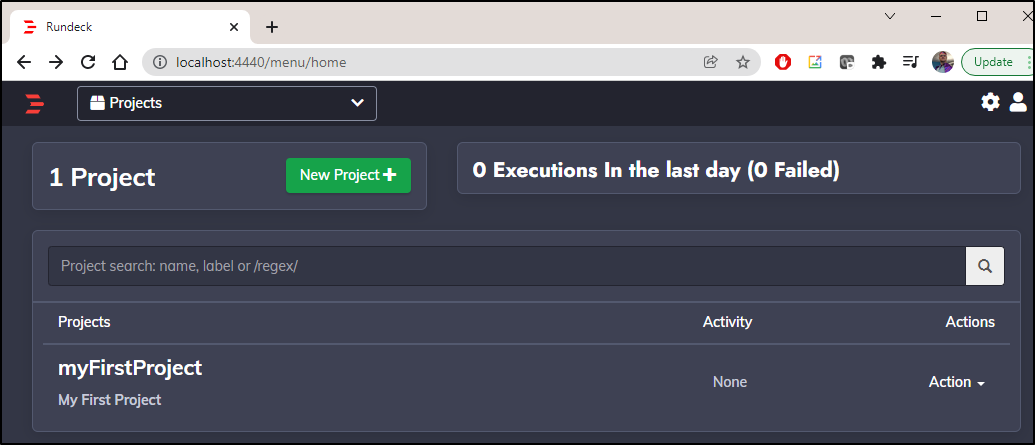

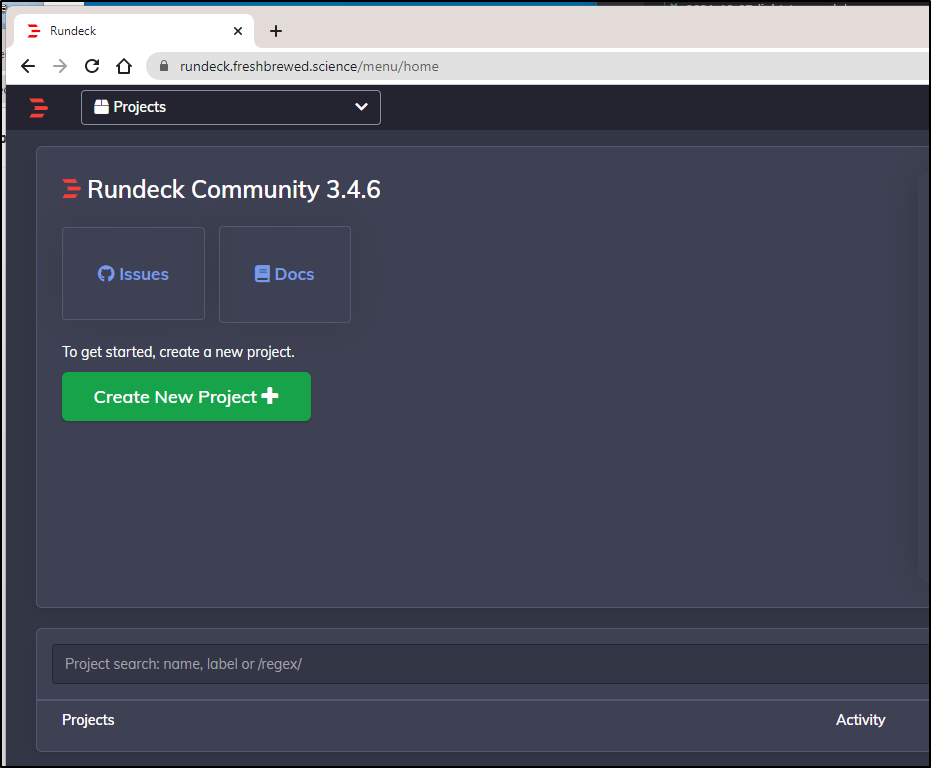

Creating a Project

Create a new Project

We click create and we can manually go back to home to view the project: http://localhost:4440/menu/home

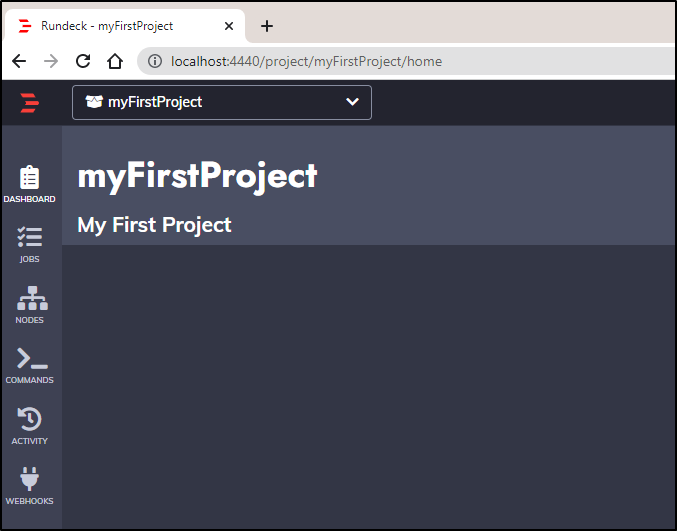

You can also use the URL with the project name to access it: http://locahost:4440l/project/myFirstProject/home

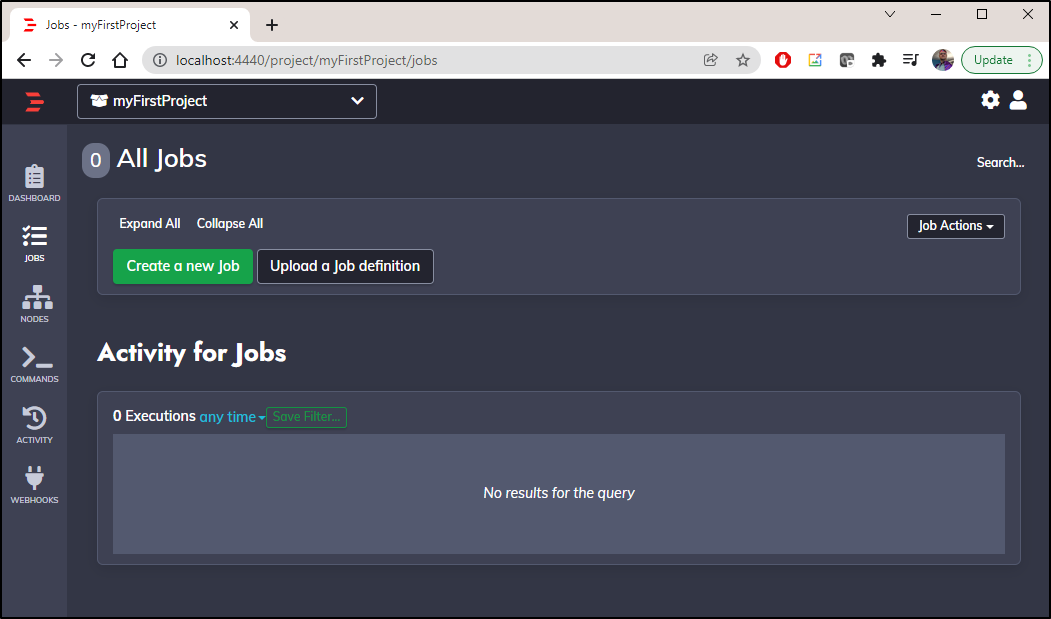

Next we want to create a job under jobs: http://localhost:4440/project/myFirstProject/jobs

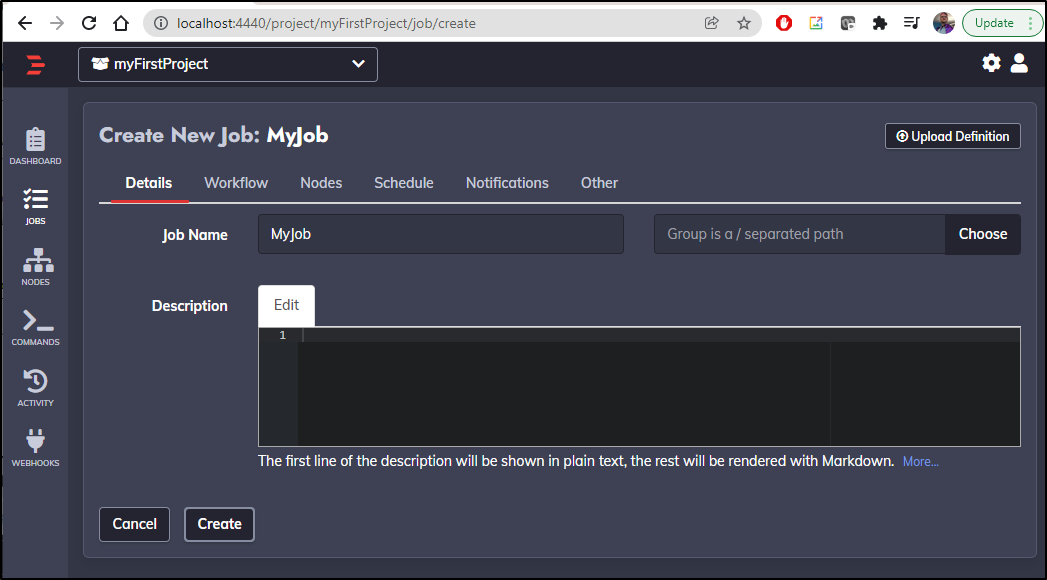

Give it a name and optional description:

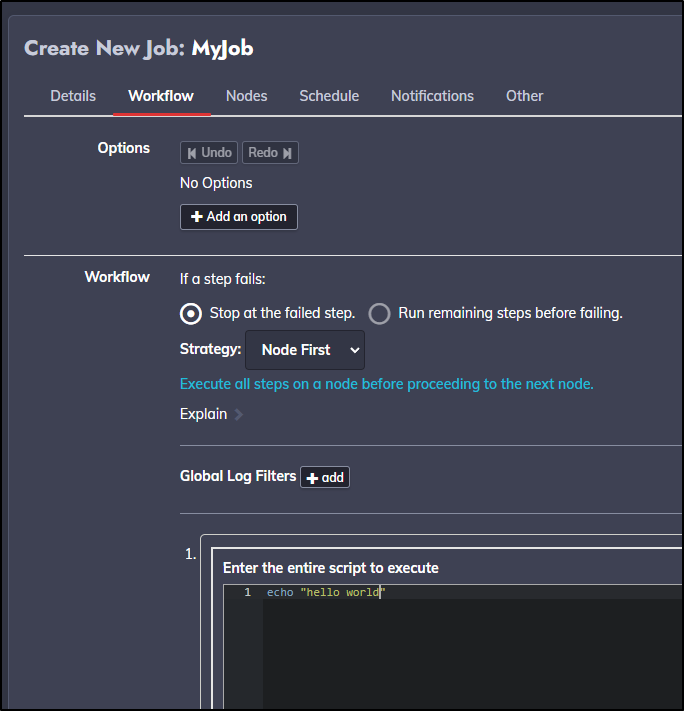

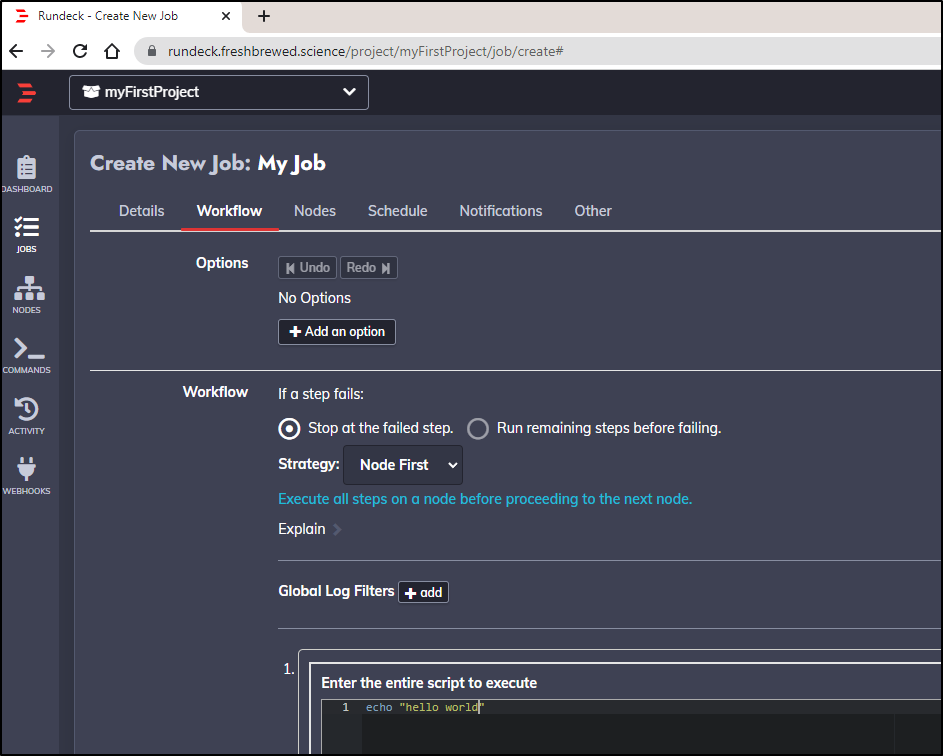

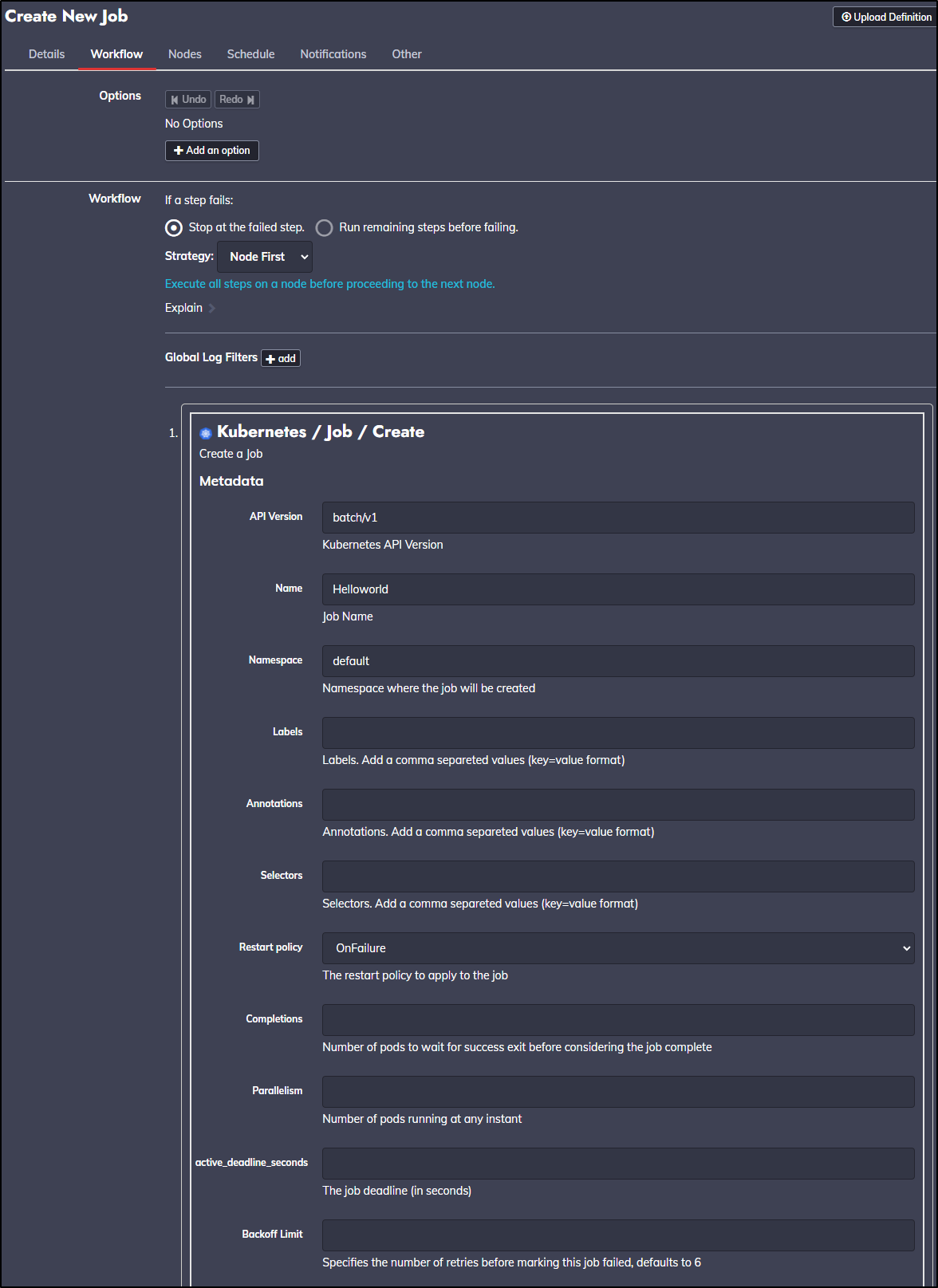

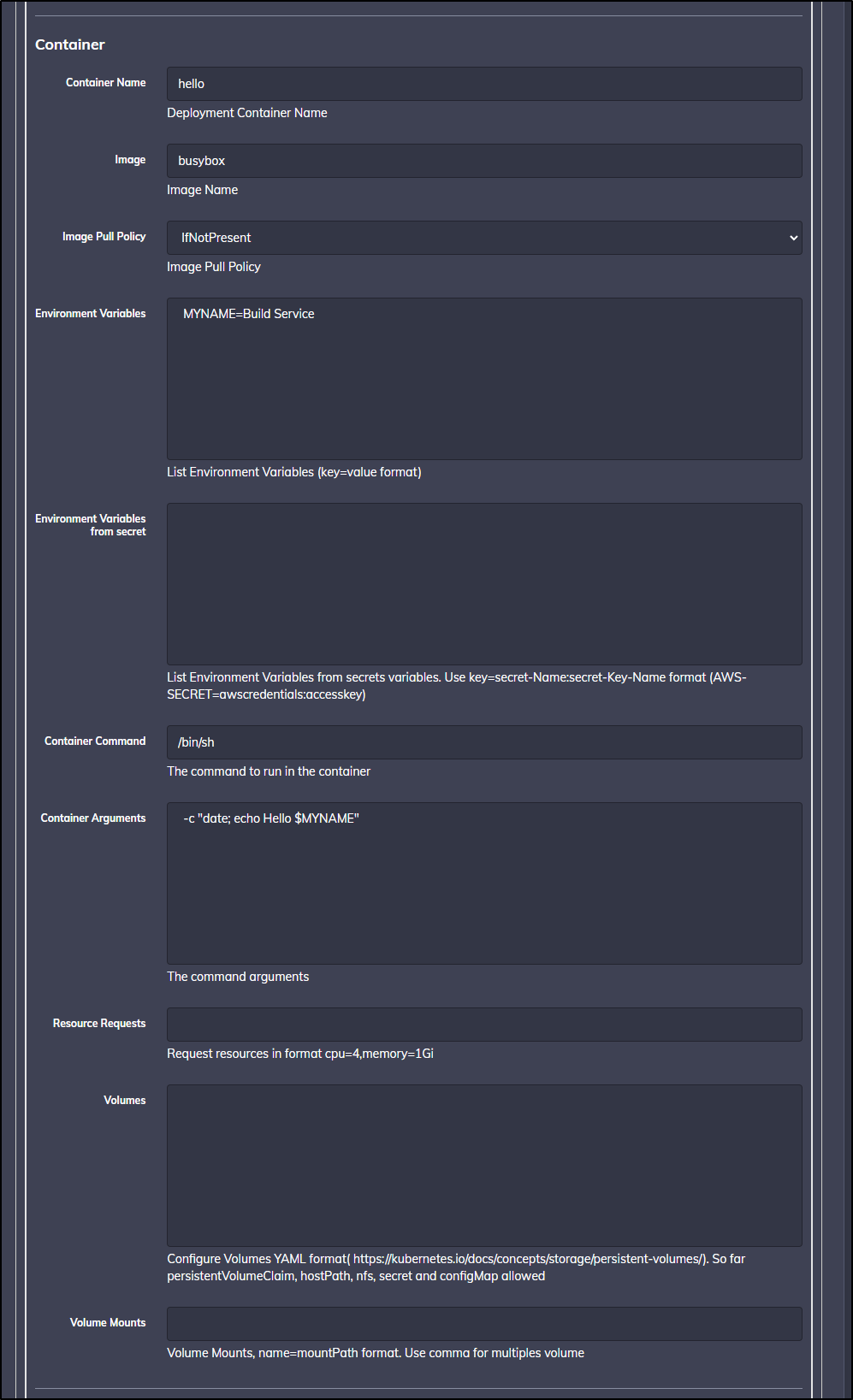

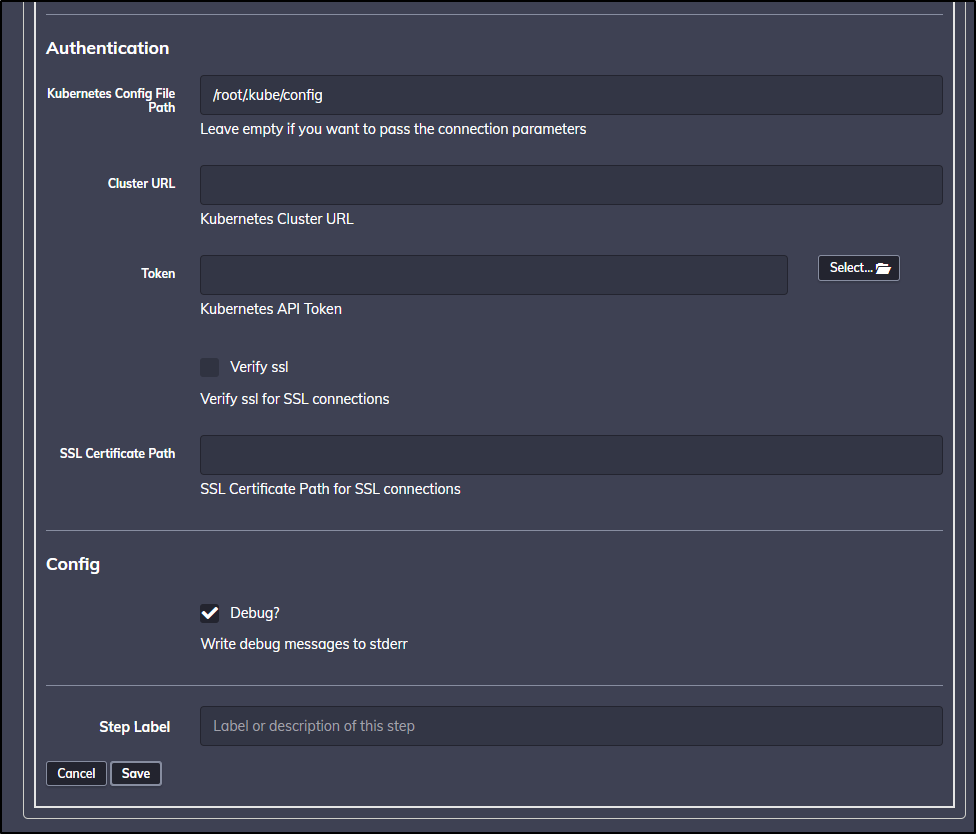

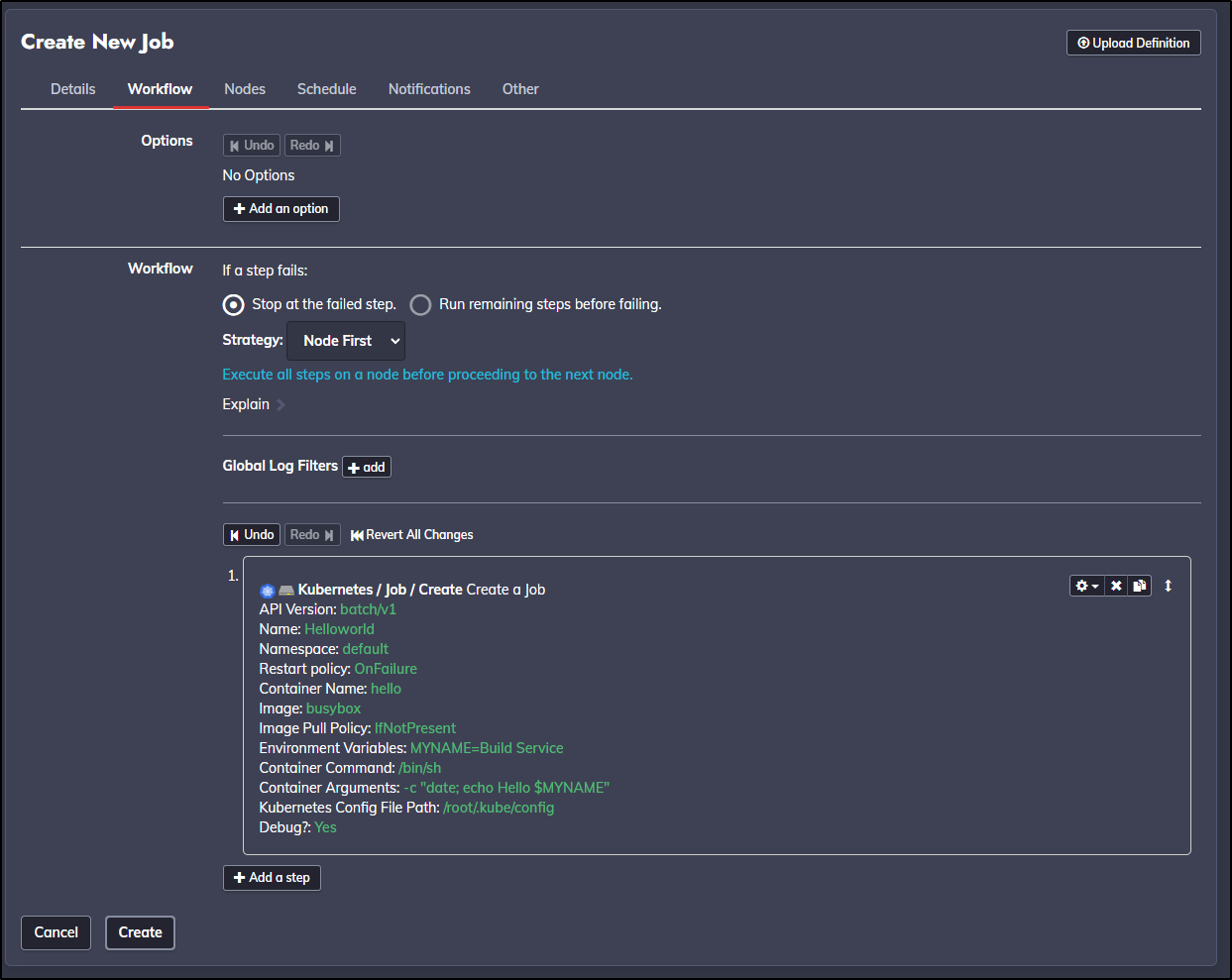

In the Workflow pane we define the steps of our job. This will also be where we can leverage any plugin steps.

Exposing the Rundeck service

At this point, rather than continuing to port-forward to the Rundeck pod, it would make much more sense to expose it as a service. The helm chart does allow setup of service and ingress. But as I would like to apply tighter controls (TLS and basic auth), I’ll expose this manually.

We can see we have a service already defined:

$ kubectl get svc rundeckrelease

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rundeckrelease ClusterIP 10.43.217.99 <none> 80/TCP 4d19h

We will need a basic auth secret to use:

$ sudo apt install apache2-utils

[sudo] password for builder:

Sorry, try again.

[sudo] password for builder:

Reading package lists... Done

Building dependency tree

Reading state information... Done

apache2-utils is already the newest version (2.4.41-4ubuntu3.8).

The following packages were automatically installed and are no longer required:

liblttng-ust-ctl4 liblttng-ust0

Use 'sudo apt autoremove' to remove them.

0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded.

$ htpasswd -c auth builder

New password:

Re-type new password:

Adding password for user builder

$ kubectl create secret generic rd-basic-auth --from-file=auth

secret/rd-basic-auth created

This creates a secret with an auth block storing ‘builder:(password encrypted)’.

e.g. for a fake foo/asdfasdf user….

$ kubectl get secret rd-basic-auth -o yaml

apiVersion: v1

data:

auth: Zm9vOiRhcHIxJHpBRWhsL3JaJFZrUUtSaC92ZDUzdEFCYkk5MjB0WTAK

kind: Secret

metadata:

creationTimestamp: "2021-12-17T12:46:20Z"

name: rd-basic-auth

namespace: default

resourceVersion: "140102220"

uid: c257742d-86a6-4b92-a822-e2d79c7d93c7

type: Opaque

$ echo Zm9vOiRhcHIxJHpBRWhsL3JaJFZrUUtSaC92ZDUzdEFCYkk5MjB0WTAK | base64 --decode

foo:$apr1$zAEhl/rZ$VkQKRh/vd53tABbI920tY0

We will want to secure with TLS. To do so, I’ll need to add an A record to point a DNS name to our ingress IP.

Usually I step through the AWS UI or Azure DNS UI. Let’s use the AWS CLI for this step.

We need to create a record JSON:

$ cat r53-rundeck.json

{

"Comment": "CREATE rundeck fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "rundeck.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "73.242.50.46"

}

]

}

}

]

}

You’ll need the hosted zone ID. A quick and easy way is to ask the CLI again…

$ aws route53 list-hosted-zones-by-name | head -n5

{

"HostedZones": [

{

"Id": "/hostedzone/Z39E8QFU0F9PZP",

"Name": "freshbrewed.science.",

So we can insert our record now:

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-rundeck.json

{

"ChangeInfo": {

"Id": "/change/C05454421L249Z1ZOG3LH",

"Status": "PENDING",

"SubmittedAt": "2021-12-17T13:17:25.102Z",

"Comment": "CREATE rundeck fb.s A record "

}

}

Next we have to create an Ingress object. This is similar to how we did it in the past albeit with a proper V1 (not v1beta1) Networking definition.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/auth-realm: Authentication Required - RunDeck

nginx.ingress.kubernetes.io/auth-secret: rd-basic-auth

nginx.ingress.kubernetes.io/auth-type: basic

kubernetes.io/tls-acme: "true"

kubernetes.io/ingress.class: nginx

cert-manager.io/cluster-issuer: "letsencrypt-prod"

name: rundeck-with-auth

namespace: default

spec:

tls:

- hosts:

- rundeck.freshbrewed.science

secretName: rd-tls

rules:

- host: rundeck.freshbrewed.science

http:

paths:

- backend:

service:

name: rundeckrelease

port:

number: 80

path: /

pathType: ImplementationSpecific

We can then apply it:

$ kubectl apply -f test-Ingress.yaml

ingress.networking.k8s.io/rundeck-with-auth created

Then check to see when the cert is created:

$ kubectl get cert rd-tls

NAME READY SECRET AGE

rd-tls False rd-tls 24s

$ kubectl get cert rd-tls

NAME READY SECRET AGE

rd-tls True rd-tls 29s

Verification

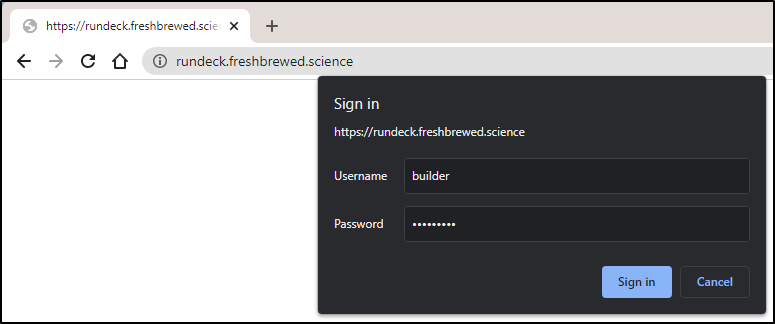

Now we can verify the URL https://rundeck.freshbrewed.science

Even with basic auth, we will still need to login to Rundeck:

Since I’m still seeing bad redirects, let’s sort that out real quick:

current values:

$ helm get values rundeckrelease

USER-SUPPLIED VALUES:

image:

tag: 3.4.6

Let’s change those. We’ll fix the URL and add a PVC. I’m using local-path storage for now, but you can use whatever storage provider works for your cluster (e.g. kubect get sc). I added the JVM option following this thread where it was reported it helped with login redirects (seems to fix Firefox, but not Chrome).

$ cat rd-values.yaml

image:

repository: rundeck/rundeck

tag: 3.4.8

persistence:

claim:

accessMode: ReadWriteOnce

create: true

size: 10G

storageClass: local-path

enabled: true

rundeck:

adminUser: admin:admin1,user,admin,architect,deploy,build

env:

RUNDECK_GRAILS_URL: https://rundeck.freshbrewed.science

RUNDECK_LOGGING_STRATEGY: CONSOLE

RUNDECK_JVM: " -Drundeck.jetty.connector.forwarded=true"

RUNDECK_SERVER_FORWARDED: "true"

$ helm upgrade rundeckrelease incubator/rundeck -f rd-values.yaml

WARNING: This chart is deprecated

Release "rundeckrelease" has been upgraded. Happy Helming!

NAME: rundeckrelease

LAST DEPLOYED: Fri Dec 17 07:37:48 2021

NAMESPACE: default

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=rundeck,app.kubernetes.io/instance=rundeckrelease" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:4440 to use your application"

kubectl port-forward $POD_NAME --namespace default 4440:4440

I have a PVC now but I’ll need to recreate the project as I did prior to the upgrade:

I did the same steps from earlier and now are caught up to prior to changing the Ingress

We can save it at the Workflow step to have a Job that we can run.

Verification

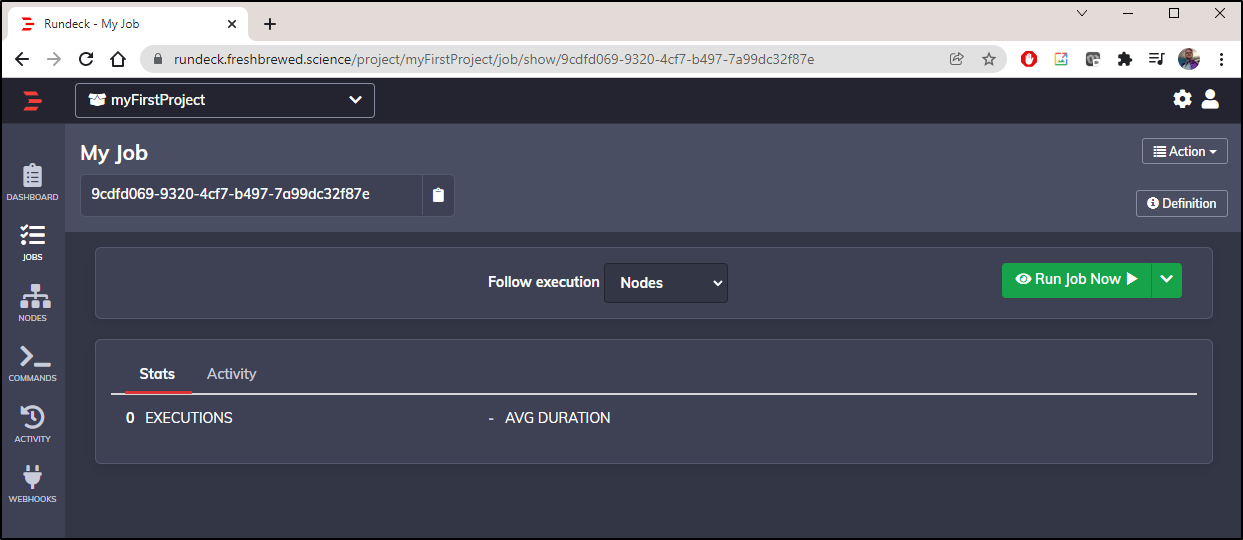

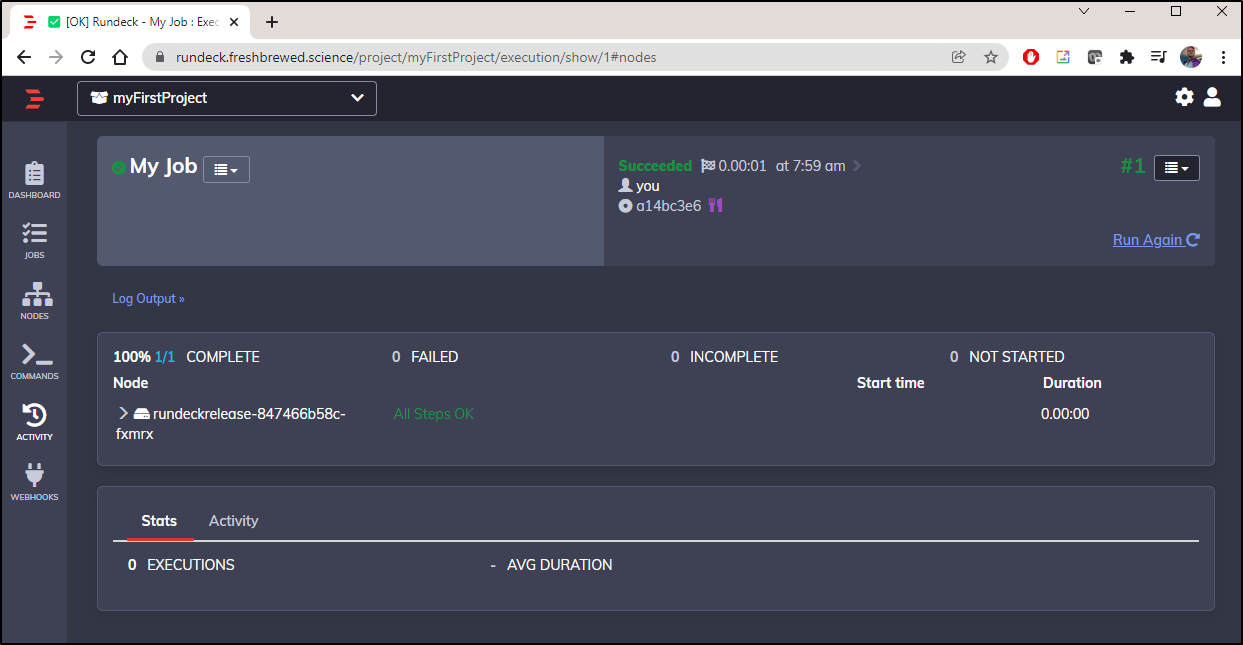

To manually test, click “Run Job Now” to execute it.

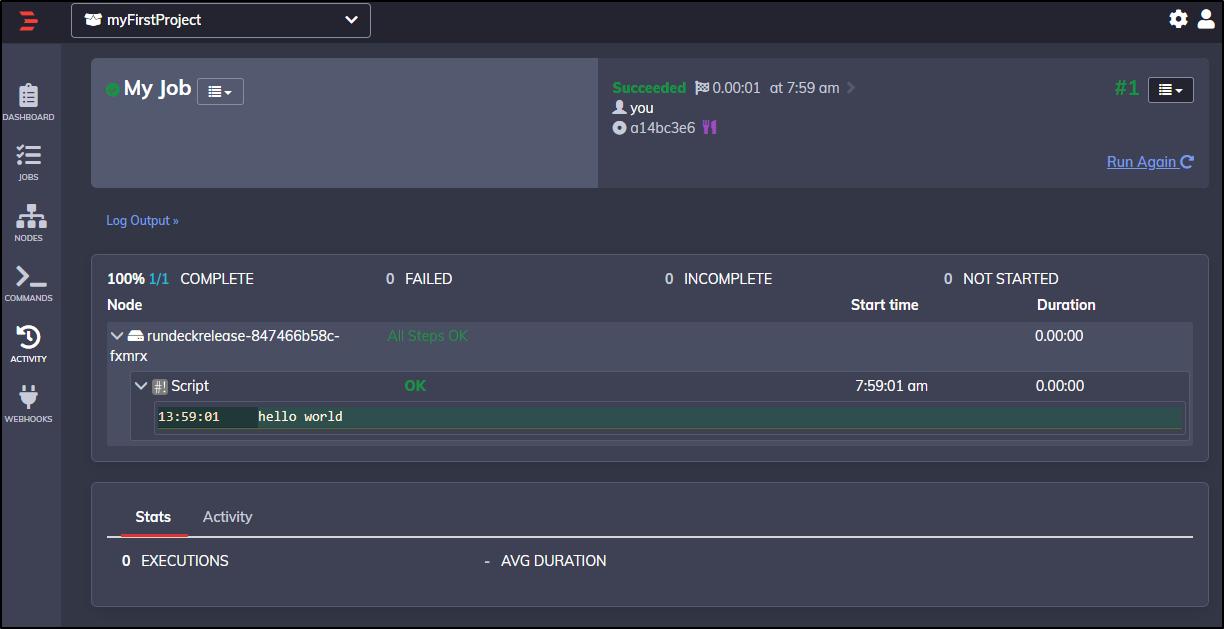

We can expand the step to see the results (just saying “hello world”)

Doing something useful

Let’s create a Kubernetes cluster with which to use RunDeck.

I’ll be using GCP this time on a fresh WSL.

$ sudo apt-get install apt-transport-https ca-certificates gnupg

$ echo "deb [signed-by=/usr/share/keyrings/cloud.google.gpg] https://packages.cloud.google.com/apt cloud-sdk main" | sudo tee -a /etc/apt/sources.list.d/google-cloud-sdk.list

deb [signed-by=/usr/share/keyrings/cloud.google.gpg] https://packages.cloud.google.com/apt cloud-sdk main

$ curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key --keyring /usr/share/keyrings/cloud.google.gpg add -

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2537 100 2537 0 0 13282 0 --:--:-- --:--:-- --:--:-- 13282

OK

$ sudo apt-get update && sudo apt-get install google-cloud-sdk

Get:1 http://security.ubuntu.com/ubuntu focal-security InRelease [114 kB]

Hit:2 https://apt.releases.hashicorp.com focal InRelease

Hit:3 https://packages.microsoft.com/repos/azure-cli focal InRelease

Get:4 https://packages.cloud.google.com/apt cloud-sdk InRelease [6739 B]

Get:5 https://packages.microsoft.com/repos/microsoft-ubuntu-focal-prod focal InRelease [10.5 kB]

Get:6 http://security.ubuntu.com/ubuntu focal-security/main amd64 Packages [1069 kB]

Hit:7 https://packages.microsoft.com/ubuntu/21.04/prod hirsute InRelease

Get:8 https://packages.cloud.google.com/apt cloud-sdk/main amd64 Packages [203 kB]

Get:9 https://packages.microsoft.com/repos/microsoft-ubuntu-focal-prod focal/main amd64 Packages [121 kB]

Hit:10 http://archive.ubuntu.com/ubuntu focal InRelease

Get:11 http://security.ubuntu.com/ubuntu focal-security/main amd64 c-n-f Metadata [9096 B]

Get:12 http://security.ubuntu.com/ubuntu focal-security/universe amd64 Packages [668 kB]

Get:13 http://security.ubuntu.com/ubuntu focal-security/universe Translation-en [112 kB]

Get:14 http://security.ubuntu.com/ubuntu focal-security/universe amd64 c-n-f Metadata [13.0 kB]

Get:15 http://archive.ubuntu.com/ubuntu focal-updates InRelease [114 kB]

Get:16 http://archive.ubuntu.com/ubuntu focal-backports InRelease [108 kB]

Get:17 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 Packages [1400 kB]

Get:18 http://archive.ubuntu.com/ubuntu focal-updates/main Translation-en [283 kB]

Get:19 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 c-n-f Metadata [14.6 kB]

Get:20 http://archive.ubuntu.com/ubuntu focal-updates/restricted amd64 Packages [616 kB]

Get:21 http://archive.ubuntu.com/ubuntu focal-updates/restricted Translation-en [88.1 kB]

Get:22 http://archive.ubuntu.com/ubuntu focal-updates/universe amd64 Packages [884 kB]

Get:23 http://archive.ubuntu.com/ubuntu focal-updates/universe Translation-en [193 kB]

Get:24 http://archive.ubuntu.com/ubuntu focal-updates/universe amd64 c-n-f Metadata [20.0 kB]

Fetched 6046 kB in 5s (1291 kB/s)

Reading package lists... Done

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following packages were automatically installed and are no longer required:

liblttng-ust-ctl4 liblttng-ust0

Use 'sudo apt autoremove' to remove them.

The following additional packages will be installed:

python3-crcmod

Suggested packages:

google-cloud-sdk-app-engine-java google-cloud-sdk-app-engine-python google-cloud-sdk-pubsub-emulator google-cloud-sdk-bigtable-emulator

google-cloud-sdk-datastore-emulator kubectl

The following NEW packages will be installed:

google-cloud-sdk python3-crcmod

0 upgraded, 2 newly installed, 0 to remove and 0 not upgraded.

Need to get 76.8 MB of archives.

After this operation, 490 MB of additional disk space will be used.

Do you want to continue? [Y/n] y

Get:1 https://packages.cloud.google.com/apt cloud-sdk/main amd64 google-cloud-sdk all 367.0.0-0 [76.8 MB]

Get:2 http://archive.ubuntu.com/ubuntu focal/universe amd64 python3-crcmod amd64 1.7+dfsg-2build2 [18.8 kB]

Fetched 76.8 MB in 2s (46.1 MB/s)

Selecting previously unselected package python3-crcmod.

(Reading database ... 135686 files and directories currently installed.)

Preparing to unpack .../python3-crcmod_1.7+dfsg-2build2_amd64.deb ...

Unpacking python3-crcmod (1.7+dfsg-2build2) ...

Selecting previously unselected package google-cloud-sdk.

Preparing to unpack .../google-cloud-sdk_367.0.0-0_all.deb ...

Unpacking google-cloud-sdk (367.0.0-0) ...

Setting up python3-crcmod (1.7+dfsg-2build2) ...

Setting up google-cloud-sdk (367.0.0-0) ...

Processing triggers for man-db (2.9.1-1) ...

We can see other optional packages to install from the docs.

Next we need to login:

$ gcloud init

Welcome! This command will take you through the configuration of gcloud.

Your current configuration has been set to: [default]

You can skip diagnostics next time by using the following flag:

gcloud init --skip-diagnostics

Network diagnostic detects and fixes local network connection issues.

Checking network connection...done.

Reachability Check passed.

Network diagnostic passed (1/1 checks passed).

You must log in to continue. Would you like to log in (Y/n)? y

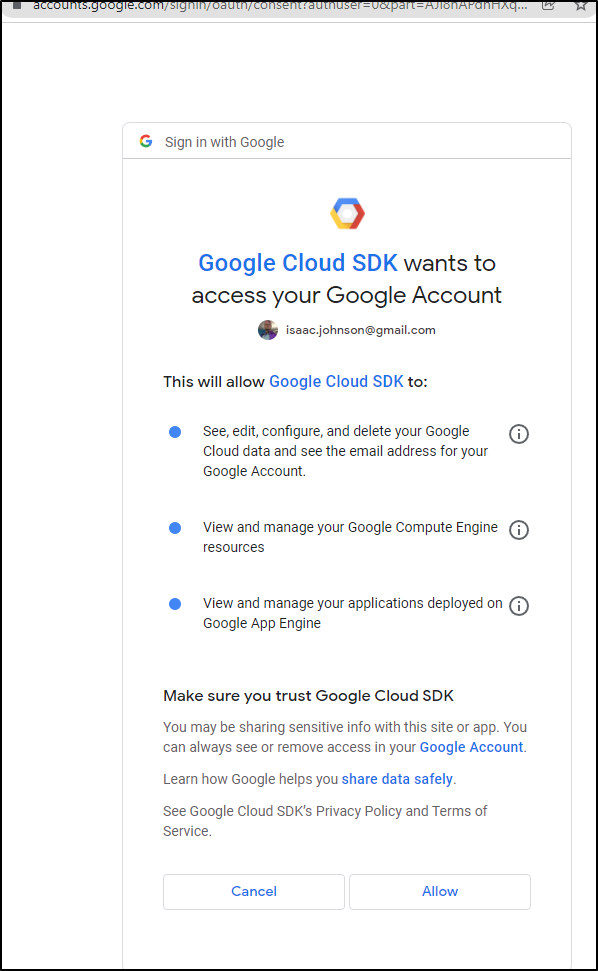

Your browser has been opened to visit:

https://accounts.google.com/o/oauth2/auth?response_type=c

.....

Which pops up the Auth for Cloud SDK

Back in the CLI we can now pick a project or create new:

You are logged in as: [isaac.johnson@gmail.com].

Pick cloud project to use:

[1] api-project-799042244963

[2] careful-compass-241122

[3] harborvm

[4] pebbletaskapp

[5] Create a new project

Please enter numeric choice or text value (must exactly match list item): 5

Enter a Project ID. Note that a Project ID CANNOT be changed later.

Project IDs must be 6-30 characters (lowercase ASCII, digits, or

hyphens) in length and start with a lowercase letter. gkedemo01

Waiting for [operations/cp.8799527528933944533] to finish...done.

Your current project has been set to: [gkedemo01].

Not setting default zone/region (this feature makes it easier to use

[gcloud compute] by setting an appropriate default value for the

--zone and --region flag).

See https://cloud.google.com/compute/docs/gcloud-compute section on how to set

default compute region and zone manually. If you would like [gcloud init] to be

able to do this for you the next time you run it, make sure the

Compute Engine API is enabled for your project on the

https://console.developers.google.com/apis page.

Created a default .boto configuration file at [/home/builder/.boto]. See this file and

[https://cloud.google.com/storage/docs/gsutil/commands/config] for more

information about configuring Google Cloud Storage.

Your Google Cloud SDK is configured and ready to use!

* Commands that require authentication will use isaac.johnson@gmail.com by default

* Commands will reference project `gkedemo01` by default

Run `gcloud help config` to learn how to change individual settings

This gcloud configuration is called [default]. You can create additional configurations if you work with multiple accounts and/or projects.

Run `gcloud topic configurations` to learn more.

Some things to try next:

* Run `gcloud --help` to see the Cloud Platform services you can interact with. And run `gcloud help COMMAND` to get help on any gcloud command.

* Run `gcloud topic --help` to learn about advanced features of the SDK like arg files and output formatting

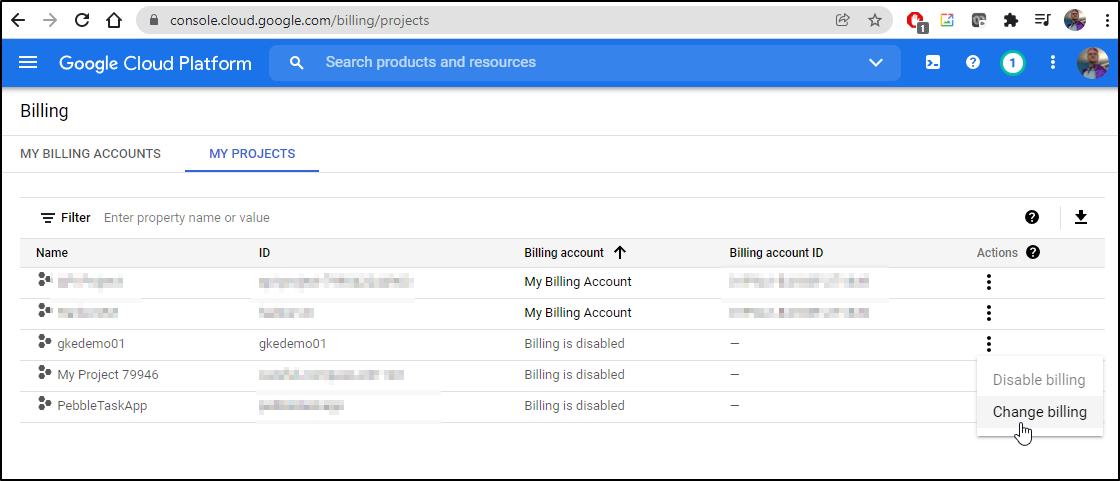

Now we have a new project (gkedemo01) we can create our cluster. But only after we enable container APIs on this project (Google limits the APIs available in a project by default). Beyond that, while we created a project, we did not sort out billing.

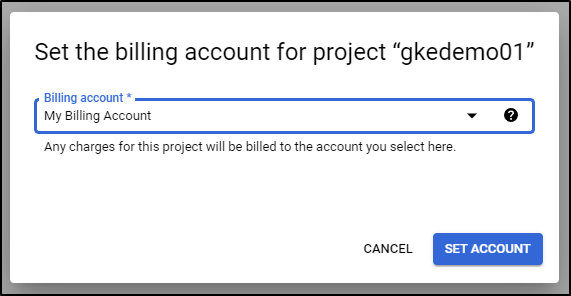

There are some Alpha level APIs to link billing, but we’ll just use the UI for now:

Then pick a billing account

Now that is enabled, I can enable Container services (kubernetes) in my project

$ gcloud services enable container.googleapis.com

Operation "operations/acf.p2-658532986431-0b9653ab-f2be-4986-8ed2-0845f5da5a6d" finished successfully.

note: this step took a few minutes with no output

Now we can create our cluster:

$ gcloud container clusters create --machine-type n1-standard-2 --num-nodes 2 --zone us-central1-a --cluster-version latest gkedemo01

WARNING: Currently VPC-native is the default mode during cluster creation for versions greater than 1.21.0-gke.1500. To create advanced routes based clusters, please pass the `--no-enable-ip-alias` flag

WARNING: Starting with version 1.18, clusters will have shielded GKE nodes by default.

WARNING: Your Pod address range (`--cluster-ipv4-cidr`) can accommodate at most 1008 node(s).

WARNING: Starting with version 1.19, newly created clusters and node-pools will have COS_CONTAINERD as the default node image when no image type is specified.

Creating cluster gkedemo01 in us-central1-a...done.

Created [https://container.googleapis.com/v1/projects/gkedemo01/zones/us-central1-a/clusters/gkedemo01].

To inspect the contents of your cluster, go to: https://console.cloud.google.com/kubernetes/workload_/gcloud/us-central1-a/gkedemo01?project=gkedemo01

kubeconfig entry generated for gkedemo01.

NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VERSION NUM_NODES STATUS

gkedemo01 us-central1-a 1.21.6-gke.1500 34.71.188.188 n1-standard-2 1.21.6-gke.1500 2 RUNNING

I should note, if you didn’t enable APIs, you’d see an error like:

ERROR: (gcloud.container.clusters.create) ResponseError: code=400, message=Failed precondition when calling the ServiceConsumerManager: tenantmanager::185014: Consumer 658532986431 should enable service:container.googleapis.com before generating a service account.

com.google.api.tenant.error.TenantManagerException: Consumer 658532986431 should enable service:container.googleapis.com before generating a service account.

And if billing is not setup:

ERROR: (gcloud.services.enable) PERMISSION_DENIED: Not found or permission denied for service(s): billing.resourceAssociations.list.

Help Token: Ae-hA1NRsadfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfooRnoLcGN15zbWtLGI8STiETrM6lt_035ZFyeTs8PGIi9

- '@type': type.googleapis.com/google.rpc.PreconditionFailure

violations:

- subject: ?error_code=220002&services=billing.resourceAssociations.list

type: googleapis.com

- '@type': type.googleapis.com/google.rpc.ErrorInfo

domain: serviceusage.googleapis.com

metadata:

services: billing.resourceAssociations.list

reason: SERVICE_CONFIG_NOT_FOUND_OR_PERMISSION_DENIED

In creating the cluster, gcloud updated our kubeconfig with the entry we need to access:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

gke-gkedemo01-default-pool-3160d14e-kn8b Ready <none> 41m v1.21.6-gke.1500

gke-gkedemo01-default-pool-3160d14e-t11d Ready <none> 41m v1.21.6-gke.1500

Using Kubernetes in Rundeck

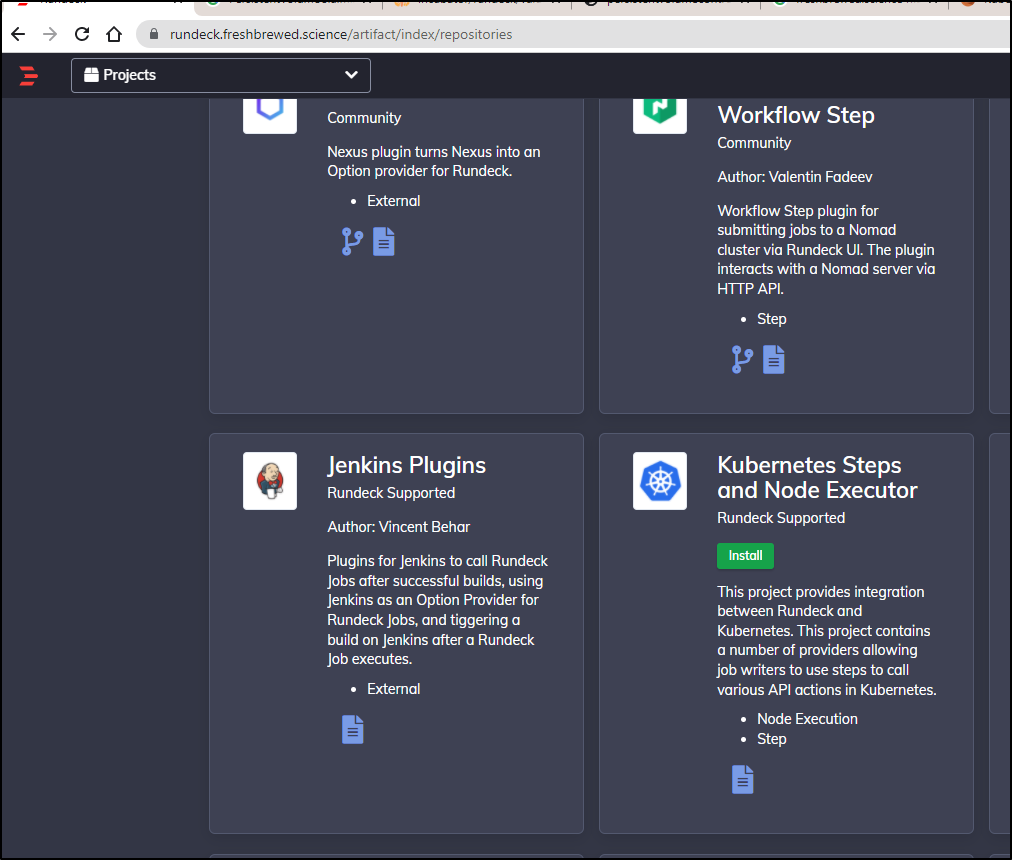

First, let’s go to the plugins area and install the Kubernetes Steps and Node Executor.

Note: While we will explore this plugin, its dependencies on python and python related libraries did not work on this default Rundeck node. I’ll explore how I tested, but in the end, I moved back to direct kubectl

I’m going to, for this moment, copy the kubeconfig from my local WSL into the PD container to test:

# curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

# chmod 755 ./kubectl

# mv /.kubectl /usr/bin

# mkdir ~/.kube

# cat t.o | base64 --decode > /root/.kube/config

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

gke-gkedemo01-default-pool-3160d14e-kn8b Ready <none> 69m v1.21.6-gke.1500

gke-gkedemo01-default-pool-3160d14e-t11d Ready <none> 69m v1.21.6-gke.1500

Here we can create a new job:

We see the details when saved:

I had to add python manually.. clearly this would be lost if the pod rotates.

$ kubectl exec -it rundeckrelease-847466b58c-fxmrx -c rundeck /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

rundeck@rundeckrelease-847466b58c-fxmrx:~$ passwd

Enter new UNIX password:

Retype new UNIX password:

passwd: password updated successfully

rundeck@rundeckrelease-847466b58c-fxmrx:~$ sudo echo hi

[sudo] password for rundeck:

hi

rundeck@rundeckrelease-847466b58c-fxmrx:~$ sudo apt update

Get:1 http://mirrors.ubuntu.com/mirrors.txt Mirrorlist [3335 B]

Get:3 http://mirror.us-midwest-1.nexcess.net/ubuntu bionic-updates InRelease [88.7 kB]

Get:2 http://mirrors.lug.mtu.edu/ubuntu bionic InRelease [242 kB]

Ign:4 https://mirror.jacksontechnical.com/ubuntu bionic-backports InRelease

Get:5 http://mirrors.vcea.wsu.edu/ubuntu bionic-security InRelease [88.7 kB]

Get:6 http://mirror.us-midwest-1.nexcess.net/ubuntu bionic-updates/main amd64 Packages [2898 kB]

Get:7 http://mirrors.lug.mtu.edu/ubuntu bionic/multiverse amd64 Packages [186 kB]

Get:8 http://mirrors.lug.mtu.edu/ubuntu bionic/universe amd64 Packages [11.3 MB]

Get:9 http://mirrors.vcea.wsu.edu/ubuntu bionic-security/restricted amd64 Packages [691 kB]

Get:10 http://mirrors.vcea.wsu.edu/ubuntu bionic-security/multiverse amd64 Packages [26.8 kB]

Get:11 http://mirrors.vcea.wsu.edu/ubuntu bionic-security/universe amd64 Packages [1452 kB]

Get:12 http://mirrors.vcea.wsu.edu/ubuntu bionic-security/main amd64 Packages [2461 kB]

Get:4 http://mirror.math.princeton.edu/pub/ubuntu bionic-backports InRelease [74.6 kB]

Get:13 http://mirror.math.princeton.edu/pub/ubuntu bionic-backports/main amd64 Packages [11.6 kB]

Get:14 http://mirror.math.princeton.edu/pub/ubuntu bionic-backports/universe amd64 Packages [12.6 kB]

Get:15 http://mirrors.lug.mtu.edu/ubuntu bionic/main amd64 Packages [1344 kB]

Get:16 http://mirrors.lug.mtu.edu/ubuntu bionic/restricted amd64 Packages [13.5 kB]

Get:17 http://mirror.us-midwest-1.nexcess.net/ubuntu bionic-updates/multiverse amd64 Packages [34.4 kB]

Get:18 http://mirror.us-midwest-1.nexcess.net/ubuntu bionic-updates/universe amd64 Packages [2230 kB]

Get:19 http://mirror.us-midwest-1.nexcess.net/ubuntu bionic-updates/restricted amd64 Packages [725 kB]

Fetched 23.9 MB in 4s (5764 kB/s)

Reading package lists... Done

Building dependency tree

Reading state information... Done

36 packages can be upgraded. Run 'apt list --upgradable' to see them.

The kubectl commands also need pip and yaml modules:

$ sudo apt install python3.8

$ sudo apt install python3-pip

$ sudo pip3 install pyyaml kubernetes

I fought and fought…

I could not get this plugin to actually work:

/usr/lib/python3/dist-packages/Crypto/Random/Fortuna/FortunaGenerator.py:28: SyntaxWarning: "is" with a literal. Did you mean "=="?

if sys.version_info[0] is 2 and sys.version_info[1] is 1:

/usr/lib/python3/dist-packages/Crypto/Random/Fortuna/FortunaGenerator.py:28: SyntaxWarning: "is" with a literal. Did you mean "=="?

if sys.version_info[0] is 2 and sys.version_info[1] is 1:

Traceback (most recent call last):

File "/home/rundeck/libext/cache/kubernetes-plugin-1.0.12/job-create.py", line 5, in <module>

import common

File "/home/rundeck/libext/cache/kubernetes-plugin-1.0.12/common.py", line 13, in <module>

from kubernetes.stream import stream

ModuleNotFoundError: No module named 'kubernetes.stream'

Failed: NonZeroResultCode: Script result code was: 1

I managed to get Python running, but not the libraries required:

Be aware that there are two containers in the pod, nginx and rundeck. The rundeck container is the default Node.

Using Kubectl directly

Moving onto install gcloud and ‘init’ on the rundeck container:

58 sudo apt-get install apt-transport-https ca-certificates gnupg

59 sudo apt-get update && sudo apt-get install google-cloud-sdk

60 echo "deb https://packages.cloud.google.com/apt cloud-sdk main" | sudo tee -a /etc/apt/sources.list.d/google-cloud-sdk.list

61 curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key --keyring /usr/share/keyrings/cloud.google.gpg add -

62 sudo apt-get update && sudo apt-get install google-cloud-sdk

63 curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

64 sudo apt-get update && sudo apt-get install google-cloud-sdk

65 ls /etc/apt/sources.list.d/google-cloud-sdk.list

66 vi /etc/apt/sources.list.d/google-cloud-sdk.list

67 sudo vi /etc/apt/sources.list.d/google-cloud-sdk.list

68 sudo apt-get update && sudo apt-get install google-cloud-sdk

69 kubectl get nodes --kubeconfig=/home/rundeck/server/data/KUBECONFIG

70 gcloud init

71 kubectl get nodes --kubeconfig=/home/rundeck/server/data/KUBECONFIG

I could then access the nodes while in the rundeck container. You’ll note that I put the kubeconfig in the PVC volume.

$ kubectl get nodes --kubeconfig=/home/rundeck/server/data/KUBECONFIG

NAME STATUS ROLES AGE VERSION

gke-gkedemo01-default-pool-3160d14e-kn8b Ready <none> 117m v1.21.6-gke.1500

gke-gkedemo01-default-pool-3160d14e-t11d Ready <none> 117m v1.21.6-gke.1500

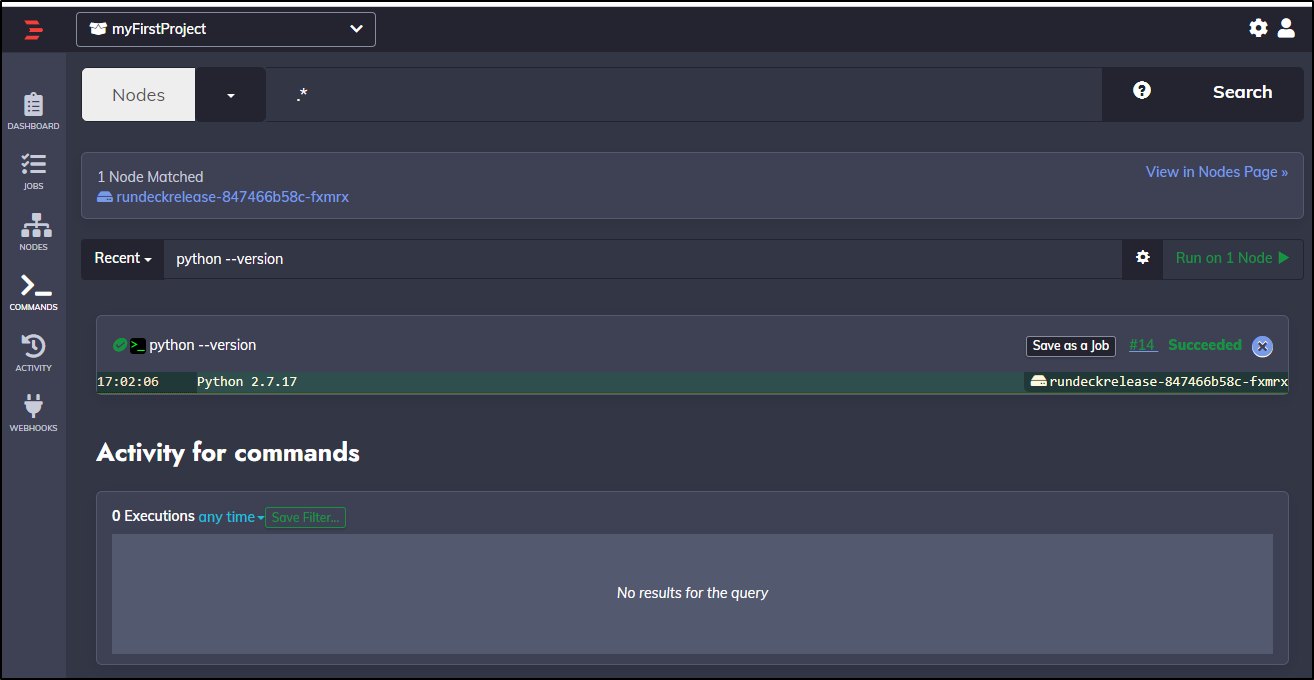

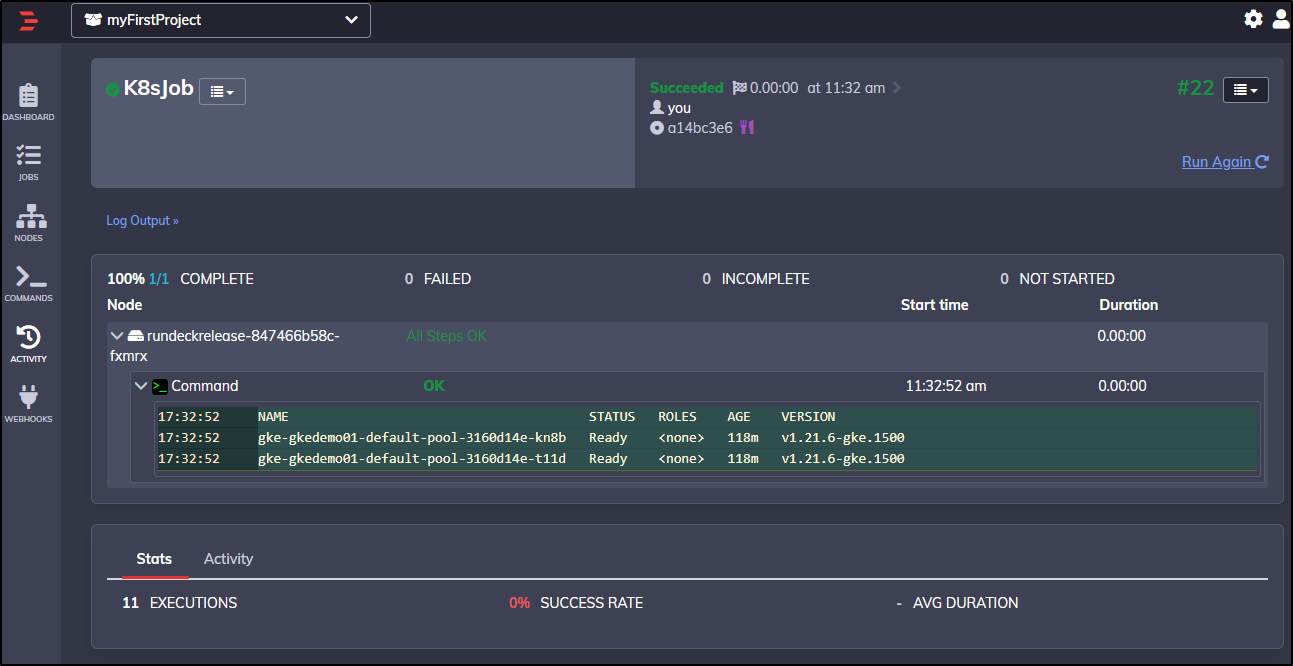

Executing the same step in the Rundeck Job:

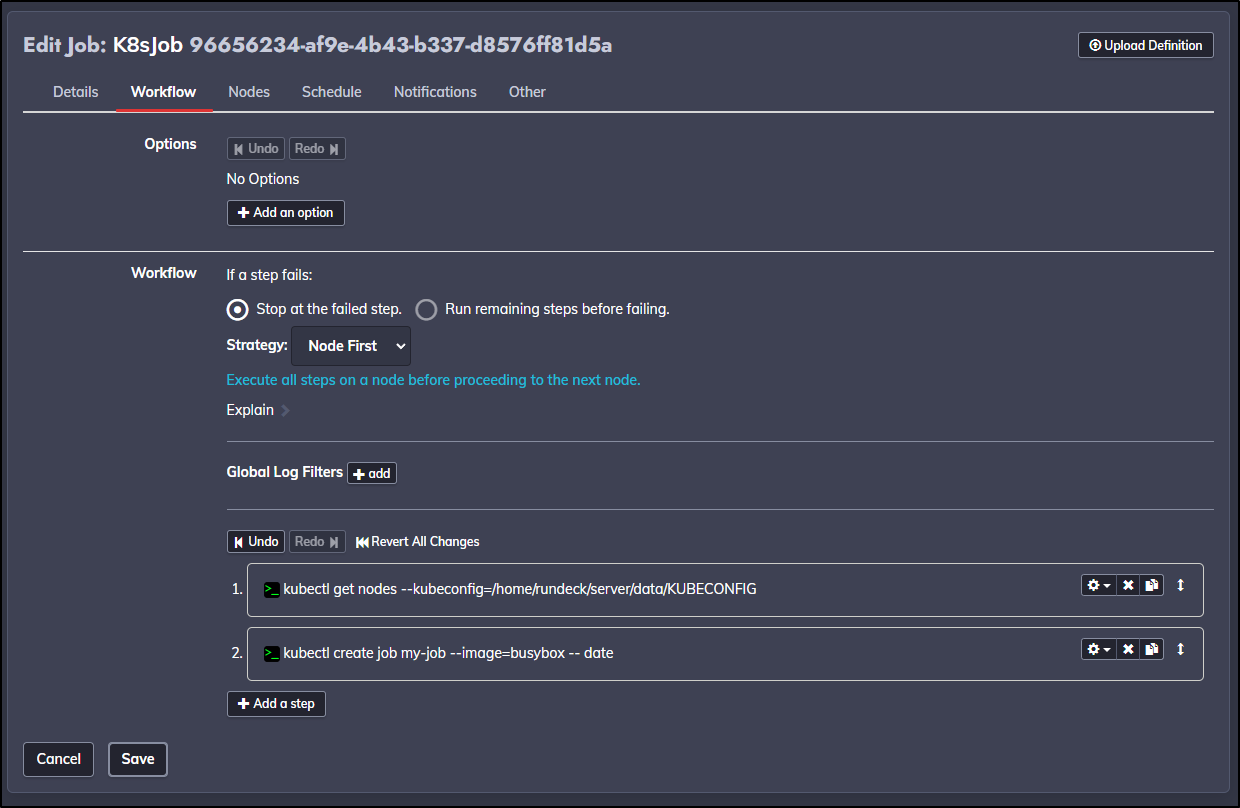

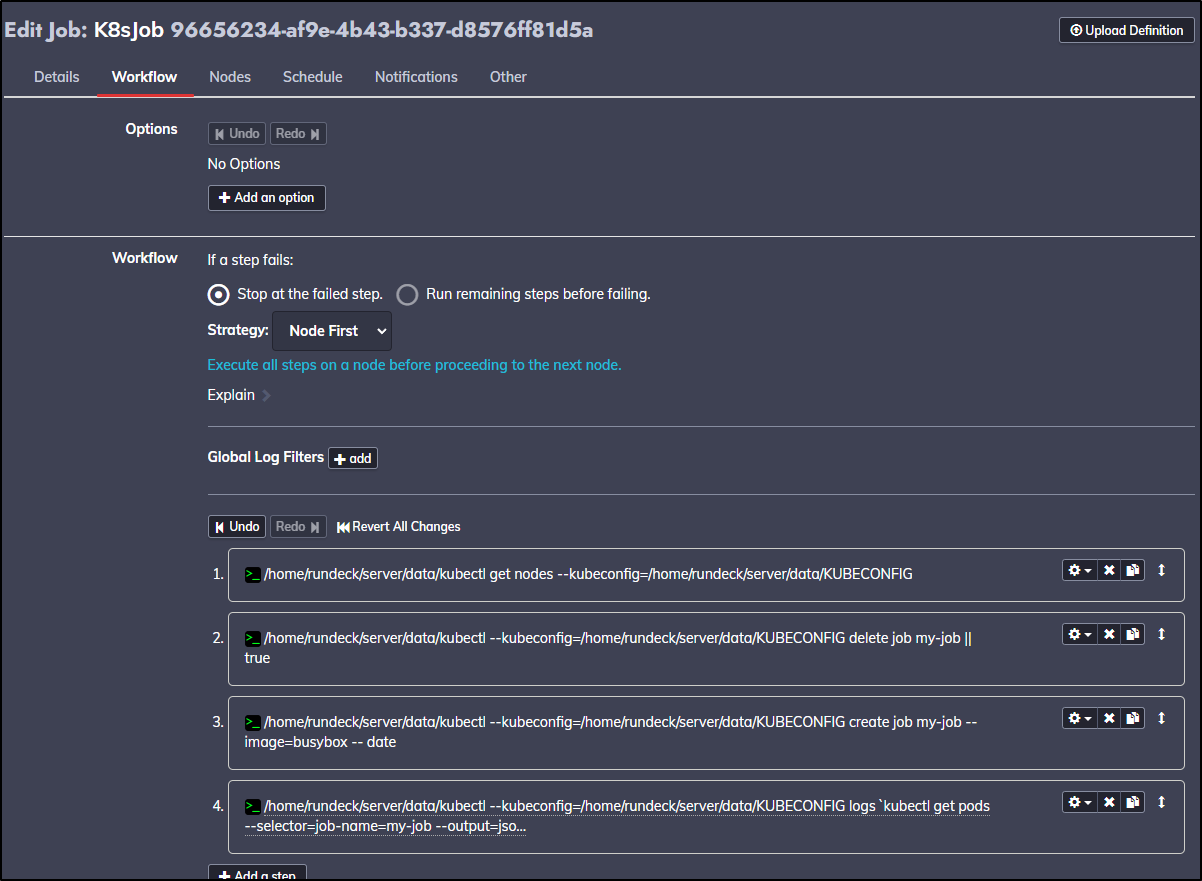

Let’s have our job in Rundeck actually run a Kubernetes Job

kubectl create job my-job --image=busybox -- date

At first I worked on the RBAC roles when I saw the error

error: failed to create job: jobs.batch is forbidden: User "system:serviceaccount:default:rundeckrelease" cannot create resource "jobs" in API group "batch" in the namespace "default"

But what I neglected to notice was I had not added the “–kubeconfig” line to the step.

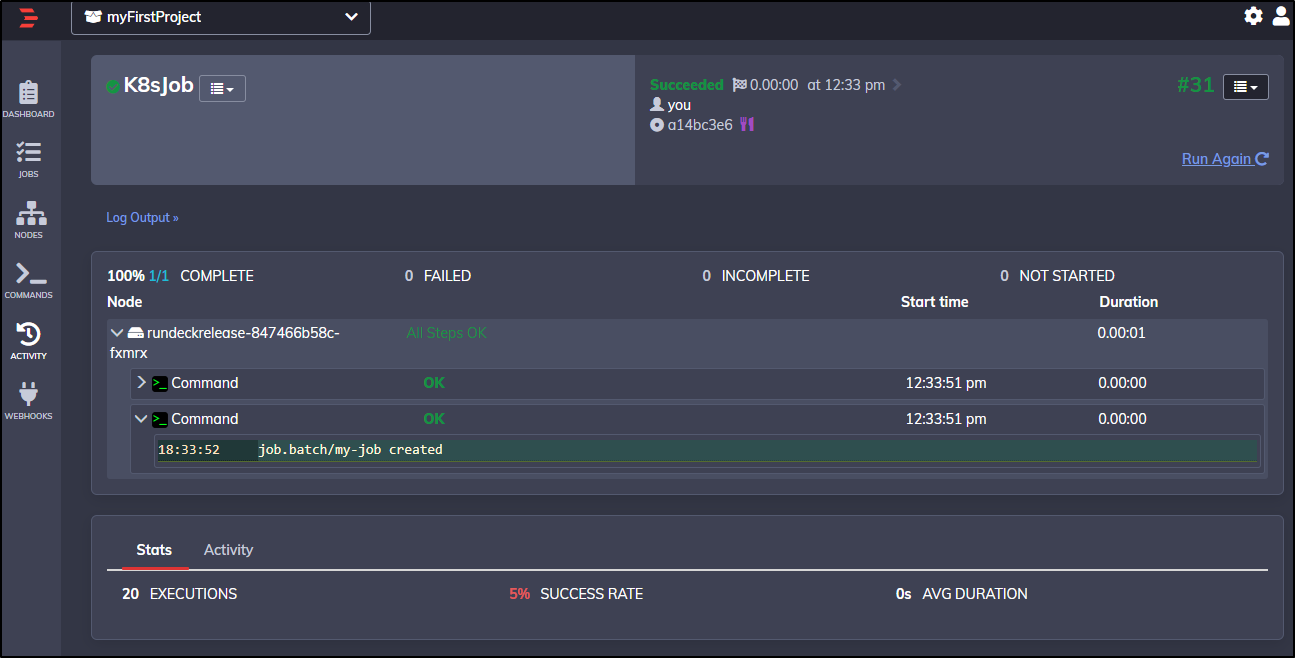

Once corrected:

kubectl --kubeconfig=/home/rundeck/server/data/KUBECONFIG create job my-job --image=busybox -- date

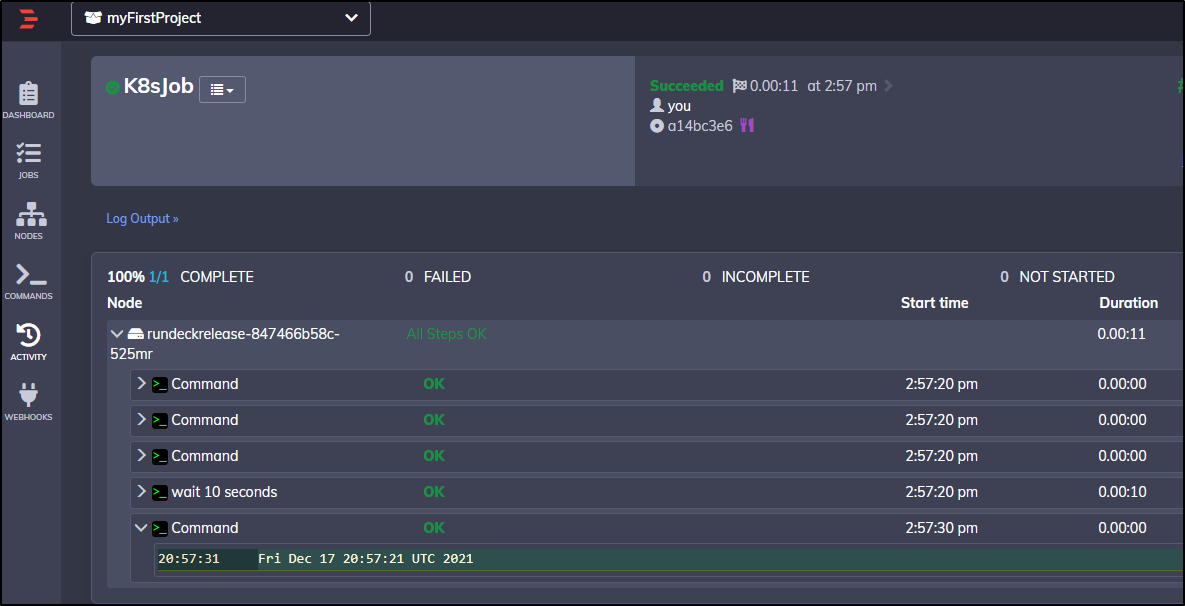

It ran great:

And we can see the work it did via our command line in WSL:

$ kubectl get jobs

NAME COMPLETIONS DURATION AGE

my-job 1/1 4s 11s

$ kubectl logs `kubectl get pods --selector=job-name=my-job --output=jsonpath='{.items[*].metadata.name}'`

Fri Dec 17 18:33:55 UTC 2021

One consequence of this approach is because we shoe-horned in kubectl into /usr/bin manually a pod rotation will loose that.

One simple fix is to copy kubectl into our PVC mount (data) where we also put the kubeconfig.

$ kubectl exec -it rundeckrelease-847466b58c-fxmrx -c rundeck -- /bin/bash

rundeck@rundeckrelease-847466b58c-fxmrx:~$ cp /usr/bin/kubectl /home/rundeck/server/data/kubectl

rundeck@rundeckrelease-847466b58c-fxmrx:~$ exit

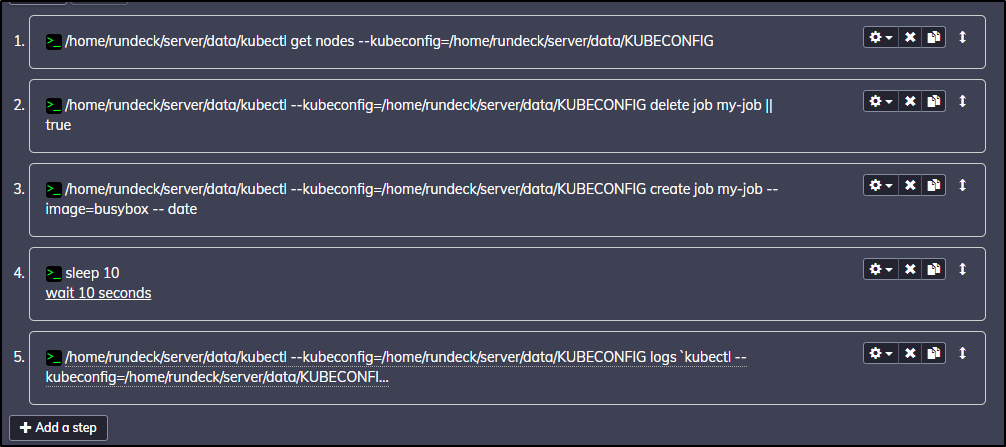

then update the steps to use it:

(the last line is)

/home/rundeck/server/data/kubectl --kubeconfig=/home/rundeck/server/data/KUBECONFIG logs `kubectl --kubeconfig=/home/rundeck/server/data/KUBECONFIG get pods --selector=job-name=my-job --output=jsonpath='{.items[*].metadata.name}'`

I got an error:

Error from server (BadRequest): container "my-job" in pod "my-job-bsrr4" is waiting to start: ContainerCreating

Result: 1

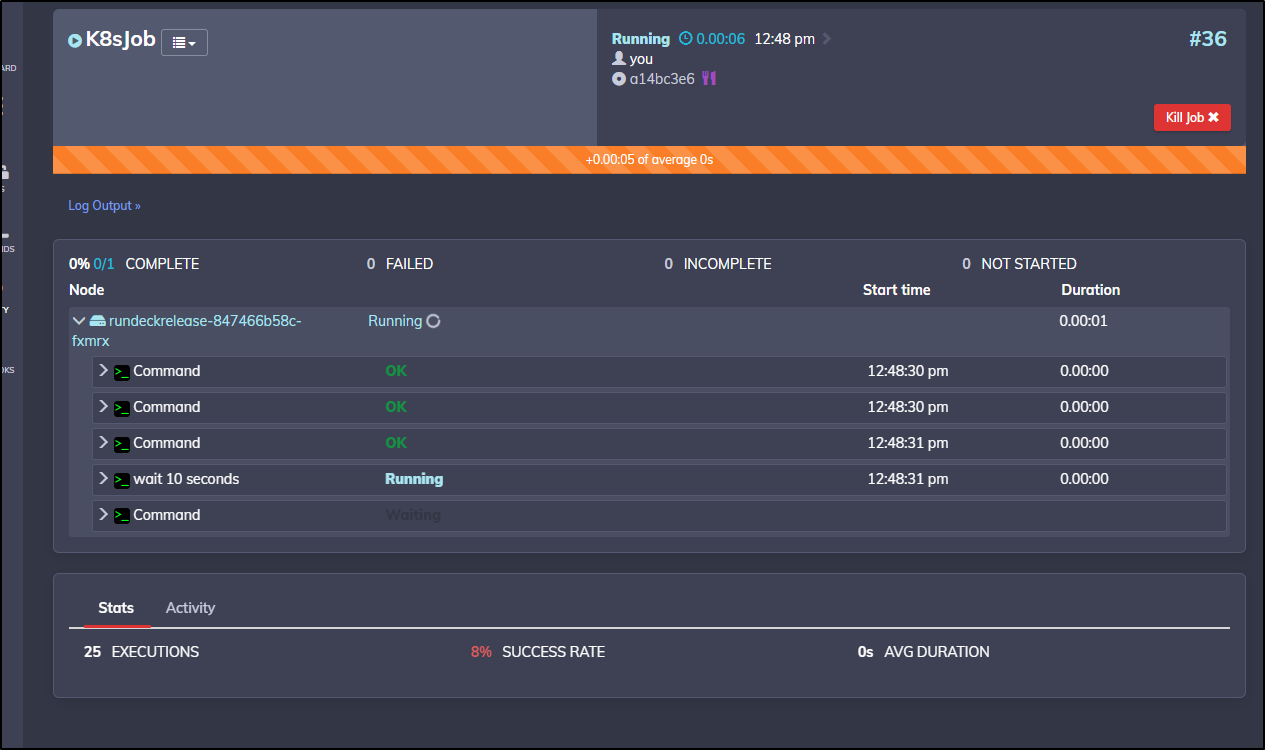

so I realized I should add a sleep in there

which worked to stall for a moment

and we can see our output

If you want to repeat the exact steps, here is the YAML job definition:

- defaultTab: nodes

description: ''

executionEnabled: true

id: 96656234-af9e-4b43-b337-d8576ff81d5a

loglevel: INFO

name: K8sJob

nodeFilterEditable: false

plugins:

ExecutionLifecycle: null

scheduleEnabled: true

sequence:

commands:

- exec: /home/rundeck/server/data/kubectl get nodes --kubeconfig=/home/rundeck/server/data/KUBECONFIG

- exec: /home/rundeck/server/data/kubectl --kubeconfig=/home/rundeck/server/data/KUBECONFIG

delete job my-job || true

- exec: /home/rundeck/server/data/kubectl --kubeconfig=/home/rundeck/server/data/KUBECONFIG

create job my-job --image=busybox -- date

- description: wait 10 seconds

exec: sleep 10

- exec: /home/rundeck/server/data/kubectl --kubeconfig=/home/rundeck/server/data/KUBECONFIG

logs `/home/rundeck/server/data/kubectl --kubeconfig=/home/rundeck/server/data/KUBECONFIG

get pods --selector=job-name=my-job --output=jsonpath='{.items[*].metadata.name}'`

keepgoing: false

strategy: node-first

uuid: 96656234-af9e-4b43-b337-d8576ff81d5a

Now even with the KUBECONFIG and kubectl moved to a PVC, the config for GKE requires a logged in user to the gcloud CLI. Clearly this would not survive a pod rotation.

In fact we can test this:

$ kubectl get pods | grep rundeck

rundeckrelease-847466b58c-fxmrx 2/2 Running 0 6h57m

$ kubectl delete pod rundeckrelease-847466b58c-fxmrx

pod "rundeckrelease-847466b58c-fxmrx" deleted

$ kubectl get pods --all-namespaces| grep rundeck

default rundeckrelease-847466b58c-525mr 2/2 Running 0 107s

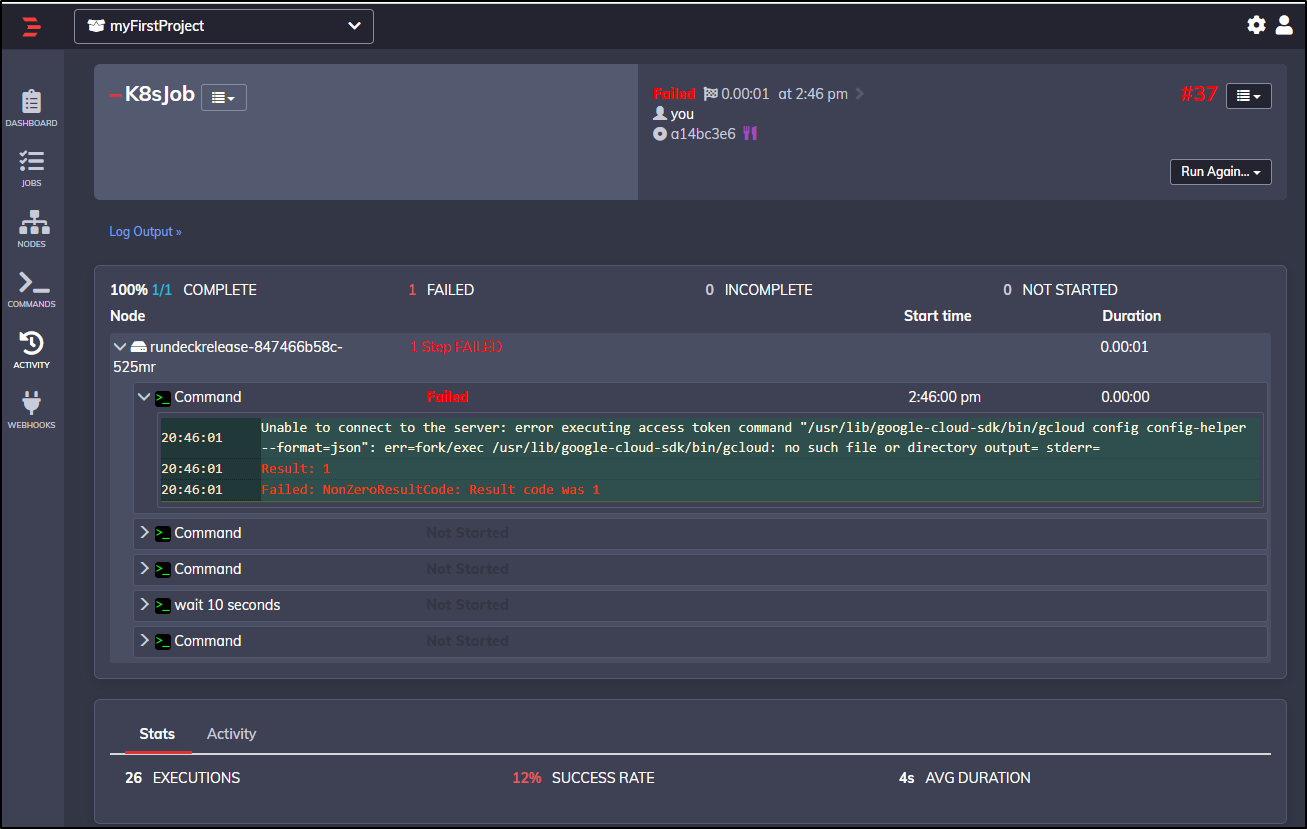

And re-running it now fails:

We could mitigate by creating a service token and paired with a URL create a usable config (akin to how we add GKE to Azure DevOps).

Webhooks

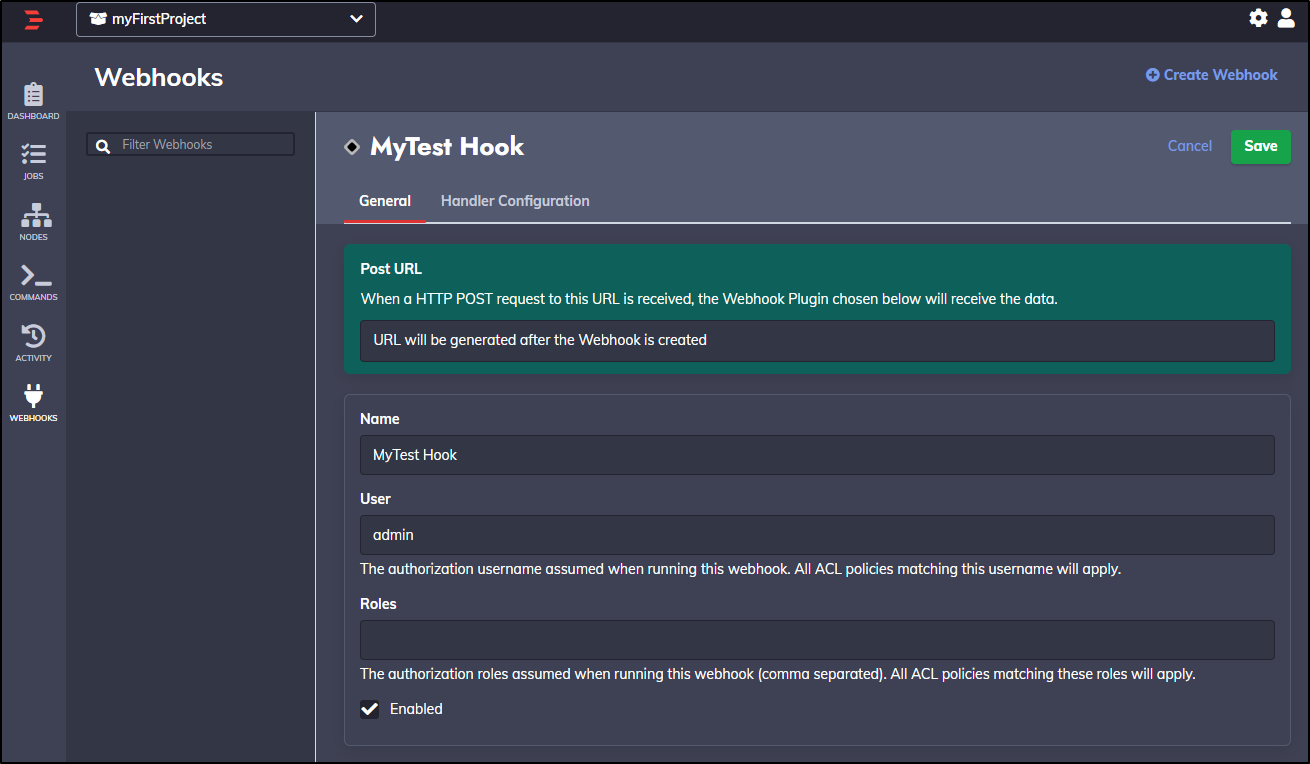

Webhooks allow us to invoke Rundeck jobs from external systems like Pagerduty, Datadog, New Relic, and many many more. Anything that can invoke a web url with a payload could trigger a Rundeck job.

We can easily create a webhook in the Webhooks menu

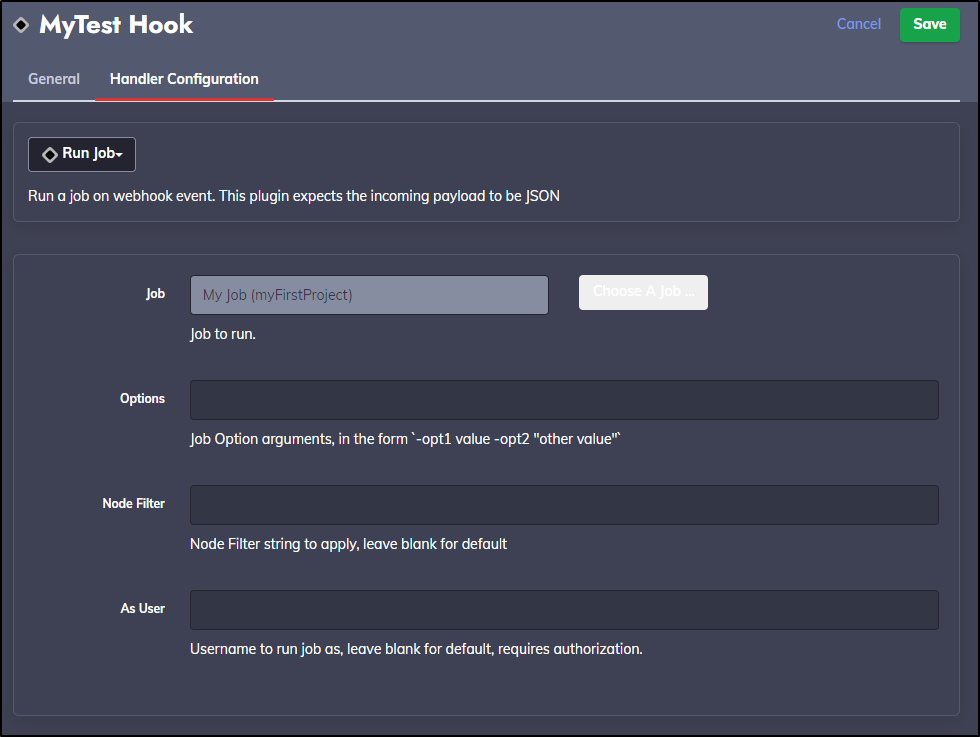

We can add additional options or Node filters

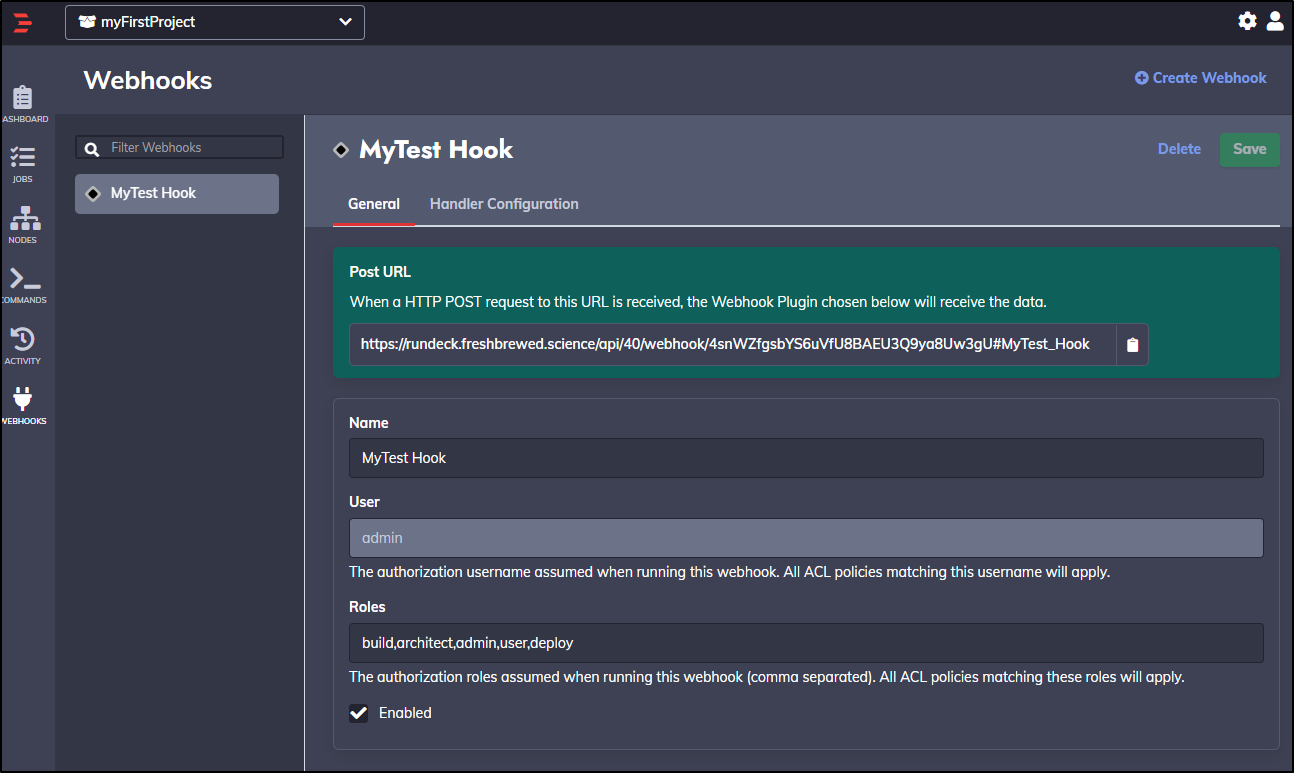

And when saving it, we get a URL

We can pass our BASIC auth user through to the webhook to invoke it:

$ curl -u builder:************ --request POST https://rundeck.freshbrewed.science/api/40/webhook/4snWZfgsbYS6uVfU8BAEU3Q9ya8Uw3gU#MyTest_Hook

{"jobId":"9cdfd069-9320-4cf7-b497-7a99dc32f87e","executionId":"41"}

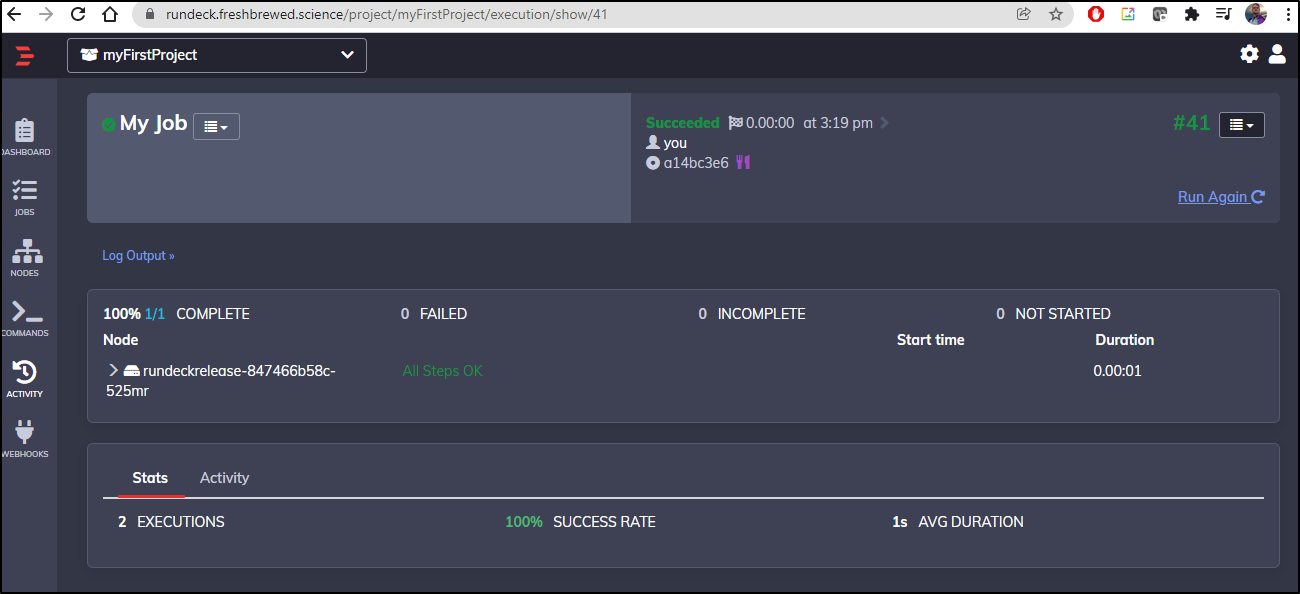

Verification

We can see indeed it was run:

The idea of all this, and why Pagerduty acquired Rundeck, is to integrate in with PD.

But as long as that is a feature locked out of the free tier, I won’t be able to test it:

Datadog Integration

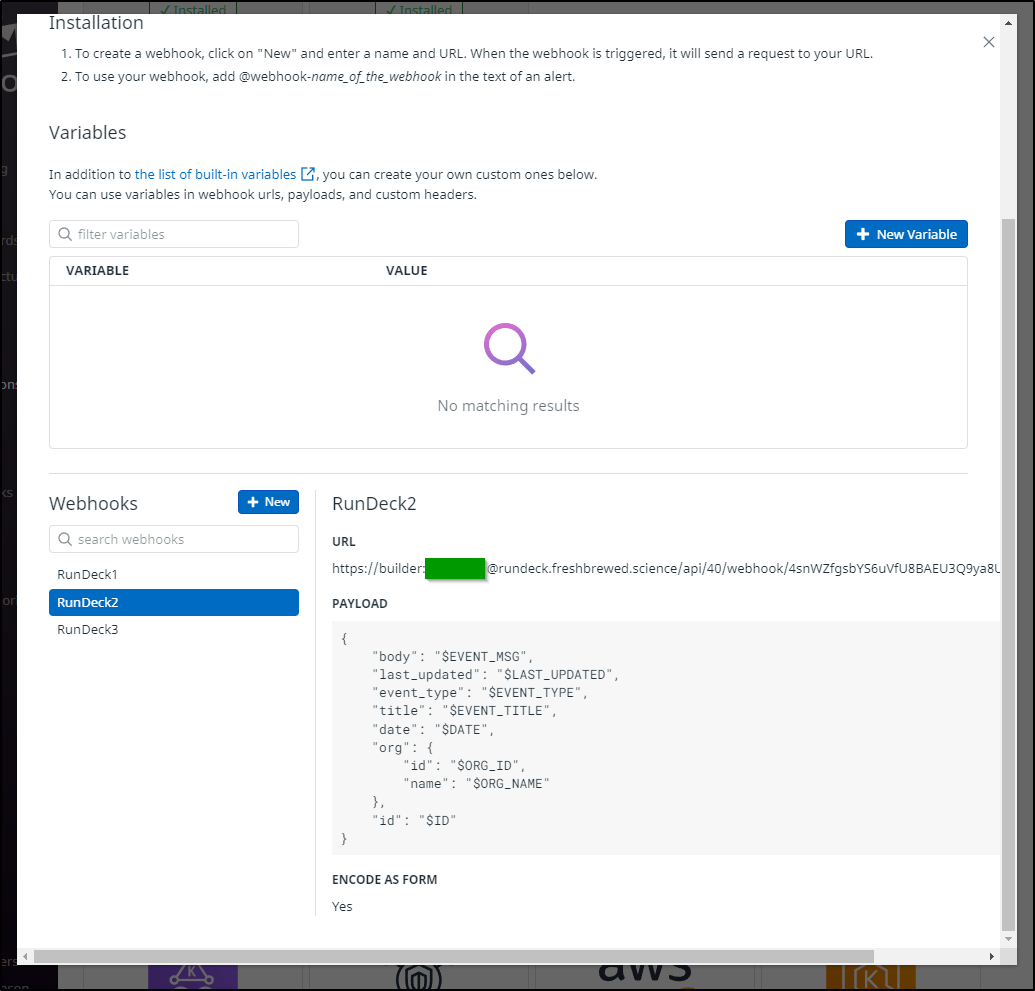

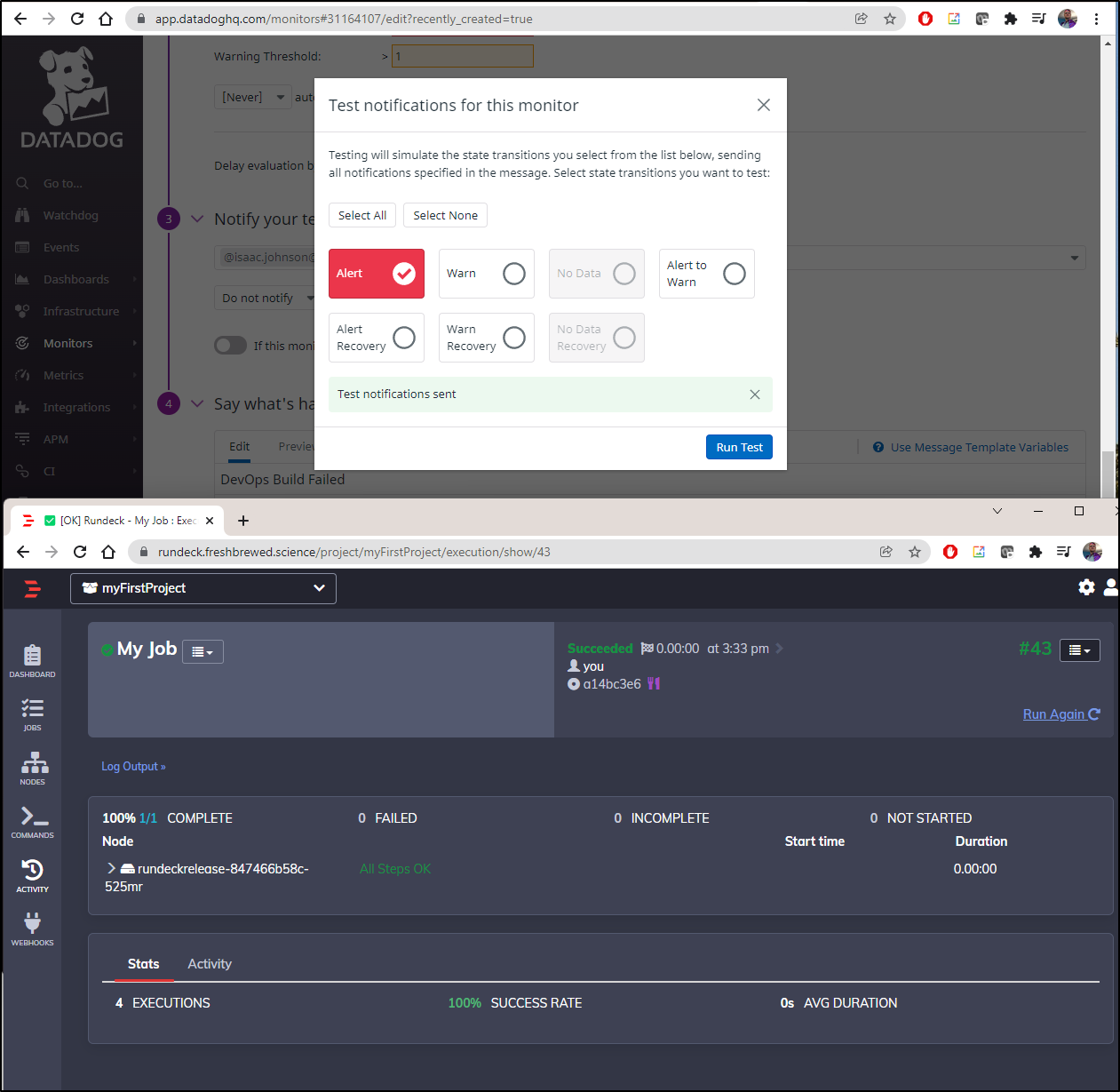

However there are two straightforward ways I can tie into Datadog (which was my signal to Pagerduty in the first place)

Let’s try using the webhook

Add a webhook with the basic auth user:pass in the URL as well as the checkbox to “submit as a form”.

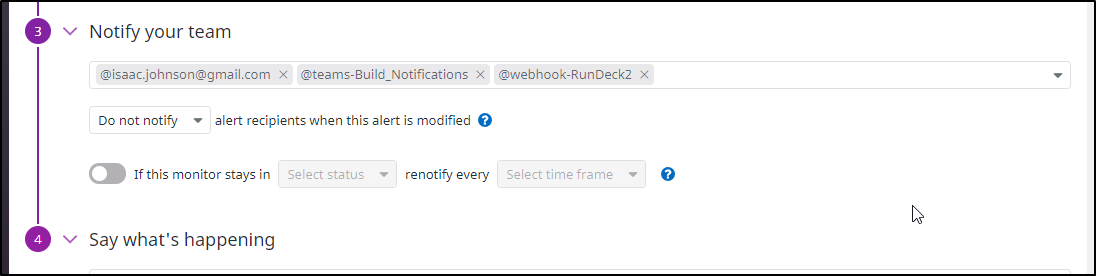

Then we can add it to a monitor as a recipient

Then we can “test notification” and see indeed it invokes the webhook running in our local cluster on Rundeck

Cleanup

We can remove our GKE cluster to avoid incuring extra charges.

This is easy to do with the gcloud CLI

$ gcloud container clusters delete gkedemo01 --zone us-central1-a

The following clusters will be deleted.

- [gkedemo01] in [us-central1-a]

Do you want to continue (Y/n)? y

Deleting cluster gkedemo01...done.

Deleted [https://container.googleapis.com/v1/projects/gkedemo01/zones/us-central1-a/clusters/gkedemo01].

Rundeck On Kubernetes No Longer Supported?

We can see the deprecated chart here and that while there is a containerized deployment model, there is not a supported k8s deployment on the installation page. Searching found some threads on reddit that generally suggest that Rundeck is not really designed to be be run on Kubernetes.

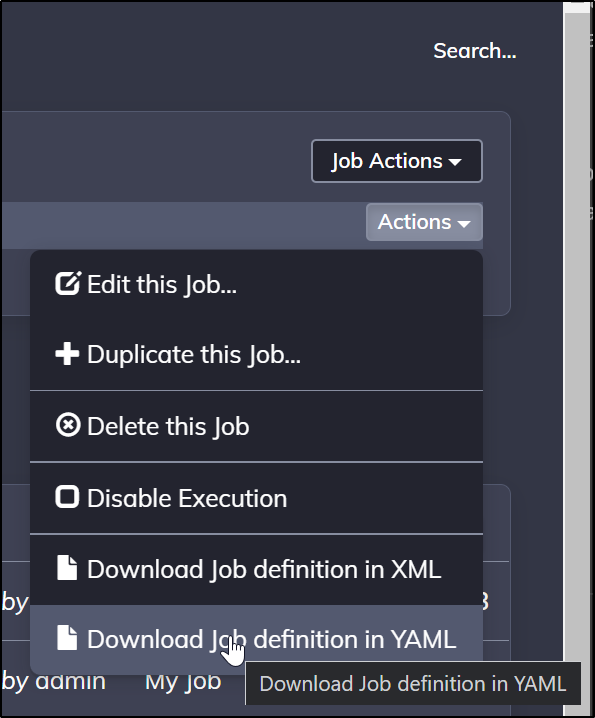

Revision Control

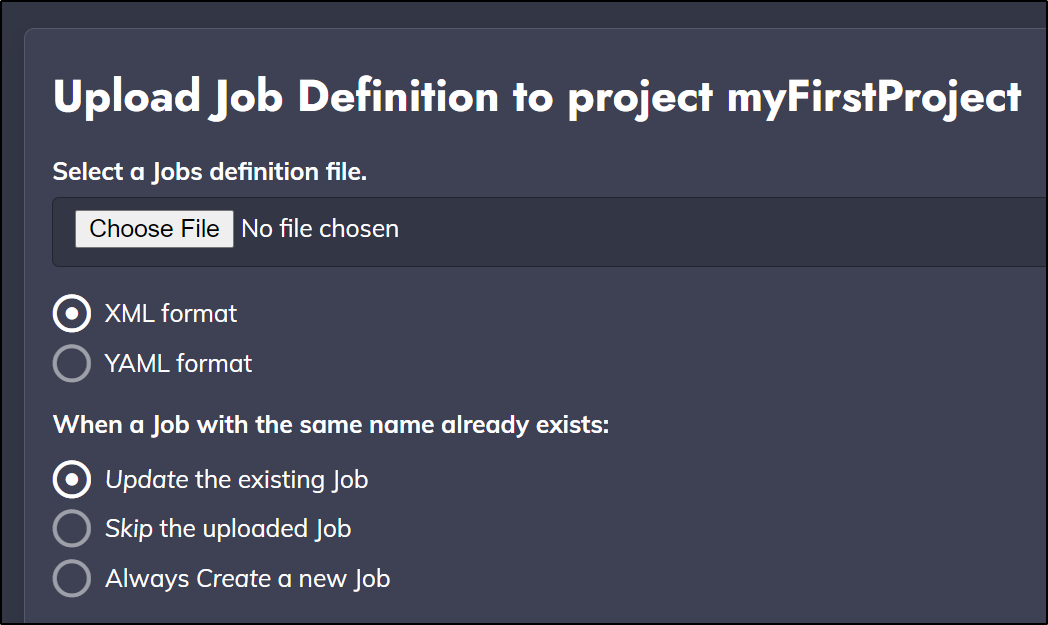

We can save a job definition from a project via the UI using either XML or YAML.

We could then check this file into revision control for versioning or searching. Later we could use it as a basis to restore a job with issues or create a new job with similar functionality.

To use a YAML or XML template we just need to upload it via the UI:

Next steps

We did a basic demo of running a containerized workload in GKE as a Batch Job; simple, but proved the idea.

The authentication required to directly engage with the cluster will continue to challenge us. For this reason, as well as security implications, it is likely not the right way to go about orchestrating in K8s.

We have tooling to expose endpoints in a Kubernetes cluster far more easily.

We could use KEDA to expose an endpoint that executes an Azure Function running inside our cluster (GKE or otherwise). There are a myriad of other serverless solutions to trigger containerized workloads as well such as OpenFaaS, Kubeless, KNative or fission.io just to name a few.

We could also use Dapr and pubsub to post to a topic or use a GCP App Engine Function.

Any of these ways effectively moves the traffic to a straight curl command with a payload and optional token. Argaubly this is the way to manage invoking utility workloads and scales far better than direct kubernetes interactions.

For instance, I can easily solve a break-glass scenerio with a self-hosted function by merely rotating the token or disabling the ingress. Furthermore, if my Rundeck orchestrator comes from a known range, I can simply block traffic to my k8s ingress from outside that CIDR range.

Another approach would be to use an “Admin” node that is authed already and take the python and login steps away from the Rundeck containerized node.

We could also use something like Gitops via Flux, Argo or Arc to update YAML in a location that is immediately implemented on the working cluster. Or possibly use something like Crossplane to update a Job by deploying YAML in our on-prem cluster (allowing Crossplane to handle the GCP/GKE authentication).

Summary

Rundeck is a handy orchestration tool that has a pretty solid open-source option. It took a bit of manipulation to run it properly and as the chart deploys one runner pod, there is the chance it could be down. I believe the idea is to use the Enterprise product for HA scenarios.

I really like the built in webhook. The ease to tie that to tools like Datadog or other monitoring suites (as it’s just a simple webhook) would allow us to have self-healing situations. For instance, if a server is detected to have pegged CPU, restart it, or if Kubernetes Nodes cannot schedule, horizontally scale a cluster.

We showed how we can revision the jobs manually but the question remains, what is the value add of this tool?

If I wanted a jobspec with revision controls and history, I might consider ansible tower that pulls from git branches (Projects) into jobs to run (Templates). A lot of teams that lean towards to the Dev in DevOps might prefer to use AzDO Azure Pipelines which we can trigger with webhooks just the same. There exist patterns for Jenkins and Github as well.

That is to say, if we are comfortable writing and building code in a robust CI/CD system, why add yet another system for applying infrastructure changes? It does not make sense to have tool sprawl.

The only answer I can think of is for teams in tightly restricted environments who cannot use SaaS offerings like Github, Gitlab, Circle-CI, or Azure DevOps. Perhaps for high-security situations with air-gapped environments a tool like Rundeck makes sense in that is very standalone.

Lastly, the refusal to share pricing is a big drawback to me. I contacted Sales but after a short call they said they could not share any type of price with me directly.

Similarly, I recall in Hashicorp’s journey prices were negotiated for Terraform Enterprise and Consul Enterprise. For the Enterprise line, they still are. However, you can do a bit of searching and find some ideas of prices. But to that end, they do have a mid-tier and Marketplace option (in Azure and AWS) so you can see prices for some commercial plans, at least at that “Teams” level.

I bring up Hashicorp’s story to essentially say that just because prices are behind an NDA, it doesn’t mean we shouldn’t look into purchasing a suite. However, when they are, it greatly impairs our ability to sell it internally. When we cannot give those with powers of the purse pricing guidelines it often kills the initiative where it stands.