Published: Dec 7, 2021 by Isaac Johnson

In our first post we explored the usage of Github actions and how we might use our own Github runners. The workflow that we created was just a simple hello-world example flow. This time let’s dig a bit deeper and implement a real working workflow with the github runners we’ve already launched.

We will create the flow that implements what we did with the Azure Pipelines in building Jekyll and then publishing it to a static site to host. We will take a look at PR builds and then other optimizations.

Updating the Github Runner with Tools

We already addressed adding some tools to the Github runner in the last post. Now we will add some additional things that will speed up build and deploys.

I knew I would need the AWS CLI (at least until I move hosting). I figured it would be easiest to just add it to the runner image.

Basically, I just added the awscli to the last apt install step:

$ cat Dockerfile

FROM summerwind/actions-runner:latest

RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y ca-certificates curl apt-transport-https lsb-release gnupg

# Install MS Key

RUN curl -sL https://packages.microsoft.com/keys/microsoft.asc | gpg --dearmor | sudo tee /etc/apt/trusted.gpg.d/microsoft.gpg > /dev/null

# Add MS Apt repo

RUN umask 0002 && echo "deb [arch=amd64] https://packages.microsoft.com/repos/azure-cli/ focal main" | sudo tee /etc/apt/sources.list.d/azure-cli.list

# Install Azure CLI

RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y azure-cli awscli

RUN sudo rm -rf /var/lib/apt/lists/*

The we just build it

$ docker build -t myrunner .

[+] Building 120.6s (10/10) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 682B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/summerwind/actions-runner:latest 10.9s

=> [1/6] FROM docker.io/summerwind/actions-runner:latest@sha256:d08b7acb0f31c37ee50383a8a37164bb7401e1f88a7e7cff879934bf2f8c675a 0.0s

=> CACHED [2/6] RUN sudo apt update -y && umask 0002 && sudo apt install -y ca-certificates curl apt-transport-https lsb-release gnupg 0.0s

=> CACHED [3/6] RUN curl -sL https://packages.microsoft.com/keys/microsoft.asc | gpg --dearmor | sudo tee /etc/apt/trusted.gpg.d/microsoft.gpg > /dev/null 0.0s

=> CACHED [4/6] RUN umask 0002 && echo "deb [arch=amd64] https://packages.microsoft.com/repos/azure-cli/ focal main" | sudo tee /etc/apt/sources.list.d/azure-cli.li 0.0s

=> [5/6] RUN sudo apt update -y && umask 0002 && sudo apt install -y azure-cli awscli 100.6s

=> [6/6] RUN sudo rm -rf /var/lib/apt/lists/* 0.6s

=> exporting to image 8.5s

=> => exporting layers 8.5s

=> => writing image sha256:16fb58a29b01e9b07ac2610fdfbf9ff3eb820026c1230e1214f7f34b49e7b506 0.0s

=> => naming to docker.io/library/myrunner 0.0s

Use 'docker scan' to run Snyk tests against images to find vulnerabilities and learn how to fix them

I quick verified the AWS CLI is working:

$ docker run --rm 16fb58a29b01e9b07ac2610fdfbf9ff3eb820026c1230e1214f7f34b49e7b506 aws version

usage: aws [options] <command> <subcommand> [<subcommand> ...] [parameters]

To see help text, you can run:

aws help

aws <command> help

aws <command> <subcommand> help

aws: error: argument command: Invalid choice, valid choices are:

accessanalyzer | acm

acm-pca | alexaforbusiness

amplify | apigateway

apigatewaymanagementapi | apigatewayv2

appconfig | application-autoscaling

application-insights | appmesh

appstream | appsync

athena | autoscaling

autoscaling-plans | backup

batch | budgets

ce | chime

cloud9 | clouddirectory

cloudformation | cloudfront

cloudhsm | cloudhsmv2

cloudsearch | cloudsearchdomain

cloudtrail | cloudwatch

codebuild | codecommit

codeguru-reviewer | codeguruprofiler

codepipeline | codestar

codestar-connections | codestar-notifications

cognito-identity | cognito-idp

cognito-sync | comprehend

comprehendmedical | compute-optimizer

connect | connectparticipant

cur | dataexchange

datapipeline | datasync

dax | detective

devicefarm | directconnect

discovery | dlm

dms | docdb

ds | dynamodb

dynamodbstreams | ebs

ec2 | ec2-instance-connect

ecr | ecs

efs | eks

elastic-inference | elasticache

elasticbeanstalk | elastictranscoder

elb | elbv2

emr | es

events | firehose

fms | forecast

forecastquery | frauddetector

fsx | gamelift

glacier | globalaccelerator

glue | greengrass

groundstation | guardduty

health | iam

imagebuilder | importexport

inspector | iot

iot-data | iot-jobs-data

iot1click-devices | iot1click-projects

iotanalytics | iotevents

iotevents-data | iotsecuretunneling

iotsitewise | iotthingsgraph

kafka | kendra

kinesis | kinesis-video-archived-media

kinesis-video-media | kinesis-video-signaling

kinesisanalytics | kinesisanalyticsv2

kinesisvideo | kms

lakeformation | lambda

lex-models | lex-runtime

license-manager | lightsail

logs | machinelearning

macie | macie2

managedblockchain | marketplace-catalog

marketplace-entitlement | marketplacecommerceanalytics

mediaconnect | mediaconvert

medialive | mediapackage

mediapackage-vod | mediastore

mediastore-data | mediatailor

meteringmarketplace | mgh

migrationhub-config | mobile

mq | mturk

neptune | networkmanager

opsworks | opsworkscm

organizations | outposts

personalize | personalize-events

personalize-runtime | pi

pinpoint | pinpoint-email

pinpoint-sms-voice | polly

pricing | qldb

qldb-session | quicksight

ram | rds

rds-data | redshift

rekognition | resource-groups

resourcegroupstaggingapi | robomaker

route53 | route53domains

route53resolver | s3control

sagemaker | sagemaker-a2i-runtime

sagemaker-runtime | savingsplans

schemas | sdb

secretsmanager | securityhub

serverlessrepo | service-quotas

servicecatalog | servicediscovery

ses | sesv2

shield | signer

sms | sms-voice

snowball | sns

sqs | ssm

sso | sso-oidc

stepfunctions | storagegateway

sts | support

swf | synthetics

textract | transcribe

transfer | translate

waf | waf-regional

wafv2 | workdocs

worklink | workmail

workmailmessageflow | workspaces

xray | s3api

s3 | configure

deploy | configservice

opsworks-cm | runtime.sagemaker

history | help

Then I tagged and pushed it:

$ docker tag myrunner:latest harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.0

$ docker push harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.0

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/myghrunner]

25fc3ad0e309: Pushed

0b8d08aa170a: Pushed

184673581df5: Pushed

a2bc486f2681: Pushed

0f07ea155abc: Pushed

20d95e7ac997: Pushed

2836d1a6237d: Pushed

f8b8f8823205: Pushed

558ee99a354e: Pushed

43c083a7fb2b: Pushed

f01c7f0339cf: Pushed

db8cd6a1db59: Pushed

9f54eef41275: Layer already exists

1.1.0: digest: sha256:37edf935b9aa0c5e2911f8cd557841d82a65aa9ecf3f9824a0bbb291e11a2f25 size: 3045

Next I updated my runner to use this 1.1.0 image:

$ cat addMyRunner.yml

apiVersion: actions.summerwind.dev/v1alpha1

kind: RunnerDeployment

metadata:

name: my-jekyllrunner-deployment

spec:

template:

spec:

repository: idjohnson/jekyll-blog

image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.0

imagePullSecrets:

- name: myharborreg

dockerEnabled: true

labels:

- my-jekyllrunner-deployment

---

apiVersion: actions.summerwind.dev/v1alpha1

kind: HorizontalRunnerAutoscaler

metadata:

name: my-jekyllrunner-deployment-autoscaler

spec:

# Runners in the targeted RunnerDeployment won't be scaled down for 5 minutes instead of the default 10 minutes now

scaleDownDelaySecondsAfterScaleOut: 300

scaleTargetRef:

name: my-jekyllrunner-deployment

minReplicas: 1

maxReplicas: 5

metrics:

- type: PercentageRunnersBusy

scaleUpThreshold: '0.75'

scaleDownThreshold: '0.25'

scaleUpFactor: '2'

scaleDownFactor: '0.5'

$ kubectl apply -f addMyRunner.yml

runnerdeployment.actions.summerwind.dev/my-jekyllrunner-deployment configured

horizontalrunnerautoscaler.actions.summerwind.dev/my-jekyllrunner-deployment-autoscaler unchanged

Now that I have the AWS CLI installed, I want to authenticate to it. There are a few ways to handle this; I could use a Dapr side car secrets injecktor, I could use baked in secrets to the image, Github secrets, or use a SP to jump out to AKV.

I decided to try, for a simple pass, to use a standard K8s secret.

$ cat addMyRunner.yml

---

apiVersion: v1

kind: Secret

metadata:

name: awsjekyll

type: Opaque

data:

USER_NAME: QUtOT1RBUkVBTEFNQVpPTktFWVlP

PASSWORD: Tm90IGEgcmVhbCBrZXksIGJ1dCB0aGFua3MgZm9yIGNoZWNraW5nLiBGZWVsIGZyZWUgdG8gZW1haWwgbWUgYXQgaXNhYWNAZnJlc2hicmV3ZWQuc2NpZW5jZSB0byBzYXkgeW91IGFjdHVhbGx5IGNoZWNrZWQgOikK

---

apiVersion: actions.summerwind.dev/v1alpha1

kind: RunnerDeployment

metadata:

name: my-jekyllrunner-deployment

spec:

template:

spec:

repository: idjohnson/jekyll-blog

image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.0

imagePullSecrets:

- name: myharborreg

imagePullPolicy: IfNotPresent

dockerEnabled: true

env:

- name: AWS_DEFAULT_REGION

value: "us-east-1"

- name: AWS_ACCESS_KEY_ID

valueFrom:

secretKeyRef:

name: awsjekyll

key: USER_NAME

- name: AWS_SECRET_ACCESS_KEY

valueFrom:

secretKeyRef:

name: awsjekyll

key: PASSWORD

labels:

- my-jekyllrunner-deployment

---

apiVersion: actions.summerwind.dev/v1alpha1

kind: HorizontalRunnerAutoscaler

metadata:

name: my-jekyllrunner-deployment-autoscaler

spec:

# Runners in the targeted RunnerDeployment won't be scaled down for 5 minutes instead of the default 10 minutes now

scaleDownDelaySecondsAfterScaleOut: 300

scaleTargetRef:

name: my-jekyllrunner-deployment

minReplicas: 1

maxReplicas: 5

metrics:

- type: PercentageRunnersBusy

scaleUpThreshold: '0.75'

scaleDownThreshold: '0.25'

scaleUpFactor: '2'

scaleDownFactor: '0.5'

I also took care of setting the imagePullPolicy to IfNotPresent. Since I version my images (avoid using ‘latest’), it will save the time to pull a large image when i scale out.

Testing:

$ kubectl apply -f addMyRunner.yml

secret/awsjekyll created

runnerdeployment.actions.summerwind.dev/my-jekyllrunner-deployment configured

horizontalrunnerautoscaler.actions.summerwind.dev/my-jekyllrunner-deployment-autoscaler unchanged

$ kubectl exec -it my-jekyllrunner-deployment-q7cch-wj7fp -- /bin/bash

Defaulted container "runner" out of: runner, docker

runner@my-jekyllrunner-deployment-q7cch-wj7fp:/$ aws s3 ls

2019-12-07 14:55:21 crwoodburytest

2020-07-02 15:47:24 datadog-forwarderstack-15ltem-forwarderzipsbucket-16v9v4vsv27pm

2021-06-25 01:12:02 feedback-fb-science

2021-09-02 11:59:40 freshbrewed-ghost

2020-04-14 16:06:12 freshbrewed-test

... snip

I should add that I tried to use Dapr following Dapr instructions:

---

apiVersion: actions.summerwind.dev/v1alpha1

kind: RunnerDeployment

metadata:

name: my-jekyllrunner-deployment

labels:

app: github-runner

spec:

selector:

matchLabels:

app: github-runner

template:

metadata:

annotations:

dapr.io/app-id: github-runner

dapr.io/app-port: "8080"

dapr.io/config: appconfig

dapr.io/enabled: "true"

creationTimestamp: null

labels:

app: github-runner

spec:

repository: idjohnson/jekyll-blog

image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.1

imagePullSecrets:

- name: myharborreg

imagePullPolicy: IfNotPresent

dockerEnabled: true

env:

- name: AWS_DEFAULT_REGION

value: "us-east-1"

- name: AWS_ACCESS_KEY_ID

valueFrom:

secretKeyRef:

name: awsjekyll

key: USER_NAME

- name: AWS_SECRET_ACCESS_KEY

valueFrom:

secretKeyRef:

name: awsjekyll

key: PASSWORD

labels:

- my-jekyllrunner-deployment

but even after upgrading Dapr on my cluster and trying a few times, it just would not add the Dapr sidecar.

I think it is because the Dapr Kubernetes operator only works on “Deployments” and not on “RunnerDeployments”.

It would have been nice to use the Dapr secrets components to pull AWS creds from AKS dynamically.

Adding Ruby

Since the AWS CLI was easy, I could save more time by adding in Ruby and the Ruby Bundler to the image.

$ cat Dockerfile

FROM summerwind/actions-runner:latest

RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y ca-certificates curl apt-transport-https lsb-release gnupg

# Install MS Key

RUN curl -sL https://packages.microsoft.com/keys/microsoft.asc | gpg --dearmor | sudo tee /etc/apt/trusted.gpg.d/microsoft.gpg > /dev/null

# Add MS Apt repo

RUN umask 0002 && echo "deb [arch=amd64] https://packages.microsoft.com/repos/azure-cli/ focal main" | sudo tee /etc/apt/sources.list.d/azure-cli.list

# Install Azure CLI

RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y azure-cli awscli ruby-full

RUN sudo rm -rf /var/lib/apt/lists/*

Next I build the container and tag it

$ docker build -t myrunner:1.1.1 .

[+] Building 10.8s (10/10) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 38B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/summerwind/actions-runner:latest 10.8s

=> [1/6] FROM docker.io/summerwind/actions-runner:latest@sha256:d08b7acb0f31c37ee50383a8a37164bb7401e1f88a7e7cff879934bf2f8c675a 0.0s

=> CACHED [2/6] RUN sudo apt update -y && umask 0002 && sudo apt install -y ca-certificates curl apt-transport-https lsb-release gnupg 0.0s

=> CACHED [3/6] RUN curl -sL https://packages.microsoft.com/keys/microsoft.asc | gpg --dearmor | sudo tee /etc/apt/trusted.gpg.d/microsoft.gpg > /dev/null 0.0s

=> CACHED [4/6] RUN umask 0002 && echo "deb [arch=amd64] https://packages.microsoft.com/repos/azure-cli/ focal main" | sudo tee /etc/apt/sources.list.d/azure-cli.list 0.0s

=> CACHED [5/6] RUN sudo apt update -y && umask 0002 && sudo apt install -y azure-cli awscli ruby-full 0.0s

=> CACHED [6/6] RUN sudo rm -rf /var/lib/apt/lists/* 0.0s

=> exporting to image 0.0s

=> => exporting layers 0.0s

=> => writing image sha256:670715c8c9a2c0f7abc47dc63024fd51018bb17cf1adcf34d1c9ba9d63791d96 0.0s

=> => naming to docker.io/library/myrunner:1.1.1 0.0s

Use 'docker scan' to run Snyk tests against images to find vulnerabilities and learn how to fix them

$ docker tag myrunner:1.1.1 harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.1

Lastly, I then push and use:

$ docker push harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.1

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/myghrunner]

4b1fb136b1b8: Pushed

975f586b68e5: Pushed

184673581df5: Layer already exists

a2bc486f2681: Layer already exists

0f07ea155abc: Layer already exists

20d95e7ac997: Layer already exists

2836d1a6237d: Layer already exists

f8b8f8823205: Layer already exists

558ee99a354e: Layer already exists

43c083a7fb2b: Layer already exists

f01c7f0339cf: Layer already exists

db8cd6a1db59: Layer already exists

9f54eef41275: Layer already exists

1.1.1: digest: sha256:f1b03ab2c186af4153165b7bfbe3c4a5d928259693504bdfea206322b00af298 size: 3045

$ cat addMyRunner.yml | grep image:

image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.1

$ kubectl apply -f addMyRunner.yml

secret/awsjekyll unchanged

runnerdeployment.actions.summerwind.dev/my-jekyllrunner-deployment configured

horizontalrunnerautoscaler.actions.summerwind.dev/my-jekyllrunner-deployment-autoscaler unchanged

Making it live

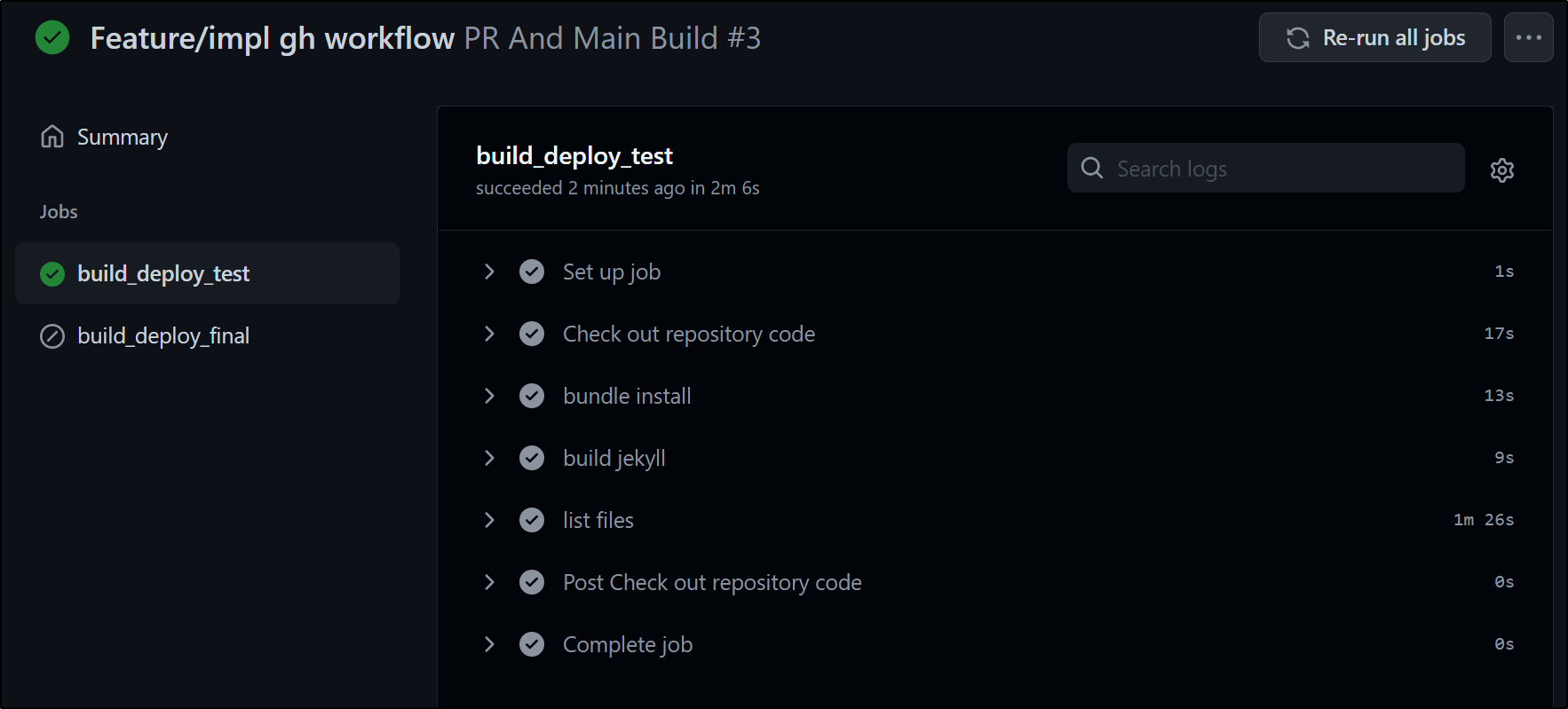

Satisfied that the demo flow is working, next let’s use it in a real flow.

$ cat .github/workflows/pr-final.yml

name: PR And Main Build

on:

push:

branches:

- main

pull_request:

jobs:

build_deploy_test:

runs-on: self-hosted

if: github.ref != 'refs/heads/main'

steps:

- name: Check out repository code

uses: actions/checkout@v2

- name: bundle install

run: |

gem install jekyll bundler

bundle install --retry=3 --jobs=4

- name: build jekyll

run: |

bundle exec jekyll build

- name: list files

run: |

aws s3 cp --recursive ./_site s3://freshbrewed-test --acl public-read

build_deploy_final:

runs-on: self-hosted

if: github.ref == 'refs/heads/main'

steps:

- name: Check out repository code

uses: actions/checkout@v2

- name: bundle install

run: |

gem install jekyll bundler

bundle install --retry=3 --jobs=4

- name: build jekyll

run: |

bundle exec jekyll build

- name: copy files to final

run: |

aws s3 cp --recursive ./_site s3://freshbrewed.science --acl public-read

- name: cloudfront invalidation

run: |

aws cloudfront create-invalidation --distribution-id E3U2HCN2ZRTBZN --paths "/index.html"

How does this compare to Azure DevOps?

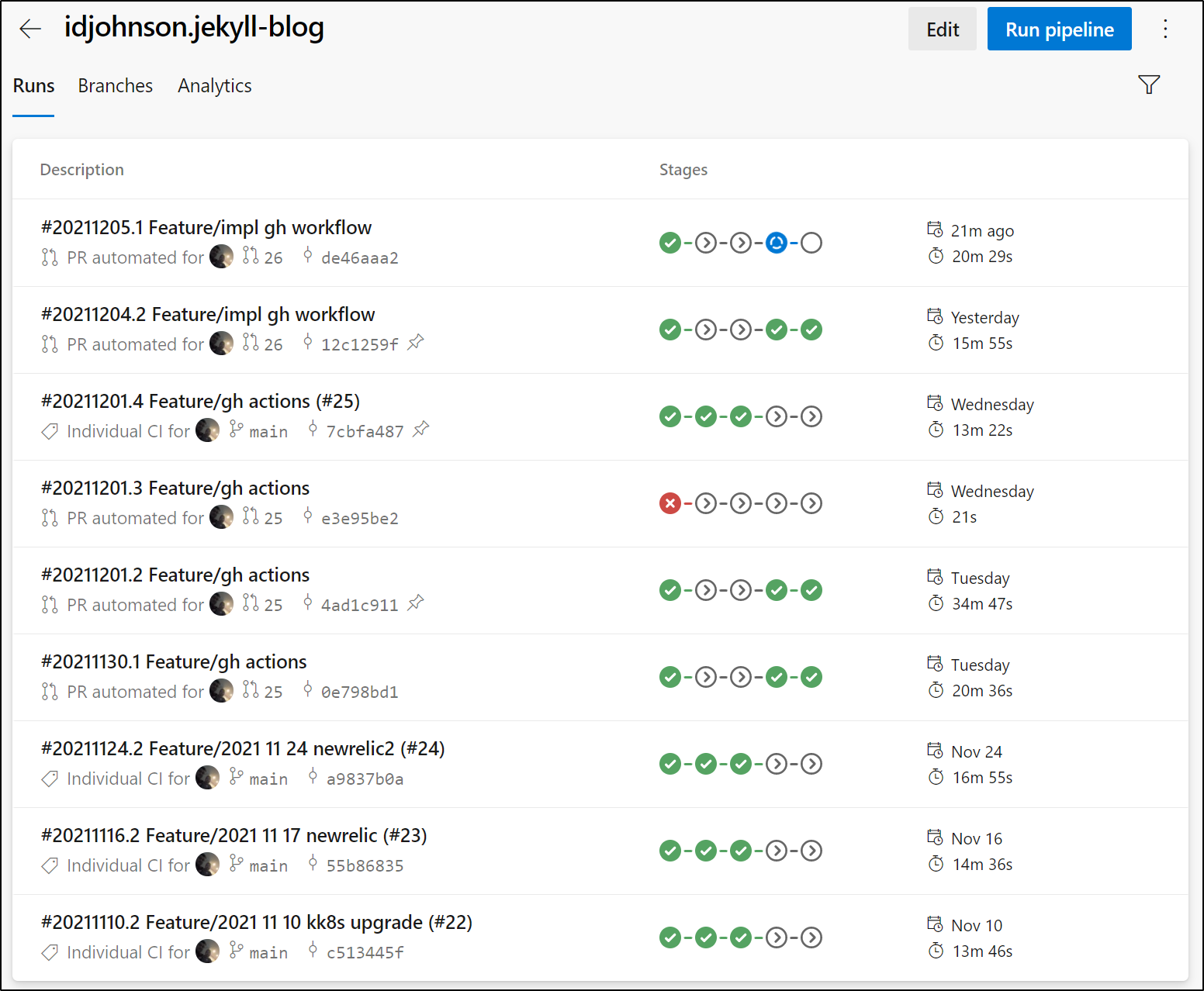

We can see that the test branch builds are 15m to 30m so clearly the performance from hosted agents to AWS uploads vary. And our final releases average 14m:

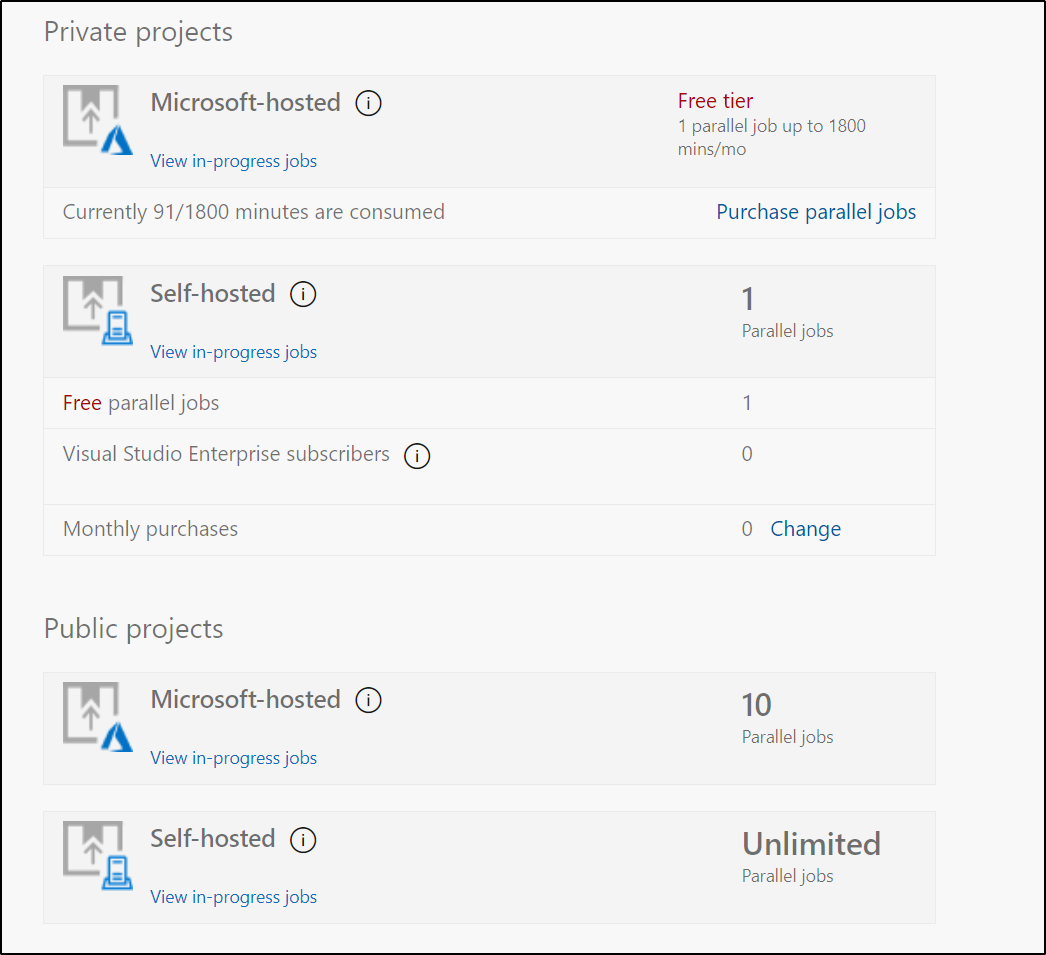

Now arguably we could improve the Azure Pipelines builds by leveraging our own private runners - but we are limited when using just the one in the free tier:

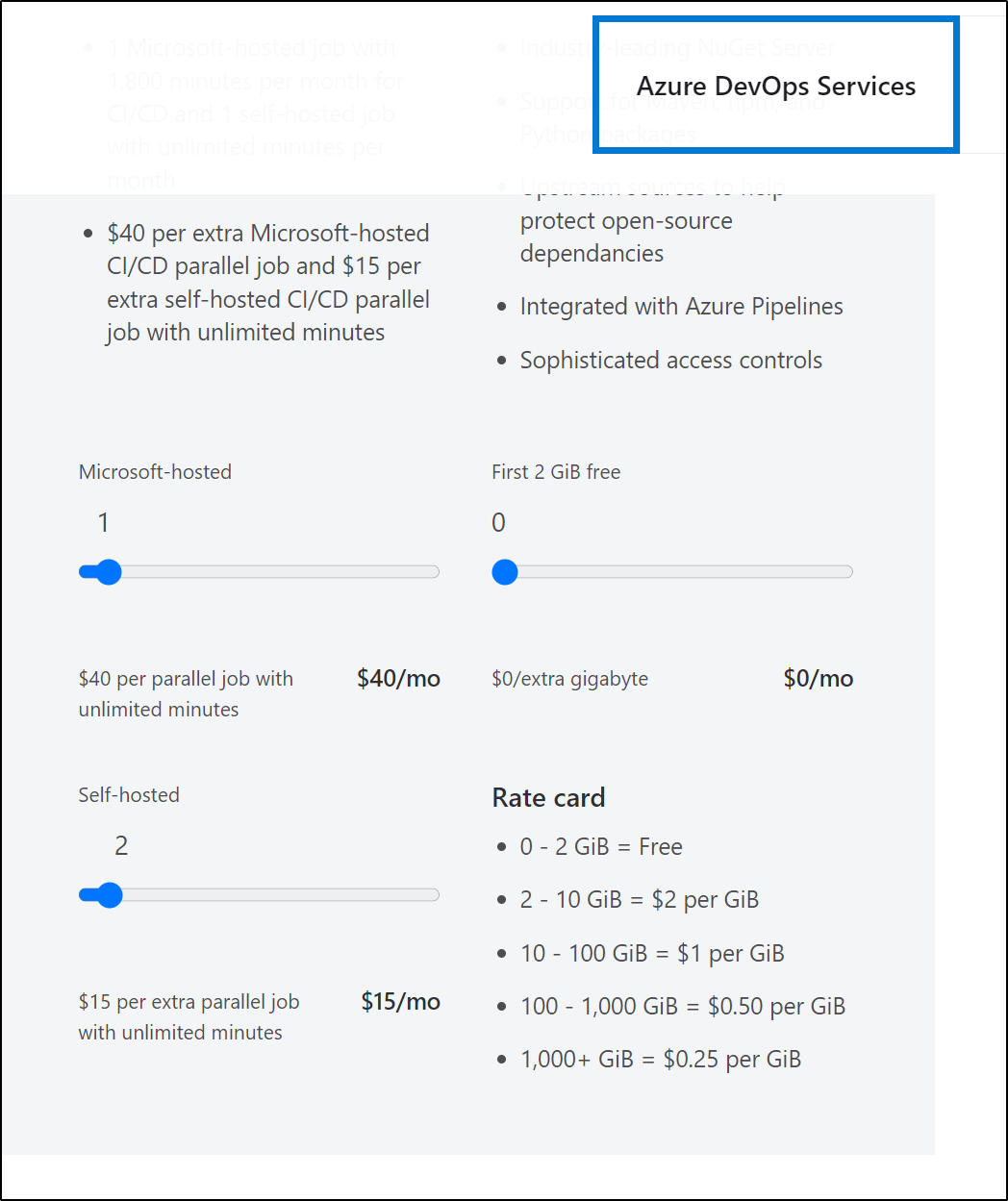

And it would cost $15/mo for each additional private agent (one’s we host ourselves).

And my understanding of Github pricing has Self-Hosted runners for Private repositories as free:

The Runner Image

We covered how we used the runner image. However, up to this point we have built the GH Container Image locally with the docker client.

For the purpose of good DR (what if my cluster died and I had no backup of harbor?), can we build from a github workflow and push it to our private container registry?

First, to allow us to have some tracking, I’ll add a line at the end that lists the current image with location and version:

$ cat ghRunnerImage/Dockerfile

FROM summerwind/actions-runner:latest

RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y ca-certificates curl apt-transport-https lsb-release gnupg

# Install MS Key

RUN curl -sL https://packages.microsoft.com/keys/microsoft.asc | gpg --dearmor | sudo tee /etc/apt/trusted.gpg.d/microsoft.gpg > /dev/null

# Add MS Apt repo

RUN umask 0002 && echo "deb [arch=amd64] https://packages.microsoft.com/repos/azure-cli/ focal main" | sudo tee /etc/apt/sources.list.d/azure-cli.list

# Install Azure CLI

RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y azure-cli awscli ruby-full

RUN sudo chown runner /usr/local/bin

RUN sudo chmod 777 /var/lib/gems/2.7.0

RUN sudo chown runner /var/lib/gems/2.7.0

# save time per build

RUN umask 0002 \

&& gem install jekyll bundler

RUN sudo rm -rf /var/lib/apt/lists/*

#harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.4

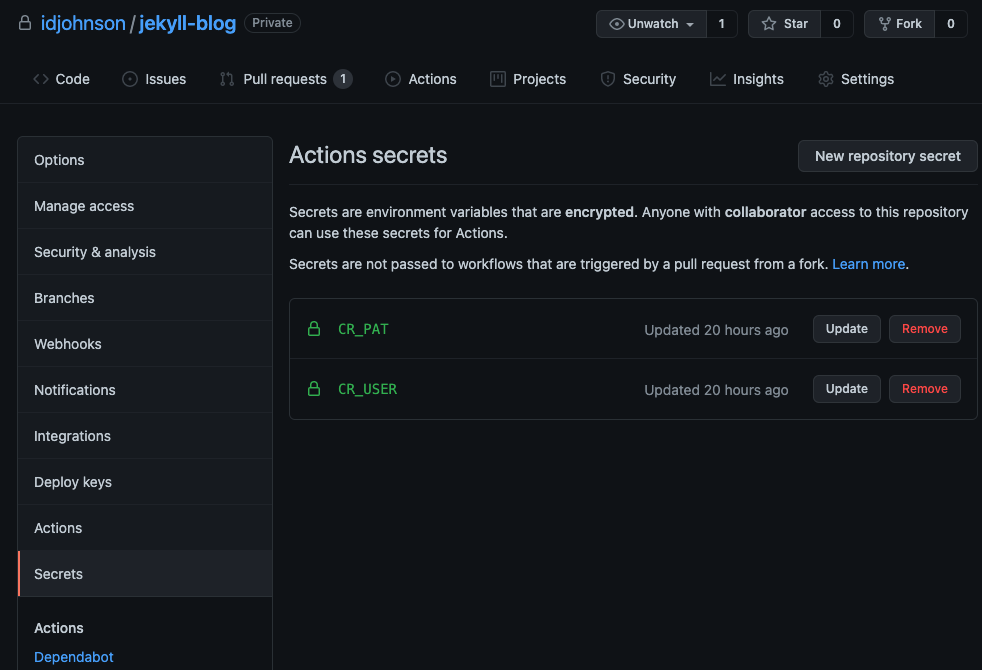

Then we need to save our credentials somewhere (as this is DR, we’ll want to use a Github provided agent to build):

These two secrets will be passed into the build job (but not external PRs)

Lastly, we just need to create a flow triggered only off of Dockerfile updates

$ cat .github/workflows/github-actions.yml

name: GitHub Actions Demo

on:

push:

paths:

- "**/Dockerfile"

jobs:

HostedActions:

runs-on: ubuntu-latest

steps:

- name: Check out repository code

uses: actions/checkout@v2

- name: Build Dockerfile

run: |

export BUILDIMGTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g'`"

docker build -t $BUILDIMGTAG ghRunnerImage

docker images

- name: Tag and Push

run: |

export BUILDIMGTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g'`"

export FINALBUILDTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^#//g'`"

docker tag $BUILDIMGTAG $FINALBUILDTAG

docker images

echo $CR_PAT | docker login harbor.freshbrewed.science -u $CR_USER --password-stdin

docker push $FINALBUILDTAG

env: # Or as an environment variable

CR_PAT: $

CR_USER: $

- run: echo "🍏 This job's status is $."

Testing

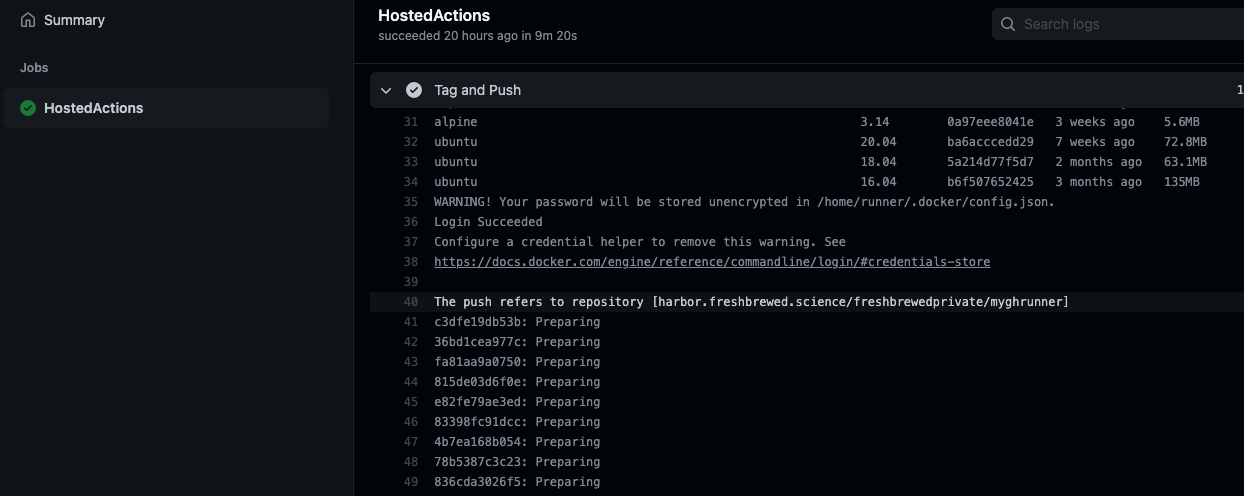

When we push a Dockerfile change, we can see it push to our Harbor registry:

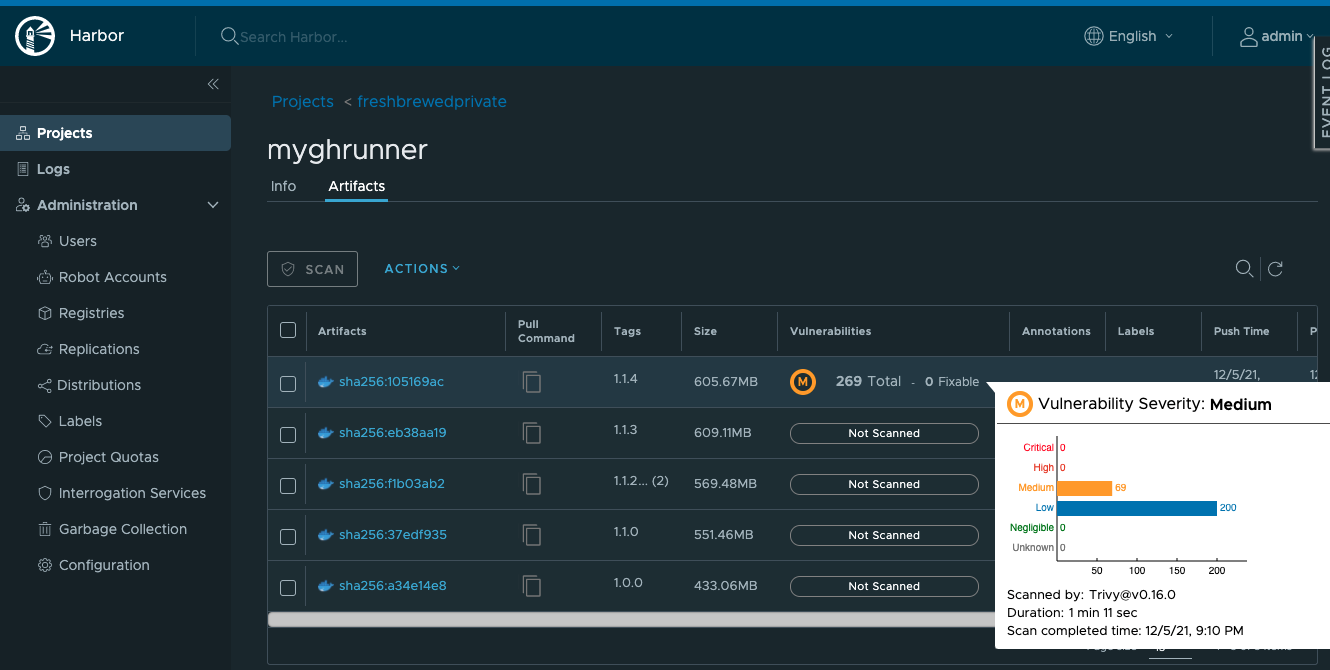

In harbor, do I not only see my image, I can easily run a security scan on the built image.

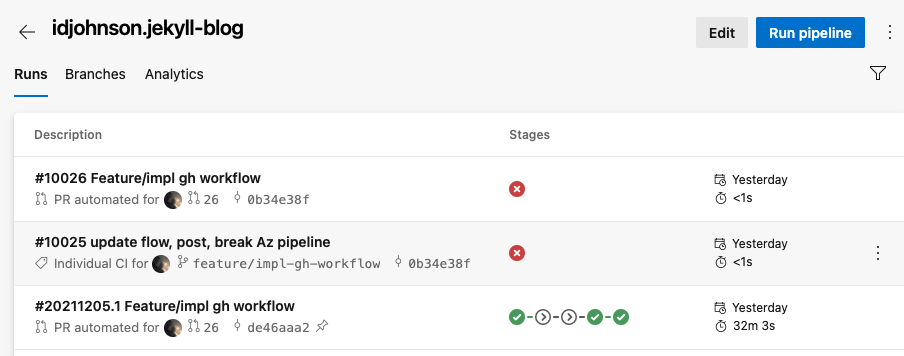

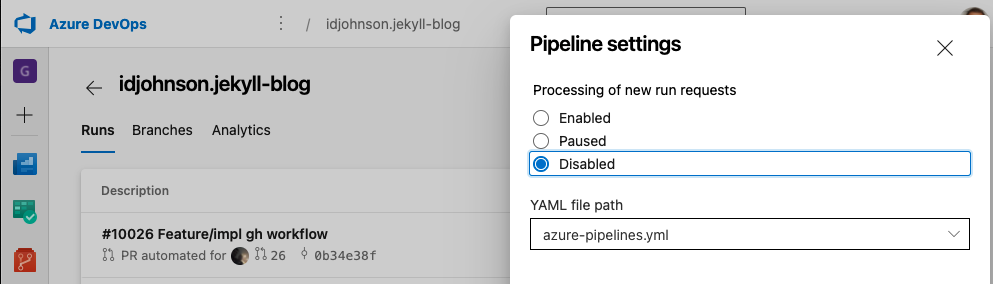

Disabling AzDO

One more bit of house-keeping is to disable the AzDO build job.

I mangled the azure-pipelines.yaml in order to disable it prior

While we could remove it, probably the most straightforward is to just disable the pipeline

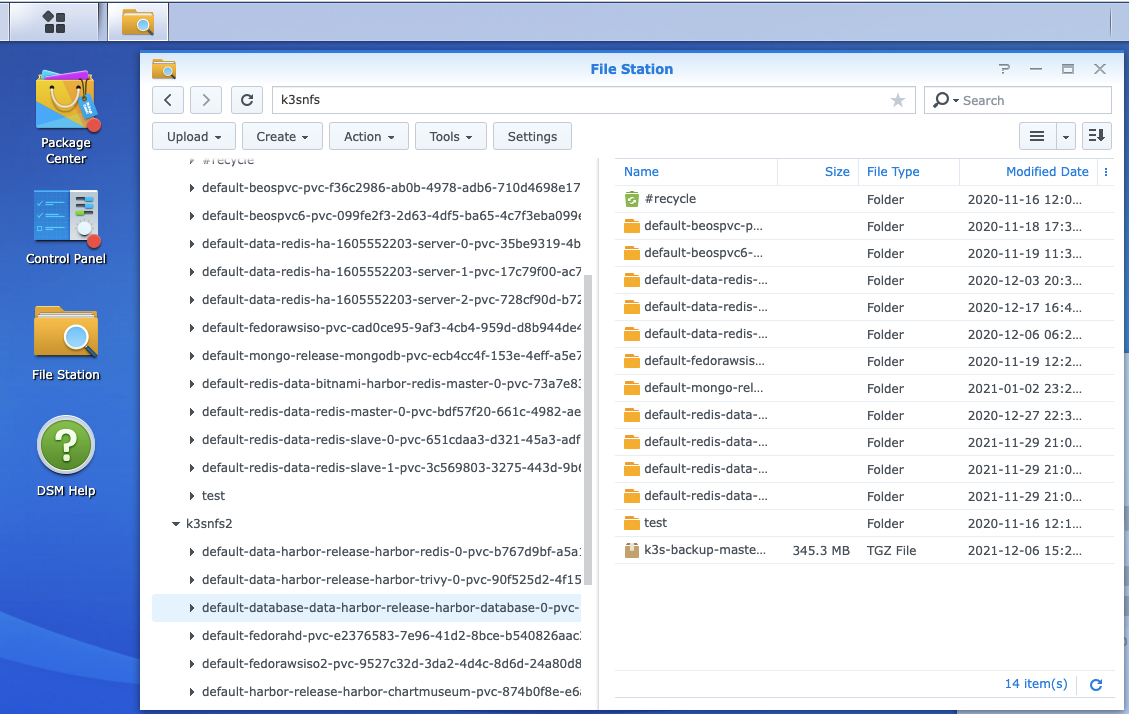

Making my K3s more DR

Because i’m using an out of the box k3s, it has one master node. That is my big single point of failure. I’m using commodity hardware (a stack of retired laptops).

So let’s first do a simple backup.

If you are using the MySQL or PSQL implementation, you can use k3s etcd-snapshot save to backup to your db/snapshots dir (see more)

Since i’m using the embedded SQL Lite, i don’t have that option.

I need to stop my master node, something I rarely like to do. So let’s do a quick pass.

Luckily I already mounted my NFS share for for my NFS based PVCs so i need not figure out a place to use to cp or sftp the backup.

$ sudo su - root

root@isaac-MacBookAir:~# service k3s stop

root@isaac-MacBookAir:~# tar -czvf /tmp/k3s-backup-master.tgz /var/lib/rancher/k3s/server

root@isaac-MacBookAir:~# service k3s start

root@isaac-MacBookAir:~# cp /tmp/k3s-backup-master2.tgz /mnt/nfs/k3snfs/k3s-backup-master-`date +"%Y%m%d"`.tgz

And we can see that on the NAS now:

Clearly you can save your bits in many many places. Perhaps you keep a rolling latest in Dropbox, S3 or Azure File storage.

To restore, i would need to pull the k3s binary down for 1.21.5+k3s1 from the k3s release page then explode the tgz into /var/lib/rancher/k3s/server and start k3s again.

The workers should rejoin using the same token… though I’m not eager to try the DR steps just yet.

Summary

Switching from Azure Pipelines to Github Workflows was actually rather painless. Let’s consider three key facets in comparing AzDO and Github Actions: Pricing, Performance and Process.

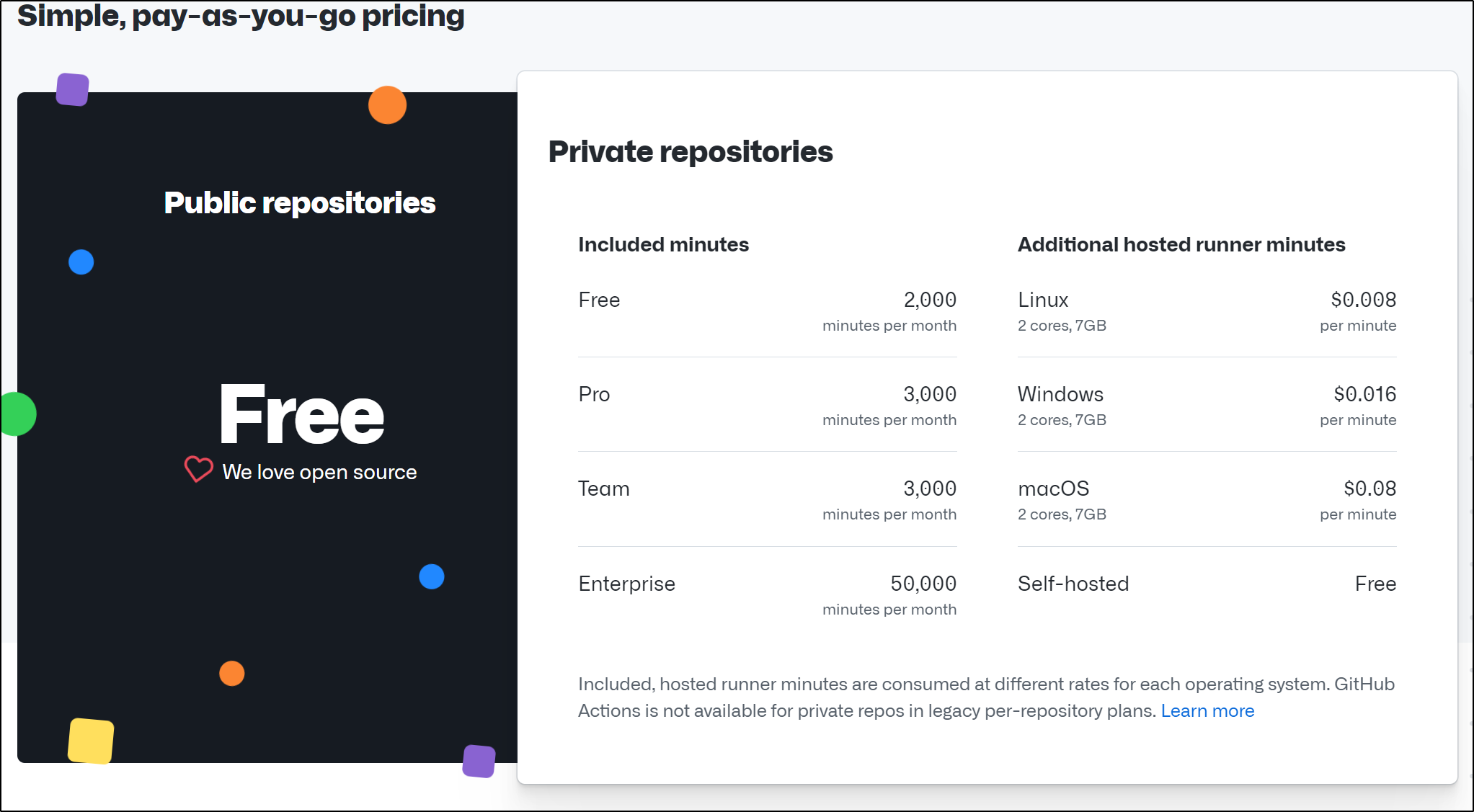

Comparing the free tier offering for private projects

The limits on time are similar; 1800m/30h for Azure Pipelines hosted agents with 1 hour stage limits and 2000 hours (with no stage limits) for Github actions. While Azure Pipelines limit me to 1 private agent per tenant (each additional is $15/mo), Github Actions does not have any such limitation.

This is a huge benefit for GH Actions as I can easily spin up many parallel agents in my own cluster and only lean on Github/Microsoft provided agents when I need a larger class instance (as I did for building the Runner agent container image itself).

Performance Improvements

In using a purely containerized solution with the SummerWind RunnderDeployment model, it was easy to bake in all my tooling. This drastically reduced my build times from an average of 15 minutes to about 5 minutes.

Because I set the imagePullPolicy to re-use the already fetched container, the speeds improved even more giving me 2 minute full builds.

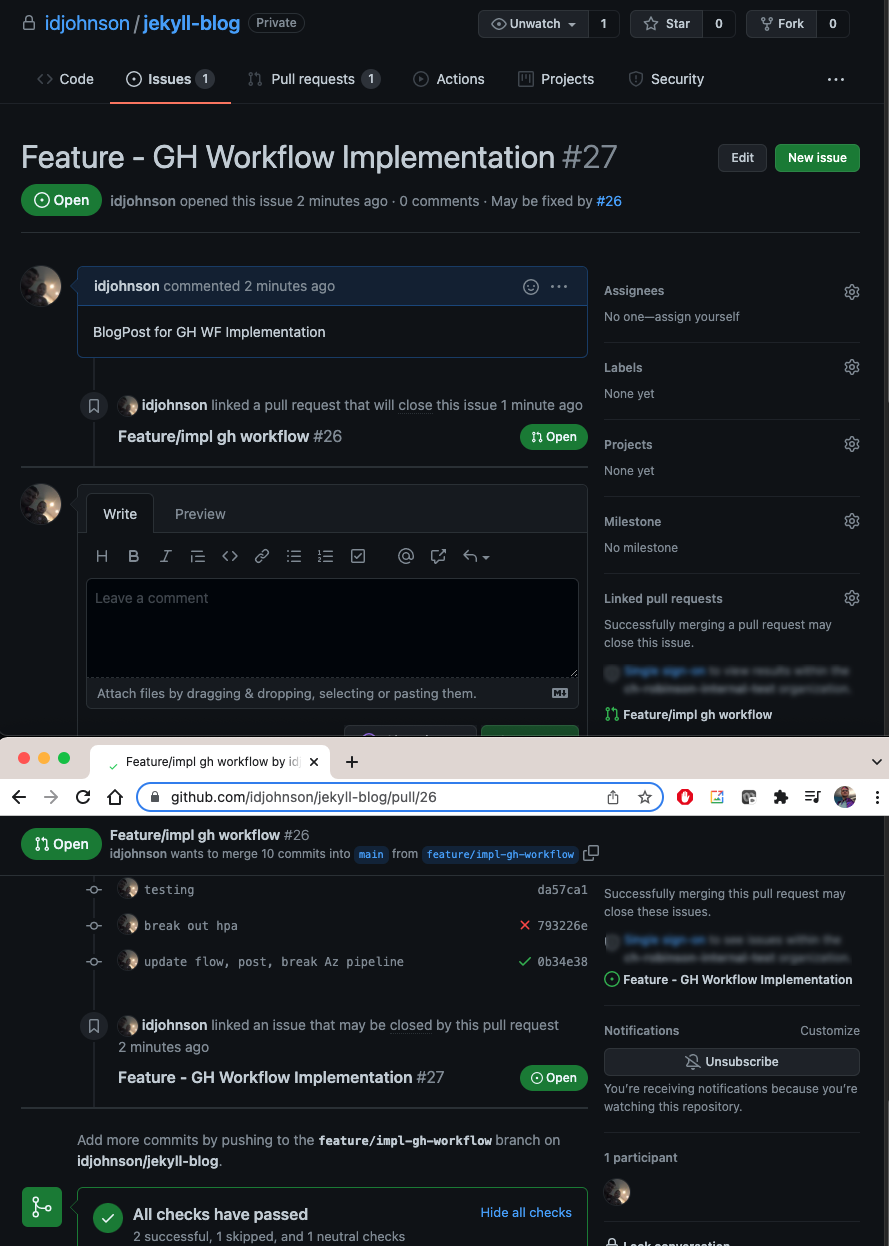

Work Items, where AzDO Shines

There are consequences in a real-world scenario in abandoning AzDO. Github workflows are great, but we blatantly ignored integration with Work Items. We can relate a PR to a Github Issue using keywords but it isn’t quite the same as linking a Work Item and an Azure Repo PR.

The GH PR to Issue linking:

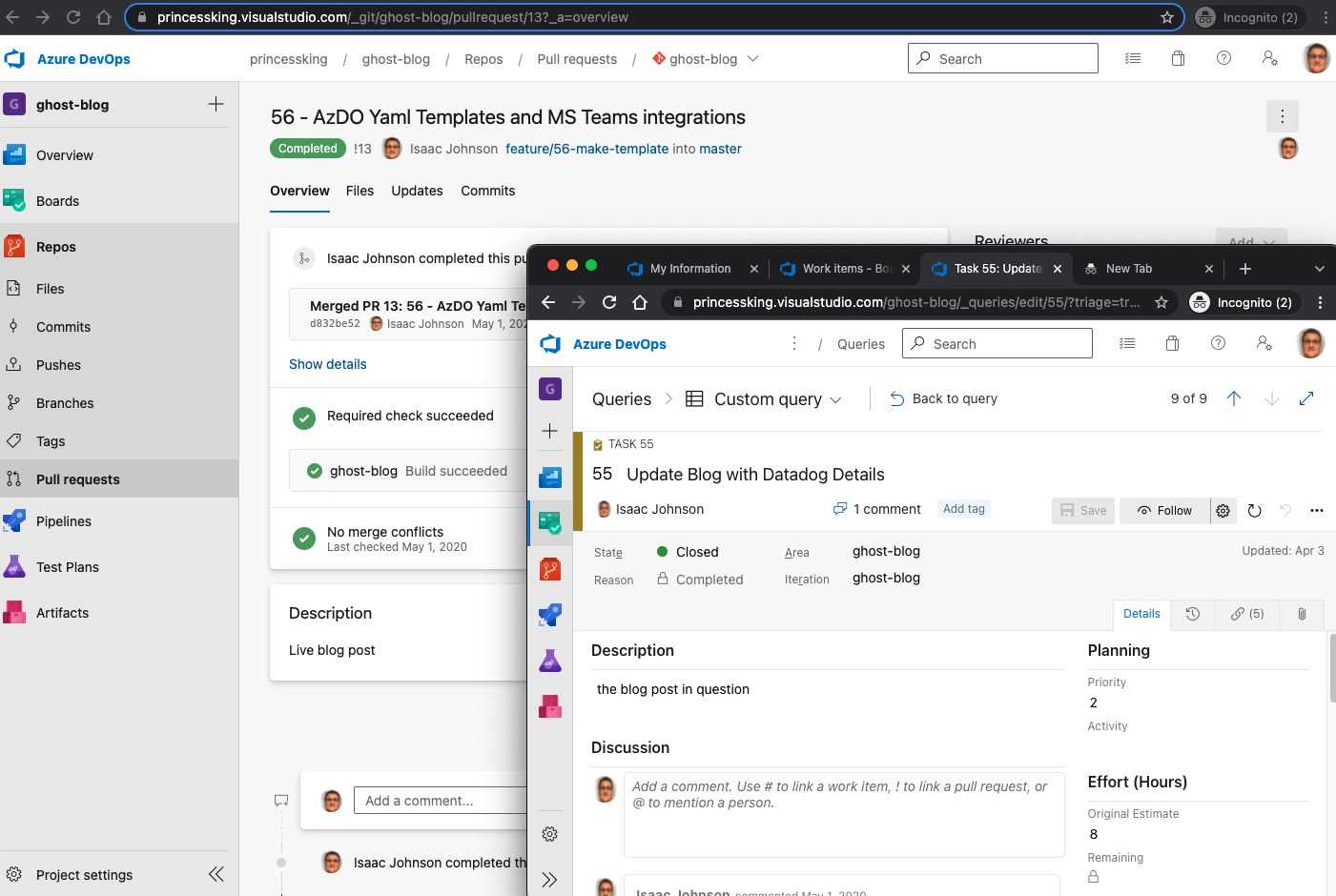

And just part of an AzDO Issue to PR to build flow

Conclusion

Where we go from here is hard to say. Everyone I’ve spoken with thus far agrees that Microsoft is clearly moving in the direction of Github Actions over Azure Pipelines. However, how that will manifest is yet to be seen. I, personally, have a hard time letting go of Azure Boards and the Work Item flow.

I’m also seeing a push in AzDO out of a free developer offering into an Enterprise one. As of this morning, I was informed I would lose auditing features unless I moved my Org into AAD (and AAD might not be free).

Then again, I had to mask the Github screenshots since my Github identity is co-mingled with my Corporate identity and I like having a totally separate AzDO organization with my own PAT for Azure Repos. When I use Github.com it is sometimes for personal and sometimes for work which makes it hard to keep the wall separate between the two.