Published: Nov 10, 2021 by Isaac Johnson

One of the more scary operations one must perform is upgrading a Kubernetes cluster. While new versions often bring better stability and new functionality, they also can deprecate APIs upon which you may depend.

Knowing how to handle upgrades is key to maintaining an operational production cluster. Today we’ll work through upgrades in several ways:

- K3s directly with the System Upgrade Controller

- K3s in Terraform (one way working, one not)

- Azure Kubernetes Service (AKS)

- Minikube (with Hyper-V)

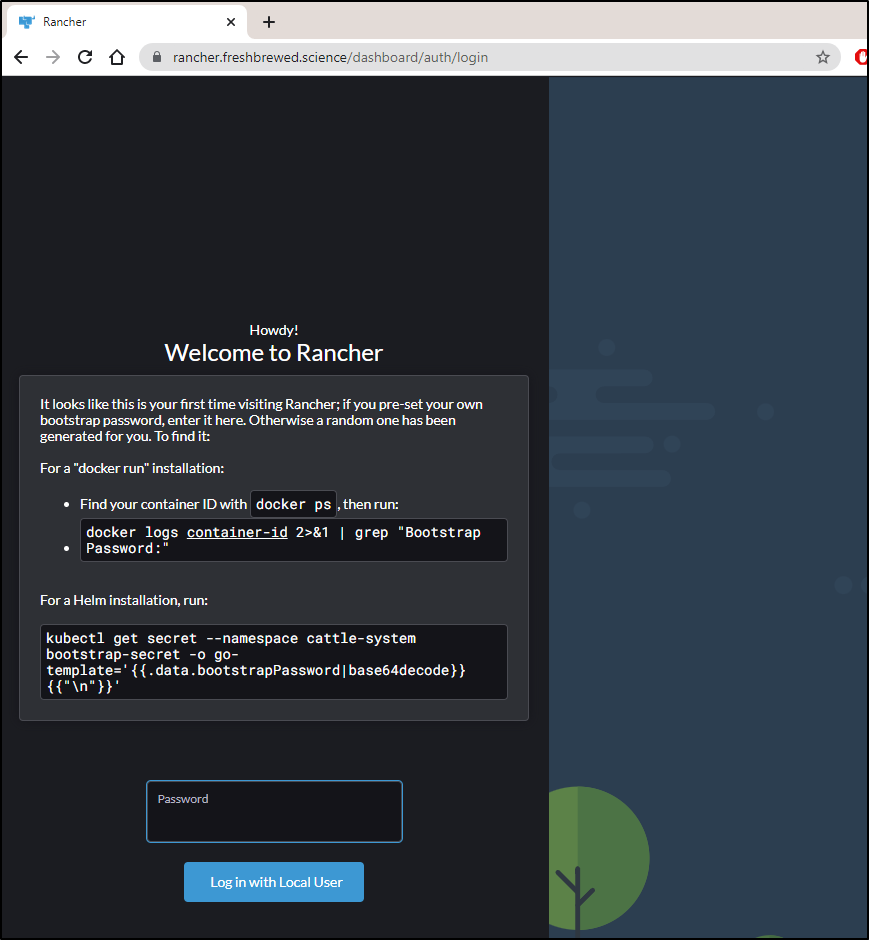

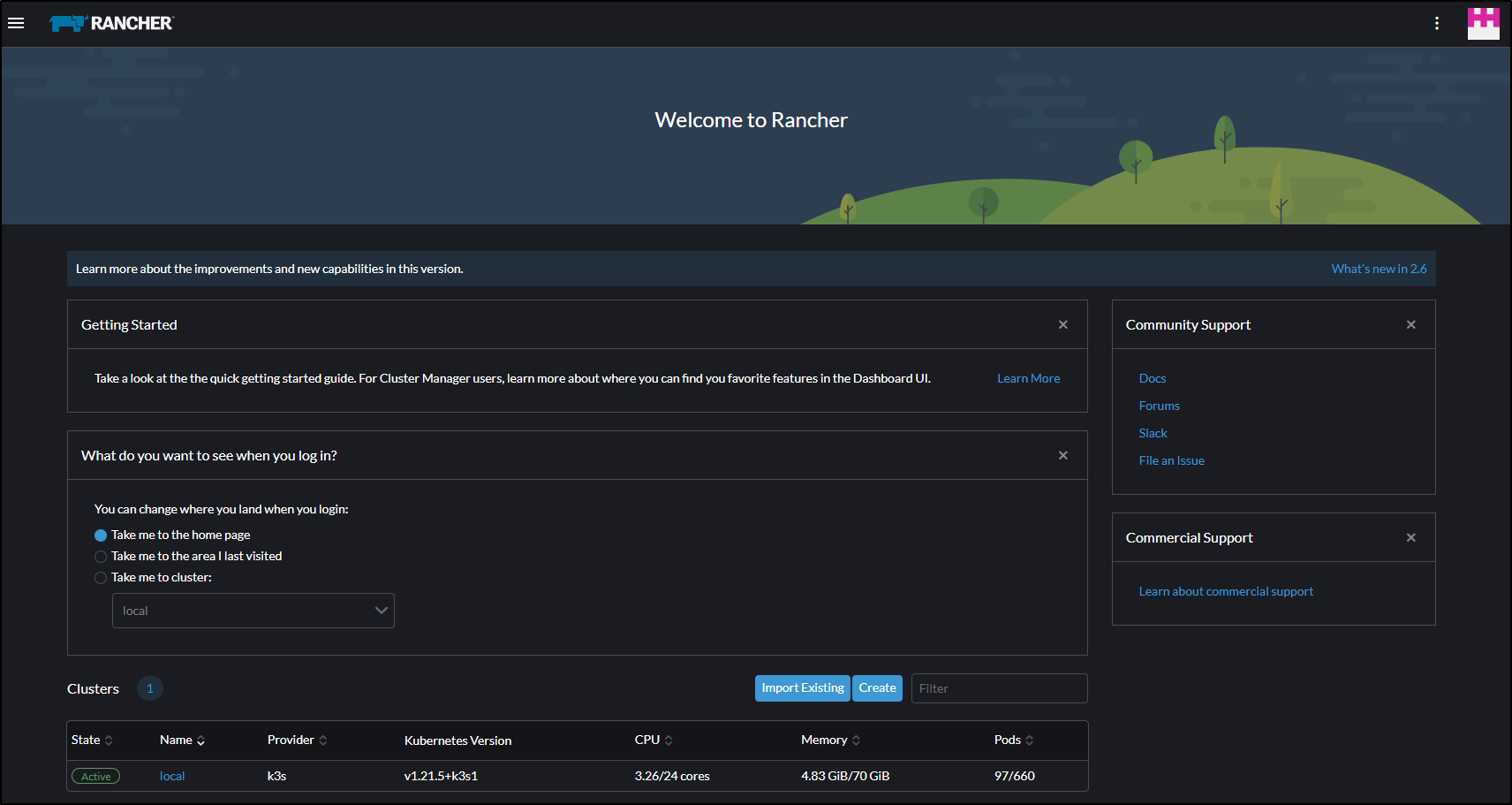

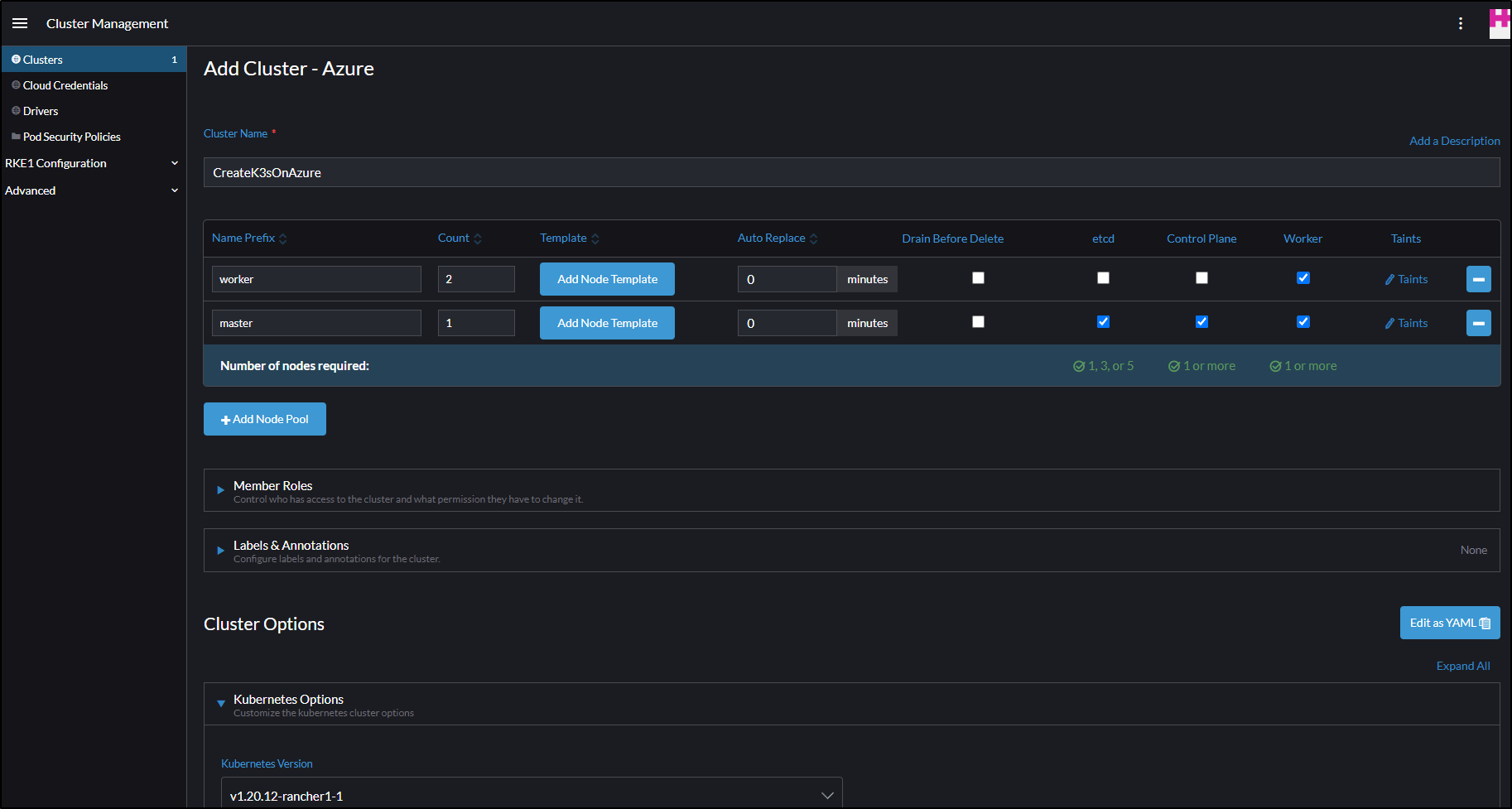

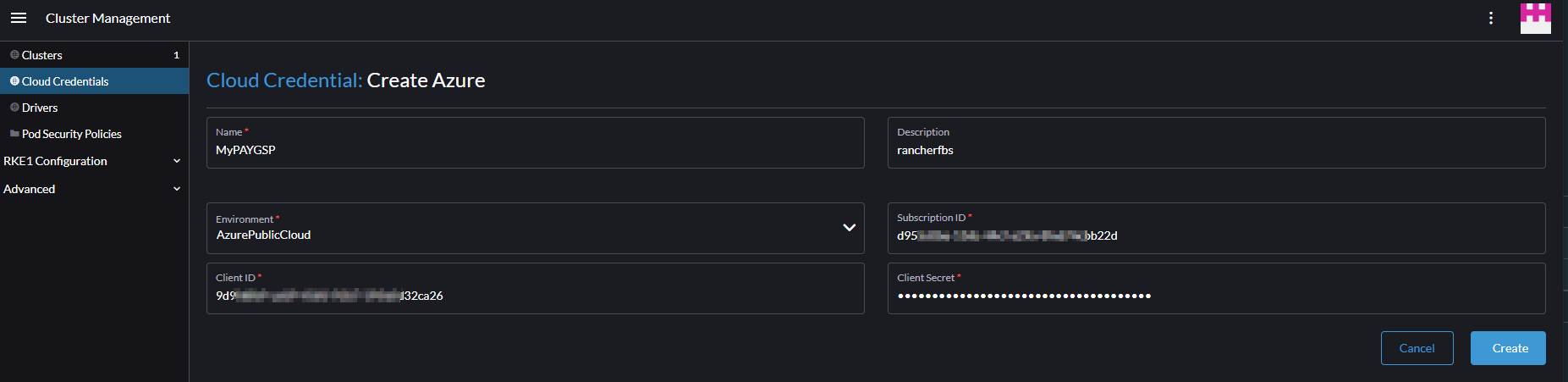

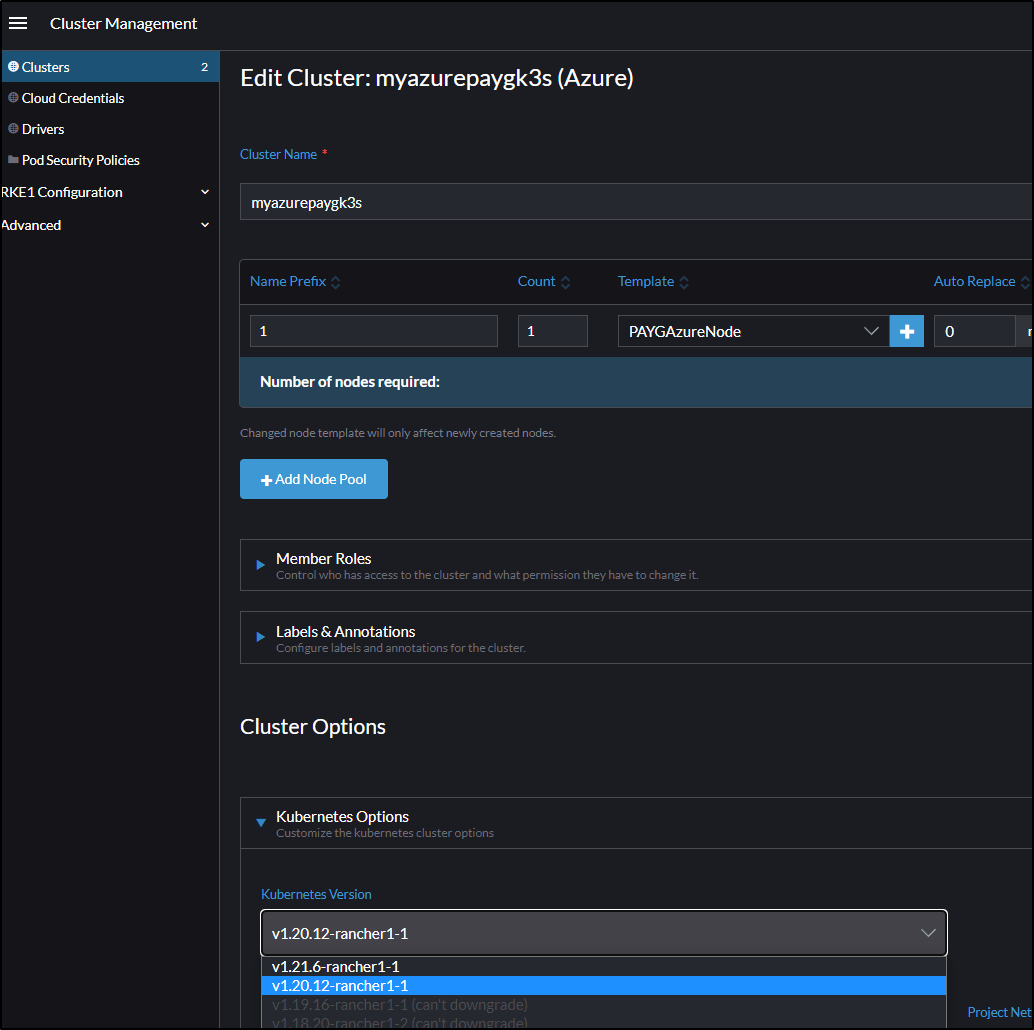

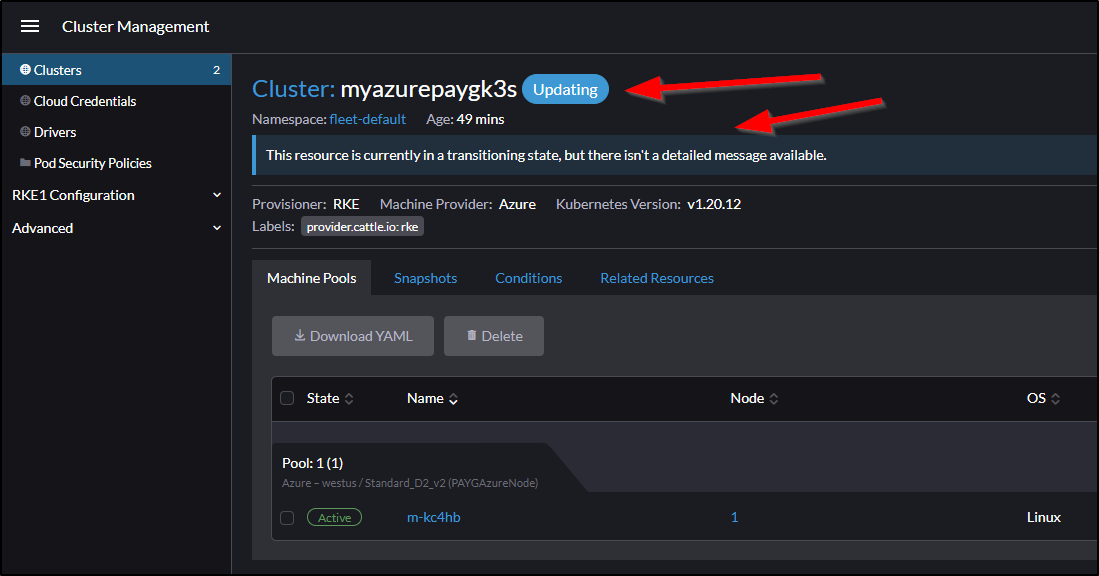

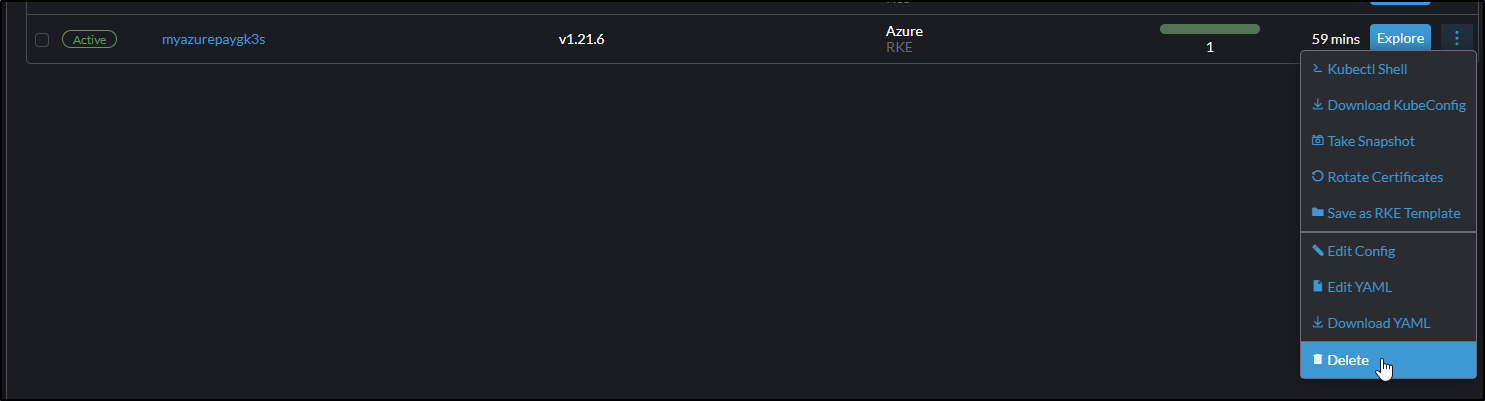

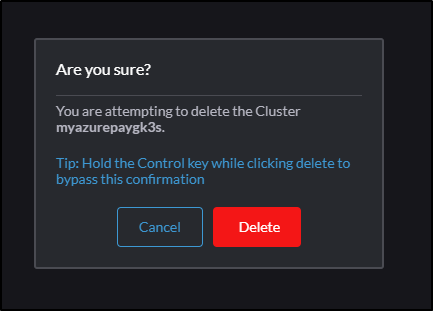

- Rancher to install and upgrade k3s in Azure

Upgrading K3s

This first type of upgrade involves my on-prem production cluster that has quite a bit of content on it. This k3s cluster is hosted on several physical machines networked together.

First, we need to setup the system upgrade controller on the cluster:

$ kubectl apply -f https://raw.githubusercontent.com/rancher/system-upgrade-controller/v0.8.0/manifests/system-upgrade-controller.yaml

Set the Master node and set all the nodes to upgrade. The Master Node label should already exist, but not the k3s-upgrade one.

# kubectl label node isaac-macbookair node-role.kubernetes.io/master=true

$ kubectl label node anna-macbookair isaac-macbookpro isaac-macbookair hp-hp-elitebook-850-g2 builder-hp-elitebook-850-g1 k3s-upgrade=true

Next, apply the upgrade plan:

$ cat UpgradePlan.yml

---

apiVersion: upgrade.cattle.io/v1

kind: Plan

metadata:

name: k3s-server

namespace: system-upgrade

labels:

k3s-upgrade: server

spec:

concurrency: 1

version: v1.21.5+k3s1

nodeSelector:

matchExpressions:

- {key: k3s-upgrade, operator: Exists}

- {key: k3s-upgrade, operator: NotIn, values: ["disabled", "false"]}

- {key: k3s.io/hostname, operator: Exists}

- {key: k3os.io/mode, operator: DoesNotExist}

- {key: node-role.kubernetes.io/master, operator: In, values: ["true"]}

serviceAccountName: system-upgrade

cordon: true

drain:

force: true

upgrade:

image: rancher/k3s-upgrade

---

apiVersion: upgrade.cattle.io/v1

kind: Plan

metadata:

name: k3s-agent

namespace: system-upgrade

labels:

k3s-upgrade: agent

spec:

concurrency: 3 # in general, this should be the number of workers - 1

version: v1.21.5+k3s1

nodeSelector:

matchExpressions:

- {key: k3s-upgrade, operator: Exists}

- {key: k3s-upgrade, operator: NotIn, values: ["disabled", "false"]}

- {key: k3s.io/hostname, operator: Exists}

- {key: k3os.io/mode, operator: DoesNotExist}

- {key: node-role.kubernetes.io/master, operator: NotIn, values: ["true"]}

serviceAccountName: system-upgrade

prepare:

# Since v0.5.0-m1 SUC will use the resolved version of the plan for the tag on the prepare container.

# image: rancher/k3s-upgrade:v1.17.4-k3s1

image: rancher/k3s-upgrade

args: ["prepare", "k3s-server"]

drain:

force: true

skipWaitForDeleteTimeout: 60 # set this to prevent upgrades from hanging on small clusters since k8s v1.18

upgrade:

image: rancher/k3s-upgrade

$ kubectl apply -f UpgradePlan.yml

This should upgrade us from v1.19.5+k3s2 to v1.21.5+k3s1.

Note: This is my oldest cluster, the nodes had been running nearly a year in some cases. It has many many things on it, from Dapr to Datadog monitors to full stack apps like Harbor. I worried that it wasn’t going to upgrade because I saw no status updates and after hours I assumed it just was a no go.

However, after about 4 hours, i checked back and it migrated nodes and by 5 hours, it was live again.

When the migration is complete, we see the jobs disappear:

$ kubectl get jobs -n system-upgrade

No resources found in system-upgrade namespace.

You may need to rotate pods to get them to pick up changes. In my case, since everything rotates, the stuck redis master blocked the redis slaves which in turn blocked Dapr side cars (which use Redis for state management and message queues).

perl-subscriber-57778545dc-5qp99 1/2 CrashLoopBackOff 16 58m

node-subscriber-5c75bfc99d-h57s8 1/2 CrashLoopBackOff 18 52m

daprtweeter-deployment-68b49d6856-w9fnw 1/2 CrashLoopBackOff 18 58m

python-subscriber-7d7694f594-nr6dn 1/2 CrashLoopBackOff 16 52m

dapr-workflows-host-85d9d74777-h7tpk 1/2 CrashLoopBackOff 16 52m

echoapp-c56bfd446-94zzg 1/2 CrashLoopBackOff 17 52m

react-form-79c7989844-gpgzg 1/2 CrashLoopBackOff 15 52m

redis-master-0 1/1 Running 0 3m38s

redis-slave-0 1/1 Running 0 2m50s

redis-slave-1 1/1 Running 0 2m29s

nfs-client-provisioner-d54c468c4-r2969 1/1 Running 0 106s

nodeeventwatcher-deployment-6dddc4858c-b685p 0/2 ContainerCreating 0 93s

nodesqswatcher-deployment-67b65f645f-c5xhl 2/2 Running 0 38s

builder@DESKTOP-72D2D9T:~$ kubectl delete pod perl-subscriber-57778545dc-5qp99 && kubectl delete pod daprtweeter-deployment-68b49d6856-w9fnw

pod "perl-subscriber-57778545dc-5qp99" deleted

pod "daprtweeter-deployment-68b49d6856-w9fnw" deleted

Now we see they are all up or coming up:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

svclb-my-release-ingress-nginx-controller-dgmgt 2/2 Running 2 150d

svclb-react-form-hkw8j 1/1 Running 1 193d

svclb-react-form-btvf7 1/1 Running 2 20d

datadogrelease-g5kqq 3/3 Running 0 6d13h

svclb-my-release-ingress-nginx-controller-2kbgb 0/2 Pending 0 76m

svclb-react-form-tbcvj 0/1 Pending 0 76m

svclb-my-release-ingress-nginx-controller-hw92x 0/2 Pending 0 76m

svclb-my-release-ingress-nginx-controller-z4qwg 0/2 Pending 0 76m

svclb-react-form-g486p 0/1 Pending 0 76m

vote-front-azure-vote-1608995981-588948447b-2cv6f 1/1 Running 0 75m

dapr-sidecar-injector-56b8954855-48dmd 1/1 Running 0 75m

dapr-placement-server-0 1/1 Running 0 75m

svclb-my-release-ingress-nginx-controller-hbtjx 2/2 Running 0 75m

svclb-react-form-cc9lg 1/1 Running 0 75m

otel-collector-67f645b9b7-w4flh 1/1 Running 0 75m

kubewatch-5d466cffc8-tf579 1/1 Running 0 60m

dapr-dashboard-6ff6f44778-tlssf 1/1 Running 0 60m

dapr-sentry-958fdd984-ts7tk 1/1 Running 0 60m

harbor-registry-harbor-notary-server-779c6bddd5-6rb94 1/1 Running 0 60m

vote-back-azure-vote-1608995981-5df9f78fd8-7vhk6 1/1 Running 0 60m

harbor-registry-harbor-exporter-655dd658bb-79nrj 1/1 Running 0 60m

datadogrelease-lzkqn 3/3 Running 0 4h3m

datadogrelease-p8zmz 3/3 Running 0 6d13h

cm-acme-http-solver-t94vj 1/1 Running 0 59m

harbor-registry-harbor-redis-0 1/1 Running 0 75m

harbor-registry-harbor-registry-86dbbfd48f-6gbmx 2/2 Running 0 75m

datadogrelease-s45zf 3/3 Running 3 5h32m

harbor-registry-harbor-core-7b4594d78d-rdfgw 1/1 Running 1 60m

harbor-registry-harbor-jobservice-95968c6d9-lgs6q 1/1 Running 0 75m

busybox-6c446876c6-7pzsx 1/1 Running 0 55m

docker-registry-6d9dc74c67-9h2k2 1/1 Running 0 54m

harbor-registry-harbor-portal-76bdcc7969-cnc8r 1/1 Running 0 54m

dapr-operator-7867c79bf9-5h2dv 1/1 Running 0 54m

mongo-x86-release-mongodb-7cb86d48f8-ks2f6 1/1 Running 0 54m

my-release-ingress-nginx-controller-7978f85c6f-467hr 1/1 Running 0 55m

datadogrelease-kube-state-metrics-6b5956746b-nm6kh 1/1 Running 0 54m

perl-debugger-5967f99ff6-lbtd2 1/1 Running 0 75m

datadogrelease-cluster-agent-6cf556df59-r56kd 1/1 Running 0 54m

datadogrelease-glxn2 3/3 Running 0 6d13h

harbor-registry-harbor-chartmuseum-559bd98f8f-42h4n 1/1 Running 0 55m

harbor-registry-harbor-trivy-0 1/1 Running 0 52m

harbor-registry-harbor-database-0 1/1 Running 0 52m

harbor-registry-harbor-notary-signer-c97648889-mnw7v 1/1 Running 6 54m

redis-master-0 1/1 Running 0 6m26s

redis-slave-0 1/1 Running 0 5m38s

redis-slave-1 1/1 Running 0 5m17s

nfs-client-provisioner-d54c468c4-r2969 1/1 Running 0 4m34s

nodesqswatcher-deployment-67b65f645f-c5xhl 2/2 Running 0 3m26s

nodeeventwatcher-deployment-6dddc4858c-b685p 2/2 Running 0 4m21s

node-subscriber-5c75bfc99d-h57s8 2/2 Running 19 54m

dapr-workflows-host-85d9d74777-h7tpk 2/2 Running 17 55m

python-subscriber-7d7694f594-nr6dn 2/2 Running 17 54m

perl-subscriber-57778545dc-tztsq 2/2 Running 0 108s

echoapp-c56bfd446-94zzg 2/2 Running 18 54m

react-form-79c7989844-gpgzg 2/2 Running 16 55m

daprtweeter-deployment-68b49d6856-k7tml 2/2 Running 0 67s

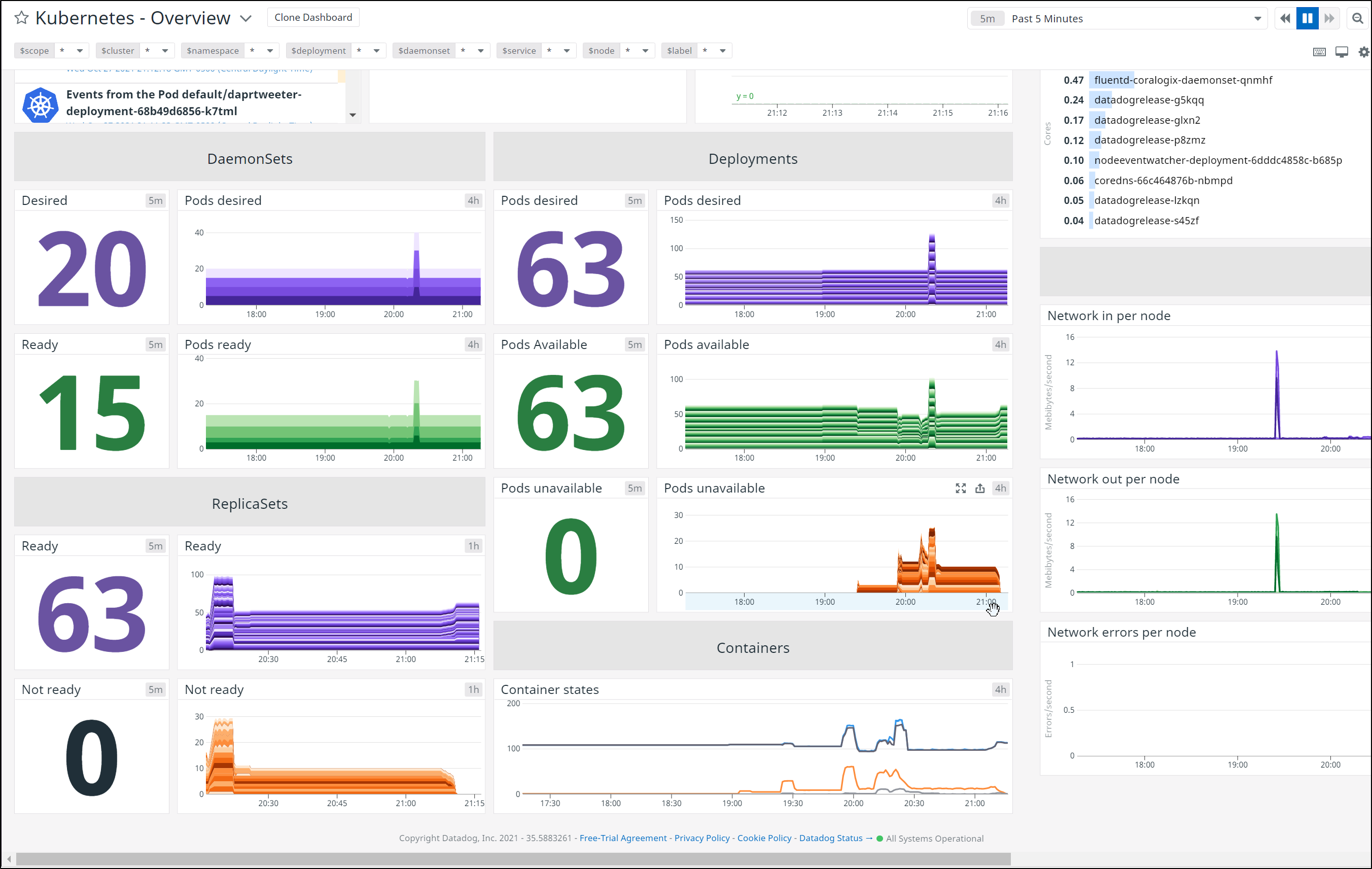

We can see results in the Datadog Kubernetes Dashboard:

Another example: K3s one node

We can use the Upgrade Controller method on very small k3s clusters as well.

Here we have a very simple computer running a one node k3sL

builder@builder-HP-EliteBook-745-G5:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-745-g5 Ready control-plane,master 45h v1.21.5+k3s1

builder@builder-HP-EliteBook-745-G5:~$ kubectl get pods

No resources found in default namespace.

builder@builder-HP-EliteBook-745-G5:~$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

traefik-5dd496474-8bbnv 1/1 Running 0 45h

metrics-server-86cbb8457f-qgq9w 1/1 Running 0 45h

local-path-provisioner-5ff76fc89d-j748k 1/1 Running 0 45h

svclb-traefik-58bb8 2/2 Running 0 45h

coredns-7448499f4d-szkpl 1/1 Running 0 45h

helm-install-traefik-t8ntj 0/1 Completed 0 45h

We’ll get the latest system upgrader version

$ curl -s "https://api.github.com/repos/rancher/system-upgrade-controller/releases/latest" | awk -F '"' '/tag_name/{print $4}'

v0.8.0

Then apply:

$ kubectl apply -f https://raw.githubusercontent.com/rancher/system-upgrade-controller/v0.8.0/manifests/system-upgrade-controller.yaml

namespace/system-upgrade unchanged

serviceaccount/system-upgrade unchanged

clusterrolebinding.rbac.authorization.k8s.io/system-upgrade unchanged

configmap/default-controller-env unchanged

deployment.apps/system-upgrade-controller unchanged

Since we upgraded once already, there were no changes

We can bring up the k3s releases page to see the latest available: https://github.com/k3s-io/k3s/releases

And at time of writing, v1.22.3-rc1+k3s1 was just published yesterday.

Since we already had the UpgradePlan.yaml file from last time, we can just sed in the new version

builder@builder-HP-EliteBook-745-G5:~$ sed -i 's/v1.21.5+k3s1/v1.22.3-rc1+k3s1/g' UpgradePlan.yaml

builder@builder-HP-EliteBook-745-G5:~$ cat UpgradePlan.yaml

---

apiVersion: upgrade.cattle.io/v1

kind: Plan

metadata:

name: k3s-server

namespace: system-upgrade

labels:

k3s-upgrade: server

spec:

concurrency: 1

version: v1.22.3-rc1+k3s1

nodeSelector:

matchExpressions:

- {key: k3s-upgrade, operator: Exists}

- {key: k3s-upgrade, operator: NotIn, values: ["disabled", "false"]}

- {key: k3s.io/hostname, operator: Exists}

- {key: k3os.io/mode, operator: DoesNotExist}

- {key: node-role.kubernetes.io/master, operator: In, values: ["true"]}

serviceAccountName: system-upgrade

cordon: true

# drain:

# force: true

upgrade:

image: rancher/k3s-upgrade

---

apiVersion: upgrade.cattle.io/v1

kind: Plan

metadata:

name: k3s-agent

namespace: system-upgrade

labels:

k3s-upgrade: agent

spec:

concurrency: 2 # in general, this should be the number of workers - 1

version: v1.22.3-rc1+k3s1

nodeSelector:

matchExpressions:

- {key: k3s-upgrade, operator: Exists}

- {key: k3s-upgrade, operator: NotIn, values: ["disabled", "false"]}

- {key: k3s.io/hostname, operator: Exists}

- {key: k3os.io/mode, operator: DoesNotExist}

- {key: node-role.kubernetes.io/master, operator: NotIn, values: ["true"]}

serviceAccountName: system-upgrade

prepare:

# Since v0.5.0-m1 SUC will use the resolved version of the plan for the tag on the prepare container.

# image: rancher/k3s-upgrade:v1.17.4-k3s1

image: rancher/k3s-upgrade

args: ["prepare", "k3s-server"]

drain:

force: true

skipWaitForDeleteTimeout: 60 # set this to prevent upgrades from hanging on small clusters since k8s v1.18

upgrade:

image: rancher/k3s-upgrade

Because we are interested in how it handles deprecations, we can look at what are the Major Themes and Major Changes in 1.22: https://kubernetes.io/blog/2021/08/04/kubernetes-1-22-release-announcement/

Notably:

A number of deprecated beta APIs have been removed in 1.22 in favor of the GA version of those same APIs. All existing objects can be interacted with via stable APIs. This removal includes beta versions of the Ingress, IngressClass, Lease, APIService, ValidatingWebhookConfiguration, MutatingWebhookConfiguration, CustomResourceDefinition, TokenReview, SubjectAccessReview, and CertificateSigningRequest APIs.

How Upgrades handle Deprecations

To test how the upgrades will handle that, let’s test the Ingress change - removal of v1beta1 Ingresses.

First, we can install Nginx. This is not required since by default our cluster has Traefik:

builder@builder-HP-EliteBook-745-G5:~$ helm repo add nginx-stable https://helm.nginx.com/stable

"nginx-stable" has been added to your repositories

builder@builder-HP-EliteBook-745-G5:~$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "nginx-stable" chart repository

Update Complete. ⎈Happy Helming!⎈

builder@builder-HP-EliteBook-745-G5:~$ export KUBECONFIG=/etc/rancher/k3s/k3s.yaml

builder@builder-HP-EliteBook-745-G5:~$ helm install my-release nginx-stable/nginx-ingress

WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /etc/rancher/k3s/k3s.yaml

WARNING: Kubernetes configuration file is world-readable. This is insecure. Location: /etc/rancher/k3s/k3s.yaml

NAME: my-release

LAST DEPLOYED: Fri Oct 29 16:45:53 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The NGINX Ingress Controller has been installed.

As mentioned, at the moment we do not need it as we have Traefik handling our ingress already

$ kubectl get svc --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 2d1h

kube-system kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 2d1h

kube-system metrics-server ClusterIP 10.43.106.155 <none> 443/TCP 2d1h

kube-system traefik-prometheus ClusterIP 10.43.97.166 <none> 9100/TCP 2d1h

kube-system traefik LoadBalancer 10.43.222.161 192.168.1.159 80:31400/TCP,443:31099/TCP 2d1h

default my-release-nginx-ingress LoadBalancer 10.43.141.8 <pending> 80:31933/TCP,443:32383/TCP 3h18m

default nginx-service ClusterIP 10.43.174.8 <none> 80/TCP 3m9s

Next, let’s deploy a basic Nginx container. This is just a simple webserver.

$ cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2 # tells deployment to run 2 pods matching the template

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

$ kubectl create -f https://k8s.io/examples/application/deployment.yaml

deployment.apps/nginx-deployment created

So as to expose via Ingress, we need a service to match the pods:

$ cat service.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

$ kubectl apply -f service.yaml

service/nginx-service created

Lastly, let’s apply the Ingress:

$ cat testIngress.yaml

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-v1betatest

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /path1

backend:

serviceName: nginx-service

servicePort: 80

$ kubectl apply -f testIngress.yaml

$ kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-v1betatest <none> * 192.168.1.159 80 6s

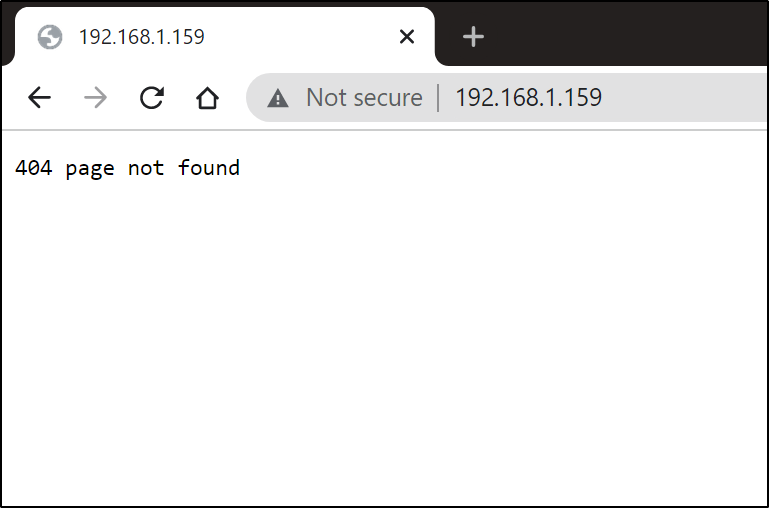

We can see Traefik

Then a basic nginx response using /path1

Applying the upgrade we can first check that the node version has yet to change:

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-745-g5 Ready control-plane,master 2d1h v1.21.5+k3s1

and pods are still running

builder@builder-HP-EliteBook-745-G5:~$ kubectl get pods

NAME READY STATUS RESTARTS AGE

svclb-my-release-nginx-ingress-h6thm 0/2 Pending 0 3h22m

my-release-nginx-ingress-865865d656-gtkzq 1/1 Running 0 3h22m

nginx-deployment-66b6c48dd5-pcfbg 1/1 Running 0 3h16m

nginx-deployment-66b6c48dd5-bfqb2 1/1 Running 0 3h16m

builder@builder-HP-EliteBook-745-G5:~$ kubectl get jobs --all-namespaces

NAMESPACE NAME COMPLETIONS DURATION AGE

kube-system helm-install-traefik 1/1 19s 2d1h

system-upgrade apply-k3s-server-on-builder-hp-elitebook-745-g5-with-1510-6488b 0/1 32s 32s

We can check status of the upgrade job using describe:

builder@builder-HP-EliteBook-745-G5:~$ kubectl describe jobs apply-k3s-server-on-builder-hp-elitebook-745-g5-with-1510-6488b -n system-upgrade

Name: apply-k3s-server-on-builder-hp-elitebook-745-g5-with-1510-6488b

Namespace: system-upgrade

Selector: controller-uid=c79b144d-9a55-44cc-bda5-1528363514c8

Labels: objectset.rio.cattle.io/hash=9f2ac612f7e407418de10c3f7b09695f3acb1dfa

plan.upgrade.cattle.io/k3s-server=151067306dc1d8a648f906540282f652b2e40f94a4d7a0f0d13b3ebc

upgrade.cattle.io/controller=system-upgrade-controller

upgrade.cattle.io/node=builder-hp-elitebook-745-g5

upgrade.cattle.io/plan=k3s-server

upgrade.cattle.io/version=v1.22.3-rc1-k3s1

Annotations: objectset.rio.cattle.io/applied:

H4sIAAAAAAAA/+xXUW/iRhD+K9U828QGQsBSH2jgeujuCDpyrU6nKFrvjmHLetfdHZOgyP+9WkM4kxCSa58qnSIFbO98M9/MfMz4AXIkJhgxSB5AsxwhAVYUahOuOi50aNdoQ6PDtJ...

objectset.rio.cattle.io/id: system-upgrade-controller

objectset.rio.cattle.io/owner-gvk: upgrade.cattle.io/v1, Kind=Plan

objectset.rio.cattle.io/owner-name: k3s-server

objectset.rio.cattle.io/owner-namespace: system-upgrade

upgrade.cattle.io/ttl-seconds-after-finished: 900

Parallelism: 1

Completions: 1

Start Time: Fri, 29 Oct 2021 20:07:53 -0500

Active Deadline Seconds: 900s

Pods Statuses: 0 Running / 0 Succeeded / 0 Failed

Pod Template:

Labels: controller-uid=c79b144d-9a55-44cc-bda5-1528363514c8

job-name=apply-k3s-server-on-builder-hp-elitebook-745-g5-with-1510-6488b

plan.upgrade.cattle.io/k3s-server=151067306dc1d8a648f906540282f652b2e40f94a4d7a0f0d13b3ebc

upgrade.cattle.io/controller=system-upgrade-controller

upgrade.cattle.io/node=builder-hp-elitebook-745-g5

upgrade.cattle.io/plan=k3s-server

upgrade.cattle.io/version=v1.22.3-rc1-k3s1

Service Account: system-upgrade

Init Containers:

cordon:

Image: rancher/kubectl:v1.18.20

Port: <none>

Host Port: <none>

Args:

cordon

builder-hp-elitebook-745-g5

Environment:

SYSTEM_UPGRADE_NODE_NAME: (v1:spec.nodeName)

SYSTEM_UPGRADE_POD_NAME: (v1:metadata.name)

SYSTEM_UPGRADE_POD_UID: (v1:metadata.uid)

SYSTEM_UPGRADE_PLAN_NAME: k3s-server

SYSTEM_UPGRADE_PLAN_LATEST_HASH: 151067306dc1d8a648f906540282f652b2e40f94a4d7a0f0d13b3ebc

SYSTEM_UPGRADE_PLAN_LATEST_VERSION: v1.22.3-rc1-k3s1

Mounts:

/host from host-root (rw)

/run/system-upgrade/pod from pod-info (ro)

Containers:

upgrade:

Image: rancher/k3s-upgrade:v1.22.3-rc1-k3s1

Port: <none>

Host Port: <none>

Environment:

SYSTEM_UPGRADE_NODE_NAME: (v1:spec.nodeName)

SYSTEM_UPGRADE_POD_NAME: (v1:metadata.name)

SYSTEM_UPGRADE_POD_UID: (v1:metadata.uid)

SYSTEM_UPGRADE_PLAN_NAME: k3s-server

SYSTEM_UPGRADE_PLAN_LATEST_HASH: 151067306dc1d8a648f906540282f652b2e40f94a4d7a0f0d13b3ebc

SYSTEM_UPGRADE_PLAN_LATEST_VERSION: v1.22.3-rc1-k3s1

Mounts:

/host from host-root (rw)

/run/system-upgrade/pod from pod-info (ro)

Volumes:

host-root:

Type: HostPath (bare host directory volume)

Path: /

HostPathType: Directory

pod-info:

Type: DownwardAPI (a volume populated by information about the pod)

Items:

metadata.labels -> labels

metadata.annotations -> annotations

Events: <none>

builder@builder-HP-EliteBook-745-G5:~$ kubectl get jobs apply-k3s-server-on-builder-hp-elitebook-745-g5-with-1510-6488b -n system-upgrade

NAME COMPLETIONS DURATION AGE

apply-k3s-server-on-builder-hp-elitebook-745-g5-with-1510-6488b 0/1 93s 93s

builder@builder-HP-EliteBook-745-G5:~$ kubectl get jobs apply-k3s-server-on-builder-hp-elitebook-745-g5-with-1510-6488b -n system-upgrade -o yaml

apiVersion: batch/v1

kind: Job

metadata:

annotations:

objectset.rio.cattle.io/applied: H4sIAAAAAAAA/+xXUW/iRhD+K9U828QGQsBSH2jgeujuCDpyrU6nKFrvjmHLetfdHZOgyP+9WkM4kxCSa58qnSIFbO98M9/MfMz4AXIkJhgxSB5AsxwhAVYUahOuOi50aNdoQ6PDtJRKoA2XRYhKEqbGrMKL7nm4OA/vJC3D+DyOwl63308hqIFcwbhHcxtHmIdlsbBMIATALTKSRl/LHB2xvIBEl0oFoFiKyvlATPoXcnJILStNizMihS1pzpbMLSGBQdZmvBe3swvsRhfduC8wjngnu0ijQW9wnnUYT2ORMQigUEy3dr4bQN/JQQI+9N5FJ+oJHos+63X72SDqnXejdr+d9c7baRu7UTbosq64YFEWibiTdjDlEMBzZG40WaNUjXzIPWw8O2aqjfAJO5Hqo2aeIiTQoHTs1Bqtk8YfXMetdrvVCS2PfZFjqAJgWhuqq3Iy/1K8QuolQ3On0YaL9QqSY7HFwS8fpBa/zjyV11B2XXrA93WLF9vxeThEKnTIjRYuZBmhDTOppVuiZz+IIqiqAFyB3KeKcZJrHCETSmqcb80gGURRACnjK5NlH2UuCZLBIADCvFCM0Fs2lfcGTfzs5Dd0cqMwa6PKHB0k3/Y/bEvjKLTGEAT19xmjZZ3a+hPOIADaFP7kSFrkZOzGQ+7tCyNCqTMDAQhzp++YFcPZxCNIwnzraoe1q1sAmUQlPmPmT9Xft0731W/tTtZ+dsZNNb4BoXm8qm6q6iYAqSVdGk1MarQHSeDGCuNVJnO28Dcs03yJ9mxVpshJJeu4Ffdb7QgCYHbhbb/bnKroTQCo101X86/z6/Gn2y+z3z8PR+Pb6ZX/N/w0hgDWTJX4zpp8z+oIQ1/Klu+lqcermqV4Aj27Gv0Q8j53+i3IXyajHwYupTiN+3E4PQj5sPFfMfw4vB7Pr2/fD+fvG/b/Wv5v8/bH+PN8cjVtOHwuwJsALDpTWu6l91AFOx1+MqWmF8WY+6e7DJ75B3BcdRaZuNJqAwnZEp/Y2VKfHf5MnRVG1CHVrT4rlZoZJfkGEhiqO7Zx9UN+VCbfB8QznXTco4PkWQJ+auCnBv5nGgjAIS+tpI0fGHhP9ULCCpZKJUnWHIAJ4QfB5XB2O/86v/3t6uoabqoACivXUuHC70Y+nmrHnpilvaMpbme50G5/71KVjtC+k9bRn5KW742jKVIdjV1LjkPOPbPpNgHP1rbl9vydsavHTNQjfTJqXk5ml4+XLPNbHG3qFx0jcNi4tvh3KS2KUWmlXsz5EkWppF5MFtrsb4/vkZdUbx5bhDmqekm4Rrsb/jkjvhzfFxad2+7S3x5ghZ6vn65WI6GrX2SMo7q0AZgCLSPjl6yJfuyreuqeHLaV//MFMGKoSf4XNt8etuvKI596OX2ZyQt72ykmDVH7sAMgUxhlFpsPp3KzJUhGedgnQfj8tw7tSu22VFmqniR2fC8d+U7HLENOviXNLi97N6R2y/swq/vyceMfRJHfKolRWau5+icAAP//fDy55DQPAAA

objectset.rio.cattle.io/id: system-upgrade-controller

objectset.rio.cattle.io/owner-gvk: upgrade.cattle.io/v1, Kind=Plan

objectset.rio.cattle.io/owner-name: k3s-server

objectset.rio.cattle.io/owner-namespace: system-upgrade

upgrade.cattle.io/ttl-seconds-after-finished: "900"

creationTimestamp: "2021-10-30T01:07:53Z"

labels:

objectset.rio.cattle.io/hash: 9f2ac612f7e407418de10c3f7b09695f3acb1dfa

plan.upgrade.cattle.io/k3s-server: 151067306dc1d8a648f906540282f652b2e40f94a4d7a0f0d13b3ebc

upgrade.cattle.io/controller: system-upgrade-controller

upgrade.cattle.io/node: builder-hp-elitebook-745-g5

upgrade.cattle.io/plan: k3s-server

upgrade.cattle.io/version: v1.22.3-rc1-k3s1

name: apply-k3s-server-on-builder-hp-elitebook-745-g5-with-1510-6488b

namespace: system-upgrade

resourceVersion: "127447"

uid: c79b144d-9a55-44cc-bda5-1528363514c8

spec:

activeDeadlineSeconds: 900

backoffLimit: 99

completions: 1

parallelism: 1

selector:

matchLabels:

controller-uid: c79b144d-9a55-44cc-bda5-1528363514c8

template:

metadata:

creationTimestamp: null

labels:

controller-uid: c79b144d-9a55-44cc-bda5-1528363514c8

job-name: apply-k3s-server-on-builder-hp-elitebook-745-g5-with-1510-6488b

plan.upgrade.cattle.io/k3s-server: 151067306dc1d8a648f906540282f652b2e40f94a4d7a0f0d13b3ebc

upgrade.cattle.io/controller: system-upgrade-controller

upgrade.cattle.io/node: builder-hp-elitebook-745-g5

upgrade.cattle.io/plan: k3s-server

upgrade.cattle.io/version: v1.22.3-rc1-k3s1

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- builder-hp-elitebook-745-g5

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: upgrade.cattle.io/plan

operator: In

values:

- k3s-server

topologyKey: kubernetes.io/hostname

containers:

- env:

- name: SYSTEM_UPGRADE_NODE_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

- name: SYSTEM_UPGRADE_POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: SYSTEM_UPGRADE_POD_UID

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.uid

- name: SYSTEM_UPGRADE_PLAN_NAME

value: k3s-server

- name: SYSTEM_UPGRADE_PLAN_LATEST_HASH

value: 151067306dc1d8a648f906540282f652b2e40f94a4d7a0f0d13b3ebc

- name: SYSTEM_UPGRADE_PLAN_LATEST_VERSION

value: v1.22.3-rc1-k3s1

image: rancher/k3s-upgrade:v1.22.3-rc1-k3s1

imagePullPolicy: Always

name: upgrade

resources: {}

securityContext:

capabilities:

add:

- CAP_SYS_BOOT

privileged: true

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /host

name: host-root

- mountPath: /run/system-upgrade/pod

name: pod-info

readOnly: true

dnsPolicy: ClusterFirstWithHostNet

hostIPC: true

hostNetwork: true

hostPID: true

initContainers:

- args:

- cordon

- builder-hp-elitebook-745-g5

env:

- name: SYSTEM_UPGRADE_NODE_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

- name: SYSTEM_UPGRADE_POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: SYSTEM_UPGRADE_POD_UID

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.uid

- name: SYSTEM_UPGRADE_PLAN_NAME

value: k3s-server

- name: SYSTEM_UPGRADE_PLAN_LATEST_HASH

value: 151067306dc1d8a648f906540282f652b2e40f94a4d7a0f0d13b3ebc

- name: SYSTEM_UPGRADE_PLAN_LATEST_VERSION

value: v1.22.3-rc1-k3s1

image: rancher/kubectl:v1.18.20

imagePullPolicy: Always

name: cordon

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /host

name: host-root

- mountPath: /run/system-upgrade/pod

name: pod-info

readOnly: true

restartPolicy: Never

schedulerName: default-scheduler

securityContext: {}

serviceAccount: system-upgrade

serviceAccountName: system-upgrade

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoSchedule

key: node.kubernetes.io/unschedulable

operator: Exists

volumes:

- hostPath:

path: /

type: Directory

name: host-root

- downwardAPI:

defaultMode: 420

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.labels

path: labels

- fieldRef:

apiVersion: v1

fieldPath: metadata.annotations

path: annotations

name: pod-info

ttlSecondsAfterFinished: 900

status:

startTime: "2021-10-30T01:07:53Z"

And as the process happens, we can continue to check status of the jobs and node:

builder@builder-HP-EliteBook-745-G5:~$ kubectl get jobs apply-k3s-server-on-builder-hp-elitebook-745-g5-with-1510-6488b -n system-upgrade

NAME COMPLETIONS DURATION AGE

apply-k3s-server-on-builder-hp-elitebook-745-g5-with-1510-6488b 0/1 105s 105s

builder@builder-HP-EliteBook-745-G5:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-745-g5 Ready control-plane,master 2d1h v1.21.5+k3s1

builder@builder-HP-EliteBook-745-G5:~$ kubectl get jobs --all-namespaces

NAMESPACE NAME COMPLETIONS DURATION AGE

kube-system helm-install-traefik 1/1 19s 2d1h

system-upgrade apply-k3s-server-on-builder-hp-elitebook-745-g5-with-1510-6488b 0/1 4m55s 4m55s

NAMESPACE NAME COMPLETIONS DURATION AGE

kube-system helm-install-traefik 1/1 19s 2d1h

system-upgrade apply-k3s-server-on-builder-hp-elitebook-745-g5-with-1510-6488b 0/1 9m45s 9m45s

builder@builder-HP-EliteBook-745-G5:~$ kubectl get jobs --all-namespaces

NAMESPACE NAME COMPLETIONS DURATION AGE

kube-system helm-install-traefik 1/1 19s 2d1h

system-upgrade apply-k3s-server-on-builder-hp-elitebook-745-g5-with-1510-6488b 0/1 22m 22m

builder@builder-HP-EliteBook-745-G5:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-745-g5 Ready control-plane,master 2d1h v1.21.5+k3s1

I knew something finally happened when my kubectl responses were rejected:

builder@builder-HP-EliteBook-745-G5:~$ kubectl logs system-upgrade-controller-85b58fbc5f-b6764 -n system-upgrade

WARN[0000] Unable to read /etc/rancher/k3s/k3s.yaml, please start server with --write-kubeconfig-mode to modify kube config permissions

error: error loading config file "/etc/rancher/k3s/k3s.yaml": open /etc/rancher/k3s/k3s.yaml: permission denied

I quick fixed the perms and tried again:

builder@builder-HP-EliteBook-745-G5:~$ sudo chmod a+rx /etc/rancher/k3s

[sudo] password for builder:

builder@builder-HP-EliteBook-745-G5:~$ sudo chmod a+r /etc/rancher/k3s/k3s.yaml

And saw the job finally had completed:

builder@builder-HP-EliteBook-745-G5:~$ kubectl get jobs --all-namespaces

NAMESPACE NAME COMPLETIONS DURATION AGE

system-upgrade apply-k3s-server-on-builder-hp-elitebook-745-g5-with-1510-6488b 1/1 36s 3m23s

kube-system helm-install-traefik 1/1 22s 3m

and our Node is now the new version

builder@builder-HP-EliteBook-745-G5:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-hp-elitebook-745-g5 Ready control-plane,master 2d2h v1.22.3-rc1+k3s1

I did see some errors on the upgrade pod:

$ kubectl logs system-upgrade-controller-85b58fbc5f-b6764 -n system-upgrade | tail -n10

E1030 01:38:27.032464 1 reflector.go:178] k8s.io/client-go@v1.18.0-k3s.1/tools/cache/reflector.go:125: Failed to list *v1.Plan: an error on the server ("apiserver not ready") has prevented the request from succeeding (get plans.meta.k8s.io)

I1030 01:38:27.033843 1 trace.go:116] Trace[1499268105]: "Reflector ListAndWatch" name:k8s.io/client-go@v1.18.0-k3s.1/tools/cache/reflector.go:125 (started: 2021-10-30 01:38:12.029042049 +0000 UTC m=+179464.469007321) (total time: 15.004765082s):

Trace[1499268105]: [15.004765082s] [15.004765082s] END

E1030 01:38:27.033866 1 reflector.go:178] k8s.io/client-go@v1.18.0-k3s.1/tools/cache/reflector.go:125: Failed to list *v1.Job: an error on the server ("apiserver not ready") has prevented the request from succeeding (get jobs.meta.k8s.io)

I1030 01:38:27.033878 1 trace.go:116] Trace[406771844]: "Reflector ListAndWatch" name:k8s.io/client-go@v1.18.0-k3s.1/tools/cache/reflector.go:125 (started: 2021-10-30 01:38:12.031850557 +0000 UTC m=+179464.471815669) (total time: 15.001994391s):

Trace[406771844]: [15.001994391s] [15.001994391s] END

E1030 01:38:27.033895 1 reflector.go:178] k8s.io/client-go@v1.18.0-k3s.1/tools/cache/reflector.go:125: Failed to list *v1.Node: an error on the server ("apiserver not ready") has prevented the request from succeeding (get nodes.meta.k8s.io)

I1030 01:38:27.034970 1 trace.go:116] Trace[196119233]: "Reflector ListAndWatch" name:k8s.io/client-go@v1.18.0-k3s.1/tools/cache/reflector.go:125 (started: 2021-10-30 01:38:12.027816345 +0000 UTC m=+179464.467781708) (total time: 15.007104388s):

Trace[196119233]: [15.007104388s] [15.007104388s] END

E1030 01:38:27.034999 1 reflector.go:178] k8s.io/client-go@v1.18.0-k3s.1/tools/cache/reflector.go:125: Failed to list *v1.Secret: an error on the server ("apiserver not ready") has prevented the request from succeeding (get secrets.meta.k8s.io)

But the errors did not seem to affect the upgrade itself.

Checking the ingress:

$ kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-v1betatest <none> * 192.168.1.159 80 41m

And we see it was converted to networking from v1beta1

$ kubectl get ingress -o yaml

apiVersion: v1

items:

- apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"networking.k8s.io/v1beta1","kind":"Ingress","metadata":{"annotations":{},"name":"ingress-v1betatest","namespace":"default"},"spec":{"rules":[{"http":{"paths":[{"backend":{"serviceName":"nginx-service","servicePort":80},"path":"/path1"}]}}]}}

creationTimestamp: "2021-10-30T01:01:05Z"

generation: 1

name: ingress-v1betatest

namespace: default

resourceVersion: "127095"

uid: f0b7c8a3-b23d-45ca-9500-b748d1ec596d

spec:

rules:

- http:

paths:

- backend:

service:

name: nginx-service

port:

number: 80

path: /path1

pathType: ImplementationSpecific

status:

loadBalancer:

ingress:

- ip: 192.168.1.159

kind: List

metadata:

resourceVersion: ""

selfLink: ""

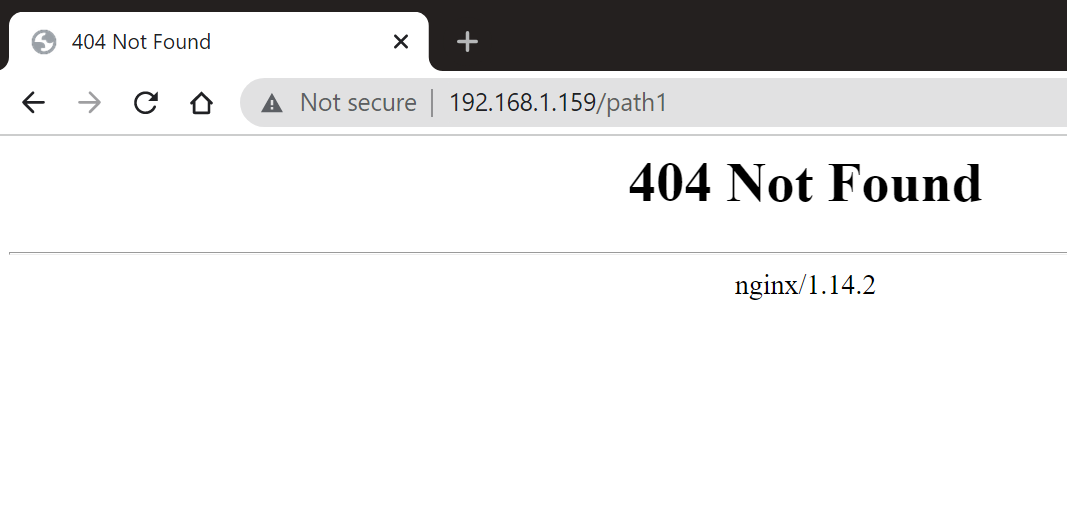

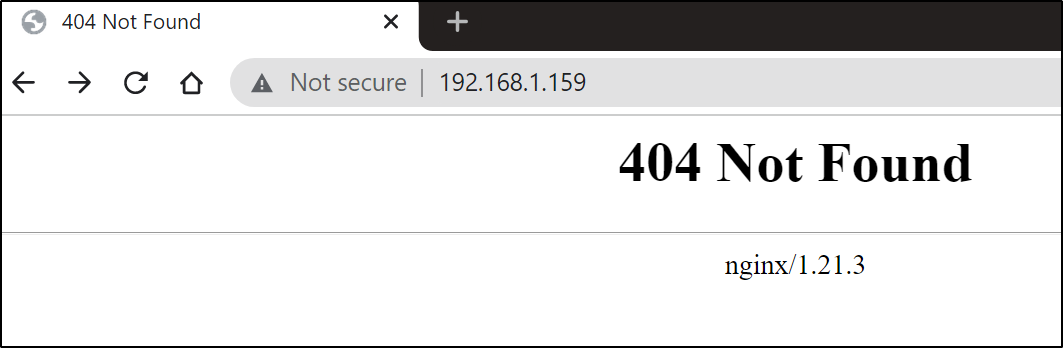

Interestingly when I checked the webserver, Nginx had taken over as the Ingress controller:

$ kubectl get svc --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 2d2h

kube-system traefik-prometheus ClusterIP 10.43.97.166 <none> 9100/TCP 2d2h

default nginx-service ClusterIP 10.43.174.8 <none> 80/TCP 43m

kube-system kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 2d2h

kube-system metrics-server ClusterIP 10.43.106.155 <none> 443/TCP 2d2h

kube-system traefik LoadBalancer 10.43.222.161 <pending> 80:31400/TCP,443:31099/TCP 2d2h

default my-release-nginx-ingress LoadBalancer 10.43.141.8 192.168.1.159 80:31933/TCP,443:32383/TCP 3h58m

But because we never explicitly set a handler in our Ingress, Our ingress request does not actually care which controller services it.

k3s in Terraform (not working)

I tried multiple runs with Terraform and the k3s module to provision on-prem hardware:

$ terraform plan -out tf.plan

module.k3s.random_password.k3s_cluster_secret: Refreshing state... [id=none]

module.k3s.tls_private_key.master_user[0]: Refreshing state... [id=06c54c3b737e0a8aff52f77584937576fe06a469]

module.k3s.tls_private_key.kubernetes_ca[2]: Refreshing state... [id=652d60b5f4d92d1f75f731b792f7dc853b5376ea]

module.k3s.tls_private_key.kubernetes_ca[0]: Refreshing state... [id=9439143ccc493e939f6f9d0cc4e88a737aad8e8b]

module.k3s.tls_private_key.kubernetes_ca[1]: Refreshing state... [id=1f4059d9261c921cdef8e3deefbb22af035e2d20]

module.k3s.tls_cert_request.master_user[0]: Refreshing state... [id=d4db109d0cd07ea192541cc36ec61cdbd4cae6ca]

module.k3s.tls_self_signed_cert.kubernetes_ca_certs["2"]: Refreshing state... [id=168650678377305405053929084634561712060]

module.k3s.tls_self_signed_cert.kubernetes_ca_certs["1"]: Refreshing state... [id=166863271464135357871740700137491090258]

module.k3s.tls_self_signed_cert.kubernetes_ca_certs["0"]: Refreshing state... [id=236627667009521719126057669130413543445]

module.k3s.tls_locally_signed_cert.master_user[0]: Refreshing state... [id=147110137514903271286127930568225412108]

module.k3s.null_resource.k8s_ca_certificates_install[3]: Refreshing state... [id=822643167695070475]

module.k3s.null_resource.k8s_ca_certificates_install[5]: Refreshing state... [id=7180920018107439379]

module.k3s.null_resource.k8s_ca_certificates_install[2]: Refreshing state... [id=6746882777759482513]

module.k3s.null_resource.k8s_ca_certificates_install[0]: Refreshing state... [id=6465958346775660162]

module.k3s.null_resource.k8s_ca_certificates_install[1]: Refreshing state... [id=751239155582439824]

module.k3s.null_resource.k8s_ca_certificates_install[4]: Refreshing state... [id=2521296445112125164]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

- destroy

Terraform will perform the following actions:

# module.k3s.null_resource.k8s_ca_certificates_install[0] will be destroyed

- resource "null_resource" "k8s_ca_certificates_install" {

- id = "6465958346775660162" -> null

}

# module.k3s.null_resource.k8s_ca_certificates_install[1] will be destroyed

- resource "null_resource" "k8s_ca_certificates_install" {

- id = "751239155582439824" -> null

}

# module.k3s.null_resource.k8s_ca_certificates_install[2] will be destroyed

- resource "null_resource" "k8s_ca_certificates_install" {

- id = "6746882777759482513" -> null

}

# module.k3s.null_resource.k8s_ca_certificates_install[3] will be destroyed

- resource "null_resource" "k8s_ca_certificates_install" {

- id = "822643167695070475" -> null

}

# module.k3s.null_resource.k8s_ca_certificates_install[4] will be destroyed

- resource "null_resource" "k8s_ca_certificates_install" {

- id = "2521296445112125164" -> null

}

# module.k3s.null_resource.k8s_ca_certificates_install[5] will be destroyed

- resource "null_resource" "k8s_ca_certificates_install" {

- id = "7180920018107439379" -> null

}

# module.k3s.null_resource.kubernetes_ready will be created

+ resource "null_resource" "kubernetes_ready" {

+ id = (known after apply)

}

# module.k3s.null_resource.servers_drain["server-one"] will be created

+ resource "null_resource" "servers_drain" {

+ id = (known after apply)

+ triggers = {

+ "connection_json" = "eyJhZ2VudCI6bnVsbCwiYWdlbnRfaWRlbnRpdHkiOm51bGwsImJhc3Rpb25fY2VydGlmaWNhdGUiOm51bGwsImJhc3Rpb25faG9zdCI6bnVsbCwiYmFzdGlvbl9ob3N0X2tleSI6bnVsbCwiYmFzdGlvbl9wYXNzd29yZCI6bnVsbCwiYmFzdGlvbl9wb3J0IjpudWxsLCJiYXN0aW9uX3ByaXZhdGVfa2V5IjpudWxsLCJiYXN0aW9uX3VzZXIiOm51bGwsImNhY2VydCI6bnVsbCwiY2VydGlmaWNhdGUiOm51bGwsImhvc3QiOiIxOTIuMTY4LjEuMTU5IiwiaG9zdF9rZXkiOiIvaG9tZS9idWlsZGVyLy5zc2gvaWRfcnNhLnB1YiIsImh0dHBzIjpudWxsLCJpbnNlY3VyZSI6bnVsbCwicGFzc3dvcmQiOm51bGwsInBvcnQiOm51bGwsInByaXZhdGVfa2V5IjpudWxsLCJzY3JpcHRfcGF0aCI6bnVsbCwidGltZW91dCI6bnVsbCwidHlwZSI6InNzaCIsInVzZV9udGxtIjpudWxsLCJ1c2VyIjoiYnVpbGRlciJ9"

+ "drain_timeout" = "30s"

+ "server_name" = "server-one"

}

}

# module.k3s.null_resource.servers_install["server-one"] will be created

+ resource "null_resource" "servers_install" {

+ id = (known after apply)

+ triggers = {

+ "on_immutable_changes" = "2dc596d87cd024ad552e7c4ba7dcb41aeb3fd6ea"

+ "on_new_version" = "v1.0.0"

}

}

# module.k3s.null_resource.servers_label["server-one|node.kubernetes.io/type"] will be created

+ resource "null_resource" "servers_label" {

+ id = (known after apply)

+ triggers = {

+ "connection_json" = "eyJhZ2VudCI6bnVsbCwiYWdlbnRfaWRlbnRpdHkiOm51bGwsImJhc3Rpb25fY2VydGlmaWNhdGUiOm51bGwsImJhc3Rpb25faG9zdCI6bnVsbCwiYmFzdGlvbl9ob3N0X2tleSI6bnVsbCwiYmFzdGlvbl9wYXNzd29yZCI6bnVsbCwiYmFzdGlvbl9wb3J0IjpudWxsLCJiYXN0aW9uX3ByaXZhdGVfa2V5IjpudWxsLCJiYXN0aW9uX3VzZXIiOm51bGwsImNhY2VydCI6bnVsbCwiY2VydGlmaWNhdGUiOm51bGwsImhvc3QiOiIxOTIuMTY4LjEuMTU5IiwiaG9zdF9rZXkiOiIvaG9tZS9idWlsZGVyLy5zc2gvaWRfcnNhLnB1YiIsImh0dHBzIjpudWxsLCJpbnNlY3VyZSI6bnVsbCwicGFzc3dvcmQiOm51bGwsInBvcnQiOm51bGwsInByaXZhdGVfa2V5IjpudWxsLCJzY3JpcHRfcGF0aCI6bnVsbCwidGltZW91dCI6bnVsbCwidHlwZSI6InNzaCIsInVzZV9udGxtIjpudWxsLCJ1c2VyIjoiYnVpbGRlciJ9"

+ "label_name" = "node.kubernetes.io/type"

+ "on_value_changes" = "master"

+ "server_name" = "server-one"

}

}

# module.k3s.tls_cert_request.master_user[0] will be destroyed

- resource "tls_cert_request" "master_user" {

- cert_request_pem = <<-EOT

-----BEGIN CERTIFICATE REQUEST-----

MIIBJjCBrgIBADAvMRcwFQYDVQQKEw5zeXN0ZW06bWFzdGVyczEUMBIGA1UEAxML

bWFzdGVyLXVzZXIwdjAQBgcqhkjOPQIBBgUrgQQAIgNiAASnI5ylVELX3yF6jlas

SSchEM8khh6vaxP/GIkCga0MS1MzT+LV4FKPxKjX9tN5OR5F/wrJ7Ggl2p+c8+ZD

Uj8BgTWNUsdfcRgPTiGkLqAt6rZTulvL2ACeFqO/1xAEZBmgADAKBggqhkjOPQQD

AwNnADBkAjBAAD+vleMn2iNwbsvPdLYzoIvqLxYDvj7lUHUnsjVY6WMQdHxYpKaA

UBEn4KAiqHACMG/OX0JVaLCkCi4b3kTDX+hRR/aupV4PJQKOtZTZn8GtI2YGOLQY

rpW7lmgS+y4KJQ==

-----END CERTIFICATE REQUEST-----

EOT -> null

- id = "d4db109d0cd07ea192541cc36ec61cdbd4cae6ca" -> null

- key_algorithm = "ECDSA" -> null

- private_key_pem = (sensitive value)

- subject {

- common_name = "master-user" -> null

- organization = "system:masters" -> null

- street_address = [] -> null

}

}

# module.k3s.tls_locally_signed_cert.master_user[0] will be destroyed

- resource "tls_locally_signed_cert" "master_user" {

- allowed_uses = [

- "key_encipherment",

- "digital_signature",

- "client_auth",

] -> null

- ca_cert_pem = "52fea89ecedbd5cef88d97f5120e226fb2026695" -> null

- ca_key_algorithm = "ECDSA" -> null

- ca_private_key_pem = (sensitive value)

- cert_pem = <<-EOT

-----BEGIN CERTIFICATE-----

MIIB4TCCAWegAwIBAgIQbqxhw/SB0wl2fKytLxVUDDAKBggqhkjOPQQDAzAfMR0w

GwYDVQQDExRrdWJlcm5ldGVzLWNsaWVudC1jYTAgFw0yMTEwMzExNDMzMzdaGA8y

MTIxMTEwMTE0MzMzN1owLzEXMBUGA1UEChMOc3lzdGVtOm1hc3RlcnMxFDASBgNV

BAMTC21hc3Rlci11c2VyMHYwEAYHKoZIzj0CAQYFK4EEACIDYgAEpyOcpVRC198h

eo5WrEknIRDPJIYer2sT/xiJAoGtDEtTM0/i1eBSj8So1/bTeTkeRf8KyexoJdqf

nPPmQ1I/AYE1jVLHX3EYD04hpC6gLeq2U7pby9gAnhajv9cQBGQZo1YwVDAOBgNV

HQ8BAf8EBAMCBaAwEwYDVR0lBAwwCgYIKwYBBQUHAwIwDAYDVR0TAQH/BAIwADAf

BgNVHSMEGDAWgBRh1LWdfpqAFlMbYsJ/fA1hRga2TjAKBggqhkjOPQQDAwNoADBl

AjBlK2+CErGdtb/gUqq2REXfEnwrAbvcxBjuRSdNNtmzBDaafeHVpu1WiCYIoijL

Z4cCMQDicw18wxRgoyiTKDOsOLFFKbqlKsdOvGSmZA3N8kRMyMpNIzREPyrtbB3O

ubBeXFk=

-----END CERTIFICATE-----

EOT -> null

- cert_request_pem = "7e813333339796598fb6b4dada1867c115a30bbe" -> null

- early_renewal_hours = 0 -> null

- id = "147110137514903271286127930568225412108" -> null

- ready_for_renewal = false -> null

- validity_end_time = "2121-11-01T09:33:37.859226051-05:00" -> null

- validity_period_hours = 876600 -> null

- validity_start_time = "2021-10-31T09:33:37.859226051-05:00" -> null

}

# module.k3s.tls_private_key.kubernetes_ca[0] will be destroyed

- resource "tls_private_key" "kubernetes_ca" {

- algorithm = "ECDSA" -> null

- ecdsa_curve = "P384" -> null

- id = "9439143ccc493e939f6f9d0cc4e88a737aad8e8b" -> null

- private_key_pem = (sensitive value)

- public_key_fingerprint_md5 = "e5:63:b5:47:6a:17:d4:89:6b:f7:ea:fc:a4:40:af:83" -> null

- public_key_openssh = <<-EOT

ecdsa-sha2-nistp384 AAAAE2VjZHNhLXNoYTItbmlzdHAzODQAAAAIbmlzdHAzODQAAABhBKb/WSaldfNKwco2BxXLXFWSVaPcXf5RDrg43MRmKhT/+eWoqH85f64SEnnBZ4iFqCV/ArVSnOho6eT0wSuCIUlPSrVhwemi1JE4Y6Ay9VBDBxslTycnaBPtBTuPFX8gKw==

EOT -> null

- public_key_pem = <<-EOT

-----BEGIN PUBLIC KEY-----

MHYwEAYHKoZIzj0CAQYFK4EEACIDYgAEpv9ZJqV180rByjYHFctcVZJVo9xd/lEO

uDjcxGYqFP/55aiofzl/rhISecFniIWoJX8CtVKc6Gjp5PTBK4IhSU9KtWHB6aLU

kThjoDL1UEMHGyVPJydoE+0FO48VfyAr

-----END PUBLIC KEY-----

EOT -> null

- rsa_bits = 2048 -> null

}

# module.k3s.tls_private_key.kubernetes_ca[1] will be destroyed

- resource "tls_private_key" "kubernetes_ca" {

- algorithm = "ECDSA" -> null

- ecdsa_curve = "P384" -> null

- id = "1f4059d9261c921cdef8e3deefbb22af035e2d20" -> null

- private_key_pem = (sensitive value)

- public_key_fingerprint_md5 = "e6:d9:74:01:9d:1d:6b:1d:fe:f7:ff:58:3e:79:77:40" -> null

- public_key_openssh = <<-EOT

ecdsa-sha2-nistp384 AAAAE2VjZHNhLXNoYTItbmlzdHAzODQAAAAIbmlzdHAzODQAAABhBENV3UV35Ul5T9OsGlT2MWajQxV6TtAus3HuLgHXR6jsCMfXip1dUSRAI3+uOBpPkJusOkBv9xIr02HqYMGGUGl4sNaSS2oNhDwxXjAQO4D3LSRC42KXorXjZz6jA60QPQ==

EOT -> null

- public_key_pem = <<-EOT

-----BEGIN PUBLIC KEY-----

MHYwEAYHKoZIzj0CAQYFK4EEACIDYgAEQ1XdRXflSXlP06waVPYxZqNDFXpO0C6z

ce4uAddHqOwIx9eKnV1RJEAjf644Gk+Qm6w6QG/3EivTYepgwYZQaXiw1pJLag2E

PDFeMBA7gPctJELjYpeiteNnPqMDrRA9

-----END PUBLIC KEY-----

EOT -> null

- rsa_bits = 2048 -> null

}

# module.k3s.tls_private_key.kubernetes_ca[2] will be destroyed

- resource "tls_private_key" "kubernetes_ca" {

- algorithm = "ECDSA" -> null

- ecdsa_curve = "P384" -> null

- id = "652d60b5f4d92d1f75f731b792f7dc853b5376ea" -> null

- private_key_pem = (sensitive value)

- public_key_fingerprint_md5 = "93:1e:1f:4a:9a:ba:50:17:b6:41:b3:78:4d:d3:08:9f" -> null

- public_key_openssh = <<-EOT

ecdsa-sha2-nistp384 AAAAE2VjZHNhLXNoYTItbmlzdHAzODQAAAAIbmlzdHAzODQAAABhBBKbFrYPGeiZ8rwV95lqL7ZPakccjGATcW3db4QE+ABt4XYPJ0QQtnOd6rJfV9Cyhmk0Sf7IzuNKTS58ybcsvTTIXDWbhZj+S2Scrl4dy6c83cCnIBKEGq6Z+vPwif4NZg==

EOT -> null

- public_key_pem = <<-EOT

-----BEGIN PUBLIC KEY-----

MHYwEAYHKoZIzj0CAQYFK4EEACIDYgAEEpsWtg8Z6JnyvBX3mWovtk9qRxyMYBNx

bd1vhAT4AG3hdg8nRBC2c53qsl9X0LKGaTRJ/sjO40pNLnzJtyy9NMhcNZuFmP5L

ZJyuXh3LpzzdwKcgEoQarpn68/CJ/g1m

-----END PUBLIC KEY-----

EOT -> null

- rsa_bits = 2048 -> null

}

# module.k3s.tls_private_key.master_user[0] will be destroyed

- resource "tls_private_key" "master_user" {

- algorithm = "ECDSA" -> null

- ecdsa_curve = "P384" -> null

- id = "06c54c3b737e0a8aff52f77584937576fe06a469" -> null

- private_key_pem = (sensitive value)

- public_key_fingerprint_md5 = "8b:9e:c5:8e:f2:82:2c:61:5c:7e:db:1a:f3:70:b2:9d" -> null

- public_key_openssh = <<-EOT

ecdsa-sha2-nistp384 AAAAE2VjZHNhLXNoYTItbmlzdHAzODQAAAAIbmlzdHAzODQAAABhBKcjnKVUQtffIXqOVqxJJyEQzySGHq9rE/8YiQKBrQxLUzNP4tXgUo/EqNf203k5HkX/CsnsaCXan5zz5kNSPwGBNY1Sx19xGA9OIaQuoC3qtlO6W8vYAJ4Wo7/XEARkGQ==

EOT -> null

- public_key_pem = <<-EOT

-----BEGIN PUBLIC KEY-----

MHYwEAYHKoZIzj0CAQYFK4EEACIDYgAEpyOcpVRC198heo5WrEknIRDPJIYer2sT

/xiJAoGtDEtTM0/i1eBSj8So1/bTeTkeRf8KyexoJdqfnPPmQ1I/AYE1jVLHX3EY

D04hpC6gLeq2U7pby9gAnhajv9cQBGQZ

-----END PUBLIC KEY-----

EOT -> null

- rsa_bits = 2048 -> null

}

# module.k3s.tls_self_signed_cert.kubernetes_ca_certs["0"] will be destroyed

- resource "tls_self_signed_cert" "kubernetes_ca_certs" {

- allowed_uses = [

- "critical",

- "digitalSignature",

- "keyEncipherment",

- "keyCertSign",

] -> null

- cert_pem = <<-EOT

-----BEGIN CERTIFICATE-----

MIIBrjCCATSgAwIBAgIRALIE1LmPOgiw+vnsQPhmEBUwCgYIKoZIzj0EAwMwHzEd

MBsGA1UEAxMUa3ViZXJuZXRlcy1jbGllbnQtY2EwIBcNMjExMDMxMTQzMzM3WhgP

MjEyMTExMDExNDMzMzdaMB8xHTAbBgNVBAMTFGt1YmVybmV0ZXMtY2xpZW50LWNh

MHYwEAYHKoZIzj0CAQYFK4EEACIDYgAEpv9ZJqV180rByjYHFctcVZJVo9xd/lEO

uDjcxGYqFP/55aiofzl/rhISecFniIWoJX8CtVKc6Gjp5PTBK4IhSU9KtWHB6aLU

kThjoDL1UEMHGyVPJydoE+0FO48VfyArozIwMDAPBgNVHRMBAf8EBTADAQH/MB0G

A1UdDgQWBBRh1LWdfpqAFlMbYsJ/fA1hRga2TjAKBggqhkjOPQQDAwNoADBlAjBR

NlzxHcDlRGapH5gqbHN9Jh5S8gdyCJNWT48HMdzI7UCcNBY9DNIkvboQonCz0KUC

MQCFYmdNKQt3cCrvIxgKvrEX1tvV3eH7gtDxvUEZpz3Dc4e+QpU63rqCGSgVk5ZD

EWw=

-----END CERTIFICATE-----

EOT -> null

- early_renewal_hours = 0 -> null

- id = "236627667009521719126057669130413543445" -> null

- is_ca_certificate = true -> null

- key_algorithm = "ECDSA" -> null

- private_key_pem = (sensitive value)

- ready_for_renewal = false -> null

- validity_end_time = "2121-11-01T09:33:37.823487581-05:00" -> null

- validity_period_hours = 876600 -> null

- validity_start_time = "2021-10-31T09:33:37.823487581-05:00" -> null

- subject {

- common_name = "kubernetes-client-ca" -> null

- street_address = [] -> null

}

}

# module.k3s.tls_self_signed_cert.kubernetes_ca_certs["1"] will be destroyed

- resource "tls_self_signed_cert" "kubernetes_ca_certs" {

- allowed_uses = [

- "critical",

- "digitalSignature",

- "keyEncipherment",

- "keyCertSign",

] -> null

- cert_pem = <<-EOT

-----BEGIN CERTIFICATE-----

MIIBrjCCATOgAwIBAgIQfYiydgFhoN/ErdbL5ZlLUjAKBggqhkjOPQQDAzAfMR0w

GwYDVQQDExRrdWJlcm5ldGVzLXNlcnZlci1jYTAgFw0yMTEwMzExNDMzMzdaGA8y

MTIxMTEwMTE0MzMzN1owHzEdMBsGA1UEAxMUa3ViZXJuZXRlcy1zZXJ2ZXItY2Ew

djAQBgcqhkjOPQIBBgUrgQQAIgNiAARDVd1Fd+VJeU/TrBpU9jFmo0MVek7QLrNx

7i4B10eo7AjH14qdXVEkQCN/rjgaT5CbrDpAb/cSK9Nh6mDBhlBpeLDWkktqDYQ8

MV4wEDuA9y0kQuNil6K142c+owOtED2jMjAwMA8GA1UdEwEB/wQFMAMBAf8wHQYD

VR0OBBYEFB7ICCTNAZfXnY6CLvYEUiG0djyBMAoGCCqGSM49BAMDA2kAMGYCMQDk

w7HDSRvlI7VdzvSbqmISAgsJiX97oxor6cBGqwPP9uqQNQuc7Hz9zHVQveFs2FIC

MQCPQjF2BQNvCqzQtOpD+k6BemLod+PQ1kHzkbFxMkRQLxBn4ltyTrnQN7IL2L15

bCs=

-----END CERTIFICATE-----

EOT -> null

- early_renewal_hours = 0 -> null

- id = "166863271464135357871740700137491090258" -> null

- is_ca_certificate = true -> null

- key_algorithm = "ECDSA" -> null

- private_key_pem = (sensitive value)

- ready_for_renewal = false -> null

- validity_end_time = "2121-11-01T09:33:37.822034333-05:00" -> null

- validity_period_hours = 876600 -> null

- validity_start_time = "2021-10-31T09:33:37.822034333-05:00" -> null

- subject {

- common_name = "kubernetes-server-ca" -> null

- street_address = [] -> null

}

}

# module.k3s.tls_self_signed_cert.kubernetes_ca_certs["2"] will be destroyed

- resource "tls_self_signed_cert" "kubernetes_ca_certs" {

- allowed_uses = [

- "critical",

- "digitalSignature",

- "keyEncipherment",

- "keyCertSign",

] -> null

- cert_pem = <<-EOT

-----BEGIN CERTIFICATE-----

MIIBxDCCAUugAwIBAgIQfuDwbPlHJPbeKSfgTScPvDAKBggqhkjOPQQDAzArMSkw

JwYDVQQDEyBrdWJlcm5ldGVzLXJlcXVlc3QtaGVhZGVyLWtleS1jYTAgFw0yMTEw

MzExNDMzMzdaGA8yMTIxMTEwMTE0MzMzN1owKzEpMCcGA1UEAxMga3ViZXJuZXRl

cy1yZXF1ZXN0LWhlYWRlci1rZXktY2EwdjAQBgcqhkjOPQIBBgUrgQQAIgNiAAQS

mxa2DxnomfK8FfeZai+2T2pHHIxgE3Ft3W+EBPgAbeF2DydEELZzneqyX1fQsoZp

NEn+yM7jSk0ufMm3LL00yFw1m4WY/ktknK5eHcunPN3ApyAShBqumfrz8In+DWaj

MjAwMA8GA1UdEwEB/wQFMAMBAf8wHQYDVR0OBBYEFMCbZjGuN3oyiY/zpQ9l8yB1

QmiBMAoGCCqGSM49BAMDA2cAMGQCMBZBw39j+hSIjh3Pxntr9iPeL5+CU9BYsK1L

5KUd4478ZDr4wIO7lCkuZFS6Gg5YNwIwZrNBq6NzJmYAShmPZeRCFcmOMy+XInsZ

ZcA7XyKDKH9yUIHrJ++zr8nEPPyUd8bI

-----END CERTIFICATE-----

EOT -> null

- early_renewal_hours = 0 -> null

- id = "168650678377305405053929084634561712060" -> null

- is_ca_certificate = true -> null

- key_algorithm = "ECDSA" -> null

- private_key_pem = (sensitive value)

- ready_for_renewal = false -> null

- validity_end_time = "2121-11-01T09:33:37.824259526-05:00" -> null

- validity_period_hours = 876600 -> null

- validity_start_time = "2021-10-31T09:33:37.824259526-05:00" -> null

- subject {

- common_name = "kubernetes-request-header-key-ca" -> null

- street_address = [] -> null

}

}

Plan: 4 to add, 0 to change, 15 to destroy.

─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Saved the plan to: tf.plan

To perform exactly these actions, run the following command to apply:

terraform apply "tf.plan"

builder@DESKTOP-QADGF36:~/Workspaces/tfk3s$ terraform apply "tf.plan"

module.k3s.null_resource.k8s_ca_certificates_install[4]: Destroying... [id=2521296445112125164]

module.k3s.null_resource.k8s_ca_certificates_install[2]: Destroying... [id=6746882777759482513]

module.k3s.null_resource.k8s_ca_certificates_install[1]: Destroying... [id=751239155582439824]

module.k3s.null_resource.k8s_ca_certificates_install[3]: Destroying... [id=822643167695070475]

module.k3s.null_resource.k8s_ca_certificates_install[5]: Destroying... [id=7180920018107439379]

module.k3s.null_resource.k8s_ca_certificates_install[2]: Destruction complete after 0s

module.k3s.null_resource.k8s_ca_certificates_install[3]: Destruction complete after 0s

module.k3s.null_resource.k8s_ca_certificates_install[0]: Destroying... [id=6465958346775660162]

module.k3s.tls_locally_signed_cert.master_user[0]: Destroying... [id=147110137514903271286127930568225412108]

module.k3s.null_resource.k8s_ca_certificates_install[1]: Destruction complete after 0s

module.k3s.null_resource.k8s_ca_certificates_install[5]: Destruction complete after 0s

module.k3s.null_resource.k8s_ca_certificates_install[4]: Destruction complete after 0s

module.k3s.tls_locally_signed_cert.master_user[0]: Destruction complete after 0s

module.k3s.null_resource.k8s_ca_certificates_install[0]: Destruction complete after 0s

module.k3s.tls_cert_request.master_user[0]: Destroying... [id=d4db109d0cd07ea192541cc36ec61cdbd4cae6ca]

module.k3s.tls_self_signed_cert.kubernetes_ca_certs["1"]: Destroying... [id=166863271464135357871740700137491090258]

module.k3s.tls_self_signed_cert.kubernetes_ca_certs["0"]: Destroying... [id=236627667009521719126057669130413543445]

module.k3s.tls_self_signed_cert.kubernetes_ca_certs["2"]: Destroying... [id=168650678377305405053929084634561712060]

module.k3s.tls_cert_request.master_user[0]: Destruction complete after 0s

module.k3s.null_resource.servers_install["server-one"]: Creating...

module.k3s.tls_self_signed_cert.kubernetes_ca_certs["1"]: Destruction complete after 0s

module.k3s.tls_self_signed_cert.kubernetes_ca_certs["0"]: Destruction complete after 0s

module.k3s.tls_self_signed_cert.kubernetes_ca_certs["2"]: Destruction complete after 0s

module.k3s.tls_private_key.master_user[0]: Destroying... [id=06c54c3b737e0a8aff52f77584937576fe06a469]

module.k3s.tls_private_key.master_user[0]: Destruction complete after 0s

module.k3s.tls_private_key.kubernetes_ca[0]: Destroying... [id=9439143ccc493e939f6f9d0cc4e88a737aad8e8b]

module.k3s.tls_private_key.kubernetes_ca[0]: Destruction complete after 0s

module.k3s.tls_private_key.kubernetes_ca[2]: Destroying... [id=652d60b5f4d92d1f75f731b792f7dc853b5376ea]

module.k3s.tls_private_key.kubernetes_ca[1]: Destroying... [id=1f4059d9261c921cdef8e3deefbb22af035e2d20]

module.k3s.null_resource.servers_install["server-one"]: Provisioning with 'file'...

module.k3s.tls_private_key.kubernetes_ca[2]: Destruction complete after 0s

module.k3s.tls_private_key.kubernetes_ca[1]: Destruction complete after 0s

╷

│ Error: file provisioner error

│

│ with module.k3s.null_resource.servers_install["server-one"],

│ on .terraform/modules/k3s/server_nodes.tf line 200, in resource "null_resource" "servers_install":

│ 200: provisioner "file" {

│

│ knownhosts: /tmp/tf-known_hosts825875476:1: knownhosts: missing key type pattern

╵

and again

builder@DESKTOP-QADGF36:~/Workspaces/tfk3s$ terraform plan -out tf.plan

module.k3s.random_password.k3s_cluster_secret: Refreshing state... [id=none]

module.k3s.null_resource.servers_install["server-one"]: Refreshing state... [id=1316345895109891785]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

-/+ destroy and then create replacement

Terraform will perform the following actions:

# module.k3s.null_resource.kubernetes_ready will be created

+ resource "null_resource" "kubernetes_ready" {

+ id = (known after apply)

}

# module.k3s.null_resource.servers_drain["server-one"] will be created

+ resource "null_resource" "servers_drain" {

+ id = (known after apply)

+ triggers = {

+ "connection_json" = "eyJhZ2VudCI6bnVsbCwiYWdlbnRfaWRlbnRpdHkiOm51bGwsImJhc3Rpb25fY2VydGlmaWNhdGUiOm51bGwsImJhc3Rpb25faG9zdCI6bnVsbCwiYmFzdGlvbl9ob3N0X2tleSI6bnVsbCwiYmFzdGlvbl9wYXNzd29yZCI6bnVsbCwiYmFzdGlvbl9wb3J0IjpudWxsLCJiYXN0aW9uX3ByaXZhdGVfa2V5IjpudWxsLCJiYXN0aW9uX3VzZXIiOm51bGwsImNhY2VydCI6bnVsbCwiY2VydGlmaWNhdGUiOm51bGwsImhvc3QiOiIxOTIuMTY4LjEuMTU5IiwiaG9zdF9rZXkiOm51bGwsImh0dHBzIjpudWxsLCJpbnNlY3VyZSI6bnVsbCwicGFzc3dvcmQiOm51bGwsInBvcnQiOm51bGwsInByaXZhdGVfa2V5IjpudWxsLCJzY3JpcHRfcGF0aCI6bnVsbCwidGltZW91dCI6bnVsbCwidHlwZSI6InNzaCIsInVzZV9udGxtIjpudWxsLCJ1c2VyIjoiYnVpbGRlciJ9"

+ "drain_timeout" = "30s"

+ "server_name" = "server-one"

}

}

# module.k3s.null_resource.servers_install["server-one"] is tainted, so must be replaced

-/+ resource "null_resource" "servers_install" {

~ id = "1316345895109891785" -> (known after apply)

# (1 unchanged attribute hidden)

}

# module.k3s.null_resource.servers_label["server-one|node.kubernetes.io/type"] will be created

+ resource "null_resource" "servers_label" {

+ id = (known after apply)

+ triggers = {

+ "connection_json" = "eyJhZ2VudCI6bnVsbCwiYWdlbnRfaWRlbnRpdHkiOm51bGwsImJhc3Rpb25fY2VydGlmaWNhdGUiOm51bGwsImJhc3Rpb25faG9zdCI6bnVsbCwiYmFzdGlvbl9ob3N0X2tleSI6bnVsbCwiYmFzdGlvbl9wYXNzd29yZCI6bnVsbCwiYmFzdGlvbl9wb3J0IjpudWxsLCJiYXN0aW9uX3ByaXZhdGVfa2V5IjpudWxsLCJiYXN0aW9uX3VzZXIiOm51bGwsImNhY2VydCI6bnVsbCwiY2VydGlmaWNhdGUiOm51bGwsImhvc3QiOiIxOTIuMTY4LjEuMTU5IiwiaG9zdF9rZXkiOm51bGwsImh0dHBzIjpudWxsLCJpbnNlY3VyZSI6bnVsbCwicGFzc3dvcmQiOm51bGwsInBvcnQiOm51bGwsInByaXZhdGVfa2V5IjpudWxsLCJzY3JpcHRfcGF0aCI6bnVsbCwidGltZW91dCI6bnVsbCwidHlwZSI6InNzaCIsInVzZV9udGxtIjpudWxsLCJ1c2VyIjoiYnVpbGRlciJ9"

+ "label_name" = "node.kubernetes.io/type"

+ "on_value_changes" = "master"

+ "server_name" = "server-one"

}

}

Plan: 4 to add, 0 to change, 1 to destroy.

─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Saved the plan to: tf.plan

To perform exactly these actions, run the following command to apply:

terraform apply "tf.plan"

builder@DESKTOP-QADGF36:~/Workspaces/tfk3s$ terraform apply "tf.plan"

module.k3s.null_resource.servers_install["server-one"]: Destroying... [id=1316345895109891785]

module.k3s.null_resource.servers_install["server-one"]: Destruction complete after 0s

module.k3s.null_resource.servers_install["server-one"]: Creating...

module.k3s.null_resource.servers_install["server-one"]: Provisioning with 'file'...

module.k3s.null_resource.servers_install["server-one"]: Still creating... [10s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [20s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [30s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [40s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [50s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [1m0s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [1m10s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [1m20s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [1m30s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [1m40s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [1m50s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [2m0s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [2m10s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [2m20s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [2m30s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [2m40s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [2m50s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [3m0s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [3m10s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [3m20s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [3m30s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [3m40s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [3m50s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [4m0s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [4m10s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [4m20s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [4m30s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [4m40s elapsed]

module.k3s.null_resource.servers_install["server-one"]: Still creating... [4m50s elapsed]

╷

│ Error: file provisioner error

│

│ with module.k3s.null_resource.servers_install["server-one"],

│ on .terraform/modules/k3s/server_nodes.tf line 200, in resource "null_resource" "servers_install":

│ 200: provisioner "file" {

│

│ timeout - last error: SSH authentication failed (builder@192.168.1.159:22): ssh: handshake failed: ssh: unable to authenticate, attempted methods [none], no supported methods

│ remain

╵

but nothing would create directly on my local ubuntu hosts with terraform. I assumed it needed kubespray/adm/ansible root ssh passwordless perms, but that did not seem to suffice.

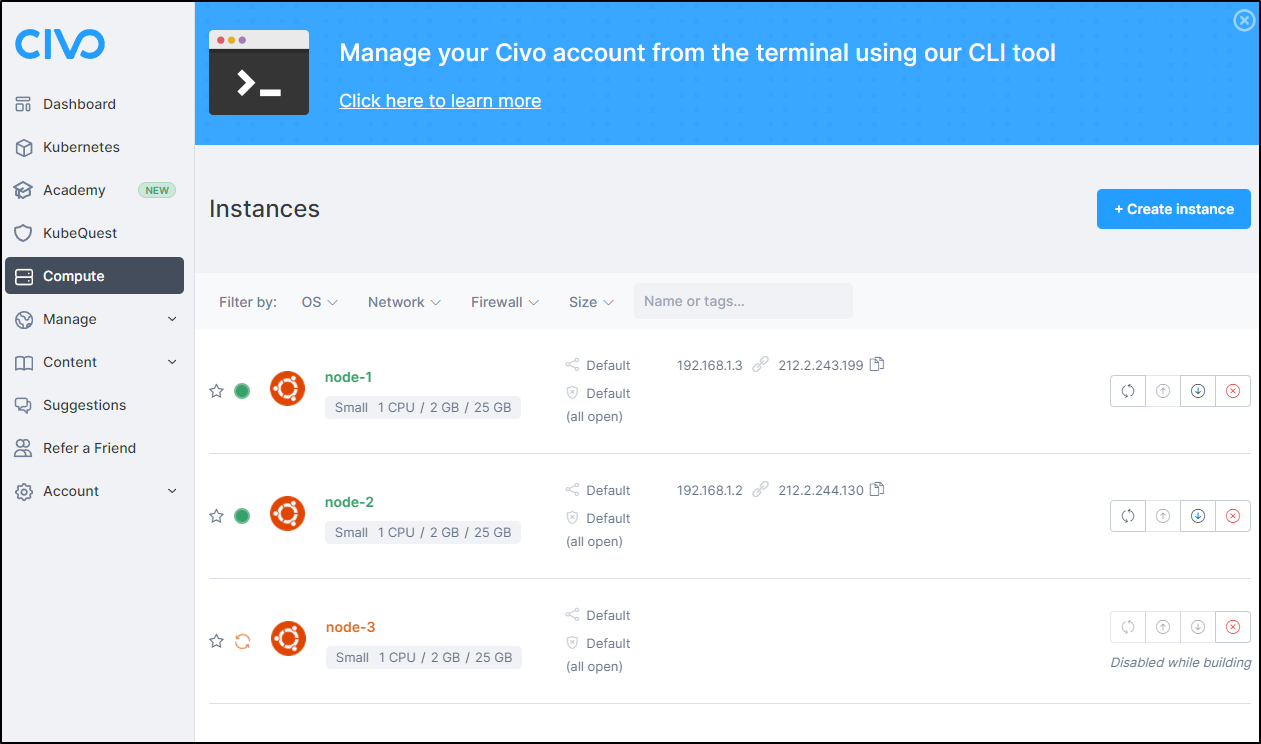

CIVO Terraform and K3s

Using Terraform for k3s is doable. There are a few community terraform modules one can use. The one I’ll cover here that I was most successful with is called k3s and on github https://github.com/xunleii/terraform-module-k3s.

builder@DESKTOP-QADGF36:~/Workspaces/terraform-module-k3s/examples/civo-k3s$ terraform apply -var-file="tf.vars"

module.k3s.random_password.k3s_cluster_secret: Refreshing state... [id=none]

module.k3s.tls_private_key.kubernetes_ca[2]: Refreshing state... [id=80ca4a9bebd3fa1437efef27571e4927e873a146]

module.k3s.tls_private_key.master_user[0]: Refreshing state... [id=56e5b1cf3fb10387213633492993251aed8a2c5c]

module.k3s.tls_private_key.kubernetes_ca[0]: Refreshing state... [id=ac1d6302a69b6a95ceaa0ab3513a0befc52eae1b]

module.k3s.tls_private_key.kubernetes_ca[1]: Refreshing state... [id=fb31ae6ed4b0cda025947155d636f41acc97932a]

module.k3s.tls_cert_request.master_user[0]: Refreshing state... [id=5457fd7b6ff35fb465293f5ac48cf51f73f604da]

module.k3s.tls_self_signed_cert.kubernetes_ca_certs["0"]: Refreshing state... [id=179381637326519421633541131708977756020]

module.k3s.tls_self_signed_cert.kubernetes_ca_certs["1"]: Refreshing state... [id=162007174841491717249967010213281523901]

module.k3s.tls_self_signed_cert.kubernetes_ca_certs["2"]: Refreshing state... [id=240239317385907192679446816919634150104]

module.k3s.tls_locally_signed_cert.master_user[0]: Refreshing state... [id=269975477633753601419630313929603900630]

civo_instance.node_instances[1]: Refreshing state... [id=27cf0c45-25d9-47a8-abda-e0616fa68d9f]

Note: Objects have changed outside of Terraform

Terraform detected the following changes made outside of Terraform since the last "terraform apply":

# module.k3s.tls_self_signed_cert.kubernetes_ca_certs["2"] has been changed

~ resource "tls_self_signed_cert" "kubernetes_ca_certs" {

id = "240239317385907192679446816919634150104"

# (10 unchanged attributes hidden)

~ subject {

+ street_address = []

# (1 unchanged attribute hidden)

}

}

# module.k3s.tls_self_signed_cert.kubernetes_ca_certs["0"] has been changed

~ resource "tls_self_signed_cert" "kubernetes_ca_certs" {

id = "179381637326519421633541131708977756020"

# (10 unchanged attributes hidden)

~ subject {

+ street_address = []

# (1 unchanged attribute hidden)

}

}

# module.k3s.tls_self_signed_cert.kubernetes_ca_certs["1"] has been changed

~ resource "tls_self_signed_cert" "kubernetes_ca_certs" {

id = "162007174841491717249967010213281523901"

# (10 unchanged attributes hidden)

~ subject {

+ street_address = []

# (1 unchanged attribute hidden)

}

}

# module.k3s.tls_cert_request.master_user[0] has been changed

~ resource "tls_cert_request" "master_user" {

id = "5457fd7b6ff35fb465293f5ac48cf51f73f604da"

# (3 unchanged attributes hidden)

~ subject {

+ street_address = []

# (2 unchanged attributes hidden)

}

}

# civo_instance.node_instances[1] has been changed

~ resource "civo_instance" "node_instances" {

id = "27cf0c45-25d9-47a8-abda-e0616fa68d9f"

+ tags = []

# (17 unchanged attributes hidden)

}

Unless you have made equivalent changes to your configuration, or ignored the relevant attributes using ignore_changes, the following plan

may include actions to undo or respond to these changes.

───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# civo_instance.node_instances[0] will be created

+ resource "civo_instance" "node_instances" {

+ cpu_cores = (known after apply)

+ created_at = (known after apply)

+ disk_gb = (known after apply)

+ disk_image = (known after apply)

+ firewall_id = (known after apply)

+ hostname = "node-1"

+ id = (known after apply)

+ initial_password = (sensitive value)

+ initial_user = "civo"

+ network_id = (known after apply)

+ private_ip = (known after apply)

+ public_ip = (known after apply)

+ public_ip_required = "create"

+ ram_mb = (known after apply)

+ size = "g3.small"

+ source_id = (known after apply)

+ source_type = (known after apply)

+ status = (known after apply)

+ template = "8eb48e20-e5db-49fe-9cdf-cc8f381c61c6"

}

# civo_instance.node_instances[2] will be created

+ resource "civo_instance" "node_instances" {

+ cpu_cores = (known after apply)

+ created_at = (known after apply)

+ disk_gb = (known after apply)

+ disk_image = (known after apply)

+ firewall_id = (known after apply)

+ hostname = "node-3"

+ id = (known after apply)

+ initial_password = (sensitive value)

+ initial_user = "civo"

+ network_id = (known after apply)

+ private_ip = (known after apply)

+ public_ip = (known after apply)

+ public_ip_required = "create"

+ ram_mb = (known after apply)

+ size = "g3.small"

+ source_id = (known after apply)

+ source_type = (known after apply)

+ status = (known after apply)

+ template = "8eb48e20-e5db-49fe-9cdf-cc8f381c61c6"

}

# module.k3s.null_resource.k8s_ca_certificates_install[0] will be created

+ resource "null_resource" "k8s_ca_certificates_install" {

+ id = (known after apply)

}

# module.k3s.null_resource.k8s_ca_certificates_install[1] will be created

+ resource "null_resource" "k8s_ca_certificates_install" {

+ id = (known after apply)

}

# module.k3s.null_resource.k8s_ca_certificates_install[2] will be created

+ resource "null_resource" "k8s_ca_certificates_install" {

+ id = (known after apply)

}

# module.k3s.null_resource.k8s_ca_certificates_install[3] will be created

+ resource "null_resource" "k8s_ca_certificates_install" {

+ id = (known after apply)

}

# module.k3s.null_resource.k8s_ca_certificates_install[4] will be created

+ resource "null_resource" "k8s_ca_certificates_install" {

+ id = (known after apply)

}

# module.k3s.null_resource.k8s_ca_certificates_install[5] will be created

+ resource "null_resource" "k8s_ca_certificates_install" {

+ id = (known after apply)

}

# module.k3s.null_resource.kubernetes_ready will be created

+ resource "null_resource" "kubernetes_ready" {

+ id = (known after apply)

}

# module.k3s.null_resource.servers_drain["node-1"] will be created

+ resource "null_resource" "servers_drain" {

+ id = (known after apply)

+ triggers = (known after apply)

}

# module.k3s.null_resource.servers_drain["node-2"] will be created

+ resource "null_resource" "servers_drain" {

+ id = (known after apply)

+ triggers = (known after apply)

}

# module.k3s.null_resource.servers_drain["node-3"] will be created

+ resource "null_resource" "servers_drain" {

+ id = (known after apply)

+ triggers = (known after apply)

}

# module.k3s.null_resource.servers_install["node-1"] will be created

+ resource "null_resource" "servers_install" {

+ id = (known after apply)

+ triggers = (known after apply)

}

# module.k3s.null_resource.servers_install["node-2"] will be created

+ resource "null_resource" "servers_install" {

+ id = (known after apply)

+ triggers = (known after apply)

}

# module.k3s.null_resource.servers_install["node-3"] will be created

+ resource "null_resource" "servers_install" {

+ id = (known after apply)

+ triggers = (known after apply)

}

# module.k3s.null_resource.servers_label["node-1|node.kubernetes.io/type"] will be created

+ resource "null_resource" "servers_label" {

+ id = (known after apply)

+ triggers = (known after apply)

}

# module.k3s.null_resource.servers_label["node-2|node.kubernetes.io/type"] will be created

+ resource "null_resource" "servers_label" {

+ id = (known after apply)

+ triggers = (known after apply)

}

# module.k3s.null_resource.servers_label["node-3|node.kubernetes.io/type"] will be created

+ resource "null_resource" "servers_label" {

+ id = (known after apply)

+ triggers = (known after apply)

}

Plan: 18 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ kube_config = (sensitive value)

╷

│ Warning: Deprecated Resource

│

│ with data.civo_template.ubuntu,

│ on main.tf line 19, in data "civo_template" "ubuntu":

│ 19: data "civo_template" "ubuntu" {

│

│ "civo_template" data source is deprecated. Moving forward, please use "civo_disk_image" data source.

│

│ (and one more similar warning elsewhere)

╵

╷

│ Warning: Attribute is deprecated

│

│ with civo_instance.node_instances,

│ on main.tf line 42, in resource "civo_instance" "node_instances":

│ 42: template = element(data.civo_template.ubuntu.templates, 0).id

│

│ "template" attribute is deprecated. Moving forward, please use "disk_image" attribute.

╵

╷

│ Warning: Deprecated Attribute

│

│ with civo_instance.node_instances[0],

│ on main.tf line 42, in resource "civo_instance" "node_instances":

│ 42: template = element(data.civo_template.ubuntu.templates, 0).id

│

│ "template" attribute is deprecated. Moving forward, please use "disk_image" attribute.

│

│ (and 2 more similar warnings elsewhere)

╵

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

civo_instance.node_instances[0]: Creating...

civo_instance.node_instances[2]: Creating...

civo_instance.node_instances[2]: Still creating... [10s elapsed]

civo_instance.node_instances[0]: Still creating... [10s elapsed]

civo_instance.node_instances[0]: Still creating... [20s elapsed]

civo_instance.node_instances[2]: Still creating... [20s elapsed]

civo_instance.node_instances[2]: Still creating... [30s elapsed]

civo_instance.node_instances[0]: Still creating... [30s elapsed]

civo_instance.node_instances[0]: Still creating... [40s elapsed]

civo_instance.node_instances[2]: Still creating... [40s elapsed]

civo_instance.node_instances[0]: Still creating... [50s elapsed]

civo_instance.node_instances[2]: Still creating... [50s elapsed]

civo_instance.node_instances[2]: Still creating... [1m0s elapsed]

civo_instance.node_instances[0]: Still creating... [1m0s elapsed]

civo_instance.node_instances[0]: Still creating... [1m10s elapsed]

civo_instance.node_instances[2]: Still creating... [1m10s elapsed]

civo_instance.node_instances[0]: Creation complete after 1m12s [id=078aeab9-89da-46db-995b-d20fc2a026e7]

civo_instance.node_instances[2]: Creation complete after 1m16s [id=7666cd7d-48ad-4703-9253-481d6aa9e7a5]

module.k3s.null_resource.k8s_ca_certificates_install[5]: Creating...

module.k3s.null_resource.k8s_ca_certificates_install[4]: Creating...

module.k3s.null_resource.k8s_ca_certificates_install[3]: Creating...

module.k3s.null_resource.k8s_ca_certificates_install[0]: Creating...

module.k3s.null_resource.k8s_ca_certificates_install[2]: Creating...

module.k3s.null_resource.k8s_ca_certificates_install[1]: Creating...

module.k3s.null_resource.k8s_ca_certificates_install[5]: Provisioning with 'remote-exec'...

module.k3s.null_resource.k8s_ca_certificates_install[1]: Provisioning with 'remote-exec'...

module.k3s.null_resource.k8s_ca_certificates_install[3]: Provisioning with 'remote-exec'...

module.k3s.null_resource.k8s_ca_certificates_install[2]: Provisioning with 'remote-exec'...

module.k3s.null_resource.k8s_ca_certificates_install[0]: Provisioning with 'remote-exec'...

module.k3s.null_resource.k8s_ca_certificates_install[4]: Provisioning with 'remote-exec'...

module.k3s.null_resource.k8s_ca_certificates_install[5] (remote-exec): Connecting to remote host via SSH...

module.k3s.null_resource.k8s_ca_certificates_install[5] (remote-exec): Host: 212.2.243.199

module.k3s.null_resource.k8s_ca_certificates_install[5] (remote-exec): User: root

module.k3s.null_resource.k8s_ca_certificates_install[5] (remote-exec): Password: true

module.k3s.null_resource.k8s_ca_certificates_install[5] (remote-exec): Private key: false

module.k3s.null_resource.k8s_ca_certificates_install[5] (remote-exec): Certificate: false

module.k3s.null_resource.k8s_ca_certificates_install[5] (remote-exec): SSH Agent: false

module.k3s.null_resource.k8s_ca_certificates_install[5] (remote-exec): Checking Host Key: false

module.k3s.null_resource.k8s_ca_certificates_install[5] (remote-exec): Target Platform: unix

module.k3s.null_resource.k8s_ca_certificates_install[3] (remote-exec): Connecting to remote host via SSH...

module.k3s.null_resource.k8s_ca_certificates_install[3] (remote-exec): Host: 212.2.243.199

module.k3s.null_resource.k8s_ca_certificates_install[3] (remote-exec): User: root

module.k3s.null_resource.k8s_ca_certificates_install[3] (remote-exec): Password: true

module.k3s.null_resource.k8s_ca_certificates_install[3] (remote-exec): Private key: false

module.k3s.null_resource.k8s_ca_certificates_install[3] (remote-exec): Certificate: false

module.k3s.null_resource.k8s_ca_certificates_install[3] (remote-exec): SSH Agent: false

module.k3s.null_resource.k8s_ca_certificates_install[3] (remote-exec): Checking Host Key: false

module.k3s.null_resource.k8s_ca_certificates_install[3] (remote-exec): Target Platform: unix

module.k3s.null_resource.k8s_ca_certificates_install[2] (remote-exec): (output suppressed due to sensitive value in config)

module.k3s.null_resource.k8s_ca_certificates_install[0] (remote-exec): (output suppressed due to sensitive value in config)

module.k3s.null_resource.k8s_ca_certificates_install[4] (remote-exec): (output suppressed due to sensitive value in config)

module.k3s.null_resource.k8s_ca_certificates_install[1] (remote-exec): Connecting to remote host via SSH...

module.k3s.null_resource.k8s_ca_certificates_install[1] (remote-exec): Host: 212.2.243.199

module.k3s.null_resource.k8s_ca_certificates_install[1] (remote-exec): User: root

module.k3s.null_resource.k8s_ca_certificates_install[1] (remote-exec): Password: true

module.k3s.null_resource.k8s_ca_certificates_install[1] (remote-exec): Private key: false

module.k3s.null_resource.k8s_ca_certificates_install[1] (remote-exec): Certificate: false

module.k3s.null_resource.k8s_ca_certificates_install[1] (remote-exec): SSH Agent: false