Published: Oct 20, 2021 by Isaac Johnson

Crossplane.io basically takes IaaC principals of Infrastucture as Code and marries them with Kubernetes concepts of Custom Resource Definitions (CRDs) and defining multi-platform objects as YAML.

With tools like Terraform we define our infrastructure in a YAML-like structure (HCL), we init and often “plan” to see what would be created, then we execute an atomic operation that either applies or does not apply the infrastructure, saving the state in a tfstate file.

With Crossplane, we define our structure in straight yaml like any other other kubernetes object. We make a request which, by default, only validates the syntax based on the API CRD. It uses a platformconfiguration that exposes our credentials to actually affect change.

In this regards, with proper RBAC rules, we could allow our development teams to create infrastructure without exposing our elevated service prinicipal or identity.

Let’s dig into Crossplane and see how easy it is to work with Azure infrastucture.

Setup

Create namespace to hold our PlatformConfigurations.

$ kubectl create namespace crossplane-system

namespace/crossplane-system created

Add the helm repo and update

$ helm repo add crossplane-stable https://charts.crossplane.io/stable

"crossplane-stable" already exists with the same configuration, skipping

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "ingress-nginx" chart repository

...Successfully got an update from the "jenkins" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "kedacore" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "datawire" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

Then install the Crossplane chart into the namespace we created:

$ helm install crossplane --namespace crossplane-system crossplane-stable/crossplane

NAME: crossplane

LAST DEPLOYED: Sat Oct 16 09:21:29 2021

NAMESPACE: crossplane-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Release: crossplane

Chart Name: crossplane

Chart Description: Crossplane is an open source Kubernetes add-on that enables platform teams to assemble infrastructure from multiple vendors, and expose higher level self-service APIs for application teams to consume.

Chart Version: 1.4.1

Chart Application Version: 1.4.1

Kube Version: v1.22.2

Install CLI

Next we need to install the Crossplane CLI (if you have not already):

$ curl -sL https://raw.githubusercontent.com/crossplane/crossplane/master/install.sh | sh

kubectl plugin downloaded successfully! Run the following commands to finish installing it:

$ sudo mv kubectl-crossplane /usr/local/bin

$ kubectl crossplane --help

Visit https://crossplane.io to get started. 🚀

Have a nice day! 👋

$ sudo mv kubectl-crossplane /usr/local/bin

[sudo] password for builder:

$ kubectl crossplane --help

Usage: kubectl crossplane <command>

A command line tool for interacting with Crossplane.

Flags:

-h, --help Show context-sensitive help.

-v, --version Print version and quit.

--verbose Print verbose logging statements.

Commands:

build configuration

Build a Configuration package.

build provider

Build a Provider package.

install configuration <package> [<name>]

Install a Configuration package.

install provider <package> [<name>]

Install a Provider package.

update configuration <name> <tag>

Update a Configuration package.

update provider <name> <tag>

Update a Provider package.

push configuration <tag>

Push a Configuration package.

push provider <tag>

Push a Provider package.

Run "kubectl crossplane <command> --help" for more information on a command.

We want the the latest v1.4.1 release. They update it often so you can check to see what the latest release is from the releases page.

$ kubectl crossplane install configuration registry.upbound.io/xp/getting-started-with-azure:v1.4.1

configuration.pkg.crossplane.io/xp-getting-started-with-azure created

The guide says to wait for the pkg(s) to be healthy before moving on.

We can check for pkgs across all namespaces:

$ kubectl get pkg --all-namespaces

NAME INSTALLED HEALTHY PACKAGE AGE

provider.pkg.crossplane.io/crossplane-provider-azure True True crossplane/provider-azure:v0.17.0 3m36s

NAME INSTALLED HEALTHY PACKAGE AGE

configuration.pkg.crossplane.io/xp-getting-started-with-azure True True registry.upbound.io/xp/getting-started-with-azure:v1.4.1 3m40s

Next we want to create a Service Principal that has Owner privs:

$ az ad sp create-for-rbac --sdk-auth --role Owner > "creds.json"

WARNING: Creating 'Owner' role assignment under scope '/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d'

WARNING: Retrying role assignment creation: 1/36

WARNING: Retrying role assignment creation: 2/36

WARNING: Retrying role assignment creation: 3/36

WARNING: Retrying role assignment creation: 4/36

WARNING: Retrying role assignment creation: 5/36

WARNING: Retrying role assignment creation: 6/36

WARNING: Retrying role assignment creation: 7/36

WARNING: The output includes credentials that you must protect. Be sure that you do not include these credentials in your code or check the credentials into your source control. For more information, see https://aka.ms/azadsp-cli

We can pull the SP Client ID from the file:

$ AZURE_CLIENT_ID=$(jq -r ".clientId" < "./creds.json")

$ echo $AZURE_CLIENT_ID

e9a01d7f-e45a-4c68-af4f-84d0d86dc212

we need to set some known API GUIDs for the next part:

RW_ALL_APPS=1cda74f2-2616-4834-b122-5cb1b07f8a59

RW_DIR_DATA=78c8a3c8-a07e-4b9e-af1b-b5ccab50a175

AAD_GRAPH_API=00000002-0000-0000-c000-000000000000

We need to now grant permissions:

$ az ad app permission add --id "${AZURE_CLIENT_ID}" --api ${AAD_GRAPH_API} --api-permissions ${RW_ALL_APPS}=Role ${RW_DIR_DATA}=Role

Invoking "az ad app permission grant --id e9a01d7f-e45a-4c68-af4f-84d0d86dc212 --api 00000002-0000-0000-c000-000000000000" is needed to make the change effective

$ az ad app permission grant --id "${AZURE_CLIENT_ID}" --api ${AAD_GRAPH_API} --expires never > /dev/null

$ az ad app permission admin-consent --id "${AZURE_CLIENT_ID}"

Next we create a secret with our Credentials in the crossplane-system namespace. At this point, the keys to the kingdom are in that namespace as a plaintext secret. It’s worth considering your RBAC roles at this point if you wish to product your Azure resources:

$ kubectl create secret generic azure-creds -n crossplane-system --from-file=creds=./creds.json

secret/azure-creds created

Next we create the provider config to use the creds.json secret:

$ kubectl apply -f https://raw.githubusercontent.com/crossplane/crossplane/release-1.4/docs/snippets/configure/azure/providerconfig.yaml

providerconfig.azure.crossplane.io/default created

Creating a PostgreSQL database

A simple test is to create PSQL Database.

We can create a PostgreSQL DB rather easily:

$ cat testdb.yaml

apiVersion: database.example.org/v1alpha1

kind: PostgreSQLInstance

metadata:

name: my-db

namespace: default

spec:

parameters:

storageGB: 20

compositionSelector:

matchLabels:

provider: azure

writeConnectionSecretToRef:

name: db-conn

Then check for creation:

$ kubectl apply -f testdb.yaml

postgresqlinstance.database.example.org/my-db created

$ kubectl get postgresqlinstance my-db

NAME READY CONNECTION-SECRET AGE

my-db False db-conn 10s

$ kubectl get postgresqlinstance my-db

NAME READY CONNECTION-SECRET AGE

my-db True db-conn 2m2s

Since we opted to save it to a secret, we can see the details for the connection string for the PostgreSQL server:

$ kubectl get secrets db-conn -o yaml

apiVersion: v1

data:

endpoint: bXktZGItbHY5NTItdm5jbXYucG9zdGdyZXMuZGF0YWJhc2UuYXp1cmUuY29t

password: aG5WTzNsRXVQZXRwa1ZzSmFVclVsVmlSRXZi

port: NTQzMg==

username: bXlhZG1pbkBteS1kYi1sdjk1Mi12bmNtdg==

which if we check:

$ echo bXktZGItbHY5NTItdm5jbXYucG9zdGdyZXMuZGF0YWJhc2UuYXp1cmUuY29t | base64 --decode

my-db-lv952-vncmv.postgres.database.azure.com

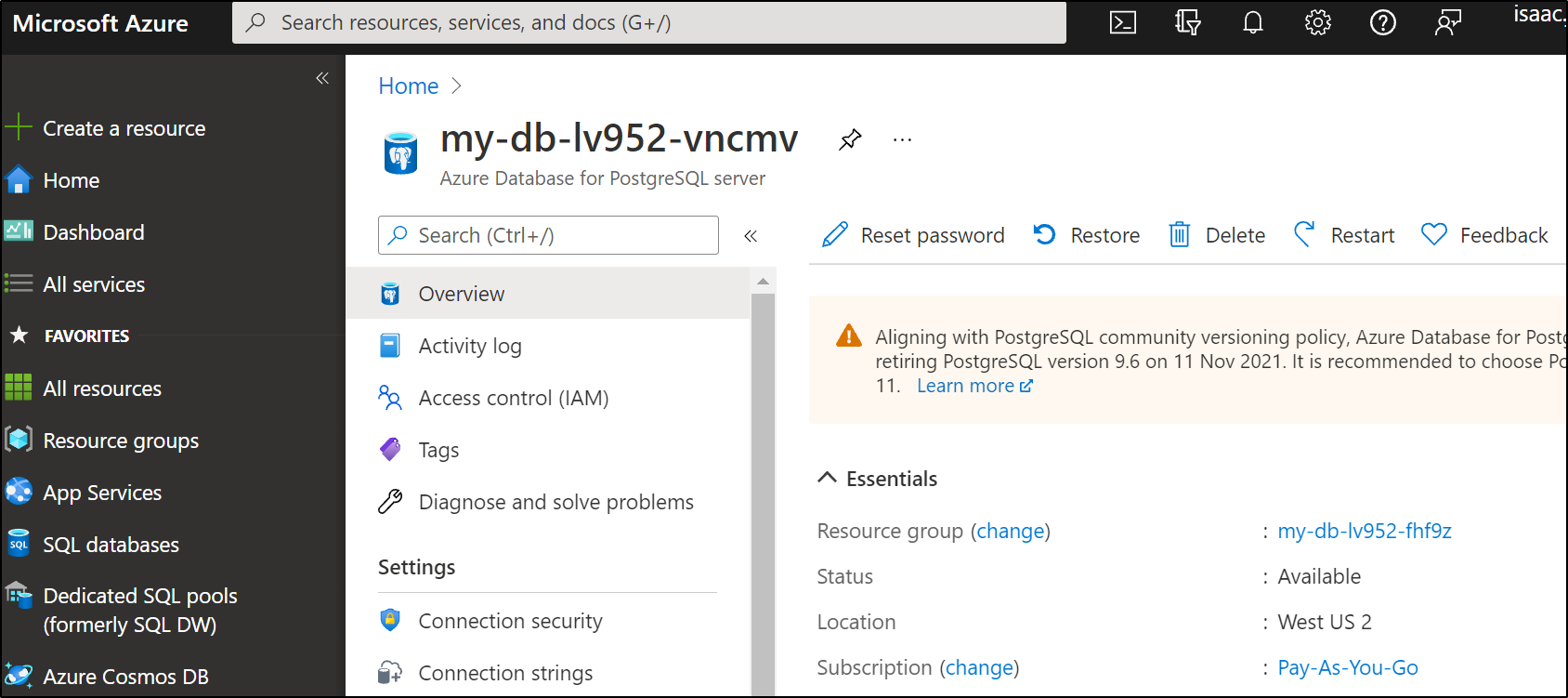

This lines up to what we see in the Azure Portal:

Cleanup

We can remove it just as easily

$ kubectl delete postgresqlinstance my-db

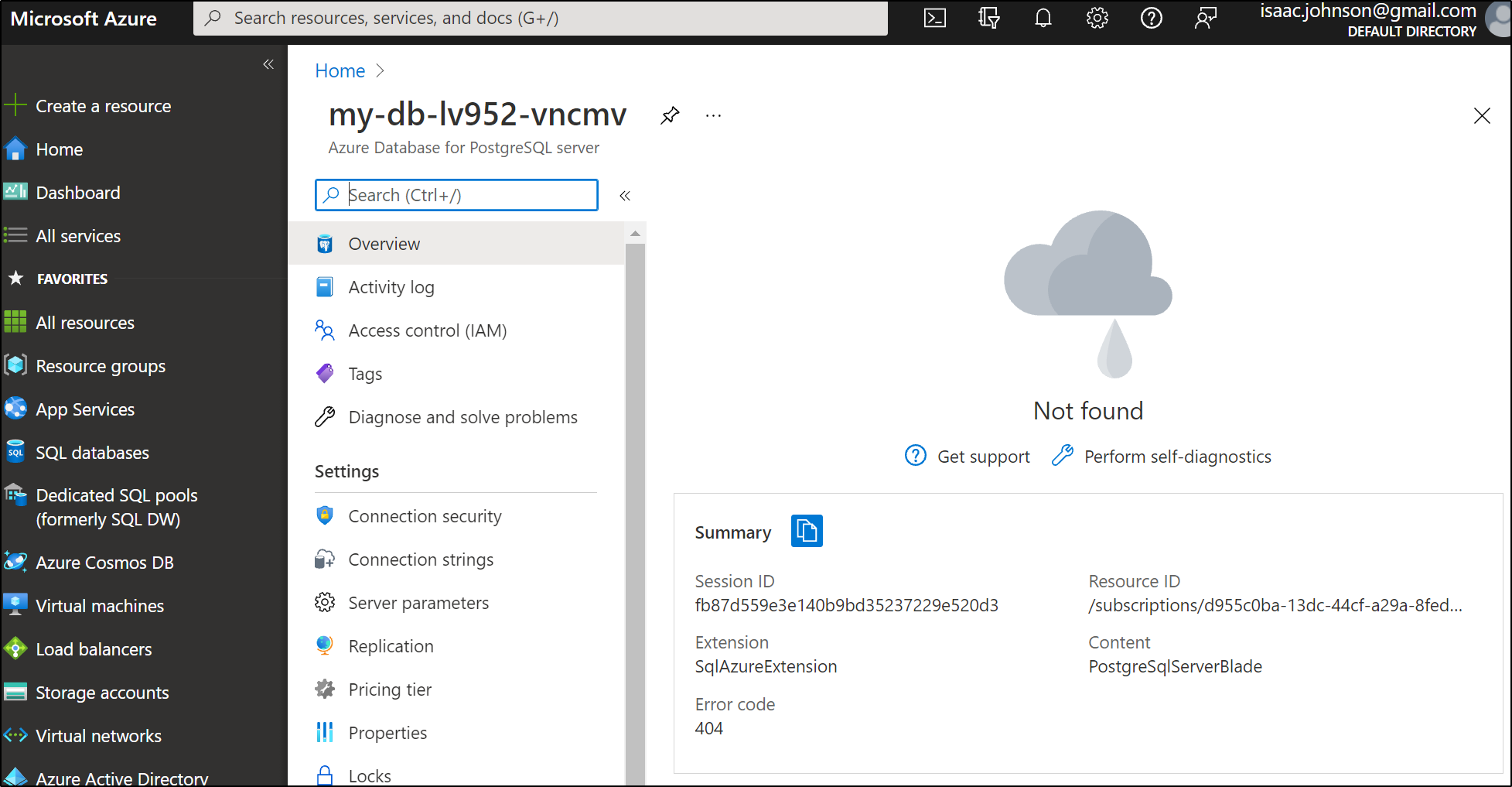

postgresqlinstance.database.example.org "my-db" deleted

And a reload of the portal shows it’s already removed:

Resource Groups

Let’s look at one more thing we can create with the latest crossplane install: resource groups:

apply the YAML:

$ cat network4.yaml

apiVersion: azure.crossplane.io/v1alpha3

kind: ResourceGroup

metadata:

name: sqlserverpostgresql-rg

spec:

location: West US 2

$ kubectl apply -f network4.yaml

resourcegroup.azure.crossplane.io/sqlserverpostgresql-rg created

and we can see the group is created:

$ az group list -o tsv | grep postgres

/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/sqlserverpostgresql-rg westus2 None sqlserverpostgresql-rg None Microsoft.Resources/resourceGroups

Removing it is just as easy:

$ kubectl delete -f network4.yaml

resourcegroup.azure.crossplane.io "sqlserverpostgresql-rg" deleted

$ az group list -o tsv | grep postgres

$

AKS

Before we move on to create a Kubernetes cluster with Crossplane, I’ll want to first check on some VM sizes. I cannot recall if the curent version is v2 or v3 for Standard DS2, so I can check with the Azure CLI:

$ az vm list-sizes --location "Central US" -o tsv | grep DS2

8 7168 Standard_DS2 2 1047552 14336

8 7168 Standard_DS2_v2_Promo 2 1047552 14336

8 7168 Standard_DS2_v2 2 1047552 14336

You can get an expansive list (I’ll just show 3) using the table output:

$ az vm list-sizes --location "Central US" -o table | head -n5

MaxDataDiskCount MemoryInMb Name NumberOfCores OsDiskSizeInMb ResourceDiskSizeInMb

------------------ ------------ ---------------------- --------------- ---------------- ----------------------

1 768 Standard_A0 1 1047552 20480

2 1792 Standard_A1 1 1047552 71680

4 3584 Standard_A2 2 1047552 138240

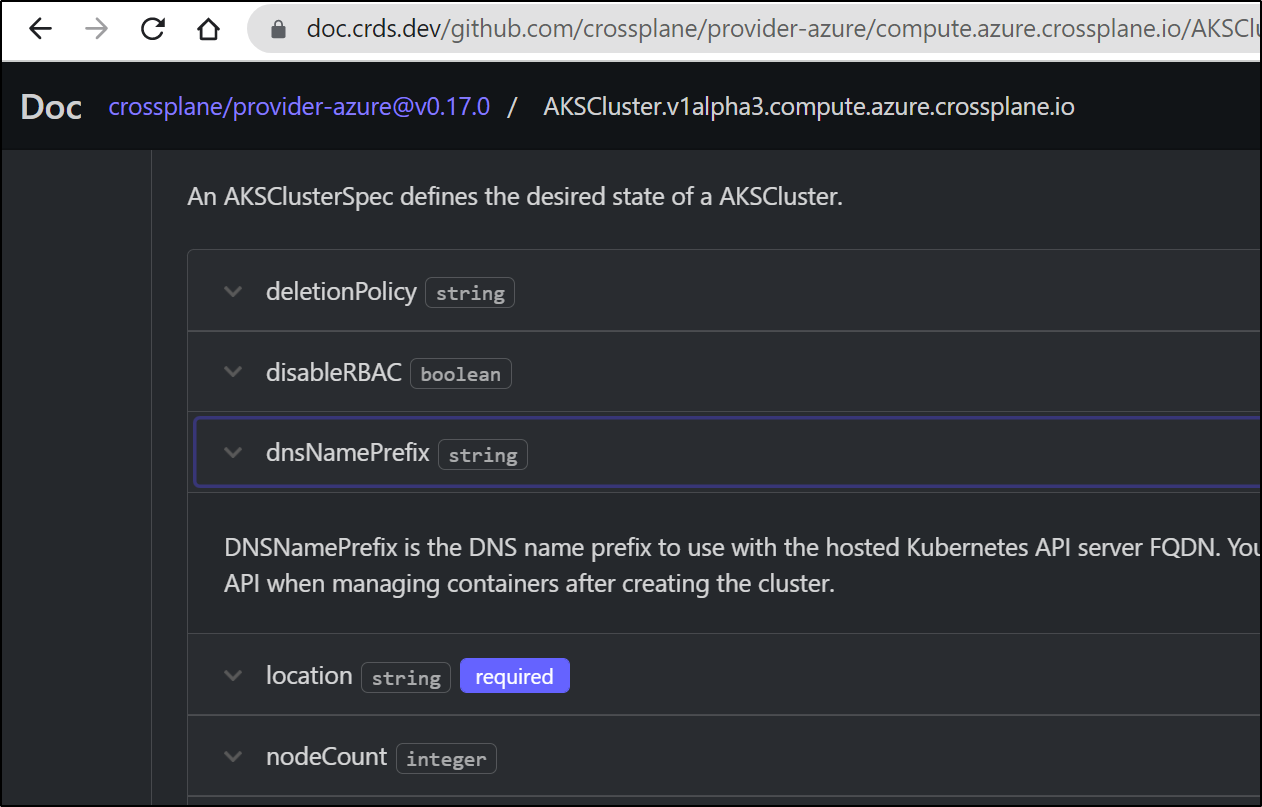

Presently, with crossplane, our options are far less than with Terraform. If we compare the AKSCluster Crossplane provider to the Terraform AzureRM one we can see a lot more options in the Terraform side.

Now crossplane does have docs to describe resources. For instance, we can lookup the details of Resource Group

Let’s create a Resource Group and Cluster with crossplane:

$ cat crossplane-aks.yaml

apiVersion: azure.crossplane.io/v1alpha3

kind: ResourceGroup

metadata:

name: aksviacp-rg

spec:

location: Central US

---

apiVersion: compute.azure.crossplane.io/v1alpha3

kind: AKSCluster

metadata:

name: idjaks01

spec:

location: Central US

version: 1.21.1

nodeVMSize: Standard_DS2_v2

nodeCount: 2

resourceGroupName: aksviacp-rg

$ kubectl apply -f crossplane-aks.yaml

resourcegroup.azure.crossplane.io/aksviacp-rg created

akscluster.compute.azure.crossplane.io/idjaks01 created

We can check on the status of our objects:

$ kubectl get ResourceGroup aksviacp-rg

NAME READY SYNCED

aksviacp-rg True True

$ kubectl get AKSCluster

NAME READY SYNCED ENDPOINT LOCATION AGE

idjaks01 False False Central US 82s

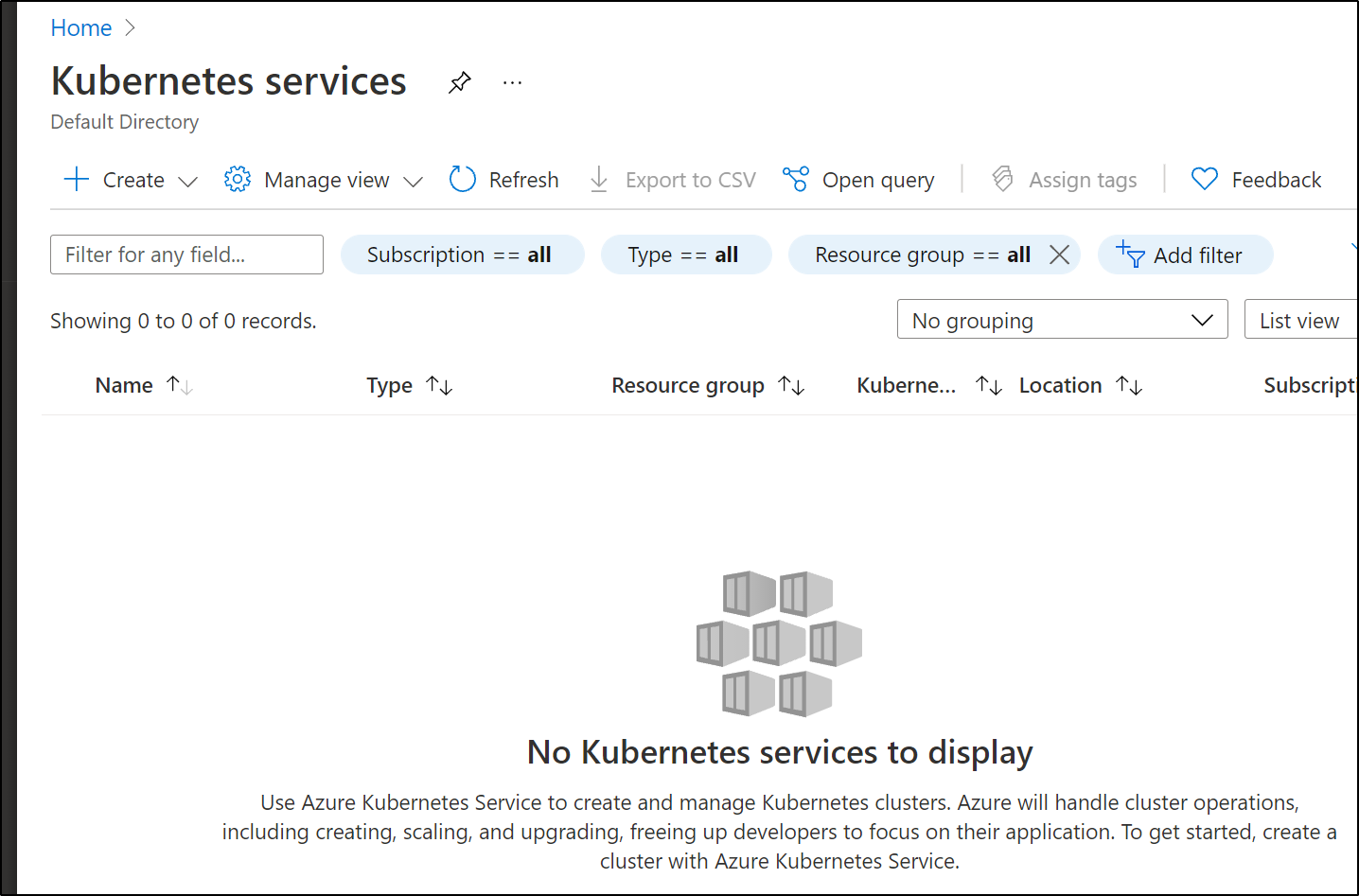

I waited a while and even checked the portal:

I then did a describe on the resource:

$ kubectl describe AKSCluster idjaks01 | tail -n 5

Type: Synced

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning CannotCreateExternalResource 63s (x8 over 3m27s) managed/akscluster.compute.azure.crossplane.io cannot create AKSCluster: containerservice.ManagedClustersClient#CreateOrUpdate: Failure sending request: StatusCode=400 -- Original Error: Code="InvalidParameter" Message="The value of parameter dnsPrefix is invalid. Error details: DNS prefix '' is invalid. DNS prefix must contain between 1 and 54 characters. Please see https://aka.ms/aks-naming-rules for more details.. Please see https://aka.ms/aks-naming-rules for more details." Target="dnsPrefix"

It would appear the DNS Prefix is required now even though it is not marked as such in the docs:

Let us add it to the end and apply:

$ cat crossplane-aks.yaml

apiVersion: azure.crossplane.io/v1alpha3

kind: ResourceGroup

metadata:

name: aksviacp-rg

spec:

location: Central US

---

apiVersion: compute.azure.crossplane.io/v1alpha3

kind: AKSCluster

metadata:

name: idjaks01

spec:

location: Central US

version: 1.21.1

nodeVMSize: Standard_DS2_v2

nodeCount: 2

resourceGroupName: aksviacp-rg

dnsNamePrefix: idjviacpaks01

$ kubectl apply -f crossplane-aks.yaml

resourcegroup.azure.crossplane.io/aksviacp-rg unchanged

akscluster.compute.azure.crossplane.io/idjaks01 configured

This is similar to tf apply on existing Terraform. Crossplane, leveraging kubernetes, shows the Resource Group had no changes (so it won’t change it) but does need to update the Kuberentes instance.

Checking status now gives us some confusing information:

$ kubectl describe AKSCluster idjaks01 | tail -n 2

Warning CannotCreateExternalResource 85s (x2 over 89s) managed/akscluster.compute.azure.crossplane.io (combined from similar events): cannot create AKSCluster: containerservice.ManagedClustersClient#CreateOrUpdate: Failure sending request: StatusCode=400 -- Original Error: Code="BadRequest" Message="The credentials in ServicePrincipalProfile were invalid. Please see https://aka.ms/aks-sp-help for more details. (Details: adal: Refresh request failed. Status Code = '401'. Response body: {\"error\":\"invalid_client\",\"error_description\":\"AADSTS7000215: Invalid client secret is provided.\\r\\nTrace ID: 20e03423-d652-4476-bf8c-cf378acbcf00\\r\\nCorrelation ID: b7e31a51-c1b1-4e36-b297-46599a7f31e3\\r\\nTimestamp: 2021-10-17 12:23:10Z\",\"error_codes\":[7000215],\"timestamp\":\"2021-10-17 12:23:10Z\",\"trace_id\":\"20e03423-d652-4476-bf8c-cf378acbcf00\",\"correlation_id\":\"b7e31a51-c1b1-4e36-b297-46599a7f31e3\",\"error_uri\":\"https://login.microsoftonline.com/error?code=7000215\"} Endpoint https://login.microsoftonline.com/28c575f6-ade1-4838-8e7c-7e6d1ba0eb4a/oauth2/token?api-version=1.0)"

Normal CreatedExternalResource 74s managed/akscluster.compute.azure.crossplane.io Successfully requested creation of external resource

At first, we might think we need to fix something. But then a quick check of the status shows it is Synced, just not ready:

$ kubectl get AKSCluster

NAME READY SYNCED ENDPOINT LOCATION AGE

idjaks01 False True idjviacpaks01-153263b4.hcp.centralus.azmk8s.io Central US 9m35s

Verificaton

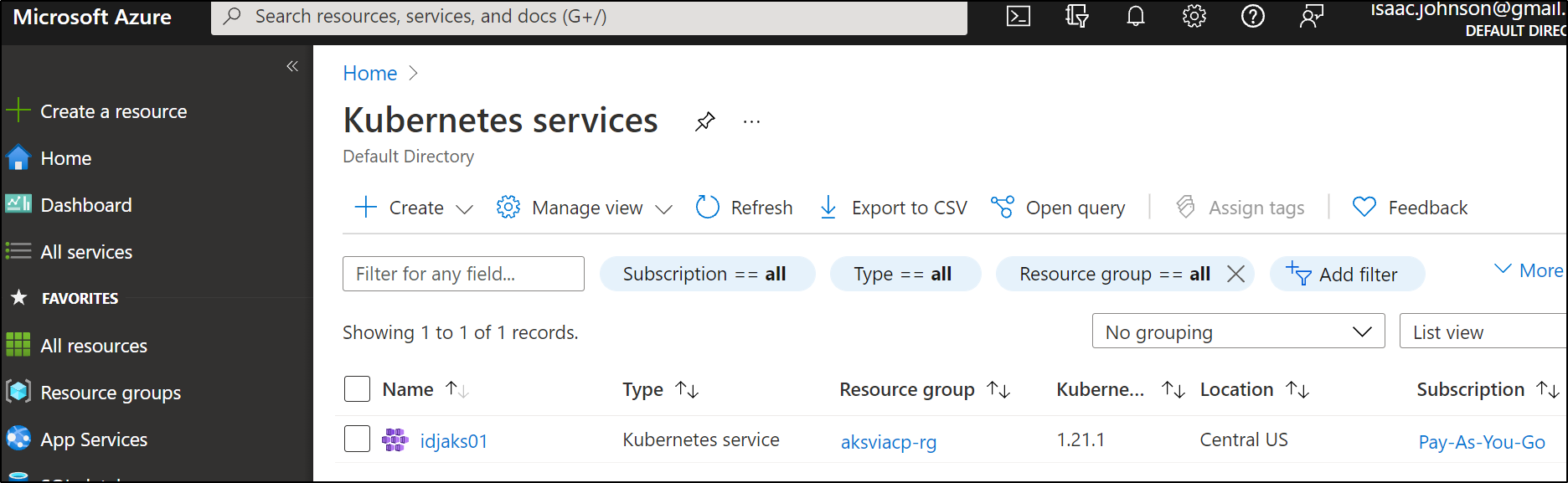

We can double check that indeed a cluster was created via the Azure Portal:

And if we wait a bit we can see Crossplane lists it as ready:

$ kubectl get AKSCluster

NAME READY SYNCED ENDPOINT LOCATION AGE

idjaks01 True True idjviacpaks01-153263b4.hcp.centralus.azmk8s.io Central US 21m

What if we want to capture the SP ID used in the cluster or the connection properties?

We cna use the optional writeConnectionSecretToRef and writeServicePrincipalTo fields to save the outputs to a secret.

*Note: as we will see below, the writeSP parameter does not work at time of writing*

Like before we can just update the YAML and apply:

$ cat crossplane-aks.yaml

apiVersion: azure.crossplane.io/v1alpha3

kind: ResourceGroup

metadata:

name: aksviacp-rg

spec:

location: Central US

---

apiVersion: compute.azure.crossplane.io/v1alpha3

kind: AKSCluster

metadata:

name: idjaks01

spec:

location: Central US

version: 1.21.1

nodeVMSize: Standard_DS2_v2

nodeCount: 2

resourceGroupName: aksviacp-rg

dnsNamePrefix: idjviacpaks01

writeConnectionSecretToRef:

name: idjviacpaksconnstr

namespace: default

writeServicePrincipalTo:

name: idjviacpakssp

namespace: default

The last section could either be writeServicePrincipalTo or writeServicePrincipalSecretTo. However Neither worked (perhaps one needs to use the SP fields at the start)

writeServicePrincipalTo:

name: idjviacpakssp

writeServicePrincipalSecretTo:

name: idjviacpakssp2

I tried nicely, then i skipped validation

$ kubectl apply -f crossplane-aks.yaml

resourcegroup.azure.crossplane.io/aksviacp-rg unchanged

error: error validating "crossplane-aks.yaml": error validating data: ValidationError(AKSCluster.spec): unknown field "writeServicePrincipalTo" in io.crossplane.azure.compute.v1alpha3.AKSCluster.spec; if you choose to ignore these errors, turn validation off with --validate=false

$ kubectl apply -f crossplane-aks.yaml --validate=false

resourcegroup.azure.crossplane.io/aksviacp-rg unchanged

akscluster.compute.azure.crossplane.io/idjaks01 configured

Only the connection string settings made it through:

$ kubectl get secrets --all-namespaces |grep idjvia

default idjviacpaksconnstr connection.crossplane.io/v1alpha1 5 2m48s

We can see the fields in the secret:

$ kubectl get secret idjviacpaksconnstr -o json | jq '.data | keys[]'

"clientCert"

"clientKey"

"clusterCA"

"endpoint"

"kubeconfig"

We can now get the kubeconfig in order to do some work with the new AKS cluster:

$ kubectl get secret idjviacpaksconnstr -o json | jq -r '.data.kubeconfig' | base64 --decode > ~/.kube/idjaks01config

$ kubectl get nodes --kubeconfig=/home/builder/.kube/idjaks01config

NAME STATUS ROLES AGE VERSION

aks-agentpool-27615710-0 Ready agent 23m v1.21.1

aks-agentpool-27615710-1 Ready agent 23m v1.21.1

As a sanity check we can even see the instance type matches what we passed:

$ kubectl describe node aks-agentpool-27615710-0 --kubeconfig=/home/builder/.kube/idjaks01config | grep instance-type | head -n1

beta.kubernetes.io/instance-type=Standard_DS2_v2

Changes

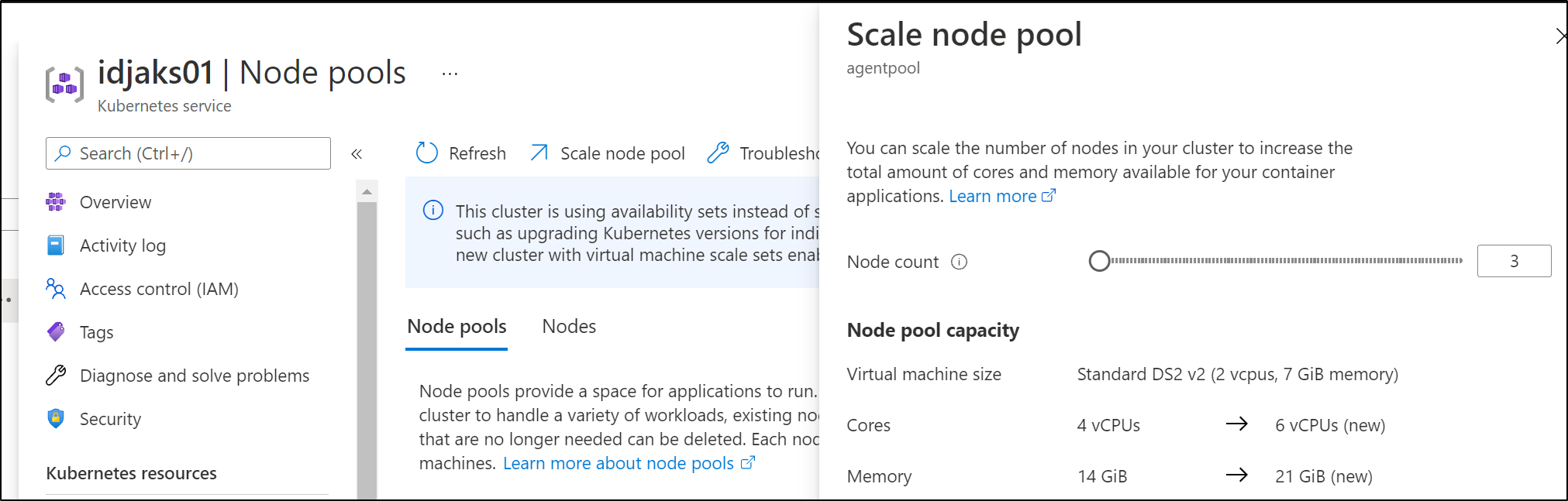

Now what happens if we change the cluster outside of crossplane?

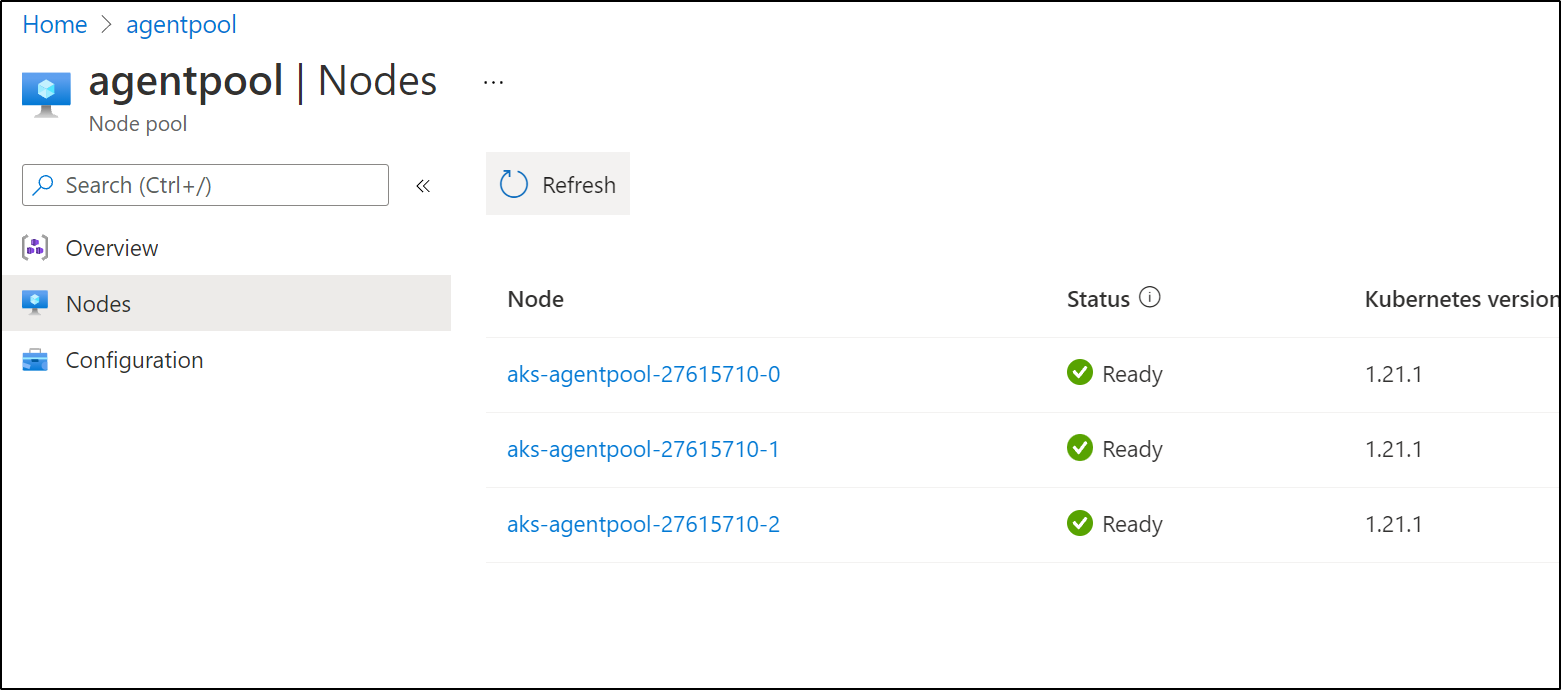

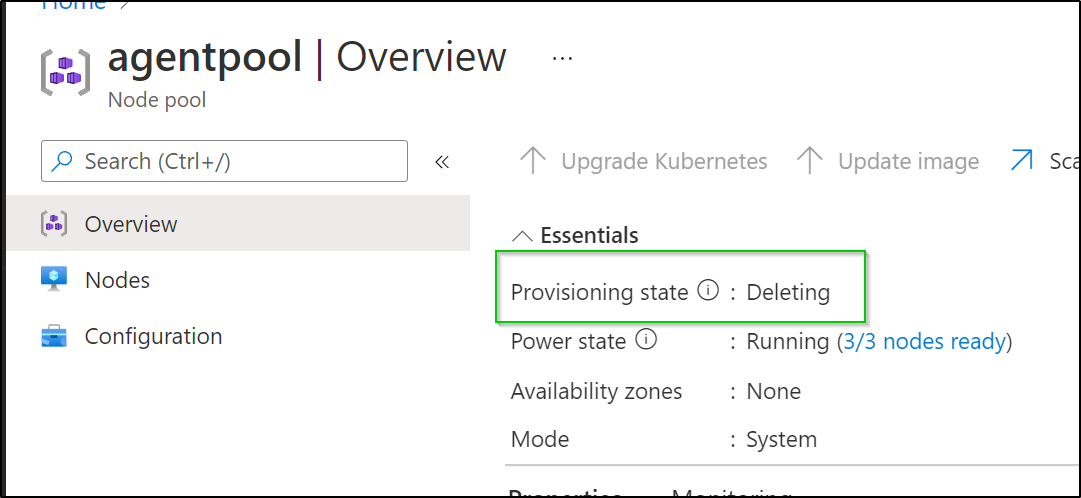

Here we went and scaled the cluster out to 3 nodes.

$ kubectl get nodes --kubeconfig=/home/builder/.kube/idjaks01config

NAME STATUS ROLES AGE VERSION

aks-agentpool-27615710-0 Ready agent 42m v1.21.1

aks-agentpool-27615710-1 Ready agent 42m v1.21.1

$ kubectl get nodes --kubeconfig=/home/builder/.kube/idjaks01config

NAME STATUS ROLES AGE VERSION

aks-agentpool-27615710-0 Ready agent 43m v1.21.1

aks-agentpool-27615710-1 Ready agent 43m v1.21.1

aks-agentpool-27615710-2 Ready agent 27s v1.21.1

Let us change something and see what Crossplane does.. will it tear down the node?

First let us see what versions of AKS are available for our region:

$ az aks get-versions --location centralus -o table

KubernetesVersion Upgrades

------------------- -----------------------

1.22.1(preview) None available

1.21.2 1.22.1(preview)

1.21.1 1.21.2, 1.22.1(preview)

1.20.9 1.21.1, 1.21.2

1.20.7 1.20.9, 1.21.1, 1.21.2

1.19.13 1.20.7, 1.20.9

1.19.11 1.19.13, 1.20.7, 1.20.9

Since we used 1.21.1, let’s upgrade to 1.22.1.

I have intentionally left the other fields alone, including the node count:

$ cat crossplane-aks.yaml

apiVersion: azure.crossplane.io/v1alpha3

kind: ResourceGroup

metadata:

name: aksviacp-rg

spec:

location: Central US

---

apiVersion: compute.azure.crossplane.io/v1alpha3

kind: AKSCluster

metadata:

name: idjaks01

spec:

location: Central US

version: 1.22.1

nodeVMSize: Standard_DS2_v2

nodeCount: 2

resourceGroupName: aksviacp-rg

dnsNamePrefix: idjviacpaks01

writeConnectionSecretToRef:

name: idjviacpaksconnstr

namespace: default

I did take out the SP secrets since they didnt work. Let’s apply it:

$ kubectl apply -f crossplane-aks.yaml

resourcegroup.azure.crossplane.io/aksviacp-rg unchanged

akscluster.compute.azure.crossplane.io/idjaks01 configured

Crossplane status shows it’s ready:

$ kubectl get AKSCluster

NAME READY SYNCED ENDPOINT LOCATION AGE

idjaks01 True True idjviacpaks01-153263b4.hcp.centralus.azmk8s.io Central US 64m

I saw nothing in the logs or describe that showed a change was being applied.

I went ahead and tried just a minor release update (perhaps the ‘preview’ of 1.22.1 isn’t available to me)

$ cat crossplane-aks.yaml

apiVersion: azure.crossplane.io/v1alpha3

kind: ResourceGroup

metadata:

name: aksviacp-rg

spec:

location: Central US

---

apiVersion: compute.azure.crossplane.io/v1alpha3

kind: AKSCluster

metadata:

name: idjaks01

spec:

location: Central US

version: 1.21.2

nodeVMSize: Standard_DS2_v2

nodeCount: 2

resourceGroupName: aksviacp-rg

dnsNamePrefix: idjviacpaks01

writeConnectionSecretToRef:

name: idjviacpaksconnstr

namespace: default

$ kubectl apply -f crossplane-aks.yaml

resourcegroup.azure.crossplane.io/aksviacp-rg unchanged

akscluster.compute.azure.crossplane.io/idjaks01 configured

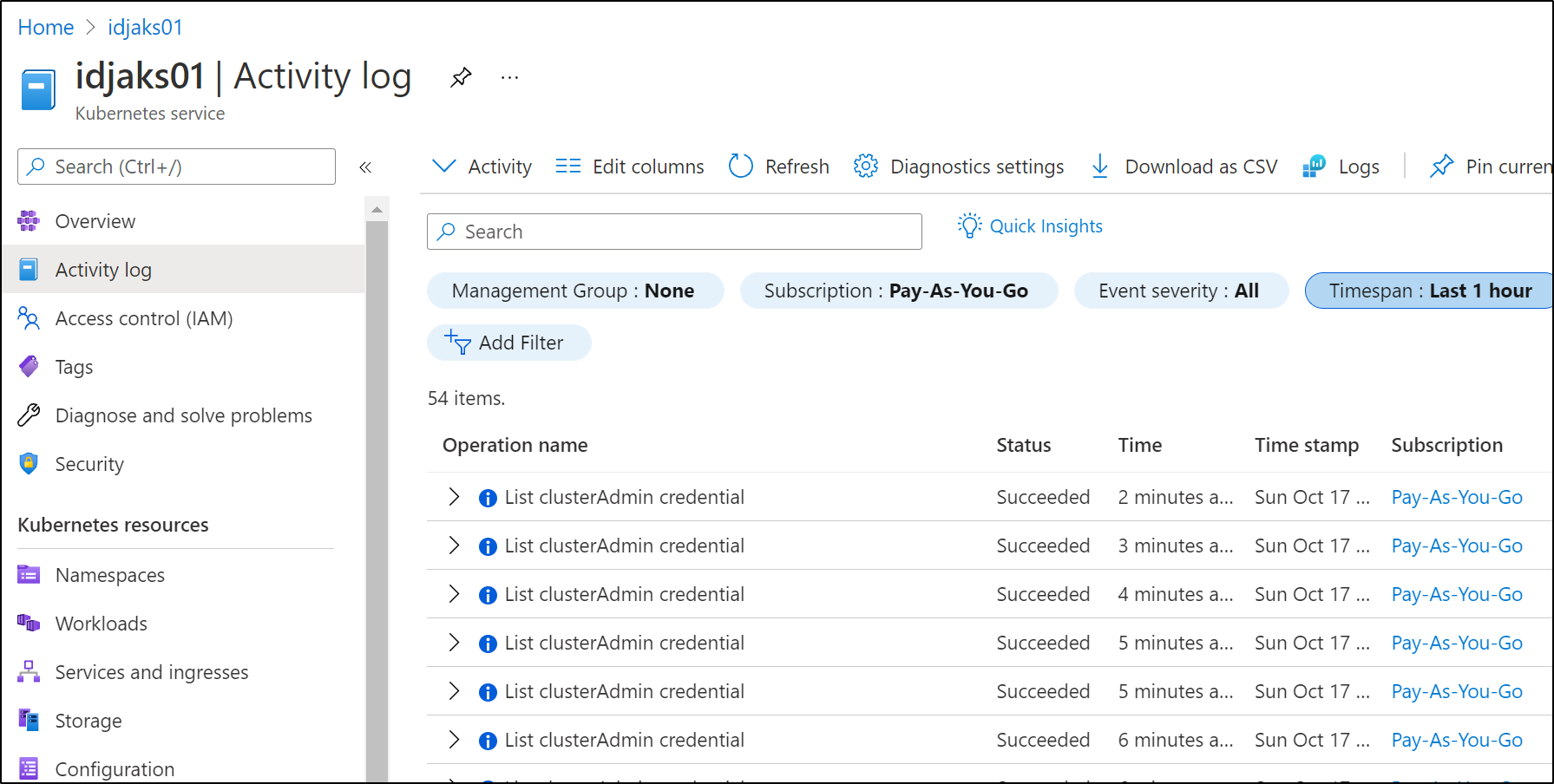

And again, i see no evidence of a change being applied:

Giving it an hour, again, I see no evidence of change:

$ kubectl get nodes --kubeconfig=/home/builder/.kube/idjaks01config

NAME STATUS ROLES AGE VERSION

aks-agentpool-27615710-0 Ready agent 123m v1.21.1

aks-agentpool-27615710-1 Ready agent 123m v1.21.1

aks-agentpool-27615710-2 Ready agent 80m v1.21.1

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

minikube Ready control-plane,master 25h v1.22.2

Let’s try one more change.. upping the node pool to 4.

$ cat crossplane-aks.yaml

apiVersion: azure.crossplane.io/v1alpha3

kind: ResourceGroup

metadata:

name: aksviacp-rg

spec:

location: Central US

---

apiVersion: compute.azure.crossplane.io/v1alpha3

kind: AKSCluster

metadata:

name: idjaks01

spec:

location: Central US

version: 1.21.2

nodeVMSize: Standard_DS2_v2

nodeCount: 4

resourceGroupName: aksviacp-rg

dnsNamePrefix: idjviacpaks01

writeConnectionSecretToRef:

name: idjviacpaksconnstr

namespace: default

$ kubectl apply -f crossplane-aks.yaml

resourcegroup.azure.crossplane.io/aksviacp-rg unchanged

akscluster.compute.azure.crossplane.io/idjaks01 configured

I see an error:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning CannotConnectToProvider 56m managed/akscluster.compute.azure.crossplane.io cannot refresh service principal token: adal: Failed to execute the refresh request. Error = 'Post "https://login.microsoftonline.com/28c575f6-ade1-4838-8e7c-7e6d1ba0eb4a/oauth2/token?api-version=1.0": read tcp 172.17.0.5:46178->40.126.28.18:443: read: network is unreachable'

I changed the YAML yet again to make clear the Provider (even though the default seemed to work for create):

$ cat crossplane-aks.yaml

apiVersion: azure.crossplane.io/v1alpha3

kind: ResourceGroup

metadata:

name: aksviacp-rg

spec:

location: Central US

---

apiVersion: compute.azure.crossplane.io/v1alpha3

kind: AKSCluster

metadata:

name: idjaks01

spec:

location: Central US

version: 1.21.2

nodeVMSize: Standard_DS2_v2

nodeCount: 4

resourceGroupName: aksviacp-rg

dnsNamePrefix: idjviacpaks01

writeConnectionSecretToRef:

name: idjviacpaksconnstr

namespace: default

providerRef:

name: default

$ kubectl apply -f crossplane-aks.yaml

resourcegroup.azure.crossplane.io/aksviacp-rg unchanged

akscluster.compute.azure.crossplane.io/idjaks01 configured

This time it seems to have taken it (no errors):

$ !2139

kubectl describe AKSCluster idjaks01

Name: idjaks01

Namespace:

Labels: <none>

Annotations: crossplane.io/external-name: idjaks01

API Version: compute.azure.crossplane.io/v1alpha3

Kind: AKSCluster

Metadata:

Creation Timestamp: 2021-10-17T12:15:37Z

Finalizers:

finalizer.managedresource.crossplane.io

Generation: 8

Managed Fields:

API Version: compute.azure.crossplane.io/v1alpha3

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

f:crossplane.io/external-name:

f:finalizers:

.:

v:"finalizer.managedresource.crossplane.io":

Manager: crossplane-azure-provider

Operation: Update

Time: 2021-10-17T12:15:45Z

API Version: compute.azure.crossplane.io/v1alpha3

Fields Type: FieldsV1

fieldsV1:

f:status:

.:

f:conditions:

f:endpoint:

f:providerID:

f:state:

Manager: crossplane-azure-provider

Operation: Update

Subresource: status

Time: 2021-10-17T12:23:22Z

API Version: compute.azure.crossplane.io/v1alpha3

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.:

f:kubectl.kubernetes.io/last-applied-configuration:

f:spec:

.:

f:deletionPolicy:

f:dnsNamePrefix:

f:location:

f:nodeCount:

f:nodeVMSize:

f:providerConfigRef:

.:

f:name:

f:providerRef:

.:

f:name:

f:resourceGroupName:

f:version:

f:writeConnectionSecretToRef:

.:

f:name:

f:namespace:

Manager: kubectl-client-side-apply

Operation: Update

Time: 2021-10-17T14:44:02Z

Resource Version: 119316

UID: f4b1db55-fef4-46a1-8bbc-f557d217765e

Spec:

Deletion Policy: Delete

Dns Name Prefix: idjviacpaks01

Location: Central US

Node Count: 4

Node VM Size: Standard_DS2_v2

Provider Config Ref:

Name: default

Provider Ref:

Name: default

Resource Group Name: aksviacp-rg

Version: 1.21.2

Write Connection Secret To Ref:

Name: idjviacpaksconnstr

Namespace: default

Status:

Conditions:

Last Transition Time: 2021-10-17T12:33:54Z

Reason: Available

Status: True

Type: Ready

Last Transition Time: 2021-10-17T12:23:21Z

Reason: ReconcileSuccess

Status: True

Type: Synced

Endpoint: idjviacpaks01-153263b4.hcp.centralus.azmk8s.io

Provider ID: /subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourcegroups/aksviacp-rg/providers/Microsoft.ContainerService/managedClusters/idjaks01

State: Succeeded

Events: <none>

The spec shows the right values:

$ kubectl describe AKSCluster idjaks01 | head -n83 | tail -n15

Spec:

Deletion Policy: Delete

Dns Name Prefix: idjviacpaks01

Location: Central US

Node Count: 4

Node VM Size: Standard_DS2_v2

Provider Config Ref:

Name: default

Provider Ref:

Name: default

Resource Group Name: aksviacp-rg

Version: 1.21.2

Write Connection Secret To Ref:

Name: idjviacpaksconnstr

Namespace: default

However, giving some amount of time, I again saw no changes applied to the cluster in the portal:

Cleanup

We can remove all the resources:

$ kubectl delete -f crossplane-aks.yaml

resourcegroup.azure.crossplane.io "aksviacp-rg" deleted

akscluster.compute.azure.crossplane.io "idjaks01" deleted

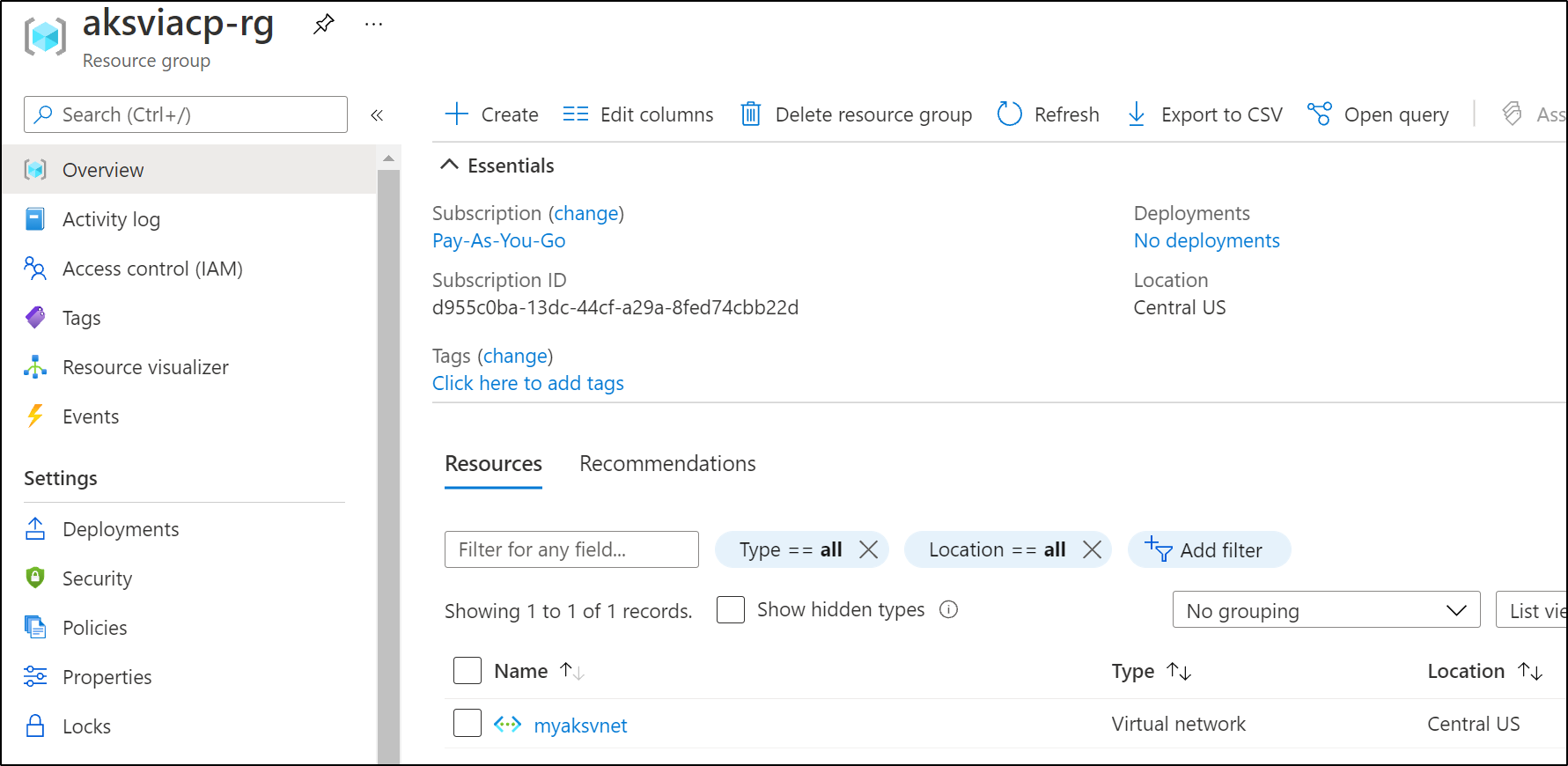

And we can see that reflected right away in the Aure Portal

I wanted to create a VM, but there is outstanding PRs to implement this: https://github.com/crossplane/provider-azure/pull/270

Thus we can at best right now create a RG, VNet and Subnets.

$ cat crossplane-vnet.yaml

apiVersion: azure.crossplane.io/v1alpha3

kind: ResourceGroup

metadata:

name: aksviacp-rg

spec:

location: Central US

providerRef:

name: default

---

apiVersion: network.azure.crossplane.io/v1alpha3

kind: VirtualNetwork

metadata:

name: myaksvnet

spec:

location: Central US

providerRef:

name: default

properties:

addressSpace:

addressPrefixes: ['192.168.1.0/24']

enableDdosProtection: false

enableVmProtection: false

deletionPolicy: Delete

resourceGroupName: aksviacp-rg

tags:

mytag: mytagvalue

---

apiVersion: network.azure.crossplane.io/v1alpha3

kind: Subnet

metadata:

name: myaksvnetsubnet1

spec:

providerRef:

name: default

properties:

addressPrefix: '192.168.1.0/28'

virtualNetworkName: myaksvnet

resourceGroupName: aksviacp-rg

deletionPolicy: Delete

writeConnectionSecretToRef:

name: idjsubnetsecret

namespace: default

---

apiVersion: network.azure.crossplane.io/v1alpha3

kind: Subnet

metadata:

name: myaksvnetsubnet2

spec:

providerRef:

name: default

properties:

addressPrefix: '192.168.1.16/28'

virtualNetworkName: myaksvnet

resourceGroupName: aksviacp-rg

deletionPolicy: Delete

writeConnectionSecretToRef:

name: idjsubnetsecret

namespace: default

$ kubectl apply -f crossplane-vnet.yaml

resourcegroup.azure.crossplane.io/aksviacp-rg unchanged

virtualnetwork.network.azure.crossplane.io/myaksvnet configured

subnet.network.azure.crossplane.io/myaksvnetsubnet1 created

subnet.network.azure.crossplane.io/myaksvnetsubnet2 created

Verification

We can see the Vnet listed in the RG:

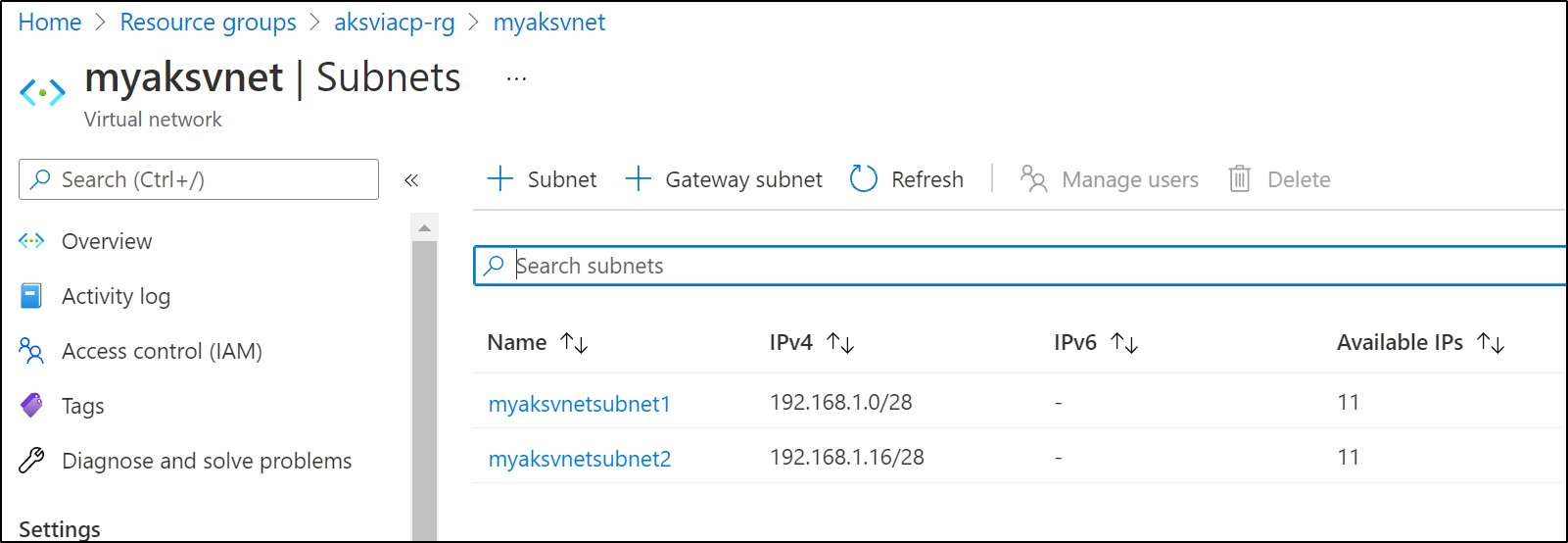

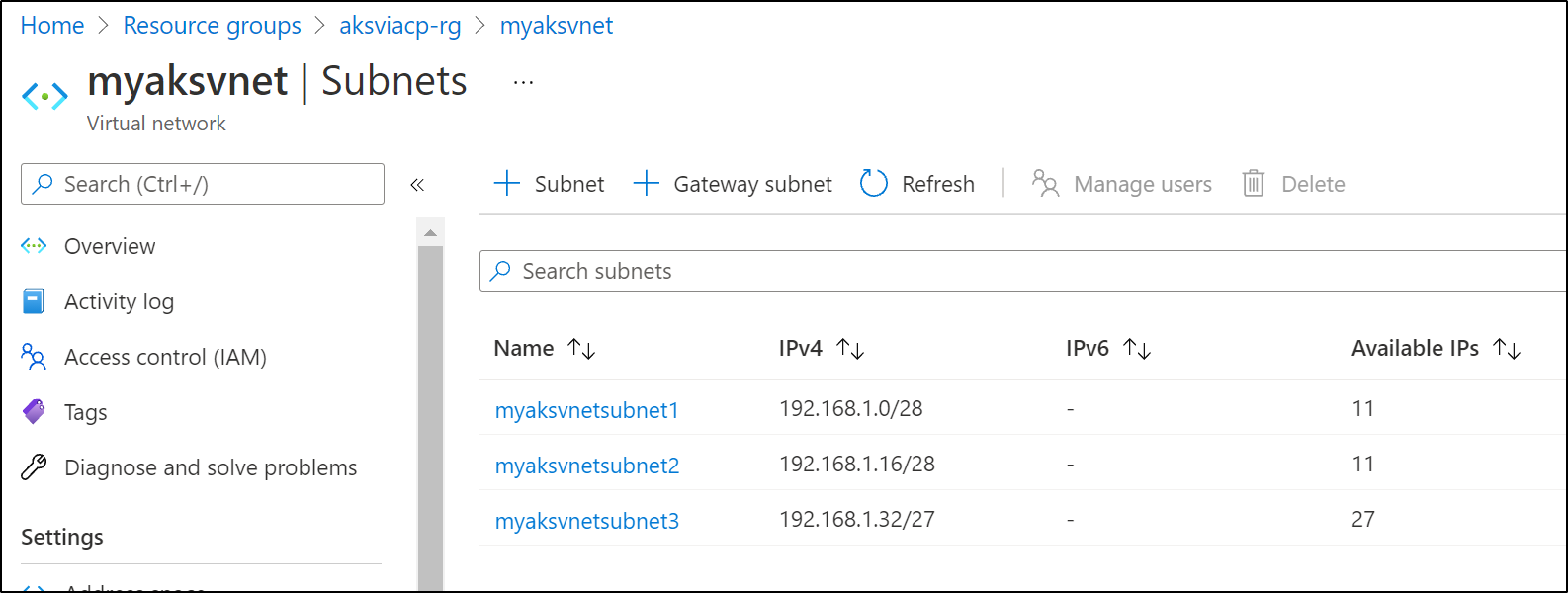

and we see the subnets were created as well:

If we want to add a subnet, we can just add a new block:

$ cat crossplane-vnet.yaml | tail -n 16

---

apiVersion: network.azure.crossplane.io/v1alpha3

kind: Subnet

metadata:

name: myaksvnetsubnet3

spec:

providerRef:

name: default

properties:

addressPrefix: '192.168.1.32/28'

virtualNetworkName: myaksvnet

resourceGroupName: aksviacp-rg

deletionPolicy: Delete

writeConnectionSecretToRef:

name: idjsubnetsecret

namespace: default

$ kubectl apply -f crossplane-vnet.yaml

resourcegroup.azure.crossplane.io/aksviacp-rg unchanged

virtualnetwork.network.azure.crossplane.io/myaksvnet unchanged

subnet.network.azure.crossplane.io/myaksvnetsubnet1 unchanged

subnet.network.azure.crossplane.io/myaksvnetsubnet2 unchanged

subnet.network.azure.crossplane.io/myaksvnetsubnet3 created

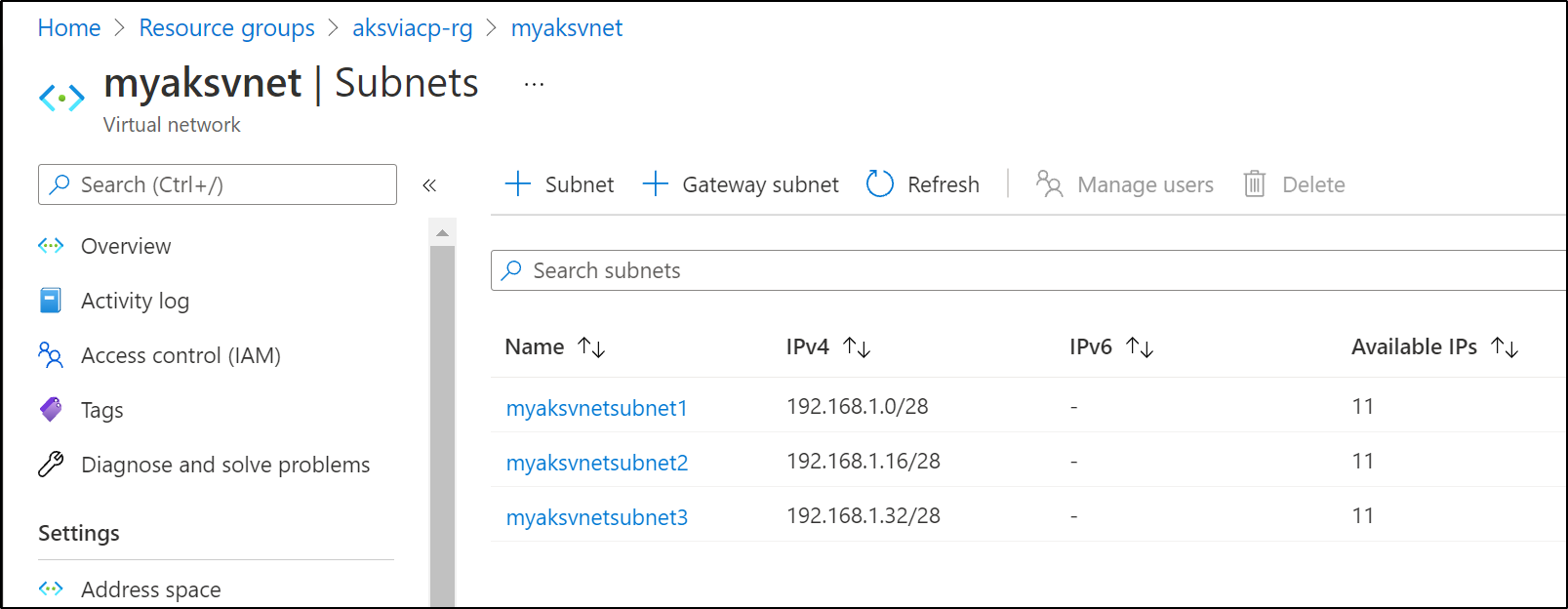

and we can see it immediately reflected in the portal:

Applying a change

I would expect changing it, like with AKS, will have no effect:

$ cat crossplane-vnet.yaml | tail -n 16

---

apiVersion: network.azure.crossplane.io/v1alpha3

kind: Subnet

metadata:

name: myaksvnetsubnet3

spec:

providerRef:

name: default

properties:

addressPrefix: '192.168.1.32/27'

virtualNetworkName: myaksvnet

resourceGroupName: aksviacp-rg

deletionPolicy: Delete

writeConnectionSecretToRef:

name: idjsubnetsecret

namespace: default

$ kubectl apply -f crossplane-vnet.yaml

resourcegroup.azure.crossplane.io/aksviacp-rg unchanged

virtualnetwork.network.azure.crossplane.io/myaksvnet unchanged

subnet.network.azure.crossplane.io/myaksvnetsubnet1 unchanged

subnet.network.azure.crossplane.io/myaksvnetsubnet2 unchanged

subnet.network.azure.crossplane.io/myaksvnetsubnet3 configured

However, i was plesently surprised to see the subnet updated without issue:

We can also verify that erroneous values are checked. If we try and set a subnet outside the range of the VNet, we can expect to see errors:

$ cat crossplane-vnet.yaml | tail -n 16

---

apiVersion: network.azure.crossplane.io/v1alpha3

kind: Subnet

metadata:

name: myaksvnetsubnet3

spec:

providerRef:

name: default

properties:

addressPrefix: '192.168.2.32/27'

virtualNetworkName: myaksvnet

resourceGroupName: aksviacp-rg

deletionPolicy: Delete

writeConnectionSecretToRef:

name: idjsubnetsecret

namespace: default

$ kubectl apply -f crossplane-vnet.yaml

resourcegroup.azure.crossplane.io/aksviacp-rg unchanged

virtualnetwork.network.azure.crossplane.io/myaksvnet unchanged

subnet.network.azure.crossplane.io/myaksvnetsubnet1 unchanged

subnet.network.azure.crossplane.io/myaksvnetsubnet2 unchanged

subnet.network.azure.crossplane.io/myaksvnetsubnet3 configured

The subnet remains unchanged and we can see that we have errors now in the describe:

$ kubectl get subnet

NAME READY SYNCED STATE AGE

myaksvnetsubnet1 True True Succeeded 17m

myaksvnetsubnet2 True True Succeeded 17m

myaksvnetsubnet3 True False Succeeded 9m52s

$ kubectl describe subnet myaksvnetsubnet3 | tail -n6

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal CreatedExternalResource 8m41s managed/subnet.network.azure.crossplane.io Successfully requested creation of external resource

Normal UpdatedExternalResource 89s (x12 over 8m41s) managed/subnet.network.azure.crossplane.io Successfully requested update of external resource

Warning CannotUpdateExternalResource 24s (x6 over 57s) managed/subnet.network.azure.crossplane.io cannot update Subnet: network.SubnetsClient#CreateOrUpdate: Failure sending request: StatusCode=400 -- Original Error: Code="NetcfgInvalidSubnet" Message="Subnet 'myaksvnetsubnet3' is not valid in virtual network 'myaksvnet'." Details=[]

If we fix it, setting it back to 192.168.1.32/28, it quickly applies and syncs

$ kubectl apply -f crossplane-vnet.yaml

resourcegroup.azure.crossplane.io/aksviacp-rg unchanged

virtualnetwork.network.azure.crossplane.io/myaksvnet unchanged

subnet.network.azure.crossplane.io/myaksvnetsubnet1 unchanged

subnet.network.azure.crossplane.io/myaksvnetsubnet2 unchanged

subnet.network.azure.crossplane.io/myaksvnetsubnet3 configured

$ kubectl get subnet

NAME READY SYNCED STATE AGE

myaksvnetsubnet1 True True Succeeded 18m

myaksvnetsubnet2 True True Succeeded 18m

myaksvnetsubnet3 True True Succeeded 10m

Cleanup

since we used Minikube, it’s pretty easy to blast out our creds by just resetting the cluster…

Summary

The initial problems I had with Crossplane, and still have, are the frequent changes to the API. Many guides refer to creating a “stack” which is now a “package”. And even then, the syntax to install is different. In some cases i could use Helm and a kubectl command to setup the Azure connection again, and other times it simply refused.

The Docs for AKSCluster in Azure showed some fields as optional that were required and googling, which usually works for other tools, would often leave me without examples.

While things like a Redis cache and PosgreSQL instances are available, common things like a Virtual Machine are still in the works. Between the lack of objects and the fluctuating API lead me to believe this is “one to watch” but perhaps not one to rely on just yet.

I will use it, however, for some flows. The ability to create the Networking quickly is rather handy. I could see a flow that creates an AKS cluster, gets the admin credentials and then tests a chart before tearing it down.