Published: Oct 13, 2021 by Isaac Johnson

In our last article we focused on Hyper-V (PC) and Hyperkit (Mac). These are the clearest and easiest paths for using Minikube to solve our Docker needs. However, for those without Windows 10 Pro (Hyper-V) or issues with Hyperkit on Mac, there is another option: VirtualBox.

VirtualBox is the Sun product that oddly has yet to be closed-sourced by Oracle. I cannot fathom why they haven’t killed it like Hudson or Java yet, but regardless, they still offer it free under GPL. It offers a unique solution that accommodates both PCs and Macs and can help sort out issues with VPNs such as CISCO AnyConnect.

The Problem

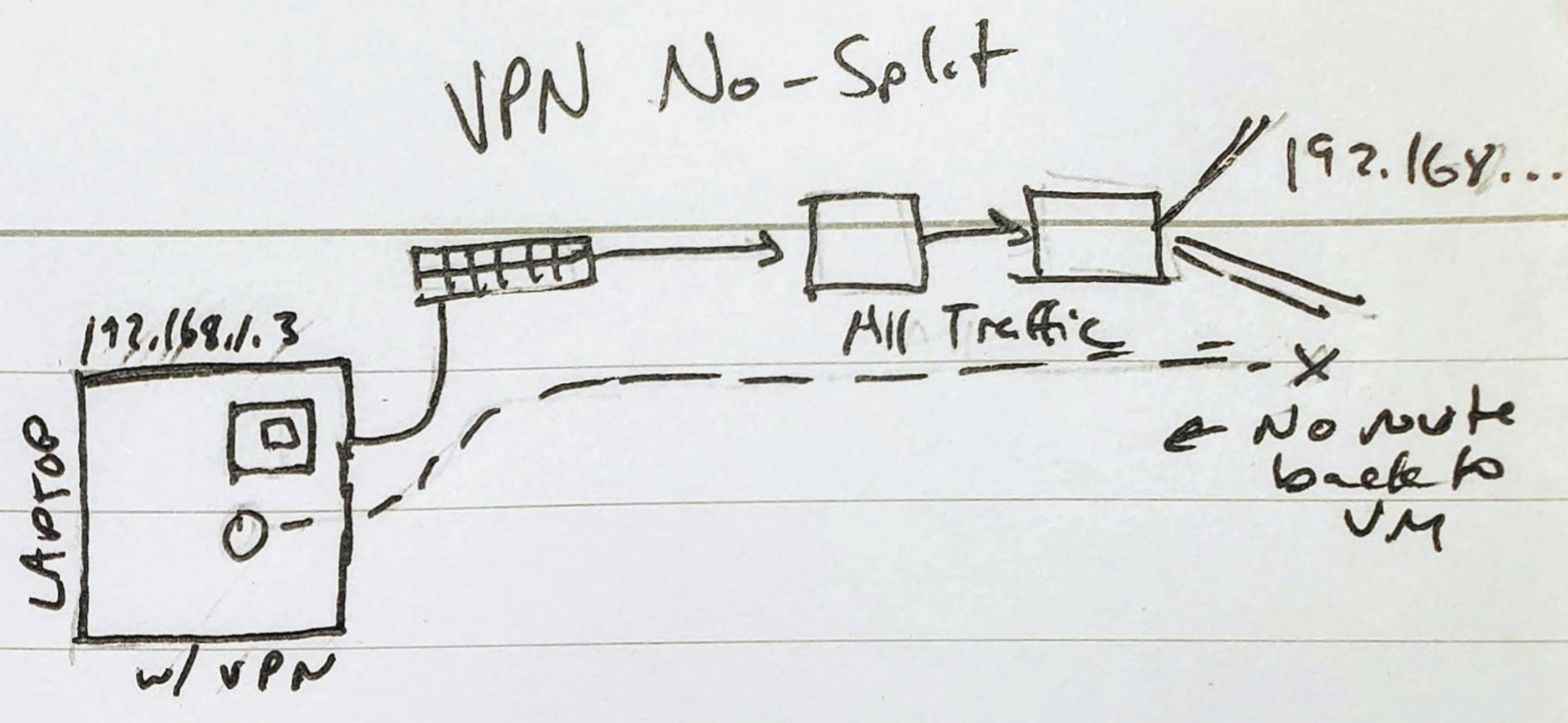

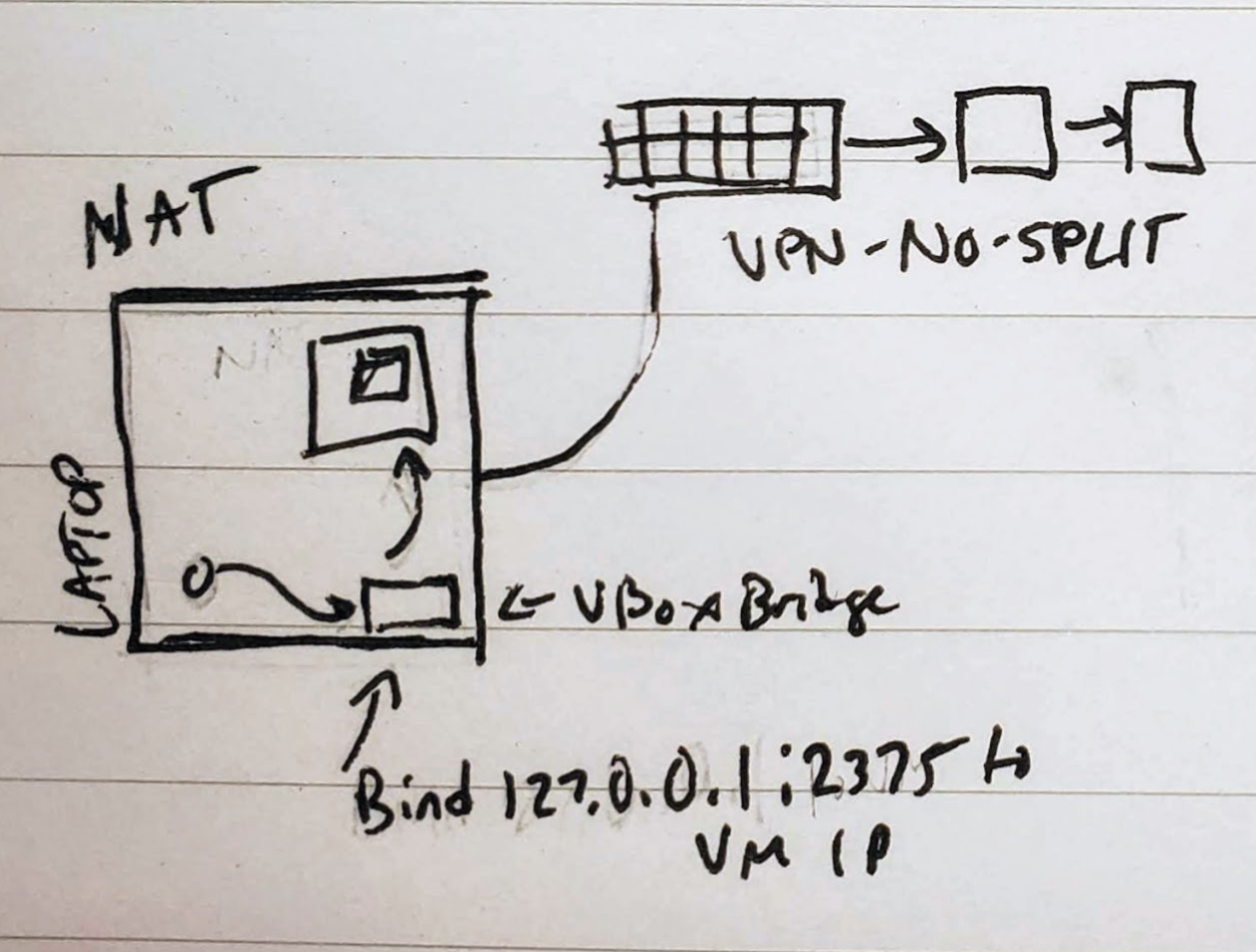

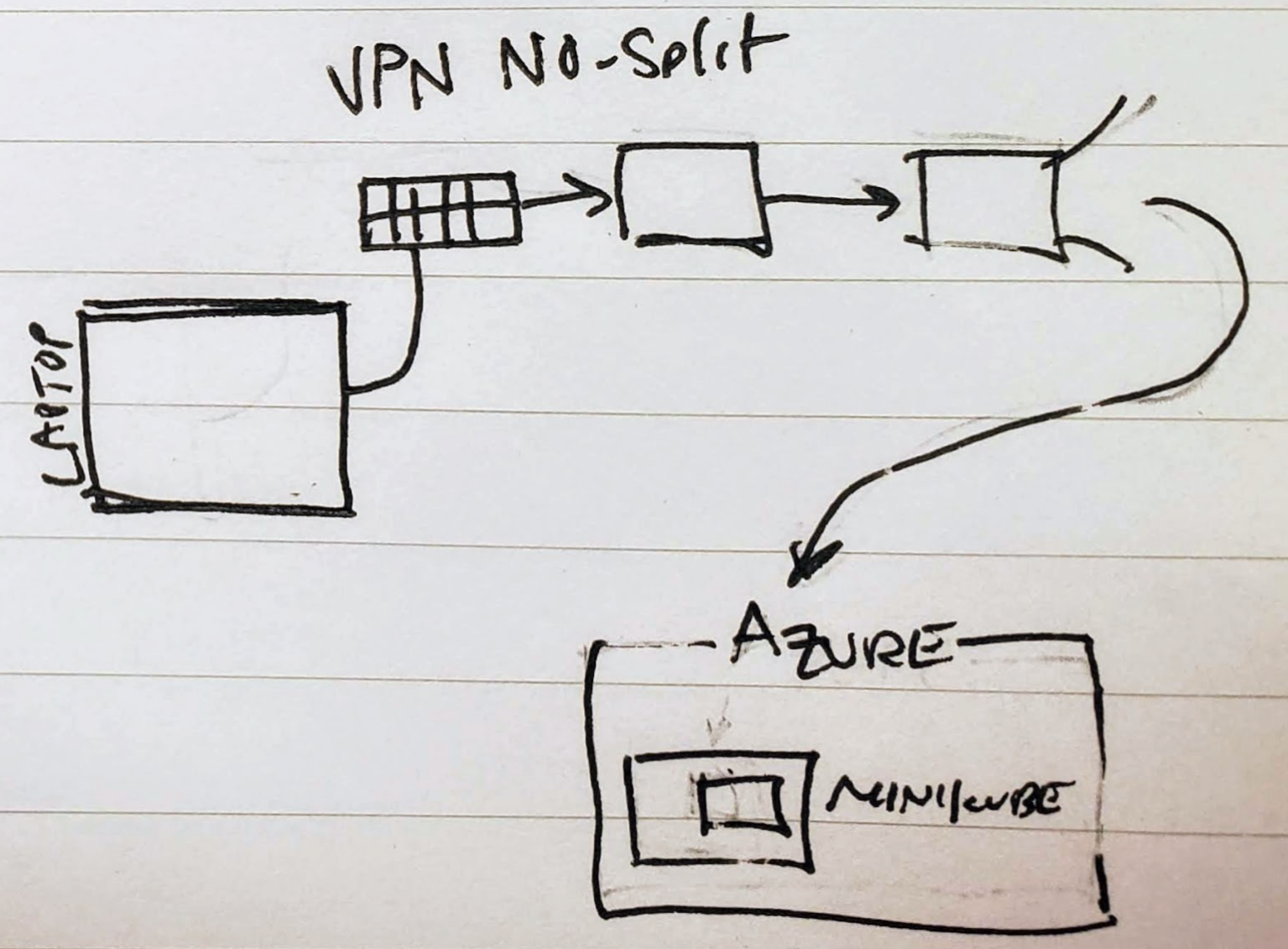

When we are on a normal network, traffic is easily routed back to our bridged minikube instance as they are in the same network subnet.

The issue i had is that when enabling a corporate VPN. I could no longer route traffic to hyperkit. This is because a forced tunnel VPN sends ALL traffic outside of localhost through an encrypted channel to an endpoint secured within the corporate network. The fact our address spaces overlap compounds things. There is no route back to the hyperv/hyperkit instance.

I first tried a few simple steps…

Hyperkit simply does not allow one to set the CIDRs for the instance. One can use a JSON to set NO_PROXIES but that still doesn’t help.

$ cat ~/.docker/config.json

{

"proxies":

{

"default":

{

"noProxy": "192.168.2.1/24,127.0.0.0/8"

}

}

}

And with the VPN enabled

That did not work. I even tried restarting with NO_PROXIES:

$ export NO_PROXY=localhost,127.0.0.1,10.96.0.0/12,192.168.99.0/24,192.168.39.0/24,192.168.64.0/24

$ minikube start --driver=hyperkit

😄 minikube v1.23.2 on Darwin 11.6

▪ MINIKUBE_ACTIVE_DOCKERD=minikube

✨ Using the hyperkit driver based on existing profile

👍 Starting control plane node minikube in cluster minikube

🔄 Restarting existing hyperkit VM for "minikube" ...

^C

and setting in the json file:

$ minikube start --driver=hyperkit

😄 minikube v1.23.2 on Darwin 11.6

▪ MINIKUBE_ACTIVE_DOCKERD=minikube

✨ Using the hyperkit driver based on user configuration

👍 Starting control plane node minikube in cluster minikube

🔥 Creating hyperkit VM (CPUs=2, Memory=4000MB, Disk=20000MB) ...

🌐 Found network options:

▪ NO_PROXY=localhost,127.0.0.1,10.96.0.0/12,192.168.99.0/24,192.168.39.0/24,192.168.64.0/24

❗ This VM is having trouble accessing https://k8s.gcr.io

💡 To pull new external images, you may need to configure a proxy: https://minikube.sigs.k8s.io/docs/reference/networking/proxy/

🐳 Preparing Kubernetes v1.22.2 on Docker 20.10.8 ...

▪ env NO_PROXY=localhost,127.0.0.1,10.96.0.0/12,192.168.99.0/24,192.168.39.0/24,192.168.64.0/24

▪ Generating certificates and keys ...

▪ Booting up control plane ...

▪ Configuring RBAC rules ...

🔎 Verifying Kubernetes components...

▪ Using image gcr.io/k8s-minikube/storage-provisioner:v5

🌟 Enabled addons: storage-provisioner, default-storageclass

🏄 Done! kubectl is now configured to use "minikube" cluster and "default" namespace by default

but still no luck. The fact was my corporate VPN used Class C routing (192.168.0.0/16) and my home Network did as well. Hyperkit was going to use a 192.168 address whether i liked it or not.

Install VirtualBox

We can Virtual box from https://www.virtualbox.org/

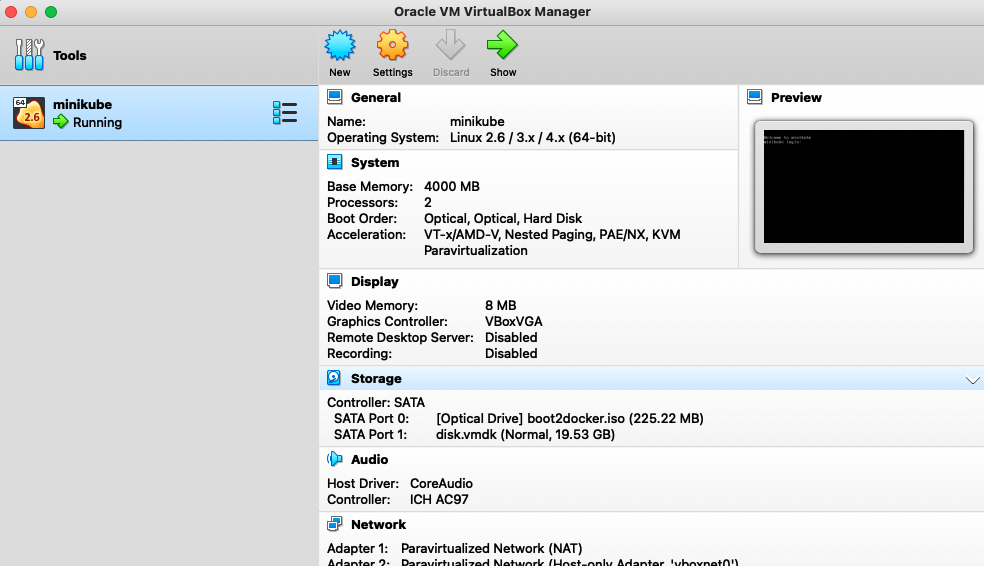

Once installed, we can see it running, albeit without any VPN enabled. You can install the extension pack for USB and KVM, but for our use, that is not required.

Next we need to delete any existing minikube entries

$ minikube delete

🔥 Deleting "minikube" in hyperkit ...

💀 Removed all traces of the "minikube" cluster.

Trying with HOST CIDR parameter

From the minikube start command, we can see that when running with VirtualBox, we can set the HOST CIDR.

Since my collisions are comming with class C 192.168.0.0/16, we can use the class B range 172.16.1.0/24

$ minikube start --driver=virtualbox --host-only-cidr 172.16.1.1

😄 minikube v1.23.2 on Darwin 11.6

▪ MINIKUBE_ACTIVE_DOCKERD=minikube

✨ Using the virtualbox driver based on existing profile

👍 Starting control plane node minikube in cluster minikube

🔄 Restarting existing virtualbox VM for "minikube" ...

🤦 StartHost failed, but will try again: driver start: Error setting up host only network on machine start: host-only cidr must be specified with a host address, not a network address

🔄 Restarting existing virtualbox VM for "minikube" ...

😿 Failed to start virtualbox VM. Running "minikube delete" may fix it: driver start: Error setting up host only network on machine start: host-only cidr must be specified with a host address, not a network address

❌ Exiting due to GUEST_PROVISION: Failed to start host: driver start: Error setting up host only network on machine start: host-only cidr must be specified with a host address, not a network address

╭───────────────────────────────────────────────────────────────────────────────────────────╮

│ │

│ 😿 If the above advice does not help, please let us know: │

│ 👉 https://github.com/kubernetes/minikube/issues/new/choose │

│ │

│ Please run `minikube logs --file=logs.txt` and attach logs.txt to the GitHub issue. │

│ │

╰───────────────────────────────────────────────────────────────────────────────────────────╯

Other guides i read suggested this host-only-cidr is flakey and would likely fail at the VPN stage. So let’s try without

Trying with default Networking

Let’s try using defaults for VBox:

$ minikube start --driver=virtualbox

😄 minikube v1.23.2 on Darwin 11.6

▪ MINIKUBE_ACTIVE_DOCKERD=minikube

✨ Using the virtualbox driver based on existing profile

👍 Starting control plane node minikube in cluster minikube

🏃 Updating the running virtualbox "minikube" VM ...

🐳 Preparing Kubernetes v1.22.2 on Docker 20.10.8 ...

╭───────────────────────────────────────────────────────────────────────────────────────────────────╮

│ │

│ You have selected "virtualbox" driver, but there are better options ! │

│ For better performance and support consider using a different driver: │

│ - hyperkit │

│ │

│ To turn off this warning run: │

│ │

│ $ minikube config set WantVirtualBoxDriverWarning false │

│ │

│ │

│ To learn more about on minikube drivers checkout https://minikube.sigs.k8s.io/docs/drivers/ │

│ To see benchmarks checkout https://minikube.sigs.k8s.io/docs/benchmarks/cpuusage/ │

│ │

╰───────────────────────────────────────────────────────────────────────────────────────────────────╯

🔎 Verifying Kubernetes components...

▪ Using image gcr.io/k8s-minikube/storage-provisioner:v5

🌟 Enabled addons: storage-provisioner, default-storageclass

🏄 Done! kubectl is now configured to use "minikube" cluster and "default" namespace by default

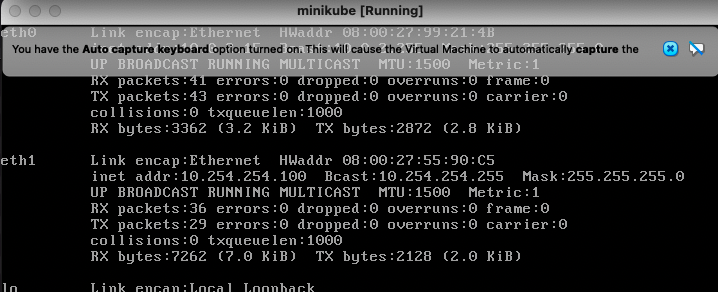

We can now check our kubeconfig and set the IP accordingly:

$ cat ~/.kube/config | grep server

server: https://10.254.254.100:8443

$ DOCKER_HOST="tcp://10.254.254.100:8443"

…. see if you spot my error below.. skip ahead to see what it is …

However, even though the former certs path worked for hyperkit, i now got errors:

$ docker ps

error during connect: Get "https://10.254.254.100:8443/v1.24/containers/json": x509: certificate signed by unknown authority

I built a new local folder (~/certs) to try and move all the crt and pem files into a place docker would see (as this worked for the PIs before) but that still failed.

When i moved the cert to the pem file (which was a guess) i got a forbidden error

$ cp /Users/johnisa/.minikube/ca.crt ~/certs/ca.pem

$ docker ps

Error response from daemon: forbidden: User "system:anonymous" cannot get path "/v1.24/containers/json"

Then, i basically chmod 777 my cluster by giving anonymous access to all:

$ cat t.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: anonymous-admin-access

rules:

- apiGroups:

- '*'

resources:

- '*'

verbs:

- '*'

- nonResourceURLs:

- '*'

verbs:

- '*'

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: anonymous-admin-access

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: anonymous-admin-access

subjects:

- kind: User

name: system:anonymous

namespace: default

$ kubectl apply -f t.yaml

clusterrole.rbac.authorization.k8s.io/anonymous-admin-access created

clusterrolebinding.rbac.authorization.k8s.io/anonymous-admin-access created

but this too didn’t work:

$ docker ps

Error response from daemon:

$ docker images

Error response from daemon:

This is when i stopped, pulled my head out of my ass and tried just using the command with which I should have started:

$ minikube docker-env

export DOCKER_TLS_VERIFY="1"

export DOCKER_HOST="tcp://10.254.254.100:2376"

export DOCKER_CERT_PATH="/Users/johnisa/.minikube/certs"

export MINIKUBE_ACTIVE_DOCKERD="minikube"

# To point your shell to minikube's docker-daemon, run:

# eval $(minikube -p minikube docker-env)

…. did you catch that? using the HTTPS k8s port instead of the TCP 2376 port …

I set those and i was fine:

$ export DOCKER_TLS_VERIFY="1"

$ export DOCKER_HOST="tcp://10.254.254.100:2376"

$ export DOCKER_CERT_PATH="/Users/johnisa/.minikube/certs"

$ export MINIKUBE_ACTIVE_DOCKERD="minikube"

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2a40ac67a5d8 6e38f40d628d "/storage-provisioner" 17 minutes ago Up 17 minutes k8s_storage-provisioner_storage-provisioner_kube-system_a45d8124-8fa7-43ab-8487-77d02ed75965_1

51611891db0f 8d147537fb7d "/coredns -conf /etc…" 18 minutes ago Up 18 minutes k8s_coredns_coredns-78fcd69978-fz788_kube-system_d0de942c-c9b7-4ab8-a34c-3a654cef97bc_0

cfee91d5aa7c 873127efbc8a "/usr/local/bin/kube…" 18 minutes ago Up 18 minutes k8s_kube-proxy_kube-proxy-xdpwr_kube-system_15bee0da-1c4f-46cb-9d0d-1b69338a40d2_0

3b0b6530b968 k8s.gcr.io/pause:3.5 "/pause" 18 minutes ago Up 18 minutes k8s_POD_coredns-78fcd69978-fz788_kube-system_d0de942c-c9b7-4ab8-a34c-3a654cef97bc_0

730e5abec93d k8s.gcr.io/pause:3.5 "/pause" 18 minutes ago Up 18 minutes k8s_POD_kube-proxy-xdpwr_kube-system_15bee0da-1c4f-46cb-9d0d-1b69338a40d2_0

195ea9c5c4c5 k8s.gcr.io/pause:3.5 "/pause" 18 minutes ago Up 18 minutes k8s_POD_storage-provisioner_kube-system_a45d8124-8fa7-43ab-8487-77d02ed75965_0

ace8de002016 004811815584 "etcd --advertise-cl…" 18 minutes ago Up 18 minutes k8s_etcd_etcd-minikube_kube-system_aab17095f2d764d27d8f40847ab6431a_0

95aba37ad937 b51ddc1014b0 "kube-scheduler --au…" 18 minutes ago Up 18 minutes k8s_kube-scheduler_kube-scheduler-minikube_kube-system_97bca4cd66281ad2157a6a68956c4fa5_0

08f56526edd2 5425bcbd23c5 "kube-controller-man…" 18 minutes ago Up 18 minutes k8s_kube-controller-manager_kube-controller-manager-minikube_kube-system_20326866d8a92c47afe958577e1a8179_0

8dafb9cadbdc e64579b7d886 "kube-apiserver --ad…" 18 minutes ago Up 18 minutes k8s_kube-apiserver_kube-apiserver-minikube_kube-system_3c077a71725cc7a253d9f030f1cc7b47_0

92fd952a4f1f k8s.gcr.io/pause:3.5 "/pause" 18 minutes ago Up 18 minutes k8s_POD_kube-controller-manager-minikube_kube-system_20326866d8a92c47afe958577e1a8179_0

aa04f1a1ee8f k8s.gcr.io/pause:3.5 "/pause" 18 minutes ago Up 18 minutes k8s_POD_kube-apiserver-minikube_kube-system_3c077a71725cc7a253d9f030f1cc7b47_0

1377757ae542 k8s.gcr.io/pause:3.5 "/pause" 18 minutes ago Up 18 minutes k8s_POD_etcd-minikube_kube-system_aab17095f2d764d27d8f40847ab6431a_0

85a484d19a91 k8s.gcr.io/pause:3.5 "/pause" 18 minutes ago Up 18 minutes k8s_POD_kube-scheduler-minikube_kube-system_97bca4cd66281ad2157a6a68956c4fa5_0

I removed the global admin anon access and tried again (to verify that had no bearing):

$ kubectl delete -f t.yaml

clusterrole.rbac.authorization.k8s.io "anonymous-admin-access" deleted

clusterrolebinding.rbac.authorization.k8s.io "anonymous-admin-access" deleted

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2a40ac67a5d8 6e38f40d628d "/storage-provisioner" 19 minutes ago Up 19 minutes k8s_storage-provisioner_storage-provisioner_kube-system_a45d8124-8fa7-43ab-8487-77d02ed75965_1

51611891db0f 8d147537fb7d "/coredns -conf /etc…" 20 minutes ago Up 20 minutes k8s_coredns_coredns-78fcd69978-fz788_kube-system_d0de942c-c9b7-4ab8-a34c-3a654cef97bc_0

cfee91d5aa7c 873127efbc8a "/usr/local/bin/kube…" 20 minutes ago Up 20 minutes k8s_kube-proxy_kube-proxy-xdpwr_kube-system_15bee0da-1c4f-46cb-9d0d-1b69338a40d2_0

3b0b6530b968 k8s.gcr.io/pause:3.5 "/pause" 20 minutes ago Up 20 minutes k8s_POD_coredns-78fcd69978-fz788_kube-system_d0de942c-c9b7-4ab8-a34c-3a654cef97bc_0

730e5abec93d k8s.gcr.io/pause:3.5 "/pause" 20 minutes ago Up 20 minutes k8s_POD_kube-proxy-xdpwr_kube-system_15bee0da-1c4f-46cb-9d0d-1b69338a40d2_0

195ea9c5c4c5 k8s.gcr.io/pause:3.5 "/pause" 20 minutes ago Up 20 minutes k8s_POD_storage-provisioner_kube-system_a45d8124-8fa7-43ab-8487-77d02ed75965_0

ace8de002016 004811815584 "etcd --advertise-cl…" 20 minutes ago Up 20 minutes k8s_etcd_etcd-minikube_kube-system_aab17095f2d764d27d8f40847ab6431a_0

95aba37ad937 b51ddc1014b0 "kube-scheduler --au…" 20 minutes ago Up 20 minutes k8s_kube-scheduler_kube-scheduler-minikube_kube-system_97bca4cd66281ad2157a6a68956c4fa5_0

08f56526edd2 5425bcbd23c5 "kube-controller-man…" 20 minutes ago Up 20 minutes k8s_kube-controller-manager_kube-controller-manager-minikube_kube-system_20326866d8a92c47afe958577e1a8179_0

8dafb9cadbdc e64579b7d886 "kube-apiserver --ad…" 20 minutes ago Up 20 minutes k8s_kube-apiserver_kube-apiserver-minikube_kube-system_3c077a71725cc7a253d9f030f1cc7b47_0

92fd952a4f1f k8s.gcr.io/pause:3.5 "/pause" 20 minutes ago Up 20 minutes k8s_POD_kube-controller-manager-minikube_kube-system_20326866d8a92c47afe958577e1a8179_0

aa04f1a1ee8f k8s.gcr.io/pause:3.5 "/pause" 20 minutes ago Up 20 minutes k8s_POD_kube-apiserver-minikube_kube-system_3c077a71725cc7a253d9f030f1cc7b47_0

1377757ae542 k8s.gcr.io/pause:3.5 "/pause" 20 minutes ago Up 20 minutes k8s_POD_etcd-minikube_kube-system_aab17095f2d764d27d8f40847ab6431a_0

85a484d19a91 k8s.gcr.io/pause:3.5 "/pause" 20 minutes ago Up 20 minutes k8s_POD_kube-scheduler-minikube_kube-system_97bca4cd66281ad2157a6a68956c4fa5_0

The root of the issue is I was trying to go to the KUBERNETES port of 8443 instead of the docker endpoint of 2376.

still issues ; when VPN enabled…

$ docker ps

Cannot connect to the Docker daemon at tcp://10.254.254.100:2376. Is the docker daemon running?

VBox Port Mappings to Solve VPN issues

From this guide, Cuong Dong-Si points out that we can use VBox forwarding rules to send traffic from 127.0.0.1 onto our host:

Which is done as such:

$ VBoxManage controlvm minikube natpf1 k8s-apiserver,tcp,127.0.0.1,8443,,8443

$ VBoxManage controlvm minikube natpf1 k8s-dashboard,tcp,127.0.0.1,30000,,30000

$ VBoxManage controlvm minikube natpf1 jenkins,tcp,127.0.0.1,30080,,30080

$ VBoxManage controlvm minikube natpf1 docker,tcp,127.0.0.1,2376,,2376

$ kubectl config set-cluster minikube-vpn --server=https://127.0.0.1:8443 --insecure-skip-tls-verify

Cluster "minikube-vpn" set.

$ kubectl config set-context minikube-vpn --cluster=minikube-vpn --user=minikube

Context "minikube-vpn" created.

Now when i change to using 127.0.0.1, it works!

Even with the VPN on, i can hit my containers.

$ export DOCKER_HOST="tcp://127.0.0.1:2376"

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2a40ac67a5d8 6e38f40d628d "/storage-provisioner" 41 minutes ago Up 41 minutes k8s_storage-provisioner_storage-provisioner_kube-system_a45d8124-8fa7-43ab-8487-77d02ed75965_1

51611891db0f 8d147537fb7d "/coredns -conf /etc…" 42 minutes ago Up 42 minutes k8s_coredns_coredns-78fcd69978-fz788_kube-system_d0de942c-c9b7-4ab8-a34c-3a654cef97bc_0

cfee91d5aa7c 873127efbc8a "/usr/local/bin/kube…" 42 minutes ago Up 42 minutes k8s_kube-proxy_kube-proxy-xdpwr_kube-system_15bee0da-1c4f-46cb-9d0d-1b69338a40d2_0

3b0b6530b968 k8s.gcr.io/pause:3.5 "/pause" 42 minutes ago Up 42 minutes k8s_POD_coredns-78fcd69978-fz788_kube-system_d0de942c-c9b7-4ab8-a34c-3a654cef97bc_0

730e5abec93d k8s.gcr.io/pause:3.5 "/pause" 42 minutes ago Up 42 minutes k8s_POD_kube-proxy-xdpwr_kube-system_15bee0da-1c4f-46cb-9d0d-1b69338a40d2_0

195ea9c5c4c5 k8s.gcr.io/pause:3.5 "/pause" 42 minutes ago Up 42 minutes k8s_POD_storage-provisioner_kube-system_a45d8124-8fa7-43ab-8487-77d02ed75965_0

ace8de002016 004811815584 "etcd --advertise-cl…" 42 minutes ago Up 42 minutes k8s_etcd_etcd-minikube_kube-system_aab17095f2d764d27d8f40847ab6431a_0

95aba37ad937 b51ddc1014b0 "kube-scheduler --au…" 42 minutes ago Up 42 minutes k8s_kube-scheduler_kube-scheduler-minikube_kube-system_97bca4cd66281ad2157a6a68956c4fa5_0

08f56526edd2 5425bcbd23c5 "kube-controller-man…" 42 minutes ago Up 42 minutes k8s_kube-controller-manager_kube-controller-manager-minikube_kube-system_20326866d8a92c47afe958577e1a8179_0

8dafb9cadbdc e64579b7d886 "kube-apiserver --ad…" 42 minutes ago Up 42 minutes k8s_kube-apiserver_kube-apiserver-minikube_kube-system_3c077a71725cc7a253d9f030f1cc7b47_0

92fd952a4f1f k8s.gcr.io/pause:3.5 "/pause" 42 minutes ago Up 42 minutes k8s_POD_kube-controller-manager-minikube_kube-system_20326866d8a92c47afe958577e1a8179_0

aa04f1a1ee8f k8s.gcr.io/pause:3.5 "/pause" 42 minutes ago Up 42 minutes k8s_POD_kube-apiserver-minikube_kube-system_3c077a71725cc7a253d9f030f1cc7b47_0

1377757ae542 k8s.gcr.io/pause:3.5 "/pause" 42 minutes ago Up 42 minutes k8s_POD_etcd-minikube_kube-system_aab17095f2d764d27d8f40847ab6431a_0

85a484d19a91 k8s.gcr.io/pause:3.5 "/pause" 42 minutes ago Up 42 minutes k8s_POD_kube-scheduler-minikube_kube-system_97bca4cd66281ad2157a6a68956c4fa5_0

When I’m on the VPN, if i use the default context, we can’t get to the host:

$ kubectl config use-context minikube

Switched to context "minikube".

$ kubectl get nodes

Unable to connect to the server: dial tcp 10.254.254.100:8443: i/o timeout

but if i switch to the VPN profile, we are good to go:

$ kubectl config use-context minikube-vpn

Switched to context "minikube-vpn".

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

minikube Ready control-plane,master 49m v1.22.2

Restarting the laptop

Hyperkit did a great job on restart. But will VBox?

When we restarted we saw the startup was aborted. Starting headlesss

still didnt work.

We can login to the host with docker/tcuser and see that the IP is the same:

But still no go:

$ minikube docker-env

export DOCKER_TLS_VERIFY="1"

export DOCKER_HOST="tcp://10.254.254.100:2376"

export DOCKER_CERT_PATH="/Users/johnisa/.minikube/certs"

export MINIKUBE_ACTIVE_DOCKERD="minikube"

# To point your shell to minikube's docker-daemon, run:

# eval $(minikube -p minikube docker-env)

$ eval $(minikube -p minikube docker-env)

$ docker ps

Cannot connect to the Docker daemon at tcp://10.254.254.100:2376. Is the docker daemon running?

$ cat ~/.kube/config | grep server

server: https://10.254.254.100:8443

server: https://127.0.0.1:8443

$ kubectl get nodes

The connection to the server 10.254.254.100:8443 was refused - did you specify the right host or port?

I saw similar with hyperkit. With that, just a minikube start seemed to kick it into gear:

$ minikube start

😄 minikube v1.23.2 on Darwin 11.6

▪ MINIKUBE_ACTIVE_DOCKERD=minikube

✨ Using the virtualbox driver based on existing profile

👍 Starting control plane node minikube in cluster minikube

🏃 Updating the running virtualbox "minikube" VM ...

🐳 Preparing Kubernetes v1.22.2 on Docker 20.10.8 ...

╭───────────────────────────────────────────────────────────────────────────────────────────────────╮

│ │

│ You have selected "virtualbox" driver, but there are better options ! │

│ For better performance and support consider using a different driver: │

│ - hyperkit │

│ │

│ To turn off this warning run: │

│ │

│ $ minikube config set WantVirtualBoxDriverWarning false │

│ │

│ │

│ To learn more about on minikube drivers checkout https://minikube.sigs.k8s.io/docs/drivers/ │

│ To see benchmarks checkout https://minikube.sigs.k8s.io/docs/benchmarks/cpuusage/ │

│ │

╰───────────────────────────────────────────────────────────────────────────────────────────────────╯

🔎 Verifying Kubernetes components...

▪ Using image gcr.io/k8s-minikube/storage-provisioner:v5

🌟 Enabled addons: storage-provisioner, default-storageclass

🏄 Done! kubectl is now configured to use "minikube" cluster and "default" namespace by default

and now testing it

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

minikube Ready control-plane,master 62m v1.22.2

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

deca47e5badb 8d147537fb7d "/coredns -conf /etc…" 21 seconds ago Up 17 seconds k8s_coredns_coredns-78fcd69978-fz788_kube-system_d0de942c-c9b7-4ab8-a34c-3a654cef97bc_1

9122a188762c 6e38f40d628d "/storage-provisioner" 21 seconds ago Up 17 seconds k8s_storage-provisioner_storage-provisioner_kube-system_a45d8124-8fa7-43ab-8487-77d02ed75965_2

9150e01977c0 873127efbc8a "/usr/local/bin/kube…" 21 seconds ago Up 17 seconds k8s_kube-proxy_kube-proxy-xdpwr_kube-system_15bee0da-1c4f-46cb-9d0d-1b69338a40d2_1

907b9f7815c2 k8s.gcr.io/pause:3.5 "/pause" 21 seconds ago Up 18 seconds k8s_POD_kube-proxy-xdpwr_kube-system_15bee0da-1c4f-46cb-9d0d-1b69338a40d2_1

83a54e3aa21a k8s.gcr.io/pause:3.5 "/pause" 22 seconds ago Up 18 seconds k8s_POD_coredns-78fcd69978-fz788_kube-system_d0de942c-c9b7-4ab8-a34c-3a654cef97bc_1

195e55c02bd3 k8s.gcr.io/pause:3.5 "/pause" 22 seconds ago Up 18 seconds k8s_POD_storage-provisioner_kube-system_a45d8124-8fa7-43ab-8487-77d02ed75965_1

2c6f5c6e2ed0 004811815584 "etcd --advertise-cl…" 29 seconds ago Up 25 seconds k8s_etcd_etcd-minikube_kube-system_aab17095f2d764d27d8f40847ab6431a_1

0b95416e80b3 b51ddc1014b0 "kube-scheduler --au…" 29 seconds ago Up 25 seconds k8s_kube-scheduler_kube-scheduler-minikube_kube-system_97bca4cd66281ad2157a6a68956c4fa5_1

8390d499be62 5425bcbd23c5 "kube-controller-man…" 29 seconds ago Up 25 seconds k8s_kube-controller-manager_kube-controller-manager-minikube_kube-system_20326866d8a92c47afe958577e1a8179_1

c817b3881a73 e64579b7d886 "kube-apiserver --ad…" 29 seconds ago Up 25 seconds k8s_kube-apiserver_kube-apiserver-minikube_kube-system_3c077a71725cc7a253d9f030f1cc7b47_1

f066d4ece5f3 k8s.gcr.io/pause:3.5 "/pause" 30 seconds ago Up 26 seconds k8s_POD_kube-apiserver-minikube_kube-system_3c077a71725cc7a253d9f030f1cc7b47_1

97e35c28bade k8s.gcr.io/pause:3.5 "/pause" 30 seconds ago Up 26 seconds k8s_POD_etcd-minikube_kube-system_aab17095f2d764d27d8f40847ab6431a_1

b1f85f2aa8cb k8s.gcr.io/pause:3.5 "/pause" 30 seconds ago Up 26 seconds k8s_POD_kube-scheduler-minikube_kube-system_97bca4cd66281ad2157a6a68956c4fa5_1

6f6bc2b973df k8s.gcr.io/pause:3.5 "/pause" 30 seconds ago Up 26 seconds k8s_POD_kube-controller-manager-minikube_kube-system_20326866d8a92c47afe958577e1a8179_1

And with the VPN on, we can test docker:

$ export DOCKER_HOST="tcp://127.0.0.1:2376"

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b0f365ae5b5c 6e38f40d628d "/storage-provisioner" 2 minutes ago Up 2 minutes k8s_storage-provisioner_storage-provisioner_kube-system_a45d8124-8fa7-43ab-8487-77d02ed75965_3

deca47e5badb 8d147537fb7d "/coredns -conf /etc…" 2 minutes ago Up 2 minutes k8s_coredns_coredns-78fcd69978-fz788_kube-system_d0de942c-c9b7-4ab8-a34c-3a654cef97bc_1

9150e01977c0 873127efbc8a "/usr/local/bin/kube…" 2 minutes ago Up 2 minutes k8s_kube-proxy_kube-proxy-xdpwr_kube-system_15bee0da-1c4f-46cb-9d0d-1b69338a40d2_1

907b9f7815c2 k8s.gcr.io/pause:3.5 "/pause" 2 minutes ago Up 2 minutes k8s_POD_kube-proxy-xdpwr_kube-system_15bee0da-1c4f-46cb-9d0d-1b69338a40d2_1

83a54e3aa21a k8s.gcr.io/pause:3.5 "/pause" 2 minutes ago Up 2 minutes k8s_POD_coredns-78fcd69978-fz788_kube-system_d0de942c-c9b7-4ab8-a34c-3a654cef97bc_1

195e55c02bd3 k8s.gcr.io/pause:3.5 "/pause" 2 minutes ago Up 2 minutes k8s_POD_storage-provisioner_kube-system_a45d8124-8fa7-43ab-8487-77d02ed75965_1

2c6f5c6e2ed0 004811815584 "etcd --advertise-cl…" 2 minutes ago Up 2 minutes k8s_etcd_etcd-minikube_kube-system_aab17095f2d764d27d8f40847ab6431a_1

0b95416e80b3 b51ddc1014b0 "kube-scheduler --au…" 2 minutes ago Up 2 minutes k8s_kube-scheduler_kube-scheduler-minikube_kube-system_97bca4cd66281ad2157a6a68956c4fa5_1

8390d499be62 5425bcbd23c5 "kube-controller-man…" 2 minutes ago Up 2 minutes k8s_kube-controller-manager_kube-controller-manager-minikube_kube-system_20326866d8a92c47afe958577e1a8179_1

c817b3881a73 e64579b7d886 "kube-apiserver --ad…" 2 minutes ago Up 2 minutes k8s_kube-apiserver_kube-apiserver-minikube_kube-system_3c077a71725cc7a253d9f030f1cc7b47_1

f066d4ece5f3 k8s.gcr.io/pause:3.5 "/pause" 3 minutes ago Up 2 minutes k8s_POD_kube-apiserver-minikube_kube-system_3c077a71725cc7a253d9f030f1cc7b47_1

97e35c28bade k8s.gcr.io/pause:3.5 "/pause" 3 minutes ago Up 2 minutes k8s_POD_etcd-minikube_kube-system_aab17095f2d764d27d8f40847ab6431a_1

b1f85f2aa8cb k8s.gcr.io/pause:3.5 "/pause" 3 minutes ago Up 2 minutes k8s_POD_kube-scheduler-minikube_kube-system_97bca4cd66281ad2157a6a68956c4fa5_1

6f6bc2b973df k8s.gcr.io/pause:3.5 "/pause" 3 minutes ago Up 2 minutes k8s_POD_kube-controller-manager-minikube_kube-system_20326866d8a92c47afe958577e1a8179_1

and k8s:

$ kubectl config use-context minikube-vpn

Switched to context "minikube-vpn".

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

minikube Ready control-plane,master 65m v1.22.2

Lastly, let’s update our bash_profile for future shell invokations:

$ cat ~/.bash_profile

# export DOCKER_TLS_VERIFY=1

# export DOCKER_HOST=tcp://192.168.64.2:2376

# export MINIKUBE_ACTIVE_DOCKERD=minikube

# export DOCKER_CERT_PATH=/Users/johnisa/.minikube/

# minikube start --driver=hyperkit

# Let Minikube handle it

eval $(minikube -p minikube docker-env)

If you are using the new zsh that Apple has shoved down our throats, you should be able to use ~/.zshrc.

I will admit that in the end, i reverted back to setting the hardcoded values because the minikube invokation took too long and at times failed when launching a terminal while the VPN was engaged:

# Let Minikube handle it

# eval $(minikube -p minikube docker-env)

export DOCKER_CERT_PATH="/Users/johnisa/.minikube/certs"

export DOCKER_HOST="tcp://127.0.0.1:2376"

export DOCKER_TLS_VERIFY="1"

export MINIKUBE_ACTIVE_DOCKERD="minikube"

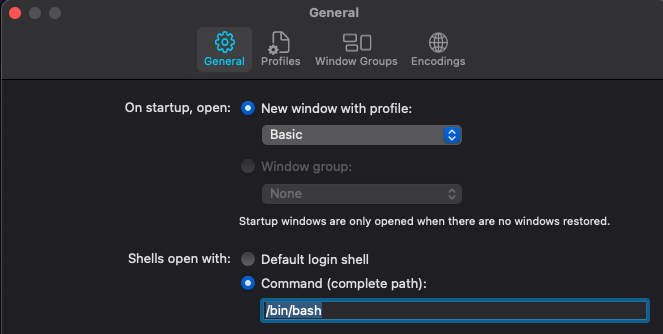

Using BASH

One of the first things I do now on Macs is to set the shell back to BASH.

and for everything else (like VSCode):

$ chsh -s /bin/bash

Changing shell for johnisa.

But this is because I have gray hair and like BASH, VI and Brew for installing.

I’m just a simple caveman, your world scares and confuses me. I look at this ZSH and say ‘what is this crazy shell’ or whatever…

Azure VM

Many DevOps engineers have use of VSE (MSDN) accounts that grant some free compute.

Even if you do not have VSE, there are free and low cost Azure instance classes. If you wish to do this for AWS, you can get by with t3.micro class EC2 instances.

Let’s set our default subscription to VSE:

$ az account list -o table | grep Visual | sed 's/AzureCloud.*Enabled/AzureCloud ***masked*** Enabled/g'

Visual Studio Enterprise Subscription AzureCloud ***masked*** Enabled True

$ az account set --subscription "Visual Studio Enterprise Subscription"

Create a resource group to host the VM and VNet:

$ az group create -n idjminikubevmcentralrg --location centralus

{

"id": "/subscriptions/a6ee78d7-b138-46c0-9b23-6f8558e730b0/resourceGroups/idjminikubevmcentralrg",

"location": "centralus",

"managedBy": null,

"name": "idjminikubevmcentralrg",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

We can get a full list of VM sizes for a region:

$ az vm list-sizes --location "centralus" -o table

MaxDataDiskCount MemoryInMb Name NumberOfCores OsDiskSizeInMb ResourceDiskSizeInMb

------------------ ------------ ---------------------- --------------- ---------------- ----------------------

1 768 Standard_A0 1 1047552 20480

2 1792 Standard_A1 1 1047552 71680

4 3584 Standard_A2 2 1047552 138240

8 7168 Standard_A3 4 1047552 291840

4 14336 Standard_A5 2 1047552 138240

16 14336 Standard_A4 8 1047552 619520

8 28672 Standard_A6 4 1047552 291840

16 57344 Standard_A7 8 1047552 619520

1 768 Basic_A0 1 1047552 20480

2 1792 Basic_A1 1 1047552 40960

4 3584 Basic_A2 2 1047552 61440

8 7168 Basic_A3 4 1047552 122880

16 14336 Basic_A4 8 1047552 245760

4 3584 Standard_D1_v2 1 1047552 51200

8 7168 Standard_D2_v2 2 1047552 102400

16 14336 Standard_D3_v2 4 1047552 204800

32 28672 Standard_D4_v2 8 1047552 409600

64 57344 Standard_D5_v2 16 1047552 819200

8 14336 Standard_D11_v2 2 1047552 102400

16 28672 Standard_D12_v2 4 1047552 204800

32 57344 Standard_D13_v2 8 1047552 409600

64 114688 Standard_D14_v2 16 1047552 819200

64 143360 Standard_D15_v2 20 1047552 1024000

8 7168 Standard_D2_v2_Promo 2 1047552 102400

16 14336 Standard_D3_v2_Promo 4 1047552 204800

32 28672 Standard_D4_v2_Promo 8 1047552 409600

64 57344 Standard_D5_v2_Promo 16 1047552 819200

8 14336 Standard_D11_v2_Promo 2 1047552 102400

16 28672 Standard_D12_v2_Promo 4 1047552 204800

32 57344 Standard_D13_v2_Promo 8 1047552 409600

64 114688 Standard_D14_v2_Promo 16 1047552 819200

4 2048 Standard_F1 1 1047552 16384

8 4096 Standard_F2 2 1047552 32768

16 8192 Standard_F4 4 1047552 65536

32 16384 Standard_F8 8 1047552 131072

64 32768 Standard_F16 16 1047552 262144

2 2048 Standard_A1_v2 1 1047552 10240

4 16384 Standard_A2m_v2 2 1047552 20480

4 4096 Standard_A2_v2 2 1047552 20480

8 32768 Standard_A4m_v2 4 1047552 40960

8 8192 Standard_A4_v2 4 1047552 40960

16 65536 Standard_A8m_v2 8 1047552 81920

16 16384 Standard_A8_v2 8 1047552 81920

4 3584 Standard_DS1 1 1047552 7168

8 7168 Standard_DS2 2 1047552 14336

16 14336 Standard_DS3 4 1047552 28672

32 28672 Standard_DS4 8 1047552 57344

8 14336 Standard_DS11 2 1047552 28672

16 28672 Standard_DS12 4 1047552 57344

32 57344 Standard_DS13 8 1047552 114688

64 114688 Standard_DS14 16 1047552 229376

4 8192 Standard_D2_v3 2 1047552 51200

8 16384 Standard_D4_v3 4 1047552 102400

16 32768 Standard_D8_v3 8 1047552 204800

32 65536 Standard_D16_v3 16 1047552 409600

32 131072 Standard_D32_v3 32 1047552 819200

64 1024000 Standard_M64 64 1047552 8192000

64 1792000 Standard_M64m 64 1047552 8192000

64 2048000 Standard_M128 128 1047552 16384000

64 3891200 Standard_M128m 128 1047552 16384000

8 224000 Standard_M8-2ms 8 1047552 256000

8 224000 Standard_M8-4ms 8 1047552 256000

8 224000 Standard_M8ms 8 1047552 256000

16 448000 Standard_M16-4ms 16 1047552 512000

16 448000 Standard_M16-8ms 16 1047552 512000

16 448000 Standard_M16ms 16 1047552 512000

32 896000 Standard_M32-8ms 32 1047552 1024000

32 896000 Standard_M32-16ms 32 1047552 1024000

32 262144 Standard_M32ls 32 1047552 1024000

32 896000 Standard_M32ms 32 1047552 1024000

32 196608 Standard_M32ts 32 1047552 1024000

64 1792000 Standard_M64-16ms 64 1047552 2048000

64 1792000 Standard_M64-32ms 64 1047552 2048000

64 524288 Standard_M64ls 64 1047552 2048000

64 1792000 Standard_M64ms 64 1047552 2048000

64 1024000 Standard_M64s 64 1047552 2048000

64 3891200 Standard_M128-32ms 128 1047552 4096000

64 3891200 Standard_M128-64ms 128 1047552 4096000

64 3891200 Standard_M128ms 128 1047552 4096000

64 2048000 Standard_M128s 128 1047552 4096000

32 896000 Standard_M32ms_v2 32 1047552 0

64 1835008 Standard_M64ms_v2 64 1047552 0

64 1048576 Standard_M64s_v2 64 1047552 0

64 3985408 Standard_M128ms_v2 128 1047552 0

64 2097152 Standard_M128s_v2 128 1047552 0

64 4194304 Standard_M192ims_v2 192 1047552 0

64 2097152 Standard_M192is_v2 192 1047552 0

32 896000 Standard_M32dms_v2 32 1047552 1048576

64 1835008 Standard_M64dms_v2 64 1047552 2097152

64 1048576 Standard_M64ds_v2 64 1047552 2097152

64 3985408 Standard_M128dms_v2 128 1047552 4194304

64 2097152 Standard_M128ds_v2 128 1047552 4194304

64 4194304 Standard_M192idms_v2 192 1047552 4194304

64 2097152 Standard_M192ids_v2 192 1047552 4194304

2 512 Standard_B1ls 1 1047552 4096

2 2048 Standard_B1ms 1 1047552 4096

2 1024 Standard_B1s 1 1047552 4096

4 8192 Standard_B2ms 2 1047552 16384

4 4096 Standard_B2s 2 1047552 8192

8 16384 Standard_B4ms 4 1047552 32768

16 32768 Standard_B8ms 8 1047552 65536

16 49152 Standard_B12ms 12 1047552 98304

32 65536 Standard_B16ms 16 1047552 131072

32 81920 Standard_B20ms 20 1047552 163840

8 7168 Standard_DS2_v2_Promo 2 1047552 14336

16 14336 Standard_DS3_v2_Promo 4 1047552 28672

32 28672 Standard_DS4_v2_Promo 8 1047552 57344

64 57344 Standard_DS5_v2_Promo 16 1047552 114688

8 14336 Standard_DS11_v2_Promo 2 1047552 28672

16 28672 Standard_DS12_v2_Promo 4 1047552 57344

32 57344 Standard_DS13_v2_Promo 8 1047552 114688

64 114688 Standard_DS14_v2_Promo 16 1047552 229376

32 196608 Standard_D48_v3 48 1047552 1228800

32 262144 Standard_D64_v3 64 1047552 1638400

4 16384 Standard_E2_v3 2 1047552 51200

8 32768 Standard_E4_v3 4 1047552 102400

16 65536 Standard_E8_v3 8 1047552 204800

32 131072 Standard_E16_v3 16 1047552 409600

32 163840 Standard_E20_v3 20 1047552 512000

32 262144 Standard_E32_v3 32 1047552 819200

4 3584 Standard_D1 1 1047552 51200

8 7168 Standard_D2 2 1047552 102400

16 14336 Standard_D3 4 1047552 204800

32 28672 Standard_D4 8 1047552 409600

8 14336 Standard_D11 2 1047552 102400

16 28672 Standard_D12 4 1047552 204800

32 57344 Standard_D13 8 1047552 409600

64 114688 Standard_D14 16 1047552 819200

4 3584 Standard_DS1_v2 1 1047552 7168

8 7168 Standard_DS2_v2 2 1047552 14336

16 14336 Standard_DS3_v2 4 1047552 28672

32 28672 Standard_DS4_v2 8 1047552 57344

64 57344 Standard_DS5_v2 16 1047552 114688

8 14336 Standard_DS11-1_v2 2 1047552 28672

8 14336 Standard_DS11_v2 2 1047552 28672

16 28672 Standard_DS12-1_v2 4 1047552 57344

16 28672 Standard_DS12-2_v2 4 1047552 57344

16 28672 Standard_DS12_v2 4 1047552 57344

32 57344 Standard_DS13-2_v2 8 1047552 114688

32 57344 Standard_DS13-4_v2 8 1047552 114688

32 57344 Standard_DS13_v2 8 1047552 114688

64 114688 Standard_DS14-4_v2 16 1047552 229376

64 114688 Standard_DS14-8_v2 16 1047552 229376

64 114688 Standard_DS14_v2 16 1047552 229376

64 143360 Standard_DS15_v2 20 1047552 286720

4 2048 Standard_F1s 1 1047552 4096

8 4096 Standard_F2s 2 1047552 8192

16 8192 Standard_F4s 4 1047552 16384

32 16384 Standard_F8s 8 1047552 32768

64 32768 Standard_F16s 16 1047552 65536

4 8192 Standard_D2s_v3 2 1047552 16384

8 16384 Standard_D4s_v3 4 1047552 32768

16 32768 Standard_D8s_v3 8 1047552 65536

32 65536 Standard_D16s_v3 16 1047552 131072

32 131072 Standard_D32s_v3 32 1047552 262144

32 196608 Standard_D48s_v3 48 1047552 393216

32 262144 Standard_D64s_v3 64 1047552 524288

4 16384 Standard_E2s_v3 2 1047552 32768

8 32768 Standard_E4-2s_v3 4 1047552 65536

8 32768 Standard_E4s_v3 4 1047552 65536

16 65536 Standard_E8-2s_v3 8 1047552 131072

16 65536 Standard_E8-4s_v3 8 1047552 131072

16 65536 Standard_E8s_v3 8 1047552 131072

32 131072 Standard_E16-4s_v3 16 1047552 262144

32 131072 Standard_E16-8s_v3 16 1047552 262144

32 131072 Standard_E16s_v3 16 1047552 262144

32 163840 Standard_E20s_v3 20 1047552 327680

32 262144 Standard_E32-8s_v3 32 1047552 524288

32 262144 Standard_E32-16s_v3 32 1047552 524288

32 262144 Standard_E32s_v3 32 1047552 524288

4 16384 Standard_E2_v4 2 1047552 0

8 32768 Standard_E4_v4 4 1047552 0

16 65536 Standard_E8_v4 8 1047552 0

32 131072 Standard_E16_v4 16 1047552 0

32 163840 Standard_E20_v4 20 1047552 0

32 262144 Standard_E32_v4 32 1047552 0

4 16384 Standard_E2d_v4 2 1047552 76800

8 32768 Standard_E4d_v4 4 1047552 153600

16 65536 Standard_E8d_v4 8 1047552 307200

32 131072 Standard_E16d_v4 16 1047552 614400

32 163840 Standard_E20d_v4 20 1047552 768000

32 262144 Standard_E32d_v4 32 1047552 1228800

4 16384 Standard_E2s_v4 2 1047552 0

8 32768 Standard_E4-2s_v4 4 1047552 0

8 32768 Standard_E4s_v4 4 1047552 0

16 65536 Standard_E8-2s_v4 8 1047552 0

16 65536 Standard_E8-4s_v4 8 1047552 0

16 65536 Standard_E8s_v4 8 1047552 0

32 131072 Standard_E16-4s_v4 16 1047552 0

32 131072 Standard_E16-8s_v4 16 1047552 0

32 131072 Standard_E16s_v4 16 1047552 0

32 163840 Standard_E20s_v4 20 1047552 0

32 262144 Standard_E32-8s_v4 32 1047552 0

32 262144 Standard_E32-16s_v4 32 1047552 0

32 262144 Standard_E32s_v4 32 1047552 0

4 16384 Standard_E2ds_v4 2 1047552 76800

8 32768 Standard_E4-2ds_v4 4 1047552 153600

8 32768 Standard_E4ds_v4 4 1047552 153600

16 65536 Standard_E8-2ds_v4 8 1047552 307200

16 65536 Standard_E8-4ds_v4 8 1047552 307200

16 65536 Standard_E8ds_v4 8 1047552 307200

32 131072 Standard_E16-4ds_v4 16 1047552 614400

32 131072 Standard_E16-8ds_v4 16 1047552 614400

32 131072 Standard_E16ds_v4 16 1047552 614400

32 163840 Standard_E20ds_v4 20 1047552 768000

32 262144 Standard_E32-8ds_v4 32 1047552 1228800

32 262144 Standard_E32-16ds_v4 32 1047552 1228800

32 262144 Standard_E32ds_v4 32 1047552 1228800

4 8192 Standard_D2d_v4 2 1047552 76800

8 16384 Standard_D4d_v4 4 1047552 153600

16 32768 Standard_D8d_v4 8 1047552 307200

32 65536 Standard_D16d_v4 16 1047552 614400

32 131072 Standard_D32d_v4 32 1047552 1228800

32 196608 Standard_D48d_v4 48 1047552 1843200

32 262144 Standard_D64d_v4 64 1047552 2457600

4 8192 Standard_D2_v4 2 1047552 0

8 16384 Standard_D4_v4 4 1047552 0

16 32768 Standard_D8_v4 8 1047552 0

32 65536 Standard_D16_v4 16 1047552 0

32 131072 Standard_D32_v4 32 1047552 0

32 196608 Standard_D48_v4 48 1047552 0

32 262144 Standard_D64_v4 64 1047552 0

4 8192 Standard_D2ds_v4 2 1047552 76800

8 16384 Standard_D4ds_v4 4 1047552 153600

16 32768 Standard_D8ds_v4 8 1047552 307200

32 65536 Standard_D16ds_v4 16 1047552 614400

32 131072 Standard_D32ds_v4 32 1047552 1228800

32 196608 Standard_D48ds_v4 48 1047552 1843200

32 262144 Standard_D64ds_v4 64 1047552 2457600

4 8192 Standard_D2s_v4 2 1047552 0

8 16384 Standard_D4s_v4 4 1047552 0

16 32768 Standard_D8s_v4 8 1047552 0

32 65536 Standard_D16s_v4 16 1047552 0

32 131072 Standard_D32s_v4 32 1047552 0

32 196608 Standard_D48s_v4 48 1047552 0

32 262144 Standard_D64s_v4 64 1047552 0

4 4096 Standard_F2s_v2 2 1047552 16384

8 8192 Standard_F4s_v2 4 1047552 32768

16 16384 Standard_F8s_v2 8 1047552 65536

32 32768 Standard_F16s_v2 16 1047552 131072

32 65536 Standard_F32s_v2 32 1047552 262144

32 98304 Standard_F48s_v2 48 1047552 393216

32 131072 Standard_F64s_v2 64 1047552 524288

32 147456 Standard_F72s_v2 72 1047552 589824

32 393216 Standard_E48_v3 48 1047552 1228800

32 442368 Standard_E64_v3 64 1047552 1638400

32 393216 Standard_E48s_v3 48 1047552 786432

32 442368 Standard_E64-16s_v3 64 1047552 884736

32 442368 Standard_E64-32s_v3 64 1047552 884736

32 442368 Standard_E64s_v3 64 1047552 884736

32 393216 Standard_E48_v4 48 1047552 0

32 516096 Standard_E64_v4 64 1047552 0

32 393216 Standard_E48d_v4 48 1047552 1843200

32 516096 Standard_E64d_v4 64 1047552 2457600

32 393216 Standard_E48s_v4 48 1047552 0

32 516096 Standard_E64-16s_v4 64 1047552 0

32 516096 Standard_E64-32s_v4 64 1047552 0

32 516096 Standard_E64s_v4 64 1047552 0

32 516096 Standard_E80is_v4 80 1047552 0

32 393216 Standard_E48ds_v4 48 1047552 1843200

32 516096 Standard_E64-16ds_v4 64 1047552 2457600

32 516096 Standard_E64-32ds_v4 64 1047552 2457600

32 516096 Standard_E64ds_v4 64 1047552 2457600

32 516096 Standard_E80ids_v4 80 1047552 4362240

32 442368 Standard_E64i_v3 64 1047552 1638400

32 442368 Standard_E64is_v3 64 1047552 884736

4 8192 Standard_D2a_v4 2 1047552 51200

8 16384 Standard_D4a_v4 4 1047552 102400

16 32768 Standard_D8a_v4 8 1047552 204800

32 65536 Standard_D16a_v4 16 1047552 409600

32 131072 Standard_D32a_v4 32 1047552 819200

32 196608 Standard_D48a_v4 48 1047552 1228800

32 262144 Standard_D64a_v4 64 1047552 1638400

32 393216 Standard_D96a_v4 96 1047552 2457600

4 8192 Standard_D2as_v4 2 1047552 16384

8 16384 Standard_D4as_v4 4 1047552 32768

16 32768 Standard_D8as_v4 8 1047552 65536

32 65536 Standard_D16as_v4 16 1047552 131072

32 131072 Standard_D32as_v4 32 1047552 262144

32 196608 Standard_D48as_v4 48 1047552 393216

32 262144 Standard_D64as_v4 64 1047552 524288

32 393216 Standard_D96as_v4 96 1047552 786432

4 16384 Standard_E2a_v4 2 1047552 51200

8 32768 Standard_E4a_v4 4 1047552 102400

16 65536 Standard_E8a_v4 8 1047552 204800

32 131072 Standard_E16a_v4 16 1047552 409600

32 163840 Standard_E20a_v4 20 1047552 512000

32 262144 Standard_E32a_v4 32 1047552 819200

32 393216 Standard_E48a_v4 48 1047552 1228800

32 524288 Standard_E64a_v4 64 1047552 1638400

32 688128 Standard_E96a_v4 96 1047552 2457600

4 16384 Standard_E2as_v4 2 1047552 32768

8 32768 Standard_E4-2as_v4 4 1047552 65536

8 32768 Standard_E4as_v4 4 1047552 65536

16 65536 Standard_E8-2as_v4 8 1047552 131072

16 65536 Standard_E8-4as_v4 8 1047552 131072

16 65536 Standard_E8as_v4 8 1047552 131072

32 131072 Standard_E16-4as_v4 16 1047552 262144

32 131072 Standard_E16-8as_v4 16 1047552 262144

32 131072 Standard_E16as_v4 16 1047552 262144

32 163840 Standard_E20as_v4 20 1047552 327680

32 262144 Standard_E32-8as_v4 32 1047552 524288

32 262144 Standard_E32-16as_v4 32 1047552 524288

32 262144 Standard_E32as_v4 32 1047552 524288

32 393216 Standard_E48as_v4 48 1047552 786432

32 524288 Standard_E64-16as_v4 64 1047552 884736

32 524288 Standard_E64-32as_v4 64 1047552 884736

32 524288 Standard_E64as_v4 64 1047552 884736

32 688128 Standard_E96-24as_v4 96 1047552 1376256

32 688128 Standard_E96-48as_v4 96 1047552 1376256

32 688128 Standard_E96as_v4 96 1047552 1376256

16 65536 Standard_L8s_v2 8 1047552 81920

32 131072 Standard_L16s_v2 16 1047552 163840

32 262144 Standard_L32s_v2 32 1047552 327680

32 393216 Standard_L48s_v2 48 1047552 491520

32 524288 Standard_L64s_v2 64 1047552 655360

32 655360 Standard_L80s_v2 80 1047552 819200

64 5836800 Standard_M208ms_v2 208 1047552 4194304

64 2918400 Standard_M208s_v2 208 1047552 4194304

64 5836800 Standard_M416-208s_v2 416 1047552 8388608

64 5836800 Standard_M416s_v2 416 1047552 8388608

64 11673600 Standard_M416-208ms_v2 416 1047552 8388608

64 11673600 Standard_M416ms_v2 416 1047552 8388608

12 114688 Standard_NC6s_v3 6 1047552 344064

24 229376 Standard_NC12s_v3 12 1047552 688128

32 458752 Standard_NC24rs_v3 24 1047552 1376256

32 458752 Standard_NC24s_v3 24 1047552 1376256

We really just need one that has 2 VCPUs and some decent memory.

I think we can get by with a Standard_A2 or Standard_A3. We’ll try the A2 to start.

$ az vm create -g idjminikubevmcentralrg -n idjminikubevm --image UbuntuLTS --admin-username azureuser --generate-ssh-keys --size Standard_A2

SSH key files '/Users/johnisa/.ssh/id_rsa' and '/Users/johnisa/.ssh/id_rsa.pub' have been generated under ~/.ssh to allow SSH access to the VM. If using machines without permanent storage, back up your keys to a safe location.

It is recommended to use parameter "--public-ip-sku Standard" to create new VM with Standard public IP. Please note that the default public IP used for VM creation will be changed from Basic to Standard in the future.

{

"fqdns": "",

"id": "/subscriptions/a6ee78d7-b138-46c0-9b23-6f8558e730b0/resourceGroups/idjminikubevmcentralrg/providers/Microsoft.Compute/virtualMachines/idjminikubevm",

"location": "centralus",

"macAddress": "00-0D-3A-43-76-DE",

"powerState": "VM running",

"privateIpAddress": "10.0.0.4",

"publicIpAddress": "52.176.165.101",

"resourceGroup": "idjminikubevmcentralrg",

"zones": ""

}

We can use the IP address shown above to login. We already have our local SSH creds in there so we need no password from this host:

$ ssh azureuser@52.176.165.101

The authenticity of host '52.176.165.101 (52.176.165.101)' can't be established.

ECDSA key fingerprint is SHA256:cUsVQEUsFnLNozMSdm3pxU76kWZrrhrOstTi+Dqi0MU.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '52.176.165.101' (ECDSA) to the list of known hosts.

Welcome to Ubuntu 18.04.6 LTS (GNU/Linux 5.4.0-1059-azure x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Mon Oct 11 15:46:59 UTC 2021

System load: 0.95 Processes: 131

Usage of /: 4.6% of 28.90GB Users logged in: 0

Memory usage: 5% IP address for eth0: 10.0.0.4

Swap usage: 0%

0 updates can be applied immediately.

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

To run a command as administrator (user "root"), use "sudo <command>".

See "man sudo_root" for details.

azureuser@idjminikubevm:~$

we can add kubectl which we will use to test the cluster later:

azureuser@idjminikubevm:~$ curl -LO https://storage.googleapis.com/kubernetes-release/release/`curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt`/bin/linux/amd64/kubectl

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 44.7M 100 44.7M 0 0 81.9M 0 --:--:-- --:--:-- --:--:-- 81.9M

azureuser@idjminikubevm:~$ chmod u+x kubectl && mv ./kubectl /usr/local/bin/

mv: cannot move './kubectl' to '/usr/local/bin/kubectl': Permission denied

azureuser@idjminikubevm:~$ chmod u+x kubectl && sudo mv ./kubectl /usr/local/bin/

azureuser@idjminikubevm:~$ kubectl version

Client Version: version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.2", GitCommit:"8b5a19147530eaac9476b0ab82980b4088bbc1b2", GitTreeState:"clean", BuildDate:"2021-09-15T21:38:50Z", GoVersion:"go1.16.8", Compiler:"gc", Platform:"linux/amd64"}

The connection to the server localhost:8080 was refused - did you specify the right host or port?

Same with the Docker CLI:

azureuser@idjminikubevm:~$ sudo apt update && sudo apt install docker.io -y

Hit:1 http://azure.archive.ubuntu.com/ubuntu bionic InRelease

Get:2 http://azure.archive.ubuntu.com/ubuntu bionic-updates InRelease [88.7 kB]

Get:3 http://azure.archive.ubuntu.com/ubuntu bionic-backports InRelease [74.6 kB]

Get:4 http://security.ubuntu.com/ubuntu bionic-security InRelease [88.7 kB]

Get:5 http://azure.archive.ubuntu.com/ubuntu bionic/universe amd64 Packages [8570 kB]

Get:6 http://azure.archive.ubuntu.com/ubuntu bionic/universe Translation-en [4941 kB]

Get:7 http://azure.archive.ubuntu.com/ubuntu bionic/multiverse amd64 Packages [151 kB]

Get:8 http://azure.archive.ubuntu.com/ubuntu bionic/multiverse Translation-en [108 kB]

Get:9 http://azure.archive.ubuntu.com/ubuntu bionic-updates/main amd64 Packages [2249 kB]

Get:10 http://azure.archive.ubuntu.com/ubuntu bionic-updates/main Translation-en [438 kB]

Get:11 http://azure.archive.ubuntu.com/ubuntu bionic-updates/restricted amd64 Packages [492 kB]

Get:12 http://azure.archive.ubuntu.com/ubuntu bionic-updates/restricted Translation-en [66.7 kB]

Get:13 http://azure.archive.ubuntu.com/ubuntu bionic-updates/universe amd64 Packages [1756 kB]

Get:14 http://azure.archive.ubuntu.com/ubuntu bionic-updates/universe Translation-en [377 kB]

Get:15 http://azure.archive.ubuntu.com/ubuntu bionic-updates/multiverse amd64 Packages [27.3 kB]

Get:16 http://azure.archive.ubuntu.com/ubuntu bionic-updates/multiverse Translation-en [6808 B]

Get:17 http://azure.archive.ubuntu.com/ubuntu bionic-backports/main amd64 Packages [10.0 kB]

Get:18 http://azure.archive.ubuntu.com/ubuntu bionic-backports/main Translation-en [4764 B]

Get:19 http://azure.archive.ubuntu.com/ubuntu bionic-backports/universe amd64 Packages [10.3 kB]

Get:20 http://azure.archive.ubuntu.com/ubuntu bionic-backports/universe Translation-en [4588 B]

Get:21 http://security.ubuntu.com/ubuntu bionic-security/main amd64 Packages [1904 kB]

Get:22 http://security.ubuntu.com/ubuntu bionic-security/main Translation-en [345 kB]

Get:23 http://security.ubuntu.com/ubuntu bionic-security/restricted amd64 Packages [468 kB]

Get:24 http://security.ubuntu.com/ubuntu bionic-security/restricted Translation-en [63.0 kB]

Get:25 http://security.ubuntu.com/ubuntu bionic-security/universe amd64 Packages [1141 kB]

Get:26 http://security.ubuntu.com/ubuntu bionic-security/universe Translation-en [261 kB]

Get:27 http://security.ubuntu.com/ubuntu bionic-security/multiverse amd64 Packages [20.9 kB]

Get:28 http://security.ubuntu.com/ubuntu bionic-security/multiverse Translation-en [4732 B]

Fetched 23.7 MB in 11s (2176 kB/s)

Reading package lists... Done

Building dependency tree

Reading state information... Done

8 packages can be upgraded. Run 'apt list --upgradable' to see them.

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following package was automatically installed and is no longer required:

linux-headers-4.15.0-159

Use 'sudo apt autoremove' to remove it.

The following additional packages will be installed:

bridge-utils containerd pigz runc ubuntu-fan

Suggested packages:

ifupdown aufs-tools cgroupfs-mount | cgroup-lite debootstrap docker-doc rinse zfs-fuse | zfsutils

The following NEW packages will be installed:

bridge-utils containerd docker.io pigz runc ubuntu-fan

0 upgraded, 6 newly installed, 0 to remove and 8 not upgraded.

Need to get 74.0 MB of archives.

After this operation, 359 MB of additional disk space will be used.

Get:1 http://azure.archive.ubuntu.com/ubuntu bionic/universe amd64 pigz amd64 2.4-1 [57.4 kB]

Get:2 http://azure.archive.ubuntu.com/ubuntu bionic/main amd64 bridge-utils amd64 1.5-15ubuntu1 [30.1 kB]

Get:3 http://azure.archive.ubuntu.com/ubuntu bionic-updates/universe amd64 runc amd64 1.0.0~rc95-0ubuntu1~18.04.2 [4087 kB]

Get:4 http://azure.archive.ubuntu.com/ubuntu bionic-updates/universe amd64 containerd amd64 1.5.2-0ubuntu1~18.04.3 [32.8 MB]

Get:5 http://azure.archive.ubuntu.com/ubuntu bionic-updates/universe amd64 docker.io amd64 20.10.7-0ubuntu1~18.04.2 [36.9 MB]

Get:6 http://azure.archive.ubuntu.com/ubuntu bionic/main amd64 ubuntu-fan all 0.12.10 [34.7 kB]

Fetched 74.0 MB in 3s (23.4 MB/s)

Preconfiguring packages ...

Selecting previously unselected package pigz.

(Reading database ... 76952 files and directories currently installed.)

Preparing to unpack .../0-pigz_2.4-1_amd64.deb ...

Unpacking pigz (2.4-1) ...

Selecting previously unselected package bridge-utils.

Preparing to unpack .../1-bridge-utils_1.5-15ubuntu1_amd64.deb ...

Unpacking bridge-utils (1.5-15ubuntu1) ...

Selecting previously unselected package runc.

Preparing to unpack .../2-runc_1.0.0~rc95-0ubuntu1~18.04.2_amd64.deb ...

Unpacking runc (1.0.0~rc95-0ubuntu1~18.04.2) ...

Selecting previously unselected package containerd.

Preparing to unpack .../3-containerd_1.5.2-0ubuntu1~18.04.3_amd64.deb ...

Unpacking containerd (1.5.2-0ubuntu1~18.04.3) ...

Selecting previously unselected package docker.io.

Preparing to unpack .../4-docker.io_20.10.7-0ubuntu1~18.04.2_amd64.deb ...

Unpacking docker.io (20.10.7-0ubuntu1~18.04.2) ...

Selecting previously unselected package ubuntu-fan.

Preparing to unpack .../5-ubuntu-fan_0.12.10_all.deb ...

Unpacking ubuntu-fan (0.12.10) ...

Setting up runc (1.0.0~rc95-0ubuntu1~18.04.2) ...

Setting up containerd (1.5.2-0ubuntu1~18.04.3) ...

Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /lib/systemd/system/containerd.service.

Setting up bridge-utils (1.5-15ubuntu1) ...

Setting up ubuntu-fan (0.12.10) ...

Created symlink /etc/systemd/system/multi-user.target.wants/ubuntu-fan.service → /lib/systemd/system/ubuntu-fan.service.

Setting up pigz (2.4-1) ...

Setting up docker.io (20.10.7-0ubuntu1~18.04.2) ...

Adding group `docker' (GID 116) ...

Done.

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /lib/systemd/system/docker.service.

Created symlink /etc/systemd/system/sockets.target.wants/docker.socket → /lib/systemd/system/docker.socket.

Processing triggers for systemd (237-3ubuntu10.52) ...

Processing triggers for man-db (2.8.3-2ubuntu0.1) ...

Processing triggers for ureadahead (0.100.0-21) ...

Download the minikube binary and move to usr/local/bin:

azureuser@idjminikubevm:~$ curl -Lo minikube https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64 && chmod +x minikube && sudo mv minikube /usr/local/bin/

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 65.8M 100 65.8M 0 0 92.4M 0 --:--:-- --:--:-- --:--:-- 92.3M

azureuser@idjminikubevm:~$ minikube version

minikube version: v1.23.2

commit: 0a0ad764652082477c00d51d2475284b5d39ceed

Latest k8s requires one additional binary of conntrack:

azureuser@idjminikubevm:~$ sudo apt-get install -y conntrack

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following package was automatically installed and is no longer required:

linux-headers-4.15.0-159

Use 'sudo apt autoremove' to remove it.

The following NEW packages will be installed:

conntrack

0 upgraded, 1 newly installed, 0 to remove and 8 not upgraded.

Need to get 30.6 kB of archives.

After this operation, 104 kB of additional disk space will be used.

Get:1 http://azure.archive.ubuntu.com/ubuntu bionic/main amd64 conntrack amd64 1:1.4.4+snapshot20161117-6ubuntu2 [30.6 kB]

Fetched 30.6 kB in 0s (1292 kB/s)

Selecting previously unselected package conntrack.

(Reading database ... 77275 files and directories currently installed.)

Preparing to unpack .../conntrack_1%3a1.4.4+snapshot20161117-6ubuntu2_amd64.deb ...

Unpacking conntrack (1:1.4.4+snapshot20161117-6ubuntu2) ...

Setting up conntrack (1:1.4.4+snapshot20161117-6ubuntu2) ...

Processing triggers for man-db (2.8.3-2ubuntu0.1) ...

We can now launch minikube:

azureuser@idjminikubevm:~$ sudo minikube start --vm-driver=none

😄 minikube v1.23.2 on Ubuntu 18.04

✨ Using the none driver based on user configuration

👍 Starting control plane node minikube in cluster minikube

🤹 Running on localhost (CPUs=2, Memory=3432MB, Disk=29598MB) ...

ℹ️ OS release is Ubuntu 18.04.6 LTS

🐳 Preparing Kubernetes v1.22.2 on Docker 20.10.7 ...

▪ kubelet.resolv-conf=/run/systemd/resolve/resolv.conf

> kubelet.sha256: 64 B / 64 B [--------------------------] 100.00% ? p/s 0s

> kubeadm.sha256: 64 B / 64 B [--------------------------] 100.00% ? p/s 0s

> kubectl.sha256: 64 B / 64 B [--------------------------] 100.00% ? p/s 0s

> kubeadm: 43.71 MiB / 43.71 MiB [-----------] 100.00% 118.26 MiB p/s 600ms

> kubectl: 44.73 MiB / 44.73 MiB [-----------] 100.00% 118.41 MiB p/s 600ms

> kubelet: 146.25 MiB / 146.25 MiB [-----------] 100.00% 97.43 MiB p/s 1.7s

▪ Generating certificates and keys ...

▪ Booting up control plane ...

▪ Configuring RBAC rules ...

🤹 Configuring local host environment ...

❗ The 'none' driver is designed for experts who need to integrate with an existing VM

💡 Most users should use the newer 'docker' driver instead, which does not require root!

📘 For more information, see: https://minikube.sigs.k8s.io/docs/reference/drivers/none/

❗ kubectl and minikube configuration will be stored in /home/azureuser

❗ To use kubectl or minikube commands as your own user, you may need to relocate them. For example, to overwrite your own settings, run:

▪ sudo mv /home/azureuser/.kube /home/azureuser/.minikube $HOME

▪ sudo chown -R $USER $HOME/.kube $HOME/.minikube

💡 This can also be done automatically by setting the env var CHANGE_MINIKUBE_NONE_USER=true

🔎 Verifying Kubernetes components...

▪ Using image gcr.io/k8s-minikube/storage-provisioner:v5

🌟 Enabled addons: default-storageclass, storage-provisioner

🏄 Done! kubectl is now configured to use "minikube" cluster and "default" namespace by default

Now we can verify we can reach nodes:

azureuser@idjminikubevm:~$ sudo chown -R azureuser /home/azureuser/

azureuser@idjminikubevm:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

idjminikubevm Ready control-plane,master 3m2s v1.22.2

azureuser@idjminikubevm:~$

azureuser@idjminikubevm:~$ exit

logout

Connection to 52.176.165.101 closed.

chrmacjohnisa:jekyll-blog johnisa$ ssh azureuser@52.176.165.101

Welcome to Ubuntu 18.04.6 LTS (GNU/Linux 5.4.0-1059-azure x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Mon Oct 11 16:49:57 UTC 2021

System load: 0.38 Processes: 150

Usage of /: 11.3% of 28.90GB Users logged in: 0

Memory usage: 30% IP address for eth0: 10.0.0.4

Swap usage: 0% IP address for docker0: 172.17.0.1

8 updates can be applied immediately.

4 of these updates are standard security updates.

To see these additional updates run: apt list --upgradable

New release '20.04.3 LTS' available.

Run 'do-release-upgrade' to upgrade to it.

Last login: Mon Oct 11 16:48:43 2021 from 73.242.50.46

azureuser@idjminikubevm:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3eacdf72d1d0 gcr.io/k8s-minikube/storage-provisioner "/storage-provisioner" 53 minutes ago Up 53 minutes k8s_storage-provisioner_storage-provisioner_kube-system_e6ca041f-1f8b-46d8-9a2d-7934a7b26ccd_0

a0eea32f13a2 8d147537fb7d "/coredns -conf /etc…" 53 minutes ago Up 53 minutes k8s_coredns_coredns-78fcd69978-gw9sx_kube-system_4126709a-41fc-4805-a3b8-59ef2b9b345a_0

d55f1247e143 873127efbc8a "/usr/local/bin/kube…" 53 minutes ago Up 53 minutes k8s_kube-proxy_kube-proxy-qh2qj_kube-system_4617746e-139e-413d-8d22-e3e8653fa8a4_0

edb02933dd39 k8s.gcr.io/pause:3.5 "/pause" 53 minutes ago Up 53 minutes k8s_POD_coredns-78fcd69978-gw9sx_kube-system_4126709a-41fc-4805-a3b8-59ef2b9b345a_0

fc94eb3a7cdf k8s.gcr.io/pause:3.5 "/pause" 53 minutes ago Up 53 minutes k8s_POD_storage-provisioner_kube-system_e6ca041f-1f8b-46d8-9a2d-7934a7b26ccd_0

191e2e2934a8 k8s.gcr.io/pause:3.5 "/pause" 53 minutes ago Up 53 minutes k8s_POD_kube-proxy-qh2qj_kube-system_4617746e-139e-413d-8d22-e3e8653fa8a4_0

cfcf633a2ae0 e64579b7d886 "kube-apiserver --ad…" 54 minutes ago Up 54 minutes k8s_kube-apiserver_kube-apiserver-idjminikubevm_kube-system_44fe3a9baea12744afd0e09c3fbb1057_0

dbb3c4a3a317 5425bcbd23c5 "kube-controller-man…" 54 minutes ago Up 54 minutes k8s_kube-controller-manager_kube-controller-manager-idjminikubevm_kube-system_334646c985a466f223d54a14df34ef51_0

79bc5796323c b51ddc1014b0 "kube-scheduler --au…" 54 minutes ago Up 54 minutes k8s_kube-scheduler_kube-scheduler-idjminikubevm_kube-system_2747485889abc7eeed54bb95f2b349b3_0

27f907d7f0ba 004811815584 "etcd --advertise-cl…" 54 minutes ago Up 54 minutes k8s_etcd_etcd-idjminikubevm_kube-system_b341a882fa14563d230338d7bb9a79e5_0

81a76efebc5c k8s.gcr.io/pause:3.5 "/pause" 54 minutes ago Up 54 minutes k8s_POD_etcd-idjminikubevm_kube-system_b341a882fa14563d230338d7bb9a79e5_0

cb7b55b649c5 k8s.gcr.io/pause:3.5 "/pause" 54 minutes ago Up 54 minutes k8s_POD_kube-scheduler-idjminikubevm_kube-system_2747485889abc7eeed54bb95f2b349b3_0

bc7cae7d6aaa k8s.gcr.io/pause:3.5 "/pause" 54 minutes ago Up 54 minutes k8s_POD_kube-controller-manager-idjminikubevm_kube-system_334646c985a466f223d54a14df34ef51_0

4cd1a0a7cd69 k8s.gcr.io/pause:3.5 "/pause" 54 minutes ago Up 54 minutes k8s_POD_kube-apiserver-idjminikubevm_kube-system_44fe3a9baea12744afd0e09c3fbb1057_0

and for docker commands, make sure to add the azureuser to the docker group:

azureuser@idjminikubevm:~$ sudo groupadd docker

groupadd: group 'docker' already exists

azureuser@idjminikubevm:~$ sudo usermod -aG docker $USER

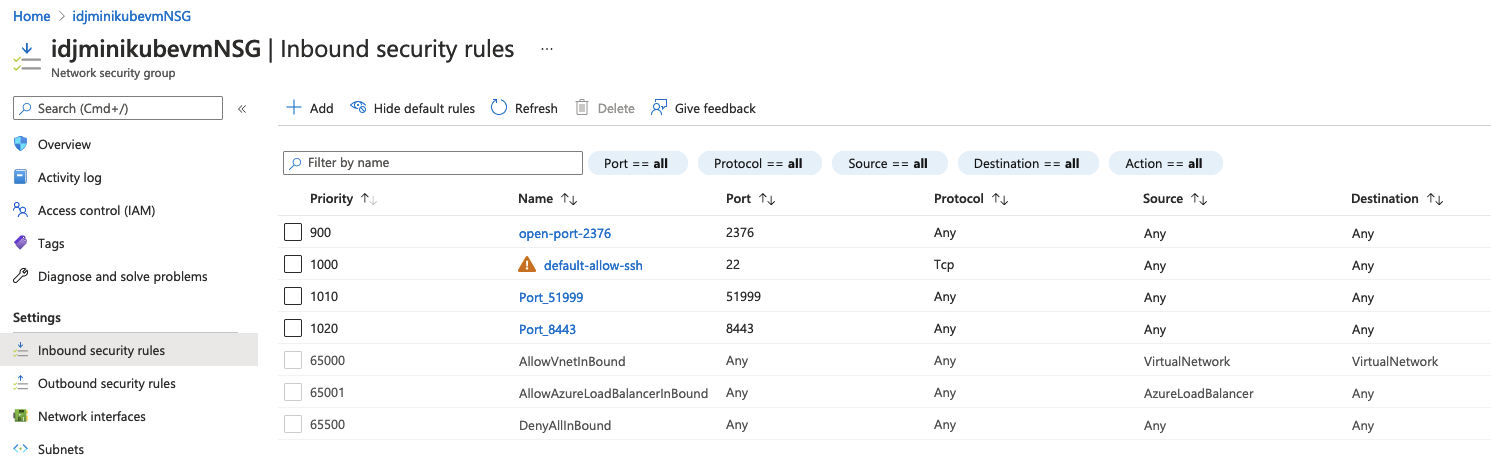

To remotely access, we’ll need to open some ports:

$ az vm open-port -g idjminikubevmcentralrg -n idjminikubevm --port 30000 --port 30080 --port 8443 --port 2376

{

"defaultSecurityRules": [

{

"access": "Allow",

...

docker ps

Cannot connect to the Docker daemon at tcp://52.176.165.101:2376. Is the docker daemon running?

the issue here is that neither k8s nor docker is being exposed as service on a port. And sadly we cannot use KVM2 as the Standard_A3 doesn’t expose CPU virtualization:

azureuser@idjminikubevm:~$ sudo apt install cpu-checker

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following package was automatically installed and is no longer required:

linux-headers-4.15.0-159

Use 'sudo apt autoremove' to remove it.

The following additional packages will be installed:

msr-tools

The following NEW packages will be installed:

cpu-checker msr-tools

0 upgraded, 2 newly installed, 0 to remove and 8 not upgraded.

Need to get 16.6 kB of archives.

After this operation, 62.5 kB of additional disk space will be used.

Do you want to continue? [Y/n] y

Get:1 http://azure.archive.ubuntu.com/ubuntu bionic/main amd64 msr-tools amd64 1.3-2build1 [9760 B]

Get:2 http://azure.archive.ubuntu.com/ubuntu bionic/main amd64 cpu-checker amd64 0.7-0ubuntu7 [6862 B]

Fetched 16.6 kB in 0s (658 kB/s)

Selecting previously unselected package msr-tools.

(Reading database ... 77283 files and directories currently installed.)

Preparing to unpack .../msr-tools_1.3-2build1_amd64.deb ...

Unpacking msr-tools (1.3-2build1) ...

Selecting previously unselected package cpu-checker.

Preparing to unpack .../cpu-checker_0.7-0ubuntu7_amd64.deb ...

Unpacking cpu-checker (0.7-0ubuntu7) ...

Setting up msr-tools (1.3-2build1) ...

Setting up cpu-checker (0.7-0ubuntu7) ...

Processing triggers for man-db (2.8.3-2ubuntu0.1) ...

azureuser@idjminikubevm:~$ kvm-ok

INFO: Your CPU does not support KVM extensions

INFO: For more detailed results, you should run this as root

HINT: sudo /usr/sbin/kvm-ok

While we cannot reach the docker endpoint, at least we can access minikube. One thing i found in testing was that while ufw was disabled, the port-open commands i had used did not seem to stick. In the end, i went to the Azure Portal and updated the NSG myself.

and then i could reach the host:

$ cat ~/.kube/config

apiVersion: v1

clusters:

- cluster:

certificate-authority: /Users/johnisa/az-vm-minikube-certs/ca.crt

extensions:

- extension:

last-update: Mon, 11 Oct 2021 16:59:29 UTC

provider: minikube.sigs.k8s.io

version: v1.23.2

name: cluster_info

server: https://52.176.165.101:8443

name: minikube

contexts:

- context:

cluster: minikube

extensions:

- extension:

last-update: Mon, 11 Oct 2021 16:59:29 UTC

provider: minikube.sigs.k8s.io

version: v1.23.2

name: context_info

namespace: default

user: minikube

name: minikube

current-context: minikube

kind: Config

preferences: {}

users:

- name: minikube

user:

client-certificate: /Users/johnisa/az-vm-minikube-certs/client.crt

client-key: /Users/johnisa/az-vm-minikube-certs/client.key

$ kubectl get nodes --insecure-skip-tls-verify

NAME STATUS ROLES AGE VERSION

idjminikubevm Ready control-plane,master 31m v1.22.2

Note: i did copy the certs down locally to /Users/johnisa/az-vm-minikube-certs but because they were generated for 10.0.0.4 only, it did not help. Ultimately, I just skipped them with insecure-skip-tls-verify).

We can of course use this with Docker-in-Docker

$ cat did.yaml

apiVersion: v1

kind: Pod

metadata:

name: dind

spec:

containers:

- name: docker-cmds

image: docker:1.12.6

command: ['docker', 'run', '-p', '80:80', 'httpd:latest']

resources:

requests:

cpu: 10m

memory: 256Mi

env:

- name: DOCKER_HOST

value: tcp://localhost:2375

- name: dind-daemon

image: docker:1.12.6-dind

resources:

requests:

cpu: 20m

memory: 512Mi

securityContext:

privileged: true

volumeMounts:

- name: docker-graph-storage

mountPath: /var/lib/docker

volumes:

- name: docker-graph-storage

emptyDir: {}

$ kubectl apply -f did.yaml --insecure-skip-tls-verify

pod/dind created

$ kubectl get pods --insecure-skip-tls-verify

NAME READY STATUS RESTARTS AGE

dind 0/2 ContainerCreating 0 14s

$ kubectl get pods --insecure-skip-tls-verify

NAME READY STATUS RESTARTS AGE

dind 2/2 Running 1 (7s ago) 18s

$ kubectl --insecure-skip-tls-verify exec -it dind -- /bin/sh

Defaulted container "docker-cmds" out of: docker-cmds, dind-daemon

/ # docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e64b28581f4b httpd:latest "httpd-foreground" 8 seconds ago Up 1 seconds 0.0.0.0:80->80/tcp berserk_bell

/ #

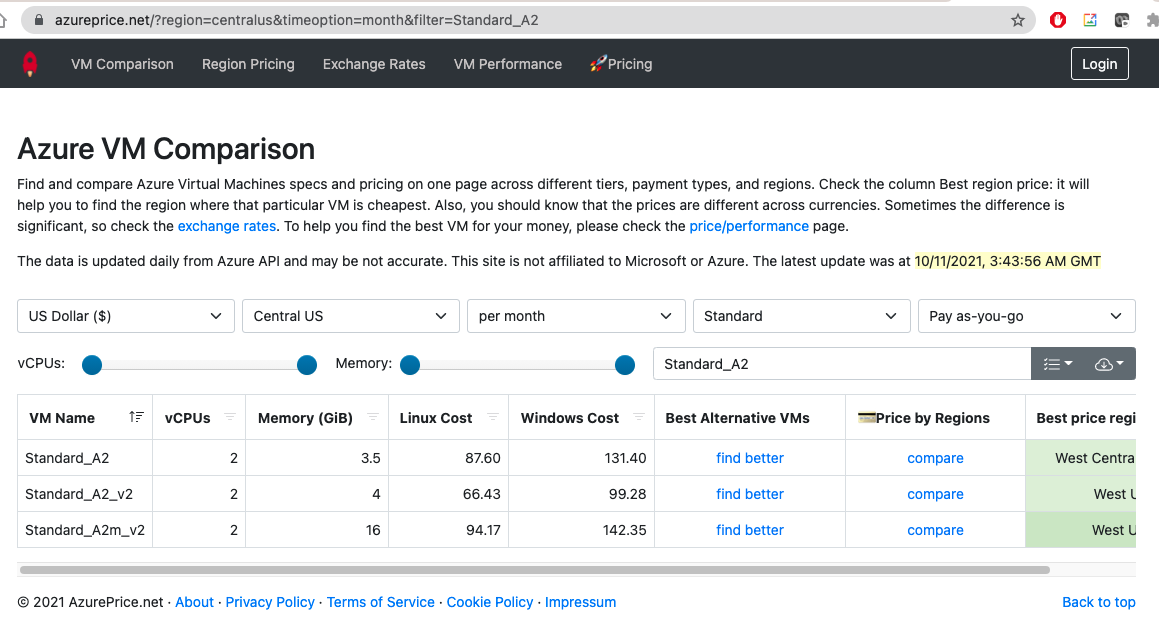

If we power this off or let it burn free credits, this is cost effective. However, just to be a general purpose host, this will, at time of writing, cost us US$87.60/mo.

Cleanup

If you wish to NOT incur further costs in Azure, you can delete all of what we made:

$ az group delete

the following arguments are required: --name/-n/--resource-group/-g

TRY THIS:

az group delete --resource-group MyResourceGroup

Delete a resource group.

az group list --query "[?location=='westus']"

List all resource groups located in the West US region.

https://docs.microsoft.com/en-US/cli/azure/group#az_group_delete

Read more about the command in reference docs

chrmacjohnisa:10 johnisa$ az group delete -g idjminikubevmcentralrg

Are you sure you want to perform this operation? (y/n): y

Summary

In using Hyperkit we encountered VPN issues that required using an alternate backend. We used Virtualbox in Mac OS (Big Sur, fwiw) to create a Minikube instance that could operate on a different CIDR than our VPN used. Beyond that, to sort out VPN forced tunneling issues, we leveraged the VBox port-mappings to redirect 127.0.0.1 for Docker and Kubernetes to our instance. Lastly, we tried restarting the box to sort out what it takes to restart minikube after a power-cycle.

I try and stay positive on all things, but I think you can understand Oracle and their modus operandi from this USENIX talk around 2010. And having lived near their HQ for a brief period in 2009 I have formulated some opinions from first hand encounters. So if I express concern over the long-term viability of Virtualbox, it comes from that place.

There are other options for backends. The list of current backends can be found here:

Linux

- Docker - container-based (preferred)

- KVM2 - VM-based (preferred)

- VirtualBox - VM

- None - bare-metal

- Podman - container (experimental)

- SSH - remote ssh

macOS

- Docker - VM + Container (preferred)

- Hyperkit - VM

- VirtualBox - VM

- Parallels - VM

- VMware - VM

- SSH - remote ssh

Windows

- Hyper-V - VM (preferred)

- Docker - VM + Container (preferred)

- VirtualBox - VM

- VMware - VM

- SSH - remote ssh

VMWare is between US$150-200 per seat for Fusion (Workstation). • Parallels is presently between US$80-100 for Parallels Desktop. Even our jaunt into Azure would show the utility box costing over $80/mo.

Most of these commercial offerings make the $6/8 month cost for commercial Docker Desktop seems reasonable. For the time being, I’ll be using VBox as it works with the VPN and so far hasn’t fallen down.

Recommendations

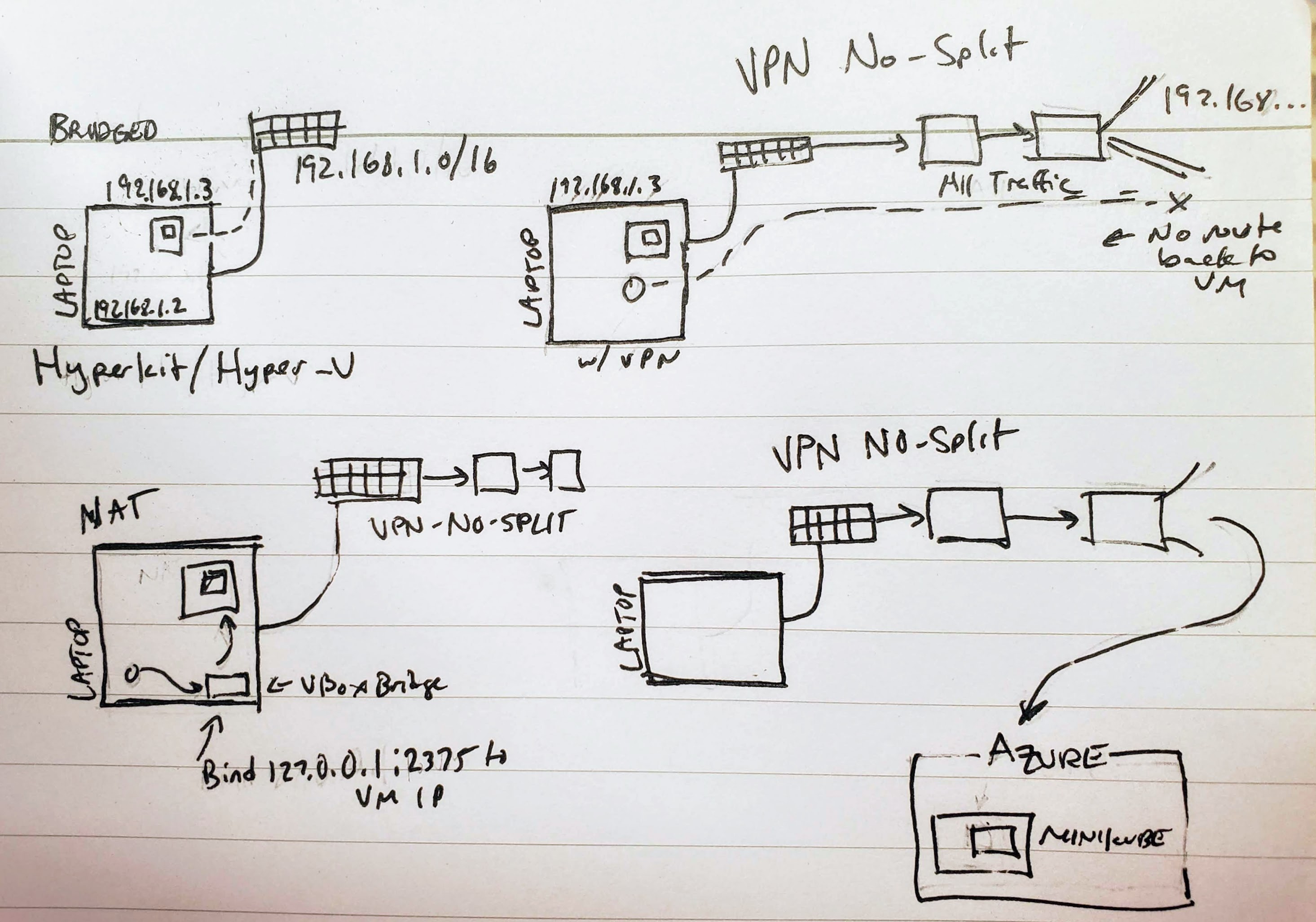

Let’s review our options for a moment. And consider that Docker Desktop operates very much like the VBox with Binding (lower left):

I reviewed these articles with a trusted EA I admire and his question was rather straightforward: “So, what is the right option?”

First, if you are an OSS developer or very small shop, stick with Docker Desktop. It works well and you are within the license entitlements for free usage (for now).

If you are a larger company (>250) and you have laptops that have to power on and off a lot, or switch between VPNs, a company might as well just pay them.

If, however, you have persistent desktops that developers use (I recall an Enterprise I was at recently that has such ‘one box wonders’ that were always on), then use minikube. If you have low tolerance for cost, use minikube.

Stop Trying to Make Laptops a Mini Cloud…

Lastly, there is some real value in teaching developers to use Kubernetes for development. AKS can provide access to on-prem resources (either through Expressroute or VPNs) as well as private namespaces. These can tie to AAD so your standard developer deploys end up being a Laptop of meager size but a namespace in a cluster with the ability to just az aks get-credentials .... and go. It can greatly lower the barrier to entry for developers to just use AKS for their work.

And if you live in an AWS world, there are federated credentials (every shop I’ve been in the last 8 years has had some kind of cloud portal to facilitate this) as well as AWS Workspaces for hosted compute and EKS isn’t as bad as it used to be. Azure has DevTest Labs for similar general purpose ‘claimable’ hosts akin to Workspaces.

In summary, if you are comfortable using the major cloud providers, then dammit, use the major cloud providers. But if you aren’t and/or have to deal with non-persistent networks, or a less technical user base, you might as well fork over the dough for Docker Desktop.