Published: Aug 24, 2021 by Isaac Johnson

I’ve been using Ghost to blog for some amount of time, but recently I was turned onto Jekyll for blogging with markdown. More to the point, Jekyll is the backend used by Github Pages blogging. We can actually use this and a custom DNS entry to make a free scalable blog, documentation portal or website.

Getting Started

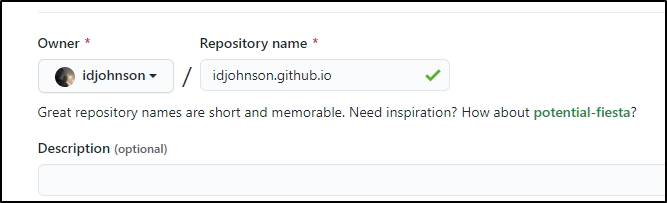

First, let’s create a root level repo for our blog. It needs to match our org or user id and end in ‘github.io’.

In my case, idjohnson:

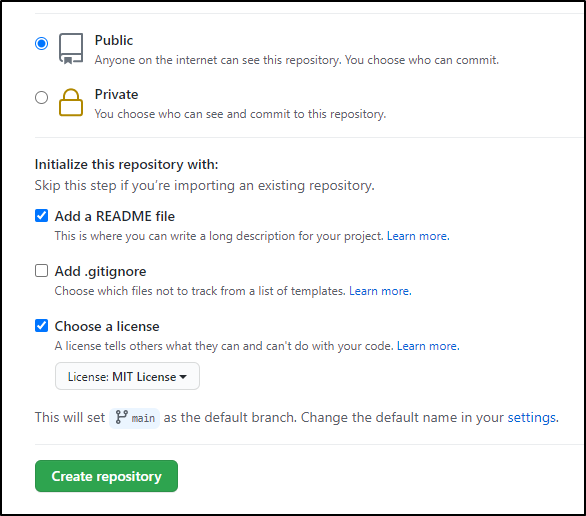

You’ll also want to set it to public if you want people to be able to see it.

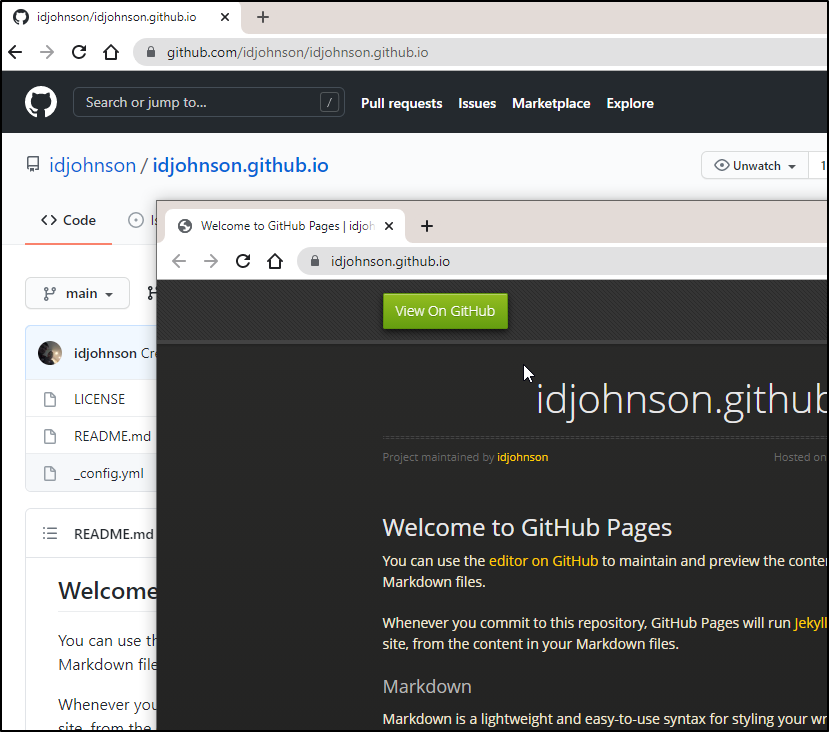

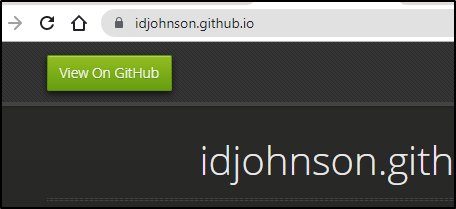

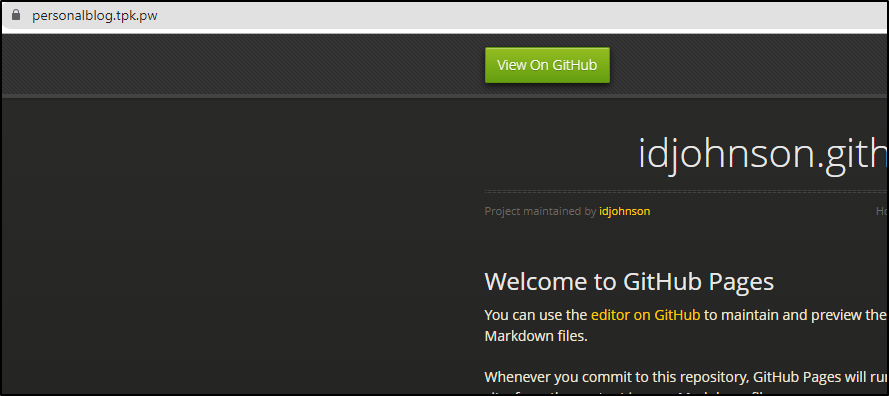

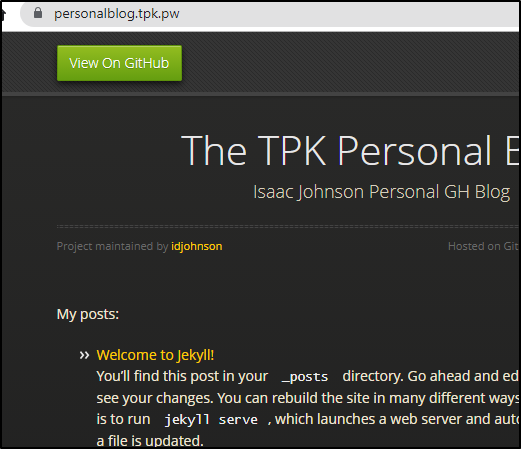

At this point, technically we are done with the MVP. There now exists a very basic GH Pages site:

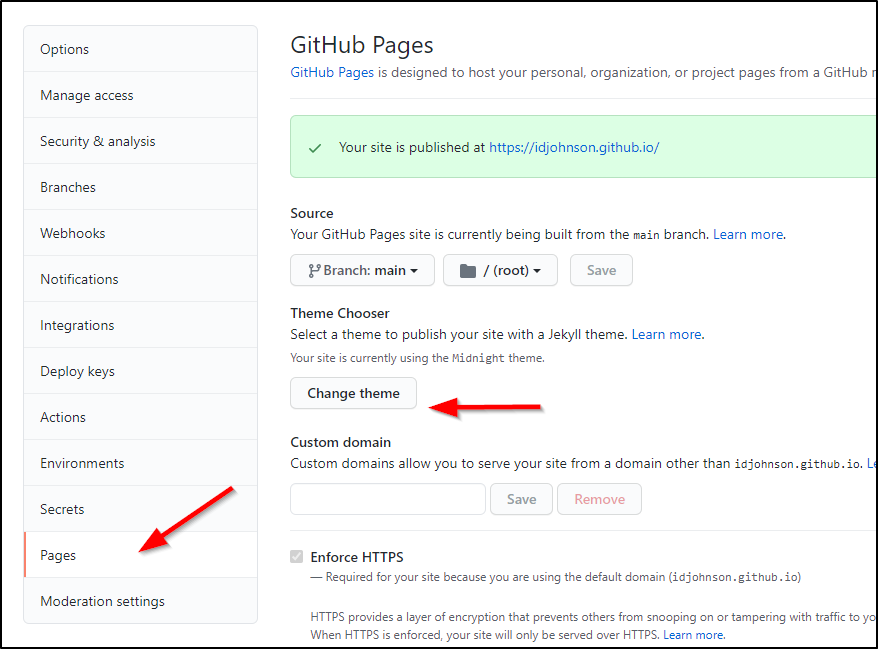

Themes

Under settings, we can easily pick from some standard themes… Here i chose “Midnight Theme”

and when i saved with a bland README.md, it rebuilt and republished the site:

Custom Domains

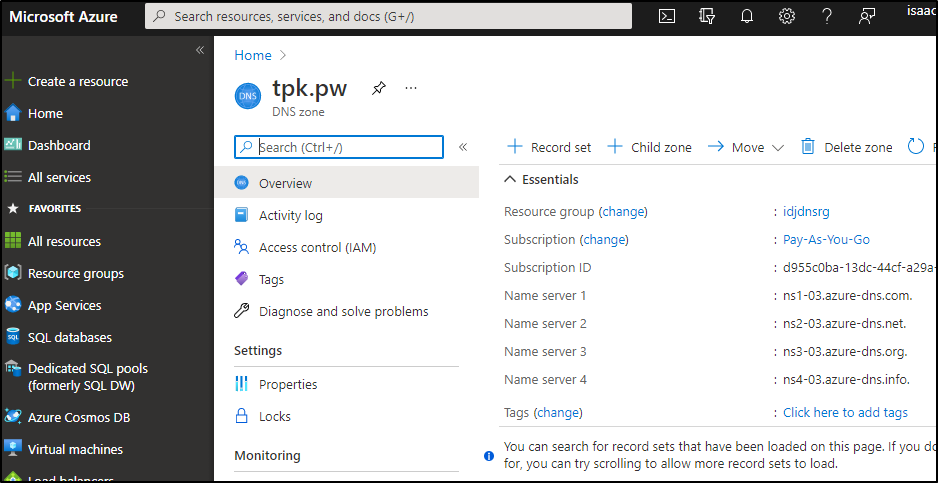

What about a custom domain? Maybe something with freshbrewed.science or tpk.pw.

Let’s hop over to Azure DNS and add an A Record for tpk.pw:

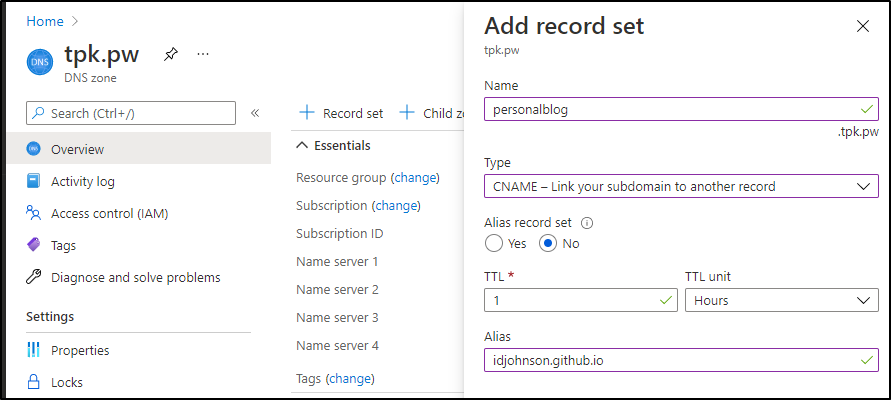

Here is a CNAME we can use personalblog (personalblog.tpk.pw). Just point that with a CNAME to the existing github.io URL (idjohnson.github.io)

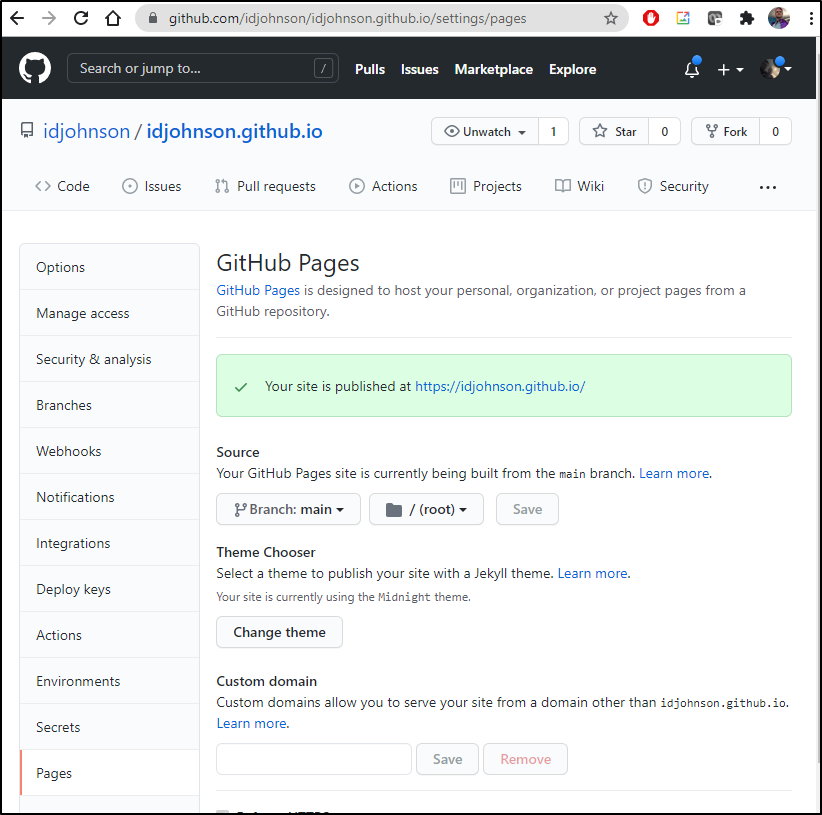

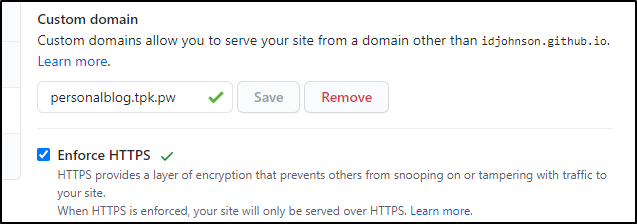

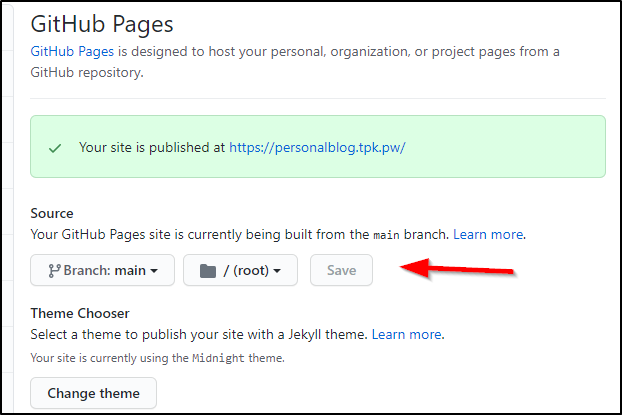

Now let’s go back to Github settings for the blog repo:

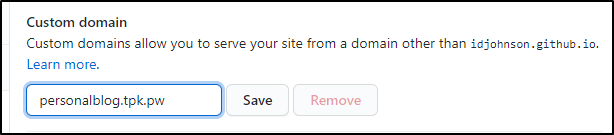

Let’s add the custom DNS entry and save:

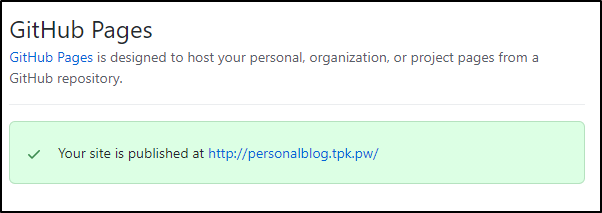

we actually see the green published box update with the new URL:

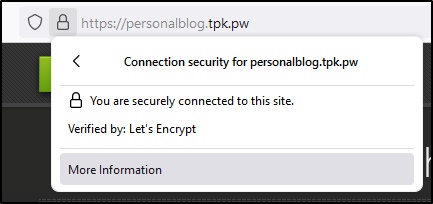

Now sadly this is an HTTP site..

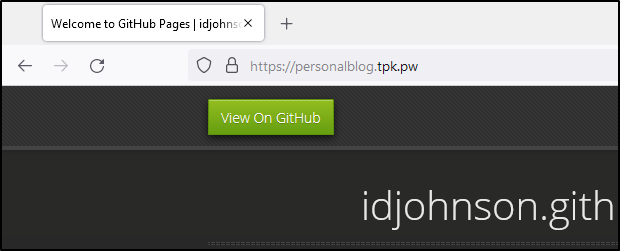

You can go to https but users will get the whole “this cert is bullcrap” warning from google which says, yes it’s TLS encrypted, but with an illegit self signed cert (or cert that doesn’t match the name): [ note : as we will see in a minute, this is temporary]

The former URL is still valid

If you wish to enforce SSL (and disable HTTP) then you can enforce that by checking ‘enforce https’

I noted that having given Github a beat to verify the DNS (green check), it created an SSL cert for me (thank you Github)

It looks like Github uses LE for that (much as i would do myself):

I certainly did not generate that TLS cert.

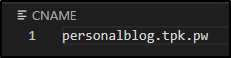

We also see that DNS name referenced in two places in our repo.

The first is the _config.yml

title: The TPK Personal Blog

email: isaac.johson@gmail.com

description: >- # this means to ignore newlines until "baseurl:"

Isaac Johnson Personal GH Blog

url: "https://personalblog.tpk.pw" # the base hostname & protocol for your site, e.g. http://example.com

twitter_username: nulubez

github_username: idjohnson

The second is a root level CNAME file:

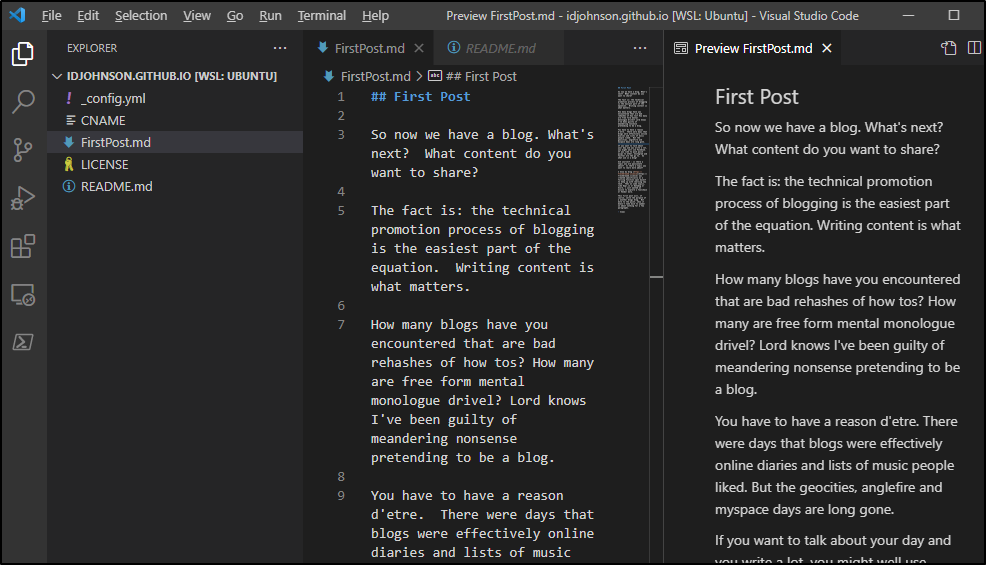

Updating the blog

say we wish to make a post. How hard is it?

Let’s checkout a branch and fire up vs code:

$ ls

CNAME LICENSE README.md _config.yml

$ code .

$ git branch

* main

$ git checkout -b feature/firstpost

Switched to a new branch 'feature/firstpost'

Then we can add the file and push it..

~/Workspaces/idjohnson.github.io$ git add FirstPost.md

~/Workspaces/idjohnson.github.io$ git commit -m "My first post"

[feature/firstpost c246d28] My first post

1 file changed, 19 insertions(+)

create mode 100644 FirstPost.md

~/Workspaces/idjohnson.github.io$ git push

fatal: The current branch feature/firstpost has no upstream branch.

To push the current branch and set the remote as upstream, use

git push --set-upstream origin feature/firstpost

~/Workspaces/idjohnson.github.io$ darf

git push --set-upstream origin feature/firstpost [enter/↑/↓/ctrl+c]

Enumerating objects: 4, done.

Counting objects: 100% (4/4), done.

Delta compression using up to 8 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (3/3), 1.01 KiB | 1.01 MiB/s, done.

Total 3 (delta 1), reused 0 (delta 0)

remote: Resolving deltas: 100% (1/1), completed with 1 local object.

remote:

remote: Create a pull request for 'feature/firstpost' on GitHub by visiting:

remote: https://github.com/idjohnson/idjohnson.github.io/pull/new/feature/firstpost

remote:

To https://github.com/idjohnson/idjohnson.github.io.git

* [new branch] feature/firstpost -> feature/firstpost

Branch 'feature/firstpost' set up to track remote branch 'feature/firstpost' from 'origin'.

Nothing appears on our blog at first. This is because pages is set to a branch spec (in our case “main”):

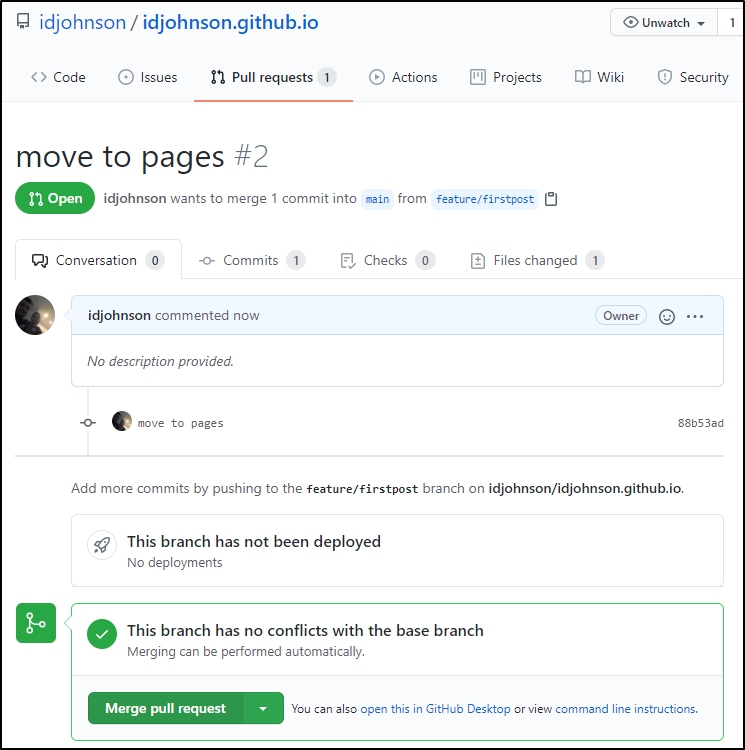

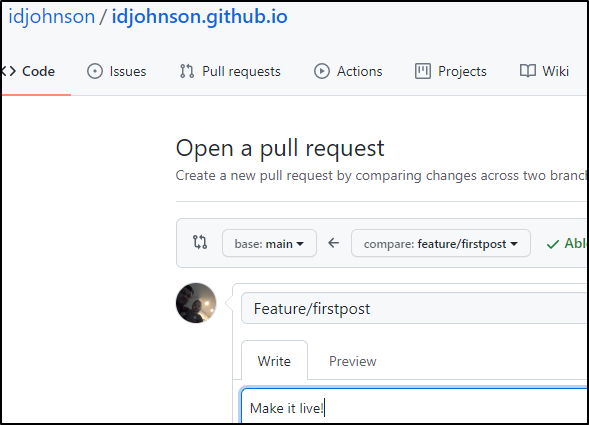

So let’s PR back to main.

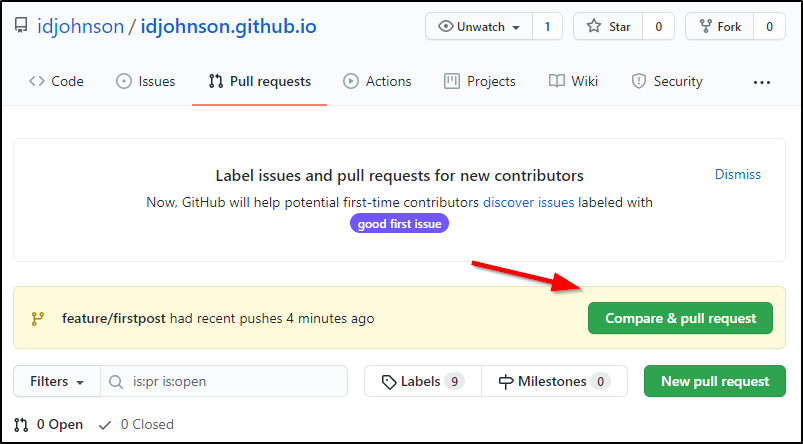

We can go to the PR page where we are prompted to make a PR:

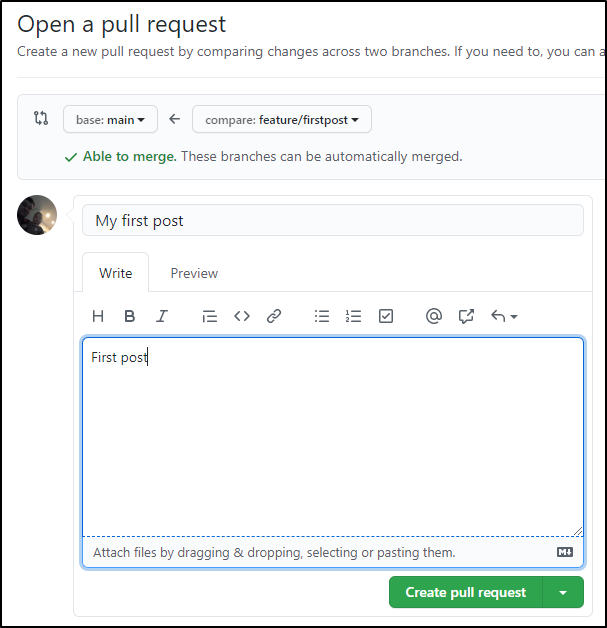

Create the PR

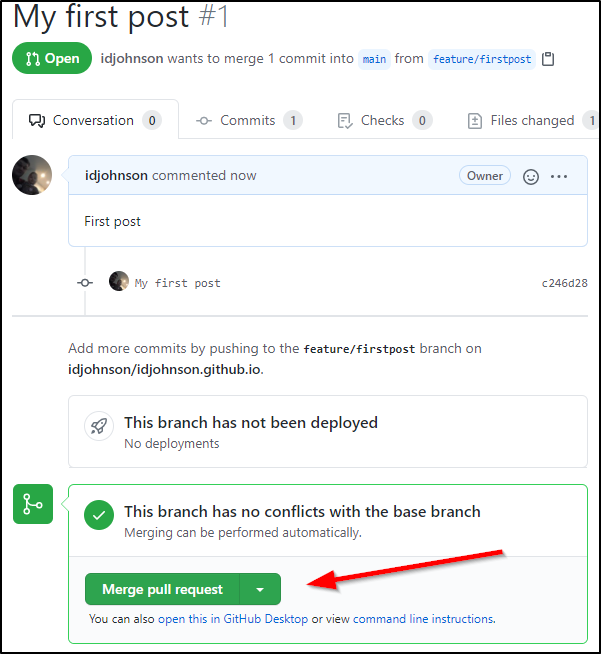

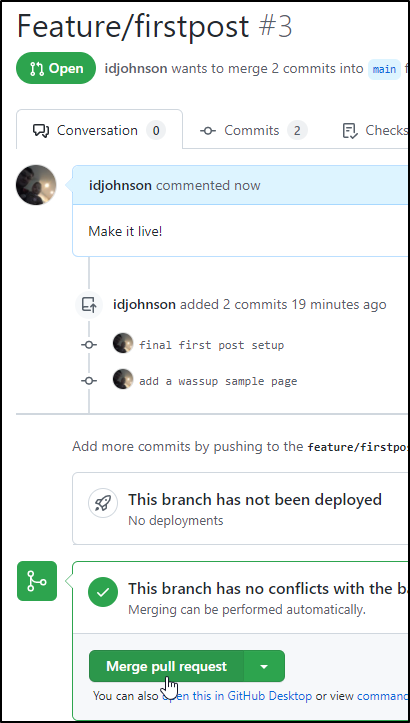

Then if there are no conflicts we can merge right away:

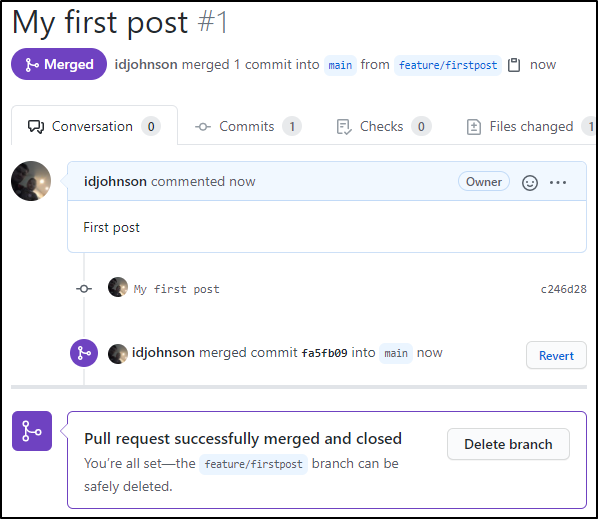

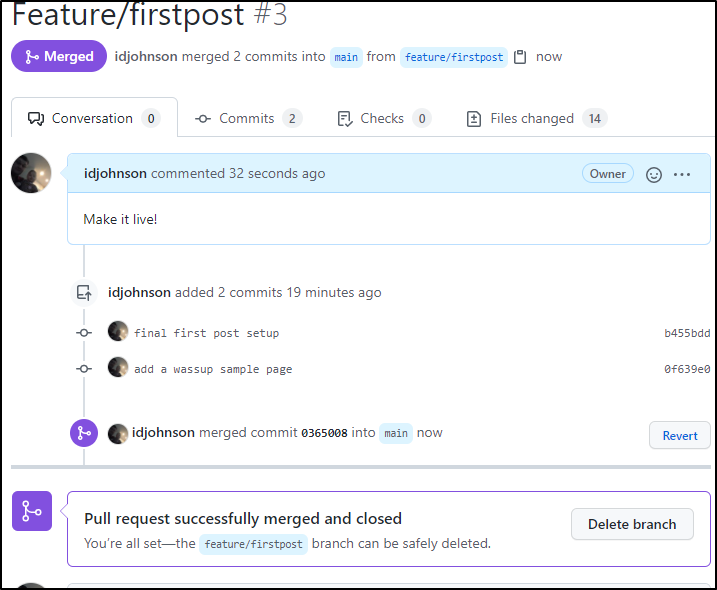

which moves it to merged and closed

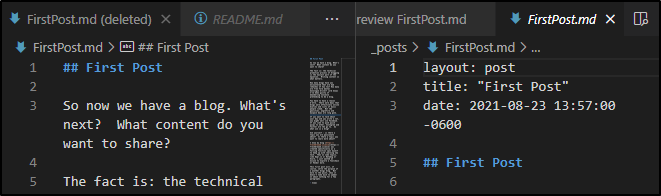

I realized i did not organize it right.

I moved to a _posts folder and added a header to the markdown:

note: later i realized the missing part was a lack of “—” before line 1 and after line 3.

Then created and merged a PR

Testing locally

Install Jekyll if you have not already: https://jekyllrb.com/docs/installation/

In fact, getting started with Jekyll locally is as simple as a few steps (documented here)

$ gem install jekyll bundler

# Create a new Jekyll site at ./blog.

$ jekyll new blog

# cd to blog and build

$ cd myblog && bundle exec jekyll serve

# now serving on localhost:4000

We will be looking at the completed repo which is made public here:

https://github.com/idjohnson/idjohnson.github.io

You’ll want to use bundle install and bundle update when updating content

~/Workspaces/idjohnson.github.io$ bundle update

Fetching gem metadata from https://rubygems.org/...........

Resolving dependencies...

Using concurrent-ruby 1.1.9

Using minitest 5.14.4

Using thread_safe 0.3.6

Using zeitwerk 2.4.2

Using public_suffix 4.0.6

Using bundler 2.2.26

Using unf_ext 0.0.7.7

Using eventmachine 1.2.7

Using ffi 1.15.3

Using faraday-em_http 1.0.0

Using faraday-excon 1.1.0

Using faraday-httpclient 1.0.1

Using faraday-net_http_persistent 1.2.0

Using faraday-patron 1.0.0

Using faraday-rack 1.0.0

Using multipart-post 2.1.1

Using forwardable-extended 2.6.0

Using gemoji 3.0.1

Using rb-fsevent 0.11.0

Using rexml 3.2.5

Using liquid 4.0.3

Using mercenary 0.3.6

Using rouge 3.26.0

Using safe_yaml 1.0.5

Using racc 1.5.2

Using jekyll-paginate 1.1.0

Using rubyzip 2.3.2

Using jekyll-swiss 1.0.0

Using unicode-display_width 1.7.0

Using i18n 0.9.5

Using unf 0.1.4

Using tzinfo 1.2.9

Using ethon 0.14.0

Using rb-inotify 0.10.1

Using addressable 2.8.0

Using pathutil 0.16.2

Using kramdown 2.3.1

Using nokogiri 1.12.3 (x86_64-linux)

Using ruby-enum 0.9.0

Using faraday-em_synchrony 1.0.0

Using terminal-table 1.8.0

Using simpleidn 0.2.1

Using typhoeus 1.4.0

Using sass-listen 4.0.0

Using listen 3.7.0

Using kramdown-parser-gfm 1.1.0

Using commonmarker 0.17.13

Using dnsruby 1.61.7

Using sass 3.7.4

Using jekyll-watch 2.2.1

Using jekyll-sass-converter 1.5.2

Using execjs 2.8.1

Using faraday-net_http 1.0.1

Using activesupport 6.0.4.1

Using colorator 1.1.0

Using html-pipeline 2.14.0

Using http_parser.rb 0.6.0

Using ruby2_keywords 0.0.5

Using em-websocket 0.5.2

Using coffee-script-source 1.11.1

Using jekyll 3.9.0

Using coffee-script 2.4.1

Using jekyll-avatar 0.7.0

Using jekyll-redirect-from 0.16.0

Using jekyll-relative-links 0.6.1

Using jekyll-remote-theme 0.4.3

Using jekyll-seo-tag 2.7.1

Using jekyll-sitemap 1.4.0

Using jekyll-titles-from-headings 0.5.3

Using jemoji 0.12.0

Using jekyll-coffeescript 1.1.1

Using jekyll-theme-architect 0.2.0

Using jekyll-theme-cayman 0.2.0

Using jekyll-theme-dinky 0.2.0

Using jekyll-theme-hacker 0.2.0

Using jekyll-theme-leap-day 0.2.0

Using jekyll-theme-merlot 0.2.0

Using jekyll-theme-midnight 0.2.0

Using jekyll-theme-minimal 0.2.0

Using jekyll-theme-modernist 0.2.0

Using jekyll-theme-slate 0.2.0

Using jekyll-theme-tactile 0.2.0

Using jekyll-theme-time-machine 0.2.0

Using faraday 1.7.0

Using jekyll-commonmark 1.3.1

Using jekyll-default-layout 0.1.4

Using jekyll-commonmark-ghpages 0.1.6

Using jekyll-mentions 1.6.0

Using jekyll-optional-front-matter 0.3.2

Using jekyll-readme-index 0.3.0

Using sawyer 0.8.2

Using jekyll-feed 0.15.1

Using octokit 4.21.0

Using minima 2.5.1

Using jekyll-gist 1.5.0

Using jekyll-github-metadata 2.13.0

Fetching github-pages-health-check 1.17.7 (was 1.17.2)

Using jekyll-theme-primer 0.6.0

Installing github-pages-health-check 1.17.7 (was 1.17.2)

Fetching github-pages 219 (was 218)

Installing github-pages 219 (was 218)

Bundle updated!

~/Workspaces/idjohnson.github.io$

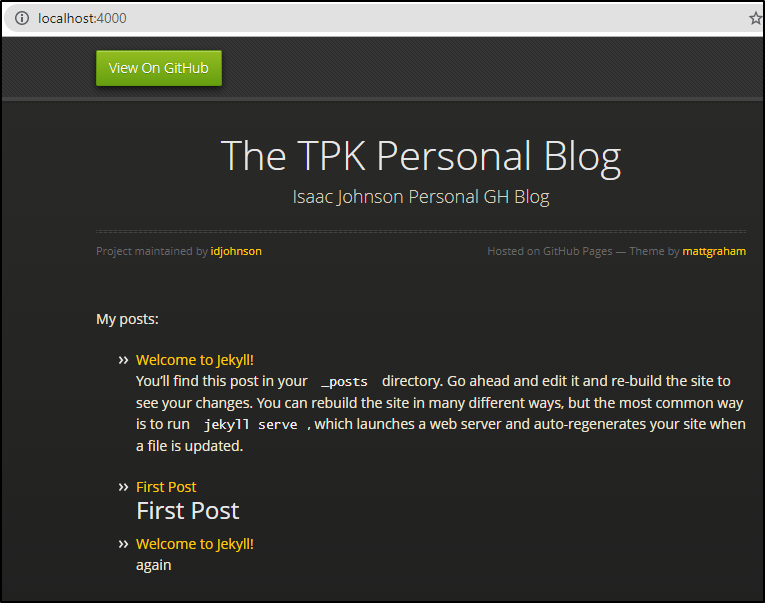

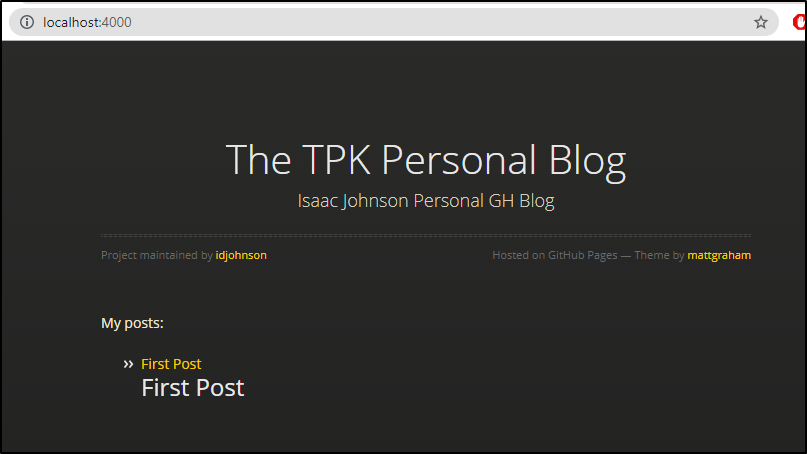

Next to serve this locally, you can use bundle exec jekyll serve

~/Workspaces/idjohnson.github.io$ bundle exec jekyll serve

Configuration file: /home/builder/Workspaces/idjohnson.github.io/_config.yml

Source: /home/builder/Workspaces/idjohnson.github.io

Destination: /home/builder/Workspaces/idjohnson.github.io/_site

Incremental build: disabled. Enable with --incremental

Generating...

Remote Theme: Using theme pages-themes/midnight

Jekyll Feed: Generating feed for posts

GitHub Metadata: No GitHub API authentication could be found. Some fields may be missing or have incorrect data.

done in 1.619 seconds.

/home/builder/gems/gems/pathutil-0.16.2/lib/pathutil.rb:502: warning: Using the last argument as keyword parameters is deprecated

Auto-regeneration may not work on some Windows versions.

Please see: https://github.com/Microsoft/BashOnWindows/issues/216

If it does not work, please upgrade Bash on Windows or run Jekyll with --no-watch.

Auto-regeneration: enabled for '/home/builder/Workspaces/idjohnson.github.io'

Server address: http://127.0.0.1:4000

Server running... press ctrl-c to stop.

[2021-08-25 13:52:31] ERROR `/favicon.ico' not found.

The key things you’ll note are that posts are automatically added from _posts directories. Note: the underscore is important there.

And we can see that matches here:

https://github.com/idjohnson/idjohnson.github.io/blob/feature/firstpost/_posts/2021-08-23-firstpost.md

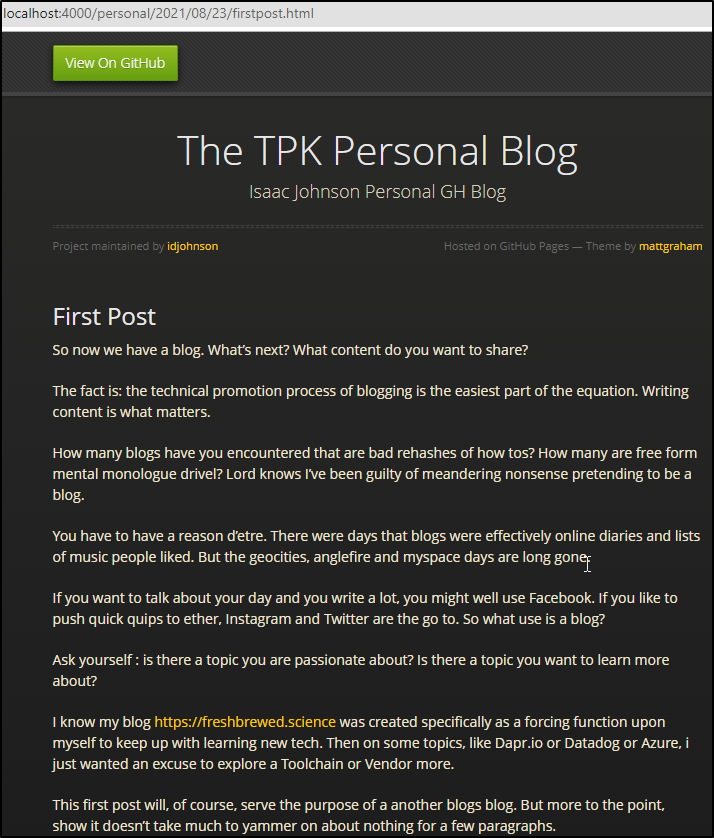

The other important note is that the markdown really must be prefixed with a date string. Also, in the markdown itself, we need a YAML block at the top *WITH* delimiters. Categories are basically tags.

---

layout: post

title: "First Post"

date: 2021-08-23 11:10:43 -0500

categories: personal

---

## First Post

So now we have a blog. What's next? What content do you want to share?

…

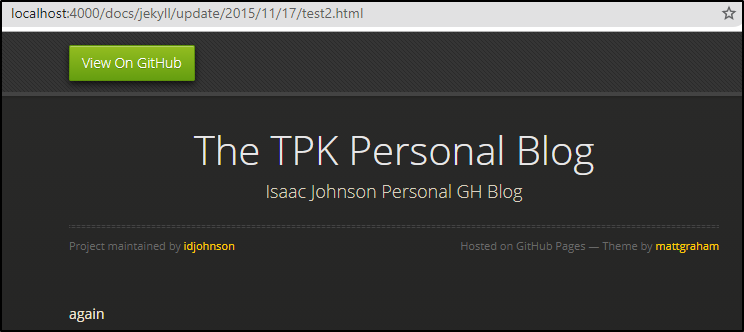

The other thing you’ll note is that we can serve pages from more than one folder.

Here i have a “/docs” root with a post: https://github.com/idjohnson/idjohnson.github.io/blob/feature/firstpost/docs/_posts/2021-08-23-test2.md which is served just fine.

and of course the other two in a _posts folder:

https://github.com/idjohnson/idjohnson.github.io/tree/feature/firstpost/_posts

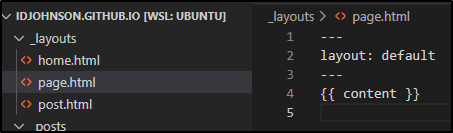

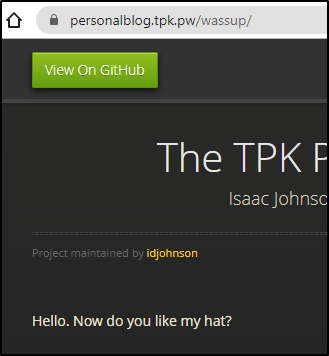

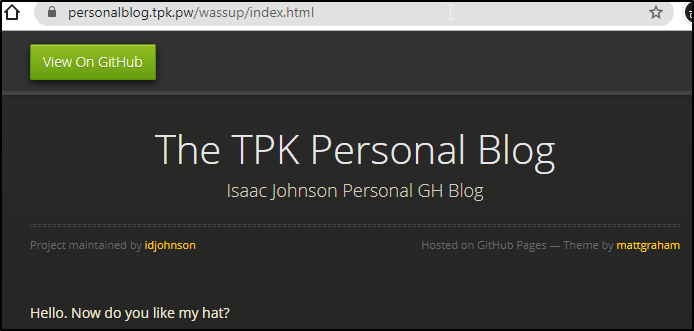

Adding pages

Say that I wanted to add a page.

First, it’s important that *if* you move off the default theme, you create _layouts for any top level type. E.g. page:

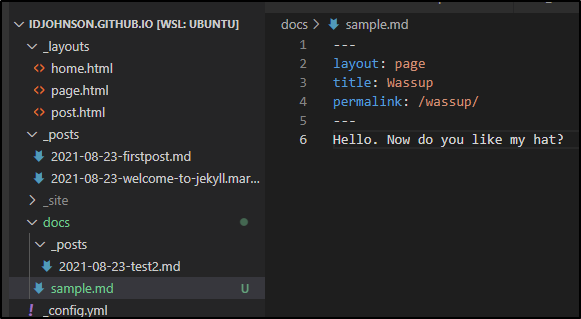

Then we can create a page. Here i will add it directly under docs:

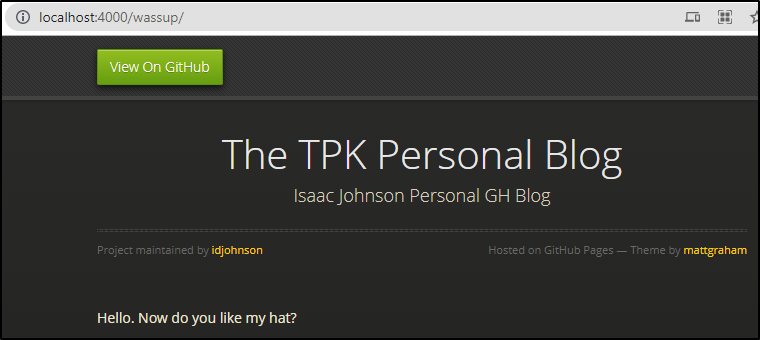

and that permalink is now served as http://localhost:4000/wassup/

We can now add and push it to Github:

`~/Workspaces/idjohnson.github.io$ git status

On branch feature/firstpost

Your branch is up to date with 'origin/feature/firstpost'.

Untracked files:

(use "git add <file>..." to include in what will be committed)

docs/sample.md

nothing added to commit but untracked files present (use "git add" to track)

~/Workspaces/idjohnson.github.io$ git add docs/

~/Workspaces/idjohnson.github.io$ git commit -m "add a wassup sample page"

[feature/firstpost 0f639e0] add a wassup sample page

1 file changed, 6 insertions(+)

create mode 100644 docs/sample.md

~/Workspaces/idjohnson.github.io$ git push

Enumerating objects: 6, done.

Counting objects: 100% (6/6), done.

Delta compression using up to 8 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 435 bytes | 435.00 KiB/s, done.

Total 4 (delta 1), reused 0 (delta 0)

remote: Resolving deltas: 100% (1/1), completed with 1 local object.

To https://github.com/idjohnson/idjohnson.github.io.git

b455bdd..0f639e0 feature/firstpost -> feature/firstpost

However, when i look, i of course don’t see it live;

This is because we don’t serve out of the feature/firstpost branch.. we need to merge to main to make it live.

We just need to make a PR:

Then merge it to make it live

completed

after a minute or so (hey, it’s free) we see the pipeline ran and our blog is rendered and live

This includes pages

As an aside, on some browsers i needed to add ‘index.html’ to the path.

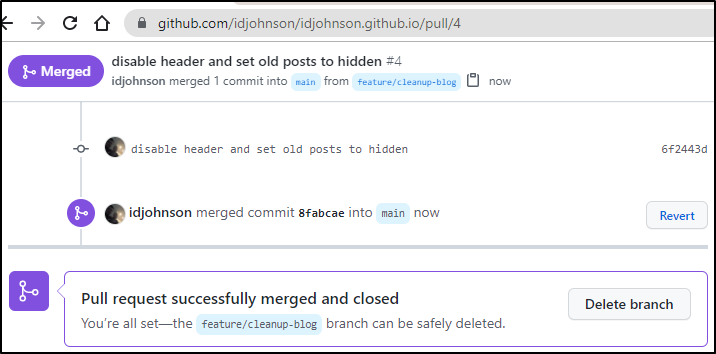

Cleaning up.

So now that we have a sample blog. having a bunch of “hello world” posts won’t be of much use.

I’ll first create a new branch off main (now that its merged, it should have more content)

~/Workspaces/idjohnson.github.io$ git checkout main

Switched to branch 'main'

Your branch is up to date with 'origin/main'.

~/Workspaces/idjohnson.github.io$ git pull

remote: Enumerating objects: 2, done.

remote: Counting objects: 100% (2/2), done.

remote: Compressing objects: 100% (2/2), done.

remote: Total 2 (delta 0), reused 0 (delta 0), pack-reused 0

Unpacking objects: 100% (2/2), 1.20 KiB | 1.20 MiB/s, done.

From https://github.com/idjohnson/idjohnson.github.io

fa5fb09..0365008 main -> origin/main

Updating fa5fb09..0365008

Fast-forward

.gitignore | 5 ++

404.html | 25 ++++++

Gemfile | 32 ++++++++

Gemfile.lock | 282 +++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

_config.yml | 55 ++++++++++++-

_layouts/home.html | 4 +

_layouts/page.html | 4 +

_layouts/post.html | 4 +

FirstPost.md => _posts/2021-08-23-firstpost.md | 9 ++-

_posts/2021-08-23-welcome-to-jekyll.markdown | 29 +++++++

about.markdown | 18 +++++

docs/_posts/2021-08-23-test2.md | 7 ++

docs/sample.md | 6 ++

index.markdown | 15 ++++

14 files changed, 493 insertions(+), 2 deletions(-)

create mode 100644 .gitignore

create mode 100644 404.html

create mode 100644 Gemfile

create mode 100644 Gemfile.lock

create mode 100644 _layouts/home.html

create mode 100644 _layouts/page.html

create mode 100644 _layouts/post.html

rename FirstPost.md => _posts/2021-08-23-firstpost.md (92%)

create mode 100644 _posts/2021-08-23-welcome-to-jekyll.markdown

create mode 100644 about.markdown

create mode 100644 docs/_posts/2021-08-23-test2.md

create mode 100644 docs/sample.md

create mode 100644 index.markdown

~/Workspaces/idjohnson.github.io$ git checkout -b feature/cleanup-blog

Switched to a new branch 'feature/cleanup-blog'

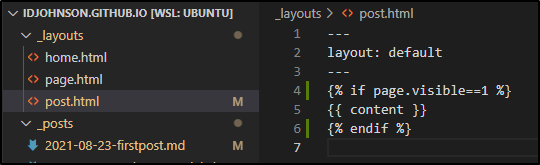

First, in /index.markdown let’s create an IF block for a new field we will use called ‘visible’

---

# Feel free to add content and custom Front Matter to this file.

# To modify the layout, see https://jekyllrb.com/docs/themes/#overriding-theme-defaults

layout: home

---

My posts:

<ul>

</ul>

Next, in our posts, set the firstpost to visible:

_posts/2021-08-23-firstpost.md:

---

layout: post

title: "First Post"

date: 2021-08-23 11:10:43 -0500

categories: personal

visible: 1

---

## First Post

and the rest use “visible: 0”

now serve locally:

But say you want to avoid even that. You could add a line in the post.html to also mask it (though note the variable is under “page” not “post”)

---

layout: default

---

For instance, maybe i want to just highly draft posts are just that - drafts.

---

layout: default

---

<h1><font color=red> **DRAFT POST: MAY BE INCOMPLETE** </font></h1>

Published: Aug 18, 2021 by Isaac Johnson

Kuma,which is a Cloud Native Computing Foundation (CNCF) sponsored service mesh, is the OSS backend of Kong Mesh. From the docs for mTLS we also see it is one of the implementers of the Spiffe universalIdP.

Kuma, meaning “bear” in Japanese, is the only Envoy-based service mesh sponsored thus far by the foundation. It was Open Sourced and given to the CNCF by Kong back in September 2019.

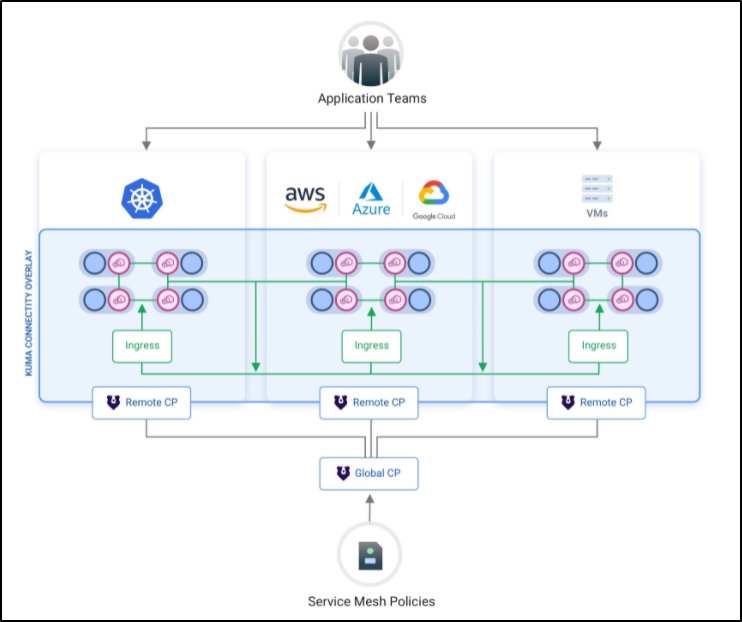

Kuma is a multi-zone mesh that can have different control planes come together in a multi-zoned global control plane:

You can get more of a high level overview from the docs. As there is quite a lot to cover with Kuma, let’s get started and show a demo app and explore metrics, logging and tracing (and see how we can integrate with other systems like Datadog Logging and APM).

Getting Started

There are a few ways you can install Kuma.

Docker version

You can just run the docker container:

docker run \

-p 5681:5681 \

docker.io/kumahq/kuma-cp:1.2.3 run

where 1.2.3 was(is) the latest image from here: https://hub.docker.com/r/kumahq/kuma-cp/tags?page=1&ordering=last_updated

builder@DESKTOP-QADGF36:~$ docker run -p 5681:5681 docker.io/kumahq/kuma-cp:1.2.3 run

Unable to find image 'kumahq/kuma-cp:1.2.3' locally

1.2.3: Pulling from kumahq/kuma-cp

5843afab3874: Pull complete

877216791a32: Pull complete

c9950e6539e5: Pull complete

9577f971b34d: Pull complete

a336c81dba13: Pull complete

827f56217717: Pull complete

e6101ec954c6: Pull complete

Digest: sha256:0b79d03966c181d1e2871e0a40b71a376dc954e15f6611eea819b6a8b7e19d11

Status: Downloaded newer image for kumahq/kuma-cp:1.2.3

2021-08-17T11:49:19.036Z INFO Skipping reading config from file

2021-08-17T11:49:19.036Z INFO bootstrap.auto-configure directory /home/kuma-cp/.kuma will be used as a working directory, it could be changed using KUMA_GENERAL_WORK_DIR environment variable

2021-08-17T11:49:19.133Z INFO bootstrap.auto-configure TLS certificate autogenerated. Autogenerated certificates are not synchronized between CP instances. It is only valid if the data plane proxy connects to the CP by one of the following address 3543ad70ba7f, localhost, 127.0.0.1, 172.17.0.2. It is recommended to generate your own certificate based on yours trusted CA. You can also generate your own self-signed certificates using 'kumactl generate tls-certificate --type=server --cp-hostname=<hostname>' and configure them using KUMA_GENERAL_TLS_CERT_FILE and KUMA_GENERAL_TLS_KEY_FILE {"crtFile": "/home/kuma-cp/.kuma/kuma-cp.crt", "keyFile": "/home/kuma-cp/.kuma/kuma-cp.key"}

2021-08-17T11:49:19.135Z INFO kuma-cp.run Current config {"apiServer":{"auth":{"allowFromLocalhost":true,"clientCertsDir":""},"corsAllowedDomains":[".*"],"http":{"enabled":true,"interface":"0.0.0.0","port":5681},"https":{"enabled":true,"interface":"0.0.0.0","port":5682,"tlsCertFile":"/home/kuma-cp/.kuma/kuma-cp.crt","tlsKeyFile":"/home/kuma-cp/.kuma/kuma-cp.key"},"readOnly":false},"bootstrapServer":{"apiVersion":"v3","params":{"adminAccessLogPath":"/dev/null","adminAddress":"127.0.0.1","adminPort":0,"xdsConnectTimeout":"1s","xdsHost":"","xdsPort":5678}},"defaults":{"skipMeshCreation":false},"diagnostics":{"debugEndpoints":false,"serverPort":5680},"dnsServer":{"CIDR":"240.0.0.0/4","domain":"mesh","port":5653},"dpServer":{"auth":{"type":"dpToken"},"hds":{"checkDefaults":{"healthyThreshold":1,"interval":"1s","noTrafficInterval":"1s","timeout":"2s","unhealthyThreshold":1},"enabled":true,"interval":"5s","refreshInterval":"10s"},"port":5678,"tlsCertFile":"/home/kuma-cp/.kuma/kuma-cp.crt","tlsKeyFile":"/home/kuma-cp/.kuma/kuma-cp.key"},"environment":"universal","general":{"dnsCacheTTL":"10s","tlsCertFile":"/home/kuma-cp/.kuma/kuma-cp.crt","tlsKeyFile":"/home/kuma-cp/.kuma/kuma-cp.key","workDir":"/home/kuma-cp/.kuma"},"guiServer":{"apiServerUrl":""},"metrics":{"dataplane":{"enabled":true,"subscriptionLimit":10},"mesh":{"maxResyncTimeout":"20s","minResyncTimeout":"1s"},"zone":{"enabled":true,"subscriptionLimit":10}},"mode":"standalone","monitoringAssignmentServer":{"apiVersions":["v1alpha1","v1"],"assignmentRefreshInterval":"1s","defaultFetchTimeout":"30s","grpcPort":0,"port":5676},"multizone":{"global":{"kds":{"grpcPort":5685,"maxMsgSize":10485760,"refreshInterval":"1s","tlsCertFile":"/home/kuma-cp/.kuma/kuma-cp.crt","tlsKeyFile":"/home/kuma-cp/.kuma/kuma-cp.key","zoneInsightFlushInterval":"10s"}},"zone":{"kds":{"maxMsgSize":10485760,"refreshInterval":"1s","rootCaFile":""}}},"reports":{"enabled":true},"runtime":{"kubernetes":{"admissionServer":{"address":"","certDir":"","port":5443},"controlPlaneServiceName":"kuma-control-plane","injector":{"builtinDNS":{"enabled":true,"port":15053},"caCertFile":"","cniEnabled":false,"exceptions":{"labels":{"openshift.io/build.name":"*","openshift.io/deployer-pod-for.name":"*"}},"initContainer":{"image":"kuma/kuma-init:latest"},"sidecarContainer":{"adminPort":9901,"drainTime":"30s","envVars":{},"gid":5678,"image":"kuma/kuma-dp:latest","livenessProbe":{"failureThreshold":12,"initialDelaySeconds":60,"periodSeconds":5,"timeoutSeconds":3},"readinessProbe":{"failureThreshold":12,"initialDelaySeconds":1,"periodSeconds":5,"successThreshold":1,"timeoutSeconds":3},"redirectPortInbound":15006,"redirectPortInboundV6":15010,"redirectPortOutbound":15001,"resources":{"limits":{"cpu":"1000m","memory":"512Mi"},"requests":{"cpu":"50m","memory":"64Mi"}},"uid":5678},"sidecarTraffic":{"excludeInboundPorts":[],"excludeOutboundPorts":[]},"virtualProbesEnabled":true,"virtualProbesPort":9000},"marshalingCacheExpirationTime":"5m0s"},"universal":{"dataplaneCleanupAge":"72h0m0s"}},"sdsServer":{"dataplaneConfigurationRefreshInterval":"1s"},"store":{"cache":{"enabled":true,"expirationTime":"1s"},"kubernetes":{"systemNamespace":"kuma-system"},"postgres":{"connectionTimeout":5,"dbName":"kuma","host":"127.0.0.1","maxIdleConnections":0,"maxOpenConnections":0,"maxReconnectInterval":"1m0s","minReconnectInterval":"10s","password":" *****","port":15432,"tls":{"caPath":"","certPath":"","keyPath":"","mode":"disable"},"user":"kuma"},"type":"memory","upsert":{"conflictRetryBaseBackoff":"100ms","conflictRetryMaxTimes":5}},"xdsServer":{"dataplaneConfigurationRefreshInterval":"1s","dataplaneStatusFlushInterval":"10s","nackBackoff":"5s"}}

2021-08-17T11:49:19.135Z INFO kuma-cp.run Running in mode `standalone`

2021-08-17T11:49:19.135Z INFO mads-server MADS v1alpha1 is enabled

2021-08-17T11:49:19.135Z INFO mads-server MADS v1 is enabled

2021-08-17T11:49:19.198Z INFO xds-server registering Aggregated Discovery Service V3 in Dataplane Server

2021-08-17T11:49:19.198Z INFO bootstrap registering Bootstrap in Dataplane Server

2021-08-17T11:49:19.198Z INFO sds-server registering Secret Discovery Service V3 in Dataplane Server

2021-08-17T11:49:19.198Z INFO hds-server registering Health Discovery Service in Dataplane Server

2021-08-17T11:49:19.202Z INFO kuma-cp.run starting Control Plane {"version": "1.2.3"}

2021-08-17T11:49:19.202Z INFO dp-server starting {"interface": "0.0.0.0", "port": 5678, "tls": true}

2021-08-17T11:49:19.202Z INFO dns-vips-synchronizer starting the DNS VIPs Synchronizer

2021-08-17T11:49:19.202Z INFO bootstrap leader acquired

2021-08-17T11:49:19.202Z INFO metrics.store-counter starting the resource counter

2021-08-17T11:49:19.202Z INFO dns-server starting {"address": "0.0.0.0:5653"}

2021-08-17T11:49:19.202Z INFO xds-server.diagnostics starting {"interface": "0.0.0.0", "port": 5680}

2021-08-17T11:49:19.202Z INFO mesh-insight-resyncer starting resilient component ...

2021-08-17T11:49:19.202Z INFO dns-vips-allocator starting the DNS VIPs allocator

2021-08-17T11:49:19.202Z INFO garbage-collector started

2021-08-17T11:49:19.203Z INFO clusterID creating cluster ID {"clusterID": "1fa50603-9d7c-4b1e-b82c-9e75a45f4323"}

2021-08-17T11:49:19.203Z INFO vip-outbounds-reconciler starting the VIP outbounds reconciler

2021-08-17T11:49:19.203Z INFO defaults trying to create default Mesh

2021-08-17T11:49:19.203Z INFO defaults.mesh ensuring default resources for Mesh exist {"mesh": "default"}

2021-08-17T11:49:19.203Z INFO defaults.mesh default TrafficPermission created {"mesh": "default", "name": "allow-all-default"}

2021-08-17T11:49:19.203Z INFO api-server starting {"interface": "0.0.0.0", "port": 5681}

2021-08-17T11:49:19.203Z INFO api-server starting {"interface": "0.0.0.0", "port": 5682, "tls": true}

2021-08-17T11:49:19.203Z INFO defaults.mesh default TrafficRoute created {"mesh": "default", "name": "route-all-default"}

2021-08-17T11:49:19.203Z INFO mads-server starting {"interface": "0.0.0.0", "port": 5676}

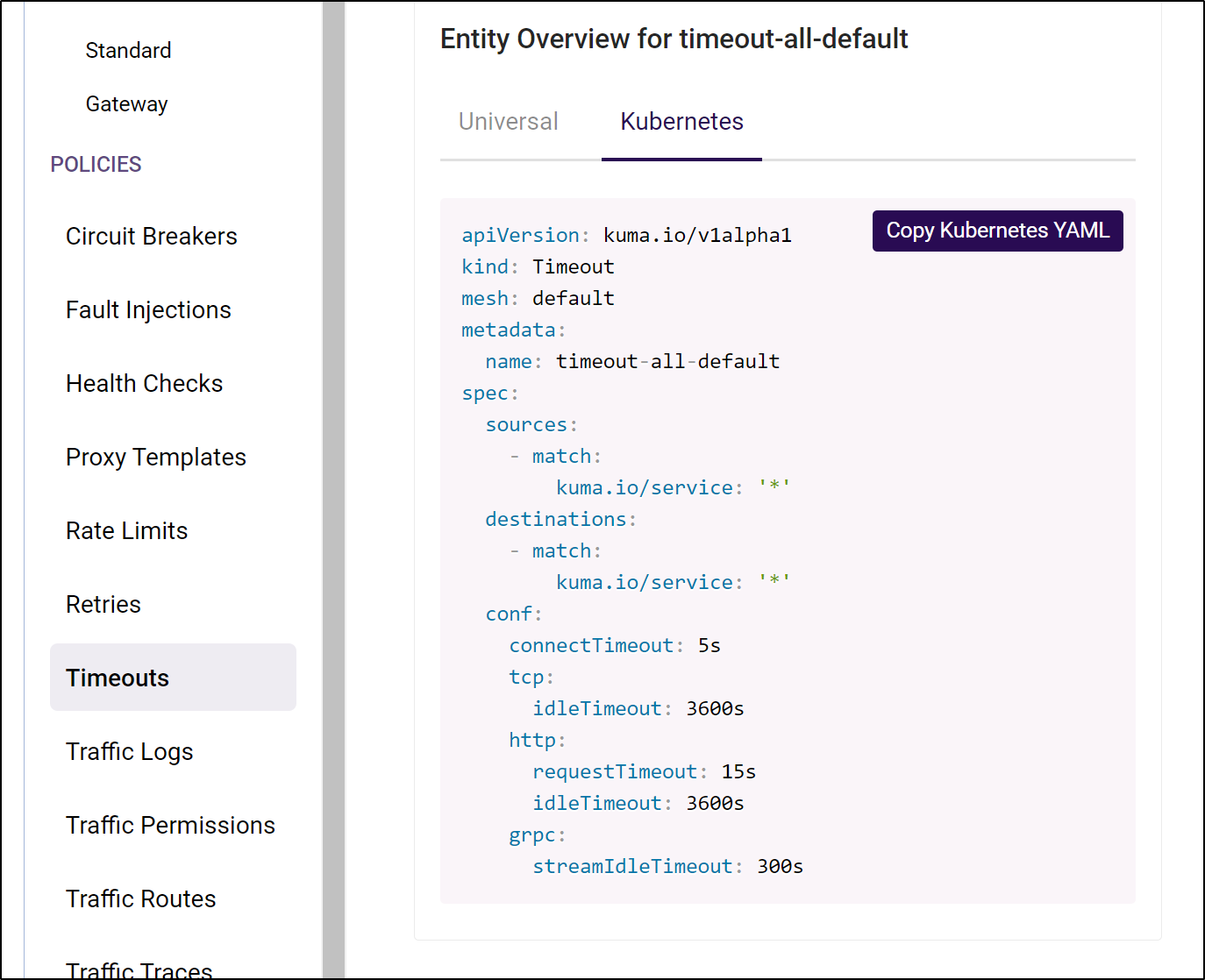

2021-08-17T11:49:19.204Z INFO defaults.mesh default Timeout created {"mesh": "default", "name": "timeout-all-default"}

2021-08-17T11:49:19.204Z INFO defaults.mesh default CircuitBreaker created {"mesh": "default", "name": "circuit-breaker-all-default"}

2021-08-17T11:49:19.204Z INFO defaults.mesh default Retry created {"mesh": "default", "name": "retry-all-default"}

2021-08-17T11:49:19.334Z INFO defaults.mesh default Signing Key created {"mesh": "default", "name": "dataplane-token-signing-key-default"}

2021-08-17T11:49:19.518Z INFO defaults.mesh default Signing Key created {"mesh": "default", "name": "envoy-admin-client-token-signing-key-default"}

2021-08-17T11:49:19.518Z INFO defaults default Mesh created

2021-08-17T11:49:19.603Z INFO defaults trying to create a Zone Ingress signing key

2021-08-17T11:49:19.603Z INFO defaults Zone Ingress signing key created

2021-08-17T11:49:20.202Z INFO clusterID setting cluster ID {"clusterID": "1fa50603-9d7c-4b1e-b82c-9e75a45f4323"}

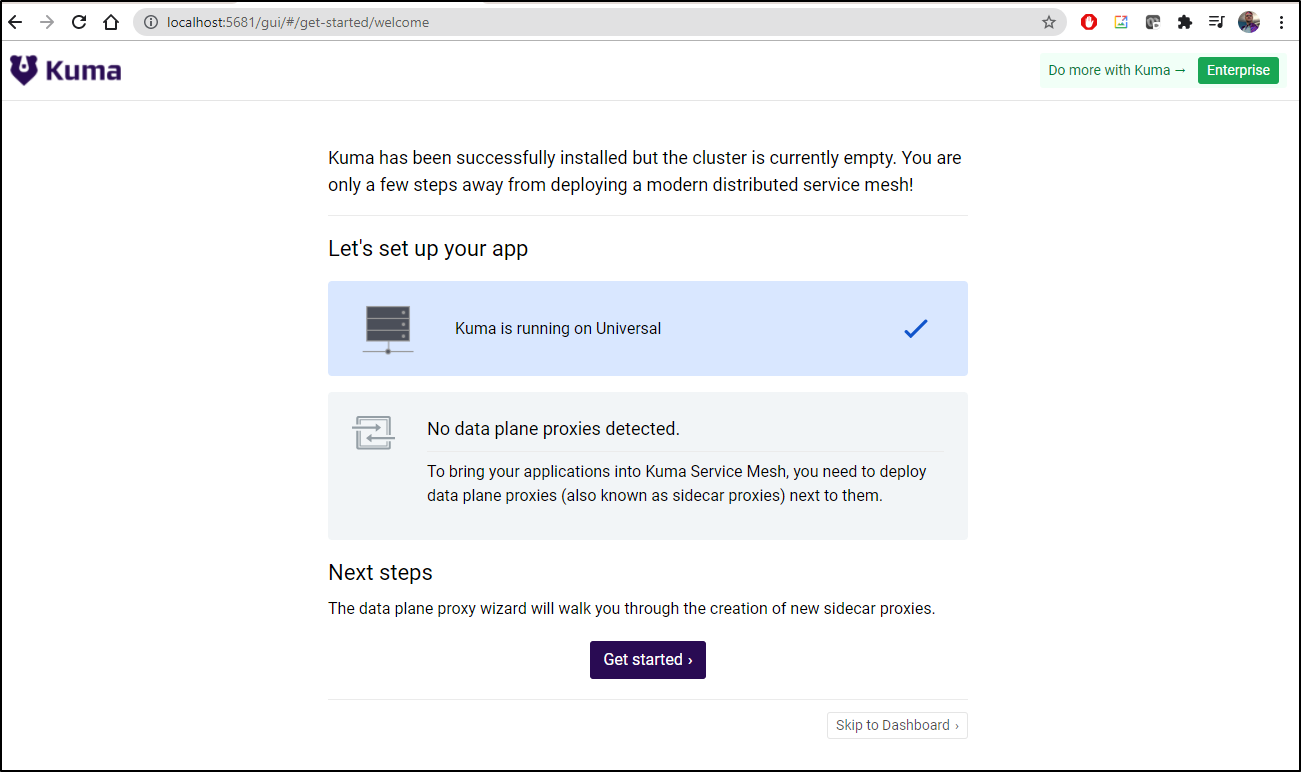

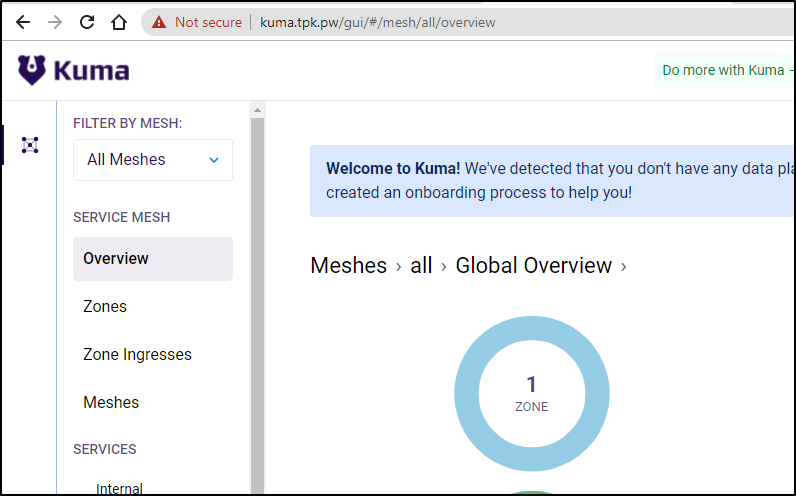

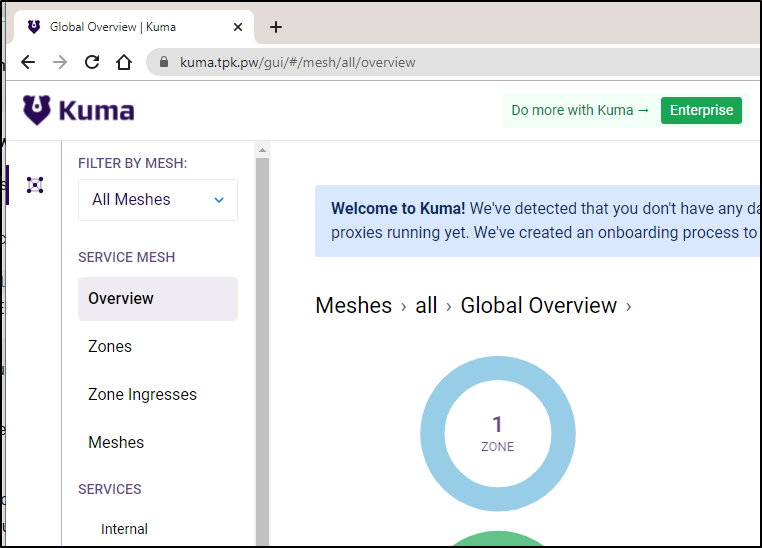

Then we can navigate to http://localhost:5681/gui

This will bring us to a dashboard where we can see our meshes as well as set a new one up:

We wouldn’t really use a standalone docker container for this. So let us switch to using Helm with Kubernetes.

Helm install to Kubernetes

We will follow the instructions here. First we need to add the helm repo.

Since I just started with a fresh WSL, i need to add Helm 3.

builder@DESKTOP-QADGF36:~$ curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3

builder@DESKTOP-QADGF36:~$ chmod 700 get_helm.sh

builder@DESKTOP-QADGF36:~$ ./get_helm.sh

Downloading https://get.helm.sh/helm-v3.6.3-linux-amd64.tar.gz

Verifying checksum... Done.

Preparing to install helm into /usr/local/bin

[sudo] password for builder:

helm installed into /usr/local/bin/helm

Now add the helm repo

builder@DESKTOP-QADGF36:~$ helm repo add kuma https://kumahq.github.io/charts

"kuma" has been added to your repositories

We will just double check we are on the right k8s cluster (home)

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

anna-macbookair Ready <none> 121d v1.19.5+k3s2

isaac-macbookpro Ready <none> 233d v1.19.5+k3s2

isaac-macbookair Ready master 233d v1.19.5+k3s2

The helm chart will create a new namespace and install kuma in one step

$ helm install --create-namespace --namespace kuma-system kuma kuma/kuma

NAME: kuma

LAST DEPLOYED: Tue Aug 17 06:56:23 2021

NAMESPACE: kuma-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The Kuma Control Plane has been installed!

You can access the control-plane via either the GUI, kubectl, the HTTP API, or the kumactl CLI

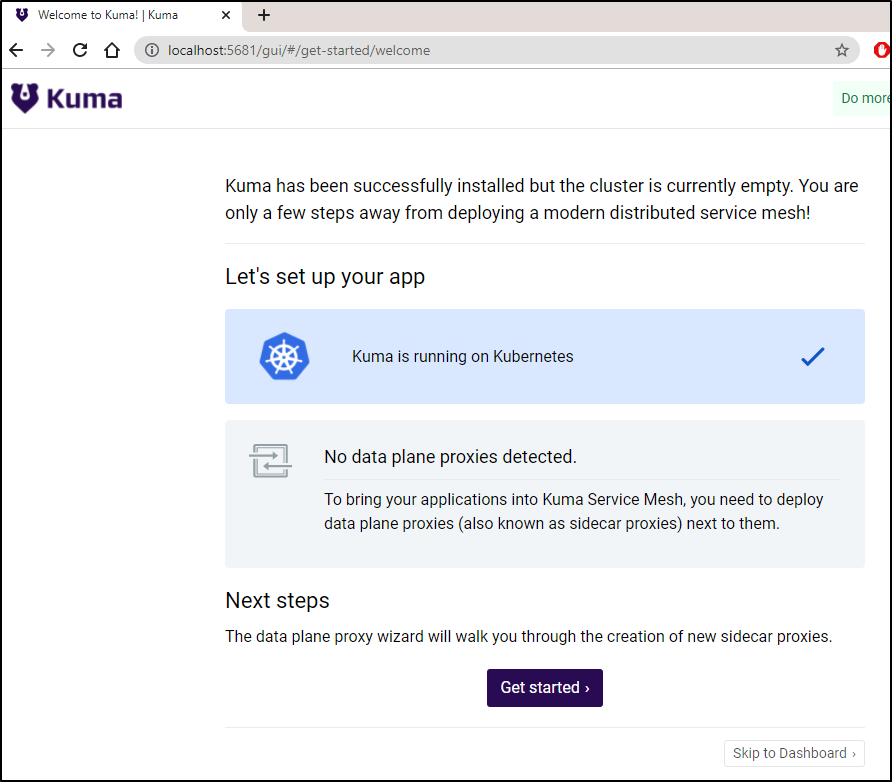

We can now see the Kuma Control Plane service is up and running:

$ kubectl get svc -n kuma-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kuma-control-plane ClusterIP 10.43.145.6 <none> 5681/TCP,5682/TCP,443/TCP,5676/TCP,5678/TCP,5653/UDP 11m

and we can port-forward to get started

$ kubectl port-forward svc/kuma-control-plane -n kuma-system 5681:5681

Forwarding from 127.0.0.1:5681 -> 5681

Forwarding from [::1]:5681 -> 5681

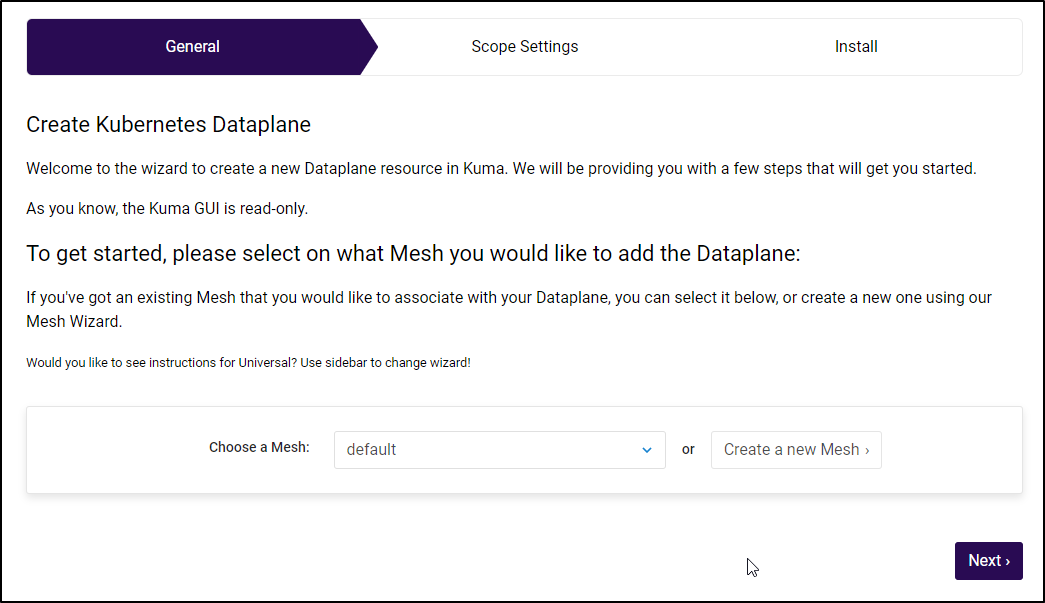

For now we will use the default mesh:

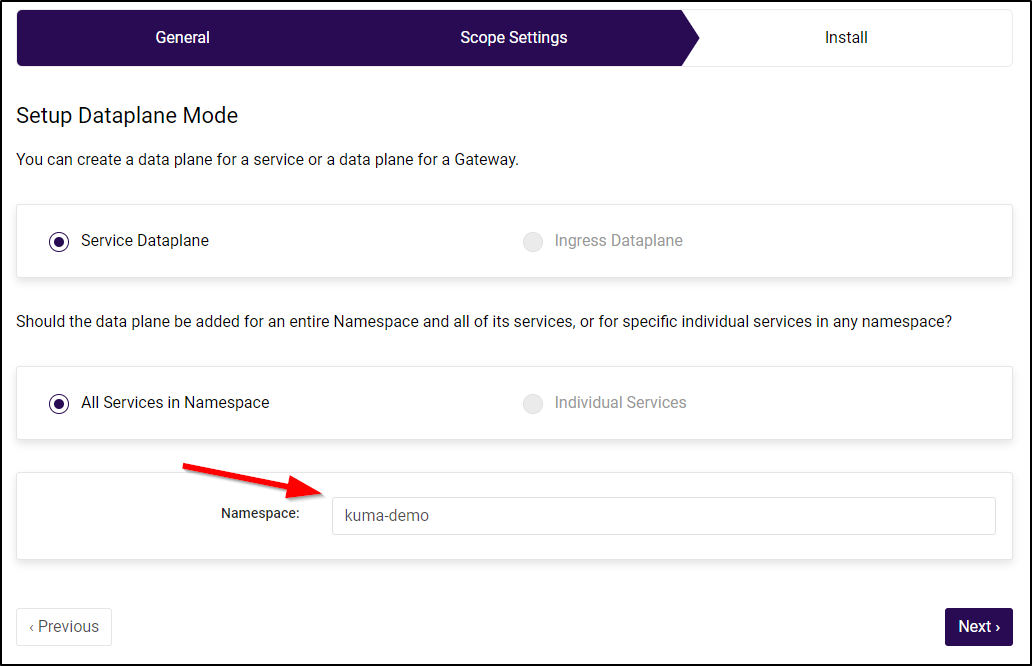

By default, it wants to use the ‘default’ namespace. However, I will limit it this time to the “kuma-demo” namespace.

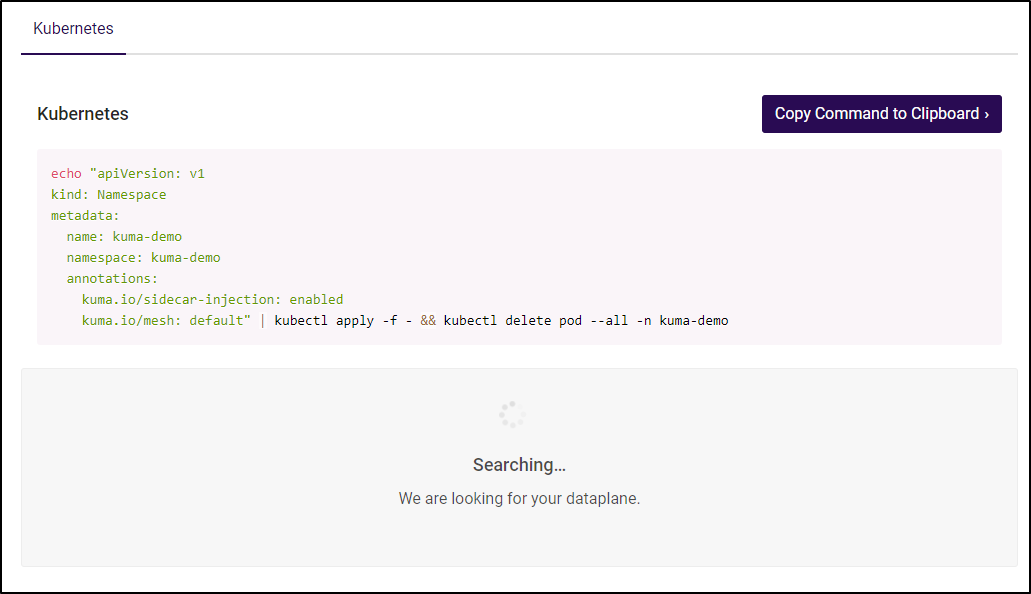

Then it would like us to add the namespace annotation:

echo "apiVersion: v1

kind: Namespace

metadata:

name: kuma-demo

namespace: kuma-demo

annotations:

kuma.io/sidecar-injection: enabled

kuma.io/mesh: default" | kubectl apply -f - && kubectl delete pod --all -n kuma-demo

Here we can pause and first install the Demo Kuma app:

builder@DESKTOP-QADGF36:~$ kubectl apply -f https://bit.ly/demokuma

namespace/kuma-demo created

deployment.apps/postgres-master created

service/postgres created

deployment.apps/redis-master created

service/redis created

service/backend created

deployment.apps/kuma-demo-backend-v0 created

deployment.apps/kuma-demo-backend-v1 created

deployment.apps/kuma-demo-backend-v2 created

service/frontend created

deployment.apps/kuma-demo-app created

builder@DESKTOP-QADGF36:~$ kubectl get pods -n kuma-demo

NAME READY STATUS RESTARTS AGE

redis-master-55fd8f6f54-qgn4s 0/2 Init:0/1 0 7s

kuma-demo-app-6787b4f7f5-7f9b4 0/2 Init:0/1 0 7s

kuma-demo-backend-v0-56db47c579-q4gjh 0/2 Init:0/1 0 7s

postgres-master-645bc44fd-wrnlm 0/2 Init:0/1 0 7s

Then apply it. Here the “default” mesh refers to the mesh name, not namespace

builder@DESKTOP-QADGF36:~$ echo "apiVersion: v1

> kind: Namespace

> metadata:

> name: kuma-demo

> namespace: kuma-demo

> annotations:

> kuma.io/sidecar-injection: enabled

> kuma.io/mesh: default" | kubectl apply -f - && kubectl delete pod --all -n kuma-demo

namespace/kuma-demo configured

pod "kuma-demo-backend-v0-56db47c579-q4gjh" deleted

pod "postgres-master-645bc44fd-wrnlm" deleted

pod "kuma-demo-app-6787b4f7f5-7f9b4" deleted

pod "redis-master-55fd8f6f54-qgn4s" deleted

The reason we apply the annotation then bounce the pods is to force the sidecar injection.

builder@DESKTOP-QADGF36:~$ kubectl get pods -n kuma-demo

NAME READY STATUS RESTARTS AGE

redis-master-55fd8f6f54-kx5tp 2/2 Running 0 74s

postgres-master-645bc44fd-fdkx4 2/2 Running 0 74s

kuma-demo-app-6787b4f7f5-f75r4 0/2 PodInitializing 0 74s

kuma-demo-backend-v0-56db47c579-qcdn9 0/2 PodInitializing 0 74s

It’s subtle, but you’ll notice the difference between the first get pods and this is the former was loading 0/1 and these are 2/2 or 0/2.. that is, the pod has 2 containers now (the app and the sidecar).

builder@DESKTOP-QADGF36:~$ kubectl get pods -n kuma-demo

NAME READY STATUS RESTARTS AGE

redis-master-55fd8f6f54-kx5tp 2/2 Running 0 2m17s

postgres-master-645bc44fd-fdkx4 2/2 Running 0 2m17s

kuma-demo-backend-v0-56db47c579-qcdn9 2/2 Running 0 2m17s

kuma-demo-app-6787b4f7f5-f75r4 2/2 Running 0 2m17s

Issues with Wizard

I tried many things at this point to get it to find my mesh in the wizard, but it always hung here:

builder@DESKTOP-QADGF36:~$ cat kuma-dp1.yaml

apiVersion: 'kuma.io/v1alpha1'

kind: Dataplane

mesh: default

metadata:

name: dp-echo-1

annotations:

kuma.io/sidecar-injection: enabled

kuma.io/mesh: default

networking:

address: 10.0.0.1

inbound:

- port: 10000

servicePort: 9000

tags:

kuma.io/service: echo

then applying

builder@DESKTOP-QADGF36:~$ kubectl apply -f kuma-dp1.yaml -n kuma-demo --validate=false

Error from server (InternalError): error when creating "kuma-dp1.yaml": Internal error occurred: failed calling webhook "validator.kuma-admission.kuma.io": Post "https://kuma-control-plane.kuma-system.svc:443/validate-kuma-io-v1alpha1?timeout=10s": EOF

builder@DESKTOP-QADGF36:~$ kubectl apply -f kuma-dp1.yaml -n kuma-system --validate=false

Error from server (InternalError): error when creating "kuma-dp1.yaml": Internal error occurred: failed calling webhook "validator.kuma-admission.kuma.io": Post "https://kuma-control-plane.kuma-system.svc:443/validate-kuma-io-v1alpha1?timeout=10s": EOF

builder@DESKTOP-QADGF36:~$ kubectl apply -f kuma-dp1.yaml --validate=false

Error from server (InternalError): error when creating "kuma-dp1.yaml": Internal error occurred: failed calling webhook "validator.kuma-admission.kuma.io": Post "https://kuma-control-plane.kuma-system.svc:443/validate-kuma-io-v1alpha1?timeout=10s": EOF

That said, i _do_ see Dataplane’s were created in kuma-demo:

builder@DESKTOP-QADGF36:~$ kubectl get dataplane --all-namespaces

NAMESPACE NAME AGE

kuma-demo redis-master-55fd8f6f54-kx5tp 13m

kuma-demo postgres-master-645bc44fd-fdkx4 13m

kuma-demo kuma-demo-backend-v0-56db47c579-qcdn9 13m

kuma-demo kuma-demo-app-6787b4f7f5-f75r4 13m

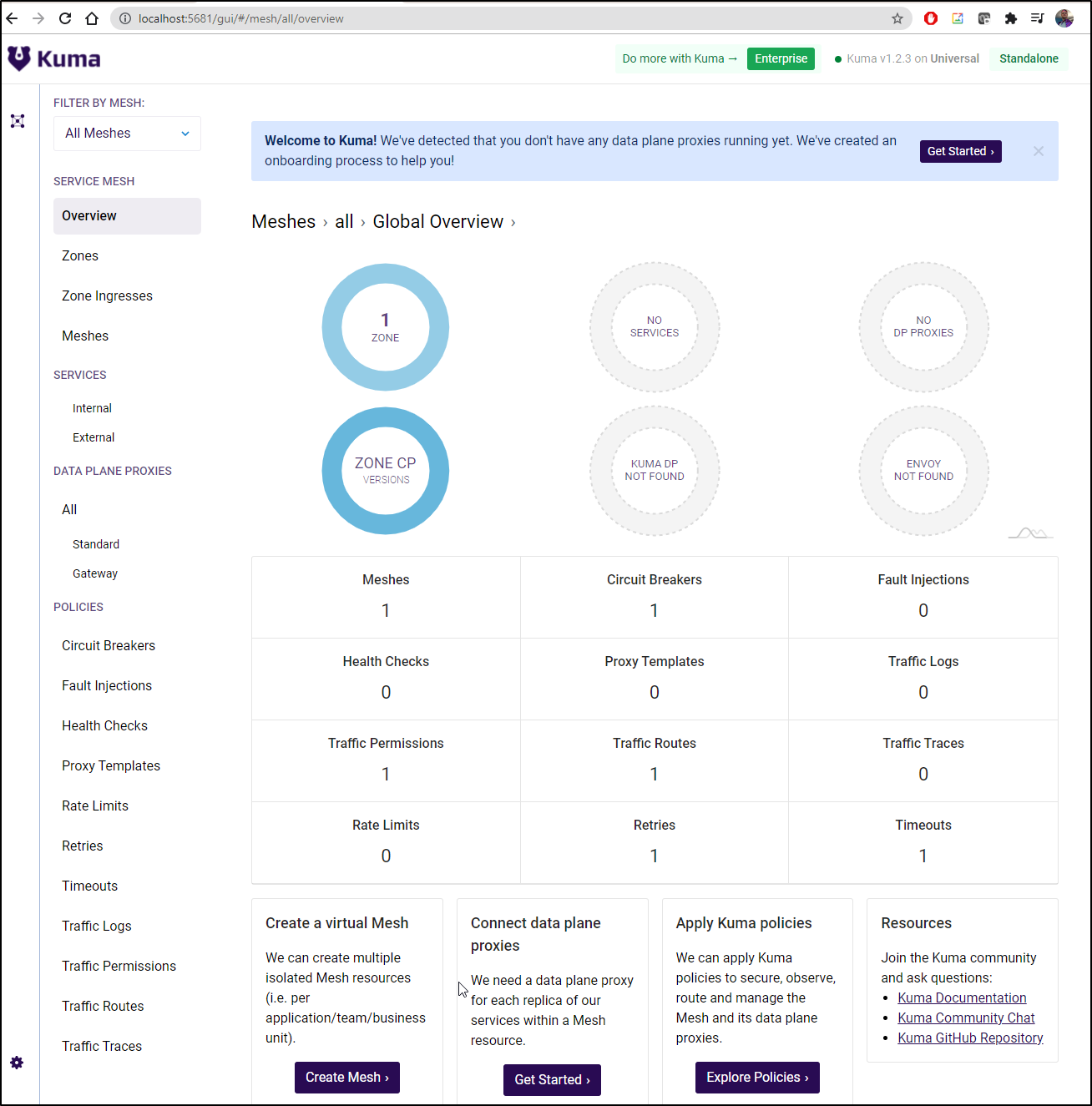

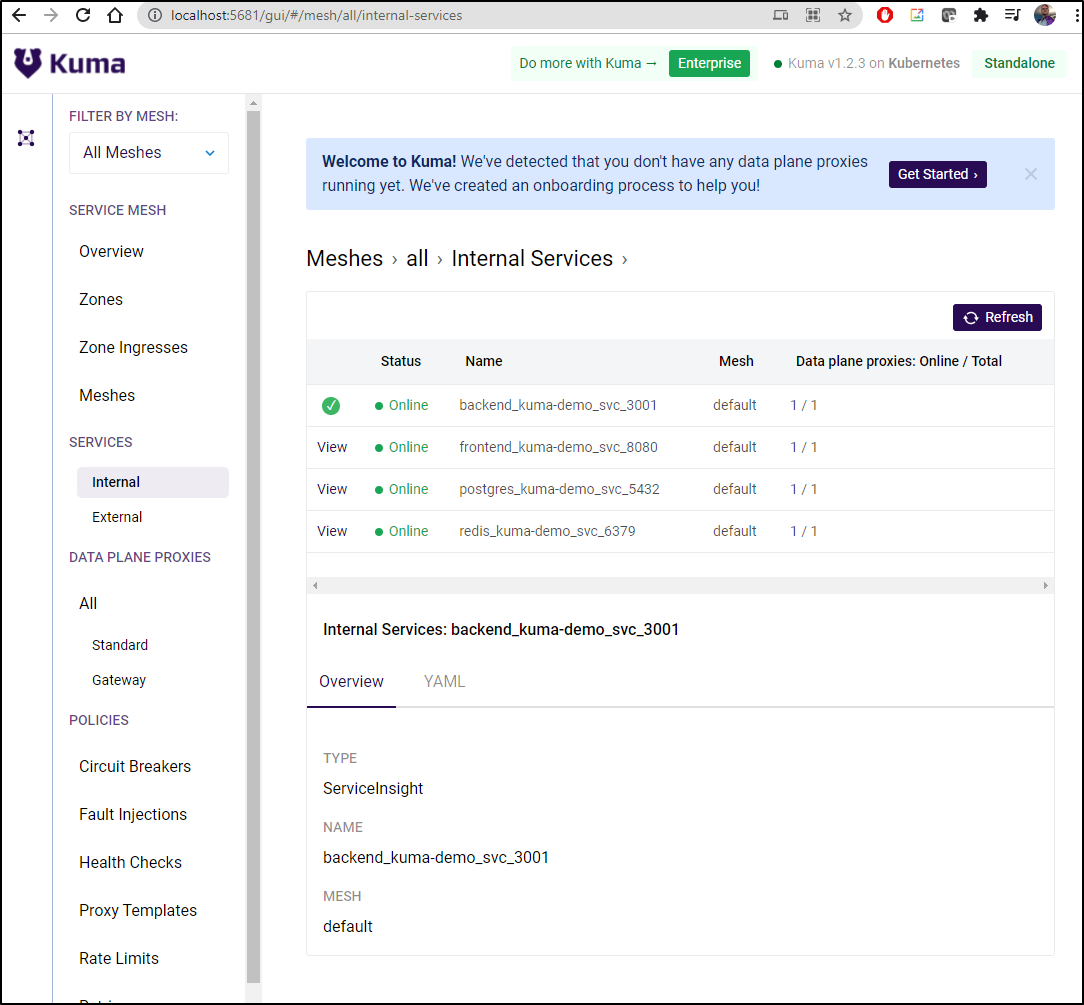

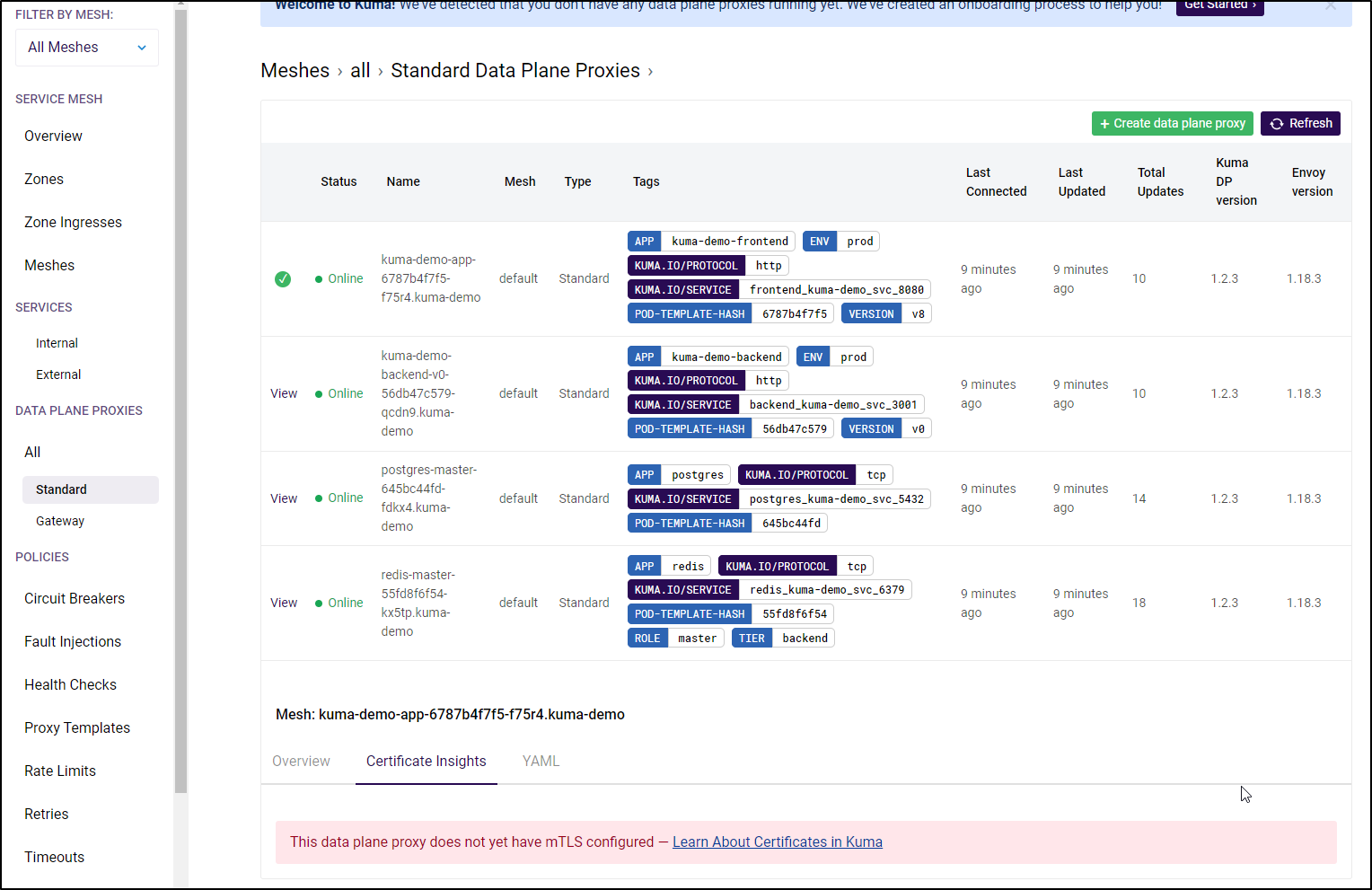

Moving on to Kuma Dashboard

Deciding the wizard was just a bit hung, i moved on to the GUI dashboard and found my mesh was just fine

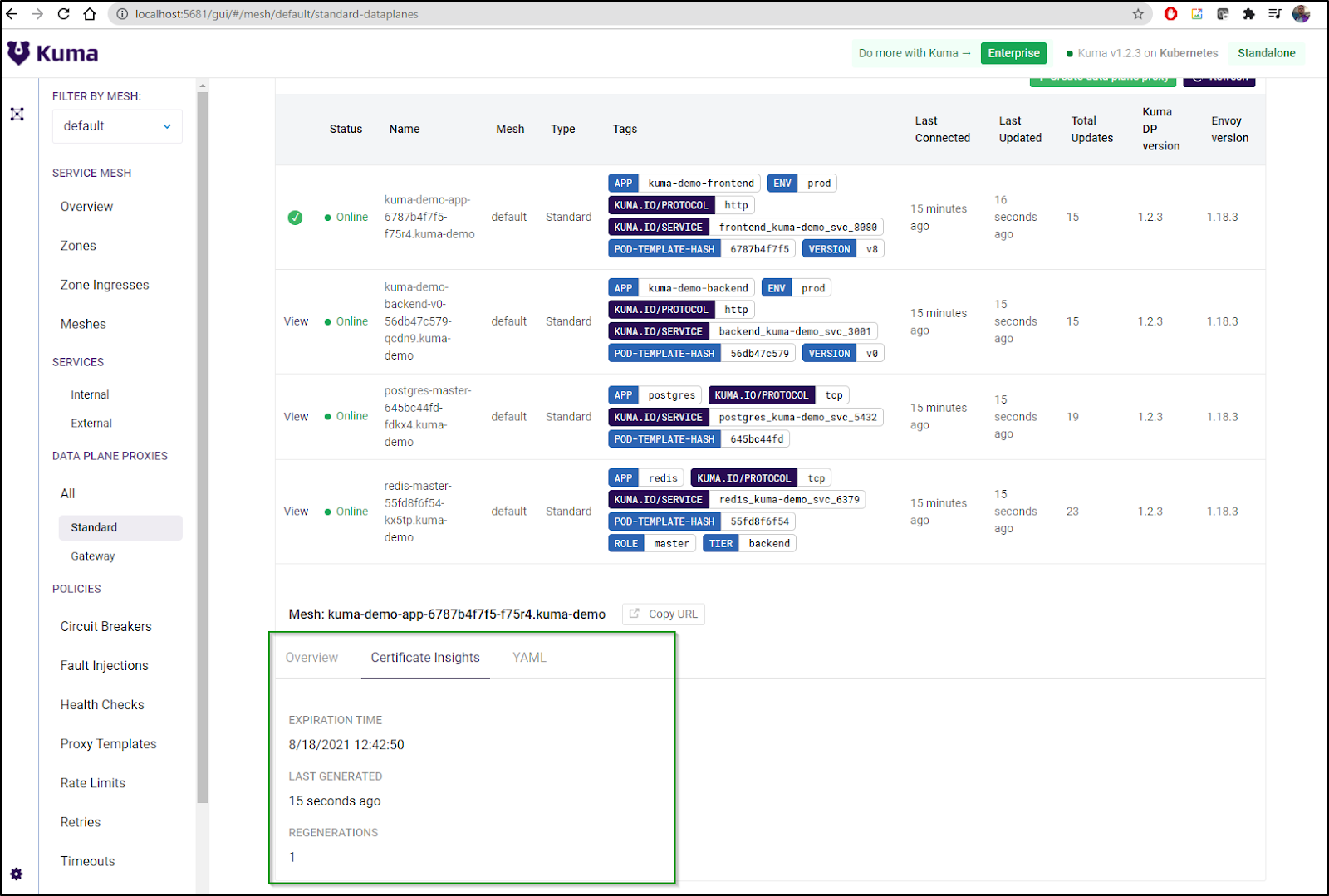

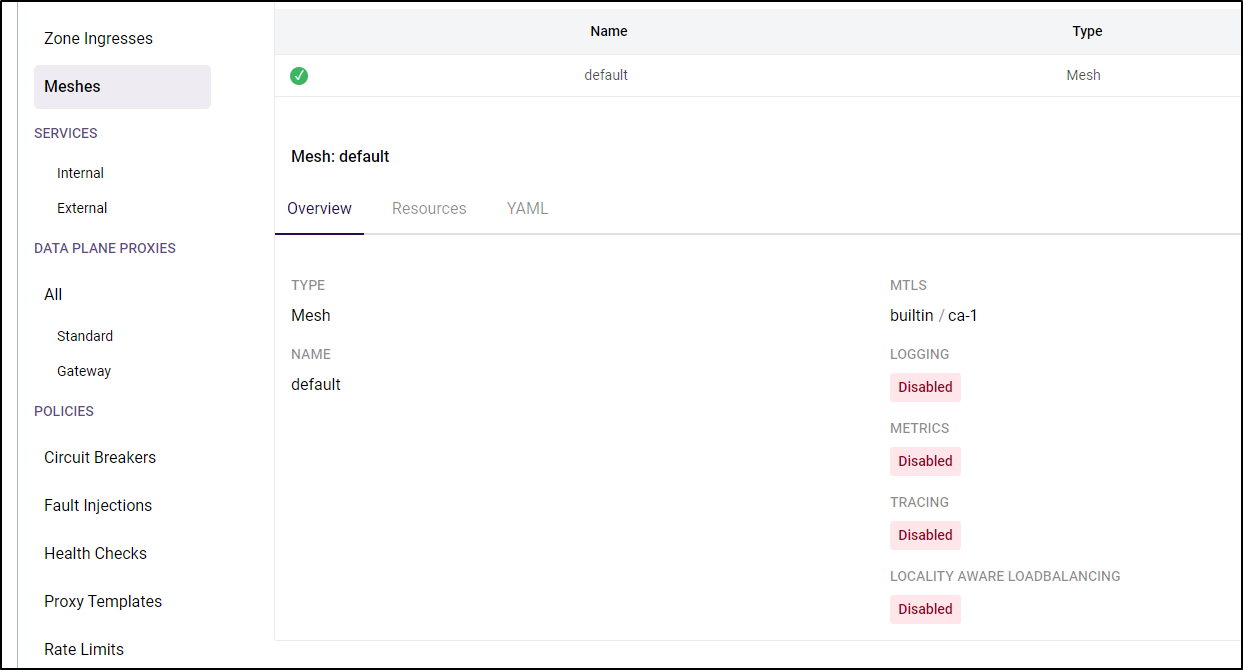

One thing we notice right away is that by default mTLS is not enabled:

We can use the built in CA (ca-1)

builder@DESKTOP-QADGF36:~$ cat enableMtls.yaml

apiVersion: kuma.io/v1alpha1

kind: Mesh

metadata:

name: default

spec:

mtls:

enabledBackend: ca-1

backends:

- name: ca-1

type: builtin

builder@DESKTOP-QADGF36:~$ kubectl apply -f enableMtls.yaml

Warning: resource meshes/default is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

mesh.kuma.io/default configured

Now when we refresh the Kuma dashboard we see mTLS is enabled:

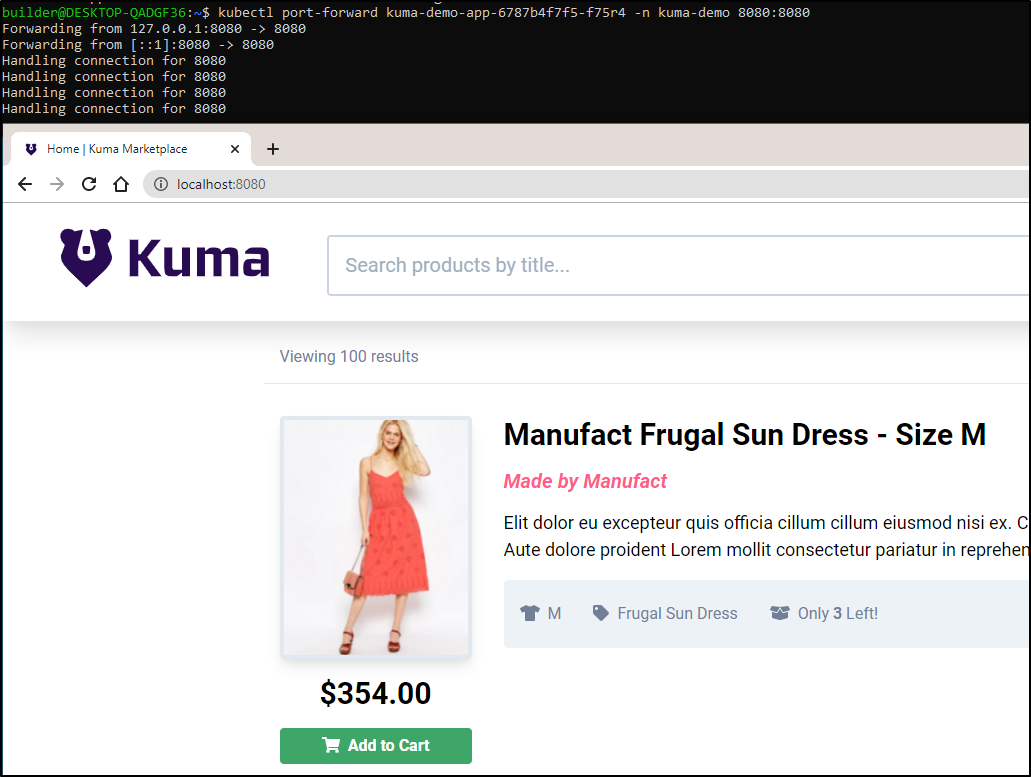

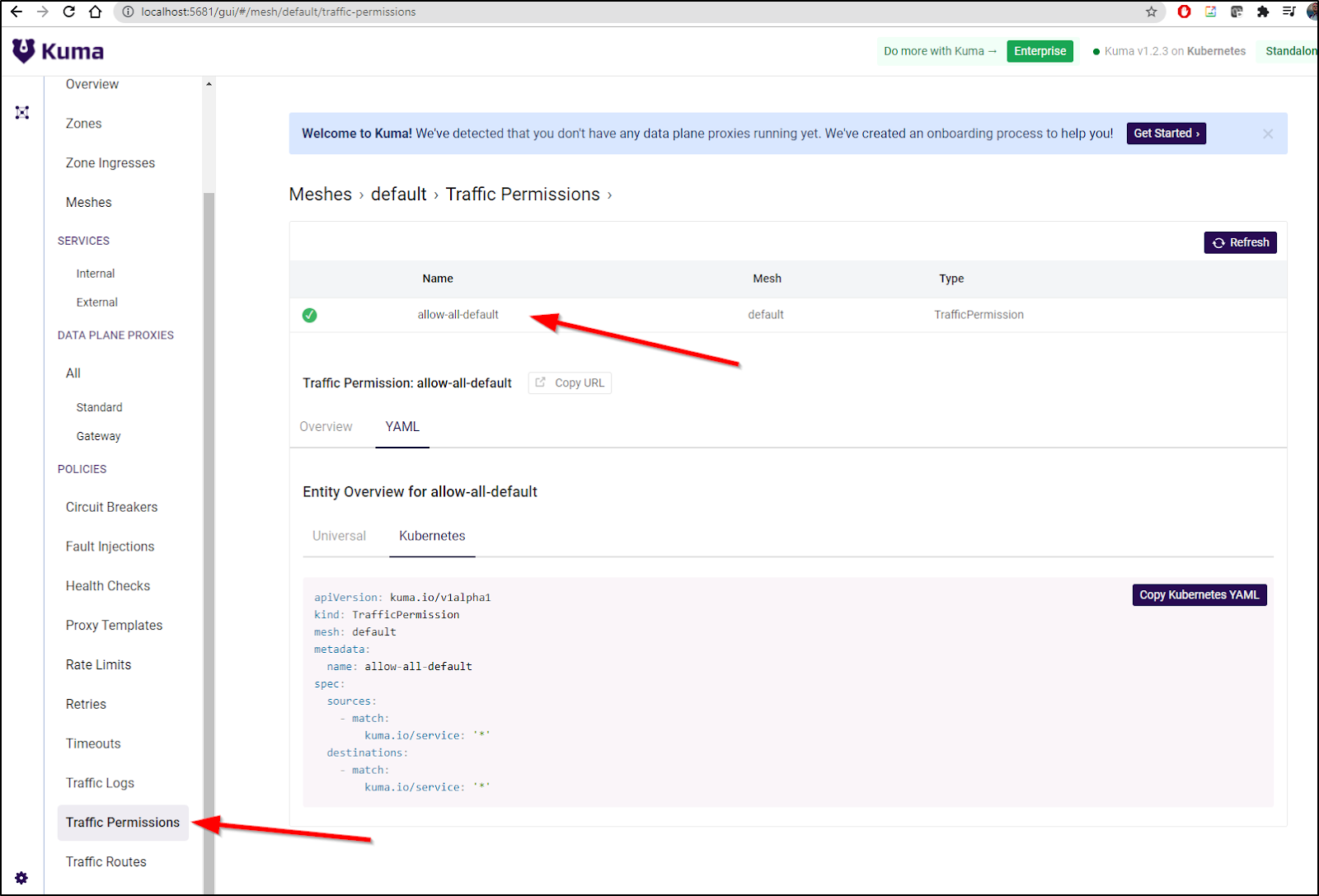

With mTLS enabled, we now need to enable Traffic Permission to reach the demo app

By default, that was enabled (which is why we can see the above):

We can see the same from the command line with kubectl:

builder@DESKTOP-QADGF36:~$ kubectl get trafficpermission

NAME AGE

allow-all-default 44m

builder@DESKTOP-QADGF36:~$ kubectl get trafficpermission allow-all-default -o yaml

apiVersion: kuma.io/v1alpha1

kind: TrafficPermission

mesh: default

metadata:

creationTimestamp: "2021-08-17T12:02:50Z"

generation: 1

name: allow-all-default

ownerReferences:

- apiVersion: kuma.io/v1alpha1

kind: Mesh

name: default

uid: 9273129b-08e9-4b01-a84c-6980de9bfc37

resourceVersion: "67307822"

selfLink: /apis/kuma.io/v1alpha1/trafficpermissions/allow-all-default

uid: 4a45b64c-9c6a-4c06-afe3-d61a8e9c954b

spec:

destinations:

- match:

kuma.io/service: '*'

sources:

- match:

kuma.io/service: '*'

let’s save it and then delete it:

builder@DESKTOP-QADGF36:~$ kubectl get trafficpermission allow-all-default -o yaml > all_traffic.yaml

builder@DESKTOP-QADGF36:~$ kubectl delete trafficpermission allow-all-default

trafficpermission.kuma.io "allow-all-default" deleted

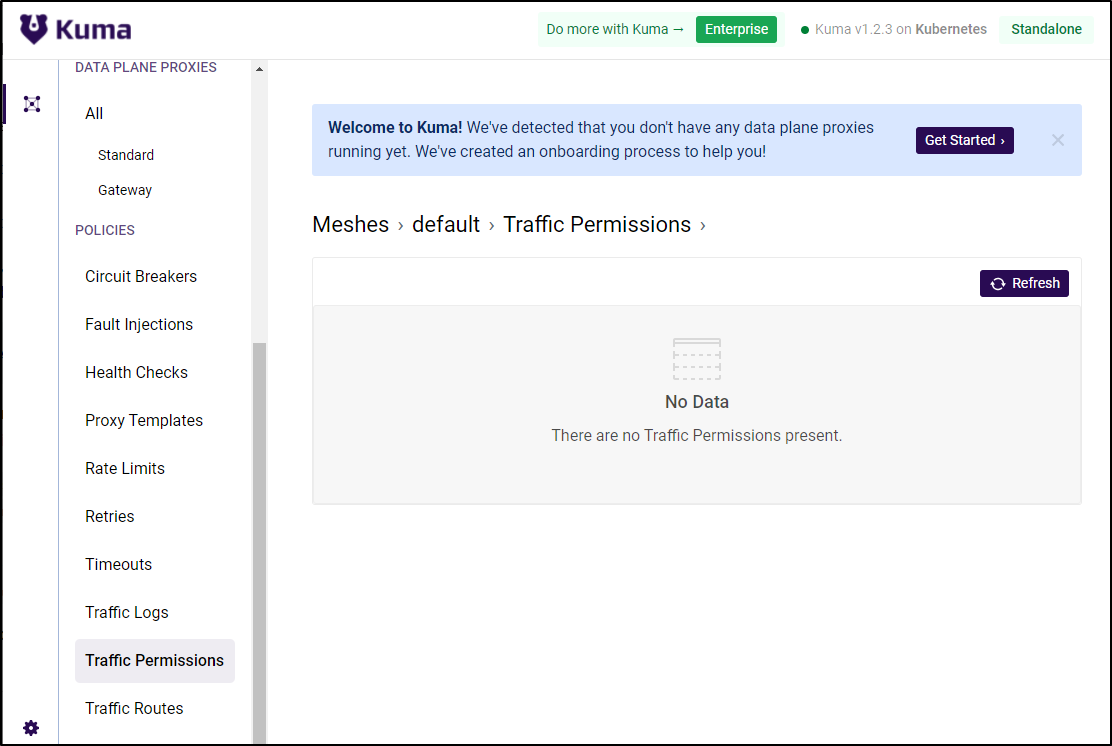

Refreshing the page shows its now gone:

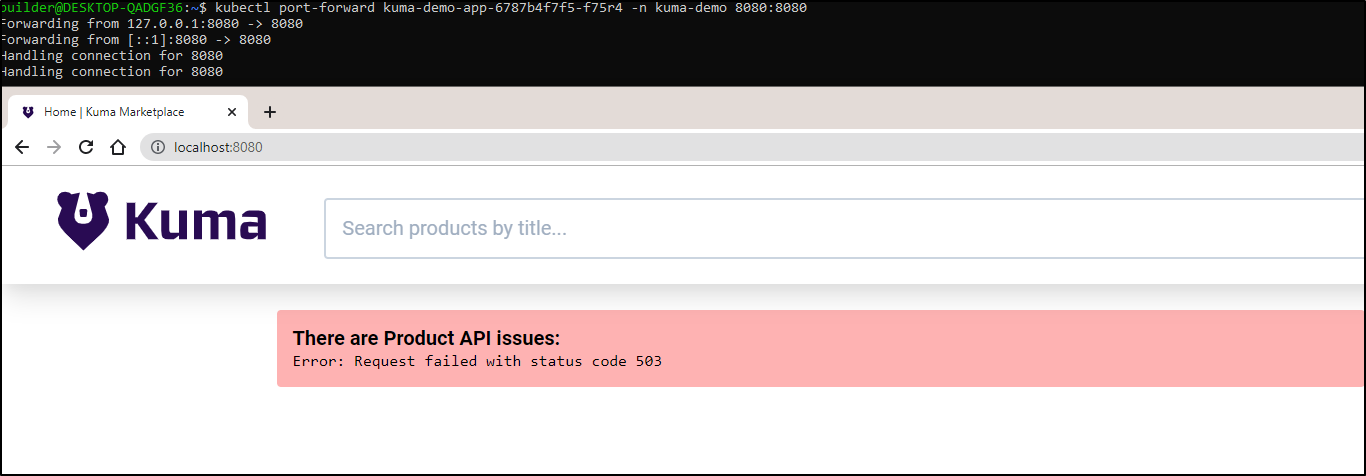

And now we see evidence that the traffic manager is blocking interpod communication:

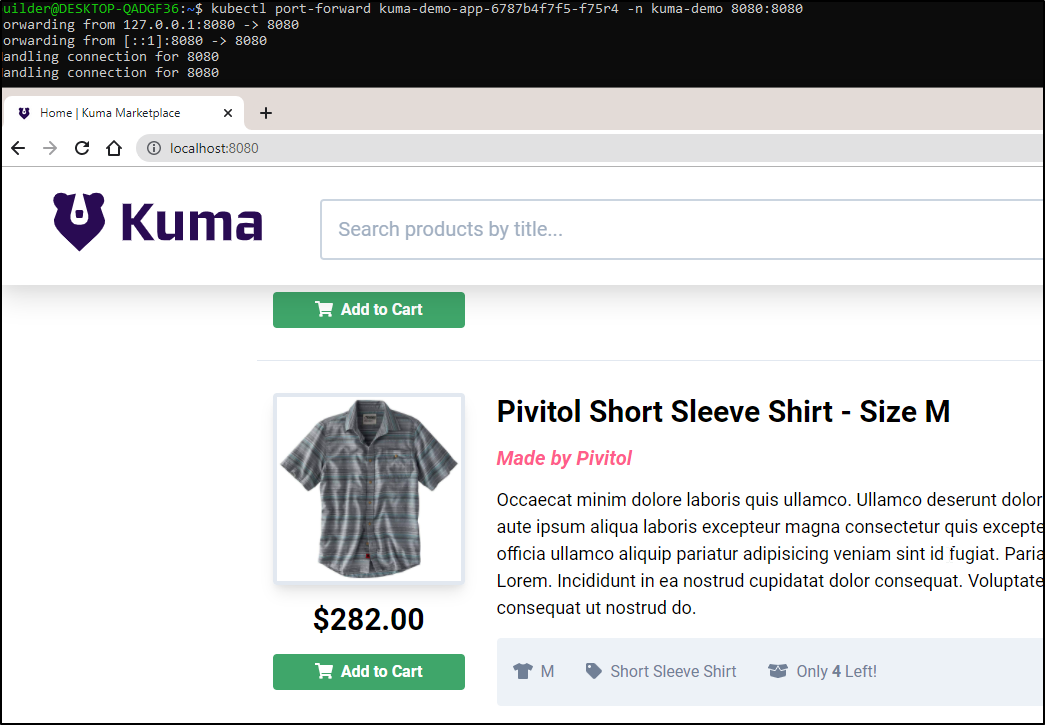

We can just put back the one we deleted:

builder@DESKTOP-QADGF36:~$ cat all_traffic.yaml

apiVersion: kuma.io/v1alpha1

kind: TrafficPermission

mesh: default

metadata:

creationTimestamp: "2021-08-17T12:02:50Z"

generation: 1

name: allow-all-default

ownerReferences:

- apiVersion: kuma.io/v1alpha1

kind: Mesh

name: default

uid: 9273129b-08e9-4b01-a84c-6980de9bfc37

resourceVersion: "67307822"

selfLink: /apis/kuma.io/v1alpha1/trafficpermissions/allow-all-default

uid: 4a45b64c-9c6a-4c06-afe3-d61a8e9c954b

spec:

destinations:

- match:

kuma.io/service: '*'

sources:

- match:

kuma.io/service: '*'

builder@DESKTOP-QADGF36:~$ kubectl apply -f ./all_traffic.yaml

trafficpermission.kuma.io/allow-all-default created

and see the app is back online

If you desire, you canfollow the guide to see traffic metrics. However, it’s just installing prometheus and grafana. We will cover this more later.

Features of Kuma

One of the nice features of Kuma is the automatic injection of livenessProbes added as a non-mTLS listener. It injects this into the pod definition automatically.

For instance, we can see the Kuma Demo app that is NOT managed by Kuma has no defined livenessProbe (just the default):

$ kubectl get pods kuma-demo2-app-6cb59848bf-q6hpk -n kuma-demo2 -o yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: "2021-08-17T13:35:32Z"

generateName: kuma-demo2-app-6cb59848bf-

labels:

app: kuma-demo2-frontend

env: prod

pod-template-hash: 6cb59848bf

version: v8

name: kuma-demo2-app-6cb59848bf-q6hpk

namespace: kuma-demo2

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: ReplicaSet

name: kuma-demo2-app-6cb59848bf

uid: e277a7da-a524-48d5-9a7e-9996a0c22280

resourceVersion: "67335217"

selfLink: /api/v1/namespaces/kuma-demo2/pods/kuma-demo2-app-6cb59848bf-q6hpk

uid: b9f73eed-4a4d-4bc5-844c-da6168bf08df

spec:

containers:

- args:

- -P

- http://backend:3001

image: kvn0218/kuma-demo-fe:latest

imagePullPolicy: IfNotPresent

name: kuma-fe

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-jppfz

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: anna-macbookair

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- name: default-token-jppfz

secret:

defaultMode: 420

secretName: default-token-jppfz

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2021-08-17T13:35:32Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2021-08-17T13:35:34Z"

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2021-08-17T13:35:34Z"

status: "True"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2021-08-17T13:35:32Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: containerd://0c4df86fdb188bf28e2a05debc16cb08348fa205fff28d711e850851d7b5ac3d

image: docker.io/kvn0218/kuma-demo-fe:latest

imageID: docker.io/kvn0218/kuma-demo-fe@sha256:e72c87263cc19bc7ea9d04978f6601d9adab01e9fc5a642219c206f154f9eb48

lastState: {}

name: kuma-fe

ready: true

restartCount: 0

started: true

state:

running:

startedAt: "2021-08-17T13:35:33Z"

hostIP: 192.168.1.12

phase: Running

podIP: 10.42.2.195

podIPs:

- ip: 10.42.2.195

qosClass: BestEffort

startTime: "2021-08-17T13:35:32Z"

But if i look to the same app as managed by kuma in kuma-demo:

$ kubectl get pods kuma-demo-app-6787b4f7f5-f75r4 -n kuma-demo -o yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

kuma.io/builtindns: enabled

kuma.io/builtindnsport: "15053"

kuma.io/mesh: default

kuma.io/sidecar-injected: "true"

kuma.io/sidecar-uid: "5678"

kuma.io/transparent-proxying: enabled

kuma.io/transparent-proxying-inbound-port: "15006"

kuma.io/transparent-proxying-inbound-v6-port: "15010"

kuma.io/transparent-proxying-outbound-port: "15001"

kuma.io/virtual-probes: enabled

kuma.io/virtual-probes-port: "9000"

creationTimestamp: "2021-08-17T12:19:07Z"

generateName: kuma-demo-app-6787b4f7f5-

labels:

app: kuma-demo-frontend

env: prod

pod-template-hash: 6787b4f7f5

version: v8

name: kuma-demo-app-6787b4f7f5-f75r4

namespace: kuma-demo

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: ReplicaSet

name: kuma-demo-app-6787b4f7f5

uid: 7699f429-f36b-4aa8-a1fe-e3295cee8256

resourceVersion: "67313681"

selfLink: /api/v1/namespaces/kuma-demo/pods/kuma-demo-app-6787b4f7f5-f75r4

uid: ff77c875-3960-4b7b-bd34-76b09281e833

spec:

containers:

- args:

- -P

- http://backend:3001

image: kvn0218/kuma-demo-fe:latest

imagePullPolicy: IfNotPresent

name: kuma-fe

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-84s7w

readOnly: true

- args:

- run

- --log-level=info

env:

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

- name: INSTANCE_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: KUMA_CONTROL_PLANE_CA_CERT

value: |

-----BEGIN CERTIFICATE-----

MIIDDzCCAfeasdfasdfasdfasdfasdfasdfsFADAS

MRAwDgYDVQQasdfasdfsadfasdfasdfgxNTExNTYy

M1owEjEQMA4GA1UEAxMHa3VtYS1jYTCCASIwDQYJKoZIhvcNAQEBBQADggEPADCC

AQoCggEBANCsl8PjpUPZoAeokwqlQEZdsRcSy3PHE7SVH6Uy2bo0lGa+rxA9w8Z2

1K0mBOCznUnfoPmj6nt4gG89FH+j+ToXMN6R86ttt4fAxOVlS1dwPgn6UtWnbkM6

FH+yJlSLNGO6wVhMT0emS5CPdxohodyHmpcgR7vtY2HPgfV99erwFJFADA0LjmaD

6oOytbJwNXLag4KrPJuxVIVHWLzWabIH22Wulb6w9SWNo7WBSIFBqtzt6ke5nsFW

7hfd6V0E7MuROzEOvVDyePVVIV+0QTuTPECjNEBZXI9jH2rfkTGVT1uadhE+ViGZ

Yk2eHGBOa/AkcX0Q4QoazxXGnlOFWvkCAwEAAaNhMF8wDgYDVR0PAQH/BAQDAgKk

MB0GA1UdJQQWMBQGCCsGAQUFBwMBBggrBgEFBQcDAjAPBgNVHRMBAf8EBTADAQH/

MB0GA1UdDgQWBBTVBqGSnAIl6j9mdJQYJxz7R7GzUDANBgkqhkiG9w0BAQsFAAOC

AQEASvTywVe6UmR6coNHCCEasgAGqgIN3wHkKa/qBUiIBKXM3ZGMcG6v9GgcvjFk

D1Bt4rUlYrFZjHU+wXbhp6ddo3E9+Mk9bbn6tTfg/Yui1w+y6L4+S2GdGd6d1Ra2

6qFKFOGqQF3whc17/zDZPYCoSiYrLe2uMiJ0bllHLVeTBkng7YkoANQRiMm4c7po

Z7R18FKpuebKVlIgLv7QHBtHfvFuhhJvyZTq6qhzQGdsGCpF8l/URWmg4hV0zCbb

vKa8we4hi3kKJosZqrzxSly62Ed9spV31mq/kPvy29+SfaR+ahU3JYhF6BkFQPOx

xCgMvUPc1RU4BWdWivdooUJOZA==

-----END CERTIFICATE-----

- name: KUMA_CONTROL_PLANE_URL

value: https://kuma-control-plane.kuma-system:5678

- name: KUMA_DATAPLANE_ADMIN_PORT

value: "9901"

- name: KUMA_DATAPLANE_DRAIN_TIME

value: 30s

- name: KUMA_DATAPLANE_MESH

value: default

- name: KUMA_DATAPLANE_NAME

value: $(POD_NAME).$(POD_NAMESPACE)

- name: KUMA_DATAPLANE_RUNTIME_TOKEN_PATH

value: /var/run/secrets/kubernetes.io/serviceaccount/token

- name: KUMA_DNS_CORE_DNS_BINARY_PATH

value: coredns

- name: KUMA_DNS_CORE_DNS_EMPTY_PORT

value: "15054"

- name: KUMA_DNS_CORE_DNS_PORT

value: "15053"

- name: KUMA_DNS_ENABLED

value: "true"

- name: KUMA_DNS_ENVOY_DNS_PORT

value: "15055"

image: docker.io/kumahq/kuma-dp:1.2.3

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 12

httpGet:

path: /ready

port: 9901

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 3

name: kuma-sidecar

readinessProbe:

failureThreshold: 12

httpGet:

path: /ready

port: 9901

scheme: HTTP

initialDelaySeconds: 1

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 3

resources:

limits:

cpu: "1"

memory: 512Mi

requests:

cpu: 50m

memory: 64Mi

securityContext:

runAsGroup: 5678

runAsUser: 5678

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-84s7w

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

initContainers:

- args:

- --redirect-outbound-port

- "15001"

- --redirect-inbound=true

- --redirect-inbound-port

- "15006"

- --redirect-inbound-port-v6

- "15010"

- --kuma-dp-uid

- "5678"

- --exclude-inbound-ports

- ""

- --exclude-outbound-ports

- ""

- --verbose

- --skip-resolv-conf

- --redirect-all-dns-traffic

- --redirect-dns-port

- "15053"

command:

- /usr/bin/kumactl

- install

- transparent-proxy

image: docker.io/kumahq/kuma-init:1.2.3

imagePullPolicy: IfNotPresent

name: kuma-init

resources:

limits:

cpu: 100m

memory: 50M

requests:

cpu: 10m

memory: 10M

securityContext:

capabilities:

add:

- NET_ADMIN

runAsGroup: 0

runAsUser: 0

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-84s7w

readOnly: true

nodeName: isaac-macbookpro

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- name: default-token-84s7w

secret:

defaultMode: 420

secretName: default-token-84s7w

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2021-08-17T12:19:31Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2021-08-17T12:21:16Z"

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2021-08-17T12:21:16Z"

status: "True"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2021-08-17T12:19:07Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: containerd://dc78658979cee5fb71a2c61492c94c42dbce7f24301c61ca30844e122627a9b3

image: docker.io/kvn0218/kuma-demo-fe:latest

imageID: docker.io/kvn0218/kuma-demo-fe@sha256:e72c87263cc19bc7ea9d04978f6601d9adab01e9fc5a642219c206f154f9eb48

lastState: {}

name: kuma-fe

ready: true

restartCount: 0

started: true

state:

running:

startedAt: "2021-08-17T12:20:29Z"

- containerID: containerd://1143f43b62f790199e16afa0a07660481fb7d14b423f891d5698bd463b9ca0e0

image: docker.io/kumahq/kuma-dp:1.2.3

imageID: docker.io/kumahq/kuma-dp@sha256:2dcf3feaeaa87db3e6e614057ce92b34e804658abd23d44c6d102e59a7f3f088

lastState: {}

name: kuma-sidecar

ready: true

restartCount: 0

started: true

state:

running:

startedAt: "2021-08-17T12:21:13Z"

hostIP: 192.168.1.205

initContainerStatuses:

- containerID: containerd://027452e7ee6e91000381715ab24635bdf14fe97f816408fc1bdc57c6e771b933

image: docker.io/kumahq/kuma-init:1.2.3

imageID: docker.io/kumahq/kuma-init@sha256:d62dac9fd095c0c4675816d9a60b88d1cd74405627be7d5baae0d6dbd87b369a

lastState: {}

name: kuma-init

ready: true

restartCount: 0

state:

terminated:

containerID: containerd://027452e7ee6e91000381715ab24635bdf14fe97f816408fc1bdc57c6e771b933

exitCode: 0

finishedAt: "2021-08-17T12:19:29Z"

reason: Completed

startedAt: "2021-08-17T12:19:28Z"

phase: Running

podIP: 10.42.1.182

podIPs:

- ip: 10.42.1.182

qosClass: Burstable

startTime: "2021-08-17T12:19:09Z"

We can see that block that allows a managed livenessProbe handled this time by Kuma, not the app itself:

livenessProbe:

failureThreshold: 12

httpGet:

path: /ready

port: 9901

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 3

name: kuma-sidecar

readinessProbe:

failureThreshold: 12

httpGet:

path: /ready

port: 9901

scheme: HTTP

initialDelaySeconds: 1

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 3

we can see more on that in the Kuma documentation

Datadog

While prometheus and grafana are fine for some. I generally prefer the metrics and log aggregation of Datadog.

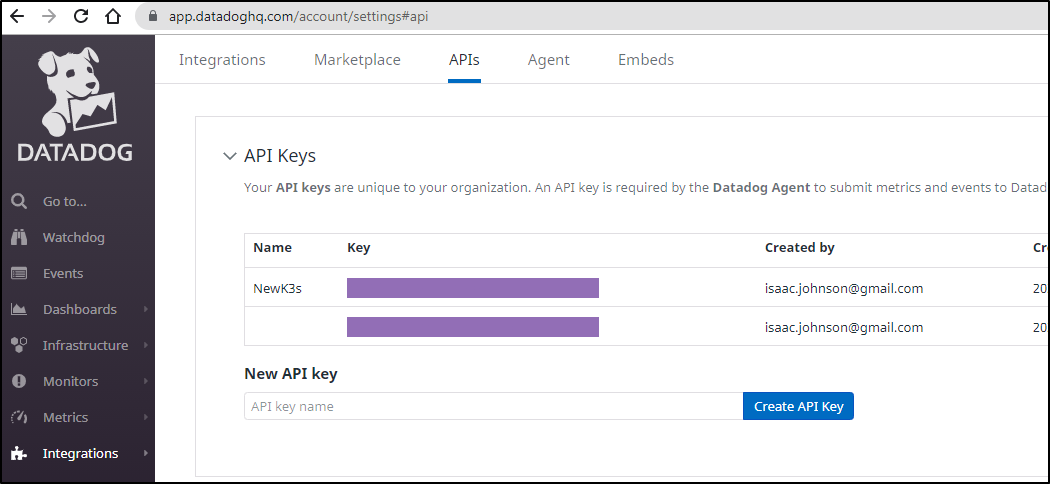

Since I rotated the key in the last 5 months, it’s time to fix that and send data in for this cluster:

builder@DESKTOP-QADGF36:~$ helm list | grep datadog

datadogrelease default 2 2021-03-01 07:16:57.3327545 -0600 CST deployed datadog-2.9.5

$ helm get values datadogrelease

USER-SUPPLIED VALUES:

USER-SUPPLIED VALUES: null

clusterAgent:

enabled: true

metricsProvider:

enabled: true

datadog:

apiKeyExistingSecret: dd-secret

appKey: 60a70omyappkeymyappkeymyappkeyf9984

logs:

containerCollectAll: true

enabled: true

The apikey is in dd-secret

We can see our values either in the integration wizard or under API Keys

There are a few ways to edit secrets (including kubectl edit secrets dd-secret) but i’ll just use base64 directly and edit/apply the file (note the tr -d to remove newline)

builder@DESKTOP-QADGF36:~$ kubectl get secret dd-secret -o yaml > dd-secret.yaml

builder@DESKTOP-QADGF36:~$ echo ******putrealkeyhere******** | tr -d '\n' | base64

asdfasdfasdfasdfasdfsadfasdfasdfasdf=

builder@DESKTOP-QADGF36:~$ vi dd-secret.yaml

builder@DESKTOP-QADGF36:~$ kubectl apply -f dd-secret.yaml

Warning: resource secrets/dd-secret is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

secret/dd-secret configured

The pods are spread over 3 labels:

builder@DESKTOP-QADGF36:~$ kubectl get pods -l app=datadogrelease

NAME READY STATUS RESTARTS AGE

datadogrelease-jhw4z 1/2 Running 7 168d

datadogrelease-wg7ks 1/2 Running 4 121d

datadogrelease-ws2jf 1/2 Running 5 168d

builder@DESKTOP-QADGF36:~$ kubectl get pods -l app=datadogrelease-cluster-agent

NAME READY STATUS RESTARTS AGE

datadogrelease-cluster-agent-86b57cc4c7-wvdbl 1/1 Running 0 22d

builder@DESKTOP-QADGF36:~$ kubectl get pods -l app.kubernetes.io/instance=datadogrelease

NAME READY STATUS RESTARTS AGE

datadogrelease-kube-state-metrics-5c6f76f766-n5jd6 1/1 Running 0 28d

We can rotate the bunch of them to get the fresh key:

$ kubectl delete pods -l app=datadogrelease-cluster-agent && kubectl delete pods -l app.kubernetes.io/instance=datadogrelease && kubectl delete pods -l app=datadogrelease

pod "datadogrelease-cluster-agent-86b57cc4c7-wvdbl" deleted

pod "datadogrelease-kube-state-metrics-5c6f76f766-n5jd6" deleted

pod "datadogrelease-jhw4z" deleted

pod "datadogrelease-wg7ks" deleted

pod "datadogrelease-ws2jf" deleted

builder@DESKTOP-QADGF36:~$ kubectl get pods | grep datadog

datadogrelease-kube-state-metrics-5c6f76f766-kztrt 1/1 Running 0 52s

datadogrelease-cluster-agent-86b57cc4c7-w7fms 1/1 Running 0 65s

datadogrelease-nk8zl 2/2 Running 0 39s

datadogrelease-hthkf 1/2 Running 0 27s

datadogrelease-gw9tq 2/2 Running 0 39s

We can see details from the cluster agent itself:

$ kubectl exec -it datadogrelease-cluster-agent-86b57cc4c7-w7fms -- datadog-cluster-agent status

Getting the status from the agent.

2021-08-17 13:24:34 UTC | CLUSTER | WARN | (pkg/util/log/log.go:541 in func1) | Agent configuration relax permissions constraint on the secret backend cmd, Group can read and exec

===============================

Datadog Cluster Agent (v1.10.0)

===============================

Status date: 2021-08-17 13:24:34.861176 UTC

Agent start: 2021-08-17 13:09:54.946022 UTC

Pid: 1

Go Version: go1.14.12

Build arch: amd64

Agent flavor: cluster_agent

Check Runners: 4

Log Level: INFO

Paths

=====

Config File: /etc/datadog-agent/datadog-cluster.yaml

conf.d: /etc/datadog-agent/conf.d

Clocks

======

System UTC time: 2021-08-17 13:24:34.861176 UTC

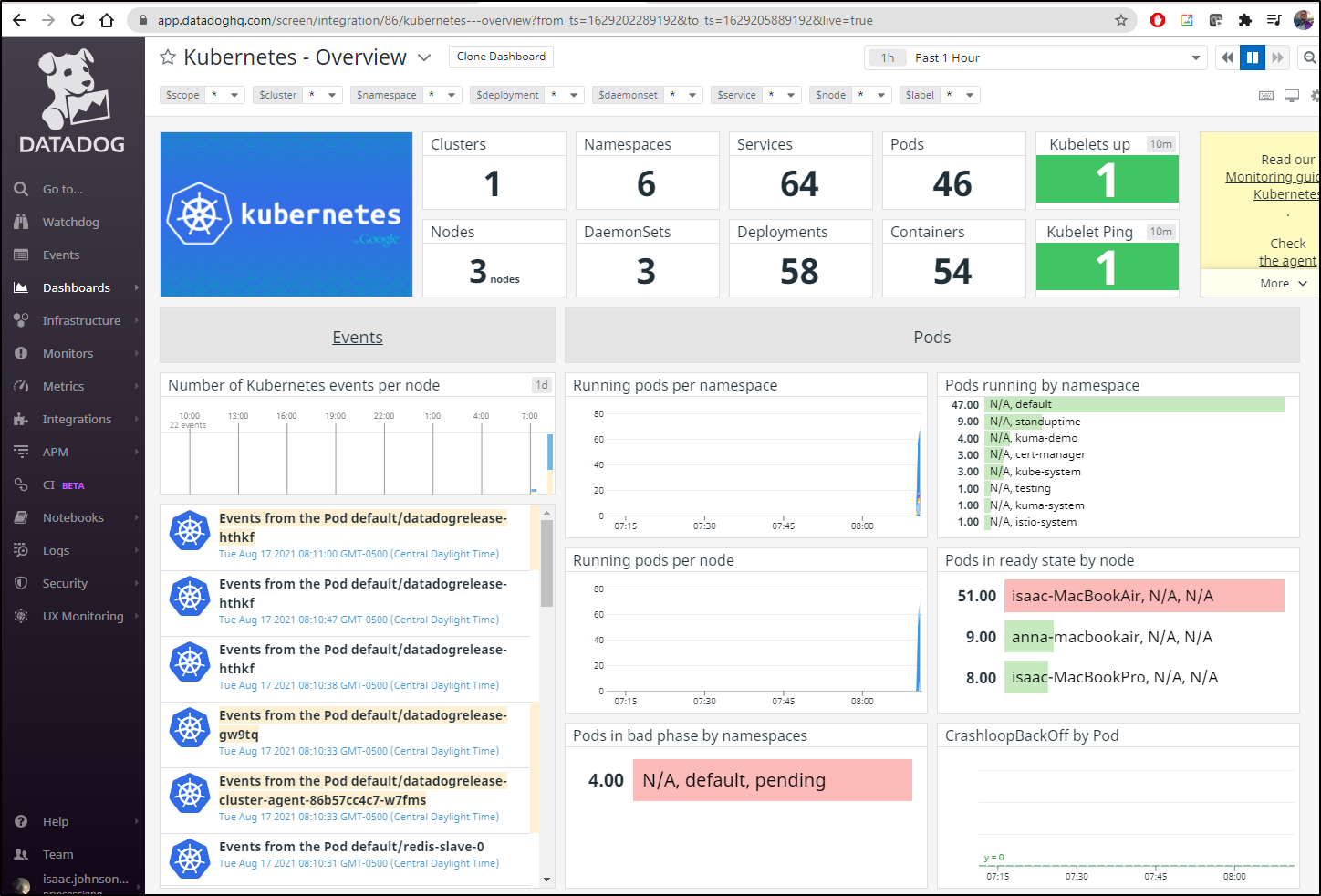

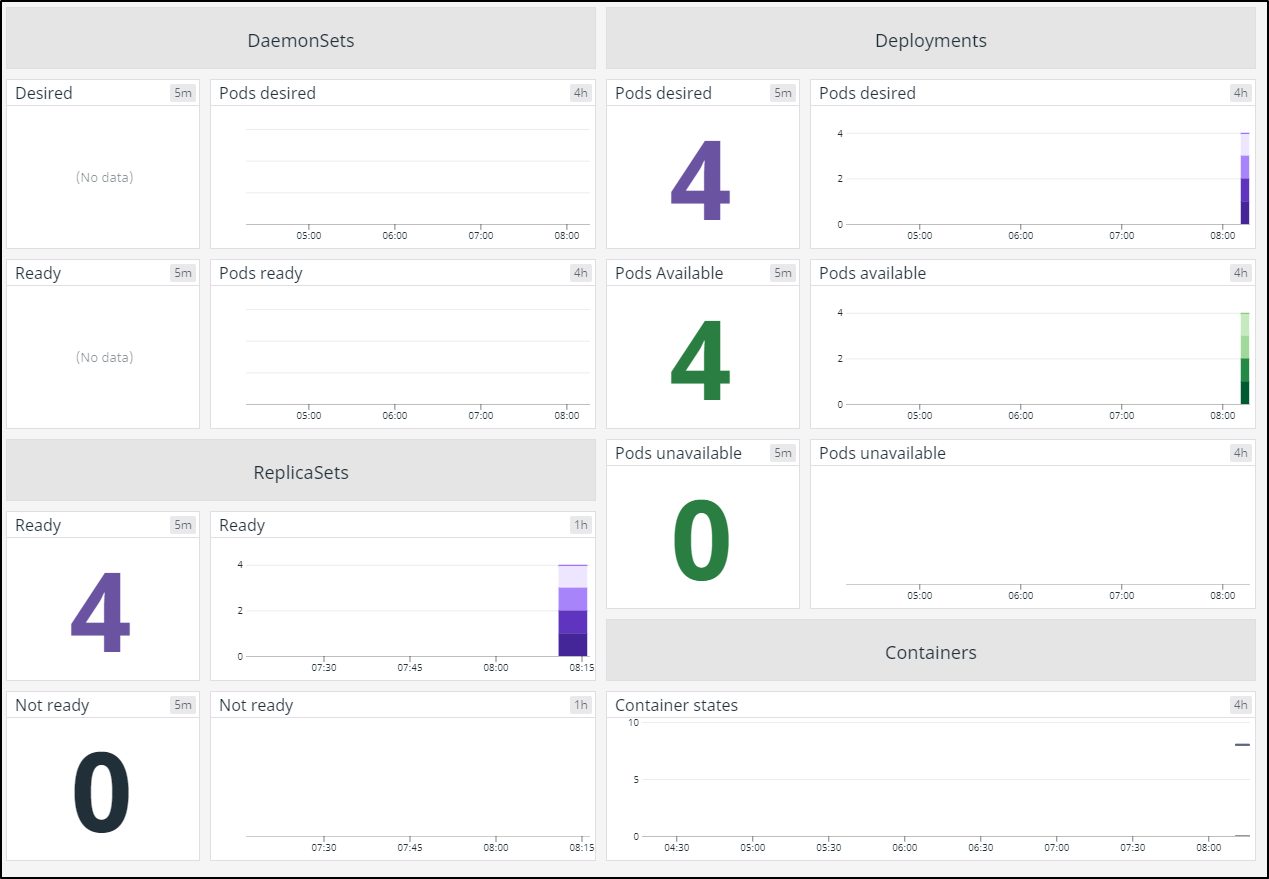

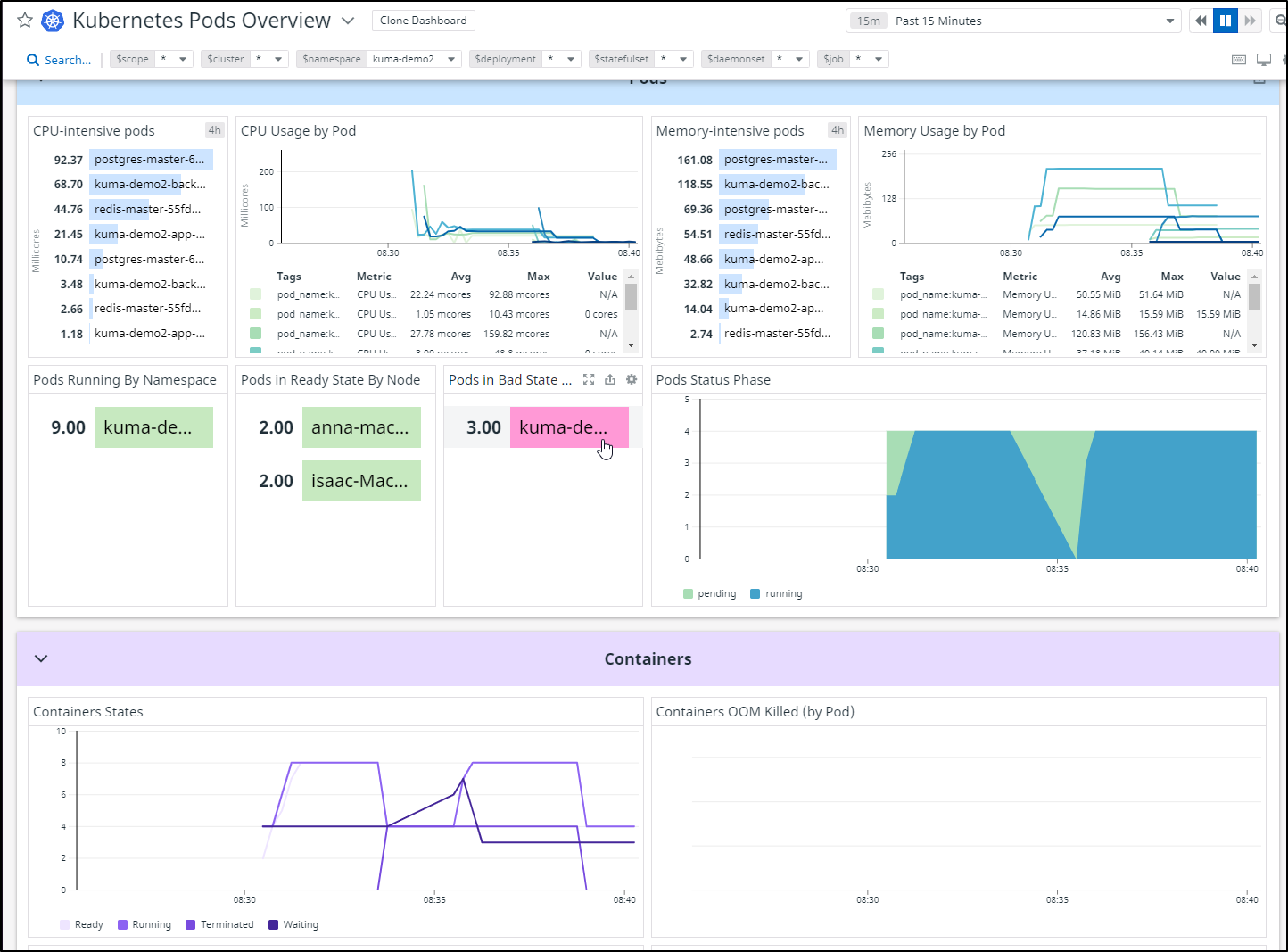

And we can see our Kubernetes dashboard is back up:

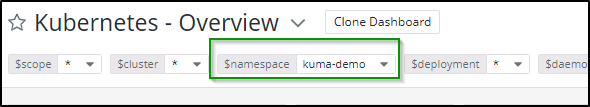

Scoping to Kuma-demo we can se we are able to detect pods by node:

This is hard to see in a blog post, but the overall dashboard

Essentially we just use the power of Datadog labels to scope our K8s dashboard to just the kuma-demo namespace:

In the upper right i set the timeframe to 5 minutes since i just corrected the keys.

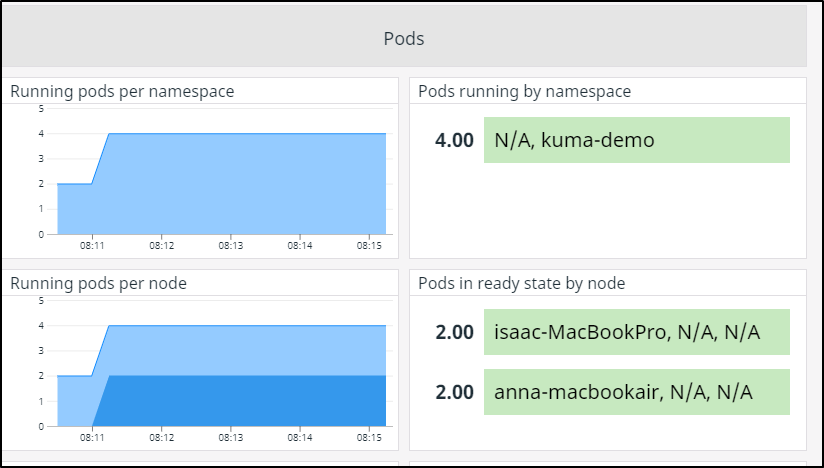

I immediately checked where the workloads were running (since the MB Pro is quite old and my least performant node):

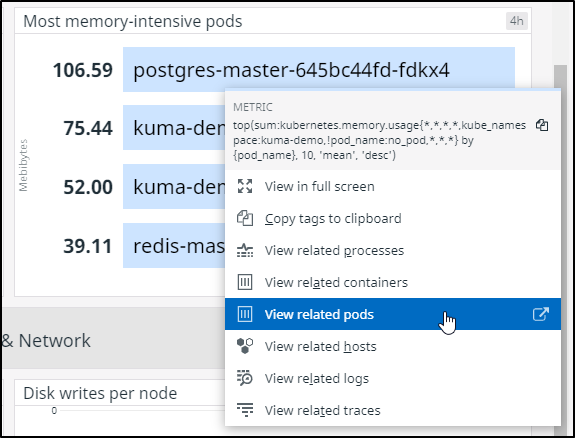

My second check was to see the CPU and Memory intensive pods. Every now and then I see a real outlier that requires attention:

Lastly, the bottom left panes are all about the deployments. Is anything down? did any not start. Here we will immediately see unavailable and unscheduled.

From our dashboard we can check out the pods themselves

However, this namespace is not giving us pods or logs presently:

This is because of the service mesh.

Let’s verify that hypothesis by creating a “demo2” without the service mesh:

$ wget https://bit.ly/demokuma

--2021-08-17 08:27:39-- https://bit.ly/demokuma

Resolving bit.ly (bit.ly)... 67.199.248.10, 67.199.248.11

Connecting to bit.ly (bit.ly)|67.199.248.10|:443... connected.

HTTP request sent, awaiting response... 301 Moved Permanently

Location: https://raw.githubusercontent.com/Kong/kuma-demo/master/kubernetes/kuma-demo-aio.yaml [following]

--2021-08-17 08:27:39-- https://raw.githubusercontent.com/Kong/kuma-demo/master/kubernetes/kuma-demo-aio.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.108.133, 185.199.110.133, 185.199.111.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 4905 (4.8K) [text/plain]

Saving to: ‘demokuma’

demokuma 100%[================================================================================================================================================================================================================================================>] 4.79K --.-KB/s in 0s

2021-08-17 08:27:39 (104 MB/s) - ‘demokuma’ saved [4905/4905]

builder@DESKTOP-QADGF36:~$ sed -i 's/name: kuma-demo/name: kuma-demo2/g' demokuma

builder@DESKTOP-QADGF36:~$ sed -i 's/namespace: kuma-demo/namespace: kuma-demo2/g' demokuma

builder@DESKTOP-QADGF36:~$ sed -i 's/app: kuma-demo/app: kuma-demo2/g' demokuma

$ kubectl apply -f demokuma

namespace/kuma-demo2 created

deployment.apps/postgres-master created

service/postgres created

deployment.apps/redis-master created

service/redis created

service/backend created

deployment.apps/kuma-demo2-backend-v0 created

deployment.apps/kuma-demo2-backend-v1 created

deployment.apps/kuma-demo2-backend-v2 created

service/frontend created

deployment.apps/kuma-demo2-app created

Verify its running

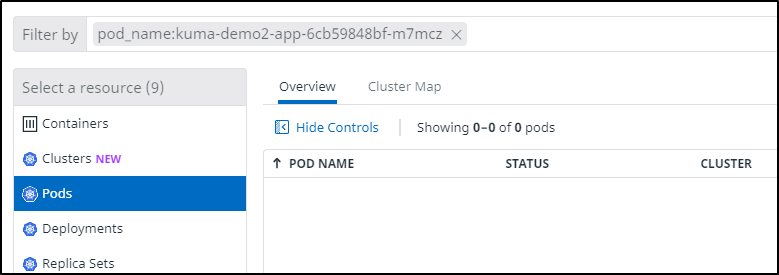

$ kubectl get pods -n kuma-demo2

NAME READY STATUS RESTARTS AGE

kuma-demo2-app-6cb59848bf-m7mcz 2/2 Running 0 64s

postgres-master-645bc44fd-cgkvf 2/2 Running 0 67s

kuma-demo2-backend-v0-5c6fc995c-62g7c 2/2 Running 0 64s

redis-master-55fd8f6f54-b9n2c 2/2 Running 0 67s

We cannot view pods

Now disable the Kuma injection by just removing the annotation at the top:

builder@DESKTOP-QADGF36:~$ diff demokuma demokuma.bak

5a6,7

> annotations:

> kuma.io/sidecar-injection: enabled

$ kubectl apply -f demokuma

namespace/kuma-demo2 created

deployment.apps/postgres-master created

service/postgres created

deployment.apps/redis-master created

service/redis created

service/backend created

deployment.apps/kuma-demo2-backend-v0 created

deployment.apps/kuma-demo2-backend-v1 created

deployment.apps/kuma-demo2-backend-v2 created

service/frontend created

deployment.apps/kuma-demo2-app created

Verification

$ kubectl get pods -n kuma-demo2

NAME READY STATUS RESTARTS AGE

redis-master-55fd8f6f54-tgft9 1/1 Running 0 30s

kuma-demo2-app-6cb59848bf-q6hpk 1/1 Running 0 30s

kuma-demo2-backend-v0-5c6fc995c-9ljqd 1/1 Running 0 30s

postgres-master-645bc44fd-qcv9r 1/1 Running 0 30s

And in fact I was in err. It was just a timing issue for log ingestion.

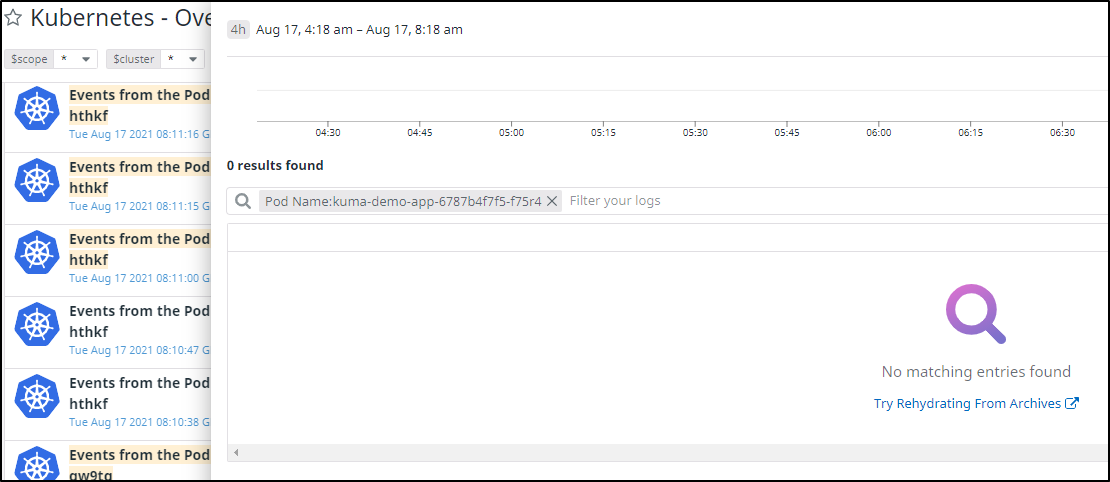

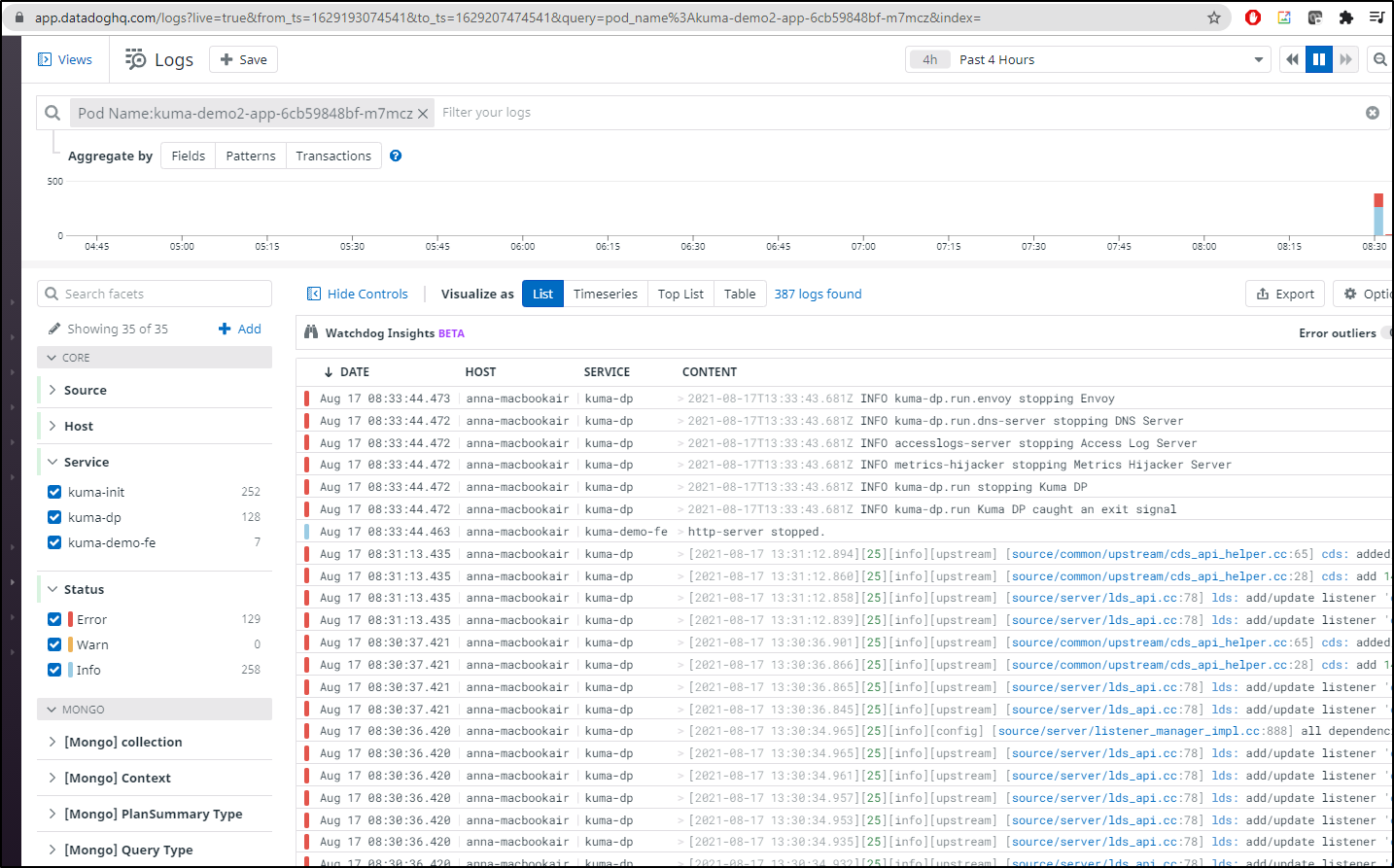

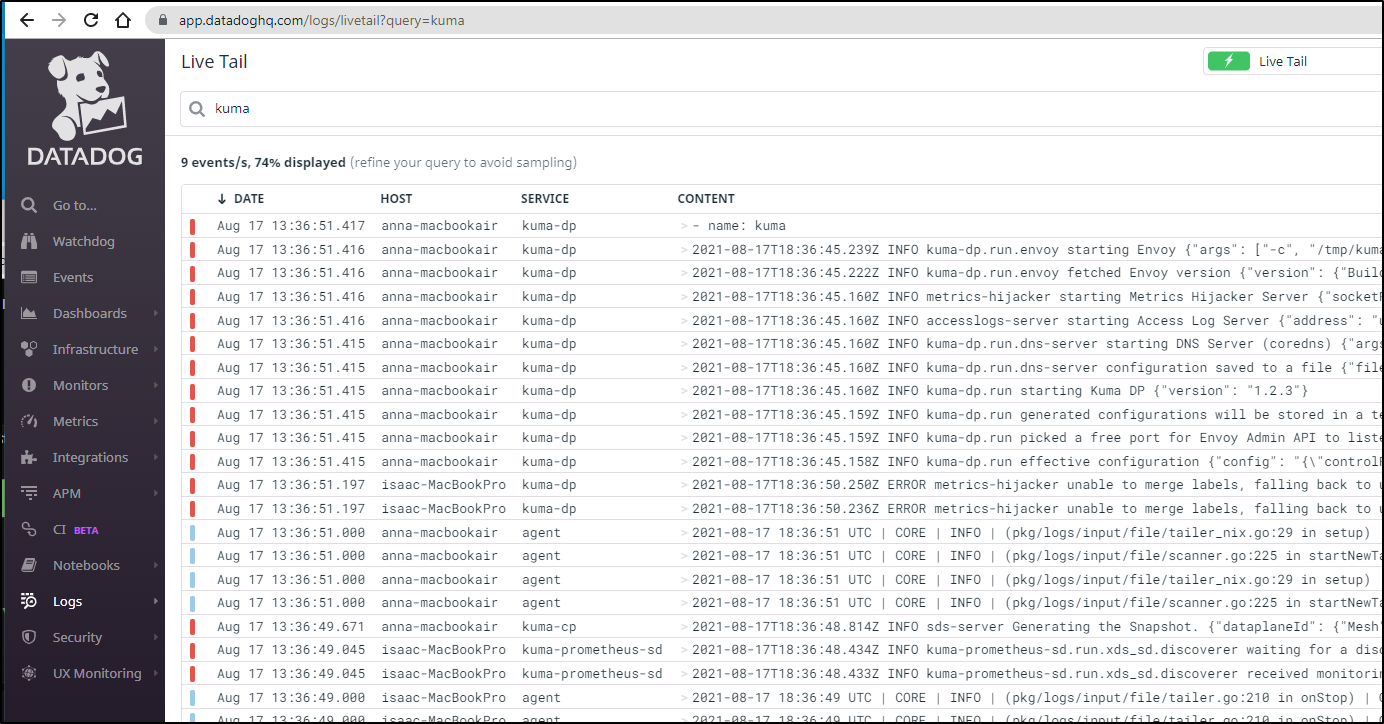

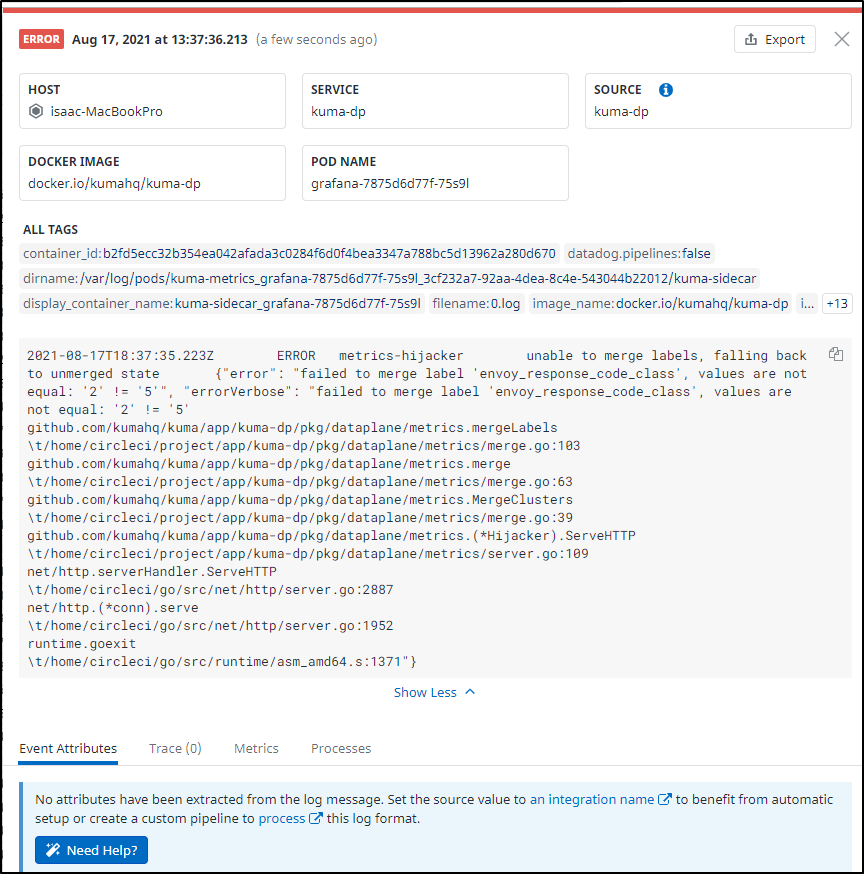

Circling back, i found the logs for the secured container

Recall, the deployment with Kuma enabled:

$ kubectl get pods -n kuma-demo2

NAME READY STATUS RESTARTS AGE

kuma-demo2-app-6cb59848bf-m7mcz 2/2 Running 0 64s

postgres-master-645bc44fd-cgkvf 2/2 Running 0 67s

kuma-demo2-backend-v0-5c6fc995c-62g7c 2/2 Running 0 64s

redis-master-55fd8f6f54-b9n2c 2/2 Running 0 67s

Which i found by checking Pods in bad states in the Kubernetes Pods Dashboard in DD

From active pods, we can see we had sidecars but they were removed:

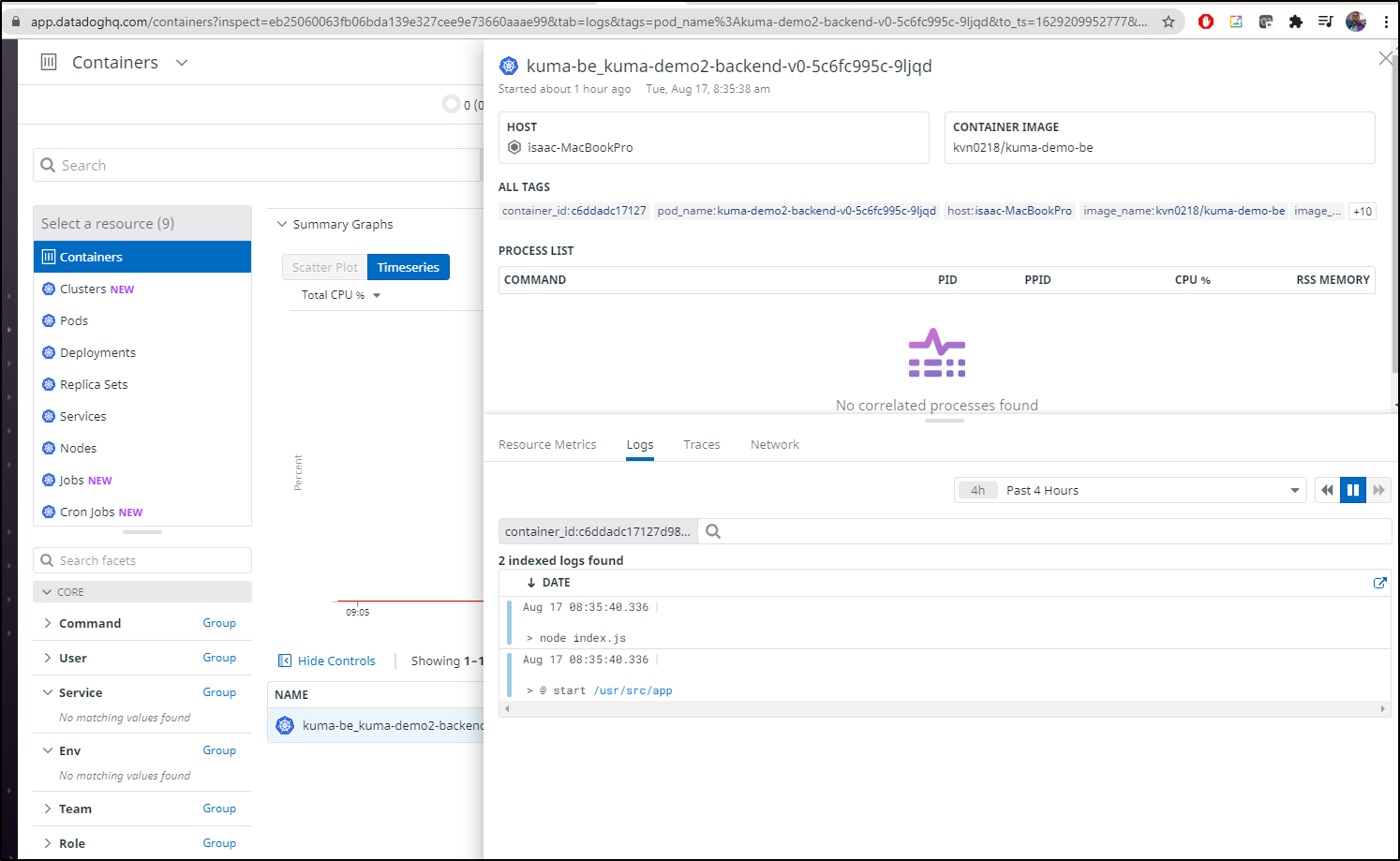

We can also view logs for pods that have logs to show:

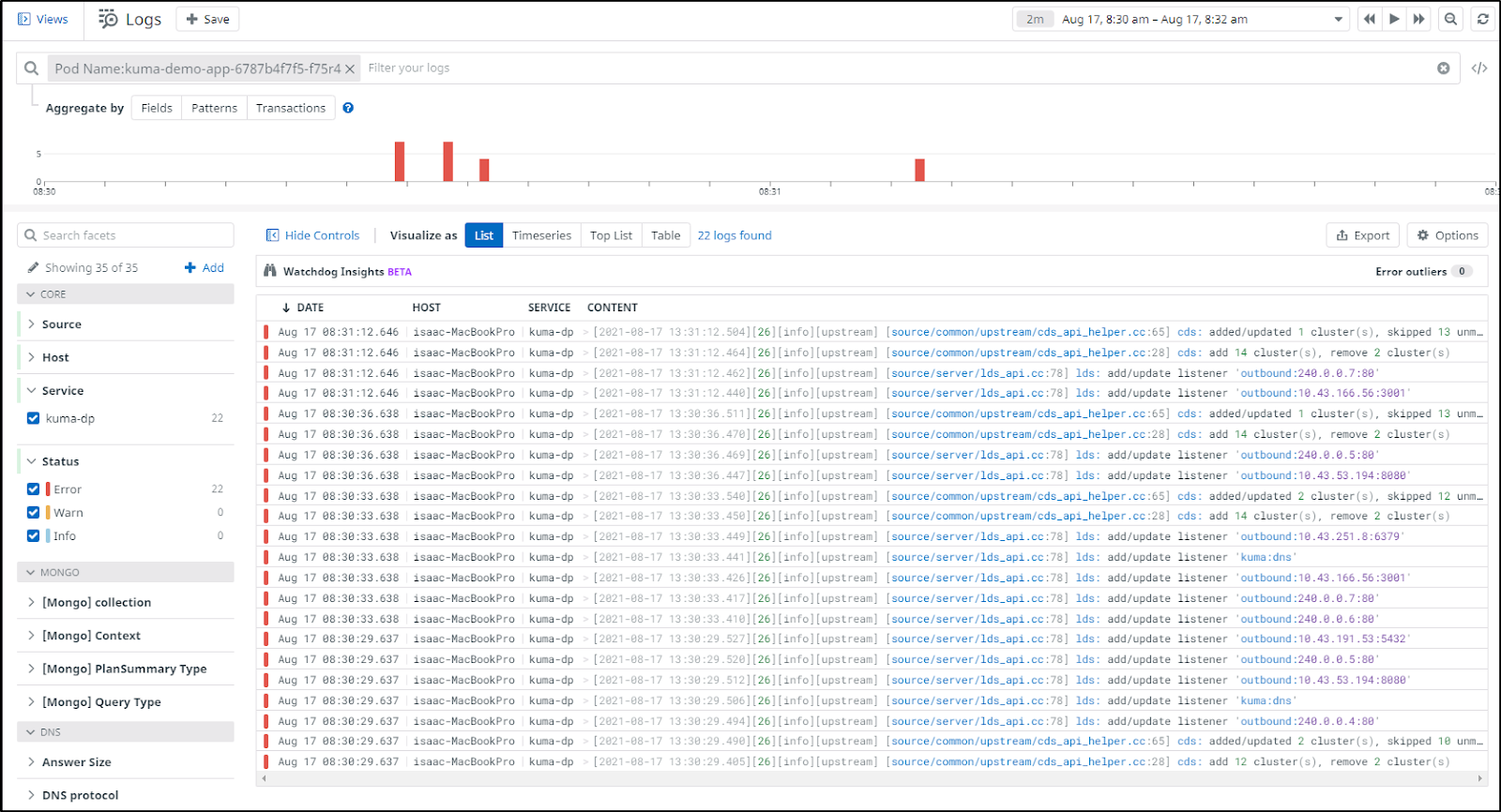

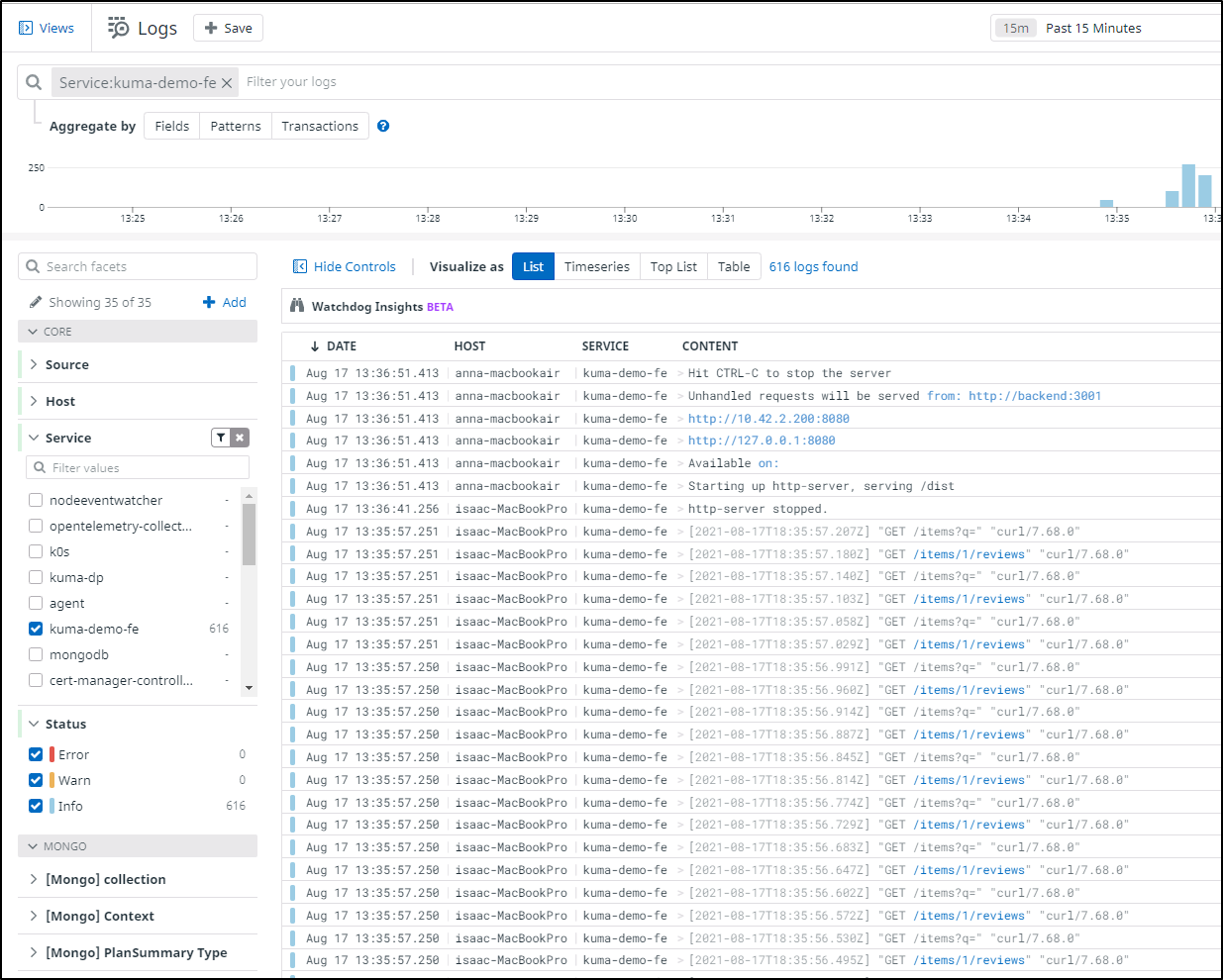

And while that is for the kuma-demo2 not managed by kuma, we can see logs for the one that is:

Adding Ingress

I’m going to show how to do this since having to use kubectl port-forward forever isn’t really ideal.

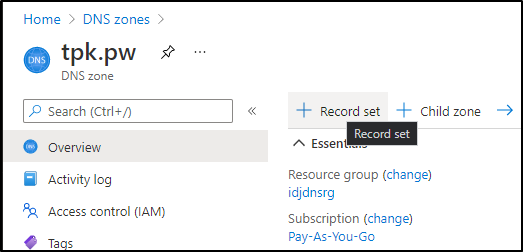

Let’s first create a new entry in our Azure DNS for Kuma.

Usually we create a recordset in the portal:

But let’s use the CLI this time:

builder@DESKTOP-QADGF36:~$ az account set --subscription "Pay-As-You-Go"

builder@DESKTOP-QADGF36:~$ az network dns record-set a add-record --resource-group idjdnsrg --zone-name tpk.pw --record-set-name kuma --ipv4-address 73.242.50.46

{

"aRecords": [

{

"ipv4Address": "73.242.50.46"

}

],

"etag": "94ef2117-3c5a-4960-994c-ec4763fe37e5",

"fqdn": "kuma.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/kuma",

"metadata": null,

"name": "kuma",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {

"id": null

},

"ttl": 3600,

"type": "Microsoft.Network/dnszones/A"

}

Next we need a valid cert, so create a cert request:

$ cat kuma.cert.yaml

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: kuma-tpk-pw

namespace: default

spec:

commonName: kuma.tpk.pw

dnsNames:

- kuma.tpk.pw

issuerRef:

kind: ClusterIssuer

name: letsencrypt-prod

secretName: kuma.tpk.pw-cert

builder@DESKTOP-QADGF36:~$ kubectl apply -f kuma.cert.yaml

certificate.cert-manager.io/kuma-tpk-pw created

builder@DESKTOP-QADGF36:~$ kubectl get cert kuma-tpk-pw

NAME READY SECRET AGE

kuma-tpk-pw False kuma.tpk.pw-cert 10s

builder@DESKTOP-QADGF36:~$ kubectl get cert kuma-tpk-pw

NAME READY SECRET AGE

kuma-tpk-pw True kuma.tpk.pw-cert 35s

which creates our valid TLS cert:

$ kubectl get secret | grep kuma

kuma.tpk.pw-cert kubernetes.io/tls 2 30s

Now we can easily create an ingress in the namespace:

$ cat kuma.ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: kuma-tpkpw-ingress

namespace: kuma-system

spec:

rules:

- host: kuma.tpk.pw

http:

paths:

- backend:

serviceName: kuma-control-plane

servicePort: 5681

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- kuma.tpk.pw

secretName: kuma.tpk.pw-cert

And apply it:

$ kubectl apply -f kuma.ingress.yaml

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.extensions/kuma-tpkpw-ingress created

However the secret is in default, not kuma-system namespace, thus we see a Cert error:

Let’s copy that secret over:

$ kubectl get secret kuma.tpk.pw-cert -o yaml > kuma.crt.yaml

$ kubectl apply -f kuma.crt.yaml

secret/kuma.tpk.pw-cert created

$ sed -i 's/namespace: default/namespace: kuma-system/g' kuma.crt.yaml

$ kubectl get secrets -n kuma-system | grep cert

kuma-tls-cert kubernetes.io/tls 3 3h19m

default.ca-builtin-cert-ca-1 system.kuma.io/secret 1 156m

kuma.tpk.pw-cert kubernetes.io/tls 2 13s

I find it best to just delete and re-add the ingress to get nginx to find the cert:

$ kubectl delete -f kuma.ingress.yaml

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.extensions "kuma-tpkpw-ingress" deleted

$ kubectl apply -f kuma.ingress.yaml

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.extensions/kuma-tpkpw-ingress created

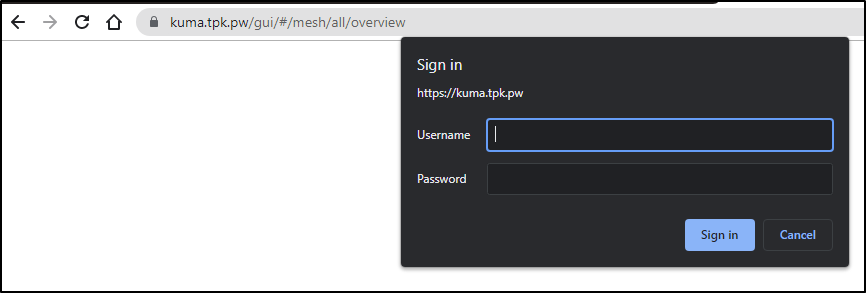

And if I would like anyone in the world to view and update my mesh, I suppose i could leave it wide open.

Since Kuma does not integrate with any federated IdP for AuthN/AuthZ out of the box, let’s at the very least setup basic auth

I’ll create a quick “foo” user with a password locally and set a k8s secret in the kuma-system namespace with it:

builder@DESKTOP-QADGF36:~$ sudo apt install apache2-utils

[sudo] password for builder:

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following additional packages will be installed:

libapr1 libaprutil1

The following NEW packages will be installed:

apache2-utils libapr1 libaprutil1

0 upgraded, 3 newly installed, 0 to remove and 33 not upgraded.

Need to get 260 kB of archives.

After this operation, 969 kB of additional disk space will be used.

Do you want to continue? [Y/n] y

Get:1 http://archive.ubuntu.com/ubuntu focal/main amd64 libapr1 amd64 1.6.5-1ubuntu1 [91.4 kB]

Get:2 http://archive.ubuntu.com/ubuntu focal/main amd64 libaprutil1 amd64 1.6.1-4ubuntu2 [84.7 kB]

Get:3 http://archive.ubuntu.com/ubuntu focal-updates/main amd64 apache2-utils amd64 2.4.41-4ubuntu3.4 [84.0 kB]

Fetched 260 kB in 1s (279 kB/s)

Selecting previously unselected package libapr1:amd64.

(Reading database ... 97707 files and directories currently installed.)

Preparing to unpack .../libapr1_1.6.5-1ubuntu1_amd64.deb ...

Unpacking libapr1:amd64 (1.6.5-1ubuntu1) ...

Selecting previously unselected package libaprutil1:amd64.

Preparing to unpack .../libaprutil1_1.6.1-4ubuntu2_amd64.deb ...

Unpacking libaprutil1:amd64 (1.6.1-4ubuntu2) ...

Selecting previously unselected package apache2-utils.

Preparing to unpack .../apache2-utils_2.4.41-4ubuntu3.4_amd64.deb ...

Unpacking apache2-utils (2.4.41-4ubuntu3.4) ...

Setting up libapr1:amd64 (1.6.5-1ubuntu1) ...

Setting up libaprutil1:amd64 (1.6.1-4ubuntu2) ...

Setting up apache2-utils (2.4.41-4ubuntu3.4) ...

Processing triggers for man-db (2.9.1-1) ...

Processing triggers for libc-bin (2.31-0ubuntu9.2) ...

builder@DESKTOP-QADGF36:~$ sudo htpasswd -c auth foo

New password:

Re-type new password:

Adding password for user foo

builder@DESKTOP-QADGF36:~$ kubectl create secret generic basic-auth --from-file auth -n kuma-system

secret/basic-auth created

We will add the auth block to the ingress annotation to use it:

builder@DESKTOP-QADGF36:~$ kubectl get ingress kuma-tpkpw-ingress -n kuma-system -o yaml > kuma.ingress.yaml.2.old

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

builder@DESKTOP-QADGF36:~$ kubectl get ingress kuma-tpkpw-ingress -n kuma-system -o yaml > kuma.ingress.yaml.2

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

builder@DESKTOP-QADGF36:~$ vi kuma.ingress.yaml.2

Now update for basic auth

$ diff -c kuma.ingress.yaml.2 kuma.ingress.yaml.2.old

*** kuma.ingress.yaml.2 2021-08-17 10:26:00.395545999 -0500

--- kuma.ingress.yaml.2.old 2021-08-17 10:25:01.765545196 -0500

***************

***5,13****

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"extensions/v1beta1","kind":"Ingress","metadata":{"annotations":{"kubernetes.io/ingress.class":"nginx"},"name":"kuma-tpkpw-ingress","namespace":"kuma-system"},"spec":{"rules":[{"host":"kuma.tpk.pw","http":{"paths":[{"backend":{"serviceName":"kuma-control-plane","servicePort":5681},"path":"/","pathType":"ImplementationSpecific"}]}}],"tls":[{"hosts":["kuma.tpk.pw"],"secretName":"kuma.tpk.pw-cert"}]}}

kubernetes.io/ingress.class: nginx

- nginx.ingress.kubernetes.io/auth-type: basic

- nginx.ingress.kubernetes.io/auth-secret: basic-auth

- nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - foo'

creationTimestamp: "2021-08-17T15:20:05Z"

generation: 1

name: kuma-tpkpw-ingress

--- 5,10 ----

$ kubectl apply -f kuma.ingress.yaml.2

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.extensions/kuma-tpkpw-ingress configured

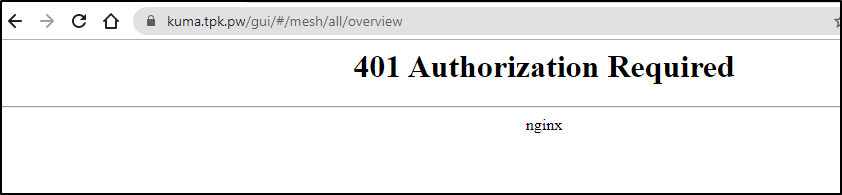

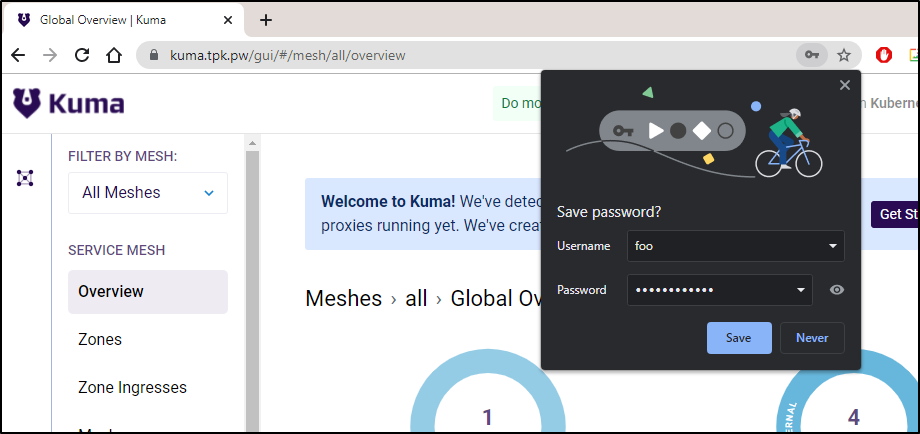

Now a reload will at least require basic auth over https:

and bad logins:

and on success

Metrics

For this, we will need kumactlto install

$ wget https://download.konghq.com/mesh-alpine/kuma-1.2.3-ubuntu-amd64.tar.gz

--2021-08-17 10:46:50-- https://download.konghq.com/mesh-alpine/kuma-1.2.3-ubuntu-amd64.tar.gz

Resolving download.konghq.com (download.konghq.com)... 3.20.242.208, 3.19.0.58, 18.220.94.64

Connecting to download.konghq.com (download.konghq.com)|3.20.242.208|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://kong-cloud-01-prod-us-east-2-kong-packages-origin.s3.amazonaws.com/pulp3-media/artifact/b8/db75682439f60399661963f6cc3f77df0ed5ceaf4b1d27efbdec86fdaa3acc?response-content-disposition=attachment%3Bx-pulp-artifact-path%3Dmesh-alpine__kuma-1.2.3-ubuntu-amd64.tar.gz%3Bfilename%3Dkuma-1.2.3-ubuntu-amd64.tar.gz&X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=ASIAQUIYLSLOCZOEMY4R%2F20210817%2Fus-east-2%2Fs3%2Faws4_request&X-Amz-Date=20210817T154656Z&X-Amz-Expires=604800&X-Amz-SignedHeaders=host&X-Amz-Security-Token=FwoGZXIvYXdzEGEaDCEW3u%2B%2FXK%2FdUOsghCKKBHEqwA3VC1HNHaLrqcJOJRAKqXdyt84rfTDu8DaPDl3wSwP7CgabyiZaUMwclJMklUsyO1PWlU%2BtMA4bBt%2FLVSahhjXMMYrPb8wlx61%2FLhcEW3PSw64zDEVkm9rKKuEAFU6GDmAOlstRtC%2FmFzVM50gauTempZy9%2BOL4X6I3TPXXUlvMyVaqJ4k5vGGXQFT9VlxB7dlXm9TRotUFThFYzIl0lRtlnfAnAiSfDYfvFlx1fMS21m3ymYYy00srfYC5hmXO5SDRXQEeYziyFIFo7AY6MloZDQRPdpa83%2FKiavRILsak55B4EBh%2FTmcqQybzgWbXw00NExQL87x%2FDzM6qGzczvpPA3e%2Bn%2FSHupwzZnlg3%2B0k0gl2Hi44QSnp2au88uSa1iaL08T4EImgBE7IxRAU0mCw%2BkpYbqGLNptOA6qFb5WpuKlDbXQNdQcHfT7nQSQpZEBO1amE4%2BFhJkFzGgpakoujKiG5j7YssUVdEnrl8iWd5MYEFxKpuM3DOm6C4TOHyr211xwDFtcImOZHNEPTjdQlBGjo9yQMyG7NvjeMj8Opi8MVd5A5eyStO4LFrPKNiMDAGhSK%2BFd%2FpsY7rw0%2FLxslu%2FlPdvsssF0UNf5vWxBxHiz3YpZQtujDe9DzCfYhZe%2BInwHAEvFfjPDtY6oOfQlaZYrZ90mnipC0%2FkM2WZKsd4BniMFZ4CjdpO%2BIBjIq5aJL%2BammL6YZwG5VeYwfQkf%2BWaH1ijKZgJPAwm11Q%2BP%2B0MoxMmUPZbn1&X-Amz-Signature=b9d3098074915edf4c4cb9fcead98bd4ef7379bad4ef3220eeee1e6645a90e89 [following]

--2021-08-17 10:46:50-- https://kong-cloud-01-prod-us-east-2-kong-packages-origin.s3.amazonaws.com/pulp3-media/artifact/b8/db75682439f60399661963f6cc3f77df0ed5ceaf4b1d27efbdec86fdaa3acc?response-content-disposition=attachment%3Bx-pulp-artifact-path%3Dmesh-alpine__kuma-1.2.3-ubuntu-amd64.tar.gz%3Bfilename%3Dkuma-1.2.3-ubuntu-amd64.tar.gz&X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=ASIAQUIYLSLOCZOEMY4R%2F20210817%2Fus-east-2%2Fs3%2Faws4_request&X-Amz-Date=20210817T154656Z&X-Amz-Expires=604800&X-Amz-SignedHeaders=host&X-Amz-Security-Token=FwoGZXIvYXdzEGEaDCEW3u%2B%2FXK%2FdUOsghCKKBHEqwA3VC1HNHaLrqcJOJRAKqXdyt84rfTDu8DaPDl3wSwP7CgabyiZaUMwclJMklUsyO1PWlU%2BtMA4bBt%2FLVSahhjXMMYrPb8wlx61%2FLhcEW3PSw64zDEVkm9rKKuEAFU6GDmAOlstRtC%2FmFzVM50gauTempZy9%2BOL4X6I3TPXXUlvMyVaqJ4k5vGGXQFT9VlxB7dlXm9TRotUFThFYzIl0lRtlnfAnAiSfDYfvFlx1fMS21m3ymYYy00srfYC5hmXO5SDRXQEeYziyFIFo7AY6MloZDQRPdpa83%2FKiavRILsak55B4EBh%2FTmcqQybzgWbXw00NExQL87x%2FDzM6qGzczvpPA3e%2Bn%2FSHupwzZnlg3%2B0k0gl2Hi44QSnp2au88uSa1iaL08T4EImgBE7IxRAU0mCw%2BkpYbqGLNptOA6qFb5WpuKlDbXQNdQcHfT7nQSQpZEBO1amE4%2BFhJkFzGgpakoujKiG5j7YssUVdEnrl8iWd5MYEFxKpuM3DOm6C4TOHyr211xwDFtcImOZHNEPTjdQlBGjo9yQMyG7NvjeMj8Opi8MVd5A5eyStO4LFrPKNiMDAGhSK%2BFd%2FpsY7rw0%2FLxslu%2FlPdvsssF0UNf5vWxBxHiz3YpZQtujDe9DzCfYhZe%2BInwHAEvFfjPDtY6oOfQlaZYrZ90mnipC0%2FkM2WZKsd4BniMFZ4CjdpO%2BIBjIq5aJL%2BammL6YZwG5VeYwfQkf%2BWaH1ijKZgJPAwm11Q%2BP%2B0MoxMmUPZbn1&X-Amz-Signature=b9d3098074915edf4c4cb9fcead98bd4ef7379bad4ef3220eeee1e6645a90e89

Resolving kong-cloud-01-prod-us-east-2-kong-packages-origin.s3.amazonaws.com (kong-cloud-01-prod-us-east-2-kong-packages-origin.s3.amazonaws.com)... 52.219.106.228

Connecting to kong-cloud-01-prod-us-east-2-kong-packages-origin.s3.amazonaws.com (kong-cloud-01-prod-us-east-2-kong-packages-origin.s3.amazonaws.com)|52.219.106.228|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 78202846 (75M) [application/x-tar]

Saving to: ‘kuma-1.2.3-ubuntu-amd64.tar.gz’

kuma-1.2.3-ubuntu-amd64.tar.gz 100%[====================================================================================================================================================================================================================================================>] 74.58M 80.6MB/s in 0.9s

2021-08-17 10:46:51 (80.6 MB/s) - ‘kuma-1.2.3-ubuntu-amd64.tar.gz’ saved [78202846/78202846]

builder@DESKTOP-QADGF36:~$ tar -xzvf kuma-1.2.3-ubuntu-amd64.tar.gz

./

./kuma-1.2.3/

./kuma-1.2.3/README

./kuma-1.2.3/NOTICE

./kuma-1.2.3/install_missing_crds.sh

./kuma-1.2.3/NOTICE-kumactl

./kuma-1.2.3/LICENSE

./kuma-1.2.3/conf/

./kuma-1.2.3/conf/kuma-cp.conf.yml

./kuma-1.2.3/bin/

./kuma-1.2.3/bin/kuma-dp

./kuma-1.2.3/bin/kuma-prometheus-sd

./kuma-1.2.3/bin/kuma-cp

./kuma-1.2.3/bin/envoy

./kuma-1.2.3/bin/kumactl

./kuma-1.2.3/bin/coredns

Then install metrics:

$ ./kuma-1.2.3/bin/kumactl install metrics | kubectl apply -f -

namespace/kuma-metrics created

podsecuritypolicy.policy/grafana created

serviceaccount/prometheus-alertmanager created

serviceaccount/prometheus-kube-state-metrics created

serviceaccount/prometheus-node-exporter created

serviceaccount/prometheus-pushgateway created

serviceaccount/prometheus-server created

serviceaccount/grafana created

configmap/grafana created

configmap/prometheus-alertmanager created

configmap/provisioning-datasource created

configmap/provisioning-dashboards created

configmap/prometheus-server created

configmap/provisioning-dashboards-0 created

configmap/provisioning-dashboards-1 created

configmap/provisioning-dashboards-2 created

configmap/provisioning-dashboards-3 created

configmap/provisioning-dashboards-4 created

persistentvolumeclaim/prometheus-alertmanager created

persistentvolumeclaim/prometheus-server created

clusterrole.rbac.authorization.k8s.io/grafana-clusterrole created

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRole is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRole

clusterrole.rbac.authorization.k8s.io/prometheus-alertmanager created

clusterrole.rbac.authorization.k8s.io/prometheus-kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/prometheus-pushgateway created

clusterrole.rbac.authorization.k8s.io/prometheus-server created

clusterrolebinding.rbac.authorization.k8s.io/grafana-clusterrolebinding created

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRoleBinding is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRoleBinding

clusterrolebinding.rbac.authorization.k8s.io/prometheus-alertmanager created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-pushgateway created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-server created

Warning: rbac.authorization.k8s.io/v1beta1 Role is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 Role

role.rbac.authorization.k8s.io/grafana created

Warning: rbac.authorization.k8s.io/v1beta1 RoleBinding is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 RoleBinding

rolebinding.rbac.authorization.k8s.io/grafana created

service/grafana created

service/prometheus-alertmanager created

service/prometheus-kube-state-metrics created

service/prometheus-node-exporter created

service/prometheus-pushgateway created

service/prometheus-server created

daemonset.apps/prometheus-node-exporter created

deployment.apps/grafana created

deployment.apps/prometheus-alertmanager created

deployment.apps/prometheus-kube-state-metrics created

deployment.apps/prometheus-pushgateway created

deployment.apps/prometheus-server created

Verification

$ kubectl get pods -n kuma-metrics

NAME READY STATUS RESTARTS AGE

prometheus-server-67974d9cdc-2gxw8 0/4 Pending 0 21s

prometheus-node-exporter-n7r2w 0/1 ContainerCreating 0 22s

prometheus-node-exporter-td8vp 1/1 Running 0 22s

prometheus-node-exporter-444n4 1/1 Running 0 22s

grafana-7875d6d77f-75s9l 0/2 Init:0/1 0 22s

prometheus-kube-state-metrics-5ddc7b5dfd-4k7bb 0/2 PodInitializing 0 22s

prometheus-alertmanager-9955cbc54-scp8g 2/3 Running 0 22s

prometheus-pushgateway-5df994c7ff-bzg5v 2/2 Running 0 21s

Once powered on, we can enable metrics by updating the default mesh definition:

builder@DESKTOP-QADGF36:~$ kubectl get mesh default -o yaml > my.default.mesh.yaml

builder@DESKTOP-QADGF36:~$ kubectl get mesh default -o yaml > my.default.mesh.yaml.bak

builder@DESKTOP-QADGF36:~$ vi my.default.mesh.yaml

builder@DESKTOP-QADGF36:~$ diff -c my.default.mesh.yaml my.default.mesh.yaml.bak

*** my.default.mesh.yaml 2021-08-17 13:00:14.745672717 -0500

--- my.default.mesh.yaml.bak 2021-08-17 12:59:46.235672327 -0500

***************

***16,23****

- name: ca-1

type: builtin

enabledBackend: ca-1

- metrics:

- enabledBackend: prometheus-1

- backends:

- - name: prometheus-1

- type: prometheus

--- 16,18 ----

builder@DESKTOP-QADGF36:~$ kubectl apply -f my.default.mesh.yaml

mesh.kuma.io/default configured

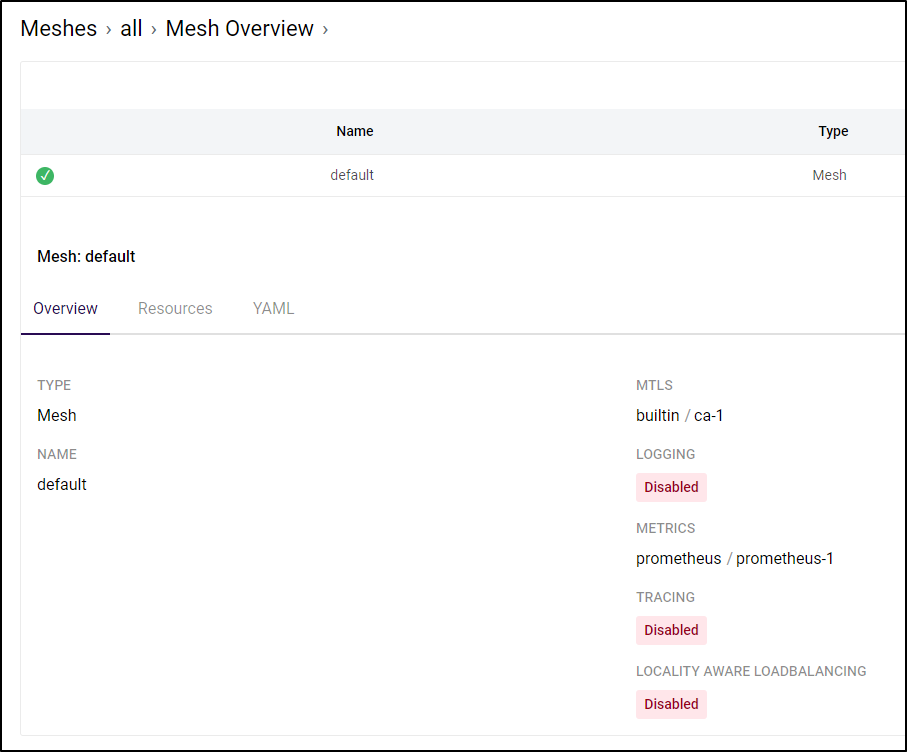

Which changes from

to

To generate a lot of traffic fast, we can port forward, then slam the service with curl commands:

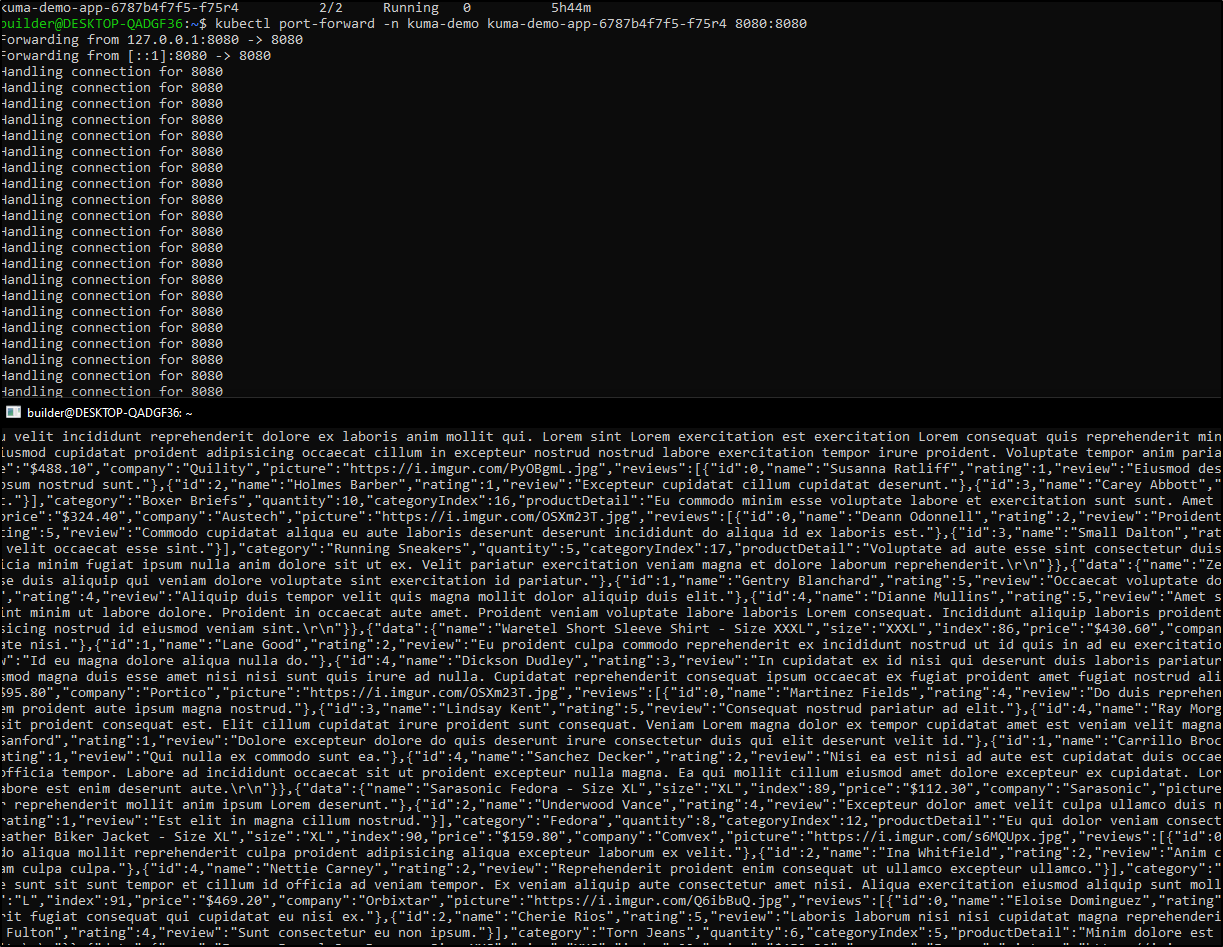

kubectl port-forward -n kuma-demo kuma-demo-app-6787b4f7f5-f75r4 8080:8080 &

while [true]; do curl http://127.0.0.1:8080/items?q=; curl http://127.0.0.1:8080/items/1/reviews; done

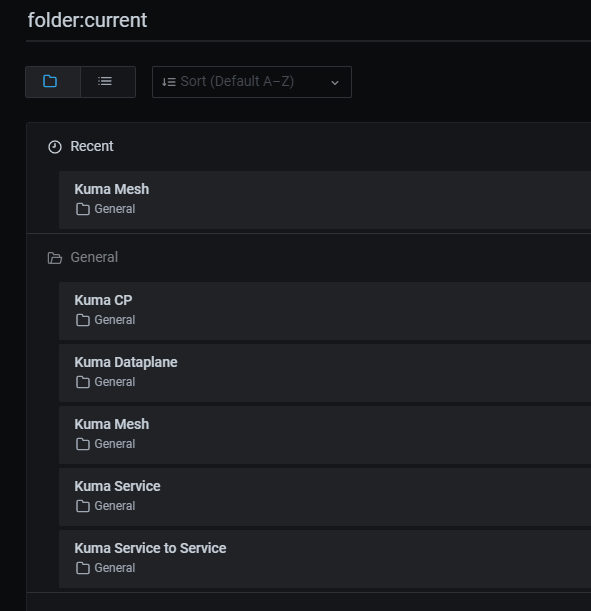

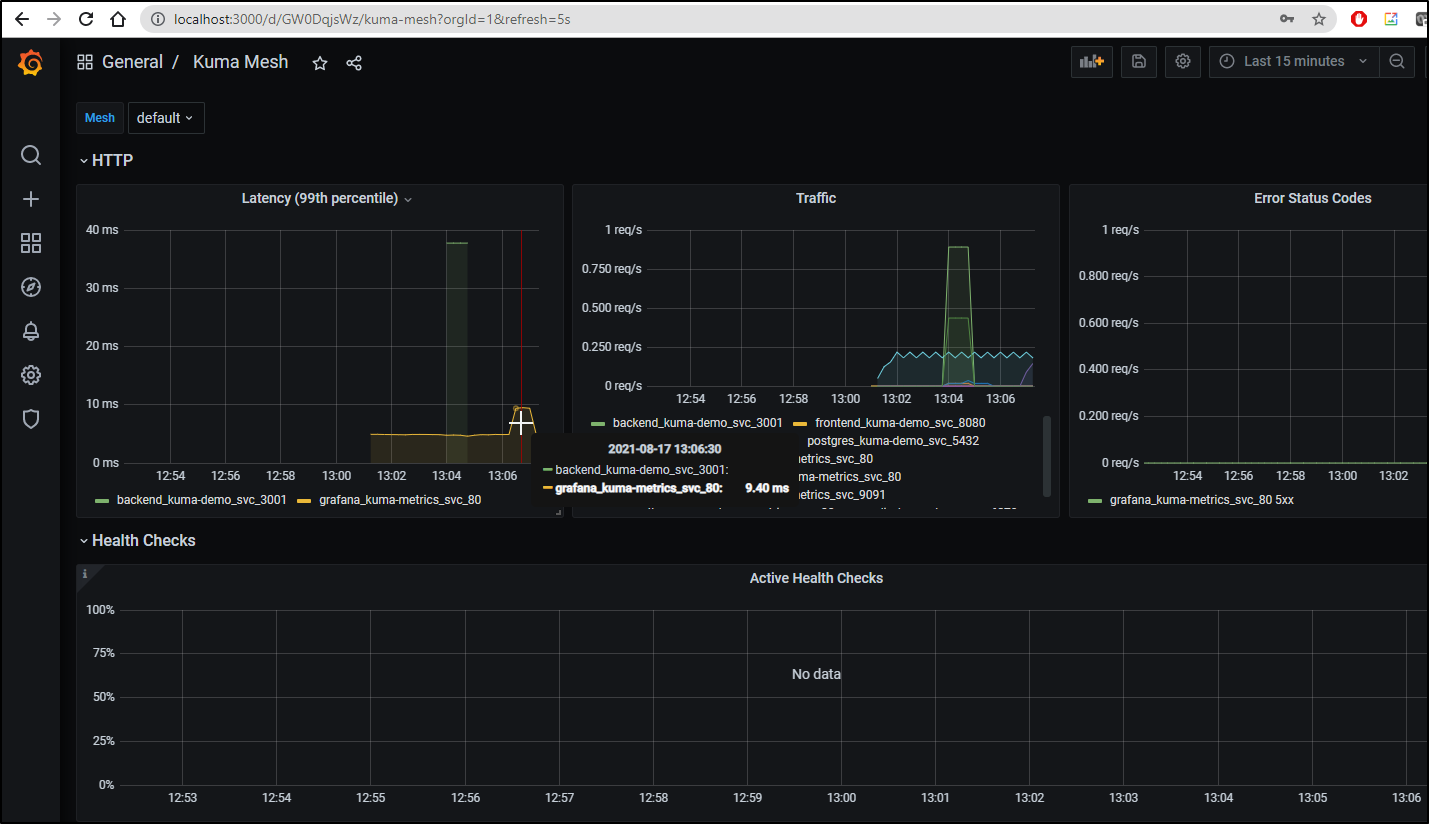

We can now hop over to Grafana where we can view some of the Kuma dashboards already setup:

builder@DESKTOP-QADGF36:~$ kubectl port-forward svc/grafana -n kuma-metrics 3000:80

Forwarding from 127.0.0.1:3000 -> 3000

Forwarding from [::1]:3000 -> 3000

Handling connection for 3000

Handling connection for 3000

Handling connection for 3000

Handling connection for 3000

Handling connection for 3000

Handling connection for 3000

For instance the Mesh

And Dataplane:

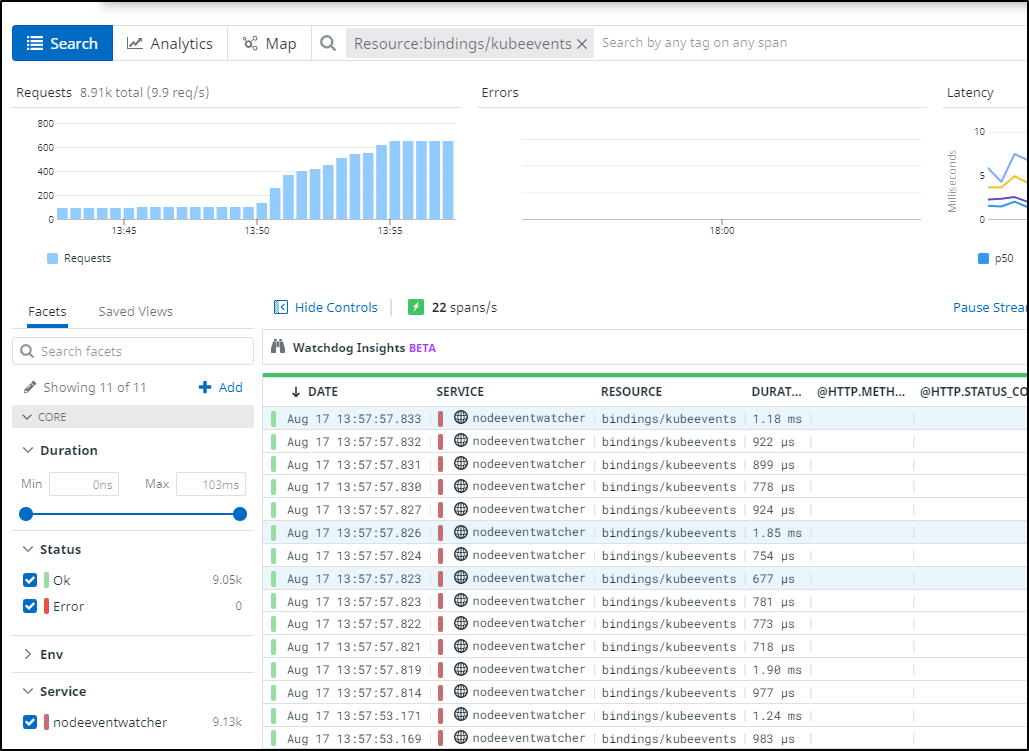

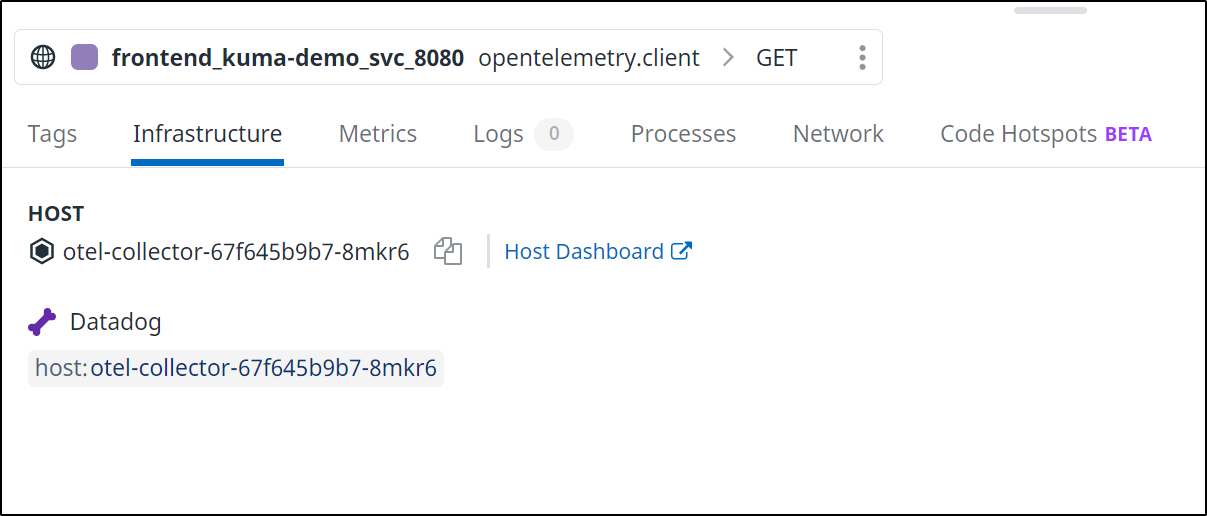

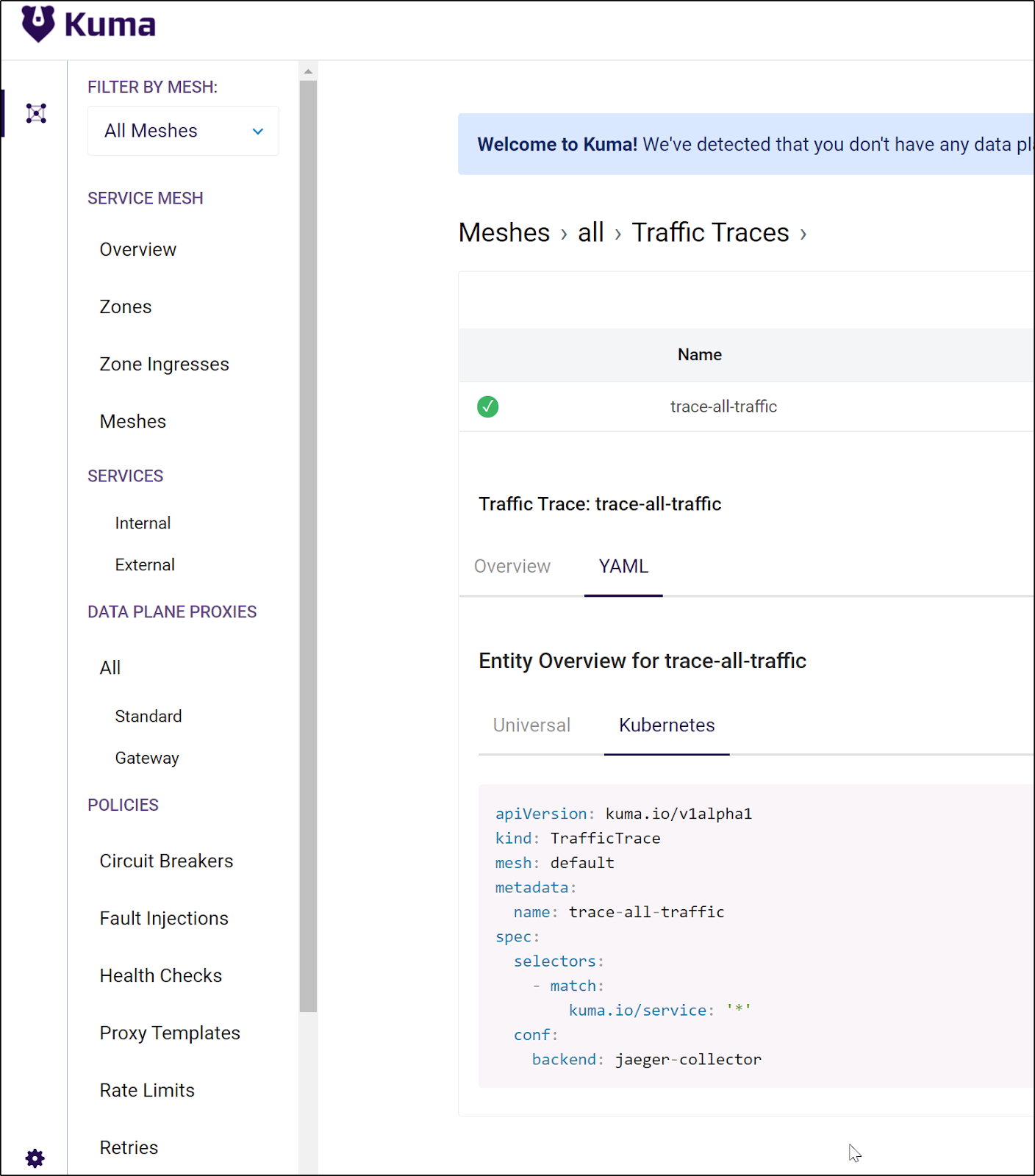

DataDog Tracing

The first path we will try is exposing Datadogs built in tracing service from the cluster agent.

Note: I had struggles with the built in APM via Cluster Agent as we’ll see below, but I do succeed using the Jaeger backend with OpenTelemetry to Datadog. Skip ahead to see the working solution

$ cat dd.svc.yaml

apiVersion: v1

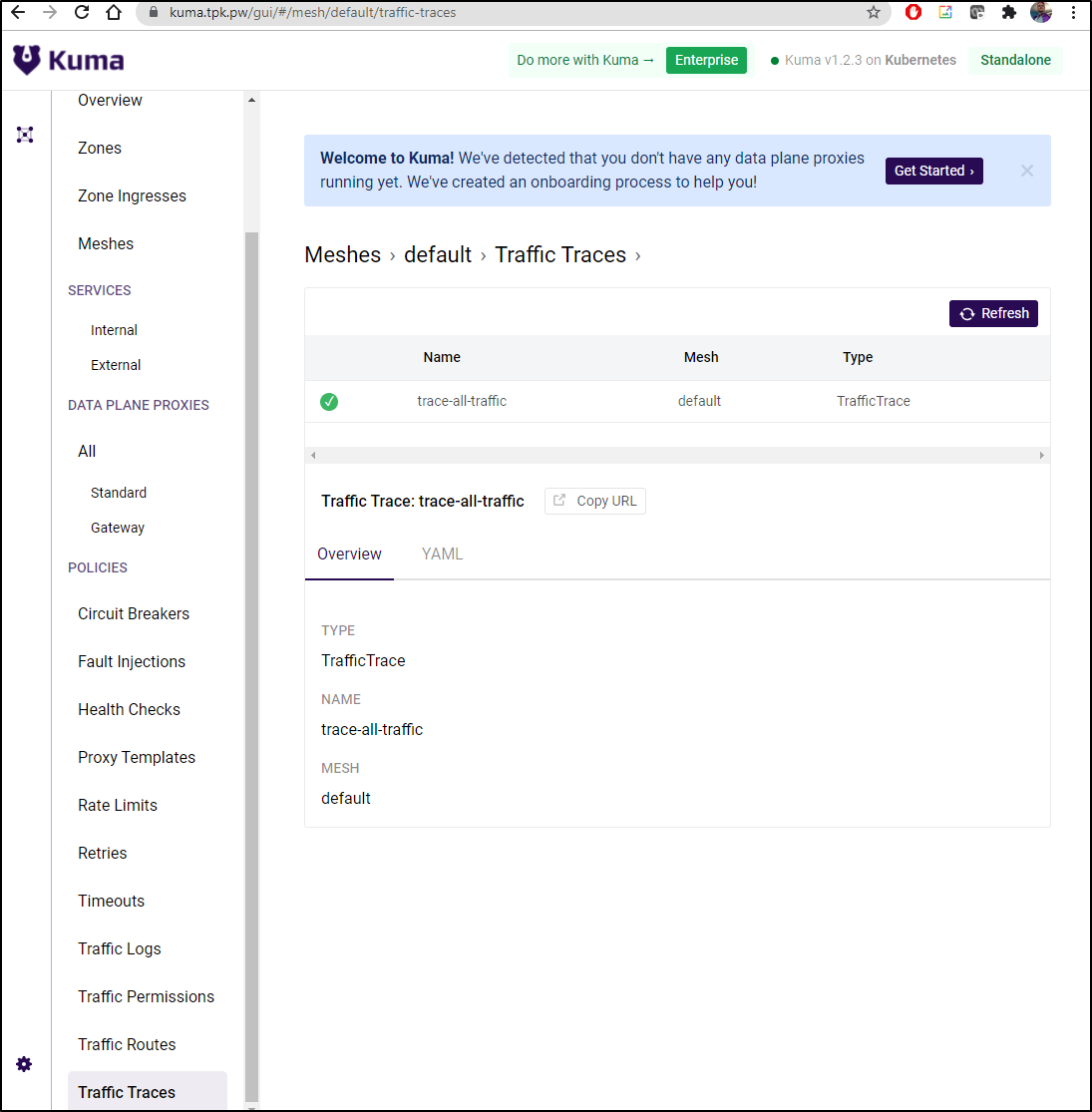

kind: Service