Published: Jun 3, 2021 by Isaac Johnson

One of the features of Dapr is to use “middleware pipelines” to automate federated login to external identity providers. We can use these pipelines to configure API Auth with OAuth using registration providers like Facebook, Github, AAD and several others.

Let’s implement a Github Oauth2 middleware pipeline in Dapr. Along the way we will also show how to tie into Open Telemetry APM metrics sent to Datadog and lastly take a side step to show how to purge Redis streams for Dapr Pubsub issues (e.g. stuck messages in your queue).

Github OAuth

Let’s create a Github OAuth app together. The two pieces we’ll need to know off the bat are the Authorization URL and Token URL

Auth URL: https://github.com/login/oauth/authorize

Token URL: https://github.com/login/oauth/access_token

Let’s start to build the Auth Code Grant component:

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: oauth2

namespace: default

spec:

type: middleware.http.oauth2

version: v1

metadata:

- name: clientId

value: "<your client ID>"

- name: clientSecret

value: "<your client secret>"

- name: scopes

value: "<comma-separated scope names>"

- name: authURL

value: "https://github.com/login/oauth/authorize"

- name: tokenURL

value: "https://github.com/login/oauth/access_token"

- name: redirectURL

value: "<redirect URL>"

- name: authHeaderName

value: "<header name under which the secret token is saved>"

# forceHTTPS:

# This key is used to set HTTPS schema on redirect to your API method

# after Dapr successfully received Access Token from Identity Provider.

# By default, Dapr will use HTTP on this redirect.

- name: forceHTTPS

value: "<set to true if you invoke an API method through Dapr from https origin>"

Getting OAuth Settings from Github

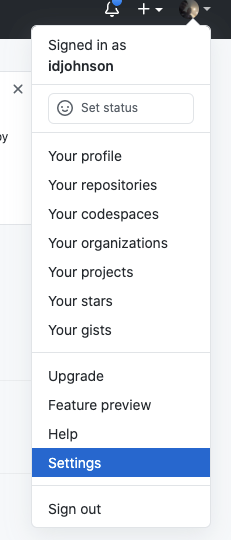

Pick Settings

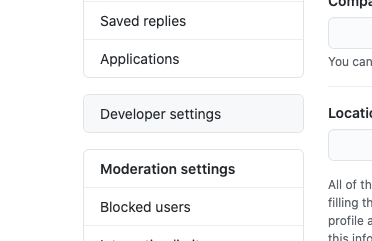

Then go to Developer Settings

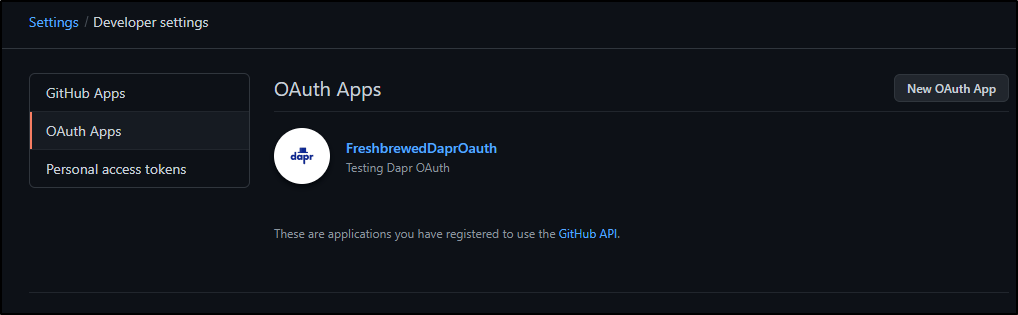

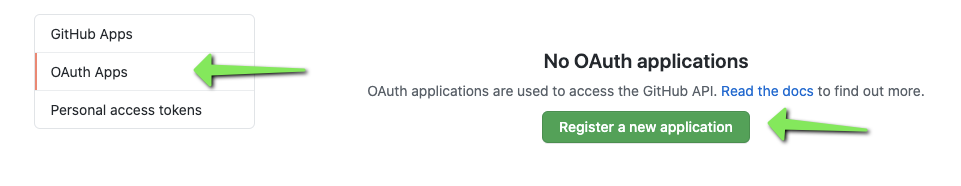

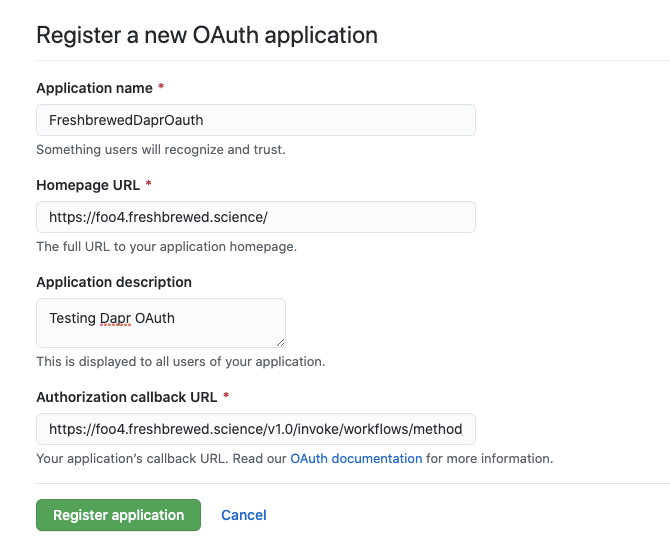

Next choose OAuth Apps and click Register a New Application

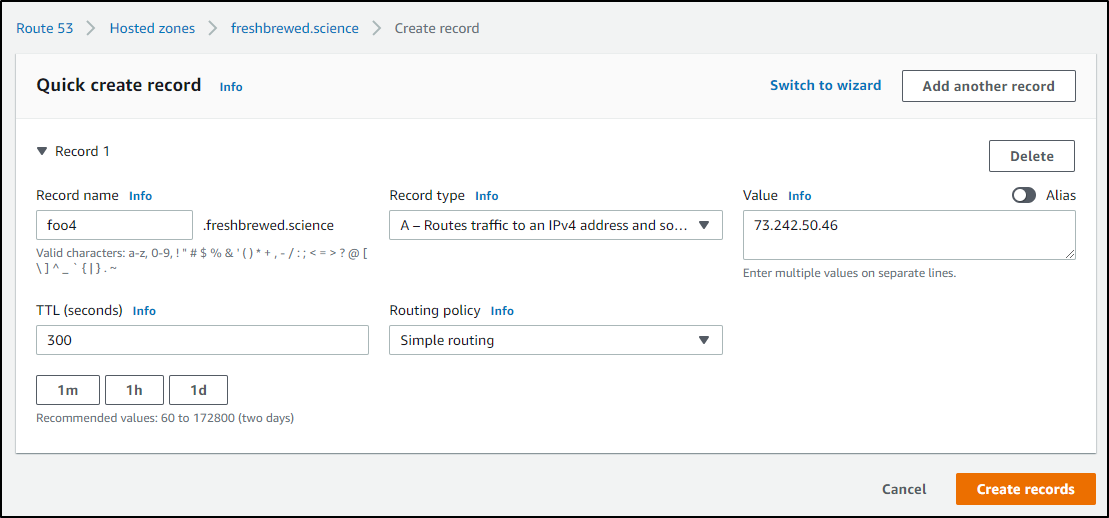

The next part might be different from your endpoints. For us, we plan to create some DNS entries for this app.

The homepage is mostly used for a URL link, but the callback in Oauth2 is what URL the properly logged in app should redirect and include headers.

For us, we’ll create

homepage : https://foo4.freshbrewed.science/

redirect : https://foo4.freshbrewed.science/v1.0/invoke/workflows/method/workflow1

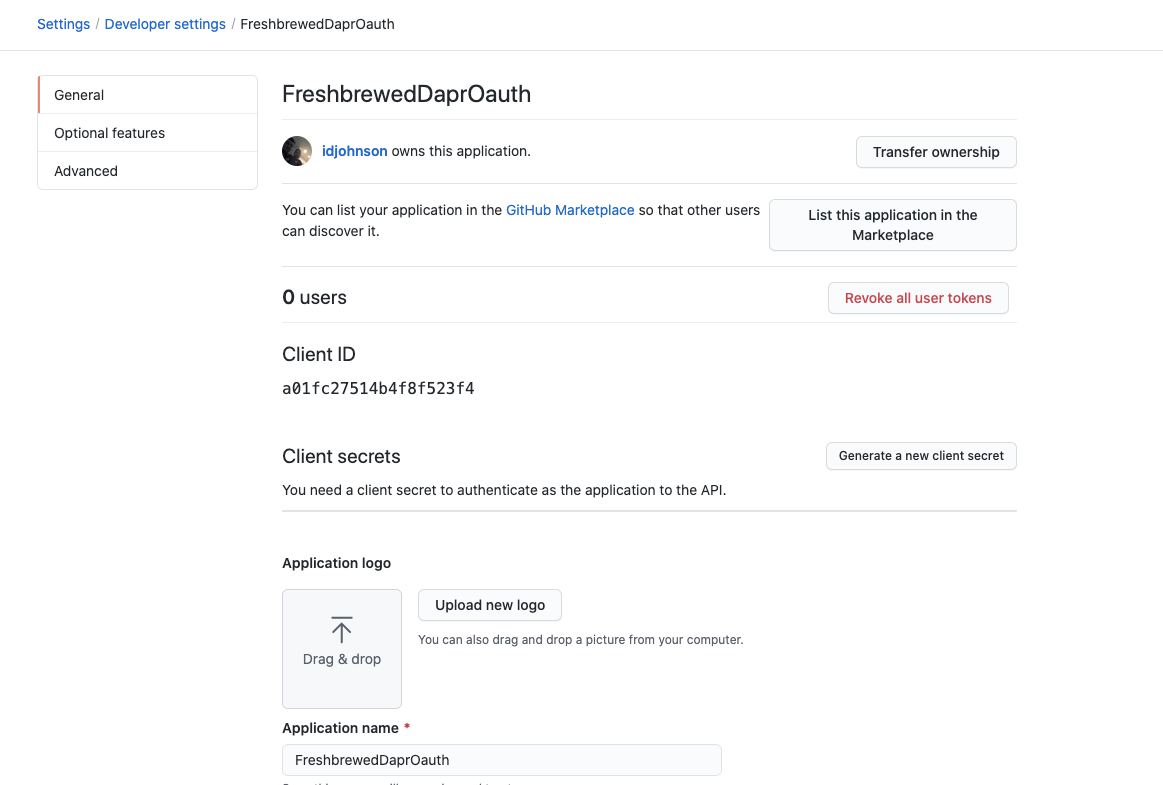

When we register, we then get a page with some details:

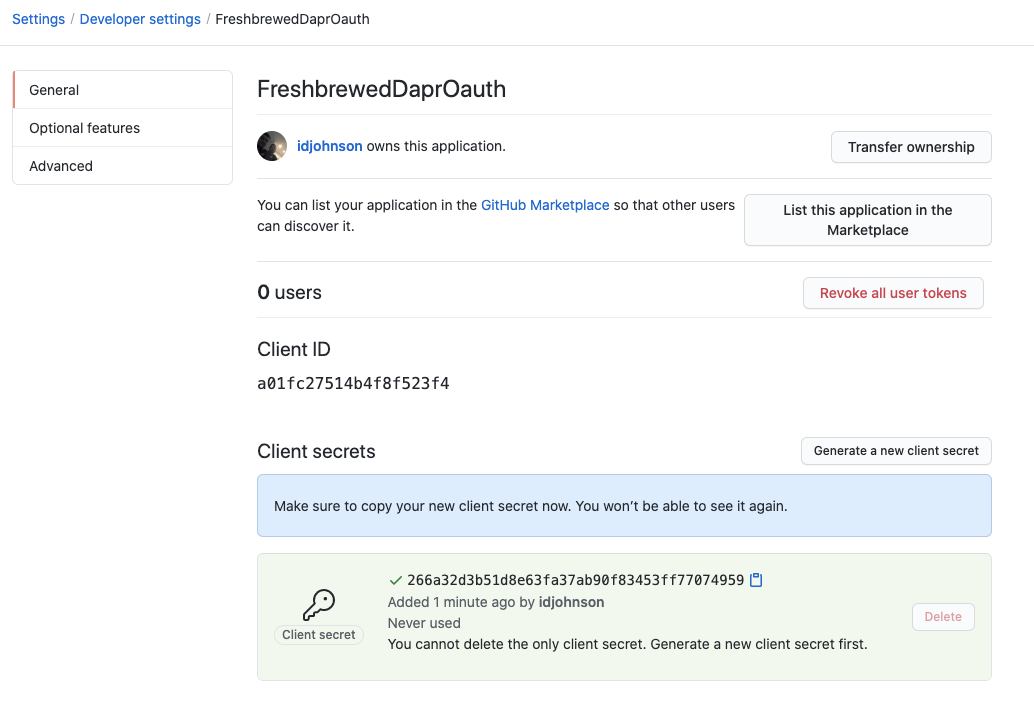

Click Generate a new Client Secret. It will show it just once, so ensure you write it down

We now have:

Client ID: a01fc27514b4f8f523f4

Client Secret: 266a32d3b51d8e63fa37ab90f83453ff77074959

homepage URL: https://foo4.freshbrewed.science/

callback URL: https://foo4.freshbrewed.science/v1.0/invoke/workflows/method/workflow1

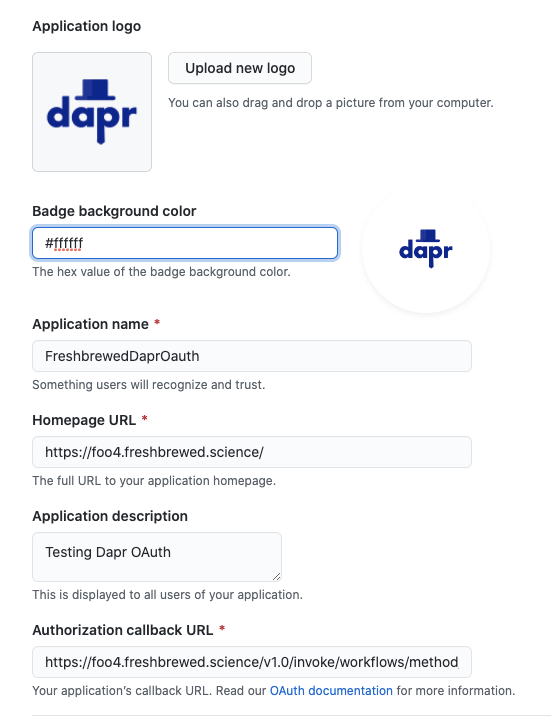

In fact, just to make it look nice, let’s add a custom logo

Now back to our Dapr config

If we omit scopes, Github will default to an empty list. If we wanted, we could use something like ‘user’ or ‘repo’. (see details). We may want user details to populate in our app. so lets set that.

(might be “user:email” for scope if we want to be more specific)

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: oauth2

namespace: default

spec:

type: middleware.http.oauth2

version: v1

metadata:

- name: clientId

value: "a01fc27514b4f8f523f4"

- name: clientSecret

value: "266a32d3b51d8e63fa37ab90f83453ff77074959"

- name: scopes

value: "user"

- name: authURL

value: "https://github.com/login/oauth/authorize"

- name: tokenURL

value: "https://github.com/login/oauth/access_token"

- name: redirectURL

value: "https://foo4.freshbrewed.science/v1.0/invoke/workflows/method/workflow1"

- name: authHeaderName

value: "<header name under which the secret token is saved>"

# forceHTTPS:

# This key is used to set HTTPS schema on redirect to your API method

# after Dapr successfully received Access Token from Identity Provider.

# By default, Dapr will use HTTP on this redirect.

- name: forceHTTPS

value: "true"

We will need to create the Middleware pipeline that uses this

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

name: pipeline

namespace: default

spec:

httpPipeline:

handlers:

- name: oauth2

type: middleware.http.oauth2

And lastly, the Client Credentials Grant component. We get the token URL and determine auth style from theGH Authorizing OAuth docs . I’m adding “state” tot he endpointParamsQuery based on the section on that page around “1. Request a user’s Github identity”

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: myComponent

spec:

type: middleware.http.oauth2clientcredentials

version: v1

metadata:

- name: clientId

value: "a01fc27514b4f8f523f4"

- name: clientSecret

value: "266a32d3b51d8e63fa37ab90f83453ff77074959"

- name: scopes

value: "user"

- name: tokenURL

value: "https://github.com/login/oauth/access_token"

- name: headerName

value: "<header name under which the secret token is saved>"

- name: endpointParamsQuery

value: "state=asdfasdf"

# authStyle:

# "0" means to auto-detect which authentication

# style the provider wants by trying both ways and caching

# the successful way for the future.

# "1" sends the "client_id" and "client_secret"

# in the POST body as application/x-www-form-urlencoded parameters.

# "2" sends the client_id and client_password

# using HTTP Basic Authorization. This is an optional style

# described in the OAuth2 RFC 6749 section 2.3.1.

- name: authStyle

value: "1"

We need to create our DNS Entry next. We can pop over to our NS provider to add an A/AAAA record.

Then back on our cluster, let’s create a new cert for foo4

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

generation: 1

name: foo4-fb-science

namespace: default

spec:

commonName: foo4.freshbrewed.science

dnsNames:

- foo4.freshbrewed.science

issuerRef:

kind: ClusterIssuer

name: letsencrypt-prod

secretName: foo4.freshbrewed.science-cert

Create and check status

$ kubectl apply -f foo4.cert.yaml

certificate.cert-manager.io/foo4-fb-science created

Here we see cert and resulting secret was created

$ kubectl get cert | grep foo4

foo4-fb-science True foo4.freshbrewed.science-cert 33s

$ kubectl get secrets | grep foo4

foo4.freshbrewed.science-cert kubernetes.io/tls 2 5m40s

Next, we need to create our oauth2.. To start with, let’s just redirect to the app

$ cat deploy/myoauth.yaml

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: oauth2

namespace: default

spec:

type: middleware.http.oauth2

version: v1

metadata:

- name: clientId

value: "a01fc27514b4f8f523f4"

- name: clientSecret

value: "266a32d3b51d8e63fa37ab90f83453ff77074959"

- name: scopes

value: "user"

- name: authURL

value: "https://github.com/login/oauth/authorize"

- name: tokenURL

value: "https://github.com/login/oauth/access_token"

- name: redirectURL

value: "https://foo4.freshbrewed.science/"

- name: authHeaderName

value: "authorization"

# forceHTTPS:

# This key is used to set HTTPS schema on redirect to your API method

# after Dapr successfully received Access Token from Identity Provider.

# By default, Dapr will use HTTP on this redirect.

- name: forceHTTPS

value: "true"

$ kubectl apply -f deploy/myoauth.yaml

component.dapr.io/oauth2 created

Next we will use the quickstart pipeline configuration (which just adds a tracing block)

$ cat deploy/pipeline.yaml

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

name: pipeline

spec:

tracing:

samplingRate: "1"

stdout: true

zipkin:

endpointAddress: "http://zipkin.default.svc.cluster.local:9411/api/v2/spans"

httpPipeline:

handlers:

- type: middleware.http.oauth2

name: oauth2

and then deploy….

$ kubectl apply -f deploy/pipeline.yaml

error: error validating "deploy/pipeline.yaml": error validating data: ValidationError(Configuration.spec.tracing): unknown field "stdout" in io.dapr.v1alpha1.Configuration.spec.tracing; if you choose to ignore these errors, turn validation off with --validate=false

The pipeline in the quickstart adds a field (that must have been deprecated) of stdout (see docs). So we will use

$ cat deploy/pipeline.yaml

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

name: pipeline

spec:

tracing:

samplingRate: "1"

zipkin:

endpointAddress: "http://zipkin.default.svc.cluster.local:9411/api/v2/spans"

httpPipeline:

handlers:

- type: middleware.http.oauth2

name: oauth2

and apply it

$ kubectl apply -f deploy/pipeline.yaml

configuration.dapr.io/pipeline created

We will use the provided echoapp for now

$ cat deploy/echoapp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: echoapp

labels:

app: echo

spec:

replicas: 1

selector:

matchLabels:

app: echo

template:

metadata:

labels:

app: echo

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "echoapp"

dapr.io/app-port: "3000"

dapr.io/config: "pipeline"

spec:

containers:

- name: echo

image: dapriosamples/middleware-echoapp:latest

ports:

- containerPort: 3000

imagePullPolicy: Always

$ kubectl apply -f deploy/echoapp.yaml

deployment.apps/echoapp created

However we need to change the ingress file from

cat deploy/ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: echo-ingress

spec:

rules:

- http:

paths:

- backend:

serviceName: echoapp-dapr

servicePort: 80

path: /

to

cat deploy/myingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: foo4-echo-ingress

spec:

rules:

- host: foo4.freshbrewed.science

http:

paths:

- backend:

serviceName: echoapp-dapr

servicePort: 80

path: /

tls:

- hosts:

- foo4.freshbrewed.science

secretName: foo4.freshbrewed.science-cert

and let’s apply it

$ kubectl apply -f deploy/myingress.yaml

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.extensions/foo4-echo-ingress created

Testing

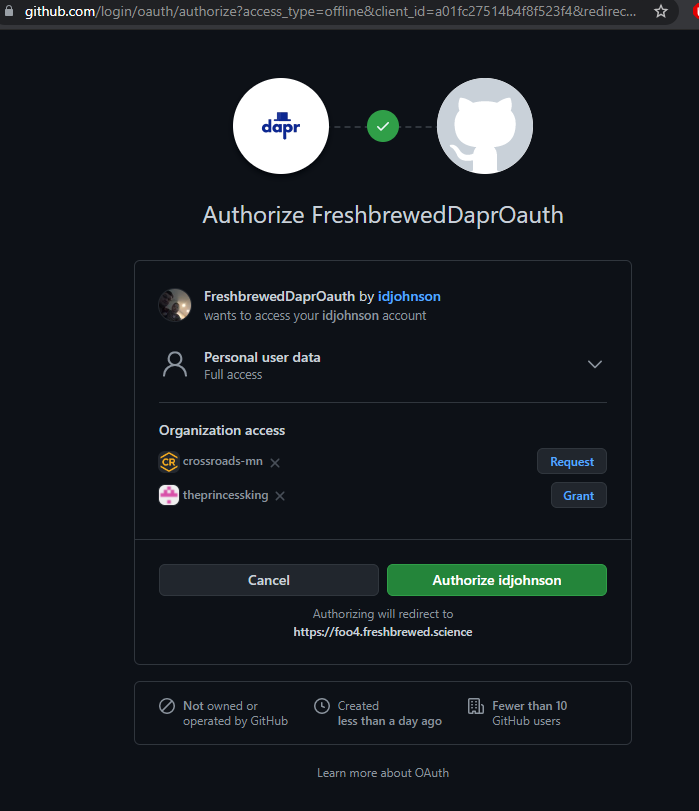

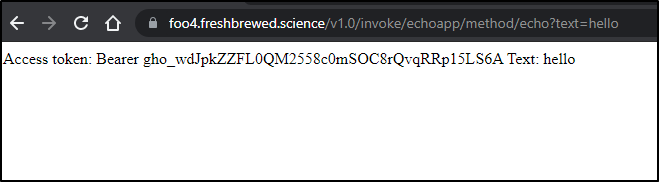

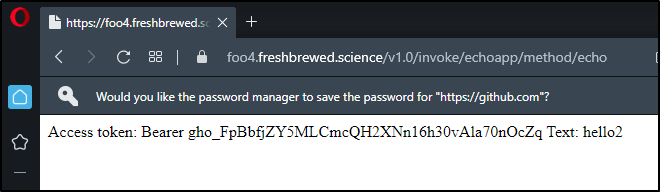

Next let’s test. https://foo4.freshbrewed.science/v1.0/invoke/echoapp/method/echo?text=hello

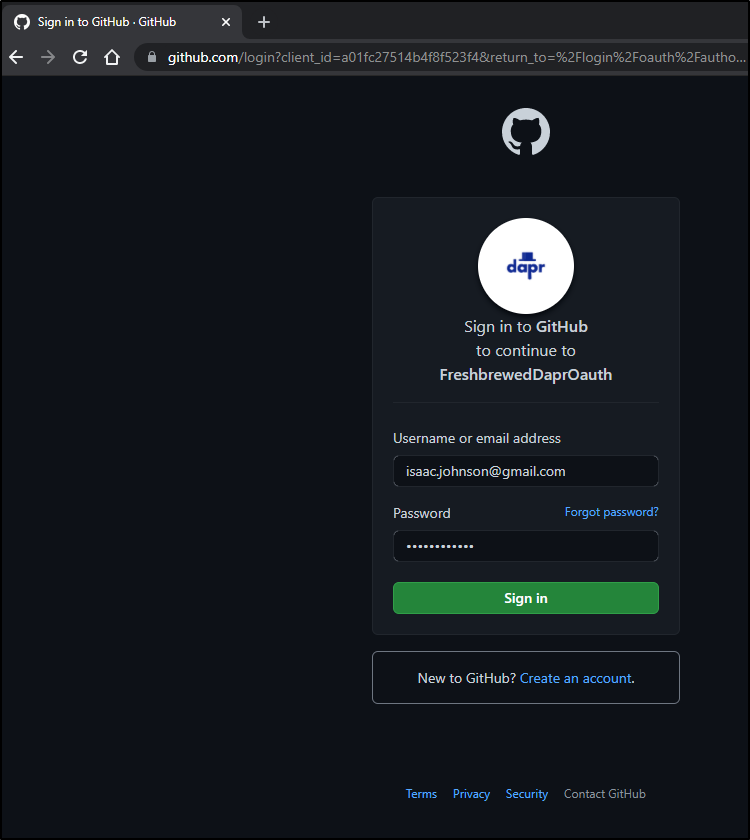

I’m immediately redirected to

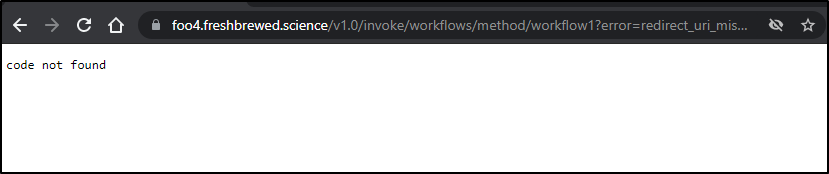

and that results in ..

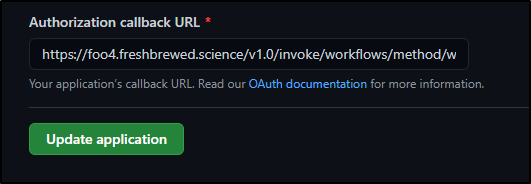

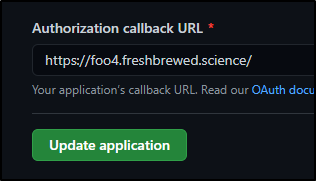

Ah.. here we setup the Github app with the assumption that we are going to redirect to a workflow app… we should fix that.

Hop back into Github and the OAuth app

and update the Authorization callback URL

to just https://foo4.freshbrewed.science

This time, hitting foo4 gives me

but we get an error

but then checking the URL in question works just fine

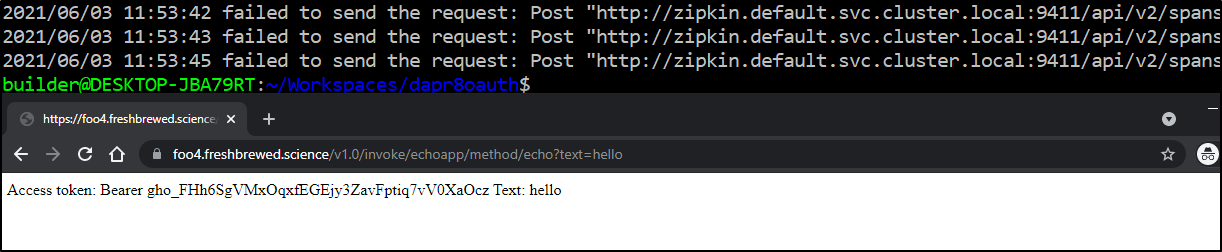

I checked on logs and noticed a slight issue

$ kubectl logs echoapp-c56bfd446-gfrrt daprd | tail -n 10

2021/06/03 11:49:17 failed to send the request: Post "http://zipkin.default.svc.cluster.local:9411/api/v2/spans": dial tcp: lookup zipkin.default.svc.cluster.local on 10.43.0.10:53: no such host

2021/06/03 11:49:18 failed to send the request: Post "http://zipkin.default.svc.cluster.local:9411/api/v2/spans": dial tcp: lookup zipkin.default.svc.cluster.local on 10.43.0.10:53: no such host

2021/06/03 11:49:19 failed to send the request: Post "http://zipkin.default.svc.cluster.local:9411/api/v2/spans": dial tcp: lookup zipkin.default.svc.cluster.local on 10.43.0.10:53: no such host

2021/06/03 11:49:20 failed to send the request: Post "http://zipkin.default.svc.cluster.local:9411/api/v2/spans": dial tcp: lookup zipkin.default.svc.cluster.local on 10.43.0.10:53: no such host

2021/06/03 11:49:21 failed to send the request: Post "http://zipkin.default.svc.cluster.local:9411/api/v2/spans": dial tcp: lookup zipkin.default.svc.cluster.local on 10.43.0.10:53: no such host

2021/06/03 11:49:23 failed to send the request: Post "http://zipkin.default.svc.cluster.local:9411/api/v2/spans": dial tcp: lookup zipkin.default.svc.cluster.local on 10.43.0.10:53: no such host

2021/06/03 11:49:24 failed to send the request: Post "http://zipkin.default.svc.cluster.local:9411/api/v2/spans": dial tcp: lookup zipkin.default.svc.cluster.local on 10.43.0.10:53: no such host

2021/06/03 11:49:25 failed to send the request: Post "http://zipkin.default.svc.cluster.local:9411/api/v2/spans": dial tcp: lookup zipkin.default.svc.cluster.local on 10.43.0.10:53: no such host

2021/06/03 11:49:26 failed to send the request: Post "http://zipkin.default.svc.cluster.local:9411/api/v2/spans": dial tcp: lookup zipkin.default.svc.cluster.local on 10.43.0.10:53: no such host

2021/06/03 11:49:27 failed to send the request: Post "http://zipkin.default.svc.cluster.local:9411/api/v2/spans": dial tcp: lookup zipkin.default.svc.cluster.local on 10.43.0.10:53: no such host

This is because we route our open telemetry to Datadog for tracing. This is a pretty easy fix.

Fixing Open Telemetry Tracing

Let’s lookup our zipkin endpoint from our other app config

$ kubectl get configuration

NAME AGE

daprsystem 61d

appconfig 48d

pipeline 15m

$ kubectl get configuration appconfig -o yaml | tail -n 10

resourceVersion: "26038212"

selfLink: /apis/dapr.io/v1alpha1/namespaces/default/configurations/appconfig

uid: e775c1d5-7d36-45d6-a8c1-c7c9332b50b1

spec:

metric:

enabled: true

tracing:

samplingRate: "1"

zipkin:

endpointAddress: http://otel-collector.default.svc.cluster.local:9411/api/v2/spans

We can also see that service in our services

$ kubectl get svc | grep otel

otel-collector ClusterIP 10.43.111.31 <none> 9411/TCP 48d

If you don’t recall the name you gave your service, you can just find the one providing 9411 traffic

$ kubectl get svc | grep 9411

otel-collector ClusterIP 10.43.111.31 <none> 9411/TCP 48d

We will update deploy/pipeline.yaml and apply

$ cat deploy/pipeline.yaml

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

name: pipeline

spec:

tracing:

samplingRate: "1"

zipkin:

endpointAddress: "http://otel-collector.default.svc.cluster.local:9411/api/v2/spans"

httpPipeline:

handlers:

- type: middleware.http.oauth2

name: oauth2

$ kubectl apply -f deploy/pipeline.yaml

configuration.dapr.io/pipeline configured

Retrying with an incognito window prompts for Github login again and we redirect properly this time. However i don’t see new error logs in daprd so we must assume it is working

I can see the pod is responding just fine

$ kubectl logs echoapp-c56bfd446-gfrrt echo

Node App listening on port 3000!

Echoing: hello

Echoing: hello

Echoing: hello

Since we haven’t instrumented the app, we don’t see traces right yet

In order to actually get some traces, we need to add “metric:enabled” to the pipeline config

$ cat deploy/pipeline.yaml

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

name: pipeline

spec:

metric:

enabled: true

tracing:

samplingRate: "1"

zipkin:

endpointAddress: "http://otel-collector.default.svc.cluster.local:9411/api/v2/spans"

httpPipeline:

handlers:

- type: middleware.http.oauth2

name: oauth2

$ kubectl apply -f deploy/pipeline.yaml

configuration.dapr.io/pipeline configured

Let’s try with another browser to trigger the middleware pipeline again

$ kubectl logs echoapp-c56bfd446-gfrrt echo | tail -n1

Echoing: hello2

empty findings…

$ kubectl logs otel-collector-67f645b9b7-ftvhg | grep pipeline

$ kubectl logs otel-collector-67f645b9b7-ftvhg | grep echo

Perhaps the lack of traces comes from the pod not recycling after update.

Let’s do that.

$ kubectl delete pod echoapp-c56bfd446-gfrrt

pod "echoapp-c56bfd446-gfrrt" deleted

$ kubectl get pods -l app=echo

NAME READY STATUS RESTARTS AGE

echoapp-c56bfd446-m8t9x 2/2 Running 0 54s

I hit the URL again

https://foo4.freshbrewed.science/v1.0/invoke/echoapp/method/echo?text=hello2

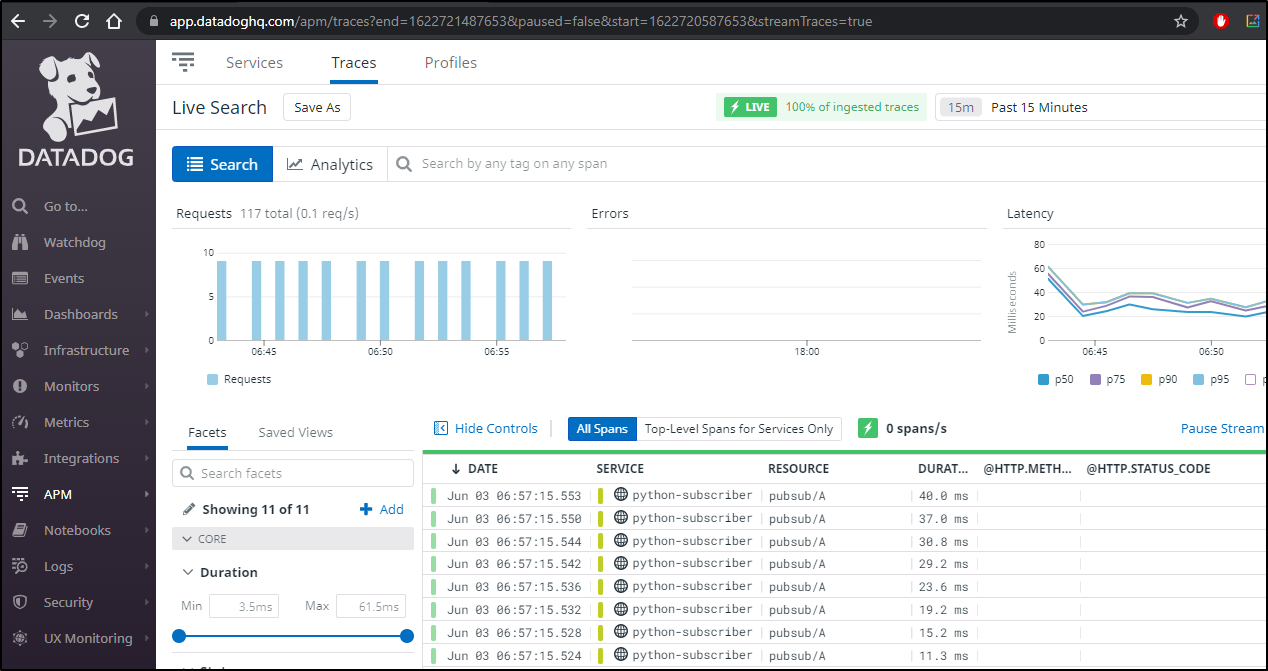

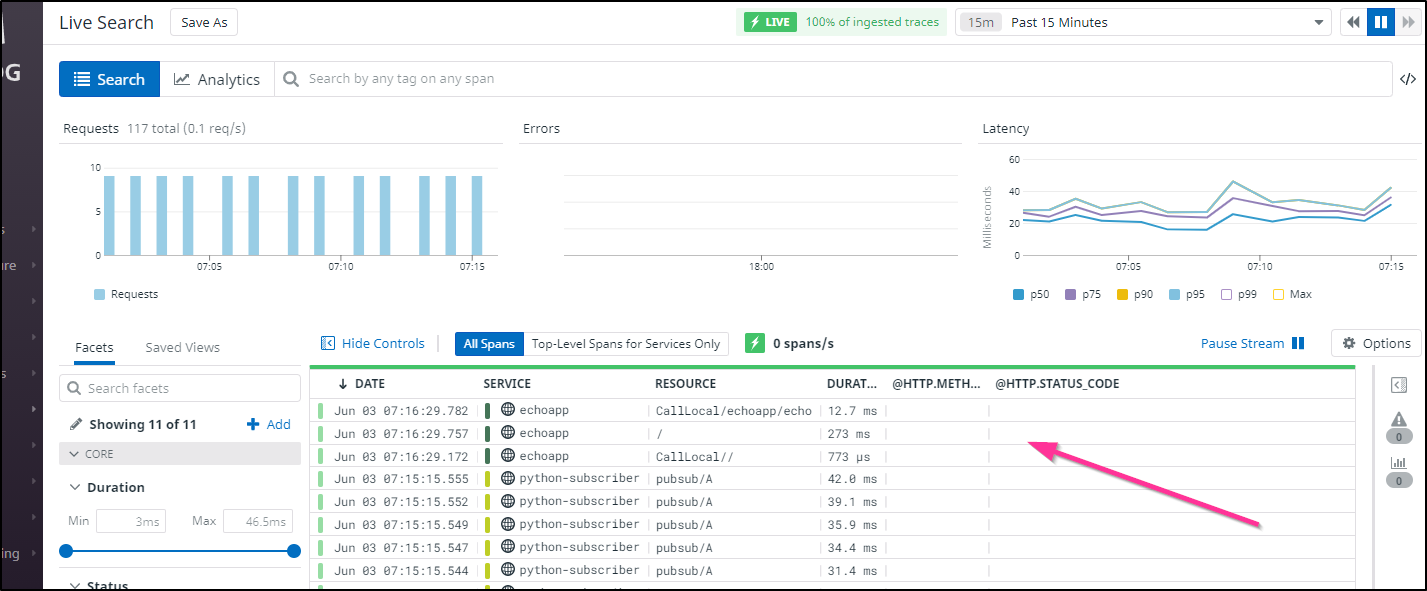

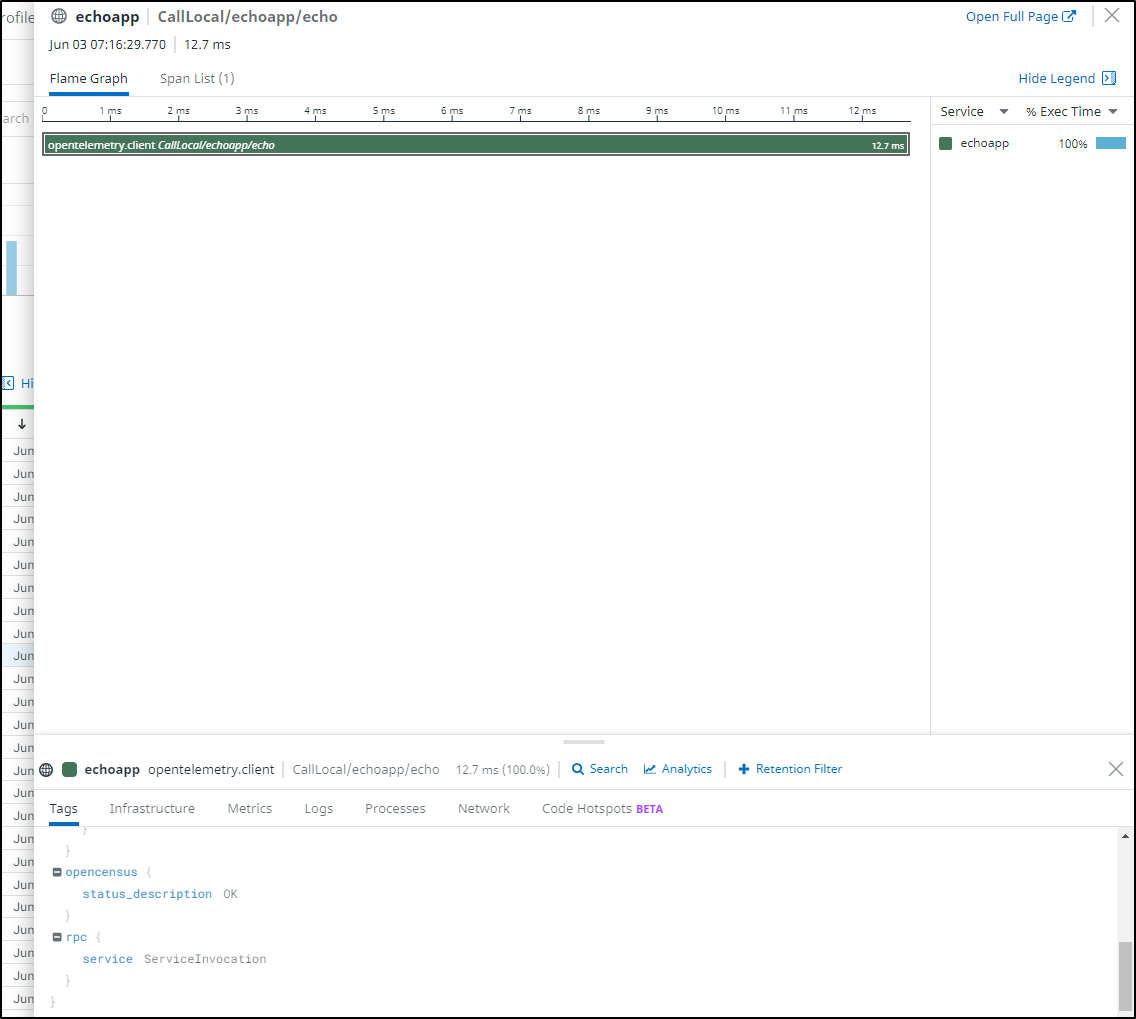

And this time I saw Traces!

We don’t see many details, after all, it’s just a simple echo app, but we can explore the trace

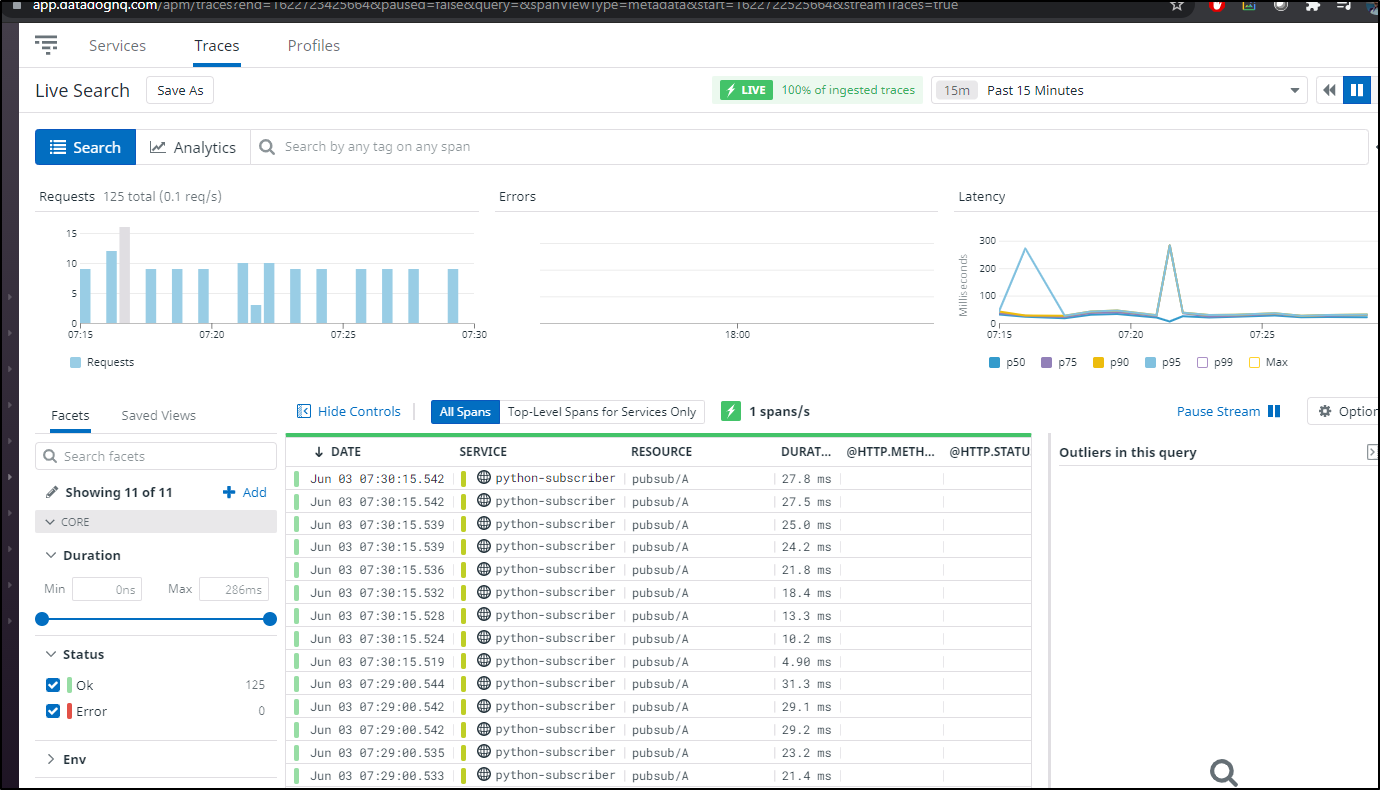

Fixing Pubsub issues

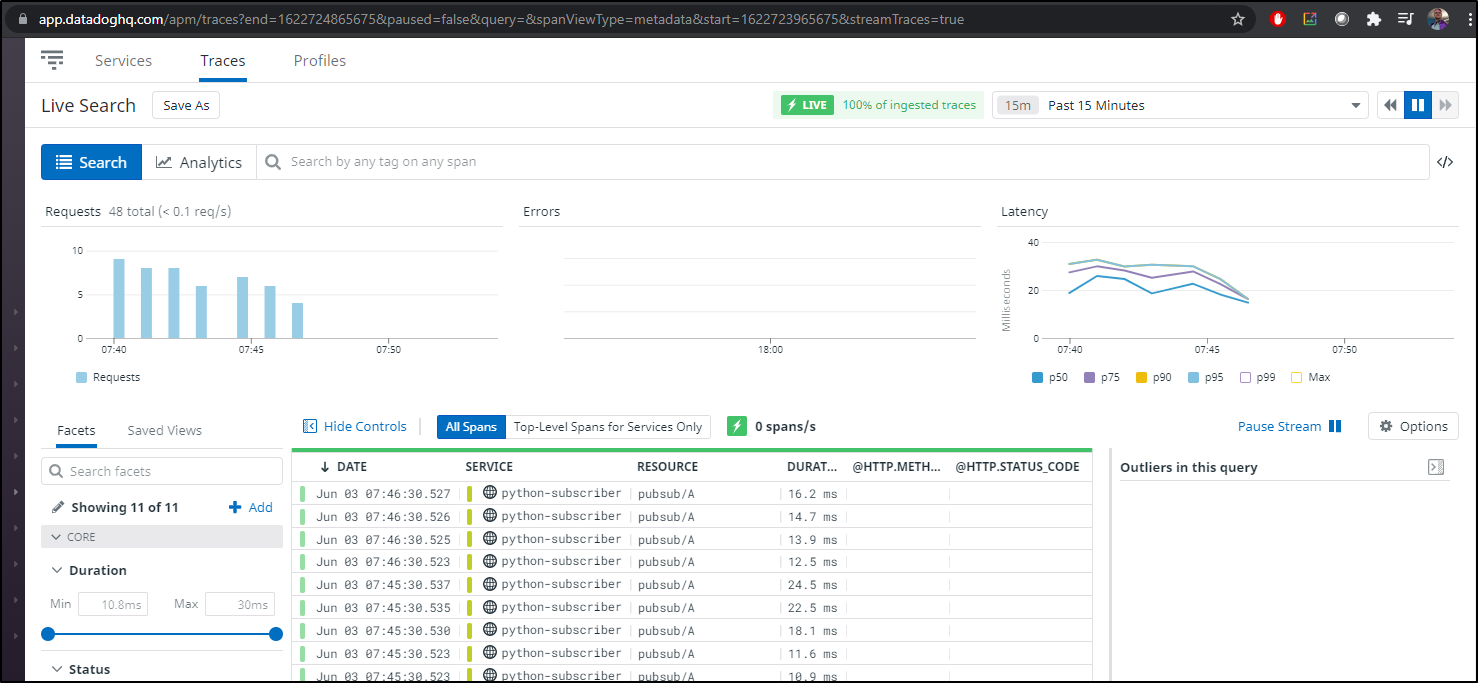

Let’s pause a second and fix a problem.. We can see the python-subscriber is going a bit nuts on pubsub/A

This makes me think we likely shoved some bad values into the redis stream that Dapr is using for pubsub.

We can login to redis. While im sure my bash foo is a bit lousy, here is my quick b64 decode the password

$ kubectl get secret redis -o yaml | grep redis-password: | head -n1 | sed "s/^.*: //" | base64 --decode

bm9wZSwgbm90IHJlYWwK

I can then login and check my streams

$ kubectl exec -it redis-master-0 -- redis-cli -a bm9wZSwgbm90IHJlYWwK

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:6379> KEYS ?

1) "C"

2) "A"

3) "B"

I can see the type is a stream (not a key)

127.0.0.1:6379> type A

stream

and then see its present length

127.0.0.1:6379> xlen A

(integer) 43

127.0.0.1:6379> xlen B

(integer) 15

127.0.0.1:6379> xlen C

(integer) 14

we can do a full stream range check

127.0.0.1:6379> XRANGE A - +

1) 1) "1617372490883-0"

2) 1) "data"

…snip...

43) 1) "1622467490946-0"

2) 1) "data"

2) "{\"datacontenttype\":\"application/json\",\"source\":\"react-form\",\"type\":\"com.dapr.event.sent\",\"data\":{\"messageType\":\"A\",\"message\":\"From Pipeline\"},\"id\":\"8c2df069-1b57-41d2-9ec4-09187b9b2521\",\"specversion\":\"1.0\",\"topic\":\"A\",\"pubsubname\":\"pubsub\",\"traceid\":\"00-e16d17f8db5d66222405677930951124-5fc2aa7ae5a5dd74-01\"}"

(i could print the whole stream, but it’s pretty clear its full of some nonsense)

We can also see there are 9 pending queued messages trying to get to python

127.0.0.1:6379> XINFO GROUPS A

1) 1) "name"

2) "node-subscriber"

3) "consumers"

4) (integer) 1

5) "pending"

6) (integer) 0

7) "last-delivered-id"

8) "1622467490946-0"

2) 1) "name"

2) "perl-subscriber"

3) "consumers"

4) (integer) 1

5) "pending"

6) (integer) 0

7) "last-delivered-id"

8) "1622467490946-0"

3) 1) "name"

2) "python-subscriber"

3) "consumers"

4) (integer) 1

5) "pending"

6) (integer) 9

7) "last-delivered-id"

8) "1622467490946-0"

127.0.0.1:6379> xpending A python-subscriber

1) (integer) 9

2) "1622420782029-0"

3) "1622421355904-0"

4) 1) 1) "python-subscriber"

2) "9"

We can now clear the stream out from python.. i did a few at time, then did the rest…

127.0.0.1:6379> xpending A python-subscriber - + 6

1) 1) "1622421348106-0"

2) "python-subscriber"

3) (integer) 44633

4) (integer) 4214

2) 1) "1622421348112-0"

2) "python-subscriber"

3) (integer) 44633

4) (integer) 4214

3) 1) "1622421348120-0"

2) "python-subscriber"

3) (integer) 44633

4) (integer) 4214

4) 1) "1622421355882-0"

2) "python-subscriber"

3) (integer) 44633

4) (integer) 4214

5) 1) "1622421355887-0"

2) "python-subscriber"

3) (integer) 44633

4) (integer) 4214

127.0.0.1:6379>

127.0.0.1:6379> xack A python-subscriber 1622421348106-0 1622421348112-0 1622421348120-0 1622421355882-0 1622421355887-0

(integer) 5

127.0.0.1:6379> xpending A python-subscriber - + 6

(empty array)

We can now see our stream is cleared out

127.0.0.1:6379> xinfo groups A

1) 1) "name"

2) "node-subscriber"

3) "consumers"

4) (integer) 1

5) "pending"

6) (integer) 0

7) "last-delivered-id"

8) "1622467490946-0"

2) 1) "name"

2) "perl-subscriber"

3) "consumers"

4) (integer) 1

5) "pending"

6) (integer) 0

7) "last-delivered-id"

8) "1622467490946-0"

3) 1) "name"

2) "python-subscriber"

3) "consumers"

4) (integer) 1

5) "pending"

6) (integer) 0

7) "last-delivered-id"

8) "1622467490946-0"

Validation

we can watch Datadog and see the traces trail out…

Next steps

We have a basic echo app :

// ------------------------------------------------------------

// Copyright (c) Microsoft Corporation.

// Licensed under the MIT License.

// ------------------------------------------------------------

const express = require('express');

const bodyParser = require('body-parser');

const app = express();

app.use(bodyParser.json());

const daprPort = process.env.DAPR_HTTP_PORT || 3500;

const port = 3000;

app.get('/echo', (req, res) => {

var text = req.query.text;

console.log("Echoing: " + text);

res.send("Access token: " + req.headers["authorization"] + " Text: " + text);

});

app.listen(port, () => console.log(`Node App listening on port ${port}!`));

As we have the authorization token and since our oauth2 component asked for the user scope (which includes user:email)

- name: scopes

value: "user"

we should be able to pull the email address back.. but we’ll save that for next time…

Summary

We examined using Middleware Pipelines with Github to authenticate against an external Idp using Dapr (instead of coding an OAuth flow ourselves). We used a simple echoapp and verified we could tie it into OpenTelemetry for tracing in Datadog.

Additionally, noting noisy errors in our python subscriber via Datadog APM, we used Redis CLI in Kubernetes to find and clear out a problematic Pubsub stream.

The nice aspect of this is that while coding OAuth in Express or Springboot is straightforward, coding OAuth flows in every app and every language that might need it would cause complications and lots of extra work.

Here we have a single pipeline that can be leveraged with a single auth flow and moreover we could easily change it just with a Dapr annotation. By having multiple pipelines, it would be easy to support multiple Oauth flows. Our app code would just assume it would get a proper header with token details.

Additionally, rotating our secret, something one should do periodically, is just a matter of updating the oauth component and it’s instantly live.

$ kubectl get component oauth2 -o yaml > oauth_dapr.yaml

$ vi oauth_dapr.yaml

$ kubectl apply -f oauth_dapr.yaml

component.dapr.io/oauth2 configured