Published: Jan 20, 2021 by Isaac Johnson

Hashicorp has had the Terraform Cloud offering now for the last year. It’s been something I really wanted to try since HC 2019. Interest was so high at that time, there was a wait-list but now it’s fairly easy to sign up. What is Terraform Cloud and how does it compare to Cloud Remote Storage (such as Azurerm of which i often use)? Let’s dig in and find out.

Setup

First we need to create an account.

Go to terraform.io/cloud to sign up

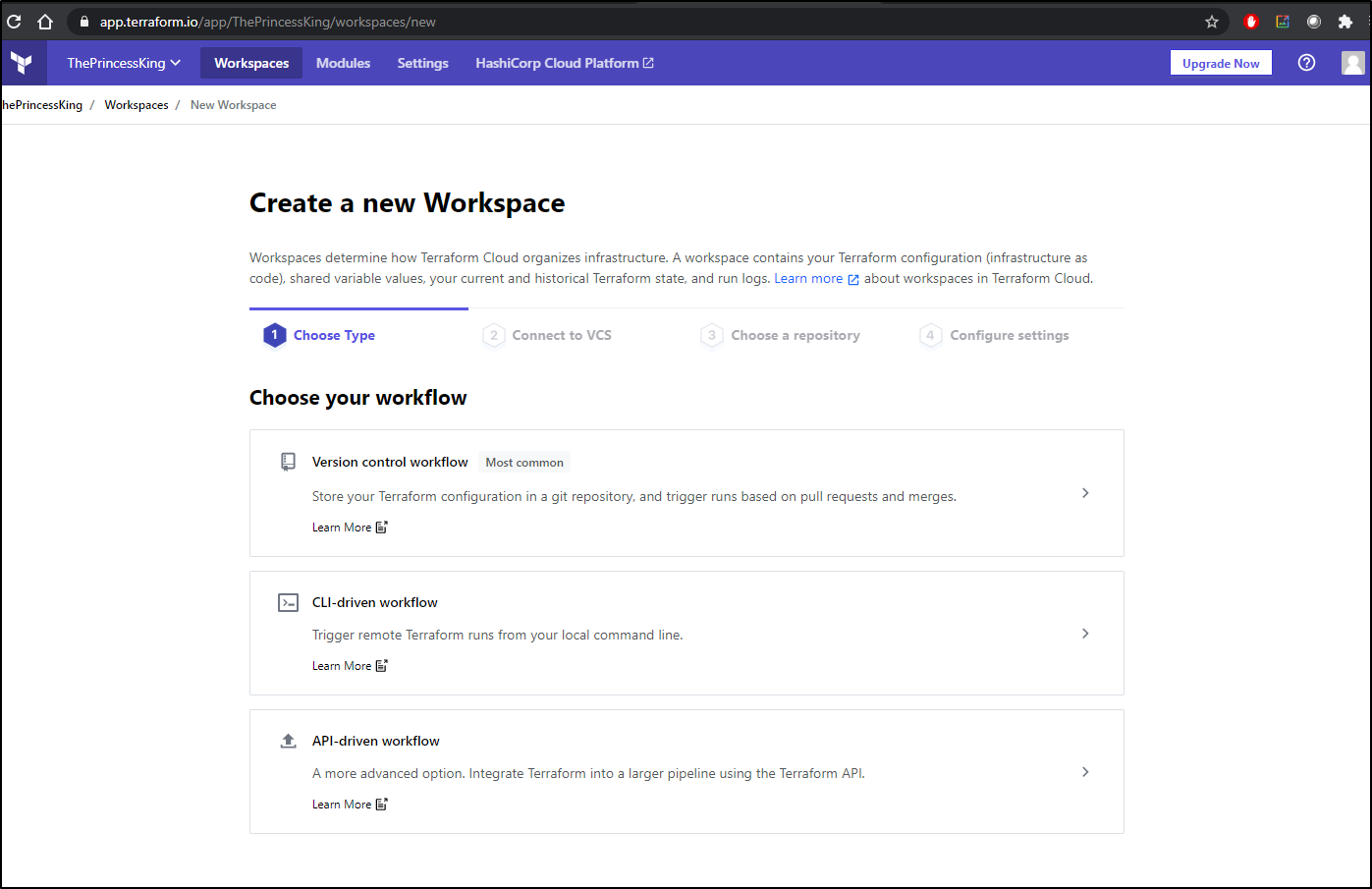

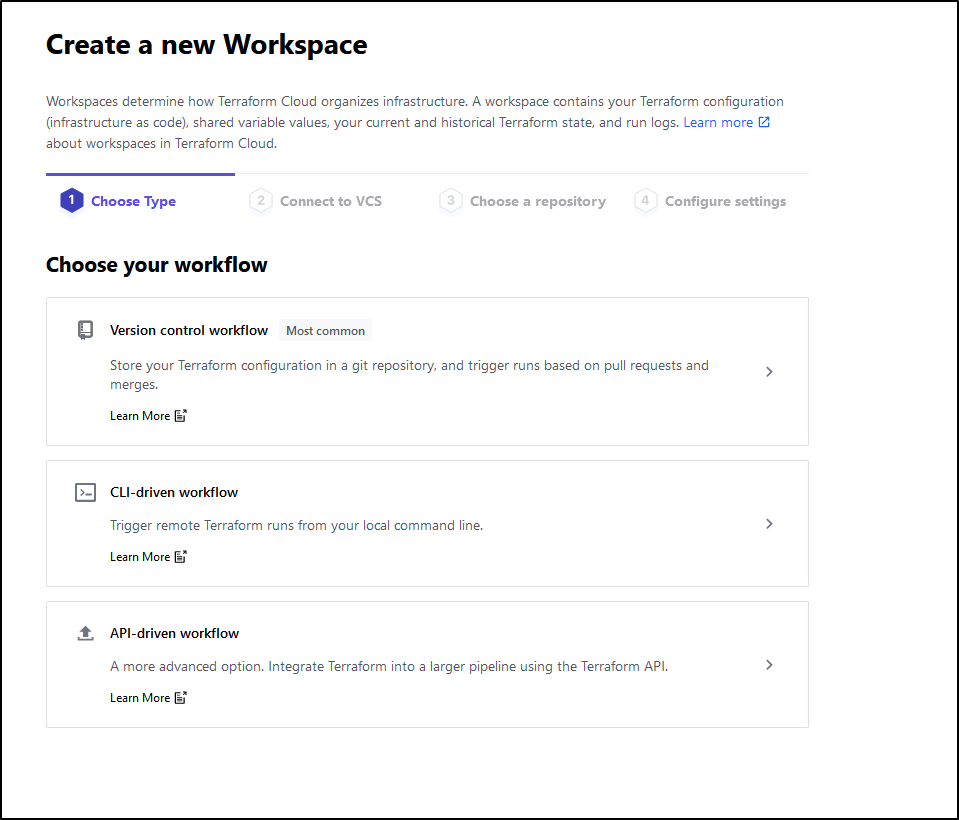

Once we confirm our email and create an organization, we can move onto creating a new Workspace:

VCS Integration

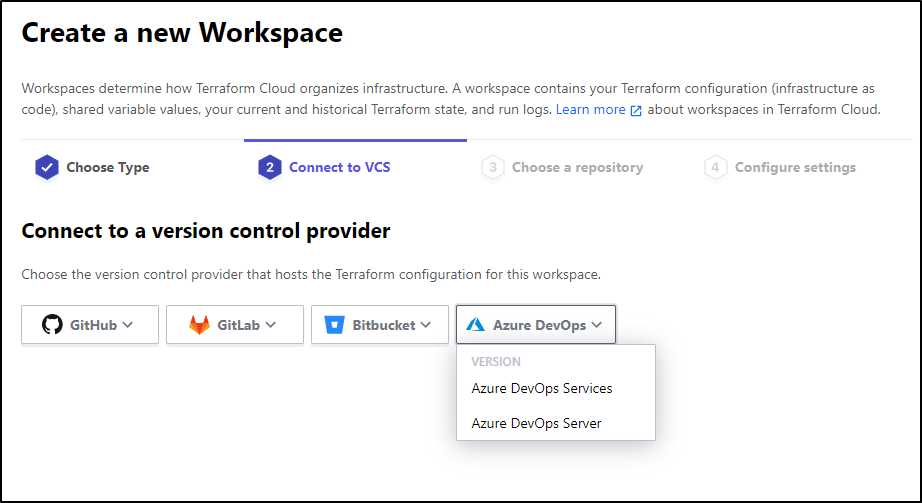

Let’s start with VCS integration.

_ Note : I will show all the ways I tried to get VCS to work - none of which were successful. However, I did succeed in the API flow so skip ahead for what worked or enjoy the ride of trying to get the **** thing to work in my AzDO_ .

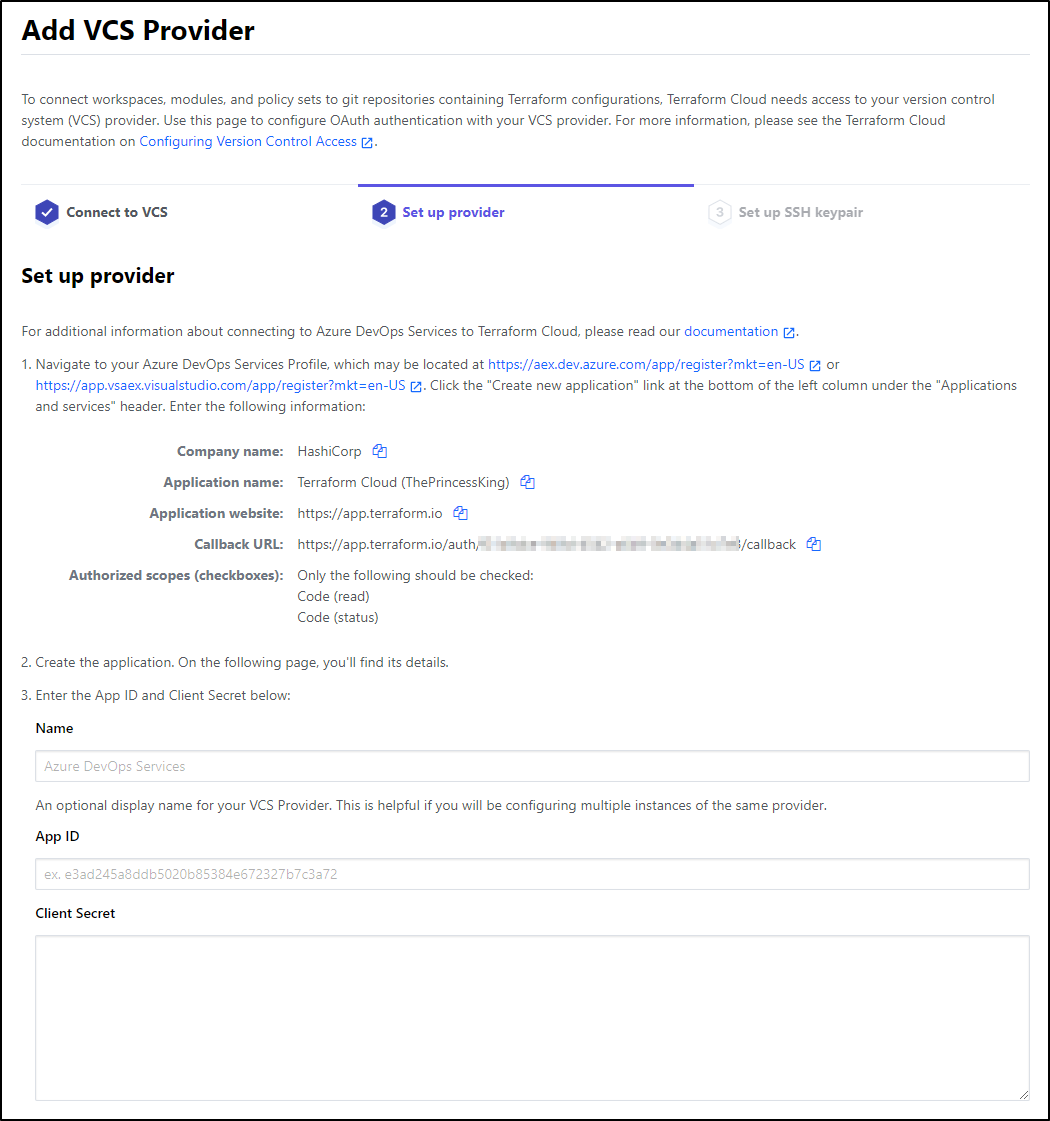

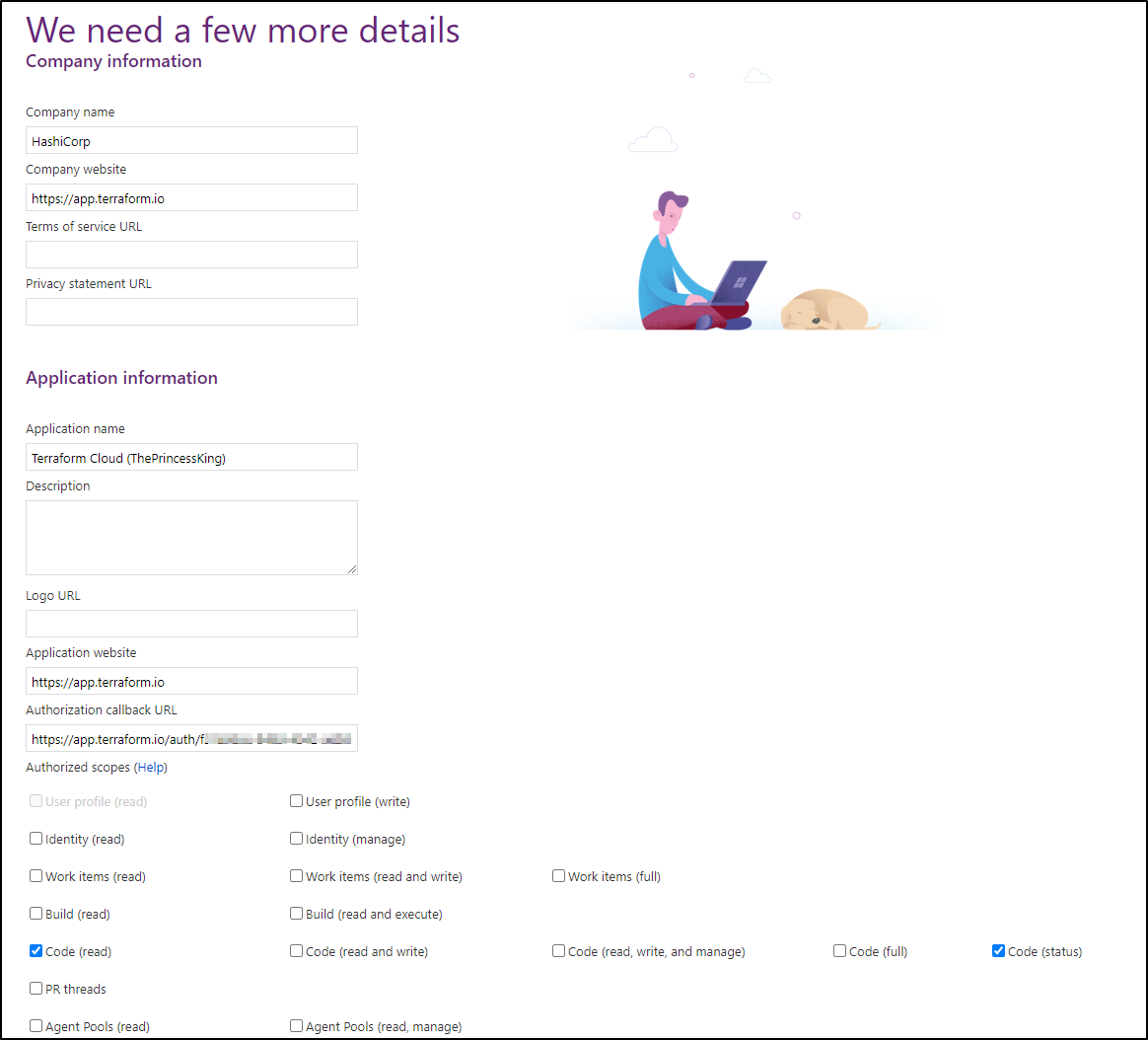

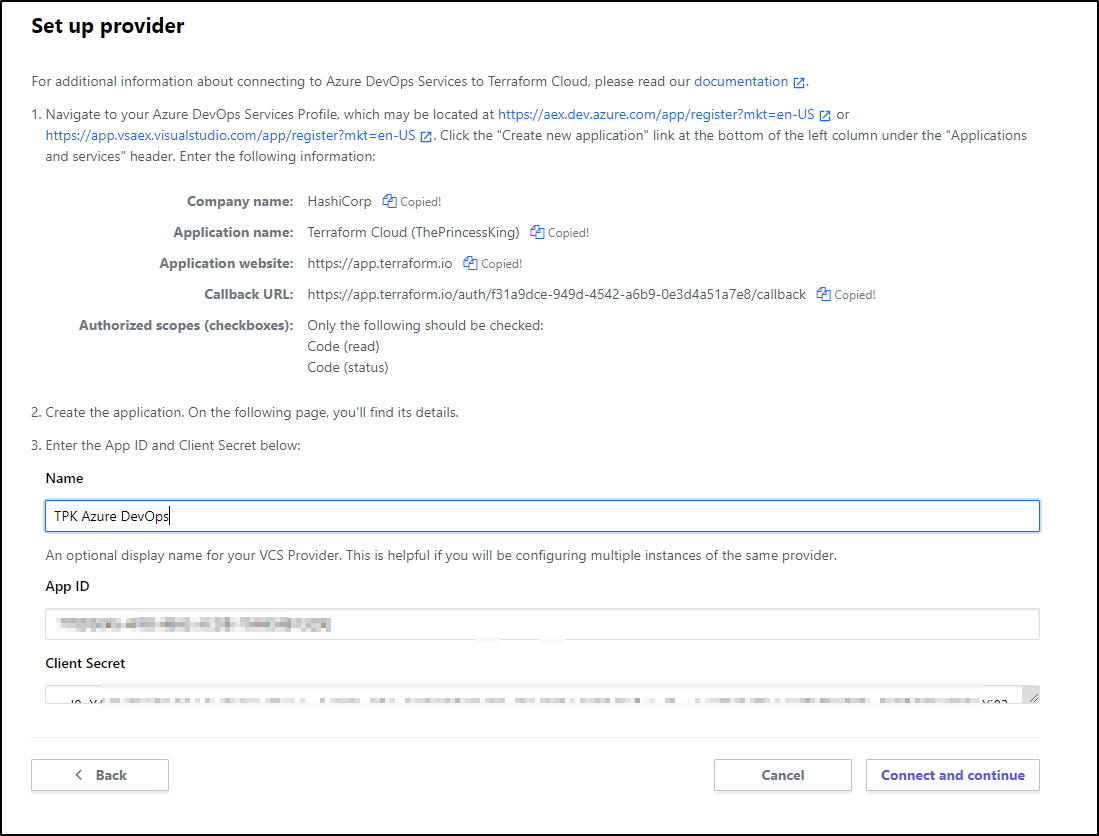

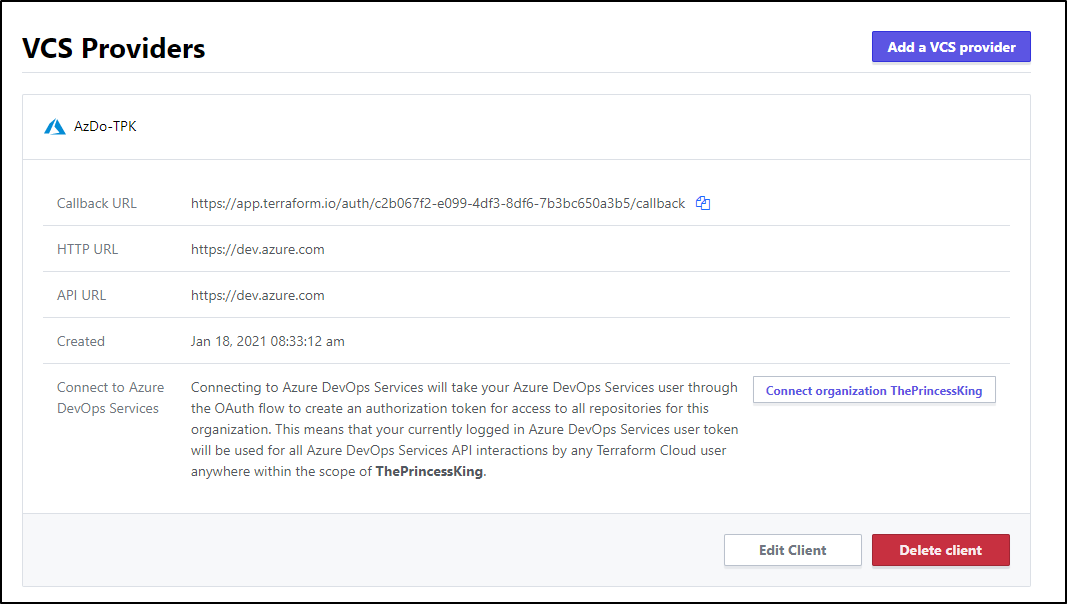

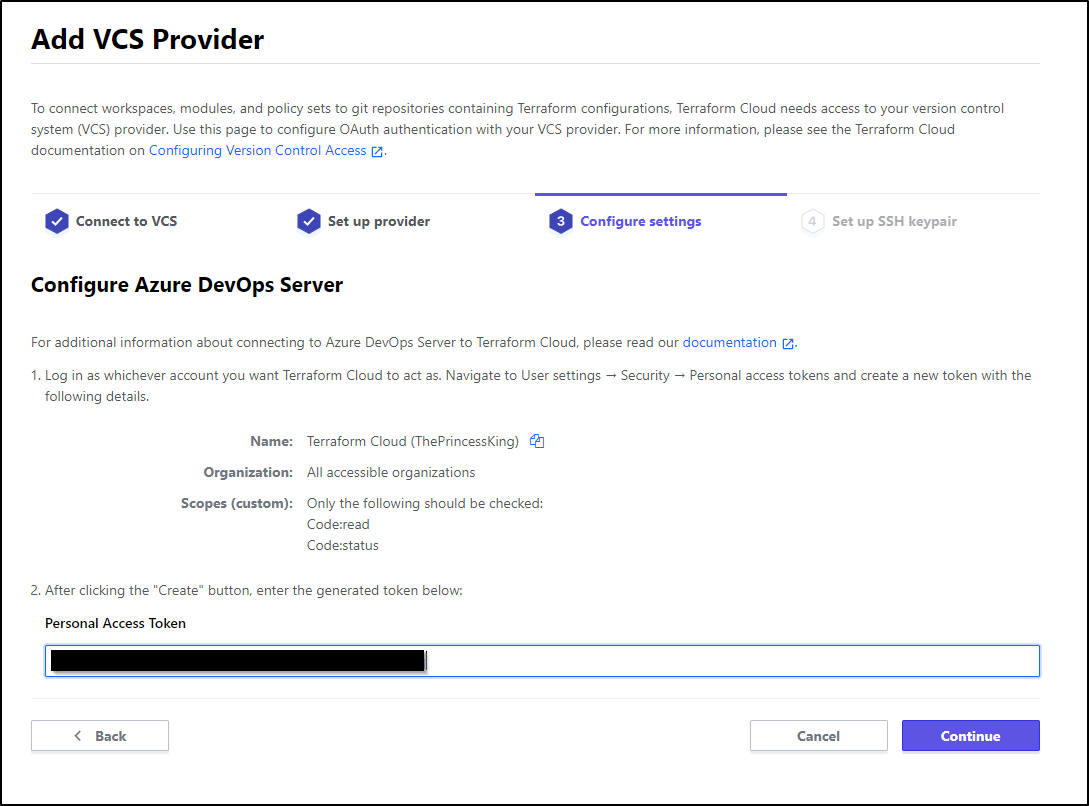

Next we setup the service integration following the next link

We can then follow the link https://aex.dev.azure.com/app/register?mkt=en-US

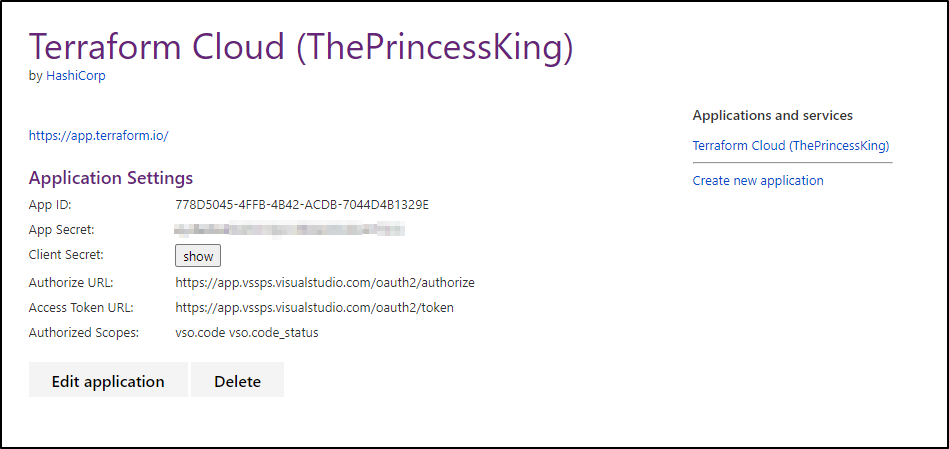

Creating the app we get back the secret and id

Now come back and put that into TF Cloud

Copy over

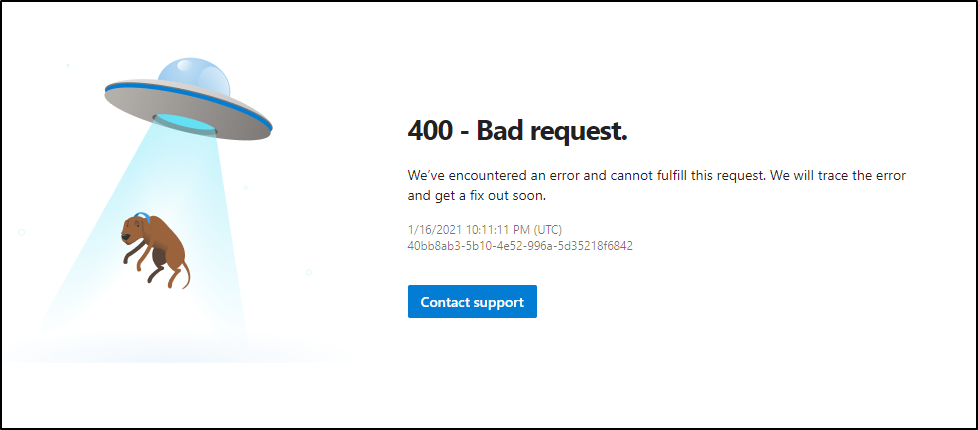

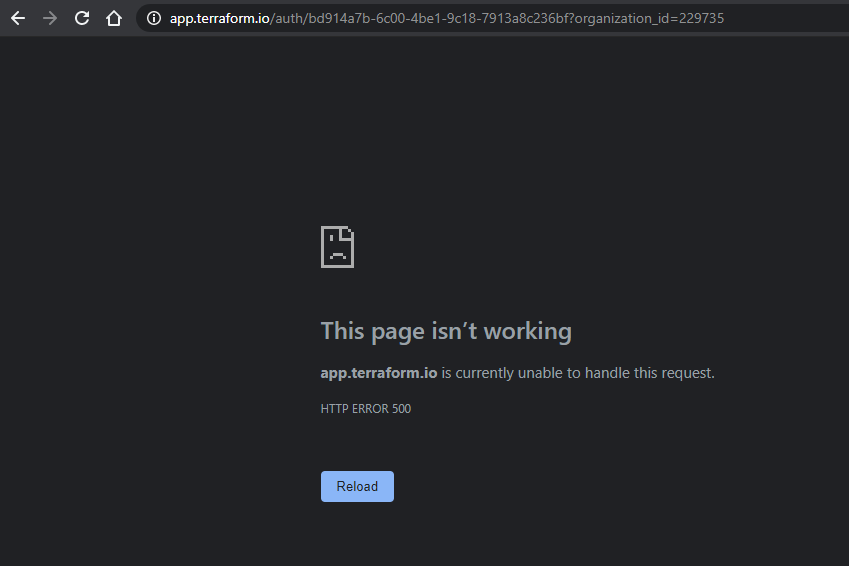

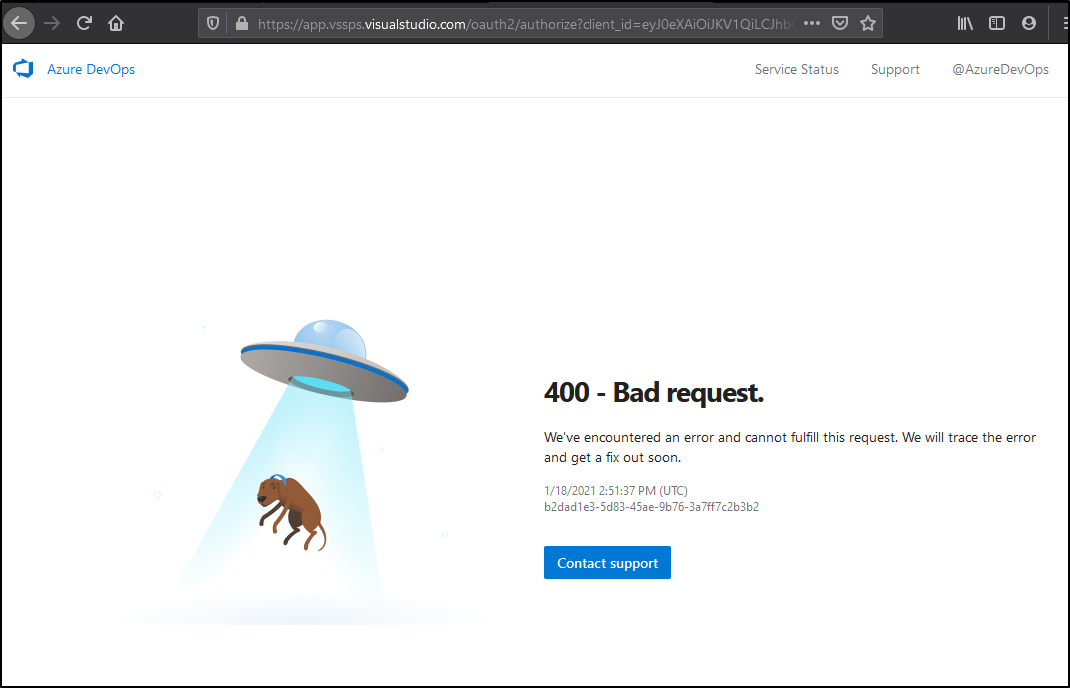

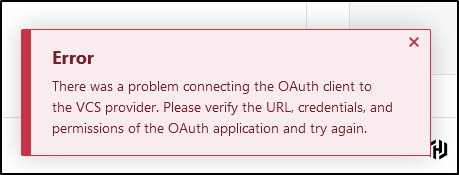

Upon second attempt with VCS…

Again

this time

Attempt ?5

This time trying with Firefox

And again…

API Driven

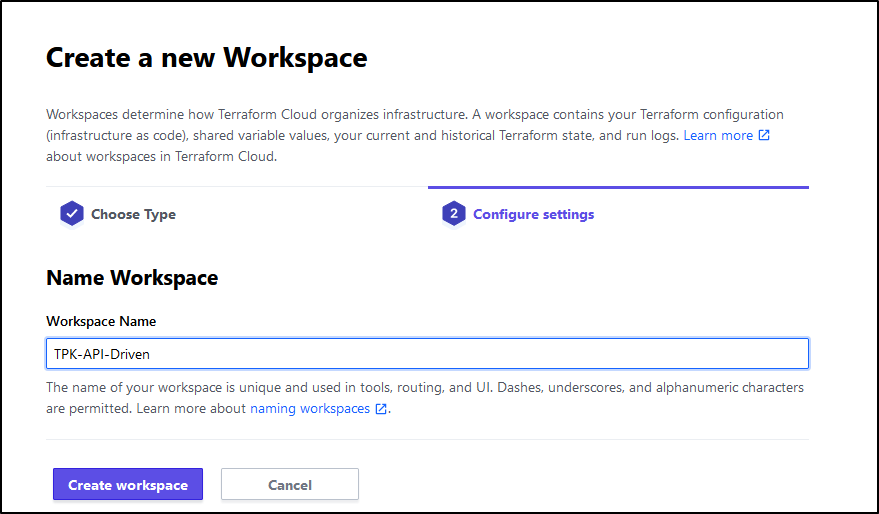

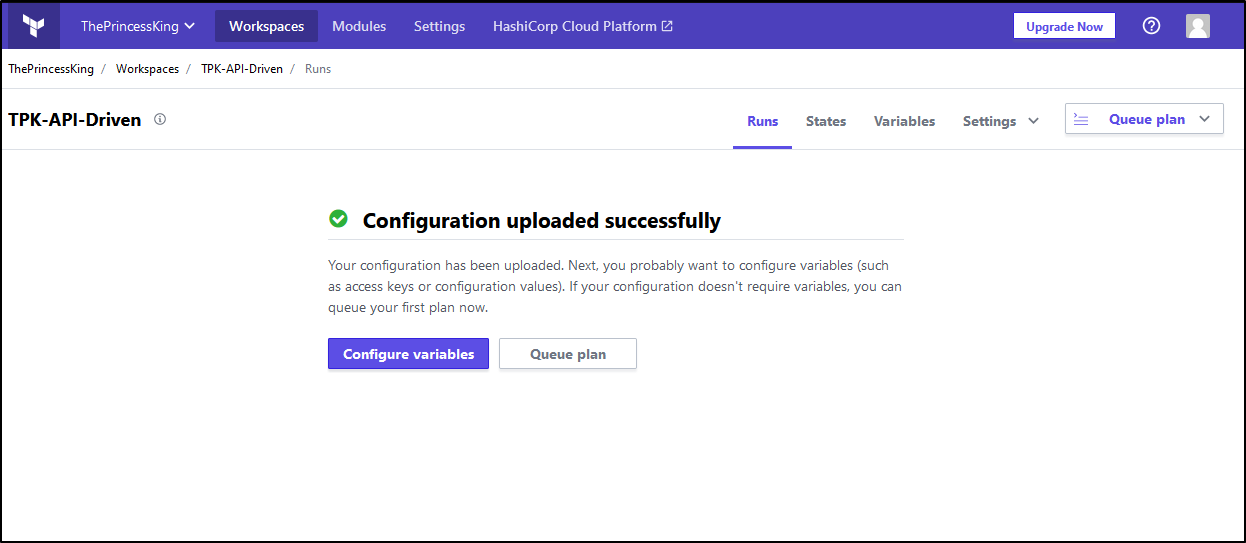

This time we will use API-driven workflow

Give it a Workspace name

Next we need to setup a remote block.

Let’s first do this locally, then move to AzDO.

I’ll follow the guide here https://learn.hashicorp.com/tutorials/terraform/install-cli to setup terraform in my WSL Bash shell:

builder@DESKTOP-JBA79RT:~/Workspaces$ mkdir tfCloudProject

builder@DESKTOP-JBA79RT:~/Workspaces$ cd tfCloudProject/

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ curl -fsSL https://apt.releases.hashicorp.com/gpg | sudo apt-key add -

[sudo] password for builder:

OK

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ sudo apt-add-repository "deb [arch=amd64] https://apt.releases.hashicorp.com $(lsb_release -cs) main"

Get:1 https://apt.releases.hashicorp.com bionic InRelease [4421 B]

Get:2 https://apt.releases.hashicorp.com bionic/main amd64 Packages [17.5 kB]

Get:3 https://packages.microsoft.com/repos/azure-cli bionic InRelease [3964 B]

Get:4 https://packages.microsoft.com/ubuntu/18.04/prod bionic InRelease [4003 B]

Hit:5 http://archive.ubuntu.com/ubuntu bionic InRelease

Get:6 https://packages.microsoft.com/repos/azure-cli bionic/main amd64 Packages [12.2 kB]

Get:7 http://security.ubuntu.com/ubuntu bionic-security InRelease [88.7 kB]

Get:8 https://packages.microsoft.com/ubuntu/18.04/prod bionic/main amd64 Packages [155 kB]

Get:9 http://archive.ubuntu.com/ubuntu bionic-updates InRelease [88.7 kB]

Get:10 http://archive.ubuntu.com/ubuntu bionic-backports InRelease [74.6 kB]

Get:11 http://security.ubuntu.com/ubuntu bionic-security/main amd64 Packages [1506 kB]

Get:12 http://archive.ubuntu.com/ubuntu bionic-updates/main amd64 Packages [1843 kB]

Get:13 http://security.ubuntu.com/ubuntu bionic-security/main Translation-en [292 kB]

Get:14 http://security.ubuntu.com/ubuntu bionic-security/restricted amd64 Packages [219 kB]

Get:15 http://archive.ubuntu.com/ubuntu bionic-updates/main Translation-en [384 kB]

Get:16 http://security.ubuntu.com/ubuntu bionic-security/restricted Translation-en [28.8 kB]

Get:17 http://archive.ubuntu.com/ubuntu bionic-updates/restricted amd64 Packages [237 kB]

Get:18 http://security.ubuntu.com/ubuntu bionic-security/universe amd64 Packages [1098 kB]

Get:19 http://archive.ubuntu.com/ubuntu bionic-updates/restricted Translation-en [31.8 kB]

Get:20 http://security.ubuntu.com/ubuntu bionic-security/universe Translation-en [245 kB]

Get:21 http://archive.ubuntu.com/ubuntu bionic-updates/universe amd64 Packages [1704 kB]

Get:22 http://security.ubuntu.com/ubuntu bionic-security/multiverse amd64 Packages [12.5 kB]

Get:23 http://archive.ubuntu.com/ubuntu bionic-updates/universe Translation-en [359 kB]

Get:24 http://security.ubuntu.com/ubuntu bionic-security/multiverse Translation-en [2644 B]

Get:25 http://archive.ubuntu.com/ubuntu bionic-updates/multiverse amd64 Packages [31.7 kB]

Get:26 http://archive.ubuntu.com/ubuntu bionic-updates/multiverse Translation-en [6696 B]

Fetched 8449 kB in 3s (3005 kB/s)

Reading package lists... Donept-get install terraform

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ sudo apt-get update && sudo apt-get install terraform

Hit:1 https://apt.releases.hashicorp.com bionic InRelease

Hit:2 http://archive.ubuntu.com/ubuntu bionic InRelease

Hit:3 http://security.ubuntu.com/ubuntu bionic-security InRelease

Hit:4 https://packages.microsoft.com/repos/azure-cli bionic InRelease

Hit:5 https://packages.microsoft.com/ubuntu/18.04/prod bionic InRelease

Hit:6 http://archive.ubuntu.com/ubuntu bionic-updates InRelease

Hit:7 http://archive.ubuntu.com/ubuntu bionic-backports InRelease

Reading package lists... Done

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following NEW packages will be installed:

terraform

0 upgraded, 1 newly installed, 0 to remove and 187 not upgraded.

Need to get 33.5 MB of archives.

After this operation, 81.7 MB of additional disk space will be used.

Get:1 https://apt.releases.hashicorp.com bionic/main amd64 terraform amd64 0.14.4 [33.5 MB]

Fetched 33.5 MB in 1s (45.8 MB/s) y

Selecting previously unselected package terraform.

(Reading database ... 110837 files and directories currently installed.)

Preparing to unpack .../terraform_0.14.4_amd64.deb ...

Unpacking terraform (0.14.4) ...

Setting up terraform (0.14.4) ...

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ terraform -version

Terraform v0.14.4

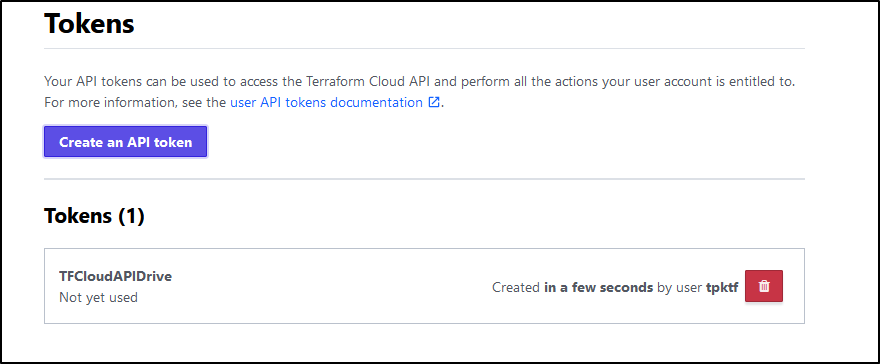

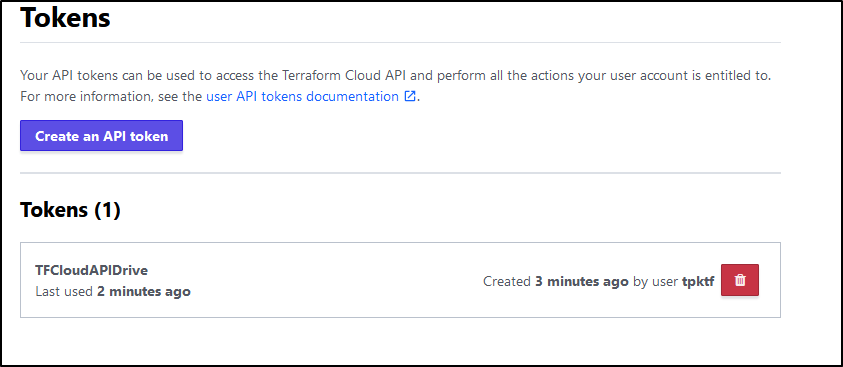

Next, we need our TF Cloud Token. We can get this from our settings page in tokens

Next let’s TF login.. Note, the first prompt echos and the second does not.

$ terraform login

Terraform will request an API token for app.terraform.io using your browser.

If login is successful, Terraform will store the token in plain text in

the following file for use by subsequent commands:

/home/builder/.terraform.d/credentials.tfrc.json

Do you want to proceed?

Only 'yes' will be accepted to confirm.

Enter a value: yes

---------------------------------------------------------------------------------

Open the following URL to access the tokens page for app.terraform.io:

https://app.terraform.io/app/settings/tokens?source=terraform-login

---------------------------------------------------------------------------------

Generate a token using your browser, and copy-paste it into this prompt.

Terraform will store the token in plain text in the following file

for use by subsequent commands:

/home/builder/.terraform.d/credentials.tfrc.json

Token for app.terraform.io:

Enter a value:

Retrieved token for user tpktf

---------------------------------------------------------------------------------

Success! Terraform has obtained and saved an API token.

The new API token will be used for any future Terraform command that must make

authenticated requests to app.terraform.io.

I can now see my token cached into

$ cat /home/builder/.terraform.d/credentials.tfrc.json

{

"credentials": {

"app.terraform.io": {

"token": "1bf53bdf78f844cfnotmyrealtoken1bf53bdf78f844cf9f974fb26734c3b5"

}

}

}

We also see this reflected in the tokens page (that it was used):

Next we can create our init file and init the directory:

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ vi backend.hcl

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ cat backend.hcl

# backend.hcl

workspaces { name = "TPK-API-Driven" }

hostname = "app.terraform.io"

organization = "ThePrincessKing"

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ terraform init -backend-config=backend.hcl

Terraform initialized in an empty directory!

The directory has no Terraform configuration files. You may begin working

with Terraform immediately by creating Terraform configuration files.

Let’s create a storage account

The old way, which certainly works, is to just embed your SP ID and Secret…

terraform {

required_version = ">= 0.13"

backend "azurerm" {}

}

provider "azurerm" {

subscription_id = "d955c0ba-13dc-44cf-a29a-8fed74cbb22d"

client_id = "MYSPID"

client_secret = "MYSPSECRET"

tenant_id = "28c575f6-ade1-4838-8e7c-7e6d1ba0eb4a"

}

resource "azurerm_resource_group" "testrg" {

name = "tpkTestStorageRG"

location = "centralus"

}

resource "azurerm_storage_account" "testsa" {

name = "tpkTestStorageAccount"

resource_group_name = "${azurerm_resource_group.testrg.name}"

location = "centralus"

account_tier = "Standard"

account_replication_type = "LRS"

tags = {

environment = "dev"

}

}

resource "azurerm_storage_share" "testshare" {

name = "tpkTestStorageContainer"

resource_group_name = "${azurerm_resource_group.testrg.name}"

storage_account_name = "${azurerm_storage_account.testsa.name}"

# in Gb

quota = 30

}

However, we can now use env vars for most this to avoid even the chance of checking things in…

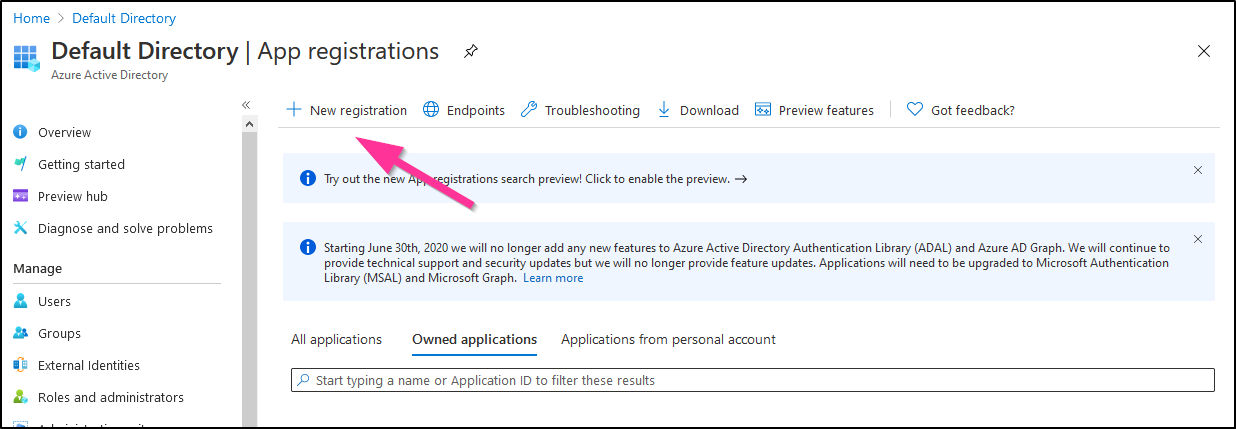

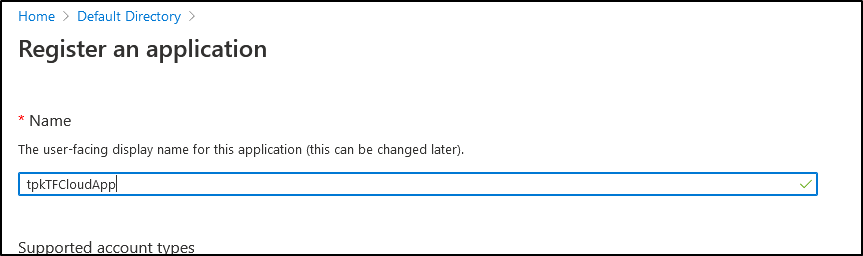

For completeness, let’s create a new App ID:

Give it a name

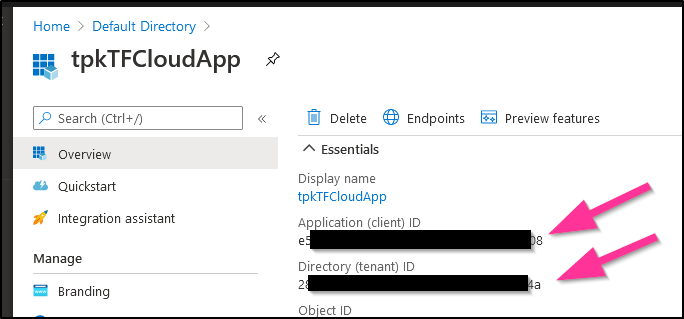

We can get the App ID and Tenant ID from the next page

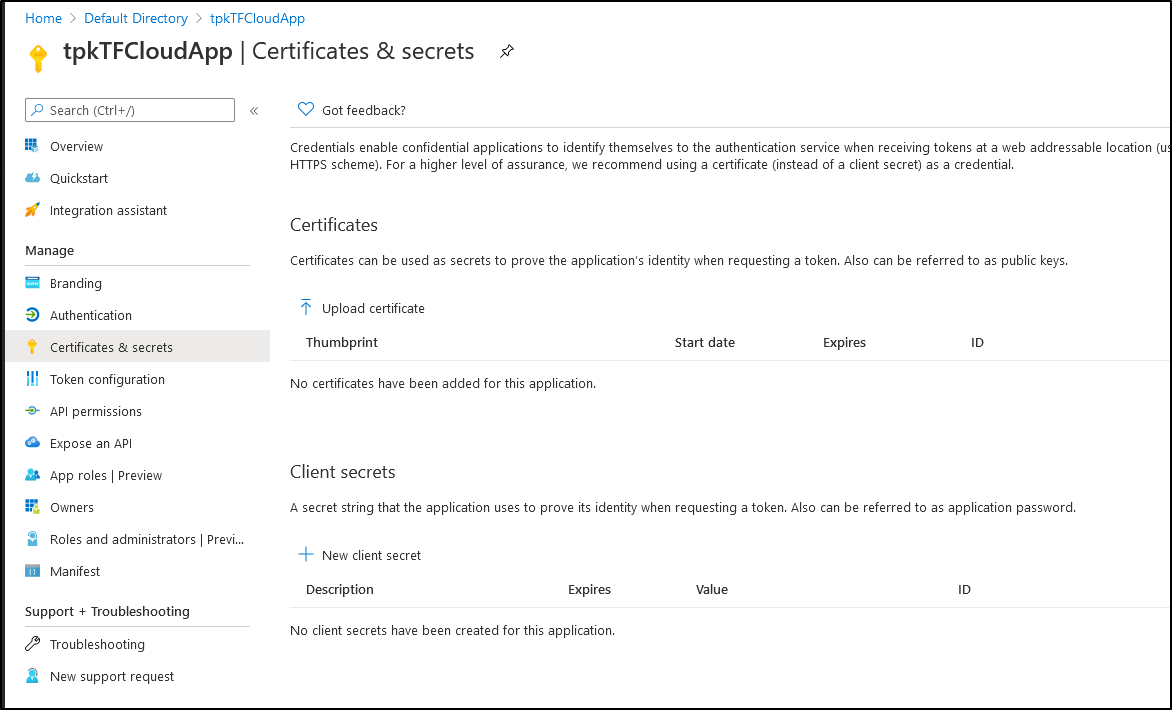

Next we need to create a new Secret.. we can do that Certificates and Secrets:

We will now use those values in env vars:

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ export ARM_CLIENT_ID=e97e47a3-527f-4ef0-98d0-e3c6d24f49fa

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ export ARM_TENANT_ID=e97e47a3-527f-4ef0-98d0-e3c6d24f49fa

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ export ARM_CLIENT_SECRET=e97e47a3-527f-4ef0-98d0-e3c6d24f49fa

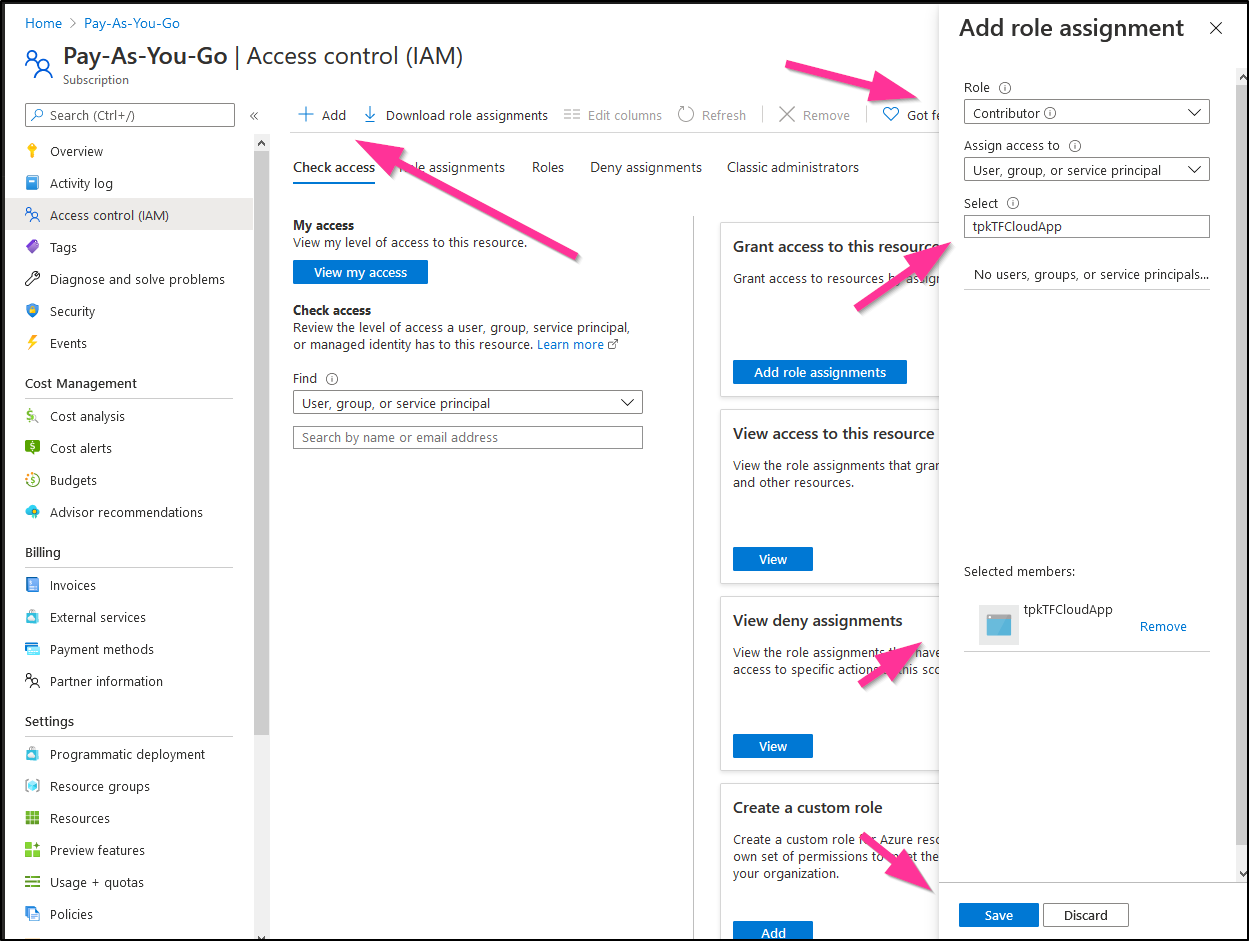

Lastly, by default, our new App Registration has no powers.. We need to add it as a contributor in our account to create things.

You can do that in subscriptions:

At this point it’s time out our terraform.

We create a backend.hcl:

$ cat backend.hcl

# backend.hcl

workspaces { name = "TPK-API-Driven" }

hostname = "app.terraform.io"

organization = "ThePrincessKing"

Then create the main.tf

terraform {

required_version = ">= 0.13"

backend "remote" {}

}

provider "azurerm" {

features {}

}

resource "azurerm_resource_group" "testrg" {

name = "tpkTestStorageRG"

location = "centralus"

}

resource "azurerm_storage_account" "testsa" {

name = "tpkTestStorageAccount"

resource_group_name = "${azurerm_resource_group.testrg.name}"

location = "centralus"

account_tier = "Standard"

account_replication_type = "LRS"

tags = {

environment = "dev"

}

}

resource "azurerm_storage_share" "testshare" {

name = "tpkTestStorageContainer"

resource_group_name = "${azurerm_resource_group.testrg.name}"

storage_account_name = "${azurerm_storage_account.testsa.name}"

# in Gb

quota = 30

}

One of the key differences in using TF Cloud vs remote state management in an Azure blob store is the backend line.

A common pattern I will have is main.tf include

terraform {

required_version = ">= 0.11"

backend "azurerm" {}

}

And a backend TF Vars:

cat > backend.tfvars <<'EOL'

storage_account_name = "$(accountName)"

container_name = "terraform-state"

key = "$(storageName).terraform.tfstate"

access_key = "$(AZFSKEY)"

EOL

Where AZFSKEY comes from the pre-created storage account:

steps:

- task: AzureCLI@1

displayName: 'Azure CLI - key azure storage keys'

inputs:

azureSubscription: 'Pay-As-You-Go Subscription'

scriptLocation: inlineScript

inlineScript: 'az storage account keys list --account-name $(accountName) --resource-group $(accountRG) -o json > keys.json'

addSpnToEnvironment: true

- bash: |

#!/bin/bash

set +x

export tval=`cat keys.json | grep '"value": "' | sed 's/.*"\([^"]*\)"/\1/' | tail -n1`

echo "##vso[task.setvariable variable=AZFSKEY]$tval" > t.o

set -x

cat ./t.o

displayName: 'set AZFSKEY'

But here we are using TF cloud.

When we init, we see:

$ terraform init -backend-config=backend.hcl

Initializing the backend...

Successfully configured the backend "remote"! Terraform will automatically

use this backend unless the backend configuration changes.

Initializing provider plugins...

- Finding latest version of hashicorp/azurerm...

- Installing hashicorp/azurerm v2.43.0...

- Installed hashicorp/azurerm v2.43.0 (signed by HashiCorp)

Terraform has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that Terraform can guarantee to make the same selections by default when

you run "terraform init" in the future.

Warning: Interpolation-only expressions are deprecated

on main.tf line 21, in resource "azurerm_storage_account" "testsa":

21: resource_group_name = "${azurerm_resource_group.testrg.name}"

Terraform 0.11 and earlier required all non-constant expressions to be

provided via interpolation syntax, but this pattern is now deprecated. To

silence this warning, remove the "${ sequence from the start and the }"

sequence from the end of this expression, leaving just the inner expression.

Template interpolation syntax is still used to construct strings from

expressions when the template includes multiple interpolation sequences or a

mixture of literal strings and interpolations. This deprecation applies only

to templates that consist entirely of a single interpolation sequence.

(and 2 more similar warnings elsewhere)

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

We can now do a Terraform plan

$ terraform plan

Running plan in the remote backend. Output will stream here. Pressing Ctrl-C

will stop streaming the logs, but will not stop the plan running remotely.

Preparing the remote plan...

To view this run in a browser, visit:

https://app.terraform.io/app/ThePrincessKing/TPK-API-Driven/runs/run-YUiJQzfXLQCCHxp2

Waiting for the plan to start...

Terraform v0.14.4

Configuring remote state backend...

Initializing Terraform configuration...

Warning: Interpolation-only expressions are deprecated

on main.tf line 19, in resource "azurerm_storage_account" "testsa":

19: resource_group_name = "${azurerm_resource_group.testrg.name}"

Terraform 0.11 and earlier required all non-constant expressions to be

provided via interpolation syntax, but this pattern is now deprecated. To

silence this warning, remove the "${ sequence from the start and the }"

sequence from the end of this expression, leaving just the inner expression.

Template interpolation syntax is still used to construct strings from

expressions when the template includes multiple interpolation sequences or a

mixture of literal strings and interpolations. This deprecation applies only

to templates that consist entirely of a single interpolation sequence.

(and 2 more similar warnings elsewhere)

Error: name ("tpkTestStorageAccount") can only consist of lowercase letters and numbers, and must be between 3 and 24 characters long

on main.tf line 17, in resource "azurerm_storage_account" "testsa":

17: resource "azurerm_storage_account" "testsa" {

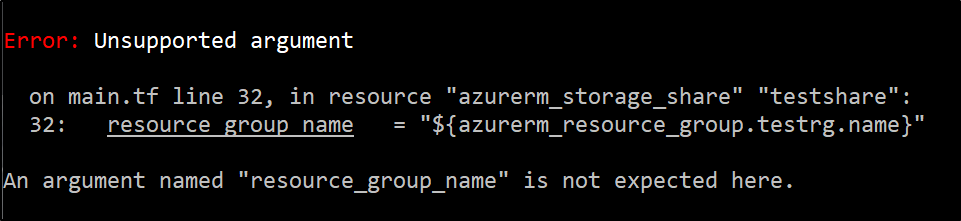

Error: Unsupported argument

on main.tf line 32, in resource "azurerm_storage_share" "testshare":

32: resource_group_name = "${azurerm_resource_group.testrg.name}"

An argument named "resource_group_name" is not expected here.

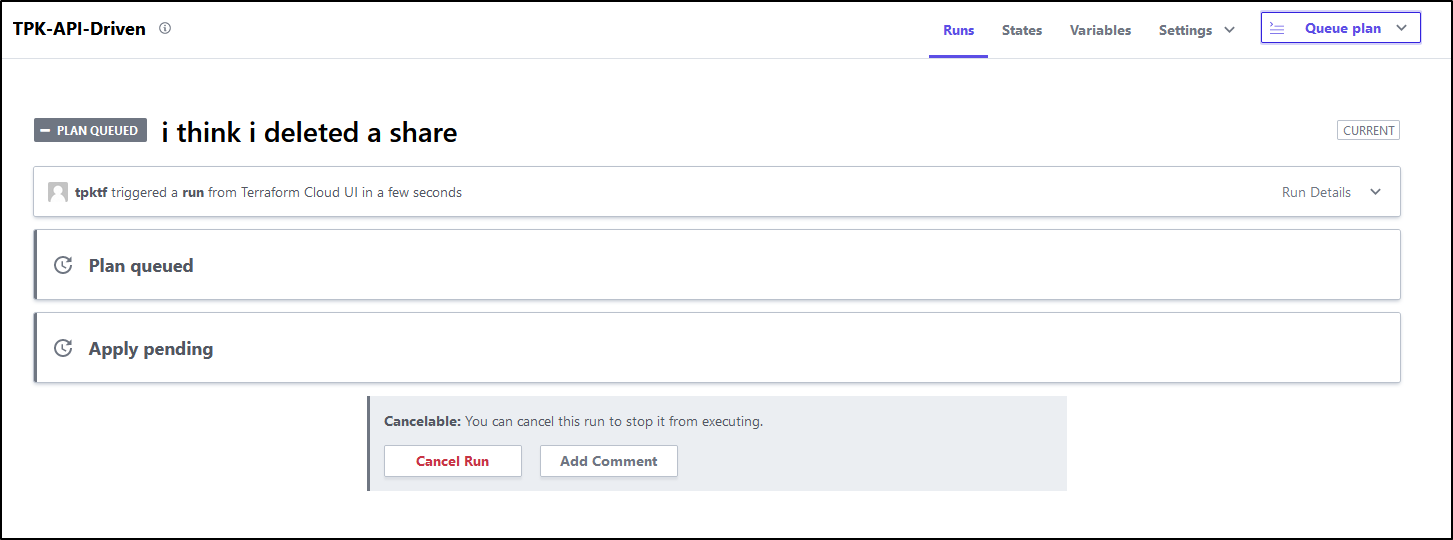

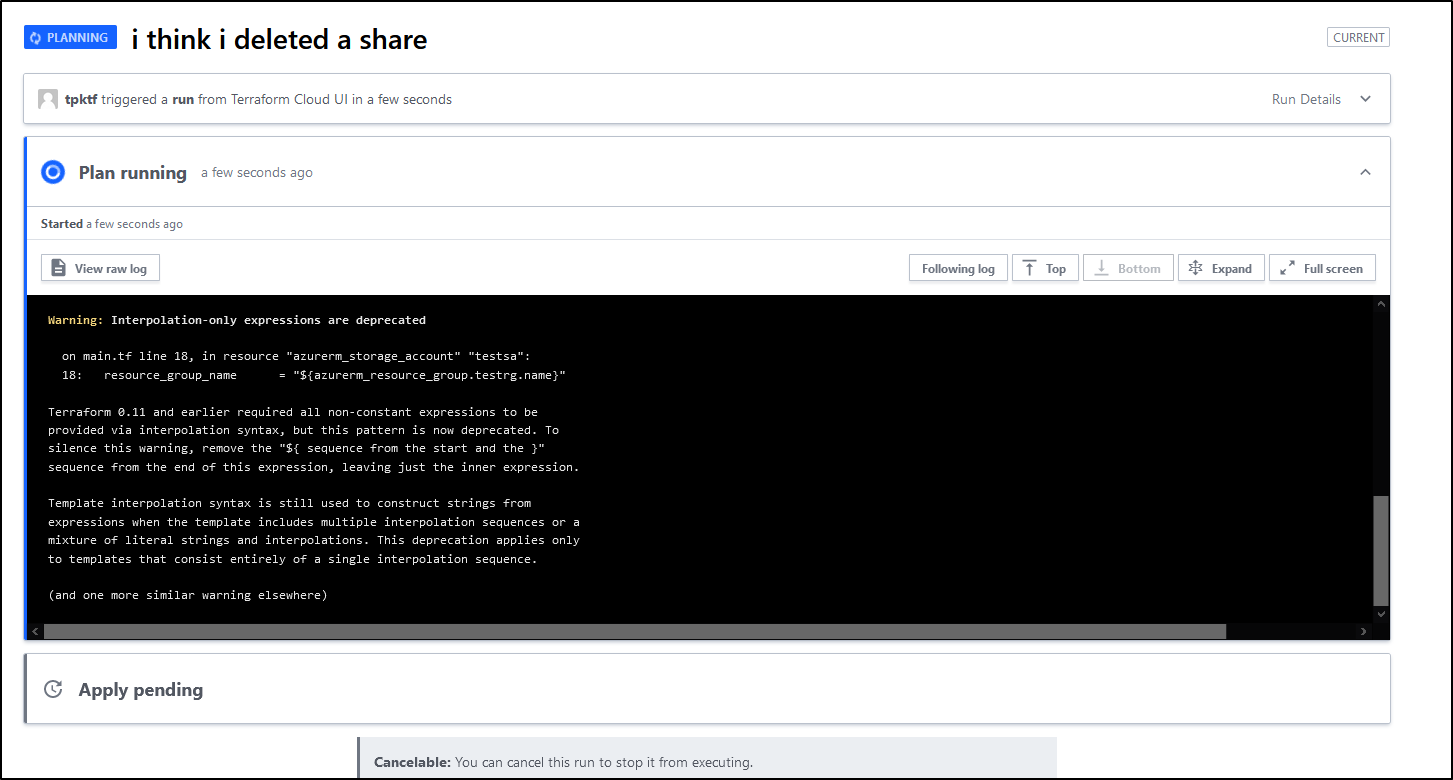

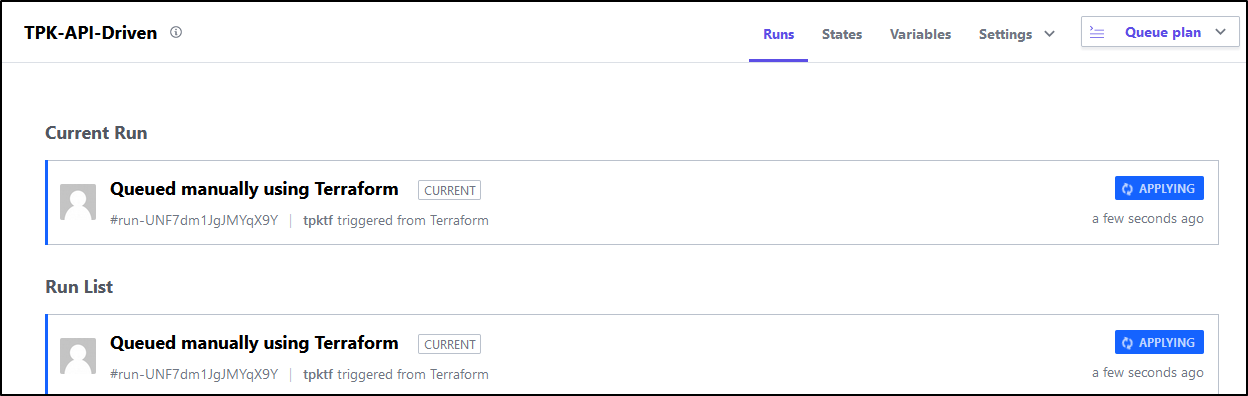

When I run this, I also see the TF Cloud website change on its own:

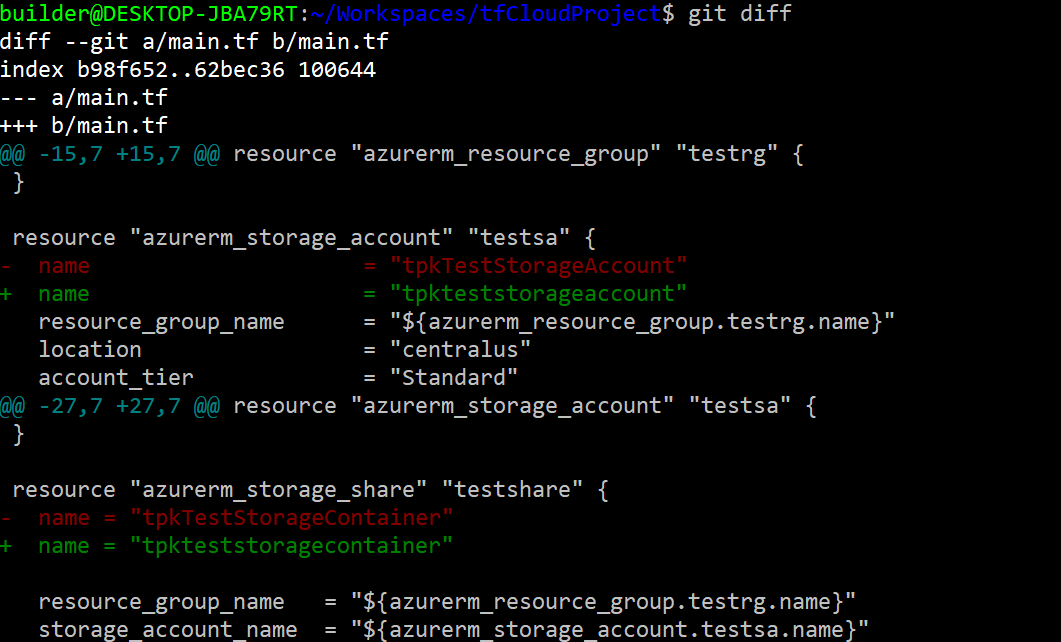

Let’s correct the problems so far.. The storage account must be 3-24 alphanumeric lowercase letters and tpkTestStorageAccount is under the limit but needs its casing fixed.

Before i go any further, let’s create a repo out of the folder we are in:

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ git init

Initialized empty Git repository in /home/builder/Workspaces/tfCloudProject/.git/

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ git checkout -b main

Switched to a new branch 'main'

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ git add main.tf

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ git commit -m "init"

[main (root-commit) 78b3473] init

1 file changed, 37 insertions(+)

create mode 100644 main.tf

Now make the changes:

$ git diff

diff --git a/main.tf b/main.tf

index b98f652..62bec36 100644

--- a/main.tf

+++ b/main.tf

@@ -15,7 +15,7 @@ resource "azurerm_resource_group" "testrg" {

}

resource "azurerm_storage_account" "testsa" {

- name = "tpkTestStorageAccount"

+ name = "tpkteststorageaccount"

resource_group_name = "${azurerm_resource_group.testrg.name}"

location = "centralus"

account_tier = "Standard"

@@ -27,7 +27,7 @@ resource "azurerm_storage_account" "testsa" {

}

resource "azurerm_storage_share" "testshare" {

- name = "tpkTestStorageContainer"

+ name = "tpkteststoragecontainer"

resource_group_name = "${azurerm_resource_group.testrg.name}"

storage_account_name = "${azurerm_storage_account.testsa.name}"

Looks like we have one error left:

I’ll remove line 32 as it’s redundant anyhow (would pull it from the storage account and doesn’t belong in the container - albeit worked in TF 0.11)

Next we have the nuance of where the Azure Details will come from:

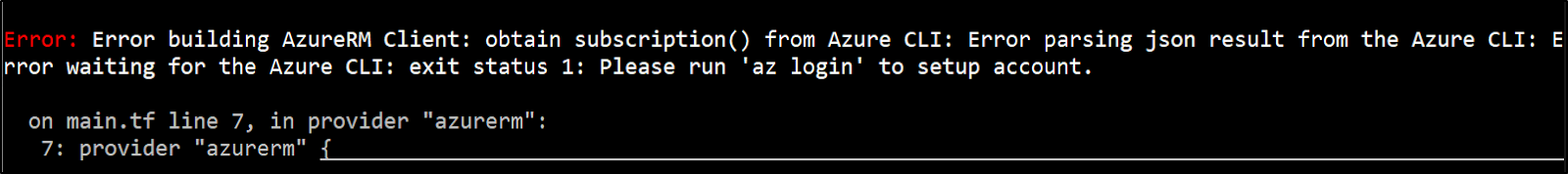

Error: Error building AzureRM Client: obtain subscription() from Azure CLI: Error parsing json result from the Azure CLI: Error waiting for the Azure CLI: exit status 1: Please run 'az login' to setup account.

on main.tf line 7, in provider "azurerm":

7: provider "azurerm" {

I fought the Azure subscription issue for a while, before realizing the errors:

Error: Error building AzureRM Client: obtain subscription() from Azure CLI: Error parsing json result from the Azure CLI: Error waiting for the Azure CLI: exit status 1: Please run 'az login' to setup account

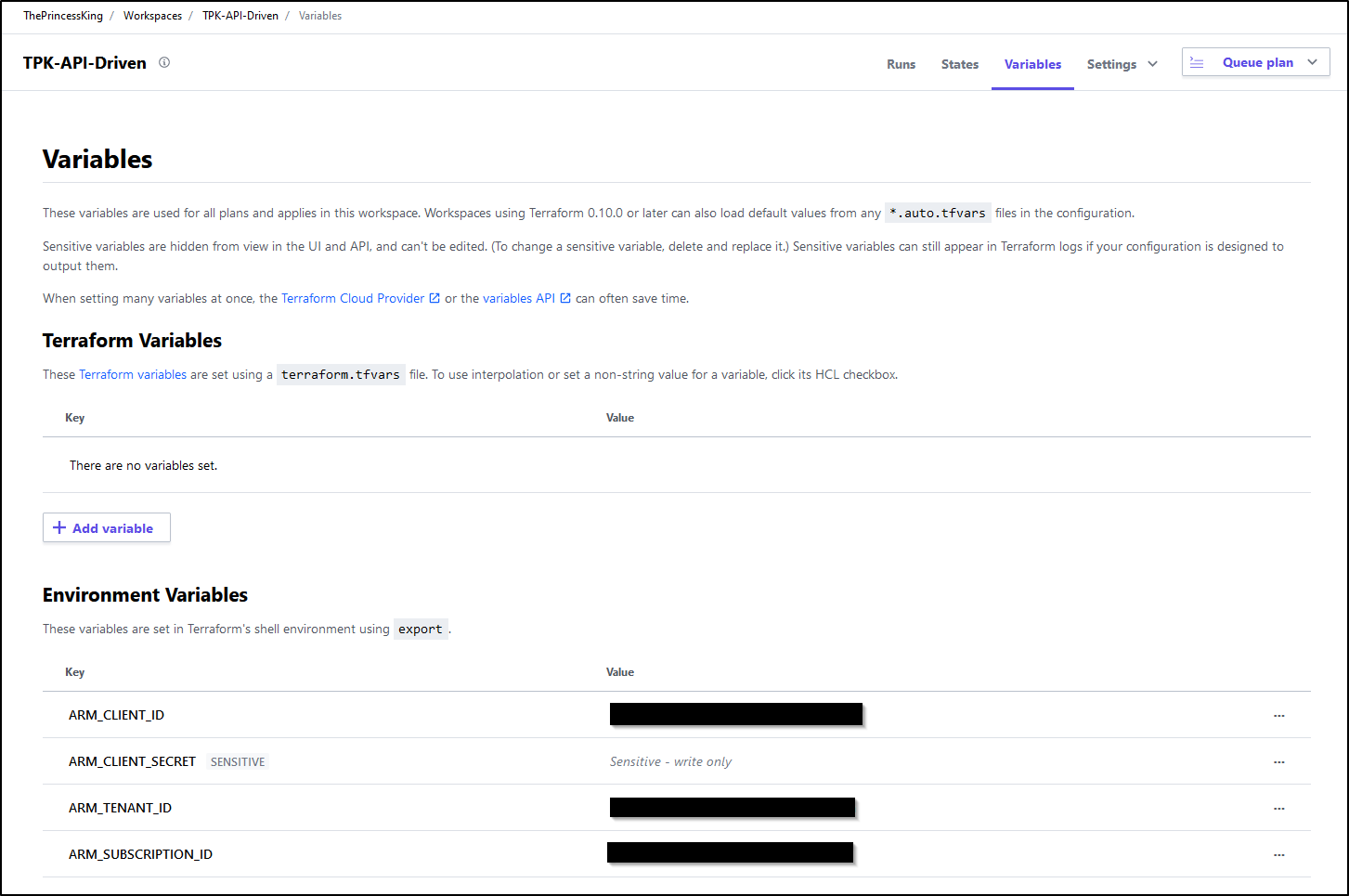

These were because, while i set the Azure RM Environment variables locally , they needed to also be set in Workspace in Terraform Cloud:

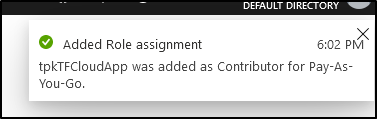

My final error was mostly due to not adding the SP as a Contributor which was quickly rectified:

So a question you might have is “why does the Azure RM vars need to be set in the workspace”? Simply put, all the parts one needs to do the work, in this case, create storage accounts in my subscription, really need to persist. If they didn’t exist as vars and secrets, they likely would be stored in the state file which has security ramifications.

This time, the run succeeded:

$ terraform plan

Running plan in the remote backend. Output will stream here. Pressing Ctrl-C

will stop streaming the logs, but will not stop the plan running remotely.

Preparing the remote plan...

To view this run in a browser, visit:

https://app.terraform.io/app/ThePrincessKing/TPK-API-Driven/runs/run-7ZHRasS6MoPLN4sY

Waiting for the plan to start...

Terraform v0.14.4

Configuring remote state backend...

Initializing Terraform configuration...

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# azurerm_resource_group.testrg will be created

+ resource "azurerm_resource_group" "testrg" {

+ id = (known after apply)

+ location = "centralus"

+ name = "tpkTestStorageRG"

}

# azurerm_storage_account.testsa will be created

+ resource "azurerm_storage_account" "testsa" {

+ access_tier = (known after apply)

+ account_kind = "StorageV2"

+ account_replication_type = "LRS"

+ account_tier = "Standard"

+ allow_blob_public_access = false

+ enable_https_traffic_only = true

+ id = (known after apply)

+ is_hns_enabled = false

+ large_file_share_enabled = (known after apply)

+ location = "centralus"

+ min_tls_version = "TLS1_0"

+ name = "tpkteststorageaccount"

+ primary_access_key = (sensitive value)

+ primary_blob_connection_string = (sensitive value)

+ primary_blob_endpoint = (known after apply)

+ primary_blob_host = (known after apply)

+ primary_connection_string = (sensitive value)

+ primary_dfs_endpoint = (known after apply)

+ primary_dfs_host = (known after apply)

+ primary_file_endpoint = (known after apply)

+ primary_file_host = (known after apply)

+ primary_location = (known after apply)

+ primary_queue_endpoint = (known after apply)

+ primary_queue_host = (known after apply)

+ primary_table_endpoint = (known after apply)

+ primary_table_host = (known after apply)

+ primary_web_endpoint = (known after apply)

+ primary_web_host = (known after apply)

+ resource_group_name = "tpkTestStorageRG"

+ secondary_access_key = (sensitive value)

+ secondary_blob_connection_string = (sensitive value)

+ secondary_blob_endpoint = (known after apply)

+ secondary_blob_host = (known after apply)

+ secondary_connection_string = (sensitive value)

+ secondary_dfs_endpoint = (known after apply)

+ secondary_dfs_host = (known after apply)

+ secondary_file_endpoint = (known after apply)

+ secondary_file_host = (known after apply)

+ secondary_location = (known after apply)

+ secondary_queue_endpoint = (known after apply)

+ secondary_queue_host = (known after apply)

+ secondary_table_endpoint = (known after apply)

+ secondary_table_host = (known after apply)

+ secondary_web_endpoint = (known after apply)

+ secondary_web_host = (known after apply)

+ tags = {

+ "environment" = "dev"

}

+ blob_properties {

+ cors_rule {

+ allowed_headers = (known after apply)

+ allowed_methods = (known after apply)

+ allowed_origins = (known after apply)

+ exposed_headers = (known after apply)

+ max_age_in_seconds = (known after apply)

}

+ delete_retention_policy {

+ days = (known after apply)

}

}

+ identity {

+ principal_id = (known after apply)

+ tenant_id = (known after apply)

+ type = (known after apply)

}

+ network_rules {

+ bypass = (known after apply)

+ default_action = (known after apply)

+ ip_rules = (known after apply)

+ virtual_network_subnet_ids = (known after apply)

}

+ queue_properties {

+ cors_rule {

+ allowed_headers = (known after apply)

+ allowed_methods = (known after apply)

+ allowed_origins = (known after apply)

+ exposed_headers = (known after apply)

+ max_age_in_seconds = (known after apply)

}

+ hour_metrics {

+ enabled = (known after apply)

+ include_apis = (known after apply)

+ retention_policy_days = (known after apply)

+ version = (known after apply)

}

+ logging {

+ delete = (known after apply)

+ read = (known after apply)

+ retention_policy_days = (known after apply)

+ version = (known after apply)

+ write = (known after apply)

}

+ minute_metrics {

+ enabled = (known after apply)

+ include_apis = (known after apply)

+ retention_policy_days = (known after apply)

+ version = (known after apply)

}

}

}

# azurerm_storage_share.testshare will be created

+ resource "azurerm_storage_share" "testshare" {

+ id = (known after apply)

+ metadata = (known after apply)

+ name = "tpkteststoragecontainer"

+ quota = 30

+ resource_manager_id = (known after apply)

+ storage_account_name = "tpkteststorageaccount"

+ url = (known after apply)

}

Plan: 3 to add, 0 to change, 0 to destroy.

Warning: Interpolation-only expressions are deprecated

on main.tf line 18, in resource "azurerm_storage_account" "testsa":

18: resource_group_name = "${azurerm_resource_group.testrg.name}"

Terraform 0.11 and earlier required all non-constant expressions to be

provided via interpolation syntax, but this pattern is now deprecated. To

silence this warning, remove the "${ sequence from the start and the }"

sequence from the end of this expression, leaving just the inner expression.

Template interpolation syntax is still used to construct strings from

expressions when the template includes multiple interpolation sequences or a

mixture of literal strings and interpolations. This deprecation applies only

to templates that consist entirely of a single interpolation sequence.

(and one more similar warning elsewhere)

Apply Terraform

Now is the time to actually create things with a TF Apply

$ terraform apply

Running apply in the remote backend. Output will stream here. Pressing Ctrl-C

will cancel the remote apply if it's still pending. If the apply started it

will stop streaming the logs, but will not stop the apply running remotely.

Preparing the remote apply...

To view this run in a browser, visit:

https://app.terraform.io/app/ThePrincessKing/TPK-API-Driven/runs/run-e6F3WvKrXSWxakyE

Waiting for the plan to start...

Terraform v0.14.4

Configuring remote state backend...

Initializing Terraform configuration...

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# azurerm_resource_group.testrg will be created

+ resource "azurerm_resource_group" "testrg" {

+ id = (known after apply)

+ location = "centralus"

+ name = "tpkTestStorageRG"

}

# azurerm_storage_account.testsa will be created

+ resource "azurerm_storage_account" "testsa" {

+ access_tier = (known after apply)

+ account_kind = "StorageV2"

+ account_replication_type = "LRS"

+ account_tier = "Standard"

+ allow_blob_public_access = false

+ enable_https_traffic_only = true

+ id = (known after apply)

+ is_hns_enabled = false

+ large_file_share_enabled = (known after apply)

+ location = "centralus"

+ min_tls_version = "TLS1_0"

+ name = "tpkteststorageaccount"

+ primary_access_key = (sensitive value)

+ primary_blob_connection_string = (sensitive value)

+ primary_blob_endpoint = (known after apply)

+ primary_blob_host = (known after apply)

+ primary_connection_string = (sensitive value)

+ primary_dfs_endpoint = (known after apply)

+ primary_dfs_host = (known after apply)

+ primary_file_endpoint = (known after apply)

+ primary_file_host = (known after apply)

+ primary_location = (known after apply)

+ primary_queue_endpoint = (known after apply)

+ primary_queue_host = (known after apply)

+ primary_table_endpoint = (known after apply)

+ primary_table_host = (known after apply)

+ primary_web_endpoint = (known after apply)

+ primary_web_host = (known after apply)

+ resource_group_name = "tpkTestStorageRG"

+ secondary_access_key = (sensitive value)

+ secondary_blob_connection_string = (sensitive value)

+ secondary_blob_endpoint = (known after apply)

+ secondary_blob_host = (known after apply)

+ secondary_connection_string = (sensitive value)

+ secondary_dfs_endpoint = (known after apply)

+ secondary_dfs_host = (known after apply)

+ secondary_file_endpoint = (known after apply)

+ secondary_file_host = (known after apply)

+ secondary_location = (known after apply)

+ secondary_queue_endpoint = (known after apply)

+ secondary_queue_host = (known after apply)

+ secondary_table_endpoint = (known after apply)

+ secondary_table_host = (known after apply)

+ secondary_web_endpoint = (known after apply)

+ secondary_web_host = (known after apply)

+ tags = {

+ "environment" = "dev"

}

+ blob_properties {

+ cors_rule {

+ allowed_headers = (known after apply)

+ allowed_methods = (known after apply)

+ allowed_origins = (known after apply)

+ exposed_headers = (known after apply)

+ max_age_in_seconds = (known after apply)

}

+ delete_retention_policy {

+ days = (known after apply)

}

}

+ identity {

+ principal_id = (known after apply)

+ tenant_id = (known after apply)

+ type = (known after apply)

}

+ network_rules {

+ bypass = (known after apply)

+ default_action = (known after apply)

+ ip_rules = (known after apply)

+ virtual_network_subnet_ids = (known after apply)

}

+ queue_properties {

+ cors_rule {

+ allowed_headers = (known after apply)

+ allowed_methods = (known after apply)

+ allowed_origins = (known after apply)

+ exposed_headers = (known after apply)

+ max_age_in_seconds = (known after apply)

}

+ hour_metrics {

+ enabled = (known after apply)

+ include_apis = (known after apply)

+ retention_policy_days = (known after apply)

+ version = (known after apply)

}

+ logging {

+ delete = (known after apply)

+ read = (known after apply)

+ retention_policy_days = (known after apply)

+ version = (known after apply)

+ write = (known after apply)

}

+ minute_metrics {

+ enabled = (known after apply)

+ include_apis = (known after apply)

+ retention_policy_days = (known after apply)

+ version = (known after apply)

}

}

}

# azurerm_storage_share.testshare will be created

+ resource "azurerm_storage_share" "testshare" {

+ id = (known after apply)

+ metadata = (known after apply)

+ name = "tpkteststoragecontainer"

+ quota = 30

+ resource_manager_id = (known after apply)

+ storage_account_name = "tpkteststorageaccount"

+ url = (known after apply)

}

Plan: 3 to add, 0 to change, 0 to destroy.

Warning: Interpolation-only expressions are deprecated

on main.tf line 18, in resource "azurerm_storage_account" "testsa":

18: resource_group_name = "${azurerm_resource_group.testrg.name}"

Terraform 0.11 and earlier required all non-constant expressions to be

provided via interpolation syntax, but this pattern is now deprecated. To

silence this warning, remove the "${ sequence from the start and the }"

sequence from the end of this expression, leaving just the inner expression.

Template interpolation syntax is still used to construct strings from

expressions when the template includes multiple interpolation sequences or a

mixture of literal strings and interpolations. This deprecation applies only

to templates that consist entirely of a single interpolation sequence.

(and one more similar warning elsewhere)

Do you want to perform these actions in workspace "TPK-API-Driven"?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

azurerm_resource_group.testrg: Creation complete after 1s [id=/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/tpkTestStorageRG]

azurerm_storage_account.testsa: Creating...

azurerm_storage_account.testsa: Still creating... [10s elapsed]

azurerm_storage_account.testsa: Creation complete after 20s [id=/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/tpkTestStorageRG/providers/Microsoft.Storage/storageAccounts/tpkteststorageaccount]

azurerm_storage_share.testshare: Creating...

azurerm_storage_share.testshare: Creation complete after 0s [id=https://tpkteststorageaccount.file.core.windows.net/tpkteststoragecontainer]

Warning: Interpolation-only expressions are deprecated

on main.tf line 18, in resource "azurerm_storage_account" "testsa":

18: resource_group_name = "${azurerm_resource_group.testrg.name}"

Terraform 0.11 and earlier required all non-constant expressions to be

provided via interpolation syntax, but this pattern is now deprecated. To

silence this warning, remove the "${ sequence from the start and the }"

sequence from the end of this expression, leaving just the inner expression.

Template interpolation syntax is still used to construct strings from

expressions when the template includes multiple interpolation sequences or a

mixture of literal strings and interpolations. This deprecation applies only

to templates that consist entirely of a single interpolation sequence.

(and one more similar warning elsewhere)

Apply complete! Resources: 3 added, 0 changed, 0 destroyed.

Verification

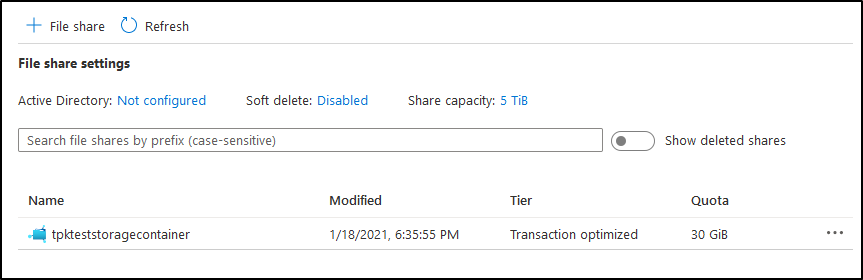

Let’s see if it created our stuff.

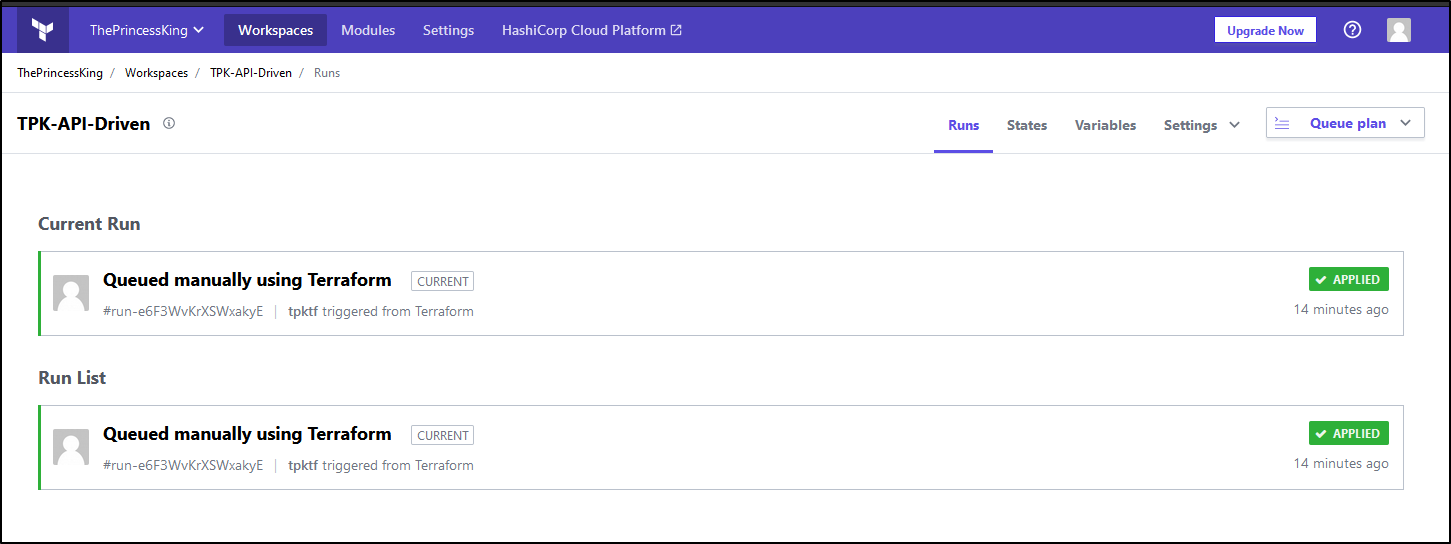

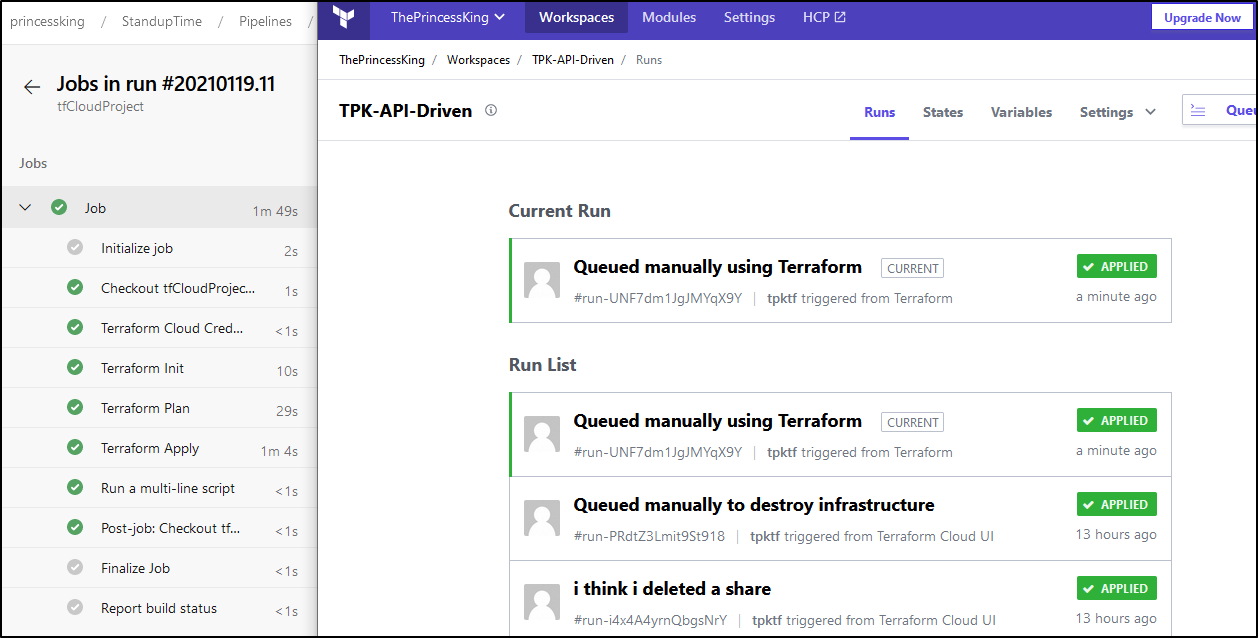

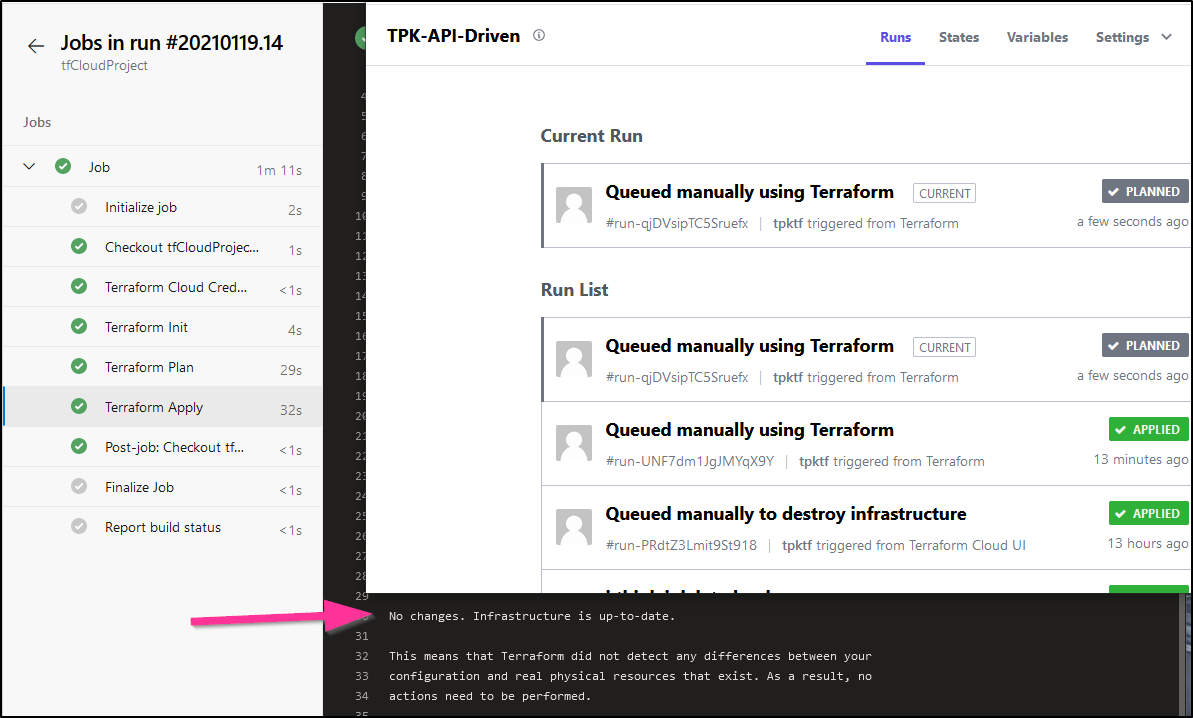

First, I can see the runs in the Workspace for Terraform Cloud:

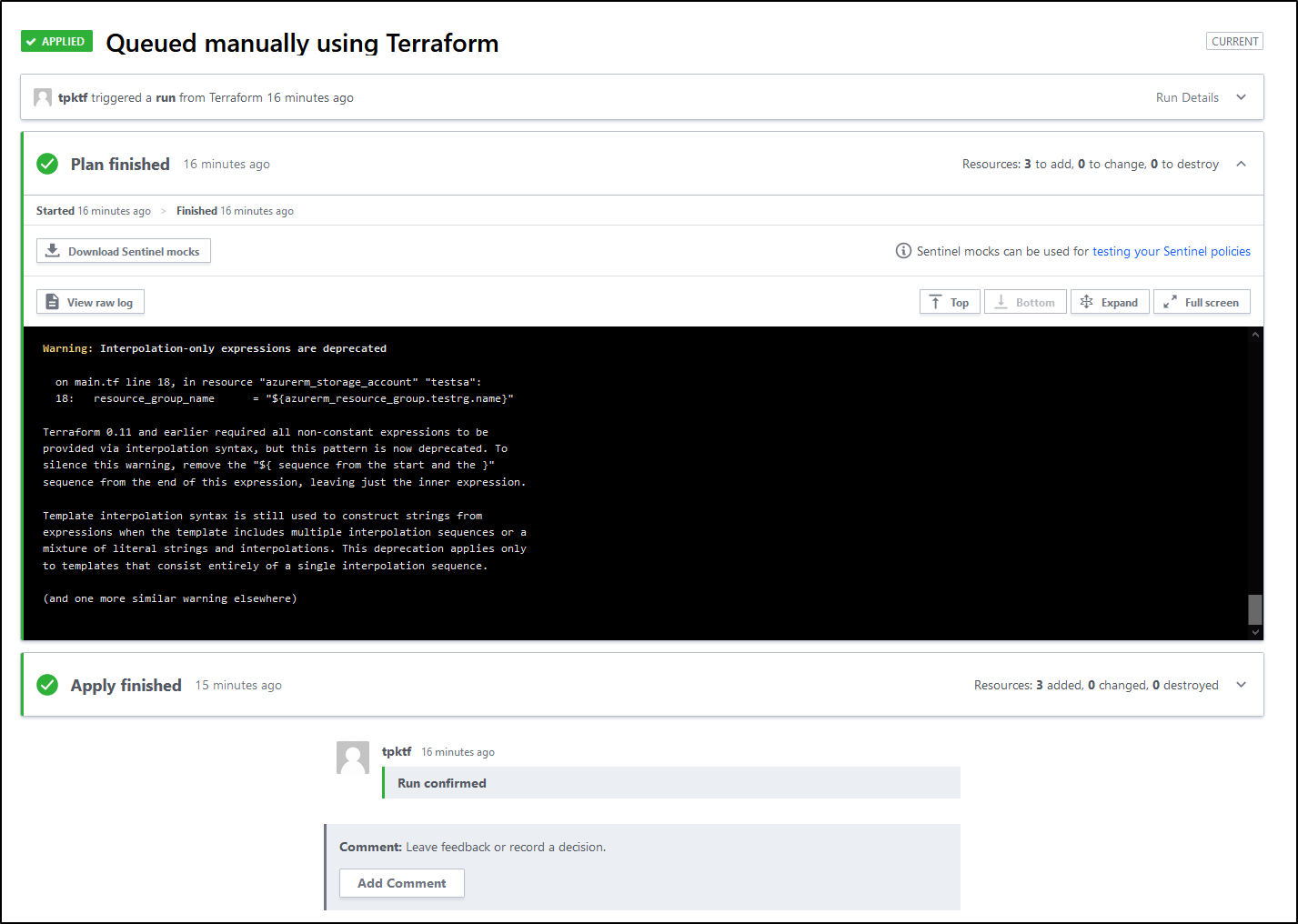

I can view the run to see details:

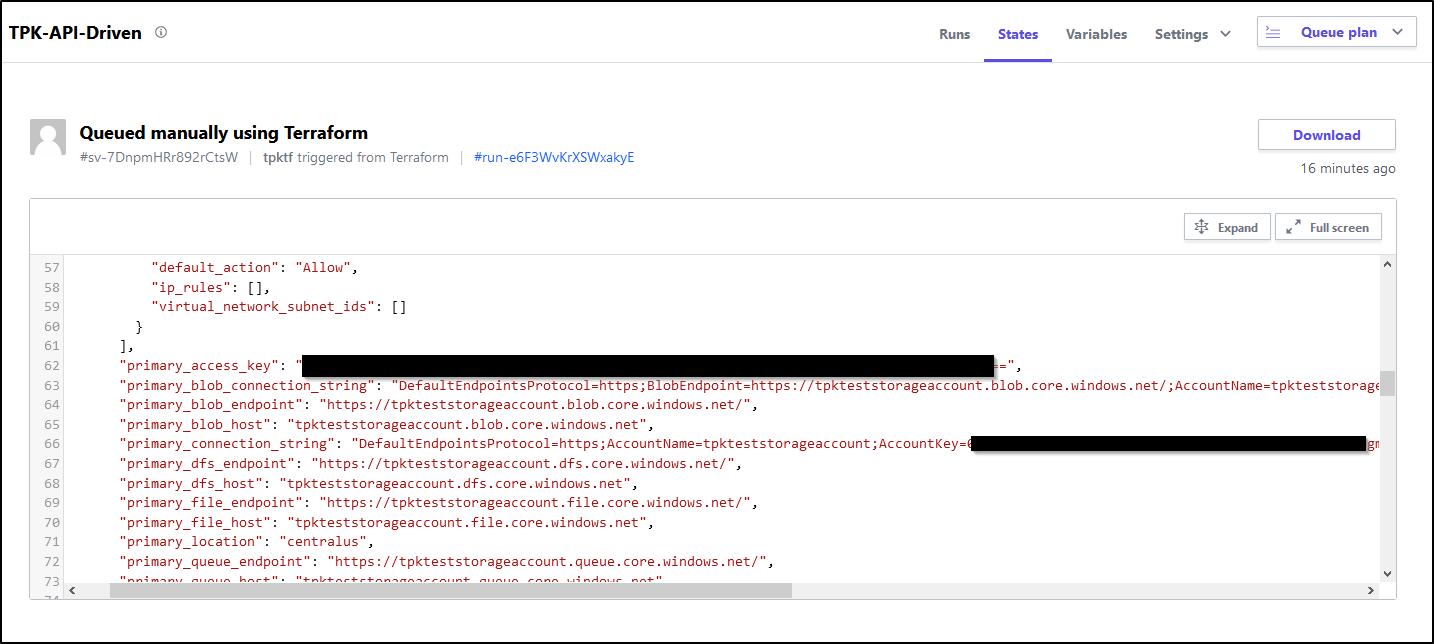

Something to keep in mind, especially if sharing the account or logs, is that some sensitive data is stored in the output. Looking just at the state output, I can see the primary access key and ID:

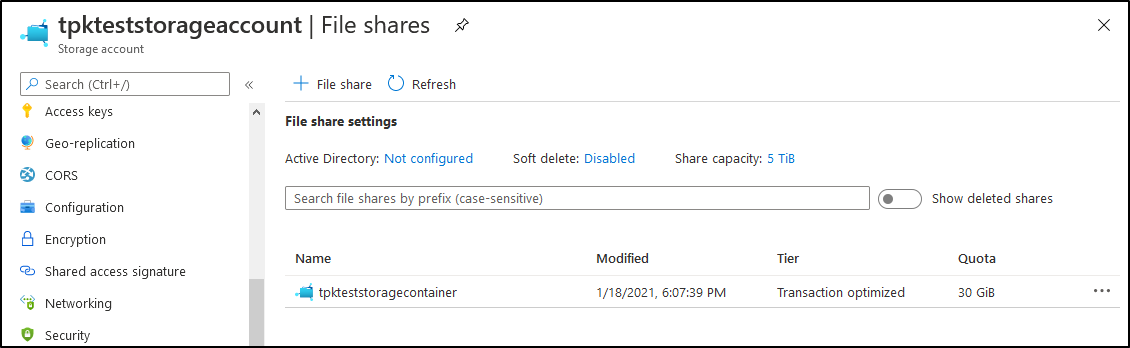

We can view the storage account in the portal:

But we can also just see details from the command prompt as well

$ az storage account list -g tpkTestStorageRg -o table

AccessTier AllowBlobPublicAccess CreationTime EnableHttpsTrafficOnly IsHnsEnabled Kind Location MinimumTlsVersion Name PrimaryLocation ProvisioningState ResourceGroup StatusOfPrimary

------------ ----------------------- -------------------------------- ------------------------ -------------- --------- ---------- ------------------- --------------------- ----------------- ------------------- ---------------- -----------------

Hot False 2021-01-19T00:07:19.804528+00:00 True False StorageV2 centralus TLS1_0 tpkteststorageaccount centralus Succeeded tpkTestStorageRG available

Using TF Cloud to restore things…

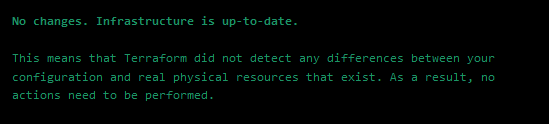

If nothing changed, a plan would indicate as such:

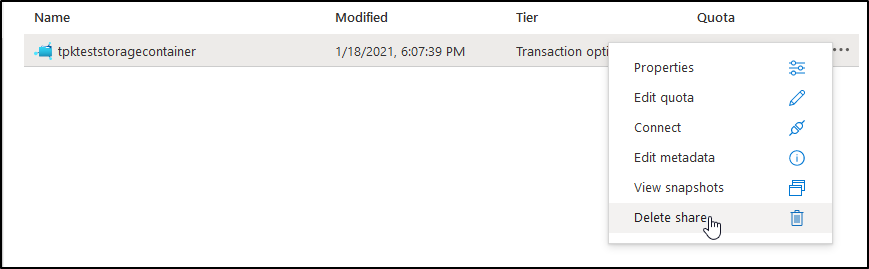

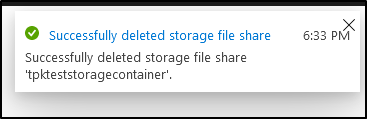

Say we tweaked things, like the quota or deleted the container we created

Confirm and delete

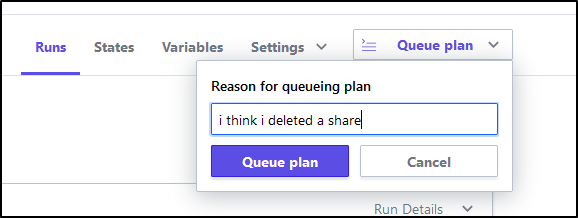

Now let’s queue a plan:

It queues

Then runs

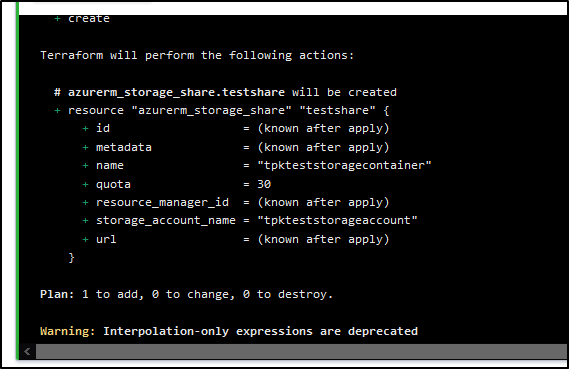

And this time identifies something to create

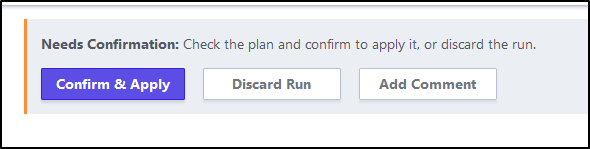

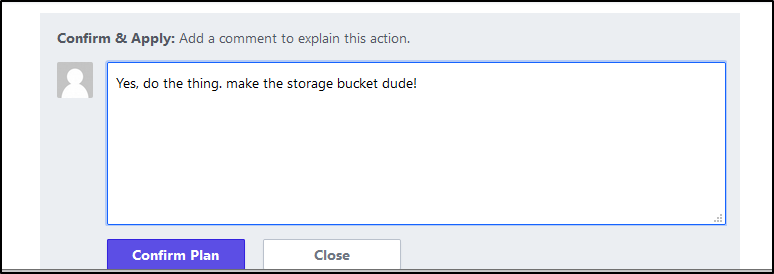

Just confirm and apply:

Confirm and apply:

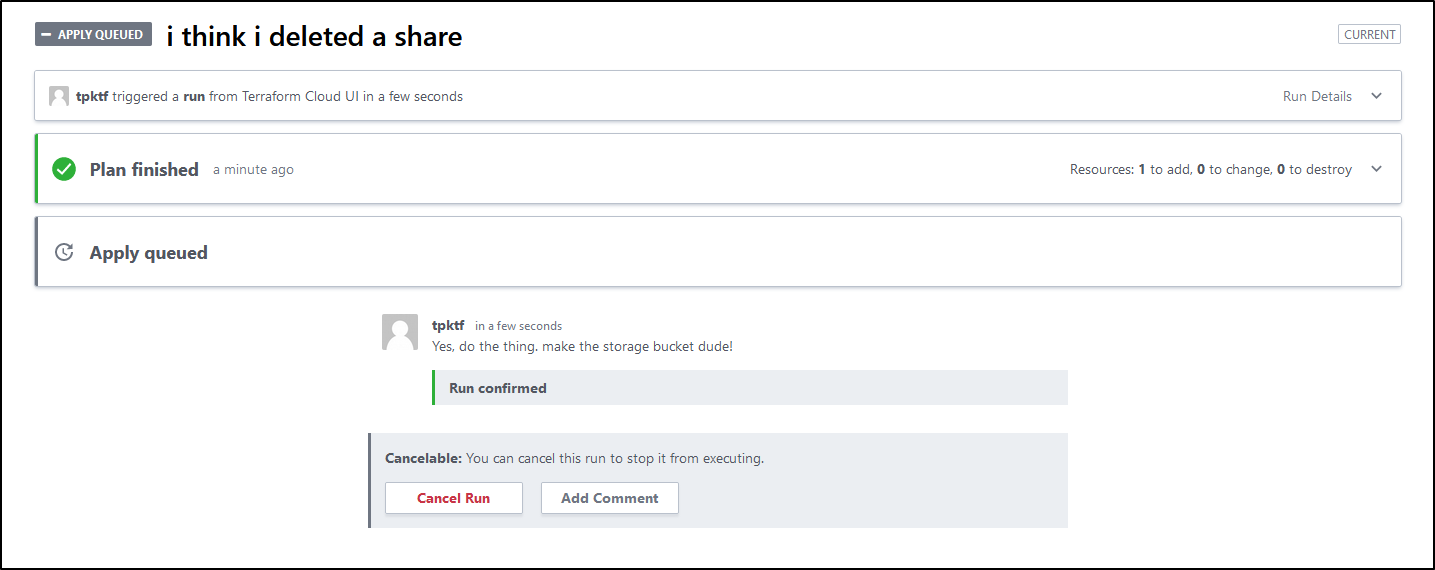

This time it queues an apply:

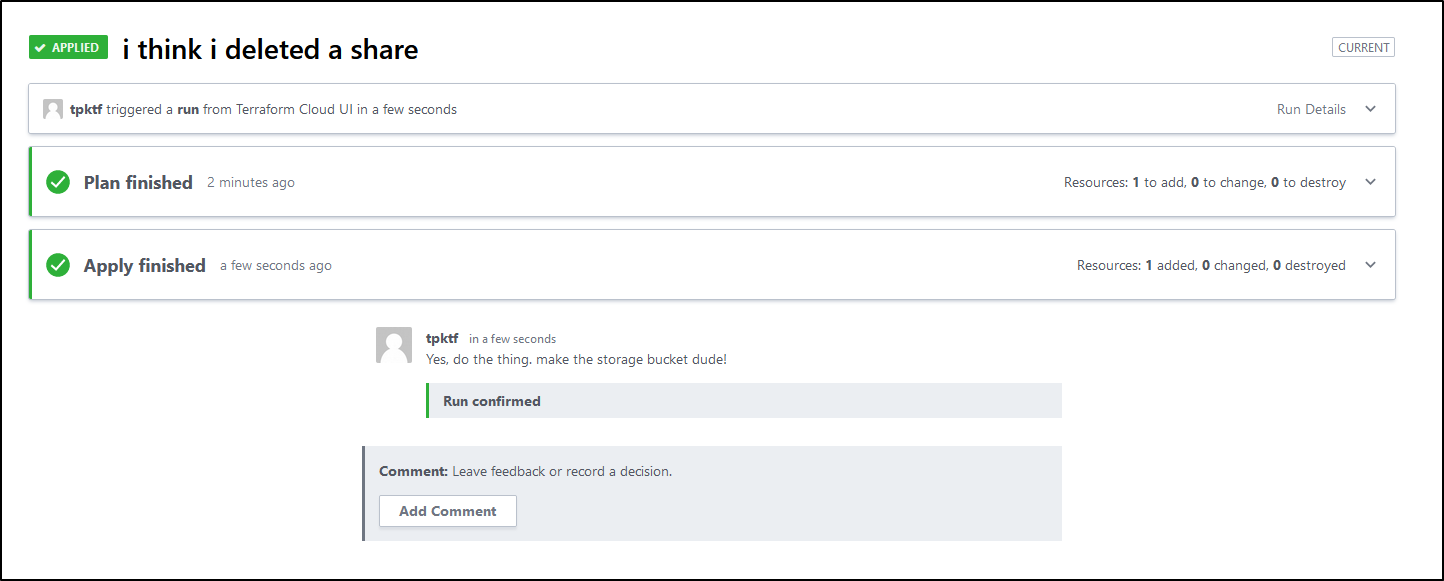

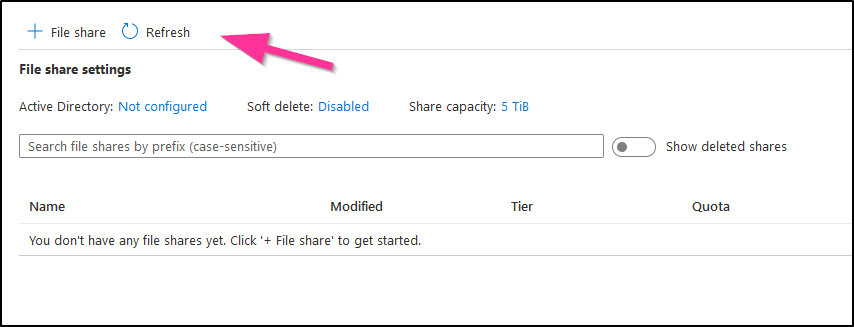

And we can see it indeed applied and created the share again:

Cleanup

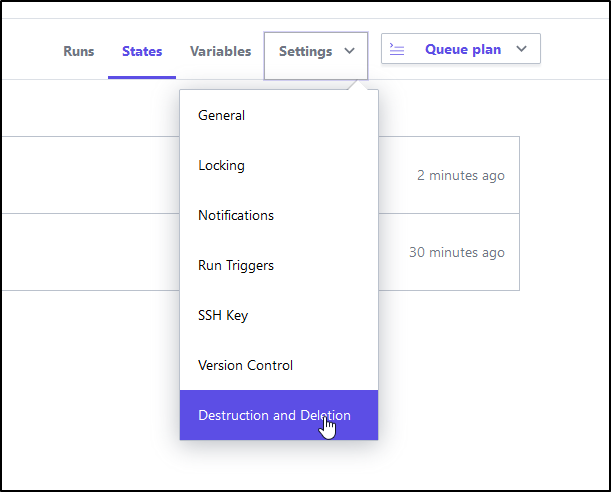

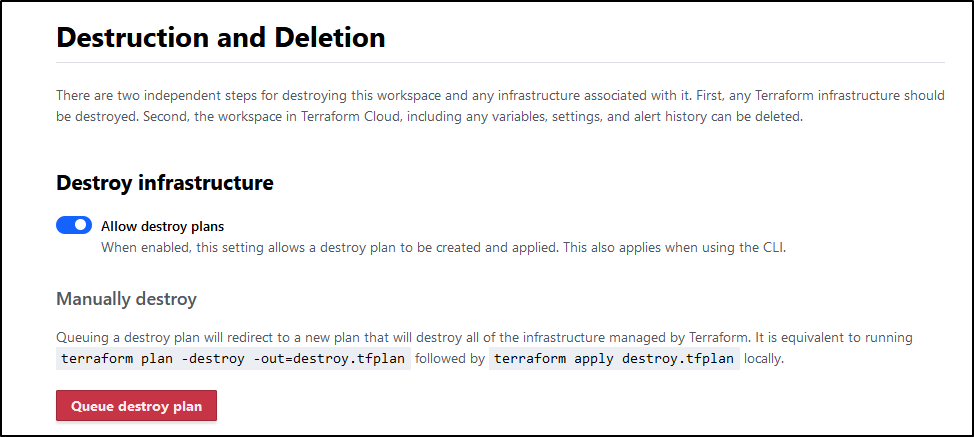

Next we should try removing all the things via Terraform Cloud as well:

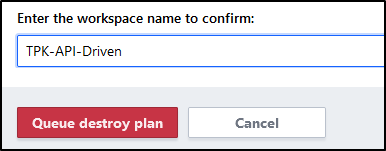

Let’s queue a destroy plan:

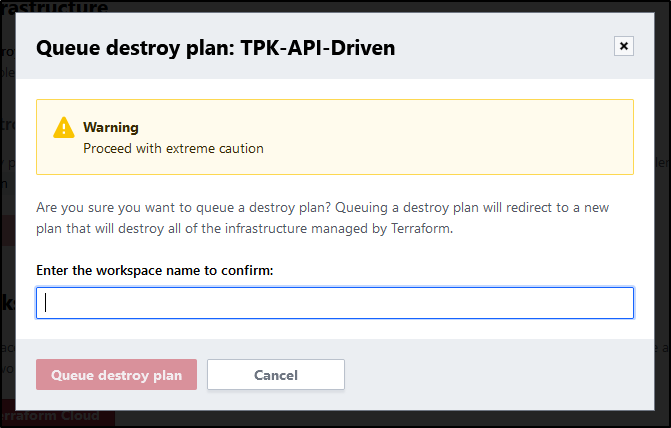

It cautions us against this:

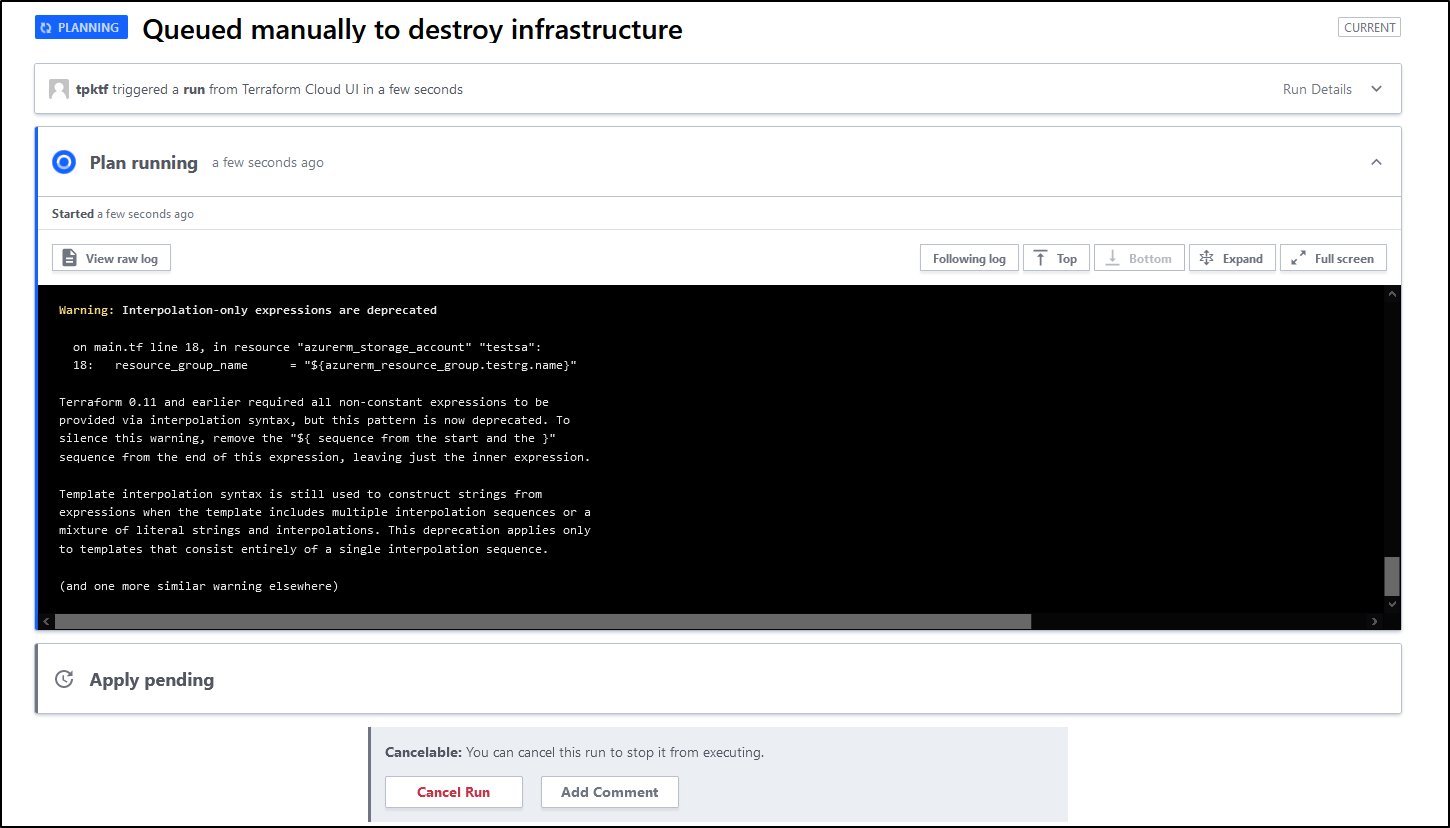

This will queue a destroy plan:

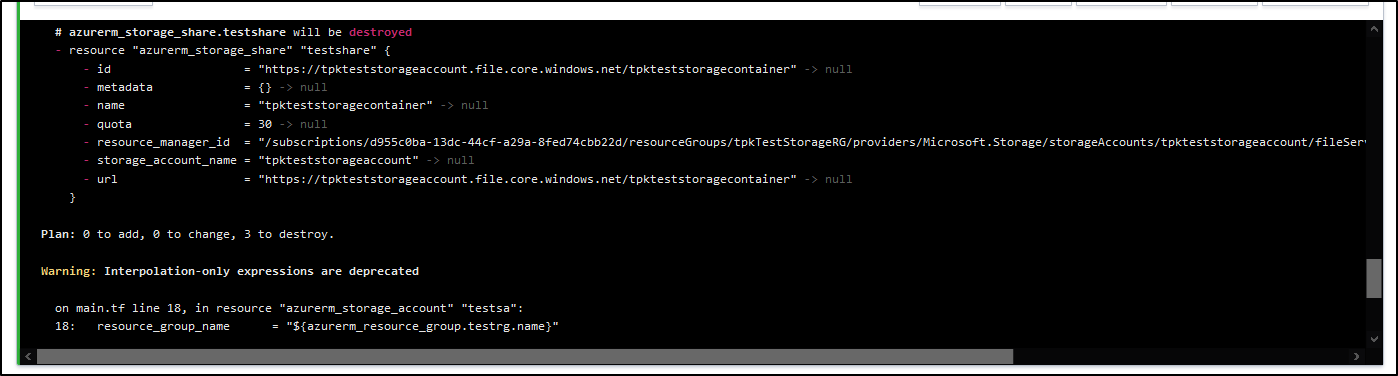

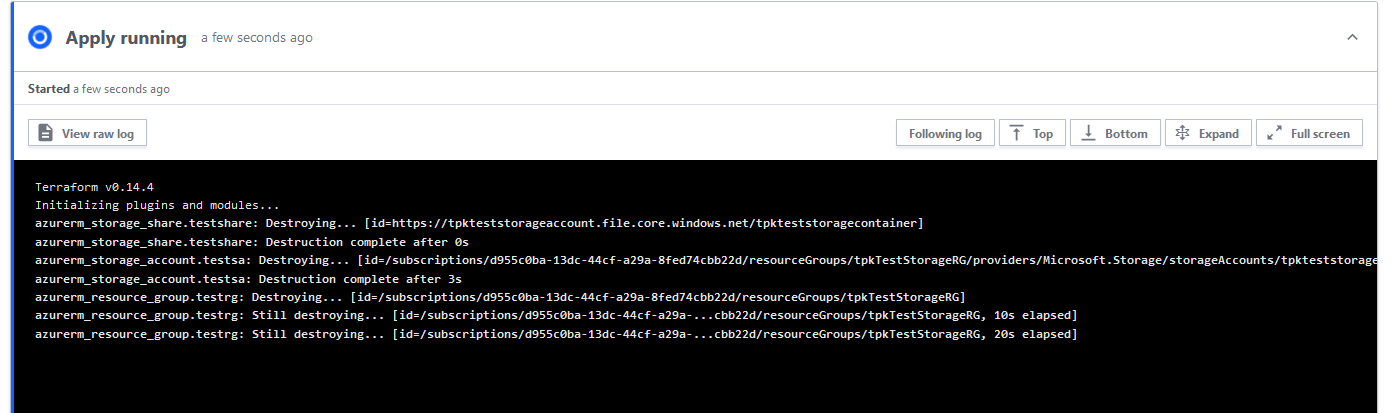

Before we confirm and apply, we can ensure from the log it is just deleting the 3 things we created: The Resource Group, the Storage Account and the Share:

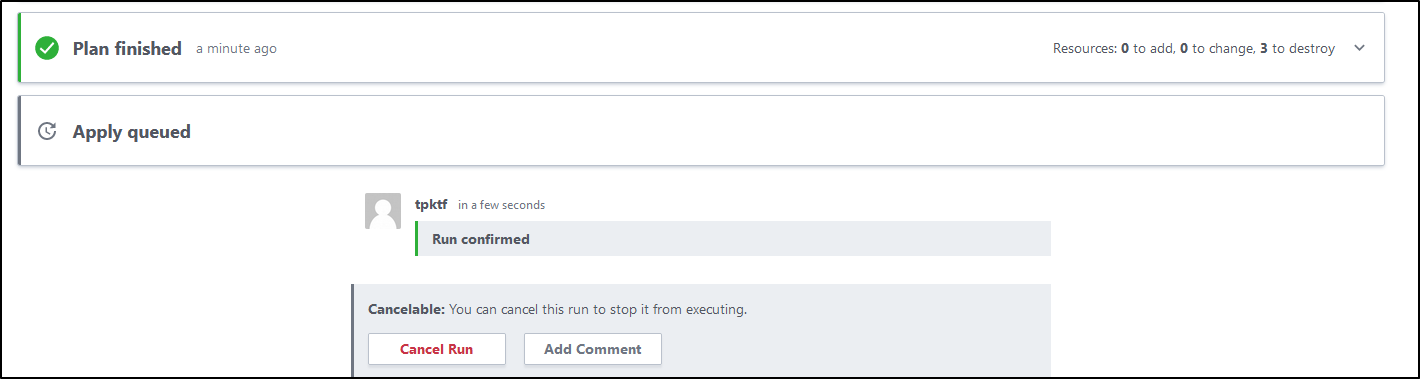

We can queue it up

And watch its progress

I can see it’s now absent in the Azure portal and can also confirm it via the command line:

$ az storage account list -o table | grep tpkteststorageaccount

$ az storage account list -g tpkTestStorageRg -o table

Resource group 'tpkTestStorageRg' could not be found.

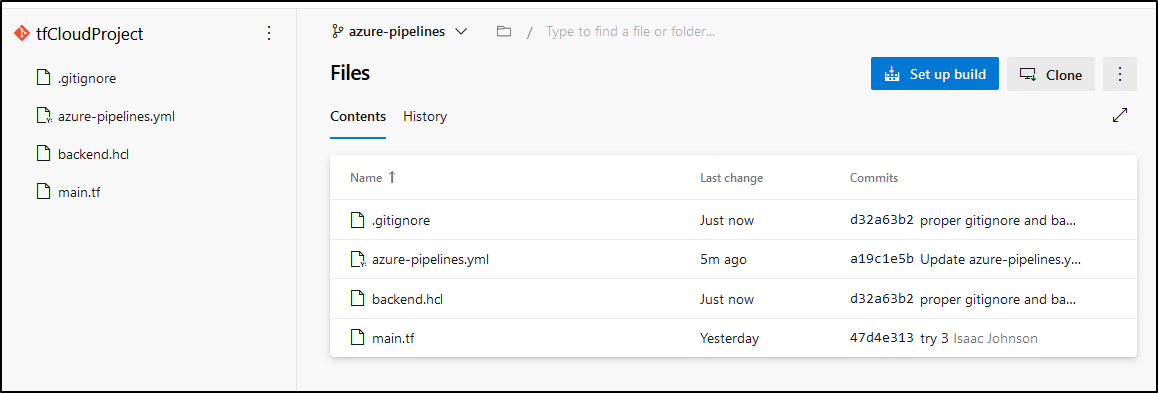

Integration with AzDO

Let’s try using the API approach to integrate with Azure DevOps

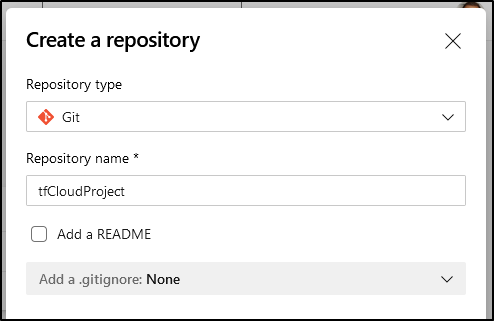

We’ll create a new repo

And upload what we have thus far:

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ git remote add origin https://princessking.visualstudio.com/StandupTime/_git/tfCloudProject

builder@DESKTOP-JBA79RT:~/Workspaces/tfCloudProject$ git push -u origin --all

Counting objects: 6, done.

Delta compression using up to 8 threads.

Compressing objects: 100% (4/4), done.

Writing objects: 100% (6/6), 795 bytes | 795.00 KiB/s, done.

Total 6 (delta 1), reused 0 (delta 0)

remote: Analyzing objects... (6/6) (5 ms)

remote: Storing packfile... done (86 ms)

remote: Storing index... done (40 ms)

remote: We noticed you're using an older version of Git. For the best experience, upgrade to a newer version.

To https://princessking.visualstudio.com/StandupTime/_git/tfCloudProject

* [new branch] main -> main

Branch 'main' set up to track remote branch 'main' from 'origin'.

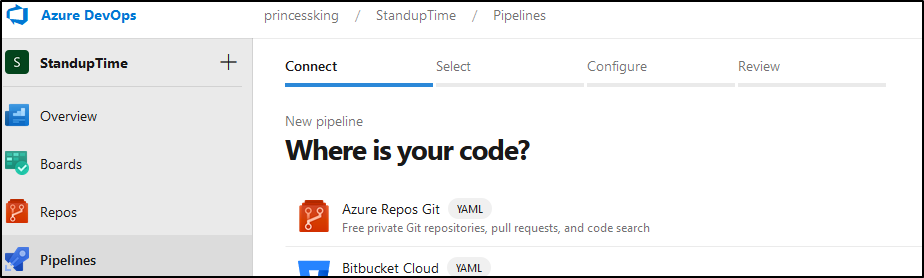

Next, we’ll create a YAML pipeline:

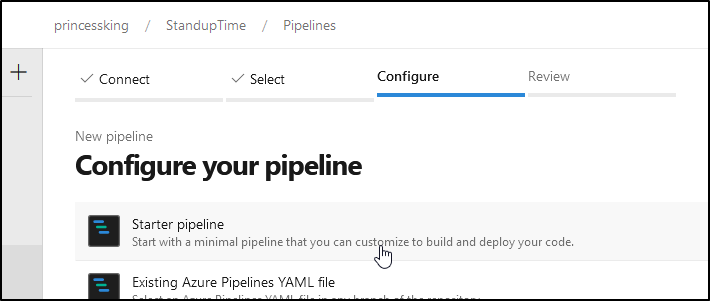

We can start with a starter pipeline

This just gives us the basic:

# Starter pipeline

# Start with a minimal pipeline that you can customize to build and deploy your code.

# Add steps that build, run tests, deploy, and more:

# https://aka.ms/yaml

trigger:

- main

pool:

vmImage: 'ubuntu-latest'

steps:

- script: echo Hello, world!

displayName: 'Run a one-line script'

- script: |

echo Add other tasks to build, test, and deploy your project.

echo See https://aka.ms/yaml

displayName: 'Run a multi-line script'

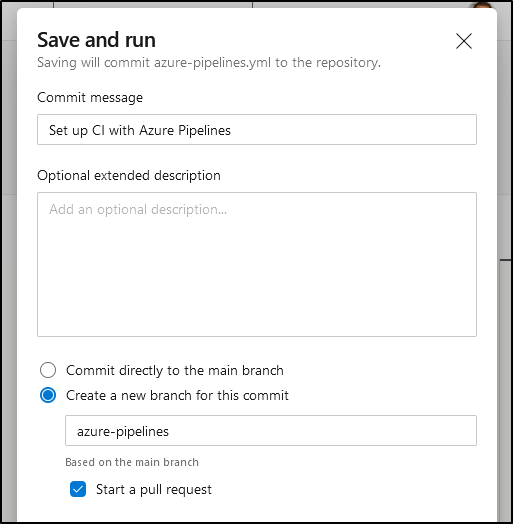

We can save and run (to a new branch):

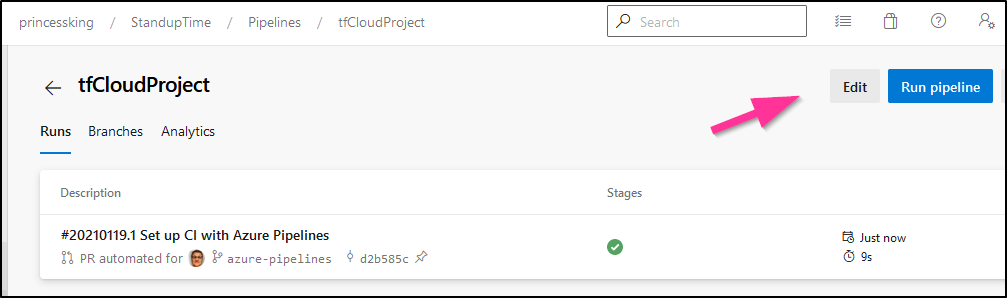

The hello world job should run clean, but lets immediately start to edit our pipeline. We’ll do this together so you see how to engage with YAML pipelines using the wizard

Choose Edit from the pipeline menu

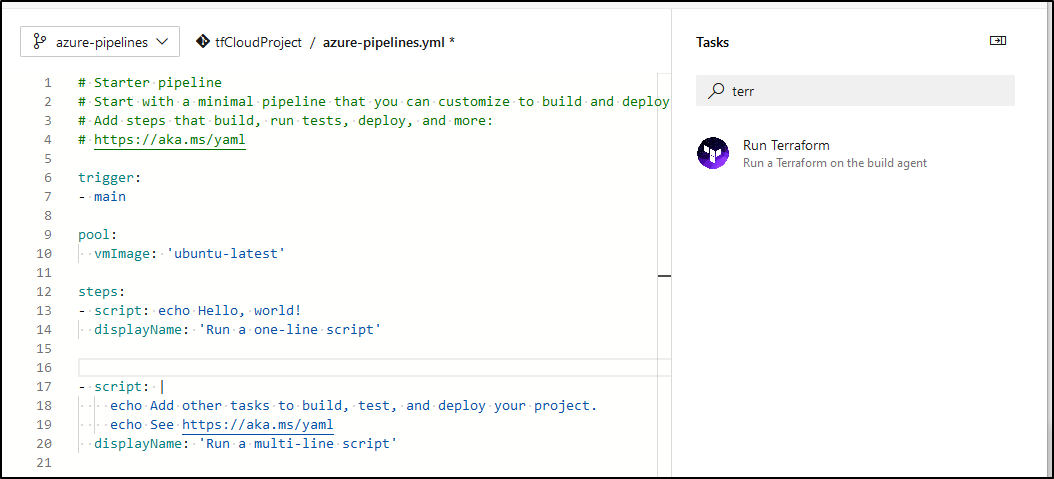

We can search for Terraform in the right hand wizard:

Tip: I often need to refer to the predefined variables page so it’s worth keeping bookmarked.

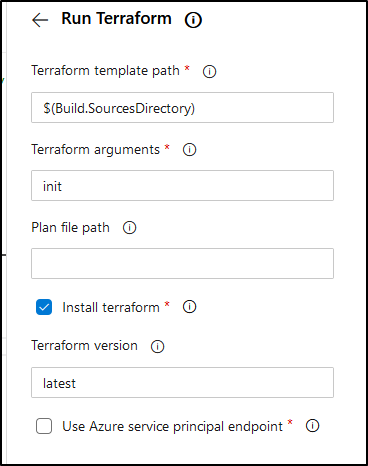

When we click add, it should add a block like this:

- task: Terraform@2

inputs:

TemplatePath: '$(Build.SourcesDirectory)'

Arguments: 'init'

InstallTerraform: true

UseAzureSub: false

Our first pipeline run complains this is a windows only task.

I removed it and realized that Terraform is already on the Ubuntu agent, we likely don’t need to complicate things with a custom task. Changing to a shell for which terraform and terraform --version showed:

2021-01-19T12:41:20.8052913Z /usr/local/bin/terraform

2021-01-19T12:41:22.2354599Z Terraform v0.14.4

2021-01-19T12:41:22.2372962Z ##[section]Finishing: Run a multi-line script

Let’s add a .gitignorefile and our backend.hcl to the azure-pipelines branch

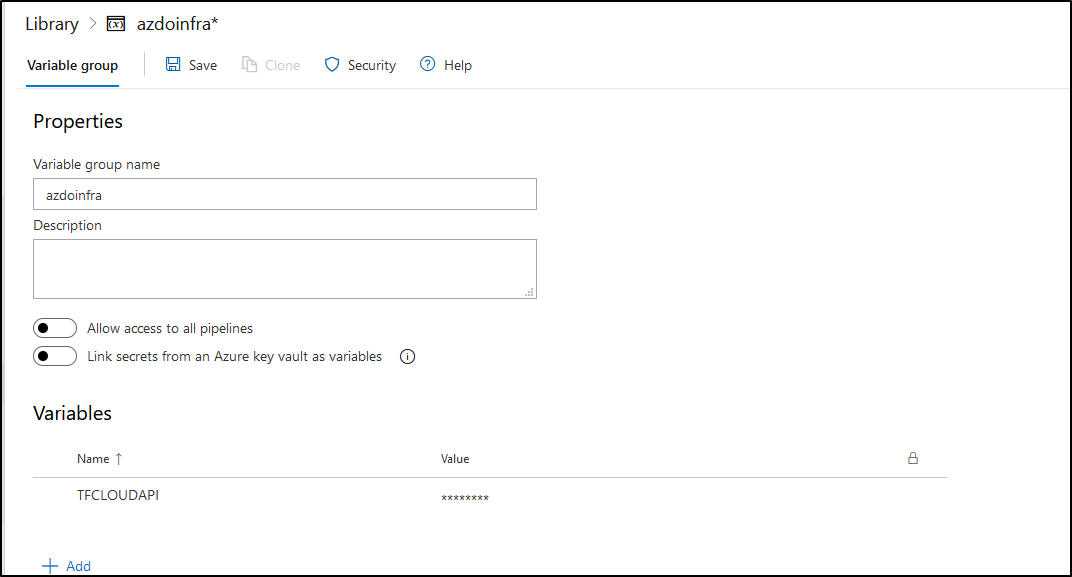

We need to save our API token to a secret store

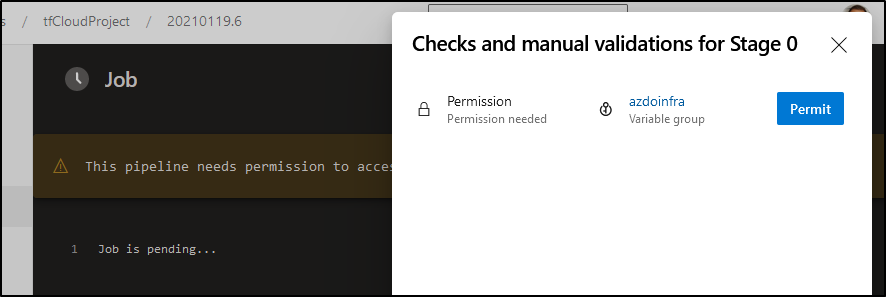

The first time we use this pipeline, we may encounter:

To which we approve. This is necessary so others (in this public project) don’t use our group var for pipelines without our knowledge (as an admin must approve)

Pipeline Setup

Our Azure-pipelines.yaml will look like this:

# Starter pipeline

# Start with a minimal pipeline that you can customize to build and deploy your code.

# Add steps that build, run tests, deploy, and more:

# https://aka.ms/yaml

trigger:

- main

variables:

- group: azdoinfra

pool:

vmImage: 'ubuntu-latest'

steps:

- script: |

set +x

cat > $(Build.SourcesDirectory)/.tfcreds <<'EOL'

{

"credentials": {

"app.terraform.io": {

"token": "TFCLOUDPAPI"

}

}

}

EOL

# inline above should have worked, but not setting var inline

sed -i "s/TFCLOUDPAPI/$(TFCLOUDAPI)/g" $(Build.SourcesDirectory)/.tfcreds

displayName: 'Terraform Cloud Credentials'

- script: |

export TF_CLI_CONFIG_FILE="$(Build.SourcesDirectory)/.tfcreds"

terraform init -backend-config=backend.hcl

displayName: 'Terraform Init'

- script: |

export TF_CLI_CONFIG_FILE="$(Build.SourcesDirectory)/.tfcreds"

terraform plan -no-color

displayName: 'Terraform Plan'

- script: |

export TF_CLI_CONFIG_FILE="$(Build.SourcesDirectory)/.tfcreds"

terraform apply -no-color -auto-approve

displayName: 'Terraform Apply'

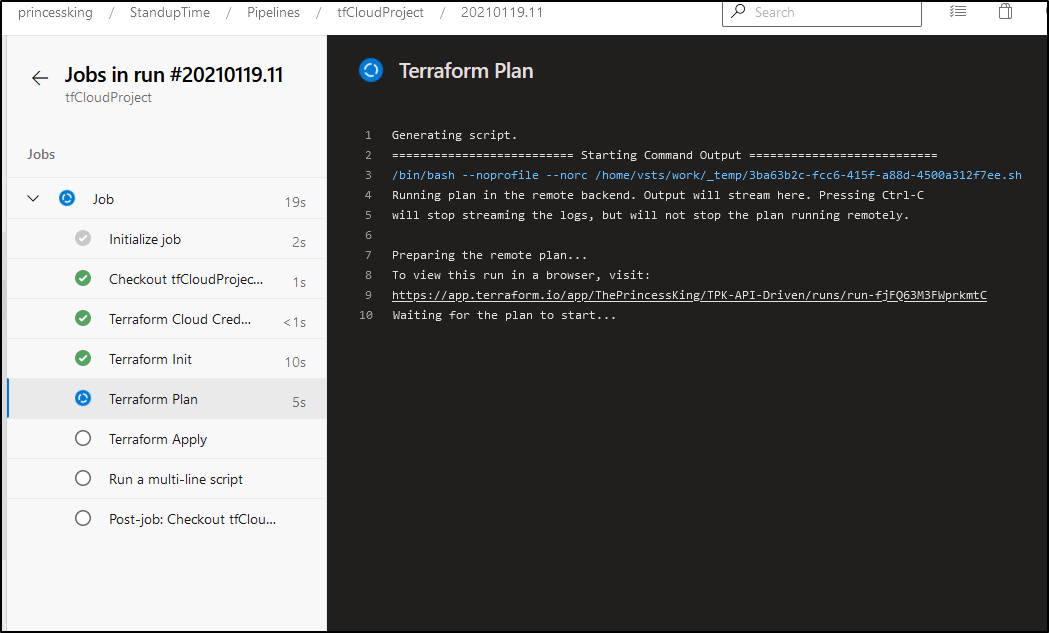

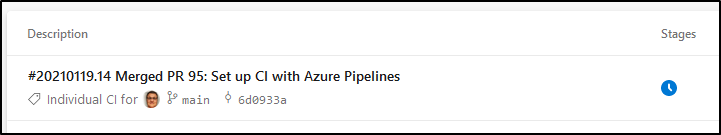

When run we should see it apply

While it is running we should see the same run reflected in TF Cloud which holds our state (as well as our credentials to the Azure Cloud for which we are modifying)

When complete we should see a successful run in both Azure DevOps and Terraform Cloud:

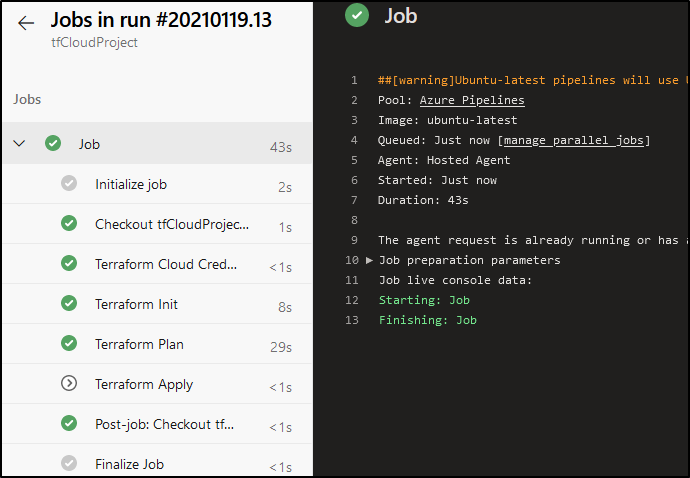

One last bit of polish - don’t actually apply in the PR or feature branches. We only want to affect things after merging.

Change the last line to add a condition

- script: |

export TF_CLI_CONFIG_FILE="$(Build.SourcesDirectory)/.tfcreds"

terraform apply -no-color -auto-approve

displayName: 'Terraform Apply'

condition: and(succeeded(), eq(variables['Build.SourceBranch'], 'refs/heads/main'))

We can fire our azure-pipelines branch to ensure it skips apply:

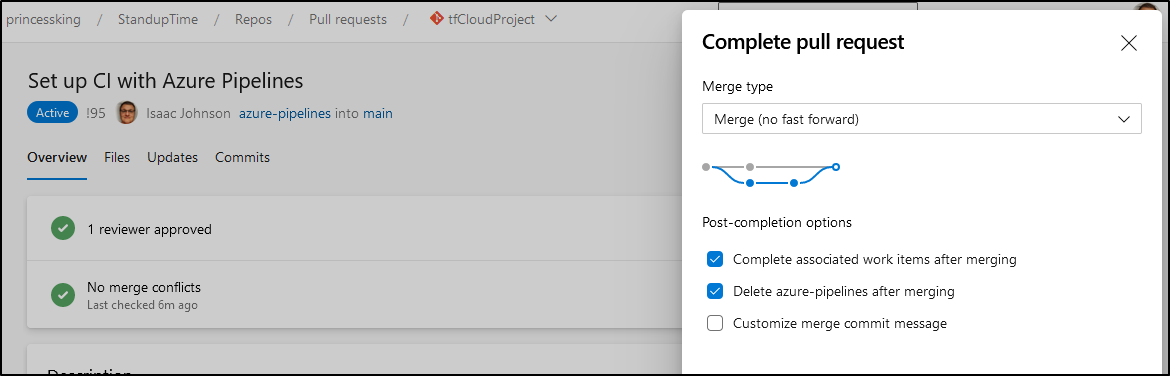

And once merged, we see it does apply (however, nothing created since we applied earlier)

Which triggers a run

And we can see nothing needed creating in the apply:

Summary

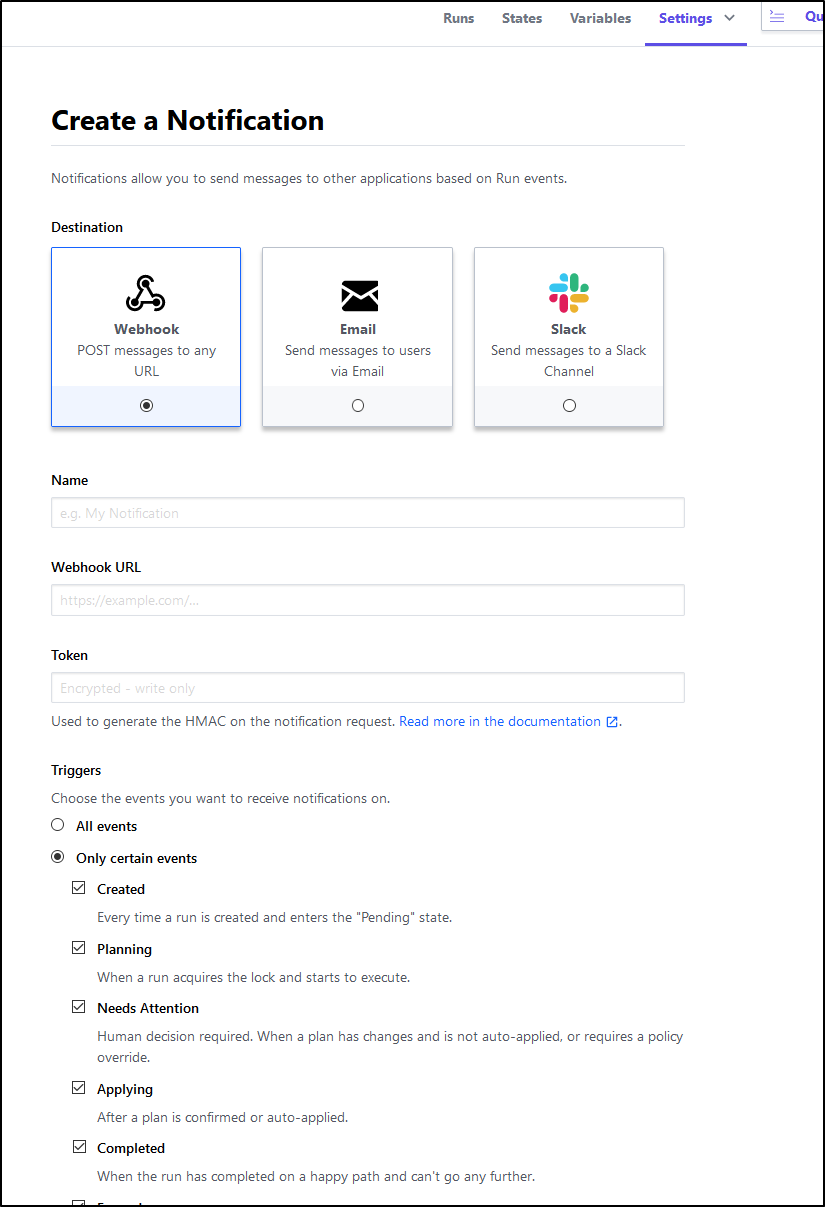

We only scratched the surface with what we can do with Terraform Cloud. There is a whole notifications area for sending notifications on events:

We can start to leverage sentinelfor policy enforcement and obviously while it didn’t work for us at the start, we should be able to use Version Control driven flows that don’t require Azure Pipelines to orchestrate (however, i will always be a fan of well formed Pull Request flows so i would likely still use the API method).

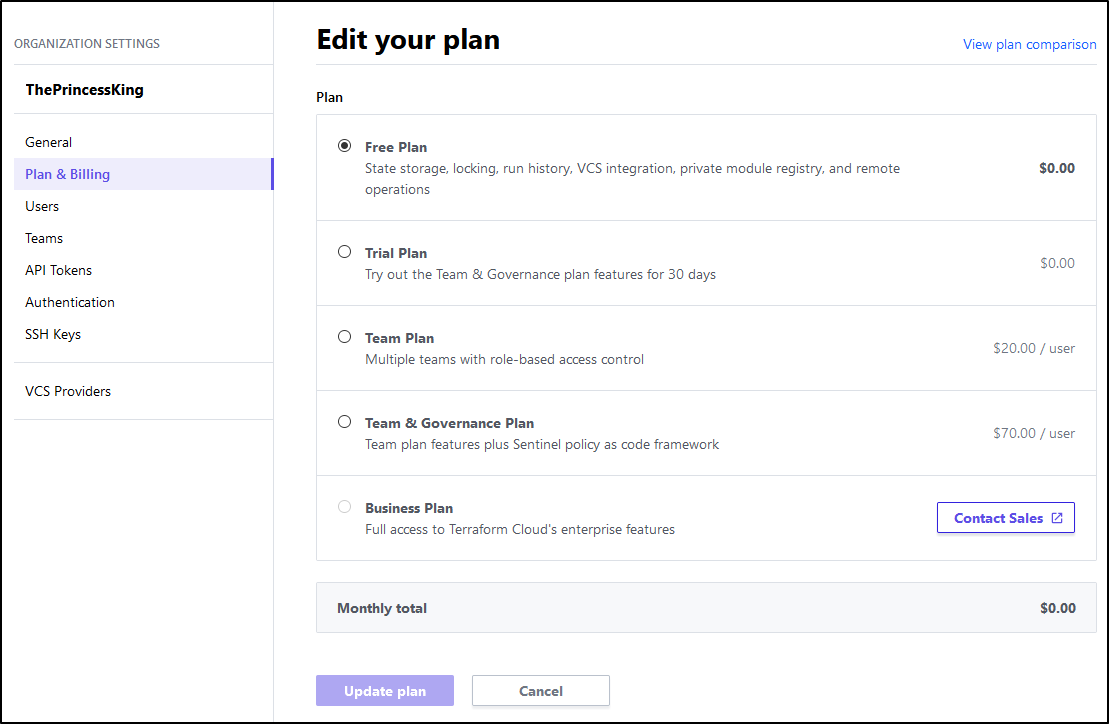

Let’s be fair - Hashicorp is giving us a real gift with Terraform Cloud. It’s a free version of some of the best features of Terraform Enterprise. I’m sure they offer it to help both us learn how to engage with cloud state storage while teasing us with the features of the enterprise offering.

And to that end, they’ve now priced the Enterprise offering in really compelling way - Instead of an all or nothing, an organization can choose how far to go:

So let us assume we are building out a new organization and would like to have some basic RBAC (to ensure intern joe doesn’t blast the k8s cluster by accident) or that I can administer my Ops users (so Grumpy Pete doesn’t go to a new gig with our API tokens). We can start with Teams at $20/user and then graduate to full governance at $70/user. And for companies that want unlimited users, they can move to Terraform Enterprise.

Am I a bit of a Hashi fan? Yes, yes I am. They continue to both innovate and release products with compelling free open-source offerings. I truly think this is the way;

- make your product easy to use

- give a compelling free option

- offer graduated commercial products with pricing that scales

- embrace the open-source community.

Companies that do this will thrive. Those that try to hide behind powerpoints and glossy demo recordings will have a real hard time. I don’t know how many times what could be a really good product has died in the room when the question of “do you have a demo environment?” or “free offering” is met with “we need NDAs and a signed PO first…”.