Published: Oct 6, 2020 by Isaac Johnson

Let’s keep going with what we started last week. This week I’ll show how to format secrets for various database engines, expose Vault with a public IP and load balancer and lastly how to connect multiple kubernetes clusters to an auto-unsealing Vault in AKS.

Getting started

You can look atlast week’s post to spin the cluster. Otherwise, let’s just log back in to our existing cluster:

$ az account set --subscription aabbccdd-aabb-ccdd-eeff-aabbccddeeff

$ az aks list -o table

Name Location ResourceGroup KubernetesVersion ProvisioningState Fqdn

---------- ---------- --------------- ------------------- ------------------- ---------------------------------------------------------------

ijvaultaks centralus ijvaultk8srg 1.17.11 Succeeded ijvaultaks-ijvaultk8srg-70b42e-e2c32296.hcp.centralus.azmk8s.io

And a sanity that we are in the right kubernetes cluster:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-84770334-vmss000000 Ready agent 3d11h v1.17.11

aks-nodepool1-84770334-vmss000001 Ready agent 3d11h v1.17.11

$ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

vault-agent-injector 1/1 1 1 3d11h

webapp 1/1 1 1 2d15h

Creating a DB User/Pass

In following their tutorials, let’s login and create a database secret and policy:

$ kubectl exec -it vault-0 -- /bin/sh

/ $ vault login

Token (will be hidden):

Success! You are now authenticated. The token information displayed below

is already stored in the token helper. You do NOT need to run "vault login"

again. Future Vault requests will automatically use this token.

Key Value

--- -----

token s.AghmoNp4pAQekX7ADcM3NID9

token_accessor yzz0shnNSMnXIK1mQ7b4JogB

token_duration ∞

token_renewable false

token_policies ["root"]

identity_policies []

policies ["root"]

Check that we haven’t already set the database string

/ $ vault kv get internal/database/config

Error making API request.

URL: GET http://127.0.0.1:8200/v1/sys/internal/ui/mounts/internal/database/config

Code: 403. Errors:

* preflight capability check returned 403, please ensure client's policies grant access to path "internal/database/config/"

Add the kv-v2 secrets engine

/ $ vault secrets enable -path=internal kv-v2

Success! Enabled the kv-v2 secrets engine at: internal/

Let’s set a user and password secret:

/ $ vault kv put internal/database/config username="db-readonly-username" password="db-secret-password"

Key Value

--- -----

created_time 2020-10-05T11:22:49.6524387Z

deletion_time n/a

destroyed false

version 1

And now we should be able to get it back:

/ $ vault kv get internal/database/config

====== Metadata ======

Key Value

--- -----

created_time 2020-10-05T11:22:49.6524387Z

deletion_time n/a

destroyed false

version 1

====== Data ======

Key Value

--- -----

password db-secret-password

username db-readonly-username

Next we double check on the kubernetes auth. If you are following the last guide, this should be set and generate an error:

/ $ vault auth enable kubernetes

Error enabling kubernetes auth: Error making API request.

URL: POST http://127.0.0.1:8200/v1/sys/auth/kubernetes

Code: 400. Errors:

* path is already in use at kubernetes/

If not set , enable then set the auth config (we’ll skip this since it’s already set):

/ $ vault auth enable kubernetes

Success! Enabled kubernetes auth method at: kubernetes/

/ $ vault write auth/kubernetes/config \

token_reviewer_jwt="$(cat /var/run/secrets/kubernetes.io/serviceaccount/token)" \

kubernetes_host="https://$KUBERNETES_PORT_443_TCP_ADDR:443" \

kubernetes_ca_cert=@/var/run/secrets/kubernetes.io/serviceaccount/ca.crt

Success! Data written to: auth/kubernetes/config

Next we’ll create an internal-app policy that reads from those settings:

/ $ vault policy write internal-app - <<EOF

> path "internal/data/database/config" {

> capabilities = ["read"]

> }

> EOF

Success! Uploaded policy: internal-app

/ $

Lastly, create a role that leverages that policy

/ $ vault write auth/kubernetes/role/internal-app \

> bound_service_account_names=internal-app \

> bound_service_account_namespaces=default \

> policies=internal-app \

> ttl=24h

Success! Data written to: auth/kubernetes/role/internal-app

We’ll exit out of the pod and create the service account now. Verify it isn’t there already.

$ kubectl get sa

NAME SECRETS AGE

default 1 3d11h

vault 1 3d11h

vault-agent-injector 1 3d11h

Then create the internal-app sa:

$ kubectl create sa internal-app

serviceaccount/internal-app created

Next let’s launch their sample org chart:

$ cat dep-orgchart.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: orgchart

labels:

app: orgchart

spec:

selector:

matchLabels:

app: orgchart

replicas: 1

template:

metadata:

annotations:

labels:

app: orgchart

spec:

serviceAccountName: internal-app

containers:

- name: orgchart

image: jweissig/app:0.0.1

$ kubectl apply -f dep-orgchart.yaml

deployment.apps/orgchart created

We can check that it’s running:

$ kubectl get pods -l app=orgchart

NAME READY STATUS RESTARTS AGE

orgchart-666df5cf59-82z56 1/1 Running 0 40s

Now let’s see if it has any secrets:

$ kubectl exec `kubectl get pod -l app=orgchart -o jsonpath="{.items[0].metadata.name}"` --container orgchart -- ls /vault/secrets

ls: /vault/secrets: No such file or directory

command terminated with exit code 1

None exist, so now we can use annotations to inject them into the pod:

$ cat patch-secrets.yaml

spec:

template:

metadata:

annotations:

vault.hashicorp.com/agent-inject: "true"

vault.hashicorp.com/role: "internal-app"

vault.hashicorp.com/agent-inject-secret-database-config.txt: "internal/data/database/config"

$ kubectl patch deployment orgchart --patch "$(cat patch-secrets.yaml)"

deployment.apps/orgchart patched

We can see they’ve been applied:

$ kubectl get deployments orgchart -o yaml | head -n 37 | tail -n 10

type: RollingUpdate

template:

metadata:

annotations:

vault.hashicorp.com/agent-inject: "true"

vault.hashicorp.com/agent-inject-secret-database-config.txt: internal/data/database/config

vault.hashicorp.com/role: internal-app

creationTimestamp: null

labels:

app: orgchart

Let’s check our pods again:

$ kubectl get pods -l app=orgchart

NAME READY STATUS RESTARTS AGE

orgchart-7447d696df-zp9fd 2/2 Running 0 3m11s

We can tell from above, this is a new pod launched after the annotations were applied. We can now see if we can get secrets and indeed we can:

$ kubectl exec `kubectl get pod -l app=orgchart -o jsonpath="{.items[0].metadata.name}"` --container orgchart -- ls /vault/secrets

database-config.txt

$ kubectl exec `kubectl get pod -l app=orgchart -o jsonpath="{.items[0].metadata.name}"` --container orgchart -- cat /vault/secrets/database-config.txt

data: map[password:db-secret-password username:db-readonly-username]

metadata: map[created_time:2020-10-05T11:22:49.6524387Z deletion_time: destroyed:false version:1]

Templates

But what if we need to format those secrets so our app can understand them. We’ll deviate from the Hashi tutorial a bit to show some common patterns using sqlserver with java and dotnet:

$ cat patch-secrets.new.yaml

spec:

template:

metadata:

annotations:

vault.hashicorp.com/agent-inject: "true"

vault.hashicorp.com/agent-inject-status: "update"

vault.hashicorp.com/role: "internal-app"

vault.hashicorp.com/agent-inject-secret-database-config.txt: "internal/data/database/config"

vault.hashicorp.com/agent-inject-dotnetsql-dbconnection.txt: |

{{- with secret "internal/data/database/config" -}}

Server=sqlserver.ad.domain.com;Database=mysqldatabase;User Id={{ .Data.data.username }};Password={{ .Data.data.password }}

{{- end -}}

vault.hashicorp.com/agent-inject-javasql-dbconnection.txt: |

{{- with secret "internal/data/database/config" -}}

jdbc:sqlserver://sqlserver.ad.domain.com;databaseName=mysqldatabase;userName={{ .Data.data.username }};password={{ .Data.data.password }}

{{- end -}}

Then patch:

$ kubectl patch deployment orgchart --patch "$(cat patch-secrets.new.yaml)"

deployment.apps/orgchart patched

Lastly, we can check that indeed, it created usable connection strings in our filesystem:

$ kubectl exec `kubectl get pod -l app=orgchart -o jsonpath="{.items[0].metadata.name}"` --container orgchart -- find /vault/secrets -type f -exec cat -n {} \; -print

1 data: map[password:db-secret-password username:db-readonly-username]

2 metadata: map[created_time:2020-10-05T11:22:49.6524387Z deletion_time: destroyed:false version:1]

/vault/secrets/database-config.txt

1 jdbc:sqlserver://sqlserver.ad.domain.com;databaseName=mysqldatabase;userName=db-readonly-username;password=db-secret-password

/vault/secrets/database-java-config.txt

1 Server=sqlserver.ad.domain.com;Database=mysqldatabase;User Id=db-readonly-username;Password=db-secret-password

/vault/secrets/database-dotnet-config.txt

Interactive Shell

How might that look on an interactive shell?

Launch an ubuntu pod:

$ kubectl run my-shell -i --tty --image ubuntu -- bash

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

If you don't see a command prompt, try pressing enter.

root@my-shell-7d5c87b77c-bnfmz:/# ls /vault/secrets

ls: cannot access '/vault/secrets': No such file or directory

root@my-shell-7d5c87b77c-bnfmz:/# exit

exit

Session ended, resume using 'kubectl attach my-shell-7d5c87b77c-bnfmz -c my-shell -i -t' command when the pod is running

Then we should change the mount point that works for ubuntu (/tmp):

$ cat patch-secrets.new.yaml

spec:

template:

metadata:

annotations:

vault.hashicorp.com/agent-inject: "true"

vault.hashicorp.com/agent-inject-status: "update"

vault.hashicorp.com/role: "internal-app"

vault.hashicorp.com/secret-volume-path: "/tmp/"

vault.hashicorp.com/agent-inject-secret-database-dotnet-config.txt: "internal/data/database/config"

vault.hashicorp.com/agent-inject-template-database-dotnet-config.txt: |

{{- with secret "internal/data/database/config" -}}

Server=sqlserver.ad.domain.com;Database=mysqldatabase;User Id={{ .Data.data.username }};Password={{ .Data.data.password }}

{{- end -}}

vault.hashicorp.com/agent-inject-secret-database-java-config.txt: "internal/data/database/config"

vault.hashicorp.com/agent-inject-template-database-java-config.txt: |

{{- with secret "internal/data/database/config" -}}

jdbc:sqlserver://sqlserver.ad.domain.com;databaseName=mysqldatabase;userName={{ .Data.data.username }};password={{ .Data.data.password }}

{{- end -}}

And apply the deployment:

$ kubectl patch deployment my-shell --patch "$(cat patch-secrets.new.yaml)"

deployment.apps/my-shell patched

However, you will notice the pods get stuck..

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

my-shell-6cf47859b7-jmvms 0/2 Init:0/1 0 110s

my-shell-7d5c87b77c-7d4wb 1/1 Running 0 3m5s

orgchart-b8ff4d77-krw8b 2/2 Running 0 16m

vault-0 1/1 Running 0 2d14h

vault-agent-injector-bdbf7b844-kqbnp 1/1 Running 0 3d12h

webapp-66bd6d455d-6rnfm 1/1 Running 0 2d16h

That’s because Vault doesn’t know who this pod is.. We need to set the service account the ubuntu container runs as in order for the vault injector to mount:

$ kubectl get deployments my-shell -o yaml > my-shell.yaml

Here i then added a section to set service accounts in the container block:

serviceAccount: internal-app

serviceAccountName: internal-app

The full yaml:

$ cat my-shell.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "3"

vault.hashicorp.com/agent-inject: "true"

vault.hashicorp.com/agent-inject-secret-database-config.txt: internal/data/database/config

vault.hashicorp.com/role: internal-app

creationTimestamp: "2020-10-05T12:01:45Z"

generation: 4

labels:

run: my-shell

name: my-shell

namespace: default

resourceVersion: "729218"

selfLink: /apis/apps/v1/namespaces/default/deployments/my-shell

uid: f0f3ce43-dca7-408b-aa48-2cae55c37071

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

run: my-shell

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

annotations:

vault.hashicorp.com/agent-inject: "true"

vault.hashicorp.com/agent-inject-secret-database-dotnet-config.txt: internal/data/database/config

vault.hashicorp.com/agent-inject-secret-database-java-config.txt: internal/data/database/config

vault.hashicorp.com/agent-inject-status: update

vault.hashicorp.com/agent-inject-template-database-dotnet-config.txt: "{{-

with secret \"internal/data/database/config\" -}} \nServer=sqlserver.ad.domain.com;Database=mysqldatabase;User

Id={{ .Data.data.username }};Password={{ .Data.data.password }}\n{{- end

-}}\n"

vault.hashicorp.com/agent-inject-template-database-java-config.txt: |-

{{- with secret "internal/data/database/config" -}}

jdbc:sqlserver://sqlserver.ad.domain.com;databaseName=mysqldatabase;userName={{ .Data.data.username }};password={{ .Data.data.password }}

{{- end -}}

vault.hashicorp.com/role: internal-app

vault.hashicorp.com/secret-volume-path: /tmp/

creationTimestamp: null

labels:

run: my-shell

spec:

containers:

- args:

- bash

image: ubuntu

imagePullPolicy: Always

name: my-shell

resources: {}

stdin: true

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

tty: true

serviceAccount: internal-app

serviceAccountName: internal-app

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

status:

availableReplicas: 1

conditions:

- lastTransitionTime: "2020-10-05T12:08:20Z"

lastUpdateTime: "2020-10-05T12:08:20Z"

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

- lastTransitionTime: "2020-10-05T12:01:45Z"

lastUpdateTime: "2020-10-05T12:12:20Z"

message: ReplicaSet "my-shell-84c788bb66" is progressing.

reason: ReplicaSetUpdated

status: "True"

type: Progressing

observedGeneration: 4

readyReplicas: 1

replicas: 2

unavailableReplicas: 1

updatedReplicas: 1

We can now see it launched:

$ kubectl apply -f my-shell.yaml

$ kubectl get pods | head -n2

NAME READY STATUS RESTARTS AGE

my-shell-7fdd4d5bc8-bttrv 2/2 Running 0 5m25s

And we can verify we can see the contents:

$ kubectl exec -it my-shell-7fdd4d5bc8-bttrv -- /bin/bash

Defaulting container name to my-shell.

Use 'kubectl describe pod/my-shell-7fdd4d5bc8-bttrv -n default' to see all of the containers in this pod.

root@my-shell-7fdd4d5bc8-bttrv:/# ls /tmp

database-dotnet-config.txt database-java-config.txt

root@my-shell-7fdd4d5bc8-bttrv:/# cat /tmp/database-dotnet-config.txt

Server=sqlserver.ad.domain.com;Database=mysqldatabase;User Id=db-readonly-username;Password=db-secret-passwordroot@my-shell-7fdd4d5bc8-bttrv:/#

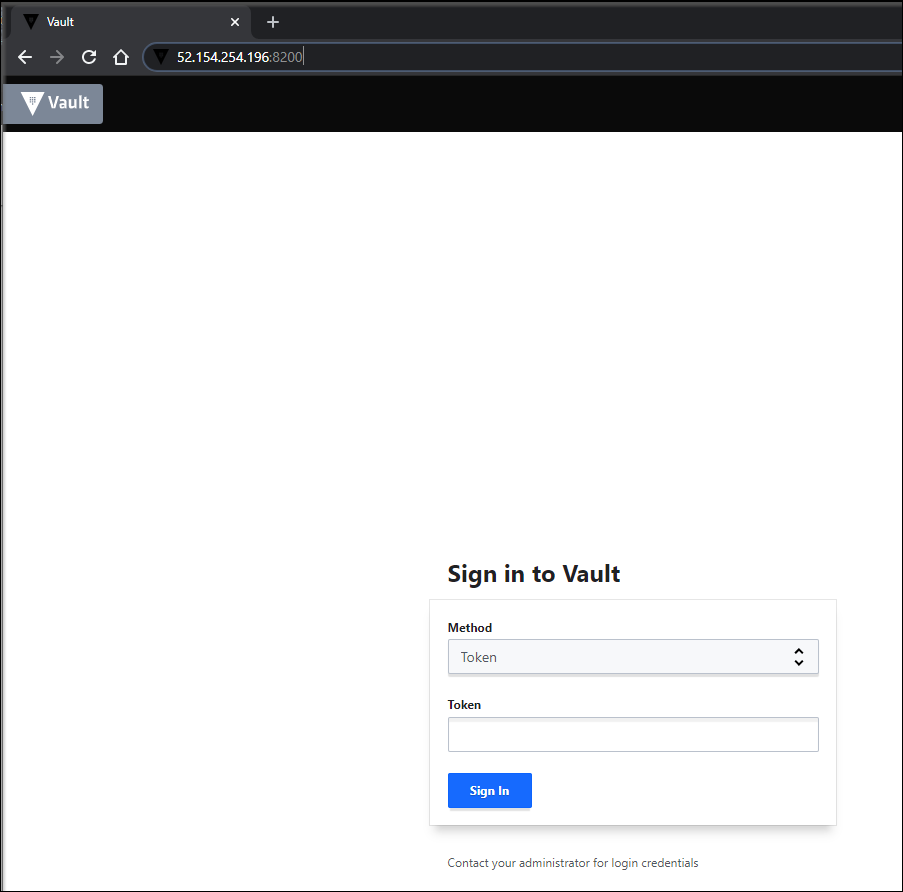

Exposing Vault on Public IP with LoadBalancer

You may wish to expose Vault to the outside world, or at least outside of your cluster.

In doing so, You’ll want to expose the service:

$ kubectl expose svc vault --port=8200 --target-port=8200 --name=vault2 --type=LoadBalance

r

service/vault2 exposed

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 3d13h

vault ClusterIP 10.0.198.124 <none> 8200/TCP,8201/TCP 3d12h

vault-agent-injector-svc ClusterIP 10.0.169.79 <none> 443/TCP 3d12h

vault-internal ClusterIP None <none> 8200/TCP,8201/TCP 3d12h

vault2 LoadBalancer 10.0.19.192 52.154.254.196 8200:30615/TCP 6s

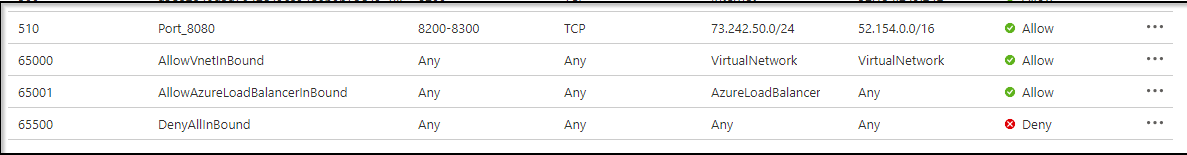

However, this exposes vault globally. Most of the time, this is a pretty bad idea. So let’s restrict via Azure’s NSG applied to the VNet.

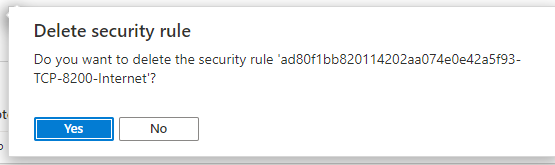

Log into the Azure port and find the NSG in the Resource Group for the cluster and delete the inbound “Internet” (everywhere) rule…

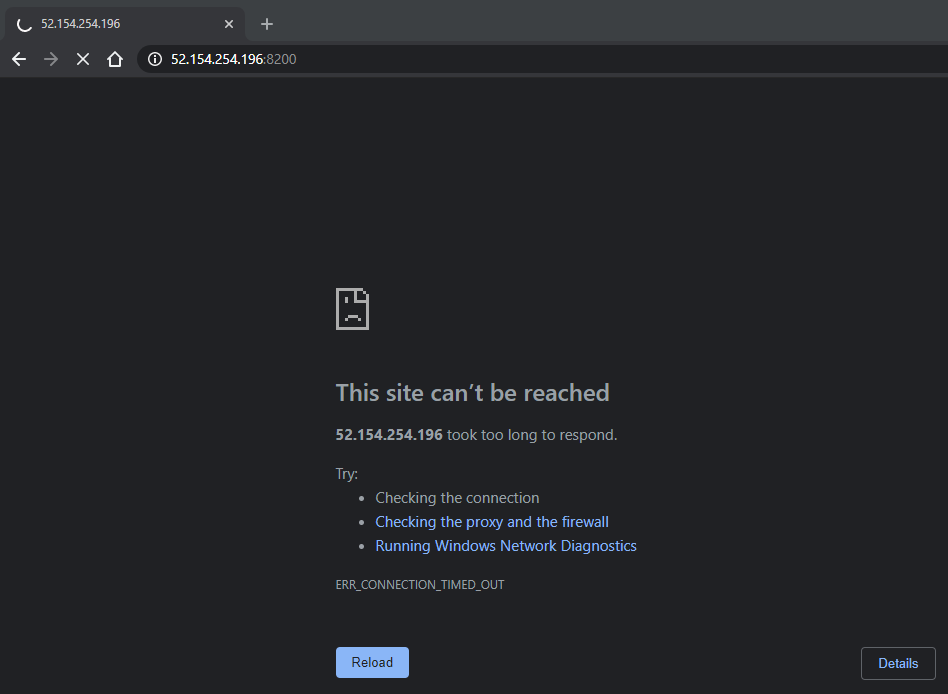

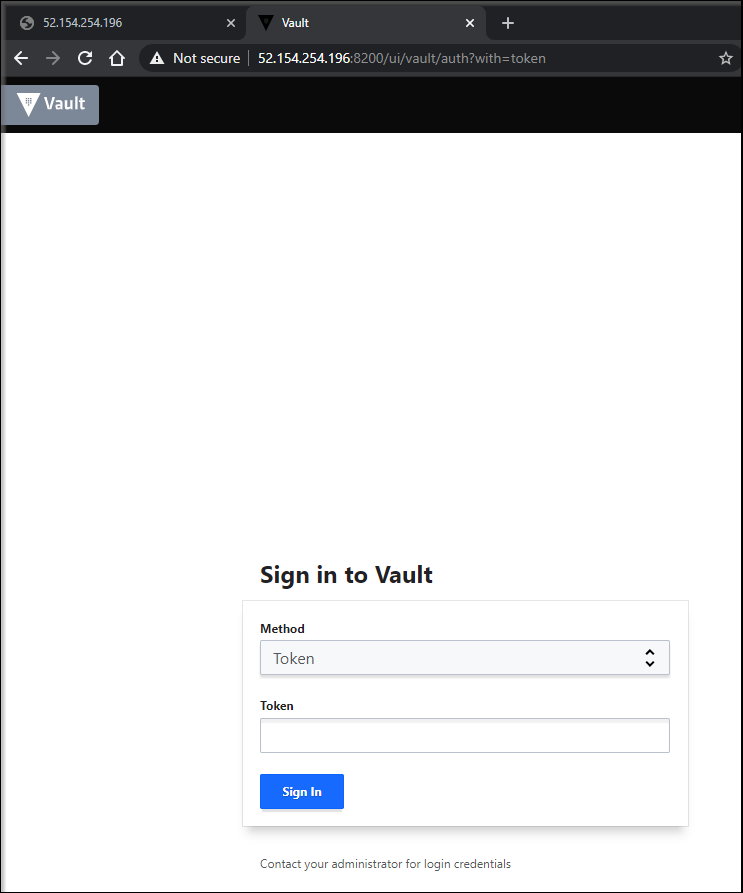

We can now reload the browser and see we cannot access that UI anymore:

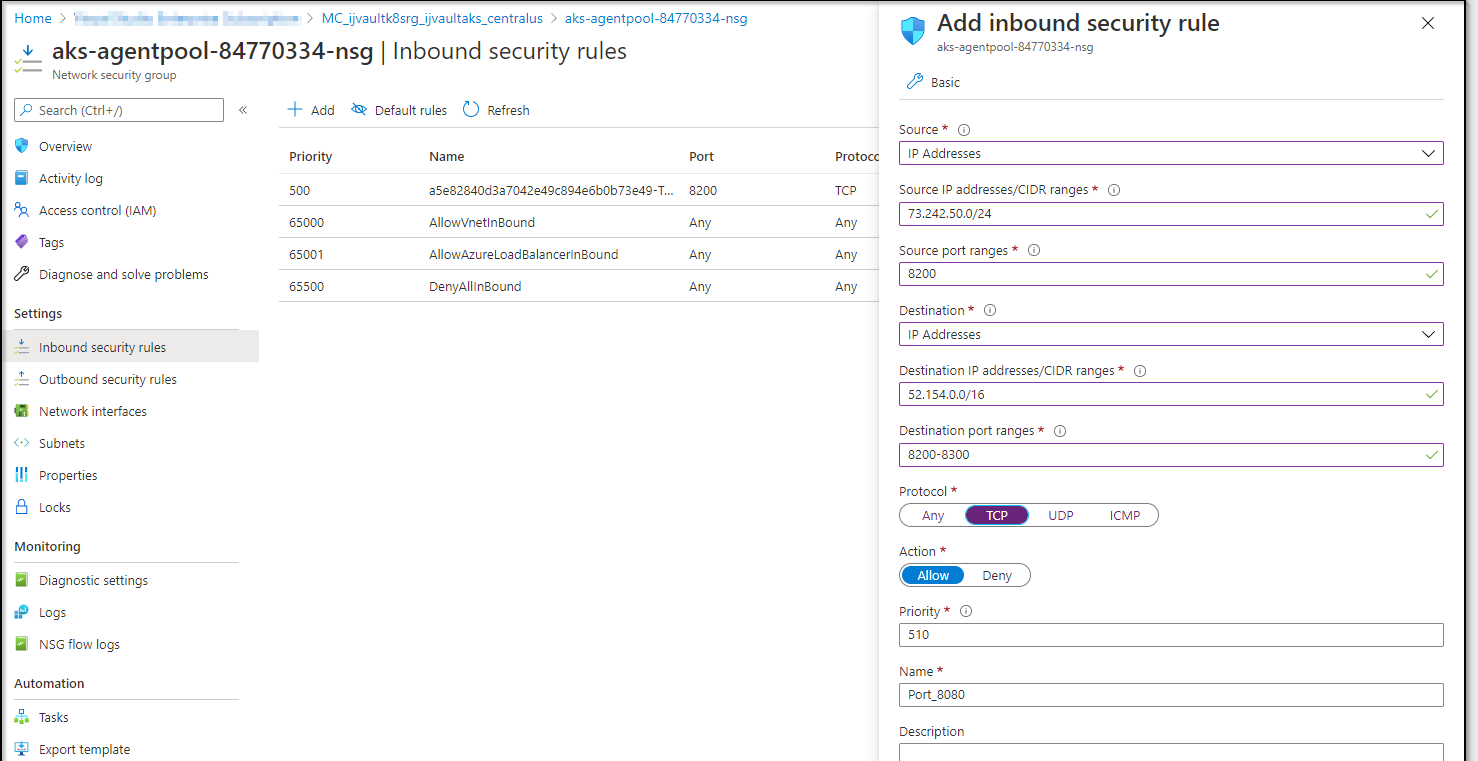

Now I’ll go to Inbound Security Rules and add a new one. I’ll use whatismyip.com or similar to determine my local output. And to be more open, i’ll open a range

I did update that source port to “*” since i was filtering on IP and destination already:

And now i can reach Vault again:

This means we could direct pods in an external kubernetes to use that cluster.

e.g.

$ cat <<EOF | kubectl apply -f -

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: devwebapp

labels:

app: devwebapp

spec:

replicas: 1

selector:

matchLabels:

app: devwebapp

template:

metadata:

labels:

app: devwebapp

spec:

serviceAccountName: internal-app

containers:

- name: app

image: burtlo/devwebapp-ruby:k8s

imagePullPolicy: Always

env:

- name: VAULT_ADDR

value: "http://52.154.254.196:8200"

EOF

Or we could expose a service in an external cluster for much the same purpose:

$ cat <<EOF | kubectl apply -f -

---

apiVersion: v1

kind: Service

metadata:

name: external-vault

namespace: default

spec:

ports:

- protocol: TCP

port: 8200

---

apiVersion: v1

kind: Endpoints

metadata:

name: external-vault

subsets:

- addresses:

- ip: 52.154.254.196

ports:

- port: 8200

EOF

Lastly, we could install vault with helm in another cluster pointing at this vault:

helm install vault hashicorp/vault \

--set "injector.externalVaultAddr=http://52.154.254.196:8200"

In fact.. let’s do that next

Adding another external K8s

Next let’s try to join an outside cluster with the Vault Injector.

Let’s create a fresh AKS cluster:

$ az aks create --resource-group ijvaultk8srg --name ijvaultaks2 --location centralus --node-count 3 --enable-cluster-autoscaler --min-count 2 --max-count 4 --generate-ssh-keys --network-plugin azure --network-policy azure --service-principal $SP_ID --client-secret $SP_PASS

Get credentials

$ az aks list -o table

Name Location ResourceGroup KubernetesVersion ProvisioningState Fqdn

----------- ---------- --------------- ------------------- ------------------- ---------------------------------------------------------------

ijvaultaks centralus ijvaultk8srg 1.17.11 Succeeded ijvaultaks-ijvaultk8srg-70b42e-e2c32296.hcp.centralus.azmk8s.io

ijvaultaks2 centralus ijvaultk8srg 1.17.11 Succeeded ijvaultaks-ijvaultk8srg-70b42e-1ff86476.hcp.centralus.azmk8s.io

$ az aks get-credentials -n ijvaultaks2 -g ijvaultk8srg --admin

Merged "ijvaultaks2-admin" as current context in /home/builder/.kube/config

Double check we have the auth-delegator role (we’ll need it in a moment)

$ kubectl get clusterroles --all-namespaces | grep auth

system:auth-delegator 12m

We want to install the following:

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: vault-auth

---

apiVersion: v1

kind: Secret

metadata:

name: vault-auth

annotations:

kubernetes.io/service-account.name: vault-auth

type: kubernetes.io/service-account-token

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: role-tokenreview-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: vault-auth

namespace: default

We can use inline:

$ cat <<EOF | kubectl create -f -

-

apiVe> ---

rsion: > apiVersion: v1

> kind: ServiceAccount

> metadata:

> name: vault-auth

> ---

iVersio> apiVersion: v1

> kind: Secret

> metadata:

> name: vault-auth

> annotations:

> kubernetes.io/service-account.name: vault-auth

> type: kubernetes.io/service-account-token

> ---

> apiVersion: rbac.authorization.k8s.io/v1beta1

> kind: ClusterRoleBinding

> metadata:

> name: role-tokenreview-binding

> roleRef:

> apiGroup: rbac.authorization.k8s.io

> kind: ClusterRole

> name: system:auth-delegator

> subjects:

> - kind: ServiceAccount

> name: vault-auth

> namespace: default

> EOF

serviceaccount/vault-auth created

secret/vault-auth created

clusterrolebinding.rbac.authorization.k8s.io/role-tokenreview-binding created

Next we need the token. Since i had some difficulties in LKE with this, i’ll show you the values to avoid any confusion:

$ kubectl get secret vault-auth -o yaml

apiVersion: v1

data:

ca.crt: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUV5VENDQXJHZ0F3SUJBZ0lRT3UxaTZjR1UxU2tac01sVHZjeGplREFOQmdrcWhraUc5dzBCQVFzRkFEQU4KTVFzd0NRWURWUVFERXdKallUQWdGdzB5TURFd01EWXhNVEU0TkRsYUdBOHlNRFV3TVRBd05qRXhNamcwT1ZvdwpEVEVMTUFrR0ExVUVBeE1DWTJFd2dnSWlNQTBHQ1NxR1NJYjNEUUVCQVFVQUE0SUNEd0F3Z2dJS0FvSUNBUURWCjZqbUI3YWJ1VEZzR0VIUG1ZdHhEU1czbmVvUVVtVGRiRXczWGFHcmxlV280cjFmTDVSaW12WkJLL2MxelBKMTcKRGo4QkJ2RGNobGZHaEpEcVgxL0g1TVRmeWcwQUNKSnVlcy9uQ2tmU0o5eWRwb012c25zT3Z0VlJKZktsTDZWegp5UFE4SjVFdnhMY0RVRjg0ZDNzUXRCa3FiMzVMdWR2UENlQTFKam5jait3ejV1RXhTV1MyQk5US0xIV0I2YlB1CjFJMkJVQVhvbm5SZTVIczBoQ2ZFZ0pwZDgzUSsvd0lzS1AvbWNNaEJONUNsbWpmSk1Ib3YvWkhtUXBQcnY4TkMKd2dJTThqYlVPRWFpd3NqWXF0NDBTNDY1OGxmSnEya0tiMk9kSXNQclN4ZmdXeVh0TVh4c29wZHViRDBObW9KTgpNdWdUZHhWMkpxU1diRTR2YUJaMnluUzZycTh3NXduT2ZwSi9EZE9Rc1doa1R5TlluVkxzSHpjY0orWDQvRnZ1ClNWd01VL2NTYndKUFppSmwxeGE1OFk1UHA4ZHNlNnF3azluOWUvdWRRN3d0cDc0Qk9qQ2JaZzVXdnN1WGVWS1EKK0ZTaWNNR0pkWTN0c3k3dnN2ZEI1Q1JpTEJwR1Z1eENuR1l2Q1JPV2l5RjZpWmt1RW9tRjVYVTBTNWpwTk5tMgpiT0VsTGQ0aXBLMHVNbnVONldQVTMwTEY4cVFLR3hMOWdJSERaaXFnZlg3b1cvNGw2ZzNBdi85L3pIak5CekxFCjg2S3JPRUVmZzI2cXZEQjEwTUliMUJxQjgvSkhyRVBReGY1MSszaXZnSHlqdTJnclY5Y3pmbWp3Tlk0NTE4amUKbmdoY0duZGtLd2xqTm1oSi9INjlCSy92WmxGbWVHWGs3TjlIV1g1V0t3SURBUUFCb3lNd0lUQU9CZ05WSFE4QgpBZjhFQkFNQ0FxUXdEd1lEVlIwVEFRSC9CQVV3QXdFQi96QU5CZ2txaGtpRzl3MEJBUXNGQUFPQ0FnRUF6OTIxCnhiRTZCKytGZ1dDTGVpbldYQjg0dHdjNUNidG1mazIwWWwvWWRUWDlFMjFFSlNPckFBc3VFbDNTWHQxTEVFbFAKc3J1VzJsdXkzclltdENDa3lxZEFNSW9QYmh0dTBwa01jcGZhUWNNQU0vME9QVS9FWkhzRHZQbnlLWW1ZZTB2Ugo1Y3NHS3F6N3ZIM2lDZFBndUdQSkJyMmJtVHp2RElFYTBOZEpxSDhYVWluTFAwT2JvbGQvdnJtazlXWUxwQW9kCnoyVVhSNWtNV1BXNWI1WmNSR1gzT2twOU5EL2c0SzZPYWJVK1dOdVcrNWtRVVRKYlpsVmx2U1RlWVNSRUFIY2oKV0ZkYVBCaFdpVXRsZHVjb0tQSmhBNXNqcTJibmlhMnB2dW9YQVRISEozU2p4TE5aQ0FjZ1BZc2h6Nm8wVUNpYQp3aUwxQSt2MWFIL25DRm5UYlIydUJnZWl5Uzl1WGh4UzkyYVJoS09ibWkrK25JU1B3b2J4OUpIZWk2UWZralZVCkV3TVo1R1BkWG5mOHdpYUpEdFdRTEJIZkRvcUtDejJReDZIVDV3T3BxWFVZMis1ZEdPS3Z3T1hsSlQ1REU0bVIKSkpBUnNiMytodHk1L3A2OGlpck9MV1RPbTZYdjZ2SWJVZmNENlBvTGI4L2c5QWJTM0NPUzBKV1ltWmRPdzFQZworN2U5dCt4R0lZYTNtRVF6MklrYyt4MHFab1NRbllxYXVVaU1rd1dqSmdMRVhJdTJPOXhsaUJMaDduMzJ1emFjCmFlbEZmTlZMUjBmbGthbFduNVkvczhzSVl1L0lpRTFLWGcvSkw5WTNNS0lmZHplTThTT25maHU3MFg4MU9NZHEKeTZGWjZYZ2tISVhMWXZrNVJ2TWZRbFpxQ21zLzVjRmVWc3lGd1RvPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

namespace: ZGVmYXVsdA==

token: ZXlKaGJHY2lPaUpTVXpJMU5pSXNJbXRwWkNJNklteFhZa1pSYzJkeVEzaGFPVFJJUW1kTU9YaG5jVUpWVmxsTU5VRXpOWEJCUkVOclRGWklVRFozUTFraWZRLmV5SnBjM01pT2lKcmRXSmxjbTVsZEdWekwzTmxjblpwWTJWaFkyTnZkVzUwSWl3aWEzVmlaWEp1WlhSbGN5NXBieTl6WlhKMmFXTmxZV05qYjNWdWRDOXVZVzFsYzNCaFkyVWlPaUprWldaaGRXeDBJaXdpYTNWaVpYSnVaWFJsY3k1cGJ5OXpaWEoyYVdObFlXTmpiM1Z1ZEM5elpXTnlaWFF1Ym1GdFpTSTZJblpoZFd4MExXRjFkR2dpTENKcmRXSmxjbTVsZEdWekxtbHZMM05sY25acFkyVmhZMk52ZFc1MEwzTmxjblpwWTJVdFlXTmpiM1Z1ZEM1dVlXMWxJam9pZG1GMWJIUXRZWFYwYUNJc0ltdDFZbVZ5Ym1WMFpYTXVhVzh2YzJWeWRtbGpaV0ZqWTI5MWJuUXZjMlZ5ZG1salpTMWhZMk52ZFc1MExuVnBaQ0k2SWpRME5URXlObVEzTFRrMllXUXRORGcwT0MxaE9XVmhMVEJrT0dGbFpUVTRNRGxrWXlJc0luTjFZaUk2SW5ONWMzUmxiVHB6WlhKMmFXTmxZV05qYjNWdWREcGtaV1poZFd4ME9uWmhkV3gwTFdGMWRHZ2lmUS5DbGE4NTNtTk50Y1hHN1RyTDB0ejRwS1Q1ekQyWEVnQ09YUFJyVHV5MFBjRmJaX3ZkM25vcjk2bkE0dF91X2pRYW4ySW1zYmduTVNRSmNFLTVpcmJ4b2Jtc3BodnYwWFdfcXMwZm5aLWlKQTVOd3NaMi1jWjN4bHBZdDQ1UlUtb3o4Yk4yTUNWdVZ6S0ZQTHhQZWV0M1gxZlQ1RUdkckpvd3QwendUZjNDdWVrU3ZPUVZYa2FaTXJIM0pBQjB1RURWWXFRb05aRlJpODhHYVl5RjdQZHhuVHY2TTFmeDNJRWlMc1FQM0h4OVFtSVBNOWFFWHZrQ1g1NklIbHpUdnprQ19WMl9UaEFmb01xODhWZXNGbGxqZVBtUElOem1lRkNEUDA3S2xEX3N2Q3RwZUxpcGdXMzc4RjZZUTF0OGpVekl4YU10NTBSZEZ1eWtrVUpjdHctcjN4QXFSeUdkblBxRG1vdldfX1preXJYemwtelBpSHhKcjhsSzdBNkVXNS1iS1RUTEJidi1hejNYOUZzZTNhTGtrakRUdVkyTVdlNWZZcEMyV3J6SFJ6dFMtaFNJX05jUnM1MXF1ajhWd1lsUXlWMDYyaXBaSXdHdFNheERQdW9EaXRzSHg3RkhyeXJPei1wQWVMbS1WN0ZaV3JjdGR3b0xaeXJfY3g5R2I1U3kzVHFvWmFBeEVJV2szR3Znd2g3M3cyZmc3ck5JdmZCOFhJYnhadl9UZlV6WEhIZGgyVFVkODBnVkRWT1NDa3EtakJFMFJCMDYtVDRPWEwxLWN1MFU4bDhXcXdmbDI1ZmVaWTczbjVPZWl4dzU2cTU2eEFxdTdDSlp2czhSVnA2aHhmWU1UN1piSzdjYUtCX3E3SFp1WEljS3pQTzQySWwxZ3YxeWZqcU00RQ==

kind: Secret

metadata:

annotations:

kubernetes.io/service-account.name: vault-auth

kubernetes.io/service-account.uid: 445126d7-96ad-4848-a9ea-0d8aee5809dc

creationTimestamp: "2020-10-06T11:43:57Z"

name: vault-auth

namespace: default

resourceVersion: "2913"

selfLink: /api/v1/namespaces/default/secrets/vault-auth

uid: 1b8dd7e1-7372-48ea-b1ae-522e422a1e6b

type: kubernetes.io/service-account-token

$ kubectl get secret vault-auth -o go-template='{{ .data.token }}' | base64 --decode

eyJhbGciOiJSUzI1NiIsImtpZCI6ImxXYkZRc2dyQ3haOTRIQmdMOXhncUJVVllMNUEzNXBBRENrTFZIUDZ3Q1kifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InZhdWx0LWF1dGgiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoidmF1bHQtYXV0aCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjQ0NTEyNmQ3LTk2YWQtNDg0OC1hOWVhLTBkOGFlZTU4MDlkYyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDpkZWZhdWx0OnZhdWx0LWF1dGgifQ.Cla853mNNtcXG7TrL0tz4pKT5zD2XEgCOXPRrTuy0PcFbZ_vd3nor96nA4t_u_jQan2ImsbgnMSQJcE-5irbxobmsphvv0XW_qs0fnZ-iJA5NwsZ2-cZ3xlpYt45RU-oz8bN2MCVuVzKFPLxPeet3X1fT5EGdrJowt0zwTf3CuekSvOQVXkaZMrH3JAB0uEDVYqQoNZFRi88GaYyF7PdxnTv6M1fx3IEiLsQP3Hx9QmIPM9aEXvkCX56IHlzTvzkC_V2_ThAfoMq88VesFlljePmPINzmeFCDP07KlD_svCtpeLipgW378F6YQ1t8jUzIxaMt50RdFuykkUJctw-r3xAqRyGdnPqDmovW__ZkyrXzl-zPiHxJr8lK7A6EW5-bKTTLBbv-az3X9Fse3aLkkjDTuY2MWe5fYpC2WrzHRztS-hSI_NcRs51quj8VwYlQyV062ipZIwGtSaxDPuoDitsHx7FHryrOz-pAeLm-V7FZWrctdwoLZyr_cx9Gb5Sy3TqoZaAxEIWk3Gvgwh73w2fg7rNIvfB8XIbxZv_TfUzXHHdh2TUd80gVDVOSCkq-jBE0RB06-T4OXL1-cu0U8l8Wqwfl25feZY73n5Oeixw56q56xAqu7CJZvs8RVp6hxfYMT7ZbK7caKB_q7HZuXIcKzPO42Il1gv1yfjqM4E

(note, no newline at the end of that)

$ export TOKEN_REVEW_JWT=`kubectl get secret vault-auth -o go-template='{{ .dat

a.token }}' | base64 --decode`

$ export | grep JWT

declare -x TOKEN_REVEW_JWT="eyJhbGciOiJSUzI1NiIsImtpZCI6ImxXYkZRc2dyQ3haOTRIQmdMOXhncUJVVllMNUEzNXBBRENrTFZIUDZ3Q1kifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InZhdWx0LWF1dGgiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoidmF1bHQtYXV0aCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjQ0NTEyNmQ3LTk2YWQtNDg0OC1hOWVhLTBkOGFlZTU4MDlkYyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDpkZWZhdWx0OnZhdWx0LWF1dGgifQ.Cla853mNNtcXG7TrL0tz4pKT5zD2XEgCOXPRrTuy0PcFbZ_vd3nor96nA4t_u_jQan2ImsbgnMSQJcE-5irbxobmsphvv0XW_qs0fnZ-iJA5NwsZ2-cZ3xlpYt45RU-oz8bN2MCVuVzKFPLxPeet3X1fT5EGdrJowt0zwTf3CuekSvOQVXkaZMrH3JAB0uEDVYqQoNZFRi88GaYyF7PdxnTv6M1fx3IEiLsQP3Hx9QmIPM9aEXvkCX56IHlzTvzkC_V2_ThAfoMq88VesFlljePmPINzmeFCDP07KlD_svCtpeLipgW378F6YQ1t8jUzIxaMt50RdFuykkUJctw-r3xAqRyGdnPqDmovW__ZkyrXzl-zPiHxJr8lK7A6EW5-bKTTLBbv-az3X9Fse3aLkkjDTuY2MWe5fYpC2WrzHRztS-hSI_NcRs51quj8VwYlQyV062ipZIwGtSaxDPuoDitsHx7FHryrOz-pAeLm-V7FZWrctdwoLZyr_cx9Gb5Sy3TqoZaAxEIWk3Gvgwh73w2fg7rNIvfB8XIbxZv_TfUzXHHdh2TUd80gVDVOSCkq-jBE0RB06-T4OXL1-cu0U8l8Wqwfl25feZY73n5Oeixw56q56xAqu7CJZvs8RVp6hxfYMT7ZbK7caKB_q7HZuXIcKzPO42Il1gv1yfjqM4E"

$ export KUBE_CA_CERT=`kubectl config view --raw --minify --flatten -o jsonpath='{.clusters[].cluster.certificate-authority-data}' | base64 --decode`

And you’ll see this is the formatted multi-line version of the ca cert:

$ export | head -n 34 | tail -n 29

declare -x KUBE_CA_CERT="-----BEGIN CERTIFICATE-----

MIIEyTCCArGgAwIBAgIQOu1i6cGU1SkZsMlTvcxjeDANBgkqhkiG9w0BAQsFADAN

MQswCQYDVQQDEwJjYTAgFw0yMDEwMDYxMTE4NDlaGA8yMDUwMTAwNjExMjg0OVow

DTELMAkGA1UEAxMCY2EwggIiMA0GCSqGSIb3DQEBAQUAA4ICDwAwggIKAoICAQDV

6jmB7abuTFsGEHPmYtxDSW3neoQUmTdbEw3XaGrleWo4r1fL5RimvZBK/c1zPJ17

Dj8BBvDchlfGhJDqX1/H5MTfyg0ACJJues/nCkfSJ9ydpoMvsnsOvtVRJfKlL6Vz

yPQ8J5EvxLcDUF84d3sQtBkqb35LudvPCeA1Jjncj+wz5uExSWS2BNTKLHWB6bPu

1I2BUAXonnRe5Hs0hCfEgJpd83Q+/wIsKP/mcMhBN5ClmjfJMHov/ZHmQpPrv8NC

wgIM8jbUOEaiwsjYqt40S4658lfJq2kKb2OdIsPrSxfgWyXtMXxsopdubD0NmoJN

MugTdxV2JqSWbE4vaBZ2ynS6rq8w5wnOfpJ/DdOQsWhkTyNYnVLsHzccJ+X4/Fvu

SVwMU/cSbwJPZiJl1xa58Y5Pp8dse6qwk9n9e/udQ7wtp74BOjCbZg5WvsuXeVKQ

+FSicMGJdY3tsy7vsvdB5CRiLBpGVuxCnGYvCROWiyF6iZkuEomF5XU0S5jpNNm2

bOElLd4ipK0uMnuN6WPU30LF8qQKGxL9gIHDZiqgfX7oW/4l6g3Av/9/zHjNBzLE

86KrOEEfg26qvDB10MIb1BqB8/JHrEPQxf51+3ivgHyju2grV9czfmjwNY4518je

nghcGndkKwljNmhJ/H69BK/vZlFmeGXk7N9HWX5WKwIDAQABoyMwITAOBgNVHQ8B

Af8EBAMCAqQwDwYDVR0TAQH/BAUwAwEB/zANBgkqhkiG9w0BAQsFAAOCAgEAz921

xbE6B++FgWCLeinWXB84twc5Cbtmfk20Yl/YdTX9E21EJSOrAAsuEl3SXt1LEElP

sruW2luy3rYmtCCkyqdAMIoPbhtu0pkMcpfaQcMAM/0OPU/EZHsDvPnyKYmYe0vR

5csGKqz7vH3iCdPguGPJBr2bmTzvDIEa0NdJqH8XUinLP0Obold/vrmk9WYLpAod

z2UXR5kMWPW5b5ZcRGX3Okp9ND/g4K6OabU+WNuW+5kQUTJbZlVlvSTeYSREAHcj

WFdaPBhWiUtlducoKPJhA5sjq2bnia2pvuoXATHHJ3SjxLNZCAcgPYshz6o0UCia

wiL1A+v1aH/nCFnTbR2uBgeiyS9uXhxS92aRhKObmi++nISPwobx9JHei6QfkjVU

EwMZ5GPdXnf8wiaJDtWQLBHfDoqKCz2Qx6HT5wOpqXUY2+5dGOKvwOXlJT5DE4mR

JJARsb3+hty5/p68iirOLWTOm6Xv6vIbUfcD6PoLb8/g9AbS3COS0JWYmZdOw1Pg

+7e9t+xGIYa3mEQz2Ikc+x0qZoSQnYqauUiMkwWjJgLEXIu2O9xliBLh7n32uzac

aelFfNVLR0flkalWn5Y/s8sIYu/IiE1KXg/JL9Y3MKIfdzeM8SOnfhu70X81OMdq

y6FZ6XgkHIXLYvk5RvMfQlZqCms/5cFeVsyFwTo=

-----END CERTIFICATE-----"

declare -x LANG="C.UTF-8"

Lastly, set our host:

$ export KUBE_HOST=`kubectl config view --raw --minify --flatten -o jsonpath='{.clusters[].cluster.server}'`

$ export | grep KUBE_HOST

declare -x KUBE_HOST="https://ijvaultaks-ijvaultk8srg-70b42e-1ff86476.hcp.centralus.azmk8s.io:443"

We need to actually set these on the vault server…

I’ll head back to the first cluster… you can use az aks get-credentials but often i keep a local copy of my config to make switching easier…

$ cp ~/.kube/vse-vault1 ~/.kube/config

Go to the vault server and lets setup the values there. note, i did a “tr” to get rid of a newline that vi leaves in there.

$ kubectl exec -it vault-0 -- /bin/sh

/ $ vim /tmp/KUBE_CA_CERT

/bin/sh: vim: not found

/ $ vi /tmp/KUBE_CA_CERT

/ $ vi /tmp/TOKEN_REVIEW_JWT

/ $ cat /tmp/TOKEN_REVIEW_JWT | tr -d '\n' > /tmp/TOKEN_REVIEW_JWT2

/ $ diff /tmp/TOKEN_REVIEW_JWT /tmp/TOKEN_REVIEW_JWT2

--- /tmp/TOKEN_REVIEW_JWT

+++ /tmp/TOKEN_REVIEW_JWT2

@@ -1 +1 @@

-eyJhbGciOiJSUzI1NiIsImtpZCI6ImxXYkZRc2dyQ3haOTRIQmdMOXhncUJVVllMNUEzNXBBRENrTFZIUDZ3Q1kifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InZhdWx0LWF1dGgiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoidmF1bHQtYXV0aCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjQ0NTEyNmQ3LTk2YWQtNDg0OC1hOWVhLTBkOGFlZTU4MDlkYyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDpkZWZhdWx0OnZhdWx0LWF1dGgifQ.Cla853mNNtcXG7TrL0tz4pKT5zD2XEgCOXPRrTuy0PcFbZ_vd3nor96nA4t_u_jQan2ImsbgnMSQJcE-5irbxobmsphvv0XW_qs0fnZ-iJA5NwsZ2-cZ3xlpYt45RU-oz8bN2MCVuVzKFPLxPeet3X1fT5EGdrJowt0zwTf3CuekSvOQVXkaZMrH3JAB0uEDVYqQoNZFRi88GaYyF7PdxnTv6M1fx3IEiLsQP3Hx9QmIPM9aEXvkCX56IHlzTvzkC_V2_ThAfoMq88VesFlljePmPINzmeFCDP07KlD_svCtpeLipgW378F6YQ1t8jUzIxaMt50RdFuykkUJctw-r3xAqRyGdnPqDmovW__ZkyrXzl-zPiHxJr8lK7A6EW5-bKTTLBbv-az3X9Fse3aLkkjDTuY2MWe5fYpC2WrzHRztS-hSI_NcRs51quj8VwYlQyV062ipZIwGtSaxDPuoDitsHx7FHryrOz-pAeLm-V7FZWrctdwoLZyr_cx9Gb5Sy3TqoZaAxEIWk3Gvgwh73w2fg7rNIvfB8XIbxZv_TfUzXHHdh2TUd80gVDVOSCkq-jBE0RB06-T4OXL1-cu0U8l8Wqwfl25feZY73n5Oeixw56q56xAqu7CJZvs8RVp6hxfYMT7ZbK7caKB_q7HZuXIcKzPO42Il1gv1yfjqM4E

+eyJhbGciOiJSUzI1NiIsImtpZCI6ImxXYkZRc2dyQ3haOTRIQmdMOXhncUJVVllMNUEzNXBBRENrTFZIUDZ3Q1kifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InZhdWx0LWF1dGgiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoidmF1bHQtYXV0aCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjQ0NTEyNmQ3LTk2YWQtNDg0OC1hOWVhLTBkOGFlZTU4MDlkYyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDpkZWZhdWx0OnZhdWx0LWF1dGgifQ.Cla853mNNtcXG7TrL0tz4pKT5zD2XEgCOXPRrTuy0PcFbZ_vd3nor96nA4t_u_jQan2ImsbgnMSQJcE-5irbxobmsphvv0XW_qs0fnZ-iJA5NwsZ2-cZ3xlpYt45RU-oz8bN2MCVuVzKFPLxPeet3X1fT5EGdrJowt0zwTf3CuekSvOQVXkaZMrH3JAB0uEDVYqQoNZFRi88GaYyF7PdxnTv6M1fx3IEiLsQP3Hx9QmIPM9aEXvkCX56IHlzTvzkC_V2_ThAfoMq88VesFlljePmPINzmeFCDP07KlD_svCtpeLipgW378F6YQ1t8jUzIxaMt50RdFuykkUJctw-r3xAqRyGdnPqDmovW__ZkyrXzl-zPiHxJr8lK7A6EW5-bKTTLBbv-az3X9Fse3aLkkjDTuY2MWe5fYpC2WrzHRztS-hSI_NcRs51quj8VwYlQyV062ipZIwGtSaxDPuoDitsHx7FHryrOz-pAeLm-V7FZWrctdwoLZyr_cx9Gb5Sy3TqoZaAxEIWk3Gvgwh73w2fg7rNIvfB8XIbxZv_TfUzXHHdh2TUd80gVDVOSCkq-jBE0RB06-T4OXL1-cu0U8l8Wqwfl25feZY73n5Oeixw56q56xAqu7CJZvs8RVp6hxfYMT7ZbK7caKB_q7HZuXIcKzPO42Il1gv1yfjqM4E

\ No newline at end of file

Set the env vars here

/ $ export KUBE_HOST=https://ijvaultaks-ijvaultk8srg-70b42e-1ff86476.hcp.centralus.azmk8s.io:443

/ $ export TOKEN_REVIEW_JWT=`cat /tmp/TOKEN_REVIEW_JWT2`

/ $ export KUBE_CA_CERT=`cat /tmp/KUBE_CA_CERT`

Create a path and launch the auth. This is necessary because we already have a kubernetes auth in use by the cluster that hosts Vault.

/ $ vault auth enable --path="aks2" kubernetes

Success! Enabled kubernetes auth method at: aks2/

/ $ vault write auth/aks2/config token_reviewer_jwt="$TOKEN_REVIEW_JWT" kubernetes_host="$KUBE_HOST" kubernetes_ca_cert="$KUBE_CA_CERT"

We next will add a policy

/ $ vault policy write devwebapp - <<EOF

> path "secret/data/devwebapp/config" {

> capabilities = ["read"]

> }

> EOF

Success! Uploaded policy: devwebapp

We need to set the secret next…

/ $ vault kv put secret/devwebapp/config username='howdy' password='salasanaapina'

Key Value

--- -----

created_time 2020-10-06T12:04:48.71500306Z

deletion_time n/a

destroyed false

version 1

/ $ vault read -format json secret/data/devwebapp/config

{

"request_id": "ebae5365-646c-1a66-dc6c-267a3ad7afea",

"lease_id": "",

"lease_duration": 0,

"renewable": false,

"data": {

"data": {

"password": "salasanaapina",

"username": "howdy"

},

"metadata": {

"created_time": "2020-10-06T12:04:48.71500306Z",

"deletion_time": "",

"destroyed": false,

"version": 1

}

},

"warnings": null

}

We can write a role to that auth endpoint;

/ $ vault write auth/aks2/role/devweb-app \

> bound_service_account_names=internal-app \

> bound_service_account_namespaces=default \

> policies=devwebapp \

> ttl=24h

Success! Data written to: auth/aks2/role/devweb-app

Now we head back to our AKS2 cluster:

$ az aks list -o table

Name Location ResourceGroup KubernetesVersion ProvisioningState Fqdn

----------- ---------- --------------- ------------------- ------------------- ---------------------------------------------------------------

ijvaultaks centralus ijvaultk8srg 1.17.11 Succeeded ijvaultaks-ijvaultk8srg-70b42e-e2c32296.hcp.centralus.azmk8s.io

ijvaultaks2 centralus ijvaultk8srg 1.17.11 Succeeded ijvaultaks-ijvaultk8srg-70b42e-1ff86476.hcp.centralus.azmk8s.io

$ rm -f ~/.kube/config && az aks get-credentials -n ijvaultaks2 -g ijvaultk8srg --admin

Merged "ijvaultaks2-admin" as current context in /home/builder/.kube/config

Install Vault pointing at our external IP for Vault in the other cluster…

$ helm install vault hashicorp/vault --set "injector.externalVaultAddr=http://52.154.254.196:8200"

NAME: vault

LAST DEPLOYED: Tue Oct 6 07:09:18 2020

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Thank you for installing HashiCorp Vault!

Now that you have deployed Vault, you should look over the docs on using

Vault with Kubernetes available here:

https://www.vaultproject.io/docs/

Your release is named vault. To learn more about the release, try:

$ helm status vault

$ helm get vault

Next, as before, we can make an internal-app service account and launch a deployment.

$ cat <<EOF | kubectl apply -f -

> apiVersion: v1

> kind: ServiceAccount

> metadata:

> name: internal-app

> EOF

serviceaccount/internal-app created

$ vi devwebapp.yaml

$ cat devwebapp.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: devwebapp

labels:

app: devwebapp

spec:

replicas: 1

selector:

matchLabels:

app: devwebapp

template:

metadata:

labels:

app: devwebapp

spec:

serviceAccountName: internal-app

containers:

- name: app

image: burtlo/devwebapp-ruby:k8s

imagePullPolicy: Always

env:

- name: VAULT_ADDR

value: "http://52.154.254.196:8200"

$ kubectl apply -f devwebapp.yaml

deployment.apps/devwebapp created

We can patch the deployment for the annotations we need.

$ cat patch-secrets.yaml

spec:

template:

metadata:

annotations:

vault.hashicorp.com/agent-inject: "true"

vault.hashicorp.com/role: "devweb-app"

vault.hashicorp.com/agent-inject-secret-credentials.txt: "secret/data/devwebapp/config"

$ kubectl patch deployment devwebapp --patch "$(cat patch-secrets.yaml)"

deployment.apps/devwebapp patched

Again, we get errors.. This time it’s about the role.. Why is it stuck to /auth/kubernetes?

$ kubectl logs devwebapp-b7df56946-2p4jj vault-agent-init

==> Vault agent started! Log data will stream in below:

==> Vault agent configuration:

Cgo: disabled

Log Level: info

Version: Vault v1.5.2

2020-10-06T12:16:35.549Z [INFO] sink.file: creating file sink

2020-10-06T12:16:35.549Z [INFO] sink.file: file sink configured: path=/home/vault/.vault-token mode=-rw-r-----

2020-10-06T12:16:35.549Z [INFO] auth.handler: starting auth handler

2020-10-06T12:16:35.549Z [INFO] auth.handler: authenticating

Version Sha: 685fdfa60d607bca069c09d2d52b6958a7a2febd

2020-10-06T12:16:35.550Z [INFO] template.server: starting template server

2020/10/06 12:16:35.550320 [INFO] (runner) creating new runner (dry: false, once: false)

2020-10-06T12:16:35.550Z [INFO] sink.server: starting sink server

2020/10/06 12:16:35.550903 [INFO] (runner) creating watcher

2020-10-06T12:16:35.553Z [ERROR] auth.handler: error authenticating: error="Error making API request.

URL: PUT http://52.154.254.196:8200/v1/auth/kubernetes/login

Code: 400. Errors:

* invalid role name "devweb-app"" backoff=2.084195088

2020-10-06T12:16:37.637Z [INFO] auth.handler: authenticating

2020-10-06T12:16:37.641Z [ERROR] auth.handler: error authenticating: error="Error making API request.

And here is where I found the issue. There are a lot of config variables one can use. In looking through the annotations, I found the problem was the default auth is “auth/kubernetes” which is fine in a single cluster, but not so hot when we have multiple clusters.

I changed to add an “auth-path” block:

$ cat patch-secrets.yaml

spec:

template:

metadata:

annotations:

vault.hashicorp.com/agent-inject: "true"

vault.hashicorp.com/auth-path: "auth/aks2"

vault.hashicorp.com/role: "devweb-app"

vault.hashicorp.com/agent-inject-secret-credentials.txt: "secret/data/devwebapp/config"

Patched

$ kubectl patch deployment devwebapp --patch "$(cat patch-secrets.yaml)"

deployment.apps/devwebapp patched

We can now see it running:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

devwebapp-6b9bbcf5b6-4gxjs 0/1 Terminating 0 8m25s

devwebapp-867d67856c-grw7k 2/2 Running 0 6s

devwebapp-b7df56946-2p4jj 0/2 Terminating 0 5m2s

vault-agent-injector-8697d97cf-q5nsl 1/1 Running 0 12m

And if we check the app container in the running pod, we can see the credentials were successfully brought over:

$ kubectl exec devwebapp-867d67856c-grw7k -c app -- cat /vault/secrets/credentials.txt

data: map[password:salasanaapina username:howdy]

metadata: map[created_time:2020-10-06T12:04:48.71500306Z deletion_time: destroyed:false version:1]

Cleanup

You can easily cleanup your clusters with the command line.

$ az aks delete --resource-group ijvaultk8srg --name ijvaultaks2

Are you sure you want to perform this operation? (y/n): y

- Running ..

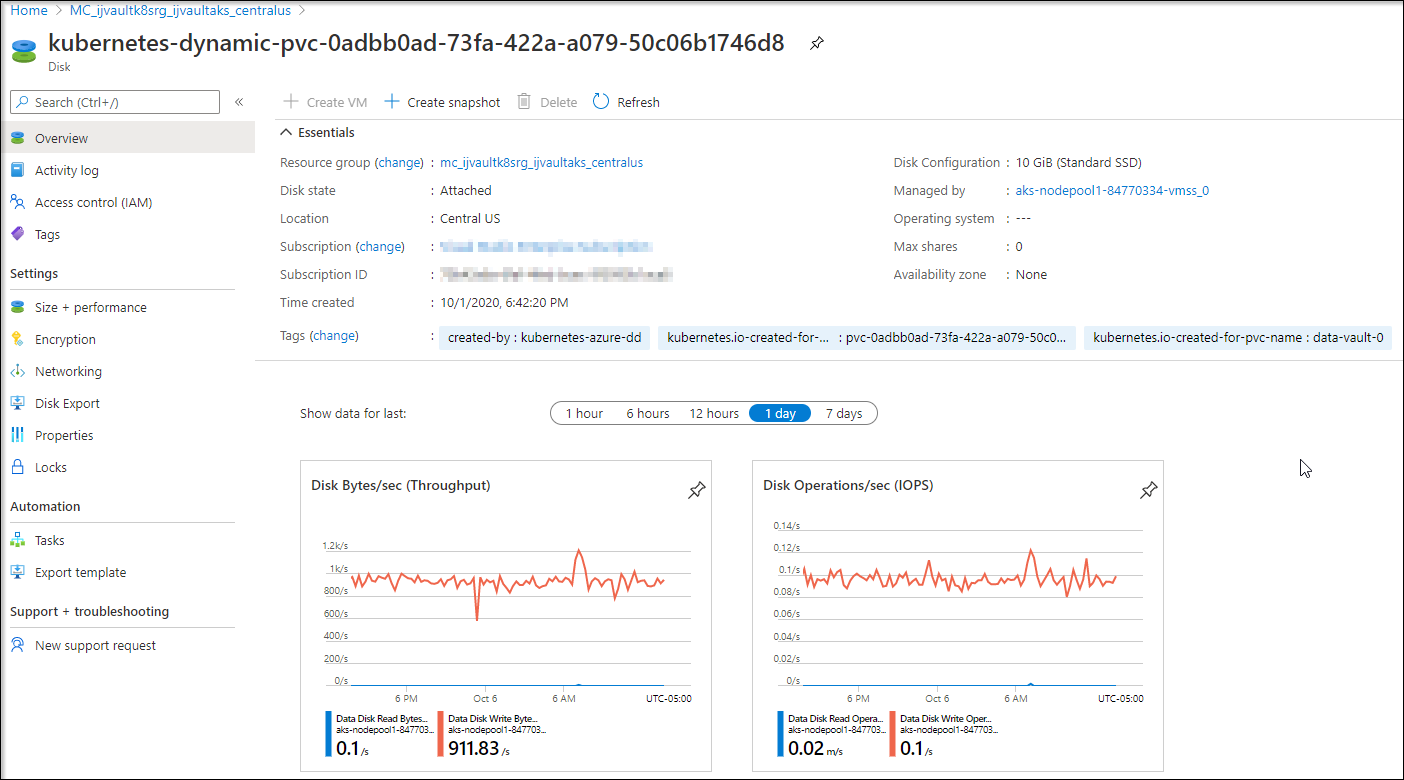

Storage of Secrets

In discussing this with a colleague it was brought up “where does vault actually store the secrets”?

Vault, in this case, uses a Persistant Volume Claim:

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

data-vault-0 Bound pvc-0adbb0ad-73fa-422a-a079-50c06b1746d8 10Gi RWO default 4d19h

This will leverage the default storage class of the cluster (which for AKS, by default, is Azure Disk):

You can override this in AKS:

$ kubectl patch storageclass azurefile-premium -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

storageclass.storage.k8s.io/azurefile-premium patched

$ kubectl patch storageclass default -p '{"metadata": {"annotations":{"storageclass.beta.kubernetes.io/is-default-class":"false", "kubectl.kubernetes.io/last-applied-configuration": "{\"allowVolumeExpansion\":true,\"apiVersion\":\"storage.k8s.io/v1beta1\",\"kind\":\"StorageClass\",\"metadata\":{\"annotations\":{\"storageclass.beta.kubernetes.io/is-default-class\":\"false\"},\"labels\":{\"kubernetes.io/cluster-service\":\"true\"},\"name\":\"default\"},\"parameters\":{\"cachingmode\":\"ReadOnly\",\"kind\":\"Managed\",\"storageaccounttype\":\"StandardSSD_LRS\"},\"provisioner\":\"kubernetes.io/azure-disk\"}"}}}'

storageclass.storage.k8s.io/default patched

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

azurefile kubernetes.io/azure-file Delete Immediate true 4d19h

azurefile-premium (default) kubernetes.io/azure-file Delete Immediate true 4d19h

default kubernetes.io/azure-disk Delete Immediate true 4d19h

managed-premium kubernetes.io/azure-disk Delete Immediate true 4d19h

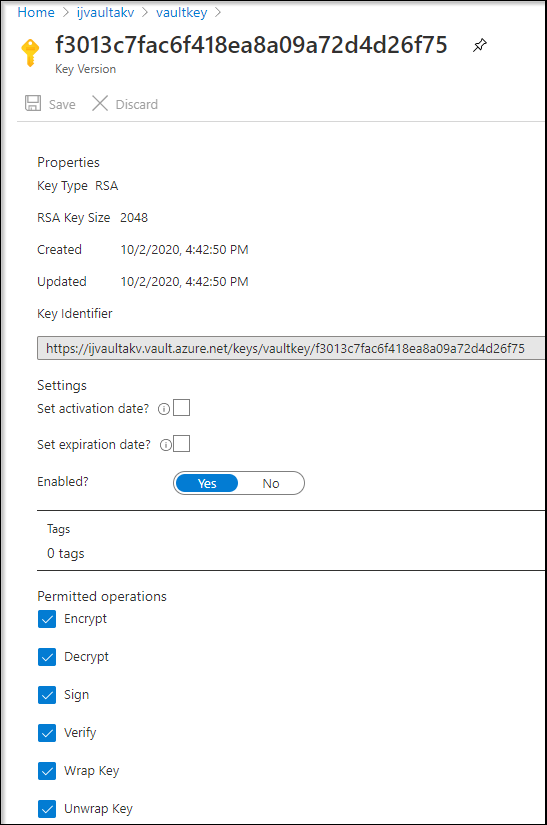

Because we used Auto-Unseal, instead of shamir with unlock keys in process, Vault will leaverage it’s vault key in AKV to handle unsealing after reboot.

Summary

In this post we explored Vault in Kubernetes a little more. We created a database user and password and exposed it to a deployment. We leveraged the “template” feature to format the string for a variety of common database engines and showed how to mount secrets on an interactive ubuntu shell. Lastly, we showed how to expose Vault with a public IP, restrict with an NSG and lastly connected a second kubernetes cluster to our primary vault agent and after some debugging, figured out how to launch a deployment that could retrieve from an external containerized vault server.

This shows us the power of the Open Source Vault agent and more ways we can use it in a Kubernetes environment. Ideally Vault uses Consul as it’s mesh network and in a proper commercial instance, we would not expose Vault directly (instead relying on a Consul cluster to bridge clouds). However, this shows us how we can get started using an auto-unsealing containerized Hashi Vault in Kubernetes.