Published: Aug 3, 2020 by Isaac Johnson

Recently the topic came up about how to procure valid SSL certificates for a colleague’s project. I knew I had used an earlier version of cert-manager ina post about self host container registries but wondered could the same tooling be used to quickly get valid certs in a manual fashion. I was also interested in trying out the cert-manager krew plugin and this could be a nice chance to do so. While automatic provisioning is easy, we’ll address manually generating a valid cert and downloading it for use.

Setup

I wanted to do this all in Windows for a change so i used a Lenovo Ideapad Flex 14 (and not in WSL).

Update Azure CLI

I found that choco install was only offering version 2.2.0 and i knew Az CLI was up to at least version 2.9 so i went to the Azure CLI site to download the msi.

C:\WINDOWS\system32>choco install azure-cli

Chocolatey v0.10.15

Installing the following packages:

azure-cli

By installing you accept licenses for the packages.

azure-cli v2.2.0 already installed.

Use --force to reinstall, specify a version to install, or try upgrade.

Chocolatey installed 0/1 packages.

See the log for details (C:\ProgramData\chocolatey\logs\chocolatey.log).

Warnings:

- azure-cli - azure-cli v2.2.0 already installed.

Use --force to reinstall, specify a version to install, or try upgrade.

After the MSI install

C:\WINDOWS\system32>az version

{

"azure-cli": "2.9.1",

"azure-cli-command-modules-nspkg": "2.0.3",

"azure-cli-core": "2.9.1",

"azure-cli-nspkg": "3.0.4",

"azure-cli-telemetry": "1.0.4",

"extensions": {}

}

Once we have a current az cli, we can install the latest kubectl:

C:\WINDOWS\system32>az aks install-cli

Downloading client to "C:\Users\isaac\.azure-kubectl\kubectl.exe" from "https://storage.googleapis.com/kubernetes-release/release/v1.18.6/bin/windows/amd64/kubectl.exe"

C:\WINDOWS\system32>set PATH=C:\Users\isaac\.azure-kubectl\;%PATH%

C:\WINDOWS\system32>kubectl version

Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.6", GitCommit:"dff82dc0de47299ab66c83c626e08b245ab19037", GitTreeState:"clean", BuildDate:"2020-07-15T16:58:53Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"windows/amd64"}

Unable to connect to the server: dial tcp 192.168.1.225:6443: connectex: No connection could be made because the target machine actively refused it.

Next we need to install the krew plugin (you’ll want to install from administrator command prompt). You can download the binary and config from the releases directory (https://github.com/kubernetes-sigs/krew/releases).

C:\Users\isaac\Downloads>dir krew*

Volume in drive C is Windows-SSD

Volume Serial Number is 9024-7E16

Directory of C:\Users\isaac\Downloads

08/01/2020 04:10 PM 11,927,552 krew.exe

08/01/2020 04:10 PM 2,193 krew.yaml

2 File(s) 11,929,745 bytes

0 Dir(s) 79,373,946,880 bytes free

C:\WINDOWS\system32>cd C:\Users\isaac\Downloads

C:\Users\isaac\Downloads>krew install --manifest=krew.yaml

←[31;1mWARNING←[0m: To be able to run kubectl plugins, you need to add the

"%USERPROFILE%\.krew\bin" directory to your PATH environment variable

and restart your shell.

Installing plugin: krew

Installed plugin: krew

\

| Use this plugin:

| kubectl krew

| Documentation:

| https://sigs.k8s.io/krew

| Caveats:

| \

| | krew is now installed! To start using kubectl plugins, you need to add

| | krew's installation directory to your PATH:

| |

| | * macOS/Linux:

| | - Add the following to your ~/.bashrc or ~/.zshrc:

| | export PATH="${KREW_ROOT:-$HOME/.krew}/bin:$PATH"

| | - Restart your shell.

| |

| | * Windows: Add %USERPROFILE%\.krew\bin to your PATH environment variable

| |

| | To list krew commands and to get help, run:

| | $ kubectl krew

| | For a full list of available plugins, run:

| | $ kubectl krew search

| |

| | You can find documentation at https://sigs.k8s.io/krew.

| /

/

C:\Users\isaac\Downloads>set PATH=%USERPROFILE%\.krew\bin;%PATH%

Next we need to update krew

C:\Users\isaac\Downloads>kubectl krew update

Updated the local copy of plugin index.

C:\Users\isaac\Downloads>kubectl krew search

NAME DESCRIPTION INSTALLED

access-matrix Show an RBAC access matrix for server resources no

advise-psp Suggests PodSecurityPolicies for cluster. unavailable on windows

apparmor-manager Manage AppArmor profiles for cluster. unavailable on windows

auth-proxy Authentication proxy to a pod or service no

bulk-action Do bulk actions on Kubernetes resources. unavailable on windows

ca-cert Print the PEM CA certificate of the current clu... unavailable on windows

capture Triggers a Sysdig capture to troubleshoot the r... unavailable on windows

cert-manager Manage cert-manager resources inside your cluster no

change-ns View or change the current namespace via kubectl. no

cilium Easily interact with Cilium agents. no

cluster-group Exec commands across a group of contexts. unavailable on windows

config-cleanup Automatically clean up your kubeconfig no

cssh SSH into Kubernetes nodes unavailable on windows

ctx Switch between contexts in your kubeconfig unavailable on windows

custom-cols A "kubectl get" replacement with customizable c... unavailable on windows

datadog Manage the Datadog Operator no

debug Attach ephemeral debug container to running pod no

debug-shell Create pod with interactive kube-shell. unavailable on windows

deprecations Checks for deprecated objects in a cluster no

df-pv Show disk usage (like unix df) for persistent v... no

doctor Scans your cluster and reports anomalies. no

duck List custom resources with ducktype support no

eksporter Export resources and removes a pre-defined set ... no

emit-event Emit Kubernetes Events for the requested object unavailable on windows

evict-pod Evicts the given pod unavailable on windows

example Prints out example manifest YAMLs no

exec-as Like kubectl exec, but offers a `user` flag to ... unavailable on windows

exec-cronjob Run a CronJob immediately as Job unavailable on windows

fields Grep resources hierarchy by field name no

fleet Shows config and resources of a fleet of clusters no

fuzzy Fuzzy and partial string search for kubectl no

gadget Gadgets for debugging and introspecting apps unavailable on windows

get-all Like `kubectl get all` but _really_ everything no

gke-credentials Fetch credentials for GKE clusters unavailable on windows

gopass Imports secrets from gopass no

grep Filter Kubernetes resources by matching their n... no

gs Handle custom resources with Giant Swarm unavailable on windows

iexec Interactive selection tool for `kubectl exec` unavailable on windows

images Show container images used in the cluster. no

ingress-nginx Interact with ingress-nginx no

ipick A kubectl wrapper for interactive resource sele... no

konfig Merge, split or import kubeconfig files no

krew Package manager for kubectl plugins. yes

kubesec-scan Scan Kubernetes resources with kubesec.io. no

kudo Declaratively build, install, and run operators... unavailable on windows

kuttl Declaratively run and test operators unavailable on windows

kyverno Kyverno is a policy engine for kubernetes no

match-name Match names of pods and other API objects no

modify-secret modify secret with implicit base64 translations unavailable on windows

mtail Tail logs from multiple pods matching label sel... unavailable on windows

neat Remove clutter from Kubernetes manifests to mak... unavailable on windows

net-forward Proxy to arbitrary TCP services on a cluster ne... unavailable on windows

node-admin List nodes and run privileged pod with chroot unavailable on windows

node-restart Restart cluster nodes sequentially and gracefully unavailable on windows

node-shell Spawn a root shell on a node via kubectl unavailable on windows

np-viewer Network Policies rules viewer no

ns Switch between Kubernetes namespaces unavailable on windows

oidc-login Log in to the OpenID Connect provider no

open-svc Open the Kubernetes URL(s) for the specified se... no

oulogin Login to a cluster via OpenUnison no

outdated Finds outdated container images running in a cl... no

passman Store kubeconfig credentials in keychains or pa... no

pod-dive Shows a pod's workload tree and info inside a node no

pod-logs Display a list of pods to get logs from unavailable on windows

pod-shell Display a list of pods to execute a shell in unavailable on windows

podevents Show events for pods no

popeye Scans your clusters for potential resource issues no

preflight Executes application preflight tests in a cluster no

profefe Gather and manage pprof profiles from running pods unavailable on windows

prompt Prompts for user confirmation when executing co... unavailable on windows

prune-unused Prune unused resources unavailable on windows

psp-util Manage Pod Security Policy(PSP) and the related... no

rbac-lookup Reverse lookup for RBAC unavailable on windows

rbac-view A tool to visualize your RBAC permissions. no

resource-capacity Provides an overview of resource requests, limi... unavailable on windows

resource-snapshot Prints a snapshot of nodes, pods and HPAs resou... no

restart Restarts a pod with the given name unavailable on windows

rm-standalone-pods Remove all pods without owner references unavailable on windows

rolesum Summarize RBAC roles for subjects no

roll Rolling restart of all persistent pods in a nam... unavailable on windows

schemahero Declarative database schema migrations via YAML no

score Kubernetes static code analysis. unavailable on windows

service-tree Status for ingresses, services, and their backends no

sick-pods Find and debug Pods that are "Not Ready" no

snap Delete half of the pods in a namespace or cluster unavailable on windows

sniff Start a remote packet capture on pods using tcp... no

sort-manifests Sort manifest files in a proper order by Kind unavailable on windows

split-yaml Split YAML output into one file per resource. no

spy pod debugging tool for kubernetes clusters with... unavailable on windows

sql Query the cluster via pseudo-SQL unavailable on windows

ssh-jump A kubectl plugin to SSH into Kubernetes nodes u... unavailable on windows

sshd Run SSH server in a Pod unavailable on windows

ssm-secret Import/export secrets from/to AWS SSM param store no

starboard Toolkit for finding risks in kubernetes resources no

status Show status details of a given resource. no

sudo Run Kubernetes commands impersonated as group s... unavailable on windows

support-bundle Creates support bundles for off-cluster analysis no

tail Stream logs from multiple pods and containers u... unavailable on windows

tap Interactively proxy Kubernetes Services with ease no

tmux-exec An exec multiplexer using Tmux unavailable on windows

topology Explore region topology for nodes or pods no

trace bpftrace programs in a cluster no

tree Show a tree of object hierarchies through owner... no

unused-volumes List unused PVCs no

view-allocations List allocations per resources, nodes, pods. unavailable on windows

view-secret Decode Kubernetes secrets no

view-serviceaccount-kubeconfig Show a kubeconfig setting to access the apiserv... no

view-utilization Shows cluster cpu and memory utilization unavailable on windows

virt Control KubeVirt virtual machines using virtctl no

warp Sync and execute local files in Pod unavailable on windows

who-can Shows who has RBAC permissions to access Kubern... no

whoami Show the subject that's currently authenticated... unavailable on windows

Azure Kubernetes Service (AKS)

If you don’t have a running cluster to use, you can setup a new one pretty easily. Login to Azure and set subscription (if you have more than one).

C:\Users\isaac\Downloads>az login

C:\Users\isaac\Downloads>az account set --subscription 70abcdef-1234-1234-1234-987654321

I plan to use an existing resource group (idjaksdemo), however you may need to create a resource group first (az group create)

C:\Users\isaac\Downloads>az aks create --resource-group idjaksdemo --name idjaksdemo01 --node-count 3 --network-plugin azure --generate-ssh-keys

{

"aadProfile": null,

"addonProfiles": {

"KubeDashboard": {

"config": null,

"enabled": true,

"identity": null

}

},

"agentPoolProfiles": [

{

"availabilityZones": null,

"count": 3,

"enableAutoScaling": null,

"enableNodePublicIp": false,

"maxCount": null,

"maxPods": 30,

"minCount": null,

"mode": "System",

"name": "nodepool1",

"nodeLabels": {},

"nodeTaints": null,

"orchestratorVersion": "1.16.10",

"osDiskSizeGb": 128,

"osType": "Linux",

"provisioningState": "Succeeded",

"scaleSetEvictionPolicy": null,

"scaleSetPriority": null,

"spotMaxPrice": null,

"tags": null,

"type": "VirtualMachineScaleSets",

"vmSize": "Standard_DS2_v2",

"vnetSubnetId": null

}

],

"apiServerAccessProfile": null,

"autoScalerProfile": null,

"diskEncryptionSetId": null,

"dnsPrefix": "idjaksdemo-idjaksdemo-70b42e",

"enablePodSecurityPolicy": null,

"enableRbac": true,

"fqdn": "idjaksdemo-idjaksdemo-70b42e-b43cba45.hcp.eastus.azmk8s.io",

"id": "/subscriptions/70abcdef-1234-1234-1234-987654321/resourcegroups/idjaksdemo/providers/Microsoft.ContainerService/managedClusters/idjaksdemo01",

"identity": null,

"identityProfile": null,

"kubernetesVersion": "1.16.10",

"linuxProfile": {

"adminUsername": "azureuser",

"ssh": {

"publicKeys": [

{

"keyData": "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDihWpPWuhb/J/hB3D3jPVoUCG4gjaWknbnZOVgOKszV5UTgpAB2BnvOfMZMjWr43M/bb9tmBxCCw6Mw0m8phuaskHP6rrsCHKBQw7CXAmDkKgH8+6ul3MYhyiEC4tjuWQXd17oWo7RGbA/EE5hIJzx5fDBbe2qjPdVJKFUq8TEwzdHCT4LRDGjCUaeu1qhxmJszCsQaAJqH7T1ah8HvnM+x++pux0MXIMu3p7Ay098lYuO9RxHvcXW1IH5RrUV+cgWZcW2JZSnIiRn1KXfyUvZf/fpLK9nUvnSYW+Q2WPdJVhLUXQ6OWk2D6Z3M0Mv1r839g4V62gryBj3hKtHGkwj isaac@DESKTOP-2SQ9NQM\n"

}

]

}

},

"location": "eastus",

"maxAgentPools": 10,

"name": "idjaksdemo01",

"networkProfile": {

"dnsServiceIp": "10.0.0.10",

"dockerBridgeCidr": "172.17.0.1/16",

"loadBalancerProfile": {

"allocatedOutboundPorts": null,

"effectiveOutboundIps": [

{

"id": "/subscriptions/70abcdef-1234-1234-1234-987654321/resourceGroups/MC_idjaksdemo_idjaksdemo01_eastus/providers/Microsoft.Network/publicIPAddresses/3e126549-b950-4625-9e80-1d785014be23",

"resourceGroup": "MC_idjaksdemo_idjaksdemo01_eastus"

}

],

"idleTimeoutInMinutes": null,

"managedOutboundIps": {

"count": 1

},

"outboundIpPrefixes": null,

"outboundIps": null

},

"loadBalancerSku": "Standard",

"networkMode": null,

"networkPlugin": "azure",

"networkPolicy": null,

"outboundType": "loadBalancer",

"podCidr": null,

"serviceCidr": "10.0.0.0/16"

},

"nodeResourceGroup": "MC_idjaksdemo_idjaksdemo01_eastus",

"privateFqdn": null,

"provisioningState": "Succeeded",

"resourceGroup": "idjaksdemo",

"servicePrincipalProfile": {

"clientId": "8ab1be70-ca21-455c-97a3-3c9d8515ead2",

"secret": null

},

"sku": {

"name": "Basic",

"tier": "Free"

},

"tags": null,

"type": "Microsoft.ContainerService/ManagedClusters",

"windowsProfile": {

"adminPassword": null,

"adminUsername": "azureuser"

}

}

Helm

We need Helm next, if you need to install it, get Helm 3 from https://github.com/helm/helm/releases

C:\Users\isaac\Downloads>"C:\Program Files\helm3\helm.exe" version

version.BuildInfo{Version:"v3.3.0-rc.2", GitCommit:"8a4aeec08d67a7b84472007529e8097ec3742105", GitTreeState:"dirty", GoVersion:"go1.14.6"}

To type it all together, we need to get the kubeconfig for kubectl to use.

C:\Users\isaac\Downloads>az aks list -o table

Name Location ResourceGroup KubernetesVersion ProvisioningState Fqdn

------------ ---------- --------------- ------------------- ------------------- ----------------------------------------------------------

idjaksdemo01 eastus idjaksdemo 1.16.10 Succeeded idjaksdemo-idjaksdemo-70b42e-b43cba45.hcp.eastus.azmk8s.io

C:\Users\isaac\Downloads>az aks get-credentials -n idjaksdemo01 -g idjaksdemo --admin

Merged "idjaksdemo01-admin" as current context in C:\Users\isaac\.kube\config

C:\Users\isaac\Downloads>kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-34730799-vmss000000 Ready agent 3h19m v1.16.10

aks-nodepool1-34730799-vmss000001 Ready agent 3h19m v1.16.10

aks-nodepool1-34730799-vmss000002 Ready agent 3h19m v1.16.10

Installing Credential Manager

Now that we have Kubectl, Helm, and work Kubernetes cluster, let’s install the cert manager following their guide (https://cert-manager.io/docs/installation/kubernetes/).

First, let’s check to see if the credential manager is already installed:

C:\Users\isaac\Downloads>kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system azure-cni-networkmonitor-7nhmb 1/1 Running 0 3h20m

kube-system azure-cni-networkmonitor-f94pn 1/1 Running 0 3h20m

kube-system azure-cni-networkmonitor-r99tj 1/1 Running 0 3h20m

kube-system azure-ip-masq-agent-g425b 1/1 Running 0 3h20m

kube-system azure-ip-masq-agent-ggf5h 1/1 Running 0 3h20m

kube-system azure-ip-masq-agent-lrqp6 1/1 Running 0 3h20m

kube-system coredns-869cb84759-cdq8r 1/1 Running 0 3h20m

kube-system coredns-869cb84759-g9vpm 1/1 Running 0 3h20m

kube-system coredns-autoscaler-5b867494f-psz7w 1/1 Running 0 3h20m

kube-system dashboard-metrics-scraper-566c858889-9dt86 1/1 Running 0 3h20m

kube-system kube-proxy-gwzw7 1/1 Running 0 3h20m

kube-system kube-proxy-qkh2b 1/1 Running 0 3h20m

kube-system kube-proxy-tlxd7 1/1 Running 0 3h20m

kube-system kubernetes-dashboard-7f7d6bbd7f-sznqp 1/1 Running 0 3h20m

kube-system metrics-server-6cd7558856-vtkf4 1/1 Running 0 3h20m

kube-system tunnelfront-788b587d5d-cbt8t 2/2 Running 0 3h20m

Create the namespace and add the helm repository that contains the current chart:

C:\Users\isaac\Downloads>kubectl create namespace cert-manager

namespace/cert-manager created

C:\Users\isaac\Downloads>helm repo add jetstack https://charts.jetstack.io

"jetstack" has been added to your repositories

Next, since we are running 1.16, we’ll need to use kubectl to install the Customer Resource Definitions (CRDS):

C:\Users\isaac\Downloads>kubectl apply --validate=false -f https://github.com/jetstack/cert-manager/releases/download/v0.16.0/cert-manager.crds.yaml

customresourcedefinition.apiextensions.k8s.io/certificaterequests.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/certificates.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/challenges.acme.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/clusterissuers.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/issuers.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/orders.acme.cert-manager.io created

Next we should install cert manager

C:\Users\isaac\Downloads>helm install cert-manager jetstack/cert-manager --namespace cert-manager --version v0.16.0

NAME: cert-manager

LAST DEPLOYED: Sat Aug 1 20:09:51 2020

NAMESPACE: cert-manager

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

cert-manager has been deployed successfully!

In order to begin issuing certificates, you will need to set up a ClusterIssuer

or Issuer resource (for example, by creating a 'letsencrypt-staging' issuer).

More information on the different types of issuers and how to configure them

can be found in our documentation:

https://cert-manager.io/docs/configuration/

For information on how to configure cert-manager to automatically provision

Certificates for Ingress resources, take a look at the `ingress-shim`

documentation:

https://cert-manager.io/docs/usage/ingress/

Verification it’s running

C:\Users\isaac\Downloads>kubectl get pods --namespace cert-manager

NAME READY STATUS RESTARTS AGE

cert-manager-69779b98cd-bf2ll 1/1 Running 0 40m

cert-manager-cainjector-7c4c4bbbb9-7jj77 1/1 Running 0 40m

cert-manager-webhook-6496b996cb-fhz8g 1/1 Running 0 40m

Next, let’s just verify the basics with a self-signed cert

You can use notepad to create test-resources.yaml

apiVersion: v1

kind: Namespace

metadata:

name: cert-manager-test

---

apiVersion: cert-manager.io/v1alpha2

kind: Issuer

metadata:

name: test-selfsigned

namespace: cert-manager-test

spec:

selfSigned: {}

---

apiVersion: cert-manager.io/v1alpha2

kind: Certificate

metadata:

name: selfsigned-cert

namespace: cert-manager-test

spec:

dnsNames:

- example.com

secretName: selfsigned-cert-tls

issuerRef:

name: test-selfsigned

Then apply it

C:\Users\isaac\Downloads>kubectl apply -f test-resources.yaml

namespace/cert-manager-test created

issuer.cert-manager.io/test-selfsigned created

certificate.cert-manager.io/selfsigned-cert created

We can now see the self signed cert in the namespace

C:\Users\isaac\Downloads>kubectl describe certificate -n cert-manager-test

Name: selfsigned-cert

Namespace: cert-manager-test

Labels: <none>

Annotations: API Version: cert-manager.io/v1beta1

Kind: Certificate

Metadata:

Creation Timestamp: 2020-08-02T01:55:16Z

Generation: 1

Resource Version: 25986

Self Link: /apis/cert-manager.io/v1beta1/namespaces/cert-manager-test/certificates/selfsigned-cert

UID: 9d239d5b-0279-44f2-9f25-a5776d082a29

Spec:

Dns Names:

example.com

Issuer Ref:

Name: test-selfsigned

Secret Name: selfsigned-cert-tls

Status:

Conditions:

Last Transition Time: 2020-08-02T01:55:17Z

Message: Certificate is up to date and has not expired

Reason: Ready

Status: True

Type: Ready

Not After: 2020-10-31T01:55:17Z

Not Before: 2020-08-02T01:55:17Z

Renewal Time: 2020-10-01T01:55:17Z

Revision: 1

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Issuing 4m33s cert-manager Issuing certificate as Secret does not exist

Normal Generated 4m32s cert-manager Stored new private key in temporary Secret resource "selfsigned-cert-4gq2r"

Normal Requested 4m32s cert-manager Created new CertificateRequest resource "selfsigned-cert-zhhvq"

Normal Issuing 4m32s cert-manager The certificate has been successfully issued

We can see the cert in the namespace

C:\Users\isaac\Downloads>kubectl get CertificateRequest -n cert-manager-test

NAME READY AGE

selfsigned-cert-zhhvq True 7m3s

C:\Users\isaac\Downloads>kubectl get CertificateRequest selfsigned-cert-zhhvq -n cert-manager-test -o yaml

apiVersion: cert-manager.io/v1beta1

kind: CertificateRequest

metadata:

annotations:

cert-manager.io/certificate-name: selfsigned-cert

cert-manager.io/certificate-revision: "1"

cert-manager.io/private-key-secret-name: selfsigned-cert-4gq2r

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"cert-manager.io/v1alpha2","kind":"Certificate","metadata":{"annotations":{},"name":"selfsigned-cert","namespace":"cert-manager-test"},"spec":{"dnsNames":["example.com"],"issuerRef":{"name":"test-selfsigned"},"secretName":"selfsigned-cert-tls"}}

creationTimestamp: "2020-08-02T01:55:17Z"

generateName: selfsigned-cert-

generation: 1

name: selfsigned-cert-zhhvq

namespace: cert-manager-test

ownerReferences:

- apiVersion: cert-manager.io/v1alpha2

blockOwnerDeletion: true

controller: true

kind: Certificate

name: selfsigned-cert

uid: 9d239d5b-0279-44f2-9f25-a5776d082a29

resourceVersion: "25978"

selfLink: /apis/cert-manager.io/v1beta1/namespaces/cert-manager-test/certificaterequests/selfsigned-cert-zhhvq

uid: 92ccd754-74c6-40da-b3ff-429e6bd5fa47

spec:

issuerRef:

name: test-selfsigned

request: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ2JqQ0NBVllDQVFBd0FEQ0NBU0l3RFFZSktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ0VCQU9YSAovdkpWQzQ2SXpCRlBScmZnWUREKzJaWkYxbGREY1NydnVBNVNGdkJhdGpuak40Y3p2M2JNb2xoaXBmRHJ0SVhICmx2TE4vNUUxM1BweVArNlplUHRtMzBvZ2hwZndRZUJjbkhmYlhKWmNHTGlKV0oyWU1zVVk0eVQ0NERnZ1k1VGUKZmpFY2h3OUJrVGdGZXFvQlZ3K2xFa3hvM3l4L0pYT2hndmovSzJoOUUwRVEvcFJkSUZxWDRUZW04MUtzY25haAp4VW9sdXpKZ1hKNDNUQlNNbGlLT0hzblhxbjBpR2wrZ2NoWmV3NGZHNUErZ0JsNFY2eG5YL3lSOWs0TUx3bjNjCnNSV1QyYzFqZlovYk03ZWs0dFVoc0xZQk8wYXhmcXR3b2FIM1k3bEc0dTZWNzYzdG1laU5JTE9ZT3ZWblk0ckcKTklQRDF3bHhVYVlyQmpzRWVNa0NBd0VBQWFBcE1DY0dDU3FHU0liM0RRRUpEakVhTUJnd0ZnWURWUjBSQkE4dwpEWUlMWlhoaGJYQnNaUzVqYjIwd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFLVEwxaFNKUkFoVjA5TGs0SzdLCmJuaDA3WDJwbHpDRmJrZWJVUHU4UWdLL1VvM2gvVnRaOE5OT1BUdTdPcTFtVDNOL3B2THRhbnBkWUlKejMzVzQKenN4U3B3bXJsZ01nNUlKT1dxRlBFd1pzSlhDSDFURHp4U3B6TWg1OGxJSGljVFQ0OVdzcTk3U2t0d0JmdFU0TQpZTHVneXdOMVJLMUk2YVZlTklZZnp5RlRjZlZxaEdUV1dkSVEzQzE0VzlzWHBTeTcrSlVUNnZYOXAybmFIQlJoCmRwQTBUbmNsQ1E5Z044cEhVTVVpdkh5NFBWUVNxNFZlcHd4V3Bsb2xIdVhwR0hTVzVTU3Fpc3pCc0RVNk5waWYKNkRxMWVqbUltUzFiMWpIOWdtMDFIcE14YjBJS2RoMEZPdmc2R3locUIzVDJHTDRvT2ZJQmZkYktSMkpPVUVJVAordTg9Ci0tLS0tRU5EIENFUlRJRklDQVRFIFJFUVVFU1QtLS0tLQo=

status:

ca: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN4akNDQWE2Z0F3SUJBZ0lSQVBzYnVVc3UvTEFmVXduK1hPT3pUc0l3RFFZSktvWklodmNOQVFFTEJRQXcKQURBZUZ3MHlNREE0TURJd01UVTFNVGRhRncweU1ERXdNekV3TVRVMU1UZGFNQUF3Z2dFaU1BMEdDU3FHU0liMwpEUUVCQVFVQUE0SUJEd0F3Z2dFS0FvSUJBUURseC83eVZRdU9pTXdSVDBhMzRHQXcvdG1XUmRaWFEzRXE3N2dPClVoYndXclk1NHplSE03OTJ6S0pZWXFYdzY3U0Z4NWJ5emYrUk5kejZjai91bVhqN1p0OUtJSWFYOEVIZ1hKeDMKMjF5V1hCaTRpVmlkbURMRkdPTWsrT0E0SUdPVTNuNHhISWNQUVpFNEJYcXFBVmNQcFJKTWFOOHNmeVZ6b1lMNAoveXRvZlJOQkVQNlVYU0JhbCtFM3B2TlNySEoyb2NWS0pic3lZRnllTjB3VWpKWWlqaDdKMTZwOUlocGZvSElXClhzT0h4dVFQb0FaZUZlc1oxLzhrZlpPREM4SjkzTEVWazluTlkzMmYyek8zcE9MVkliQzJBVHRHc1g2cmNLR2gKOTJPNVJ1THVsZSt0N1pub2pTQ3ptRHIxWjJPS3hqU0R3OWNKY1ZHbUt3WTdCSGpKQWdNQkFBR2pPekE1TUE0RwpBMVVkRHdFQi93UUVBd0lGb0RBTUJnTlZIUk1CQWY4RUFqQUFNQmtHQTFVZEVRRUIvd1FQTUEyQ0MyVjRZVzF3CmJHVXVZMjl0TUEwR0NTcUdTSWIzRFFFQkN3VUFBNElCQVFDcWxVcWVWRnJDUStycGpycVJaaUdXVmhXZ1h6WEYKZUh6WGdZUG5pazZWclpCeUduamRKTmd0L0hRWUtBUkFMdFowZ3NnQWV3VWRQVUpQYU9TVmNpUmt0ZzdVTGdRLwpUeDZ6eXpoYlp5Y1dtWlp0T2xmZFZ3aFc1cUhsTThoUzAzWkpDdUxnd0YwUGtPbW0xTHpZYWhPdkhxRkxVRkFzCkNRdXdZQjFiYVB0RnlDelJRRlFvVkpDT29nOW05WTJyY1UzOFlnb21zeEUrcXNMU29qQjBGbU9hODRtNGhWNWcKLzNOMWw5WXBwY0RtT2JqU2hDajlHTEtuMEdWYkUrQitwRjVGM0ZLcWNNamFiMXhXYmNDQktEZjF2a1dWUnNrUApEd1l4aUViVHZtd3hvMmh5clBURDlOUmdUVWxyZmRwbjRPalMzTmRpdURITUJNMG84dXZabkg4YgotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

certificate: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN4akNDQWE2Z0F3SUJBZ0lSQVBzYnVVc3UvTEFmVXduK1hPT3pUc0l3RFFZSktvWklodmNOQVFFTEJRQXcKQURBZUZ3MHlNREE0TURJd01UVTFNVGRhRncweU1ERXdNekV3TVRVMU1UZGFNQUF3Z2dFaU1BMEdDU3FHU0liMwpEUUVCQVFVQUE0SUJEd0F3Z2dFS0FvSUJBUURseC83eVZRdU9pTXdSVDBhMzRHQXcvdG1XUmRaWFEzRXE3N2dPClVoYndXclk1NHplSE03OTJ6S0pZWXFYdzY3U0Z4NWJ5emYrUk5kejZjai91bVhqN1p0OUtJSWFYOEVIZ1hKeDMKMjF5V1hCaTRpVmlkbURMRkdPTWsrT0E0SUdPVTNuNHhISWNQUVpFNEJYcXFBVmNQcFJKTWFOOHNmeVZ6b1lMNAoveXRvZlJOQkVQNlVYU0JhbCtFM3B2TlNySEoyb2NWS0pic3lZRnllTjB3VWpKWWlqaDdKMTZwOUlocGZvSElXClhzT0h4dVFQb0FaZUZlc1oxLzhrZlpPREM4SjkzTEVWazluTlkzMmYyek8zcE9MVkliQzJBVHRHc1g2cmNLR2gKOTJPNVJ1THVsZSt0N1pub2pTQ3ptRHIxWjJPS3hqU0R3OWNKY1ZHbUt3WTdCSGpKQWdNQkFBR2pPekE1TUE0RwpBMVVkRHdFQi93UUVBd0lGb0RBTUJnTlZIUk1CQWY4RUFqQUFNQmtHQTFVZEVRRUIvd1FQTUEyQ0MyVjRZVzF3CmJHVXVZMjl0TUEwR0NTcUdTSWIzRFFFQkN3VUFBNElCQVFDcWxVcWVWRnJDUStycGpycVJaaUdXVmhXZ1h6WEYKZUh6WGdZUG5pazZWclpCeUduamRKTmd0L0hRWUtBUkFMdFowZ3NnQWV3VWRQVUpQYU9TVmNpUmt0ZzdVTGdRLwpUeDZ6eXpoYlp5Y1dtWlp0T2xmZFZ3aFc1cUhsTThoUzAzWkpDdUxnd0YwUGtPbW0xTHpZYWhPdkhxRkxVRkFzCkNRdXdZQjFiYVB0RnlDelJRRlFvVkpDT29nOW05WTJyY1UzOFlnb21zeEUrcXNMU29qQjBGbU9hODRtNGhWNWcKLzNOMWw5WXBwY0RtT2JqU2hDajlHTEtuMEdWYkUrQitwRjVGM0ZLcWNNamFiMXhXYmNDQktEZjF2a1dWUnNrUApEd1l4aUViVHZtd3hvMmh5clBURDlOUmdUVWxyZmRwbjRPalMzTmRpdURITUJNMG84dXZabkg4YgotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

conditions:

- lastTransitionTime: "2020-08-02T01:55:17Z"

message: Certificate fetched from issuer successfully

reason: Issued

status: "True"

type: Ready

Lastly, we can cleanup and remove that self signed cert

C:\Users\isaac\Downloads>kubectl delete -f test-resources.yaml

namespace "cert-manager-test" deleted

issuer.cert-manager.io "test-selfsigned" deleted

certificate.cert-manager.io "selfsigned-cert" deleted

Create LetsEncrypt Issuers

Let’s create Staging and Production issuers

The staging issuer:

apiVersion: cert-manager.io/v1alpha2

kind: Issuer

metadata:

name: letsencrypt-staging

namespace: default

spec:

acme:

# You must replace this email address with your own.

# Let's Encrypt will use this to contact you about expiring

# certificates, and issues related to your account.

email: isaac.johnson@gmail.com

server: https://acme-staging-v02.api.letsencrypt.org/directory

privateKeySecretRef:

# Secret resource used to store the account's private key.

name: letsencrypt-account-key

# Add a single challenge solver, HTTP01 using nginx

solvers:

- dns01:

cnameStrategy: Follow

clouddns:

project: enscluster

serviceAccountSecretRef:

name: clouddns-dns01-solver-svc-acct

key: key.json

And production

apiVersion: cert-manager.io/v1alpha2

kind: ClusterIssuer

metadata:

name: letsencrypt-prod

namespace: default

spec:

acme:

# The ACME server URL

server: https://acme-v02.api.letsencrypt.org/directory

# Email address used for ACME registration

email: isaac.johnson@gmail.com

# Name of a secret used to store the ACME account private key

privateKeySecretRef:

name: letsencrypt-prod

# Enable the HTTP-01 challenge provider

solvers:

- http01:

ingress:

class: nginx

Before we continue, we’ll need an nginx ingress controller to receive traffic

C:\Users\isaac\Downloads>helm repo add nginx-stable https://helm.nginx.com/stable

"nginx-stable" has been added to your repositories

C:\Users\isaac\Downloads>helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈ Happy Helming!⎈

C:\Users\isaac\Downloads> helm install my-release nginx-stable/nginx-ingress

NAME: my-release

LAST DEPLOYED: Sat Aug 1 21:41:11 2020

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The NGINX Ingress Controller has been installed.

Take note of the chart and release (nginx-stable/nginx-ingress and “my-release”). We’ll come back to that later

We now need to get the external IP to use in our DNS record

C:\Users\isaac\Downloads>kubectl get svc --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cert-manager cert-manager ClusterIP 10.0.207.7 <none> 9402/TCP 92m

cert-manager cert-manager-webhook ClusterIP 10.0.22.45 <none> 443/TCP 92m

default kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 5h4m

default my-release-nginx-ingress LoadBalancer 10.0.120.83 52.190.47.225 80:32385/TCP,443:31855/TCP 63s

kube-system dashboard-metrics-scraper ClusterIP 10.0.231.231 <none> 8000/TCP 5h3m

kube-system kube-dns ClusterIP 10.0.0.10 <none> 53/UDP,53/TCP 5h3m

kube-system kubernetes-dashboard ClusterIP 10.0.161.1 <none> 443/TCP 5h3m

kube-system metrics-server ClusterIP 10.0.59.71 <none> 443/TCP 5h3m

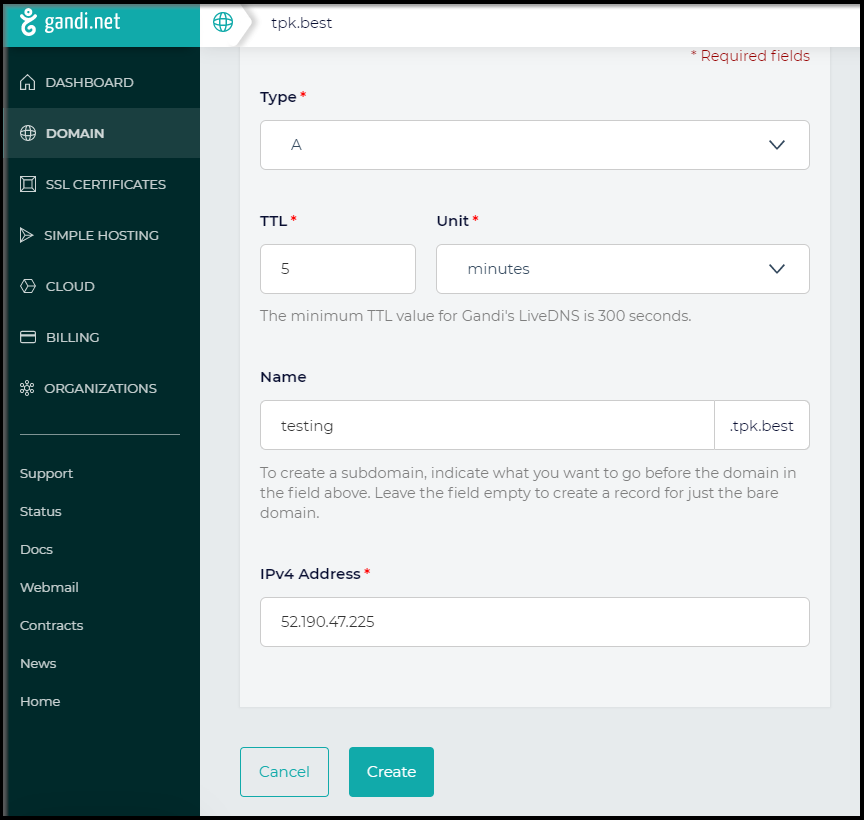

Create an A DNS Record

Let’s set an A/AAAA record to test. Testing.tpk.best to 52.190.47.225 (which we see above in the EXTERNAL-IP)

We can now apply the issuers yaml to install them into the cluster:

C:\Users\isaac\Downloads>kubectl apply -f issuer-prod.yaml

clusterissuer.cert-manager.io/letsencrypt-prod created

C:\Users\isaac\Downloads>kubectl apply -f issuer-staging.yaml

issuer.cert-manager.io/letsencrypt-staging created

Getting a certificate

Let’s create a certificate manually, for instance testing-tpk-best.yml

apiVersion: cert-manager.io/v1alpha2

kind: Certificate

metadata:

name: testing-tpk-best

namespace: default

spec:

secretName: testing.tpk.best-cert

issuerRef:

name: letsencrypt-prod

kind: ClusterIssuer

commonName: testing.tpk.best

dnsNames:

- testing.tpk.best

acme:

config:

- http01:

ingressClass: nginx

domains:

- testing.tpk.best

Then apply it:

C:\Users\isaac\Downloads>kubectl apply -f testing-tpk-best.yml --validate=false

certificate.cert-manager.io/testing-tpk-best created

We can now verify it was created:

C:\Users\isaac\Downloads>kubectl get certificate

NAME READY SECRET AGE

testing-tpk-best True testing.tpk.best-cert 86m

C:\Users\isaac\Downloads>kubectl get certificate testing-tpk-best -o yaml

apiVersion: cert-manager.io/v1beta1

kind: Certificate

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"cert-manager.io/v1alpha2","kind":"Certificate","metadata":{"annotations":{},"name":"testing-tpk-best","namespace":"default"},"spec":{"acme":{"config":[{"domains":["testing.tpk.best"],"http01":{"ingressClass":"nginx"}}]},"commonName":"testing.tpk.best","dnsNames":["testing.tpk.best"],"issuerRef":{"kind":"ClusterIssuer","name":"letsencrypt-prod"},"secretName":"testing.tpk.best-cert"}}

creationTimestamp: "2020-08-02T14:38:37Z"

generation: 1

name: testing-tpk-best

namespace: default

resourceVersion: "146491"

selfLink: /apis/cert-manager.io/v1beta1/namespaces/default/certificates/testing-tpk-best

uid: 2510f98c-2dbd-45ea-92e9-891763e37b74

spec:

commonName: testing.tpk.best

dnsNames:

- testing.tpk.best

issuerRef:

kind: ClusterIssuer

name: letsencrypt-prod

secretName: testing.tpk.best-cert

status:

conditions:

- lastTransitionTime: "2020-08-02T14:39:03Z"

message: Certificate is up to date and has not expired

reason: Ready

status: "True"

type: Ready

notAfter: "2020-10-31T13:39:02Z"

notBefore: "2020-08-02T13:39:02Z"

renewalTime: "2020-10-01T13:39:02Z"

revision: 1

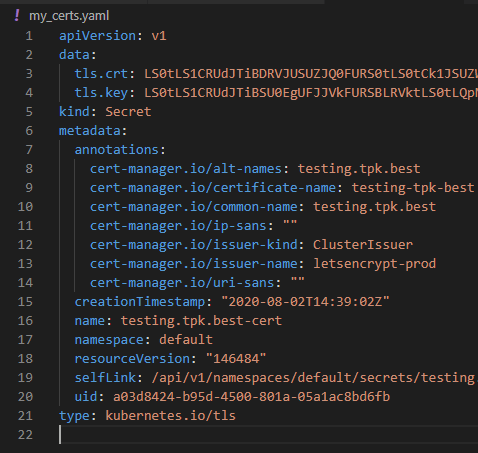

Next, get the crt and key from the secret that matches in the namespace:

kubectl get secrets testing.tpk.best-cert -o yaml > my_certs.yaml

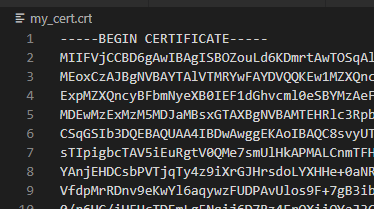

If we take the base64 value in tls.crt above and put into a text file, we can run that through certutil to get back the actual cert value:

C:\Users\isaac\Downloads>certutil -decode my_crt_b64.txt my_cert.crt

Input Length = 4744

Output Length = 3558

CertUtil: -decode command completed successfully.

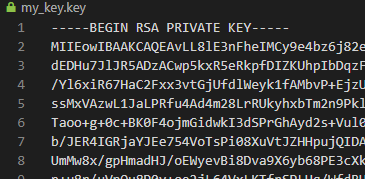

We can do the same with the key

C:\Users\isaac\Downloads>certutil -decode my_key_b64.txt my_key.key

Input Length = 2236

Output Length = 1675

CertUtil: -decode command completed successfully.

Using it

We can now use those values either directly or referencing the secret in a variety of ways. One way we can quick test it is to patch the helm release of our ingress controller.

Up until now our HTTPS traffic on the Nginx ingress was being served with a self-signed cert. While this does encrypt traffic, the browser recognizes that it’s an invalid cert and lets us know. Depending on your security settings, some browsers will block self-signed https traffic (443).

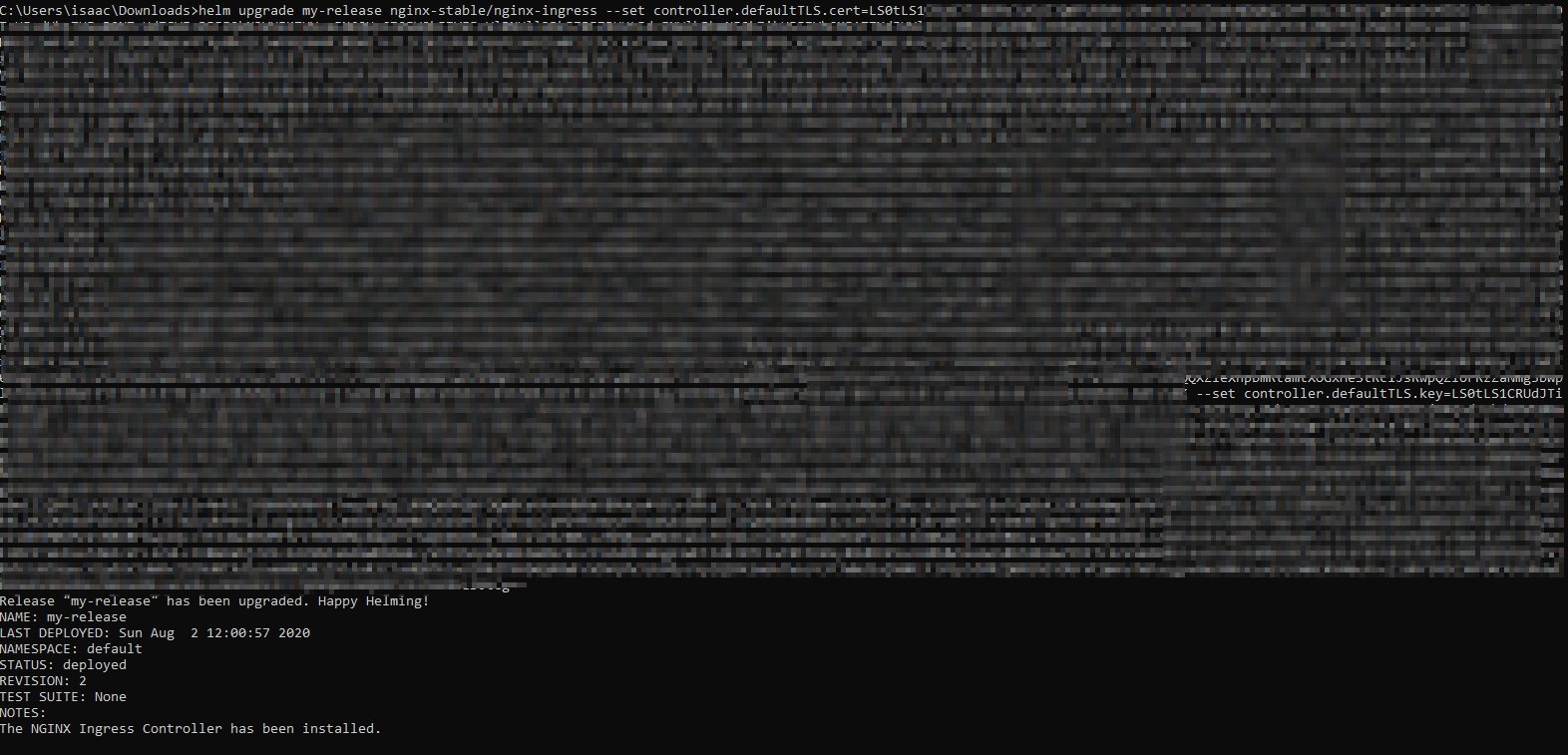

Let’s take those values we saw in the secret and apply them to the nginx release we applied at the start:

C:\Users\isaac\Downloads>helm upgrade my-release nginx-stable/nginx-ingress --set controller.defaultTLS.cert=LS0 **********K --set controller.defaultTLS.key=LS0******** ==

Release "my-release" has been upgraded. Happy Helming!

NAME: my-release

LAST DEPLOYED: Sun Aug 2 12:00:57 2020

NAMESPACE: default

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

The NGINX Ingress Controller has been installed.

Clearly i masked those.. But the values will be rather sizable when you do this yourself:

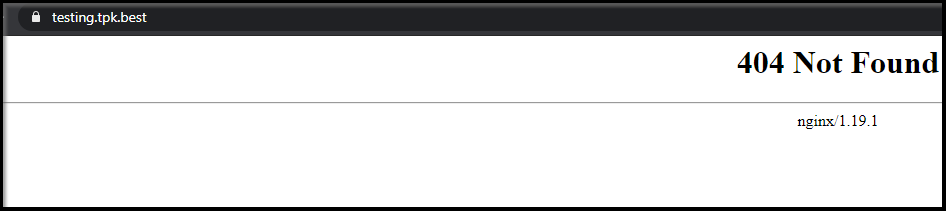

Now when we reload the DNS entry, a valid certificate is loaded:

This is how we did it manually, but we can have annotations handle this work for us.

Automatic Certificates

We can refer tothis guide and see one could just launch an Nginx ingress controller and reference the letsencyrpt-prod provider to have kubernetes do it for us :

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: echo-ingress

annotations:

kubernetes.io/ingress.class: "nginx"

cert-manager.io/cluster-issuer: "letsencrypt-prod"

spec:

tls:

- hosts:

- echo1.example.com

- echo2.example.com

secretName: echo-tls

rules:

- host: echo1.example.com

http:

paths:

- backend:

serviceName: echo1

servicePort: 80

- host: echo2.example.com

http:

paths:

- backend:

serviceName: echo2

servicePort: 80

A similar guide for Joomla or Ghost uses the provider in much the same way:

helm install ghost bitnami/ghost --set ghostHost=blog.DOMAIN,serviceType=ClusterIP

with an ingress definition:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: joomla-ingress

annotations:

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

cert-manager.io/cluster-issuer: "letsencrypt-prod"

spec:

rules:

- host: DOMAIN

http:

paths:

- backend:

serviceName: joomla

servicePort: http

path: /

- host: blog.DOMAIN

http:

paths:

- backend:

serviceName: ghost

servicePort: 80

path: /

tls:

- secretName: joomla-tls-cert

hosts:

- DOMAIN

- blog.DOMAIN

Krew : Cert-Manager

We can use a newer cert-manager utility as provided via Krew:

C:\Users\isaac\Downloads>kubectl krew install cert-manager

Updated the local copy of plugin index.

Installing plugin: cert-manager

Installed plugin: cert-manager

\

| Use this plugin:

| kubectl cert-manager

| Documentation:

| https://github.com/jetstack/cert-manager

/

←[31;1mWARNING←[0m: You installed plugin "cert-manager" from the krew-index plugin repository.

These plugins are not audited for security by the Krew maintainers.

Run them at your own risk.

Presently, we can use this to see the status of the certs:

C:\Users\isaac\Downloads>kubectl cert-manager status certificate testing-tpk-best

Name: testing-tpk-best

Namespace: default

Conditions:

Ready: True, Reason: Ready, Message: Certificate is up to date and has not expired

DNS Names:

- testing.tpk.best

Events: <none>

Issuer:

Name: letsencrypt-prod

Kind: ClusterIssuer

Secret Name: testing.tpk.best-cert

Not Before: 2020-08-02T08:39:02-05:00

Not After: 2020-10-31T08:39:02-05:00

Renewal Time: 2020-10-01T08:39:02-05:00

No CertificateRequest found for this Certificate

The other really nice feature of the cert-manager is the ability to manually renew a certificate ahead of it’s expiry.

For instance, we can look at the one we requested earlier:

C:\Users\isaac\Downloads>kubectl cert-manager status certificate testing-tpk-best

Name: testing-tpk-best

Namespace: default

Conditions:

Ready: True, Reason: Ready, Message: Certificate is up to date and has not expired

DNS Names:

- testing.tpk.best

Events: <none>

Issuer:

Name: letsencrypt-prod

Kind: ClusterIssuer

Secret Name: testing.tpk.best-cert

Not Before: 2020-08-02T08:39:02-05:00

Not After: 2020-10-31T08:39:02-05:00

Renewal Time: 2020-10-01T08:39:02-05:00

No CertificateRequest found for this Certificate

Then trigger a manual renewal now

C:\Users\isaac\Downloads>kubectl cert-manager renew testing-tpk-best

Manually triggered issuance of Certificate default/testing-tpk-best

And lastly, verify that it did indeed renew it:

C:\Users\isaac\Downloads>kubectl cert-manager status certificate testing-tpk-best

Name: testing-tpk-best

Namespace: default

Conditions:

Ready: True, Reason: Ready, Message: Certificate is up to date and has not expired

DNS Names:

- testing.tpk.best

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Reused 28s cert-manager Reusing private key stored in existing Secret resource "testing.tpk.best-cert"

Normal Requested 27s cert-manager Created new CertificateRequest resource "testing-tpk-best-z7jlp"

Normal Issuing 24s (x2 over 6h48m) cert-manager The certificate has been successfully issued

Issuer:

Name: letsencrypt-prod

Kind: ClusterIssuer

Secret Name: testing.tpk.best-cert

Not Before: 2020-08-02T15:27:12-05:00

Not After: 2020-10-31T15:27:12-05:00

Renewal Time: 2020-10-01T15:27:12-05:00

No CertificateRequest found for this Certificate

Granted, it’s advanced by just a few hours - but then I only requested the certificate this morning.

Cleanup:

We need to first delete the cluster:

C:\Users\isaac\Downloads>az aks list -o table

Name Location ResourceGroup KubernetesVersion ProvisioningState Fqdn

------------ ---------- --------------- ------------------- ------------------- ----------------------------------------------------------

idjaksdemo01 eastus idjaksdemo 1.16.10 Succeeded idjaksdemo-idjaksdemo-70b42e-b43cba45.hcp.eastus.azmk8s.io

C:\Users\isaac\Downloads>az aks delete -n idjaksdemo01 -g idjaksdemo

Are you sure you want to perform this operation? (y/n): y

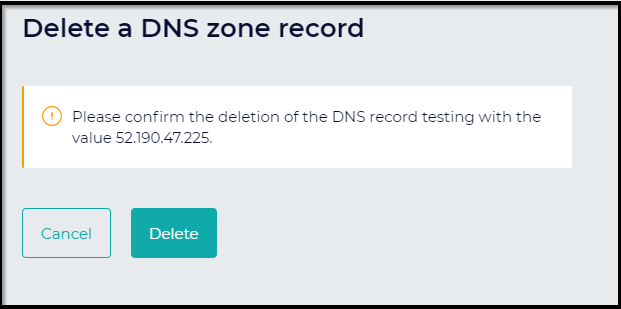

Then we should remove the DNS entry as well.

Summary

Using Kubernetes and the Cert-Manager service we can easily create valid automatic and manual SSL certificates. We tested the validity of the certificate by applying it to the Nginx ingress controller. Lastly, we took a look at a couple nice features of the cert-manager krew plugin for kubectl, namely status and renew.