Published: Jul 29, 2020 by Isaac Johnson

While I cannot recall how I came across krew, it’s been in the back of my mind to check it out. I was aware that it contained a solid library of plugins especially around role base access controls (RBAC). I was curious; how easy is it to install krew and krew plugins? Would there be any that could help us manage RBAC rules and usage? I know in production clusters I’ve spent a fair amount of time cranking commands to lookup roles, clusteroles, rolebindings and clusterrolebindings on a myriad of namespaces to debug access issues.

Installing Krew

We can follow their guide here for Mac/Linux and Windows.

For MacOS i just needed to create a bash script and run it:

$ cat ./get_krew.sh

(

set -x; cd "$(mktemp -d)" &&

curl -fsSLO "https://github.com/kubernetes-sigs/krew/releases/latest/download/krew.{tar.gz,yaml}" &&

tar zxvf krew.tar.gz &&

KREW=./krew-"$(uname | tr '[:upper:]' '[:lower:]')_amd64" &&

"$KREW" install --manifest=krew.yaml --archive=krew.tar.gz &&

"$KREW" update

)

$ chmod 755 ./get_krew.sh

$ ./get_krew.sh

+++ mktemp -d

++ cd /var/folders/dp/wgg0qtcs2lv7j0vwx4fnrgq80000gp/T/tmp.dHz6yeLY

++ curl -fsSLO 'https://github.com/kubernetes-sigs/krew/releases/latest/download/krew.{tar.gz,yaml}'

++ tar zxvf krew.tar.gz

x ./LICENSE

x ./krew-darwin_amd64

x ./krew-linux_amd64

x ./krew-linux_arm

x ./krew-windows_amd64.exe

+++ uname

+++ tr '[:upper:]' '[:lower:]'

++ KREW=./krew-darwin_amd64

++ ./krew-darwin_amd64 install --manifest=krew.yaml --archive=krew.tar.gz

Installing plugin: krew

Installed plugin: krew

\

| Use this plugin:

| kubectl krew

| Documentation:

| https://sigs.k8s.io/krew

| Caveats:

| \

| | krew is now installed! To start using kubectl plugins, you need to add

| | krew's installation directory to your PATH:

| |

| | * macOS/Linux:

| | - Add the following to your ~/.bashrc or ~/.zshrc:

| | export PATH="${KREW_ROOT:-$HOME/.krew}/bin:$PATH"

| | - Restart your shell.

| |

| | * Windows: Add %USERPROFILE%\.krew\bin to your PATH environment variable

| |

| | To list krew commands and to get help, run:

| | $ kubectl krew

| | For a full list of available plugins, run:

| | $ kubectl krew search

| |

| | You can find documentation at https://sigs.k8s.io/krew.

| /

/

++ ./krew-darwin_amd64 update

WARNING: To be able to run kubectl plugins, you need to add

the following to your ~/.bash_profile or ~/.bashrc:

export PATH="${PATH}:${HOME}/.krew/bin"

and restart your shell.

Verify it works:

$ export PATH="${PATH}:${HOME}/.krew/bin"

$ kubectl krew

krew is the kubectl plugin manager.

You can invoke krew through kubectl: "kubectl krew [command]..."

Usage:

krew [command]

Available Commands:

help Help about any command

info Show information about a kubectl plugin

install Install kubectl plugins

list List installed kubectl plugins

search Discover kubectl plugins

uninstall Uninstall plugins

update Update the local copy of the plugin index

upgrade Upgrade installed plugins to newer versions

version Show krew version and diagnostics

Flags:

-h, --help help for krew

-v, --v Level number for the log level verbosity

Use "krew [command] --help" for more information about a command.

Update and show some plugins:

$ kubectl krew update

Updated the local copy of plugin index.

$ kubectl krew search

NAME DESCRIPTION INSTALLED

access-matrix Show an RBAC access matrix for server resources no

advise-psp Suggests PodSecurityPolicies for cluster. no

apparmor-manager Manage AppArmor profiles for cluster. no

auth-proxy Authentication proxy to a pod or service no

bulk-action Do bulk actions on Kubernetes resources. no

ca-cert Print the PEM CA certificate of the current clu... no

capture Triggers a Sysdig capture to troubleshoot the r... no

cert-manager Manage cert-manager resources inside your cluster no

change-ns View or change the current namespace via kubectl. no

cilium Easily interact with Cilium agents. no

cluster-group Exec commands across a group of contexts. no

config-cleanup Automatically clean up your kubeconfig no

cssh SSH into Kubernetes nodes no

ctx Switch between contexts in your kubeconfig no

custom-cols A "kubectl get" replacement with customizable c... no

datadog Manage the Datadog Operator no

debug Attach ephemeral debug container to running pod no

debug-shell Create pod with interactive kube-shell. no

deprecations Checks for deprecated objects in a cluster no

df-pv Show disk usage (like unix df) for persistent v... no

doctor Scans your cluster and reports anomalies. no

duck List custom resources with ducktype support no

eksporter Export resources and removes a pre-defined set ... no

emit-event Emit Kubernetes Events for the requested object no

evict-pod Evicts the given pod no

example Prints out example manifest YAMLs no

exec-as Like kubectl exec, but offers a `user` flag to ... no

exec-cronjob Run a CronJob immediately as Job no

fields Grep resources hierarchy by field name no

fleet Shows config and resources of a fleet of clusters no

fuzzy Fuzzy and partial string search for kubectl no

gadget Gadgets for debugging and introspecting apps no

get-all Like `kubectl get all` but _really_ everything no

gke-credentials Fetch credentials for GKE clusters no

gopass Imports secrets from gopass no

grep Filter Kubernetes resources by matching their n... no

gs Handle custom resources with Giant Swarm no

iexec Interactive selection tool for `kubectl exec` no

images Show container images used in the cluster. no

ingress-nginx Interact with ingress-nginx no

ipick A kubectl wrapper for interactive resource sele... no

konfig Merge, split or import kubeconfig files no

krew Package manager for kubectl plugins. yes

kubesec-scan Scan Kubernetes resources with kubesec.io. no

kudo Declaratively build, install, and run operators... no

kuttl Declaratively run and test operators no

kyverno Kyverno is a policy engine for kubernetes no

match-name Match names of pods and other API objects no

modify-secret modify secret with implicit base64 translations no

mtail Tail logs from multiple pods matching label sel... no

neat Remove clutter from Kubernetes manifests to mak... no

net-forward Proxy to arbitrary TCP services on a cluster ne... unavailable on darwin

node-admin List nodes and run privileged pod with chroot no

node-restart Restart cluster nodes sequentially and gracefully no

node-shell Spawn a root shell on a node via kubectl no

np-viewer Network Policies rules viewer no

ns Switch between Kubernetes namespaces no

oidc-login Log in to the OpenID Connect provider no

open-svc Open the Kubernetes URL(s) for the specified se... no

oulogin Login to a cluster via OpenUnison no

outdated Finds outdated container images running in a cl... no

passman Store kubeconfig credentials in keychains or pa... no

pod-dive Shows a pod's workload tree and info inside a node no

pod-logs Display a list of pods to get logs from no

pod-shell Display a list of pods to execute a shell in no

podevents Show events for pods no

popeye Scans your clusters for potential resource issues no

preflight Executes application preflight tests in a cluster no

profefe Gather and manage pprof profiles from running pods no

prompt Prompts for user confirmation when executing co... no

prune-unused Prune unused resources no

psp-util Manage Pod Security Policy(PSP) and the related... no

rbac-lookup Reverse lookup for RBAC no

rbac-view A tool to visualize your RBAC permissions. no

resource-capacity Provides an overview of resource requests, limi... no

resource-snapshot Prints a snapshot of nodes, pods and HPAs resou... no

restart Restarts a pod with the given name no

rm-standalone-pods Remove all pods without owner references no

rolesum Summarize RBAC roles for subjects no

roll Rolling restart of all persistent pods in a nam... no

schemahero Declarative database schema migrations via YAML no

score Kubernetes static code analysis. no

service-tree Status for ingresses, services, and their backends no

sick-pods Find and debug Pods that are "Not Ready" no

snap Delete half of the pods in a namespace or cluster no

sniff Start a remote packet capture on pods using tcp... no

sort-manifests Sort manifest files in a proper order by Kind no

split-yaml Split YAML output into one file per resource. no

spy pod debugging tool for kubernetes clusters with... no

sql Query the cluster via pseudo-SQL unavailable on darwin

ssh-jump A kubectl plugin to SSH into Kubernetes nodes u... no

sshd Run SSH server in a Pod no

ssm-secret Import/export secrets from/to AWS SSM param store no

starboard Toolkit for finding risks in kubernetes resources no

status Show status details of a given resource. no

sudo Run Kubernetes commands impersonated as group s... no

support-bundle Creates support bundles for off-cluster analysis no

tail Stream logs from multiple pods and containers u... no

tap Interactively proxy Kubernetes Services with ease no

tmux-exec An exec multiplexer using Tmux no

topology Explore region topology for nodes or pods no

trace bpftrace programs in a cluster no

tree Show a tree of object hierarchies through owner... no

unused-volumes List unused PVCs no

view-allocations List allocations per resources, nodes, pods. No

view-secret Decode Kubernetes secrets no

view-serviceaccount-kubeconfig Show a kubeconfig setting to access the apiserv... no

view-utilization Shows cluster cpu and memory utilization no

virt Control KubeVirt virtual machines using virtctl no

warp Sync and execute local files in Pod no

who-can Shows who has RBAC permissions to access Kubern... no

whoami Show the subject that's currently authenticated... no

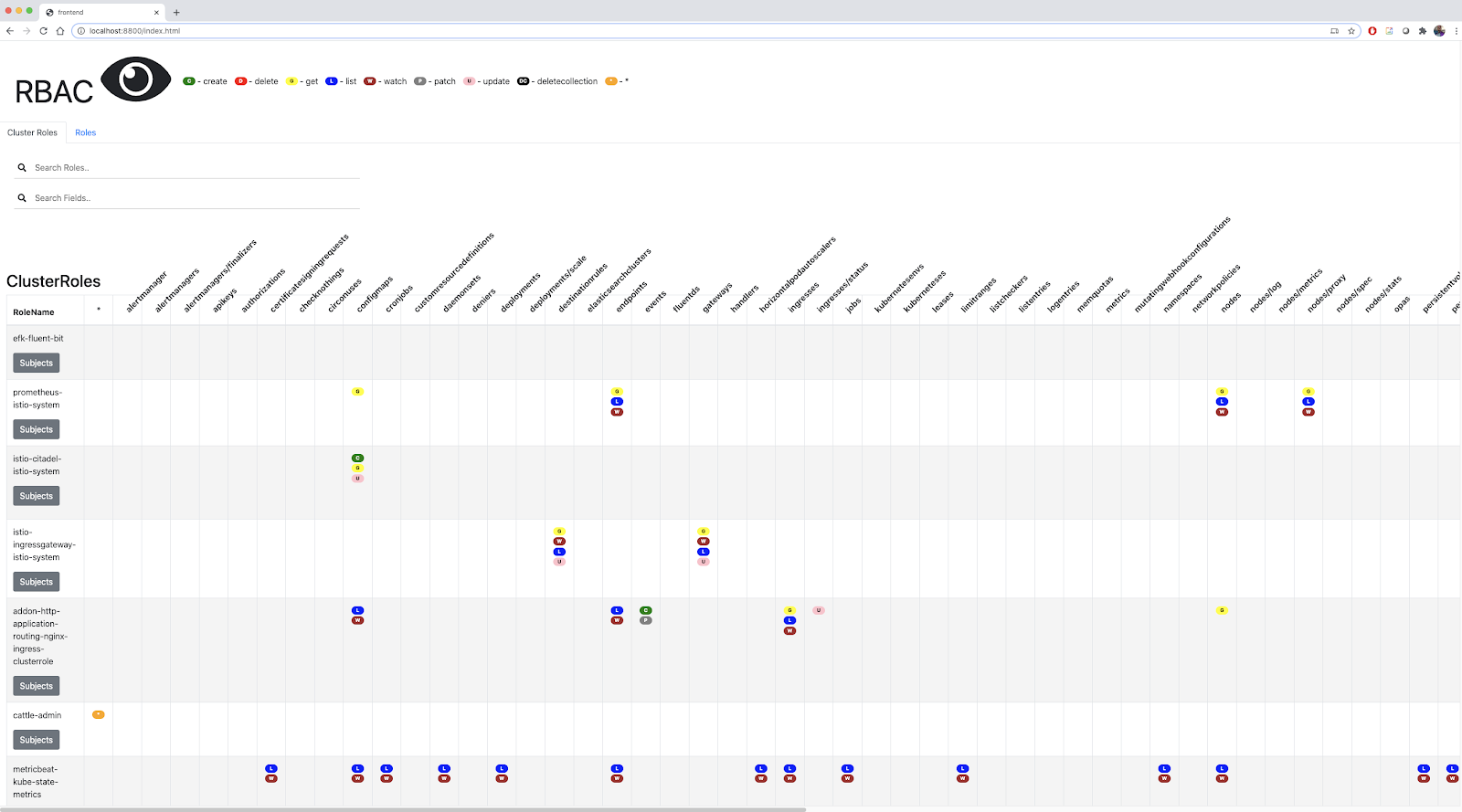

The one that caught my eye was rbac-view. It looked like there were actually a few krew plugins that would be quite helpful.

RBAC-View

rbac-view is a “A tool to visualize your RBAC permissions.”

Installing

$ kubectl krew install rbac-view

Updated the local copy of plugin index.

Installing plugin: rbac-view

Installed plugin: rbac-view

\

| Use this plugin:

| kubectl rbac-view

| Documentation:

| https://github.com/jasonrichardsmith/rbac-view

| Caveats:

| \

| | Run "kubectl rbac-view" to open a browser with an html view of your permissions.

| /

/

WARNING: You installed plugin "rbac-view" from the krew-index plugin repository.

These plugins are not audited for security by the Krew maintainers.

Run them at your own risk.

When we launch it, we can get a local service on port 8800 that lets us see and search for roles.

$ kubectl rbac-view

INFO[0000] Getting K8s client

INFO[0000] serving RBAC View and http://localhost:8800

INFO[0027] Building full matrix for json

INFO[0027] Building Matrix for Roles

INFO[0027] Retrieving RoleBindings

INFO[0027] Building Matrix for ClusterRoles

INFO[0027] Retrieving ClusterRoleBindings

INFO[0028] Retrieving RoleBindings for Namespace azdo-agents

INFO[0028] Retrieving RoleBindings for Namespace kube-system

INFO[0028] Retrieving RoleBindings for Namespace default

INFO[0028] Retrieving RoleBindings for Namespace logging

INFO[0028] Retrieving RoleBindings for Namespace cattle-system

INFO[0028] Retrieving RoleBindings for Namespace istio-system

INFO[0028] Retrieving RoleBindings for Namespace kube-public

INFO[0028] Retrieved 0 RoleBindings for Namespace azdo-agents

INFO[0028] Retrieved 0 RoleBindings for Namespace kube-public

INFO[0028] Retrieved 0 RoleBindings for Namespace cattle-system

INFO[0028] Retrieved 0 RoleBindings for Namespace default

INFO[0028] Retrieved 2 RoleBindings for Namespace logging

...

While that was for an older AKS cluster. Let’s look at a newer EKS cluster i have that has RBAC enabled.

So for instance, i can look up a role, like that used by ES to verify it indeed doesn’t have elevated permissions.

However, we can use another plugin to look at a specific role.

RBAC Lookup

Rbac-lookup is used to “Easily find roles and cluster roles attached to any user, service account, or group name in your Kubernetes cluster.”

$ kubectl krew install rbac-lookup

Updated the local copy of plugin index.

Installing plugin: rbac-lookup

Installed plugin: rbac-lookup

\

| Use this plugin:

| kubectl rbac-lookup

| Documentation:

| https://github.com/reactiveops/rbac-lookup

/

WARNING: You installed plugin "rbac-lookup" from the krew-index plugin repository.

These plugins are not audited for security by the Krew maintainers.

Run them at your own risk.

We can look at theGH pagefor usage.

However, i found it didn’t include everything. For instance, i wanted to see the bindings on the elasticsearch-master role but saw none.

$ kubectl rbac-lookup ro --output wide --kind serviceaccount

SUBJECT SCOPE ROLE SOURCE

ServiceAccount/attachdetach-controller cluster-wide ClusterRole/system:controller:attachdetach-controller ClusterRoleBinding/system:controller:attachdetach-controller

ServiceAccount/certificate-controller cluster-wide ClusterRole/system:controller:certificate-controller ClusterRoleBinding/system:controller:certificate-controller

ServiceAccount/cloud-provider kube-system Role/system:controller:cloud-provider RoleBinding/system:controller:cloud-provider

ServiceAccount/clusterrole-aggregation-controller cluster-wide ClusterRole/system:controller:clusterrole-aggregation-controller ClusterRoleBinding/system:controller:clusterrole-aggregation-controller

ServiceAccount/cronjob-controller cluster-wide ClusterRole/system:controller:cronjob-controller ClusterRoleBinding/system:controller:cronjob-controller

ServiceAccount/daemon-set-controller cluster-wide ClusterRole/system:controller:daemon-set-controller ClusterRoleBinding/system:controller:daemon-set-controller

ServiceAccount/deployment-controller cluster-wide ClusterRole/system:controller:deployment-controller ClusterRoleBinding/system:controller:deployment-controller

ServiceAccount/disruption-controller cluster-wide ClusterRole/system:controller:disruption-controller ClusterRoleBinding/system:controller:disruption-controller

ServiceAccount/endpoint-controller cluster-wide ClusterRole/system:controller:endpoint-controller ClusterRoleBinding/system:controller:endpoint-controller

ServiceAccount/expand-controller cluster-wide ClusterRole/system:controller:expand-controller ClusterRoleBinding/system:controller:expand-controller

ServiceAccount/job-controller cluster-wide ClusterRole/system:controller:job-controller ClusterRoleBinding/system:controller:job-controller

ServiceAccount/kube-controller-manager kube-system Role/system::leader-locking-kube-controller-manager RoleBinding/system::leader-locking-kube-controller-manager

ServiceAccount/kube-proxy cluster-wide ClusterRole/system:node-proxier ClusterRoleBinding/eks:kube-proxy

ServiceAccount/namespace-controller cluster-wide ClusterRole/system:controller:namespace-controller ClusterRoleBinding/system:controller:namespace-controller

ServiceAccount/node-controller cluster-wide ClusterRole/system:controller:node-controller ClusterRoleBinding/system:controller:node-controller

ServiceAccount/pv-protection-controller cluster-wide ClusterRole/system:controller:pv-protection-controller ClusterRoleBinding/system:controller:pv-protection-controller

ServiceAccount/pvc-protection-controller cluster-wide ClusterRole/system:controller:pvc-protection-controller ClusterRoleBinding/system:controller:pvc-protection-controller

ServiceAccount/replicaset-controller cluster-wide ClusterRole/system:controller:replicaset-controller ClusterRoleBinding/system:controller:replicaset-controller

ServiceAccount/replication-controller cluster-wide ClusterRole/system:controller:replication-controller ClusterRoleBinding/system:controller:replication-controller

ServiceAccount/resourcequota-controller cluster-wide ClusterRole/system:controller:resourcequota-controller ClusterRoleBinding/system:controller:resourcequota-controller

ServiceAccount/route-controller cluster-wide ClusterRole/system:controller:route-controller ClusterRoleBinding/system:controller:route-controller

ServiceAccount/service-account-controller cluster-wide ClusterRole/system:controller:service-account-controller ClusterRoleBinding/system:controller:service-account-controller

ServiceAccount/service-controller cluster-wide ClusterRole/system:controller:service-controller ClusterRoleBinding/system:controller:service-controller

ServiceAccount/statefulset-controller cluster-wide ClusterRole/system:controller:statefulset-controller ClusterRoleBinding/system:controller:statefulset-controller

ServiceAccount/ttl-controller cluster-wide ClusterRole/system:controller:ttl-controller ClusterRoleBinding/system:controller:ttl-controller

This could just mean that no one uses that role yet. However, I verified that indeed there is a role defined for es-master in logging.

$ kubectl get rolebinding elasticsearch-master -n logging -o yaml | tail -n8

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: elasticsearch-master

subjects:

- kind: ServiceAccount

name: elasticsearch-master

namespace: logging

Let’s also look at Access-Matrix

Access-Matrix

Install access-matrix:

$ kubectl krew install access-matrix

Updated the local copy of plugin index.

Installing plugin: access-matrix

Installed plugin: access-matrix

\

| Use this plugin:

| kubectl access-matrix

| Documentation:

| https://github.com/corneliusweig/rakkess

| Caveats:

| \

| | Usage:

| | kubectl access-matrix

| | kubectl access-matrix for pods

| /

/

WARNING: You installed plugin "access-matrix" from the krew-index plugin repository.

These plugins are not audited for security by the Krew maintainers.

Run them at your own risk.

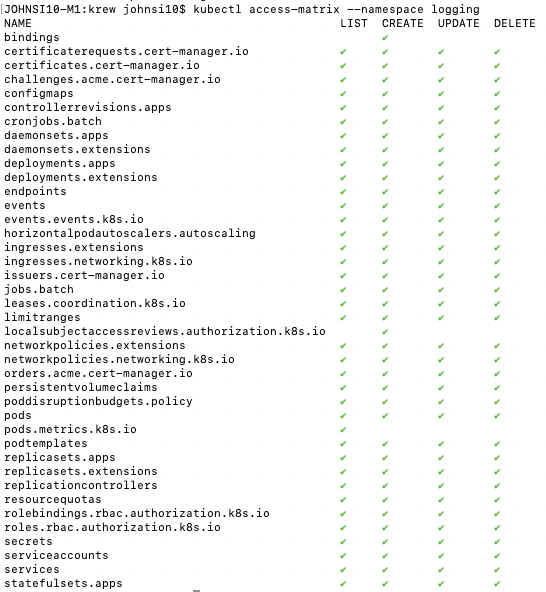

Here we can see the level of access _your user_ has on namespaces. For instance, I have very expansive powers on the logging namespace:

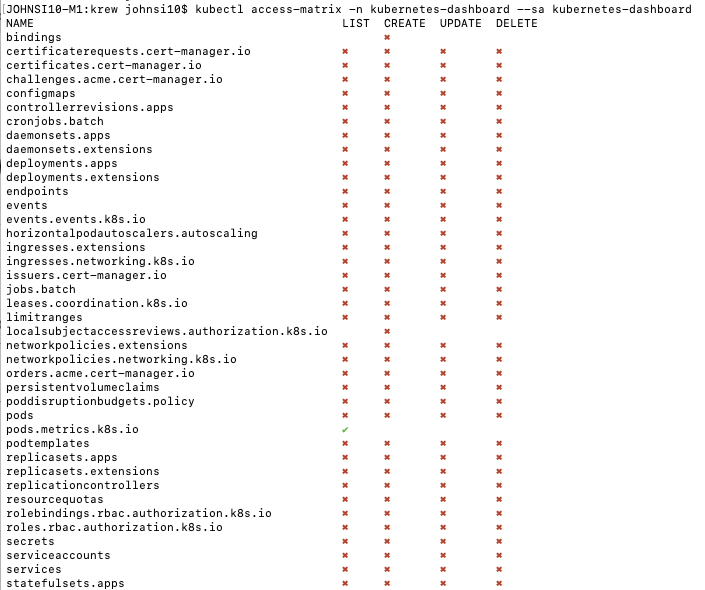

But then we can verify that other SA in logging does not (which matches what we saw from rbac-view):

Let’s look at an example of something that does have privs.

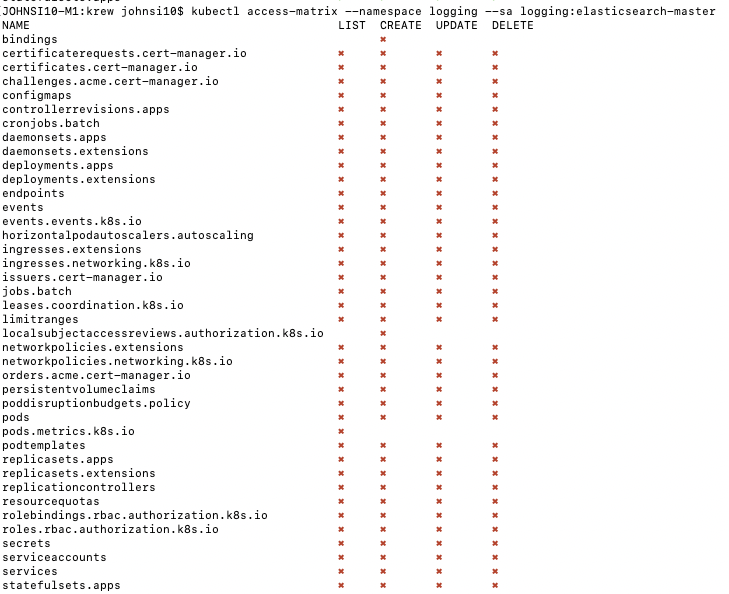

Here we see the ClusterRole of cert-manager-cainjector has a few privs:

Clicking subjects shows the binding to “ServiceAccount - cert-manager-cainjector”

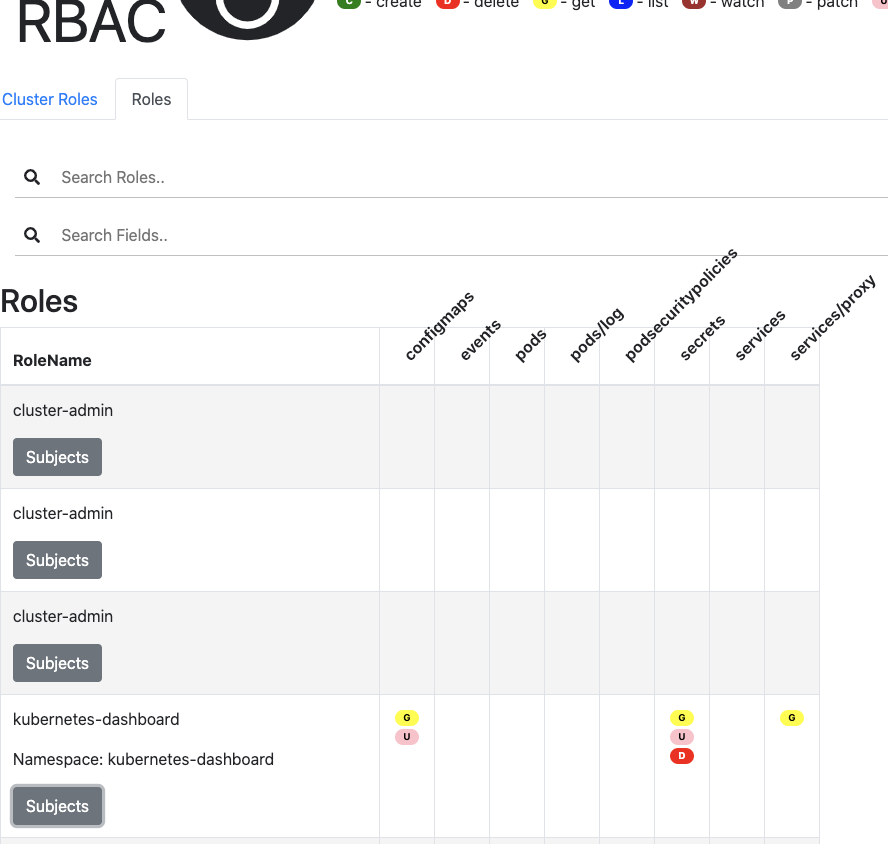

Another example might be the kubernetes-dashboard Role which ties to “ServiceAccount - kubernetes-dashboard”

The issue i see is looking this up, indicates kubernetes-dashboard has no secrets power in it’s namespace:

However i can verify a rolebinding:

$ kubectl get rolebinding -n kubernetes-dashboard -o yaml | tail -n 12 | head -n 8

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

And that role shows secrets powers:

$ kubectl get role kubernetes-dashboard -n kubernetes-dashboard -o yaml | head -n 27 | tail -n 6

resources:

- secrets

verbs:

- get

- update

- delete

Which indicates the rbac-view is right. Let’s circle back and try with AKS. Sometimes I find EKS is a bit wonky.

Testing with AKS

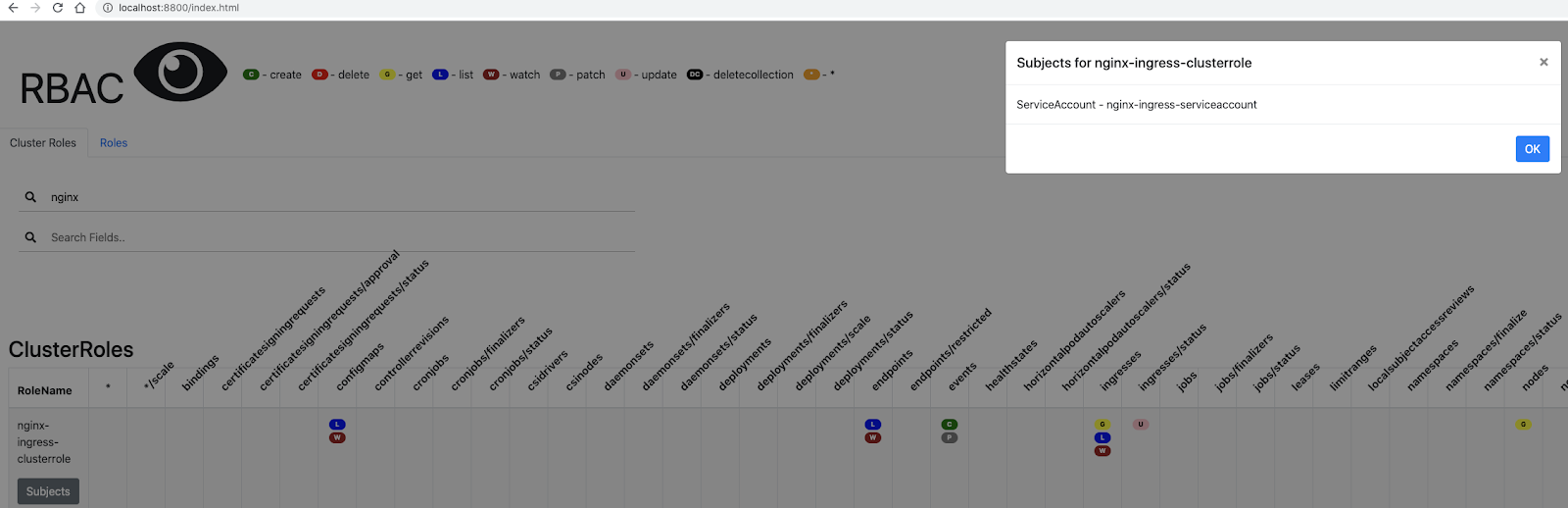

Let’s check out Nginx in our cluster:

$ kubectl rbac-view

INFO[0000] Getting K8s client

INFO[0000] serving RBAC View and http://localhost:8800

INFO[0005] Building full matrix for json

INFO[0005] Building Matrix for Roles

INFO[0005] Retrieving RoleBindings

INFO[0005] Building Matrix for ClusterRoles

INFO[0005] Retrieving ClusterRoleBindings

INFO[0006] Retrieving RoleBindings for Namespace logging

INFO[0006] Retrieving RoleBindings for Namespace default

INFO[0006] Retrieving RoleBindings for Namespace kube-system

INFO[0006] Retrieving RoleBindings for Namespace automation

…

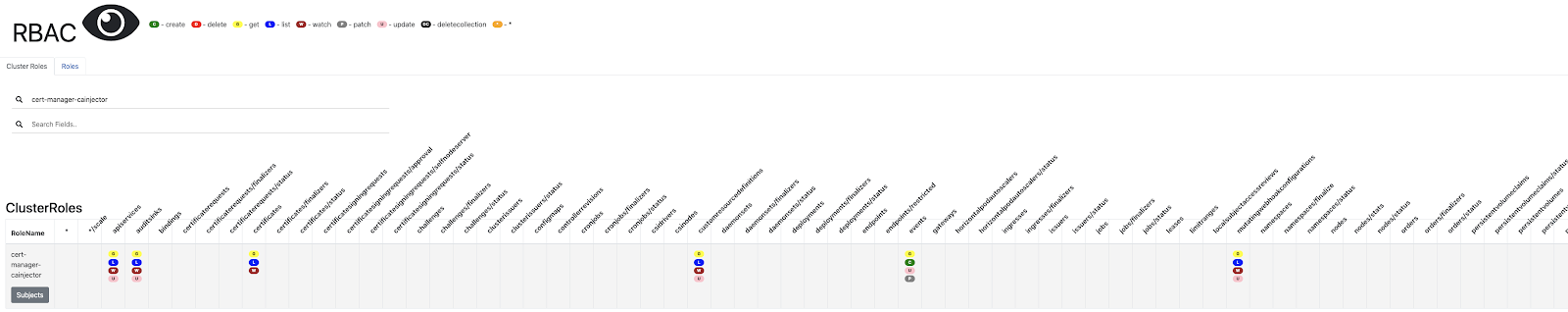

Here we see the cluster role of nginx-ingress-clusterrole which is bound to nginx-ingress-serviceaccount.

Let’s determine to what this binding should have access:

$ kubectl get clusterrolebinding nginx-ingress-clusterrole-nisa-binding -n nginx-ingress-clusterrole -o yaml | tail -n 8

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: shareddev

and we can see the verbs it can use:

$ kubectl get clusterrole nginx-ingress-clusterrole -o yaml | tail -n 49

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- extensions

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

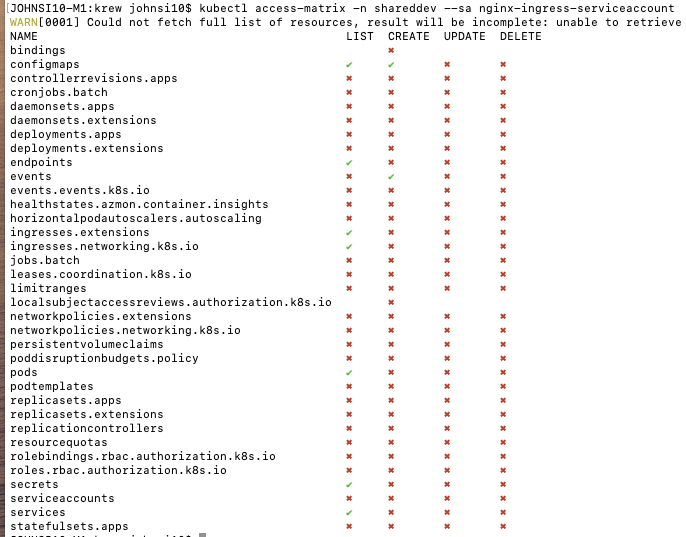

We should see in the shareddev namespace evidence that the service account can action on the above verbs and resources.

This looks right:

We can also lookup bindings with rbac-lookup:

$ kubectl rbac-lookup nginx-ingress-serviceaccount

SUBJECT SCOPE ROLE

nginx-ingress-serviceaccount shareddev Role/nginx-ingress-role

nginx-ingress-serviceaccount cluster-wide ClusterRole/nginx-ingress-clusterrole

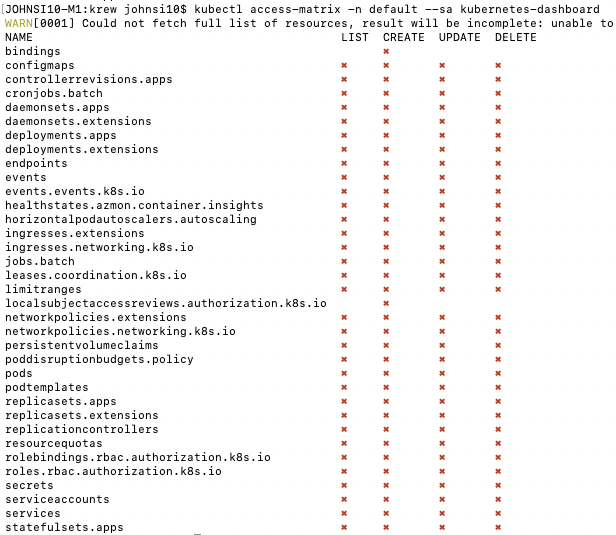

And as a sanity check, we can verify no access on the default namespace:

$ kubectl access-matrix -n default --sa nginx-ingress-serviceaccount -o ascii-table

WARN[0001] Could not fetch full list of resources, result will be incomplete: unable to retrieve the complete list of server APIs: metrics.k8s.io/v1beta1: the server is currently unable to handle the request

NAME LIST CREATE UPDATE DELETE

bindings n/a no n/a n/a

configmaps no no no no

controllerrevisions.apps no no no no

cronjobs.batch no no no no

daemonsets.apps no no no no

daemonsets.extensions no no no no

deployments.apps no no no no

deployments.extensions no no no no

endpoints no no no no

events no no no no

events.events.k8s.io no no no no

healthstates.azmon.container.insights no no no no

horizontalpodautoscalers.autoscaling no no no no

ingresses.extensions no no no no

ingresses.networking.k8s.io no no no no

jobs.batch no no no no

leases.coordination.k8s.io no no no no

limitranges no no no no

localsubjectaccessreviews.authorization.k8s.io n/a no n/a n/a

networkpolicies.extensions no no no no

networkpolicies.networking.k8s.io no no no no

persistentvolumeclaims no no no no

poddisruptionbudgets.policy no no no no

pods no no no no

podtemplates no no no no

replicasets.apps no no no no

replicasets.extensions no no no no

replicationcontrollers no no no no

resourcequotas no no no no

rolebindings.rbac.authorization.k8s.io no no no no

roles.rbac.authorization.k8s.io no no no no

secrets no no no no

serviceaccounts no no no no

services no no no no

statefulsets.apps no no no no

I also see the provided dashboard as the role kubernetes-dashboard-minimal in kube-system bound to the sa “kubernetes-dashboard”

And here we can see that indeed kubernetes-dashboard has a of power in kube-system, but none, in say default:

$ kubectl access-matrix -n kube-system --sa kubernetes-dashboard -o ascii-table

WARN[0001] Could not fetch full list of resources, result will be incomplete: unable to retrieve the complete list of server APIs: metrics.k8s.io/v1beta1: the server is currently unable to handle the request

NAME LIST CREATE UPDATE DELETE

bindings n/a yes n/a n/a

configmaps yes yes yes yes

controllerrevisions.apps yes yes yes yes

cronjobs.batch yes yes yes yes

daemonsets.apps yes yes yes yes

daemonsets.extensions yes yes yes yes

deployments.apps yes yes yes yes

deployments.extensions yes yes yes yes

endpoints yes yes yes yes

events yes yes yes yes

events.events.k8s.io yes yes yes yes

healthstates.azmon.container.insights yes yes yes yes

horizontalpodautoscalers.autoscaling yes yes yes yes

ingresses.extensions yes yes yes yes

ingresses.networking.k8s.io yes yes yes yes

jobs.batch yes yes yes yes

leases.coordination.k8s.io yes yes yes yes

limitranges yes yes yes yes

localsubjectaccessreviews.authorization.k8s.io n/a yes n/a n/a

networkpolicies.extensions yes yes yes yes

networkpolicies.networking.k8s.io yes yes yes yes

persistentvolumeclaims yes yes yes yes

poddisruptionbudgets.policy yes yes yes yes

pods yes yes yes yes

podtemplates yes yes yes yes

replicasets.apps yes yes yes yes

replicasets.extensions yes yes yes yes

replicationcontrollers yes yes yes yes

resourcequotas yes yes yes yes

rolebindings.rbac.authorization.k8s.io yes yes yes yes

roles.rbac.authorization.k8s.io yes yes yes yes

secrets yes yes yes yes

serviceaccounts yes yes yes yes

services yes yes yes yes

statefulsets.apps yes yes yes yes

But in checking default namespace

$ kubectl access-matrix -n default --sa kubernetes-dashboard -o ascii-table

WARN[0001] Could not fetch full list of resources, result will be incomplete: unable to retrieve the complete list of server APIs: metrics.k8s.io/v1beta1: the server is currently unable to handle the request

NAME LIST CREATE UPDATE DELETE

bindings n/a no n/a n/a

configmaps no no no no

controllerrevisions.apps no no no no

cronjobs.batch no no no no

daemonsets.apps no no no no

daemonsets.extensions no no no no

deployments.apps no no no no

deployments.extensions no no no no

endpoints no no no no

events no no no no

events.events.k8s.io no no no no

healthstates.azmon.container.insights no no no no

horizontalpodautoscalers.autoscaling no no no no

ingresses.extensions no no no no

ingresses.networking.k8s.io no no no no

jobs.batch no no no no

leases.coordination.k8s.io no no no no

limitranges no no no no

localsubjectaccessreviews.authorization.k8s.io n/a no n/a n/a

networkpolicies.extensions no no no no

networkpolicies.networking.k8s.io no no no no

persistentvolumeclaims no no no no

poddisruptionbudgets.policy no no no no

pods no no no no

podtemplates no no no no

replicasets.apps no no no no

replicasets.extensions no no no no

replicationcontrollers no no no no

resourcequotas no no no no

rolebindings.rbac.authorization.k8s.io no no no no

roles.rbac.authorization.k8s.io no no no no

secrets no no no no

serviceaccounts no no no no

services no no no no

statefulsets.apps no no no no

Which graphically:

From our rbac-lookup, we actually see that the k8s dashboard is bound to both a role and clusterrole which could cause confusion:

$ kubectl rbac-lookup kubernetes-dashboard

SUBJECT SCOPE ROLE

kubernetes-dashboard kube-system Role/kubernetes-dashboard-minimal

kubernetes-dashboard cluster-wide ClusterRole/cluster-admin

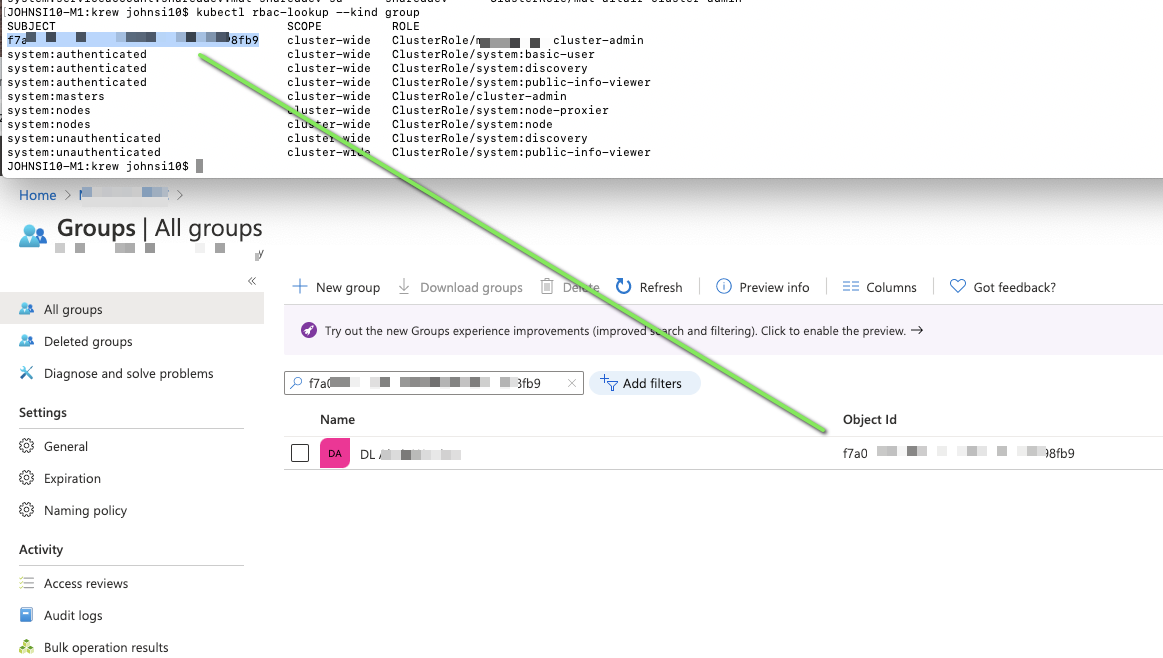

Actually, I should point out that because we are in one of my preferred cloud providers here, we have an RBAC enabled cluster that allows federation into AAD.

The means we can lookup groups with –kind group and see the OID of the group (which we can use Azure Active Directory to lookup):

This is also confirmed in the rbac-view app:

More Clouds! LKE

Let’s use another one of my favourite providers, Linode and see how well the plugins work there.

If your linode-cli is out of date, you can use pip3 to upgrade:

$ pip3 install linode-cli --upgrade

Collecting linode-cli

My token had expired, so you might need to create a PAT in the settings page.

You can add it here:

$ linode-cli configure

Welcome to the Linode CLI. This will walk you through some

initial setup.

First, we need a Personal Access Token. To get one, please visit

https://cloud.linode.com/profile/tokens and click

"Add a Personal Access Token". The CLI needs access to everything

on your account to work correctly.

Personal Access Token: ....

Here i see no clusters:

$ linode-cli lke clusters-list

┌────┬───────┬────────┐

│ id │ label │ region │

└────┴───────┴────────┘

My memory with LKE Alpha is really different to LKE now that it’s released. I’ll definitely need to revisit later. However, in summary:

First, get K8s versions

$ linode-cli lke versions-list

┌──────┐

│ id │

├──────┤

│ 1.17 │

│ 1.15 │

│ 1.16 │

└──────┘

Then we can spin a cluster;

$ linode-cli lke cluster-create --node_pools.type g6-standard-2 --node_pools.count 2 --k8s_version 1.16 --label myNewCluster

┌──────┬──────────────┬────────────┐

│ id │ label │ region │

├──────┼──────────────┼────────────┤

│ 8323 │ myNewCluster │ us-central │

└──────┴──────────────┴────────────┘

But indeed, the kubeconfig view is just as wonkey as before…I reviewed my old blog post and used similar commands to get the config…

$ linode-cli lke kubeconfig-view 8323 | tail -n2 | head -n1 | sed 's/.\{8\}$//' | sed 's/^.\{8\}//' | base64 --decode > ~/.kube/config

$ kubectl get ns

NAME STATUS AGE

default Active 4m31s

kube-node-lease Active 4m32s

kube-public Active 4m32s

kube-system Active 4m32s

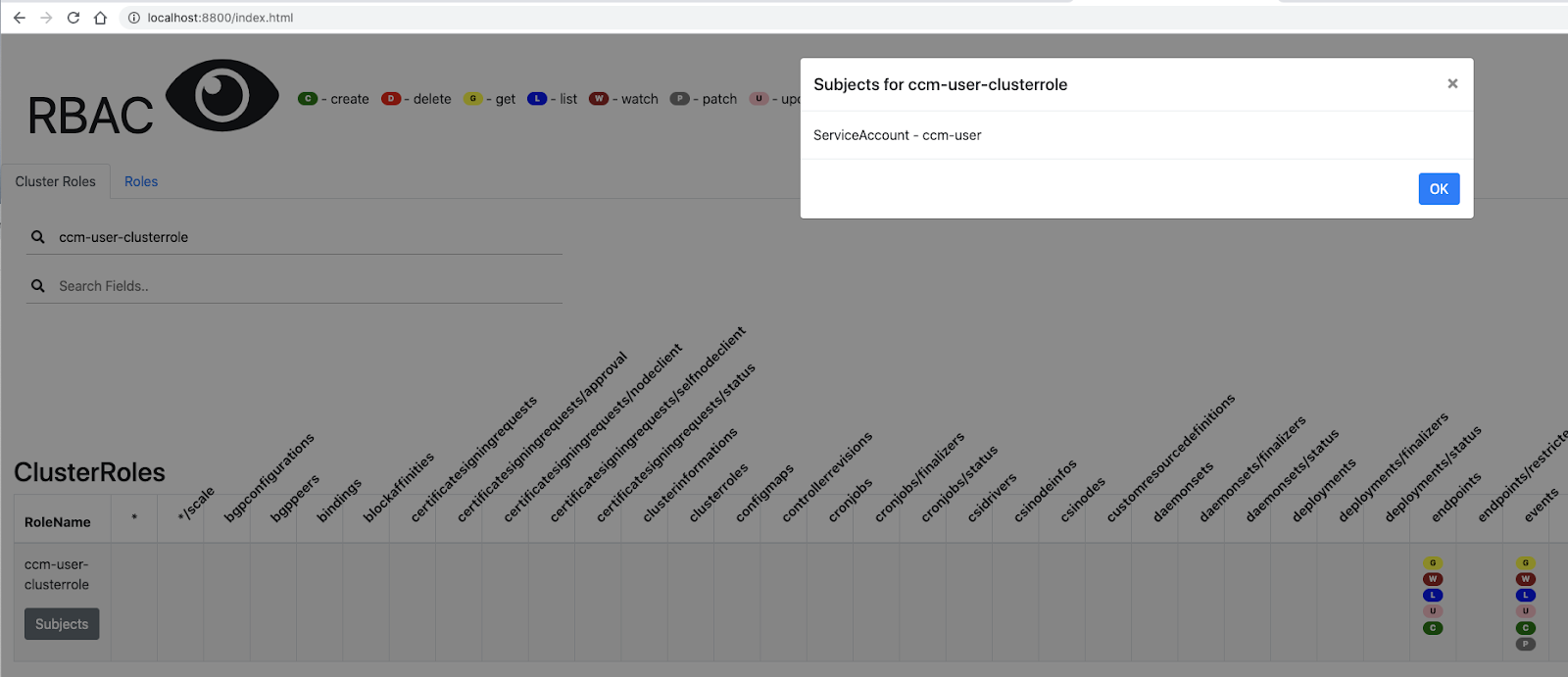

In a fresh cluster, there isn’t much to see but we can check the default roles:

Here we see a service account “ccm-user”.

Let’s now use what we learned to figure out what that sa can see.

We can verify the bindings are to the clusterwide ccm-user-clusterrole:

$ kubectl rbac-lookup ccm-user

SUBJECT SCOPE ROLE

ccm-user cluster-wide ClusterRole/ccm-user-clusterrole

That role is pretty expansive:

$ kubectl get clusterrole ccm-user-clusterrole -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"rbac.authorization.k8s.io/v1","kind":"ClusterRole","metadata":{"annotations":{"lke.linode.com/caplke-version":"v1.16.13-001"},"name":"ccm-user-clusterrole"},"rules":[{"apiGroups":[""],"resources":["endpoints"],"verbs":["get","watch","list","update","create"]},{"apiGroups":[""],"resources":["nodes"],"verbs":["get","watch","list","update","delete"]},{"apiGroups":[""],"resources":["nodes/status"],"verbs":["get","watch","list","update","delete","patch"]},{"apiGroups":[""],"resources":["events"],"verbs":["get","watch","list","update","create","patch"]},{"apiGroups":[""],"resources":["persistentvolumes"],"verbs":["get","watch","list","update"]},{"apiGroups":[""],"resources":["secrets"],"verbs":["get"]},{"apiGroups":[""],"resources":["services"],"verbs":["get","watch","list"]},{"apiGroups":[""],"resources":["services/status"],"verbs":["get","watch","list","update"]}]}

lke.linode.com/caplke-version: v1.16.13-001

creationTimestamp: "2020-07-29T12:57:27Z"

name: ccm-user-clusterrole

resourceVersion: "354"

selfLink: /apis/rbac.authorization.k8s.io/v1/clusterroles/ccm-user-clusterrole

uid: ff2c6596-e0fb-438d-9196-743899951500

rules:

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

- watch

- list

- update

- create

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- watch

- list

- update

- delete

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- get

- watch

- list

- update

- delete

- patch

- apiGroups:

- ""

resources:

- events

verbs:

- get

- watch

- list

- update

- create

- patch

- apiGroups:

- ""

resources:

- persistentvolumes

verbs:

- get

- watch

- list

- update

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- watch

- list

- apiGroups:

- ""

resources:

- services/status

verbs:

- get

- watch

- list

- update

And if we lookup the binding, we can see it’s limited to kube-system:

$ kubectl get clusterrolebindings ccm-user-clusterrolebinding -o yaml | tail -n 8

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ccm-user-clusterrole

subjects:

- kind: ServiceAccount

name: ccm-user

namespace: kube-system

So verifying against _default_ shows, as we expect, no permissions

$ kubectl access-matrix -n default --sa ccm-user -o ascii-table

NAME LIST CREATE UPDATE DELETE

bindings n/a no n/a n/a

configmaps no no no no

controllerrevisions.apps no no no no

cronjobs.batch no no no no

daemonsets.apps no no no no

deployments.apps no no no no

endpoints no no no no

events no no no no

events.events.k8s.io no no no no

horizontalpodautoscalers.autoscaling no no no no

ingresses.extensions no no no no

ingresses.networking.k8s.io no no no no

jobs.batch no no no no

leases.coordination.k8s.io no no no no

limitranges no no no no

localsubjectaccessreviews.authorization.k8s.io n/a no n/a n/a

networkpolicies.crd.projectcalico.org no no no no

networkpolicies.networking.k8s.io no no no no

networksets.crd.projectcalico.org no no no no

persistentvolumeclaims no no no no

poddisruptionbudgets.policy no no no no

pods no no no no

podtemplates no no no no

replicasets.apps no no no no

replicationcontrollers no no no no

resourcequotas no no no no

rolebindings.rbac.authorization.k8s.io no no no no

roles.rbac.authorization.k8s.io no no no no

secrets no no no no

serviceaccounts no no no no

services no no no no

statefulsets.apps no no no no

But against kube-system, we should see at least endpoint access:

$ kubectl access-matrix -n kube-system --sa ccm-user -o ascii-table

NAME LIST CREATE UPDATE DELETE

bindings n/a no n/a n/a

configmaps no no no no

controllerrevisions.apps no no no no

cronjobs.batch no no no no

daemonsets.apps no no no no

deployments.apps no no no no

endpoints yes yes yes no

events yes yes yes no

events.events.k8s.io no no no no

horizontalpodautoscalers.autoscaling no no no no

ingresses.extensions no no no no

ingresses.networking.k8s.io no no no no

jobs.batch no no no no

leases.coordination.k8s.io no no no no

limitranges no no no no

localsubjectaccessreviews.authorization.k8s.io n/a no n/a n/a

networkpolicies.crd.projectcalico.org no no no no

networkpolicies.networking.k8s.io no no no no

networksets.crd.projectcalico.org no no no no

persistentvolumeclaims no no no no

poddisruptionbudgets.policy no no no no

pods no no no no

podtemplates no no no no

replicasets.apps no no no no

replicationcontrollers no no no no

resourcequotas no no no no

rolebindings.rbac.authorization.k8s.io no no no no

roles.rbac.authorization.k8s.io no no no no

secrets no no no no

serviceaccounts no no no no

services yes no no no

statefulsets.apps no no no no

Cleanup:

$ linode-cli lke clusters-list

┌──────┬──────────────┬────────────┐

│ id │ label │ region │

├──────┼──────────────┼────────────┤

│ 8323 │ myNewCluster │ us-central │

└──────┴──────────────┴────────────┘

$ linode-cli lke cluster-delete 8323

I can confirm this cleaned up everything (i used to sometimes have to cleanup nodebalancers after the fact - seems that was fixed post-alpha).

Summary

We only touched the surface of plugins. For instance there is “ images ” which makes it really easy to see the images used in the whole cluster or just a namespace:

$ kubectl images -n logging

[Summary]: 1 namespaces, 10 pods, 15 containers and 5 different images

+------------------------------------------------+-------------------------+-----------------------------------------------------+

| PodName | ContainerName | ContainerImage |

+------------------------------------------------+-------------------------+-----------------------------------------------------+

| elasticsearch-master-0 | elasticsearch | docker.elastic.co/elasticsearch/elasticsearch:7.5.1 |

+ +-------------------------+ +

| | (init) configure-sysctl | |

+------------------------------------------------+-------------------------+ +

| elasticsearch-master-1 | elasticsearch | |

+ +-------------------------+ +

| | (init) configure-sysctl | |

+------------------------------------------------+-------------------------+ +

| elasticsearch-master-2 | elasticsearch | |

+ +-------------------------+ +

| | (init) configure-sysctl | |

+------------------------------------------------+-------------------------+ +

| elasticsearch-master-3 | elasticsearch | |

+ +-------------------------+ +

| | (init) configure-sysctl | |

+------------------------------------------------+-------------------------+ +

| elasticsearch-master-4 | elasticsearch | |

+ +-------------------------+ +

| | (init) configure-sysctl | |

+------------------------------------------------+-------------------------+-----------------------------------------------------+

| kibana-kibana-f874ccc6-5hmxm | kibana | docker.elastic.co/kibana/kibana:7.5.1 |

+------------------------------------------------+-------------------------+-----------------------------------------------------+

| logstash-logstash-0 | logstash | docker.elastic.co/logstash/logstash:7.5.1 |

+------------------------------------------------+-------------------------+-----------------------------------------------------+

| metricbeat-kube-state-metrics-69487cddcf-lv57b | kube-state-metrics | quay.io/coreos/kube-state-metrics:v1.8.0 |

+------------------------------------------------+-------------------------+-----------------------------------------------------+

| metricbeat-metricbeat-metrics-6954ff987f-hdt26 | metricbeat | docker.elastic.co/beats/metricbeat:7.5.1 |

+------------------------------------------------+ + +

| metricbeat-metricbeat-metrics-7b85664dfb-4kqkv | | |

+------------------------------------------------+-------------------------+-----------------------------------------------------+

We can now see the images used by default in LKE’s 1.16 cluster (likely matches k3s):

$ kubectl images --all-namespaces

[Summary]: 1 namespaces, 10 pods, 23 containers and 11 different images

+------------------------------------------+-------------------------+----------------------------------------------+

| PodName | ContainerName | ContainerImage |

+------------------------------------------+-------------------------+----------------------------------------------+

| calico-kube-controllers-77c869668f-6n7pj | calico-kube-controllers | calico/kube-controllers:v3.9.2 |

+------------------------------------------+-------------------------+----------------------------------------------+

| calico-node-cs4mz | calico-node | calico/node:v3.9.2 |

+ +-------------------------+----------------------------------------------+

| | (init) upgrade-ipam | calico/cni:v3.9.2 |

+ +-------------------------+ +

| | (init) install-cni | |

+ +-------------------------+----------------------------------------------+

| | (init) flexvol-driver | calico/pod2daemon-flexvol:v3.9.2 |

+------------------------------------------+-------------------------+----------------------------------------------+

| calico-node-x4fms | calico-node | calico/node:v3.9.2 |

+ +-------------------------+----------------------------------------------+

| | (init) upgrade-ipam | calico/cni:v3.9.2 |

+ +-------------------------+ +

| | (init) install-cni | |

+ +-------------------------+----------------------------------------------+

| | (init) flexvol-driver | calico/pod2daemon-flexvol:v3.9.2 |

+------------------------------------------+-------------------------+----------------------------------------------+

| coredns-5644d7b6d9-4mk6f | coredns | k8s.gcr.io/coredns:1.6.2 |

+------------------------------------------+ + +

| coredns-5644d7b6d9-xlswr | | |

+------------------------------------------+-------------------------+----------------------------------------------+

| csi-linode-controller-0 | csi-provisioner | linode/csi-provisioner:v1.0.0 |

+ +-------------------------+----------------------------------------------+

| | csi-attacher | linode/csi-attacher:v1.0.0 |

+ +-------------------------+----------------------------------------------+

| | linode-csi-plugin | linode/linode-blockstorage-csi-driver:v0.1.4 |

+ +-------------------------+----------------------------------------------+

| | (init) init | bitnami/kubectl:1.16.3-debian-10-r36 |

+------------------------------------------+-------------------------+----------------------------------------------+

| csi-linode-node-lmr6w | driver-registrar | linode/driver-registrar:v1.0-canary |

+ +-------------------------+----------------------------------------------+

| | csi-linode-plugin | linode/linode-blockstorage-csi-driver:v0.1.4 |

+ +-------------------------+----------------------------------------------+

| | (init) init | bitnami/kubectl:1.16.3-debian-10-r36 |

+------------------------------------------+-------------------------+----------------------------------------------+

| csi-linode-node-rldbp | driver-registrar | linode/driver-registrar:v1.0-canary |

+ +-------------------------+----------------------------------------------+

| | csi-linode-plugin | linode/linode-blockstorage-csi-driver:v0.1.4 |

+ +-------------------------+----------------------------------------------+

| | (init) init | bitnami/kubectl:1.16.3-debian-10-r36 |

+------------------------------------------+-------------------------+----------------------------------------------+

| kube-proxy-d2zrx | kube-proxy | k8s.gcr.io/kube-proxy:v1.16.13 |

+------------------------------------------+ + +

| kube-proxy-g5kcr | | |

+------------------------------------------+-------------------------+----------------------------------------------+

Datadog has one to upgrade clusteragents or verify what is monitoring a given pod:

$ kubectl datadog agent find -n kube-system kubernetes-dashboard-9f5bf9974-n67tg

Error: no agent pod found. Label selector used: agent.datadoghq.com/component=agent

While not all of them work perfectly, as is the nature of OSS, krew is a great way to expand the functionality of kubectl easily. The RBAC plugins we tested were useful with AKS and LKE but our results seemed to have trouble with access-matrix and rbac-lookup on the instance of EKS i was using (I have had some issues with that instance so i will chalk it up to user error on my part).

I plan to keep katching up on krew kontinually for kubernetes kreation and konnectivity #dadjokes as they are a great set of free OSS tooling.