Published: May 1, 2020 by Isaac Johnson

Now that we have YAML pipelines, one powerful feature we’ll want to leverage is the use of templates. Templates are the YAML equivalent of Task Groups - you can take common sets of functionality and move them into sources in files. This enables code-reuse and can make pipelines more manageable.

The other thing we want to do is tackle Teams. Microsoft Teams is free (either as an individual or bundled in your organization’s O365 subscription). I will dig into both ways of integrating MS Teams into your pipelines.

Teams

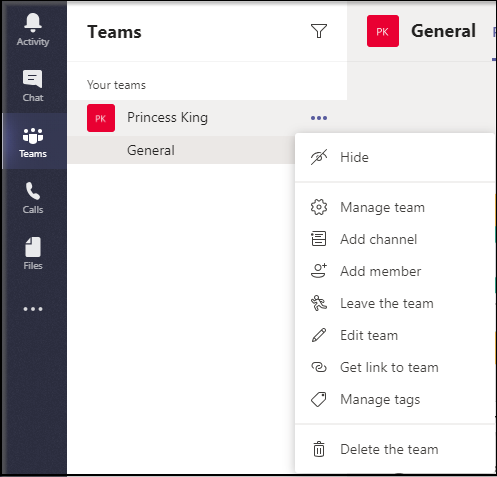

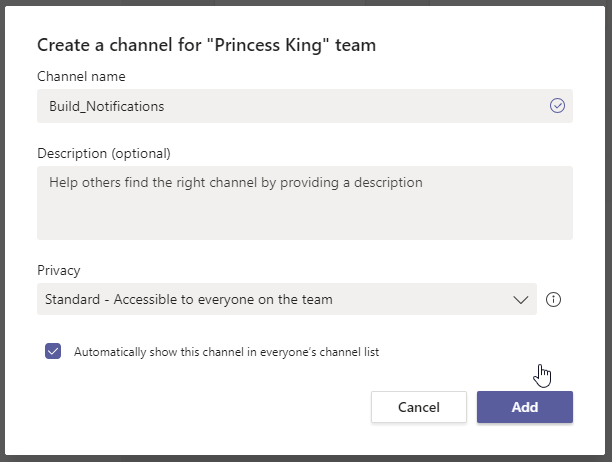

You’ll want to bring up Teams and go to your “Team”. By default, you’ll have a “General” Channel. What we will want to do is add a Build_Notifications channel :

Next we are going to show how to add notifications two ways.

Connectors

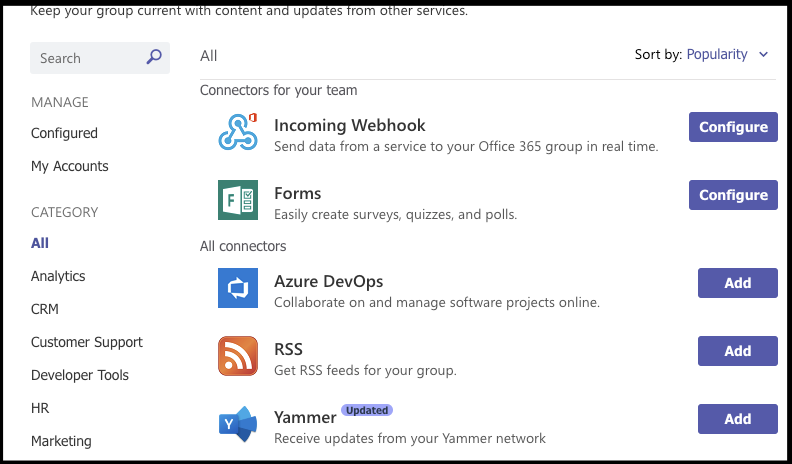

The first is through connectors:

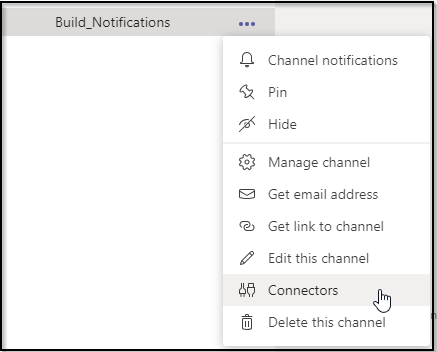

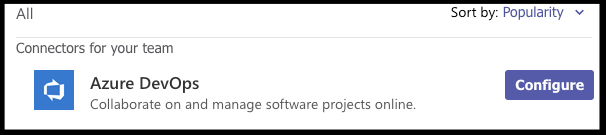

If you have an Office365 subscription, you’ll see Azure DevOps as a connector:

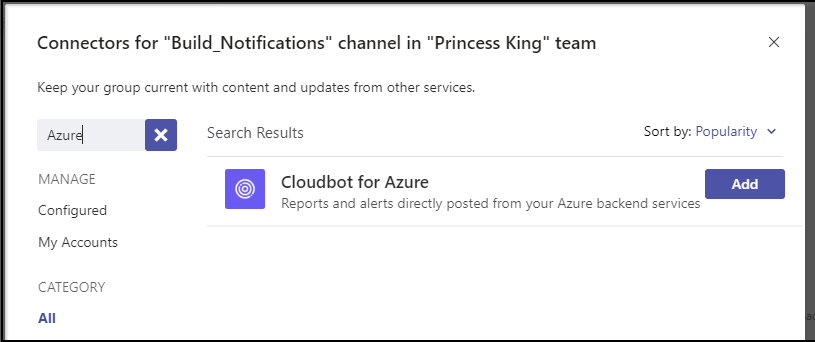

If you do not, Azure DevOps won’t be listed:

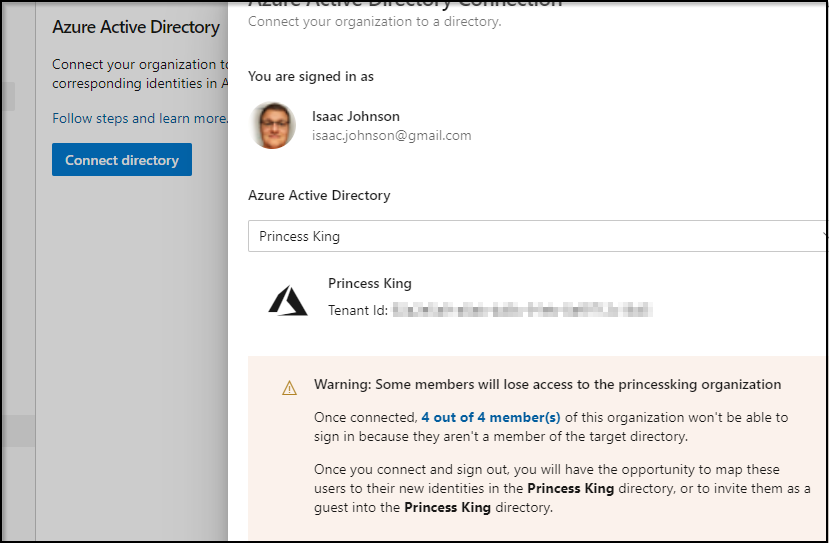

We could go through the steps of marrying our AzDO with AAD - but i shared my AzDO outside my organization and I don’t want to yank access for the non AD members:

However, I am a member in a different Teams/AzDO so I can take you through the integration as one might experience in an O365/AAD environment.

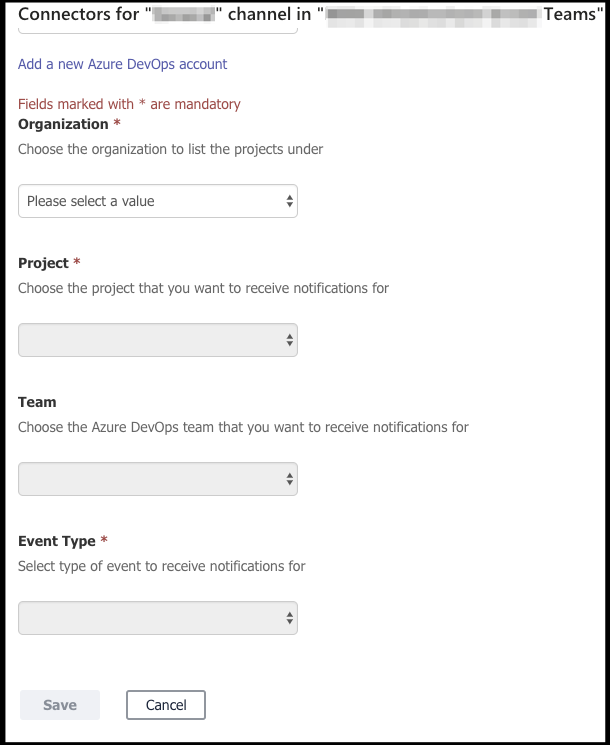

There is a nuance though. Configure will always launch a new wizard:

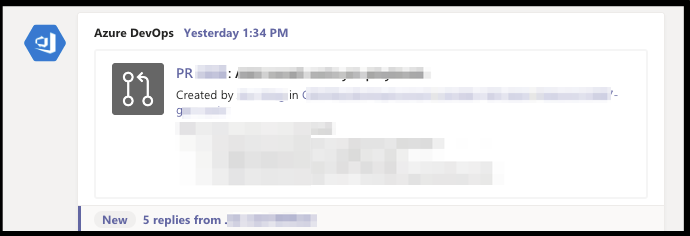

Despite being blank above, i know the Integration is working as I see PR notifications just fine:

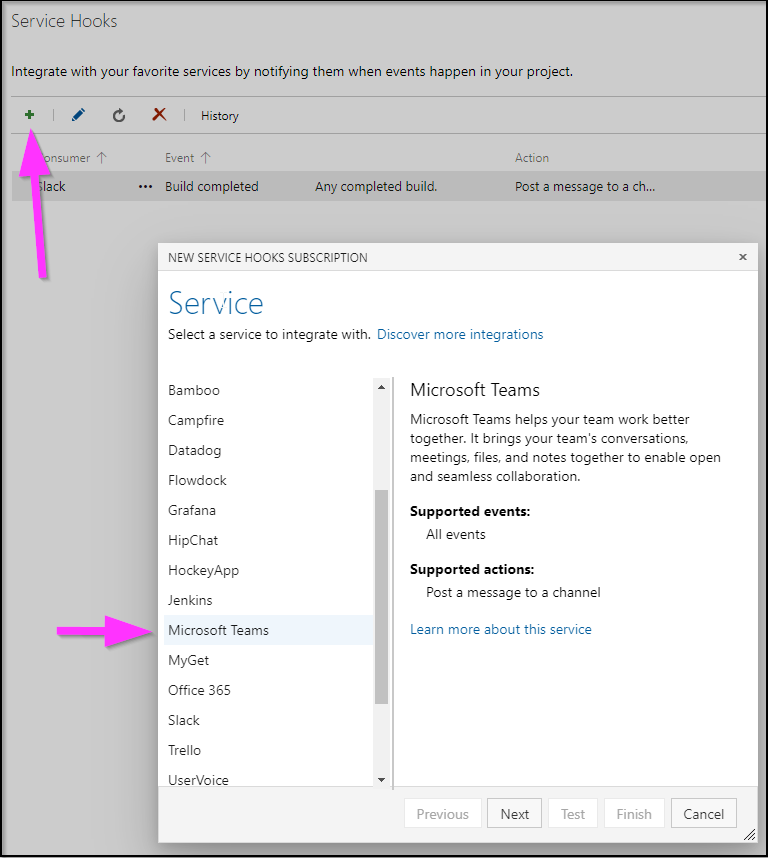

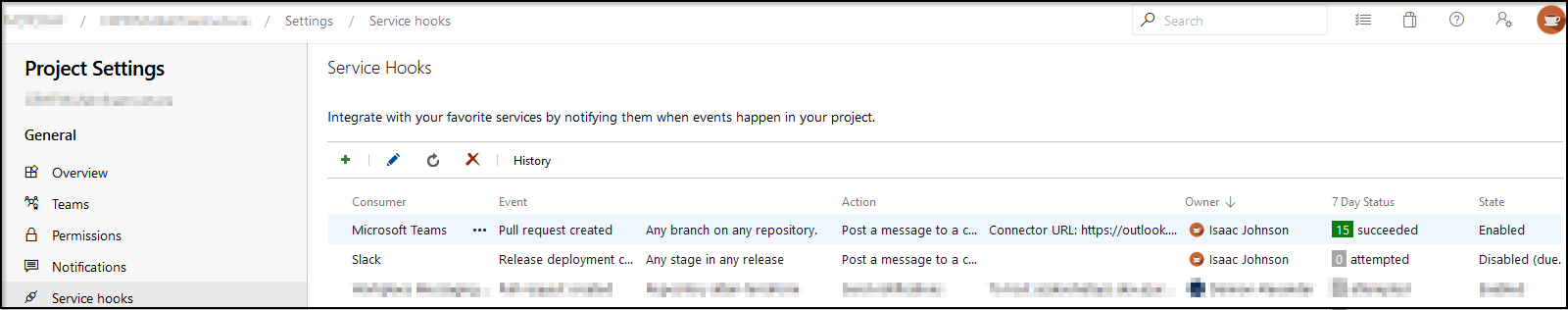

If we wish to see the connector that was created, we will find the Teams integration actually under Project Settings / Service Hooks in AzDO:

So can we add the Service Hook to Teams from the AzDO side if we are blocked on the Teams side?

While so far the answer is no, I can take you though what I tried:

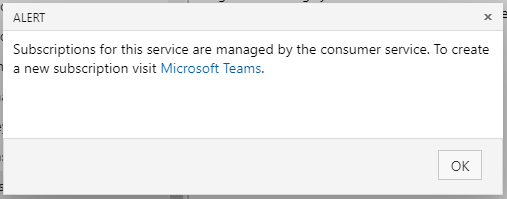

First, in service hooks, click the “+” and navigate to Microsoft Teams, and choose Next.. and…

Nuts. But it does make sense.

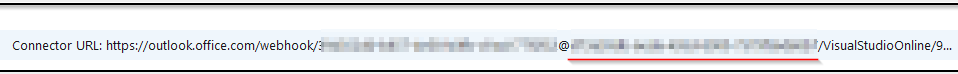

If i dig into the WebHook URL, the part that is red underline actually matches my AAD tenant:

Teams Notifications with Web Hooks

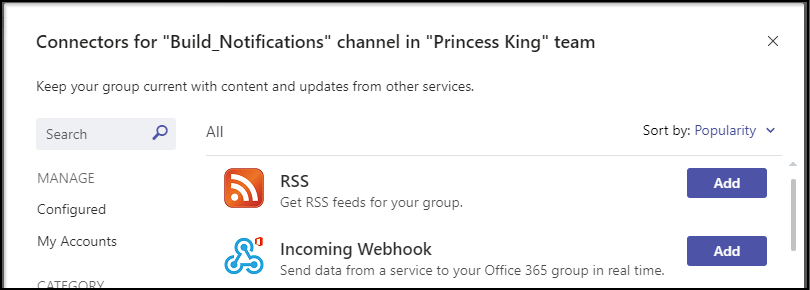

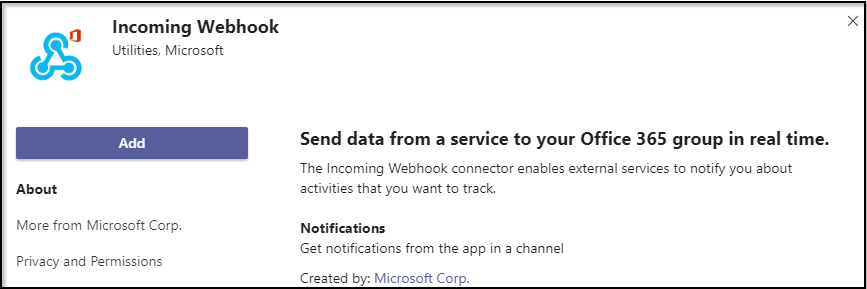

Another way we can do this is using webhooks in Teams

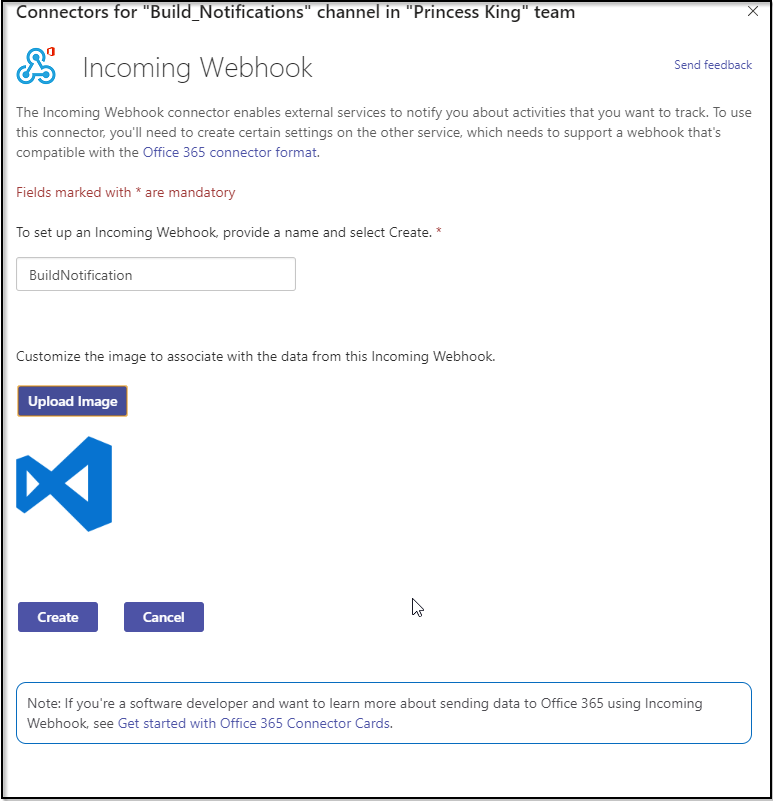

Click Add

Next, we’ll add an image (any icon works) and give it a name:

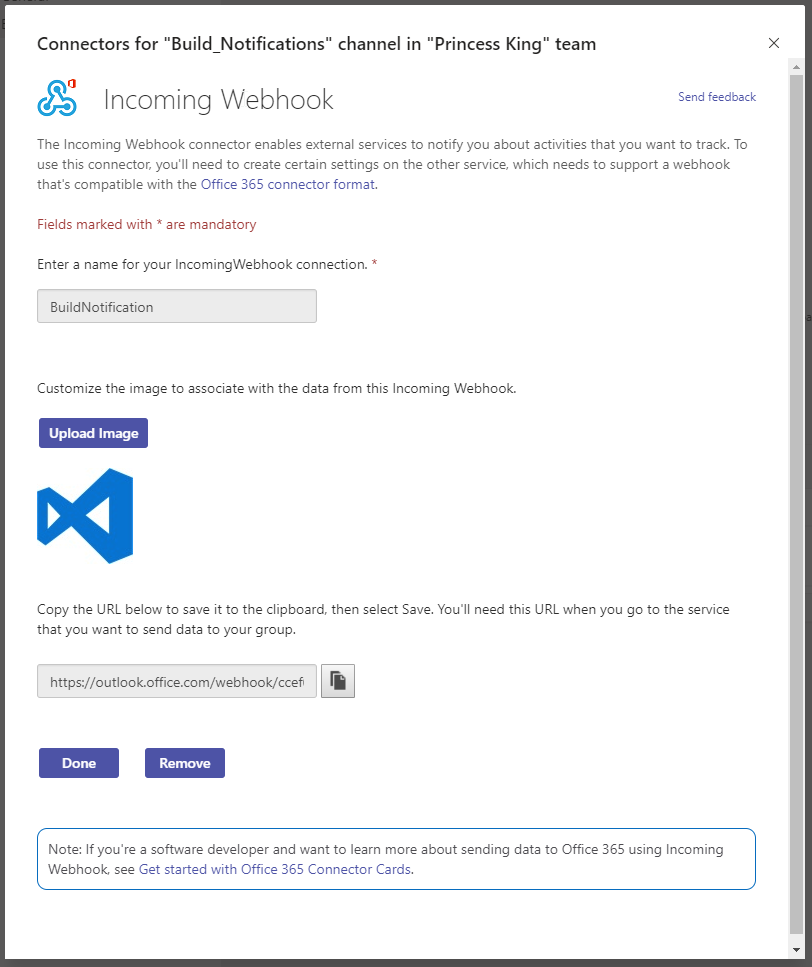

When we click create, we’ll get a URL

And we can click done..

That URL looks like :

https://outlook.office.com/webhook/782a3e08-de5f-437c-98ec-6b52458908a2@782a3e08-de5f-437c-98ec-6b52458908a2/IncomingWebhook/24cb02989ad246fba5d6e19ceade1fad/782a3e08-de5f-437c-98ec-6b52458908a2

That URL has no security so it’s worth protecting…

Next we’ll need a “card” which is how we craft our message. To fashion a card, you can use this messagecard playground:

Let’s try an example:

builder@DESKTOP-JBA79RT:~$ cat post.json

{

"@type": "MessageCard",

"@context": "https://schema.org/extensions",

"summary": "1 new build message",

"themeColor": "0078D7",

"sections": [

{

"activityImage": "https://ca.slack-edge.com/T4AQPQN8M-U4AL2JFC3-g45c8854734a-48",

"activityTitle": "Isaac Johnson",

"activitySubtitle": "2 hours ago",

"facts": [

{

"name": "Keywords:",

"value": "AzDO Ghost-Blog Pipeline"

},

{

"name": "Group:",

"value": "Build 123456.1 Succeeded"

}

],

"text": "Build 123456.1 has completed. Freshbrewed.com is updated. The world now has just that much more joy in it.\n<br/>\n Peace and love.",

"potentialAction": [

{

"@type": "OpenUri",

"name": "View conversation"

}

]

}

]

}

builder@DESKTOP-JBA79RT:~$ curl -X POST -H "Content-Type: application/json" -d @post.json https://outlook.office.com/webhook/782a3e08-de5f-437c-98ec-6b52458908a2@782a3e08-de5f-437c-98ec-6b52458908a2/IncomingWebhook/24cb02989ad246fba5d6e19ceade1fad/782a3e08-de5f-437c-98ec-6b52458908a2

Now let’s add that into our Azure-Pipelines yaml:

- stage: build_notification

jobs:

- job: release

pool:

vmImage: 'ubuntu-latest'

steps:

- bash: |

#!/bin/bash

set -x

umask 0002

cat > ./post.json <<'endmsg'

{

"@type": "MessageCard",

"@context": "https://schema.org/extensions",

"summary": "1 new build message",

"themeColor": "0078D7",

"sections": [

{

"activityImage": "https://ca.slack-edge.com/T4AQPQN8M-U4AL2JFC3-g45c8854734a-48",

"activityTitle": "$(Build.SourceVersionAuthor)",

"activitySubtitle": "$(Build.SourceVersionMessage) - $(Build.SourceBranchName) - $(Build.SourceVersion)",

"facts": [

{

"name": "Keywords:",

"value": "$(System.DefinitionName)"

},

{

"name": "Group:",

"value": "$(Build.BuildNumber)"

}

],

"text": "Build $(Build.BuildNumber) has completed. Pipeline started at $(System.PipelineStartTime). Freshbrewed.com is updated. The world now has just that much more joy in it.\n<br/>\n Peace and love.",

"potentialAction": [

{

"@type": "OpenUri",

"name": "View conversation"

}

]

}

]

}

endmsg

curl -X POST -H "Content-Type: application/json" -d @post.json https://outlook.office.com/webhook/782a3e08-de5f-437c-98ec-6b52458908a2@782a3e08-de5f-437c-98ec-6b52458908a2/IncomingWebhook/24cb02989ad246fba5d6e19ceade1fad/782a3e08-de5f-437c-98ec-6b52458908a2

displayName: 'Bash Script'

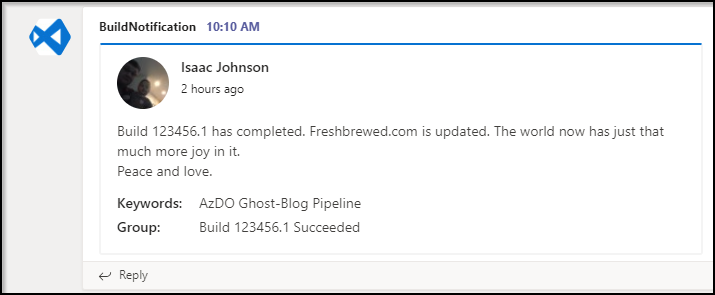

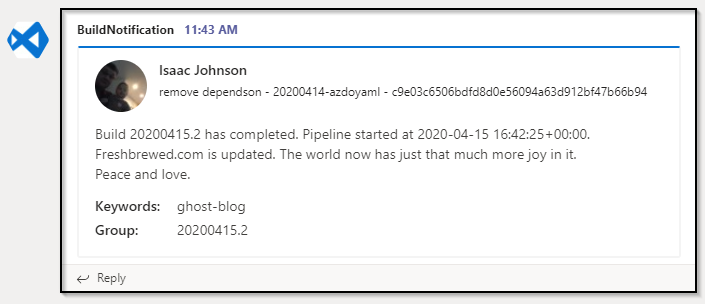

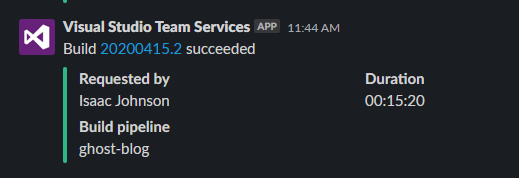

That should now call the Teams REST API when our build completes:

Which produced:

And note, our REST Call for slack is still in our Service Hooks:

I should also point out, calling slack or teams can be done via a maven pom.xml as well. I have some golang projects today that use mvn-golang-wrapper from com.igormaznitsa. There is a notification profile I use on those builds to update slack directly:

<profile>

<id>slack</id>

<build>

<plugins>

<plugin>

<groupId>org.codehaus.mojo</groupId>

<artifactId>exec-maven-plugin</artifactId>

<version>1.5.0</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>exec</goal>

</goals>

</execution>

</executions>

<configuration>

<executable>wget</executable>

<!-- optional -->

<arguments>

<argument>https://slack.com/api/chat.postMessage?token=${slacktoken}&channel=%23builds&text=Just%20completed%20a%20new%20build:%20${env.SYSTEM_TEAMPROJECT}%20${env.BUILD_DEFINITIONNAME}%20build%20${env.BUILD_BUILDNUMBER}%20${env.AGENT_JOBSTATUS}%20of%20branch%20${env.BUILD_SOURCEBRANCHNAME}%20(${env.BUILD_SOURCEVERSION}).%20get%20audl-go-client-${env.BUILD_BUILDNUMBER}.zip%20from%20https://drive.google.com/open?id=0B_fxXZ0azjzTLWZTcGFtNGIzMG8&icon_url=http%3A%2F%2F68.media.tumblr.com%2F69189dc4f6f5eb98477f9e72cc6c6a81%2Ftumblr_inline_nl3y29qlqD1rllijo.png&pretty=1</argument>

<!-- argument>"https://slack.com/api/chat.postMessage?token=xoxp-123123123-12312312312-123123123123-123asd123asd123asd123asd&channel=%23builds&text=just%20a%20test3&icon_url=http%3A%2F%2F68.media.tumblr.com%2F69189dc4f6f5eb98477f9e72cc6c6a81%2Ftumblr_inline_nl3y29qlqD1rllijo.png&pretty=1"</argument -->

</arguments>

</configuration>

</plugin>

</plugins>

</build>

</profile>

Using YAML Templates

The next step we will want to do with YAML pipelines is start to leverage the equivalent of task groups, template files.

Template files are how we create reusable subroutines - bundled common functionality that can be used by more than one pipeline and managed separately.

Let’s take for instance my release sections:

- stage: release_prod

dependsOn: build

condition: and(succeeded(), eq(variables['Build.SourceBranch'], 'refs/heads/master'))

jobs:

- job: release

pool:

vmImage: 'ubuntu-latest'

steps:

- task: DownloadBuildArtifacts@0

inputs:

buildType: "current"

downloadType: "single"

artifactName: "drop"

downloadPath: "_drop"

- task: ExtractFiles@1

displayName: 'Extract files '

inputs:

archiveFilePatterns: '**/drop/*.zip'

destinationFolder: '$(System.DefaultWorkingDirectory)/out'

- bash: |

#!/bin/bash

export

set -x

export DEBIAN_FRONTEND=noninteractive

sudo apt-get -yq install tree

cd ..

pwd

tree .

displayName: 'Bash Script Debug'

- task: AmazonWebServices.aws-vsts-tools.S3Upload.S3Upload@1

displayName: 'S3 Upload: freshbrewed.science html'

inputs:

awsCredentials: freshbrewed

regionName: 'us-east-1'

bucketName: freshbrewed.science

sourceFolder: '$(System.DefaultWorkingDirectory)/out/localhost_2368'

globExpressions: '**/*.html'

filesAcl: 'public-read'

- task: AmazonWebServices.aws-vsts-tools.S3Upload.S3Upload@1

displayName: 'S3 Upload: freshbrewed.science rest'

inputs:

awsCredentials: freshbrewed

regionName: 'us-east-1'

bucketName: freshbrewed.science

sourceFolder: '$(System.DefaultWorkingDirectory)/out/localhost_2368'

filesAcl: 'public-read'

- stage: release_test

dependsOn: build

condition: and(succeeded(), ne(variables['Build.SourceBranch'], 'refs/heads/master'))

jobs:

- job: release

pool:

vmImage: 'ubuntu-latest'

steps:

- task: DownloadBuildArtifacts@0

inputs:

buildType: "current"

downloadType: "single"

artifactName: "drop"

downloadPath: "_drop"

- task: ExtractFiles@1

displayName: 'Extract files '

inputs:

archiveFilePatterns: '**/drop/*.zip'

destinationFolder: '$(System.DefaultWorkingDirectory)/out'

- bash: |

#!/bin/bash

export

set -x

export DEBIAN_FRONTEND=noninteractive

sudo apt-get -yq install tree

cd ..

pwd

tree .

displayName: 'Bash Script Debug'

- task: AmazonWebServices.aws-vsts-tools.S3Upload.S3Upload@1

displayName: 'S3 Upload: freshbrewed.science rest'

inputs:

awsCredentials: freshbrewed

regionName: 'us-east-1'

bucketName: freshbrewed-test

sourceFolder: '$(System.DefaultWorkingDirectory)/out/localhost_2368'

filesAcl: 'public-read'

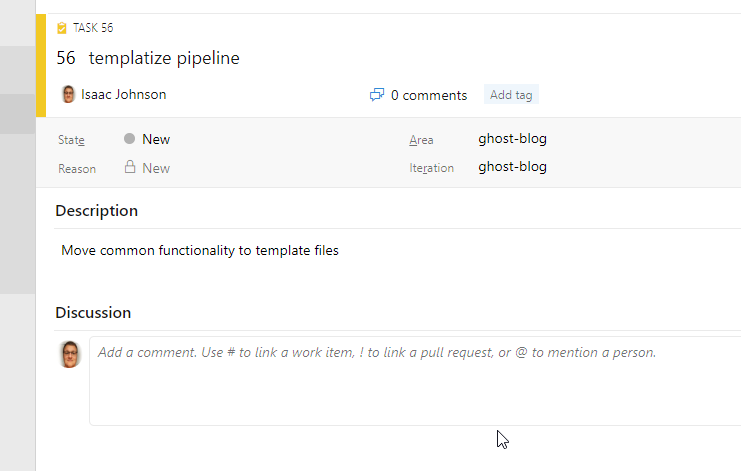

You’ll notice that they are similar - really just bucket names are different. We could bundle those into templates. First, let’s create a task;

Next we can make a branch and create the files we need:

builder@DESKTOP-JBA79RT:~/Workspaces/ghost-blog$ git checkout -b feature/56-make-template

The first thing we’ll do is to create a templates folder and create files for uploading to AWS and Notifications as these are the parts we want to re-use right away.

templates/aws-release.yaml:

jobs:

- job:

pool:

vmImage: 'ubuntu-latest'

steps:

- task: DownloadBuildArtifacts@0

inputs:

buildType: "current"

downloadType: "single"

artifactName: "drop"

downloadPath: "_drop"

- task: ExtractFiles@1

displayName: 'Extract files '

inputs:

archiveFilePatterns: '**/drop/*.zip'

destinationFolder: '$(System.DefaultWorkingDirectory)/out'

- bash: |

#!/bin/bash

export

set -x

export DEBIAN_FRONTEND=noninteractive

sudo apt-get -yq install tree

cd ..

pwd

tree .

displayName: 'Bash Script Debug'

- task: AmazonWebServices.aws-vsts-tools.S3Upload.S3Upload@1

displayName: 'S3 Upload: $ html'

inputs:

awsCredentials: $

regionName: 'us-east-1'

bucketName: $

sourceFolder: '$(System.DefaultWorkingDirectory)/out/localhost_2368'

globExpressions: '**/*.html'

filesAcl: 'public-read'

- task: AmazonWebServices.aws-vsts-tools.S3Upload.S3Upload@1

displayName: 'S3 Upload: $ rest'

inputs:

awsCredentials: $

regionName: 'us-east-1'

bucketName: $

sourceFolder: '$(System.DefaultWorkingDirectory)/out/localhost_2368'

filesAcl: 'public-read'

templates/notifications.yaml:

jobs:

- job:

pool:

vmImage: 'ubuntu-latest'

steps:

- bash: |

#!/bin/bash

set -x

umask 0002

cat > ./post.json <<'endmsg'

{

"@type": "MessageCard",

"@context": "https://schema.org/extensions",

"summary": "1 new build message",

"themeColor": "0078D7",

"sections": [

{

"activityImage": "https://ca.slack-edge.com/T4AQPQN8M-U4AL2JFC3-g45c8854734a-48",

"activityTitle": "$(Build.SourceVersionAuthor)",

"activitySubtitle": "$(Build.SourceVersionMessage) - $(Build.SourceBranchName) - $(Build.SourceVersion)",

"facts": [

{

"name": "Keywords:",

"value": "$(System.DefinitionName)"

},

{

"name": "Group:",

"value": "$(Build.BuildNumber)"

}

],

"text": "Build $(Build.BuildNumber) has completed. Pipeline started at $(System.PipelineStartTime). $ bucket updated and $ is live. The world now has just that much more joy in it.\n<br/>\n Peace and love.",

"potentialAction": [

{

"@type": "OpenUri",

"name": "View conversation"

}

]

}

]

}

endmsg

curl -X POST -H "Content-Type: application/json" -d @post.json https://outlook.office.com/webhook/ad403e91-2f21-4c3c-92e0-02d7dce003ff@ad403e91-2f21-4c3c-92e0-02d7dce003ff/IncomingWebhook/ad403e91-2f21-4c3c-92e0-02d7dce003ff/ad403e91-2f21-4c3c-92e0-02d7dce003ff

displayName: 'Bash Script'

Now we can change the bottom of our YAML file to use those files:

trigger:

branches:

include:

- master

- develop

- feature/*

pool:

vmImage: 'ubuntu-latest'

stages:

- stage: build

jobs:

- job: start_n_sync

displayName: start_n_sync

continueOnError: false

steps:

- task: DownloadPipelineArtifact@2

displayName: 'Download Pipeline Artifact'

inputs:

buildType: specific

project: 'f83a3d41-3696-4b1a-9658-88cecf68a96b'

definition: 12

buildVersionToDownload: specific

pipelineId: 484

artifactName: freshbrewedsync

targetPath: '$(Pipeline.Workspace)/s/freshbrewed5b'

- task: NodeTool@0

displayName: 'Use Node 8.16.2'

inputs:

versionSpec: '8.16.2'

- task: Npm@1

displayName: 'npm run setup'

inputs:

command: custom

verbose: false

customCommand: 'run setup'

- task: Npm@1

displayName: 'npm install'

inputs:

command: custom

verbose: false

customCommand: 'install --production'

- bash: |

#!/bin/bash

set -x

npm start &

wget http://localhost:2368

which httrack

export DEBIAN_FRONTEND=noninteractive

sudo apt-get -yq install httrack tree curl || true

wget http://localhost:2368

which httrack

httrack http://localhost:2368 -c16 -O "./freshbrewed5b" --quiet -%s

displayName: 'install httrack and sync'

timeoutInMinutes: 120

- task: PublishBuildArtifacts@1

displayName: 'Publish artifacts: sync'

inputs:

PathtoPublish: '$(Build.SourcesDirectory)/freshbrewed5b'

ArtifactName: freshbrewedsync

- task: Bash@3

displayName: 'run static fix script'

inputs:

targetType: filePath

filePath: './static_fix.sh'

arguments: freshbrewed5b

- task: ArchiveFiles@2

displayName: 'Archive files'

inputs:

rootFolderOrFile: '$(Build.SourcesDirectory)/freshbrewed5b'

includeRootFolder: false

- task: PublishBuildArtifacts@1

displayName: 'Publish artifacts: drop'

- stage: release_prod

dependsOn: build

condition: and(succeeded(), eq(variables['Build.SourceBranch'], 'refs/heads/master'))

jobs:

- template: templates/aws-release.yaml

parameters:

awsCreds: freshbrewed

awsBucket: freshbrewed.science

- stage: build_notification_prod

dependsOn: release_prod

jobs:

- template: templates/notifications.yaml

parameters:

siteUrl: https://freshbrewed.com

awsBucket: freshbrewed.com

- stage: release_test

dependsOn: build

condition: and(succeeded(), ne(variables['Build.SourceBranch'], 'refs/heads/master'))

jobs:

- template: templates/aws-release.yaml

parameters:

awsCreds: freshbrewed

awsBucket: freshbrewed-test

- stage: build_notification_test

dependsOn: release_test

jobs:

- template: templates/notifications.yaml

parameters:

siteUrl: http://freshbrewed-test.s3-website-us-east-1.amazonaws.com/

awsBucket: freshbrewed-test

You’ll also notice the addition of dependsOn in the notifications so we can fork properly.

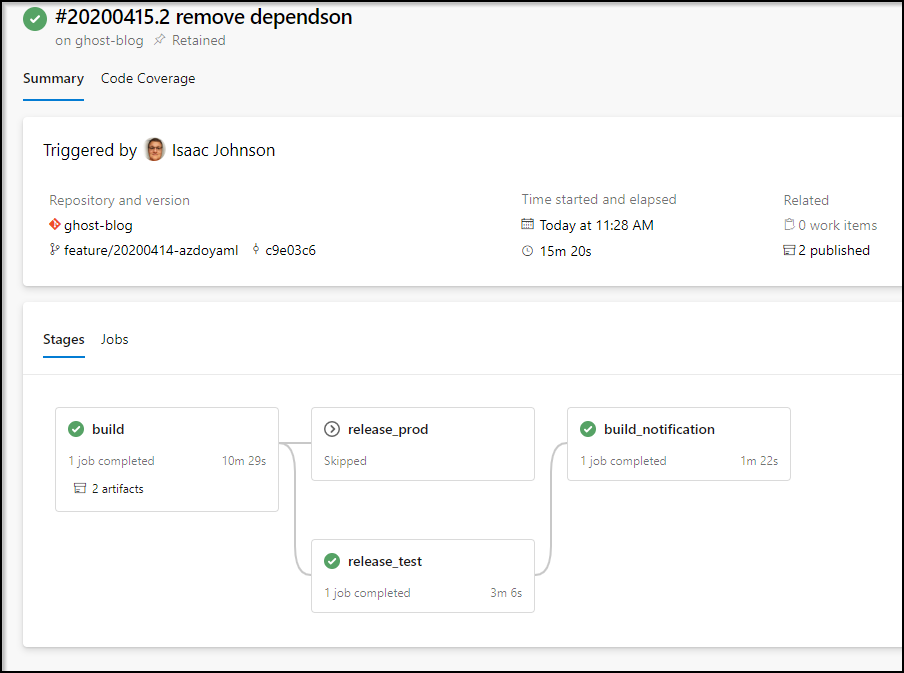

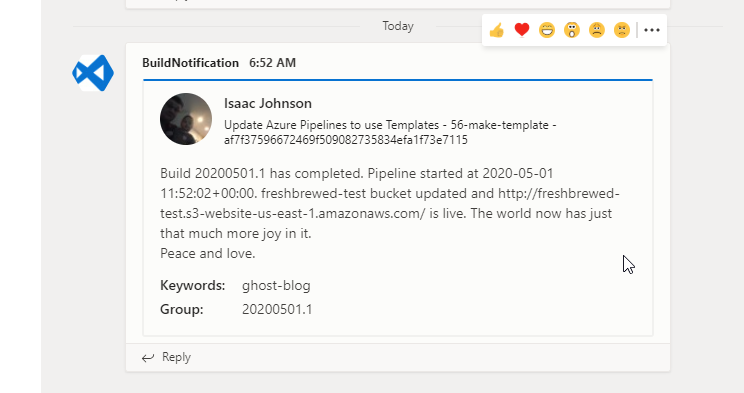

Now let’s test

builder@DESKTOP-JBA79RT:~/Workspaces/ghost-blog$ git commit -m "Update Azure Pipelines to use Templates"

[feature/56-make-template af7f3759] Update Azure Pipelines to use Templates

3 files changed, 217 insertions(+), 224 deletions(-)

rewrite azure-pipelines.yml (65%)

create mode 100644 templates/aws-release.yaml

create mode 100644 templates/notifications.yaml

builder@DESKTOP-JBA79RT:~/Workspaces/ghost-blog$ git push --set-upstream origin feature/56-make-template

Counting objects: 8, done.

Delta compression using up to 8 threads.

Compressing objects: 100% (8/8), done.

Writing objects: 100% (8/8), 1.58 KiB | 1.58 MiB/s, done.

Total 8 (delta 5), reused 0 (delta 0)

remote: Analyzing objects... (8/8) (1699 ms)

remote: Storing packfile... done (215 ms)

remote: Storing index... done (107 ms)

remote: We noticed you're using an older version of Git. For the best experience, upgrade to a newer version.

To https://princessking.visualstudio.com/ghost-blog/_git/ghost-blog

* [new branch] feature/56-make-template -> feature/56-make-template

Branch 'feature/56-make-template' set up to track remote branch 'feature/56-make-template' from 'origin'.

We can immediately see our push is working:

Summary

Teams is making significant headway against others like Slack today (as most of us are home during the COVID-19 crisis). Integrating automated build notifications is a great way to keep your development team up to date without having to rely on emails.

YAML Templates are quite powerful. In fact, there is a lot more we can do with templates (see full documentation here) such as referring to another GIT repo and tag:

resources:

repositories:

- repository: templates

name: PrincessKing/CommonTemplates

endpoint: myServiceConnection # AzDO service connection

jobs:

- template: common.yml@templates

The idea we can use Non-public templates cross-org is a very pratical way to scale Azure DevOps.