Published: Apr 14, 2020 by Isaac Johnson

Let’s talk about why I spent $40 this month because of DevOps debt. That’s right, even yours truly can kick the can on some processes for a while and eventually that bit me in the tuchus.

What it isn’t

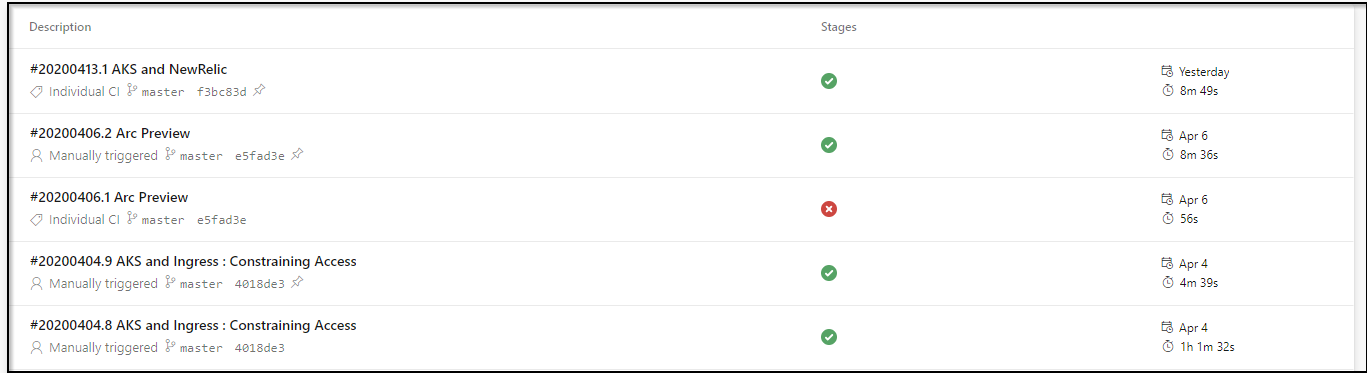

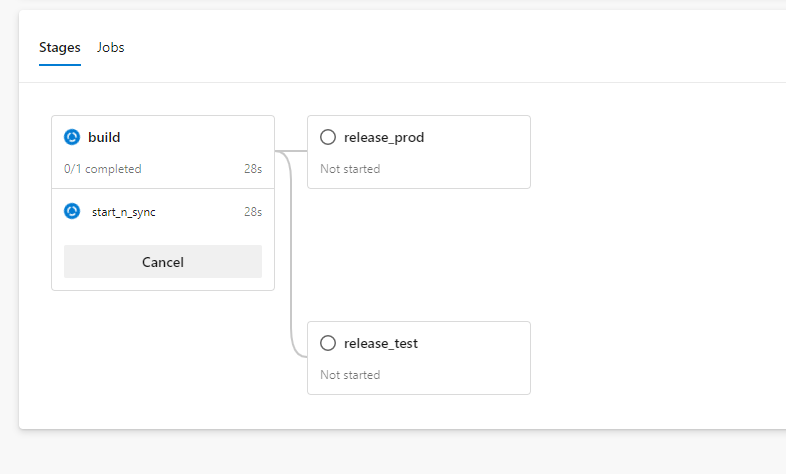

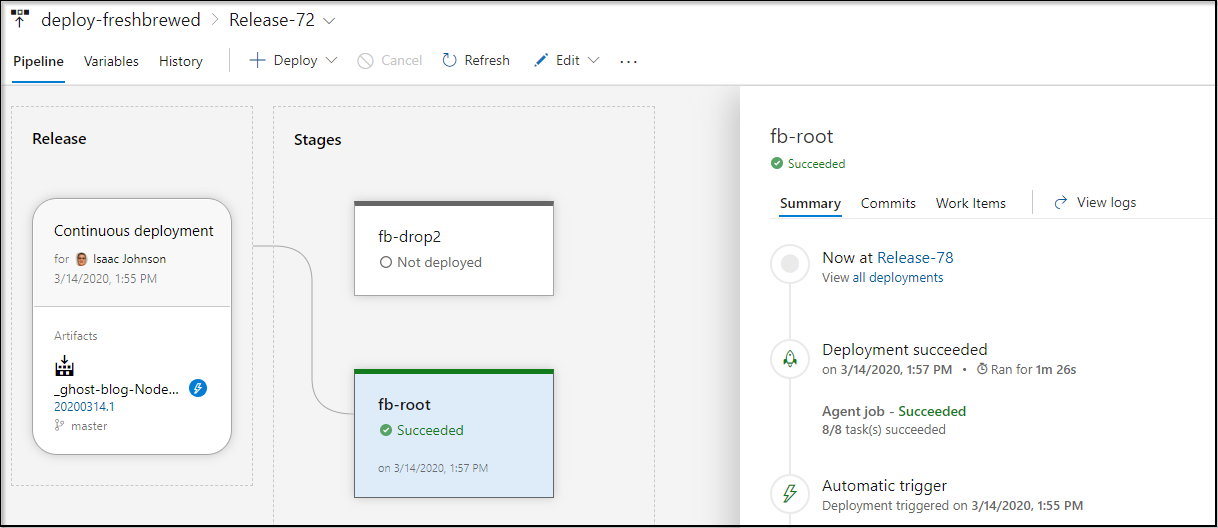

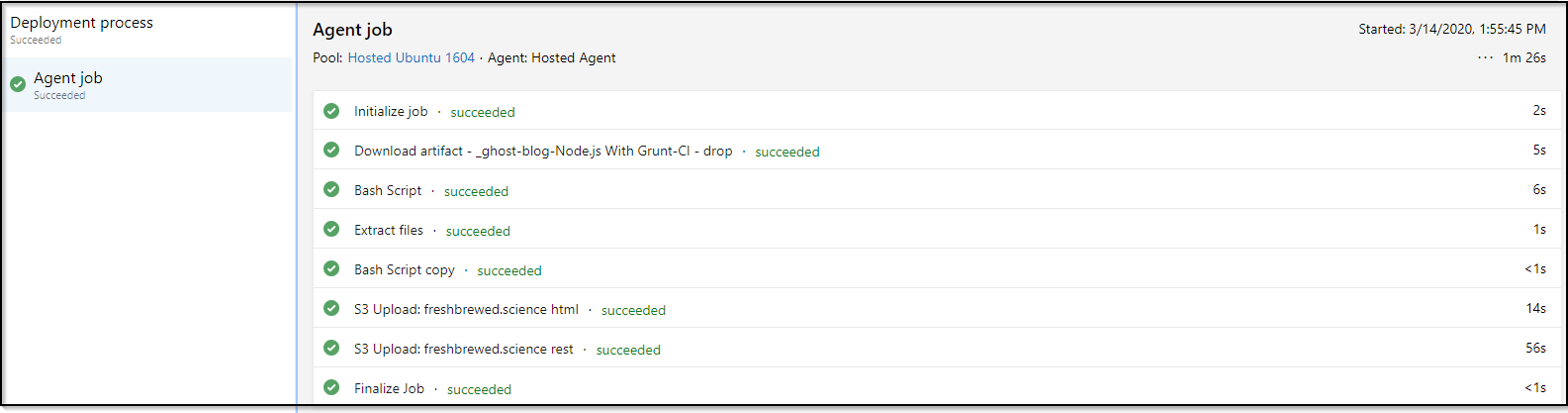

First, let’s cover what it isn’t: The releases. The releases have been cake taking between 1 and 2 minutes.

All they really have to do is unpack a zip of a website rendered and upload it to AWS.

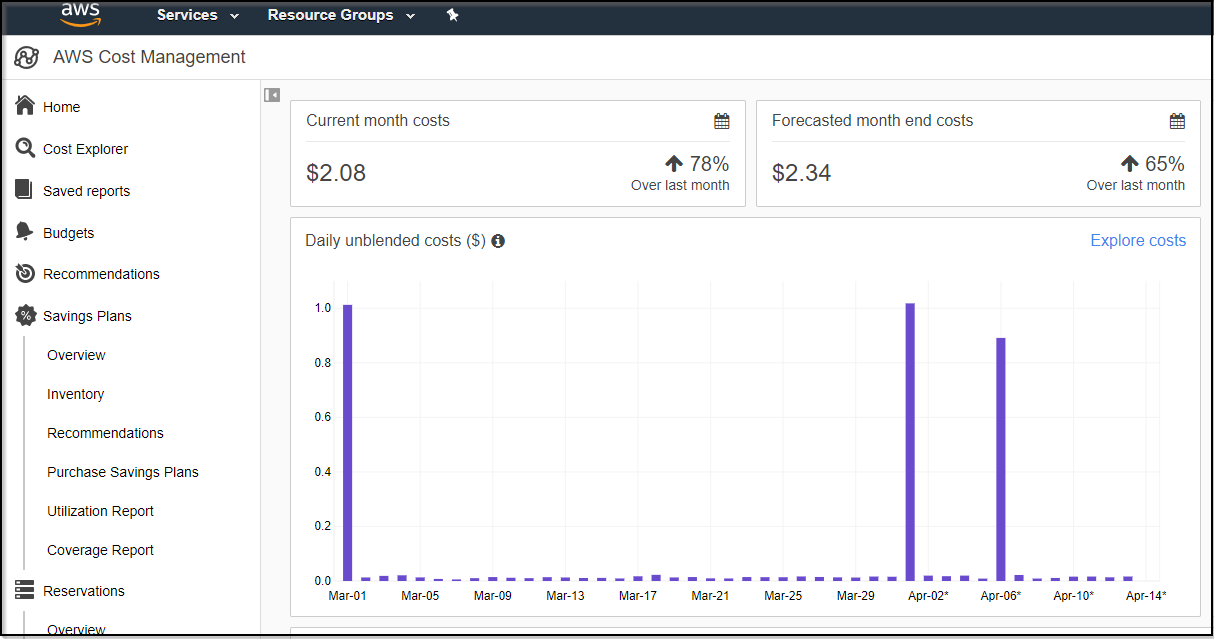

And yes, while I really do put a lot of love out there for Azureand Linode, the fact is, to host a fast, global website with HTTPS and proper DNS, I do use AWS. Seriously, can you beat these costs to host the volume of content this blog has?

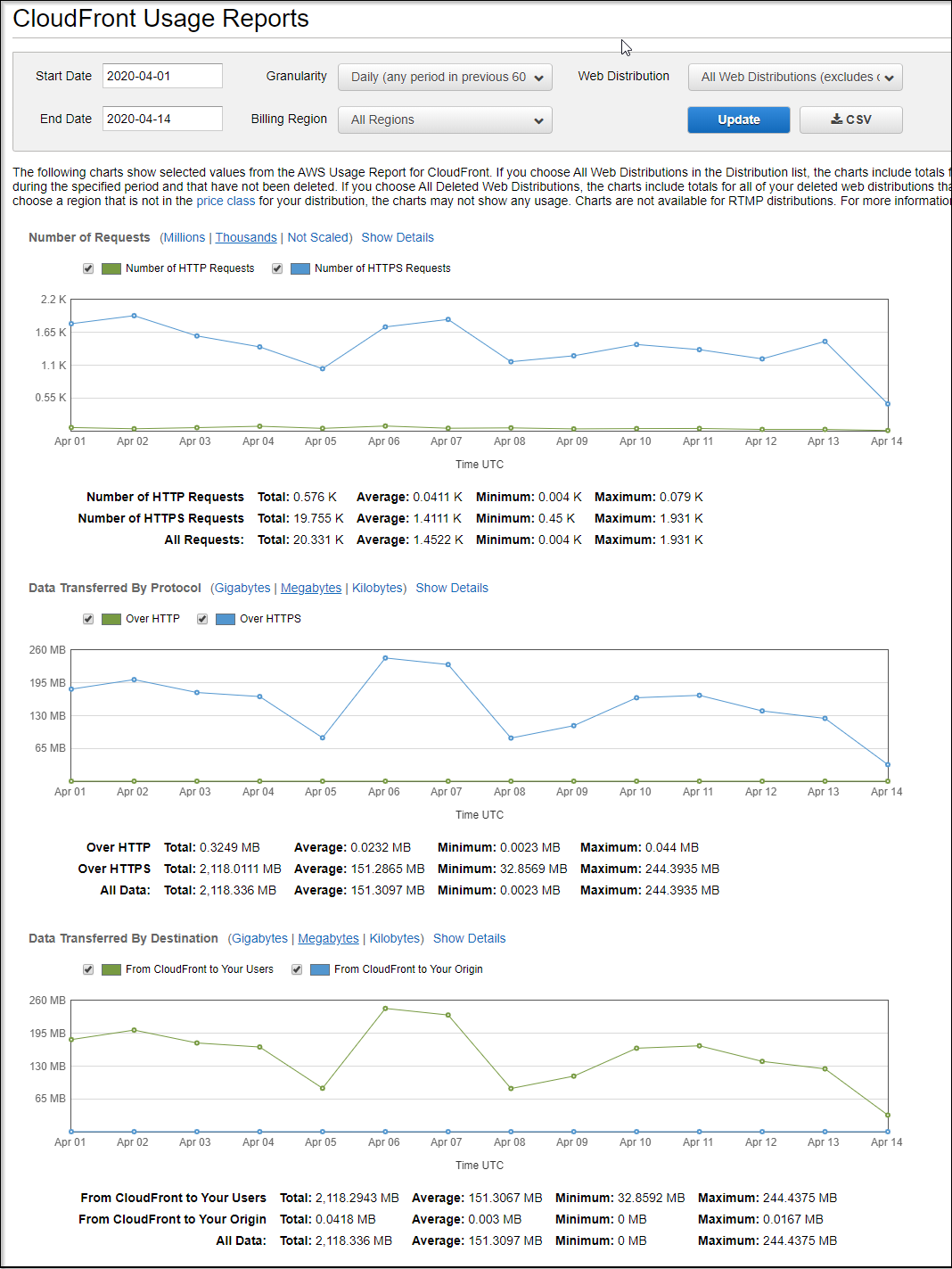

And lest you think this site gets little traffic since I don’t monetize. I’ll gladly share some details on traffic.

This is just for the first half of the month:

That’s roughly 2Gb of data and 20k requests for half of April - for $2. Find me similar hosting elsewhere…

Okay, enough of that. As I said, the problem was not my release pipelines.

So where did I tuck debt under the rug?

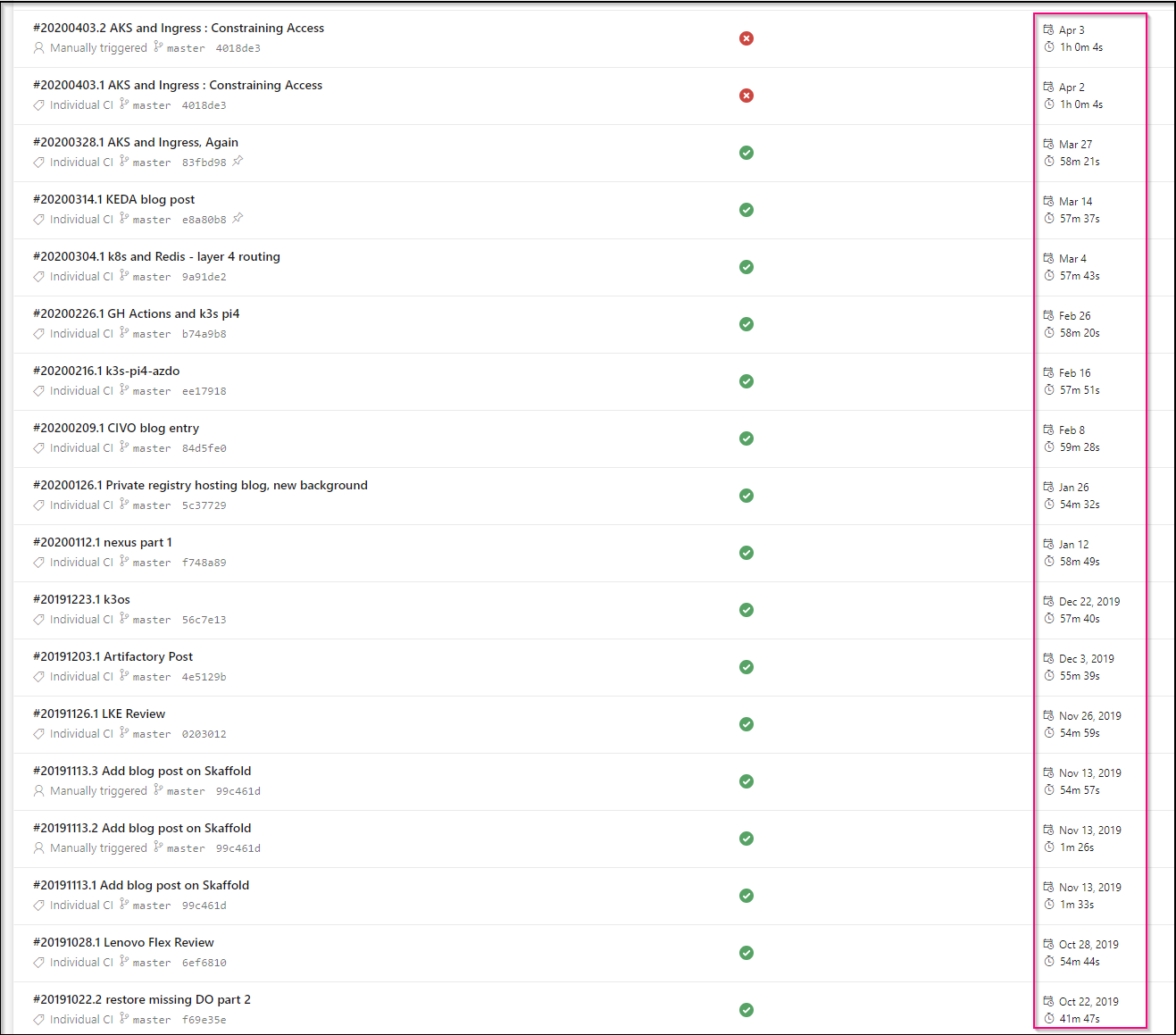

The CI pipeline.. The fact is Azure DevOps gives you so many free hours of compute a month in your pipelines, but with a couple tiny weeny itsy bitsy caveats. The one that snuck up slowly but surely was that time limit.

Each push of my blog was calling httrackto fully since the website using httrack. Back when i had a few articles, that just took a few minutes. But now, with around 68 articles linked off the main page, each with lengthy writeups and images, that’s just not scaled:

So I had one of three choices to make.

- Redesign the website and archive old content

- Pay Microsoft for a pipeline agent ($40/mo/agent)

- Fix the pipeline

At first, I planned the last option, but with COVID and Easter, i just wanted the blog out. Also, I like my layout and I like having all this content easy to find.

Once I was WFH’ed like the rest with a bunch of PTO to use up, I took the time to fix the thing.

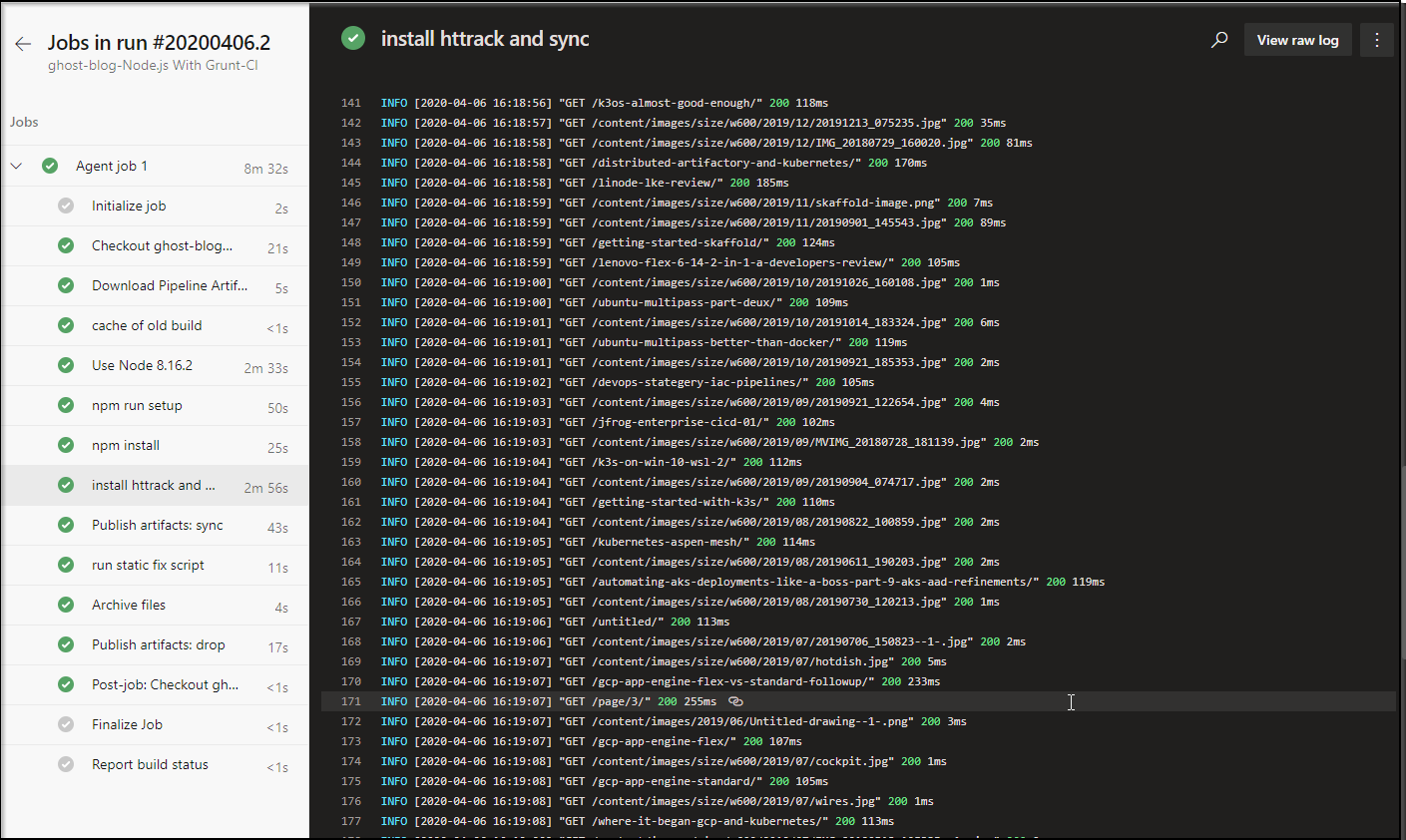

As you can see, with a few httrack tricks (and i had to flesh them out with other pipelines), I managed to reduce the time from 1h 1m 32s to 4m 39s.

But how, you may ask?

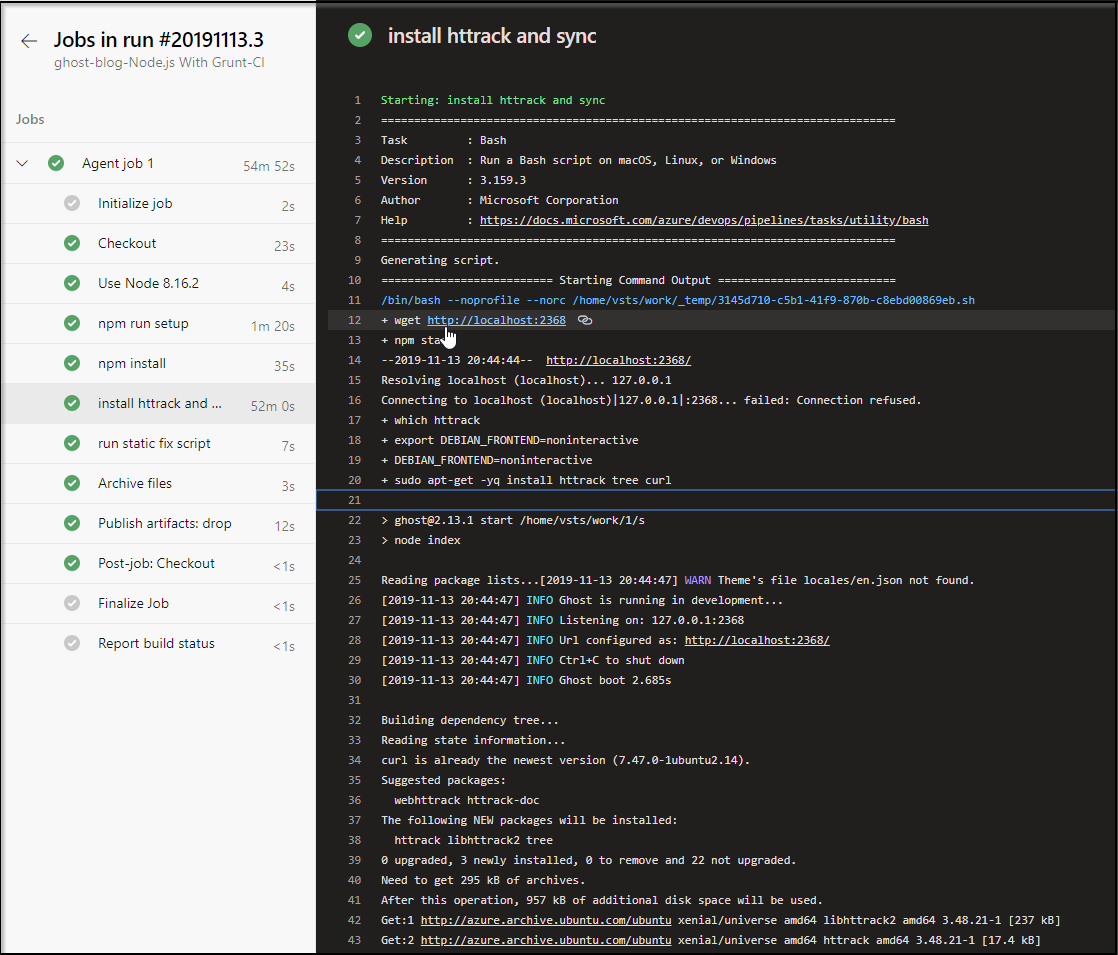

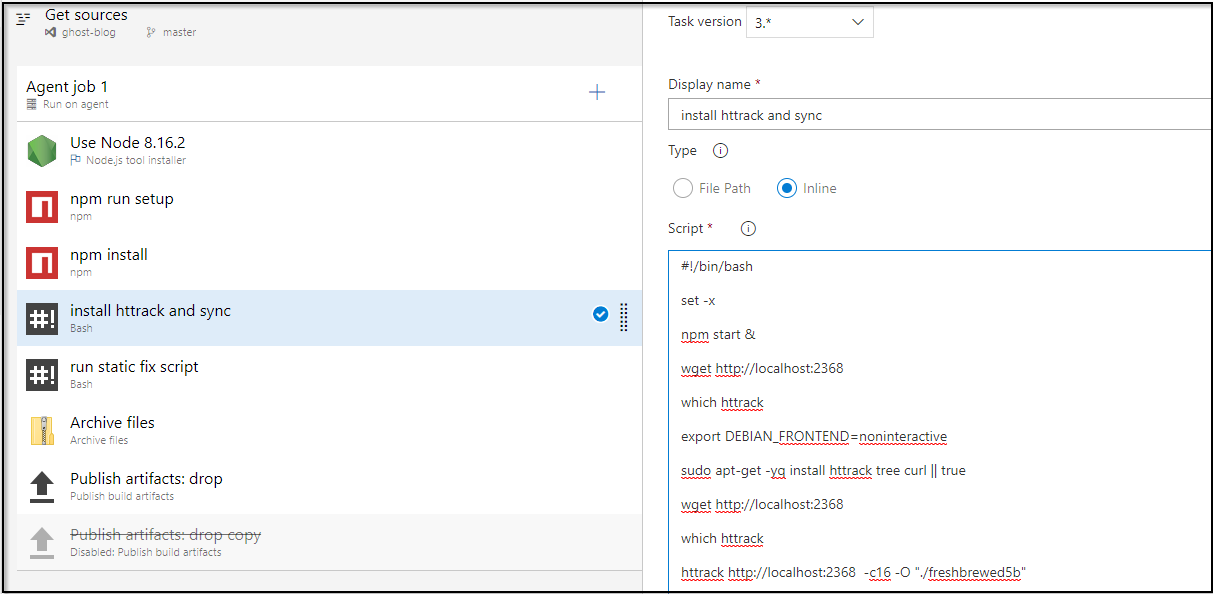

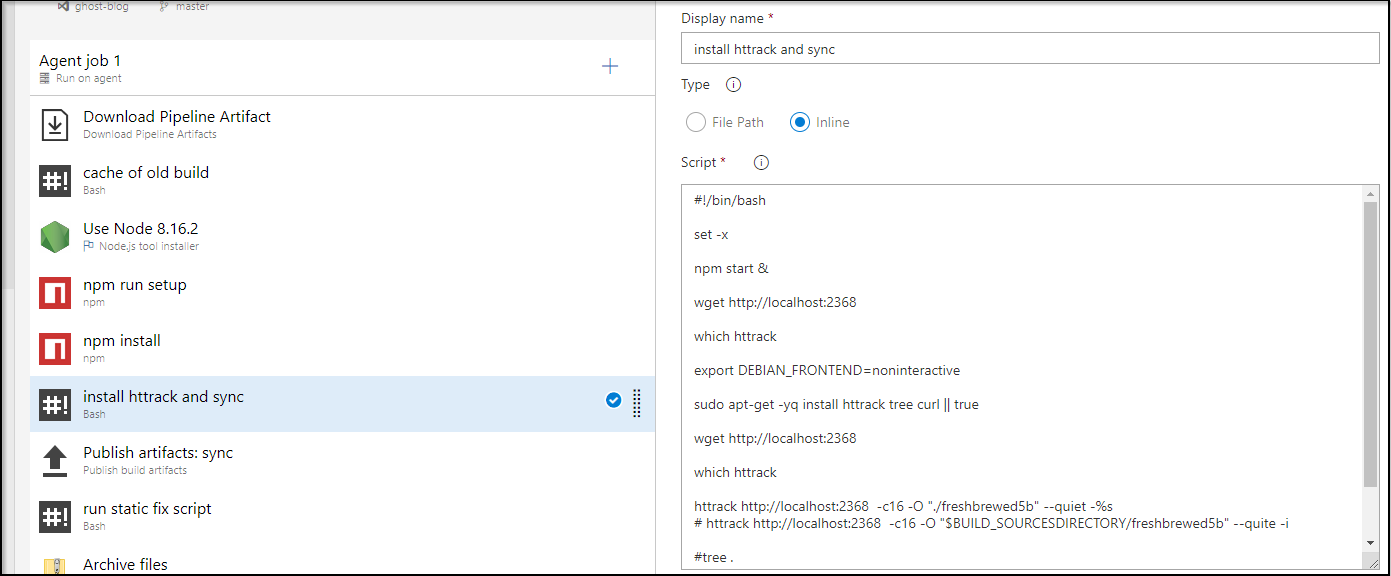

First, let’s look at my old steps:

One issue here is that we started ghost ( npm start & ) then synced all the things to a new folder (freshbrewed5b). We then, as a post step, crawl that and changed from http://localhost:2368 to https://freshbrewed.com in the “run static fix script”.

This meant with every single build, i slowly used a webscrawler to sync and sync and sync everything over and over - even though i rarely updated the older blog writeups.

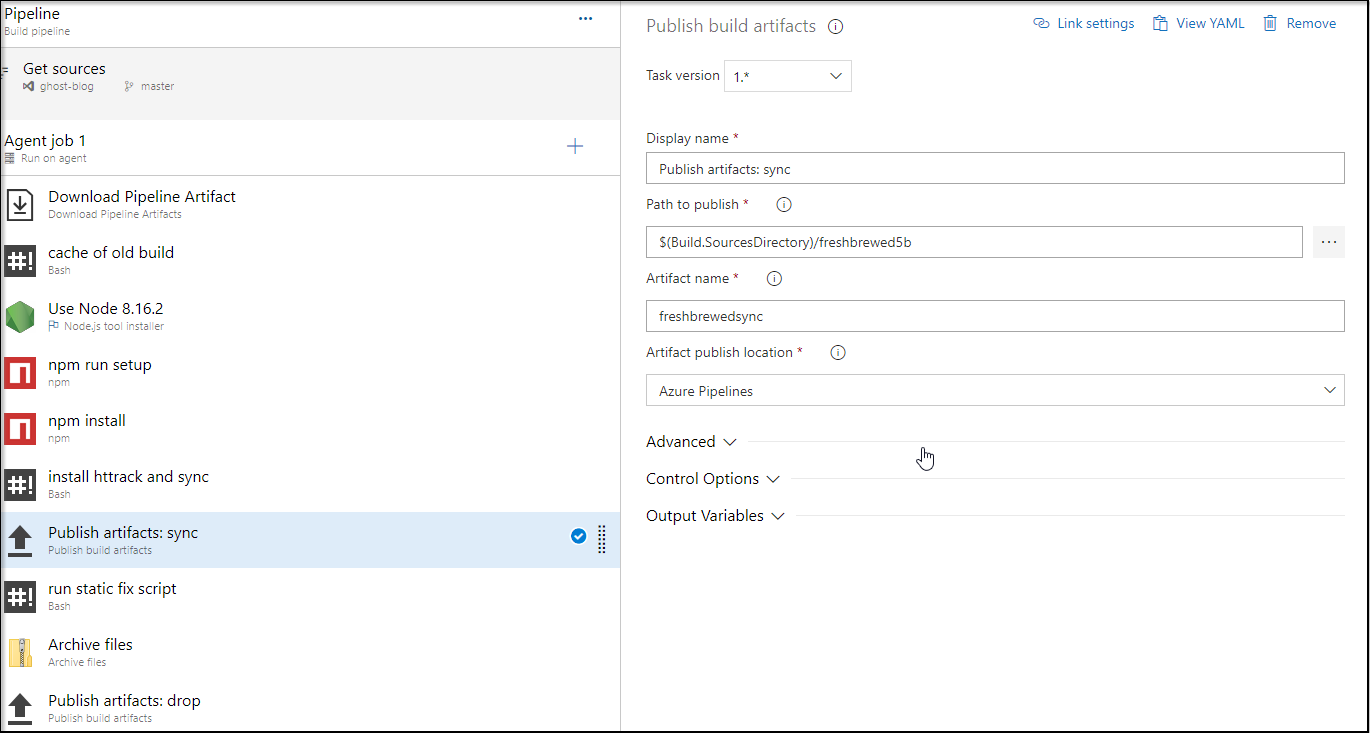

The first step I realized I needed was to archive a sync. I would need to do this before I crawled and modified every page for the URL.

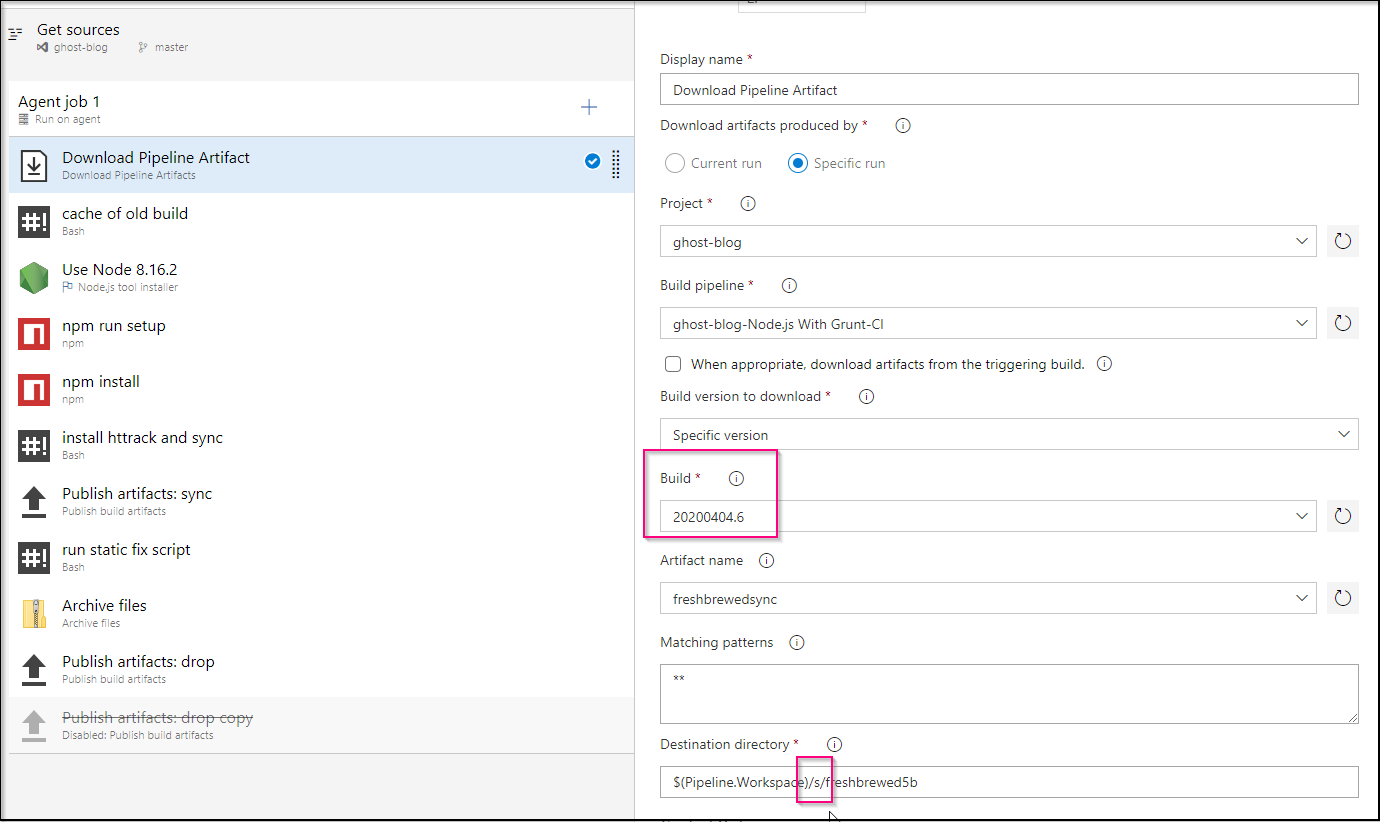

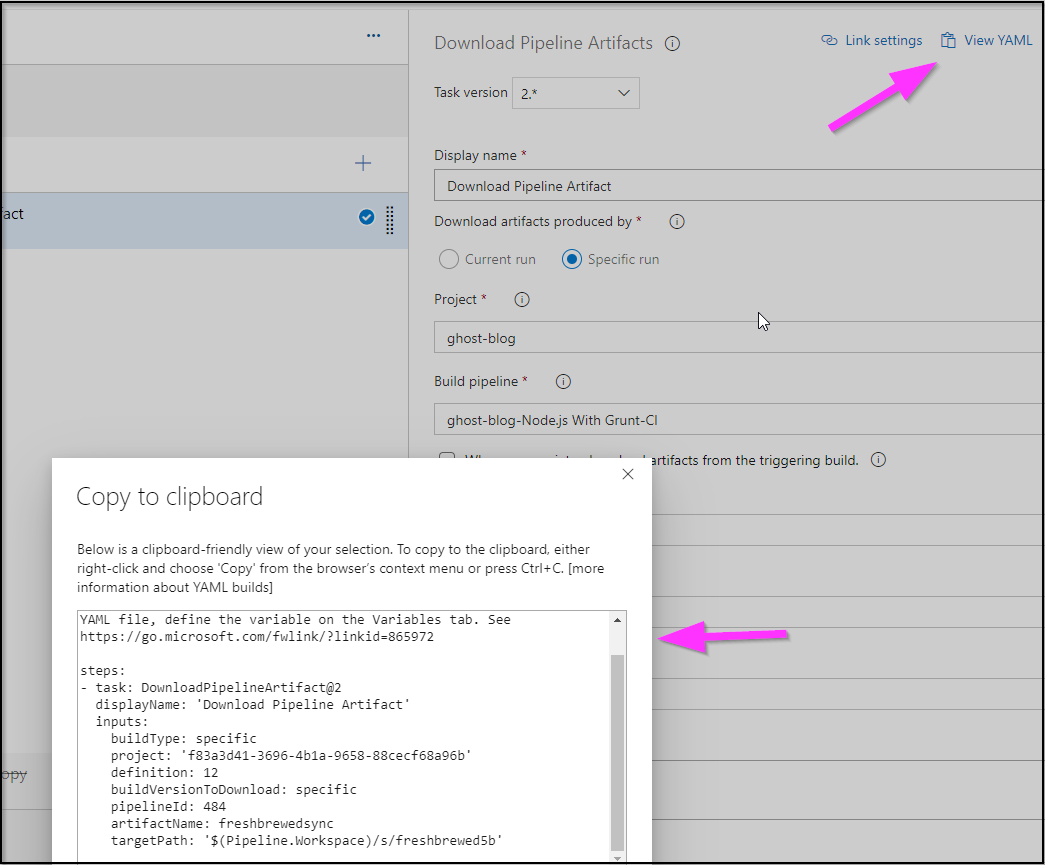

The next step would be to download it at the start of the next build. The part that honestly threw me for a loop was the “Pipeline.Workspace” variable. VSTS variables have changed over time as Microsoft is moving to a more unified pipeline approach. We used to talk about source and artifact dirs. I assumed this was the source dir and was mistaken - it’s the root of both so i needed the “/s/” in there.

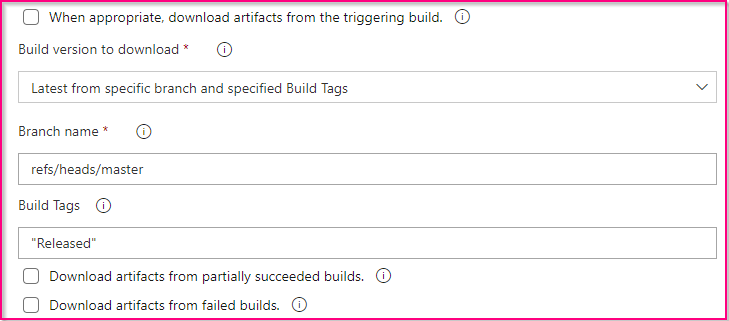

You will see above an area I’ll need to optimize later - i’ll want to change the fixed source build to last released. This means I’ll need to add some tagging and change the above step (Build version to download) from “Specific version” to specific branch and build tags:

The step I’ll gloss over is the “cache of old build” which is some bash debug I used to try and figure out where the heck my files were going and how they were laid out.

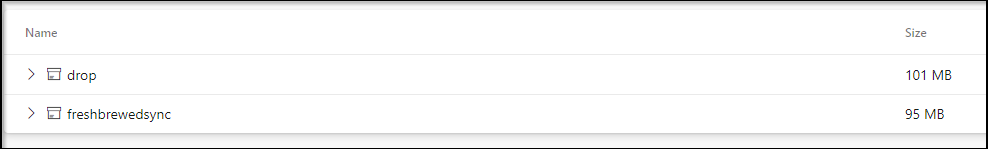

And if you want an idea of how much a sync we are speaking about, the website as a whole is about 100mb:

The final change was to work out some optimizations on httrack:

We can look more carefully at those steps

#!/bin/bash

set -x

npm start &

wget http://localhost:2368

which httrack

export DEBIAN_FRONTEND=noninteractive

sudo apt-get -yq install httrack tree curl || true

wget http://localhost:2368

which httrack

httrack http://localhost:2368 -c16 -O "./freshbrewed5b" --quiet -%s

# httrack http://localhost:2368 -c16 -O "$BUILD_SOURCESDIRECTORY/freshbrewed5b" --quite -i

#tree .

cd freshbrewed5b/localhost_2368

pwd

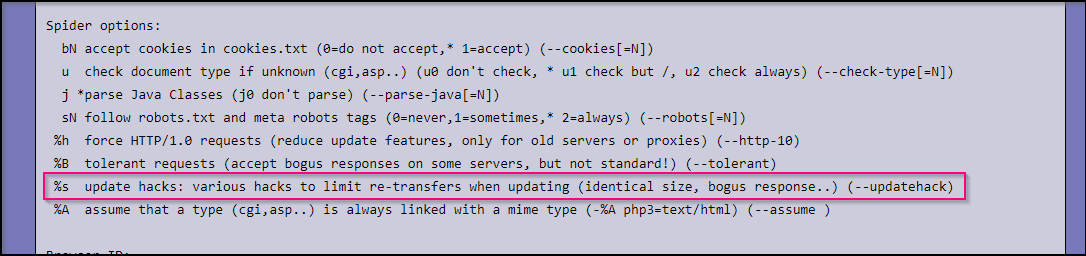

So what is really going on here? It’s that “%s” combined with pointing at a sync folder pre-populated with an old sync.

%s is also “–updatehack”. We can see all the options on httrack here: https://www.httrack.com/html/fcguide.html

So to summarize, what we changed was:

- Save a full sync of the site

- Use that sync as a basis in subsequent pipelines

- Update httrack, the website syncer, to avoid redownloading things (for us, that is images)

We can see the results both in the times and bash output:

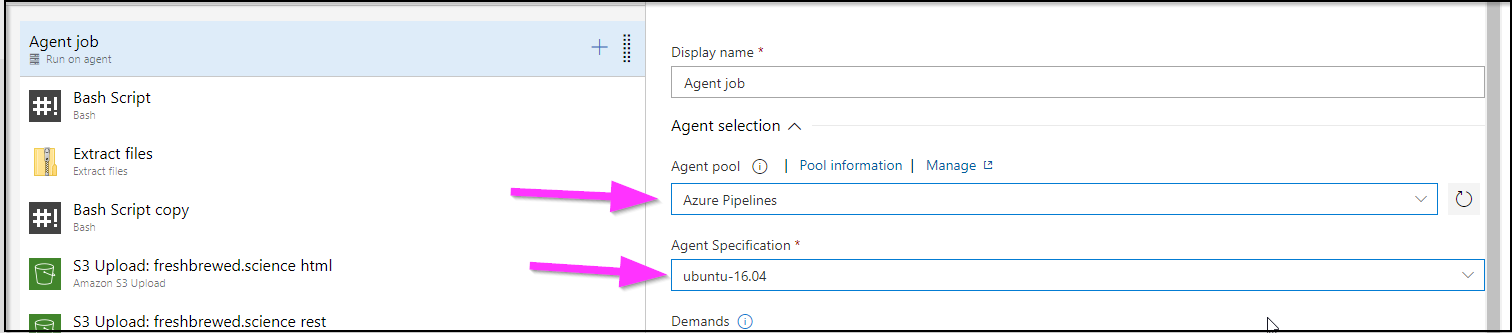

Since we are fixing pipelines, let’s tackle some Release Pipeline debt while we are at it.

Release Pipelines

First let’s fix some Agent issues. Sometimes Azure DevOps depreciates agents or, in our case, changes how they structure the pools. Slightly.

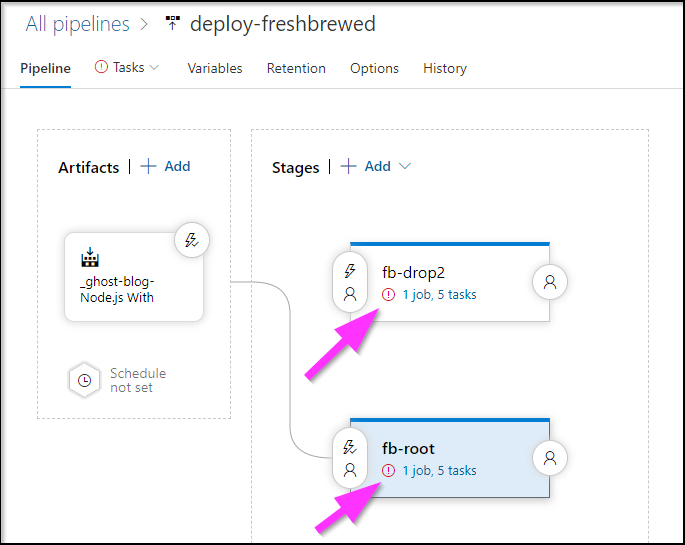

The little red bangs tell us that Azure DevOps needs a bit of TLC:

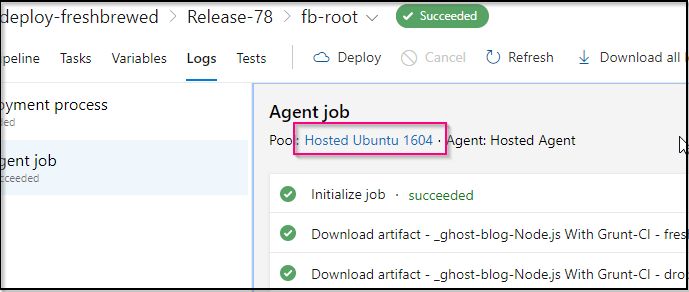

The first thing I’ll do is a quick check of the last run and see what agent was used.

We see that we’ve been using an older Ubuntu Xenial LTS instance.

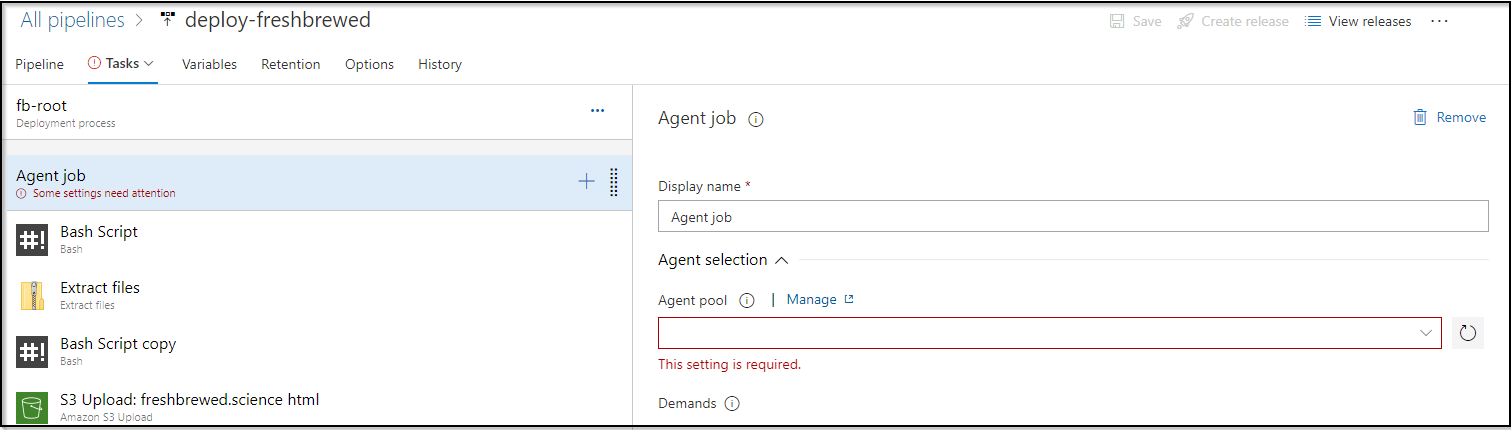

Now, back in my release pipeline, i’ll find that “settings that need attention” and change the pool:

Here we just need to pick the Ubuntu 16.04 agent specification in the Azure Pipelines agent pool.

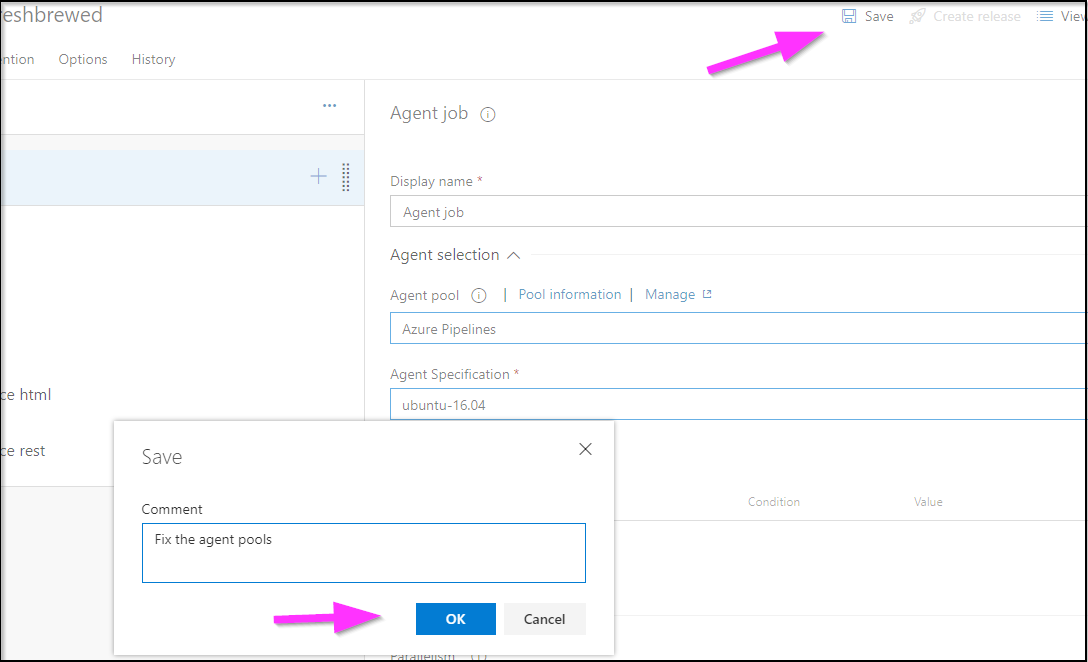

When done, we’ll just save and make a comment (for the pipeline history):

And now our pipeline graphical view is happy again:

Switching to YAML

We will now do something I swore I wouldn’t - move our pipelines to YAML.

We’ll need to go through our steps and view the YAML on each step:

So what does this translate to when done?

# Node.js

# Build a general Node.js project with npm.

# Add steps that analyze code, save build artifacts, deploy, and more:

# https://docs.microsoft.com/azure/devops/pipelines/languages/javascript

trigger:

- master

pool:

vmImage: 'ubuntu-latest'

steps:

- task: DownloadPipelineArtifact@2

displayName: 'Download Pipeline Artifact'

inputs:

buildType: specific

project: 'f83a3d41-3696-4b1a-9658-88cecf68a96b'

definition: 12

buildVersionToDownload: specific

pipelineId: 484

artifactName: freshbrewedsync

targetPath: '$(Pipeline.Workspace)/s/freshbrewed5b'

- task: NodeTool@0

displayName: 'Use Node 8.16.2'

inputs:

versionSpec: '8.16.2'

- task: Npm@1

displayName: 'npm run setup'

inputs:

command: custom

verbose: false

customCommand: 'run setup'

- task: Npm@1

displayName: 'npm install'

inputs:

command: custom

verbose: false

customCommand: 'install --production'

- bash: |

#!/bin/bash

set -x

npm start &

wget http://localhost:2368

which httrack

export DEBIAN_FRONTEND=noninteractive

sudo apt-get -yq install httrack tree curl || true

wget http://localhost:2368

which httrack

httrack http://localhost:2368 -c16 -O "./freshbrewed5b" --quiet -%s

displayName: 'install httrack and sync'

timeoutInMinutes: 120

- task: PublishBuildArtifacts@1

displayName: 'Publish artifacts: sync'

inputs:

PathtoPublish: '$(Build.SourcesDirectory)/freshbrewed5b'

ArtifactName: freshbrewedsync

- task: Bash@3

displayName: 'run static fix script'

inputs:

targetType: filePath

filePath: './static_fix.sh'

arguments: freshbrewed5b

- task: ArchiveFiles@2

displayName: 'Archive files'

inputs:

rootFolderOrFile: '$(Build.SourcesDirectory)/freshbrewed5b'

includeRootFolder: false

- task: PublishBuildArtifacts@1

displayName: 'Publish artifacts: drop'

It’s a pretty simple azure-pipelines.yaml file and built without issue:

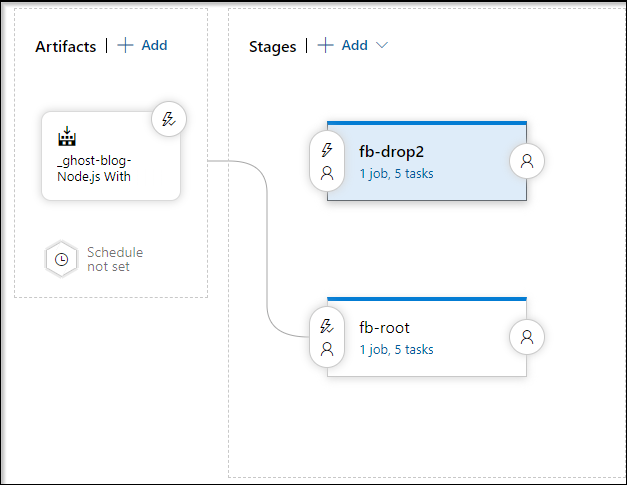

But what if we want to really make this complete? Handle Build and Release in a single pipeline. It would also be nice if we could support branches - no “master only”. (i’m a fan of gitflow).

Something that might look like this:

We can and we are able to do this with multi-stage pipelines.

trigger:

branches:

include:

- master

- develop

- feature/*

pool:

vmImage: 'ubuntu-latest'

stages:

- stage: build

jobs:

- job: start_n_sync

displayName: start_n_sync

continueOnError: false

steps:

- task: DownloadPipelineArtifact@2

displayName: 'Download Pipeline Artifact'

inputs:

buildType: specific

project: 'f83a3d41-3696-4b1a-9658-88cecf68a96b'

definition: 12

buildVersionToDownload: specific

pipelineId: 484

artifactName: freshbrewedsync

targetPath: '$(Pipeline.Workspace)/s/freshbrewed5b'

- task: NodeTool@0

displayName: 'Use Node 8.16.2'

inputs:

versionSpec: '8.16.2'

- task: Npm@1

displayName: 'npm run setup'

inputs:

command: custom

verbose: false

customCommand: 'run setup'

- task: Npm@1

displayName: 'npm install'

inputs:

command: custom

verbose: false

customCommand: 'install --production'

- bash: |

#!/bin/bash

set -x

npm start &

wget http://localhost:2368

which httrack

export DEBIAN_FRONTEND=noninteractive

sudo apt-get -yq install httrack tree curl || true

wget http://localhost:2368

which httrack

httrack http://localhost:2368 -c16 -O "./freshbrewed5b" --quiet -%s

displayName: 'install httrack and sync'

timeoutInMinutes: 120

- task: PublishBuildArtifacts@1

displayName: 'Publish artifacts: sync'

inputs:

PathtoPublish: '$(Build.SourcesDirectory)/freshbrewed5b'

ArtifactName: freshbrewedsync

- task: Bash@3

displayName: 'run static fix script'

inputs:

targetType: filePath

filePath: './static_fix.sh'

arguments: freshbrewed5b

- task: ArchiveFiles@2

displayName: 'Archive files'

inputs:

rootFolderOrFile: '$(Build.SourcesDirectory)/freshbrewed5b'

includeRootFolder: false

- task: PublishBuildArtifacts@1

displayName: 'Publish artifacts: drop'

- stage: release_prod

dependsOn: build

condition: and(succeeded(), eq(variables['Build.SourceBranch'], 'refs/heads/master'))

jobs:

- job: release

pool:

vmImage: 'ubuntu-latest'

steps:

- task: DownloadBuildArtifacts@0

inputs:

buildType: "current"

downloadType: "single"

artifactName: "drop"

downloadPath: "_drop"

- task: ExtractFiles@1

displayName: 'Extract files '

inputs:

archiveFilePatterns: '**/drop/*.zip'

destinationFolder: '$(System.DefaultWorkingDirectory)/out'

- bash: |

#!/bin/bash

export

set -x

export DEBIAN_FRONTEND=noninteractive

sudo apt-get -yq install tree

cd ..

pwd

tree .

displayName: 'Bash Script Debug'

- task: AmazonWebServices.aws-vsts-tools.S3Upload.S3Upload@1

displayName: 'S3 Upload: freshbrewed.science html'

inputs:

awsCredentials: freshbrewed

regionName: 'us-east-1'

bucketName: freshbrewed.science

sourceFolder: '$(System.DefaultWorkingDirectory)/out/localhost_2368'

globExpressions: '**/*.html'

filesAcl: 'public-read'

- task: AmazonWebServices.aws-vsts-tools.S3Upload.S3Upload@1

displayName: 'S3 Upload: freshbrewed.science rest'

inputs:

awsCredentials: freshbrewed

regionName: 'us-east-1'

bucketName: freshbrewed.science

sourceFolder: '$(System.DefaultWorkingDirectory)/out/localhost_2368'

filesAcl: 'public-read'

- stage: release_test

dependsOn: build

condition: and(succeeded(), ne(variables['Build.SourceBranch'], 'refs/heads/master'))

jobs:

- job: release

pool:

vmImage: 'ubuntu-latest'

steps:

- task: DownloadBuildArtifacts@0

inputs:

buildType: "current"

downloadType: "single"

artifactName: "drop"

downloadPath: "_drop"

- task: ExtractFiles@1

displayName: 'Extract files '

inputs:

archiveFilePatterns: '**/drop/*.zip'

destinationFolder: '$(System.DefaultWorkingDirectory)/out'

- bash: |

#!/bin/bash

export

set -x

export DEBIAN_FRONTEND=noninteractive

sudo apt-get -yq install tree

cd ..

pwd

tree .

displayName: 'Bash Script Debug'

- task: AmazonWebServices.aws-vsts-tools.S3Upload.S3Upload@1

displayName: 'S3 Upload: freshbrewed.science rest'

inputs:

awsCredentials: freshbrewed

regionName: 'us-east-1'

bucketName: freshbrewed-test

sourceFolder: '$(System.DefaultWorkingDirectory)/out/localhost_2368'

filesAcl: 'public-read'

trigger: branches: include: - develop - feature/*

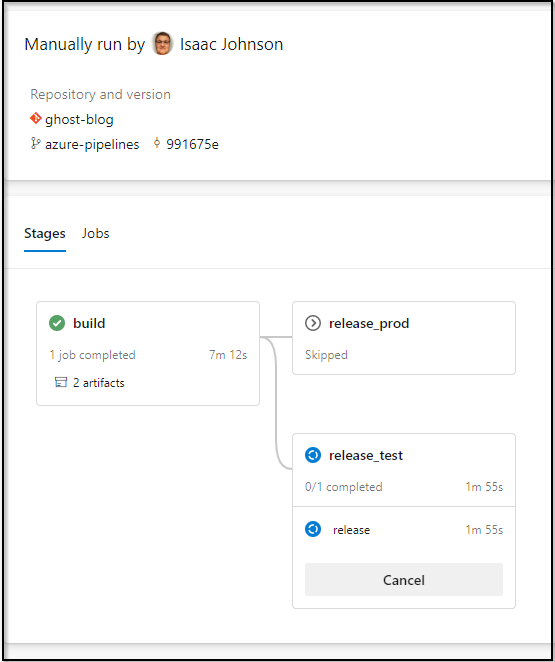

So what we’ve done is created three stages…

stages:

- stage: build

jobs:

- job: start_n_sync

displayName: start_n_sync

continueOnError: false

...

- stage: release_prod

dependsOn: build

condition: and(succeeded(), eq(variables['Build.SourceBranch'], 'refs/heads/master'))

...

- stage: release_test

dependsOn: build

condition: and(succeeded(), ne(variables['Build.SourceBranch'], 'refs/heads/master'))

While it’s technically a “fan out” pipeline. The release and release_test are logically mutually exclusive - either the branch is master or is not.

We can see this behaviour in action by manually triggering a pipeline:

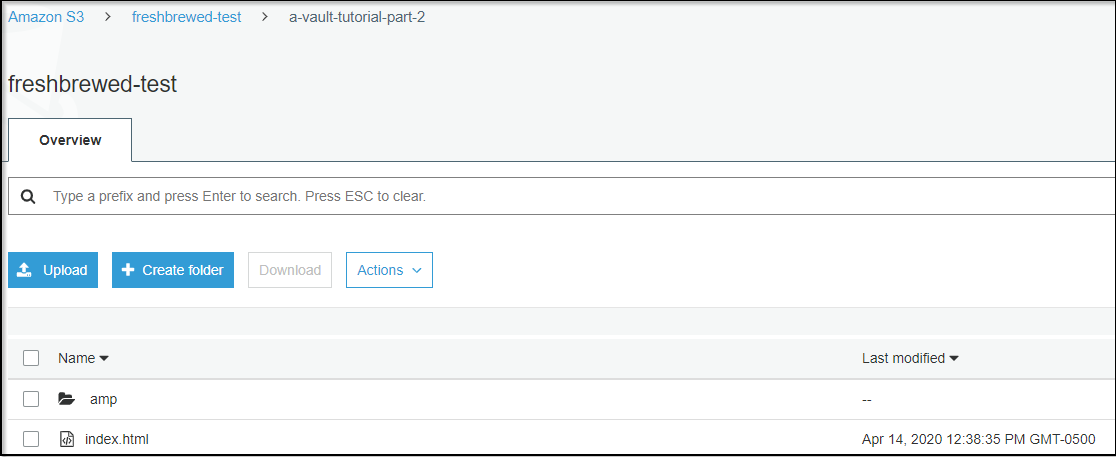

I also, as denoted in the YAML above, created a “test” site to receive content:

With a public ACL and index file, this means the “pre-validation” site shows up here: http://freshbrewed-test.s3-website-us-east-1.amazonaws.com/

I might re-organize later, but this proves out the point - I can combine pipelines to make a live one and let the build definition live in code

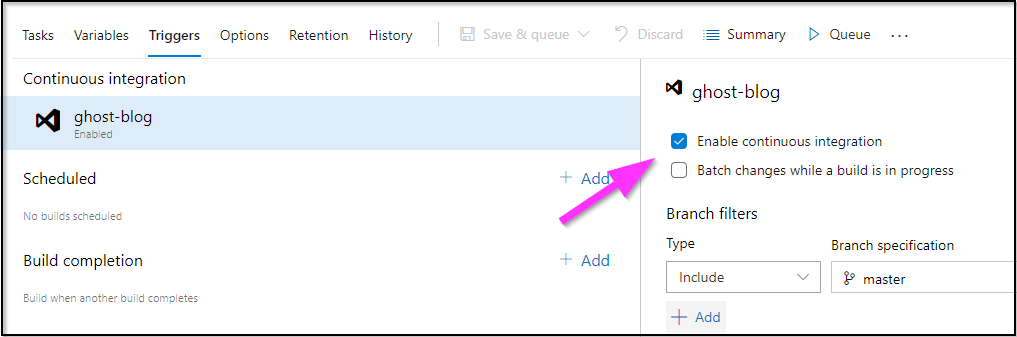

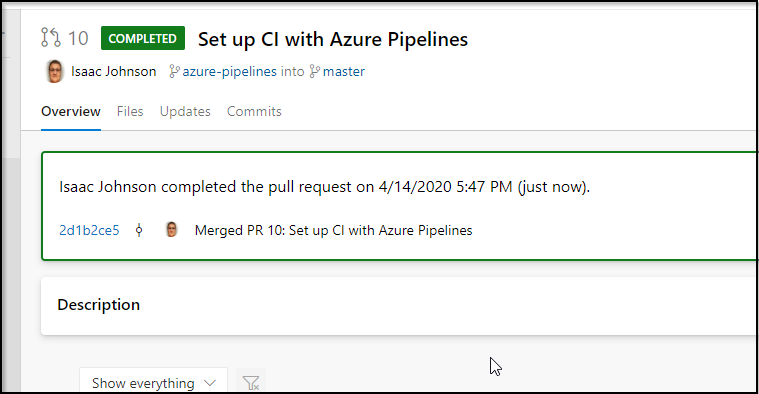

I can now complete my PR to make the change live (after disabling the old jobs triggers):

Note: once completed:

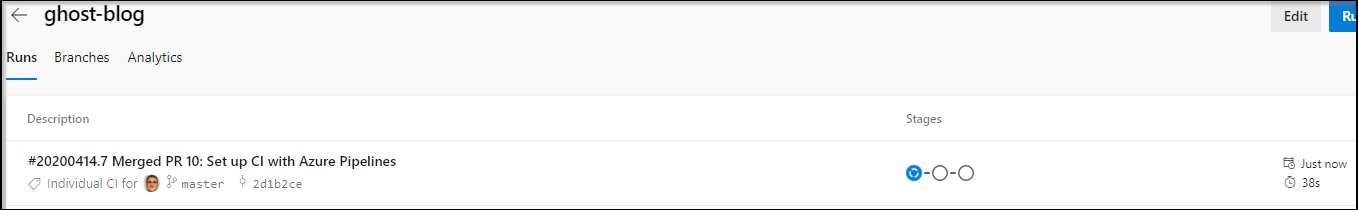

This will of course trigger a build/release:

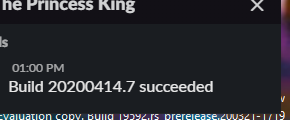

which includes our slack notifications

Summary

Ignoring your problems doesn’t make them go away. DevOps pipelines are not Ronco machines - you don’t ‘set it and forget it’. They require a certain amount of TLC and hydration. In my case, I let some debt build until I had to pay to work through it.

Additionally, as much as we love the graphical tools Azure DevOps has to create and pipelines, the world has moved to YAML. And if we want to stay on top of the latest, we need to move as well. It has allowed us to merge from two to one single pipeline and made it easier to share with others the steps in our pipeline.