Published: Apr 3, 2020 by Isaac Johnson

In my last missive I detailed out AKS and Ingress using NGinx Ingress Controllers. For public facing services this can be sufficient. However, many businesses require tighter controls - either for canary style deployments, hybrid cloud mixed systems or geofencing using CIDR blocks.

While we have a public IP that can be reached globally, here are two methods we can explore to restrict traffic.

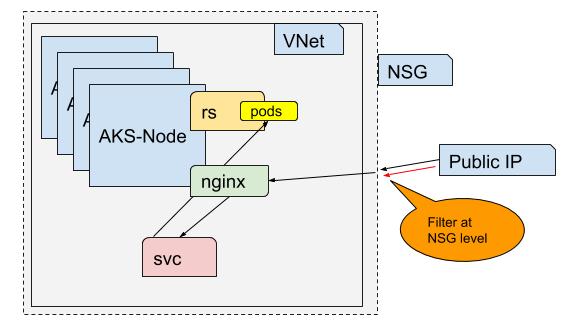

Network Security Groups

One way is to restrict traffic at the Network Security Group (NSG) level. This maintains the perimeter around our VNet that holds our cluster’s VMs. We can see rules have been added for us that allows all of the internet to access our NGinx.

However, I can reach that from anywhere.

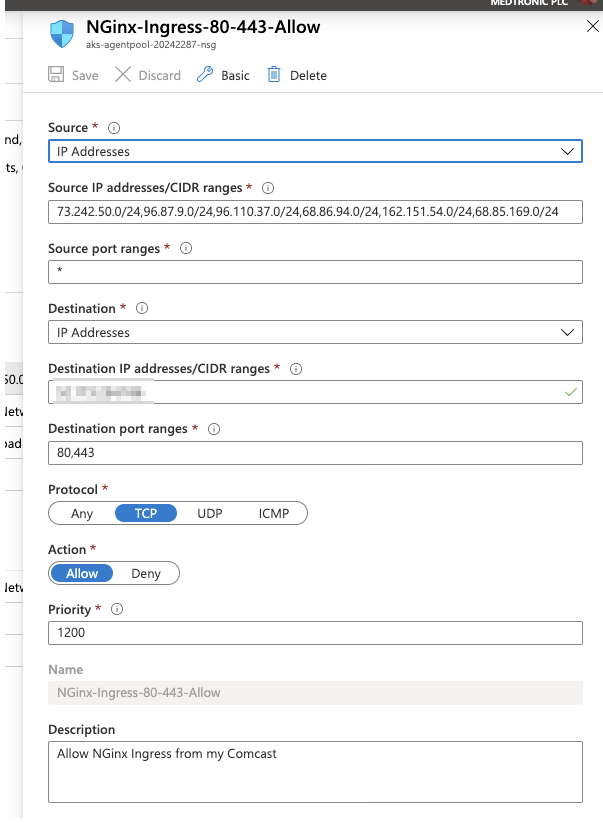

Let’s head to the Inbound rules and first add a rule.

We are going to put it at a range above 500 but below the 65000 rules, then remove the old ones created by Nginx…

Which means, this:

becomes:

One thing I discovered in testing is that my external facing IP address was not enough. I used traceroute to find the hops from Comcast and add CIDR rules for all of them.

This now means my network range can see the site, but others would get an unreachable page:

From my AKS cluster, i now see :

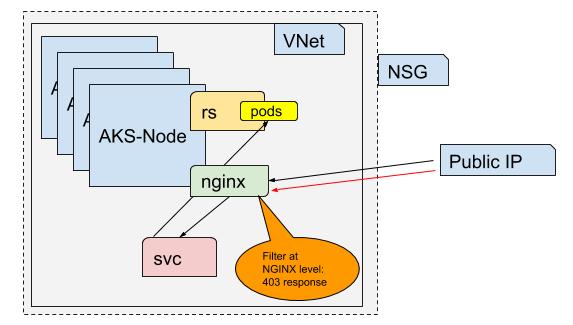

Restricting via NGinx

Perhaps you want to set controls on the NGinx ingress itself instead of the NSG. In other words, you want to allow traffic to hit the ingress, but reject anything but our allowable ranges. This can be controlled by annotations on the ingress deployment, this is something that can be parameterized and updated via pipelines allowing our CICD systems to control it. This could be particularly useful for slow roleouts (canary deployments) or allowing limited private access. The disadvantage is that your NGinx can get hammered from anywhere so you are more exposed from a security standpoint.

Let’s install our Helloworld example:

$ helm version

version.BuildInfo{Version:"v3.0.2", GitCommit:"19e47ee3283ae98139d98460de796c1be1e3975f", GitTreeState:"clean", GoVersion:"go1.13.5"}

$ helm repo add azure-samples https://azure-samples.github.io/helm-charts/

"azure-samples" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "banzaicloud-stable" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈ Happy Helming!⎈

$ helm install aks-helloworld azure-samples/aks-helloworld --namespace shareddev

NAME: aks-helloworld

LAST DEPLOYED: Thu Apr 2 10:49:46 2020

NAMESPACE: shareddev

STATUS: deployed

REVISION: 1

TEST SUITE: None

We can list our services there and add an ingress:

$ kubectl get svc -n shareddev

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

aks-helloworld ClusterIP 10.0.200.75 <none> 80/TCP 21s

ingress-nginx LoadBalancer 10.0.19.138 xxx.xxx.xxx.xxx 80:30466/TCP,443:30090/TCP 24h

$ vi helloworld-ingress.yaml

$ kubectl apply -f helloworld-ingress.yaml

error: error validating "helloworld-ingress.yaml": error validating data: ValidationError(Ingress.spec.rules[0].http.paths[0]): unknown field "pathType" in io.k8s.api.networking.v1beta1.HTTPIngressPath; if you choose to ignore these errors, turn validation off with --validate=false

$ kubectl apply -f helloworld-ingress.yaml --validate=false

ingress.networking.k8s.io/aks-helloworld created

We can now restrict at the controller level.

Let’s look at the Nginx configmap:

$ kubectl get cm nginx-configuration -n shareddev

NAME DATA AGE

nginx-configuration 0 24h

$ kubectl get cm nginx-configuration -n shareddev -o yaml

apiVersion: v1

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"ConfigMap","metadata":{"annotations":{},"labels":{"app.kubernetes.io/name":"ingress-nginx","app.kubernetes.io/part-of":"ingress-nginx"},"name":"nginx-configuration","namespace":"shareddev"}}

creationTimestamp: "2020-04-01T15:29:50Z"

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: nginx-configuration

namespace: shareddev

resourceVersion: "177523"

selfLink: /api/v1/namespaces/shareddev/configmaps/nginx-configuration

uid: a0c5ef9f-742d-11ea-a4a0-bac2a8381a43

Here we have no settings.. Let’s add a data block to lock down access.

$ kubectl edit cm nginx-configuration -n shareddev

configmap/nginx-configuration edited

$ kubectl get cm nginx-configuration -n shareddev -o yaml

apiVersion: v1

data:

whitelist-source-range: 10.2.2.2/24

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"ConfigMap","metadata":{"annotations":{},"labels":{"app.kubernetes.io/name":"ingress-nginx","app.kubernetes.io/part-of":"ingress-nginx"},"name":"nginx-configuration","namespace":"shareddev"}}

creationTimestamp: "2020-04-01T15:29:50Z"

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: nginx-configuration

namespace: shareddev

resourceVersion: "177827"

selfLink: /api/v1/namespaces/shareddev/configmaps/nginx-configuration

uid: a0c5ef9f-742d-11ea-a4a0-bac2a8381a43

Note that i added a data block with a whitelist-source-range. I used 10.2.2.2 knowing it won’t match any of my systems.

We can now see it’s immediately blocked.

But what if we want to enable access to some by not all?

Let’s restore our configmap ( kubectl edit configmap nginx-configuration -n shareddev) and remove the datablock:

$ kubectl get cm nginx-configuration -n shareddev -o yaml

apiVersion: v1

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"ConfigMap","metadata":{"annotations":{},"labels":{"app.kubernetes.io/name":"ingress-nginx","app.kubernetes.io/part-of":"ingress-nginx"},"name":"nginx-configuration","namespace":"shareddev"}}

creationTimestamp: "2020-04-01T15:29:50Z"

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: nginx-configuration

namespace: shareddev

resourceVersion: "178232"

selfLink: /api/v1/namespaces/shareddev/configmaps/nginx-configuration

uid: a0c5ef9f-742d-11ea-a4a0-bac2a8381a43

Let’s make two endpoints, one that is open, but the other has an annotation to block access:

$ cat helloworld-ingress.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: aks-helloworld

namespace: shareddev

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: "false"

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /mysimplehelloworld

pathType: Prefix

backend:

serviceName: aks-helloworld

servicePort: 80

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: aks-helloworld-blocked

namespace: shareddev

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: "false"

nginx.ingress.kubernetes.io/rewrite-target: /

nginx.ingress.kubernetes.io/whitelist-source-range: '10.2.2.0/24'

spec:

rules:

- http:

paths:

- path: /mysimplehelloworldb

pathType: Prefix

backend:

serviceName: aks-helloworld

servicePort: 80

$ kubectl apply -f helloworld-ingress.yaml --validate=false

ingress.networking.k8s.io/aks-helloworld configured

ingress.networking.k8s.io/aks-helloworld-blocked created

We can now see that the “b” (blocked) endpoint is blocked by Nginx but the other remains open:

Summary

When it comes to controlling access to the resources in our cluster, we have illustrated two ways we can constrain access. The first uses cloud native perimeter security techniques that would apply to any cloud compute. This is the stricter approach but does require access to modify Network Security Groups. The other technique uses kubernetes native annotations to selectively limit and enable access to specific ingress pathways.