There is one Hashi Tool I really haven't dived into much and that is Nomad. Nomad has been the Hashi product for deployments, containerized and otherwise for a while and some large companies orchestrate amazingly large workloads with it. However, as a Kubernetes-centric blog, I've tended to avoid diving into non-k8s solutions. However, there are some compelling reasons to consider Nomad and as we'll see below, even ways to leverage Nomad from Azure DevOps and Kubernetes.

Getting Started

Let’s set up Nomad and tie it into an Azure DevOps pipeline in the most basic way to see how it works.

I’m going to use an arm64 pi for this demo

ubuntu@ubuntu:~$ curl -fsSL https://apt.releases.hashicorp.com/gpg | sudo apt-key add -

OK

ubuntu@ubuntu:~$ sudo apt-add-repository "deb [arch=amd64] https://apt.releases.hashicorp.com $(lsb_release -cs) main"

Get:1 https://apt.releases.hashicorp.com focal InRelease [4419 B]

Hit:2 http://ports.ubuntu.com/ubuntu-ports focal InRelease

Get:3 http://ports.ubuntu.com/ubuntu-ports focal-updates InRelease [114 kB]

Get:4 https://apt.releases.hashicorp.com focal/main amd64 Packages [18.1 kB]

Get:5 http://ports.ubuntu.com/ubuntu-ports focal-backports InRelease [101 kB]

Get:6 http://ports.ubuntu.com/ubuntu-ports focal-security InRelease [109 kB]

Get:7 http://ports.ubuntu.com/ubuntu-ports focal-updates/main arm64 Packages [642 kB]

Get:8 http://ports.ubuntu.com/ubuntu-ports focal-updates/main Translation-en [192 kB]

Get:9 http://ports.ubuntu.com/ubuntu-ports focal-updates/main arm64 c-n-f Metadata [11.6 kB]

Get:10 http://ports.ubuntu.com/ubuntu-ports focal-updates/restricted arm64 Packages [2292 B]

Get:11 http://ports.ubuntu.com/ubuntu-ports focal-updates/restricted Translation-en [21.3 kB]

Get:12 http://ports.ubuntu.com/ubuntu-ports focal-updates/universe arm64 Packages [687 kB]

Get:13 http://ports.ubuntu.com/ubuntu-ports focal-updates/universe Translation-en [152 kB]

Get:14 http://ports.ubuntu.com/ubuntu-ports focal-updates/universe arm64 c-n-f Metadata [13.9 kB]

Get:15 http://ports.ubuntu.com/ubuntu-ports focal-updates/multiverse arm64 Packages [4068 B]

Get:16 http://ports.ubuntu.com/ubuntu-ports focal-updates/multiverse Translation-en [5076 B]

Get:17 http://ports.ubuntu.com/ubuntu-ports focal-updates/multiverse arm64 c-n-f Metadata [228 B]

Get:18 http://ports.ubuntu.com/ubuntu-ports focal-backports/universe arm64 Packages [4028 B]

Get:19 http://ports.ubuntu.com/ubuntu-ports focal-backports/universe arm64 c-n-f Metadata [224 B]

Get:20 http://ports.ubuntu.com/ubuntu-ports focal-security/main arm64 Packages [344 kB]

Get:21 http://ports.ubuntu.com/ubuntu-ports focal-security/main Translation-en [105 kB]

Get:22 http://ports.ubuntu.com/ubuntu-ports focal-security/main arm64 c-n-f Metadata [6048 B]

Get:23 http://ports.ubuntu.com/ubuntu-ports focal-security/restricted arm64 Packages [2092 B]

Get:24 http://ports.ubuntu.com/ubuntu-ports focal-security/restricted Translation-en [17.6 kB]

Get:25 http://ports.ubuntu.com/ubuntu-ports focal-security/universe arm64 Packages [485 kB]

Get:26 http://ports.ubuntu.com/ubuntu-ports focal-security/universe Translation-en [74.5 kB]

Get:27 http://ports.ubuntu.com/ubuntu-ports focal-security/universe arm64 c-n-f Metadata [8164 B]

Get:28 http://ports.ubuntu.com/ubuntu-ports focal-security/multiverse arm64 Packages [1000 B]

Get:29 http://ports.ubuntu.com/ubuntu-ports focal-security/multiverse Translation-en [2876 B]

Fetched 3129 kB in 5s (668 kB/s)

Reading package lists... Done

ubuntu@ubuntu:~$ sudo apt-get update && sudo apt-get install nomad

Hit:1 https://apt.releases.hashicorp.com focal InRelease

Hit:2 http://ports.ubuntu.com/ubuntu-ports focal InRelease

Hit:3 http://ports.ubuntu.com/ubuntu-ports focal-updates InRelease

Hit:4 http://ports.ubuntu.com/ubuntu-ports focal-backports InRelease

Hit:5 http://ports.ubuntu.com/ubuntu-ports focal-security InRelease

Reading package lists... Done

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following additional packages will be installed:

pipexec

Suggested packages:

consul default-jre qemu-kvm | qemu-system-x86 rkt

The following NEW packages will be installed:

nomad pipexec

0 upgraded, 2 newly installed, 0 to remove and 62 not upgraded.

Need to get 9793 kB of archives.

After this operation, 51.6 MB of additional disk space will be used.

Do you want to continue? [Y/n] y

Get:1 http://ports.ubuntu.com/ubuntu-ports focal/universe arm64 pipexec arm64 2.5.5-2 [17.2 kB]

Get:2 http://ports.ubuntu.com/ubuntu-ports focal/universe arm64 nomad arm64 0.8.7+dfsg1-1ubuntu1 [9776 kB]

Fetched 9793 kB in 2s (5786 kB/s)

Selecting previously unselected package pipexec.

(Reading database ... 132767 files and directories currently installed.)

Preparing to unpack .../pipexec_2.5.5-2_arm64.deb ...

Unpacking pipexec (2.5.5-2) ...

Selecting previously unselected package nomad.

Preparing to unpack .../nomad_0.8.7+dfsg1-1ubuntu1_arm64.deb ...

Unpacking nomad (0.8.7+dfsg1-1ubuntu1) ...

Setting up pipexec (2.5.5-2) ...

Setting up nomad (0.8.7+dfsg1-1ubuntu1) ...

Processing triggers for man-db (2.9.1-1) ...

Processing triggers for systemd (245.4-4ubuntu3.3) ...

Let’s verify

ubuntu@ubuntu:~$ nomad

Usage: nomad [-version] [-help] [-autocomplete-(un)install] <command> [args]

Common commands:

run Run a new job or update an existing job

stop Stop a running job

status Display the status output for a resource

alloc Interact with allocations

job Interact with jobs

node Interact with nodes

agent Runs a Nomad agent

Other commands:

acl Interact with ACL policies and tokens

agent-info Display status information about the local agent

deployment Interact with deployments

eval Interact with evaluations

namespace Interact with namespaces

operator Provides cluster-level tools for Nomad operators

quota Interact with quotas

sentinel Interact with Sentinel policies

server Interact with servers

ui Open the Nomad Web UI

version Prints the Nomad version

Now that we have the binary, we can start it as an agent: sudo nomad agent -dev

ubuntu@ubuntu:~$ sudo nomad agent -dev

==> No configuration files loaded

==> Starting Nomad agent...

==> Nomad agent configuration:

Advertise Addrs: HTTP: 127.0.0.1:4646; RPC: 127.0.0.1:4647; Serf: 127.0.0.1:4648

Bind Addrs: HTTP: 127.0.0.1:4646; RPC: 127.0.0.1:4647; Serf: 127.0.0.1:4648

Client: true

Log Level: DEBUG

Region: global (DC: dc1)

Server: true

Version: 0.8.7

==> Nomad agent started! Log data will stream in below:

2021/01/29 17:02:32 [INFO] raft: Initial configuration (index=1): [{Suffrage:Voter ID:127.0.0.1:4647 Address:127.0.0.1:4647}]

2021/01/29 17:02:32 [INFO] raft: Node at 127.0.0.1:4647 [Follower] entering Follower state (Leader: "")

2021/01/29 17:02:32 [INFO] serf: EventMemberJoin: ubuntu.global 127.0.0.1

…

2021/01/29 17:02:38.802941 [INFO] client: node registration complete

2021/01/29 17:03:37.827362 [DEBUG] driver.docker: using client connection initialized from environment

2021/01/29 17:03:38.840934 [DEBUG] client: state changed, updating node and re-registering.

2021/01/29 17:03:38.845617 [INFO] client: node registration complete

You can ctrl-C to escape.

Let’s now setup autocomplete and a data dir

ubuntu@ubuntu:~$ nomad -autocomplete-install

ubuntu@ubuntu:~$ complete -C /usr/local/bin/nomad nomad

ubuntu@ubuntu:~$ sudo mkdir -p /opt/nomadOf course, we want this to be a service. So let's setup systemd

ubuntu@ubuntu:~$ sudo vi /etc/systemd/system/nomad.service

ubuntu@ubuntu:~$ sudo cat /etc/systemd/system/nomad.service

[Unit]

Description=Nomad

Documentation=https://www.nomadproject.io/docs

Wants=network-online.target

After=network-online.target

[Service]

ExecReload=/bin/kill -HUP $MAINPID

ExecStart=/usr/local/bin/nomad agent -config /etc/nomad.d

KillMode=process

KillSignal=SIGINT

LimitNOFILE=infinity

LimitNPROC=infinity

Restart=on-failure

RestartSec=2

StartLimitBurst=3

StartLimitIntervalSec=10

TasksMax=infinity

[Install]

WantedBy=multi-user.target

Set up settings

ubuntu@ubuntu:~$ sudo mkdir -p /etc/nomad.d

ubuntu@ubuntu:~$ sudo chmod 700 /etc/nomad.d

ubuntu@ubuntu:~$ sudo vi /etc/nomad.d/nomad.hcl

ubuntu@ubuntu:~$ sudo cat /etc/nomad.d/nomad.hcl

datacenter = "dc1"

data_dir = "/opt/nomad"

ubuntu@ubuntu:~$ sudo vi /etc/nomad.d/server.hcl

ubuntu@ubuntu:~$ sudo cat /etc/nomad.d/server.hcl

server {

enabled = true

bootstrap_expect = 3

}

If we want this server to also be a client, then we can add that too

ubuntu@ubuntu:~$ sudo vi /etc/nomad.d/client.hcl

ubuntu@ubuntu:~$ sudo cat /etc/nomad.d/client.hcl

client {

enabled = true

}

Try and start…

ubuntu@ubuntu:~$ sudo systemctl enable nomad

Synchronizing state of nomad.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable nomad

Created symlink /etc/systemd/system/multi-user.target.wants/nomad.service → /etc/systemd/system/nomad.service.

ubuntu@ubuntu:~$ sudo systemctl start nomad

ubuntu@ubuntu:~$ sudo systemctl status nomad

● nomad.service - Nomad

Loaded: loaded (/etc/systemd/system/nomad.service; enabled; vendor preset: enabled)

Active: failed (Result: exit-code) since Fri 2021-01-29 17:16:52 UTC; 593ms ago

Docs: https://www.nomadproject.io/docs

Process: 3380201 ExecStart=/usr/local/bin/nomad agent -config /etc/nomad.d (code=exited, status=203/EXEC)

Main PID: 3380201 (code=exited, status=203/EXEC)

Jan 29 17:16:52 ubuntu systemd[1]: nomad.service: Scheduled restart job, restart counter is at 3.

Jan 29 17:16:52 ubuntu systemd[1]: Stopped Nomad.

Jan 29 17:16:52 ubuntu systemd[1]: nomad.service: Start request repeated too quickly.

Jan 29 17:16:52 ubuntu systemd[1]: nomad.service: Failed with result 'exit-code'.

Jan 29 17:16:52 ubuntu systemd[1]: Failed to start Nomad.This was because i had earlier installed /usr/bin not /usr/local/bin. This is an easy fix

ubuntu@ubuntu:~$ sudo vi /etc/systemd/system/nomad.service

ubuntu@ubuntu:~$ cat /etc/systemd/system/nomad.service | grep Exec

ExecReload=/bin/kill -HUP $MAINPID

ExecStart=/usr/bin/nomad agent -config /etc/nomad.d

ubuntu@ubuntu:~$ sudo systemctl enable nomad

Synchronizing state of nomad.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable nomad

ubuntu@ubuntu:~$ sudo systemctl start nomad

ubuntu@ubuntu:~$ sudo systemctl status nomad

● nomad.service - Nomad

Loaded: loaded (/etc/systemd/system/nomad.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2021-01-29 17:19:28 UTC; 5s ago

Docs: https://www.nomadproject.io/docs

Main PID: 3380344 (nomad)

Tasks: 13

CGroup: /system.slice/nomad.service

└─3380344 /usr/bin/nomad agent -config /etc/nomad.d

Jan 29 17:19:32 ubuntu nomad[3380344]: 2021/01/29 17:19:28 [INFO] raft: Node at 192.168.1.208:4647 [Follower] entering Follower state (Leader: "")

Jan 29 17:19:32 ubuntu nomad[3380344]: 2021/01/29 17:19:28 [INFO] serf: EventMemberJoin: ubuntu.global 192.168.1.208

Jan 29 17:19:32 ubuntu nomad[3380344]: 2021/01/29 17:19:28.302638 [INFO] nomad: starting 4 scheduling worker(s) for [system service batch _core]

Jan 29 17:19:32 ubuntu nomad[3380344]: 2021/01/29 17:19:28.303323 [INFO] client: using state directory /opt/nomad/client

Jan 29 17:19:32 ubuntu nomad[3380344]: 2021/01/29 17:19:28.304583 [INFO] nomad: adding server ubuntu.global (Addr: 192.168.1.208:4647) (DC: dc1)

Jan 29 17:19:32 ubuntu nomad[3380344]: 2021/01/29 17:19:28.306459 [ERR] consul: error looking up Nomad servers: server.nomad: unable to query Consul datacenters: Get http:/>

Jan 29 17:19:32 ubuntu nomad[3380344]: 2021/01/29 17:19:28.316411 [INFO] client: using alloc directory /opt/nomad/alloc

Jan 29 17:19:32 ubuntu nomad[3380344]: 2021/01/29 17:19:28.328845 [INFO] fingerprint.cgroups: cgroups are available

Jan 29 17:19:32 ubuntu nomad[3380344]: 2021/01/29 17:19:29 [WARN] raft: no known peers, aborting election

Jan 29 17:19:32 ubuntu nomad[3380344]: 2021/01/29 17:19:32.400040 [INFO] client: Node ID "dcf6fd03-9cc8-1b20-de7f-25e936fa04eb"

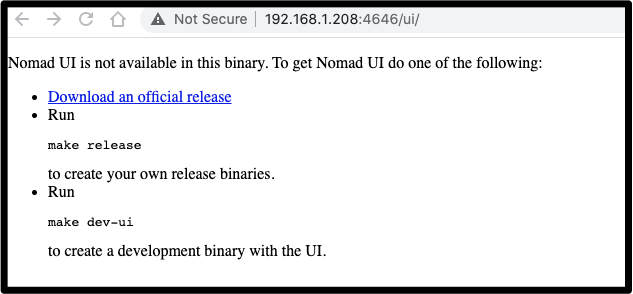

Our WebUI is not enabled (and i'm not seeking to custom compile for aarm64 presently):

We can download nomad locally and engage with the server:

$ ~/Downloads/nomad server members -address=http://192.168.1.208:4646

Name Address Port Status Leader Protocol Build Datacenter Region

ubuntu.global 192.168.1.208 4648 alive false 2 0.8.7 dc1 global

Error determining leaders: 1 error occurred:

* Region "global": Unexpected response code: 500 (No cluster leader)

We can fix this.. Our expect said 3 hosts but this is not an HA install. On the pi server:

$ sudo cat /etc/nomad.d/server.hcl

server {

enabled = true

bootstrap_expect = 1

}

advertise {

http = "192.168.1.208"

rpc = "192.168.1.208"

serf = "192.168.1.208"

}

Then restart

ubuntu@ubuntu:~$ sudo systemctl stop nomad

ubuntu@ubuntu:~$ sudo systemctl start nomad

ubuntu@ubuntu:~$ sudo systemctl status nomad

● nomad.service - Nomad

Loaded: loaded (/etc/systemd/system/nomad.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2021-01-29 17:29:40 UTC; 6s ago

Docs: https://www.nomadproject.io/docs

Main PID: 3380732 (nomad)

Tasks: 13

CGroup: /system.slice/nomad.service

└─3380732 /usr/bin/nomad agent -config /etc/nomad.d

Jan 29 17:29:44 ubuntu nomad[3380732]: 2021/01/29 17:29:40.883214 [INFO] client: using state directory /opt/nomad/client

Jan 29 17:29:44 ubuntu nomad[3380732]: 2021/01/29 17:29:40.883441 [INFO] client: using alloc directory /opt/nomad/alloc

Jan 29 17:29:44 ubuntu nomad[3380732]: 2021/01/29 17:29:40.895131 [INFO] fingerprint.cgroups: cgroups are available

Jan 29 17:29:44 ubuntu nomad[3380732]: 2021/01/29 17:29:42 [WARN] raft: Heartbeat timeout from "" reached, starting election

Jan 29 17:29:44 ubuntu nomad[3380732]: 2021/01/29 17:29:42 [INFO] raft: Node at 192.168.1.208:4647 [Candidate] entering Candidate state in term 2

Jan 29 17:29:44 ubuntu nomad[3380732]: 2021/01/29 17:29:42 [INFO] raft: Election won. Tally: 1

Jan 29 17:29:44 ubuntu nomad[3380732]: 2021/01/29 17:29:42 [INFO] raft: Node at 192.168.1.208:4647 [Leader] entering Leader state

Jan 29 17:29:44 ubuntu nomad[3380732]: 2021/01/29 17:29:42.165969 [INFO] nomad: cluster leadership acquired

Jan 29 17:29:44 ubuntu nomad[3380732]: 2021/01/29 17:29:44.968717 [INFO] client: Node ID "dcf6fd03-9cc8-1b20-de7f-25e936fa04eb"

Jan 29 17:29:45 ubuntu nomad[3380732]: 2021/01/29 17:29:45.007045 [INFO] client: node registration complete

Now my local binary can reach it:

$ ~/Downloads/nomad server members -address=http://192.168.1.208:4646

Name Address Port Status Leader Protocol Build Datacenter Region

ubuntu.global 192.168.1.208 4648 alive true 2 0.8.7 dc1 global

Let’s create a quick test job

$ ~/Downloads/nomad job init

Example job file written to example.nomad

$ cat example.nomad

# There can only be a single job definition per file. This job is named

# "example" so it will create a job with the ID and Name "example".

# The "job" stanza is the top-most configuration option in the job

# specification. A job is a declarative specification of tasks that Nomad

# should run. Jobs have a globally unique name, one or many task groups, which

# are themselves collections of one or many tasks.

#

# For more information and examples on the "job" stanza, please see

# the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/job

#

job "example" {

# The "region" parameter specifies the region in which to execute the job.

# If omitted, this inherits the default region name of "global".

# region = "global"

#

# The "datacenters" parameter specifies the list of datacenters which should

# be considered when placing this task. This must be provided.

datacenters = ["dc1"]

# The "type" parameter controls the type of job, which impacts the scheduler's

# decision on placement. This configuration is optional and defaults to

# "service". For a full list of job types and their differences, please see

# the online documentation.

#

# For more information, please see the online documentation at:

#

# https://www.nomadproject.io/docs/schedulers

#

type = "service"

# The "constraint" stanza defines additional constraints for placing this job,

# in addition to any resource or driver constraints. This stanza may be placed

# at the "job", "group", or "task" level, and supports variable interpolation.

#

# For more information and examples on the "constraint" stanza, please see

# the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/constraint

#

# constraint {

# attribute = "${attr.kernel.name}"

# value = "linux"

# }

# The "update" stanza specifies the update strategy of task groups. The update

# strategy is used to control things like rolling upgrades, canaries, and

# blue/green deployments. If omitted, no update strategy is enforced. The

# "update" stanza may be placed at the job or task group. When placed at the

# job, it applies to all groups within the job. When placed at both the job and

# group level, the stanzas are merged with the group's taking precedence.

#

# For more information and examples on the "update" stanza, please see

# the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/update

#

update {

# The "max_parallel" parameter specifies the maximum number of updates to

# perform in parallel. In this case, this specifies to update a single task

# at a time.

max_parallel = 1

# The "min_healthy_time" parameter specifies the minimum time the allocation

# must be in the healthy state before it is marked as healthy and unblocks

# further allocations from being updated.

min_healthy_time = "10s"

# The "healthy_deadline" parameter specifies the deadline in which the

# allocation must be marked as healthy after which the allocation is

# automatically transitioned to unhealthy. Transitioning to unhealthy will

# fail the deployment and potentially roll back the job if "auto_revert" is

# set to true.

healthy_deadline = "3m"

# The "progress_deadline" parameter specifies the deadline in which an

# allocation must be marked as healthy. The deadline begins when the first

# allocation for the deployment is created and is reset whenever an allocation

# as part of the deployment transitions to a healthy state. If no allocation

# transitions to the healthy state before the progress deadline, the

# deployment is marked as failed.

progress_deadline = "10m"

# The "auto_revert" parameter specifies if the job should auto-revert to the

# last stable job on deployment failure. A job is marked as stable if all the

# allocations as part of its deployment were marked healthy.

auto_revert = false

# The "canary" parameter specifies that changes to the job that would result

# in destructive updates should create the specified number of canaries

# without stopping any previous allocations. Once the operator determines the

# canaries are healthy, they can be promoted which unblocks a rolling update

# of the remaining allocations at a rate of "max_parallel".

#

# Further, setting "canary" equal to the count of the task group allows

# blue/green deployments. When the job is updated, a full set of the new

# version is deployed and upon promotion the old version is stopped.

canary = 0

}

# The migrate stanza specifies the group's strategy for migrating off of

# draining nodes. If omitted, a default migration strategy is applied.

#

# For more information on the "migrate" stanza, please see

# the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/migrate

#

migrate {

# Specifies the number of task groups that can be migrated at the same

# time. This number must be less than the total count for the group as

# (count - max_parallel) will be left running during migrations.

max_parallel = 1

# Specifies the mechanism in which allocations health is determined. The

# potential values are "checks" or "task_states".

health_check = "checks"

# Specifies the minimum time the allocation must be in the healthy state

# before it is marked as healthy and unblocks further allocations from being

# migrated. This is specified using a label suffix like "30s" or "15m".

min_healthy_time = "10s"

# Specifies the deadline in which the allocation must be marked as healthy

# after which the allocation is automatically transitioned to unhealthy. This

# is specified using a label suffix like "2m" or "1h".

healthy_deadline = "5m"

}

# The "group" stanza defines a series of tasks that should be co-located on

# the same Nomad client. Any task within a group will be placed on the same

# client.

#

# For more information and examples on the "group" stanza, please see

# the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/group

#

group "cache" {

# The "count" parameter specifies the number of the task groups that should

# be running under this group. This value must be non-negative and defaults

# to 1.

count = 1

# The "network" stanza specifies the network configuration for the allocation

# including requesting port bindings.

#

# For more information and examples on the "network" stanza, please see

# the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/network

#

network {

port "db" {

to = 6379

}

}

# The "service" stanza instructs Nomad to register this task as a service

# in the service discovery engine, which is currently Consul. This will

# make the service addressable after Nomad has placed it on a host and

# port.

#

# For more information and examples on the "service" stanza, please see

# the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/service

#

service {

name = "redis-cache"

tags = ["global", "cache"]

port = "db"

# The "check" stanza instructs Nomad to create a Consul health check for

# this service. A sample check is provided here for your convenience;

# uncomment it to enable it. The "check" stanza is documented in the

# "service" stanza documentation.

# check {

# name = "alive"

# type = "tcp"

# interval = "10s"

# timeout = "2s"

# }

}

# The "restart" stanza configures a group's behavior on task failure. If

# left unspecified, a default restart policy is used based on the job type.

#

# For more information and examples on the "restart" stanza, please see

# the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/restart

#

restart {

# The number of attempts to run the job within the specified interval.

attempts = 2

interval = "30m"

# The "delay" parameter specifies the duration to wait before restarting

# a task after it has failed.

delay = "15s"

# The "mode" parameter controls what happens when a task has restarted

# "attempts" times within the interval. "delay" mode delays the next

# restart until the next interval. "fail" mode does not restart the task

# if "attempts" has been hit within the interval.

mode = "fail"

}

# The "ephemeral_disk" stanza instructs Nomad to utilize an ephemeral disk

# instead of a hard disk requirement. Clients using this stanza should

# not specify disk requirements in the resources stanza of the task. All

# tasks in this group will share the same ephemeral disk.

#

# For more information and examples on the "ephemeral_disk" stanza, please

# see the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/ephemeral_disk

#

ephemeral_disk {

# When sticky is true and the task group is updated, the scheduler

# will prefer to place the updated allocation on the same node and

# will migrate the data. This is useful for tasks that store data

# that should persist across allocation updates.

# sticky = true

#

# Setting migrate to true results in the allocation directory of a

# sticky allocation directory to be migrated.

# migrate = true

#

# The "size" parameter specifies the size in MB of shared ephemeral disk

# between tasks in the group.

size = 300

}

# The "affinity" stanza enables operators to express placement preferences

# based on node attributes or metadata.

#

# For more information and examples on the "affinity" stanza, please

# see the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/affinity

#

# affinity {

# attribute specifies the name of a node attribute or metadata

# attribute = "${node.datacenter}"

# value specifies the desired attribute value. In this example Nomad

# will prefer placement in the "us-west1" datacenter.

# value = "us-west1"

# weight can be used to indicate relative preference

# when the job has more than one affinity. It defaults to 50 if not set.

# weight = 100

# }

# The "spread" stanza allows operators to increase the failure tolerance of

# their applications by specifying a node attribute that allocations

# should be spread over.

#

# For more information and examples on the "spread" stanza, please

# see the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/spread

#

# spread {

# attribute specifies the name of a node attribute or metadata

# attribute = "${node.datacenter}"

# targets can be used to define desired percentages of allocations

# for each targeted attribute value.

#

# target "us-east1" {

# percent = 60

# }

# target "us-west1" {

# percent = 40

# }

# }

# The "task" stanza creates an individual unit of work, such as a Docker

# container, web application, or batch processing.

#

# For more information and examples on the "task" stanza, please see

# the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/task

#

task "redis" {

# The "driver" parameter specifies the task driver that should be used to

# run the task.

driver = "docker"

# The "config" stanza specifies the driver configuration, which is passed

# directly to the driver to start the task. The details of configurations

# are specific to each driver, so please see specific driver

# documentation for more information.

config {

image = "redis:3.2"

ports = ["db"]

}

# The "artifact" stanza instructs Nomad to download an artifact from a

# remote source prior to starting the task. This provides a convenient

# mechanism for downloading configuration files or data needed to run the

# task. It is possible to specify the "artifact" stanza multiple times to

# download multiple artifacts.

#

# For more information and examples on the "artifact" stanza, please see

# the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/artifact

#

# artifact {

# source = "http://foo.com/artifact.tar.gz"

# options {

# checksum = "md5:c4aa853ad2215426eb7d70a21922e794"

# }

# }

# The "logs" stanza instructs the Nomad client on how many log files and

# the maximum size of those logs files to retain. Logging is enabled by

# default, but the "logs" stanza allows for finer-grained control over

# the log rotation and storage configuration.

#

# For more information and examples on the "logs" stanza, please see

# the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/logs

#

# logs {

# max_files = 10

# max_file_size = 15

# }

# The "resources" stanza describes the requirements a task needs to

# execute. Resource requirements include memory, cpu, and more.

# This ensures the task will execute on a machine that contains enough

# resource capacity.

#

# For more information and examples on the "resources" stanza, please see

# the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/resources

#

resources {

cpu = 500 # 500 MHz

memory = 256 # 256MB

}

# The "template" stanza instructs Nomad to manage a template, such as

# a configuration file or script. This template can optionally pull data

# from Consul or Vault to populate runtime configuration data.

#

# For more information and examples on the "template" stanza, please see

# the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/template

#

# template {

# data = "---\nkey: {{ key \"service/my-key\" }}"

# destination = "local/file.yml"

# change_mode = "signal"

# change_signal = "SIGHUP"

# }

# The "template" stanza can also be used to create environment variables

# for tasks that prefer those to config files. The task will be restarted

# when data pulled from Consul or Vault changes.

#

# template {

# data = "KEY={{ key \"service/my-key\" }}"

# destination = "local/file.env"

# env = true

# }

# The "vault" stanza instructs the Nomad client to acquire a token from

# a HashiCorp Vault server. The Nomad servers must be configured and

# authorized to communicate with Vault. By default, Nomad will inject

# The token into the job via an environment variable and make the token

# available to the "template" stanza. The Nomad client handles the renewal

# and revocation of the Vault token.

#

# For more information and examples on the "vault" stanza, please see

# the online documentation at:

#

# https://www.nomadproject.io/docs/job-specification/vault

#

# vault {

# policies = ["cdn", "frontend"]

# change_mode = "signal"

# change_signal = "SIGHUP"

# }

# Controls the timeout between signalling a task it will be killed

# and killing the task. If not set a default is used.

# kill_timeout = "20s"

}

}

}

Testing it

$ export NOMAD_ADDR="http://192.168.1.208:4646"

$ ~/Downloads/nomad job run example.nomad

Error submitting job: Unexpected response code: 500 (1 error occurred:

* group "cache" -> task "redis" -> config: 1 error occurred:

* "ports" is an invalid field

)

Instead of redis in a container, let’s just use a hello-world example

$ cat http-echo.nomad

job "http-echo" {

datacenters = ["dc1"]

group "echo" {

count = 1

task "server" {

driver = "docker"

config {

image = "hashicorp/http-echo:latest"

args = [

"-listen", ":8080",

"-text", "Hello and welcome to 127.0.0.1 running on port 8080",

]

}

resources {

network {

mbits = 10

port "http" {

static = 8080

}

}

}

}

}

}

and run it

$ ~/Downloads/nomad job run http-echo.nomad

==> Monitoring evaluation "9d1e1088"

Evaluation triggered by job "http-echo"

==> Monitoring evaluation "9d1e1088"

Allocation "f44b65a0" created: node "dcf6fd03", group "echo"

Evaluation status changed: "pending" -> "complete"

==> Evaluation "9d1e1088" finished with status "complete"

And i can use that job id that was generated to check the status

$ ~/Download/nomad status 9d1e1088

ID = 9d1e1088

Create Time = <none>

Modify Time = <none>

Status = complete

Status Description = complete

Type = service

TriggeredBy = job-register

Job ID = http-echo

Priority = 50

Placement Failures = false

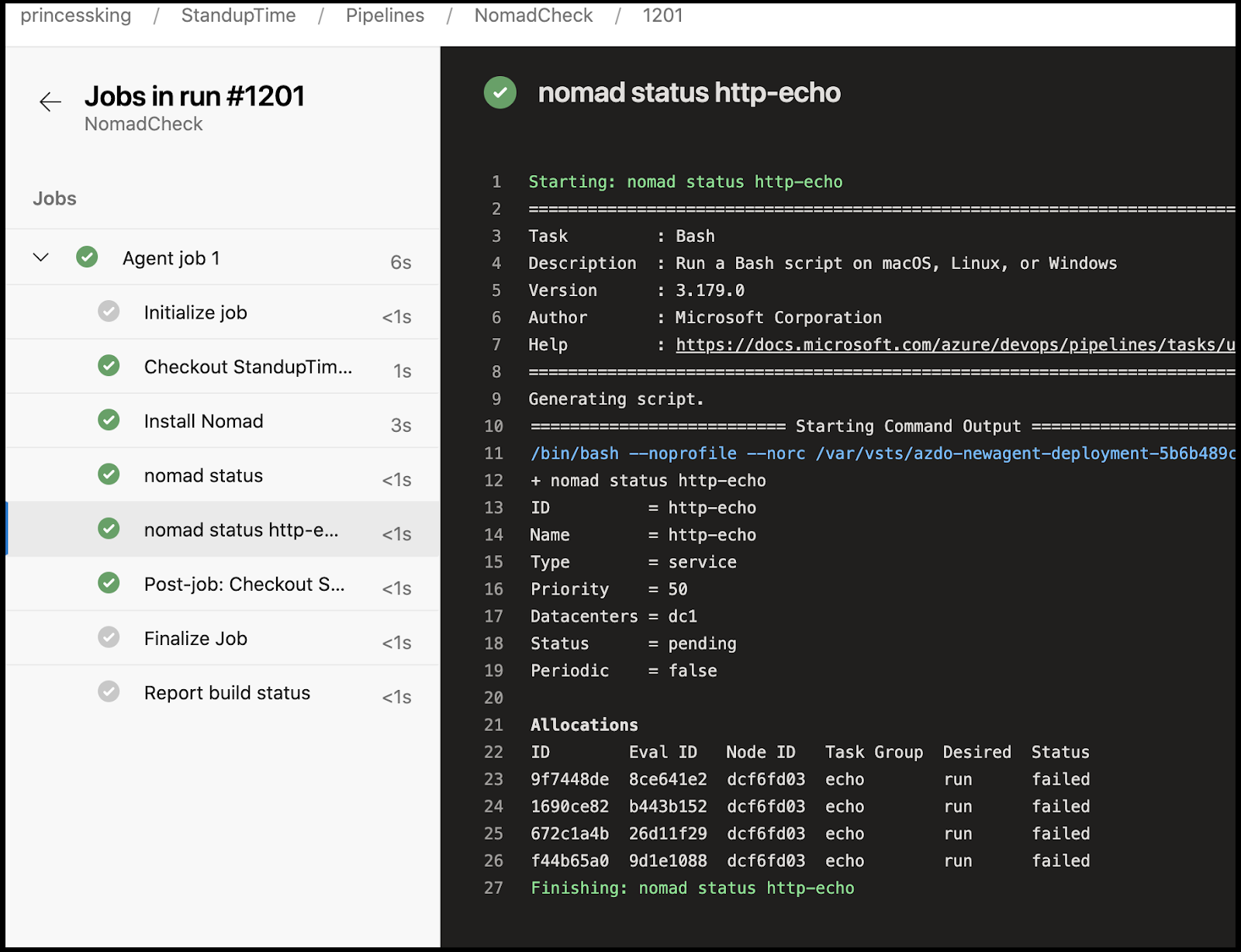

Using with AzDO

Presently, my non-HA server is just local.. However, as I have an active Azure DevOps containerized agent running in an on-prem cluster, i can use that to interact with the nomad server (and agent)

I just need a few steps to run on the onprem agent:

jobs:

- job: Job_1

displayName: Agent job 1

pool:

name: Kubernetes-Agent-Pool

steps:

- checkout: self

- task: Bash@3

displayName: Install Nomad

inputs:

targetType: inline

script: >

curl -fsSL https://apt.releases.hashicorp.com/gpg | apt-key add -

apt-add-repository "deb [arch=amd64] https://apt.releases.hashicorp.com $(lsb_release -cs) main"

apt-get update

apt-get install -y nomad

- task: Bash@3

displayName: nomad status

inputs:

targetType: inline

script: >-

set -x

nomad status

- task: Bash@3

displayName: nomad status http-echo

condition: succeededOrFailed()

inputs:

targetType: inline

script: >-

set -x

nomad status http-echo

that can also be written

steps:

- bash: |

curl -fsSL https://apt.releases.hashicorp.com/gpg | apt-key add -

apt-add-repository "deb [arch=amd64] https://apt.releases.hashicorp.com $(lsb_release -cs) main"

apt-get update

apt-get install -y nomad

displayName: 'Install Nomad'

- bash: |

set -x

nomad status

displayName: 'nomad status'

env:

NOMAD_ADDR: http://192.168.1.208:4646

- bash: |

set -x

nomad status http-echo

displayName: 'nomad status http-echo'

condition: succeededOrFailed()

env:

NOMAD_ADDR: http://192.168.1.208:4646

Testing shows it works just dandy

Next steps

There are some guides that show nomad can run in a container (https://github.com/multani/docker-nomad). Thus it could be deployed as an agent into Kubernetes.

There is also a project to expose a nomad server as a virtual kublet (https://github.com/virtual-kubelet/nomad)

Lastly, there is a walkthrough from Kelsey Hightower for running Nomad (agent) on Kubernetes (albeit gcloud) here: https://github.com/kelseyhightower/nomad-on-kubernetes

You can also do the learn.hashicorp.com journey on Nomad here: https://learn.hashicorp.com/collections/nomad/get-started

Summary

Why do this? What is the actual purpose of having a Nomad server when the focus has been around kubernetes? The reason is simple - not everything in this world is a container. There are things like a non-containerized redis, or a mysql instance. It could be as simple as setting up and exposing an NFS share from a local NAS. The point is that tools like Nomad (and Chef, Puppet, Salt, etc) serve the purpose of deployment targets for non-containerized loads.

We have the advantage of the Nomad binary being quite light and thus easy to run _in_ a container and use with our Azure DevOps agent. The nomad server is equally light and in a non-HA way, it runs on a tiny Raspberry Pi hanging off an ethernet cord next to my desk.