A few weeks ago, a coworker of mine posted to our slack this medium article about KEDA and Kafka.I wasn’t familiar with Kubernetes-based Event Driven Autoscaler (KEDA) and wanted to find out more. It’s an official CNCF project and aims to provide event-driven (do a thing, trigger a thing) autoscaling. It lead me to explore some of their samples.

Let’s learn more by following their Hello World with Azure Functions guide. This guide will require the Azure CLI as well as Azure Functions Core Tools.

Setup

We are going to need a cluster for this. We could use any linux based cluster, but since we’re doing Azure Functions, might as well create a quick AKS cluster.

First, create a resource group:

builder@DESKTOP-2SQ9NQM:/mnt/c/WINDOWS/system32$ az group create --name idjaksdemo --location eastus

{

"id": "/subscriptions/70b42e6a-6faf-4fed-bcec-9f3995b1aca8/resourceGroups/idjaksdemo",

"location": "eastus",

"managedBy": null,

"name": "idjaksdemo",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

Next, we’ll need a service principal for our Cluster to use.

builder@DESKTOP-2SQ9NQM:/mnt/c/WINDOWS/system32$ az ad sp create-for-rbac --name idjaksdemosp --skip-assi

gnment true

Changing "idjaksdemosp" to a valid URI of "http://idjaksdemosp", which is the required format used for service principal names

{

"appId": "ca06a4a1-9b27-4be9-9869-e4279a2c3ea9",

"displayName": "idjaksdemosp",

"name": "http://idjaksdemosp",

"password": "07150222-f20e-4d95-9f38-6f1fc8c33a7a",

"tenant": "d73a39db-6eda-495d-8000-7579f56d68b7"

}

Lastly, let’s create that cluster (passing in the service principal and resource group).

builder@DESKTOP-2SQ9NQM:/mnt/c/WINDOWS/system32$ az aks create -g idjaksdemo --name idjaksdemo01 --enable-rbac --service-principal ca06a4a1-9b27-4be9-9869-e4279a2c3ea9 --client-secret "07150222-f20e-4d95-9f38-6f1fc8c33a7a" --generate-ssh-keys

Argument 'enable_rbac' has been deprecated and will be removed in a future release. Use '--disable-rbac' instead.

{

"aadProfile": null,

"addonProfiles": null,

"agentPoolProfiles": [

{

"availabilityZones": null,

"count": 3,

"enableAutoScaling": null,

"enableNodePublicIp": null,

"maxCount": null,

"maxPods": 110,

"minCount": null,

"name": "nodepool1",

"nodeLabels": null,

"nodeTaints": null,

"orchestratorVersion": "1.14.8",

"osDiskSizeGb": 100,

"osType": "Linux",

"provisioningState": "Succeeded",

"scaleSetEvictionPolicy": null,

"scaleSetPriority": null,

"tags": null,

"type": "VirtualMachineScaleSets",

"vmSize": "Standard_DS2_v2",

"vnetSubnetId": null

}

],

"apiServerAccessProfile": null,

"dnsPrefix": "idjaksdemo-idjaksdemo-70b42e",

"enablePodSecurityPolicy": null,

"enableRbac": true,

"fqdn": "idjaksdemo-idjaksdemo-70b42e-23f077ed.hcp.eastus.azmk8s.io",

"id": "/subscriptions/70b42e6a-6faf-4fed-bcec-9f3995b1aca8/resourcegroups/idjaksdemo/providers/Microsoft.ContainerService/managedClusters/idjaksdemo01",

"identity": null,

"identityProfile": null,

"kubernetesVersion": "1.14.8",

"linuxProfile": {

"adminUsername": "azureuser",

"ssh": {

"publicKeys": [

{

"keyData": "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDHZ3iOnMMLkiltuikXSjqudfCHmQvIjBGMOuGk6wedwG8Xai3uv0M/X3Z2LS6Ac8tComKEKg7Zje2KFBnvBJvU5JqkTwNHnmp682tXf15EYgn4tB7MDz5DUARpcUXJbYfUg8yPUDveYHw8PEm1n+1MvLJN0ftvdORG5CQQEl/m7jErbJJQI70xg7C8/HG5GmJpIQjDl7UVsJANKab/2/bbUlG1Sqp4cQ/LwxKxQ6/QK/HVauxDkudoTkFLqukLWVjHvNZD37MC/wygSsEVYF+yrkNJySlNbMk4ZNmMwva1yLX8Shhr8G4wWe8QI9Ska8B0keSIu8fzRWxXAv2gB3xB"

}

]

}

},

"location": "eastus",

"maxAgentPools": 10,

"name": "idjaksdemo01",

"networkProfile": {

"dnsServiceIp": "10.0.0.10",

"dockerBridgeCidr": "172.17.0.1/16",

"loadBalancerProfile": {

"allocatedOutboundPorts": null,

"effectiveOutboundIps": [

{

"id": "/subscriptions/70b42e6a-6faf-4fed-bcec-9f3995b1aca8/resourceGroups/MC_idjaksdemo_idjaksdemo01_eastus/providers/Microsoft.Network/publicIPAddresses/9ad9ab6c-165d-4e6e-8752-246a284183f7",

"resourceGroup": "MC_idjaksdemo_idjaksdemo01_eastus"

}

],

"idleTimeoutInMinutes": null,

"managedOutboundIps": {

"count": 1

},

"outboundIpPrefixes": null,

"outboundIps": null

},

"loadBalancerSku": "Standard",

"networkPlugin": "kubenet",

"networkPolicy": null,

"outboundType": "loadBalancer",

"podCidr": "10.244.0.0/16",

"serviceCidr": "10.0.0.0/16"

},

"nodeResourceGroup": "MC_idjaksdemo_idjaksdemo01_eastus",

"privateFqdn": null,

"provisioningState": "Succeeded",

"resourceGroup": "idjaksdemo",

"servicePrincipalProfile": {

"clientId": "ca06a4a1-9b27-4be9-9869-e4279a2c3ea9",

"secret": null

},

"tags": null,

"type": "Microsoft.ContainerService/ManagedClusters",

"windowsProfile": null

}

Now that the cluster is running, let’s get the admin credentials.

builder@DESKTOP-2SQ9NQM:/mnt/c/WINDOWS/system32$ az aks get-credentials -n idjaksdemo01 -g idjaksdemo --admin

Merged "idjaksdemo01-admin" as current context in /home/builder/.kube/config

builder@DESKTOP-2SQ9NQM:/mnt/c/WINDOWS/system32$ kubectl create namespace keda

namespace/keda created

builder@DESKTOP-2SQ9NQM:/mnt/c/WINDOWS/system32$ helm repo add kedacore https://kedacore.github.io/charts

"kedacore" has been added to your repositories

builder@DESKTOP-2SQ9NQM:/mnt/c/WINDOWS/system32$

builder@DESKTOP-2SQ9NQM:/mnt/c/WINDOWS/system32$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "kedacore" chart repository

...Successfully got an update from the "banzaicloud-stable" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈ Happy Helming!⎈

builder@DESKTOP-2SQ9NQM:/mnt/c/WINDOWS/system32$ helm install keda kedacore/keda --namespace keda

manifest_sorter.go:175: info: skipping unknown hook: "crd-install"

manifest_sorter.go:175: info: skipping unknown hook: "crd-install"

NAME: keda

LAST DEPLOYED: Thu Mar 12 21:15:11 2020

NAMESPACE: keda

STATUS: deployed

REVISION: 1

TEST SUITE: None

Azure Function

Let’s follow that guide to create a local node function that we can then deploy. (https://github.com/kedacore/sample-hello-world-azure-functions). This is going to use Azure Queues which is the PaaS equivalent of Kafka.

Quick side note: i did switch to a newer node (nvm use 10.17.0). I had some challenges later with node 8 (which i use for some sqlite issues i have with some other projects).

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ npm install -g azure-functions-core-tools@3 --unsafe-perm true

/home/builder/.nvm/versions/node/v8.10.0/bin/func -> /home/builder/.nvm/versions/node/v8.10.0/lib/node_modules/azure-functions-core-tools/lib/main.js

/home/builder/.nvm/versions/node/v8.10.0/bin/azurefunctions -> /home/builder/.nvm/versions/node/v8.10.0/lib/node_modules/azure-functions-core-tools/lib/main.js

/home/builder/.nvm/versions/node/v8.10.0/bin/azfun -> /home/builder/.nvm/versions/node/v8.10.0/lib/node_modules/azure-functions-core-tools/lib/main.js

> azure-functions-core-tools@3.0.2245 postinstall /home/builder/.nvm/versions/node/v8.10.0/lib/node_modules/azure-functions-core-tools

> node lib/install.js

attempting to GET "https://functionscdn.azureedge.net/public/3.0.2245/Azure.Functions.Cli.linux-x64.3.0.2245.zip"

[==================] Downloading Azure Functions Core Tools

Telemetry

---------

The Azure Functions Core tools collect usage data in order to help us improve your experience.

The data is anonymous and doesn't include any user specific or personal information. The data is collected by Microsoft.

You can opt-out of telemetry by setting the FUNCTIONS_CORE_TOOLS_TELEMETRY_OPTOUT environment variable to '1' or 'true' using your favorite shell.

+ azure-functions-core-tools@3.0.2245

added 53 packages in 106.967s

╭──────────────────────────────────────╮

│ │

│ Update available 5.6.0 → 6.14.2 │

│ Run npm i -g npm to update │

│ │

╰──────────────────────────────────────╯

Next, let’s create a new docker based function project. This will fill the directory with content.

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ func init . --docker

Select a number for worker runtime:

1. dotnet

2. node

3. python

4. powershell

Choose option: 2

node

Select a number for language:

1. javascript

2. typescript

Choose option: 1

javascript

Writing package.json

Writing .gitignore

Writing host.json

Writing local.settings.json

Writing /home/builder/Workspaces/hello-keda/.vscode/extensions.json

Writing Dockerfile

Writing .dockerignore

Now let’s instantiate a new function.

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ func new

Select a number for template:

1. Azure Blob Storage trigger

2. Azure Cosmos DB trigger

3. Durable Functions activity

4. Durable Functions HTTP starter

5. Durable Functions orchestrator

6. Azure Event Grid trigger

7. Azure Event Hub trigger

8. HTTP trigger

9. IoT Hub (Event Hub)

10. Azure Queue Storage trigger

11. SendGrid

12. Azure Service Bus Queue trigger

13. Azure Service Bus Topic trigger

14. SignalR negotiate HTTP trigger

15. Timer trigger

Choose option: 10

Azure Queue Storage trigger

Function name: [QueueTrigger]

Writing /home/builder/Workspaces/hello-keda/QueueTrigger/index.js

Writing /home/builder/Workspaces/hello-keda/QueueTrigger/readme.md

Writing /home/builder/Workspaces/hello-keda/QueueTrigger/function.json

The function "QueueTrigger" was created successfully from the "Azure Queue Storage trigger" template.

We are going to need a storage account. Since we have the CLI, let’s just create that here.

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ az group create -l westus -n hello-keda

{

"id": "/subscriptions/70b42e6a-6faf-4fed-bcec-9f3995b1aca8/resourceGroups/hello-keda",

"location": "westus",

"managedBy": null,

"name": "hello-keda",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ az storage account create --sku Standard_LRS --location westus -g hello-keda -n kedastoragefunc

{

"accessTier": "Hot",

"azureFilesIdentityBasedAuthentication": null,

"blobRestoreStatus": null,

"creationTime": "2020-03-13T02:30:47.628604+00:00",

"customDomain": null,

"enableHttpsTrafficOnly": true,

"encryption": {

"keySource": "Microsoft.Storage",

"keyVaultProperties": null,

"services": {

"blob": {

"enabled": true,

"keyType": "Account",

"lastEnabledTime": "2020-03-13T02:30:47.691077+00:00"

},

"file": {

"enabled": true,

"keyType": "Account",

"lastEnabledTime": "2020-03-13T02:30:47.691077+00:00"

},

"queue": null,

"table": null

}

},

"failoverInProgress": null,

"geoReplicationStats": null,

"id": "/subscriptions/70b42e6a-6faf-4fed-bcec-9f3995b1aca8/resourceGroups/hello-keda/providers/Microsoft.Storage/storageAccounts/kedastoragefunc",

"identity": null,

"isHnsEnabled": null,

"kind": "StorageV2",

"largeFileSharesState": null,

"lastGeoFailoverTime": null,

"location": "westus",

"name": "kedastoragefunc",

"networkRuleSet": {

"bypass": "AzureServices",

"defaultAction": "Allow",

"ipRules": [],

"virtualNetworkRules": []

},

"primaryEndpoints": {

"blob": "https://kedastoragefunc.blob.core.windows.net/",

"dfs": "https://kedastoragefunc.dfs.core.windows.net/",

"file": "https://kedastoragefunc.file.core.windows.net/",

"internetEndpoints": null,

"microsoftEndpoints": null,

"queue": "https://kedastoragefunc.queue.core.windows.net/",

"table": "https://kedastoragefunc.table.core.windows.net/",

"web": "https://kedastoragefunc.z22.web.core.windows.net/"

},

"primaryLocation": "westus",

"privateEndpointConnections": [],

"provisioningState": "Succeeded",

"resourceGroup": "hello-keda",

"routingPreference": null,

"secondaryEndpoints": null,

"secondaryLocation": null,

"sku": {

"name": "Standard_LRS",

"tier": "Standard"

},

"statusOfPrimary": "available",

"statusOfSecondary": null,

"tags": {},

"type": "Microsoft.Storage/storageAccounts"

}

We can now set a local variable to the connection string for the storage account we just created:

CONNECTION_STRING=$(az storage account show-connection-string --name kedastoragefunc --query connectionString)And next using it, create a storage queue.

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ az storage queue create -n js-queue-itemtring $CONNECTION_STRING

{

"created": true

}

One issue with the connection string is that it includes double quotes. Let’s quickly make a variable that doesn’t have quotes.

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ CONN_STR=`echo $CONNECTION_STRING | sed s#\"##g`Next, since i plan to use it in a regexp, i need to make it regexp compliant.

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ repl=$(sed -e 's/[&\\/]/\\&/g; s/$/\\/' -e '$s/\\$//' <<<"$CONN_STR")

Now i can insert it into the local.settings.json

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ sed -i "s/{AzureWebJobsStorage}/$repl/" local.settings.json

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ cat local.settings.json

{

"IsEncrypted": false,

"Values": {

"FUNCTIONS_WORKER_RUNTIME": "node",

"AzureWebJobsStorage": "DefaultEndpointsProtocol=https;EndpointSuffix=core.windows.net;AccountName=kedastoragefunc;AccountKey=thd3asdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdafdsfasdfuA=="

}

Next, we need to change the connection setting in the function.json to know to use it.

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ sed -i 's/"connection": ""/"connection": "AzureWebJobsStorage"/g' QueueTrigger/function.json

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ cat QueueTrigger/function.json

{

"bindings": [

{

"name": "myQueueItem",

"type": "queueTrigger",

"direction": "in",

"queueName": "js-queue-items",

"connection": "AzureWebJobsStorage"

}

]

}

Lastly, verify host.version

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ cat host.json

{

"version": "2.0",

"extensionBundle": {

"id": "Microsoft.Azure.Functions.ExtensionBundle",

"version": "[1.*, 2.0.0)"

}

}

Testing

Then start the function with “func start”:

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ func start

%%%%%%

%%%%%%

@ %%%%%% @

@@ %%%%%% @@

@@@ %%%%%%%%%%% @@@

@@ %%%%%%%%%% @@

@@ %%%% @@

@@ %%% @@

@@ %% @@

%%

%

Azure Functions Core Tools (3.0.2245 Commit hash:

...We then head to the queue and insert a message.

When we click add, we should see it reflected in the interactive console:

[03/13/2020 23:31:21] Job host started

Hosting environment: Production

Content root path: /home/builder/Workspaces/hello-keda

Now listening on: http://0.0.0.0:7071

Application started. Press Ctrl+C to shut down.

[03/13/2020 23:31:21] Worker 05f811c8-607d-4f3f-82a5-007a003fdf53 connecting on 127.0.0.1:46769

[03/13/2020 23:31:27] Host lock lease acquired by instance ID '00000000000000000000000055878859'.

[03/13/2020 23:31:39] Executing 'Functions.QueueTrigger' (Reason='New queue message detected on 'js-queue-items'.', Id=d12a44e0-7bd0-49a0-8304-360e68160a0d)

[03/13/2020 23:31:39] Trigger Details: MessageId: 1843d31c-ff3d-4736-b369-b242fe65e3ae, DequeueCount: 1, InsertionTime: 03/13/2020 23:31:36 +00:00

[03/13/2020 23:31:40] JavaScript queue trigger function processed work item Hello FreshBrewed!

[03/13/2020 23:31:40] Executed 'Functions.QueueTrigger' (Succeeded, Id=d12a44e0-7bd0-49a0-8304-360e68160a0d)

At this point, we have KEDA running in the cluster and have verified the function worked. We can now install this function into KEDA.

Installing into Kubernetes

Now we can tie this all together. First, let’s double check KEDA is installed correctly in the cluster:

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ kubectl get customresourcedefinition

NAME CREATED AT

scaledobjects.keda.k8s.io 2020-03-13T02:15:05Z

triggerauthentications.keda.k8s.io 2020-03-13T02:15:05Z

Let’s build and deploy

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ func kubernetes deploy --name hello-keda --registry idjohnson

Running 'docker build -t idjohnson/hello-keda /home/builder/Workspaces/hello-keda'..done

Error running docker build -t idjohnson/hello-keda /home/builder/Workspaces/hello-keda.

output:

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

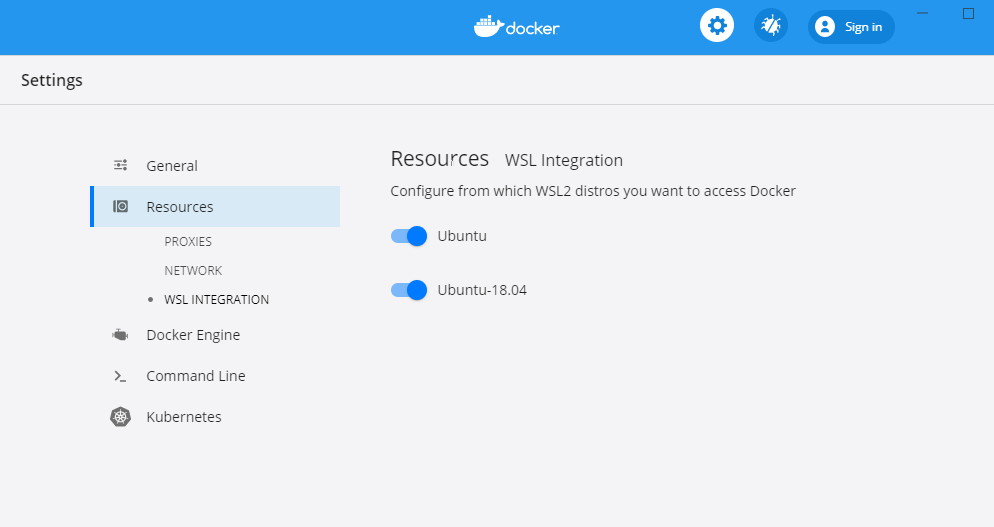

I initially had an issue as docker was not exposed to WSL. I just needed to expose on 2375 without TLS and then add WSL Support.

Eventually i managed to enable my ubuntu instances in WSL to use docker on my windows 10 laptop. If you are on a Mac, this won’t apply to you.

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ docker login

Login with your Docker ID to push and pull images from Docker Hub. If you don't have a Docker ID, head over to https://hub.docker.com to create one.

Username: idjohnson

Password:

WARNING! Your password will be stored unencrypted in /home/builder/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

Before we move on, there is an error in the Dockerfile, it’s missing npm.

We just need to add an apt install -y npm to the steps

That is

RUN cd /home/site/wwwroot && \

npm install

becomes

RUN cd /home/site/wwwroot && \

apt install -y npm && \

npm install

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ cat Dockerfile

# To enable ssh & remote debugging on app service change the base image to the one below

# FROM mcr.microsoft.com/azure-functions/node:3.0-appservice

FROM mcr.microsoft.com/azure-functions/node:3.0

ENV AzureWebJobsScriptRoot=/home/site/wwwroot \

AzureFunctionsJobHost__Logging__Console__IsEnabled=true

COPY . /home/site/wwwroot

RUN cd /home/site/wwwroot && \

apt install -y npm && \

npm install

Deploy it into our cluster. This step will build the image and push it to our registry (docker hub) then launch into the k8s cluster.

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ func kubernetes deploy --name hello-keda --namespace keda --registry idjohnson

Running 'docker build -t idjohnson/hello-keda /home/builder/Workspaces/hello-keda'.......................................................................................done

Running 'docker push idjohnson/hello-keda'...............................................................................................................................................................done

secret/hello-keda created

deployment.apps/hello-keda created

scaledobject.keda.k8s.io/hello-keda created

Validation:

Get pods…

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ kubectl get deploy -n keda

NAME READY UP-TO-DATE AVAILABLE AGE

hello-keda 0/0 0 0 10m

keda 1/1 1 1 24m

keda-operator 1/1 1 1 24h

keda-operator-metrics-apiserver 1/1 1 1 24h

You should note 0 pods spun up.. Now lets watch.

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ kubectl get pods -n keda -w

NAME READY STATUS RESTARTS AGE

keda-65cfff5bd8-fb5mq 2/2 Running 0 26m

keda-operator-6ddb57c944-2gs76 1/1 Running 0 24h

keda-operator-metrics-apiserver-76f5cbb769-mr58q 1/1 Running 0 24h

Send a message again via the portal..

And we should see (in less than a minute) pods spun up

Checking again and i see the queue has been emptied

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ kubectl get pods -n keda

NAME READY STATUS RESTARTS AGE

hello-keda-55b6ccd77f-p6cdc 1/1 Running 0 116s

keda-65cfff5bd8-fb5mq 2/2 Running 0 29m

keda-operator-6ddb57c944-2gs76 1/1 Running 0 24h

keda-operator-metrics-apiserver-76f5cbb769-mr58q 1/1 Running 0 24h

Cleanup

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ kubectl delete deploy -n keda hello-keda

deployment.extensions "hello-keda" deleted

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ kubectl delete ScaledObject -n keda hello-keda

scaledobject.keda.k8s.io "hello-keda" deleted

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ kubectl delete Secret -n keda hello-keda

secret "hello-keda" deleted

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ az aks list -o table

Name Location ResourceGroup KubernetesVersion ProvisioningState Fqdn

------------ ---------- --------------- ------------------- ------------------- ----------------------------------------------------------

idjaksdemo01 eastus idjaksdemo 1.14.8 Succeeded idjaksdemo-idjaksdemo-70b42e-23f077ed.hcp.eastus.azmk8s.io

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ az aks delete -n idjaksdemo01 -g idjaksdemo

Are you sure you want to perform this operation? (y/n): Y

- Running ..

But before we delete the storage account.. How might this look on pi4?

Attempting to run KEDA on ARM

Reinstall: https://keda.sh/deploy/

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ helm repo add kedacore https://kedacore.github.io/charts

"kedacore" has been added to your repositories

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "kedacore" chart repository

...Successfully got an update from the "banzaicloud-stable" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈ Happy Helming!⎈

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ kubectl create namespace keda

namespace/keda created

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ helm install keda kedacore/keda --namespace keda

manifest_sorter.go:175: info: skipping unknown hook: "crd-install"

manifest_sorter.go:175: info: skipping unknown hook: "crd-install"

NAME: keda

LAST DEPLOYED: Fri Mar 13 22:22:27 2020

NAMESPACE: keda

STATUS: deployed

REVISION: 1

TEST SUITE: None

verification:

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ kubectl get customresourcedefinition

NAME CREATED AT

listenerconfigs.k3s.cattle.io 2019-08-25T01:42:42Z

addons.k3s.cattle.io 2019-08-25T01:42:42Z

helmcharts.helm.cattle.io 2019-08-25T01:42:42Z

triggerauthentications.keda.k8s.io 2020-03-14T03:22:06Z

scaledobjects.keda.k8s.io 2020-03-14T03:22:04Z

now deploy

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ func kubernetes deploy --name hello-keda --registry idjohnson

Running 'docker build -t idjohnson/hello-keda /home/builder/Workspaces/hello-keda'.........................................................done

Running 'docker push idjohnson/hello-keda'..................................................done

secret/hello-keda created

deployment.apps/hello-keda created

scaledobject.keda.k8s.io/hello-keda created

However, these images aren’t based on arm64:

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ kubectl get pods -n keda

NAME READY STATUS RESTARTS AGE

keda-operator-dbfbd6bdb-49cg7 0/1 CrashLoopBackOff 6 8m28s

keda-operator-metrics-apiserver-8678f8c5d9-gl6cj 0/1 CrashLoopBackOff 6 8m28s

builder@DESKTOP-2SQ9NQM:~/Workspaces/hello-keda$ kubectl logs -n keda keda-operator-dbfbd6bdb-49cg7

standard_init_linux.go:211: exec user process caused "exec format error"

There are really two issues we would need to tackle to get this running on an ARM based cluster. The first is to build KEDA for ARM since the helm charts we used (as we see above) are for x86.

The second is to build our function container with ARM. This is doable by using a different base label: While (at present) there is not a 3.0-arm32v7, there is a 2.0-arm32v7 tag: https://hub.docker.com/_/microsoft-azure-functions-base

Mcr.microsoft.com doesn't work with browse (neither ‘docker images ls’ nor the npm app docker-browse work with it) and the website doesn't list tags: https://docs.microsoft.com/en-us/azure/azure-functions/functions-versions

I found a tag of “arm” for keda core (but not medtrics apiserver)

However, while the image launches, it doesn't actually work:

--- a/deploy/12-operator.yaml

+++ b/deploy/12-operator.yaml

@@ -23,7 +23,7 @@ spec:

serviceAccountName: keda-operator

containers:

- name: keda-operator

- image: docker.io/kedacore/keda:1.3.0

+ image: docker.io/kedacore/keda:arm

command:

- keda

args:

Error from pod:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled <unknown> default-scheduler Successfully assigned keda/keda-operator-8996c6b48-54x7k to raspberrypi

Normal Pulled 41m (x4 over 42m) kubelet, raspberrypi Successfully pulled image "docker.io/kedacore/keda:arm"

Normal Created 41m (x4 over 42m) kubelet, raspberrypi Created container keda-operator

Warning Failed 41m (x4 over 42m) kubelet, raspberrypi Error: failed to create containerd task: OCI runtime create failed: container_linux.go:346: starting container process caused "exec: \"keda\": executable file not found in $PATH": unknown

Normal Pulling 40m (x5 over 42m) kubelet, raspberrypi Pulling image "docker.io/kedacore/keda:arm"

Warning BackOff 2m51s (x183 over 42m) kubelet, raspberrypi Back-off restarting failed container

Summary

KEDA paired with Functions is a great way to develop local Azure functions without needing to actually host them in the cloud. We should be able to use this with any Kubernetes cluster, not just AKS. I could easily foresee this as a great to take Azure Functions written for Azure and bring them to EKS or GKE if one needs to support cross cloud without rewriting them into Google Cloud Functions or AWS Lambdas.