We've discussed AKV and Hashi Vault, but one simple pattern for secrets storage and decimation is to use encrypted file storage. While not as elegant, it can be more than sufficient, fast and readily available for many cases that need to just apply the KISS method.

Storing in AWS S3

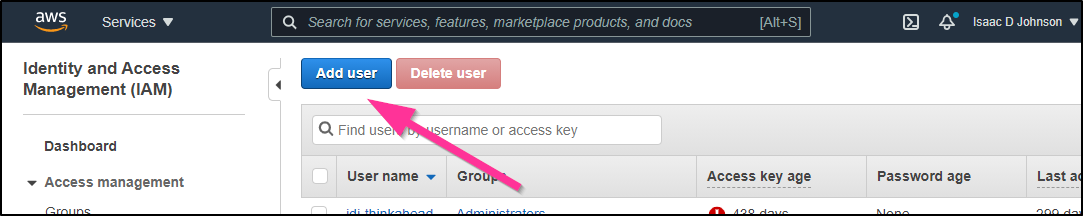

First, if you haven't already, setup an AWS IAM user.

Create a new user in IAM in the AWS Console

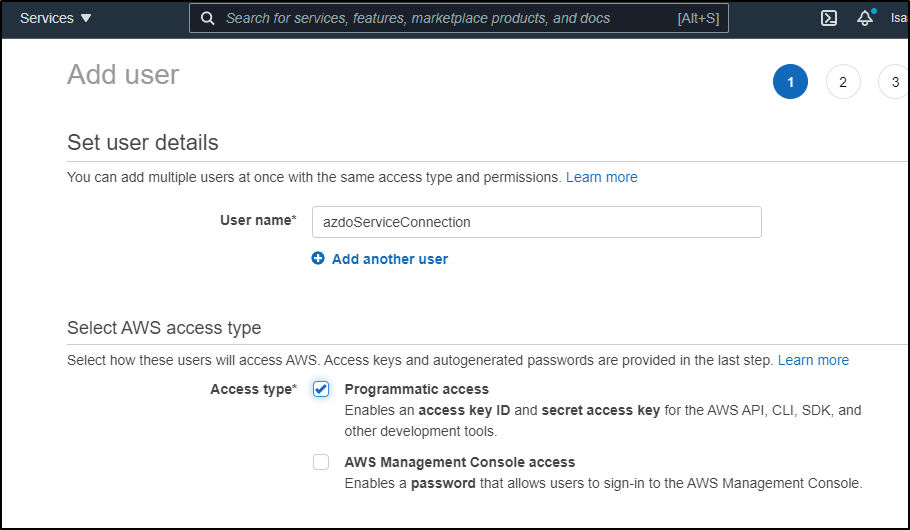

Type in username and set just for programmatic

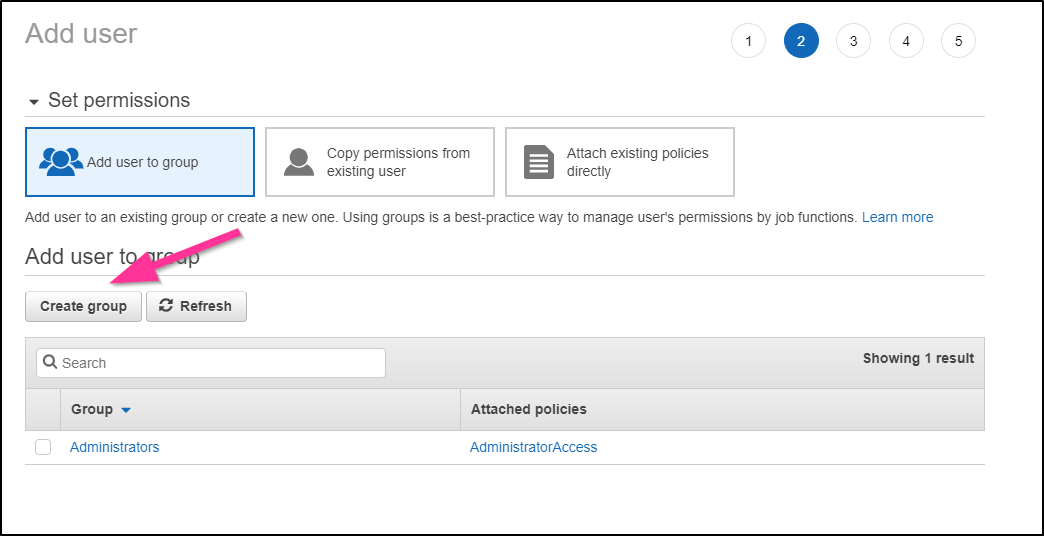

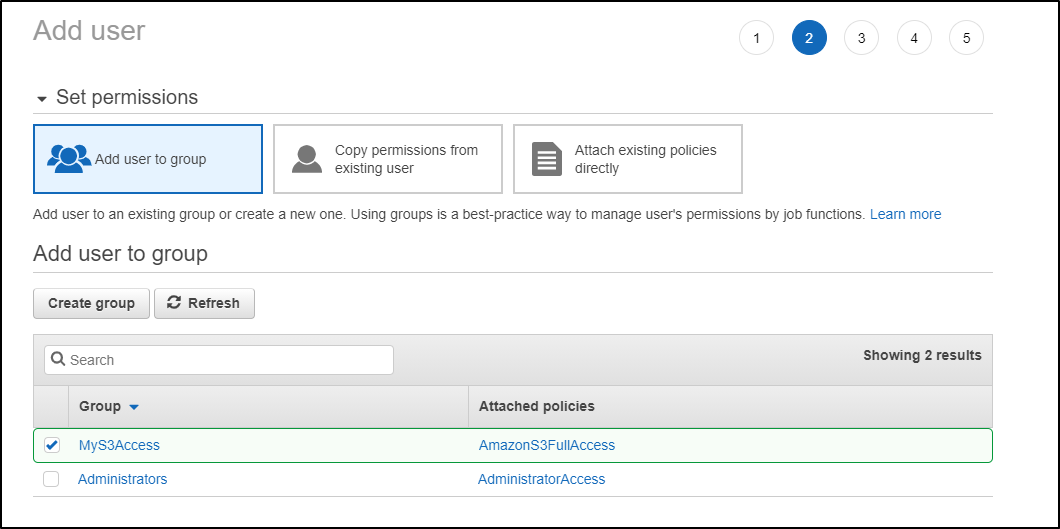

Next we should create a group (we don't really want this user in the administrators)

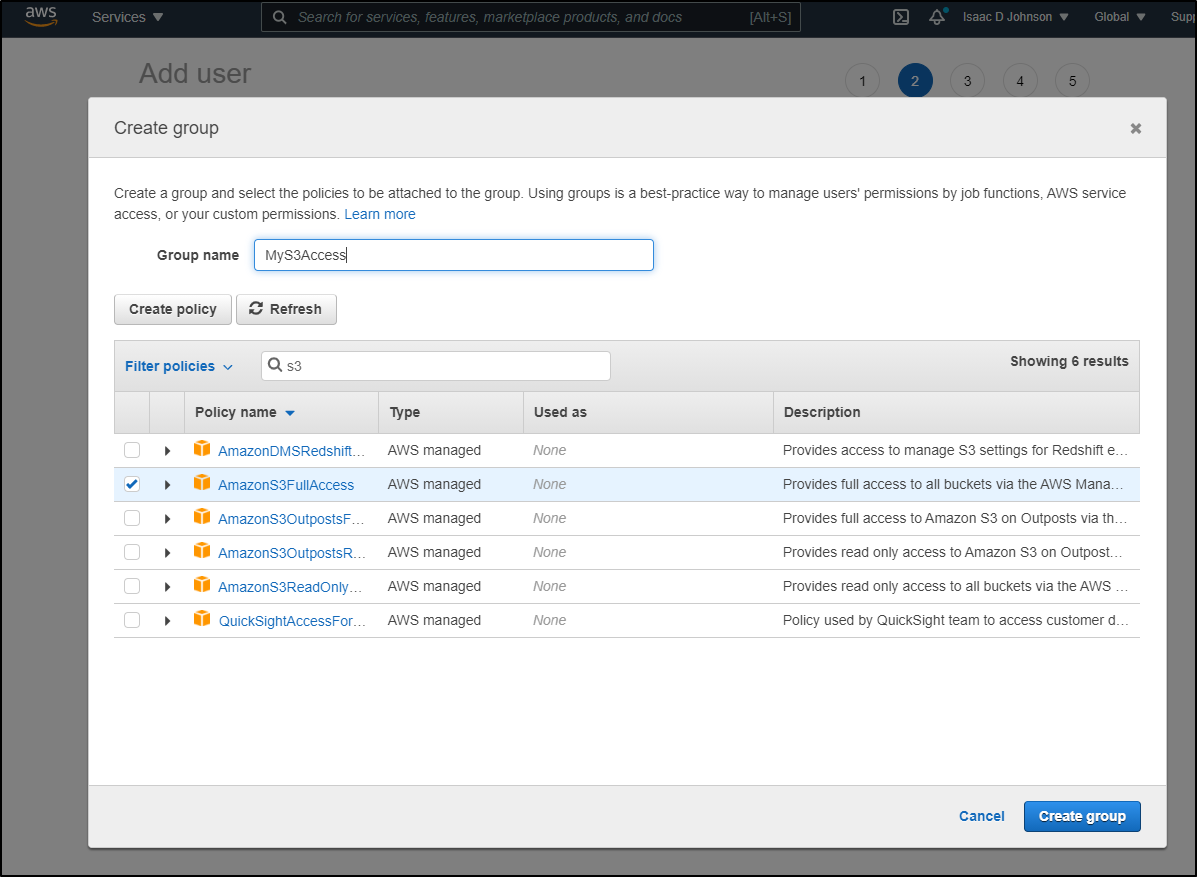

And we can set S3 Access only

After we select the group, we can come back to the create wizard

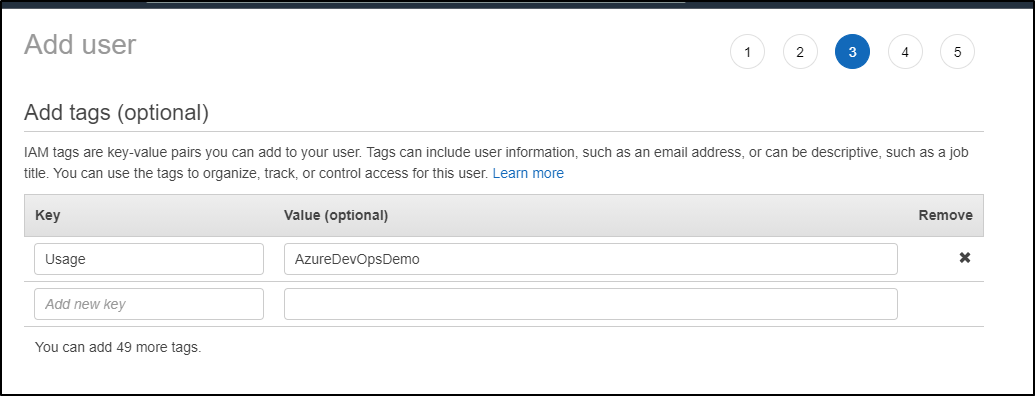

And set any tags

Finally, create the identity

When created, grab the access and secret

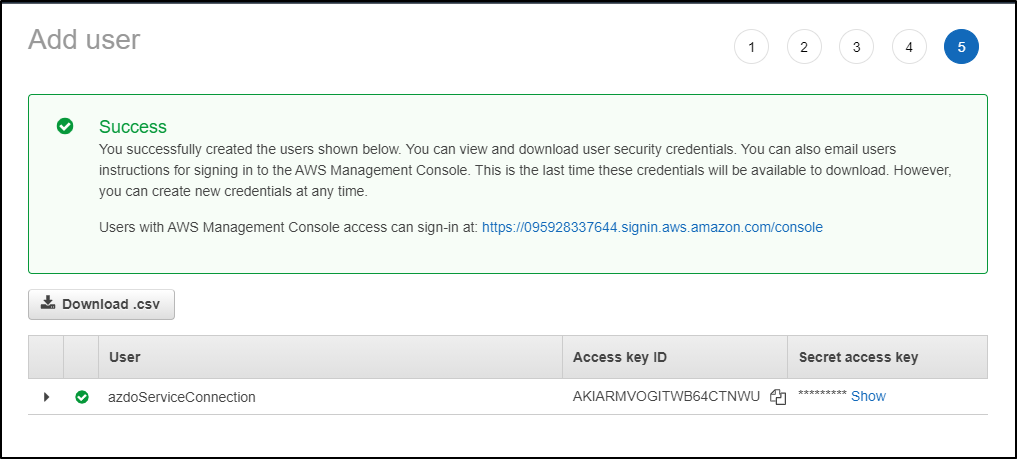

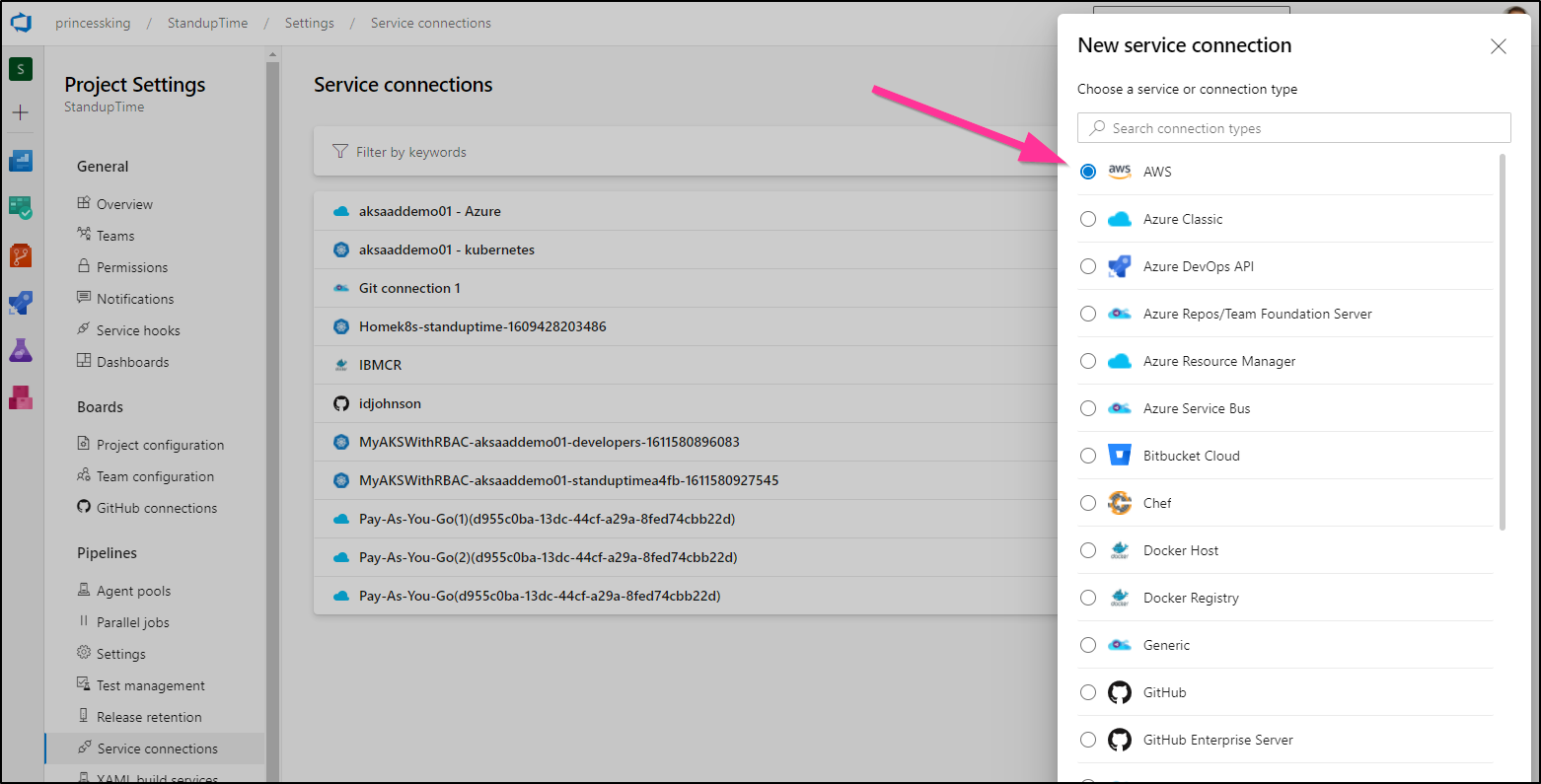

Back in Azure DevOps

Create a new AWS Service Connection

The details refer to the Key and ID we created in our first section

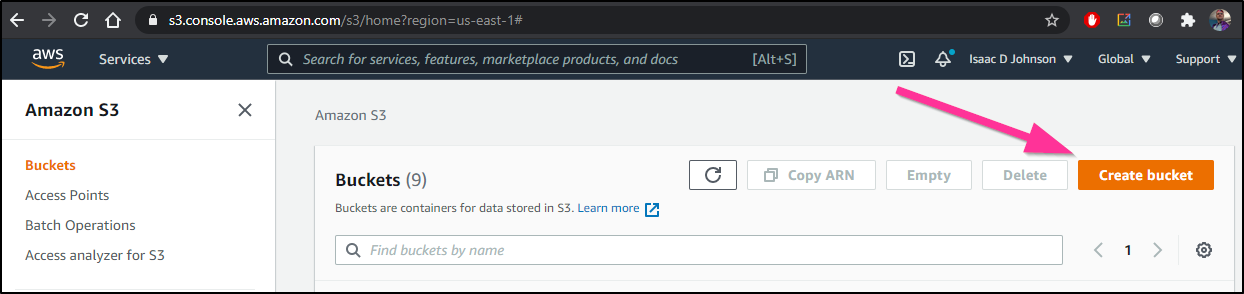

Create a bucket

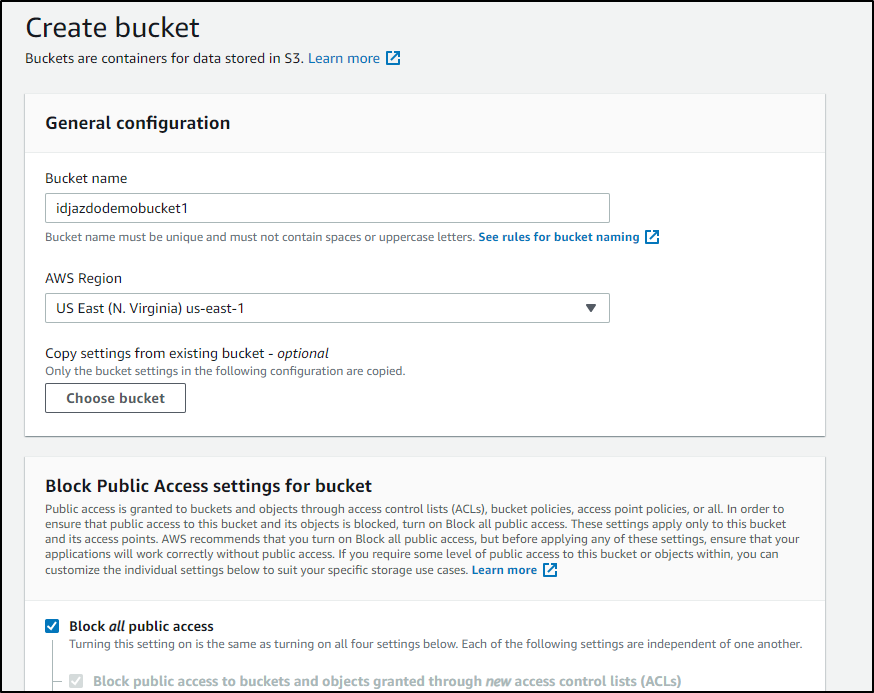

Now in S3, let’s make a bucket with encryption…

Then when we create a bucket, let’s set a few things..

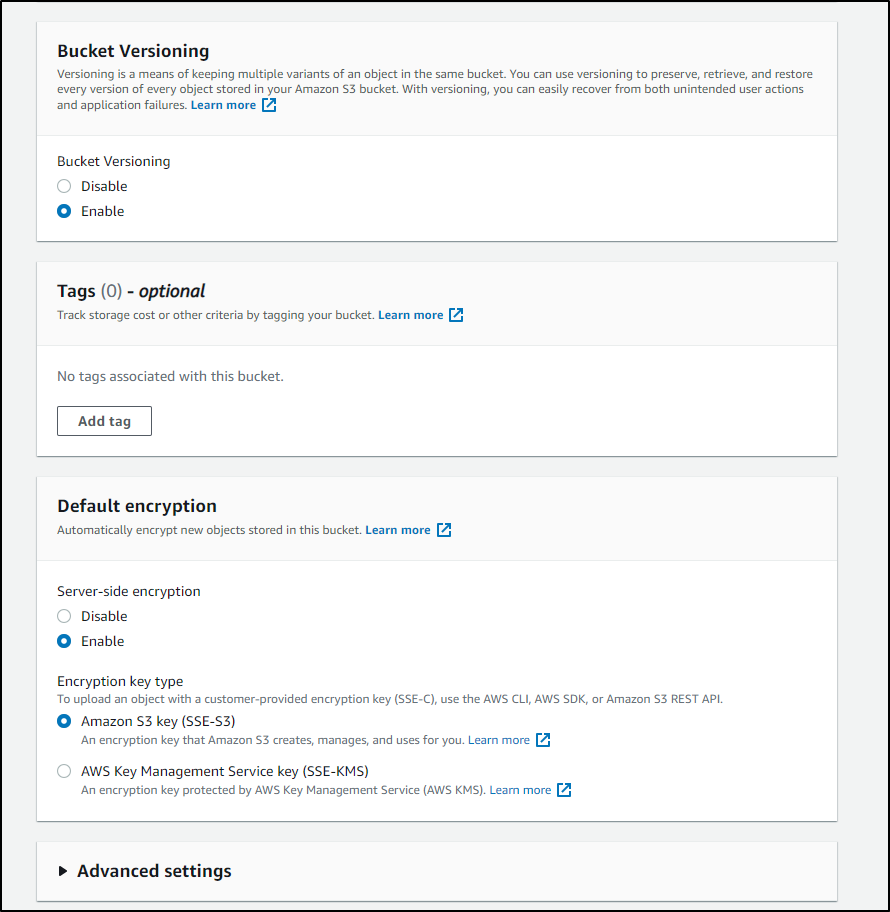

Then enable versioning and encryption

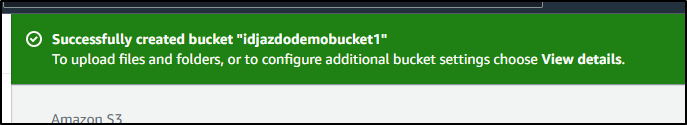

After we create, we should see confirmation

In Azure DevOps

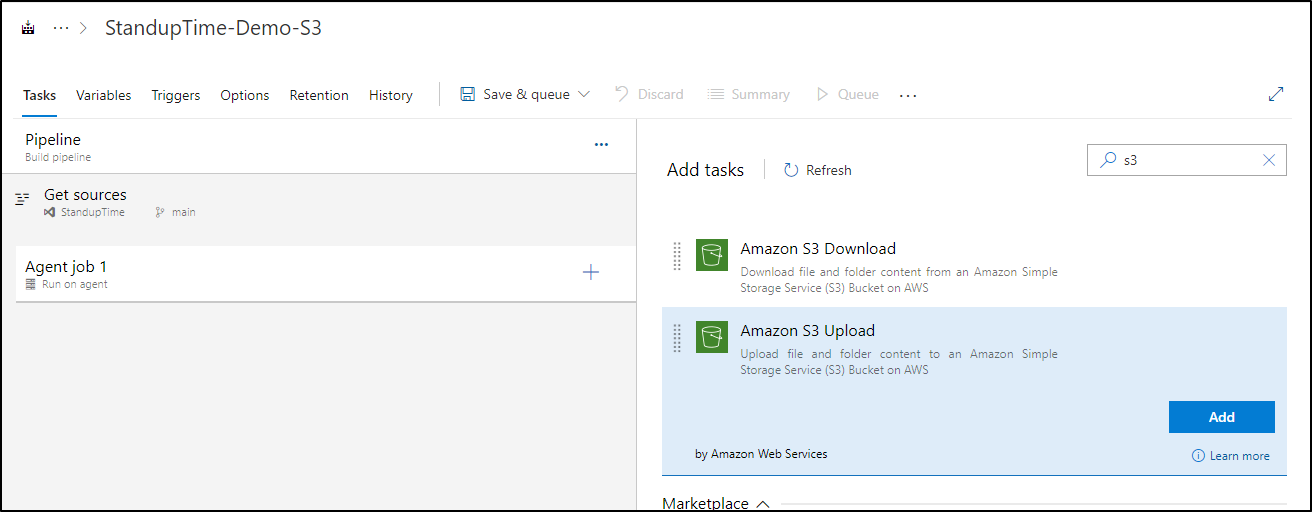

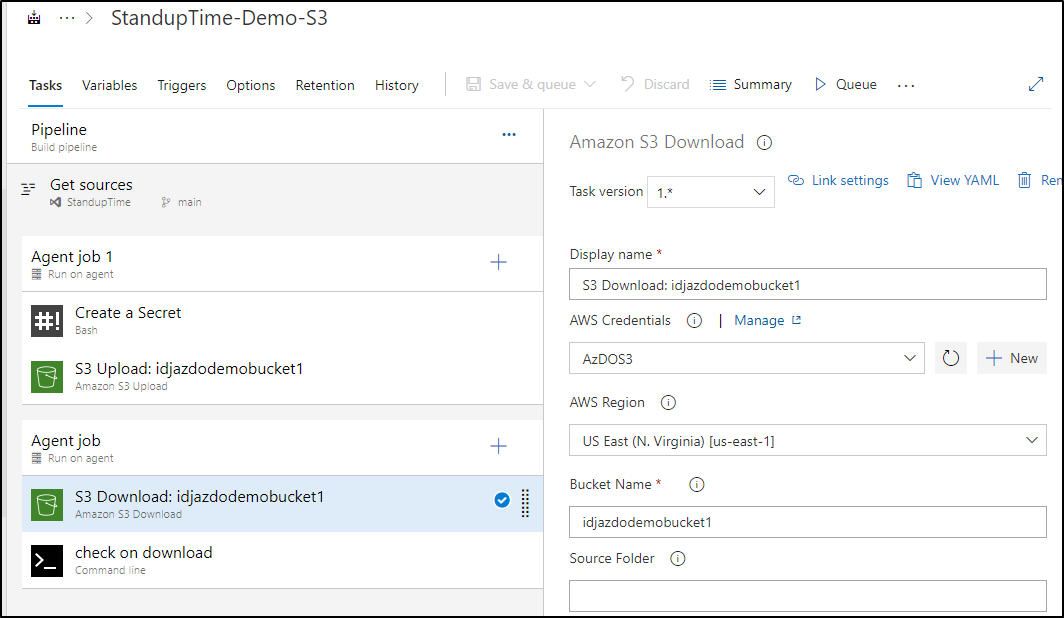

We can then create a ClassicUI pipeline and add the s3 task:

In a YAML pipeline, the action that should look like:

steps:

- task: AmazonWebServices.aws-vsts-tools.S3Upload.S3Upload@1

displayName: 'S3 Upload: idjazdodemobucket1'

inputs:

awsCredentials: AzDOS3

regionName: 'us-east-1'

bucketName: idjazdodemobucket1

sourceFolder: tmpwith the following download in a different stage/agent

steps:

- task: AmazonWebServices.aws-vsts-tools.S3Download.S3Download@1

displayName: 'S3 Download: idjazdodemobucket1'

inputs:

awsCredentials: AzDOS3

regionName: 'us-east-1'

bucketName: idjazdodemobucket1

targetFolder: '$(Build.ArtifactStagingDirectory)'In ClassicUI, that looks like

Verification

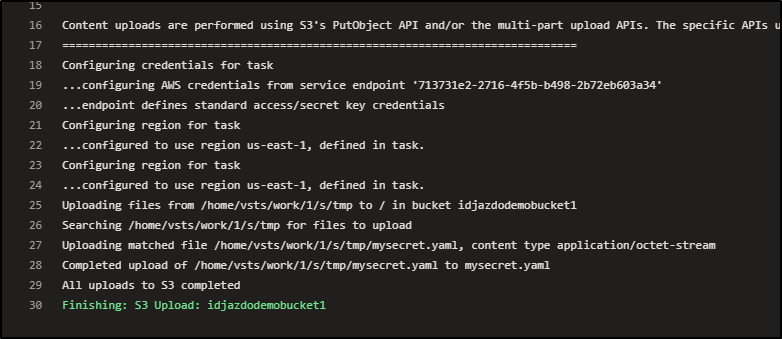

In testing, we can see the data uploaded:

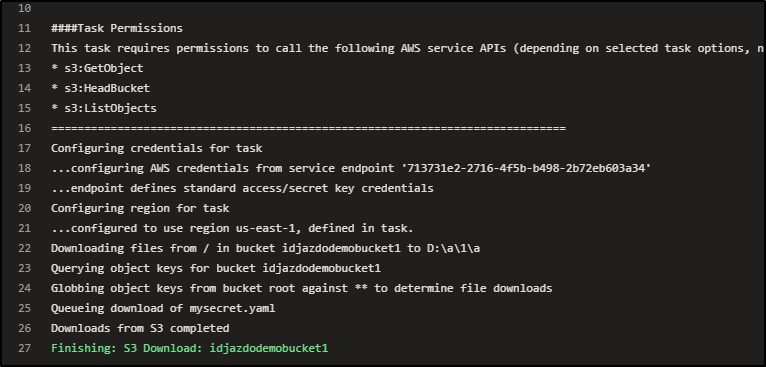

And subsequently downloaded (this time on a windows host):

Azure Blob Storage

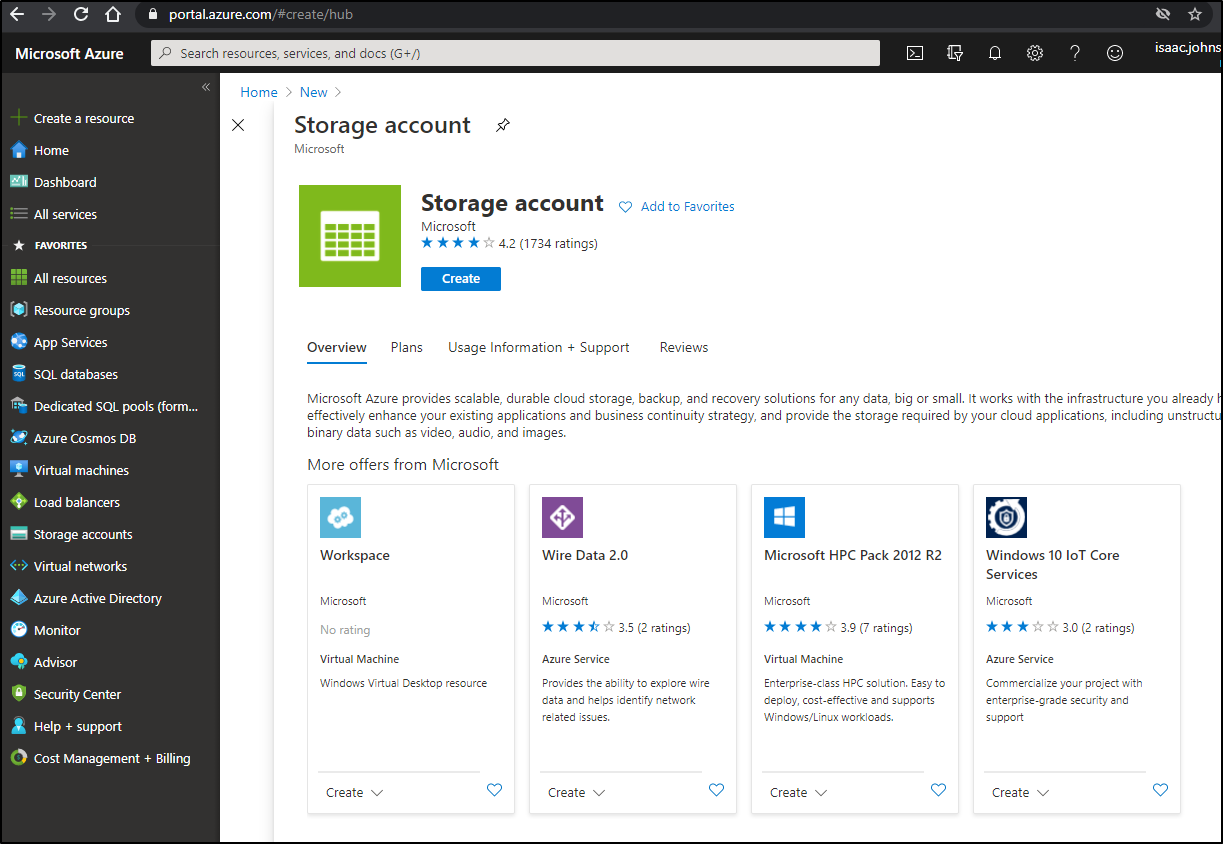

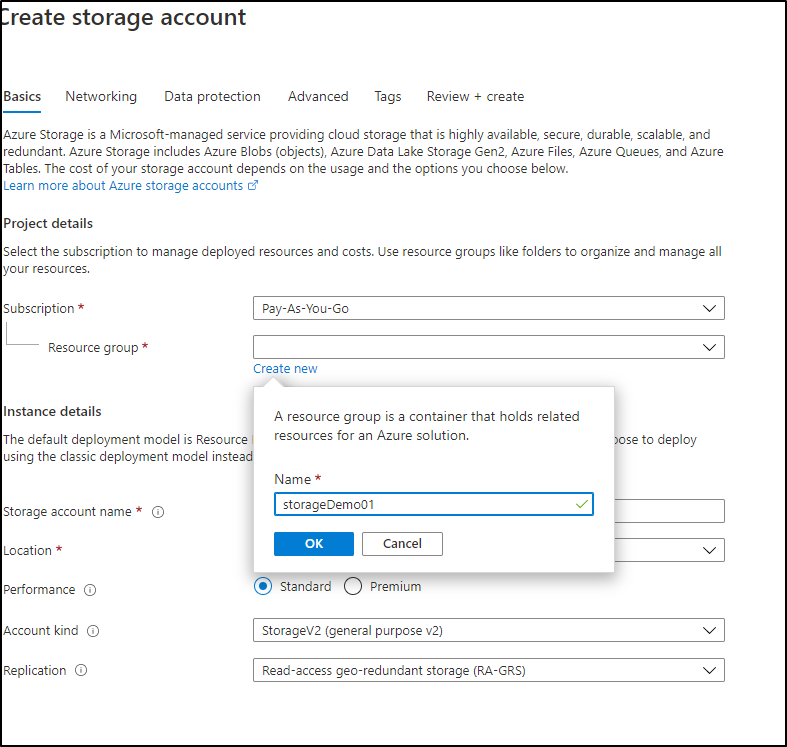

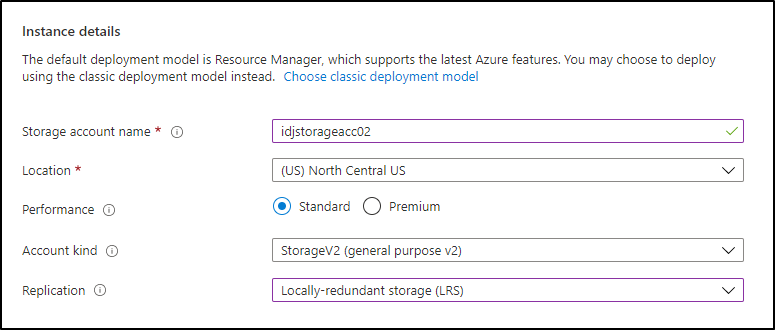

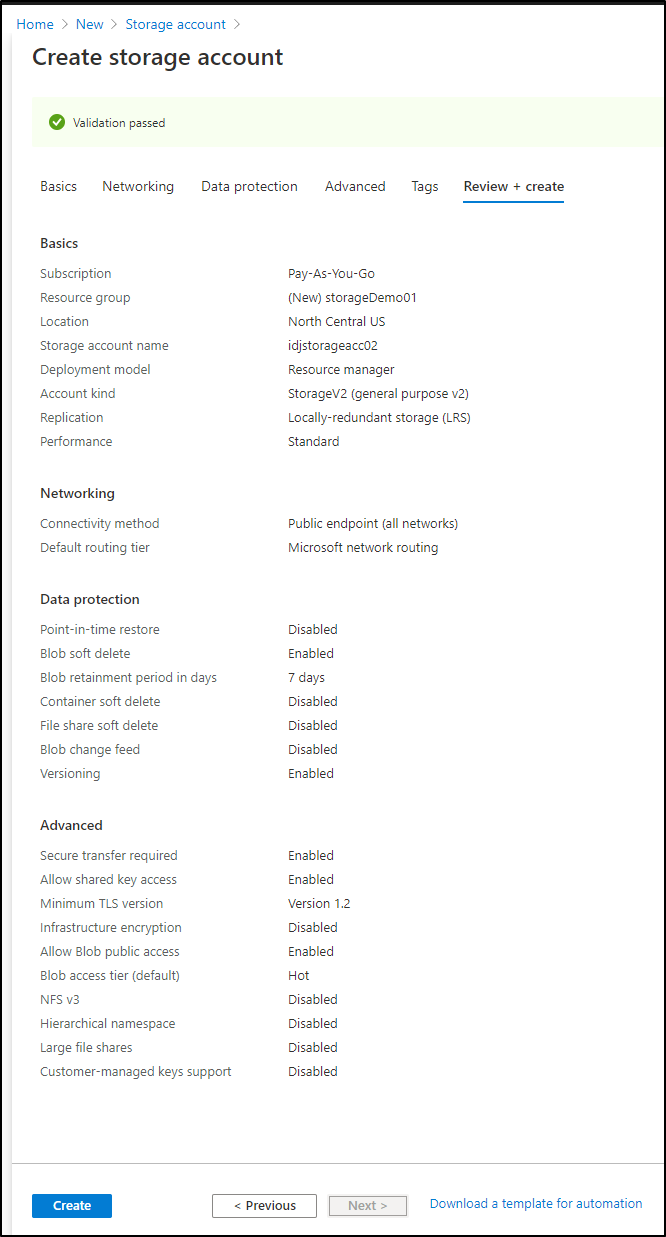

Create a new storage account

Blob store created

Storage account

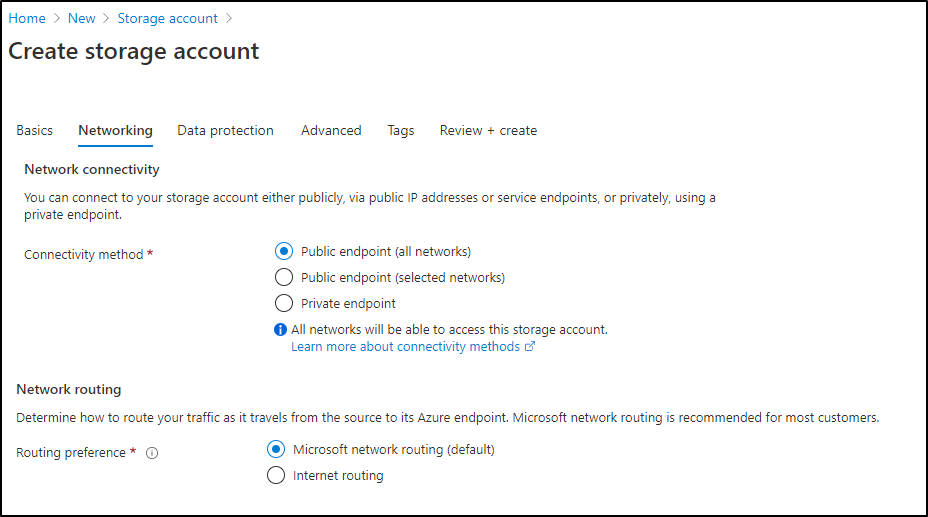

Then set networking. While I’m leaving it public, setting routing to Microsoft however

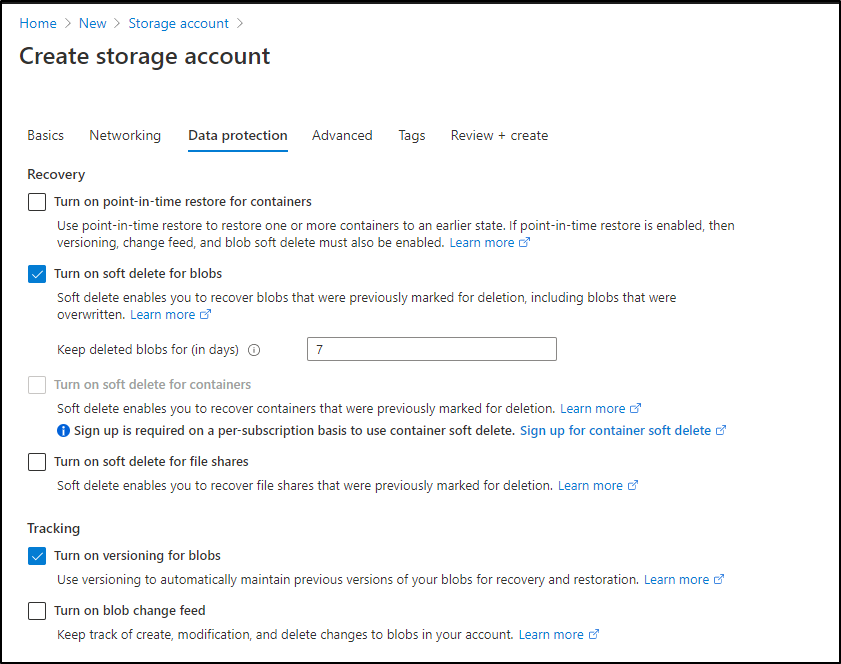

Then set versioning on blobs

Then create

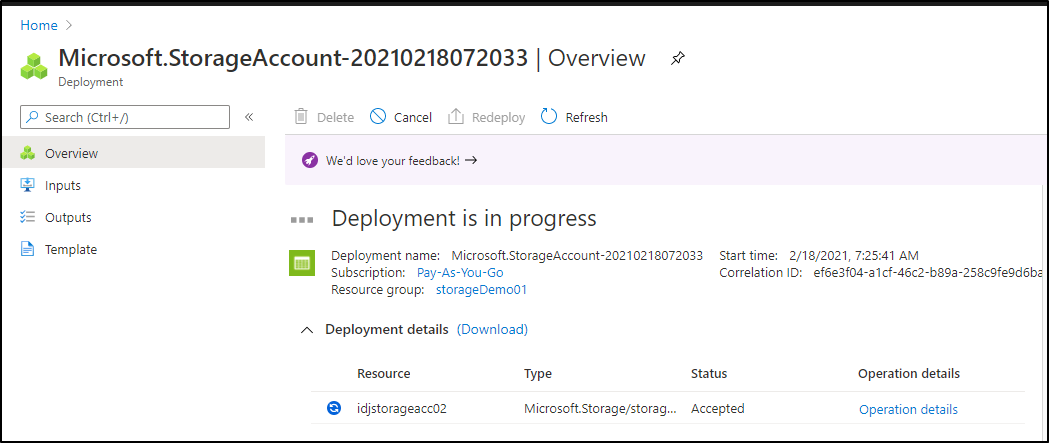

once created

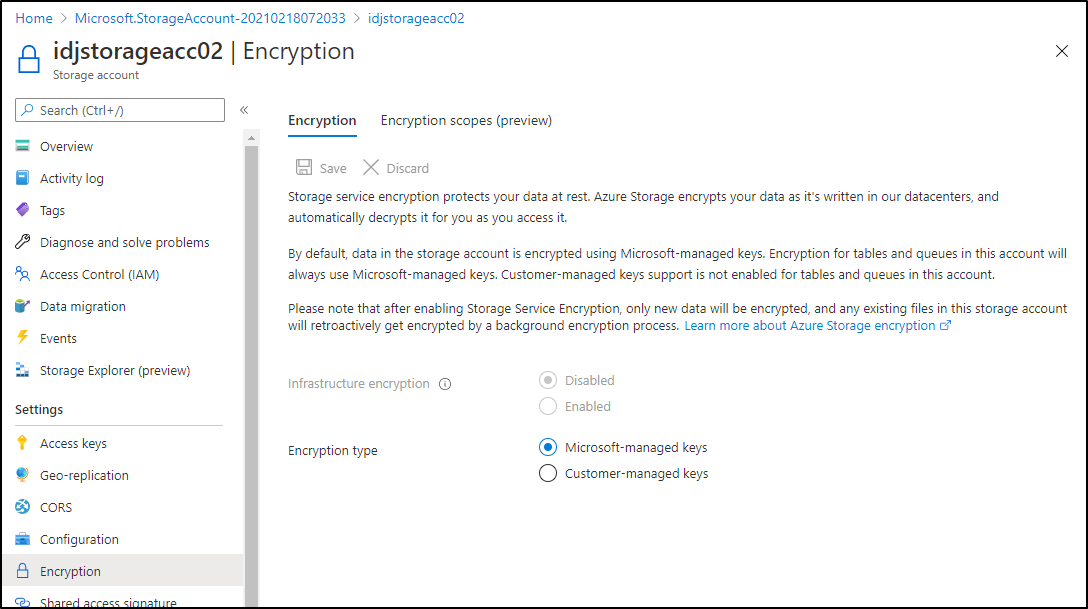

Verify encryption is enabled

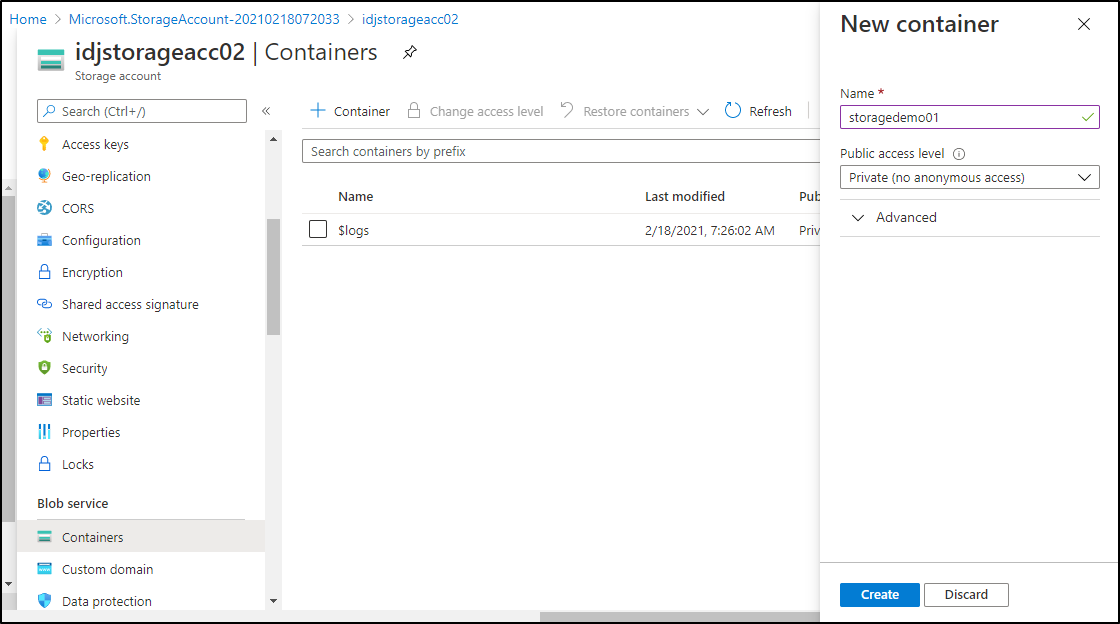

Now we can create a blob container

And we can see it listed here

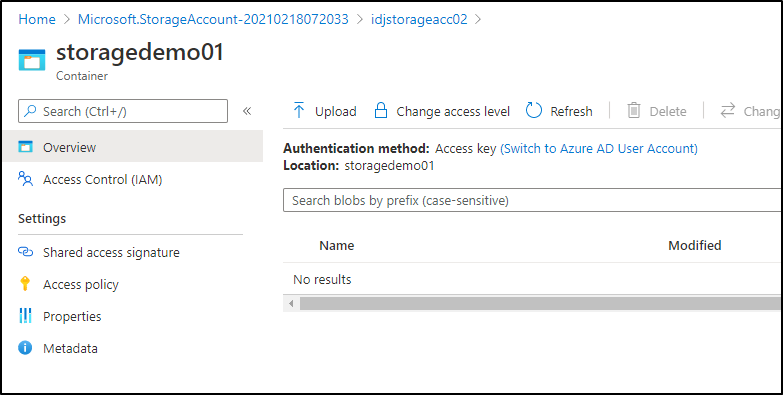

In Azure DevOps

We can now user pipeline steps to access

steps:

- task: AzureCLI@2

displayName: 'Azure CLI'

inputs:

azureSubscription: 'Pay-As-You-Go(d955c0ba-13dc-aaaa-aaaa-8fed74cbb22d)'

scriptType: bash

scriptLocation: inlineScript

inlineScript: 'az storage blob upload-batch -d storagedemo01 --account-name idjstorageacc02 -s ./tmp'

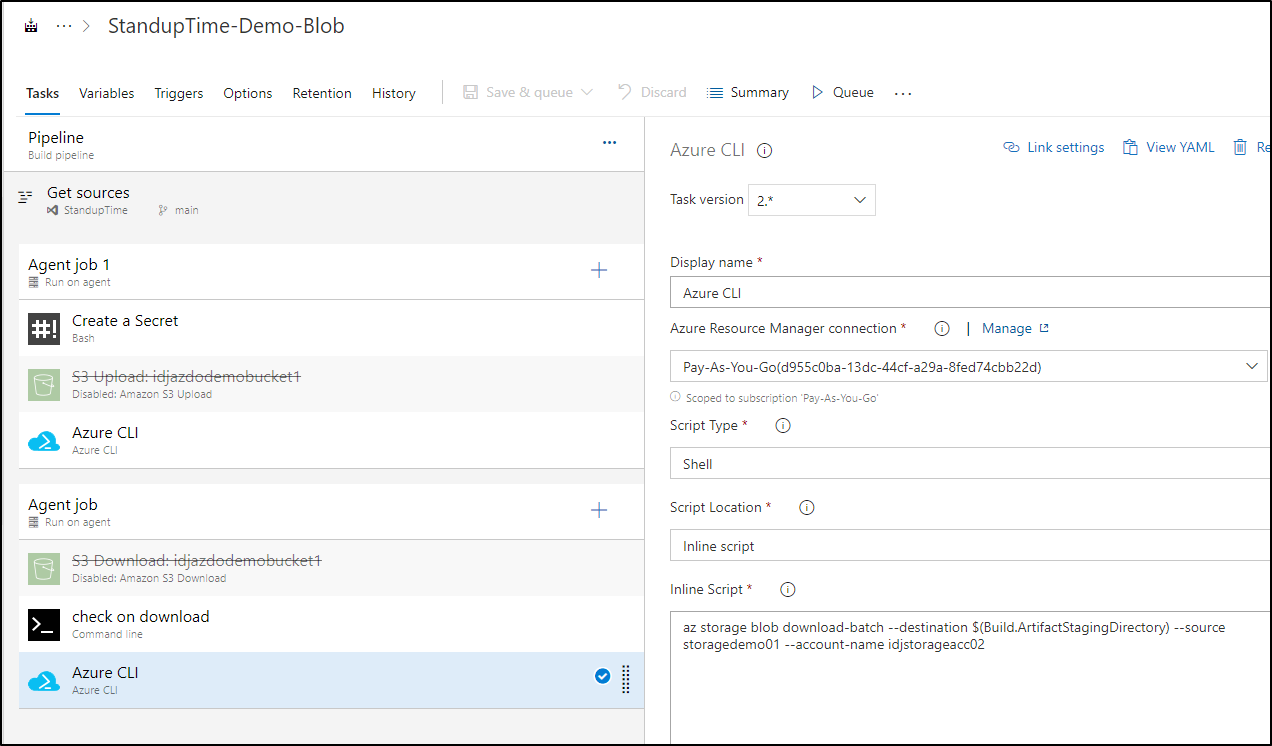

And then download as

steps:

- task: AzureCLI@2

displayName: 'Azure CLI '

inputs:

azureSubscription: 'Pay-As-You-Go(d955c0ba-13dc-aaaa-aaaa-8fed74cbb22d)'

scriptType: bash

scriptLocation: inlineScript

inlineScript: 'az storage blob download-batch --destination $(Build.ArtifactStagingDirectory) --source storagedemo01 --account-name idjstorageacc02'

And as a ClassicUI pipeline

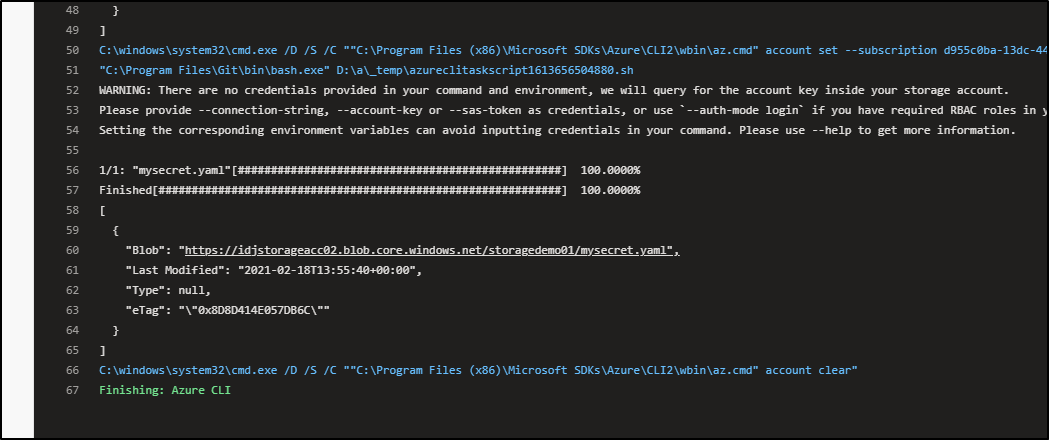

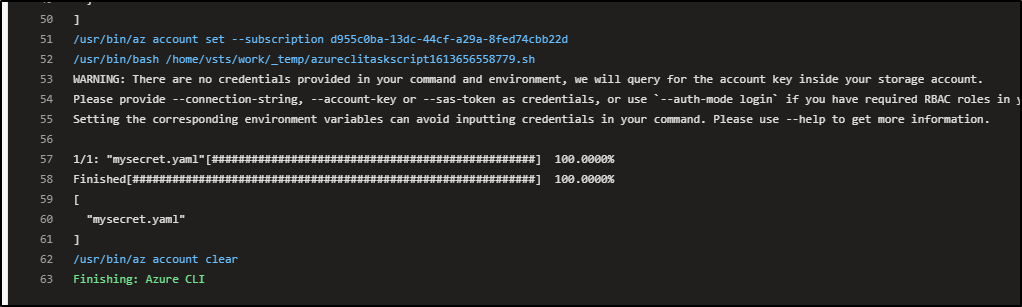

When run, we can see it uploaded

And then downloaded later in a different host

Summary

I showed two easy ways to use encrypted storage in AWS and Azure to store some data in one stage and retrieve it in a completely different. While I didn't dig into passing and parsing key pairs, One could use the format of their choosing to accomplish that. I often use JSON with jq to set and retrieve values. e.g.

$ echo '[{"keyName":"whatever","keyValue":"somevalue"}]' > t.json

$ cat t.json

[{"keyName":"whatever","keyValue":"somevalue"}]

$ cat t.json | jq -r ".[] | .keyValue"

somevalueTo store less than a Gb of JSON data in an LRS account in US-East would likely run me 3 cents. If I added GRS redundancy, i would up that to about 7 cents. AWS S3 is similarly priced.

While not a replacement for AKV or SSM, using Blob and S3 provides a nice KISS method when you just need a reliable and fast way to store some configurations and/or secrets used by various pipelines.