Published: Jun 18, 2024 by Isaac Johnson

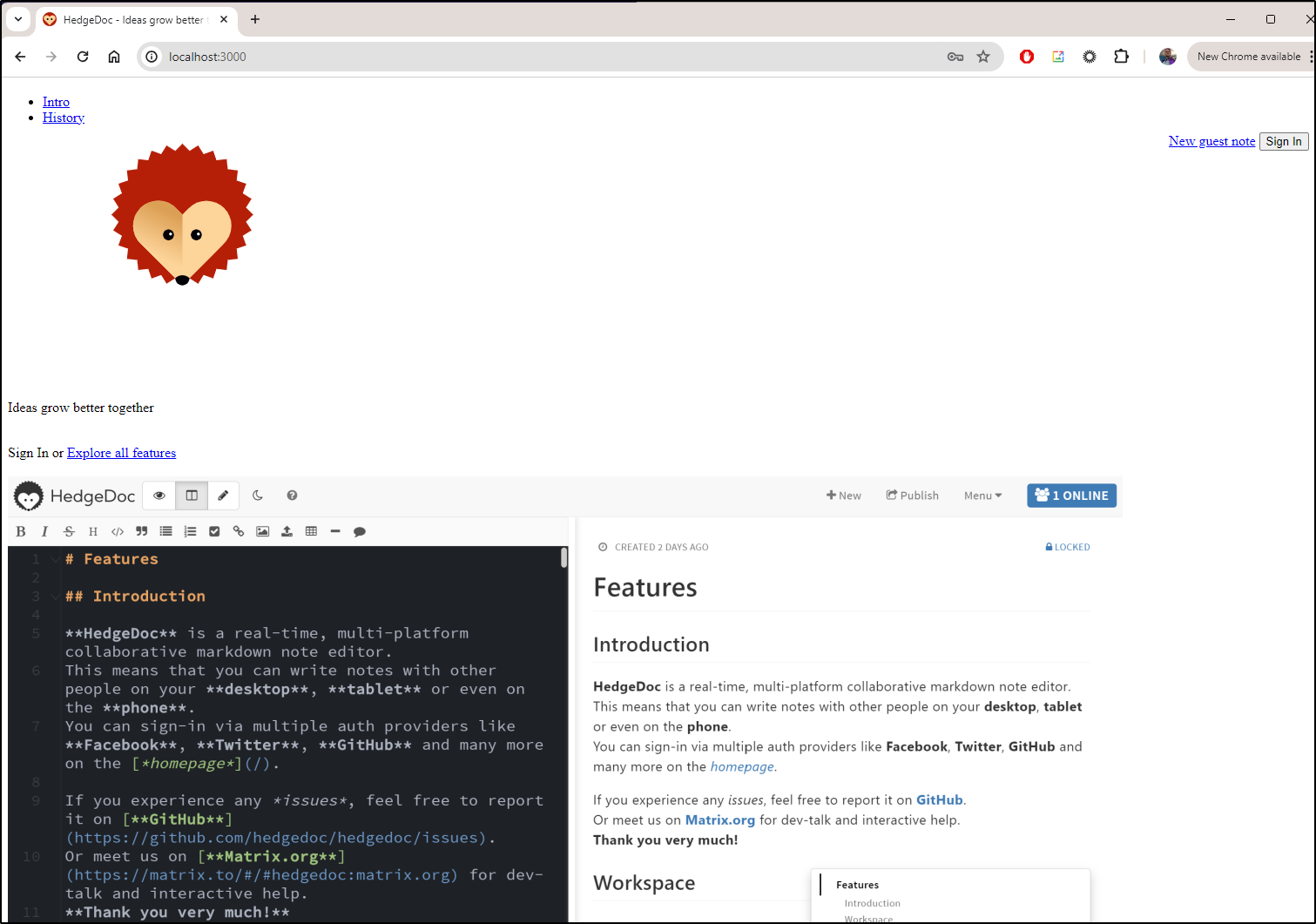

I love new Documentation tools, especially when there are self-hosted and open-source. Today, we’ll look at Hedgedoc, which came out of CodiMD which itself came from HackMD.

The history of Hedgedoc is kind of interesting. Enough so that they built a Git stile commit flow history diagram to explain the project forks and renames. Eseentially starting in 2015 and the HedgeDoc fork getting it’s name in 2020.

HedgeDoc

HedgeDoc came up in my feed from a post on David Augustat’s blog where he showed how to launch it with Docker Compose.

version: '3'

services:

database:

image: postgres:alpine

environment:

- POSTGRES_USER=hedgedoc

- POSTGRES_PASSWORD=password

- POSTGRES_DB=hedgedoc

volumes:

- database:/var/lib/postgresql/data

restart: unless-stopped

app:

image: quay.io/hedgedoc/hedgedoc:latest

environment:

- CMD_DB_URL=postgres://hedgedoc:password@database:5432/hedgedoc

- CMD_DOMAIN=192.168.178.103 # change this

- CMD_PORT=3000

- CMD_URL_ADDPORT=true

- NODE_ENV=production

- CMD_ALLOW_ANONYMOUS=false

- CMD_ALLOW_ANONYMOUS_EDITS=true

- CMD_DEFAULT_PERMISSION=private

- CMD_ALLOW_EMAIL_REGISTER=false

I’ll first tackle the database, then the app

$ cat db.manifest.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgres-deployment

spec:

replicas: 1

selector:

matchLabels:

app: postgres

template:

metadata:

labels:

app: postgres

spec:

containers:

- name: postgres

image: postgres:13

env:

- name: POSTGRES_DB

value: hedgedoc

- name: POSTGRES_USER

value: hedgedoc

- name: POSTGRES_PASSWORD

value: ThisIsNotMyPassword1234

ports:

- containerPort: 5432

volumeMounts:

- name: postgres-data

mountPath: /var/lib/postgresql/data

volumes:

- name: postgres-data

persistentVolumeClaim:

claimName: postgres-pvc

---

apiVersion: v1

kind: Service

metadata:

name: postgres-service

spec:

selector:

app: postgres

ports:

- protocol: TCP

port: 5432

targetPort: 5432

type: ClusterIP

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: postgres-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

Then applied

$ kubectl create ns hedgedoc

$ kubectl apply -f db.manifest.yaml -n hedgedoc

deployment.apps/postgres-deployment created

service/postgres-service created

persistentvolumeclaim/postgres-pvc created

For some odd reason the pod was stuck in pending waiting for the PVC and the PVC was stuck in Pending waiting for first consumer.

$ kubectl get pvc -n hedgedoc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

postgres-pvc Pending local-path 46s

I deleted the deployment and re-applied and all was well

$ kubectl get pods -n hedgedoc

NAME READY STATUS RESTARTS AGE

postgres-deployment-6bbd578855-hhsvn 1/1 Running 0 3m23s

$ kubectl get svc -n hedgedoc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

postgres-service ClusterIP 10.43.141.34 <none> 5432/TCP 6m29s

This first pass, we’ll just be exposing on port 3000 with IP 127.0.0.1. This should work for a kube-proxy test

$ cat hedgedoc.app.manifest.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: hedgedoc-uploads

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: hedgedoc

spec:

replicas: 1

selector:

matchLabels:

app: hedgedoc

template:

metadata:

labels:

app: hedgedoc

spec:

containers:

- name: hedgedoc

image: quay.io/hedgedoc/hedgedoc:latest

ports:

- containerPort: 3000

env:

- name: CMD_DB_URL

value: "postgres://hedgedoc:ThisIsNotMyPassword1234@postgres-service:5432/hedgedoc"

- name: CMD_DOMAIN

value: "127.0.0.1"

- name: CMD_PORT

value: "3000"

- name: CMD_URL_ADDPORT

value: "true"

- name: NODE_ENV

value: "production"

- name: CMD_ALLOW_ANONYMOUS

value: "true"

- name: CMD_ALLOW_ANONYMOUS_EDITS

value: "true"

- name: CMD_ALLOW_GRAVATAR

value: "true"

volumeMounts:

- name: hedgedoc-uploads

mountPath: /hedgedoc/public/uploads

volumes:

- name: hedgedoc-uploads

persistentVolumeClaim:

claimName: hedgedoc-uploads

---

apiVersion: v1

kind: Service

metadata:

name: hedgedoc-service

spec:

selector:

app: hedgedoc

ports:

- protocol: TCP

port: 80

targetPort: 3000

type: ClusterIP

I can then apply

$ kubectl apply -f ./hedgedoc.app.manifest.yaml -n hedgedoc

persistentvolumeclaim/hedgedoc-uploads created

deployment.apps/hedgedoc created

service/hedgedoc-service created

I see the pods running

$ kubectl get pods -n hedgedoc

NAME READY STATUS RESTARTS AGE

postgres-deployment-6bbd578855-hhsvn 1/1 Running 0 17m

hedgedoc-6b6479979f-gxsj7 1/1 Running 0 96s

And the logs look good

$ kubectl logs hedgedoc-6b6479979f-gxsj7 -n hedgedoc | tail -n10

2024-06-02T14:00:59.271Z info: == 20200321153000-fix-account-deletion: migrating =======

2024-06-02T14:00:59.272Z info: Cleaning up notes that should already have been removed. Sorry.

2024-06-02T14:00:59.353Z info: == 20200321153000-fix-account-deletion: migrated (0.082s)

2024-06-02T14:00:59.362Z info: == 20220901102800-convert-history-to-longtext: migrating =======

2024-06-02T14:00:59.386Z info: == 20220901102800-convert-history-to-longtext: migrated (0.024s)

2024-06-02T14:00:59.387Z info: All migrations performed successfully

2024-06-02T14:00:59.408Z info: HTTP Server listening at 0.0.0.0:3000

I’ll port-forward to the service

$ kubectl port-forward -n hedgedoc svc/hedgedoc-service 3000:80

Forwarding from 127.0.0.1:3000 -> 3000

Forwarding from [::1]:3000 -> 3000

Handling connection for 3000

Handling connection for 3000

Handling connection for 3000

Handling connection for 3000

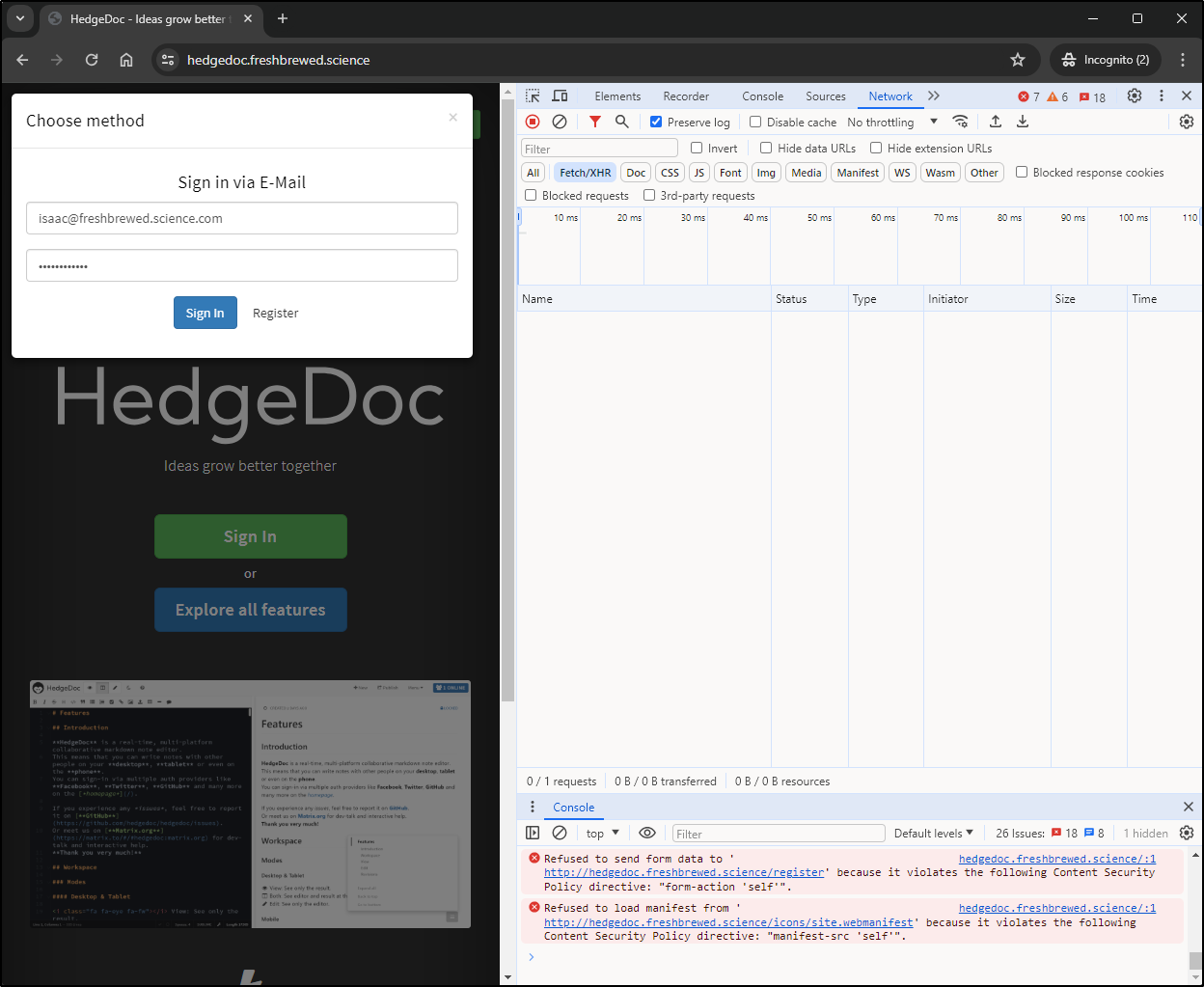

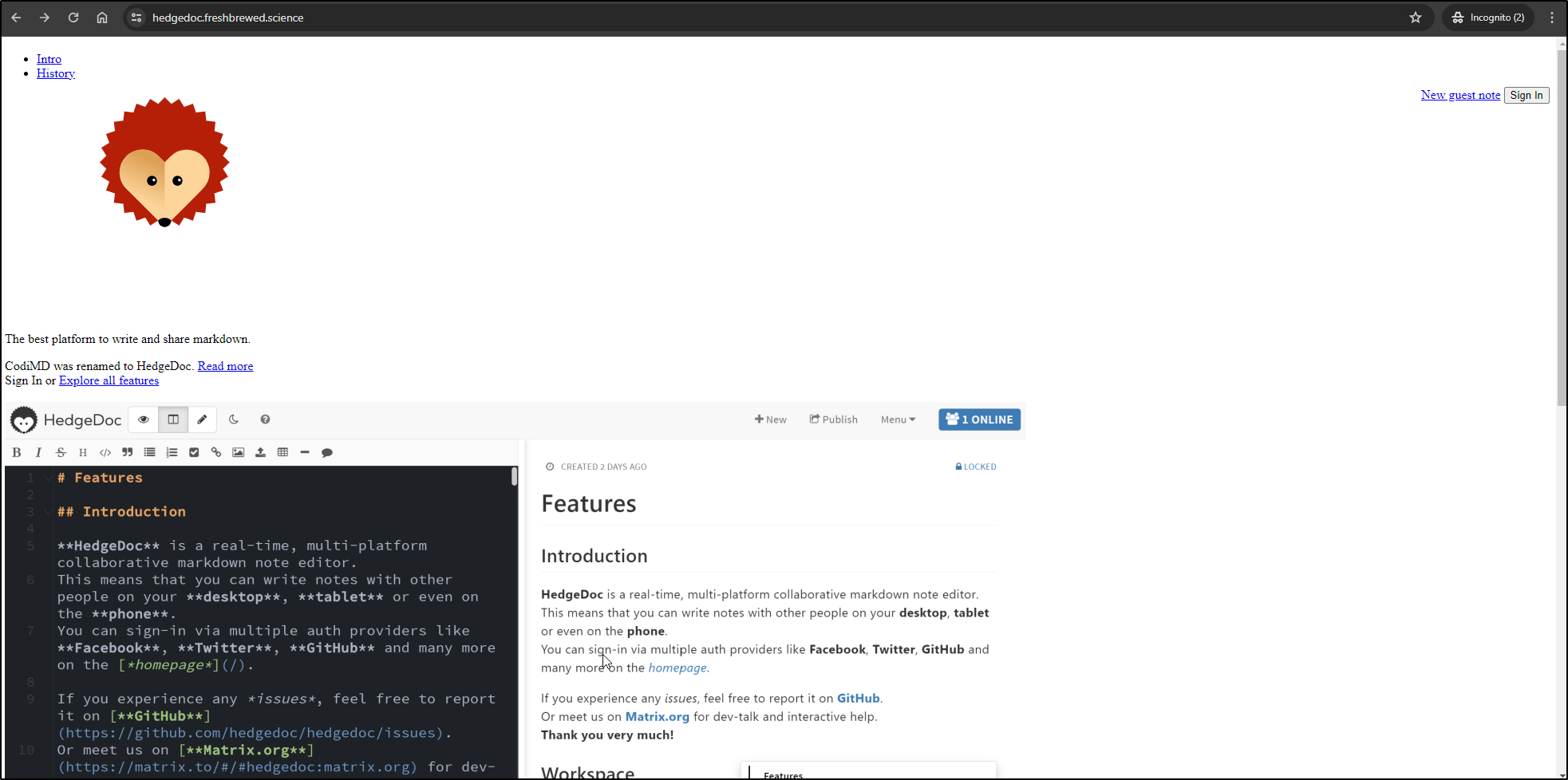

and can see it running

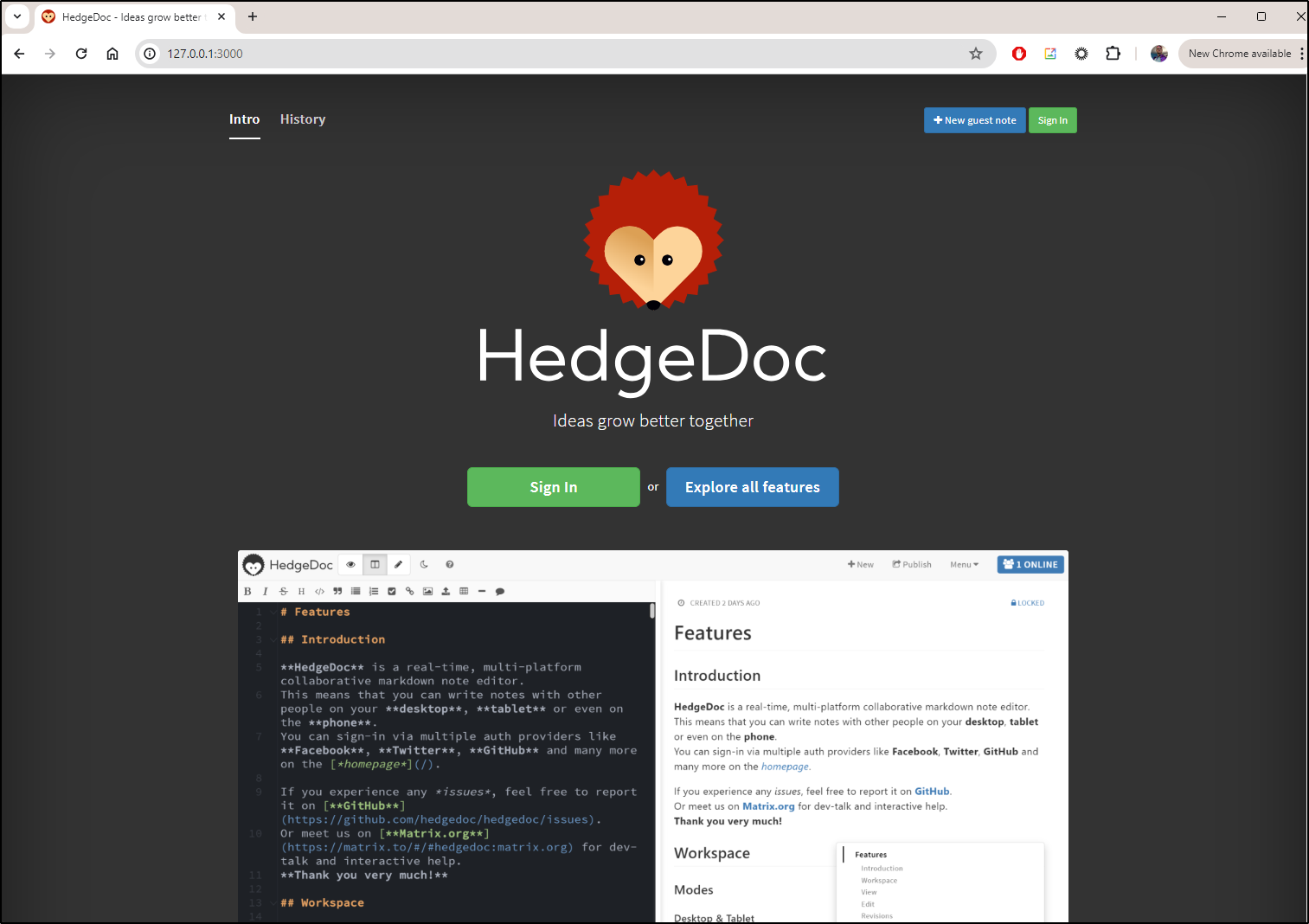

I noticed if I use ‘127.0.0.1’ instead of ‘localhost’ it looks a lot better

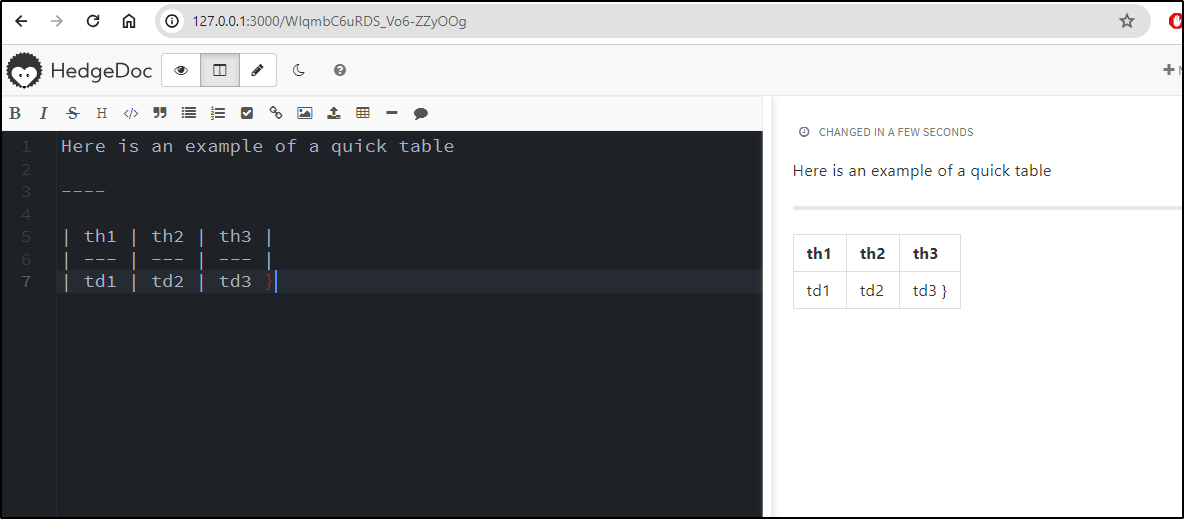

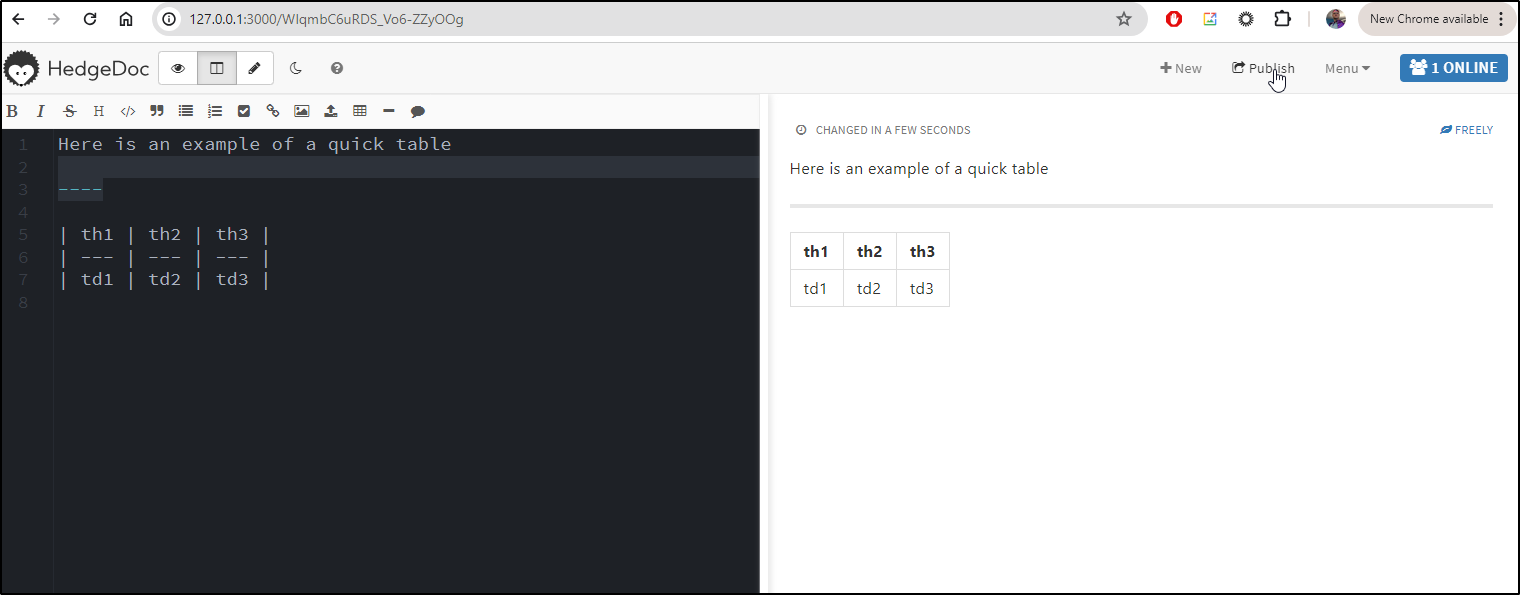

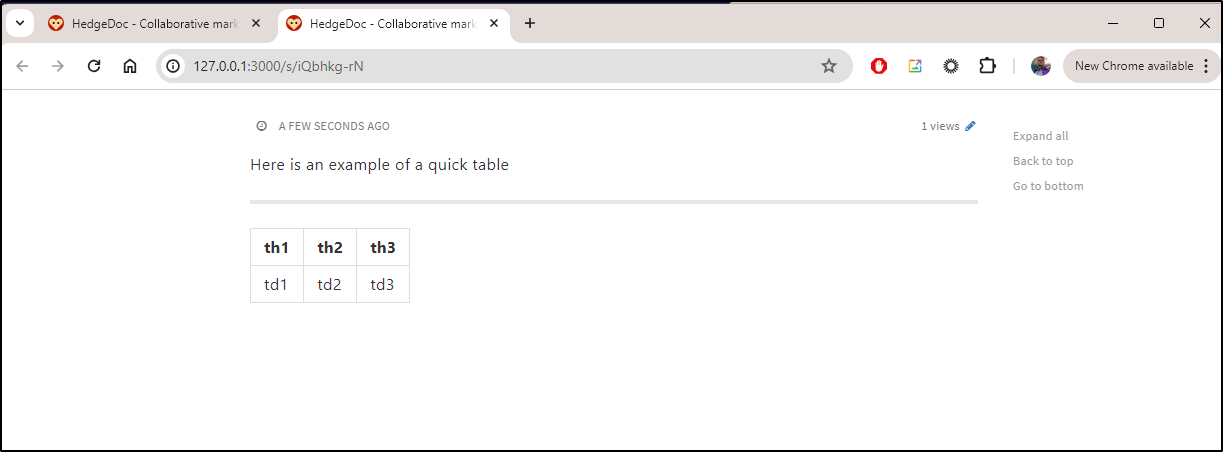

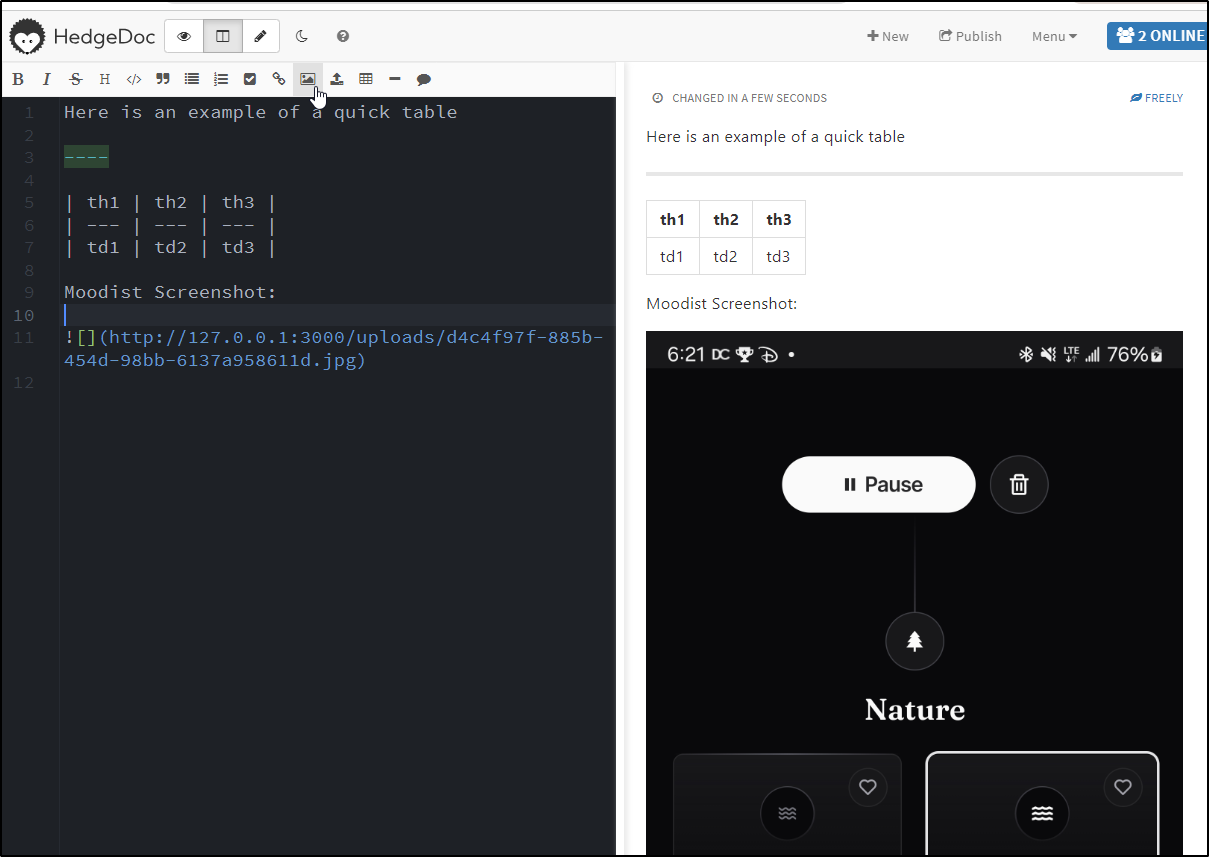

I decided to create a “Guest Note” and noticed the markdown did some nice syntax checking.

I can then publish the note

I now have a nice markdown gist i could share with others

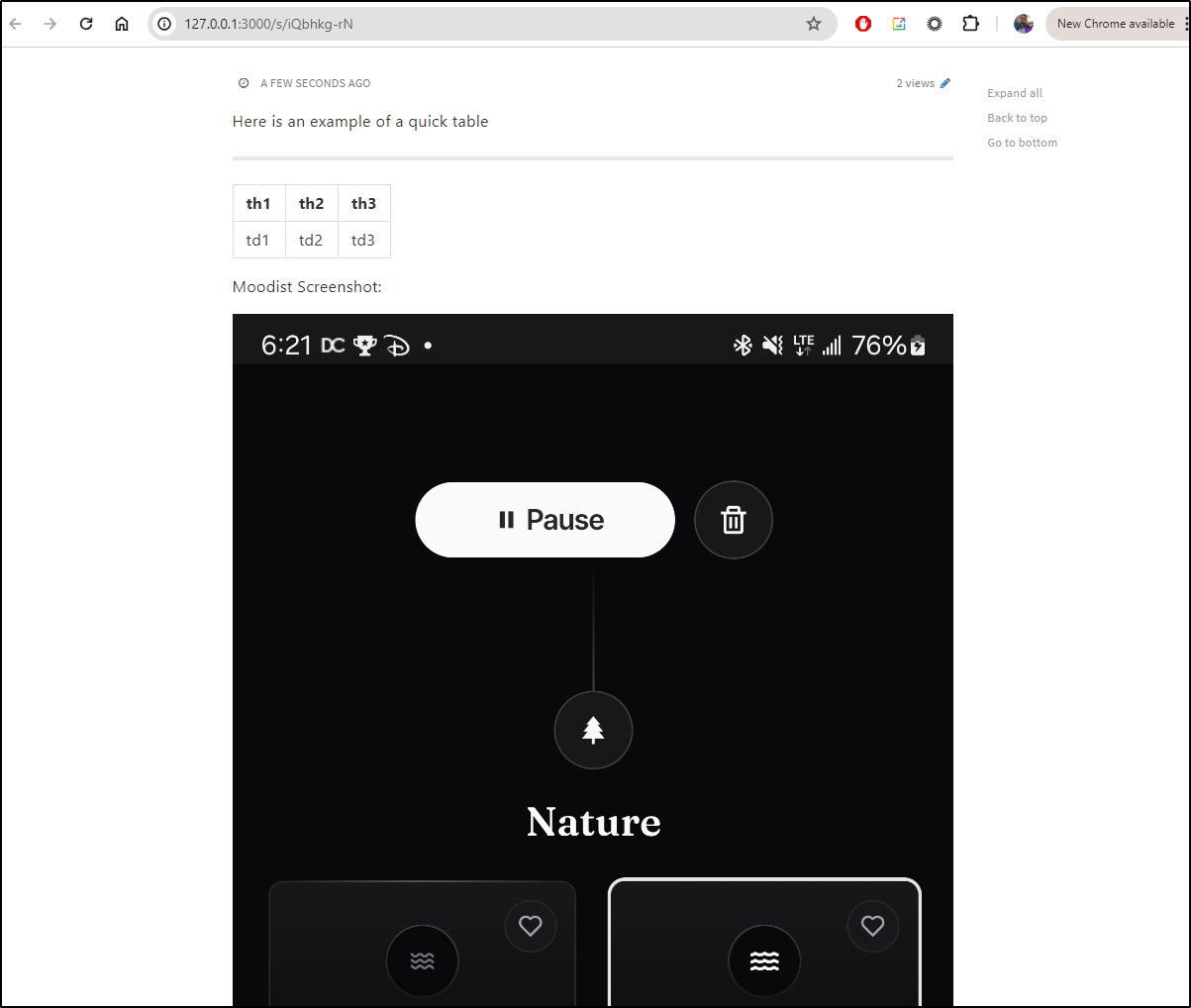

There is an embed image link, but also an image upload option as well. I tried it with a local screenshot from Moodist

which looks pretty good

Production cluster

I think I want to keep using this app so I’ll move on to the production cluster.

I need an A Record in my AWS HostedZone first

$ cat r53-hedgedoc.json

{

"Comment": "CREATE hedgedoc fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "hedgedoc.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "75.73.224.240"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-hedgedoc.json

{

"ChangeInfo": {

"Id": "/change/C1025982YWG96NVYKNEJ",

"Status": "PENDING",

"SubmittedAt": "2024-06-02T14:16:21.174000+00:00",

"Comment": "CREATE hedgedoc fb.s A record "

}

}

Since we are in a new cluster, let’s create the Database and App again

$ kubectl create ns hedgedoc

namespace/hedgedoc created

$ kubectl apply -f ./db.manifest.yaml -n hedgedoc

deployment.apps/postgres-deployment created

service/postgres-service created

persistentvolumeclaim/postgres-pvc created

I’ll check that the DB is running

$ kubectl get pods -n hedgedoc

NAME READY STATUS RESTARTS AGE

postgres-deployment-7c5cff478f-lslzc 1/1 Running 0 23s

Then make the app

$ kubectl apply -f ./hedgedoc.app.manifest.yaml -n hedgedoc

persistentvolumeclaim/hedgedoc-uploads created

deployment.apps/hedgedoc created

service/hedgedoc-service created

I see it is running

$ kubectl get pods -n hedgedoc

NAME READY STATUS RESTARTS AGE

postgres-deployment-7c5cff478f-lslzc 1/1 Running 0 71s

hedgedoc-74867d74f5-x48kn 1/1 Running 0 22s

However it dawned on me that we likely need to give this the real name in the config:

... snip ...

- name: CMD_DOMAIN

value: "hedgedoc.freshbrewed.science"

- name: CMD_PORT

value: "3000"

- name: CMD_URL_ADDPORT

value: "false"

... snip ...

Apply

$ kubectl apply -f ./hedgedoc.app.manifest.yaml -n hedgedoc

persistentvolumeclaim/hedgedoc-uploads unchanged

deployment.apps/hedgedoc configured

service/hedgedoc-service unchanged

$ kubectl get pods -n hedgedoc

NAME READY STATUS RESTARTS AGE

postgres-deployment-7c5cff478f-lslzc 1/1 Running 0 3m1s

hedgedoc-645f7c65b9-grqnt 1/1 Running 0 15s

I can now apply the ingress

$ cat ./hedgedoc.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/websocket-services: hedgedoc-service

name: hedgedoc

spec:

rules:

- host: hedgedoc.freshbrewed.science

http:

paths:

- backend:

service:

name: hedgedoc-service

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- hedgedoc.freshbrewed.science

secretName: hedgedoc-tls

$ kubectl apply -f ./hedgedoc.ingress.yaml -n hedgedoc

ingress.networking.k8s.io/hedgedoc created

When I see the cert satisified

$ kubectl get cert -n hedgedoc

NAME READY SECRET AGE

hedgedoc-tls False hedgedoc-tls 38s

$ kubectl get cert -n hedgedoc

NAME READY SECRET AGE

hedgedoc-tls True hedgedoc-tls 2m7s

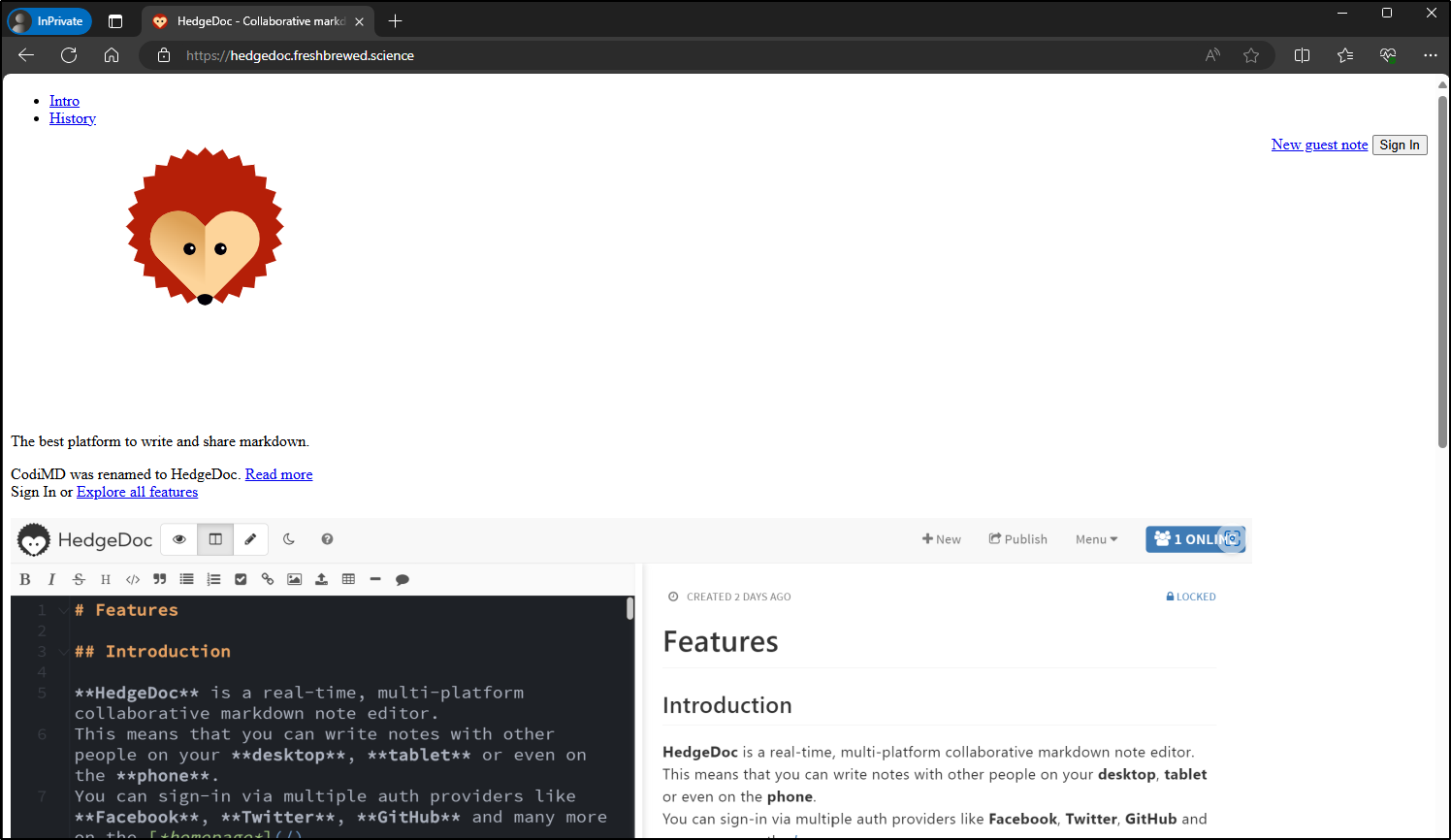

I now have a fully working instance in my production k8s

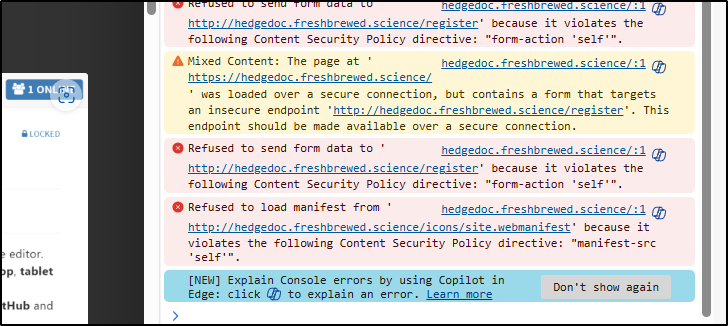

Though for some reason register does not work for me:

note: spoiler alert - i will solve this later looking at an abset SSL setting

I’m going to try explicitly enabling email and registering, though the default should e true

... snip ...

- name: CMD_ALLOW_EMAIL_REGISTER

value: "true"

- name: CMD_ALLOW_EMAIL

value: "true"

.. snip ...

$ kubectl apply -f ./hedgedoc.app.manifest.yaml -n hedgedoc

persistentvolumeclaim/hedgedoc-uploads unchanged

deployment.apps/hedgedoc configured

service/hedgedoc-service unchanged

I looked at DevTools and could see a lot of COORS violations. Seems it’s a pretty strict CSP that is catching us up

I tried explicitly adding the origin

... snip ...

- name: CMD_DB_URL

value: "postgres://hedgedoc:ThisIsNotMyPassword1234@postgres-service:5432/hedgedoc"

- name: CMD_DOMAIN

value: "hedgedoc.freshbrewed.science"

- name: CMD_ALLOW_ORIGIN

value: "['localhost'], ['hedgedoc.org'],['hedgedoc.freshbrewed.science']"

... snip ...

I even tried to disable it:

... snip ...

- name: CMD_CSP_ENABLE

value: "false"

... snip ...

But I’m still stuck on the CSP for the form

Community chart

Let’s pivot and use the Community chart. Maybe I’ll have more success there.

$ helm repo add nicholaswilde https://nicholaswilde.github.io/helm-charts/

"nicholaswilde" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "nicholaswilde" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

I can leave the database alone, but need to remove my app as they have deployment name collisions

$ kubectl delete -f ./hedgedoc.app.manifest.yaml -n hedgedoc

persistentvolumeclaim "hedgedoc-uploads" deleted

deployment.apps "hedgedoc" deleted

service "hedgedoc-service" deleted

I installed:

$ helm install hedgedoc nicholaswilde/hedgedoc -n hedgedoc

NAME: hedgedoc

LAST DEPLOYED: Sun Jun 2 10:16:48 2024

NAMESPACE: hedgedoc

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace hedgedoc -l "app.kubernetes.io/name=hedgedoc,app.kubernetes.io/instance=hedgedoc" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl port-forward $POD_NAME 8080:3000

The helm chart uses a service just called hedgedoc on port 3000:

$ kubectl get svc -n hedgedoc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

postgres-service ClusterIP 10.43.25.13 <none> 5432/TCP 60m

hedgedoc ClusterIP 10.43.54.168 <none> 3000/TCP 52s

So I’ll need to fix that in the ingress

$ cat hedgedoc.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/websocket-services: hedgedoc-service

name: hedgedoc

spec:

rules:

- host: hedgedoc.freshbrewed.science

http:

paths:

- backend:

service:

name: hedgedoc

port:

number: 3000

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- hedgedoc.freshbrewed.science

secretName: hedgedoc-tls

$ kubectl apply -f ./hedgedoc.ingress.yaml -n hedgedoc

ingress.networking.k8s.io/hedgedoc configured

That was significantly worse.

It wouldnt route.

I then created a patch service that matched the selectors

apiVersion: v1

kind: Service

metadata:

name: hedgedoc-service

spec:

selector:

app.kubernetes.io/instance: hedgedoc

app.kubernetes.io/name: hedgedoc

ports:

- protocol: TCP

port: 80

targetPort: 3000

type: ClusterIP

That would at least route, but the forms were entirely broken

There was nothing in the helm values that looked to let me set the URL.

I did note the image used linuxserver instead of hedgedoc

image:

pullPolicy: IfNotPresent

repository: ghcr.io/linuxserver/hedgedoc

tag: version-1.9.0

Perhaps that release will work better with my YAML?

For now, I’ll remove this helm attempt

$ helm delete hedgedoc -n hedgedoc

release "hedgedoc" uninstalled

And my hacked service

$ kubectl delete svc/hedgedoc-service -n hedgedoc

service "hedgedoc-service" deleted

Trying linuxserver image in my app manifest

I update the image to use the linuxserver image and tried again

$ kubectl apply -f ./hedgedoc.app.manifest.yaml -n hedgedoc

persistentvolumeclaim/hedgedoc-uploads created

deployment.apps/hedgedoc created

service/hedgedoc-service created

Still no go

In the end, the problem was not setting the SSL param

- name: CMD_PROTOCOL_USESSL

value: "true"

This meant my manifest was:

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: hedgedoc-uploads

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: hedgedoc

spec:

replicas: 1

selector:

matchLabels:

app: hedgedoc

template:

metadata:

labels:

app: hedgedoc

spec:

containers:

- name: hedgedoc

image: quay.io/hedgedoc/hedgedoc:latest

ports:

- containerPort: 3000

env:

- name: CMD_DB_URL

value: "postgres://hedgedoc:ThisIsNotMyPassword1234@postgres-service:5432/hedgedoc"

- name: CMD_DOMAIN

value: "hedgedoc.freshbrewed.science"

- name: CMD_ALLOW_ORIGIN

value: "['hedgedoc.freshbrewed.science'], ['localhost'], ['hedgedoc.org']"

- name: CMD_PORT

value: "3000"

- name: CMD_URL_ADDPORT

value: "false"

- name: CMD_PROTOCOL_USESSL

value: "true"

- name: NODE_ENV

value: "production"

- name: CMD_ALLOW_ANONYMOUS

value: "true"

- name: CMD_ALLOW_ANONYMOUS_EDITS

value: "true"

- name: CMD_ALLOW_GRAVATAR

value: "true"

- name: CMD_ALLOW_EMAIL_REGISTER

value: "true"

- name: CMD_ALLOW_EMAIL

value: "true"

volumeMounts:

- name: hedgedoc-uploads

mountPath: /hedgedoc/public/uploads

volumes:

- name: hedgedoc-uploads

persistentVolumeClaim:

claimName: hedgedoc-uploads

---

apiVersion: v1

kind: Service

metadata:

name: hedgedoc-service

spec:

selector:

app: hedgedoc

ports:

- protocol: TCP

port: 80

targetPort: 3000

type: ClusterIP

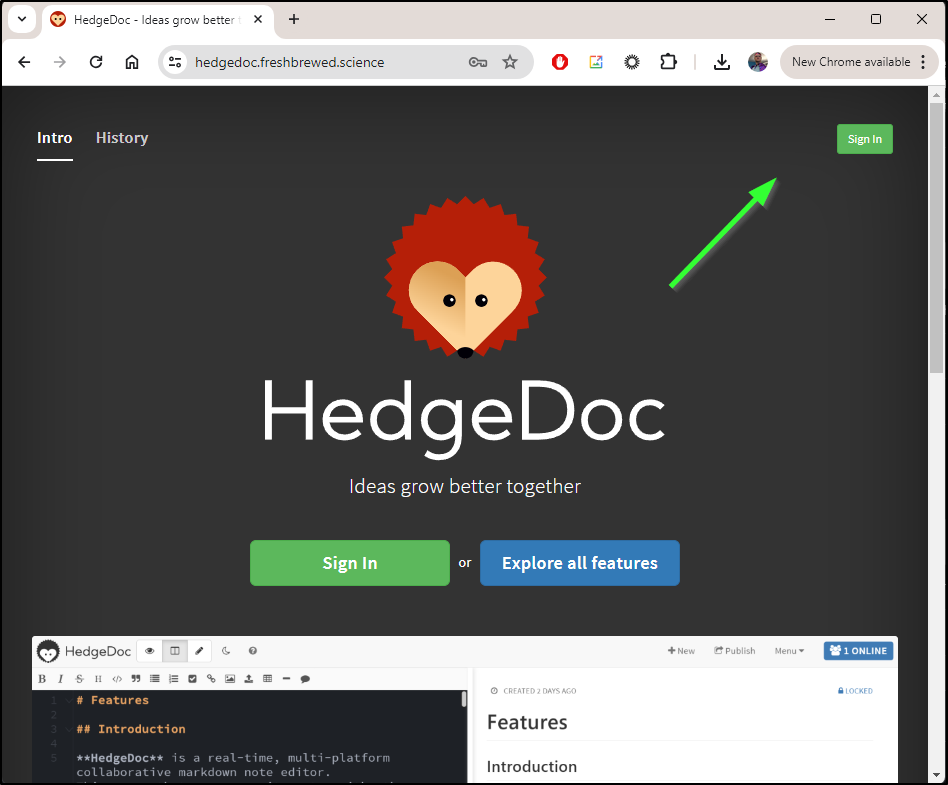

As you can see, login works:

Note: I realized only much much later that just reading the reverse proxy doc would have clued me in on that variable much sooner. RTFM, Mr. Johnson!

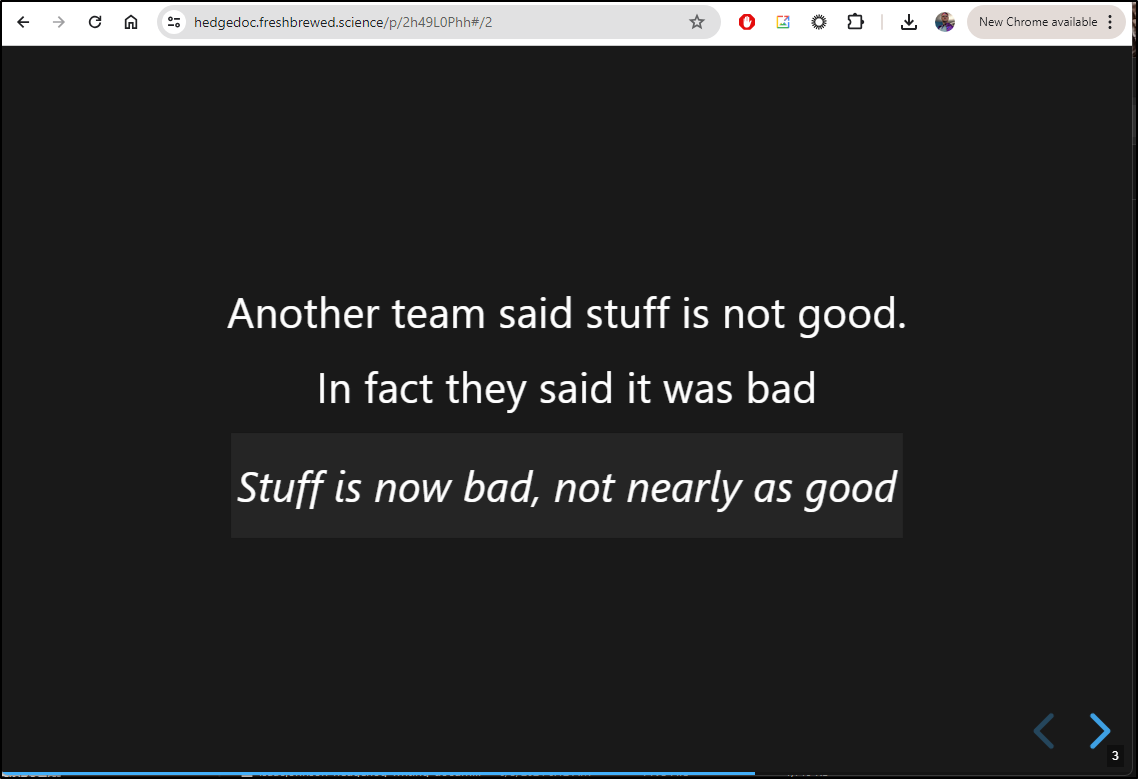

Presentations

In building out an example of linking, I already realized Hedgedoc can solve our needs for basic presentations

Here you can see me building links then figuring out presentations and making a very ridiculous short one

Graphviz

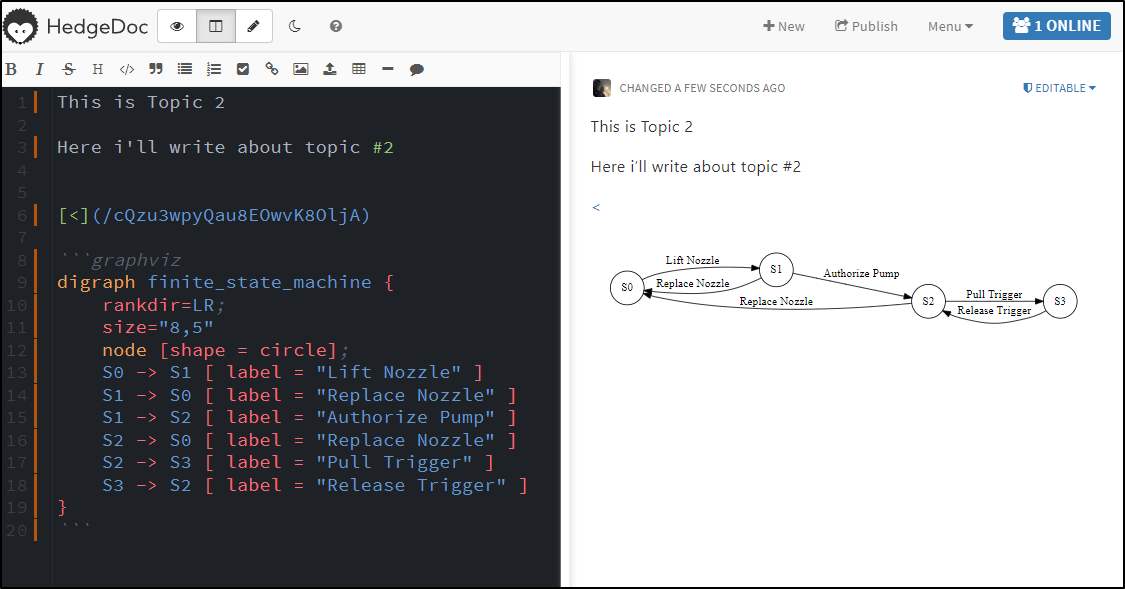

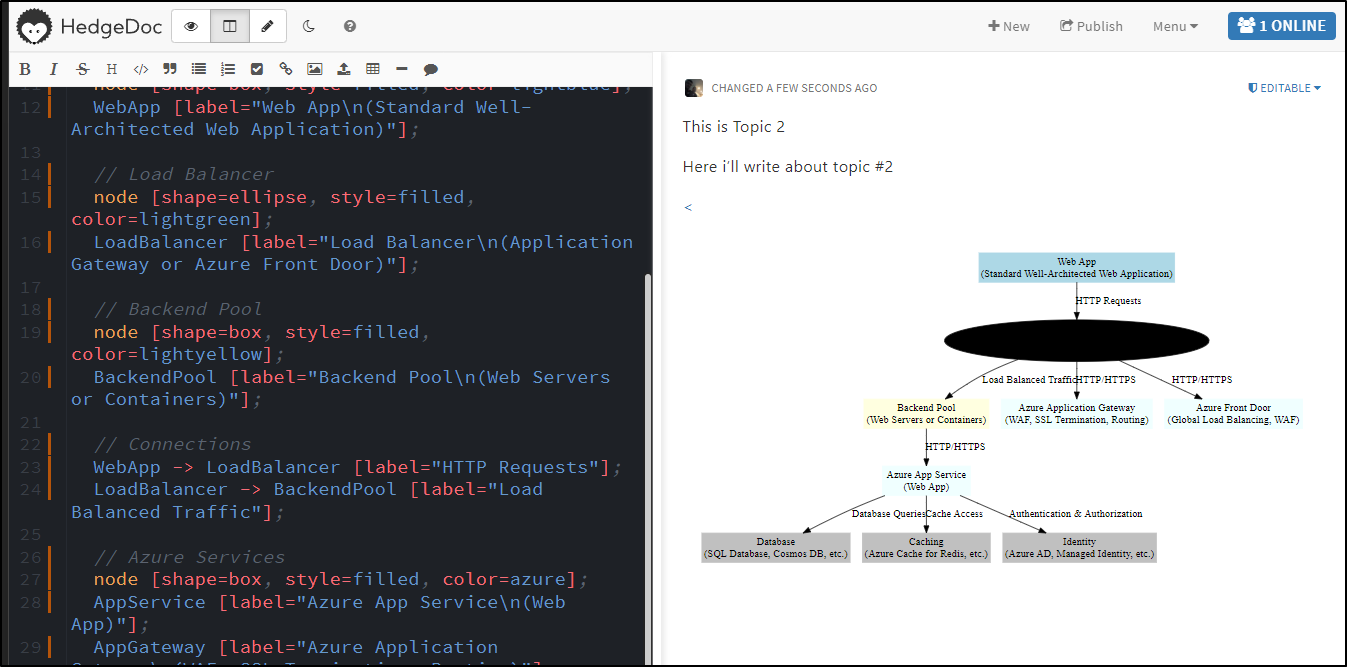

A lot of Markdown systems have leaned into MermaidJS, however Hedgedoc chose to incorporate Graphviz.

Here is an example (Note, I used markdown with Graphviz to show Jekyll doesn’t support it):

digraph finite_state_machine {

rankdir=LR;

size="8,5"

node [shape = circle];

S0 -> S1 [ label = "Lift Nozzle" ]

S1 -> S0 [ label = "Replace Nozzle" ]

S1 -> S2 [ label = "Authorize Pump" ]

S2 -> S0 [ label = "Replace Nozzle" ]

S2 -> S3 [ label = "Pull Trigger" ]

S3 -> S2 [ label = "Release Trigger" ]

}]

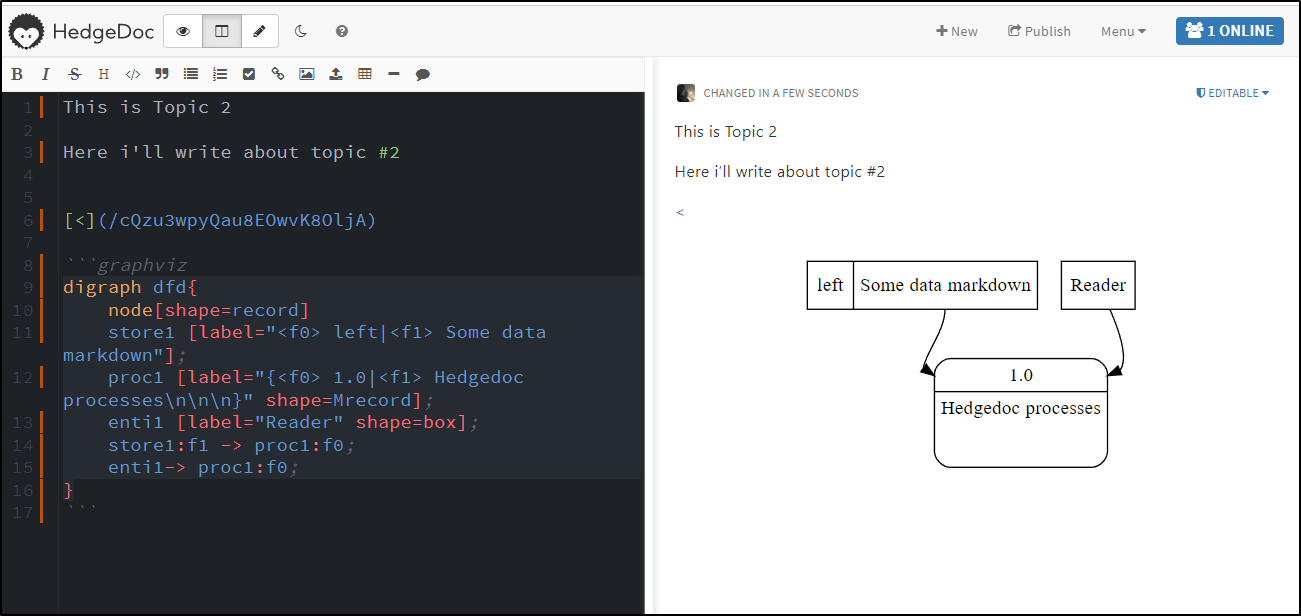

and a DFD example

digraph dfd{

node[shape=record]

store1 [label="<f0> left|<f1> Some data markdown"];

proc1 [label="{<f0> 1.0|<f1> Hedgedoc processes\n\n\n}" shape=Mrecord];

enti1 [label="Reader" shape=box];

store1:f1 -> proc1:f0;

enti1-> proc1:f0;

}

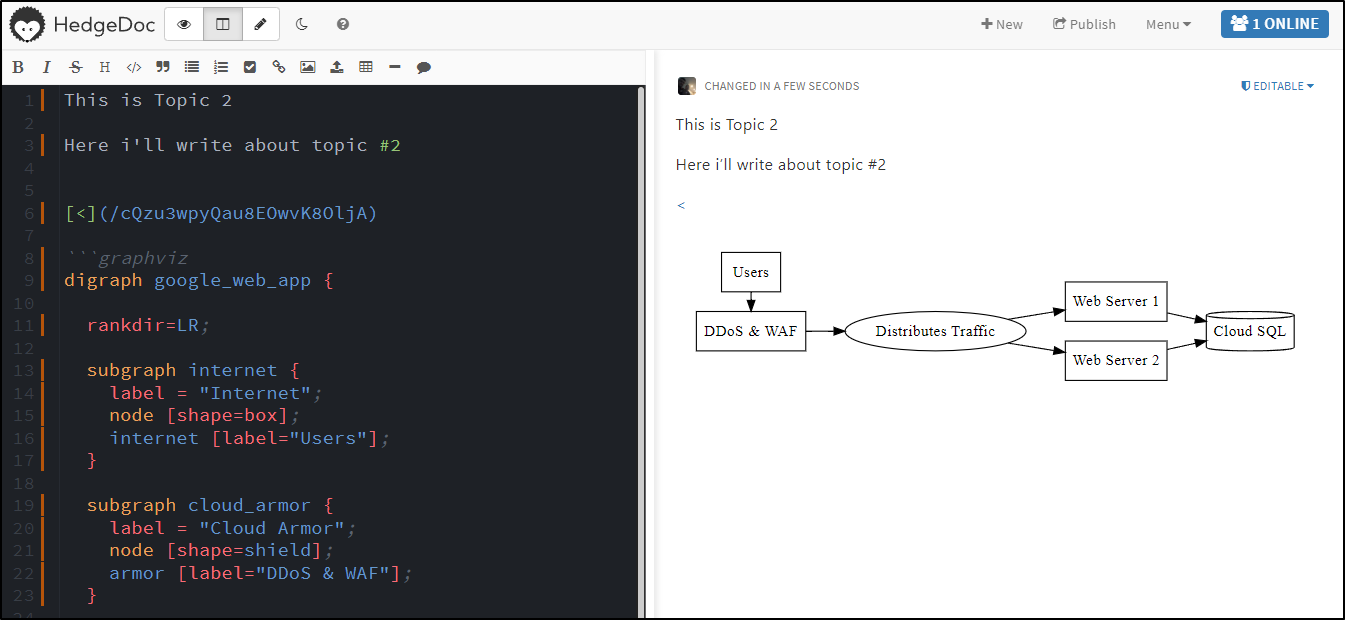

Playing with the AIs

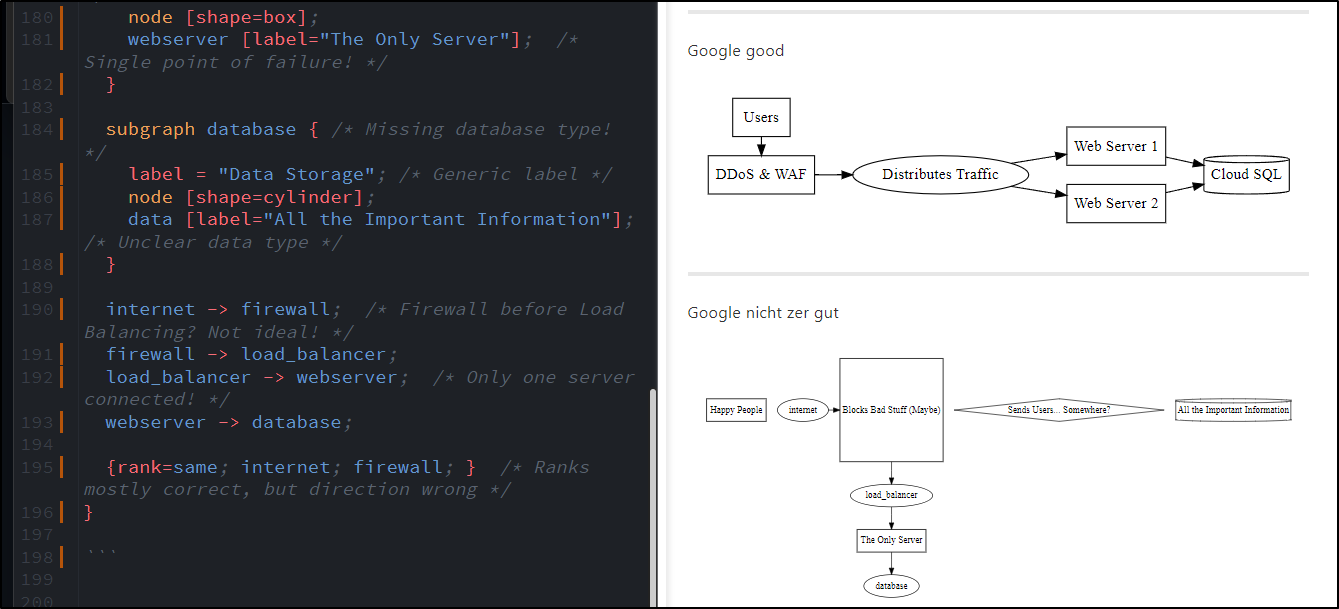

Now I’ll ask Gemini from Google to give me a well-architected WebApp running in Google Cloud using Graphviz syntax:

show me the graphviz code to generate a standard well architected web application with load balancing in Google Cloud

digraph google_web_app {

rankdir=LR;

subgraph internet {

label = "Internet";

node [shape=box];

internet [label="Users"];

}

subgraph cloud_armor {

label = "Cloud Armor";

node [shape=shield];

armor [label="DDoS & WAF"];

}

subgraph load_balancing {

label = "Load Balancing";

node [shape=ellipse];

lb [label="Distributes Traffic"];

}

subgraph web_servers {

label = "Web Servers";

node [shape=box];

web1 [label="Web Server 1"];

web2 [label="Web Server 2" ];

... // Add more web servers as needed

}

subgraph database {

label = "Database";

node [shape=cylinder];

db [label="Cloud SQL"];

}

internet -> armor;

armor -> lb;

lb -> web1;

lb -> web2;

web1 -> db;

web2 -> db;

{rank=same; internet; armor; }

{rank=same; lb; }

{rank=same; web1; web2; }

}

The comment initially threw me off (line 28, the “Add more” needs to be removed)

But this is Google’s output:

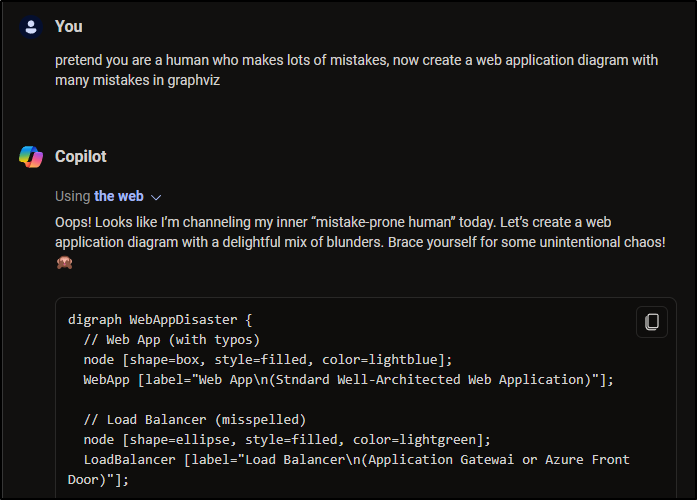

I gave a similar question to Copilot

show me the graphviz code to generate a standard well architected web application with load balancing in azure

It gave me

digraph AzureWebAppArchitecture {

// Web Application

node [shape=box, style=filled, color=lightblue];

WebApp [label="Web App\n(Standard Well-Architected Web Application)"];

// Load Balancer

node [shape=ellipse, style=filled, color=lightgreen];

LoadBalancer [label="Load Balancer\n(Application Gateway or Azure Front Door)"];

// Backend Pool

node [shape=box, style=filled, color=lightyellow];

BackendPool [label="Backend Pool\n(Web Servers or Containers)"];

// Connections

WebApp -> LoadBalancer [label="HTTP Requests"];

LoadBalancer -> BackendPool [label="Load Balanced Traffic"];

// Azure Services

node [shape=box, style=filled, color=azure];

AppService [label="Azure App Service\n(Web App)"];

AppGateway [label="Azure Application Gateway\n(WAF, SSL Termination, Routing)"];

AzureFrontDoor [label="Azure Front Door\n(Global Load Balancing, WAF)"];

// Connections to Services

LoadBalancer -> AppGateway [label="HTTP/HTTPS"];

LoadBalancer -> AzureFrontDoor [label="HTTP/HTTPS"];

BackendPool -> AppService [label="HTTP/HTTPS"];

// Additional Components (not shown in detail)

node [shape=box, style=filled, color=gray];

Database [label="Database\n(SQL Database, Cosmos DB, etc.)"];

Caching [label="Caching\n(Azure Cache for Redis, etc.)"];

Identity [label="Identity\n(Azure AD, Managed Identity, etc.)"];

// Connections to Additional Components

AppService -> Database [label="Database Queries"];

AppService -> Caching [label="Cache Access"];

AppService -> Identity [label="Authentication & Authorization"];

}

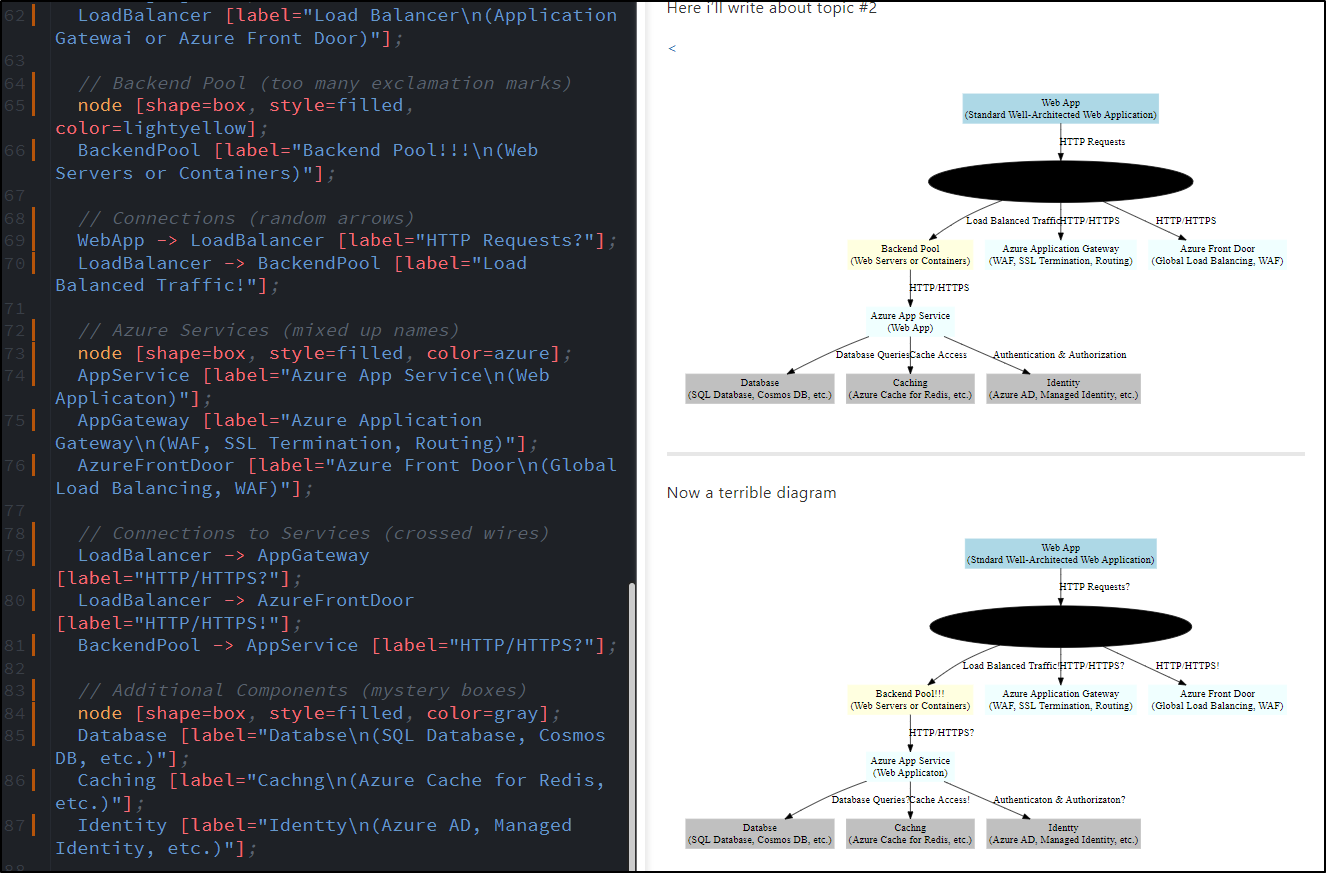

Which is much prettier (in a web 1.0 kind of way)

And these can be part of presentations as one might expect

I tried to get the AI to give me Chaos:

But it just gave me back the same diagram, but with question marks and exclamation points

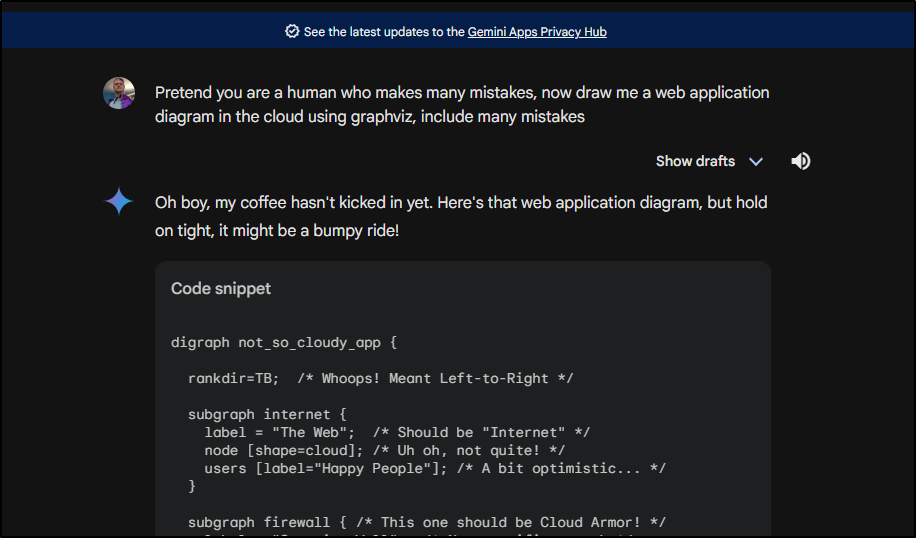

As we can see, they put a bit of humour in Gemini as well:

The output:

digraph not_so_cloudy_app {

rankdir=TB; /* Whoops! Meant Left-to-Right */

subgraph internet {

label = "The Web"; /* Should be "Internet" */

node [shape=cloud]; /* Uh oh, not quite! */

users [label="Happy People"]; /* A bit optimistic... */

}

subgraph firewall { /* This one should be Cloud Armor! */

label = "Security Wall"; /* Not specific enough */

node [shape=square];

firewall [label="Blocks Bad Stuff (Maybe)"]; /* Unreliable protection? */

}

subgraph load_balancer {

label = "Traffic Director"; /* Not the best term... */

node [shape=diamond]; /* A less common shape choice */

router [label="Sends Users... Somewhere?"]; /* Vague functionality */

}

subgraph web_servers { /* Missing redundancy! */

label = "Server Farm"; /* Not very specific */

node [shape=box];

webserver [label="The Only Server"]; /* Single point of failure! */

}

subgraph database { /* Missing database type! */

label = "Data Storage"; /* Generic label */

node [shape=cylinder];

data [label="All the Important Information"]; /* Unclear data type */

}

internet -> firewall; /* Firewall before Load Balancing? Not ideal! */

firewall -> load_balancer;

load_balancer -> webserver; /* Only one server connected! */

webserver -> database;

{rank=same; internet; firewall; } /* Ranks mostly correct, but direction wrong */

}

To be frank, Google Gemini takes the W on this task:

Here is a quick presentation of Azure Good/Bad and Google Good/Bad

Google Auth

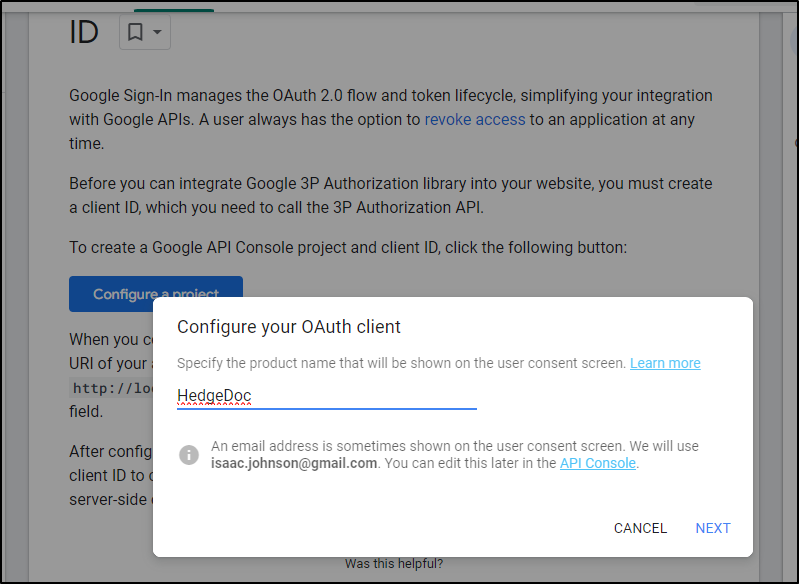

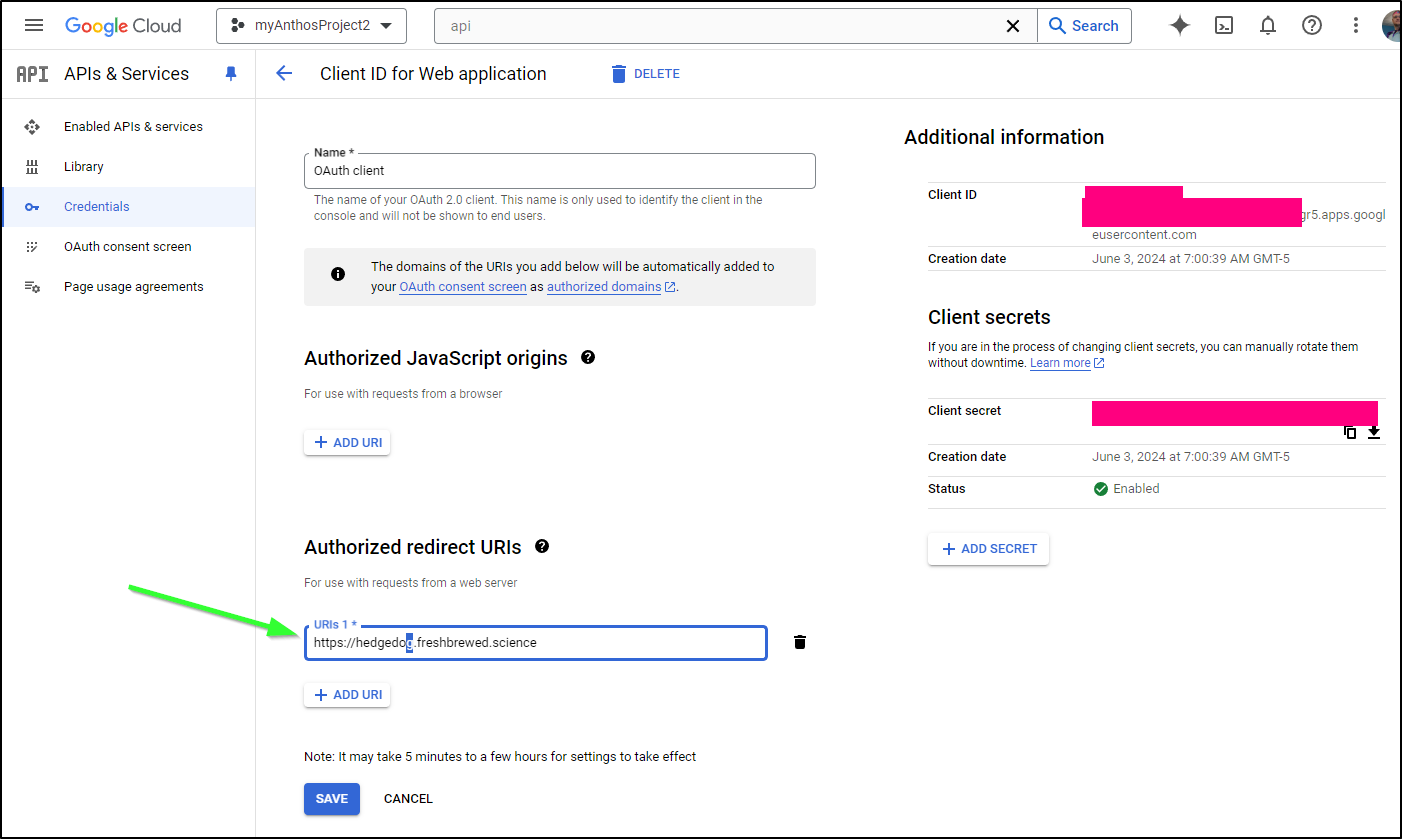

To get Google Auth going, I’ll need to create a Google API Console Project and Client ID

I’ll give it a name

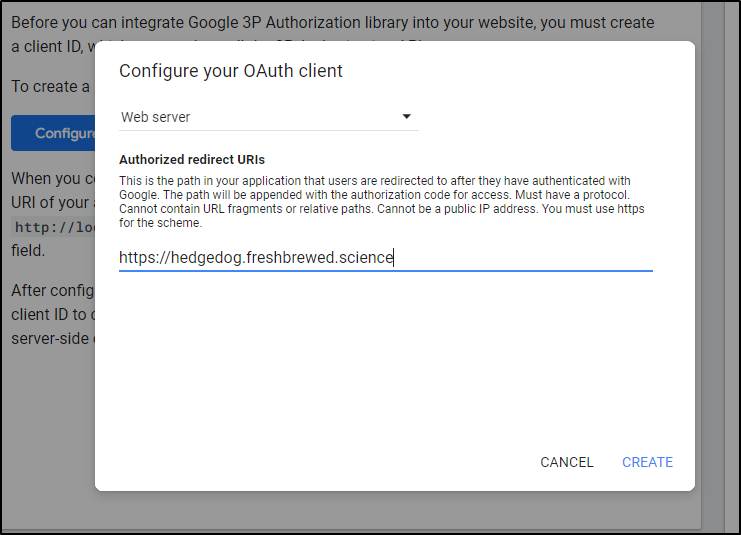

A callback URL (best guess here)

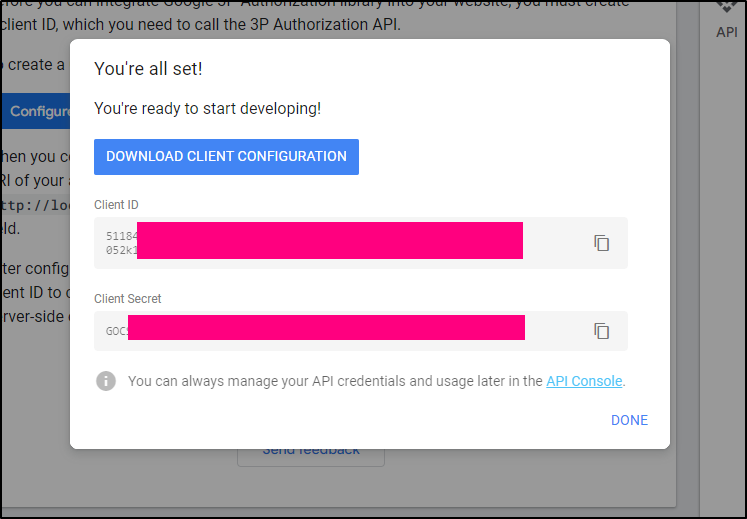

Then it will give me my Client ID and secret

I’ll then edit my deployment to add the Client Secret and Key

$ kubectl edit deployment hedgedoc -n hedgedoc

... snip ...

containers:

- env:

- name: CMD_DB_URL

value: postgres://hedgedoc:ThisIsNotMyPassword1234@postgres-service:5432/hedgedoc

- name: CMD_DOMAIN

value: hedgedoc.freshbrewed.science

- name: CMD_ALLOW_ORIGIN

value: '[''hedgedoc.freshbrewed.science''], [''localhost''], [''hedgedoc.org'']'

- name: CMD_PORT

value: "3000"

- name: CMD_URL_ADDPORT

value: "false"

- name: CMD_PROTOCOL_USESSL

value: "true"

- name: NODE_ENV

value: production

- name: CMD_ALLOW_ANONYMOUS

value: "true"

- name: CMD_ALLOW_ANONYMOUS_EDITS

value: "true"

- name: CMD_ALLOW_GRAVATAR

value: "true"

- name: CMD_ALLOW_EMAIL_REGISTER

value: "true"

- name: CMD_ALLOW_EMAIL

value: "true"

- name: CMD_GOOGLE_CLIENTSECRET

value: "GOxxxxxxxxxxxxxxxxxxxxxxxxxxxxxK"

- name: CMD_GOOGLE_CLIENTID

value: "51xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx5.apps.googleusercontent.com"

... snip ...

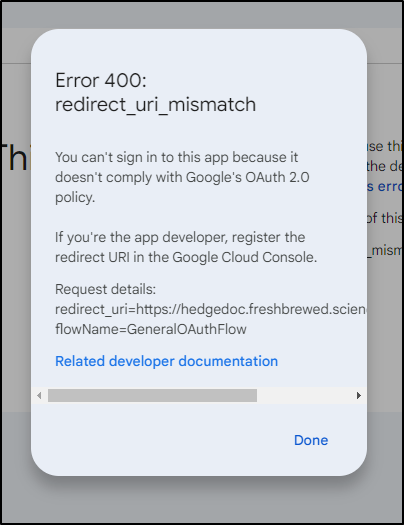

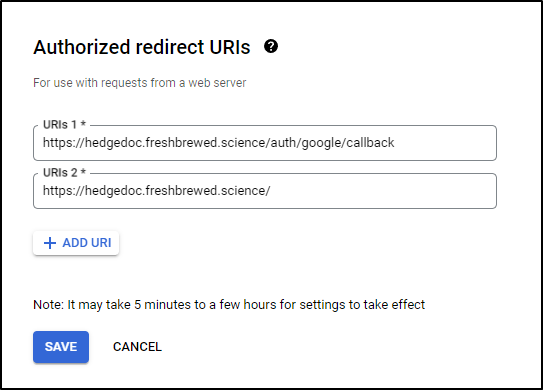

I quickly noted the callback should have been

https://hedgedoc.freshbrewed.science/auth/google/callback

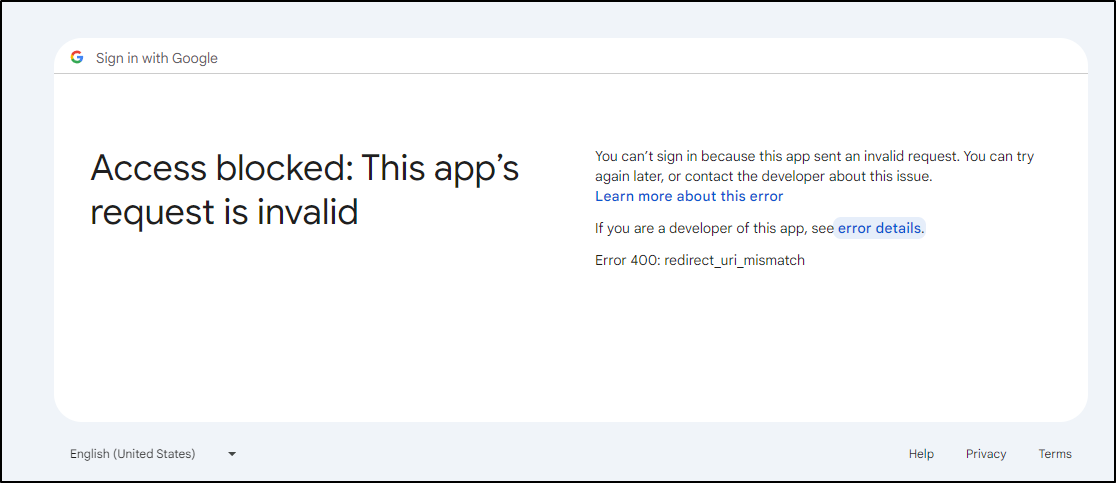

The error being

I found it in the “APIs & Services” area. I realized i even typoed the URL

I’ll just make both URLs valid

Now you can see the full flow is working!

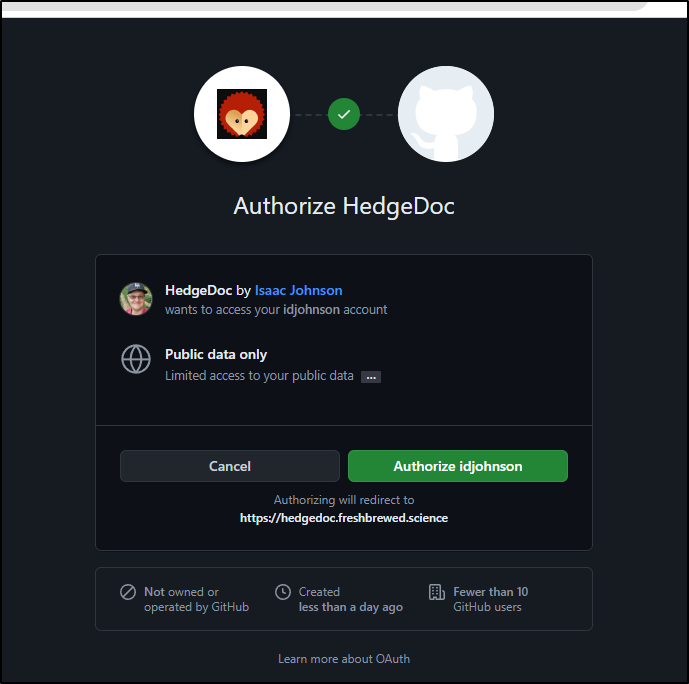

Let’s add Github as well. We can follow the guide

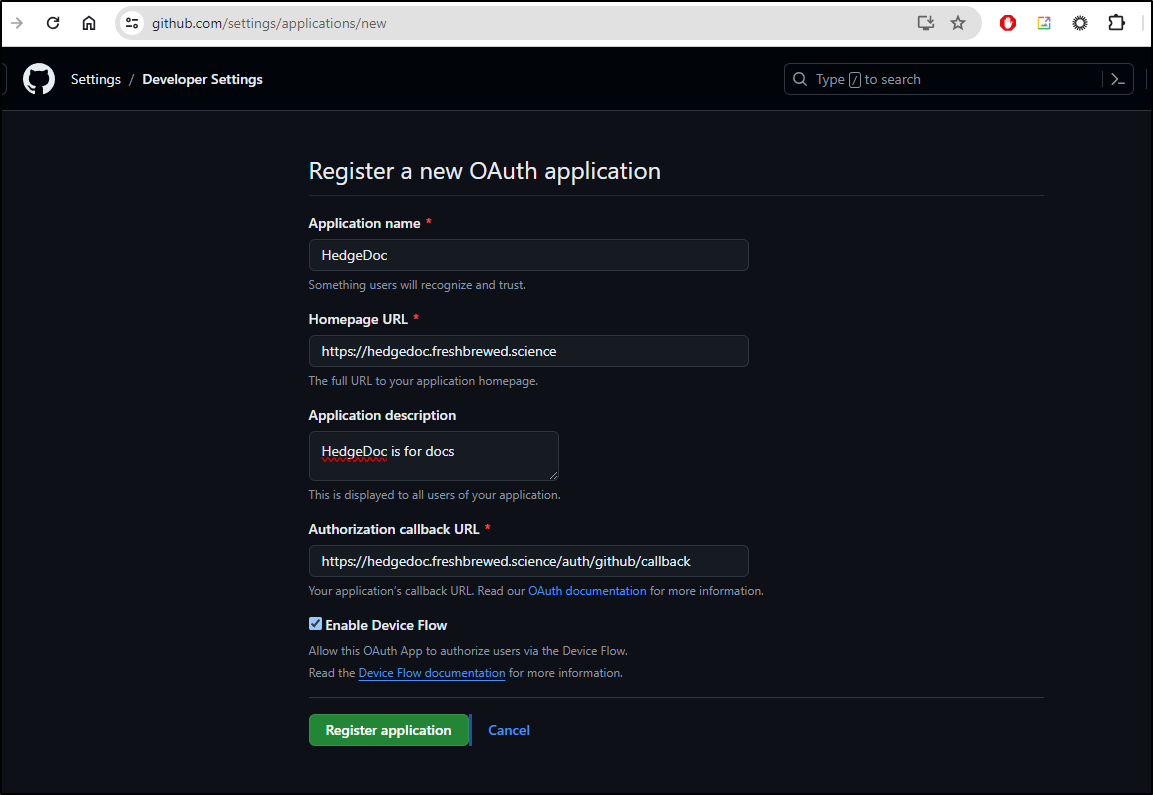

I’ll add a new Application in Github and use the following details. Note the callback is ‘auth/github/callback’

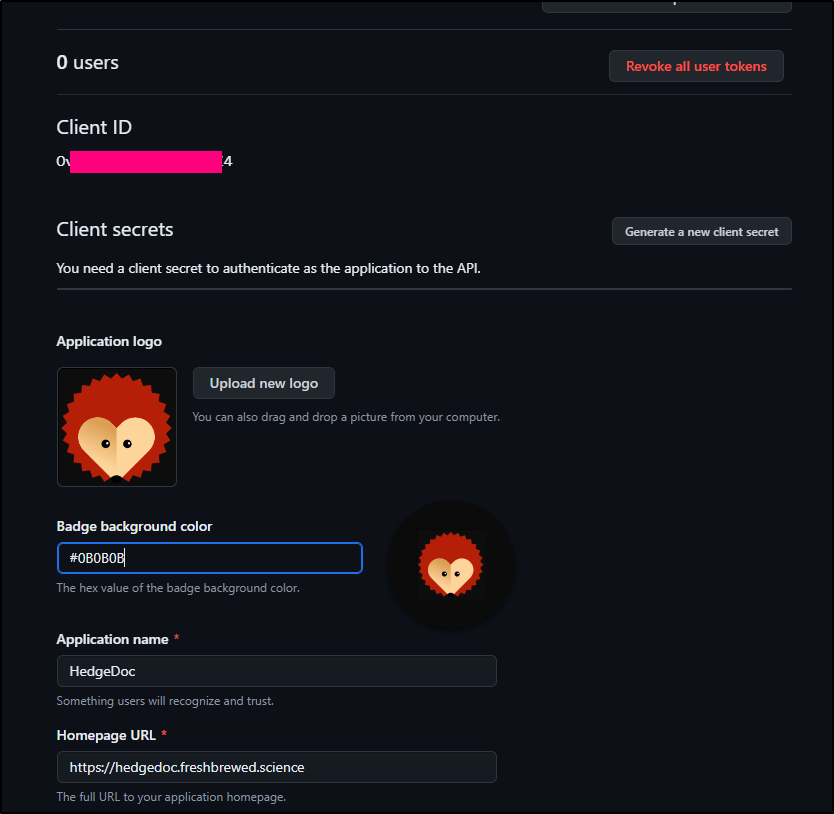

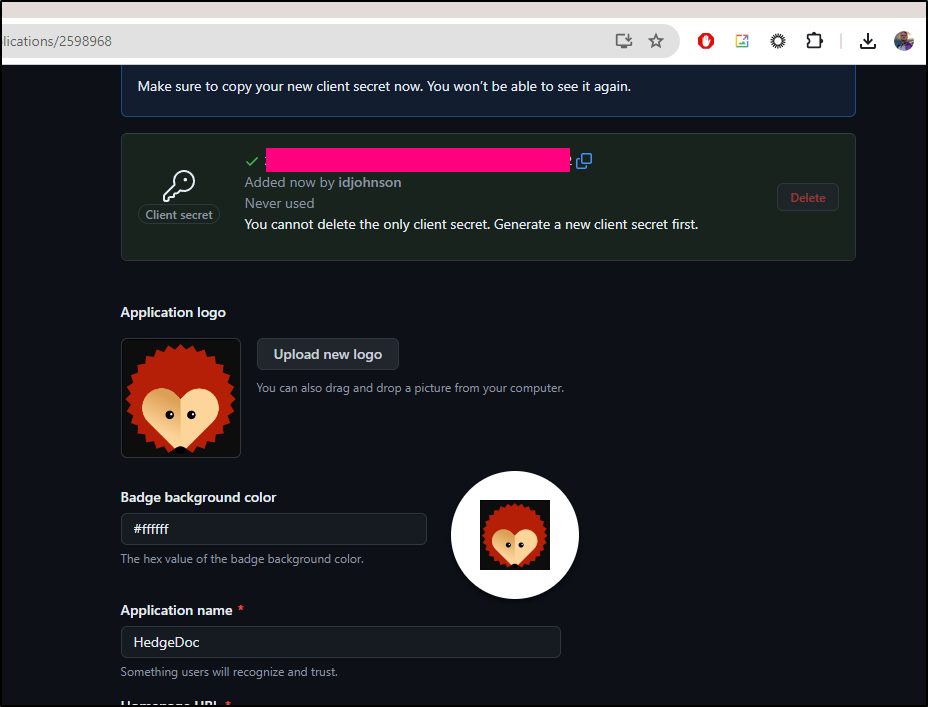

I get a client ID right away. I can now tweak the logo picture the click “Generate a new client secret”

I had to Passkey auth then it would give me my Client Secret

I’ll then added them to the deployment as well

... snip ...

- name: CMD_GOOGLE_CLIENTSECRET

value: GxxxxxxxxxxxxxxxxxxxK

- name: CMD_GOOGLE_CLIENTID

value: 5xxxxxxxxxxxxxxxxxxx5.apps.googleusercontent.com

- name: CMD_GITHUB_CLIENTID

value: "Oxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx4"

- name: CMD_GITHUB_CLIENTSECRET

value: "2xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx2"

... snip ...

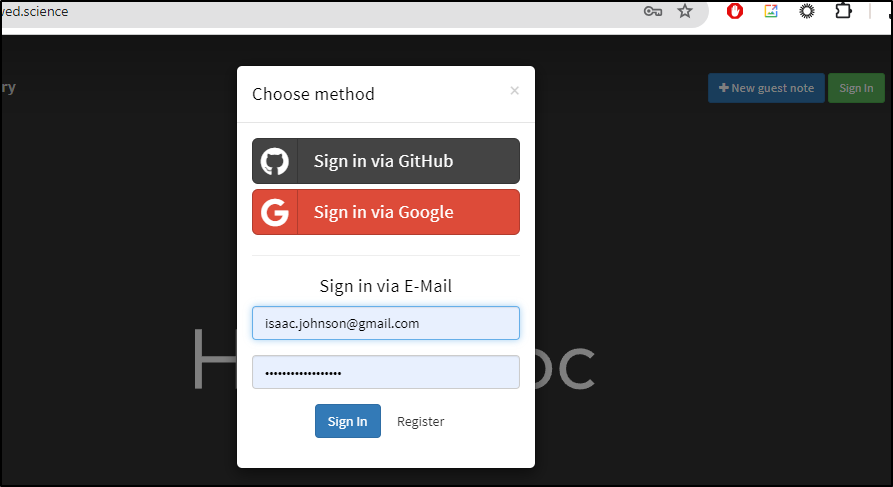

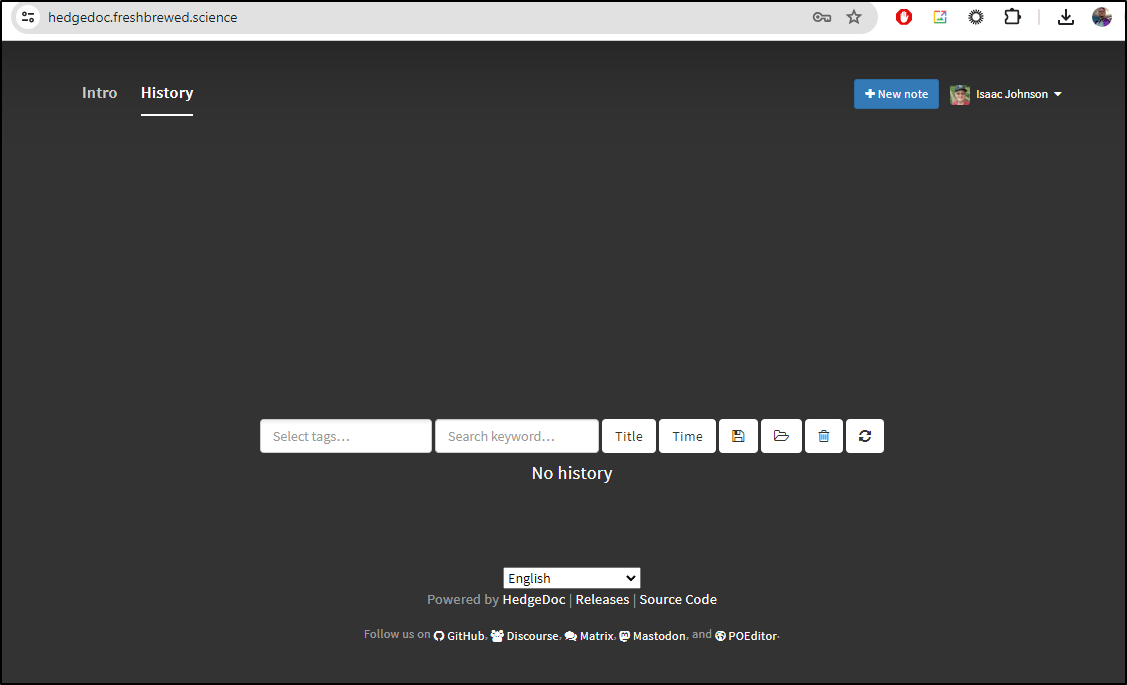

I can now sign-in with either Google or Github

Github will prompt for permissions

Now I’m logged in via Github!

There are many more including Dropbox, Facebook, Gitlab, LDAP, Mattermost, OAuth2, SAML and X as documented in here.

Disable anonymous

I love giving out free, and I’ll let others use Github and Google, but this could be abused with just anonymous.

I’ll leave in email (which could, of course, be garbage as I’m not validating it), but disable just straight anonymous edits

$ kubectl edit deployment hedgedoc -n hedgedoc

... snip ...

- name: CMD_ALLOW_ANONYMOUS

value: "false"

- name: CMD_ALLOW_ANONYMOUS_EDITS

value: "false"

- name: CMD_ALLOW_GRAVATAR

value: "true"

- name: CMD_ALLOW_EMAIL_REGISTER

value: "true"

- name: CMD_ALLOW_EMAIL

value: "true"

... snip ...

We now just have “sign in”

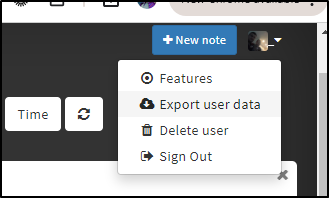

Export

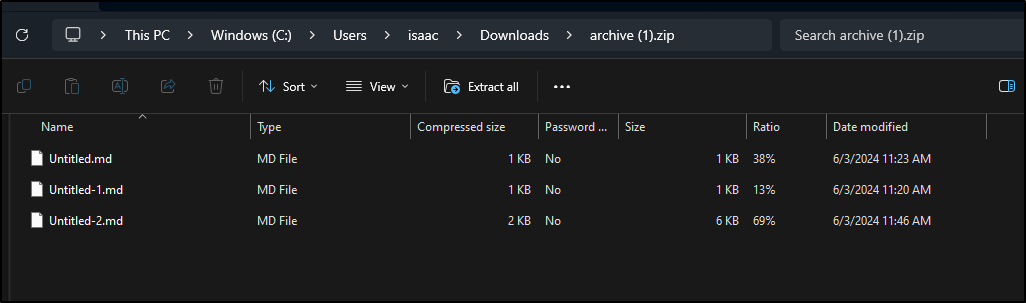

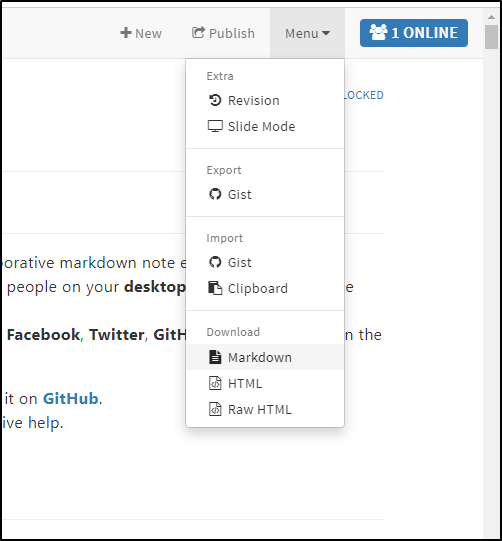

We can export our User’s data at any point from the drop down menu

Which then gives us all the markdown files

One can also download just a given page as Markdown or HTML

They used to have PDF, but removed it because of some security issues with the implementation. They are suggesting it could come back by as soon as version 4.2.

Summary

We covered a lot today including creating a working HedgeDoc using Kubernetes YAML Manifests and sorting out login issues. We learned about Graphviz diagrams and creating Presentations in Hedgedoc. We enjoyed taking a moment to engage with Copilot and Gemini on Graphviz Diagrams. We moved on to adding federated auth via Github and Google and then disabling Anonymous for security. Lastly, we looked at data export.

Overall, I think this is a great tool. I had full intentions of just doing a quick breeze-over while looking at a few different tools, but I really just got stuck in. I plan to try this out in a professional setting as well since I can lock down Google Auth to a specific domain which would comply with InfoSec guidelines in my place of business.